Fast Algorithms for Mining Association Rules Rakesh Agrawal

![Data Mining Seminar 2003 Proof Suppose a candidate itemset c = c[1]c[2]…c[k] is in Data Mining Seminar 2003 Proof Suppose a candidate itemset c = c[1]c[2]…c[k] is in](https://slidetodoc.com/presentation_image_h/8c1d53cd710a4cfffe08095d2c4562a2/image-27.jpg)

- Slides: 57

Fast Algorithms for Mining Association Rules Rakesh Agrawal Ramakrishnan Srikant 1

Data Mining Seminar 2003 Outline l Introduction l Formal statement l Apriori Algorithm l Apriori. Tid Algorithm l Comparison l Apriori. Hybrid Algorithm l Conclusions ©Ofer Pasternak 2

Data Mining Seminar 2003 Introduction l Bar-Code technology l Mining Association Rules over basket data (93) l Tires ^ accessories automotive service l Cross market, Attached mail. l Very large databases. ©Ofer Pasternak 3

Data Mining Seminar 2003 Notation l Items – I = {i 1, i 2, …, im} l Transaction – set of items – Items are sorted lexicographically l TID – unique identifier for each transaction ©Ofer Pasternak 4

Data Mining Seminar 2003 Notation l Association ©Ofer Pasternak Rule – X Y 5

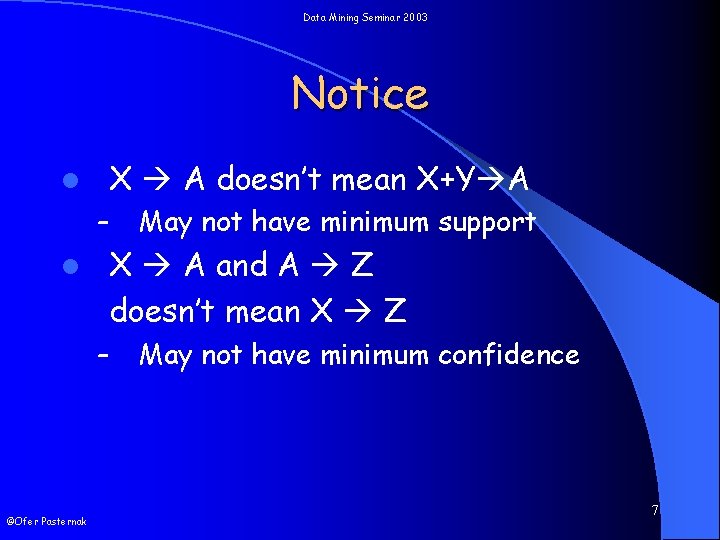

Data Mining Seminar 2003 Confidence and Support Association rule X Y has confidence c, c% of transactions in D that contain X also contain Y. l Association rule X Y has support s, s% of transactions in D contain X and Y. l ©Ofer Pasternak 6

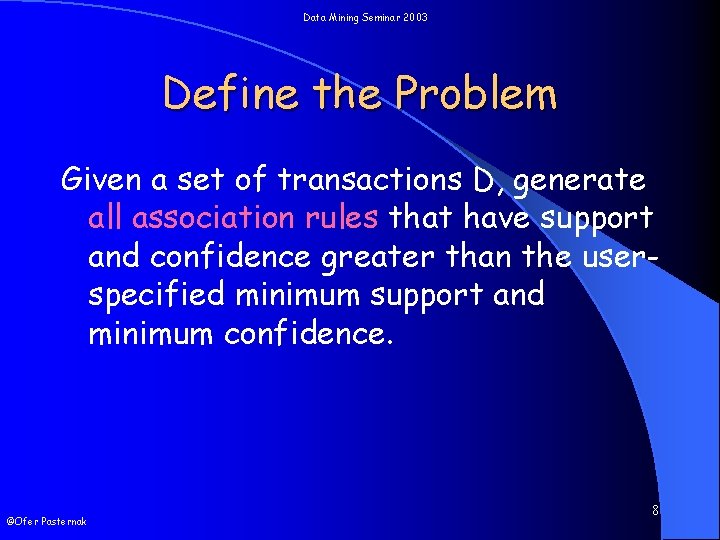

Data Mining Seminar 2003 Notice X A doesn’t mean X+Y A l – X A and A Z doesn’t mean X Z l – ©Ofer Pasternak May not have minimum support May not have minimum confidence 7

Data Mining Seminar 2003 Define the Problem Given a set of transactions D, generate all association rules that have support and confidence greater than the userspecified minimum support and minimum confidence. ©Ofer Pasternak 8

Data Mining Seminar 2003 Previous Algorithms l AIS l SETM l Knowledge Discovery l Induction of Classification Rules l Discovery of causal rules l Fitting of function to data l KID 3 – machine learning ©Ofer Pasternak 9

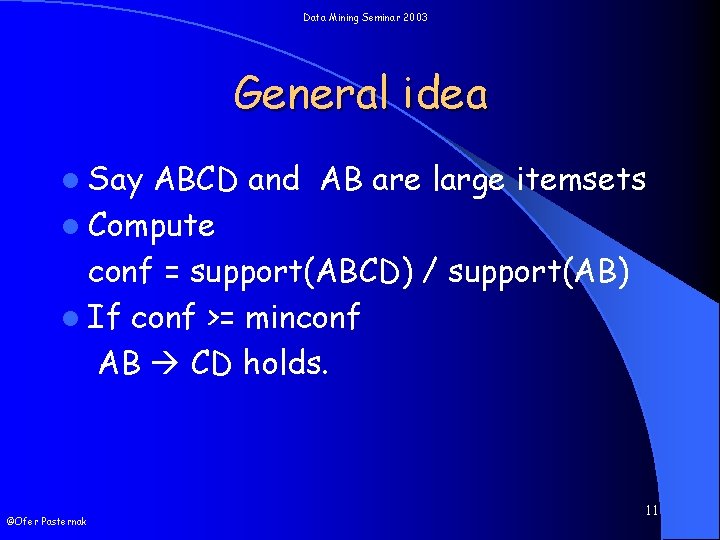

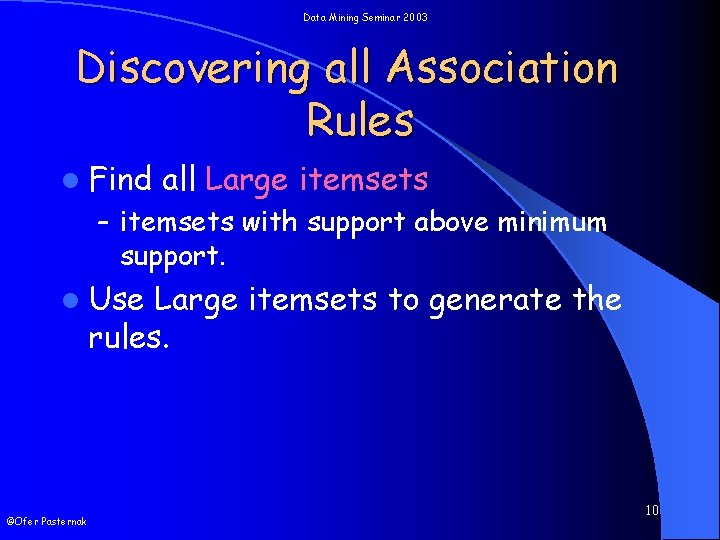

Data Mining Seminar 2003 Discovering all Association Rules l Find all Large itemsets – itemsets with support above minimum support. l Use Large itemsets to generate the rules. ©Ofer Pasternak 10

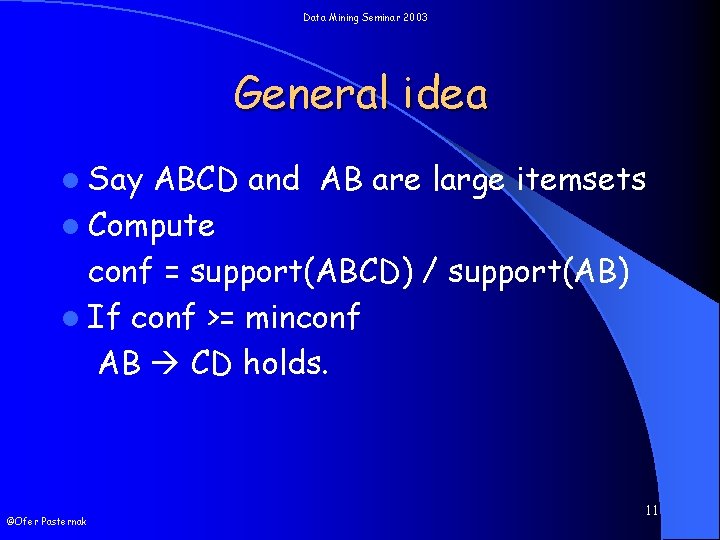

Data Mining Seminar 2003 General idea l Say ABCD and AB are large itemsets l Compute conf = support(ABCD) / support(AB) l If conf >= minconf AB CD holds. ©Ofer Pasternak 11

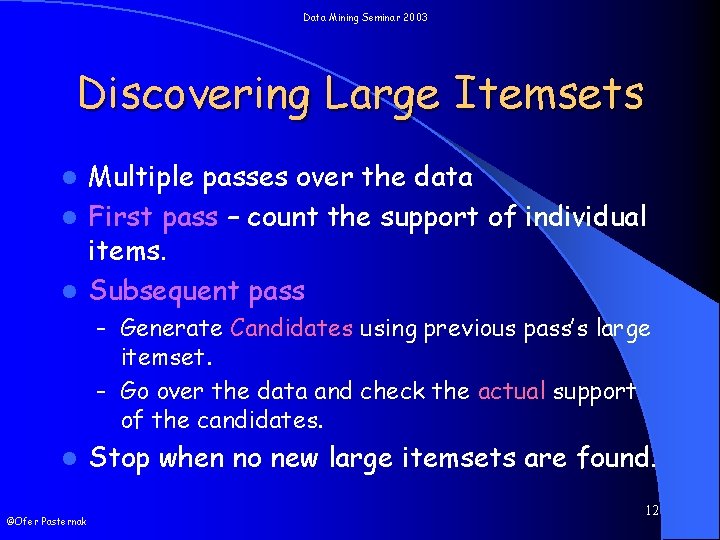

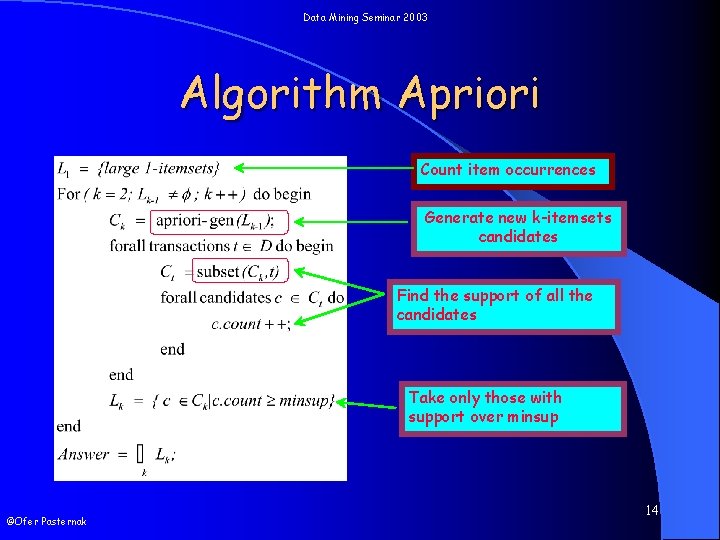

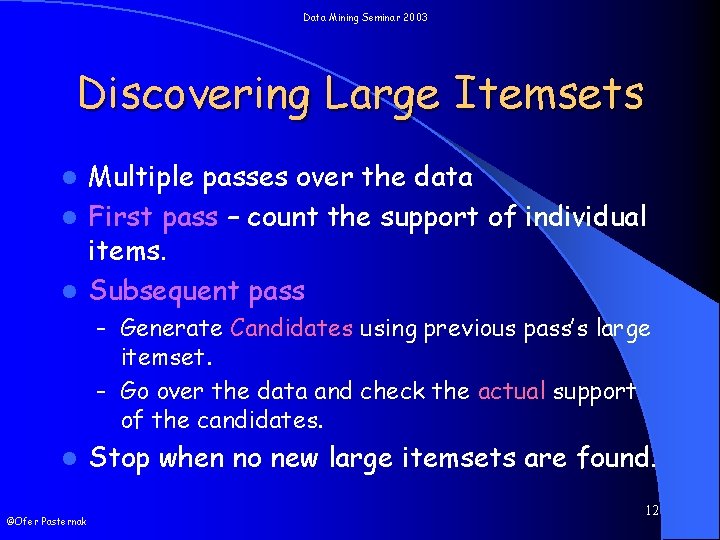

Data Mining Seminar 2003 Discovering Large Itemsets Multiple passes over the data l First pass – count the support of individual items. l Subsequent pass l – Generate Candidates using previous pass’s large itemset. – Go over the data and check the actual support of the candidates. l ©Ofer Pasternak Stop when no new large itemsets are found. 12

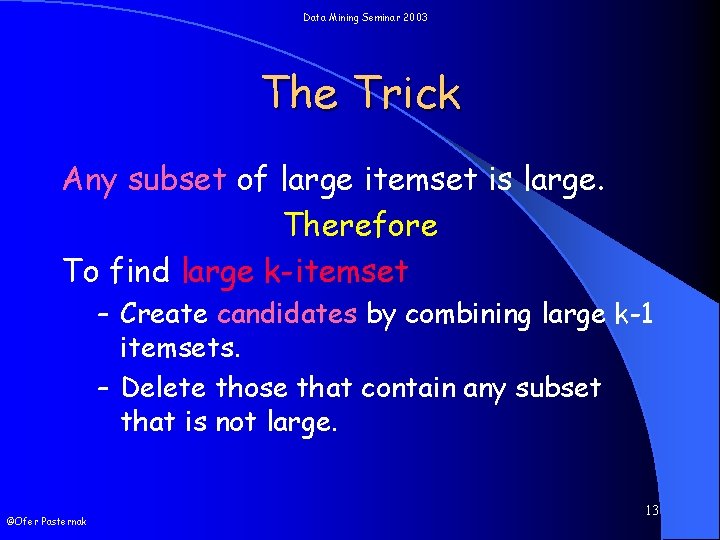

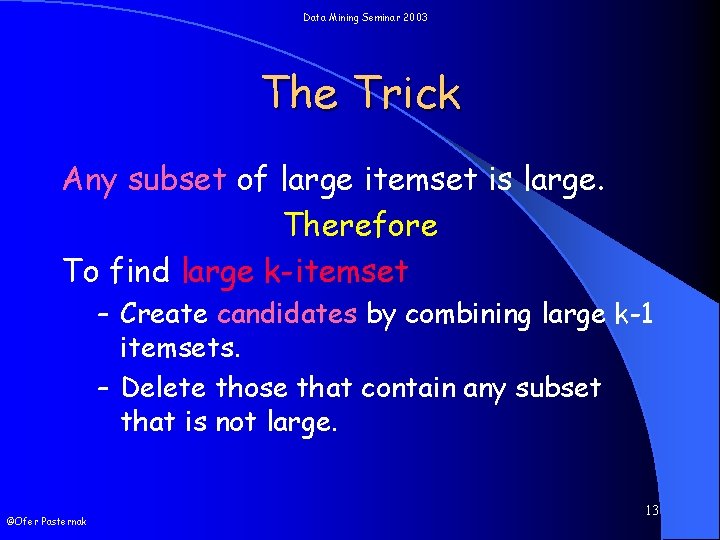

Data Mining Seminar 2003 The Trick Any subset of large itemset is large. Therefore To find large k-itemset – Create candidates by combining large k-1 itemsets. – Delete those that contain any subset that is not large. ©Ofer Pasternak 13

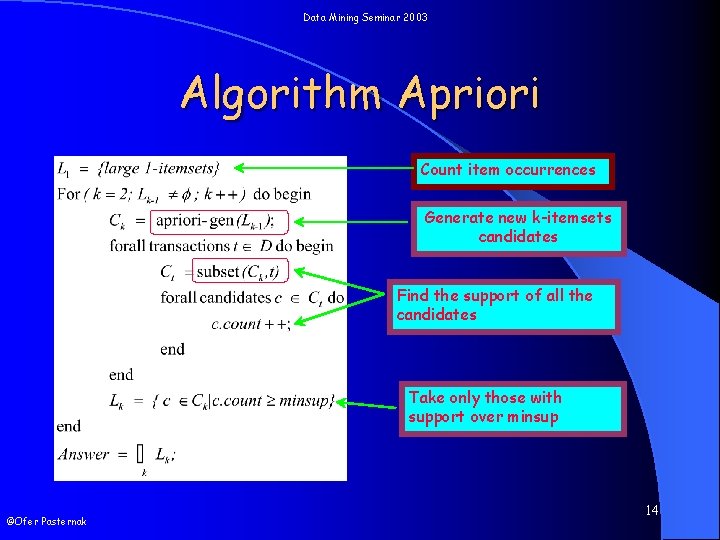

Data Mining Seminar 2003 Algorithm Apriori Count item occurrences Generate new k-itemsets candidates Find the support of all the candidates Take only those with support over minsup ©Ofer Pasternak 14

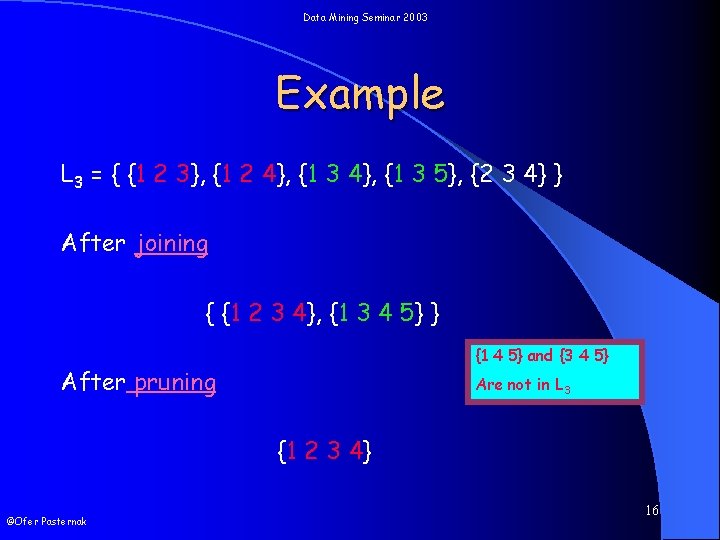

Data Mining Seminar 2003 Candidate generation l Join step l Prune step P and q are 2 k-1 large itemsets identical in all k-2 first items. Join by adding the last item of q to p Check all the subsets, remove a candidate with “small” subset ©Ofer Pasternak 15

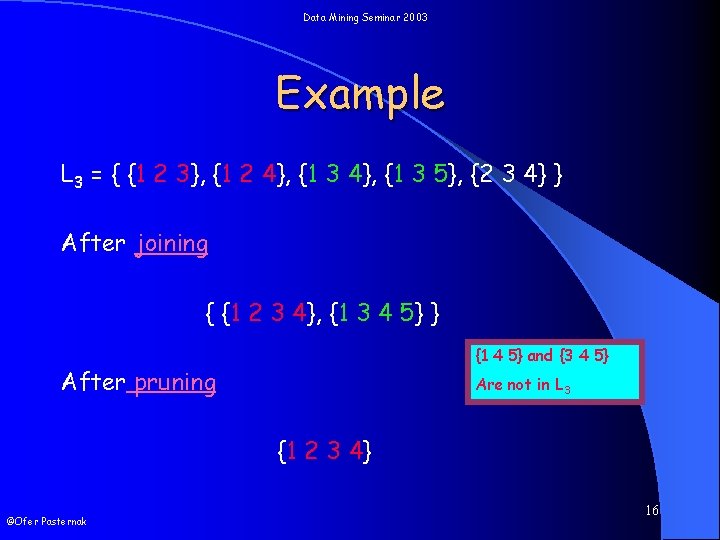

Data Mining Seminar 2003 Example L 3 = { {1 2 3}, {1 2 4}, {1 3 5}, {2 3 4} } After joining { {1 2 3 4}, {1 3 4 5} } {1 4 5} and {3 4 5} After pruning Are not in L 3 {1 2 3 4} ©Ofer Pasternak 16

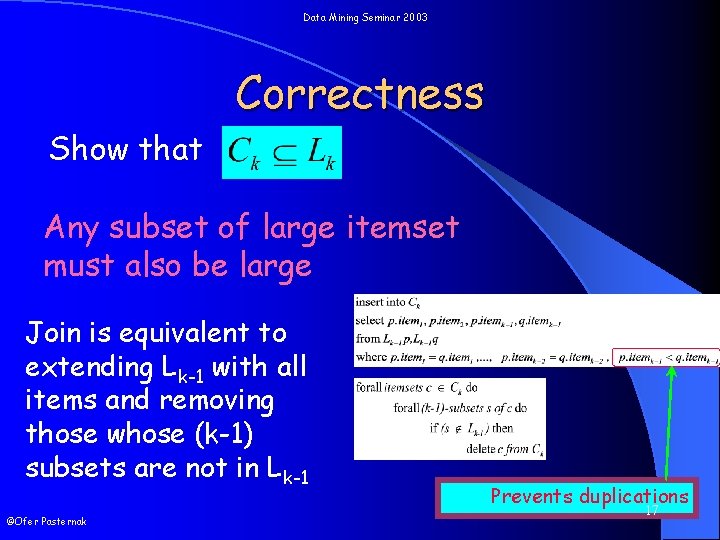

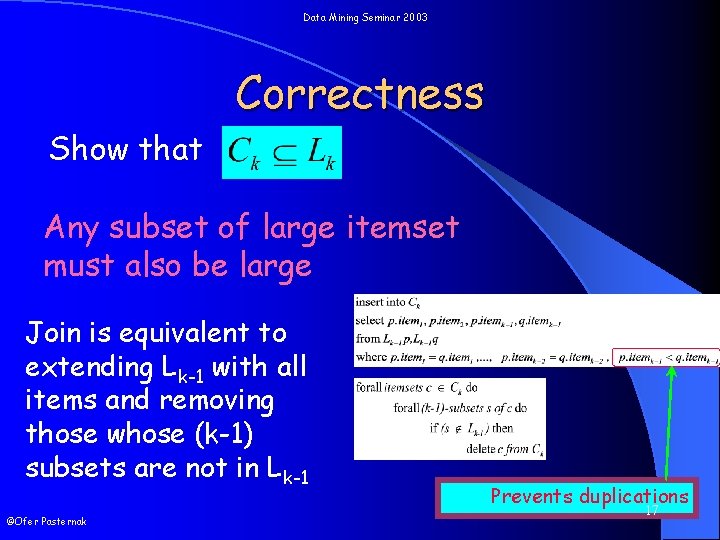

Data Mining Seminar 2003 Correctness Show that Any subset of large itemset must also be large Join is equivalent to extending Lk-1 with all items and removing those whose (k-1) subsets are not in Lk-1 ©Ofer Pasternak Prevents duplications 17

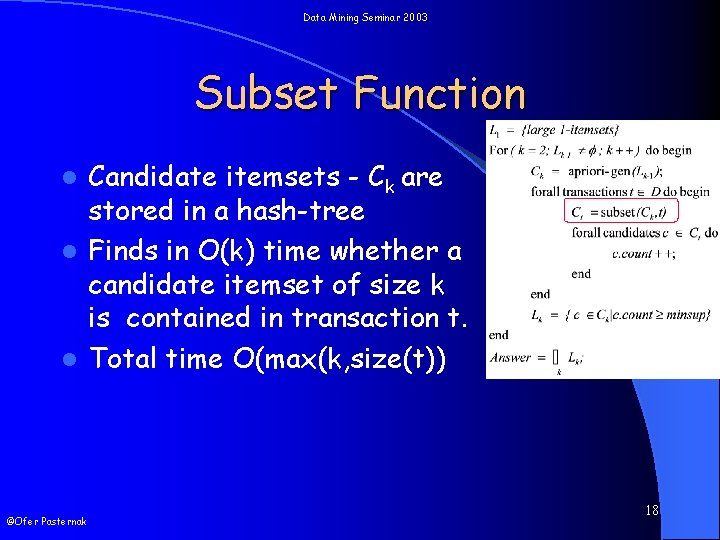

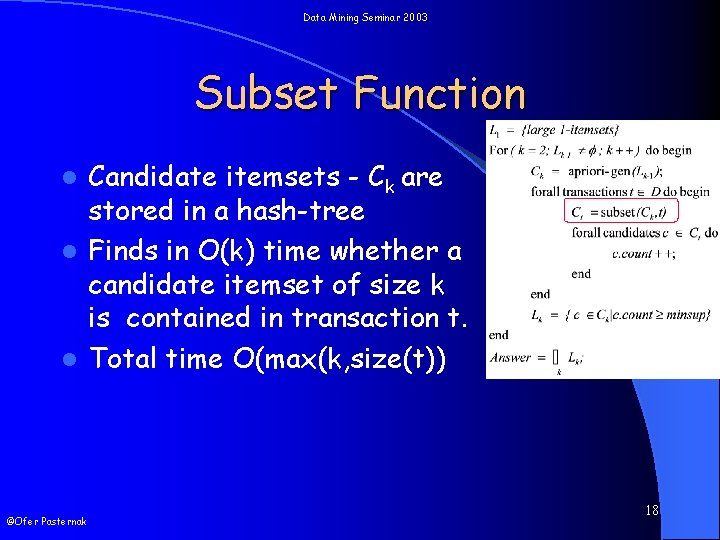

Data Mining Seminar 2003 Subset Function Candidate itemsets - Ck are stored in a hash-tree l Finds in O(k) time whether a candidate itemset of size k is contained in transaction t. l Total time O(max(k, size(t)) l ©Ofer Pasternak 18

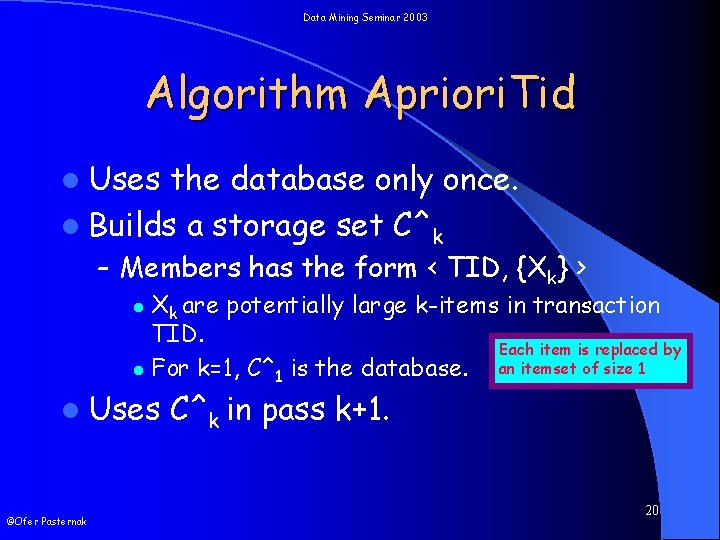

Data Mining Seminar 2003 Problem? l Every pass goes over the whole data. ©Ofer Pasternak 19

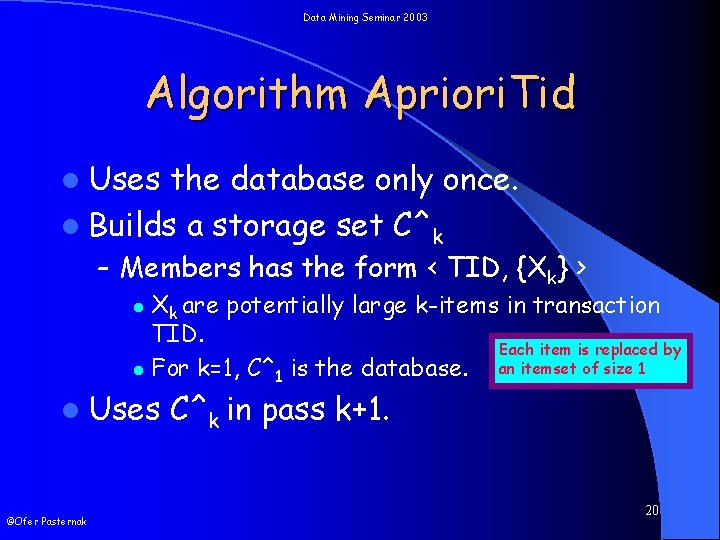

Data Mining Seminar 2003 Algorithm Apriori. Tid l Uses the database only once. l Builds a storage set C^k – Members has the form < TID, {Xk} > Xk are potentially large k-items in transaction TID. Each item is replaced by an itemset of size 1 l For k=1, C^1 is the database. l l Uses ©Ofer Pasternak C^k in pass k+1. 20

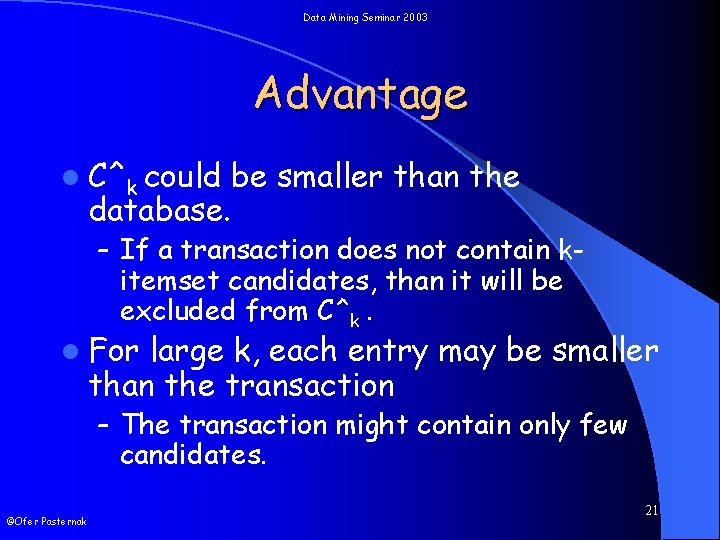

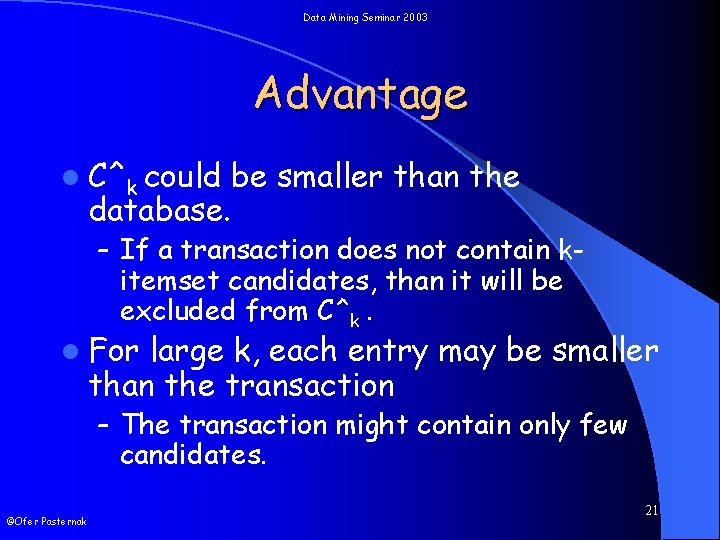

Data Mining Seminar 2003 Advantage l C^k could be smaller than the database. – If a transaction does not contain k- itemset candidates, than it will be excluded from C^k. l For large k, each entry may be smaller than the transaction – The transaction might contain only few candidates. ©Ofer Pasternak 21

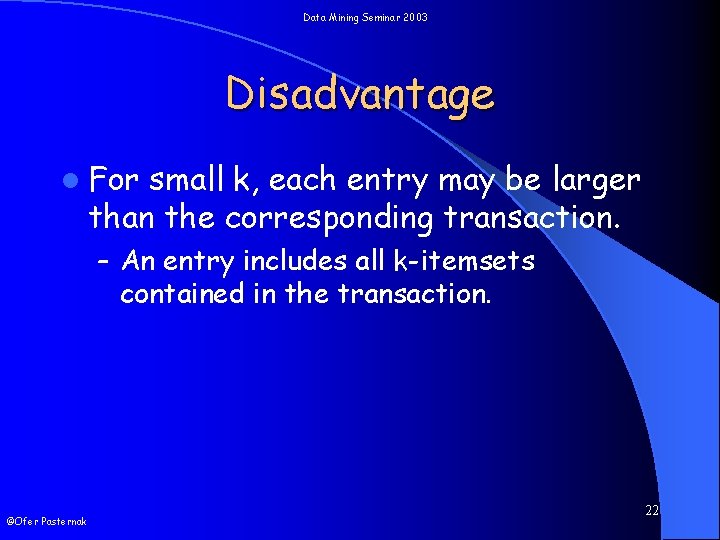

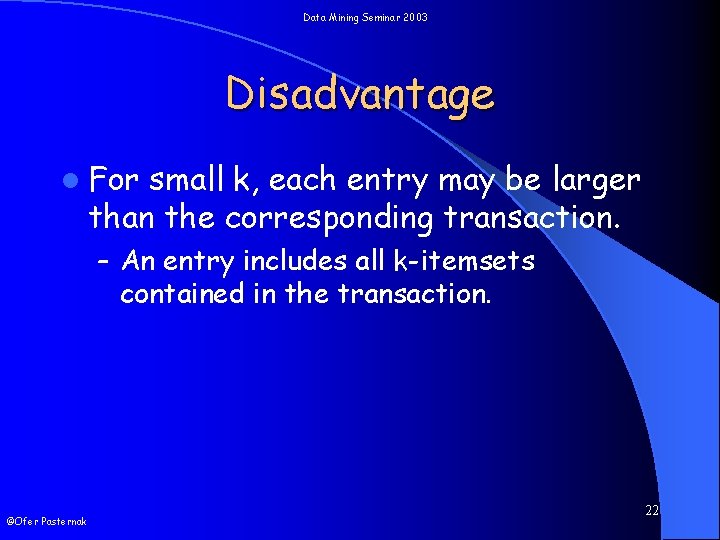

Data Mining Seminar 2003 Disadvantage l For small k, each entry may be larger than the corresponding transaction. – An entry includes all k-itemsets contained in the transaction. ©Ofer Pasternak 22

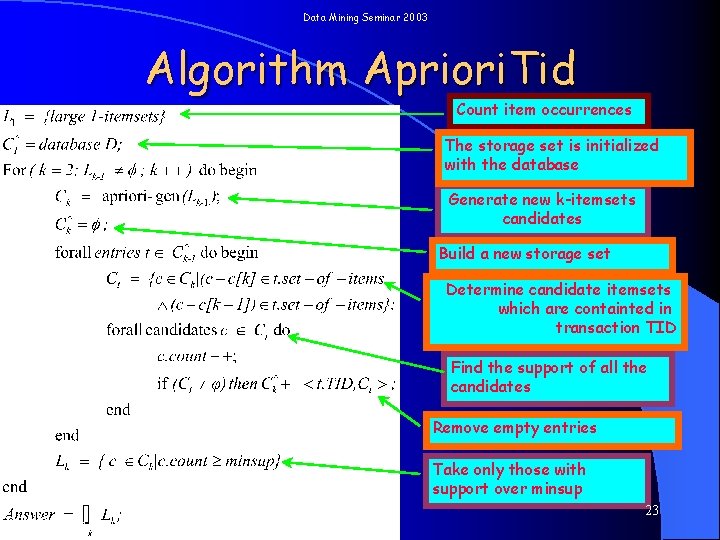

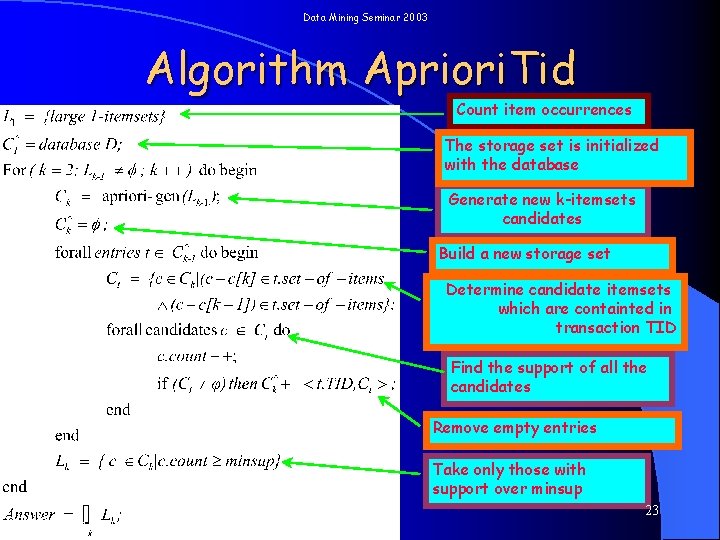

Data Mining Seminar 2003 Algorithm Apriori. Tid Count item occurrences The storage set is initialized with the database Generate new k-itemsets candidates Build a new storage set Determine candidate itemsets which are containted in transaction TID Find the support of all the candidates Remove empty entries Take only those with support over minsup ©Ofer Pasternak 23

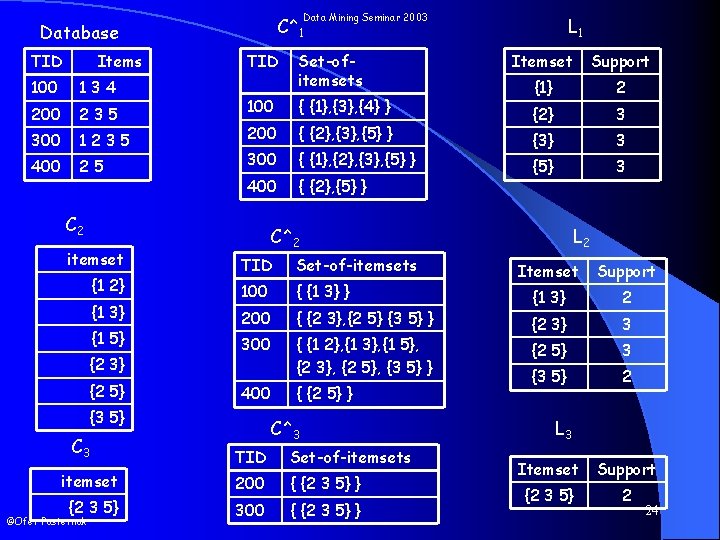

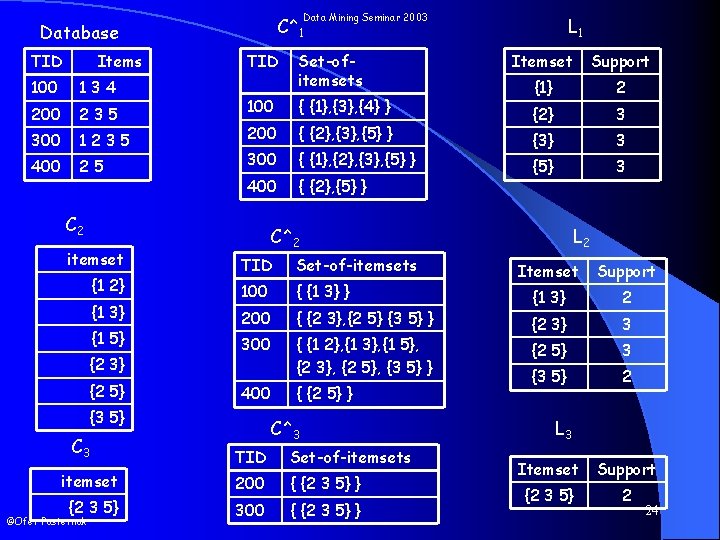

Data Mining Seminar 2003 C^1 Database TID Items 100 134 200 235 300 1235 400 25 TID 100 { {1}, {3}, {4} } 200 { {2}, {3}, {5} } 300 { {1}, {2}, {3}, {5} } 400 { {2}, {5} } C 2 itemset {1 2} {1 3} {1 5} {2 3} {2 5} TID Set-of-itemsets 100 { {1 3} } 200 { {2 3}, {2 5} {3 5} } 300 { {1 2}, {1 3}, {1 5}, {2 3}, {2 5}, {3 5} } 400 { {2 5} } C^3 TID Set-of-itemsets itemset 200 { {2 3 5} } {2 3 5} 300 { {2 3 5} } ©Ofer Pasternak Itemset Support {1} 2 {2} 3 {3} 3 {5} 3 C^2 {3 5} C 3 Set-ofitemsets L 1 L 2 Itemset Support {1 3} 2 {2 3} 3 {2 5} 3 {3 5} 2 L 3 Itemset Support {2 3 5} 2 24

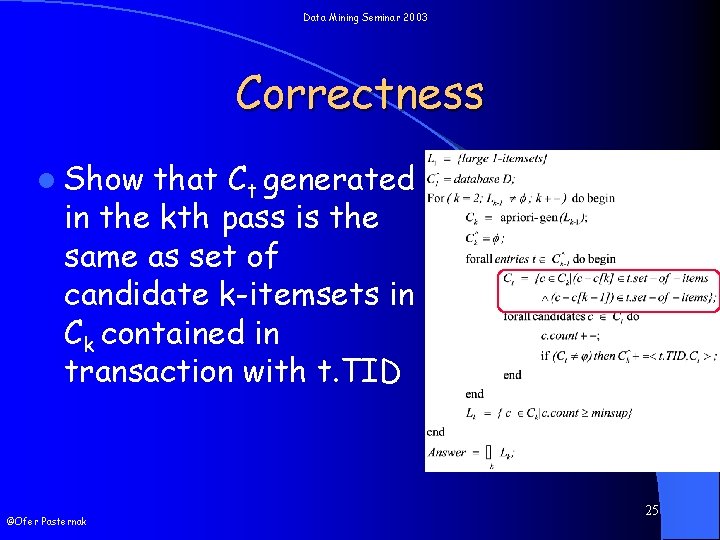

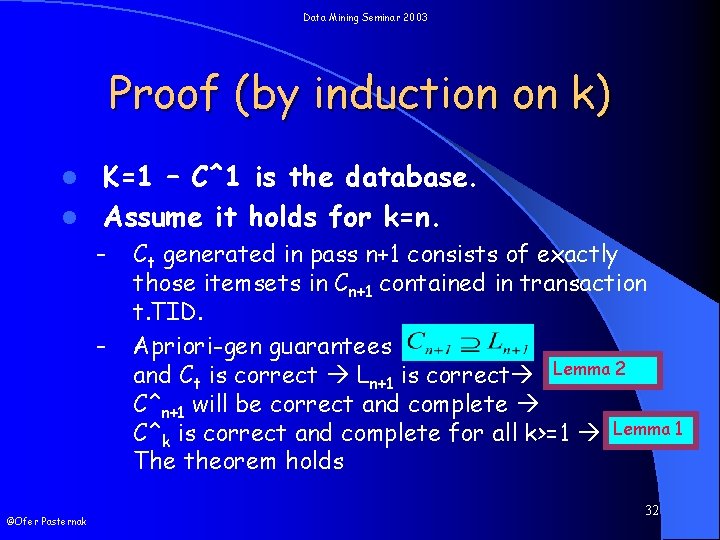

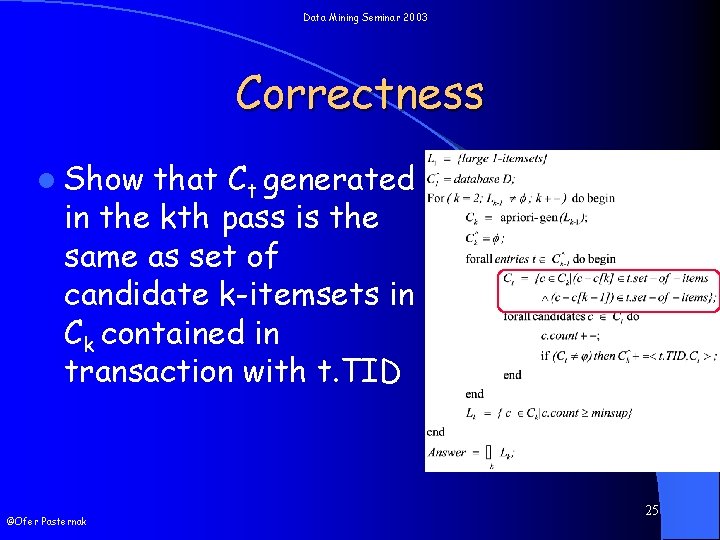

Data Mining Seminar 2003 Correctness l Show that Ct generated in the kth pass is the same as set of candidate k-itemsets in Ck contained in transaction with t. TID ©Ofer Pasternak 25

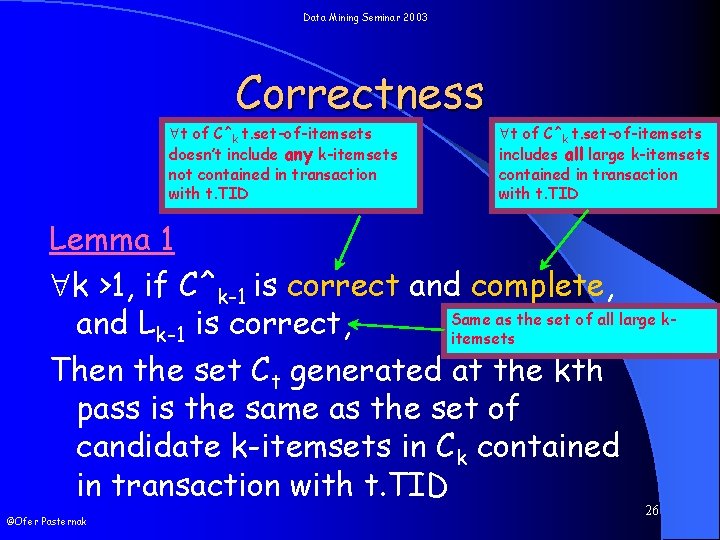

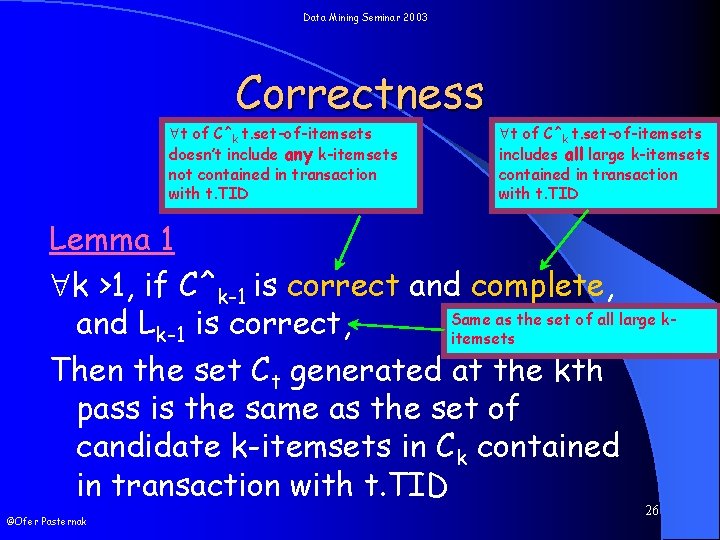

Data Mining Seminar 2003 Correctness t of C^k t. set-of-itemsets doesn’t include any k-itemsets not contained in transaction with t. TID t of C^k t. set-of-itemsets includes all large k-itemsets contained in transaction with t. TID Lemma 1 k >1, if C^k-1 is correct and complete, Same as the set of all large kand Lk-1 is correct, itemsets Then the set Ct generated at the kth pass is the same as the set of candidate k-itemsets in Ck contained in transaction with t. TID ©Ofer Pasternak 26

![Data Mining Seminar 2003 Proof Suppose a candidate itemset c c1c2ck is in Data Mining Seminar 2003 Proof Suppose a candidate itemset c = c[1]c[2]…c[k] is in](https://slidetodoc.com/presentation_image_h/8c1d53cd710a4cfffe08095d2c4562a2/image-27.jpg)

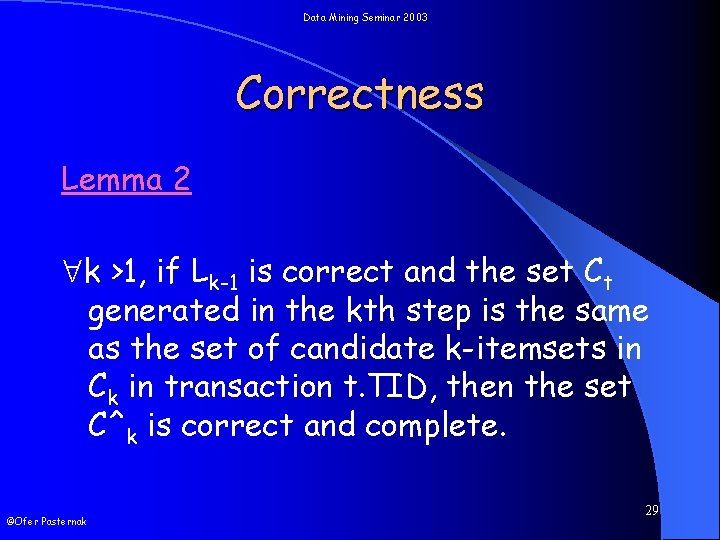

Data Mining Seminar 2003 Proof Suppose a candidate itemset c = c[1]c[2]…c[k] is in transaction t. TID c 1 = (c-c[k]) and c 2=(c-c[k-1]) were in transaction t. TID Ck was built using apriori-gen(Lk-1) all subsets of c of Ck must be large c 1 and c 2 must be large C^k-1 is complete c 1 and c 2 were members of t. set-of-items c will be a member of Ct ©Ofer Pasternak 27

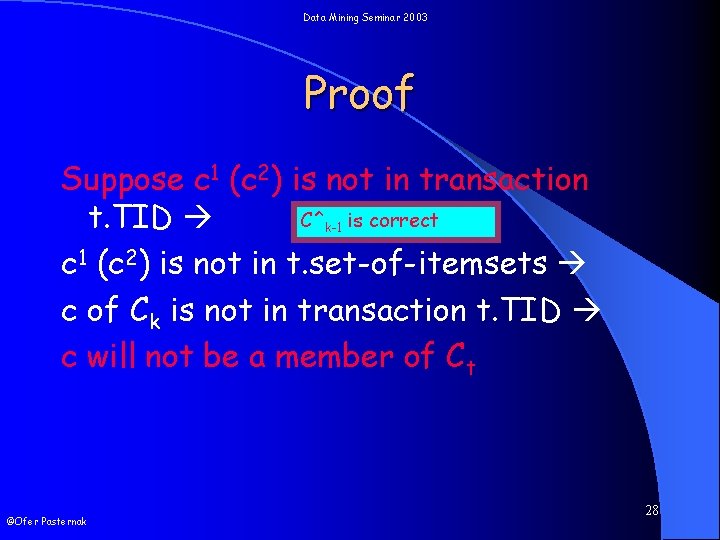

Data Mining Seminar 2003 Proof Suppose c 1 (c 2) is not in transaction C^k-1 is correct t. TID c 1 (c 2) is not in t. set-of-itemsets c of Ck is not in transaction t. TID c will not be a member of Ct ©Ofer Pasternak 28

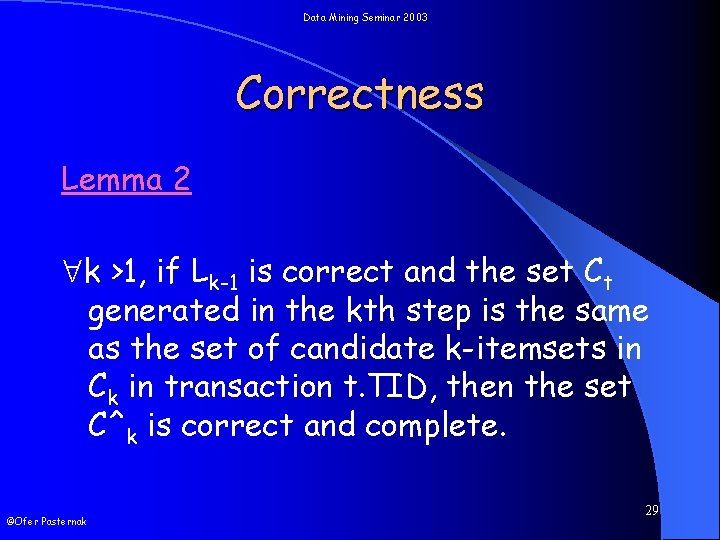

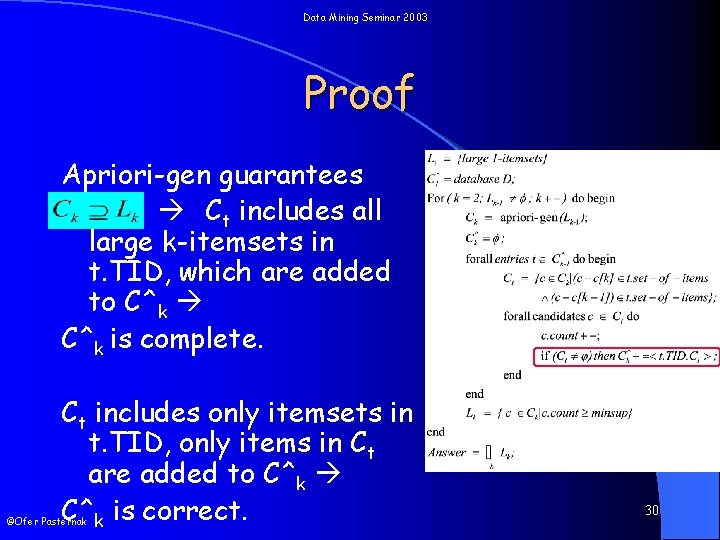

Data Mining Seminar 2003 Correctness Lemma 2 k >1, if Lk-1 is correct and the set Ct generated in the kth step is the same as the set of candidate k-itemsets in Ck in transaction t. TID, then the set C^k is correct and complete. ©Ofer Pasternak 29

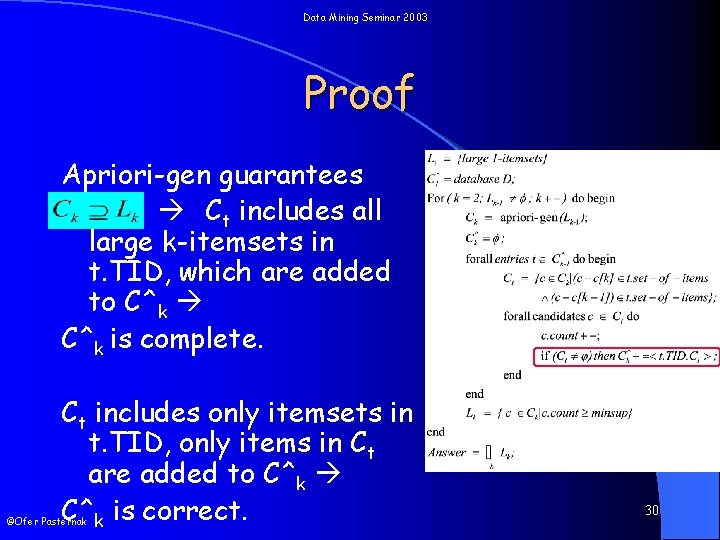

Data Mining Seminar 2003 Proof Apriori-gen guarantees Ct includes all large k-itemsets in t. TID, which are added to C^k is complete. Ct includes only itemsets in t. TID, only items in Ct are added to C^k is correct. ©Ofer Pasternak 30

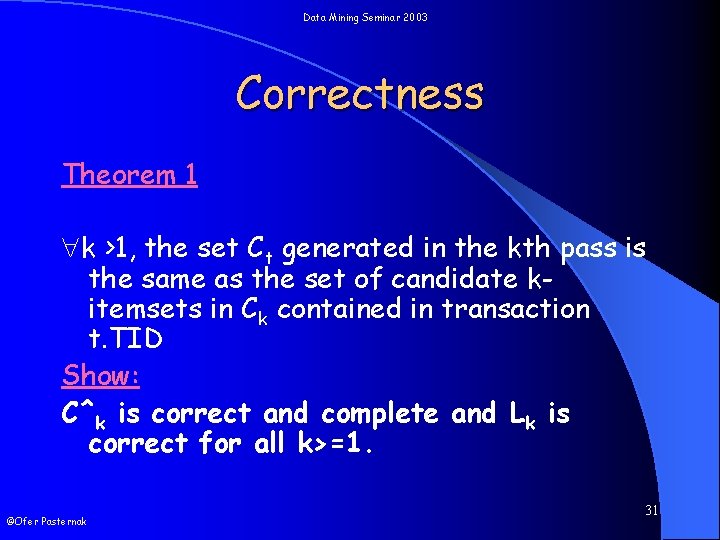

Data Mining Seminar 2003 Correctness Theorem 1 k >1, the set Ct generated in the kth pass is the same as the set of candidate kitemsets in Ck contained in transaction t. TID Show: C^k is correct and complete and Lk is correct for all k>=1. ©Ofer Pasternak 31

Data Mining Seminar 2003 Proof (by induction on k) K=1 – C^1 is the database. l Assume it holds for k=n. l – – ©Ofer Pasternak Ct generated in pass n+1 consists of exactly those itemsets in Cn+1 contained in transaction t. TID. Apriori-gen guarantees and Ct is correct Ln+1 is correct Lemma 2 C^n+1 will be correct and complete C^k is correct and complete for all k>=1 Lemma 1 The theorem holds 32

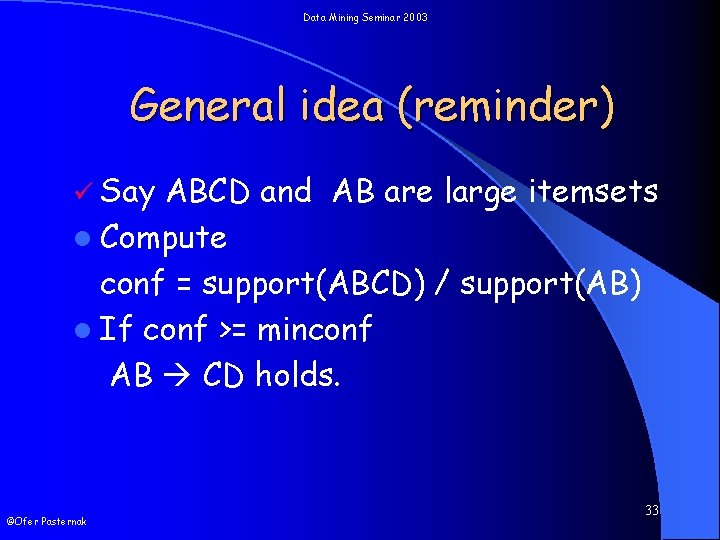

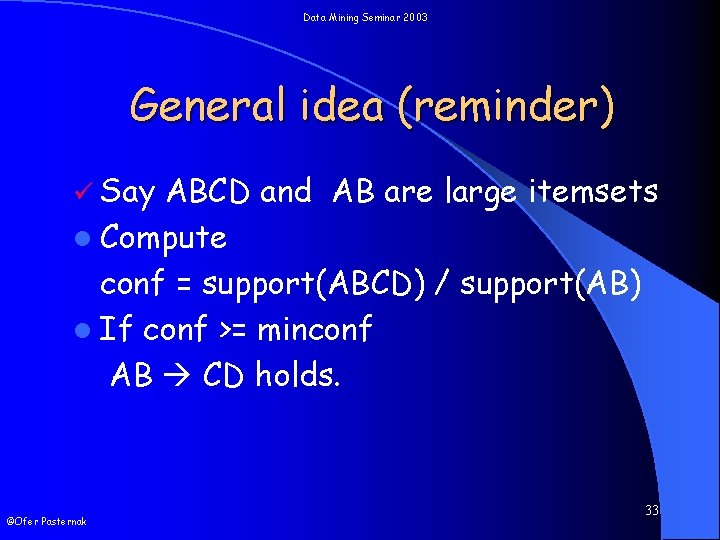

Data Mining Seminar 2003 General idea (reminder) ü Say ABCD and AB are large itemsets l Compute conf = support(ABCD) / support(AB) l If conf >= minconf AB CD holds. ©Ofer Pasternak 33

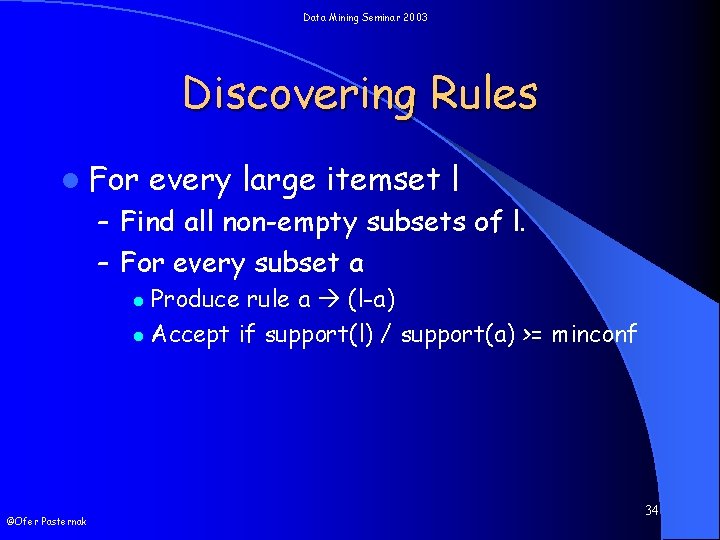

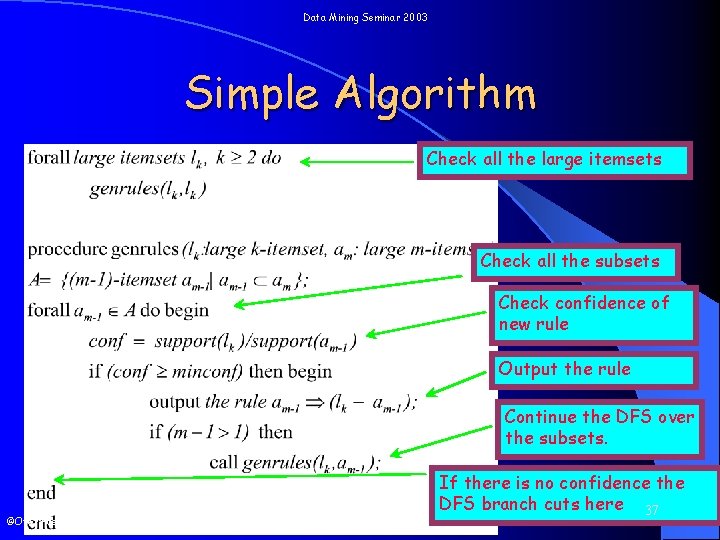

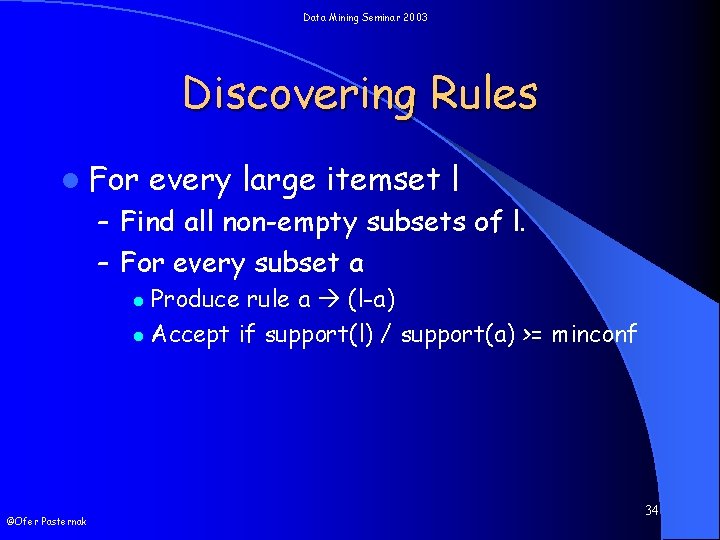

Data Mining Seminar 2003 Discovering Rules l For every large itemset l – Find all non-empty subsets of l. – For every subset a Produce rule a (l-a) l Accept if support(l) / support(a) >= minconf l ©Ofer Pasternak 34

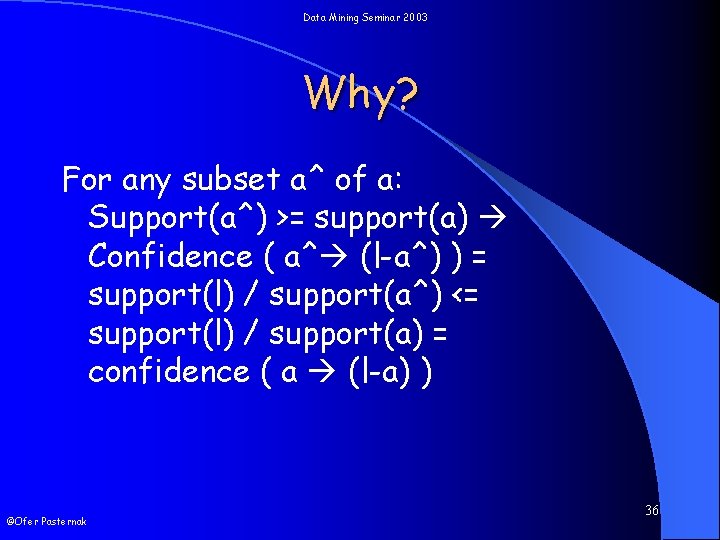

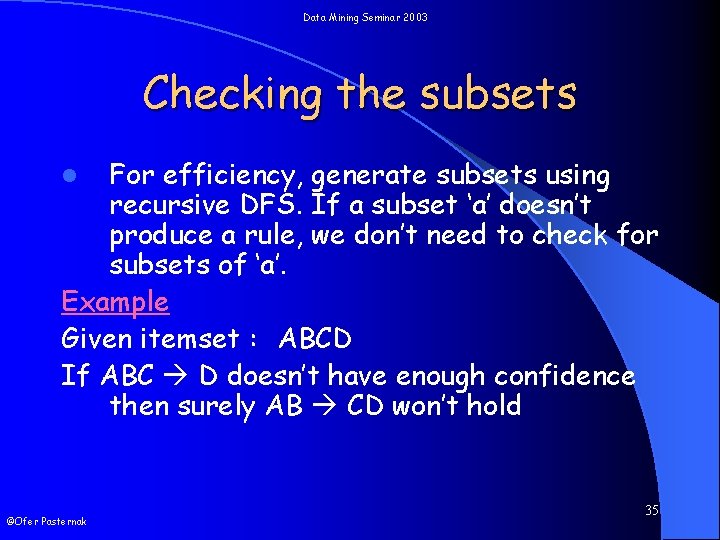

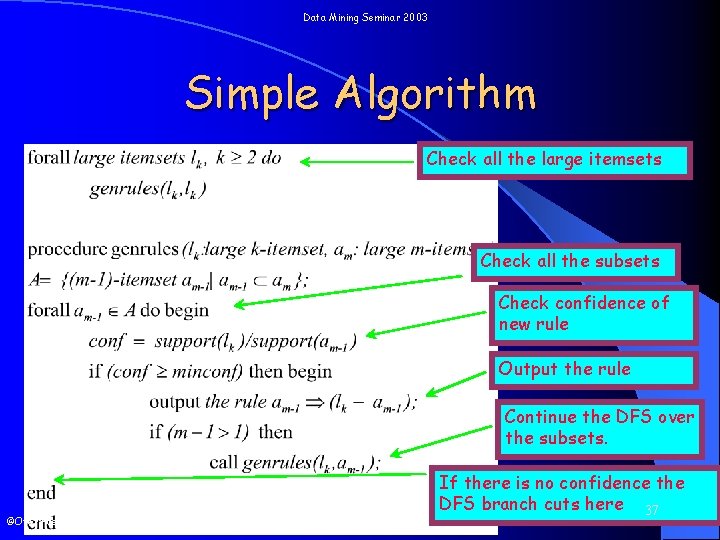

Data Mining Seminar 2003 Checking the subsets For efficiency, generate subsets using recursive DFS. If a subset ‘a’ doesn’t produce a rule, we don’t need to check for subsets of ‘a’. Example Given itemset : ABCD If ABC D doesn’t have enough confidence then surely AB CD won’t hold l ©Ofer Pasternak 35

Data Mining Seminar 2003 Why? For any subset a^ of a: Support(a^) >= support(a) Confidence ( a^ (l-a^) ) = support(l) / support(a^) <= support(l) / support(a) = confidence ( a (l-a) ) ©Ofer Pasternak 36

Data Mining Seminar 2003 Simple Algorithm Check all the large itemsets Check all the subsets Check confidence of new rule Output the rule Continue the DFS over the subsets. ©Ofer Pasternak If there is no confidence the DFS branch cuts here 37

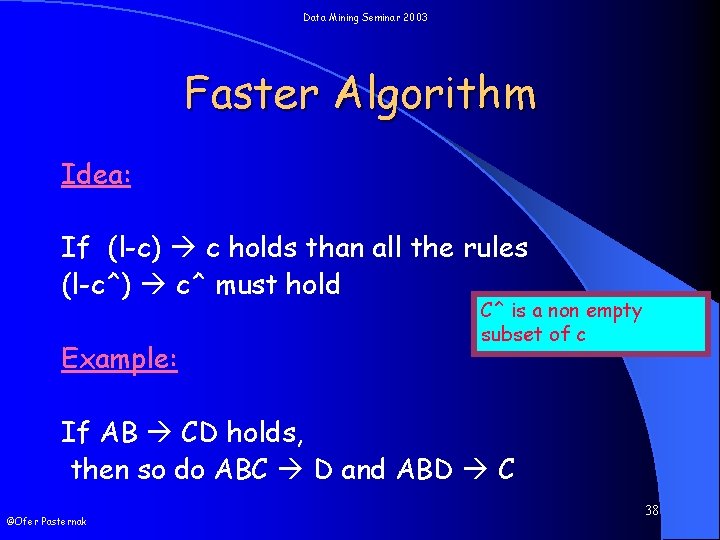

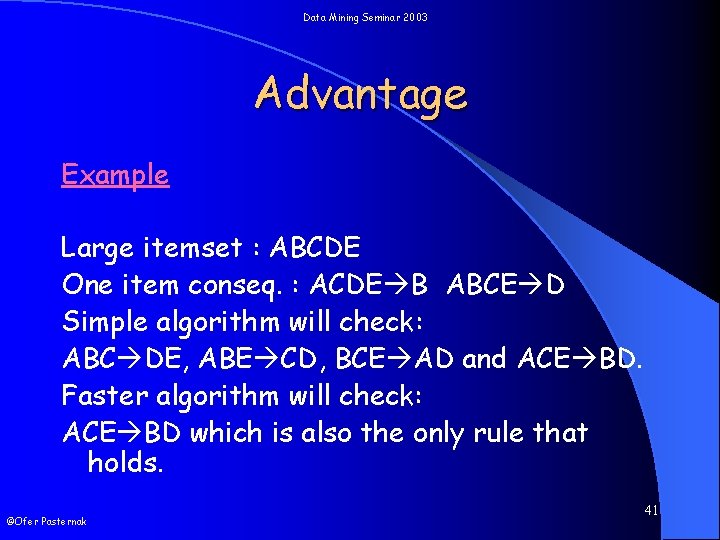

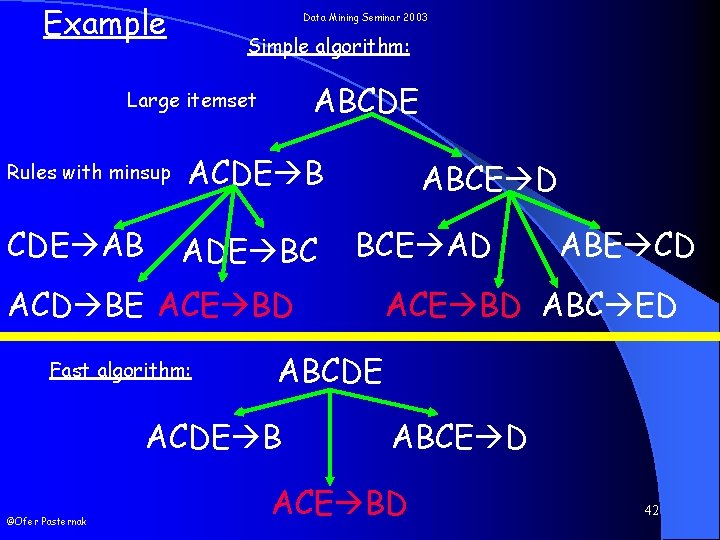

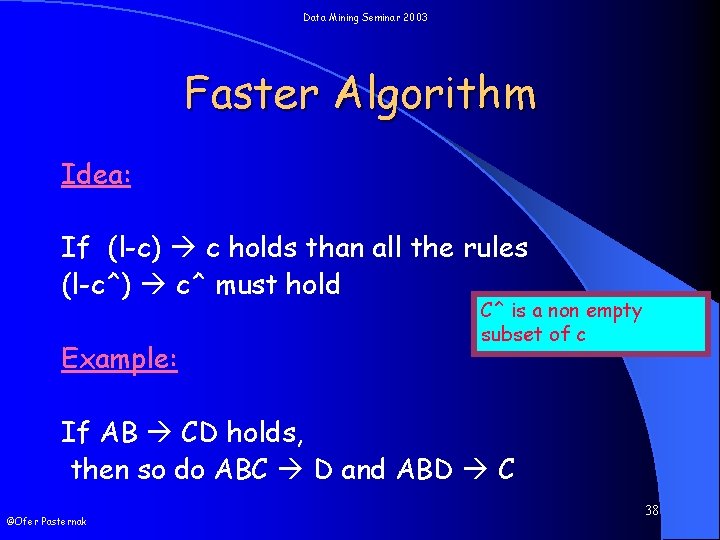

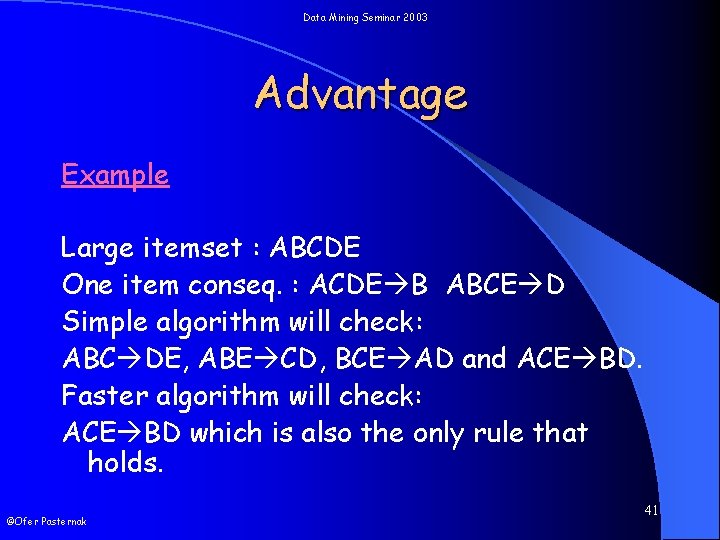

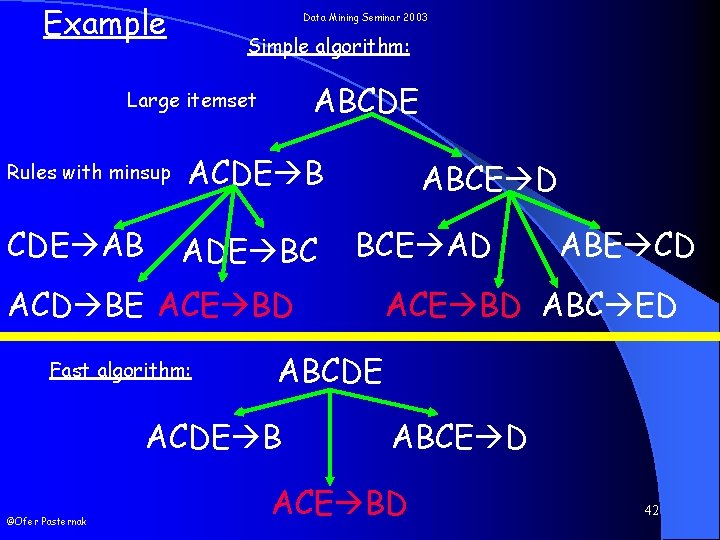

Data Mining Seminar 2003 Faster Algorithm Idea: If (l-c) c holds than all the rules (l-c^) c^ must hold Example: C^ is a non empty subset of c If AB CD holds, then so do ABC D and ABD C ©Ofer Pasternak 38

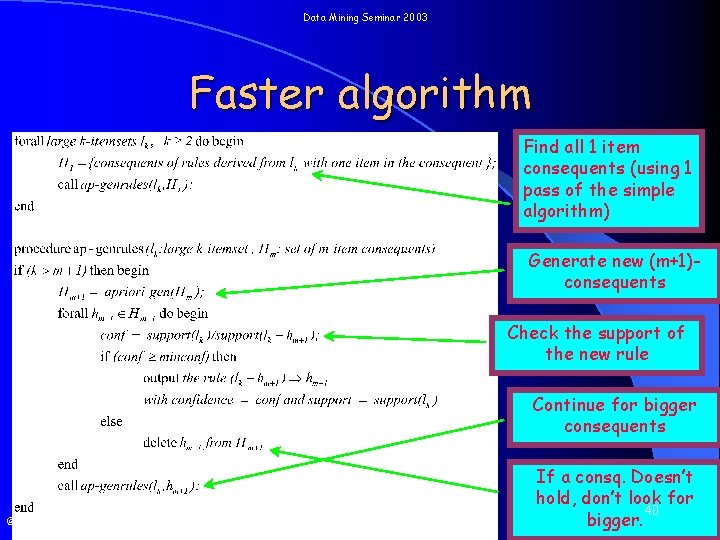

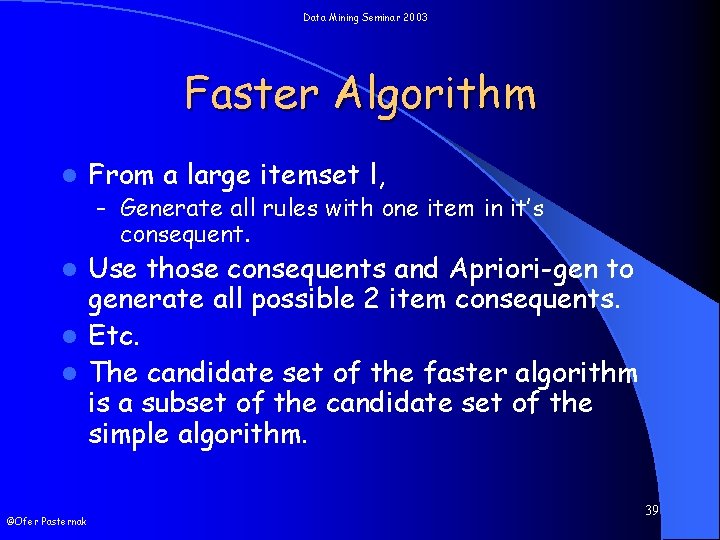

Data Mining Seminar 2003 Faster Algorithm l From a large itemset l, – Generate all rules with one item in it’s consequent. Use those consequents and Apriori-gen to generate all possible 2 item consequents. l Etc. l The candidate set of the faster algorithm is a subset of the candidate set of the simple algorithm. l ©Ofer Pasternak 39

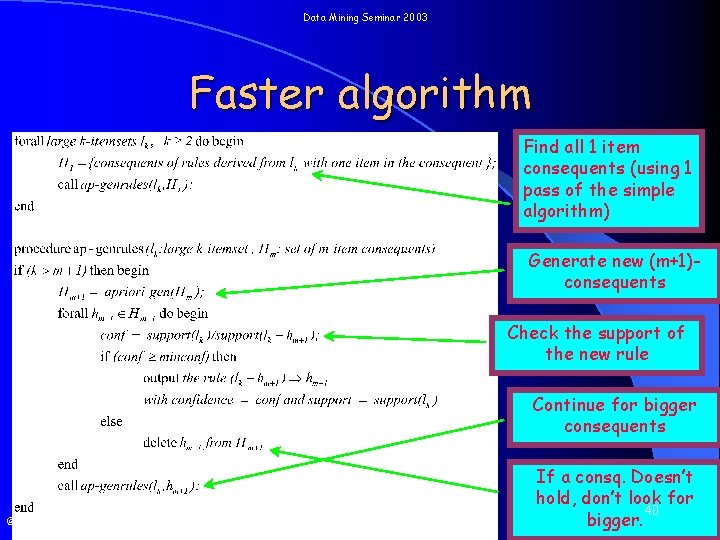

Data Mining Seminar 2003 Faster algorithm Find all 1 item consequents (using 1 pass of the simple algorithm) Generate new (m+1)consequents Check the support of the new rule Continue for bigger consequents ©Ofer Pasternak If a consq. Doesn’t hold, don’t look for 40 bigger.

Data Mining Seminar 2003 Advantage Example Large itemset : ABCDE One item conseq. : ACDE B ABCE D Simple algorithm will check: ABC DE, ABE CD, BCE AD and ACE BD. Faster algorithm will check: ACE BD which is also the only rule that holds. ©Ofer Pasternak 41

Example Data Mining Seminar 2003 Simple algorithm: ABCDE Large itemset Rules with minsup ACDE B CDE AB ADE BC ABCE D BCE AD ACD BE ACE BD Fast algorithm: ACE BD ABC ED ABCDE ACDE B ©Ofer Pasternak ABE CD ABCE D ACE BD 42

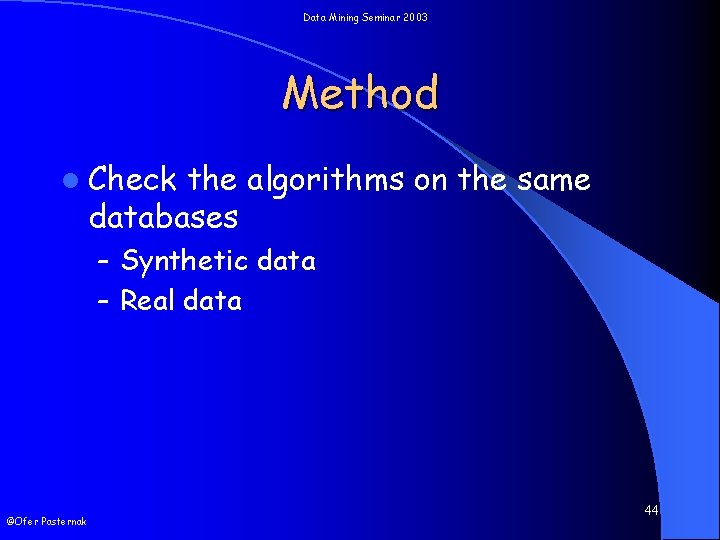

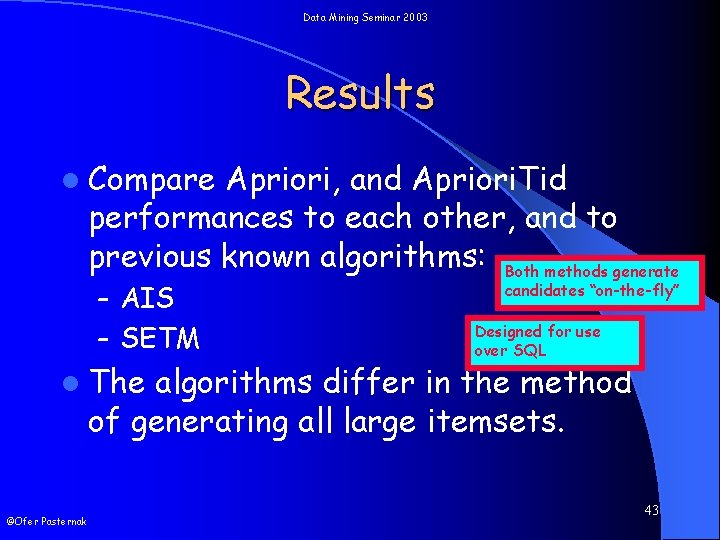

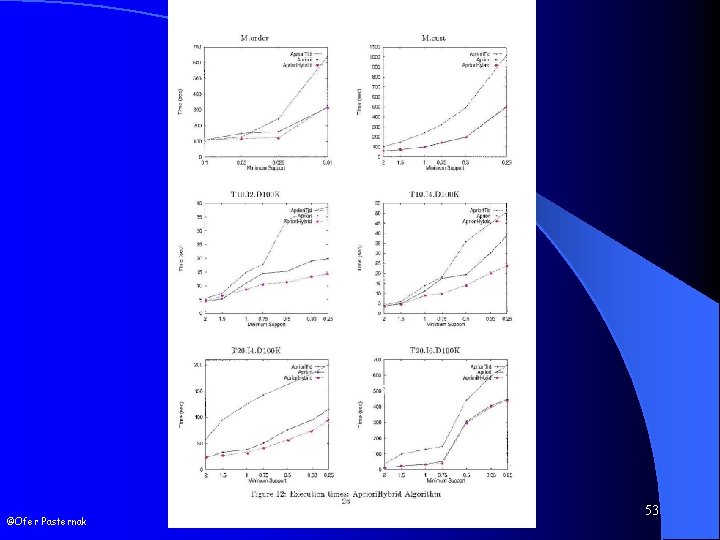

Data Mining Seminar 2003 Results l Compare Apriori, and Apriori. Tid performances to each other, and to previous known algorithms: Both methods generate – AIS – SETM l The candidates “on-the-fly” Designed for use over SQL algorithms differ in the method of generating all large itemsets. ©Ofer Pasternak 43

Data Mining Seminar 2003 Method l Check the algorithms on the same databases – Synthetic data – Real data ©Ofer Pasternak 44

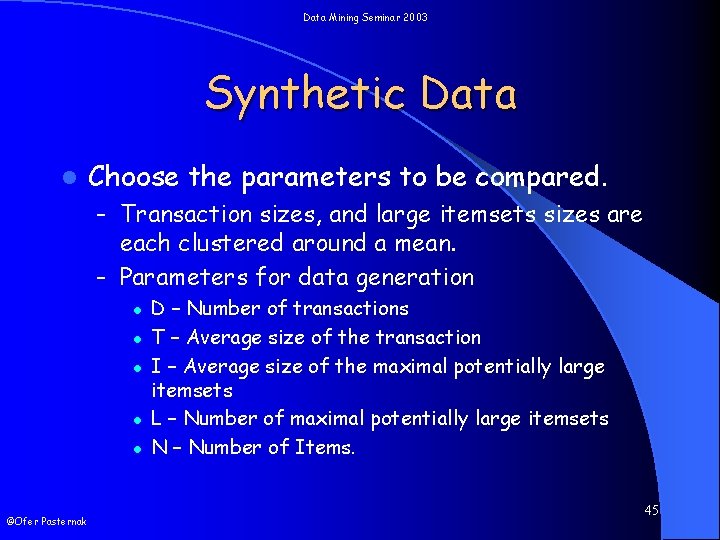

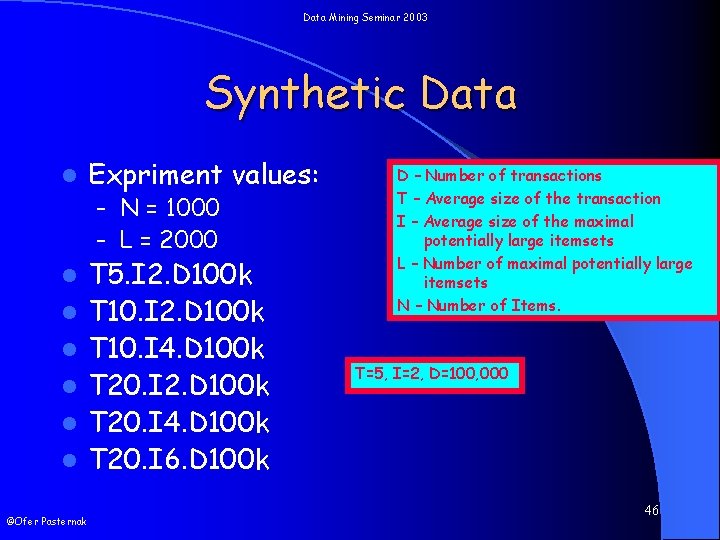

Data Mining Seminar 2003 Synthetic Data l Choose the parameters to be compared. – Transaction sizes, and large itemsets sizes are each clustered around a mean. – Parameters for data generation l l l ©Ofer Pasternak D – Number of transactions T – Average size of the transaction I – Average size of the maximal potentially large itemsets L – Number of maximal potentially large itemsets N – Number of Items. 45

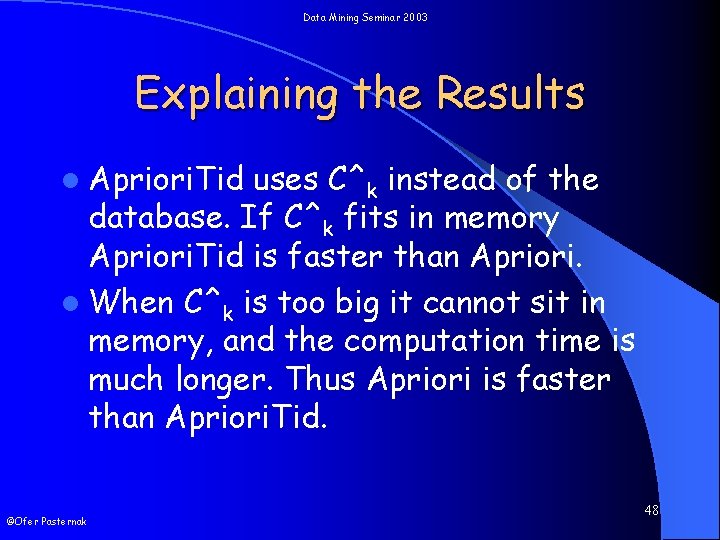

Data Mining Seminar 2003 Synthetic Data l Expriment values: – N = 1000 – L = 2000 l l l ©Ofer Pasternak T 5. I 2. D 100 k T 10. I 4. D 100 k T 20. I 2. D 100 k T 20. I 4. D 100 k T 20. I 6. D 100 k D – Number of transactions T – Average size of the transaction I – Average size of the maximal potentially large itemsets L – Number of maximal potentially large itemsets N – Number of Items. T=5, I=2, D=100, 000 46

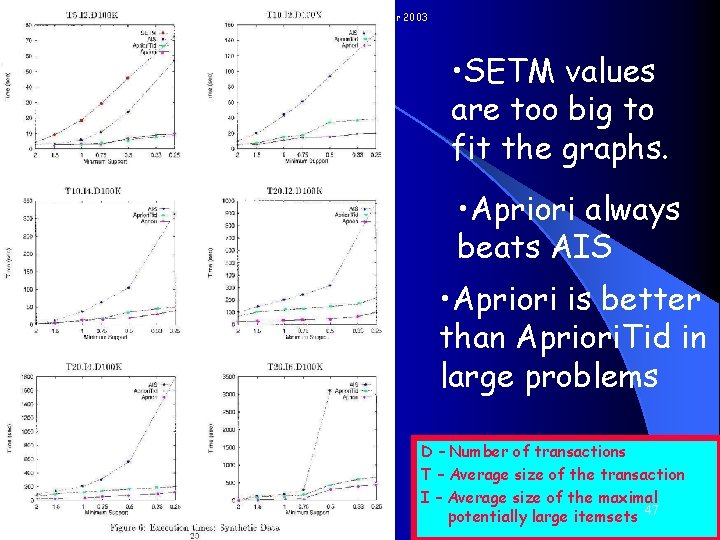

Data Mining Seminar 2003 • SETM values are too big to fit the graphs. • Apriori always beats AIS • Apriori is better than Apriori. Tid in large problems ©Ofer Pasternak D – Number of transactions T – Average size of the transaction I – Average size of the maximal potentially large itemsets 47

Data Mining Seminar 2003 Explaining the Results l Apriori. Tid uses C^k instead of the database. If C^k fits in memory Apriori. Tid is faster than Apriori. l When C^k is too big it cannot sit in memory, and the computation time is much longer. Thus Apriori is faster than Apriori. Tid. ©Ofer Pasternak 48

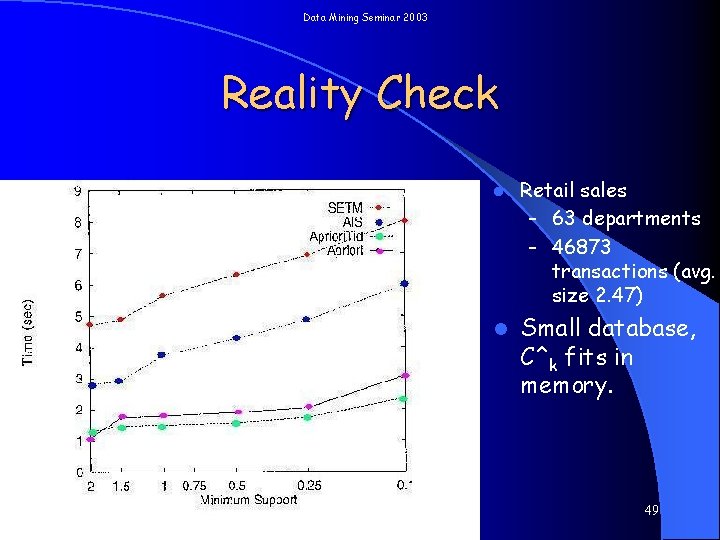

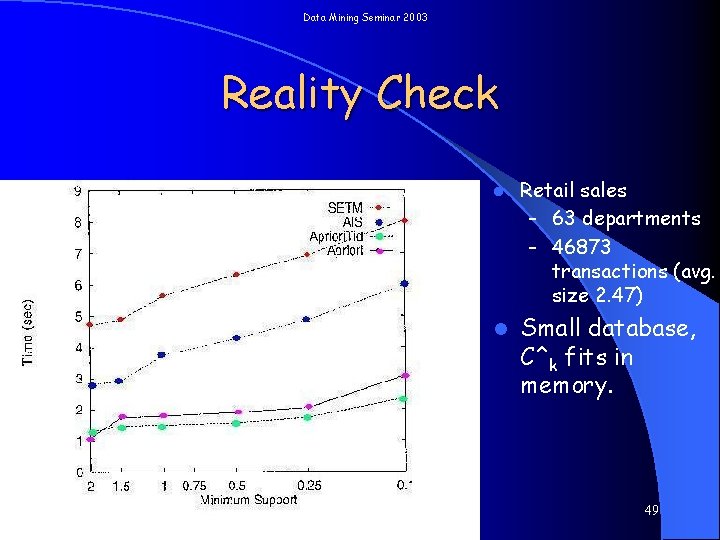

Data Mining Seminar 2003 Reality Check l l ©Ofer Pasternak Retail sales – 63 departments – 46873 transactions (avg. size 2. 47) Small database, C^k fits in memory. 49

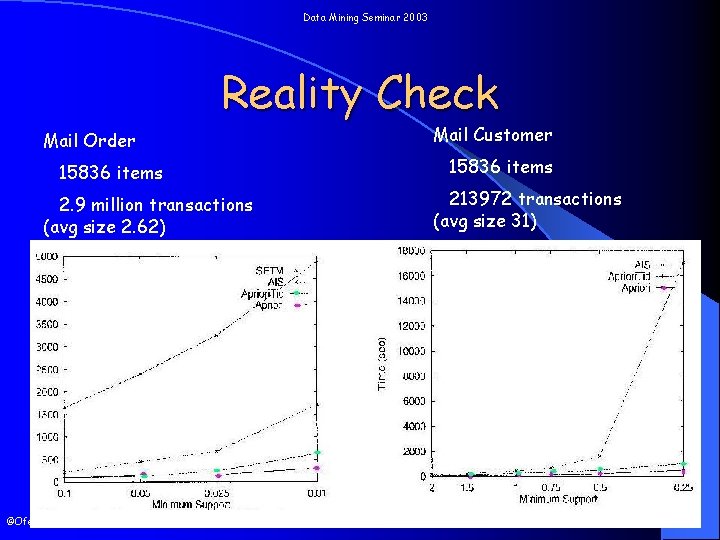

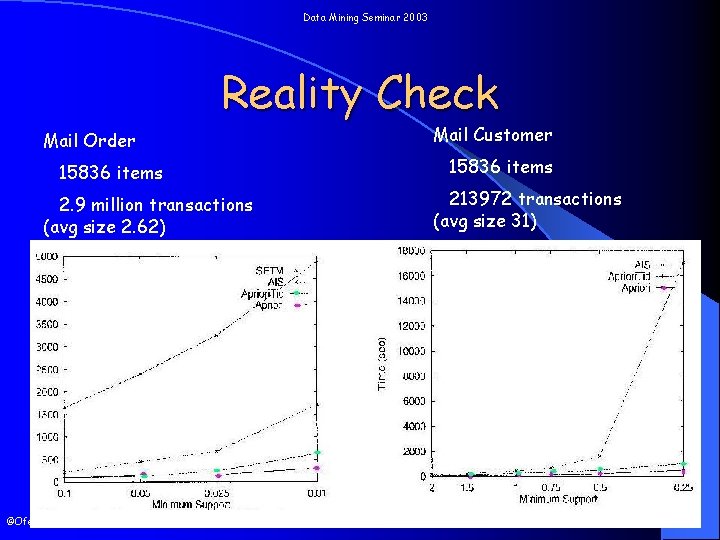

Data Mining Seminar 2003 Reality Check Mail Order 15836 items 2. 9 million transactions (avg size 2. 62) ©Ofer Pasternak Mail Customer 15836 items 213972 transactions (avg size 31) 50

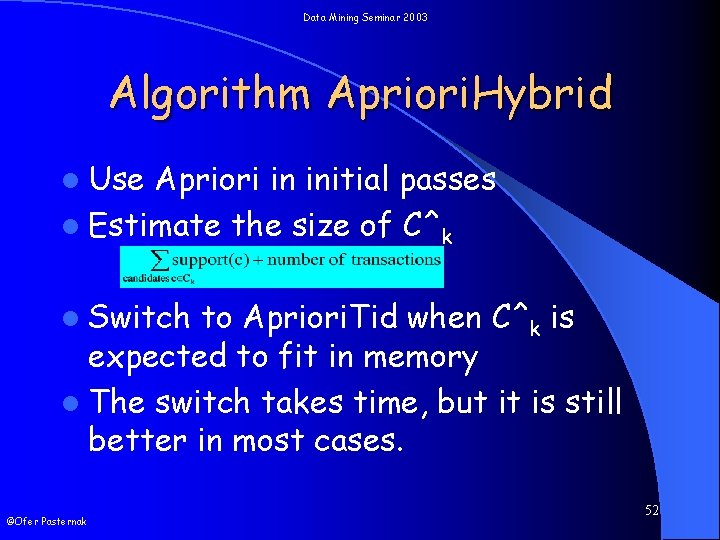

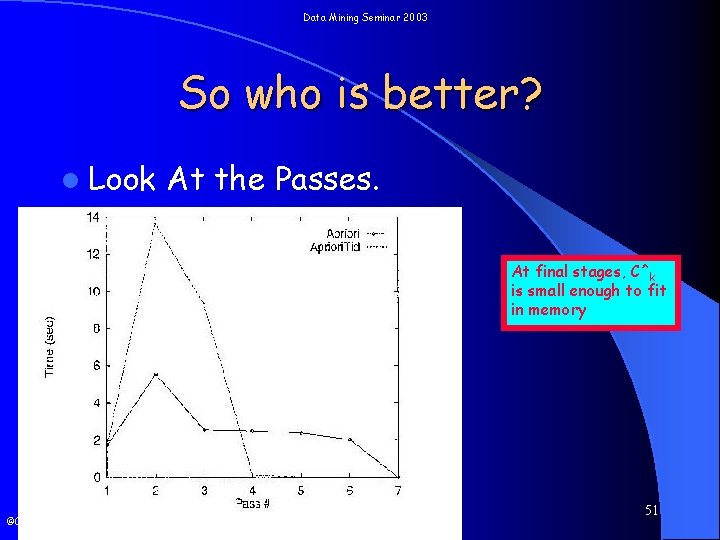

Data Mining Seminar 2003 So who is better? l Look At the Passes. At final stages, C^k is small enough to fit in memory ©Ofer Pasternak 51

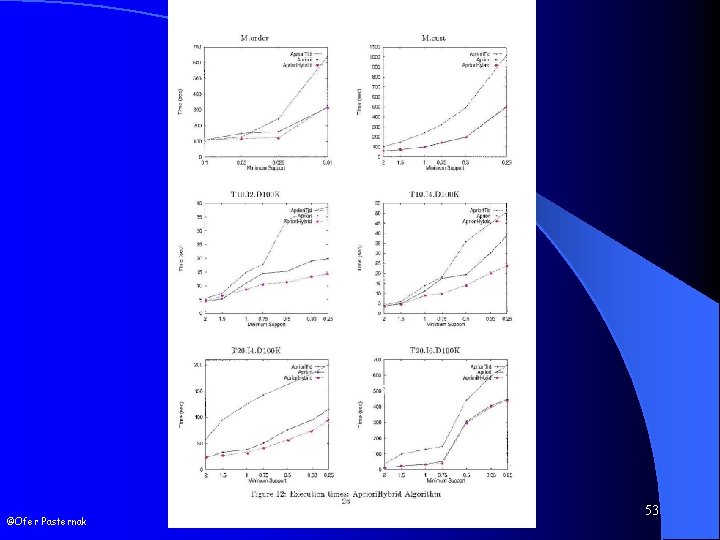

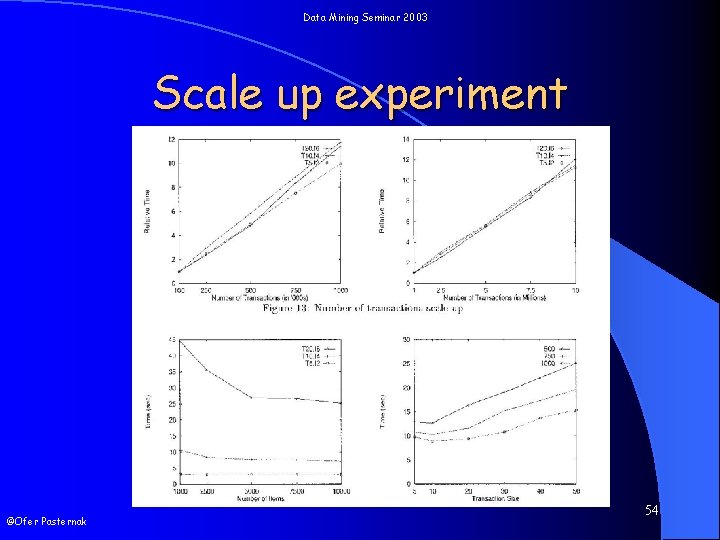

Data Mining Seminar 2003 Algorithm Apriori. Hybrid l Use Apriori in initial passes l Estimate the size of C^k l Switch to Apriori. Tid when C^k is expected to fit in memory l The switch takes time, but it is still better in most cases. ©Ofer Pasternak 52

Data Mining Seminar 2003 ©Ofer Pasternak 53

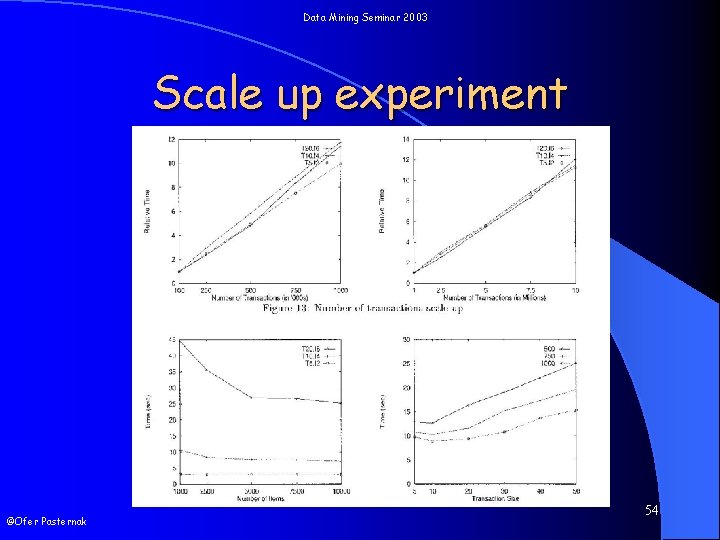

Data Mining Seminar 2003 Scale up experiment ©Ofer Pasternak 54

Data Mining Seminar 2003 Conclusions l The Apriori algorithms are better than the previous algorithms. – For small problems by factors – For large problems by orders of magnitudes. l The algorithms are best combined. l The algorithm shows good results in scale-up experiments. ©Ofer Pasternak 55

Data Mining Seminar 2003 Summary Association rules are an important tool in analyzing databases. l We’ve seen an algorithm which finds all association rules in a database. l The algorithm has better time results then previous algorithms. l The algorithm maintains it’s performances for large databases. l ©Ofer Pasternak 56

Data Mining Seminar 2003 End ©Ofer Pasternak 57