Fast Algorithms for Mining Association Rules Rakesh Agrawal

- Slides: 21

Fast Algorithms for Mining Association Rules Rakesh Agrawal Ramakrishnan Srikant Modified from slides by: Dan Li Presenter: Jimmy Jiang Discussion Lead: Leo Li 1

Origin of Problem • Basket data: new possibility of customized data-driven strategies for retail companies • Simple example: beer and diapers • Famous case for market basket analysis • If you buy diapers, you tend to buy beer 2

Usage of Data Mining (general) • Clustering, predictive modeling, dependency modeling, data summarization, change and deviation. • Association rules in dependency modeling • Applications: market basket analysis, direct/interactive marketing, fraud detection, science, sports stats , etc 3 Some sources from: http: //redbook. cs. berkeley. edu/redbook 3/lec 29. html

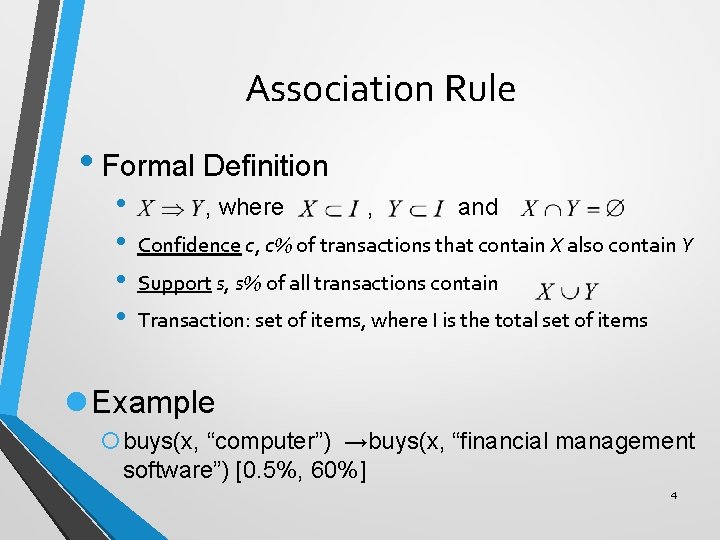

Association Rule • Formal Definition • • , where , and Confidence c, c% of transactions that contain X also contain Y Support s, s% of all transactions contain Transaction: set of items, where I is the total set of items l Example ¡buys(x, “computer”) →buys(x, “financial management software”) [0. 5%, 60%] 4

Mining Association Rules q The problem of finding association rules falls within the purview of database mining. • Eg 1: Find proportion (confidence) of transactions • that purchase diapers also purchase beer. Eg 2: Find proportion (support) of all transactions purchase diaper and/or beer (among all transactions). Finding association rules is valuable for • Cross-marketing • Catalog design • Add-on sales • Store layout and so on 5

Problem Decomposition l Find all sets of items (itemseis) that have transaction support above minimum support: Apriori and Apriori. Tid l Use the large itemsets to generate the desired rules: not discussed 6

Discovering Large Itemsets Intuition: any subset of a large itemset must be large. Algorithms for discovering large itemsets make multiple passes over the data. l In the first pass, determine which individual item is large. l In each sequent pass: ¡ Previous large itemsets (seed sets) are used to generate candidate itemsets. ¡ Count actual support for the candidate itemsets. ¡ Determine which are the real large itemsets, pass to next pass l This process continues until no new large itemsets are found. 7

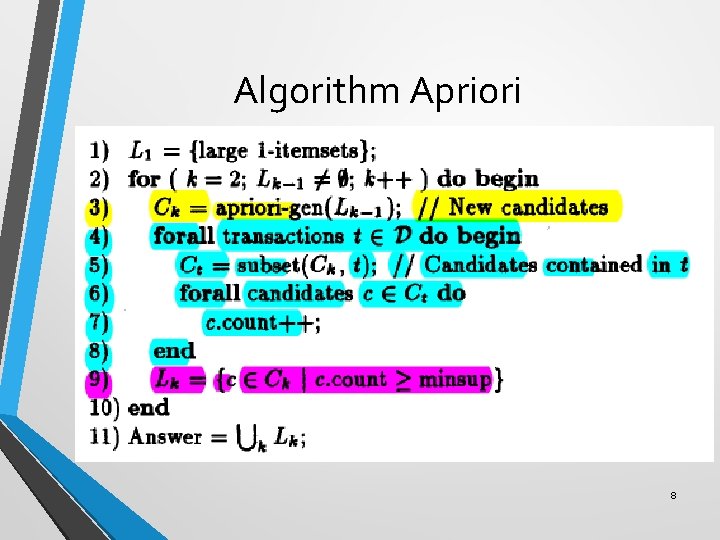

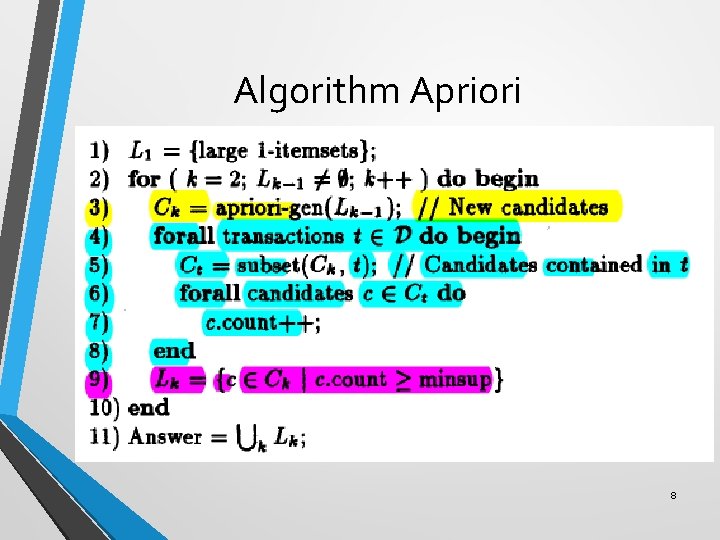

Algorithm Apriori 8

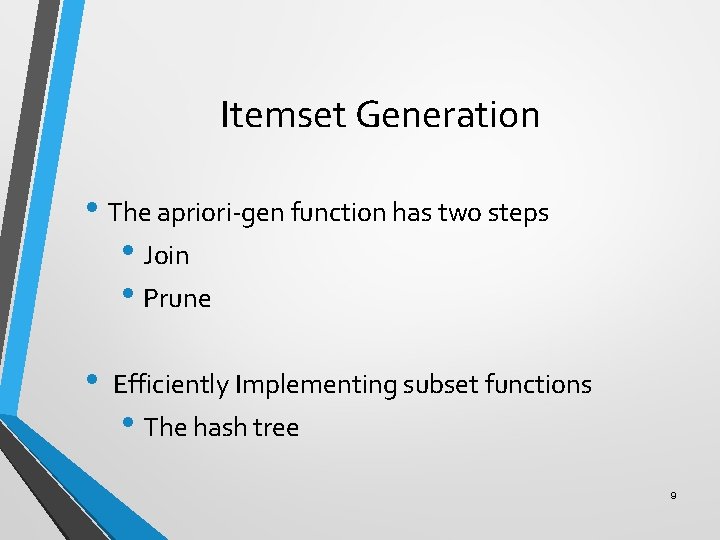

Itemset Generation • The apriori-gen function has two steps • Join • Prune • Efficiently Implementing subset functions • The hash tree 9

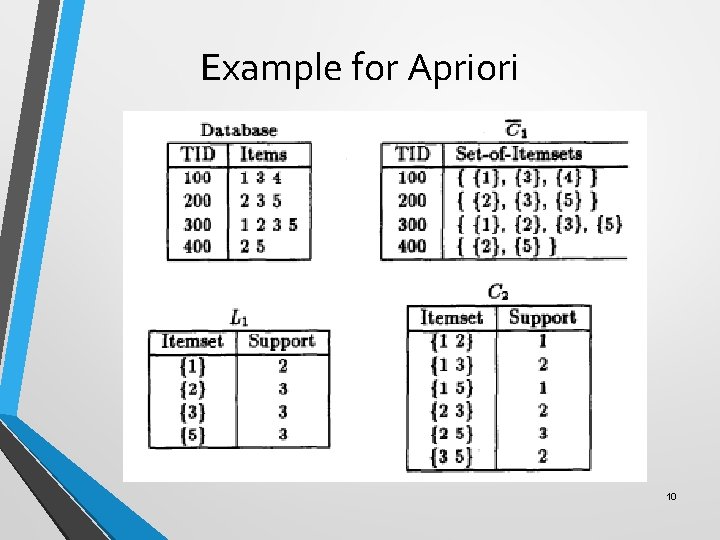

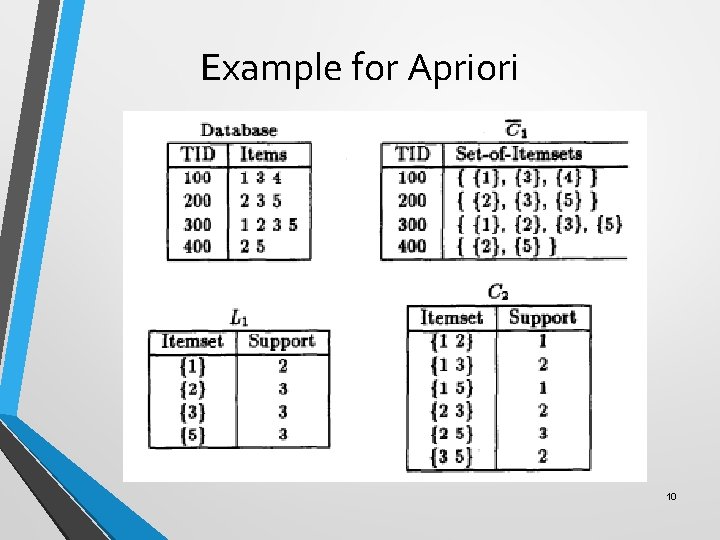

Example for Apriori 10

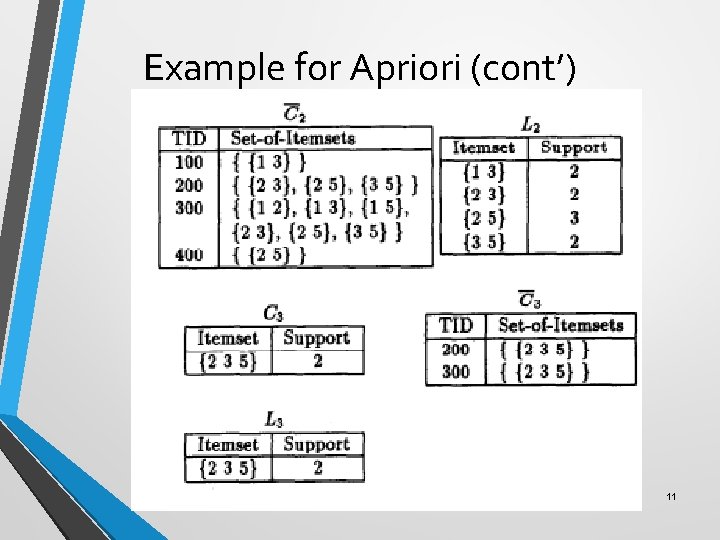

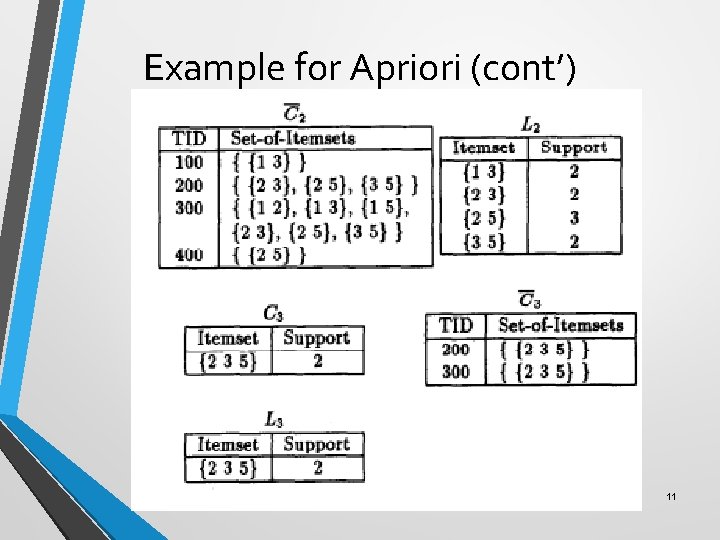

Example for Apriori (cont’) 11

Apriori TID • Candidate generation is same as Apriori, but DB is used for counting support only in the first pass. • More memory needed: storage set in memory containing frequent sets per transaction 12

Performance • Average size of transactions 5~20; • Average size of maximal potentially large itemsets 2~6 • Dataset sizes 2. 4~8. 4 MB (on an IBM RS/SOOO 530 H workstation with a CPU clock rate of 33 MHz, 64 MB of main memory, and running AIX 3. 2. The data resided in the AIX file system and was stored on a 2 GB SCSI 3. 5” drive, with measured sequential throughput of about 2 MB/second. ) 13

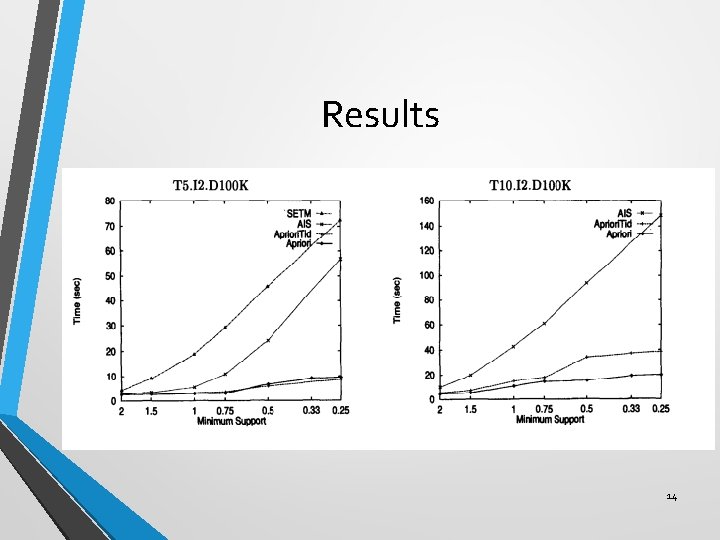

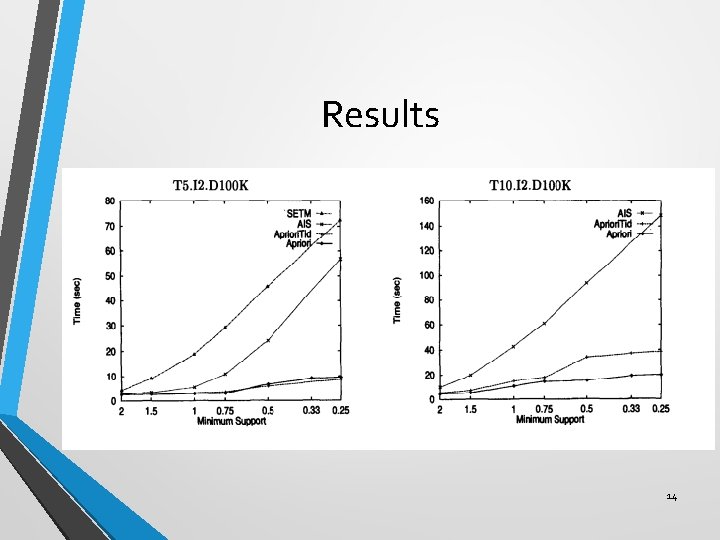

Results 14

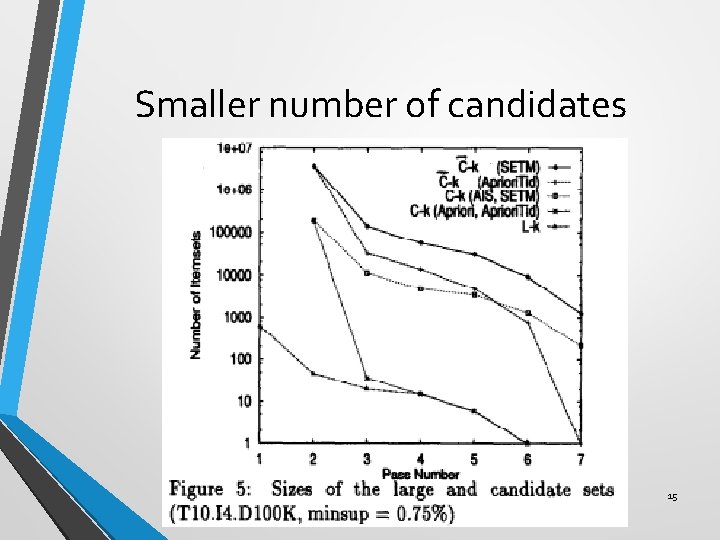

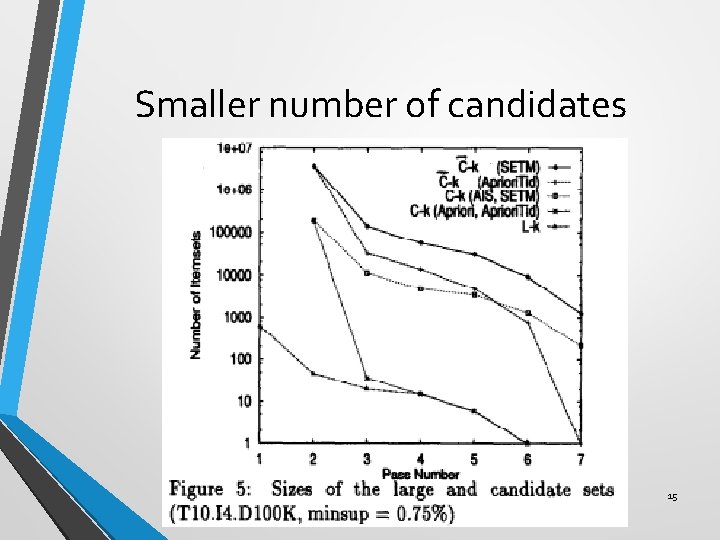

Smaller number of candidates 15

Discussion: • In year 1994, the author mentioned the dataset they used in scale-up experiment are not even as large as 1 GB. • Nowadays, this seems to be not sufficient as scale-up (as in previous discussion, Hive and Dremel could handle TB and even PB level data). • Do you think Apriori algorithm is still suitable today? Are there any limitations or issues exposed due to the increased data size? 16

Improving Apriori in efficiency • Apriori. Hybrid: • • • Use Apriori in initial passes; (Heuristic) Estimation on the size of C^k; Switch to Apriori. Tid when C^k is expected to fit in memory� 17

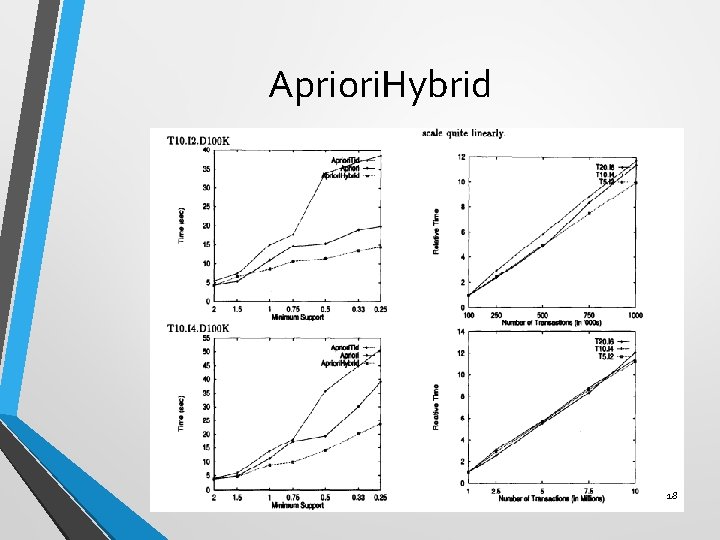

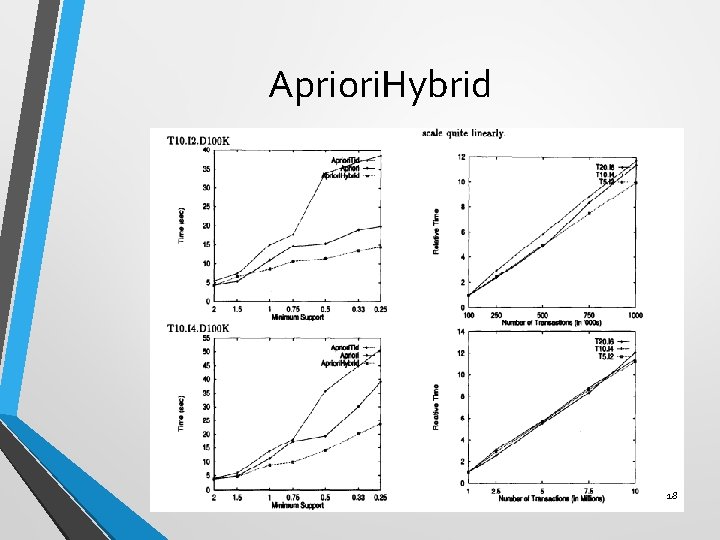

Apriori. Hybrid 18

Later Work • Parallel versions • Quantitative association rules E. g. , "10% of married people between age 50 and 60 have at least 2 cars. " • Online association rules 19

Conclusion • Two new algorithms: Apriori and Apriori. Tid are discussed. • These algorithms outperform AIS and SETM. • Apriori and Apriori. Tid can be combined into Apriori. Hybird. • Apriori. Hybrid matches Apriori and Apriori. Tid when either one wins. 20

Discussion • This paper has spun off more similar algorithm in the database world than any other data mining algorithm. • Why do you think this is the case? Is it the algorithm? The problem? The approach? Or something else. 21