Association Rule Mining Data Mining and Knowledge Discovery

- Slides: 11

Association Rule Mining Data Mining and Knowledge Discovery Prof. Carolina Ruiz and Weiyang Lin Department of Computer Science Worcester Polytechnic Institute

Sample Applications n n Sample Commercial Applications n Market basket analysis n cross-marketing n attached mailing n store layout, catalog design n customer segmentation based on buying patterns n … Sample Scientific Applications n Genetic analysis n Analysis of medical data n …

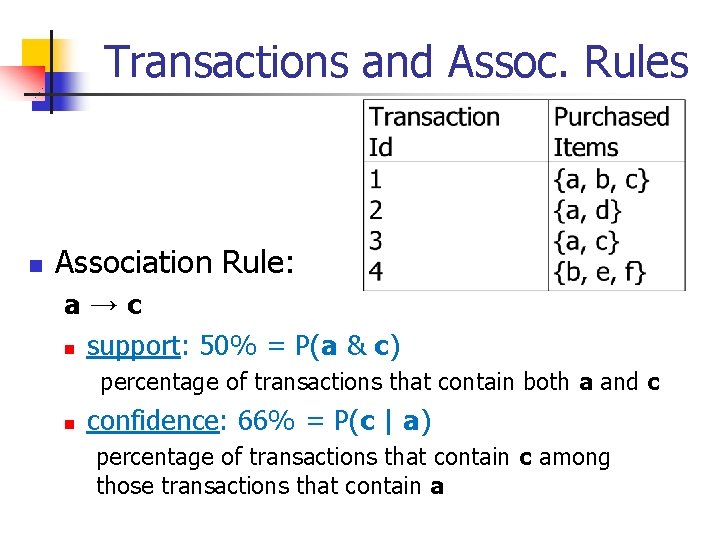

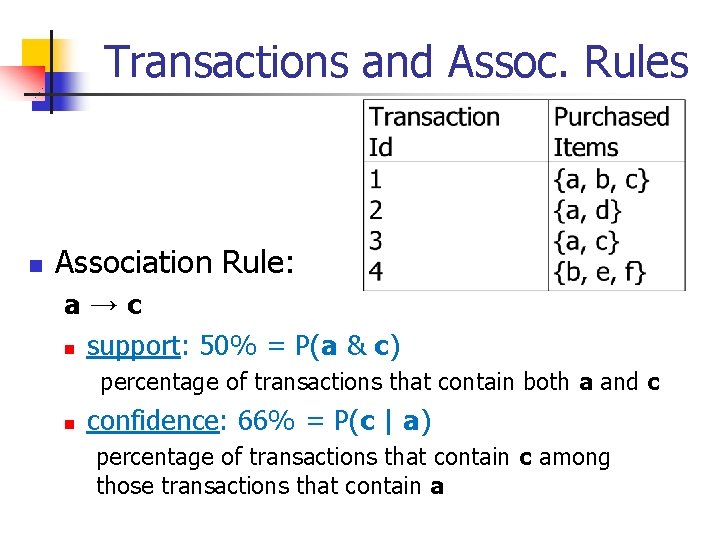

Transactions and Assoc. Rules n Association Rule: a→c n support: 50% = P(a & c) percentage of transactions that contain both a and c n confidence: 66% = P(c | a) percentage of transactions that contain c among those transactions that contain a

Association Rules - Intuition n n Given a set of transactions where each transaction is a set of items Find all rules X → Y that relate the presence of one set of items X with another set of items Y n n n Example: 98% of people who purchase diapers and baby food also buy beer A rule may have any number of items in the antecedent and in the consequent Possible to specify constraints on rules

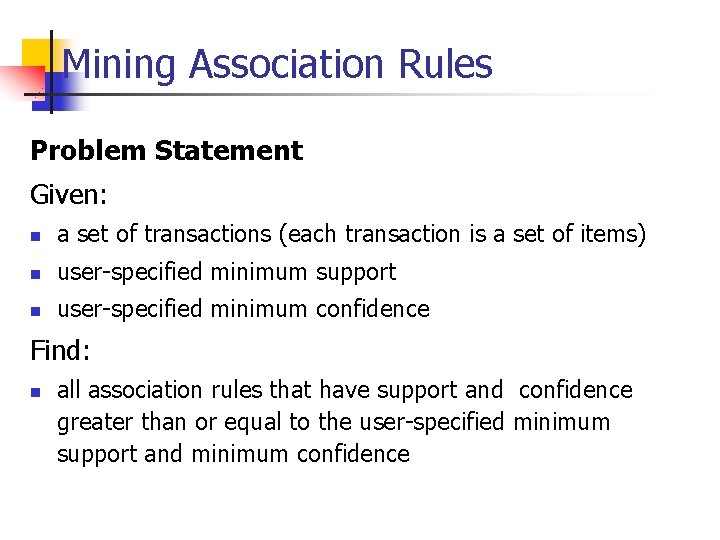

Mining Association Rules Problem Statement Given: n a set of transactions (each transaction is a set of items) n user-specified minimum support n user-specified minimum confidence Find: n all association rules that have support and confidence greater than or equal to the user-specified minimum support and minimum confidence

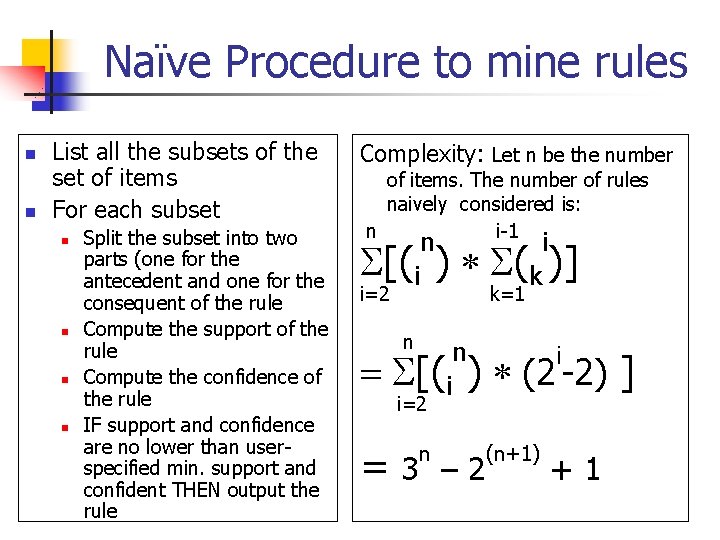

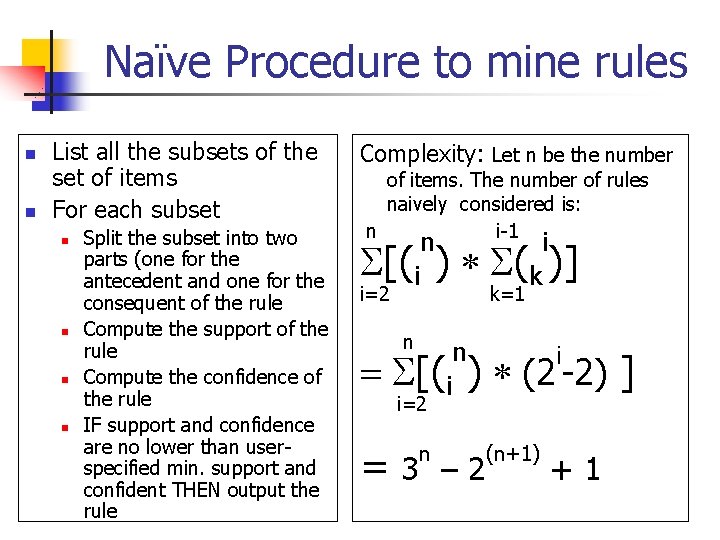

Naïve Procedure to mine rules n n List all the subsets of the set of items For each subset n n Split the subset into two parts (one for the antecedent and one for the consequent of the rule Compute the support of the rule Compute the confidence of the rule IF support and confidence are no lower than userspecified min. support and confident THEN output the rule Complexity: Let n be the number of items. The number of rules naively considered is: n i-1 n i S[(i ) * S(k )] i=2 k=1 n n i = S[(i ) * (2 -2) ] i=2 = n 3 – 2 (n+1) +1

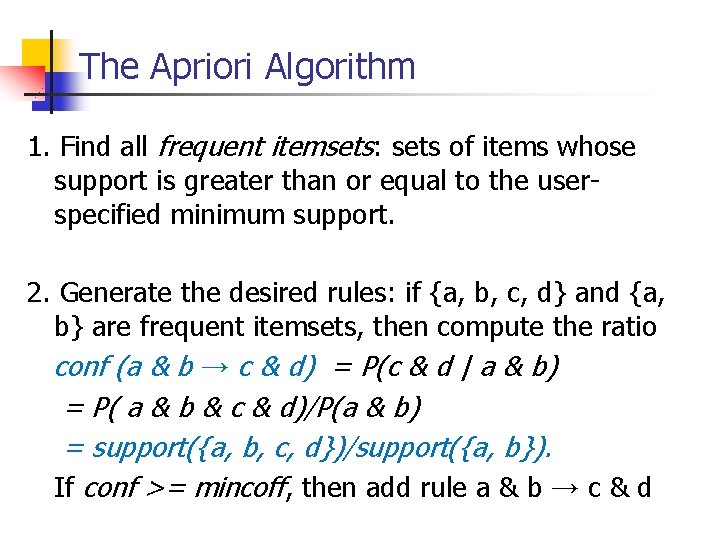

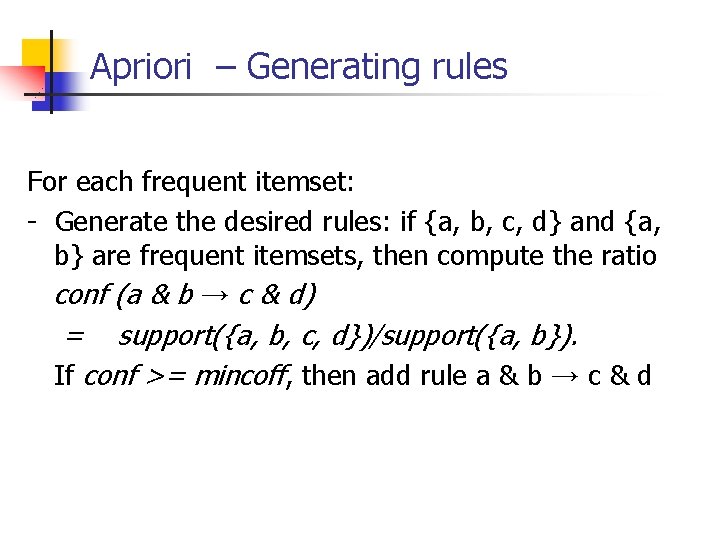

The Apriori Algorithm 1. Find all frequent itemsets: sets of items whose support is greater than or equal to the userspecified minimum support. 2. Generate the desired rules: if {a, b, c, d} and {a, b} are frequent itemsets, then compute the ratio conf (a & b → c & d) = P(c & d | a & b) = P( a & b & c & d)/P(a & b) = support({a, b, c, d})/support({a, b}). If conf >= mincoff, then add rule a & b → c & d

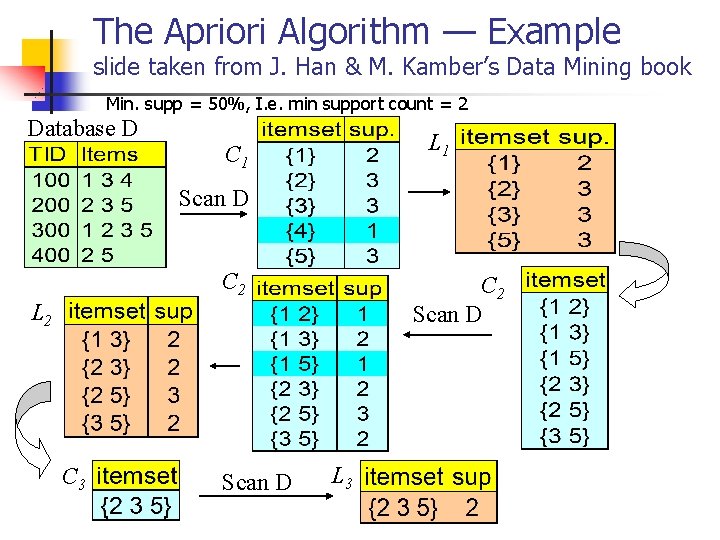

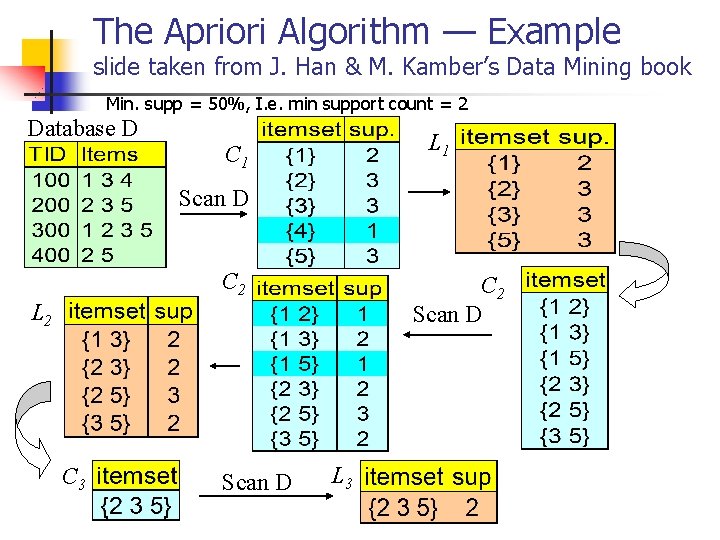

The Apriori Algorithm — Example slide taken from J. Han & M. Kamber’s Data Mining book Min. supp = 50%, I. e. min support count = 2 Database D L 1 C 1 Scan D C 2 Scan D L 2 C 3 Scan D L 3

Apriori Principle n Key observation: Every subset of a frequent itemset is also a frequent itemset Or equivalently, n The support of an itemset is greater than or equal to the support of any superset of the itemset n

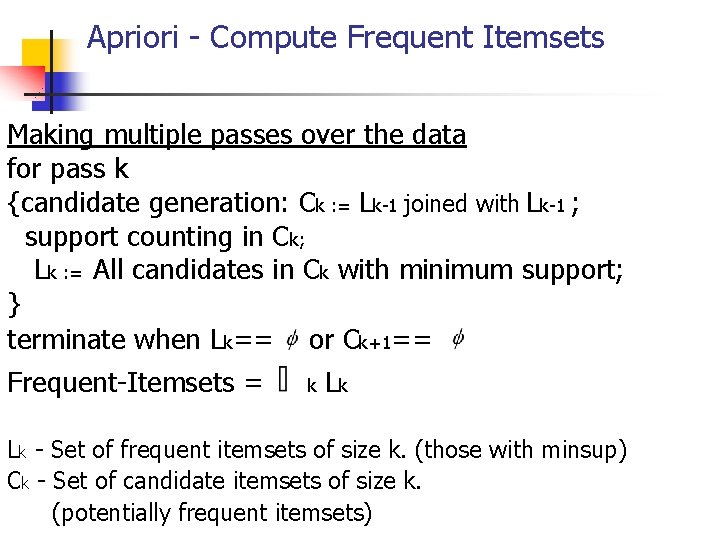

Apriori - Compute Frequent Itemsets Making multiple passes over the data for pass k {candidate generation: Ck : = Lk-1 joined with Lk-1 ; support counting in Ck; Lk : = All candidates in Ck with minimum support; } terminate when Lk== or Ck+1== Frequent-Itemsets = k Lk Lk - Set of frequent itemsets of size k. (those with minsup) Ck - Set of candidate itemsets of size k. (potentially frequent itemsets)

Apriori – Generating rules For each frequent itemset: - Generate the desired rules: if {a, b, c, d} and {a, b} are frequent itemsets, then compute the ratio conf (a & b → c & d) = support({a, b, c, d})/support({a, b}). If conf >= mincoff, then add rule a & b → c & d