Chapter 04 Software Testing Strategies and Methods The

- Slides: 70

Chapter - 04 Software Testing Strategies and Methods

• The process of executing the programs with the intention to find out the errors is software testing. • A software engineer must develop or design software which should be flexible to test.

Objectives of software testing 1. Find out the errors and fix them. 2. Good test is able to find out undiscovered errors. 3. To fulfill customers requirement regarding software product. 4. To enforce standards while developing software product. 5. To support software quality assurance. 6. Check operability, flexibility, reliability of software product.

Characteristics / attributes: 1. Operability 2. Observability 3. Controllability 4. Simplicity 5. Stability 6. Understandability 7. Reliability 8. Safety and security.

Operability • Ability of getting operated easily • The more is operability, more efficiently s/w can be tested. • It make sure that • The system will have less number of bugs. • Bugs wont block the execution of test.

observability • It is nothing but what you can see is what you can test. • Points observed are: – Different o/p is generated for each an every I/p. – Check system states and variables and their values. – Each an every factor that affects the o/p is clearly visible. – Wrong o/p and internal errors can be easily find out.

Concept of Good Test • A good test has a high probability of finding an error. • A good test is not redundant, every test should have a unique purpose. • A good test should be best of breed. (best test selected from a group of tests. ) • A good test should be neither too simple nor too complex.

Concept of Successful Test • If testing is conducted successfully i. e. by following the all objectives, it will uncover almost all errors in the software. • The benefit of successful testing is that software functions appear to be working according to design and specification of s/w. • It leads to increase in the reliability and quality of the s/w.

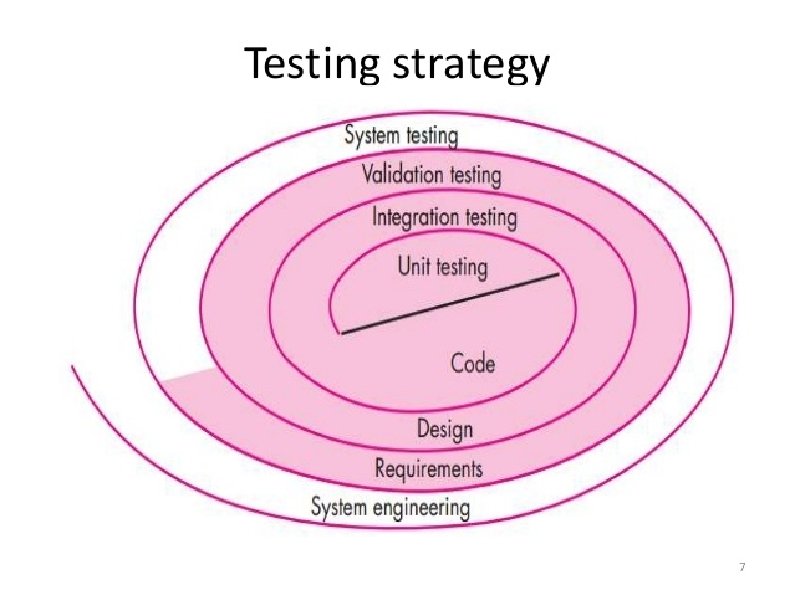

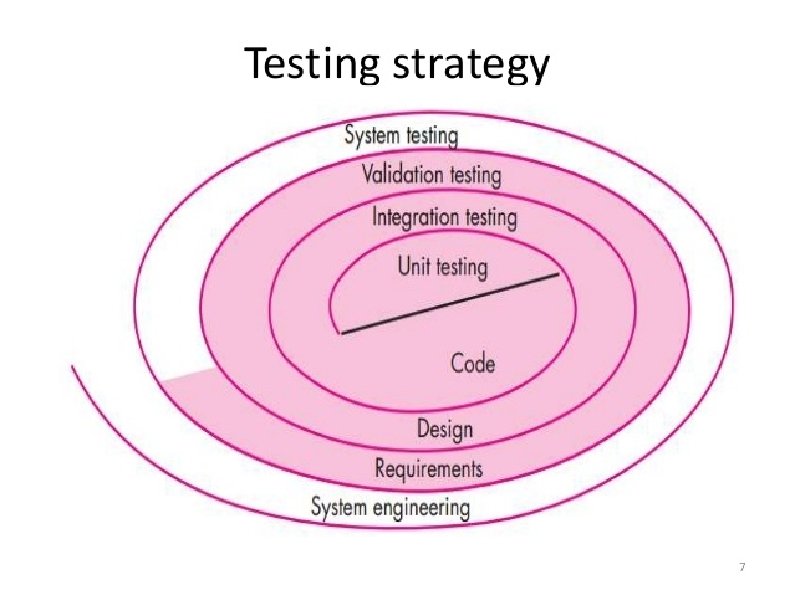

Testing strategies • Testing strategy is a way or method using which the testing is done. • This strategy plays very imp role as it is a major contributor in SQA activities. • As per the type and scope of the software , the testing strategy is designed and implemented. • In testing strategy we define test plan, test cases, test data.

Test plan • A s/w test plan is a document that contains the strategy used to verify that the s/w product or system adheres to its design specification and other requirement. Test plan may contain following test: 1. Design verification test 2. Development or production test 3. Acceptance or commissioning test 4. Service and repair test 5. Regression test.

• Test plan format varies organization to organization. • Test plan should contain the three important elements like test coverage, test methods, test responsibilities.

Test cases Test case is a set of conditions or variables under which a testing team will determine whether an application or s/w system is working correctly or not. The test case is classified into three categories: - 1. Formal test case -(i/p is known and o/p is expected) 2. Informal test case-(multiple steps, not written) 3. Typical written test case(contain test case data in detail)

Test data • Test data are data which have been specifically identified for use in tests. • The test data may have different purpose as follows : – It can be used for inputting data to the tests to produce the expected o/p. – To check an ability or behavior of a s/w program to deal with unexpected, unusual, extreme and exceptional I/p. – Test data may include data which describes details about the testing strategy, i/p, o/p in general.

Char. Of system strategies • Need to specify requirement of the product in a quantifiable way or in a measurable way before testing strategy. • Testing objectives should be explicitly mentioned in the testing strategy. • Develops a profile for every category of the user by understanding the users of the software. • It should develop a testing plan that focuses on “rapid cycle testing” • A robust software has to be built that is capable of testing itself.

• Formal technical reviews need to be conducted to check the test strategy and test cases. • For the testing process a continues improvement approach should be used by testing strategy.

Software Verification and Validation (V&V) • Verification refers to the set of activities that ensures that s/w correctly implements a specific function. • Validation refers to a different set of activities that ensures that the s/w that has been built is traceable to customer requirements. • Verification- Are we building the product right? • Validation- Are we building the right product?

• V&V encompasses a wide array of SQA activities that include – – – formal technical reviews, quality audits, performance monitoring, simulation, feasibility study, usability testing, database review, algorithm analysis, development testing, usability testing, qualification testing, installation testing etc.

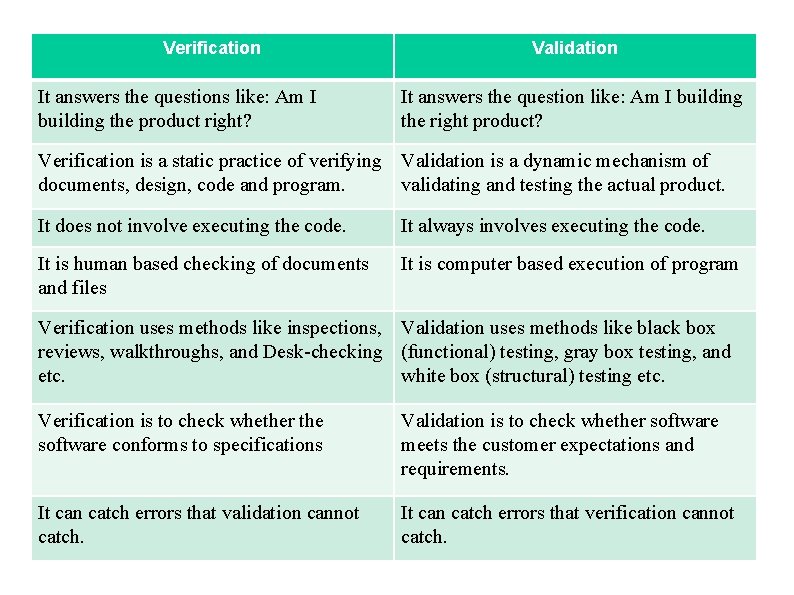

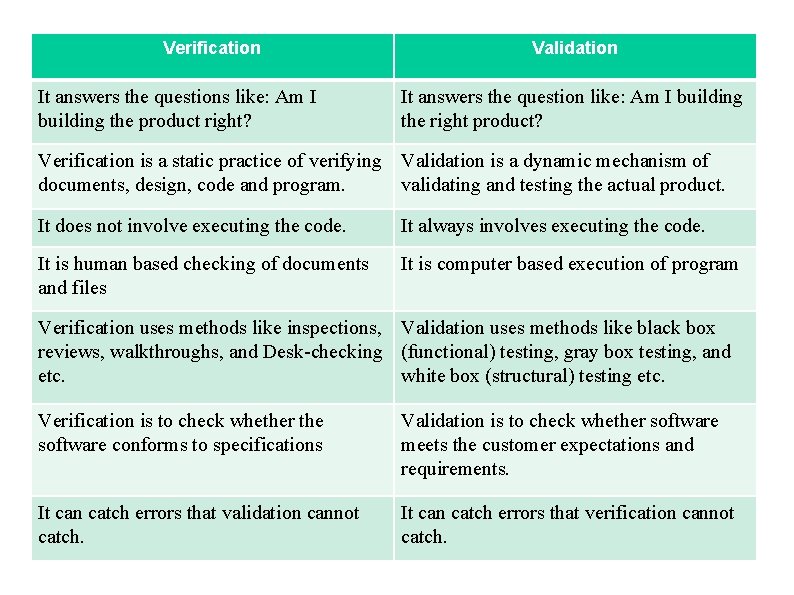

Verification It answers the questions like: Am I building the product right? Validation It answers the question like: Am I building the right product? Verification is a static practice of verifying Validation is a dynamic mechanism of documents, design, code and program. validating and testing the actual product. It does not involve executing the code. It always involves executing the code. It is human based checking of documents and files It is computer based execution of program Verification uses methods like inspections, Validation uses methods like black box reviews, walkthroughs, and Desk-checking (functional) testing, gray box testing, and etc. white box (structural) testing etc. Verification is to check whether the software conforms to specifications Validation is to check whether software meets the customer expectations and requirements. It can catch errors that validation cannot catch. It can catch errors that verification cannot catch.

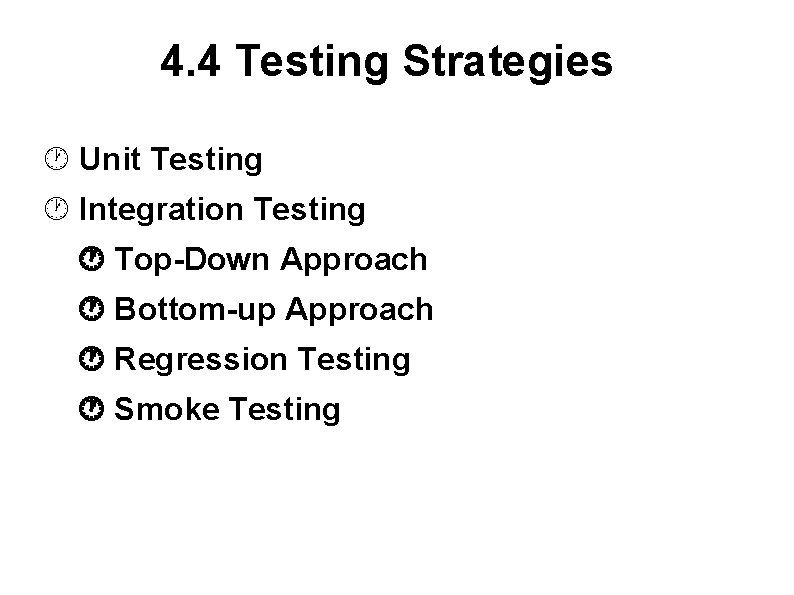

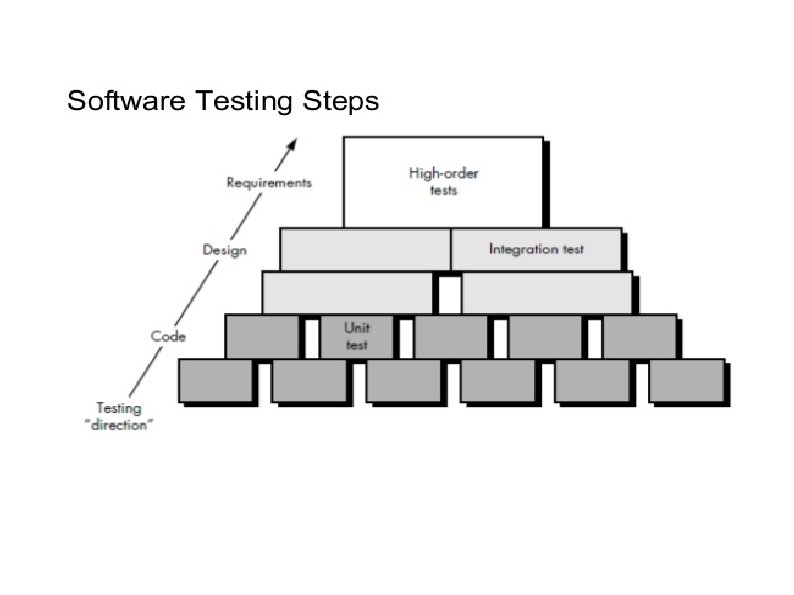

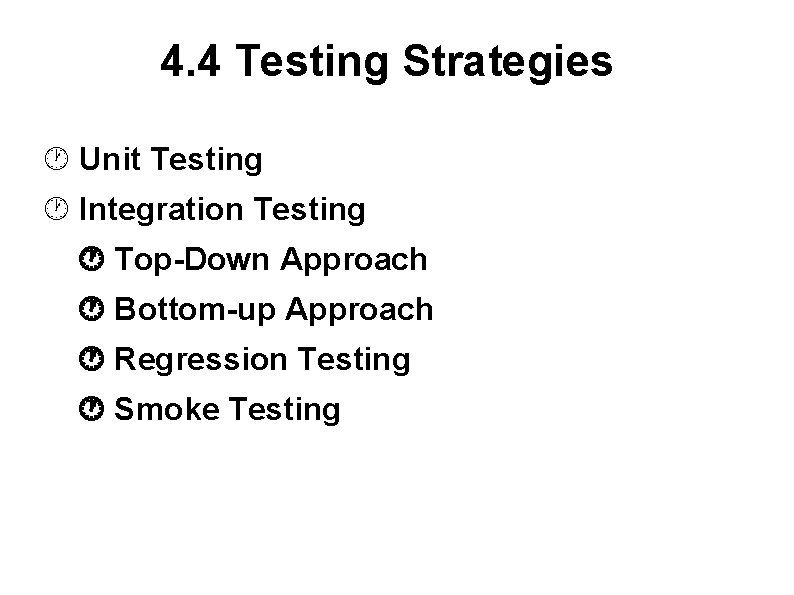

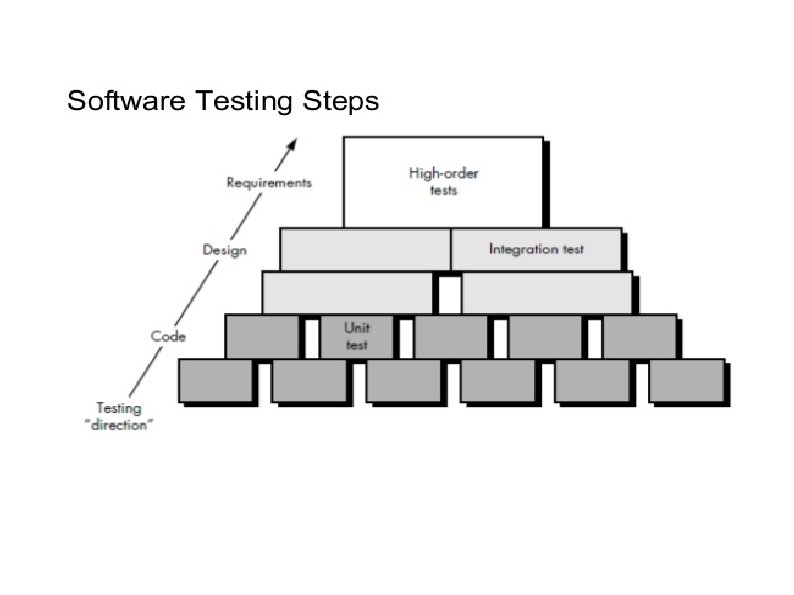

4. 4 Testing Strategies Unit Testing Integration Testing Top-Down Approach Bottom-up Approach Regression Testing Smoke Testing

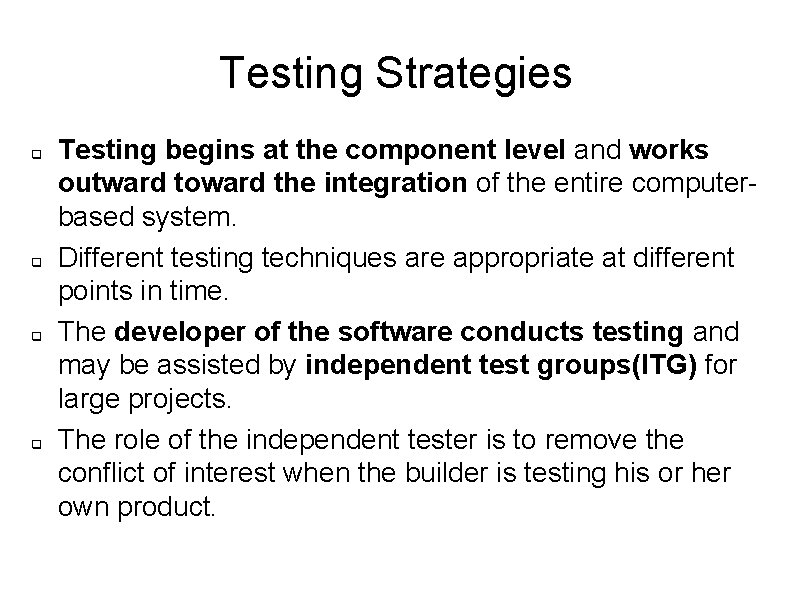

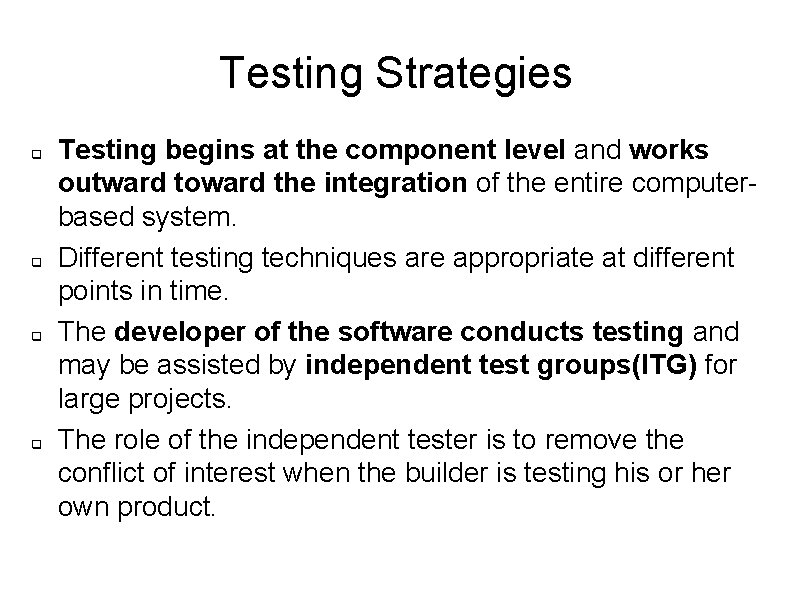

Testing Strategies q q Testing begins at the component level and works outward toward the integration of the entire computerbased system. Different testing techniques are appropriate at different points in time. The developer of the software conducts testing and may be assisted by independent test groups(ITG) for large projects. The role of the independent tester is to remove the conflict of interest when the builder is testing his or her own product.

Unit Testing • Unit Testing is a level of the software testing process where individual units/components of a software/system are tested. • The purpose is to validate that each unit of the software performs as designed. • A unit is the smallest testable part of software. • It usually has one or a few inputs and usually a single output. • In procedural programming a unit may be an individual program, function, procedure, etc. • In OOP, the smallest unit is a method, which may belong to a base/super class, abstract class or derived/child class.

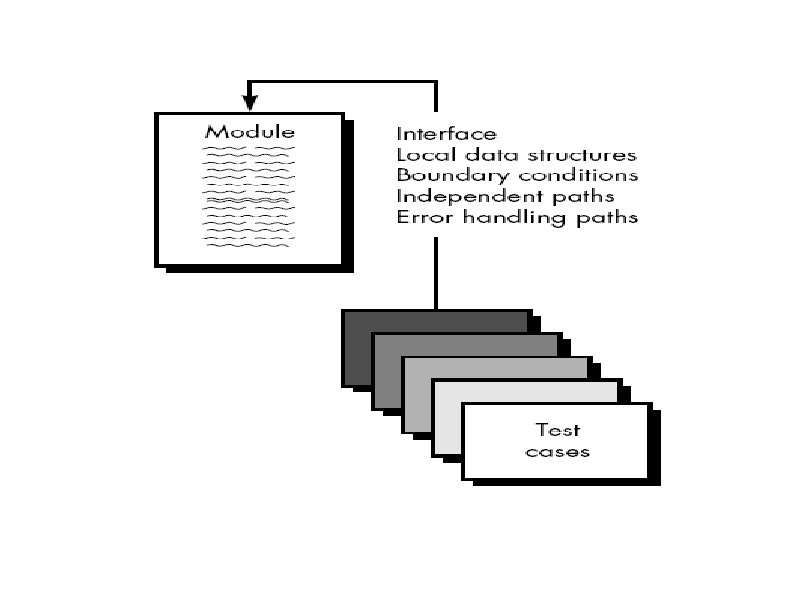

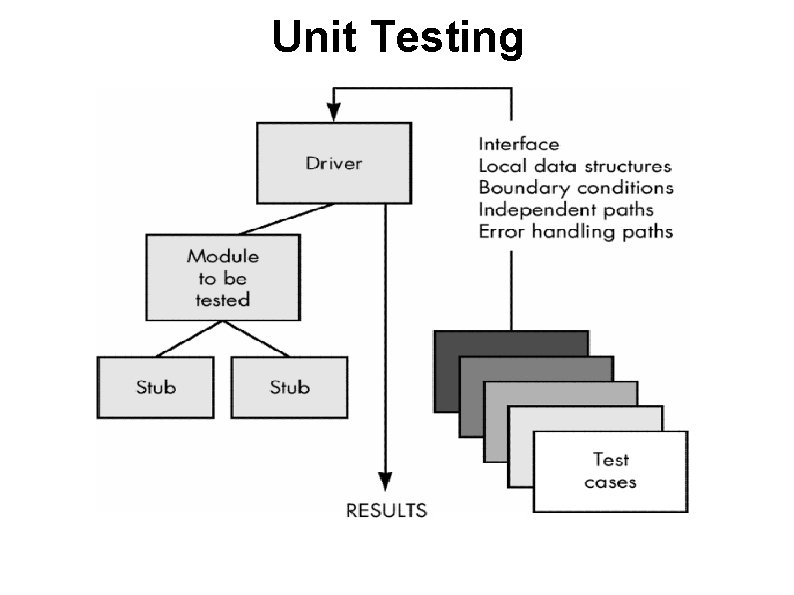

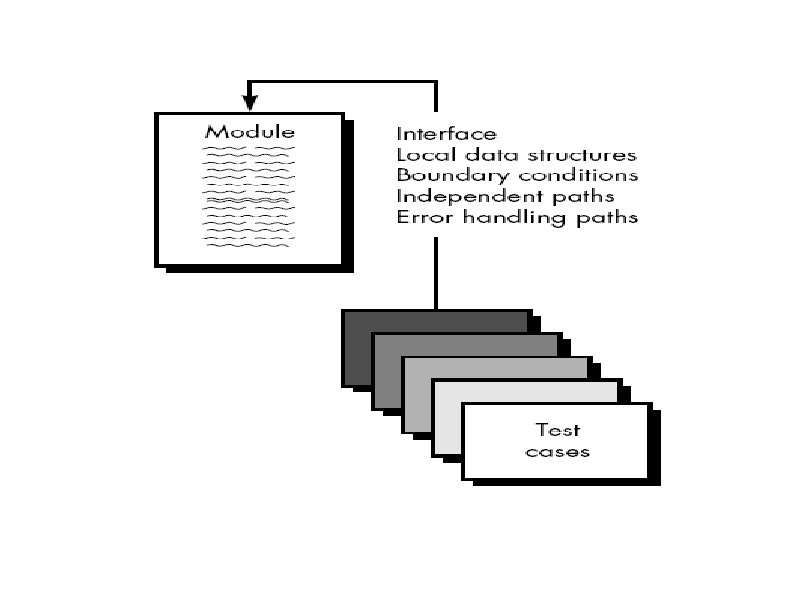

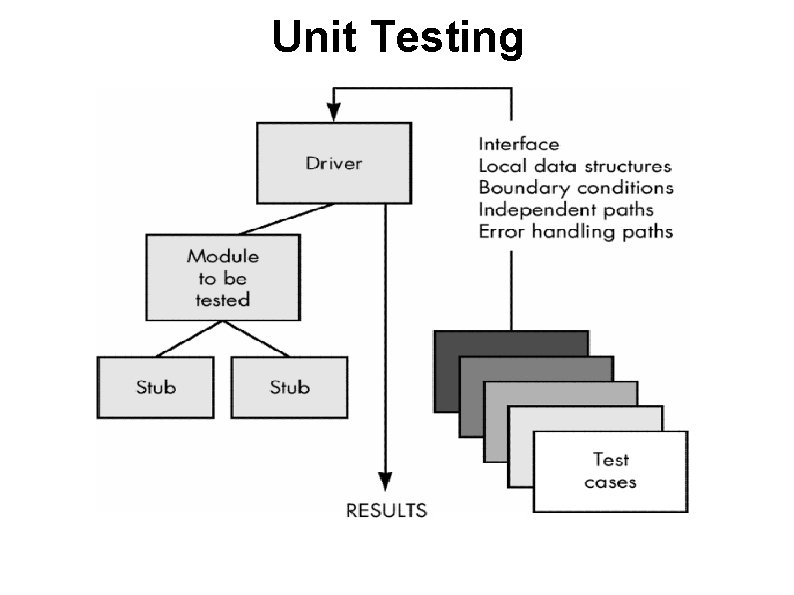

• The module interface is tested to ensure that information properly flows into and out of the program unit under test. • Local data structures are examined to ensure that data store temporarily maintains its integrity during execution. • All independent path ensures that all statements in a module have been executed at least once. • Boundary conditions are tested to ensure the module operates properly at boundaries established to limit or restrict processing. • Finally all error handling paths are tested.

Unit Testing

Unit test procedures • In most applications a driver is nothing more then a main program that accepts test case data passes to component to be tested and prints results. • Stubs serves to replace modules that are called by the component to be tested. • A stub or dummy sub program uses the sub ordinate modules interface, may do data manipulation, provides verification and returns control to the module under going testing. • Drivers and stub are kept simple, actual over head is kept low.

Example of Stubs and Drivers is given below: - • For Example we have 3 modules login, home, and user module. • Login module is ready and need to test it, but we call functions from home and user (which is not ready). • To test at a selective module we write a short dummy piece of a code which simulates home and user, which will return values for Login, this piece of dummy code is always called Stubs and it is used in a top down integration.

• Considering the same Example: • If we have Home and User modules get ready and Login module is not ready, and we need to test Home and User modules. • Which return values from Login module, So to extract the values from Login module we write a Short Piece of Dummy code for login which returns value for home and user, So these pieces of code is always called Drivers and it is used in Bottom Up Integration • Conclusion: - So it is fine from the above example that Stubs act “called” functions in top down integration. Drivers are “calling” Functions in bottom up integration.

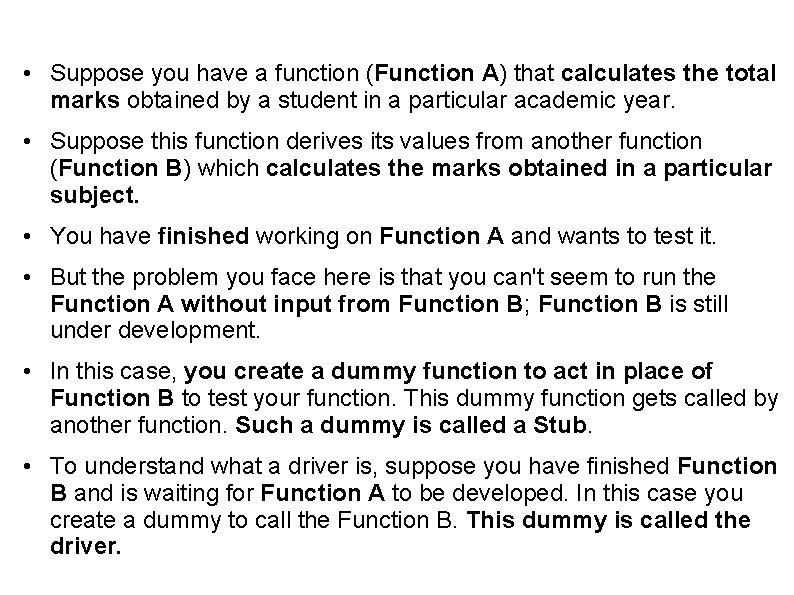

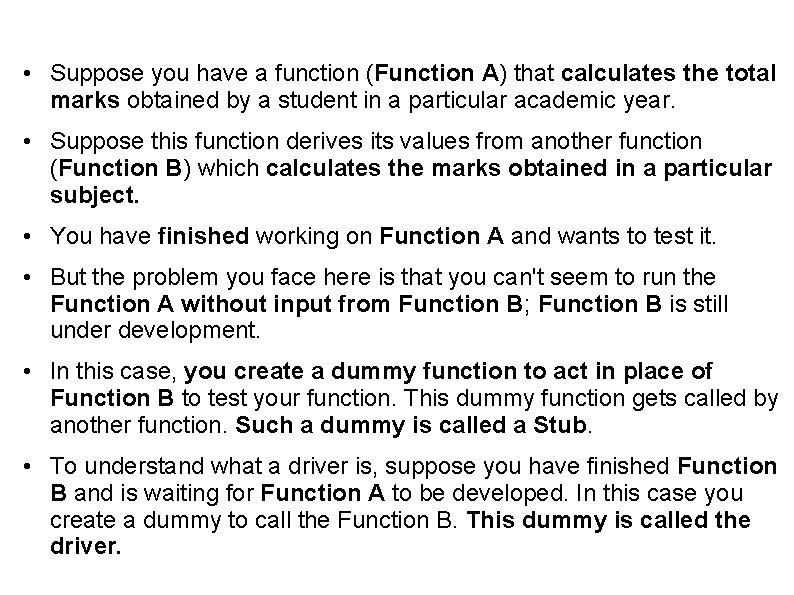

• Suppose you have a function (Function A) that calculates the total marks obtained by a student in a particular academic year. • Suppose this function derives its values from another function (Function B) which calculates the marks obtained in a particular subject. • You have finished working on Function A and wants to test it. • But the problem you face here is that you can't seem to run the Function A without input from Function B; Function B is still under development. • In this case, you create a dummy function to act in place of Function B to test your function. This dummy function gets called by another function. Such a dummy is called a Stub. • To understand what a driver is, suppose you have finished Function B and is waiting for Function A to be developed. In this case you create a dummy to call the Function B. This dummy is called the driver.

Integration Testing • It is a systematic technique for constructing software architecture while at the same time conducting testing to uncover errors with interfacing. • The objective is to take unit tested components and build program structure that has been dictated by design. • The entire program is tested as a whole.

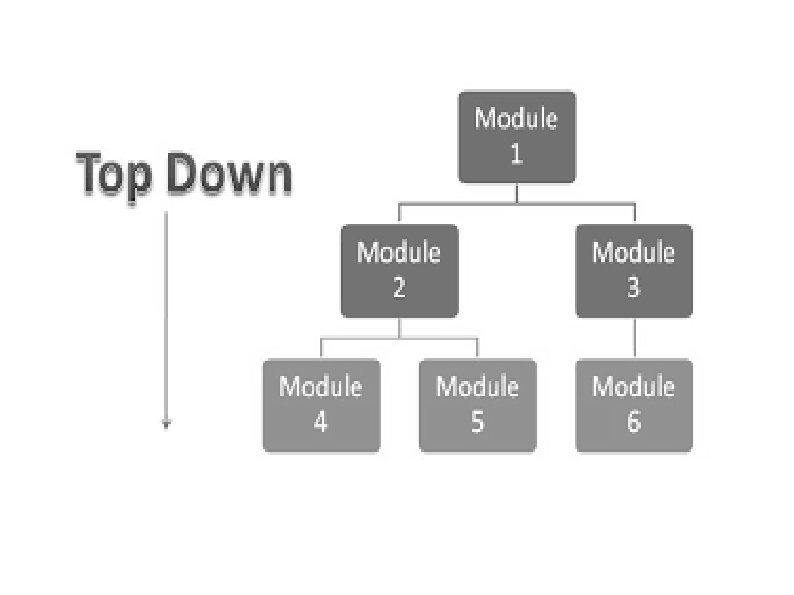

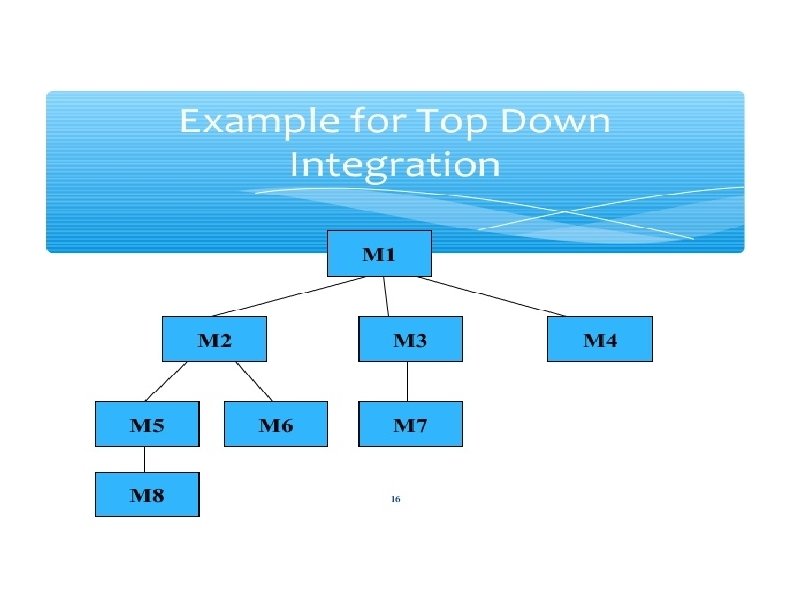

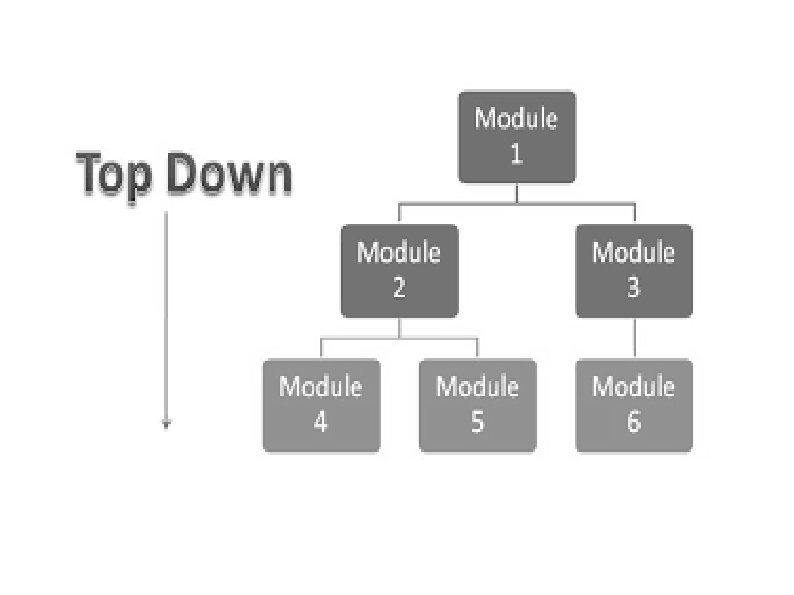

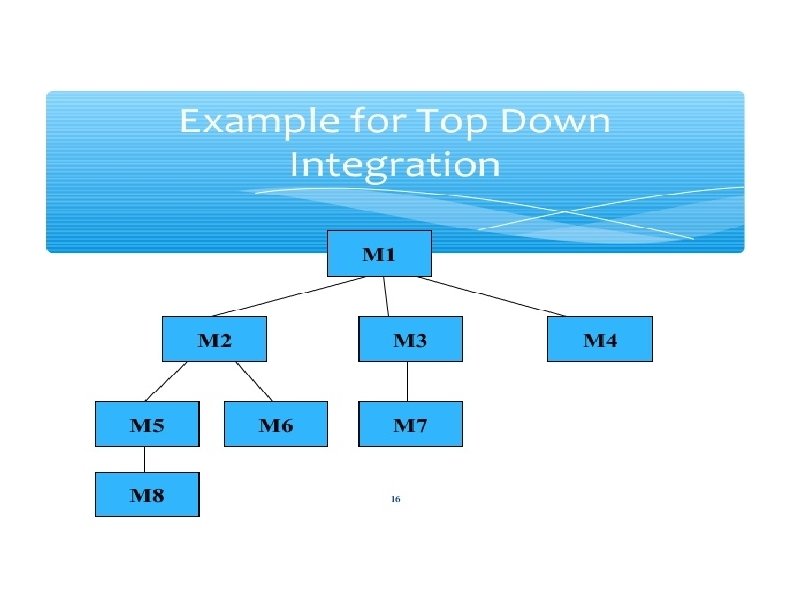

Top down integration • It is an incremental approach to construction of the s/w architecture. • Modules are integrated by moving downward , beginning with the main control module(main program). • Modules subordinate to the main control module are incorporated into the structure in either a DFS or BFS manner.

Steps to perform top down integration: - • The main control module is used as a test driver, stubs are substituted one at a time for all components directly subordinates to the main module. • Tests are conducted as each component is integrated. • On completion of test, another stub is replaced with real components.

Bottom up integration • As its name implies, begins construction and testing with lowest level component in the program structure. • Components are integrated bottom up, the need for stub is eliminated.

Steps for bottom-up integration: - • Low level components are combined into clusters. (builds- performs specific s/w sub function) • A driver is written to coordinate test case input and output. • The cluster is tested. • Drivers are removed and clusters are combined moving upward in structure.

• Components are combined to form a clusters. • Each of clusters is tested using a driver shown as a dashed block. components in clusters 1, 2 are subordinate to Ma. • Drivers D 1, D 2 are removed and clusters are interfaced directly to Ma. • As integration moves upward, the need for separate test drivers are less.

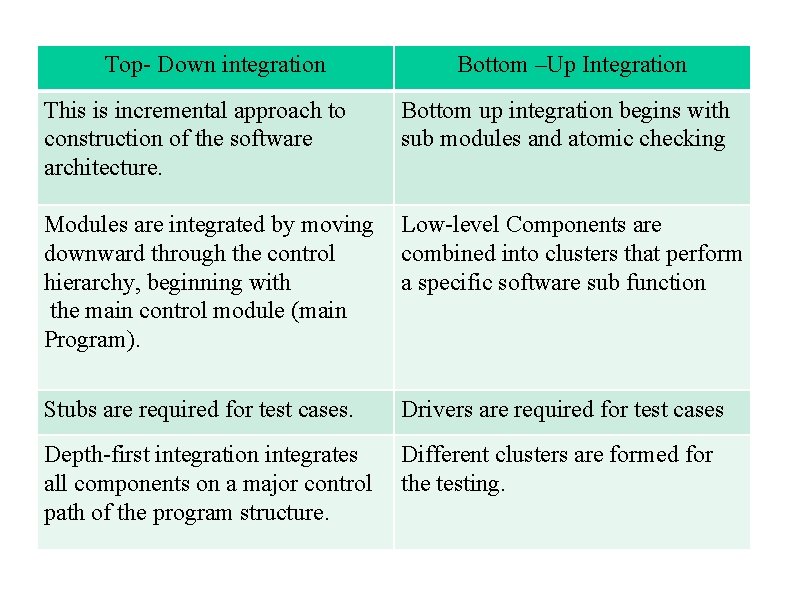

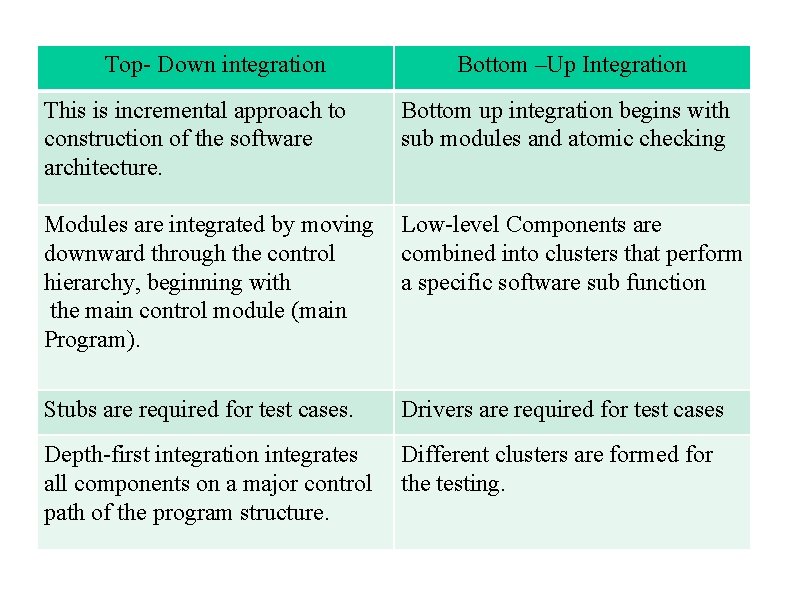

Top- Down integration Bottom –Up Integration This is incremental approach to construction of the software architecture. Bottom up integration begins with sub modules and atomic checking Modules are integrated by moving downward through the control hierarchy, beginning with the main control module (main Program). Low-level Components are combined into clusters that perform a specific software sub function Stubs are required for test cases. Drivers are required for test cases Depth-first integration integrates all components on a major control path of the program structure. Different clusters are formed for the testing.

Regression testing • Each time a new module is added as part of integration testing, the s/w changes. • New data flow paths are established, new i/o may occur, new control logic is invoked. These changes may cause problems with functions that previously worked flawlessly. • Regression testing is the re execution of some subsets of tests that have already been conducted to ensure that changes have not propagated unintended side effects.

• It is the activity that helps to ensure that changes do not introduce unintended behavior and additional errors. • Regression testing may be conducted manually or by using automated tools. • As integration testing proceeds, the number of regression tests can grow quite large.

Smoke testing • Smoke testing is an integration testing approach that is commonly used when software products are being developed. • It is designed mechanism for time critical projects , allowing the s/w team to test their projects on frequent basis. S /w components that have been translated into code are integrated into a “Build” - build includes all data files, libraries, reusable modules, engineered components that are required to implement one or more product functions. A series of tests is designed to expose errors that will keep the build from performing its function.

• The build is integrated with other build and the entire product is smoke tested daily. • It does not have to be exhaustive, but it should be capable of exposing major problems. • If the build passes , you can assume that it is stable enough to be tested more completely.

Benefits of s/w testing: 1. Integration risk is minimized. 2. The quality of the end product is improved. 3. Error diagnosis and correction are simplified. 4. Progress is easier to assess.

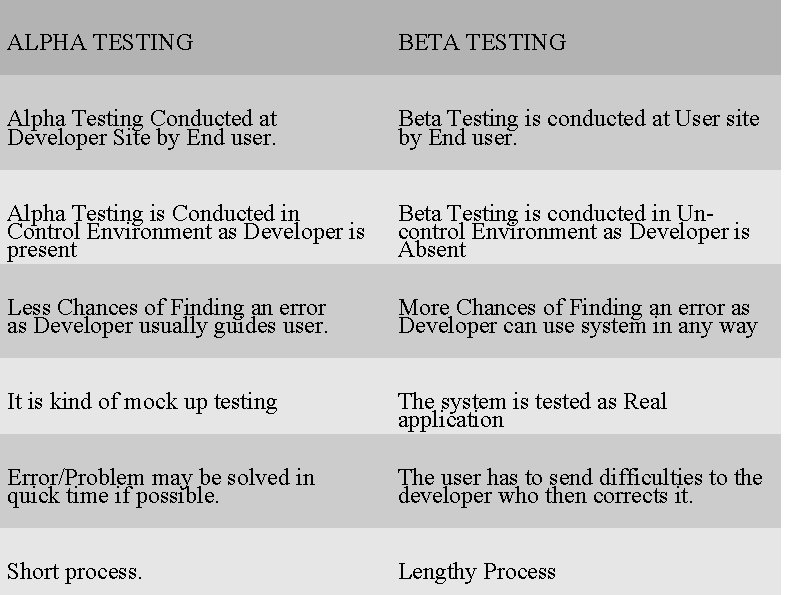

Validation Testing Alpha testing: - • This test takes place at the developer’s site. Developers observe the users and note problems. • Alpha testing is testing of an application when development is about to complete. • Alpha testing is typically performed by a group that is independent of the design team, but still within the company, e. g. in-house software test engineers, or software QA engineers.

• Alpha testing is final testing before the software is released to the general public. • It has two phases: 1. In the first phase, the software is tested by in-house developers. The goal is to catch bugs quickly. 2. In the second phase, the software is handed over to the software QA staff, for additional testing in an environment that is similar to the intended use.

Beta Testing • It is also known as field testing. It takes place at customer’s site. It sends the system to users who install it and use it under real-world working conditions. • A beta test is the second phase of software testing in which a sampling of the intended audience tries the product out. (Beta is the second letter of the Greek alphabet. ) • Originally, the term alpha test meant the first phase of testing in a software development process. • The first phase includes unit testing, component testing, and system testing. • Beta testing can be considered “pre-release testing”.

The goal of beta testing is to place your application in the hands of real users outside of your own engineering team to discover any flaws or issues from the user’s perspective that you would not want to have in your final, released version of the application.

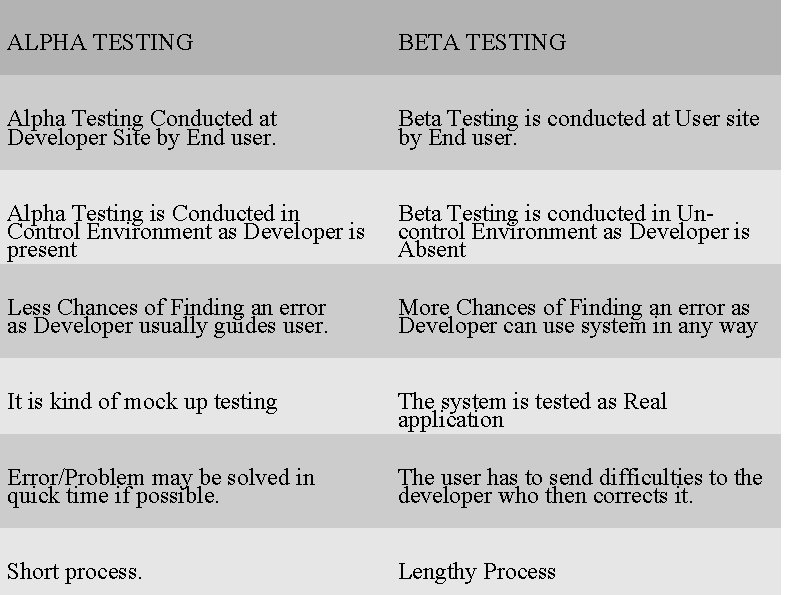

ALPHA TESTING BETA TESTING Alpha Testing Conducted at Developer Site by End user. Beta Testing is conducted at User site by End user. Alpha Testing is Conducted in Control Environment as Developer is present Beta Testing is conducted in Uncontrol Environment as Developer is Absent Less Chances of Finding an error as Developer usually guides user. More Chances of Finding an error as Developer can use system in any way It is kind of mock up testing The system is tested as Real application Error/Problem may be solved in quick time if possible. The user has to send difficulties to the developer who then corrects it. Short process. Lengthy Process

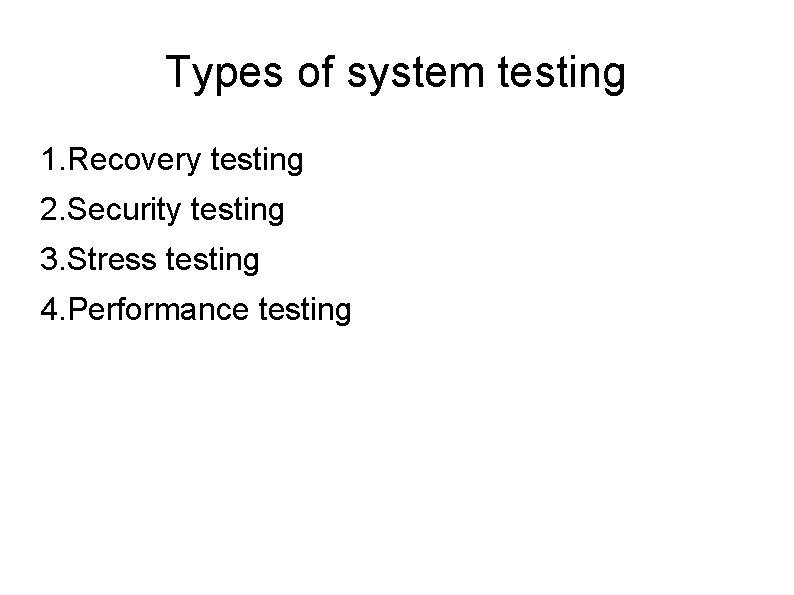

System testing is a actually a series of different tests whose primary purpose is to fully exercise the computer based system. Although each test has a different purpose, all work to verify that system elements have been properly integrated and perform allocated functions.

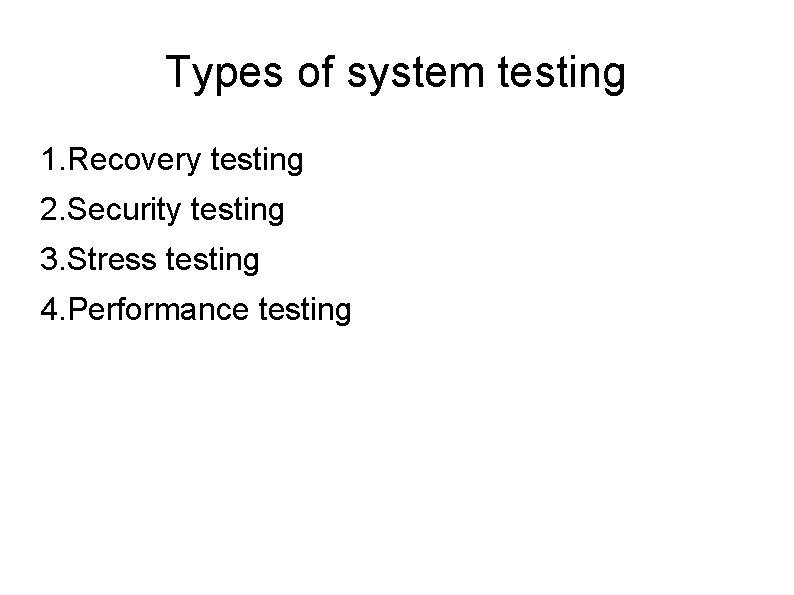

Types of system testing 1. Recovery testing 2. Security testing 3. Stress testing 4. Performance testing

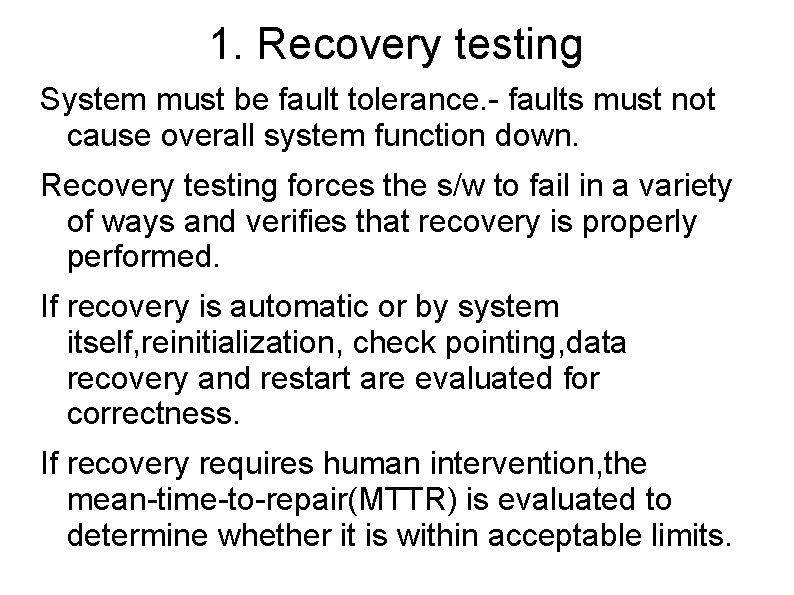

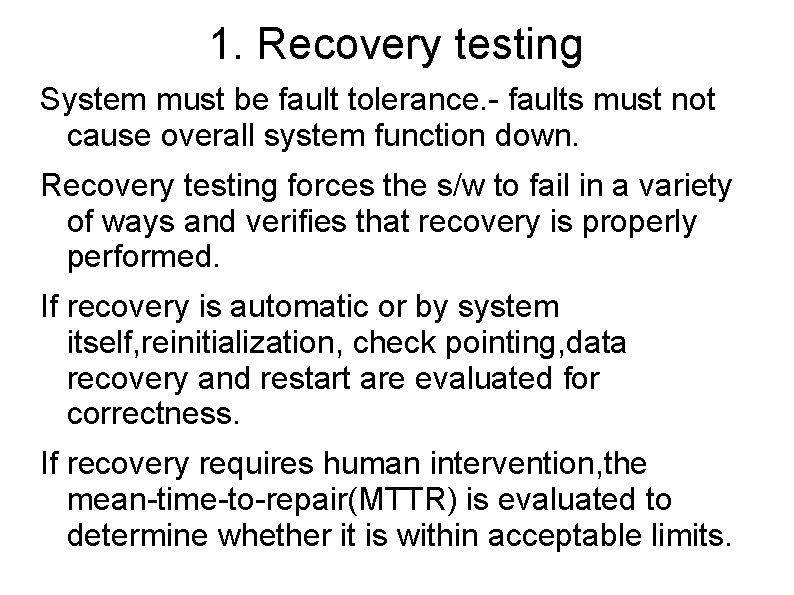

1. Recovery testing System must be fault tolerance. - faults must not cause overall system function down. Recovery testing forces the s/w to fail in a variety of ways and verifies that recovery is properly performed. If recovery is automatic or by system itself, reinitialization, check pointing, data recovery and restart are evaluated for correctness. If recovery requires human intervention, the mean-time-to-repair(MTTR) is evaluated to determine whether it is within acceptable limits.

Security testing • Security testing is mainly concerned about overall security of computer based system, that can be targeted for misuse or gaining sensitive information or causes actions that can improperly harm individuals by unauthorized person. • Security testing verifies that protection mechanism built into a system, protecting it from improper penetration.

• During Security testing tester plays role of individual who desires to penetrate system. • The tester may attempt to acquire passwords, may attack the system, may purposely cause errors, hoping to penetrate during recovery, may browse through insecure data, hoping to find the key to system entry.

Stress testing • Stress tests are designed to confront programs with abnormal situations • Tester who performs stress testing asks “how high can we crank this up before it fails? ” • Stress testing executes a system in a manner that demands resources in a abnormal quantity, frequency, volume.

Example: Produce 10 -15 interrupts /sec when average rate is 1 -2/sec.

Performance Testing • A software in real-time and embedded systems, need to confirm the performance and not only provide the required function. • Performance testing is necessary as it is designed to test the run time performance of software within the context of an integrated system. • Performance testing occurs throughout all steps in the testing process. • Even at the unit level, the performance of an individual module may be assessed as tests are conducted.

White Box Testing White Box Testing is also known as glass box testing or clear box testing. In White Box Testing code is tested and it is done by s/w developers. In this testing test cases are written to check : 1. 2. 3. 4. Independent paths at least once All logical decision(true or false) All loops( boundaries) All internal data structures.

White Box Testing helps to remove the logical errors, logical path related errors. The types of White Box Testing are: – Statement testing, – Condition coverage and – Decision coverage.

Black box testing • Black box testing ignores the internal mechanism of the system or components and focuses only on the o/p generated. • Here system is considered as a black box. • I/p are given and o/p are compared against specification. • Test cases are based on requirements specification. • Here testers do not requires the knowledge of code in which system is developed.

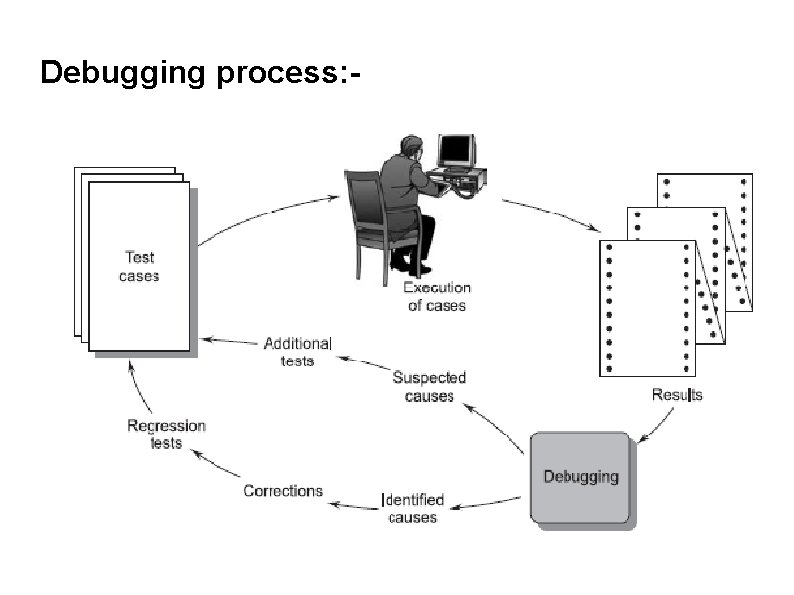

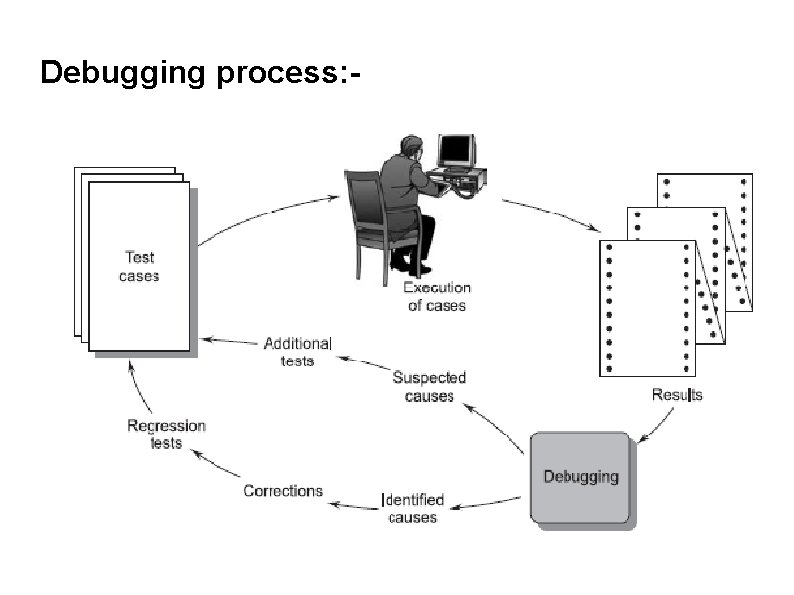

Concept and need of debugging • Testing strategy is planned, test cases are designed and the results are evaluated by comparing them with prescribed expectations. • Successful testing leads to the process called as debugging. • When test cases cannot find the errors, the process of debugging is used to find out the errors.

Debugging process: -

• Debugging is not testing but always occurs as a outcome or conclusion of testing. • In above fig process begins with the execution of a test cases and results are assessed. • It is found that in many cases it is difficult to match the symptom and its causes to fix errors. • The process of debugging can find out the symptom as well as its cause and results into error correction.

Char. Of bugs: 1. Symptom may appear in one part of a program, while the cause may actually be located at a site that is far removed. So highly coupled components makes the situation worst. 2. The symptom may disappear temporarily when another error is corrected. 3. The symptom may actually be caused by nonerrors (round off inaccuracies) 4. The symptom may caused by human errors that is not easily traced. 5. The symptom may be a result of timing problem rather than processing problem.

6. Reproducing accuracy in input conditions. 7. Symptom are irregular, situation is common in embedded system that combines s/w and h/w. 8. The cause of symptom could be number of tasks running on different processors at the same time.

Debugging strategies: • Debugging is straightforward application to find and correct the cause of s/w error by locating the problem source. There are 3 debugging strategies: - 1. brute force 2. backtracking 3. cause elimination.

1. Brute Force: • This category of debugging is probably the most common and least efficient method for isolating the cause of a software error. • Brute force debugging methods are applied when all else fails. • Using a "let the computer find the error“ philosophy, memory dumps are taken, run-time traces are invoked, and the program is loaded with WRITE statements. In the morass of information that is produced a clue is found that can lead us to the cause of an error. • Although the mass of information produced may ultimately lead to success, it more frequently leads to wasted effort and time. Thought must be expended first.

2. Backtracking: • It is a fairly common debugging strategy that can be used successfully in small programs. • Beginning at the site where a symptom has been uncovered, the source code is traced backward (manually) until the site of the cause is found. • Unfortunately, as the number of source lines increases, the number of potential backward paths may become unmanageably large.

3. Cause Elimination: • It is manifested by induction or deduction and introduces the concept of binary partitioning. • Data related to the error occurrence are organized to isolate potential causes. • A "cause hypothesis" is devised and the aforementioned data are used to prove or disprove the hypothesis. • Alternatively, a list of all possible causes is developed and tests are conducted to eliminate each. • If initial tests indicate that a particular cause hypothesis shows promise, data are refined in an attempt to isolate the bug.