Software Testing and Integration Testing Software Testing Integration

- Slides: 74

Software Testing and Integration Testing

Software Testing Integration Testing

Definitions • Testing The execution of a program to find its faults. While more time is spent on testing than in any other phase of software development, there is considerable confusion about its purpose. Many software professionals, believe that tests are run to show that the program works rather than to learn about its faults. • Verification The process of proving the programs correctness. (executes the program in a test or simulated environment) • Validation The process of finding errors by executing the program in a real environment • Debugging Diagnosing the error and correct it Integration Testing

VERIFICATION & VALIDATION • Verification and validation are sometimes confused. They are, in fact, different activities. The difference between them is concisely summarized by Boehm: a) ‘Validation: Are we building the right product? ’ b) ‘Verification: Are we building the product right? ’ Verification - typically involves reviews and meeting to evaluate documents, plans, code, requirements, and specifications. This can be done with checklists, issues lists, walkthroughs, and inspection meeting. Validation - typically involves actual testing and takes place after verifications are completed. Validation and Verification process continue in a cycle till the software becomes defects free. Integration Testing

Software Testing Objectives • To remove as many defects as possible before test since the quality improvement potential of testing is limited • A common view of testing is that all untested code has a roughly equal probability of containing defects. • De. Marco asserts that the incidence of defects in untested codes varies widely and that no amount of testing can remove more than 50 percent of them. • However, there is data that shows that properly run unit tests are potentially capable of detecting as many as 70 percent of the defects in a program. The objective should therefore be to remove as many as defects as possible before test since the quality improvement potential of testing is limited. Integration Testing

Types of Software Tests • • • Unit Testing (White Box) Integration Testing Function Testing (Black Box) Regression Testing System Test Acceptance and Installation Tests Integration Testing

Unit Testing (White Box) • Individual components are tested. • It is a path test. • The idea is to focus on a relatively small segment of code and aim to exercise a high percentage of the internal paths – The simplest approach is to ensure that every statement is exercised at least once. A more stringent criterion is to require coverage of every path within a program. • Is simplified when a module is designed with high cohesion – Reduces the number of test cases – Allows errors to be more easily predicted and uncovered • Disadvantage: the tester may be biased by previous experience. And the test value may not cover all possible values. Integration Testing

Integration Testing • Top-down Integration Test • Bottom-up Integration Test • Sandwich Integration (explained later!) Integration Testing

Function Testing (Black Box) Functional testing examines the functions the program is to perform and devises a sequence of inputs to test them. • Designed to meet specifications • Disadvantages: 1. The need for explicitly stated requirements 2. Only cover a small portion of the possible test conditions (since exhaustive black box testing is generally impossible). • Integration Testing

Regression Testing • The progressive phase introduces and tests new functions, uncovering problems in the newly added or modified modules and in their interfaces with the previously integrated modules. • The regressive phase concerns the effect of the newly introduced changes on all the previously integrated code. • Problems arise when errors made in incorporating new functions affect previously tested functions. Integration Testing

Regression Testing • Each new addition or change to baselined software may cause problems with functions that previously worked flawlessly • Regression testing re-executes a small subset of tests that have already been conducted – Ensures that changes have not propagated unintended side effects – Helps to ensure that changes do not introduce unintended behavior or additional errors • Regression test suite contains three different classes of test cases – A representative sample of tests that will exercise all software functions – Additional tests that focus on software functions that are likely to be affected by the change – Tests that focus on the actual software components that have been changed Integration Testing

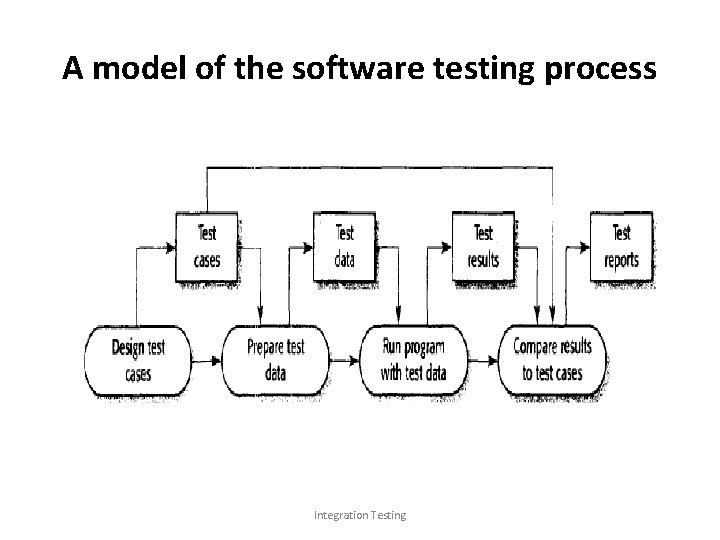

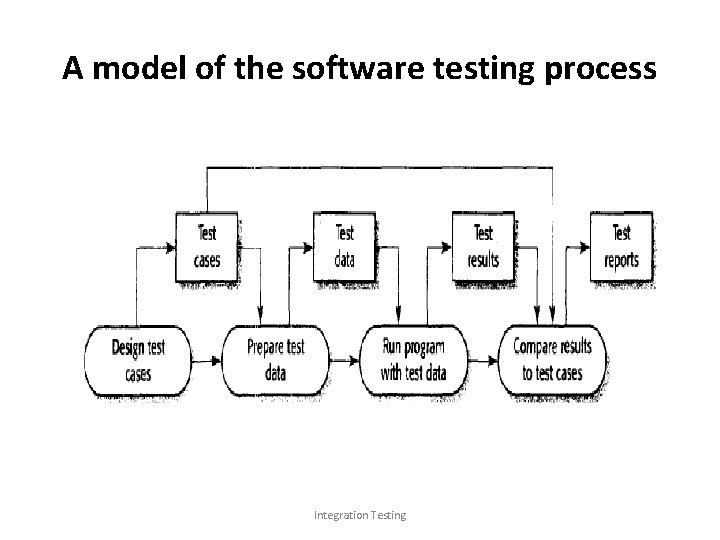

A model of the software testing process Integration Testing

What is Test Planning • • • 1. 2. 3. 4. Define the functions, roles and methods for all test phases. Test planning usually start during the requirements phase. Major test plan elements are: Objectives for each test phase Schedules and responsibilities for each test activity Availability of tools, facilities and test libraries. Set the criteria for test completion Integration Testing

Testing phases in the software process Integration Testing

Testing phases in the software process • Normally, component development and testing are interleaved. • Programmers make up their own test data. This is an economically sensible approach, as the programmer knows the component best and is therefore the best person to generate test cases. • In extreme programming, tests are developed along with the requirements before development starts. • Later stages of testing involve integrating work from a number of programmers and must be planned in advance. • An independent team of testers should work from preformulated test plans that are developed from the system specification and design. • Previous slide shows how test plans are the link between testing and development. Integration activities. Testing

Test Execution & Reporting • Testing should be treated like an experiment to be carefully controlled and recorded so that it can be reproduced. • Testing require that all irregular behavior be noted and investigated. • Big companies keep a special library with all copies of test reports, incident forms, and test plans Integration Testing

When is Testing Complete? • There is no definitive answer to this question • Every time a user executes the software, the program is being tested • Sadly, testing usually stops when a project is running out of time, money, or both • One approach is to divide the test results into various severity levels – Then consider testing to be complete when certain levels of errors no longer occur or have been repaired or eliminated Integration Testing

Ensuring a Successful Software Test Strategy • Specify product requirements in a quantifiable manner long before testing commences • State testing objectives explicitly • Understand the user of the software (through use cases) and develop a profile for each user category • Use effective formal technical reviews as a filter prior to testing to reduce the amount of testing required • Conduct formal technical reviews to assess the test strategy and test cases themselves • Develop a continuous improvement approach for the testing process Integration Testing

Validation Testing Integration Testing

Background • Validation testing follows integration testing • Focuses on user-visible actions and user-recognizable output from the system • Demonstrates conformity with requirements • Designed to ensure that – – – All functional requirements are satisfied All behavioral characteristics are achieved All performance requirements are attained Documentation is correct Usability and other requirements are met (e. g. , compatibility, error recovery, maintainability) • After each validation test – The function or performance characteristic conforms to specification and is accepted – A deviation from specification is uncovered and a deficiency list is created • A configuration review or audit ensures that all elements of the software configuration have been properly developed, catalogued, and have the necessary detail for entering the maintenance phase of the software life cycle Integration Testing

Alpha and Beta Testing • Alpha testing – Conducted at the developer’s site by end users – Software is used in a natural setting with developers watching intently – Testing is conducted in a controlled environment • Beta testing – Conducted at end-user sites – Developer is generally not present – It serves as a live application of the software in an environment that cannot be controlled by the developer – The end-user records all problems that are encountered and reports these to the developers at regular intervals • After beta testing is complete, software engineers make software modifications and prepare for release of the software product to the entire customer base Integration Testing

The Art of Debugging Integration Testing

Debugging Process Debugging occurs as a consequence of successful testing It is still very much an art rather than a science Good debugging ability may be an innate human trait Large variances in debugging ability exist The debugging process begins with the execution of a test case Results are assessed and the difference between expected and actual performance is encountered • This difference is a symptom of an underlying cause that lies hidden • The debugging process attempts to match symptom with cause, thereby leading to error correction • • • Integration Testing

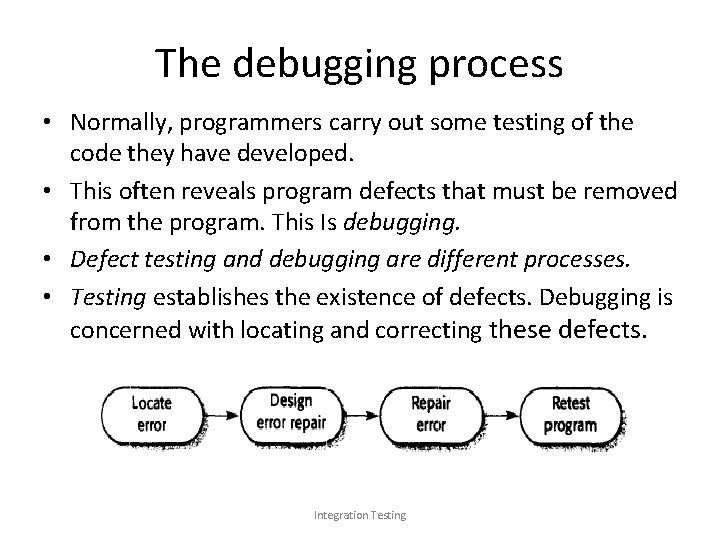

The debugging process • Normally, programmers carry out some testing of the code they have developed. • This often reveals program defects that must be removed from the program. This Is debugging. • Defect testing and debugging are different processes. • Testing establishes the existence of defects. Debugging is concerned with locating and correcting these defects. Integration Testing

Why is Debugging so difficult? • The symptom and the cause may be geographically remote • The symptom may disappear (temporarily) when another error is corrected • The symptom may actually be caused by nonerrors (e. g. , round-off accuracies) • The symptom may be caused by human error that is not easily traced • The symptom may be a result of timing problems, rather than processing problems • The symptom may be intermittent involving both hardware and software Integration Testing

Debugging Strategies • Objective of debugging is to find and correct the cause of a software error • Bugs are found by a combination of systematic evaluation, intuition, and luck • Debugging methods and tools are not a substitute for careful evaluation based on a complete design model and clear source code • Some debugging strategies are – Brute force – Backtracking Integration Testing

Strategy #1: Brute Force • Most commonly used and least efficient method • Used when all else fails • Involves the use of memory dumps, run-time traces, and output statements • Leads many times to wasted effort and time Integration Testing

Strategy #2: Backtracking • Can be used successfully in small programs • The method starts at the location where a symptom has been uncovered • The source code is then traced backward (manually) until the location of the cause is found • In large programs, the number of potential backward paths may become unmanageably large Integration Testing

Three Questions to ask Before Correcting the Error • Is the cause of the bug reproduced in another part of the program? – Similar errors may be occurring in other parts of the program • What next bug might be introduced by the fix that I’m about to make? – The source code (and even the design) should be studied to assess the coupling of logic and data structures related to the fix • What could we have done to prevent this bug in the first place? – This is the first step toward software quality assurance – By correcting the process as well as the product, the bug will be removed from the current program and may be eliminated from all future programs Integration Testing

Integration Testing

What is Integration? The process of combining individually developed components into a system. C System AB A ABC Integration Testing B

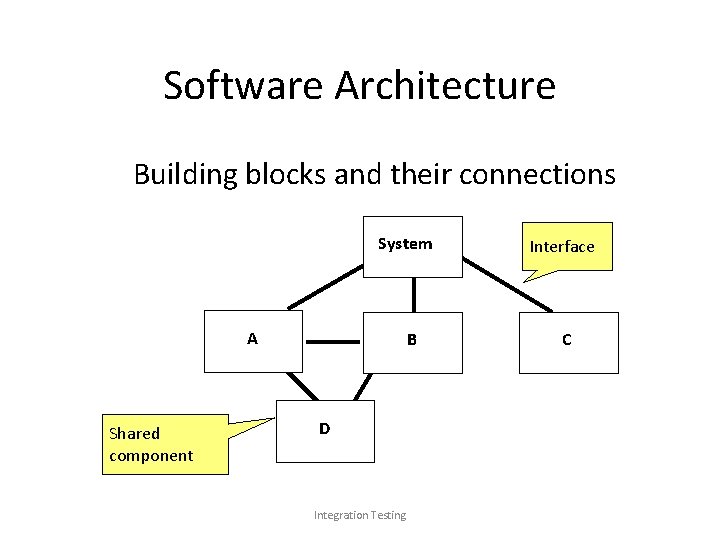

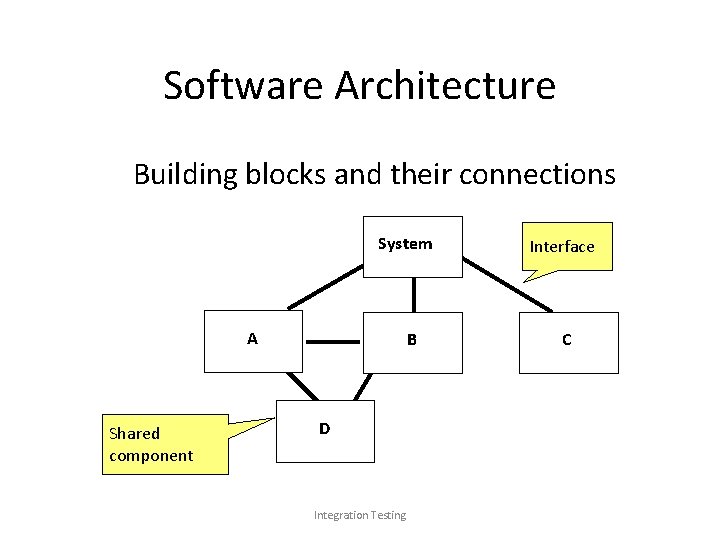

Software Architecture Building blocks and their connections System A Shared component B D Integration Testing Interface C

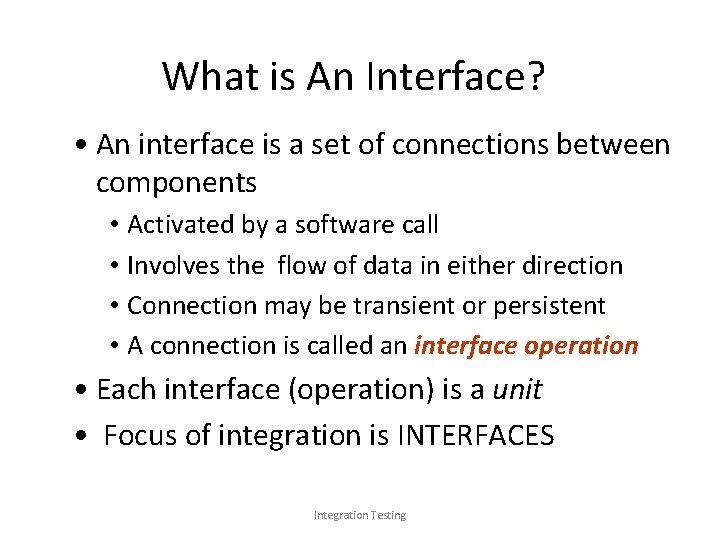

What is An Interface? • An interface is a set of connections between components • Activated by a software call • Involves the flow of data in either direction • Connection may be transient or persistent • A connection is called an interface operation • Each interface (operation) is a unit • Focus of integration is INTERFACES Integration Testing

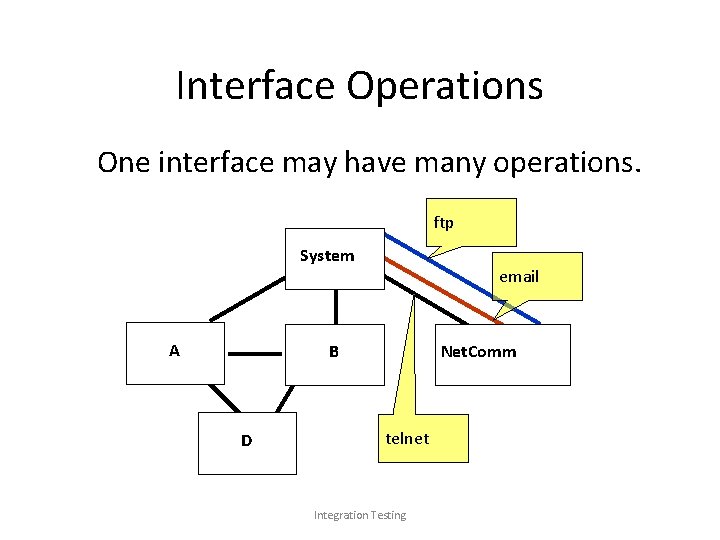

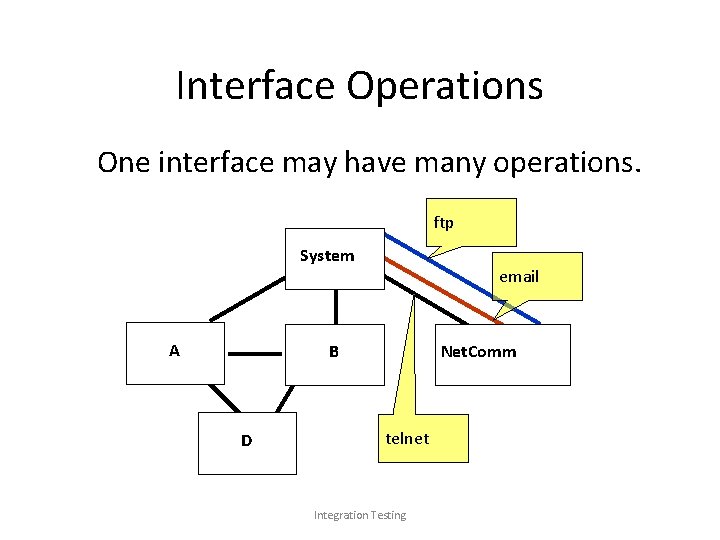

Interface Operations One interface may have many operations. ftp System A email B D Net. Comm telnet Integration Testing

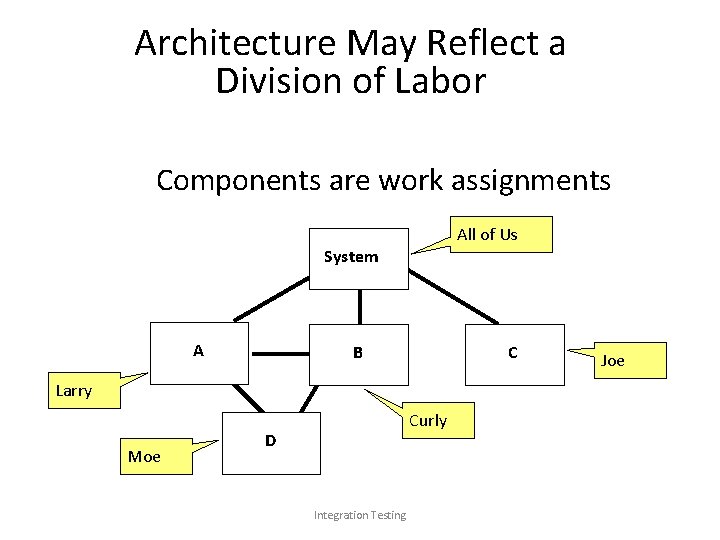

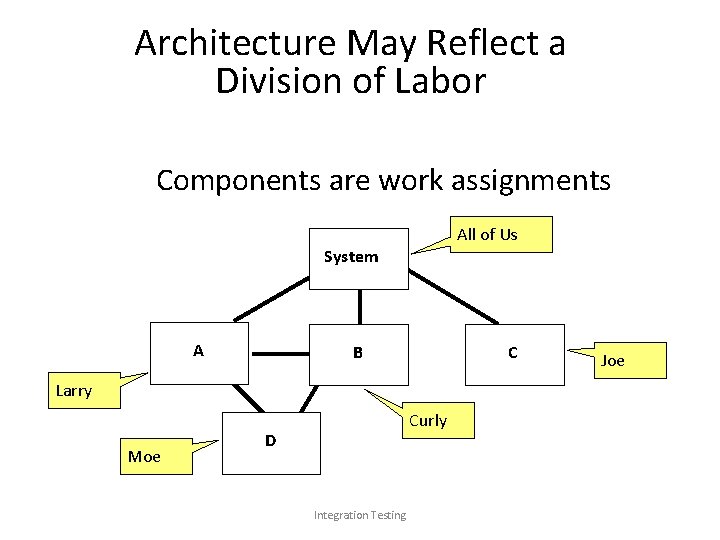

Architecture May Reflect a Division of Labor Components are work assignments All of Us System A B C Larry Moe Curly D Integration Testing Joe

What is Integration Testing? • Defined as a systematic technique for constructing the software architecture – At the same time integration is occurring, conduct tests to uncover errors associated with interfaces • Objective is to take unit tested modules and build a program structure based on the prescribed design • The program is constructed and tested in small increments • Errors are easier to isolate and correct • Interfaces are more likely to be tested completely • A systematic test approach is applied • Software Integration Testing harness Test Stubs and Test Drivers Integration Testing

Test Drivers and Test Stubs • What are software test drivers? i - Software test drivers are programs which simulate the behaviors of software components (or modules) that are the control modules of the module under testing. – An example may be a simple main program that accepts test case data, passes such data to the component being tested, and prints the returned results • What are software test stubs? i - Software test stubs are programs which simulate the behaviors of software components (or modules) that are the dependent modules of the module under testing. – Serve to replace modules that are subordinate to (called by) the component to be tested – It uses the module’s exact interface, may do minimal data manipulation, provides verification of entry, and returns control to the module undergoing testing • Drivers and stubs both represent overhead – Both must be written but don’t constitute part of the installed software product Integration Testing

Traditional Software Integration Strategy • There are two groups of software integration strategies: - Non Incremental software integration - Incremental software integration • Non Incremental software integration: – Big bang integration approach • Incremental software integration: Involves adding unit-testing program module or component one by one, and testing each result and combination. • Types of incremental integration: – Top-down software integration – Bottom-up software integration – Sandwich integration: a combination of top-down & bottom-up Integration Testing

Non-incremental integration • Big Bang - combine (or integrate) all parts at once. • Advantages: simple • Disadvantages: – hard to debug, not easy to isolate errors – not easy to validate test results – impossible to form an integrated system Integration Testing

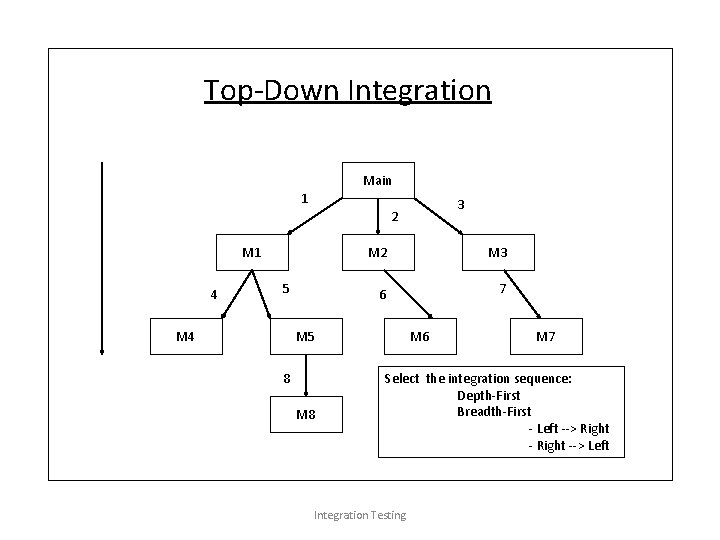

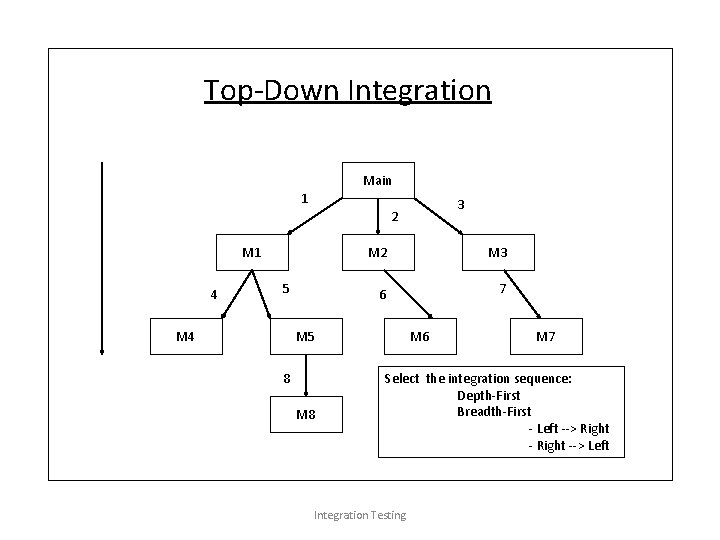

Top-down Integration • Idea: - Modules are integrated by moving downward through the control hierarchy, beginning with the main module • Modules subordinate to the main control module are incorporated into the system in either a depth-first or breadth -first fashion – DF: All modules on a major control path are integrated – BF: All modules directly subordinate at each level are integrated • Integration process (five steps): 1. the main control module is used as a test driver, and the stubs are substituted for all modules directly subordinate to the main control module. 2. subordinate stubs are replaced one at a time with actual modules. 3. tests are conducted as each module is integrated. 4. On completion of each set of tests, another stub is replaced with the real module. 5. regression testing may be conducted. Integration Testing

Top-Down Integration Main 1 3 2 M 1 4 5 M 4 M 2 M 3 6 7 M 5 8 M 6 M 7 Select the integration sequence: Depth-First Breadth-First - Left --> Right --> Left Integration Testing

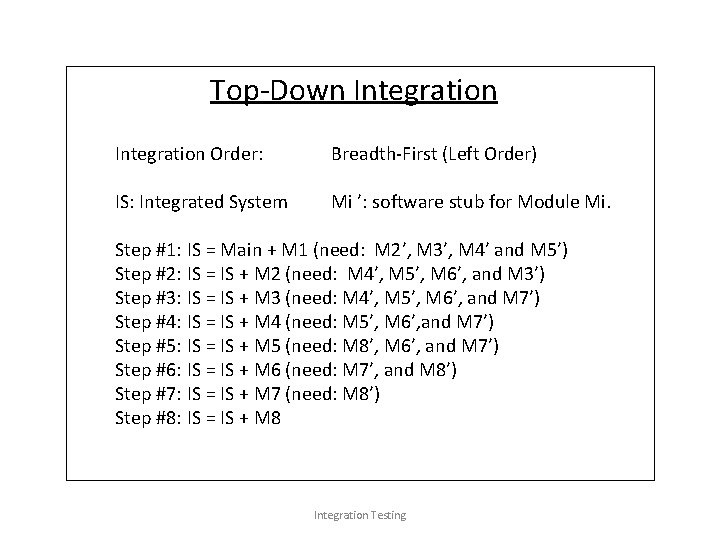

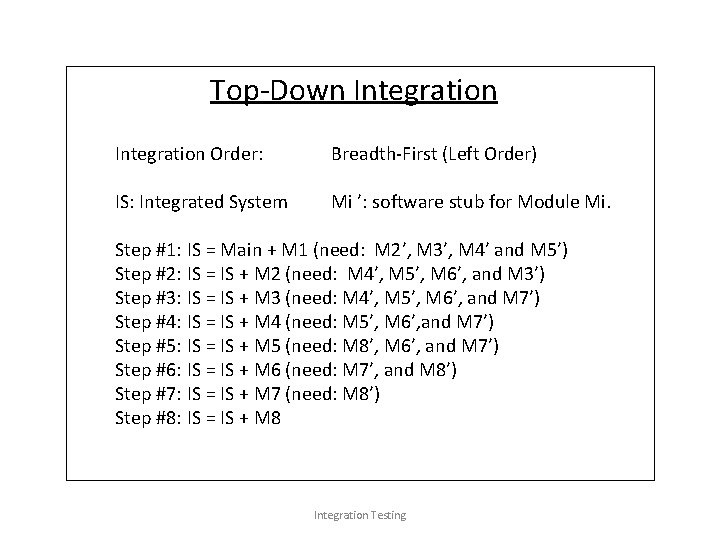

Top-Down Integration Order: Breadth-First (Left Order) IS: Integrated System Mi ’: software stub for Module Mi. Step #1: IS = Main + M 1 (need: M 2’, M 3’, M 4’ and M 5’) Step #2: IS = IS + M 2 (need: M 4’, M 5’, M 6’, and M 3’) Step #3: IS = IS + M 3 (need: M 4’, M 5’, M 6’, and M 7’) Step #4: IS = IS + M 4 (need: M 5’, M 6’, and M 7’) Step #5: IS = IS + M 5 (need: M 8’, M 6’, and M 7’) Step #6: IS = IS + M 6 (need: M 7’, and M 8’) Step #7: IS = IS + M 7 (need: M 8’) Step #8: IS = IS + M 8 Integration Testing

Top-down Integration • Advantages – This approach verifies major control or decision points early in the test process • Disadvantages – Stubs need to be created to substitute for modules that have not been built or tested yet; this code is later discarded – stub construction cost Integration Testing

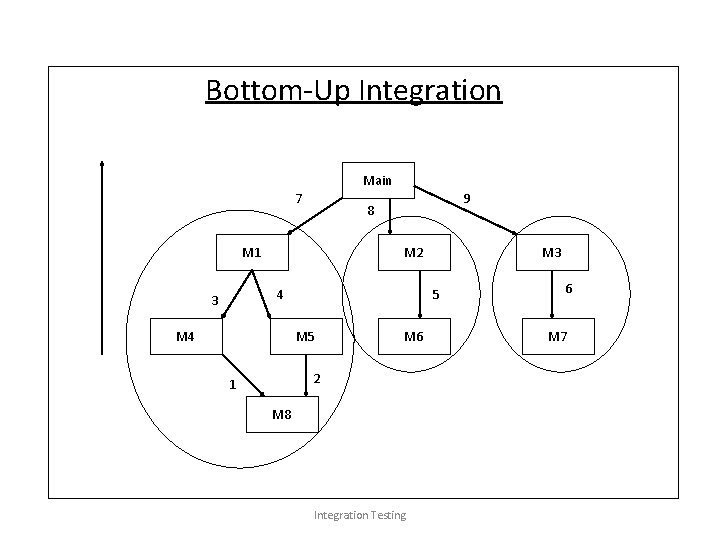

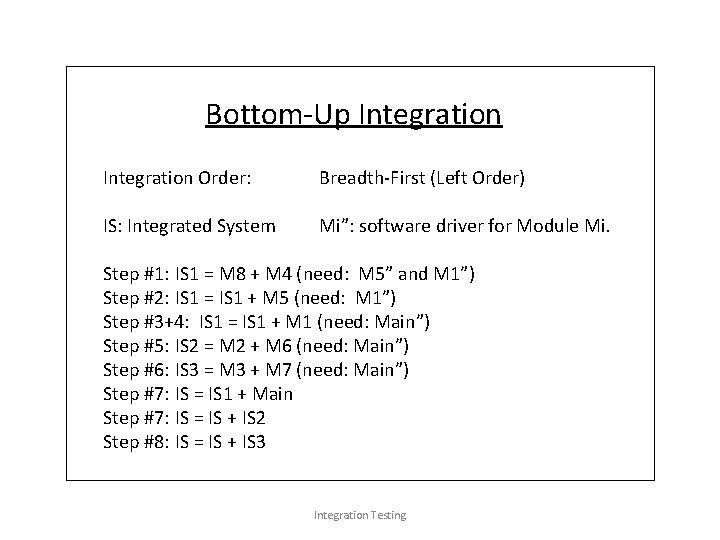

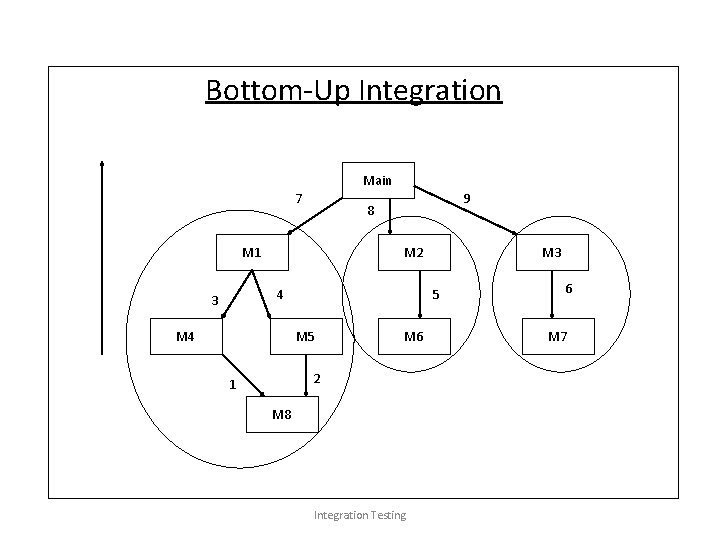

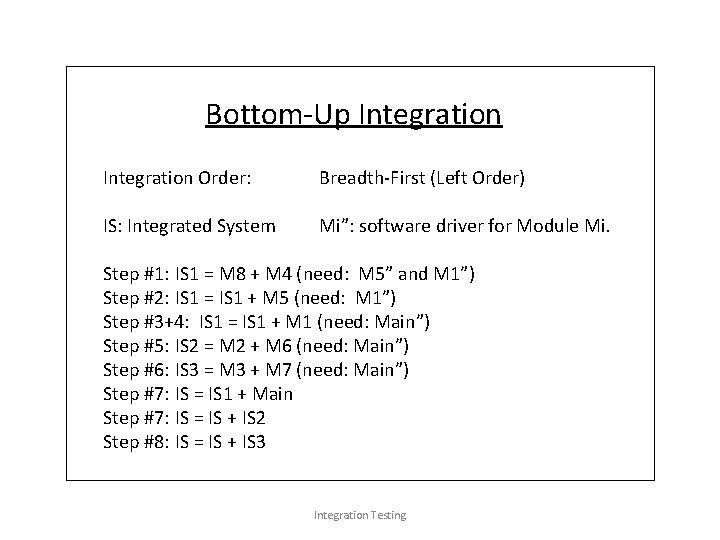

Bottom-up Integration • Integration and testing starts with the most atomic modules in the control hierarchy • Idea: - Modules at the lowest levels are integrated at first, then by moving upward through the control structure. • Integration process (four steps): 1. Low-level modules are combined into clusters that perform a specific software sub-function. 2. A driver is written to coordinate test case input and output. 3. Test cluster is tested. 4. Drivers are removed and clusters are combined moving upward in the program structure. Integration Testing

Bottom-Up Integration Main 7 9 8 M 1 M 2 4 3 M 4 5 M 6 2 1 M 3 M 8 Integration Testing 6 M 7

Bottom-Up Integration Order: Breadth-First (Left Order) IS: Integrated System Mi”: software driver for Module Mi. Step #1: IS 1 = M 8 + M 4 (need: M 5” and M 1”) Step #2: IS 1 = IS 1 + M 5 (need: M 1”) Step #3+4: IS 1 = IS 1 + M 1 (need: Main”) Step #5: IS 2 = M 2 + M 6 (need: Main”) Step #6: IS 3 = M 3 + M 7 (need: Main”) Step #7: IS = IS 1 + Main Step #7: IS = IS + IS 2 Step #8: IS = IS + IS 3 Integration Testing

Bottom-up Integration • Advantages – This approach verifies low-level data processing early in the testing process – Need for stubs is eliminated, no stubs cost • Disadvantages – Driver modules need to be built to test the lower-level modules; this code is later discarded or expanded into a full-featured version – no controllable system until the last step Integration Testing

TEST PLAN Objectives • To create a set of testing tasks. • Assign resources to each testing task. • Estimate completion time for each testing task. • Document testing standards. Integration Testing

ØA document that describes the scope approach resources schedule …of intended test activities. ØIdentifies the test items features to be tested testing tasks task allotment risks requiring contingency planning. Integration Testing

Purpose of preparing a Test Plan • Validate the acceptability of a software product. • Help the people outside the test group to understand ‘why’ and ‘how’ of product validation. • A Test Plan should be – thorough enough (Overall coverage of test to be conducted) – useful and understandable by the people inside and outside the test group. Integration Testing

Scope • The areas to be tested by the QA team. • Specify the areas which are out of scope (screens, database, mainframe processes etc). Test Approach • Details on how the testing is to be performed. • Any specific strategy to be followed for testing? Integration Testing

Entry Criteria Various steps to be performed before the start of a test i. e. Pre-requisites. E. g. – Timely environment set up – Starting the web server/app server – Successful implementation of the latest build etc. Resources List of the people involved in the project and their designation etc. Integration Testing

Tasks/Responsibilities Tasks to be performed and responsibilities assigned to the various team members. Exit Criteria Contains tasks like • Bringing down the system / server • Restoring system to pre-test environment • Database refresh etc. Schedule / Milestones Deals with the final delivery date and the various milestones dates. Integration Testing

Hardware / Software Requirements • Details of PC’s / servers required to install the application or perform the testing • Specific software to get the application running or to connect to the database etc. Risks & Mitigation Plans • List out the possible risks during testing • Mitigation plans to implement incase the risk actually turns into a reality. Integration Testing

Deliverables • Various deliverables due to the client at various points of time i. e. Daily / weekly / start of the project end of the project etc. • These include test plans, test procedures, status reports, test scripts etc. Integration Testing

Good Test Plans • • • Developed and Reviewed early. Clear, Complete and Specific Specifies tangible deliverables that can be inspected. Staff knows what to expect and when to expect it. Realistic quality levels for goals Includes time for planning Can be monitored and updated Includes user responsibilities Based on past experience Recognizes learning curves Integration Testing

TEST CASES Test case is defined as • A set of test inputs, execution conditions and expected results, developed for a particular objective. • Documentation specifying inputs, predicted results and a set of execution conditions for a test item. Integration Testing

Test Cases Contents – Test plan reference id – Test case – Test condition – Expected behavior Integration Testing

Good Test Cases Find Defects • Have high probability of finding a new defect. • Unambiguous tangible result that can be inspected. • Repeatable and predictable. • Traceable to requirements or design documents • Push systems to its limits • Execution and tracking can be automated • Do not mislead • Feasible Integration Testing

Defect Life Cycle What is Defect? A defect is a variance from a desired product attribute. Two categories of defects are • Variance from product specifications • Variance from Customer/User expectations Defect categories Wrong The specifications have been implemented incorrectly. Missing A specified requirement is not in the built product. Extra A requirement incorporated into the product that was not specified. Integration Testing

Defect Log 1. 2. 3. 4. 5. 6. Defect ID number Descriptive defect name and type Source of defect – test case or other source Defect severity Defect Priority Defect status (e. g. New, open, fixed, closed, reopen, reject) Integration Testing

Defect Log 7. Date and time tracking for each change in the status. 8. Detailed description, including the steps necessary to reproduce the defect. 9. Component or program where defect was found 10. Screen prints, logs, etc. that will aid the developer in resolution process. 11. Person assigned to research and/or correct the defect. Integration Testing

Severity Vs Priority Severity Factor that shows how bad the defect is and the impact it has on the product Priority Based upon input from users regarding which defects are most important to them, and be fixed first. Severity Levels • Critical • Major / High • Minor / low • Cosmetic defects Integration Testing

Severity Level – Critical • An installation process which does not load a component. • A missing menu option. • Security permission required to access a function under test. • Functionality does not permit for further testing. • Runtime Errors like Java. Script errors etc. • Functionality Missed out / Incorrect Implementation (Major Deviation from Requirements). • Performance Issues (If specified by Client). • Browser incompatibility and Operating systems incompatibility issues. Integration Testing

Severity Level – Major / High • • • Reboot the system. The wrong field being updated. An updated operation that fails to complete. Performance Issues (If not specified by Client). Mandatory Validations for Mandatory Fields. Functionality incorrectly implemented (Minor Deviation from Requirements). • Images, Graphics missing which hinders functionality. • Front End / Home Page Alignment issues. Integration Testing

Severity Level – Minor / Low • Misspelled or ungrammatical text • Inappropriate or incorrect formatting (such as text font, size, alignment, color, etc. ) • Screen Layout Issues • Spelling Mistakes / Grammatical Mistakes • Documentation Errors • Page Titles Missing • Background Color for the Pages other than Home page • Default Value missing for the fields required • Cursor Set Focus and Tab Flow on the Page • Images, Graphics missing, which does not hinder functionality Integration Testing

Test Reports 6 INTERIM REPORTS • • • Functional Testing Status Functions Working Timeline Expected Vs Actual Defects Detected Timeline Defects Detected Vs Corrected Gap Timeline Defect/Relative Defect Distribution Testing Action Integration Testing

Functional Testing Status Report shows percentage of the functions that are • Fully Tested • Tested with Open defects • Not Tested Functions Working Timeline Report shows the actual plan to have all functions working verses the current status of the functions working. Line graph is an ideal format. Integration Testing

Expected Vs. Actual Defects Detected • Analysis between the number of defects being generated against the expected number of defects expected from the planning stage. Defects Detected Vs. Corrected Gap A line graph format that shows the • Number of defects uncovered verses the number of defects being corrected and accepted by the testing group. Integration Testing

Defect/Relative Defect Distribution Shows defect distribution by function or module and the number of tests completed. Testing Action Report shows – Possible shortfalls in testing – Number of severity-1 defects – Priority of defects – Recurring defects – Tests behind schedule …. and other information that present an accurate testing picture Integration Testing

TESTING STANDARDS External Standards Familiarity with and adoption of industry test standards from organizations. Internal Standards Development and enforcement of the test standards that testers must meet. IEEE STANDARDS Institute of Electrical and Electronics Engineers designed an entire set of standards for software and to be followed by the testers. Integration Testing

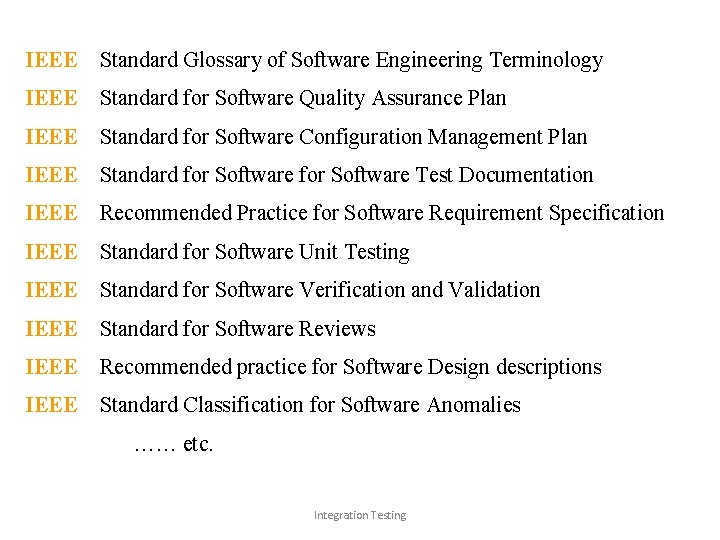

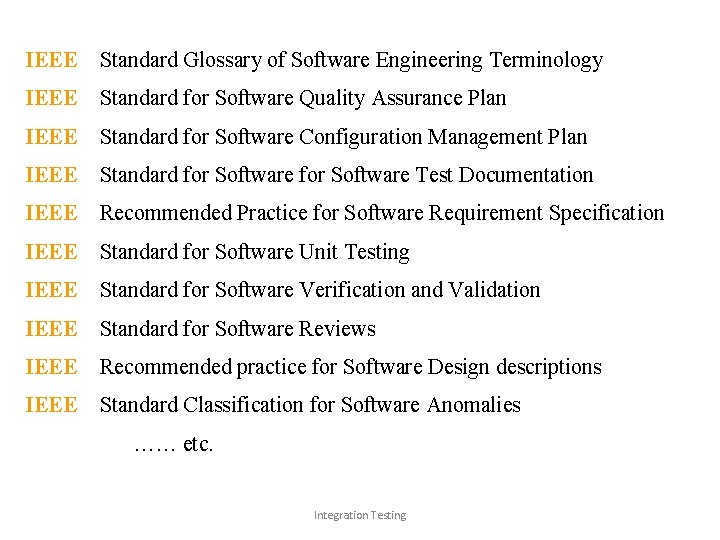

IEEE – Standard Glossary of Software Engineering Terminology IEEE – Standard for Software Quality Assurance Plan IEEE – Standard for Software Configuration Management Plan IEEE – Standard for Software Test Documentation IEEE – Recommended Practice for Software Requirement Specification IEEE – Standard for Software Unit Testing IEEE – Standard for Software Verification and Validation IEEE – Standard for Software Reviews IEEE – Recommended practice for Software Design descriptions IEEE – Standard Classification for Software Anomalies …… etc. Integration Testing

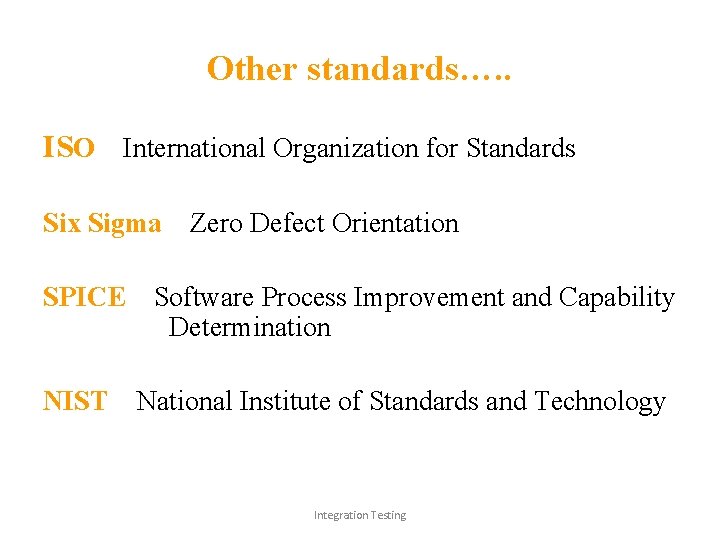

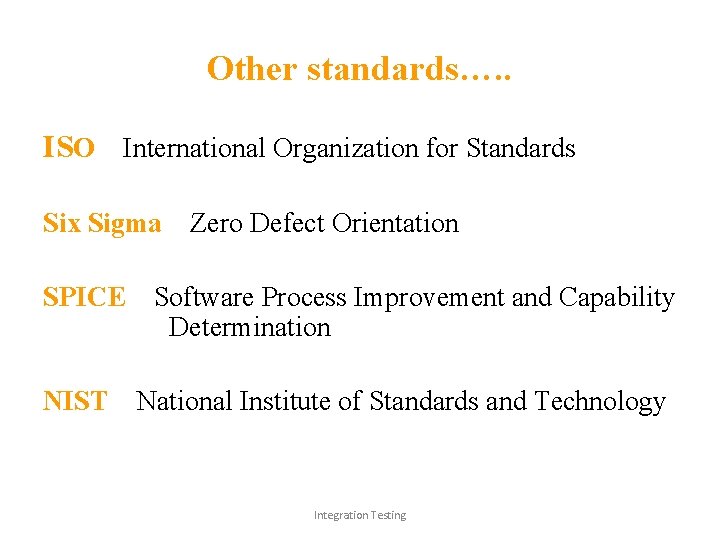

Other standards…. . ISO – International Organization for Standards Six Sigma – Zero Defect Orientation SPICE – Software Process Improvement and Capability Determination NIST – National Institute of Standards and Technology Integration Testing

Summary • The key to integration testing is the use of unit tested components. • The architecture of the system drives the sequence of integration and test activities. • With proper planning, integration testing leverages unit testing, resulting in a smooth and efficient integration test effort. Integration Testing