Reinforcement learning 2 action selection Peter Dayan thanks

- Slides: 74

Reinforcement learning 2: action selection Peter Dayan (thanks to Nathaniel Daw)

Global plan • Reinforcement learning I: (Wednesday) – prediction – classical conditioning – dopamine • Reinforcement learning II: – dynamic programming; action selection – sequential sensory decisions – vigor – Pavlovian misbehaviour • Chapter 9 of Theoretical Neuroscience

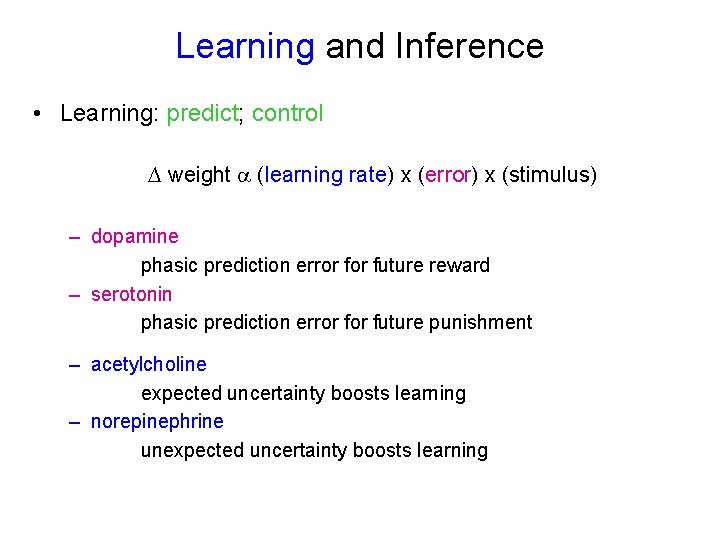

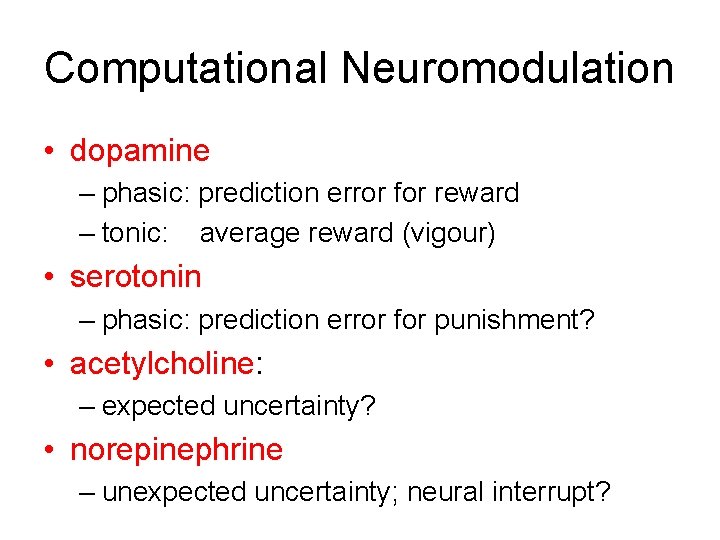

Learning and Inference • Learning: predict; control ∆ weight (learning rate) x (error) x (stimulus) – dopamine phasic prediction error future reward – serotonin phasic prediction error future punishment – acetylcholine expected uncertainty boosts learning – norepinephrine unexpected uncertainty boosts learning

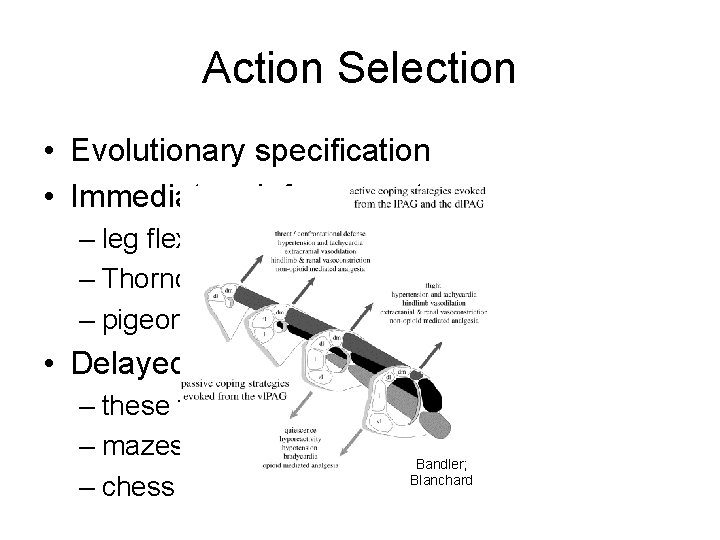

Action Selection • Evolutionary specification • Immediate reinforcement: – leg flexion – Thorndike puzzle box – pigeon; rat; human matching • Delayed reinforcement: – these tasks – mazes – chess Bandler; Blanchard

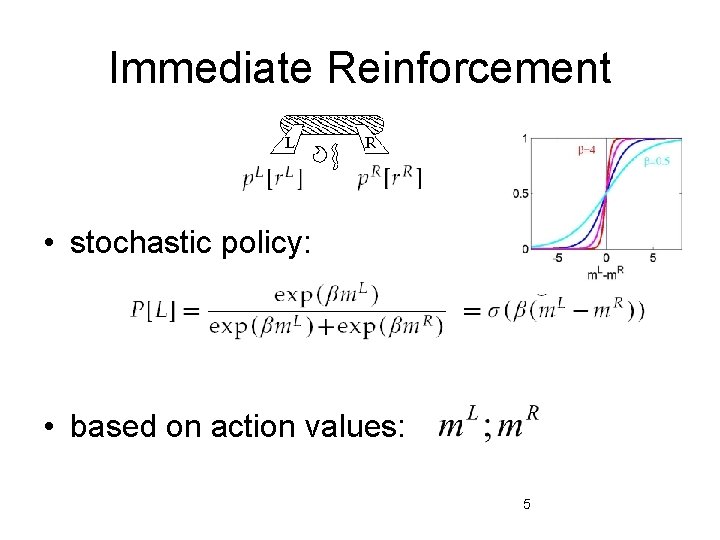

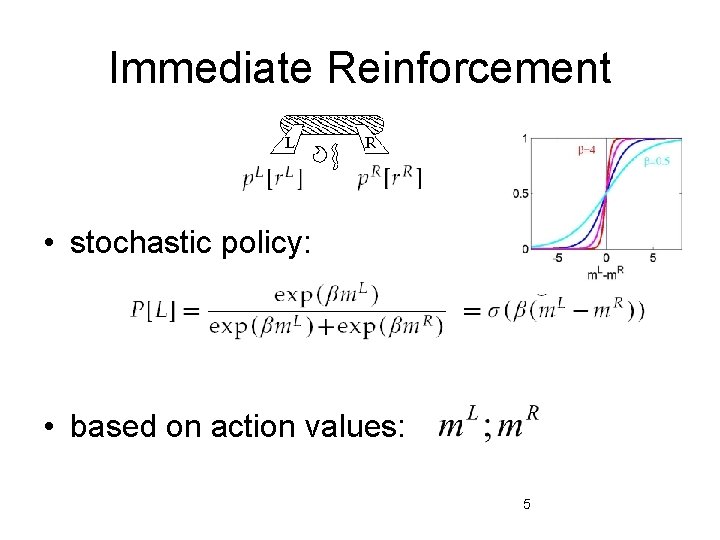

Immediate Reinforcement • stochastic policy: • based on action values: 5

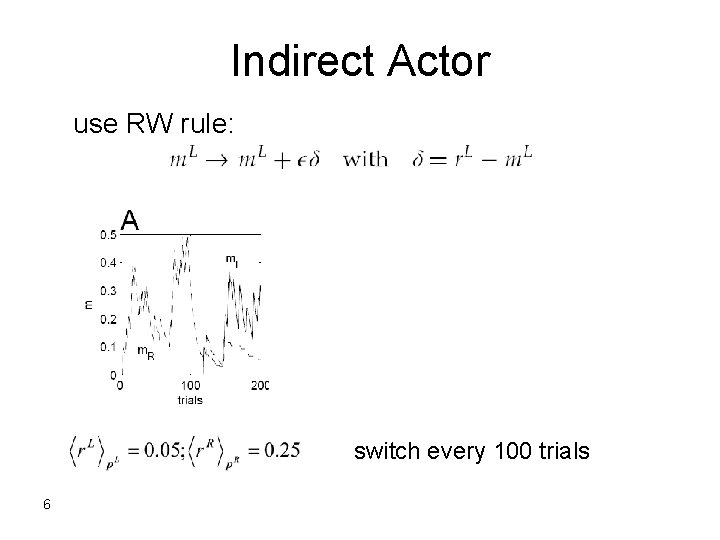

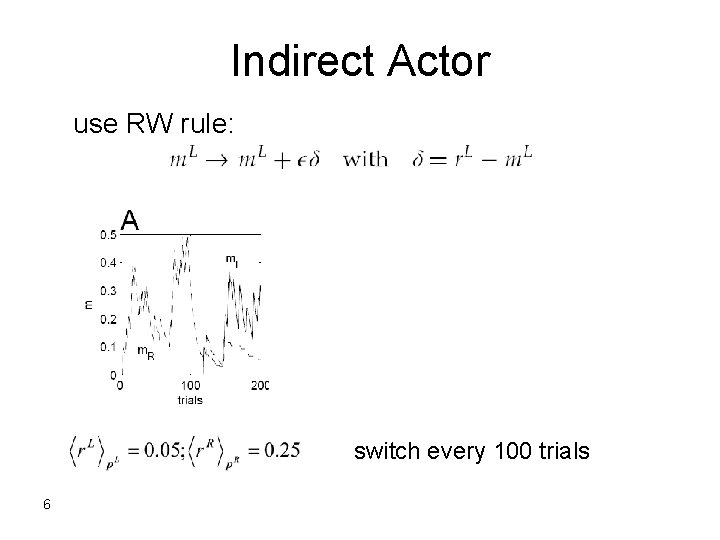

Indirect Actor use RW rule: switch every 100 trials 6

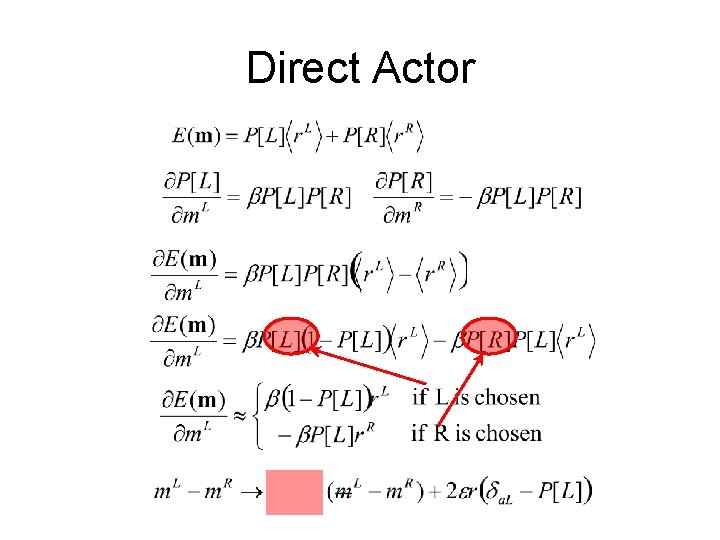

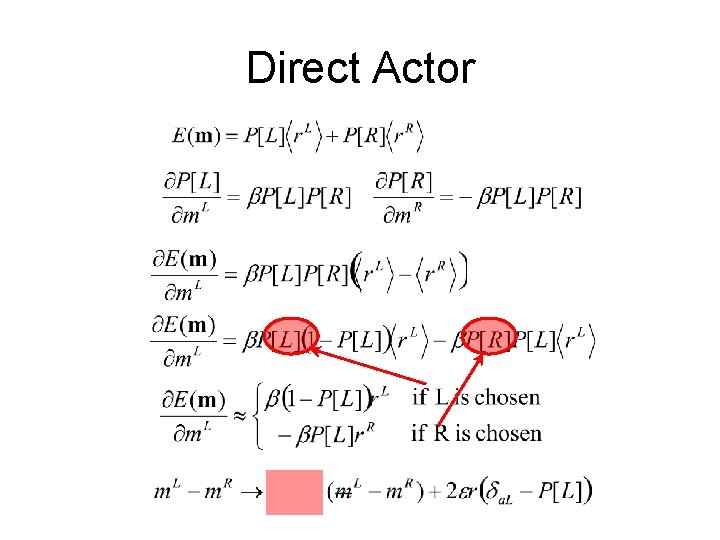

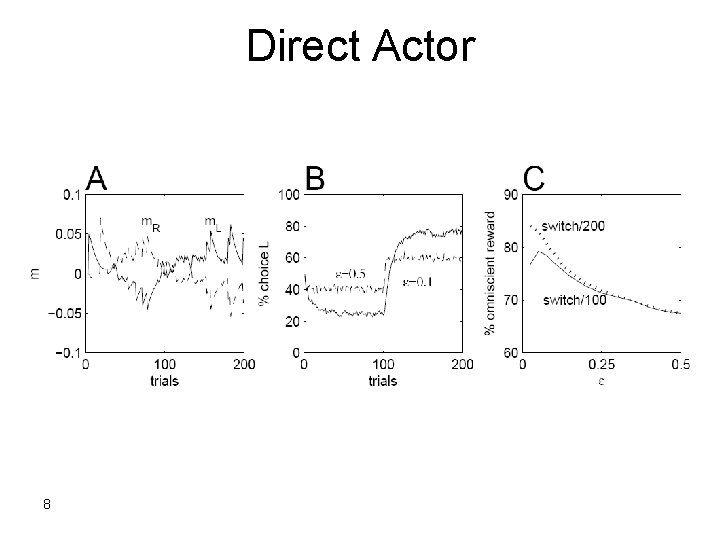

Direct Actor

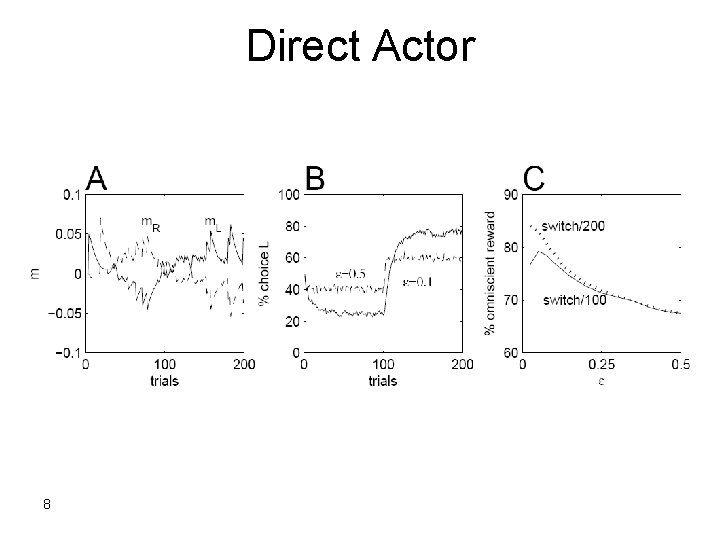

Direct Actor 8

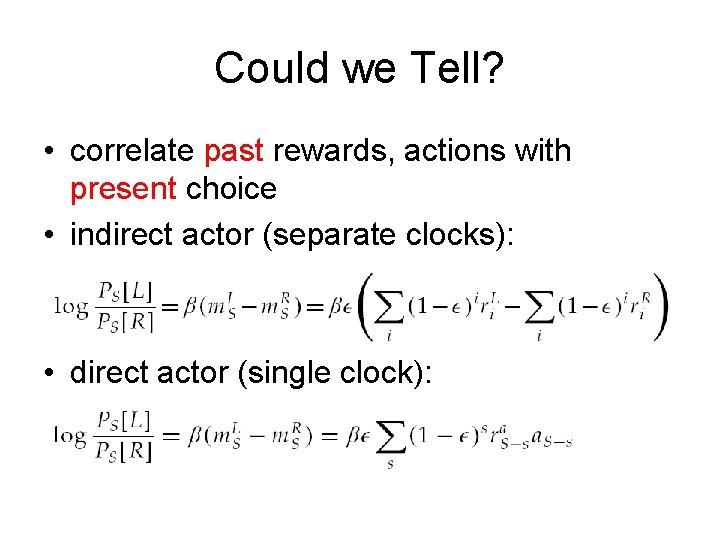

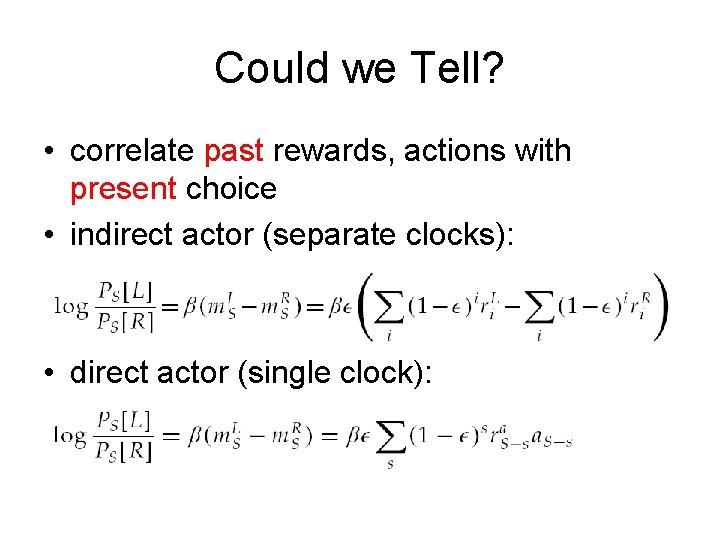

Could we Tell? • correlate past rewards, actions with present choice • indirect actor (separate clocks): • direct actor (single clock):

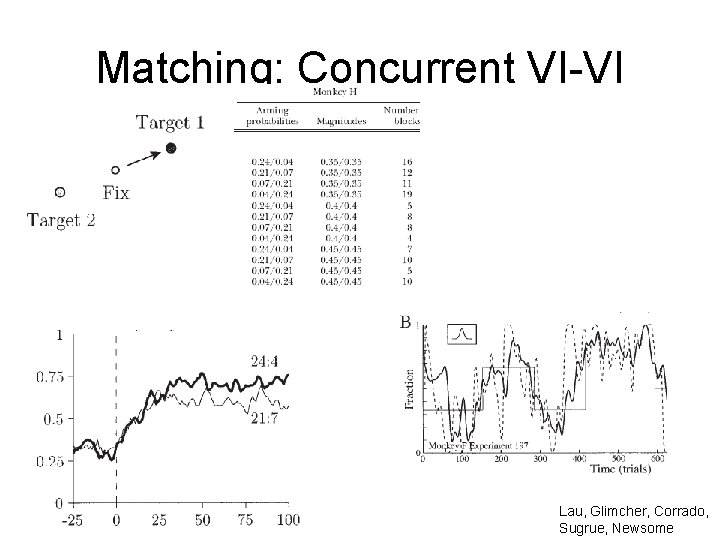

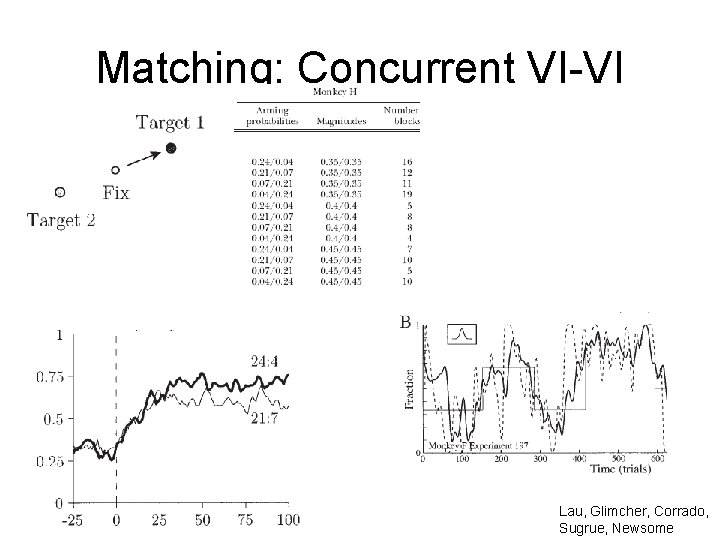

Matching: Concurrent VI-VI Lau, Glimcher, Corrado, Sugrue, Newsome

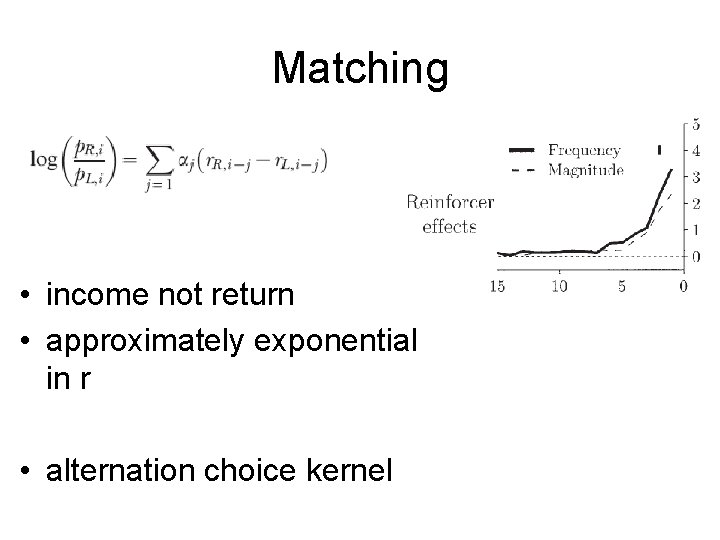

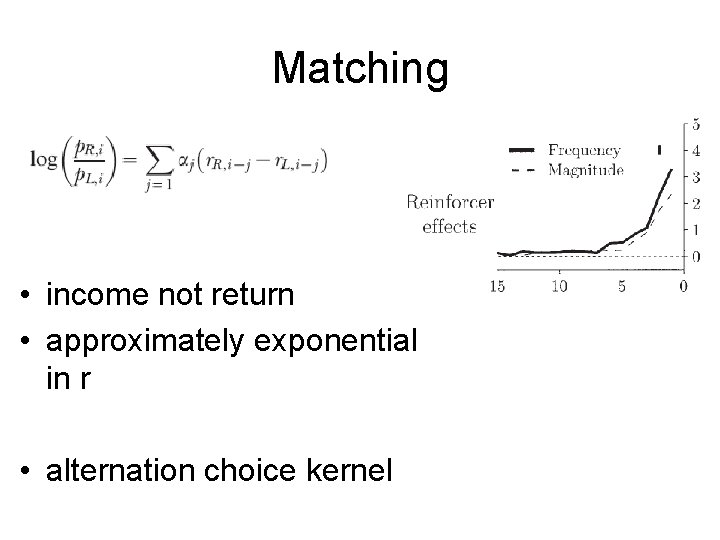

Matching • income not return • approximately exponential in r • alternation choice kernel

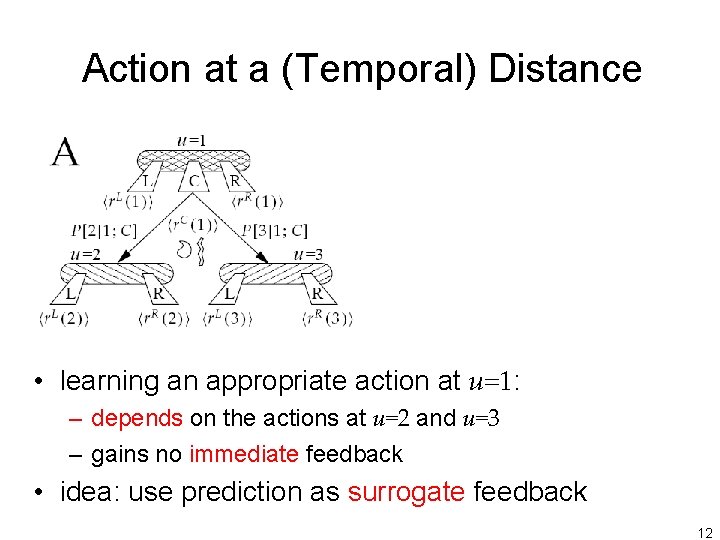

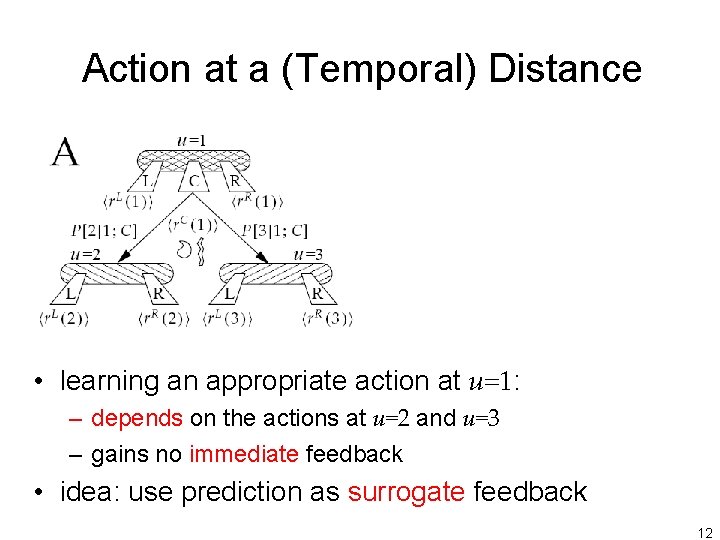

Action at a (Temporal) Distance • learning an appropriate action at u=1: – depends on the actions at u=2 and u=3 – gains no immediate feedback • idea: use prediction as surrogate feedback 12

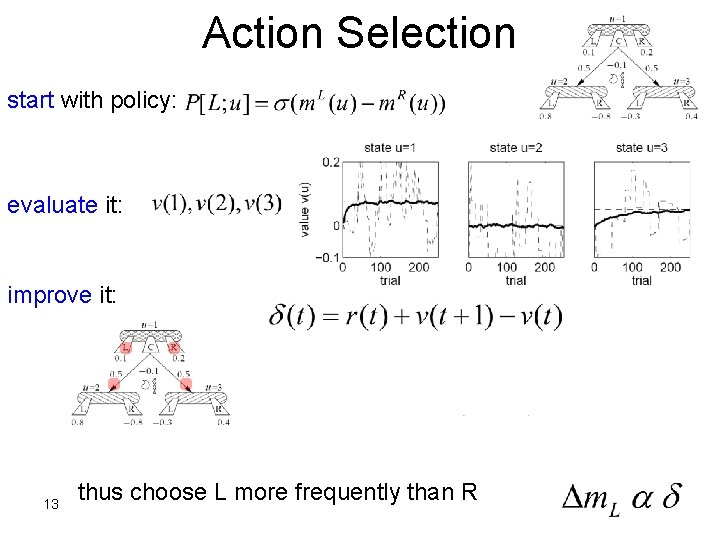

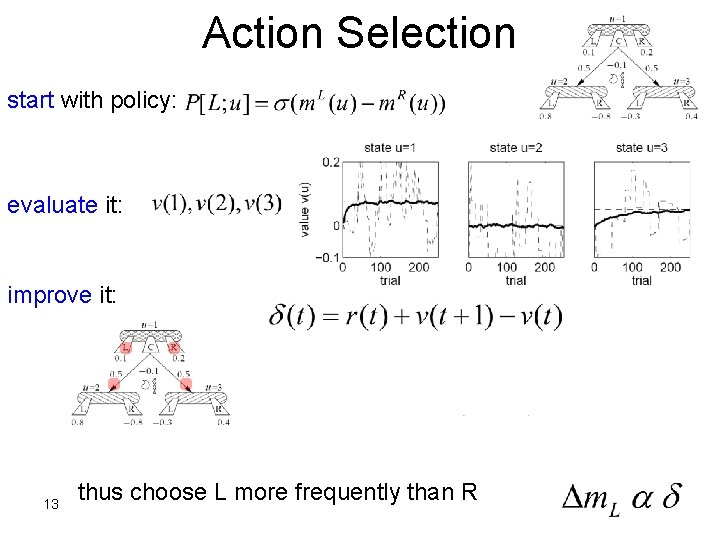

Action Selection start with policy: evaluate it: improve it: 0. 025 -0. 175 -0. 125 13 thus choose L more frequently than R

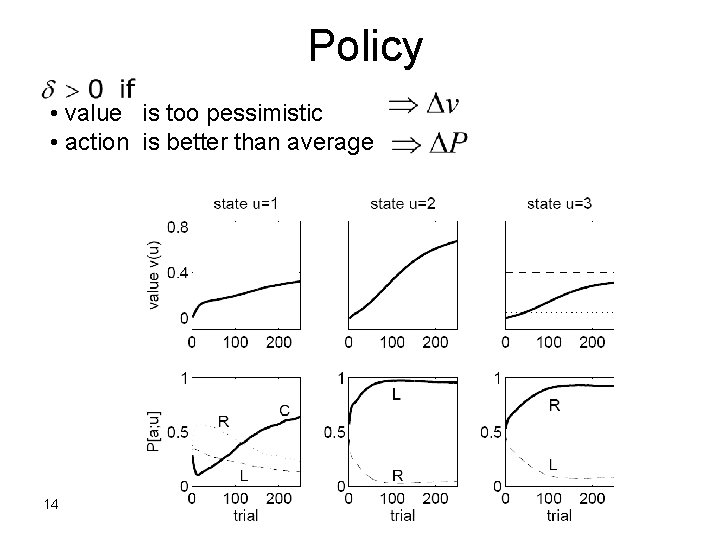

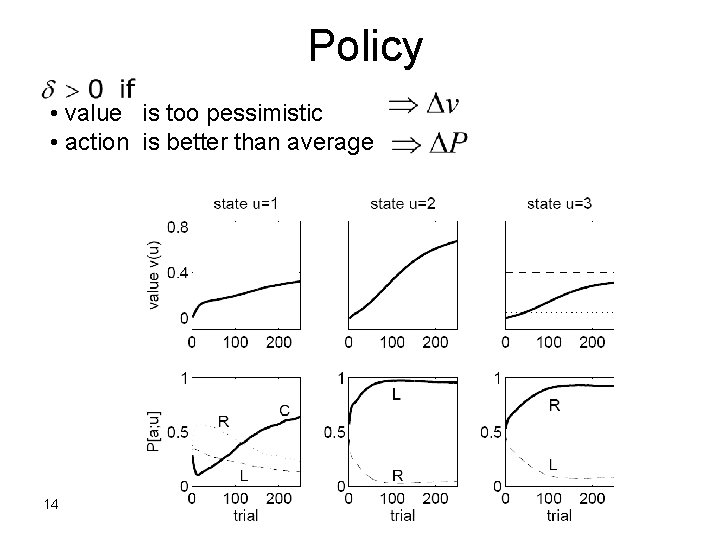

Policy • value is too pessimistic • action is better than average 14

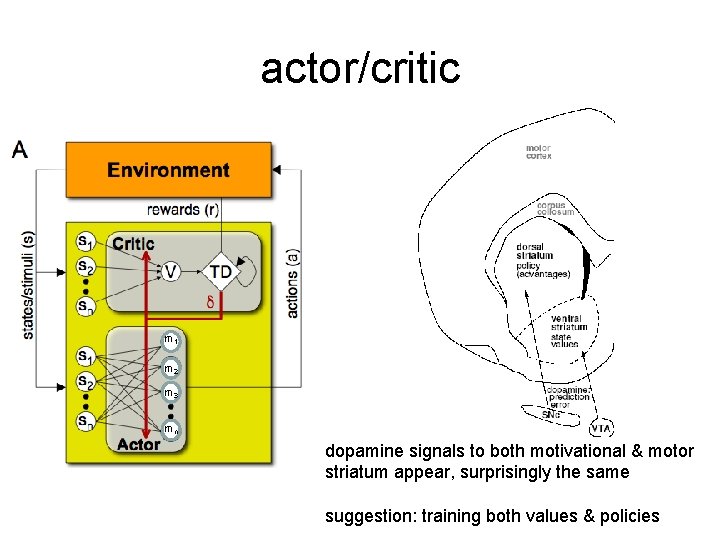

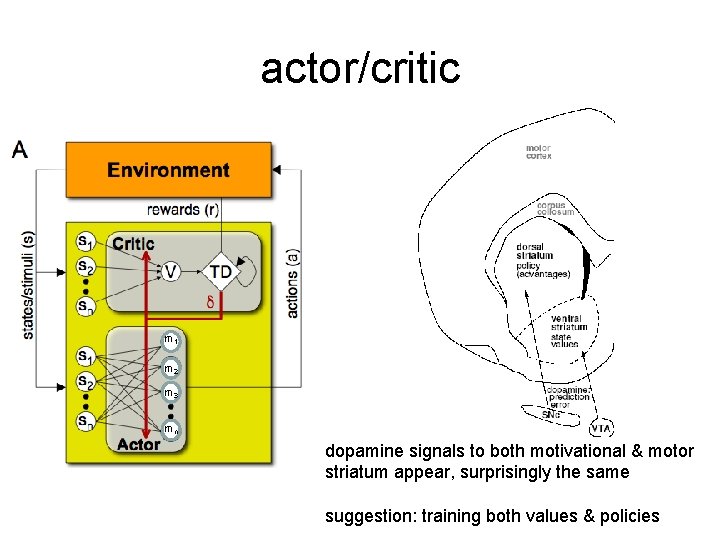

actor/critic m 1 m 2 m 3 mn dopamine signals to both motivational & motor striatum appear, surprisingly the same suggestion: training both values & policies

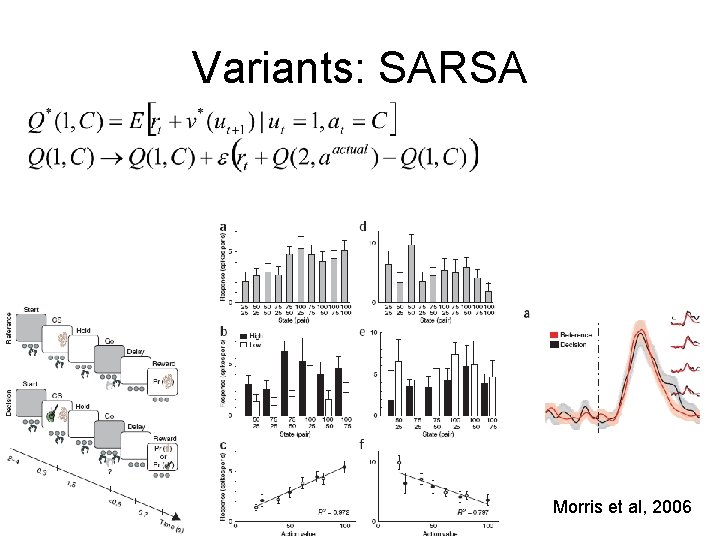

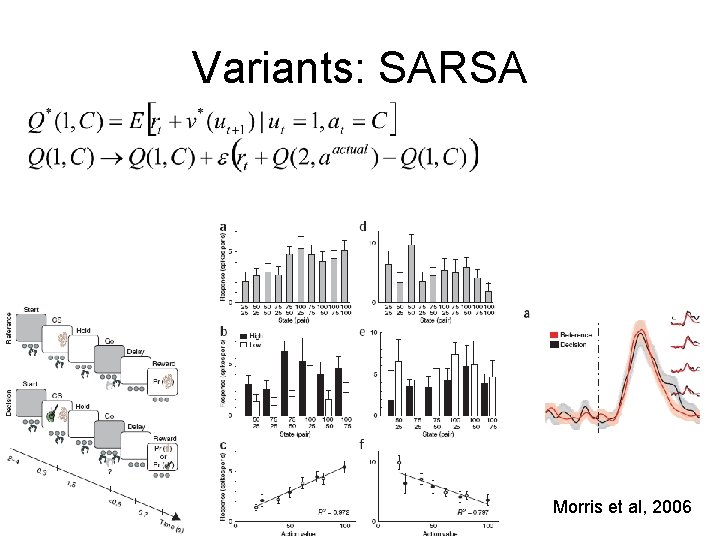

Variants: SARSA Morris et al, 2006

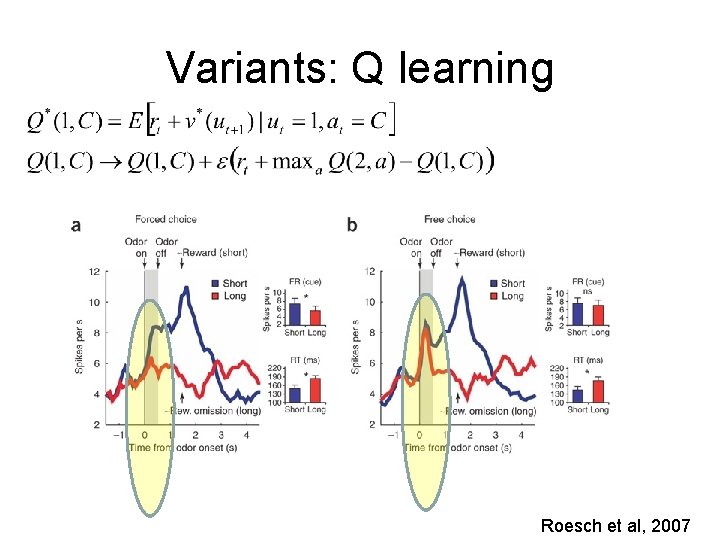

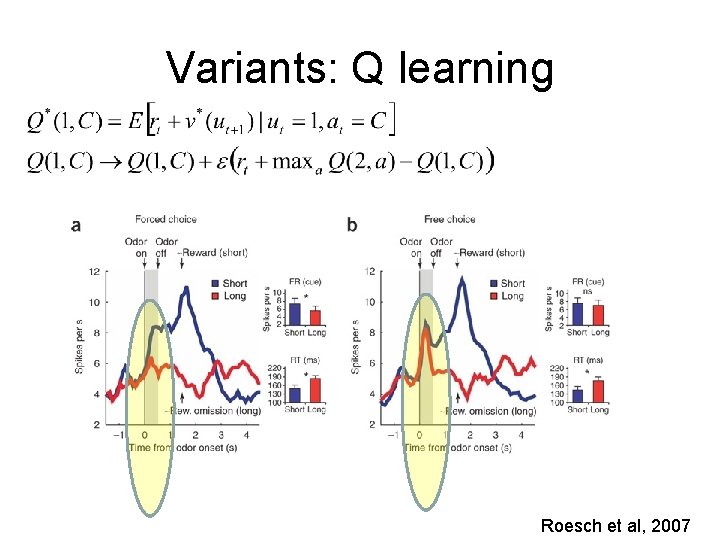

Variants: Q learning Roesch et al, 2007

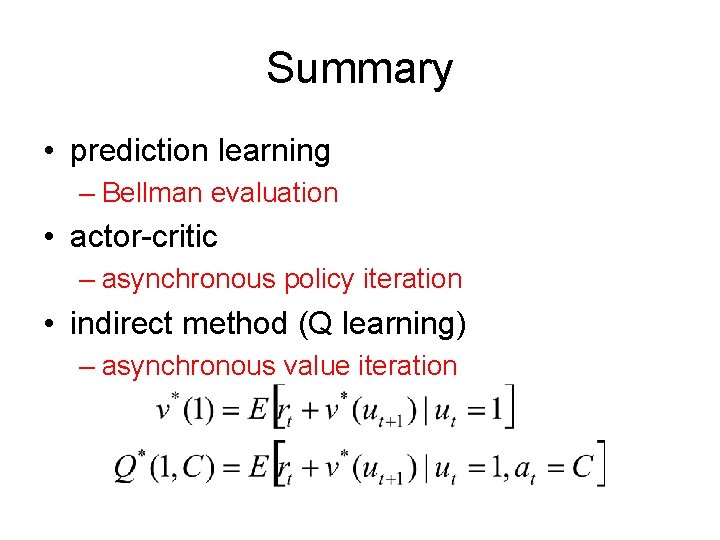

Summary • prediction learning – Bellman evaluation • actor-critic – asynchronous policy iteration • indirect method (Q learning) – asynchronous value iteration

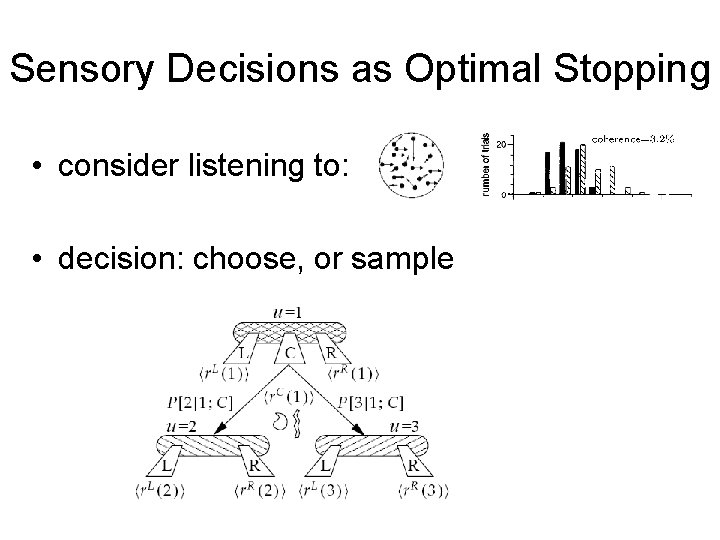

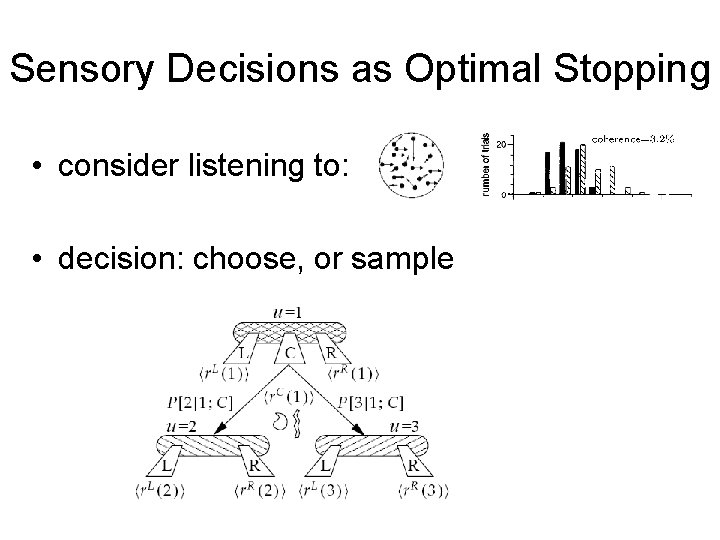

Sensory Decisions as Optimal Stopping • consider listening to: • decision: choose, or sample

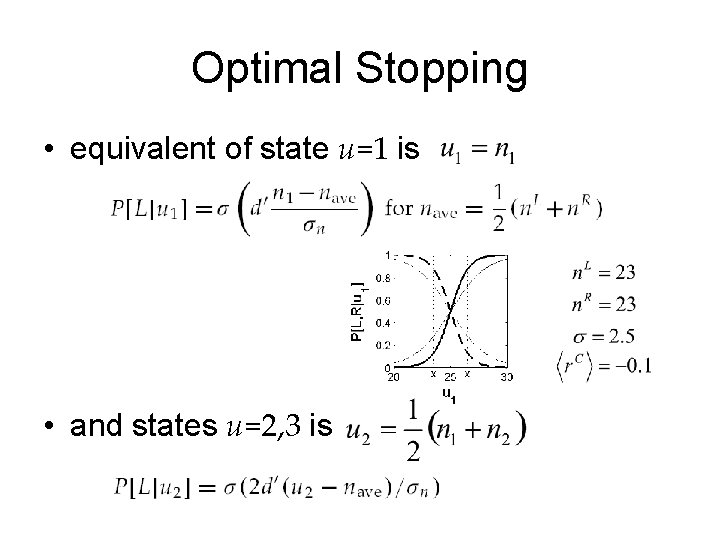

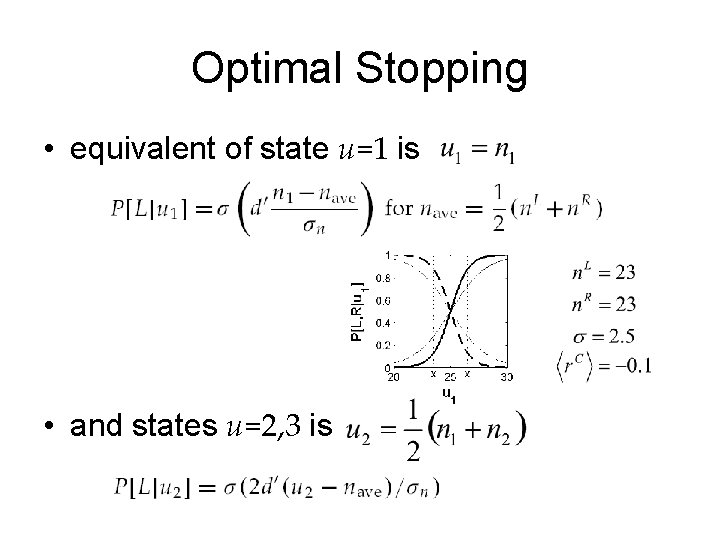

Optimal Stopping • equivalent of state u=1 is • and states u=2, 3 is

Transition Probabilities

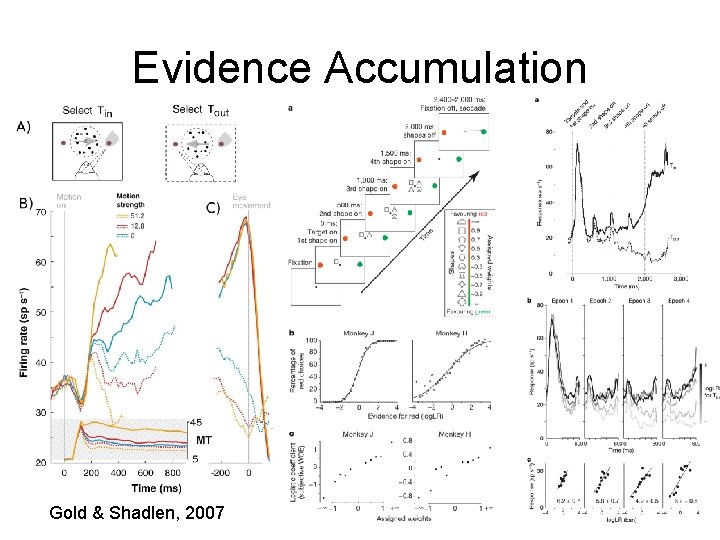

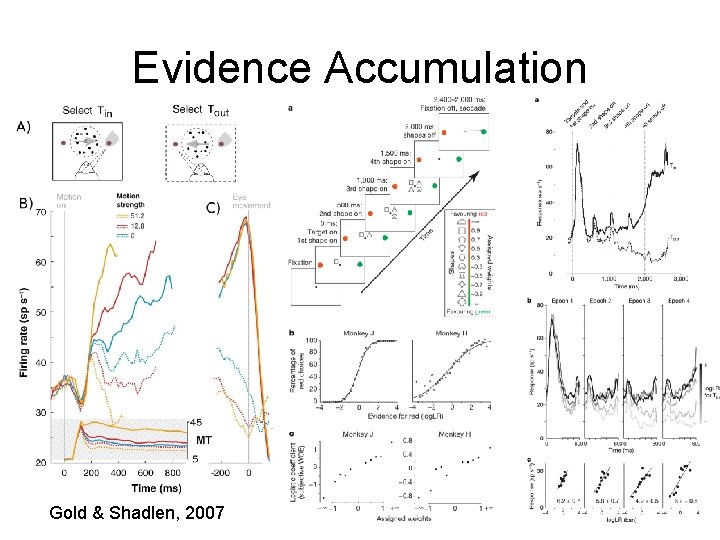

Evidence Accumulation Gold & Shadlen, 2007

Current Topics • Vigour & tonic dopamine • Priors over decision problems (LH) • Pavlovian-instrumental interactions – impulsivity – behavioural inhibition – framing • Model-based, model-free and episodic control • Exploration vs exploitation • Game theoretic interactions (inequity aversion)

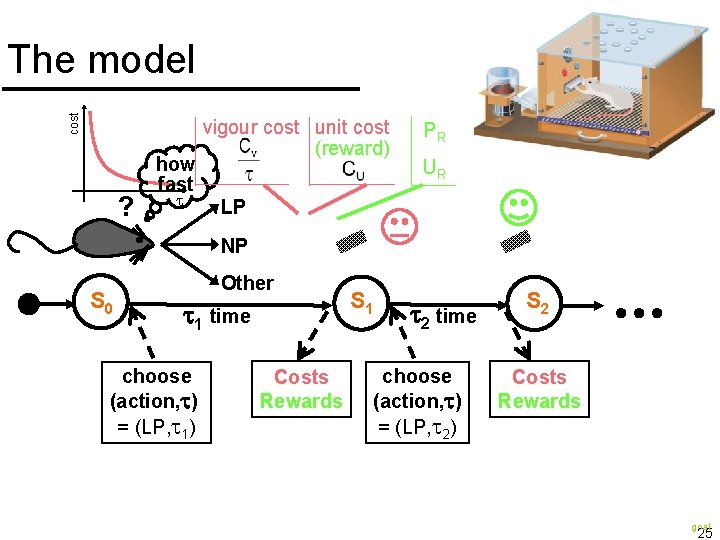

Vigour • Two components to choice: – what: • lever pressing • direction to run • meal to choose – when/how fast/how vigorous • free operant tasks • real-valued DP 24

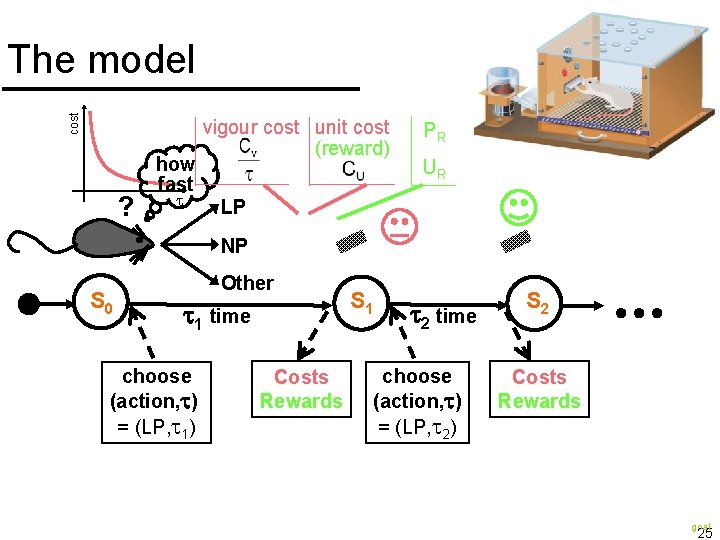

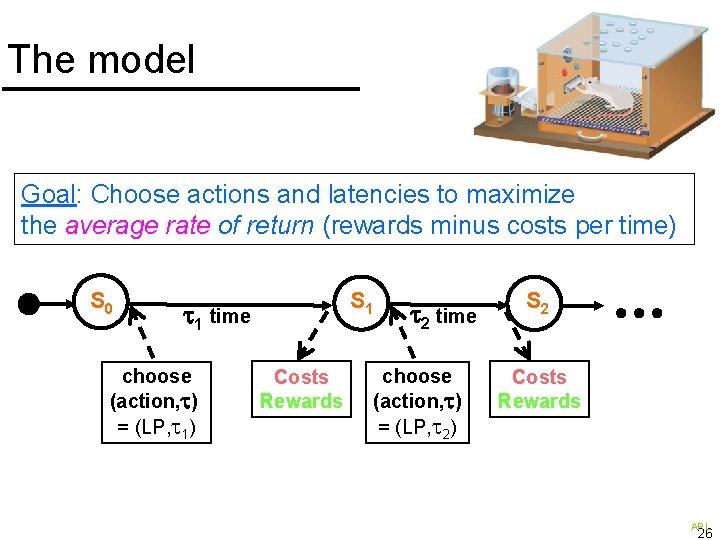

cost The model ? how fast vigour cost unit cost (reward) PR UR LP NP S 0 Other 1 time choose (action, ) = (LP, 1) Costs Rewards S 1 2 time choose (action, ) = (LP, 2) S 2 Costs Rewards goal 25

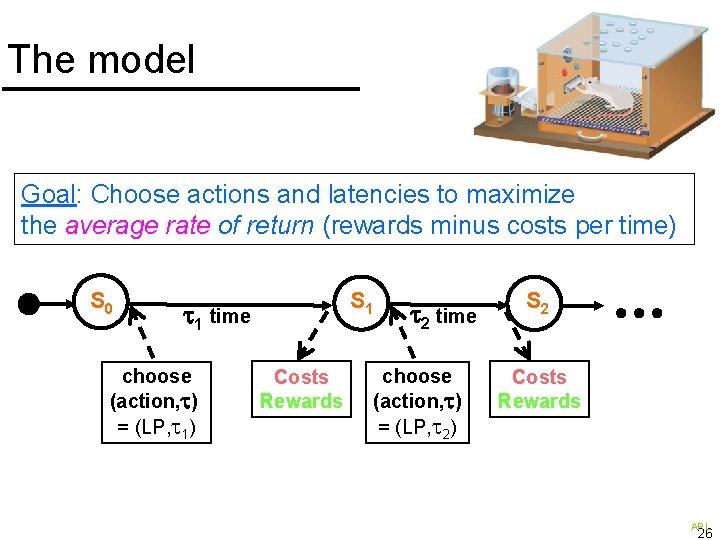

The model Goal: Choose actions and latencies to maximize the average rate of return (rewards minus costs per time) S 0 S 1 1 time choose (action, ) = (LP, 1) Costs Rewards 2 time choose (action, ) = (LP, 2) S 2 Costs Rewards ARL 26

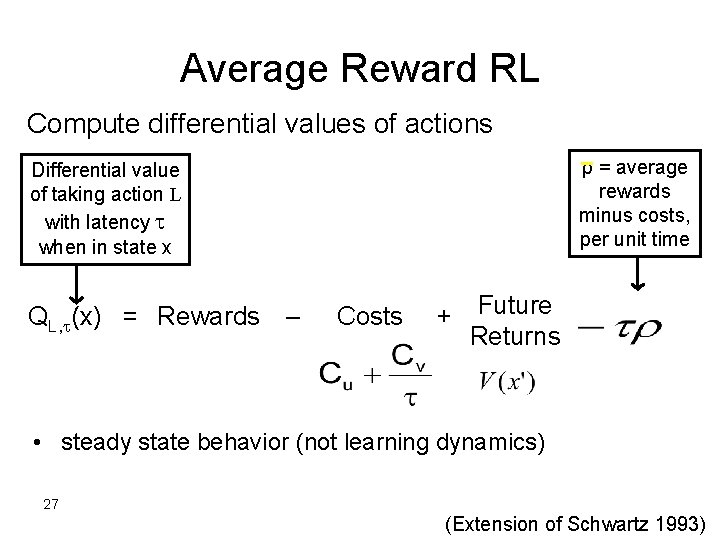

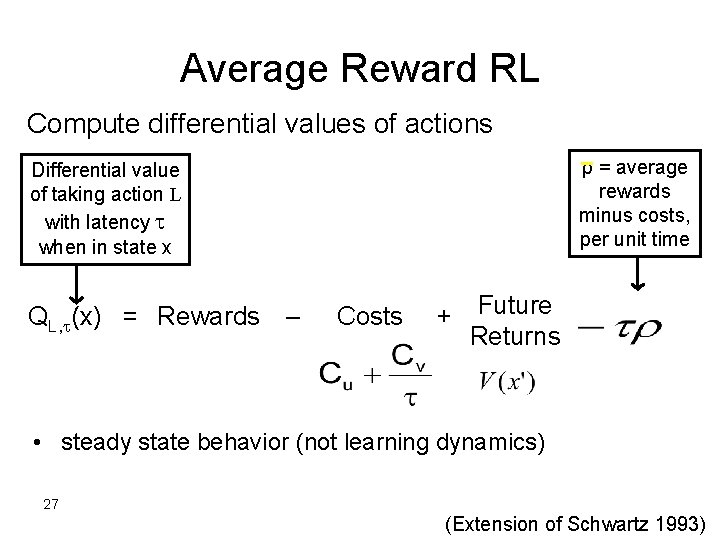

Average Reward RL Compute differential values of actions ρ = average rewards minus costs, per unit time Differential value of taking action L with latency when in state x QL, (x) = Rewards – Costs + Future Returns • steady state behavior (not learning dynamics) 27 (Extension of Schwartz 1993)

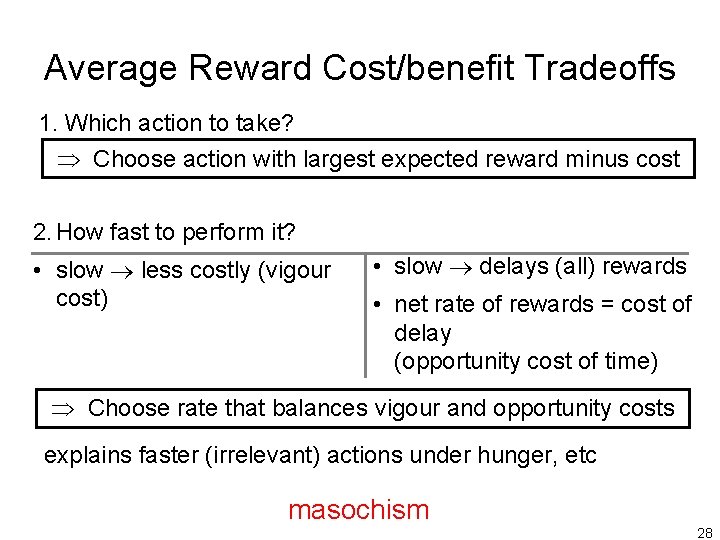

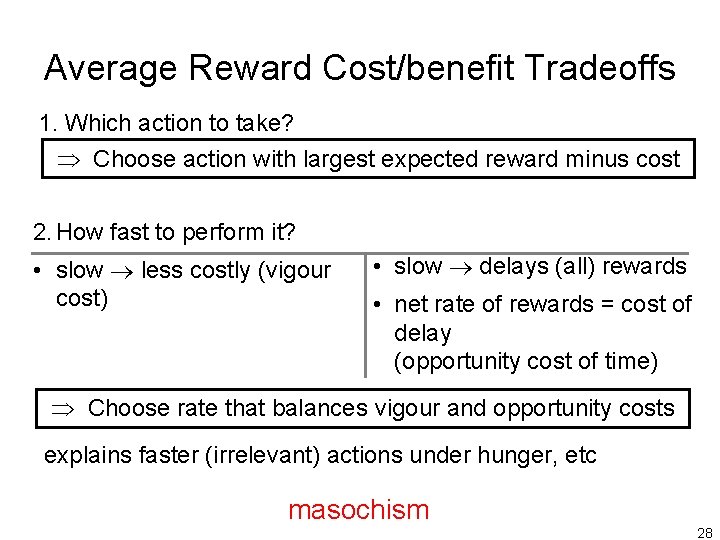

Average Reward Cost/benefit Tradeoffs 1. Which action to take? Þ Choose action with largest expected reward minus cost 2. How fast to perform it? • slow less costly (vigour cost) • slow delays (all) rewards • net rate of rewards = cost of delay (opportunity cost of time) Þ Choose rate that balances vigour and opportunity costs explains faster (irrelevant) actions under hunger, etc masochism 28

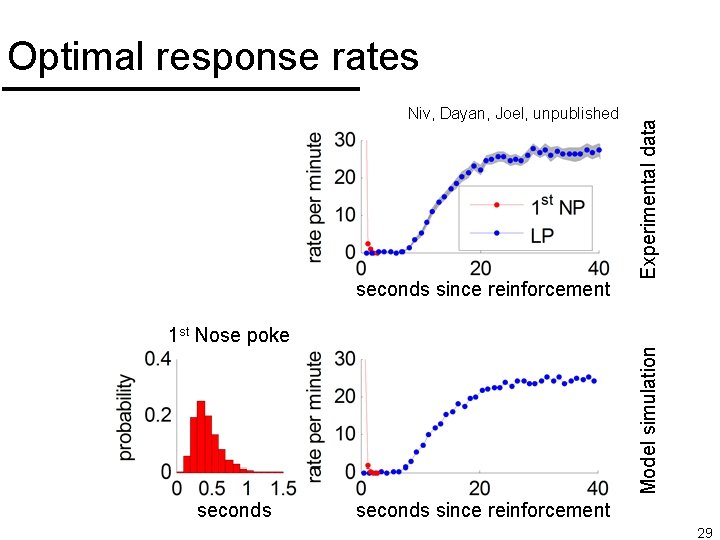

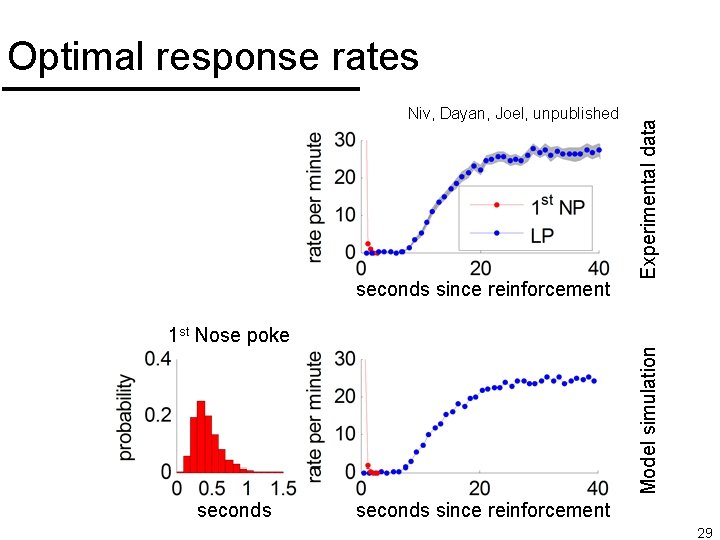

1 st Nose poke seconds Niv, Dayan, Joel, unpublished seconds since reinforcement seconds Model simulation 1 st Nose poke Experimental data Optimal response rates seconds since reinforcement 29

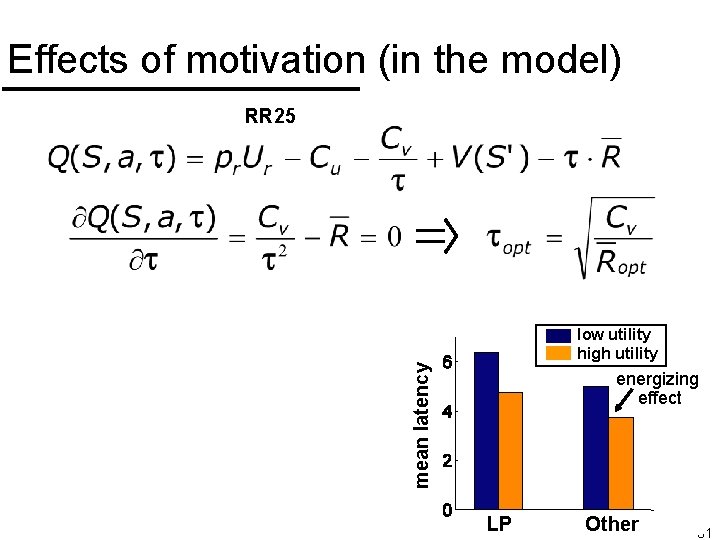

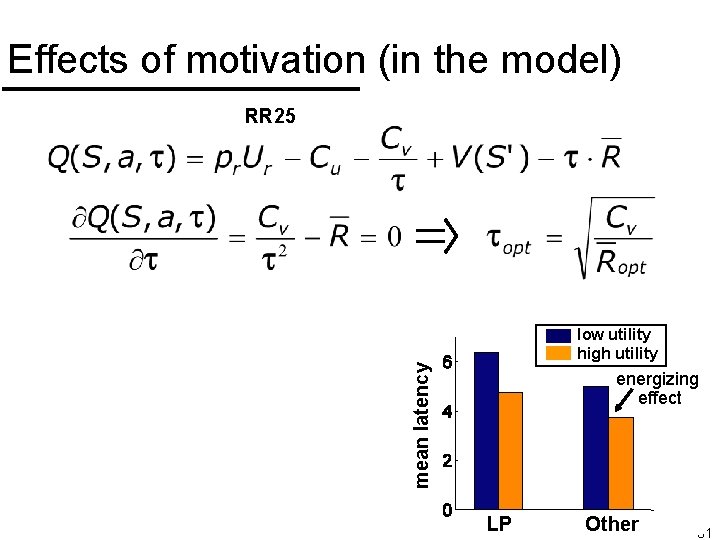

Effects of motivation (in the model) RR 25 mean latency low utility high utility energizing effect LP Other 31

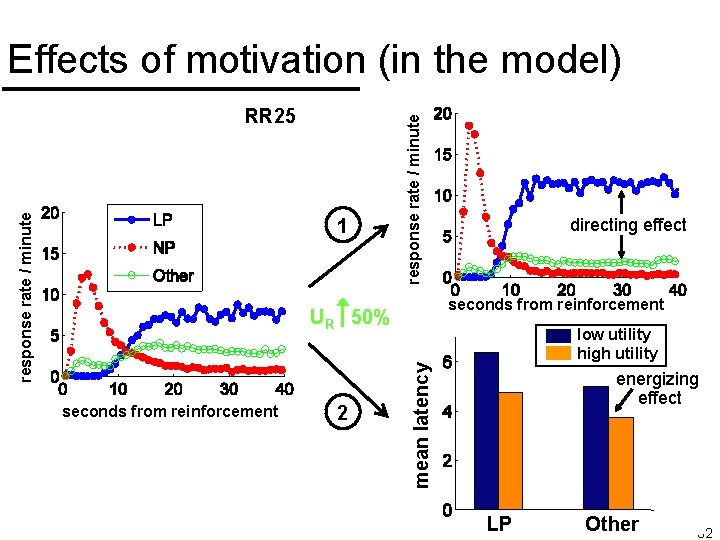

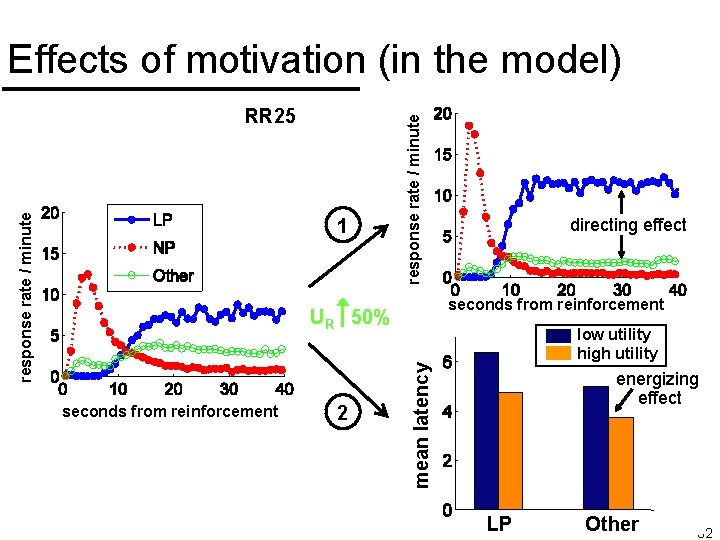

response rate / minute RR 25 1 response rate / minute Effects of motivation (in the model) seconds from reinforcement 2 low utility high utility mean latency UR 50% seconds from reinforcement directing effect energizing effect LP Other 32

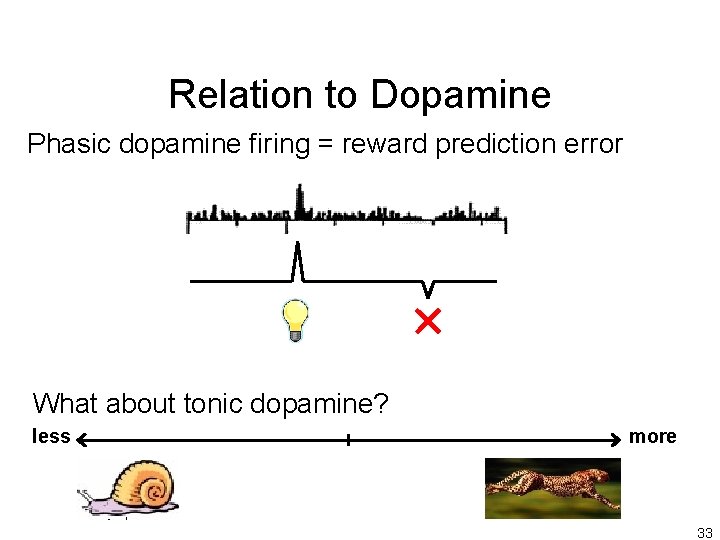

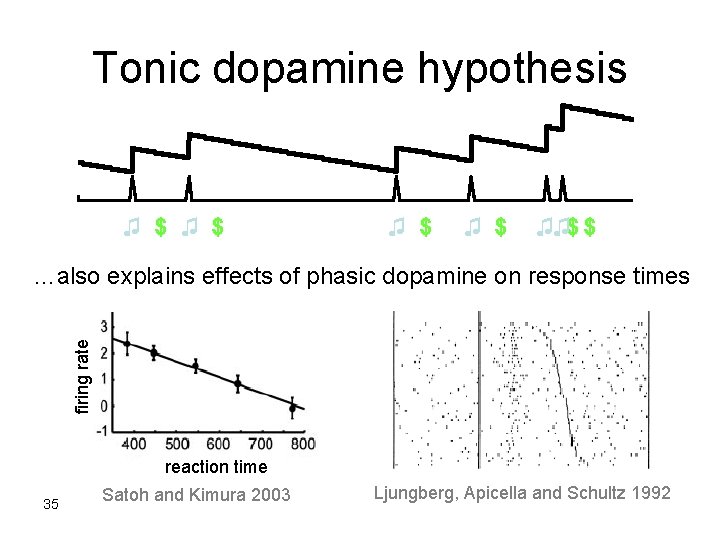

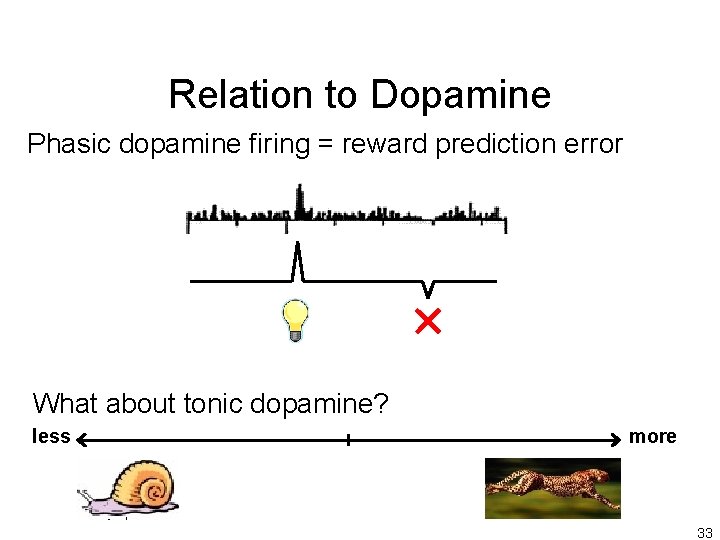

Relation to Dopamine Phasic dopamine firing = reward prediction error What about tonic dopamine? less more 33

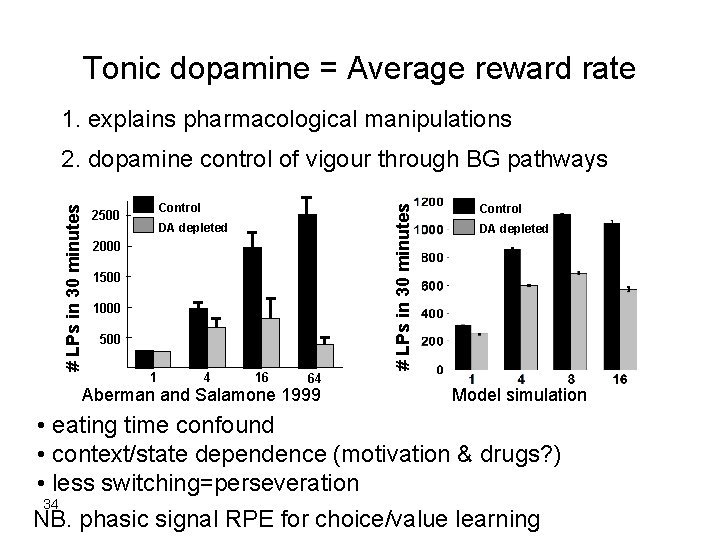

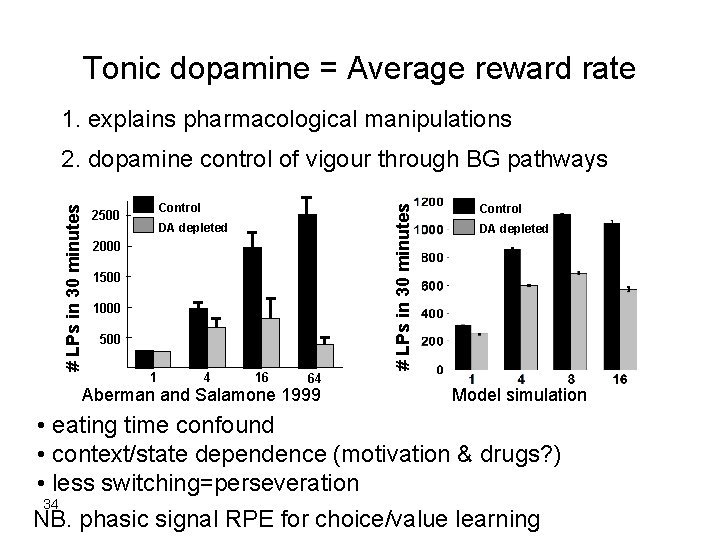

Tonic dopamine = Average reward rate 1. explains pharmacological manipulations Control 2500 # LPs in 30 minutes 2. dopamine control of vigour through BG pathways DA depleted 2000 1500 1000 500 1 4 16 64 Aberman and Salamone 1999 Control DA depleted Model simulation • eating time confound • context/state dependence (motivation & drugs? ) • less switching=perseveration 34 NB. phasic signal RPE for choice/value learning

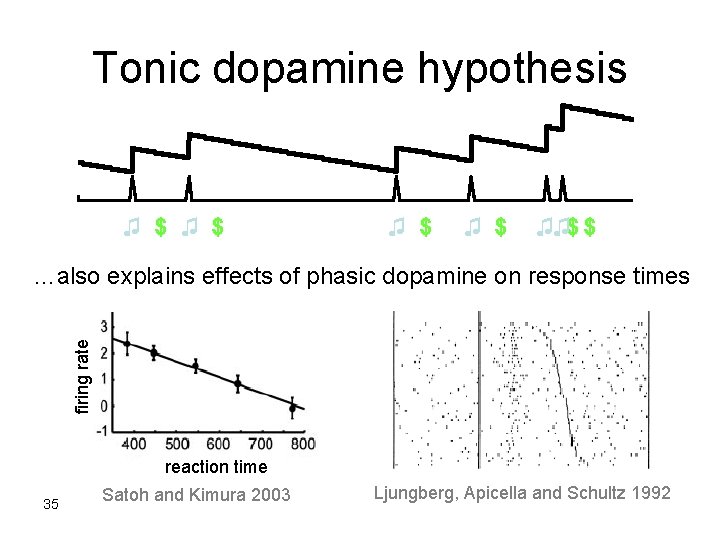

Tonic dopamine hypothesis ♫ $ ♫ $ ♫♫$ $ firing rate …also explains effects of phasic dopamine on response times reaction time 35 Satoh and Kimura 2003 Ljungberg, Apicella and Schultz 1992

Pavlovian & Instrumental Conditioning • Pavlovian – learning values and predictions – using TD error • Instrumental – learning actions: • by reinforcement (leg flexion) • by (TD) critic – (actually different forms: goal directed & habitual)

Pavlovian-Instrumental Interactions • synergistic – conditioned reinforcement – Pavlovian-instrumental transfer • Pavlovian cue predicts the instrumental outcome • behavioural inhibition to avoid aversive outcomes • neutral – Pavlovian-instrumental transfer • Pavlovian cue predicts outcome with same motivational valence • opponent – Pavlovian-instrumental transfer • Pavlovian cue predicts opposite motivational valence – negative automaintenance

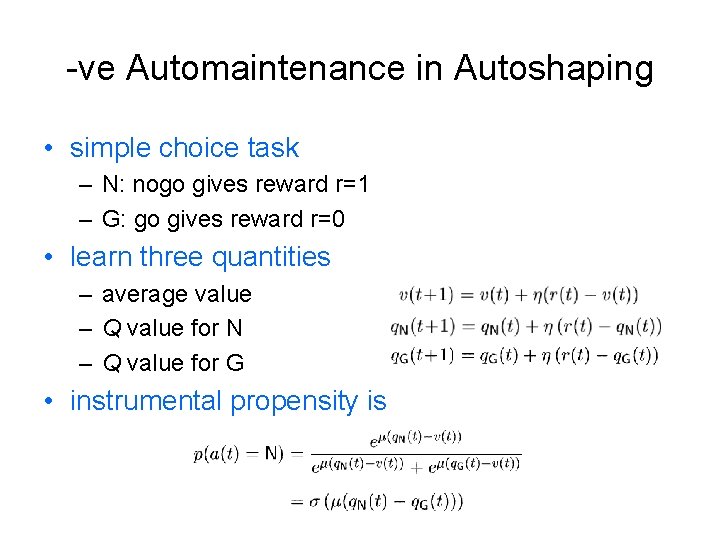

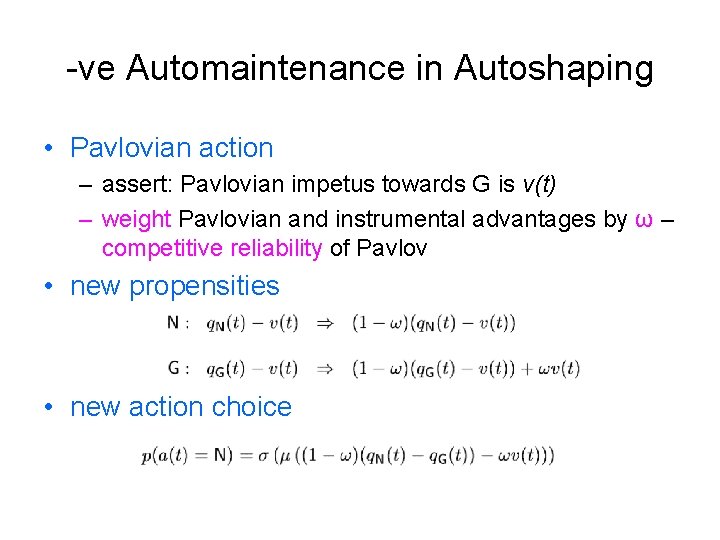

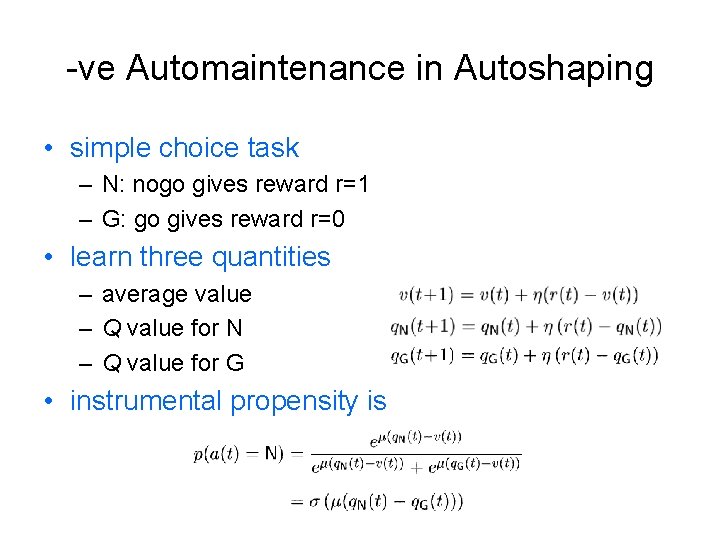

-ve Automaintenance in Autoshaping • simple choice task – N: nogo gives reward r=1 – G: go gives reward r=0 • learn three quantities – average value – Q value for N – Q value for G • instrumental propensity is

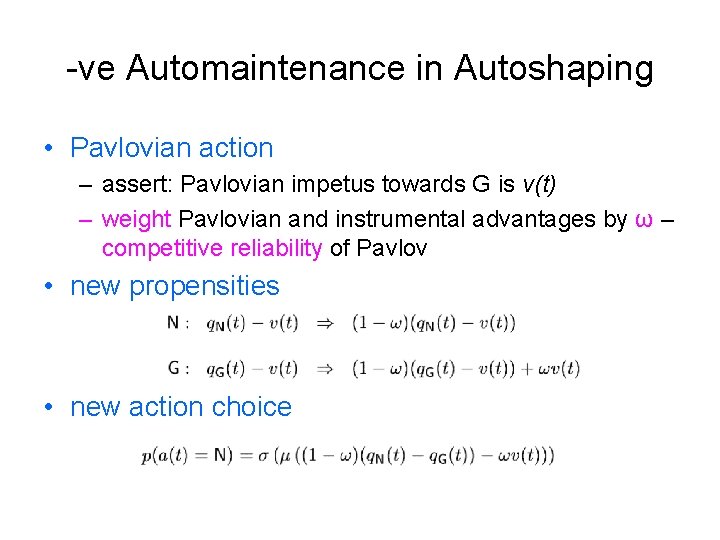

-ve Automaintenance in Autoshaping • Pavlovian action – assert: Pavlovian impetus towards G is v(t) – weight Pavlovian and instrumental advantages by ω – competitive reliability of Pavlov • new propensities • new action choice

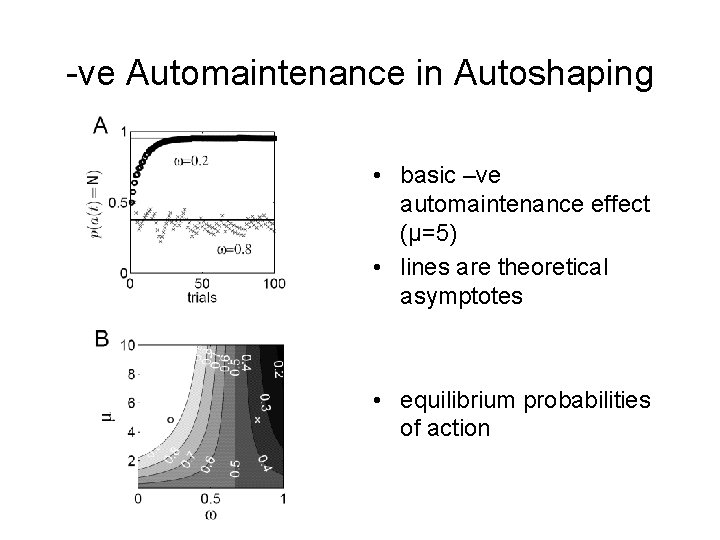

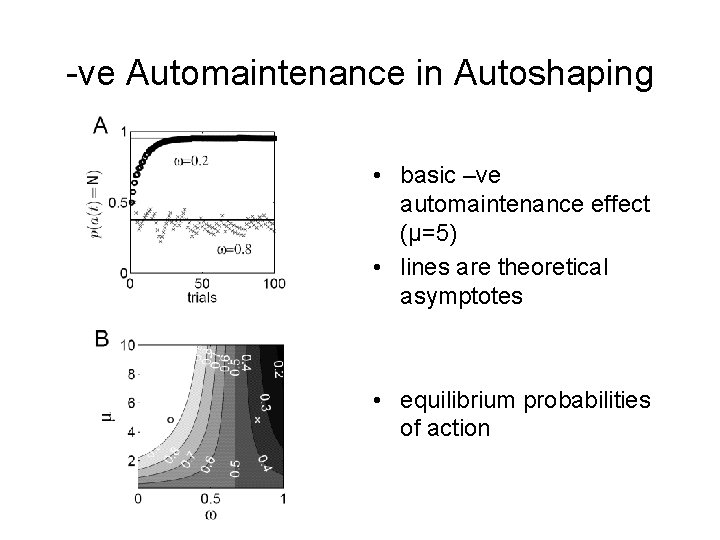

-ve Automaintenance in Autoshaping • basic –ve automaintenance effect (μ=5) • lines are theoretical asymptotes • equilibrium probabilities of action

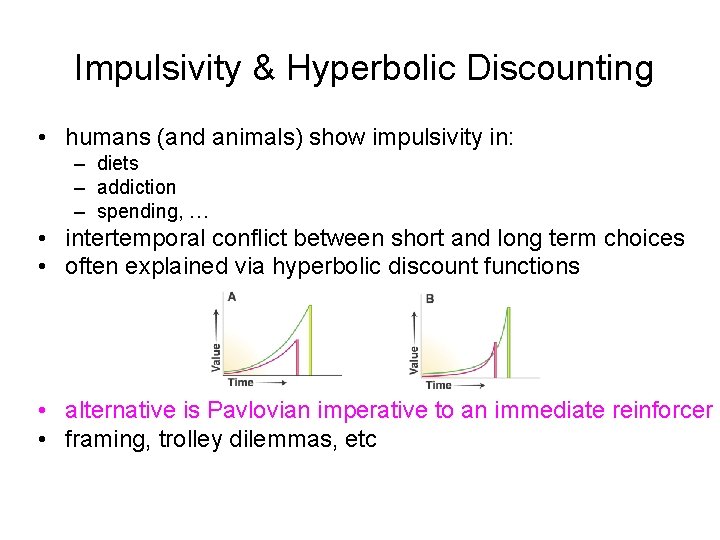

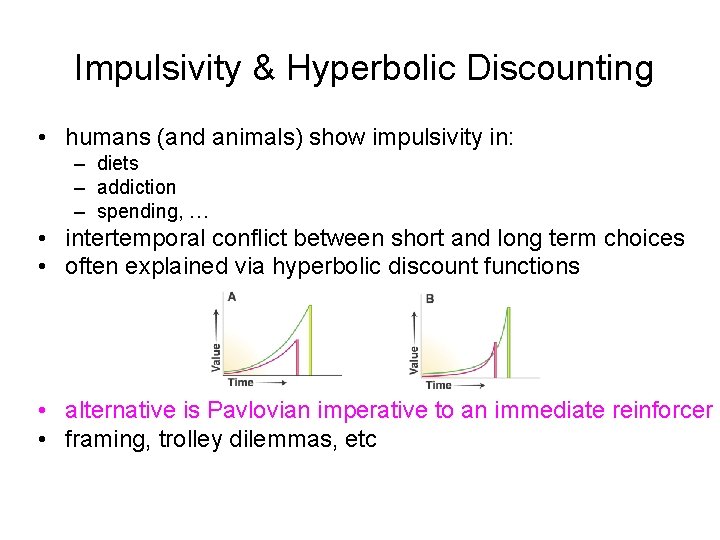

Impulsivity & Hyperbolic Discounting • humans (and animals) show impulsivity in: – diets – addiction – spending, … • intertemporal conflict between short and long term choices • often explained via hyperbolic discount functions • alternative is Pavlovian imperative to an immediate reinforcer • framing, trolley dilemmas, etc

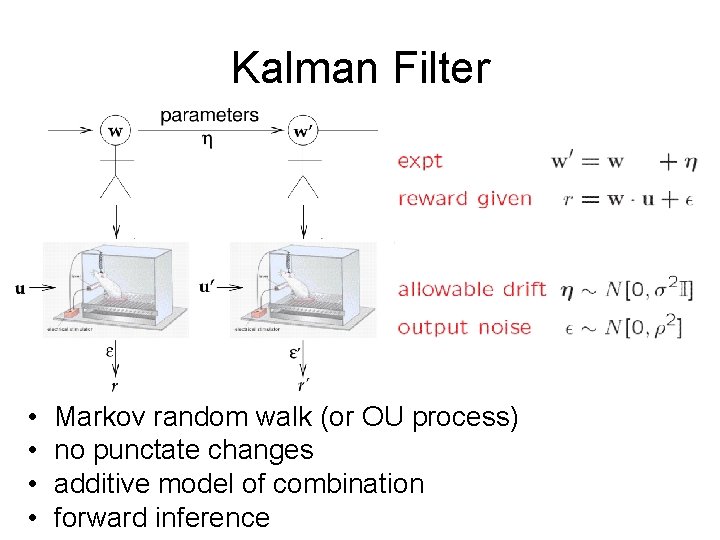

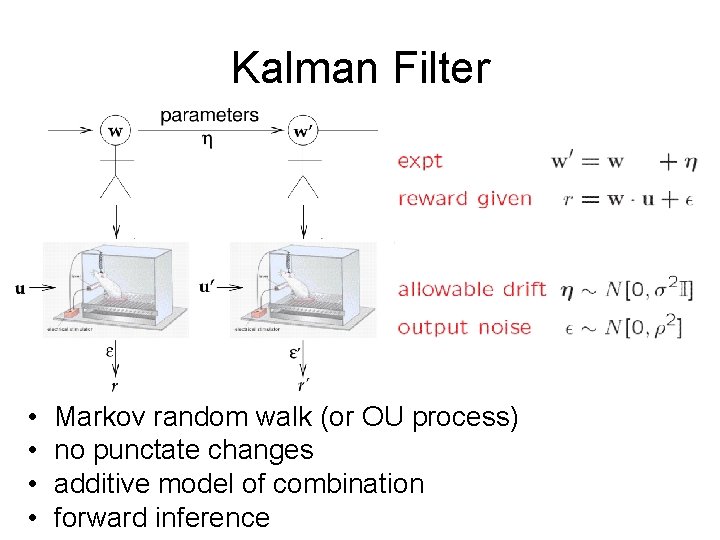

Kalman Filter • • Markov random walk (or OU process) no punctate changes additive model of combination forward inference

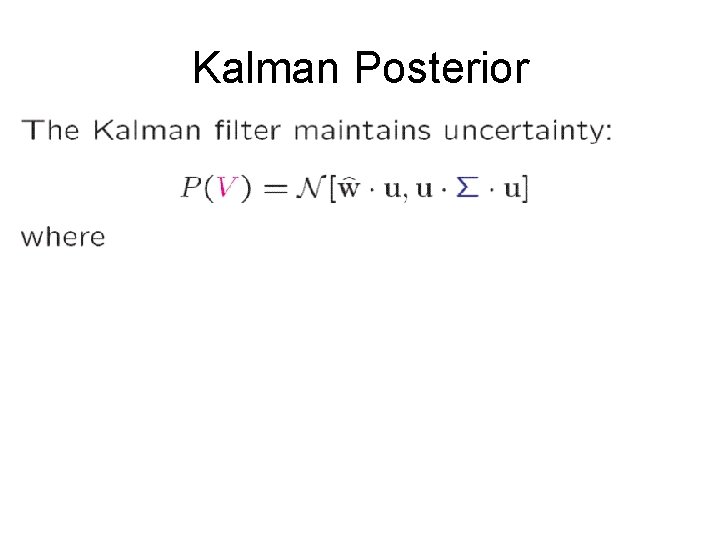

Kalman Posterior ^ ε

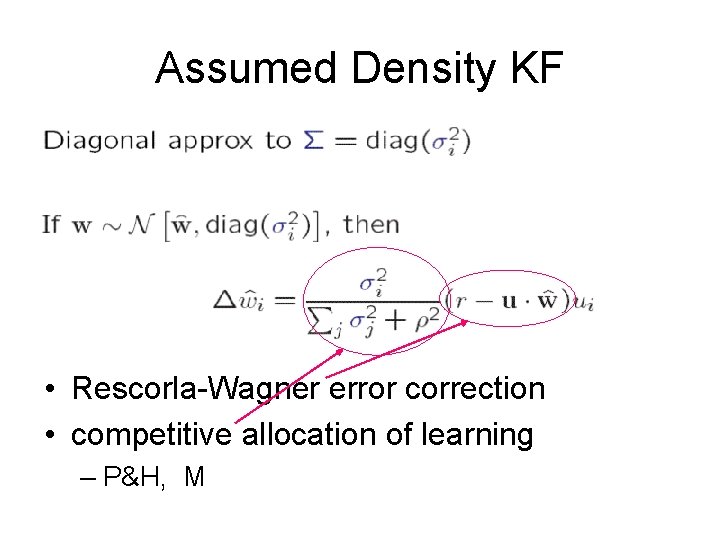

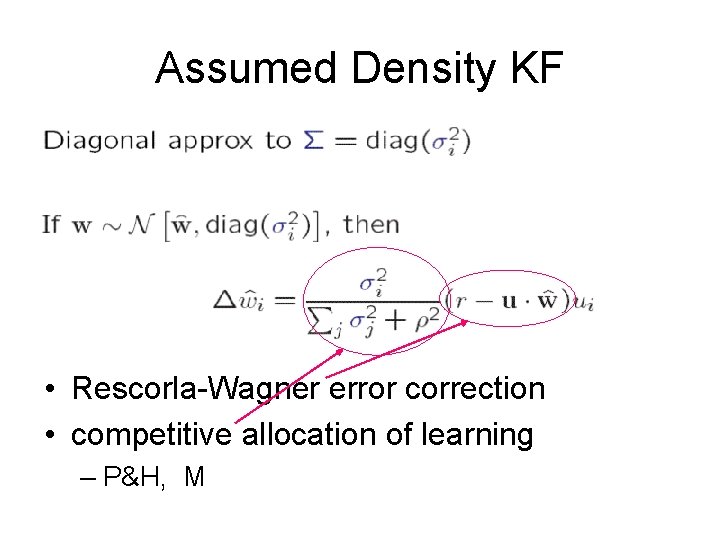

Assumed Density KF • Rescorla-Wagner error correction • competitive allocation of learning – P&H, M

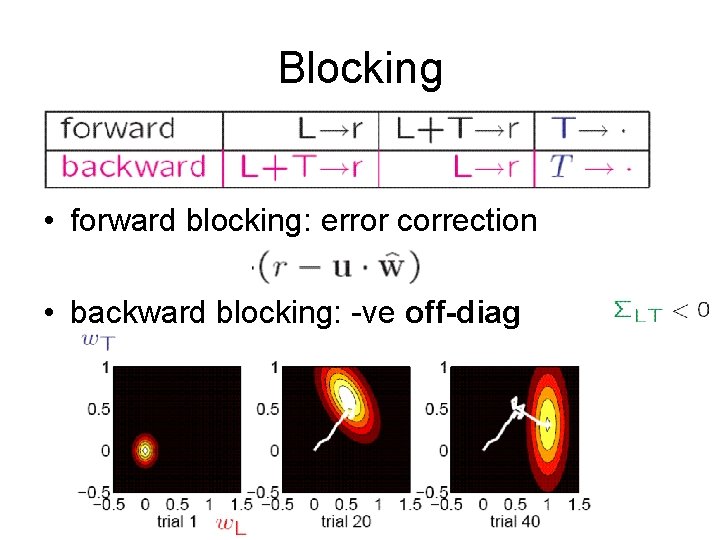

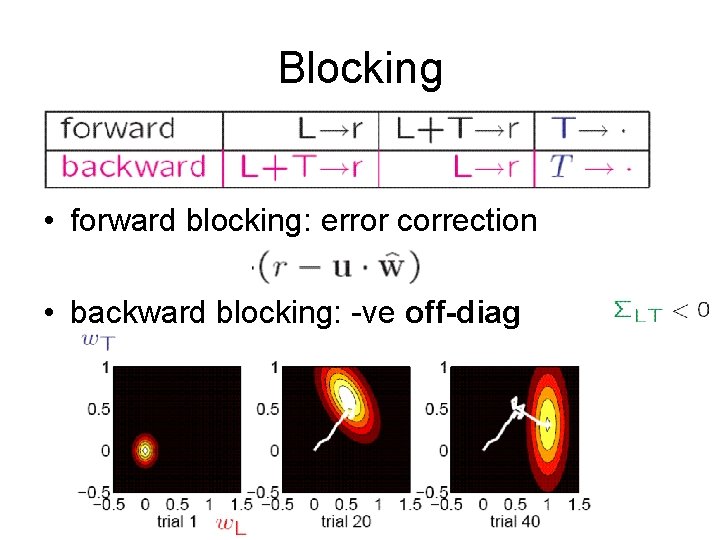

Blocking • forward blocking: error correction • backward blocking: -ve off-diag

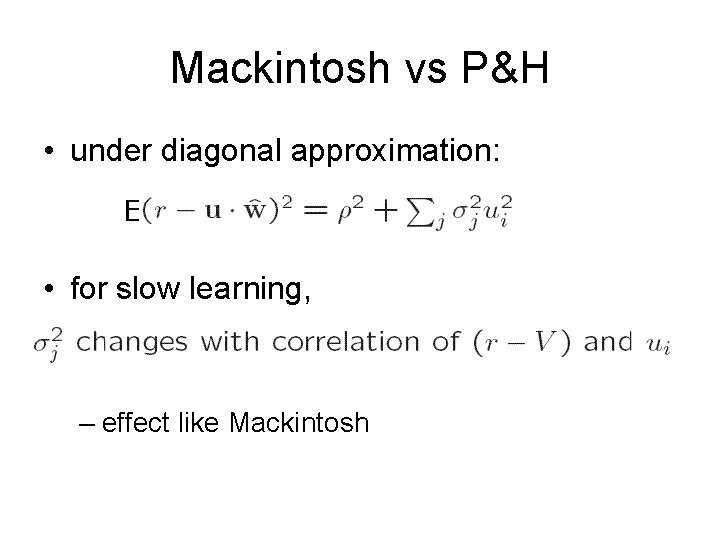

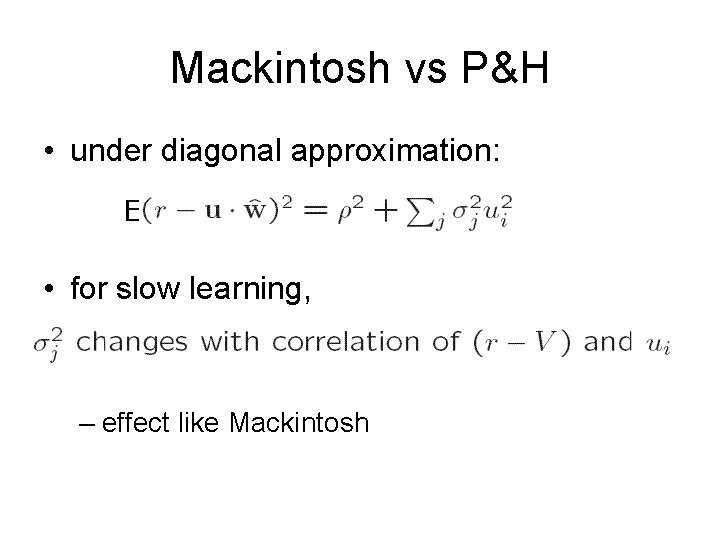

Mackintosh vs P&H • under diagonal approximation: E • for slow learning, – effect like Mackintosh

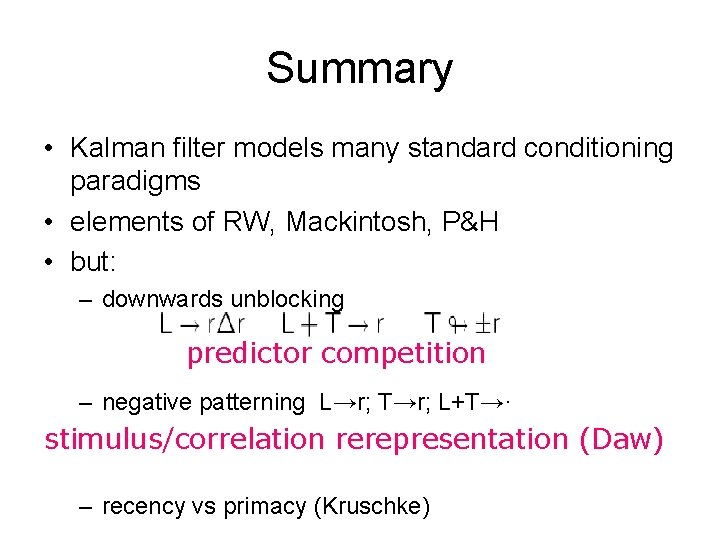

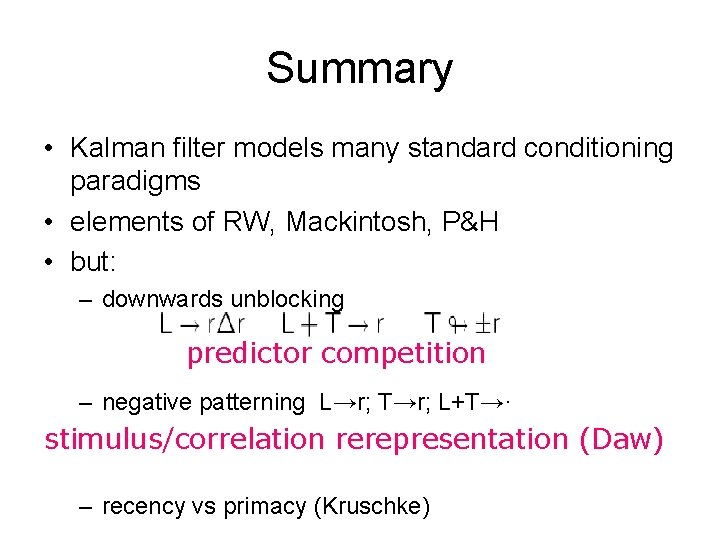

Summary • Kalman filter models many standard conditioning paradigms • elements of RW, Mackintosh, P&H • but: – downwards unblocking predictor competition – negative patterning L→r; T→r; L+T→· stimulus/correlation rerepresentation (Daw) – recency vs primacy (Kruschke)

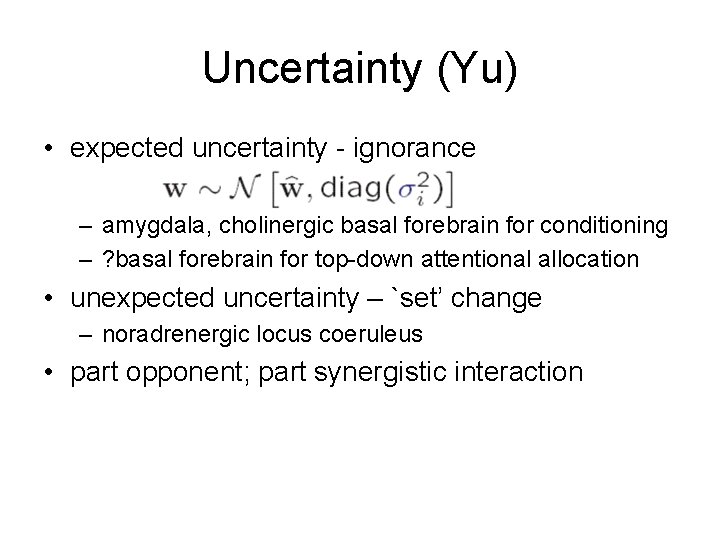

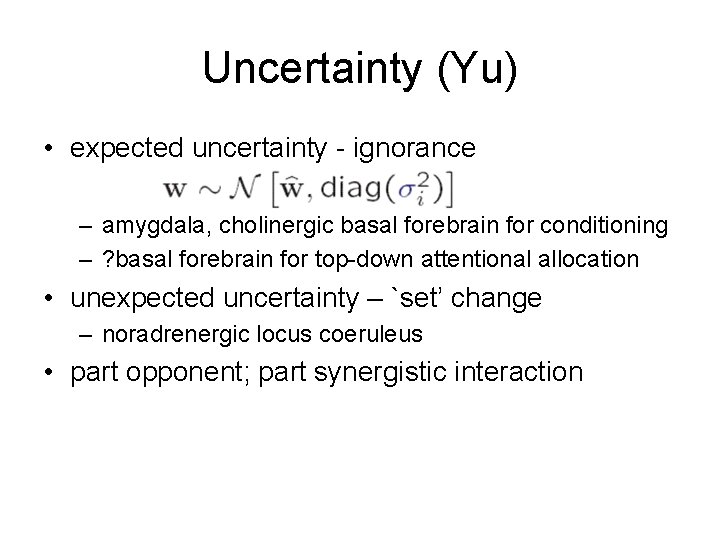

Uncertainty (Yu) • expected uncertainty - ignorance – amygdala, cholinergic basal forebrain for conditioning – ? basal forebrain for top-down attentional allocation • unexpected uncertainty – `set’ change – noradrenergic locus coeruleus • part opponent; part synergistic interaction

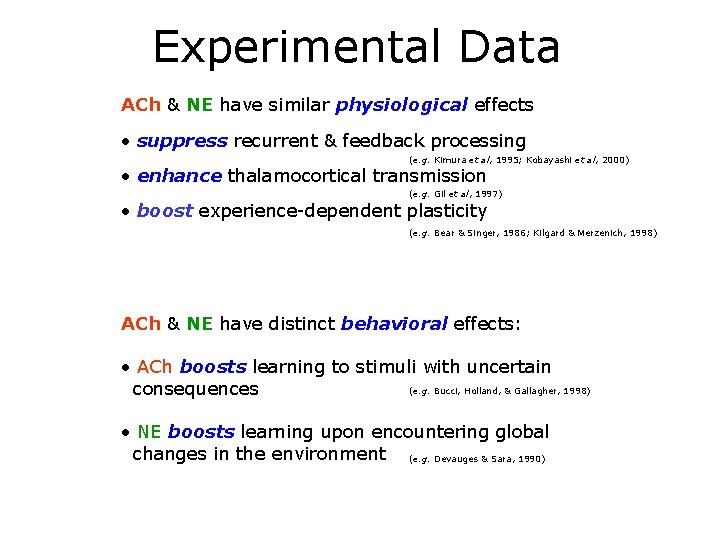

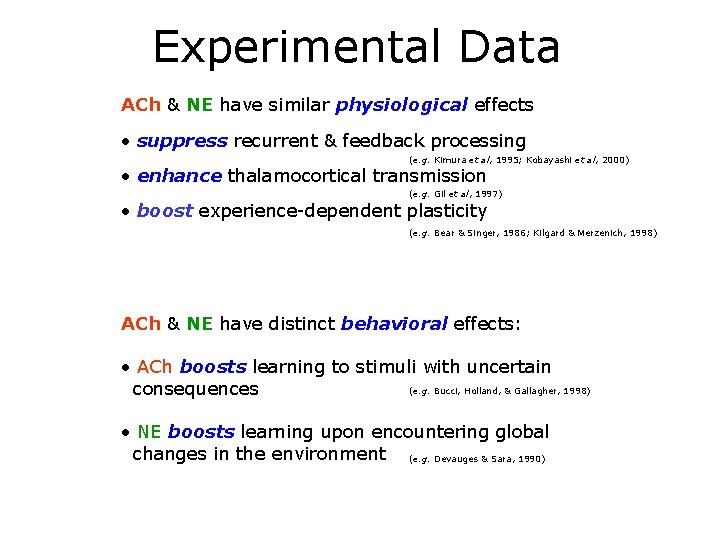

Experimental Data ACh & NE have similar physiological effects • suppress recurrent & feedback processing (e. g. Kimura et al, 1995; Kobayashi et al, 2000) • enhance thalamocortical transmission (e. g. Gil et al, 1997) • boost experience-dependent plasticity (e. g. Bear & Singer, 1986; Kilgard & Merzenich, 1998) ACh & NE have distinct behavioral effects: • ACh boosts learning to stimuli with uncertain (e. g. Bucci, Holland, & Gallagher, 1998) consequences • NE boosts learning upon encountering global changes in the environment (e. g. Devauges & Sara, 1990)

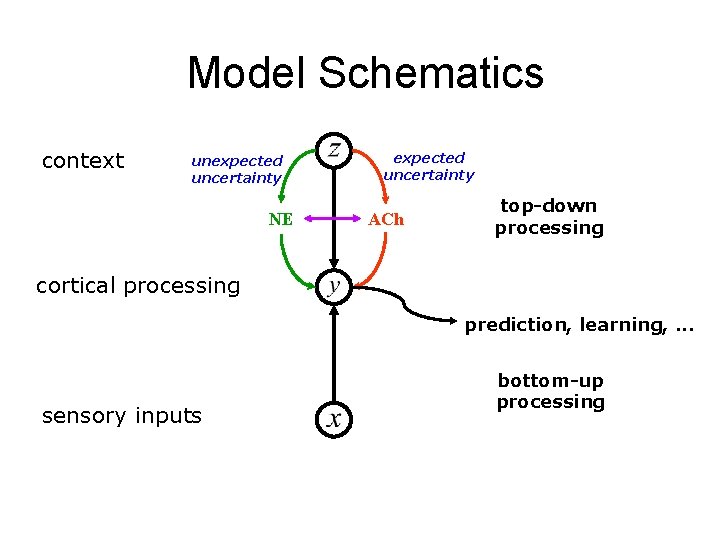

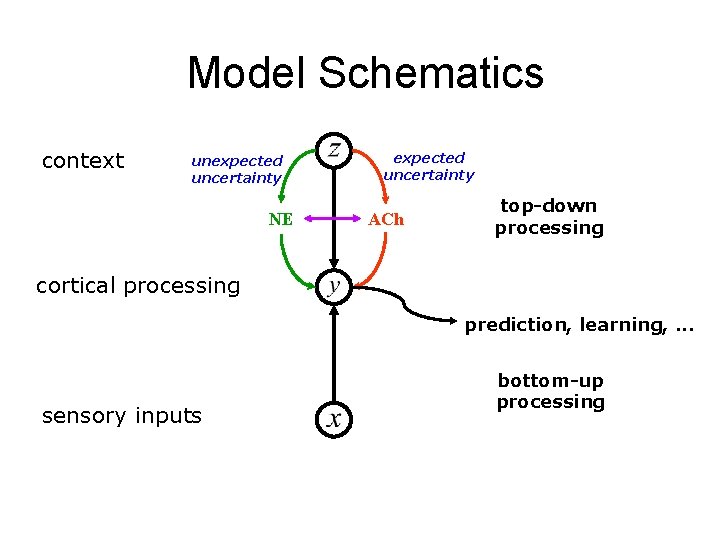

Model Schematics context unexpected uncertainty NE expected uncertainty ACh top-down processing cortical processing prediction, learning, . . . sensory inputs bottom-up processing

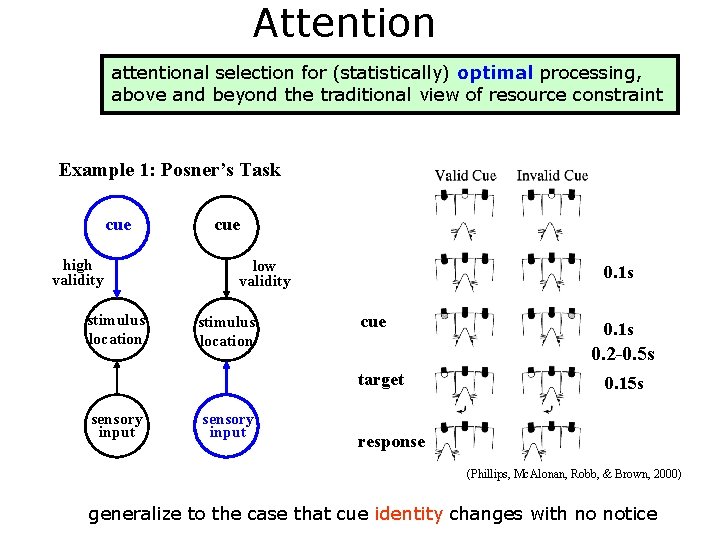

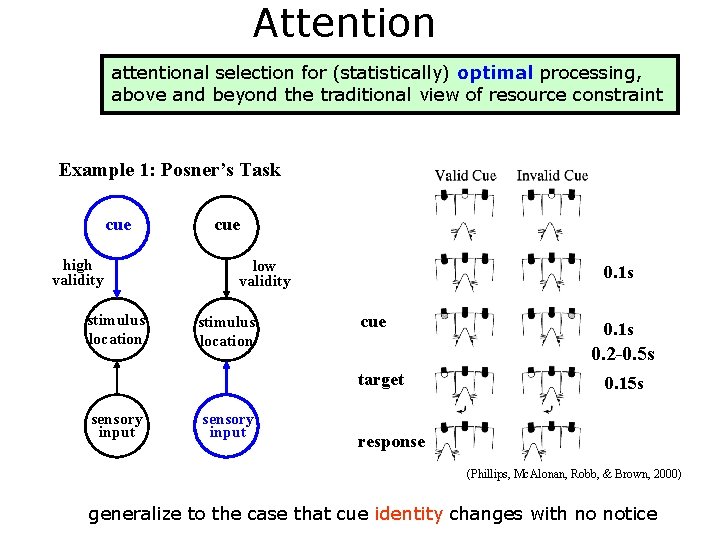

Attention attentional selection for (statistically) optimal processing, above and beyond the traditional view of resource constraint Example 1: Posner’s Task cue high validity stimulus location cue low validity stimulus location 0. 1 s cue 0. 2 -0. 5 s target sensory input 0. 1 s 0. 15 s response (Phillips, Mc. Alonan, Robb, & Brown, 2000) generalize to the case that cue identity changes with no notice

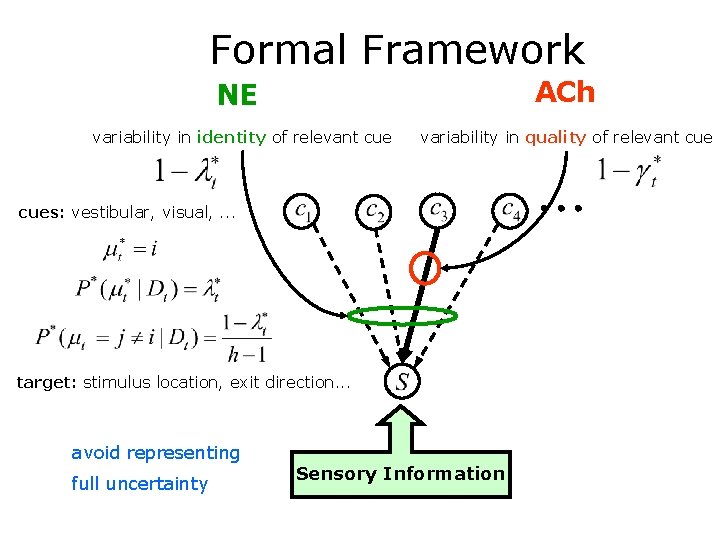

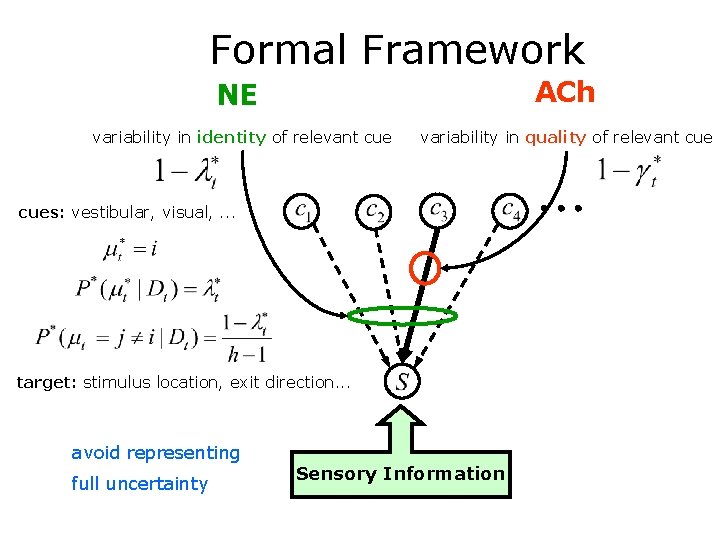

Formal Framework ACh NE variability in identity of relevant cue variability in quality of relevant cues: vestibular, visual, . . . target: stimulus location, exit direction. . . avoid representing full uncertainty Sensory Information

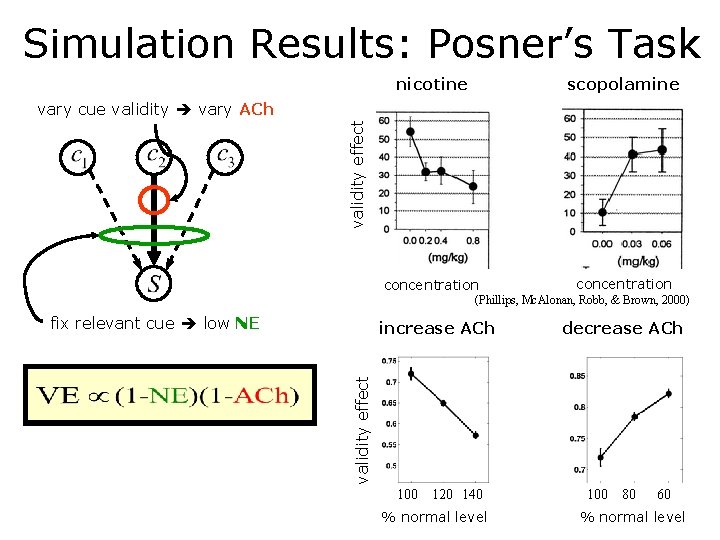

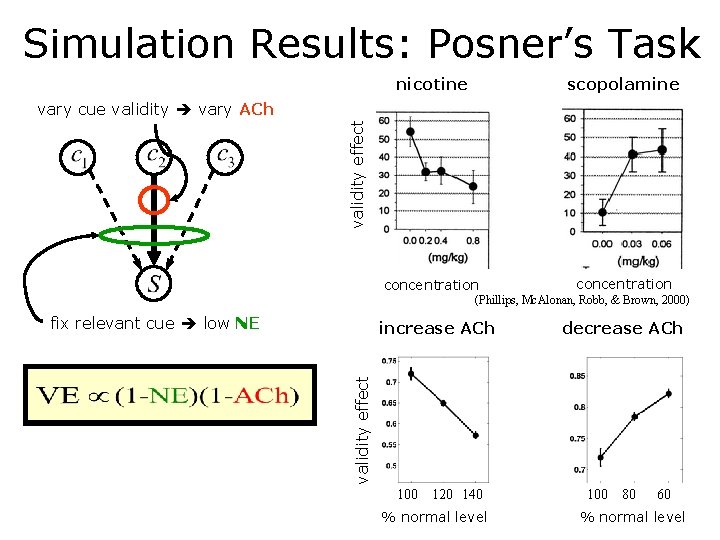

Simulation Results: Posner’s Task nicotine scopolamine validity effect vary cue validity vary ACh concentration (Phillips, Mc. Alonan, Robb, & Brown, 2000) fix relevant cue low NE decrease ACh validity effect increase ACh 100 120 140 % normal level 100 80 60 % normal level

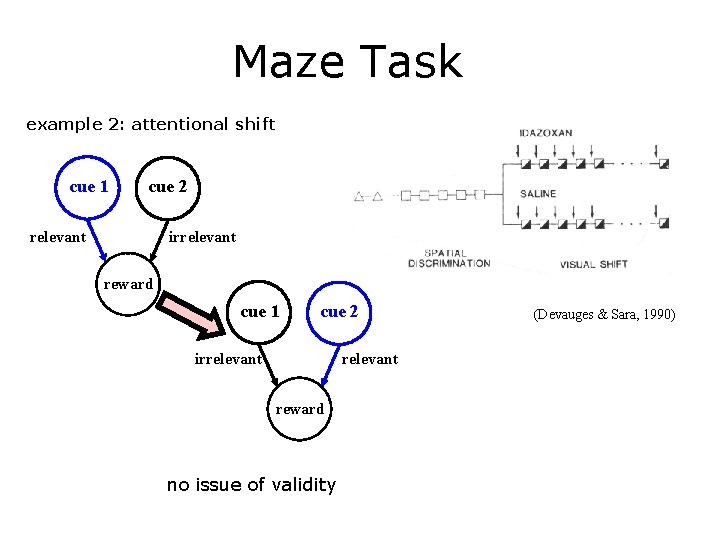

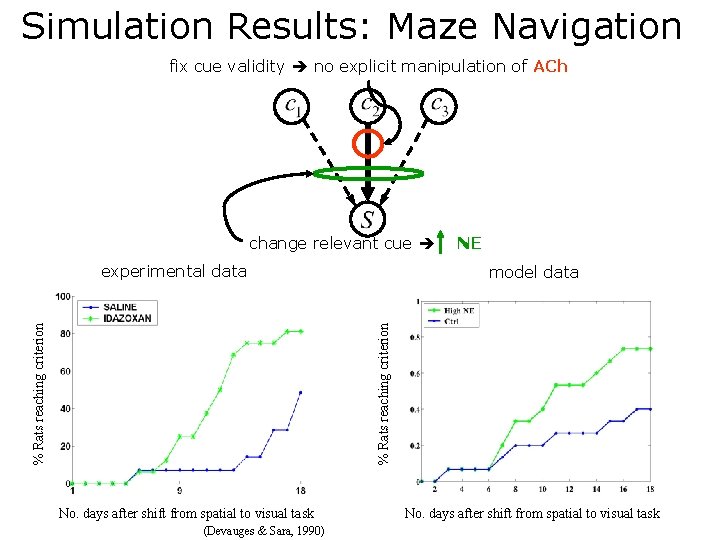

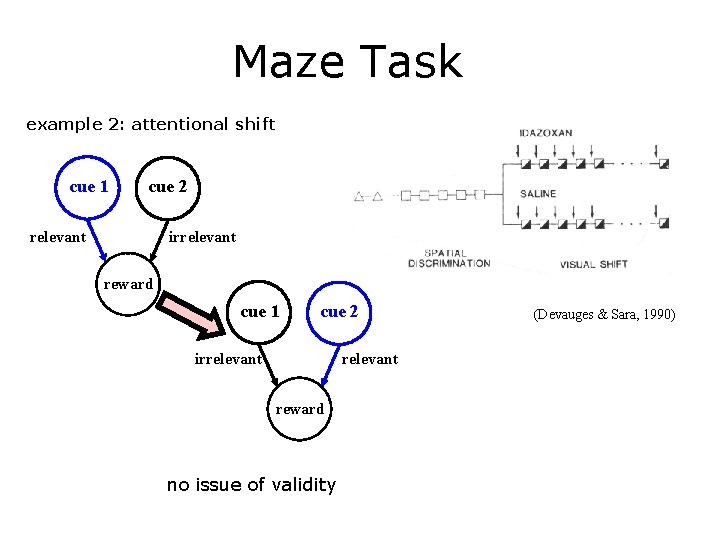

Maze Task example 2: attentional shift cue 1 cue 2 relevant irrelevant reward cue 1 cue 2 irrelevant reward no issue of validity (Devauges & Sara, 1990)

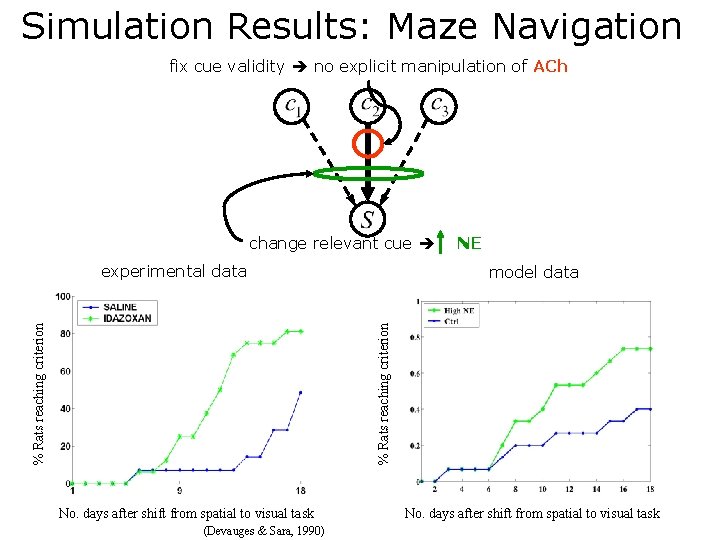

Simulation Results: Maze Navigation fix cue validity no explicit manipulation of ACh change relevant cue experimental data NE % Rats reaching criterion model data No. days after shift from spatial to visual task (Devauges & Sara, 1990) No. days after shift from spatial to visual task

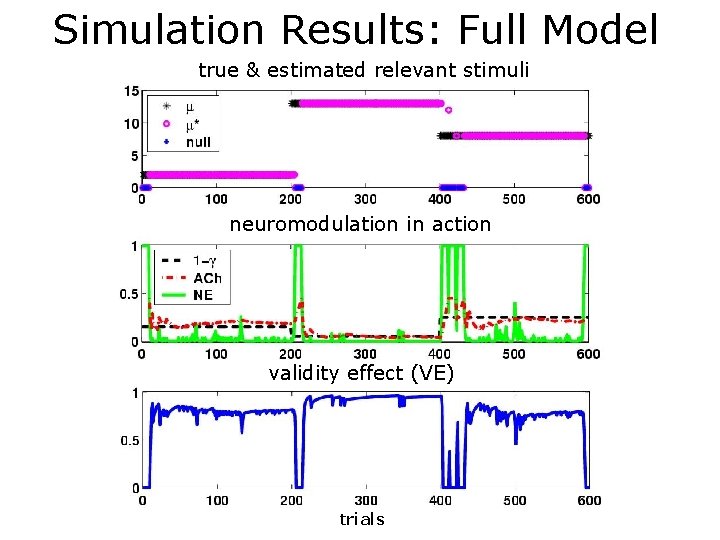

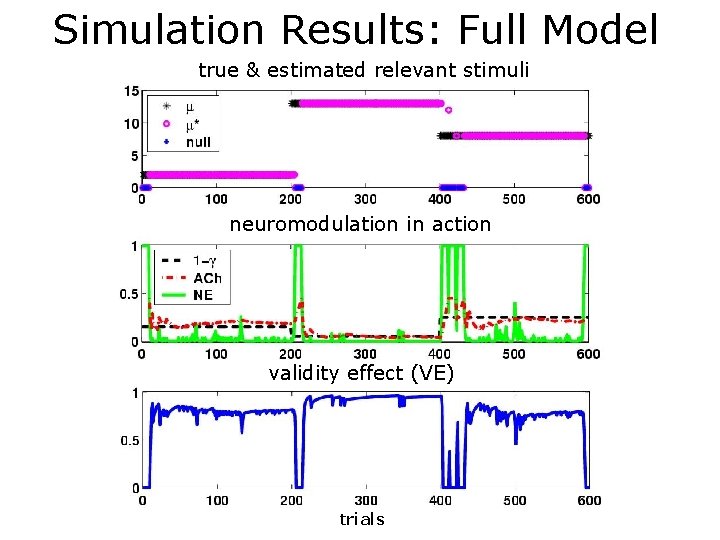

Simulation Results: Full Model true & estimated relevant stimuli neuromodulation in action validity effect (VE) trials

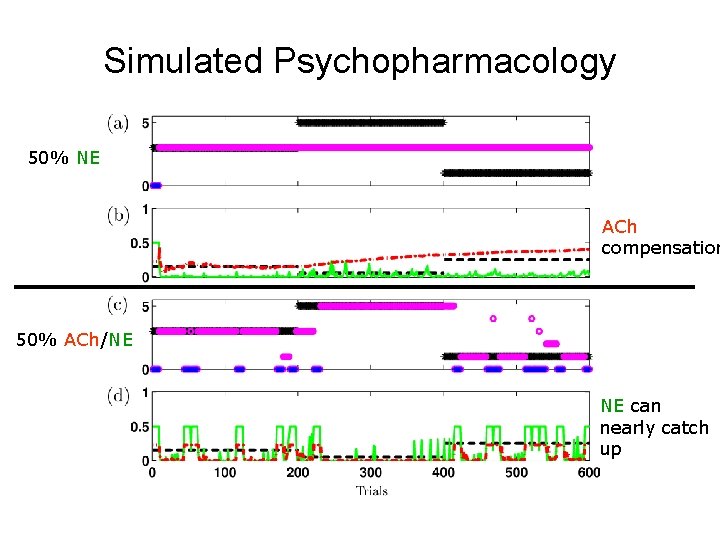

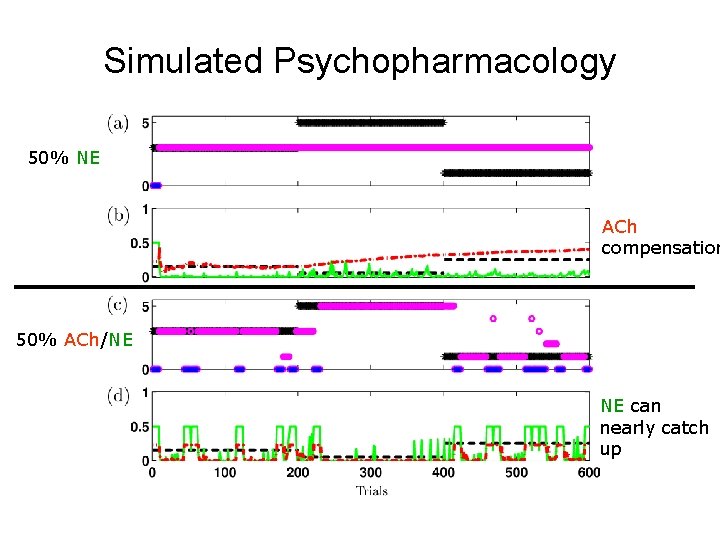

Simulated Psychopharmacology 50% NE ACh compensation 50% ACh/NE NE can nearly catch up

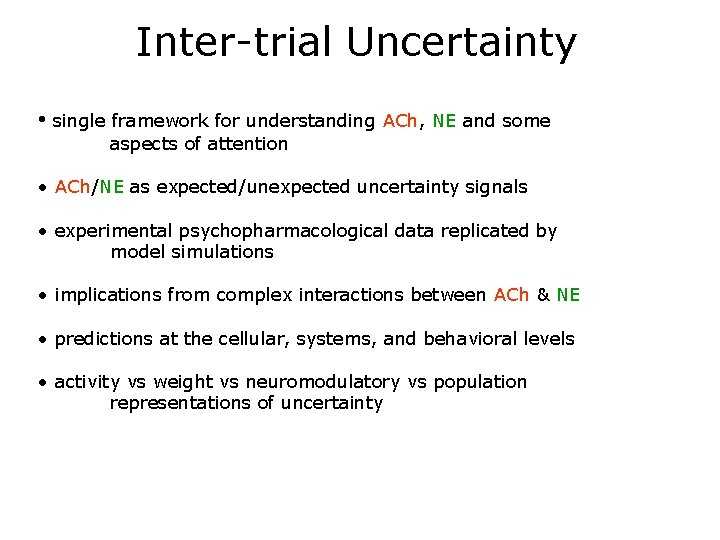

Inter-trial Uncertainty • single framework for understanding ACh, NE and some aspects of attention • ACh/NE as expected/unexpected uncertainty signals • experimental psychopharmacological data replicated by model simulations • implications from complex interactions between ACh & NE • predictions at the cellular, systems, and behavioral levels • activity vs weight vs neuromodulatory vs population representations of uncertainty

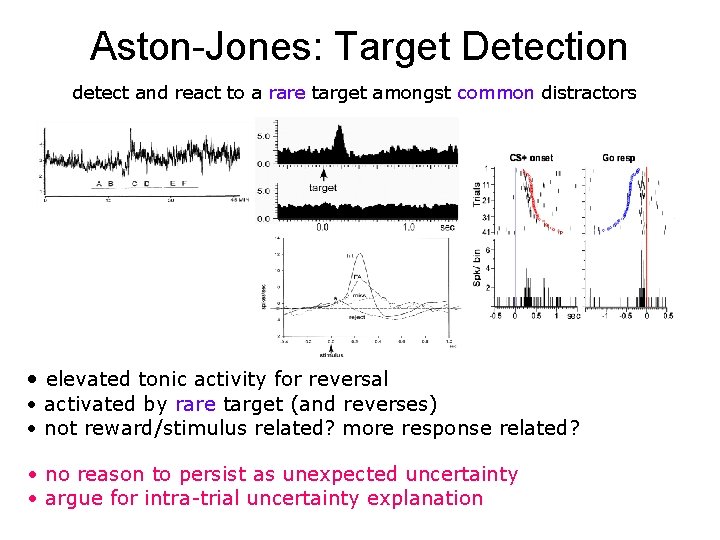

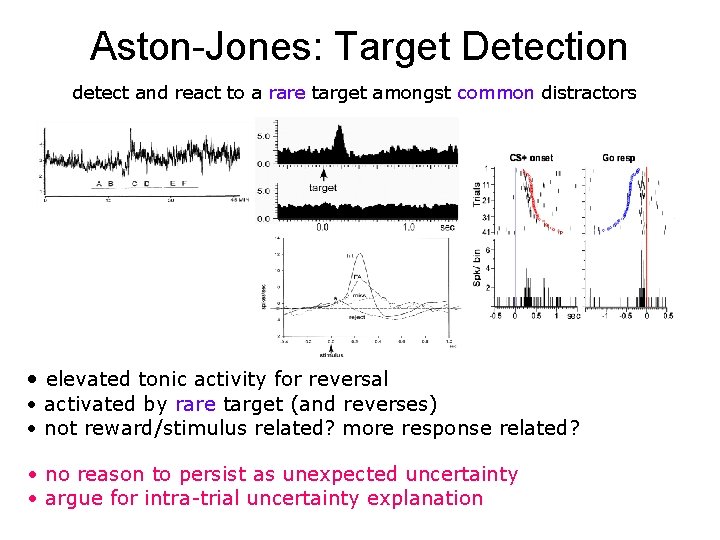

Aston-Jones: Target Detection detect and react to a rare target amongst common distractors • elevated tonic activity for reversal • activated by rare target (and reverses) • not reward/stimulus related? more response related? • no reason to persist as unexpected uncertainty • argue for intra-trial uncertainty explanation

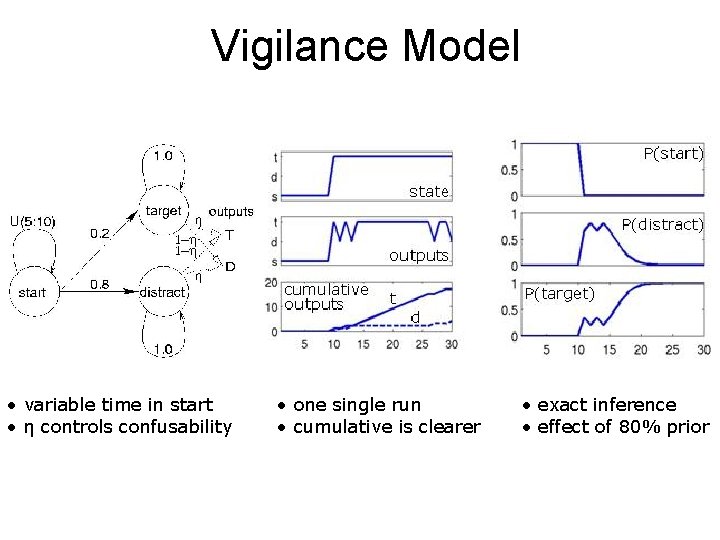

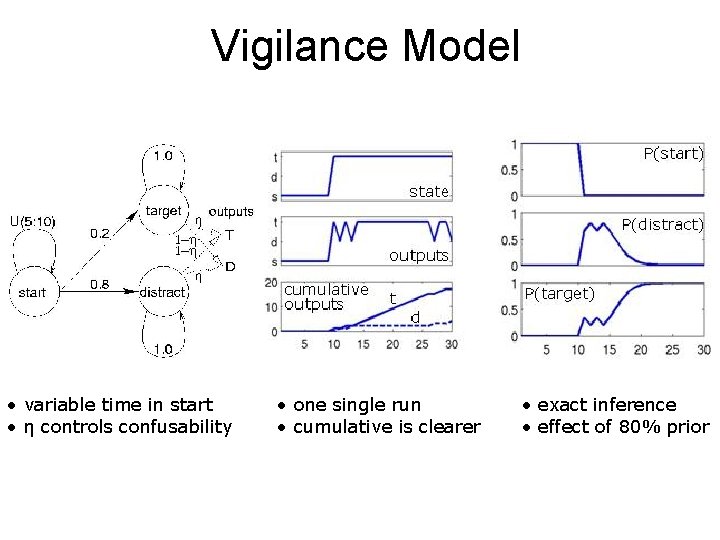

Vigilance Model • variable time in start • η controls confusability • one single run • cumulative is clearer • exact inference • effect of 80% prior

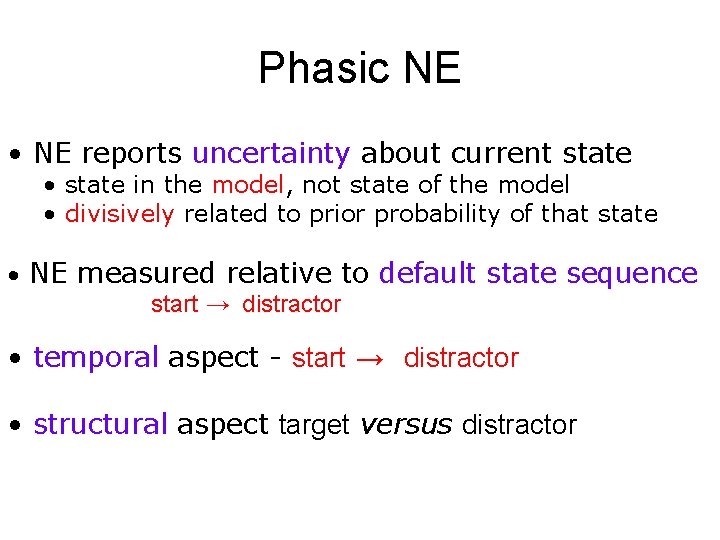

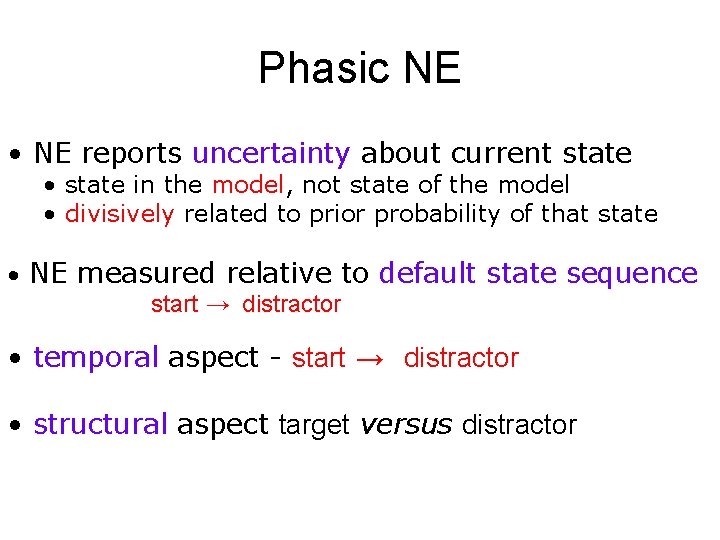

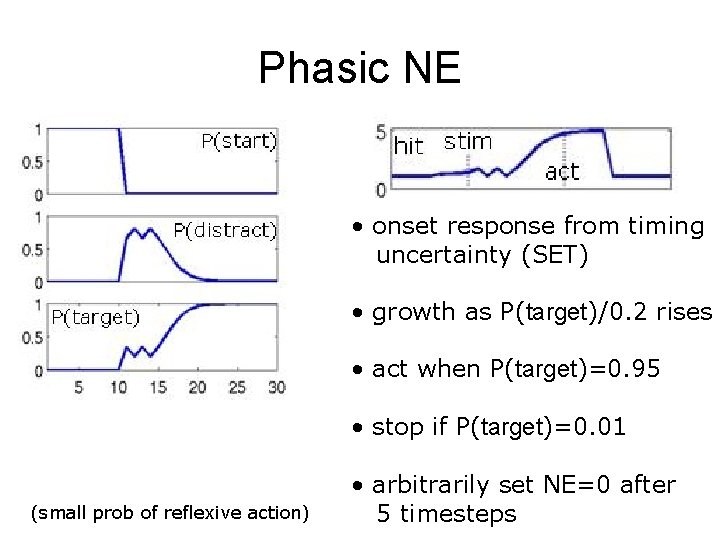

Phasic NE • NE reports uncertainty about current state • state in the model, not state of the model • divisively related to prior probability of that state • NE measured relative to default state sequence start → distractor • temporal aspect - start → distractor • structural aspect target versus distractor

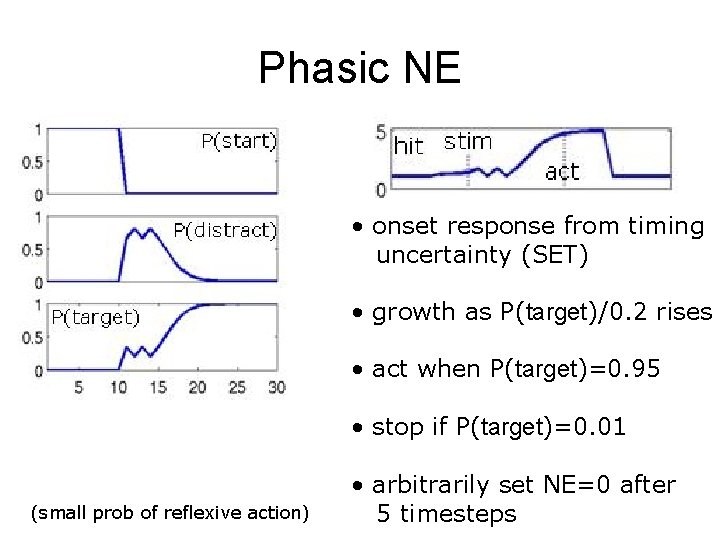

Phasic NE • onset response from timing uncertainty (SET) • growth as P(target)/0. 2 rises • act when P(target)=0. 95 • stop if P(target)=0. 01 (small prob of reflexive action) • arbitrarily set NE=0 after 5 timesteps

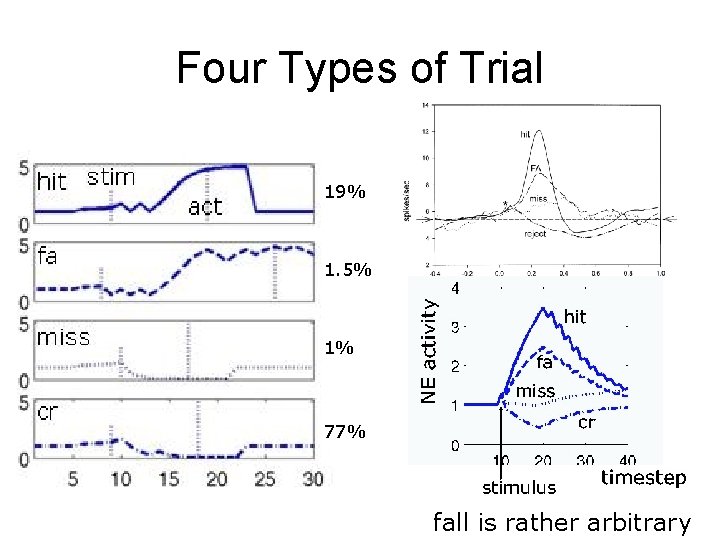

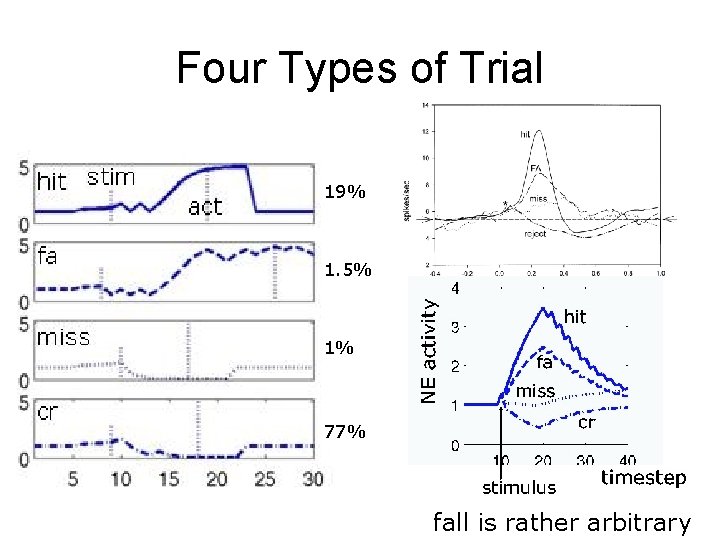

Four Types of Trial 19% 1. 5% 1% 77% fall is rather arbitrary

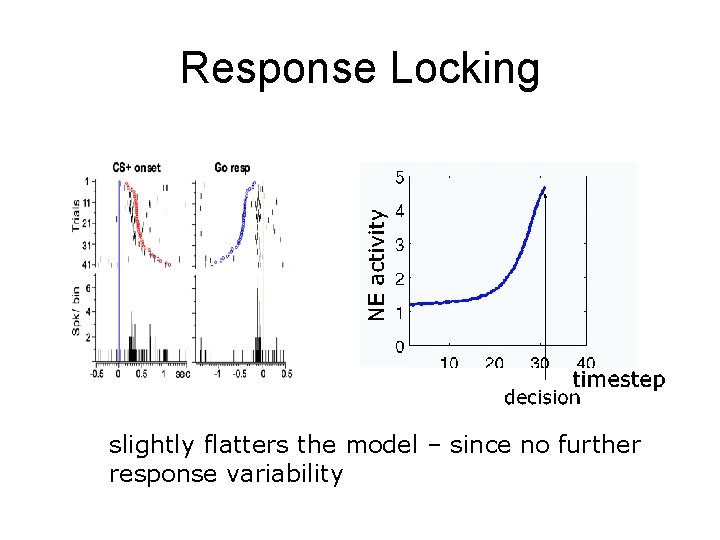

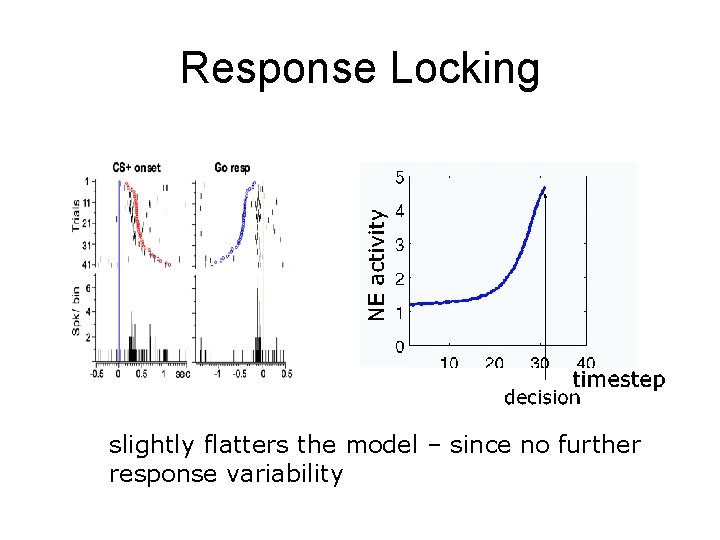

Response Locking slightly flatters the model – since no further response variability

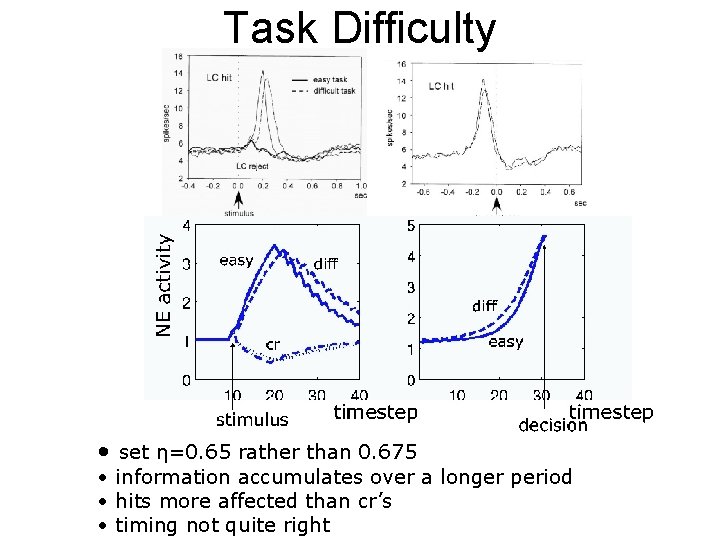

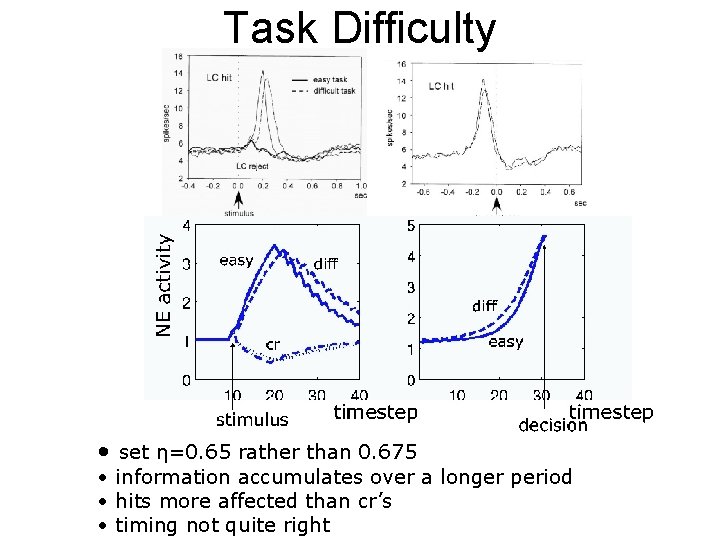

Task Difficulty • set η=0. 65 rather than 0. 675 • information accumulates over a longer period • hits more affected than cr’s • timing not quite right

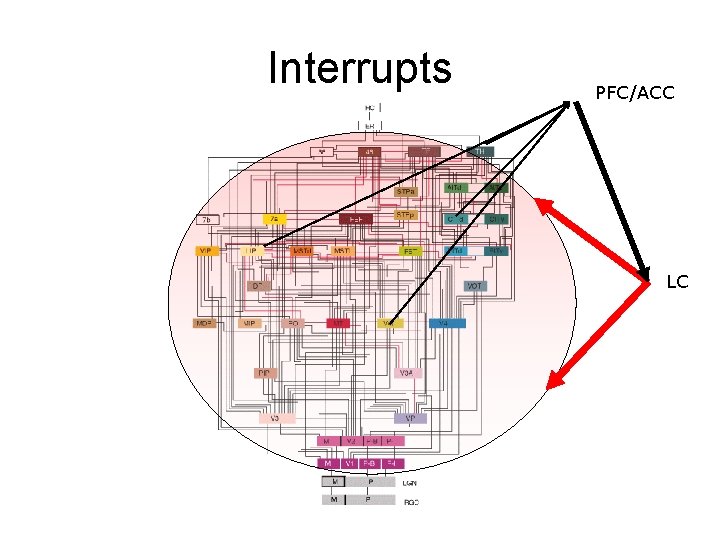

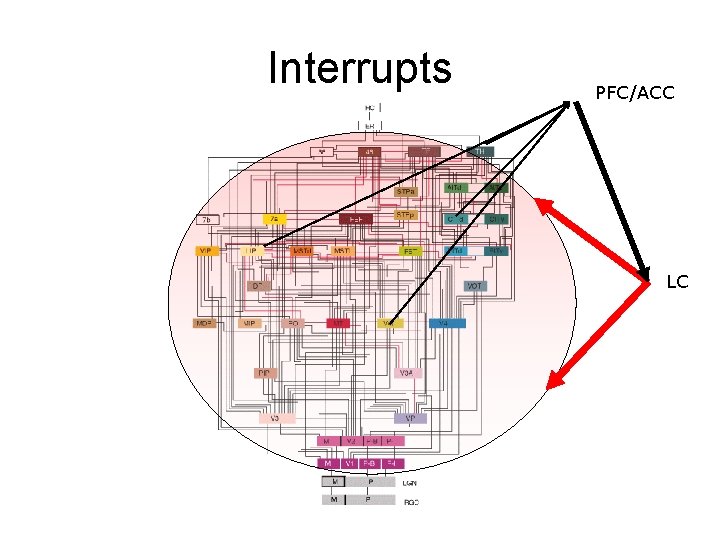

Interrupts PFC/ACC LC

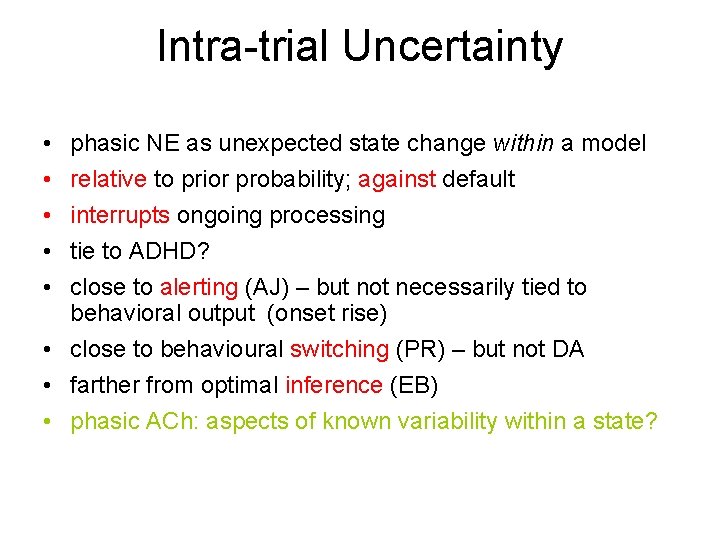

Intra-trial Uncertainty • • • phasic NE as unexpected state change within a model relative to prior probability; against default interrupts ongoing processing tie to ADHD? close to alerting (AJ) – but not necessarily tied to behavioral output (onset rise) • close to behavioural switching (PR) – but not DA • farther from optimal inference (EB) • phasic ACh: aspects of known variability within a state?

Learning and Inference • Learning: predict; control ∆ weight (learning rate) x (error) x (stimulus) – dopamine phasic prediction error future reward – serotonin phasic prediction error future punishment – acetylcholine expected uncertainty boosts learning – norepinephrine unexpected uncertainty boosts learning

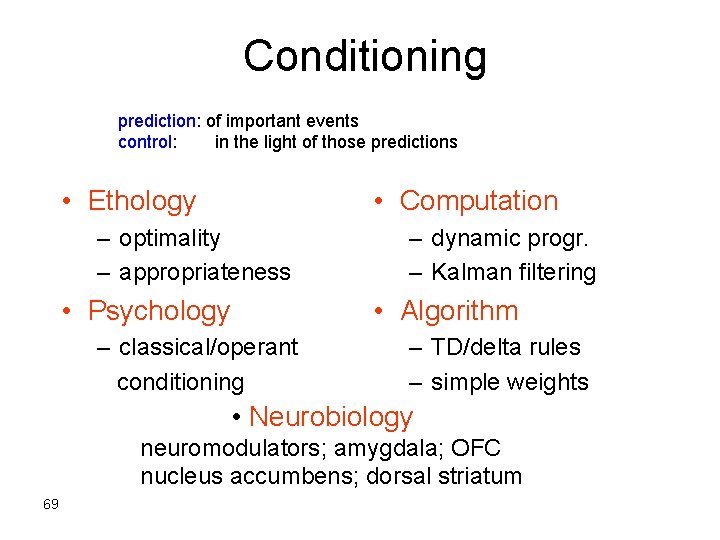

Conditioning prediction: of important events control: in the light of those predictions • Ethology • Computation – optimality – appropriateness • Psychology – dynamic progr. – Kalman filtering • Algorithm – classical/operant conditioning – TD/delta rules – simple weights • Neurobiology neuromodulators; amygdala; OFC nucleus accumbens; dorsal striatum 69

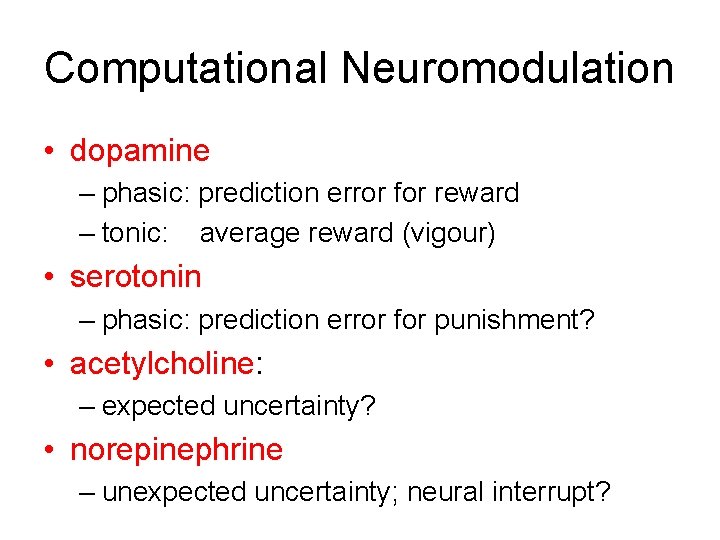

Computational Neuromodulation • dopamine – phasic: prediction error for reward – tonic: average reward (vigour) • serotonin – phasic: prediction error for punishment? • acetylcholine: – expected uncertainty? • norepinephrine – unexpected uncertainty; neural interrupt?

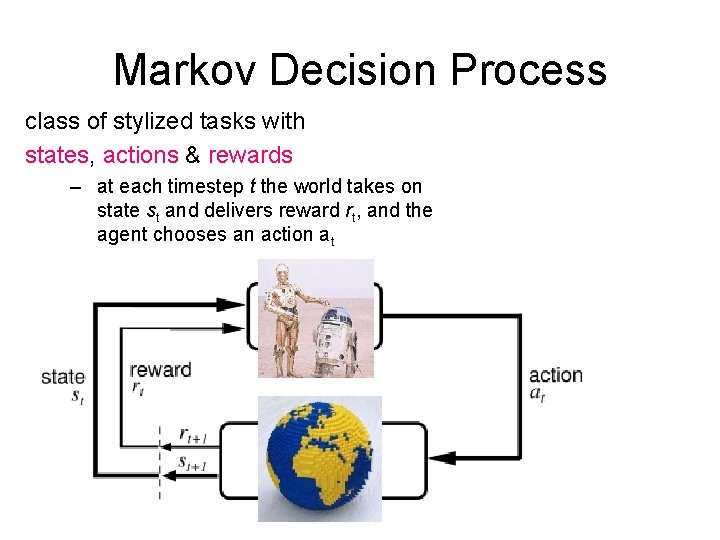

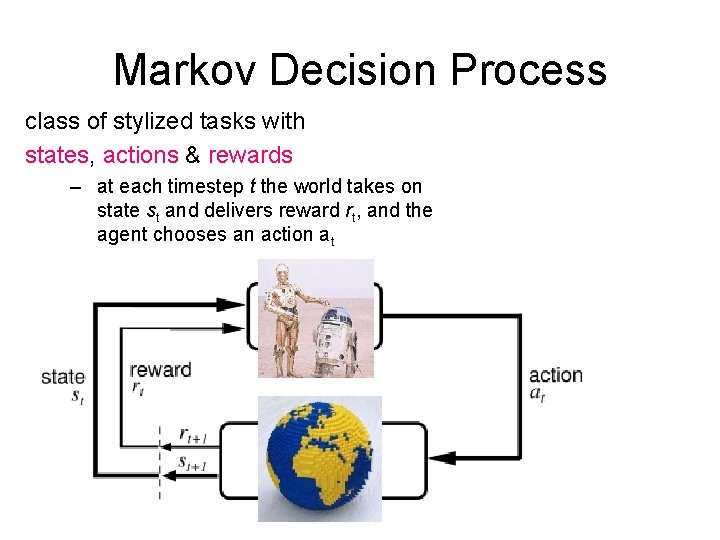

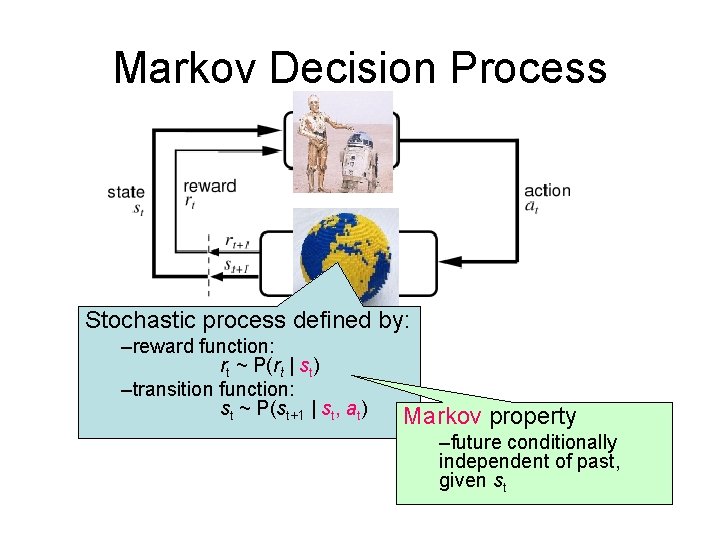

Markov Decision Process class of stylized tasks with states, actions & rewards – at each timestep t the world takes on state st and delivers reward rt, and the agent chooses an action at

Markov Decision Process World: You are in state 34. Your immediate reward is 3. You have 3 actions. Robot: I’ll take action 2. World: You are in state 77. Your immediate reward is -7. You have 2 actions. Robot: I’ll take action 1. World: You’re in state 34 (again). Your immediate reward is 3. You have 3 actions.

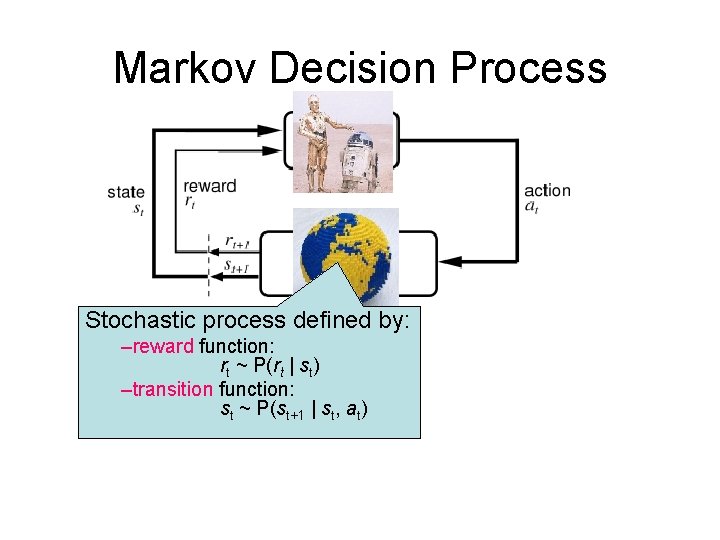

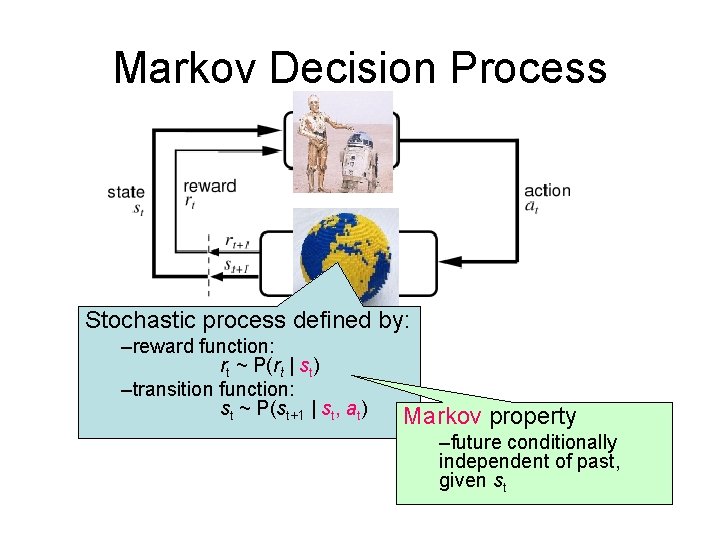

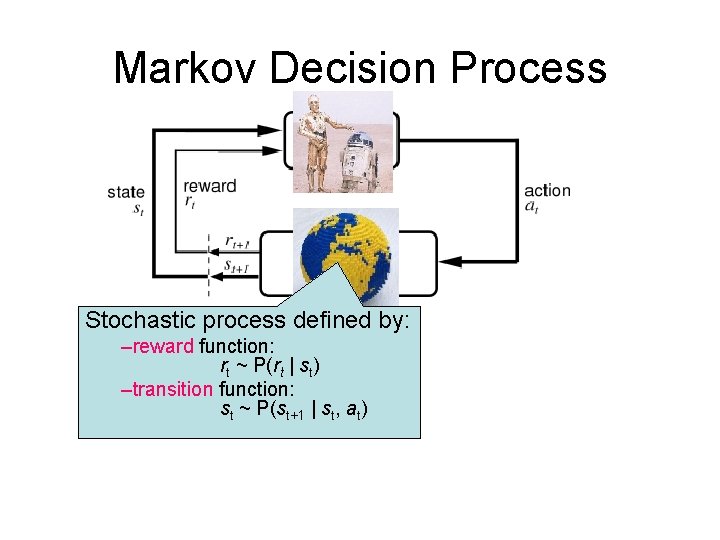

Markov Decision Process Stochastic process defined by: –reward function: rt ~ P(rt | st) –transition function: st ~ P(st+1 | st, at)

Markov Decision Process Stochastic process defined by: –reward function: rt ~ P(rt | st) –transition function: st ~ P(st+1 | st, at) Markov property –future conditionally independent of past, given st

The optimal policy Definition: a policy such that at every state, its expected value is better than (or equal to) that of all other policies Theorem: For every MDP there exists (at least) one deterministic optimal policy. by the way, why is the optimal policy just a mapping from states to actions? couldn’t you earn more reward by choosing a different action depending on last 2 states?