Learning to Trade via Direct Reinforcement John Moody

![[1] John Moody and Lizhong Wu. Optimization of trading systems and portfolios. Decision Technologies [1] John Moody and Lizhong Wu. Optimization of trading systems and portfolios. Decision Technologies](https://slidetodoc.com/presentation_image_h/ce218b9467476720b4406a7e7708d399/image-22.jpg)

- Slides: 37

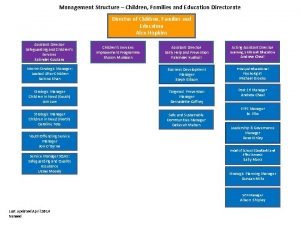

Learning to Trade via Direct Reinforcement John Moody International Computer Science Institute, Berkeley & J E Moody & Company LLC, Portland Moody@ICSI. Berkeley. Edu John@JEMoody. Com Global Derivatives Trading & Risk Management Paris, May 2008

RL Considers: • A Goal-Directed “Learning” Agent • interacting with an Uncertain Environment • that attempts to maximize Reward / Utility Learning to Trade via RL is an Active Paradigm: • Agent “Learns” by “Trial & Error” Discovery • Actions result in Reinforcement RL Paradigms: • Value Function Learning (Dynamic Programming) • Direct Reinforcement (Adaptive Control) Global Derivatives Trading & Risk Management – May 2008 Direct Reinforcement What is Reinforcement Learning?

Direct Reinforcement Learning: Learning to Trade via Finds predictive structure in financial data Integrates Forecasting w/ Decision Making Balances Risk vs. Reward Incorporates Transaction Costs Discover Trading Strategies! Global Derivatives Trading & Risk Management – May 2008 Direct Reinforcement I. Why Direct Reinforcement?

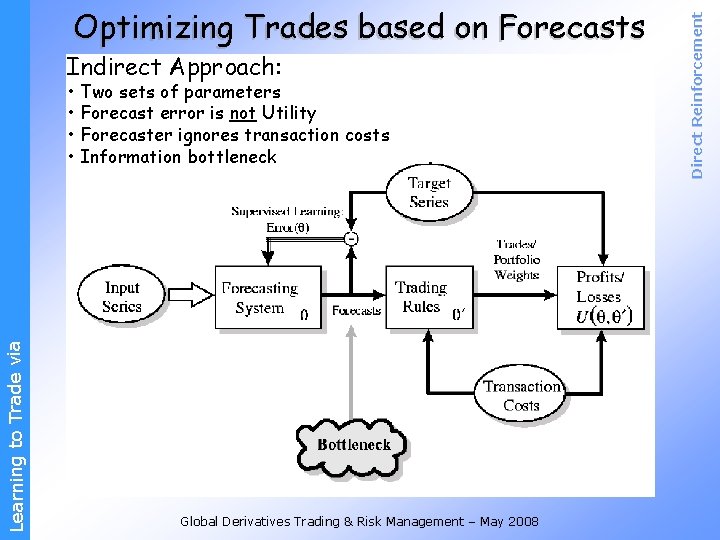

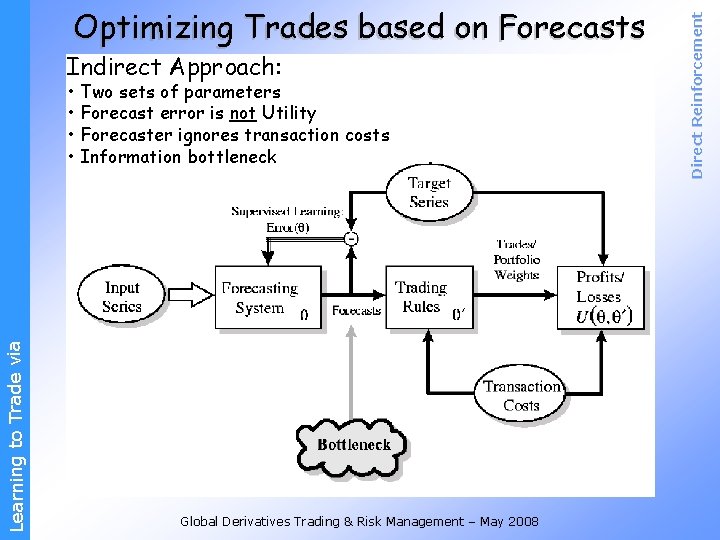

Indirect Approach: Learning to Trade via • Two sets of parameters • Forecast error is not Utility • Forecaster ignores transaction costs • Information bottleneck Global Derivatives Trading & Risk Management – May 2008 Direct Reinforcement Optimizing Trades based on Forecasts

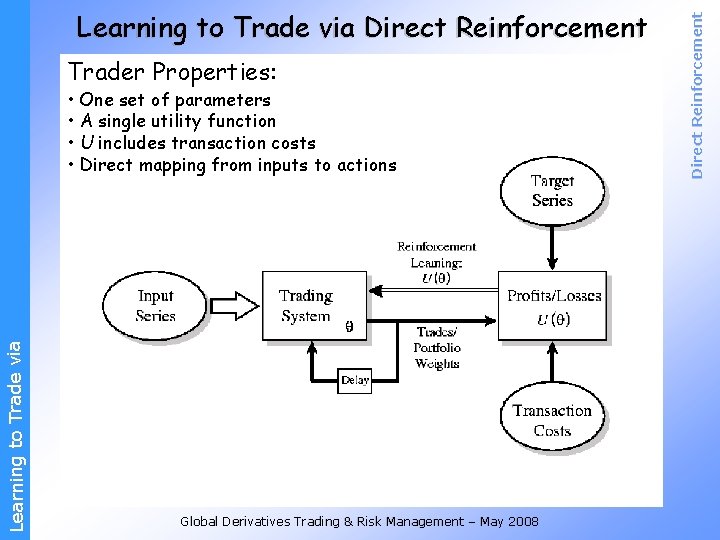

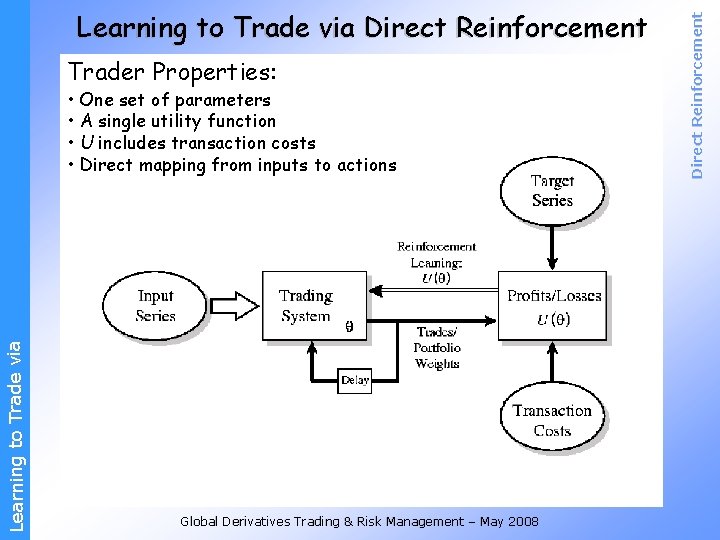

Trader Properties: Learning to Trade via • One set of parameters • A single utility function • U includes transaction costs • Direct mapping from inputs to actions Global Derivatives Trading & Risk Management – May 2008 Direct Reinforcement Learning to Trade via Direct Reinforcement

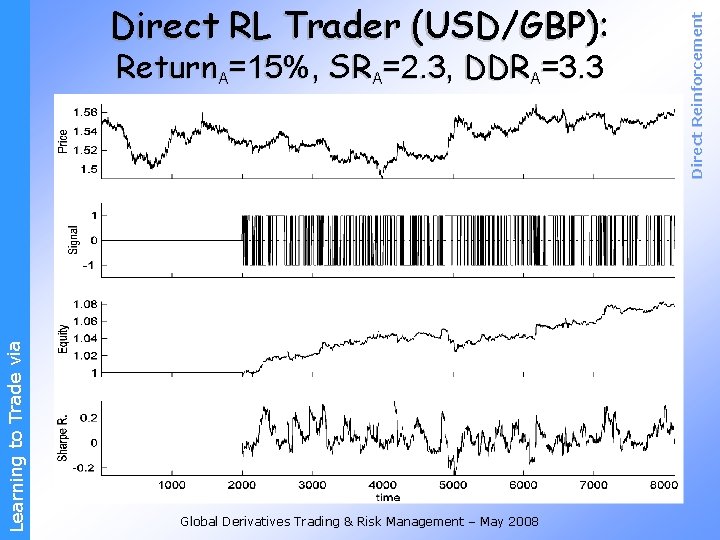

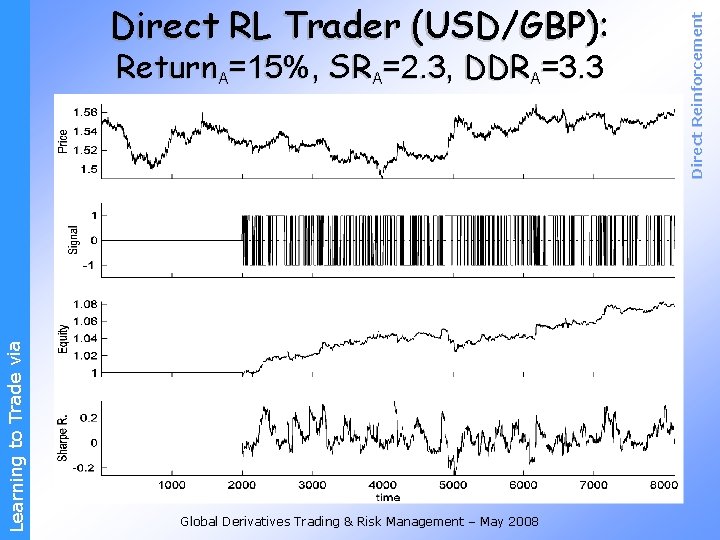

Learning to Trade via Return A=15%, SR A=2. 3, DDR A=3. 3 Global Derivatives Trading & Risk Management – May 2008 Direct Reinforcement Direct RL Trader (USD/GBP):

Learning to Trade via Algorithms: Recurrent Reinforcement Learning (RRL) Stochastic Direct Reinforcement (SDR) Illustrations: Sensitivity to Transaction Costs Risk-Averse Reinforcement Global Derivatives Trading & Risk Management – May 2008 Direct Reinforcement II. Direct Reinforcement: Algorithms & Illustrations

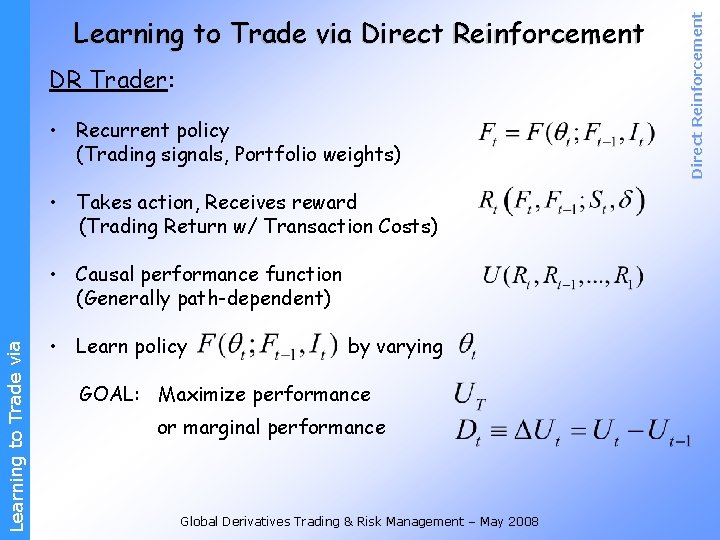

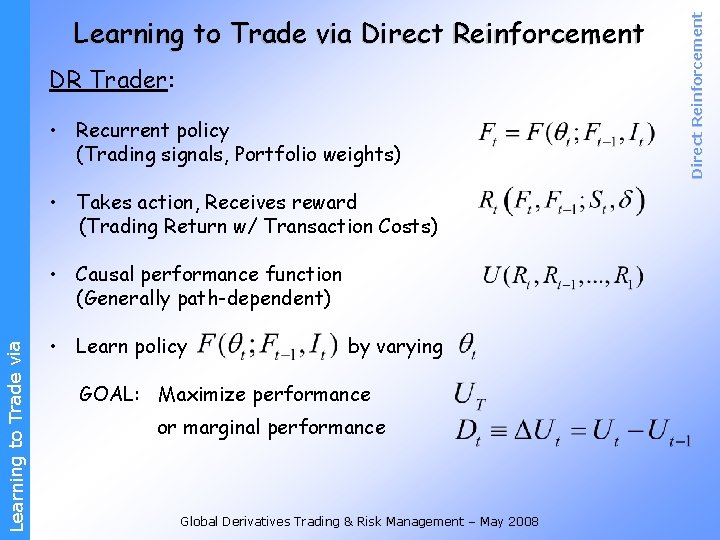

DR Trader: • Recurrent policy (Trading signals, Portfolio weights) • Takes action, Receives reward (Trading Return w/ Transaction Costs) Learning to Trade via • Causal performance function (Generally path-dependent) • Learn policy by varying GOAL: Maximize performance or marginal performance Global Derivatives Trading & Risk Management – May 2008 Direct Reinforcement Learning to Trade via Direct Reinforcement

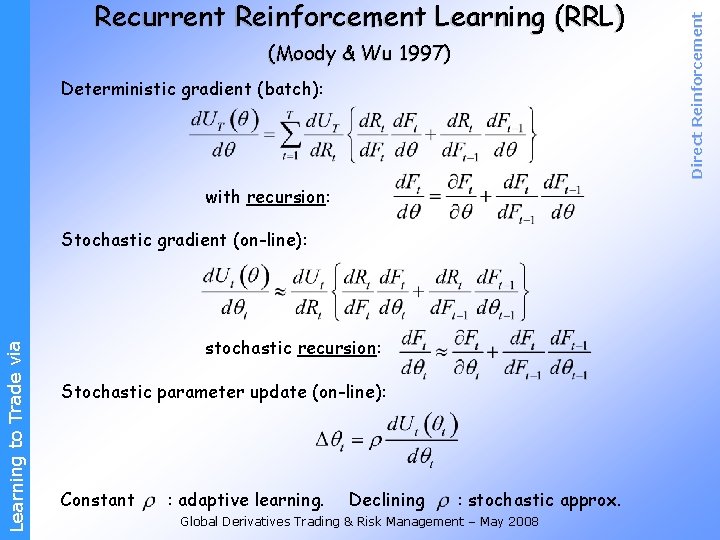

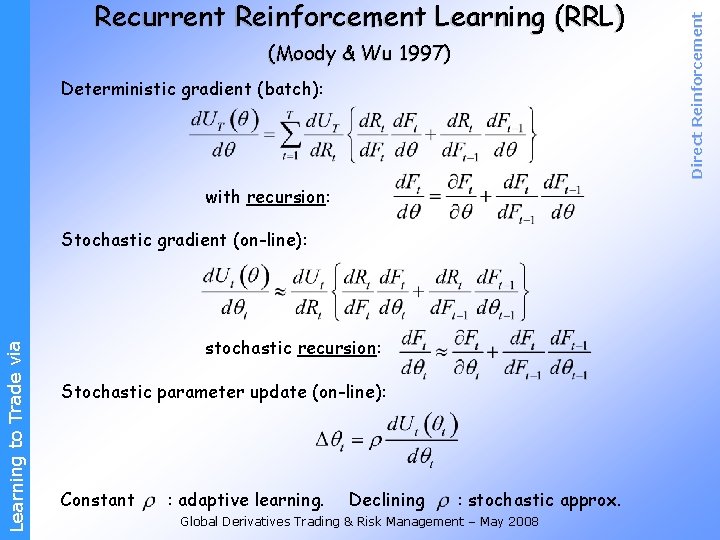

(Moody & Wu 1997) Deterministic gradient (batch): with recursion: Learning to Trade via Stochastic gradient (on-line): stochastic recursion: Stochastic parameter update (on-line): Constant : adaptive learning. Declining : stochastic approx. Global Derivatives Trading & Risk Management – May 2008 Direct Reinforcement Recurrent Reinforcement Learning (RRL)

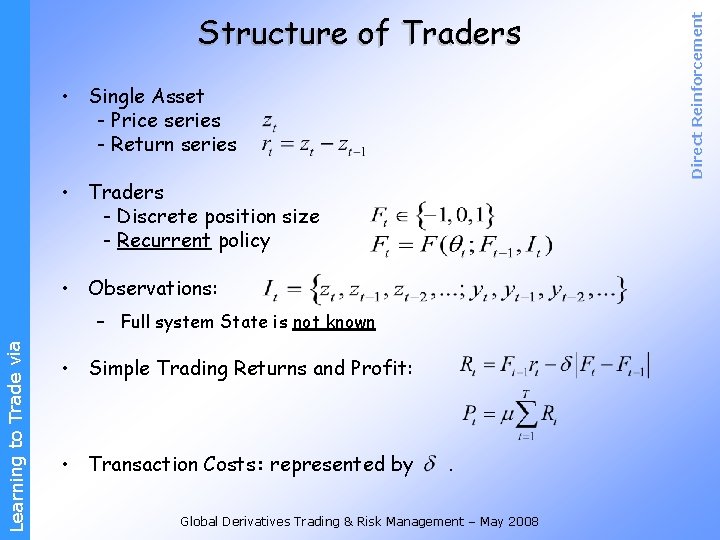

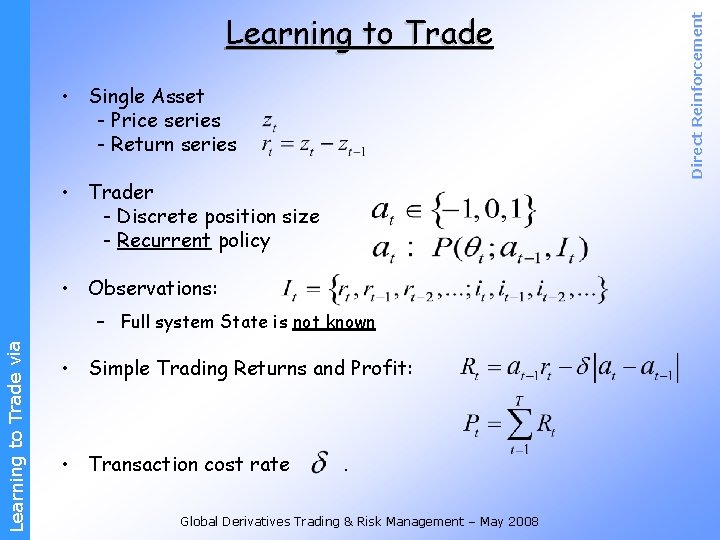

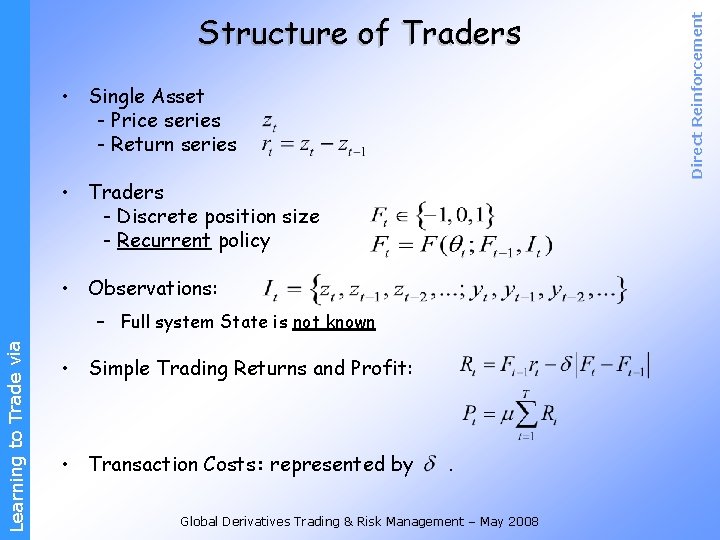

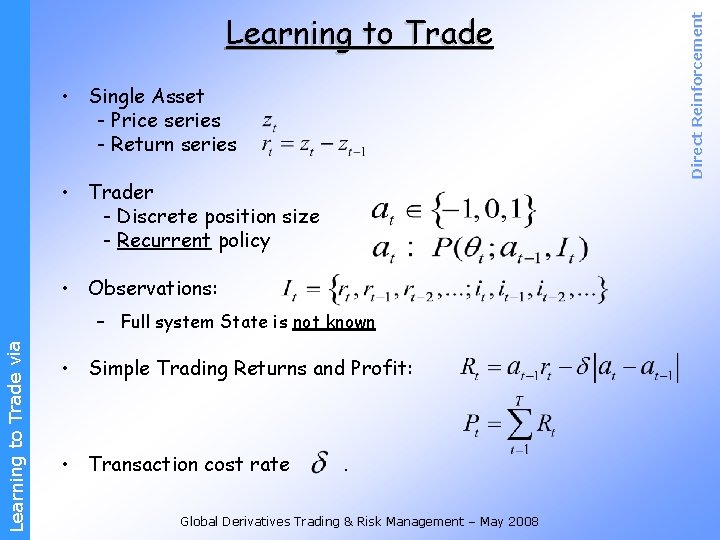

• Single Asset - Price series - Return series • Traders - Discrete position size - Recurrent policy • Observations: Learning to Trade via – Full system State is not known • Simple Trading Returns and Profit: • Transaction Costs: represented by . Global Derivatives Trading & Risk Management – May 2008 Direct Reinforcement Structure of Traders

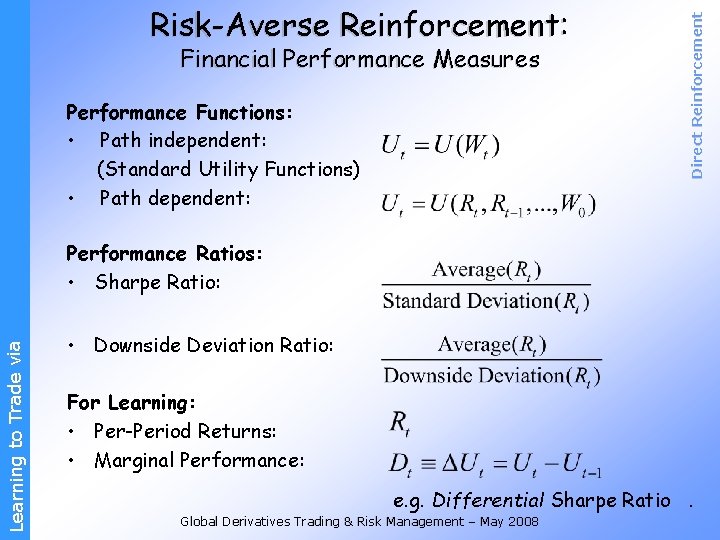

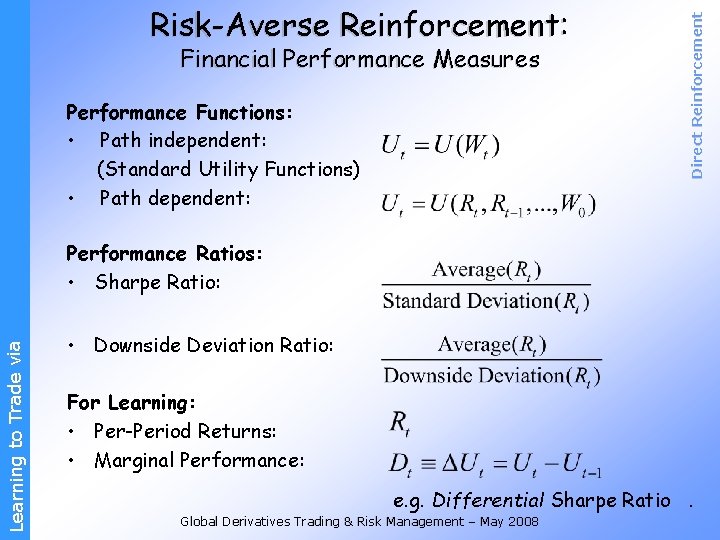

Financial Performance Measures Performance Functions: • Path independent: (Standard Utility Functions) • Path dependent: Direct Reinforcement Risk-Averse Reinforcement: Learning to Trade via Performance Ratios: • Sharpe Ratio: • Downside Deviation Ratio: For Learning: • Per-Period Returns: • Marginal Performance: e. g. Differential Sharpe Ratio. Global Derivatives Trading & Risk Management – May 2008

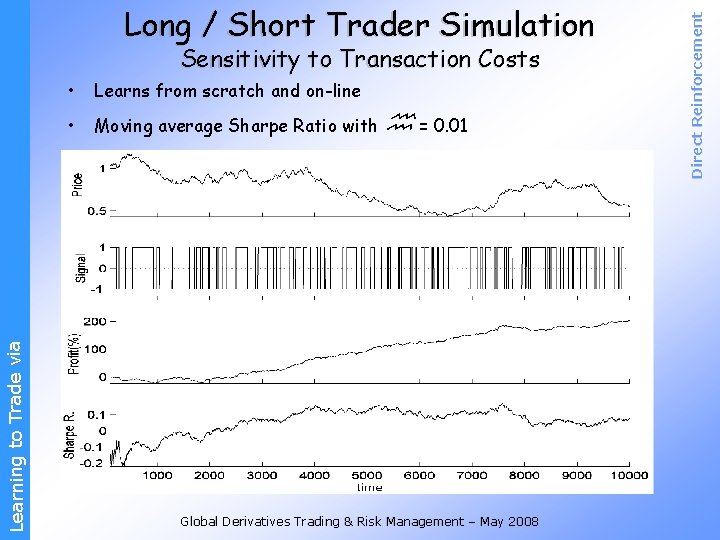

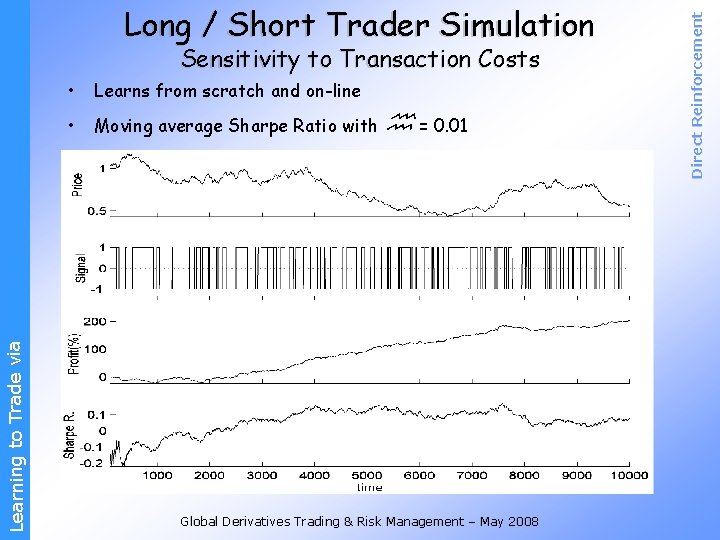

Learning to Trade via Sensitivity to Transaction Costs • Learns from scratch and on-line • Moving average Sharpe Ratio with = 0. 01 Global Derivatives Trading & Risk Management – May 2008 Direct Reinforcement Long / Short Trader Simulation

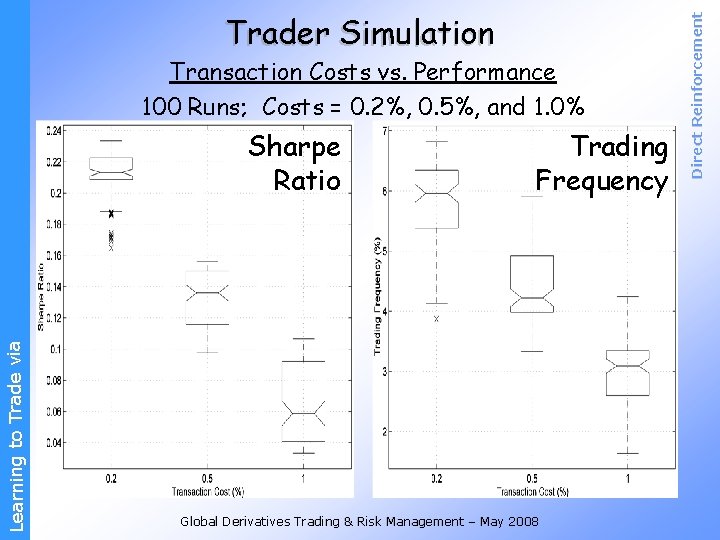

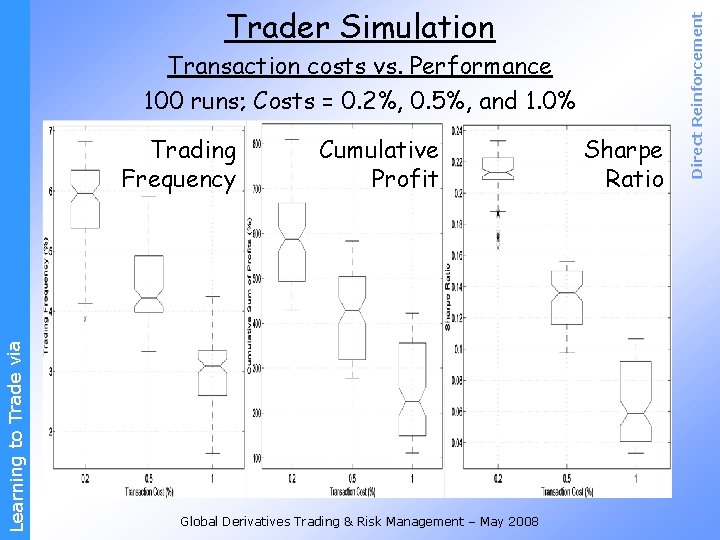

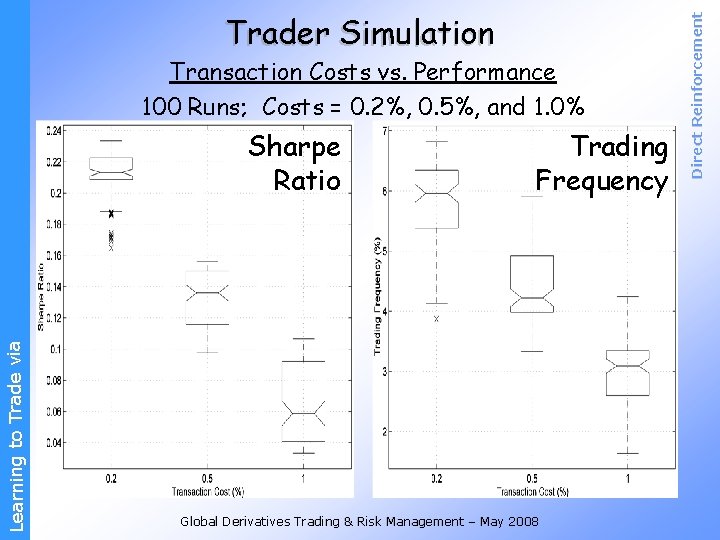

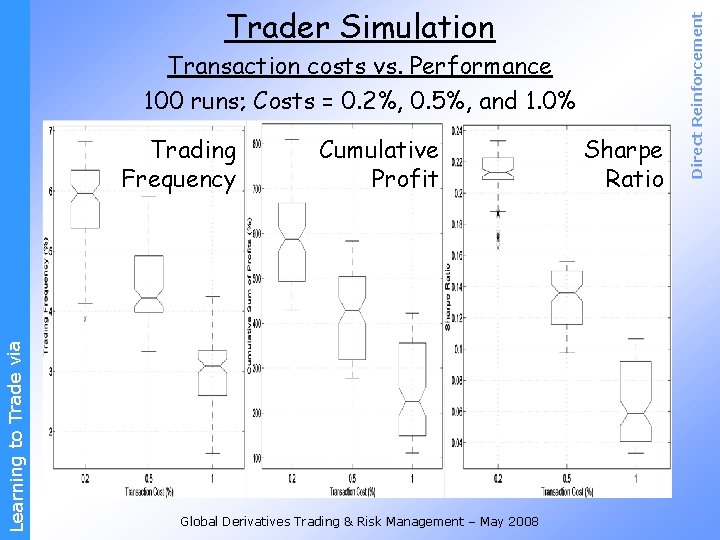

Transaction Costs vs. Performance 100 Runs; Costs = 0. 2%, 0. 5%, and 1. 0% Learning to Trade via Sharpe Ratio Trading Frequency Global Derivatives Trading & Risk Management – May 2008 Direct Reinforcement Trader Simulation

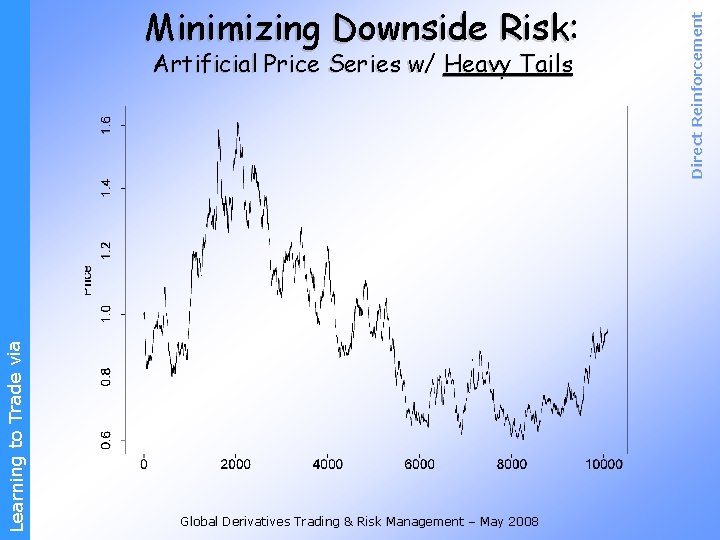

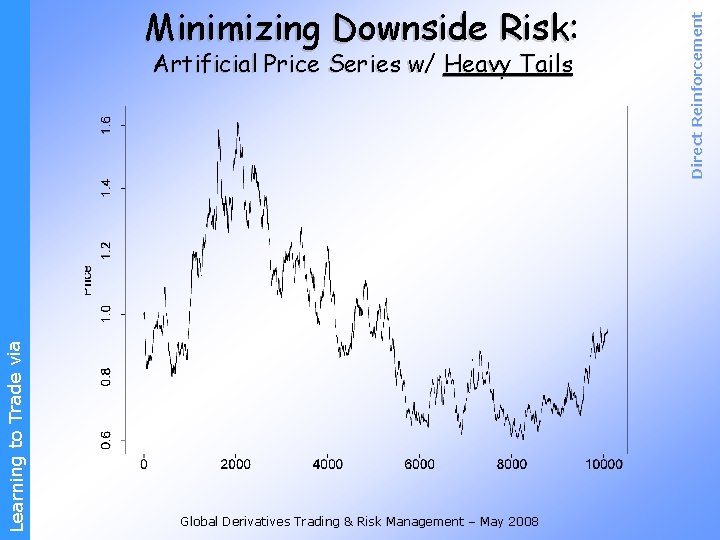

Learning to Trade via Artificial Price Series w/ Heavy Tails Global Derivatives Trading & Risk Management – May 2008 Direct Reinforcement Minimizing Downside Risk:

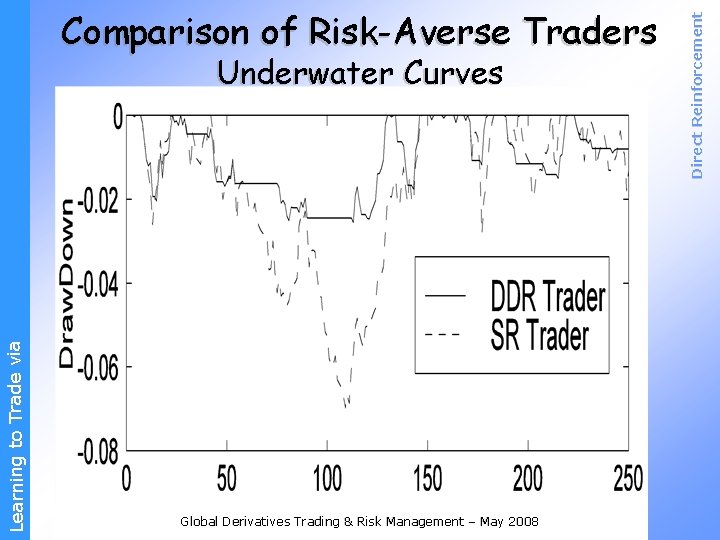

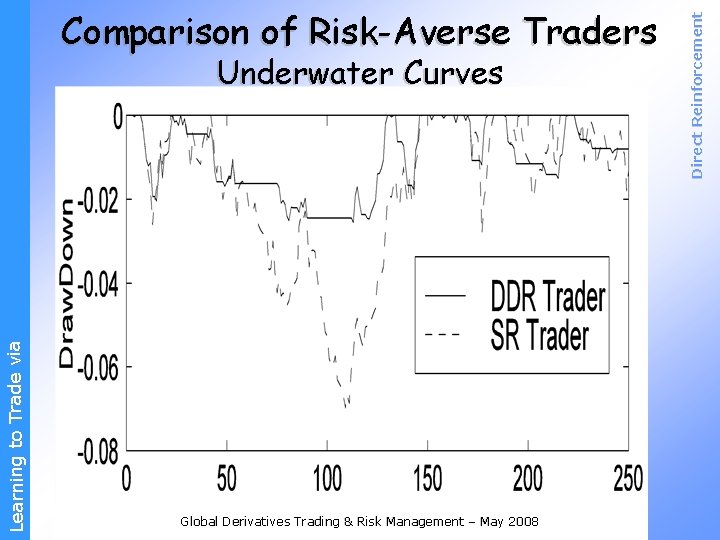

Learning to Trade via Underwater Curves Global Derivatives Trading & Risk Management – May 2008 Direct Reinforcement Comparison of Risk-Averse Traders

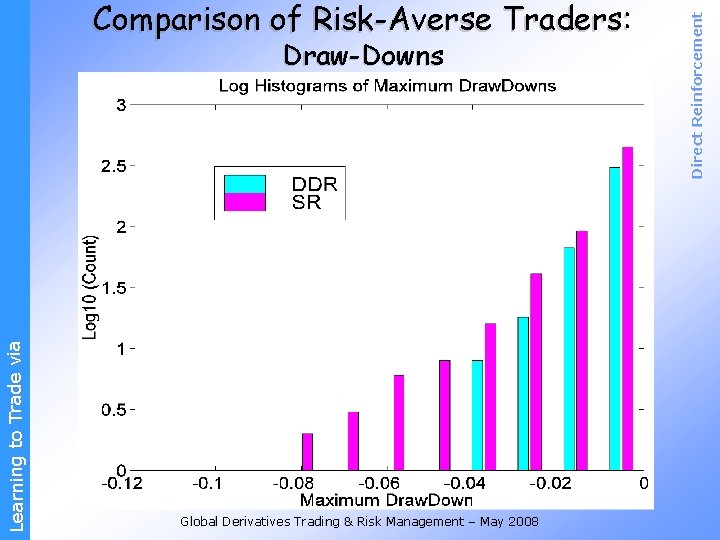

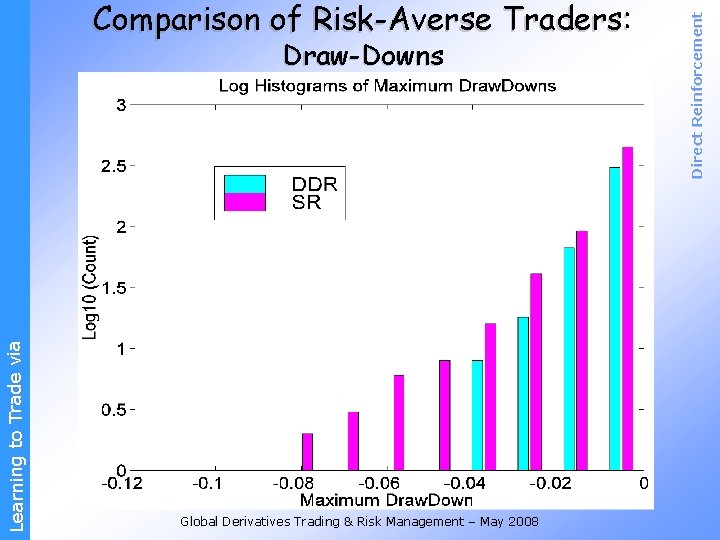

Learning to Trade via Draw-Downs Global Derivatives Trading & Risk Management – May 2008 Direct Reinforcement Comparison of Risk-Averse Traders:

Learning to Trade via Algorithms: Value Function Method (Q-Learning) Direct Reinforcement Learning (RRL) Illustration: Asset Allocation: S&P 500 & T-Bills RRL vs. Q-Learning Global Derivatives Trading & Risk Management – May 2008 Direct Reinforcement III. Direct Reinforcement vs. Dynamic Programming

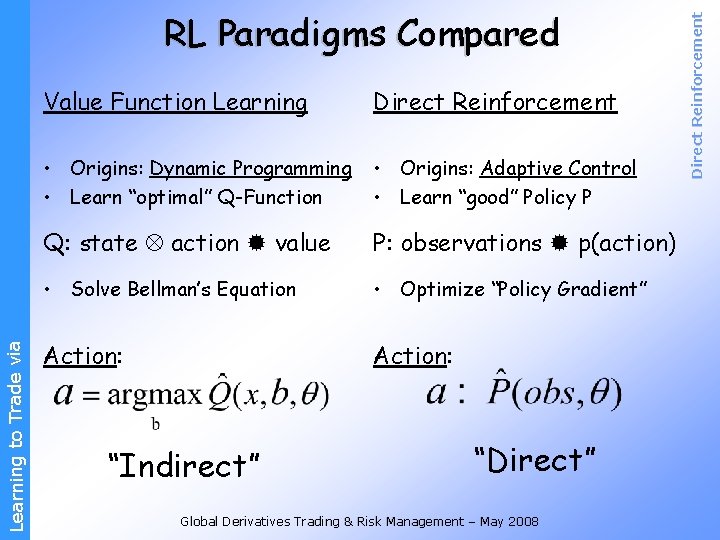

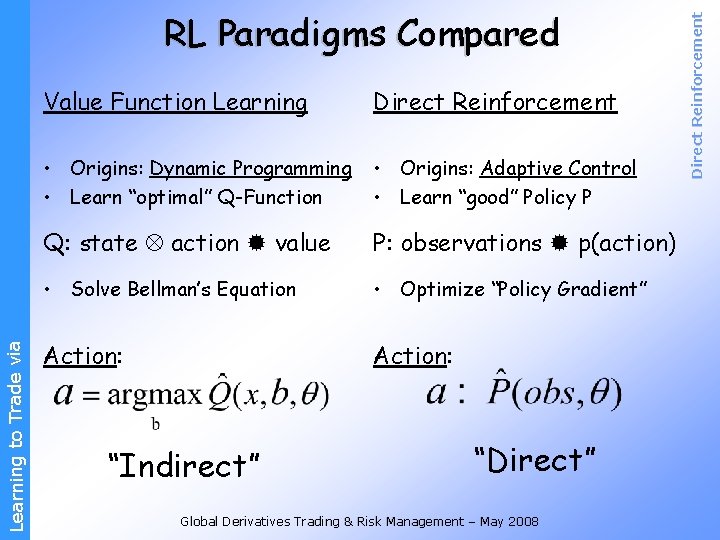

Learning to Trade via Value Function Learning Direct Reinforcement • Origins: Dynamic Programming • Learn “optimal” Q-Function • Origins: Adaptive Control • Learn “good” Policy P Q: state action value P: observations p(action) • Solve Bellman’s Equation • Optimize “Policy Gradient” Action: “Indirect” “Direct” Global Derivatives Trading & Risk Management – May 2008 Direct Reinforcement RL Paradigms Compared

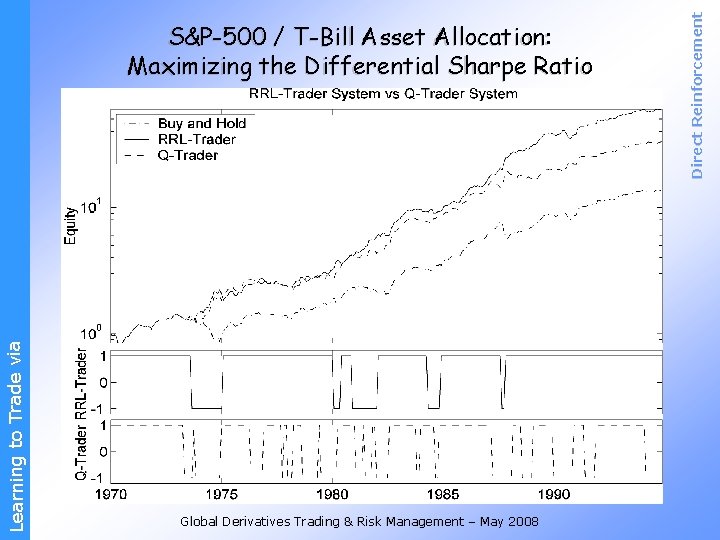

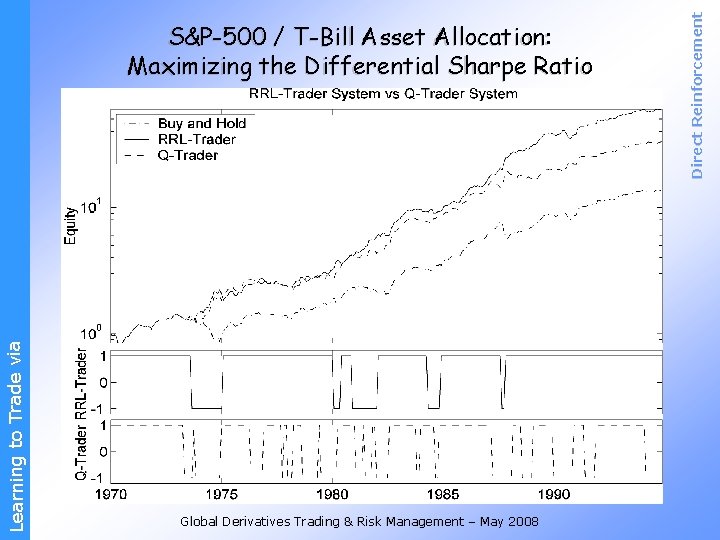

Learning to Trade via Global Derivatives Trading & Risk Management – May 2008 Direct Reinforcement S&P-500 / T-Bill Asset Allocation: Maximizing the Differential Sharpe Ratio

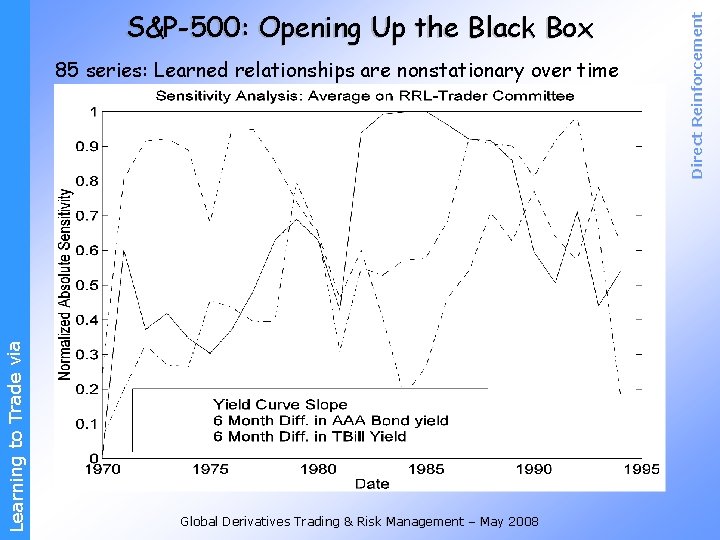

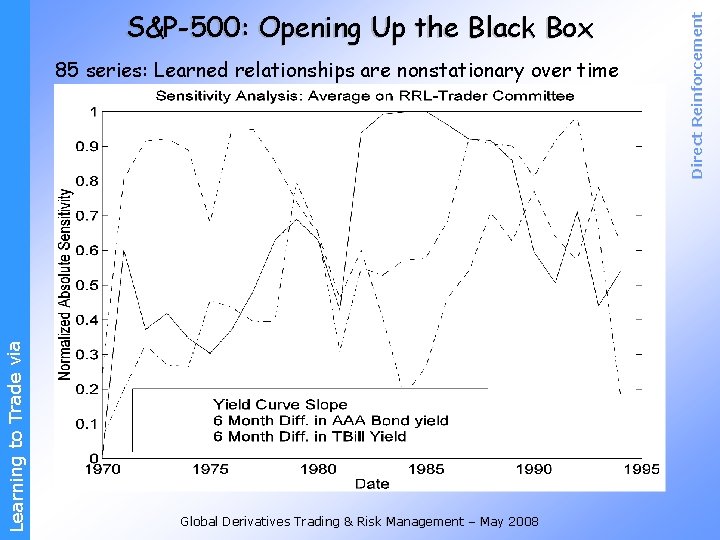

Learning to Trade via 85 series: Learned relationships are nonstationary over time Global Derivatives Trading & Risk Management – May 2008 Direct Reinforcement S&P-500: Opening Up the Black Box

• Direct Reinforcement Learning: – – Discovers Trading Opportunities in Markets Integrates Forecasting w/ Trading Maximizes Risk-Adjusted Returns Optimizes Trading w/ Transaction Costs • Direct Reinforcement Offers Advantages Over: Learning to Trade via – Trading based on Forecasts (Supervised Learning) – Dynamic Programming RL (Value Function Methods) • Illustrations: – Controlled Simulations – FX Currency Trader – Asset Allocation: S&P 500 vs. Cash Moody@ICSI. Berkeley. Edu & John@JEMoody. Com Global Derivatives Trading & Risk Management – May 2008 Direct Reinforcement Closing Remarks

![1 John Moody and Lizhong Wu Optimization of trading systems and portfolios Decision Technologies [1] John Moody and Lizhong Wu. Optimization of trading systems and portfolios. Decision Technologies](https://slidetodoc.com/presentation_image_h/ce218b9467476720b4406a7e7708d399/image-22.jpg)

[1] John Moody and Lizhong Wu. Optimization of trading systems and portfolios. Decision Technologies for Financial Engineering, 1997. [2] John Moody, Lizhong Wu, Yuansong Liao, and Matthew Saffell. Performance functions and reinforcement learning for trading systems and portfolios. Journal of Forecasting, 17: 441 -470, 1998. Learning to Trade via [3] Jonathan Baxter and Peter L. Bartlett. Direct gradient-based reinforcement learning: Gradient estimation algorithms. 2001. [4] John Moody and Matthew Saffell. Learning to trade via direct reinforcement. IEEE Transactions on Neural Networks, 12(4): 875889, July 2001. [5] Carl Gold. FX Trading via Recurrent Reinforcement Learning. Proceedings of IEEE CIFEr Conference, Hong Kong, 2003. [6] John Moody, Y. Liu, M. Saffell and K. J. Youn. Stochastic Direct Reinforcement: Application to Simple Games with Recurrence. In Artificial Multiagent Learning, Sean Luke et al. eds, AAAI Press, 2004. Global Derivatives Trading & Risk Management – May 2008 Direct Reinforcement Selected References:

• Differential Sharpe Ratio • Portfolio Optimization Learning to Trade via • Stochastic Direct Reinforcement (SDR) Global Derivatives Trading & Risk Management – May 2008 Direct Reinforcement Supplemental Slides

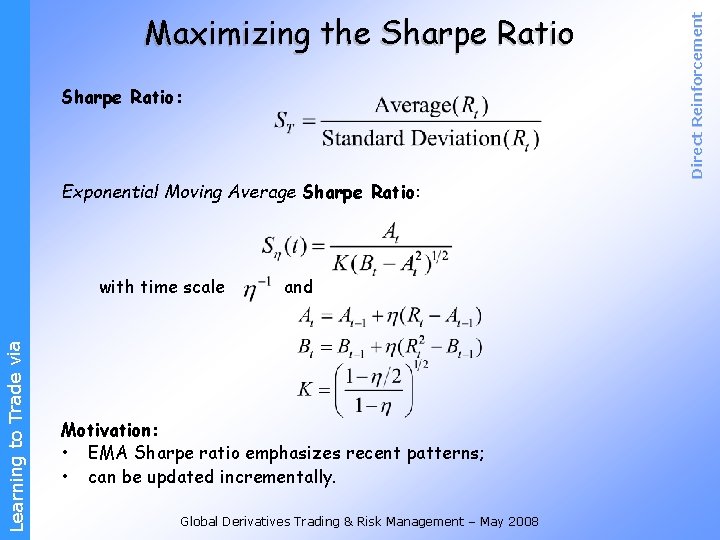

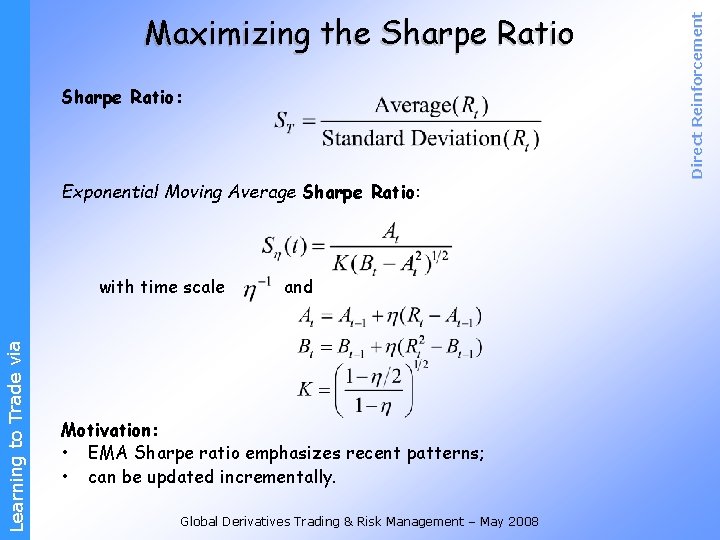

Sharpe Ratio: Exponential Moving Average Sharpe Ratio: Learning to Trade via with time scale and Motivation: • EMA Sharpe ratio emphasizes recent patterns; • can be updated incrementally. Global Derivatives Trading & Risk Management – May 2008 Direct Reinforcement Maximizing the Sharpe Ratio

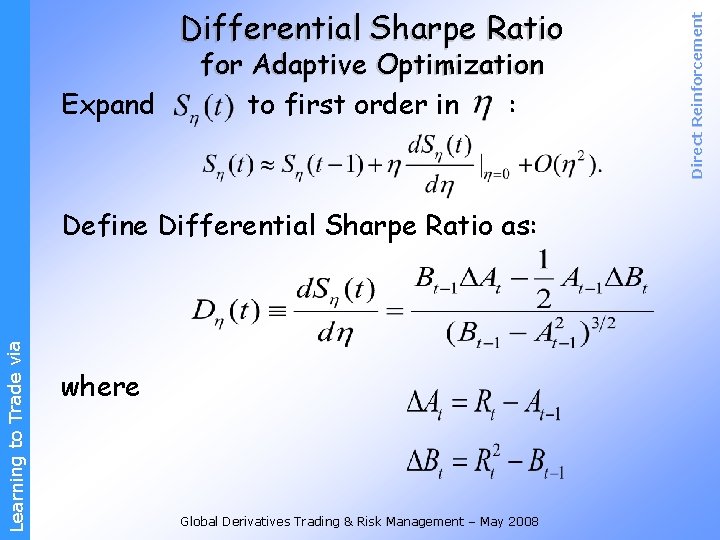

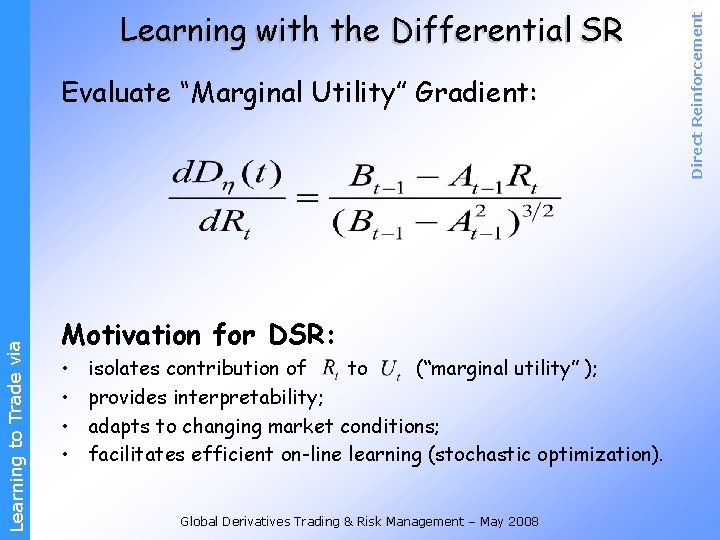

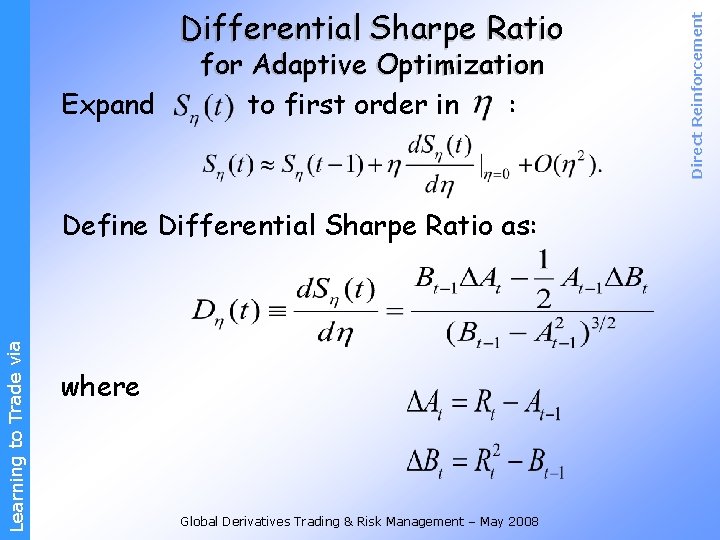

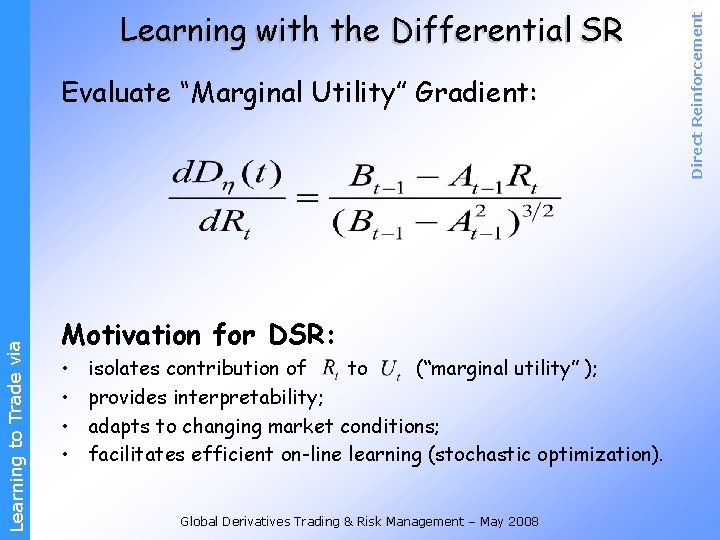

Expand for Adaptive Optimization to first order in : Learning to Trade via Define Differential Sharpe Ratio as: where Global Derivatives Trading & Risk Management – May 2008 Direct Reinforcement Differential Sharpe Ratio

Learning to Trade via Evaluate “Marginal Utility” Gradient: Motivation for DSR: • • isolates contribution of to (“marginal utility” ); provides interpretability; adapts to changing market conditions; facilitates efficient on-line learning (stochastic optimization). Global Derivatives Trading & Risk Management – May 2008 Direct Reinforcement Learning with the Differential SR

Transaction costs vs. Performance 100 runs; Costs = 0. 2%, 0. 5%, and 1. 0% Learning to Trade via Trading Frequency Cumulative Profit Global Derivatives Trading & Risk Management – May 2008 Sharpe Ratio Direct Reinforcement Trader Simulation

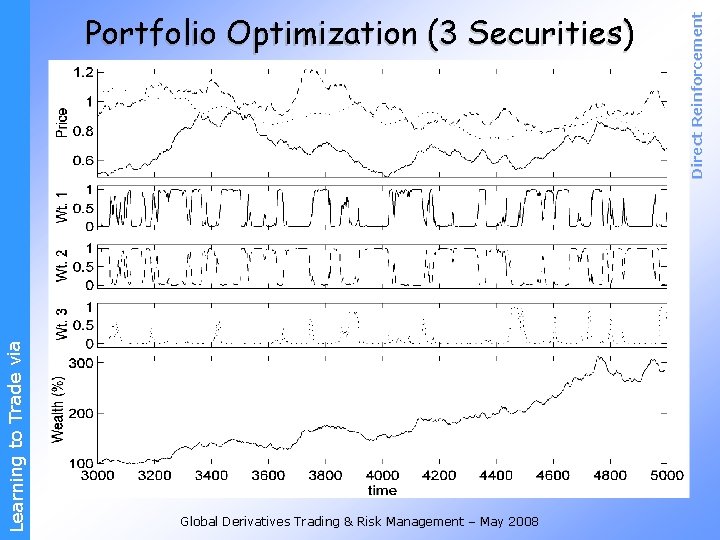

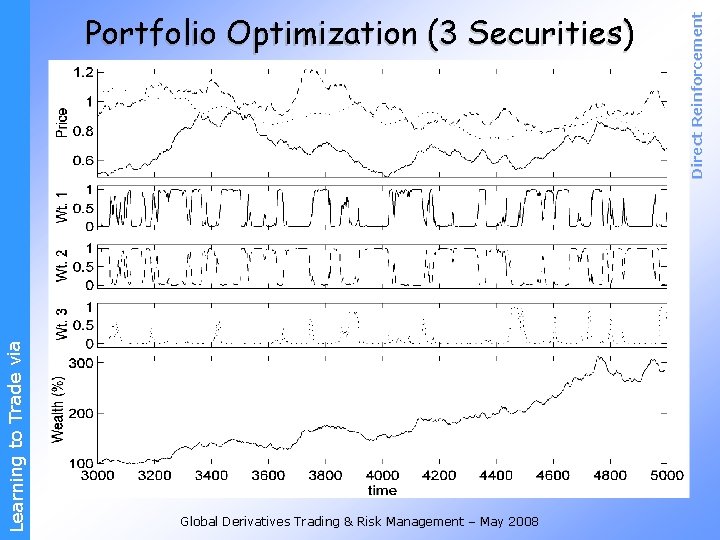

Learning to Trade via Global Derivatives Trading & Risk Management – May 2008 Direct Reinforcement Portfolio Optimization (3 Securities)

Learning to Trade via Global Derivatives Trading & Risk Management – May 2008 Direct Reinforcement Stochastic Direct Reinforcement: Probabilistic Policies

• Single Asset - Price series - Return series • Trader - Discrete position size - Recurrent policy • Observations: Learning to Trade via – Full system State is not known • Simple Trading Returns and Profit: • Transaction cost rate . Global Derivatives Trading & Risk Management – May 2008 Direct Reinforcement Learning to Trade

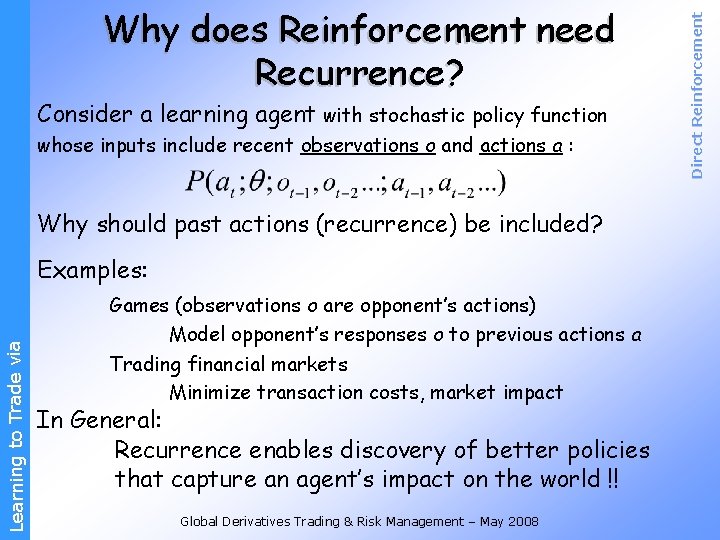

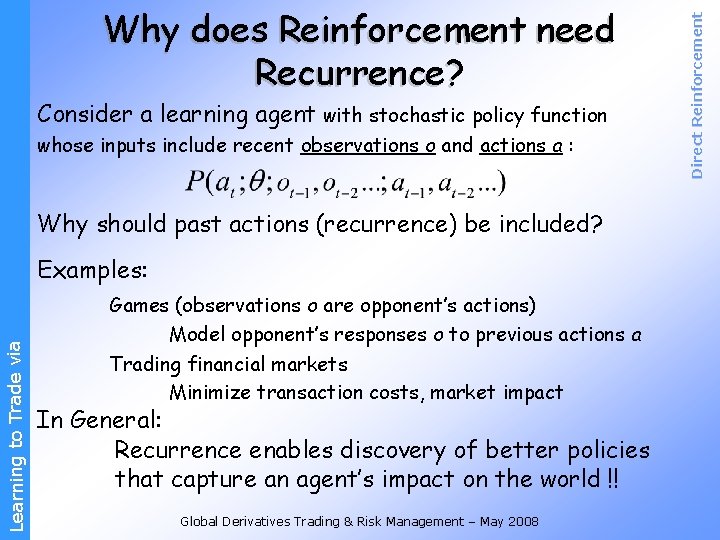

Consider a learning agent with stochastic policy function whose inputs include recent observations o and actions a : Why should past actions (recurrence) be included? Learning to Trade via Examples: Games (observations o are opponent’s actions) Model opponent’s responses o to previous actions a Trading financial markets Minimize transaction costs, market impact In General: Recurrence enables discovery of better policies that capture an agent’s impact on the world !! Global Derivatives Trading & Risk Management – May 2008 Direct Reinforcement Why does Reinforcement need Recurrence?

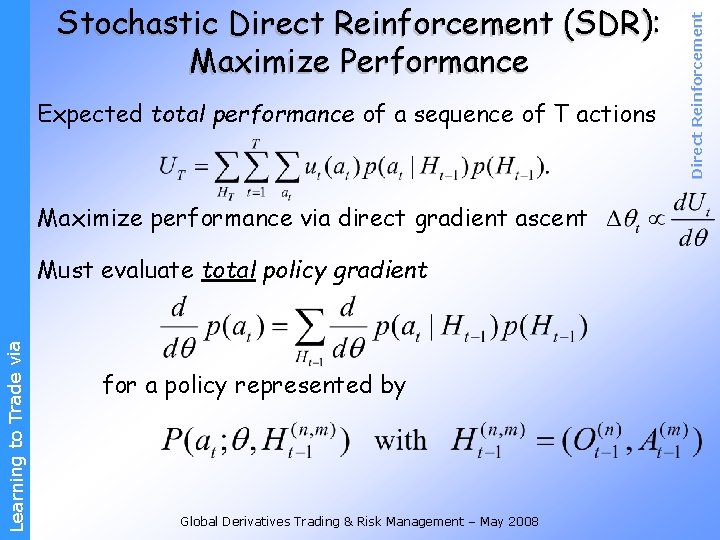

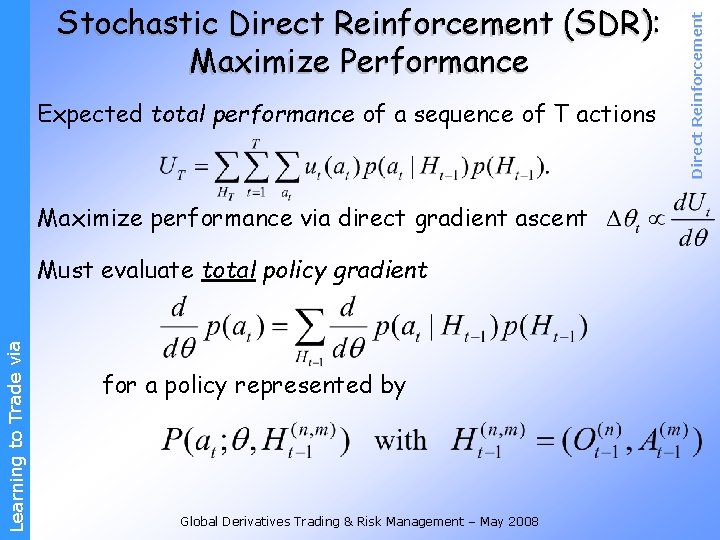

Expected total performance of a sequence of T actions Maximize performance via direct gradient ascent Learning to Trade via Must evaluate total policy gradient for a policy represented by Global Derivatives Trading & Risk Management – May 2008 Direct Reinforcement Stochastic Direct Reinforcement (SDR): Maximize Performance

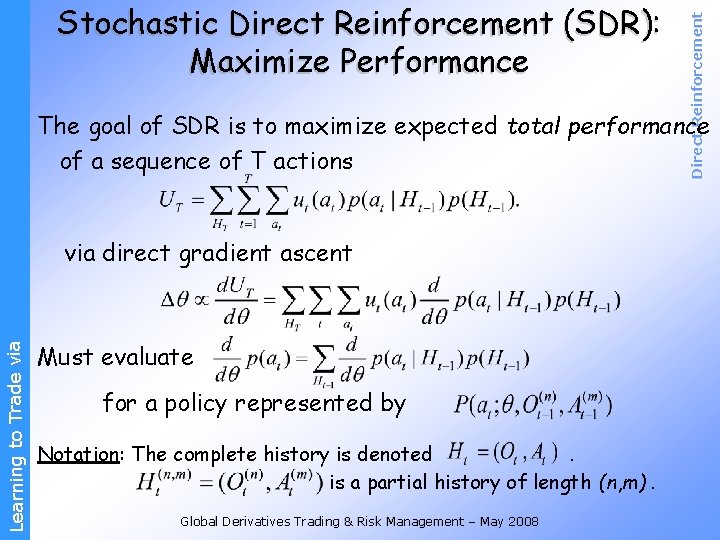

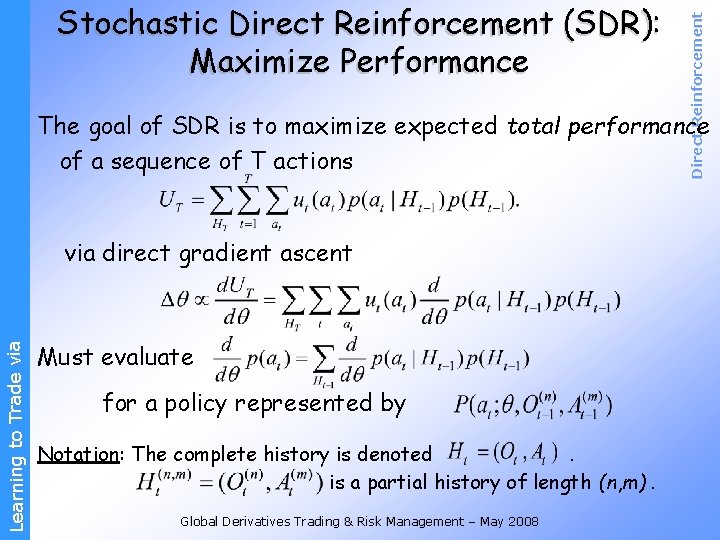

Direct Reinforcement Stochastic Direct Reinforcement (SDR): Maximize Performance The goal of SDR is to maximize expected total performance of a sequence of T actions Learning to Trade via direct gradient ascent Must evaluate for a policy represented by Notation: The complete history is denoted. is a partial history of length (n, m). Global Derivatives Trading & Risk Management – May 2008

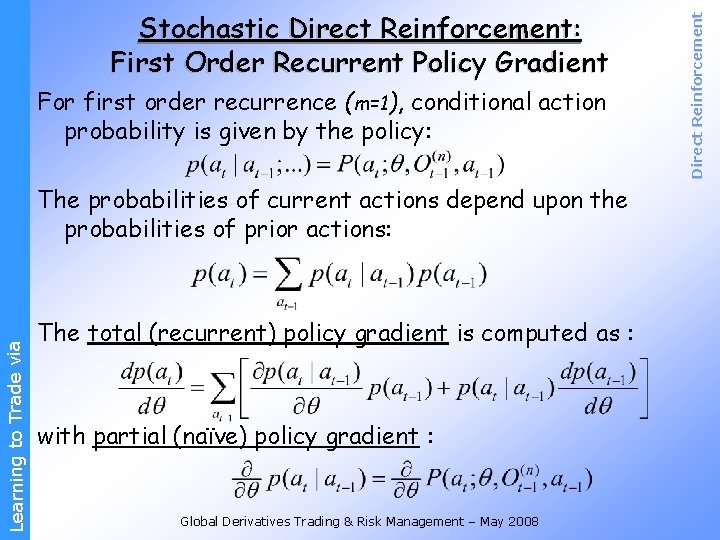

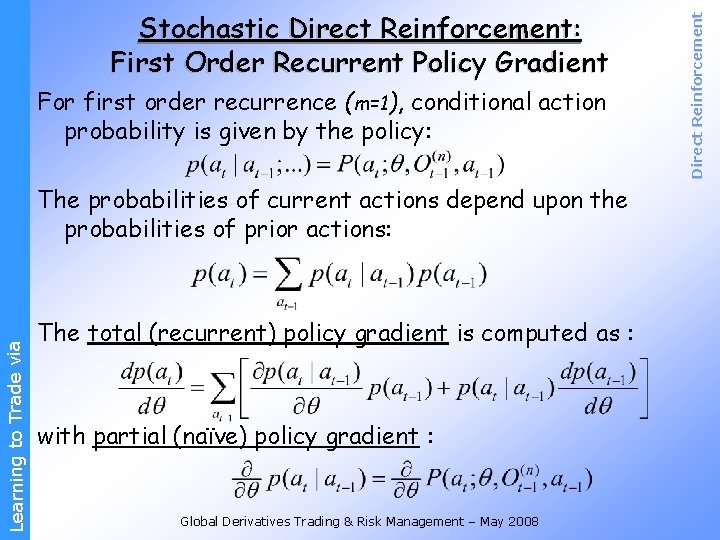

For first order recurrence (m=1), conditional action probability is given by the policy: Learning to Trade via The probabilities of current actions depend upon the probabilities of prior actions: The total (recurrent) policy gradient is computed as : with partial (naïve) policy gradient : Global Derivatives Trading & Risk Management – May 2008 Direct Reinforcement Stochastic Direct Reinforcement: First Order Recurrent Policy Gradient

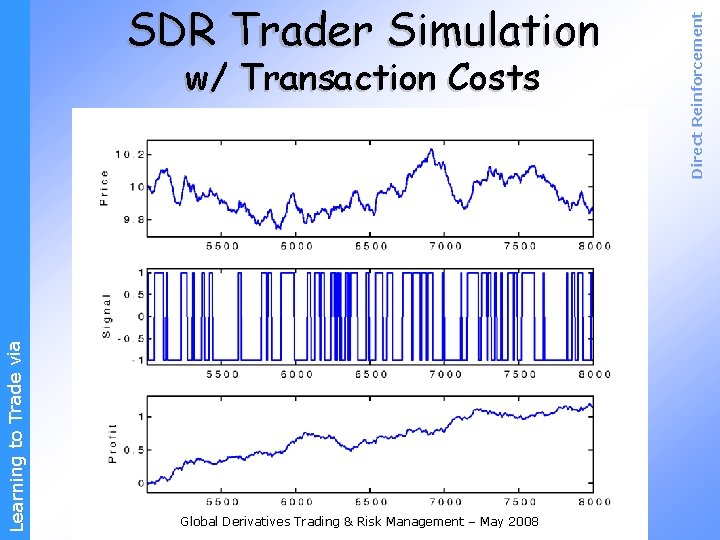

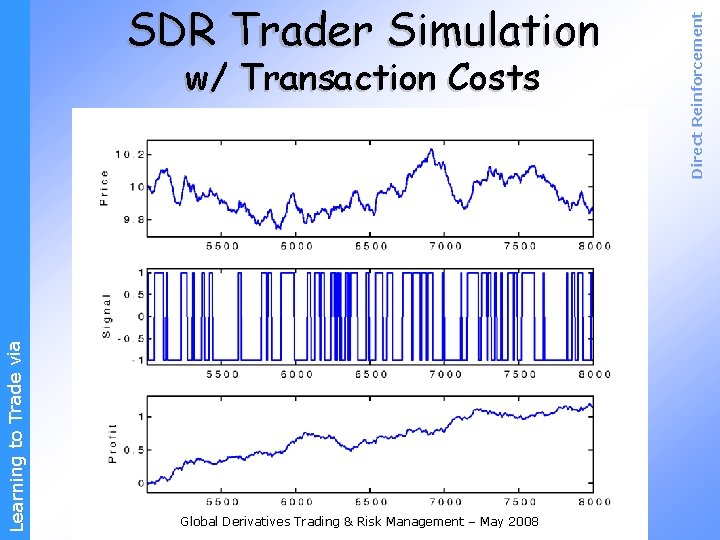

Learning to Trade via w/ Transaction Costs Global Derivatives Trading & Risk Management – May 2008 Direct Reinforcement SDR Trader Simulation

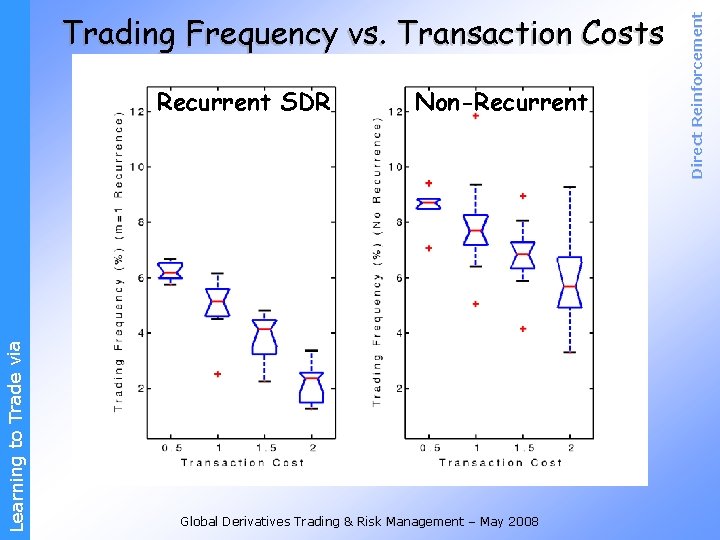

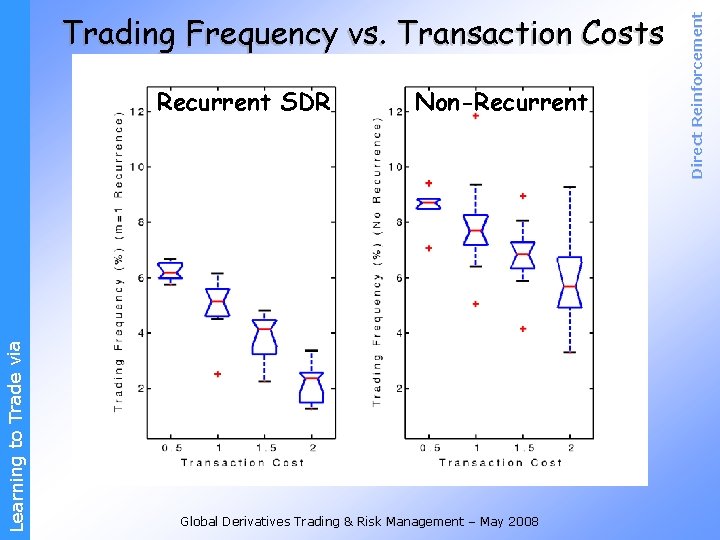

Learning to Trade via Recurrent SDR Non-Recurrent Global Derivatives Trading & Risk Management – May 2008 Direct Reinforcement Trading Frequency vs. Transaction Costs

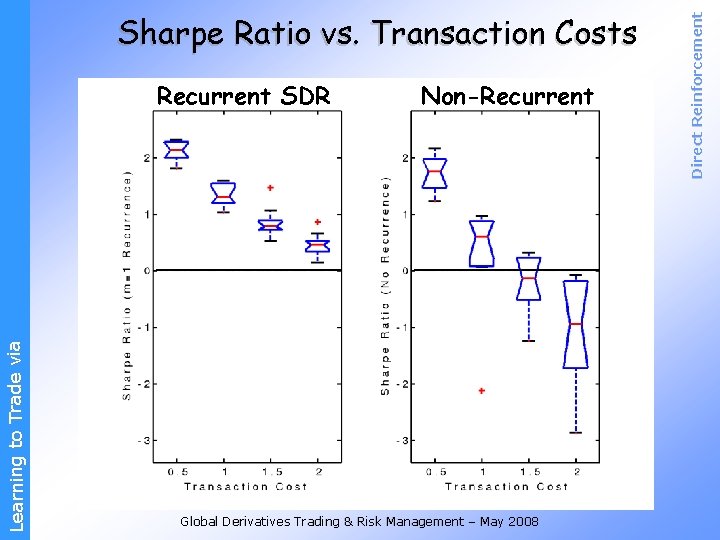

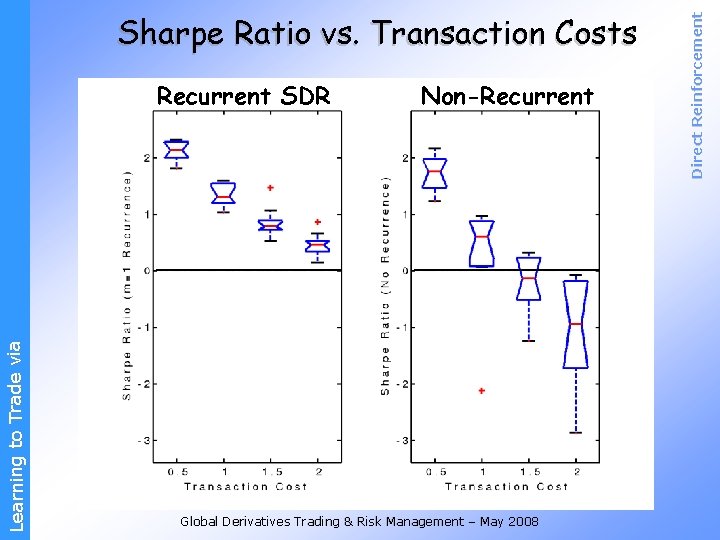

Learning to Trade via Recurrent SDR Non-Recurrent Global Derivatives Trading & Risk Management – May 2008 Direct Reinforcement Sharpe Ratio vs. Transaction Costs

Via-direct trading

Via-direct trading Apprenticeship learning via inverse reinforcement learning

Apprenticeship learning via inverse reinforcement learning Apprenticeship learning via inverse reinforcement learning

Apprenticeship learning via inverse reinforcement learning Active learning reinforcement learning

Active learning reinforcement learning Positive reinforcement vs negative reinforcement

Positive reinforcement vs negative reinforcement Via crucis y via lucis

Via crucis y via lucis La via negativa

La via negativa Estaciones del via lucis pdf

Estaciones del via lucis pdf Motoneurona

Motoneurona Via erudita e via popular

Via erudita e via popular Interrogative mood verb

Interrogative mood verb Achim edelmann

Achim edelmann Reynolds number units

Reynolds number units Analysis

Analysis Uzma moody

Uzma moody To kill a mockingbird settings

To kill a mockingbird settings Eastern woodland clothing

Eastern woodland clothing George moody mit

George moody mit Grafico de moody

Grafico de moody Davina moody drama queen

Davina moody drama queen Rayon hydraulique

Rayon hydraulique Placa plana

Placa plana Débit massique

Débit massique Give an example of an irritable/moody customer.

Give an example of an irritable/moody customer. Apa itu bsn

Apa itu bsn Cuadro comparativo de e-learning b-learning y m-learning

Cuadro comparativo de e-learning b-learning y m-learning Trade diversion and trade creation

Trade diversion and trade creation Umich

Umich Trade diversion and trade creation

Trade diversion and trade creation The trade in the trade-to-gdp ratio

The trade in the trade-to-gdp ratio Fair trade not free trade

Fair trade not free trade Trade diversion and trade creation

Trade diversion and trade creation Liner trade and tramp trade

Liner trade and tramp trade Triangular trade definition

Triangular trade definition Karan kathpalia

Karan kathpalia Active and passive reinforcement learning

Active and passive reinforcement learning What is active and passive reinforcement learning

What is active and passive reinforcement learning Bootstrapping machine learning

Bootstrapping machine learning