MDPs and Reinforcement Learning Overview MDPs Reinforcement learning

![Rewards • The utility of a sequence is usually additive – U([s 0…s 1]) Rewards • The utility of a sequence is usually additive – U([s 0…s 1])](https://slidetodoc.com/presentation_image_h/c79ab16761c0461b3260f4ea0edd3c3c/image-8.jpg)

- Slides: 11

MDPs and Reinforcement Learning

Overview • MDPs • Reinforcement learning

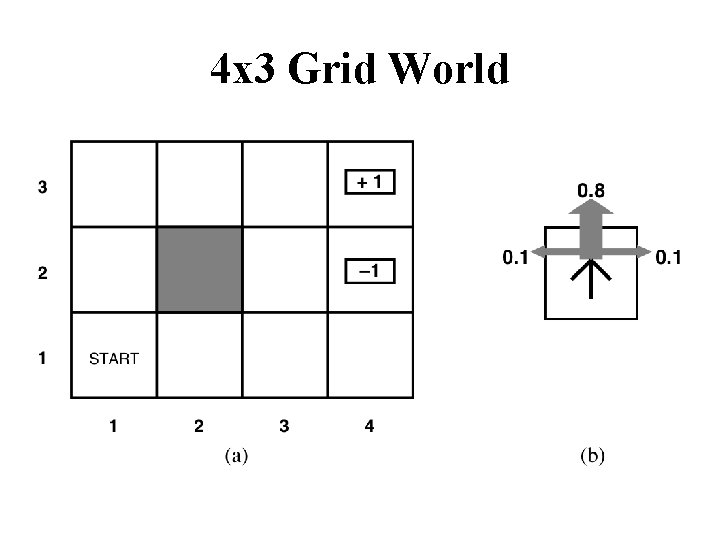

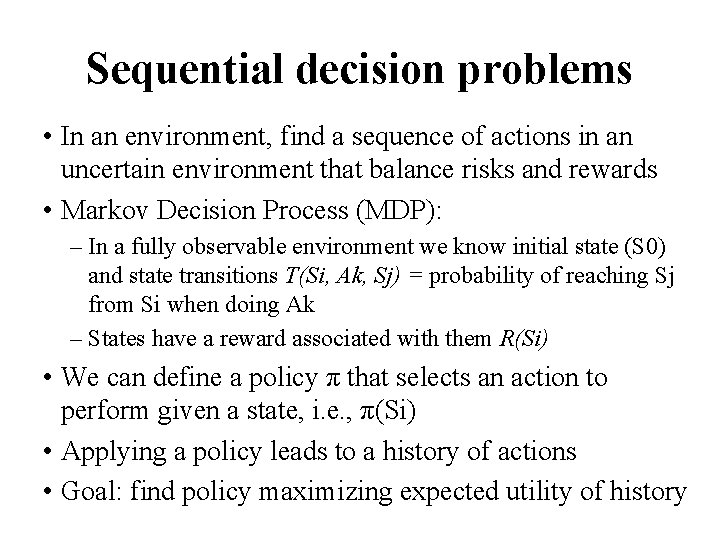

Sequential decision problems • In an environment, find a sequence of actions in an uncertain environment that balance risks and rewards • Markov Decision Process (MDP): – In a fully observable environment we know initial state (S 0) and state transitions T(Si, Ak, Sj) = probability of reaching Sj from Si when doing Ak – States have a reward associated with them R(Si) • We can define a policy π that selects an action to perform given a state, i. e. , π(Si) • Applying a policy leads to a history of actions • Goal: find policy maximizing expected utility of history

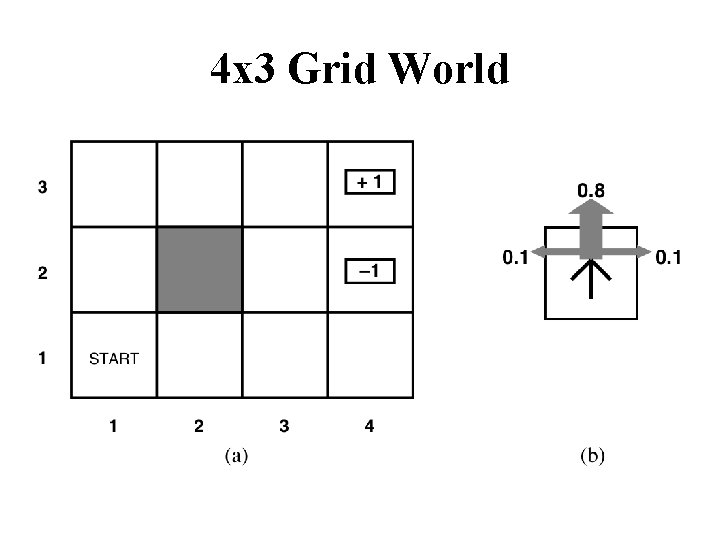

4 x 3 Grid World

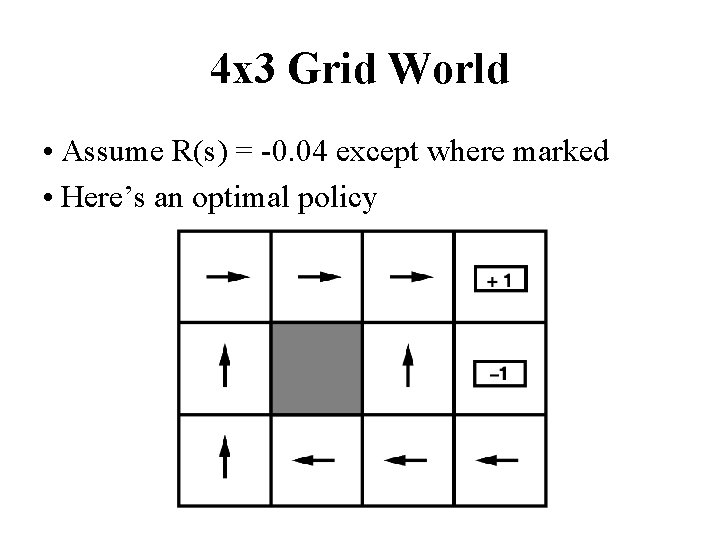

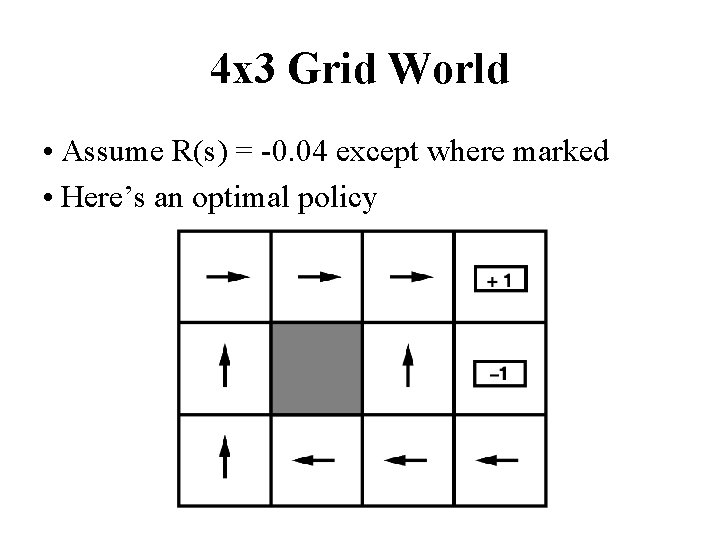

4 x 3 Grid World • Assume R(s) = -0. 04 except where marked • Here’s an optimal policy

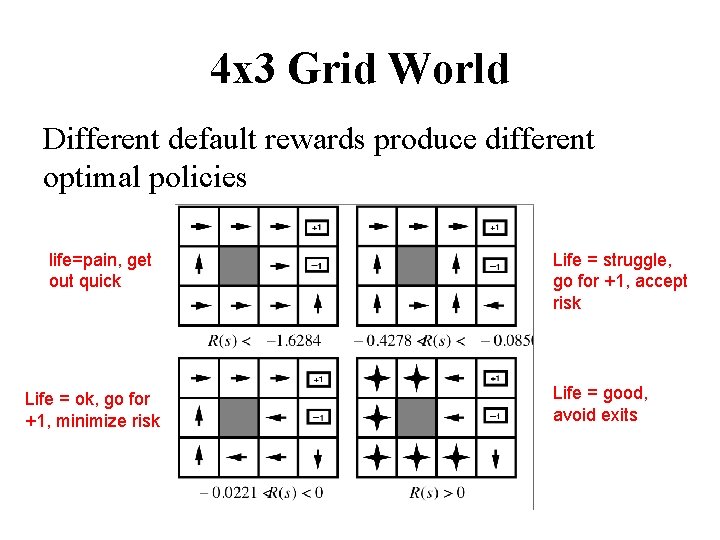

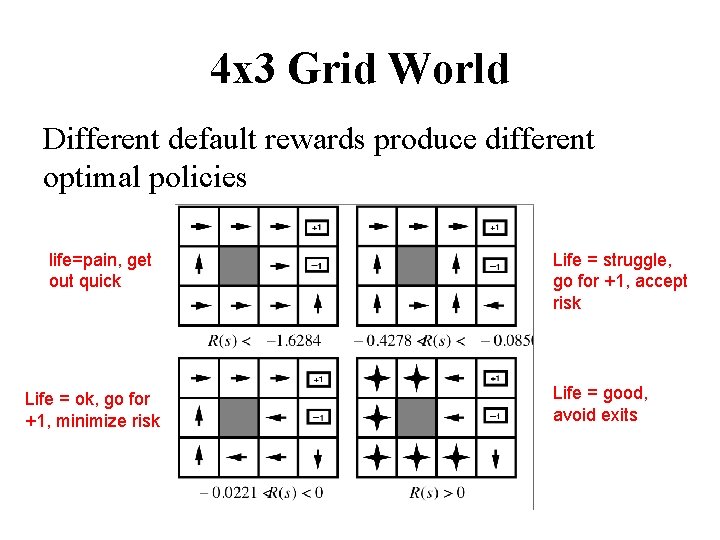

4 x 3 Grid World Different default rewards produce different optimal policies life=pain, get out quick Life = ok, go for +1, minimize risk Life = struggle, go for +1, accept risk Life = good, avoid exits

Finite and infinite horizons • Finite Horizon – There’s a fixed time N when the game is over – U([s 1…sn]) = U([s 1…sn…sk]) – Find a policy that takes that into account • Infinite Horizon – Game goes on forever • The best policy for with a finite horizon can change over time: more complicated

![Rewards The utility of a sequence is usually additive Us 0s 1 Rewards • The utility of a sequence is usually additive – U([s 0…s 1])](https://slidetodoc.com/presentation_image_h/c79ab16761c0461b3260f4ea0edd3c3c/image-8.jpg)

Rewards • The utility of a sequence is usually additive – U([s 0…s 1]) = R(s 0) + R(s 1) + … R(sn) • But future rewards might be discounted by a factor γ – U([s 0…s 1]) = R(s 0) + γ*R(s 1) + γ 2*R(s 2)…+ γn*R(sn) • Using discounted rewards – Solves some technical difficulties with very long or infinite sequences and – Is psychologically realistic

Value Functions • The value of a state is the expected return starting from that state; depends on the agent’s policy: • The value of taking an action in a state under policy p is the expected return starting from that state, taking that action, and thereafter following p : 9

Bellman Equation for a Policy p The basic idea: So: Or, without the expectation operator: 10

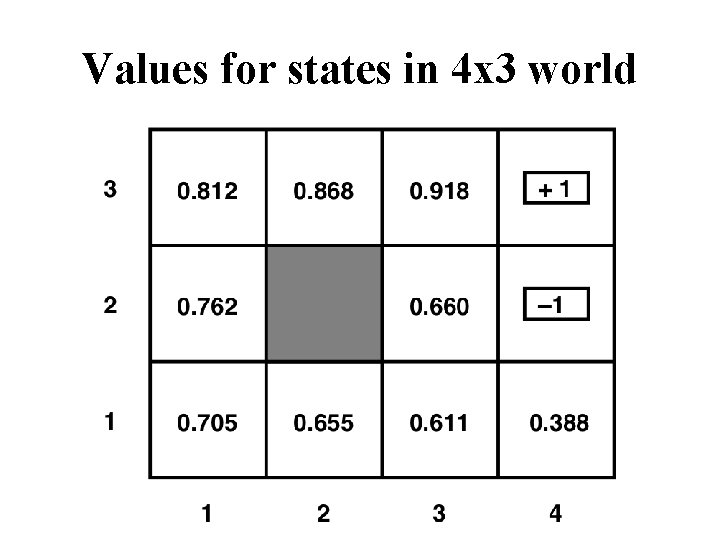

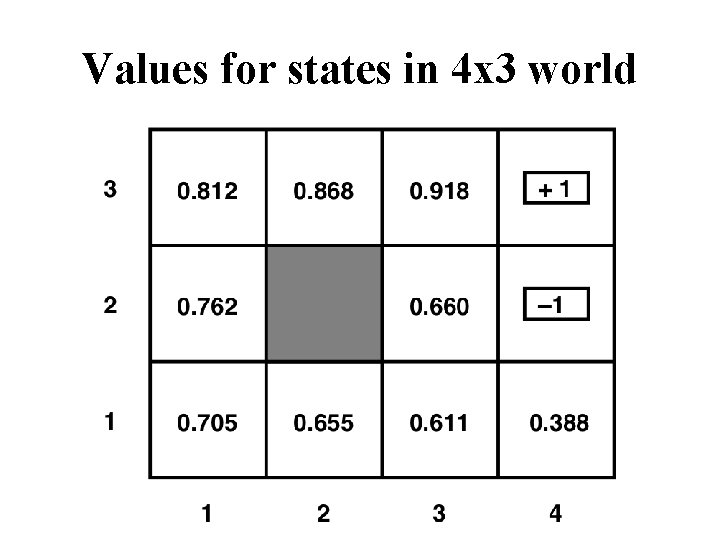

Values for states in 4 x 3 world