Pipelining and Vector Processing 1 PIPELINING AND VECTOR

![Pipelining and Vector Processing 21 Instruction Pipeline INSTRUCTION CYCLE ==> 4 -Stage Pipeline [1] Pipelining and Vector Processing 21 Instruction Pipeline INSTRUCTION CYCLE ==> 4 -Stage Pipeline [1]](https://slidetodoc.com/presentation_image_h/19659ca89361e6fad5787658a04b6d75/image-21.jpg)

![Pipelining and Vector Processing 55 Berkeley RISC I LDL Rd M[(Rs) + S 2] Pipelining and Vector Processing 55 Berkeley RISC I LDL Rd M[(Rs) + S 2]](https://slidetodoc.com/presentation_image_h/19659ca89361e6fad5787658a04b6d75/image-55.jpg)

- Slides: 79

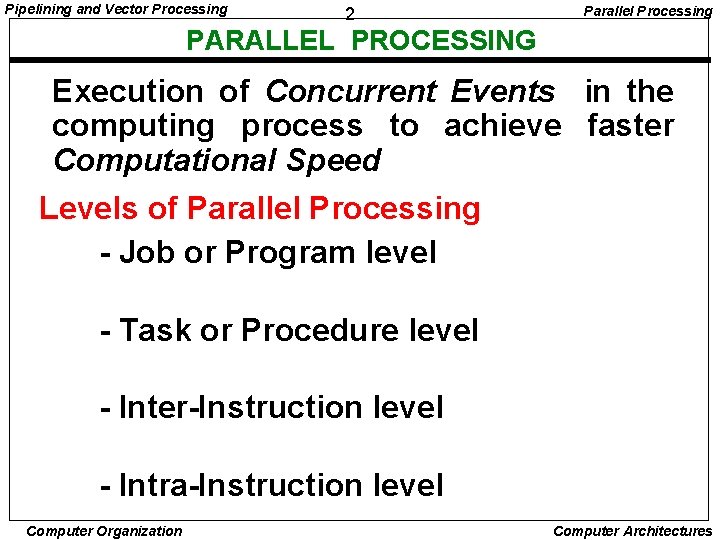

Pipelining and Vector Processing 1 PIPELINING AND VECTOR PROCESSING • Parallel Processing • Pipelining • Arithmetic Pipeline • Instruction Pipeline • RISC Pipeline • Vector Processing • Array Processors Computer Organization Computer Architectures

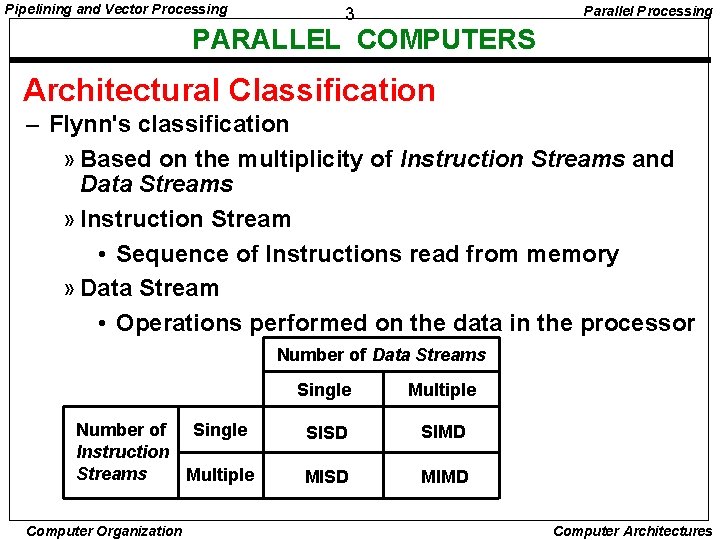

Pipelining and Vector Processing 2 Parallel Processing PARALLEL PROCESSING Execution of Concurrent Events in the computing process to achieve faster Computational Speed Levels of Parallel Processing - Job or Program level - Task or Procedure level - Inter-Instruction level - Intra-Instruction level Computer Organization Computer Architectures

Pipelining and Vector Processing Parallel Processing 3 PARALLEL COMPUTERS Architectural Classification – Flynn's classification » Based on the multiplicity of Instruction Streams and Data Streams » Instruction Stream • Sequence of Instructions read from memory » Data Stream • Operations performed on the data in the processor Number of Data Streams Number of Single Instruction Streams Multiple Computer Organization Single Multiple SISD SIMD MISD MIMD Computer Architectures

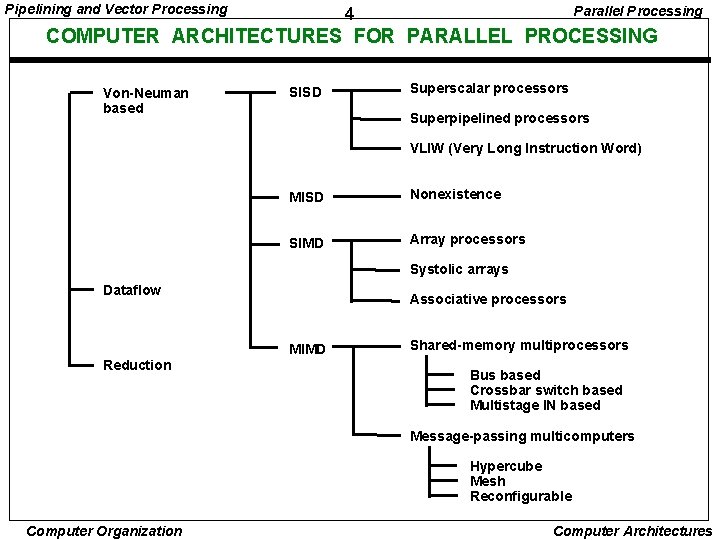

Pipelining and Vector Processing Parallel Processing 4 COMPUTER ARCHITECTURES FOR PARALLEL PROCESSING Von-Neuman based SISD Superscalar processors Superpipelined processors VLIW (Very Long Instruction Word) MISD Nonexistence SIMD Array processors Systolic arrays Dataflow Associative processors MIMD Reduction Shared-memory multiprocessors Bus based Crossbar switch based Multistage IN based Message-passing multicomputers Hypercube Mesh Reconfigurable Computer Organization Computer Architectures

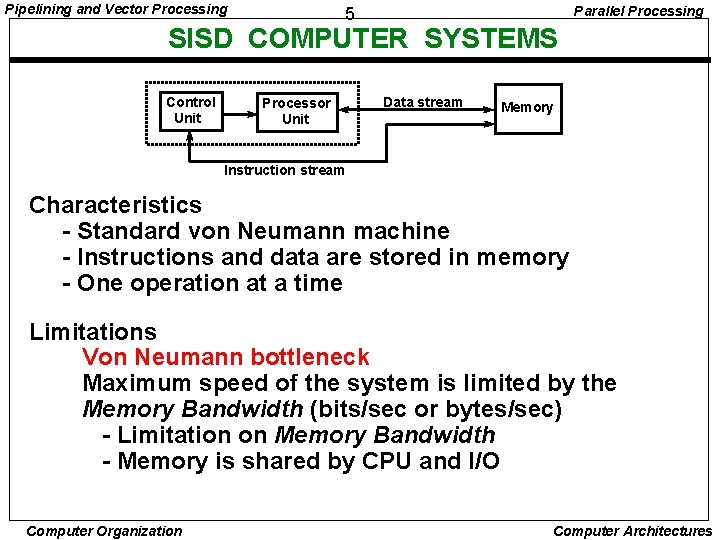

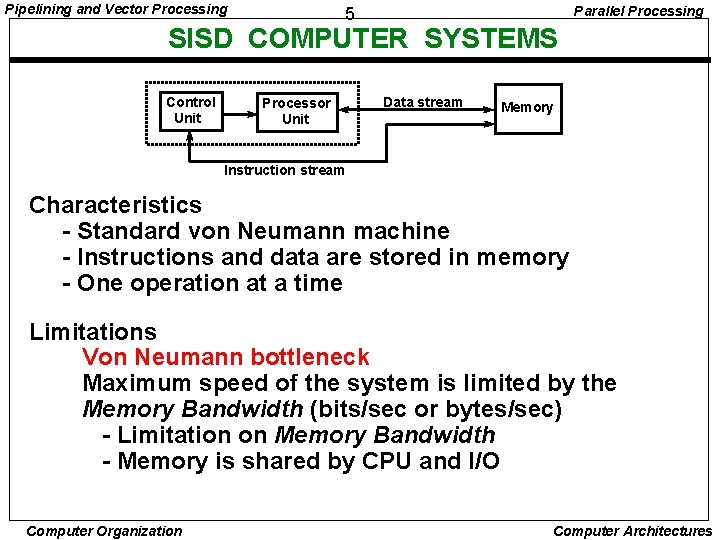

Pipelining and Vector Processing Parallel Processing 5 SISD COMPUTER SYSTEMS Control Unit Processor Unit Data stream Memory Instruction stream Characteristics - Standard von Neumann machine - Instructions and data are stored in memory - One operation at a time Limitations Von Neumann bottleneck Maximum speed of the system is limited by the Memory Bandwidth (bits/sec or bytes/sec) - Limitation on Memory Bandwidth - Memory is shared by CPU and I/O Computer Organization Computer Architectures

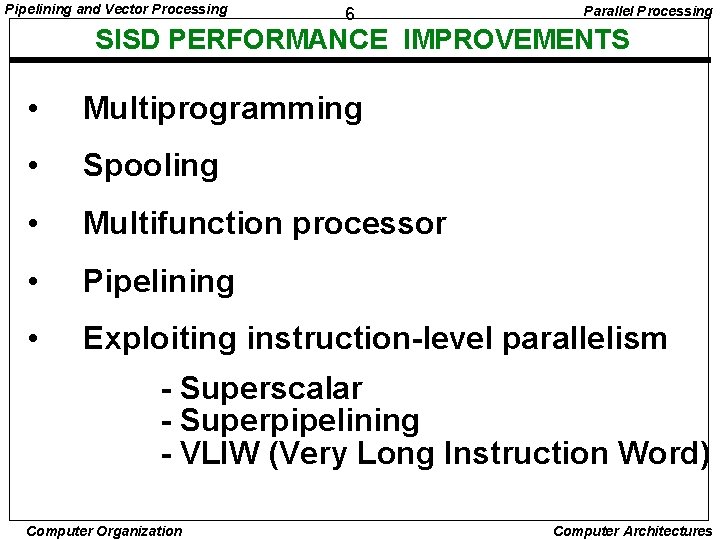

Pipelining and Vector Processing 6 Parallel Processing SISD PERFORMANCE IMPROVEMENTS • Multiprogramming • Spooling • Multifunction processor • Pipelining • Exploiting instruction-level parallelism - Superscalar - Superpipelining - VLIW (Very Long Instruction Word) Computer Organization Computer Architectures

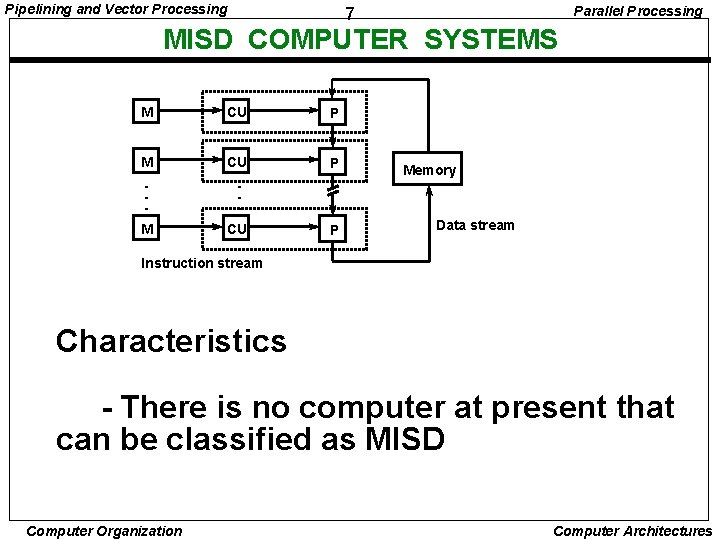

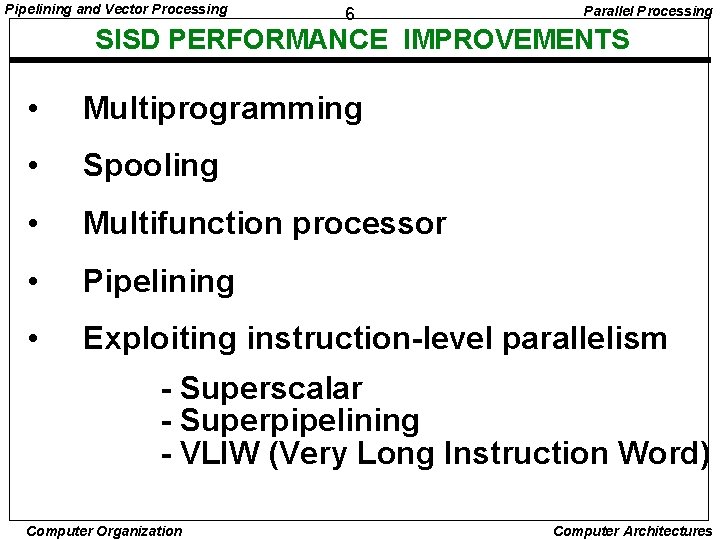

Pipelining and Vector Processing Parallel Processing 7 MISD COMPUTER SYSTEMS M CU P • • • M CU P Memory Data stream Instruction stream Characteristics - There is no computer at present that can be classified as MISD Computer Organization Computer Architectures

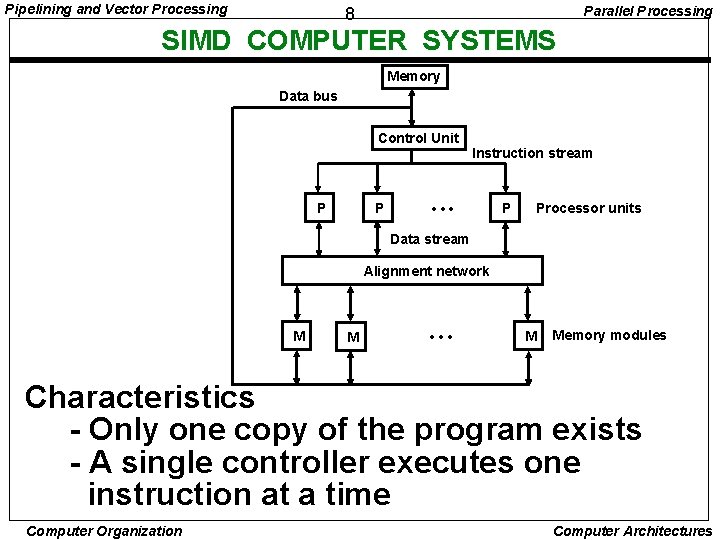

Pipelining and Vector Processing Parallel Processing 8 SIMD COMPUTER SYSTEMS Memory Data bus Control Unit P P Instruction stream • • • P Processor units Data stream Alignment network M M • • • M Memory modules Characteristics - Only one copy of the program exists - A single controller executes one instruction at a time Computer Organization Computer Architectures

Pipelining and Vector Processing Parallel Processing 9 TYPES OF SIMD COMPUTERS Array Processors - The control unit broadcasts instructions to all Processor Units, and all active Processor Units execute the same instructions - ILLIAC IV, GF-11, Connection Machine, DAP, MPP Systolic Arrays - Regular arrangement of a large number of very simple processors constructed on VLSI circuits - CMU Warp, Purdue CHi. P Associative Processors - Content addressing - Data transformation operations over many sets of arguments with a single instruction - STARAN, PEPE Computer Organization Computer Architectures

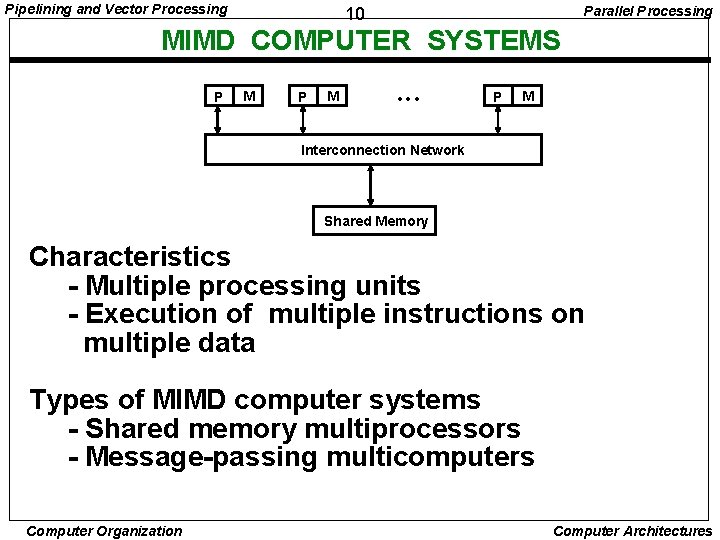

Pipelining and Vector Processing Parallel Processing 10 MIMD COMPUTER SYSTEMS P M • • • P M Interconnection Network Shared Memory Characteristics - Multiple processing units - Execution of multiple instructions on multiple data Types of MIMD computer systems - Shared memory multiprocessors - Message-passing multicomputers Computer Organization Computer Architectures

Pipelining and Vector Processing 11 Parallel Processing SHARED MEMORY MULTIPROCESSORS M M • • • M Buses, Multistage IN, Crossbar Switch Interconnection Network(IN) P P • • • P Characteristics All processors have equally direct access to one large memory address space Example systems Bus and cache-based systems - Sequent Balance, Encore Multimax Multistage IN-based systems - Ultracomputer, Butterfly, RP 3, HEP Crossbar switch-based systems- C. mmp, Alliant FX/8 Limitations Memory access latency, Hot spot problem Computer Organization Computer Architectures

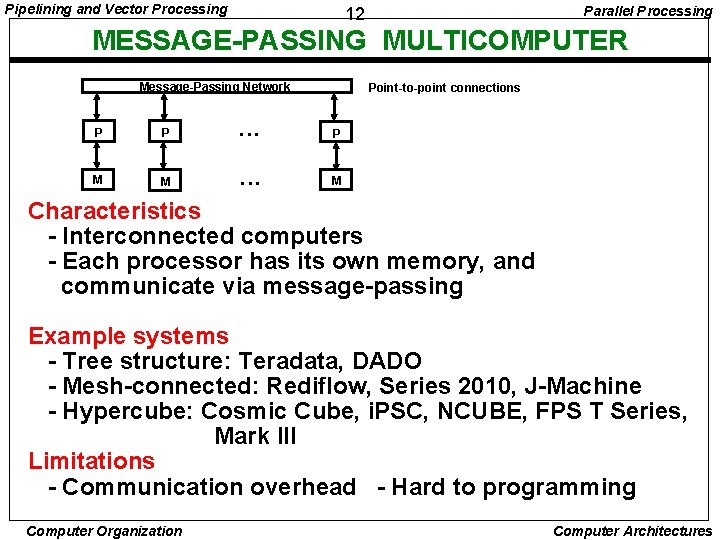

Pipelining and Vector Processing Parallel Processing 12 MESSAGE-PASSING MULTICOMPUTER Message-Passing Network Point-to-point connections P P • • • P M M • • • M Characteristics - Interconnected computers - Each processor has its own memory, and communicate via message-passing Example systems - Tree structure: Teradata, DADO - Mesh-connected: Rediflow, Series 2010, J-Machine - Hypercube: Cosmic Cube, i. PSC, NCUBE, FPS T Series, Mark III Limitations - Communication overhead - Hard to programming Computer Organization Computer Architectures

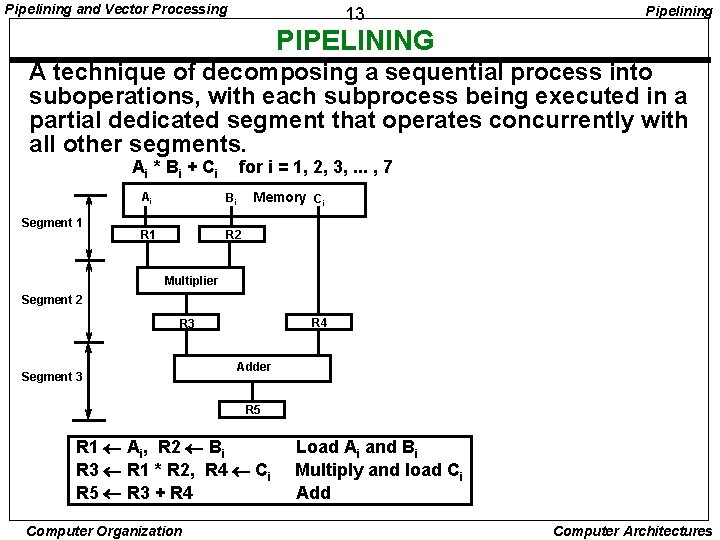

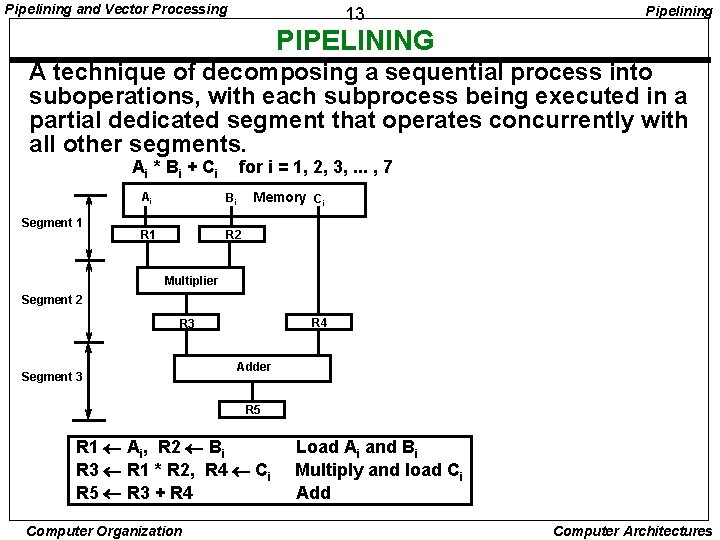

Pipelining and Vector Processing 13 Pipelining PIPELINING A technique of decomposing a sequential process into suboperations, with each subprocess being executed in a partial dedicated segment that operates concurrently with all other segments. Ai * B i + C i Segment 1 for i = 1, 2, 3, . . . , 7 Ai Bi R 1 R 2 Memory Ci Multiplier Segment 2 R 4 R 3 Segment 3 Adder R 5 R 1 Ai, R 2 Bi R 3 R 1 * R 2, R 4 Ci R 5 R 3 + R 4 Computer Organization Load Ai and Bi Multiply and load Ci Add Computer Architectures

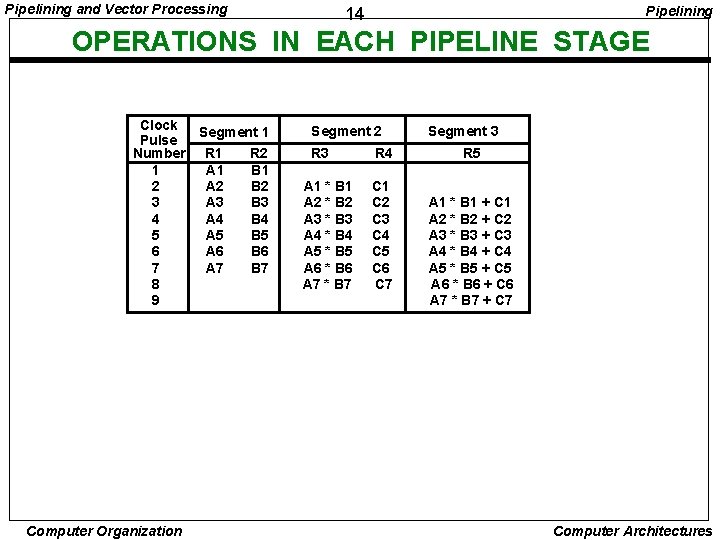

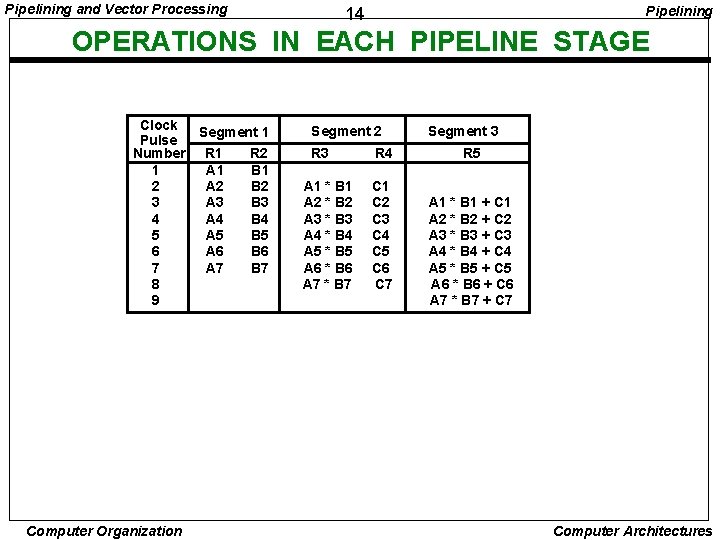

Pipelining and Vector Processing Pipelining 14 OPERATIONS IN EACH PIPELINE STAGE Clock Segment 1 Pulse Number R 1 R 2 1 A 1 B 1 2 A 2 B 2 3 A 3 B 3 4 A 4 B 4 5 A 5 B 5 6 A 6 B 6 7 A 7 B 7 8 9 Computer Organization Segment 2 R 3 A 1 * B 1 A 2 * B 2 A 3 * B 3 A 4 * B 4 A 5 * B 5 A 6 * B 6 A 7 * B 7 R 4 C 1 C 2 C 3 C 4 C 5 C 6 C 7 Segment 3 R 5 A 1 * B 1 + C 1 A 2 * B 2 + C 2 A 3 * B 3 + C 3 A 4 * B 4 + C 4 A 5 * B 5 + C 5 A 6 * B 6 + C 6 A 7 * B 7 + C 7 Computer Architectures

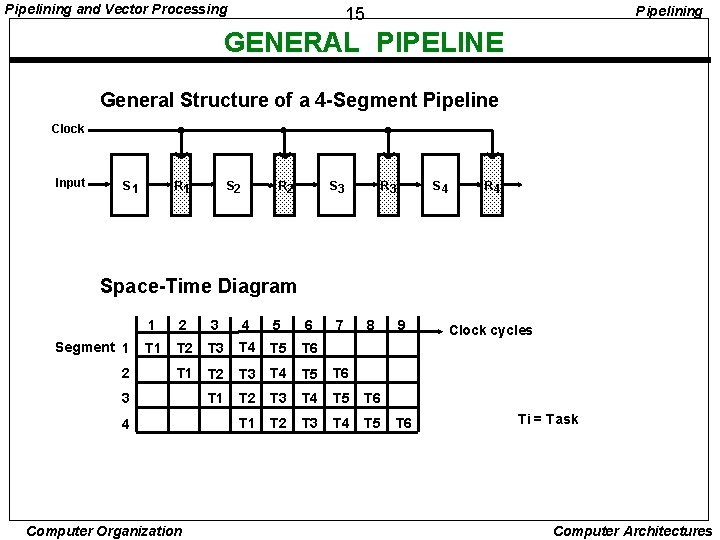

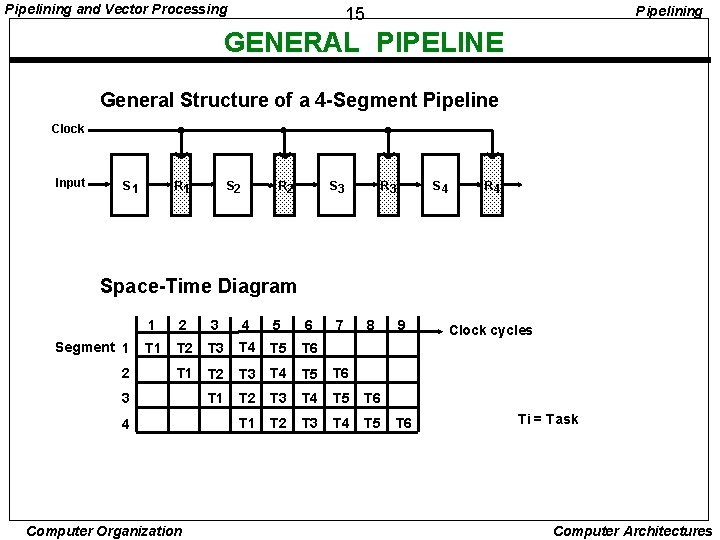

Pipelining and Vector Processing Pipelining 15 GENERAL PIPELINE General Structure of a 4 -Segment Pipeline Clock Input S 1 R 1 S 2 R 2 S 3 R 3 S 4 R 4 Space-Time Diagram Segment 1 2 3 4 5 6 T 1 T 2 T 3 T 4 T 5 T 6 T 1 T 2 T 3 T 4 T 5 3 4 Computer Organization 7 8 9 T 6 Clock cycles Ti = Task Computer Architectures

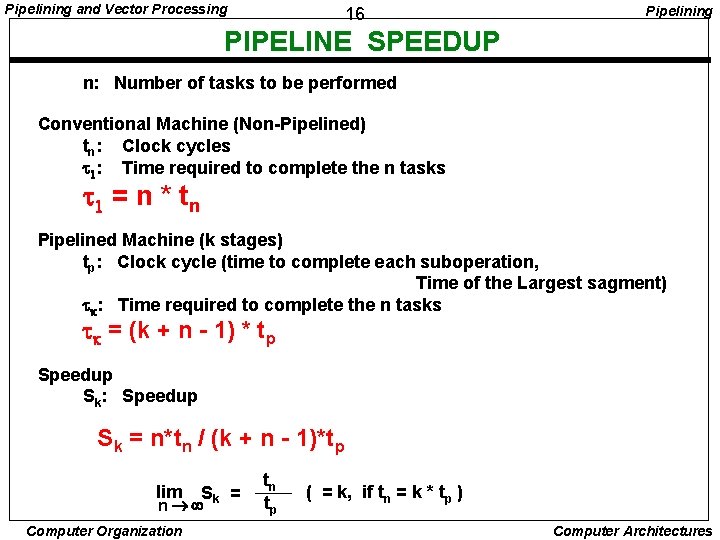

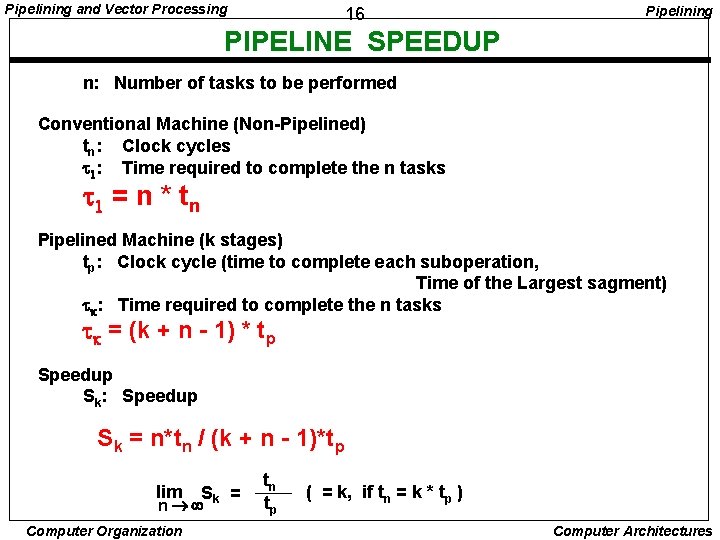

Pipelining and Vector Processing 16 Pipelining PIPELINE SPEEDUP n: Number of tasks to be performed Conventional Machine (Non-Pipelined) tn: Clock cycles t 1: Time required to complete the n tasks t 1 = n * t n Pipelined Machine (k stages) tp: Clock cycle (time to complete each suboperation, Time of the Largest sagment) tk: Time required to complete the n tasks tk = (k + n - 1) * tp Speedup Sk: Speedup Sk = n*tn / (k + n - 1)*tp lim Sk = n Computer Organization tn tp ( = k, if tn = k * tp ) Computer Architectures

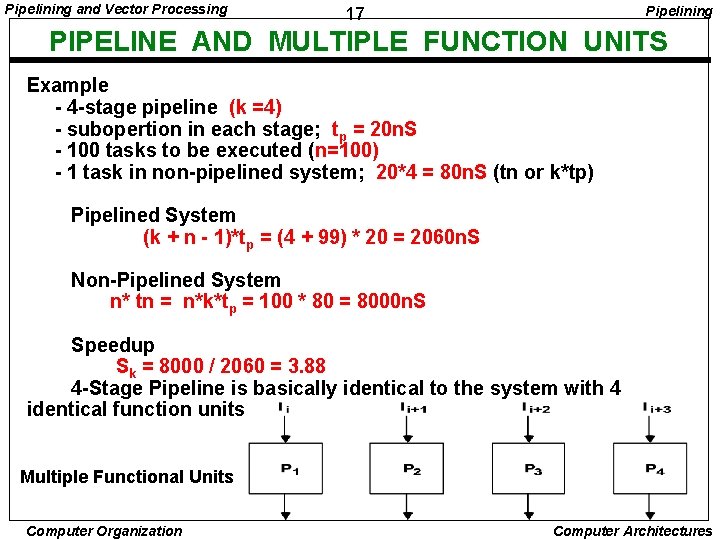

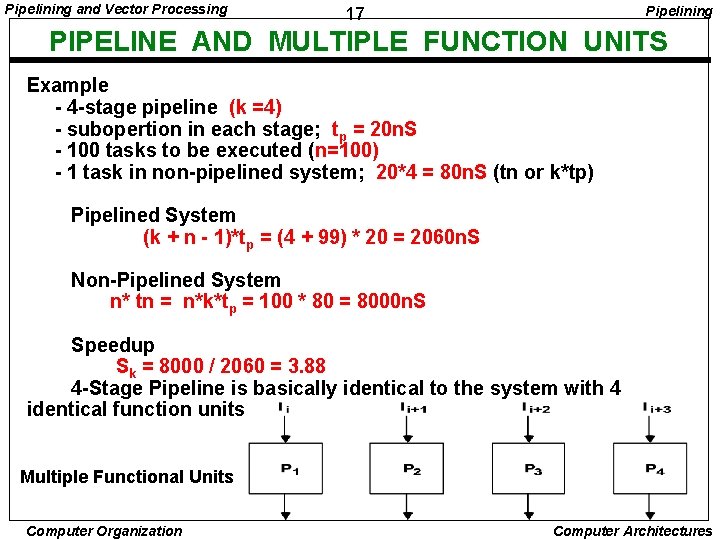

Pipelining and Vector Processing Pipelining 17 PIPELINE AND MULTIPLE FUNCTION UNITS Example - 4 -stage pipeline (k =4) - subopertion in each stage; tp = 20 n. S - 100 tasks to be executed (n=100) - 1 task in non-pipelined system; 20*4 = 80 n. S (tn or k*tp) Pipelined System (k + n - 1)*tp = (4 + 99) * 20 = 2060 n. S Non-Pipelined System n* tn = n*k*tp = 100 * 80 = 8000 n. S Speedup Sk = 8000 / 2060 = 3. 88 4 -Stage Pipeline is basically identical to the system with 4 identical function units Multiple Functional Units Computer Organization Computer Architectures

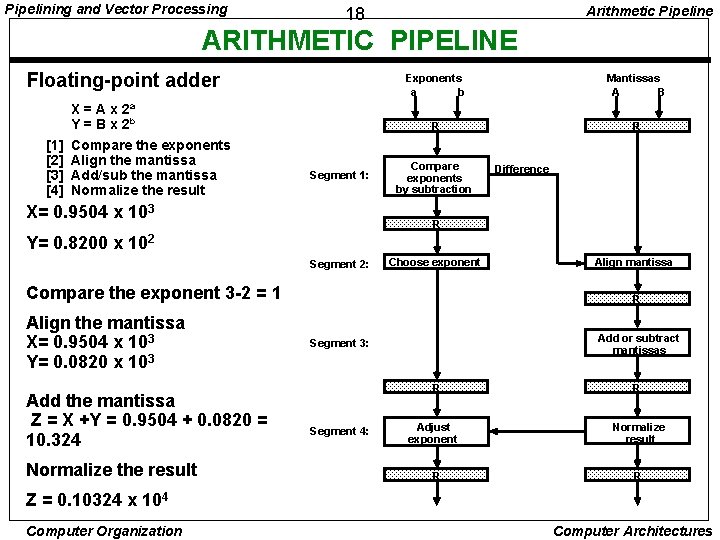

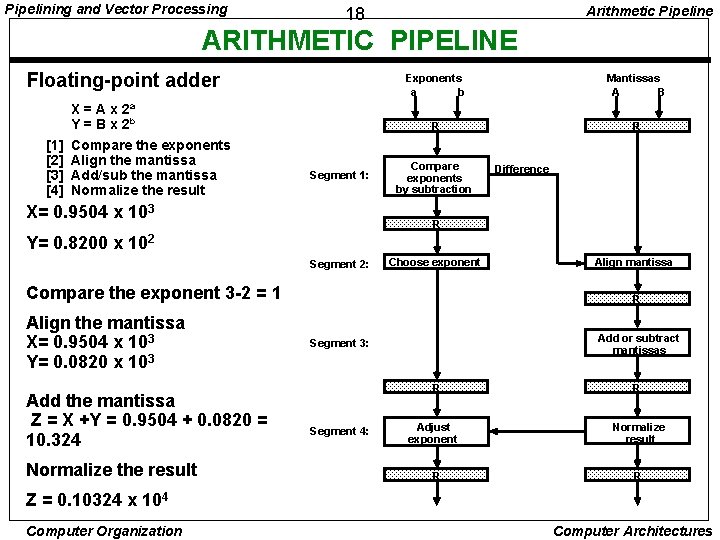

Pipelining and Vector Processing Arithmetic Pipeline 18 ARITHMETIC PIPELINE Floating-point adder X = A x 2 a Y = B x 2 b [1] [2] [3] [4] Compare the exponents Align the mantissa Add/sub the mantissa Normalize the result Segment 1: X= 0. 9504 x 103 Exponents a b Mantissas A B R R Compare exponents by subtraction Difference R Y= 0. 8200 x 102 Segment 2: Choose exponent Compare the exponent 3 -2 = 1 Align the mantissa X= 0. 9504 x 103 Y= 0. 0820 x 103 Add the mantissa Z = X +Y = 0. 9504 + 0. 0820 = 10. 324 Normalize the result Align mantissa R Add or subtract mantissas Segment 3: R Segment 4: Adjust exponent R R Normalize result R Z = 0. 10324 x 104 Computer Organization Computer Architectures

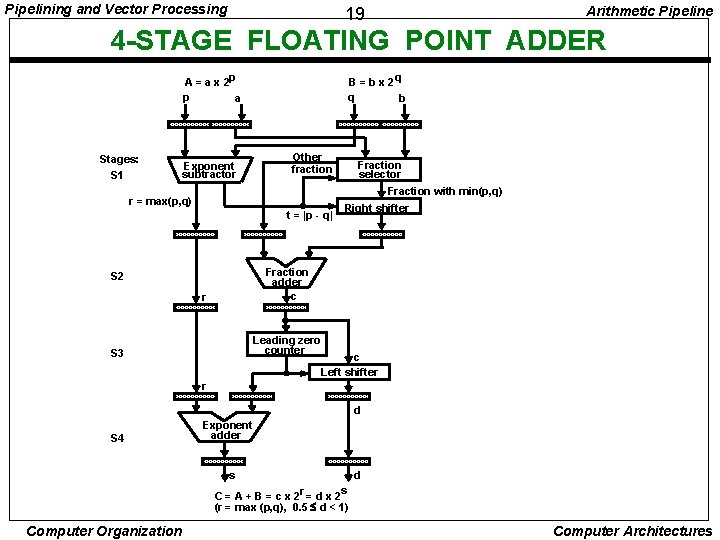

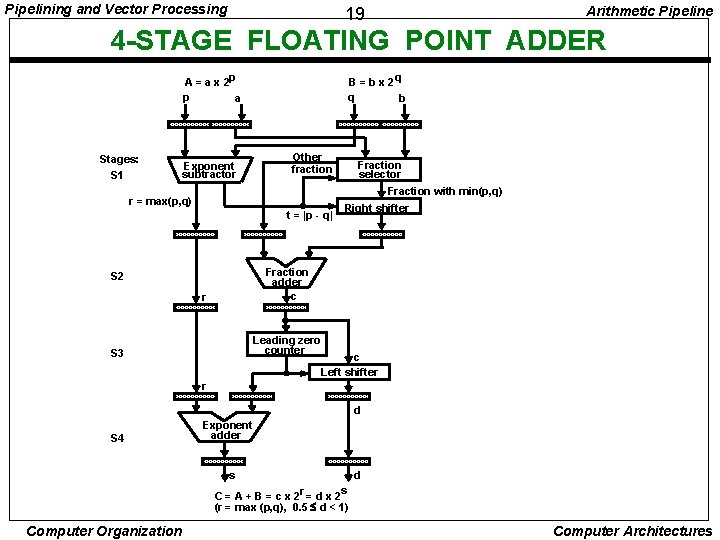

Pipelining and Vector Processing 19 Arithmetic Pipeline 4 -STAGE FLOATING POINT ADDER A = a x 2 p p a Stages: S 1 Exponent subtractor B = b x 2 q q b Other fraction r = max(p, q) t = |p - q| Fraction selector Fraction with min(p, q) Right shifter Fraction adder c S 2 r Leading zero counter S 3 c Left shifter r d S 4 Exponent adder s d C = A + B = c x 2 r = d x 2 s (r = max (p, q), 0. 5 d < 1) Computer Organization Computer Architectures

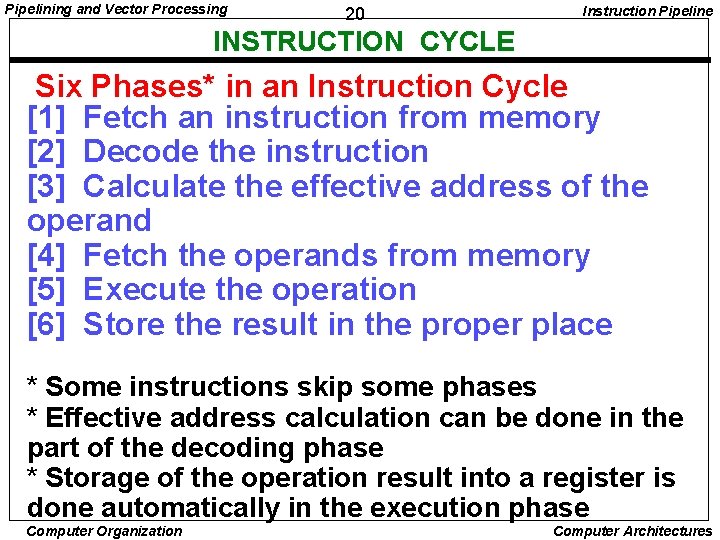

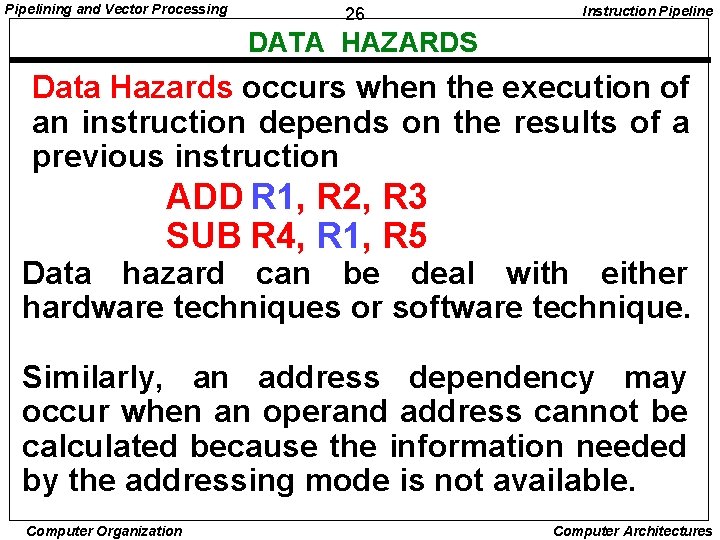

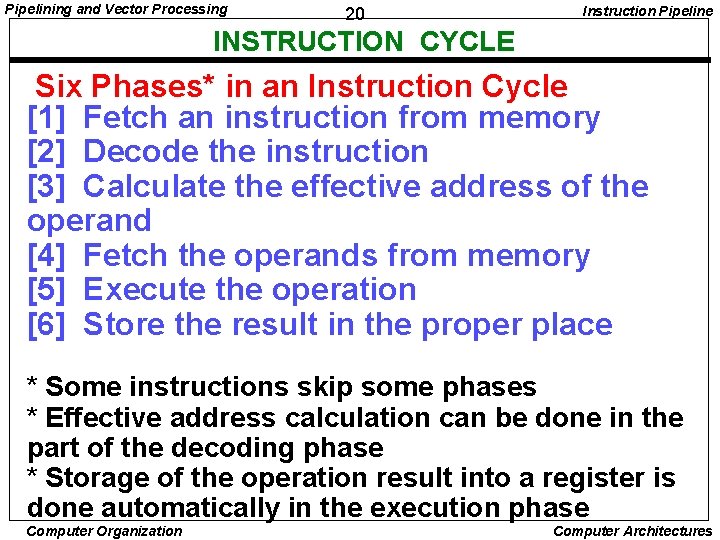

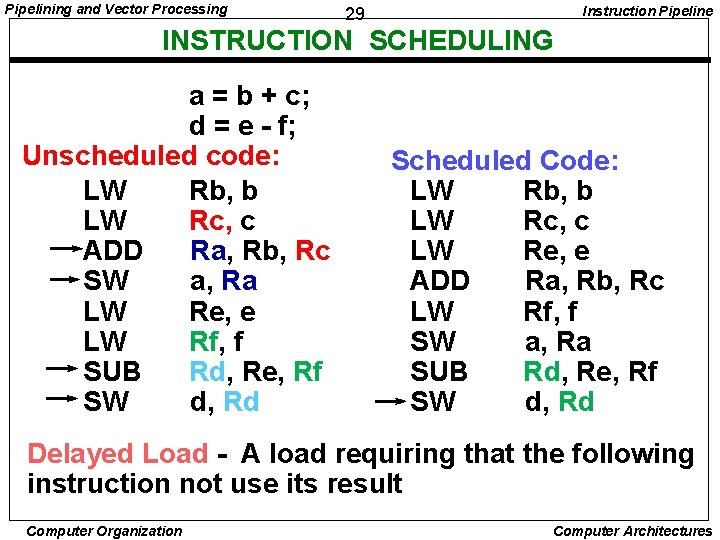

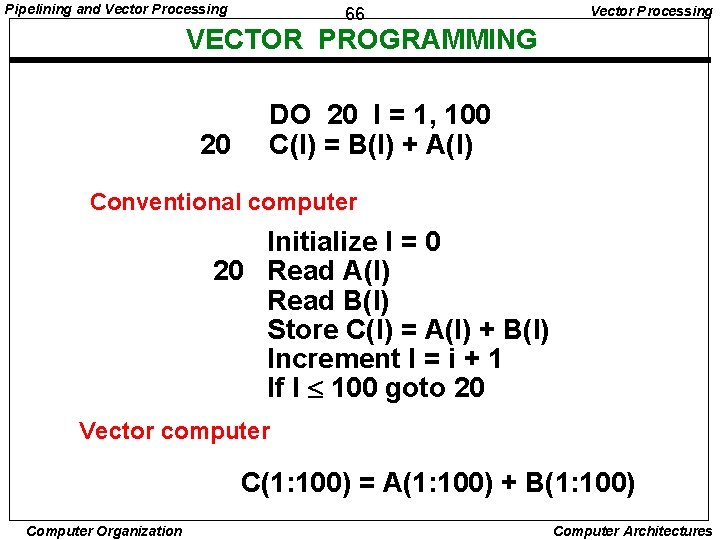

Pipelining and Vector Processing 20 Instruction Pipeline INSTRUCTION CYCLE Six Phases* in an Instruction Cycle [1] Fetch an instruction from memory [2] Decode the instruction [3] Calculate the effective address of the operand [4] Fetch the operands from memory [5] Execute the operation [6] Store the result in the proper place * Some instructions skip some phases * Effective address calculation can be done in the part of the decoding phase * Storage of the operation result into a register is done automatically in the execution phase Computer Organization Computer Architectures

![Pipelining and Vector Processing 21 Instruction Pipeline INSTRUCTION CYCLE 4 Stage Pipeline 1 Pipelining and Vector Processing 21 Instruction Pipeline INSTRUCTION CYCLE ==> 4 -Stage Pipeline [1]](https://slidetodoc.com/presentation_image_h/19659ca89361e6fad5787658a04b6d75/image-21.jpg)

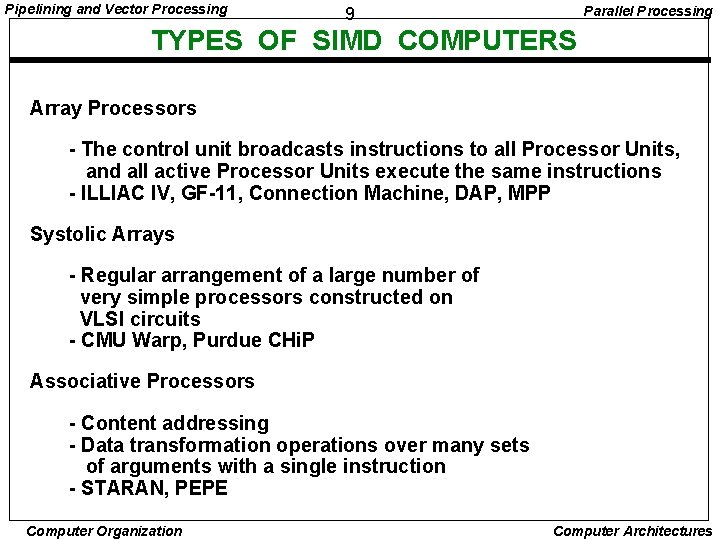

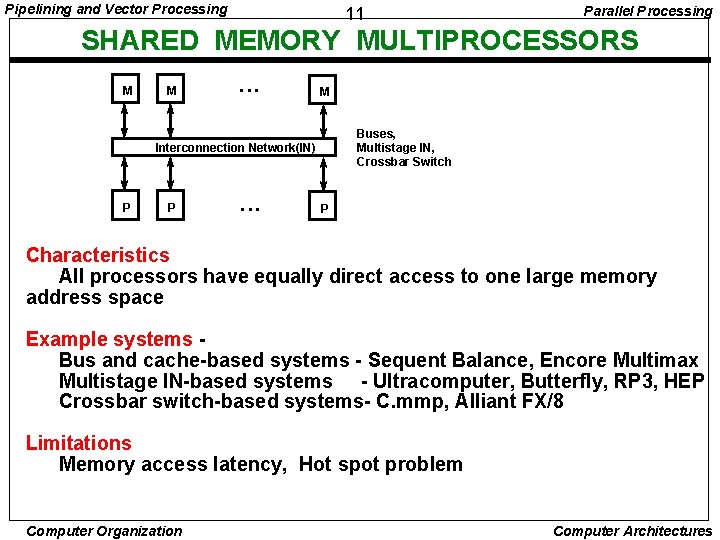

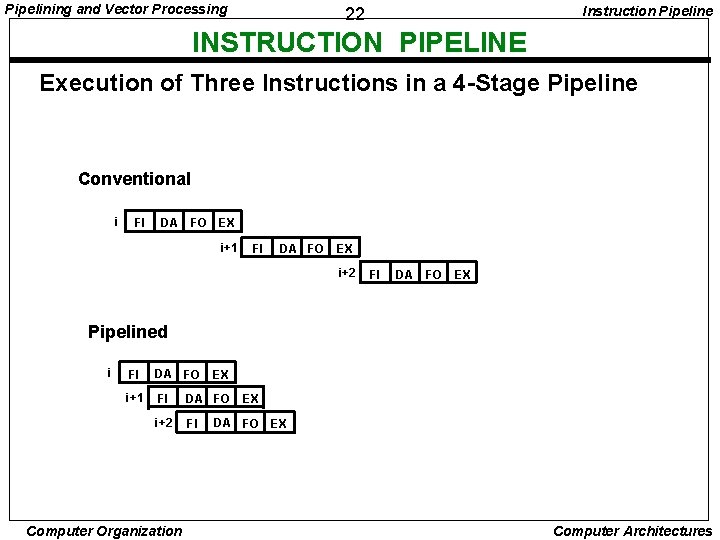

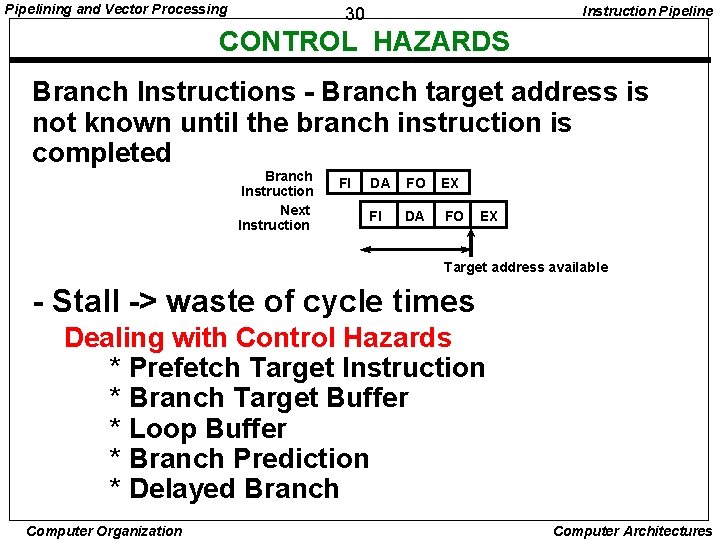

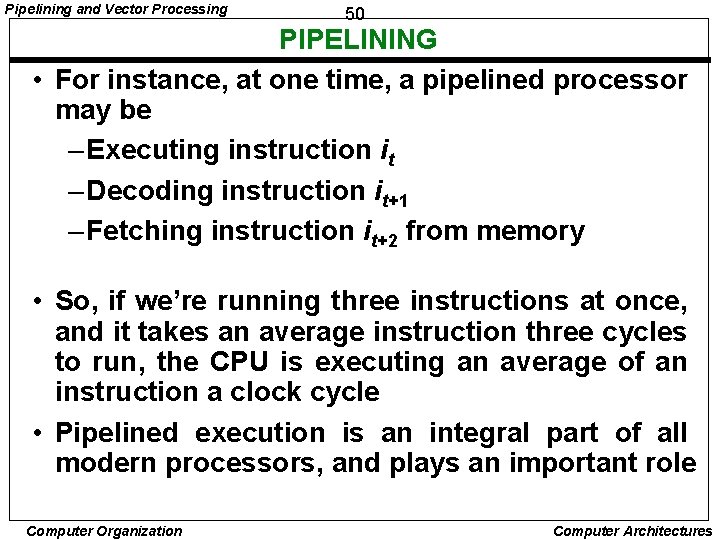

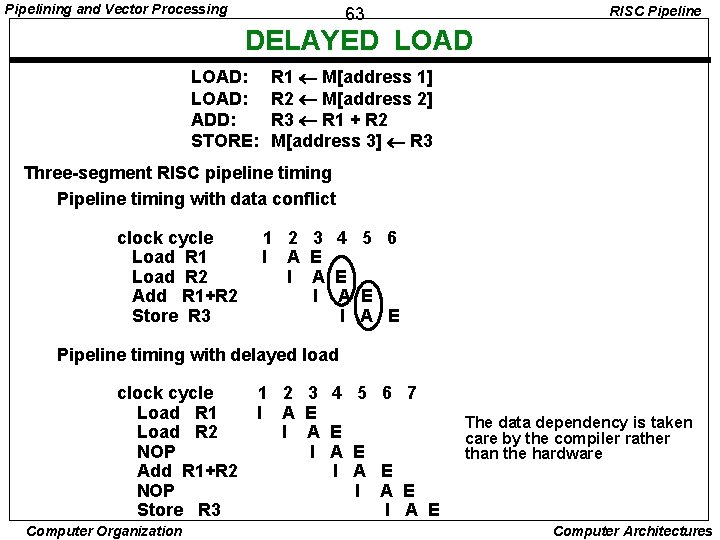

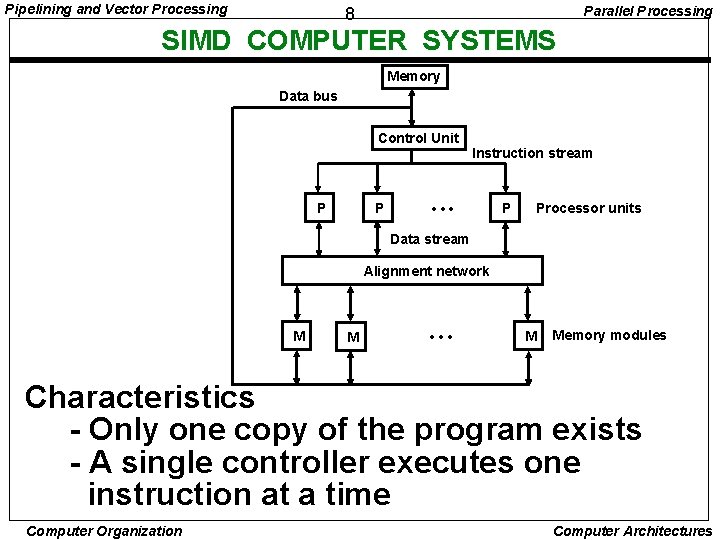

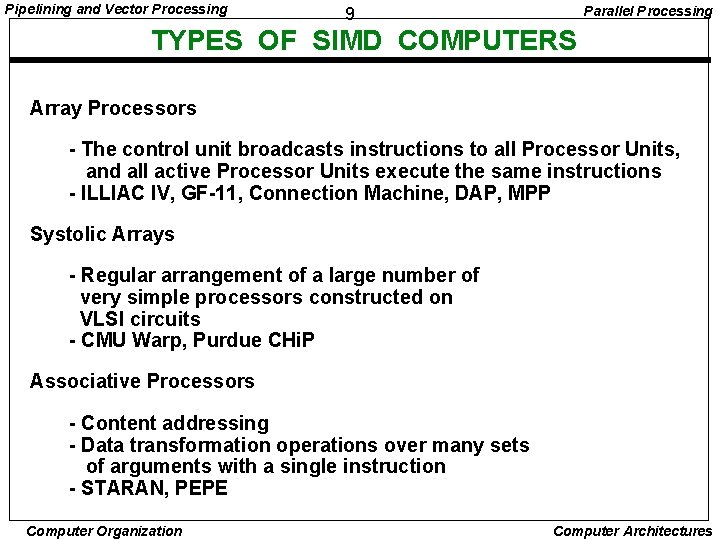

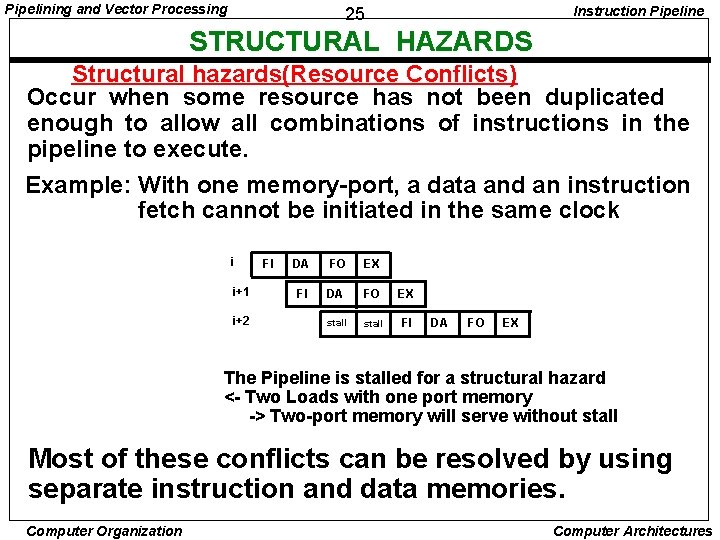

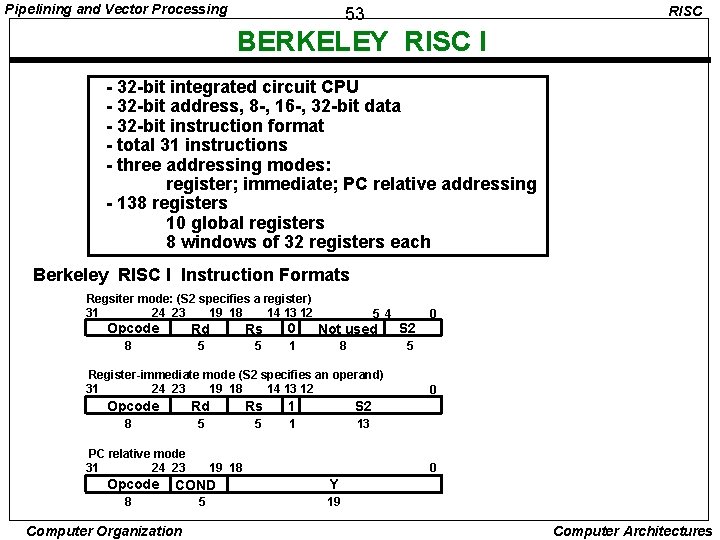

Pipelining and Vector Processing 21 Instruction Pipeline INSTRUCTION CYCLE ==> 4 -Stage Pipeline [1] FI: Fetch an instruction from memory [2] DA: Decode the instruction and calculate the effective address of the operand [3] FO: Fetch the operand [4] EX: Execute the operation Computer Organization Computer Architectures

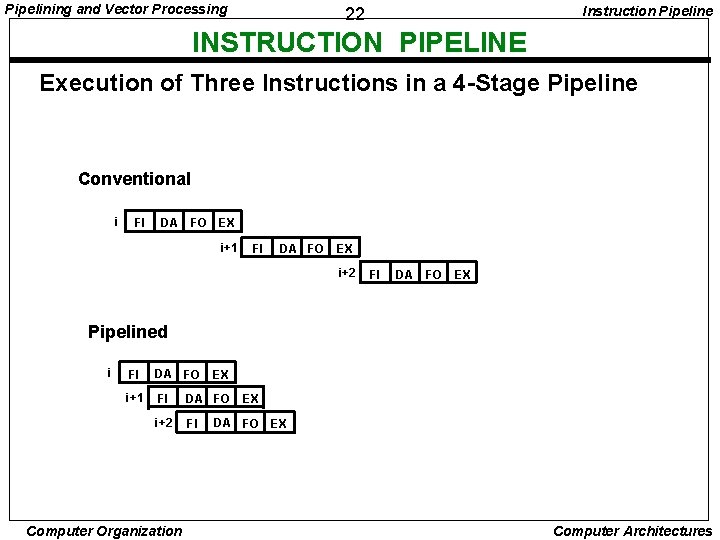

Pipelining and Vector Processing Instruction Pipeline 22 INSTRUCTION PIPELINE Execution of Three Instructions in a 4 -Stage Pipeline Conventional i FI DA FO EX i+1 FI DA FO EX i+2 FI DA FO EX Pipelined i FI DA FO i+1 FI DA FO i+2 FI Computer Organization EX EX DA FO EX Computer Architectures

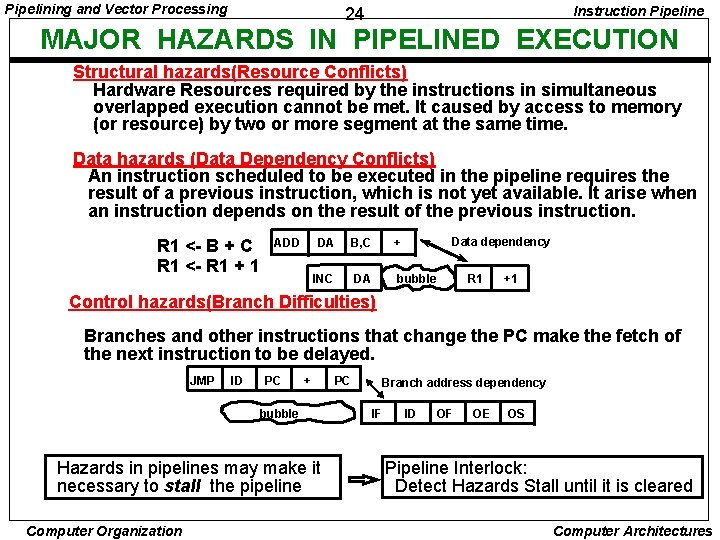

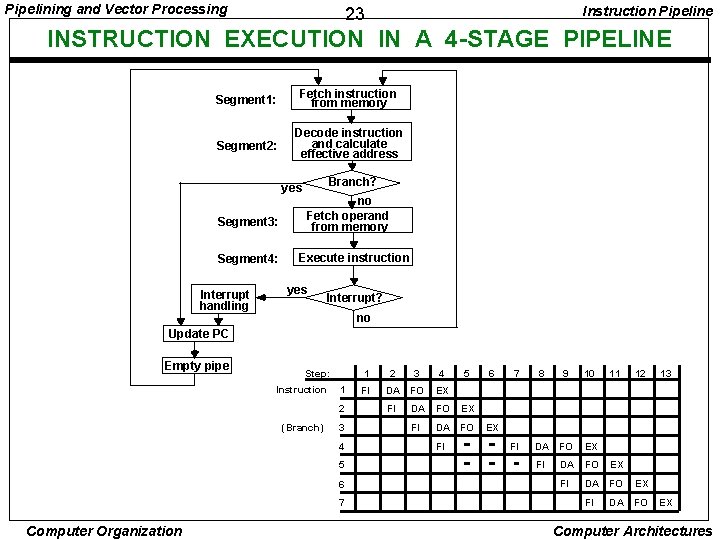

Pipelining and Vector Processing Instruction Pipeline 23 INSTRUCTION EXECUTION IN A 4 -STAGE PIPELINE Segment 1: Fetch instruction from memory Segment 2: Decode instruction and calculate effective address yes Segment 3: Segment 4: Interrupt handling Branch? no Fetch operand from memory Execute instruction yes Interrupt? no Update PC Empty pipe Step: Instruction 1 2 (Branch) 3 4 5 6 7 Computer Organization 1 2 3 4 FI DA FO EX FI DA FO FI 5 6 7 8 9 10 11 12 FI DA FO EX FI DA FO 13 EX EX EX Computer Architectures

Pipelining and Vector Processing Instruction Pipeline 24 MAJOR HAZARDS IN PIPELINED EXECUTION Structural hazards(Resource Conflicts) Hardware Resources required by the instructions in simultaneous overlapped execution cannot be met. It caused by access to memory (or resource) by two or more segment at the same time. Data hazards (Data Dependency Conflicts) An instruction scheduled to be executed in the pipeline requires the result of a previous instruction, which is not yet available. It arise when an instruction depends on the result of the previous instruction. R 1 <- B + C R 1 <- R 1 + 1 ADD Data dependency DA B, C + INC DA bubble R 1 +1 Control hazards(Branch Difficulties) Branches and other instructions that change the PC make the fetch of the next instruction to be delayed. JMP ID PC + bubble Hazards in pipelines may make it necessary to stall the pipeline Computer Organization PC Branch address dependency IF ID OF OE OS Pipeline Interlock: Detect Hazards Stall until it is cleared Computer Architectures

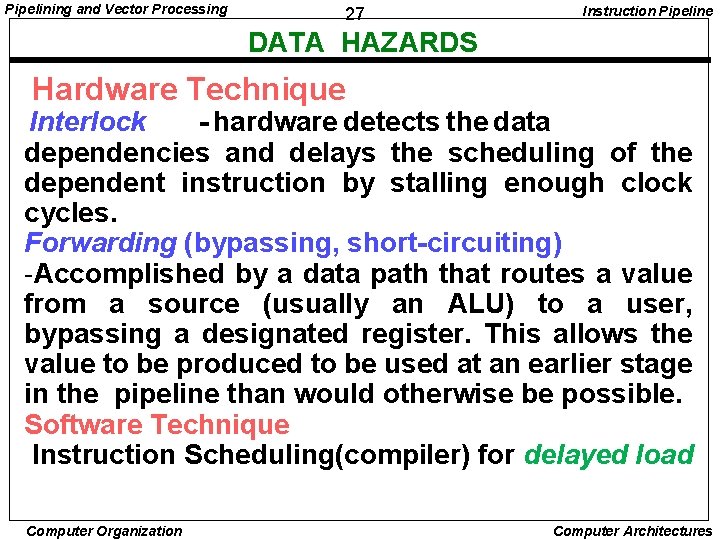

Pipelining and Vector Processing Instruction Pipeline 25 STRUCTURAL HAZARDS Structural hazards(Resource Conflicts) Occur when some resource has not been duplicated enough to allow all combinations of instructions in the pipeline to execute. Example: With one memory-port, a data and an instruction fetch cannot be initiated in the same clock i i+1 i+2 FI DA FO EX stall FI DA FO EX The Pipeline is stalled for a structural hazard <- Two Loads with one port memory -> Two-port memory will serve without stall Most of these conflicts can be resolved by using separate instruction and data memories. Computer Organization Computer Architectures

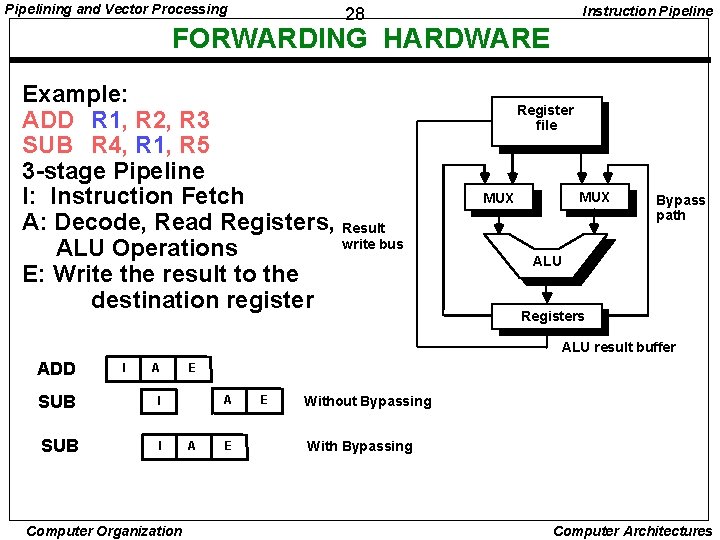

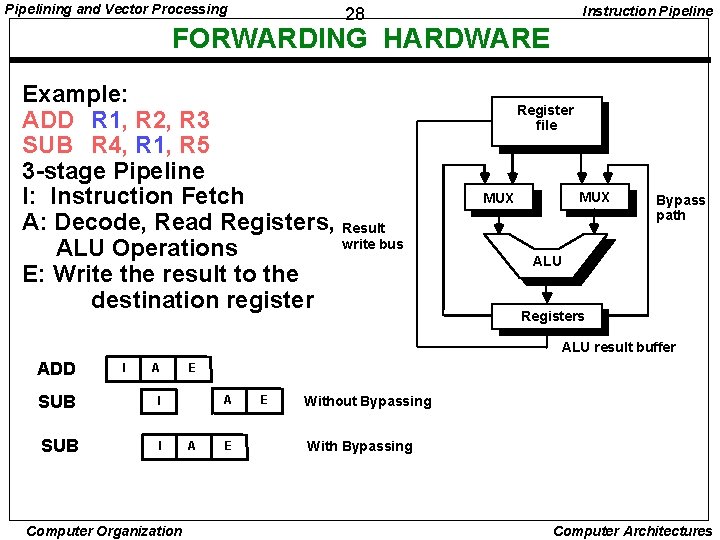

Pipelining and Vector Processing 26 Instruction Pipeline DATA HAZARDS Data Hazards occurs when the execution of an instruction depends on the results of a previous instruction ADD R 1, R 2, R 3 SUB R 4, R 1, R 5 Data hazard can be deal with either hardware techniques or software technique. Similarly, an address dependency may occur when an operand address cannot be calculated because the information needed by the addressing mode is not available. Computer Organization Computer Architectures

Pipelining and Vector Processing 27 Instruction Pipeline DATA HAZARDS Hardware Technique Interlock - hardware detects the data dependencies and delays the scheduling of the dependent instruction by stalling enough clock cycles. Forwarding (bypassing, short-circuiting) -Accomplished by a data path that routes a value from a source (usually an ALU) to a user, bypassing a designated register. This allows the value to be produced to be used at an earlier stage in the pipeline than would otherwise be possible. Software Technique Instruction Scheduling(compiler) for delayed load Computer Organization Computer Architectures

Pipelining and Vector Processing Instruction Pipeline 28 FORWARDING HARDWARE Example: ADD R 1, R 2, R 3 SUB R 4, R 1, R 5 3 -stage Pipeline I: Instruction Fetch A: Decode, Read Registers, Result write bus ALU Operations E: Write the result to the destination register Register file MUX Bypass path ALU Registers ALU result buffer ADD I A SUB I Computer Organization E A A E E Without Bypassing With Bypassing Computer Architectures

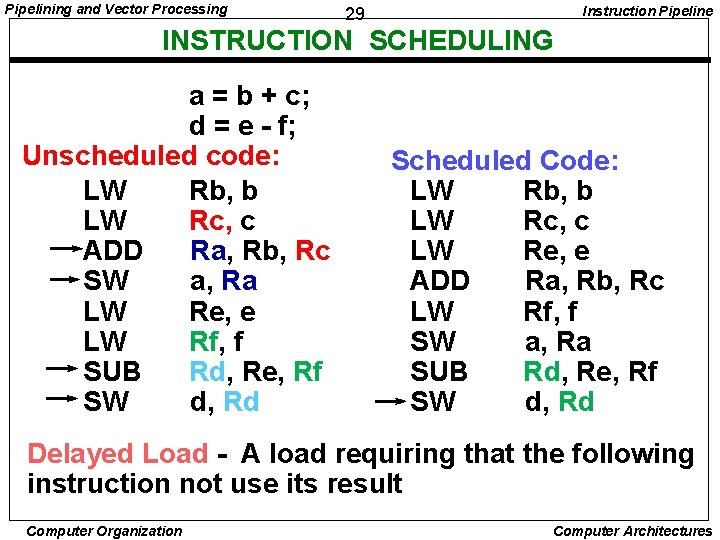

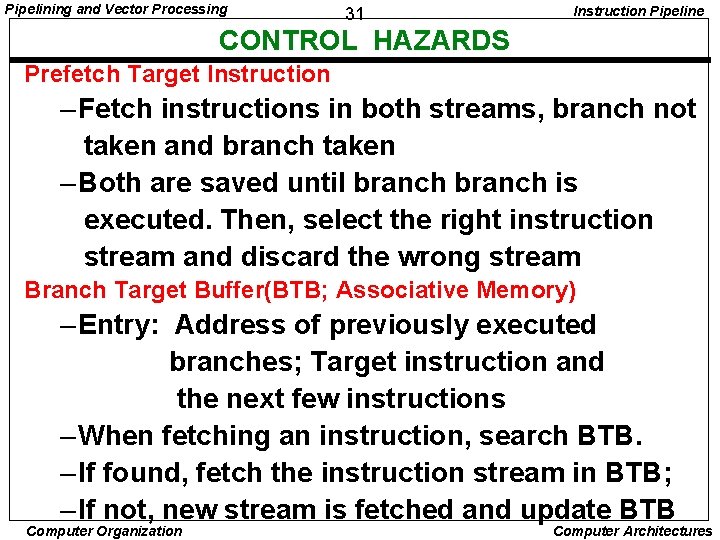

Pipelining and Vector Processing Instruction Pipeline 29 INSTRUCTION SCHEDULING a = b + c; d = e - f; Unscheduled code: LW Rb, b LW Rc, c ADD Ra, Rb, Rc SW a, Ra LW Re, e LW Rf, f SUB Rd, Re, Rf SW d, Rd Scheduled Code: LW Rb, b LW Rc, c LW Re, e ADD Ra, Rb, Rc LW Rf, f SW a, Ra SUB Rd, Re, Rf SW d, Rd Delayed Load - A load requiring that the following instruction not use its result Computer Organization Computer Architectures

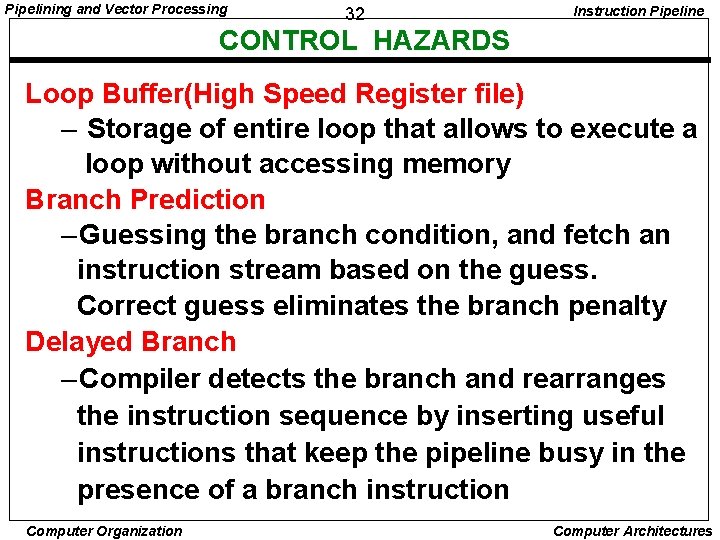

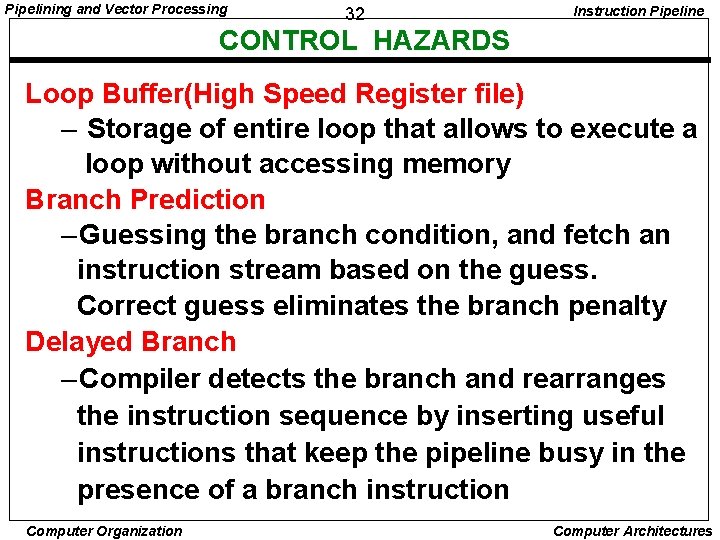

Pipelining and Vector Processing Instruction Pipeline 30 CONTROL HAZARDS Branch Instructions - Branch target address is not known until the branch instruction is completed Branch Instruction Next Instruction FI DA FO EX Target address available - Stall -> waste of cycle times Dealing with Control Hazards * Prefetch Target Instruction * Branch Target Buffer * Loop Buffer * Branch Prediction * Delayed Branch Computer Organization Computer Architectures

Pipelining and Vector Processing 31 Instruction Pipeline CONTROL HAZARDS Prefetch Target Instruction – Fetch instructions in both streams, branch not taken and branch taken – Both are saved until branch is executed. Then, select the right instruction stream and discard the wrong stream Branch Target Buffer(BTB; Associative Memory) – Entry: Address of previously executed branches; Target instruction and the next few instructions – When fetching an instruction, search BTB. – If found, fetch the instruction stream in BTB; – If not, new stream is fetched and update BTB Computer Organization Computer Architectures

Pipelining and Vector Processing 32 Instruction Pipeline CONTROL HAZARDS Loop Buffer(High Speed Register file) – Storage of entire loop that allows to execute a loop without accessing memory Branch Prediction – Guessing the branch condition, and fetch an instruction stream based on the guess. Correct guess eliminates the branch penalty Delayed Branch – Compiler detects the branch and rearranges the instruction sequence by inserting useful instructions that keep the pipeline busy in the presence of a branch instruction Computer Organization Computer Architectures

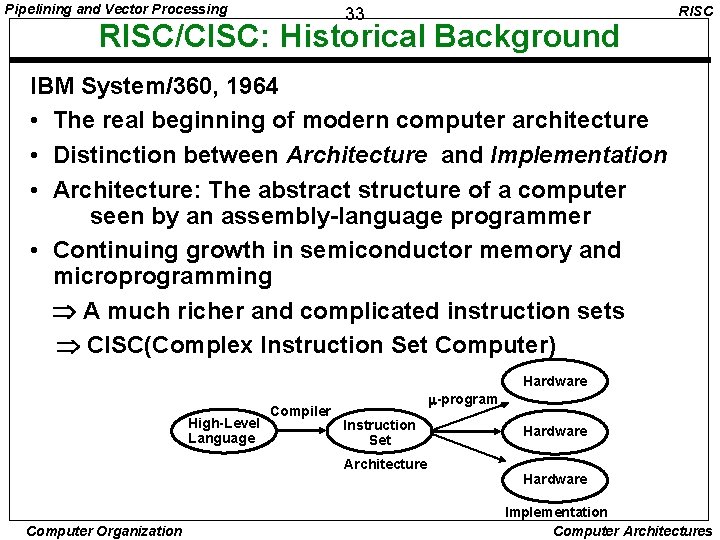

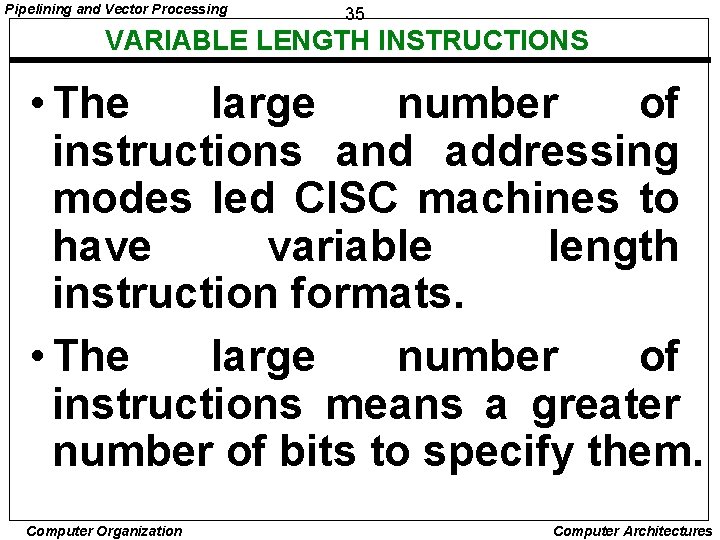

Pipelining and Vector Processing 33 RISC/CISC: Historical Background RISC IBM System/360, 1964 • The real beginning of modern computer architecture • Distinction between Architecture and Implementation • Architecture: The abstract structure of a computer seen by an assembly-language programmer • Continuing growth in semiconductor memory and microprogramming A much richer and complicated instruction sets CISC(Complex Instruction Set Computer) Hardware High-Level Language Compiler -program Instruction Set Architecture Computer Organization Hardware Implementation Computer Architectures

Pipelining and Vector Processing 34 COMPLEX INSTRUCTION SET COMPUTER (CISC) • These computers with many instructions and addressing modes came to be known as Complex Instruction Set Computers (CISC). • One goal for CISC machines was to have a machine language instruction to match each high-level language statement type. Computer Organization Computer Architectures

Pipelining and Vector Processing 35 VARIABLE LENGTH INSTRUCTIONS • The large number of instructions and addressing modes led CISC machines to have variable length instruction formats. • The large number of instructions means a greater number of bits to specify them. Computer Organization Computer Architectures

Pipelining and Vector Processing 36 VARIABLE LENGTH INSTRUCTIONS • In order to manage this large number of opcodes efficiently, they were encoded with different lengths: –More frequently used instructions were encoded using short opcodes. –Less frequently used ones were assigned longer opcodes. Computer Organization Computer Architectures

Pipelining and Vector Processing 37 VARIABLE LENGTH INSTRUCTIONS • Also, multiple operand instructions could specify different addressing modes for each operand - For example, » Operand 1 could be a directly addressed register, » Operand 2 could be an indirectly addressed memory location, » Operand 3 (the destination) could be an indirectly addressed register. • All of this led to the need to have different length instructions in different situations, depending on the opcode and operands used Computer Organization Computer Architectures

Pipelining and Vector Processing 38 VARIABLE LENGTH INSTRUCTIONS • For example, an instruction that only specifies register operands may only be two bytes in length – One byte to specify the instruction and addressing mode – One byte to specify the source and destination registers. • An instruction that specifies memory addresses for operands may need five bytes – One byte to specify the instruction and addressing mode – Two bytes to specify each memory address » Maybe more if there’s a large amount of memory. Computer Organization Computer Architectures

Pipelining and Vector Processing 39 VARIABLE LENGTH INSTRUCTIONS • Variable length instructions greatly complicate the fetch and decode problem for a processor • The circuitry to recognize the various instructions and to properly fetch the required number of bytes for operands is very complex Computer Organization Computer Architectures

Pipelining and Vector Processing 40 COMPLEX INSTRUCTION SET COMPUTER • Another property of CISC computers is that they have instructions that act directly on memory addresses – For example, ADD L 1, L 2, L 3 that takes the contents of M[L 1] adds it to the contents of M[L 2] and stores the result in location M[L 3] • An instruction like this takes three memory access cycles to execute • That makes for a potentially very long instruction execution cycle Computer Organization Computer Architectures

Pipelining and Vector Processing 41 COMPLEX INSTRUCTION SET COMPUTER • The problems with CISC computers are - The complexity of the design may slow down the processor, –The complexity of the design may result in costly errors in the processor design and implementation, –Many of the instructions and addressing modes are used rarely, if ever Computer Organization Computer Architectures

Pipelining and Vector Processing RISC 42 SUMMARY: CRITICISMS ON CISC High Performance Instructions General Purpose - Complex Instruction → Format, Length, Addressing Modes → Complicated instruction cycle control due to the complex decoding HW and decoding process - Multiple memory cycle instructions → Operations on memory data → Multiple memory accesses/instruction Computer Organization Computer Architectures

Pipelining and Vector Processing 43 RISC SUMMARY: CRITICISMS ON CISC - Microprogrammed control is necessity → Microprogram control storage takes substantial portion of CPU chip area →Semantic Gap is large between machine instruction and microinstruction - General purpose instruction set includes all the features required by individually different applications → When any one application is running, all the features required by the other applications are extra burden to the application Computer Organization Computer Architectures

Pipelining and Vector Processing 44 REDUCED INSTRUCTION SET COMPUTERS • In the late ‘ 70 s and early ‘ 80 s there was a reaction to the shortcomings of the CISC style of processors. • Reduced Instruction Set Computers (RISC) were proposed as an alternative. • The idea behind RISC processors is to simplify the instruction set and reduce instruction execution time. Computer Organization Computer Architectures

Pipelining and Vector Processing 45 REDUCED INSTRUCTION SET COMPUTERS • RISC processors often feature: – Few instructions – Few addressing modes – Only load and store instructions access memory – All other operations are done using onprocessor registers – Fixed length instructions – Single cycle execution of instructions – The control unit is hardwired, not microprogrammed Computer Organization Computer Architectures

Pipelining and Vector Processing 46 REDUCED INSTRUCTION SET COMPUTERS • Since all but the load and store instructions use only registers for operands, only a few addressing modes are needed. • By having all instructions the same length, reading them in is easy and fast. • The fetch and decode stages are simple, looking much more like Mano’s Basic Computer than a CISC machine. • The instruction and address formats are designed to be easy to decode. Computer Organization Computer Architectures

Pipelining and Vector Processing 47 REDUCED INSTRUCTION SET COMPUTERS • Unlike the variable length CISC instructions, the opcode and register fields of RISC instructions can be decoded simultaneously. • The control logic of a RISC processor is designed to be simple and fast. • The control logic is simple because of the small number of instructions and the simple addressing modes. • The control logic is hardwired, rather than microprogrammed, because hardwired control is faster. Computer Organization Computer Architectures

Pipelining and Vector Processing 48 REGISTERS in RISC • By simplifying the instructions and addressing modes, there is space available on the chip or board of a RISC CPU for more circuits than with a CISC processor • This extra capacity is used to –Pipeline instruction execution to speed up instruction execution –Add a large number of registers to the CPU Computer Organization Computer Architectures

Pipelining and Vector Processing 49 PIPELINING in RISC • A very important feature of many RISC processors is the ability to execute an instruction each clock cycle. • This may seem nonsensical, since it takes at least once clock cycle each to fetch, decode and execute an instruction. • It is however possible, because of a technique known as pipelining. • Pipelining is the use of the processor to work on different phases of multiple instructions in parallel Computer Organization Computer Architectures

Pipelining and Vector Processing 50 PIPELINING • For instance, at one time, a pipelined processor may be – Executing instruction it – Decoding instruction it+1 – Fetching instruction it+2 from memory • So, if we’re running three instructions at once, and it takes an average instruction three cycles to run, the CPU is executing an average of an instruction a clock cycle • Pipelined execution is an integral part of all modern processors, and plays an important role Computer Organization Computer Architectures

Pipelining and Vector Processing 51 REGISTERS • By having a large number of general purpose registers, a processor can minimize the number of times it needs to access memory to load or store a value. • This results in a significant speed up, since memory accesses are much slower than register accesses • Register accesses are fast, since they just use the bus on the CPU itself, and any transfer can be done in one clock cycle. • To go off-processor to memory requires using the much slower memory (or system) bus. Computer Organization Computer Architectures

Pipelining and Vector Processing 52 REGISTERS • It may take many clock cycles to read or write to memory across the memory bus– The memory bus hardware is usually slower than the processor. – There may even be competition for access to the memory bus by other devices in the computer (e. g. disk drives). • So, for this reason alone, a RISC processor may have an advantage over a comparable CISC processor, since it only needs to access memory – for its instructions, and – occasionally to load or store a memory value Computer Organization Computer Architectures

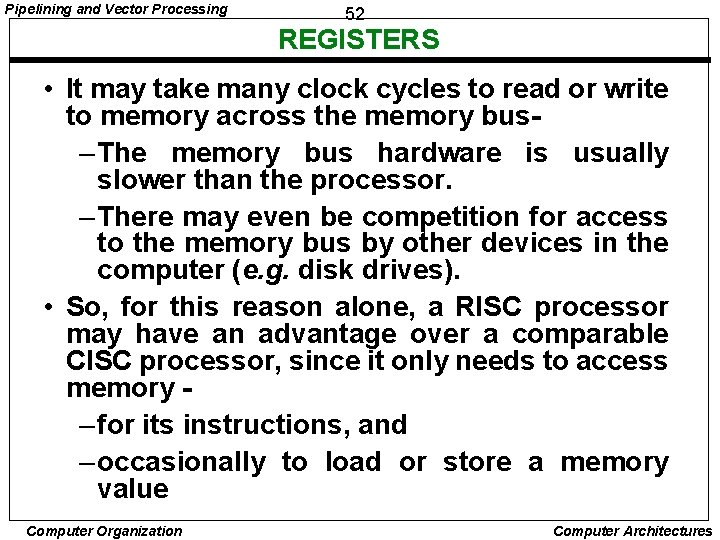

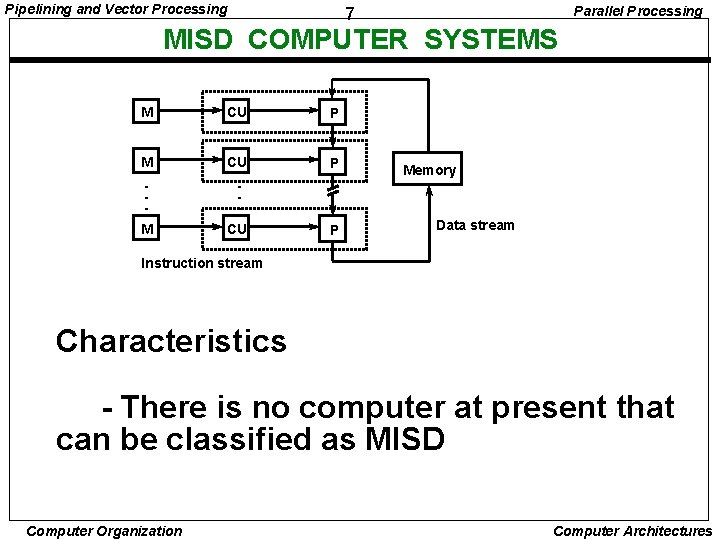

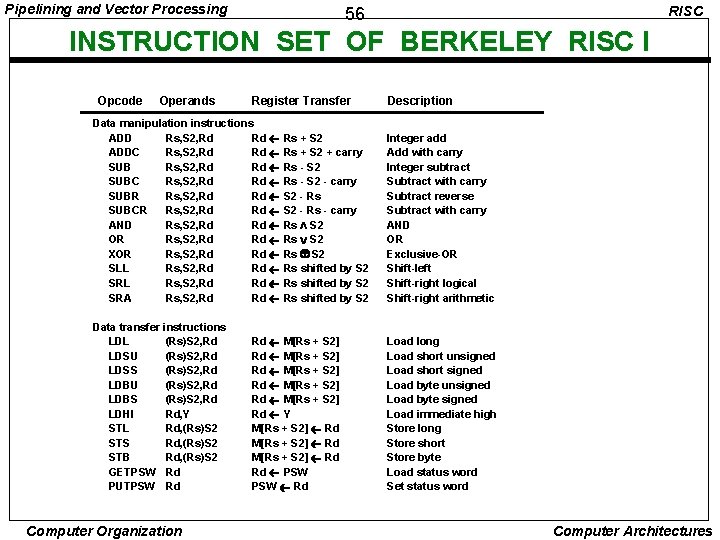

Pipelining and Vector Processing RISC 53 BERKELEY RISC I - 32 -bit integrated circuit CPU - 32 -bit address, 8 -, 16 -, 32 -bit data - 32 -bit instruction format - total 31 instructions - three addressing modes: register; immediate; PC relative addressing - 138 registers 10 global registers 8 windows of 32 registers each Berkeley RISC I Instruction Formats Regsiter mode: (S 2 specifies a register) 31 24 23 19 18 14 13 12 Opcode Rd 8 5 Rs 0 5 1 5 4 Not used 8 5 Register-immediate mode (S 2 specifies an operand) 31 24 23 19 18 14 13 12 Opcode Rd 8 5 PC relative mode 31 24 23 Opcode COND 8 Computer Organization Rs 1 S 2 5 1 13 19 18 5 S 2 0 0 0 Y 19 Computer Architectures

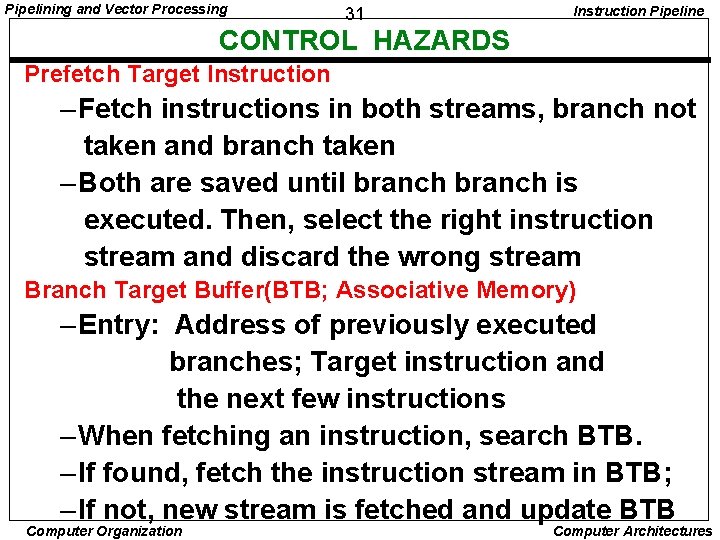

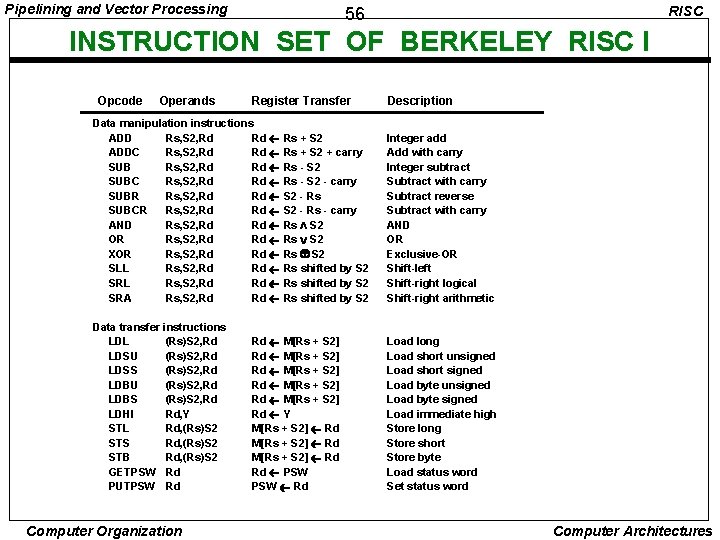

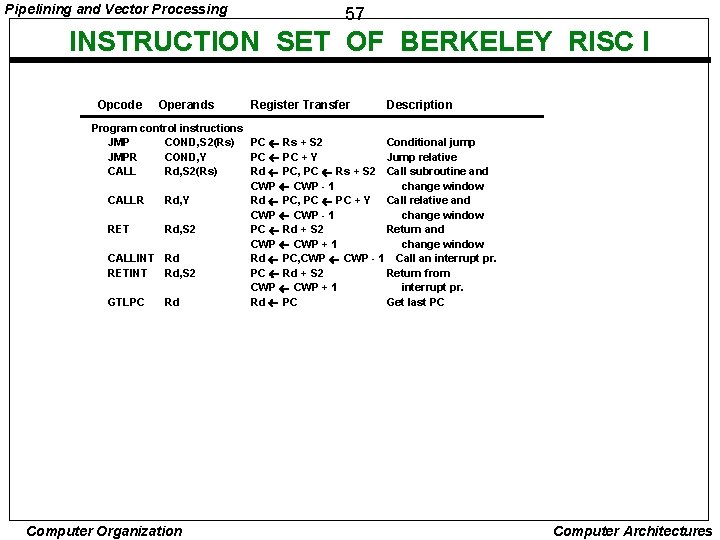

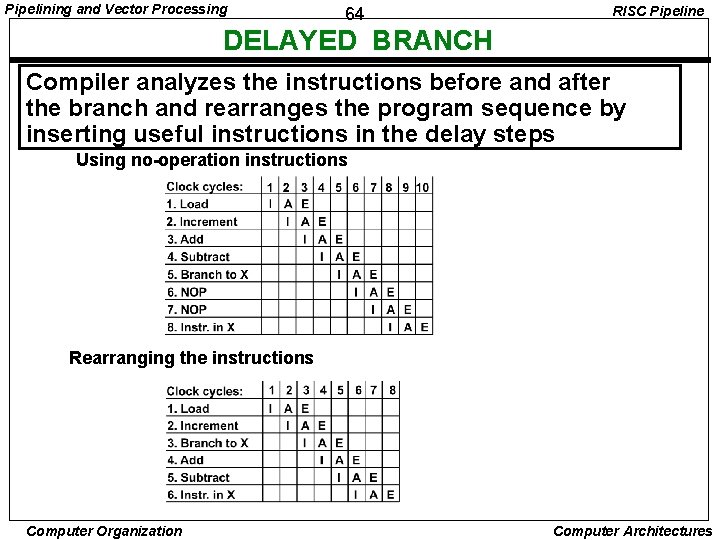

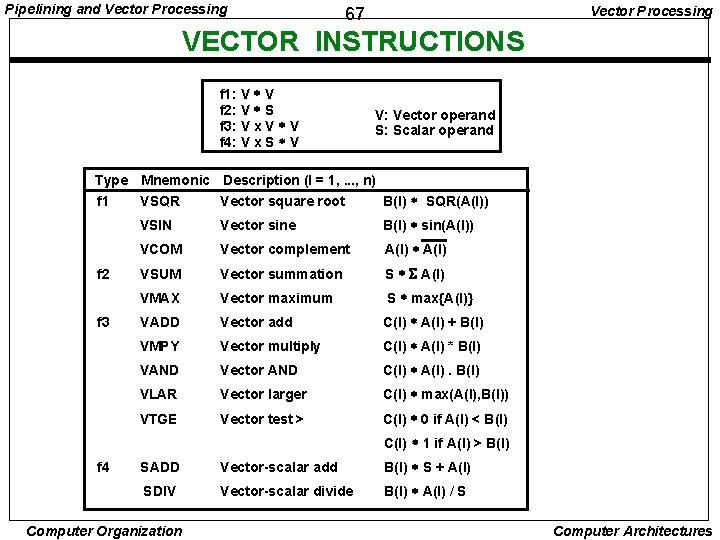

Pipelining and Vector Processing 54 BERKELEY RISC I • Register 0 was hard-wired to a value of 0. • There are eight memory access instructions – Five load-from-memory instructions – Three store-to-memory instructions. • The load instructions: LDL load long LDSU load short unsigned LDSS load short signed LDBU load byte unsigned LDBS load byte signed – Where long is 32 bits, short is 16 bits and a byte is 8 bits • The store instructions: STL store long STS store short STB store byte Computer Organization Computer Architectures

![Pipelining and Vector Processing 55 Berkeley RISC I LDL Rd MRs S 2 Pipelining and Vector Processing 55 Berkeley RISC I LDL Rd M[(Rs) + S 2]](https://slidetodoc.com/presentation_image_h/19659ca89361e6fad5787658a04b6d75/image-55.jpg)

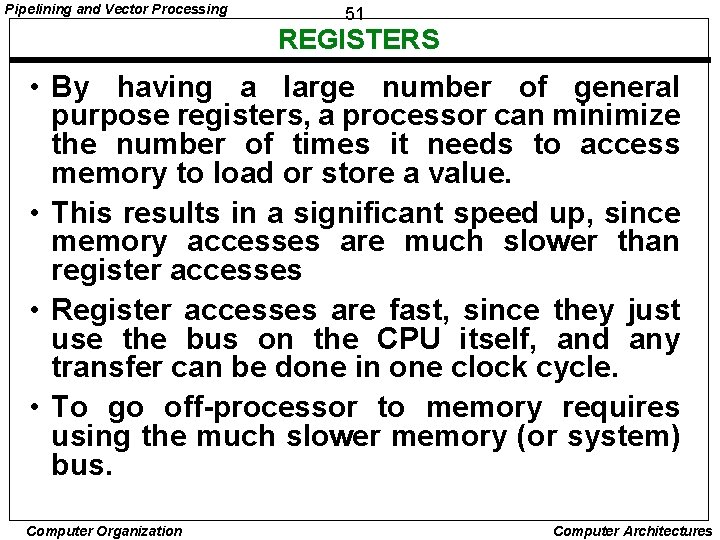

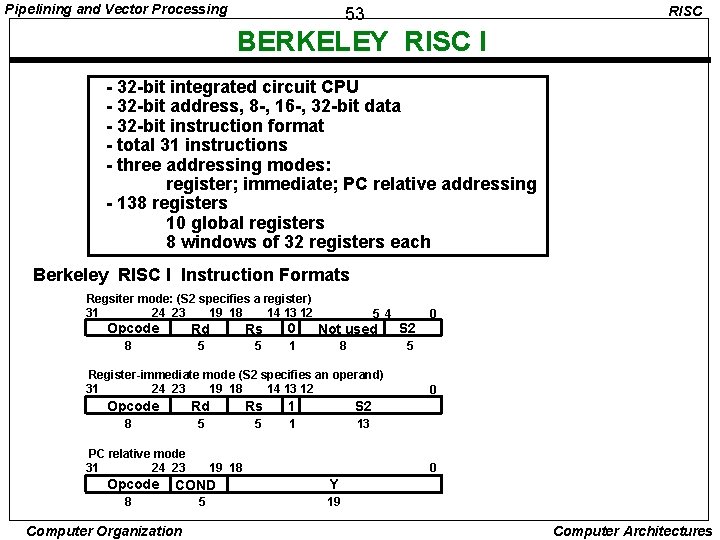

Pipelining and Vector Processing 55 Berkeley RISC I LDL Rd M[(Rs) + S 2] load long LDSU LDSS Rd M[(Rs) + S 2] load short unsigned Rd M[(Rs) + S 2] load short signed LDBU LDBS Rd M[(Rs) + S 2] load byte unsigned Rd M[(Rs) + S 2] load byte signed STL STS STB M[(Rs) + S 2] Rd store long M[(Rs) + S 2] Rd store short M[(Rs) + S 2] Rd store byte • Here the difference between the lengths is – A long is simply loaded, since it is the same size as the register (32 bits). – A short or a byte can be loaded into a register » Unsigned - in which case the upper bits of the register are loaded with 0’s. » Signed - in which case the upper bits of the register are loaded with the sign bit of the short/byte loaded. Computer Organization Computer Architectures

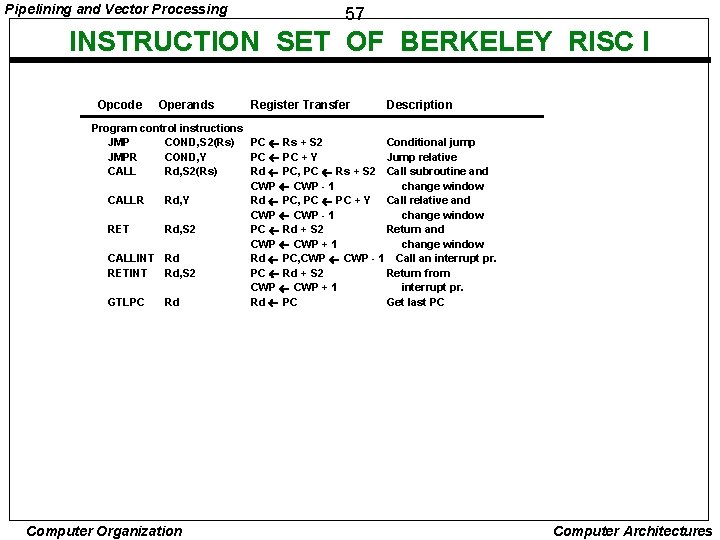

Pipelining and Vector Processing RISC 56 INSTRUCTION SET OF BERKELEY RISC I Opcode Operands Register Transfer Description Data manipulation instructions ADD Rs, S 2, Rd Rd Rs + S 2 ADDC Rs, S 2, Rd Rd Rs + S 2 + carry SUB Rs, S 2, Rd Rd Rs - S 2 SUBC Rs, S 2, Rd Rd Rs - S 2 - carry SUBR Rs, S 2, Rd Rd S 2 - Rs SUBCR Rs, S 2, Rd Rd S 2 - Rs - carry AND Rs, S 2, Rd Rd Rs S 2 OR Rs, S 2, Rd Rd Rs S 2 XOR Rs, S 2, Rd Rd Rs S 2 SLL Rs, S 2, Rd Rd Rs shifted by S 2 SRA Rs, S 2, Rd Rd Rs shifted by S 2 Integer add Add with carry Integer subtract Subtract with carry Subtract reverse Subtract with carry AND OR Exclusive-OR Shift-left Shift-right logical Shift-right arithmetic Data transfer instructions LDL (Rs)S 2, Rd LDSU (Rs)S 2, Rd LDSS (Rs)S 2, Rd LDBU (Rs)S 2, Rd LDBS (Rs)S 2, Rd LDHI Rd, Y STL Rd, (Rs)S 2 STS Rd, (Rs)S 2 STB Rd, (Rs)S 2 GETPSW Rd PUTPSW Rd Load long Load short unsigned Load short signed Load byte unsigned Load byte signed Load immediate high Store long Store short Store byte Load status word Set status word Computer Organization Rd M[Rs + S 2] Rd M[Rs + S 2] Rd Y M[Rs + S 2] Rd Rd PSW Rd Computer Architectures

Pipelining and Vector Processing 57 INSTRUCTION SET OF BERKELEY RISC I Opcode Operands Register Transfer Description Program control instructions JMP COND, S 2(Rs) PC Rs + S 2 Conditional jump JMPR COND, Y PC + Y Jump relative CALL Rd, S 2(Rs) Rd PC, PC Rs + S 2 Call subroutine and CWP - 1 change window CALLR Rd, Y Rd PC, PC + Y Call relative and CWP - 1 change window RET Rd, S 2 PC Rd + S 2 Return and CWP + 1 change window CALLINT Rd Rd PC, CWP - 1 Call an interrupt pr. RETINT Rd, S 2 PC Rd + S 2 Return from CWP + 1 interrupt pr. GTLPC Rd Rd PC Get last PC Computer Organization Computer Architectures

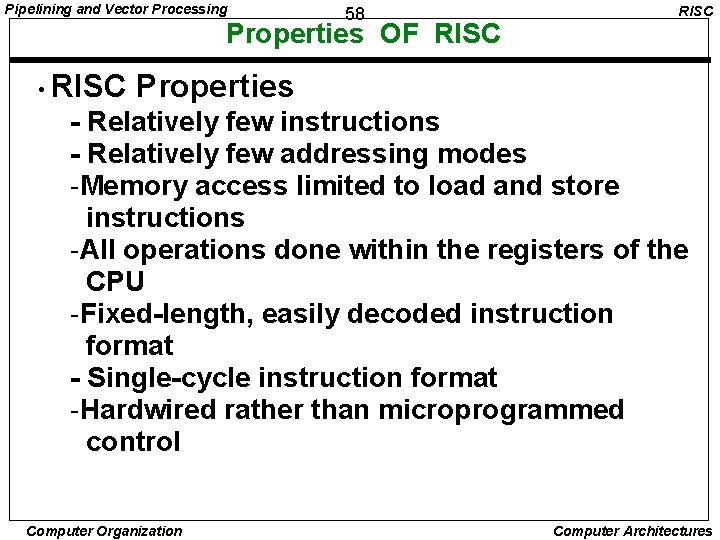

Pipelining and Vector Processing 58 Properties OF RISC • RISC Properties - Relatively few instructions - Relatively few addressing modes -Memory access limited to load and store instructions -All operations done within the registers of the CPU -Fixed-length, easily decoded instruction format - Single-cycle instruction format -Hardwired rather than microprogrammed control Computer Organization Computer Architectures

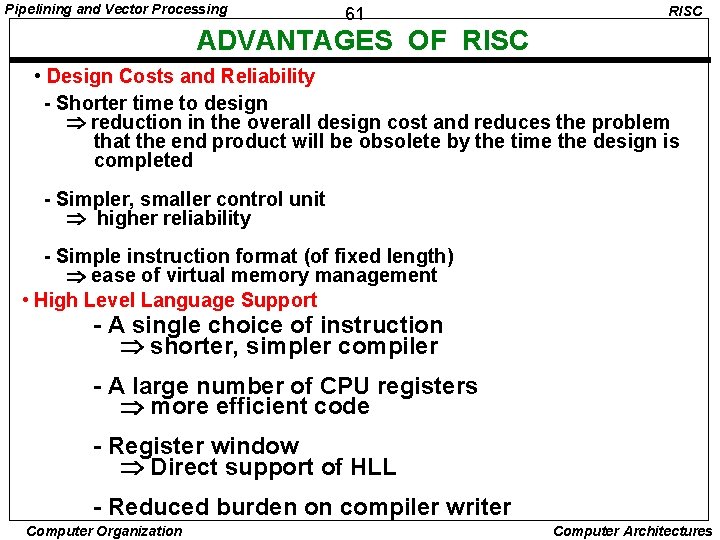

Pipelining and Vector Processing 59 Advantages OF RISC • Advantages RISC of RISC - VLSI Realization - Computing Speed - Design Costs and Reliability - High Level Language Support Computer Organization Computer Architectures

Pipelining and Vector Processing RISC 60 ADVANTAGES OF RISC • VLSI Realization Control area is considerably reduced Example: RISC I: 6% RISC II: 10% MC 68020: 68% general CISCs: ~50% RISC chips allow a large number of registers on the chip - Enhancement of performance and HLL support - Higher regularization factor and lower VLSI design cost The Ga. As VLSI chip realization is possible • Computing Speed - Simpler, smaller control unit faster - Simpler instruction set; addressing modes; instruction format faster decoding - Register operation faster than memory operation - Register window enhances the overall speed of execution - Identical instruction length, One cycle instruction execution suitable for pipelining faster Computer Organization Computer Architectures

Pipelining and Vector Processing 61 RISC ADVANTAGES OF RISC • Design Costs and Reliability - Shorter time to design reduction in the overall design cost and reduces the problem that the end product will be obsolete by the time the design is completed - Simpler, smaller control unit higher reliability - Simple instruction format (of fixed length) ease of virtual memory management • High Level Language Support - A single choice of instruction shorter, simpler compiler - A large number of CPU registers more efficient code - Register window Direct support of HLL - Reduced burden on compiler writer Computer Organization Computer Architectures

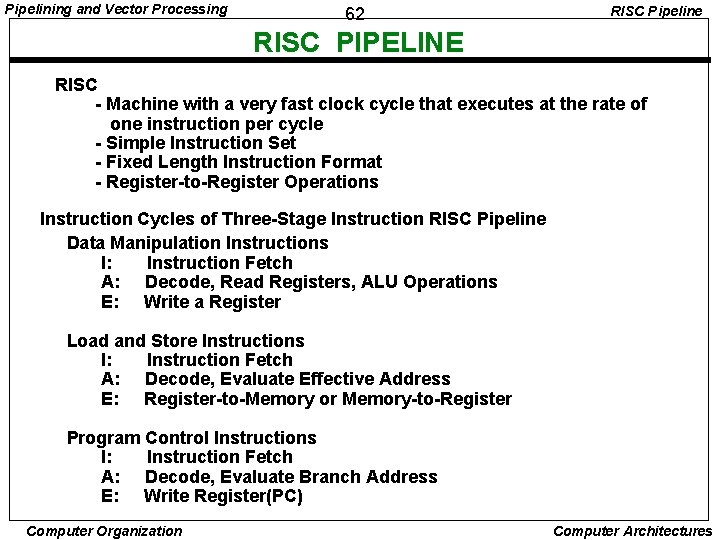

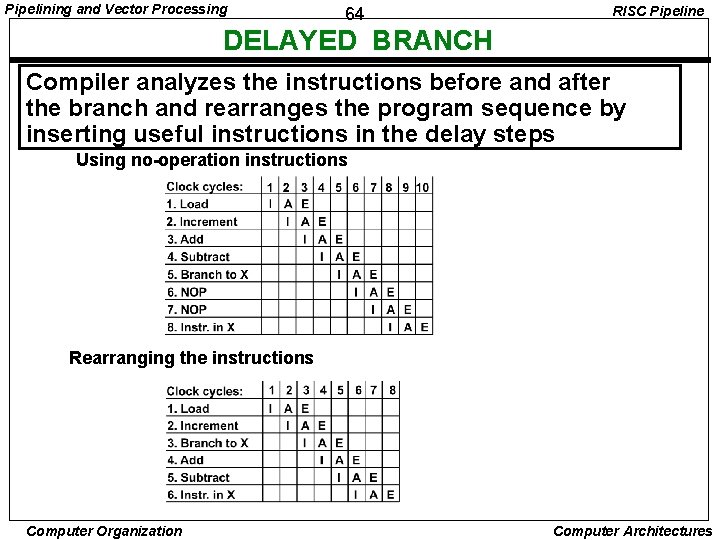

Pipelining and Vector Processing 62 RISC Pipeline RISC PIPELINE RISC - Machine with a very fast clock cycle that executes at the rate of one instruction per cycle - Simple Instruction Set - Fixed Length Instruction Format - Register-to-Register Operations Instruction Cycles of Three-Stage Instruction RISC Pipeline Data Manipulation Instructions I: Instruction Fetch A: Decode, Read Registers, ALU Operations E: Write a Register Load and Store Instructions I: Instruction Fetch A: Decode, Evaluate Effective Address E: Register-to-Memory or Memory-to-Register Program Control Instructions I: Instruction Fetch A: Decode, Evaluate Branch Address E: Write Register(PC) Computer Organization Computer Architectures

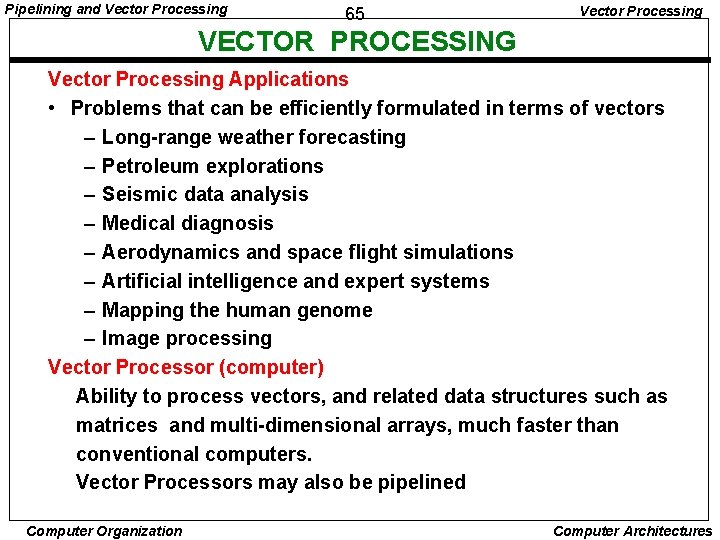

Pipelining and Vector Processing RISC Pipeline 63 DELAYED LOAD: ADD: STORE: R 1 M[address 1] R 2 M[address 2] R 3 R 1 + R 2 M[address 3] R 3 Three-segment RISC pipeline timing Pipeline timing with data conflict clock cycle Load R 1 Load R 2 Add R 1+R 2 Store R 3 1 2 3 4 5 6 I A E Pipeline timing with delayed load clock cycle Load R 1 Load R 2 NOP Add R 1+R 2 NOP Store R 3 Computer Organization 1 2 3 4 5 6 7 I A E I A E The data dependency is taken care by the compiler rather than the hardware Computer Architectures

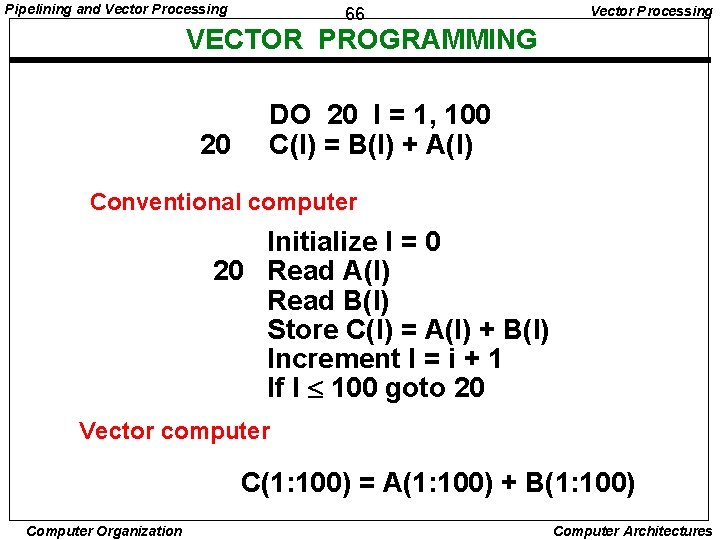

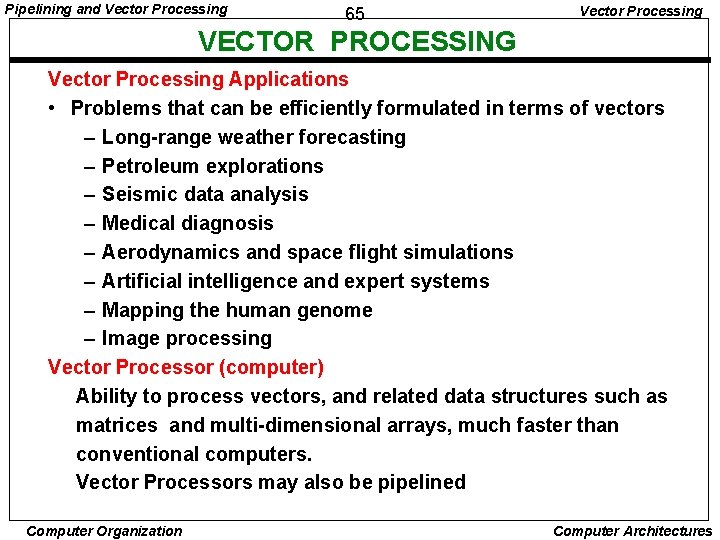

Pipelining and Vector Processing 64 RISC Pipeline DELAYED BRANCH Compiler analyzes the instructions before and after the branch and rearranges the program sequence by inserting useful instructions in the delay steps Using no-operation instructions Rearranging the instructions Computer Organization Computer Architectures

Pipelining and Vector Processing 65 Vector Processing VECTOR PROCESSING Vector Processing Applications • Problems that can be efficiently formulated in terms of vectors – Long-range weather forecasting – Petroleum explorations – Seismic data analysis – Medical diagnosis – Aerodynamics and space flight simulations – Artificial intelligence and expert systems – Mapping the human genome – Image processing Vector Processor (computer) Ability to process vectors, and related data structures such as matrices and multi-dimensional arrays, much faster than conventional computers. Vector Processors may also be pipelined Computer Organization Computer Architectures

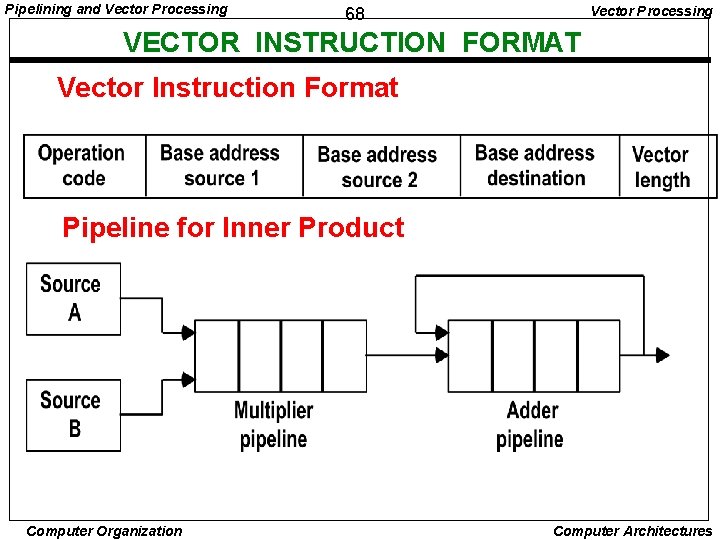

Pipelining and Vector Processing 66 Vector Processing VECTOR PROGRAMMING 20 DO 20 I = 1, 100 C(I) = B(I) + A(I) Conventional computer Initialize I = 0 20 Read A(I) Read B(I) Store C(I) = A(I) + B(I) Increment I = i + 1 If I 100 goto 20 Vector computer C(1: 100) = A(1: 100) + B(1: 100) Computer Organization Computer Architectures

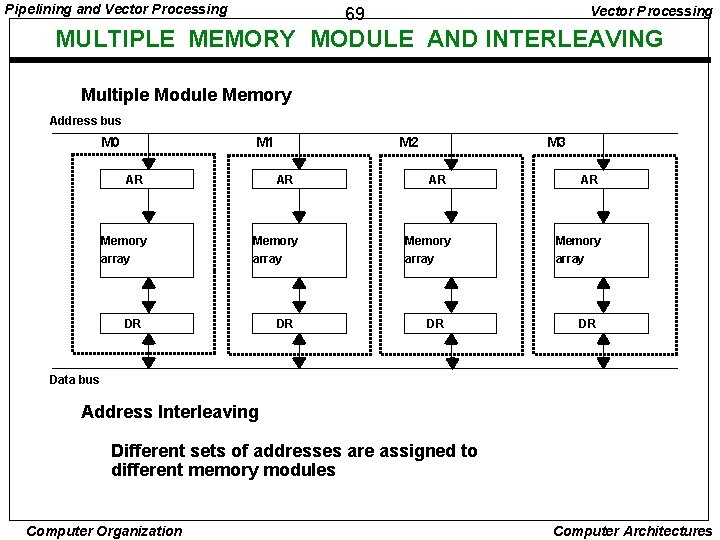

Pipelining and Vector Processing 67 VECTOR INSTRUCTIONS f 1: V * V f 2: V * S f 3: V x V * V f 4: V x S * V V: Vector operand S: Scalar operand Type Mnemonic Description (I = 1, . . . , n) f 1 VSQR Vector square root B(I) * SQR(A(I)) f 2 f 3 VSIN Vector sine B(I) * sin(A(I)) VCOM Vector complement A(I) * A(I) VSUM Vector summation S * S A(I) VMAX Vector maximum S * max{A(I)} VADD Vector add C(I) * A(I) + B(I) VMPY Vector multiply C(I) * A(I) * B(I) VAND Vector AND C(I) * A(I). B(I) VLAR Vector larger C(I) * max(A(I), B(I)) VTGE Vector test > C(I) * 0 if A(I) < B(I) C(I) * 1 if A(I) > B(I) f 4 SADD Vector-scalar add B(I) * S + A(I) SDIV Vector-scalar divide B(I) * A(I) / S Computer Organization Computer Architectures

Pipelining and Vector Processing 68 VECTOR INSTRUCTION FORMAT Vector Instruction Format Pipeline for Inner Product Computer Organization Computer Architectures

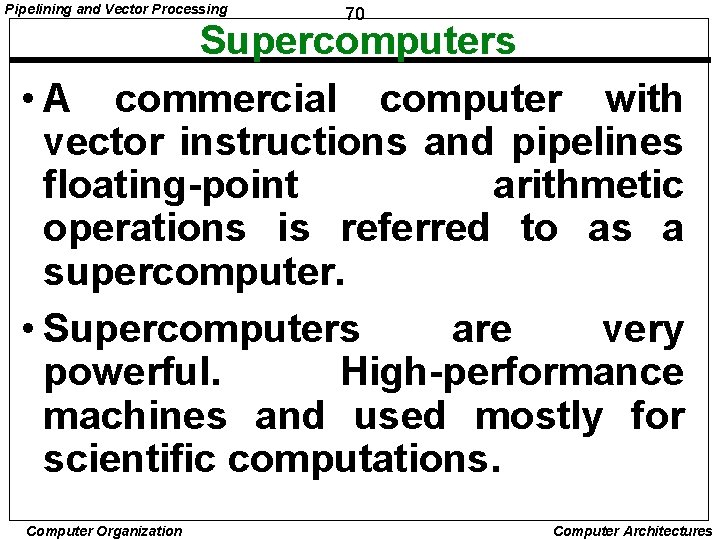

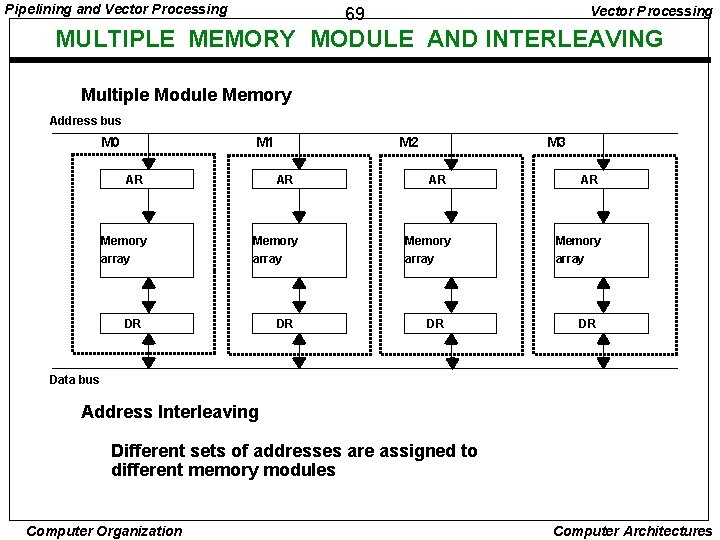

Pipelining and Vector Processing 69 MULTIPLE MEMORY MODULE AND INTERLEAVING Multiple Module Memory Address bus M 0 M 1 AR Memory array M 2 AR Memory array DR DR M 3 AR Memory array DR Data bus Address Interleaving Different sets of addresses are assigned to different memory modules Computer Organization Computer Architectures

Pipelining and Vector Processing 70 Supercomputers • A commercial computer with vector instructions and pipelines floating-point arithmetic operations is referred to as a supercomputer. • Supercomputers are very powerful. High-performance machines and used mostly for scientific computations. Computer Organization Computer Architectures

Pipelining and Vector Processing 71 Supercomputers • To speed up the operations, the components are packed tightly together to minimize the distance that the electronic signals have to travel. • Supercomputers also use special techniques for removing the heat from circuits to prevent then from burning up because of their close proximity. Computer Organization Computer Architectures

Pipelining and Vector Processing 72 Supercomputers • The instruction set of supercomputer contains the standard data transfer, data manipulation, and program control instructions. • A supercomputer is a computer system best known for its high computational speed, fast and large memory systems, and the extensive use of parallel processing. Computer Organization Computer Architectures

Pipelining and Vector Processing 73 Supercomputers • The measure used to evaluate computers in their ability to perform a given number of floating-point operations per second is referred to as flops. • The term megaflops is used to denote million flops and gigaflops to denote billion flops. Computer Organization Computer Architectures

Pipelining and Vector Processing 74 Supercomputers • Typical supercomputer has a basic cycle time of 4 -20 ns. • If the processor can calculate a floating-point operations through a pipeline each cycle time, it will have the ability to perform 50 to 250 megaflops. Computer Organization Computer Architectures

Pipelining and Vector Processing 75 Supercomputers • The first supercomputer developed in 1976 is the Cray-1 supercomputer. ØIt uses vector processing with 12 distinct functional units in parallel. ØEach functional unit is segmented to process the data through pipeline. ØA floating-point operation can be performed on two set of 64 -bit operands during one clock cycle of 12. 5 ns. ØThis gives a rate of 80 megaflops. Computer Organization Computer Architectures

Pipelining and Vector Processing 76 Supercomputers ØIt has a memory capacity of 4 millions 64 -bit words. ØThe memory is divided into 16 banks, with each bank having 50 -ns access time. ØThis means that when all 16 banks are accessed simultaneously, the memory transfer rate is 320 million words per second. ØLater version are Cray X-MP, Cray Y-MP, Cray 2 (12 times powerful that the Cray-1) Another supercomputers are Fujitsu VP 200, VP-2600, PARAM Computers. Computer Organization Computer Architectures

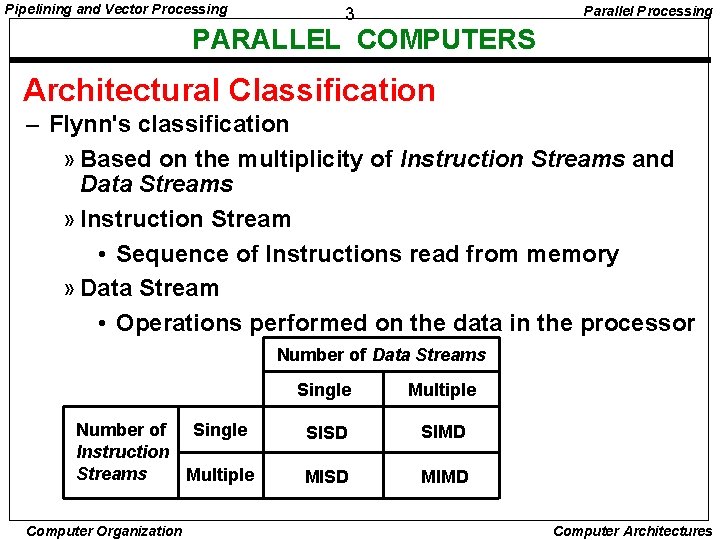

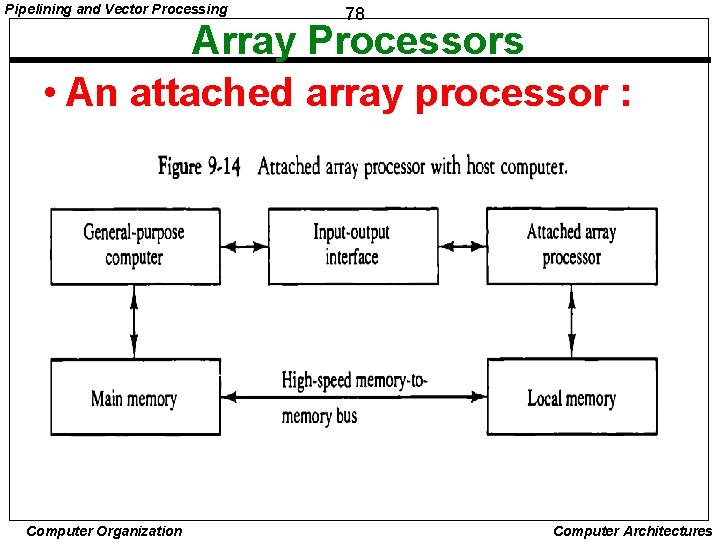

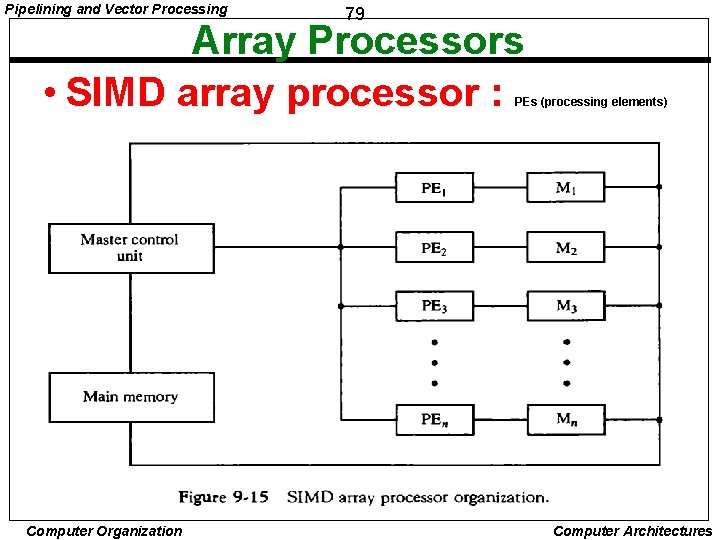

Pipelining and Vector Processing 77 Array Processors • An array processor is a processor that performs computations on large arrays of data. • The term is used to refer to two different types of processors. – An attached array processor – An SIMD array processor Computer Organization Computer Architectures

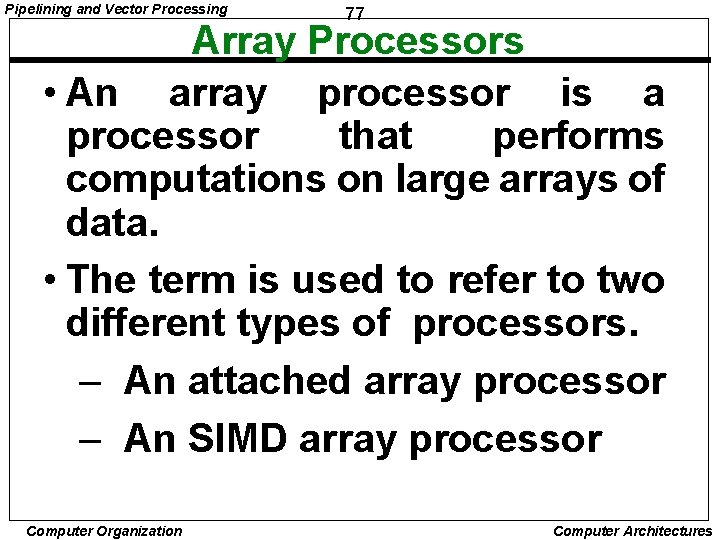

Pipelining and Vector Processing 78 Array Processors • An attached array processor : Computer Organization Computer Architectures

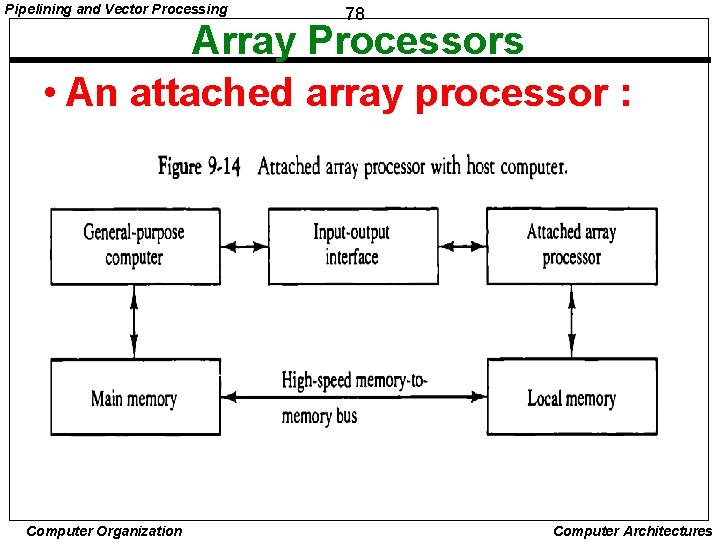

Pipelining and Vector Processing 79 Array Processors • SIMD array processor : PEs (processing elements) Computer Organization Computer Architectures