Fine Grain Incremental Rescheduling Via Architectural Retiming Soha

- Slides: 41

Fine Grain Incremental Rescheduling Via Architectural Retiming Soha Hassoun Tufts University Medford, MA Thanks to: Carl Ebeling University of Washington Seattle, WA

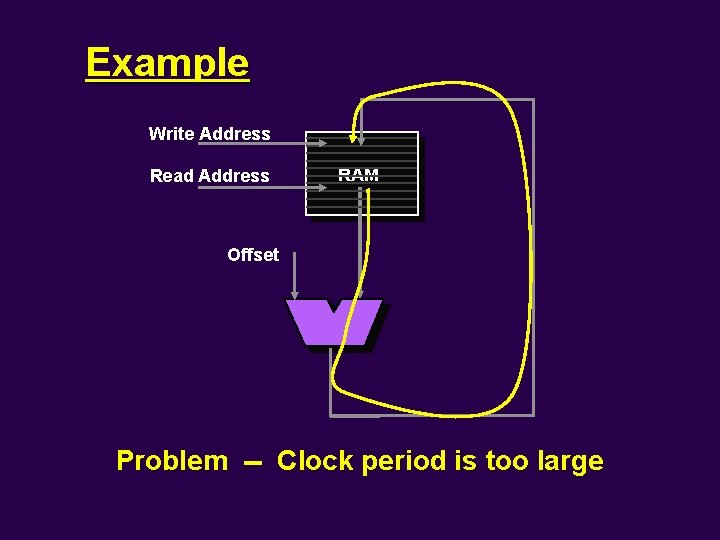

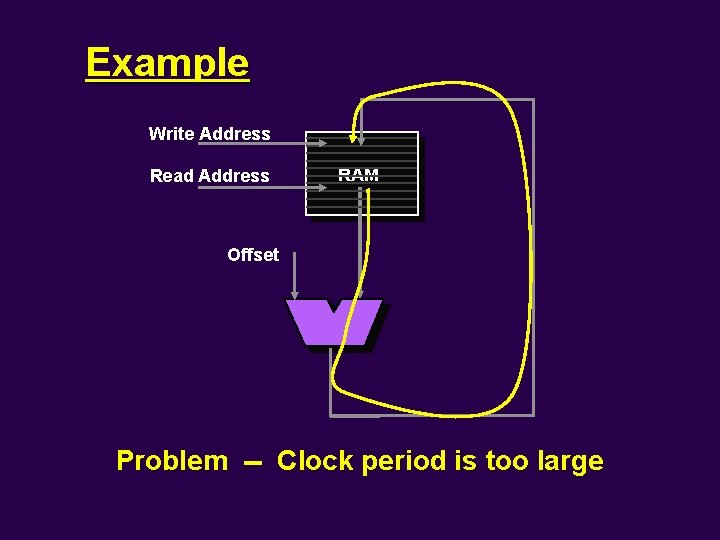

Example Write Address Read Address RAM Offset Problem -- Clock period is too large

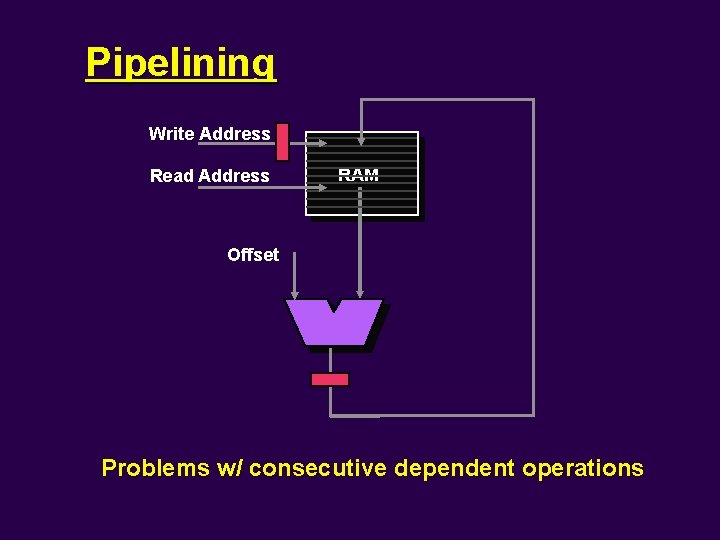

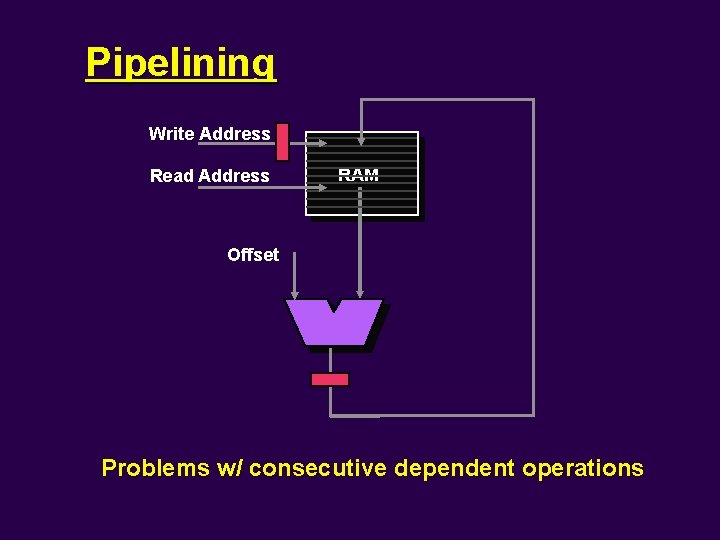

Pipelining Write Address Read Address RAM Offset Problems w/ consecutive dependent operations

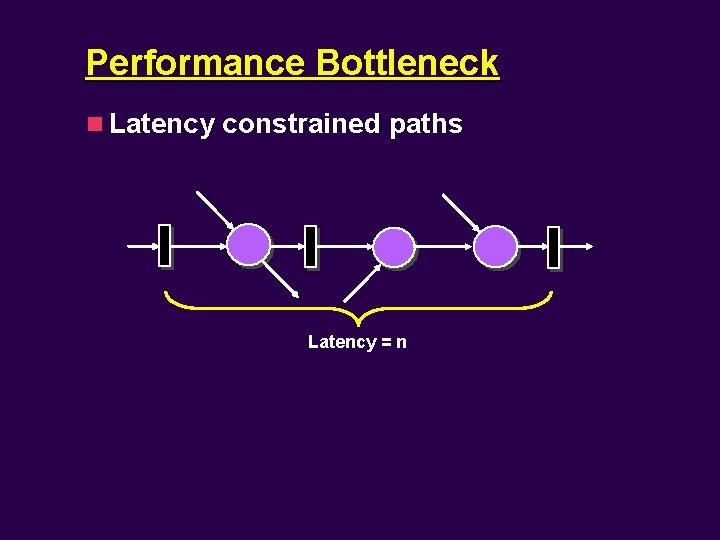

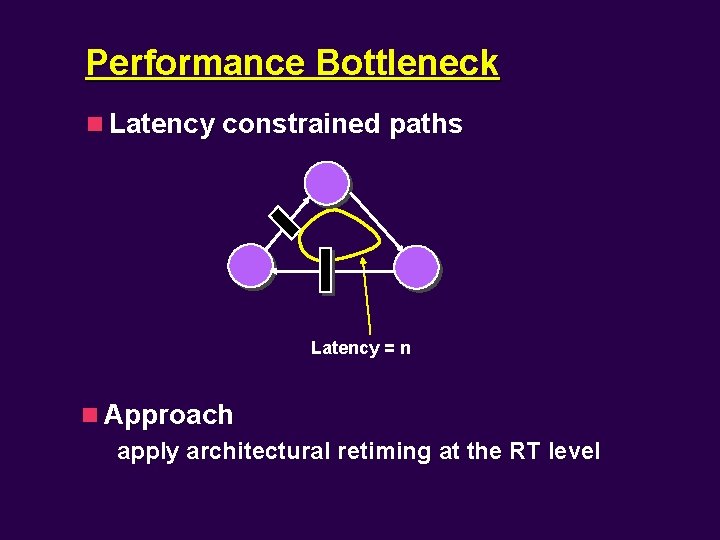

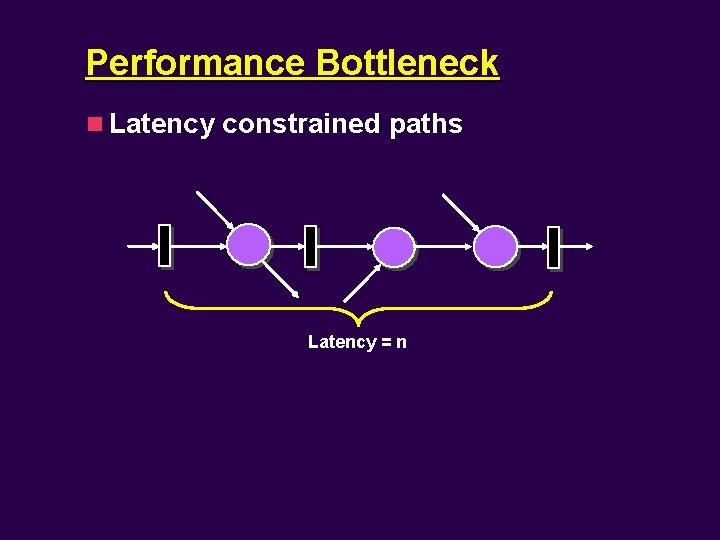

Performance Bottleneck n Latency constrained paths Latency = n

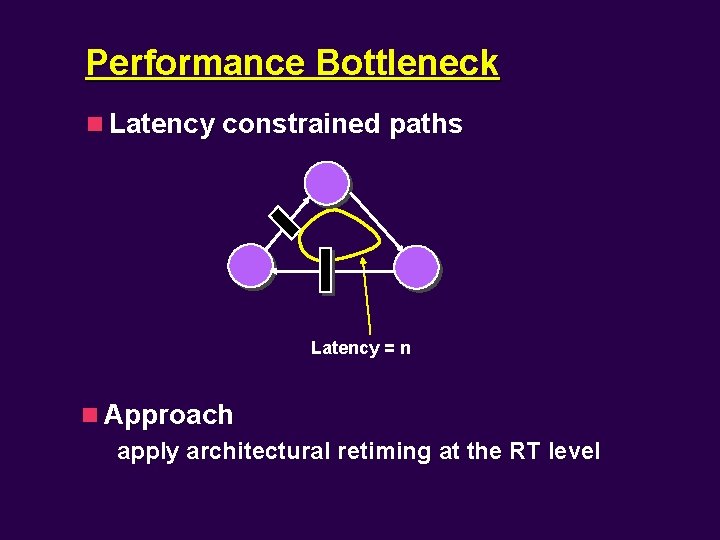

Performance Bottleneck n Latency constrained paths Latency = n n Approach apply architectural retiming at the RT level

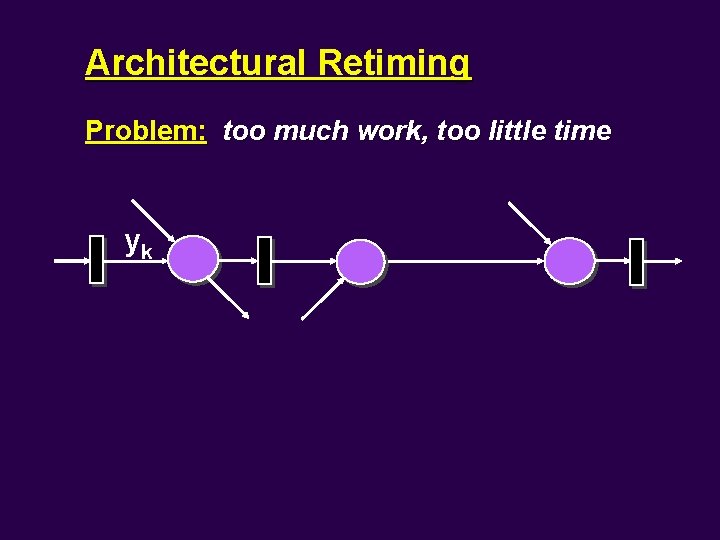

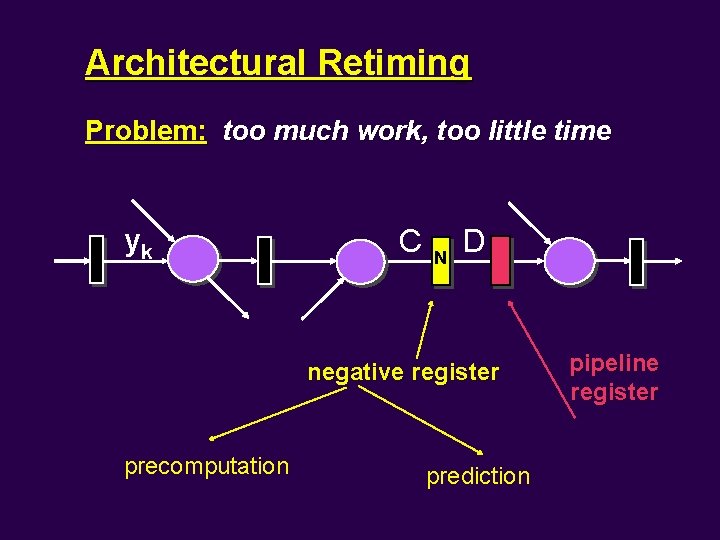

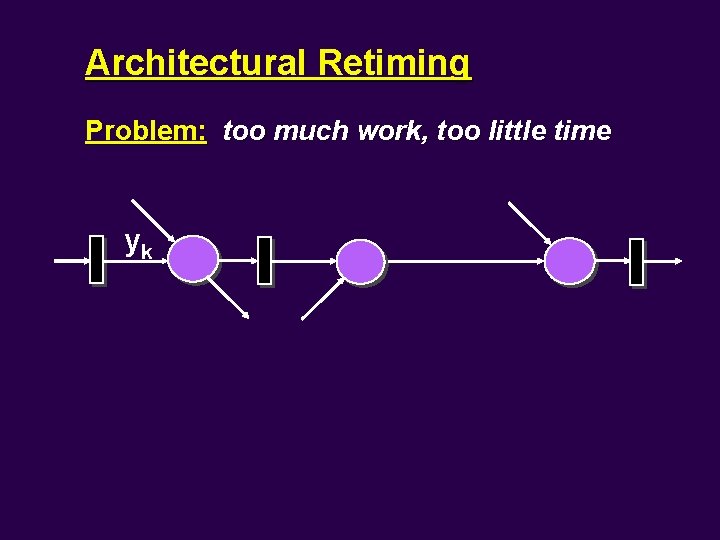

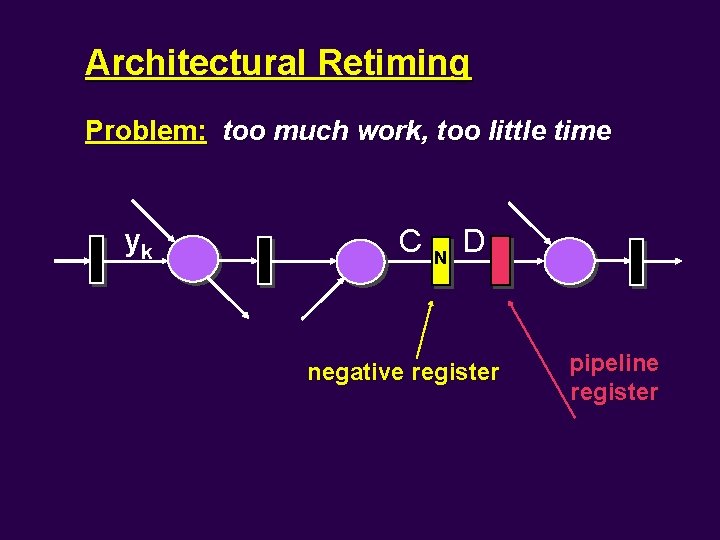

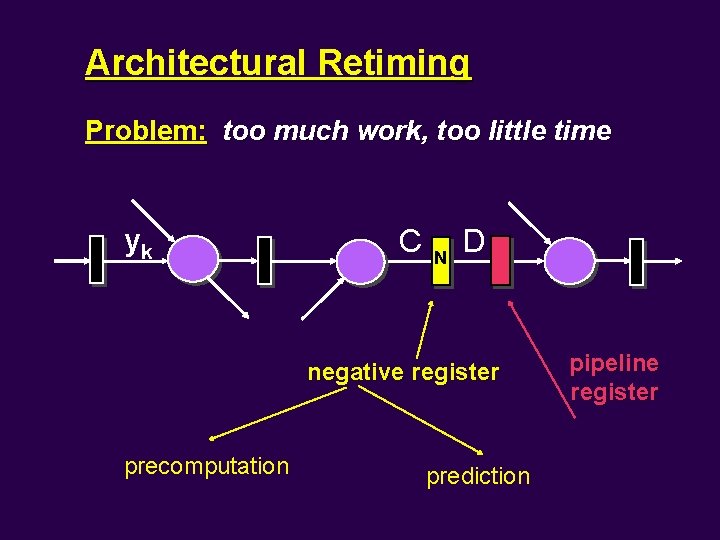

Architectural Retiming Problem: too much work, too little time yk

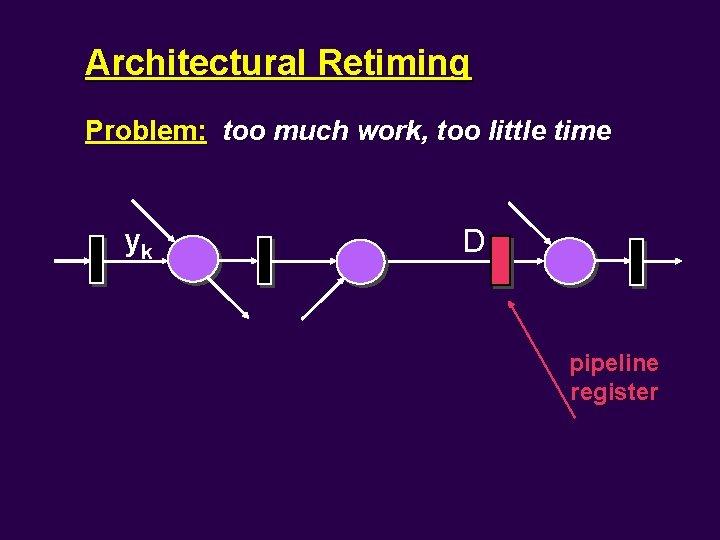

Architectural Retiming Problem: too much work, too little time yk D pipeline register

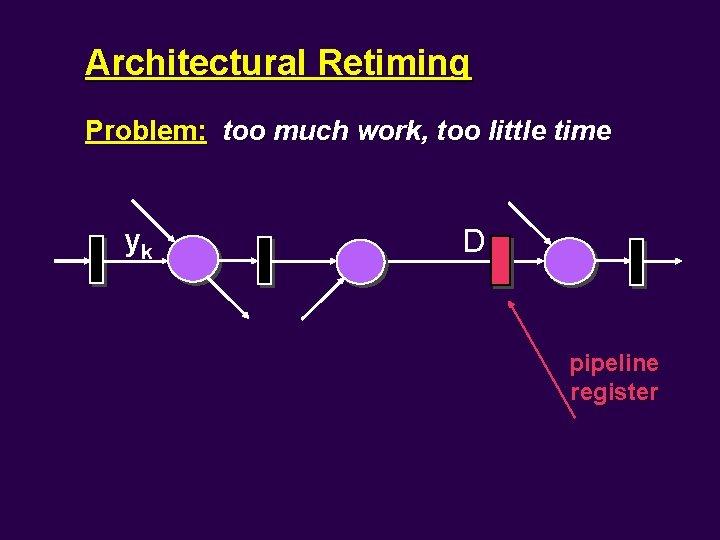

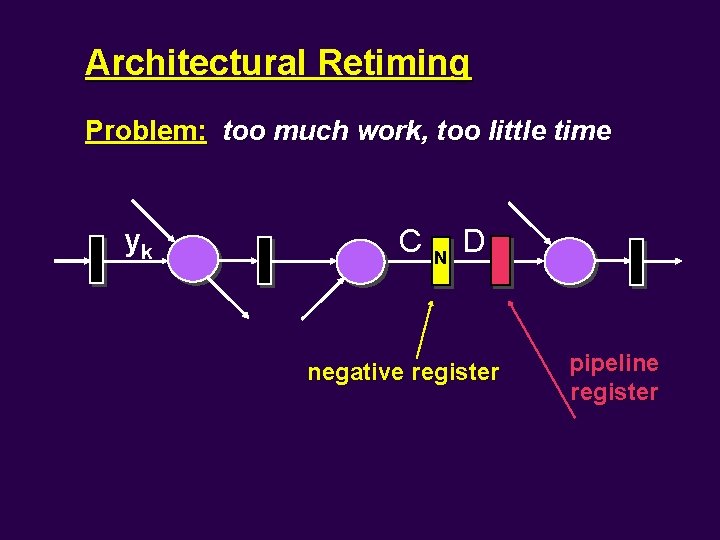

Architectural Retiming Problem: too much work, too little time yk C N D negative register pipeline register

Architectural Retiming Problem: too much work, too little time yk C N D negative register precomputation prediction pipeline register

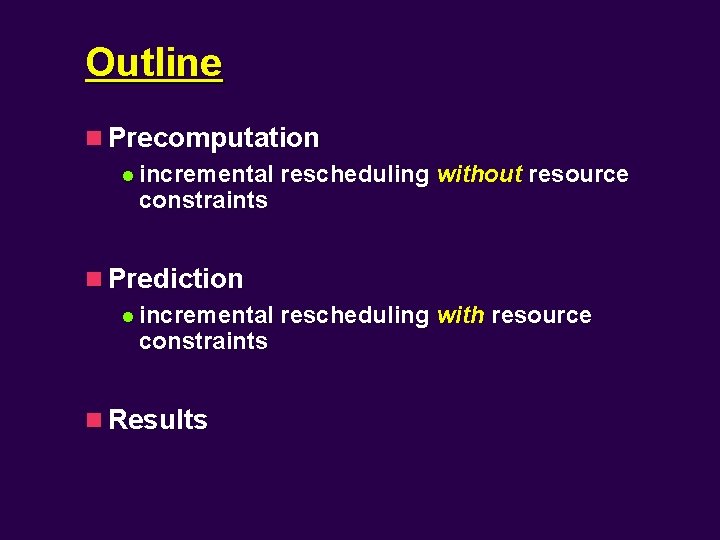

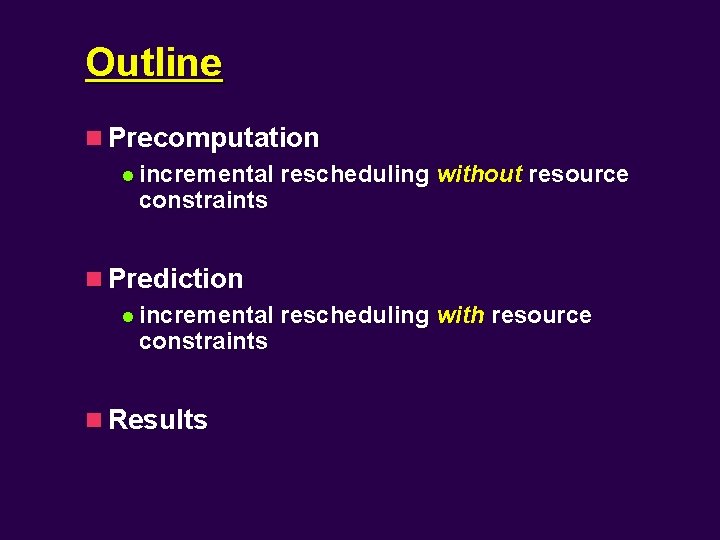

Outline n Precomputation l incremental rescheduling without resource constraints n Prediction l incremental rescheduling with resource constraints n Results

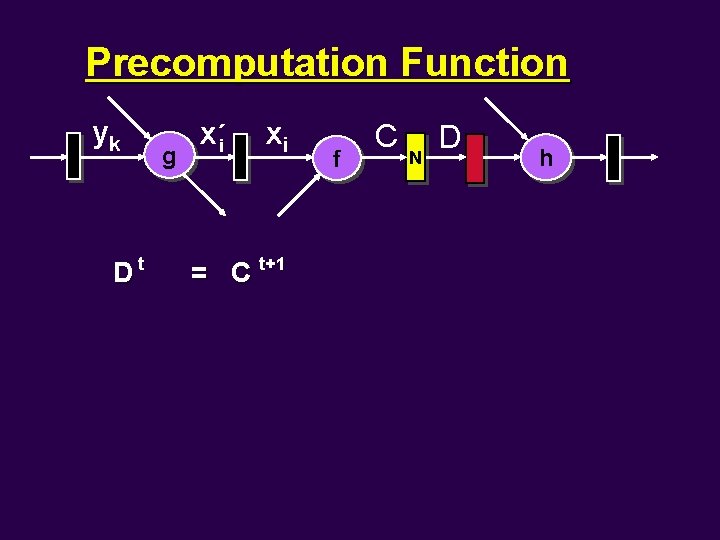

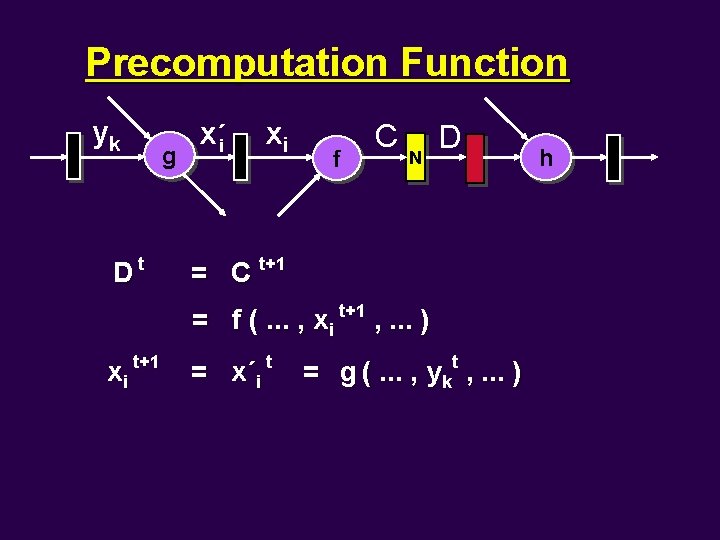

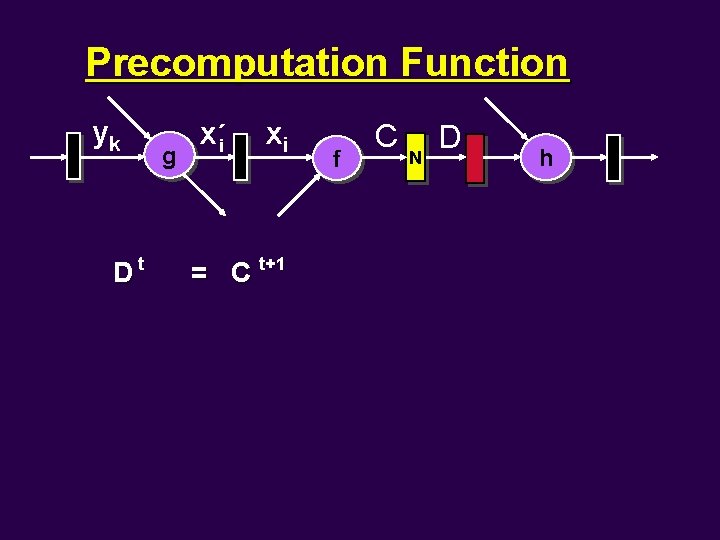

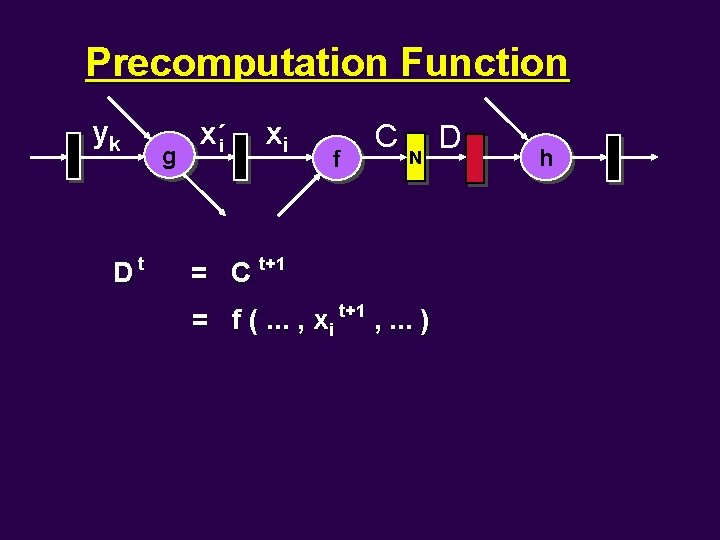

Precomputation Function yk Dt g x´i xi = C t+1 f C N D h

Precomputation Function yk Dt g x´i xi f C N = C t+1 = f (. . . , xi t+1 , . . . ) D h

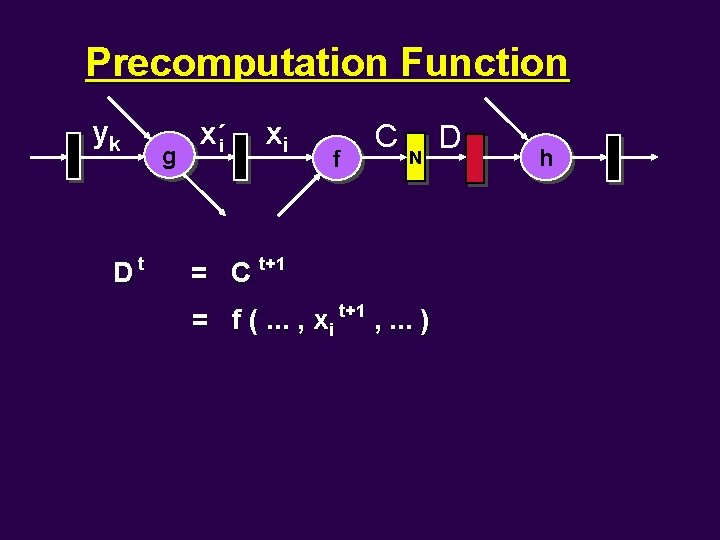

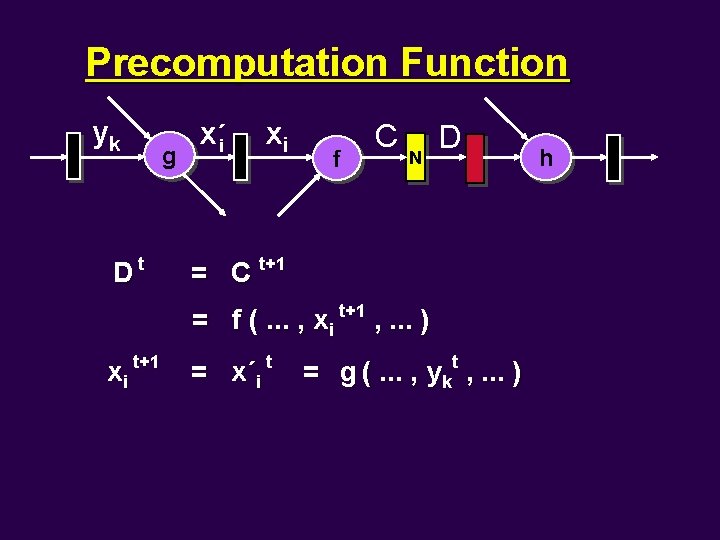

Precomputation Function yk Dt g x´i xi f C N D = C t+1 = f (. . . , xi t+1 , . . . ) xi t+1 = x´i t = g (. . . , ykt , . . . ) h

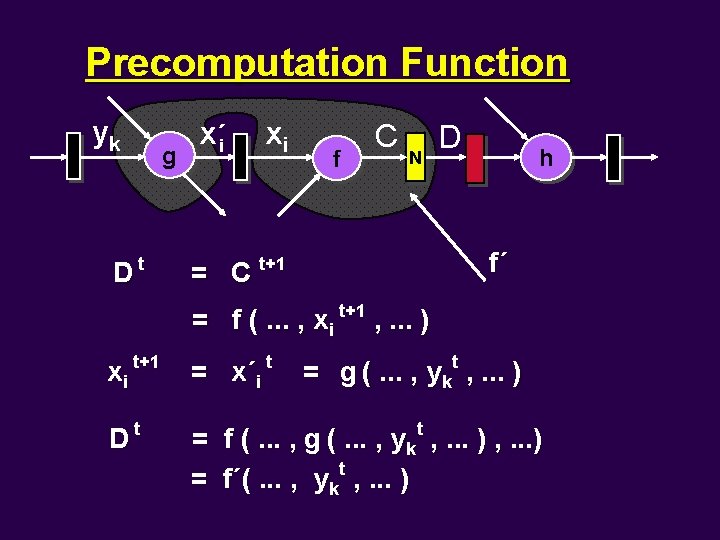

Precomputation Function yk Dt g x´i xi f C N D h f´ = C t+1 = f (. . . , xi t+1 , . . . ) xi t+1 = x´i t = g (. . . , ykt , . . . ) Dt = f (. . . , g (. . . , ykt , . . . ) t = f´(. . . , yk , . . . )

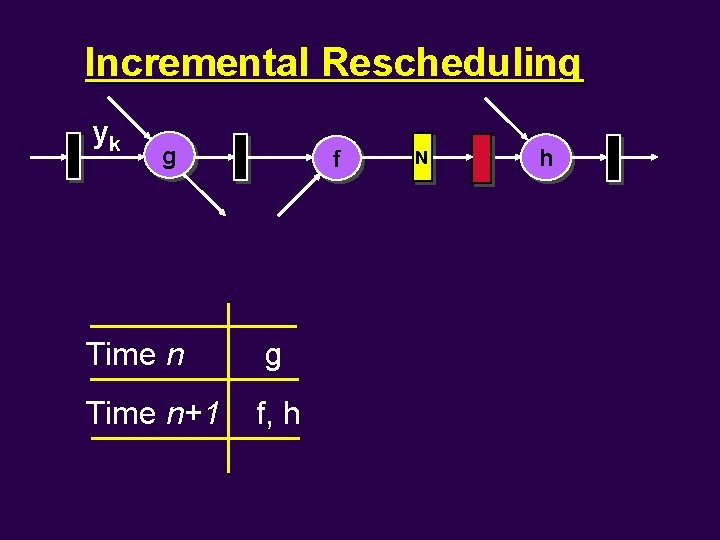

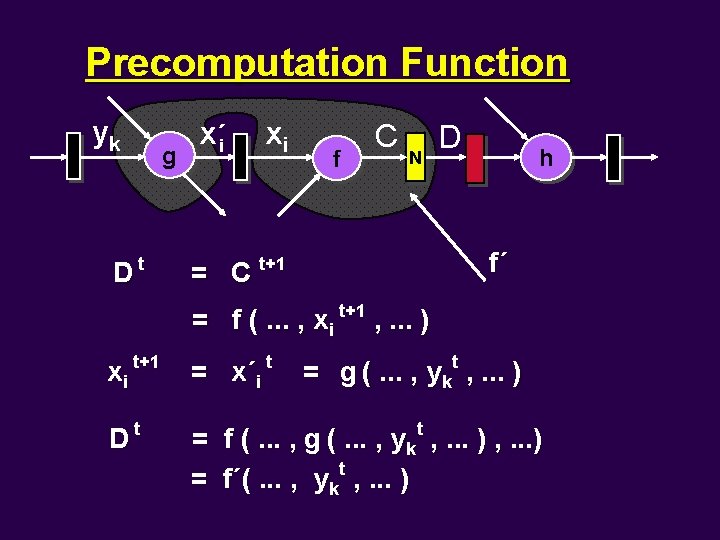

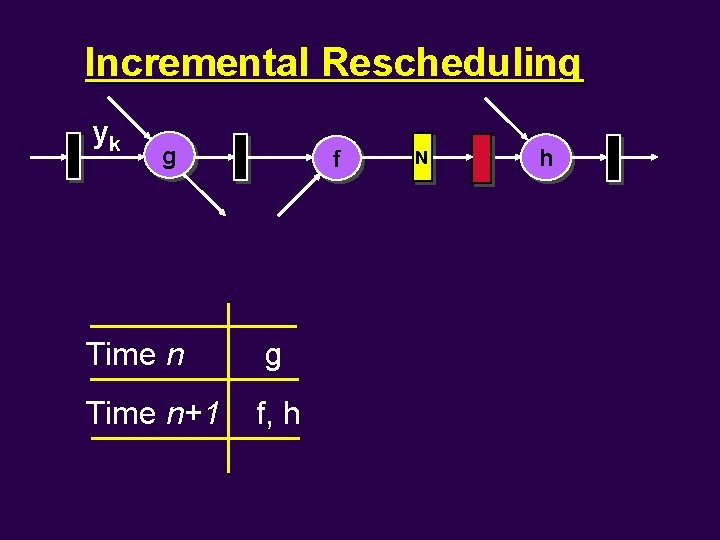

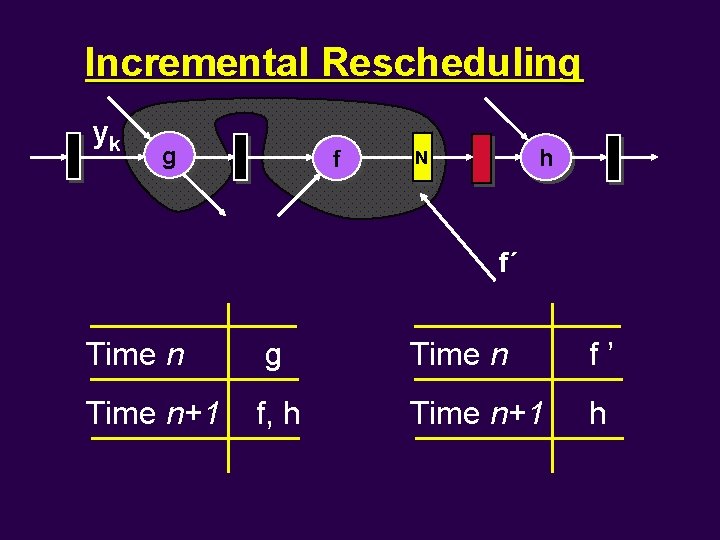

Incremental Rescheduling yk g f Time n g Time n+1 f, h N h

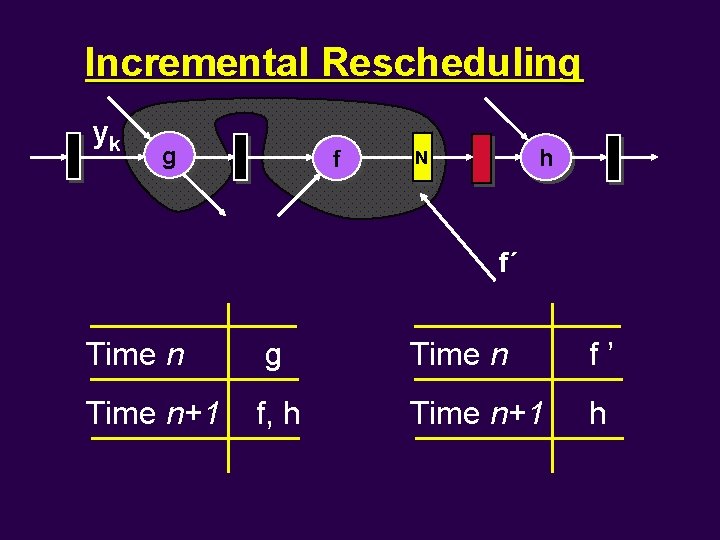

Incremental Rescheduling yk g f h N f´ Time n g Time n f’ Time n+1 f, h Time n+1 h

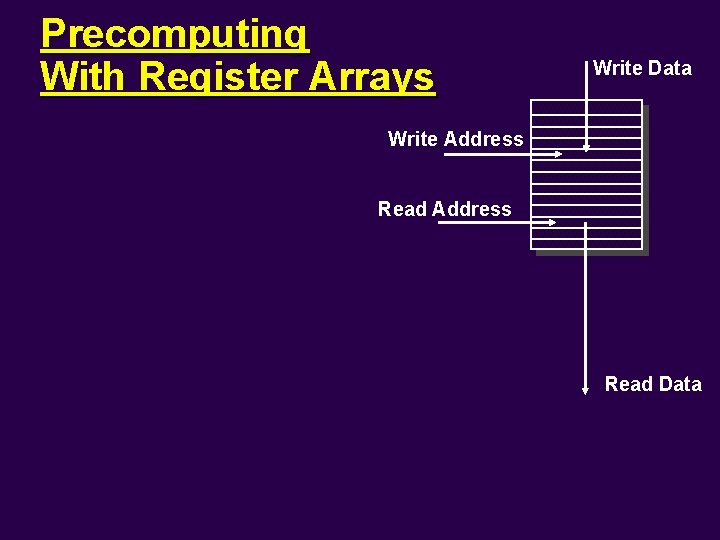

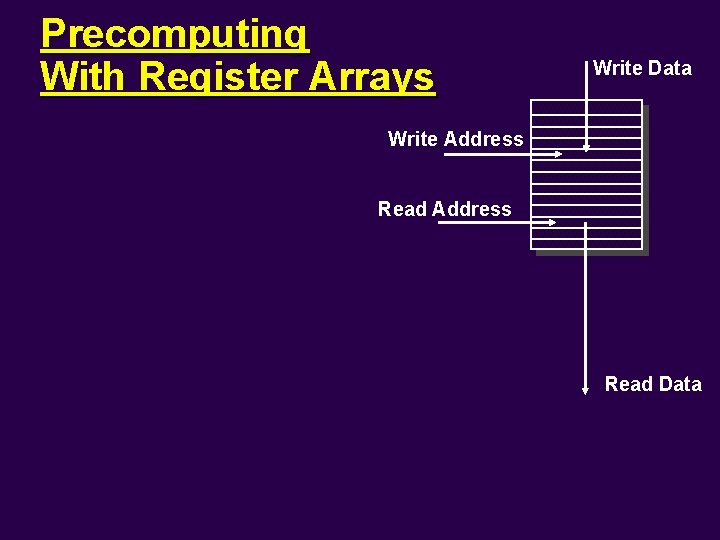

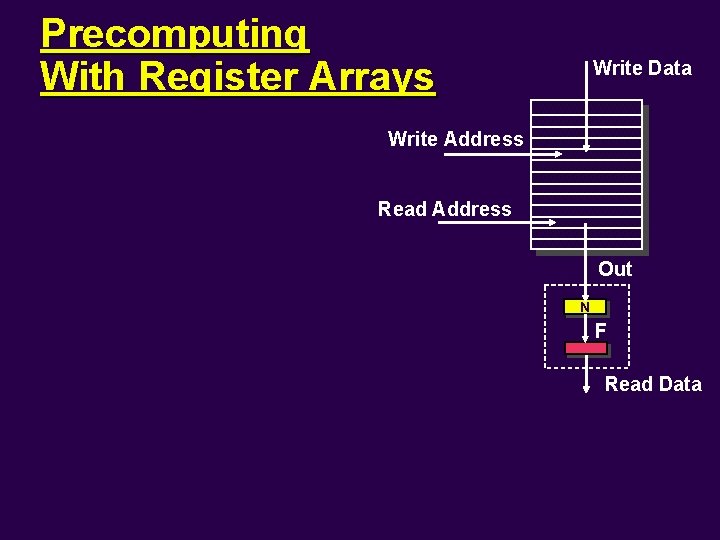

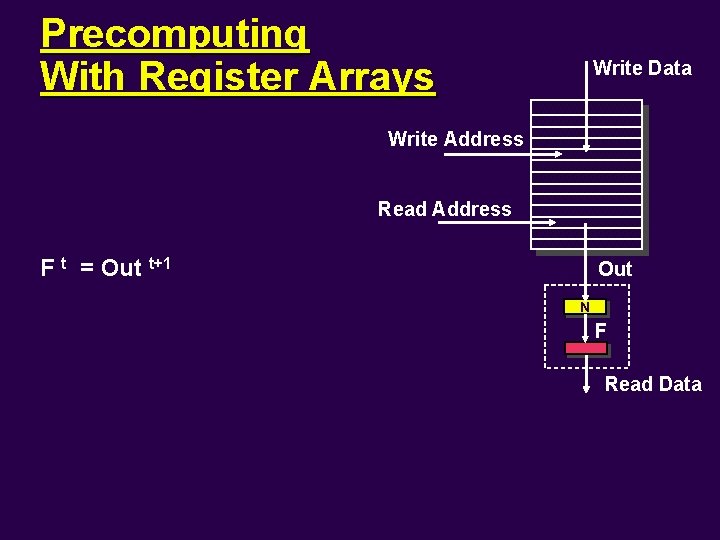

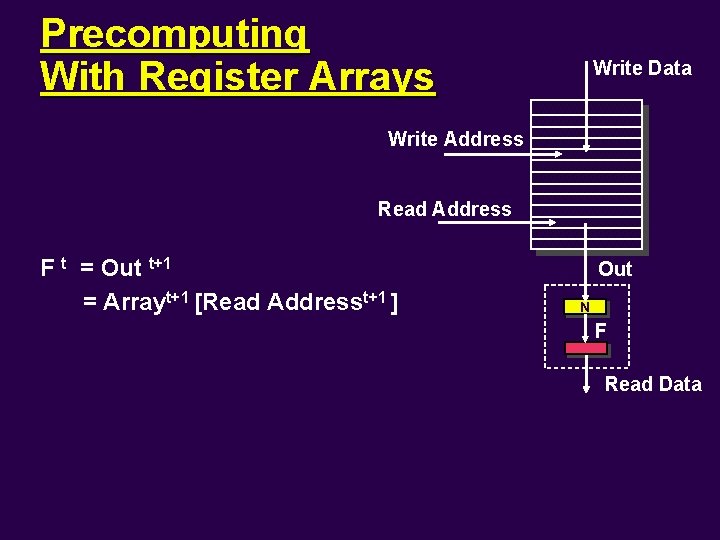

Precomputing With Register Arrays Write Data Write Address Read Data

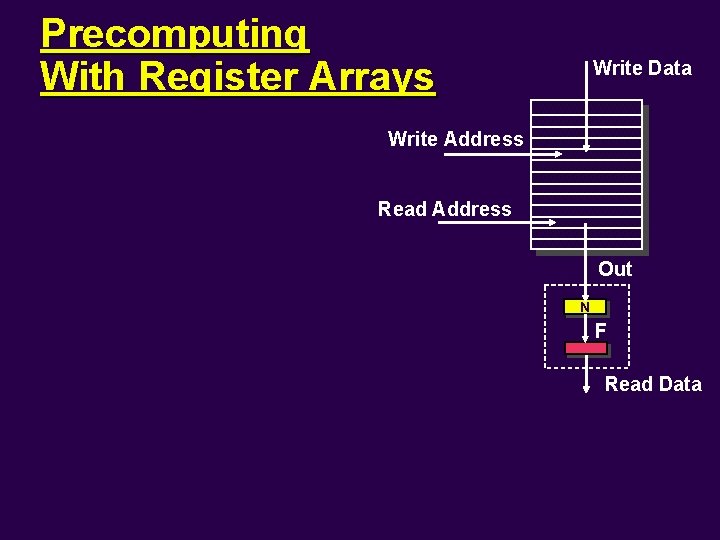

Precomputing With Register Arrays Write Data Write Address Read Address Out N F Read Data

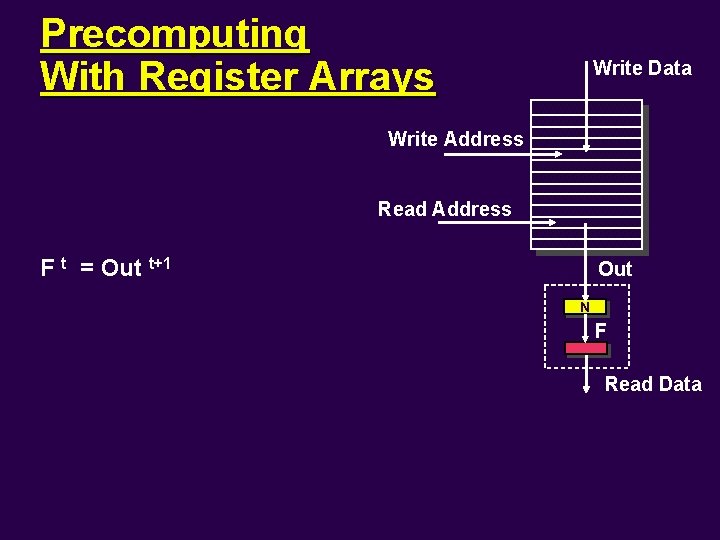

Precomputing With Register Arrays Write Data Write Address Read Address F t = Out t+1 Out N F Read Data

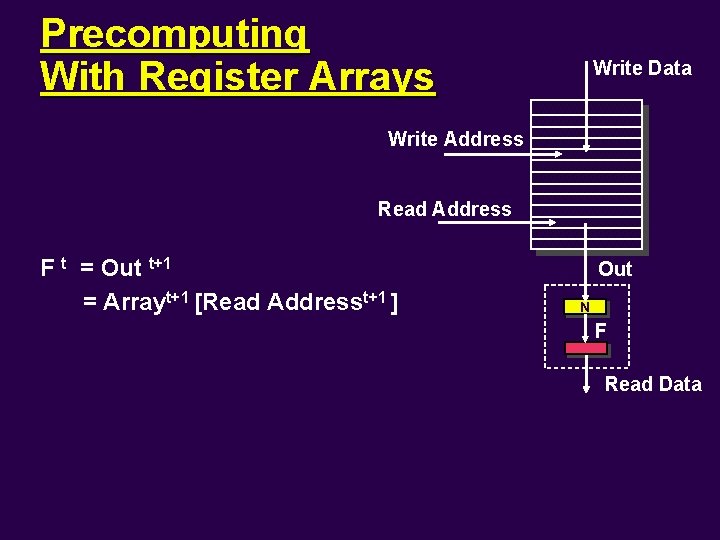

Precomputing With Register Arrays Write Data Write Address Read Address F t = Out t+1 = Arrayt+1 [Read Addresst+1 ] Out N F Read Data

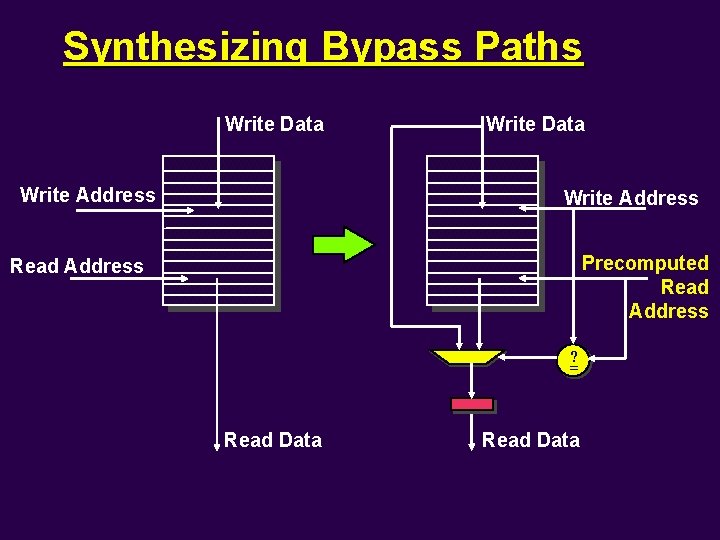

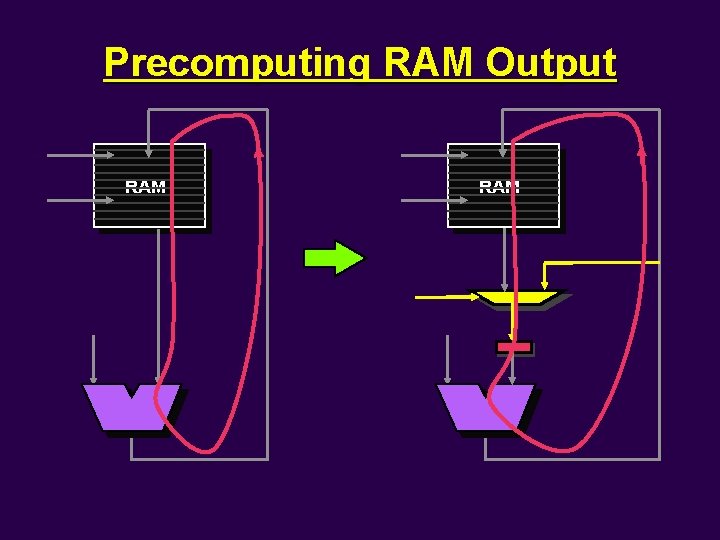

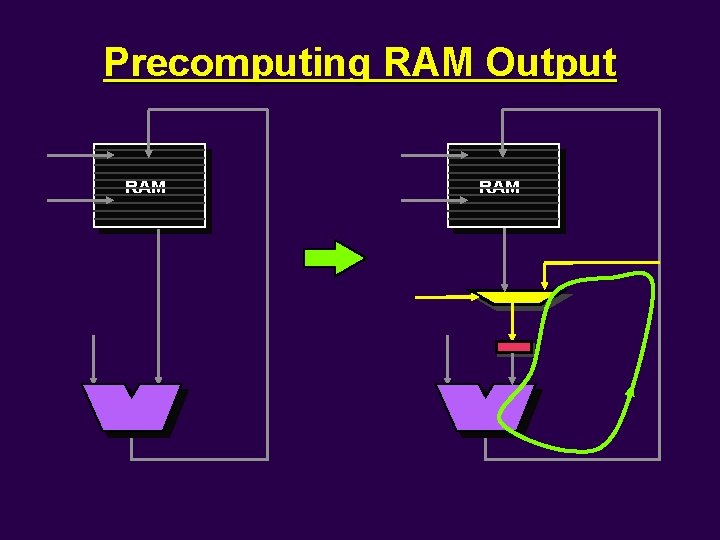

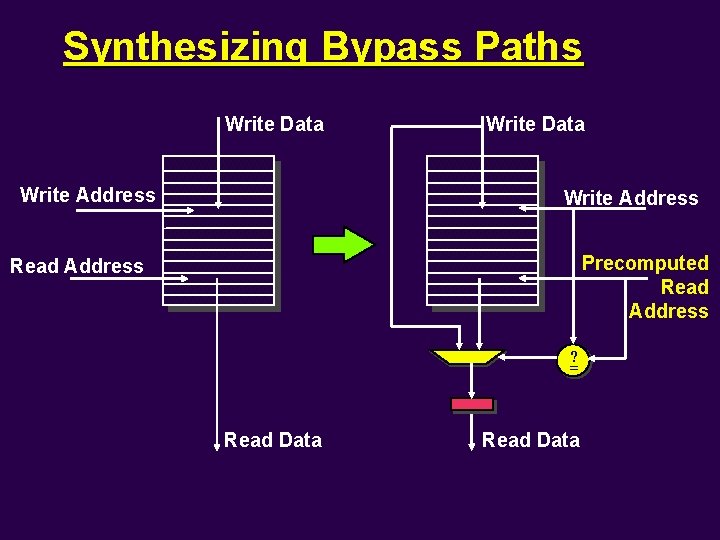

Synthesizing Bypass Paths Write Data Write Address Precomputed Read Address ? = Read Data

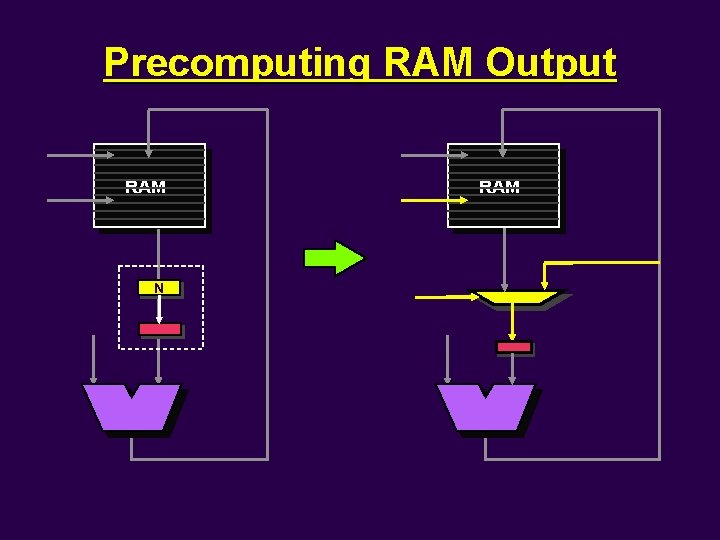

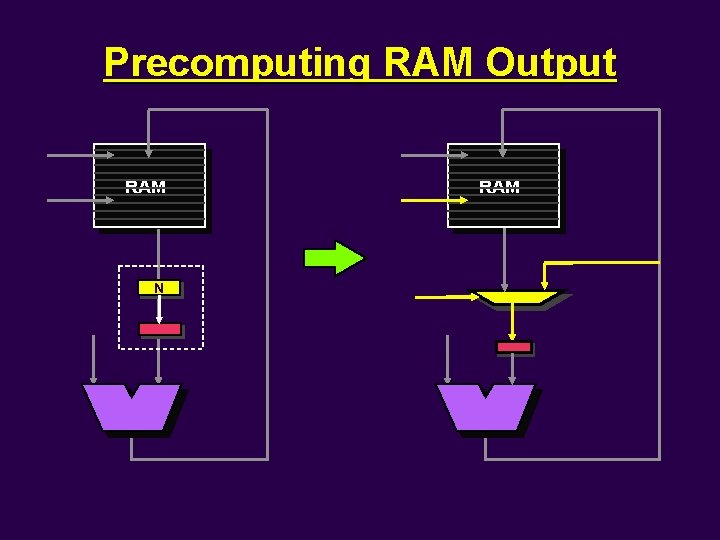

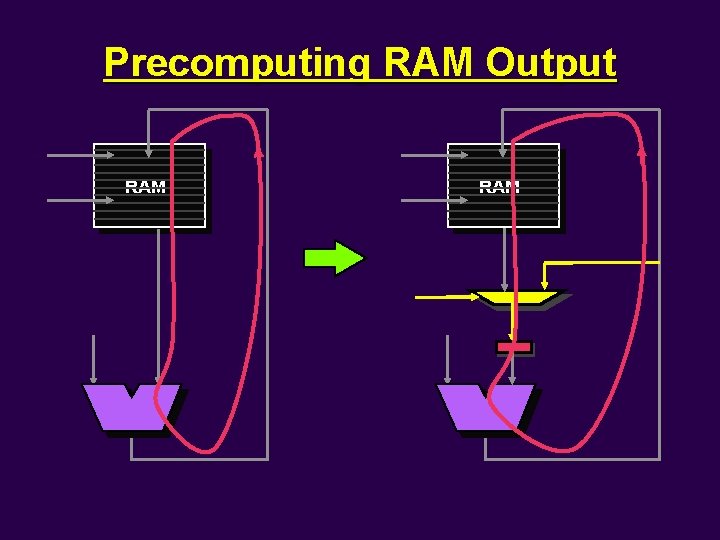

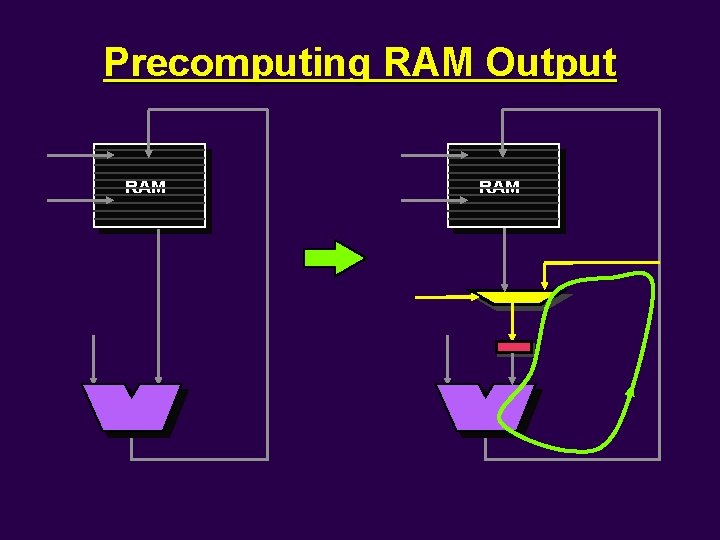

Precomputing RAM Output RAM N RAM

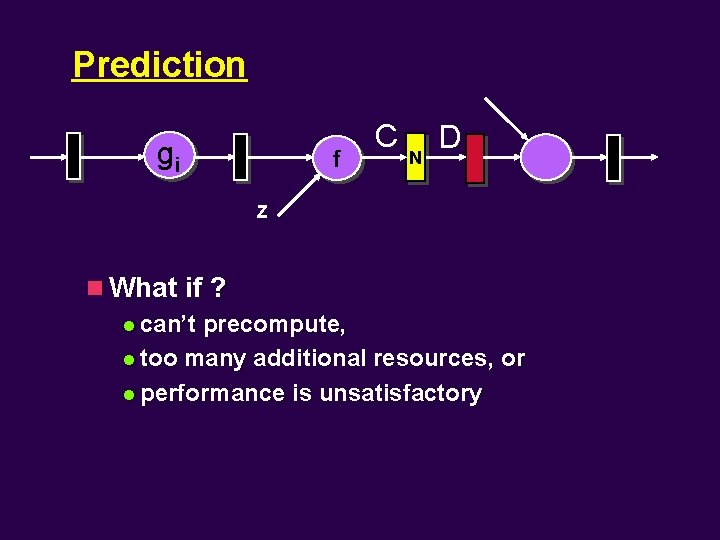

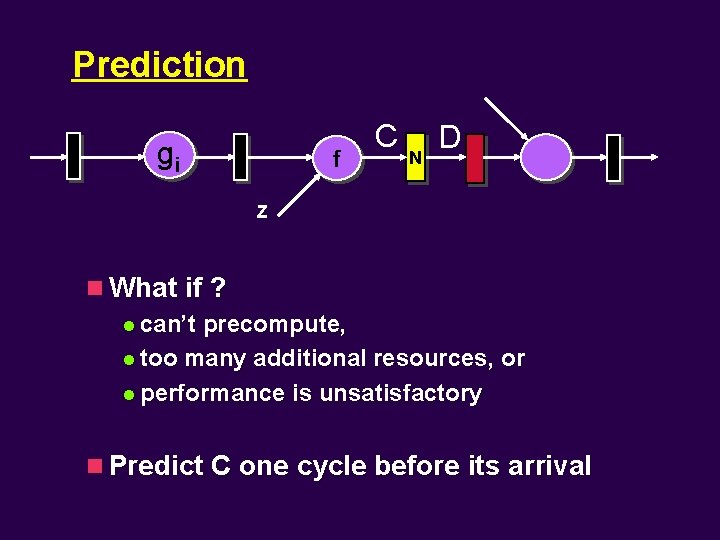

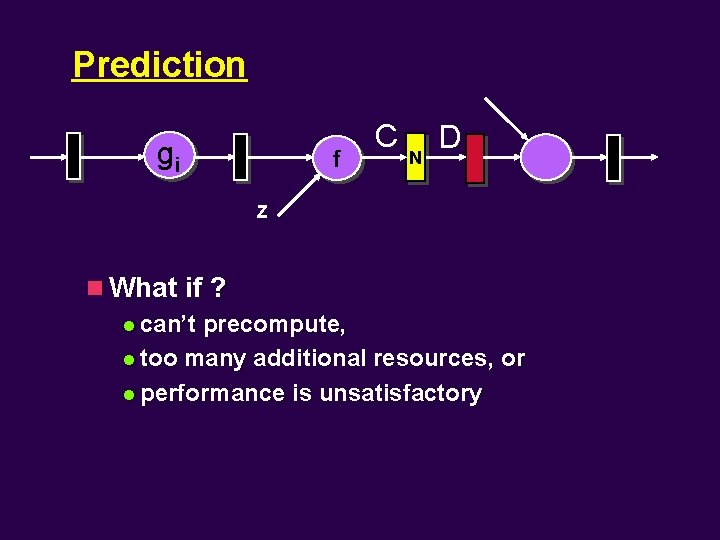

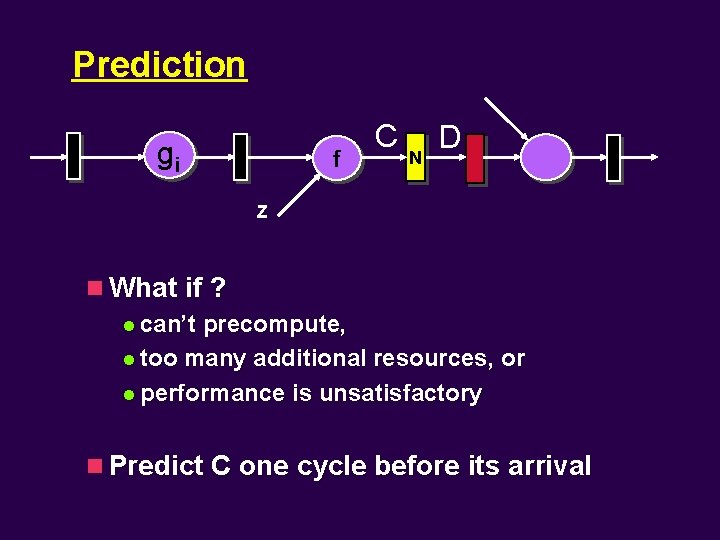

Prediction gi f C N D Z n What if ? l can’t precompute, l too many additional resources, or l performance is unsatisfactory

Prediction gi f C N D Z n What if ? l can’t precompute, l too many additional resources, or l performance is unsatisfactory n Predict C one cycle before its arrival

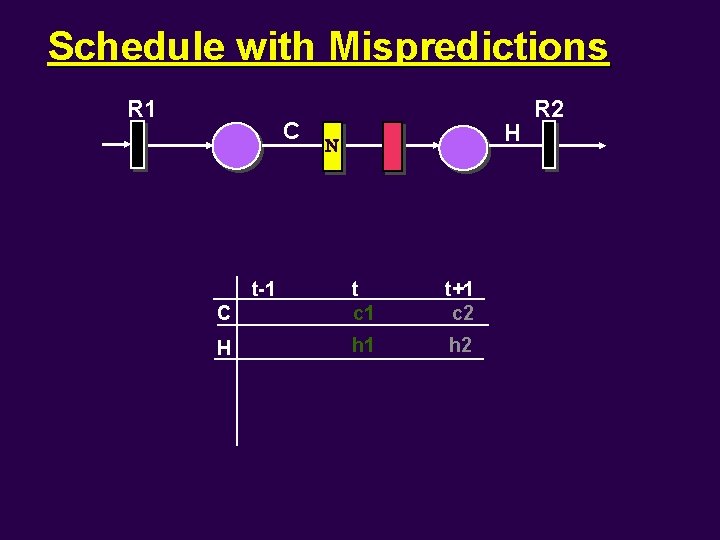

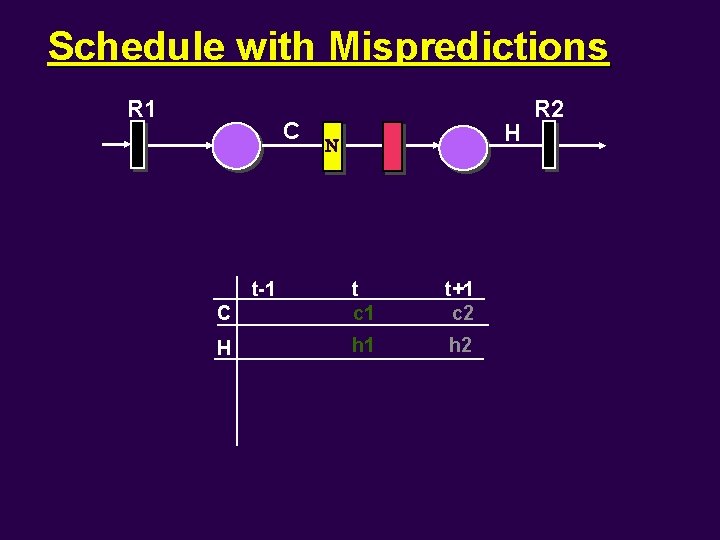

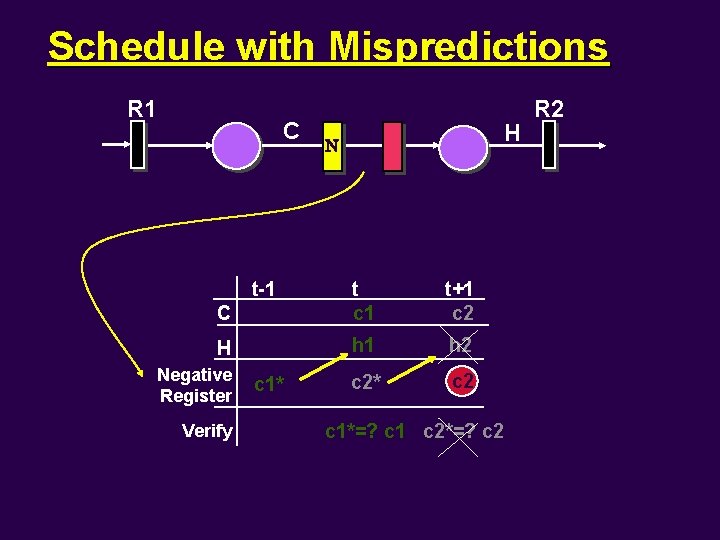

Schedule with Mispredictions R 1 C H C t c 1 t+1 c 2 H h 1 h 2 t-1 R 2

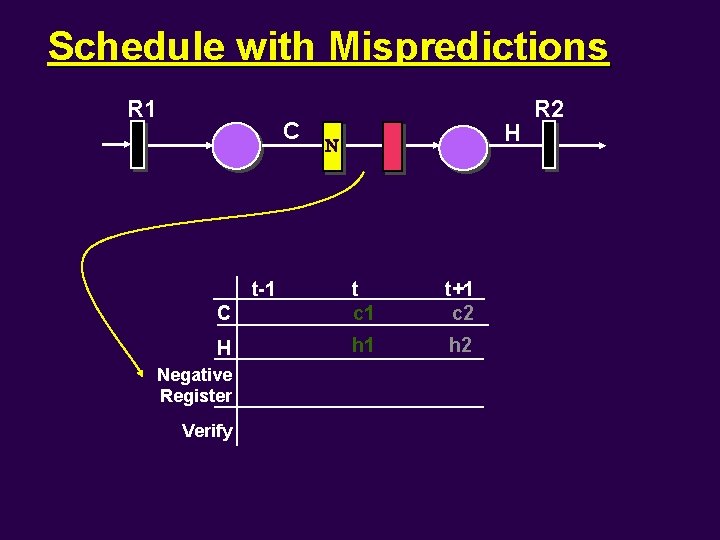

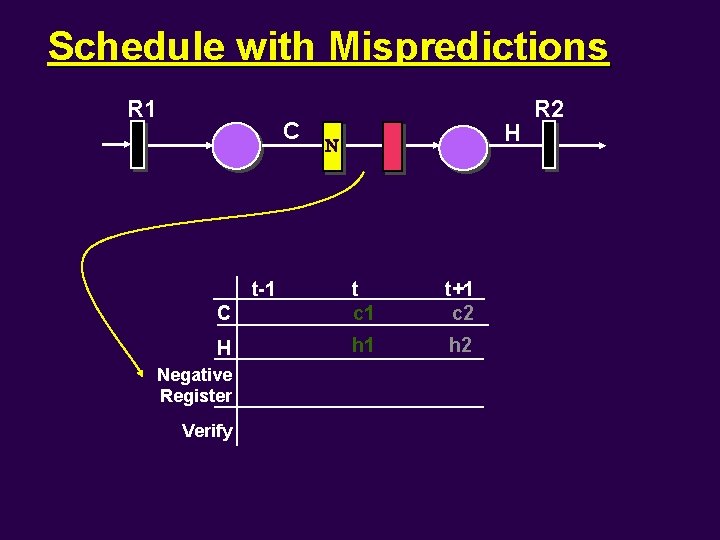

Schedule with Mispredictions R 1 C H C t c 1 t+1 c 2 H h 1 h 2 t-1 Negative Register Verify R 2

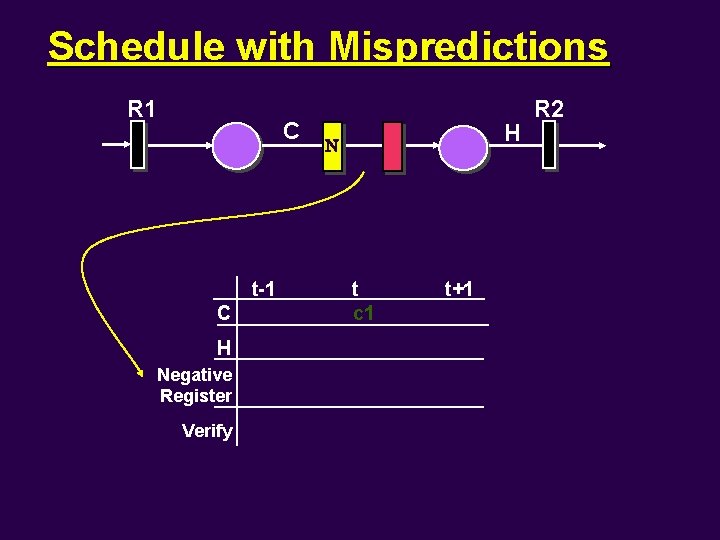

Schedule with Mispredictions R 1 C t-1 C H Negative Register Verify H t c 1 t+1 R 2

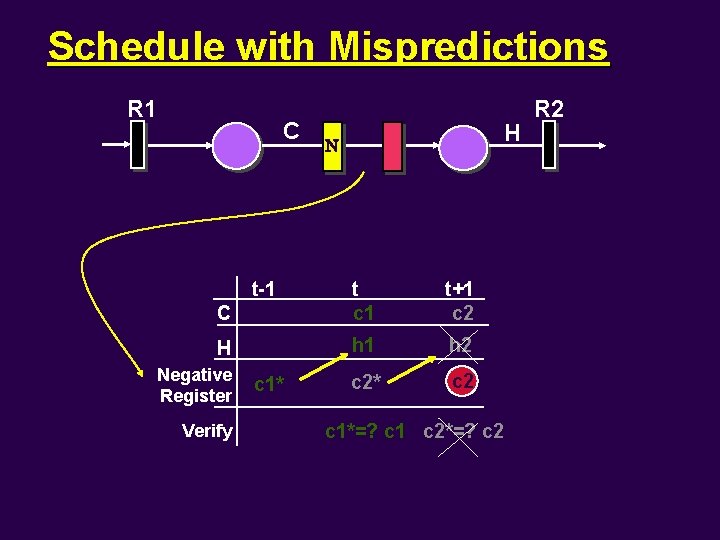

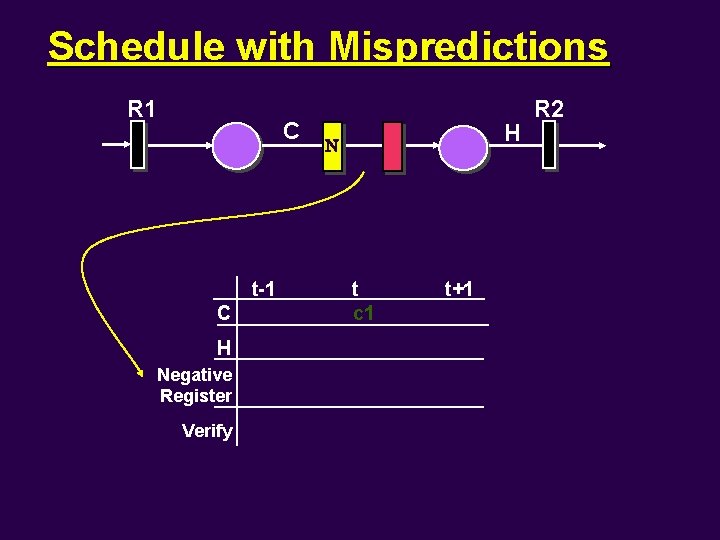

Schedule with Mispredictions R 1 C H C t c 1 t+1 c 2 H h 1 h 2 c 2* c 2 t-1 Negative Register Verify c 1*=? c 1 c 2*=? c 2 R 2

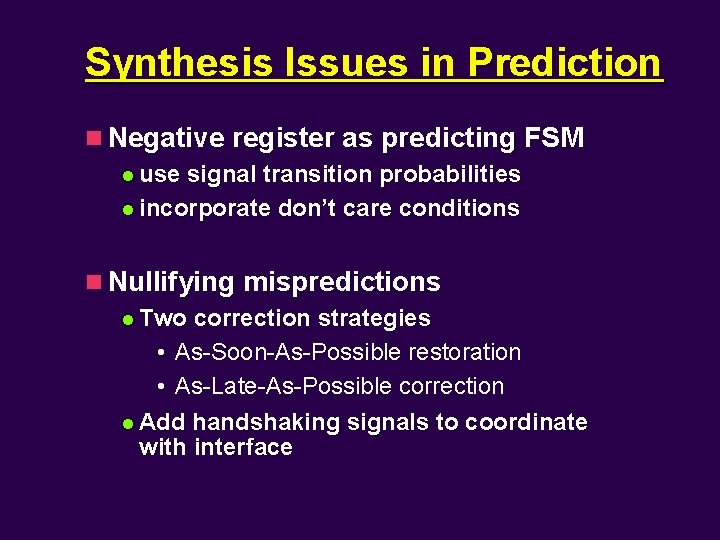

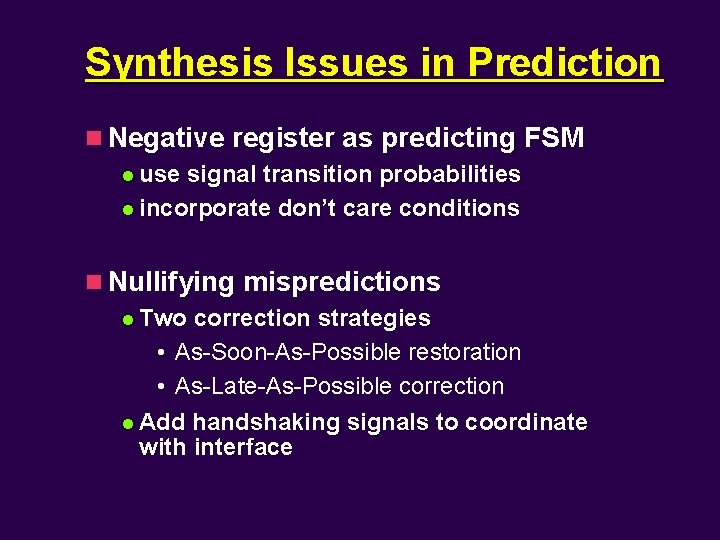

Synthesis Issues in Prediction n Negative register as predicting FSM l use signal transition probabilities l incorporate don’t care conditions n Nullifying mispredictions l Two correction strategies • As-Soon-As-Possible restoration • As-Late-As-Possible correction l Add handshaking signals to coordinate with interface

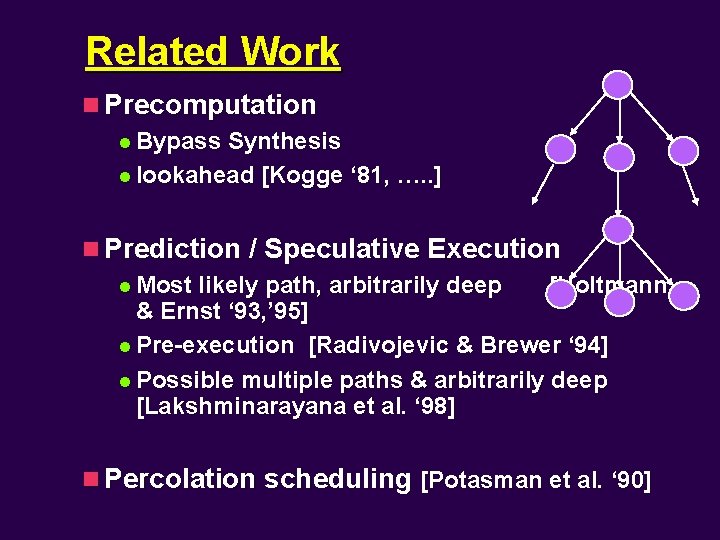

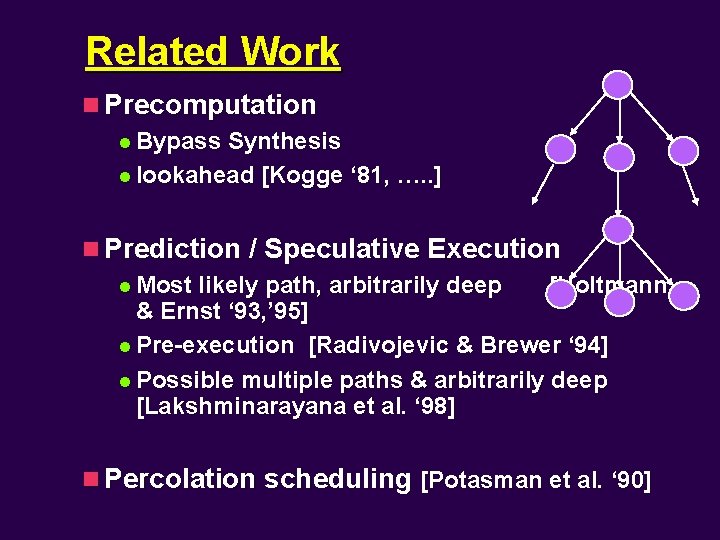

Related Work n Precomputation l Bypass Synthesis l lookahead [Kogge ‘ 81, …. . ] n Prediction / Speculative Execution l Most likely path, arbitrarily deep [Holtmann & Ernst ‘ 93, ’ 95] l Pre-execution [Radivojevic & Brewer ‘ 94] l Possible multiple paths & arbitrarily deep [Lakshminarayana et al. ‘ 98] n Percolation scheduling [Potasman et al. ‘ 90]

Results

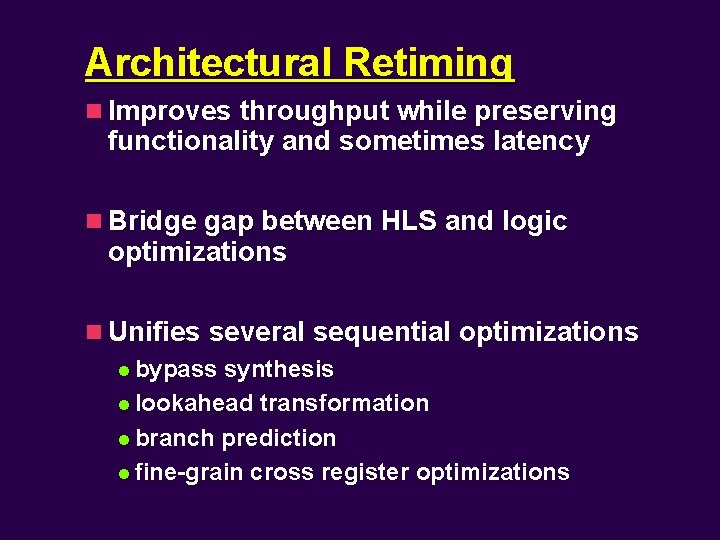

Architectural Retiming n Improves throughput while preserving functionality and sometimes latency n Bridge gap between HLS and logic optimizations n Unifies several sequential optimizations l bypass synthesis l lookahead transformation l branch prediction l fine-grain cross register optimizations

Ph. D. Forum at DAC ‘ 99 n Goal l increase interaction between academia and industry n Format l students present work at poster session at DAC l researchers give feedback n Who’s eligible? l Students within 1 or 2 years of finishing Ph. D. thesis www. cs. washington. edu/homes/soha/forum

The End

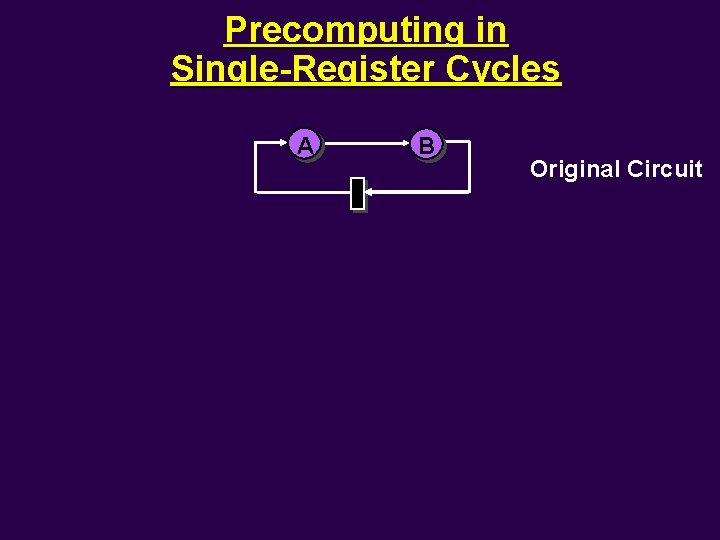

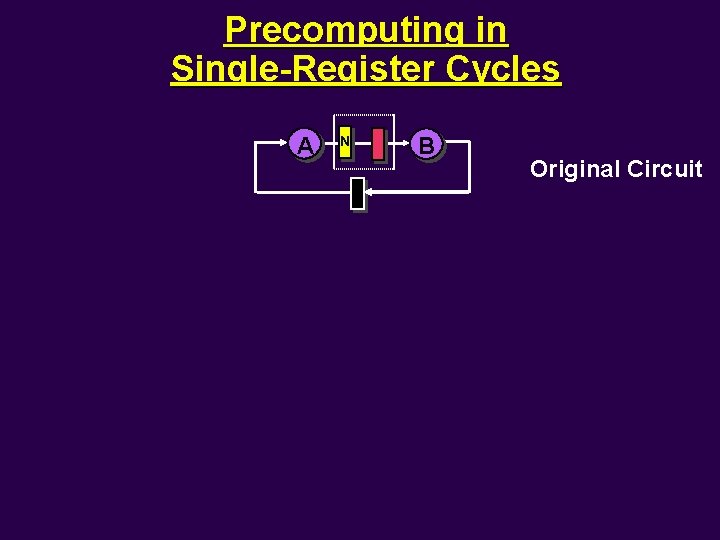

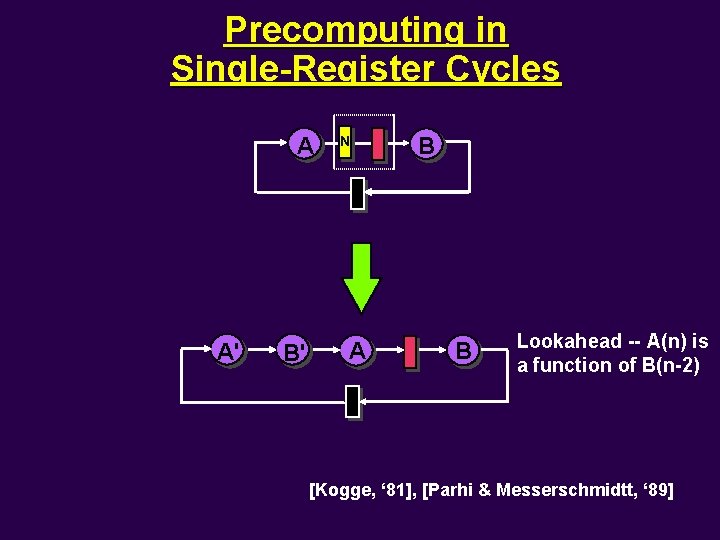

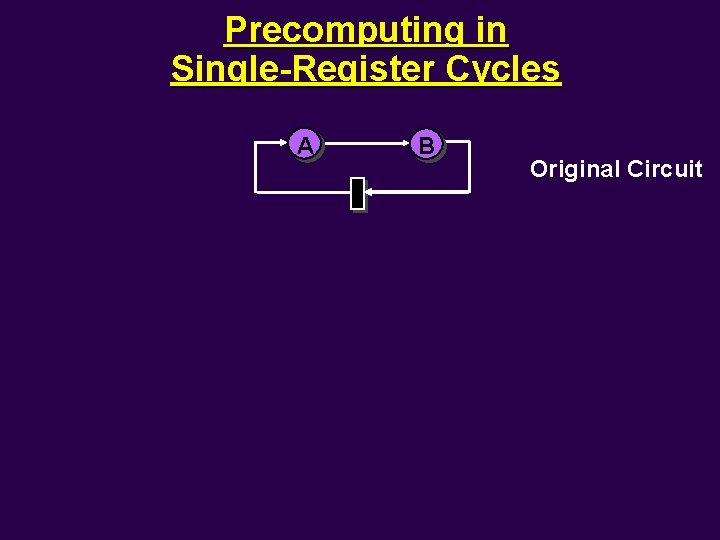

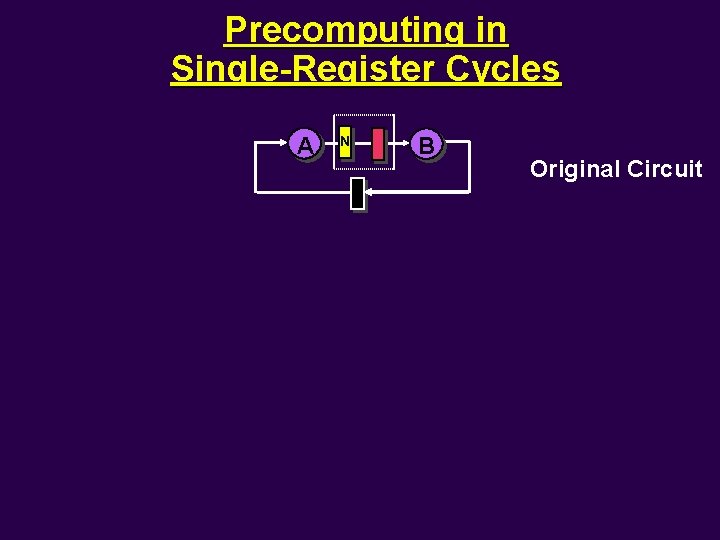

Precomputing in Single-Register Cycles A B Original Circuit

Precomputing in Single-Register Cycles A N B Original Circuit

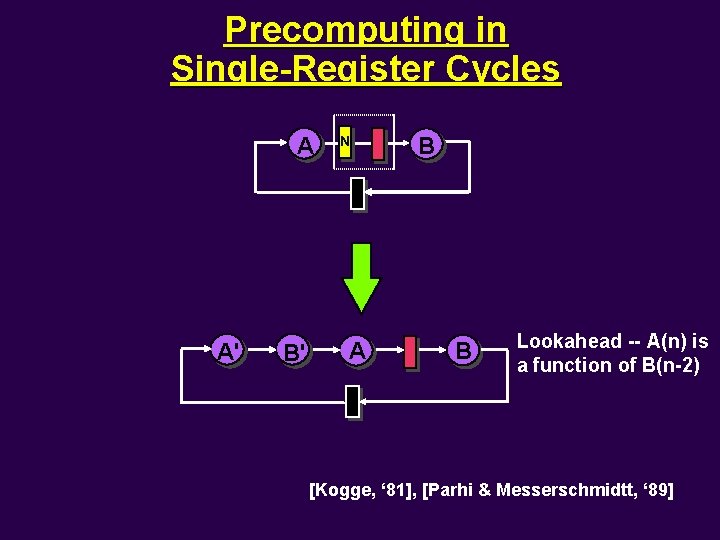

Precomputing in Single-Register Cycles A A' B' N A B B Lookahead -- A(n) is a function of B(n-2) [Kogge, ‘ 81], [Parhi & Messerschmidtt, ‘ 89]

Precomputing RAM Output RAM

Precomputing RAM Output RAM

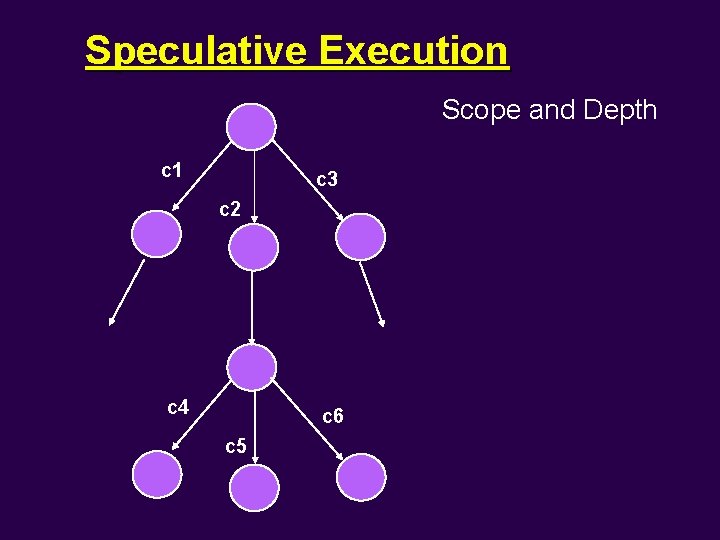

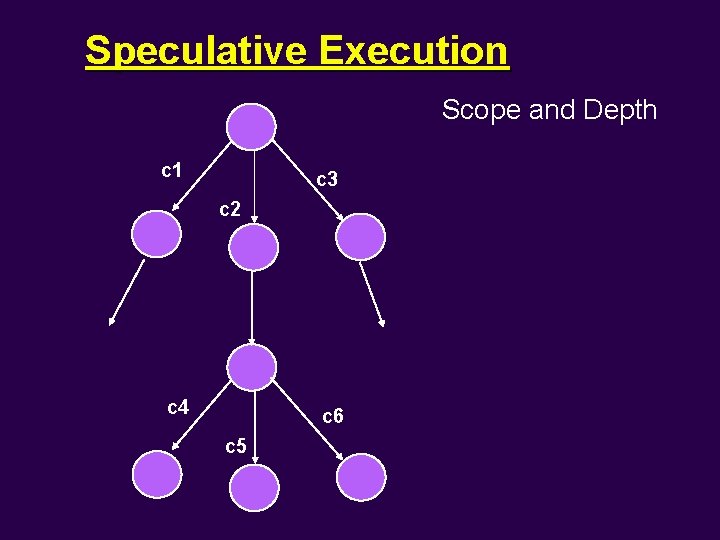

Speculative Execution Scope and Depth c 1 c 3 c 2 c 4 c 6 c 5

Speculative Execution Scope and Depth