15 744 Computer Networking L16 P 2 PDHT

- Slides: 69

15 -744: Computer Networking L-16 P 2 P/DHT

Overview • P 2 P Lookup Overview • Centralized/Flooded Lookups • Bittorrent • Routed Lookups – Chord • Comparison of DHTs 2

Peer-to-Peer Networks • Typically each member stores/provides access to content • Basically a replication system for files • Always a tradeoff between possible location of files and searching difficulty • Peer-to-peer allow files to be anywhere searching is the challenge • Dynamic member list makes it more difficult • What other systems have similar goals? • Routing, DNS 3

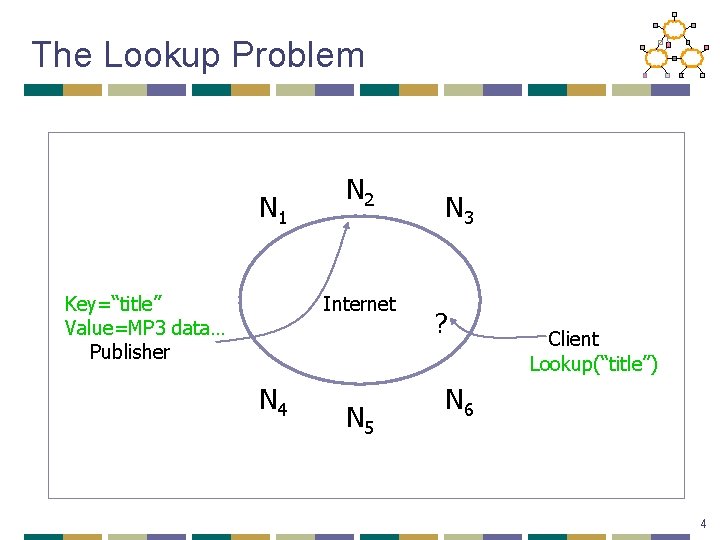

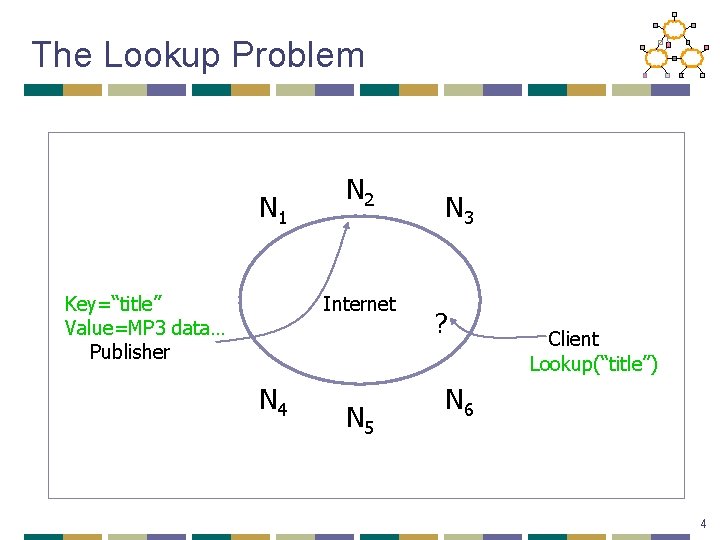

The Lookup Problem N 1 Key=“title” Value=MP 3 data… Publisher N 2 Internet N 4 N 5 N 3 ? Client Lookup(“title”) N 6 4

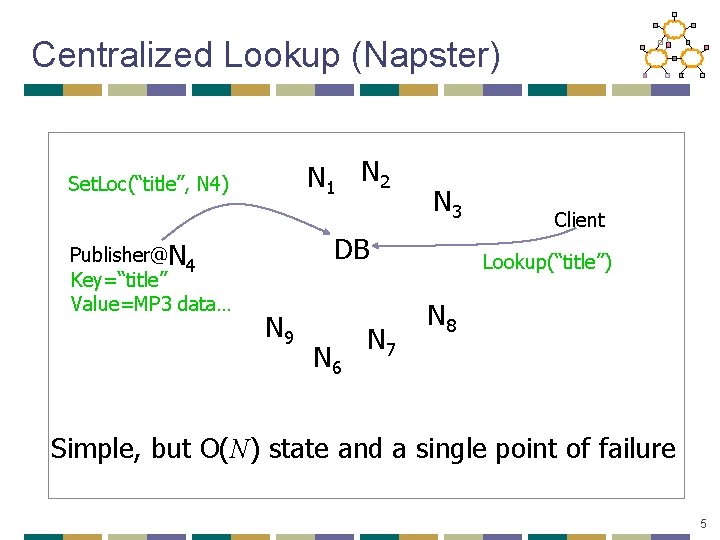

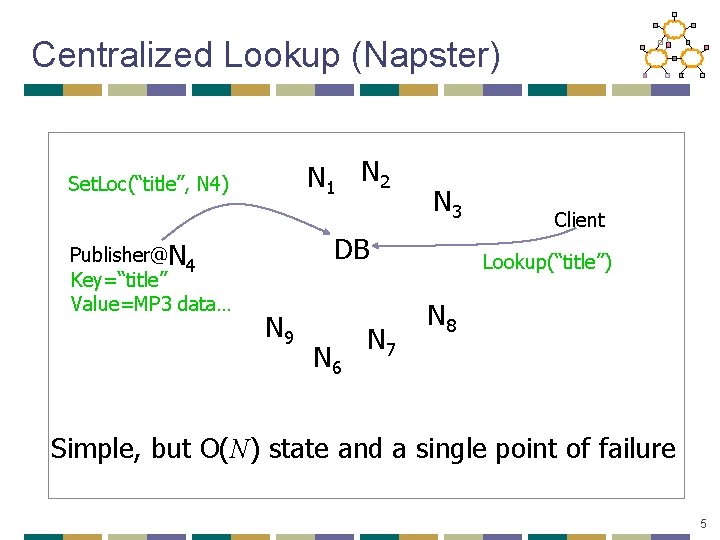

Centralized Lookup (Napster) N 1 N 2 Set. Loc(“title”, N 4) Publisher@N 4 Key=“title” Value=MP 3 data… N 3 DB N 9 N 6 N 7 Client Lookup(“title”) N 8 Simple, but O(N) state and a single point of failure 5

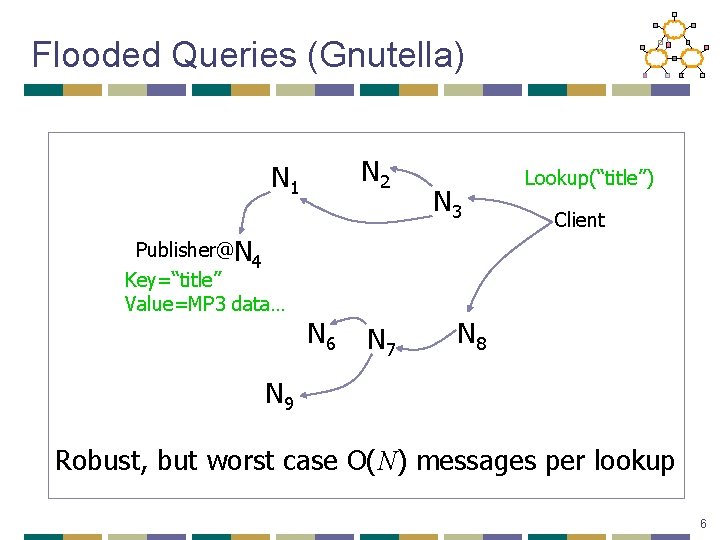

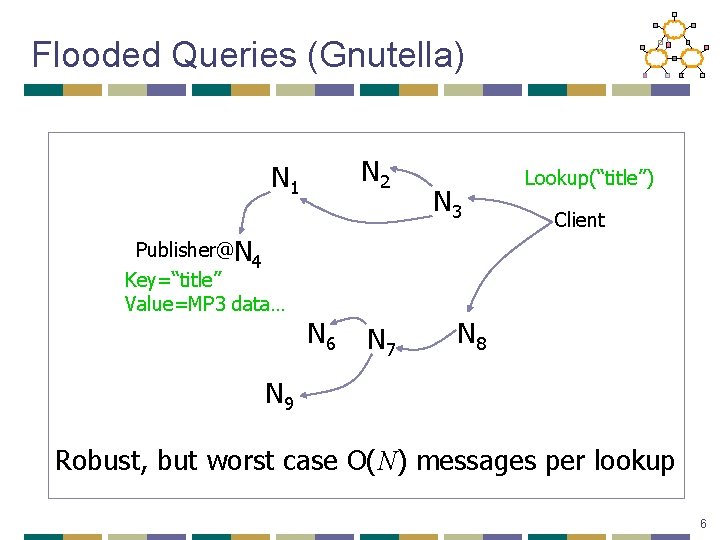

Flooded Queries (Gnutella) N 2 N 1 Publisher@N 4 Key=“title” Value=MP 3 data… N 6 N 7 N 3 Lookup(“title”) Client N 8 N 9 Robust, but worst case O(N) messages per lookup 6

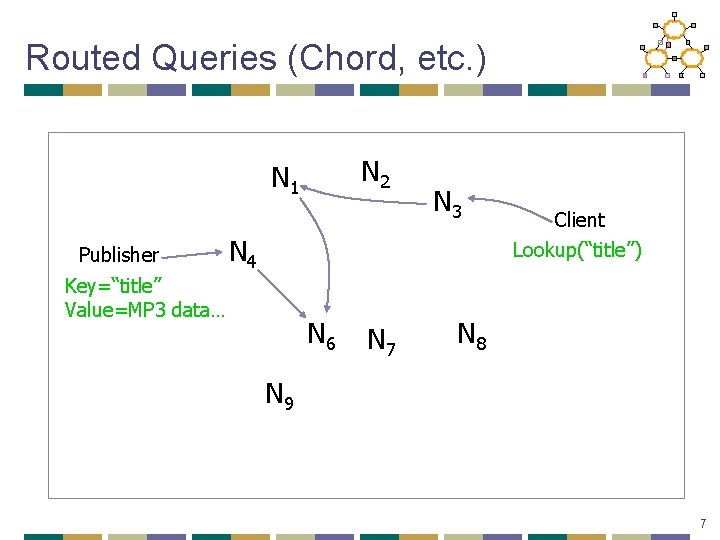

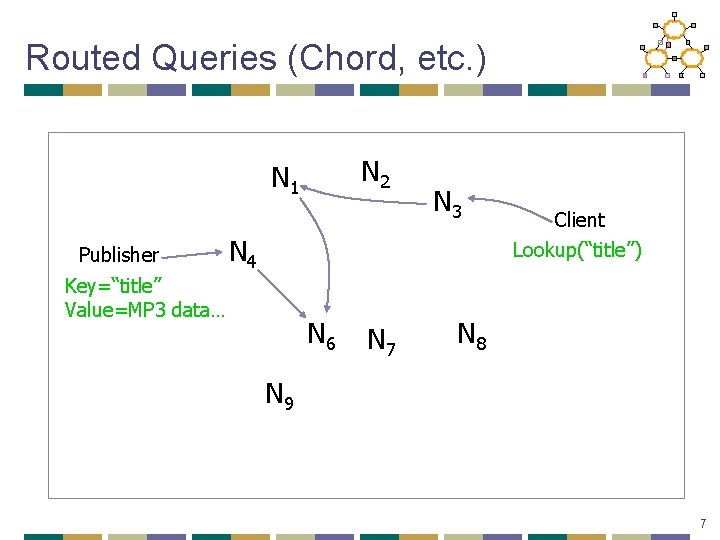

Routed Queries (Chord, etc. ) N 2 N 1 Publisher N 3 N 4 Client Lookup(“title”) Key=“title” Value=MP 3 data… N 6 N 7 N 8 N 9 7

Overview • P 2 P Lookup Overview • Centralized/Flooded Lookups • Bittorrent • Routed Lookups – Chord • Comparison of DHTs 8

Centralized: Napster • Simple centralized scheme motivated by ability to sell/control • How to find a file: • On startup, client contacts central server and reports list of files • Query the index system return a machine that stores the required file • Ideally this is the closest/least-loaded machine • Fetch the file directly from peer 9

Centralized: Napster • Advantages: • Simple • Easy to implement sophisticated search engines on top of the index system • Disadvantages: • Robustness, scalability • Easy to sue! 10

Flooding: Old Gnutella • On startup, client contacts any servent (server + client) in network • Servent interconnection used to forward control (queries, hits, etc) • Idea: broadcast the request • How to find a file: • Send request to all neighbors • Neighbors recursively forward the request • Eventually a machine that has the file receives the request, and it sends back the answer • Transfers are done with HTTP between peers 11

Flooding: Old Gnutella • Advantages: • Totally decentralized, highly robust • Disadvantages: • Not scalable; the entire network can be swamped with request (to alleviate this problem, each request has a TTL) • Especially hard on slow clients • At some point broadcast traffic on Gnutella exceeded 56 kbps – what happened? • Modem users were effectively cut off! 12

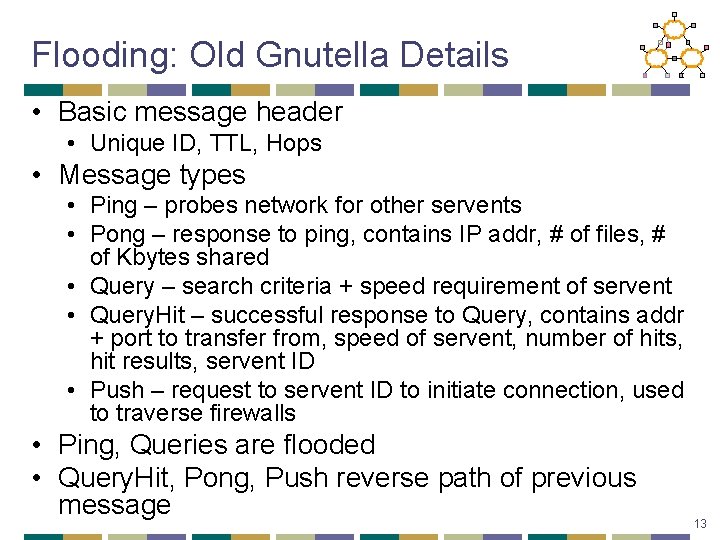

Flooding: Old Gnutella Details • Basic message header • Unique ID, TTL, Hops • Message types • Ping – probes network for other servents • Pong – response to ping, contains IP addr, # of files, # of Kbytes shared • Query – search criteria + speed requirement of servent • Query. Hit – successful response to Query, contains addr + port to transfer from, speed of servent, number of hits, hit results, servent ID • Push – request to servent ID to initiate connection, used to traverse firewalls • Ping, Queries are flooded • Query. Hit, Pong, Push reverse path of previous message 13

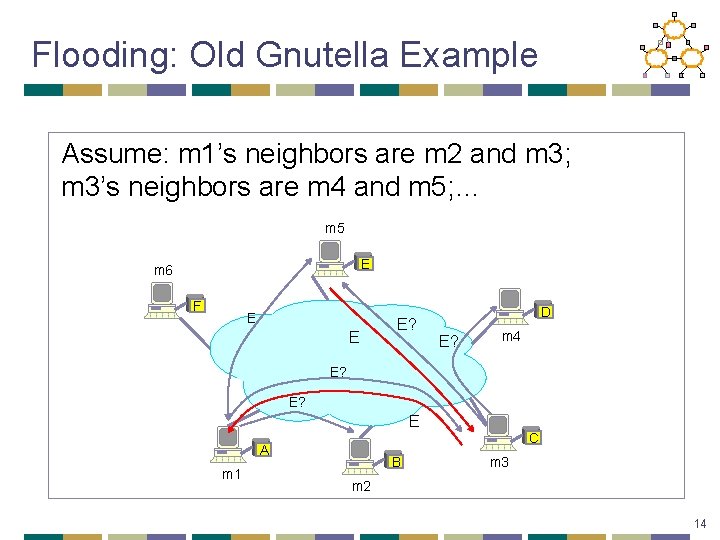

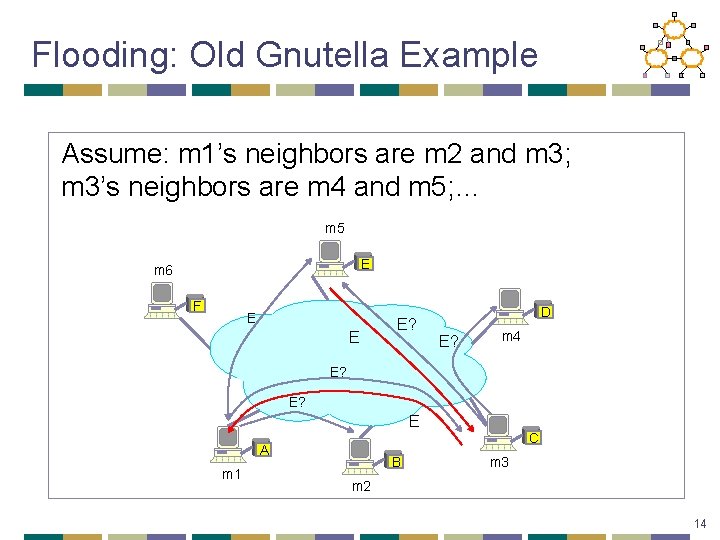

Flooding: Old Gnutella Example Assume: m 1’s neighbors are m 2 and m 3; m 3’s neighbors are m 4 and m 5; … m 5 E m 6 F E E E? D E? m 4 E? E? E C A m 1 B m 3 m 2 14

Flooding: Gnutella, Kazaa • Modifies the Gnutella protocol into two-level hierarchy • Hybrid of Gnutella and Napster • Supernodes • Nodes that have better connection to Internet • Act as temporary indexing servers for other nodes • Help improve the stability of the network • Standard nodes • Connect to supernodes and report list of files • Allows slower nodes to participate • Search • Broadcast (Gnutella-style) search across supernodes • Disadvantages • Kept a centralized registration allowed for law suits 15

Overview • P 2 P Lookup Overview • Centralized/Flooded Lookups • Bittorrent • Routed Lookups – Chord • Comparison of DHTs 16

Peer-to-Peer Networks: Bit. Torrent • Bit. Torrent history and motivation • 2002: B. Cohen debuted Bit. Torrent • Key motivation: popular content • Popularity exhibits temporal locality (Flash Crowds) • E. g. , Slashdot/Digg effect, CNN Web site on 9/11, release of a new movie or game • Focused on efficient fetching, not searching • Distribute same file to many peers • Single publisher, many downloaders • Preventing free-loading 17

Bit. Torrent: Simultaneous Downloading • Divide large file into many pieces • Replicate different pieces on different peers • A peer with a complete piece can trade with other peers • Peer can (hopefully) assemble the entire file • Allows simultaneous downloading • Retrieving different parts of the file from different peers at the same time • And uploading parts of the file to peers • Important for very large files 18

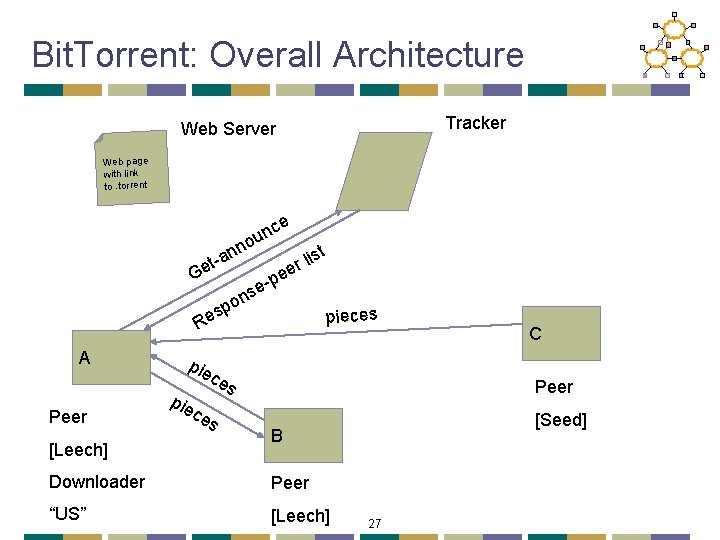

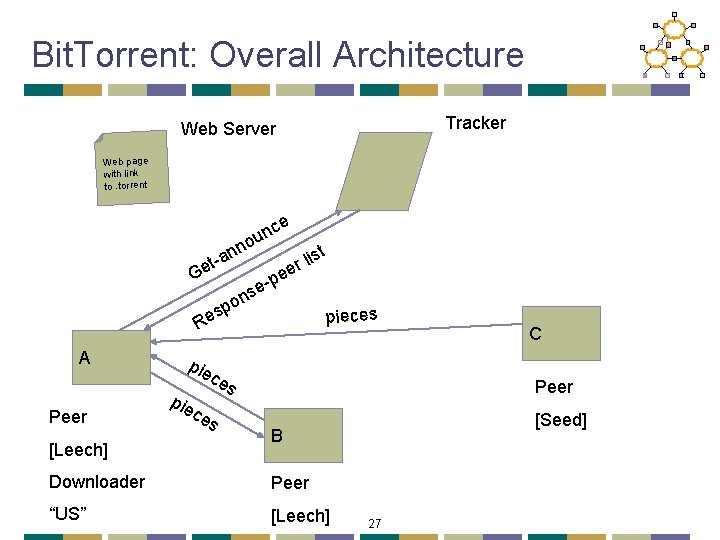

Bit. Torrent: Tracker • Infrastructure node • Keeps track of peers participating in the torrent • Peers register with the tracker • Peer registers when it arrives • Peer periodically informs tracker it is still there • Tracker selects peers for downloading • Returns a random set of peers • Including their IP addresses • So the new peer knows who to contact for data • Can have “trackerless” system using DHT 19

Bit. Torrent: Chunks • Large file divided into smaller pieces • Fixed-sized chunks • Typical chunk size of 256 Kbytes • Allows simultaneous transfers • Downloading chunks from different neighbors • Uploading chunks to other neighbors • Learning what chunks your neighbors have • Periodically asking them for a list • File done when all chunks are downloaded 20

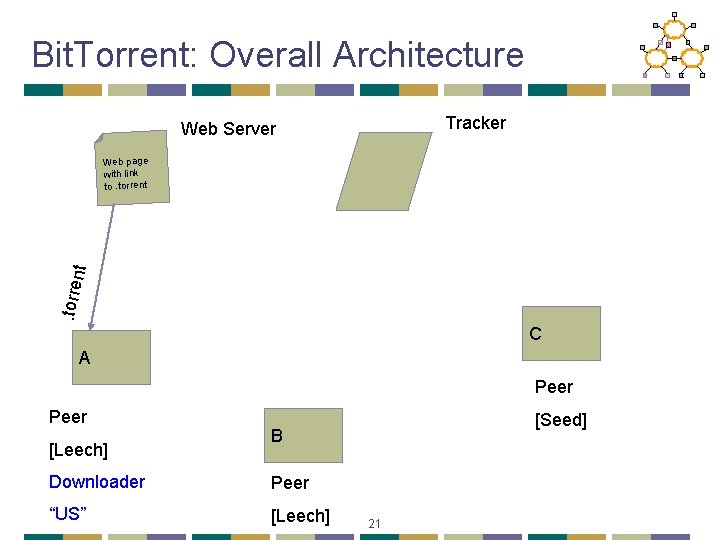

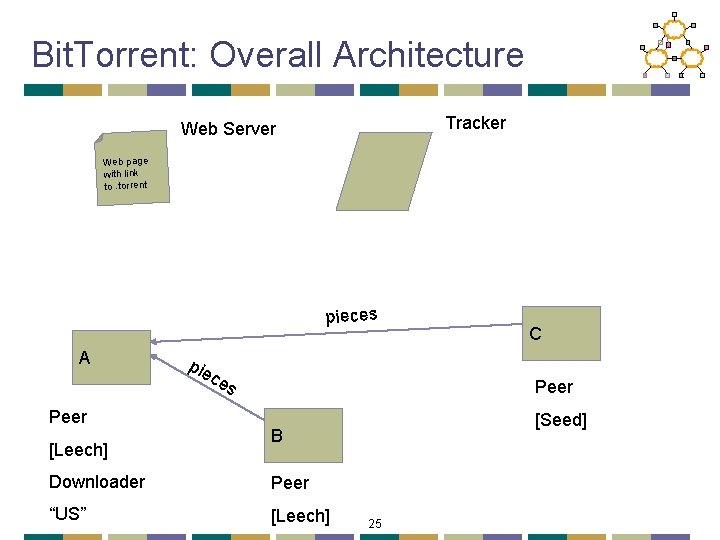

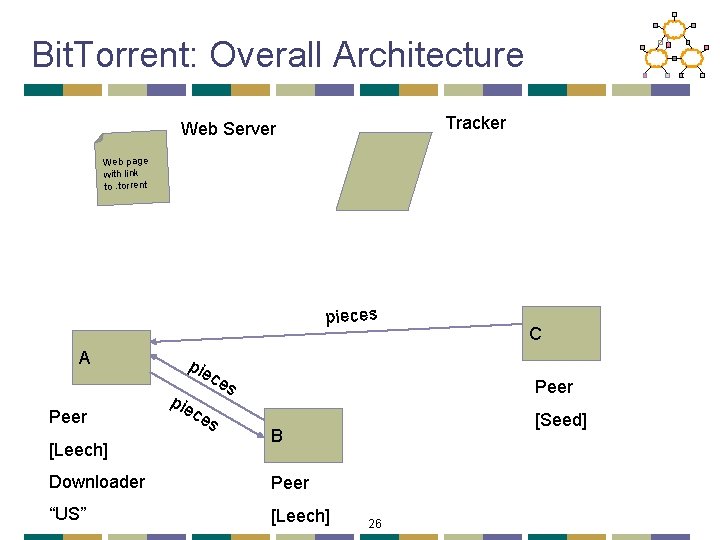

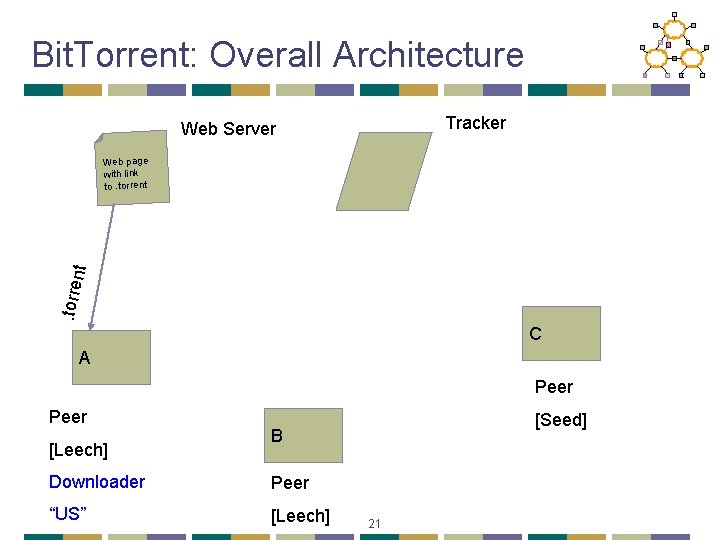

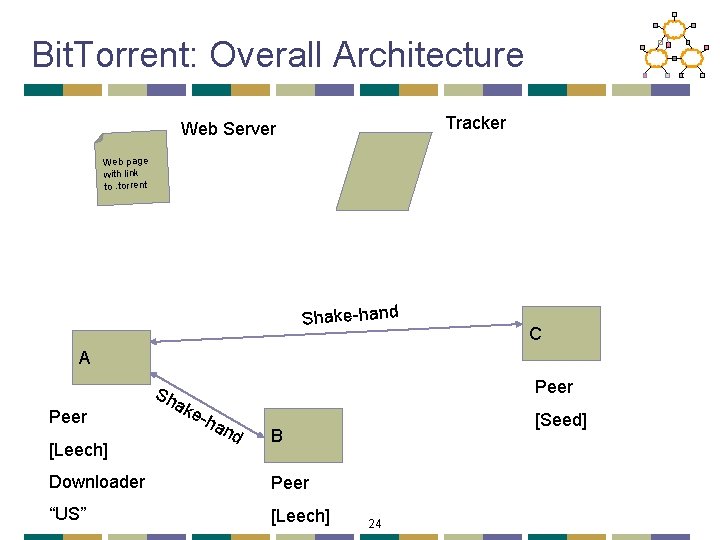

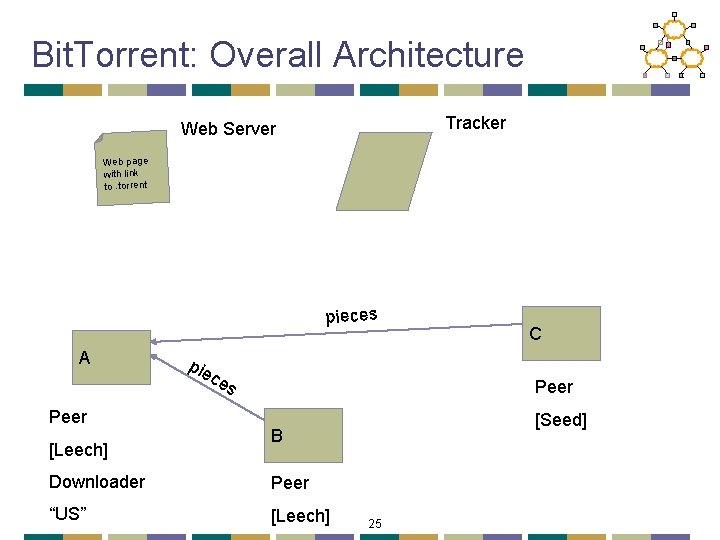

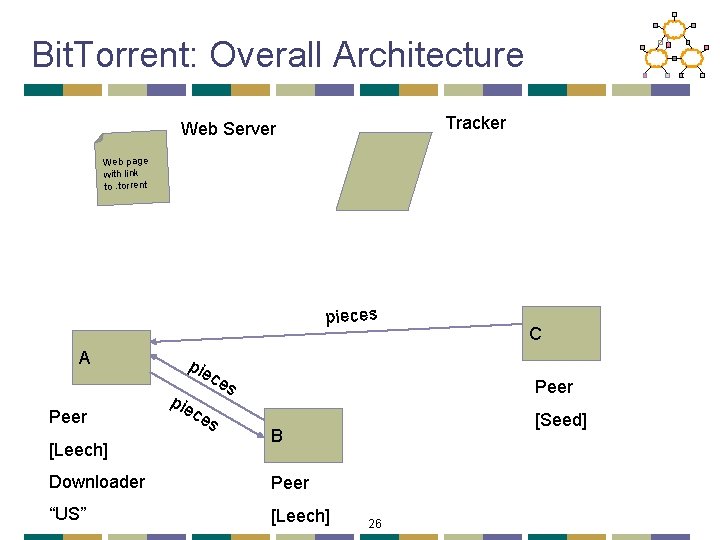

Bit. Torrent: Overall Architecture Tracker Web Server . torre nt Web page with link to. torrent C A Peer [Leech] [Seed] B Downloader Peer “US” [Leech] 21

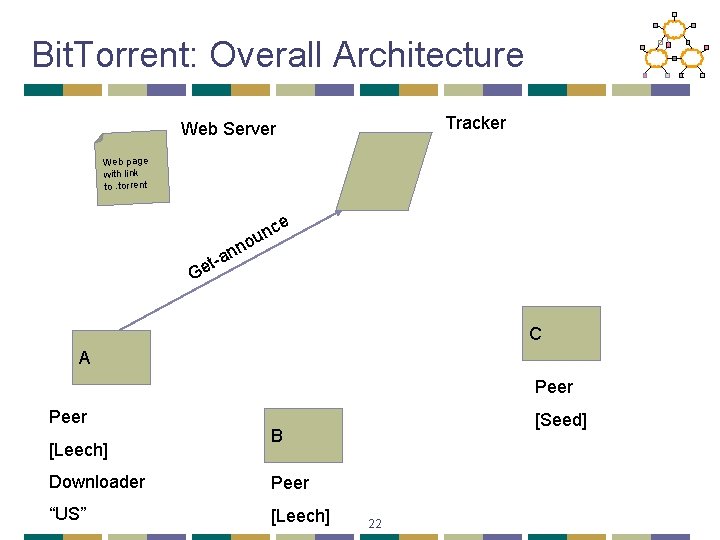

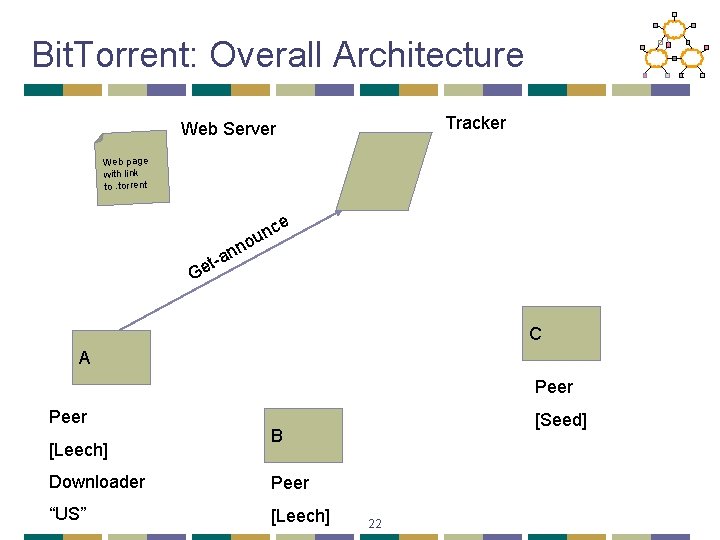

Bit. Torrent: Overall Architecture Tracker Web Server Web page with link to. torrent ce un o n n a et- G C A Peer [Leech] [Seed] B Downloader Peer “US” [Leech] 22

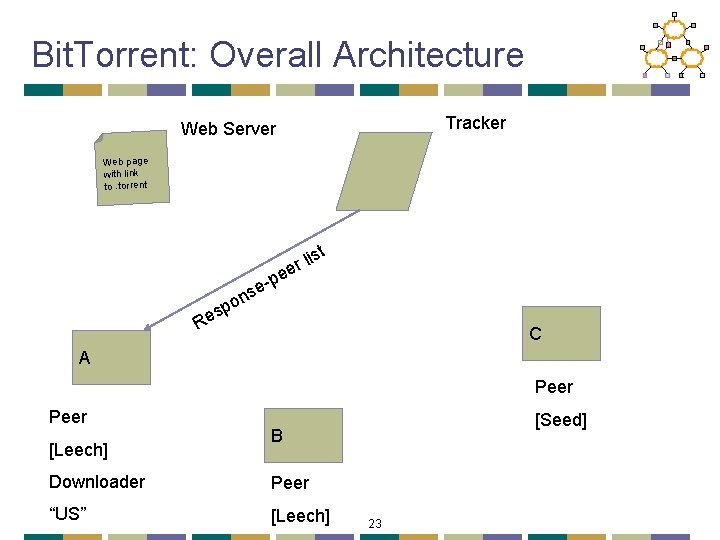

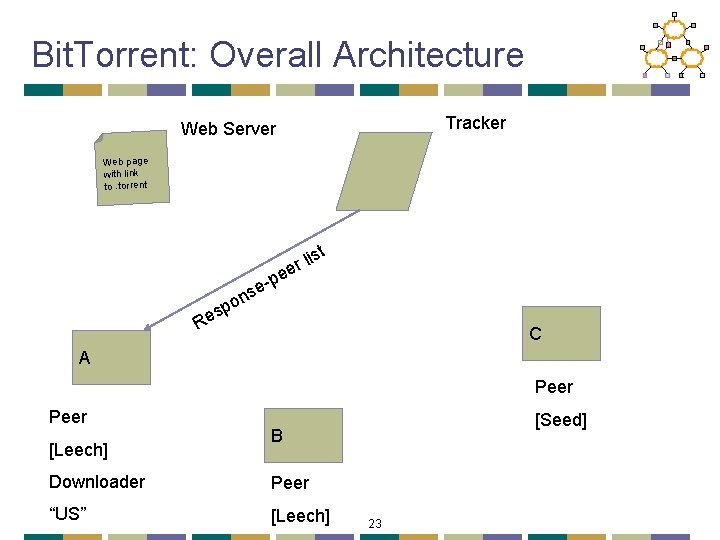

Bit. Torrent: Overall Architecture Tracker Web Server Web page with link to. torrent st -p e ns o sp e R li r ee C A Peer [Leech] [Seed] B Downloader Peer “US” [Leech] 23

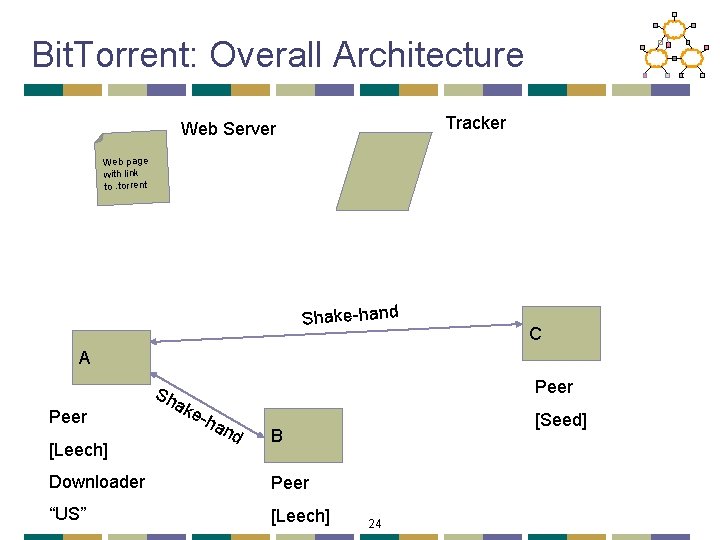

Bit. Torrent: Overall Architecture Tracker Web Server Web page with link to. torrent Shake-hand C A Peer [Leech] Peer Sh ak e-h an d [Seed] B Downloader Peer “US” [Leech] 24

Bit. Torrent: Overall Architecture Tracker Web Server Web page with link to. torrent pieces A Peer [Leech] pie ce s C Peer [Seed] B Downloader Peer “US” [Leech] 25

Bit. Torrent: Overall Architecture Tracker Web Server Web page with link to. torrent pieces A Peer [Leech] pie c ce s es C Peer [Seed] B Downloader Peer “US” [Leech] 26

Bit. Torrent: Overall Architecture Tracker Web Server Web page with link to. torrent e nc u no n ist a l t r ee Ge p se n o sp e pieces R A Peer [Leech] pie c ce s es C Peer [Seed] B Downloader Peer “US” [Leech] 27

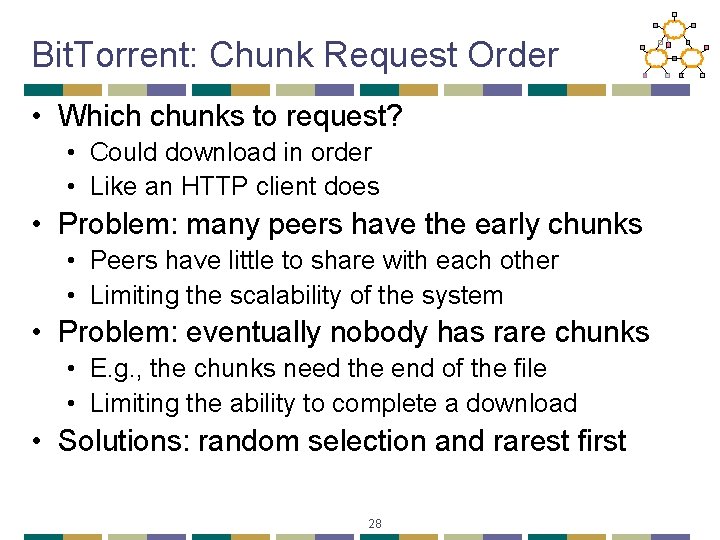

Bit. Torrent: Chunk Request Order • Which chunks to request? • Could download in order • Like an HTTP client does • Problem: many peers have the early chunks • Peers have little to share with each other • Limiting the scalability of the system • Problem: eventually nobody has rare chunks • E. g. , the chunks need the end of the file • Limiting the ability to complete a download • Solutions: random selection and rarest first 28

Bit. Torrent: Rarest Chunk First • Which chunks to request first? • The chunk with the fewest available copies • I. e. , the rarest chunk first • Benefits to the peer • Avoid starvation when some peers depart • Benefits to the system • Avoid starvation across all peers wanting a file • Balance load by equalizing # of copies of chunks 29

Free-Riding Problem in P 2 P Networks • Vast majority of users are free-riders • Most share no files and answer no queries • Others limit # of connections or upload speed • A few “peers” essentially act as servers • A few individuals contributing to the public good • Making them hubs that basically act as a server • Bit. Torrent prevent free riding • Allow the fastest peers to download from you • Occasionally let some free loaders download 30

Bit-Torrent: Preventing Free-Riding • Peer has limited upload bandwidth • And must share it among multiple peers • Prioritizing the upload bandwidth: tit for tat • Favor neighbors that are uploading at highest rate • Rewarding the top few (e. g. four) peers • Measure download bit rates from each neighbor • Reciprocates by sending to the top few peers • Recompute and reallocate every 10 seconds • Optimistic unchoking • Randomly try a new neighbor every 30 seconds • To find a better partner and help new nodes startup 31

Bit. Tyrant: Gaming Bit. Torrent • Lots of altruistic contributors • High contributors take a long time to find good partners • Active sets are statically sized • Peer uploads to top N peers at rate 1/N • E. g. , if N=4 and peers upload at 15, 12, 10, 9, 8, 3 • … then peer uploading at rate 9 gets treated quite well 32

Bit. Tyrant: Gaming Bit. Torrent • Best to be the Nth peer in the list, rather than 1 st • Distribution of BW suggests 14 KB/s is enough • Dynamically probe for this value • Use saved bandwidth to expand peer set • Choose clients that maximize download/upload ratio • Discussion • Is “progressive tax” so bad? • What if everyone does this? 33

Overview • P 2 P Lookup Overview • Centralized/Flooded Lookups • Bittorrent • Routed Lookups – Chord • Comparison of DHTs 34

Routing: Structured Approaches • Goal: make sure that an item (file) identified is always found in a reasonable # of steps • Abstraction: a distributed hash-table (DHT) data structure • insert(id, item); • item = query(id); • Note: item can be anything: a data object, document, file, pointer to a file… • Proposals • • • CAN (ICIR/Berkeley) Chord (MIT/Berkeley) Pastry (Rice) Tapestry (Berkeley) … 35

Routing: Chord • Associate to each node and item a unique id in an uni-dimensional space • Properties • Routing table size O(log(N)) , where N is the total number of nodes • Guarantees that a file is found in O(log(N)) steps 36

Aside: Hashing • Advantages • Let nodes be numbered 1. . m • Client uses a good hash function to map a URL to 1. . m • Say hash (url) = x, so, client fetches content from node x • No duplication – not being fault tolerant. • One hop access • Any problems? • What happens if a node goes down? • What happens if a node comes back up? • What if different nodes have different views? 37

Robust hashing • Let 90 documents, node 1. . 9, node 10 which was dead is alive again • % of documents in the wrong node? • 10, 19 -20, 28 -30, 37 -40, 46 -50, 55 -60, 64 -70, 73 -80, 82 -90 • Disruption coefficient = ½ • Unacceptable, use consistent hashing – idea behind Akamai! 38

Consistent Hash • “view” = subset of all hash buckets that are visible • Desired features • Balanced – in any one view, load is equal across buckets • Smoothness – little impact on hash bucket contents when buckets are added/removed • Spread – small set of hash buckets that may hold an object regardless of views • Load – across all views # of objects assigned to hash bucket is small 39

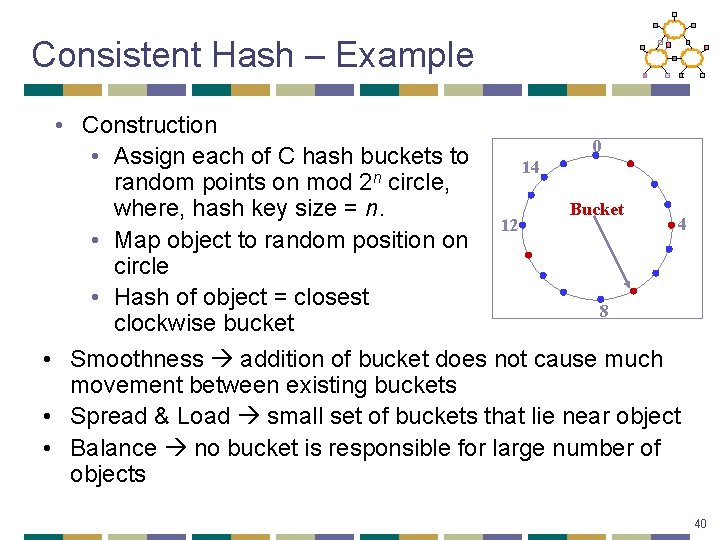

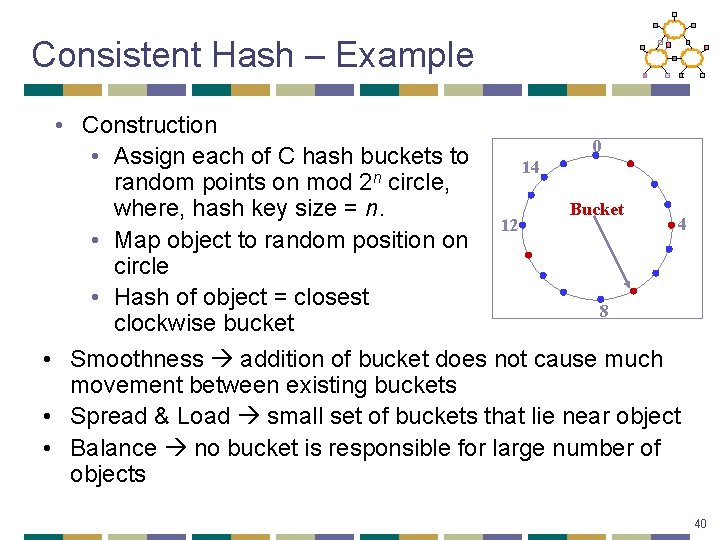

Consistent Hash – Example • Construction 0 • Assign each of C hash buckets to 14 n random points on mod 2 circle, Bucket where, hash key size = n. 4 12 • Map object to random position on circle • Hash of object = closest 8 clockwise bucket • Smoothness addition of bucket does not cause much movement between existing buckets • Spread & Load small set of buckets that lie near object • Balance no bucket is responsible for large number of objects 40

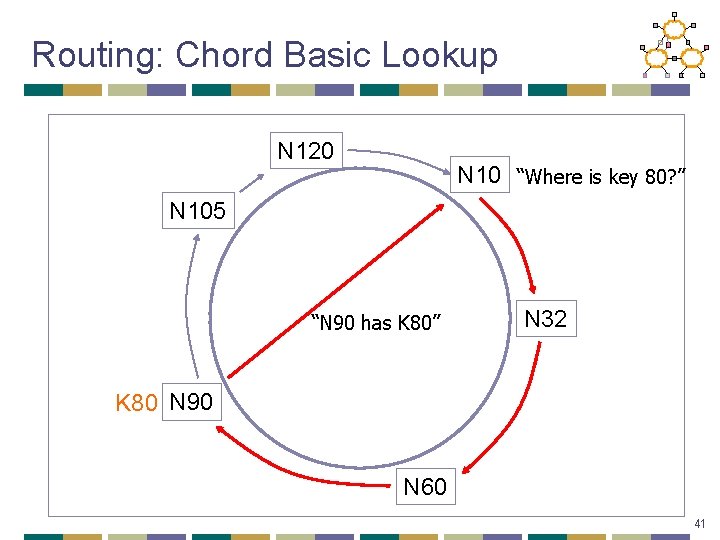

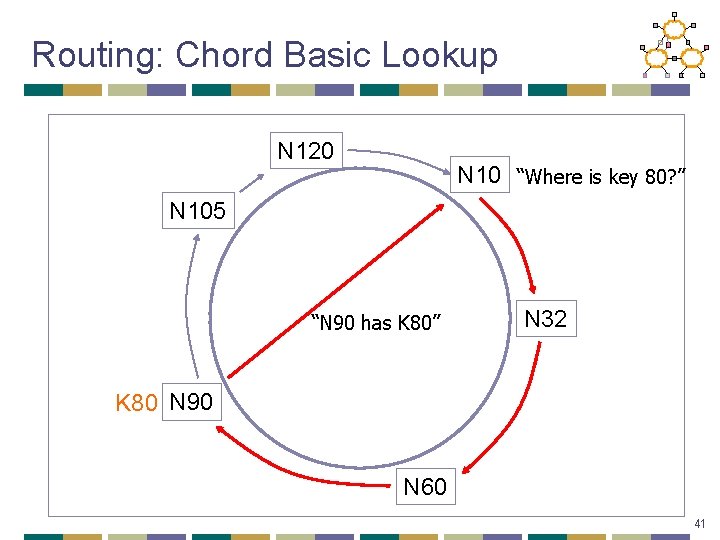

Routing: Chord Basic Lookup N 120 N 10 “Where is key 80? ” N 105 “N 90 has K 80” N 32 K 80 N 90 N 60 41

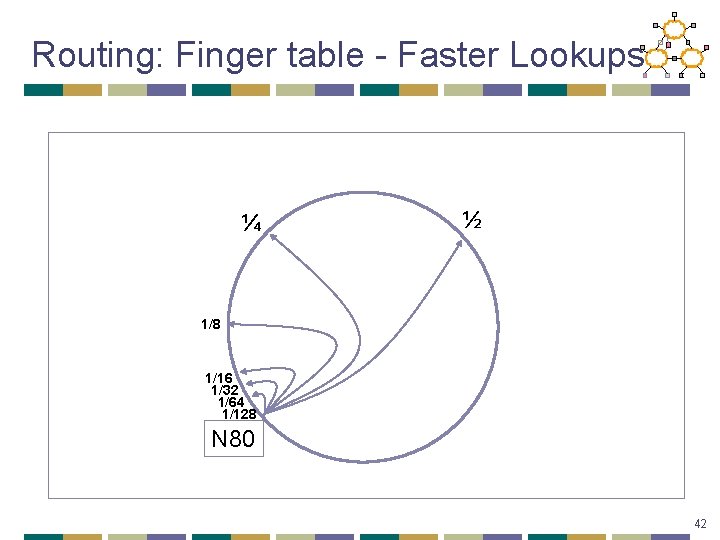

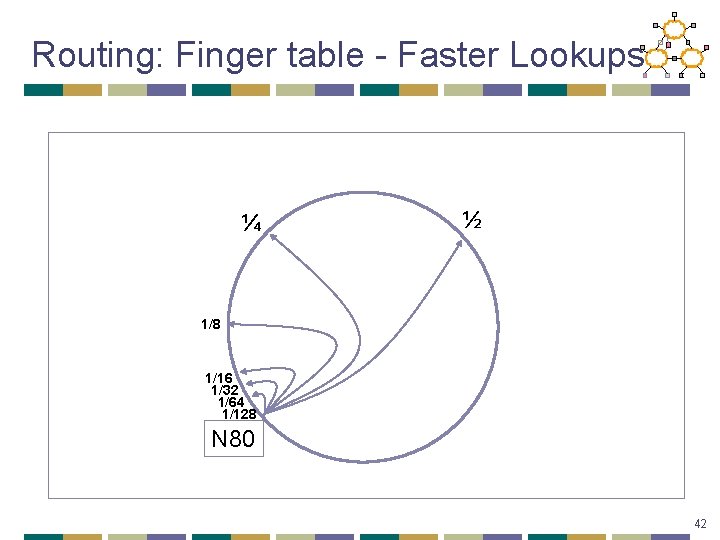

Routing: Finger table - Faster Lookups ¼ ½ 1/8 1/16 1/32 1/64 1/128 N 80 42

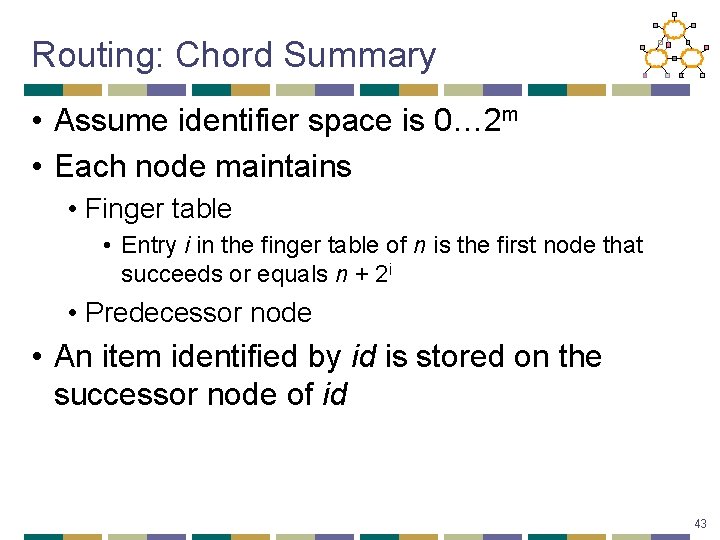

Routing: Chord Summary • Assume identifier space is 0… 2 m • Each node maintains • Finger table • Entry i in the finger table of n is the first node that succeeds or equals n + 2 i • Predecessor node • An item identified by id is stored on the successor node of id 43

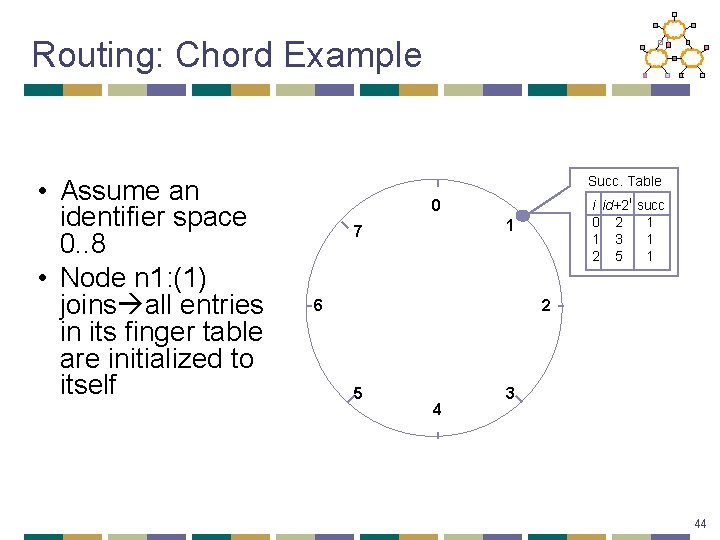

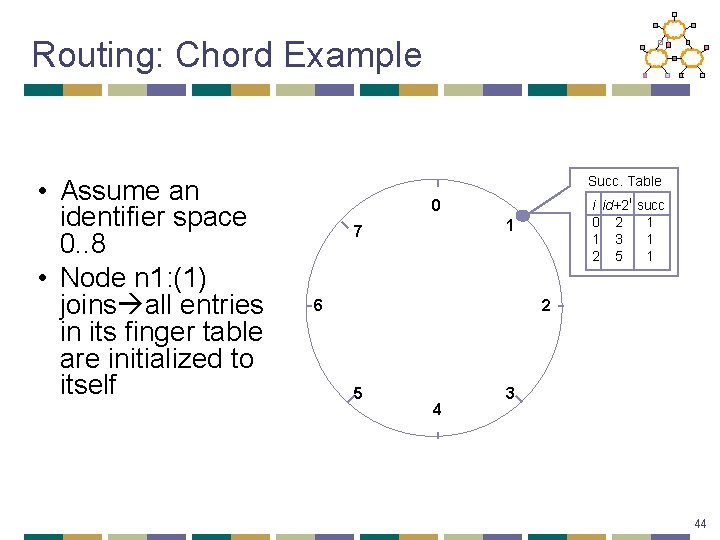

Routing: Chord Example • Assume an identifier space 0. . 8 • Node n 1: (1) joins all entries in its finger table are initialized to itself Succ. Table i id+2 i succ 0 2 1 1 3 1 2 5 1 0 1 7 6 2 5 4 3 44

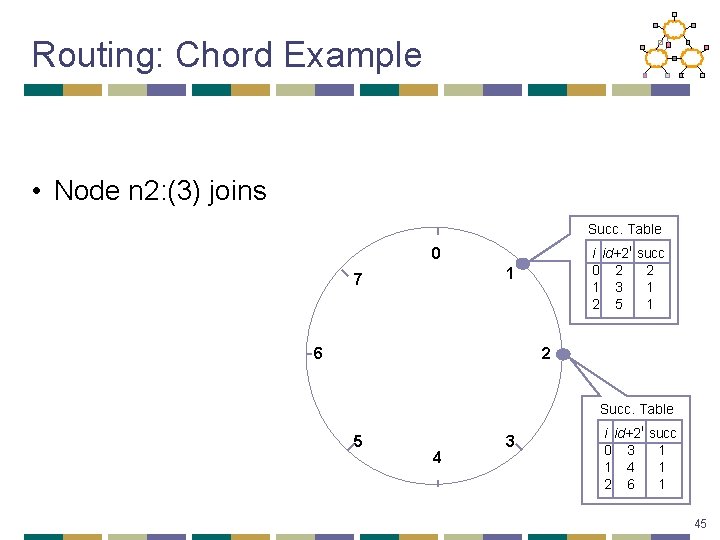

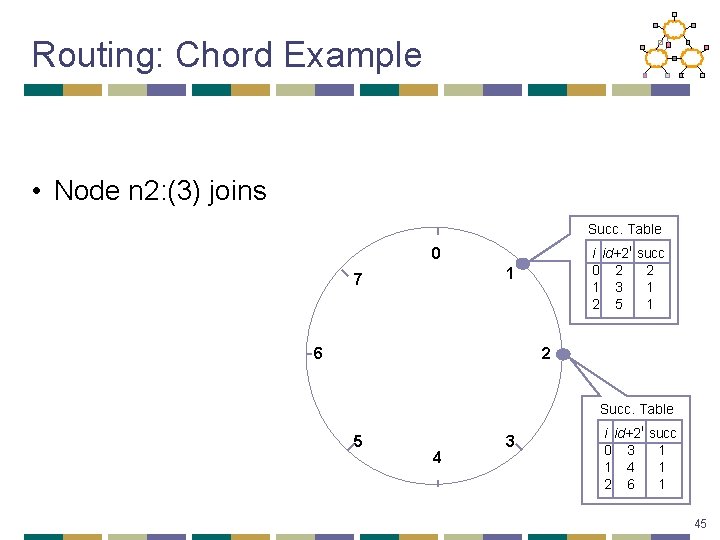

Routing: Chord Example • Node n 2: (3) joins Succ. Table i id+2 i succ 0 2 2 1 3 1 2 5 1 0 1 7 6 2 Succ. Table 5 4 3 i id+2 i succ 0 3 1 1 4 1 2 6 1 45

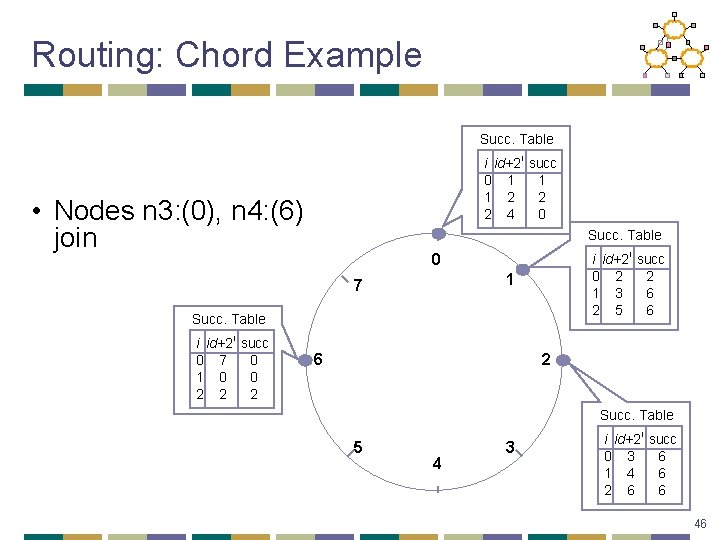

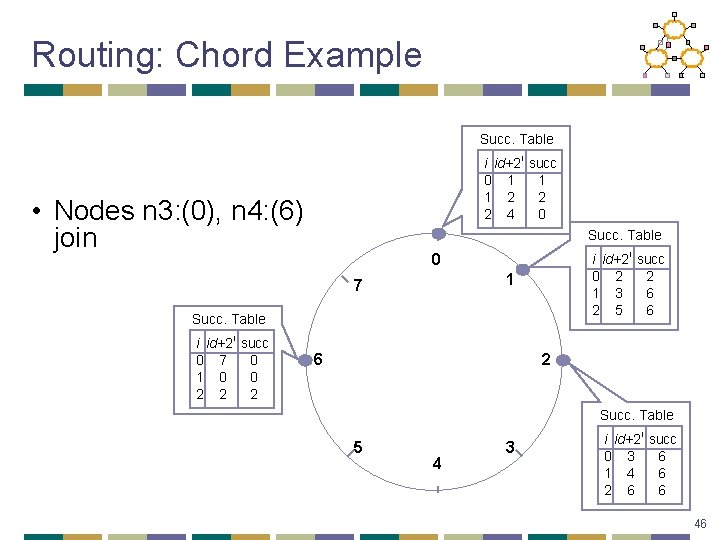

Routing: Chord Example Succ. Table i id+2 i succ 0 1 1 1 2 2 2 4 0 • Nodes n 3: (0), n 4: (6) join Succ. Table i id+2 i succ 0 2 2 1 3 6 2 5 6 0 1 7 Succ. Table i id+2 i succ 0 7 0 1 0 0 2 2 2 6 2 Succ. Table 5 4 3 i id+2 i succ 0 3 6 1 4 6 2 6 6 46

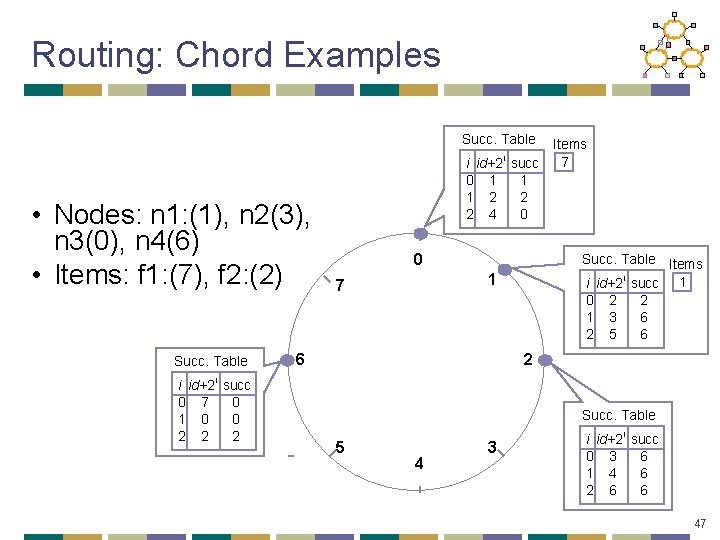

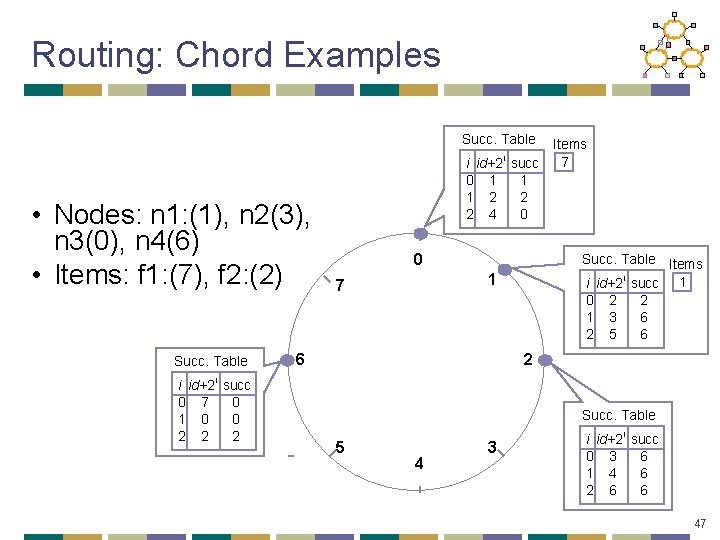

Routing: Chord Examples Succ. Table • Nodes: n 1: (1), n 2(3), n 3(0), n 4(6) • Items: f 1: (7), f 2: (2) Succ. Table i id+2 i succ 0 7 0 1 0 0 2 2 2 i id+2 0 1 1 2 2 4 i Items 7 succ 1 2 0 0 Succ. Table Items i id+2 i succ 1 0 2 2 1 3 6 2 5 6 1 7 6 2 Succ. Table 5 4 3 i id+2 i succ 0 3 6 1 4 6 2 6 6 47

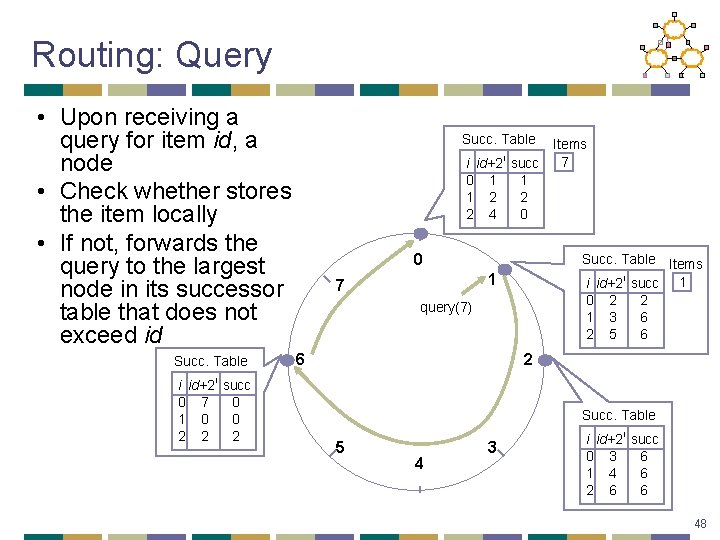

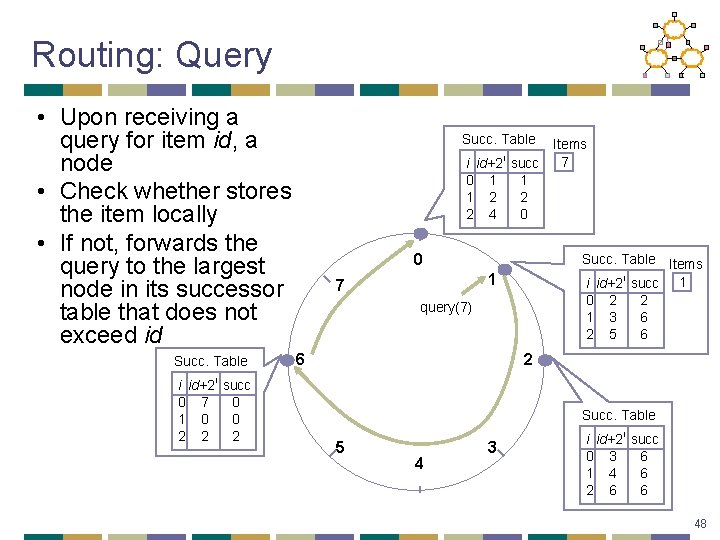

Routing: Query • Upon receiving a query for item id, a node • Check whether stores the item locally • If not, forwards the query to the largest node in its successor table that does not exceed id Succ. Table i id+2 i succ 0 7 0 1 0 0 2 2 2 Succ. Table i id+2 0 1 1 2 2 4 i Items 7 succ 1 2 0 0 Succ. Table Items i id+2 i succ 1 0 2 2 1 3 6 2 5 6 1 7 query(7) 6 2 Succ. Table 5 4 3 i id+2 i succ 0 3 6 1 4 6 2 6 6 48

What can DHTs do for us? • Distributed object lookup • Based on object ID • De-centralized file systems • CFS, PAST, Ivy • Application Layer Multicast • Scribe, Bayeux, Splitstream • Databases • PIER 49

Overview • P 2 P Lookup Overview • Centralized/Flooded Lookups • Bittorrent • Routed Lookups – Chord • Comparison of DHTs 50

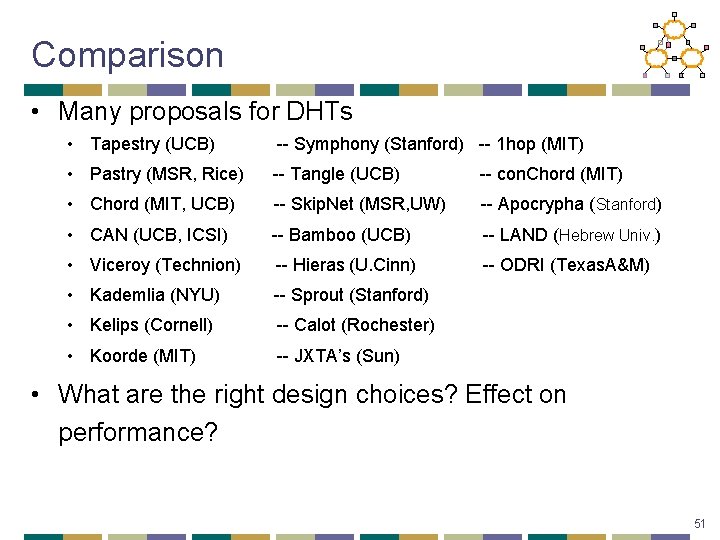

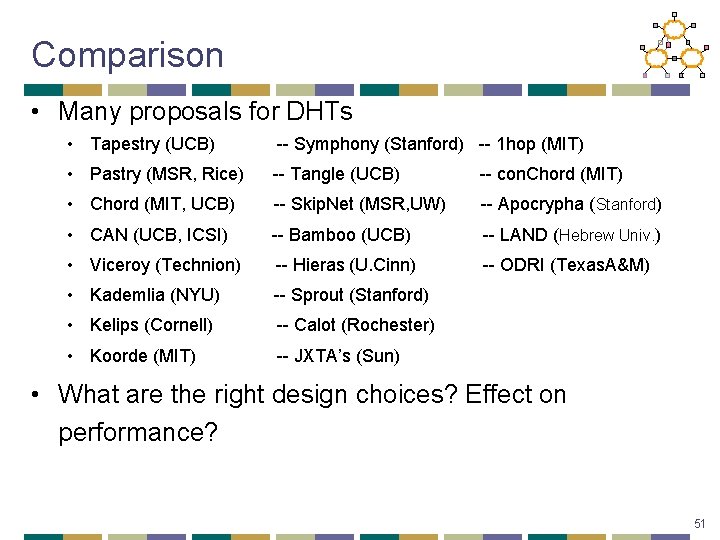

Comparison • Many proposals for DHTs • Tapestry (UCB) -- Symphony (Stanford) -- 1 hop (MIT) • Pastry (MSR, Rice) -- Tangle (UCB) -- con. Chord (MIT) • Chord (MIT, UCB) -- Skip. Net (MSR, UW) -- Apocrypha (Stanford) • CAN (UCB, ICSI) -- Bamboo (UCB) -- LAND (Hebrew Univ. ) • Viceroy (Technion) -- Hieras (U. Cinn) -- ODRI (Texas. A&M) • Kademlia (NYU) -- Sprout (Stanford) • Kelips (Cornell) -- Calot (Rochester) • Koorde (MIT) -- JXTA’s (Sun) • What are the right design choices? Effect on performance? 51

Deconstructing DHTs Two observations: 1. Common approach • • • 2. N nodes; each labeled with a virtual identifier (128 bits) define “distance” function on the identifiers routing works to reduce the distance to the destination DHTs differ primarily in their definition of “distance” • typically derived from (loose) notion of a routing geometry 52

DHT Routing Geometries • Geometries: • • • Tree (Plaxton, Tapestry) Ring (Chord) Hypercube (CAN) XOR (Kademlia) Hybrid (Pastry) • What is the impact of geometry on routing? 53

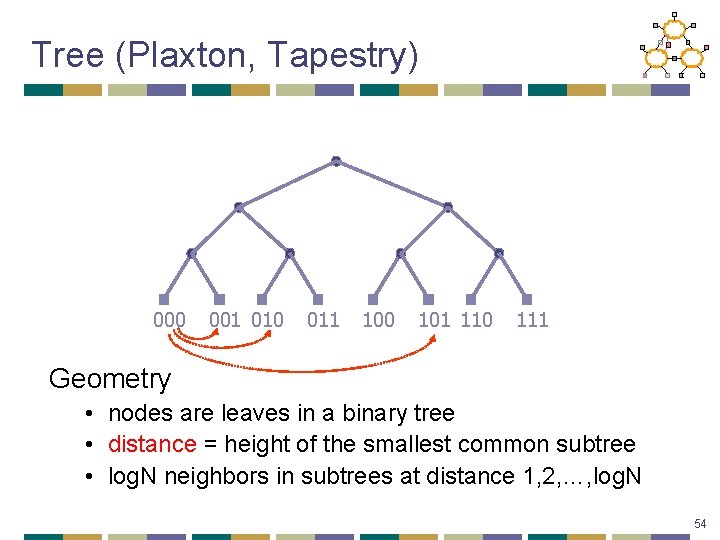

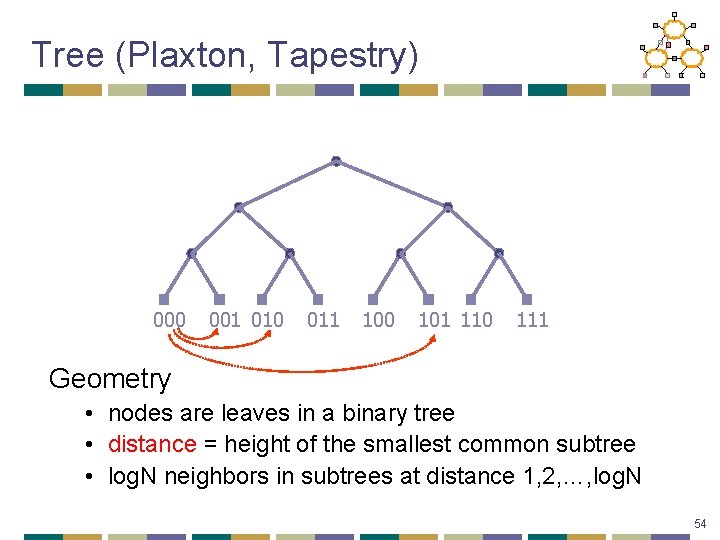

Tree (Plaxton, Tapestry) 000 001 010 011 100 101 110 111 Geometry • nodes are leaves in a binary tree • distance = height of the smallest common subtree • log. N neighbors in subtrees at distance 1, 2, …, log. N 54

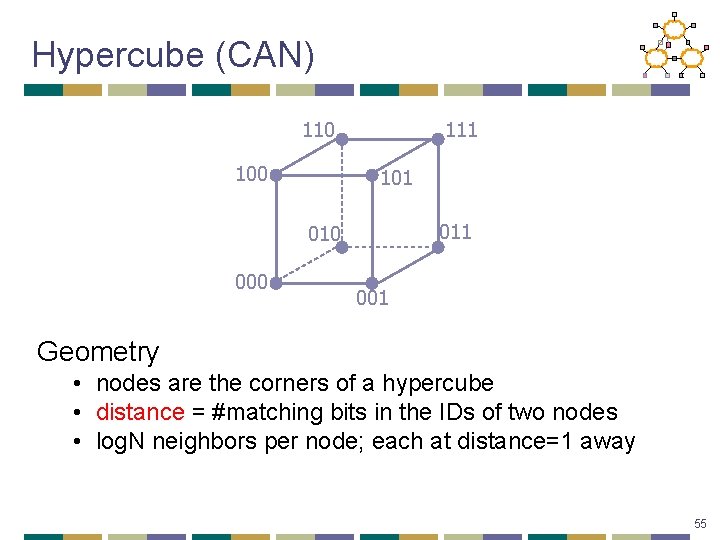

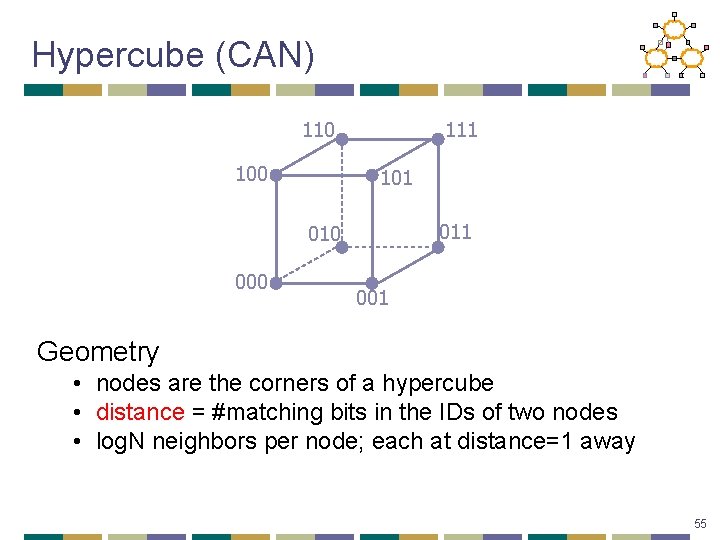

Hypercube (CAN) 110 100 111 101 010 001 Geometry • nodes are the corners of a hypercube • distance = #matching bits in the IDs of two nodes • log. N neighbors per node; each at distance=1 away 55

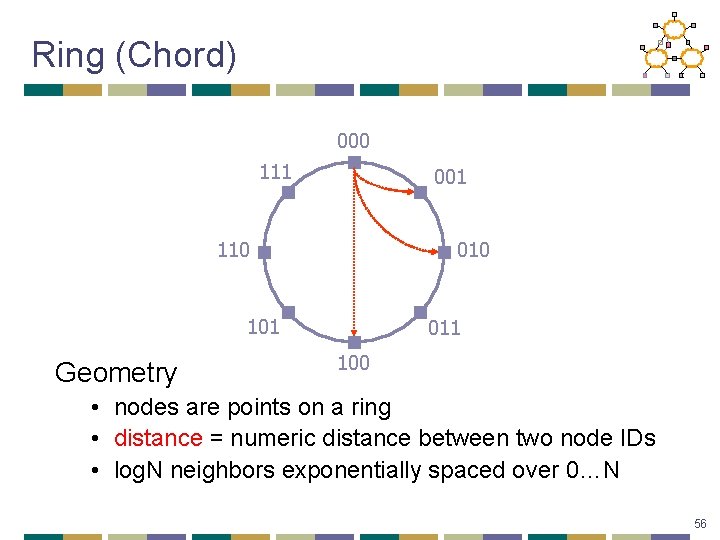

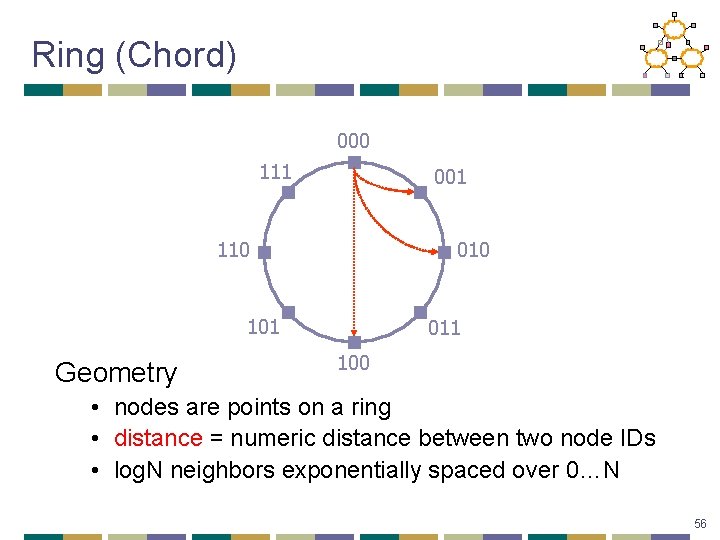

Ring (Chord) 000 111 001 110 010 101 Geometry 011 100 • nodes are points on a ring • distance = numeric distance between two node IDs • log. N neighbors exponentially spaced over 0…N 56

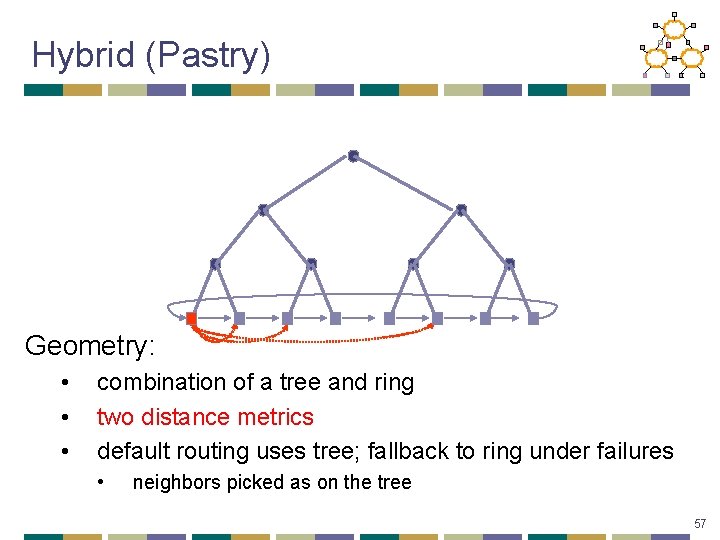

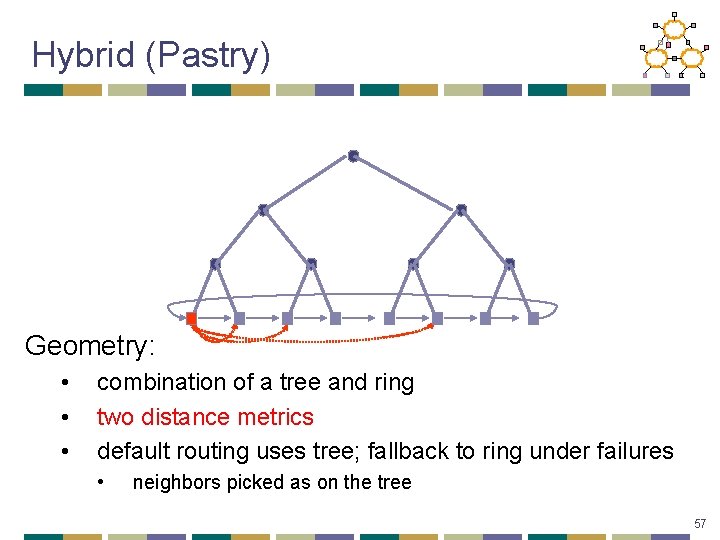

Hybrid (Pastry) Geometry: • • • combination of a tree and ring two distance metrics default routing uses tree; fallback to ring under failures • neighbors picked as on the tree 57

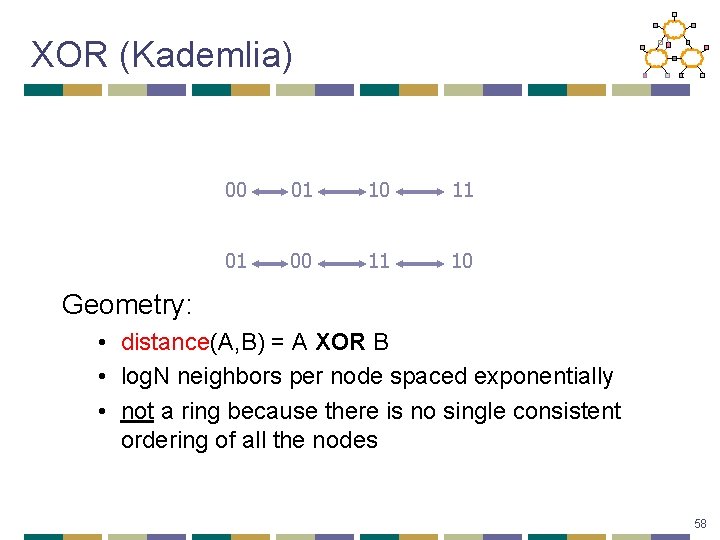

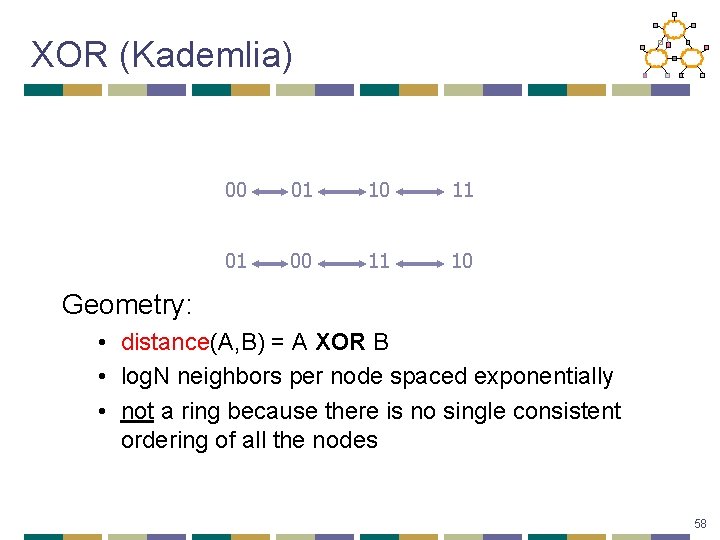

XOR (Kademlia) 00 01 10 11 01 00 11 10 Geometry: • distance(A, B) = A XOR B • log. N neighbors per node spaced exponentially • not a ring because there is no single consistent ordering of all the nodes 58

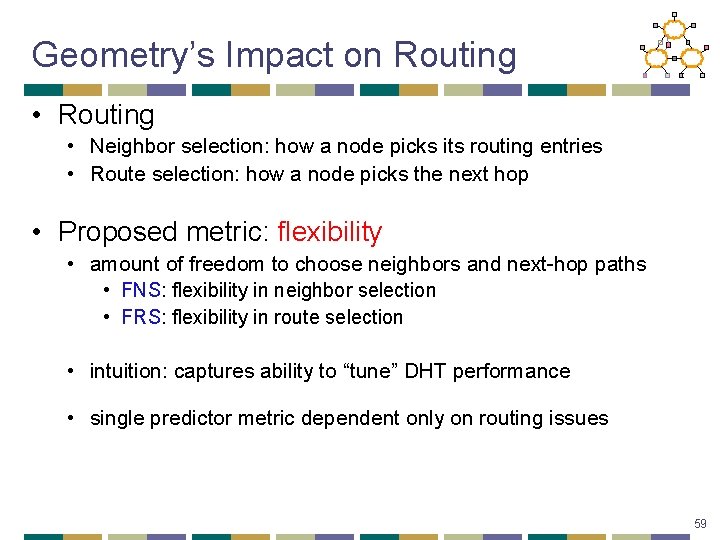

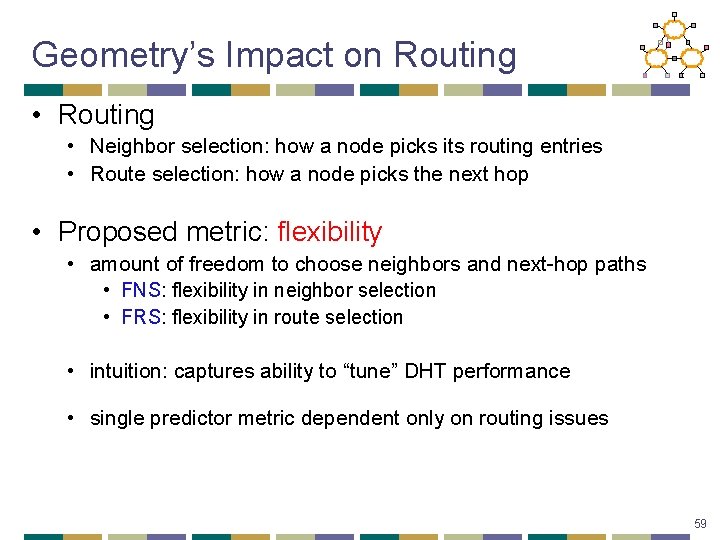

Geometry’s Impact on Routing • Neighbor selection: how a node picks its routing entries • Route selection: how a node picks the next hop • Proposed metric: flexibility • amount of freedom to choose neighbors and next-hop paths • FNS: flexibility in neighbor selection • FRS: flexibility in route selection • intuition: captures ability to “tune” DHT performance • single predictor metric dependent only on routing issues 59

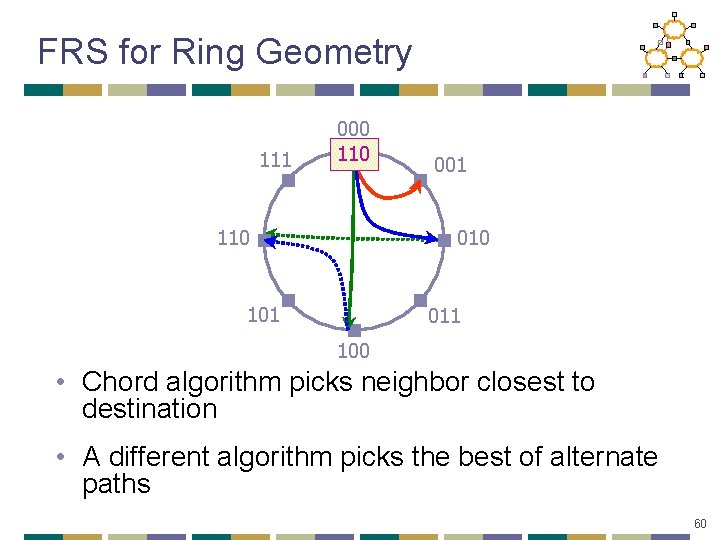

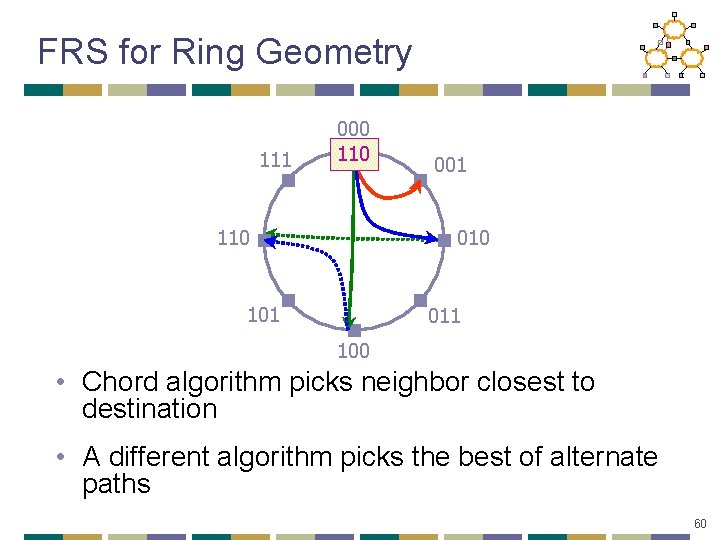

FRS for Ring Geometry 111 000 110 001 010 101 011 100 • Chord algorithm picks neighbor closest to destination • A different algorithm picks the best of alternate paths 60

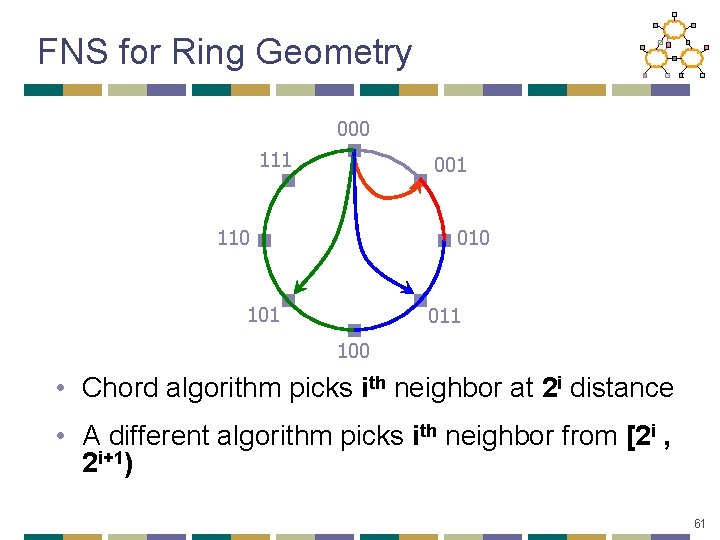

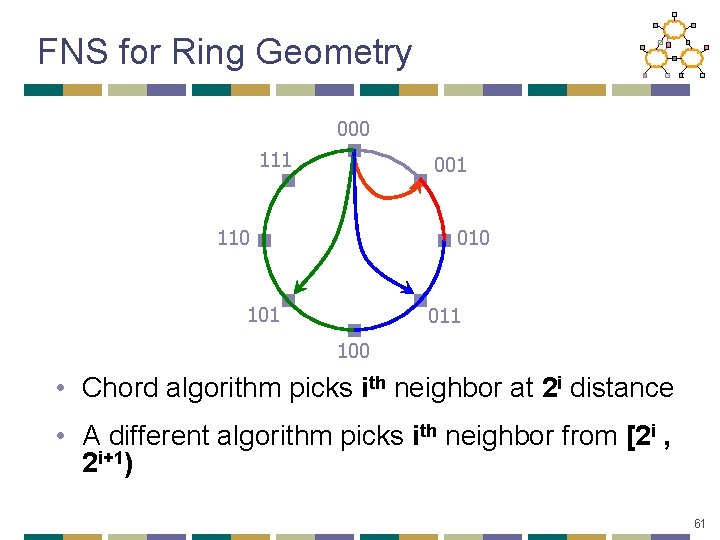

FNS for Ring Geometry 000 111 001 110 010 101 011 100 • Chord algorithm picks ith neighbor at 2 i distance • A different algorithm picks ith neighbor from [2 i , 2 i+1) 61

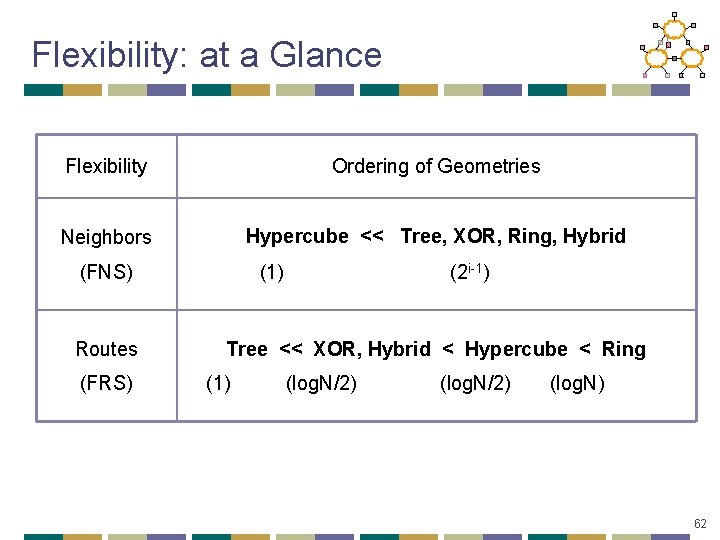

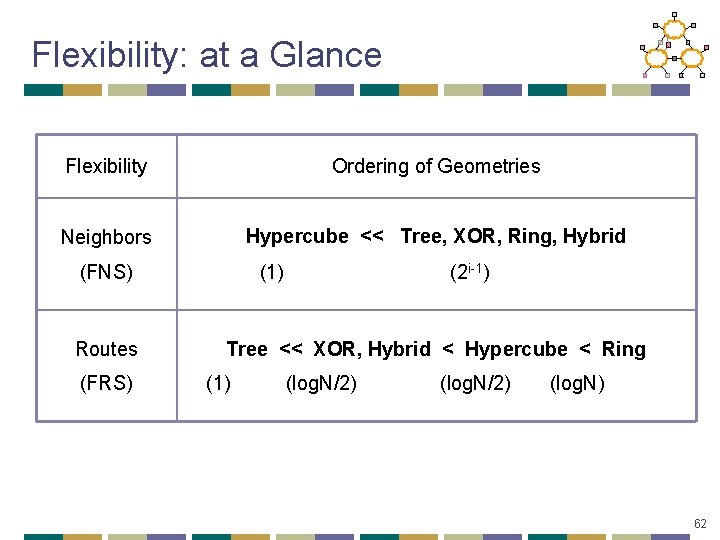

Flexibility: at a Glance Flexibility Ordering of Geometries Neighbors Hypercube << Tree, XOR, Ring, Hybrid (FNS) Routes (FRS) (1) (2 i-1) Tree << XOR, Hybrid < Hypercube < Ring (1) (log. N/2) (log. N) 62

Geometry Flexibility Performance? Validate over three performance metrics: 1. resilience 2. path latency 3. path convergence Metrics address two typical concerns: • • ability to handle node failure ability to incorporate proximity into overlay routing 64

Analysis of Static Resilience Two aspects of robust routing • Dynamic Recovery : how quickly routing state is recovered after failures • Static Resilience : how well the network routes before recovery finishes • captures how quickly recovery algorithms need to work • depends on FRS Evaluation: • Fail a fraction of nodes, without recovering any state • Metric: % Paths Failed 65

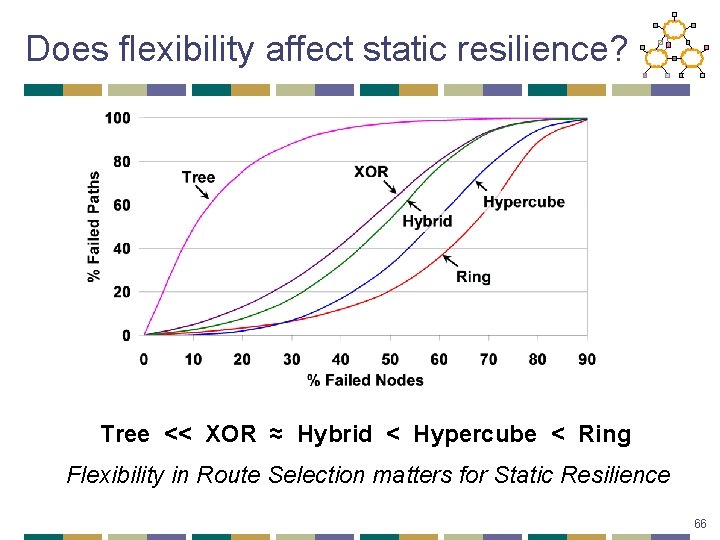

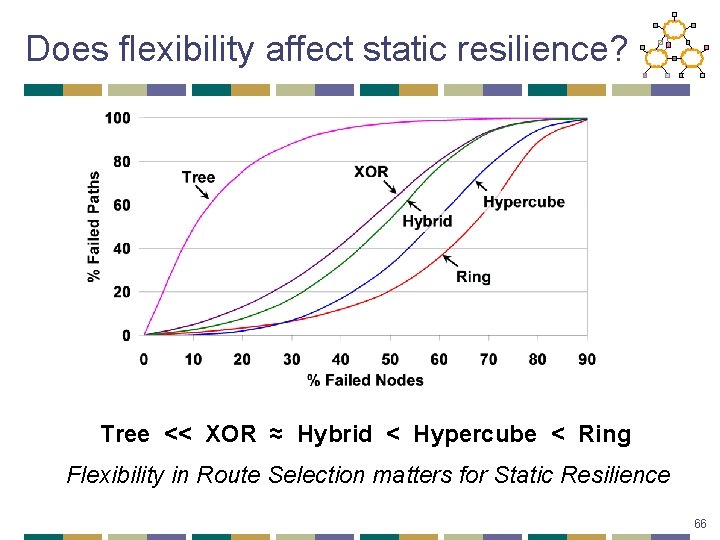

Does flexibility affect static resilience? Tree << XOR ≈ Hybrid < Hypercube < Ring Flexibility in Route Selection matters for Static Resilience 66

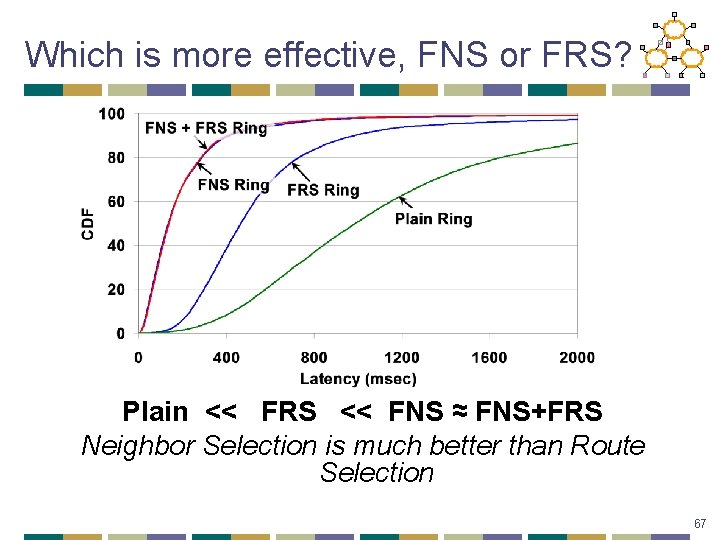

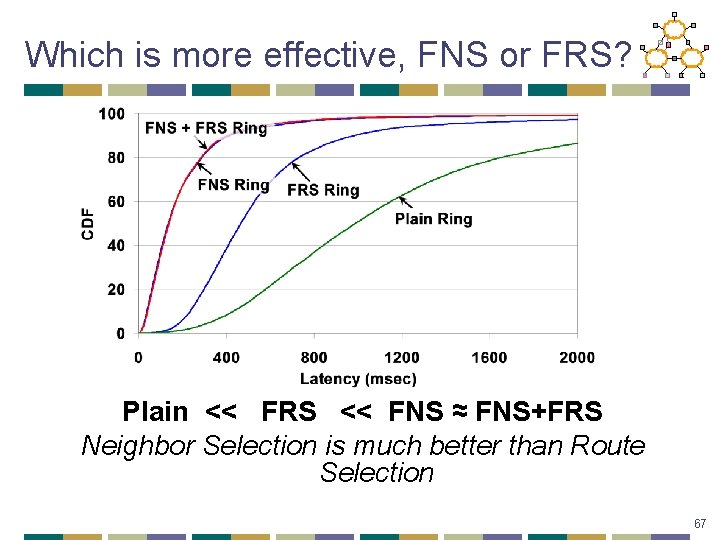

Which is more effective, FNS or FRS? Plain << FRS << FNS ≈ FNS+FRS Neighbor Selection is much better than Route Selection 67

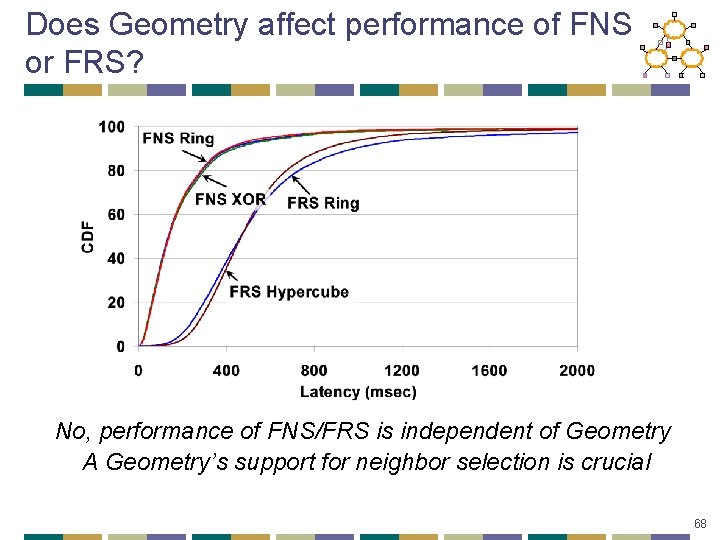

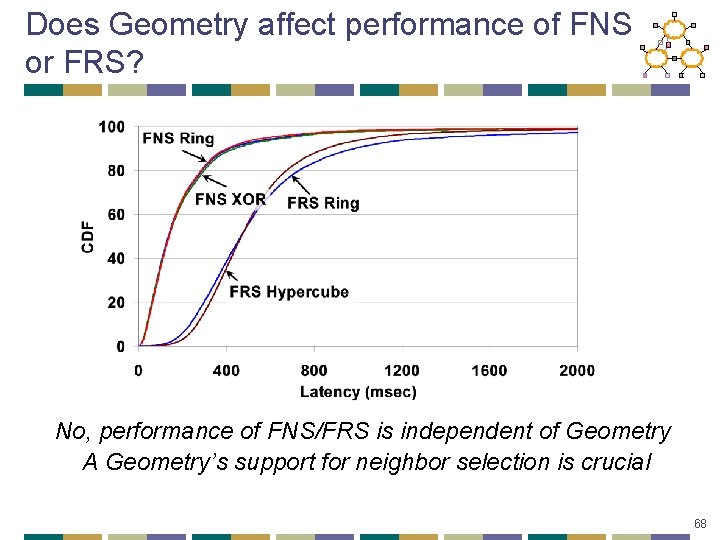

Does Geometry affect performance of FNS or FRS? No, performance of FNS/FRS is independent of Geometry A Geometry’s support for neighbor selection is crucial 68

Understanding DHT Routing: Conclusion • What makes for a “good” DHT? • one answer: a flexible routing geometry • Result: Ring is most flexible • Why not the Ring? 69

Next Lecture • Data-oriented networking • Required readings • Content-centric networking (read) • Redundancy elimination (skim) • Optional readings • DOT, DONA, DTN 70