15 744 Computer Networking L15 Data Center Networking

![Workloads • Partition/Aggregate (Query) • Short messages [50 KB-1 MB] (Coordination, Control state) • Workloads • Partition/Aggregate (Query) • Short messages [50 KB-1 MB] (Coordination, Control state) •](https://slidetodoc.com/presentation_image_h2/67d4b7fc6dfc480b119b683c03d80805/image-8.jpg)

![“Ideal” Flow Scheduling Problem is NP-hard [Bar-Noy et al. ] • Simple greedy algorithm: “Ideal” Flow Scheduling Problem is NP-hard [Bar-Noy et al. ] • Simple greedy algorithm:](https://slidetodoc.com/presentation_image_h2/67d4b7fc6dfc480b119b683c03d80805/image-26.jpg)

- Slides: 39

15 -744: Computer Networking L-15 Data Center Networking III

Announcements • Midterm – next Wednesday 3/7 • Project meeting slots – will be posted on Piazza by Wednesday • Slots mostly during lecture time on Friday 2

Overview • DCTCP • Pfabric • RDMA 3

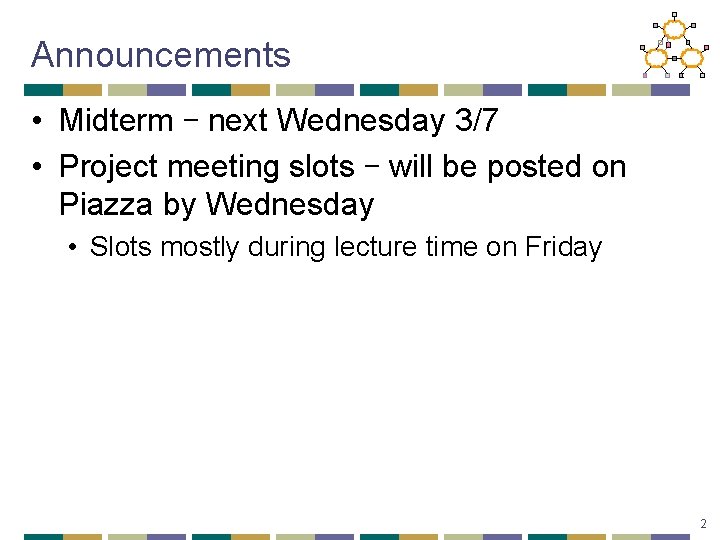

Data Center Packet Transport • Large purpose-built DCs • Huge investment: R&D, business • Transport inside the DC • TCP rules (99. 9% of traffic) • How’s TCP doing? 4

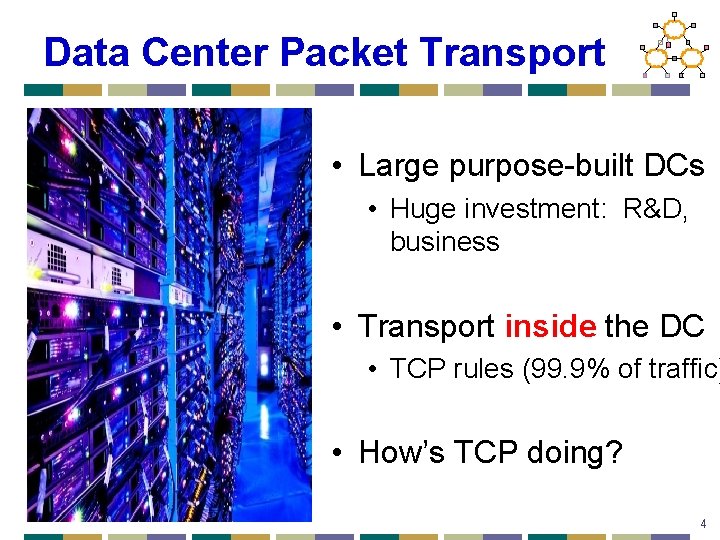

TCP in the Data Center • We’ll see TCP does not meet demands of apps. • Suffers from bursty packet drops, Incast [SIGCOMM ‘ 09], . . . • Builds up large queues: Ø Adds significant latency. Ø Wastes precious buffers, esp. bad with shallow-buffered switches. • Operators work around TCP problems. ‒ Ad-hoc, inefficient, often expensive solutions ‒ No solid understanding of consequences, tradeoffs 5

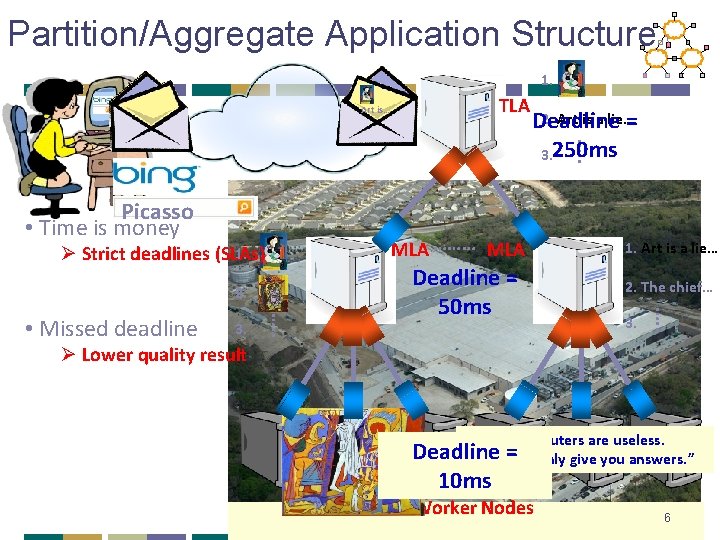

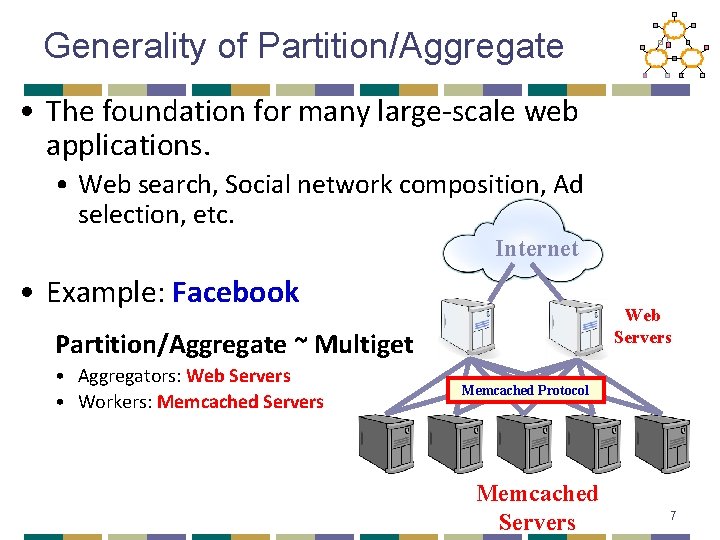

Partition/Aggregate Application Structure 1. Picasso Art is… TLA …. . 2. Art is a lie… Deadline = 3. 250 ms Picasso • Time is money MLA ……… MLA 1. Ø Strict deadlines (SLAs) 2. The chief… 3. …. . • Missed deadline Deadline = 50 ms 1. Art is a lie… Ø Lower quality result “Everything “The “It“I'd is “Art “Computers “Inspiration your chief like “Bad isto you awork enemy lie live artists can that in as are does of imagine life acopy. makes useless. creativity poor that exist, man us is the real. ” is Deadline They but=can itwith ultimate Good must realize only good lots artists find give seduction. “ of the sense. “ you money. “ you truth. steal. ” working. ” answers. ” 10 ms Worker Nodes 6

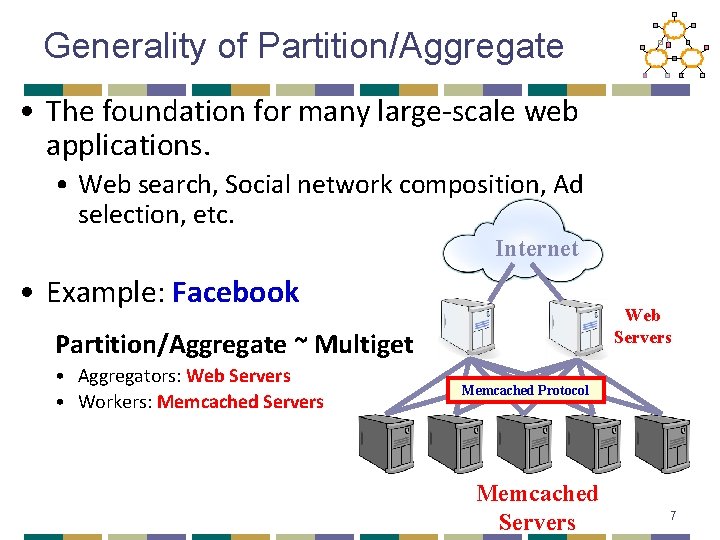

Generality of Partition/Aggregate • The foundation for many large-scale web applications. • Web search, Social network composition, Ad selection, etc. Internet • Example: Facebook Web Servers Partition/Aggregate ~ Multiget • Aggregators: Web Servers • Workers: Memcached Servers Memcached Protocol Memcached Servers 7

![Workloads PartitionAggregate Query Short messages 50 KB1 MB Coordination Control state Workloads • Partition/Aggregate (Query) • Short messages [50 KB-1 MB] (Coordination, Control state) •](https://slidetodoc.com/presentation_image_h2/67d4b7fc6dfc480b119b683c03d80805/image-8.jpg)

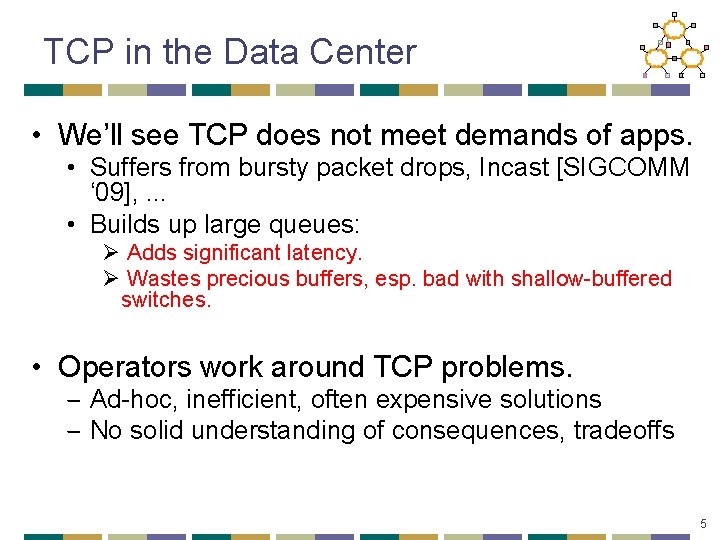

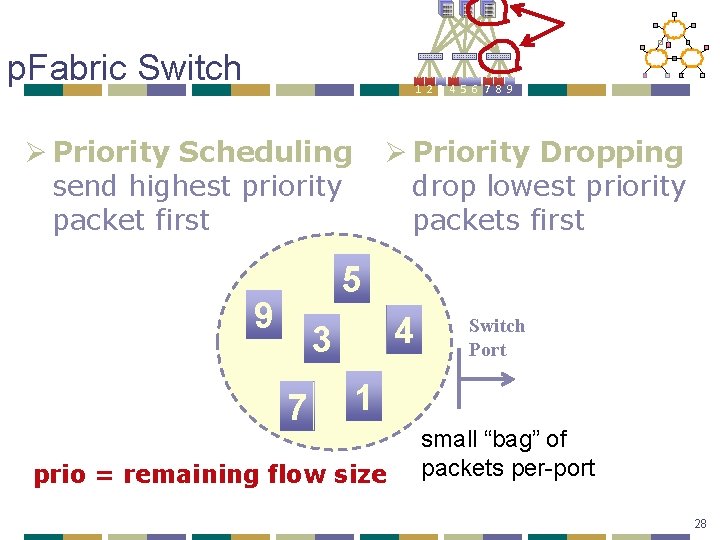

Workloads • Partition/Aggregate (Query) • Short messages [50 KB-1 MB] (Coordination, Control state) • Large flows [1 MB-50 MB] (Data update) Delay-sensitive Throughput-sensitive 8

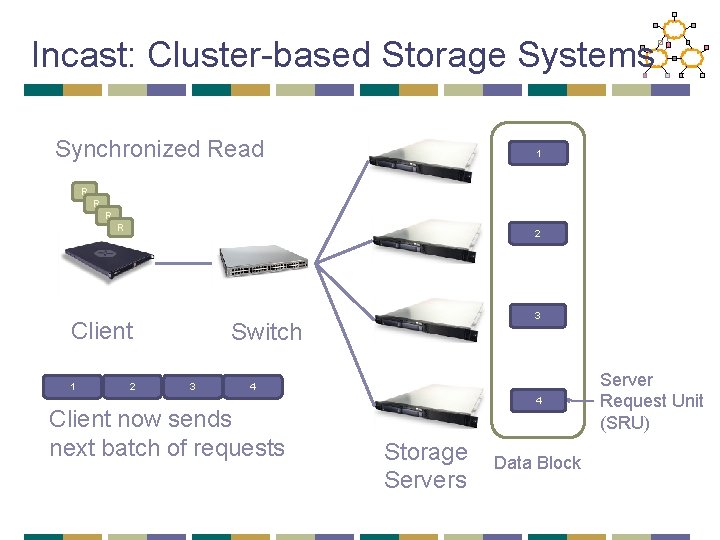

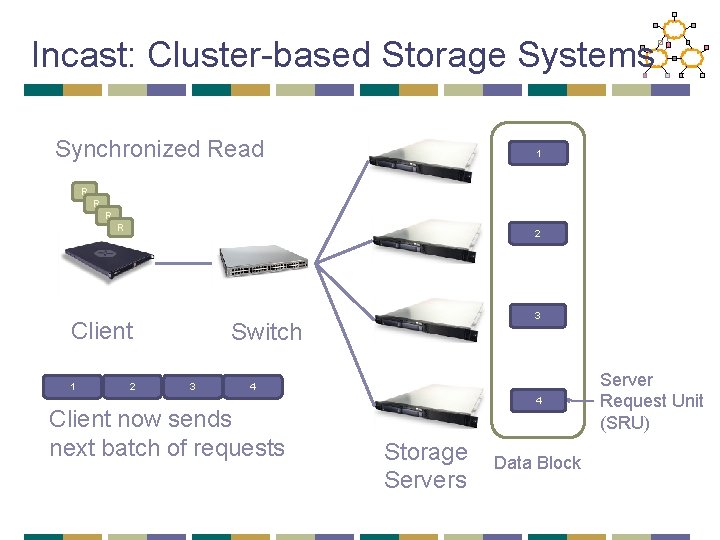

Incast: Cluster-based Storage Systems Synchronized Read 1 R R 2 Client 1 2 3 Switch 3 4 Client now sends next batch of requests 4 Storage Servers Data Block Server Request Unit (SRU)

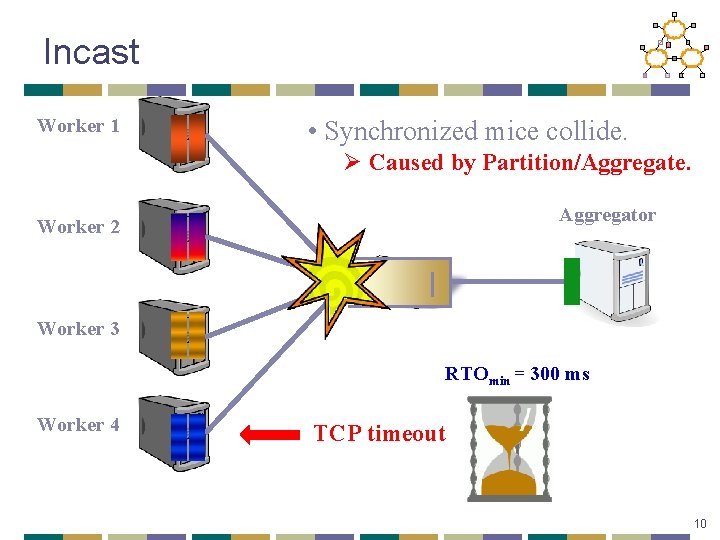

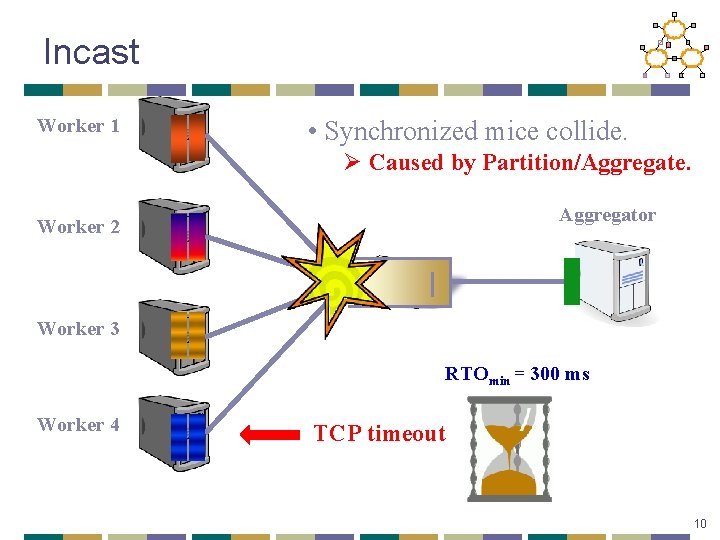

Incast Worker 1 • Synchronized mice collide. Ø Caused by Partition/Aggregate. Aggregator Worker 2 Worker 3 RTOmin = 300 ms Worker 4 TCP timeout 10

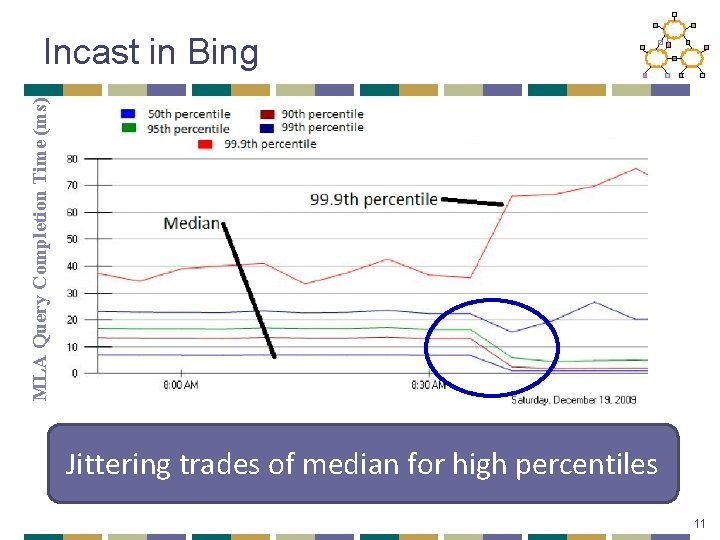

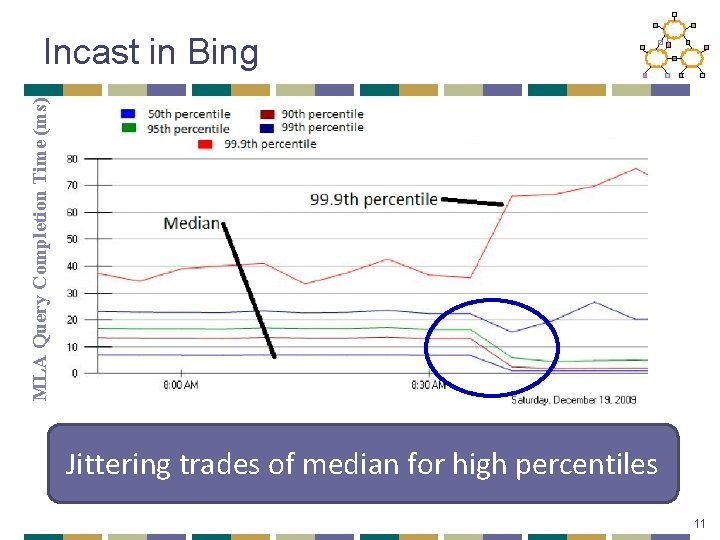

MLA Query Completion Time (ms) Incast in Bing • Requests are jittered over 10 ms window. Jittering trades of median for high percentiles • Jittering switched off around 8: 30 am. 11

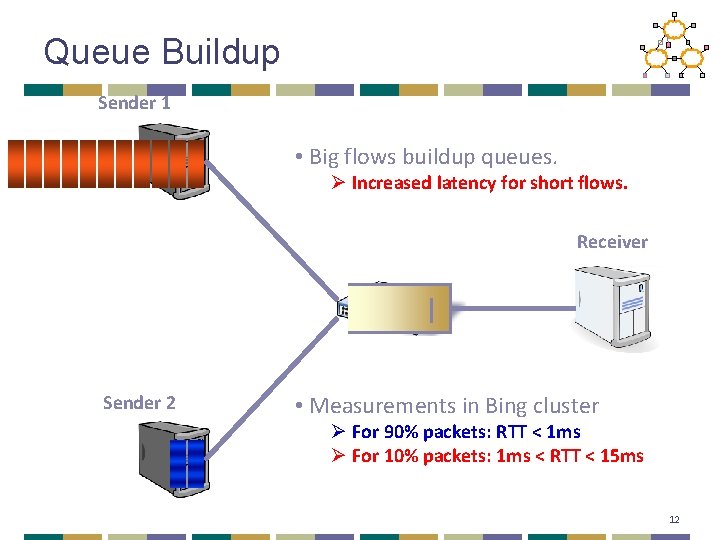

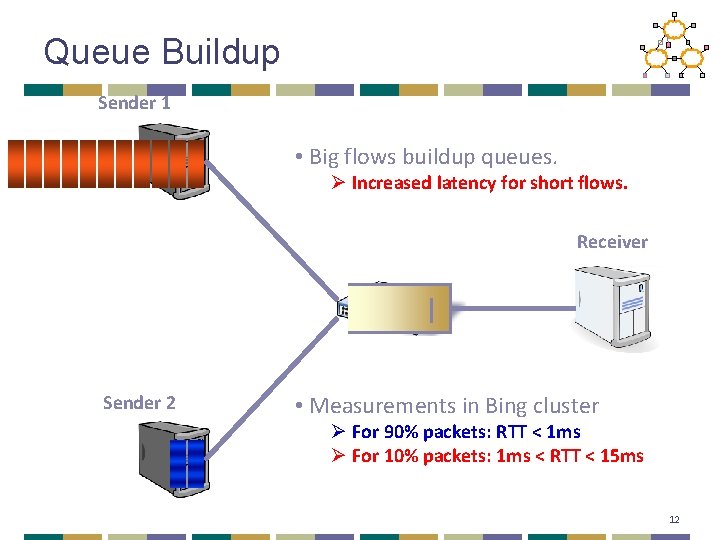

Queue Buildup Sender 1 • Big flows buildup queues. Ø Increased latency for short flows. Receiver Sender 2 • Measurements in Bing cluster Ø For 90% packets: RTT < 1 ms Ø For 10% packets: 1 ms < RTT < 15 ms 12

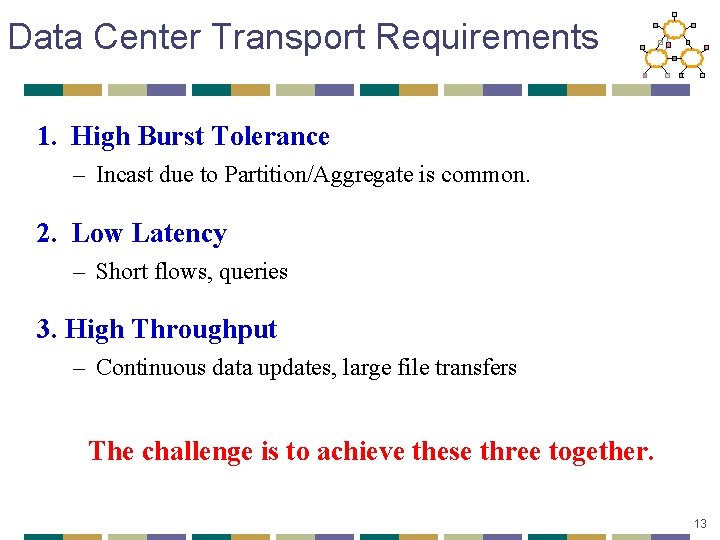

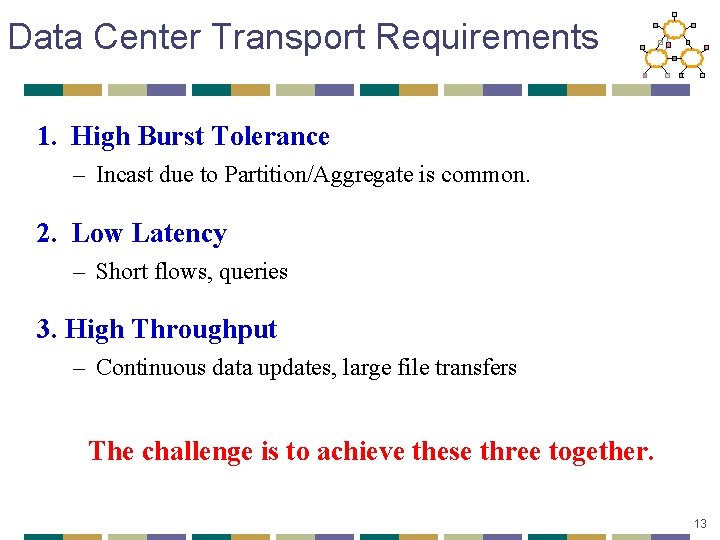

Data Center Transport Requirements 1. High Burst Tolerance – Incast due to Partition/Aggregate is common. 2. Low Latency – Short flows, queries 3. High Throughput – Continuous data updates, large file transfers The challenge is to achieve these three together. 13

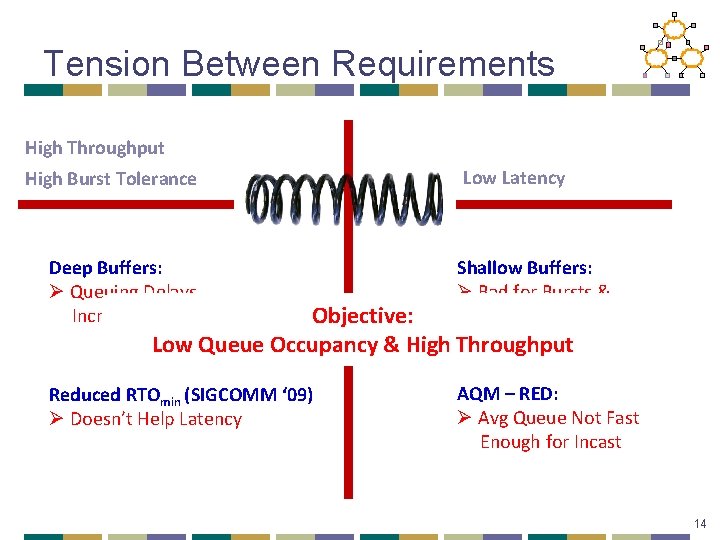

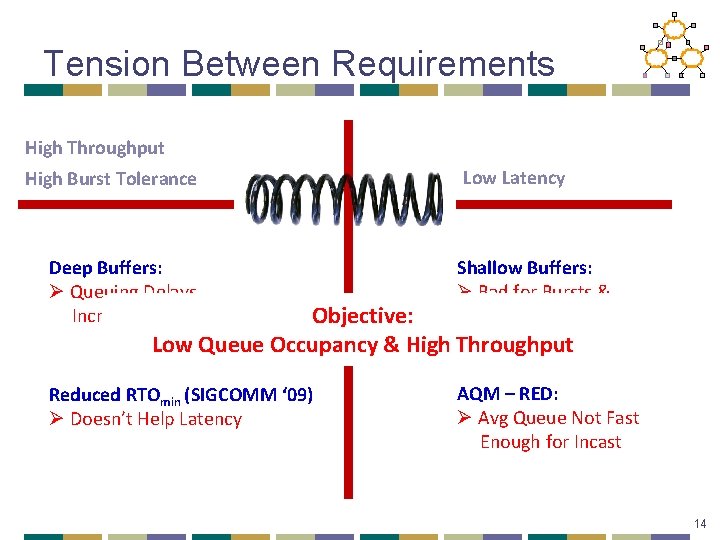

Tension Between Requirements High Throughput High Burst Tolerance Low Latency Deep Buffers: Ø Queuing Delays Increase Latency Shallow Buffers: Ø Bad for Bursts & Throughput Reduced RTOmin (SIGCOMM ‘ 09) Ø Doesn’t Help Latency AQM – RED: Ø Avg Queue Not Fast Enough for Incast Objective: Low Queue Occupancy & High Throughput 14

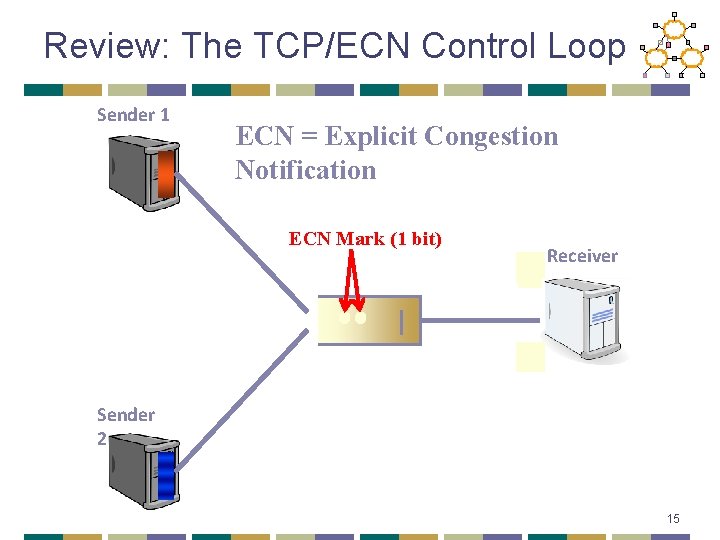

Review: The TCP/ECN Control Loop Sender 1 ECN = Explicit Congestion Notification ECN Mark (1 bit) Receiver Sender 2 15

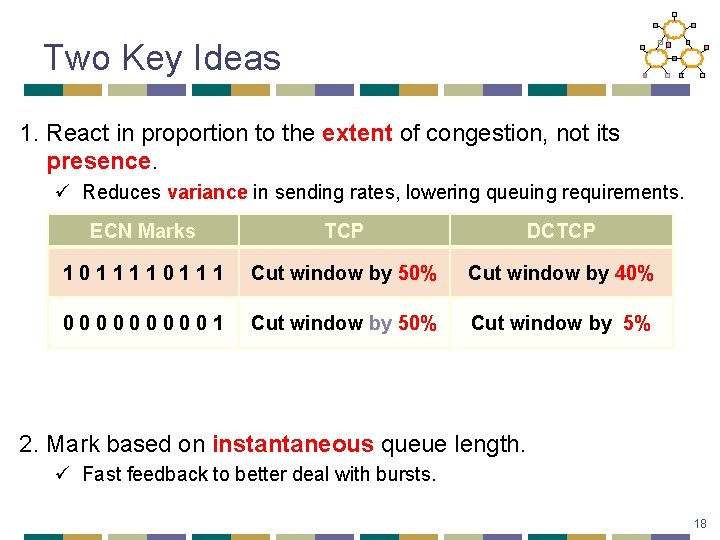

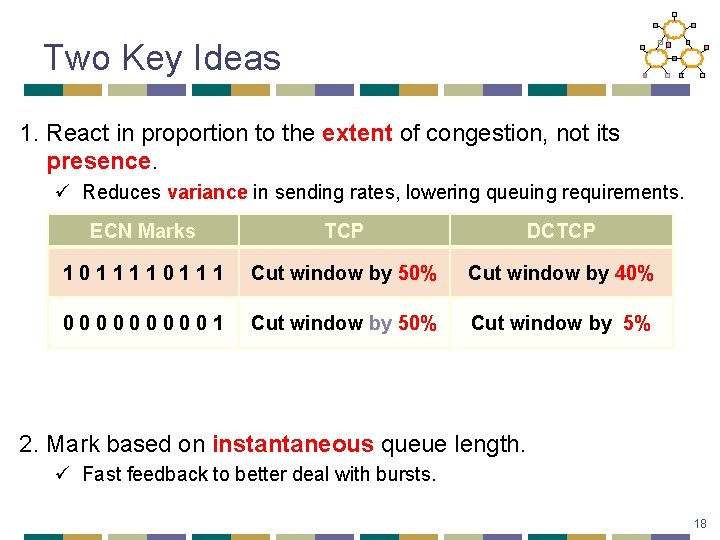

Two Key Ideas 1. React in proportion to the extent of congestion, not its presence. ü Reduces variance in sending rates, lowering queuing requirements. ECN Marks TCP DCTCP 10111 Cut window by 50% Cut window by 40% 000001 Cut window by 50% Cut window by 5% 2. Mark based on instantaneous queue length. ü Fast feedback to better deal with bursts. 18

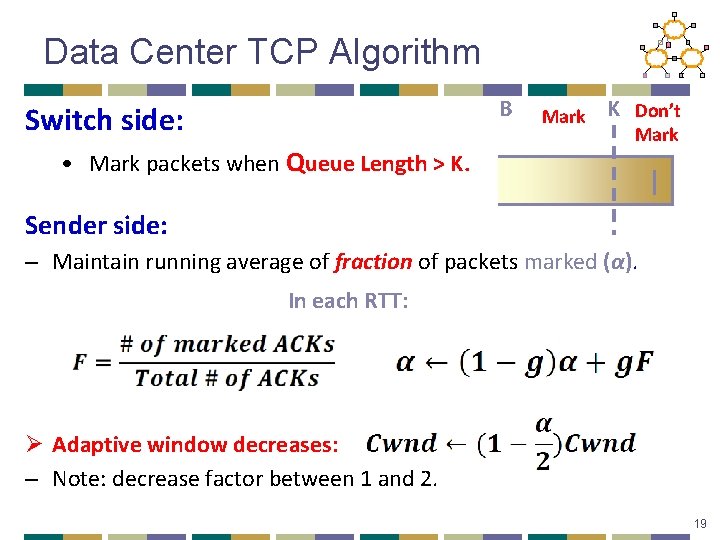

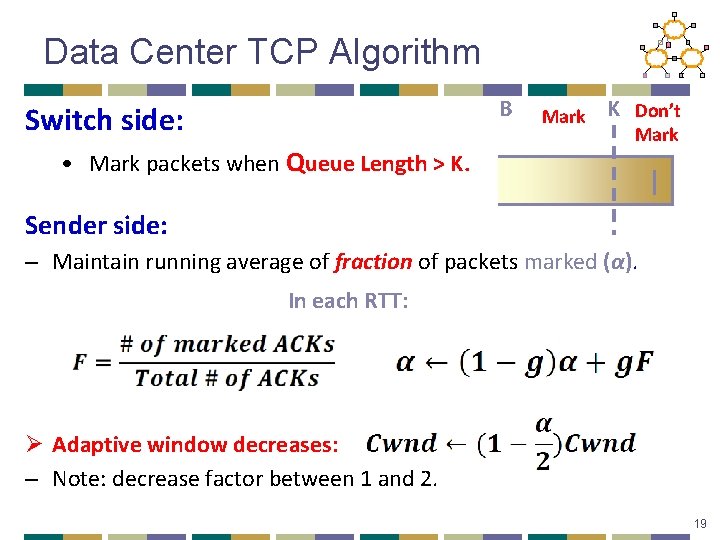

Data Center TCP Algorithm B Switch side: • Mark packets when Queue Length > K. Mark K Don’t Mark Sender side: – Maintain running average of fraction of packets marked (α). In each RTT: Ø Adaptive window decreases: – Note: decrease factor between 1 and 2. 19

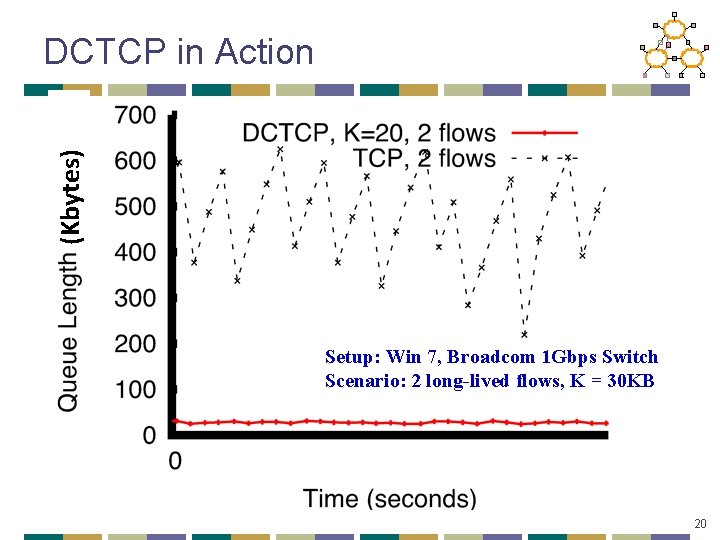

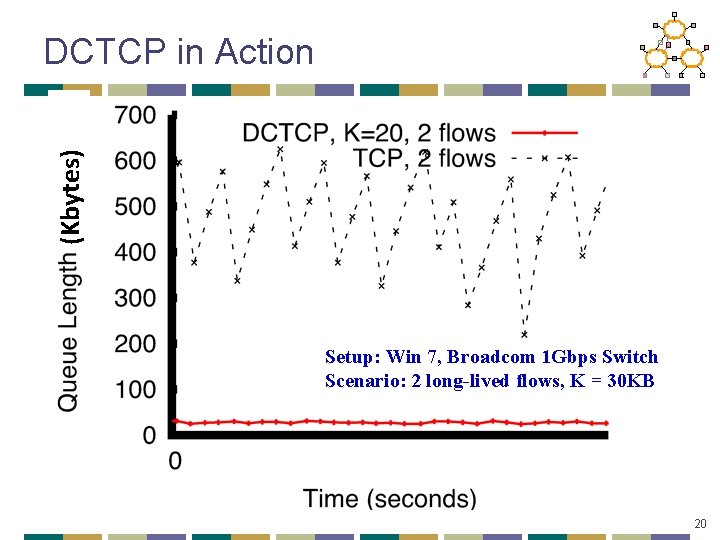

(Kbytes) DCTCP in Action Setup: Win 7, Broadcom 1 Gbps Switch Scenario: 2 long-lived flows, K = 30 KB 20

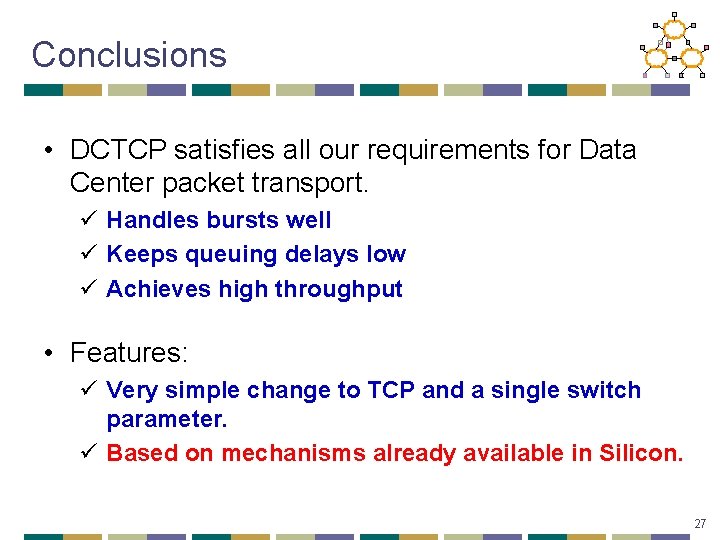

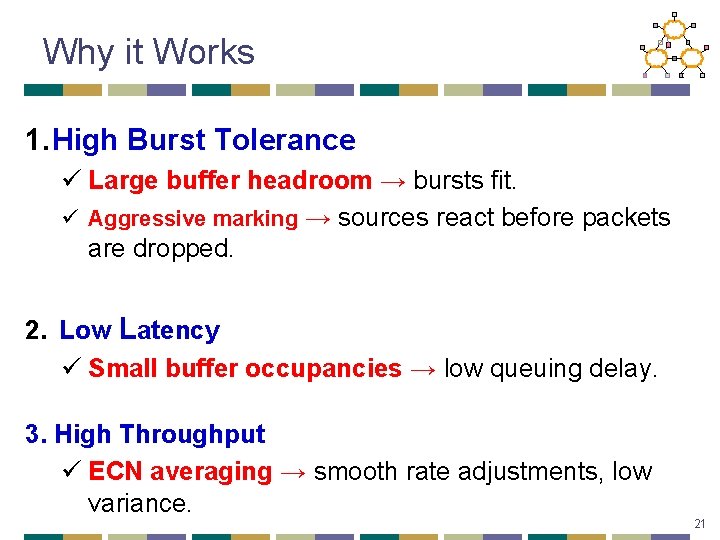

Why it Works 1. High Burst Tolerance ü Large buffer headroom → bursts fit. ü Aggressive marking → sources react before packets are dropped. 2. Low Latency ü Small buffer occupancies → low queuing delay. 3. High Throughput ü ECN averaging → smooth rate adjustments, low variance. 21

Conclusions • DCTCP satisfies all our requirements for Data Center packet transport. ü Handles bursts well ü Keeps queuing delays low ü Achieves high throughput • Features: ü Very simple change to TCP and a single switch parameter. ü Based on mechanisms already available in Silicon. 27

Overview • DCTCP • Pfabric • RDMA 21

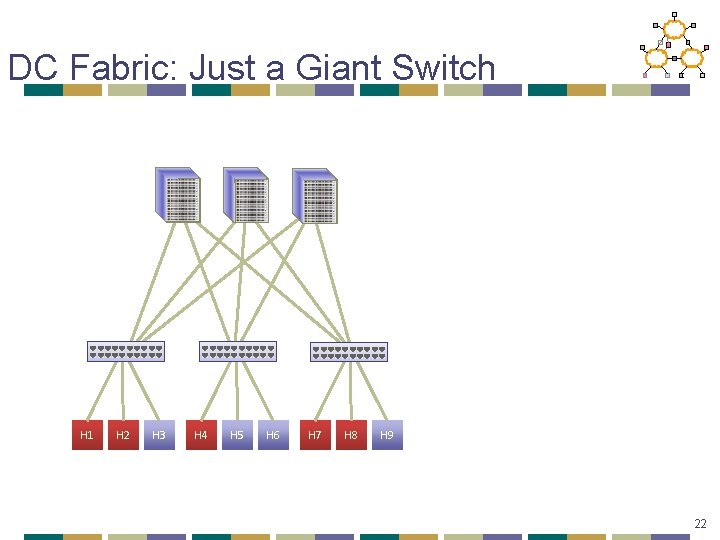

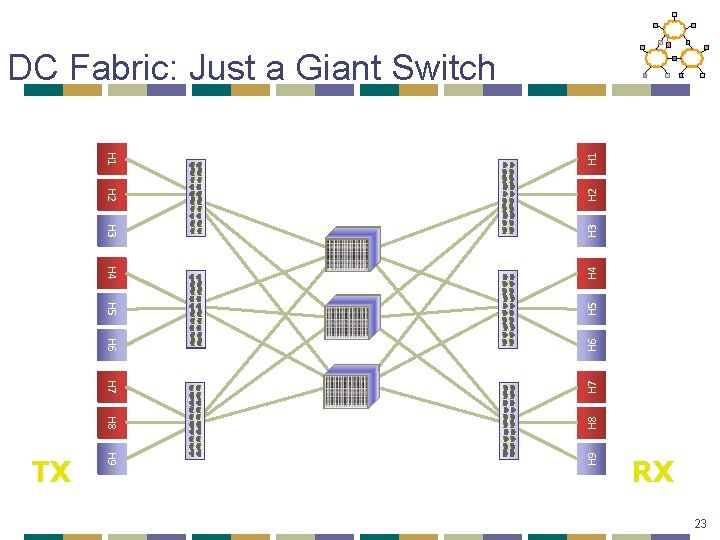

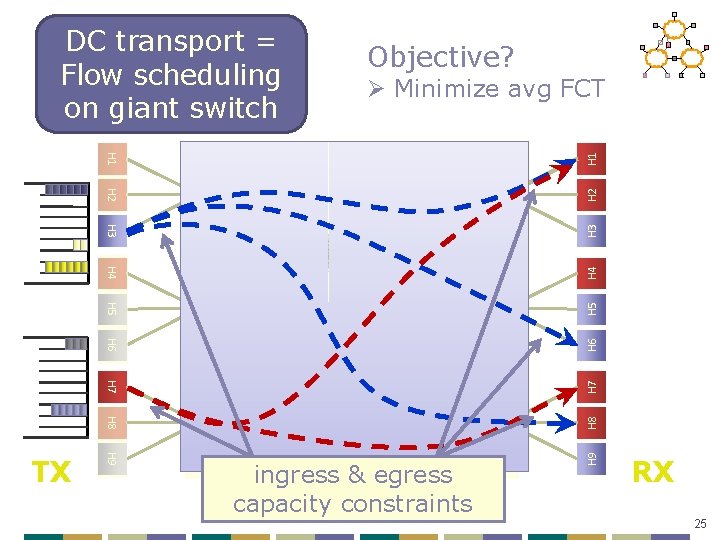

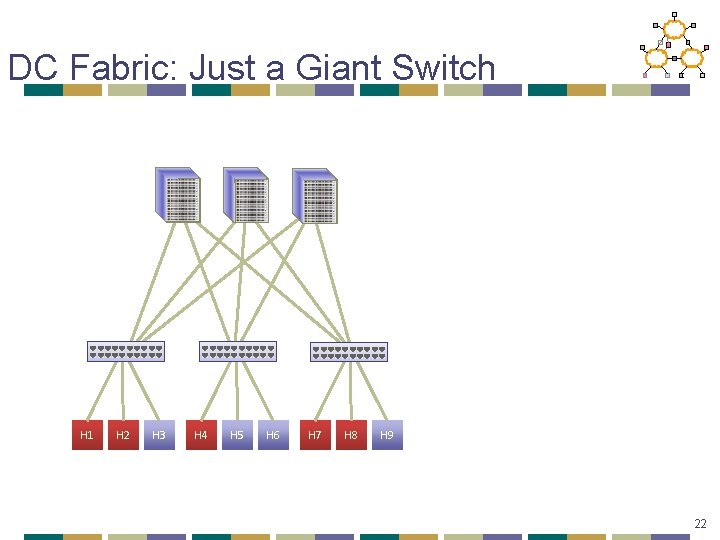

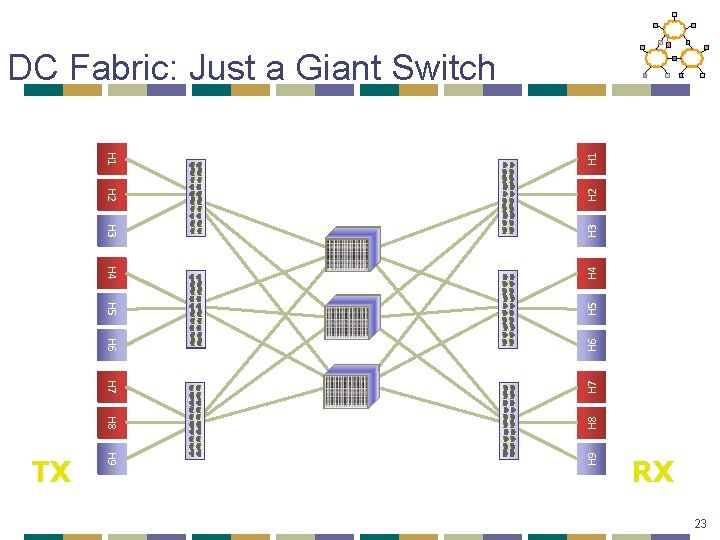

DC Fabric: Just a Giant Switch H 1 H 2 H 3 H 4 H 5 H 6 H 7 H 8 H 9 22

H 2 H 3 H 4 H 5 H 3 H 6 H 2 H 8 H 9 H 7 H 1 TX H 1 DC Fabric: Just a Giant Switch RX 23

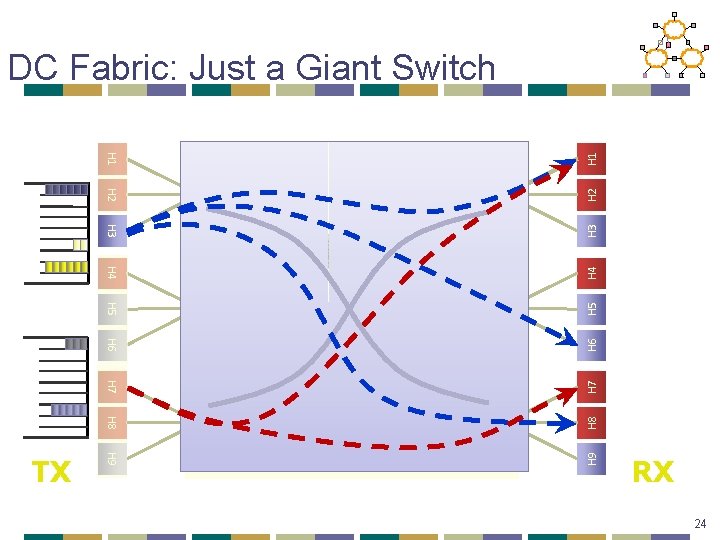

H 2 H 3 H 4 H 5 H 3 H 6 H 2 H 8 H 9 H 7 H 1 TX H 1 DC Fabric: Just a Giant Switch RX 24

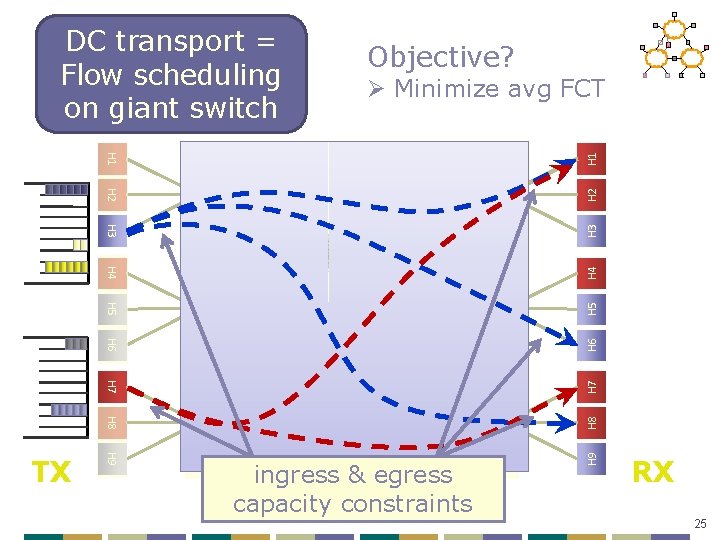

DC transport = Flow scheduling on giant switch Objective? Ø Minimize avg FCT H 1 H 2 H 3 H 4 H 5 H 6 H 7 H 9 ingress & egress capacity constraints H 9 H 8 TX RX 25

![Ideal Flow Scheduling Problem is NPhard BarNoy et al Simple greedy algorithm “Ideal” Flow Scheduling Problem is NP-hard [Bar-Noy et al. ] • Simple greedy algorithm:](https://slidetodoc.com/presentation_image_h2/67d4b7fc6dfc480b119b683c03d80805/image-26.jpg)

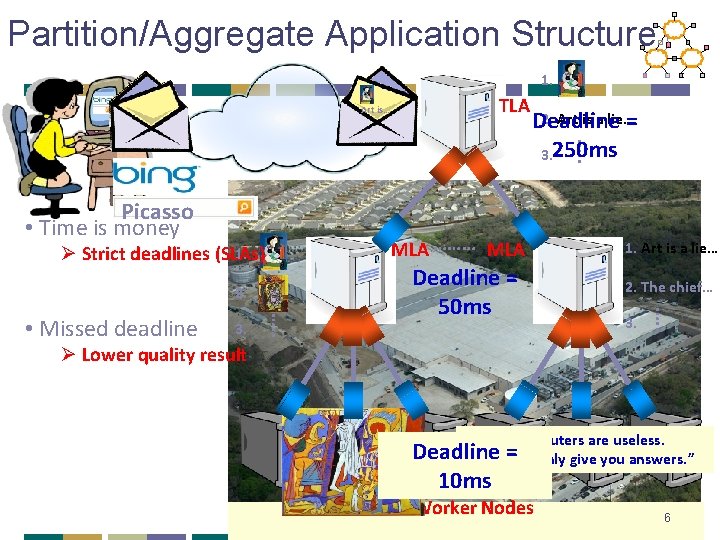

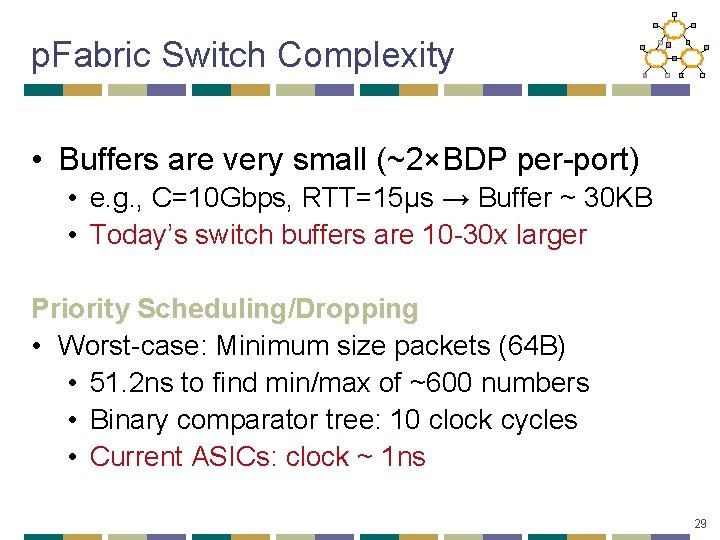

“Ideal” Flow Scheduling Problem is NP-hard [Bar-Noy et al. ] • Simple greedy algorithm: 2 -approximation 1 1 2 2 3 3 26

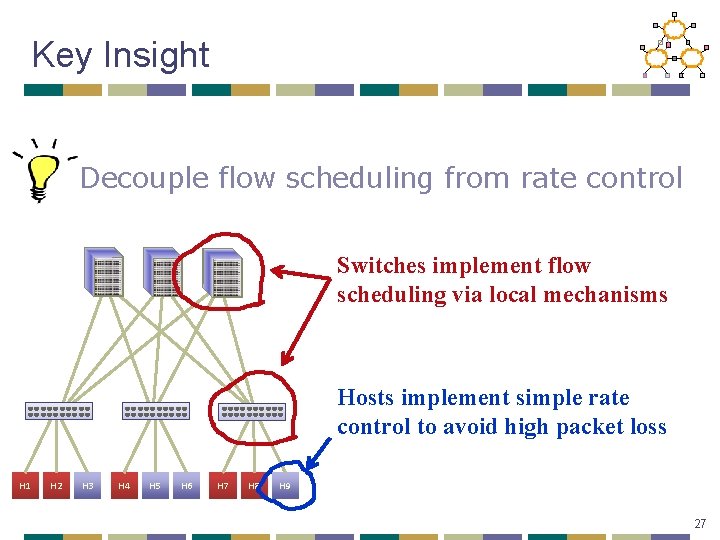

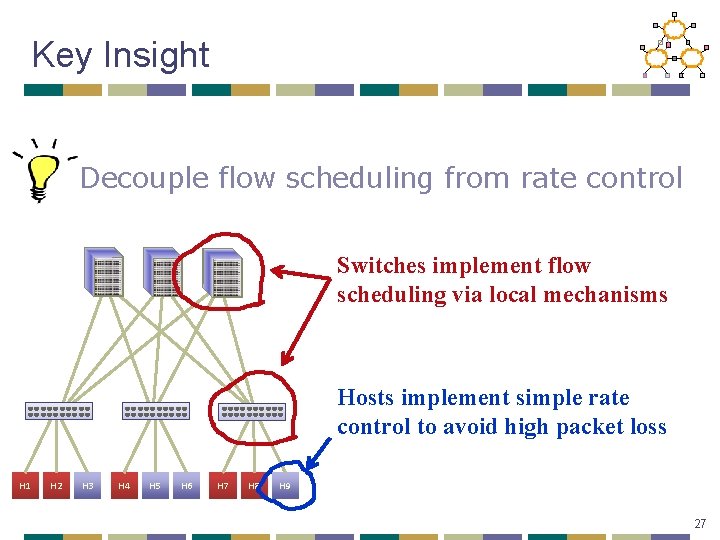

Key Insight Decouple flow scheduling from rate control Switches implement flow scheduling via local mechanisms Hosts implement simple rate control to avoid high packet loss H 1 H 2 H 3 H 4 H 5 H 6 H 7 H 8 H 9 27

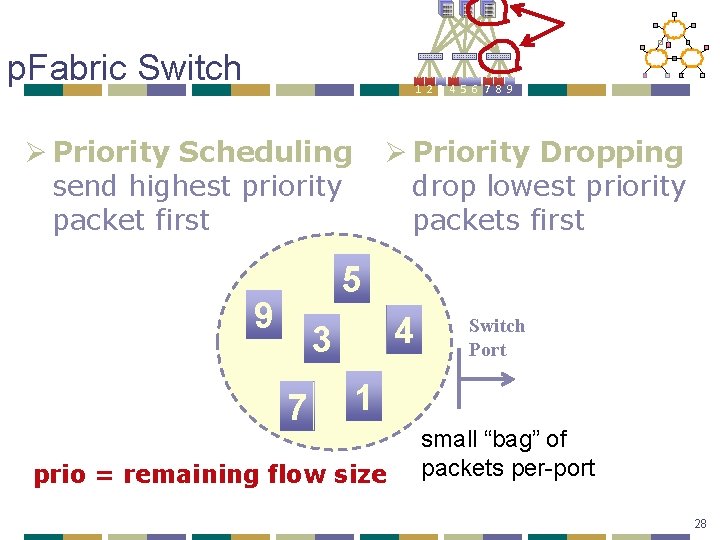

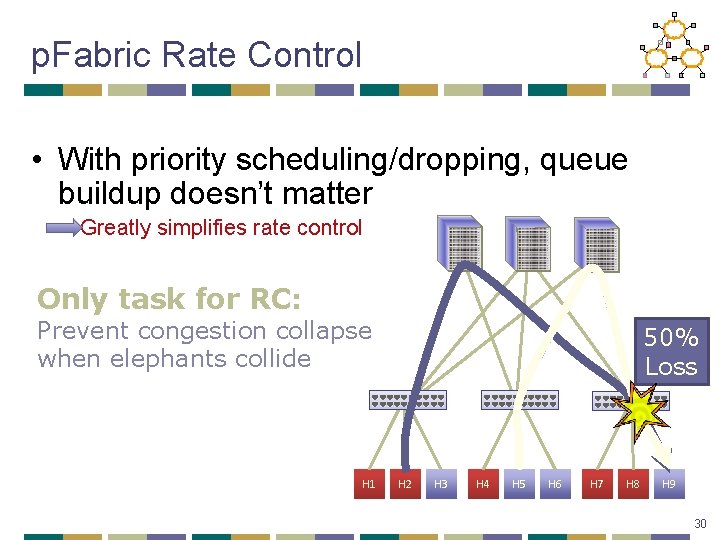

p. Fabric Switch HHH HHH 1 2 3 4 5 6 7 8 9 Ø Priority Scheduling send highest priority packet first Ø Priority Dropping drop lowest priority packets first 5 9 4 3 7 Switch Port 1 prio = remaining flow size small “bag” of packets per-port 28

p. Fabric Switch Complexity • Buffers are very small (~2×BDP per-port) • e. g. , C=10 Gbps, RTT=15µs → Buffer ~ 30 KB • Today’s switch buffers are 10 -30 x larger Priority Scheduling/Dropping • Worst-case: Minimum size packets (64 B) • 51. 2 ns to find min/max of ~600 numbers • Binary comparator tree: 10 clock cycles • Current ASICs: clock ~ 1 ns 29

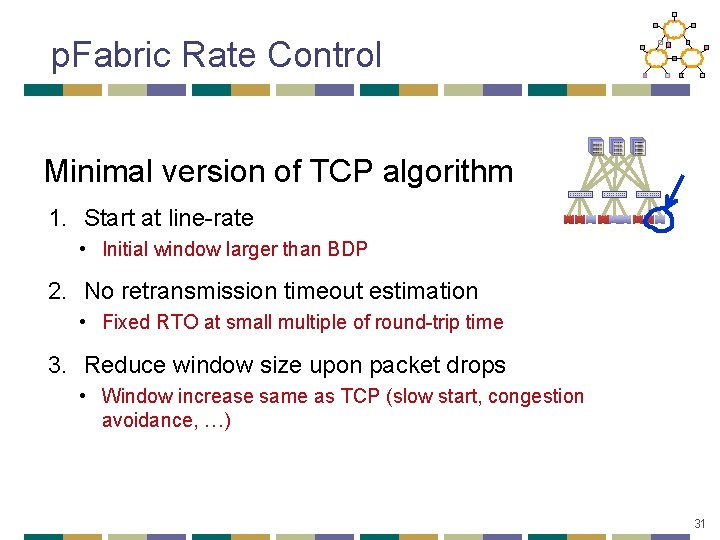

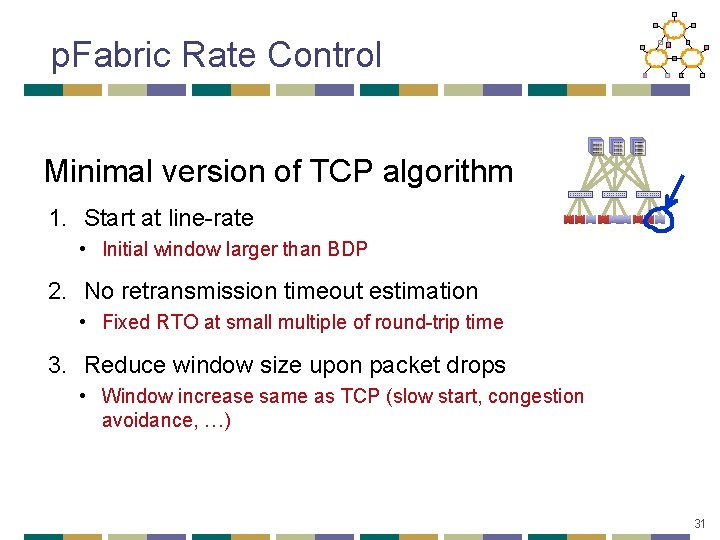

p. Fabric Rate Control • With priority scheduling/dropping, queue buildup doesn’t matter Greatly simplifies rate control Only task for RC: Prevent congestion collapse when elephants collide H 1 50% Loss H 2 H 3 H 4 H 5 H 6 H 7 H 8 H 9 30

p. Fabric Rate Control Minimal version of TCP algorithm 1. Start at line-rate HHH HHH 1 2 3 4 5 6 7 8 9 • Initial window larger than BDP 2. No retransmission timeout estimation • Fixed RTO at small multiple of round-trip time 3. Reduce window size upon packet drops • Window increase same as TCP (slow start, congestion avoidance, …) 31

Why does this work? Key invariant for ideal scheduling: At any instant, have the highest priority packet (according to ideal algorithm) available at the switch. • Priority scheduling Ø High priority packets traverse fabric as quickly as possible • What about dropped packets? Ø Lowest priority → not needed till all other packets depart Ø Buffer > BDP → enough time (> RTT) to retransmit 32

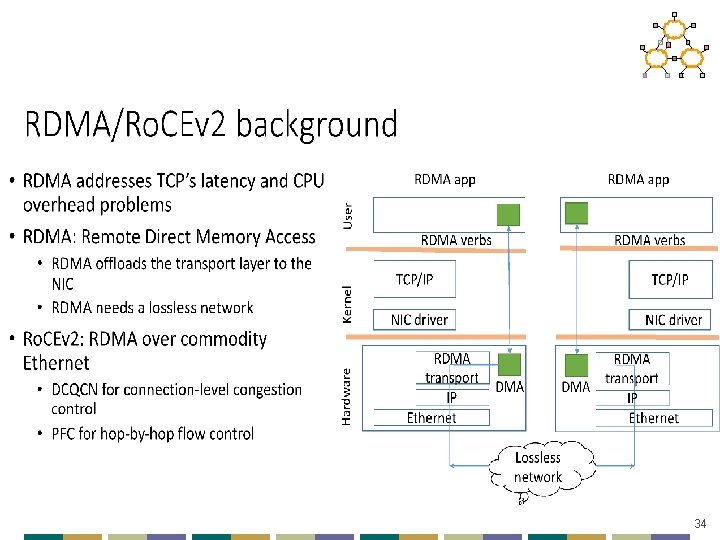

Overview • DCTCP • Pfabric • RDMA 33

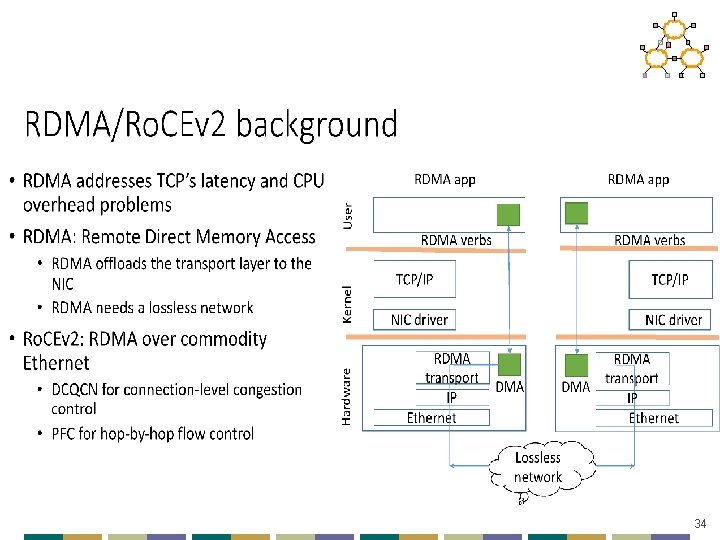

34

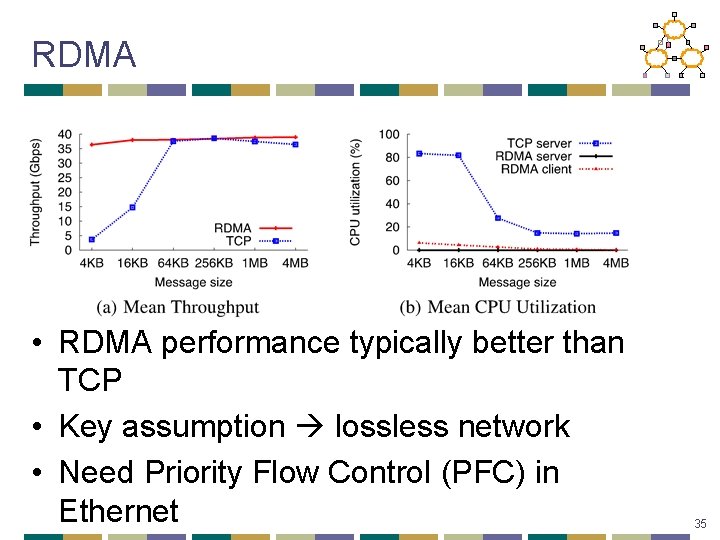

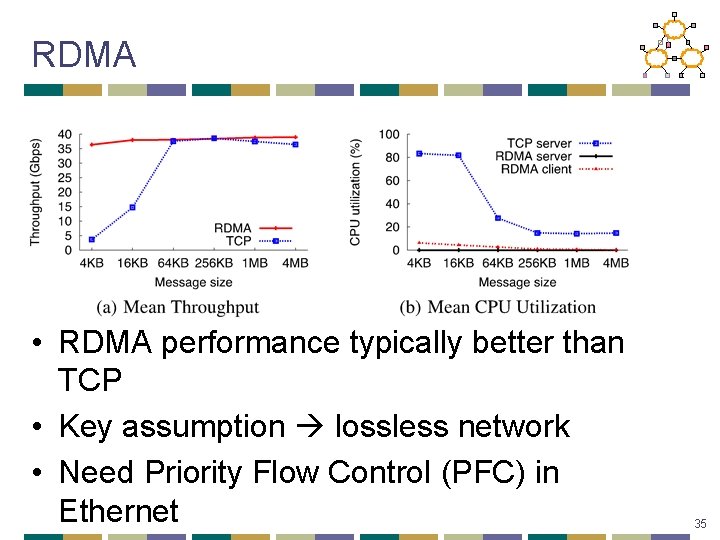

RDMA • RDMA performance typically better than TCP • Key assumption lossless network • Need Priority Flow Control (PFC) in Ethernet 35

36

37

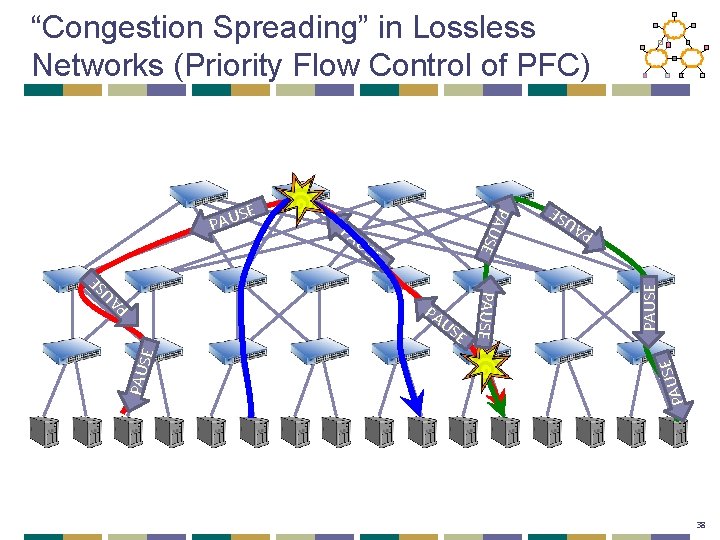

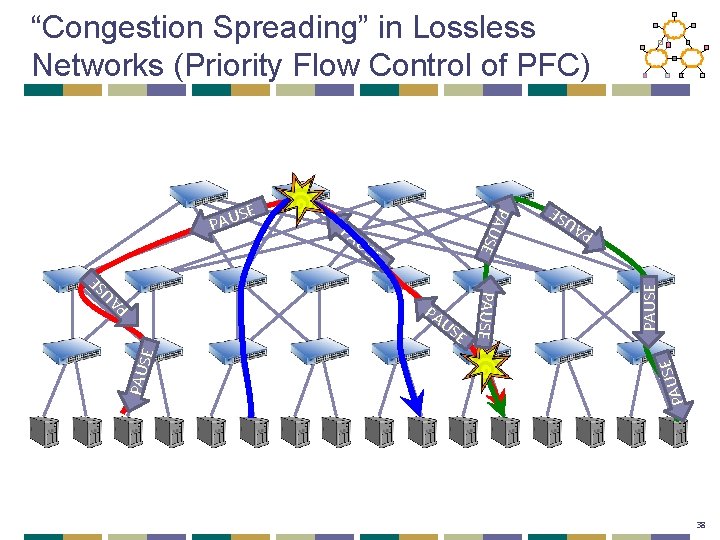

“Congestion Spreading” in Lossless Networks (Priority Flow Control of PFC) SE E PA PAU S PAU U S E US E PAUSE PA PAUSE E P US A U SE PA P A U SE SE PAU 38

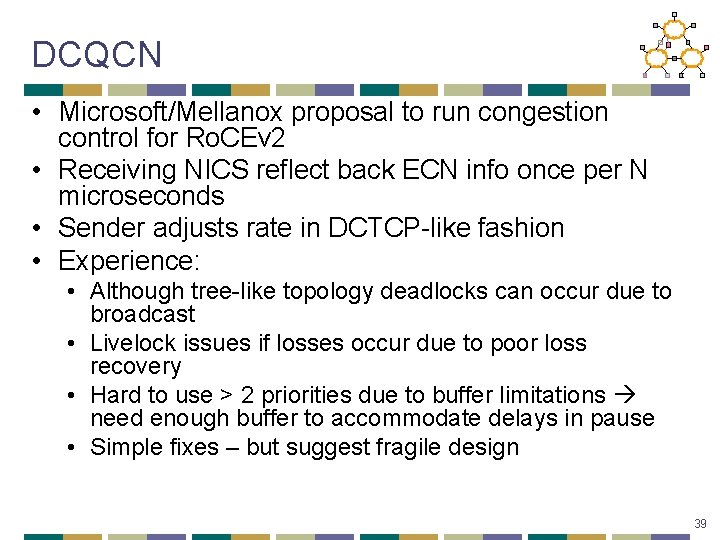

DCQCN • Microsoft/Mellanox proposal to run congestion control for Ro. CEv 2 • Receiving NICS reflect back ECN info once per N microseconds • Sender adjusts rate in DCTCP-like fashion • Experience: • Although tree-like topology deadlocks can occur due to broadcast • Livelock issues if losses occur due to poor loss recovery • Hard to use > 2 priorities due to buffer limitations need enough buffer to accommodate delays in pause • Simple fixes – but suggest fragile design 39