UDA IKASTAROAK CURSOS DE VERANO SUMMER COURSES Evaluacin

- Slides: 47

UDA IKASTAROAK / CURSOS DE VERANO / SUMMER COURSES Evaluación docente: asignatura pendiente UPV / EHU Herramientas e indicadores de medición de la calidad de la actividad docente en los rankings 1

Outline 1. 2. 3. 4. 5. 6. UPV / EHU Teaching quality and its complexity Regulations and incentives to promote teaching quality How to measure teaching quality The state of play of international rankings Examples of how global rankings measure teaching quality Conclusions and future trends 2

Basics – Teaching quality UPV / EHU • "Quality of teaching" lacks a clear definition: • • quality can be regarded as an outcome, a property, and even a process The concept is stakeholder-relative (student, teachers, QA agencies…) • Top-down vs. Bottom-up approaches to be considered when evauating quality (and notably when willing to foster innovation in teaching) • Levels to be distinguished: • • Systemic-level Institution-wide Degree/discipline level Individual teacher level • Neither the size nor the specificity of the institutions poses a major obstacle to develop institutional policies for promoting teaching quality 3

Basics – Teaching quality regulations and incentives UPV / EHU • Motivation: governments, students and their families, employers, fund providers increasily demand accountability and efficiency through teaching • Some countries promote competition among institutions • There are national and international teaching contests with performancebased criteria (not indicators) • Quality assurance methodologies hardly embrace the complexity of teaching • Debate: Innovation vs. Accreditation? • Focus is too input-focused? • Teachers might be reluctant to consider quality as "value for money" 4

Basics – Teaching quality in European countries UPV / EHU • Europe: countries report that only at institutional level are developed specific and innovative ways of fostering teaching excellenrce • Some countries link funding to it; some of them even competitive funding • Teachers’ careers: ES as an example of good practice due to ANECA’s DOCENTIA • In some countries student opinions have an effect in teacher careers: SI, BE-NL, CY and ES – In PL even repeated bad feedback from students can lead to termination of contract – FR and MA: by law students cannot influence teacher careers and remuneration • ES is an exception for teacher evaluation: – System level; HU, PL, DE – QA agencies: ES – Others: at institutional level 5

UPV / EHU Basics –Teaching quality rankings by European countries? • In Europe, – only RO states the use of national teaching quality rankings for degrees and universities, based on indicators of the Ministry – CZ: no national ranking, although uses comparative information from public universities – UK: lots of league tables done by newspapers, but not used by government. • However, they have an important impact on the reputation of the universities – Other countries: state that rankings play no role in understanding and developing teaching quality 6

Basics – Transparency in Higher Education UPV / EHU • Purpose creating tools to support accountability and evaluating quality on higher education • Usually aim to cover all university missions • Stakeholders and public bodies are especially interested in promoting these • Tools include: • • • Education quality prizes and contests Quality assurance and accreditation Ratings and rankings 7

Basics: RANKINGS vs. RATINGS UPV / EHU RANKINGS • A ranking compares universities to one another and put them in an order based on institutions perform across different indicators. • The order is calculated grouping into a single indicator several aspects • Examples: Shanghai ranking, Leiden ranking. RATINGS • A rating assesses universities on how they perform in several areas, similar to the areas considered in rankings. But rather than comparing institutions against one another, they are judged on how they perform against a set standard. • Useful for institutional profiling and for identifying stregths and weaknesses • Examples: U-multirank, QS starts 8

Basic elements of quality of teaching • The learning process is complex to assess since it combines – Teachers: • Skills of teachers • Their attitude in class, – Students • students' experience, – Context • quality of the faculty-students relationship, • Facilities (library, computers. . . ) • This is difficult to measure – Even more if measured just through indicators! UPV / EHU 9

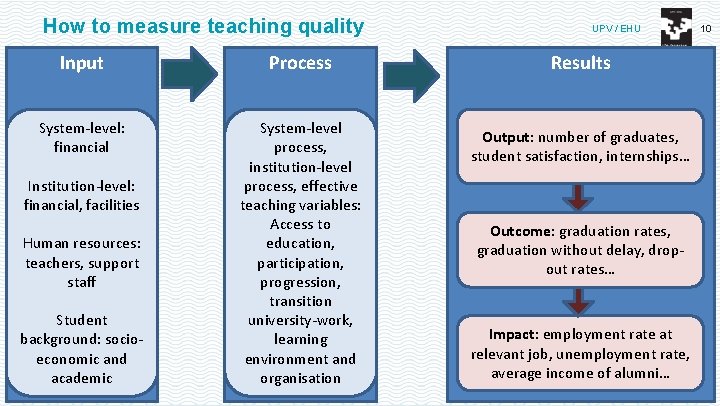

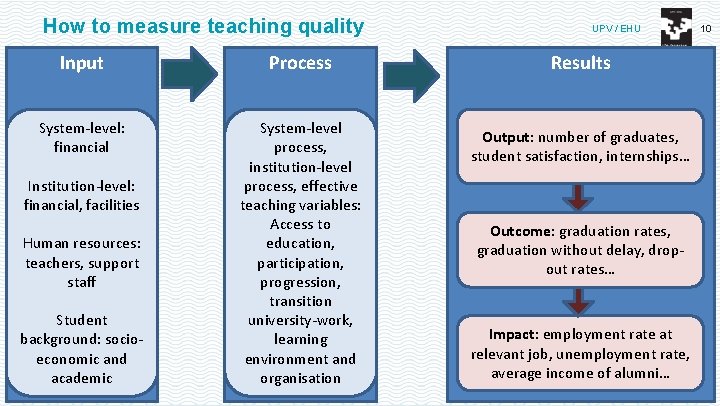

How to measure teaching quality Input Process System-level: financial System-level process, institution-level process, effective teaching variables: Access to education, participation, progression, transition university-work, learning environment and organisation Institution-level: financial, facilities Human resources: teachers, support staff Student background: socioeconomic and academic UPV / EHU Results Output: number of graduates, student satisfaction, internships… Outcome: graduation rates, graduation without delay, dropout rates… Impact: employment rate at relevant job, unemployment rate, average income of alumni… 10

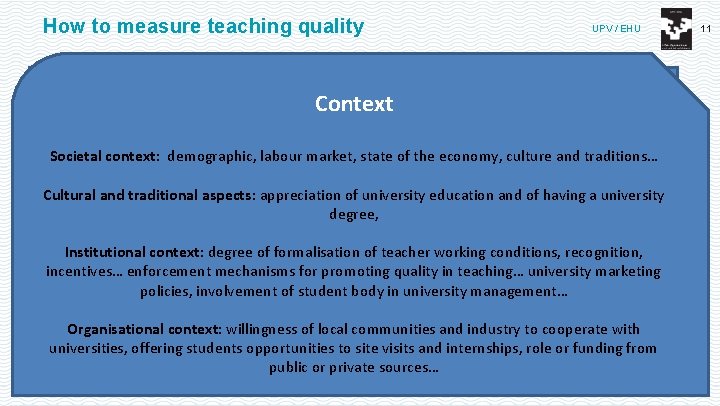

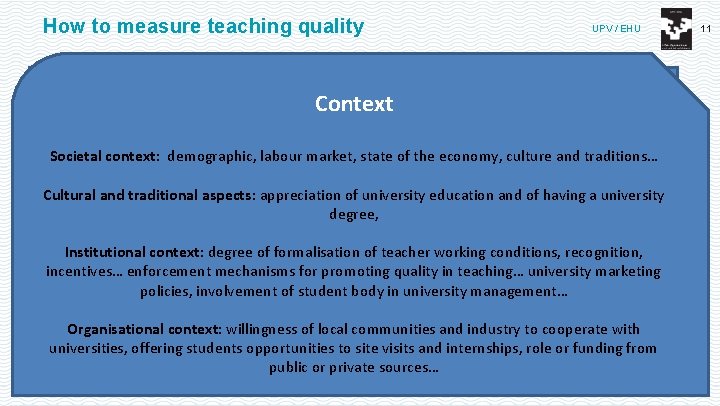

How to measure teaching quality Input Process UPV / EHU Results Context Output: of Societal context: demographic, labourinstitution market, state of the economy, culturenumber and traditions… financial process, System-level: System-level graduates, internships… -level process, Cultural and traditional aspects: effective appreciation Institution-level: teachingof university education and of having a university financial, facilities variables: degree, Access to Outcome: graduation rates, Human resources: context: degree ofeducation, Institutional formalisation of teacher working conditions, recognition, graduation without delay, teachers, support participation, incentives… enforcement mechanisms for promoting quality in teaching… university marketing drop-out rates… staff progression, policies, involvementtransition of student body in university management… Student university-work, Impact: employment rate Organisational of local communities and industry to cooperate background: socio- context: willingness learning at relevant job, with economic and environment and universities, offering students opportunities to site visits and internships, role or funding from unemployment rate, academic organisation public or private sources… average income of alumni… 11

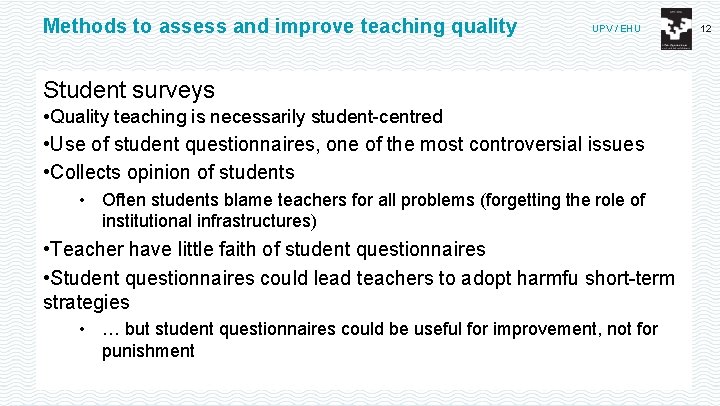

Methods to assess and improve teaching quality UPV / EHU Student surveys • Quality teaching is necessarily student-centred • Use of student questionnaires, one of the most controversial issues • Collects opinion of students • Often students blame teachers for all problems (forgetting the role of institutional infrastructures) • Teacher have little faith of student questionnaires • Student questionnaires could lead teachers to adopt harmfu short-term strategies • … but student questionnaires could be useful for improvement, not for punishment 12

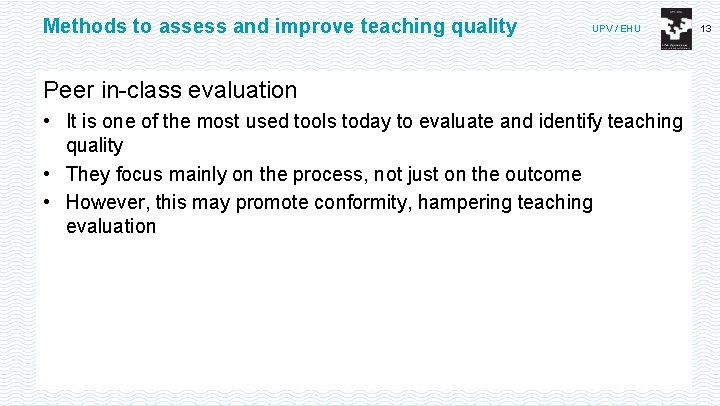

Methods to assess and improve teaching quality UPV / EHU Peer in-class evaluation • It is one of the most used tools today to evaluate and identify teaching quality • They focus mainly on the process, not just on the outcome • However, this may promote conformity, hampering teaching evaluation 13

Research as a proxy for teaching quality? UPV / EHU Is it true that a university strong in research is also strong in teaching? • bidirectional relationship? • In direct conflict because of how they are rewarded? Is there correlation between research and of teaching quality in the literature? • And between indicators of research and teaching quality? Research-intensive institutions have difficulty raising the profile of teaching as compared to research Short from elite universities to mass universities – …universities are expected more now to bridge the students to the job market, make them become responsible citizens, and new missions such as regional development and social inclusion – …this affects the reputation of universities 14

Can teaching quality indicators be used for international comparison? UPV / EHU • The importance of the context – Three ways to use indicators: • As a classifier indicator (e. g. regulat quantitative indicators such as income, generalist vs. Polytechnic…) • As a performance/rating indicator • As a student/employer satisfaction indicator • OECD's AHELO project on learning outcomes • Student surveys and the influence of different cultures and outlooks rather than loyalty 15

RANKINGS – more than a single league table Types of Rankings • Academic rankings producing league tables • Shanghai ranking • Times Higher Education World university ranking • QS World universities ranking • Rankings only focusing on research • Leiden ranking • Taiwan ranking • Multidimensional rankings • CHE (Germany) • U-Multirank UPV / EHU 16

RANKINGS – limitations UPV / EHU • They cover no more than 3%-5% of worlds universities • Some indicators cover only "elite" research universities • Shanghai – quality of faculty: staff winning nobel prices • QS – peer review: only covers nomination of 30 best universities from pre-selected list • QS / Times – teaching reputation: nominating 30 best • Indicator scores combine other indicators in a single dimension • Ranking providers consider which factors weights more (e. g. reputation 40%) 17

RANKINGS – limitations UPV / EHU • Subject bias: natural sciences and medicine vs. Social sciences and humanities • • … several indicators count by 22 broad areas Different publication and citation cultures in different fields • English bias • What about the third mission? • Despite this, rankings are affecting universities' behaviour due to their impact in public policy discussions and funding schemes • Audit of rankings also exist – IREG ranking audit • Berlin principles on ranking of HEIs (adopted in 2006) 18

RANKINGS – the evolution of ranking providers UPV / EHU Rankings are gradually changing • Shanghai's attempt to include education quality indicators • Methodological changes: some of them changed completely their indicators between 2011 and 2013 • Tendencies: • • • Less weight to research excellence Research: less weight to bibliometrics, more to networking and collaboration Education: more relevance of international cooperation, more on social dimension (incl. diversity) and inclusion • New services being offered by ranking providers: • Tools for university profiling (e. g. Shanghai's benchmarking tool with 40 indicators) • Classification type tools (e. g. QS start audit) • Multi-indicator rankings (e. h. Leiden's impact and collaboration per discipline) – Consultancy services to improve positions… 19

RANKINGS – Measuring teaching quality UPV / EHU Ranking methods • Typically based on some combination of • • institutional performance (research-teaching-services…), institutional characteristics (institutional mission, size, regional locations… Rankings usually compare based on performance, although generally rely more heavily on research quality than on teaching quality: • The two main gobal university rankings (QS Times HE ranking and Shanghai) practically do not cover this aspect in their league tables. Why research quality is more predominant in rankings than teaching? • International research indicators are well-established and are commonly accepted • Obtaining internationally comparable data in education is difficult, which makes the creation of indicators challenging and provokes big differences across country results 20

RANKINGS – Measuring teaching quality UPV / EHU Usual indicators in international rankings for teaching quality • • Alumni who have been awarded a nobel prize Staff/student ratio Reputation surveys (academics, students, employers) Teaching income Drop-out rate Time to degree Ph. D/undergraduate ratio Teaching Quality is occasionally measured by statistics from student class evaluations • Controversy: teaching quality should ideally be measured by learning outcomes, student attitudes, & behavioural change. • • … but in practice it is difficult to measure learning outcomes or student improved competencies Most rankings do not use course evaluations because each institution has a different evaluation process and data is usually not made available externally • Instead rankings usually rely on metrics that (they believe) are correlated with teaching quality • e. g. employer ("customers") surveys and student surveys are used for accountability and for feeding data in rankings 21

RANKINGS – Measuring teaching quality UPV / EHU Data collection for international rankings • In some countries there are national-wide student questionnaires used by rankers for comparison E. g. in student college survey in USA's National Survey on Student Engagement, UK's Teaching Quality Assessments, and Australia's College Student experience Questionnaires • European project for tertiary education register (ETER) In some countries there are national-wide student questionnaires used by rankers for comparison • E. g. in student college survey in USA's National Survey on Student Engagement, UK's Teaching Quality Assessments, and Australia's College Student experience Questionnaires 22

Shanghai ranking UPV / EHU • It has always been research-oriented, to focus on chinese needs • In 2011 attempted to add education quality indicators to the league table Indicators related to teaching quality • Quality of education: alumni winning nobel prizes and fields medals (weight 10%) • … including death ones! • Quality of faculty • 1) staff nobel prizes and fields medals (weight 20%) • 2) highly cited researchers in 21 areas (weight 20%) 23

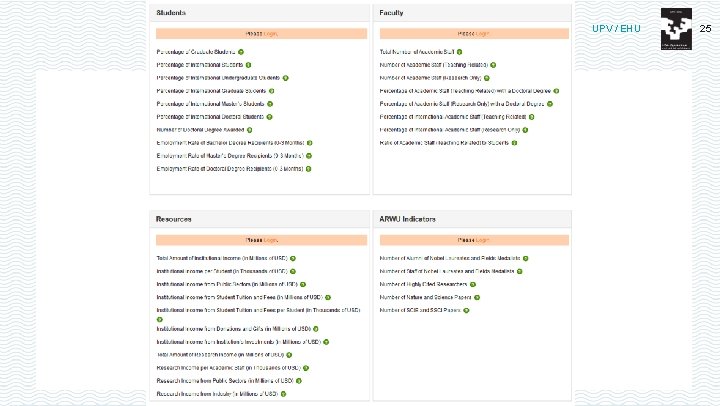

Shanghai ranking UPV / EHU • Data collected in separate indicators related to teaching quality • http: //www. shanghairanking. com/grup/ranking-byindicator. jsp 24

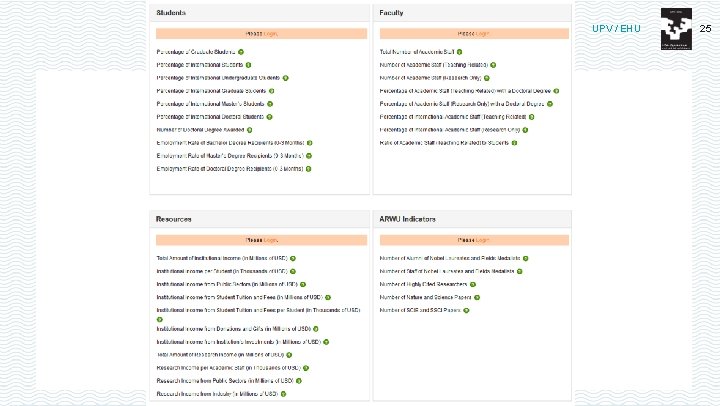

UPV / EHU 25

Times Higher Education (THE) UPV / EHU • From 2013 THE and QS where together doing a ranking. Since 2010 THE started alone and QS partnered with Thomson Reuters Performance indicators are grouped into five areas: • Teaching (the learning environment) • Research (volume, income and reputation) • Citations (research influence) • International outlook (staff, students and research) • Industry income (knowledge transfer) 26

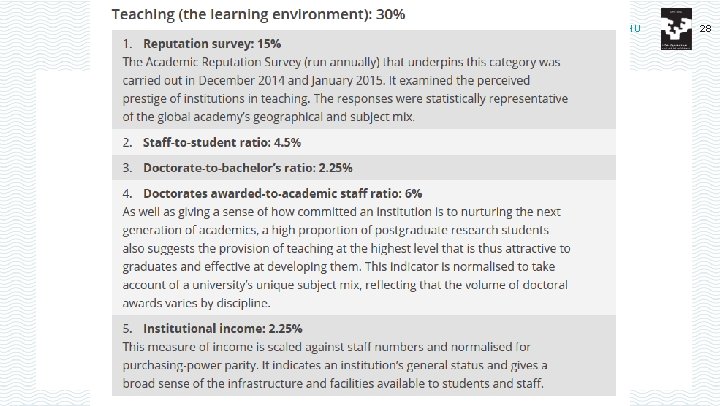

Times Higher Education (THE) UPV / EHU • How each area is divided into indicators • https: //www. timeshighereducation. com/news/rankingmethodology-2016 27

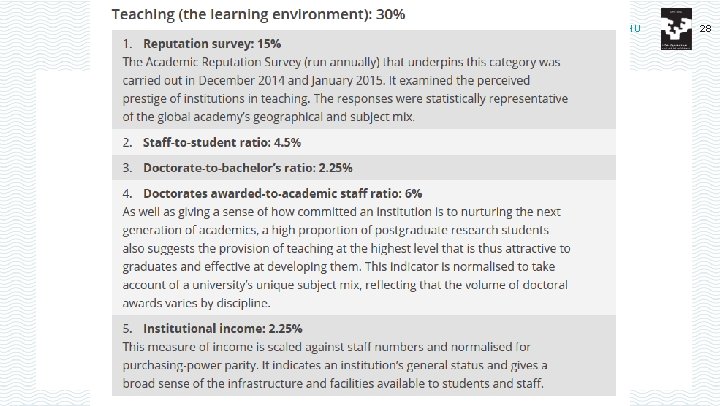

UPV / EHU 28

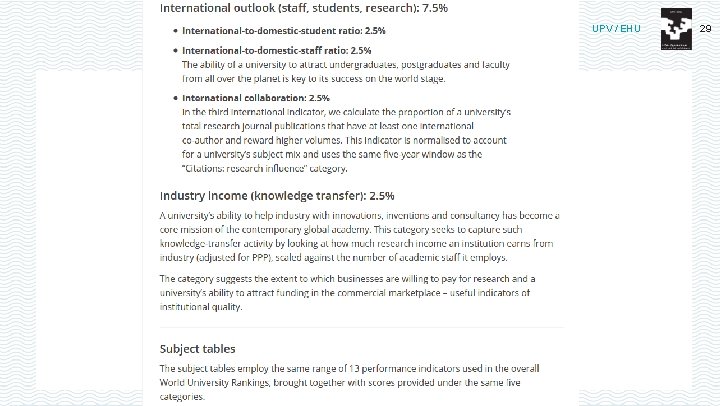

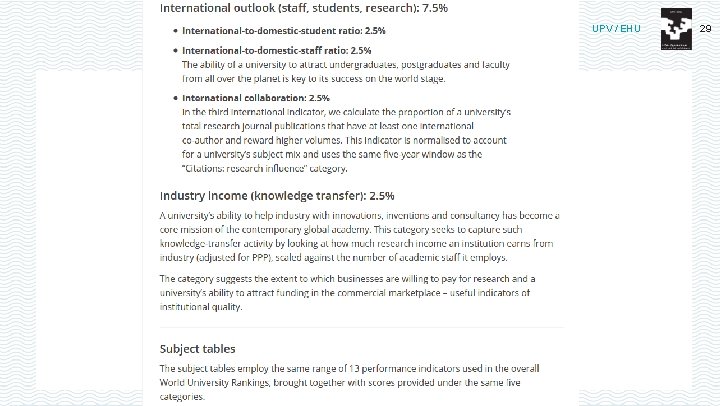

UPV / EHU 29

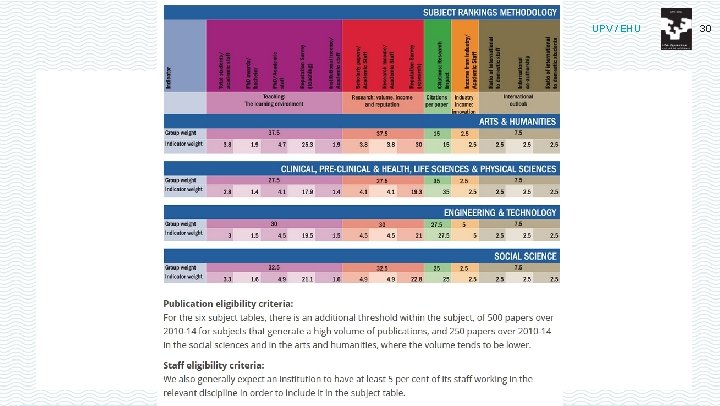

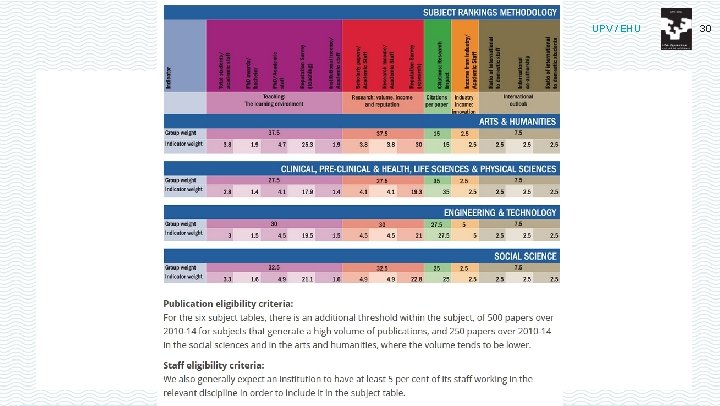

UPV / EHU 30

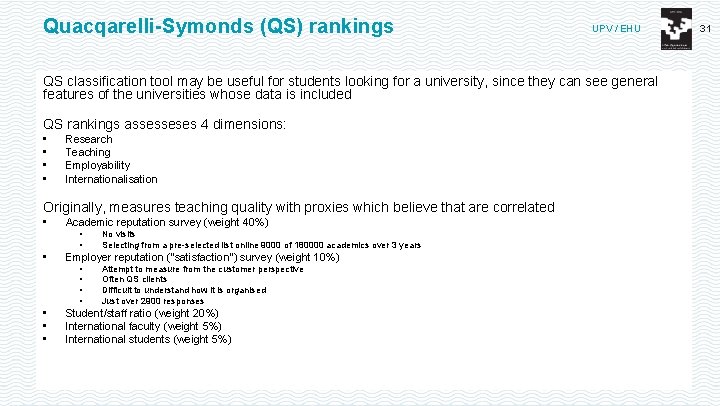

Quacqarelli-Symonds (QS) rankings UPV / EHU QS classification tool may be useful for students looking for a university, since they can see general features of the universities whose data is included QS rankings assesseses 4 dimensions: • • Research Teaching Employability Internationalisation Originally, measures teaching quality with proxies which believe that are correlated • Academic reputation survey (weight 40%) • • • Employer reputation ("satisfaction") survey (weight 10%) • • No visits Selecting from a pre-selected list online 9000 of 180000 academics over 3 years Attempt to measure from the customer perspective Often QS clients Difficult to understand how it is organised Just over 2900 responses Student/staff ratio (weight 20%) International faculty (weight 5%) International students (weight 5%) 31

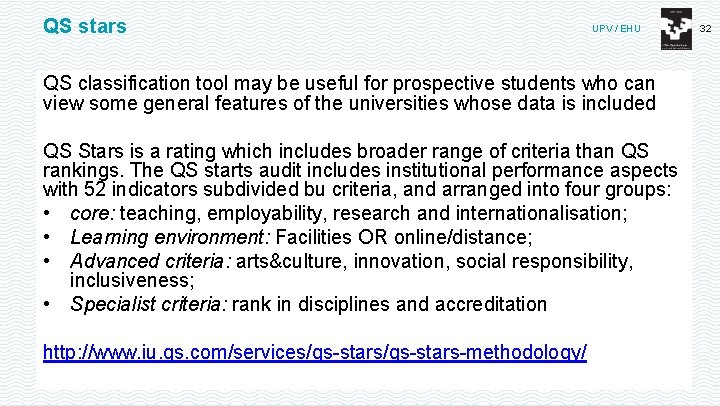

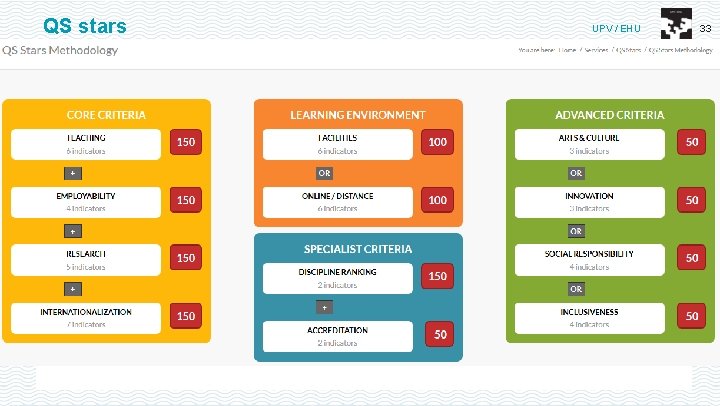

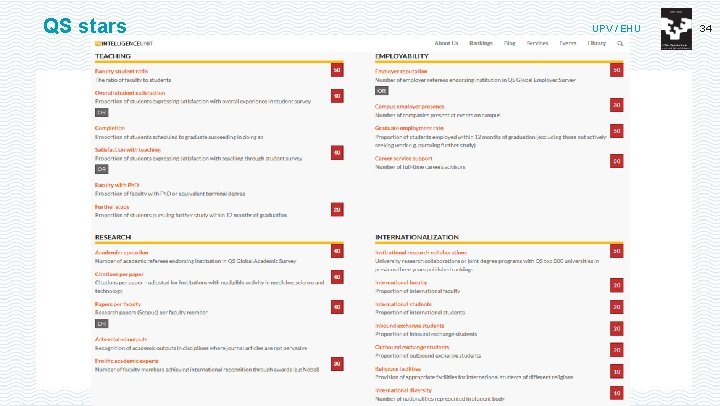

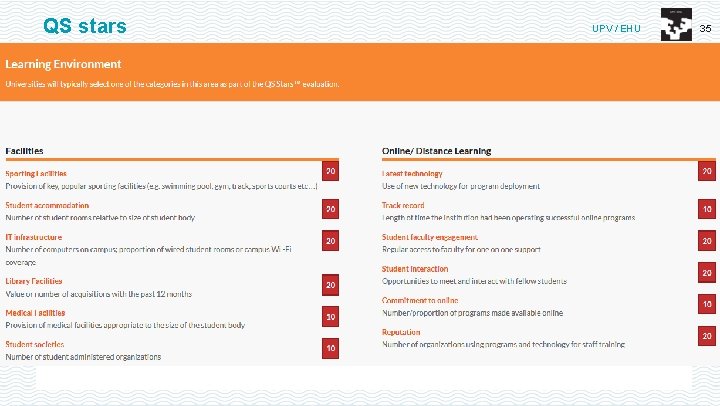

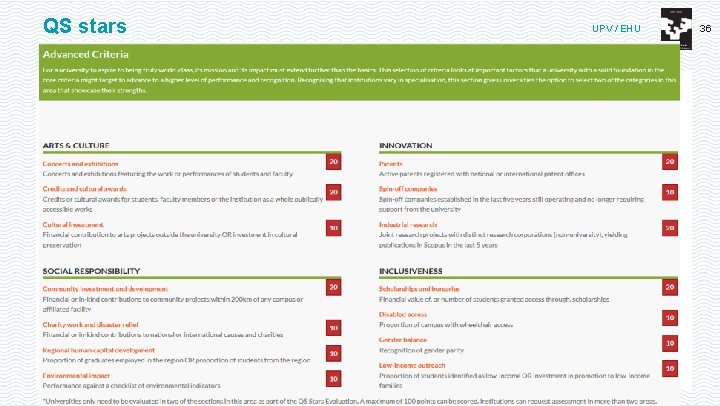

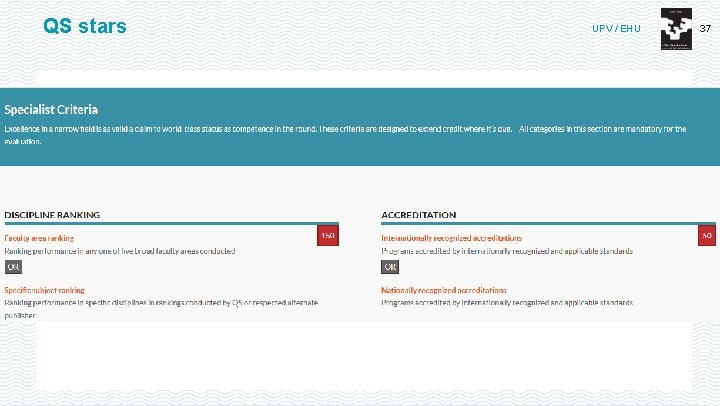

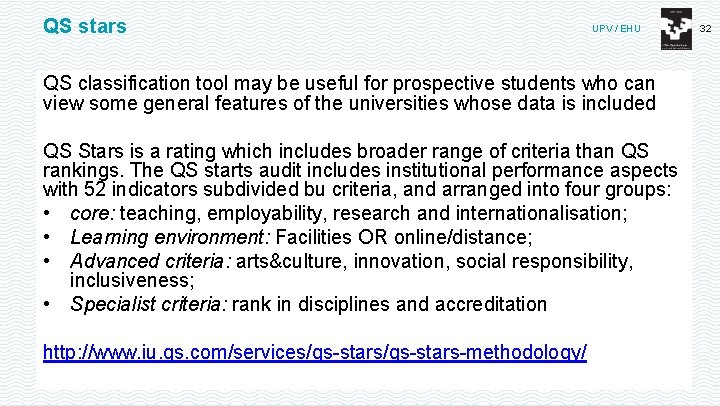

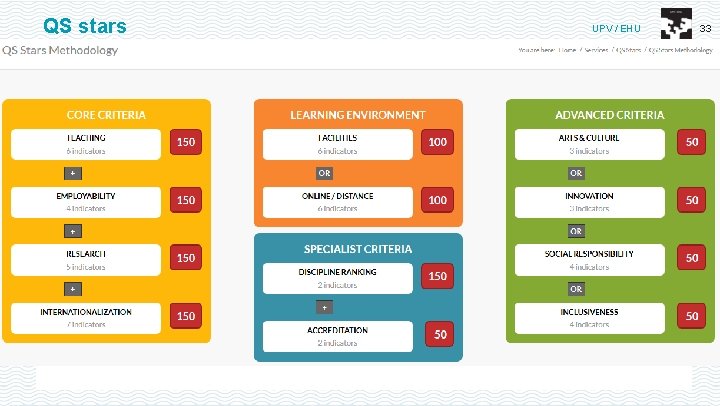

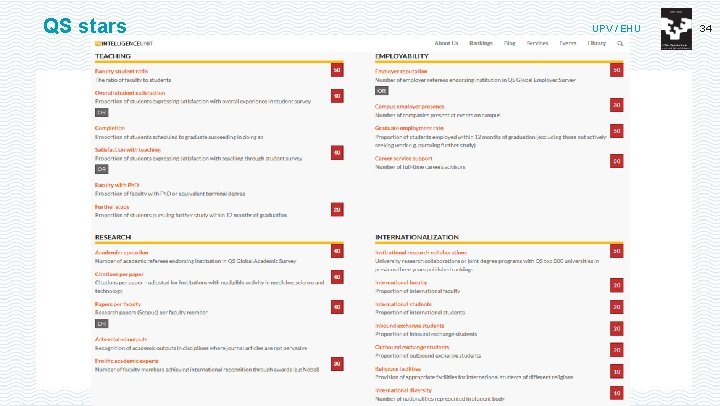

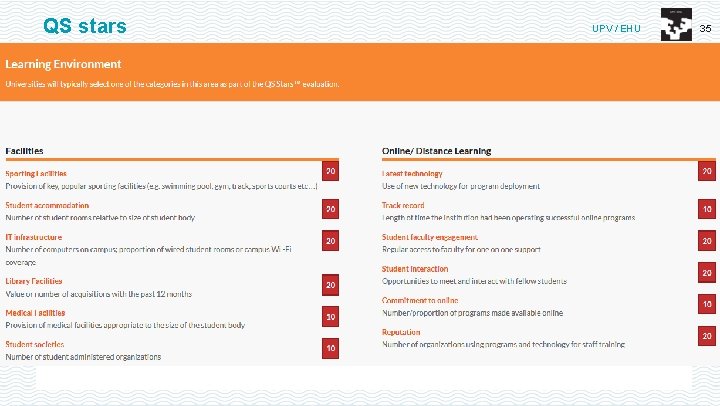

QS stars UPV / EHU QS classification tool may be useful for prospective students who can view some general features of the universities whose data is included QS Stars is a rating which includes broader range of criteria than QS rankings. The QS starts audit includes institutional performance aspects with 52 indicators subdivided bu criteria, and arranged into four groups: • core: teaching, employability, research and internationalisation; • Learning environment: Facilities OR online/distance; • Advanced criteria: arts&culture, innovation, social responsibility, inclusiveness; • Specialist criteria: rank in disciplines and accreditation http: //www. iu. qs. com/services/qs-stars-methodology/ 32

QS stars UPV / EHU 33

QS stars UPV / EHU 34

QS stars UPV / EHU 35

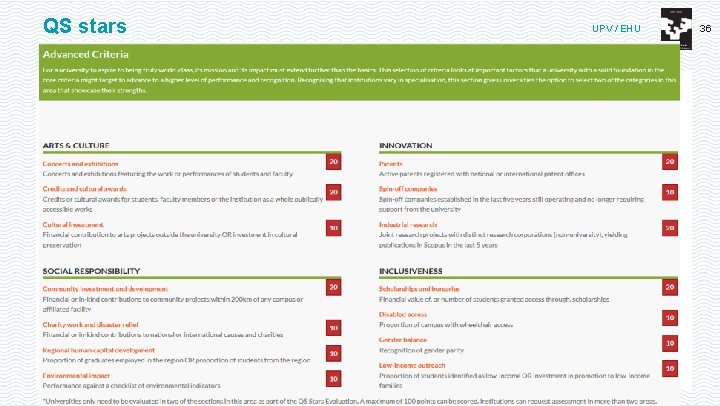

QS stars UPV / EHU 36

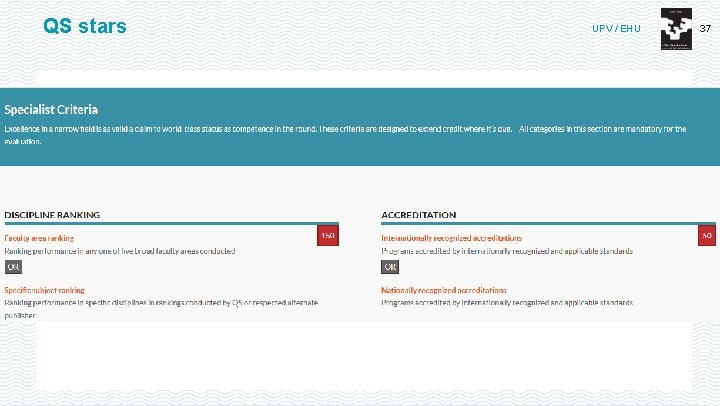

QS stars UPV / EHU 37

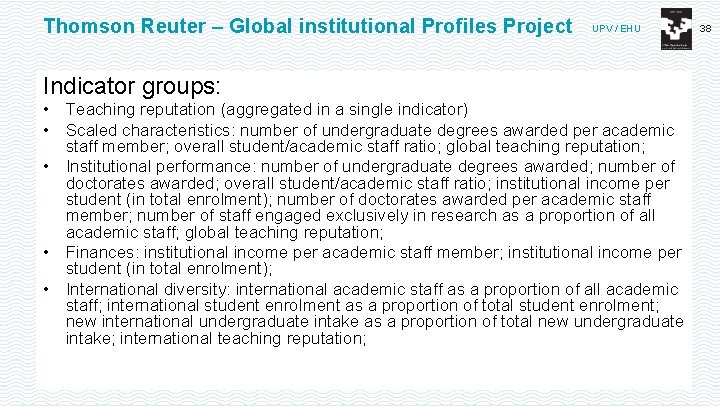

Thomson Reuter – Global institutional Profiles Project UPV / EHU Indicator groups: • Teaching reputation (aggregated in a single indicator) • Scaled characteristics: number of undergraduate degrees awarded per academic staff member; overall student/academic staff ratio; global teaching reputation; • Institutional performance: number of undergraduate degrees awarded; number of doctorates awarded; overall student/academic staff ratio; institutional income per student (in total enrolment); number of doctorates awarded per academic staff member; number of staff engaged exclusively in research as a proportion of all academic staff; global teaching reputation; • Finances: institutional income per academic staff member; institutional income per student (in total enrolment); • International diversity: international academic staff as a proportion of all academic staff; international student enrolment as a proportion of total student enrolment; new international undergraduate intake as a proportion of total new undergraduate intake; international teaching reputation; 38

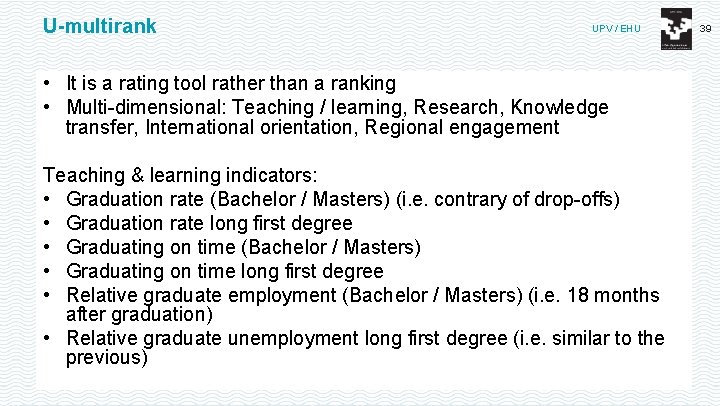

U-multirank UPV / EHU • It is a rating tool rather than a ranking • Multi-dimensional: Teaching / learning, Research, Knowledge transfer, International orientation, Regional engagement Teaching & learning indicators: • Graduation rate (Bachelor / Masters) (i. e. contrary of drop-offs) • Graduation rate long first degree • Graduating on time (Bachelor / Masters) • Graduating on time long first degree • Relative graduate employment (Bachelor / Masters) (i. e. 18 months after graduation) • Relative graduate unemployment long first degree (i. e. similar to the previous) 39

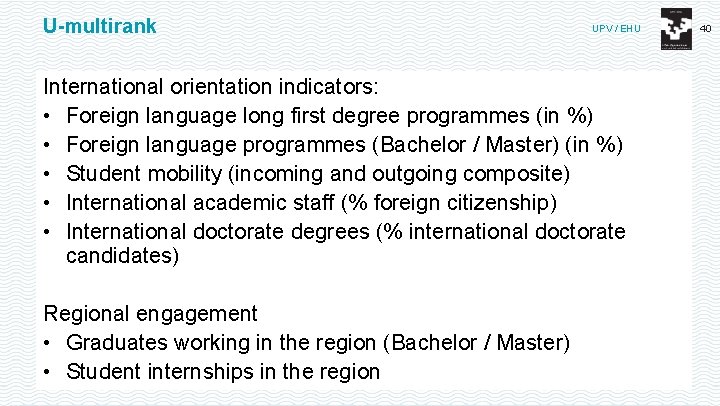

U-multirank UPV / EHU International orientation indicators: • Foreign language long first degree programmes (in %) • Foreign language programmes (Bachelor / Master) (in %) • Student mobility (incoming and outgoing composite) • International academic staff (% foreign citizenship) • International doctorate degrees (% international doctorate candidates) Regional engagement • Graduates working in the region (Bachelor / Master) • Student internships in the region 40

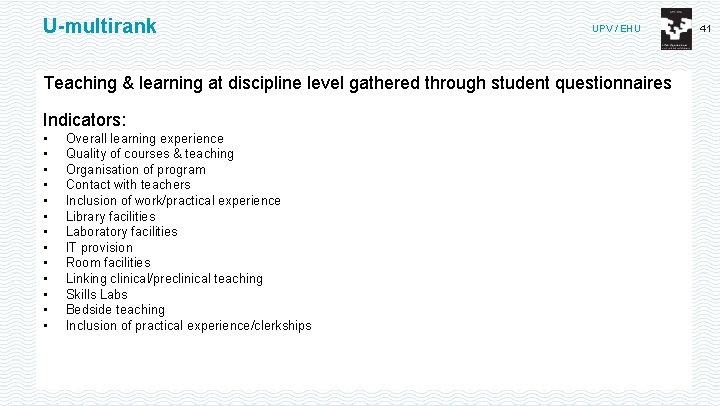

U-multirank UPV / EHU Teaching & learning at discipline level gathered through student questionnaires Indicators: • • • • Overall learning experience Quality of courses & teaching Organisation of program Contact with teachers Inclusion of work/practical experience Library facilities Laboratory facilities IT provision Room facilities Linking clinical/preclinical teaching Skills Labs Bedside teaching Inclusion of practical experience/clerkships 41

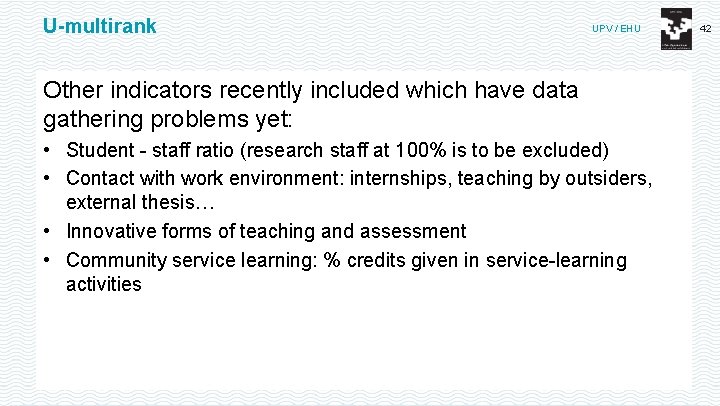

U-multirank UPV / EHU Other indicators recently included which have data gathering problems yet: • Student - staff ratio (research staff at 100% is to be excluded) • Contact with work environment: internships, teaching by outsiders, external thesis… • Innovative forms of teaching and assessment • Community service learning: % credits given in service-learning activities 42

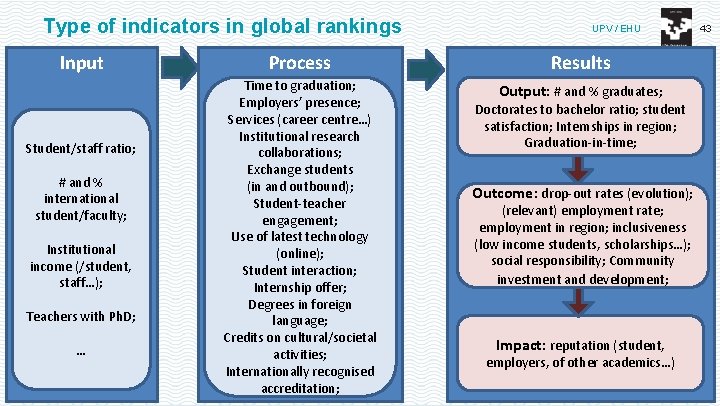

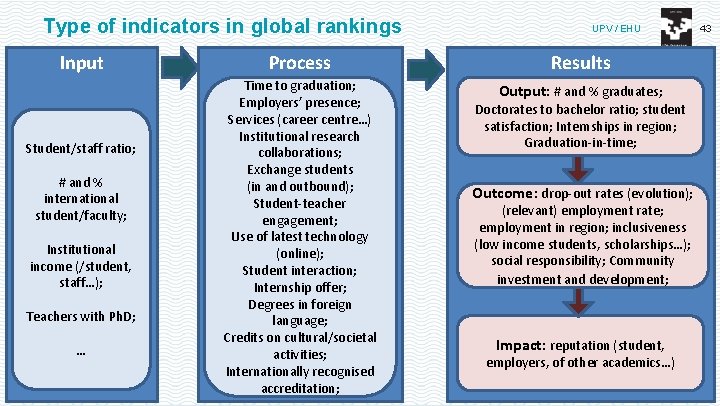

Type of indicators in global rankings Input Student/staff ratio; # and % international student/faculty; Institutional income (/student, staff…); Teachers with Ph. D; … UPV / EHU Process Results Time to graduation; Employers’ presence; Services (career centre…) Institutional research collaborations; Exchange students (in and outbound); Student-teacher engagement; Use of latest technology (online); Student interaction; Internship offer; Degrees in foreign language; Credits on cultural/societal activities; Internationally recognised accreditation; Output: # and % graduates; Doctorates to bachelor ratio; student satisfaction; Internships in region; Graduation-in-time; Outcome: drop-out rates (evolution); (relevant) employment rate; employment in region; inclusiveness (low income students, scholarships…); social responsibility; Community investment and development; Impact: reputation (student, employers, of other academics…) 43

Indicators in rankings for teaching quality – wrap up UPV / EHU Current indicators do not show the whole picture • Reputation surveys favour universities already well-known regardless of merit – Reputation surveys are not "Peer reviews" despite being called like that – Reputation is influenced by previous knowledge, not performance • Performance indicators are currently chosen by HEIs and rankings because they are readily quantifiable and available, and not because they accurately assess the quality of teaching • Student-staff ratio, presented as a proxy for teaching quality, fails to evaluate the pedagogy or the learning environment. It could lead to fake quality enhancement measures • Drop-out ratios reflect the risk of misinterpreting results: drop-outs could be due to circumstances or external events. And HEIs enrol a higher number of students from equity groups more likely to drop-out. • … however the evolution of drop-outs over the years could be useful, since the reason for these can be the inability of students to make the transition to university life. • Employability is very dependent on the job market and not attributable at a great extent to the universities under economic crisis circumstances. 44

UDA IKASTAROAK / CURSOS DE VERANO / SUMMER COURSES UPV / EHU 45

Rankings and teaching quality: future trends UPV / EHU • Assumption of good researchers automaticaly are good teacher is challenged • The student body is increased and more diverse • Not just possible to measure teaching, also need to analyse learning – A good or excellent teacher helps his/her students, but the contribution to the teaching field will be weak if not sharing discoveries with other colleagues or not analysing own methods – Relationship between teachers and students – Promotion fo student engagement – Focus on learning communities – Fostering leadership and creating "change agents" • The social dimension of universities: expected gradually in more rankings: – Completion rates, – Success of under-represented groups, – Indicators on social conditions of students… 46

Rankings and teaching quality: future trends UPV / EHU • Rankings of systemic level: Ranking of higher education systems U 21 • Rankings are increasingly becoming a business • Quality assurance & accreditation vs. Rankings • Gradually more universities require new teachers to proof teaching skills and competences. 47

Uda ikastaroak

Uda ikastaroak Uda ikastaroak

Uda ikastaroak Evaluacin

Evaluacin Evaluación financiera de proyectos

Evaluación financiera de proyectos Paradigmas de interacción

Paradigmas de interacción Evaluacion por agente evaluador

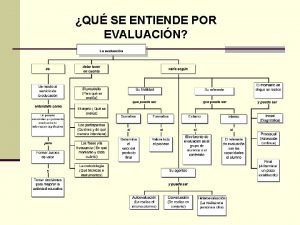

Evaluacion por agente evaluador Evaluacin

Evaluacin Principios de evaluación

Principios de evaluación Evaluacin

Evaluacin Evaluacin

Evaluacin Evaluacin

Evaluacin Fordham university visiting student

Fordham university visiting student Bvc cursos

Bvc cursos Ssa processo de ingresso

Ssa processo de ingresso Example of simple present tense negative

Example of simple present tense negative Escuela normal superior de la laguna cursos intensivos

Escuela normal superior de la laguna cursos intensivos Cep sevilla cursos

Cep sevilla cursos Uninorte intersemestrales

Uninorte intersemestrales Sofos cursos

Sofos cursos Http://evea.uh.cu

Http://evea.uh.cu Participante contreras

Participante contreras Cruz roja toledo

Cruz roja toledo Certificarm cita previa

Certificarm cita previa 033303

033303 Legale cursos jurídicos

Legale cursos jurídicos Cursos ao 2020

Cursos ao 2020 Cursos de inicial 3 años

Cursos de inicial 3 años Educatransparencia cursos

Educatransparencia cursos Fnde sife

Fnde sife Grade curricular de fisioterapia ufrn

Grade curricular de fisioterapia ufrn Ligação metálica

Ligação metálica Correo senati outlook

Correo senati outlook Escuela normal superior de la laguna cursos intensivos

Escuela normal superior de la laguna cursos intensivos Sineltepar cursos

Sineltepar cursos Segurimax cursos

Segurimax cursos Ead.sinpeem

Ead.sinpeem Bvc programacion

Bvc programacion Fundacion wiener cursos a distancia

Fundacion wiener cursos a distancia Cct mendoza cursos

Cct mendoza cursos Salud ocupacional mexico

Salud ocupacional mexico Telcel

Telcel Primavera invierno verano otoño

Primavera invierno verano otoño Características de la primavera

Características de la primavera Meses de invierno

Meses de invierno Separar en sílabas verano

Separar en sílabas verano Termina el verano

Termina el verano Y la semana pasada

Y la semana pasada Adios al verano capitulo 1 vocabulario 1

Adios al verano capitulo 1 vocabulario 1