The Pacific Research Platform Opening Keynote Lecture 15

- Slides: 32

“The Pacific Research Platform” Opening Keynote Lecture 15 th Annual ON*VECTOR International Photonics Workshop Calit 2’s Qualcomm Institute University of California, San Diego February 29, 2016 Dr. Larry Smarr Director, California Institute for Telecommunications and Information Technology Harry E. Gruber Professor, Dept. of Computer Science and Engineering Jacobs School of Engineering, UCSD http: //lsmarr. calit 2. net 1

Abstract Research in data-intensive fields is increasingly multi-investigator and multi-campus, depending on ever more rapid access to ultra-large heterogeneous and widely distributed datasets. The Pacific Research Platform (PRP) is a multi-institutional extensible deployment that establishes a science-driven high-capacity data-centric “freeway system. ” The PRP spans all 10 campuses of the University of California, as well as the major California private research universities, four supercomputer centers, and several universities outside California. Fifteen multi-campus data-intensive application teams act as drivers of the PRP, providing feedback over the five years to the technical design staff. These application areas include particle physics, astronomy/astrophysics, earth sciences, biomedicine, and scalable multimedia, providing models for many other applications. The PRP partnership extends the NSF-funded campus cyberinfrastructure awards to a regional model that allows high-speed data-intensive networking, facilitating researchers moving data between their labs and their collaborators’ sites, supercomputer centers or data repositories, and enabling that data to traverse multiple heterogeneous networks without performance degradation over campus, regional, national, and international distances.

Vision: Creating a Pacific Research Platform Use Lightpaths to Connect All Data Generators and Consumers, Creating a “Big Data” Freeway System Using CENIC/Pacific Wave as a 100 Gbps Backplane Integrated With High-Performance Global Networks “The Bisection Bandwidth of a Cluster Interconnect, but Deployed on a 20 -Campus Scale. ” This Vision Has Been Building for 15 Years

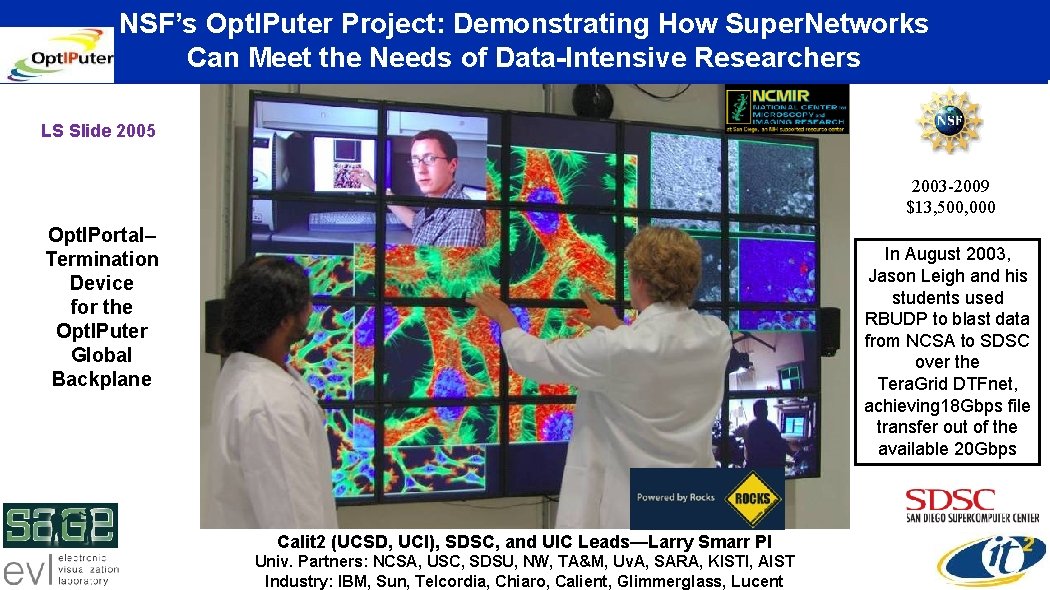

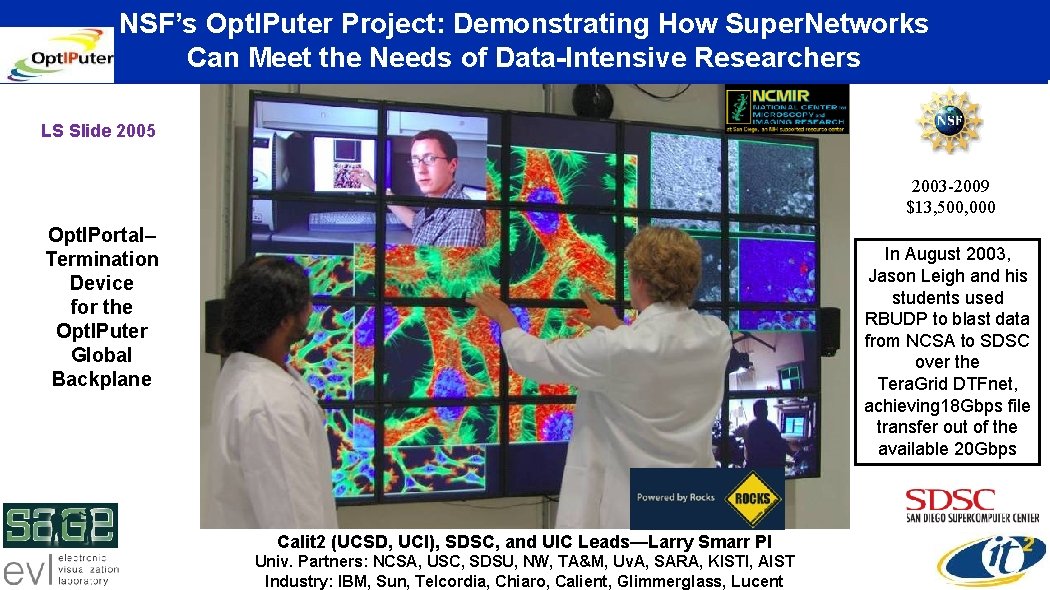

NSF’s Opt. IPuter Project: Demonstrating How Super. Networks Can Meet the Needs of Data-Intensive Researchers LS Slide 2005 2003 -2009 $13, 500, 000 Opt. IPortal– Termination Device for the Opt. IPuter Global Backplane In August 2003, Jason Leigh and his students used RBUDP to blast data from NCSA to SDSC over the Tera. Grid DTFnet, achieving 18 Gbps file transfer out of the available 20 Gbps Calit 2 (UCSD, UCI), SDSC, and UIC Leads—Larry Smarr PI Univ. Partners: NCSA, USC, SDSU, NW, TA&M, Uv. A, SARA, KISTI, AIST Industry: IBM, Sun, Telcordia, Chiaro, Calient, Glimmerglass, Lucent

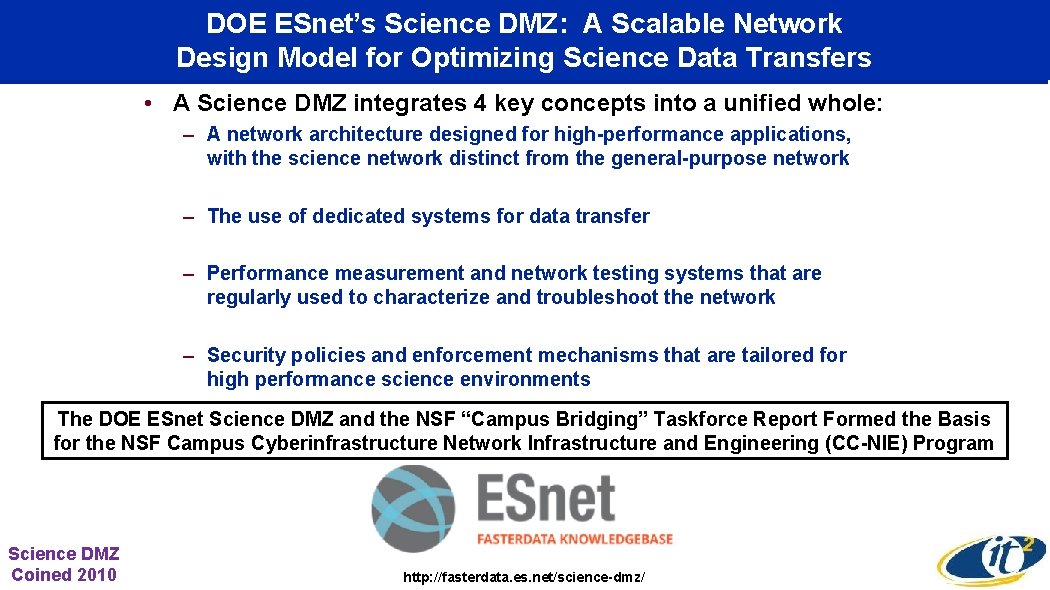

DOE ESnet’s Science DMZ: A Scalable Network Design Model for Optimizing Science Data Transfers • A Science DMZ integrates 4 key concepts into a unified whole: – A network architecture designed for high-performance applications, with the science network distinct from the general-purpose network – The use of dedicated systems for data transfer – Performance measurement and network testing systems that are regularly used to characterize and troubleshoot the network – Security policies and enforcement mechanisms that are tailored for high performance science environments The DOE ESnet Science DMZ and the NSF “Campus Bridging” Taskforce Report Formed the Basis for the NSF Campus Cyberinfrastructure Network Infrastructure and Engineering (CC-NIE) Program Science DMZ Coined 2010 http: //fasterdata. es. net/science-dmz/

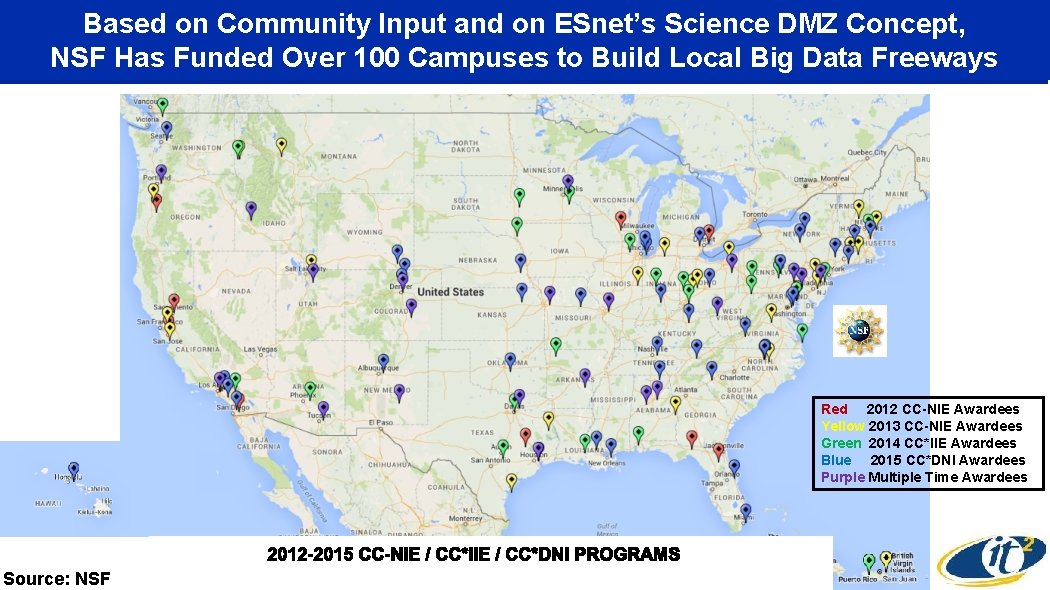

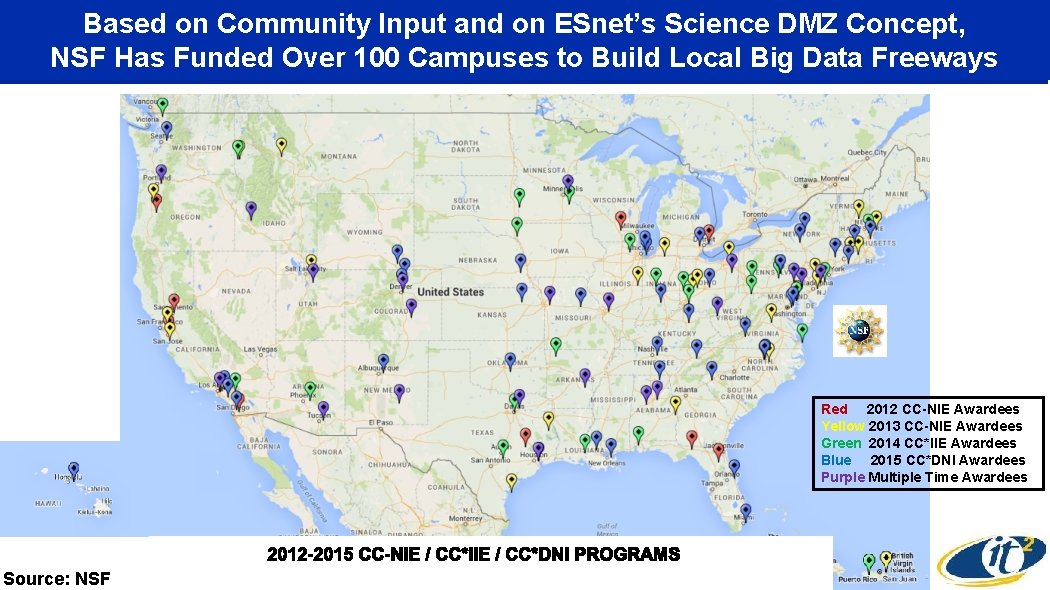

Based on Community Input and on ESnet’s Science DMZ Concept, NSF Has Funded Over 100 Campuses to Build Local Big Data Freeways Red 2012 CC-NIE Awardees Yellow 2013 CC-NIE Awardees Green 2014 CC*IIE Awardees Blue 2015 CC*DNI Awardees Purple Multiple Time Awardees Source: NSF

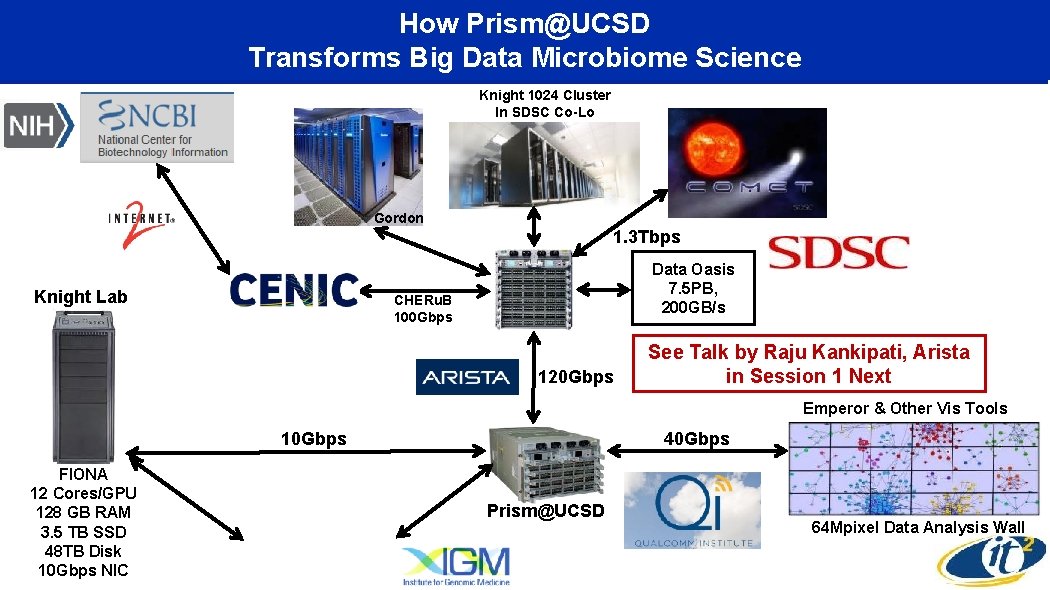

Creating a “Big Data” Freeway on Campus: NSF-Funded CC-NIE Grants Prism@UCSD and CHeru. B CHERu. B Prism@UCSD, Phil Papadopoulos, SDSC, Calit 2, PI (2013 -15) CHERu. B, Mike Norman, SDSC PI

Prism@UCSD Has Connected the Science Drivers Reported to ON*VECTOR in 2013 “Terminating the GLIF” - 2013 ON*VECTOR www. youtube. com/watch? v=Ar 7 vm. MIM 7 q 8

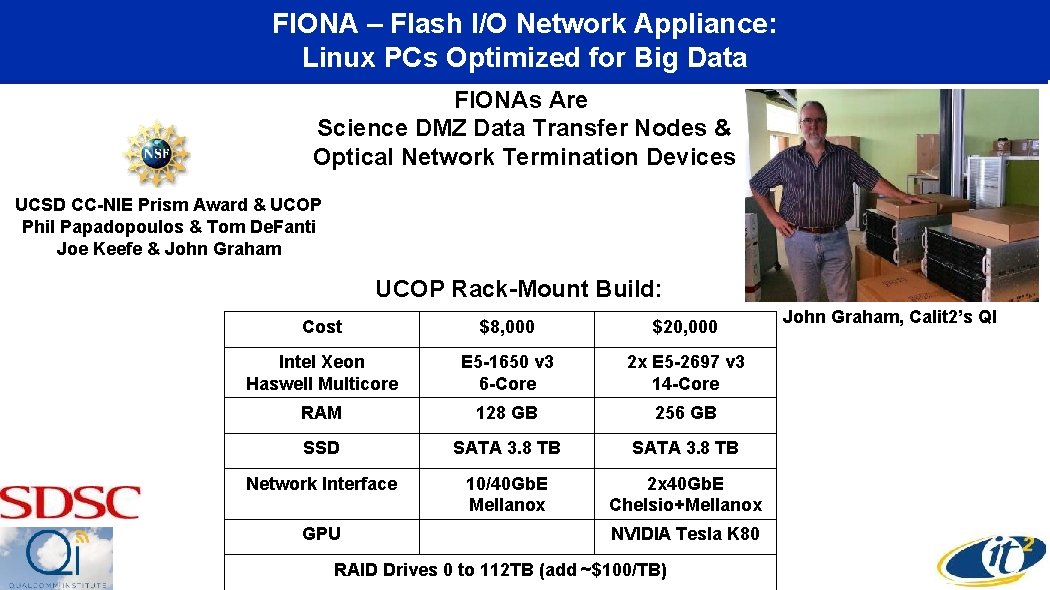

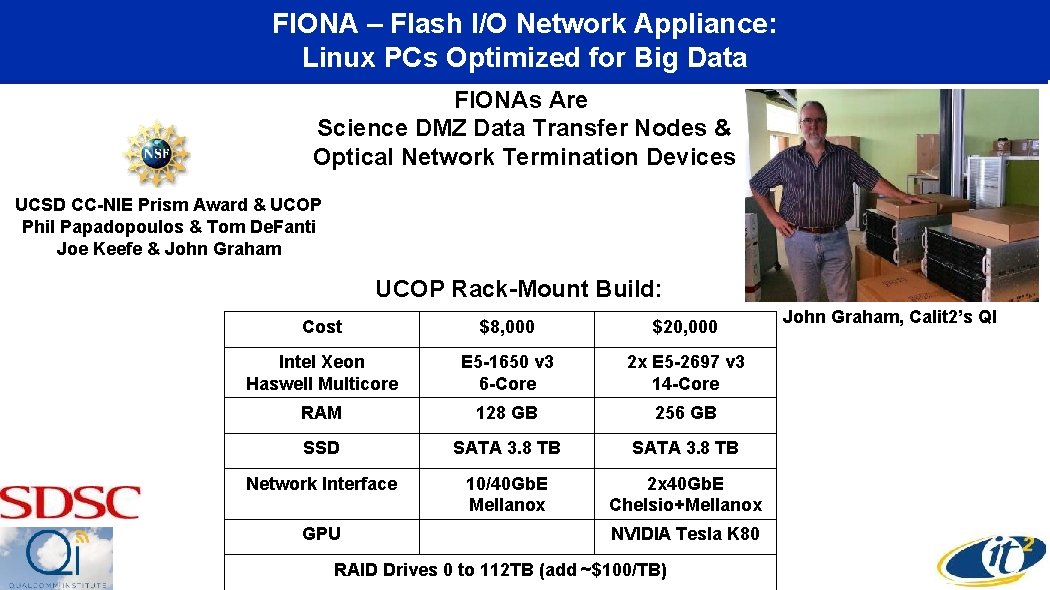

FIONA – Flash I/O Network Appliance: Linux PCs Optimized for Big Data FIONAs Are Science DMZ Data Transfer Nodes & Optical Network Termination Devices UCSD CC-NIE Prism Award & UCOP Phil Papadopoulos & Tom De. Fanti Joe Keefe & John Graham UCOP Rack-Mount Build: Cost $8, 000 $20, 000 Intel Xeon Haswell Multicore E 5 -1650 v 3 6 -Core 2 x E 5 -2697 v 3 14 -Core RAM 128 GB 256 GB SSD SATA 3. 8 TB Network Interface 10/40 Gb. E Mellanox 2 x 40 Gb. E Chelsio+Mellanox GPU NVIDIA Tesla K 80 RAID Drives 0 to 112 TB (add ~$100/TB) John Graham, Calit 2’s QI

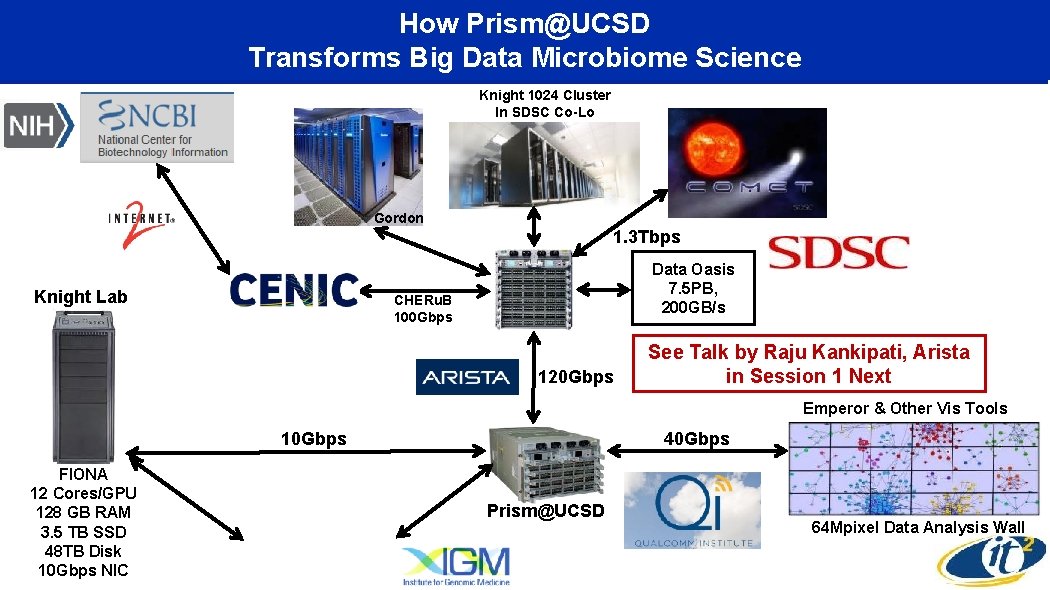

How Prism@UCSD Transforms Big Data Microbiome Science Knight 1024 Cluster In SDSC Co-Lo Gordon 1. 3 Tbps Knight Lab Data Oasis 7. 5 PB, 200 GB/s CHERu. B 100 Gbps 120 Gbps See Talk by Raju Kankipati, Arista in Session 1 Next Emperor & Other Vis Tools 10 Gbps FIONA 12 Cores/GPU 128 GB RAM 3. 5 TB SSD 48 TB Disk 10 Gbps NIC 40 Gbps Prism@UCSD 64 Mpixel Data Analysis Wall

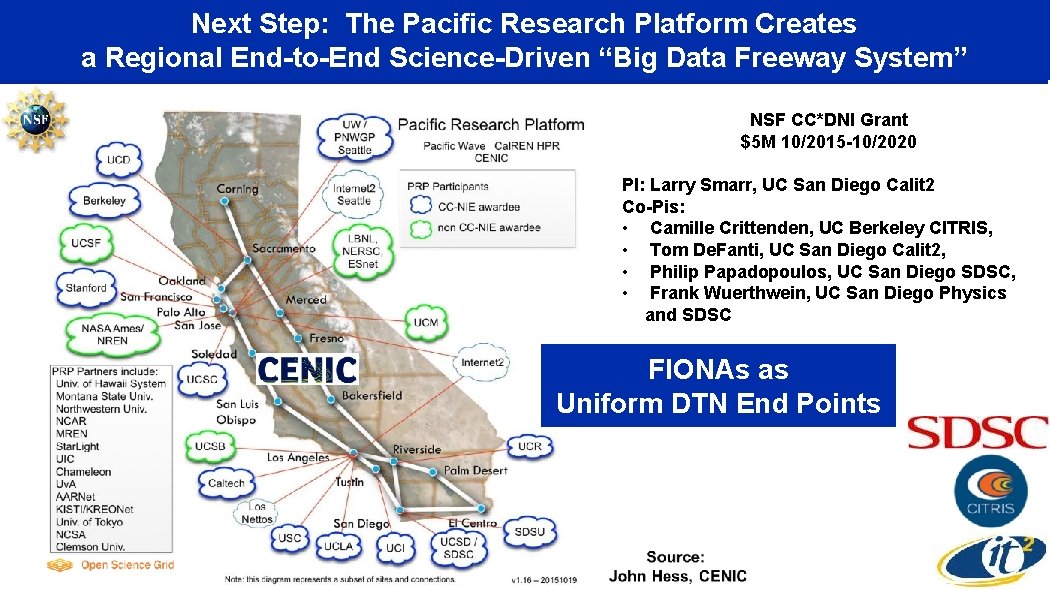

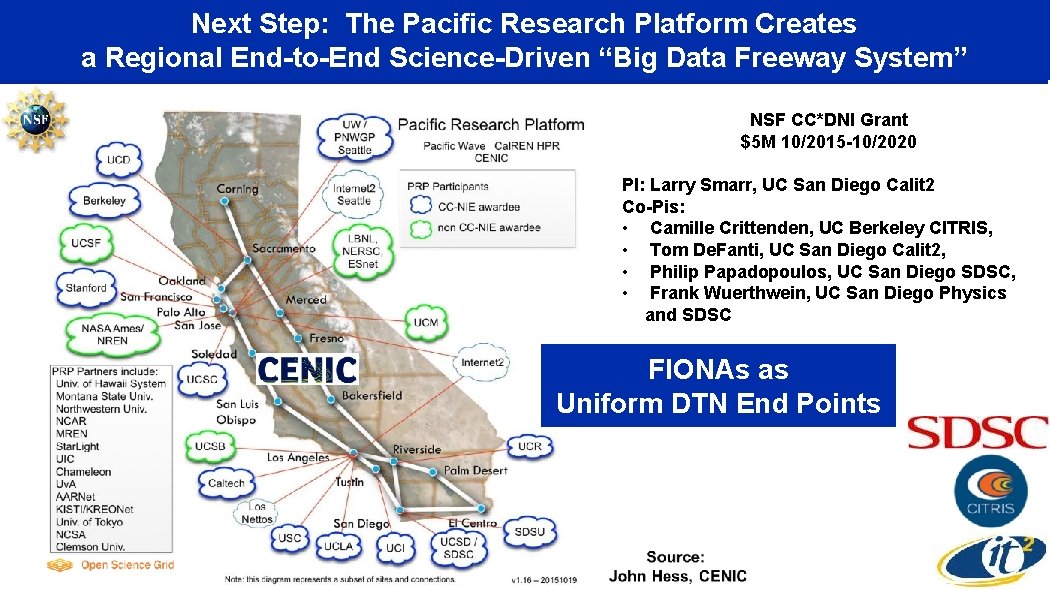

Next Step: The Pacific Research Platform Creates a Regional End-to-End Science-Driven “Big Data Freeway System” NSF CC*DNI Grant $5 M 10/2015 -10/2020 PI: Larry Smarr, UC San Diego Calit 2 Co-Pis: • Camille Crittenden, UC Berkeley CITRIS, • Tom De. Fanti, UC San Diego Calit 2, • Philip Papadopoulos, UC San Diego SDSC, • Frank Wuerthwein, UC San Diego Physics and SDSC FIONAs as Uniform DTN End Points

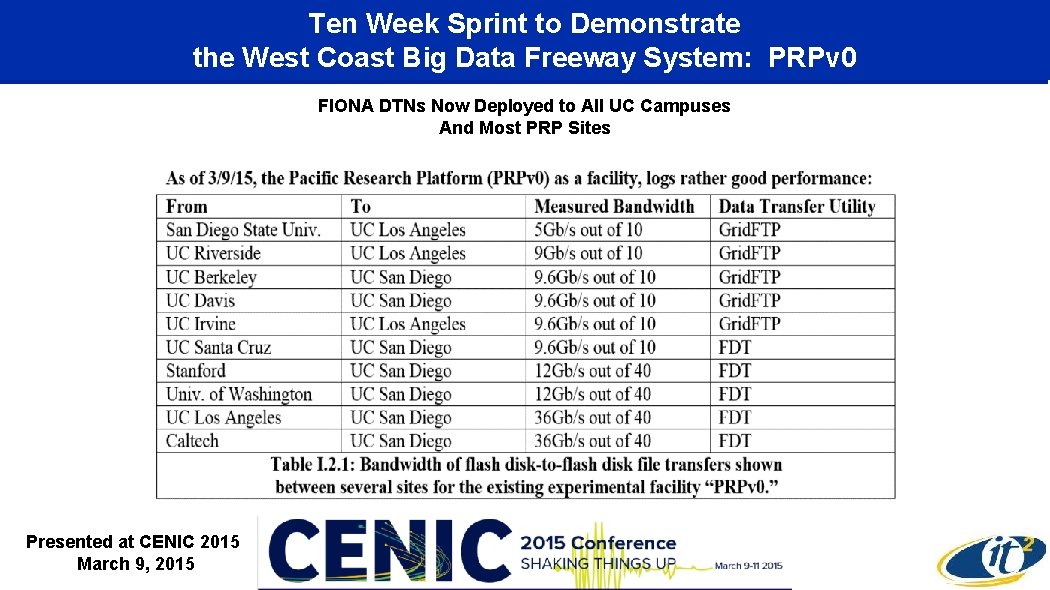

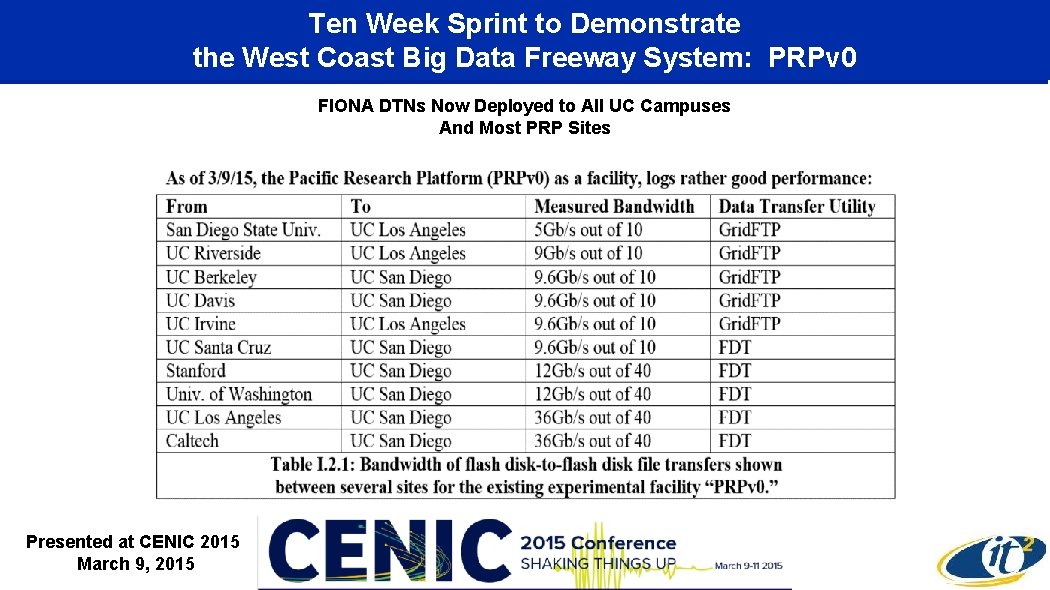

Ten Week Sprint to Demonstrate the West Coast Big Data Freeway System: PRPv 0 FIONA DTNs Now Deployed to All UC Campuses And Most PRP Sites Presented at CENIC 2015 March 9, 2015

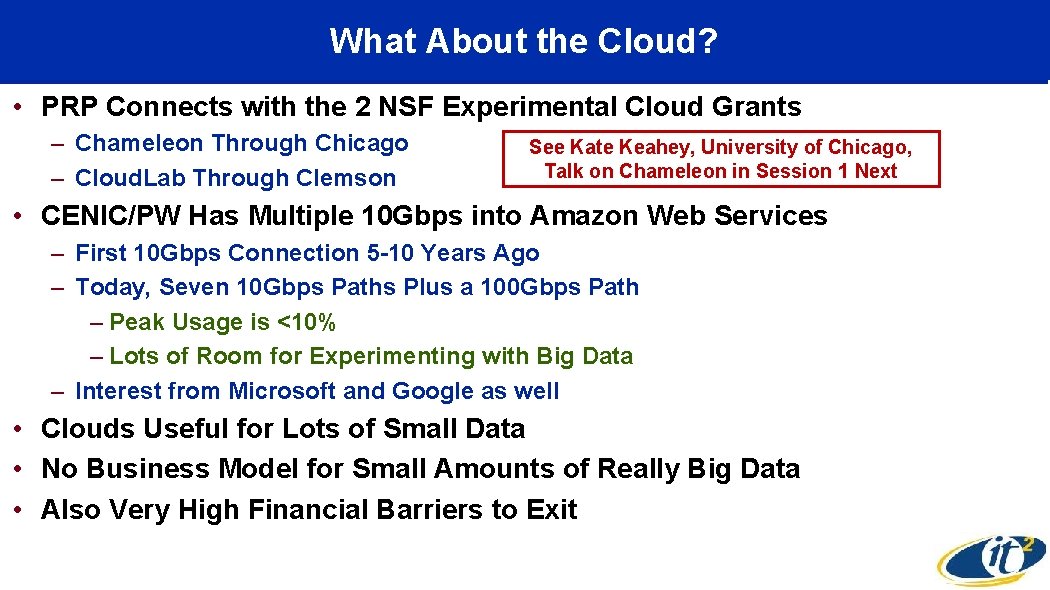

What About the Cloud? • PRP Connects with the 2 NSF Experimental Cloud Grants – Chameleon Through Chicago – Cloud. Lab Through Clemson See Kate Keahey, University of Chicago, Talk on Chameleon in Session 1 Next • CENIC/PW Has Multiple 10 Gbps into Amazon Web Services – First 10 Gbps Connection 5 -10 Years Ago – Today, Seven 10 Gbps Paths Plus a 100 Gbps Path – Peak Usage is <10% – Lots of Room for Experimenting with Big Data – Interest from Microsoft and Google as well • Clouds Useful for Lots of Small Data • No Business Model for Small Amounts of Really Big Data • Also Very High Financial Barriers to Exit

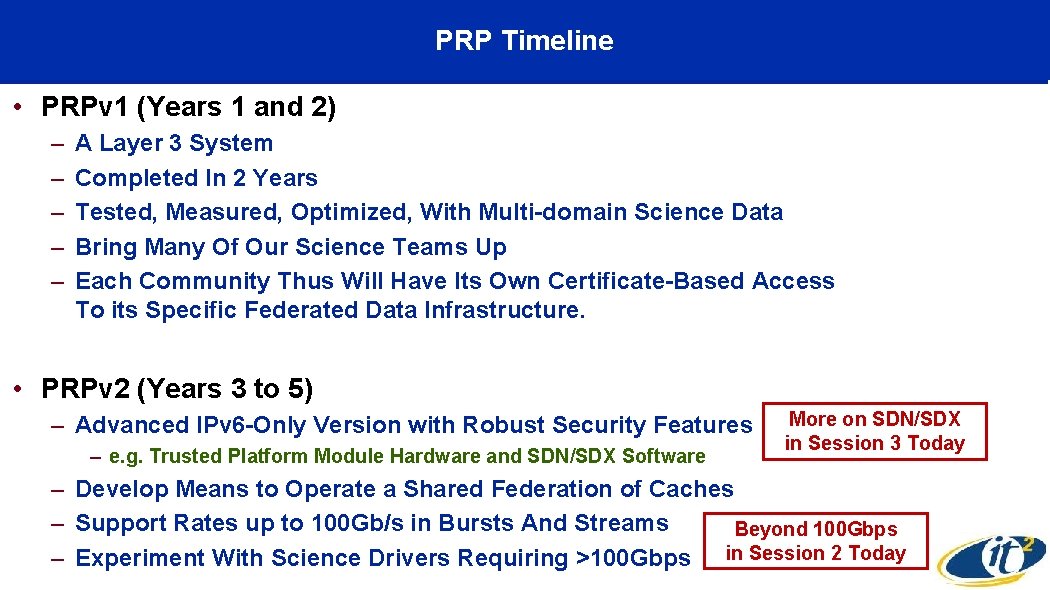

PRP Timeline • PRPv 1 (Years 1 and 2) – – – A Layer 3 System Completed In 2 Years Tested, Measured, Optimized, With Multi-domain Science Data Bring Many Of Our Science Teams Up Each Community Thus Will Have Its Own Certificate-Based Access To its Specific Federated Data Infrastructure. • PRPv 2 (Years 3 to 5) – Advanced IPv 6 -Only Version with Robust Security Features – e. g. Trusted Platform Module Hardware and SDN/SDX Software More on SDN/SDX in Session 3 Today – Develop Means to Operate a Shared Federation of Caches – Support Rates up to 100 Gb/s in Bursts And Streams Beyond 100 Gbps – Experiment With Science Drivers Requiring >100 Gbps in Session 2 Today

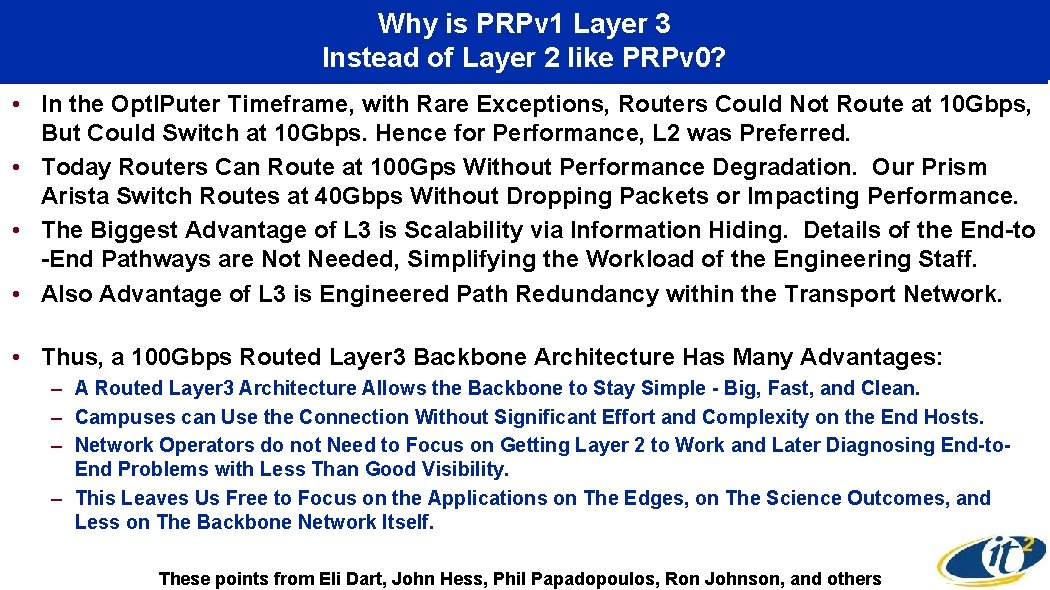

Why is PRPv 1 Layer 3 Instead of Layer 2 like PRPv 0? • In the Opt. IPuter Timeframe, with Rare Exceptions, Routers Could Not Route at 10 Gbps, But Could Switch at 10 Gbps. Hence for Performance, L 2 was Preferred. • Today Routers Can Route at 100 Gps Without Performance Degradation. Our Prism Arista Switch Routes at 40 Gbps Without Dropping Packets or Impacting Performance. • The Biggest Advantage of L 3 is Scalability via Information Hiding. Details of the End-to -End Pathways are Not Needed, Simplifying the Workload of the Engineering Staff. • Also Advantage of L 3 is Engineered Path Redundancy within the Transport Network. • Thus, a 100 Gbps Routed Layer 3 Backbone Architecture Has Many Advantages: – A Routed Layer 3 Architecture Allows the Backbone to Stay Simple - Big, Fast, and Clean. – Campuses can Use the Connection Without Significant Effort and Complexity on the End Hosts. – Network Operators do not Need to Focus on Getting Layer 2 to Work and Later Diagnosing End-to. End Problems with Less Than Good Visibility. – This Leaves Us Free to Focus on the Applications on The Edges, on The Science Outcomes, and Less on The Backbone Network Itself. These points from Eli Dart, John Hess, Phil Papadopoulos, Ron Johnson, and others

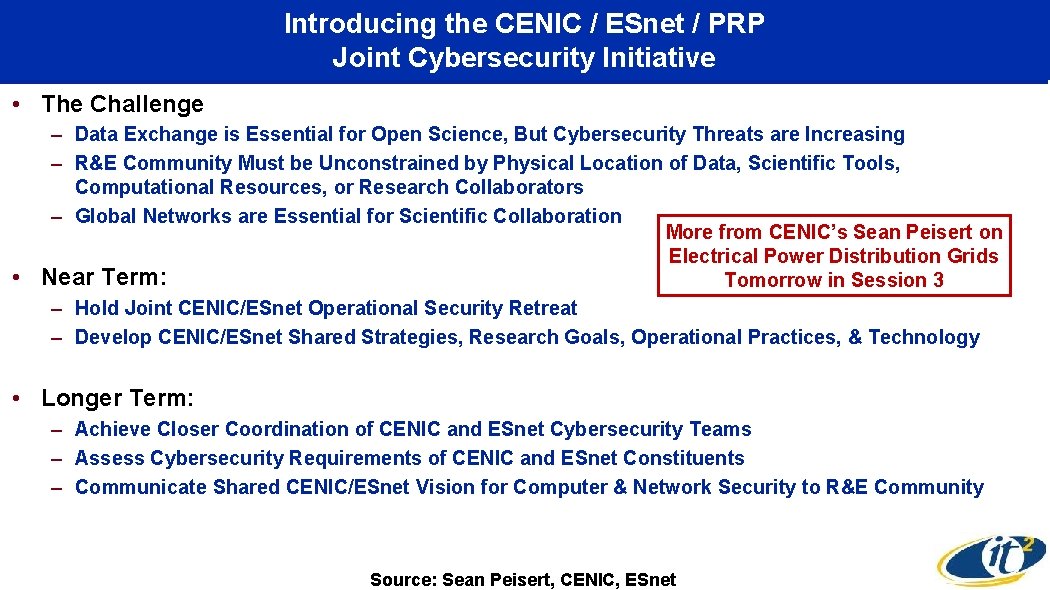

Introducing the CENIC / ESnet / PRP Joint Cybersecurity Initiative • The Challenge • – Data Exchange is Essential for Open Science, But Cybersecurity Threats are Increasing – R&E Community Must be Unconstrained by Physical Location of Data, Scientific Tools, Computational Resources, or Research Collaborators – Global Networks are Essential for Scientific Collaboration More from CENIC’s Sean Peisert on Electrical Power Distribution Grids Near Term: Tomorrow in Session 3 – Hold Joint CENIC/ESnet Operational Security Retreat – Develop CENIC/ESnet Shared Strategies, Research Goals, Operational Practices, & Technology • Longer Term: – Achieve Closer Coordination of CENIC and ESnet Cybersecurity Teams – Assess Cybersecurity Requirements of CENIC and ESnet Constituents – Communicate Shared CENIC/ESnet Vision for Computer & Network Security to R&E Community Source: Sean Peisert, CENIC, ESnet

Pacific Research Platform Regional Collaboration: Multi-Campus Science Driver Teams • Jupyter Hub • Particle Physics • Astronomy and Astrophysics – Telescope Surveys – Galaxy Evolution – Gravitational Wave Astronomy • Earth Sciences – – Data Analysis and Simulation for Earthquakes and Natural Disasters Climate Modeling: NCAR/UCAR California/Nevada Regional Climate Data Analysis Wireless Environmental Sensornets • Biomedical – Cancer Genomics Hub/Browser – Microbiome and Integrative ‘Omics – Integrative Structural Biology • Scalable Visualization, Virtual Reality, and Ultra-Resolution Video 17

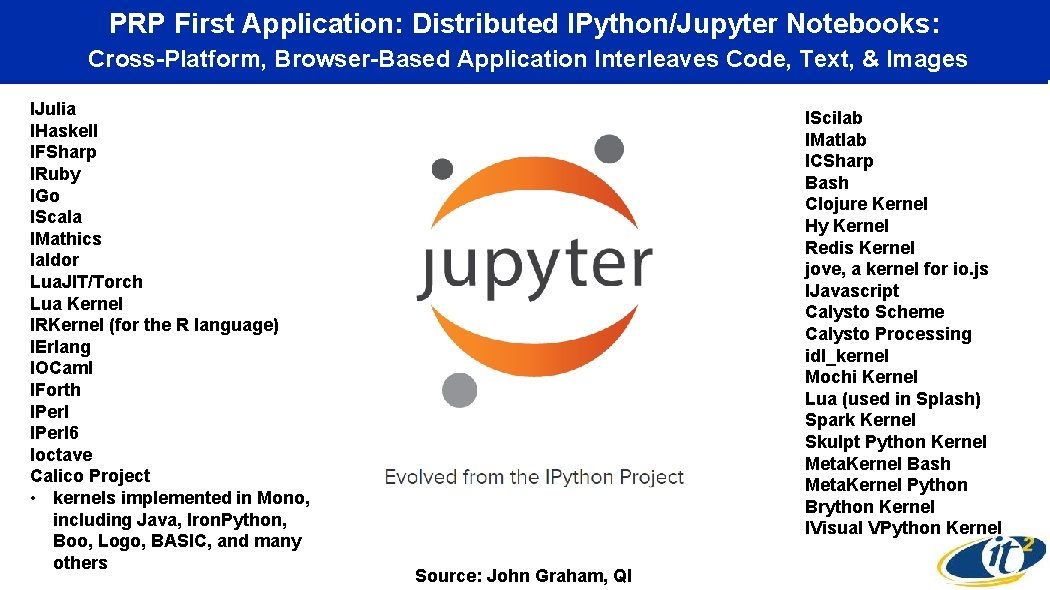

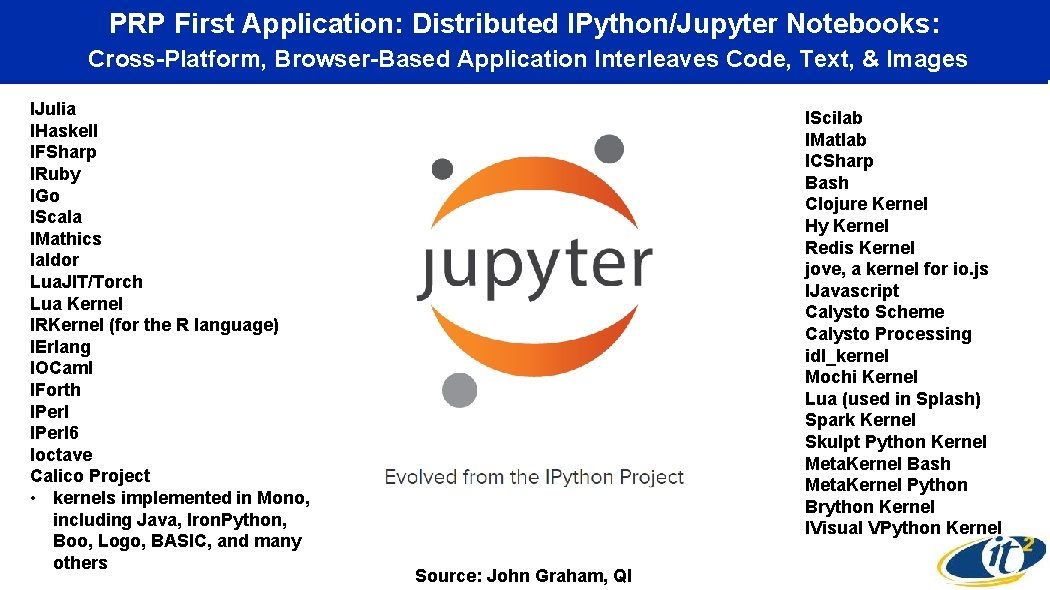

PRP First Application: Distributed IPython/Jupyter Notebooks: Cross-Platform, Browser-Based Application Interleaves Code, Text, & Images IJulia IHaskell IFSharp IRuby IGo IScala IMathics Ialdor Lua. JIT/Torch Lua Kernel IRKernel (for the R language) IErlang IOCaml IForth IPerl 6 Ioctave Calico Project • kernels implemented in Mono, including Java, Iron. Python, Boo, Logo, BASIC, and many others IScilab IMatlab ICSharp Bash Clojure Kernel Hy Kernel Redis Kernel jove, a kernel for io. js IJavascript Calysto Scheme Calysto Processing idl_kernel Mochi Kernel Lua (used in Splash) Spark Kernel Skulpt Python Kernel Meta. Kernel Bash Meta. Kernel Python Brython Kernel IVisual VPython Kernel Source: John Graham, QI

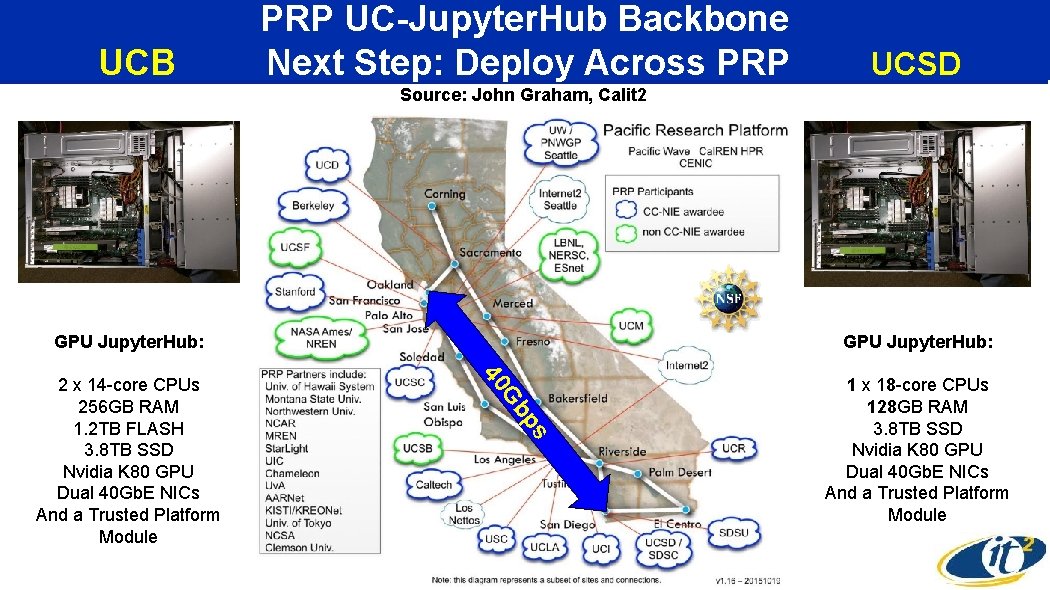

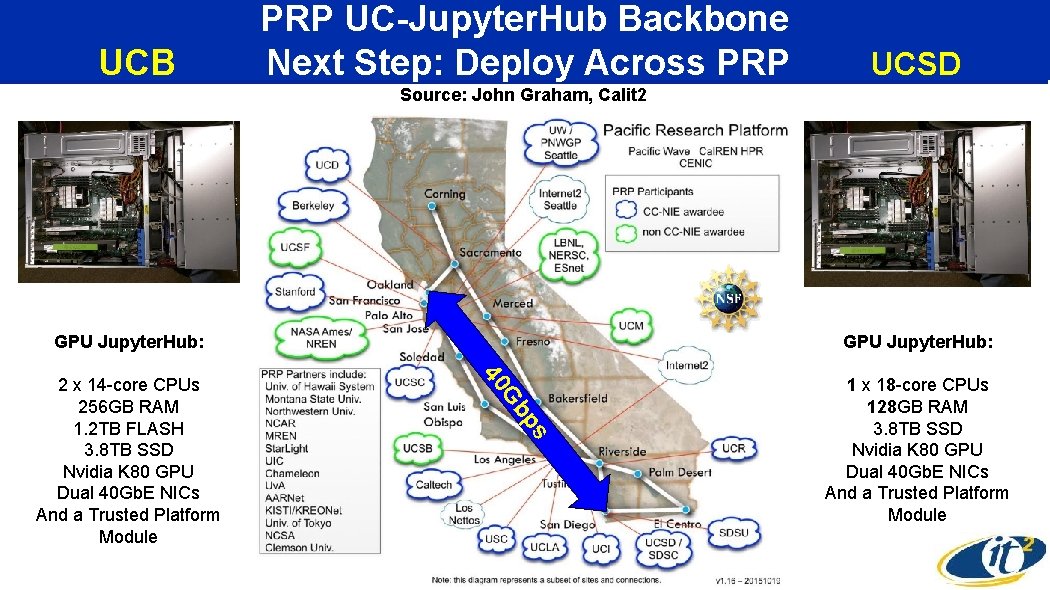

UCB PRP UC-Jupyter. Hub Backbone Next Step: Deploy Across PRP UCSD Source: John Graham, Calit 2 GPU Jupyter. Hub: Gb 40 ps 2 x 14 -core CPUs 256 GB RAM 1. 2 TB FLASH 3. 8 TB SSD Nvidia K 80 GPU Dual 40 Gb. E NICs And a Trusted Platform Module GPU Jupyter. Hub: 1 x 18 -core CPUs 128 GB RAM 3. 8 TB SSD Nvidia K 80 GPU Dual 40 Gb. E NICs And a Trusted Platform Module

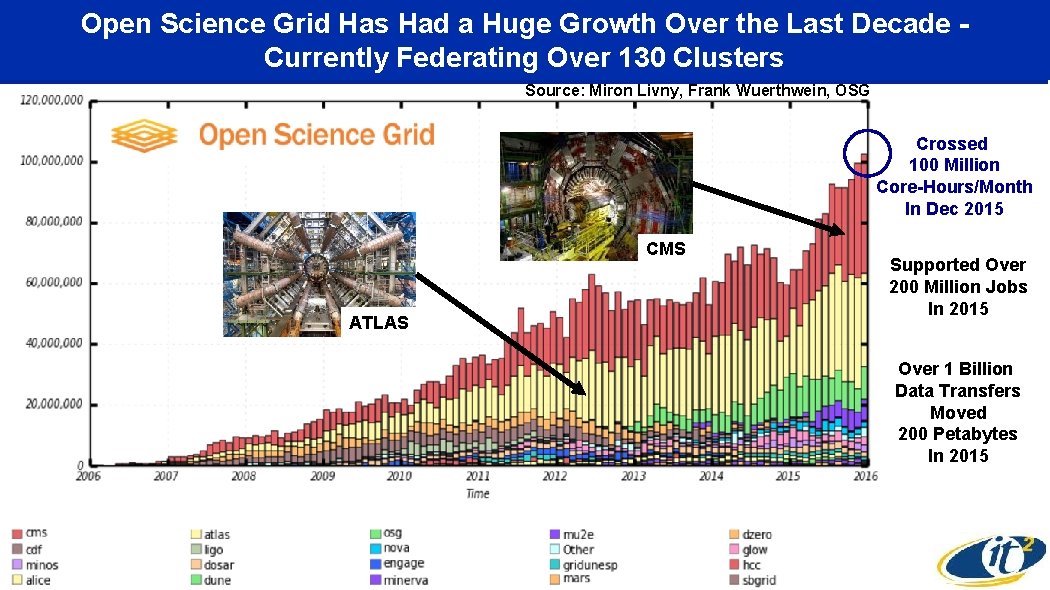

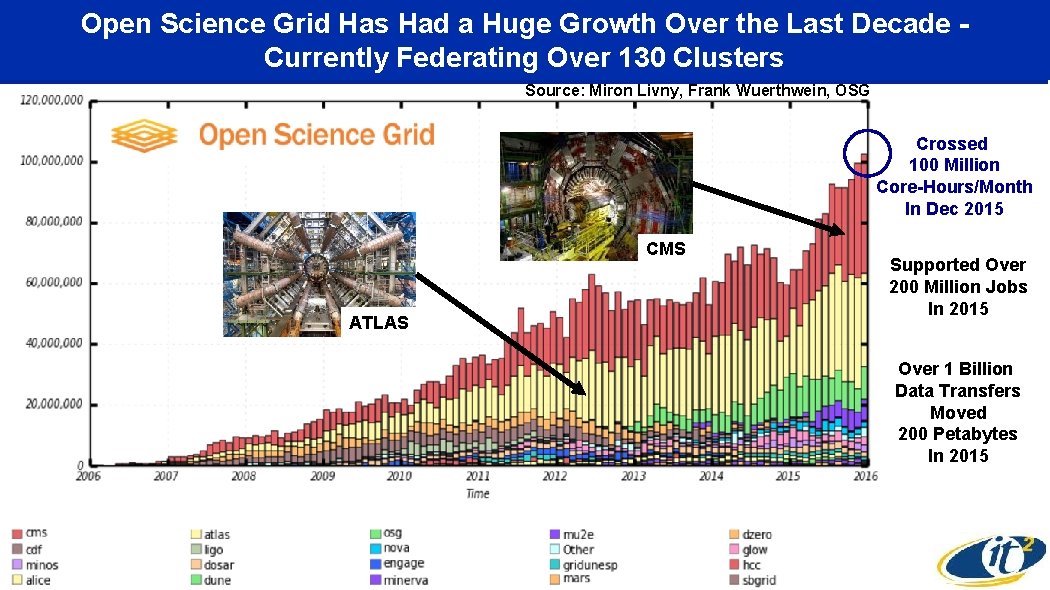

Open Science Grid Has Had a Huge Growth Over the Last Decade Currently Federating Over 130 Clusters Source: Miron Livny, Frank Wuerthwein, OSG Crossed 100 Million Core-Hours/Month In Dec 2015 CMS ATLAS Supported Over 200 Million Jobs In 2015 Over 1 Billion Data Transfers Moved 200 Petabytes In 2015

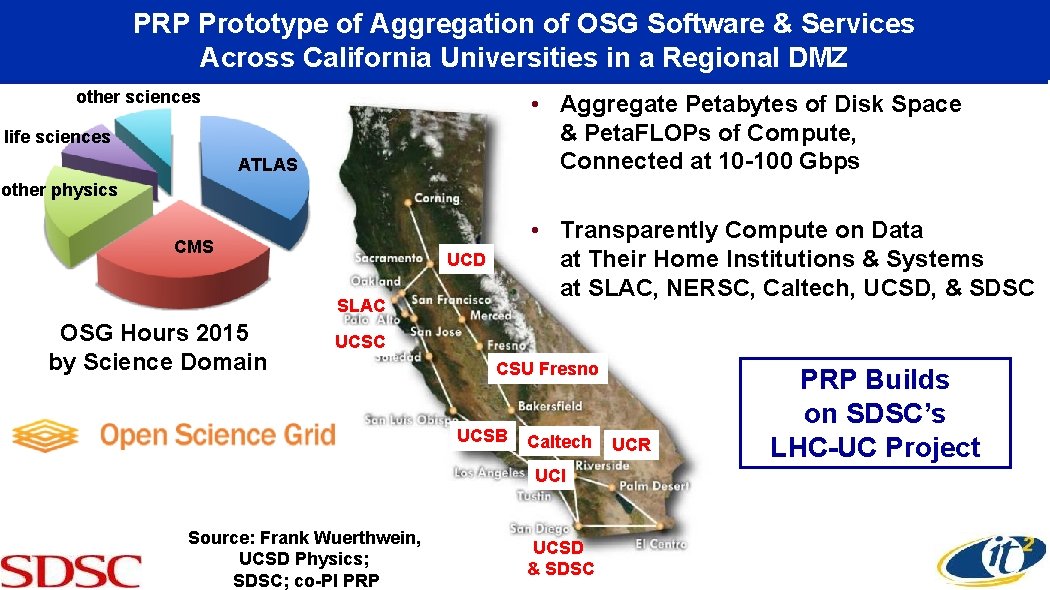

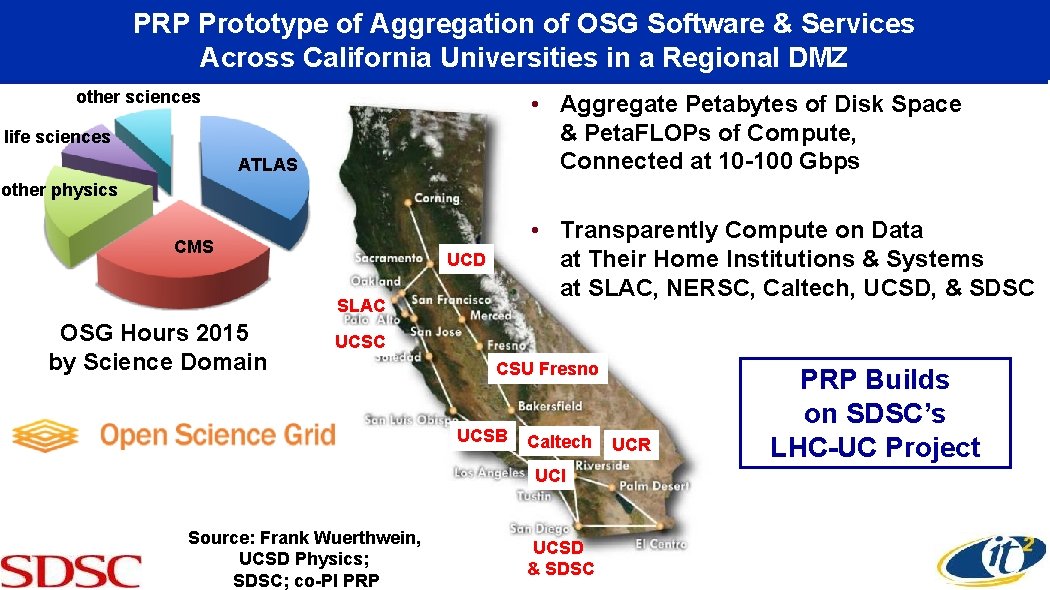

PRP Prototype of Aggregation of OSG Software & Services Across California Universities in a Regional DMZ other sciences • Aggregate Petabytes of Disk Space & Peta. FLOPs of Compute, Connected at 10 -100 Gbps life sciences ATLAS other physics CMS • Transparently Compute on Data at Their Home Institutions & Systems at SLAC, NERSC, Caltech, UCSD, & SDSC UCD SLAC OSG Hours 2015 by Science Domain UCSC CSU Fresno UCSB Caltech UCI Source: Frank Wuerthwein, UCSD Physics; SDSC; co-PI PRP UCSD & SDSC UCR PRP Builds on SDSC’s LHC-UC Project

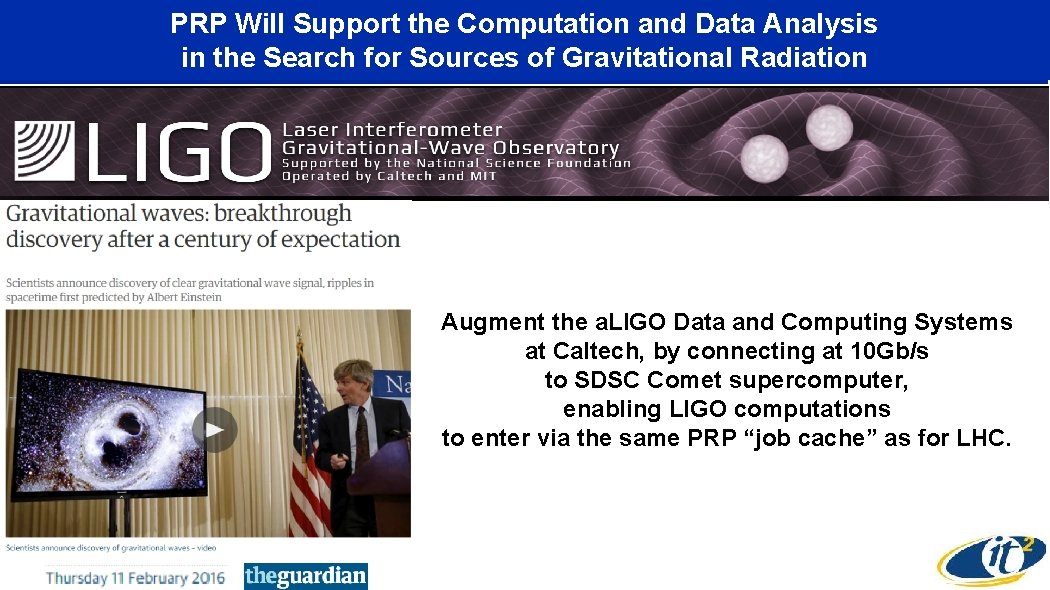

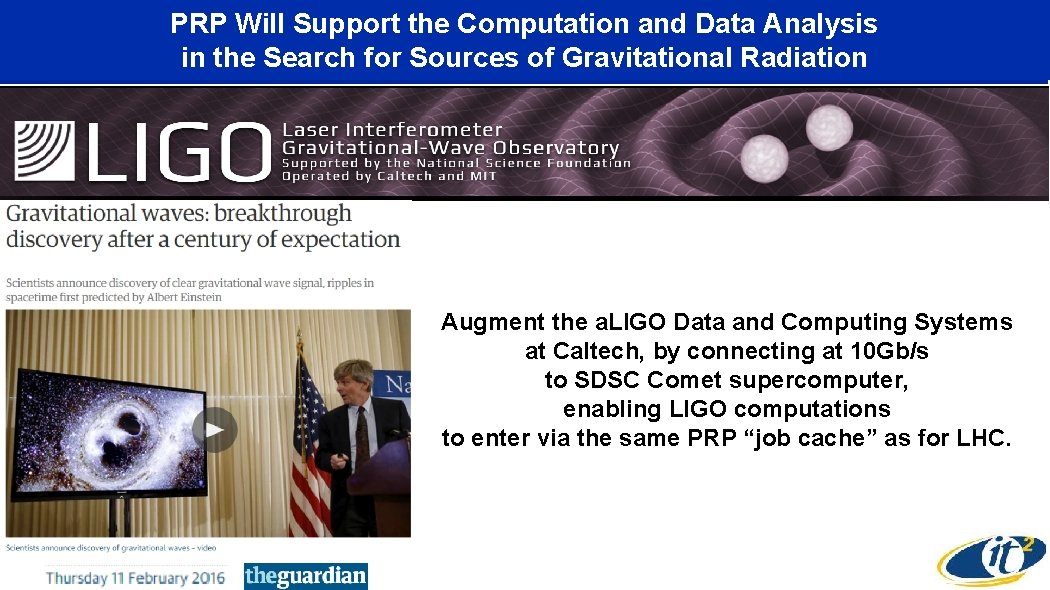

PRP Will Support the Computation and Data Analysis in the Search for Sources of Gravitational Radiation Augment the a. LIGO Data and Computing Systems at Caltech, by connecting at 10 Gb/s to SDSC Comet supercomputer, enabling LIGO computations to enter via the same PRP “job cache” as for LHC.

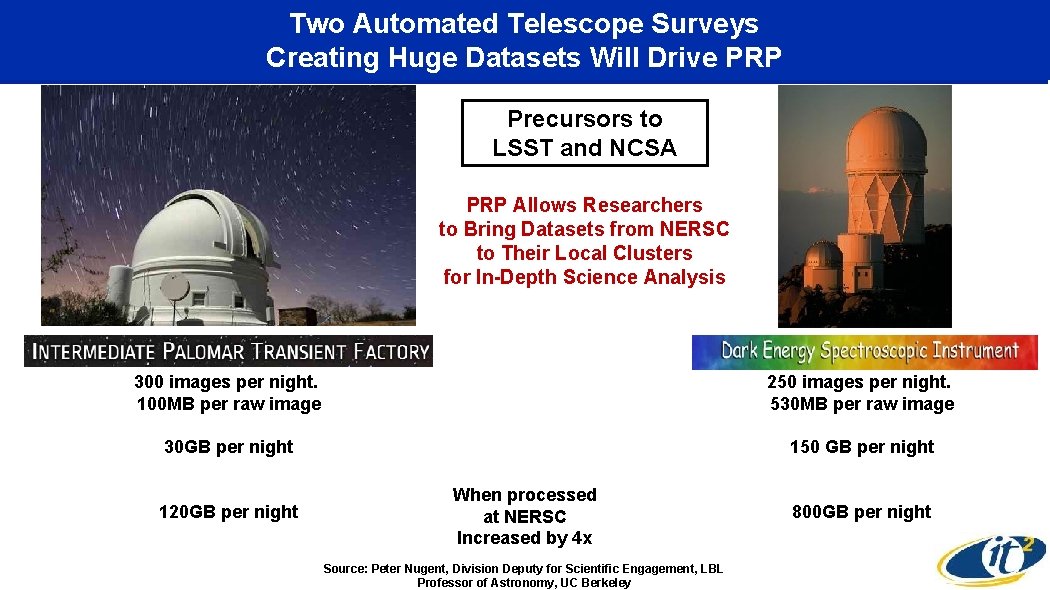

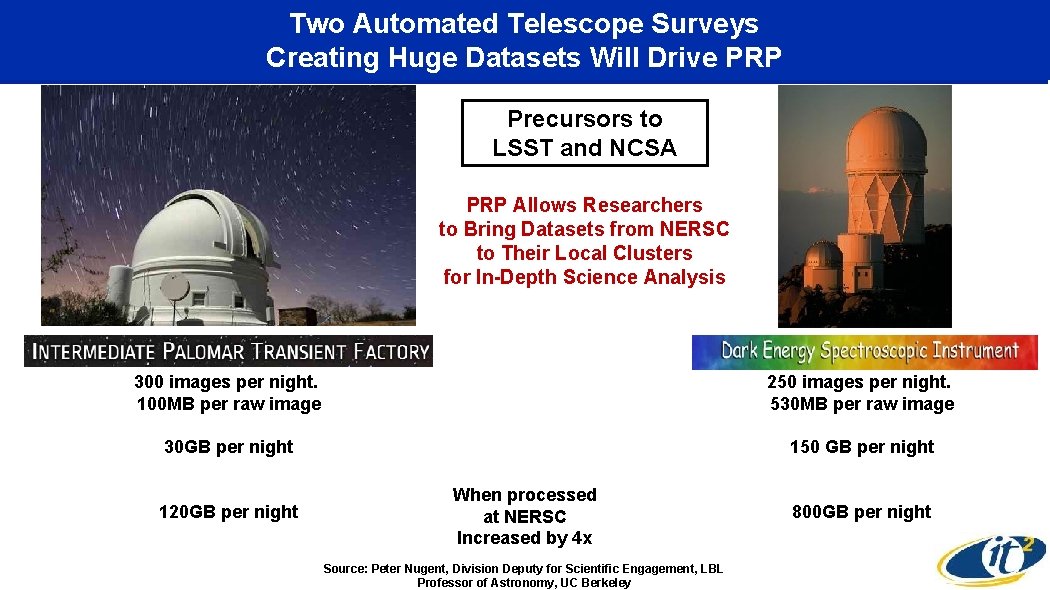

Two Automated Telescope Surveys Creating Huge Datasets Will Drive PRP Precursors to LSST and NCSA PRP Allows Researchers to Bring Datasets from NERSC to Their Local Clusters for In-Depth Science Analysis 300 images per night. 100 MB per raw image 250 images per night. 530 MB per raw image 30 GB per night 150 GB per night 120 GB per night When processed at NERSC Increased by 4 x Source: Peter Nugent, Division Deputy for Scientific Engagement, LBL Professor of Astronomy, UC Berkeley 800 GB per night

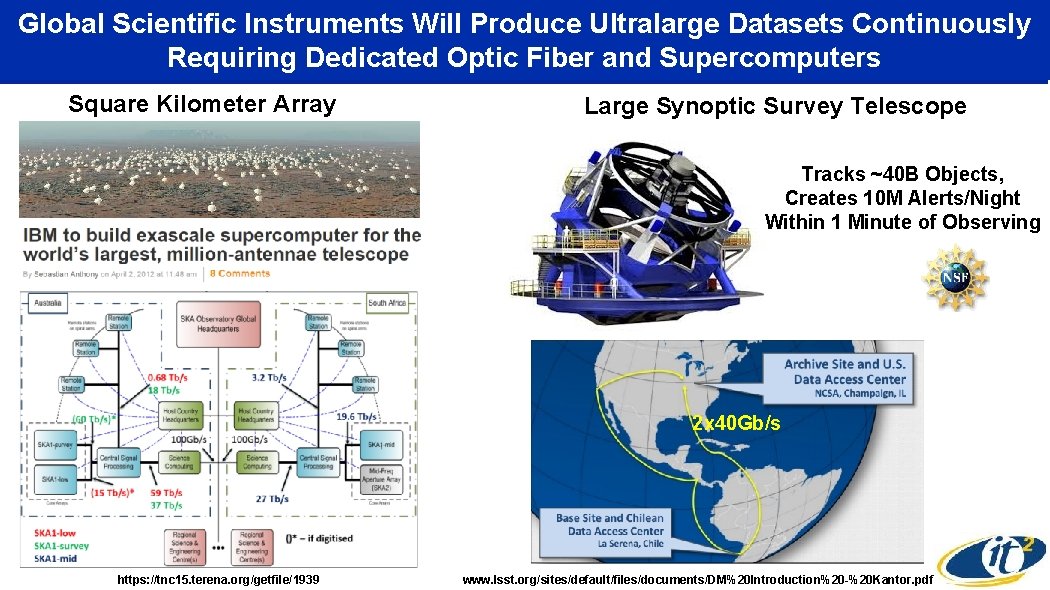

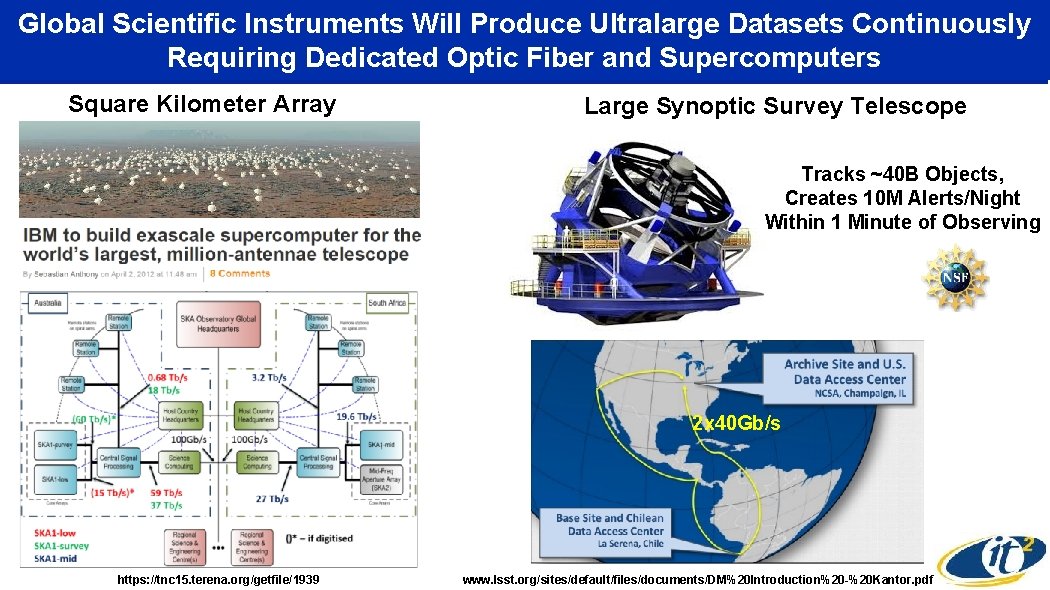

Global Scientific Instruments Will Produce Ultralarge Datasets Continuously Requiring Dedicated Optic Fiber and Supercomputers Square Kilometer Array Large Synoptic Survey Telescope Tracks ~40 B Objects, Creates 10 M Alerts/Night Within 1 Minute of Observing 2 x 40 Gb/s https: //tnc 15. terena. org/getfile/1939 www. lsst. org/sites/default/files/documents/DM%20 Introduction%20 -%20 Kantor. pdf

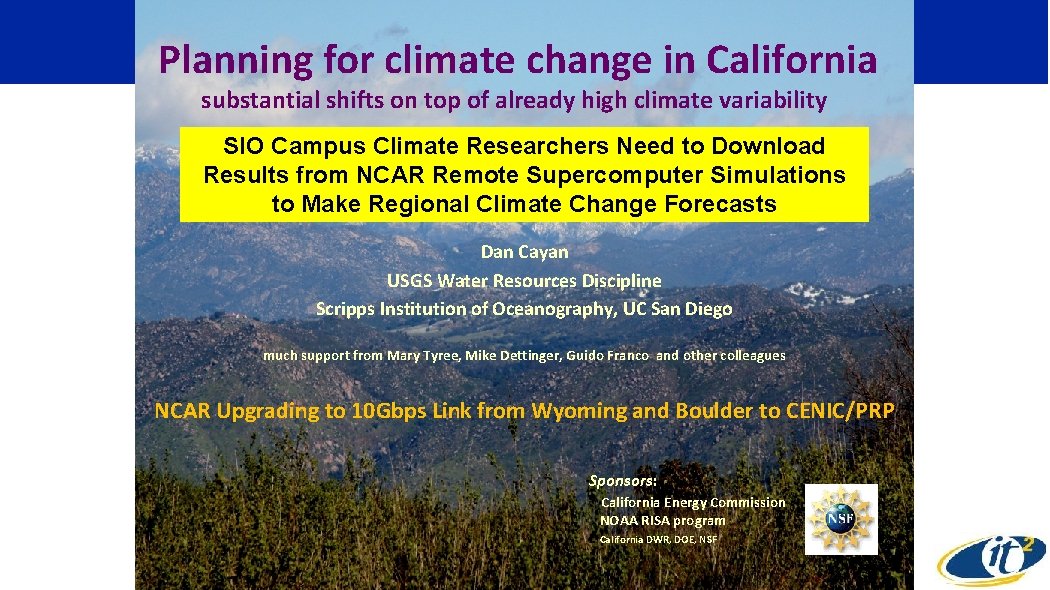

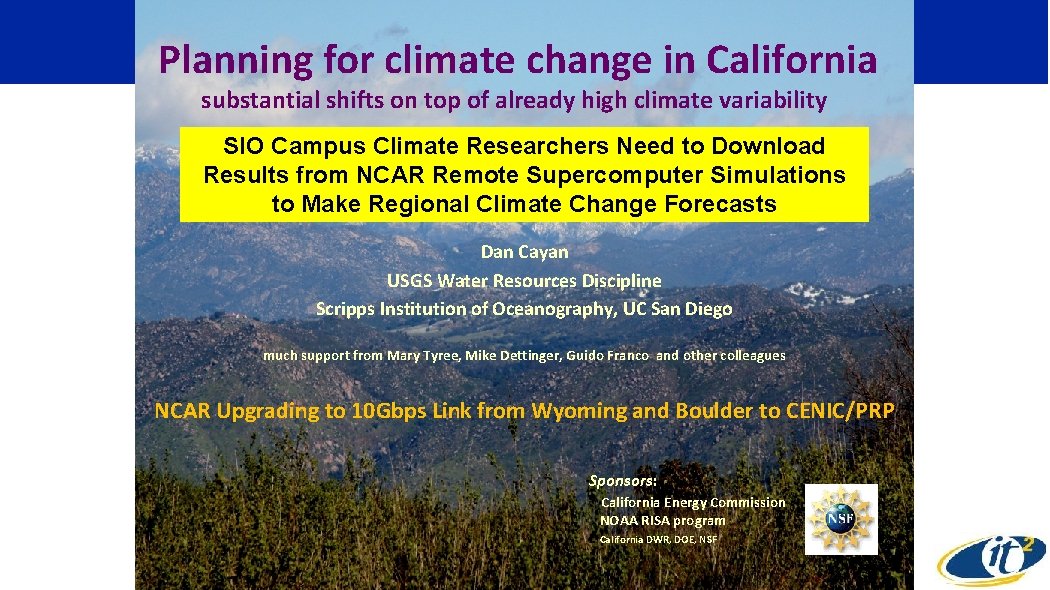

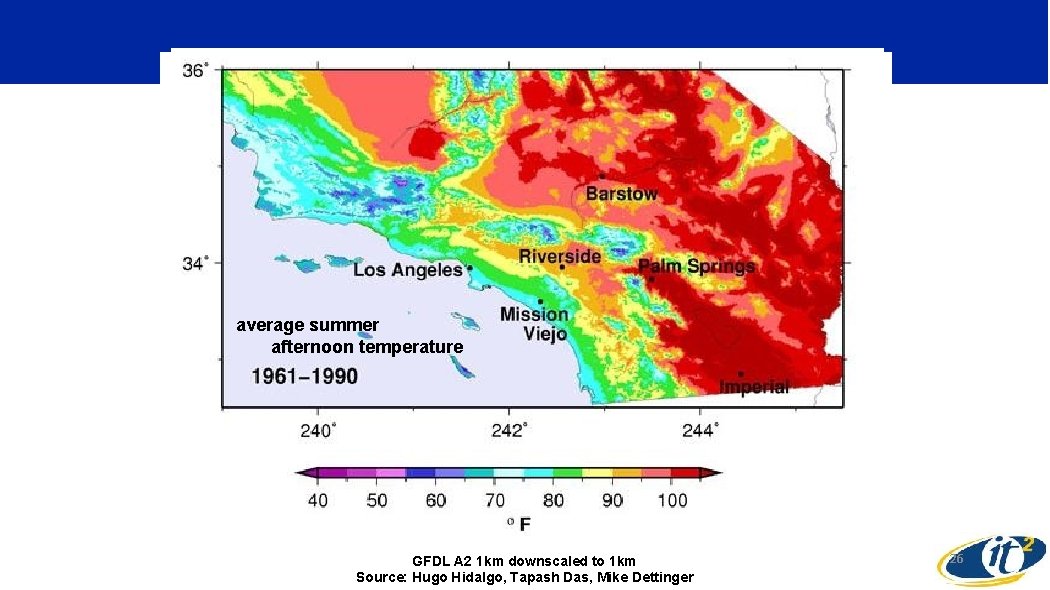

Planning for climate change in California substantial shifts on top of already high climate variability SIO Campus Climate Researchers Need to Download Results from NCAR Remote Supercomputer Simulations to Make Regional Climate Change Forecasts Dan Cayan USGS Water Resources Discipline Scripps Institution of Oceanography, UC San Diego much support from Mary Tyree, Mike Dettinger, Guido Franco and other colleagues NCAR Upgrading to 10 Gbps Link from Wyoming and Boulder to CENIC/PRP Sponsors: California Energy Commission NOAA RISA program California DWR, DOE, NSF

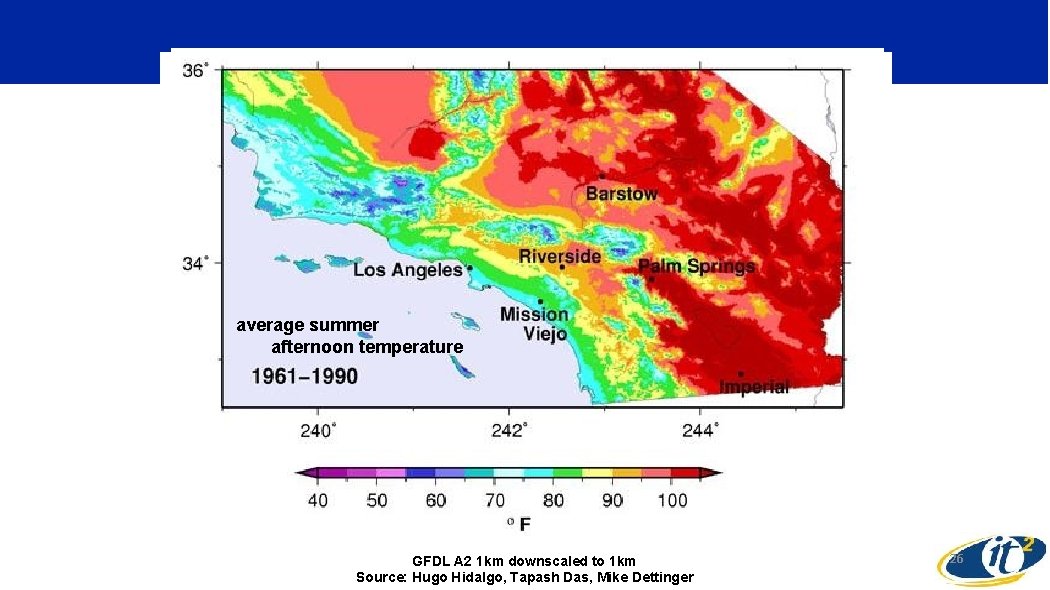

average summer afternoon temperature GFDL A 2 1 km downscaled to 1 km Source: Hugo Hidalgo, Tapash Das, Mike Dettinger 26

High-Performance Wireless Research and Education Network (HPWREN) Real-Time Cameras on Mountains for Environmental Observations Source: Hans Werner Braun, HPWREN PI

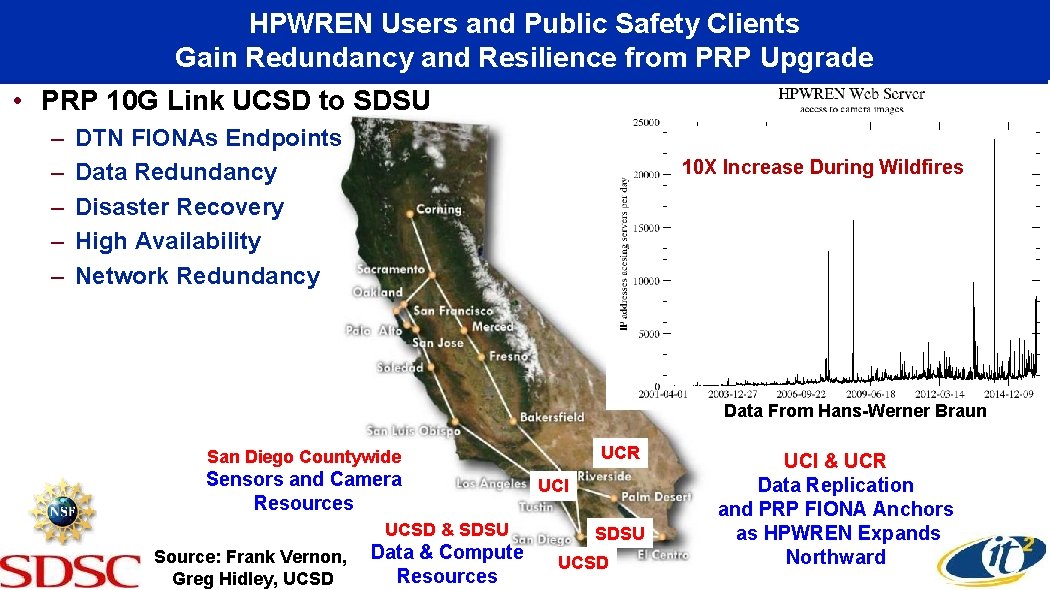

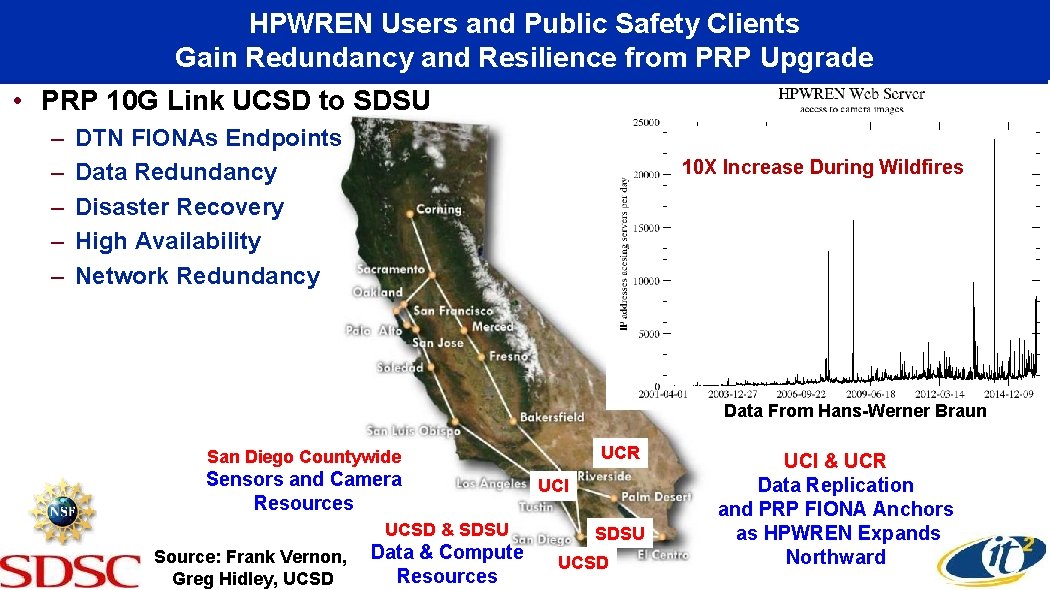

HPWREN Users and Public Safety Clients Gain Redundancy and Resilience from PRP Upgrade • PRP 10 G Link UCSD to SDSU – – – DTN FIONAs Endpoints Data Redundancy Disaster Recovery High Availability Network Redundancy 10 X Increase During Wildfires Data From Hans-Werner Braun UCR San Diego Countywide Sensors and Camera Resources UCSD & SDSU Source: Frank Vernon, Greg Hidley, UCSD Data & Compute Resources UCI SDSU UCSD UCI & UCR Data Replication and PRP FIONA Anchors as HPWREN Expands Northward

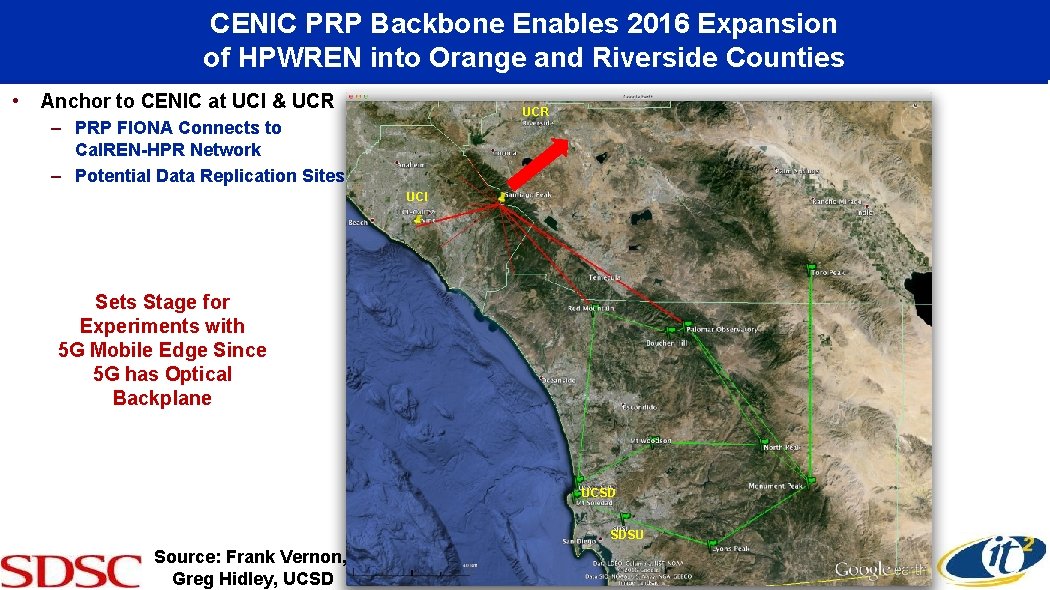

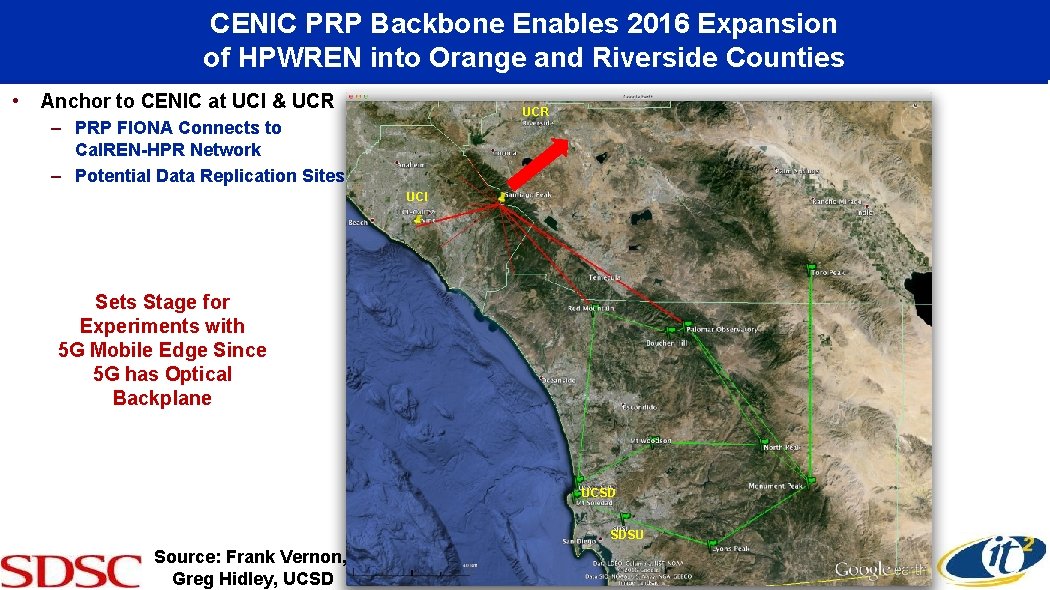

CENIC PRP Backbone Enables 2016 Expansion of HPWREN into Orange and Riverside Counties • Anchor to CENIC at UCI & UCR – PRP FIONA Connects to Cal. REN-HPR Network – Potential Data Replication Sites UCI Sets Stage for Experiments with 5 G Mobile Edge Since 5 G has Optical Backplane UCSD SDSU Source: Frank Vernon, Greg Hidley, UCSD

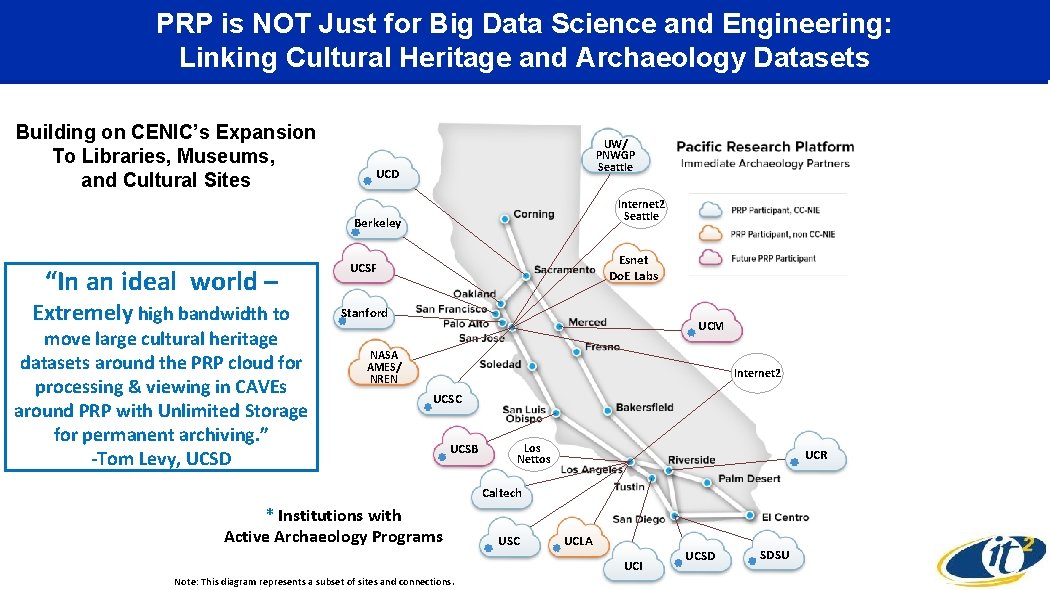

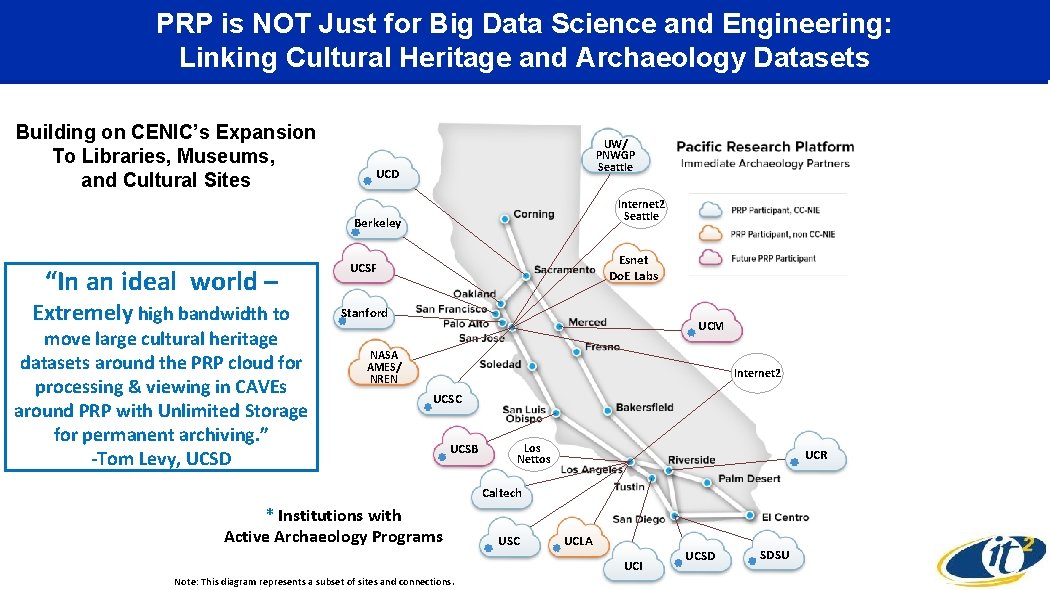

PRP is NOT Just for Big Data Science and Engineering: Linking Cultural Heritage and Archaeology Datasets Building on CENIC’s Expansion To Libraries, Museums, and Cultural Sites UW/ PNWGP Seattle UCD Internet 2 Seattle Berkeley “In an ideal world – Extremely high bandwidth to move large cultural heritage datasets around the PRP cloud for processing & viewing in CAVEs around PRP with Unlimited Storage for permanent archiving. ” -Tom Levy, UCSD Esnet Do. E Labs UCSF Stanford UCM NASA AMES/ NREN Internet 2 UCSC UCSB Los Nettos UCR Caltech * Institutions with Active Archaeology Programs USC UCLA UCI Note: This diagram represents a subset of sites and connections. UCSD SDSU

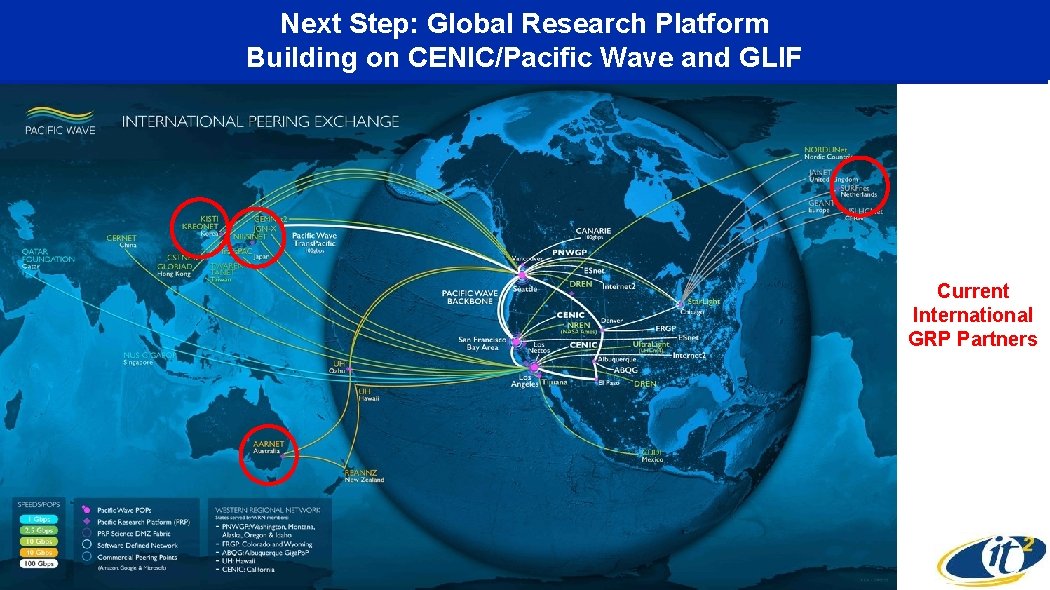

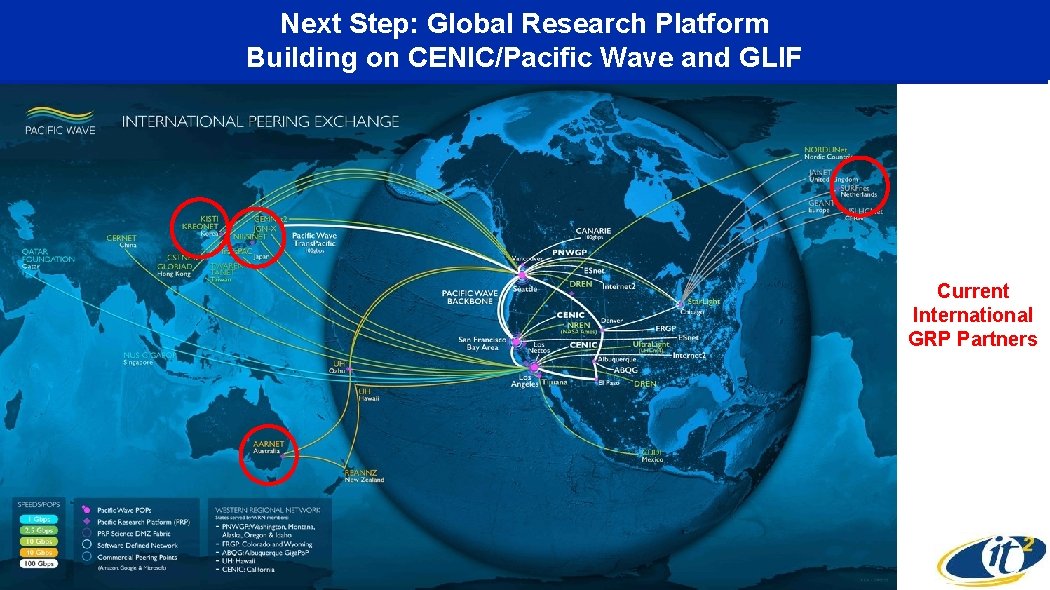

Next Step: Global Research Platform Building on CENIC/Pacific Wave and GLIF Current International GRP Partners

New Applications of PRP-Like Networks • Brain-Inspired Pattern Recognition – See Session 4 Later Today • Internet of Things and the Industrial Internet – See Keynote and Sessions 1 -3 Tomorrow • Smart Cities – See Sessions 4 Tomorrow