The INFN Tier1 Farming Davide Salomoni Davide Salomonicnaf

- Slides: 31

The INFN Tier-1: Farming Davide Salomoni Davide. Salomoni@cnaf. infn. it INFN CNAF D. Salomoni – INFN Tier-1 Review, March 1 -2, 2006

Talk Outline • Farm status – Current situation – Users – Monitoring – Accounting • Operational activities • Testing activities • Assessment, outlook, milestones D. Salomoni – INFN Tier-1 Review, March 1 -2, 2006 2

The INFN Tier-1 Farm • About 1000 bi-processor nodes, ranging from Pentium III @1 GHz to Opteron 252 @2. 6 GHz. • Total of about 1550 KSI 2 K, plus additional 150 KSI 2 K in “old” decommissioned nodes, used in separate clusters for testing activities. • Tender for additional 800 KSI 2 K, delivery expected Fall 2006. • Farm upgrades, to be done in synch with the Tier 1 infrastructure upgrade, are planned until 2010. D. Salomoni – INFN Tier-1 Review, March 1 -2, 2006 3

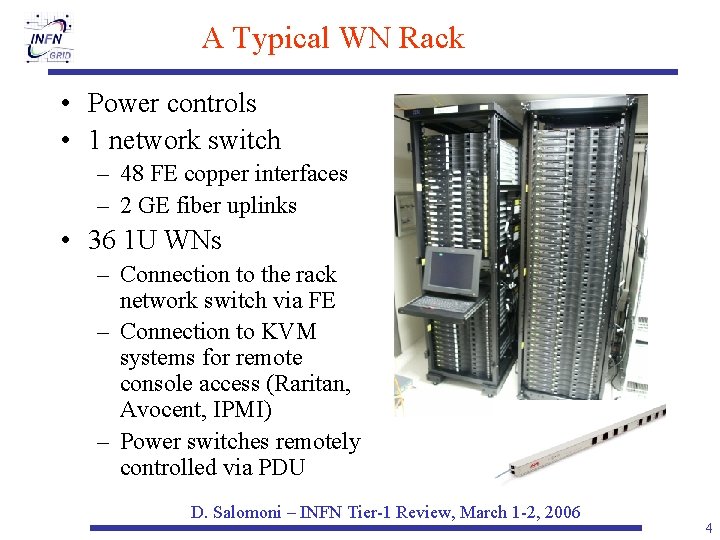

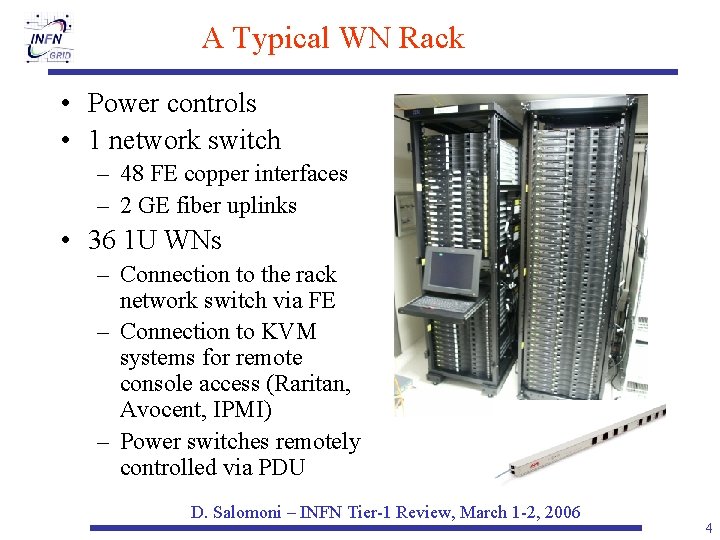

A Typical WN Rack • Power controls • 1 network switch – 48 FE copper interfaces – 2 GE fiber uplinks • 36 1 U WNs – Connection to the rack network switch via FE – Connection to KVM systems for remote console access (Raritan, Avocent, IPMI) – Power switches remotely controlled via PDU D. Salomoni – INFN Tier-1 Review, March 1 -2, 2006 4

The Users • 22 Virtual Organizations, among them: – The 4 LHC experiments – CDF, Ba. Bar, Argo, AMS, Virgo, Pamela, INGV, etc. • Several VOs still using the local batch system instead of accessing the grid. – This has negative operational implications – we are actively trying to migrate these VOs to a grid environment. D. Salomoni – INFN Tier-1 Review, March 1 -2, 2006 5

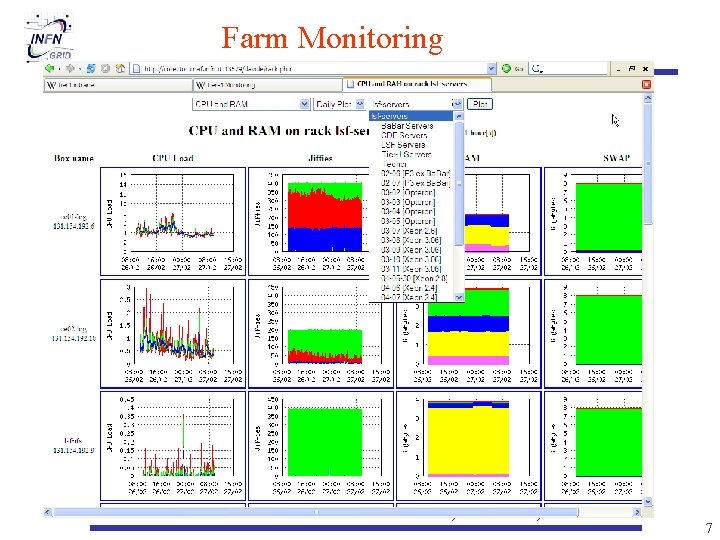

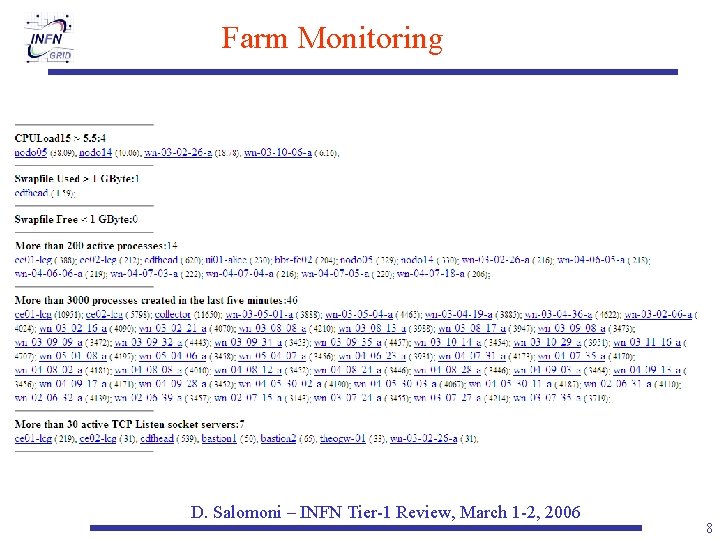

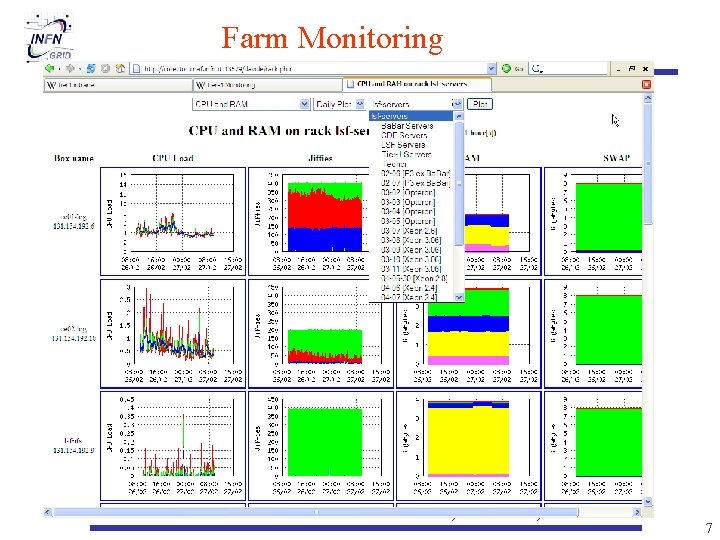

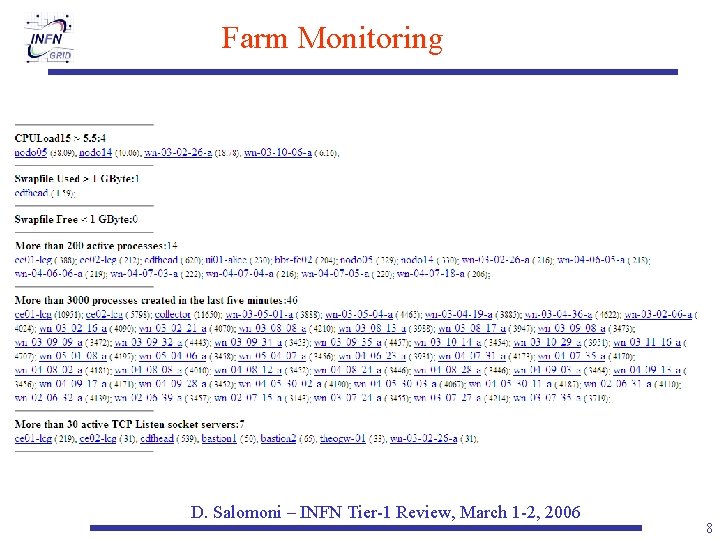

Farm Monitoring • Farm monitoring is done via a house made set of programs, providing basic sanity checks and alarms for anomalous conditions. – This solution has proven to be reliable and scalable. Any modification to the status quo is subject to extensive scrutiny, due to the size and complexity of the Tier-1 farm. – There is an upgrade path toward: • LEMON – for farm monitoring. • Grid. ICE – for integration with grid resources, e. g. realtime or quasi-realtime job monitoring. D. Salomoni – INFN Tier-1 Review, March 1 -2, 2006 6

Farm Monitoring D. Salomoni – INFN Tier-1 Review, March 1 -2, 2006 7

Farm Monitoring D. Salomoni – INFN Tier-1 Review, March 1 -2, 2006 8

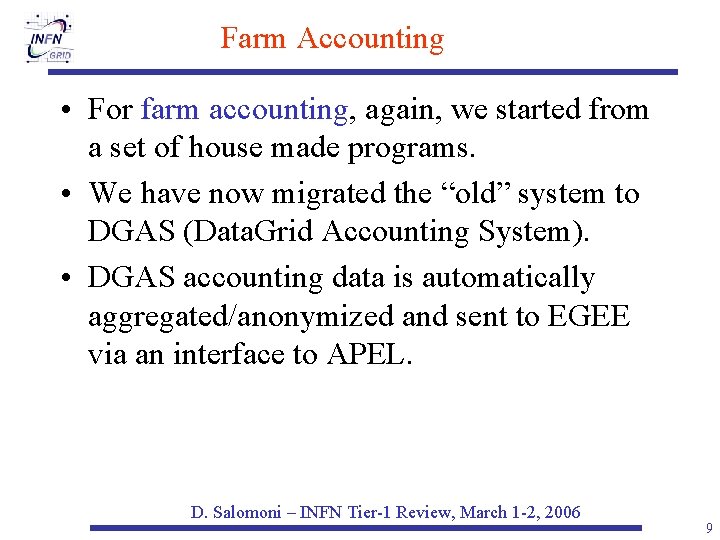

Farm Accounting • For farm accounting, again, we started from a set of house made programs. • We have now migrated the “old” system to DGAS (Data. Grid Accounting System). • DGAS accounting data is automatically aggregated/anonymized and sent to EGEE via an interface to APEL. D. Salomoni – INFN Tier-1 Review, March 1 -2, 2006 9

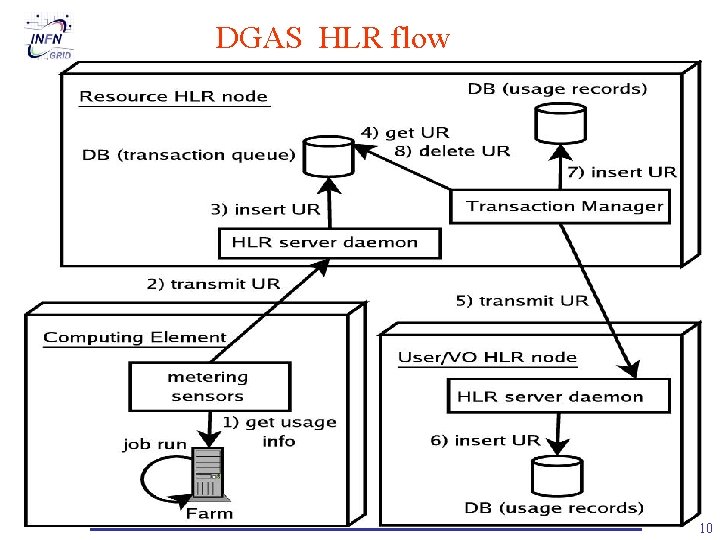

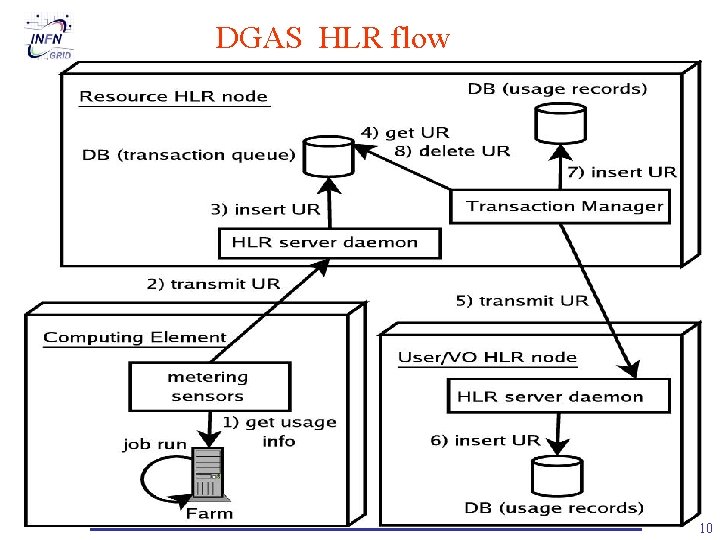

DGAS HLR flow D. Salomoni – INFN Tier-1 Review, March 1 -2, 2006 10

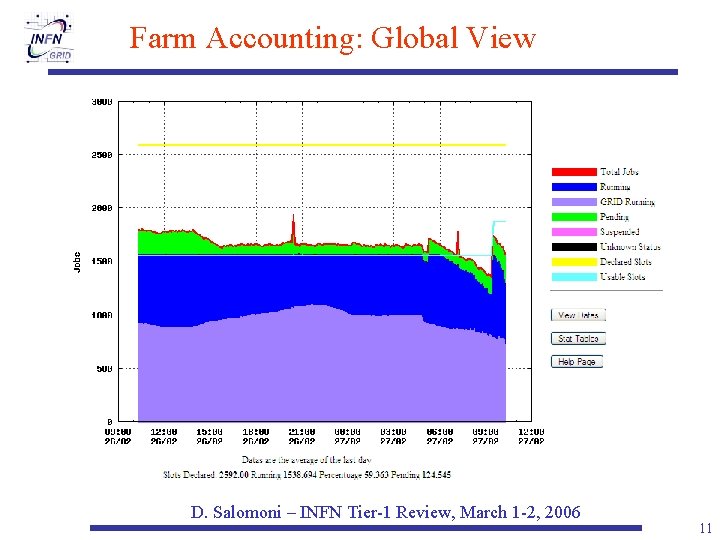

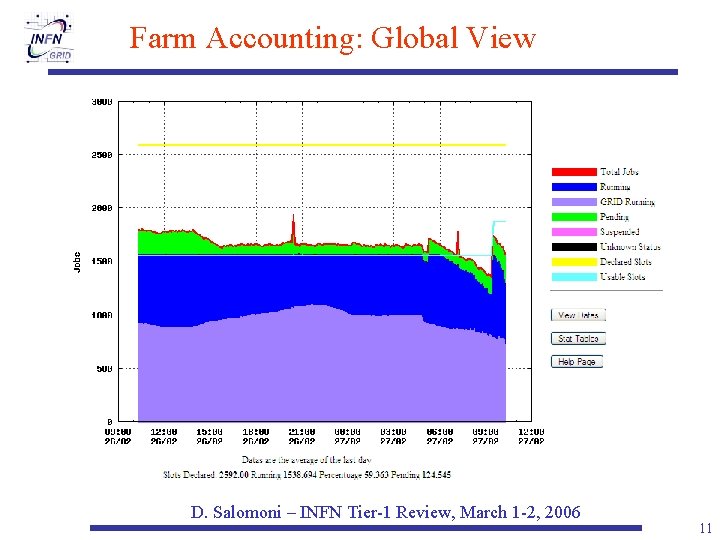

Farm Accounting: Global View D. Salomoni – INFN Tier-1 Review, March 1 -2, 2006 11

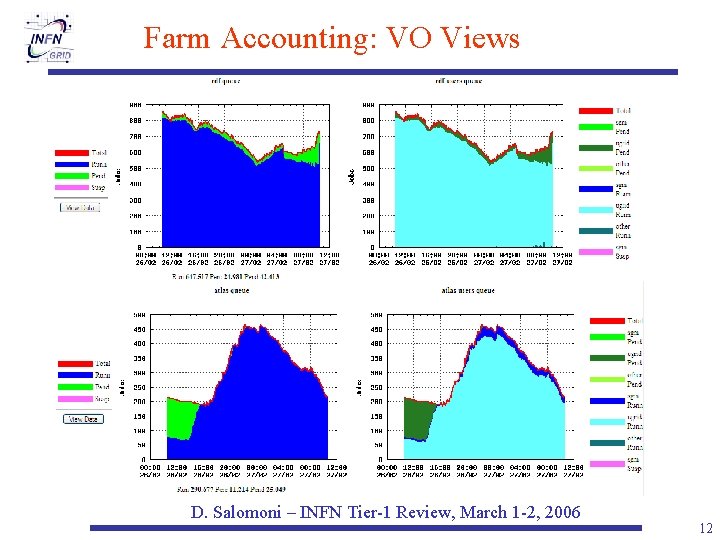

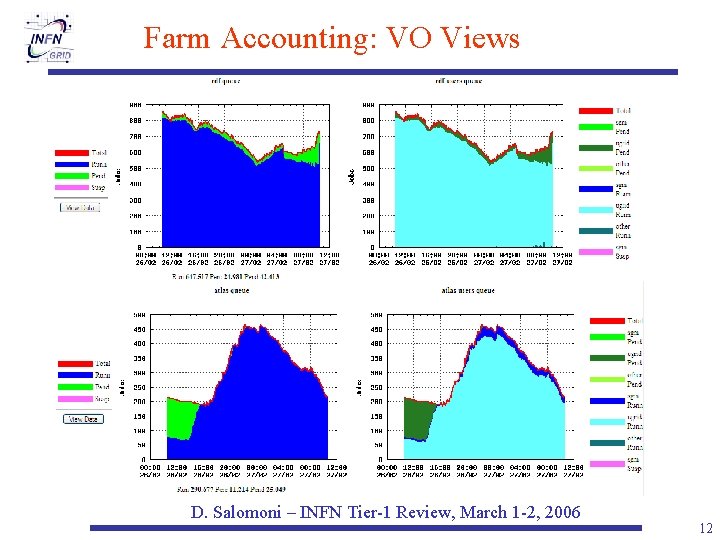

Farm Accounting: VO Views D. Salomoni – INFN Tier-1 Review, March 1 -2, 2006 12

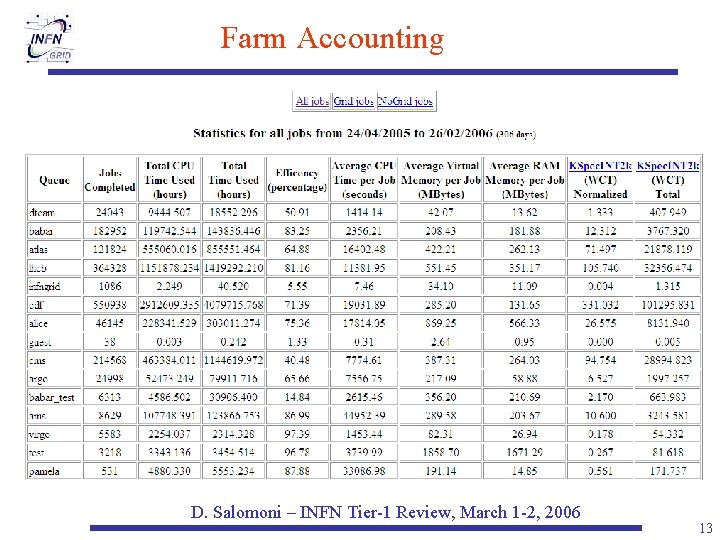

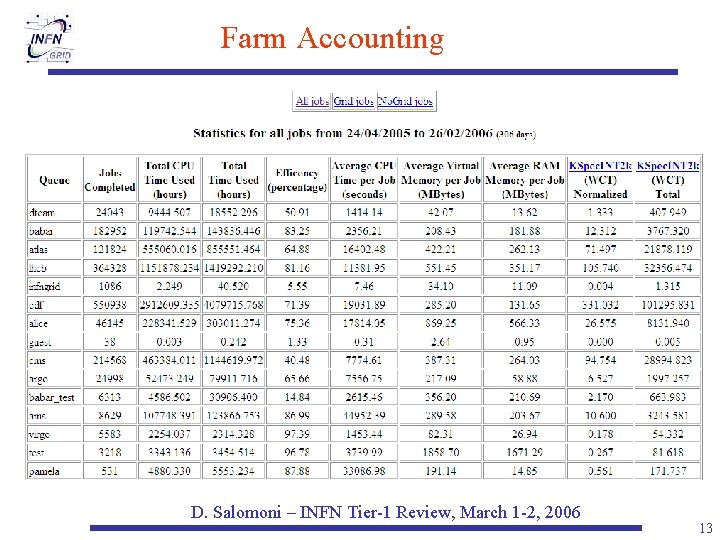

Farm Accounting D. Salomoni – INFN Tier-1 Review, March 1 -2, 2006 13

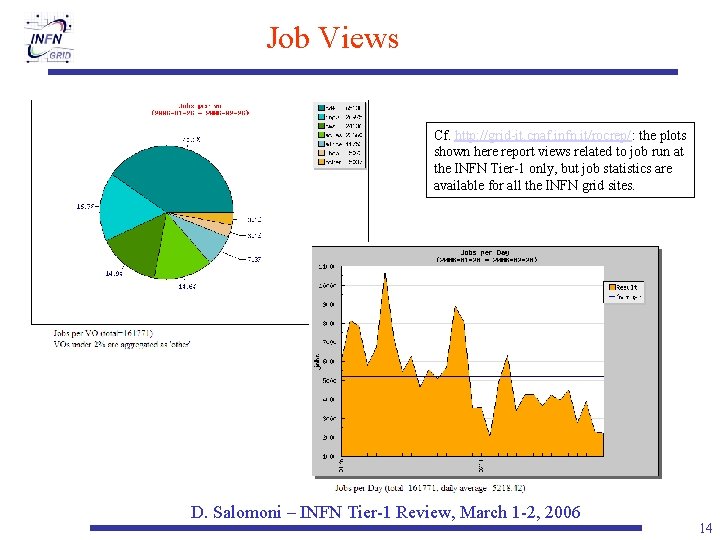

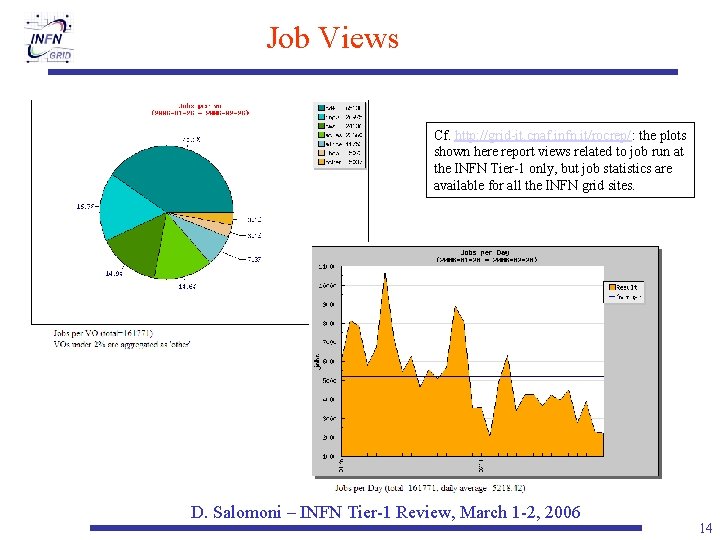

Job Views Cf. http: //grid-it. cnaf. infn. it/rocrep/: the plots shown here report views related to job run at the INFN Tier-1 only, but job statistics are available for all the INFN grid sites. D. Salomoni – INFN Tier-1 Review, March 1 -2, 2006 14

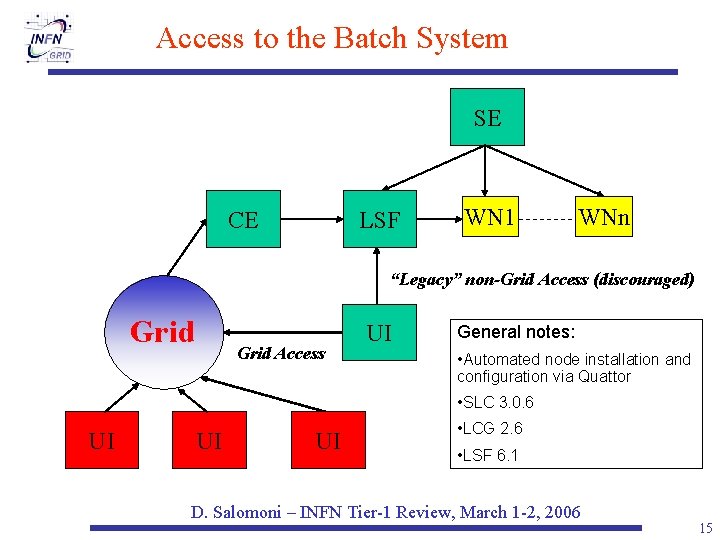

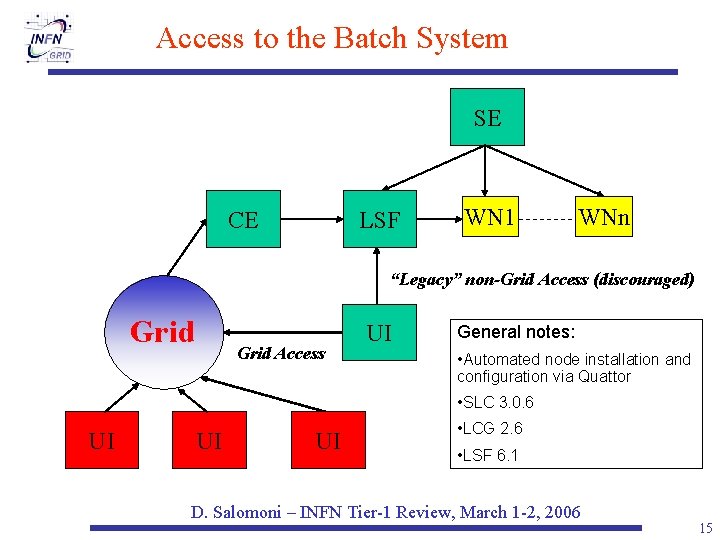

Access to the Batch System SE CE LSF WN 1 WNn “Legacy” non-Grid Access (discouraged) Grid Access UI General notes: • Automated node installation and configuration via Quattor • SLC 3. 0. 6 UI UI UI • LCG 2. 6 • LSF 6. 1 D. Salomoni – INFN Tier-1 Review, March 1 -2, 2006 15

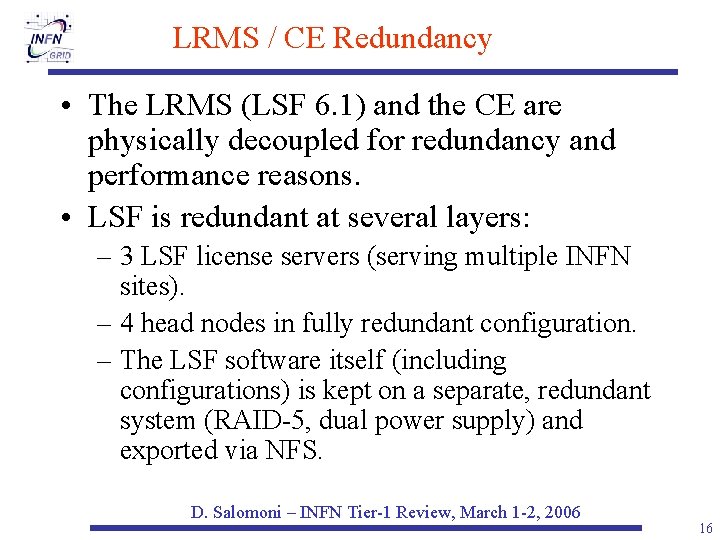

LRMS / CE Redundancy • The LRMS (LSF 6. 1) and the CE are physically decoupled for redundancy and performance reasons. • LSF is redundant at several layers: – 3 LSF license servers (serving multiple INFN sites). – 4 head nodes in fully redundant configuration. – The LSF software itself (including configurations) is kept on a separate, redundant system (RAID-5, dual power supply) and exported via NFS. D. Salomoni – INFN Tier-1 Review, March 1 -2, 2006 16

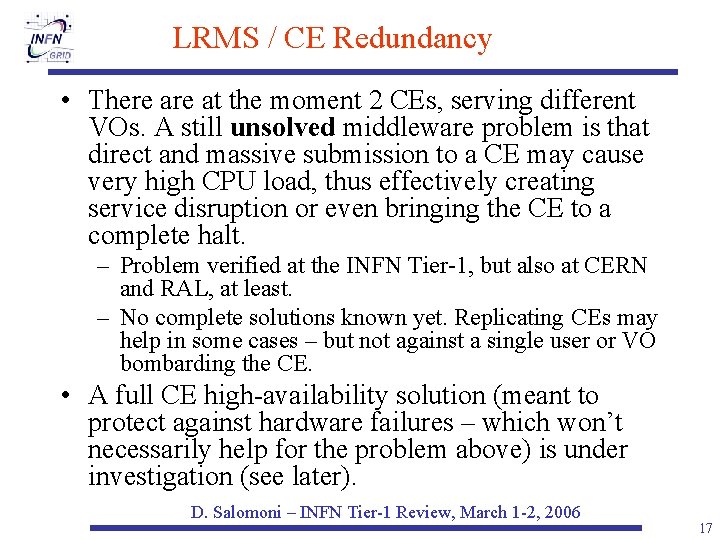

LRMS / CE Redundancy • There at the moment 2 CEs, serving different VOs. A still unsolved middleware problem is that direct and massive submission to a CE may cause very high CPU load, thus effectively creating service disruption or even bringing the CE to a complete halt. – Problem verified at the INFN Tier-1, but also at CERN and RAL, at least. – No complete solutions known yet. Replicating CEs may help in some cases – but not against a single user or VO bombarding the CE. • A full CE high-availability solution (meant to protect against hardware failures – which won’t necessarily help for the problem above) is under investigation (see later). D. Salomoni – INFN Tier-1 Review, March 1 -2, 2006 17

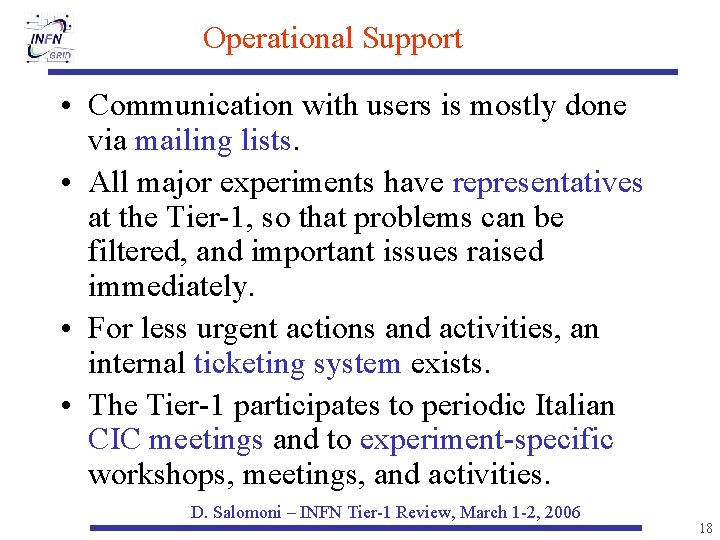

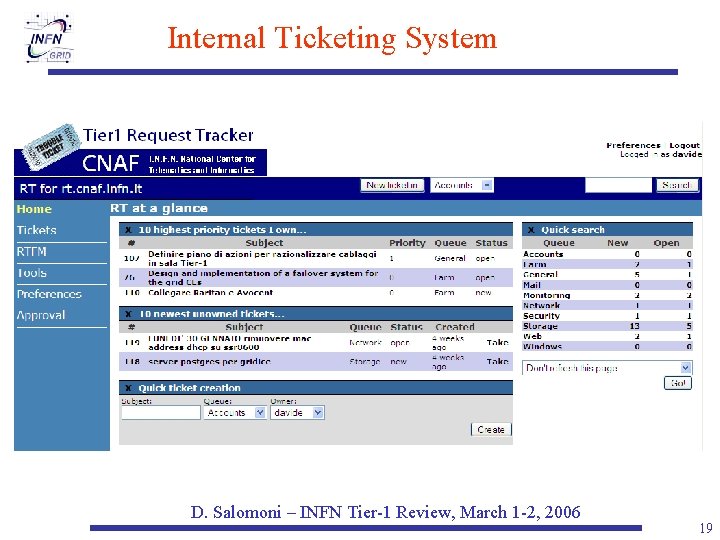

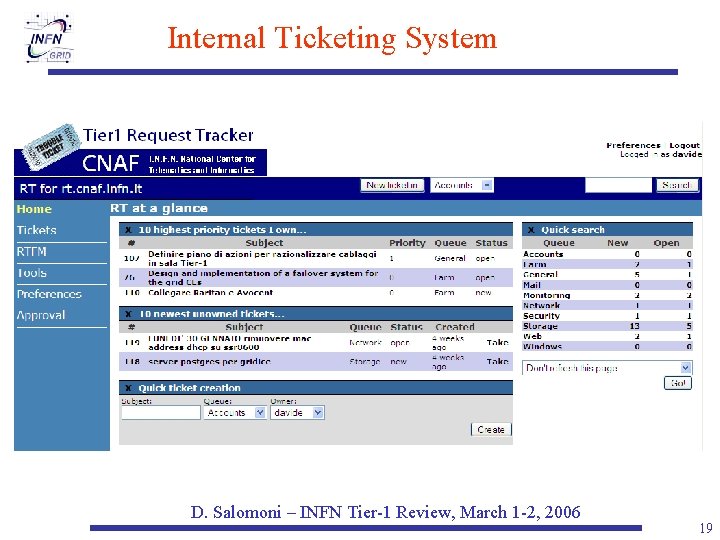

Operational Support • Communication with users is mostly done via mailing lists. • All major experiments have representatives at the Tier-1, so that problems can be filtered, and important issues raised immediately. • For less urgent actions and activities, an internal ticketing system exists. • The Tier-1 participates to periodic Italian CIC meetings and to experiment-specific workshops, meetings, and activities. D. Salomoni – INFN Tier-1 Review, March 1 -2, 2006 18

Internal Ticketing System D. Salomoni – INFN Tier-1 Review, March 1 -2, 2006 19

Internal Documentation • Internal documentation is kept in an intranet -only wiki. D. Salomoni – INFN Tier-1 Review, March 1 -2, 2006 20

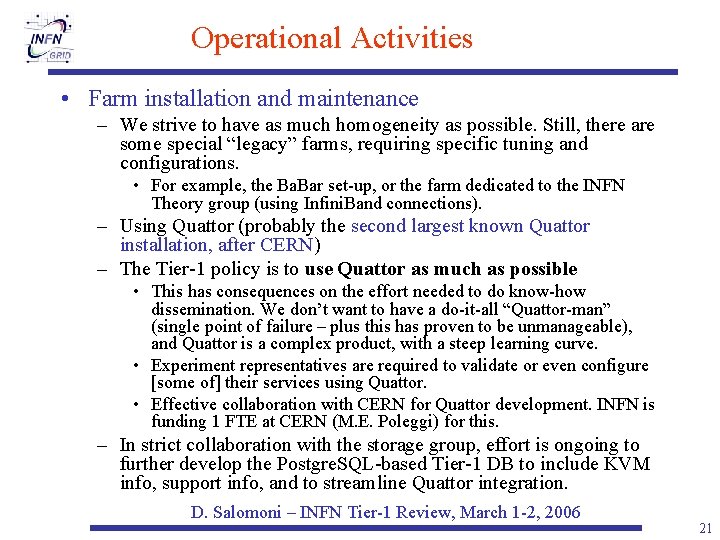

Operational Activities • Farm installation and maintenance – We strive to have as much homogeneity as possible. Still, there are some special “legacy” farms, requiring specific tuning and configurations. • For example, the Ba. Bar set-up, or the farm dedicated to the INFN Theory group (using Infini. Band connections). – Using Quattor (probably the second largest known Quattor installation, after CERN) – The Tier-1 policy is to use Quattor as much as possible • This has consequences on the effort needed to do know-how dissemination. We don’t want to have a do-it-all “Quattor-man” (single point of failure – plus this has proven to be unmanageable), and Quattor is a complex product, with a steep learning curve. • Experiment representatives are required to validate or even configure [some of] their services using Quattor. • Effective collaboration with CERN for Quattor development. INFN is funding 1 FTE at CERN (M. E. Poleggi) for this. – In strict collaboration with the storage group, effort is ongoing to further develop the Postgre. SQL-based Tier-1 DB to include KVM info, support info, and to streamline Quattor integration. D. Salomoni – INFN Tier-1 Review, March 1 -2, 2006 21

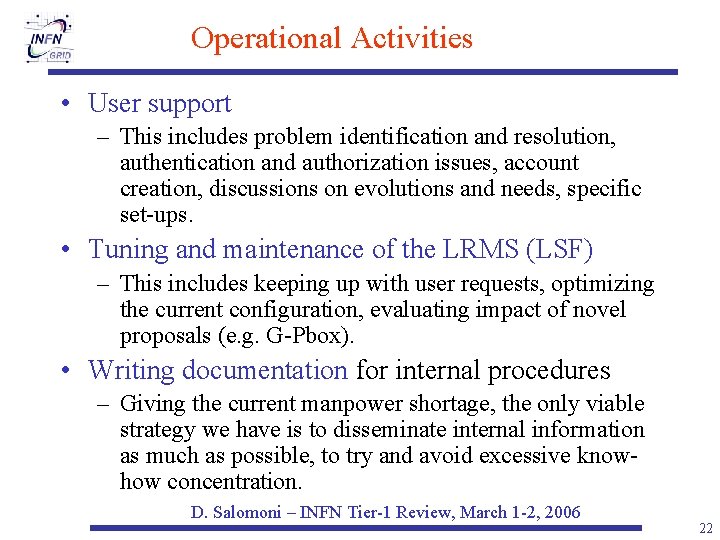

Operational Activities • User support – This includes problem identification and resolution, authentication and authorization issues, account creation, discussions on evolutions and needs, specific set-ups. • Tuning and maintenance of the LRMS (LSF) – This includes keeping up with user requests, optimizing the current configuration, evaluating impact of novel proposals (e. g. G-Pbox). • Writing documentation for internal procedures – Giving the current manpower shortage, the only viable strategy we have is to disseminate internal information as much as possible, to try and avoid excessive knowhow concentration. D. Salomoni – INFN Tier-1 Review, March 1 -2, 2006 22

Testing Activities Foreword: we strongly believe that all INFN Tier-1 groups should be directly involved in testing and study activities. – Proceeding otherwise would negatively affect operations and eventually the Tier-1 performance. • Large-scale system installation for both Operating System and middleware. – Integration with INFNGrid through Quattor and YAIM, in close collaboration with the grid operational support. • Virtual Machines – Currently testing XEN as a VM platform. The aim is eventually to replicate VM configurations, either for testing purposes, or for high-availability. D. Salomoni – INFN Tier-1 Review, March 1 -2, 2006 23

Testing Activities • High-Availability Issues – Without a generic HA platform, we will have a very hard time in keeping reasonable SLAs. • Considering also INFN- (or Italian-) specific constraints, e. g. offhours coverage capabilities or possibilities. • Max downtime for a typical SLA of 99% (which is fairly low) is 3. 6 days/year – How does this affect a site with e. g. 80 servers? – Consider drives only (MTBF = 106 hours), 1 drive/server: 80 hard drives x 1 year x 8760 hours in a year =700800 / 106 = 70. 1% failure rate; applied to 80 hard drives -> 70. 1% * 80 = 56 drives failing in the first year. If time to repair is (fairly unrealistically good estimate) 6 hours => 6*56 = 336 hours = 14 days of downtime per year for some service (and this is for drives only, if you want the total MTBF you should do 1/MTBF(total) = Sum[1/MTBF(subcomponent 1) + 1/MTBF(subcomponent 2) +. . . ]) – As INFN, we have just formed a group trying to work on a continuum of activities covering lower layers like networking (esp. LAN), storage, farming – and their interaction with SC and grid middleware. This group is coordinated by D. Salomoni – INFN Tier-1 Review, March 1 -2, 2006 24

Testing Activities • Scalability and improvements of the DGAS accounting system – Taking into account the experience developed at the Tier-1 with our own tools. Some of these tools have been integrated into DGAS already. • Currently testing LEMON as fabric monitoring system, in light of a replacement of our own custom tools. – On top of LEMON, Grid. ICE is also being tested and developed. • Experiment-specific testbeds. – These are set-up on demand, in close collaboration with the storage group, normally upon request from the experiments – the goal is often for them to validate their computing and operational models. (e. g. : Pamela, Virgo) • Participation and support to other, middleware-related, projects and testbeds. (e. g. : WMS scalability tests) These projects often require expertise in the LRMS area. D. Salomoni – INFN Tier-1 Review, March 1 -2, 2006 25

Operational Issues • Manpower – The Tier-1 farming group is composed of only 1. 5 FTE staff people (plus myself), plus 1 additional temporary FTE. • • Alessandro Italiano, staff – shared with multimedia group Andrea Chierici, staff – shared with local computing support Felice Rosso, temporary contract Massimo Donatelli, temporary contract – shared with storage, network, local computing support – Looking at the “real people” working in the farming group, 1 is specialized in monitoring and accounting systems, 1 in system administration, 1 in LRMS topics and 1 in authc/authz topics. • The requests for the farming group are as follows: 4. 5 staff FTE, 3 temporary FTE, 1 FTE at CERN. See the document “Pianificazione delle risorse umane al CNAF 2005 -2008” for more details. – This raises important questions even in the short term wrt overall sustainability and operational stability of the Tier-1. – The real risk is, on the one hand, to be crushed by operational issues (w/o real effectiveness in solving them); and, on the other hand, to be unable to keep up with developments and projects. D. Salomoni – INFN Tier-1 Review, March 1 -2, 2006 26

Operational Issues • Massive hardware heterogeneity – It is difficult to keep track of all the maintenance contracts (valid, expiring, expired) and of the peculiarity of each one (e. g. the service level: NBD, 4 -hours, etc. ) – Different hardware inevitably means different configurations. • For example: SCSI support (e. g. hardware vs. software), IPMI (supported on the latest machines only), kernel architectures, etc. – Legacy solutions have still to be completely phased out. For example, there at the moment 3 KVM systems. • Farm size – O(103) nodes, together with hardware batches purchased at different times, means that a constant activity is to troubleshoot hardware problems, call maintenance support, follow up repair work, re-insert nodes into production, etc. – Some inertia in finding and applying new solutions is unavoidable and actually desirable, given the need to maintain and improve the Tier-1 operational level. D. Salomoni – INFN Tier-1 Review, March 1 -2, 2006 27

Operational Assessment • For the past 12 months, the farming and the other Tier-1 groups have provided excellent best-effort support during off-hours, week-ends, holidays. – Example: a serious unscheduled outage occurred on the morning of December 25, 2005 – Tier-1 people were immediately alerted by phone. Remote diagnostic happened within 1 hour. Tier-1 staff was onsite to fix the problem on December 26. (the problem was then to engage maintenance support during that period!) – Problems occurring during office hours normally either go unnoticed by the user community (thanks to redundancy of key systems), or are taken care of within 1 hour or less, often in collaboration with grid support people. – The short-term operational goal is to consolidate the current best-effort support and include more CNAF employee into first-level support. • Second-level support still to be provided by people belonging to the farming / storage / network groups. • Manpower shortage and national/company legislation rules prevent immediate implementation of this goal. • The current Tier-1 infrastructural situation prevents efficient exploitation of the resources. – This is a significant worry, especially as we move towards warmer months – we are already observing environmental parameters in the Tier-1 room to be too high. This reduces the life time of components, creates outages, and/or forces us to take emergency actions. D. Salomoni – INFN Tier-1 Review, March 1 -2, 2006 28

Ramping Up to 2008 and Beyond • A major point is the infrastructure upgrade. – Need to work out a detailed operational plan, once the engineering part is approved. – Until at least part of this upgrade is finalized, the Tier-1 farm won’t be able to meet the planned KSI 2 K milestones – not even for 2006. • Tenders are ongoing for the farm upgrade, in parallel with the farm upgrade. – See Director’s presentation for the currently foreseen numbers. • Need to keep on following technology trends, and to participate to global research and testing activities. • Need to devise generic HA solutions, to detail on-call arrangements and to expand service-level monitoring. – This includes finalization of first/second level support and higher escalation procedures. • Worrying situation for what regards manpower, both at the senior and junior levels. – Need to actively operate at the management layer to find and hire resources. – See next slide for more details. D. Salomoni – INFN Tier-1 Review, March 1 -2, 2006 29

Manpower Shortage Issues • O(103) nodes, with O(104) CPU slots, means that a large fraction of time is spent in massaging the farm. Specifically, there is significant overhead in the areas of: – – – • • Operationally, moving beyond the best-effort paradigm, given also national and company legislation constraints, requires both a bigger critical mass, and effective highlevel management support. Need better interaction with the experiments. – • • • Technical maintenance; Administrative duties (current contracts); Acquisition processes. (including here market research, tender processes, equipment validation, burn-in and commissioning phases) This includes not only HEP experiments (LHC et al. ), but also other activities currently supported by the INFN Tier-1 (e. g. astroparticle and nuclear physics, theoretical physics, bioinformatics, etc. ) Need an improved user interface and integration with other CNAF resources. More effectiveness in testing activities. See the world (and be seen) more. Resource access policies are growing in complexity as we move closer and closer to the LHC startup. And this diversification of access policies becomes all the more complex, the bigger the activity range served by the Tier-1 is supposed to become. If we ever diverge [more] toward a “standardless grid” (a concept exemplified by the “VO-Box solution”), support quickly becomes probably unfeasible. But, even if we manage not to diverge, we still need [senior] people to evaluate and influence scenarios. D. Salomoni – INFN Tier-1 Review, March 1 -2, 2006 30

2006 Main Milestones • Spring 2006: first implementation of first/second level on-call procedures, in conjunction with SC 4 start-up. • Spring 2006: integration of KVM systems. • Summer 2006: first report on HA solutions. • Summer 2006: hiring of at least 1. 5 temporary FTE for the farming group. • Summer 2006: improved (LEMON-based) farm monitoring infrastructure in production. • Summer/Fall 2006: operational plan for the Tier-1 infrastructure upgrade finalized. • Fall 2006: integration of the main Tier-1 monitoring platforms (environmental, farming, storage, network, grid) and user interfaces. • Fall 2006: HA solutions implemented for the main Tier-1 service nodes. • Fall/Winter 2006: additional 800 KSI 2 K commissioned. (this requires at least a partial infrastructure upgrade). • Winter 2006: review of first/second level on-call procedures, in conjunction with SC 4 end. D. Salomoni – INFN Tier-1 Review, March 1 -2, 2006 31

Davide salomoni

Davide salomoni Alessandra salomoni

Alessandra salomoni Alessandra salomoni

Alessandra salomoni Alessandra salomoni

Alessandra salomoni Alessandra salomoni

Alessandra salomoni Tư thế worms-breton

Tư thế worms-breton ưu thế lai là gì

ưu thế lai là gì Tư thế ngồi viết

Tư thế ngồi viết Bàn tay mà dây bẩn

Bàn tay mà dây bẩn Cách giải mật thư tọa độ

Cách giải mật thư tọa độ Các châu lục và đại dương trên thế giới

Các châu lục và đại dương trên thế giới Bổ thể

Bổ thể Tư thế ngồi viết

Tư thế ngồi viết Thẻ vin

Thẻ vin Thế nào là giọng cùng tên?

Thế nào là giọng cùng tên? Thể thơ truyền thống

Thể thơ truyền thống Chúa sống lại

Chúa sống lại Từ ngữ thể hiện lòng nhân hậu

Từ ngữ thể hiện lòng nhân hậu Sự nuôi và dạy con của hươu

Sự nuôi và dạy con của hươu Diễn thế sinh thái là

Diễn thế sinh thái là Vẽ hình chiếu vuông góc của vật thể sau

Vẽ hình chiếu vuông góc của vật thể sau 101012 bằng

101012 bằng Tỉ lệ cơ thể trẻ em

Tỉ lệ cơ thể trẻ em Lời thề hippocrates

Lời thề hippocrates Vẽ hình chiếu đứng bằng cạnh của vật thể

Vẽ hình chiếu đứng bằng cạnh của vật thể đại từ thay thế

đại từ thay thế Quá trình desamine hóa có thể tạo ra

Quá trình desamine hóa có thể tạo ra Công thức tính độ biến thiên đông lượng

Công thức tính độ biến thiên đông lượng Các môn thể thao bắt đầu bằng tiếng bóng

Các môn thể thao bắt đầu bằng tiếng bóng Thế nào là mạng điện lắp đặt kiểu nổi

Thế nào là mạng điện lắp đặt kiểu nổi Hình ảnh bộ gõ cơ thể búng tay

Hình ảnh bộ gõ cơ thể búng tay Sự nuôi và dạy con của hổ

Sự nuôi và dạy con của hổ