Tera Grid Data Transfer Joint EGEE and OSG

- Slides: 42

Tera. Grid Data Transfer Joint EGEE and OSG Workshop on Data Handling in Production Grids June 25, 2007 - Monterey, CA Derek Simmel dsimmel@psc. edu Pittsburgh Supercomputing Center June 25, 2007 Tera. Grid Data Transfer - dsimmel@psc. edu - © 2007 PSC

Tera. Grid Data Transfer • Topics – Tera. Grid Network (June 2007) – Tera. Grid Data Kits – Grid. FTP – HPN-SSH – WAN Filesystems • Lustre-WAN and GPFS-WAN – Advanced Solutions • Scheduled Data Jobs - DMOVER • Getting data to/from MPPs - PDIO June 25, 2007 Tera. Grid Data Transfer - dsimmel@psc. edu - © 2007 PSC 2

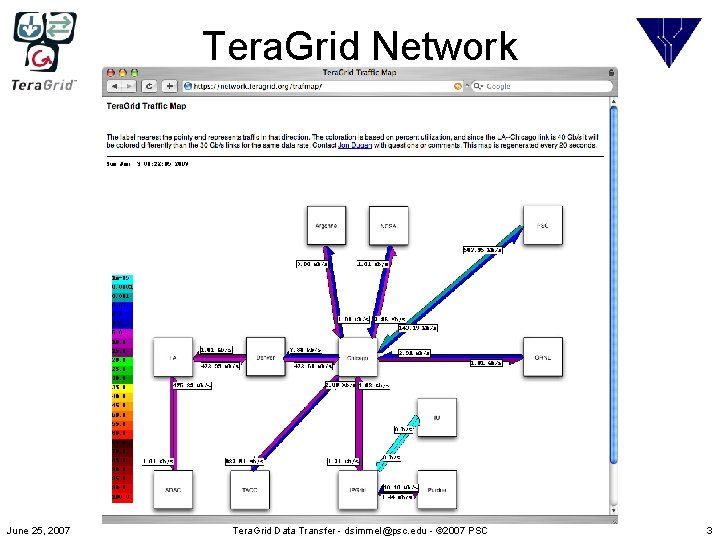

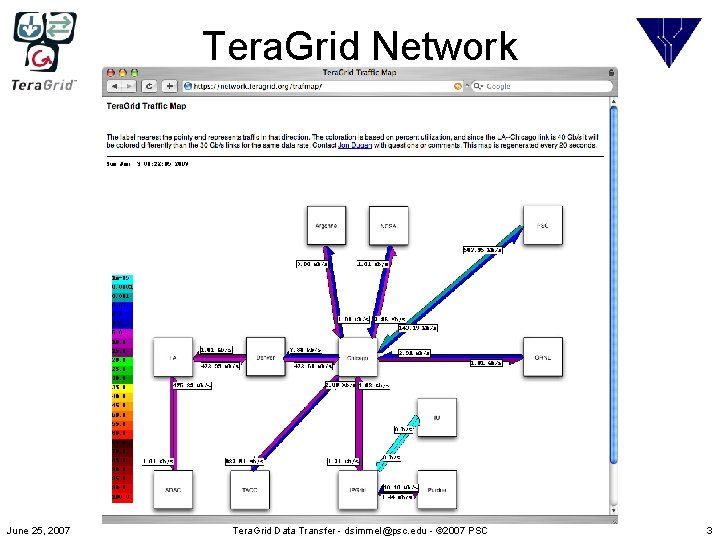

Tera. Grid Network June 25, 2007 Tera. Grid Data Transfer - dsimmel@psc. edu - © 2007 PSC 3

network. teragrid. org June 25, 2007 Tera. Grid Data Transfer - dsimmel@psc. edu - © 2007 PSC 4

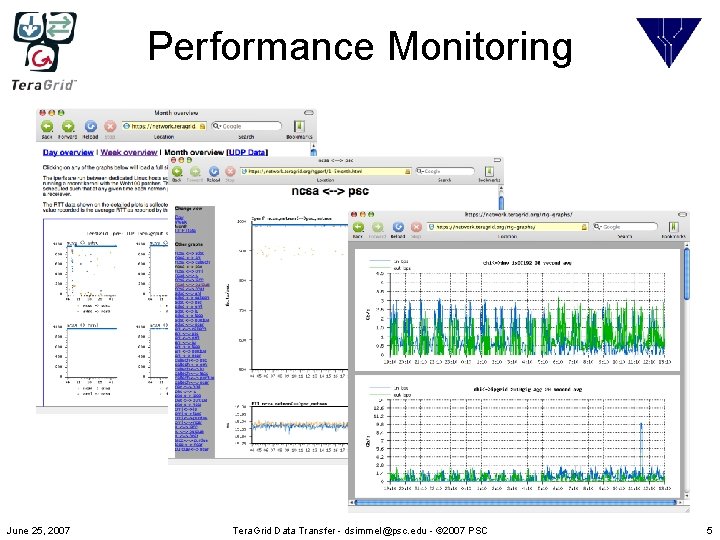

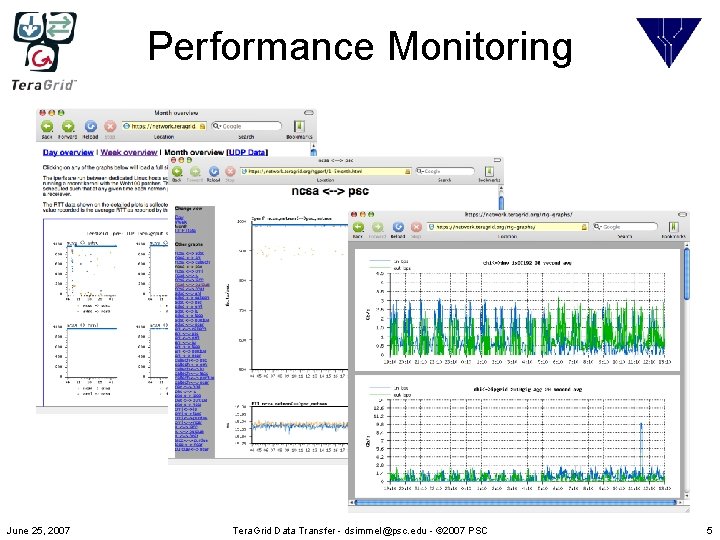

Performance Monitoring June 25, 2007 Tera. Grid Data Transfer - dsimmel@psc. edu - © 2007 PSC 5

Tera. Grid Data Kits • Data Movement – Grid. FTP, HPN-SSH – Tera. Grid Globus deployment includes VDTcontributed improvements to the Globus toolkit • Data Management – SRB support • WAN Filesystems – Development: GPFS-WAN, Lustre-WAN – Future: p. NFS with GPFS-WAN & Lustre-WAN client modules June 25, 2007 Tera. Grid Data Transfer - dsimmel@psc. edu - © 2007 PSC 6

Tera. Grid. FTP service • Standard target names – gridftp. {system}. {site}. teragrid. org • Sets of striped servers – Most sites have deployed multiple (4~12) data stripe servers per (HPC) system – Mix of 10 Gb. E and 1 Gb. E deployments – Multiple data stripes services started on 10 Gb. E Grid. FTP data transfer servers June 25, 2007 Tera. Grid Data Transfer - dsimmel@psc. edu - © 2007 PSC 7

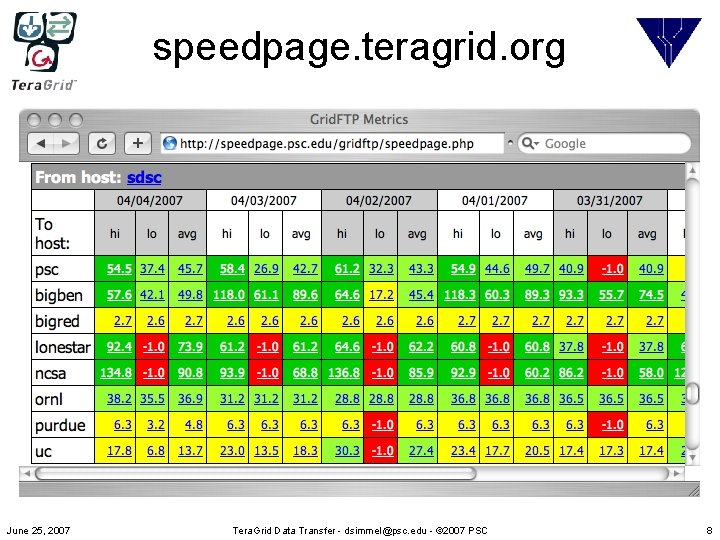

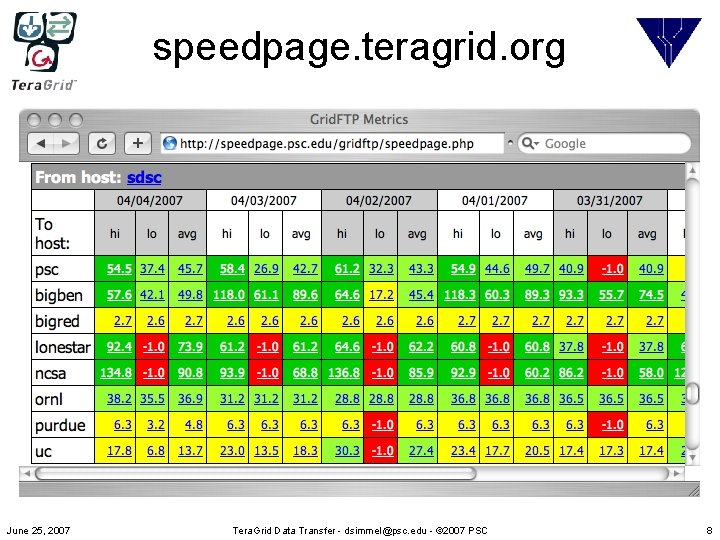

speedpage. teragrid. org June 25, 2007 Tera. Grid Data Transfer - dsimmel@psc. edu - © 2007 PSC 8

Grid. FTP Observations • Specify server configuration parameters in an external file (-c option) – Allows updates to configuration on the fly between invocations of Grid. FTP server – Facilitate custom setups for dedicated user jobs • Make the server block size parameter match the default (parallel) filesystem block size for the filesystem visible to the Grid. FTP server – How to accommodate user configurable filesystem block sizing (e. g. Lustre)? Don’t know yet… • -vb is still broken – Calculate throughput using time as a wrapper instead June 25, 2007 Tera. Grid Data Transfer - dsimmel@psc. edu - © 2007 PSC 9

Grid. FTP server configuration • Recommended Striping for Tera. Grid sites: – 10 Gb. E: 4 - or 6 -way striping per interface • Not more since most 10 Gb. E are limited by PCI-X bus – 1 Gb. E: 1 stripe each • Factors: – Tera. Grid network is uncongested • Multiple stripes/flows are not necessary to mitigate congestion-related loss – Mix of 10 Gb. E and 1 Gb. E • Striping is determined by the receiving server config 8 x 1 Gb. E -> 2 x 10 Gb. E = 2 stripes unless the latter are configured with multiple stripes each June 25, 2007 Tera. Grid Data Transfer - dsimmel@psc. edu - © 2007 PSC 10

globus-url-copy -tcp-bs • TCP Buffer Size – Goal is to optimize the buffer size large enough to handle as many bytes as can typically be in flight between the source and target • Too small: waste time in transfer waiting at source for responses from target when you could have been sending data • Too big: waste time having to retransmit packets that got dropped at the target because the target ran out of buffer space and/or could not process them fast enough – Tera. Grid tgcp tool uses TCP buffer size values calculated from measurements between Tera. Grid sites over the Tera. Grid network – Autotuning kernels/OSs • Linux kernel 2. 6. 9 or later • Microsoft Windows Vista • Observed superior performance at TACC and ORNL on systems with autotuning enabled June 25, 2007 Tera. Grid Data Transfer - dsimmel@psc. edu - © 2007 PSC 11

Other Performance Factors • Other TCP implementation factors that will affect network performance – RFC 1323 TCP extensions for high performance • Window scaling - you’re limited to 64 K max without this • Timestamps – Protection against wrapped sequence numbers in high-capacity networks – RFC 2018 SACK (Selective ACK support) • Receiver sends ACK’s with info about what packets it has seen - allowing the sender to only resend missing packets, thus reducing retransmissions – RFC 1191 Path MTU discovery • Packet sizes should be maximized for network • MTU=9000 bytes on Tera. Grid network (mix of 1 Gb & 10 Gb i/fs) June 25, 2007 Tera. Grid Data Transfer - dsimmel@psc. edu - © 2007 PSC 12

Additional Tuning Resources • TCP tuning guide: – http: //www. psc. edu/networking/projects/tcptune/ • Autotuning: – Jeff Semke, Jamshid Mahdavi, Matt Mathis - 1998 auto tuning paper: • http: //www. psc. edu/networking/ftp/papers/autotune_sigco mm 98. ps – Dunigan Oak Ridge auto tuning: • http: //www. csm. ornl. gov/~dunigan/netperf/auto. html June 25, 2007 Tera. Grid Data Transfer - dsimmel@psc. edu - © 2007 PSC 13

Grid. FTP 4. 1. 2 Dev Release • Tera. Grid GIG Data Transfer team is investigating new Grid. FTP features – “pipeline” mode to more-efficiently transfer large numbers of files – Automatic data stripe server failure recovery – sshftp: // - transfers to/from SSH servers June 25, 2007 Tera. Grid Data Transfer - dsimmel@psc. edu - © 2007 PSC 14

HPN-SSH • So What’s Wrong with SSH? – Standard SSH is slow in wide area networks – Internal bottlenecks prevent SSH from using all of the network you have • What is HPN-SSH? – A set of patches to greatly improve the network performance of Open. SSH • Where do I get it? – http: //www. psc. edu/networking/projects/hpn-ssh June 25, 2007 Tera. Grid Data Transfer - dsimmel@psc. edu - © 2007 PSC 15

(Current) Standard SSH June 25, 2007 Tera. Grid Data Transfer - dsimmel@psc. edu - © 2007 PSC 16

The Real Problem with SSH • It is *NOT* the encryption process! – If it was: • Faster computers would give faster throughput. Which doesn’t happen. • Transfer rates would be constant in local and wide area network. Which they aren’t. • In fact transfer rates seem dependent on RTT, the farther away the slower the transfer. • Any time rates are strongly linked to RTT it implies a receive buffer problem June 25, 2007 Tera. Grid Data Transfer - dsimmel@psc. edu - © 2007 PSC 17

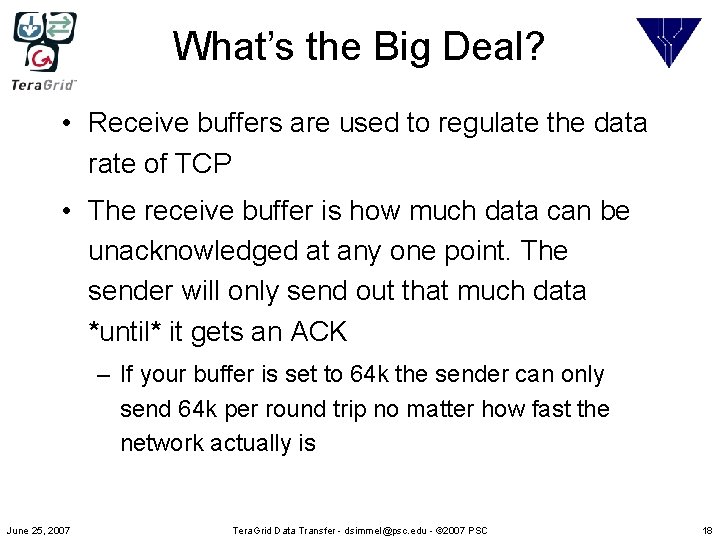

What’s the Big Deal? • Receive buffers are used to regulate the data rate of TCP • The receive buffer is how much data can be unacknowledged at any one point. The sender will only send out that much data *until* it gets an ACK – If your buffer is set to 64 k the sender can only send 64 k per round trip no matter how fast the network actually is June 25, 2007 Tera. Grid Data Transfer - dsimmel@psc. edu - © 2007 PSC 18

How Bad Can it Be? • Pretty bad – Lets say you have a 64 KB receive buffer June 25, 2007 RTT Link BDP Utilization 100 ms 10 Mbs 125 KB 50% 100 ms 100 Mbs 1. 25 MB 5% 100 ms 1000 Mbs 12. 5 MB 0. 5% Tera. Grid Data Transfer - dsimmel@psc. edu - © 2007 PSC 19

SSH is RWIN Limited • Analysis of the code reveals – SSH Protocol V 2 is multiplexed • Multiple channels over one TCP connection – Must implement a flow control mechanism per channel • Essentially the same as the TCP receive window – This application level RWIN is effectively set to 64 KB. So real connection RWIN is MIN(TCPrwin, SSHrwin) • Thus TPUTmax = 64 KB/RTT June 25, 2007 Tera. Grid Data Transfer - dsimmel@psc. edu - © 2007 PSC 20

Solving the Problem • Use getsockopt() to get TCP(rwin) and dynamically set SSH(rwin) – Performed several times throughout transfer to handle autotuning kernels • Results in 10 x to 50 x faster throughput depending on cipher used on well tuned system June 25, 2007 Tera. Grid Data Transfer - dsimmel@psc. edu - © 2007 PSC 21

HPN-SSH versus SSH June 25, 2007 Tera. Grid Data Transfer - dsimmel@psc. edu - © 2007 PSC 22

HPN-SSH Advantages • Users already know how to use scp – Keys, ~/. ssh/config file preferences & shortcuts • Speed is roughly comparable to single-stripe Grid. FTP and Kerberized FTP • Use existing authentication infrastructure – GSISSH now includes HPN patches – Do both GSI and Kerberos authn with Mech. Glue • Can be used with other applications – rsync, svn, SFTP, ssh port forwarding, etc. June 25, 2007 Tera. Grid Data Transfer - dsimmel@psc. edu - © 2007 PSC 23

HPN-SSH Issues • Users are accustomed to using scp/sftp to transfer files to/from login nodes – Now that HPN-scp can be a bandwidth hog like Grid. FTP, interactive login nodes are no longer the best place for it – 3 rd-party transfer • scp a: file b: file 2 = (ssh a; scp a: file b: file 2) • Tricky to configure hosts that you don’t want to give interactive ssh access on? June 25, 2007 Tera. Grid Data Transfer - dsimmel@psc. edu - © 2007 PSC 24

Tera. Grid HPN-SSH service • Currently available with default SSH service on many HPC login hosts – Login hosts running current GSISSH include HPN-SSH patches • Likely to move HPN-SSH to dedicated data service nodes – e. g. existing Grid. FTP data server pools June 25, 2007 Tera. Grid Data Transfer - dsimmel@psc. edu - © 2007 PSC 25

WAN Filesystems • A common filesystem (or at least the transparent semblance of one) is one of the most commonly user-requested enhancements for Tera. Grid • WAN Filesystems on Tera. Grid: – Lustre-WAN – GPFS-WAN June 25, 2007 Tera. Grid Data Transfer - dsimmel@psc. edu - © 2007 PSC 26

Tera. Grid Lustre-WAN • Active Tera. Grid sites include PSC, Indiana Univ. , and ORNL. NCAR to be added soon • We’ve seen good performance across the Tera. Grid network – As high as 977 MB/s for a single client over 10 Gb. E • 2 active Lustre-WAN filesystems (PSC & IU) • Currently experimenting with alpha version of Lustre that supports Kerberos authentication, encryption (metadata, data) & UID mapping – Uses some of the NFSv 4 infrastructure built by UMICH June 25, 2007 Tera. Grid Data Transfer - dsimmel@psc. edu - © 2007 PSC 27

Tera. Grid GPFS-WAN • 700 TB GPFS-WAN filesystem housed at San Diego Supercomputer Center • Currently mounted across Tera. Grid network at SDSC, NCSA, ANL and PSC • Divided into three categories – Collections 150 TB – Projects 475 TB - User projects apply for space – Scratch 75 TB (purged periodically) – Note: GPFS-WAN filesystems are not backed up June 25, 2007 Tera. Grid Data Transfer - dsimmel@psc. edu - © 2007 PSC 28

Advanced Solutions • Tera. Grid staff actively work on custom solutions to meet the needs of the NSF user community • Examples: – DMOVER – Parallel Direct I/O (PDIO) June 25, 2007 Tera. Grid Data Transfer - dsimmel@psc. edu - © 2007 PSC 29

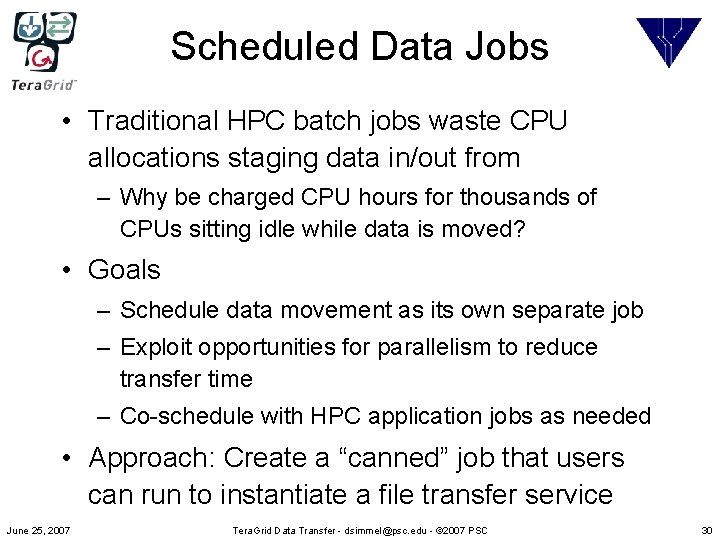

Scheduled Data Jobs • Traditional HPC batch jobs waste CPU allocations staging data in/out from – Why be charged CPU hours for thousands of CPUs sitting idle while data is moved? • Goals – Schedule data movement as its own separate job – Exploit opportunities for parallelism to reduce transfer time – Co-schedule with HPC application jobs as needed • Approach: Create a “canned” job that users can run to instantiate a file transfer service June 25, 2007 Tera. Grid Data Transfer - dsimmel@psc. edu - © 2007 PSC 30

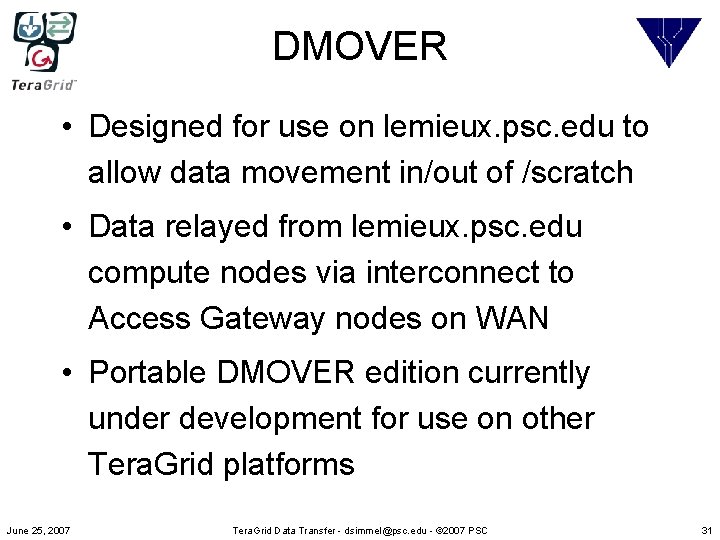

DMOVER • Designed for use on lemieux. psc. edu to allow data movement in/out of /scratch • Data relayed from lemieux. psc. edu compute nodes via interconnect to Access Gateway nodes on WAN • Portable DMOVER edition currently under development for use on other Tera. Grid platforms June 25, 2007 Tera. Grid Data Transfer - dsimmel@psc. edu - © 2007 PSC 31

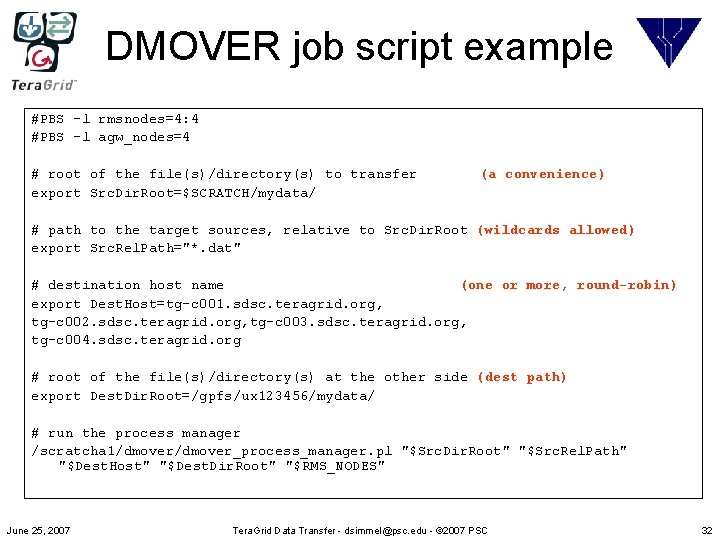

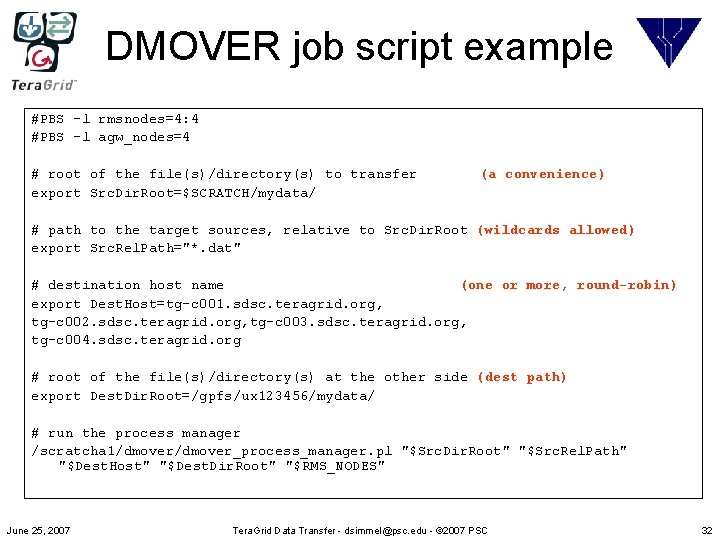

DMOVER job script example #PBS -l rmsnodes=4: 4 #PBS -l agw_nodes=4 # root of the file(s)/directory(s) to transfer (a convenience) export Src. Dir. Root=$SCRATCH/mydata/ # path to the target sources, relative to Src. Dir. Root (wildcards allowed) export Src. Rel. Path="*. dat" # destination host name (one or more, round-robin) export Dest. Host=tg-c 001. sdsc. teragrid. org, tg-c 002. sdsc. teragrid. org, tg-c 003. sdsc. teragrid. org, tg-c 004. sdsc. teragrid. org # root of the file(s)/directory(s) at the other side (dest path) export Dest. Dir. Root=/gpfs/ux 123456/mydata/ # run the process manager /scratcha 1/dmover_process_manager. pl "$Src. Dir. Root" "$Src. Rel. Path" "$Dest. Host" "$Dest. Dir. Root" "$RMS_NODES" June 25, 2007 Tera. Grid Data Transfer - dsimmel@psc. edu - © 2007 PSC 32

DMOVER Process Perl Script for ($i=0; $i<=$#file; $i++){ if (!$child){ # pick host IDs, unless we just got them from wait() $ret = system($cmd); if ($i<$n. Streams){ } $shost. ID = $i % $ENV{'RMS_NODES'}; $dhost. ID = $i % ($#host+1); # keep the number of streams constant $dest=$host[$dhost. ID]; if ($n. Streams<=$i+1){ } $pid = wait; # re-use whichever source host just finished. . . # command to launch the transfer agent $shost. ID = $cid{$pid}[0]; $cmd = "prun -N 1 -n 1 -B `offset 2 base $shost. ID` $DMOVERHOME/dmover_transfer. sh $Src. Dir. Root $file[$i] $dest $Dest. Dir. Root $shost. ID" # re-use whichever remote host just finished. . . $dhost. ID = $cid{$pid}[1]; delete($cid{$pid}); } $child = fork(); } if ($child){ $cid{$child}[0] = $shost. ID; while (-1 != wait){ $cid{$child}[1] = $dhost. ID; } June 25, 2007 sleep(1); } Tera. Grid Data Transfer - dsimmel@psc. edu - © 2007 PSC 33

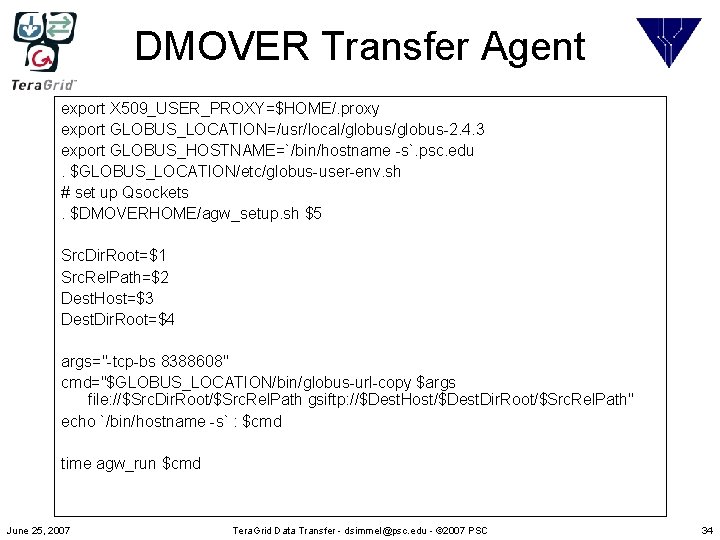

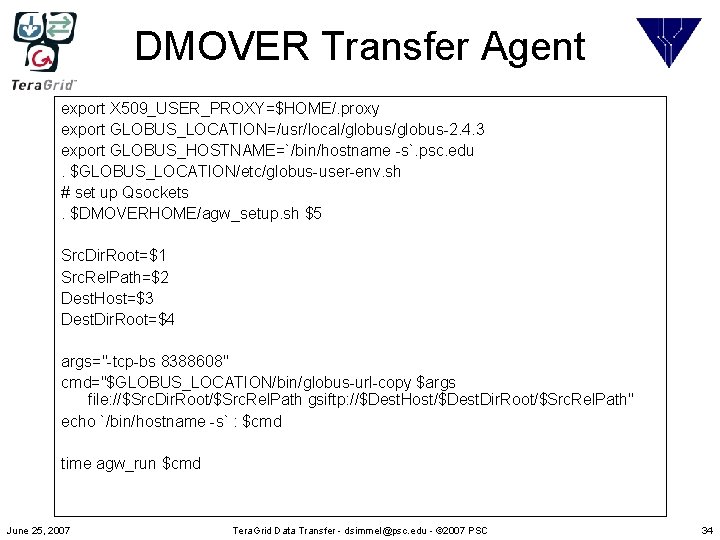

DMOVER Transfer Agent export X 509_USER_PROXY=$HOME/. proxy export GLOBUS_LOCATION=/usr/local/globus-2. 4. 3 export GLOBUS_HOSTNAME=`/bin/hostname -s`. psc. edu. $GLOBUS_LOCATION/etc/globus-user-env. sh # set up Qsockets. $DMOVERHOME/agw_setup. sh $5 Src. Dir. Root=$1 Src. Rel. Path=$2 Dest. Host=$3 Dest. Dir. Root=$4 args="-tcp-bs 8388608" cmd="$GLOBUS_LOCATION/bin/globus-url-copy $args file: //$Src. Dir. Root/$Src. Rel. Path gsiftp: //$Dest. Host/$Dest. Dir. Root/$Src. Rel. Path" echo `/bin/hostname -s` : $cmd time agw_run $cmd June 25, 2007 Tera. Grid Data Transfer - dsimmel@psc. edu - © 2007 PSC 34

What about MPPs? June 25, 2007 Tera. Grid Data Transfer - dsimmel@psc. edu - © 2007 PSC 35

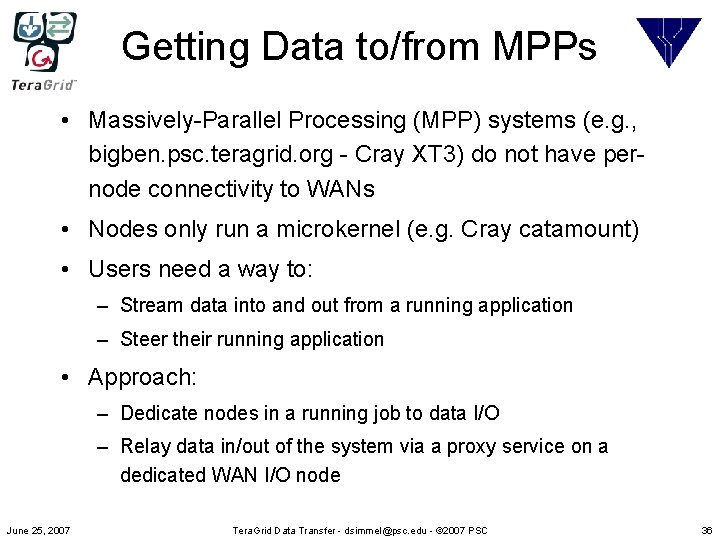

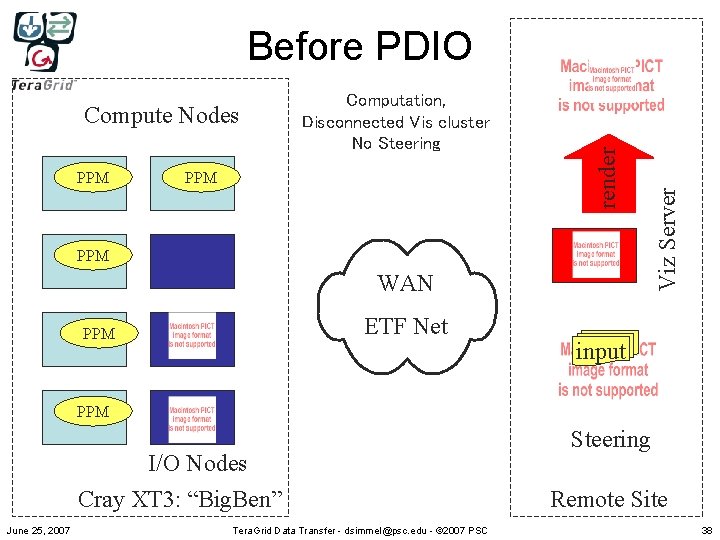

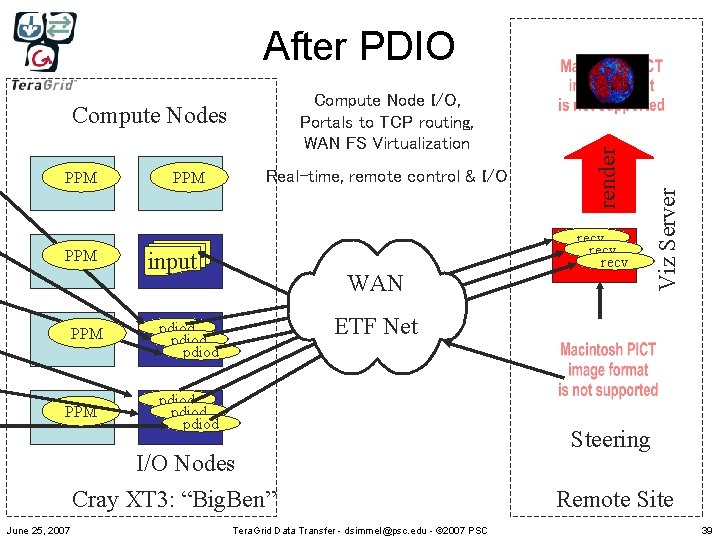

Getting Data to/from MPPs • Massively-Parallel Processing (MPP) systems (e. g. , bigben. psc. teragrid. org - Cray XT 3) do not have pernode connectivity to WANs • Nodes only run a microkernel (e. g. Cray catamount) • Users need a way to: – Stream data into and out from a running application – Steer their running application • Approach: – Dedicate nodes in a running job to data I/O – Relay data in/out of the system via a proxy service on a dedicated WAN I/O node June 25, 2007 Tera. Grid Data Transfer - dsimmel@psc. edu - © 2007 PSC 36

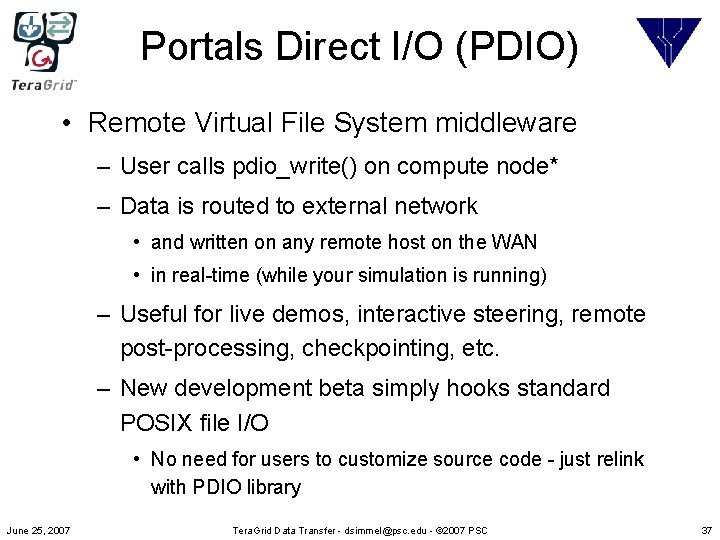

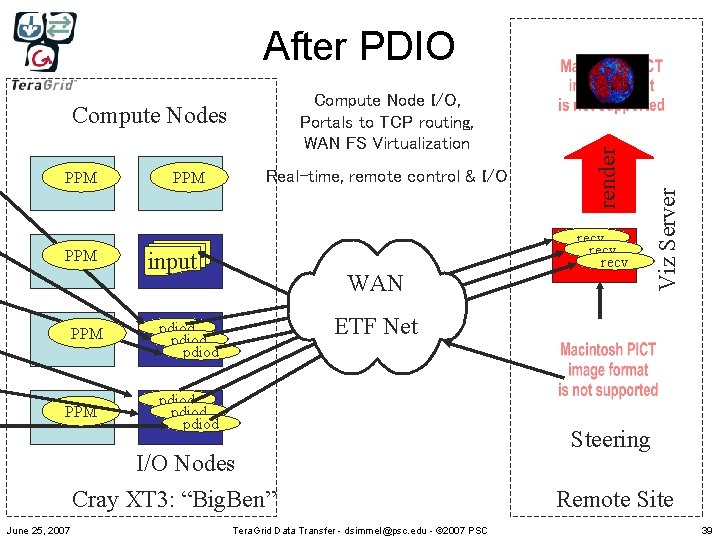

Portals Direct I/O (PDIO) • Remote Virtual File System middleware – User calls pdio_write() on compute node* – Data is routed to external network • and written on any remote host on the WAN • in real-time (while your simulation is running) – Useful for live demos, interactive steering, remote post-processing, checkpointing, etc. – New development beta simply hooks standard POSIX file I/O • No need for users to customize source code - just relink with PDIO library June 25, 2007 Tera. Grid Data Transfer - dsimmel@psc. edu - © 2007 PSC 37

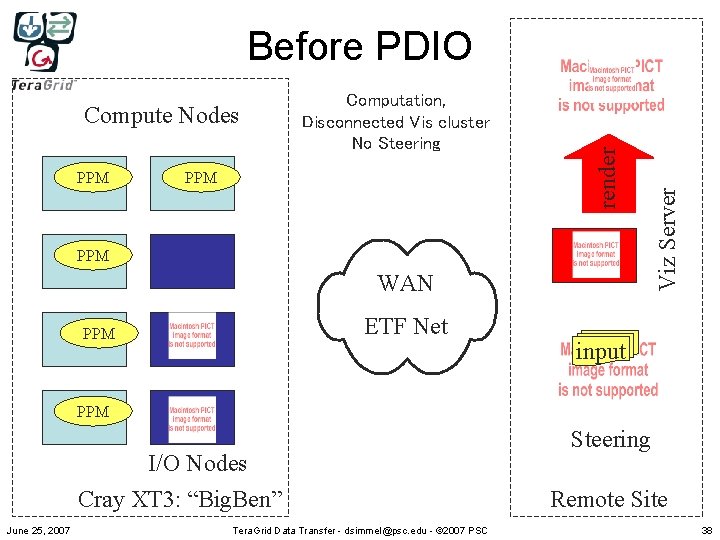

PPM PPM WAN ETF Net PPM Viz Server Compute Nodes Computation, Disconnected Vis cluster No Steering render Before PDIO input PPM I/O Nodes Cray XT 3: “Big. Ben” June 25, 2007 Tera. Grid Data Transfer - dsimmel@psc. edu - © 2007 PSC Steering Remote Site 38

Compute Nodes PPM PPM PPM Real-time, remote control & I/O input WAN ETF Net pdiod pdiod I/O Nodes Cray XT 3: “Big. Ben” June 25, 2007 recv Viz Server Compute Node I/O, Portals to TCP routing, WAN FS Virtualization render After PDIO Tera. Grid Data Transfer - dsimmel@psc. edu - © 2007 PSC Steering Remote Site 39

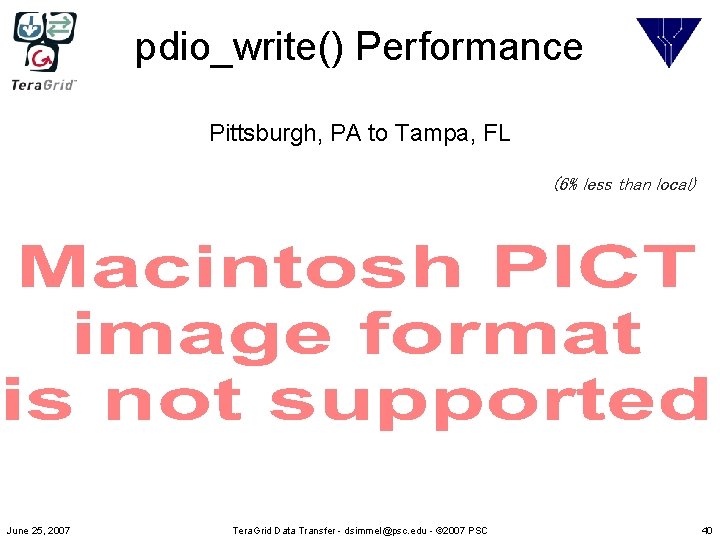

pdio_write() Performance Pittsburgh, PA to Tampa, FL (6% less than local) June 25, 2007 Tera. Grid Data Transfer - dsimmel@psc. edu - © 2007 PSC 40

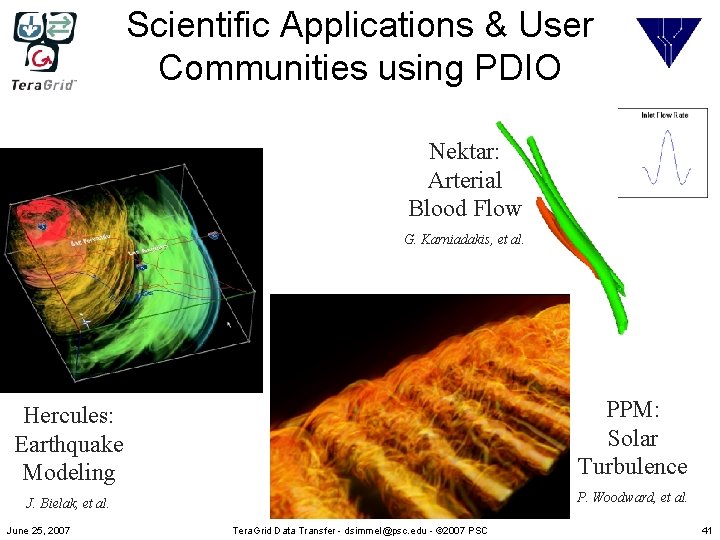

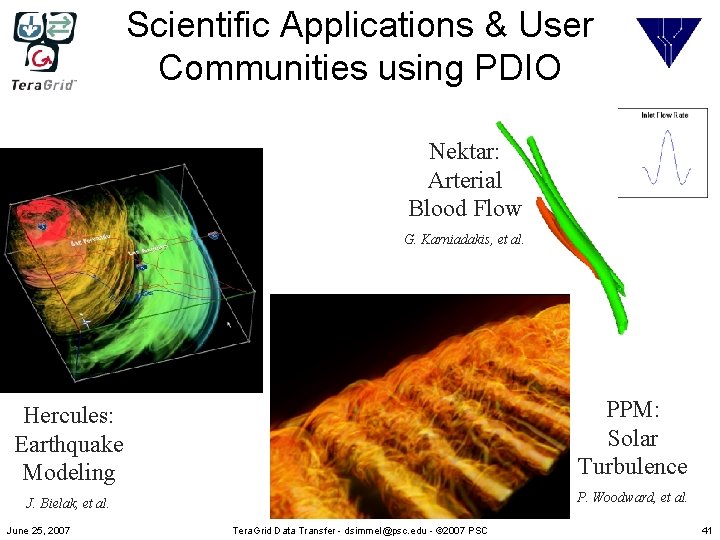

Scientific Applications & User Communities using PDIO Nektar: Arterial Blood Flow G. Karniadakis, et al. Hercules: Earthquake Modeling PPM: Solar Turbulence J. Bielak, et al. P. Woodward, et al. June 25, 2007 Tera. Grid Data Transfer - dsimmel@psc. edu - © 2007 PSC 41

Acknowledgements • Chris Rapier, PSC – HPN-SSH research, development and presentation materials • Kathy Benninger, PSC – Network analysis of Tera. Grid. FTP servers behavior, transfer performance and TCP tuning recommendations • Doug Balog, PSC; Steve Simms, Indiana U. – Lustre-WAN • Nathan Stone, PSC – DMOVER, Parallel Direct I/O June 25, 2007 Tera. Grid Data Transfer - dsimmel@psc. edu - © 2007 PSC 42