Data on OSG Frank Wrthwein OSG Executive Director

- Slides: 20

Data on OSG Frank Würthwein OSG Executive Director Professor of Physics UCSD/SDSC

The Basic Problem F(x) -> Y F = some transformation of the input data X Y = output data delivered to Y August 9 th, 2017

Basic Solution Have both the input and the output delivered in a controlled fashion as part of the workflow. => HTCondor file transfer as the default solution. August 9 th, 2017

n e t it r sw i s i h t t n me u c do to r e Benchmarking HTCondor Filetransfer p a p P E H C Initiated by Glue. X and Jefferson Lab. Wanted to know if a single submit host at JLab can support glue. X operations needs. Concern was primarily the IO in and out of the system. OSG did the test on our system. Then provided instructions for deployment at JLab. Then repeated test on their system, and helped debug until expected performance was achieved.

Why do we need more ?

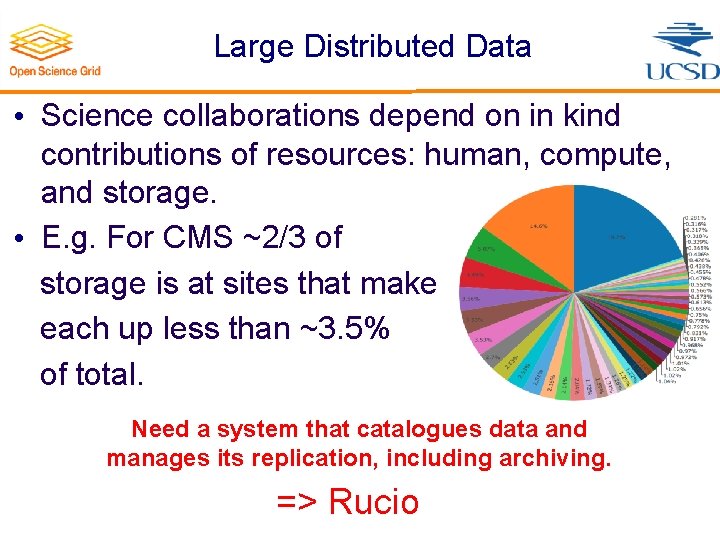

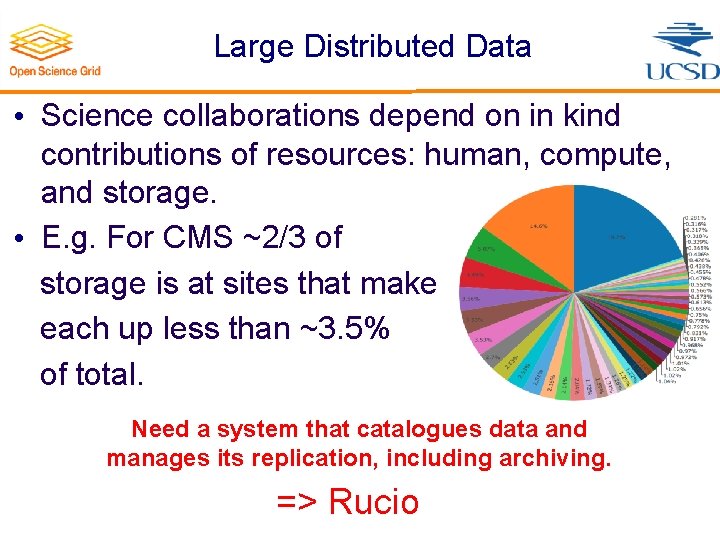

Large Distributed Data • Science collaborations depend on in kind contributions of resources: human, compute, and storage. • E. g. For CMS ~2/3 of storage is at sites that make each up less than ~3. 5% of total. Need a system that catalogues data and manages its replication, including archiving. => Rucio August 9 th, 2017

Sharing of data across organizations • Hospitals tend to consider their patient data as a form of intellectual property. − Even after de-identification, they want to have some control over its use. • Machine Learning Bioinformatics wants to pool de-identified patient data from as many hospitals as possible, to maximize stats. => OSG Data Federation August 9 th, 2017

OSG Data Federation Goals • Have as many data sources included in the federation as possible. − Recruit organizations that own data, and want to make it available. − Support wide variety of authz across these data ▪ Strictly public to all ▪ Public to collaborators only ▪ Private to only the owners organization − We provide a data rich computing environment across these organizations. • Example Science Motivations: − Clinical Bioinformatics as described on previous page − Multi-Messenger Astronomy/Astrophysics − Lot’s of other sciences that share data from multiple independent organizations. August 9 th, 2017

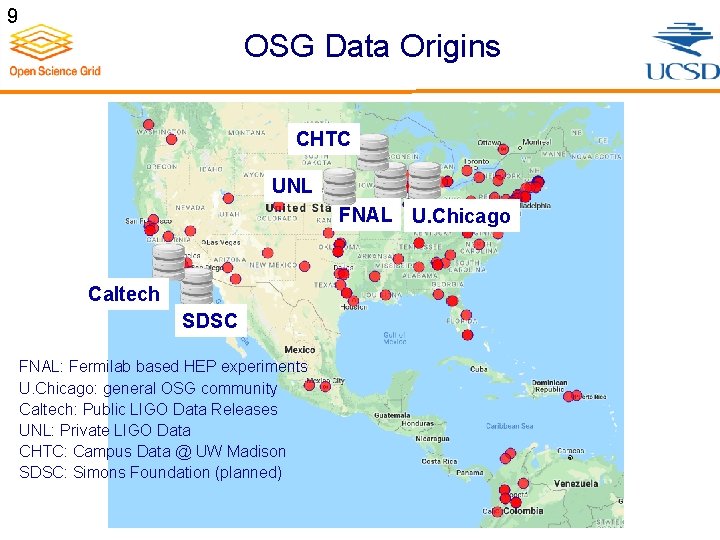

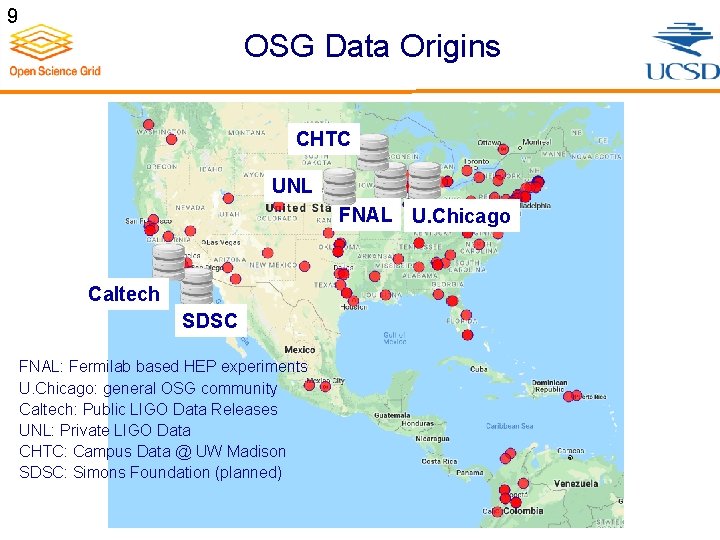

9 OSG Data Origins CHTC UNL FNAL U. Chicago Caltech SDSC FNAL: Fermilab based HEP experiments U. Chicago: general OSG community Caltech: Public LIGO Data Releases UNL: Private LIGO Data CHTC: Campus Data @ UW Madison SDSC: Simons Foundation (planned) August 9 th, 2017

Rucio vs Data Federation • Rucio requires a central authority that registers all the data & endpoints. − Space & Data are “controlled” by Rucio globally • Data Federation is much less restrictive regarding cohesion among the organizations providing the data. − Organizations need not know of each other and still be useful to scientists for a correlated analysis. − OSG manages a global top level namespace to avoid naming conflicts ▪ E. g. /<organization name>/ or some such August 9 th, 2017

Aside on Replication • The OSG Data Federation allows for replication across multiple origins by design. − Two origins can provide overlapping namespace. − However, this is a “business rule” without any enforcement by the federation infrastructure. ▪ You can serve two different objects as the same name and thus screw your users up royally. August 9 th, 2017

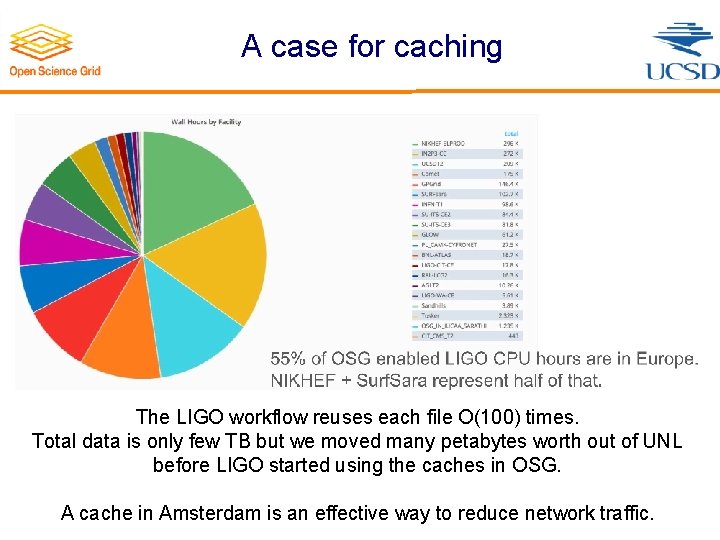

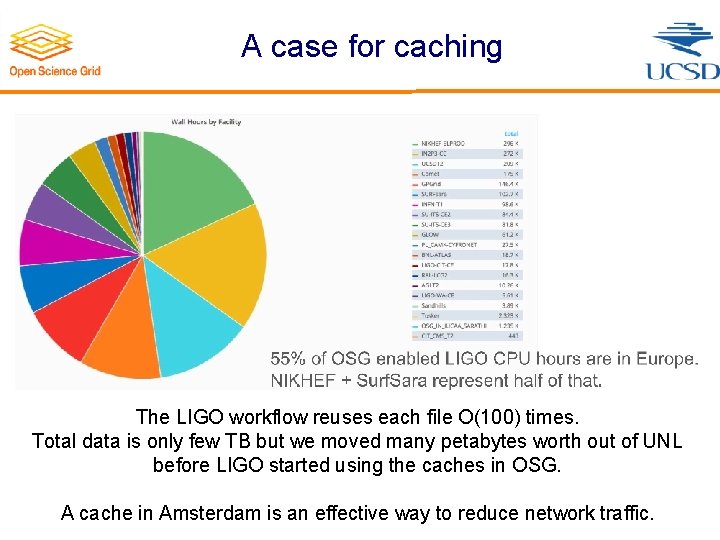

A case for caching The LIGO workflow reuses each file O(100) times. Total data is only few TB but we moved many petabytes worth out of UNL before LIGO started using the caches in OSG. A cache in Amsterdam is an effective way to reduce network traffic. August 9 th, 2017

A case against caching • Fundamental to caching is lack of central control over how space is used. − Workflows drive what gets written without having any explicit scheduling control. − Each individual cache decides what to delete when based on some algorithm. • Caches are prone to thrash if the working set of data is larger than the cache size. − This is a fundamental threat to stability. August 9 th, 2017

Ameliorating the bad • Measure activity by namespace − First step in separating communities from each other. • We could implement “quotas” per namespace in caches. − Caches are namespace aware and can be configured to behave differently for different parts of the namespace. • Release valve against thrashing − Caches stop writing if too busy. I. e. rather than thrash, the cache becomes a proxy for remote reading. August 9 th, 2017

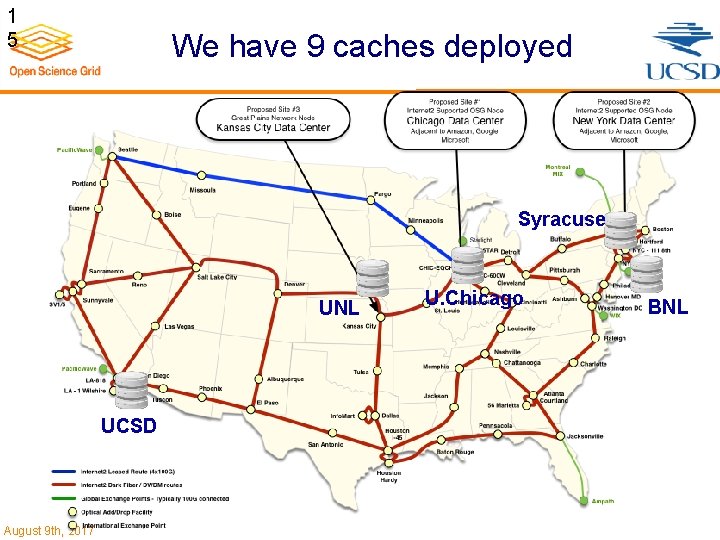

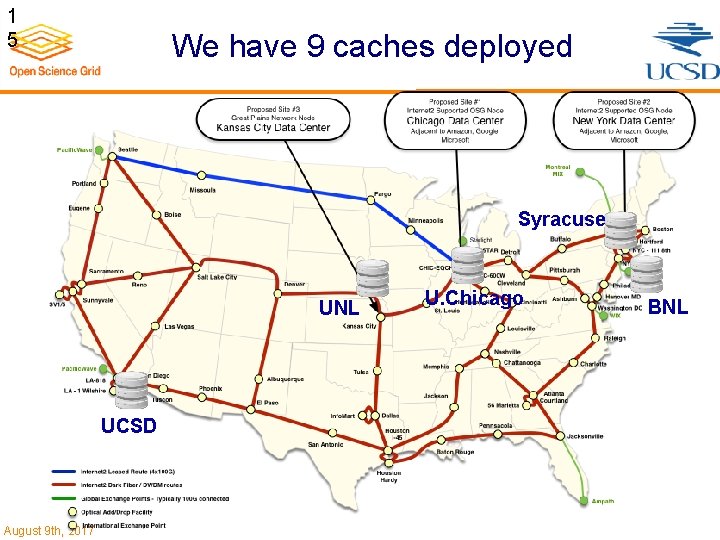

1 5 We have 9 caches deployed Syracuse UNL UCSD August 9 th, 2017 U. Chicago BNL

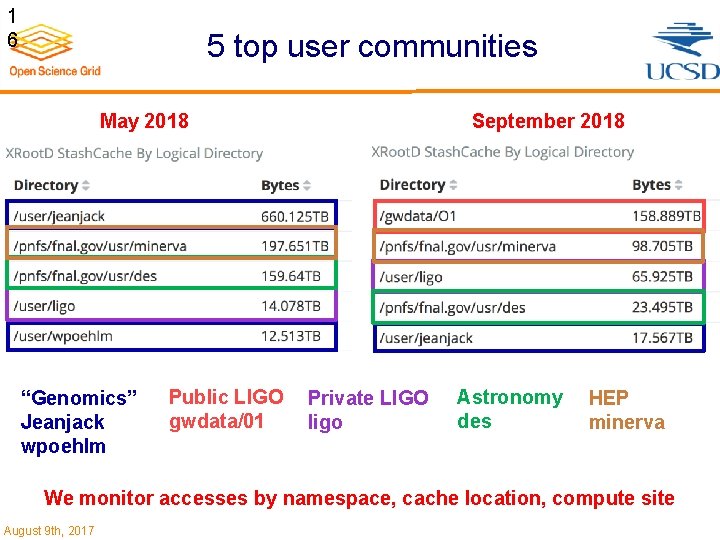

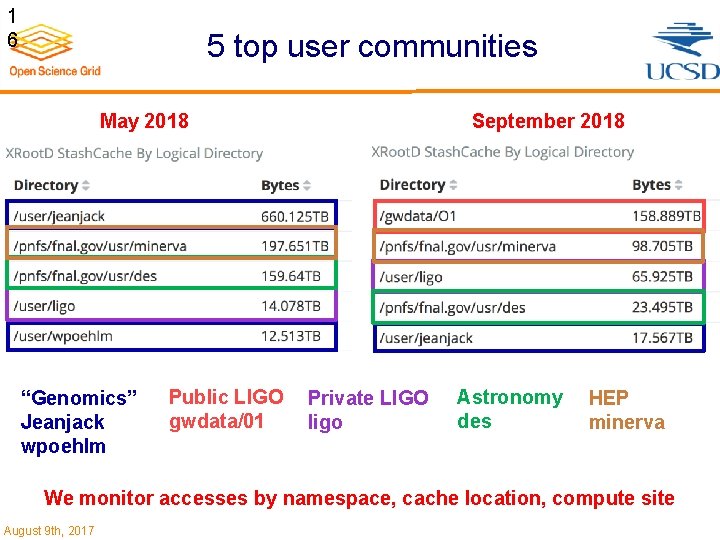

1 6 5 top user communities May 2018 “Genomics” Jeanjack wpoehlm Public LIGO gwdata/01 September 2018 Private LIGO ligo Astronomy des HEP minerva We monitor accesses by namespace, cache location, compute site August 9 th, 2017

A case for legacy application support • MINERVA transitioned to OSG in a significant way, and easily because we provided them with the illusion of a uniform filesystem everywhere they landed. • Latency was hidden via the caches. August 9 th, 2017

Aside on IO Latency • Stating the obvious: − There are plenty of applications dominated by k. B reads, especially in the life sciences. − Those obviously need to bring their data into the worker node they are executing on. • The applications on OSG span the entire spectrum from CPU to IO latency dominated. • As we migrate from a compute rich to a data rich compute environment, we ought to pay some attention to what jobs are doing on our infrastructure. August 9 th, 2017

Aside on Strategy • For ~10 years OSG had no storage it controlled. • Then Rob G. donated a PB to osg-connect and called it stash. • Now we have the opportunity to gain control of disk space at many locations around the world. − By “selling” the OSG Federation and its caching infrastructure. − By showing that its useful to science. • As future software innovations provide new opportunities for use of the storage we control we can simply deploy them August 9 th, 2017

Longer Term Technology Opportunities • User definable groups for authz on the access to data in the federation. • Work out the distinction between: − Storage hierarchy (archive vs compute-ready) − One time vs multiple use expectation − Managed replication vs caching • The LHC will make up its mind on these issues because of its Exabyte challenge • The rest of science will need people with expertise on this as well, sooner than later. • We have an opportunity to engage the LHC on this with an eye towards translating what we learn to the rest of science. August 9 th, 2017

Osg architecture

Osg architecture Osg walk in

Osg walk in Frank william abagnale jr. young

Frank william abagnale jr. young The text-based director, also known as the

The text-based director, also known as the Deberes de un director de escuela

Deberes de un director de escuela Director penitenciar miercurea ciuc

Director penitenciar miercurea ciuc Tanggungjawab pengarah syarikat

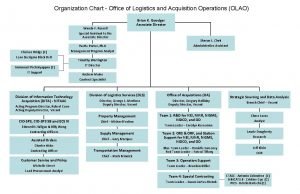

Tanggungjawab pengarah syarikat Logistics organizational chart

Logistics organizational chart Director comercial turistico tenerife

Director comercial turistico tenerife Oliver stone

Oliver stone Salaam bombay director

Salaam bombay director Role brief

Role brief Types of span of control in organization

Types of span of control in organization Director ict

Director ict Usana ranking in the world

Usana ranking in the world Floor manager hand signals

Floor manager hand signals Fashion show staff positions

Fashion show staff positions Abstract forms and shapes on a bare stage

Abstract forms and shapes on a bare stage Electron donating groups examples

Electron donating groups examples Vestuario del teatro romano

Vestuario del teatro romano El pianista director

El pianista director