Use of HPC in CMS Frank Wrthwein SDSCUCSD

- Slides: 6

Use of HPC in CMS Frank Würthwein SDSC/UCSD 3/15/16 OSG AHM 1

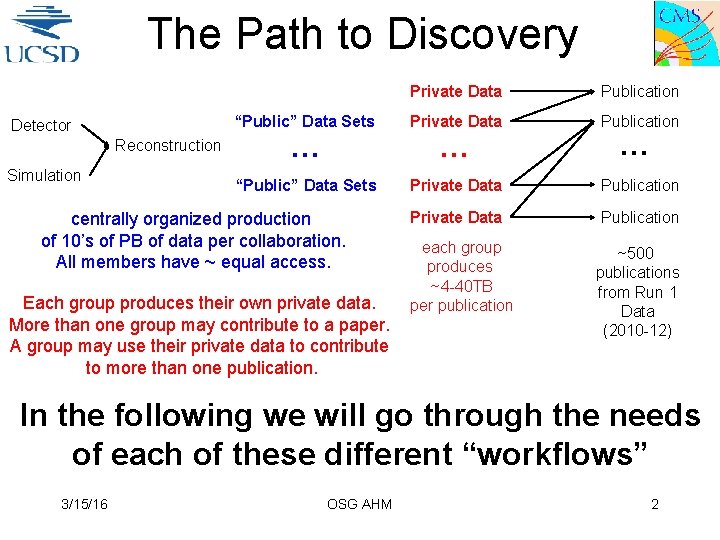

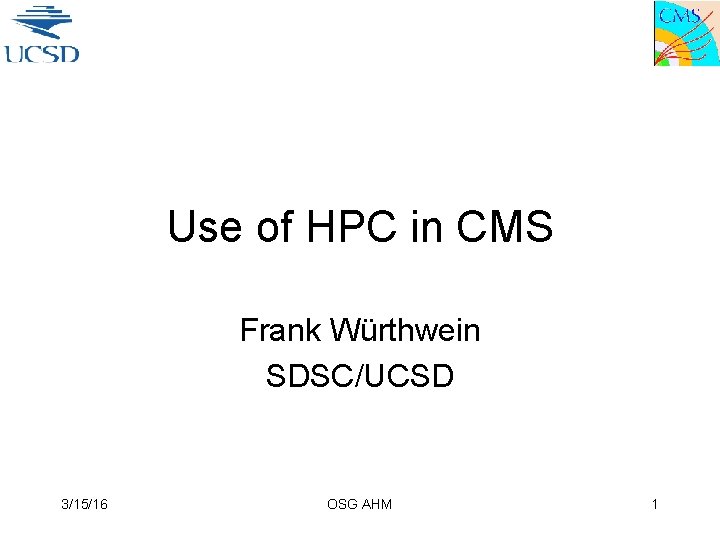

The Path to Discovery Detector Reconstruction Simulation Private Data Publication “Public” Data Sets Private Data Publication … centrally organized production of 10’s of PB of data per collaboration. All members have ~ equal access. Each group produces their own private data. More than one group may contribute to a paper. A group may use their private data to contribute to more than one publication. … each group produces ~4 -40 TB per publication … ~500 publications from Run 1 Data (2010 -12) In the following we will go through the needs of each of these different “workflows” 3/15/16 OSG AHM 2

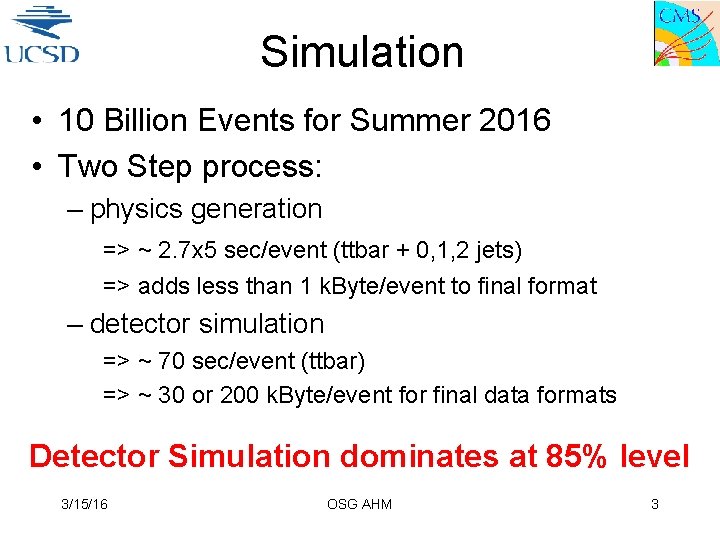

Simulation • 10 Billion Events for Summer 2016 • Two Step process: – physics generation => ~ 2. 7 x 5 sec/event (ttbar + 0, 1, 2 jets) => adds less than 1 k. Byte/event to final format – detector simulation => ~ 70 sec/event (ttbar) => ~ 30 or 200 k. Byte/event for final data formats Detector Simulation dominates at 85% level 3/15/16 OSG AHM 3

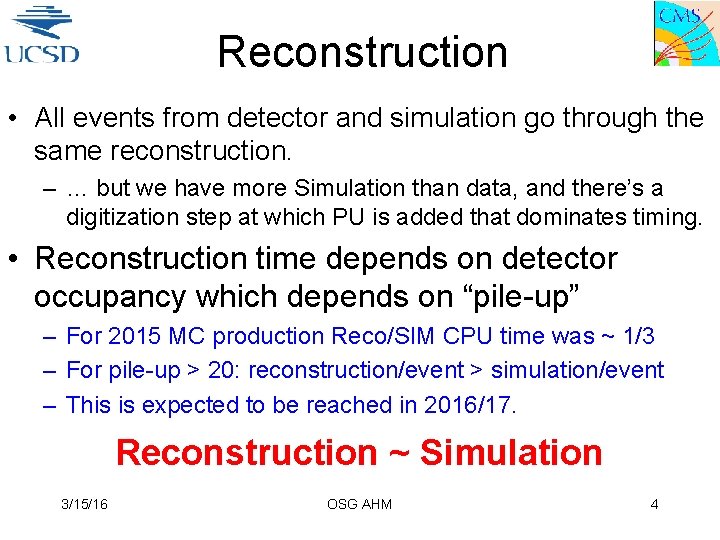

Reconstruction • All events from detector and simulation go through the same reconstruction. – … but we have more Simulation than data, and there’s a digitization step at which PU is added that dominates timing. • Reconstruction time depends on detector occupancy which depends on “pile-up” – For 2015 MC production Reco/SIM CPU time was ~ 1/3 – For pile-up > 20: reconstruction/event > simulation/event – This is expected to be reached in 2016/17. Reconstruction ~ Simulation 3/15/16 OSG AHM 4

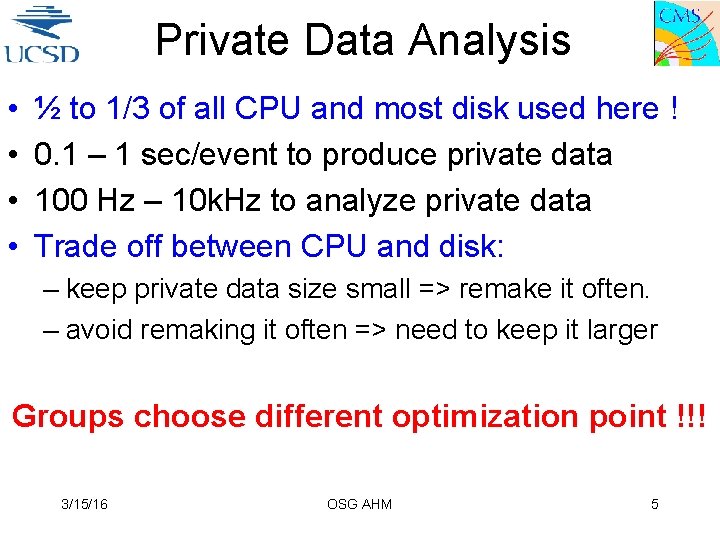

Private Data Analysis • • ½ to 1/3 of all CPU and most disk used here ! 0. 1 – 1 sec/event to produce private data 100 Hz – 10 k. Hz to analyze private data Trade off between CPU and disk: – keep private data size small => remake it often. – avoid remaking it often => need to keep it larger Groups choose different optimization point !!! 3/15/16 OSG AHM 5

Where can HPC help? • All steps except physics simulation require x 86 architecture. – no benefit from Blue. Gene, GPU, MIC, … • … but Comet (~50 k core), Cori (~50 k Core), Stampede (~ 100 k core) and AWS all are x 86 today. Elastic scale-out into x 86 based AWS & HPC for simulation & reconstruction 3/15/16 OSG AHM 6