OSG Status Frank Wrthwein OSG Executive Director Professor

- Slides: 16

OSG – Status Frank Würthwein OSG Executive Director Professor of Physics UCSD/SDSC This presentation is an update to what was last presented to the OSG Council, the governing body of OSG on October 16 th, 2018.

Start with 2 slides previously shown

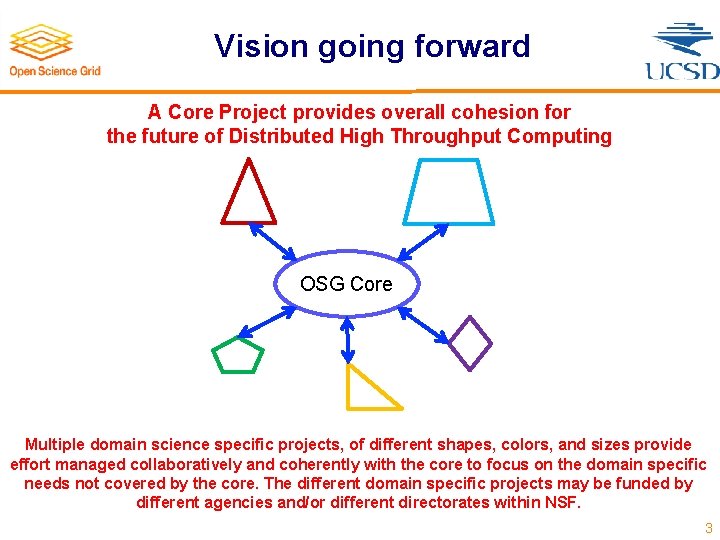

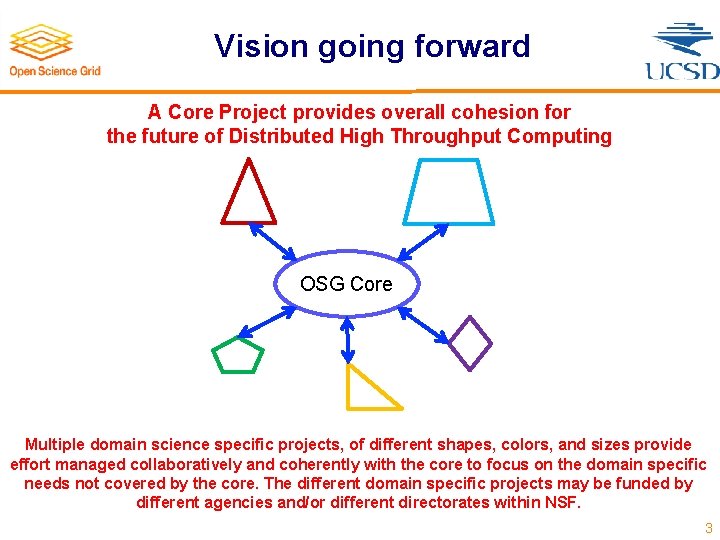

Vision going forward A Core Project provides overall cohesion for the future of Distributed High Throughput Computing OSG Core Multiple domain science specific projects, of different shapes, colors, and sizes provide effort managed collaboratively and coherently with the core to focus on the domain specific needs not covered by the core. The different domain specific projects may be funded by different agencies and/or different directorates within NSF. 3

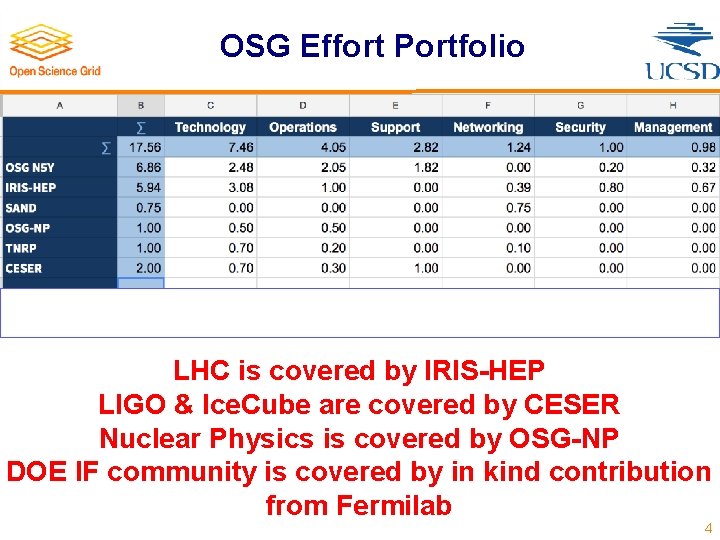

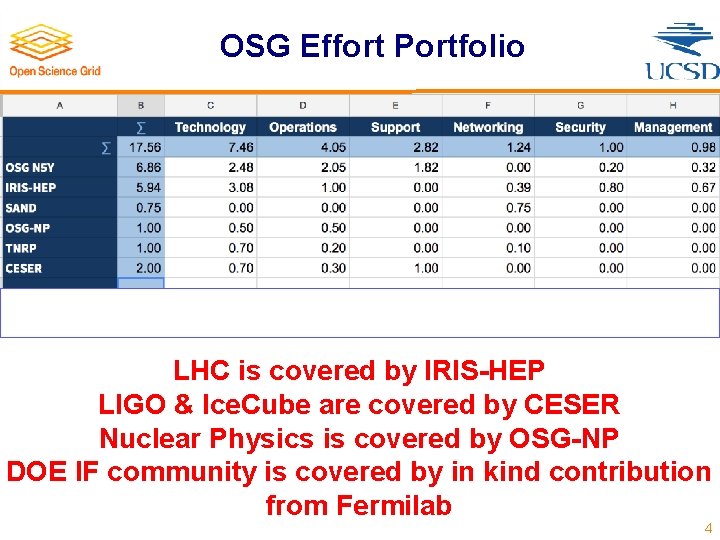

OSG Effort Portfolio LHC is covered by IRIS-HEP LIGO & Ice. Cube are covered by CESER Nuclear Physics is covered by OSG-NP DOE IF community is covered by in kind contribution from Fermilab 4

“Data Infrastructure for Open Science in Support of LIGO and Ice. Cube” (NSF # 1841530, 1841487, 1841479, 1841546, 1841475) L. Cadonati, P. Couvares, R. Gardner, H. Pfeffer, B. Riedel, M. Schultz, F. Wuerthwein In 2015, the NSF-funded LIGO Observatory made the first-ever detections of gravitational waves – from the collision of two black holes, a discovery that was recognized by the 2017 Nobel Prize in Physics. In 2017, LIGO and its sister observatory Virgo in Italy made the first detection of gravitational waves from another extreme event in the Universe - the collision of two neutron stars. Gamma rays from the same neutron star collision were also simultaneously detected by NASA’s Fermi space telescope. Meanwhile, the NSF-funded lce. Cube facility, located at the U. S. South Pole Station, has made the first detections of high-energy neutrinos from beyond our galaxy, giving us unobstructed views of other extreme objects in Universe such as supermassive black holes and supernova remnants. The revolutionary ability to observe gravitational waves, neutrinos, and optical and radio waves from the same celestial events has launched the era of “Multi-Messenger Astrophysics”, an exciting new field supported by one of NSF’s ten Big Ideas, “Windows on the Universe”. The success of Multi-Messenger Astrophysics depends on building new data infrastructure to seamlessly share, integrate, and analyze data from many large observing instruments. We are implementing a cohesive, federated, nationalscale research data infrastructure for large instruments, focused initially on LIGO and Ice. Cube, to address the need to access, share, and combine science data, and make the entire data processing life cycle more robust. Our novel working model is a multi-institutional collaboration comprising the LIGO and Ice. Cube observatories, Internet 2, and platform integration experts. We will conduct a fast-track two-year effort that draws heavily on prior and concurrent NSF investments in software, computing and data infrastructure, and international software developments including at CERN. Internet 2 will established data caches inside the national network backbone to optimize the LIGO data analysis. The goal is to achieve a data infrastructure platform that addresses the production needs of LIGO and Ice. Cube while serving as an exemplar for the entire scope of Multi-messenger Astrophysics and beyond. In the process, we are prototyping a redefinition of the role the academic internet plays in supporting science. This project is supported by the Office of Advanced Cyberinfrastructure in the Directorate for Computer and Information Science and Engineering. 5

Modified Vision

Vision going forward The Executive Director’s Office provides overall cohesion for the future of distributed High Throughput Computing (d. HTC) OSG-MMA OSG-LHC OSG ED Office And other projects OSG-Fo. Ca. S OSG-NP OSG-IF Multiple domain science specific projects, of different shapes, colors, and sizes provide effort managed collaboratively and coherently with the ED Office. The different domain specific projects may be funded by different agencies and/or different directorates within NSF. 7

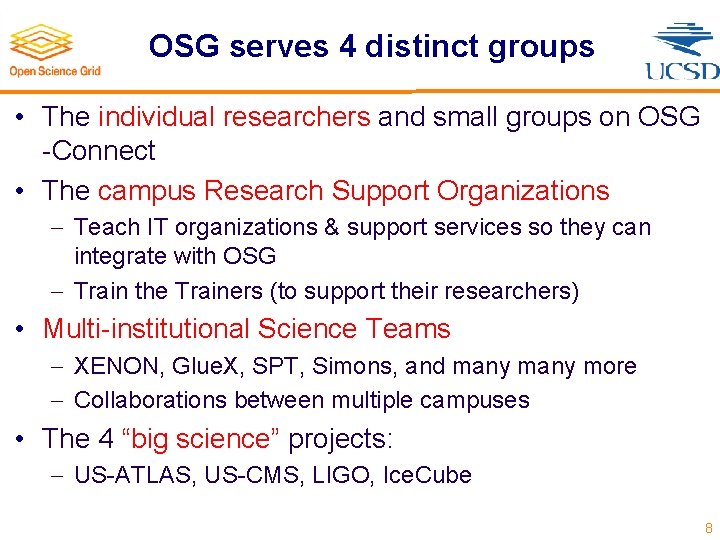

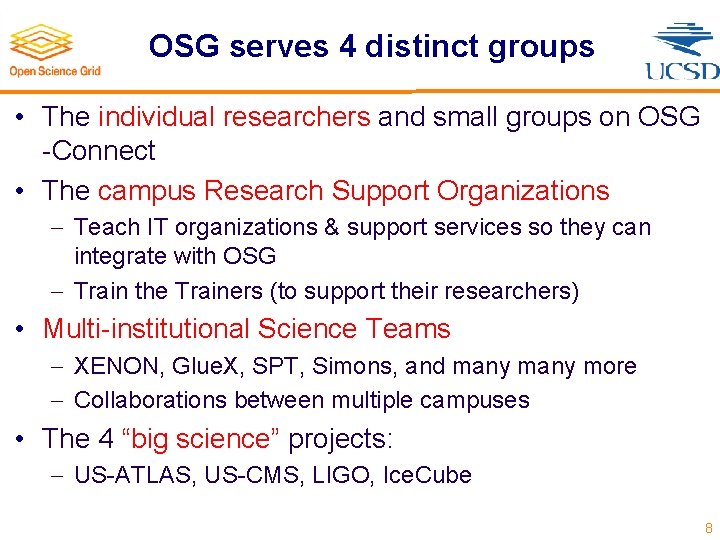

OSG serves 4 distinct groups • The individual researchers and small groups on OSG -Connect • The campus Research Support Organizations Teach IT organizations & support services so they can integrate with OSG Train the Trainers (to support their researchers) • Multi-institutional Science Teams XENON, Glue. X, SPT, Simons, and many more Collaborations between multiple campuses • The 4 “big science” projects: US-ATLAS, US-CMS, LIGO, Ice. Cube 8

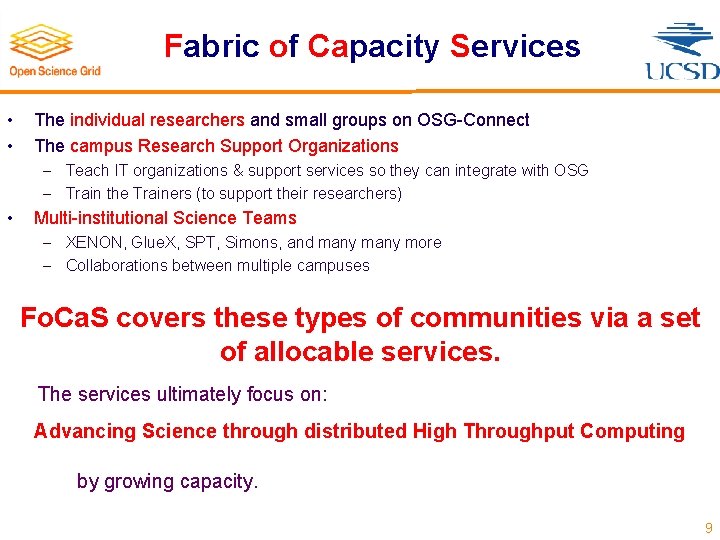

Fabric of Capacity Services • • The individual researchers and small groups on OSG-Connect The campus Research Support Organizations Teach IT organizations & support services so they can integrate with OSG Train the Trainers (to support their researchers) • Multi-institutional Science Teams XENON, Glue. X, SPT, Simons, and many more Collaborations between multiple campuses Fo. Ca. S covers these types of communities via a set of allocable services. The services ultimately focus on: Advancing Science through distributed High Throughput Computing by growing capacity. 9

Growing Capacity • Advance the maximum possible dynamic range of science, groups, and institutions From individual undergraduates to international collaborations with thousands of members. From small colleges, museums, zoos, to national scale centers of open science. • Growing capacity by integrating RAW capacity and turning it into effective capacity. Let me give some examples. 10

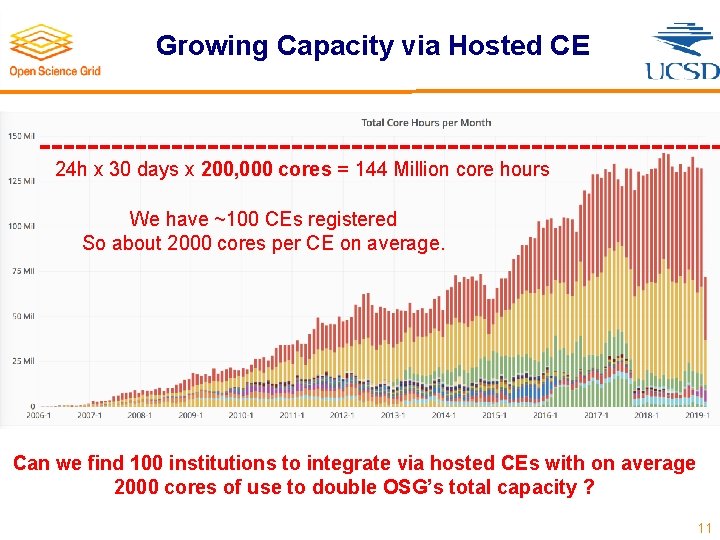

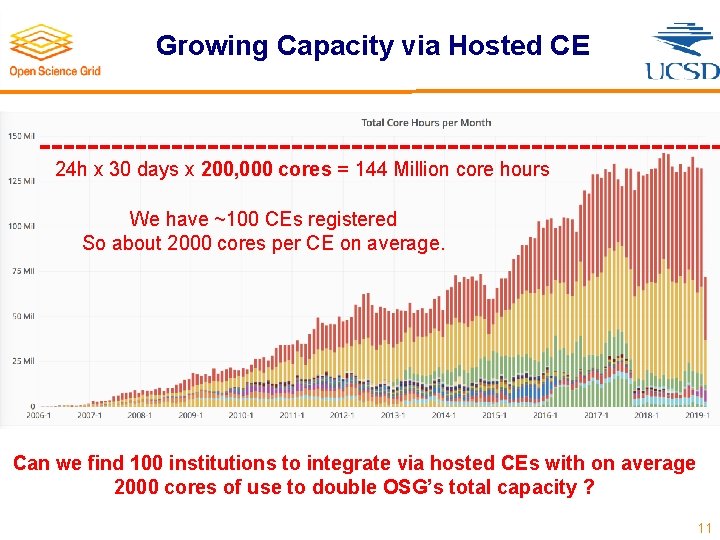

Growing Capacity via Hosted CE 24 h x 30 days x 200, 000 cores = 144 Million core hours We have ~100 CEs registered So about 2000 cores per CE on average. Can we find 100 institutions to integrate via hosted CEs with on average 2000 cores of use to double OSG’s total capacity ? 11

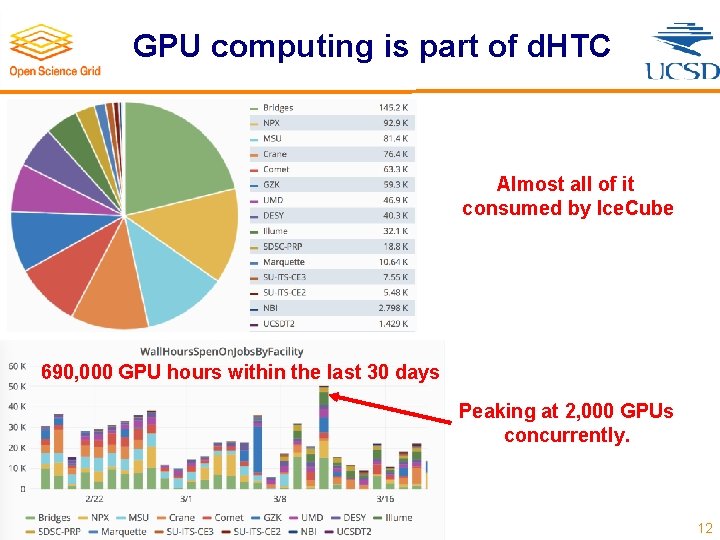

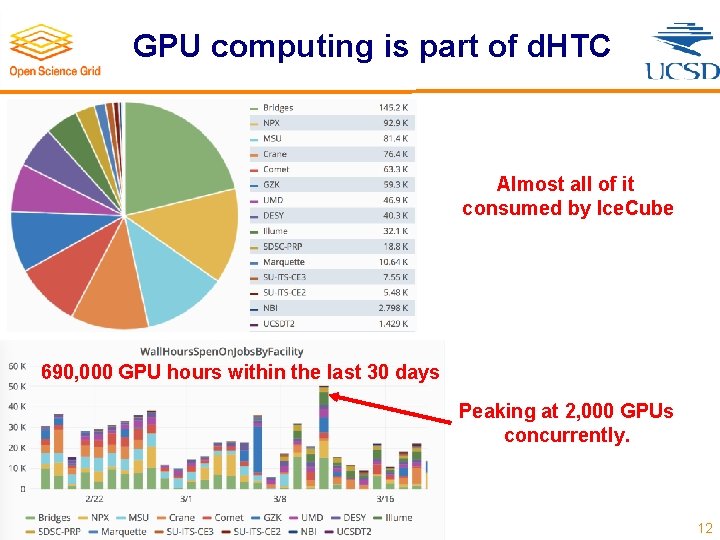

GPU computing is part of d. HTC Almost all of it consumed by Ice. Cube 690, 000 GPU hours within the last 30 days Peaking at 2, 000 GPUs concurrently. 12

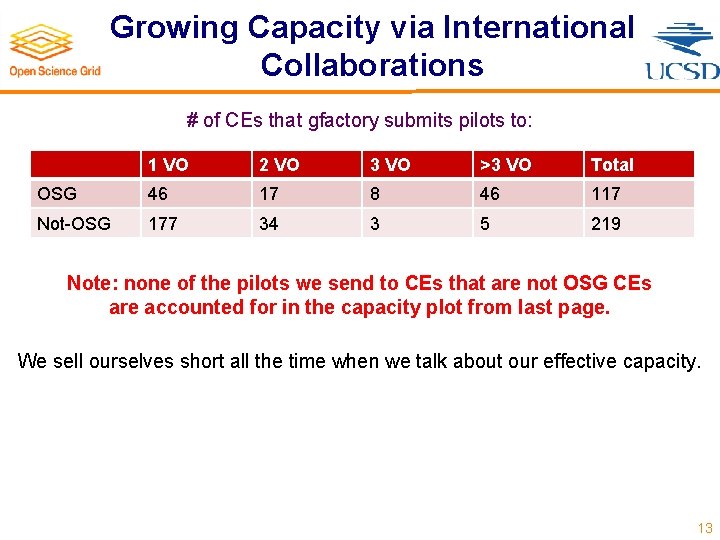

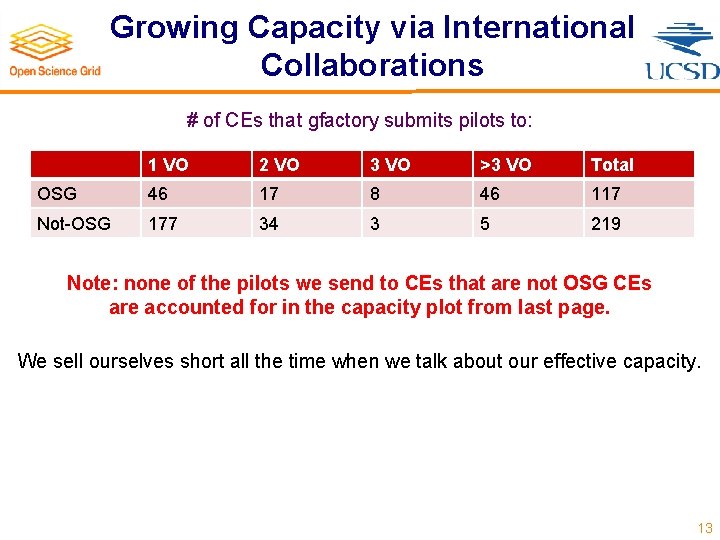

Growing Capacity via International Collaborations # of CEs that gfactory submits pilots to: 1 VO 2 VO 3 VO >3 VO Total OSG 46 17 8 46 117 Not-OSG 177 34 3 5 219 Note: none of the pilots we send to CEs that are not OSG CEs are accounted for in the capacity plot from last page. We sell ourselves short all the time when we talk about our effective capacity. 13

Growing Capacity via Cloud • The largest spot market elastic scale out ever done in a single region in AWS is 1. 1 Million cores. • The demonstrated scalability of a single HTCondor pool is 200, 000 startds. (We run the OSG VO pool at up to 30, 000 startds, typically) For a 72 core VM or an 8 V 100 GPU VM this translates into: § 14 Million core or § 1. 6 Million GPUs • Our theoretical capacity for elastic scale out is beyond anything anybody we know could ever afford. • And it’s orders of magnitude larger than exascale. 14

Conclusions about capacity • We can provide services that can grow effective capacity beyond anything the NSF or DOE could ever afford to purchase. • The secret sauce is distributed HTC’s ingenious parallelism. We scale perfectly by definition up to the max we can scale the middleware. • There is more money in the total aggregate of all Universities cloud and on-premise spending than in the NSF computing hardware budget. How do we best communicate OSG’s capacity potential ? 15

Summary • We are in good shape financially for now. • We submitted the OSG-Fo. Ca. S proposal in early March that covers the remaining communities for the next 5 years. After this, we need to worry about: § Funding the ED Office. § Funding the MMA effort within the next 2 years. § Eventually, funding the IF effort when Dune becomes a funded project. • We can sort of see the end of the tunnel in resolving OSG funding for the next 10 years. • We intend to prepare for OSG-Fo. Ca. S within the next year, assuming success with the proposal. Much better accounting of our Assets in order to be able to operate the OSG Fabric of Capacity Services as an allocable set of services. Prepare for cloud services operations. 16

Promotion from associate professor to professor

Promotion from associate professor to professor Professor frank kee

Professor frank kee Frank chinegwundoh

Frank chinegwundoh Osg architecture

Osg architecture Osg walk in

Osg walk in Frank william abagnale sr

Frank william abagnale sr Eft director's office

Eft director's office El trabajo del director y el proyecto de la escuela

El trabajo del director y el proyecto de la escuela Territory sales manager

Territory sales manager Six images of change

Six images of change Peranan pengarah syarikat

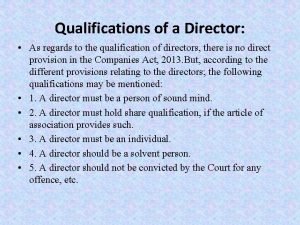

Peranan pengarah syarikat Qualification of a director

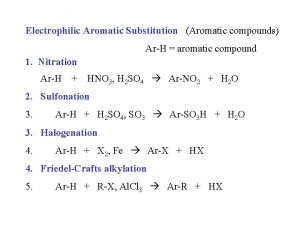

Qualification of a director Electron donating groups examples

Electron donating groups examples Auteur

Auteur Sub director

Sub director Dr sethi bhopal

Dr sethi bhopal Pla director sociosanitari

Pla director sociosanitari