Soar Onehour Tutorial John E Laird University of

![Tac. Air-Soar [1997] Controls simulated aircraft in rea -time training exercises (>3000 entities) Flies Tac. Air-Soar [1997] Controls simulated aircraft in rea -time training exercises (>3000 entities) Flies](https://slidetodoc.com/presentation_image_h/259c5a9dc00e642b829117a41ba30269/image-20.jpg)

![Episodic Memory: Multi-Step Action Projection [Andrew Nuxoll] Average Margin of Victory 40 Margin of Episodic Memory: Multi-Step Action Projection [Andrew Nuxoll] Average Margin of Victory 40 Margin of](https://slidetodoc.com/presentation_image_h/259c5a9dc00e642b829117a41ba30269/image-50.jpg)

![Spatial Problem Solving with Mental Imagery [Scott Lathrop & Sam Wintermute] (intersect (no_intersect (on Spatial Problem Solving with Mental Imagery [Scott Lathrop & Sam Wintermute] (intersect (no_intersect (on](https://slidetodoc.com/presentation_image_h/259c5a9dc00e642b829117a41ba30269/image-55.jpg)

- Slides: 60

Soar One-hour Tutorial John E. Laird University of Michigan March 2009 http: //sitemaker. umich. edu/soar laird@umich. edu Supported in part by DARPA and ONR 1

Tutorial Outline 1. 2. 3. 4. Cognitive Architecture Soar History Overview of Soar Details of Basic Soar Processing and Syntax – – Internal decision cycle Interaction with external environments Subgoals and meta-reasoning Chunking 5. Recent extensions to Soar – – Reinforcement Learning Semantic Memory Episodic Memory Visual Imagery 2

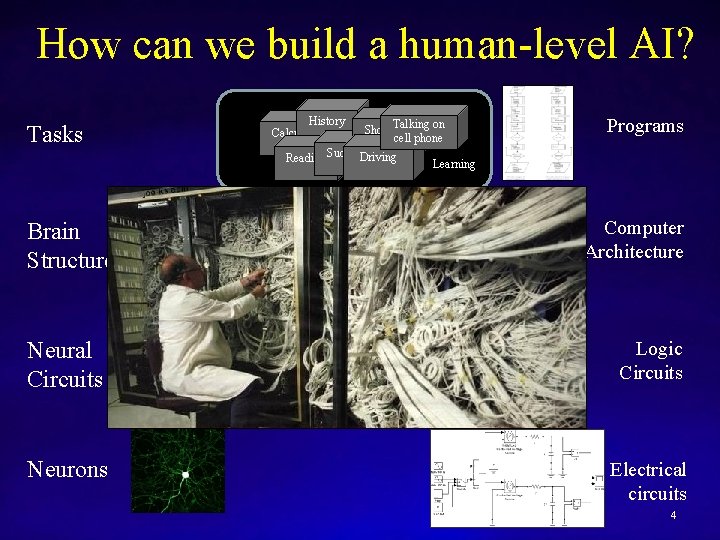

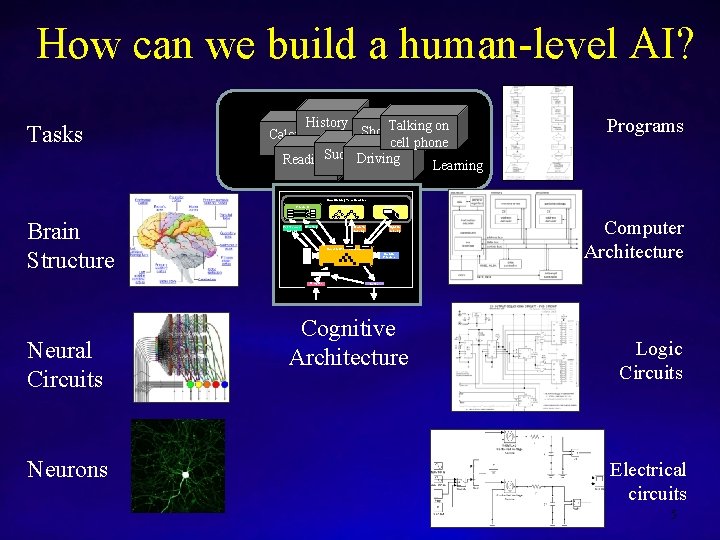

How can we build a human-level AI? Tasks History Talking on Shopping Calculus cell phone Sudoku Driving Reading Learning Brain Structure Neural Circuits Neurons 3

How can we build a human-level AI? Tasks Brain Structure History Calculus Talking on Shopping cell phone Reading Sudoku. Driving Programs Learning Computer Architecture Neural Circuits Logic Circuits Neurons Electrical circuits 4

How can we build a human-level AI? Tasks History Talking on Shopping Calculus cell phone Sudoku Driving Reading Learning Programs Symbolic Long-Term Memories Procedural Reinforcement Learning Chunking Neurons Episodic Learning Decision Procedure Computer Architecture Imagery Perception Neural Circuits Semantic Learning Symbolic Short-Term Memory Appraisals Brain Structure Episodic Semantic Action Cognitive Architecture Logic Circuits Electrical circuits 5

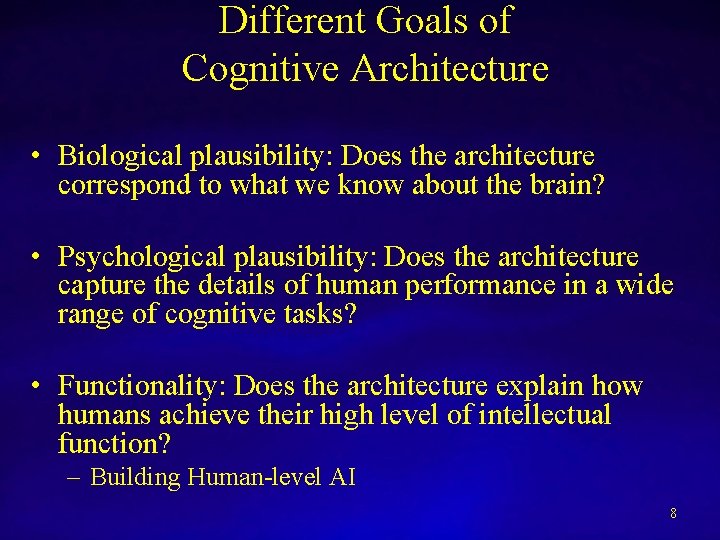

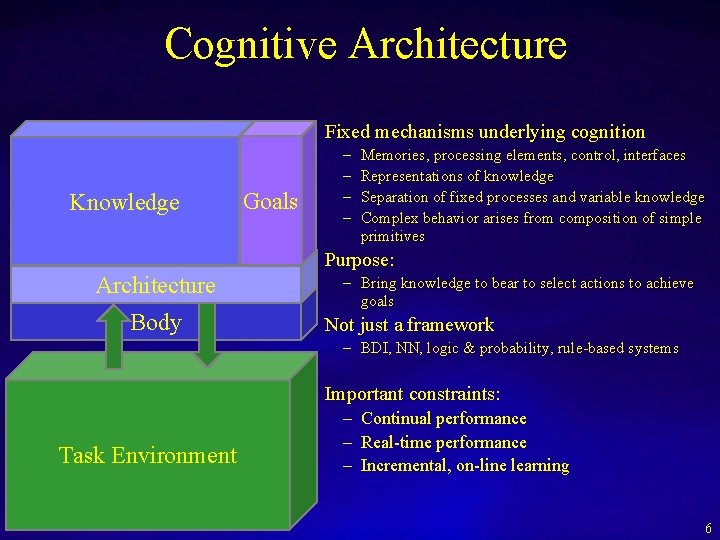

Cognitive Architecture Fixed mechanisms underlying cognition Knowledge Goals – – Memories, processing elements, control, interfaces Representations of knowledge Separation of fixed processes and variable knowledge Complex behavior arises from composition of simple primitives Purpose: Architecture Body – Bring knowledge to bear to select actions to achieve goals Not just a framework – BDI, NN, logic & probability, rule-based systems Important constraints: Task Environment – Continual performance – Real-time performance – Incremental, on-line learning 6

Common Structures of many Cognitive Architectures Declarative Learning Declarative Long-term Memory Procedural Long-term Memory Goals Short-term Memory Action Selection Perception Action Procedure Learning 7

Different Goals of Cognitive Architecture • Biological plausibility: Does the architecture correspond to what we know about the brain? • Psychological plausibility: Does the architecture capture the details of human performance in a wide range of cognitive tasks? • Functionality: Does the architecture explain how humans achieve their high level of intellectual function? – Building Human-level AI 8

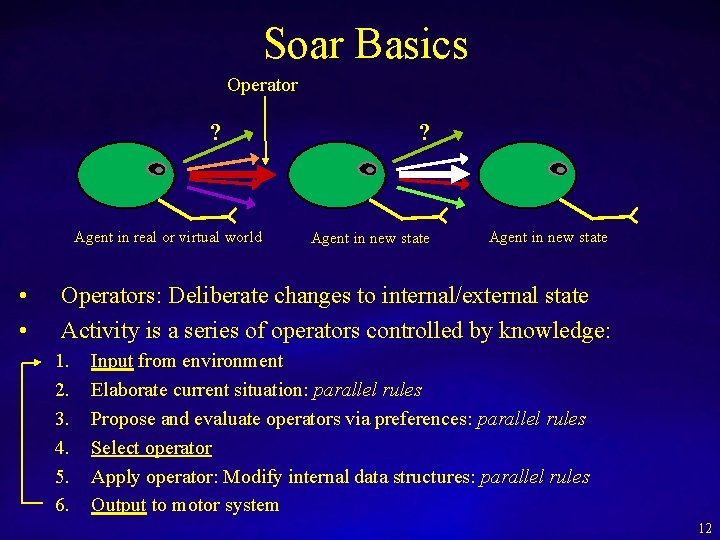

Short History of Soar 1980 1985 1990 1995 2000 2005 Modeling Pre-Soar Problem Spaces Production Systems Heuristic Search Multi-method Multi-task problem solving Subgoaling Chunking UTC Natural Language HCI External Environment Integration Large bodies of knowledge Teamwork Real Application Virtual Agents Learning from Experience, Observation, Instruction New Capabilities Functionality 9

Distinctive Features of Soar • Emphasis on functionality – Take engineering, scaling issues seriously – Interfaces to real world systems – Can build very large systems in Soar that exist for a long time • Integration with perception and action – Mental imagery and spatial reasoning • Integrates reaction, deliberation, meta-reasoning – Dynamically switching between them • Integrated learning – Chunking, reinforcement learning, episodic & semantic • Useful in cognitive modeling – Expanding this is emphasis of many current projects • Easy to integrate with other systems & environments – SML efficiently supports many languages, inter-process 10

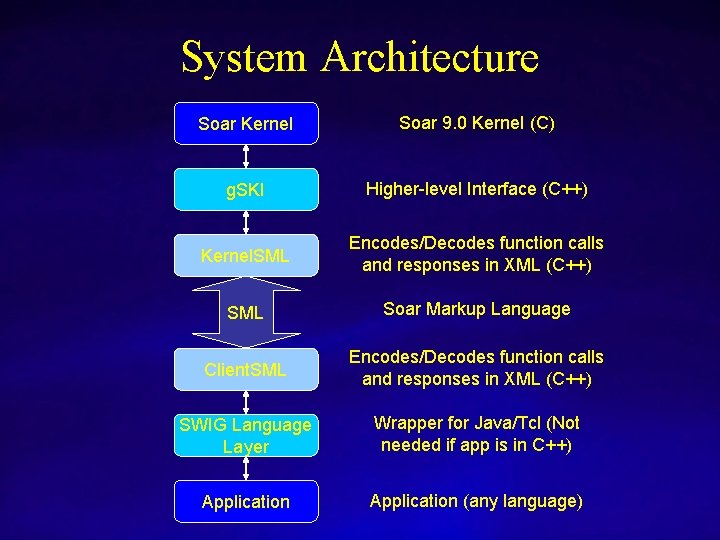

System Architecture Soar Kernel Soar 9. 0 Kernel (C) g. SKI Higher-level Interface (C++) Kernel. SML Encodes/Decodes function calls and responses in XML (C++) SML Soar Markup Language Client. SML Encodes/Decodes function calls and responses in XML (C++) SWIG Language Layer Wrapper for Java/Tcl (Not needed if app is in C++) Application (any language)

Soar Basics Operator ? Agent in real or virtual world • • ? Agent in new state Operators: Deliberate changes to internal/external state Activity is a series of operators controlled by knowledge: 1. 2. 3. 4. 5. 6. Input from environment Elaborate current situation: parallel rules Propose and evaluate operators via preferences: parallel rules Select operator Apply operator: Modify internal data structures: parallel rules Output to motor system 12

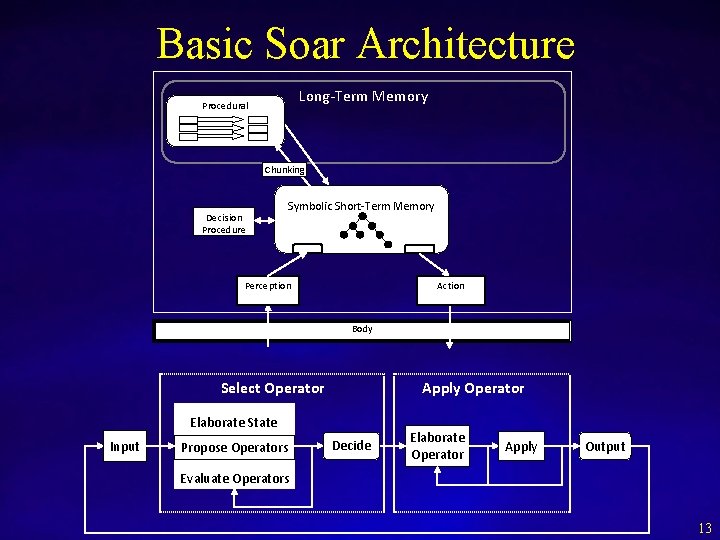

Basic Soar Architecture Long-Term Memory Procedural Chunking Decision Procedure Symbolic Short-Term Memory Perception Action Body Select Operator Apply Operator Elaborate State Input Propose Operators Decide Elaborate Operator Apply Output Evaluate Operators 13

Soar 101: Eaters Input Propose Operator If cell in direction <d> is not a wall, --> propose operator move <d> Evaluate Operators If operator <o 1> will move to a empty cell and operator <o 2> bonus food --> will move to a normal food, operator <o 1> < --> operator <o 1> > <o 2> East North South North>>East South<> East North = South Select Operator move-direction North Working Memory Apply Operator Output If an operator is selected to move <d> --> create output move-direction <d> Production Memory

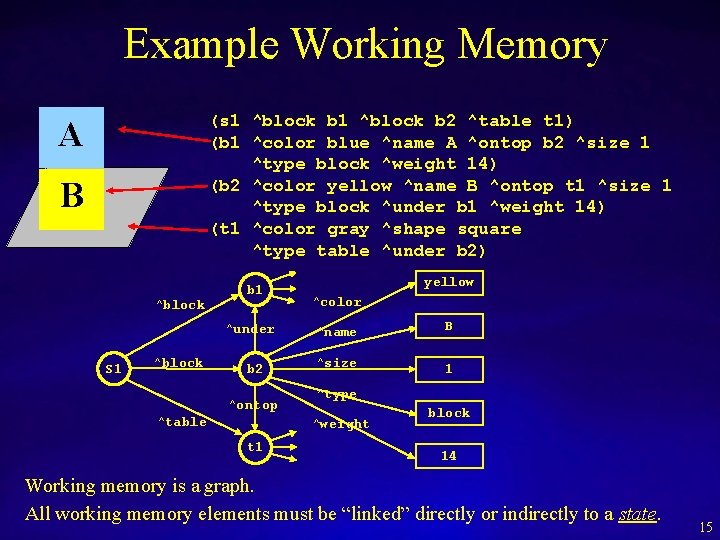

Example Working Memory (s 1 ^block b 2 ^table t 1) (b 1 ^color blue ^name A ^ontop b 2 ^size 1 ^type block ^weight 14) (b 2 ^color yellow ^name B ^ontop t 1 ^size 1 ^type block ^under b 1 ^weight 14) (t 1 ^color gray ^shape square ^type table ^under b 2) A B ^block b 1 ^under S 1 ^block b 2 ^ontop ^table yellow ^color ^name B ^size 1 ^type ^weight t 1 block 14 Working memory is a graph. All working memory elements must be “linked” directly or indirectly to a state. 15

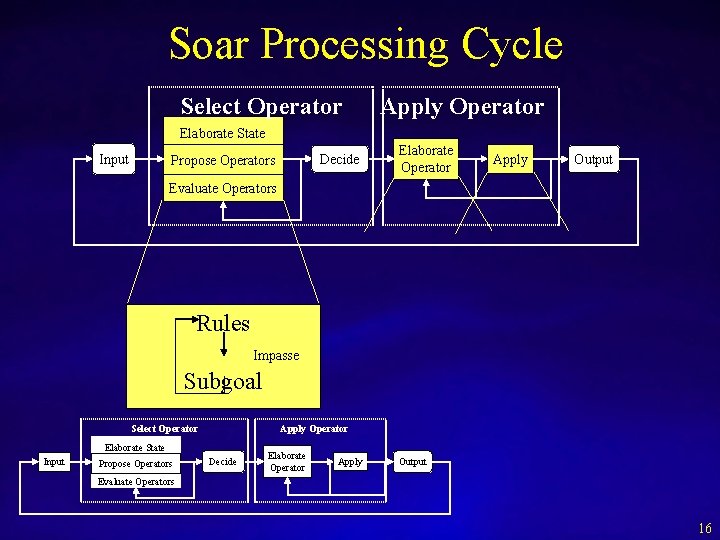

Soar Processing Cycle Select Operator Apply Operator Elaborate State Input Decide Propose Operators Elaborate Operator Apply Output Evaluate Operators Rules Impasse Subgoal Select Operator Apply Operator Elaborate State Input Propose Operators Decide Elaborate Operator Apply Output Evaluate Operators 16

Tank. Soar Red Tank’s Shield Borders (stone) Walls (trees) Health charger Missile pack Blue tank (Ouch!) Energy charger Green tank’s radar 17

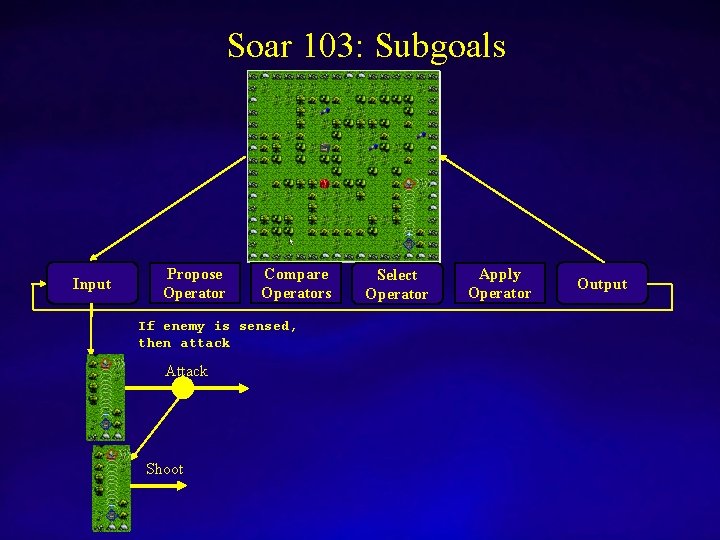

Soar 103: Subgoals Input Propose Operator Compare Operators If enemy not sensed, then wander Wander Move Turn Select Operator Apply Operator Output

Soar 103: Subgoals Input Propose Operator Compare Operators If enemy is sensed, then attack Attack Shoot Select Operator Apply Operator Output

![Tac AirSoar 1997 Controls simulated aircraft in rea time training exercises 3000 entities Flies Tac. Air-Soar [1997] Controls simulated aircraft in rea -time training exercises (>3000 entities) Flies](https://slidetodoc.com/presentation_image_h/259c5a9dc00e642b829117a41ba30269/image-20.jpg)

Tac. Air-Soar [1997] Controls simulated aircraft in rea -time training exercises (>3000 entities) Flies all U. S. air missions Dynamically changes missions as appropriate Communicates and coordinates with computer and human controlled planes Large knowledge base (8000 rules) No learning

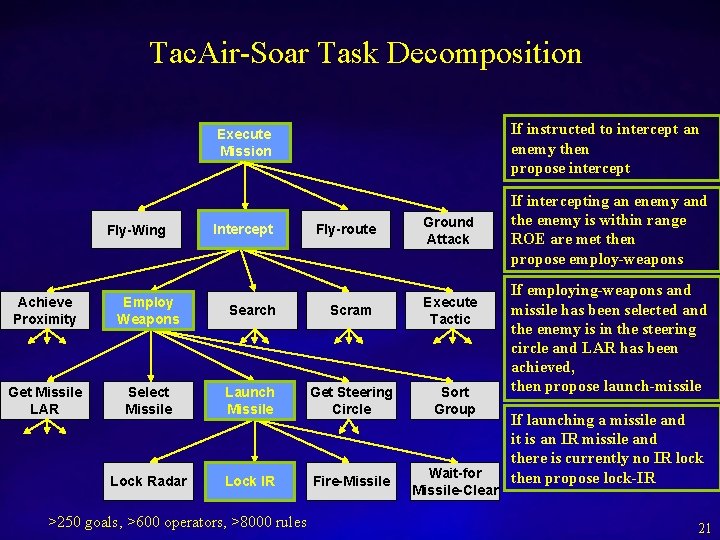

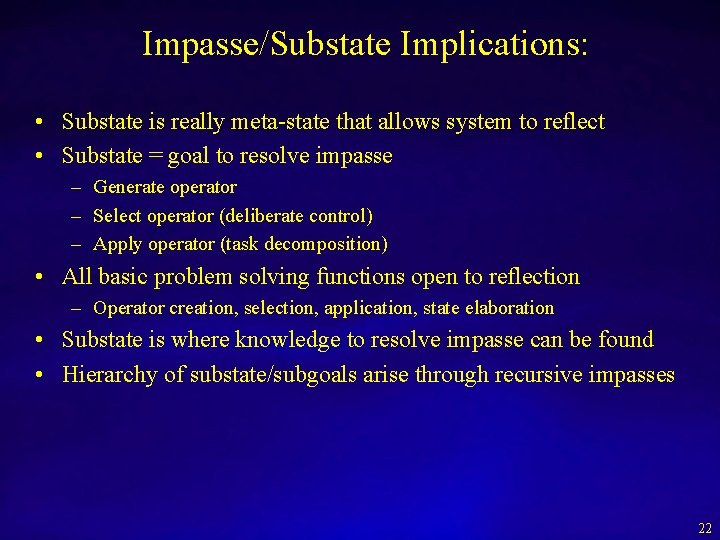

Tac. Air-Soar Task Decomposition Fly-Wing Execute Mission If instructed to intercept an enemy then propose intercept If intercepting an enemy and the enemy is within range ROE are met then propose employ-weapons Fly-route Ground Attack Execute Tactic Achieve Proximity Employ Weapons Search Scram Get Missile LAR Select Missile Launch Missile Get Steering Circle Sort Group Lock Radar Lock IR Fire-Missile Wait-for Missile-Clear >250 goals, >600 operators, >8000 rules If employing-weapons and missile has been selected and the enemy is in the steering circle and LAR has been achieved, then propose launch-missile If launching a missile and it is an IR missile and there is currently no IR lock then propose lock-IR 21

Impasse/Substate Implications: • Substate is really meta-state that allows system to reflect • Substate = goal to resolve impasse – Generate operator – Select operator (deliberate control) – Apply operator (task decomposition) • All basic problem solving functions open to reflection – Operator creation, selection, application, state elaboration • Substate is where knowledge to resolve impasse can be found • Hierarchy of substate/subgoals arise through recursive impasses 22

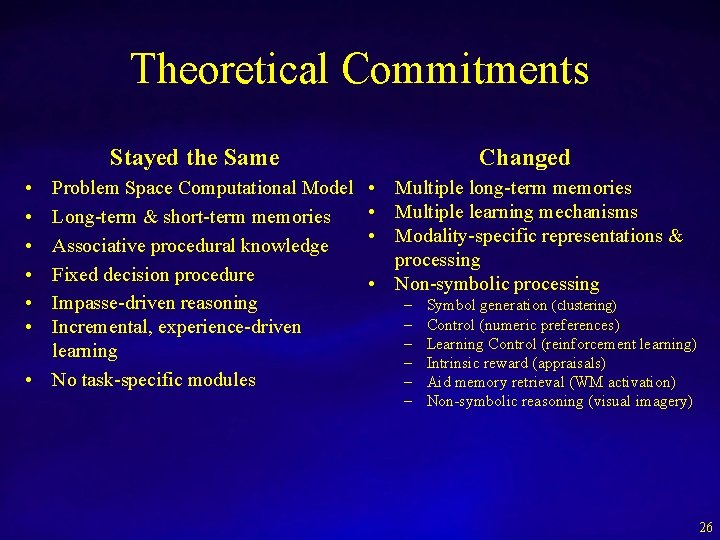

Tie Subgoals and Chunking Input Propose Operator East Tie Impasse North Evaluate Operators North > East South > East North = South Select Operator Apply Operator Output Chunking creates rules that create preferences based on what was tested South Evaluate-operator = 10 (North) North Evaluate-operator = 10 (South) = 10 Evaluate-operator = 5 (East) Chunking creates rule that applies evaluate-operator

Chunking Analysis • Converts deliberate reasoning/planning to reaction • Generality of learning based on generality of reasoning – Leads to many different types learning – If reasoning is inductive, so is learning • Soar only learns what it thinks about • Chunking is impasse driven – Learning arises from a lack of knowledge 24

Extending Soar • Learn from internal rewards – Reinforcement learning Symbolic Long-Term Memories Procedural Episodic Semantic • Learn facts – What you know – Semantic memory Reinforcement Learning Episodic Learning Semantic Learning Chunking • Learn events • Basic drives and … – Emotions, feelings, mood • Non-symbolic reasoning – Mental imagery • Learn from regularities Symbolic Short-Term Memory Appraisal Detector – What you remember – Episodic memory Decision Procedure Clustering Perception Visual Imagery Action Body – Spatial and temporal clusters 25

Theoretical Commitments Stayed the Same Changed Problem Space Computational Model Long-term & short-term memories Associative procedural knowledge Fixed decision procedure Impasse-driven reasoning Incremental, experience-driven learning • No task-specific modules • Multiple long-term memories • Multiple learning mechanisms • Modality-specific representations & processing • Non-symbolic processing • • • – – – Symbol generation (clustering) Control (numeric preferences) Learning Control (reinforcement learning) Intrinsic reward (appraisals) Aid memory retrieval (WM activation) Non-symbolic reasoning (visual imagery) 26

Reinforcement Learning Shelly Nason 27

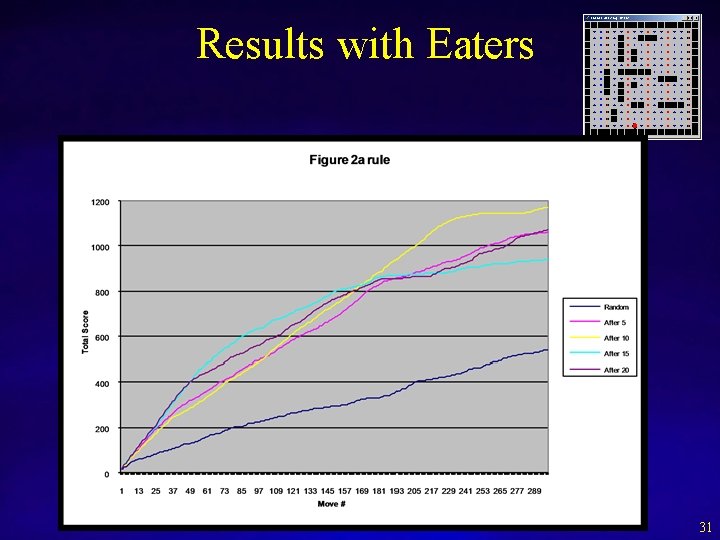

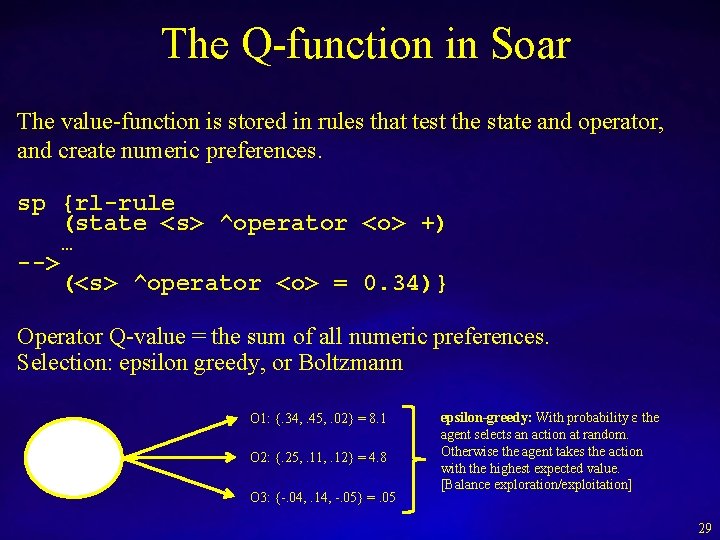

RL in Soar 1. Encode the value function as operator evaluation rules with numeric preferences. 2. Combine all numeric preferences for an operator dynamically. 3. Adjust value of numeric preferences with experience. Reward Update Value Function Internal State Perception Action Selection Action 28

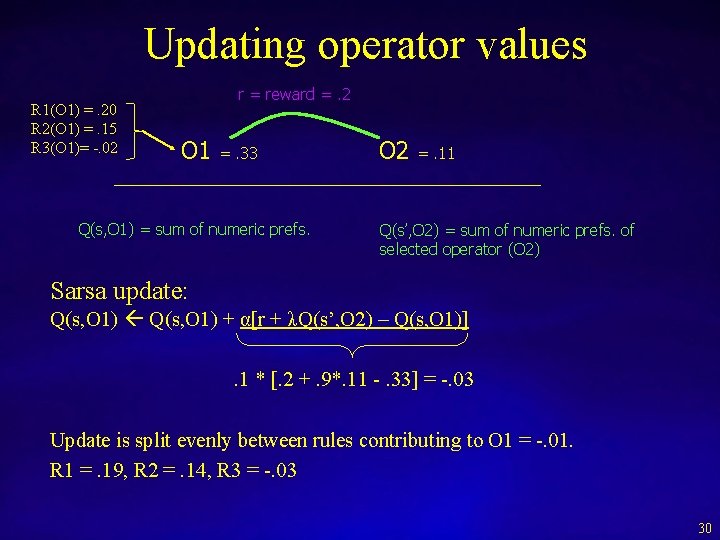

The Q-function in Soar The value-function is stored in rules that test the state and operator, and create numeric preferences. sp {rl-rule (state <s> ^operator <o> +) … --> (<s> ^operator <o> = 0. 34)} Operator Q-value = the sum of all numeric preferences. Selection: epsilon greedy, or Boltzmann O 1: {. 34, . 45, . 02} = 8. 1 O 2: {. 25, . 11, . 12} = 4. 8 O 3: {-. 04, . 14, -. 05} =. 05 epsilon-greedy: With probability ε the agent selects an action at random. Otherwise the agent takes the action with the highest expected value. [Balance exploration/exploitation] 29

Updating operator values R 1(O 1) =. 20 R 2(O 1) =. 15 R 3(O 1)= -. 02 r = reward =. 2 O 1 =. 33 Q(s, O 1) = sum of numeric prefs. O 2 =. 11 Q(s’, O 2) = sum of numeric prefs. of selected operator (O 2) Sarsa update: Q(s, O 1) + α[r + λQ(s’, O 2) – Q(s, O 1)]. 1 * [. 2 +. 9*. 11 -. 33] = -. 03 Update is split evenly between rules contributing to O 1 = -. 01. R 1 =. 19, R 2 =. 14, R 3 = -. 03 30

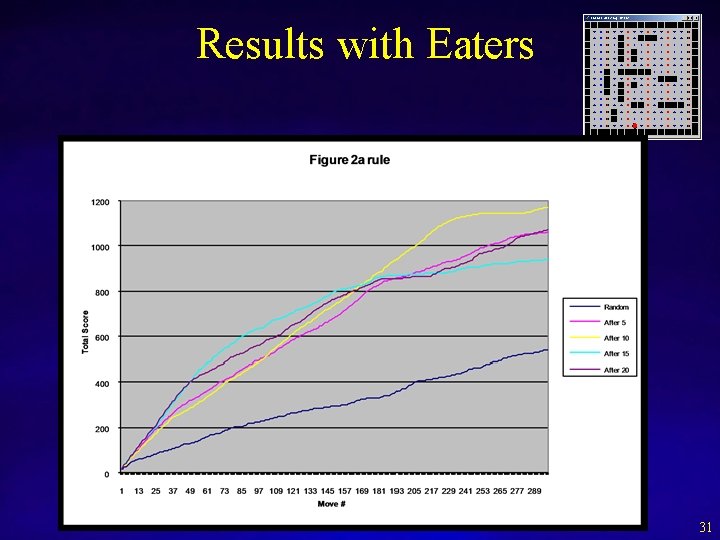

Results with Eaters 31

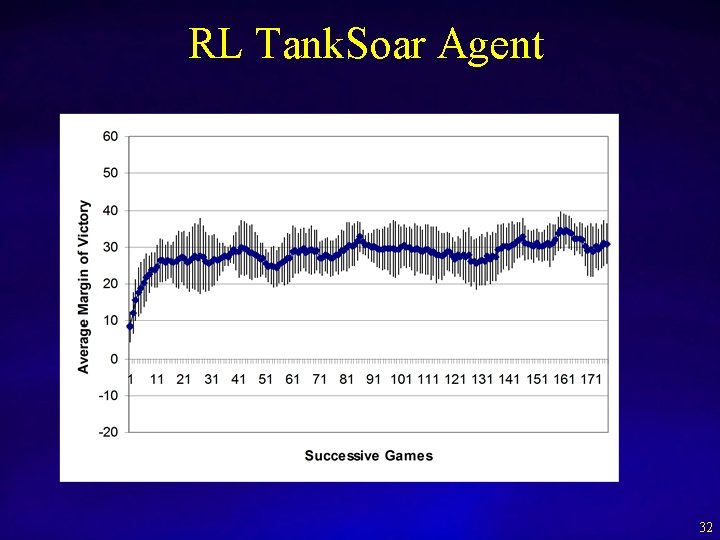

RL Tank. Soar Agent 32

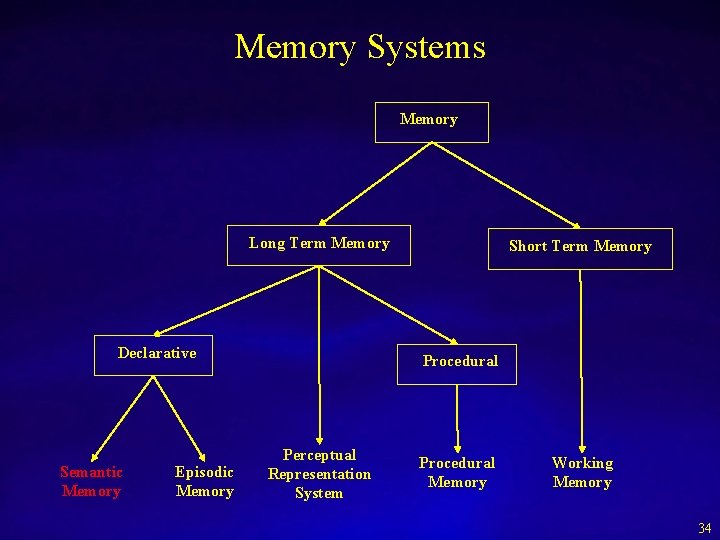

Semantic Memory Yongjia Wang 33

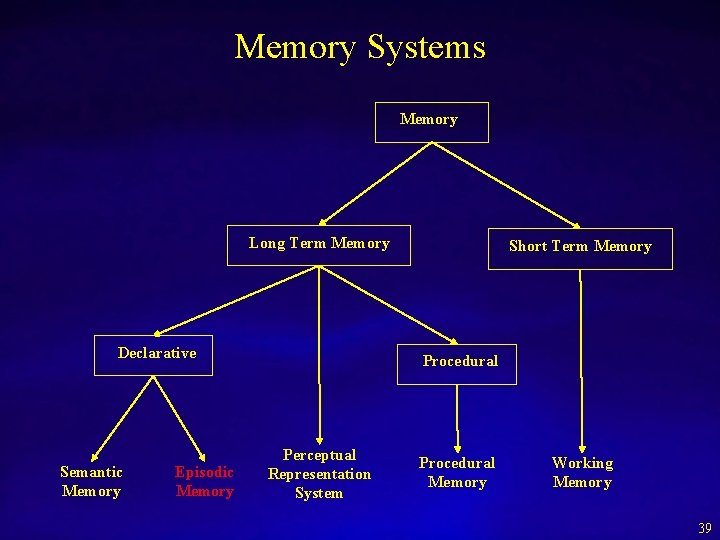

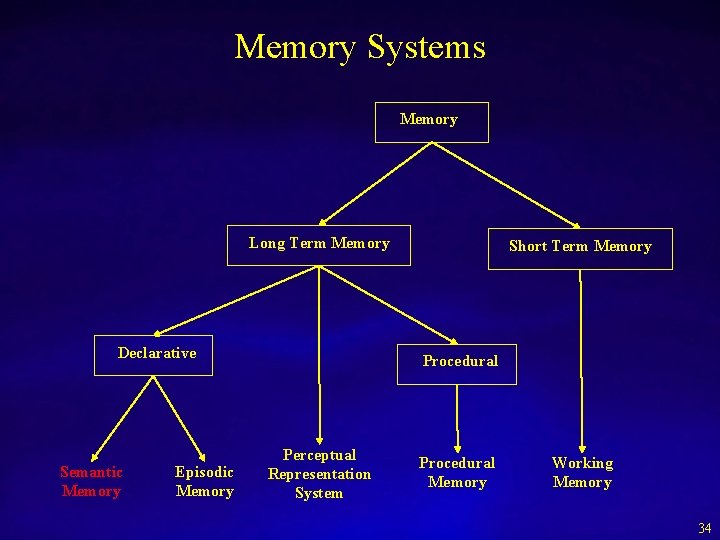

Memory Systems Memory Long Term Memory Declarative Semantic Memory Episodic Memory Short Term Memory Procedural Perceptual Representation System Procedural Memory Working Memory 34

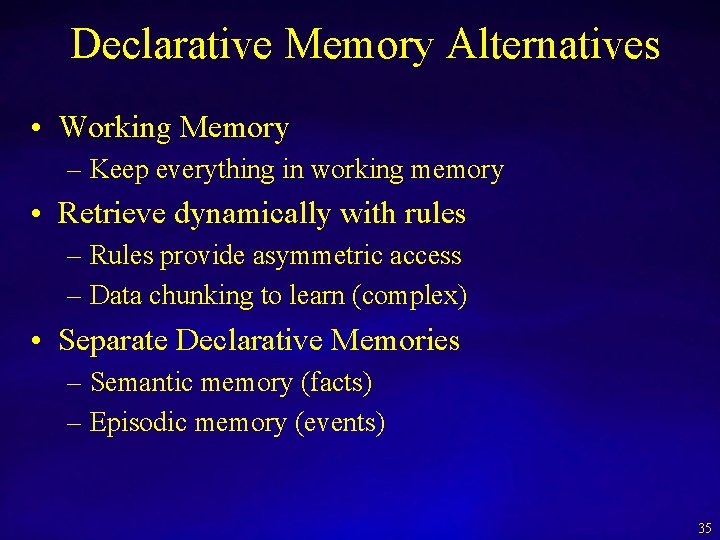

Declarative Memory Alternatives • Working Memory – Keep everything in working memory • Retrieve dynamically with rules – Rules provide asymmetric access – Data chunking to learn (complex) • Separate Declarative Memories – Semantic memory (facts) – Episodic memory (events) 35

Basic Semantic Memory Functionalities • Encoding – What to save? – When to add new declarative chunk? – How to update knowledge? • Retrieval – How the cue is placed and matched? – What are the different types of retrieval? • Storage – What are the storage structures? – How are they maintained? 36

Semantic Memory Functionalities state Feature Match Auto. Commit Working Memory B Retrieval Expand Cue NIL Save Expand A C A Semantic Memory Save NIL D E E F A B Save Update with Complex Structure D F E E Remove-No-Change 37

Episodic Memory Andrew Nuxoll 38

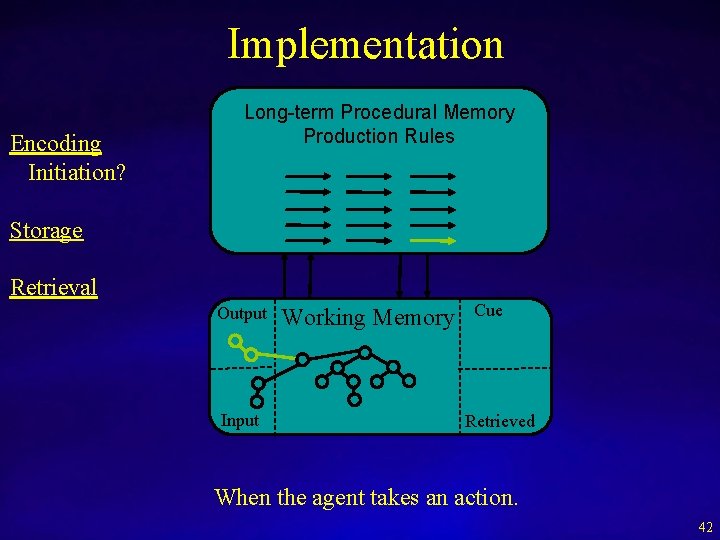

Memory Systems Memory Long Term Memory Declarative Semantic Memory Episodic Memory Short Term Memory Procedural Perceptual Representation System Procedural Memory Working Memory 39

Episodic vs. Semantic Memory • Semantic Memory – Knowledge of what we “know” – Example: what state the Grand Canyon is in • Episodic Memory – History of specific events – Example: a family vacation to the Grand Canyon

Characteristics of Episodic Memory: Tulving • Architectural: – Does not compete with reasoning. – Task independent • Automatic: – Memories created without deliberate decision. • Autonoetic: – Retrieved memory is distinguished from sensing. • Autobiographical: – Episode remembered from own perspective. • Variable Duration: – The time period spanned by a memory is not fixed. • Temporally Indexed: – Rememberer has a sense of when the episode occurred. 41

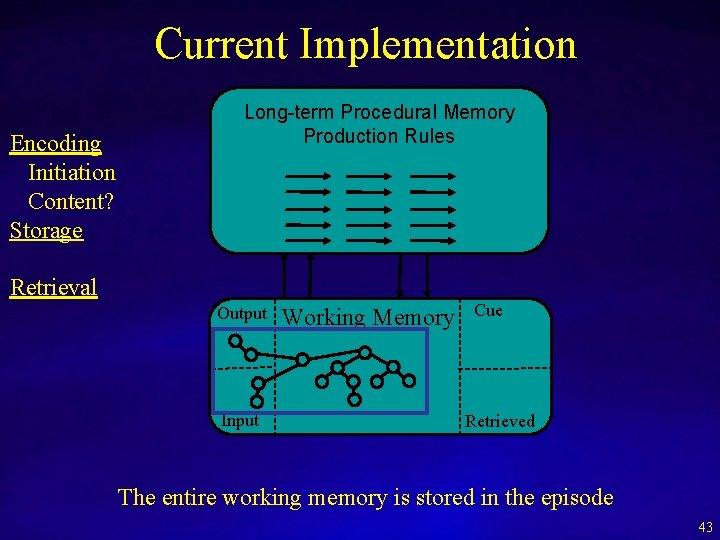

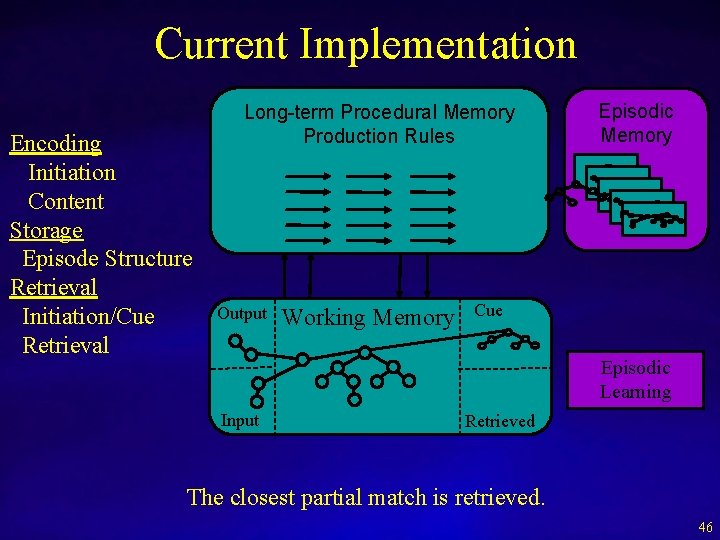

Implementation Encoding Initiation? Long-term Procedural Memory Production Rules Storage Retrieval Output Input Working Memory Cue Retrieved When the agent takes an action. 42

Current Implementation Encoding Initiation Content? Storage Long-term Procedural Memory Production Rules Retrieval Output Input Working Memory Cue Retrieved The entire working memory is stored in the episode 43

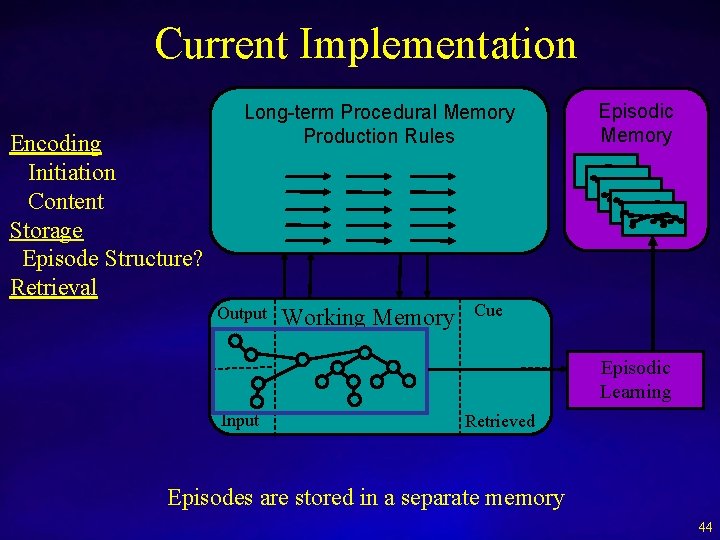

Current Implementation Encoding Initiation Content Storage Episode Structure? Retrieval Long-term Procedural Memory Production Rules Output Working Memory Episodic Memory Cue Episodic Learning Input Retrieved Episodes are stored in a separate memory 44

Current Implementation Encoding Initiation Content Storage Episode Structure Retrieval Initiation/Cue? Long-term Procedural Memory Production Rules Output Working Memory Episodic Memory Cue Episodic Learning Input Retrieved Cue is placed in an architecture specific buffer. 45

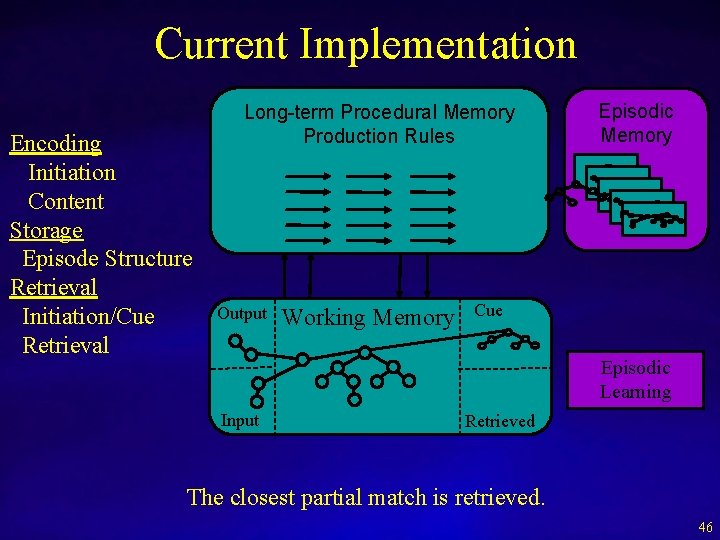

Current Implementation Encoding Initiation Content Storage Episode Structure Retrieval Initiation/Cue Retrieval Long-term Procedural Memory Production Rules Output Working Memory Episodic Memory Cue Episodic Learning Input Retrieved The closest partial match is retrieved. 46

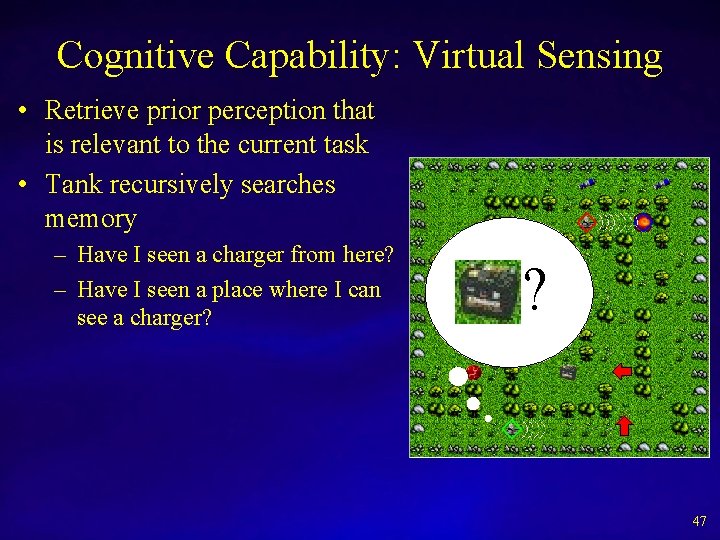

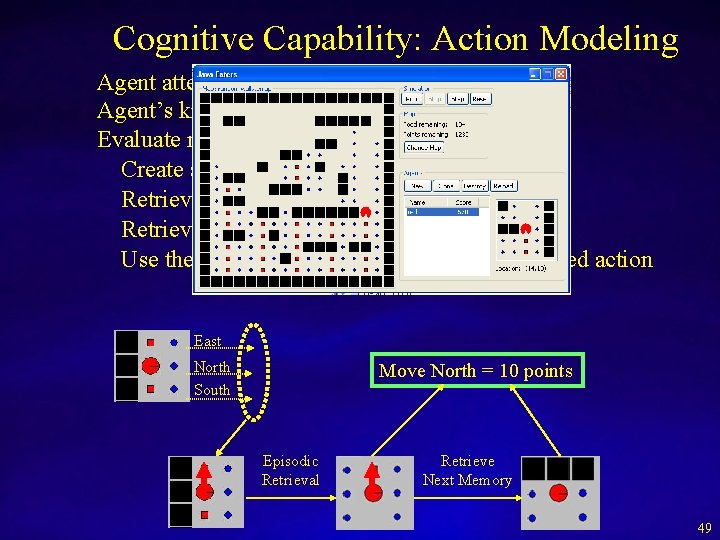

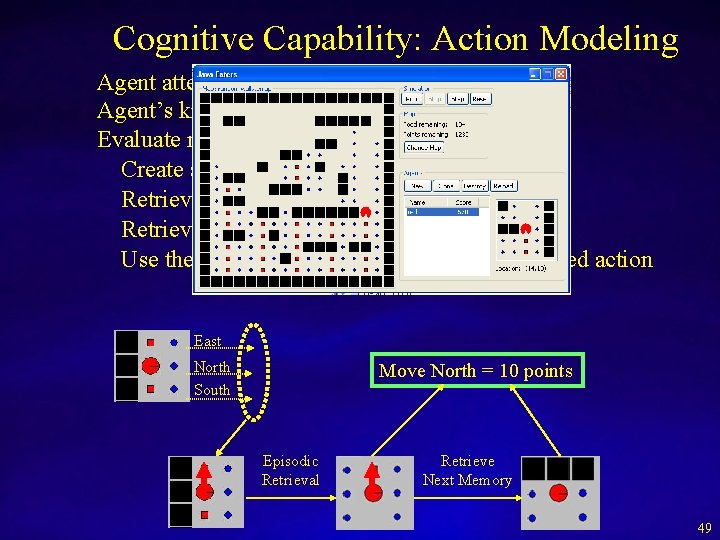

Cognitive Capability: Virtual Sensing • Retrieve prior perception that is relevant to the current task • Tank recursively searches memory – Have I seen a charger from here? – Have I seen a place where I can see a charger? ? 47

Virtual Sensors Results 48

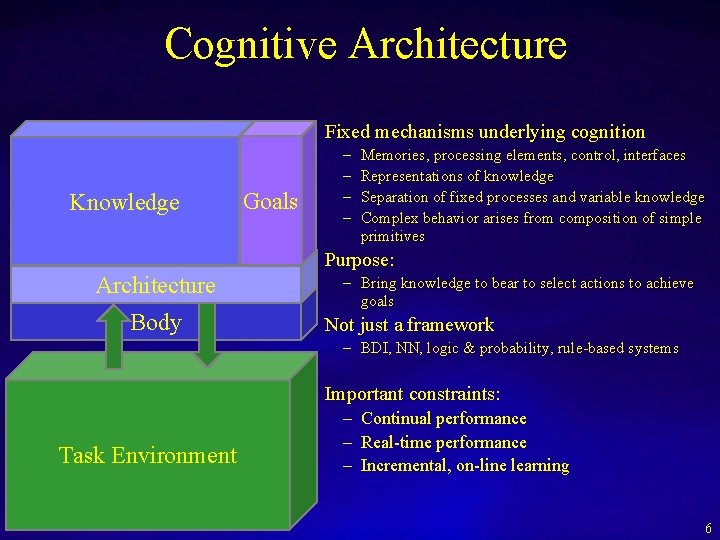

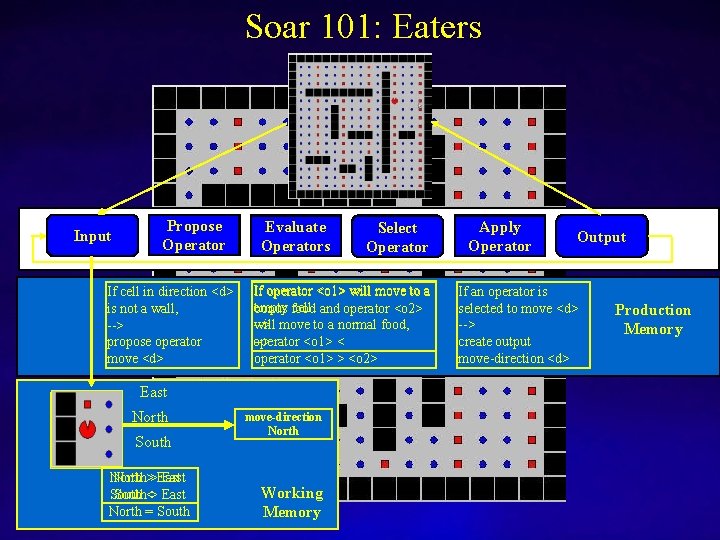

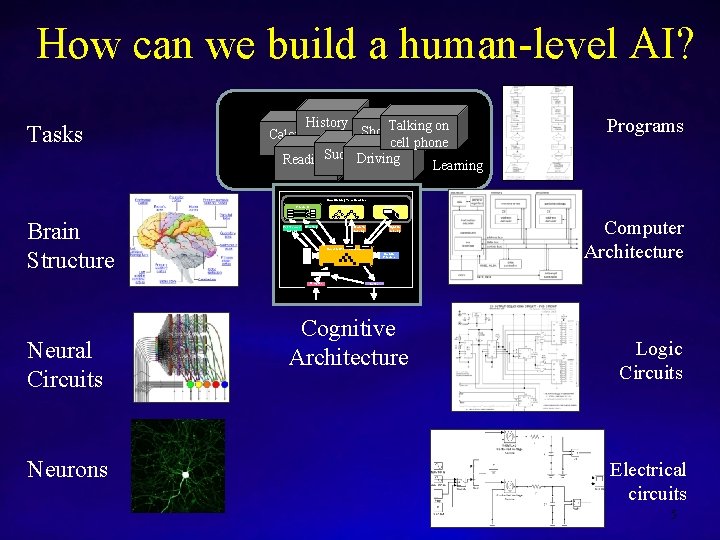

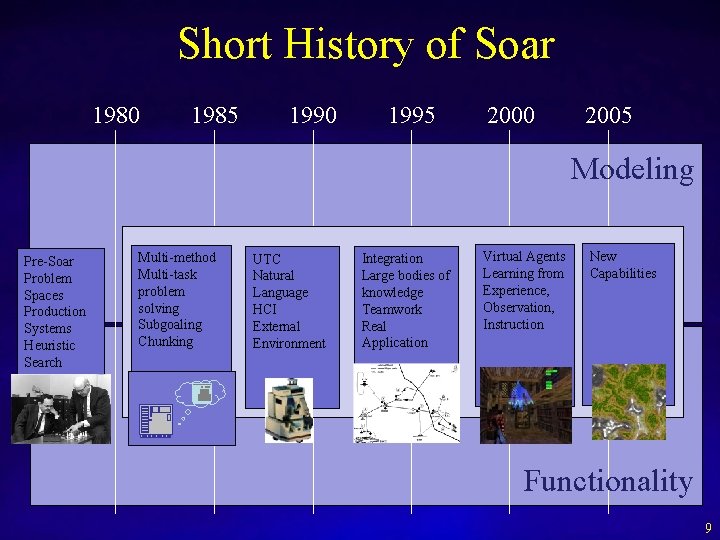

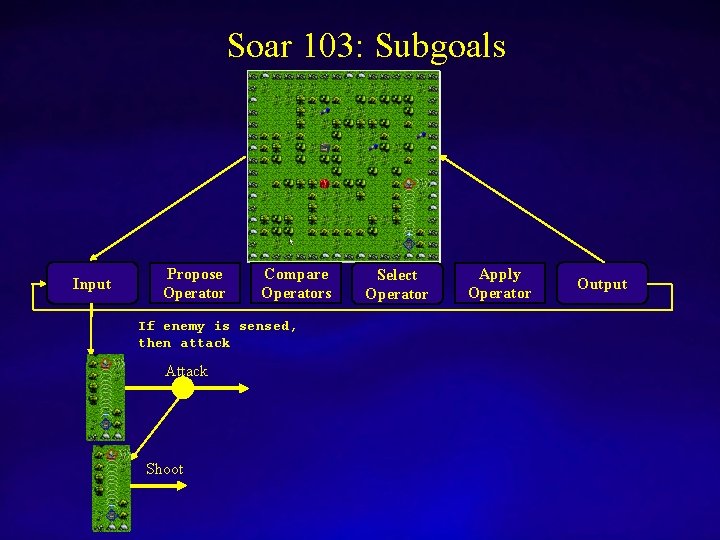

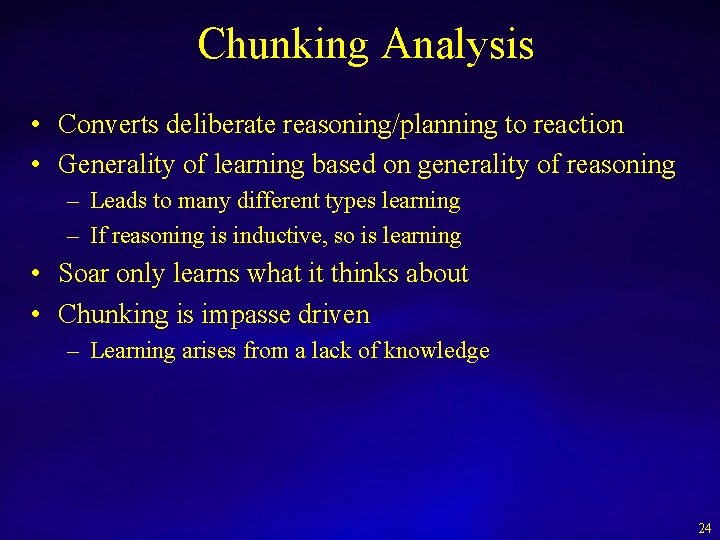

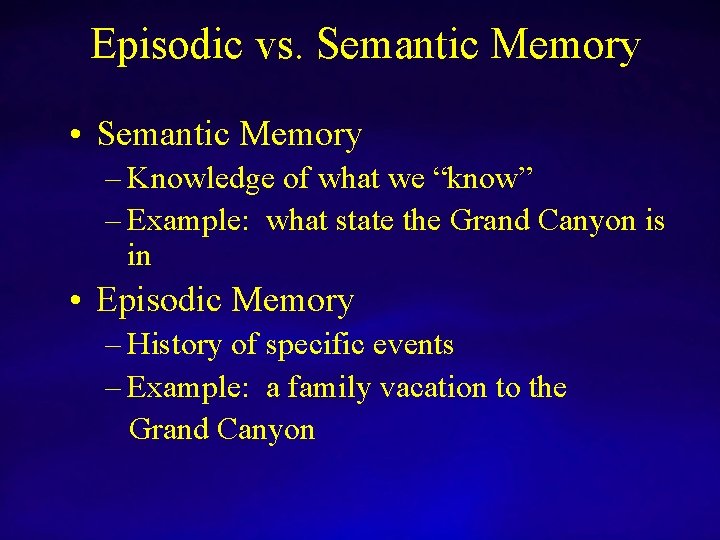

Cognitive Capability: Action Modeling Agent attempts to choose direction Agent’s knowledge is insufficient - impasse Evaluate moving in each available direction Create a memory cue Retrieve the best matching memory Retrieve the next memory Use the change in score to evaluate the proposed action East North South Move North = 10 points Episodic Retrieval Retrieve Next Memory 49

![Episodic Memory MultiStep Action Projection Andrew Nuxoll Average Margin of Victory 40 Margin of Episodic Memory: Multi-Step Action Projection [Andrew Nuxoll] Average Margin of Victory 40 Margin of](https://slidetodoc.com/presentation_image_h/259c5a9dc00e642b829117a41ba30269/image-50.jpg)

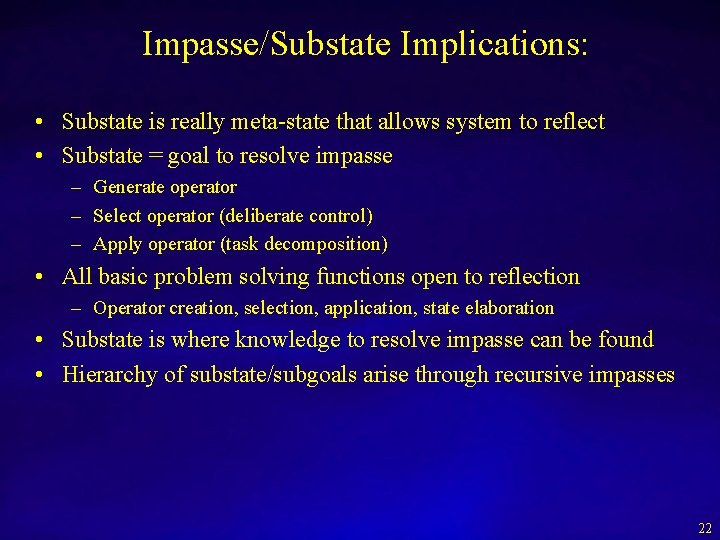

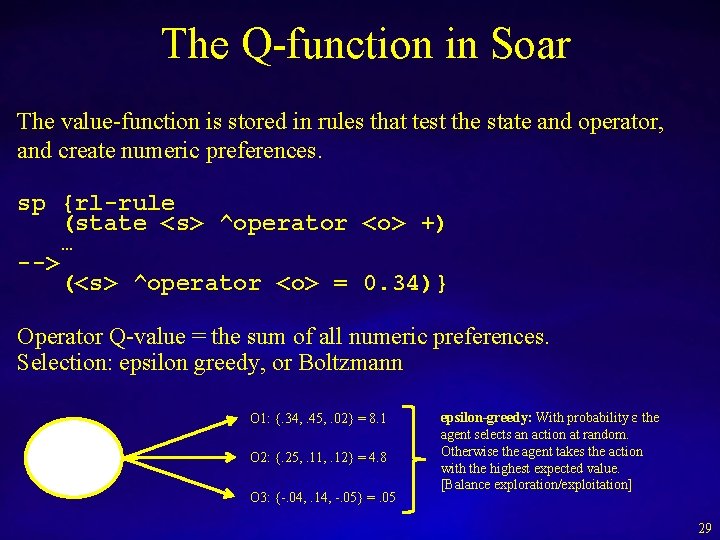

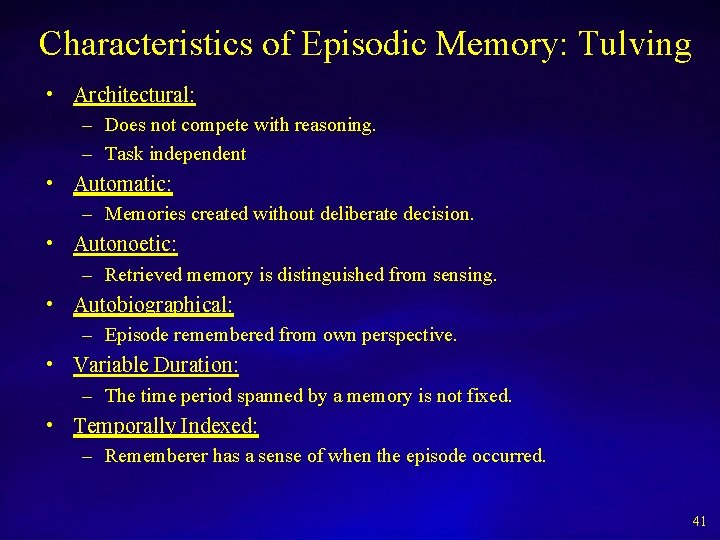

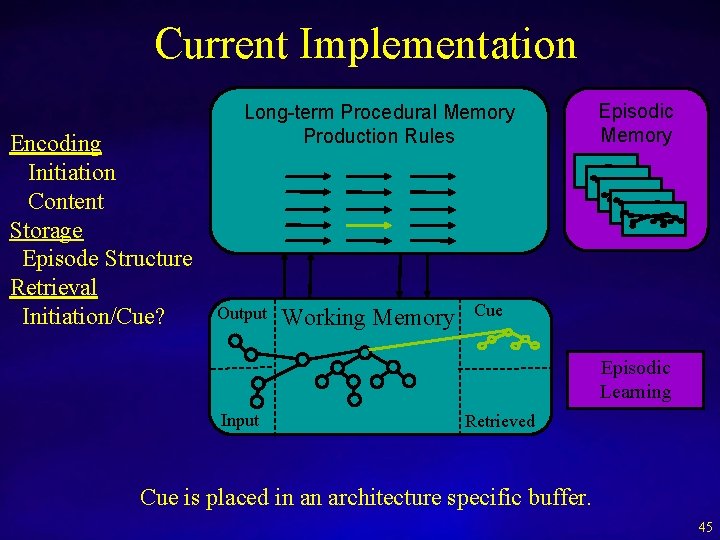

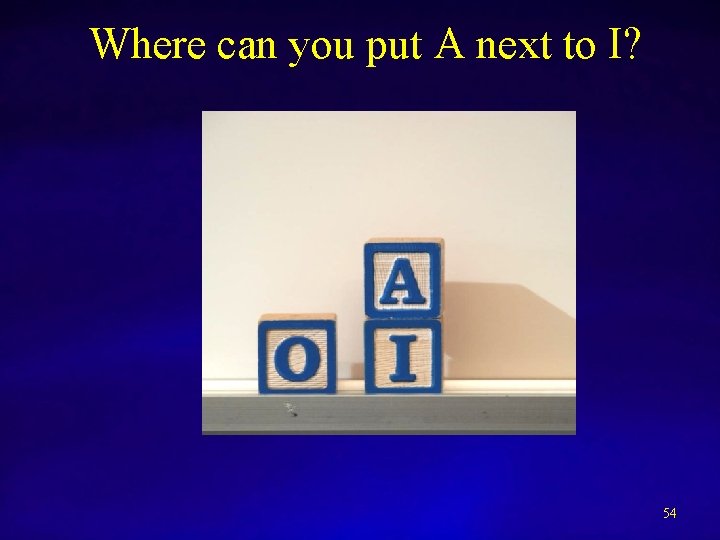

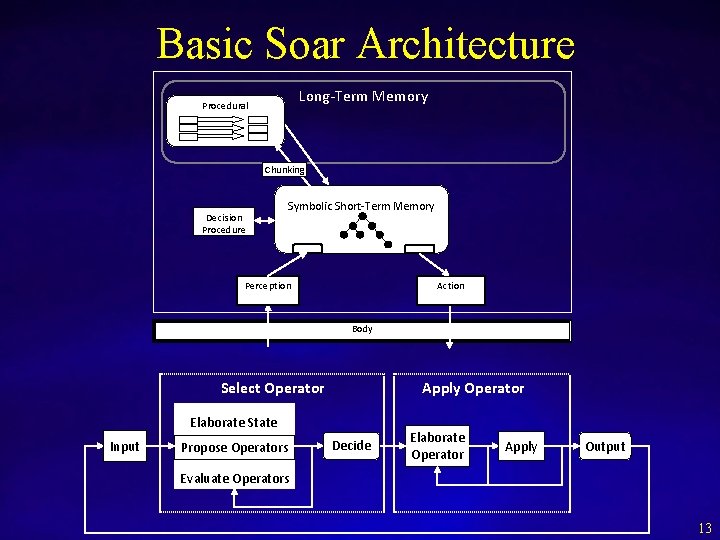

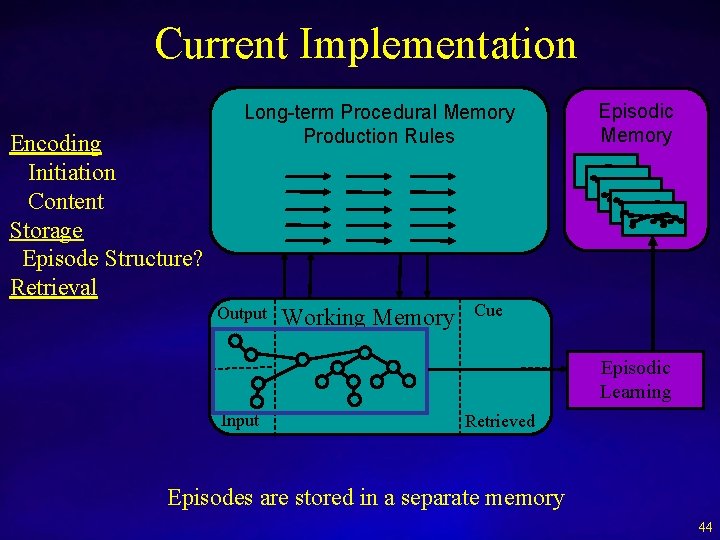

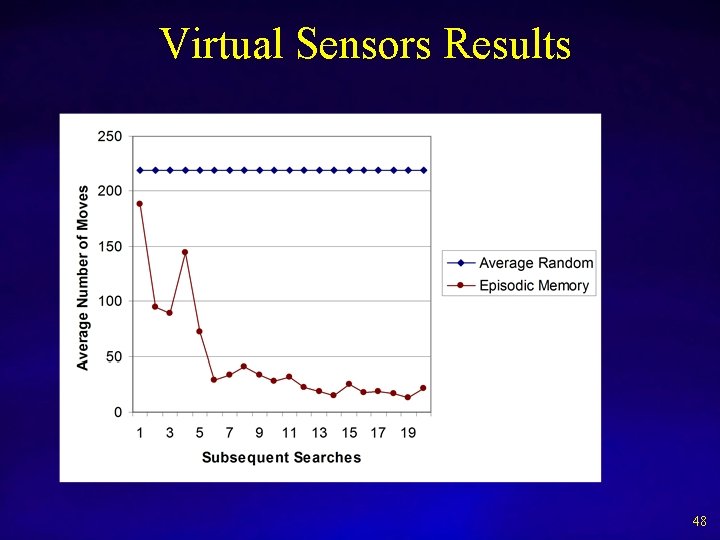

Episodic Memory: Multi-Step Action Projection [Andrew Nuxoll] Average Margin of Victory 40 Margin of Victory 30 20 10 0 1 11 21 31 41 51 61 71 81 91 101 111 121 131 141 151 161 171 -10 -20 -30 Successive Games • Learn tactics from prior success and failure – Fight/flight – Back away from enemy (and fire) – Dodging

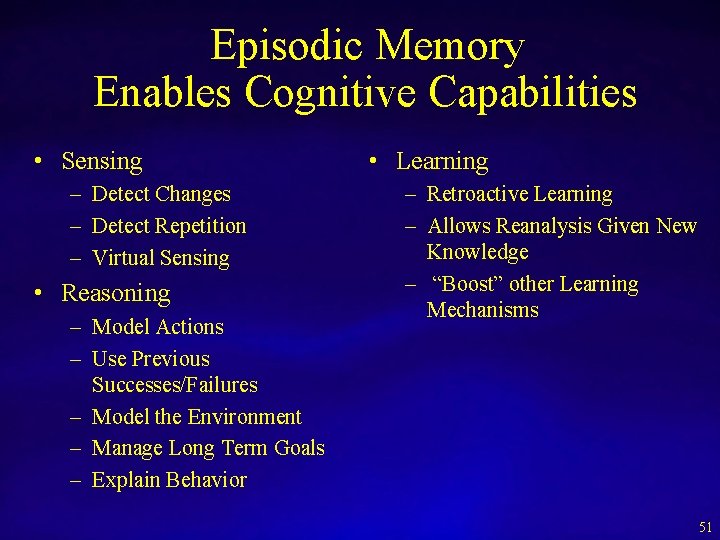

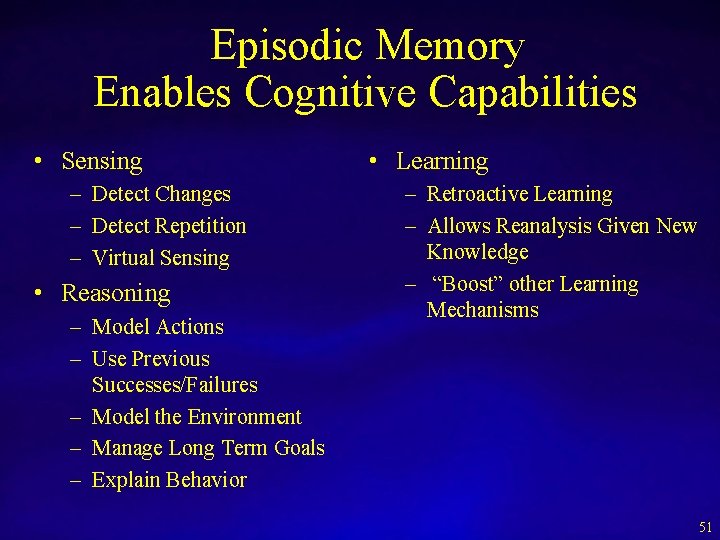

Episodic Memory Enables Cognitive Capabilities • Sensing – Detect Changes – Detect Repetition – Virtual Sensing • Reasoning – Model Actions – Use Previous Successes/Failures – Model the Environment – Manage Long Term Goals – Explain Behavior • Learning – Retroactive Learning – Allows Reanalysis Given New Knowledge – “Boost” other Learning Mechanisms 51

Mental Imagery and Spatial Reasoning Scott Lathrop Sam Wintermute See AGI Talks 52

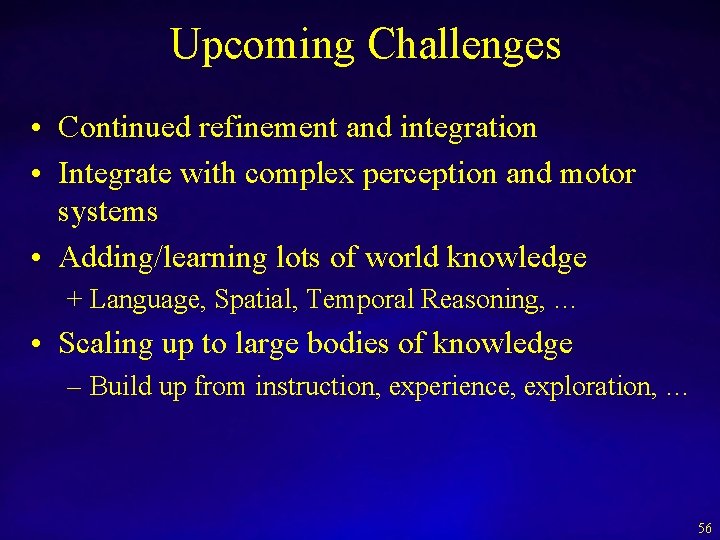

WHAT IS VISUAL IMAGERY? VISUAL IMAGERY VISUAL-SPATIAL VISUAL-DEPICTIVE • Location, orientation • Shape, color, topology, spatial properties • Sentential, quantitative representations • Depictive, pixel-based representations • Linear algebra and computational geometry algorithms • Image algebra algorithms q Sentential/Algebraic algorithms q Depictive/Ordinal algorithms 53

Where can you put A next to I? 54

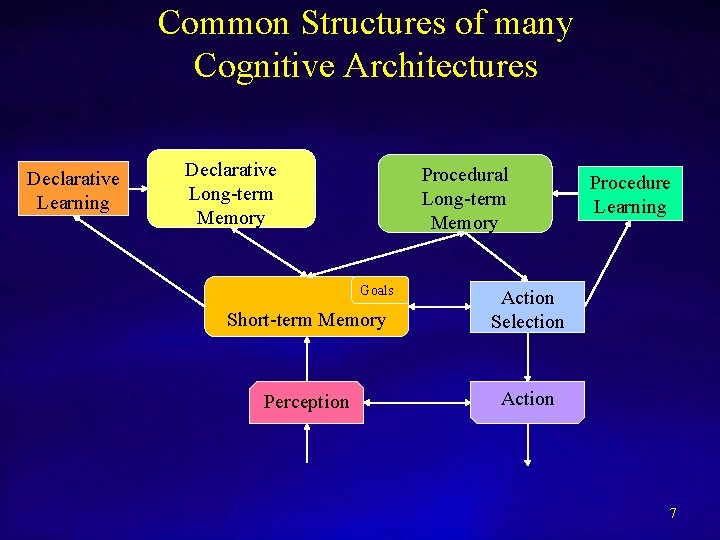

![Spatial Problem Solving with Mental Imagery Scott Lathrop Sam Wintermute intersect nointersect on Spatial Problem Solving with Mental Imagery [Scott Lathrop & Sam Wintermute] (intersect (no_intersect (on](https://slidetodoc.com/presentation_image_h/259c5a9dc00e642b829117a41ba30269/image-55.jpg)

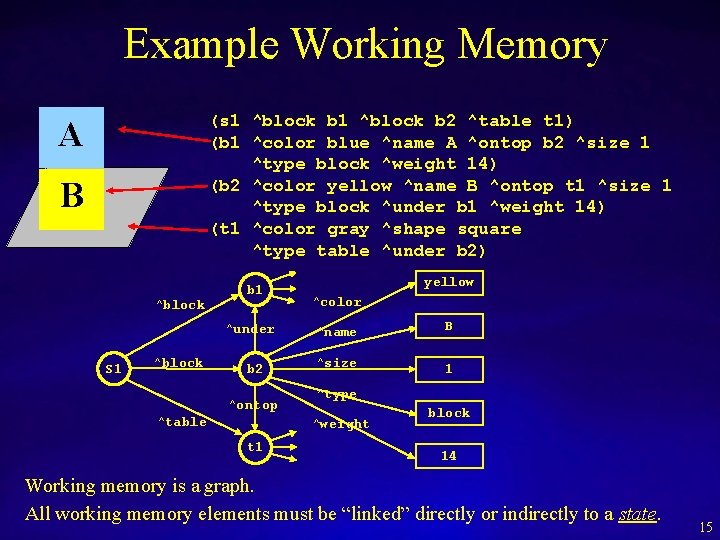

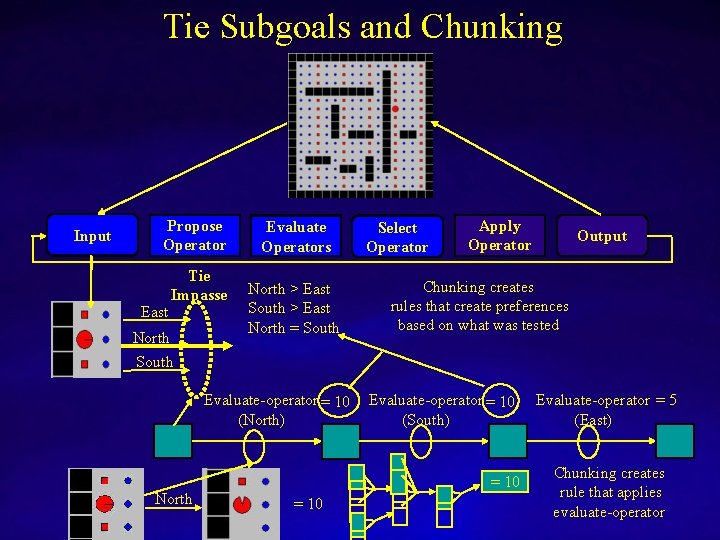

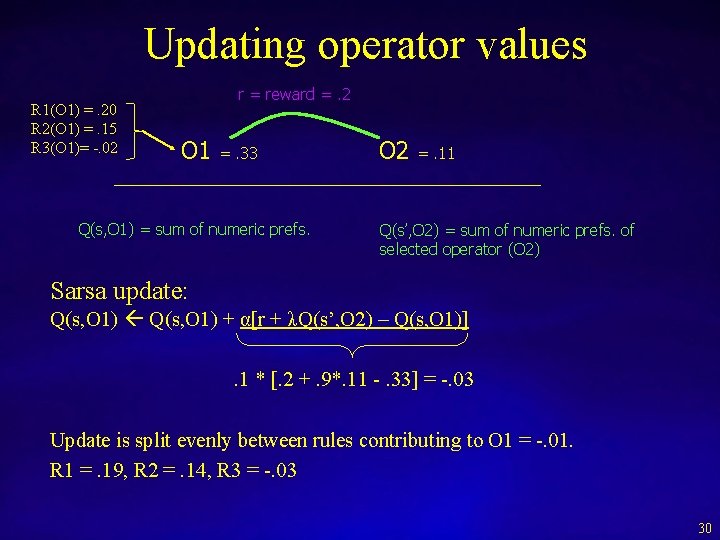

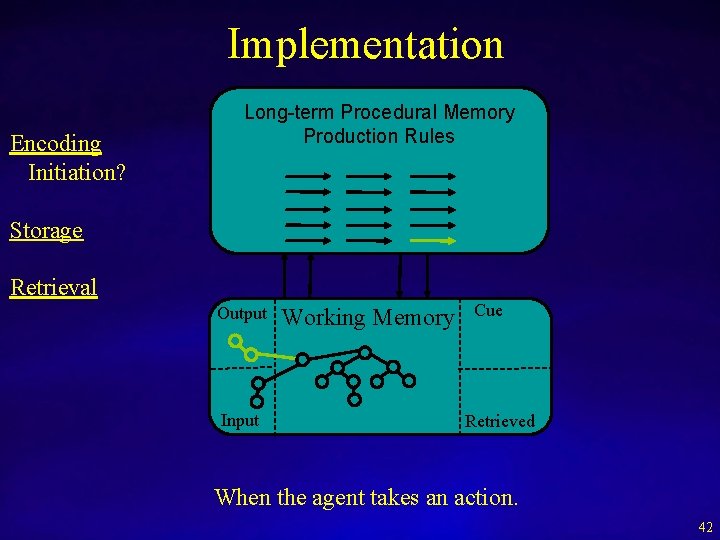

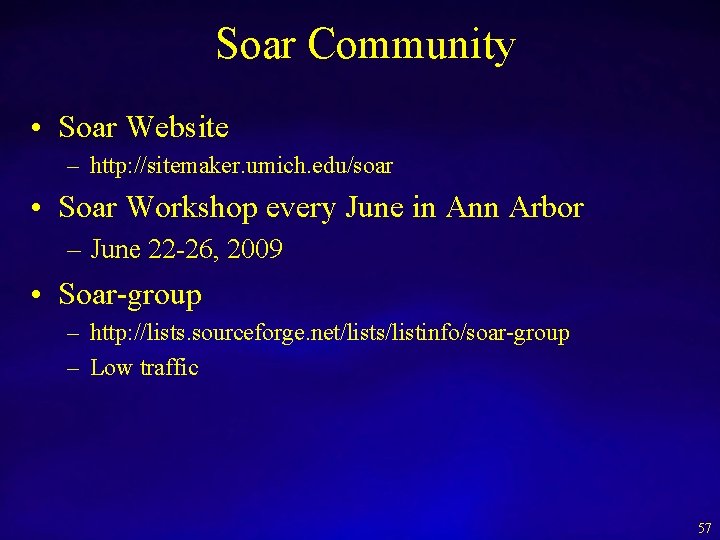

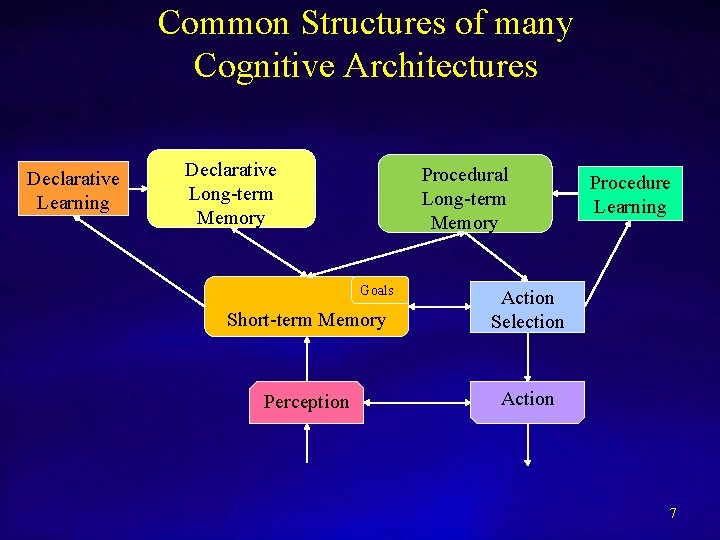

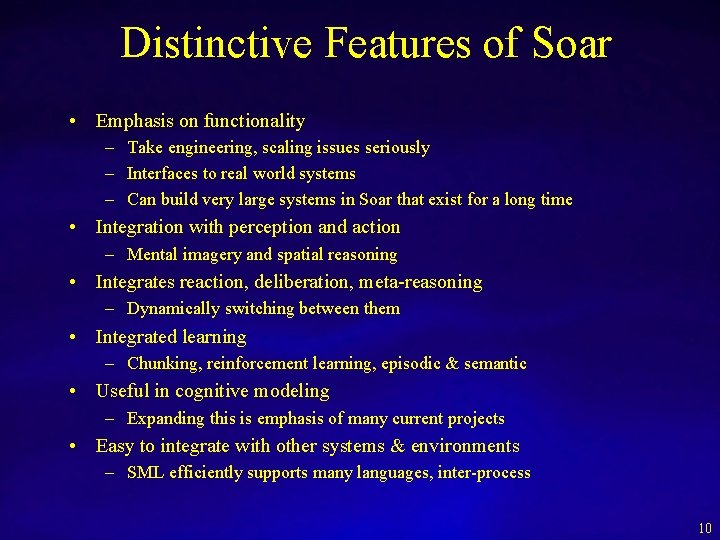

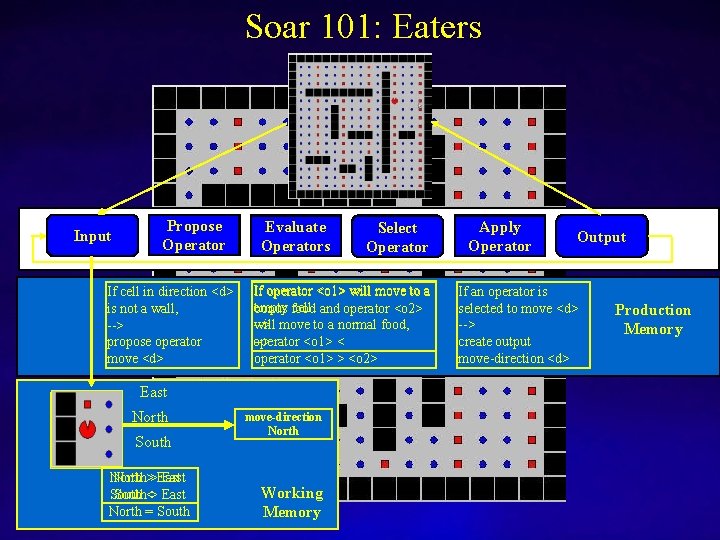

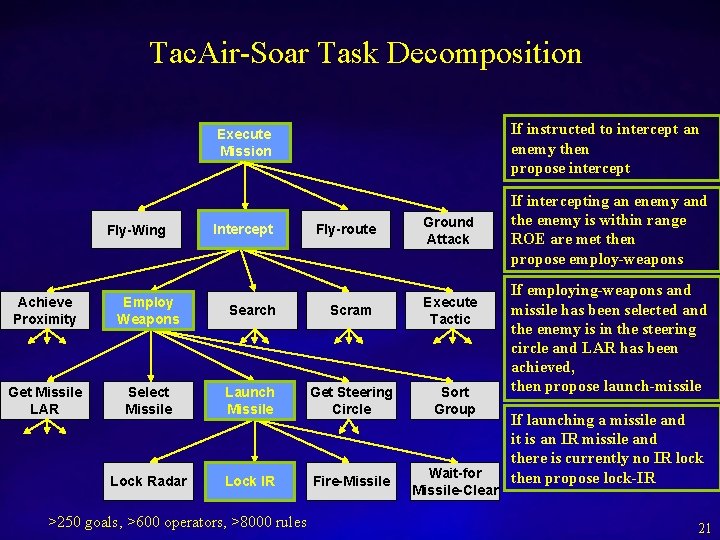

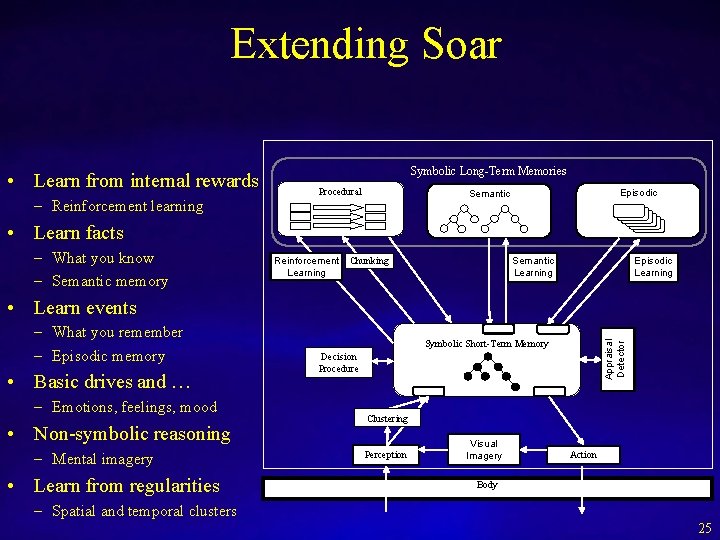

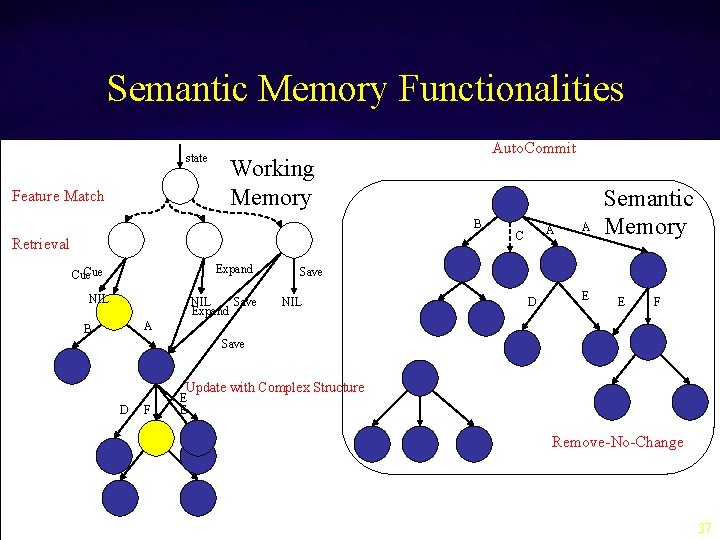

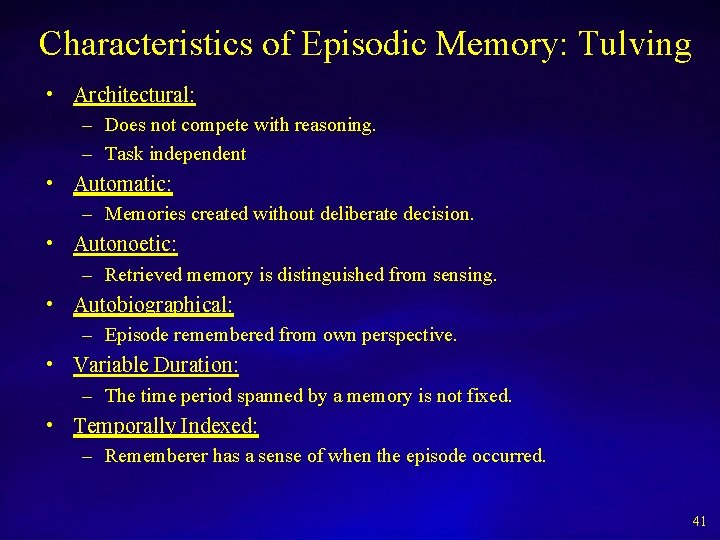

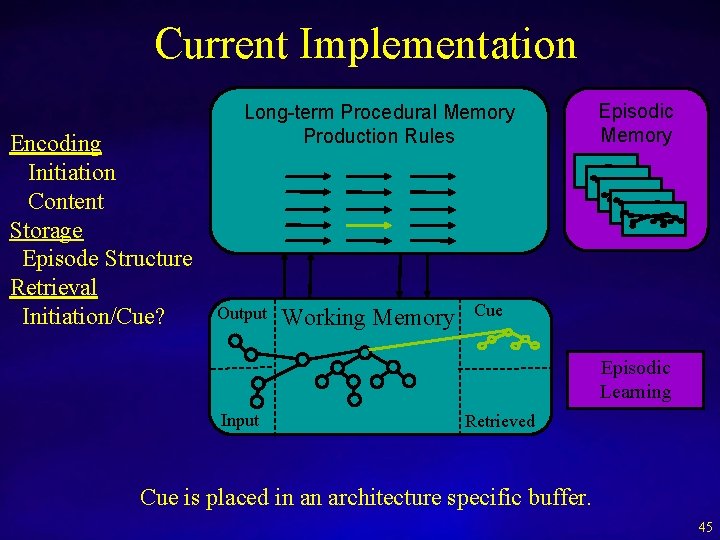

Spatial Problem Solving with Mental Imagery [Scott Lathrop & Sam Wintermute] (intersect (no_intersect (on AI) A′ O) A’) Soar (imagine_left_of. AAI) (imagine_right_of (move_right_of AI)I) Qualitative descriptions of object relationships Qualitative description of new objects in relation to existing objects A O A’ I Spatial Scene A ’ Quantitative descriptions of environmental objects Environment

Upcoming Challenges • Continued refinement and integration • Integrate with complex perception and motor systems • Adding/learning lots of world knowledge + Language, Spatial, Temporal Reasoning, … • Scaling up to large bodies of knowledge – Build up from instruction, experience, exploration, … 56

Soar Community • Soar Website – http: //sitemaker. umich. edu/soar • Soar Workshop every June in Ann Arbor – June 22 -26, 2009 • Soar-group – http: //lists. sourceforge. net/lists/listinfo/soar-group – Low traffic 57

Thanks to Funding Agencies: NSF, DARPA, ONR Ph. D. students: Nate Derbinsky, Nicholas Gorski, Scott Lathrop, Robert Marinier, Andrew Nuxoll, Yongjia Wang, Samuel Wintermute, Joseph Xu Research Programmers: Karen Coulter, Jonathan Voigt Continued inspiration: Allen Newell 58

Challenges in Cognitive Architecture Research • Dynamic taskability – Pursue novel tasks • Learning – Always learning, learning in unexpected and unplanned ways (wild learning) – Transition from programming to learning by imitation, instruction, experience, reflection, … • Natural language – Active area but much left to do. • Social behavior – Interaction with humans and other entities • Connect to the real world – Cognitive robotics with long-term existence • Applications – Expand domains and problems – Putting cognitive architectures to work • Connect to unfolding research on the brain, psychology, and the rest of AI. 60