Sequential decision analytics and modeling ORF 411 Fall

- Slides: 43

Sequential decision analytics and modeling ORF 411 Fall, 2018 . Warren Powell Princeton University http: //www. castlelab. princeton. edu © 2017 Warren B. Powell, Princeton University

Week 1 - Wednesday Machine learning © 2018 W. B. Powell Slide 2

n Three core approximation architectures » Lookup tables » Parametric models • Linear in the parameters • Nonlinear (in the parameters) » Nonparametric © 2018 W. B. Powell

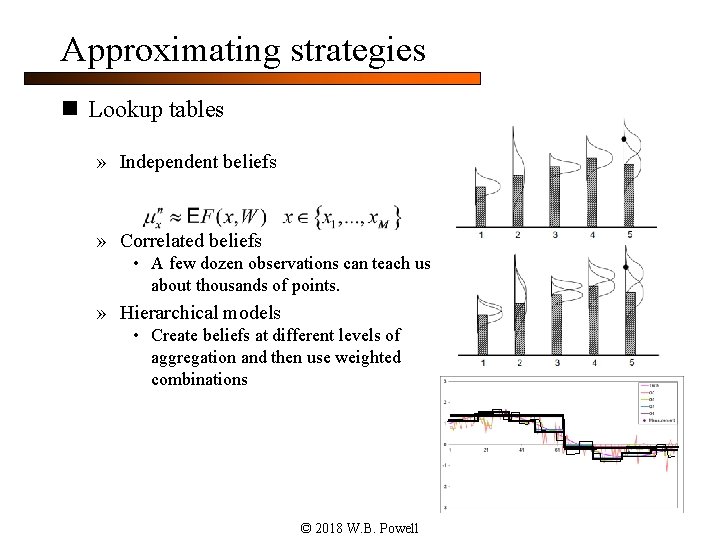

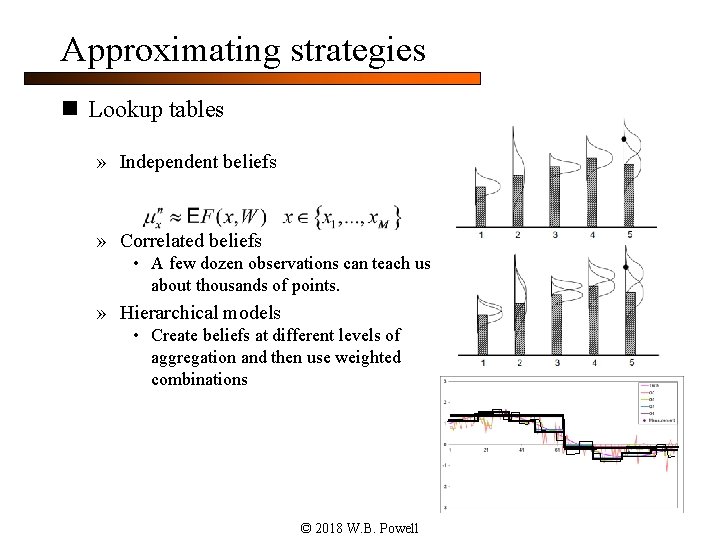

Approximating strategies n Lookup tables » Independent beliefs » Correlated beliefs • A few dozen observations can teach us about thousands of points. » Hierarchical models • Create beliefs at different levels of aggregation and then use weighted combinations © 2018 W. B. Powell

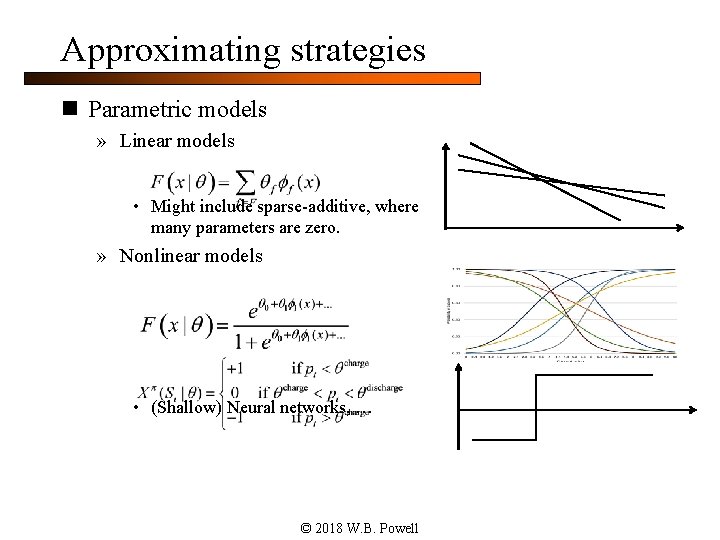

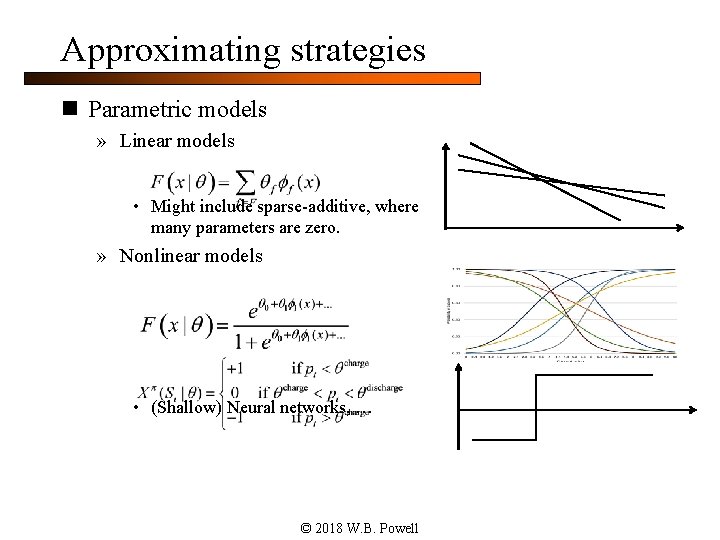

Approximating strategies n Parametric models » Linear models • Might include sparse-additive, where many parameters are zero. » Nonlinear models • (Shallow) Neural networks, … © 2018 W. B. Powell

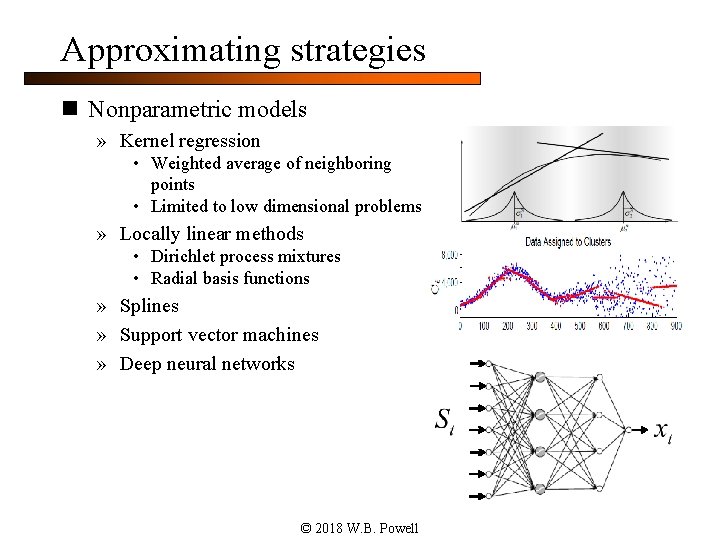

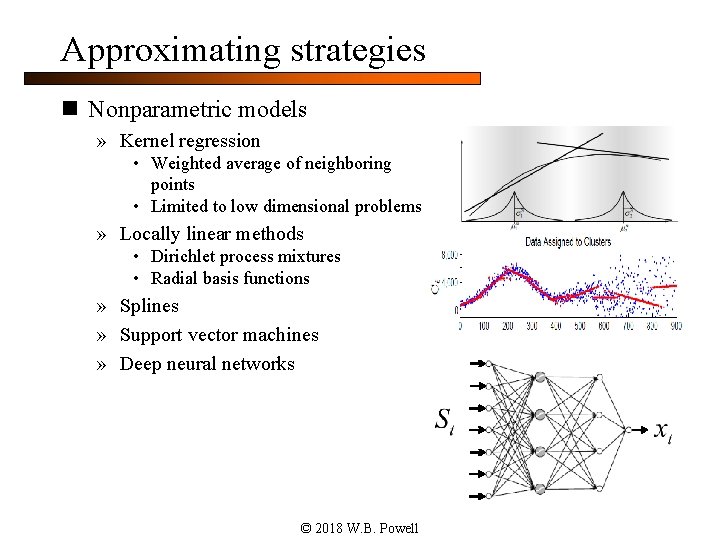

Approximating strategies n Nonparametric models » Kernel regression • Weighted average of neighboring points • Limited to low dimensional problems » Locally linear methods • Dirichlet process mixtures • Radial basis functions » Splines » Support vector machines » Deep neural networks © 2018 W. B. Powell

Lookup tables Frequentist Bayesian – Independent Bayesian - correlated © 2018 W. B. Powell Slide 7

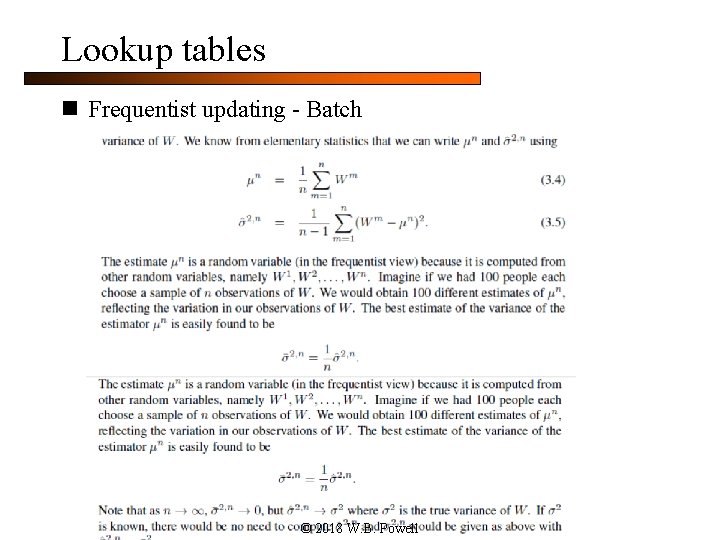

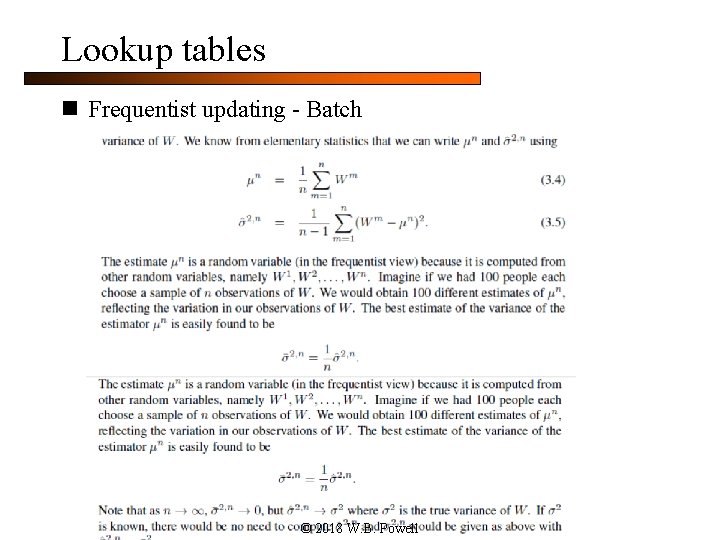

Lookup tables n Frequentist updating - Batch © 2018 W. B. Powell

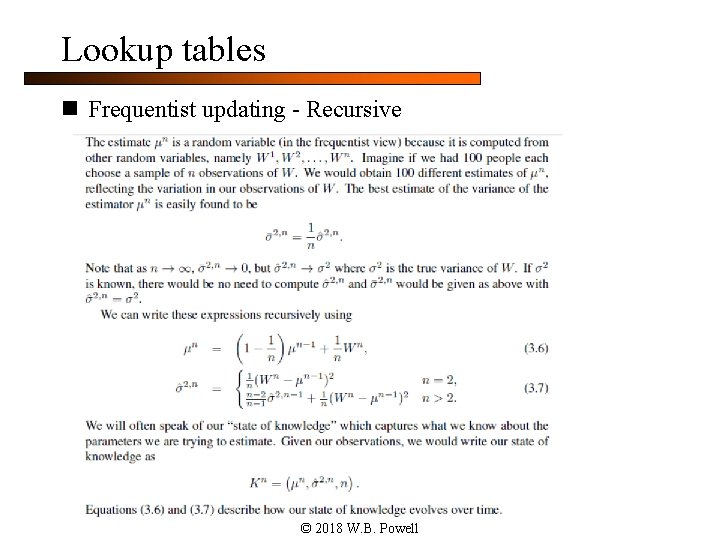

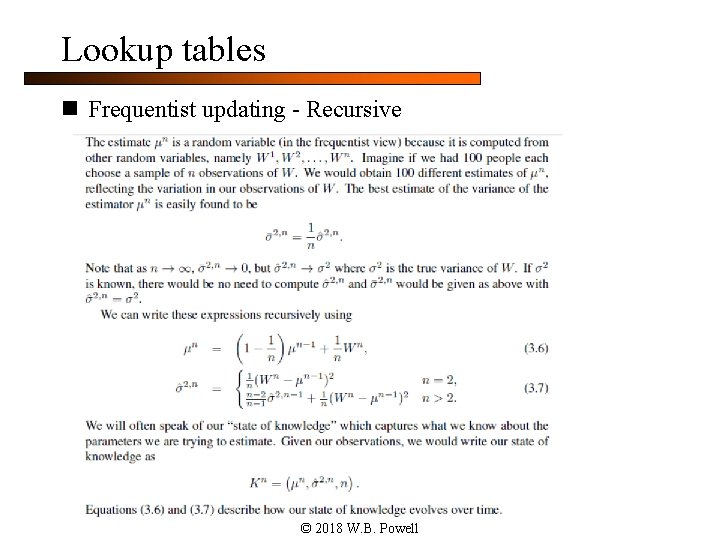

Lookup tables n Frequentist updating - Recursive © 2018 W. B. Powell

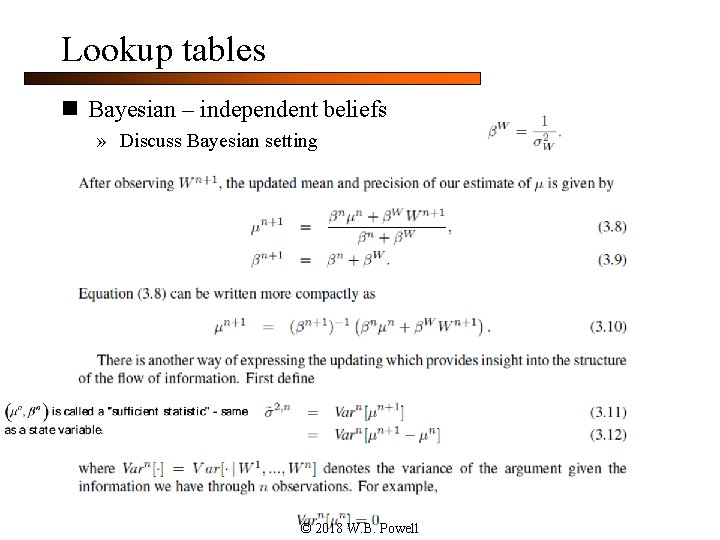

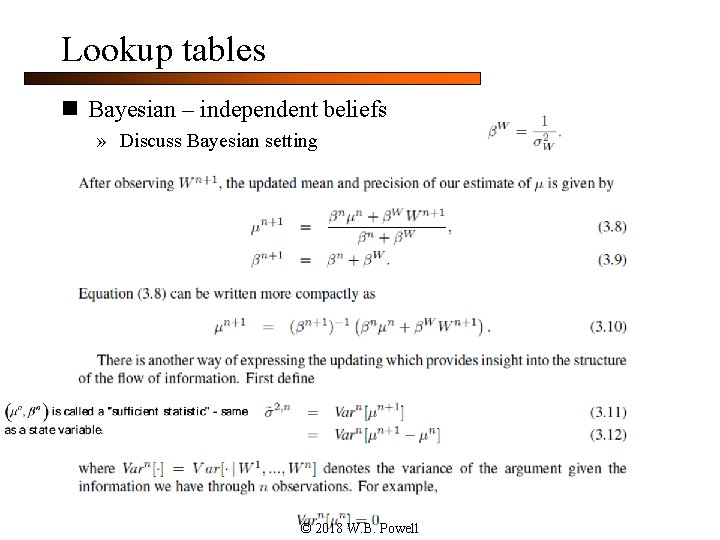

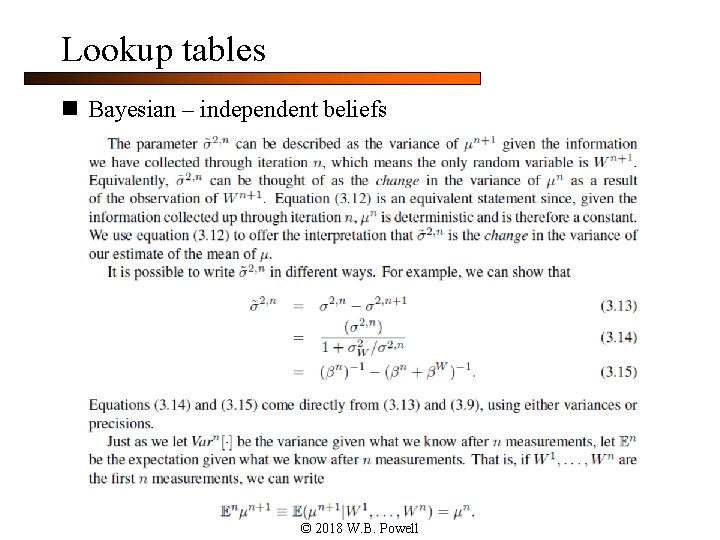

Lookup tables n Bayesian – independent beliefs » Discuss Bayesian setting © 2018 W. B. Powell

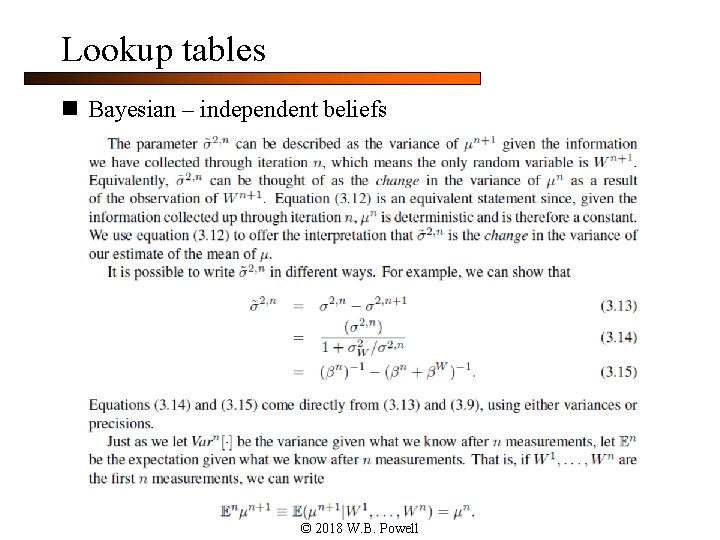

Lookup tables n Bayesian – independent beliefs © 2018 W. B. Powell

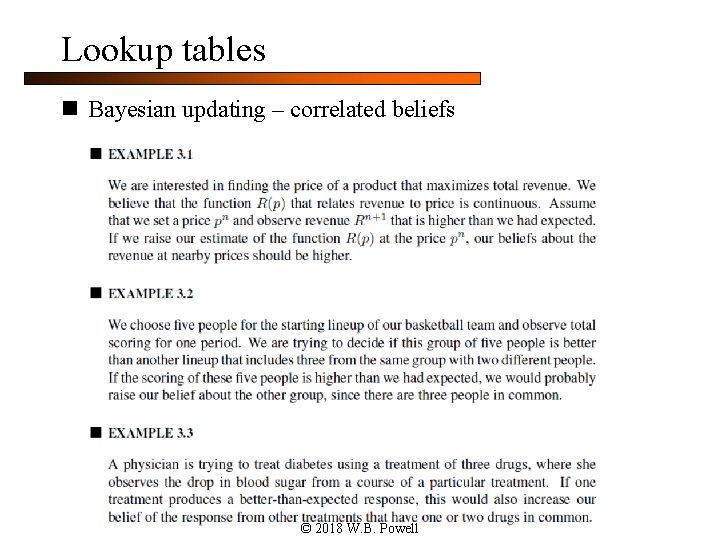

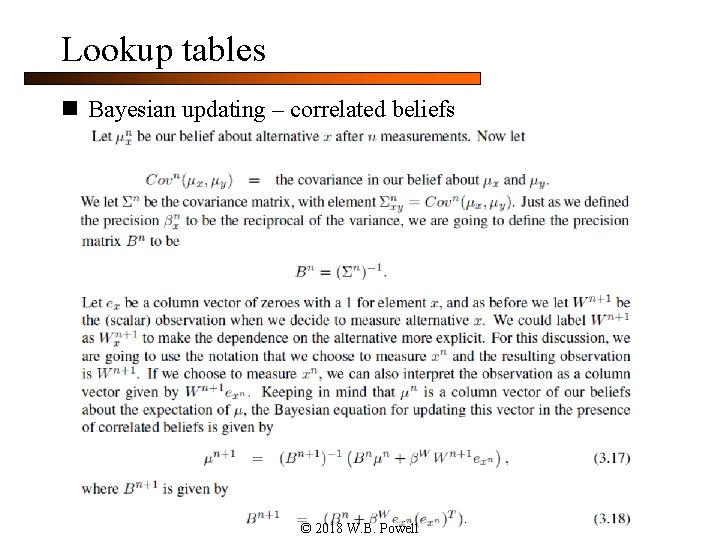

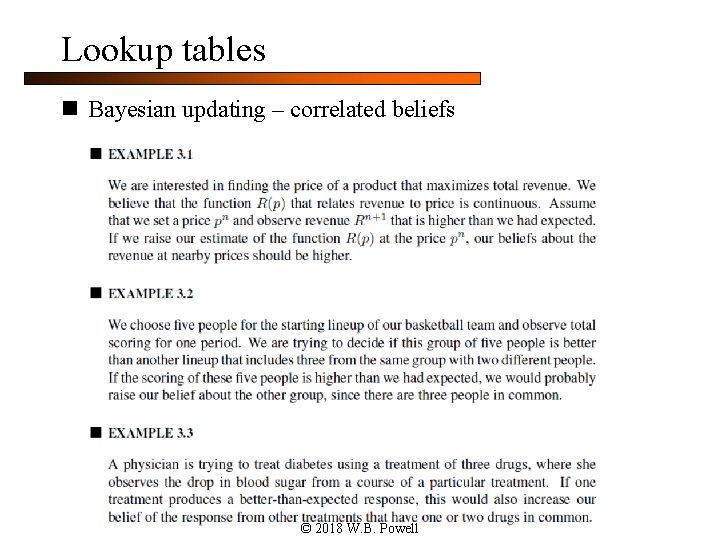

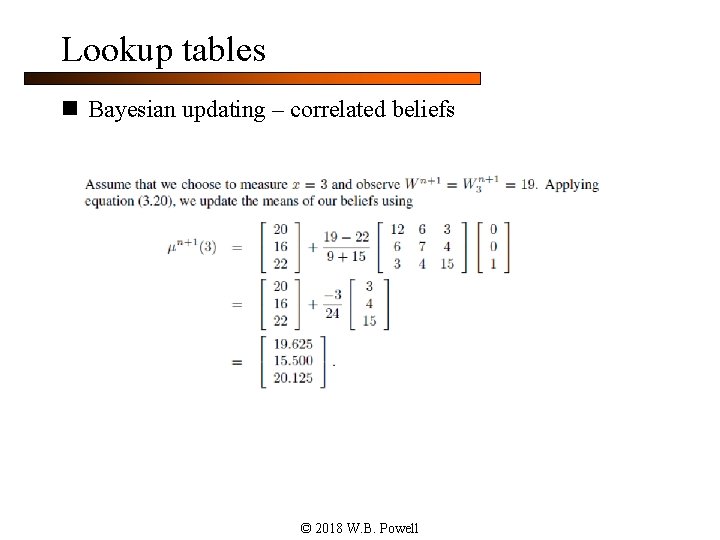

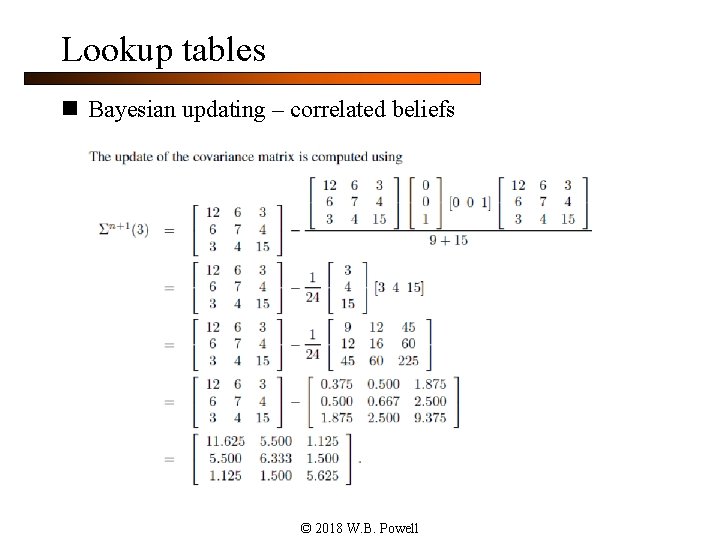

Lookup tables n Bayesian updating – correlated beliefs © 2018 W. B. Powell

Lookup tables n Bayesian updating – correlated beliefs © 2018 W. B. Powell

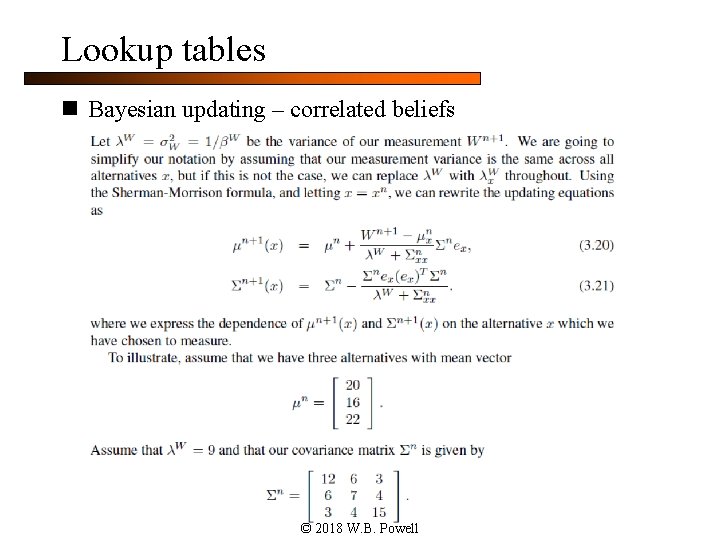

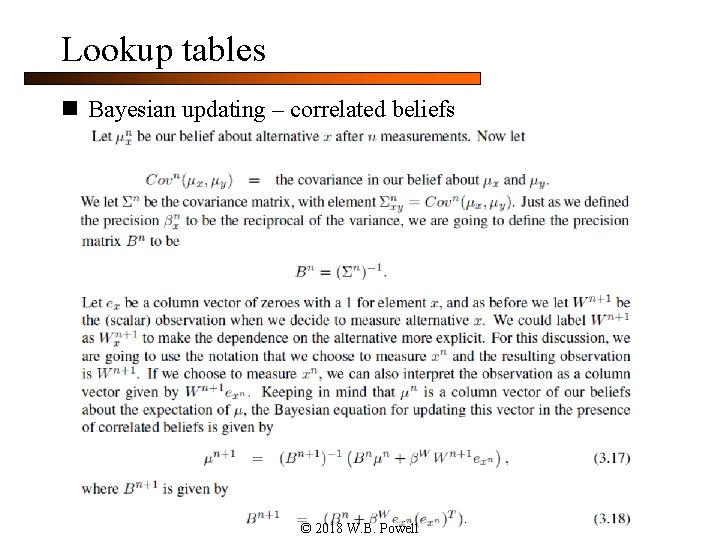

Lookup tables n Bayesian updating – correlated beliefs © 2018 W. B. Powell

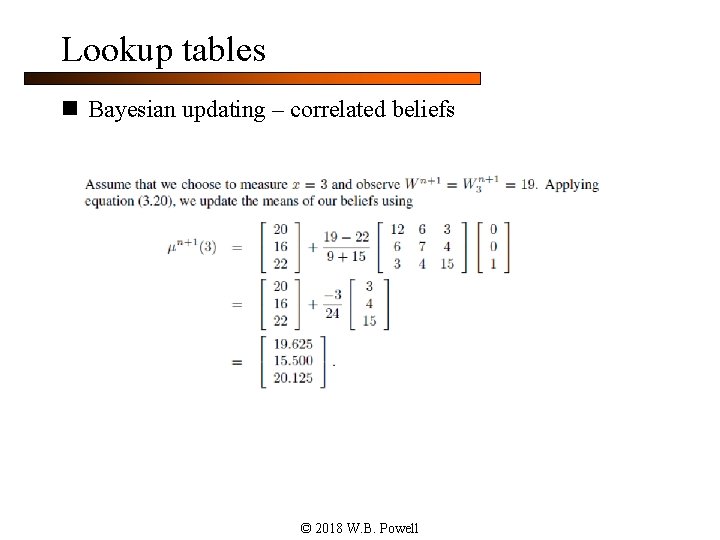

Lookup tables n Bayesian updating – correlated beliefs © 2018 W. B. Powell

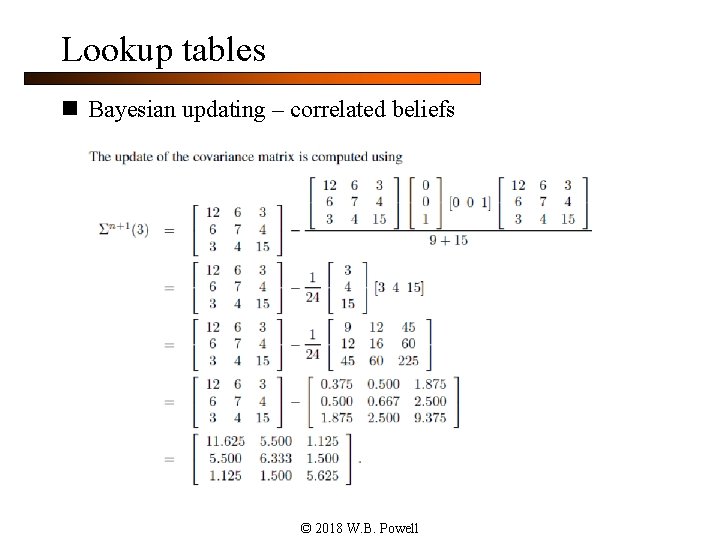

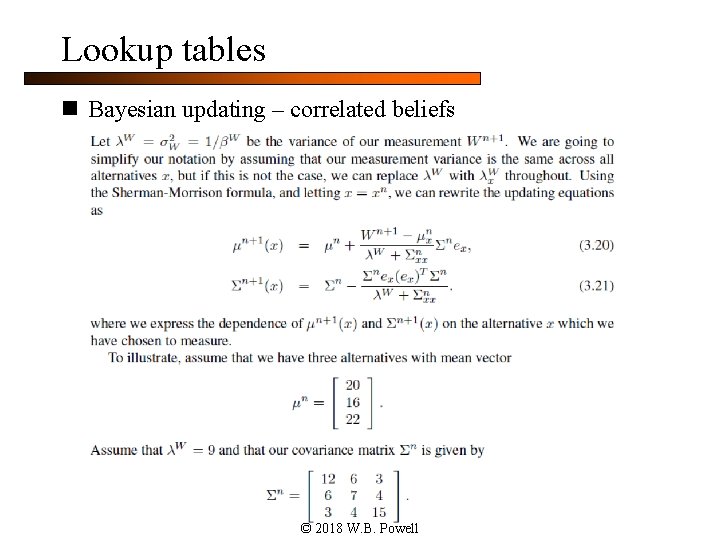

Lookup tables n Bayesian updating – correlated beliefs © 2018 W. B. Powell

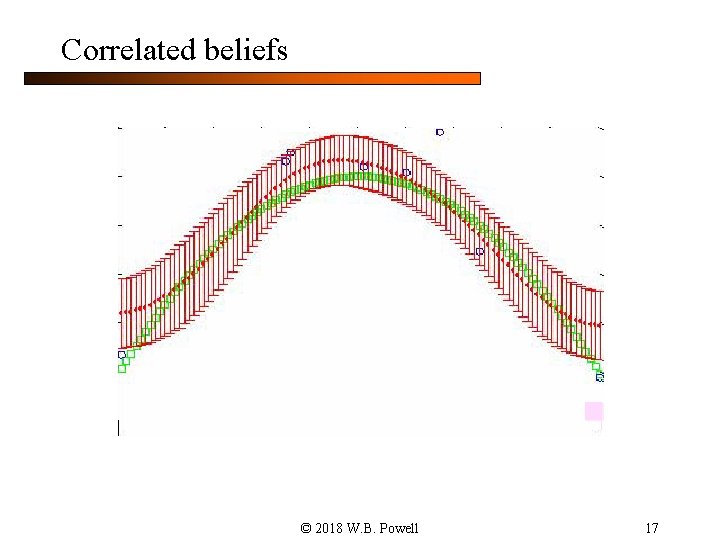

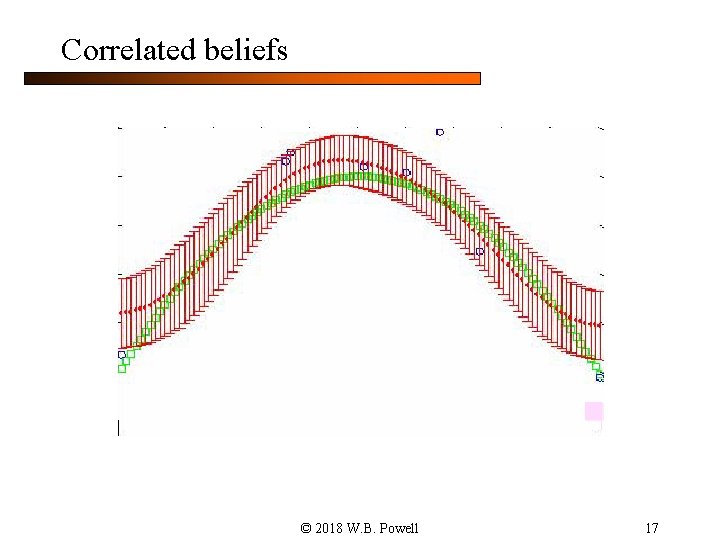

Correlated beliefs © 2018 W. B. Powell 17

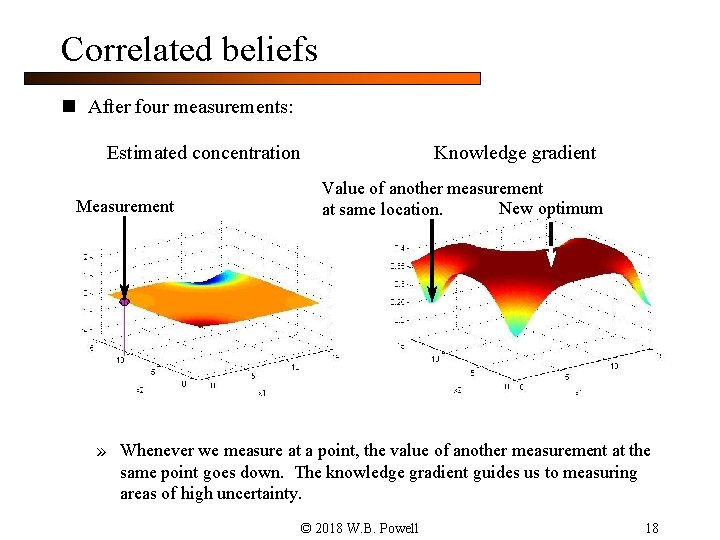

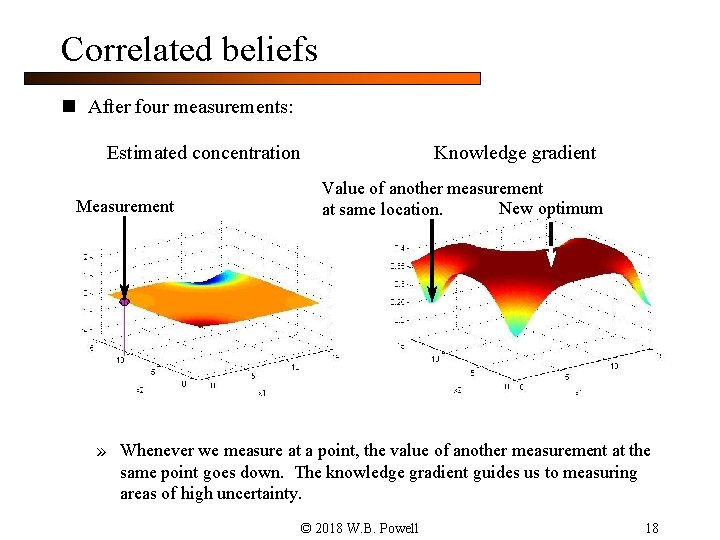

Correlated beliefs n After four measurements: Estimated concentration Measurement Knowledge gradient Value of another measurement New optimum at same location. » Whenever we measure at a point, the value of another measurement at the same point goes down. The knowledge gradient guides us to measuring areas of high uncertainty. © 2018 W. B. Powell 18

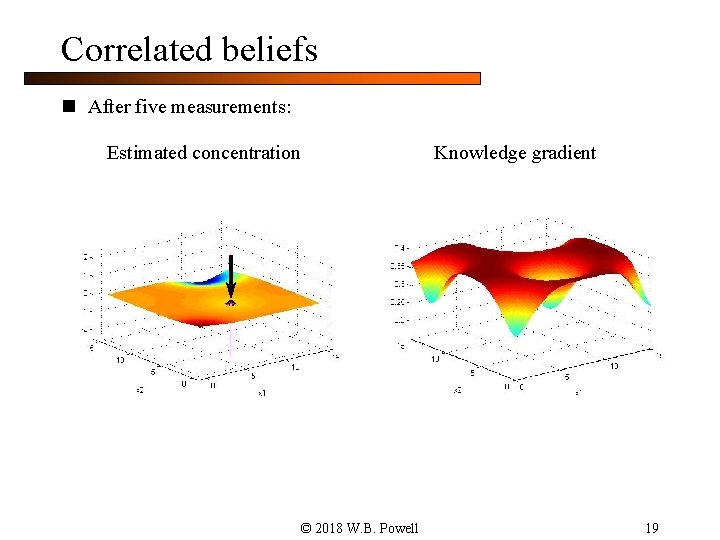

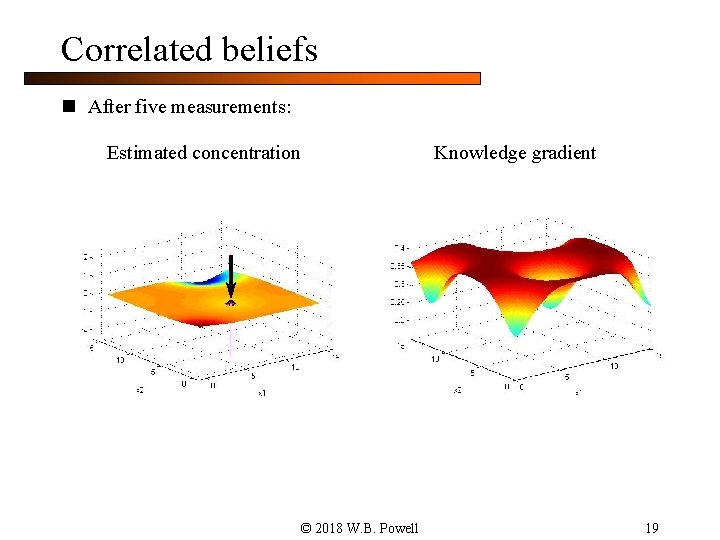

Correlated beliefs n After five measurements: Estimated concentration Knowledge gradient © 2018 W. B. Powell 19

Linear models © 2018 W. B. Powell Slide 20

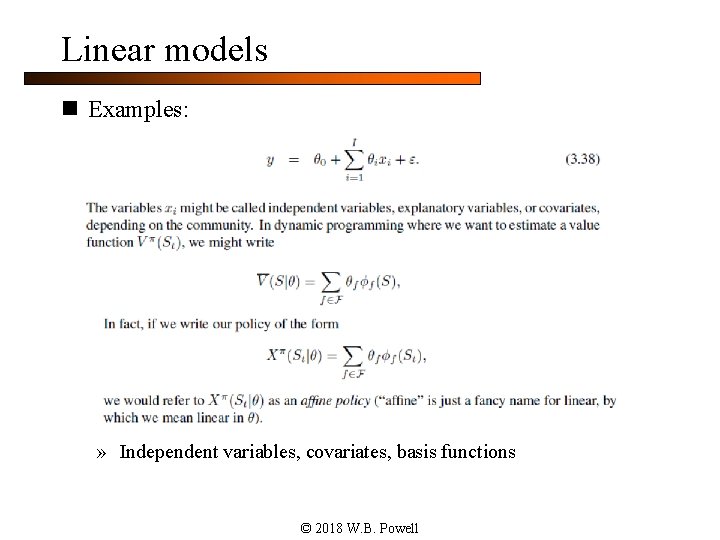

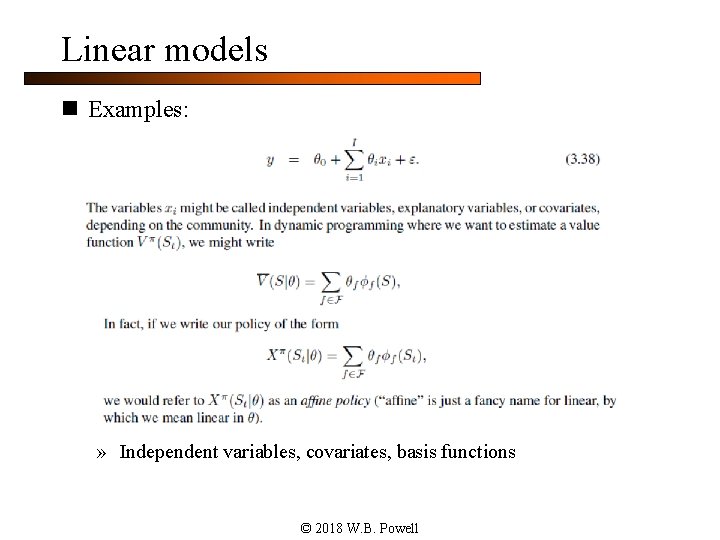

Linear models n Examples: » Independent variables, covariates, basis functions © 2018 W. B. Powell

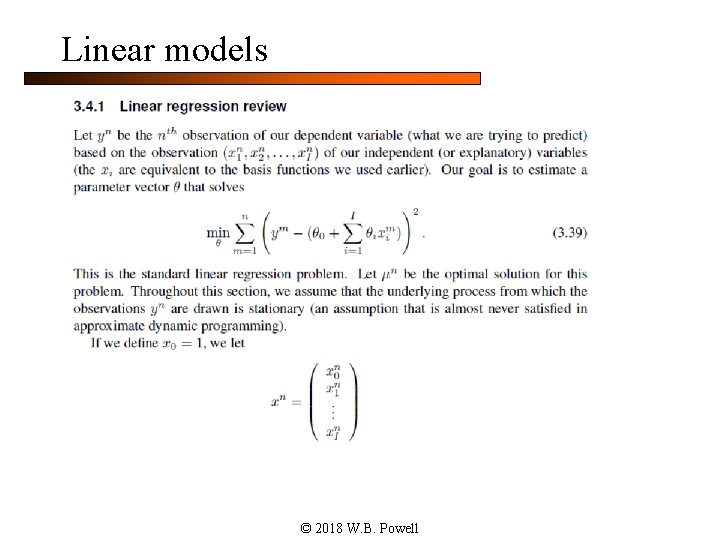

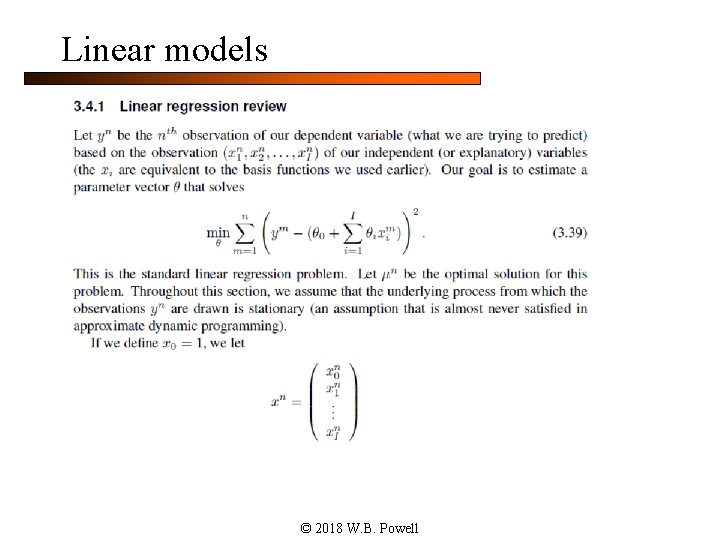

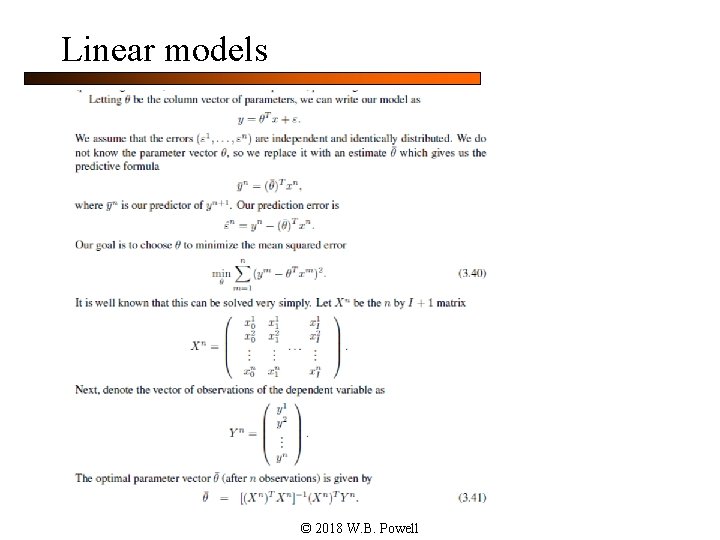

Linear models © 2018 W. B. Powell

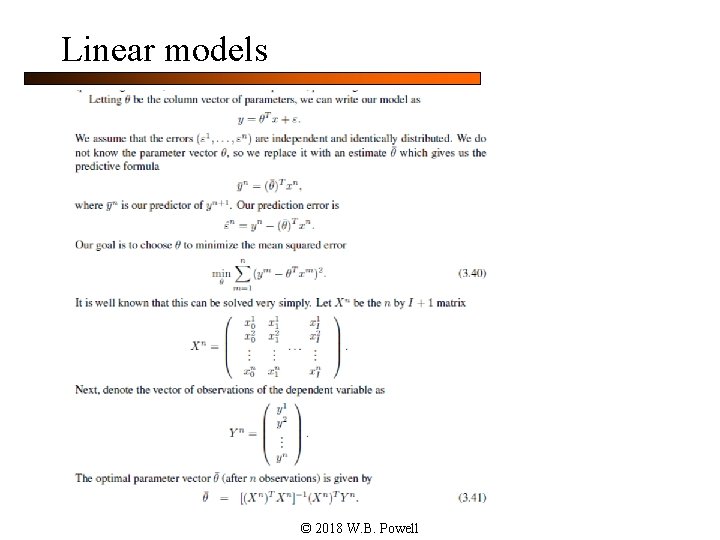

Linear models © 2018 W. B. Powell

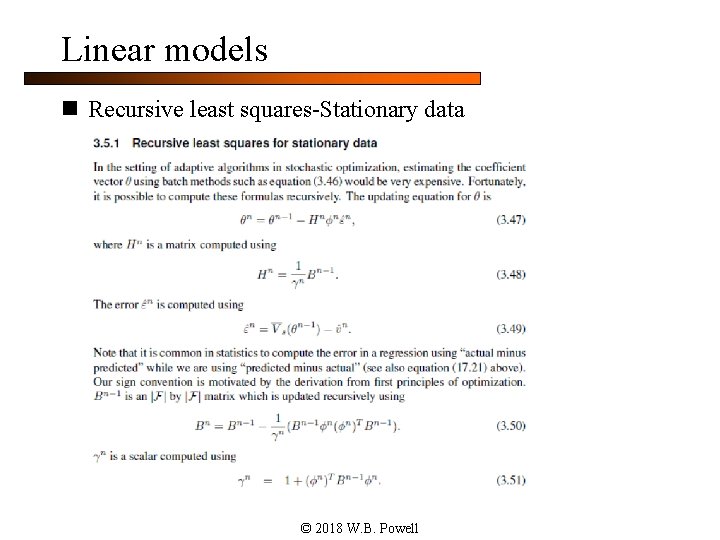

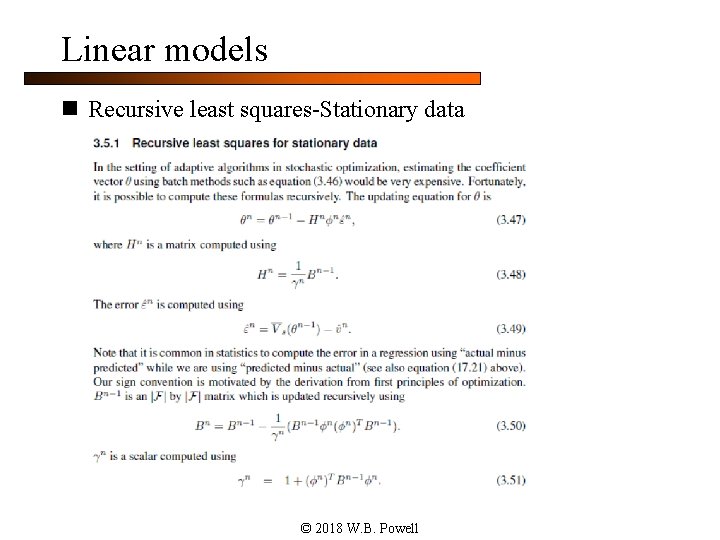

Linear models n Recursive least squares-Stationary data © 2018 W. B. Powell

Nonlinear models Sampled nonlinear models © 2018 W. B. Powell Slide 25

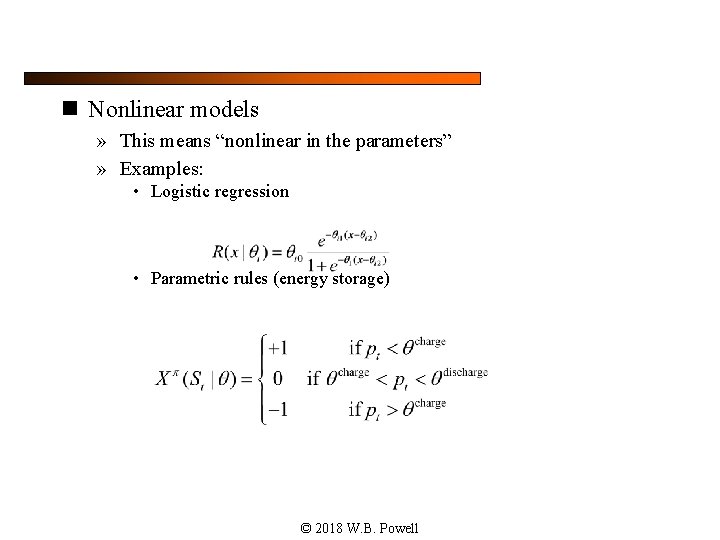

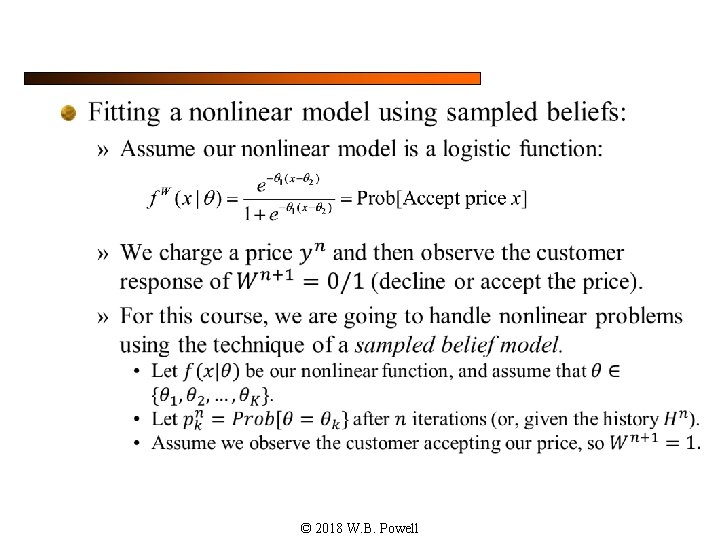

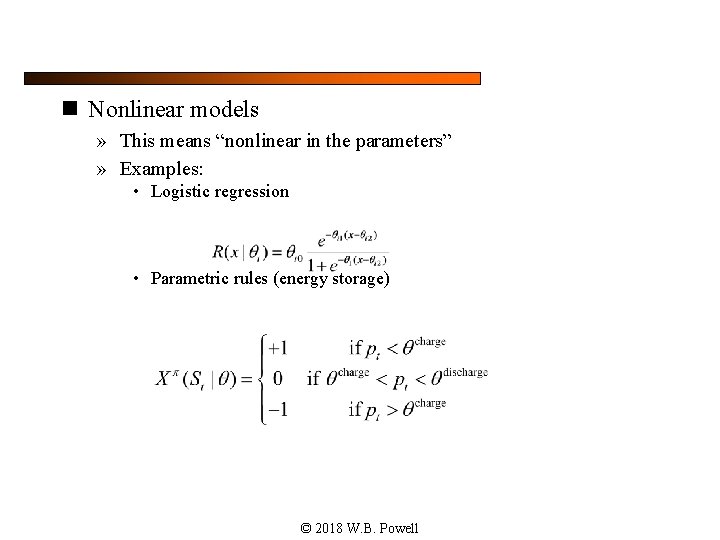

n Nonlinear models » This means “nonlinear in the parameters” » Examples: • Logistic regression • Parametric rules (energy storage) © 2018 W. B. Powell

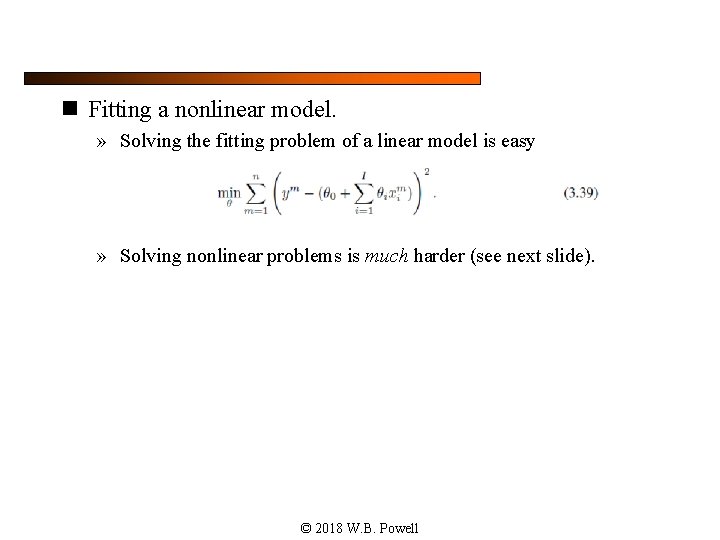

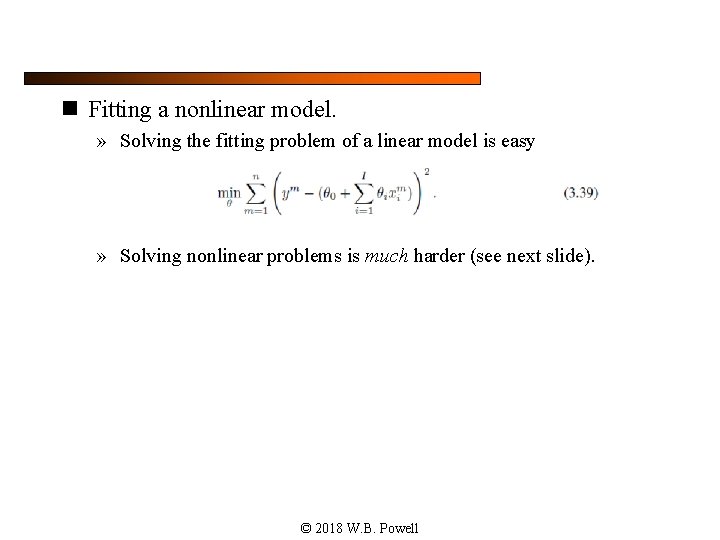

n Fitting a nonlinear model. » Solving the fitting problem of a linear model is easy » Solving nonlinear problems is much harder (see next slide). © 2018 W. B. Powell

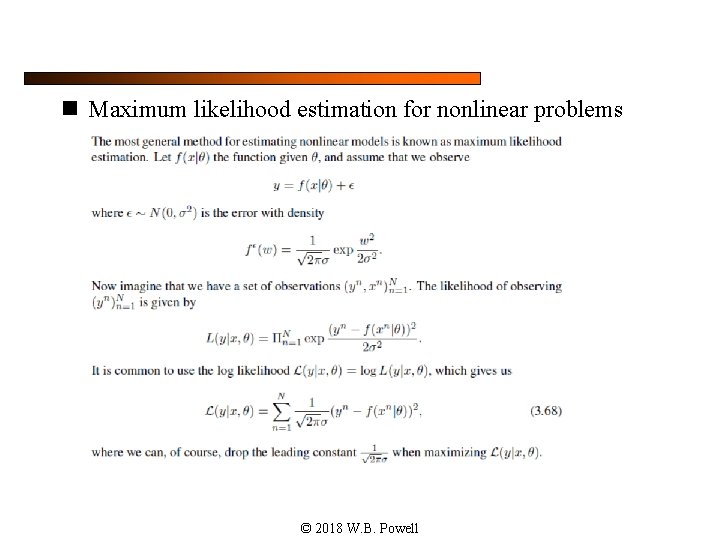

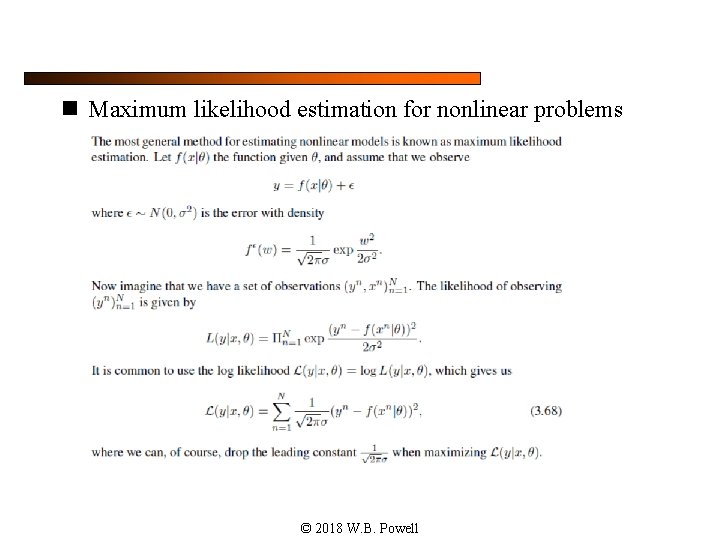

n Maximum likelihood estimation for nonlinear problems © 2018 W. B. Powell

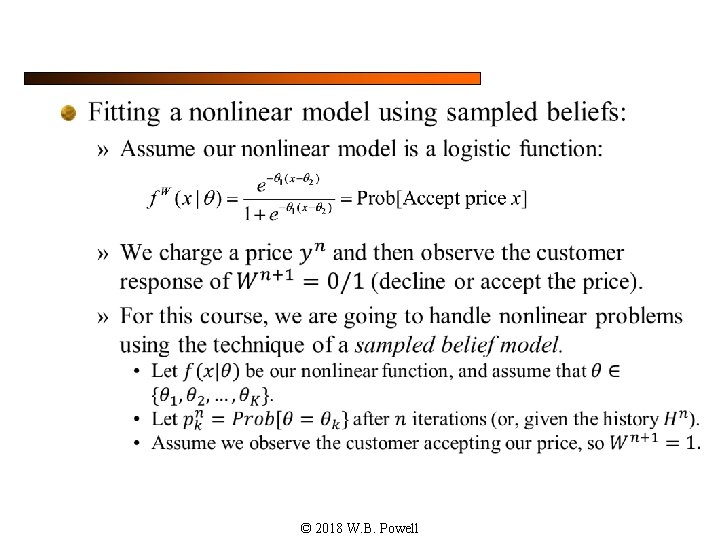

n © 2018 W. B. Powell

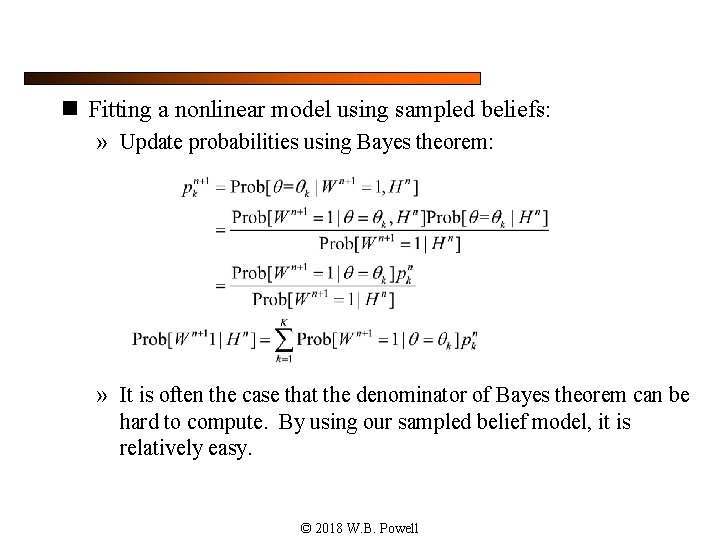

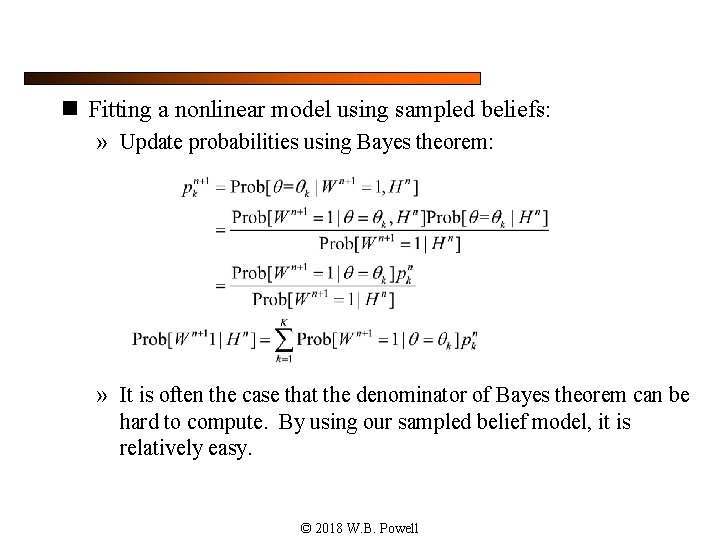

n Fitting a nonlinear model using sampled beliefs: » Update probabilities using Bayes theorem: » It is often the case that the denominator of Bayes theorem can be hard to compute. By using our sampled belief model, it is relatively easy. © 2018 W. B. Powell

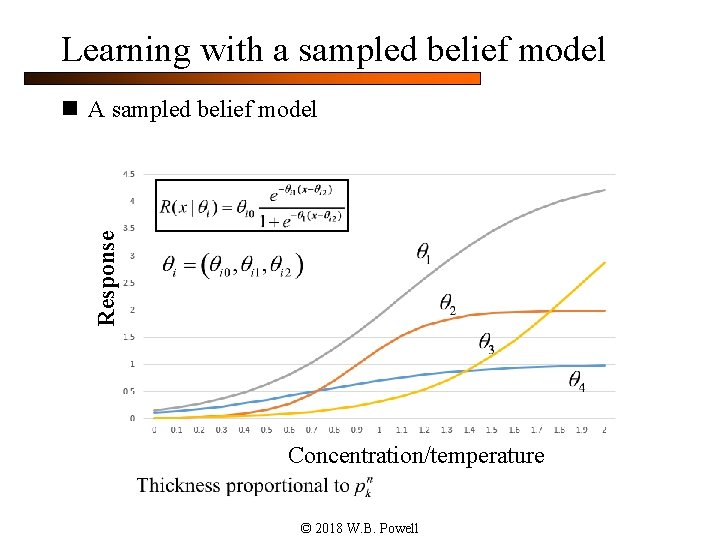

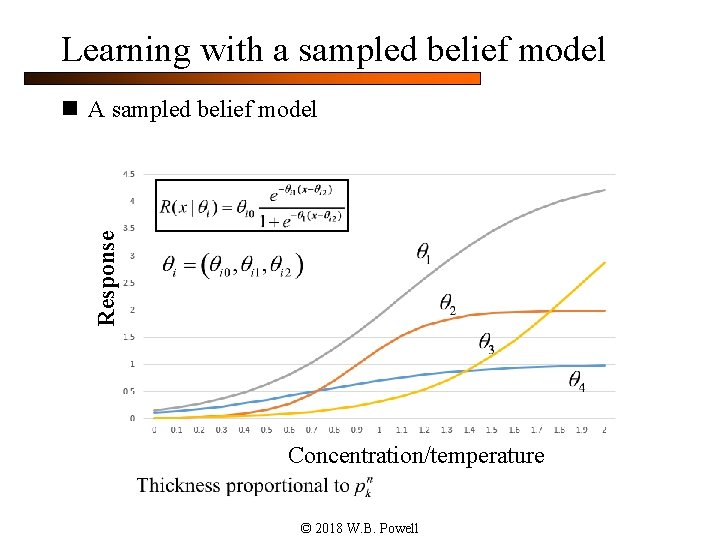

Learning with a sampled belief model Response n A sampled belief model Concentration/temperature © 2018 W. B. Powell

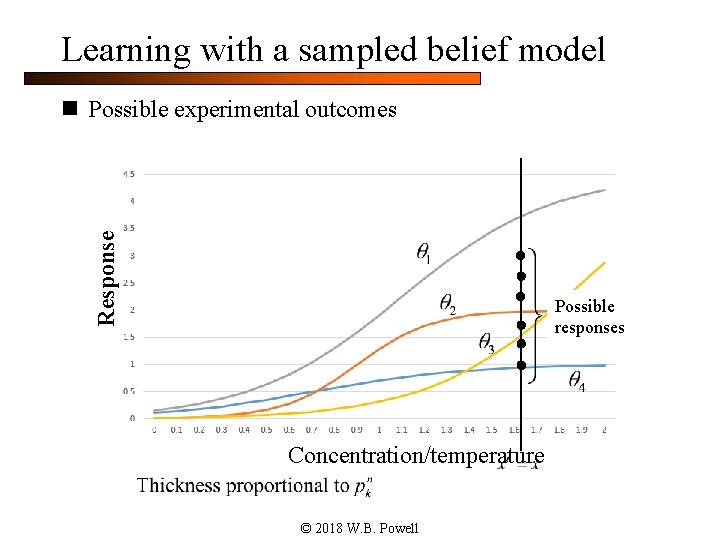

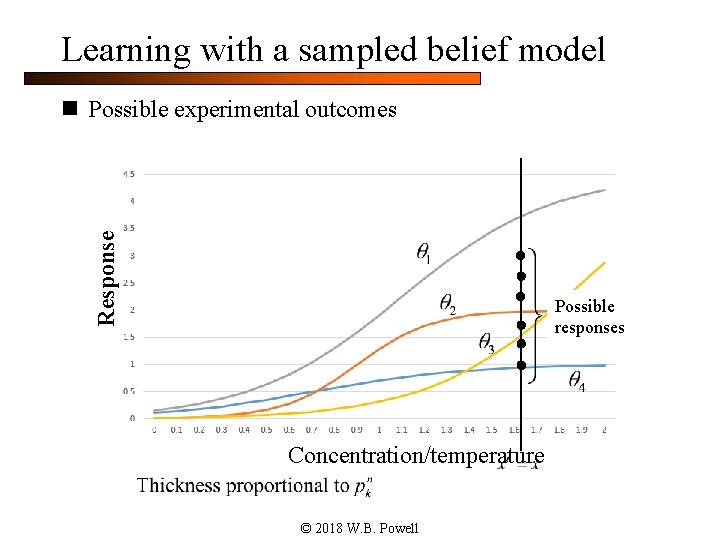

Learning with a sampled belief model Response n Possible experimental outcomes Possible responses Concentration/temperature © 2018 W. B. Powell

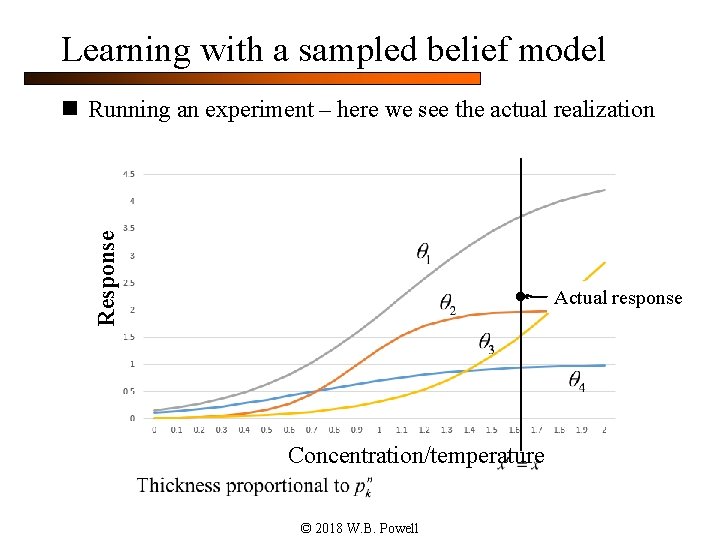

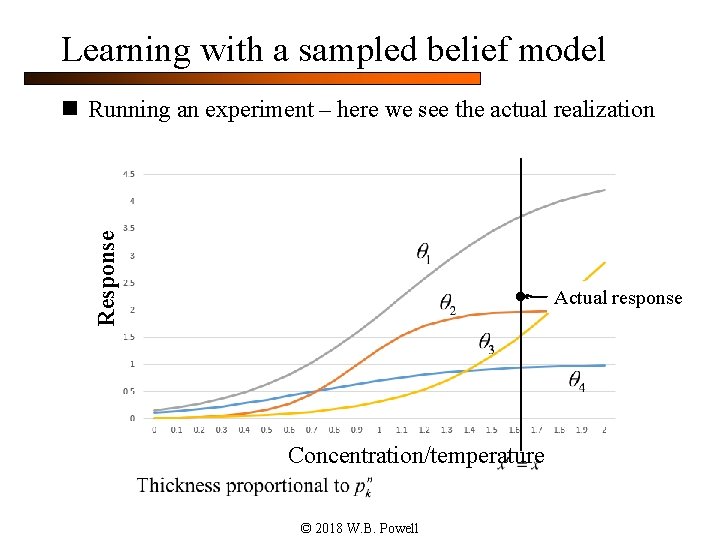

Learning with a sampled belief model Response n Running an experiment – here we see the actual realization Actual response Concentration/temperature © 2018 W. B. Powell

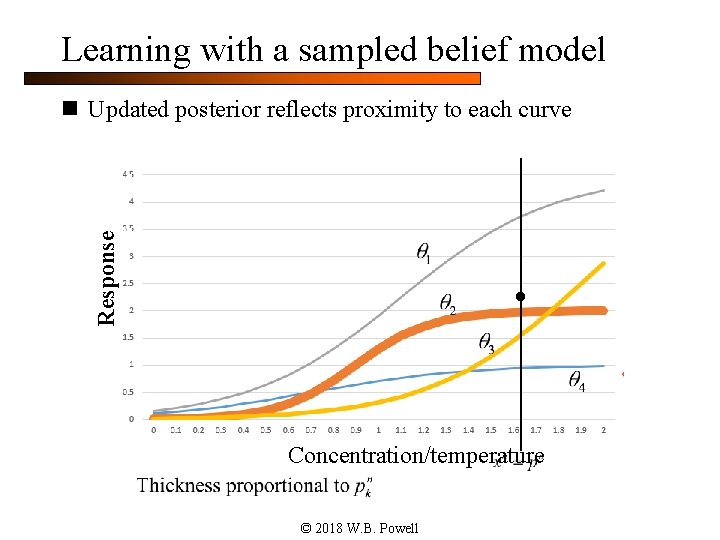

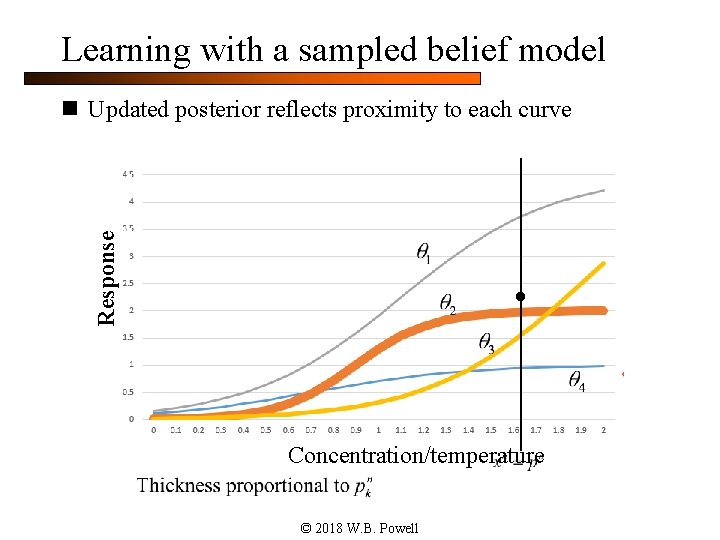

Learning with a sampled belief model Response n Updated posterior reflects proximity to each curve Concentration/temperature © 2018 W. B. Powell

Nonparametric models © 2018 W. B. Powell Slide 35

Nonparametric methods © 2018 W. B. Powell

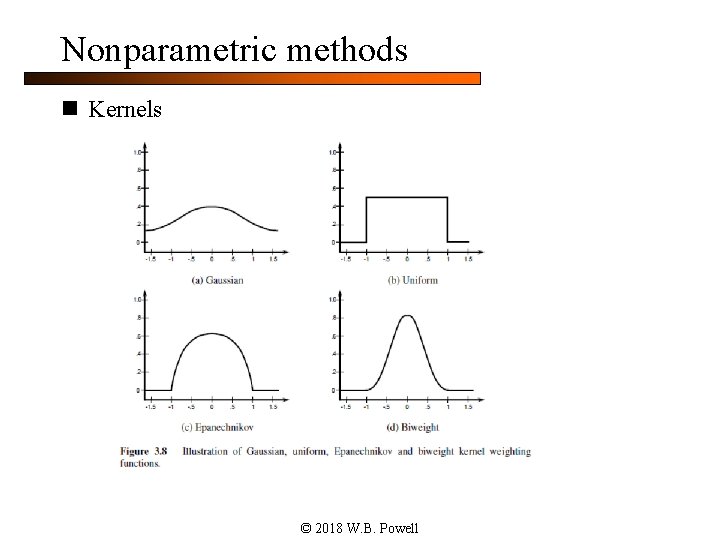

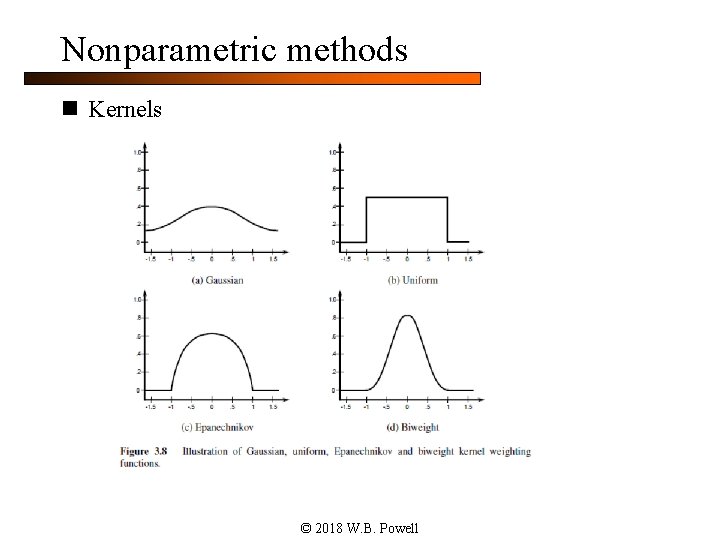

Nonparametric methods n Kernels © 2018 W. B. Powell

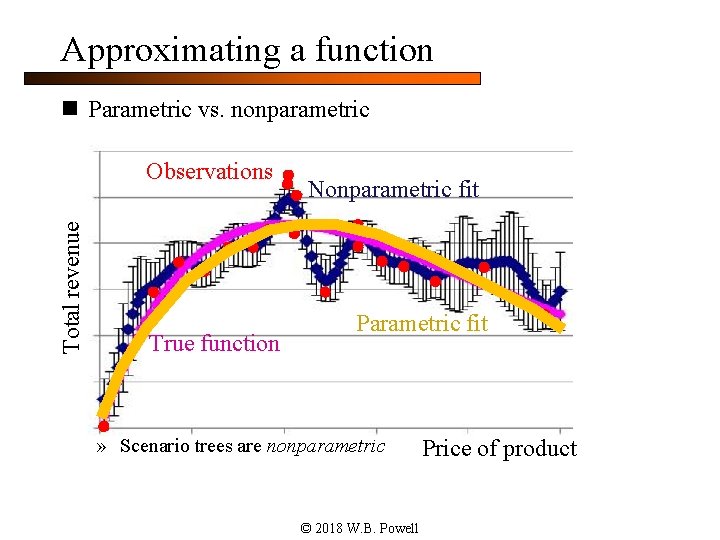

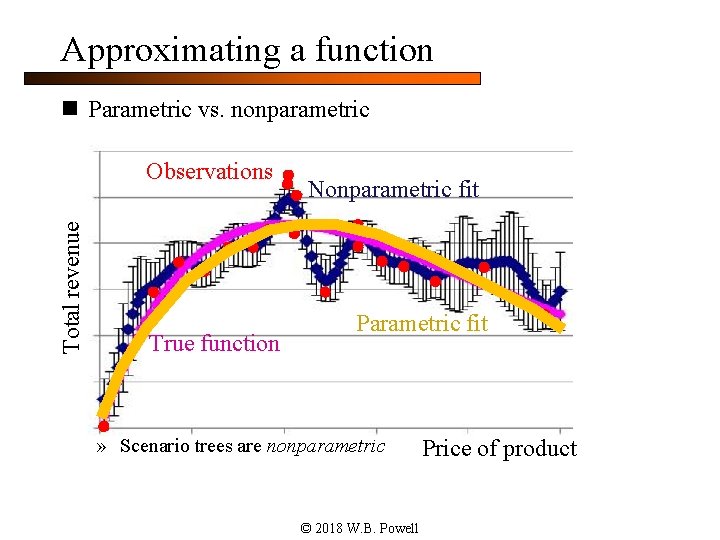

Approximating a function n Parametric vs. nonparametric Total revenue Observations True function Nonparametric fit Parametric fit » Robust CFAs are parametric » Scenario trees are nonparametric © 2018 W. B. Powell Price of product

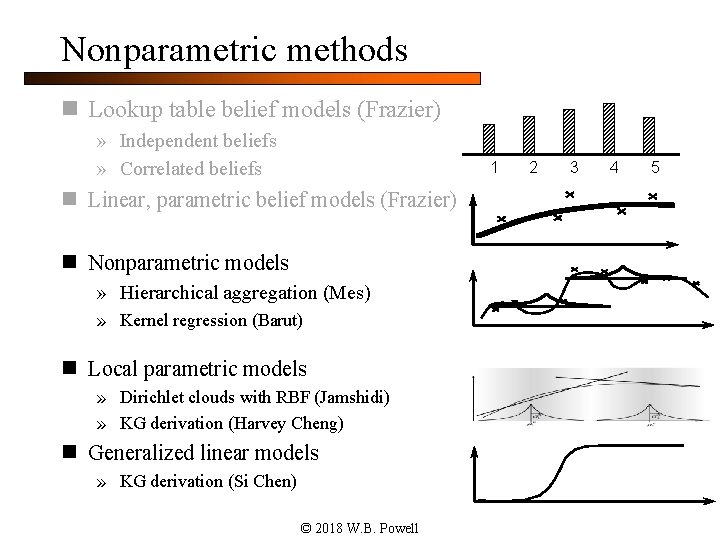

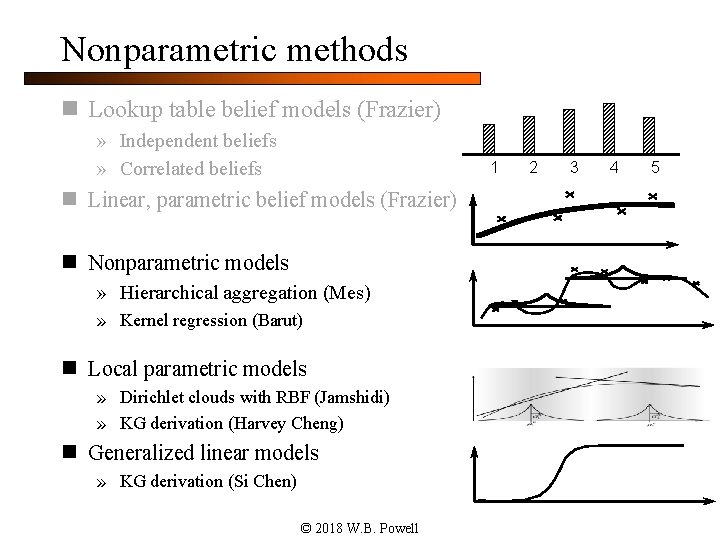

Nonparametric methods n Lookup table belief models (Frazier) » Independent beliefs » Correlated beliefs 1 n Linear, parametric belief models (Frazier) n Nonparametric models » Hierarchical aggregation (Mes) » Kernel regression (Barut) n Local parametric models » Dirichlet clouds with RBF (Jamshidi) » KG derivation (Harvey Cheng) n Generalized linear models » KG derivation (Si Chen) © 2018 W. B. Powell 2 3 4 5

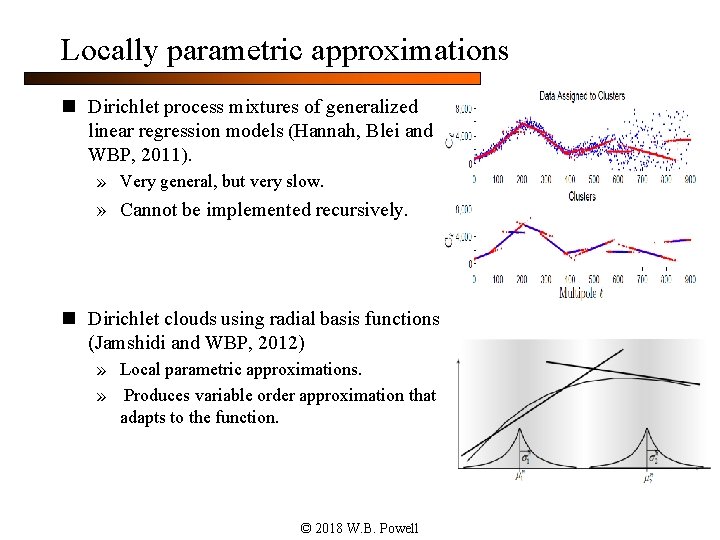

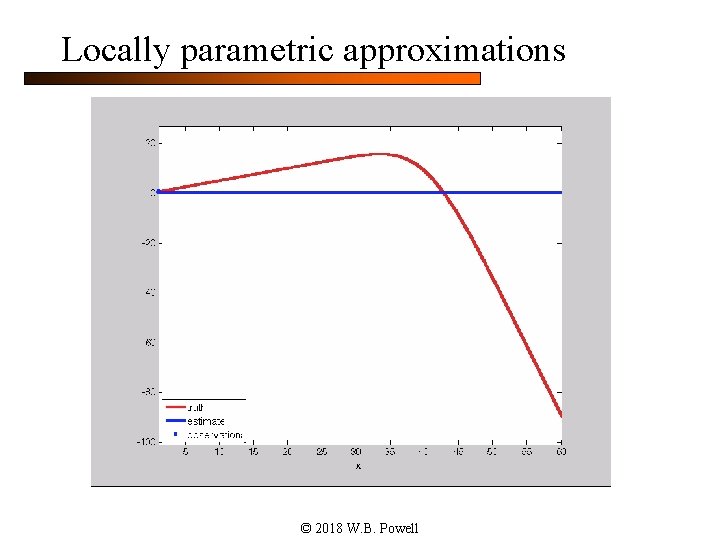

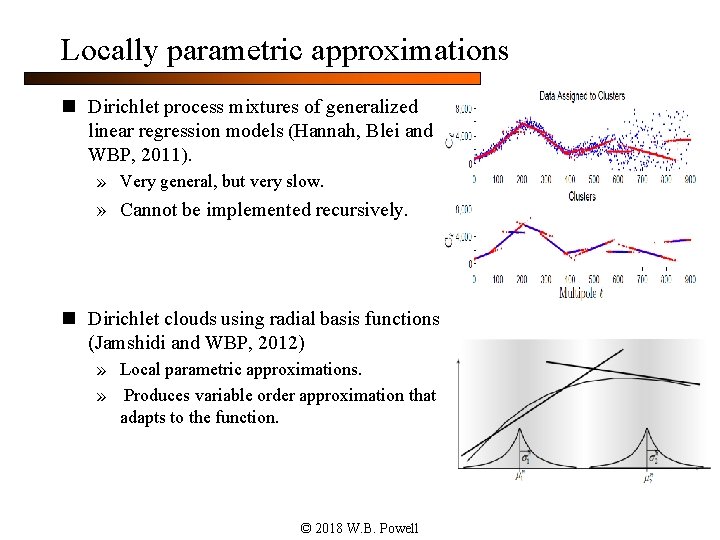

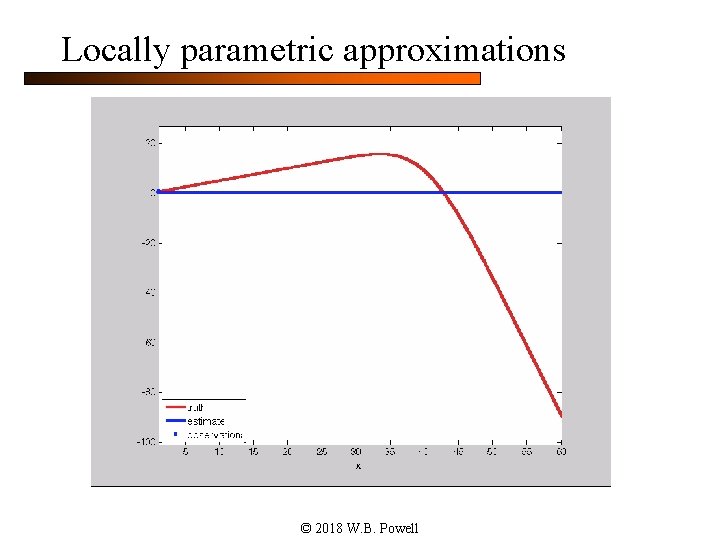

Locally parametric approximations n Dirichlet process mixtures of generalized linear regression models (Hannah, Blei and WBP, 2011). » Very general, but very slow. » Cannot be implemented recursively. n Dirichlet clouds using radial basis functions (Jamshidi and WBP, 2012) » Local parametric approximations. » Produces variable order approximation that adapts to the function. © 2018 W. B. Powell

Locally parametric approximations © 2018 W. B. Powell

Nonparametric methods n Discussion: » Local smoothing methods struggle with curse of dimensionality. In high dimensions, no two data points are ever “close. ” » Nonparametric representations are very high-dimensional, which makes it hard to “store” a model. © 2018 W. B. Powell

Nonparametric methods n More advanced methods » Deep neural networks • Before, we described neural networks as a nonlinear parametric model. • Deep neural networks, which have the property of being able to approximate any function, are classified as nonparametric. • These have proven to be very powerful on image processing and voice recognition, but not in stochastic optimization. » Support vector machines (classification) » Support vector regression (continuous) • We have had surprising but limited success with SVM, but considerably more empirical research is needed. © 2018 W. B. Powell