ANALYTICS Business Analytics BA Definition Business analytics BA

ANALYTICS

Business Analytics (BA) Definition • Business analytics (BA) is the practice of iterative, methodical exploration of an organization’s data with emphasis on statistical analysis. Business analytics is used by companies committed to data-driven decision making.

• BA is used to gain insights that inform business decisions and can be used to automate and optimize business processes. Data-driven companies treat their data as a corporate asset and leverage it for competitive advantage. Successful business analytics depends on data quality, skilled analysts who understand the technologies and the business and an organizational commitment to datadriven decision making.

Examples of BA uses include: • Exploring data to find new patterns and relationships (data mining) • Explaining why a certain result occurred (statistical analysis, quantitative analysis) • Experimenting to test previous decisions (A/B testing, multivariate testing) • Forecasting future results (predictive modeling, predictive analytics)

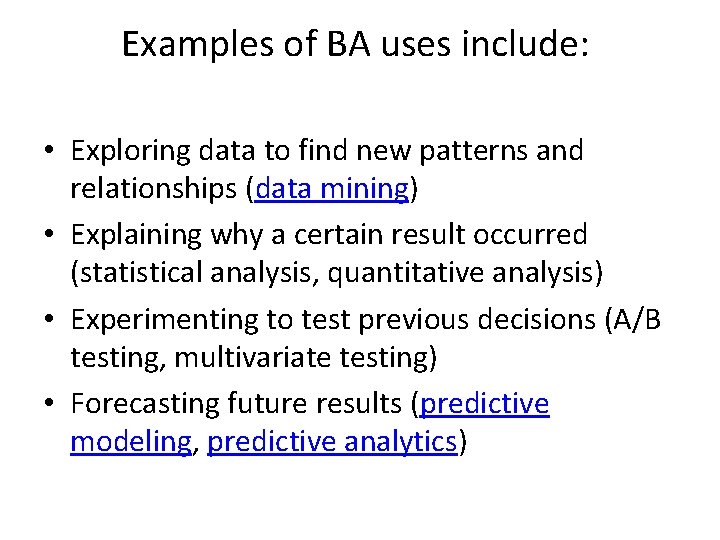

Business Intelligence Business Analytics What happened? Why did it happen? What will happen if we change x? Who? Will it happen again? How many? What else does the data tell us that never thought to ask? Reporting (KPIs, metrics) Automated Monitoring/Alerting (thresholds) Dashboards Scorecards OLAP (Cubes, Slice & Dice, Drilling) Ad hoc query Statistical/Quantitative Analysis Data Mining Predictive Modeling Multivariate Testing

Exploratory Data Analysis (EDA) • Exploratory Data Analysis (EDA) is an approach/philosophy for data analysis that employs a variety of techniques (mostly graphical) to maximize insight into a data set; • uncover underlying structure; • extract important variables; • detect outliers and anomalies; • test underlying assumptions; • develop parsimonious models; and • determine optimal factor settings.

• The EDA approach is precisely that--an approach--not a set of techniques, but an attitude/philosophy about how a data analysis should be carried out. • Most EDA techniques are graphical in nature with a few quantitative techniques. The reason for the heavy reliance on graphics is that by its very nature the main role of EDA is to open-mindedly explore, and graphics gives the analysts unparalleled power to do so, enticing the data to reveal its structural secrets, and being always ready to gain some new, often unsuspected, insight into the data.

Cluster analysis • Cluster analysis is a group of multivariate techniques whose primary purpose is to group objects (e. g. , respondents, products, or other entities) based on the characteristics they possess. It is a means of grouping records based upon attributes that make them similar. If plotted geometrically, the objects within the clusters will be close together, while the distance between clusters will be farther apart.

Cluster analysis • Cluster Analysis, also called data segmentation, has a variety of goals. All relate to grouping or segmenting a collection of objects (also called observations, individuals, cases, or data rows) into subsets or "clusters", such that those within each cluster are more closely related to one another than objects assigned to different clusters. Central to all of the goals of cluster analysis is the notion of degree of similarity (or dissimilarity) between the individual objects being clustered. • There are two major methods of clustering -hierarchical clustering and k-means clustering.

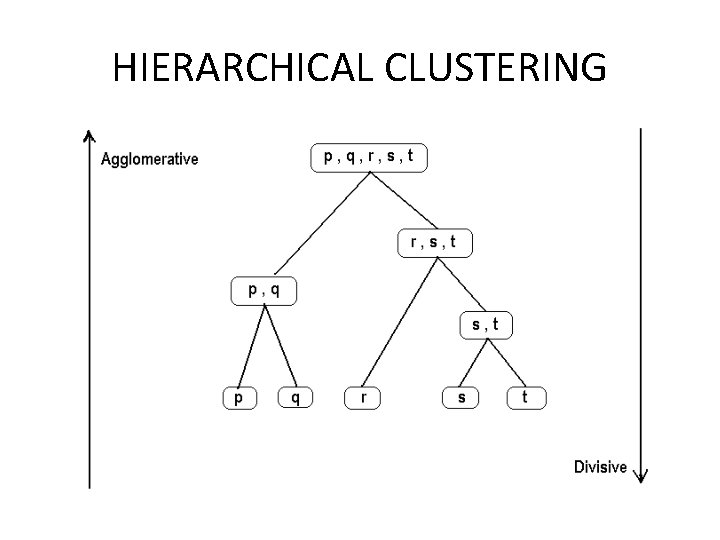

HIERARCHICAL CLUSTERING • In hierarchical clustering the data are not partitioned into a particular cluster in a single step. Instead, a series of partitions takes place, which may run from a single cluster containing all objects to n clusters each containing a single object. Hierarchical Clustering is subdivided into agglomerative methods, which proceed by series of fusions of the n objects into groups, and divisive methods, which separate n objects successively into finer groupings. Agglomerative techniques are more commonly used, and this is the method implemented in XLMiner™. Hierarchical clustering may be represented by a two dimensional diagram known as dendrogram which illustrates the fusions or divisions made at each successive stage of analysis.

HIERARCHICAL CLUSTERING

K-Means Clustering • k-means is one of the simplest unsupervised learning algorithms that solve the well known clustering problem. The procedure follows a simple and easy way to classify a given data set through a certain number of clusters (assume k clusters) fixed apriori. The main idea is to define k centers, one for each cluster.

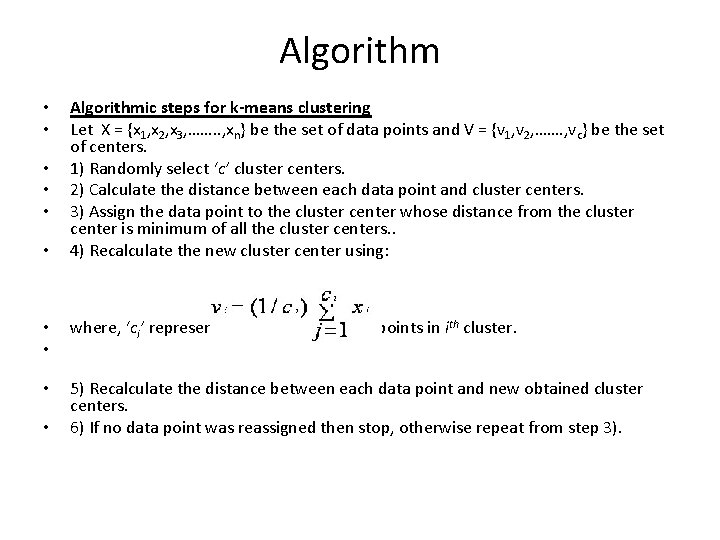

Algorithm • • • Algorithmic steps for k-means clustering Let X = {x 1, x 2, x 3, ……. . , xn} be the set of data points and V = {v 1, v 2, ……. , vc} be the set of centers. 1) Randomly select ‘c’ cluster centers. 2) Calculate the distance between each data point and cluster centers. 3) Assign the data point to the cluster center whose distance from the cluster center is minimum of all the cluster centers. . 4) Recalculate the new cluster center using: • • where, ‘ci’ represents the number of data points in ith cluster. • 5) Recalculate the distance between each data point and new obtained cluster centers. 6) If no data point was reassigned then stop, otherwise repeat from step 3). •

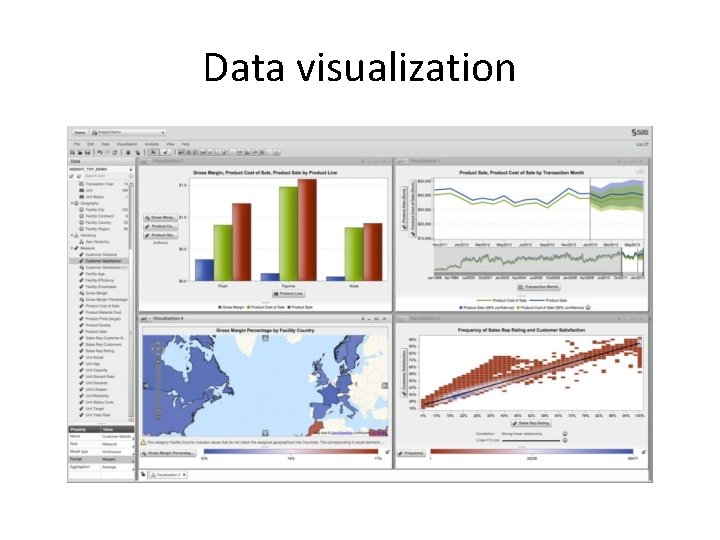

Data visualization • Data visualization is the presentation of data in a pictorial or graphical format. For centuries, people have depended on visual representations such as charts and maps to understand information more easily and quickly. • Interactive visualization: Interactive data visualization goes a step further – moving beyond the display of static graphics and spreadsheets to using computers and mobile devices to drill down into charts and graphs for more details, and interactively (and immediately) changing what data you see and how it is processed.

Data visualization

Regression • Regression is a data mining function that predicts a number. Profit, sales, mortgage rates, house values, square footage, temperature, or distance could all be predicted using regression techniques. For example, a regression model could be used to predict the value of a house based on location, number of rooms, lot size, and other factors.

Data Mining • A process used by companies to turn raw data into useful information. By using software to look for patterns in large batches of data, businesses can learn more about their customers and develop more effective marketing strategies as well as increase sales and decrease costs. Data mining depends on effective data collection and warehousing as well as computer processing.

Application of DM • Grocery stores are well-known users of data mining techniques. Many supermarkets offer free loyalty cards to customers that give them access to reduced prices not available to non-members. The cards make it easy for stores to track who is buying what, when they are buying it, and at what price. The stores can then use this data, after analyzing it, for multiple purposes, such as offering customers coupons that are targeted to their buying habits and deciding when to put items on sale and when to sell them at full price.

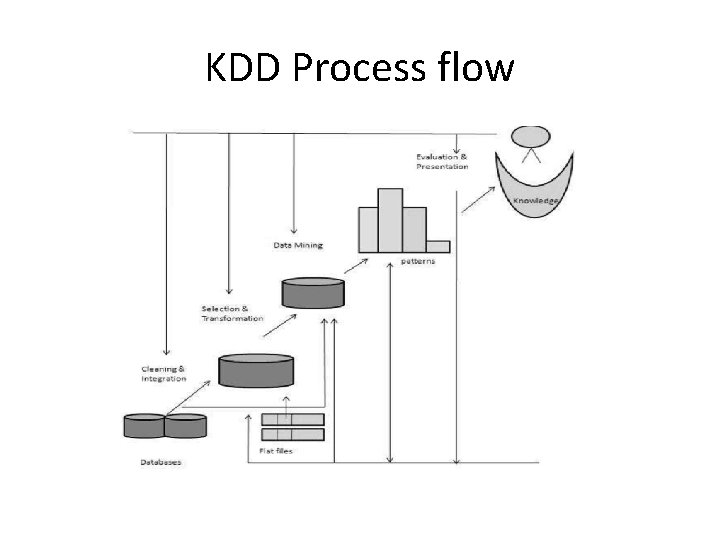

What is Knowledge Discovery? • Some people don’t differentiate data mining from knowledge discovery while others view data mining as an essential step in the process of knowledge discovery. Here is the list of steps involved in the knowledge discovery process − • Data Cleaning − In this step, the noise and inconsistent data is removed. • Data Integration − In this step, multiple data sources are combined. • Data Selection − In this step, data relevant to the analysis task are retrieved from the database. • Data Transformation − In this step, data is transformed or consolidated into forms appropriate for mining by performing summary or aggregation operations. • Data Mining − In this step, intelligent methods are applied in order to extract data patterns. • Pattern Evaluation − In this step, data patterns are evaluated. • Knowledge Presentation − In this step, knowledge is represented.

KDD Process flow

Text mining • Text mining also is known as Text Data Mining (TDM) and Knowledge Discovery in Textual Database (KDT). • A process of identifying novel information from a collection of texts (also known as a corpus).

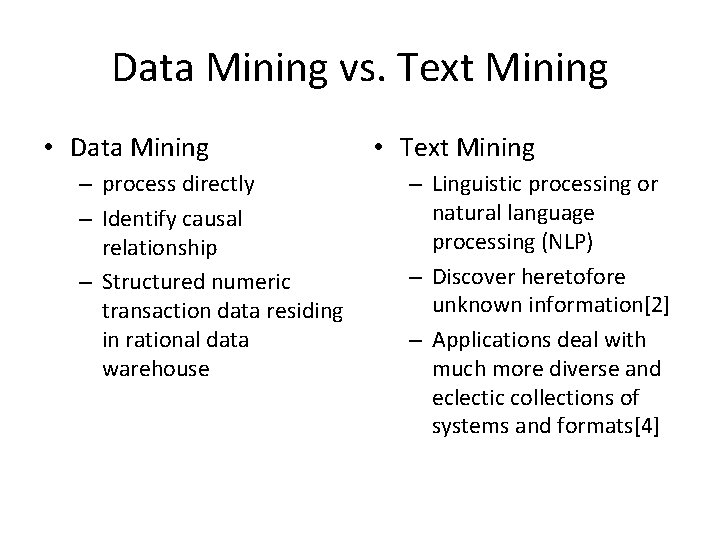

Data Mining vs. Text Mining • Data Mining – process directly – Identify causal relationship – Structured numeric transaction data residing in rational data warehouse • Text Mining – Linguistic processing or natural language processing (NLP) – Discover heretofore unknown information[2] – Applications deal with much more diverse and eclectic collections of systems and formats[4]

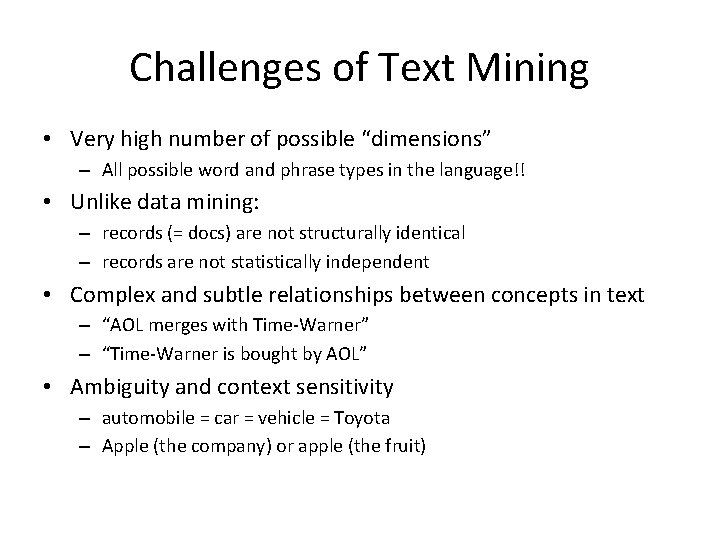

Challenges of Text Mining • Very high number of possible “dimensions” – All possible word and phrase types in the language!! • Unlike data mining: – records (= docs) are not structurally identical – records are not statistically independent • Complex and subtle relationships between concepts in text – “AOL merges with Time-Warner” – “Time-Warner is bought by AOL” • Ambiguity and context sensitivity – automobile = car = vehicle = Toyota – Apple (the company) or apple (the fruit)

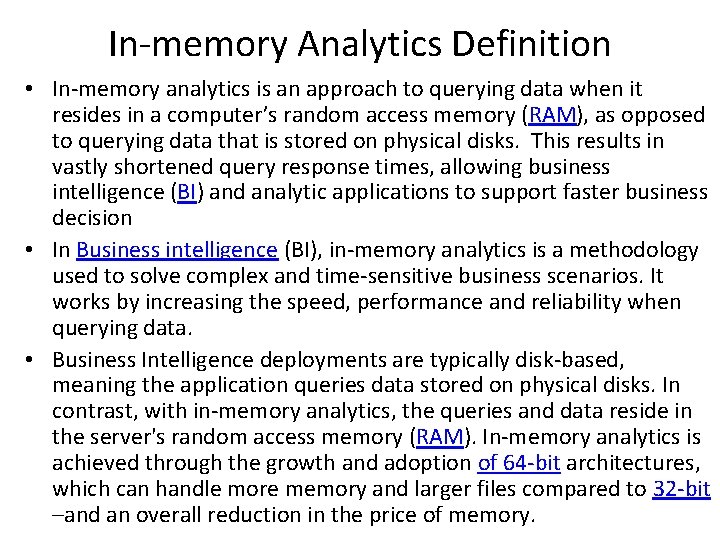

In-memory Analytics Definition • In-memory analytics is an approach to querying data when it resides in a computer’s random access memory (RAM), as opposed to querying data that is stored on physical disks. This results in vastly shortened query response times, allowing business intelligence (BI) and analytic applications to support faster business decision • In Business intelligence (BI), in-memory analytics is a methodology used to solve complex and time-sensitive business scenarios. It works by increasing the speed, performance and reliability when querying data. • Business Intelligence deployments are typically disk-based, meaning the application queries data stored on physical disks. In contrast, with in-memory analytics, the queries and data reside in the server's random access memory (RAM). In-memory analytics is achieved through the growth and adoption of 64 -bit architectures, which can handle more memory and larger files compared to 32 -bit –and an overall reduction in the price of memory.

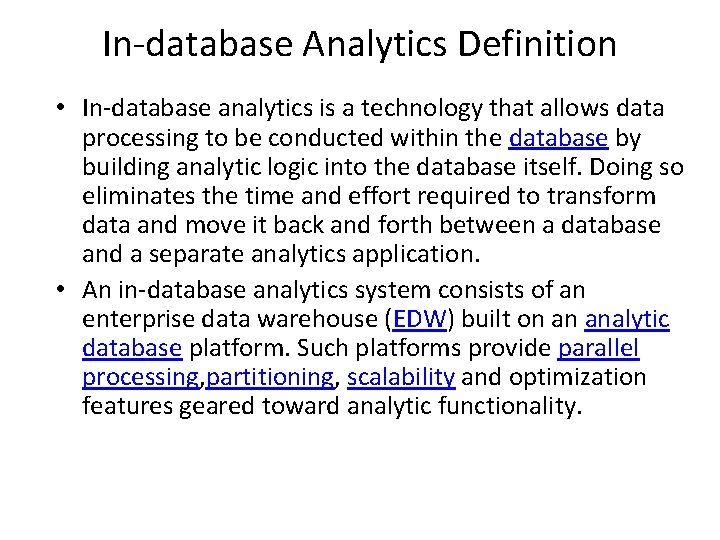

In-database Analytics Definition • In-database analytics is a technology that allows data processing to be conducted within the database by building analytic logic into the database itself. Doing so eliminates the time and effort required to transform data and move it back and forth between a database and a separate analytics application. • An in-database analytics system consists of an enterprise data warehouse (EDW) built on an analytic database platform. Such platforms provide parallel processing, partitioning, scalability and optimization features geared toward analytic functionality.

Google analytics

- Slides: 26