Predictively Modeling Social Text William W Cohen Machine

![Hyperlink modeling using PLSA [Cohn and Hoffman, NIPS, 2001] • Select document d ~ Hyperlink modeling using PLSA [Cohn and Hoffman, NIPS, 2001] • Select document d ~](https://slidetodoc.com/presentation_image_h2/b8c767777efee46ee281e13d30105abb/image-16.jpg)

![Hyperlink modeling using PLSA [Cohn and Hoffman, NIPS, 2001] PLSA likelihood: d d z Hyperlink modeling using PLSA [Cohn and Hoffman, NIPS, 2001] PLSA likelihood: d d z](https://slidetodoc.com/presentation_image_h2/b8c767777efee46ee281e13d30105abb/image-17.jpg)

![Hyperlink modeling using PLSA [Cohn and Hoffman, NIPS, 2001] Heuristic: (1 - ) 0 Hyperlink modeling using PLSA [Cohn and Hoffman, NIPS, 2001] Heuristic: (1 - ) 0](https://slidetodoc.com/presentation_image_h2/b8c767777efee46ee281e13d30105abb/image-18.jpg)

![Hyperlink modeling using PLSA [Cohn and Hoffman, NIPS, 2001] • Experiments: Text Classification • Hyperlink modeling using PLSA [Cohn and Hoffman, NIPS, 2001] • Experiments: Text Classification •](https://slidetodoc.com/presentation_image_h2/b8c767777efee46ee281e13d30105abb/image-19.jpg)

![Hyperlink modeling using PLSA [Cohn and Hoffman, NIPS, 2001] • Classification performance Hyperlink conten Hyperlink modeling using PLSA [Cohn and Hoffman, NIPS, 2001] • Classification performance Hyperlink conten](https://slidetodoc.com/presentation_image_h2/b8c767777efee46ee281e13d30105abb/image-20.jpg)

![Hyperlink modeling using Link. LDA [Erosheva, Fienberg, Lafferty, PNAS, 2004] • For each document Hyperlink modeling using Link. LDA [Erosheva, Fienberg, Lafferty, PNAS, 2004] • For each document](https://slidetodoc.com/presentation_image_h2/b8c767777efee46ee281e13d30105abb/image-22.jpg)

![Hyperlink modeling using LDA [Erosheva, Fienberg, Lafferty, PNAS, 2004] Hyperlink modeling using LDA [Erosheva, Fienberg, Lafferty, PNAS, 2004]](https://slidetodoc.com/presentation_image_h2/b8c767777efee46ee281e13d30105abb/image-23.jpg)

![Author-Topic Model for Scientific Literature [Rozen-Zvi, Griffiths, Steyvers, Smyth UAI, 2004] a P • Author-Topic Model for Scientific Literature [Rozen-Zvi, Griffiths, Steyvers, Smyth UAI, 2004] a P •](https://slidetodoc.com/presentation_image_h2/b8c767777efee46ee281e13d30105abb/image-26.jpg)

![Author-Topic Model for Scientific Literature [Rozen-Zvi, Griffiths, Steyvers, Smyth UAI, 2004] a Learning: Gibbs Author-Topic Model for Scientific Literature [Rozen-Zvi, Griffiths, Steyvers, Smyth UAI, 2004] a Learning: Gibbs](https://slidetodoc.com/presentation_image_h2/b8c767777efee46ee281e13d30105abb/image-27.jpg)

![Author-Topic Model for Scientific Literature [Rozen-Zvi, Griffiths, Steyvers, Smyth UAI, 2004] • Perplexity results Author-Topic Model for Scientific Literature [Rozen-Zvi, Griffiths, Steyvers, Smyth UAI, 2004] • Perplexity results](https://slidetodoc.com/presentation_image_h2/b8c767777efee46ee281e13d30105abb/image-28.jpg)

![Author-Topic Model for Scientific Literature [Rozen-Zvi, Griffiths, Steyvers, Smyth UAI, 2004] • Topic-Author visualization Author-Topic Model for Scientific Literature [Rozen-Zvi, Griffiths, Steyvers, Smyth UAI, 2004] • Topic-Author visualization](https://slidetodoc.com/presentation_image_h2/b8c767777efee46ee281e13d30105abb/image-29.jpg)

![Author-Topic Model for Scientific Literature [Rozen-Zvi, Griffiths, Steyvers, Smyth UAI, 2004] • Application 1: Author-Topic Model for Scientific Literature [Rozen-Zvi, Griffiths, Steyvers, Smyth UAI, 2004] • Application 1:](https://slidetodoc.com/presentation_image_h2/b8c767777efee46ee281e13d30105abb/image-30.jpg)

![Author-Topic Model for Scientific Literature [Rozen-Zvi, Griffiths, Steyvers, Smyth UAI, 2004] • Application 2: Author-Topic Model for Scientific Literature [Rozen-Zvi, Griffiths, Steyvers, Smyth UAI, 2004] • Application 2:](https://slidetodoc.com/presentation_image_h2/b8c767777efee46ee281e13d30105abb/image-31.jpg)

![Author-Topic-Recipient model for email data [Mc. Callum, Corrada-Emmanuel, Wang, ICJAI’ 05] Author-Topic-Recipient model for email data [Mc. Callum, Corrada-Emmanuel, Wang, ICJAI’ 05]](https://slidetodoc.com/presentation_image_h2/b8c767777efee46ee281e13d30105abb/image-32.jpg)

![Author-Topic-Recipient model for email data [Mc. Callum, Corrada-Emmanuel, Wang, ICJAI’ 05] Gibbs sampling Author-Topic-Recipient model for email data [Mc. Callum, Corrada-Emmanuel, Wang, ICJAI’ 05] Gibbs sampling](https://slidetodoc.com/presentation_image_h2/b8c767777efee46ee281e13d30105abb/image-33.jpg)

![Author-Topic-Recipient model for email data [Mc. Callum, Corrada-Emmanuel, Wang, ICJAI’ 05] • Datasets – Author-Topic-Recipient model for email data [Mc. Callum, Corrada-Emmanuel, Wang, ICJAI’ 05] • Datasets –](https://slidetodoc.com/presentation_image_h2/b8c767777efee46ee281e13d30105abb/image-34.jpg)

![Author-Topic-Recipient model for email data [Mc. Callum, Corrada-Emmanuel, Wang, ICJAI’ 05] • Topic Visualization: Author-Topic-Recipient model for email data [Mc. Callum, Corrada-Emmanuel, Wang, ICJAI’ 05] • Topic Visualization:](https://slidetodoc.com/presentation_image_h2/b8c767777efee46ee281e13d30105abb/image-35.jpg)

![Author-Topic-Recipient model for email data [Mc. Callum, Corrada-Emmanuel, Wang, ICJAI’ 05] • Topic Visualization: Author-Topic-Recipient model for email data [Mc. Callum, Corrada-Emmanuel, Wang, ICJAI’ 05] • Topic Visualization:](https://slidetodoc.com/presentation_image_h2/b8c767777efee46ee281e13d30105abb/image-36.jpg)

![Author-Topic-Recipient model for email data [Mc. Callum, Corrada-Emmanuel, Wang, ICJAI’ 05] Author-Topic-Recipient model for email data [Mc. Callum, Corrada-Emmanuel, Wang, ICJAI’ 05]](https://slidetodoc.com/presentation_image_h2/b8c767777efee46ee281e13d30105abb/image-37.jpg)

![Modeling Citation Influences [Dietz, Bickel, Scheffer, ICML 2007] • Copycat model of citation influence Modeling Citation Influences [Dietz, Bickel, Scheffer, ICML 2007] • Copycat model of citation influence](https://slidetodoc.com/presentation_image_h2/b8c767777efee46ee281e13d30105abb/image-39.jpg)

![Modeling Citation Influences [Dietz, Bickel, Scheffer, ICML 2007] • Citation influence model Modeling Citation Influences [Dietz, Bickel, Scheffer, ICML 2007] • Citation influence model](https://slidetodoc.com/presentation_image_h2/b8c767777efee46ee281e13d30105abb/image-40.jpg)

![Modeling Citation Influences [Dietz, Bickel, Scheffer, ICML 2007] • Citation influence graph for LDA Modeling Citation Influences [Dietz, Bickel, Scheffer, ICML 2007] • Citation influence graph for LDA](https://slidetodoc.com/presentation_image_h2/b8c767777efee46ee281e13d30105abb/image-41.jpg)

![Models of hypertext for blogs [ICWSM 2008] Ramesh Nallapati Amr Ahmed Eric Xing me Models of hypertext for blogs [ICWSM 2008] Ramesh Nallapati Amr Ahmed Eric Xing me](https://slidetodoc.com/presentation_image_h2/b8c767777efee46ee281e13d30105abb/image-42.jpg)

![Predicting Response to Political Blog Posts with Topic Models [NAACL ’ 09] Tae Yano Predicting Response to Political Blog Posts with Topic Models [NAACL ’ 09] Tae Yano](https://slidetodoc.com/presentation_image_h2/b8c767777efee46ee281e13d30105abb/image-55.jpg)

- Slides: 75

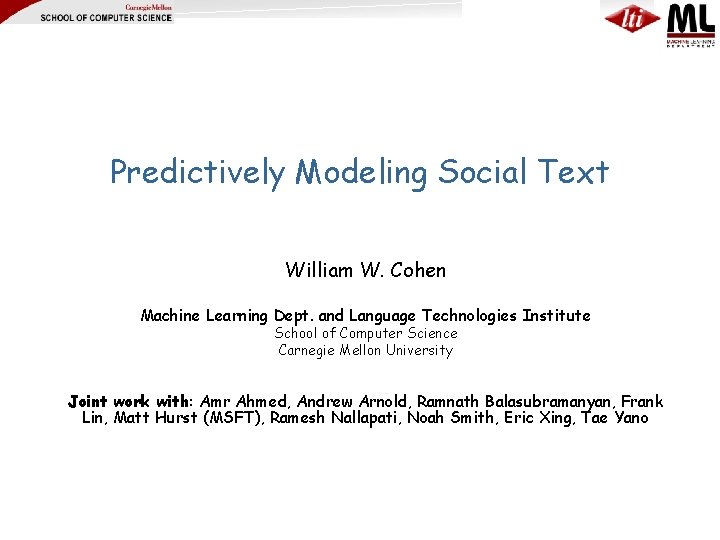

Predictively Modeling Social Text William W. Cohen Machine Learning Dept. and Language Technologies Institute School of Computer Science Carnegie Mellon University Joint work with: Amr Ahmed, Andrew Arnold, Ramnath Balasubramanyan, Frank Lin, Matt Hurst (MSFT), Ramesh Nallapati, Noah Smith, Eric Xing, Tae Yano

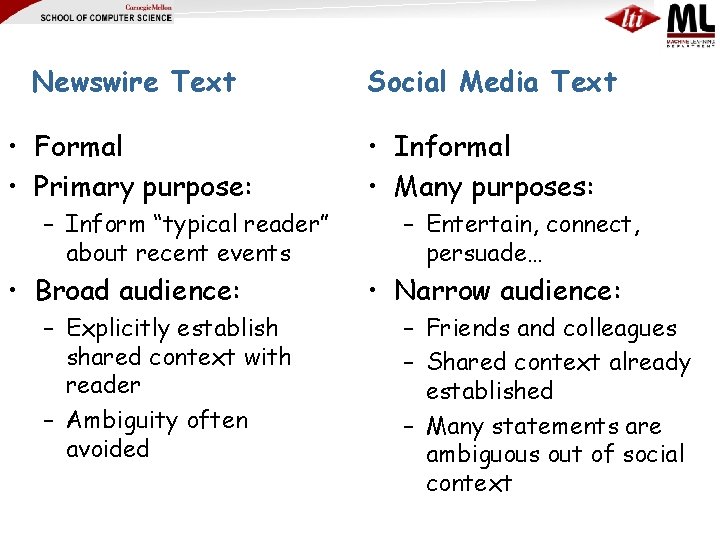

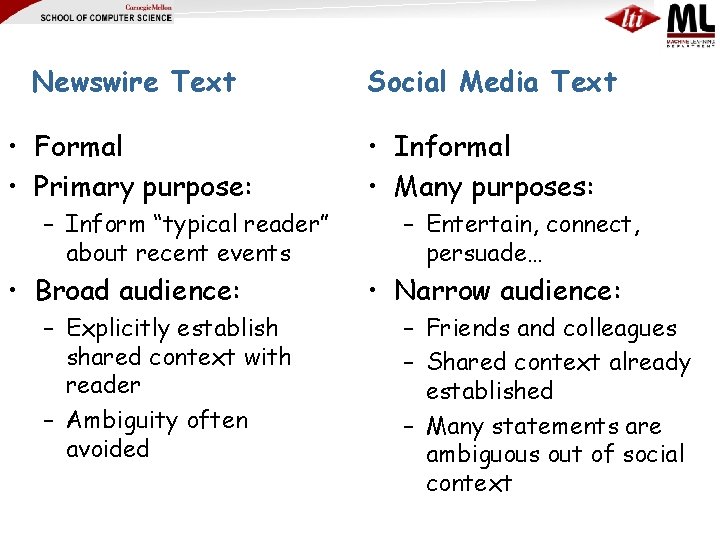

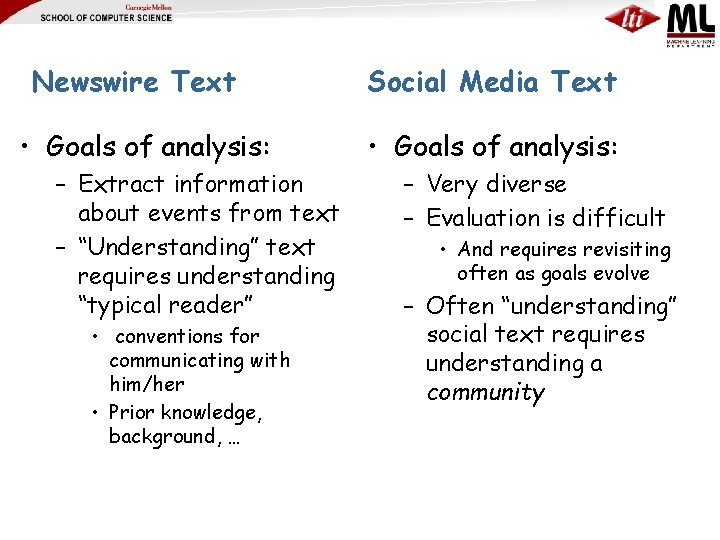

Newswire Text • Formal • Primary purpose: – Inform “typical reader” about recent events • Broad audience: – Explicitly establish shared context with reader – Ambiguity often avoided Social Media Text • Informal • Many purposes: – Entertain, connect, persuade… • Narrow audience: – Friends and colleagues – Shared context already established – Many statements are ambiguous out of social context

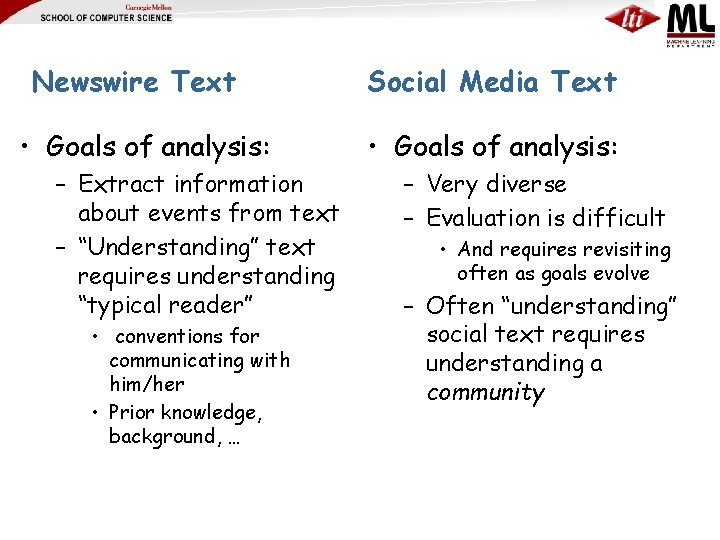

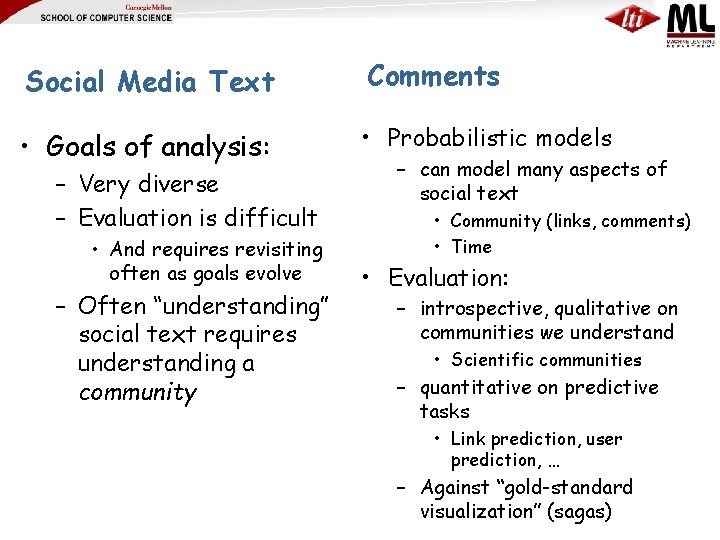

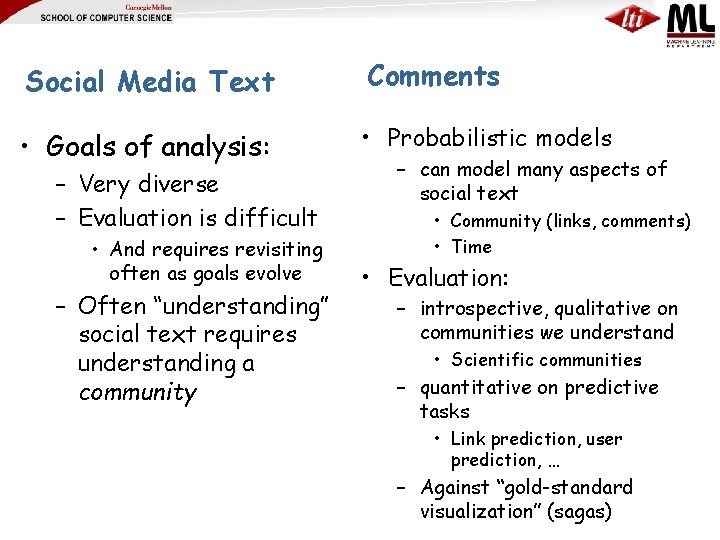

Newswire Text • Goals of analysis: – Extract information about events from text – “Understanding” text requires understanding “typical reader” • conventions for communicating with him/her • Prior knowledge, background, … Social Media Text • Goals of analysis: – Very diverse – Evaluation is difficult • And requires revisiting often as goals evolve – Often “understanding” social text requires understanding a community

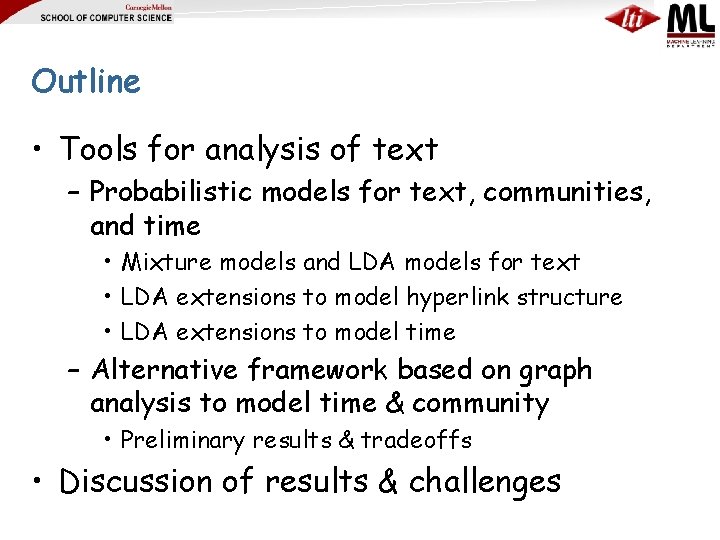

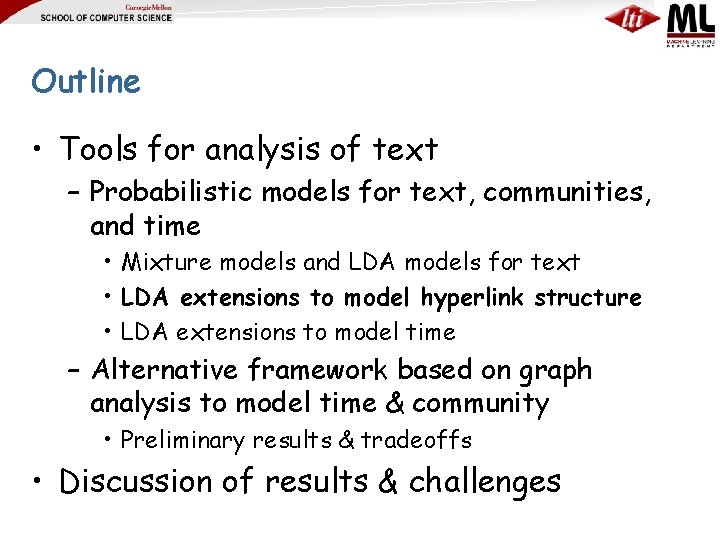

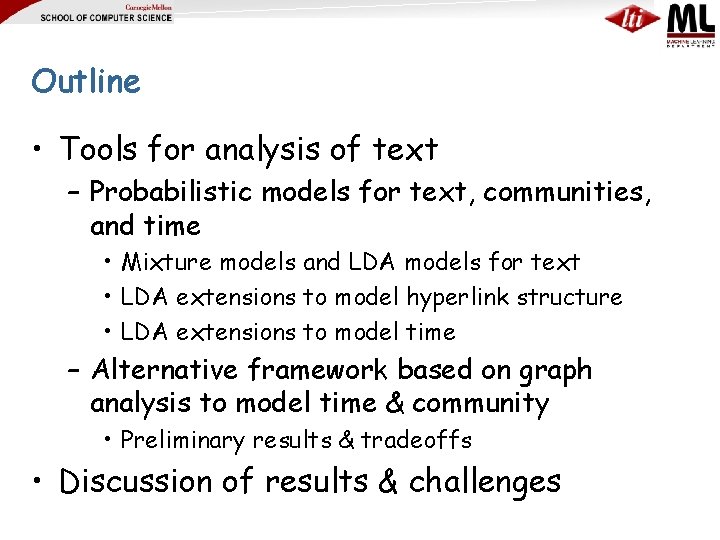

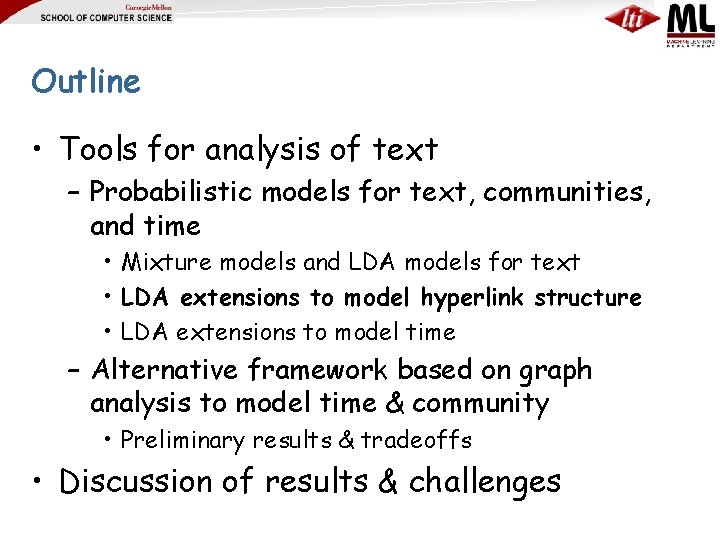

Outline • Tools for analysis of text – Probabilistic models for text, communities, and time • Mixture models and LDA models for text • LDA extensions to model hyperlink structure • LDA extensions to model time – Alternative framework based on graph analysis to model time & community • Preliminary results & tradeoffs • Discussion of results & challenges

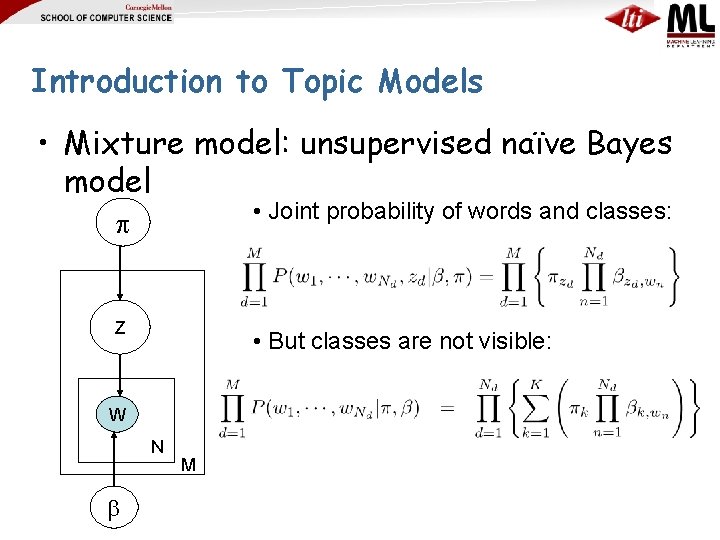

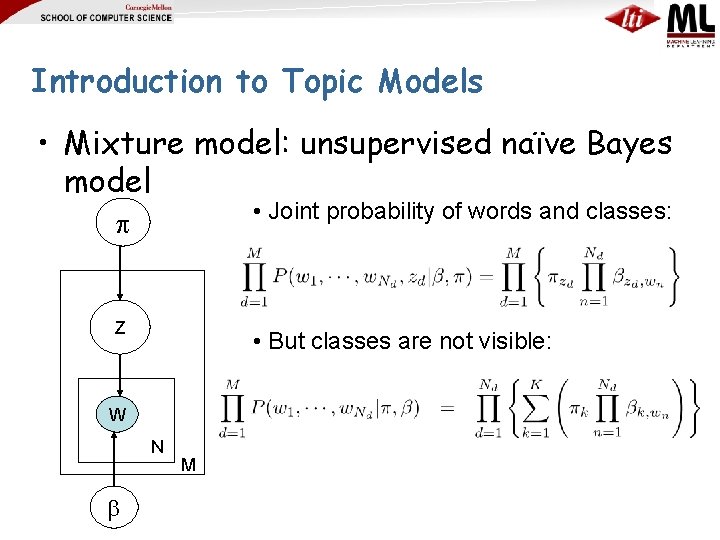

Introduction to Topic Models • Mixture model: unsupervised naïve Bayes model • Joint probability of words and classes: C Z • But classes are not visible: W N M

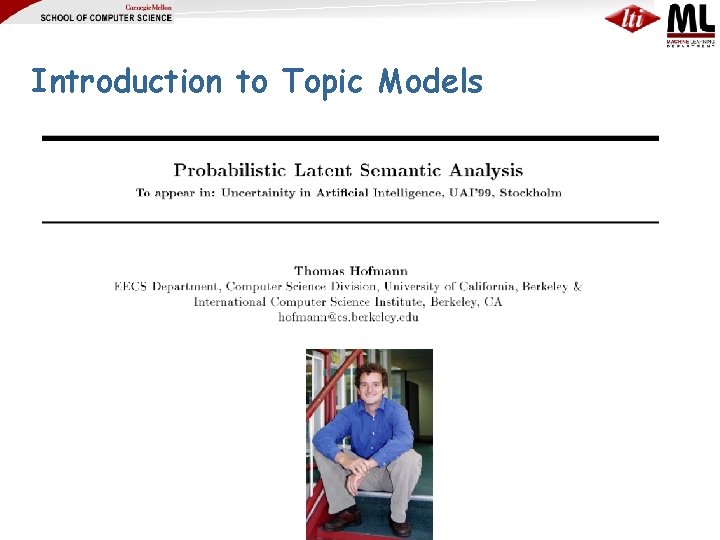

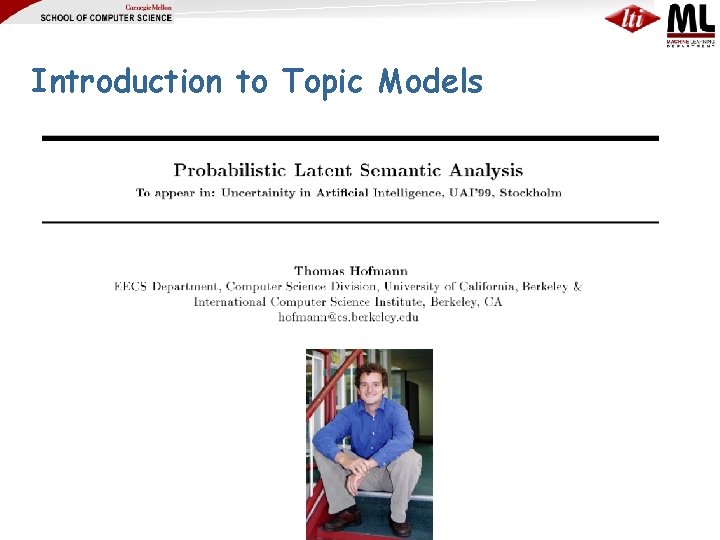

Introduction to Topic Models

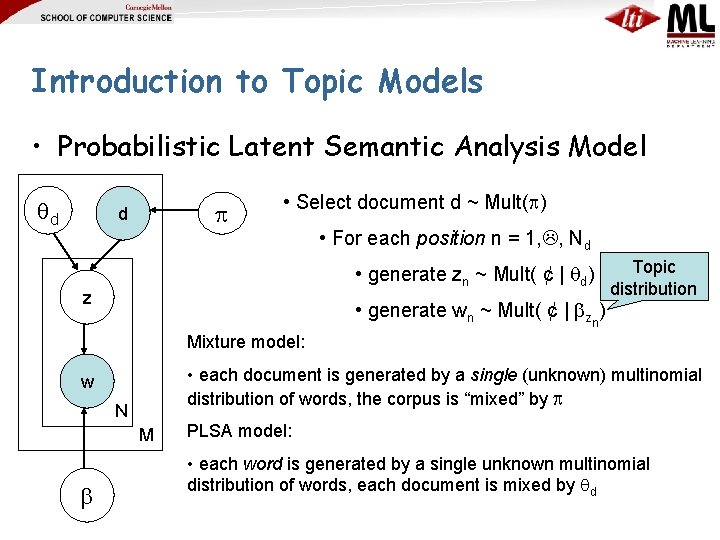

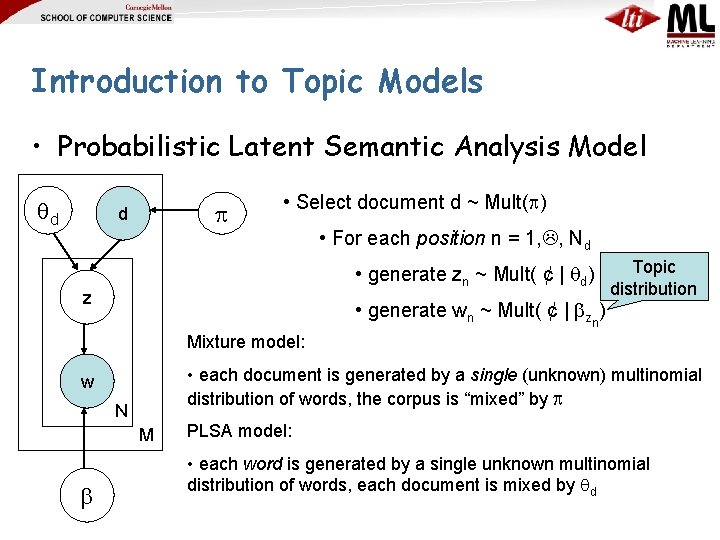

Introduction to Topic Models • Probabilistic Latent Semantic Analysis Model d d • Select document d ~ Mult( ) • For each position n = 1, , Nd • generate zn ~ Mult( ¢ | d) z • generate wn ~ Mult( ¢ | zn) Topic distribution Mixture model: • each document is generated by a single (unknown) multinomial distribution of words, the corpus is “mixed” by w N M PLSA model: • each word is generated by a single unknown multinomial distribution of words, each document is mixed by d

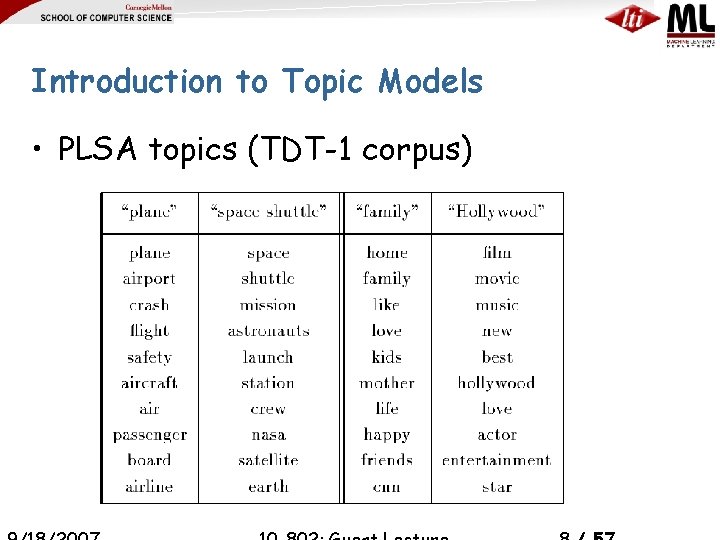

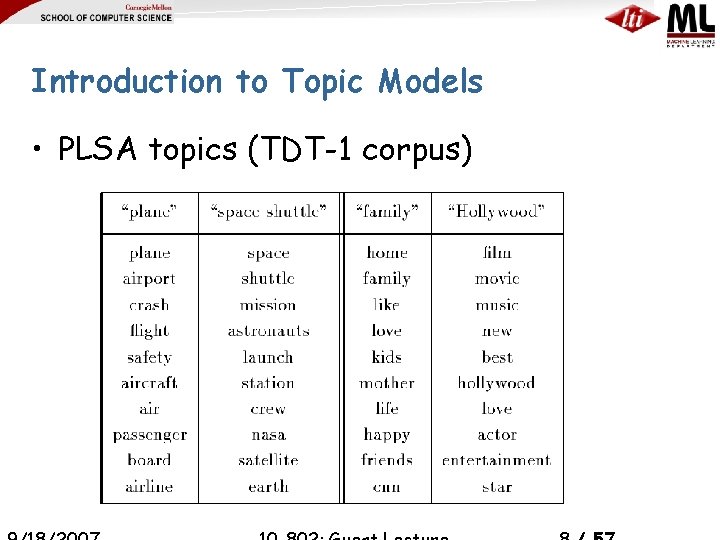

Introduction to Topic Models • PLSA topics (TDT-1 corpus)

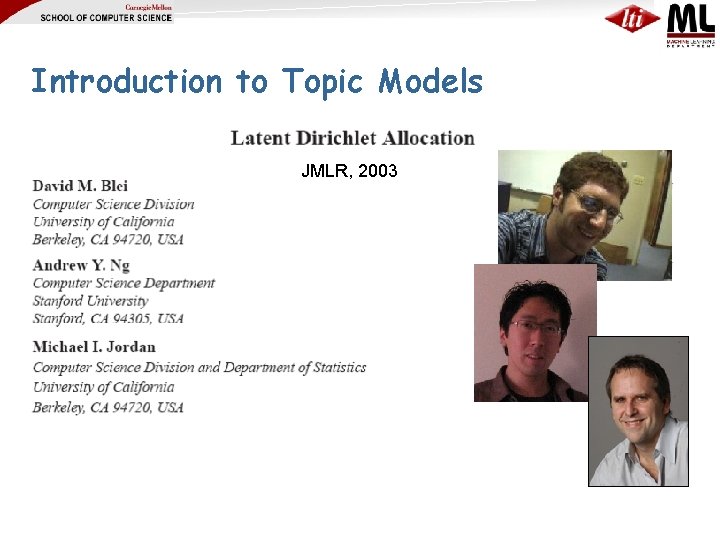

Introduction to Topic Models JMLR, 2003

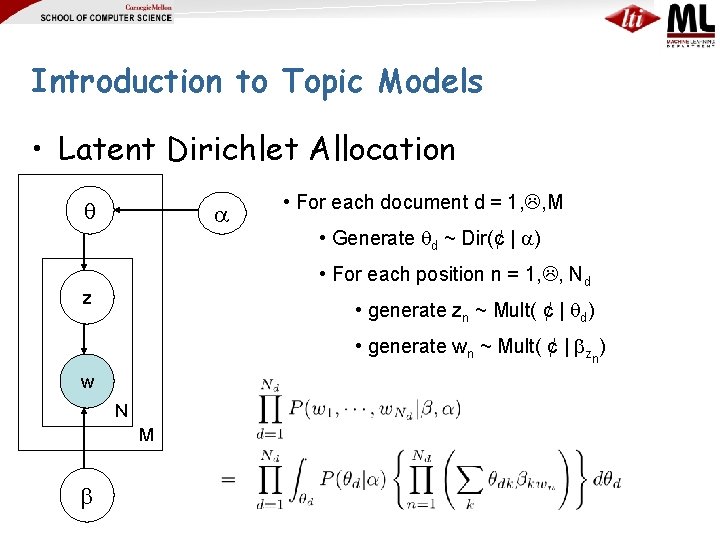

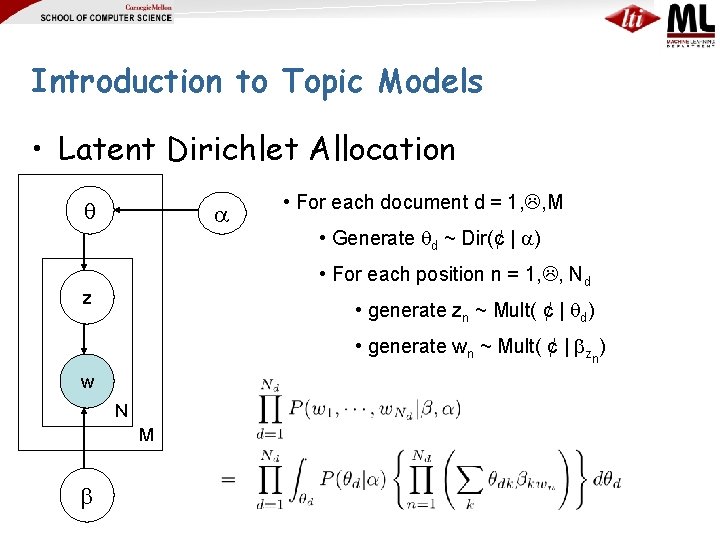

Introduction to Topic Models • Latent Dirichlet Allocation • For each document d = 1, , M • Generate d ~ Dir(¢ | ) • For each position n = 1, , Nd z • generate zn ~ Mult( ¢ | d) • generate wn ~ Mult( ¢ | zn) w N M

Introduction to Topic Models • Latent Dirichlet Allocation – Overcomes some technical issues with PLSA • PLSA only estimates mixing parameters for training docs – Parameter learning is more complicated: • Gibbs Sampling: easy to program, often slow • Variational EM

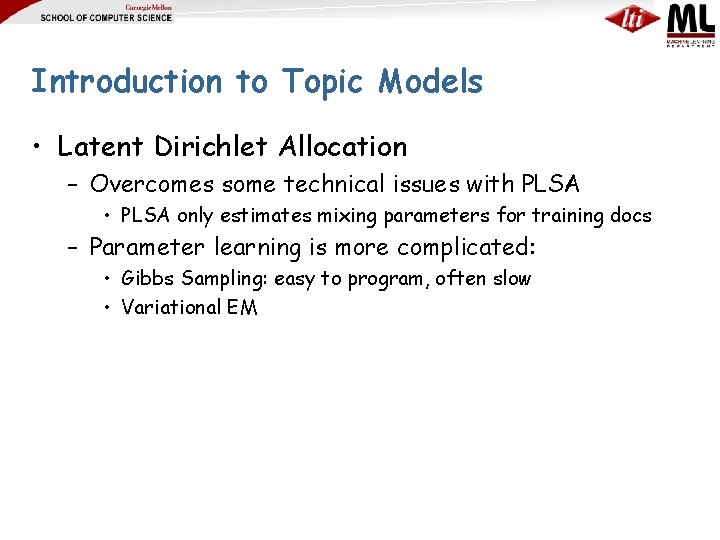

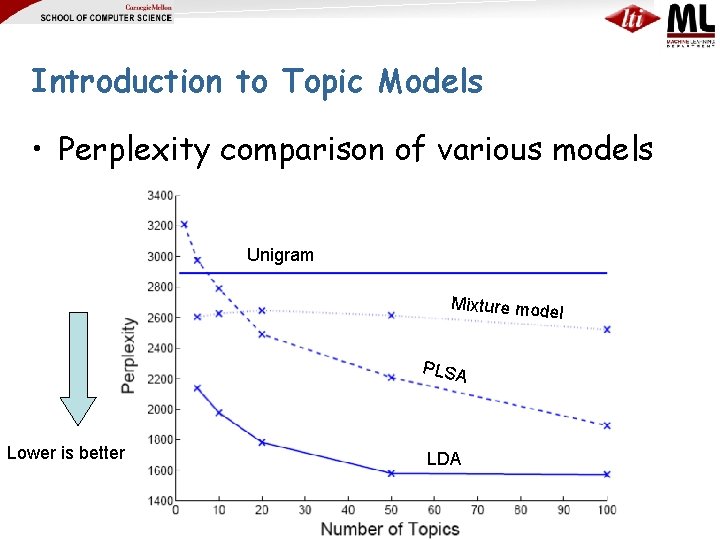

Introduction to Topic Models • Perplexity comparison of various models Unigram Mixture mode l PLSA Lower is better LDA

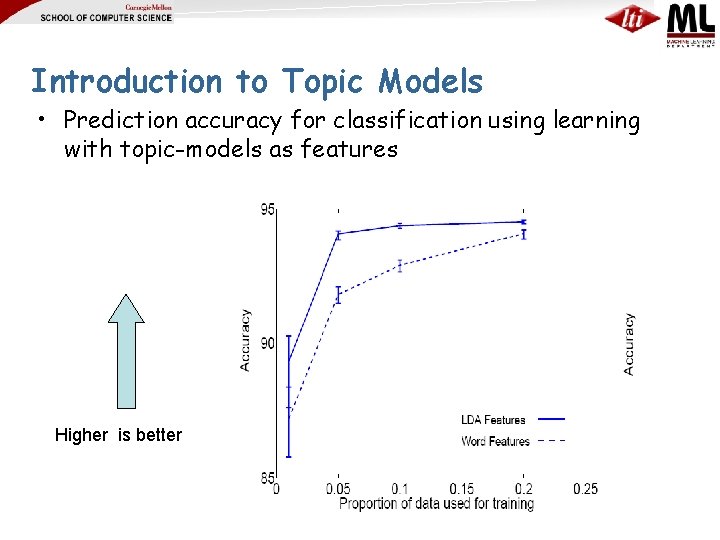

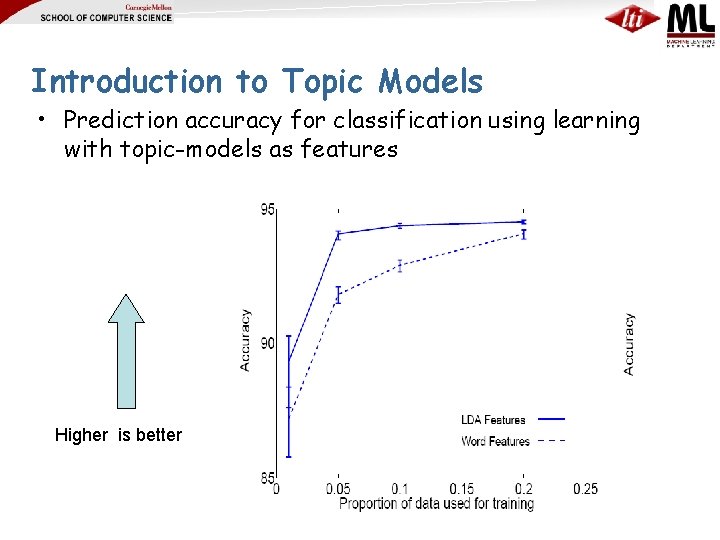

Introduction to Topic Models • Prediction accuracy for classification using learning with topic-models as features Higher is better

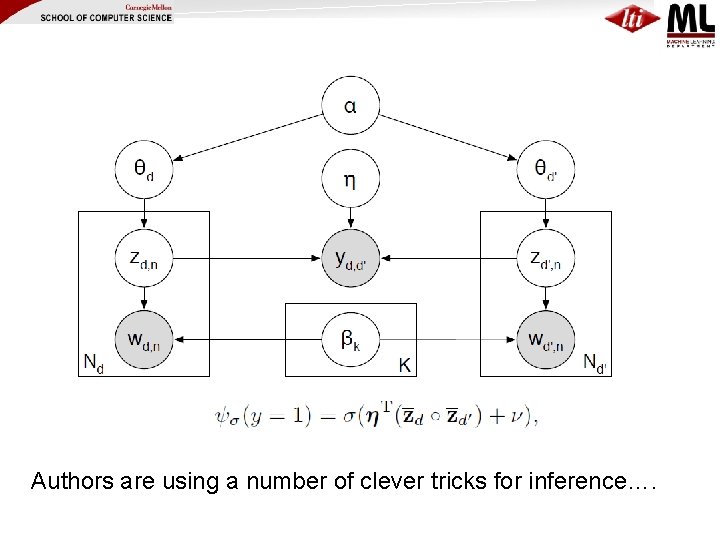

Outline • Tools for analysis of text – Probabilistic models for text, communities, and time • Mixture models and LDA models for text • LDA extensions to model hyperlink structure • LDA extensions to model time – Alternative framework based on graph analysis to model time & community • Preliminary results & tradeoffs • Discussion of results & challenges

Hyperlink modeling using PLSA

![Hyperlink modeling using PLSA Cohn and Hoffman NIPS 2001 Select document d Hyperlink modeling using PLSA [Cohn and Hoffman, NIPS, 2001] • Select document d ~](https://slidetodoc.com/presentation_image_h2/b8c767777efee46ee281e13d30105abb/image-16.jpg)

Hyperlink modeling using PLSA [Cohn and Hoffman, NIPS, 2001] • Select document d ~ Mult( ) d d • For each position n = 1, , Nd • generate zn ~ Mult( ¢ | d) z w • For each citation j = 1, , Ld • generate zj ~ Mult( ¢ | d) c N • generate wn ~ Mult( ¢ | zn) z L M • generate cj ~ Mult( ¢ | zj)

![Hyperlink modeling using PLSA Cohn and Hoffman NIPS 2001 PLSA likelihood d d z Hyperlink modeling using PLSA [Cohn and Hoffman, NIPS, 2001] PLSA likelihood: d d z](https://slidetodoc.com/presentation_image_h2/b8c767777efee46ee281e13d30105abb/image-17.jpg)

Hyperlink modeling using PLSA [Cohn and Hoffman, NIPS, 2001] PLSA likelihood: d d z z w c N New likelihood: L M Learning using EM

![Hyperlink modeling using PLSA Cohn and Hoffman NIPS 2001 Heuristic 1 0 Hyperlink modeling using PLSA [Cohn and Hoffman, NIPS, 2001] Heuristic: (1 - ) 0](https://slidetodoc.com/presentation_image_h2/b8c767777efee46ee281e13d30105abb/image-18.jpg)

Hyperlink modeling using PLSA [Cohn and Hoffman, NIPS, 2001] Heuristic: (1 - ) 0 · · 1 determines the relative importance of content and hyperlinks

![Hyperlink modeling using PLSA Cohn and Hoffman NIPS 2001 Experiments Text Classification Hyperlink modeling using PLSA [Cohn and Hoffman, NIPS, 2001] • Experiments: Text Classification •](https://slidetodoc.com/presentation_image_h2/b8c767777efee46ee281e13d30105abb/image-19.jpg)

Hyperlink modeling using PLSA [Cohn and Hoffman, NIPS, 2001] • Experiments: Text Classification • Datasets: – Web KB • 6000 CS dept web pages with hyperlinks • 6 Classes: faculty, course, student, staff, etc. – Cora • 2000 Machine learning abstracts with citations • 7 classes: sub-areas of machine learning • Methodology: – Learn the model on complete data and obtain d for each document – Test documents classified into the label of the nearest neighbor in training set – Distance measured as cosine similarity in the space – Measure the performance as a function of

![Hyperlink modeling using PLSA Cohn and Hoffman NIPS 2001 Classification performance Hyperlink conten Hyperlink modeling using PLSA [Cohn and Hoffman, NIPS, 2001] • Classification performance Hyperlink conten](https://slidetodoc.com/presentation_image_h2/b8c767777efee46ee281e13d30105abb/image-20.jpg)

Hyperlink modeling using PLSA [Cohn and Hoffman, NIPS, 2001] • Classification performance Hyperlink conten t Hyperlink conten

Hyperlink modeling using LDA

![Hyperlink modeling using Link LDA Erosheva Fienberg Lafferty PNAS 2004 For each document Hyperlink modeling using Link. LDA [Erosheva, Fienberg, Lafferty, PNAS, 2004] • For each document](https://slidetodoc.com/presentation_image_h2/b8c767777efee46ee281e13d30105abb/image-22.jpg)

Hyperlink modeling using Link. LDA [Erosheva, Fienberg, Lafferty, PNAS, 2004] • For each document d = 1, , M • Generate d ~ Dir(¢ | ) z • For each position n = 1, , Nd z • generate zn ~ Mult( ¢ | d) • generate wn ~ Mult( ¢ | zn) w N L M • For each citation j = 1, , Ld c • generate zj ~ Mult(. | d) • generate cj ~ Mult(. | zj) Learning using variational EM

![Hyperlink modeling using LDA Erosheva Fienberg Lafferty PNAS 2004 Hyperlink modeling using LDA [Erosheva, Fienberg, Lafferty, PNAS, 2004]](https://slidetodoc.com/presentation_image_h2/b8c767777efee46ee281e13d30105abb/image-23.jpg)

Hyperlink modeling using LDA [Erosheva, Fienberg, Lafferty, PNAS, 2004]

Newswire Text • Goals of analysis: – Extract information about events from text – “Understanding” text requires understanding “typical reader” Social Media Text • Goals of analysis: – Very diverse – Evaluation is difficult • And requires revisiting often as goals evolve – Often “understanding” social text requires • conventions for communicating with understanding a him/her community • Prior knowledge, Science as a testbed for social background, … text: an open community which we understand

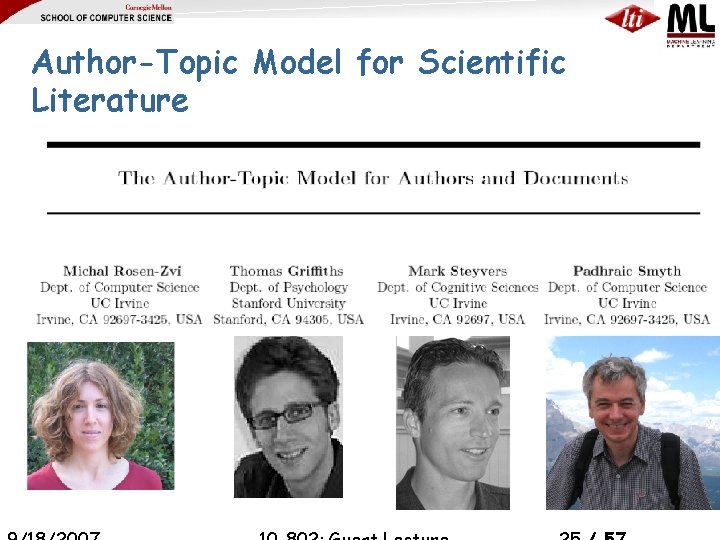

Author-Topic Model for Scientific Literature

![AuthorTopic Model for Scientific Literature RozenZvi Griffiths Steyvers Smyth UAI 2004 a P Author-Topic Model for Scientific Literature [Rozen-Zvi, Griffiths, Steyvers, Smyth UAI, 2004] a P •](https://slidetodoc.com/presentation_image_h2/b8c767777efee46ee281e13d30105abb/image-26.jpg)

Author-Topic Model for Scientific Literature [Rozen-Zvi, Griffiths, Steyvers, Smyth UAI, 2004] a P • For each author a = 1, , A • Generate a ~ Dir(¢ | ) x • For each topic k = 1, , K • Generate fk ~ Dir( ¢ | ) z A w K • For each position n = 1, , Nd • Generate author x ~ Unif(¢ | ad) N M f • For each document d = 1, , M • generate zn ~ Mult( ¢ | a) • generate wn ~ Mult( ¢ | fzn)

![AuthorTopic Model for Scientific Literature RozenZvi Griffiths Steyvers Smyth UAI 2004 a Learning Gibbs Author-Topic Model for Scientific Literature [Rozen-Zvi, Griffiths, Steyvers, Smyth UAI, 2004] a Learning: Gibbs](https://slidetodoc.com/presentation_image_h2/b8c767777efee46ee281e13d30105abb/image-27.jpg)

Author-Topic Model for Scientific Literature [Rozen-Zvi, Griffiths, Steyvers, Smyth UAI, 2004] a Learning: Gibbs sampling P x z A w N M f K

![AuthorTopic Model for Scientific Literature RozenZvi Griffiths Steyvers Smyth UAI 2004 Perplexity results Author-Topic Model for Scientific Literature [Rozen-Zvi, Griffiths, Steyvers, Smyth UAI, 2004] • Perplexity results](https://slidetodoc.com/presentation_image_h2/b8c767777efee46ee281e13d30105abb/image-28.jpg)

Author-Topic Model for Scientific Literature [Rozen-Zvi, Griffiths, Steyvers, Smyth UAI, 2004] • Perplexity results

![AuthorTopic Model for Scientific Literature RozenZvi Griffiths Steyvers Smyth UAI 2004 TopicAuthor visualization Author-Topic Model for Scientific Literature [Rozen-Zvi, Griffiths, Steyvers, Smyth UAI, 2004] • Topic-Author visualization](https://slidetodoc.com/presentation_image_h2/b8c767777efee46ee281e13d30105abb/image-29.jpg)

Author-Topic Model for Scientific Literature [Rozen-Zvi, Griffiths, Steyvers, Smyth UAI, 2004] • Topic-Author visualization

![AuthorTopic Model for Scientific Literature RozenZvi Griffiths Steyvers Smyth UAI 2004 Application 1 Author-Topic Model for Scientific Literature [Rozen-Zvi, Griffiths, Steyvers, Smyth UAI, 2004] • Application 1:](https://slidetodoc.com/presentation_image_h2/b8c767777efee46ee281e13d30105abb/image-30.jpg)

Author-Topic Model for Scientific Literature [Rozen-Zvi, Griffiths, Steyvers, Smyth UAI, 2004] • Application 1: Author similarity

![AuthorTopic Model for Scientific Literature RozenZvi Griffiths Steyvers Smyth UAI 2004 Application 2 Author-Topic Model for Scientific Literature [Rozen-Zvi, Griffiths, Steyvers, Smyth UAI, 2004] • Application 2:](https://slidetodoc.com/presentation_image_h2/b8c767777efee46ee281e13d30105abb/image-31.jpg)

Author-Topic Model for Scientific Literature [Rozen-Zvi, Griffiths, Steyvers, Smyth UAI, 2004] • Application 2: Author entropy

![AuthorTopicRecipient model for email data Mc Callum CorradaEmmanuel Wang ICJAI 05 Author-Topic-Recipient model for email data [Mc. Callum, Corrada-Emmanuel, Wang, ICJAI’ 05]](https://slidetodoc.com/presentation_image_h2/b8c767777efee46ee281e13d30105abb/image-32.jpg)

Author-Topic-Recipient model for email data [Mc. Callum, Corrada-Emmanuel, Wang, ICJAI’ 05]

![AuthorTopicRecipient model for email data Mc Callum CorradaEmmanuel Wang ICJAI 05 Gibbs sampling Author-Topic-Recipient model for email data [Mc. Callum, Corrada-Emmanuel, Wang, ICJAI’ 05] Gibbs sampling](https://slidetodoc.com/presentation_image_h2/b8c767777efee46ee281e13d30105abb/image-33.jpg)

Author-Topic-Recipient model for email data [Mc. Callum, Corrada-Emmanuel, Wang, ICJAI’ 05] Gibbs sampling

![AuthorTopicRecipient model for email data Mc Callum CorradaEmmanuel Wang ICJAI 05 Datasets Author-Topic-Recipient model for email data [Mc. Callum, Corrada-Emmanuel, Wang, ICJAI’ 05] • Datasets –](https://slidetodoc.com/presentation_image_h2/b8c767777efee46ee281e13d30105abb/image-34.jpg)

Author-Topic-Recipient model for email data [Mc. Callum, Corrada-Emmanuel, Wang, ICJAI’ 05] • Datasets – Enron email data • 23, 488 messages between 147 users – Mc. Callum’s personal email • 23, 488(? ) messages with 128 authors

![AuthorTopicRecipient model for email data Mc Callum CorradaEmmanuel Wang ICJAI 05 Topic Visualization Author-Topic-Recipient model for email data [Mc. Callum, Corrada-Emmanuel, Wang, ICJAI’ 05] • Topic Visualization:](https://slidetodoc.com/presentation_image_h2/b8c767777efee46ee281e13d30105abb/image-35.jpg)

Author-Topic-Recipient model for email data [Mc. Callum, Corrada-Emmanuel, Wang, ICJAI’ 05] • Topic Visualization: Enron set

![AuthorTopicRecipient model for email data Mc Callum CorradaEmmanuel Wang ICJAI 05 Topic Visualization Author-Topic-Recipient model for email data [Mc. Callum, Corrada-Emmanuel, Wang, ICJAI’ 05] • Topic Visualization:](https://slidetodoc.com/presentation_image_h2/b8c767777efee46ee281e13d30105abb/image-36.jpg)

Author-Topic-Recipient model for email data [Mc. Callum, Corrada-Emmanuel, Wang, ICJAI’ 05] • Topic Visualization: Mc. Callum’s data

![AuthorTopicRecipient model for email data Mc Callum CorradaEmmanuel Wang ICJAI 05 Author-Topic-Recipient model for email data [Mc. Callum, Corrada-Emmanuel, Wang, ICJAI’ 05]](https://slidetodoc.com/presentation_image_h2/b8c767777efee46ee281e13d30105abb/image-37.jpg)

Author-Topic-Recipient model for email data [Mc. Callum, Corrada-Emmanuel, Wang, ICJAI’ 05]

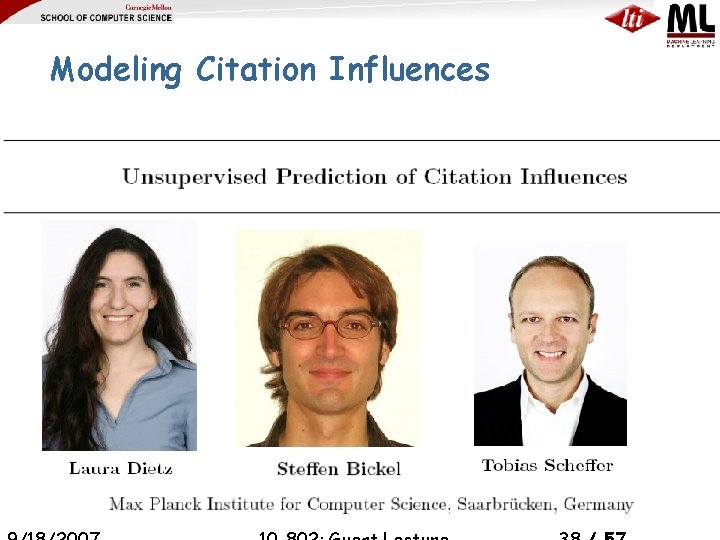

Modeling Citation Influences

![Modeling Citation Influences Dietz Bickel Scheffer ICML 2007 Copycat model of citation influence Modeling Citation Influences [Dietz, Bickel, Scheffer, ICML 2007] • Copycat model of citation influence](https://slidetodoc.com/presentation_image_h2/b8c767777efee46ee281e13d30105abb/image-39.jpg)

Modeling Citation Influences [Dietz, Bickel, Scheffer, ICML 2007] • Copycat model of citation influence • LDA model for cited papers • Extended LDA model for citing papers • For each word, depending on coin flip c, you might chose to copy a word from a cited paper instead of generating the word

![Modeling Citation Influences Dietz Bickel Scheffer ICML 2007 Citation influence model Modeling Citation Influences [Dietz, Bickel, Scheffer, ICML 2007] • Citation influence model](https://slidetodoc.com/presentation_image_h2/b8c767777efee46ee281e13d30105abb/image-40.jpg)

Modeling Citation Influences [Dietz, Bickel, Scheffer, ICML 2007] • Citation influence model

![Modeling Citation Influences Dietz Bickel Scheffer ICML 2007 Citation influence graph for LDA Modeling Citation Influences [Dietz, Bickel, Scheffer, ICML 2007] • Citation influence graph for LDA](https://slidetodoc.com/presentation_image_h2/b8c767777efee46ee281e13d30105abb/image-41.jpg)

Modeling Citation Influences [Dietz, Bickel, Scheffer, ICML 2007] • Citation influence graph for LDA paper

![Models of hypertext for blogs ICWSM 2008 Ramesh Nallapati Amr Ahmed Eric Xing me Models of hypertext for blogs [ICWSM 2008] Ramesh Nallapati Amr Ahmed Eric Xing me](https://slidetodoc.com/presentation_image_h2/b8c767777efee46ee281e13d30105abb/image-42.jpg)

Models of hypertext for blogs [ICWSM 2008] Ramesh Nallapati Amr Ahmed Eric Xing me

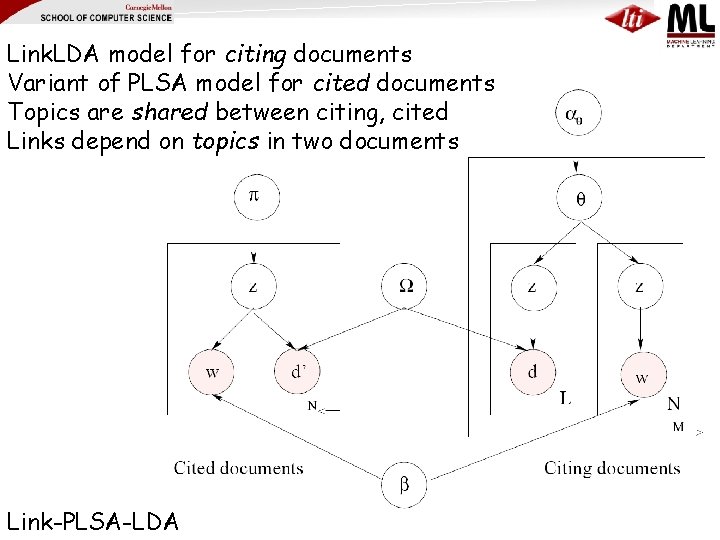

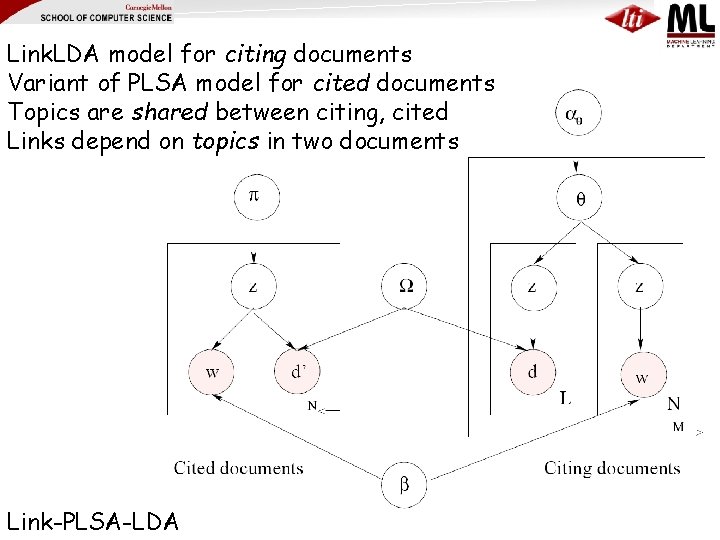

Link. LDA model for citing documents Variant of PLSA model for cited documents Topics are shared between citing, cited Links depend on topics in two documents Link-PLSA-LDA

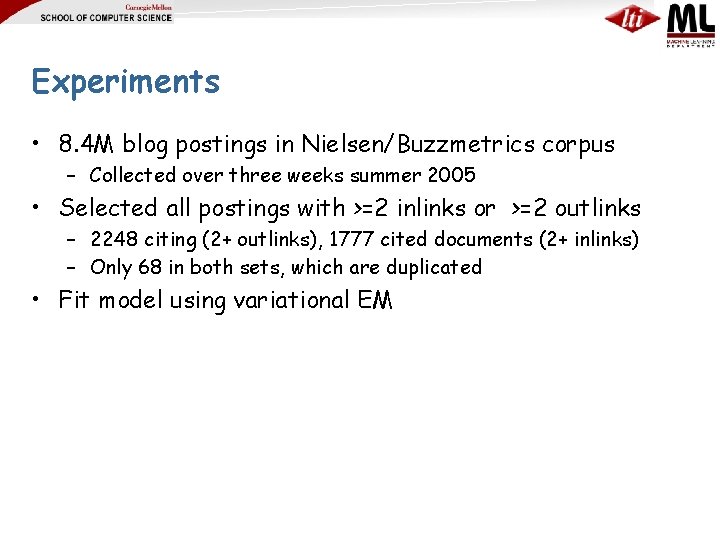

Experiments • 8. 4 M blog postings in Nielsen/Buzzmetrics corpus – Collected over three weeks summer 2005 • Selected all postings with >=2 inlinks or >=2 outlinks – 2248 citing (2+ outlinks), 1777 cited documents (2+ inlinks) – Only 68 in both sets, which are duplicated • Fit model using variational EM

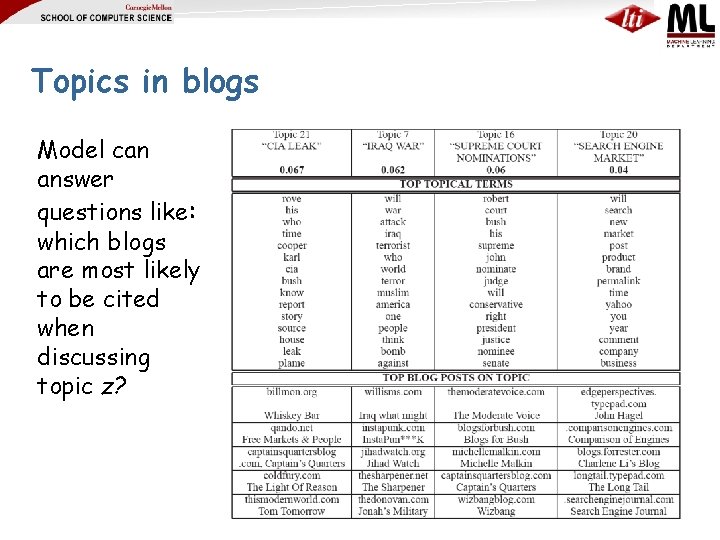

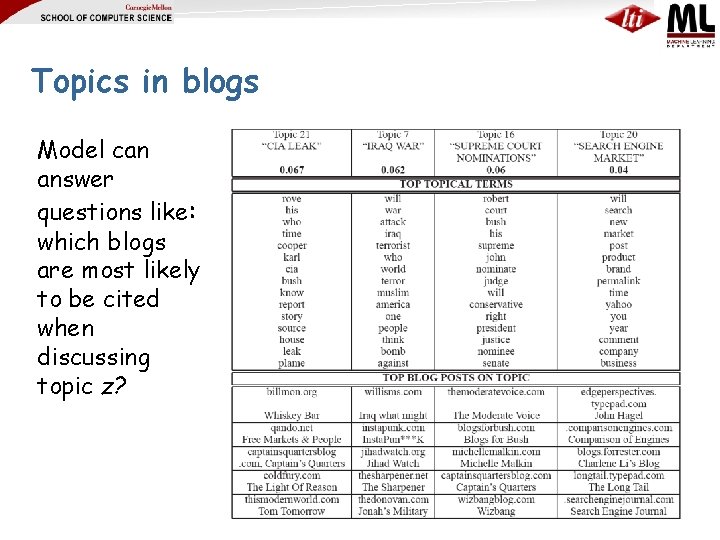

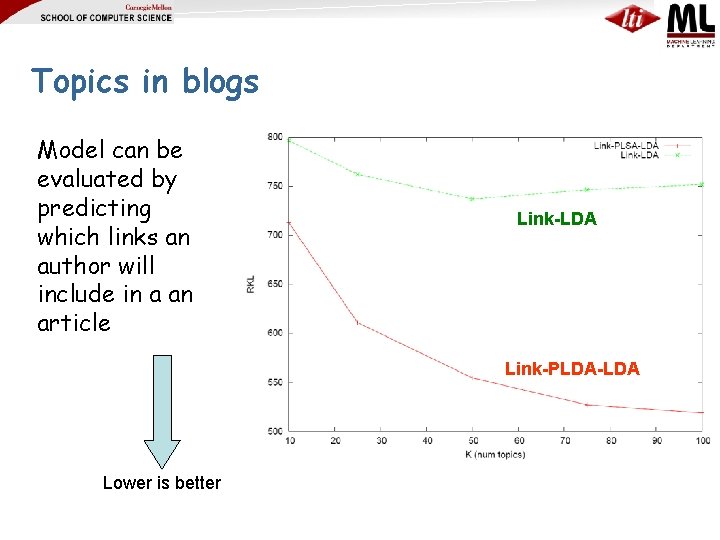

Topics in blogs Model can answer questions like: which blogs are most likely to be cited when discussing topic z?

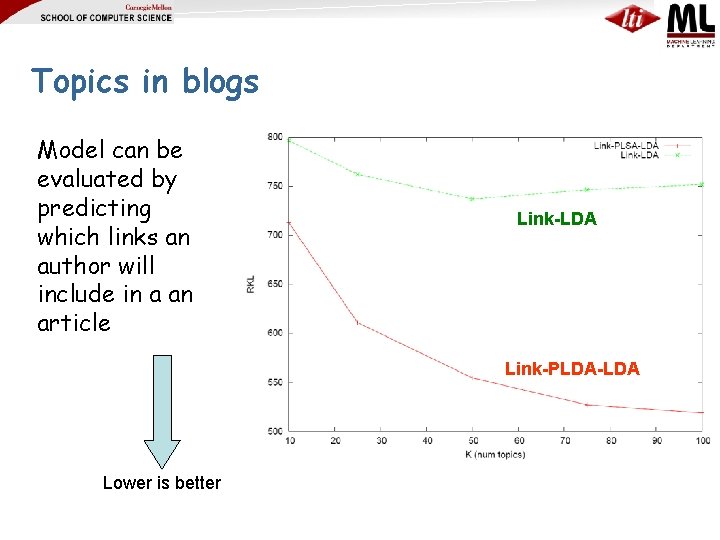

Topics in blogs Model can be evaluated by predicting which links an author will include in a an article Link-LDA Link-PLDA-LDA Lower is better

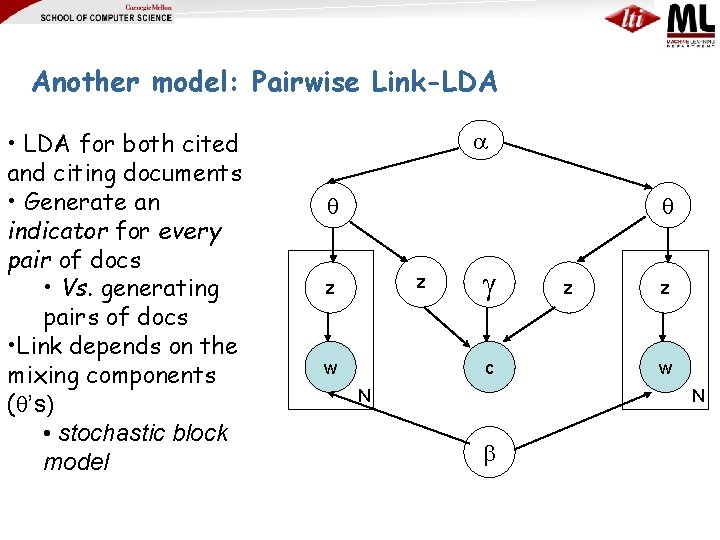

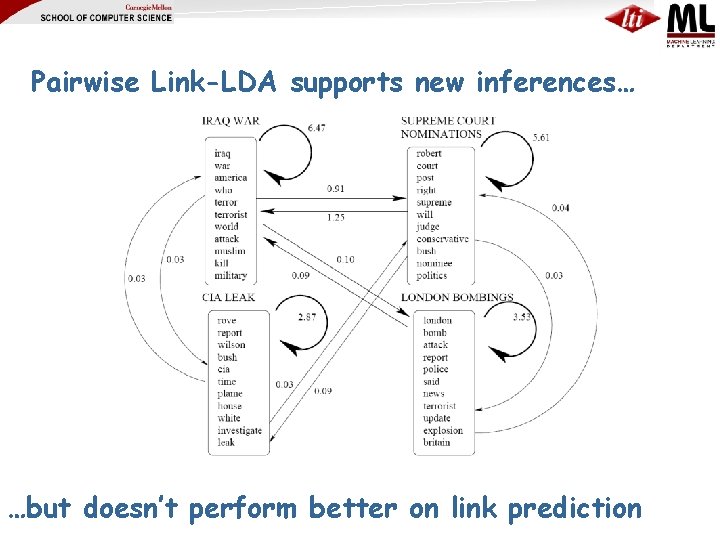

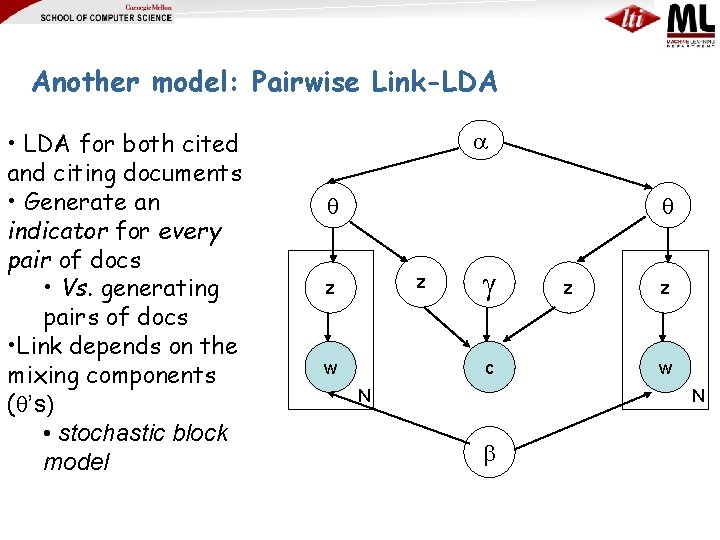

Another model: Pairwise Link-LDA • LDA for both cited and citing documents • Generate an indicator for every pair of docs • Vs. generating pairs of docs • Link depends on the mixing components ( ’s) • stochastic block model z z w c N z z w N

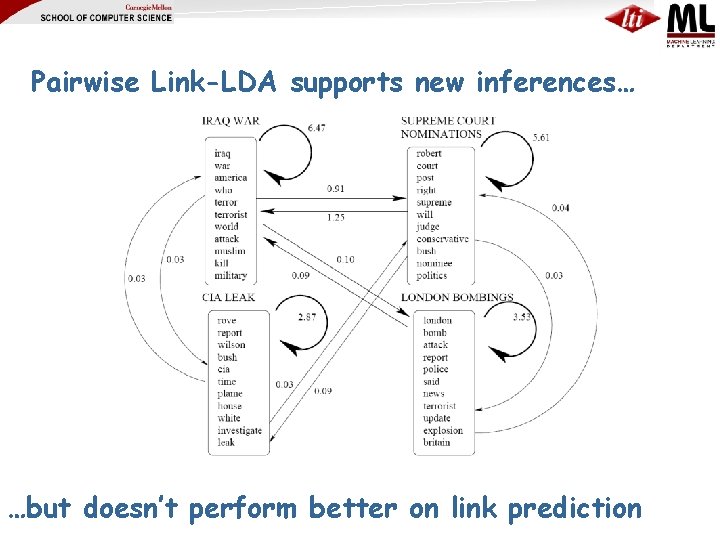

Pairwise Link-LDA supports new inferences… …but doesn’t perform better on link prediction

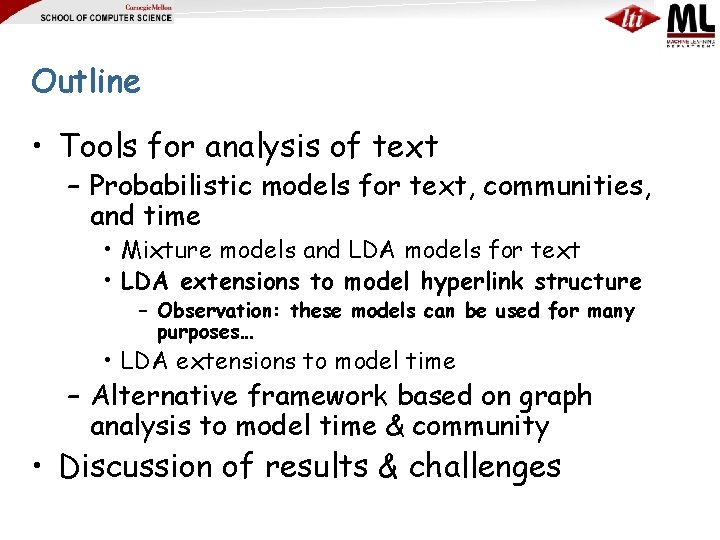

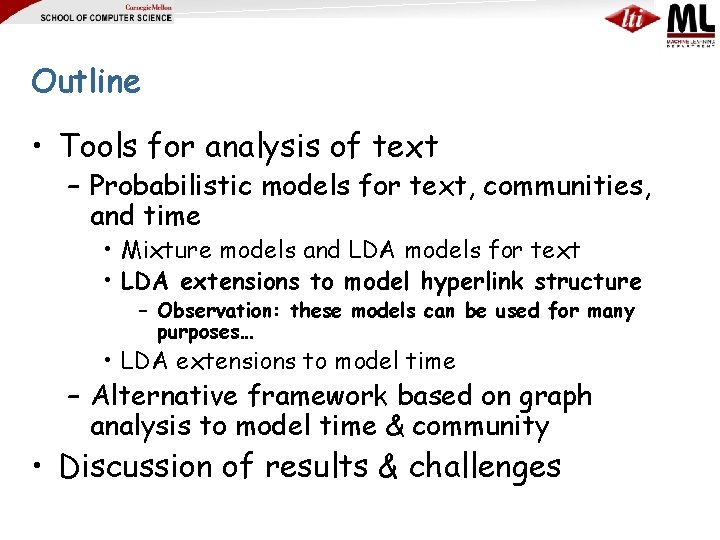

Outline • Tools for analysis of text – Probabilistic models for text, communities, and time • Mixture models and LDA models for text • LDA extensions to model hyperlink structure – Observation: these models can be used for many purposes… • LDA extensions to model time – Alternative framework based on graph analysis to model time & community • Discussion of results & challenges

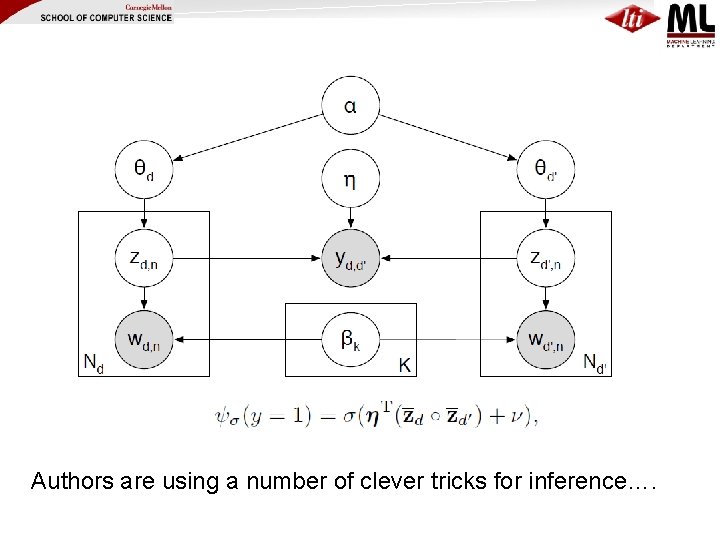

Authors are using a number of clever tricks for inference….

![Predicting Response to Political Blog Posts with Topic Models NAACL 09 Tae Yano Predicting Response to Political Blog Posts with Topic Models [NAACL ’ 09] Tae Yano](https://slidetodoc.com/presentation_image_h2/b8c767777efee46ee281e13d30105abb/image-55.jpg)

Predicting Response to Political Blog Posts with Topic Models [NAACL ’ 09] Tae Yano Noah Smith

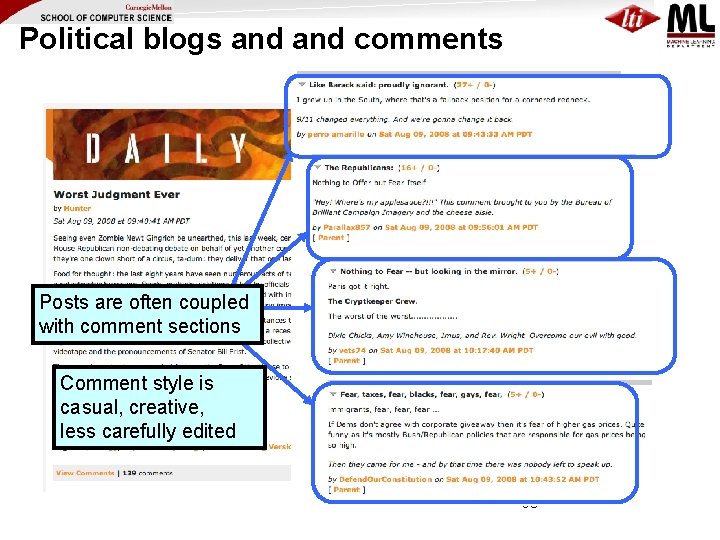

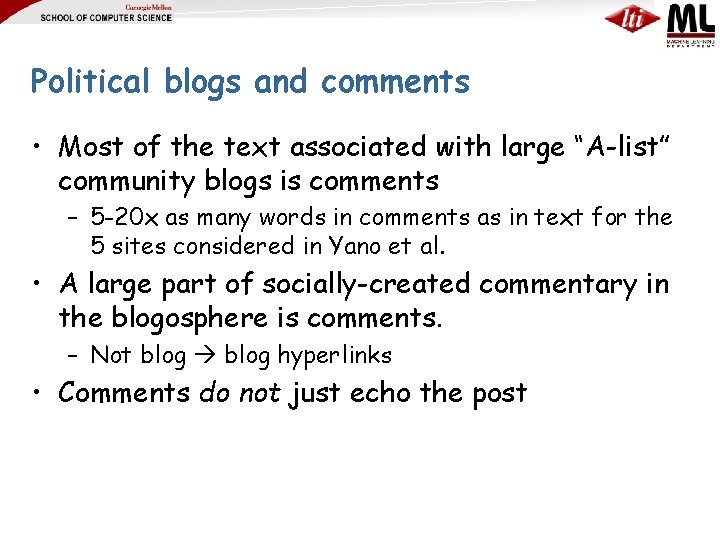

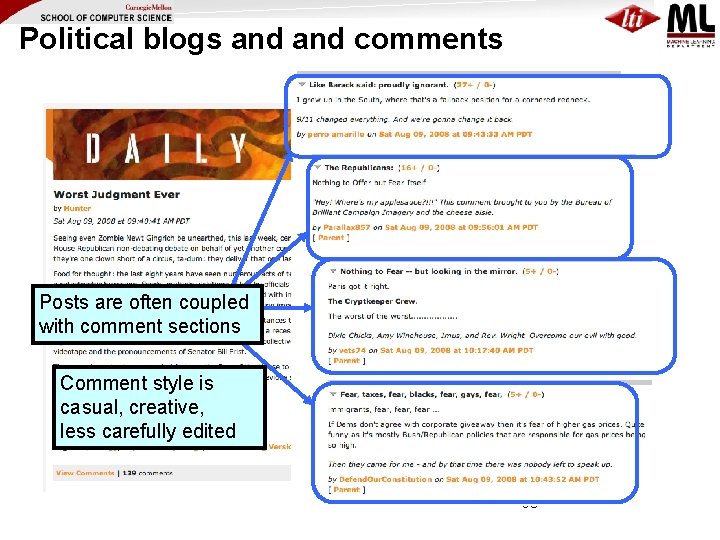

Political blogs and comments Posts are often coupled with comment sections Comment style is casual, creative, less carefully edited 56

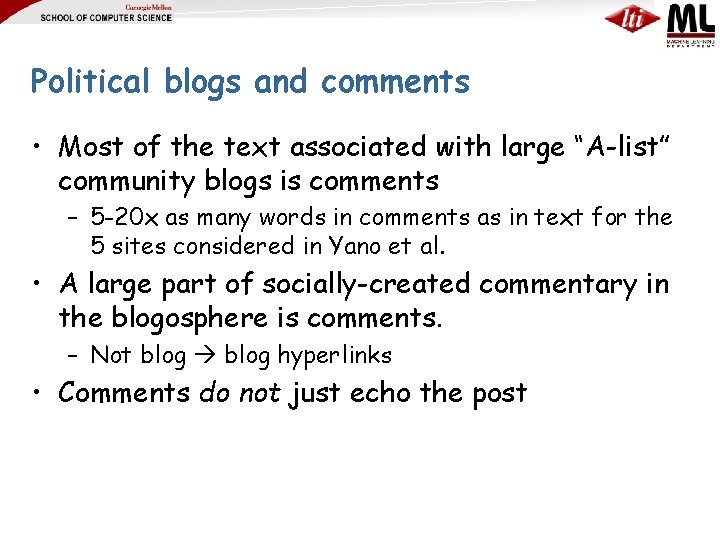

Political blogs and comments • Most of the text associated with large “A-list” community blogs is comments – 5 -20 x as many words in comments as in text for the 5 sites considered in Yano et al. • A large part of socially-created commentary in the blogosphere is comments. – Not blog hyperlinks • Comments do not just echo the post

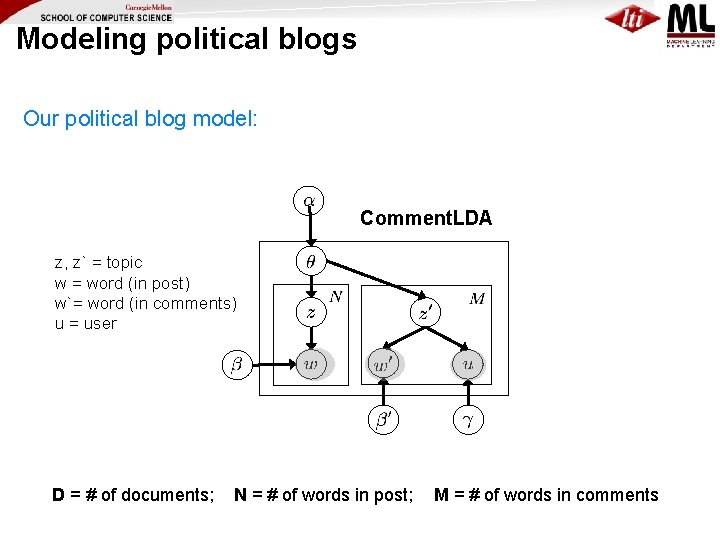

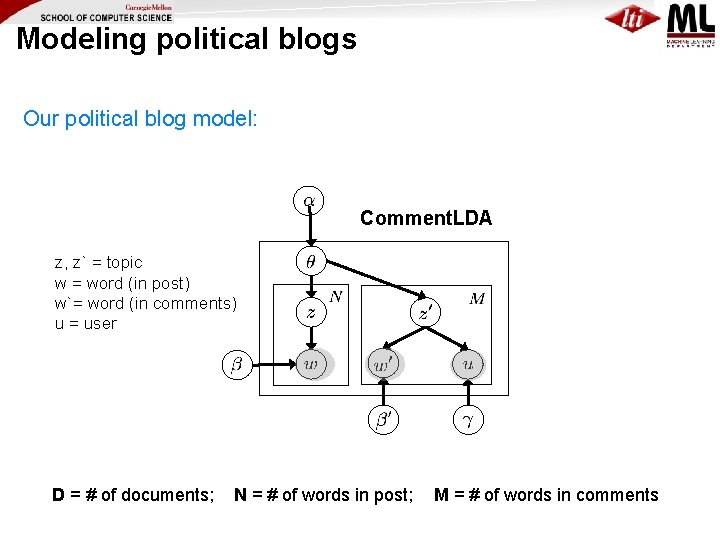

Modeling political blogs Our political blog model: Comment. LDA z, z` = topic w = word (in post) w`= word (in comments) u = user D = # of documents; N = # of words in post; M = # of words in comments

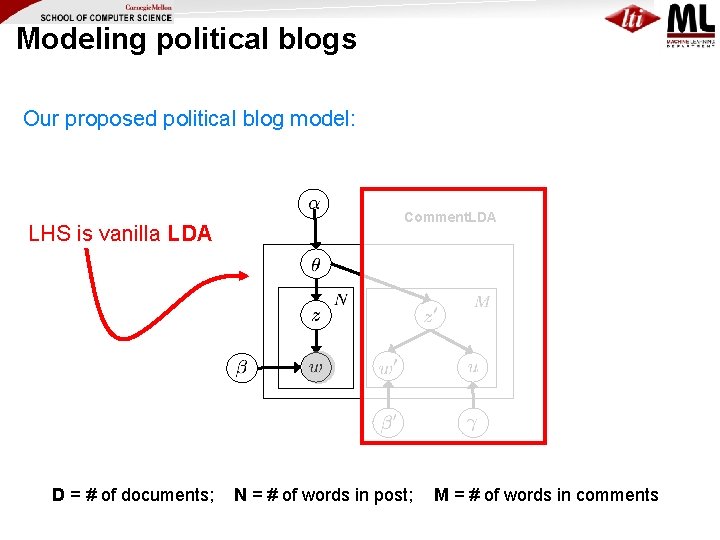

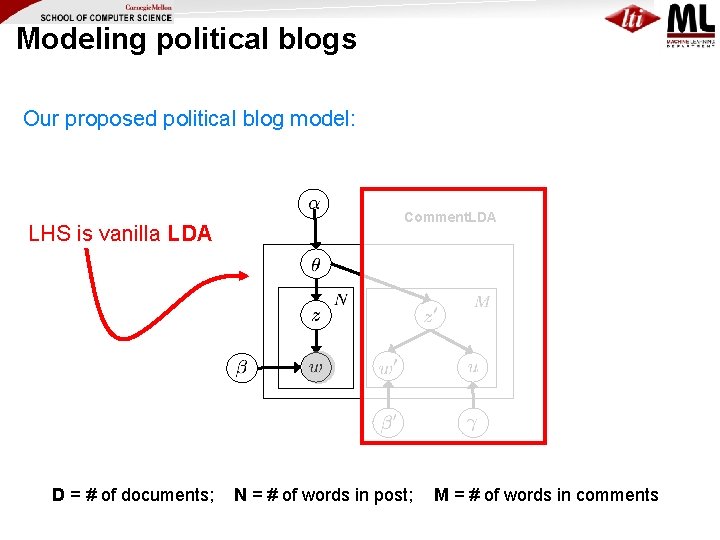

Modeling political blogs Our proposed political blog model: LHS is vanilla LDA D = # of documents; Comment. LDA N = # of words in post; M = # of words in comments

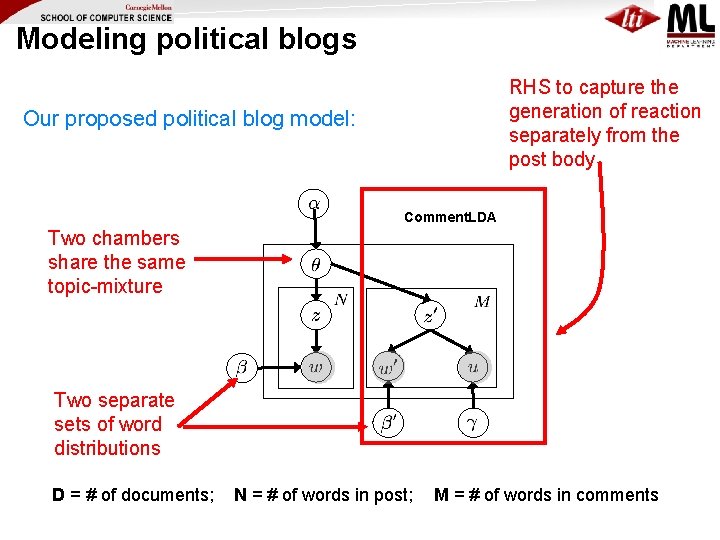

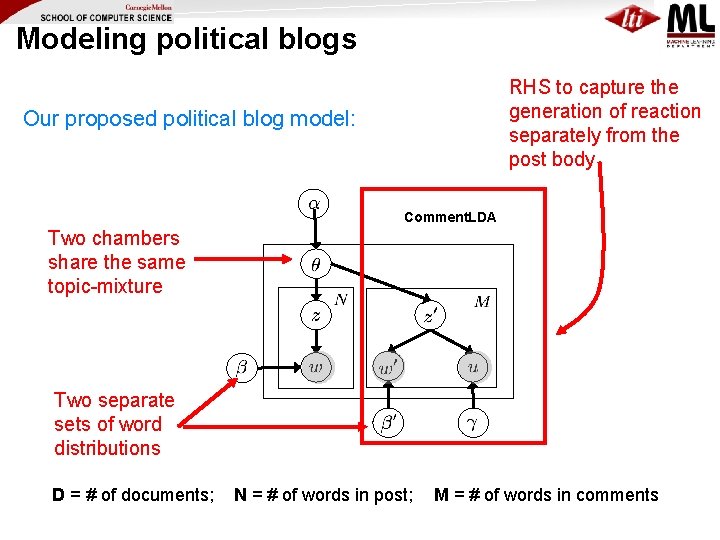

Modeling political blogs RHS to capture the generation of reaction separately from the post body Our proposed political blog model: Comment. LDA Two chambers share the same topic-mixture Two separate sets of word distributions D = # of documents; N = # of words in post; M = # of words in comments

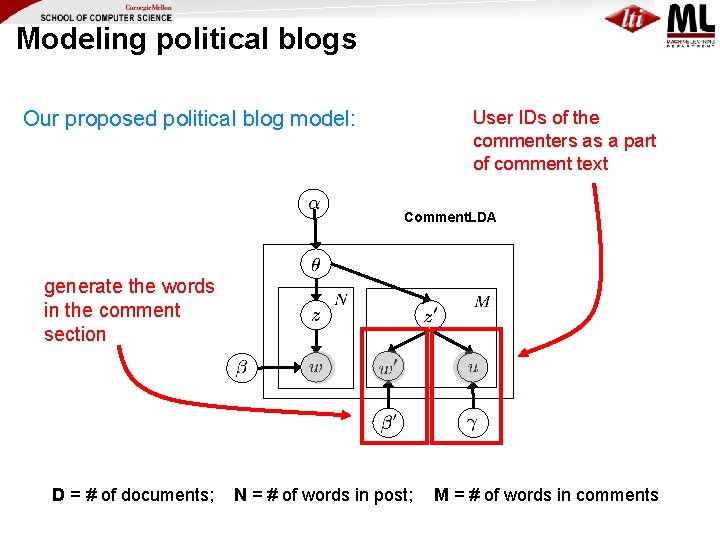

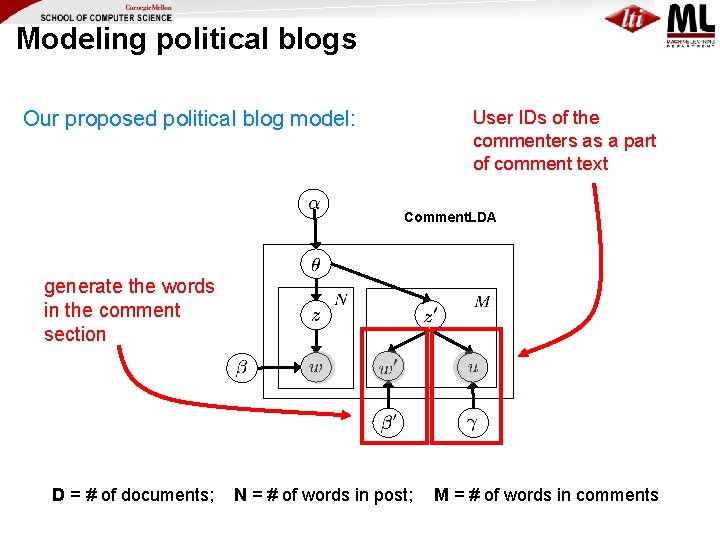

Modeling political blogs Our proposed political blog model: User IDs of the commenters as a part of comment text Comment. LDA generate the words in the comment section D = # of documents; N = # of words in post; M = # of words in comments

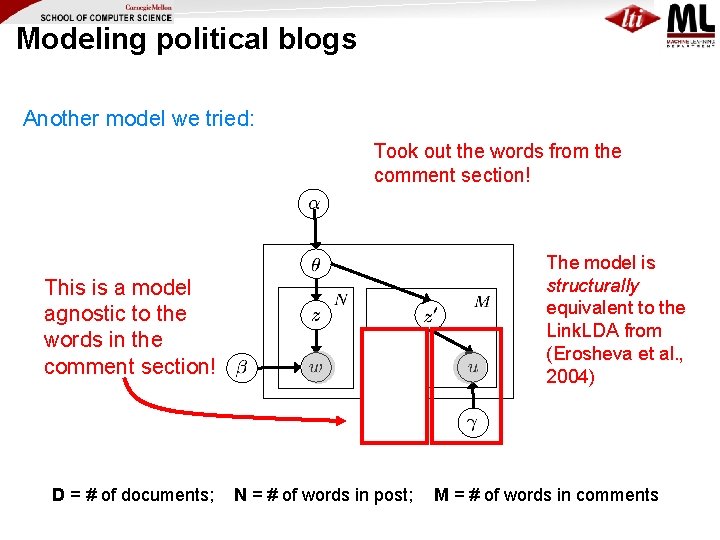

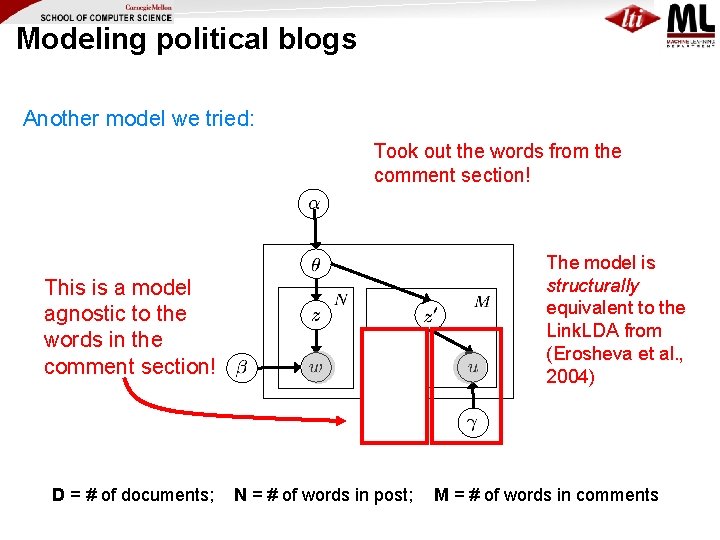

Modeling political blogs Another model we tried: Took out the words from the comment section! Comment. LDA The model is structurally equivalent to the Link. LDA from (Erosheva et al. , 2004) This is a model agnostic to the words in the comment section! D = # of documents; N = # of words in post; M = # of words in comments

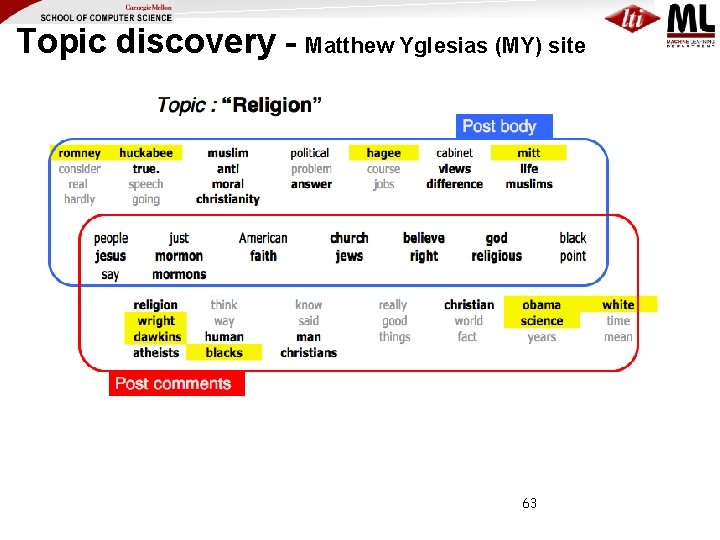

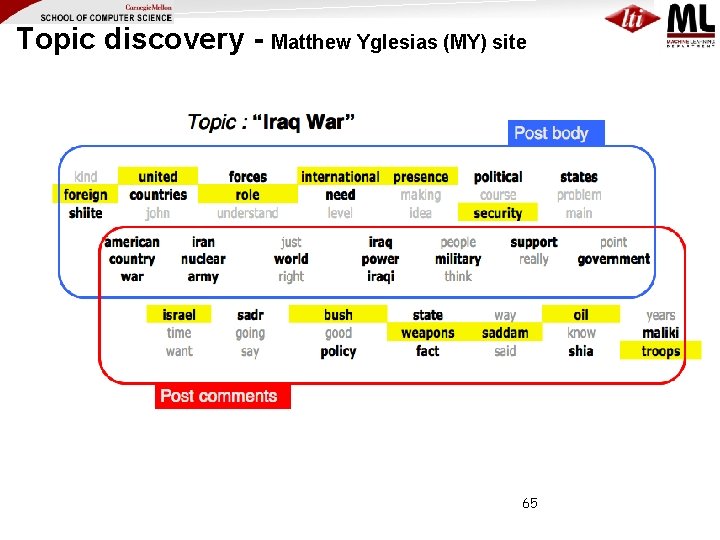

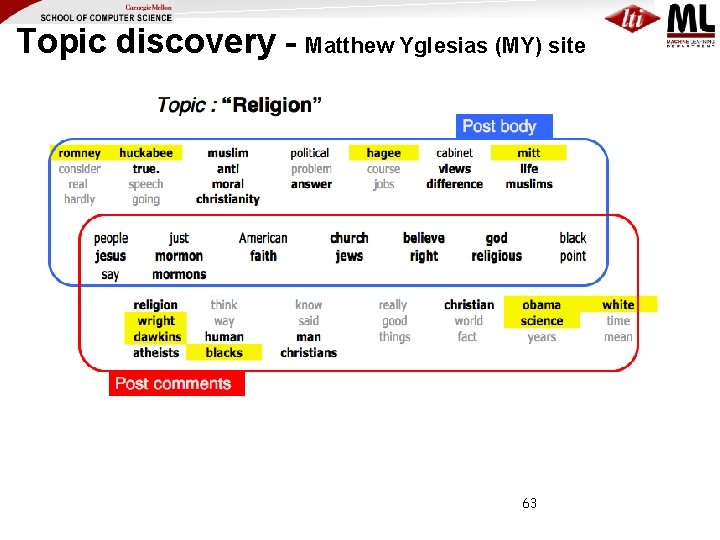

Topic discovery - Matthew Yglesias (MY) site 63

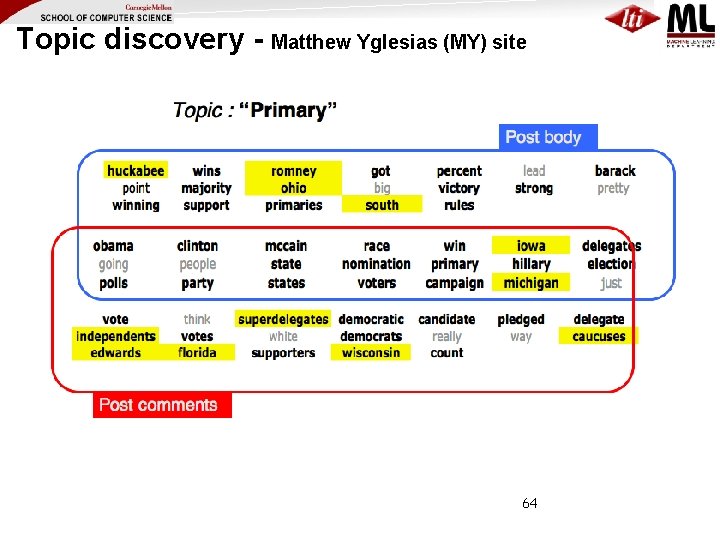

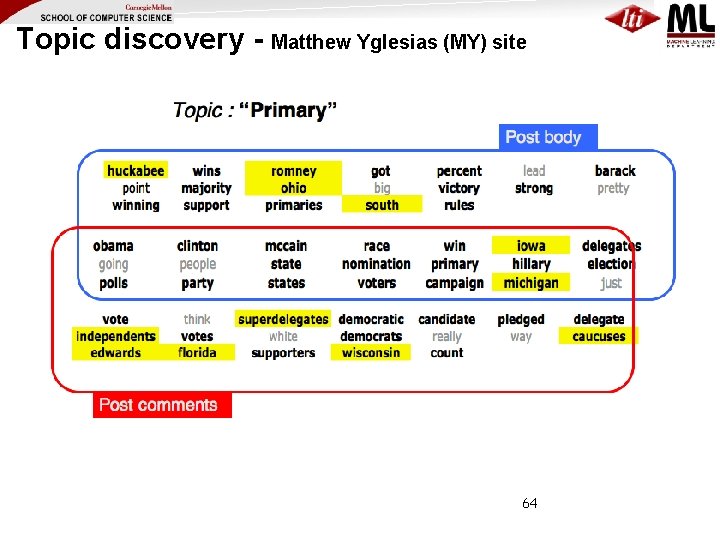

Topic discovery - Matthew Yglesias (MY) site 64

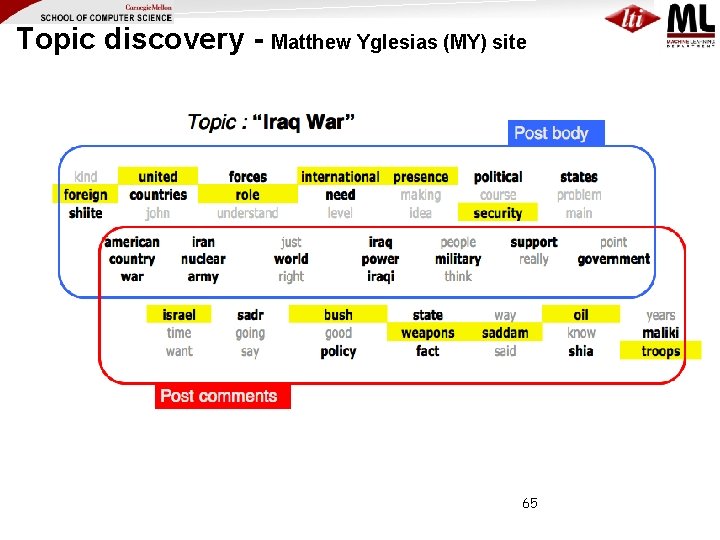

Topic discovery - Matthew Yglesias (MY) site 65

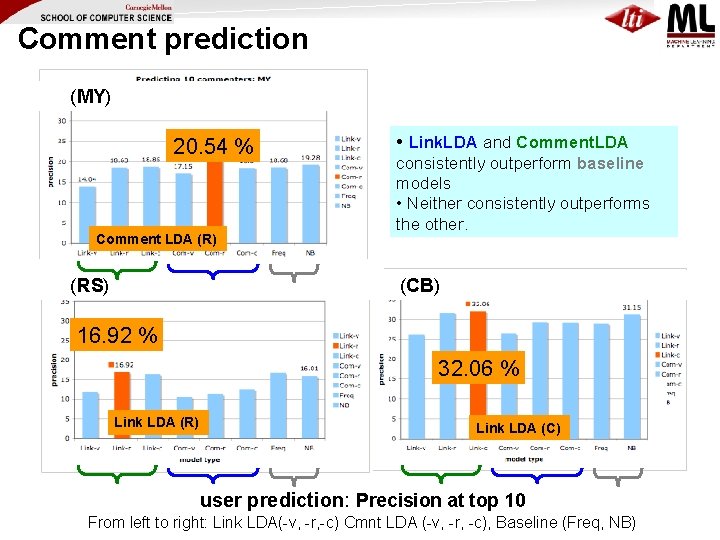

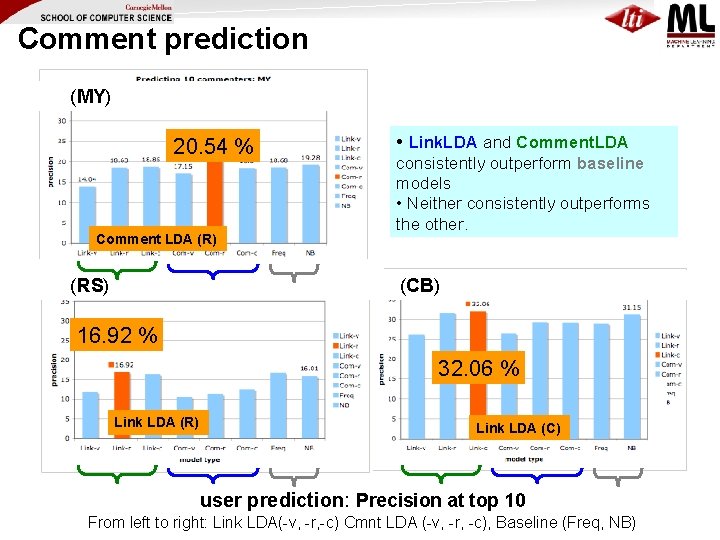

Comment prediction (MY) 20. 54 % Comment LDA (R) (RS) • Link. LDA and Comment. LDA consistently outperform baseline models • Neither consistently outperforms the other. (CB) 16. 92 % 32. 06 % Link LDA (R) Link LDA (C) user prediction: Precision at top 1066 From left to right: Link LDA(-v, -r, -c) Cmnt LDA (-v, -r, -c), Baseline (Freq, NB)

From Episodes to Sagas: Temporally Clustering News Via Social. Media Commentary Ramnath Balasubramanyan Frank Lin Matthew Hurst Noah Smith

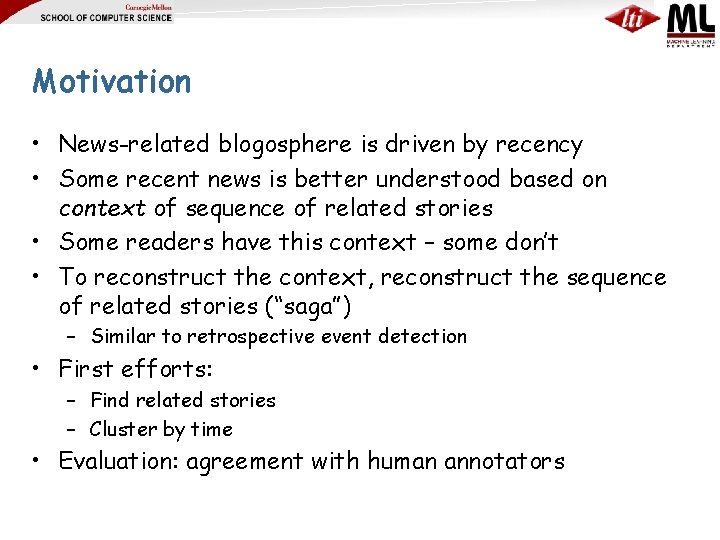

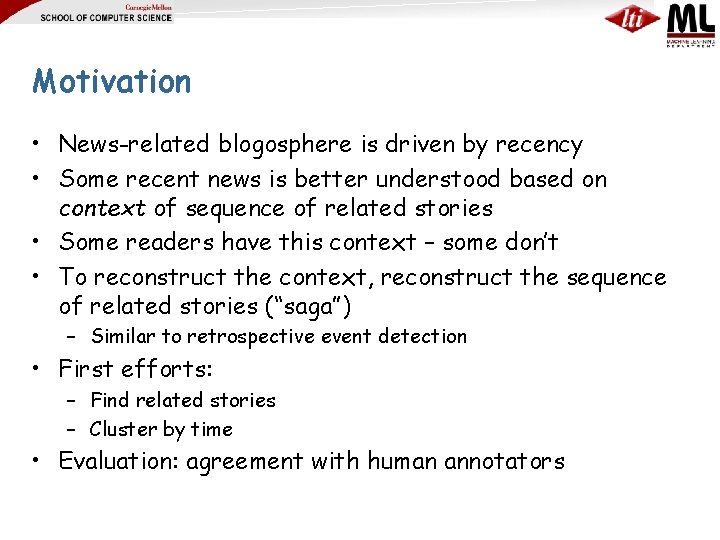

Motivation • News-related blogosphere is driven by recency • Some recent news is better understood based on context of sequence of related stories • Some readers have this context – some don’t • To reconstruct the context, reconstruct the sequence of related stories (“saga”) – Similar to retrospective event detection • First efforts: – Find related stories – Cluster by time • Evaluation: agreement with human annotators

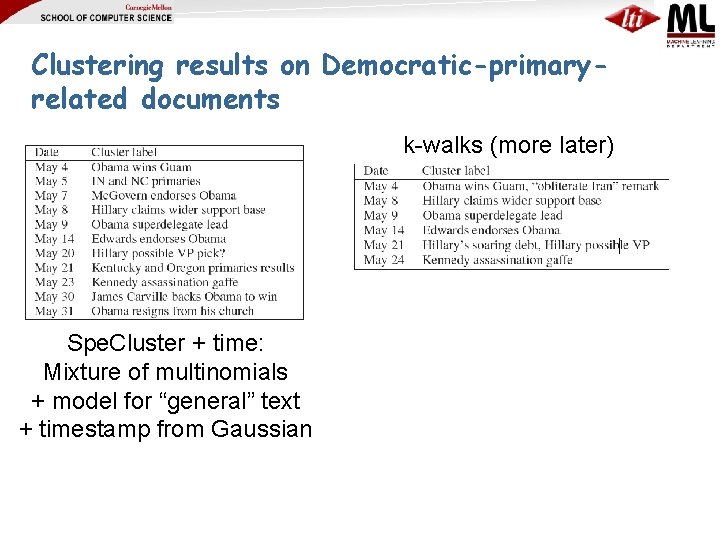

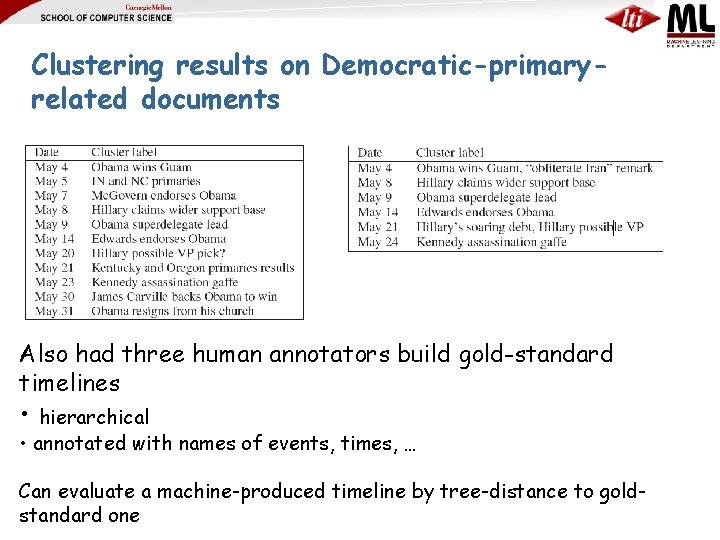

Clustering results on Democratic-primaryrelated documents k-walks (more later) Spe. Cluster + time: Mixture of multinomials + model for “general” text + timestamp from Gaussian

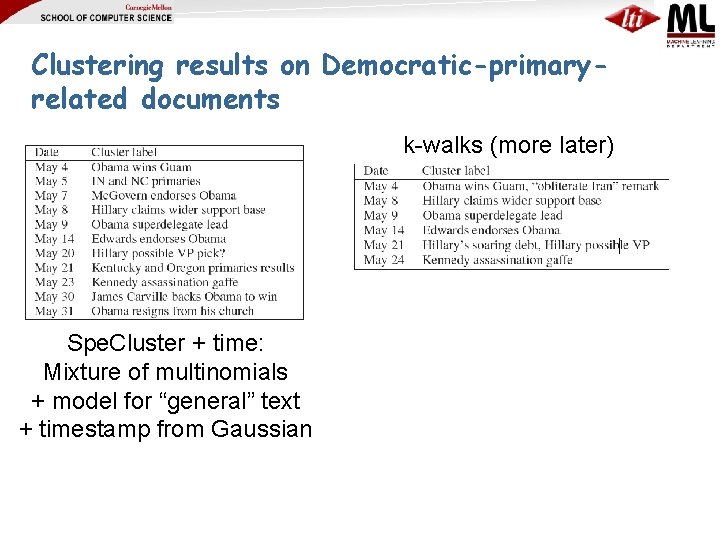

Clustering results on Democratic-primaryrelated documents Also had three human annotators build gold-standard timelines • hierarchical • annotated with names of events, times, … Can evaluate a machine-produced timeline by tree-distance to goldstandard one

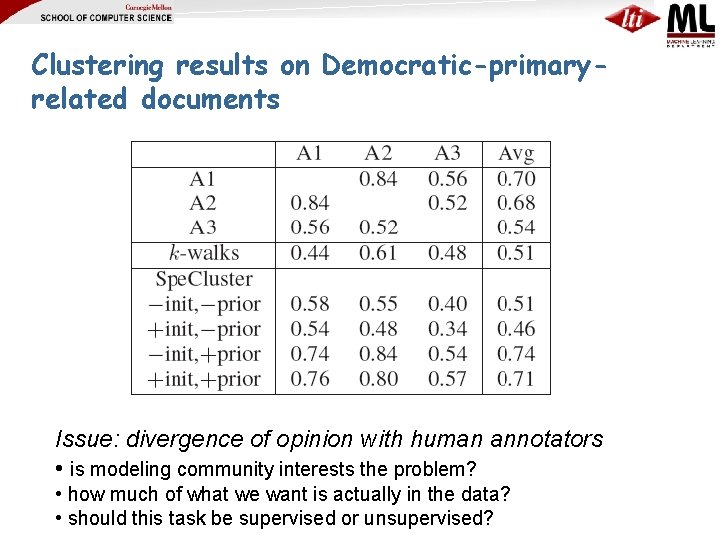

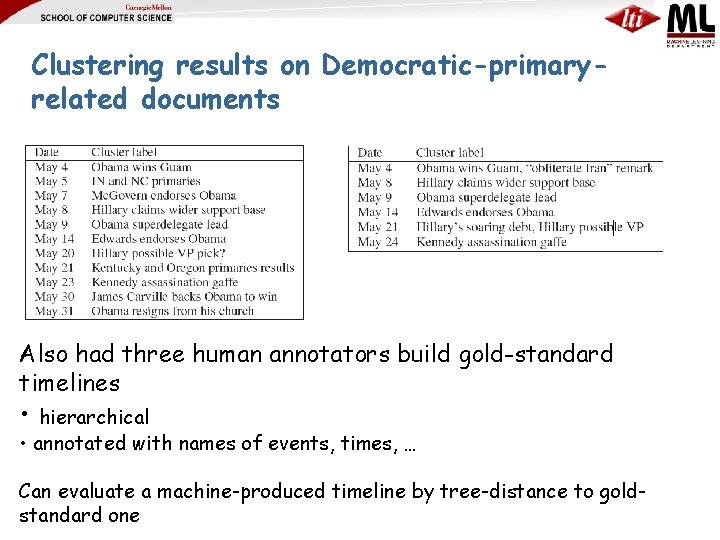

Clustering results on Democratic-primaryrelated documents Issue: divergence of opinion with human annotators • is modeling community interests the problem? • how much of what we want is actually in the data? • should this task be supervised or unsupervised?

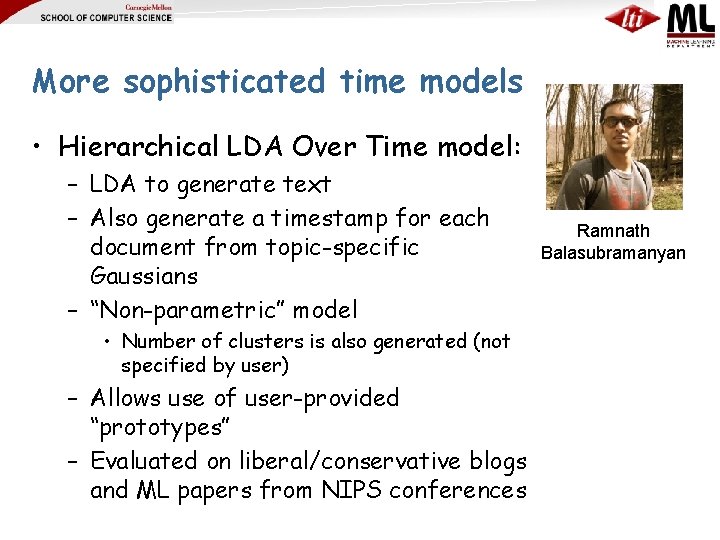

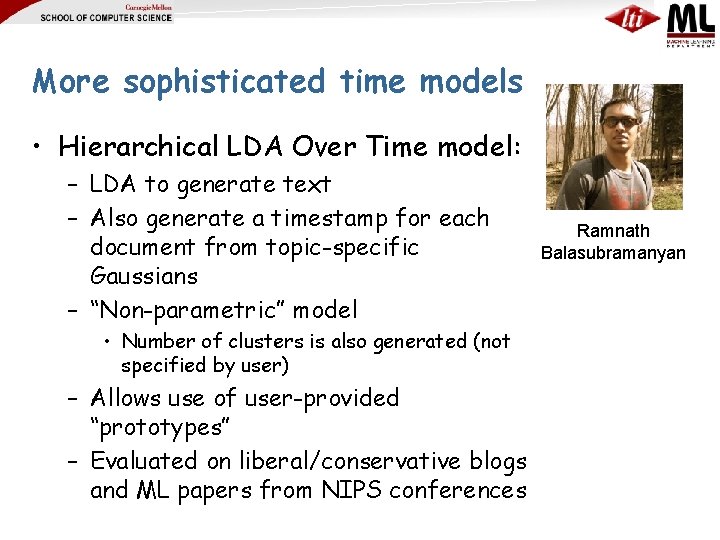

More sophisticated time models • Hierarchical LDA Over Time model: – LDA to generate text – Also generate a timestamp for each document from topic-specific Gaussians – “Non-parametric” model • Number of clusters is also generated (not specified by user) – Allows use of user-provided “prototypes” – Evaluated on liberal/conservative blogs and ML papers from NIPS conferences Ramnath Balasubramanyan

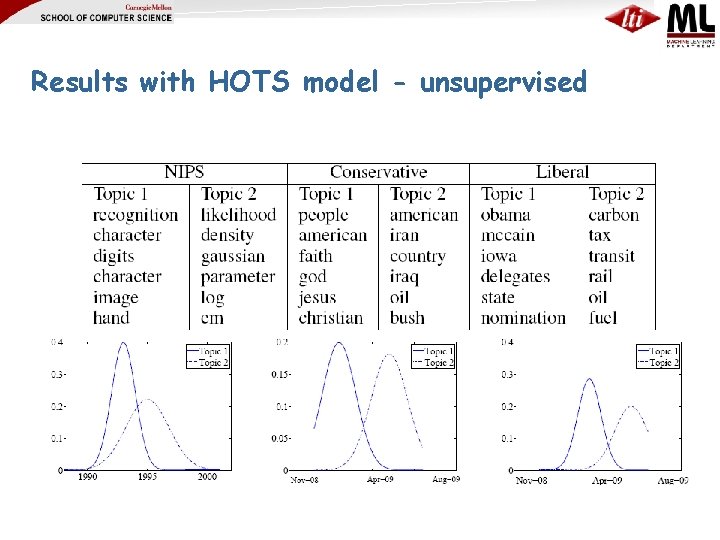

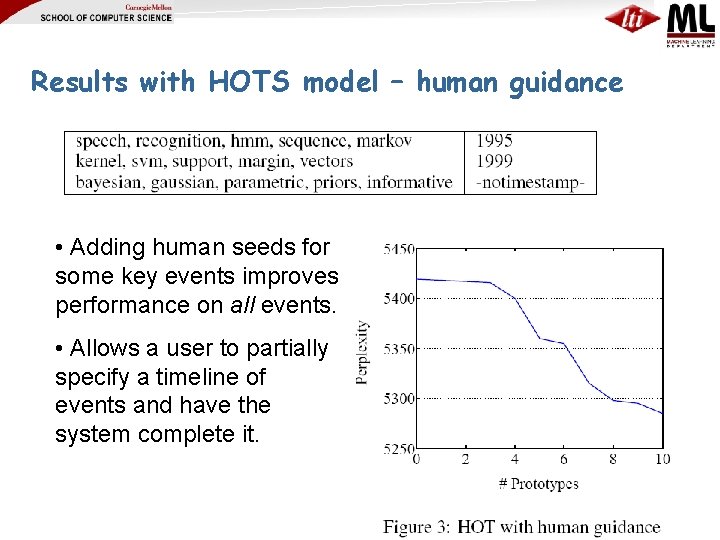

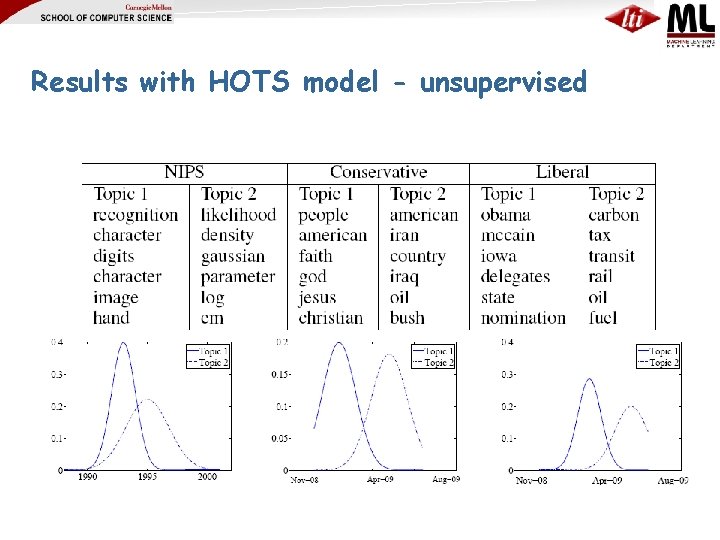

Results with HOTS model - unsupervised

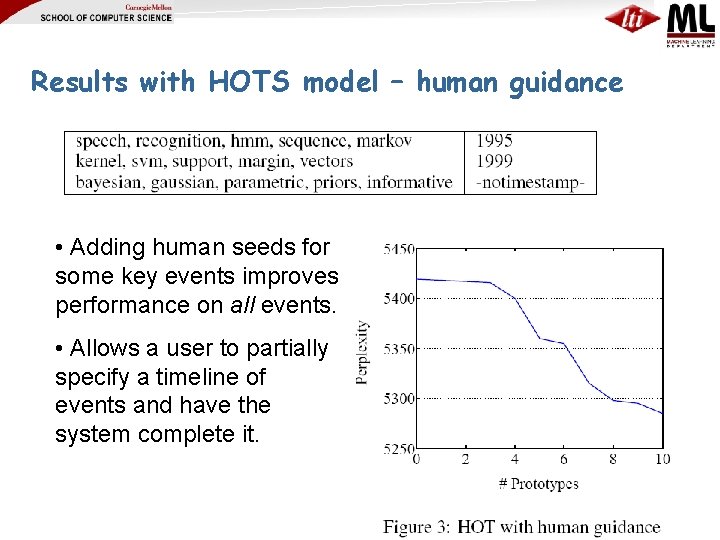

Results with HOTS model – human guidance • Adding human seeds for some key events improves performance on all events. • Allows a user to partially specify a timeline of events and have the system complete it.

Social Media Text Comments • Goals of analysis: • Probabilistic models – Very diverse – Evaluation is difficult • And requires revisiting often as goals evolve – Often “understanding” social text requires understanding a community – can model many aspects of social text • Community (links, comments) • Time • Evaluation: – introspective, qualitative on communities we understand • Scientific communities – quantitative on predictive tasks • Link prediction, user prediction, … – Against “gold-standard visualization” (sagas)