Statistical Evaluation of Data Chapter 15 1 52

- Slides: 52

Statistical Evaluation of Data Chapter 15 1 /52

Descriptive / inferential • Descriptive statistics are methods that help researchers organize, summarize, and simplify the results obtained from research studies. • Inferential statistics are methods that use the results obtained from samples to help make generalizations about populations. 2 /52

Statistic / parameter • A summary value that describes a sample is called a statistic. M=25 s=2 • A summary value that describes a population is called a parameter. µ =25 σ=2 3 /52

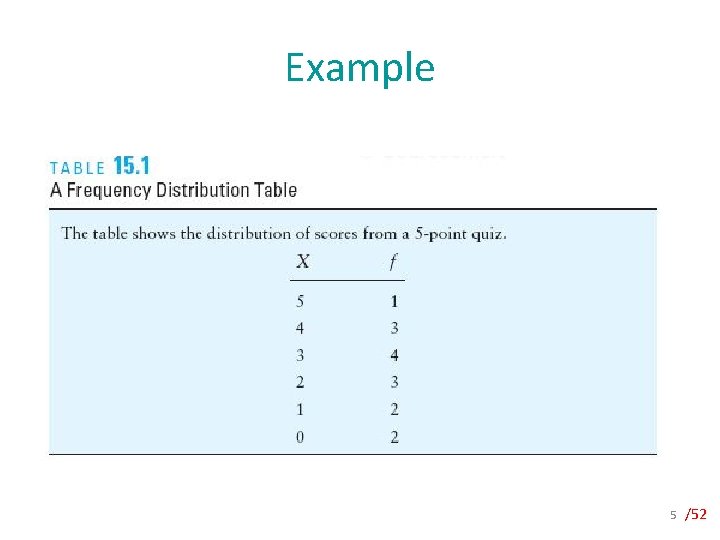

Frequency Distributions One method of simplifying and organizing a set of scores is to group them into an organized display that shows the entire set. 4 /52

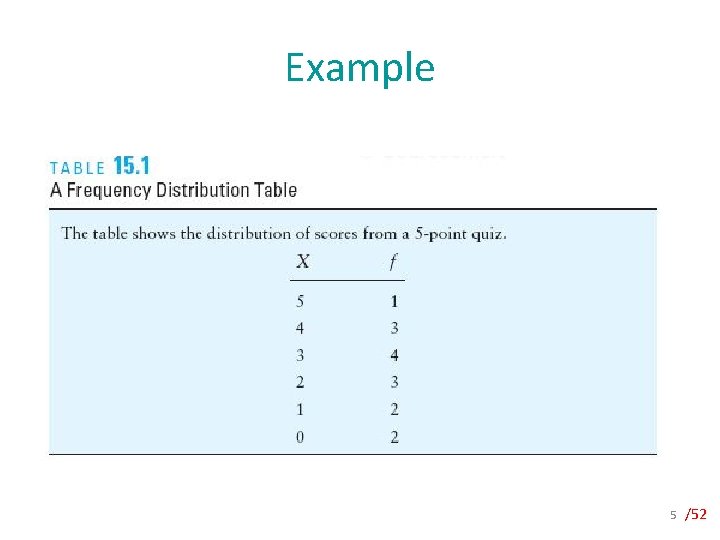

Example 5 /52

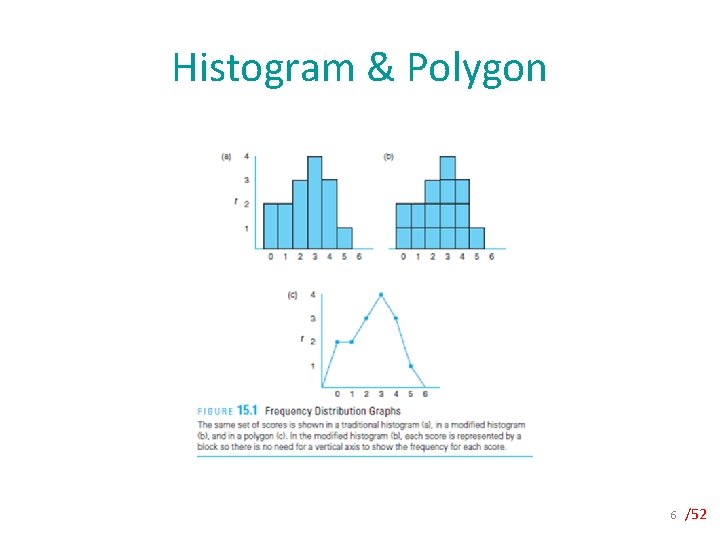

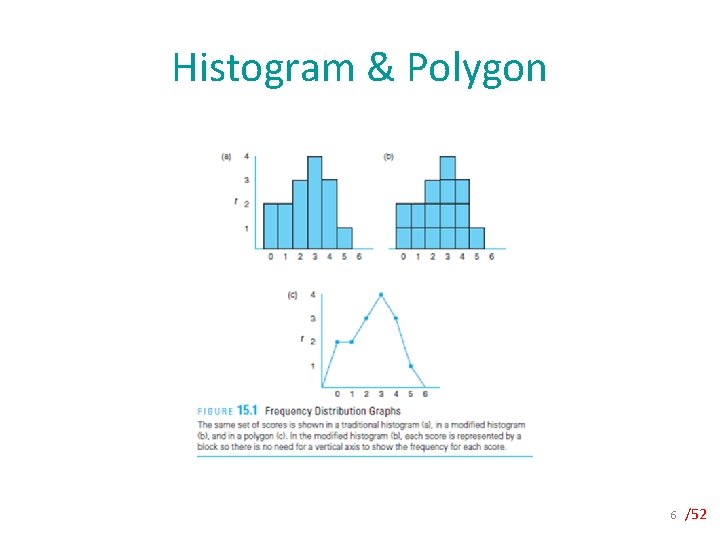

Histogram & Polygon 6 /52

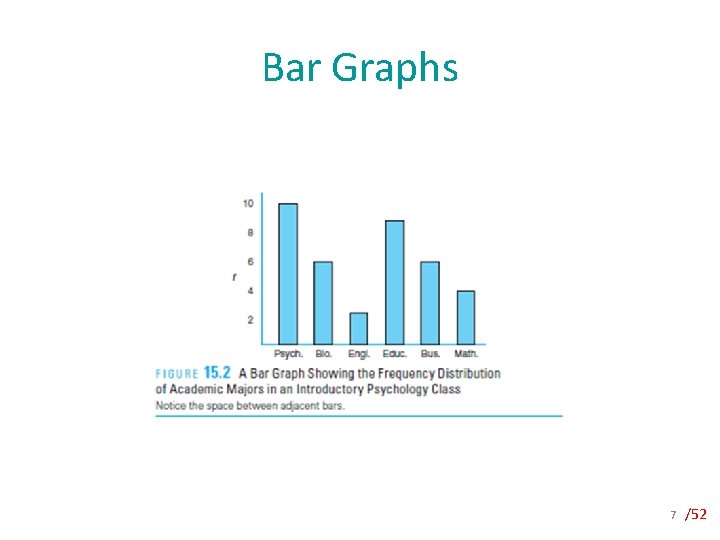

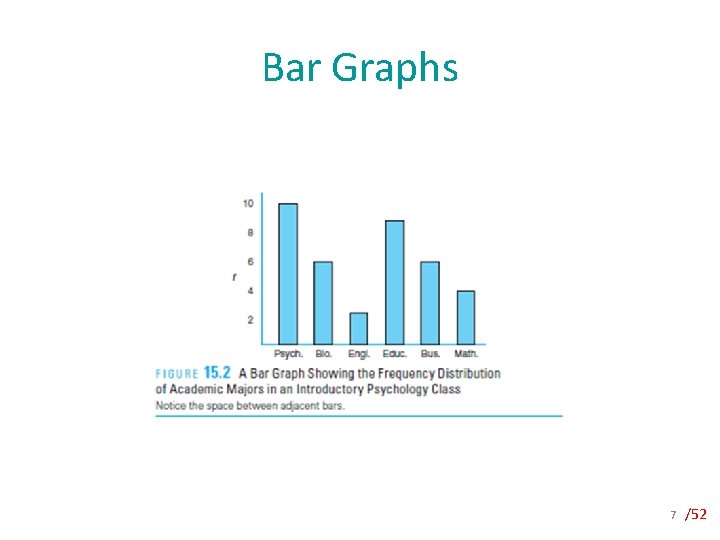

Bar Graphs 7 /52

Central tendency The goal of central tendency is to identify the value that is most typical or most representative of the entire group. 8 /52

Central tendency • The mean is the arithmetic average. • The median measures central tendency by identifying the score that divides the distribution in half. • The mode is the most frequently occurring score in the distribution. 9 /52

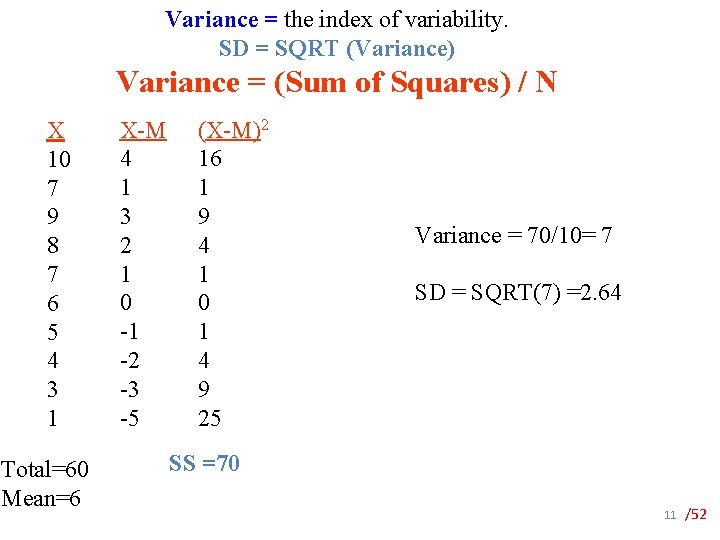

Variability is a measure of the spread of scores in a distribution. 1. Range (the difference between min and max) 2. Standard deviation describes the average distance from the mean. 3. Variance measures variability by computing the average squared distance from the mean. 10 /52

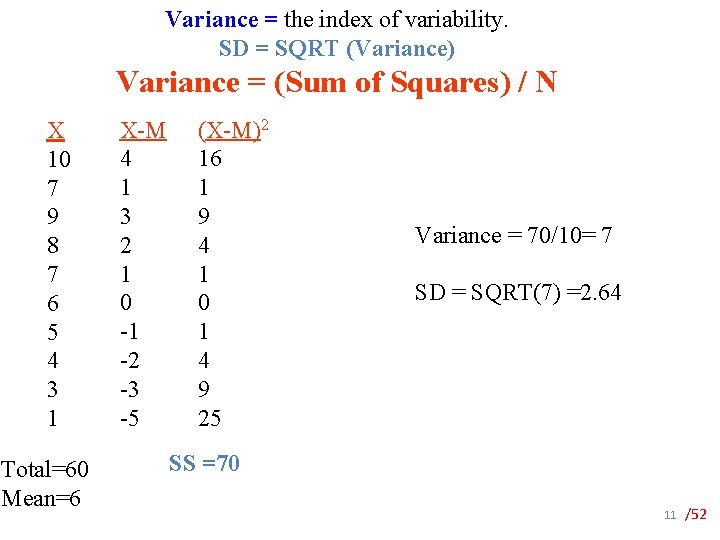

Variance = the index of variability. SD = SQRT (Variance) Variance = (Sum of Squares) / N X 10 7 9 8 7 6 5 4 3 1 Total=60 Mean=6 X-M 4 1 3 2 1 0 -1 -2 -3 -5 (X-M)2 16 1 9 4 1 0 1 4 9 25 Variance = 70/10= 7 SD = SQRT(7) =2. 64 SS =70 11 /52

Non-numerical Data Proportion or percentage in each category. For example, • 43% prefer Democrat candidate, • 28% prefer Republican candidate, • 29% are undecided 12 /52

Correlations A correlation is a statistical value that measures and describes the direction and degree of relationship between two variables. 13 /52

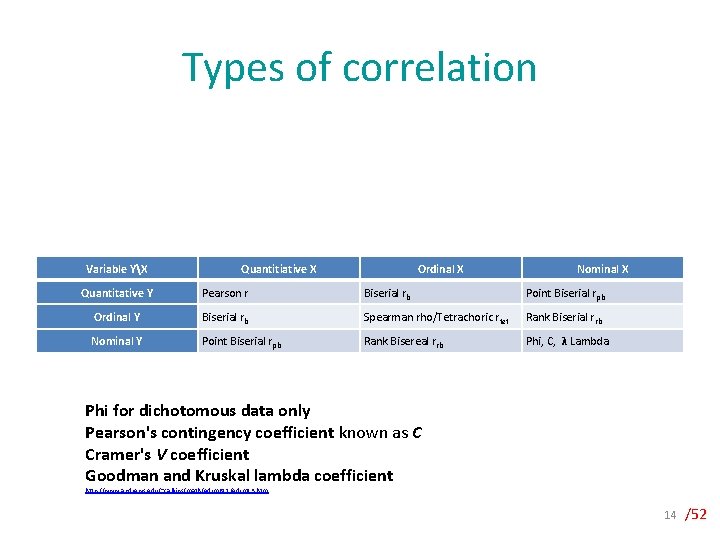

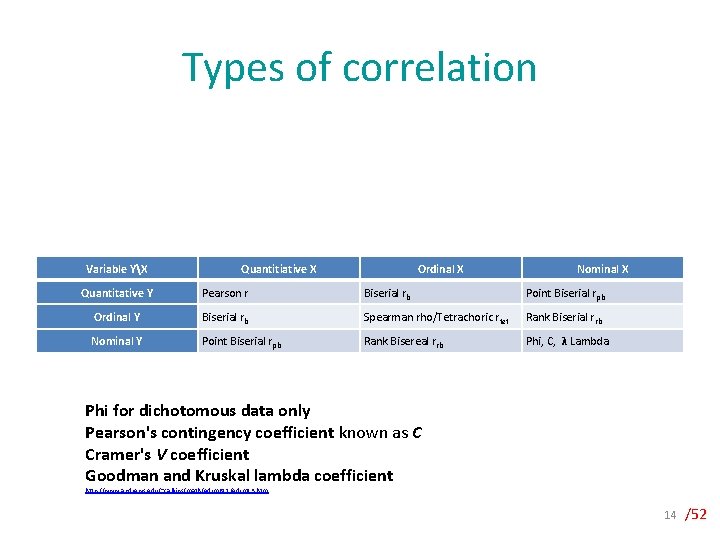

Types of correlation Variable YX Quantitiative X Ordinal X Nominal X Quantitative Y Pearson r Biserial rb Point Biserial rpb Ordinal Y Biserial rb Spearman rho/Tetrachoric rtet Rank Biserial rrb Nominal Y Point Biserial rpb Rank Bisereal rrb Phi, C, λ Lambda Phi for dichotomous data only Pearson's contingency coefficient known as C Cramer's V coefficient Goodman and Kruskal lambda coefficient http: //www. andrews. edu/~calkins/math/edrm 611/edrm 13. htm 14 /52

Regression 15 /52

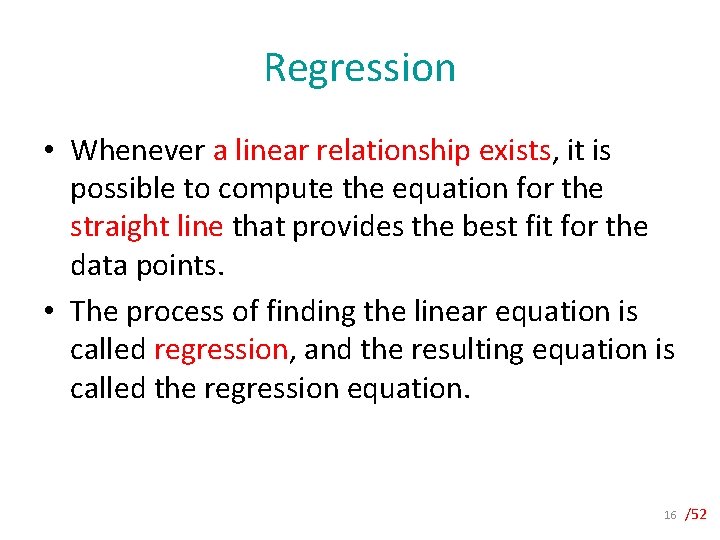

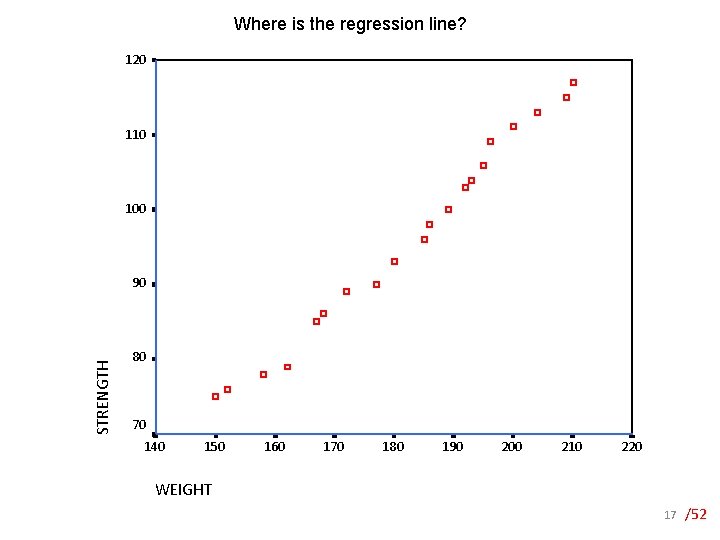

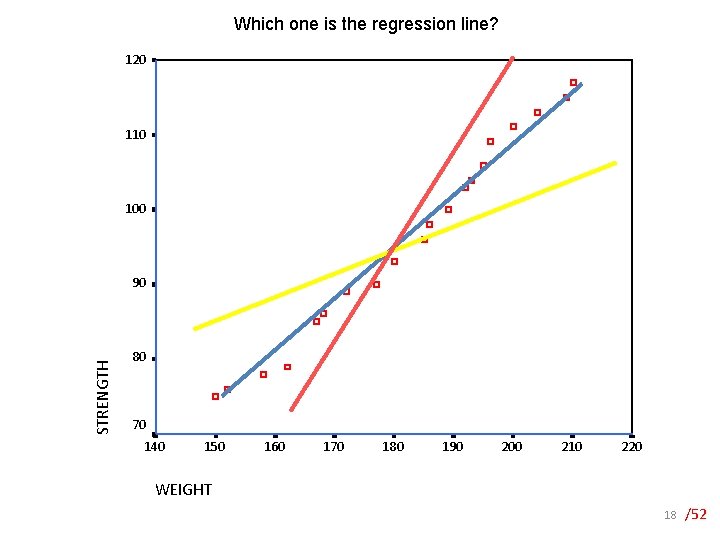

Regression • Whenever a linear relationship exists, it is possible to compute the equation for the straight line that provides the best fit for the data points. • The process of finding the linear equation is called regression, and the resulting equation is called the regression equation. 16 /52

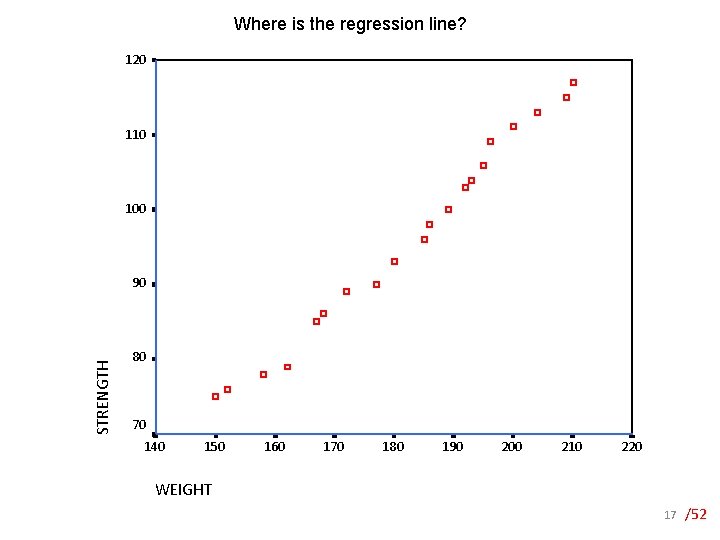

Where is the regression line? 120 110 100 STRENGTH 90 80 70 140 150 160 170 180 190 200 210 220 WEIGHT 17 /52

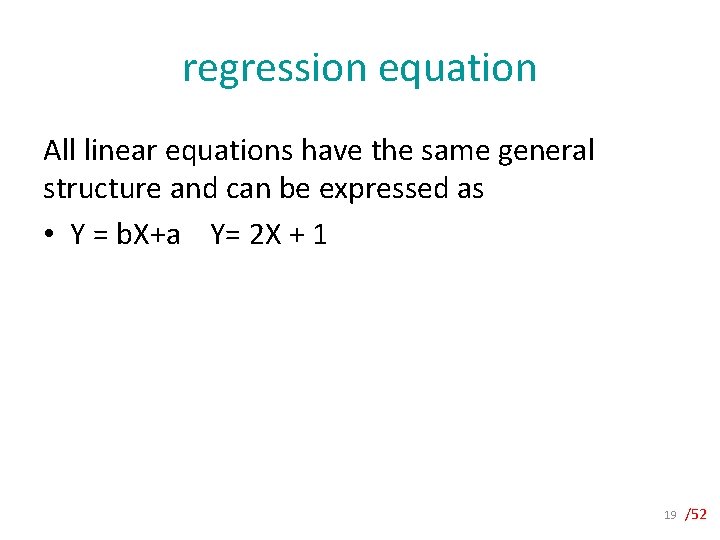

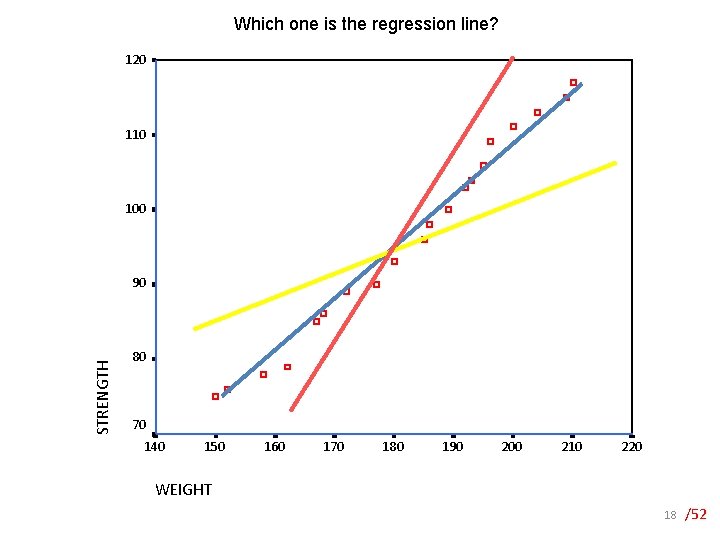

Which one is the regression line? 120 110 100 STRENGTH 90 80 70 140 150 160 170 180 190 200 210 220 WEIGHT 18 /52

regression equation All linear equations have the same general structure and can be expressed as • Y = b. X+a Y= 2 X + 1 19 /52

standardized form • Often the regression equation is reported in standardized form, which means that the original X and Y scores were standardized, or transformed into z- scores, before the equation was computed. ȥy=βȥx 20 /52

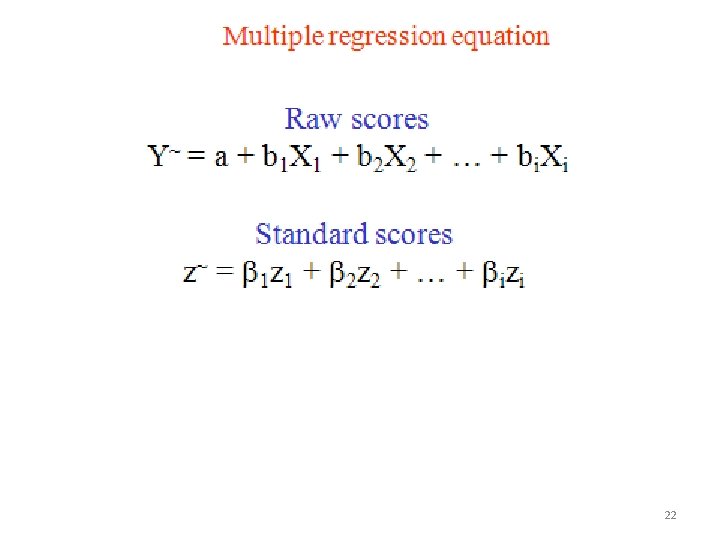

Multiple Regression 21 /52

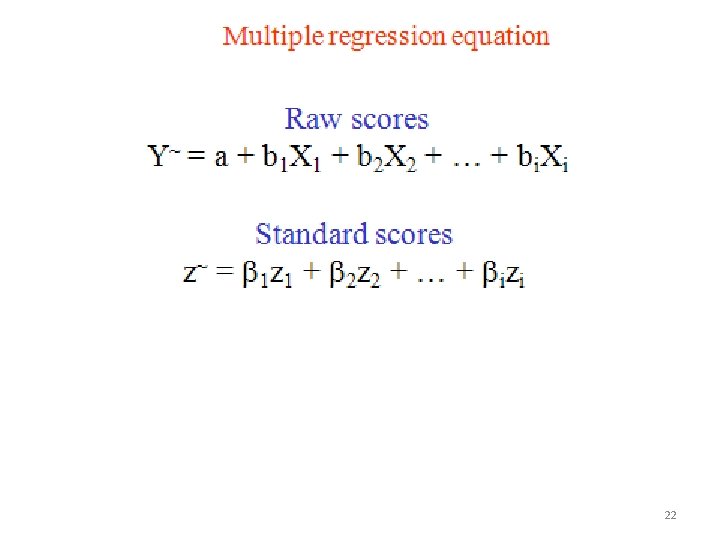

22 /52

INFERENTIAL STATISTICS • INFERENTIAL STATISTICS 23 /52

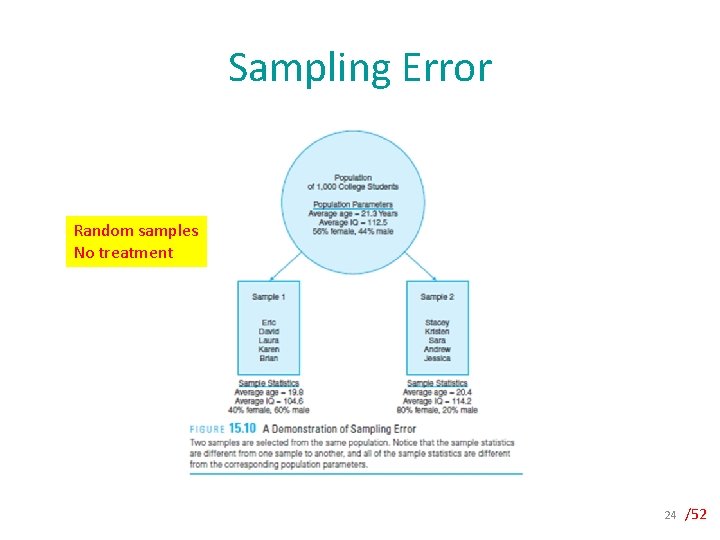

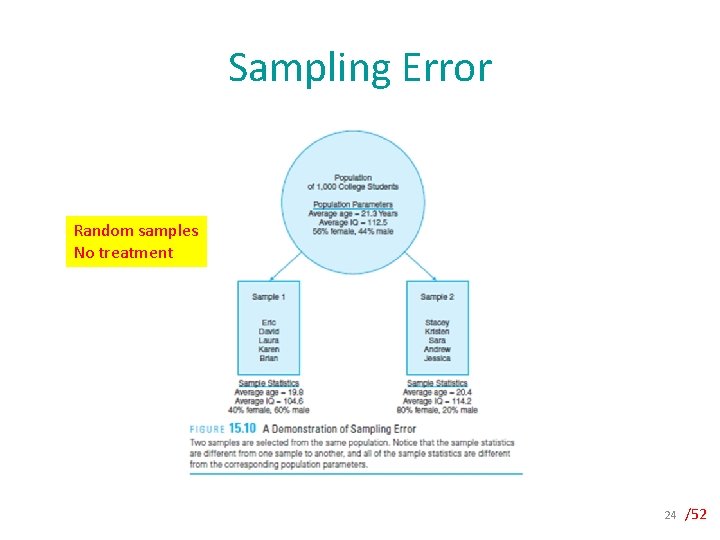

Sampling Error Random samples No treatment 24 /52

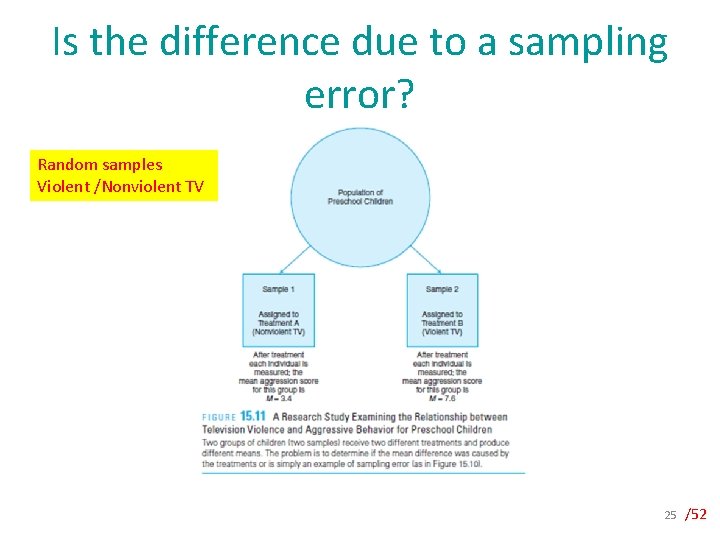

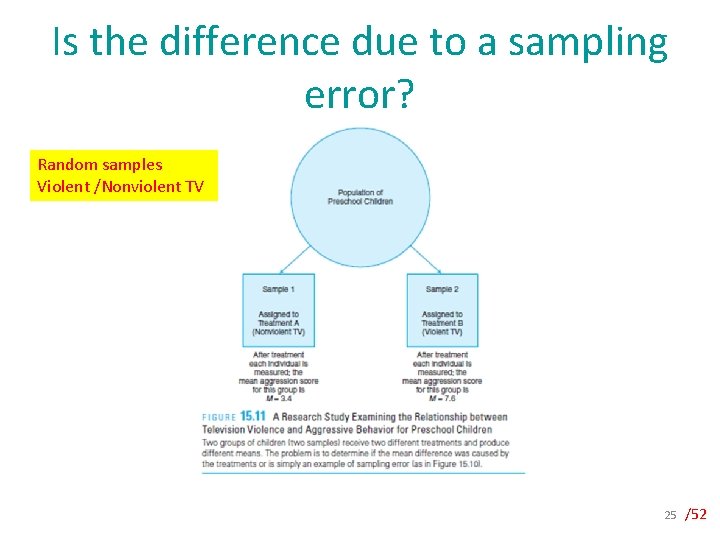

Is the difference due to a sampling error? Random samples Violent /Nonviolent TV 25 /52

Is the difference due to a sampling error? • Sampling error is the naturally occurring difference between a sample statistic and the corresponding population parameter. • The problem for the researcher is to decide whether the 4 - point difference was caused by the treatments ( the different television programs) or is just a case of sampling error 26 /52

Hypothesis testing • A hypothesis test is a statistical procedure that uses sample data to evaluate the credibility of a hypothesis about a population. 27 /52

5 elements of a hypothesis test 1. The Null Hypothesis The null hypothesis is a statement about the population, or populations, being examined, and always says that there is no effect, no change, or no relationship. 2. The Sample Statistic The data from the research study are used to compute the sample statistic. 28 /52

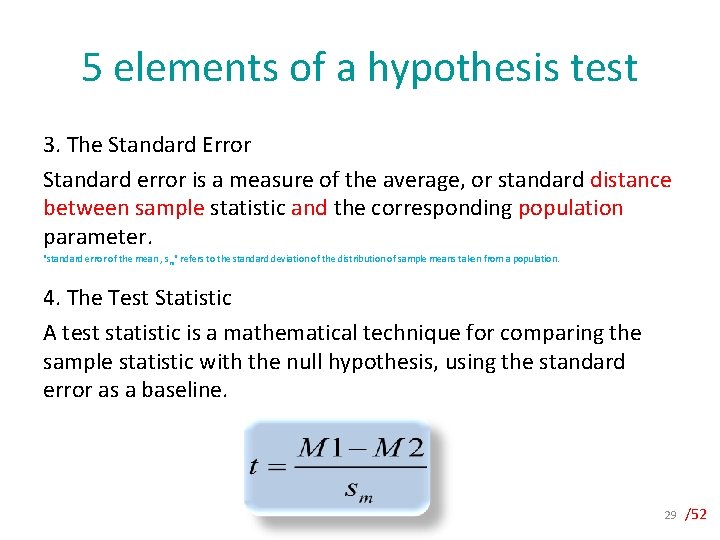

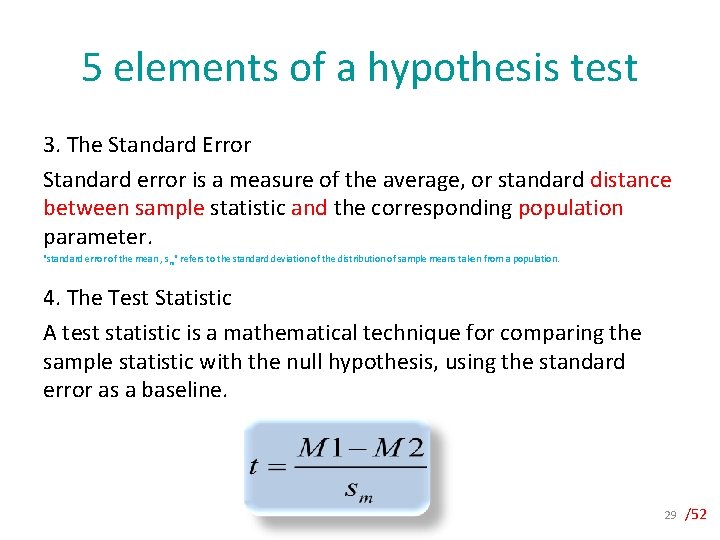

5 elements of a hypothesis test 3. The Standard Error Standard error is a measure of the average, or standard distance between sample statistic and the corresponding population parameter. "standard error of the mean , sm" refers to the standard deviation of the distribution of sample means taken from a population. 4. The Test Statistic A test statistic is a mathematical technique for comparing the sample statistic with the null hypothesis, using the standard error as a baseline. 29 /52

5 elements of a hypothesis test 5. The Alpha Level ( Level of Significance) The alpha level, or level of significance, for a hypothesis test is the maximum probability that the research result was obtained simply by chance. A hypothesis test with an alpha level of. 05, for example, means that the test demands that there is less than a 5% (. 05) probability that the results are caused only by chance. 30 /52

Reporting Results from a Hypothesis Test • In the literature, significance levels are reported as p values. For example, a research paper may report a significant difference between two treatments with p <. 05. The expression p <. 05 simply means that there is less than a. 05 probability that the result is caused by chance. 31 /52

Errors in Hypothesis Testing If a researcher is misled by the results from the sample, it is likely that the researcher will reach an incorrect conclusion. Two kinds of errors can be made in hypothesis testing. 32 /52

Type I Errors • A Type I error occurs when a researcher finds evidence for a significant result when, in fact, there is no effect ( no relationship) in the population. • The error occurs because the researcher has, by chance, selected an extreme sample that appears to show the existence of an effect when there is none. • The consequence of a Type I error is a false report. This is a serious mistake. • Fortunately, the likelihood of a Type I error is very small, and the exact probability of this kind of mistake is known to everyone who sees the research report. 33 /52

Type II error • A Type II error occurs when sample data do not show evidence of a significant effect when, in fact, a real effect does exist in the population. • This often occurs when the effect is so small that it does not show up in the sample. 34 /52

Factors that Influence the Outcome of a Hypothesis Test 1. The sample size. The difference found with a large sample is more likely to be significant than the same result found with a small sample. 2. The Size of the Variance When the variance is small, the data show a clear mean difference between the two treatments. 35 /52

Effect Size • Knowing the significance of difference is not enough. We need to know the size of the effect. 36 /52

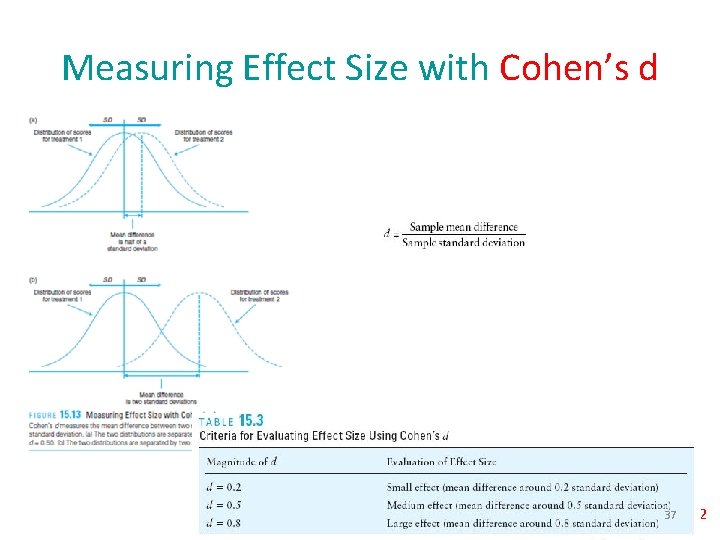

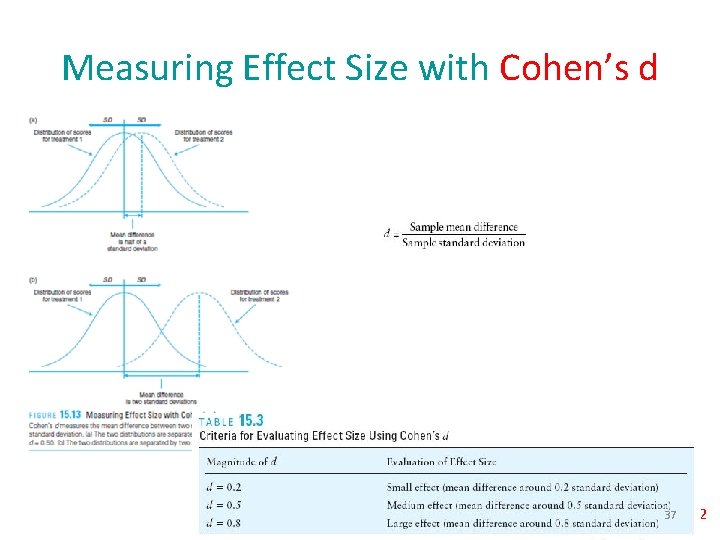

Measuring Effect Size with Cohen’s d 37 /52

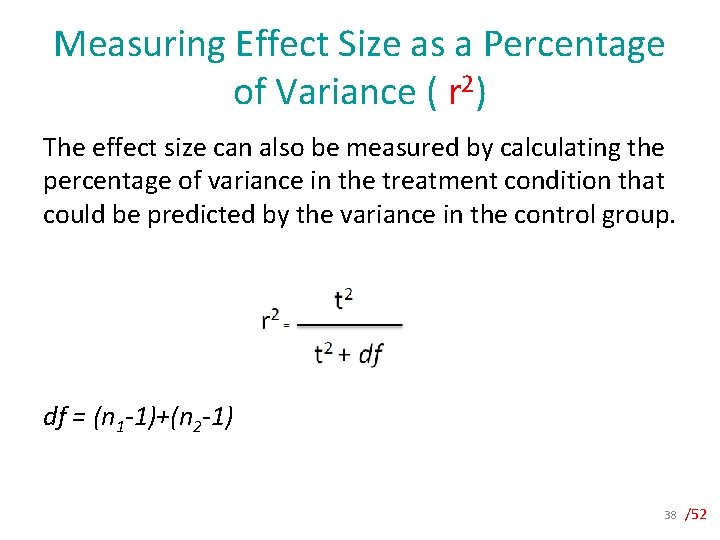

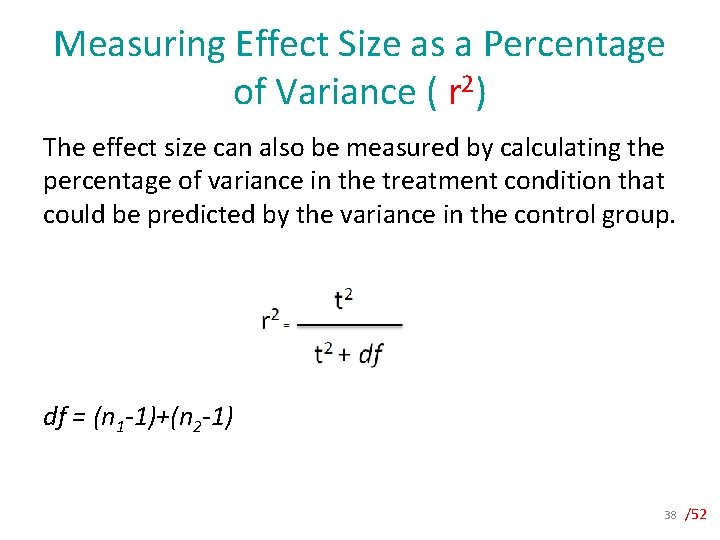

Measuring Effect Size as a Percentage of Variance ( r 2) The effect size can also be measured by calculating the percentage of variance in the treatment condition that could be predicted by the variance in the control group. df = (n 1 -1)+(n 2 -1) 38 /52

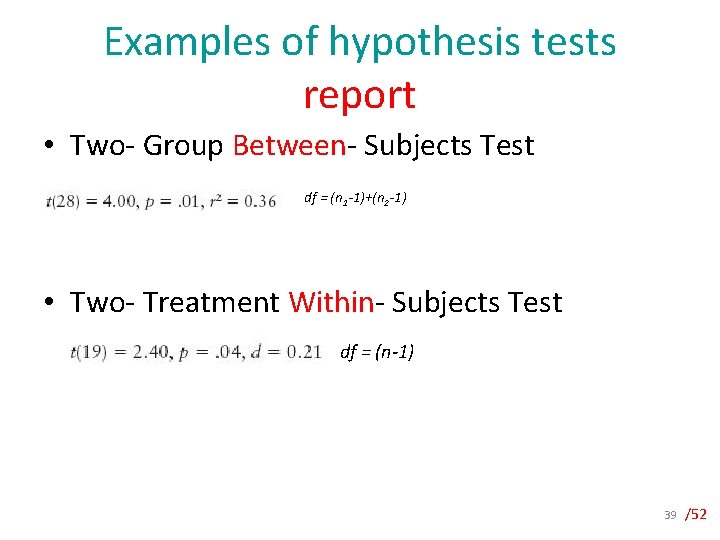

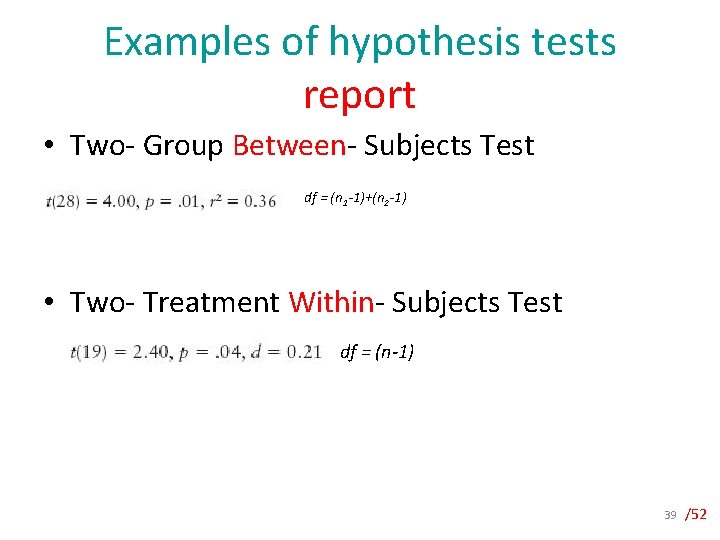

Examples of hypothesis tests report • Two- Group Between- Subjects Test • df = (n 1 -1)+(n 2 -1) • Two- Treatment Within- Subjects Test df = (n-1) 39 /52

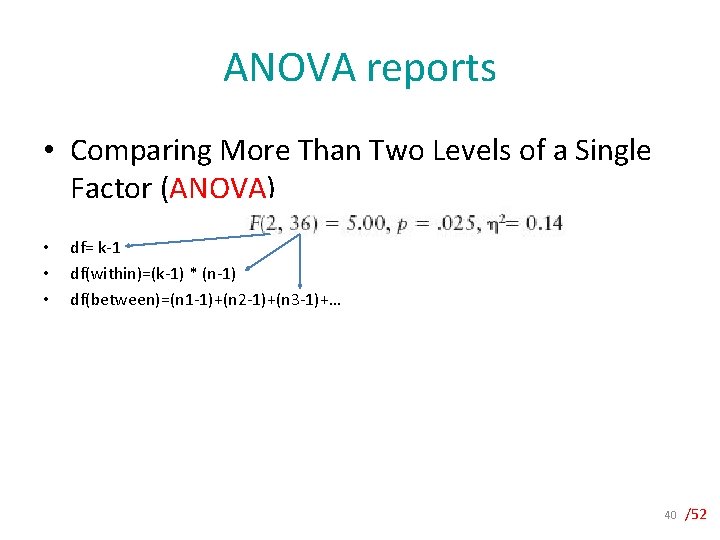

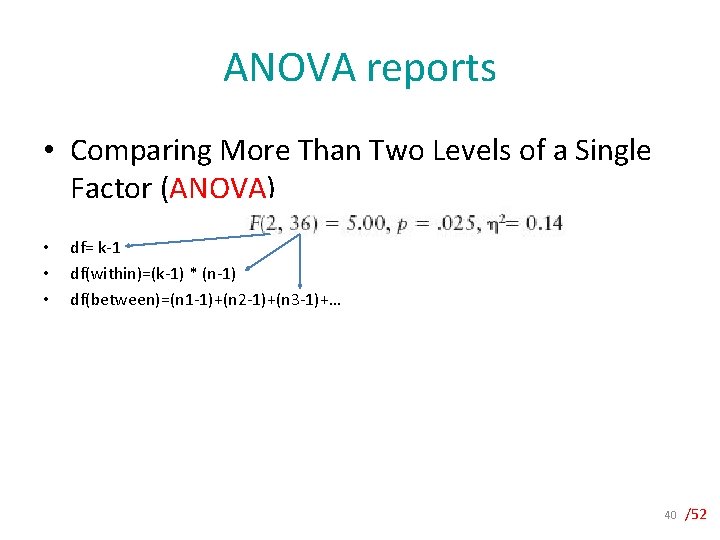

ANOVA reports • Comparing More Than Two Levels of a Single Factor (ANOVA) • • • df= k-1 df(within)=(k-1) * (n-1) df(between)=(n 1 -1)+(n 2 -1)+(n 3 -1)+… 40 /52

Post Hoc Tests Are necessary because the original ANOVA simply establishes that mean differences exist, but does not identify exactly which means are significantly different and which are not. 41 /52

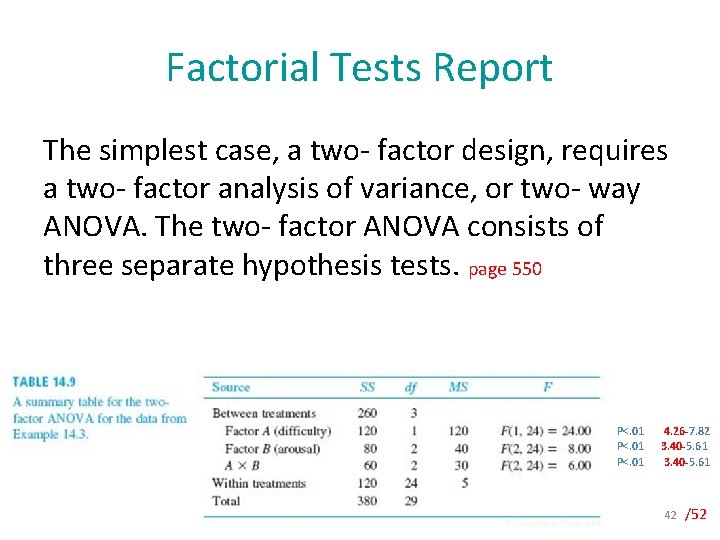

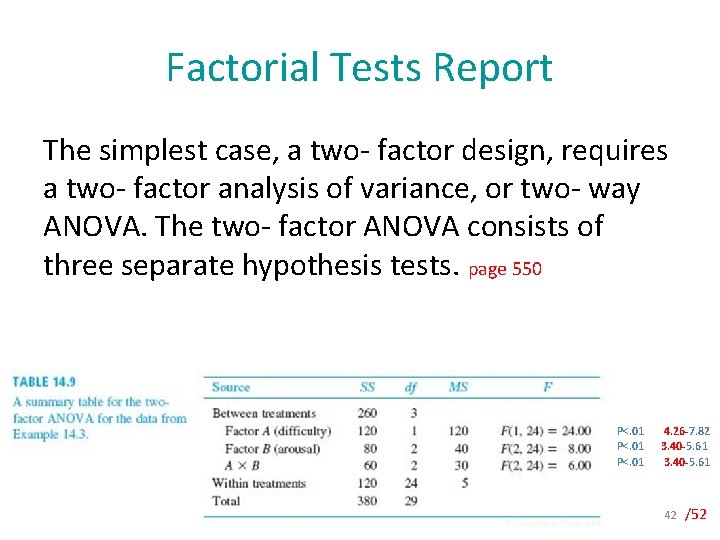

Factorial Tests Report The simplest case, a two- factor design, requires a two- factor analysis of variance, or two- way ANOVA. The two- factor ANOVA consists of three separate hypothesis tests. page 550 P<. 01 4. 26 -7. 82 3. 40 -5. 61 42 /52

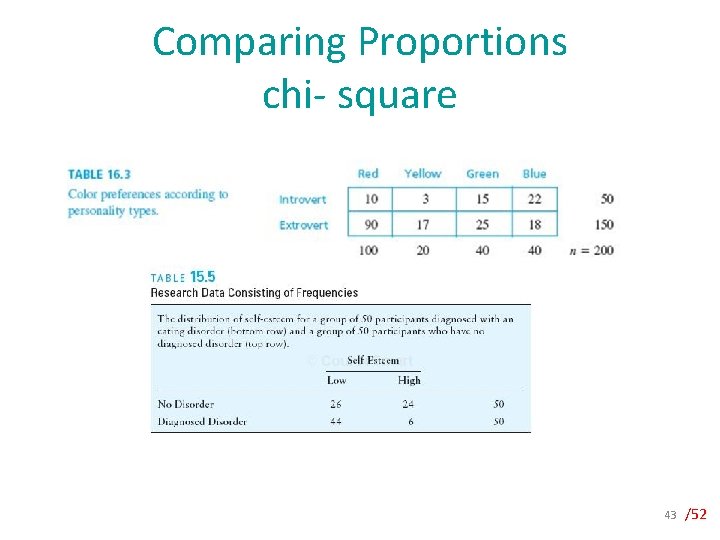

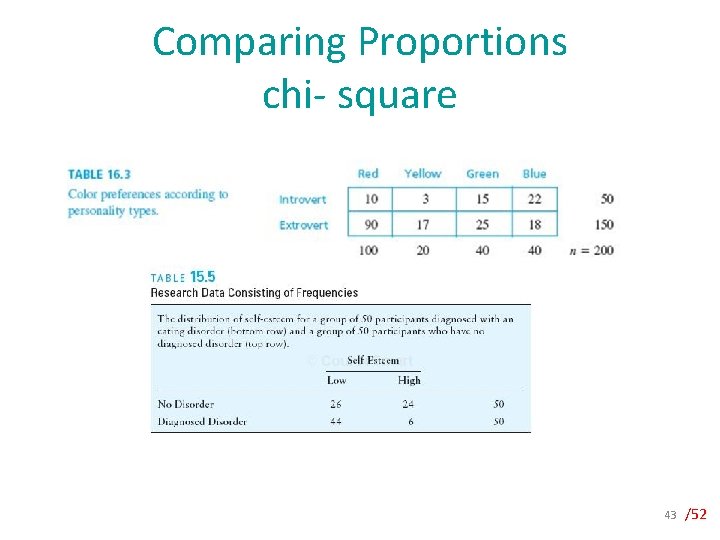

Comparing Proportions chi- square 43 /52

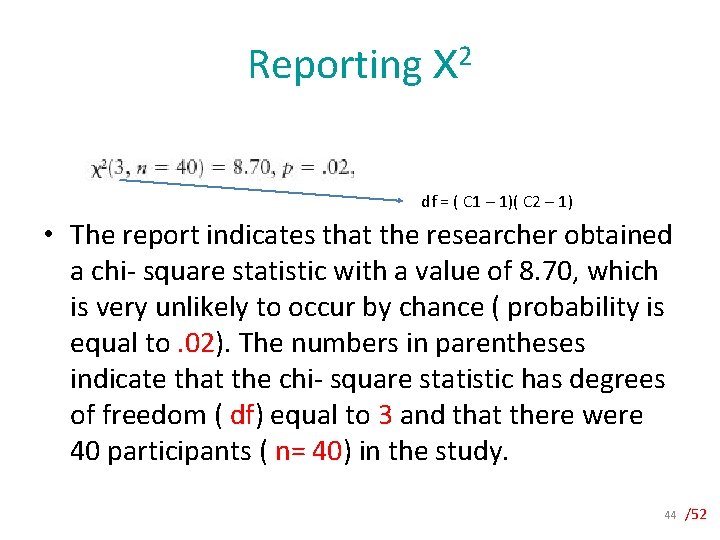

Reporting X 2 df = ( C 1 – 1)( C 2 – 1) • The report indicates that the researcher obtained a chi- square statistic with a value of 8. 70, which is very unlikely to occur by chance ( probability is equal to. 02). The numbers in parentheses indicate that the chi- square statistic has degrees of freedom ( df) equal to 3 and that there were 40 participants ( n= 40) in the study. 44 /52

Evaluating Correlations • r= 0. 65, n =40, p=. 01 • The report indicates that the sample correlation is r= 0. 65 for a group of n= 40 participants, which is very unlikely to have occurred if the population correlation is zero ( probability less than. 01). 45 /52

Reliability & Validity • Reliability refers to the relationship between two sets of measurements. 46 /52

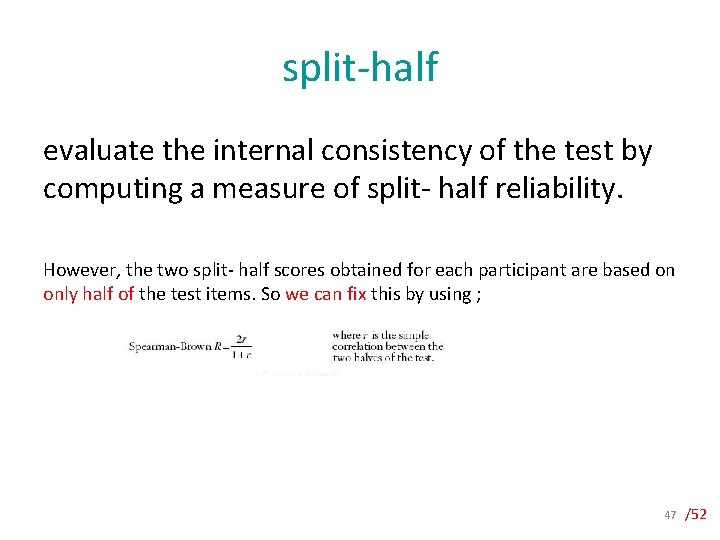

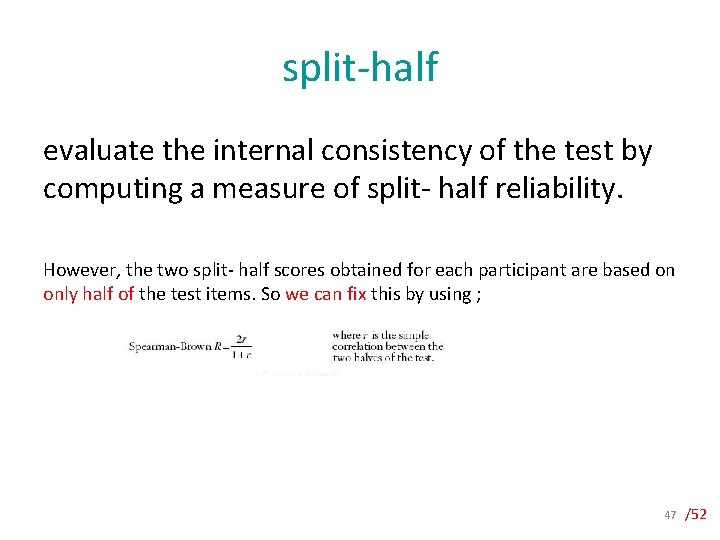

split-half evaluate the internal consistency of the test by computing a measure of split- half reliability. However, the two split- half scores obtained for each participant are based on only half of the test items. So we can fix this by using ; 47 /52

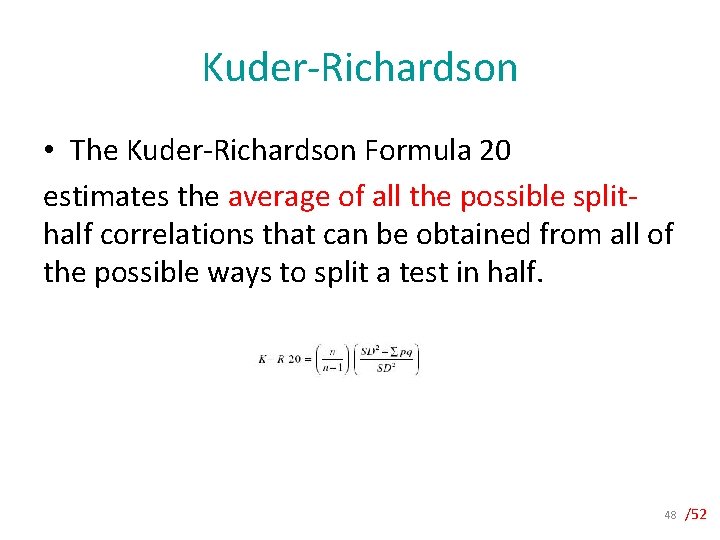

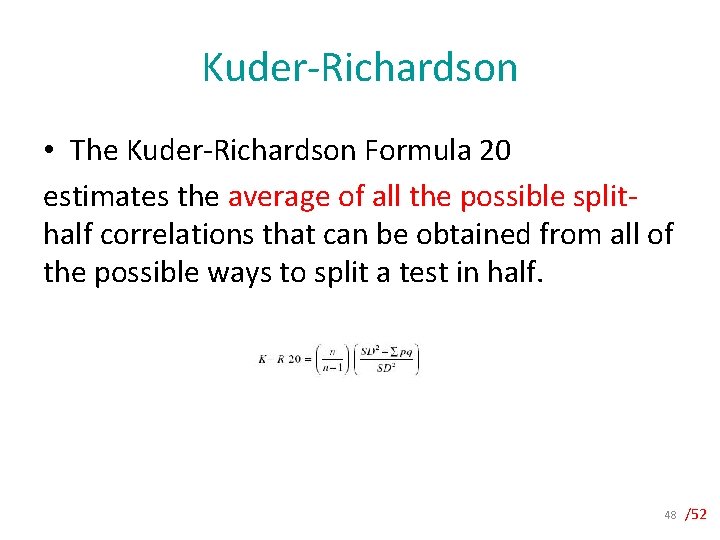

Kuder-Richardson • The Kuder-Richardson Formula 20 estimates the average of all the possible splithalf correlations that can be obtained from all of the possible ways to split a test in half. 48 /52

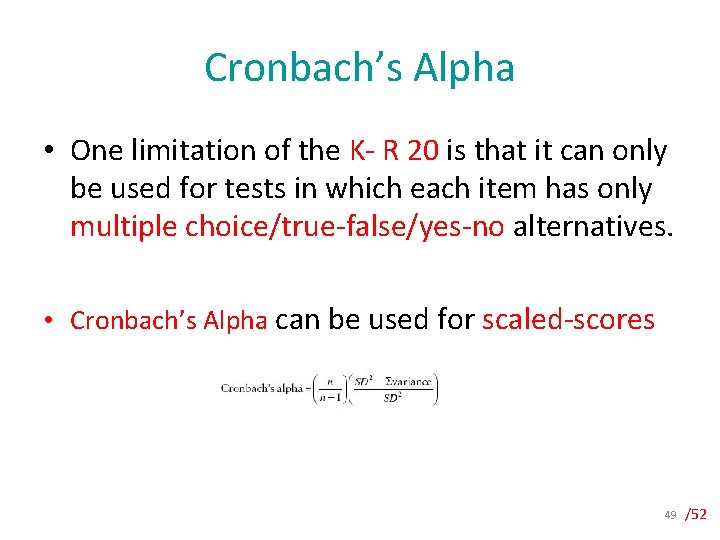

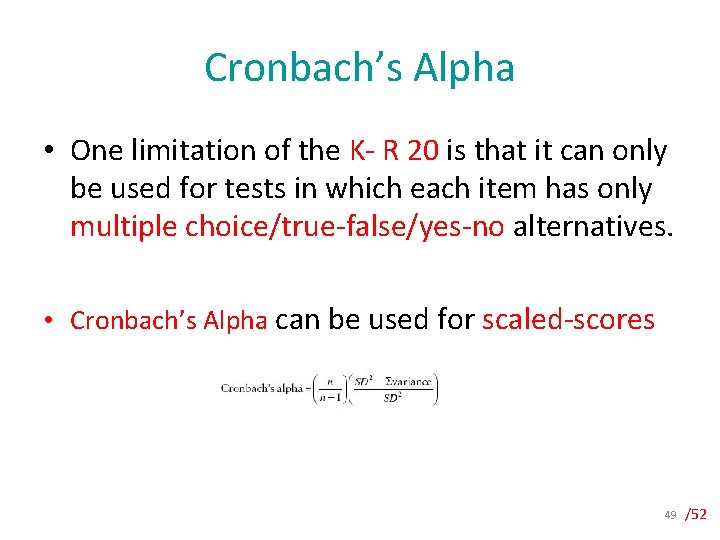

Cronbach’s Alpha • One limitation of the K- R 20 is that it can only be used for tests in which each item has only multiple choice/true-false/yes-no alternatives. • Cronbach’s Alpha can be used for scaled-scores 49 /52

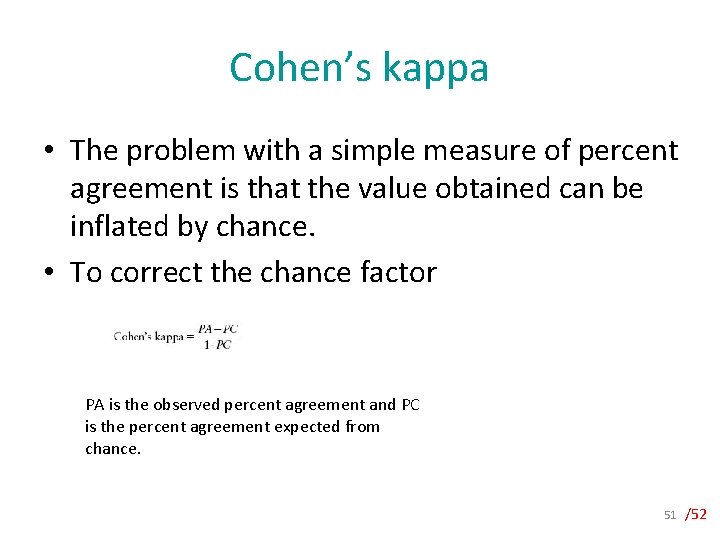

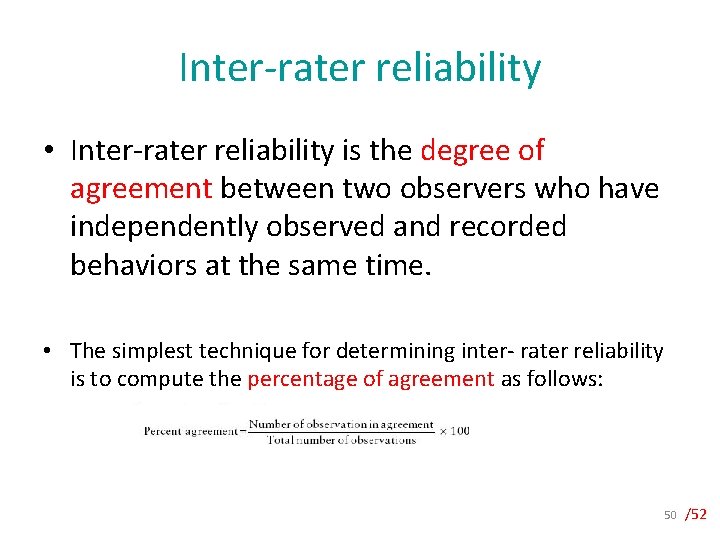

Inter-rater reliability • Inter-rater reliability is the degree of agreement between two observers who have independently observed and recorded behaviors at the same time. • The simplest technique for determining inter- rater reliability is to compute the percentage of agreement as follows: 50 /52

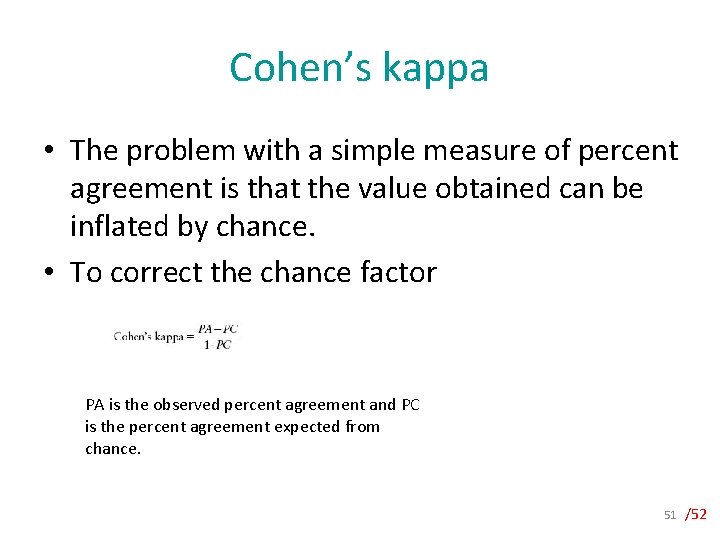

Cohen’s kappa • The problem with a simple measure of percent agreement is that the value obtained can be inflated by chance. • To correct the chance factor PA is the observed percent agreement and PC is the percent agreement expected from chance. 51 /52

Group Discussion • Identify the two basic concerns with using a correlation to measure split-half reliability and explain how these concerns are addressed by Spearman-Brown, K-R 20, and Cronbach’s alpha. • Identify the basic concern with using the percentage of agreement as a measure of inter-rater reliability and explain how this concern is addressed by Cohen’s kappa. 52 /52