Statistical Power 1 First Effect Size The size

- Slides: 31

Statistical Power 1

First: Effect Size • The size of the distance between two means in standardized units (not inferential). • A measure of the impact of an intervention based on the distributions of the samples in your study. • We use two measures of effect size – Cohen’s d – Eta Squared (η 2) 2

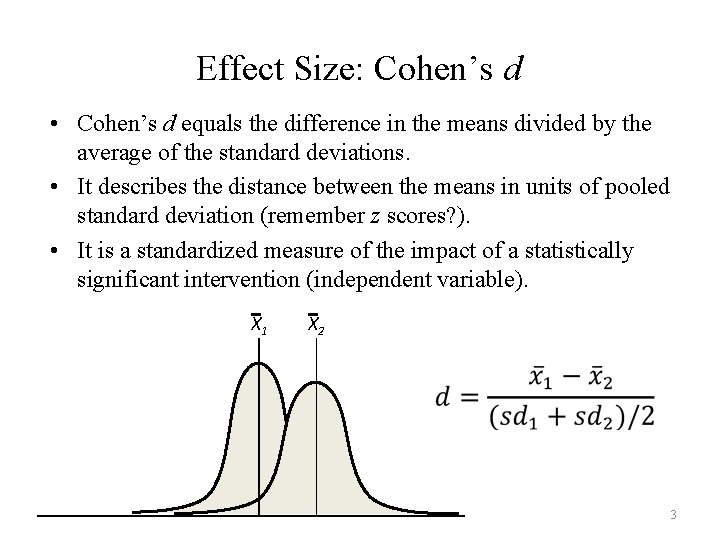

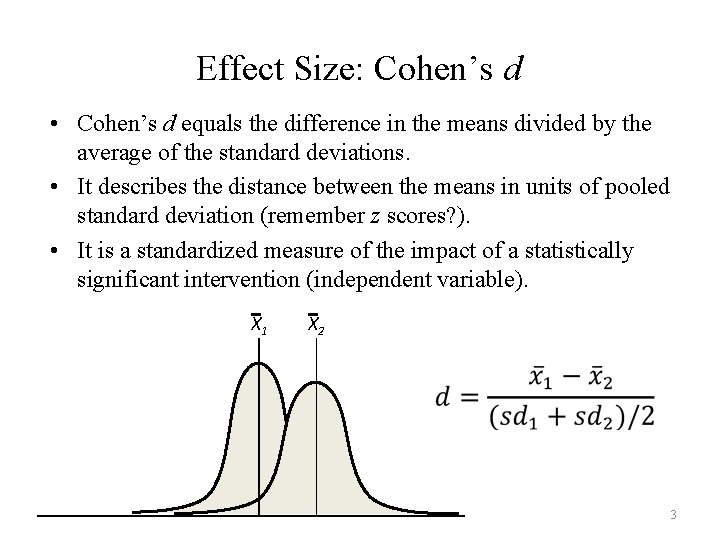

Effect Size: Cohen’s d • Cohen’s d equals the difference in the means divided by the average of the standard deviations. • It describes the distance between the means in units of pooled standard deviation (remember z scores? ). • It is a standardized measure of the impact of a statistically significant intervention (independent variable). X 1 X 2 3

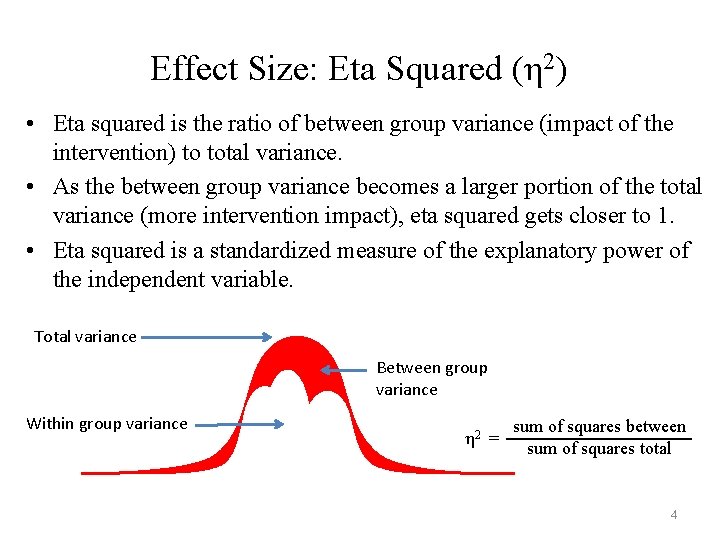

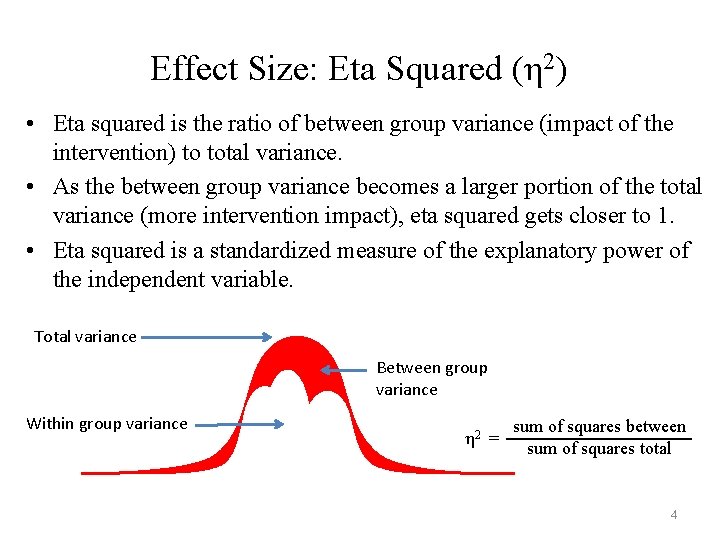

Effect Size: Eta Squared (η 2) • Eta squared is the ratio of between group variance (impact of the intervention) to total variance. • As the between group variance becomes a larger portion of the total variance (more intervention impact), eta squared gets closer to 1. • Eta squared is a standardized measure of the explanatory power of the independent variable. Total variance Between group variance Within group variance η 2 = sum of squares between sum of squares total 4

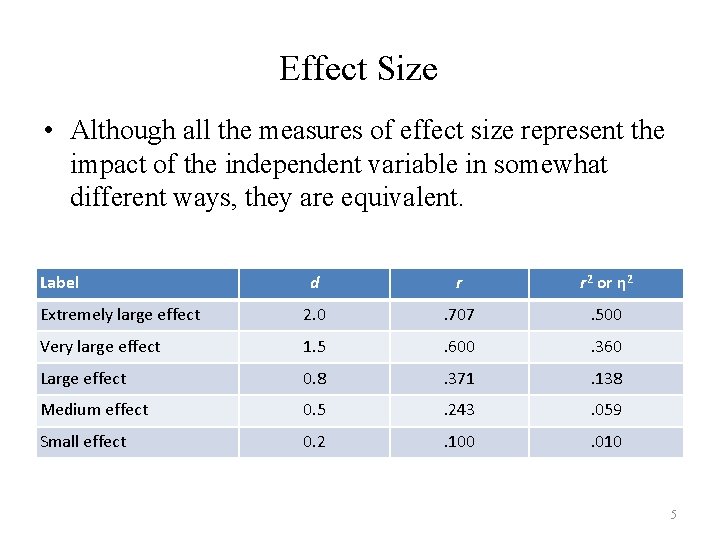

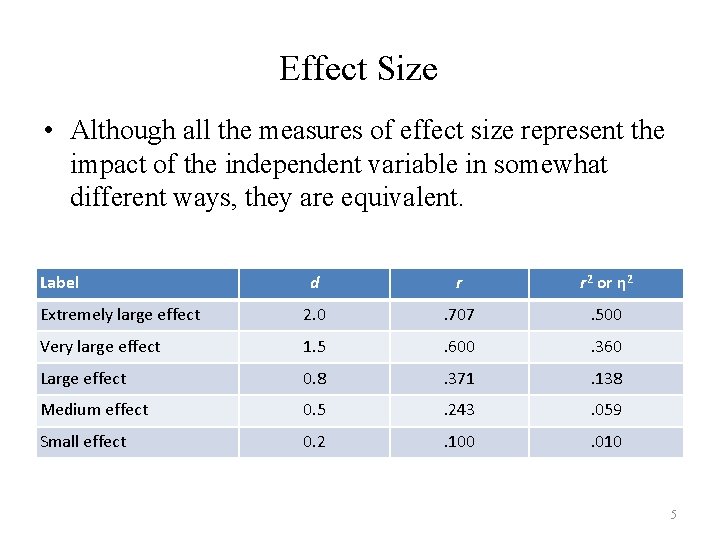

Effect Size • Although all the measures of effect size represent the impact of the independent variable in somewhat different ways, they are equivalent. Label d r r 2 or η 2 Extremely large effect 2. 0 . 707 . 500 Very large effect 1. 5 . 600 . 360 Large effect 0. 8 . 371 . 138 Medium effect 0. 5 . 243 . 059 Small effect 0. 2 . 100 . 010 5

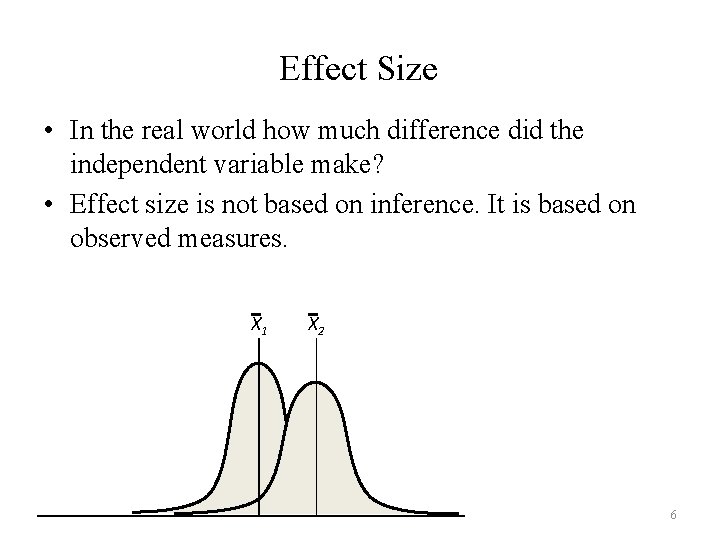

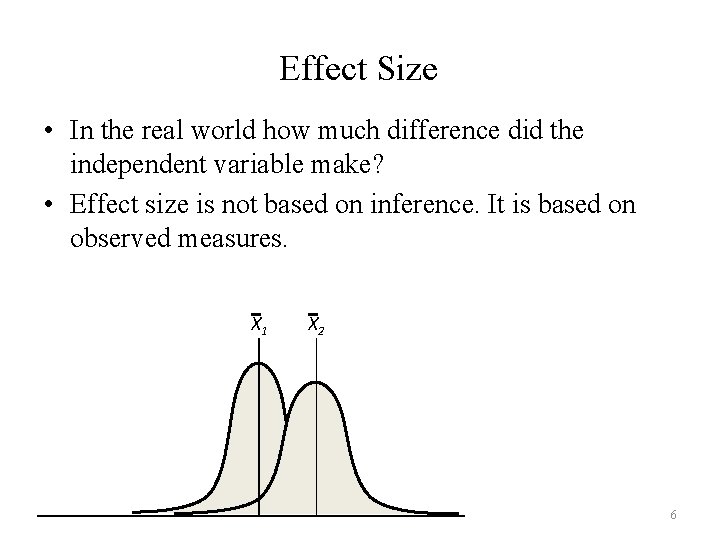

Effect Size • In the real world how much difference did the independent variable make? • Effect size is not based on inference. It is based on observed measures. X 1 X 2 6

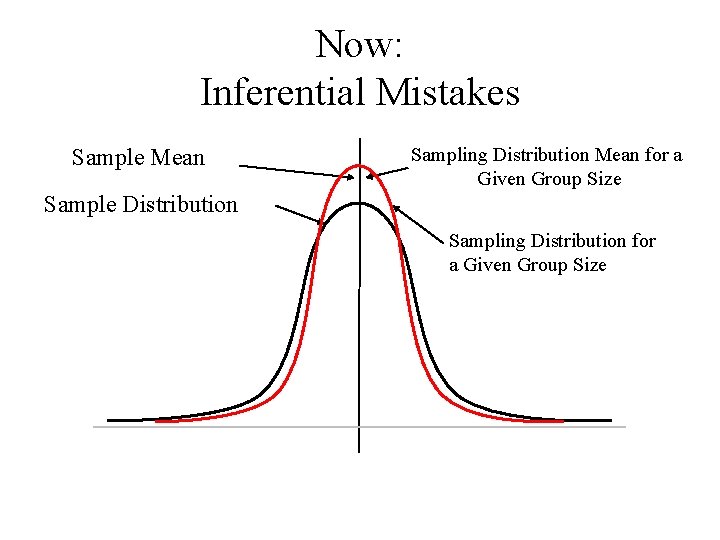

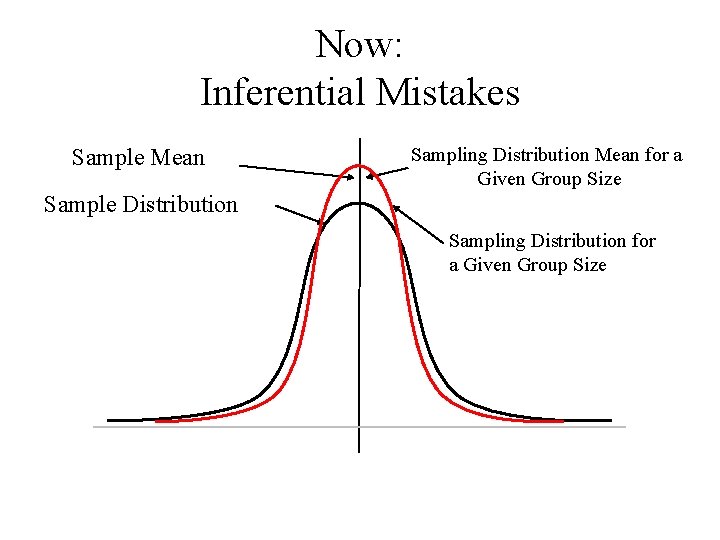

Now: Inferential Mistakes Sample Mean Sampling Distribution Mean for a Given Group Size Sample Distribution Sampling Distribution for a Given Group Size

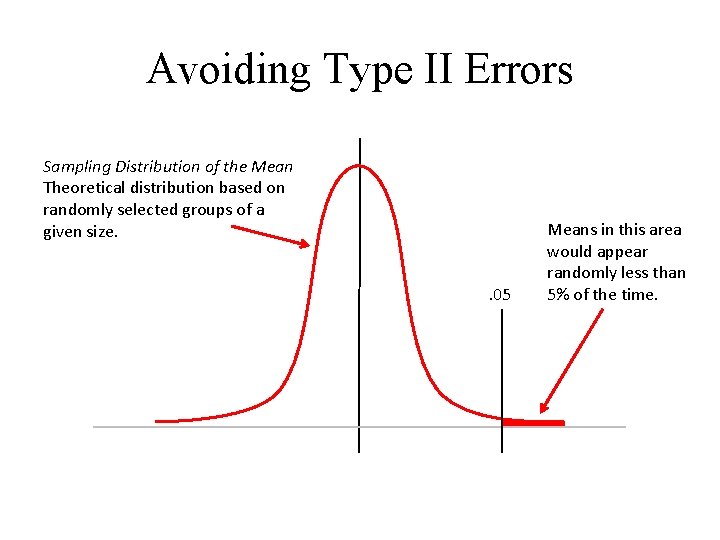

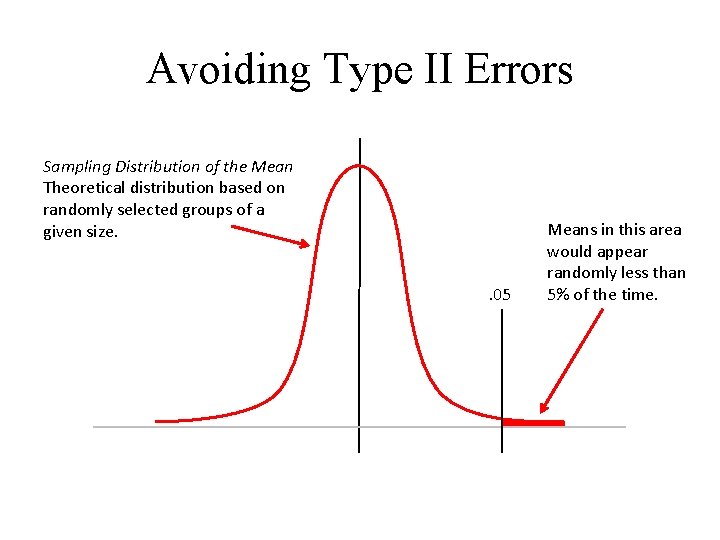

Avoiding Type II Errors Sampling Distribution of the Mean Theoretical distribution based on randomly selected groups of a given size. . 05 Means in this area would appear randomly less than 5% of the time.

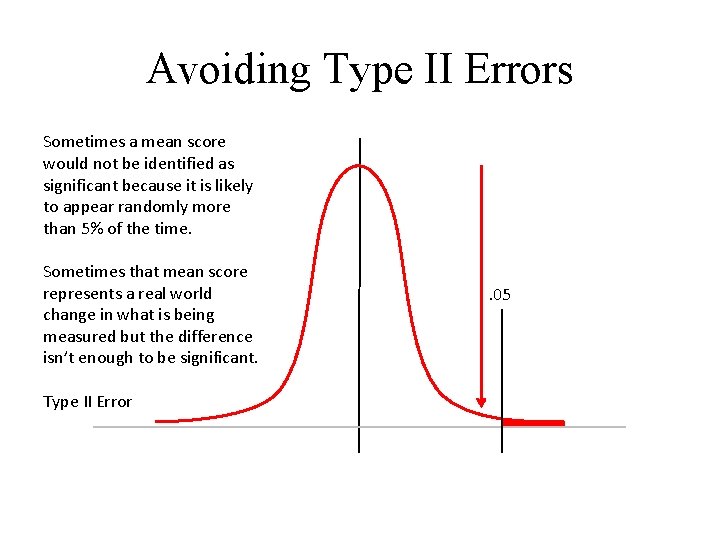

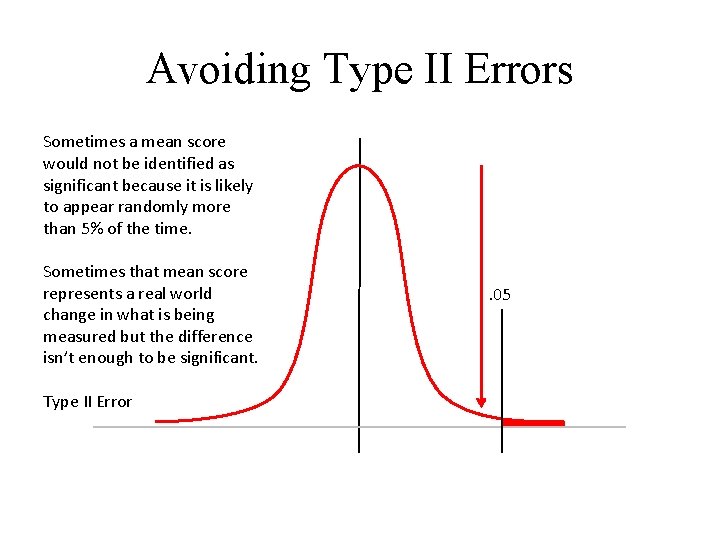

Avoiding Type II Errors Sometimes a mean score would not be identified as significant because it is likely to appear randomly more than 5% of the time. Sometimes that mean score represents a real world change in what is being measured but the difference isn’t enough to be significant. Type II Error . 05

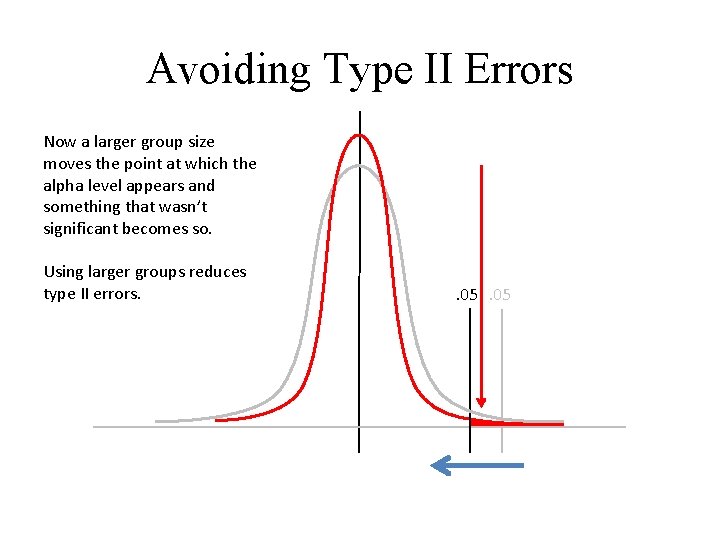

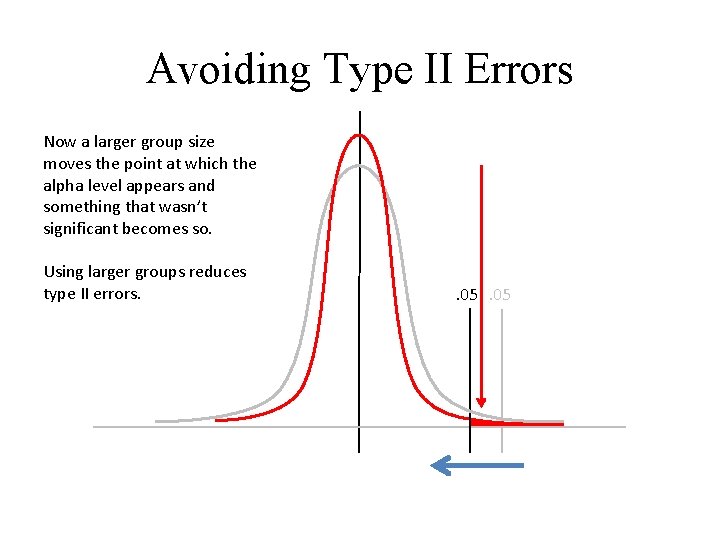

Avoiding Type II Errors Now a larger group size moves the point at which the alpha level appears and something that wasn’t significant becomes so. Using larger groups reduces type II errors. . 05

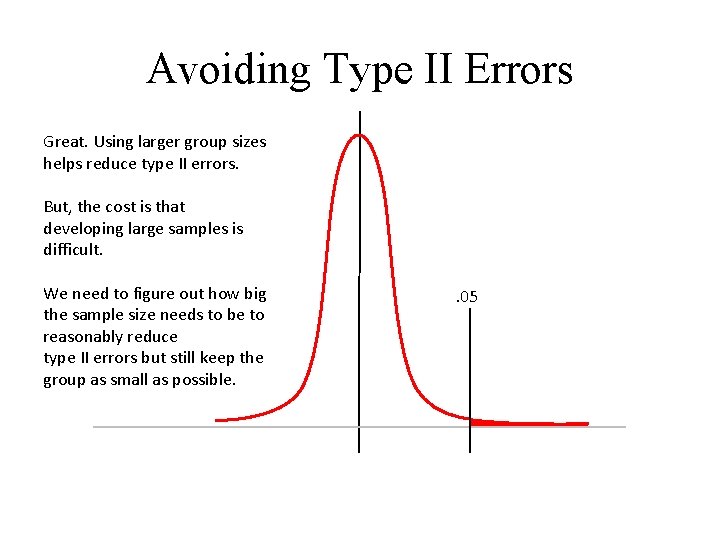

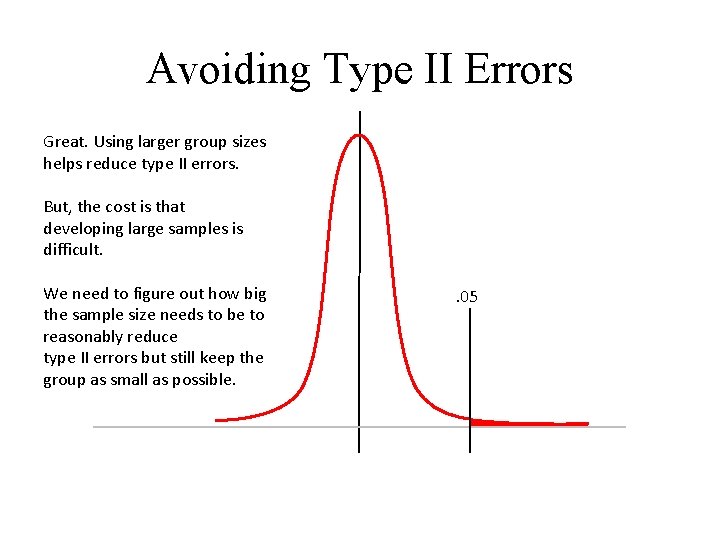

Avoiding Type II Errors Great. Using larger group sizes helps reduce type II errors. But, the cost is that developing large samples is difficult. We need to figure out how big the sample size needs to be to reasonably reduce type II errors but still keep the group as small as possible. . 05

Power • Power is defined as the probability of finding significance if it exists (avoiding type II errors). • Eighty percent (. 80) is accepted as a reasonable target power. • If non-random change occurs it has an 80% probability of being observed. 12

Power • There are 2 ways to use power calculations. • First, they can be used to figure out appropriate sample sizes for a study. • Second, they can be used to evaluate the use of a specific sample size after a study has been completed. 13

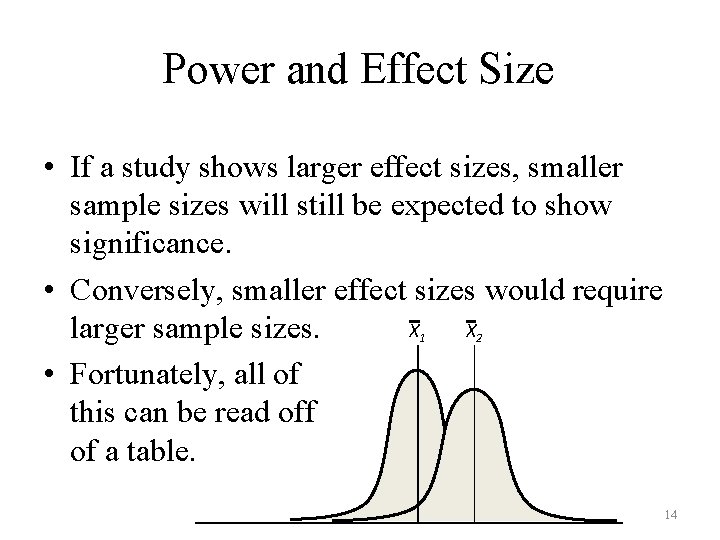

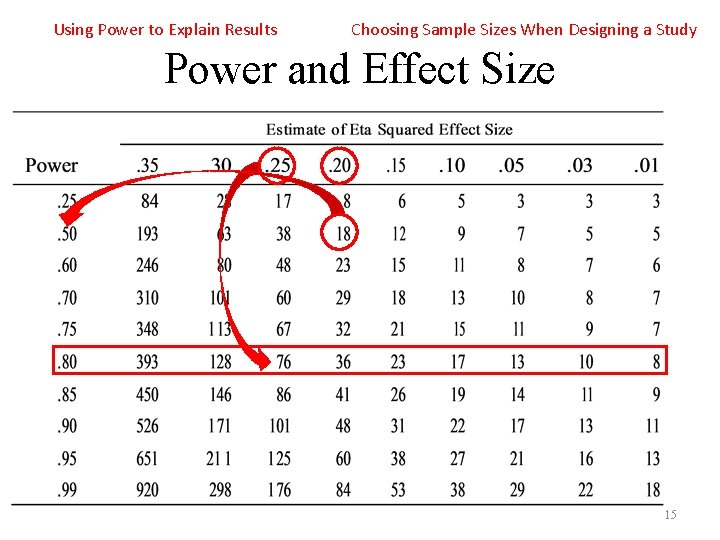

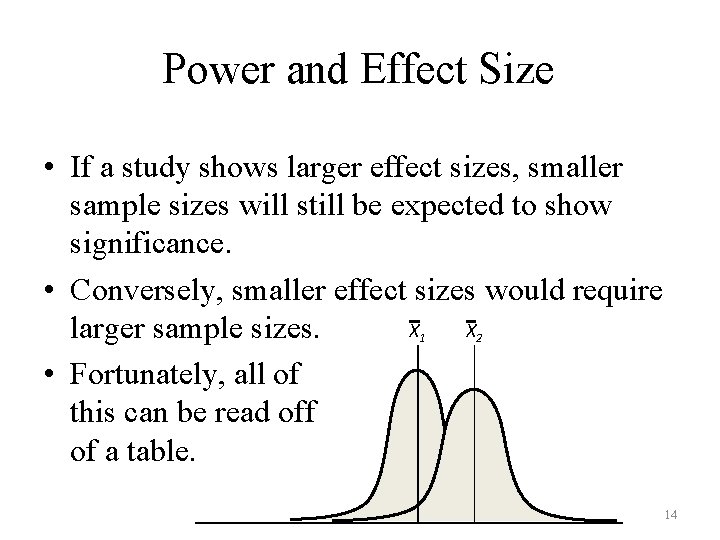

Power and Effect Size • If a study shows larger effect sizes, smaller sample sizes will still be expected to show significance. • Conversely, smaller effect sizes would require X 1 X 2 larger sample sizes. • Fortunately, all of this can be read off of a table. 14

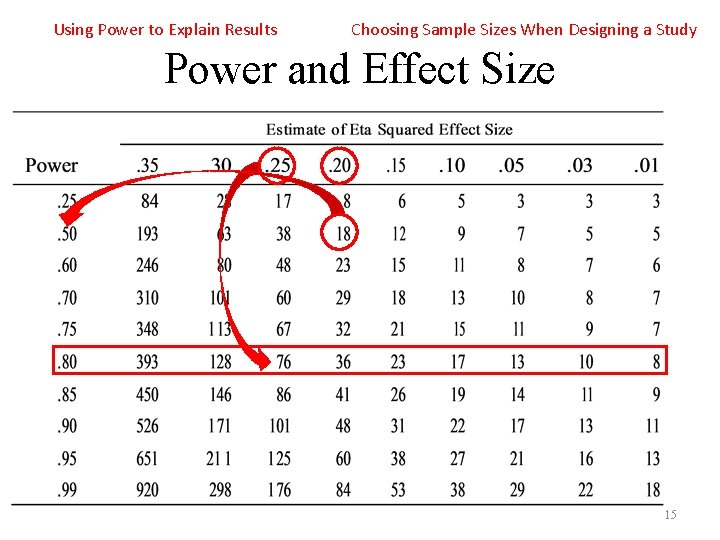

Using Power to Explain Results Choosing Sample Sizes When Designing a Study Power and Effect Size 15

While we are here … • Remember we have talked about inferential errors when something appears significant but it really wasn’t? • Type I errors 16

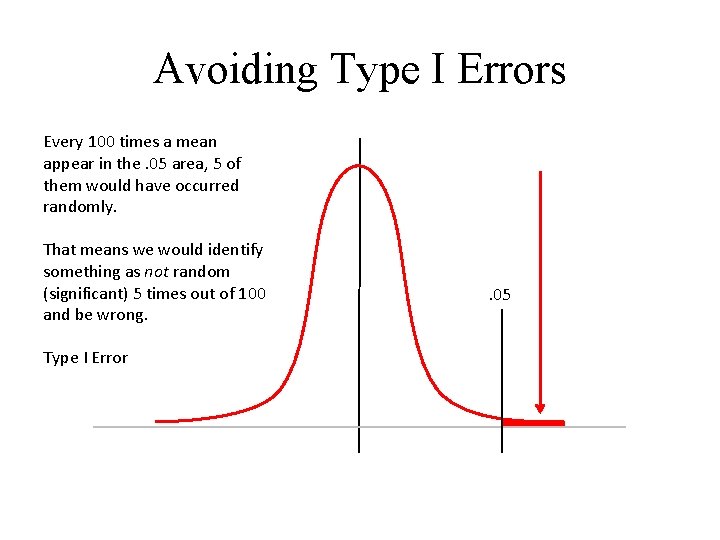

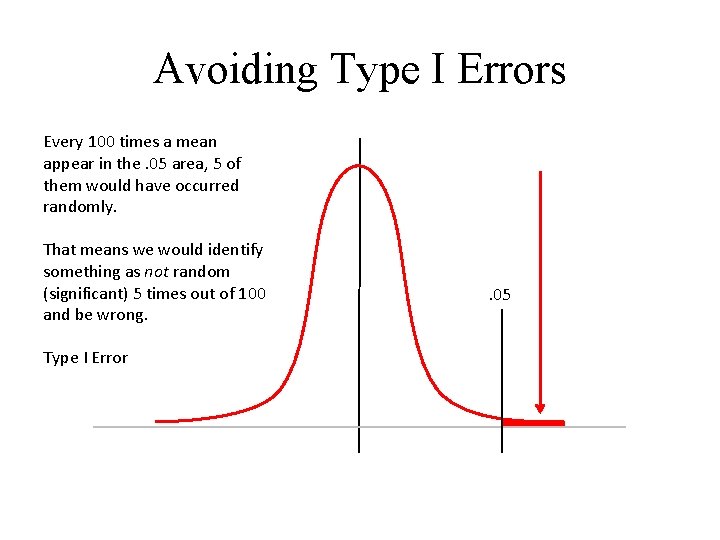

Avoiding Type I Errors Every 100 times a mean appear in the. 05 area, 5 of them would have occurred randomly. That means we would identify something as not random (significant) 5 times out of 100 and be wrong. Type I Error . 05

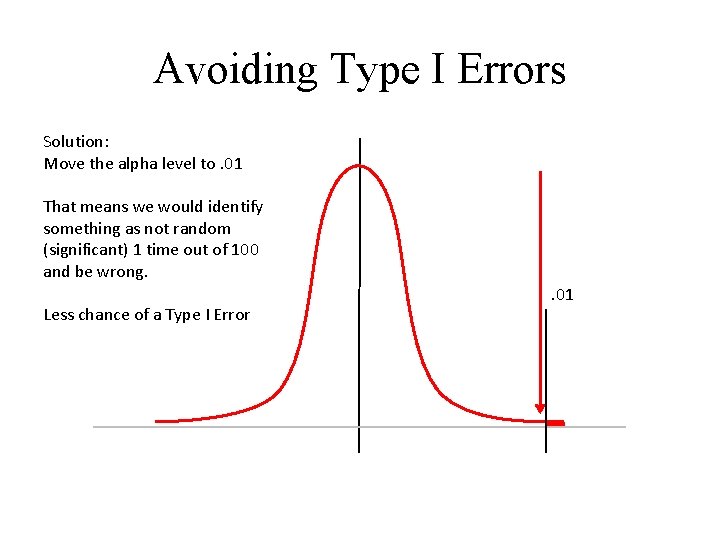

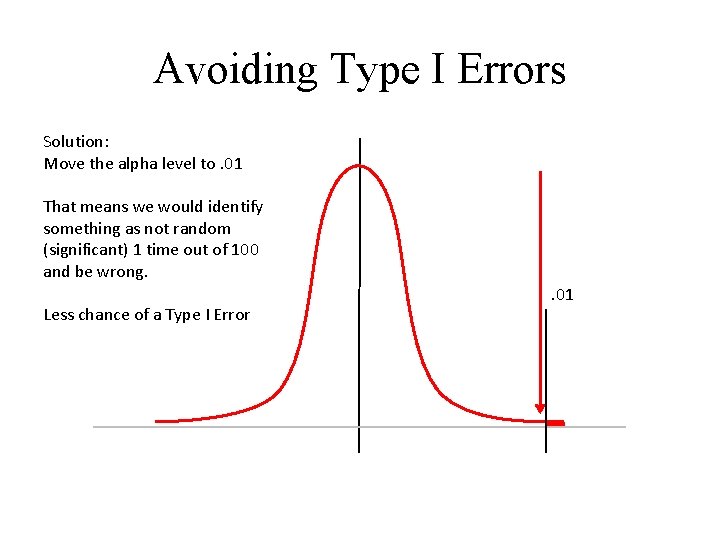

Avoiding Type I Errors Solution: Move the alpha level to. 01 That means we would identify something as not random (significant) 1 time out of 100 and be wrong. Less chance of a Type I Error . 01

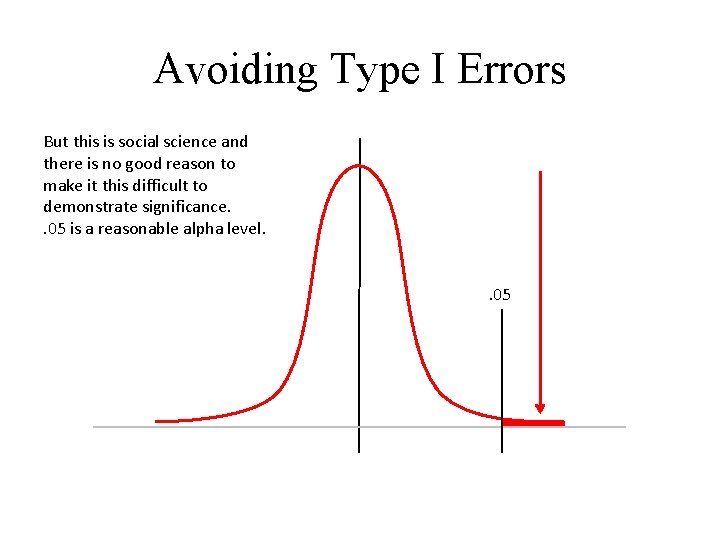

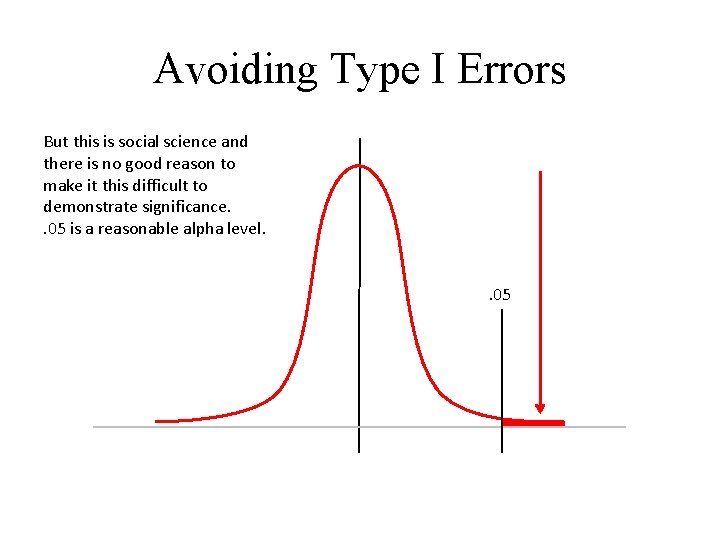

Avoiding Type I Errors But this is social science and there is no good reason to make it this difficult to demonstrate significance. . 05 is a reasonable alpha level. . 05

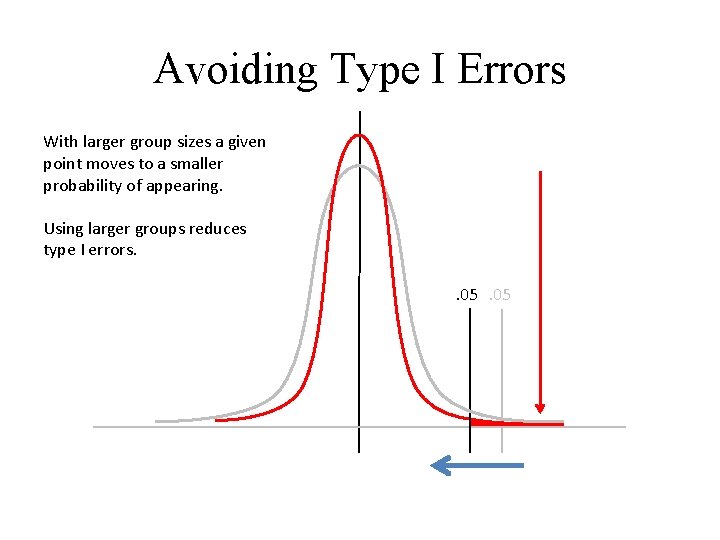

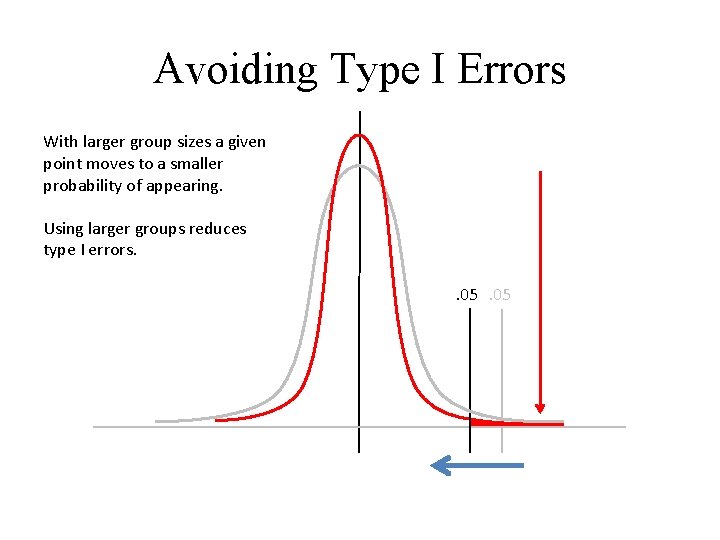

Avoiding Type I Errors With larger group sizes a given point moves to a smaller probability of appearing. Using larger groups reduces type I errors. . 05

Sample Sizes • We know that using larger sample sizes is statistically powerful but life isn’t that simple. • Whenever possible use Power Analysis to help you be more confident you will find something if it is there. • When things don’t appear to be significant at least now Power Analysis gives you something else to talk about to suggest what might be done to improve the quality of your data. 21

Examples 1. Most of the studies in your lit review are showing medium effects around 0. 8 Cohen’s d. You want to be 90% sure you find non-random effects if they are there. Approximately how big does your sample need to be? 2. In your study you showed mean differences of 1. 0 Cohen’s d but groups were not significantly different. Your sample size was 18. What was the probability of finding significant differences if they were there? 22

Central Limit Theorem Constraints Power and Effect Size 23

Analysis

Inferential Statistics • Assumptions – Dependent variable is an interval measure of one characteristic of a group. – Tests are based on knowing or assuming the distribution of a population. – Statistics demonstrate if comparison samples are from the same population.

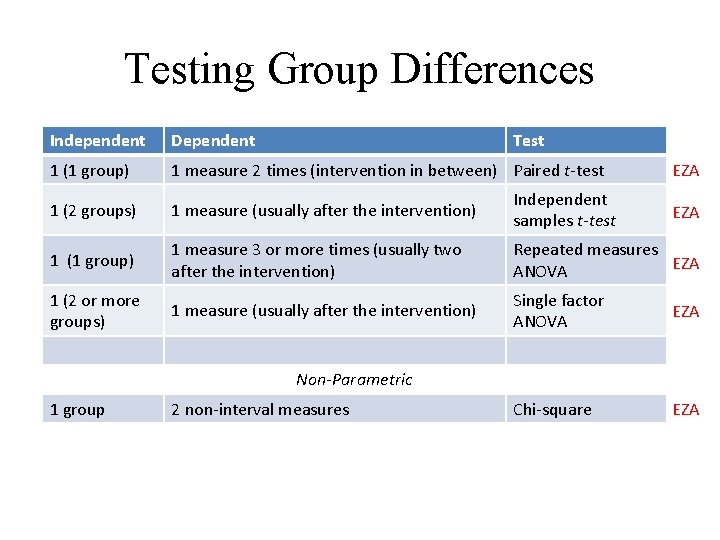

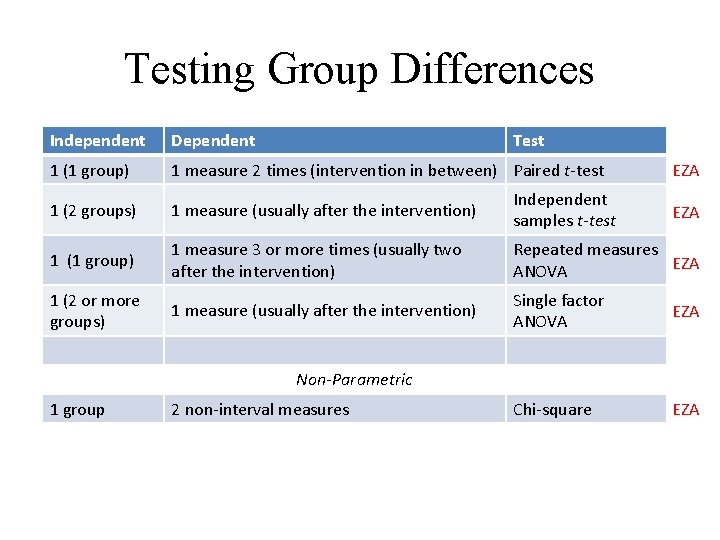

Testing Group Differences Independent Dependent Test 1 (1 group) 1 measure 2 times (intervention in between) Paired t-test 1 (2 groups) 1 measure (usually after the intervention) Independent samples t-test 1 (1 group) 1 measure 3 or more times (usually two after the intervention) Repeated measures EZA ANOVA 1 (2 or more groups) 1 measure (usually after the intervention) Single factor ANOVA EZA Chi-square EZA EZA Non-Parametric 1 group 2 non-interval measures

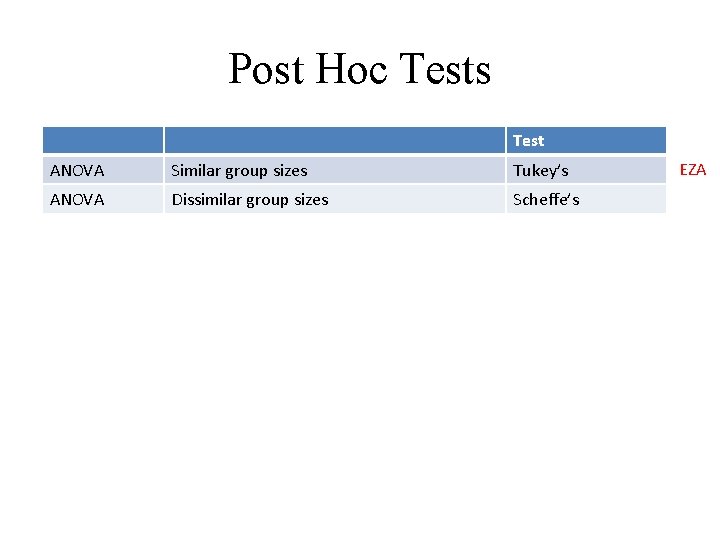

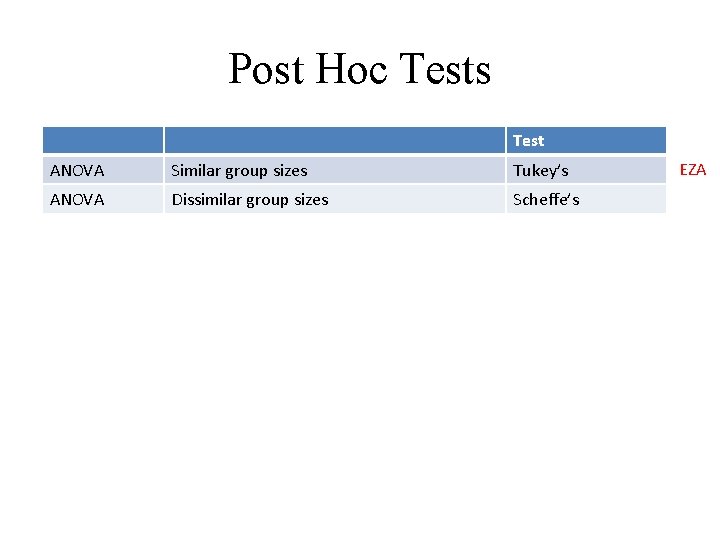

Post Hoc Tests Test ANOVA Similar group sizes Tukey’s ANOVA Dissimilar group sizes Scheffe’s EZA

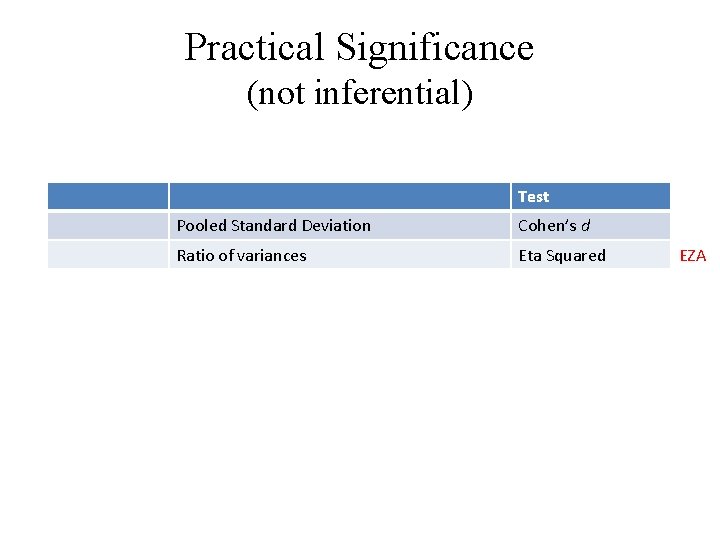

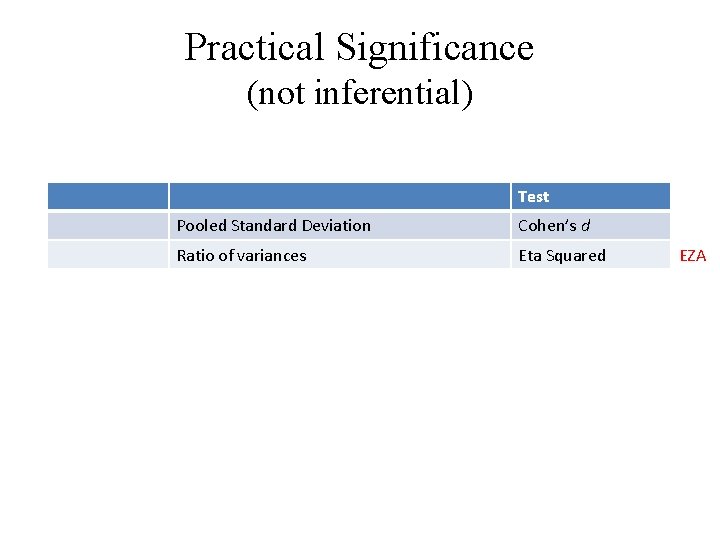

Practical Significance (not inferential) Test Pooled Standard Deviation Cohen’s d Ratio of variances Eta Squared EZA

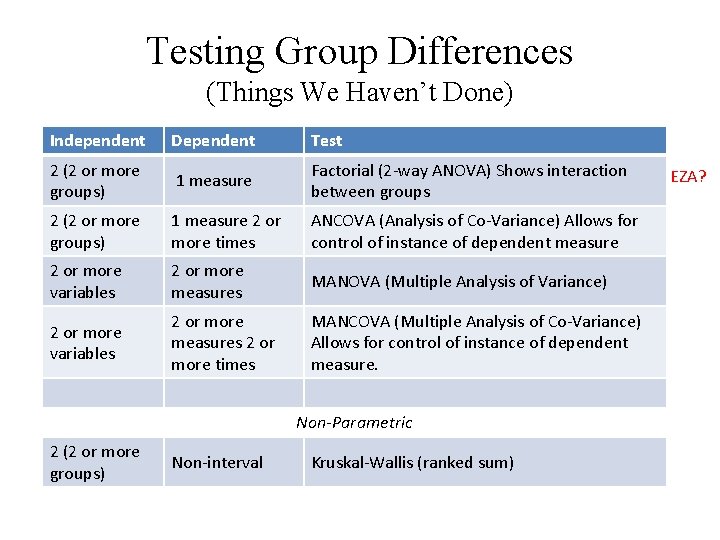

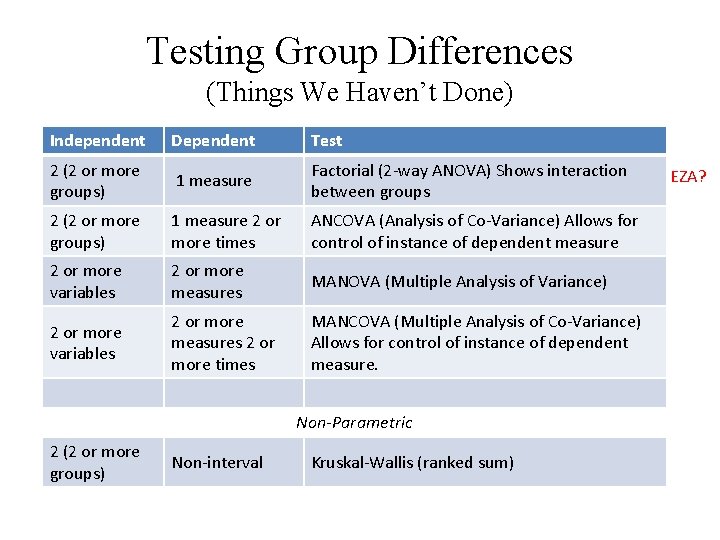

Testing Group Differences (Things We Haven’t Done) Independent Dependent Test 2 (2 or more groups) 1 measure Factorial (2 -way ANOVA) Shows interaction between groups 2 (2 or more groups) 1 measure 2 or more times ANCOVA (Analysis of Co-Variance) Allows for control of instance of dependent measure 2 or more variables 2 or more measures MANOVA (Multiple Analysis of Variance) 2 or more variables 2 or more measures 2 or more times MANCOVA (Multiple Analysis of Co-Variance) Allows for control of instance of dependent measure. Non-Parametric 2 (2 or more groups) Non-interval Kruskal-Wallis (ranked sum) EZA?

Correlational Statistics • Assumptions – The relationship among the measures of two characteristics is linear. – Compared measures come from individuals in the same population – Correlations are not causal

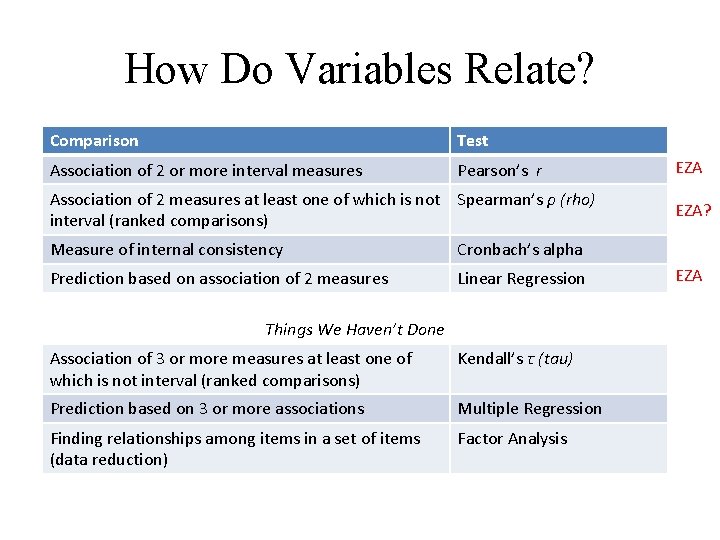

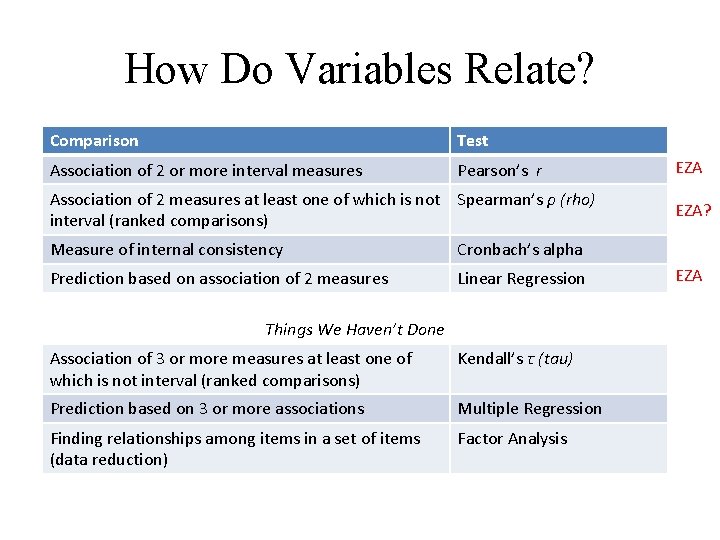

How Do Variables Relate? Comparison Test Association of 2 or more interval measures Pearson’s r Association of 2 measures at least one of which is not Spearman’s ρ (rho) interval (ranked comparisons) Measure of internal consistency Cronbach’s alpha Prediction based on association of 2 measures Linear Regression Things We Haven’t Done Association of 3 or more measures at least one of which is not interval (ranked comparisons) Kendall’s τ (tau) Prediction based on 3 or more associations Multiple Regression Finding relationships among items in a set of items (data reduction) Factor Analysis EZA? EZA