Natural Language Processing Computational Discourse 1 Outline Reference

- Slides: 71

Natural Language Processing Computational Discourse 1

Outline • Reference – – Kinds of reference phenomena Constraints on co-reference Preferences for co-reference The Lappin-Leass algorithm for coreference • Coherence – Hobbs coherence relations – Rhetorical Structure Theory 2

Note • Reference resolution and discourse processing are very difficult • There are no fully successful approaches • But, they are needed. • Today, the first goal is for you to be aware of how you resolve pronouns (as a human). You need to understand the task before you could develop a method • Then, we will look at a concrete algorithm for pronoun resolution • Finally, we’ll look at discourse relations 3

Part I: Reference Resolution • John went to Bill’s car dealership to check out an Acura Integra. He looked at it for half an hour • I’d like to get from Boston to San Francisco, on either December 5 th or December 6 th. It’s ok if it stops in another city along they way 4

Some terminology • John went to Bill’s car dealership to check out an Acura Integra. He looked at it for half an hour • Reference: process by which speakers use words John and he to denote a particular person – Referring expression: John, he – Referent: the actual entity (but as a shorthand we might call “John” the referent). – John and he “corefer” – Antecedent: John – Anaphor: he 5

Discourse Model • Model of the entities the discourse is about • A referent is first evoked into the model. Then later it is accessed from the model Access Evoke John Corefer He 6

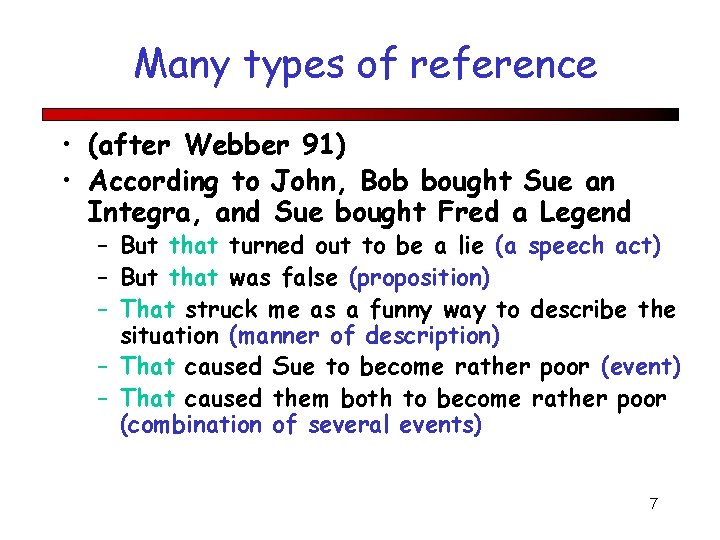

Many types of reference • (after Webber 91) • According to John, Bob bought Sue an Integra, and Sue bought Fred a Legend – But that turned out to be a lie (a speech act) – But that was false (proposition) – That struck me as a funny way to describe the situation (manner of description) – That caused Sue to become rather poor (event) – That caused them both to become rather poor (combination of several events) 7

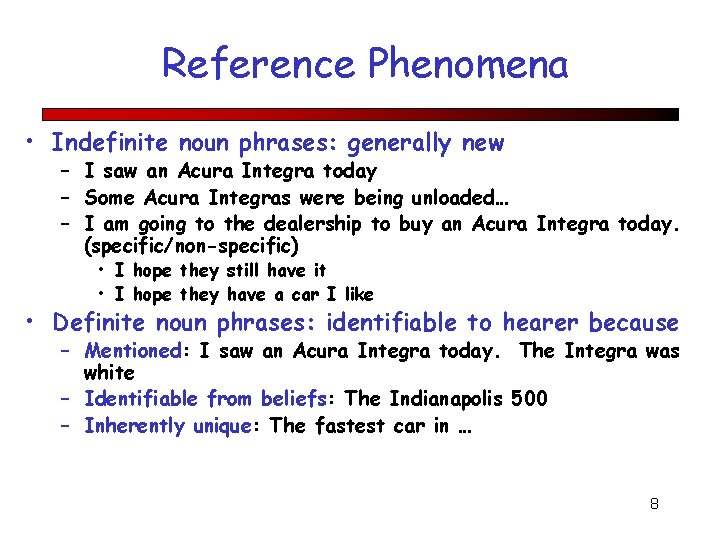

Reference Phenomena • Indefinite noun phrases: generally new – I saw an Acura Integra today – Some Acura Integras were being unloaded… – I am going to the dealership to buy an Acura Integra today. (specific/non-specific) • I hope they still have it • I hope they have a car I like • Definite noun phrases: identifiable to hearer because – Mentioned: I saw an Acura Integra today. The Integra was white – Identifiable from beliefs: The Indianapolis 500 – Inherently unique: The fastest car in … 8

Reference Phenomena: Pronouns • I saw an Acura Integra today. It was white • Compared to definite noun phrases, pronouns require more referent salience. – John went to Bob’s party, and parked next to a beautiful Acura Integra – He got out and talked to Bob, the owner, for more than an hour. – Bob told him that he recently got engaged and that they are moving into a new home on Main Street. – ? ? He also said that he bought it yesterday. – He also said that he bought the Acura yesterday 9

Salience Via Structural Recency • E: So, you have the engine assembly finished. Now attach the rope. By the way, did you buy the gas can today? • A: Yes • E: Did it cost much? • A: No • E: Good. Ok, have you got it attached yet? • The middle part is a subdialog; once it is over, we resume with the discourse model of the first two sentences. “By the way” signals the beginning of the subdialog and “Ok” signals the end of it. 10

More on Pronouns • Cataphora: pronoun appears before referent: – Before he bought it, John checked over the Integra very carefully. 11

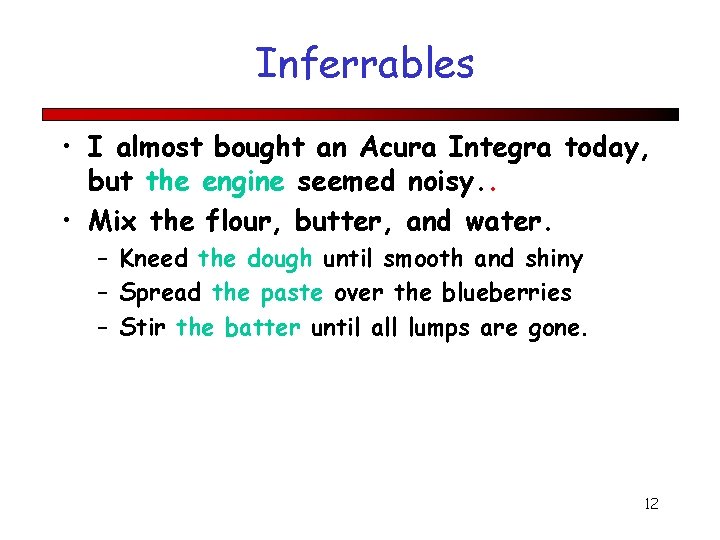

Inferrables • I almost bought an Acura Integra today, but the engine seemed noisy. . • Mix the flour, butter, and water. – Kneed the dough until smooth and shiny – Spread the paste over the blueberries – Stir the batter until all lumps are gone. 12

Inferrables • I almost bought an Acura Integra today, but the engine seemed noisy. This works, even if the engine wasn’t mentioned before. • Mix the flour, butter, and water. Same for these. – Kneed the dough until smooth and shiny – Spread the paste over the blueberries – Stir the batter until all lumps are gone. 13

Discontinuous sets • John has an Acura and Mary has a Suburu. They drive them all the time. 14

Generics • I saw no less than 6 Acura Integras today. They are the coolest cars. 15

Generics • I saw no less than 6 Acura Integras today. They are the coolest cars. • “They” refers to the general class, not to the 6 specific cars 16

Pronominal Reference Resolution • Given a pronoun, find the referent (either in text or as a entity in the world) • We will approach this today in 3 steps – Hard constraints on reference – Soft constraints on reference – An algorithm that uses these constraints • But first, let’s think about what influences pronominal reference resolution 17

What influences pronoun resolution? • Syntax • Semantics/world knowledge 18

Syntax matters • John kicked Bill. Mary told him to go home. • Bill was kicked by John. Mary told him to go home. • John kicked Bill. Mary punched him. 19

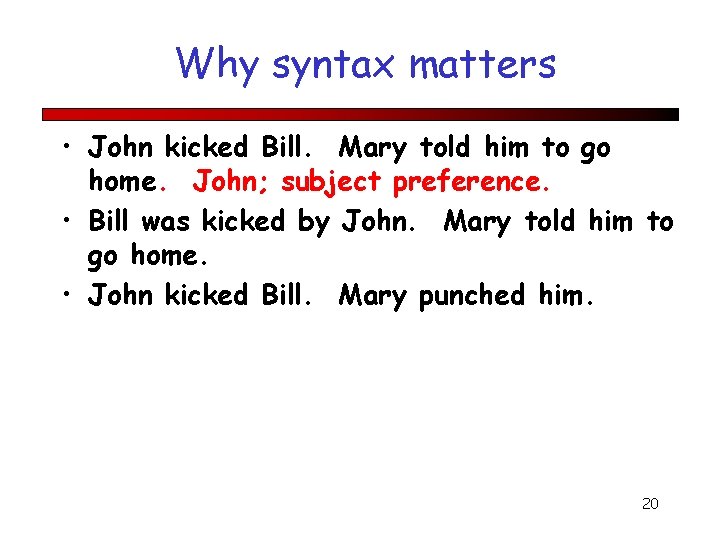

Why syntax matters • John kicked Bill. Mary told him to go home. John; subject preference. • Bill was kicked by John. Mary told him to go home. • John kicked Bill. Mary punched him. 20

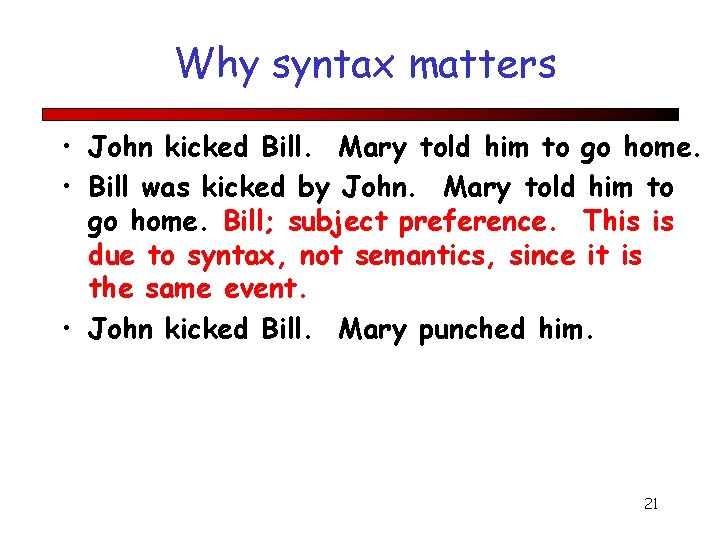

Why syntax matters • John kicked Bill. Mary told him to go home. • Bill was kicked by John. Mary told him to go home. Bill; subject preference. This is due to syntax, not semantics, since it is the same event. • John kicked Bill. Mary punched him. 21

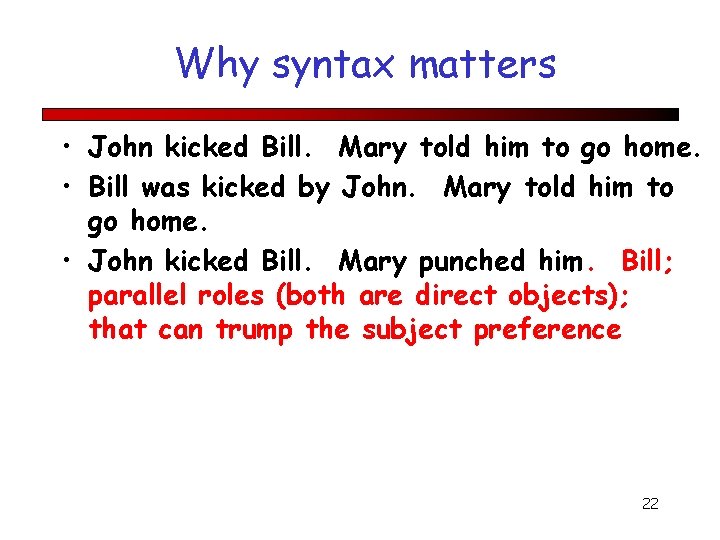

Why syntax matters • John kicked Bill. Mary told him to go home. • Bill was kicked by John. Mary told him to go home. • John kicked Bill. Mary punched him. Bill; parallel roles (both are direct objects); that can trump the subject preference 22

Why syntax matters • John kicked Bill. Mary told him to go home. • Bill was kicked by John. Mary told him to go home. • John kicked Bill. Mary punched him. Grammatical role hierarchy 23

Why syntax matters • John kicked Bill. Mary told him to go home. • Bill was kicked by John. Mary told him to go home. • John kicked Bill. Mary punched him. Grammatical role parallelism 24

Why semantics matters The city council denied the demonstrators a permit because they {feared|advocated} violence. 25

Why semantics matters The city council denied the demonstrators a permit because they {feared|advocated} violence. 26

Why semantics matters The city council denied the demonstrators a permit because they {feared|advocated} violence. 27

Why knowledge matters • John hit Bill. He was severely injured. 28

Why knowledge matters • John hit Bill. He was severely injured. • Even though John is the subject, our knowledge tells us “He” is probably Bill, overriding the syntactic preference 29

Why Knowledge Matters Margaret Thatcher admires Hillary Clinton, and George W. Bush absolutely worships her. 30

Why Knowledge Matters Margaret Thatcher admires Hillary Clinton, and George W. Bush absolutely worships her. Remember: George W. Bush is positive toward Thatcher and negative toward H. Clinton; so we reject “her” being H. Clinton, even though syntactic parallelism suggests it is Clinton. (Or, you consider sarcasm, that the speaker is wrong, etc. ) 31

Pronoun Resolution: State of the Art • Not very good • There are no successful methods that take care of all the different cases we’ve seen in these notes (e. g. , those that require knowledge, detecting subdialogs, detecting sarcasm, etc) • There approaches that exploit hard and soft syntactic and semantic constraints • In general, we need reference resolution to succeed to get to the “next level” of NLP systems. Hopefully, there will be a breakthrough … 32

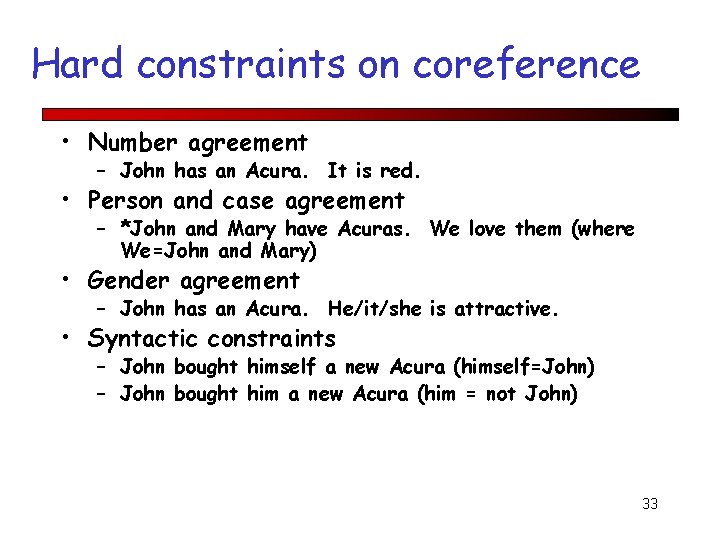

Hard constraints on coreference • Number agreement – John has an Acura. It is red. • Person and case agreement – *John and Mary have Acuras. We love them (where We=John and Mary) • Gender agreement – John has an Acura. He/it/she is attractive. • Syntactic constraints – John bought himself a new Acura (himself=John) – John bought him a new Acura (him = not John) 33

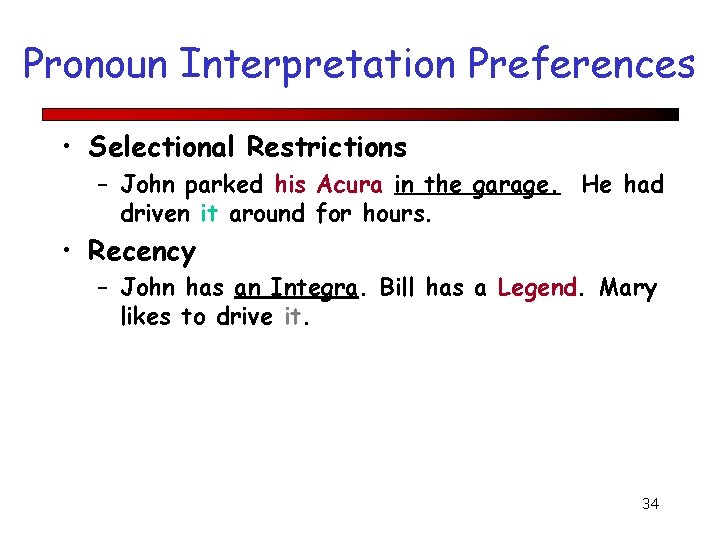

Pronoun Interpretation Preferences • Selectional Restrictions – John parked his Acura in the garage. He had driven it around for hours. • Recency – John has an Integra. Bill has a Legend. Mary likes to drive it. 34

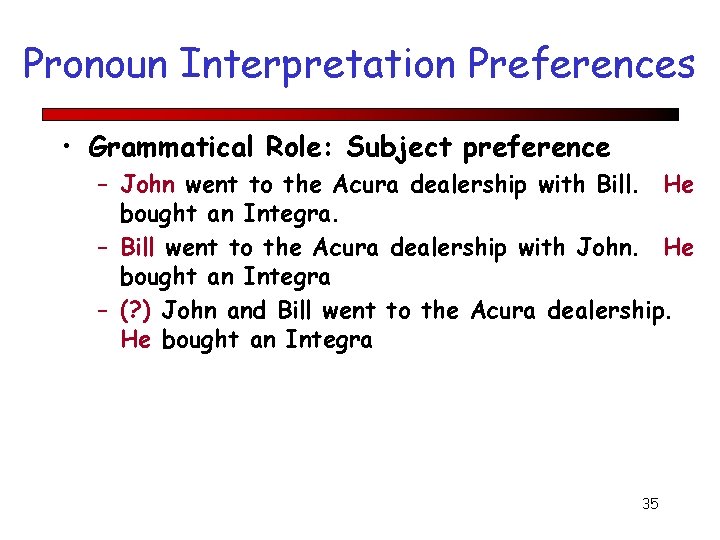

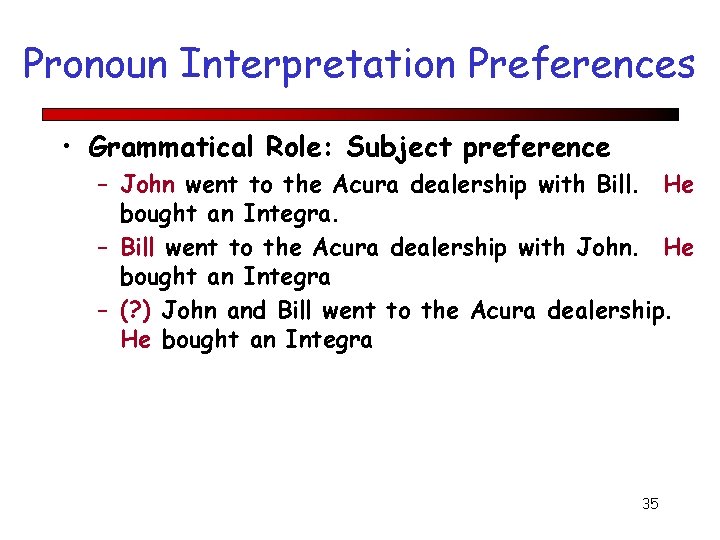

Pronoun Interpretation Preferences • Grammatical Role: Subject preference – John went to the Acura dealership with Bill. He bought an Integra. – Bill went to the Acura dealership with John. He bought an Integra – (? ) John and Bill went to the Acura dealership. He bought an Integra 35

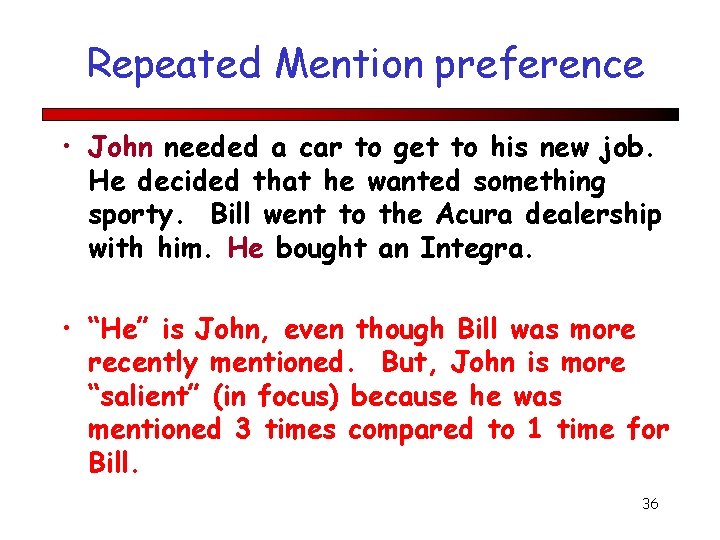

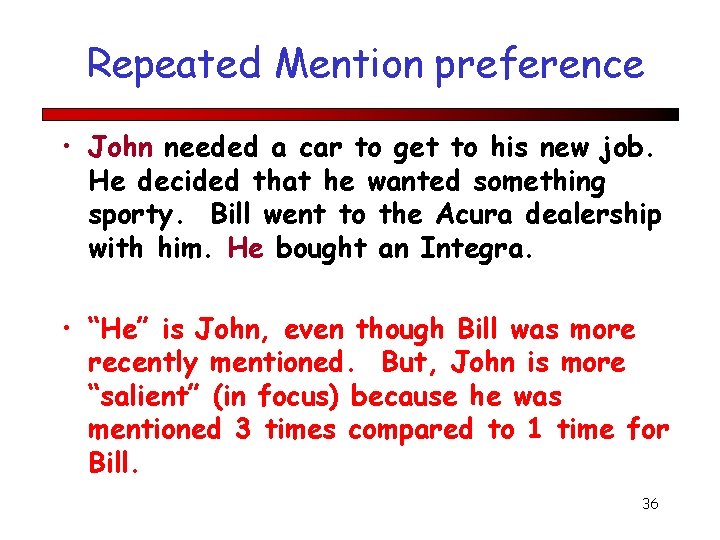

Repeated Mention preference • John needed a car to get to his new job. He decided that he wanted something sporty. Bill went to the Acura dealership with him. He bought an Integra. • “He” is John, even though Bill was more recently mentioned. But, John is more “salient” (in focus) because he was mentioned 3 times compared to 1 time for Bill. 36

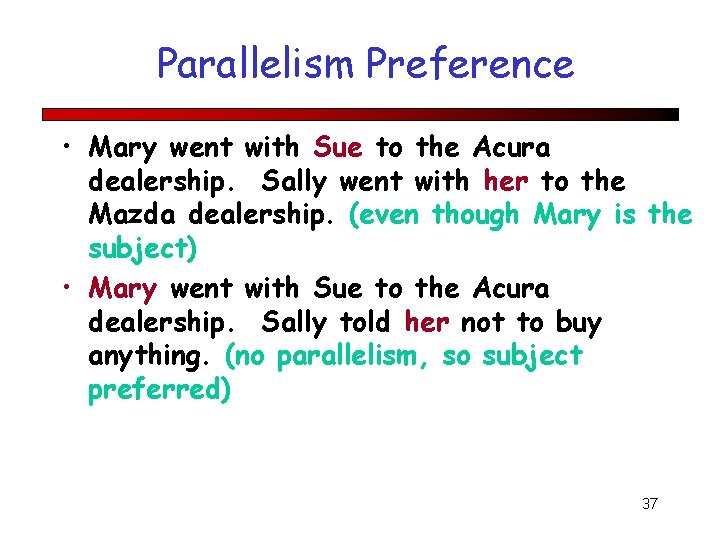

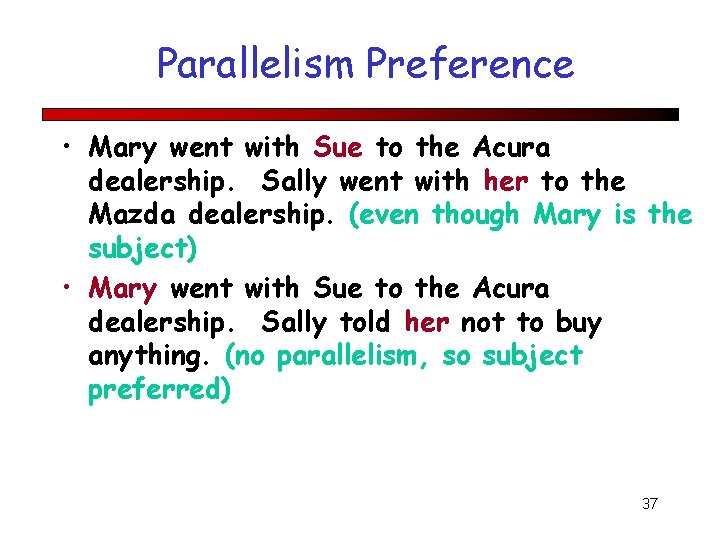

Parallelism Preference • Mary went with Sue to the Acura dealership. Sally went with her to the Mazda dealership. (even though Mary is the subject) • Mary went with Sue to the Acura dealership. Sally told her not to buy anything. (no parallelism, so subject preferred) 37

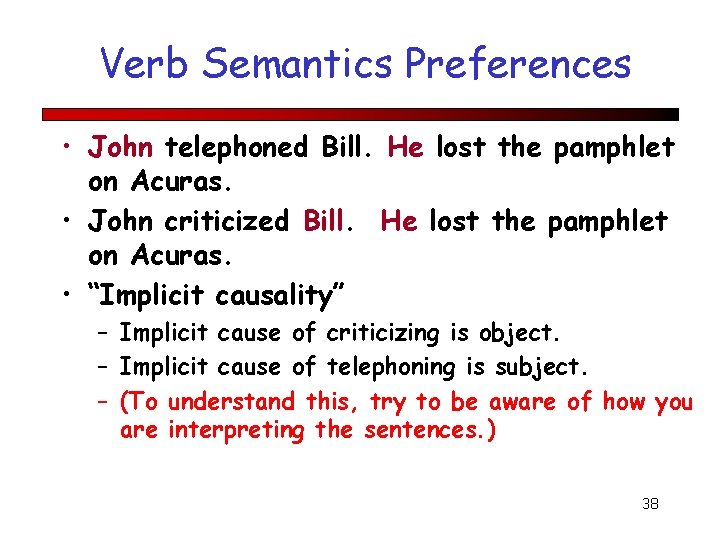

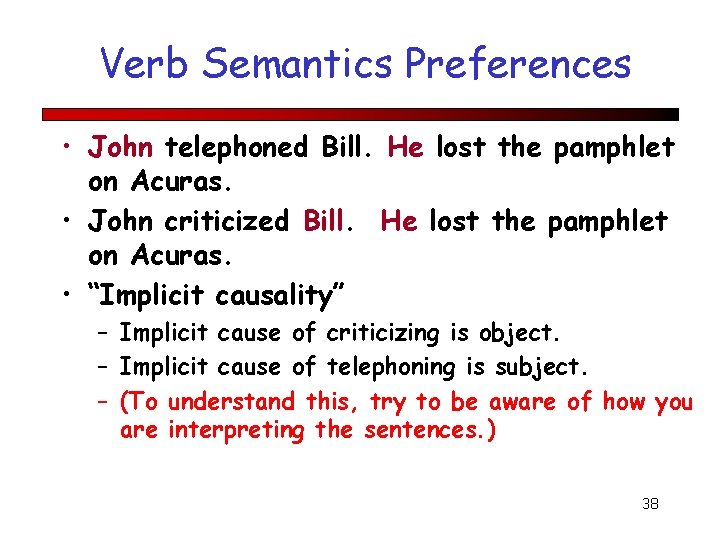

Verb Semantics Preferences • John telephoned Bill. He lost the pamphlet on Acuras. • John criticized Bill. He lost the pamphlet on Acuras. • “Implicit causality” – Implicit cause of criticizing is object. – Implicit cause of telephoning is subject. – (To understand this, try to be aware of how you are interpreting the sentences. ) 38

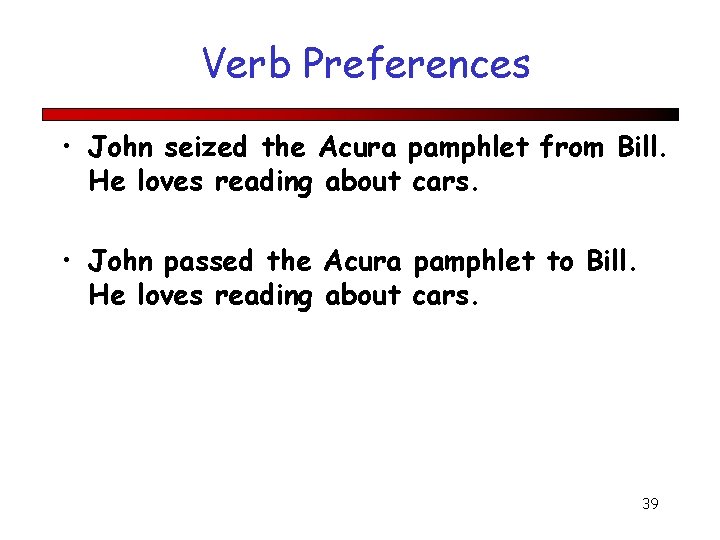

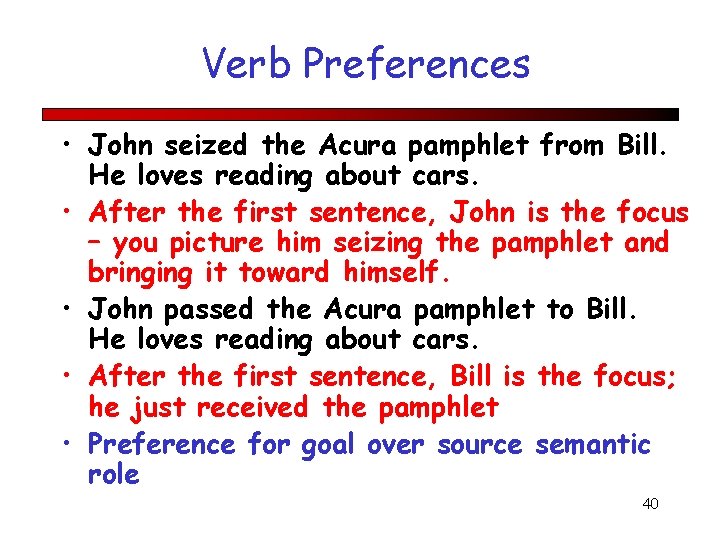

Verb Preferences • John seized the Acura pamphlet from Bill. He loves reading about cars. • John passed the Acura pamphlet to Bill. He loves reading about cars. 39

Verb Preferences • John seized the Acura pamphlet from Bill. He loves reading about cars. • After the first sentence, John is the focus – you picture him seizing the pamphlet and bringing it toward himself. • John passed the Acura pamphlet to Bill. He loves reading about cars. • After the first sentence, Bill is the focus; he just received the pamphlet • Preference for goal over source semantic role 40

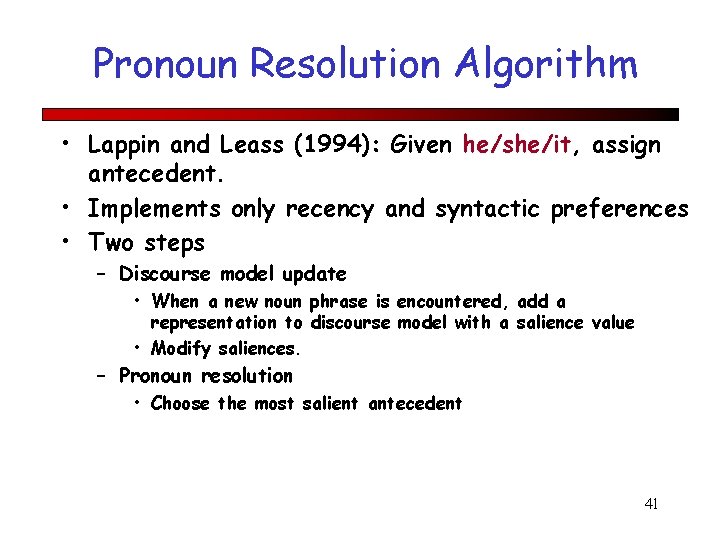

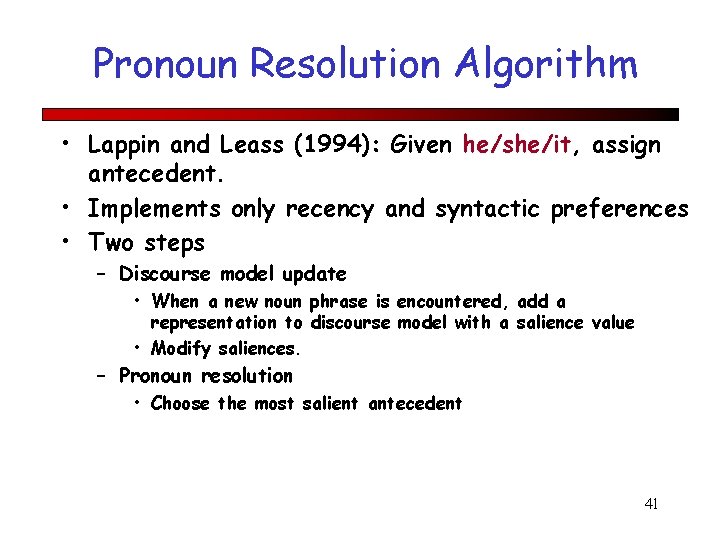

Pronoun Resolution Algorithm • Lappin and Leass (1994): Given he/she/it, assign antecedent. • Implements only recency and syntactic preferences • Two steps – Discourse model update • When a new noun phrase is encountered, add a representation to discourse model with a salience value • Modify saliences. – Pronoun resolution • Choose the most salient antecedent 41

Salience Factors and Weights • From Lappin and Leass Sentence recency 100 Subject emphasis 80 Existential emphasis 70 Accusative (direct object) emphasis 50 Ind. Obj and oblique emphasis 40 Non-adverbial emphasis 50 Head noun emphasis 80 42

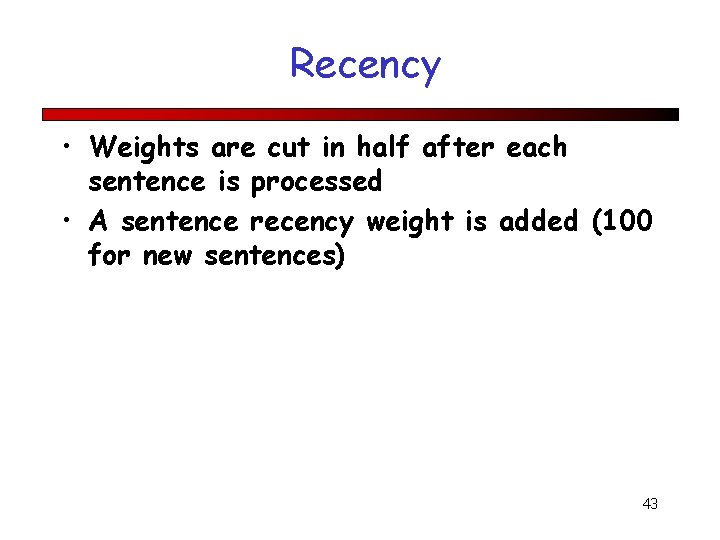

Recency • Weights are cut in half after each sentence is processed • A sentence recency weight is added (100 for new sentences) 43

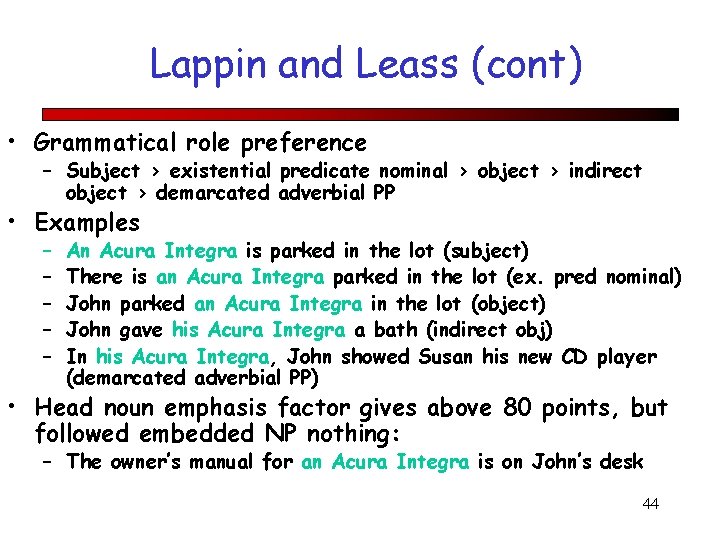

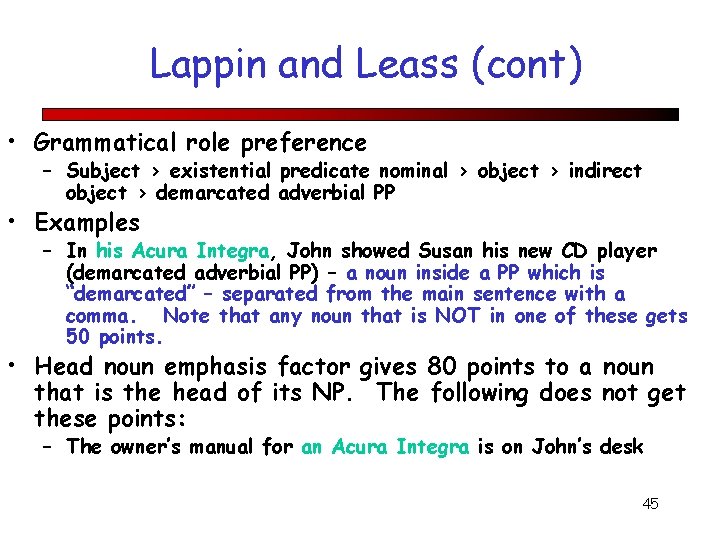

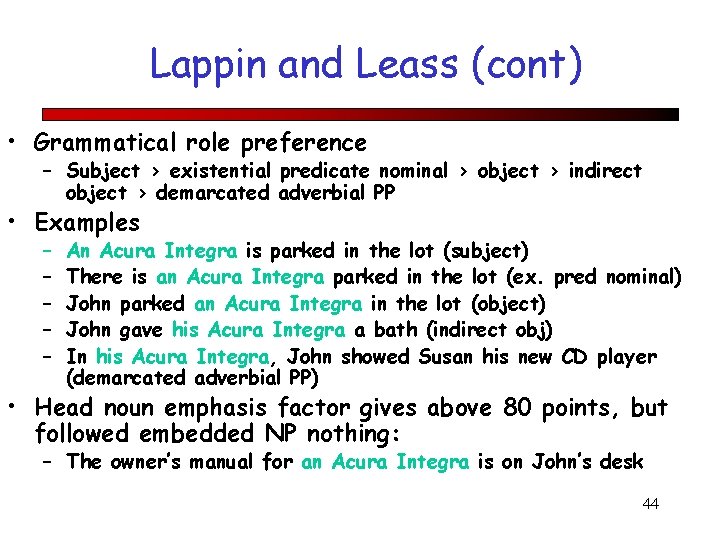

Lappin and Leass (cont) • Grammatical role preference – Subject > existential predicate nominal > object > indirect object > demarcated adverbial PP • Examples – – – An Acura Integra is parked in the lot (subject) There is an Acura Integra parked in the lot (ex. pred nominal) John parked an Acura Integra in the lot (object) John gave his Acura Integra a bath (indirect obj) In his Acura Integra, John showed Susan his new CD player (demarcated adverbial PP) • Head noun emphasis factor gives above 80 points, but followed embedded NP nothing: – The owner’s manual for an Acura Integra is on John’s desk 44

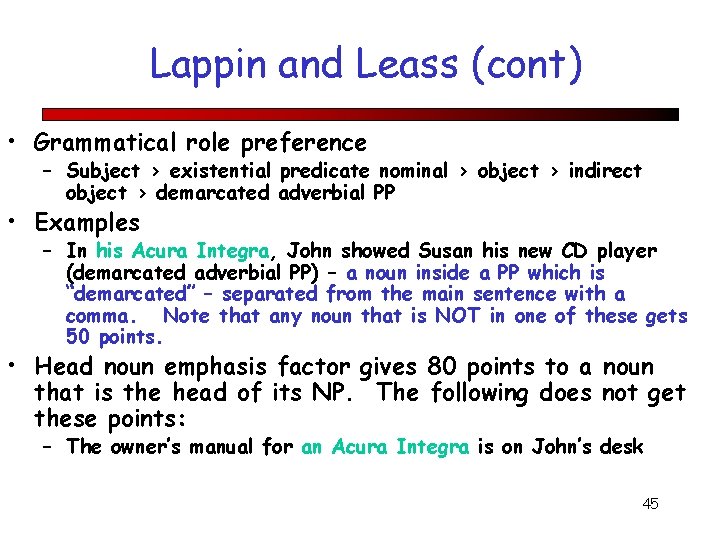

Lappin and Leass (cont) • Grammatical role preference – Subject > existential predicate nominal > object > indirect object > demarcated adverbial PP • Examples – In his Acura Integra, John showed Susan his new CD player (demarcated adverbial PP) – a noun inside a PP which is “demarcated” – separated from the main sentence with a comma. Note that any noun that is NOT in one of these gets 50 points. • Head noun emphasis factor gives 80 points to a noun that is the head of its NP. The following does not get these points: – The owner’s manual for an Acura Integra is on John’s desk 45

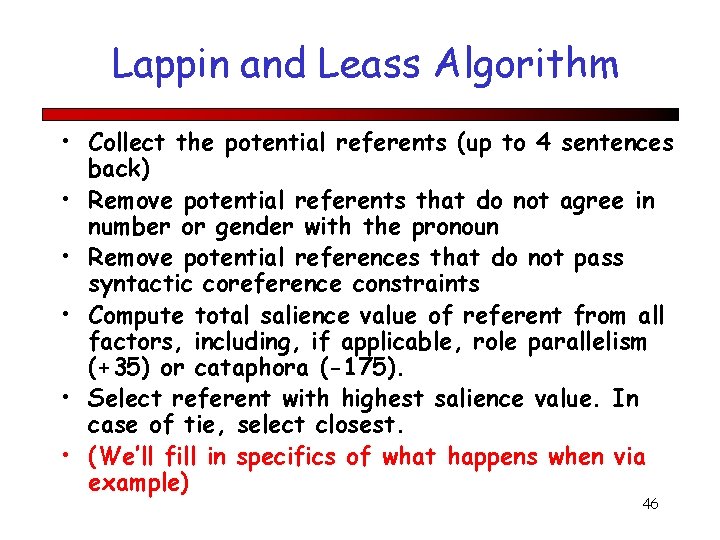

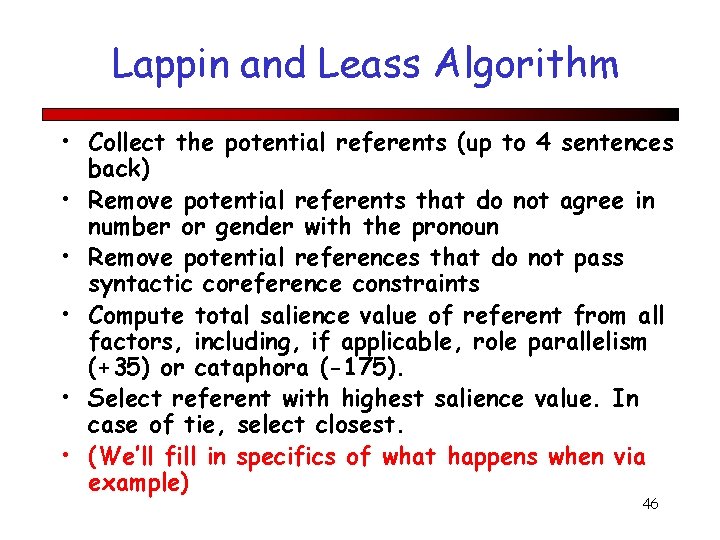

Lappin and Leass Algorithm • Collect the potential referents (up to 4 sentences back) • Remove potential referents that do not agree in number or gender with the pronoun • Remove potential references that do not pass syntactic coreference constraints • Compute total salience value of referent from all factors, including, if applicable, role parallelism (+35) or cataphora (-175). • Select referent with highest salience value. In case of tie, select closest. • (We’ll fill in specifics of what happens when via example) 46

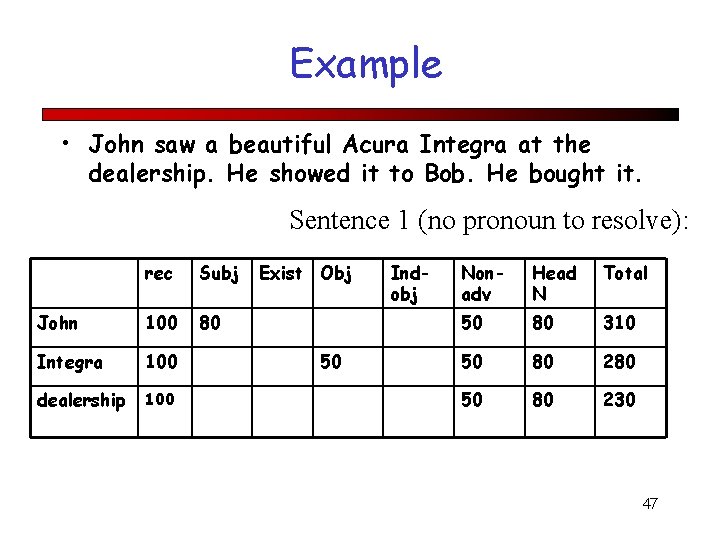

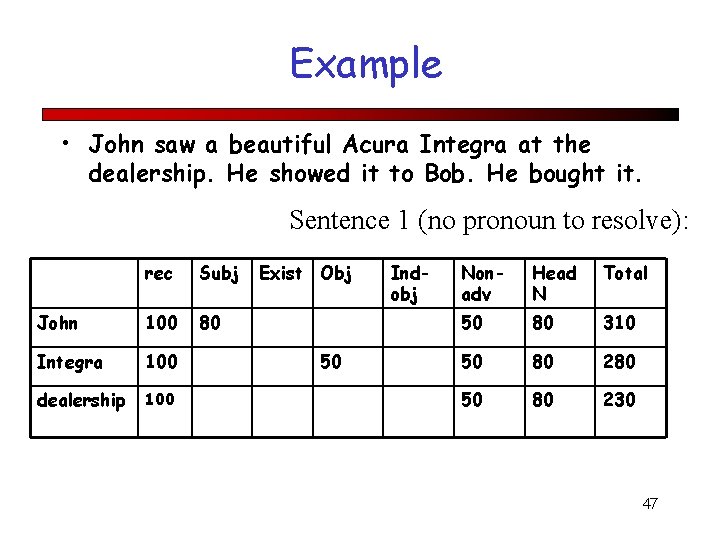

Example • John saw a beautiful Acura Integra at the dealership. He showed it to Bob. He bought it. Sentence 1 (no pronoun to resolve): rec Subj John 100 80 Integra 100 dealership 100 Exist Obj 50 Indobj Nonadv Head N Total 50 80 310 50 80 280 50 80 230 47

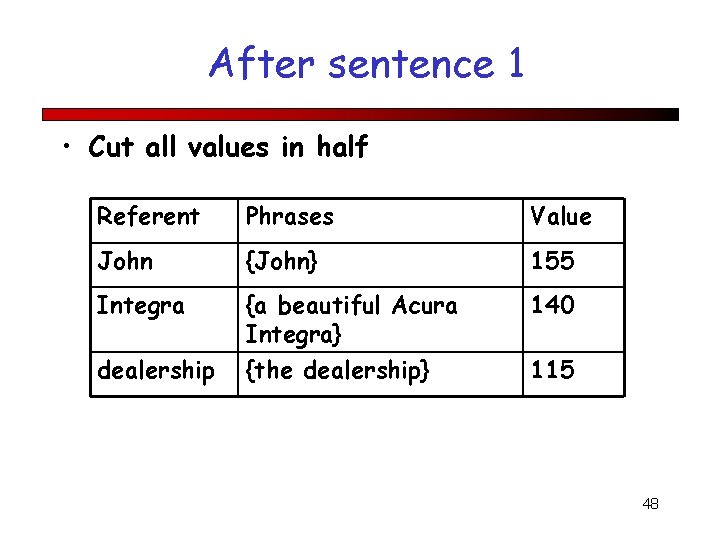

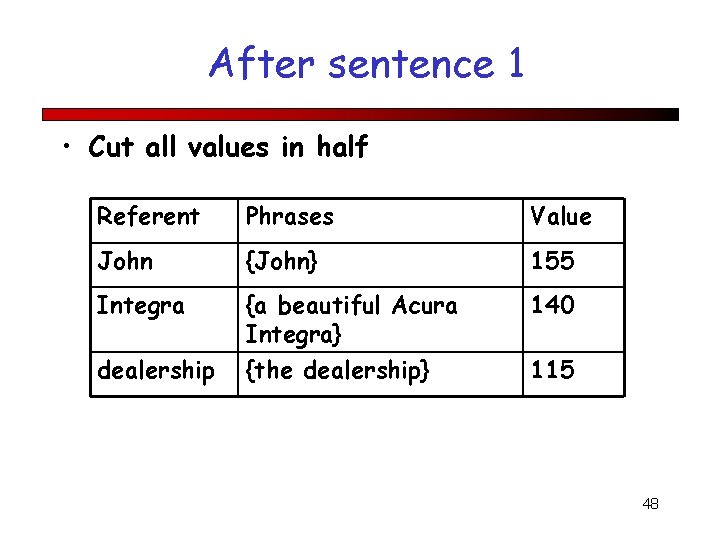

After sentence 1 • Cut all values in half Referent Phrases Value John {John} 155 Integra {a beautiful Acura Integra} 140 dealership {the dealership} 115 48

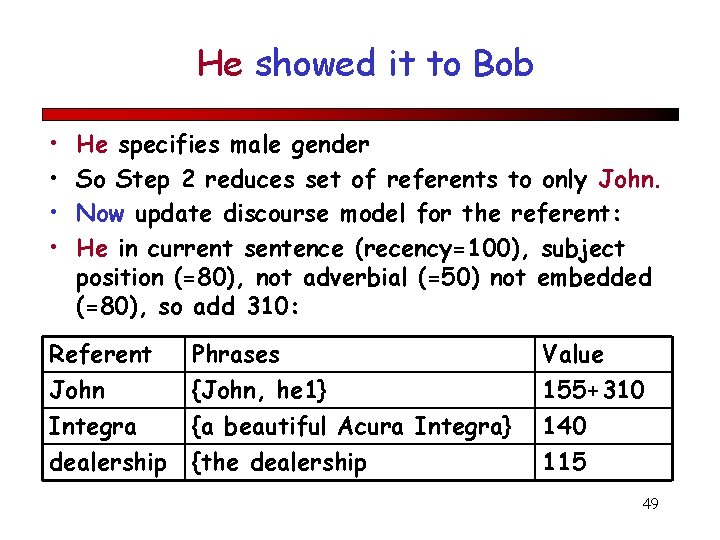

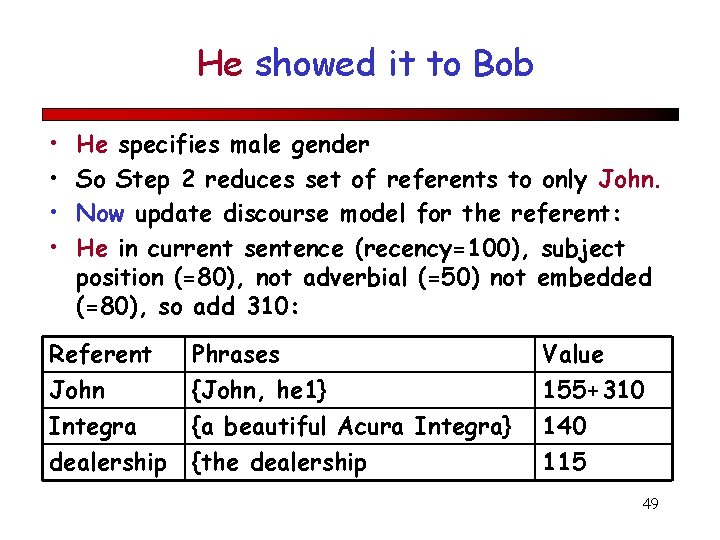

He showed it to Bob • • He specifies male gender So Step 2 reduces set of referents to only John. Now update discourse model for the referent: He in current sentence (recency=100), subject position (=80), not adverbial (=50) not embedded (=80), so add 310: Referent John Integra dealership Phrases {John, he 1} {a beautiful Acura Integra} {the dealership Value 155+310 140 115 49

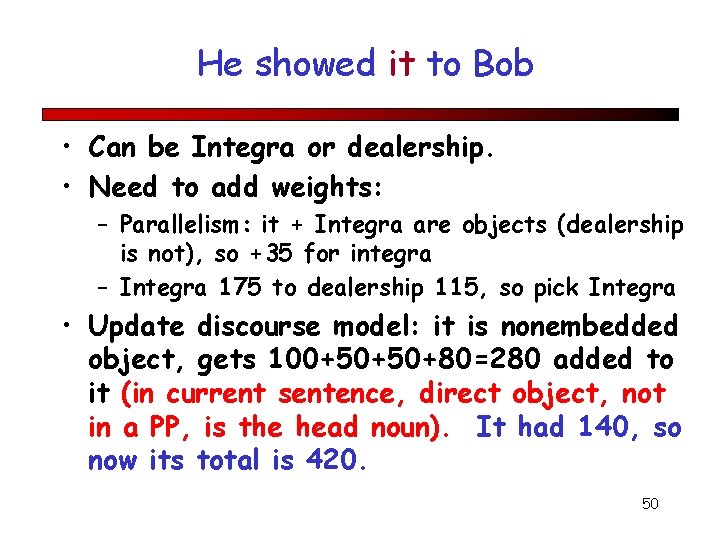

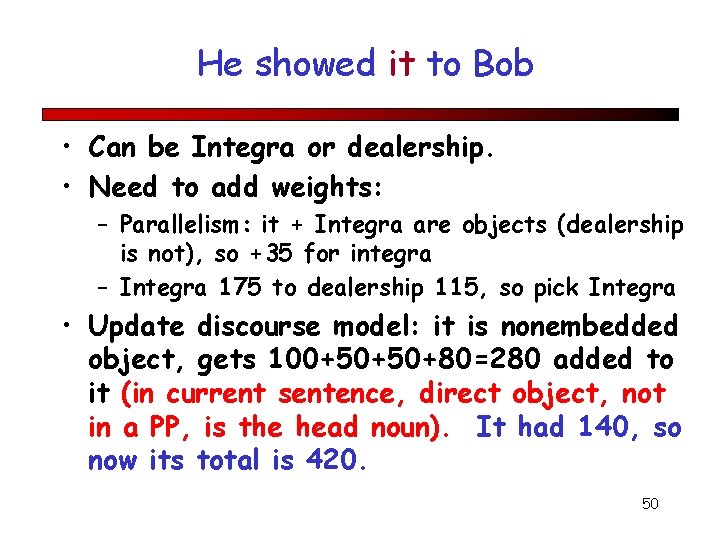

He showed it to Bob • Can be Integra or dealership. • Need to add weights: – Parallelism: it + Integra are objects (dealership is not), so +35 for integra – Integra 175 to dealership 115, so pick Integra • Update discourse model: it is nonembedded object, gets 100+50+50+80=280 added to it (in current sentence, direct object, not in a PP, is the head noun). It had 140, so now its total is 420. 50

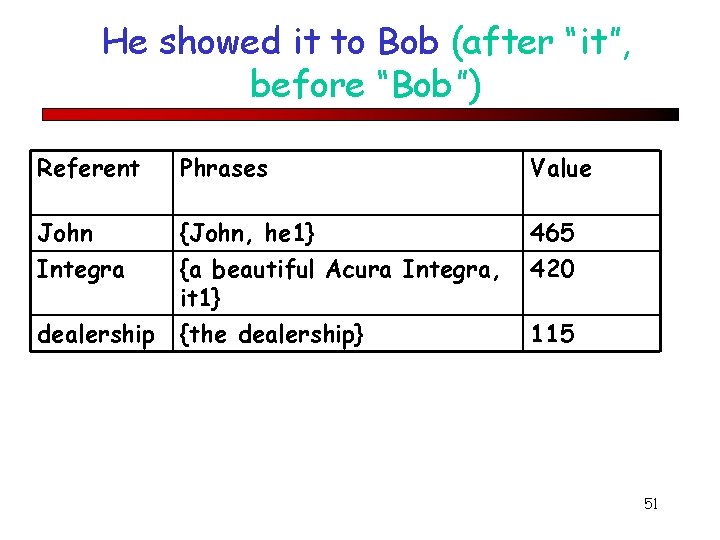

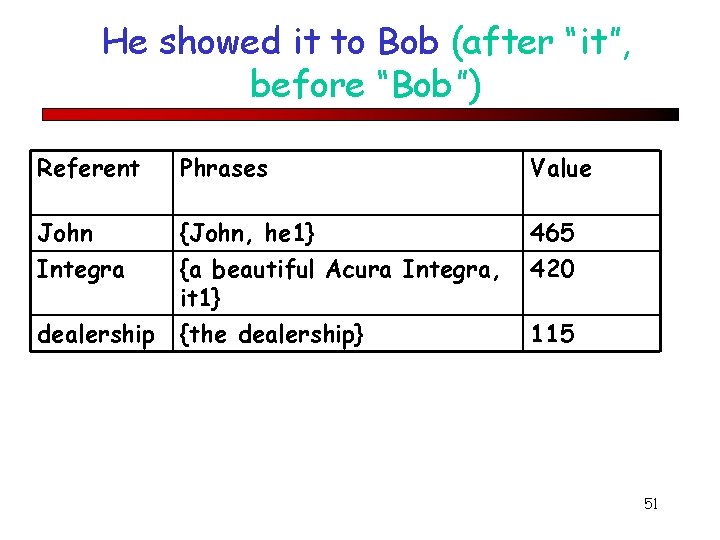

He showed it to Bob (after “it”, before “Bob”) Referent Phrases Value John Integra {John, he 1} {a beautiful Acura Integra, it 1} 465 420 dealership {the dealership} 115 51

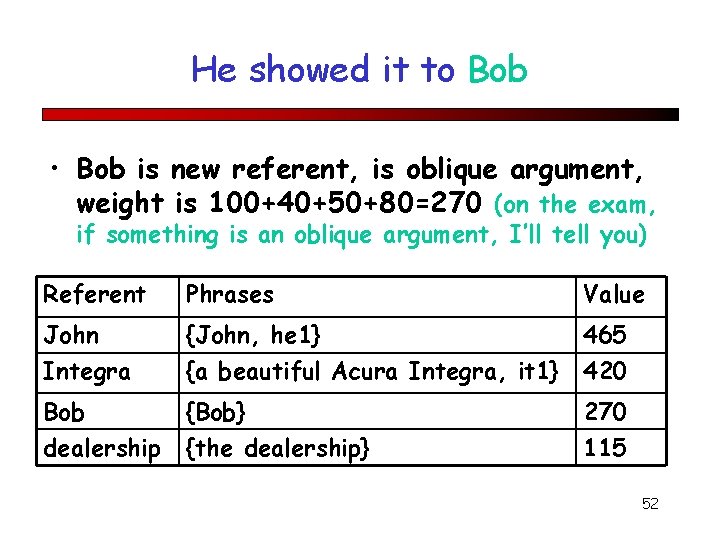

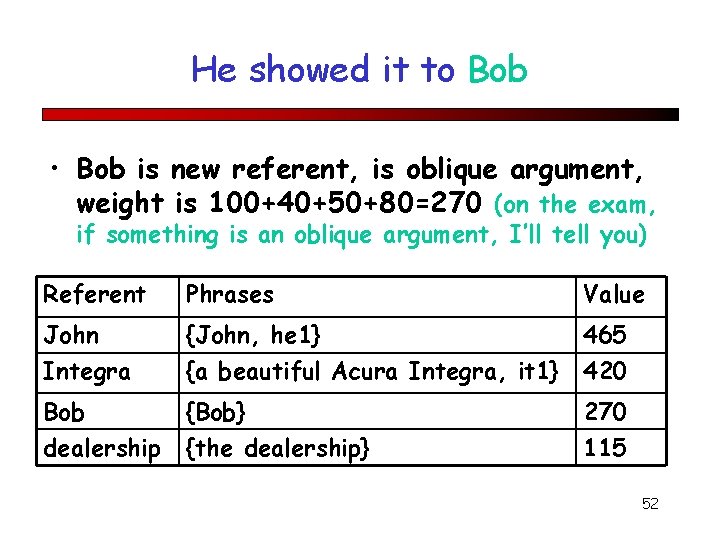

He showed it to Bob • Bob is new referent, is oblique argument, weight is 100+40+50+80=270 (on the exam, if something is an oblique argument, I’ll tell you) Referent Phrases Value John Integra {John, he 1} {a beautiful Acura Integra, it 1} 465 420 Bob {Bob} 270 dealership {the dealership} 115 52

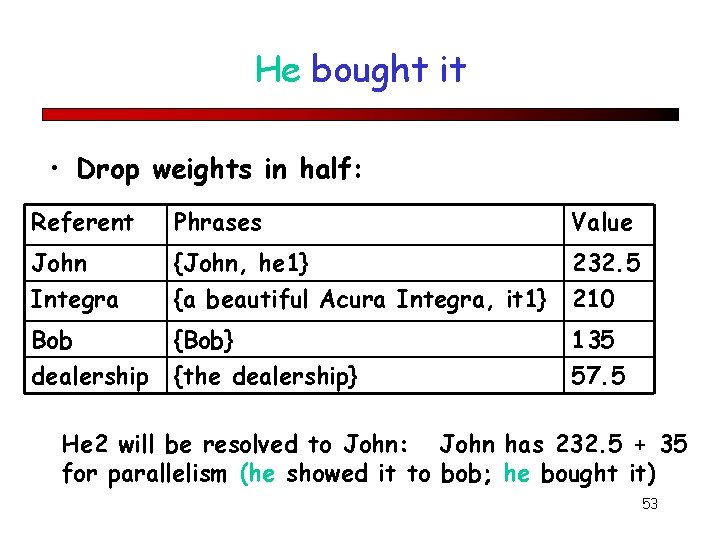

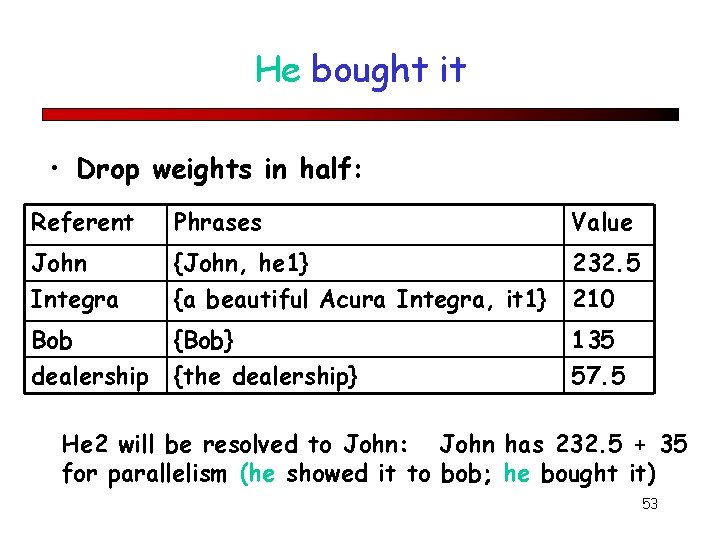

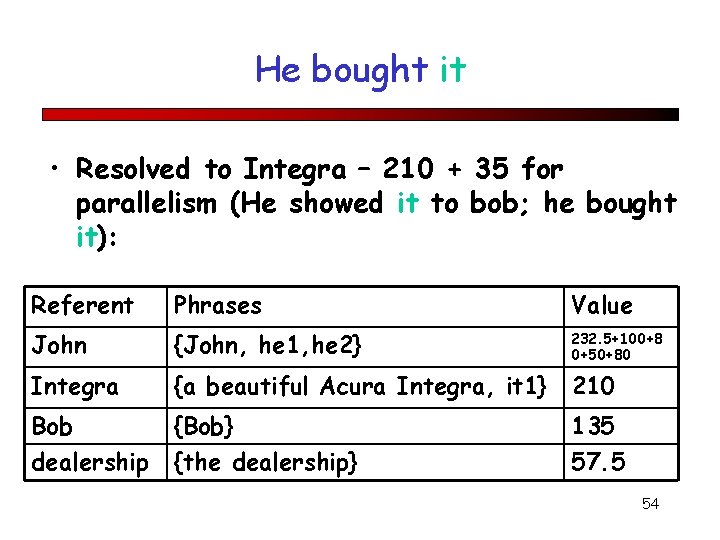

He bought it • Drop weights in half: Referent Phrases Value John Integra {John, he 1} {a beautiful Acura Integra, it 1} 232. 5 210 Bob {Bob} 135 dealership {the dealership} 57. 5 He 2 will be resolved to John: John has 232. 5 + 35 for parallelism (he showed it to bob; he bought it) 53

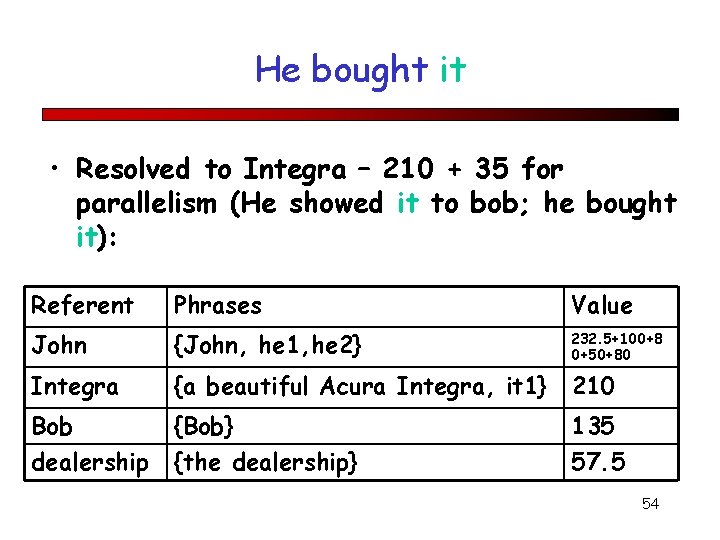

He bought it • Resolved to Integra – 210 + 35 for parallelism (He showed it to bob; he bought it): Referent Phrases Value John {John, he 1, he 2} 232. 5+100+8 0+50+80 Integra {a beautiful Acura Integra, it 1} 210 Bob dealership {Bob} {the dealership} 135 57. 5 54

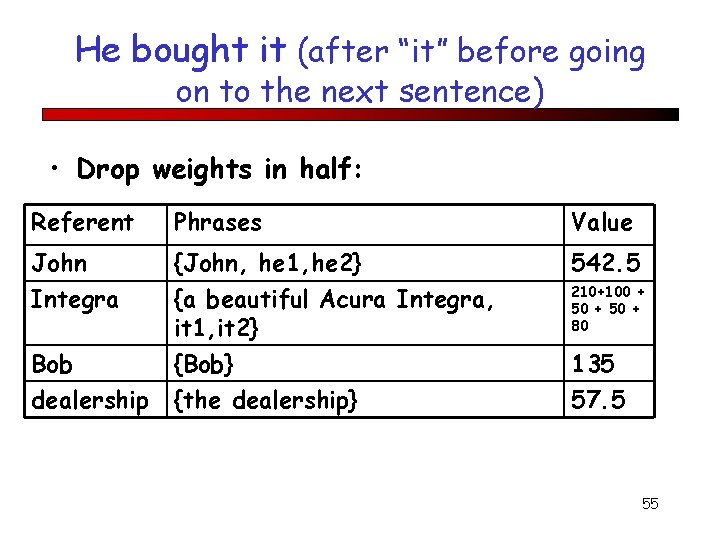

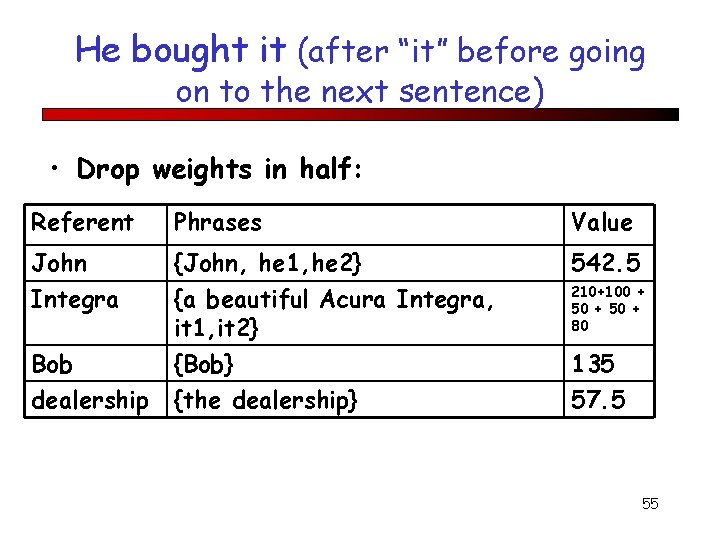

He bought it (after “it” before going on to the next sentence) • Drop weights in half: Referent Phrases Value John Integra {John, he 1, he 2} {a beautiful Acura Integra, it 1, it 2} 542. 5 Bob dealership {Bob} {the dealership} 135 57. 5 210+100 + 50 + 80 55

Reference Resolution • Many other algorithms and other constraints – Centering theory: constraints which focus on discourse state, and focus. – Hobbs: ref. resolution as by-product of general reasoning – Mitkov et al. (e. g. ) Machine learning 56

Part II: Text Coherence 57

What Makes a Discourse Coherent? The reason is that these utterances, when juxtaposed, will not exhibit coherence. Almost certainly not. Do you have a discourse? Assume that you have collected an arbitrary set of wellformed and independently interpretable utterances, for instance, by randomly selecting one sentence from each of the previous chapters of this book. 58

Better? Assume that you have collected an arbitrary set of well-formed and independently interpretable utterances, for instance, by randomly selecting one sentence from each of the previous chapters of this book. Do you have a discourse? Almost certainly not. The reason is that these utterances, when juxtaposed, will not exhibit coherence. 59

Coherence • John hid Bill’s car keys. He was drunk • ? ? John hid Bill’s car keys. He likes spinach 60

What makes a text coherent? • Appropriate use of coherence relations between subparts of the discourse -rhetorical structure • Appropriate sequencing of subparts of the discourse -- discourse/topic structure • Appropriate use of referring expressions 61

Hobbs 1979 Coherence Relations • Result • Infer that the state or event asserted by S 0 causes or could cause the state or event asserted by S 1. – John bought an Acura. His father went ballistic. 62

Hobbs: “Explanation” • Infer that the state or event asserted by S 1 causes or could cause the state or event asserted by S 0 • John hid Bill’s car keys. He was drunk 63

Hobbs: “Parallel” • Infer p(a 1, a 2. . . ) from the assertion of S 0 and p(b 1, b 2…) from the assertion of S 1, where ai and bi are similar, for all I. • John bought an Acura. Bill leased a BMW. 64

Hobbs “Elaboration” • Infer the same proposition P from the assertions of S 0 and S 1: • John bought an Acura this weekend. He purchased a beautiful new Integra for 20 thousand dollars at Bill’s dealership on Saturday afternoon. 65

An Inference-Based Algorithm • Hobbs: make the choices concerning coherence relations and references that gives you the most probable overall interpretation • John hid Bill’s car keys. He was drunk • He = John; Sentence 2 is an explanation of sentence 1. 66

Rhetorical Structure Theory • One theory of discourse structure, based on identifying relations between segments of the text – Nucleus/satellite notion encodes asymmetry – Some rhetorical relations: • • Elaboration (set/member, class/instance, whole/part…) Contrast: multinuclear Condition: Sat presents precondition for N Purpose: Sat presents goal of the activity in N 67

Automatic Rhetorical Structure Labeling • Supervised machine learning – Get a group of annotators to assign a set of RST relations to a text – Extract a set of surface features from the text that might signal the presence of the rhetorical relations in that text – Train a supervised ML system based on the training set 68

Features • Explicit markers: because, however, therefore, then, etc. • Tendency of certain syntactic structures to signal certain relations: Infinitives are often used to signal purpose relations: Use rm to delete files. • Ordering • Tense/aspect • Intonation 69

Some Problems with RST • How many Rhetorical Relations are there? • How can we use RST in dialogue as well as monologue? • RST forces an artificial tree structure on discourses • Difficult to get annotators to agree on labeling the same texts 70

Summary • Reference – – Kinds of reference phenomena Constraints on co-reference Preferences for co-reference The Lappin-Leass algorithm for coreference • Coherence – Hobbs coherence relations – Rhetorical Structure Theory 71