ITCS 6010 Natural Language Understanding Natural Language Processing

- Slides: 16

ITCS 6010 Natural Language Understanding

Natural Language Processing n What is it? n n n Studies the problems inherent in the processing and manipulation of natural language, and, natural language understanding devoted to making computers "understand" statements written in human languages Subset of AI and linguistics Has several categories n n Open domain question answering Natural interfaces to databases Text-based natural language research Dialogue-based natural language research

Text-based Research n Performed with respect to text-based applications e. g. magazines, newspapers, email messages n n Information extraction and comprehension Document Retrieval Translations Summarization

Dialogue-based Research n n Performed with respect to dialogue-based applications e. g. question-answering systems, automated help centers Difficult because dialogue-based applications manage a natural flowing dialogue between the user and the interface

Natural Language Interface to Databases (NLIDB) n n n Allows users to access information from database using natural language queries Removes user’s need to know structure of database and details about data Examples: RENDEVOUS, ASK and LANGUAGEACCESS

Architecture of NLIDB n General architecture n n n Linguistic front-end Database back-end Front-end n n Accepts natural language question as input Translate question to a meaning representation language (MRL)

Architecture of NLIDB (cont’d) n Back-end n n Accepts MRL Translate MRL to supported database language Executes query Results typically presented in sub-set of natural langauge

Natural Language Question Answering (NLQA) n n n Process of retrieving answers for questions Questions posed in natural language Precise answer presented in natural language

Question Answering Using Statistical Model (QASM) n n Converts natural language questions into search engine specific query Premise: n There exists a best possible operator to apply on a natural language question

QASM (cont’d) n How it works: n n Classifier determines best operator to apply to a NL question Operator produces new query that improves upon original Operator matched to question-answer pair Expectation maximization (EM) algorithm stabilizes missing data i. e. paraphrased questions n Iteratively maximizes likelihood estimation

Probabilistic Phrase Reranking (PPR) n PPR n n A process that goes through a set of subtasks to retrieve most relevant answer to proposed question Subtasks: n Query modulation n n Question Type Recognition n n Question converted to appropriate query Queries organized according to the question type i. e. location, definition, person, etc. Document Retrieval n Most relevant unit of information e. g. documents are returned in this stage i. e. the units with highest probability of containing the answer

Probabilistic Phrase Reranking (PPR) cont’d n Subtasks (cont’d): n Passage/Sentence Retrieval n n Sentences, phrases or textual units that contain answers are identified from information unit returned in previous task Answer Extraction n Chosen textual units are split into phrases n n Each is a potential answer Phrase/Answer Reranking n Phrases generated are ranked n Top of the list - Phrase with greatest possibility of containing correct answer

Bayesian Approach n n Uses probabilistic IR model and Bayes’ Rule Goal of probabilistic model: n n n Estimate probability that a document, dk, is relevant (R) to a query, q i. e. Pq( R | dk ) Each document represented by set of words Words stemmed n n Suffixes and prefixes removed Now known as index terms

Bayesian Approach (cont’d) Each document represented by a vector, t = ( t 1, t 2, …. , tp ) where p is the number of index terms n Bayes’ Rule applied to model to express probability that document relevant to a specific query, q Pq ( R | t ) α Pq ( t | R ) Pq ( R ) n

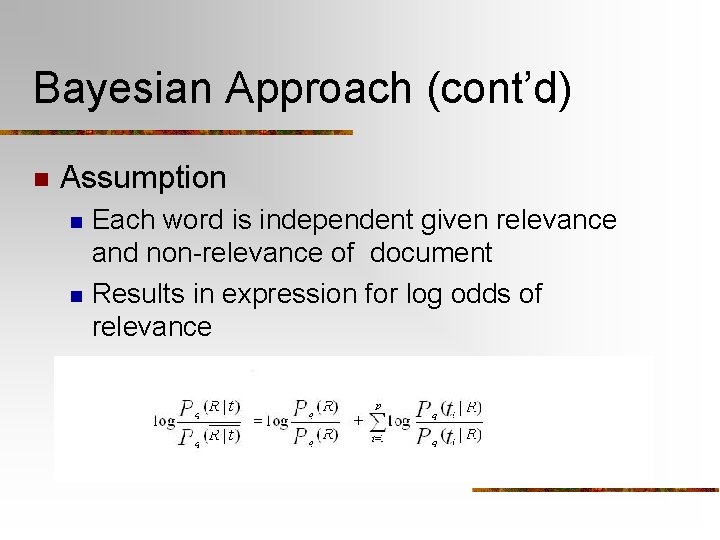

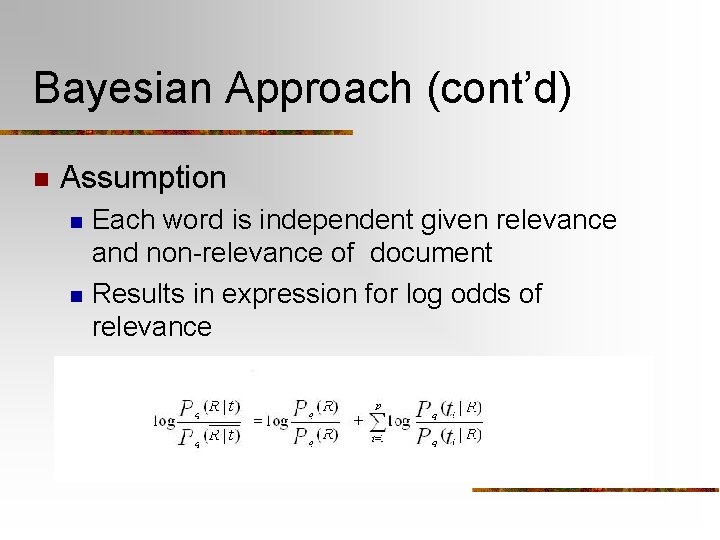

Bayesian Approach (cont’d) n Assumption n n Each word is independent given relevance and non-relevance of document Results in expression for log odds of relevance

Bayesian Approach (cont’d) n Document is relevant if: n n n Frequency of terms in relevant and non-relevant documents retrieved Initial status of documents unknown n n User’s needs satisfied Ad hoc estimation of probabilistic model parameters used to determine an initially-ranked list of documents Strengths of approach: n n Initial document ranking not based on ad hoc considerations provided Automatic mechanism for learning and incorporating relevance information from other queries provided