Natural Language Processing In Games Michael Mateas Andrew

- Slides: 37

Natural Language Processing In Games Michael Mateas Andrew Stern Georgia Institute of Technology College of Computing & LCC Interactive. Story. net www. interactivestory. net egl. gatech. edu grandtextauto. org

Natural language processing (NLP) and games • We’ll explore why you’d want NLP in games • We'll discuss the issues involved with NLP in games, using a case study of the NLP in the interactive drama Façade (to be released next month!) • We’ll suggest future applications and research directions for NLP in games

Immediate potential uses of NLP in games • NPC conversations in RPG / MMPORPG / Adventure games • Avoid lock-step, menu-based dialog trees • Already a lot of texting in MMPORPGs • In FPS games • Communication with NPC comrades • Already a lot of texting in multi-player FPS • In RTS games • High-level commands to units

Medium-term uses of NLP in games • In MMPORPGs, listen in on player-to-player conversation • Robust rule-based parsing and statistical parsing • But need a game master AI that shapes world events • Mobile phone games (e. g. Vivienne virtual girlfriend) • New genres: e. g. interactive drama

The technologies of NLP • Natural language understanding (NLU) – given natural language input, extract meaning • Involves syntax, semantics, pragmatics • May include added step of speech recognition • Conversation management – given an ongoing conversation, figure out what state the conversation is in and where it is going • Tracking the conversational state & expectations • Deciding what to say next • Natural language generation (NLG) – given a meaning, generate text that expresses that meaning • Turning a formal meaning representation into character-specific dialog • Generating facial expressions, gestures, etc. that accompany dialog

(Rare) examples of game NLP • Text adventures – (brittle) parser maps text to verbs • Seaman, Babyz, Furby – relatively simple stimulus/response conversations, simple word association • Lifeline, Rebel Moon Revolution – commands • Constructive dialog interface

Game designers and NLP • Game designers are (rightly) suspicious of NLU • Text-based adventure games: “I don’t understand” • AI complete problem – need the entirety of common sense to understand language? • Language input (even speech) may break up the action • Dialog management is typically accomplished with finite state machines, dialog trees • For example, the buying and selling dialogs of shopkeepers • Natural language generation has rarely (never? ) been tried • Dialog is canned – enormous effort goes into writing thousands of lines of character dialog • But the dialog is (hopefully) well written – complete authorial control

Problems of open-ended NL input • NLU is a notoriously difficult, AI-complete problem • There will be NLU failures, so why do it? • Why not use dialog menus, or menus of story actions, or physical action? • Text input • Requires keyboard • Speech input • Requires training your voice to work well at all • User-independent, emotional speech recognition technology not there yet

Why do NLU in games? • Explicit choices (menus)… • • Foreground the boundaries of the experience Weight all choices equally Become unmanageable for broad action spaces Not natural! • Far more expressiveness for player • When characters respond to what I said, they finally seem alive • But risky – when they don’t respond appropriately, seem more mechanical Deeper, more personal games / stories require language

Why conversation management? • Conversation doesn’t just stay on one track • Actually combines multiple simultaneous conversations / levels • Even simple conversations should have this • Shopkeepers, for example, currently force you to walk through lockstep, modal interactions • Trees and state machines don’t scale for representing complex, multi-threaded conversations

Why natural language generation? • Unwieldy to pre-write all responses • Tension between authorial burden vs. authorial control • But, it’s the farthest away • Personality-specific, natural-sounding dialog generation • Speech synthesis with emotion

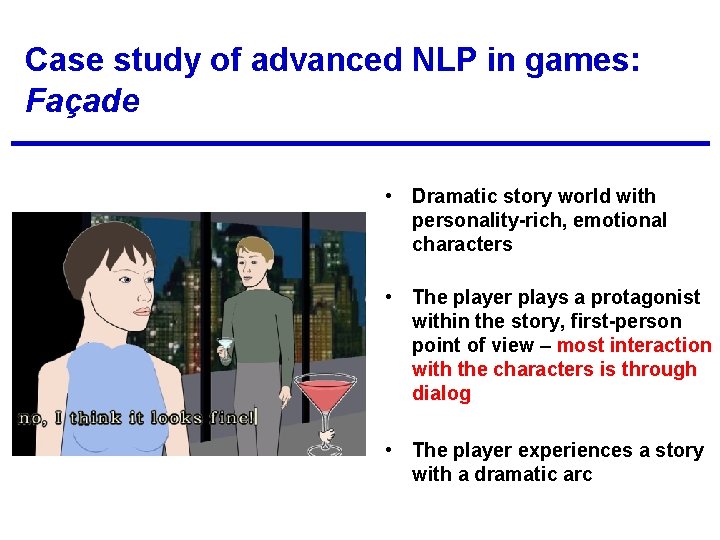

Case study of advanced NLP in games: Façade • Dramatic story world with personality-rich, emotional characters • The player plays a protagonist within the story, first-person point of view – most interaction with the characters is through dialog • The player experiences a story with a dramatic arc

Façade NLP requirements • Support broad range of language relevant to story domain • Not a narrow range of language specific to a task domain • Extract interesting player intentions • Not distinguish “correct” and “incorrect” utterances • Understanding sensitive to story & character context • ABL characters have their own internal lives • Not the same as a chatterbot

Code support for NLP requirements • Rule language for matching surface text features • Semantic parsing • Support deep and shallow rules existing side-by-side • Reaction selection framework supports conversation management • Includes ABL meta-behaviors for incorporating reactions into the current character goals

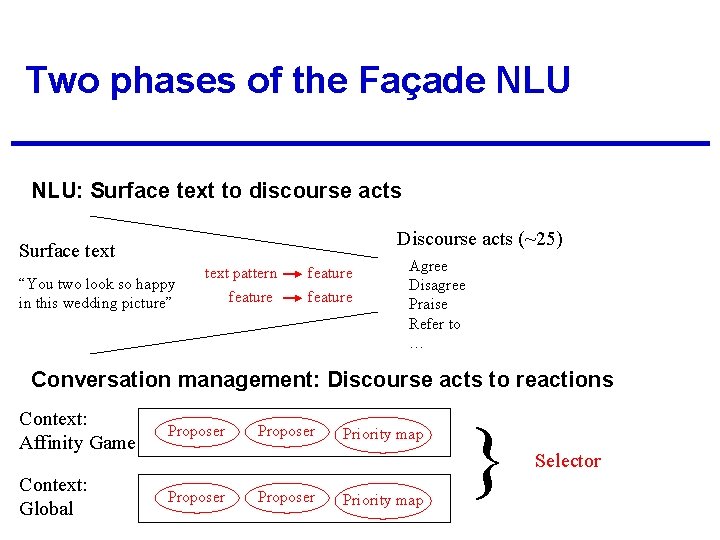

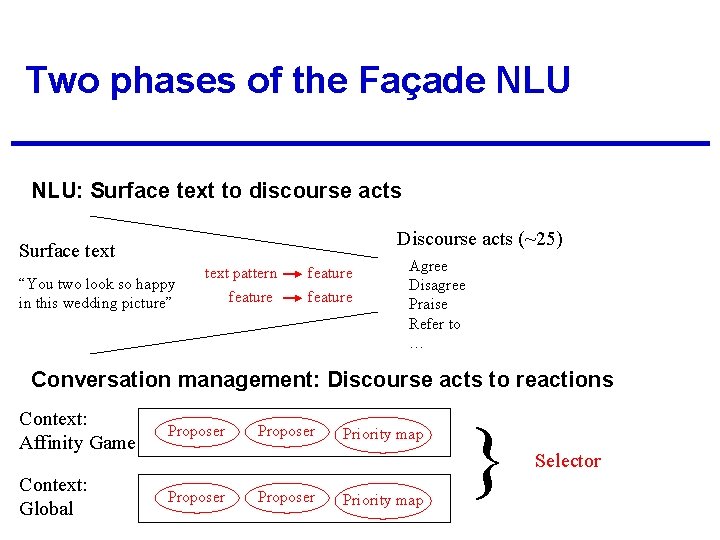

Two phases of the Façade NLU: Surface text to discourse acts Discourse acts (~25) Surface text “You two look so happy in this wedding picture” text pattern feature Agree Disagree Praise Refer to … Conversation management: Discourse acts to reactions Context: Affinity Game Proposer Context: Global Proposer Priority map } Selector

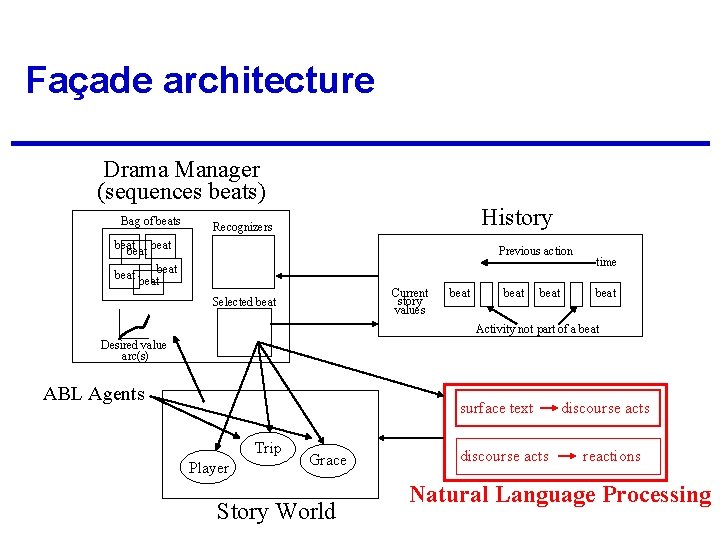

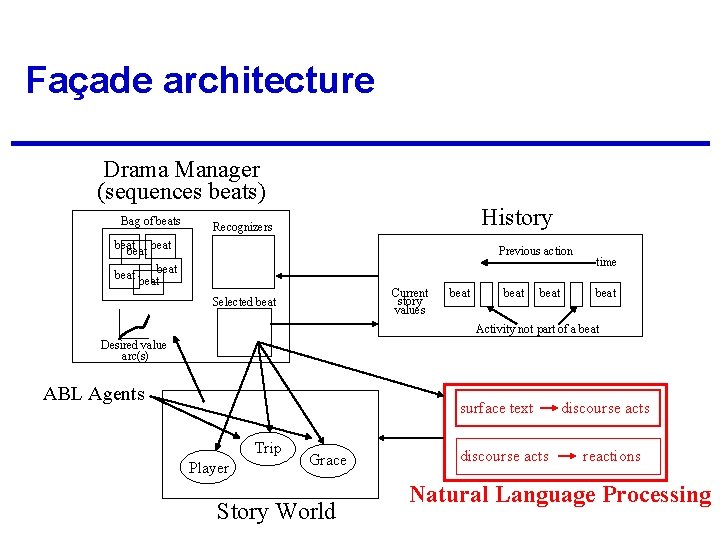

Façade architecture Drama Manager (sequences beats) Bag of beats History Recognizers beat Previous action beatbeat Current story values Selected beat time beat Activity not part of a beat Desired value arc(s) ABL Agents surface text Trip Player Grace Story World discourse acts reactions Natural Language Processing

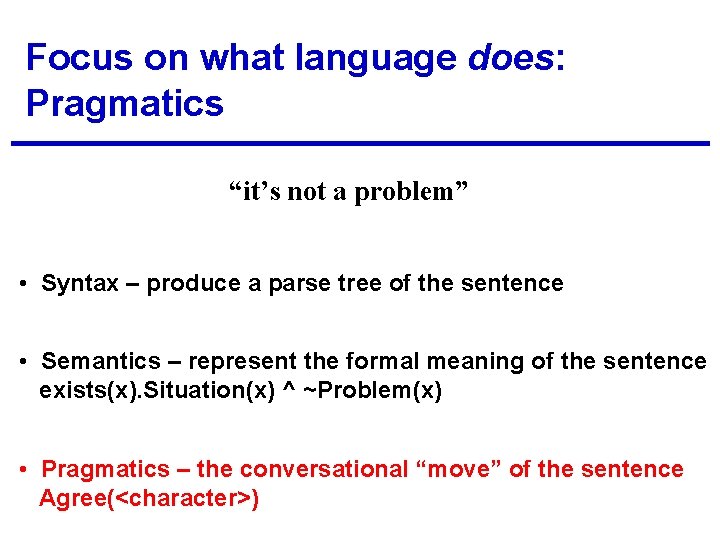

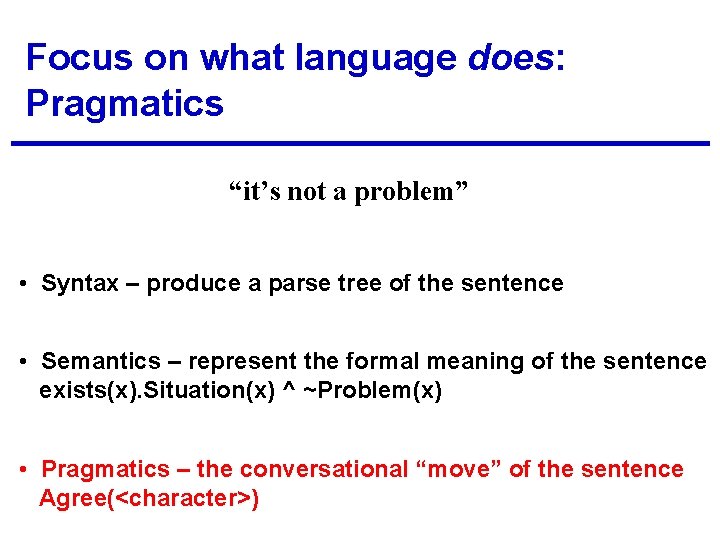

Focus on what language does: Pragmatics “it’s not a problem” • Syntax – produce a parse tree of the sentence • Semantics – represent the formal meaning of the sentence exists(x). Situation(x) ^ ~Problem(x) • Pragmatics – the conversational “move” of the sentence Agree(<character>)

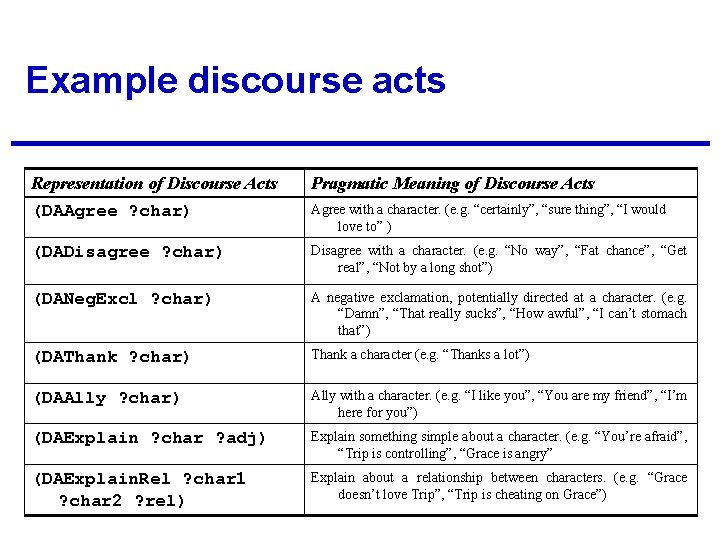

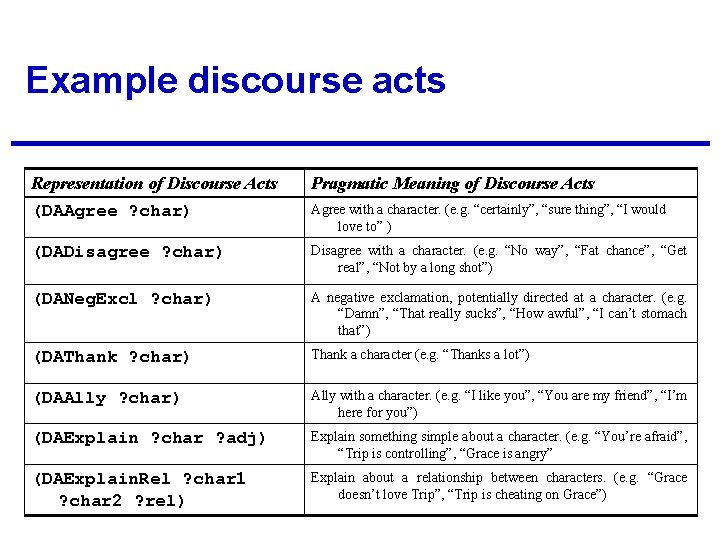

Example discourse acts Representation of Discourse Acts Pragmatic Meaning of Discourse Acts (DAAgree ? char) Agree with a character. (e. g. “certainly”, “sure thing”, “I would love to” ) (DADisagree ? char) Disagree with a character. (e. g. “No way”, “Fat chance”, “Get real”, “Not by a long shot”) (DANeg. Excl ? char) A negative exclamation, potentially directed at a character. (e. g. “Damn”, “That really sucks”, “How awful”, “I can’t stomach that”) (DAThank ? char) Thank a character (e. g. “Thanks a lot”) (DAAlly ? char) Ally with a character. (e. g. “I like you”, “You are my friend”, “I’m here for you”) (DAExplain ? char ? adj) Explain something simple about a character. (e. g. “You’re afraid”, “Trip is controlling”, “Grace is angry” (DAExplain. Rel ? char 1 ? char 2 ? rel) Explain about a relationship between characters. (e. g. “Grace doesn’t love Trip”, “Trip is cheating on Grace”)

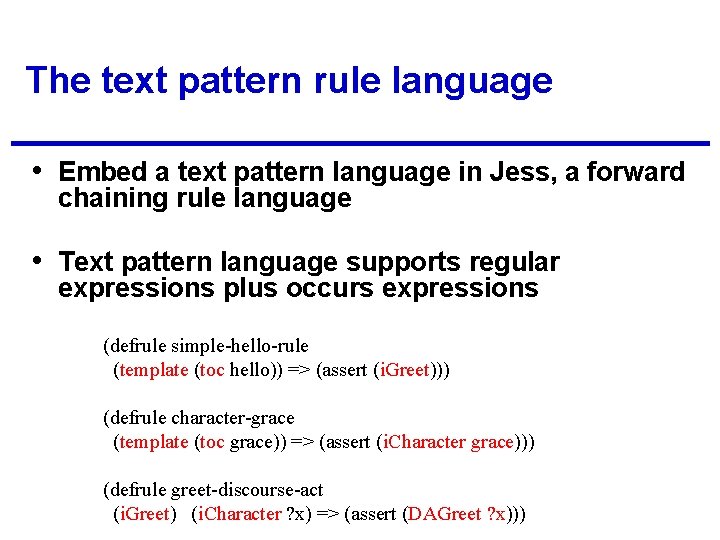

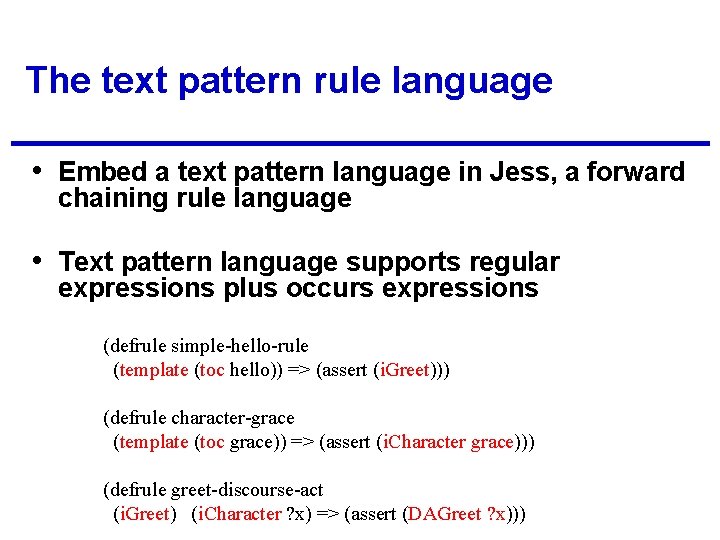

The text pattern rule language • Embed a text pattern language in Jess, a forward chaining rule language • Text pattern language supports regular expressions plus occurs expressions (defrule simple-hello-rule (template (toc hello)) => (assert (i. Greet))) (defrule character-grace (template (toc grace)) => (assert (i. Character grace))) (defrule greet-discourse-act (i. Greet) (i. Character ? x) => (assert (DAGreet ? x)))

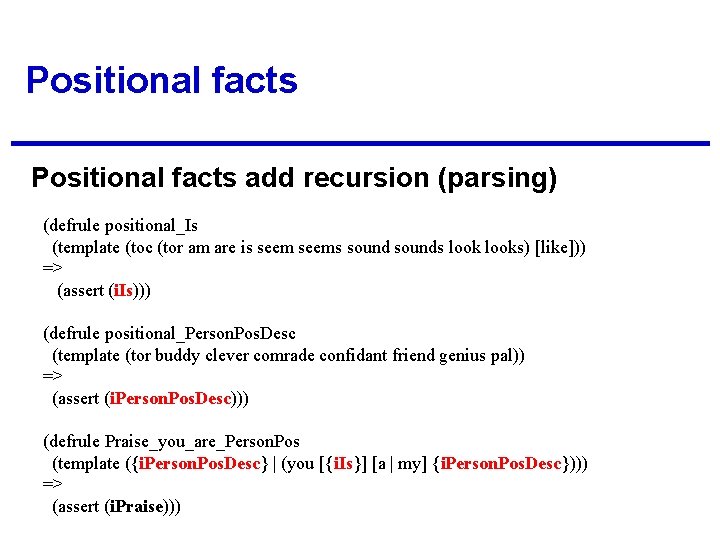

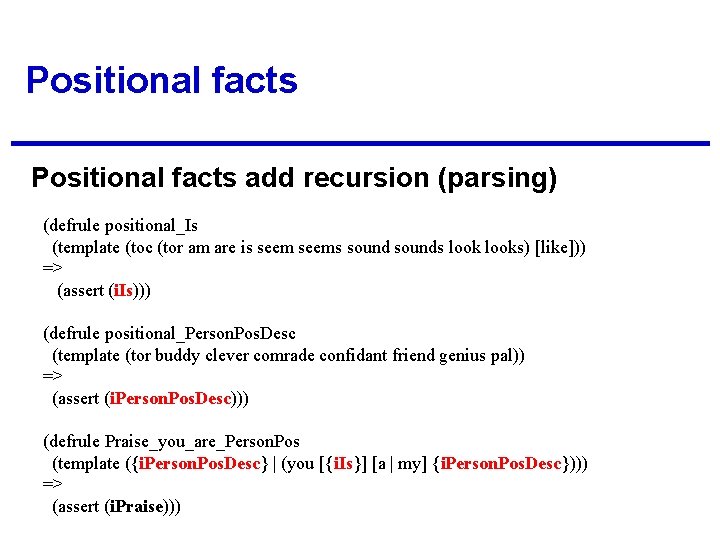

Positional facts add recursion (parsing) (defrule positional_Is (template (toc (tor am are is seems sounds looks) [like])) => (assert (i. Is))) (defrule positional_Person. Pos. Desc (template (tor buddy clever comrade confidant friend genius pal)) => (assert (i. Person. Pos. Desc))) (defrule Praise_you_are_Person. Pos (template ({i. Person. Pos. Desc} | (you [{i. Is}] [a | my] {i. Person. Pos. Desc}))) => (assert (i. Praise)))

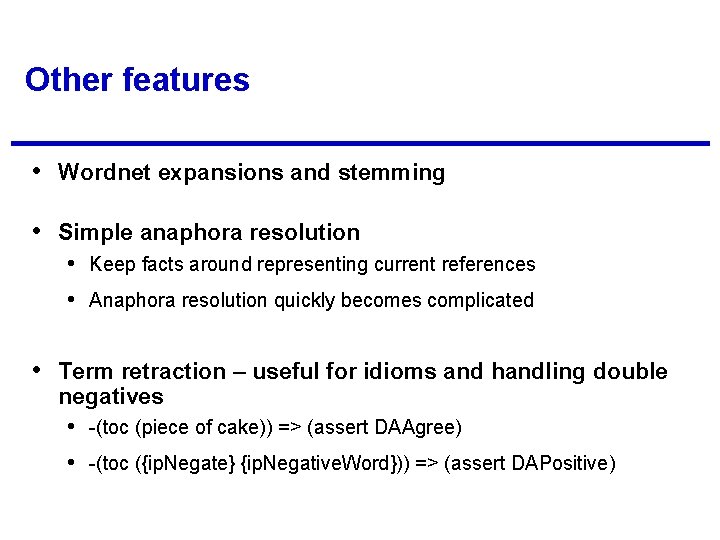

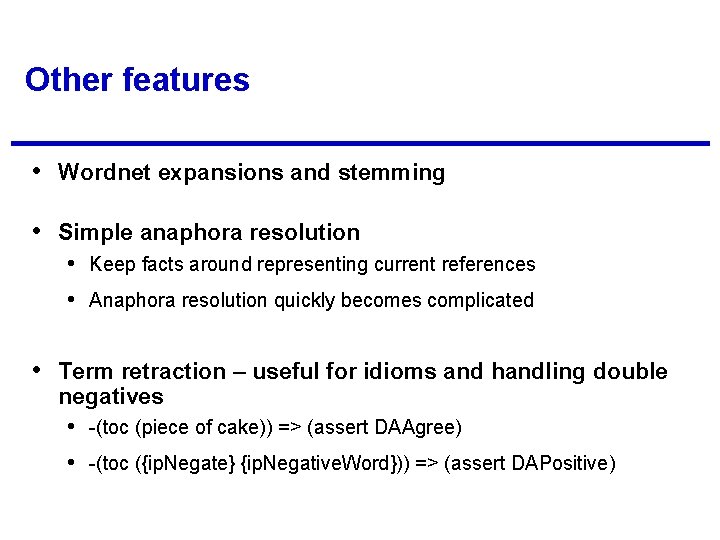

Other features • Wordnet expansions and stemming • Simple anaphora resolution • Keep facts around representing current references • Anaphora resolution quickly becomes complicated • Term retraction – useful for idioms and handling double negatives • -(toc (piece of cake)) => (assert DAAgree) • -(toc ({ip. Negate} {ip. Negative. Word})) => (assert DAPositive)

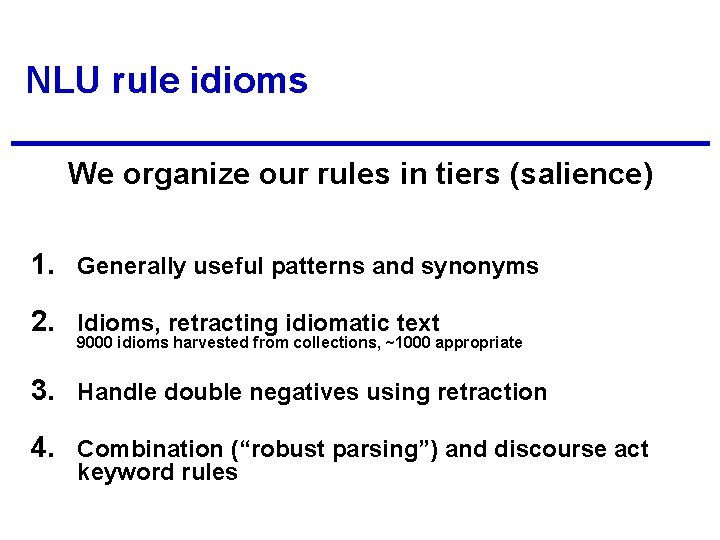

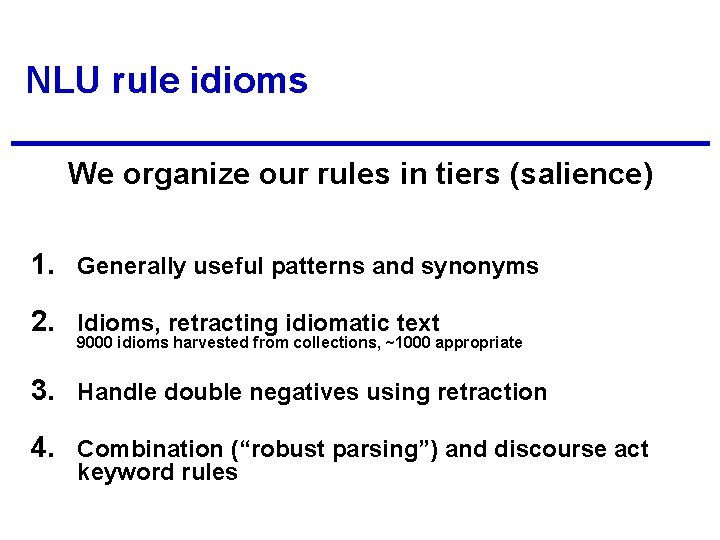

NLU rule idioms We organize our rules in tiers (salience) 1. Generally useful patterns and synonyms 2. Idioms, retracting idiomatic text 9000 idioms harvested from collections, ~1000 appropriate 3. Handle double negatives using retraction 4. Combination (“robust parsing”) and discourse act keyword rules

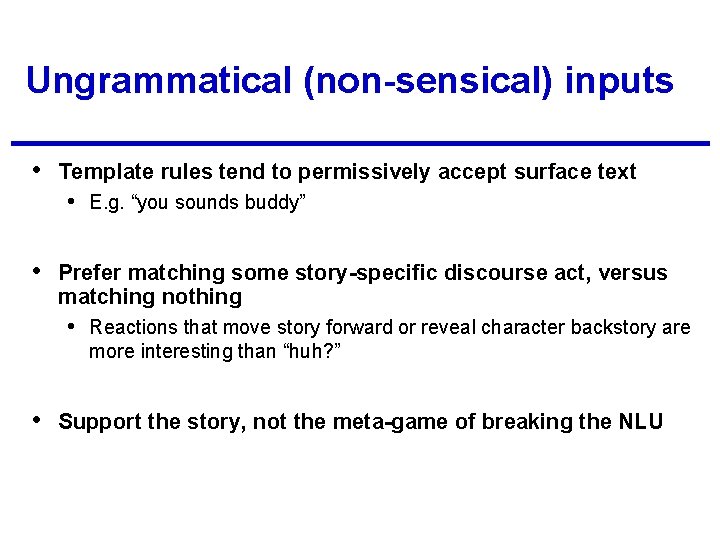

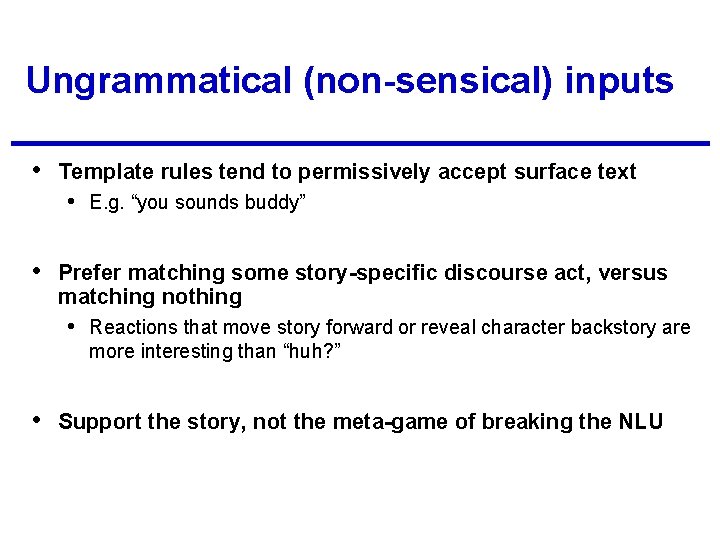

Ungrammatical (non-sensical) inputs • Template rules tend to permissively accept surface text • E. g. “you sounds buddy” • Prefer matching some story-specific discourse act, versus matching nothing • Reactions that move story forward or reveal character backstory are more interesting than “huh? ” • Support the story, not the meta-game of breaking the NLU

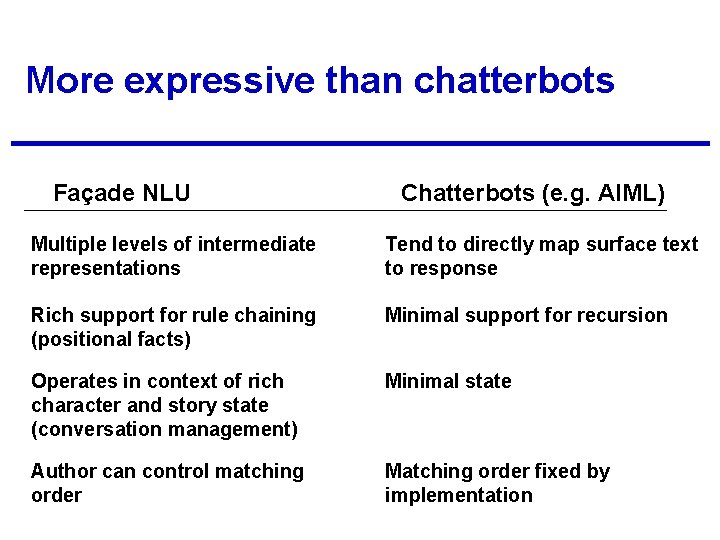

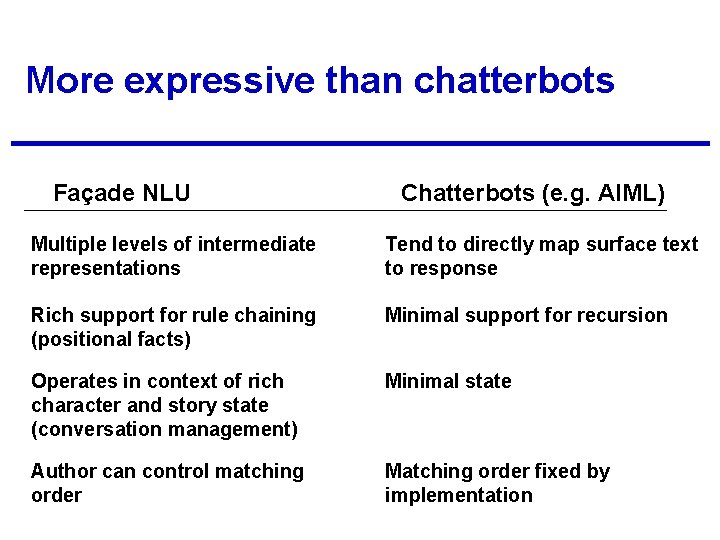

More expressive than chatterbots Façade NLU Chatterbots (e. g. AIML) Multiple levels of intermediate representations Tend to directly map surface text to response Rich support for rule chaining (positional facts) Minimal support for recursion Operates in context of rich character and story state (conversation management) Minimal state Author can control matching order Matching order fixed by implementation

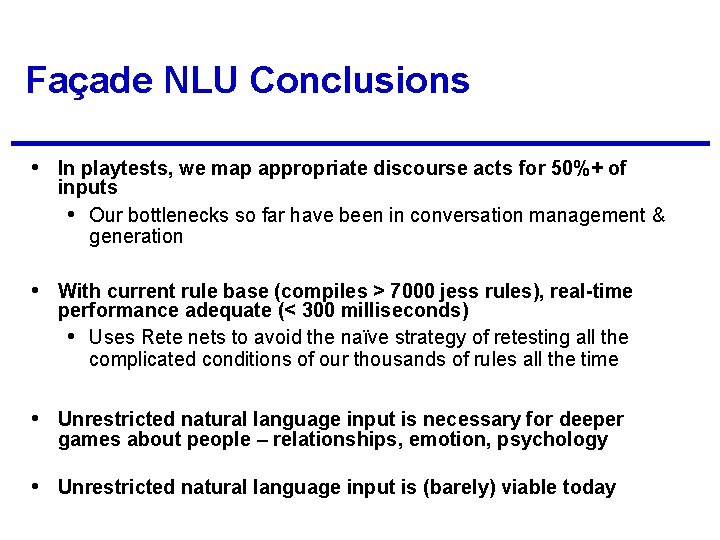

Façade NLU Conclusions • In playtests, we map appropriate discourse acts for 50%+ of inputs • Our bottlenecks so far have been in conversation management & generation • With current rule base (compiles > 7000 jess rules), real-time performance adequate (< 300 milliseconds) • Uses Rete nets to avoid the naïve strategy of retesting all the complicated conditions of our thousands of rules all the time • Unrestricted natural language input is necessary for deeper games about people – relationships, emotion, psychology • Unrestricted natural language input is (barely) viable today

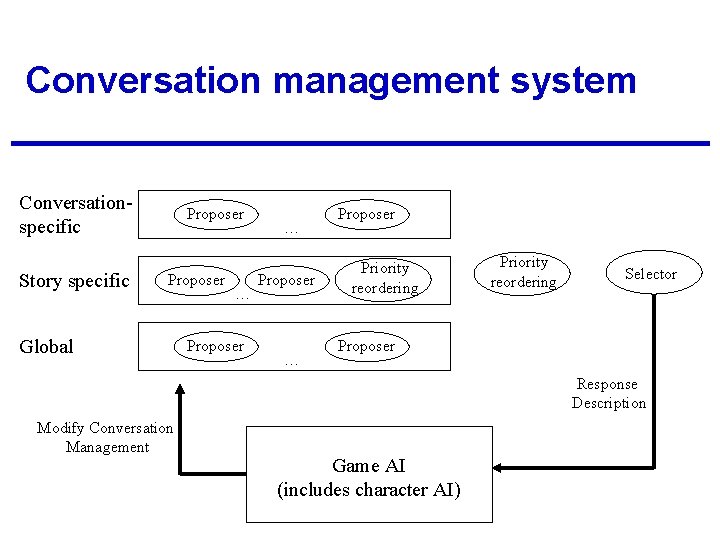

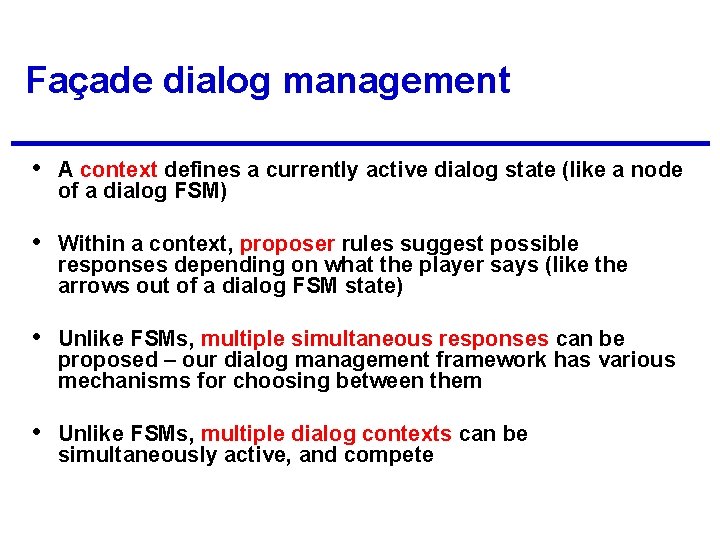

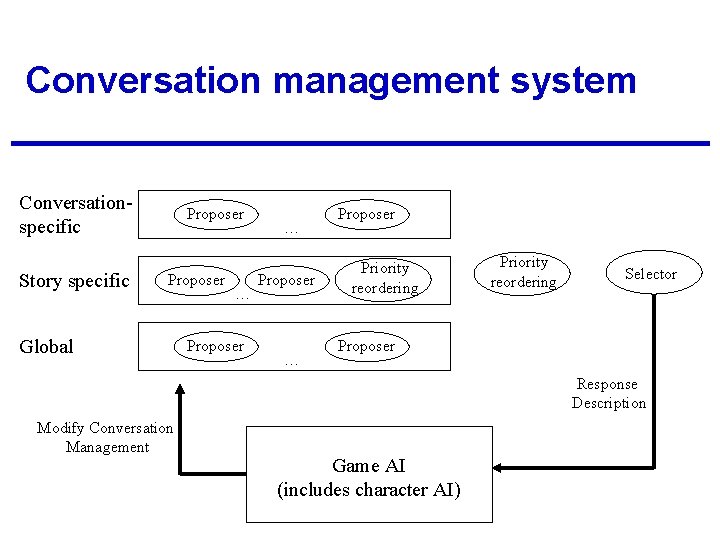

Façade dialog management • A context defines a currently active dialog state (like a node of a dialog FSM) • Within a context, proposer rules suggest possible responses depending on what the player says (like the arrows out of a dialog FSM state) • Unlike FSMs, multiple simultaneous responses can be proposed – our dialog management framework has various mechanisms for choosing between them • Unlike FSMs, multiple dialog contexts can be simultaneously active, and compete

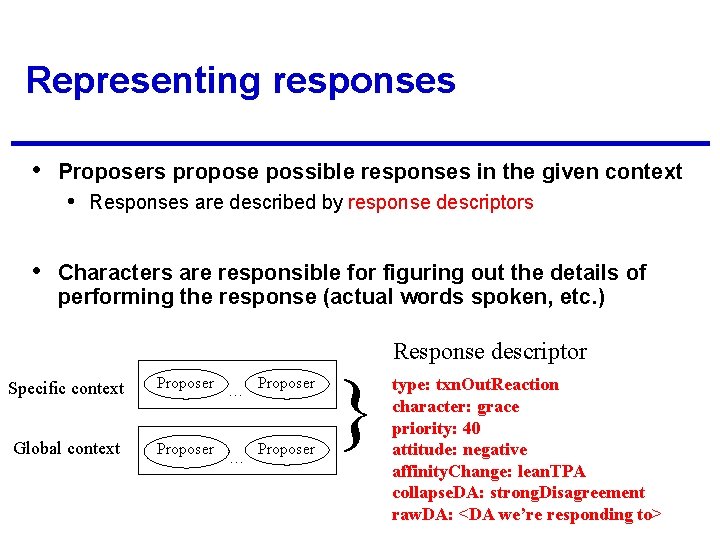

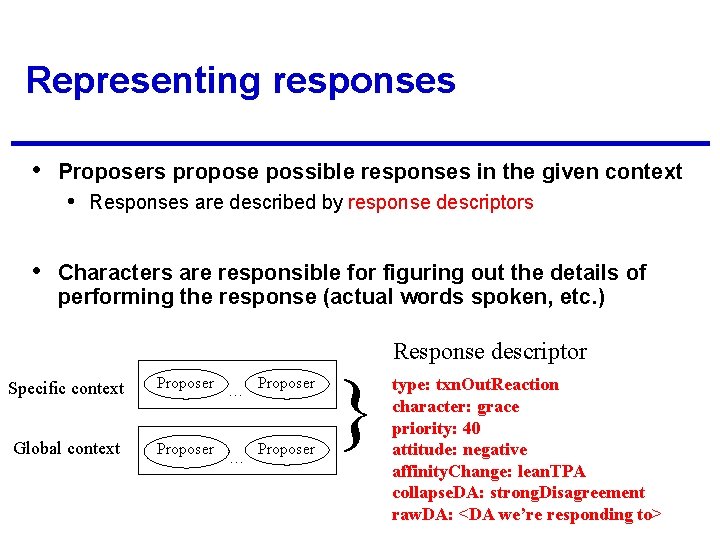

Representing responses • Proposers propose possible responses in the given context • Responses are described by response descriptors • Characters are responsible for figuring out the details of performing the response (actual words spoken, etc. ) Specific context Global context Proposer … Proposer } Response descriptor type: txn. Out. Reaction character: grace priority: 40 attitude: negative affinity. Change: lean. TPA collapse. DA: strong. Disagreement raw. DA: <DA we’re responding to>

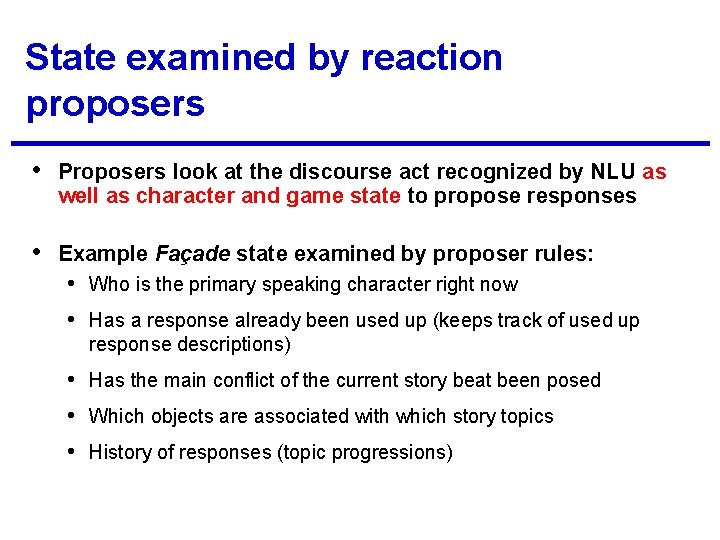

State examined by reaction proposers • Proposers look at the discourse act recognized by NLU as well as character and game state to propose responses • Example Façade state examined by proposer rules: • Who is the primary speaking character right now • Has a response already been used up (keeps track of used up response descriptions) • Has the main conflict of the current story beat been posed • Which objects are associated with which story topics • History of responses (topic progressions)

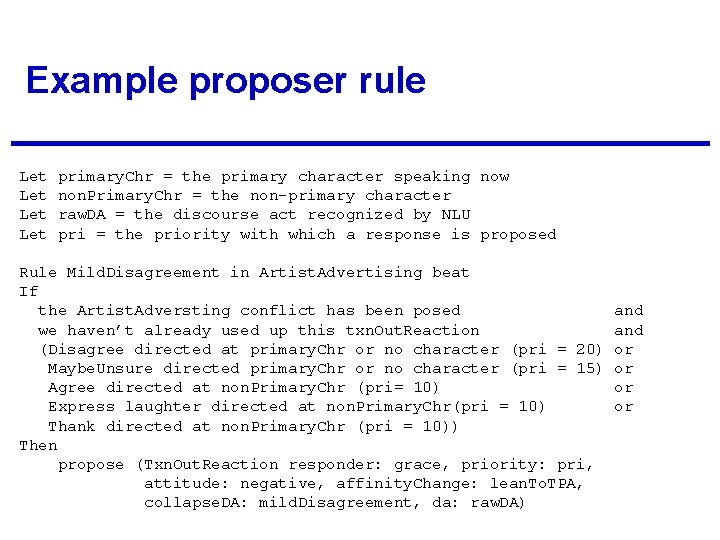

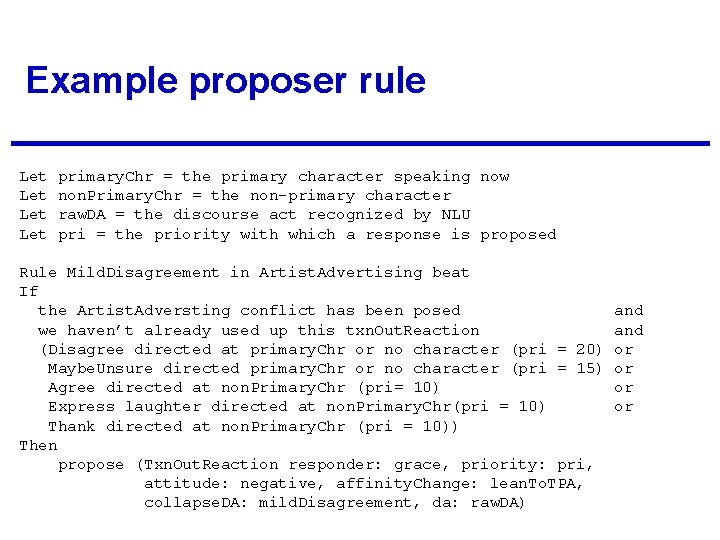

Example proposer rule Let Let primary. Chr = the primary character speaking now non. Primary. Chr = the non-primary character raw. DA = the discourse act recognized by NLU pri = the priority with which a response is proposed Rule Mild. Disagreement in Artist. Advertising beat If the Artist. Adversting conflict has been posed we haven’t already used up this txn. Out. Reaction (Disagree directed at primary. Chr or no character (pri = 20) Maybe. Unsure directed primary. Chr or no character (pri = 15) Agree directed at non. Primary. Chr (pri= 10) Express laughter directed at non. Primary. Chr(pri = 10) Thank directed at non. Primary. Chr (pri = 10)) Then propose (Txn. Out. Reaction responder: grace, priority: pri, attitude: negative, affinity. Change: lean. To. TPA, collapse. DA: mild. Disagreement, da: raw. DA) and or or

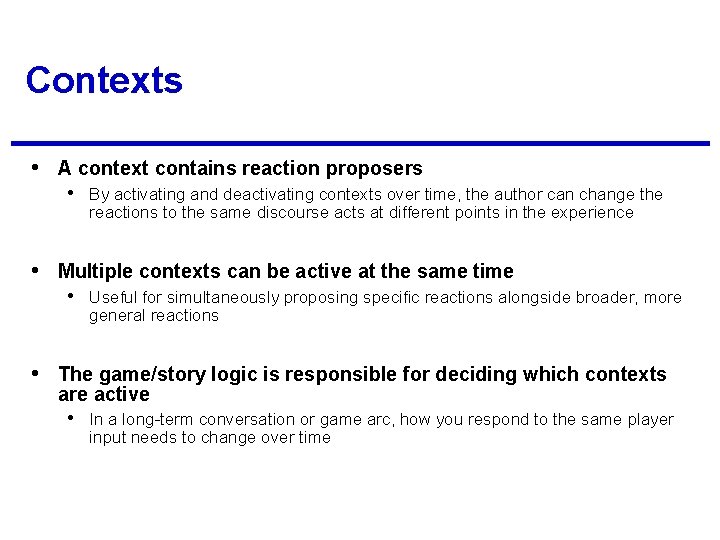

Contexts • A context contains reaction proposers • By activating and deactivating contexts over time, the author can change the reactions to the same discourse acts at different points in the experience • Multiple contexts can be active at the same time • Useful for simultaneously proposing specific reactions alongside broader, more general reactions • The game/story logic is responsible for deciding which contexts are active • In a long-term conversation or game arc, how you respond to the same player input needs to change over time

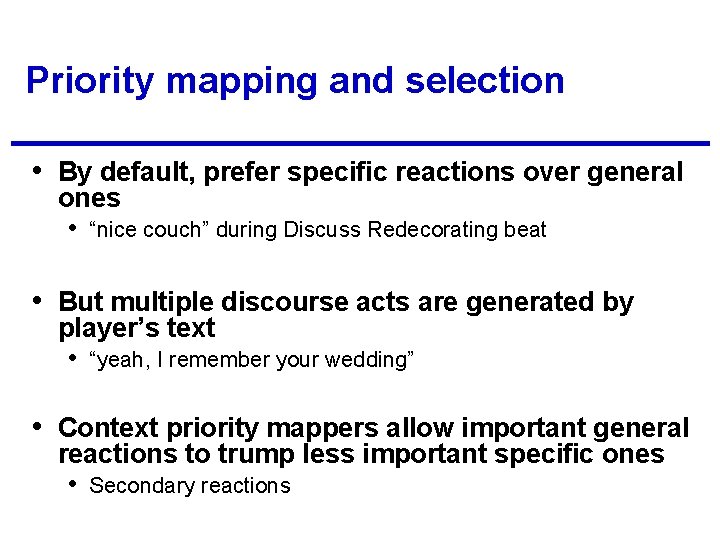

Priority mapping and selection • By default, prefer specific reactions over general ones • “nice couch” during Discuss Redecorating beat • But multiple discourse acts are generated by player’s text • “yeah, I remember your wedding” • Context priority mappers allow important general reactions to trump less important specific ones • Secondary reactions

Conversation management conclusions • Rule-based architecture avoids manually unwinding finite state machines • Can handle multiple simultaneous contexts, and multiple simultaneous interpretations • Somewhere describe loop between game logic and phase 2 (for us, this loop is managed by beats – handlers are really part of narrative intelligence) • Combined with character dialog logic, creates the experience of an ongoing conversation • Interesting alternative – have system combine multiple simple conversation graphs into a larger multithreaded conversation • Rob Zubek’s work at Northwestern

Live NLP demo

Natural language generation • Currently, no examples of NLG in games • Academic NLG • Symbolic approaches to individual sentences and sentence planning • Statistical approaches to summarization and translation • But little work on rich, personality-specific, emotion laden dialog • NLG requires deep(er) formal knowledge representation

NLG conclusions • Won't be able to do NLG for game characters without a focused 5+ year research effort • Situation is far too complex / rich • Game research that pushes NLG will need to use simpler situation + simpler language • Examples: children on playground, cavemen, …

For more info (including these slides) interactivestory. net Façade project site egl. gatech. edu Experimental Game Lab (NLP research should be starting up soon) grandtextauto. org Group blog on games, interactive drama, IF, new media

Conversation management system Conversationspecific Story specific Proposer Global … Proposer Priority reordering Selector Proposer Response Description Modify Conversation Management Game AI (includes character AI)