Lecture 2 Ngram KaiWei Chang CS University of

- Slides: 45

Lecture 2: N-gram Kai-Wei Chang CS @ University of Virginia kw@kwchang. net Couse webpage: http: //kwchang. net/teaching/NLP 16 CS 6501: Natural Language Processing 1

This lecture v Language Models v What are N-gram models? v How to use probabilities v What does P(Y|X) mean? v How can I manipulate it? v How can I estimate its value in practice? CS 6501: Natural Language Processing 2

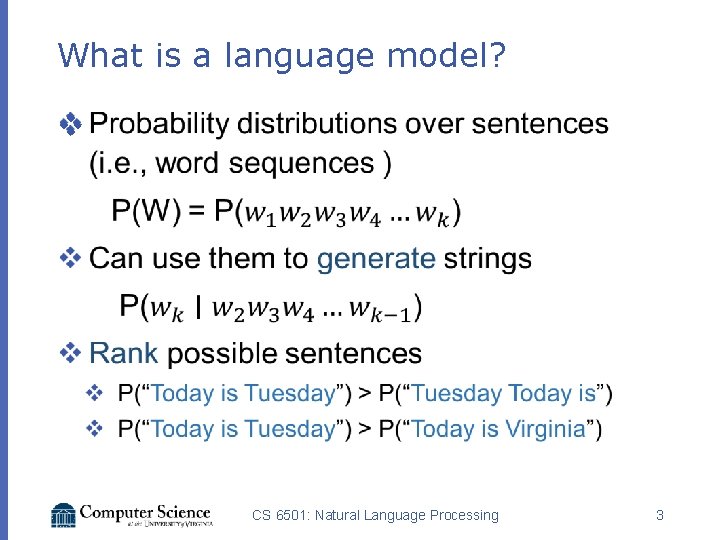

What is a language model? v CS 6501: Natural Language Processing 3

Language model applications Context-sensitive spelling correction CS 6501: Natural Language Processing 4

Language model applications Autocomplete CS 6501: Natural Language Processing 5

Language model applications Smart Reply CS 6501: Natural Language Processing 6

Language model applications Language generation https: //pdos. csail. mit. edu/archive/scigen/ CS 6501: Natural Language Processing 7

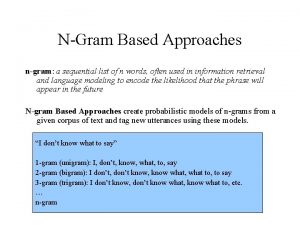

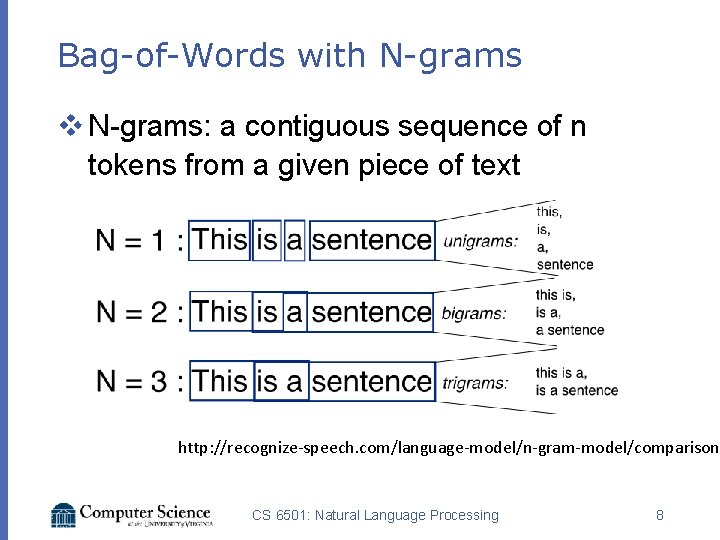

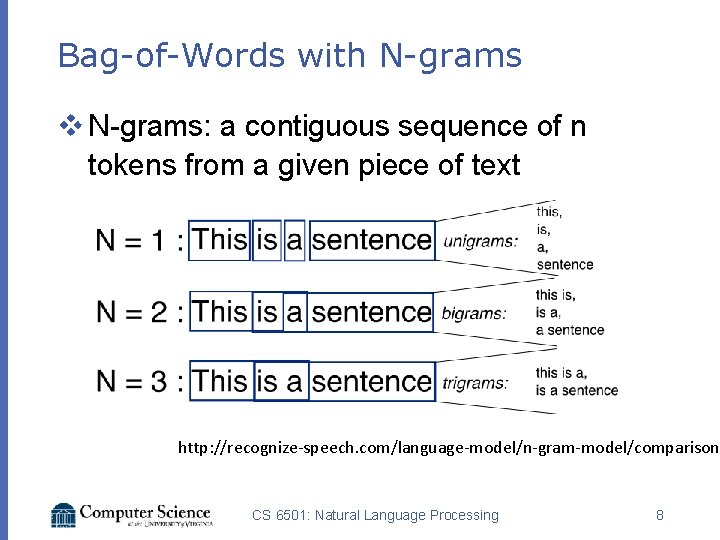

Bag-of-Words with N-grams v N-grams: a contiguous sequence of n tokens from a given piece of text http: //recognize-speech. com/language-model/n-gram-model/comparison CS 6501: Natural Language Processing 8

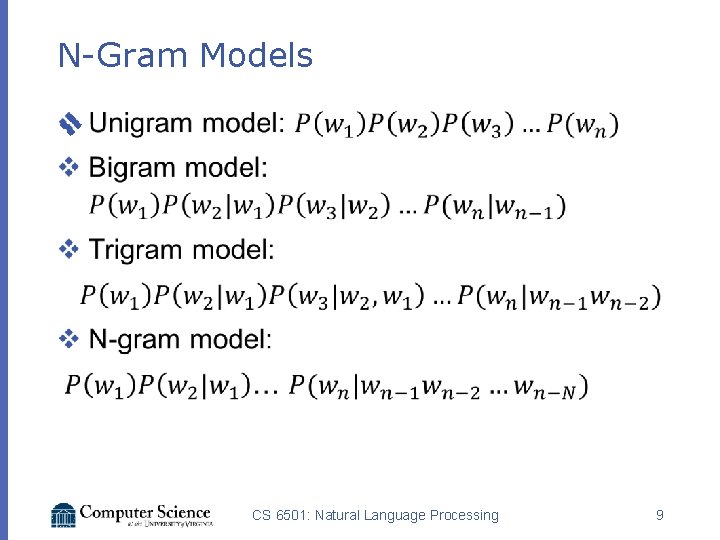

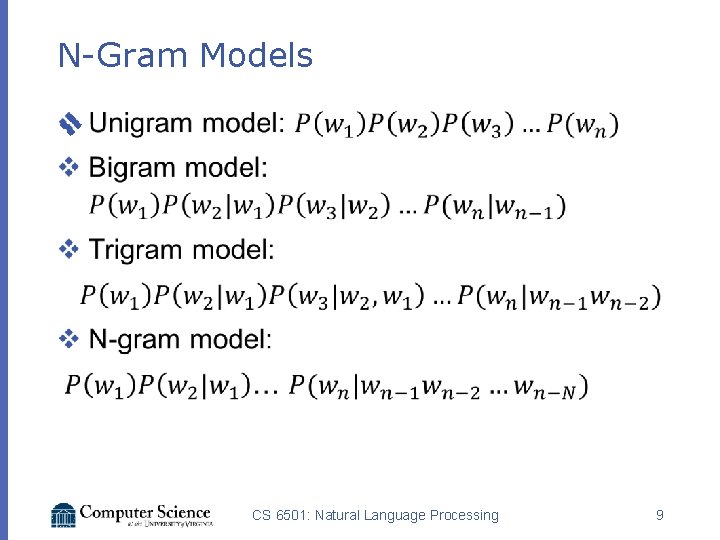

N-Gram Models v CS 6501: Natural Language Processing 9

Random language via n-gram v http: //www. cs. jhu. edu/~jason/465/Power. Po int/lect 01, 3 tr-ngram-gen. pdf v Behind the scenes – probability theory CS 6501: Natural Language Processing 10

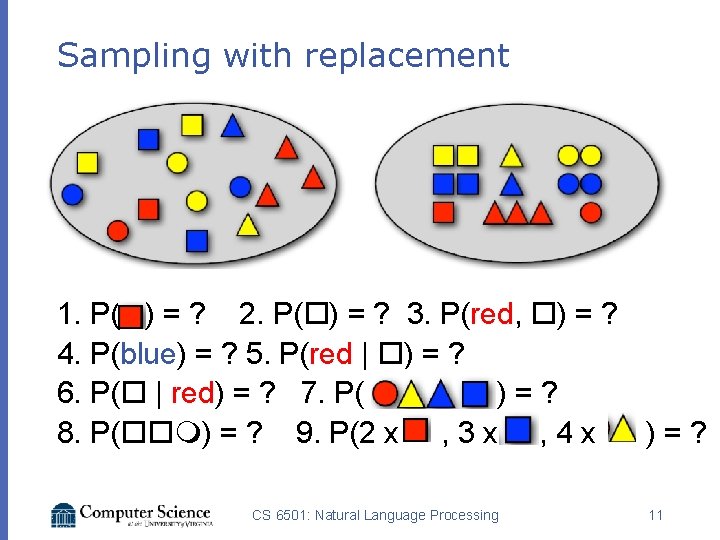

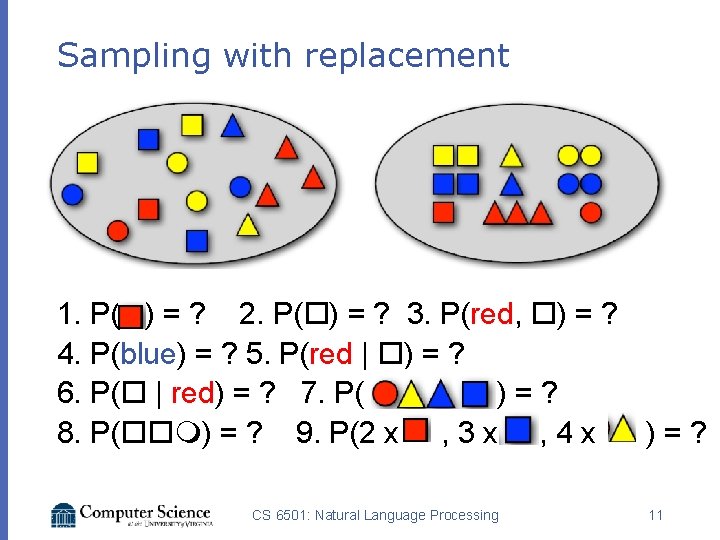

Sampling with replacement 1. P( ) = ? 2. P( ) = ? 3. P(red, ) = ? 4. P(blue) = ? 5. P(red | ) = ? 6. P( | red) = ? 7. P( ) = ? 8. P( ) = ? 9. P(2 x , 3 x , 4 x ) = ? CS 6501: Natural Language Processing 11

Sampling words with replacement Example from Julia hockenmaier, Intro to NLP CS 6501: Natural Language Processing 12

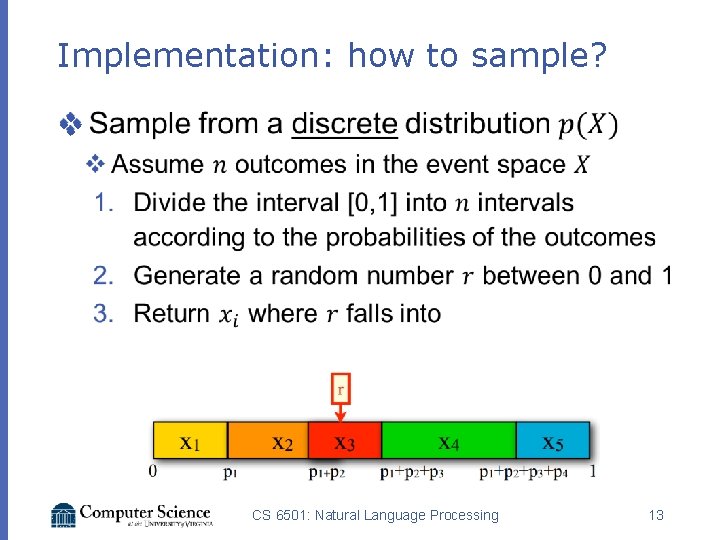

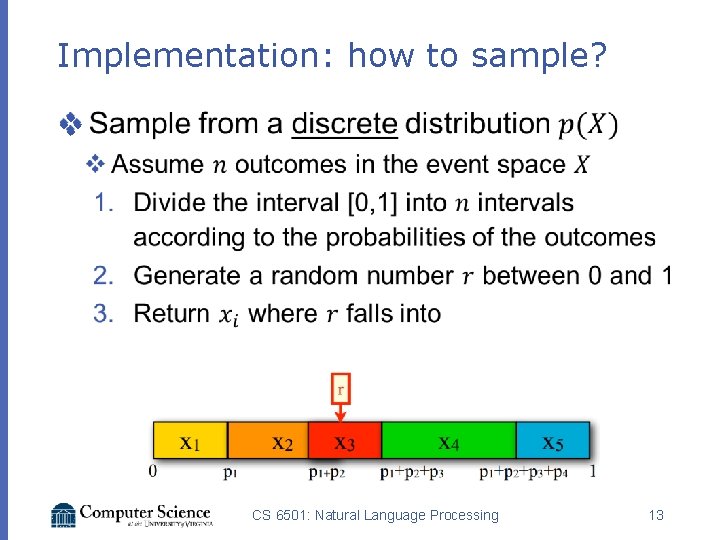

Implementation: how to sample? v CS 6501: Natural Language Processing 13

Conditional on the previous word Example from Julia hockenmaier, Intro to NLP CS 6501: Natural Language Processing 14

Conditional on the previous word Example from Julia hockenmaier, Intro to NLP CS 6501: Natural Language Processing 15

Recap: probability Theory v CS 6501: Natural Language Processing 16

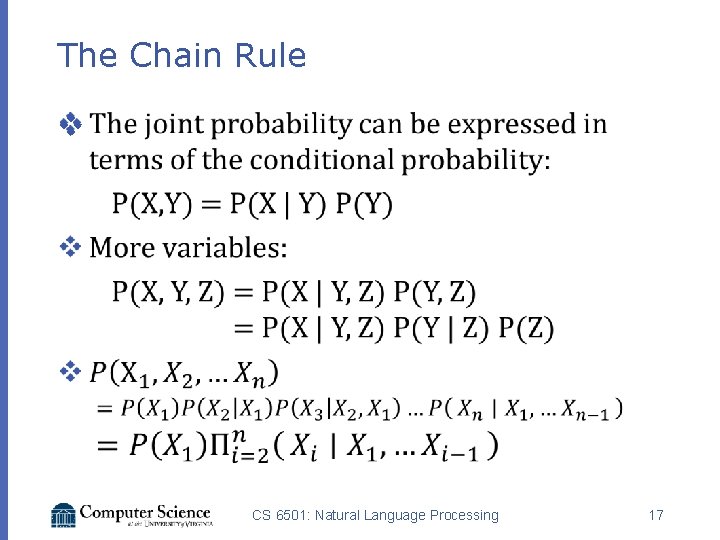

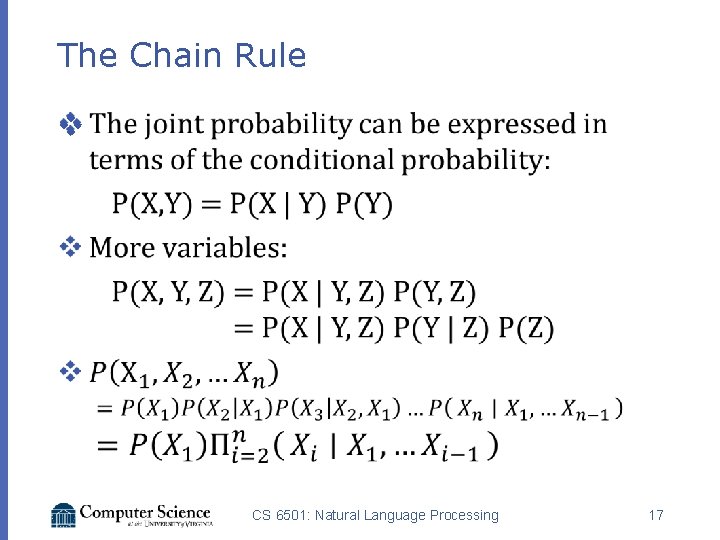

The Chain Rule v CS 6501: Natural Language Processing 17

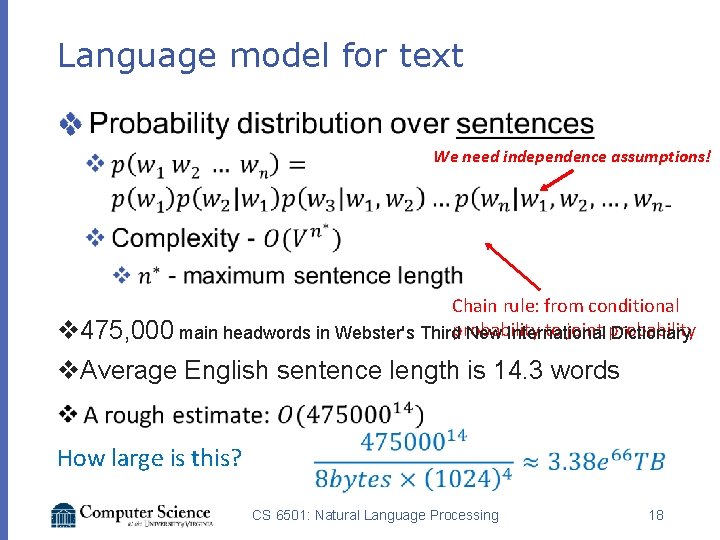

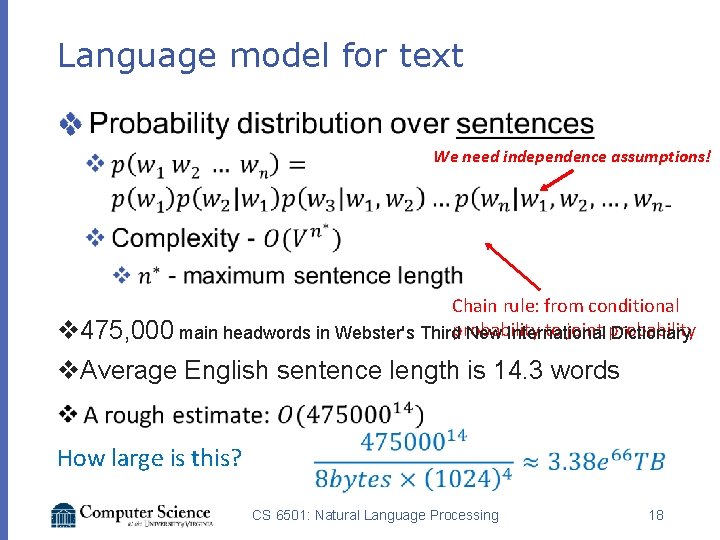

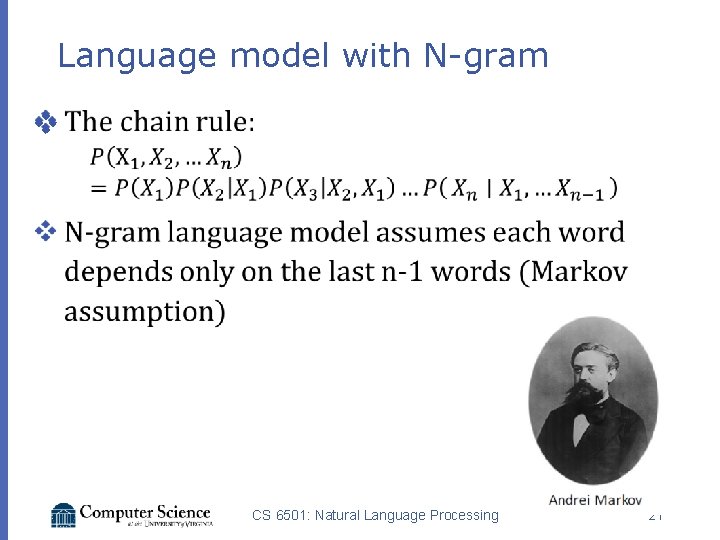

Language model for text v We need independence assumptions! Chain rule: from conditional probability to joint probability v 475, 000 main headwords in Webster's Third New International Dictionary v. Average English sentence length is 14. 3 words How large is this? CS 6501: Natural Language Processing 18

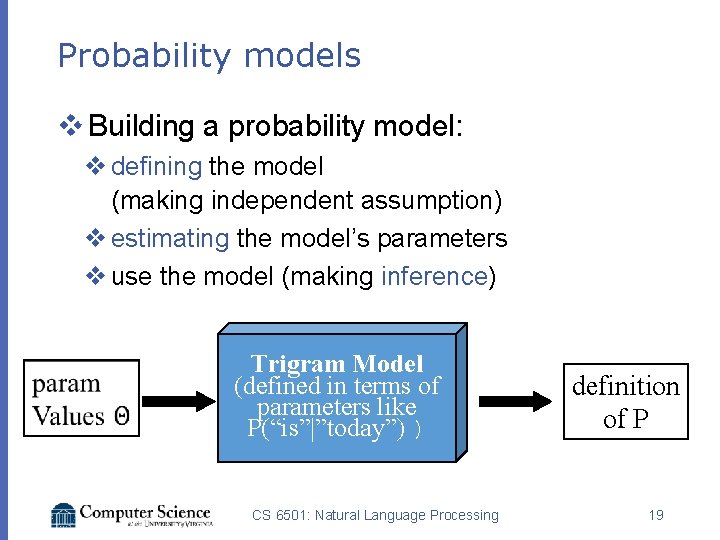

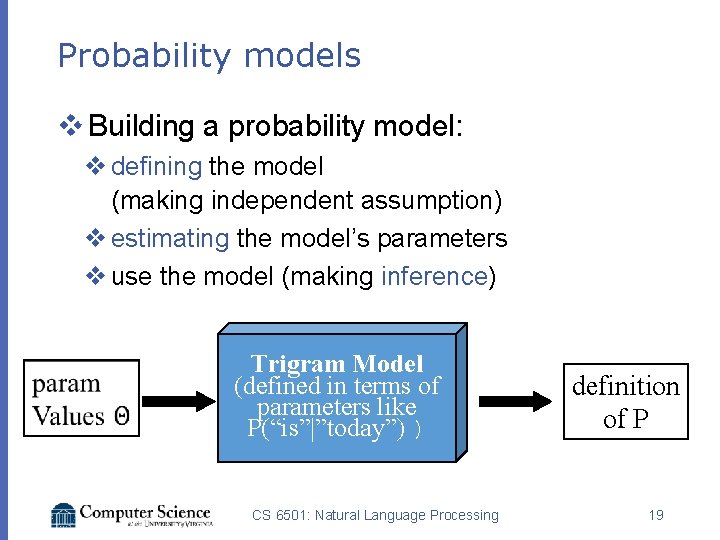

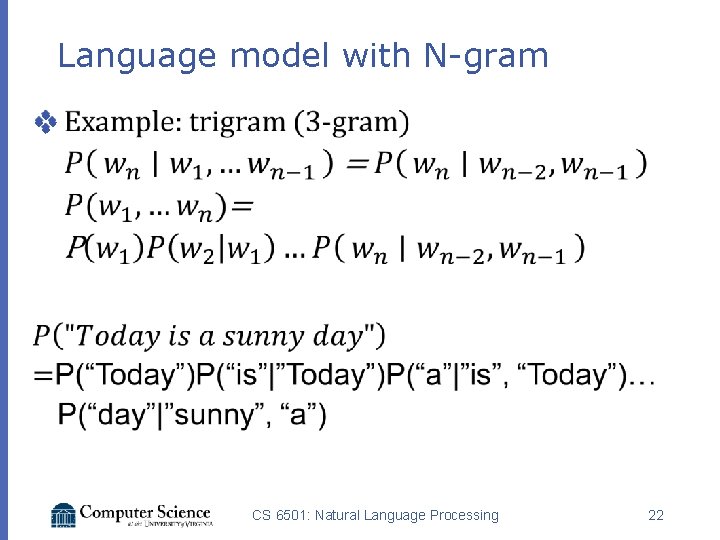

Probability models v Building a probability model: v defining the model (making independent assumption) v estimating the model’s parameters v use the model (making inference) Trigram Model (defined in terms of parameters like P(“is”|”today”) ) CS 6501: Natural Language Processing definition of P 19

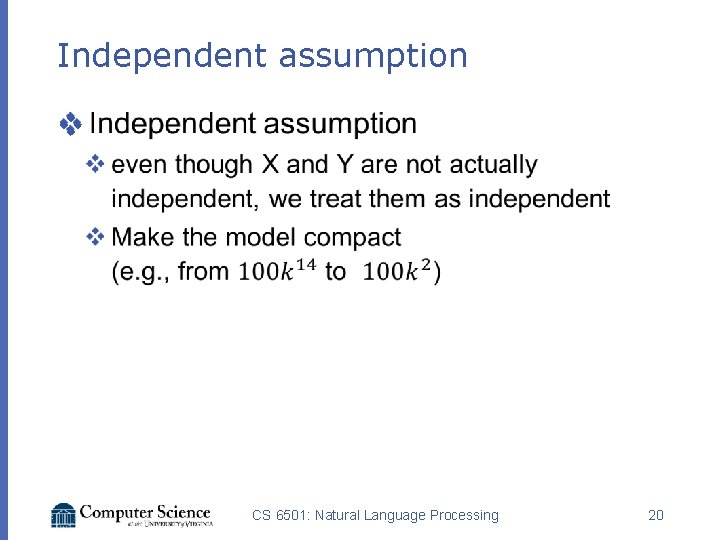

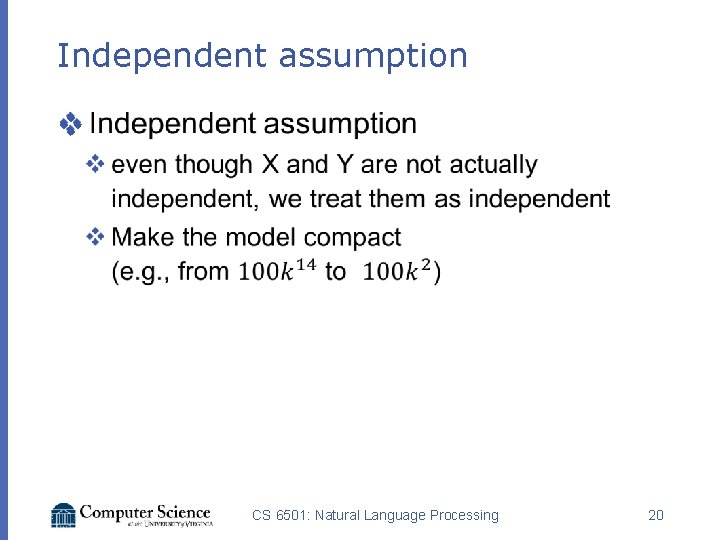

Independent assumption v CS 6501: Natural Language Processing 20

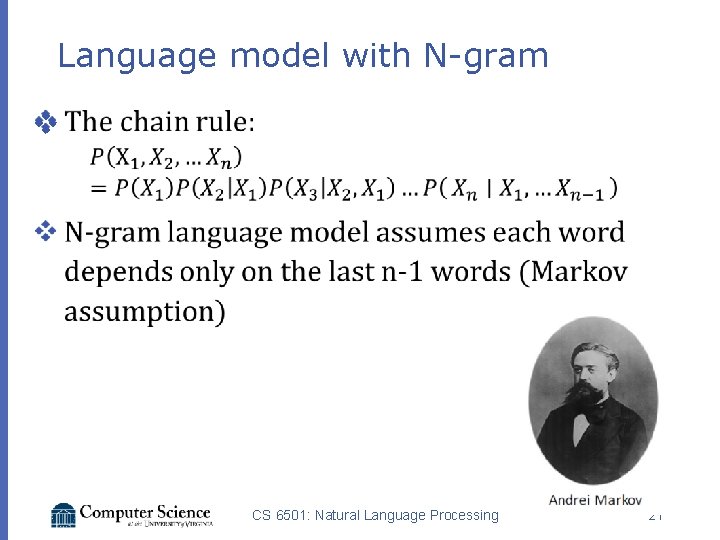

Language model with N-gram v CS 6501: Natural Language Processing 21

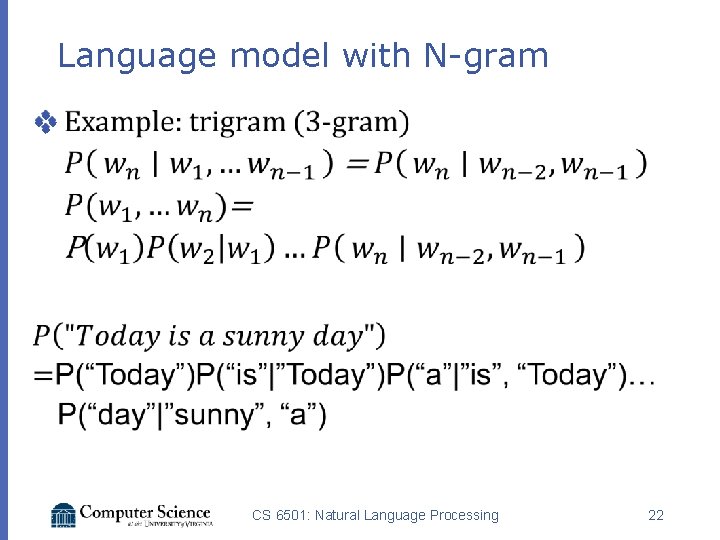

Language model with N-gram v CS 6501: Natural Language Processing 22

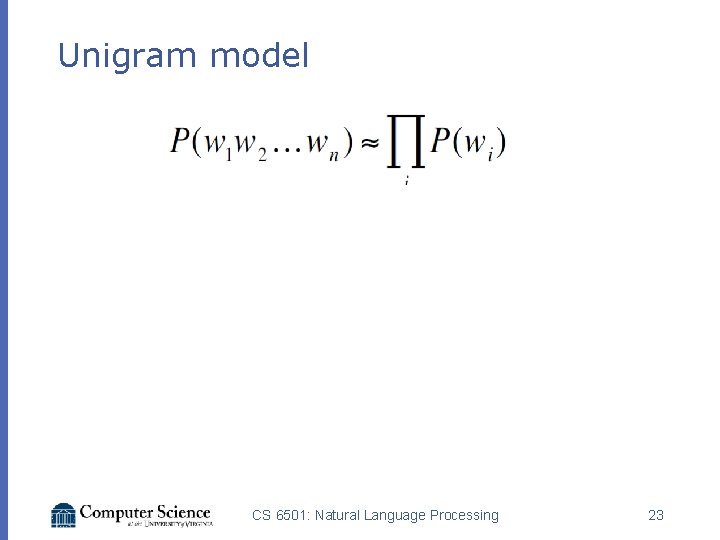

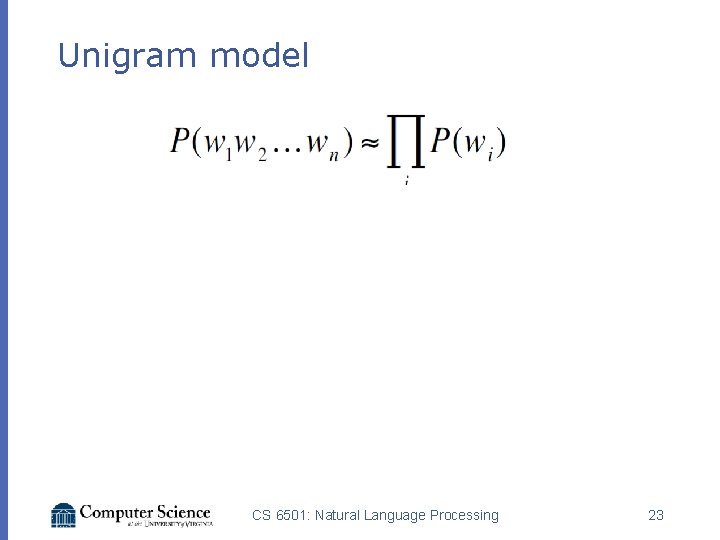

Unigram model CS 6501: Natural Language Processing 23

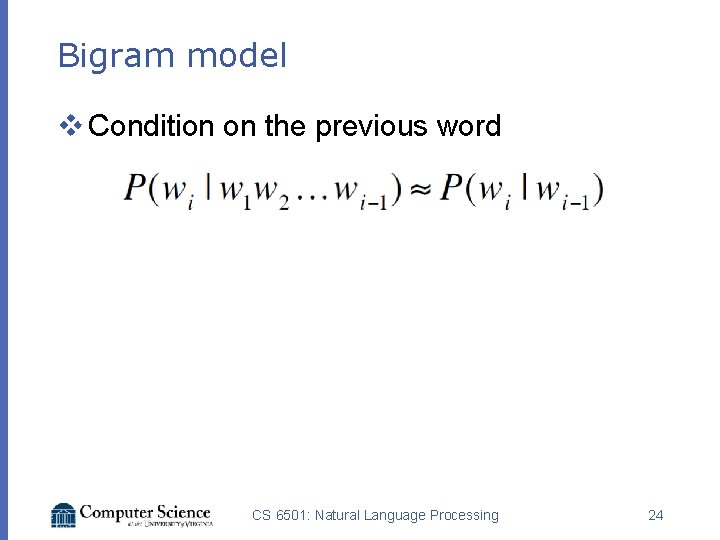

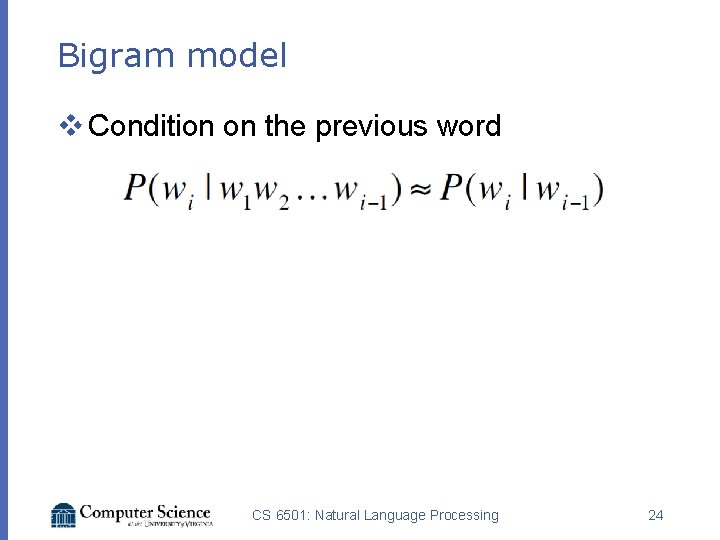

Bigram model v Condition on the previous word CS 6501: Natural Language Processing 24

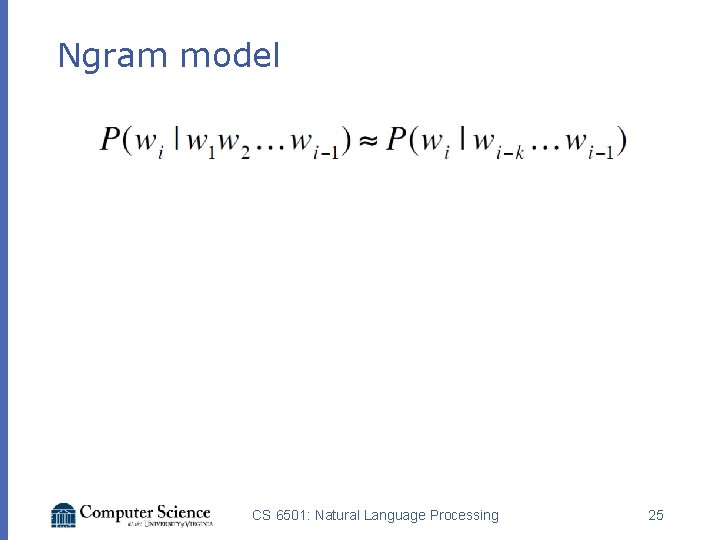

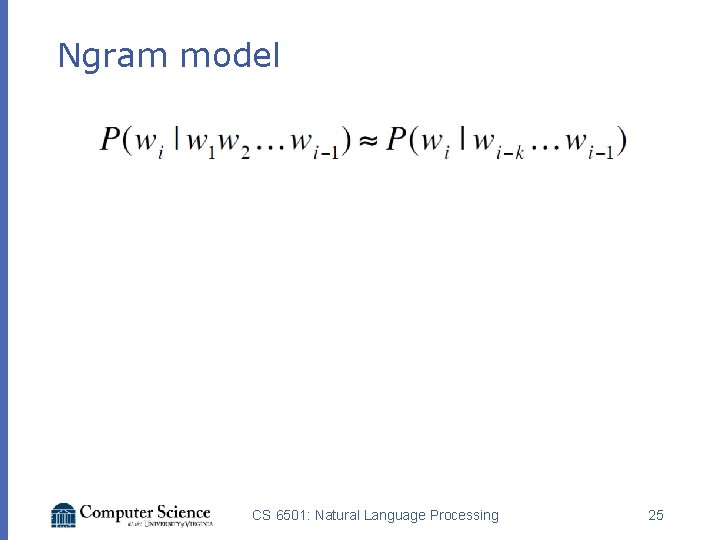

Ngram model CS 6501: Natural Language Processing 25

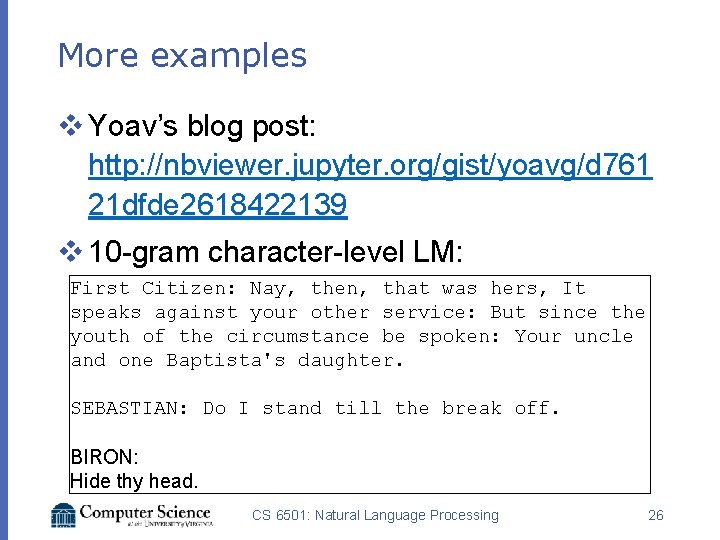

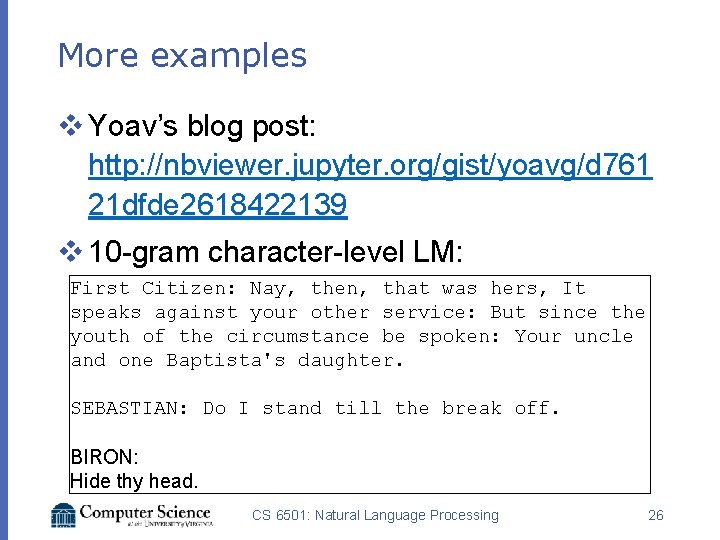

More examples v Yoav’s blog post: http: //nbviewer. jupyter. org/gist/yoavg/d 761 21 dfde 2618422139 v 10 -gram character-level LM: First Citizen: Nay, then, that was hers, It speaks against your other service: But since the youth of the circumstance be spoken: Your uncle and one Baptista's daughter. SEBASTIAN: Do I stand till the break off. BIRON: Hide thy head. CS 6501: Natural Language Processing 26

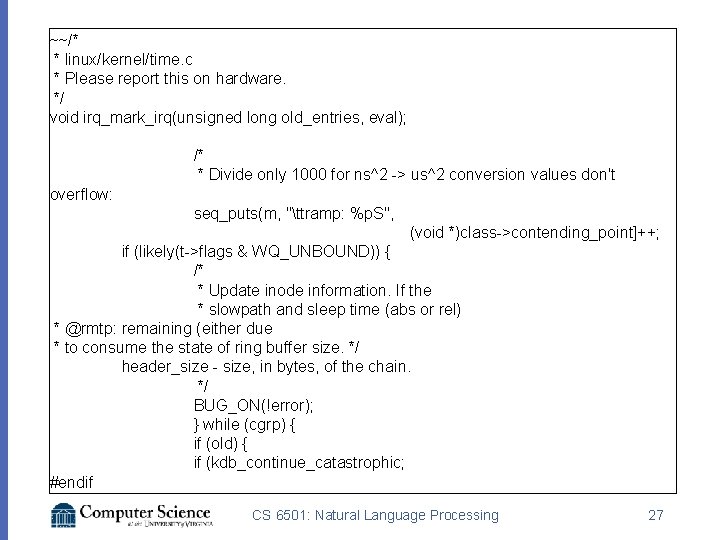

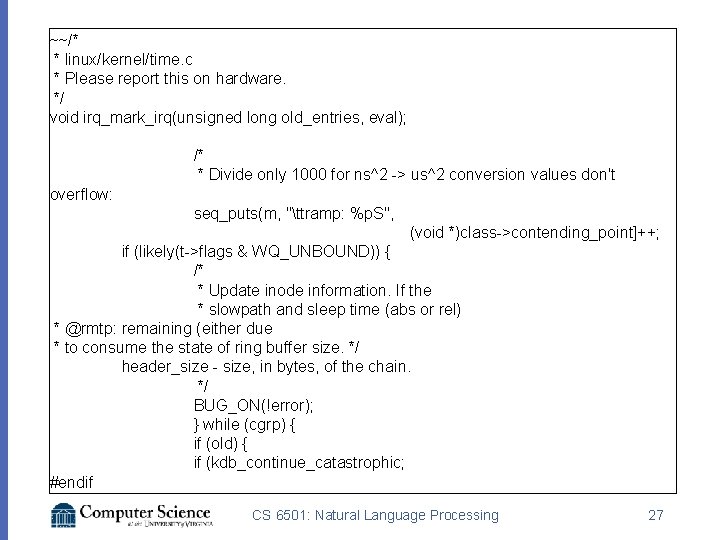

~~/* * linux/kernel/time. c * Please report this on hardware. */ void irq_mark_irq(unsigned long old_entries, eval); More examples v Yoav’s blog post: /* http: //nbviewer. jupyter. org/gist/yoavg/d 761 * Divide only 1000 for ns^2 -> us^2 conversion values don't overflow: 21 dfde 2618422139 seq_puts(m, "ttramp: %p. S", (void *)class->contending_point]++; if (likely(t->flags & WQ_UNBOUND)) { v 10 -gram character-level LM: /* * Update inode information. If the * slowpath and sleep time (abs or rel) * @rmtp: remaining (either due * to consume the state of ring buffer size. */ header_size - size, in bytes, of the chain. */ BUG_ON(!error); } while (cgrp) { if (old) { if (kdb_continue_catastrophic; #endif CS 6501: Natural Language Processing 27

Questions? CS 6501: Natural Language Processing 28

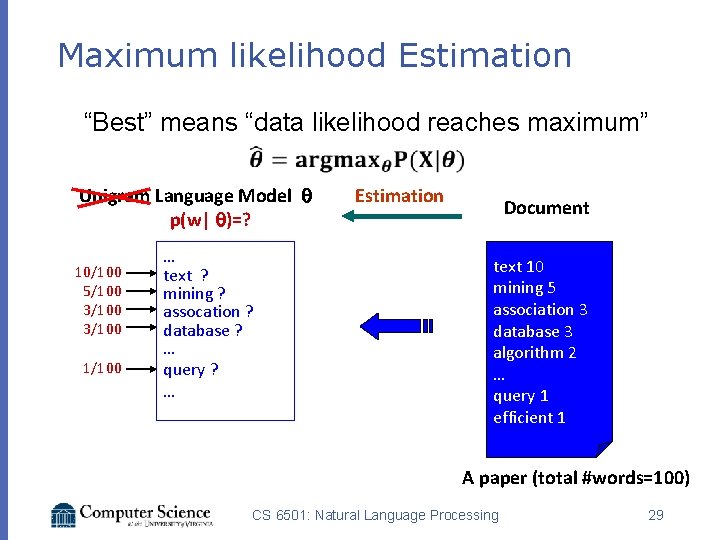

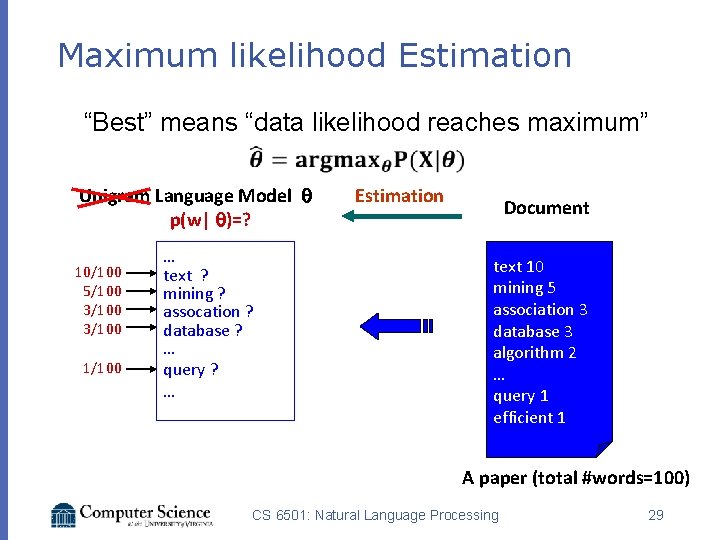

Maximum likelihood Estimation “Best” means “data likelihood reaches maximum” Unigram Language Model p(w| )=? 10/100 5/100 3/100 1/100 … text ? mining ? assocation ? database ? … query ? … Estimation Document text 10 mining 5 association 3 database 3 algorithm 2 … query 1 efficient 1 A paper (total #words=100) CS 6501: Natural Language Processing 29

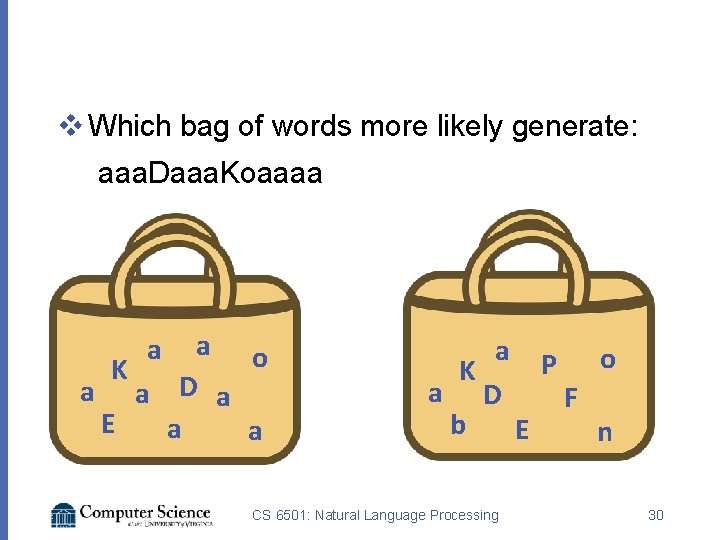

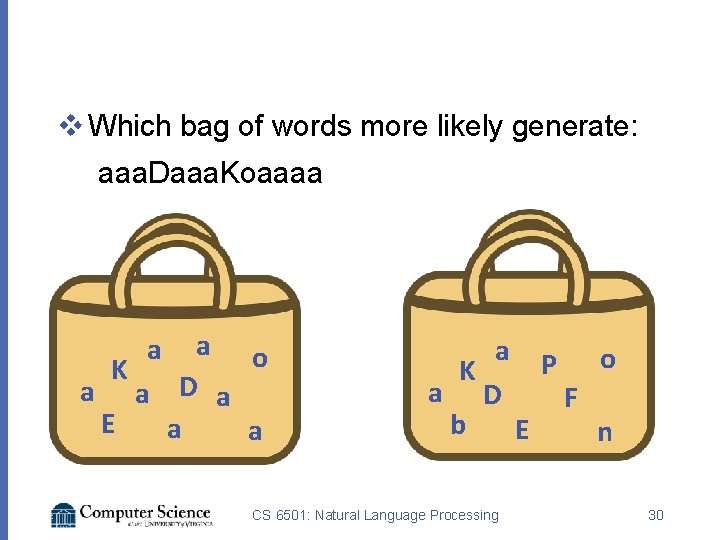

v Which bag of words more likely generate: aaa. Daaa. Koaaaa a a o K a a D a E a a a P o K a D F b E n CS 6501: Natural Language Processing 30

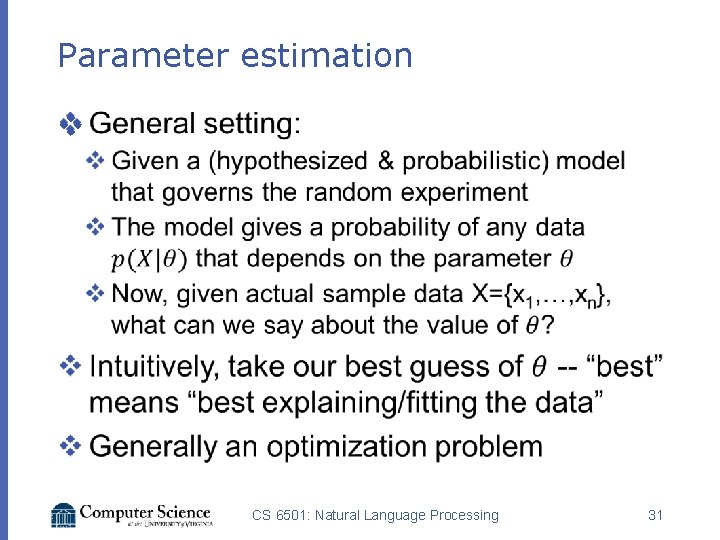

Parameter estimation v CS 6501: Natural Language Processing 31

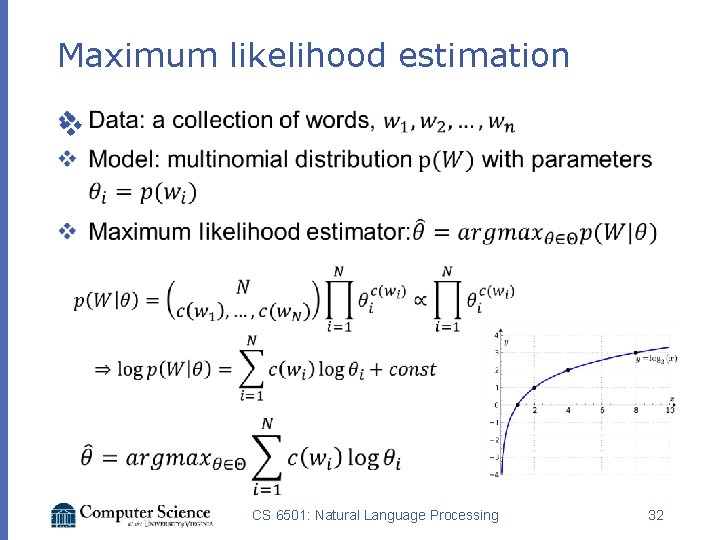

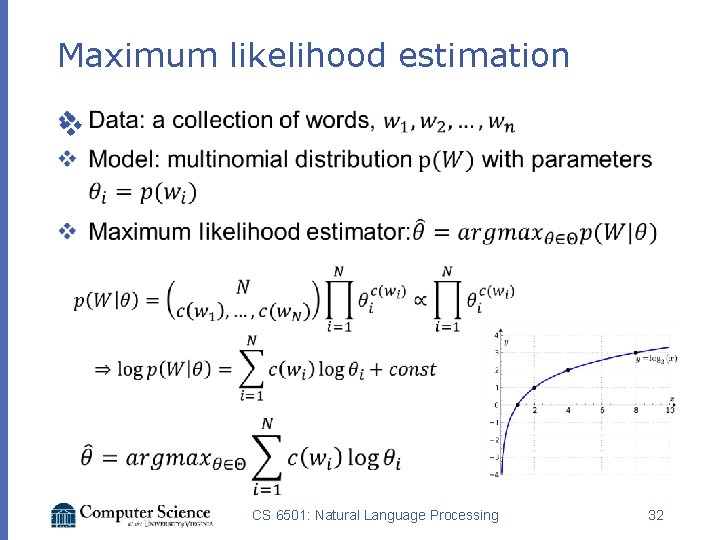

Maximum likelihood estimation v CS 6501: Natural Language Processing 32

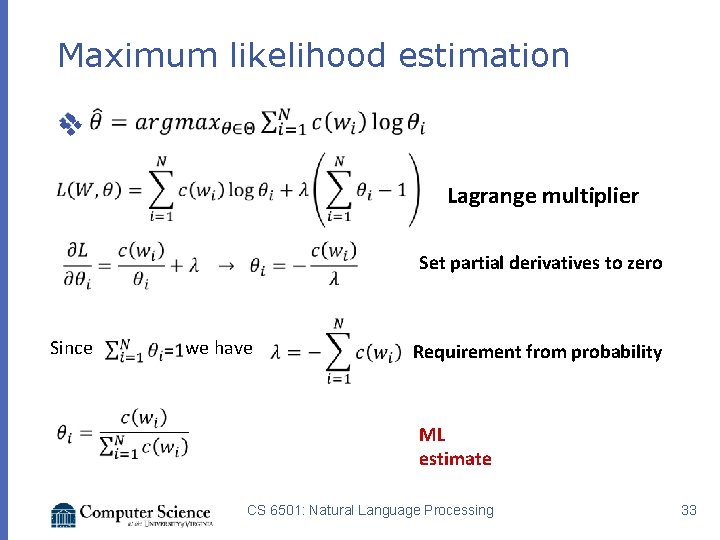

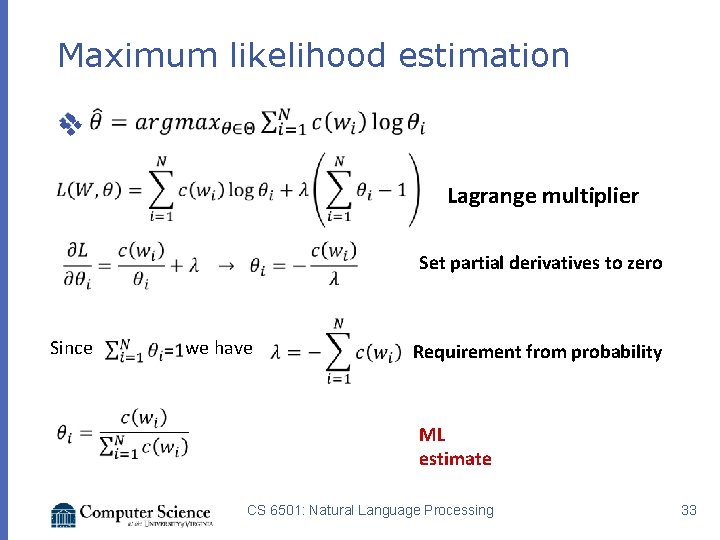

Maximum likelihood estimation v Lagrange multiplier Since Set partial derivatives to zero we have Requirement from probability ML estimate CS 6501: Natural Language Processing 33

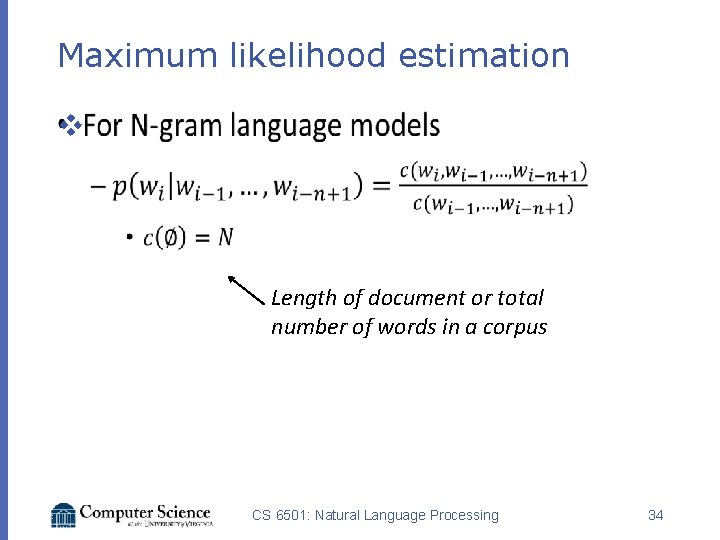

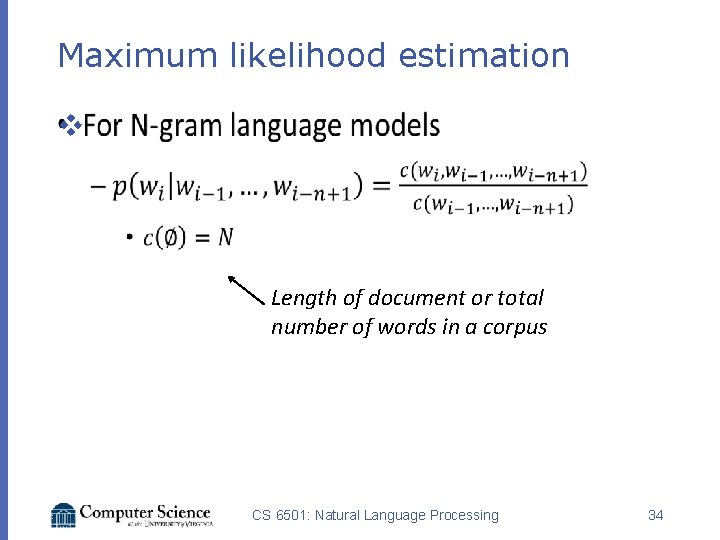

Maximum likelihood estimation v Length of document or total number of words in a corpus CS 6501: Natural Language Processing 34

A bi-gram example <S> I am Sam </S> <S> I am legend </S> <S> Sam I am </S> P( I | <S>) = ? P(am | I) = ? P( Sam | am) = ? P( </S> | Sam) = ? P( <S>I am Sam</S> | bigram model) = ? CS 6501: Natural Language Processing 35

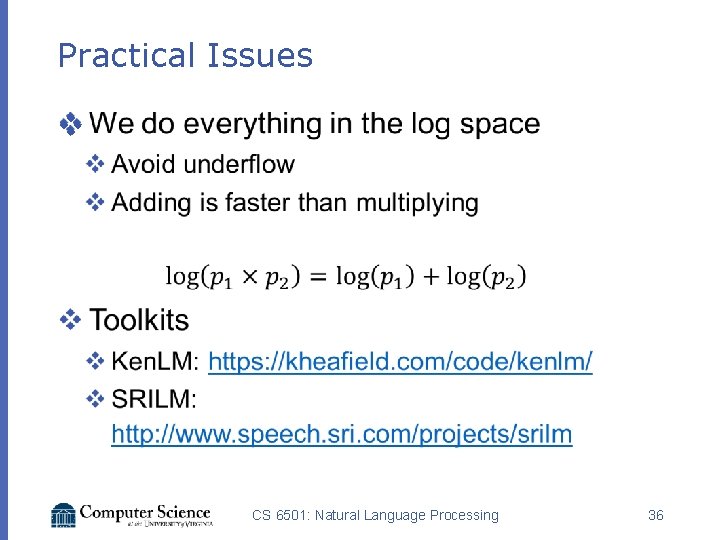

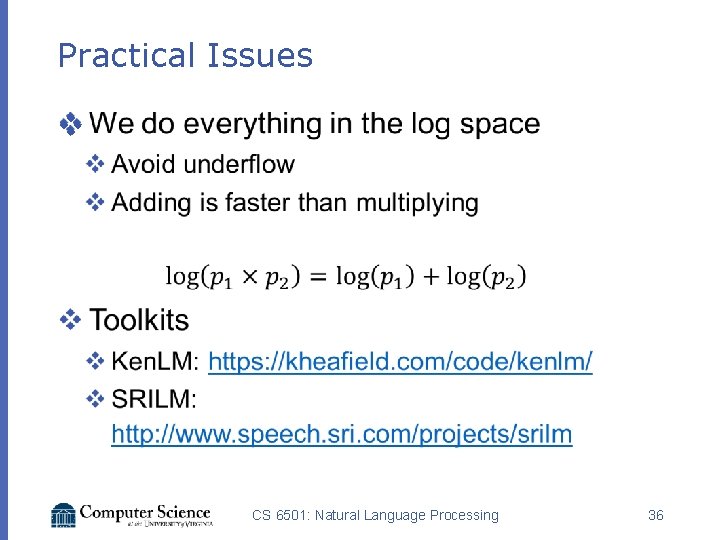

Practical Issues v CS 6501: Natural Language Processing 36

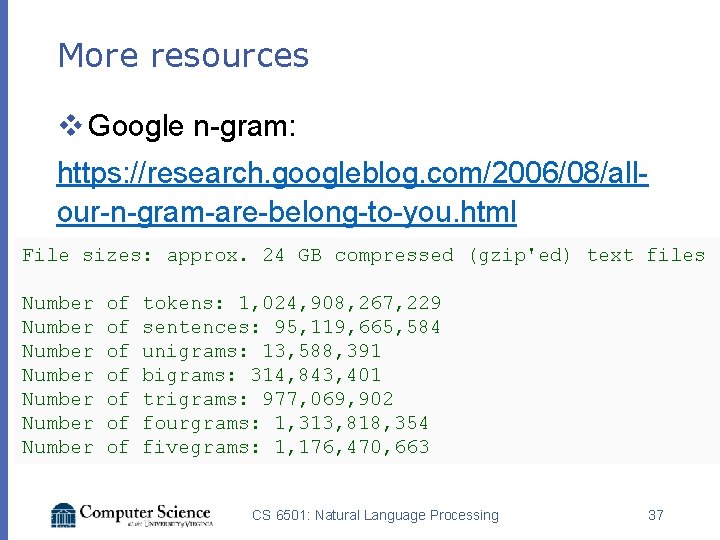

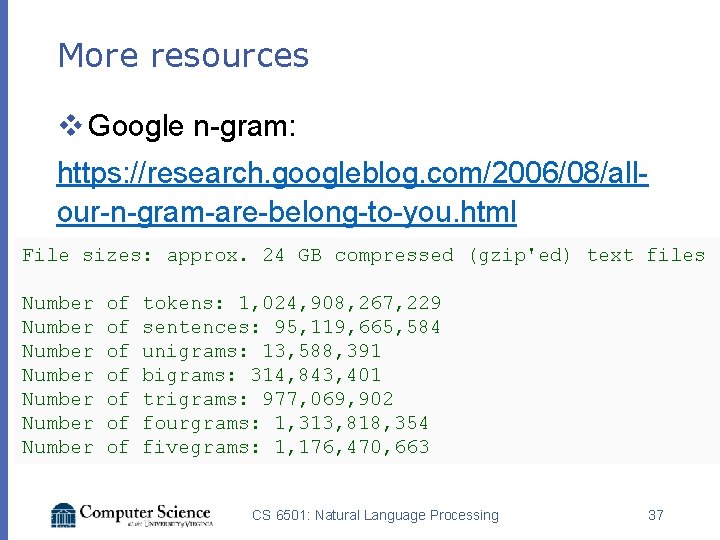

More resources v Google n-gram: https: //research. googleblog. com/2006/08/allour-n-gram-are-belong-to-you. html File sizes: approx. 24 GB compressed (gzip'ed) text files Number Number of of tokens: 1, 024, 908, 267, 229 sentences: 95, 119, 665, 584 unigrams: 13, 588, 391 bigrams: 314, 843, 401 trigrams: 977, 069, 902 fourgrams: 1, 313, 818, 354 fivegrams: 1, 176, 470, 663 CS 6501: Natural Language Processing 37

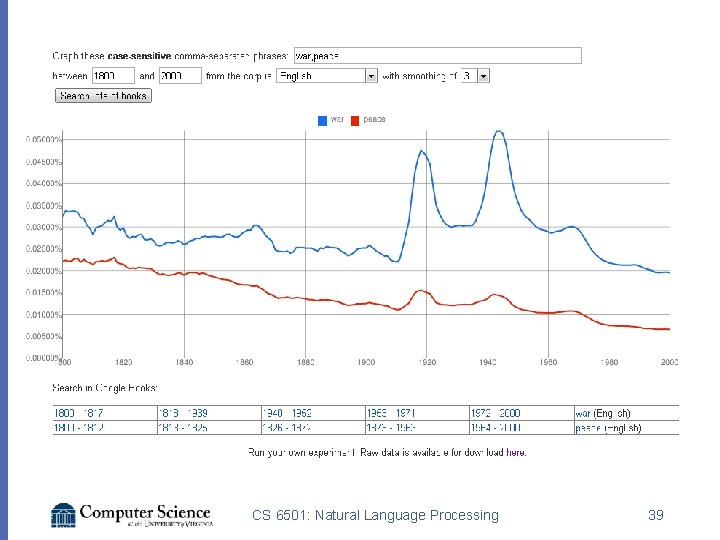

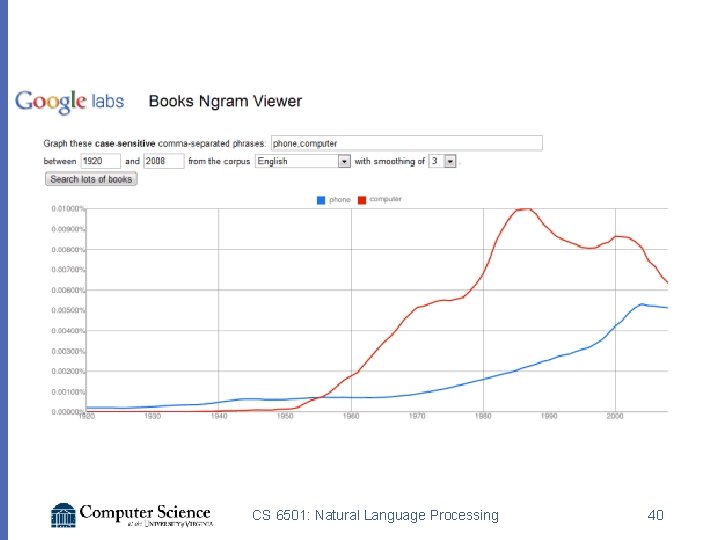

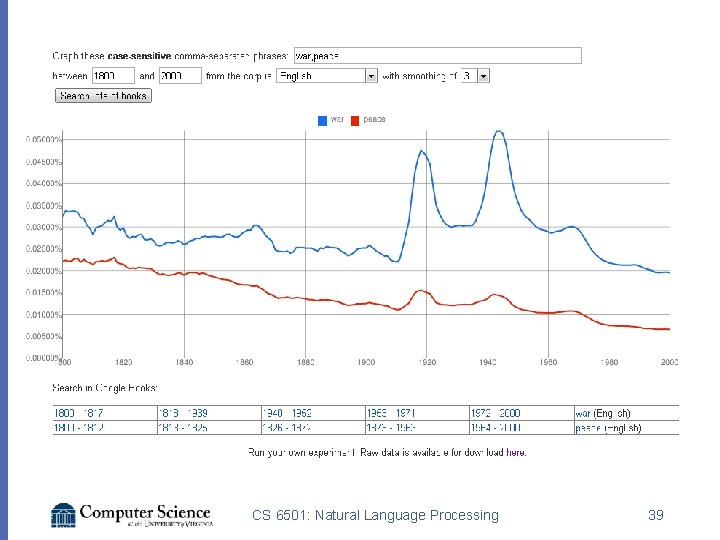

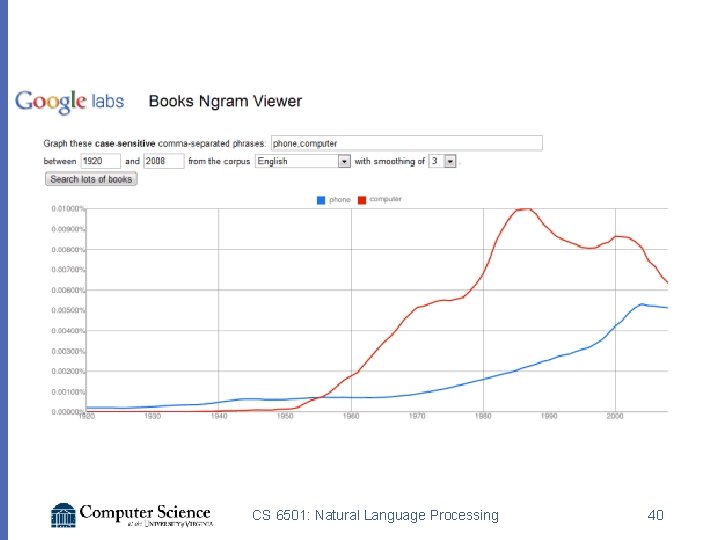

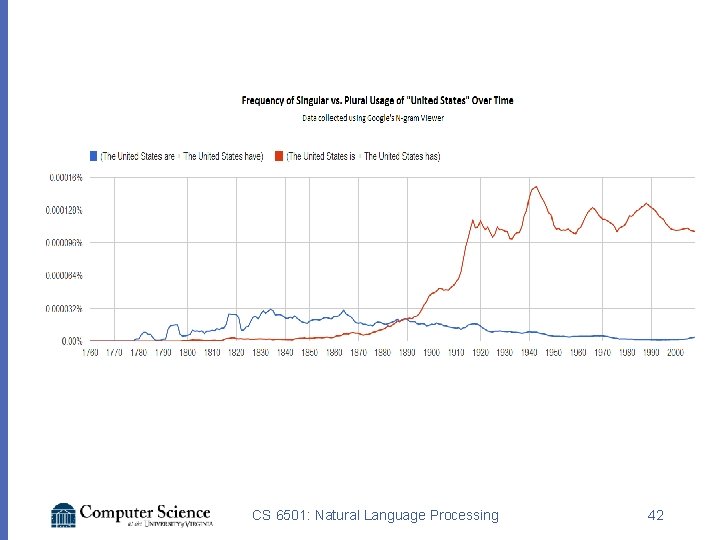

More resources v Google n-gram viewer https: //books. google. com/ngrams/ Data: http: //storage. googleapis. com/books/ngrams/ books/datasetsv 2. html circumvallate 1978 335 91 circumvallate 1979 261 91 CS 6501: Natural Language Processing 38

CS 6501: Natural Language Processing 39

CS 6501: Natural Language Processing 40

CS 6501: Natural Language Processing 41

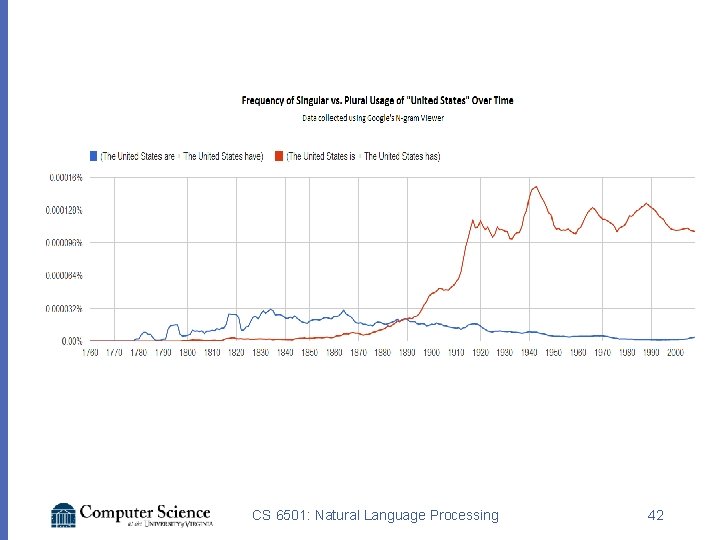

CS 6501: Natural Language Processing 42

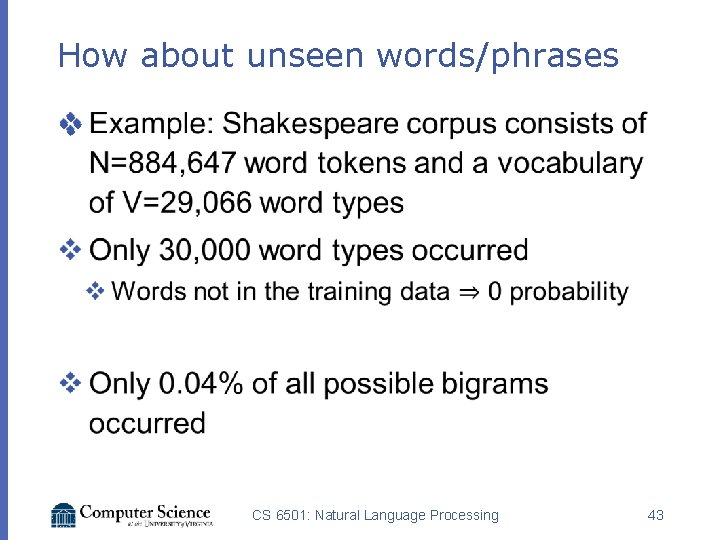

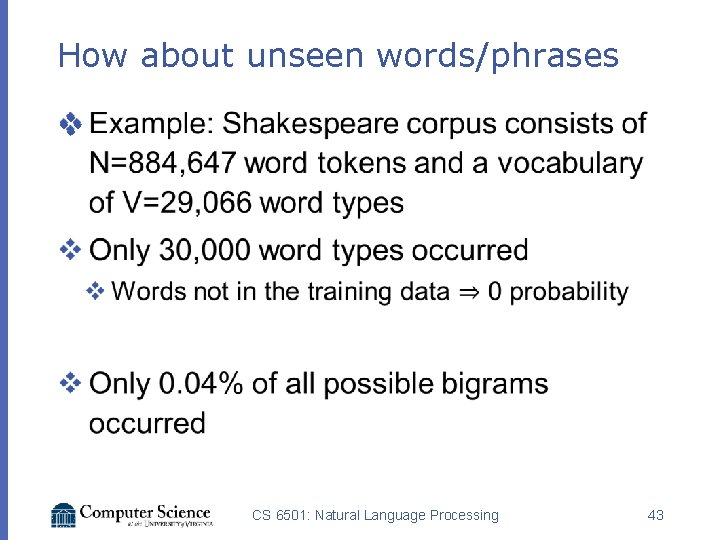

How about unseen words/phrases v CS 6501: Natural Language Processing 43

Next Lecture v Dealing with unseen n-grams v Key idea: reserve some probability mass to events that don’t occur in the training data v How much probability mass should we reserve? CS 6501: Natural Language Processing 44

Recap v N-gram language models v How to generate text from a language model v How to estimate a language model v Reading: Speech and Language Processing Chapter 4: N-Grams CS 6501: Natural Language Processing 45

Kai-wei chang

Kai-wei chang Kai wei chang

Kai wei chang Kaiwei chang

Kaiwei chang Google books ngram

Google books ngram Ngram

Ngram Ngram analyzer google

Ngram analyzer google Google books ngram viewer api

Google books ngram viewer api Ngram wikipedia

Ngram wikipedia 01:640:244 lecture notes - lecture 15: plat, idah, farad

01:640:244 lecture notes - lecture 15: plat, idah, farad Joe chang

Joe chang Tc chang

Tc chang Jichuan chang

Jichuan chang Darrick chang

Darrick chang Peter chang jewelry

Peter chang jewelry Fay chang google

Fay chang google Khởi nghĩa chàng lía nổ ra ở đâu

Khởi nghĩa chàng lía nổ ra ở đâu Xavier rival

Xavier rival Ching chang walla walla bing bang song

Ching chang walla walla bing bang song Chang pao chinese clothing

Chang pao chinese clothing Lpity

Lpity Paoti chang

Paoti chang Hee chang trading co. ltd

Hee chang trading co. ltd Winnie chang fiu

Winnie chang fiu Joo yeun chang

Joo yeun chang Chang wah technology

Chang wah technology Chang pui chung memorial school

Chang pui chung memorial school Thêu chăng chặn

Thêu chăng chặn Conclusion machine learning

Conclusion machine learning Angel x chang

Angel x chang Conrad chang

Conrad chang Rong chang dictation

Rong chang dictation Paoti chang

Paoti chang Chia hui chang

Chia hui chang Chẳng may em đánh vỡ một lọ hoa đẹp

Chẳng may em đánh vỡ một lọ hoa đẹp Trên đồng cỏ xanh rì người cho tôi nằm nghỉ

Trên đồng cỏ xanh rì người cho tôi nằm nghỉ Post classical vietnam

Post classical vietnam Chang'an

Chang'an Johan chang

Johan chang Chẳng nhìn thấy ve đâu

Chẳng nhìn thấy ve đâu Chang horing

Chang horing Language change theories

Language change theories Chia hui chang

Chia hui chang Jichuan chang

Jichuan chang Chemistry by raymond chang 10th edition

Chemistry by raymond chang 10th edition Chang cgi

Chang cgi Remco chang

Remco chang