Natural Language Processing Language Model Markov Process 2

- Slides: 21

Natural Language Processing Language Model & Markov Process 2 nd 8/6/2018 Class Natural Language Processing Dr. Isma Farah Siddiqui isma. farah@faculty. muet. edu. pk

Language Model 8/6/2018 Natural Language Processing Dr. Isma Farah Siddiqui isma. farah@faculty. muet. edu. pk 2

Language Modeling • A language model is a probabilistic mechanism for generating text • Language models estimate the probability distribution of various natural language phenomena – sentences, utterances, queries … 8/6/2018 Natural Language Processing Dr. Isma Farah Siddiqui isma. farah@faculty. muet. edu. pk 3

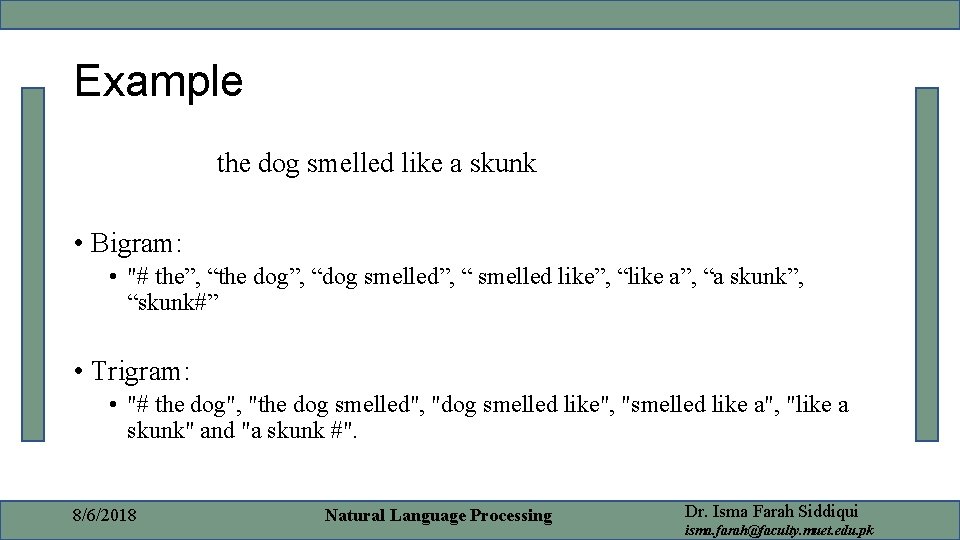

What is an N-Gram? • A subsequence of n items from a given sequence • Unigram: n-gram of size 1 • Bigram: n-gram of size 2 • Trigram: n-gram of size 3 • Items: • • Phonemes Syllables Letters Words • Number of Items: • Unigram, Bigram, Trigram, . . . 8/6/2018 Natural Language Processing Dr. Isma Farah Siddiqui isma. farah@faculty. muet. edu. pk

Example the dog smelled like a skunk • Bigram: • "# the”, “the dog”, “dog smelled”, “ smelled like”, “like a”, “a skunk”, “skunk#” • Trigram: • "# the dog", "the dog smelled", "dog smelled like", "smelled like a", "like a skunk" and "a skunk #". 8/6/2018 Natural Language Processing Dr. Isma Farah Siddiqui isma. farah@faculty. muet. edu. pk

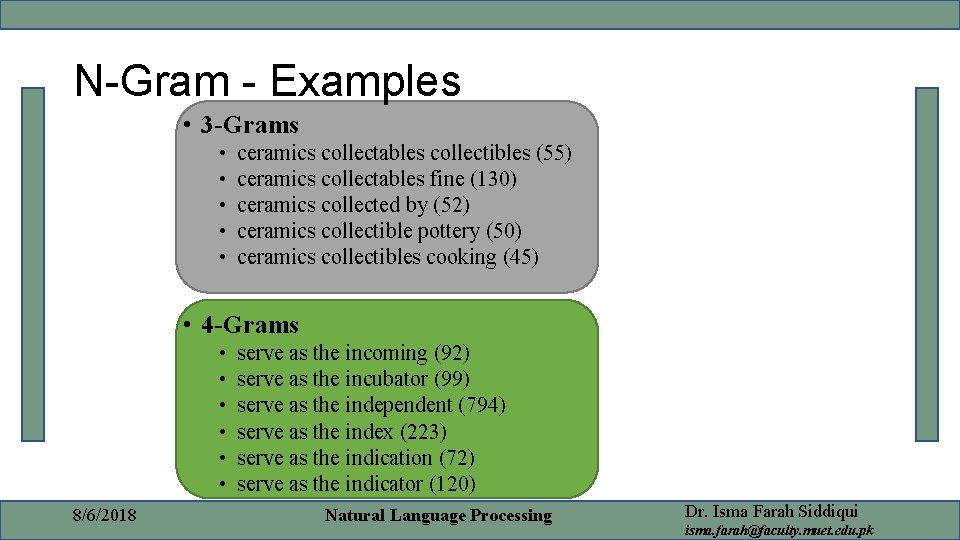

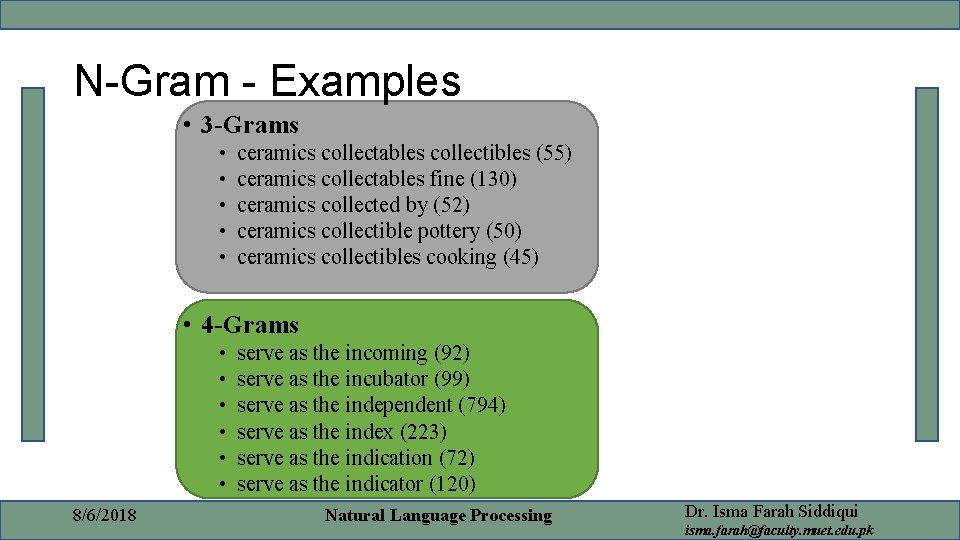

N-Gram - Examples • 3 -Grams • • • ceramics collectables collectibles (55) ceramics collectables fine (130) ceramics collected by (52) ceramics collectible pottery (50) ceramics collectibles cooking (45) • 4 -Grams • • • 8/6/2018 serve as the incoming (92) serve as the incubator (99) serve as the independent (794) serve as the index (223) serve as the indication (72) serve as the indicator (120) Natural Language Processing Dr. Isma Farah Siddiqui isma. farah@faculty. muet. edu. pk

N-Gram Model • A Probabilistic Model for Predicting the next Item in such a sequence. • Why do we want to Predict Words? • • Chatbots Speech recognition Handwriting recognition/OCR Spelling correction Author attribution Plagiarism detection. . . 8/6/2018 Natural Language Processing Dr. Isma Farah Siddiqui isma. farah@faculty. muet. edu. pk

N-Gram Model • Models Sequences, esp. NL, using the Statistical Properties of NGrams • Idea: Shannon • given a sequence of letters (e. g. "for ex"), what is the likelihood of the next letter? • From training data, derive a probability distribution for the next letter given a history of size n. 8/6/2018 Natural Language Processing Dr. Isma Farah Siddiqui isma. farah@faculty. muet. edu. pk

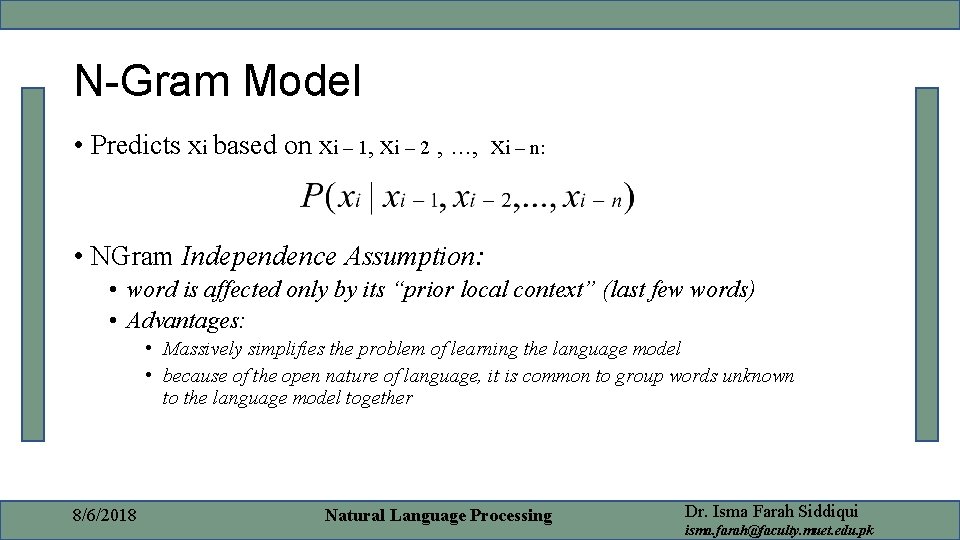

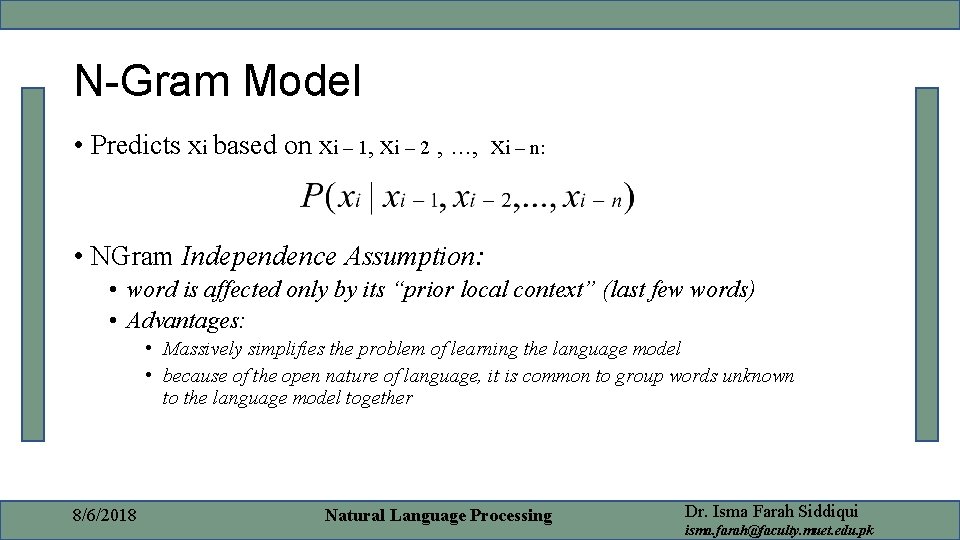

N-Gram Model • Predicts xi based on xi – 1, xi – 2 , . . . , xi – n: • NGram Independence Assumption: • word is affected only by its “prior local context” (last few words) • Advantages: • Massively simplifies the problem of learning the language model • because of the open nature of language, it is common to group words unknown to the language model together 8/6/2018 Natural Language Processing Dr. Isma Farah Siddiqui isma. farah@faculty. muet. edu. pk

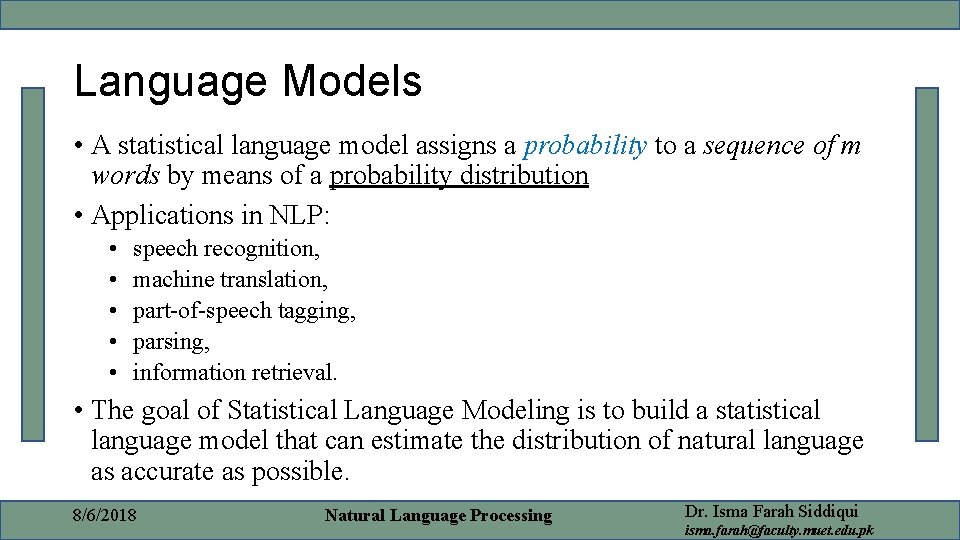

Language Models • A statistical language model assigns a probability to a sequence of m words by means of a probability distribution • Applications in NLP: • • • speech recognition, machine translation, part-of-speech tagging, parsing, information retrieval. • The goal of Statistical Language Modeling is to build a statistical language model that can estimate the distribution of natural language as accurate as possible. 8/6/2018 Natural Language Processing Dr. Isma Farah Siddiqui isma. farah@faculty. muet. edu. pk

Markov Process 8/6/2018 Natural Language Processing Dr. Isma Farah Siddiqui isma. farah@faculty. muet. edu. pk 11

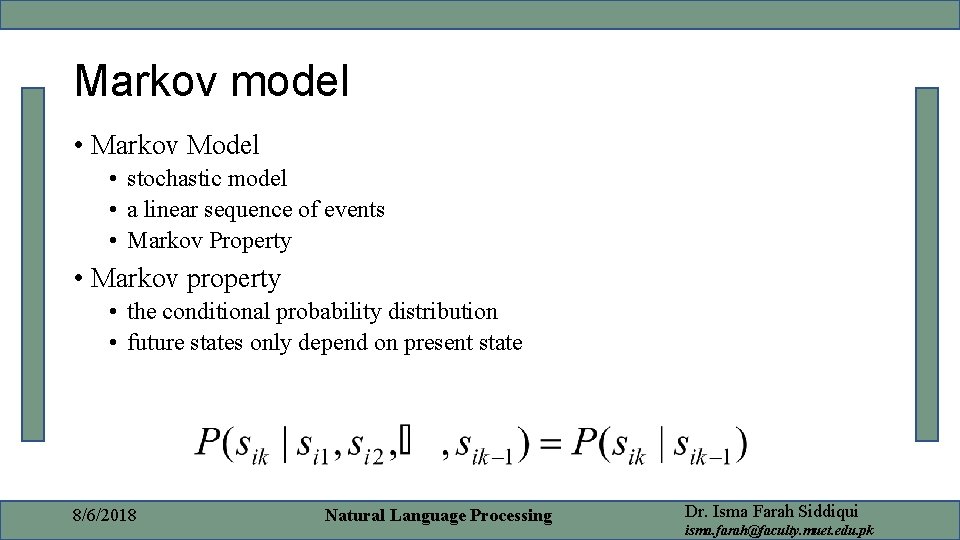

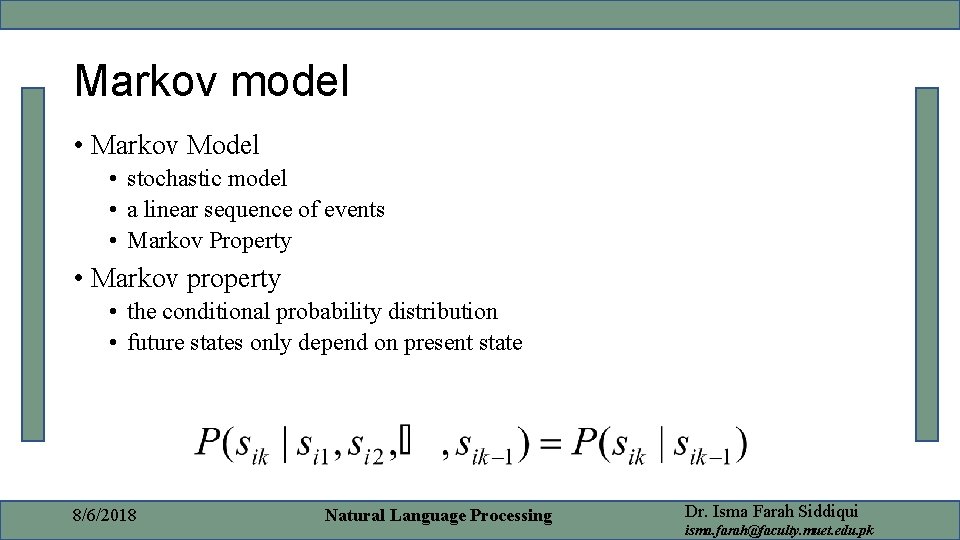

Markov model • Markov Model • stochastic model • a linear sequence of events • Markov Property • Markov property • the conditional probability distribution • future states only depend on present state 8/6/2018 Natural Language Processing Dr. Isma Farah Siddiqui isma. farah@faculty. muet. edu. pk

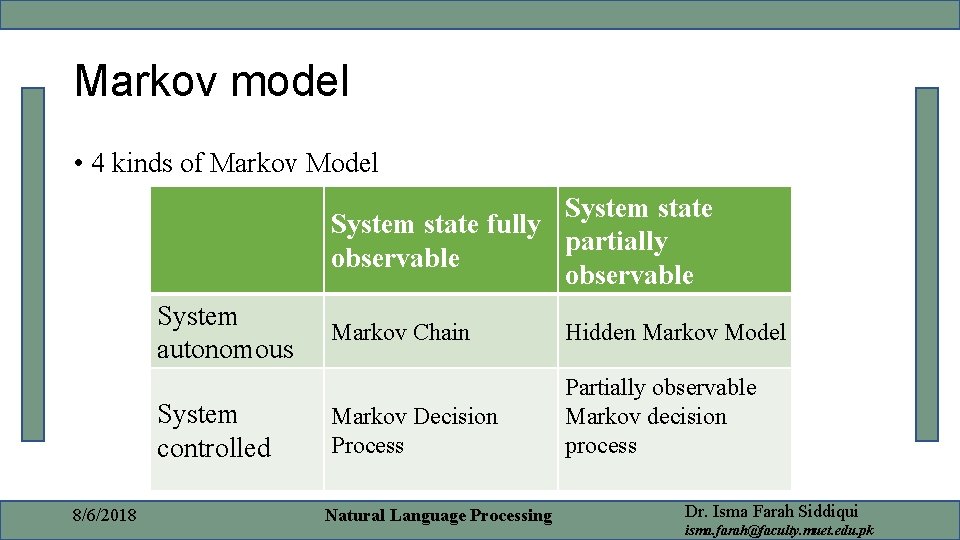

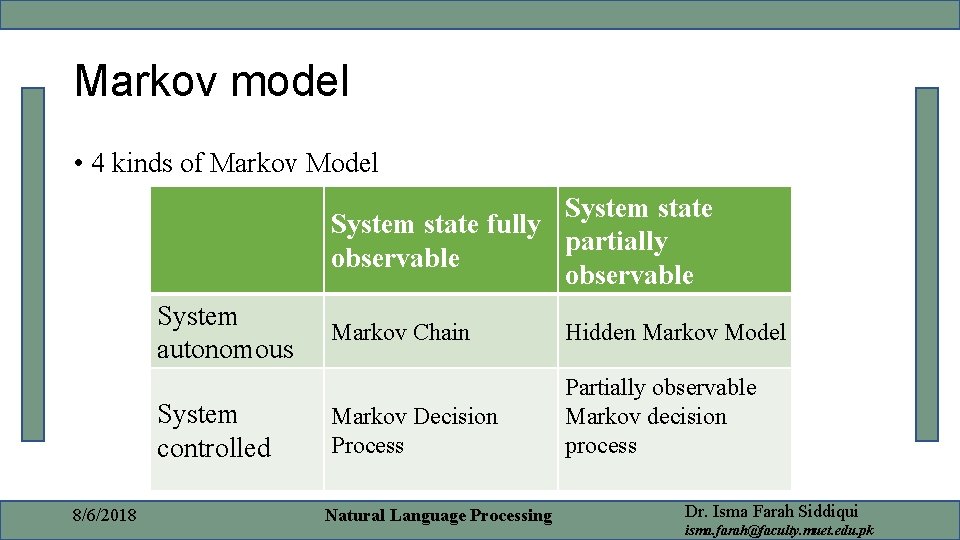

Markov model • 4 kinds of Markov Model System state fully partially observable System autonomous System controlled 8/6/2018 Markov Chain Hidden Markov Model Markov Decision Process Partially observable Markov decision process Natural Language Processing Dr. Isma Farah Siddiqui isma. farah@faculty. muet. edu. pk

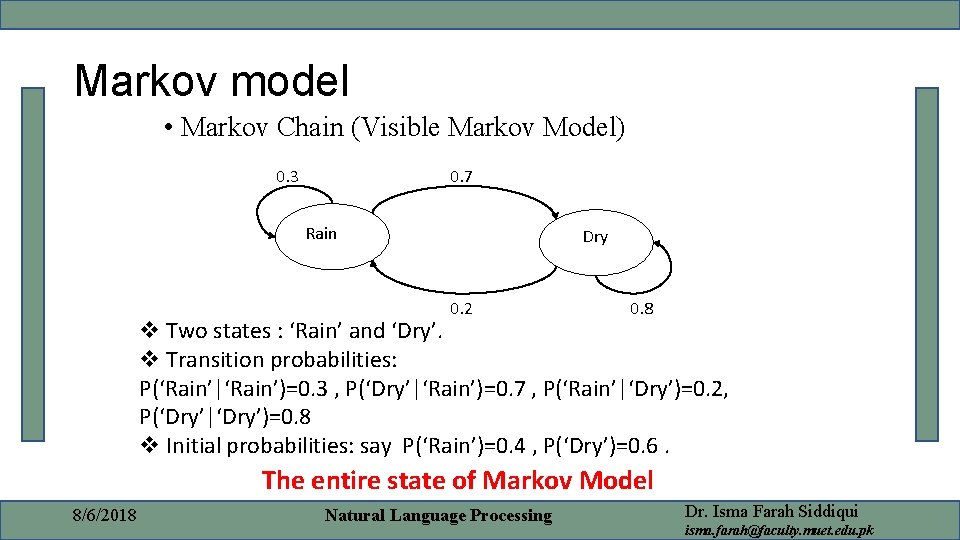

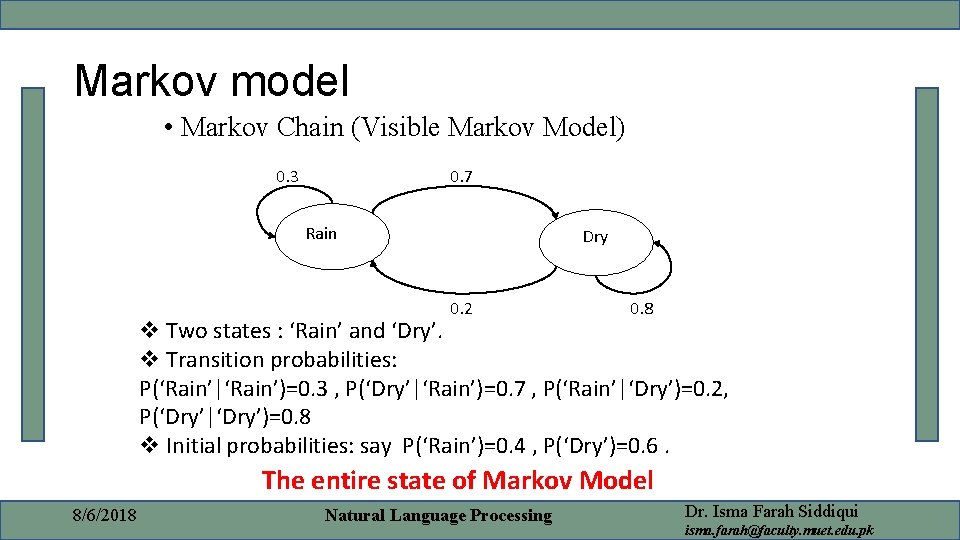

Markov model • Markov Chain (Visible Markov Model) 0. 3 0. 7 Rain Dry 0. 2 0. 8 v Two states : ‘Rain’ and ‘Dry’. v Transition probabilities: P(‘Rain’|‘Rain’)=0. 3 , P(‘Dry’|‘Rain’)=0. 7 , P(‘Rain’|‘Dry’)=0. 2, P(‘Dry’|‘Dry’)=0. 8 v Initial probabilities: say P(‘Rain’)=0. 4 , P(‘Dry’)=0. 6. The entire state of Markov Model 8/6/2018 Natural Language Processing Dr. Isma Farah Siddiqui isma. farah@faculty. muet. edu. pk

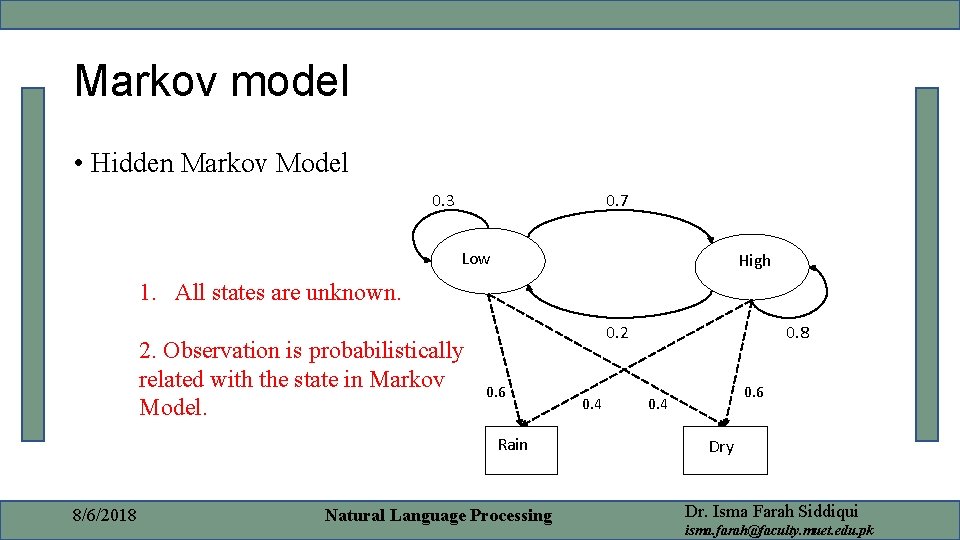

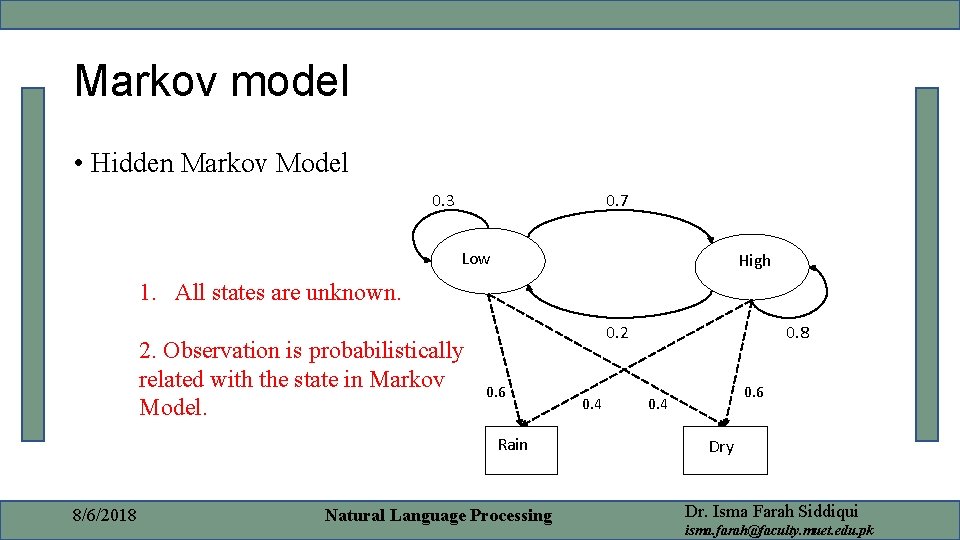

Markov model • Hidden Markov Model 0. 3 0. 7 Low High 1. All states are unknown. 2. Observation is probabilistically related with the state in Markov Model. 0. 2 0. 6 Rain 8/6/2018 Natural Language Processing 0. 4 0. 8 0. 6 0. 4 Dry Dr. Isma Farah Siddiqui isma. farah@faculty. muet. edu. pk

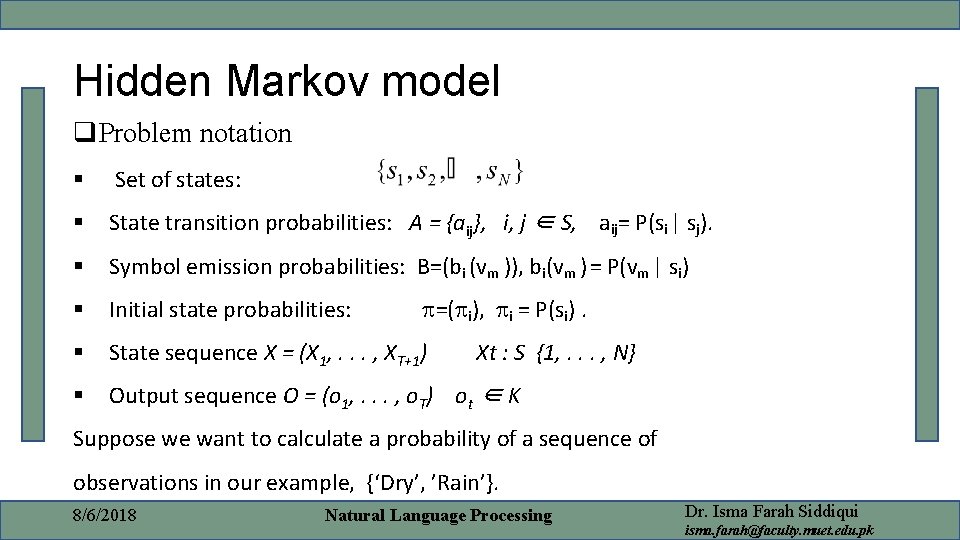

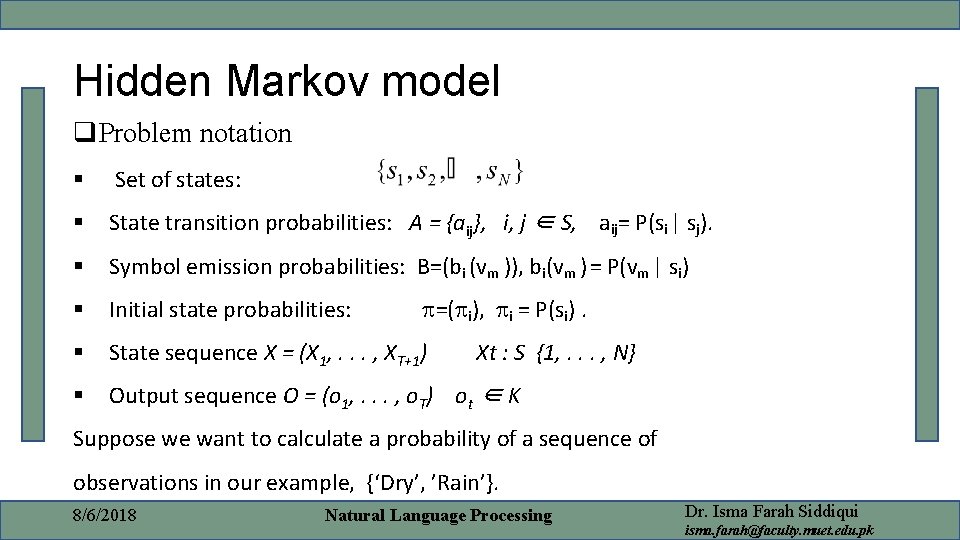

Hidden Markov model q. Problem notation § Set of states: § State transition probabilities: A = {aij}, i, j ∈ S, aij= P(si | sj). § Symbol emission probabilities: B=(bi (vm )), bi(vm ) = P(vm | si) § Initial state probabilities: =( i), i = P(si). § State sequence X = (X 1, . . . , XT+1) § Output sequence O = (o 1, . . . , o. T) ot ∈ K Xt : S {1, . . . , N} Suppose we want to calculate a probability of a sequence of observations in our example, {‘Dry’, ’Rain’}. 8/6/2018 Natural Language Processing Dr. Isma Farah Siddiqui isma. farah@faculty. muet. edu. pk

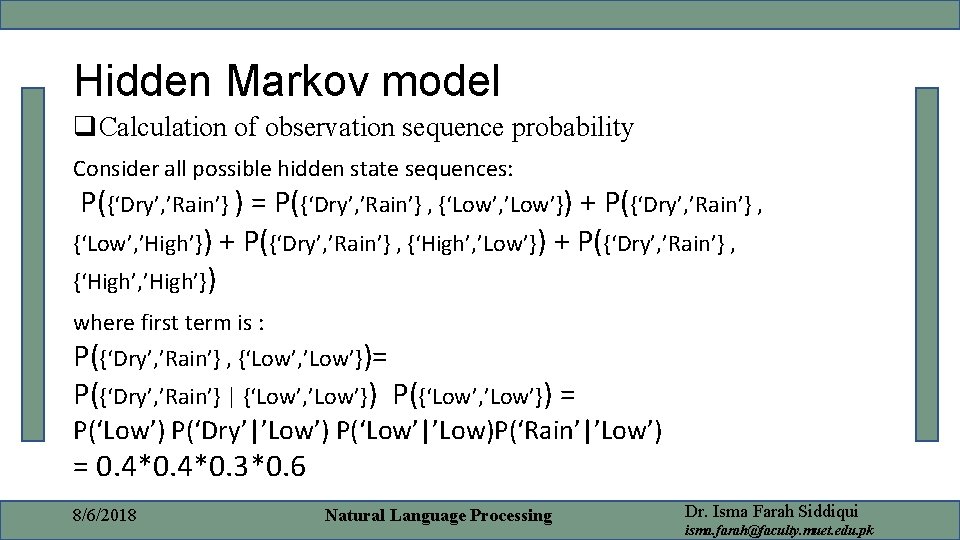

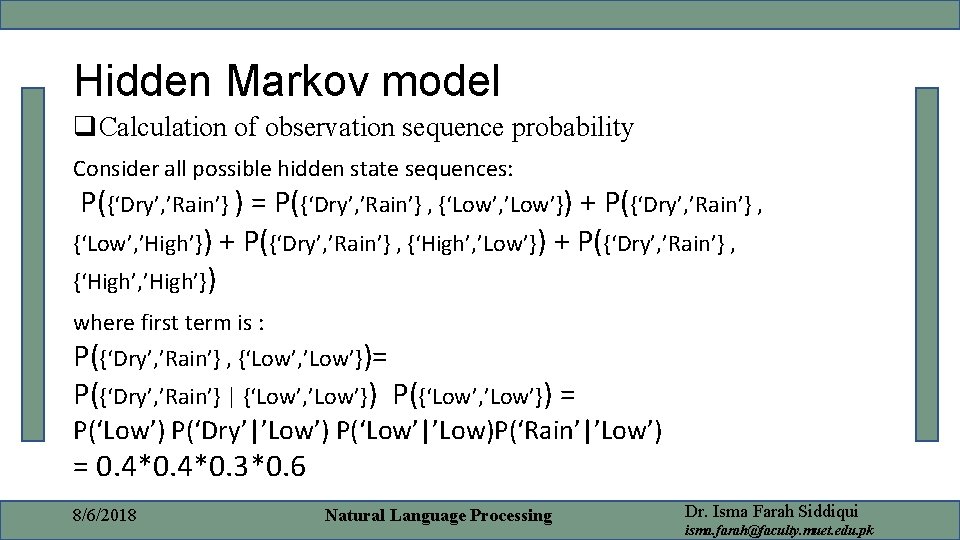

Hidden Markov model q. Calculation of observation sequence probability Consider all possible hidden state sequences: P({‘Dry’, ’Rain’} ) = P({‘Dry’, ’Rain’} , {‘Low’, ’Low’}) + P({‘Dry’, ’Rain’} , {‘Low’, ’High’}) + P({‘Dry’, ’Rain’} , {‘High’, ’Low’}) + P({‘Dry’, ’Rain’} , {‘High’, ’High’}) where first term is : P({‘Dry’, ’Rain’} , {‘Low’, ’Low’})= P({‘Dry’, ’Rain’} | {‘Low’, ’Low’}) P({‘Low’, ’Low’}) = P(‘Low’) P(‘Dry’|’Low’) P(‘Low’|’Low)P(‘Rain’|’Low’) = 0. 4*0. 3*0. 6 8/6/2018 Natural Language Processing Dr. Isma Farah Siddiqui isma. farah@faculty. muet. edu. pk

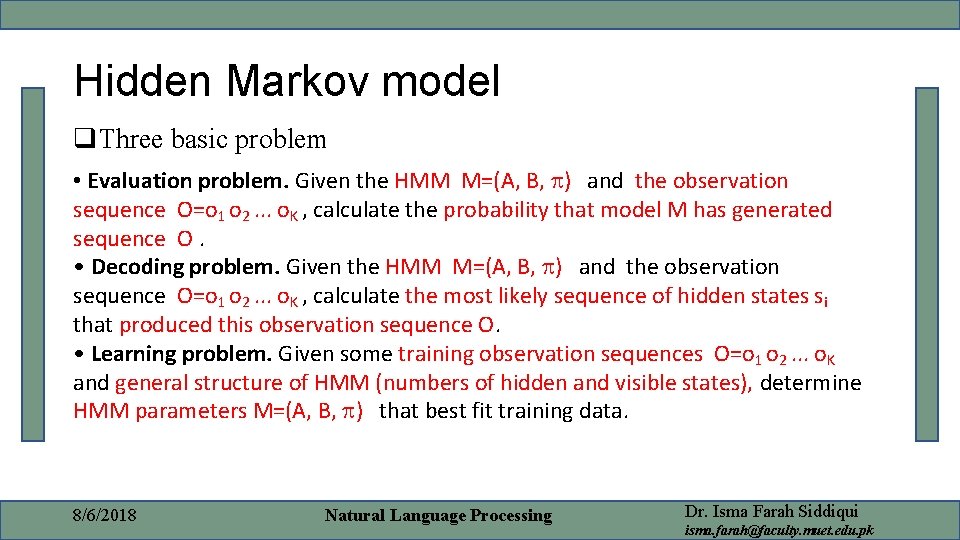

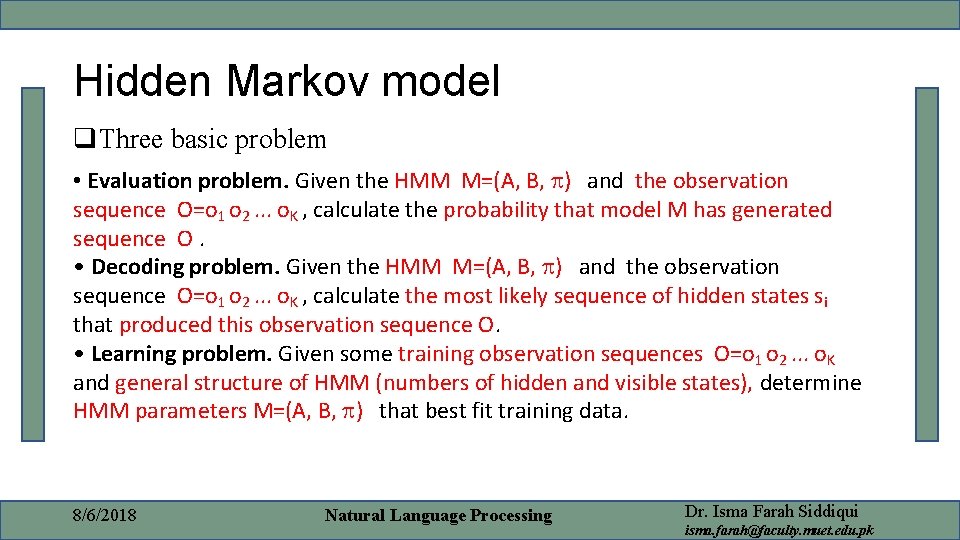

Hidden Markov model q. Three basic problem • Evaluation problem. Given the HMM M=(A, B, ) and the observation sequence O=o 1 o 2. . . o. K , calculate the probability that model M has generated sequence O. • Decoding problem. Given the HMM M=(A, B, ) and the observation sequence O=o 1 o 2. . . o. K , calculate the most likely sequence of hidden states si that produced this observation sequence O. • Learning problem. Given some training observation sequences O=o 1 o 2. . . o. K and general structure of HMM (numbers of hidden and visible states), determine HMM parameters M=(A, B, ) that best fit training data. 8/6/2018 Natural Language Processing Dr. Isma Farah Siddiqui isma. farah@faculty. muet. edu. pk

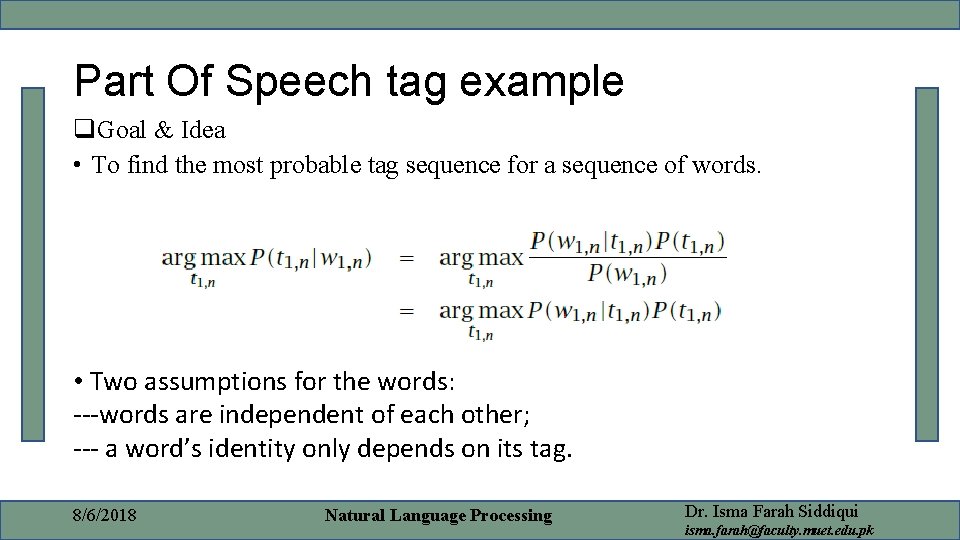

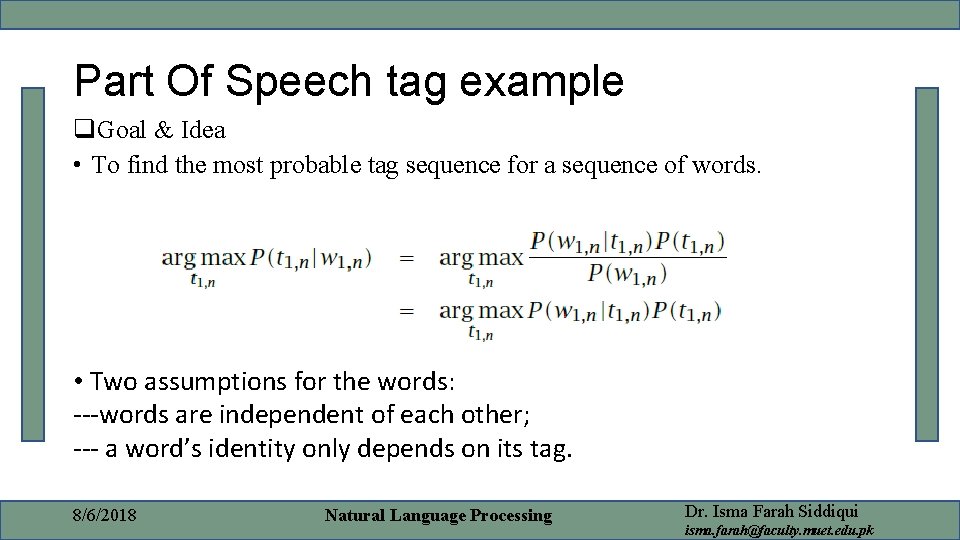

Part Of Speech tag example q. Goal & Idea • To find the most probable tag sequence for a sequence of words. • Two assumptions for the words: ---words are independent of each other; --- a word’s identity only depends on its tag. 8/6/2018 Natural Language Processing Dr. Isma Farah Siddiqui isma. farah@faculty. muet. edu. pk

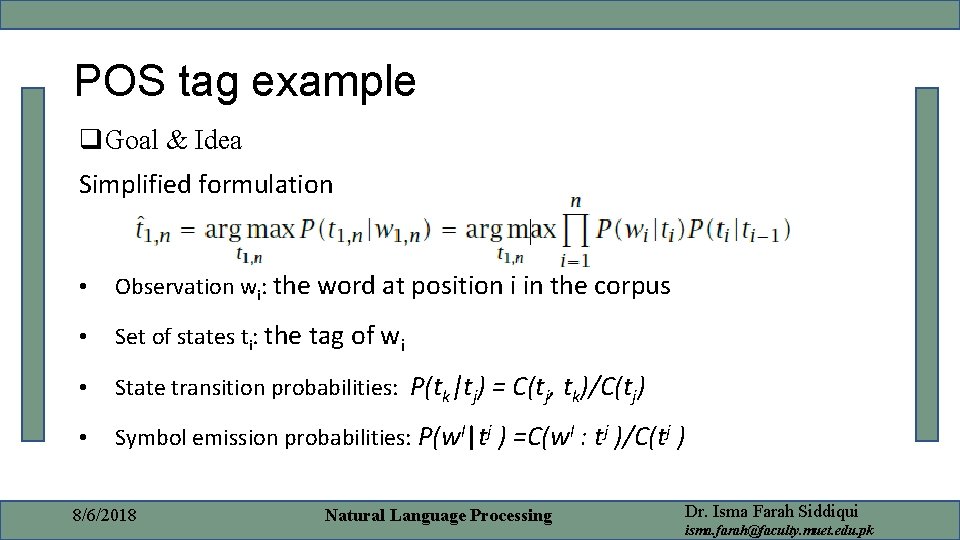

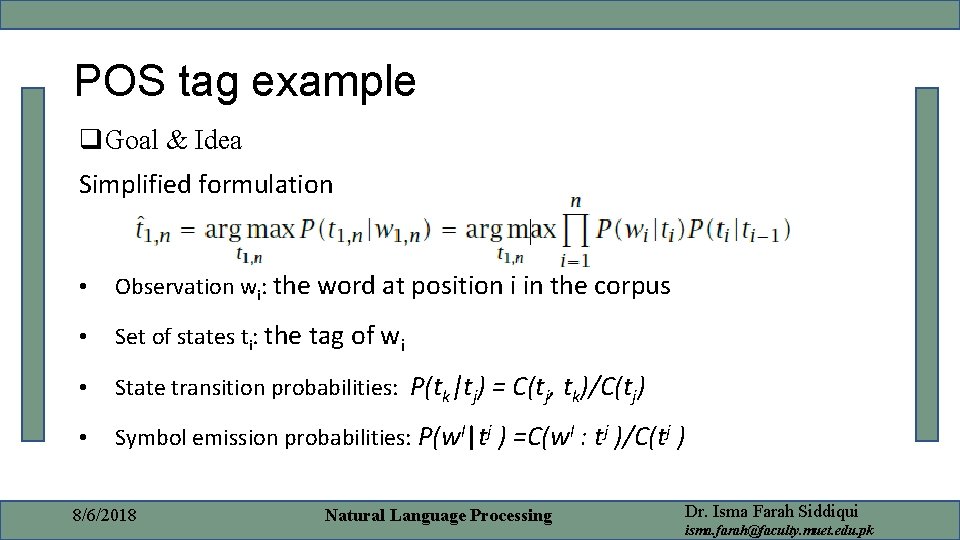

POS tag example q. Goal & Idea Simplified formulation • Observation wi: the word at position i in the corpus • Set of states ti: the tag of wi • State transition probabilities: P(tk|tj) = C(tj, tk)/C(tj) • Symbol emission probabilities: P(wl|tj ) =C(wl : tj )/C(tj ) 8/6/2018 Natural Language Processing Dr. Isma Farah Siddiqui isma. farah@faculty. muet. edu. pk

POS tag example Points • bigram Model: P(tk|tj); • Data Sparse: unseen & rare words in corpus; • Training corpus & computation complexity. • A combination of HMM and Visible Markov Model Reference: Manning, C. D. , & Schütze, H. (1999). Foundations of statistical natural language processing. MIT press. David D. (2003). Introduction to Hidden Markov Models [Power. Point slides]. Retrieved from www. cedar. buffalo. edu/~govind/CS 661/Lec 12. ppt 8/6/2018 Natural Language Processing Dr. Isma Farah Siddiqui isma. farah@faculty. muet. edu. pk