Natural Language Processing Introduction Natural Language Processing NLP

- Slides: 51

Natural Language Processing

Introduction • Natural Language Processing (NLP), is a branch of artificial intelligence that deals with the interaction between computers and humans using the natural language. • The ultimate objective of NLP is to read, decipher, understand, and make sense of the human languages in a manner that is valuable. • Most NLP techniques rely on machine learning to derive meaning from human languages

Syntactic Analysis • Syntactic analysis and semantic analysis are the main techniques used to complete Natural Language Processing tasks. • Syntax refers to the arrangement of words in a sentence such that they make grammatical sense. In NLP, syntactic analysis is used to assess how the natural language aligns with the grammatical rules • Semantics refers to the meaning that is conveyed by a text. Semantic analysis is one of the difficult aspects of Natural Language Processing that has not been fully resolved yet.

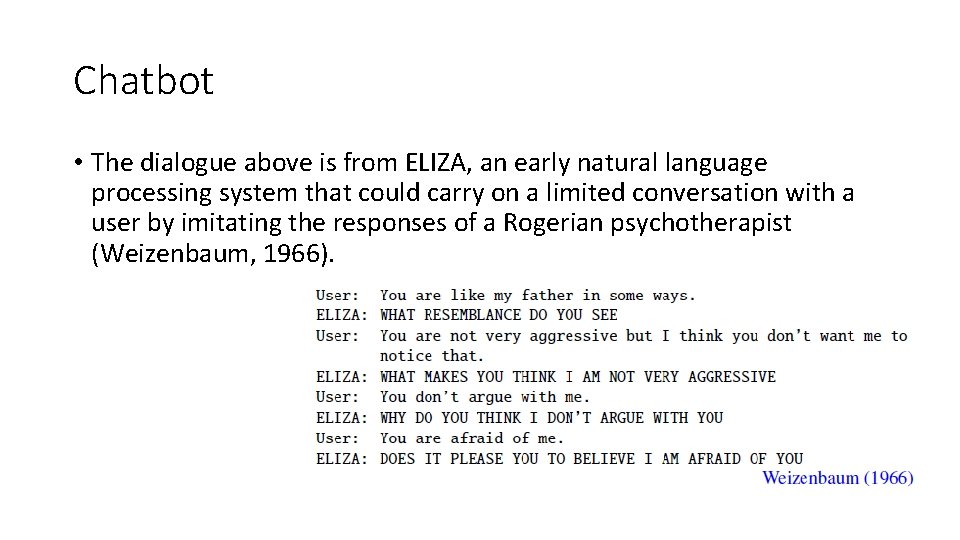

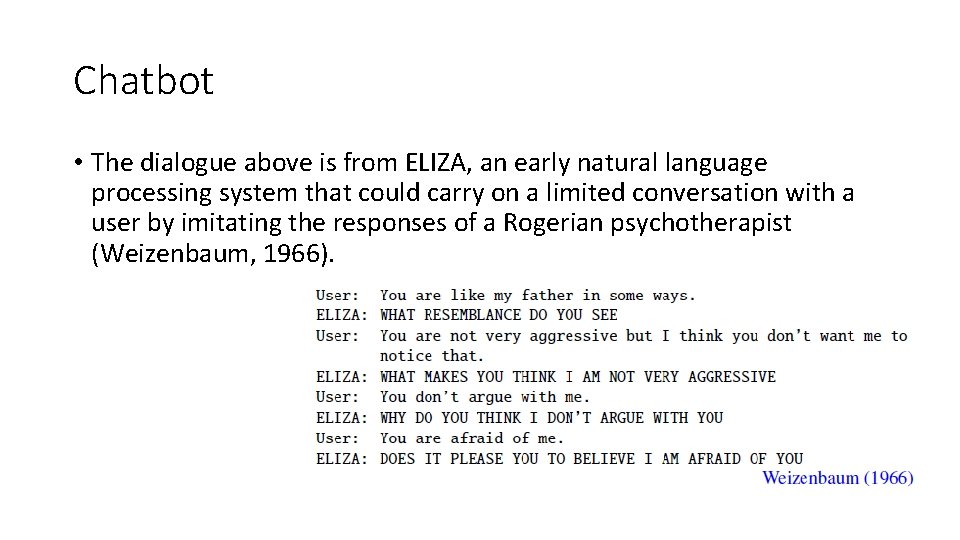

Chatbot • The dialogue above is from ELIZA, an early natural language processing system that could carry on a limited conversation with a user by imitating the responses of a Rogerian psychotherapist (Weizenbaum, 1966).

ELIZA • ELIZA is a surprisingly simple program that uses pattern matching to recognize phrases like “You are X” and translate them into suitable outputs like “What makes you think I am X? ”. This simple technique succeeds in this domain because ELIZA doesn’t actually need to know anything to mimic a Rogerian psychotherapist.

Algorithms in NLP • Computer algorithms are used to apply grammatical rules to a group of words and derive meaning from them. Here are some syntax techniques that can be used: • Lemmatization: It entails reducing the various inflected forms of a word into a single form for easy analysis. • Morphological segmentation: It involves dividing words into individual units called morphemes. • Word segmentation: It involves dividing a large piece of continuous text into distinct units. • Part-of-speech tagging: It involves identifying the part of speech for every word. • Parsing: It involves undertaking grammatical analysis for the provided sentence.

Algorithm in NLP • Sentence breaking: It involves placing sentence boundaries on a large piece of text. • Stemming: It involves cutting the inflected words to their root form.

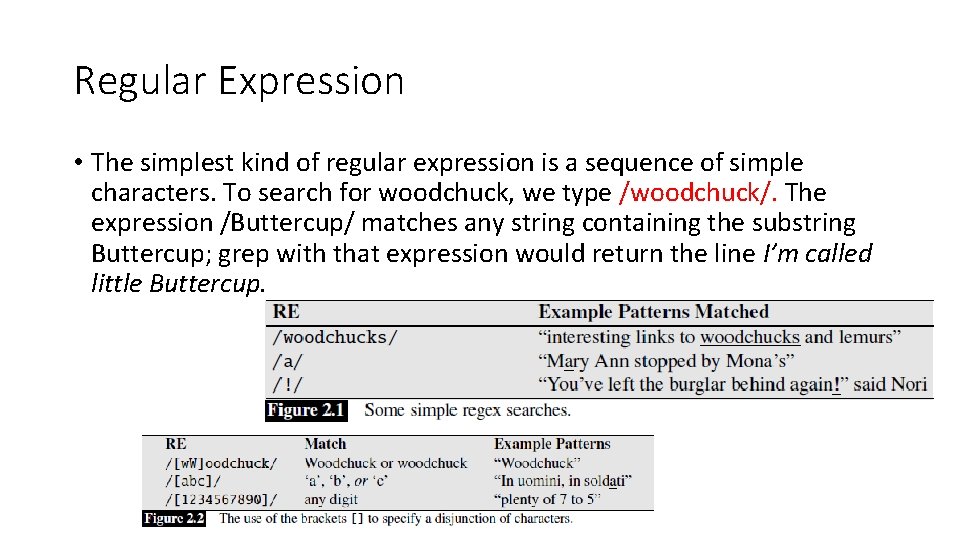

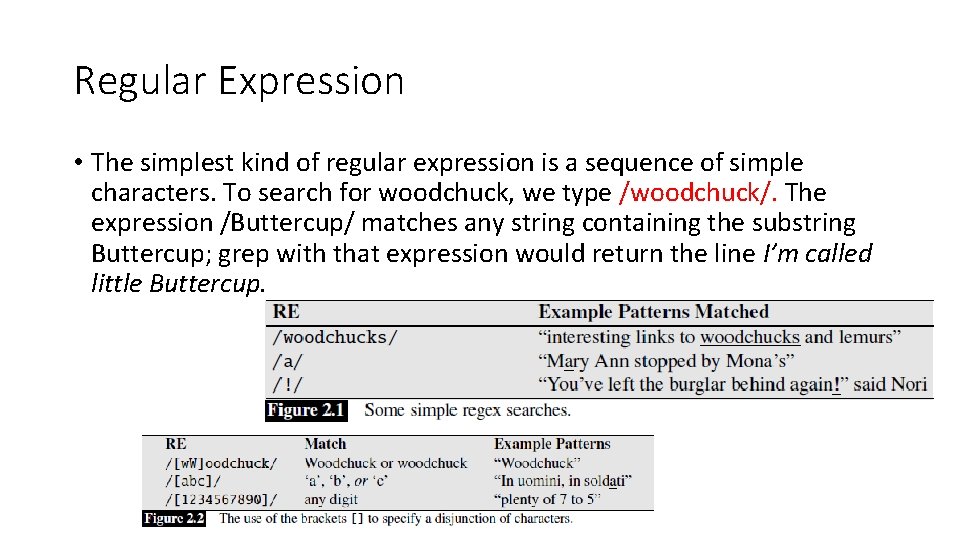

Regular Expression • The simplest kind of regular expression is a sequence of simple characters. To search for woodchuck, we type /woodchuck/. The expression /Buttercup/ matches any string containing the substring Buttercup; grep with that expression would return the line I’m called little Buttercup.

Exercise • Install Python • Install Sastrawi for Stemming using Indonesian Language • Create a simple program by giving a paragraph in Indonesian language, then display the result of stemming.

N-gram Language Models • Probabilities are essential in any task in which we have to identify words in noisy, ambiguous input, like speech recognition or handwriting recognition. • In the movie Take the Money and Run, Woody Allen tries to rob a bank with a sloppily written hold-up note that the teller incorrectly reads as “I have a gub”. As Russell and Norvig (2002) point out, a language processing system could avoid making this mistake by using the knowledge that the sequence “I have a gun” is far more probable than the non-word “I have a gub” or even “I have a gull”.

N-gram • In spelling correction, we need to find and correct spelling errors like Their are two midterms in this class, in which There was mistyped as Their. A sentence starting with the phrase There are will be much more probable than one starting with Their are, allowing a spellchecker to both detect and correct these errors. • Models that assign probabilities to sequences of words are called language model or LMs. We introduce the simplest model that assigns probabilities LM to sentences and sequences of words, the ngram.

N-gram • An n-gram is a sequence of N gram words: a 2 -gram (or bigram) is a two-word sequence of words like “please turn”, “turn your”, or ”your homework”, and a 3 -gram (or trigram) is a three-word sequence of words like “please turn your”, or “turn your homework”. • Let’s begin with the task of computing P(w|h), the probability of a word w given some history h. Suppose the history h is “its water is so transparent that” and we want to know the probability that the next word is the: P(the|its water is so transparent that)

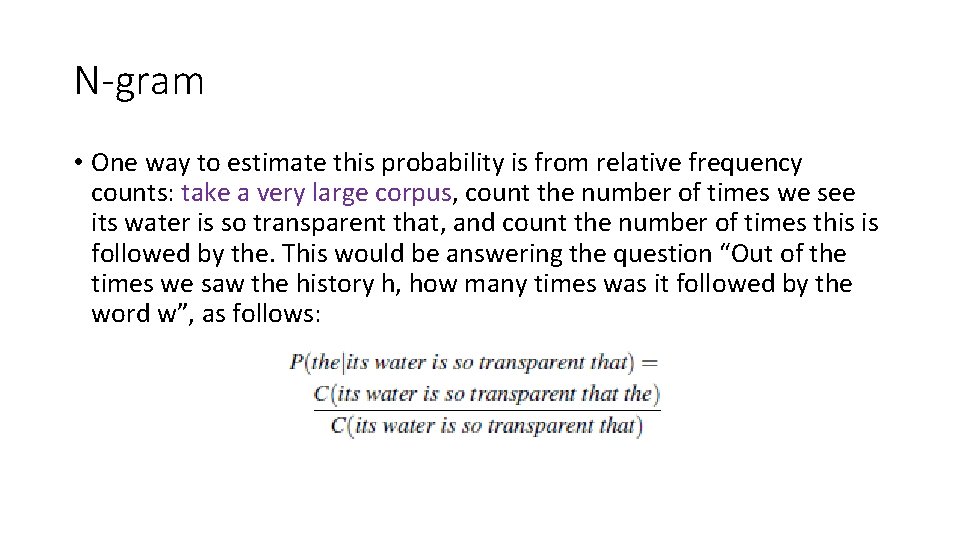

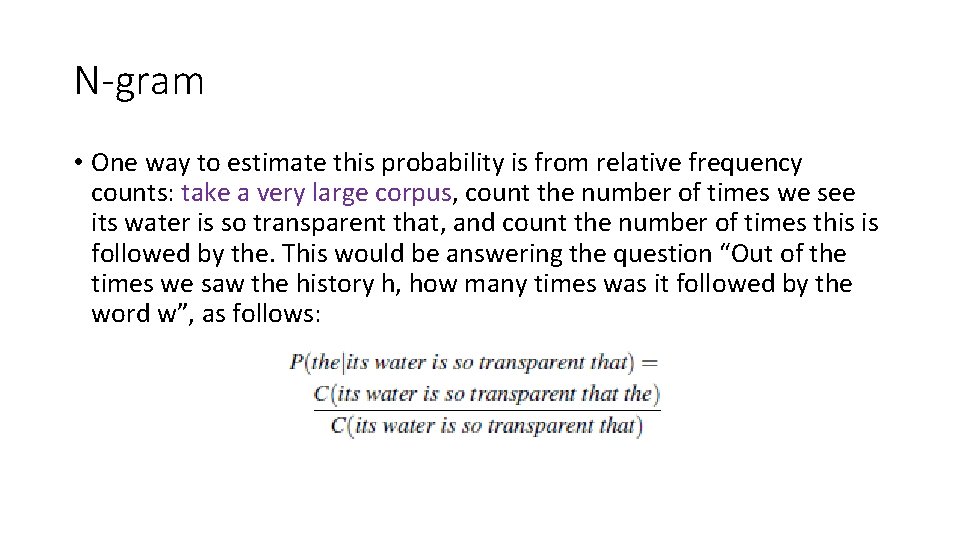

N-gram • One way to estimate this probability is from relative frequency counts: take a very large corpus, count the number of times we see its water is so transparent that, and count the number of times this is followed by the. This would be answering the question “Out of the times we saw the history h, how many times was it followed by the word w”, as follows:

Evaluation Model • Since our evaluation metric is based on test set probability, it’s important not to let the test sentences into the training set. Suppose we are trying to compute the probability of a particular “test” sentence. If our test sentence is part of the training corpus, we will mistakenly assign it an artificially high probability when it occurs in the test set. We call this situation training on the test set. Training on the test set introduces a bias that makes the probabilities all look too high, and causes huge inaccuracies in perplexity, the probabilitybased metric.

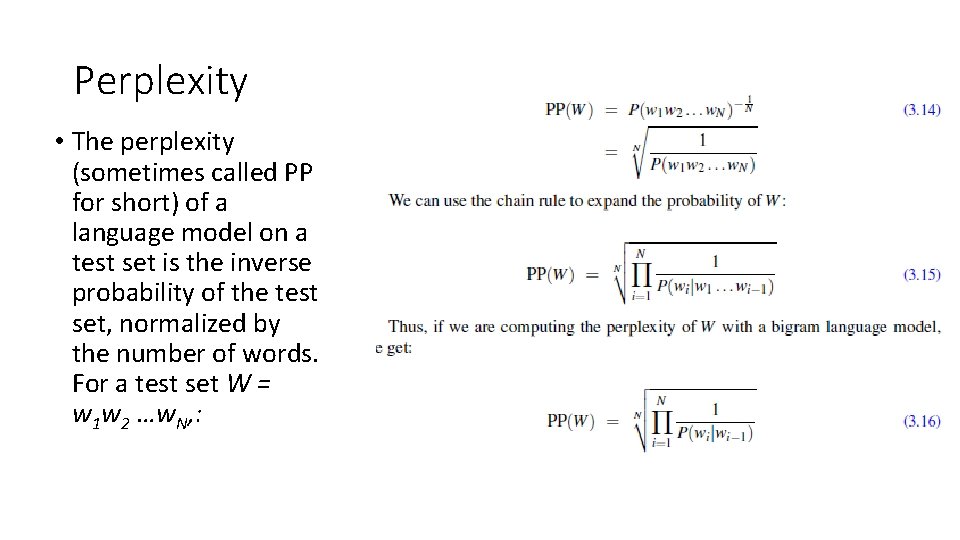

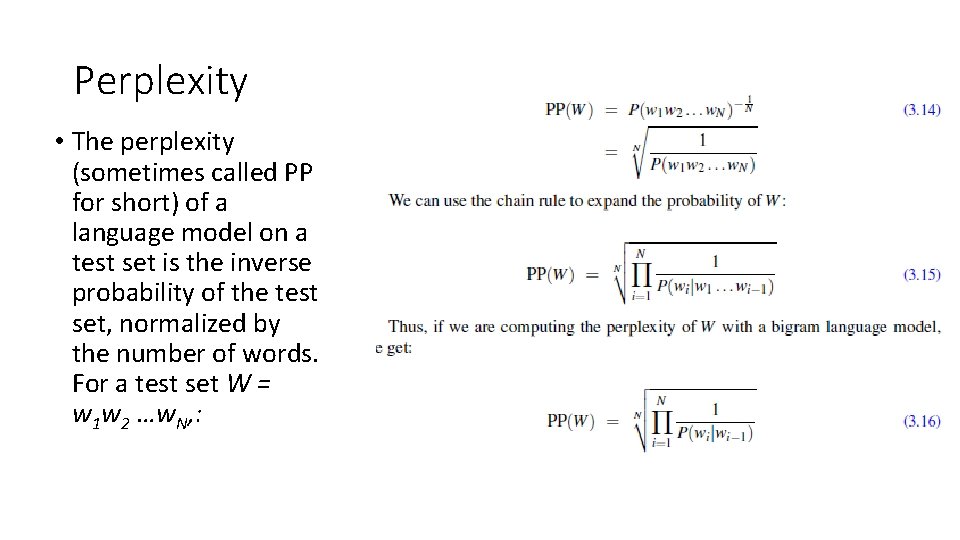

Perplexity • The perplexity (sometimes called PP for short) of a language model on a test set is the inverse probability of the test set, normalized by the number of words. For a test set W = w 1 w 2 …w. N, :

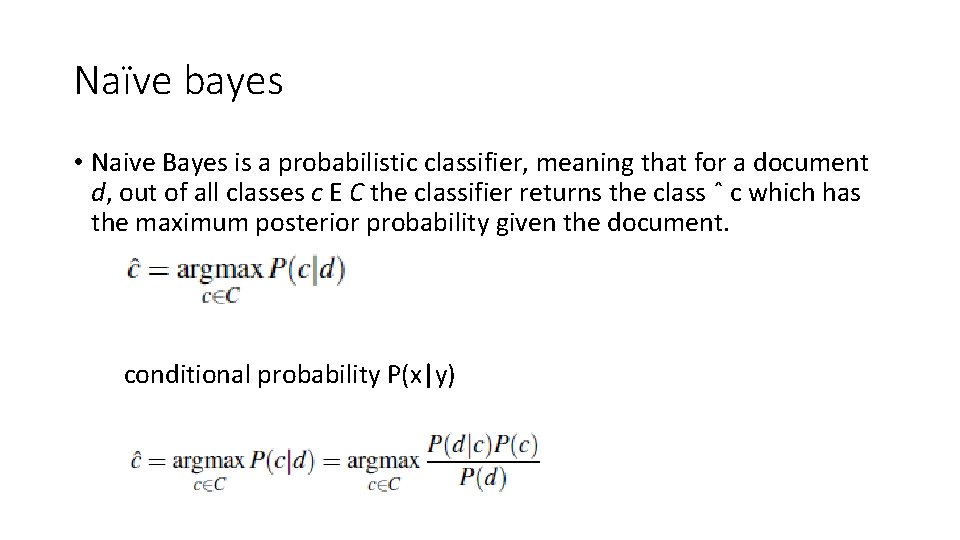

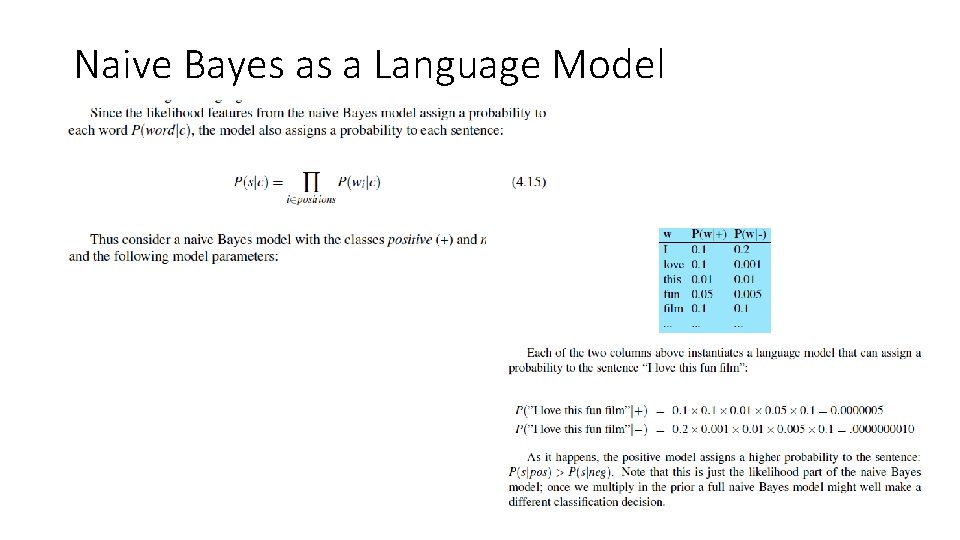

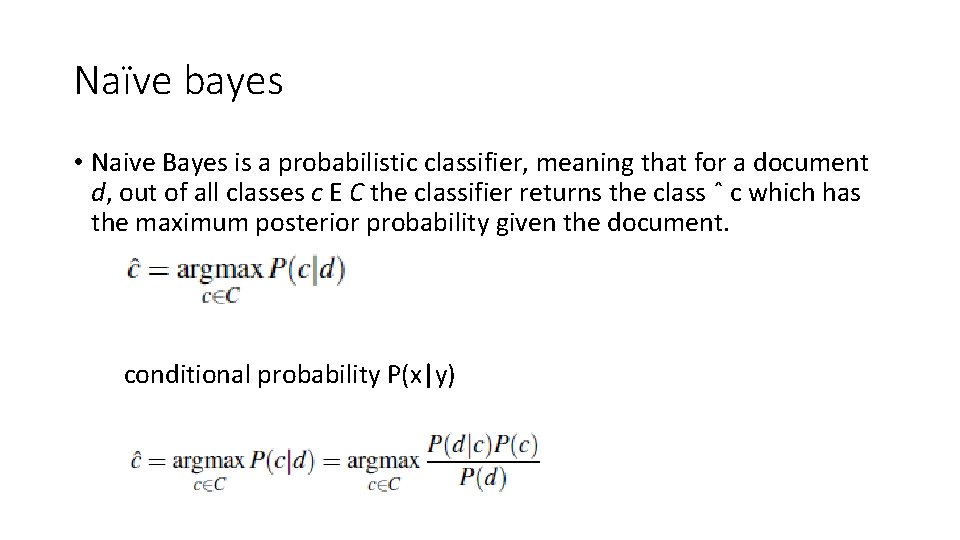

Naïve bayes • Naive Bayes is a probabilistic classifier, meaning that for a document d, out of all classes c E C the classifier returns the class ˆ c which has the maximum posterior probability given the document. conditional probability P(x|y)

exercise • Terapkan N-gram (3 -gram) pada aplikasi chatbot utnuk menjawab pertanyaan. Referensi: http: //www. albertauyeung. com/post/generating-ngrams-python/ https: //stackabuse. com/python-for-nlp-developing-an-automatic-textfiller-using-n-grams/

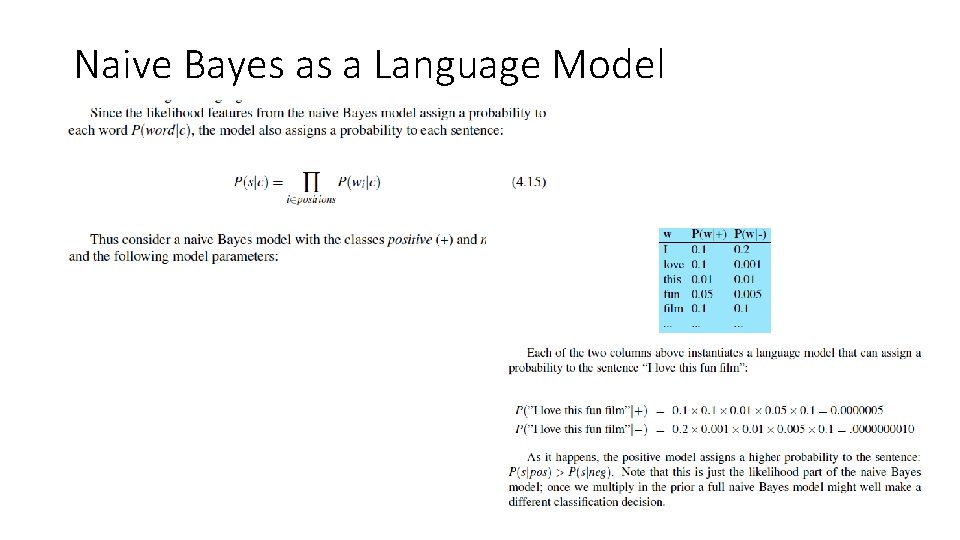

Naive Bayes as a Language Model

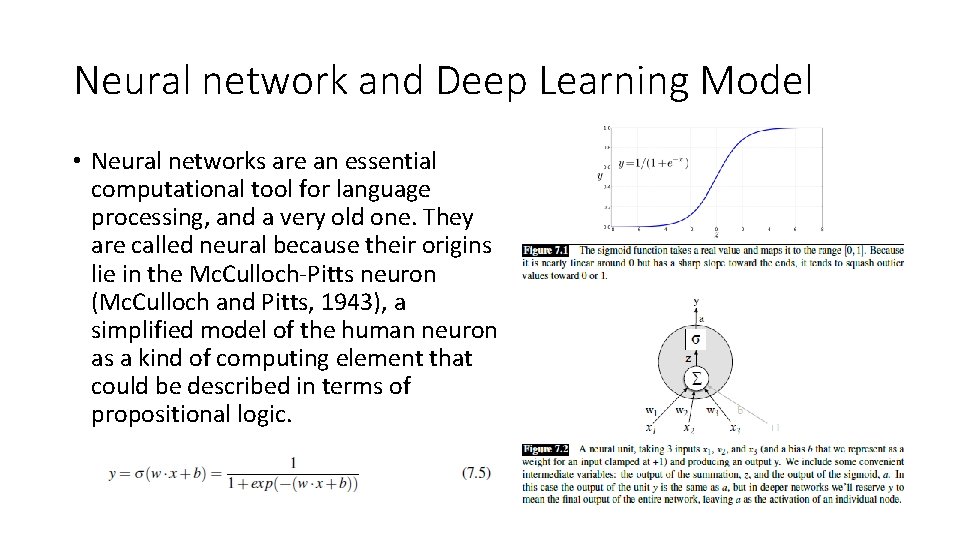

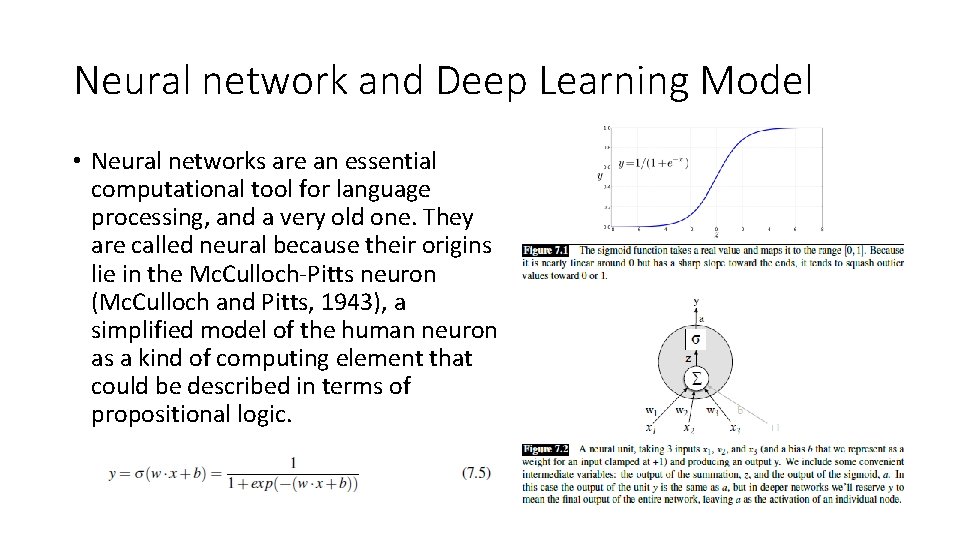

Neural network and Deep Learning Model • Neural networks are an essential computational tool for language processing, and a very old one. They are called neural because their origins lie in the Mc. Culloch-Pitts neuron (Mc. Culloch and Pitts, 1943), a simplified model of the human neuron as a kind of computing element that could be described in terms of propositional logic.

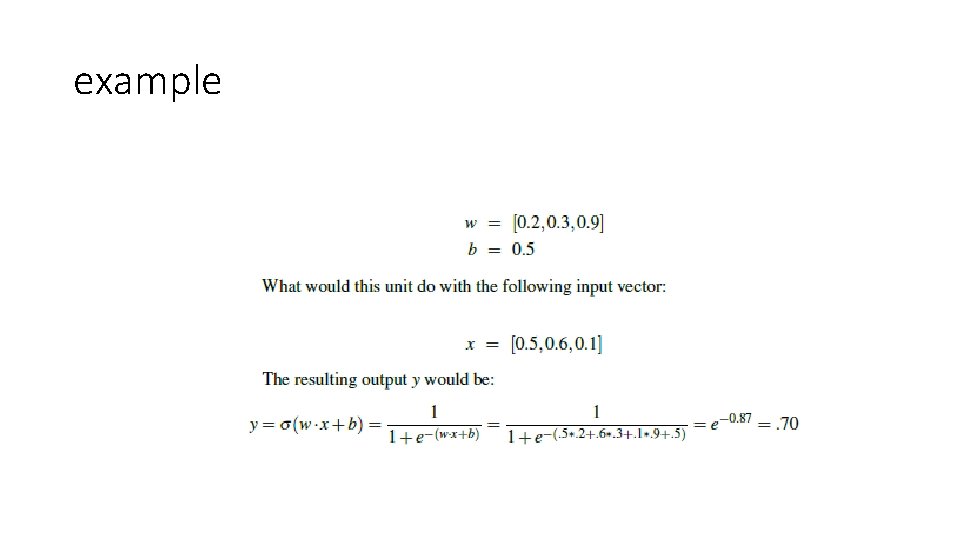

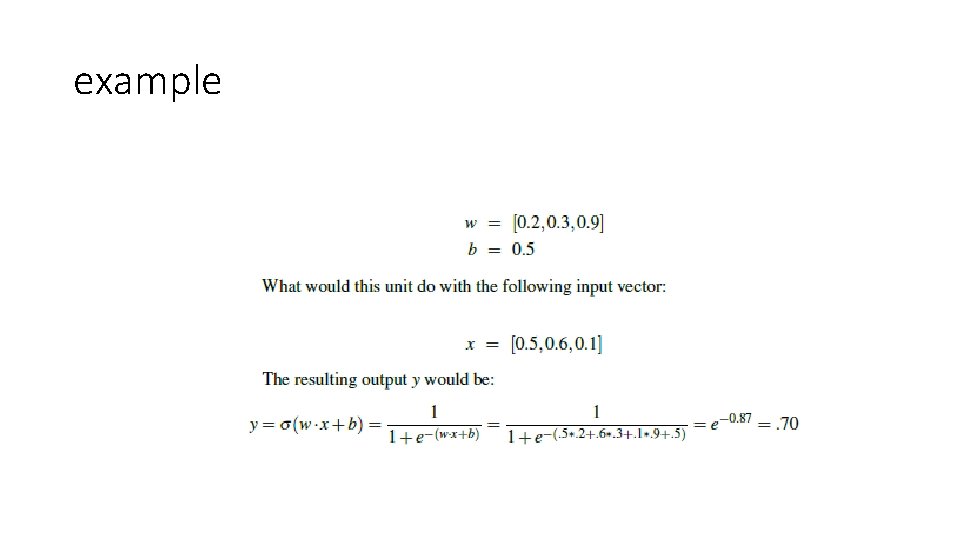

example

Introduction • There are over a trillion pages of information on the Web, almost all of it in natural language. An agent that wants to do knowledge acquisition needs to understand (at least partially) the ambiguous, messy languages that humans use. • We examine the problem from the point of view of specific information-seeking tasks: text classification, information retrieval, and information extraction. One common factor in addressing these tasks is the use of language models: models that predict the probability distribution of language expressions. 21

Language Model • Formal languages, such as the programming languages Java or Python, have precisely defined language models. A language can be defined as a set of strings; • “print(2 + 2)” is a legal program in the language Python, whereas “ 2)+(2 print” is not. • Since there an infinite number of legal programs, they cannot be enumerated; instead they are specified by a set of rules called a grammar. • Formal languages also have rules that define the meaning or semantics of a program; for example, the rules say that the “meaning” of “ 2+2” is 4, and the meaning of “ 1/0” is that an error is signaled. 22

N-gram character Model • Ultimately, a written text is composed of characters—letters, digits, punctuation, and spaces in English (and more exotic characters in some other languages). Thus, one of the simplest language models is a probability distribution over sequences of characters. • we write P(c 1: N ) for the probability of a sequence of N characters, c 1 through c. N. In one Web collection, P(“the”)=0. 027 and P(“zgq”)=0. 00002. • A sequence of written symbols of length n is called an n-gram (from the Greek root for writing or letters), with special case “unigram” for 1 -gram, “bigram” for 2 -gram, and “trigram” for 3 -gram. A model of the probability distribution of n-letter sequences is thus called an n-gram model. 23

Text Classification • We now consider in depth the task of text classification, also known as categorization: given a text of some kind, decide which of a predefined set of classes it belongs to. Language identification and genre classification are examples of text classification, as is sentiment analysis (classifying a movie or product review as positive or negative) and spam detection (classifying an email message as spam or not-spam). Since “not-spam” is awkward, researchers have coined the term ham for not-spam 24

Information extraction • Information extraction is the process of acquiring knowledge by skimming a text and looking for occurrences of a particular class of object and for relationships among objects. A typical task is to extract instances of addresses from Web pages, with database fields for street, city, state, and zip code; or instances of storms from weather reports, with fields for temperature, wind speed, and precipitation. • In a limited domain, this can be done with high accuracy. As the domain gets more general, more complex linguistic models and more complex learning techniques are necessary. 25

Information Retrieval • Information retrieval is the task of finding documents that are relevant to a query, where the query may be a question, or just a topic area or concept. • Question answering is a somewhat different task, in which the query really is a question, and the answer is not a ranked list of documents but rather a short response—a sentence, or even just a phrase. There have been question-answering NLP (natural language processing) systems since the 1960 s, but only since 2001 have such systems used Web information retrieval to radically increase their breadth of coverage. • Page. Rank algorithm was one of the two original ideas that set Google’s search apart from other Web search engines when it was introduced in 1997. 26

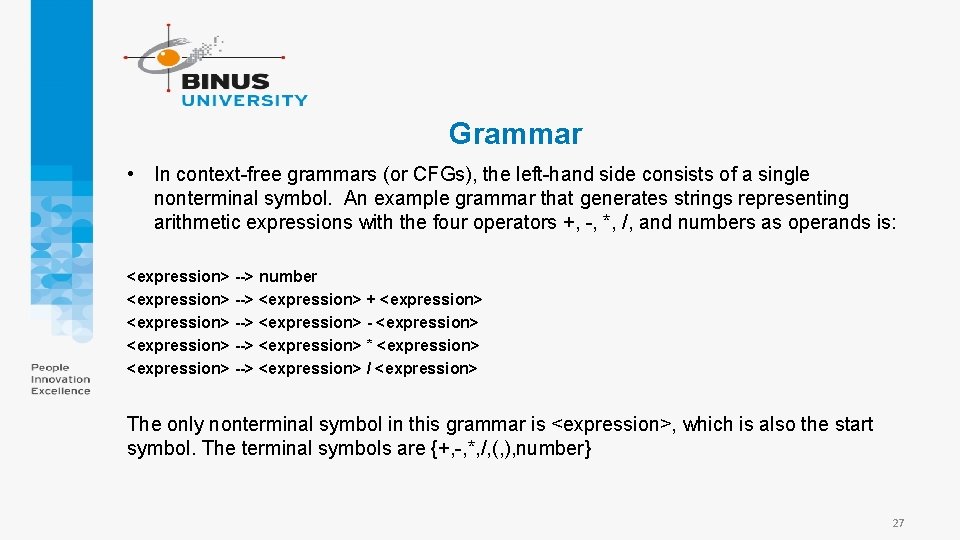

Grammar • In context-free grammars (or CFGs), the left-hand side consists of a single nonterminal symbol. An example grammar that generates strings representing arithmetic expressions with the four operators +, -, *, /, and numbers as operands is: <expression> --> number <expression> --> <expression> + <expression> --> <expression> --> <expression> * <expression> --> <expression> / <expression> The only nonterminal symbol in this grammar is <expression>, which is also the start symbol. The terminal symbols are {+, -, *, /, (, ), number} 27

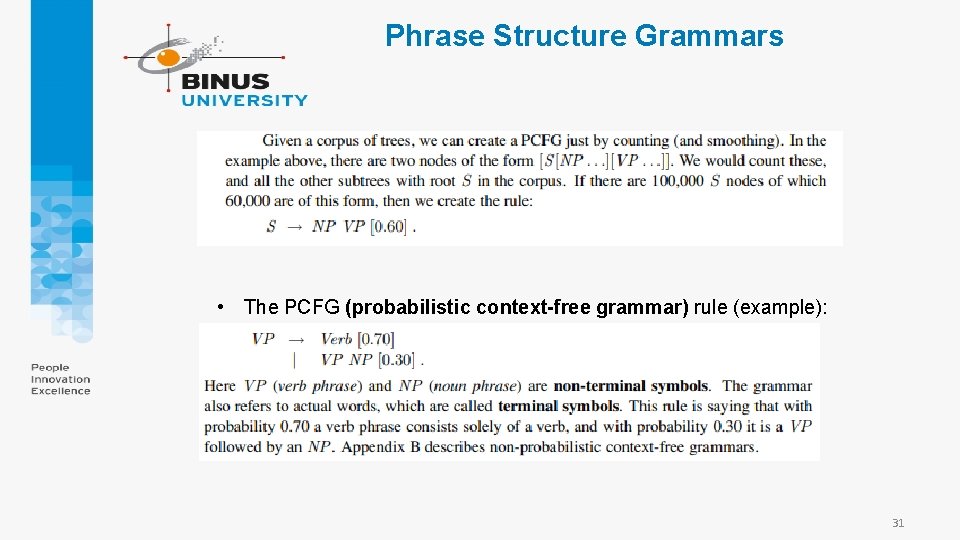

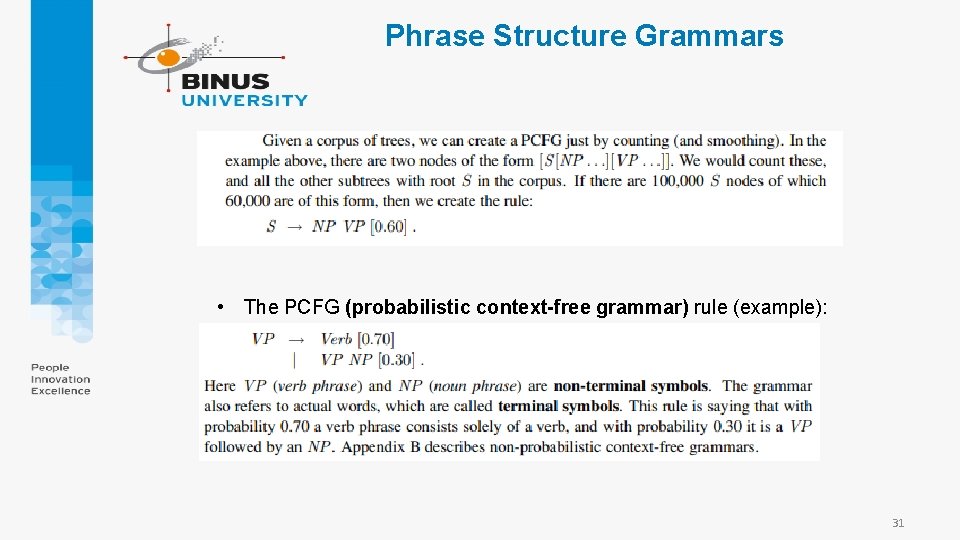

Phrase Structure Grammars • There have been many competing language models based on the idea of phrase structure; we will describe a popular model called the probabilistic context-free grammar, or PCFG. A grammar is a collection of rules that defines a language as a set of allowable LANGUAGE strings of words • A grammar is a collection of rules that defines a language as a set of allowable strings of words 28

Phrases • A formal language is defined as a set of strings, where each string is a sequence of symbols taken from a finite set called the terminal symbols. For English, the terminal symbols include words like a, aardvark, abacus, and about 400, 000 more • Strings are composed of substrings called phrases • For example, the phrases "the wumpus, " "the king, " and "the agent in the corner" are all examples of the category noun phrase (or NP for short) 29

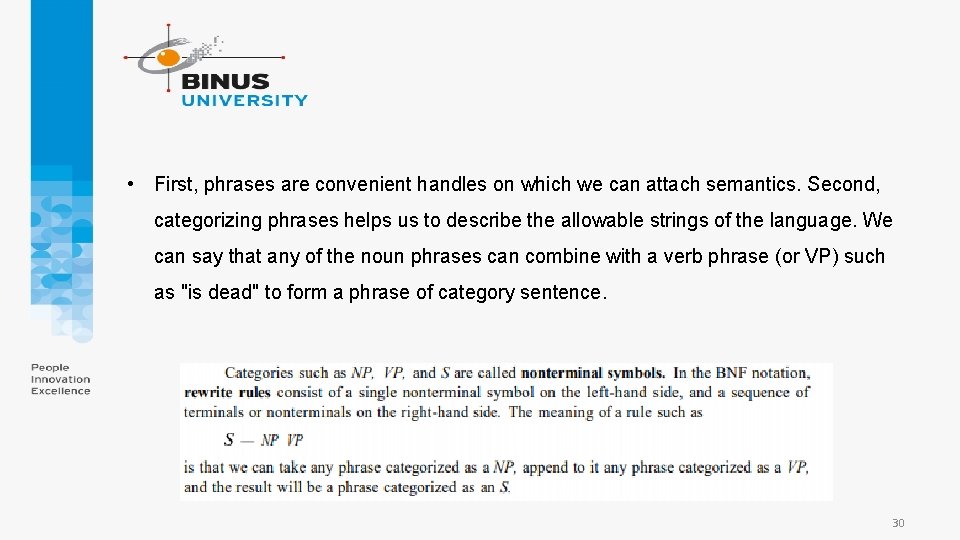

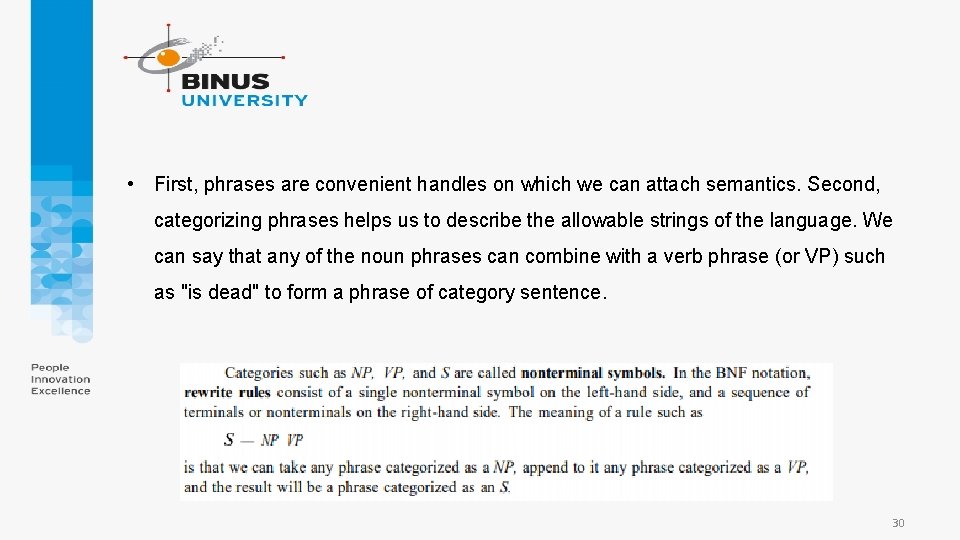

• First, phrases are convenient handles on which we can attach semantics. Second, categorizing phrases helps us to describe the allowable strings of the language. We can say that any of the noun phrases can combine with a verb phrase (or VP) such as "is dead" to form a phrase of category sentence. 30

Phrase Structure Grammars • The PCFG (probabilistic context-free grammar) rule (example): 31

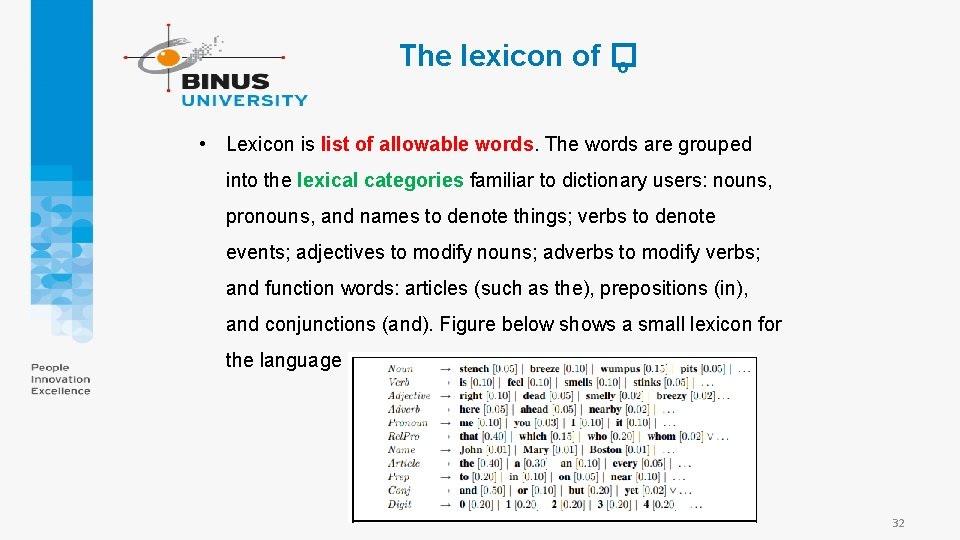

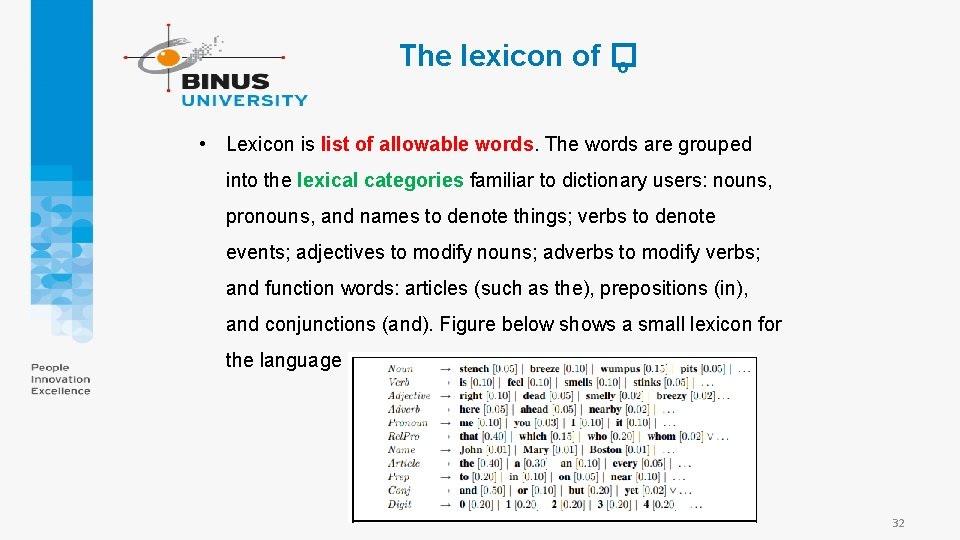

The lexicon of � o • Lexicon is list of allowable words. The words are grouped into the lexical categories familiar to dictionary users: nouns, pronouns, and names to denote things; verbs to denote events; adjectives to modify nouns; adverbs to modify verbs; and function words: articles (such as the), prepositions (in), and conjunctions (and). Figure below shows a small lexicon for the language � o 32

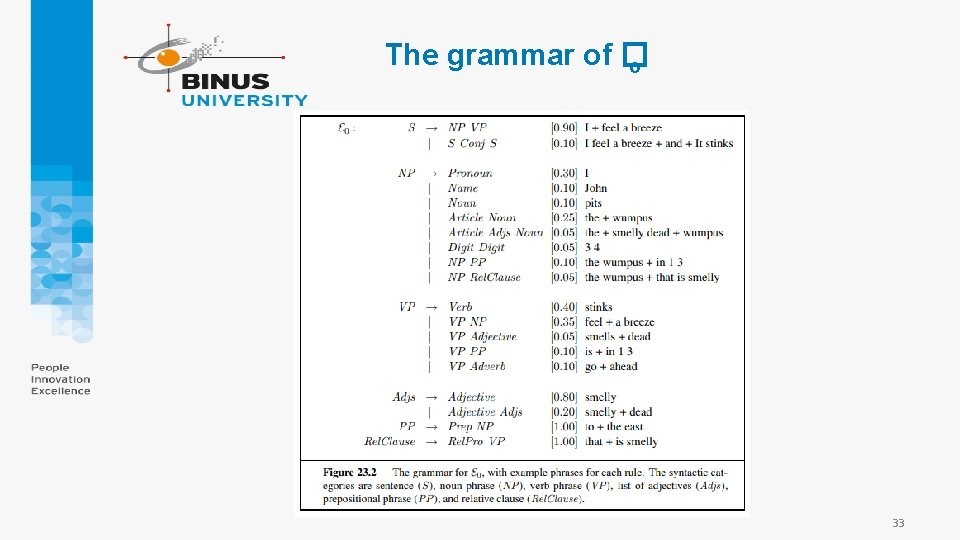

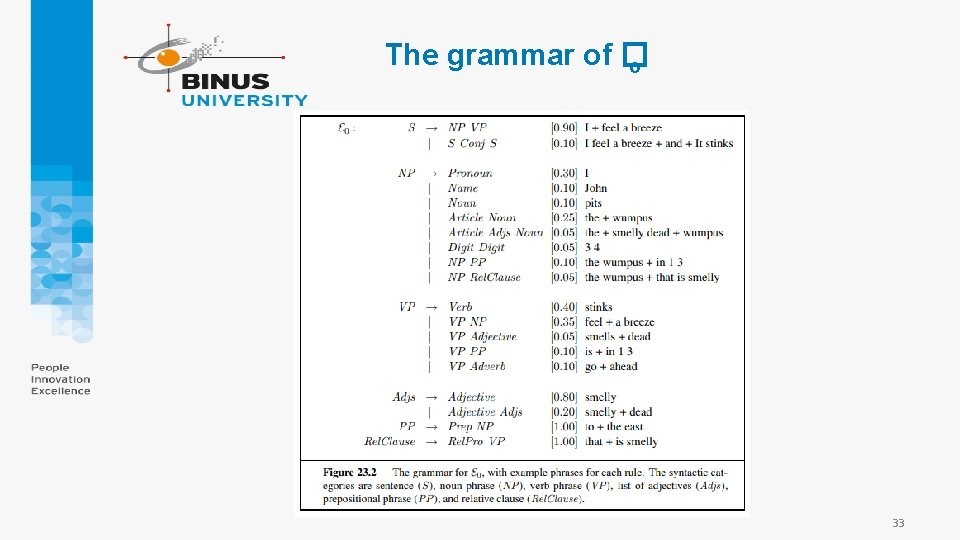

The grammar of � o 33

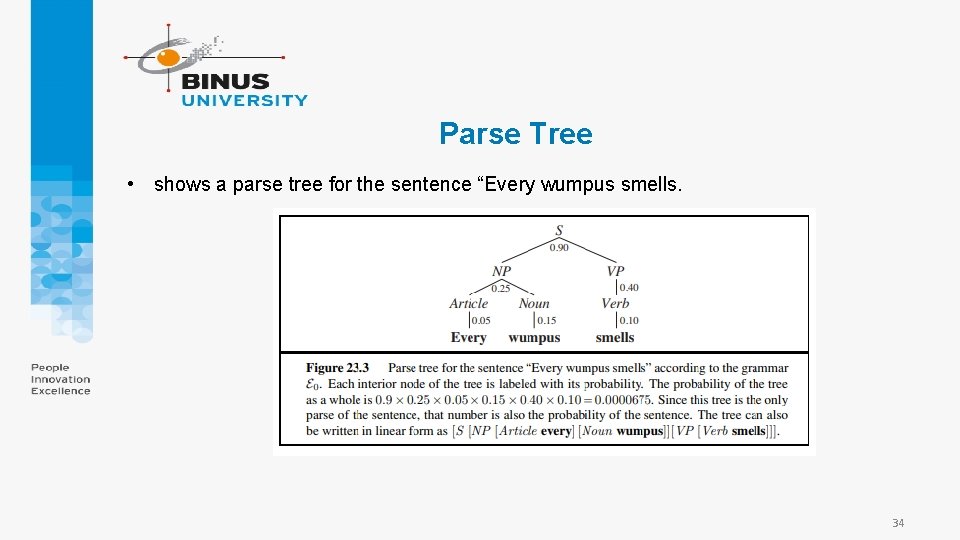

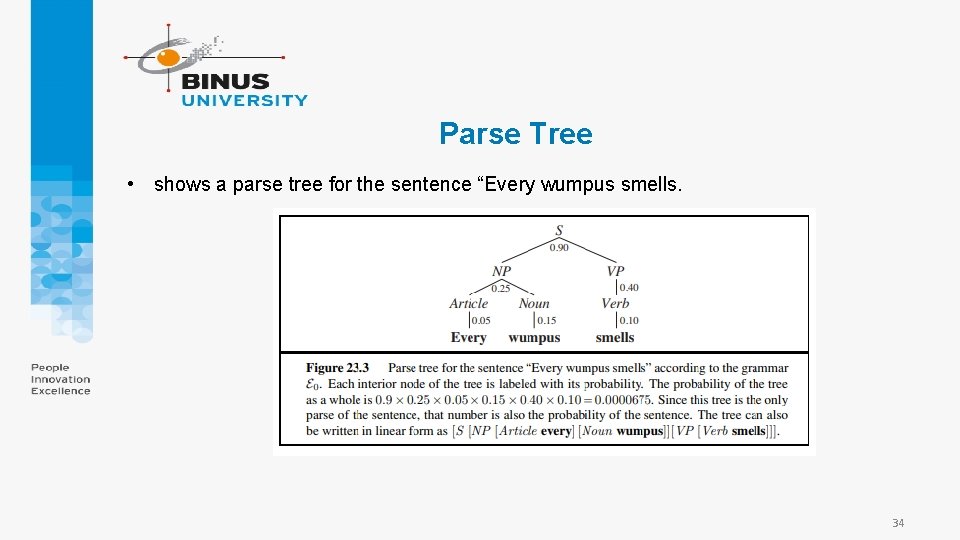

Parse Tree • shows a parse tree for the sentence “Every wumpus smells. 34

Parse Tree 35

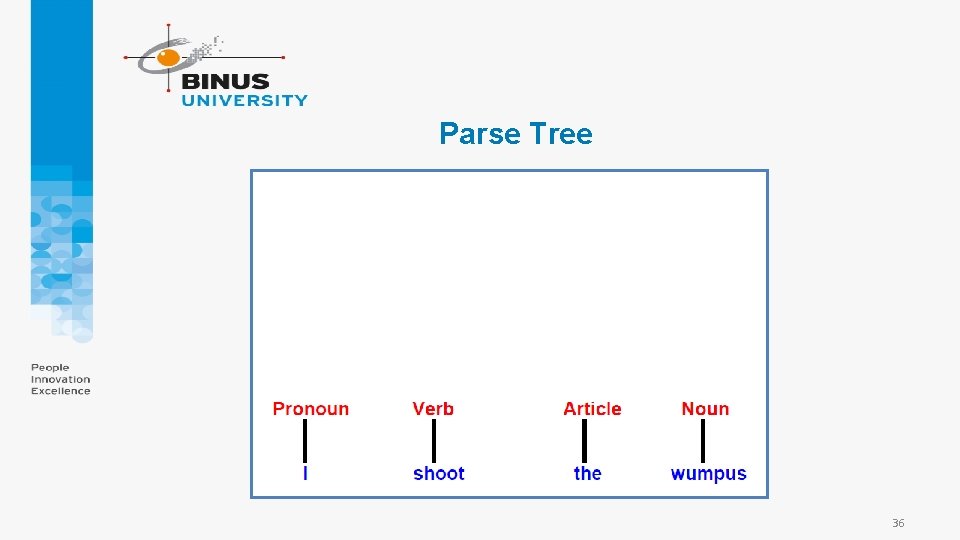

Parse Tree 36

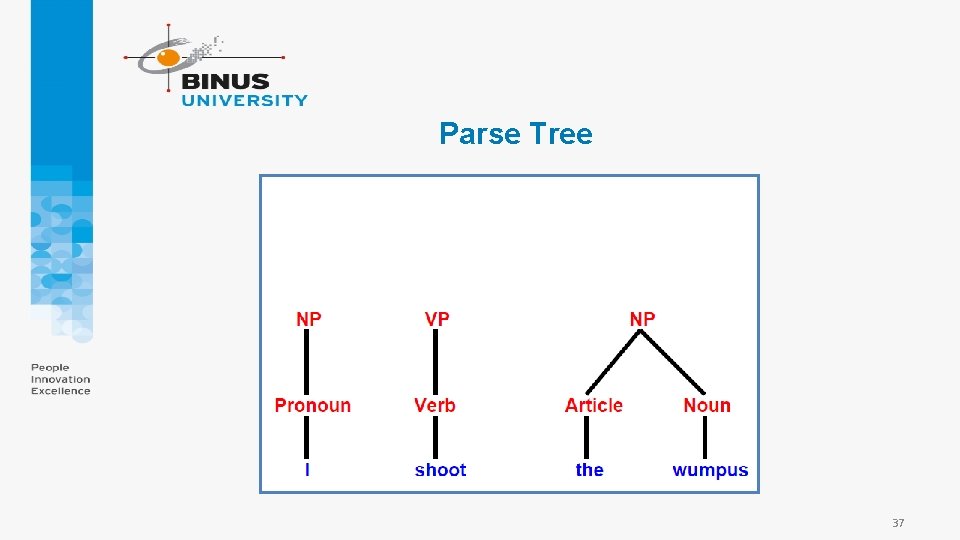

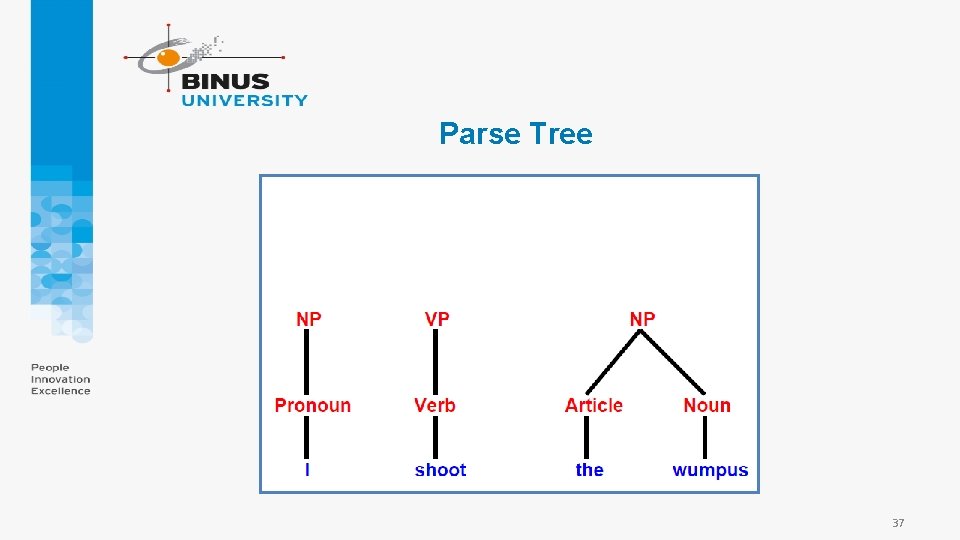

Parse Tree 37

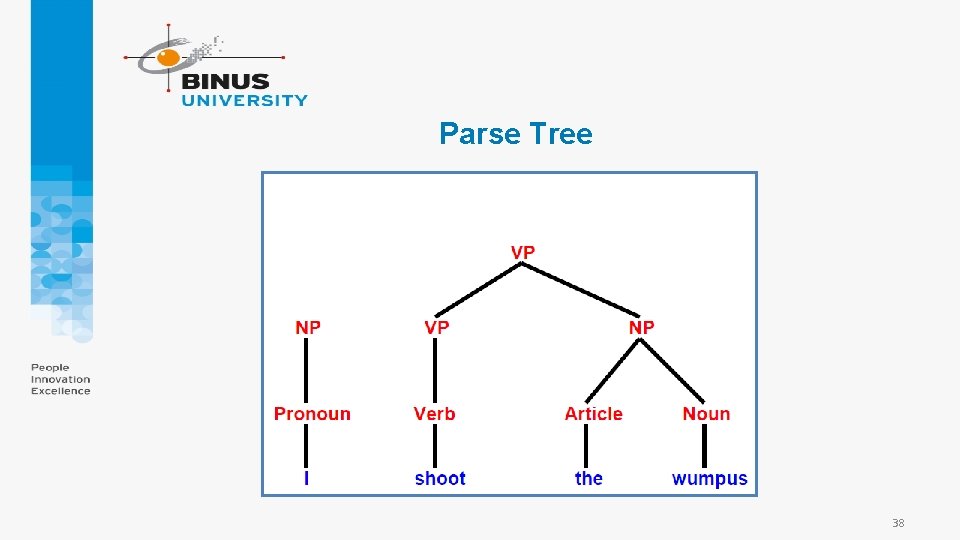

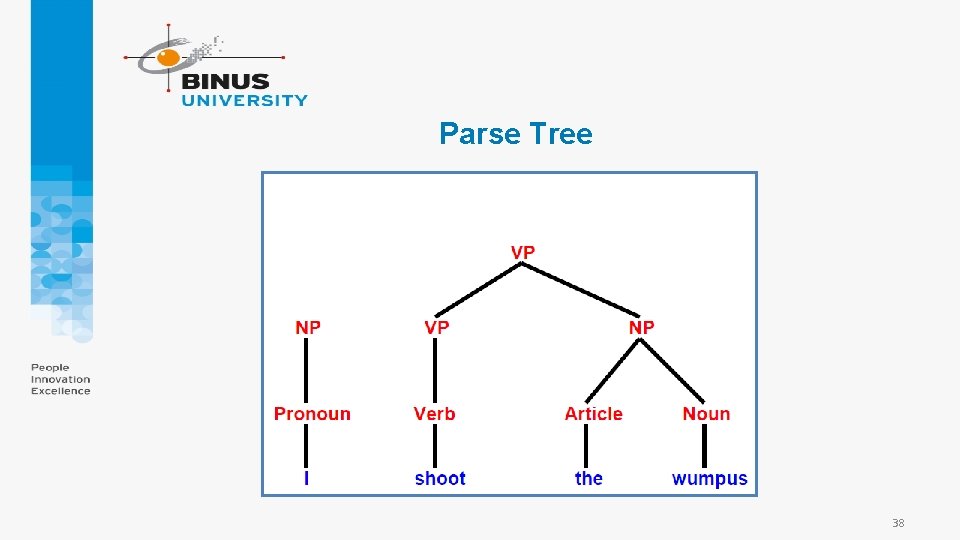

Parse Tree 38

Parse Tree 39

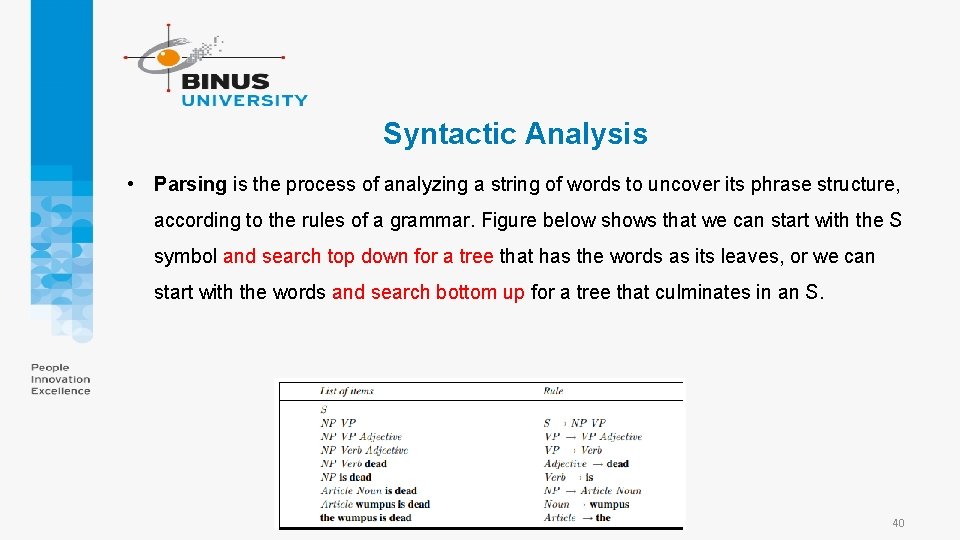

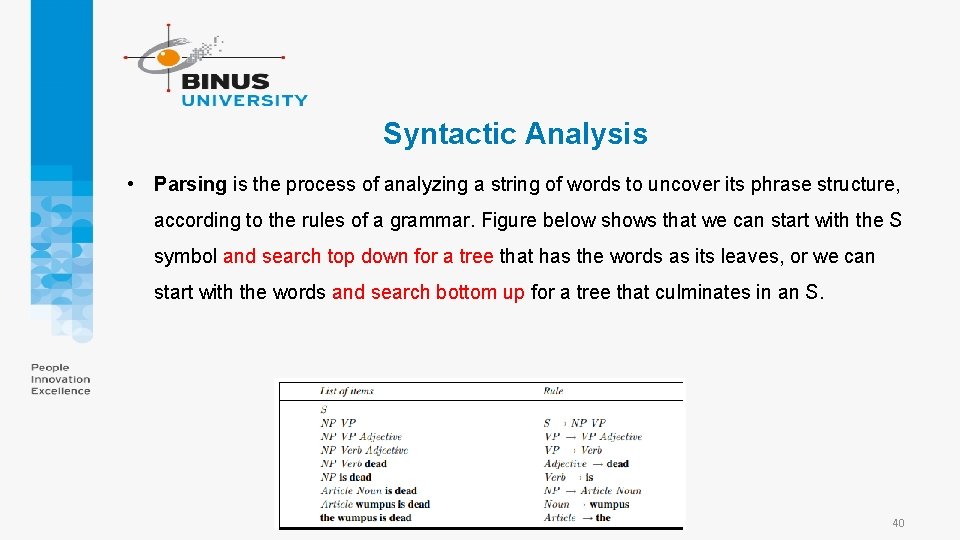

Syntactic Analysis • Parsing is the process of analyzing a string of words to uncover its phrase structure, according to the rules of a grammar. Figure below shows that we can start with the S symbol and search top down for a tree that has the words as its leaves, or we can start with the words and search bottom up for a tree that culminates in an S. 40

Syntactic Analysis • Both top-down and bottom-up parsing can be inefficient, however, because they can end up repeating effort in areas of the search space that lead to dead ends. Consider the following two sentences: • Have the students in section 2 of Computer Science 101 take the exam. • Have the students in section 2 of Computer Science 101 taken the exam? Even though they share the first 10 words, these sentences have very different parses, because the first is a command the second is a question. A left-to-right parsing algorithm would have to guess whether the first word is part of a command or a question and will not be able to tell if the guess is correct until at least the eleventh word, take or taken. 41

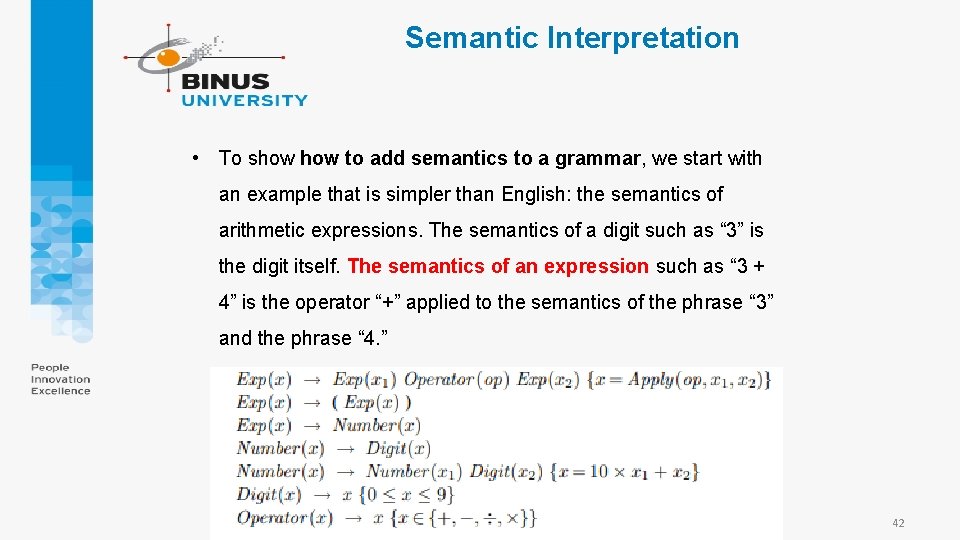

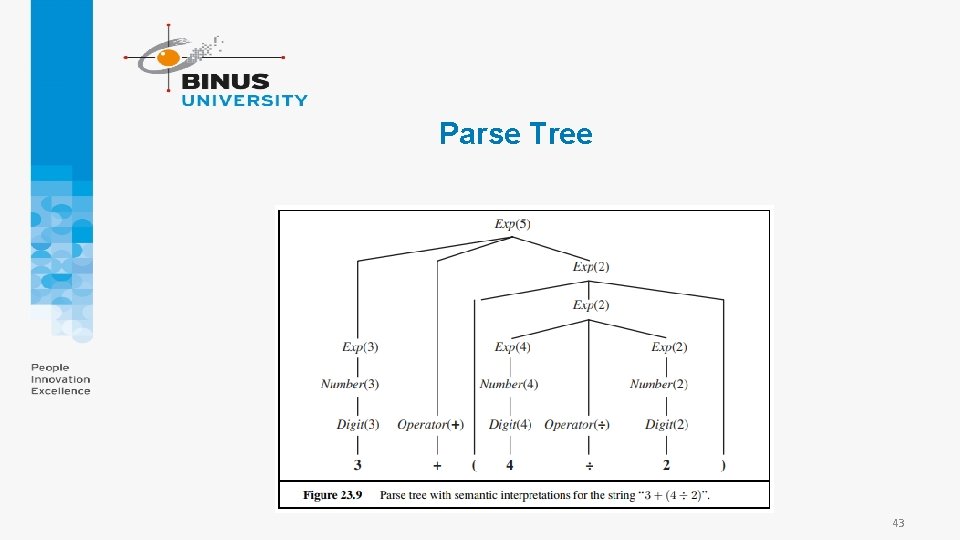

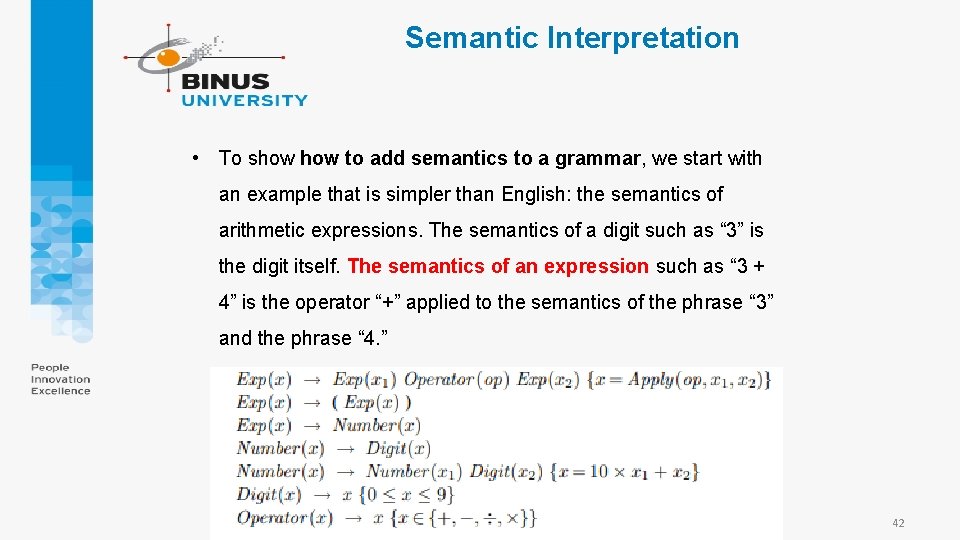

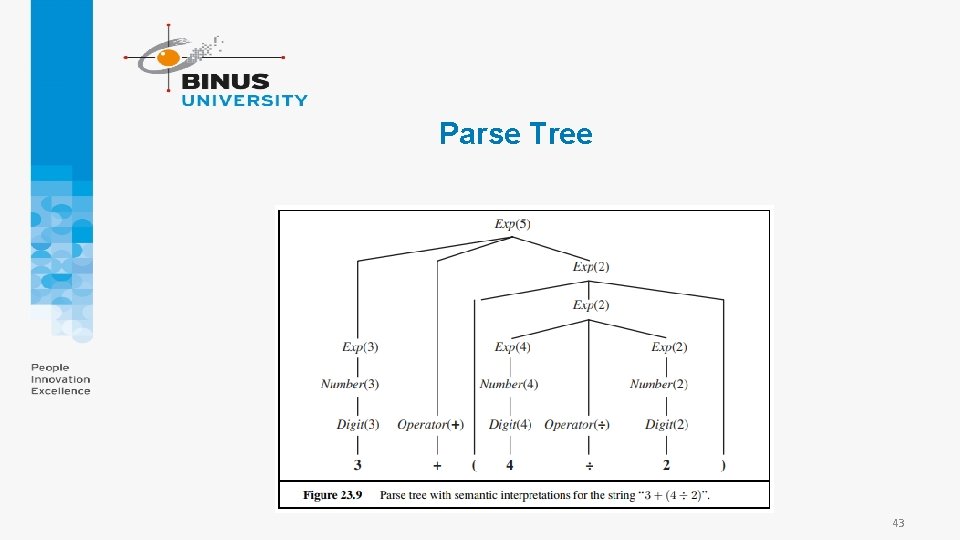

Semantic Interpretation • To show to add semantics to a grammar, we start with an example that is simpler than English: the semantics of arithmetic expressions. The semantics of a digit such as “ 3” is the digit itself. The semantics of an expression such as “ 3 + 4” is the operator “+” applied to the semantics of the phrase “ 3” and the phrase “ 4. ” 42

Parse Tree 43

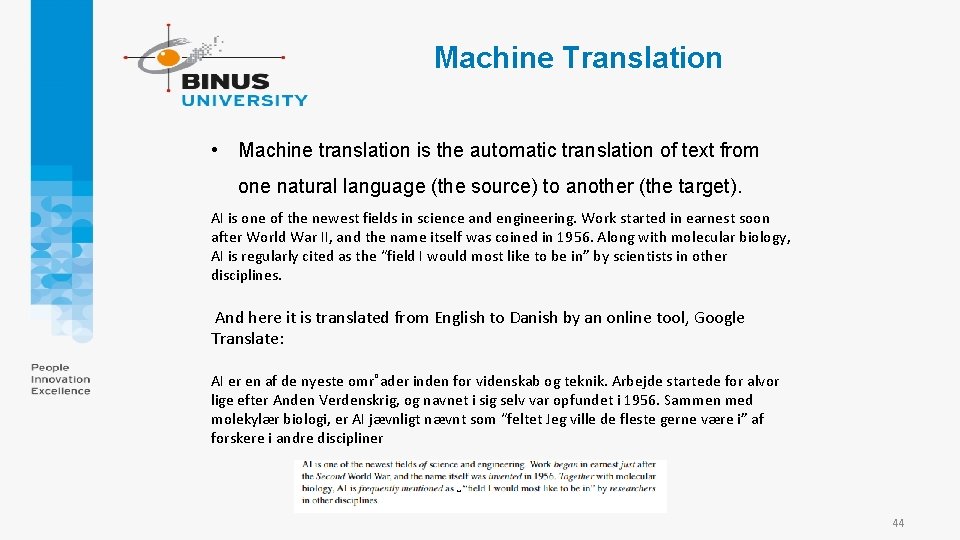

Machine Translation • Machine translation is the automatic translation of text from one natural language (the source) to another (the target). AI is one of the newest fields in science and engineering. Work started in earnest soon after World War II, and the name itself was coined in 1956. Along with molecular biology, AI is regularly cited as the “field I would most like to be in” by scientists in other disciplines. And here it is translated from English to Danish by an online tool, Google Translate: AI er en af de nyeste omr˚ader inden for videnskab og teknik. Arbejde startede for alvor lige efter Anden Verdenskrig, og navnet i sig selv var opfundet i 1956. Sammen med molekylær biologi, er AI jævnligt nævnt som “feltet Jeg ville de fleste gerne være i” af forskere i andre discipliner 44

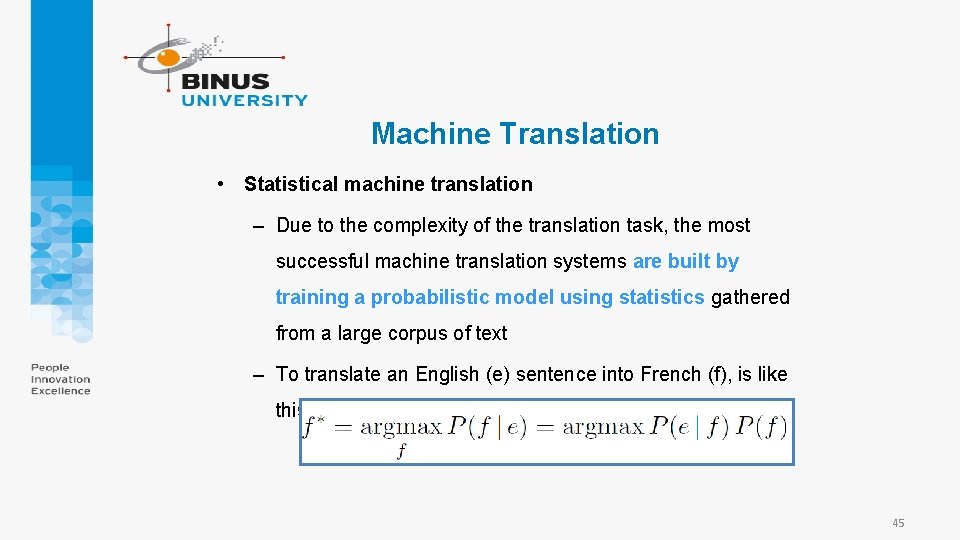

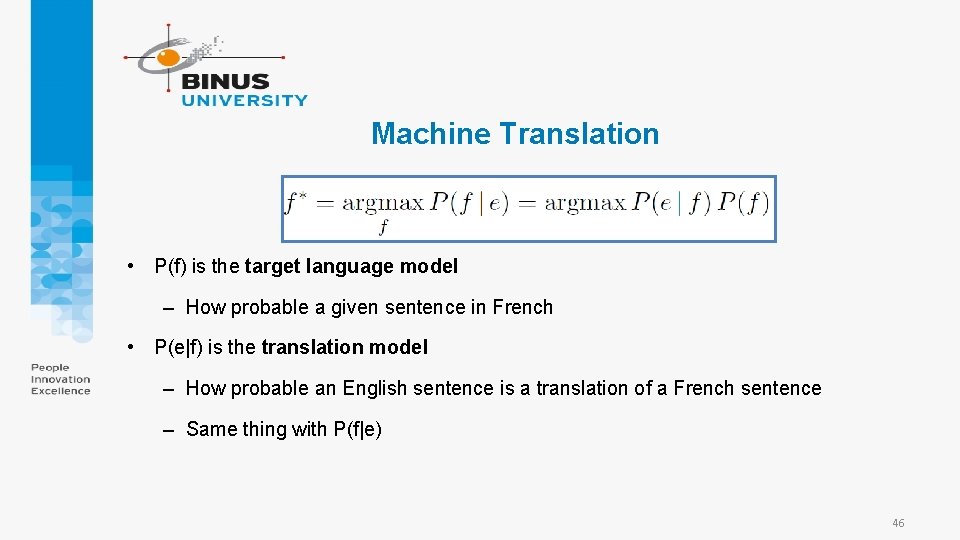

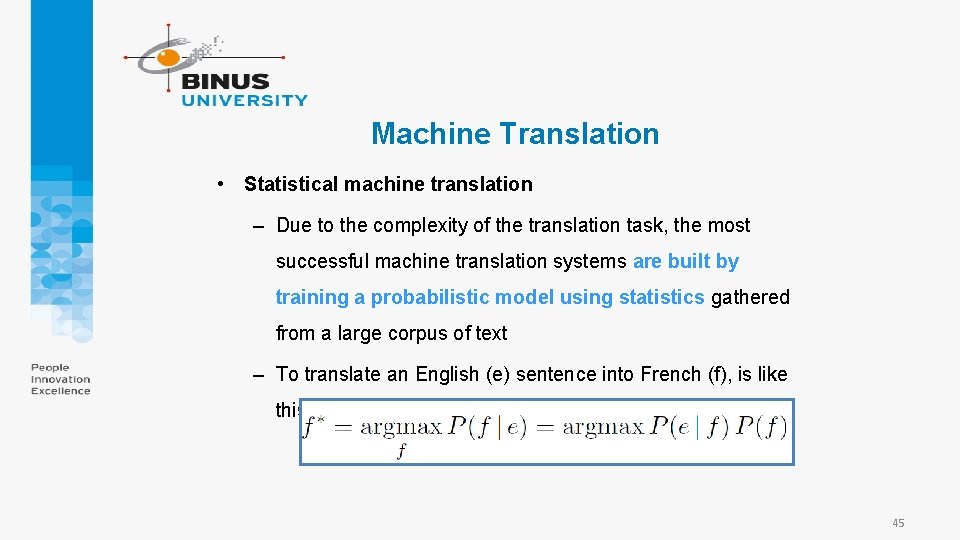

Machine Translation • Statistical machine translation – Due to the complexity of the translation task, the most successful machine translation systems are built by training a probabilistic model using statistics gathered from a large corpus of text – To translate an English (e) sentence into French (f), is like this 45

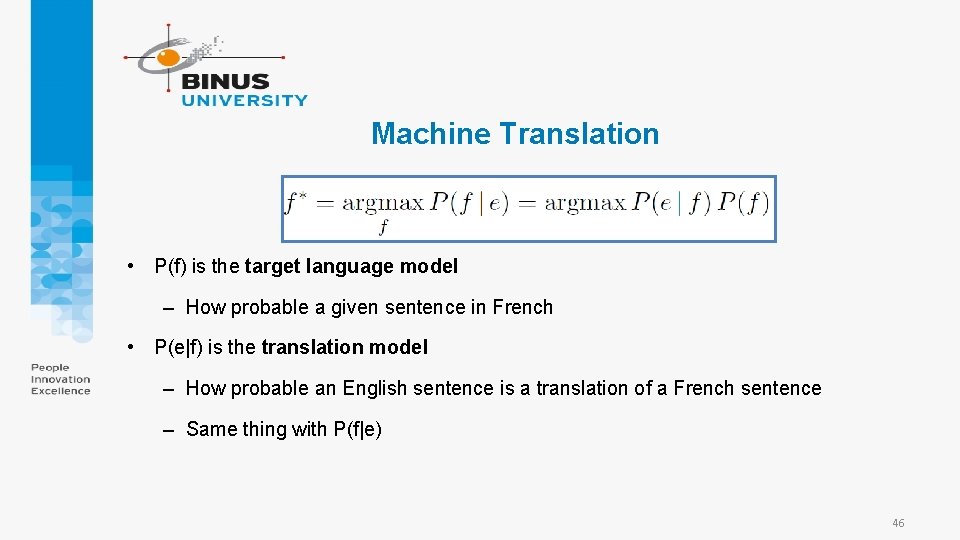

Machine Translation • P(f) is the target language model – How probable a given sentence in French • P(e|f) is the translation model – How probable an English sentence is a translation of a French sentence – Same thing with P(f|e) 46

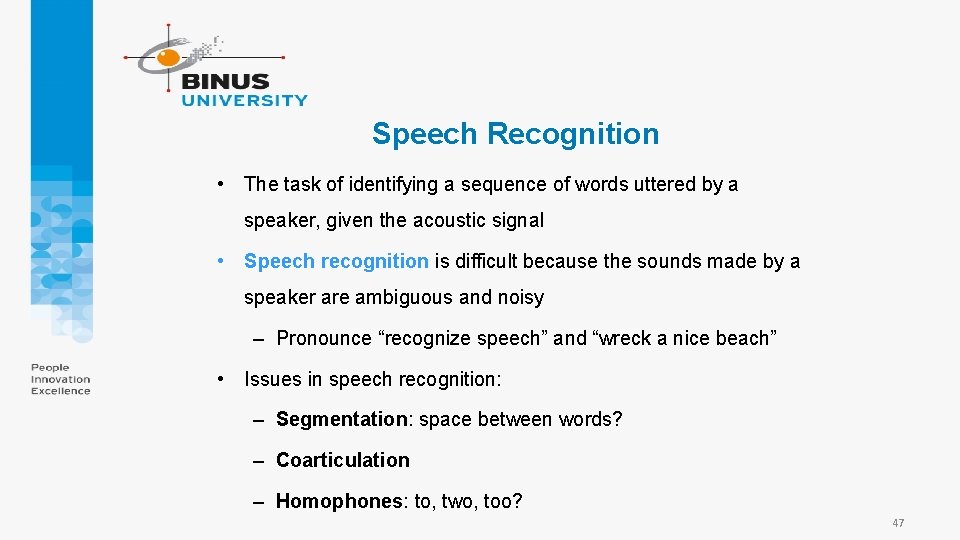

Speech Recognition • The task of identifying a sequence of words uttered by a speaker, given the acoustic signal • Speech recognition is difficult because the sounds made by a speaker are ambiguous and noisy – Pronounce “recognize speech” and “wreck a nice beach” • Issues in speech recognition: – Segmentation: space between words? – Coarticulation – Homophones: to, two, too? 47

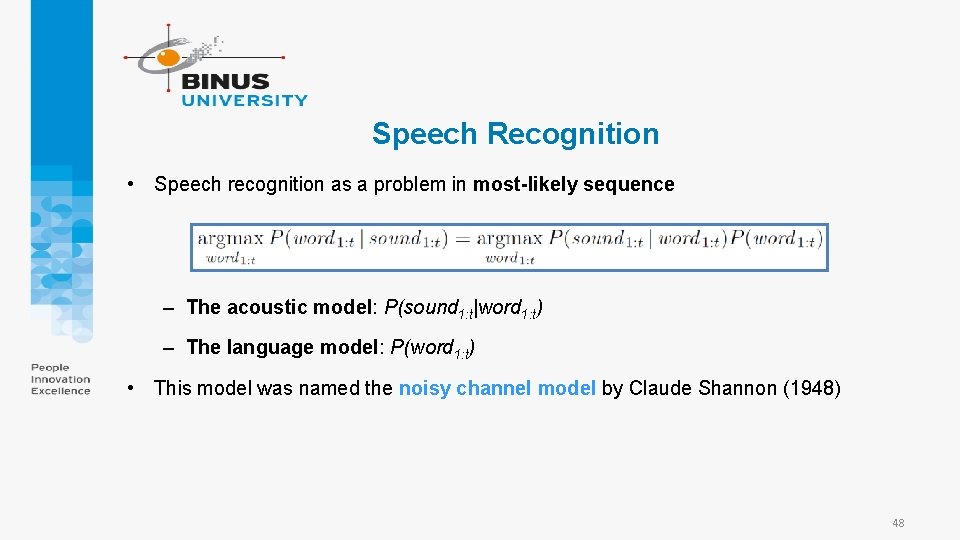

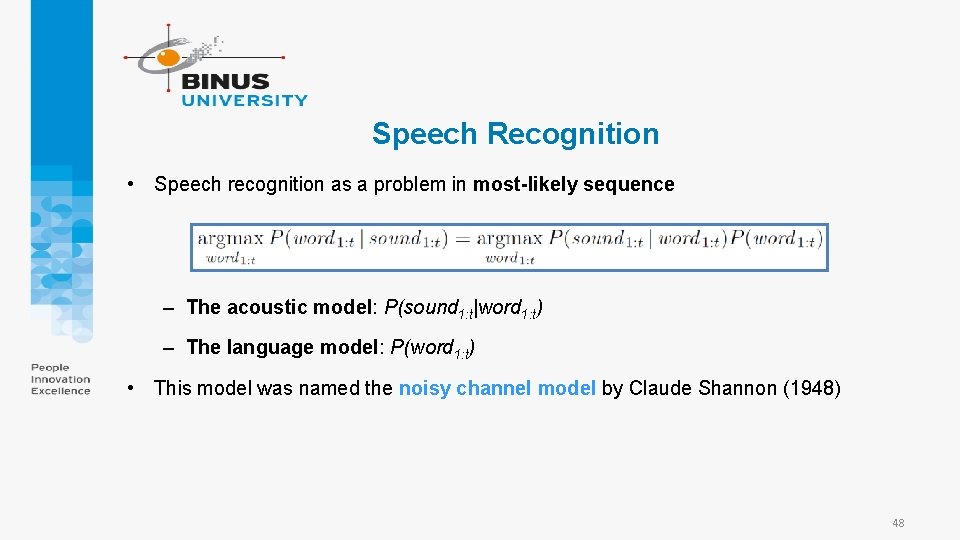

Speech Recognition • Speech recognition as a problem in most-likely sequence – The acoustic model: P(sound 1: t|word 1: t) – The language model: P(word 1: t) • This model was named the noisy channel model by Claude Shannon (1948) 48

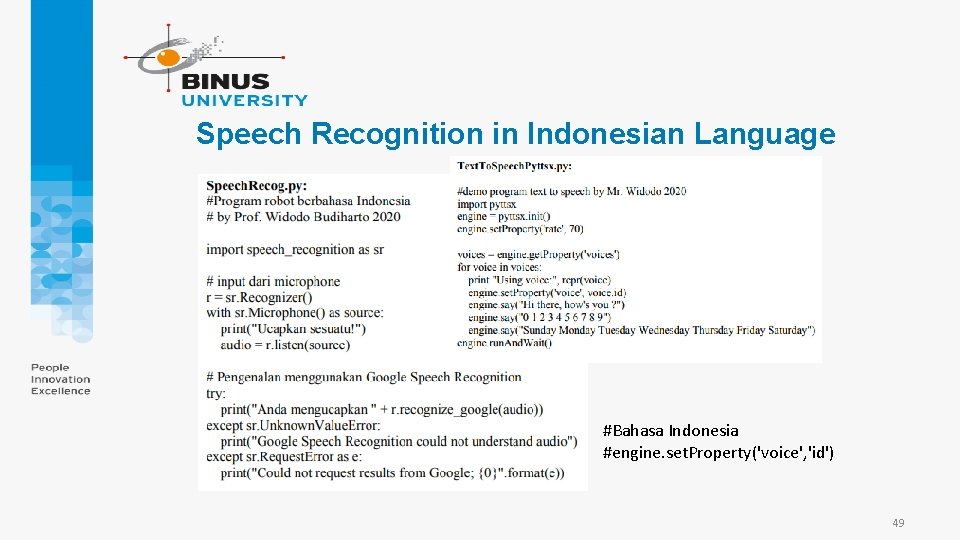

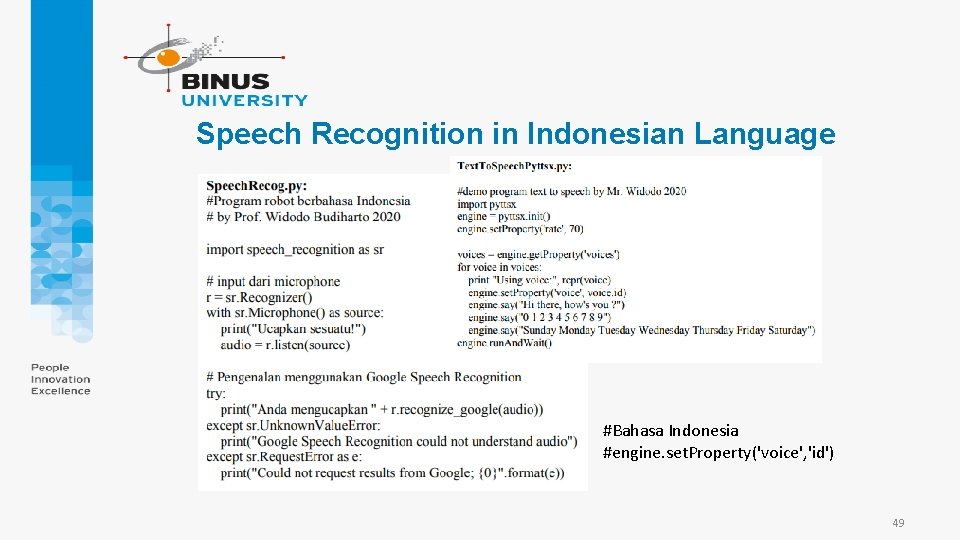

Speech Recognition in Indonesian Language #Bahasa Indonesia #engine. set. Property('voice', 'id') 49

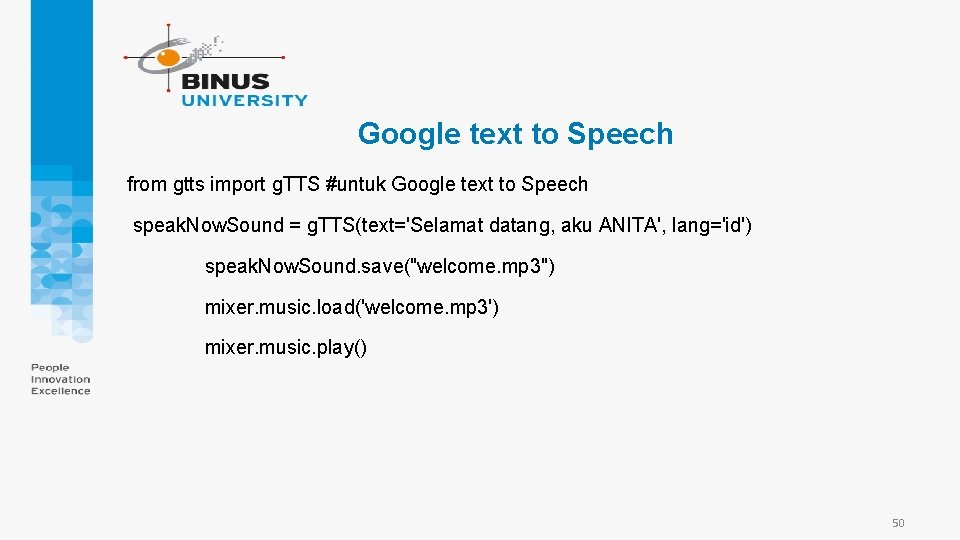

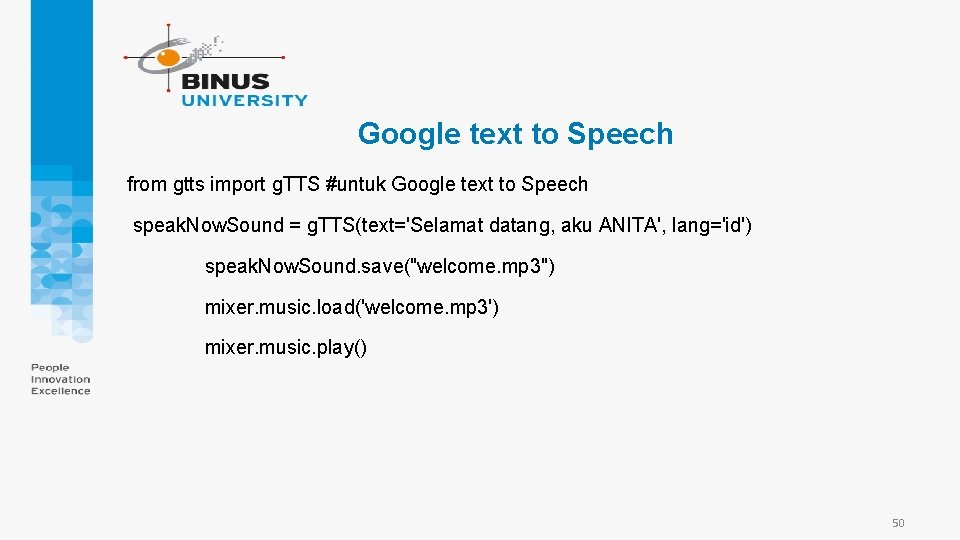

Google text to Speech from gtts import g. TTS #untuk Google text to Speech speak. Now. Sound = g. TTS(text='Selamat datang, aku ANITA', lang='id') speak. Now. Sound. save("welcome. mp 3") mixer. music. load('welcome. mp 3') mixer. music. play() 50

case • Text summarization in Indonesian language Reference; https: //www. analyticsvidhya. com/blog/2019/06/comprehensive-guide -text-summarization-using-deep-learning-python/ Please try this tutorial using Indonesian text.