Natural Language Processing using Wikipedia Rada Mihalcea University

![Ten-fold cross validations n [WSD] Supervised word sense disambiguation on Wikipedia sense tagged corpora Ten-fold cross validations n [WSD] Supervised word sense disambiguation on Wikipedia sense tagged corpora](https://slidetodoc.com/presentation_image_h/9d8e3fec7641e700ca1f6cf7b2847e4f/image-21.jpg)

- Slides: 54

Natural Language Processing using Wikipedia Rada Mihalcea University of North Texas

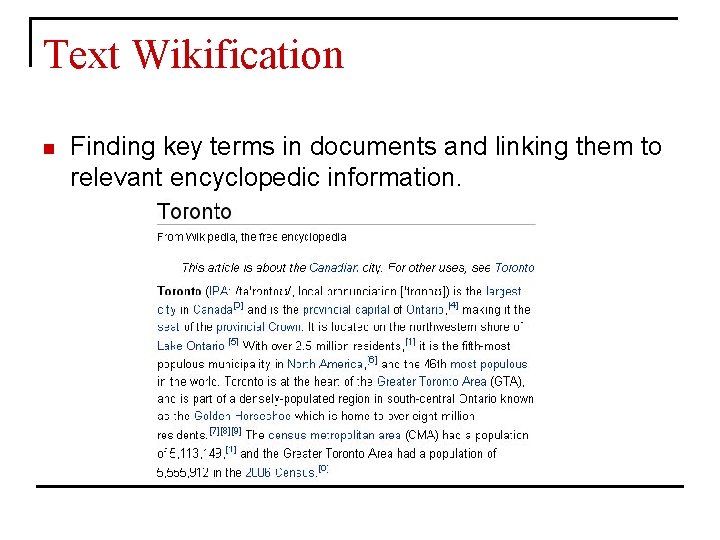

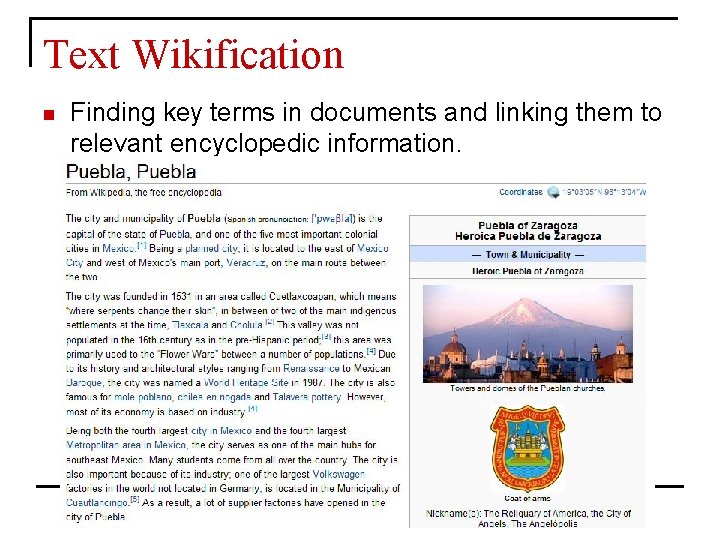

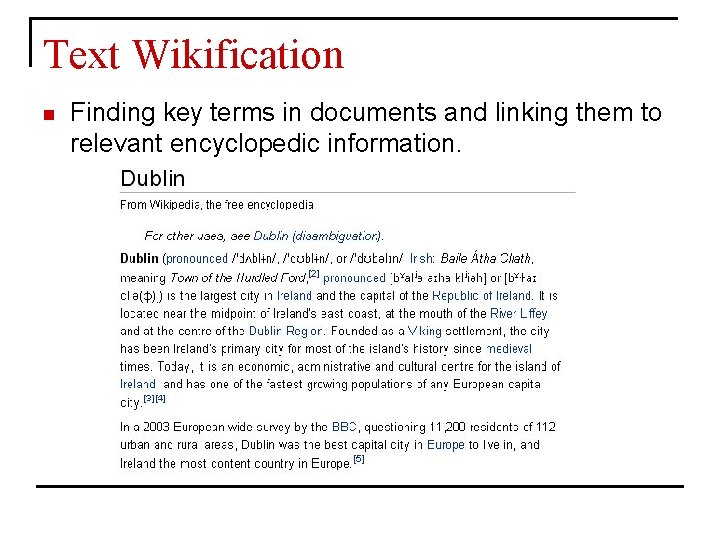

Text Wikification n Finding key terms in documents and linking them to relevant encyclopedic information.

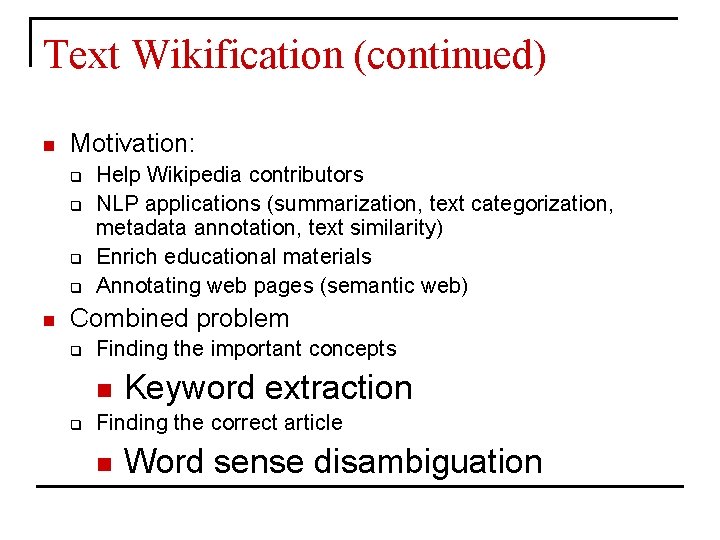

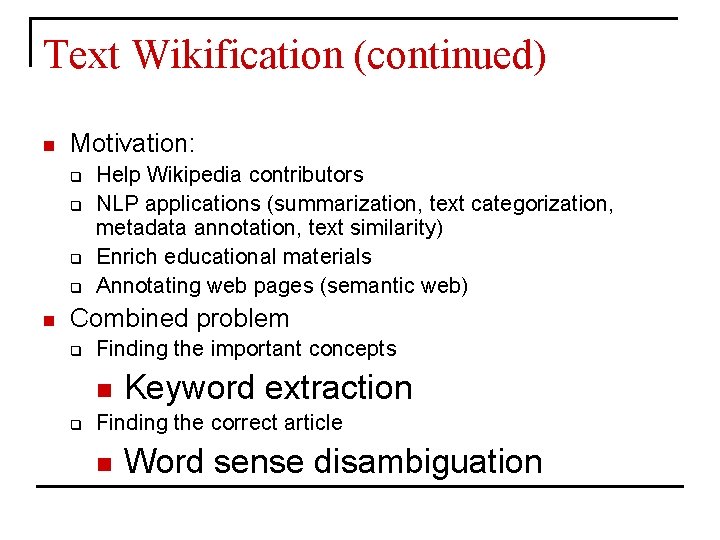

Text Wikification (continued) n Motivation: q q n Help Wikipedia contributors NLP applications (summarization, text categorization, metadata annotation, text similarity) Enrich educational materials Annotating web pages (semantic web) Combined problem q Finding the important concepts q n Keyword extraction Finding the correct article n Word sense disambiguation

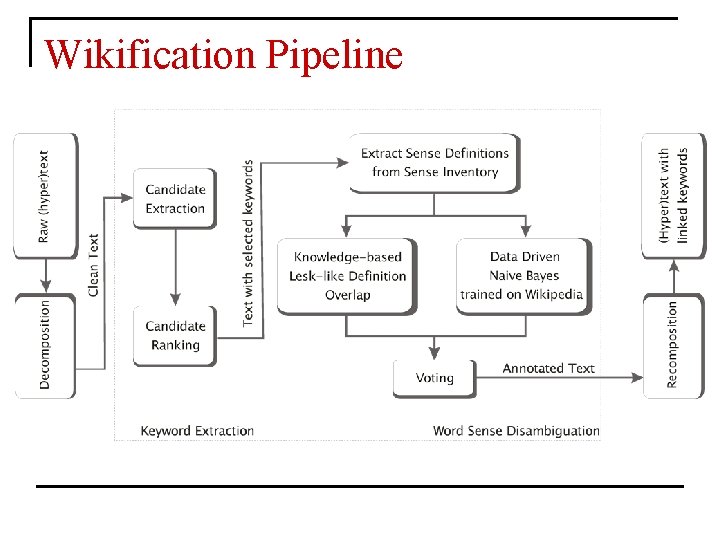

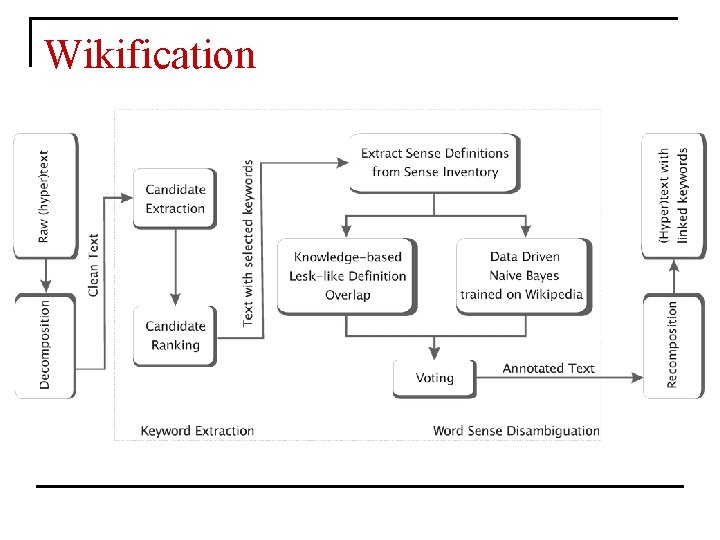

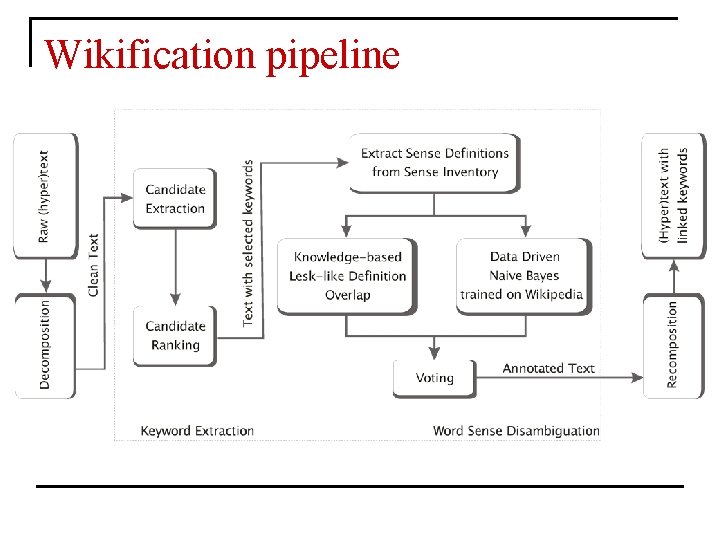

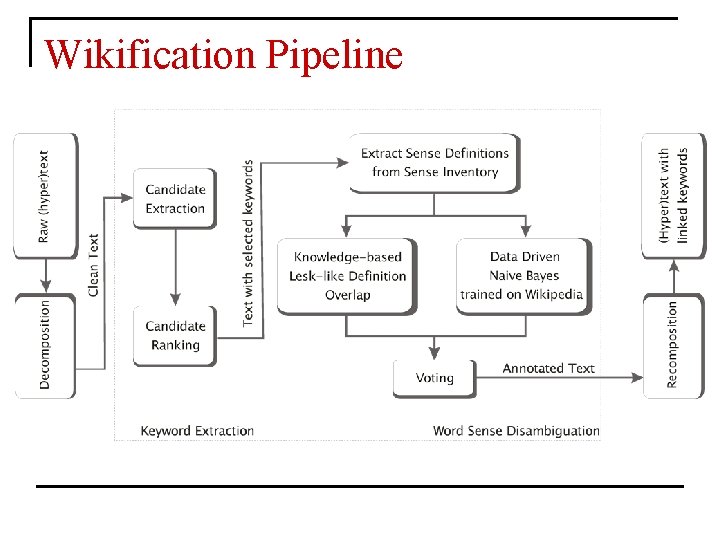

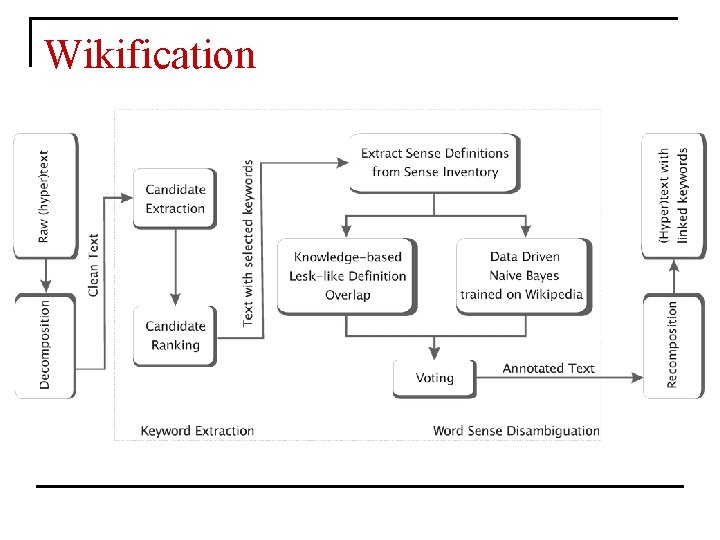

Wikification pipeline

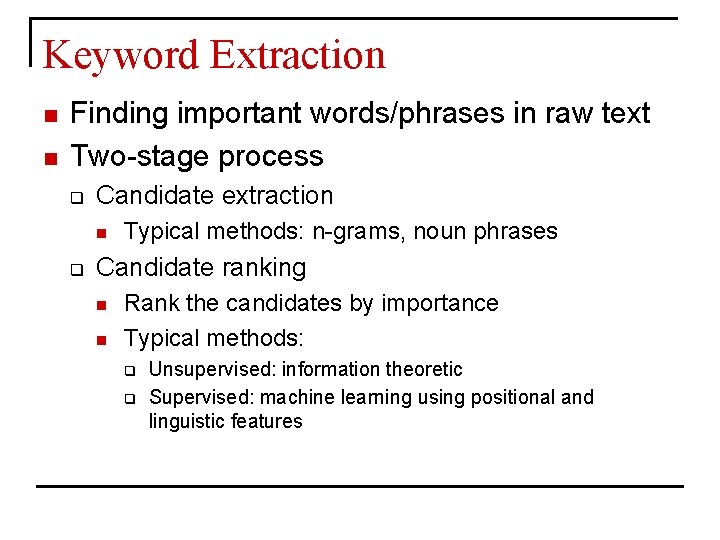

Keyword Extraction n n Finding important words/phrases in raw text Two-stage process q Candidate extraction n q Typical methods: n-grams, noun phrases Candidate ranking n n Rank the candidates by importance Typical methods: q q Unsupervised: information theoretic Supervised: machine learning using positional and linguistic features

Keyword Extraction using Wikipedia 1. Candidate extraction n Semi-controlled vocabulary q Wikipedia article titles and anchor texts (surface forms). n q q E. g. “USA”, “U. S. ” = “United States of America” More than 2, 000 terms/phrases Vocabulary is broad (e. g. , the, a are included)

Keyword Extraction using Wikipedia 2. Candidate ranking n tf * idf q n Chi-squared independence of phrase and text q n Wikipedia articles as document collection The degree to which it appeared more times than expected by chance Keyphraseness:

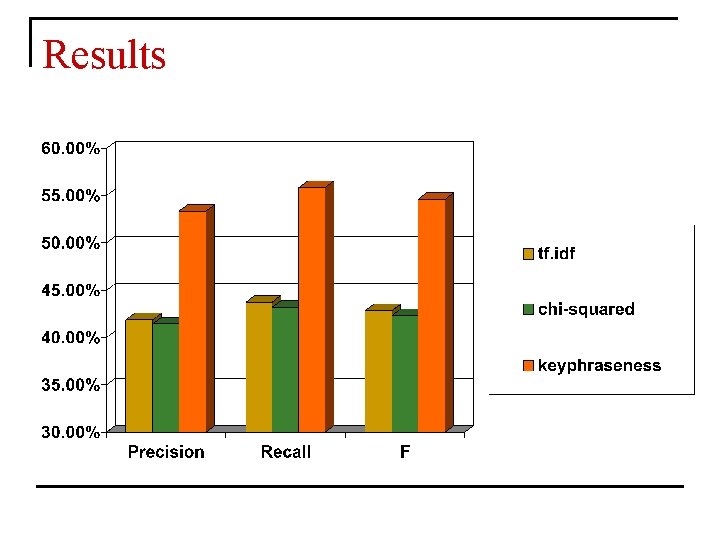

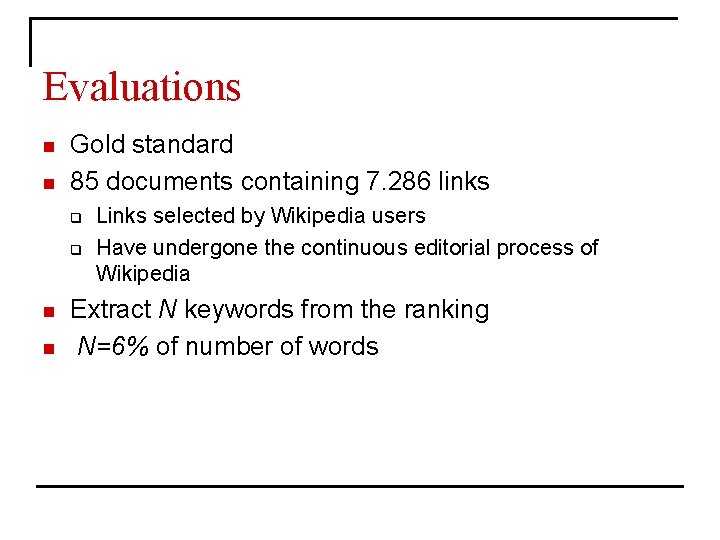

Evaluations n n Gold standard 85 documents containing 7. 286 links q q n n Links selected by Wikipedia users Have undergone the continuous editorial process of Wikipedia Extract N keywords from the ranking N=6% of number of words

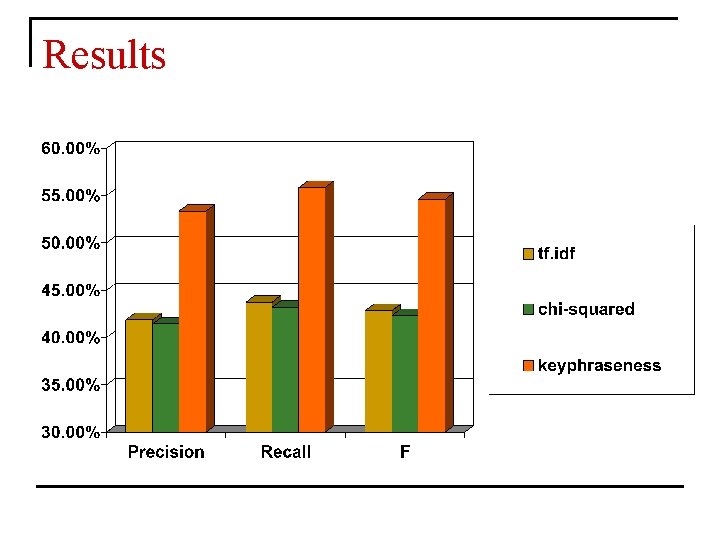

Results

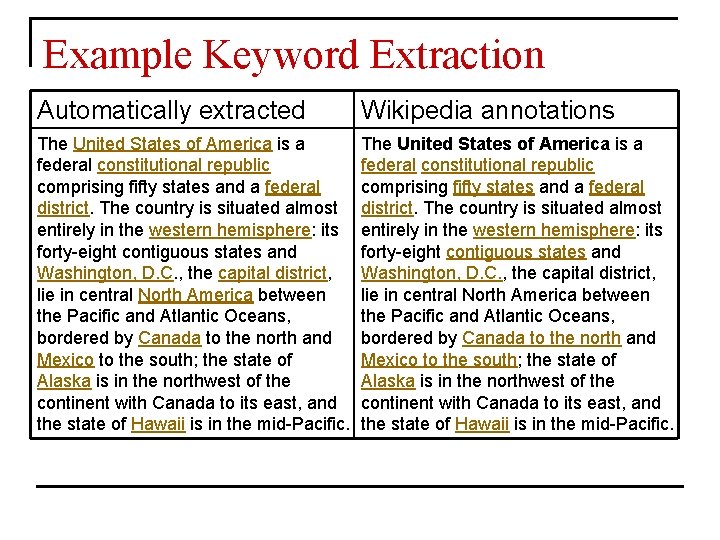

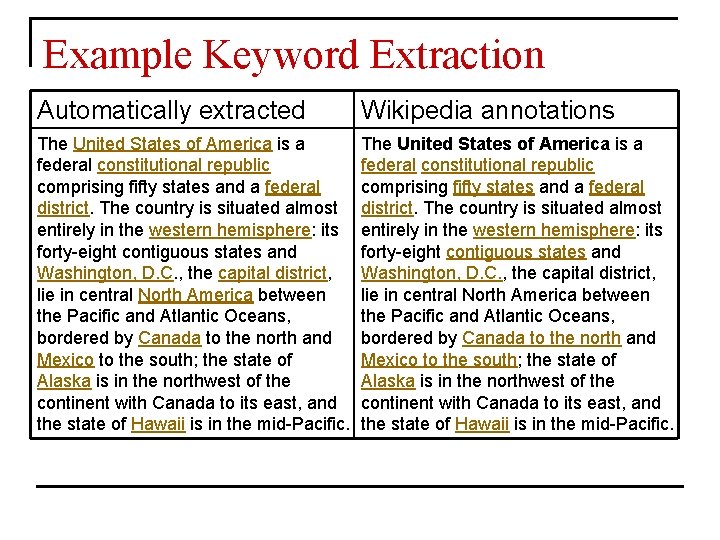

Example Keyword Extraction Automatically extracted Wikipedia annotations The United States of America is a federal constitutional republic comprising fifty states and a federal district. The country is situated almost entirely in the western hemisphere: its forty-eight contiguous states and Washington, D. C. , the capital district, lie in central North America between the Pacific and Atlantic Oceans, bordered by Canada to the north and Mexico to the south; the state of Alaska is in the northwest of the continent with Canada to its east, and the state of Hawaii is in the mid-Pacific.

Wikification Pipeline

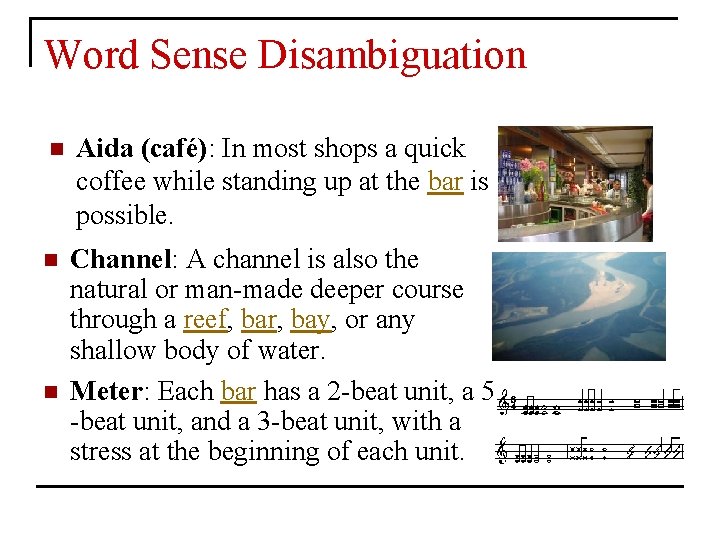

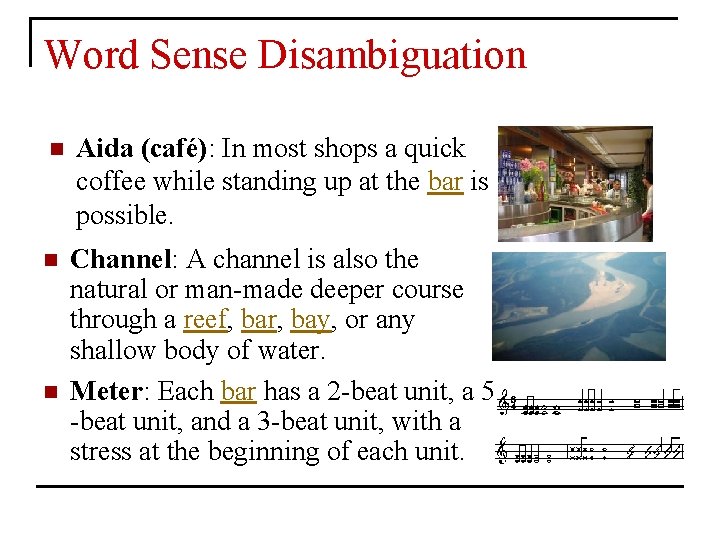

Word Sense Disambiguation n Aida (café): In most shops a quick coffee while standing up at the bar is possible. n Channel: A channel is also the natural or man-made deeper course through a reef, bar, bay, or any shallow body of water. Meter: Each bar has a 2 -beat unit, a 5 -beat unit, and a 3 -beat unit, with a stress at the beginning of each unit. n

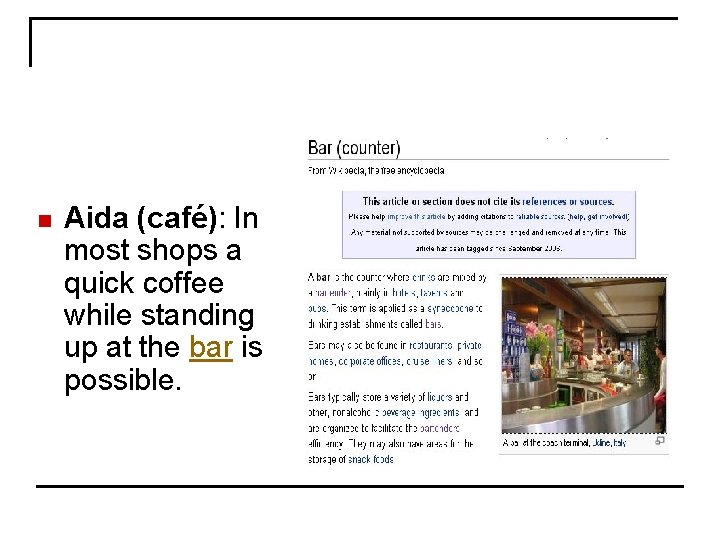

n Aida (café): In most shops a quick coffee while standing up at the bar is possible.

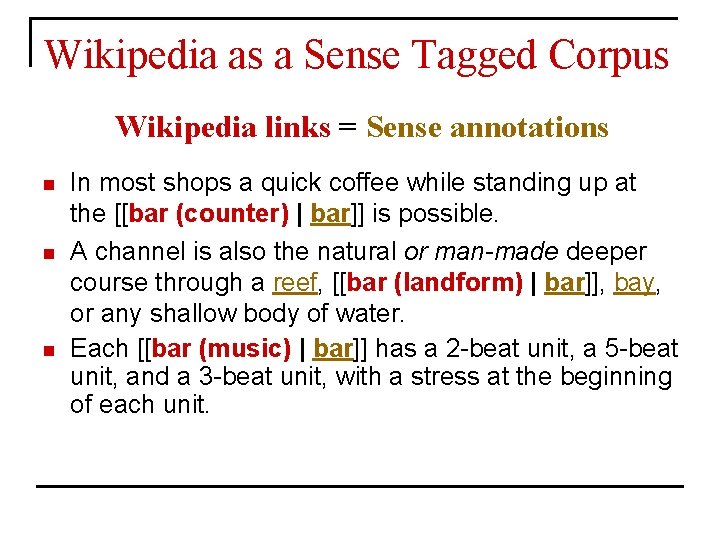

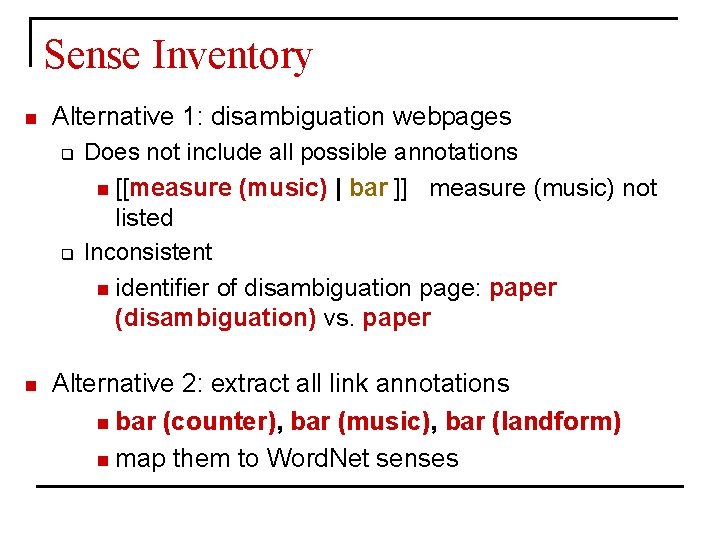

Wikipedia as a Sense Tagged Corpus Wikipedia links = Sense annotations n n n In most shops a quick coffee while standing up at the [[bar (counter) | bar]] is possible. A channel is also the natural or man-made deeper course through a reef, [[bar (landform) | bar]], bay, or any shallow body of water. Each [[bar (music) | bar]] has a 2 -beat unit, a 5 -beat unit, and a 3 -beat unit, with a stress at the beginning of each unit.

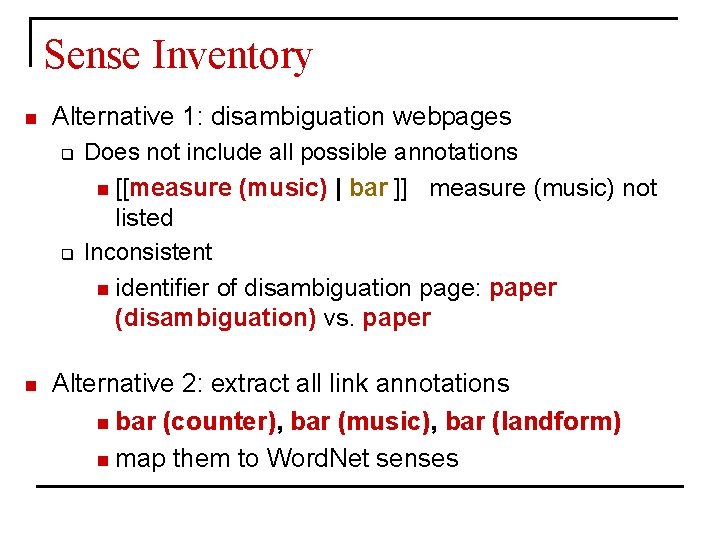

Sense Inventory n Alternative 1: disambiguation webpages q q n Does not include all possible annotations n [[measure (music) | bar ]] measure (music) not listed Inconsistent n identifier of disambiguation page: paper (disambiguation) vs. paper Alternative 2: extract all link annotations n bar (counter), bar (music), bar (landform) n map them to Word. Net senses

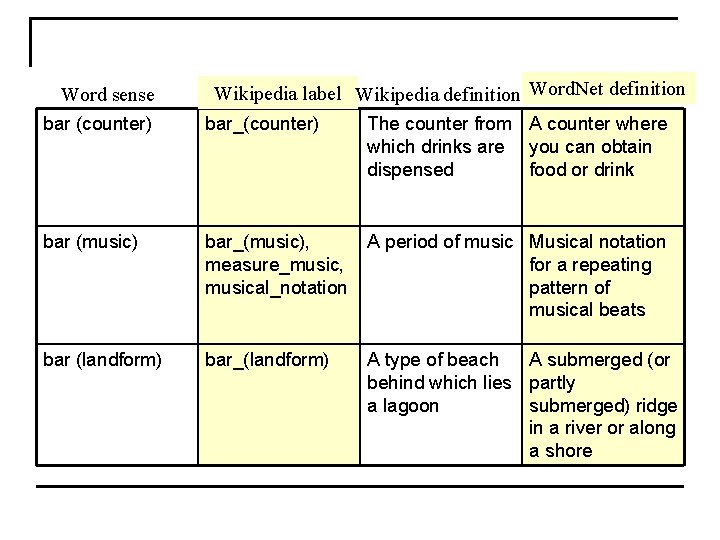

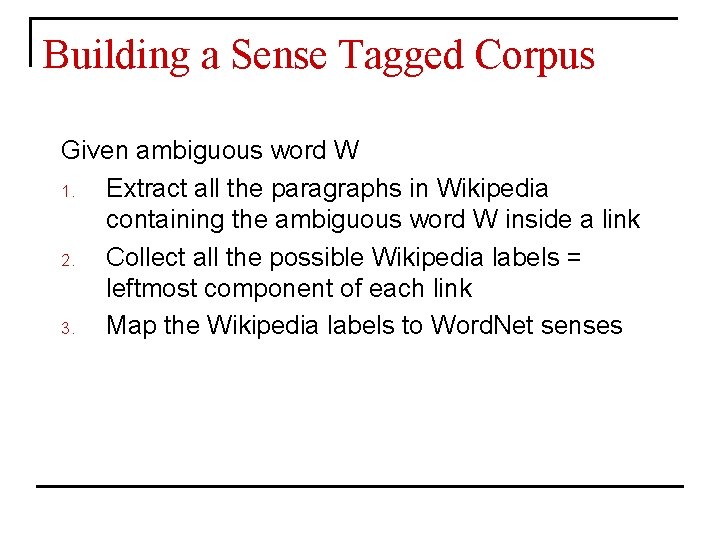

Building a Sense Tagged Corpus Given ambiguous word W 1. Extract all the paragraphs in Wikipedia containing the ambiguous word W inside a link 2. Collect all the possible Wikipedia labels = leftmost component of each link 3. Map the Wikipedia labels to Word. Net senses

An Example Given ambiguous word W = BAR 1. Extract all the paragraphs in Wikipedia containing the ambiguous word W inside a link q 1, 217 paragraphs remove examples with [[bar]] (ambiguous): 1, 108 examples Collect all the possible Wikipedia labels = leftmost component of each link n 2. q 40 Wikipedia labels bar (music); measure music; musical notation Map the Wikipedia labels to Word. Net senses n 3. q 9 Word. Net senses

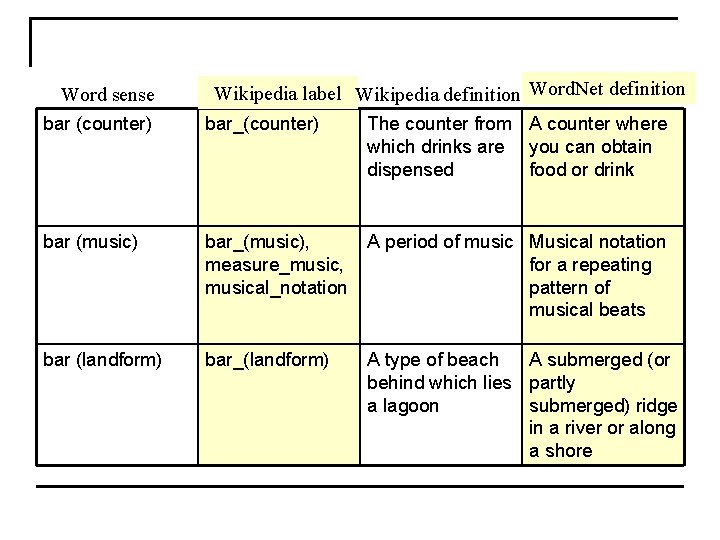

Word sense Wikipedia label Wikipedia definition Word. Net definition bar (counter) bar_(counter) The counter from A counter where which drinks are you can obtain dispensed food or drink bar (music) bar_(music), measure_music, musical_notation A period of music Musical notation for a repeating pattern of musical beats bar (landform) bar_(landform) A type of beach A submerged (or behind which lies partly a lagoon submerged) ridge in a river or along a shore

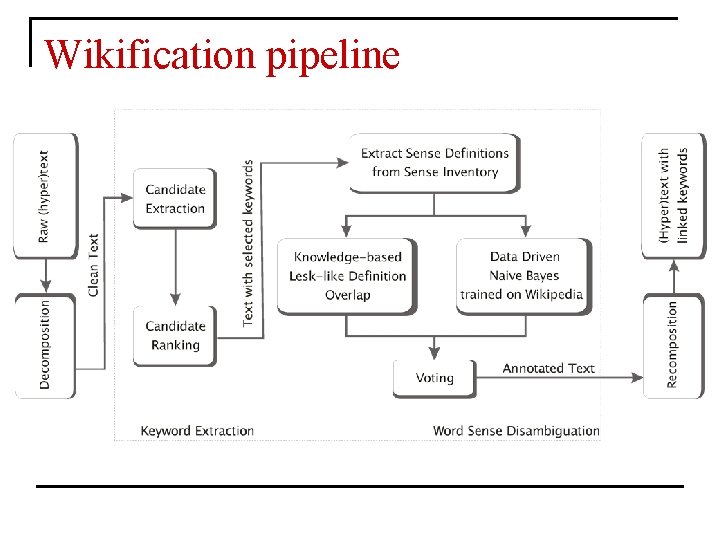

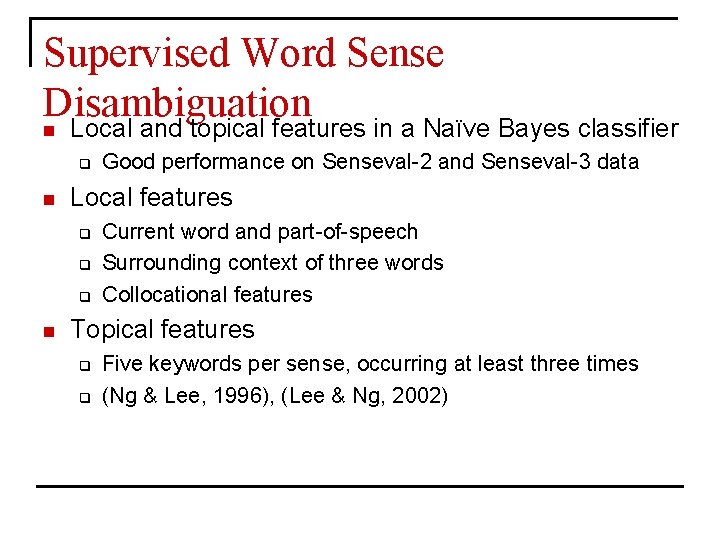

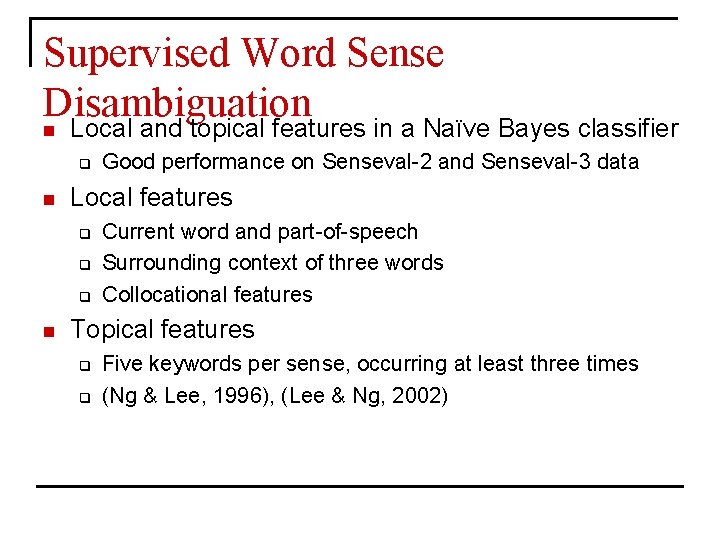

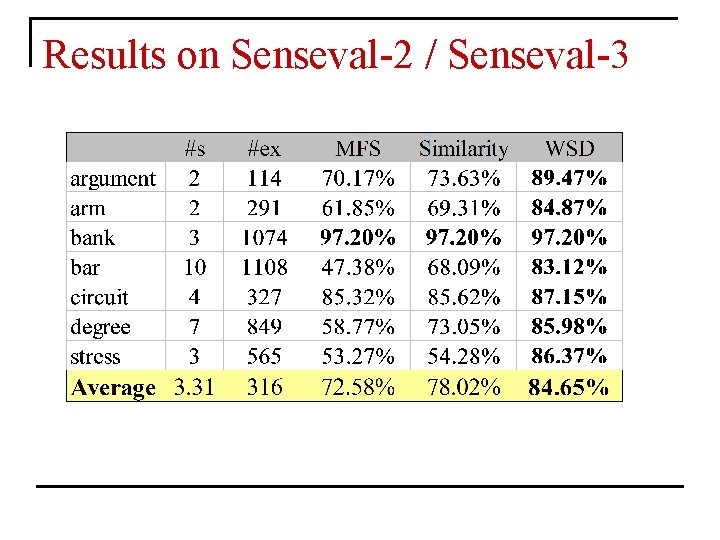

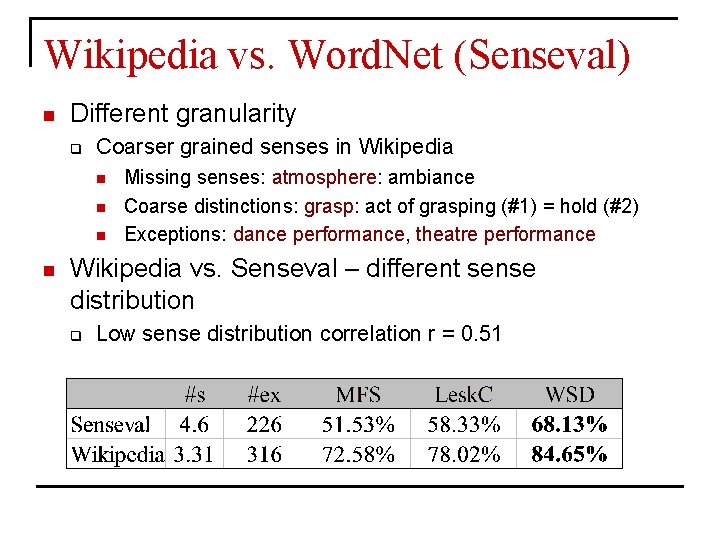

Supervised Word Sense Disambiguation n Local and topical features in a Naïve Bayes classifier q n Local features q q q n Good performance on Senseval-2 and Senseval-3 data Current word and part-of-speech Surrounding context of three words Collocational features Topical features q q Five keywords per sense, occurring at least three times (Ng & Lee, 1996), (Lee & Ng, 2002)

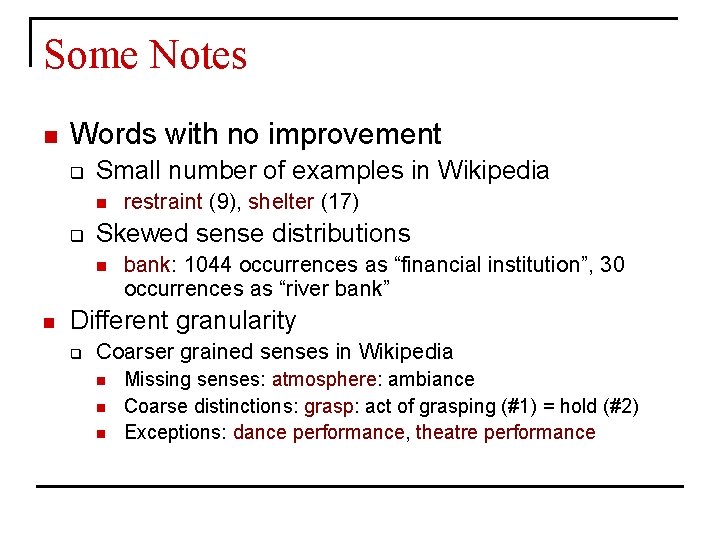

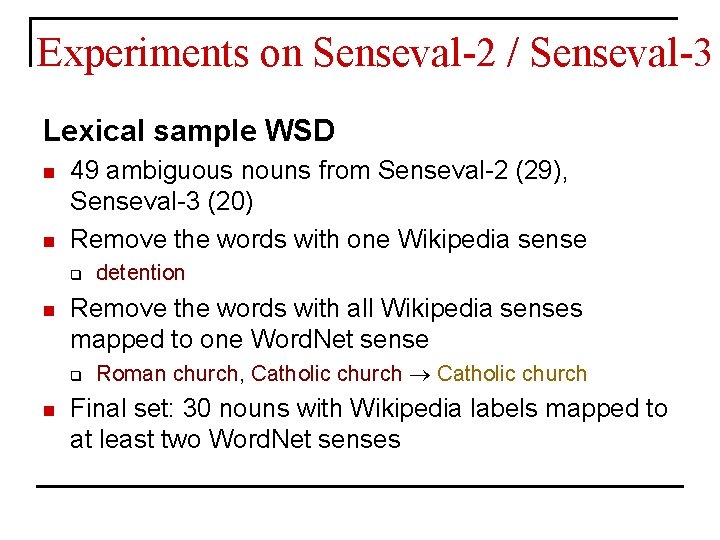

Experiments on Senseval-2 / Senseval-3 Lexical sample WSD n n 49 ambiguous nouns from Senseval-2 (29), Senseval-3 (20) Remove the words with one Wikipedia sense q n Remove the words with all Wikipedia senses mapped to one Word. Net sense q n detention Roman church, Catholic church Final set: 30 nouns with Wikipedia labels mapped to at least two Word. Net senses

![Tenfold cross validations n WSD Supervised word sense disambiguation on Wikipedia sense tagged corpora Ten-fold cross validations n [WSD] Supervised word sense disambiguation on Wikipedia sense tagged corpora](https://slidetodoc.com/presentation_image_h/9d8e3fec7641e700ca1f6cf7b2847e4f/image-21.jpg)

Ten-fold cross validations n [WSD] Supervised word sense disambiguation on Wikipedia sense tagged corpora n [MFS] Most frequent sense: choose the most frequent sense by default [Similarity] Similarity between current example and training data available for each sense n

Results on Senseval-2 / Senseval-3

Some Notes n Words with no improvement q Small number of examples in Wikipedia n q Skewed sense distributions n n restraint (9), shelter (17) bank: 1044 occurrences as “financial institution”, 30 occurrences as “river bank” Different granularity q Coarser grained senses in Wikipedia n n n Missing senses: atmosphere: ambiance Coarse distinctions: grasp: act of grasping (#1) = hold (#2) Exceptions: dance performance, theatre performance

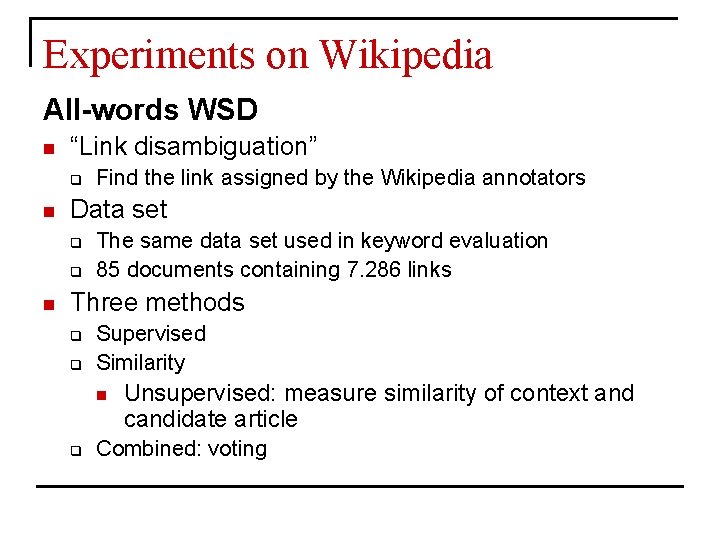

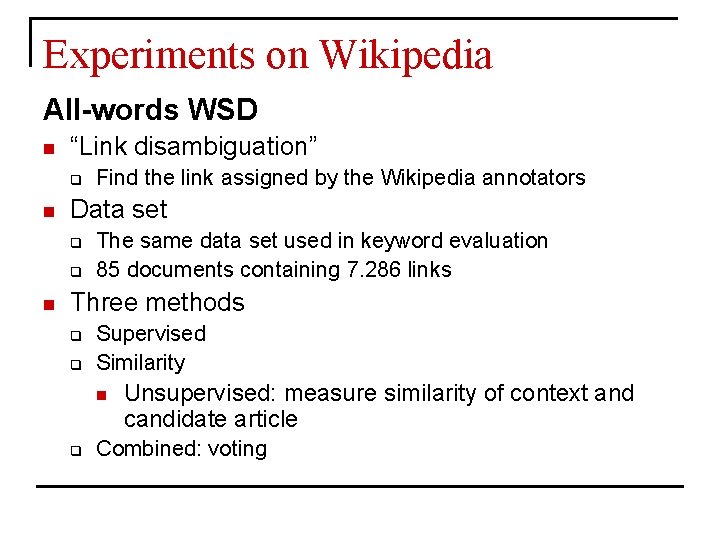

Experiments on Wikipedia All-words WSD n “Link disambiguation” q n Data set q q n Find the link assigned by the Wikipedia annotators The same data set used in keyword evaluation 85 documents containing 7. 286 links Three methods q q Supervised Similarity n q Unsupervised: measure similarity of context and candidate article Combined: voting

Results

Wikification

Wikify! system (http: //lit. csci. unt. edu/~wikify/ or www. wikifyer. com)

Overall System Evaluation n n Turing-like test Annotation of educational materials

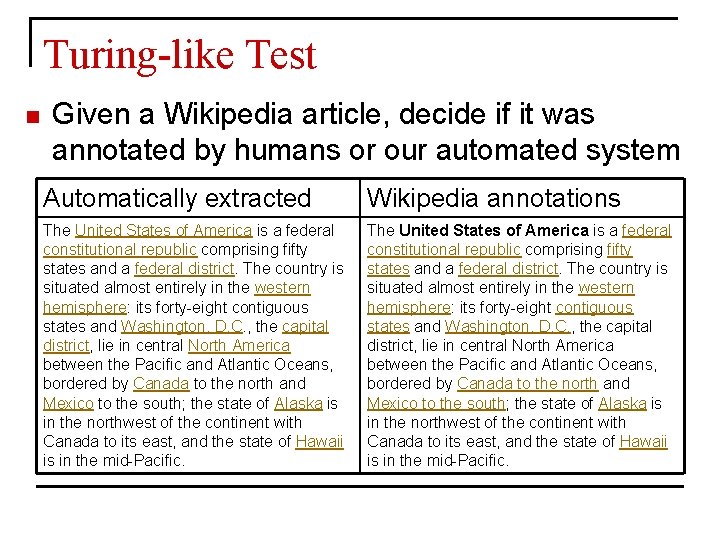

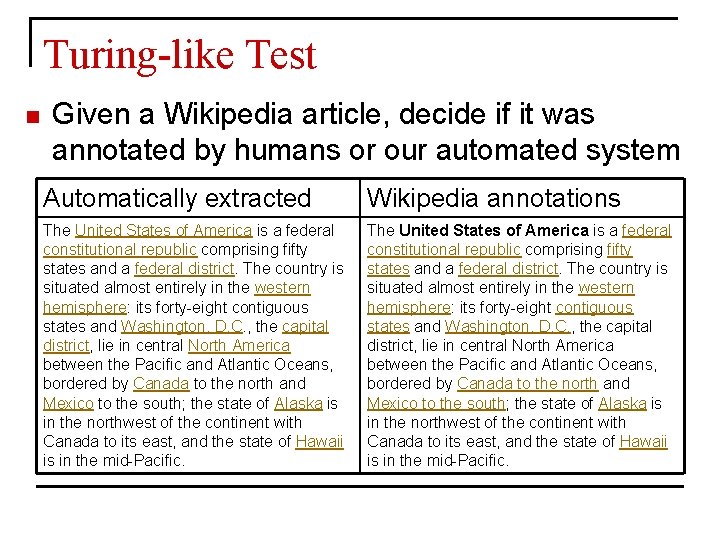

Turing-like Test n Given a Wikipedia article, decide if it was annotated by humans or our automated system Automatically extracted Wikipedia annotations The United States of America is a federal constitutional republic comprising fifty states and a federal district. The country is situated almost entirely in the western hemisphere: its forty-eight contiguous states and Washington, D. C. , the capital district, lie in central North America between the Pacific and Atlantic Oceans, bordered by Canada to the north and Mexico to the south; the state of Alaska is in the northwest of the continent with Canada to its east, and the state of Hawaii is in the mid-Pacific.

Turing-like Test n n 20 test subjects (mixed background) 10 document pairs for each subject (side by side) Average accuracy: 57% Ideal case = 50% success rate (total confusion)

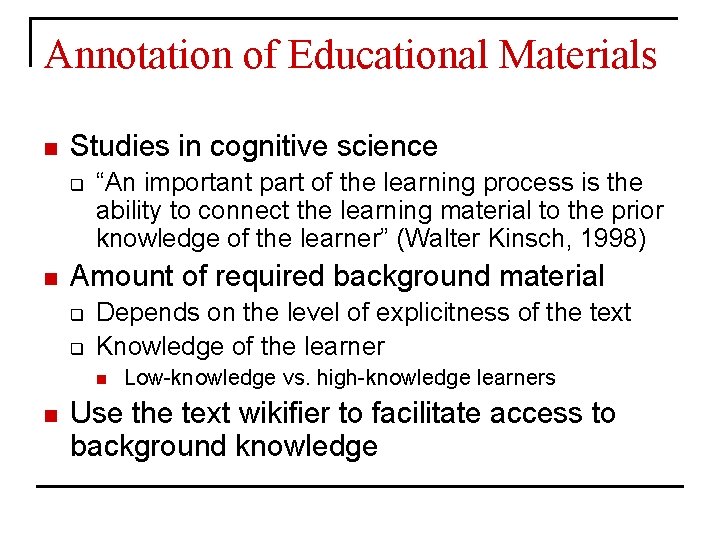

Annotation of Educational Materials n Studies in cognitive science q n “An important part of the learning process is the ability to connect the learning material to the prior knowledge of the learner” (Walter Kinsch, 1998) Amount of required background material q q Depends on the level of explicitness of the text Knowledge of the learner n n Low-knowledge vs. high-knowledge learners Use the text wikifier to facilitate access to background knowledge

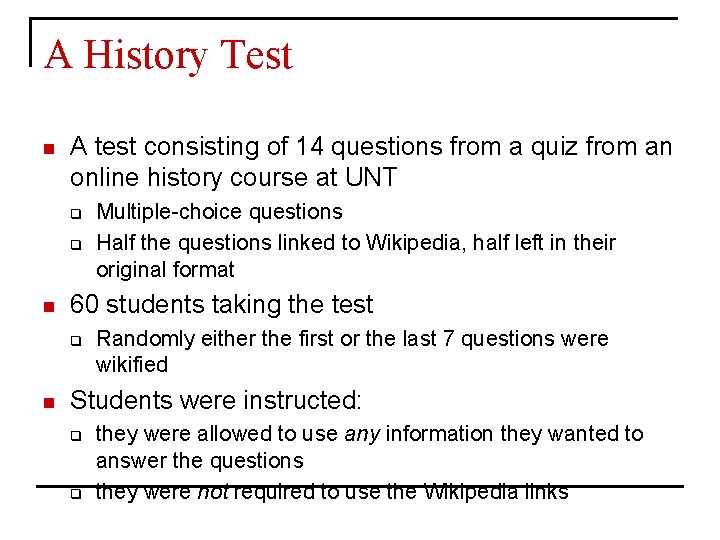

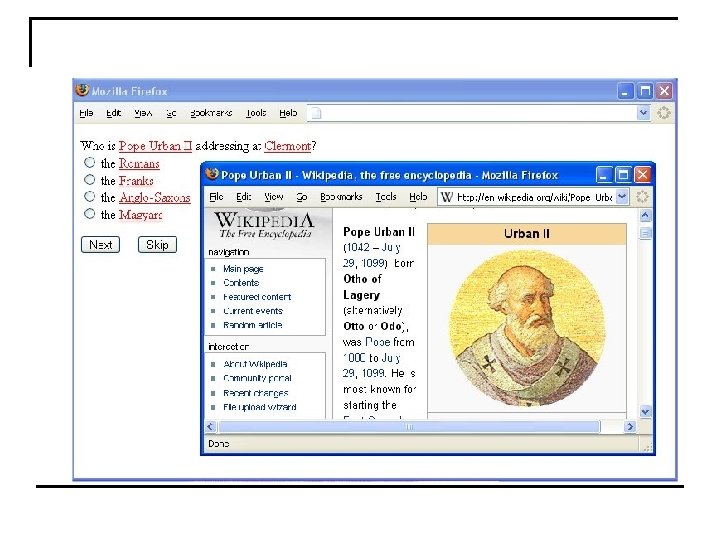

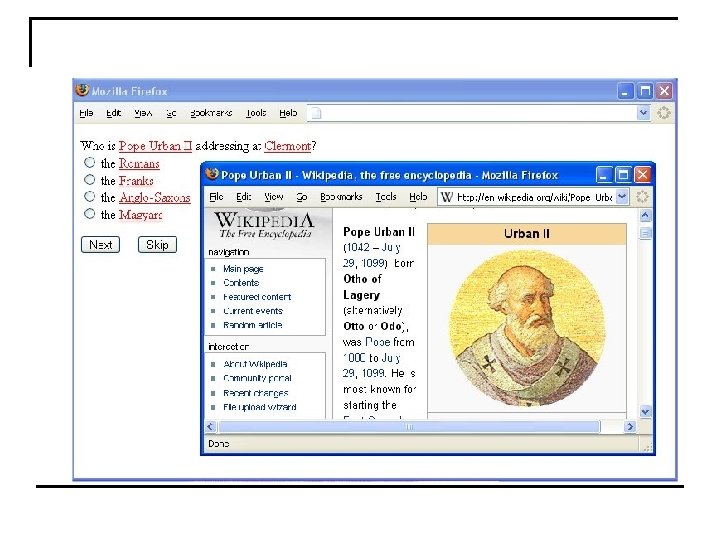

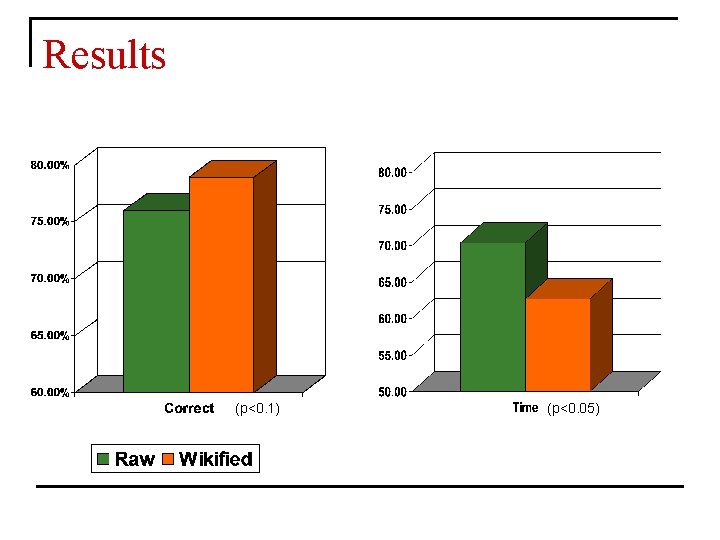

A History Test n A test consisting of 14 questions from a quiz from an online history course at UNT q q n 60 students taking the test q n Multiple-choice questions Half the questions linked to Wikipedia, half left in their original format Randomly either the first or the last 7 questions were wikified Students were instructed: q q they were allowed to use any information they wanted to answer the questions they were not required to use the Wikipedia links

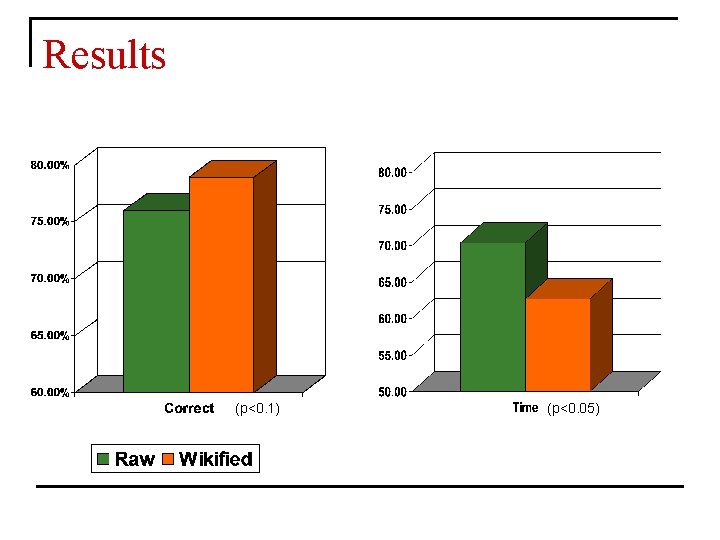

Results (p<0. 1) (p<0. 05)

Lessons Learned n Wikipedia can be used as a source of evidence for text processing tasks q q n Keyword extraction Word sense disambiguation Text wikification: linking documents to encyclopedic knowledge q q q Enrich educational materials Annotation of web pages (semantic web) NLP applications n n summarization, information retrieval, text categorization text adaptation, topic identification, multilingual semantic networks

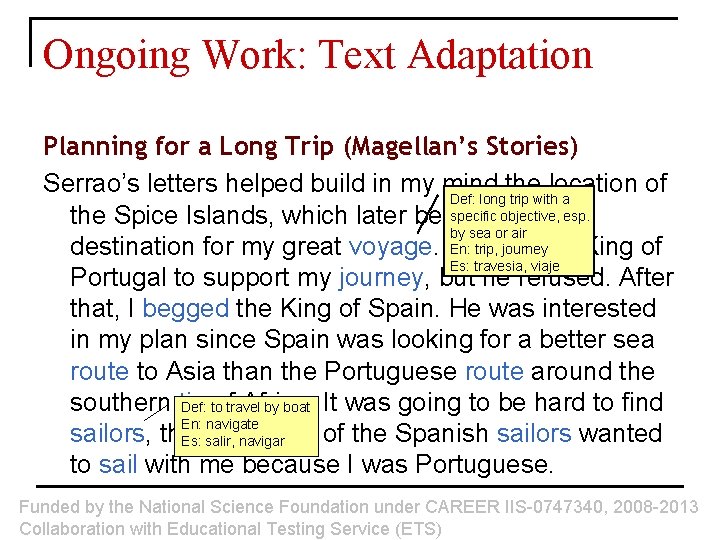

Ongoing Work: Text Adaptation Planning for a Long Trip (Magellan’s Stories) Serrao’s letters helped build in my mind the location of Def: long trip with a specific objective, the Spice Islands, which later became the esp. by sea or air trip, journey destination for my great voyage. IEn: asked the King of Es: travesia, viaje Portugal to support my journey, but he refused. After that, I begged the King of Spain. He was interested in my plan since Spain was looking for a better sea route to Asia than the Portuguese route around the southern tip oftravel Africa. Def: to by boat It was going to be hard to find En: navigate sailors, though. None of the Spanish sailors wanted Es: salir, navigar to sail with me because I was Portuguese. Funded by the National Science Foundation under CAREER IIS-0747340, 2008 -2013 Collaboration with Educational Testing Service (ETS)

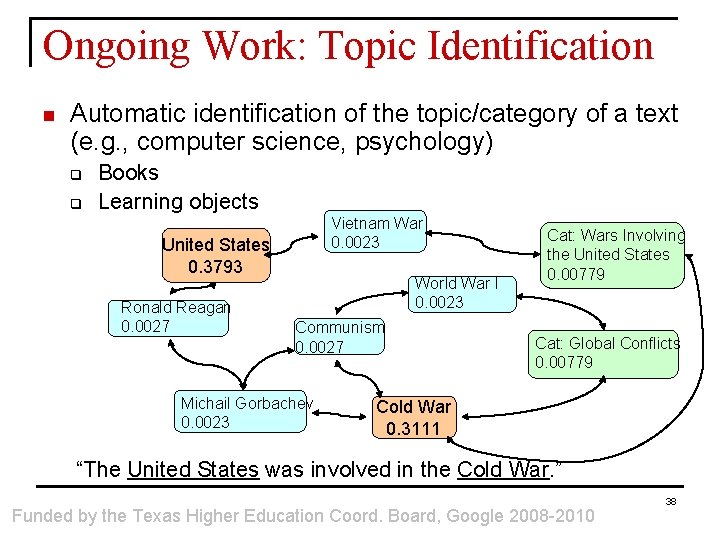

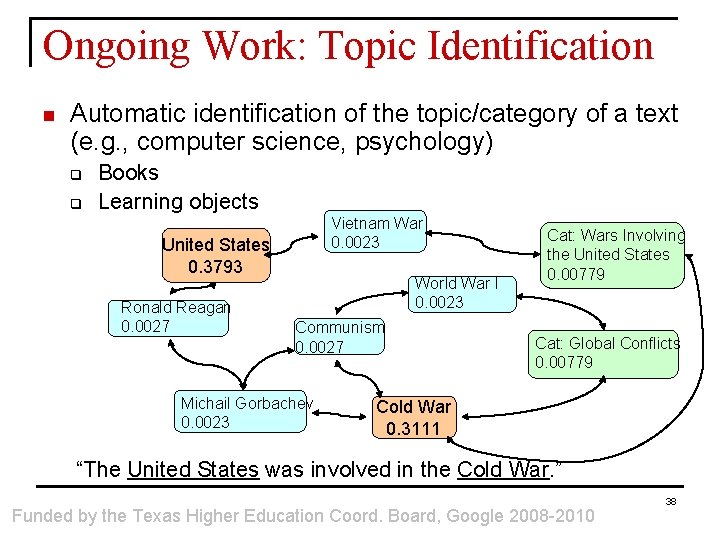

Ongoing Work: Topic Identification n Automatic identification of the topic/category of a text (e. g. , computer science, psychology) q q Books Learning objects Vietnam War 0. 0023 United States 0. 3793 Ronald Reagan 0. 0027 World War I 0. 0023 Communism 0. 0027 Michail Gorbachev 0. 0023 Cat: Wars Involving the United States 0. 00779 Cat: Global Conflicts 0. 00779 Cold War 0. 3111 “The United States was involved in the Cold War. ” Funded by the Texas Higher Education Coord. Board, Google 2008 -2010 38

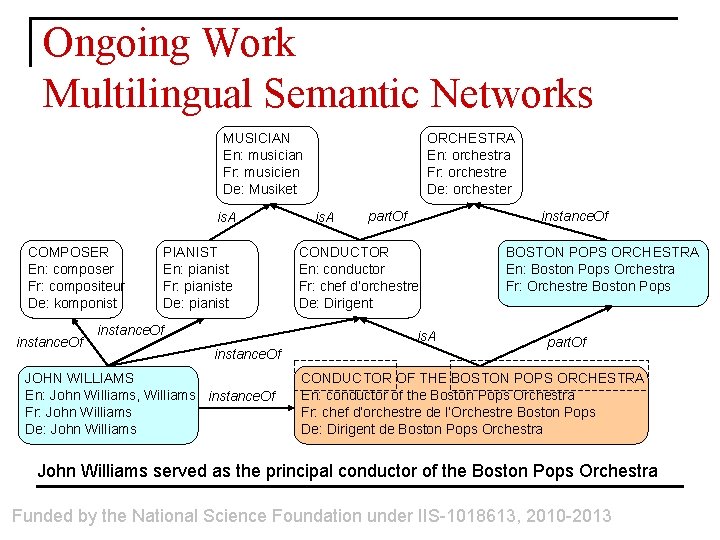

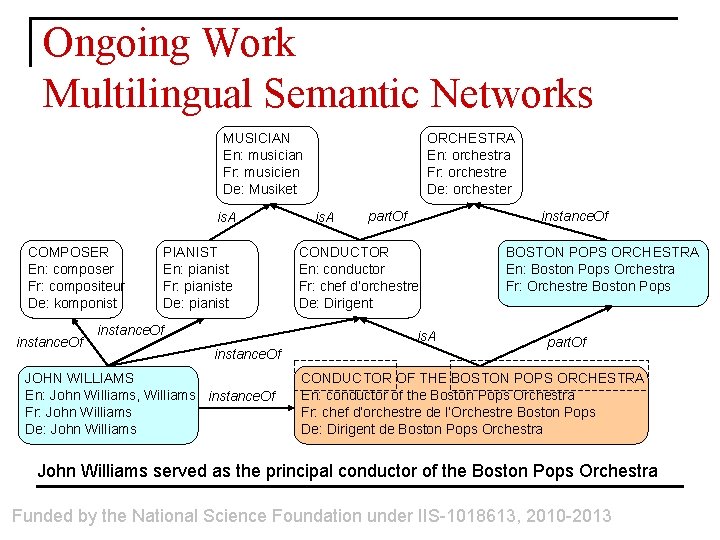

Ongoing Work Multilingual Semantic Networks MUSICIAN En: musician Fr: musicien De: Musiket is. A COMPOSER En: composer Fr: compositeur De: komponist instance. Of PIANIST En: pianist Fr: pianiste De: pianist instance. Of ORCHESTRA En: orchestra Fr: orchestre De: orchester is. A part. Of instance. Of CONDUCTOR En: conductor Fr: chef d’orchestre De: Dirigent is. A instance. Of JOHN WILLIAMS En: John Williams, Williams instance. Of Fr: John Williams De: John Williams BOSTON POPS ORCHESTRA En: Boston Pops Orchestra Fr: Orchestre Boston Pops part. Of CONDUCTOR OF THE BOSTON POPS ORCHESTRA En: conductor of the Boston Pops Orchestra Fr: chef d’orchestre de l’Orchestre Boston Pops De: Dirigent de Boston Pops Orchestra John Williams served as the principal conductor of the Boston Pops Orchestra Funded by the National Science Foundation under IIS-1018613, 2010 -2013

Thank You! Questions?

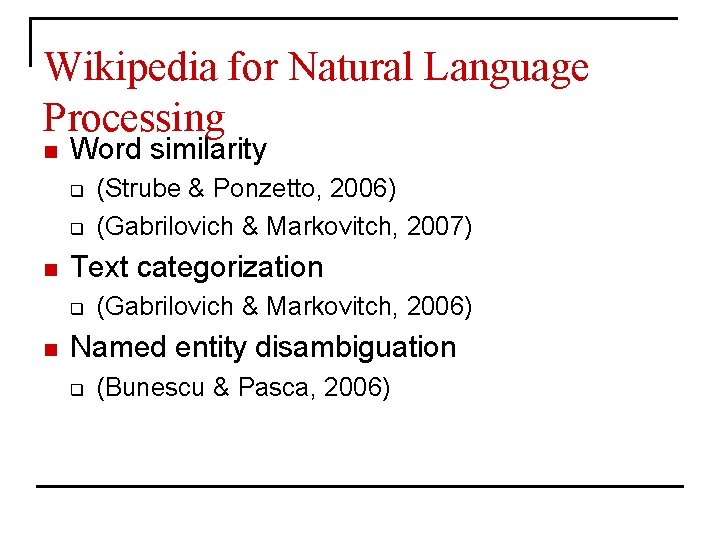

Wikipedia for Natural Language Processing n Word similarity q q n Text categorization q n (Strube & Ponzetto, 2006) (Gabrilovich & Markovitch, 2007) (Gabrilovich & Markovitch, 2006) Named entity disambiguation q (Bunescu & Pasca, 2006)

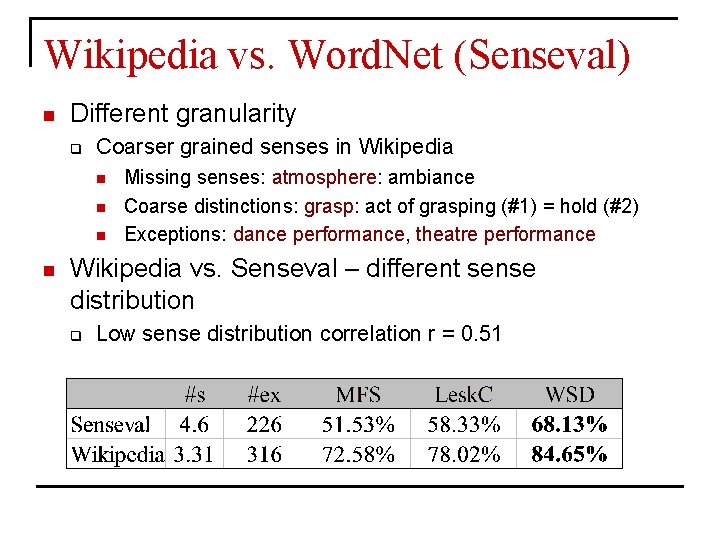

Wikipedia vs. Word. Net (Senseval) n Different granularity q Coarser grained senses in Wikipedia n n Missing senses: atmosphere: ambiance Coarse distinctions: grasp: act of grasping (#1) = hold (#2) Exceptions: dance performance, theatre performance Wikipedia vs. Senseval – different sense distribution q Low sense distribution correlation r = 0. 51

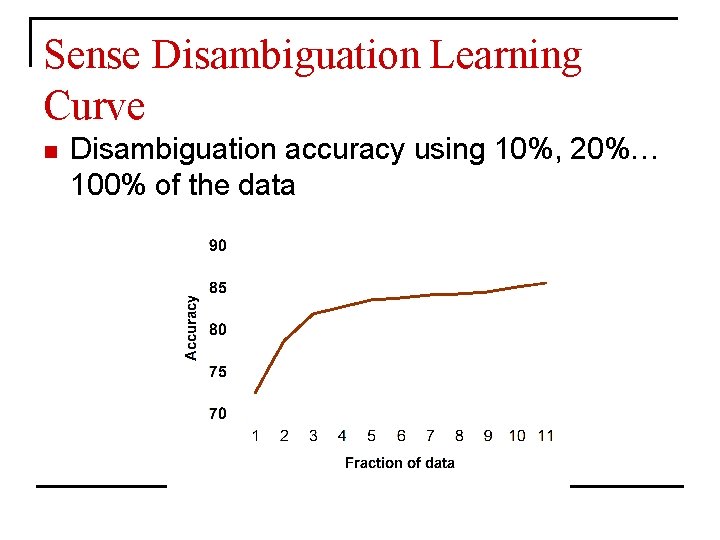

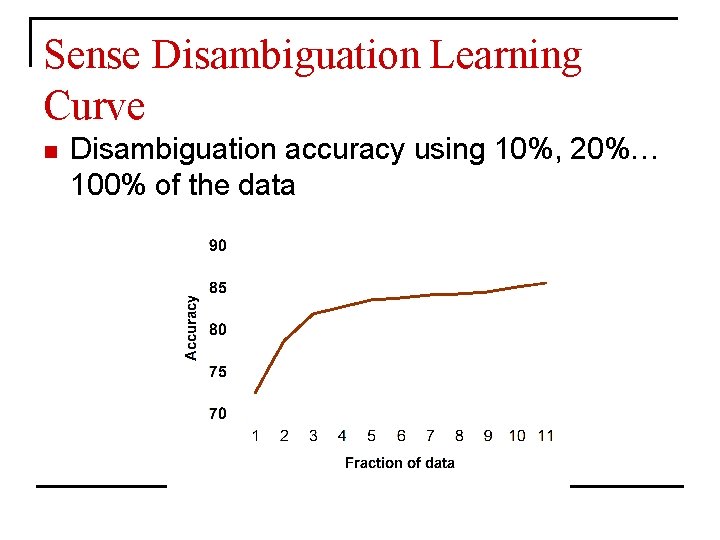

Sense Disambiguation Learning Curve n Disambiguation accuracy using 10%, 20%… 100% of the data

Text Wikification n Finding key terms in documents and linking them to relevant encyclopedic information.

Text Wikification n Finding key terms in documents and linking them to relevant encyclopedic information.

Text Wikification n Finding key terms in documents and linking them to relevant encyclopedic information.

Text Wikification n Finding key terms in documents and linking them to relevant encyclopedic information.

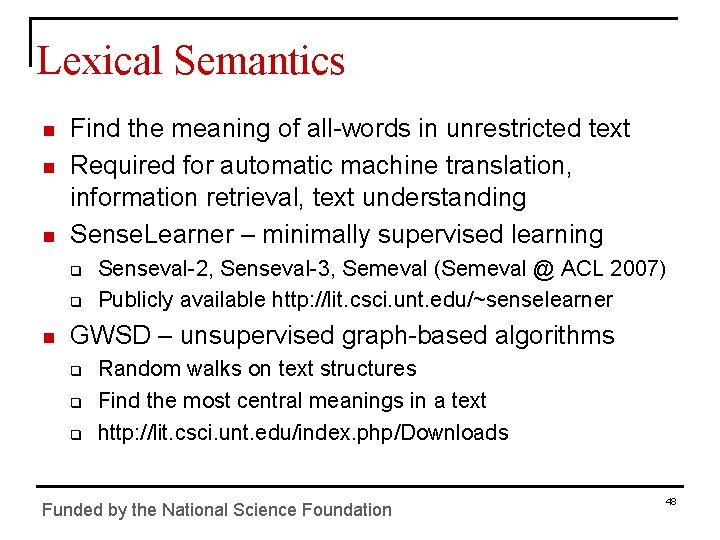

Lexical Semantics n n n Find the meaning of all-words in unrestricted text Required for automatic machine translation, information retrieval, text understanding Sense. Learner – minimally supervised learning q q n Senseval-2, Senseval-3, Semeval (Semeval @ ACL 2007) Publicly available http: //lit. csci. unt. edu/~senselearner GWSD – unsupervised graph-based algorithms q q q Random walks on text structures Find the most central meanings in a text http: //lit. csci. unt. edu/index. php/Downloads Funded by the National Science Foundation 48

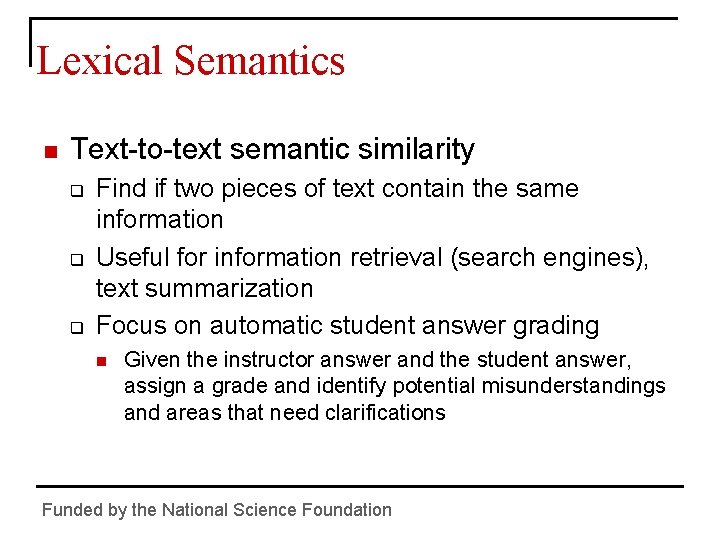

Lexical Semantics n Lexical substitution: Sub. Finder q q Find semantically-equivalent substitutes for a target word in a given context Combine corpus-based and knowledge-based approaches Combine monolingual and multilingual resources Wordnet, Encarta, bilingual dictionaries, large corpora n Faired well in the Semeval 2007 lexical substitution task n Trans. Finder q q q Find the translation of a target word in a given context Assist Hispanic students with the understanding of English texts Task at Semeval 2010 Funded by the National Science Foundation 49

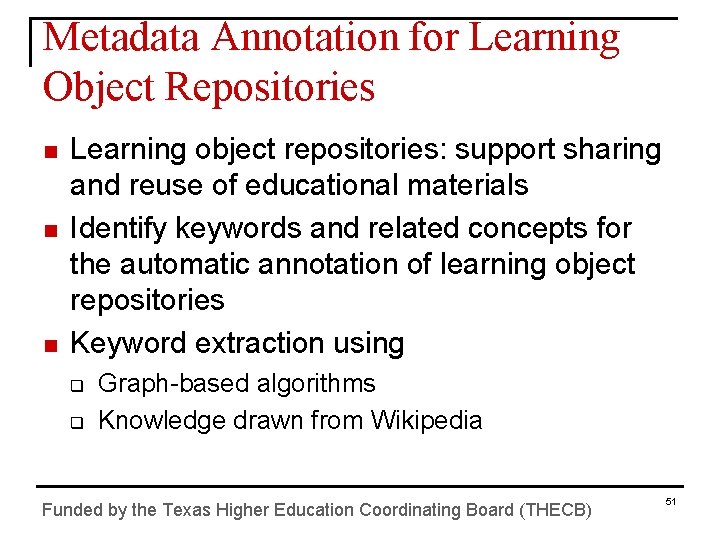

Lexical Semantics n Text-to-text semantic similarity q q q Find if two pieces of text contain the same information Useful for information retrieval (search engines), text summarization Focus on automatic student answer grading n Given the instructor answer and the student answer, assign a grade and identify potential misunderstandings and areas that need clarifications Funded by the National Science Foundation

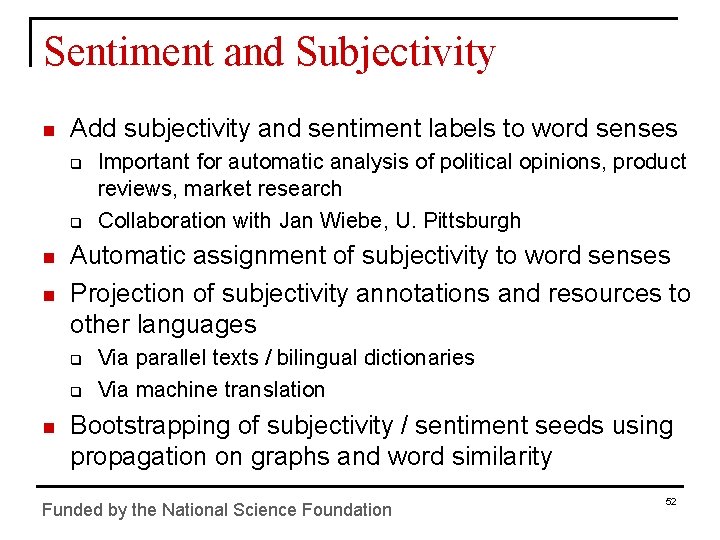

Metadata Annotation for Learning Object Repositories n n n Learning object repositories: support sharing and reuse of educational materials Identify keywords and related concepts for the automatic annotation of learning object repositories Keyword extraction using q q Graph-based algorithms Knowledge drawn from Wikipedia Funded by the Texas Higher Education Coordinating Board (THECB) 51

Sentiment and Subjectivity n Add subjectivity and sentiment labels to word senses q q n n Automatic assignment of subjectivity to word senses Projection of subjectivity annotations and resources to other languages q q n Important for automatic analysis of political opinions, product reviews, market research Collaboration with Jan Wiebe, U. Pittsburgh Via parallel texts / bilingual dictionaries Via machine translation Bootstrapping of subjectivity / sentiment seeds using propagation on graphs and word similarity Funded by the National Science Foundation 52

Sentiment and Subjectivity n Affective text q q n Automatic annotation of emotions in text Anger, disgust, fear, joy, sadness, surprise Collaboration with Carlo Strapparava, IRST Large data sets constructed Computational humour Learning to recognize humour q Identification of connections with other linguistic properties: affect, valence, semantic classes q 53

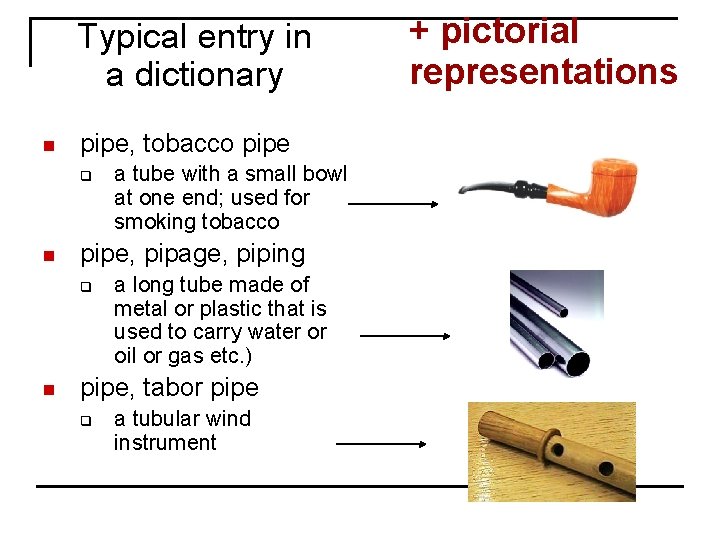

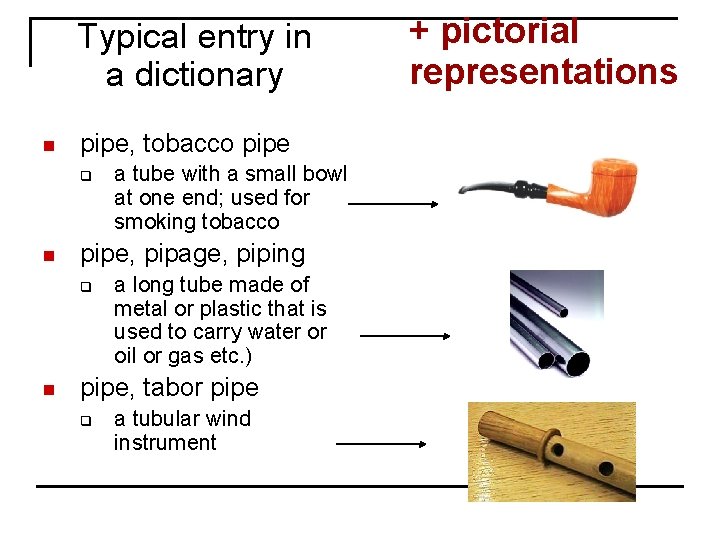

Text-to-image Synthesis n Language learning q q q n n Second (foreign) language People with language disorders International language-independent knowledge base q n Children Pictures are transparent to languages Applications q Pictorial translations (“Letters to my cousin”) Bridge the gap between research in image and text processing q Image retrieval/classification, natural language

Typical entry in a dictionary n pipe, tobacco pipe q n pipe, pipage, piping q n a tube with a small bowl at one end; used for smoking tobacco a long tube made of metal or plastic that is used to carry water or oil or gas etc. ) pipe, tabor pipe q a tubular wind instrument + pictorial representations