Lookahead pathology in realtime pathfinding Mitja Lutrek Joef

![Our setting p p HOG – Hierarchical Open Graph [Sturtevant et al. ] Maps Our setting p p HOG – Hierarchical Open Graph [Sturtevant et al. ] Maps](https://slidetodoc.com/presentation_image/37aed4d795a6f7daee0814008759d0de/image-17.jpg)

![State abstraction p Clique abstraction [Sturtevant, Bulitko et al. 05] p Compute the optimal State abstraction p Clique abstraction [Sturtevant, Bulitko et al. 05] p Compute the optimal](https://slidetodoc.com/presentation_image/37aed4d795a6f7daee0814008759d0de/image-53.jpg)

- Slides: 57

Lookahead pathology in real-time pathfinding Mitja Luštrek Jožef Stefan Institute, Department of Intelligent Systems Vadim Bulitko University of Alberta, Department of Computer Science

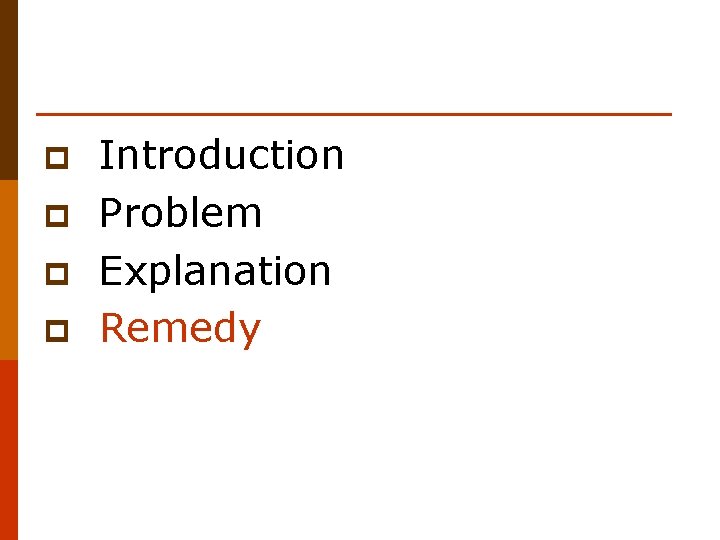

p p Introduction Problem Explanation Remedy

Real-time single-agent heuristic search p Task: n find a path from a start state to a goal state p Complete search: n plan the whole path to the goal state n execute the plan n example: A* [Hart et al. 68] n good: given an admissible heuristic, the path is optimal bad: the delay before the first move can be large n

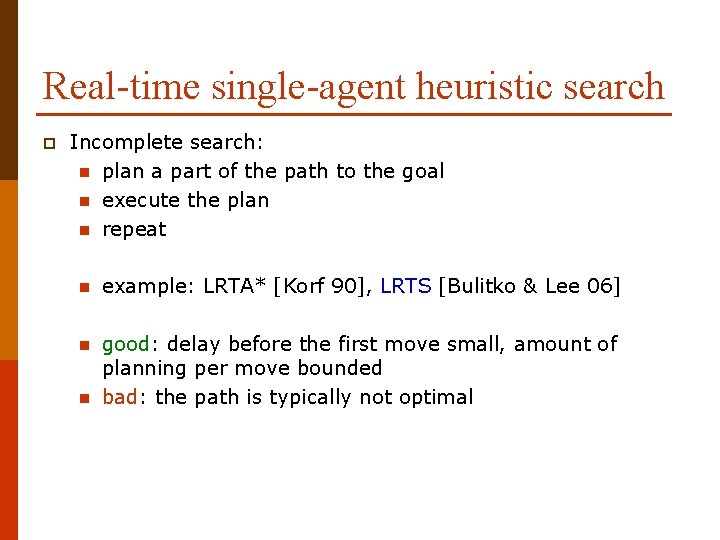

Real-time single-agent heuristic search p Incomplete search: n plan a part of the path to the goal n execute the plan n repeat n example: LRTA* [Korf 90], LRTS [Bulitko & Lee 06] n good: delay before the first move small, amount of planning per move bounded bad: the path is typically not optimal n

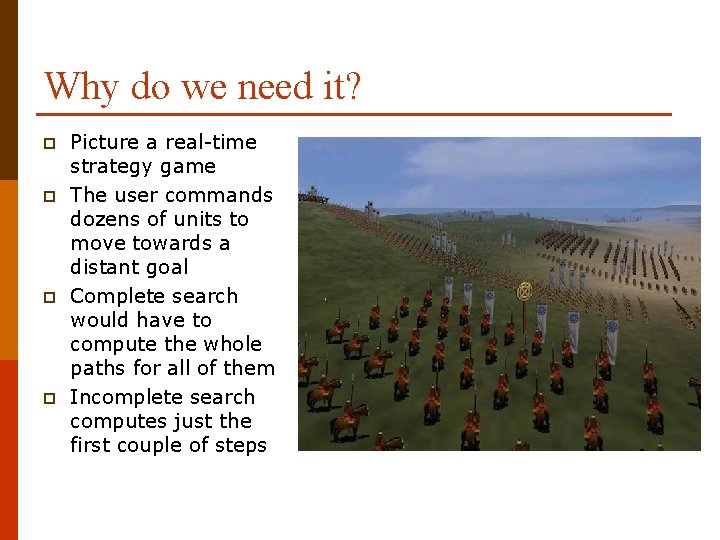

Why do we need it? p p Picture a real-time strategy game The user commands dozens of units to move towards a distant goal Complete search would have to compute the whole paths for all of them Incomplete search computes just the first couple of steps

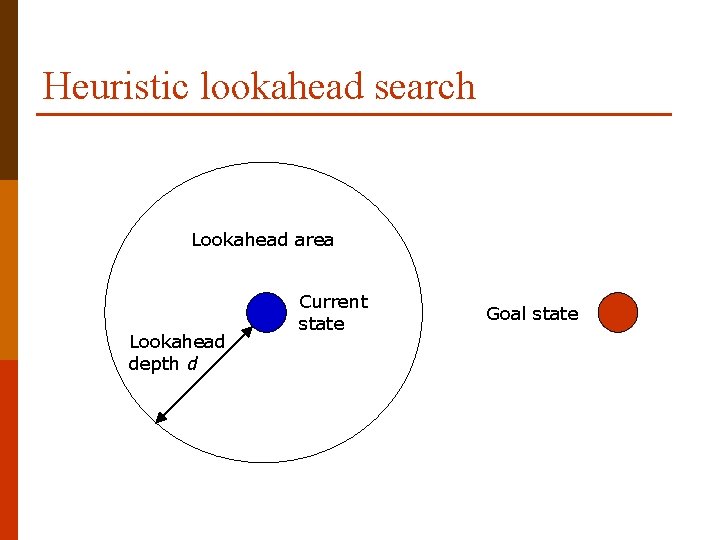

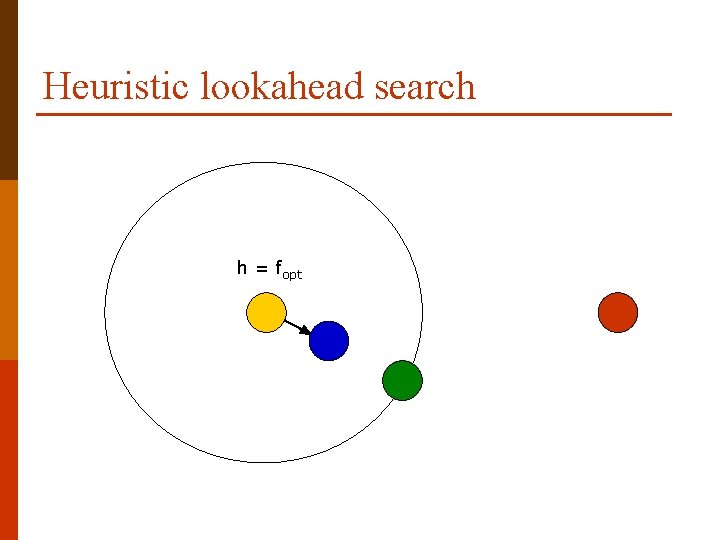

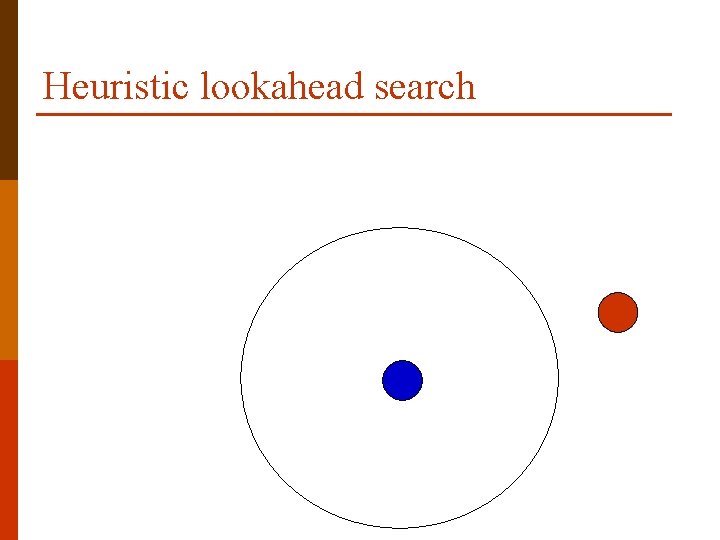

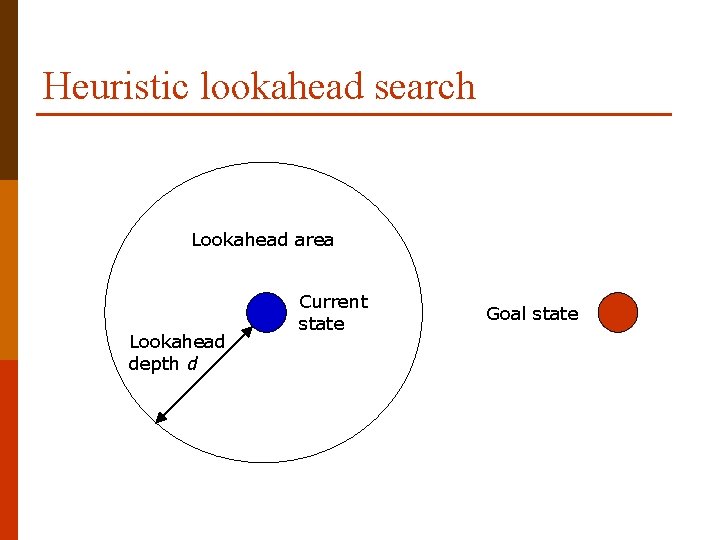

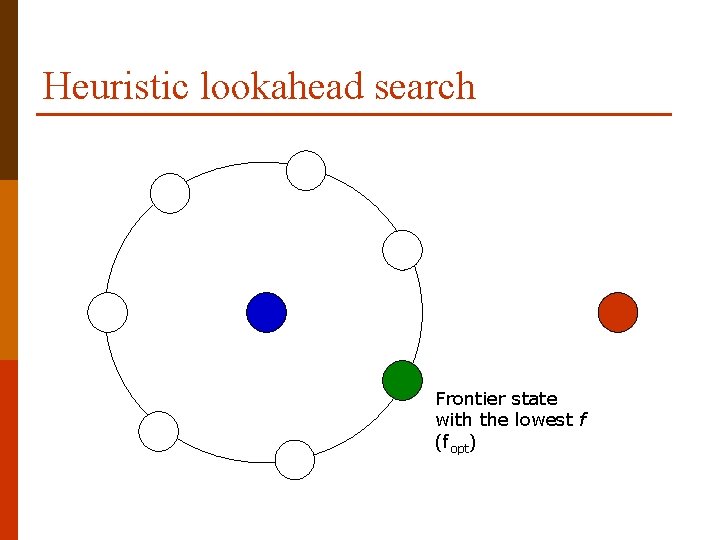

Heuristic lookahead search Lookahead area Lookahead depth d Current state Goal state

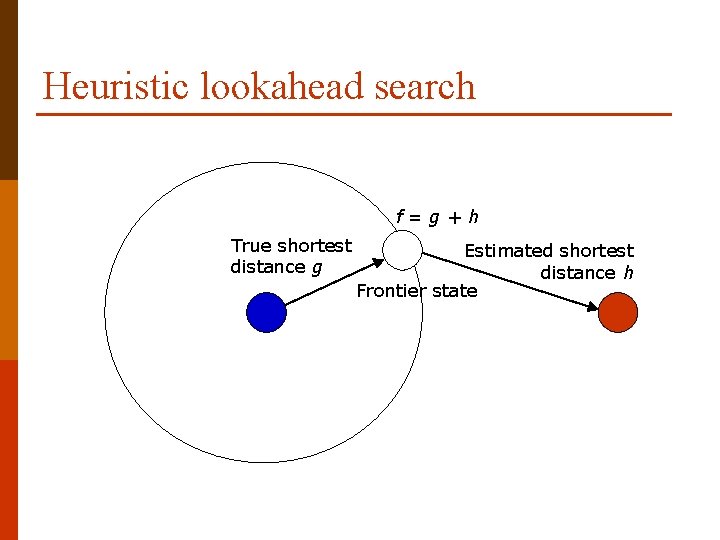

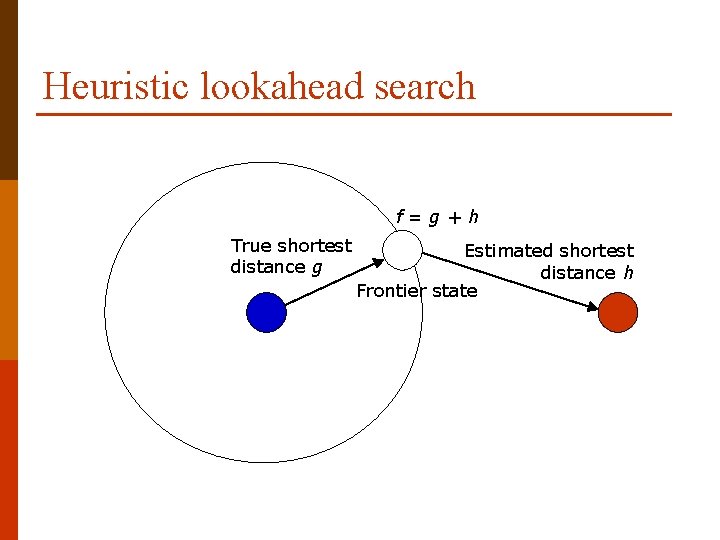

Heuristic lookahead search f=g+h True shortest distance g Estimated shortest distance h Frontier state

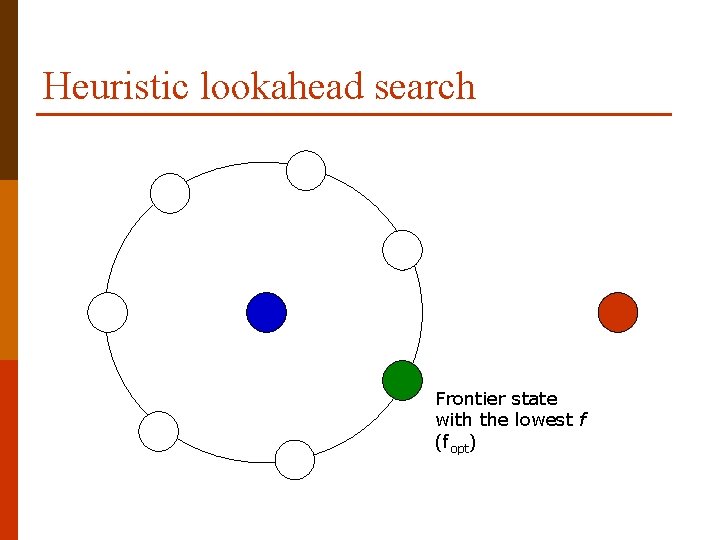

Heuristic lookahead search Frontier state with the lowest f (fopt)

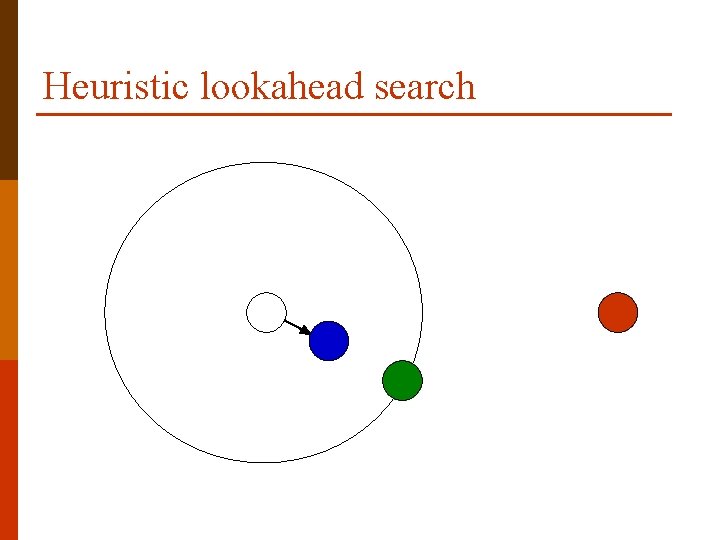

Heuristic lookahead search

Heuristic lookahead search h = fopt

Heuristic lookahead search

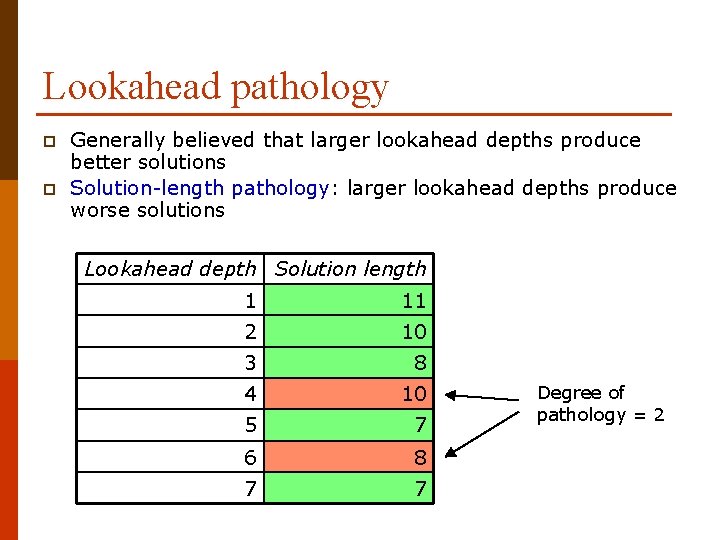

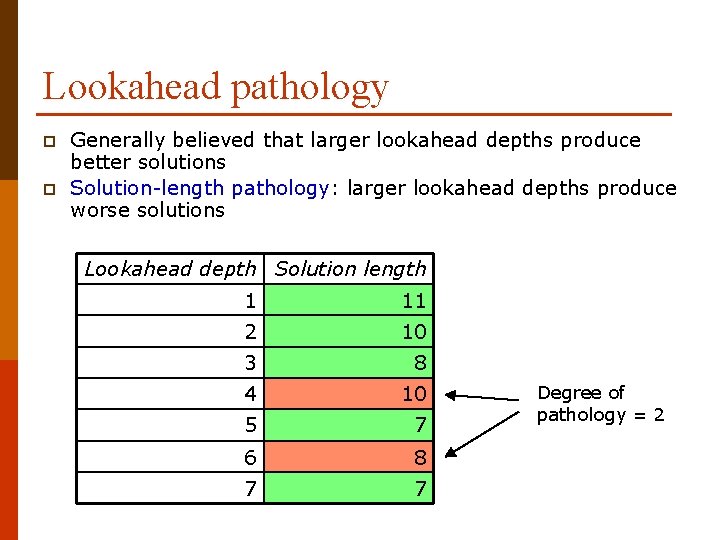

Lookahead pathology p p Generally believed that larger lookahead depths produce better solutions Solution-length pathology: larger lookahead depths produce worse solutions Lookahead depth Solution length 1 11 2 10 3 8 4 10 5 7 6 8 7 7 Degree of pathology = 2

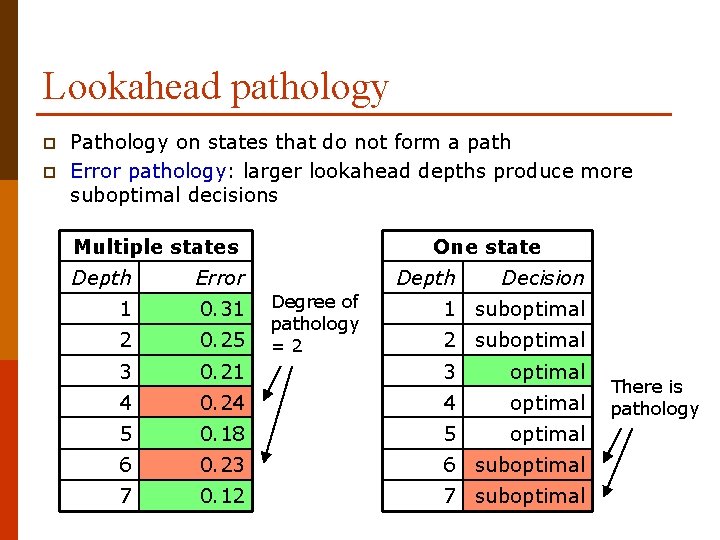

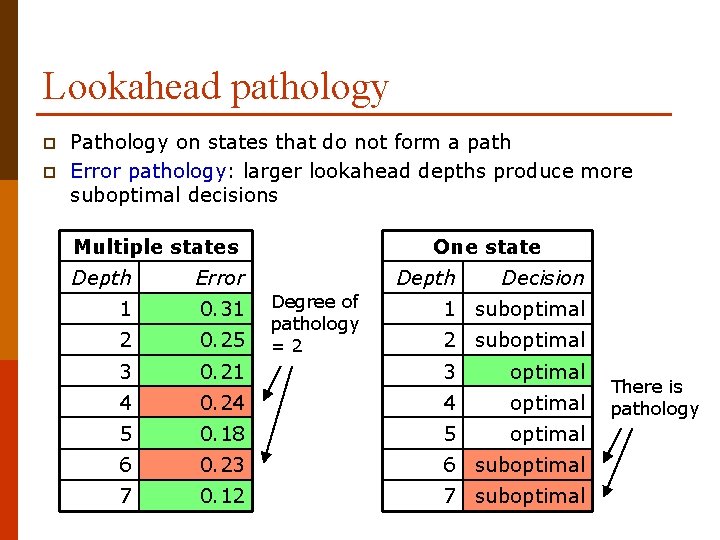

Lookahead pathology p p Pathology on states that do not form a path Error pathology: larger lookahead depths produce more suboptimal decisions Multiple states One state Depth Error Depth 1 0. 31 2 0. 25 3 0. 21 3 optimal 4 0. 24 4 optimal 5 0. 18 5 optimal 6 0. 23 6 suboptimal 7 0. 12 7 suboptimal Degree of pathology =2 Decision 1 suboptimal 2 suboptimal There is pathology

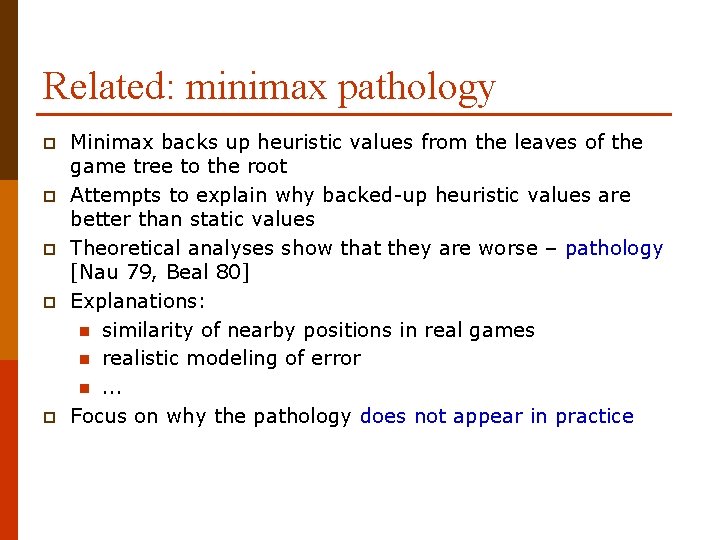

Related: minimax pathology p p p Minimax backs up heuristic values from the leaves of the game tree to the root Attempts to explain why backed-up heuristic values are better than static values Theoretical analyses show that they are worse – pathology [Nau 79, Beal 80] Explanations: n similarity of nearby positions in real games n realistic modeling of error n. . . Focus on why the pathology does not appear in practice

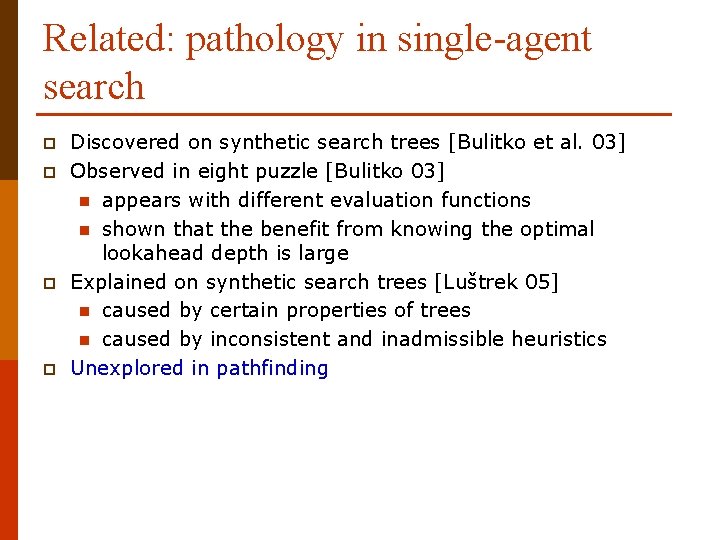

Related: pathology in single-agent search p p Discovered on synthetic search trees [Bulitko et al. 03] Observed in eight puzzle [Bulitko 03] n appears with different evaluation functions n shown that the benefit from knowing the optimal lookahead depth is large Explained on synthetic search trees [Luštrek 05] n caused by certain properties of trees n caused by inconsistent and inadmissible heuristics Unexplored in pathfinding

p p Introduction Problem Explanation Remedy

![Our setting p p HOG Hierarchical Open Graph Sturtevant et al Maps Our setting p p HOG – Hierarchical Open Graph [Sturtevant et al. ] Maps](https://slidetodoc.com/presentation_image/37aed4d795a6f7daee0814008759d0de/image-17.jpg)

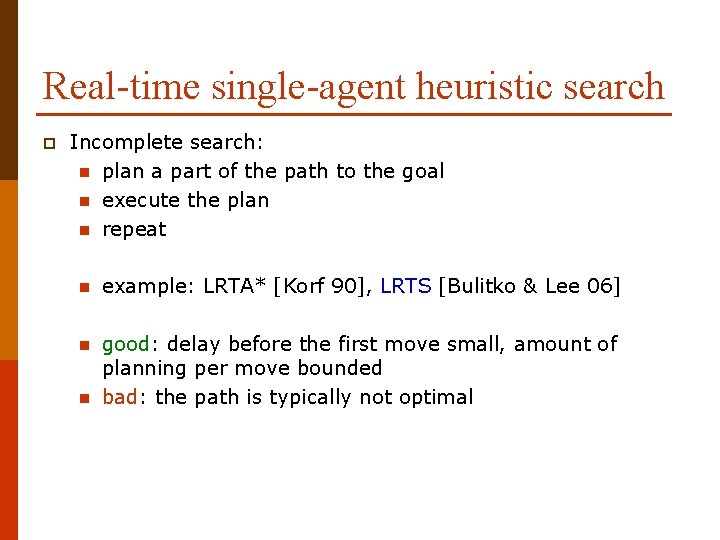

Our setting p p HOG – Hierarchical Open Graph [Sturtevant et al. ] Maps from commercial computer games (Baldur’s Gate, Warcraft III) Initial heuristic: octile distance (true distance assuming an empty map) 1, 000 problems (map, start state, goal state)

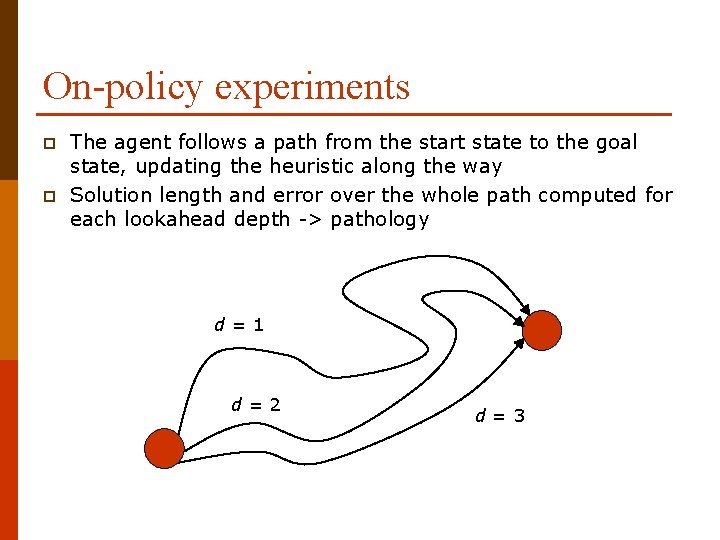

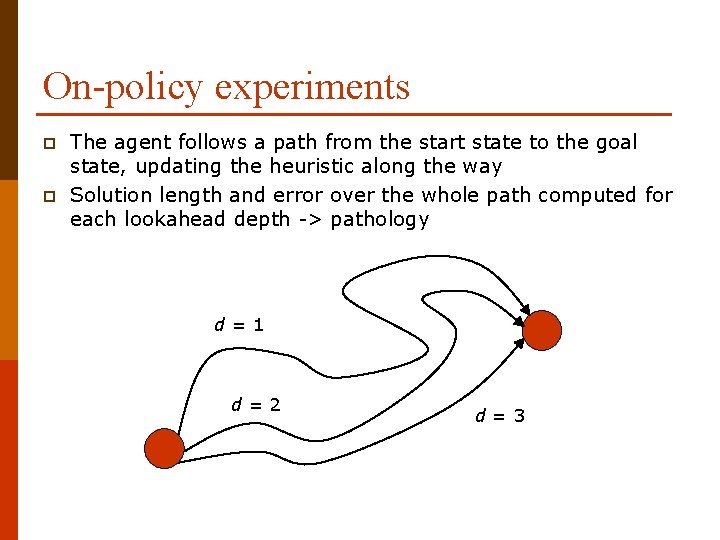

On-policy experiments p p The agent follows a path from the start state to the goal state, updating the heuristic along the way Solution length and error over the whole path computed for each lookahead depth -> pathology d=1 d=2 d=3

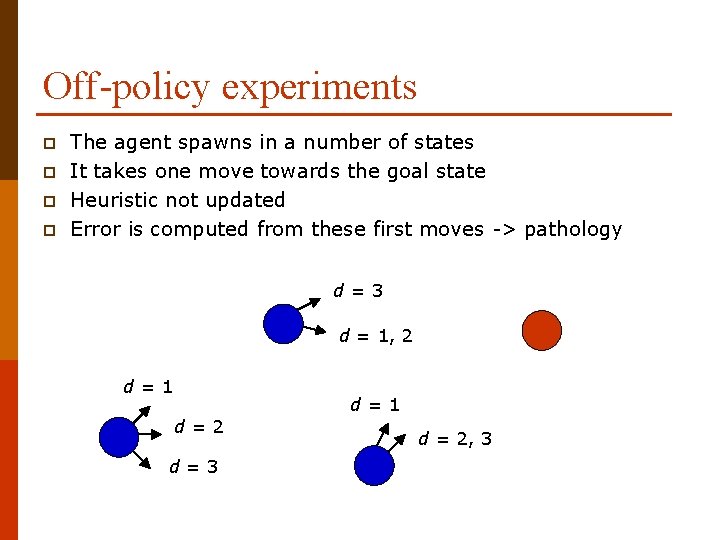

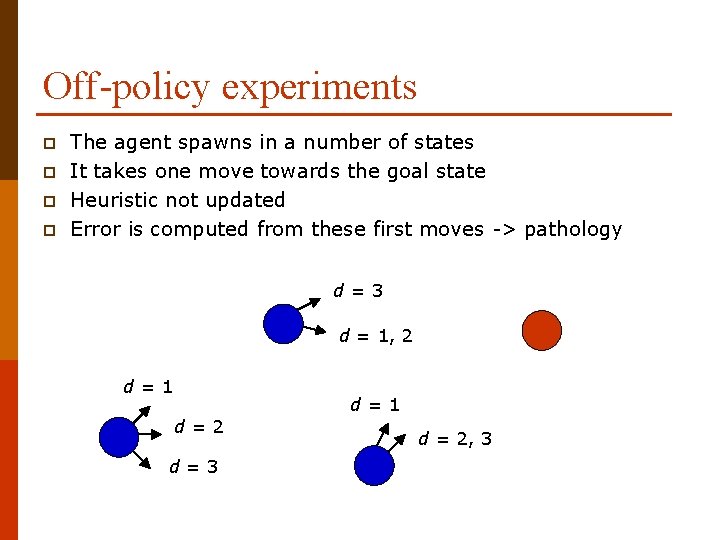

Off-policy experiments p p The agent spawns in a number of states It takes one move towards the goal state Heuristic not updated Error is computed from these first moves -> pathology d=3 d = 1, 2 d=1 d=2 d=3 d = 2, 3

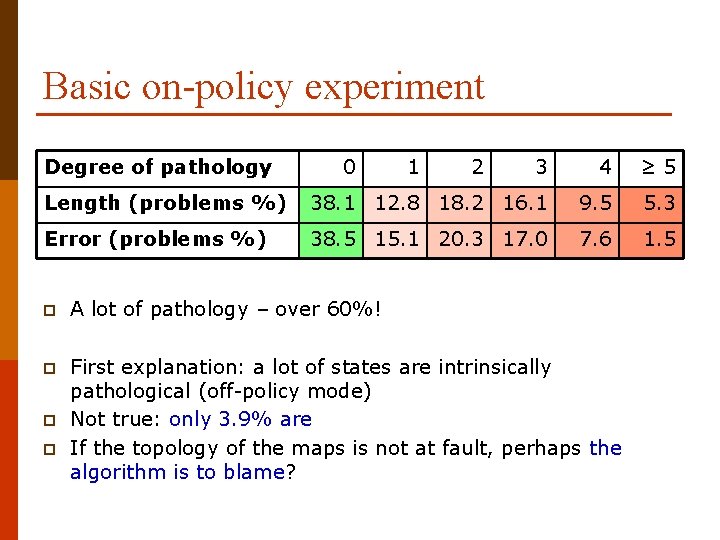

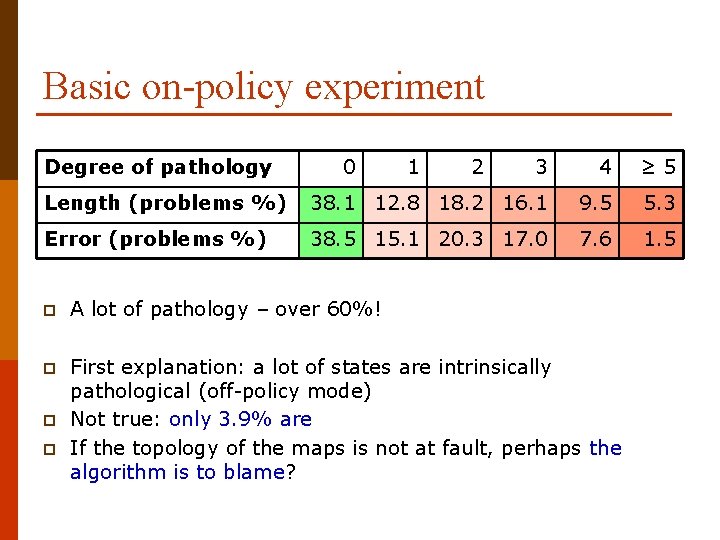

Basic on-policy experiment Degree of pathology 0 1 2 3 4 ≥ 5 Length (problems %) 38. 1 12. 8 18. 2 16. 1 9. 5 5. 3 Error (problems %) 38. 5 15. 1 20. 3 17. 0 7. 6 1. 5 p A lot of pathology – over 60%! p First explanation: a lot of states are intrinsically pathological (off-policy mode) Not true: only 3. 9% are If the topology of the maps is not at fault, perhaps the algorithm is to blame? p p

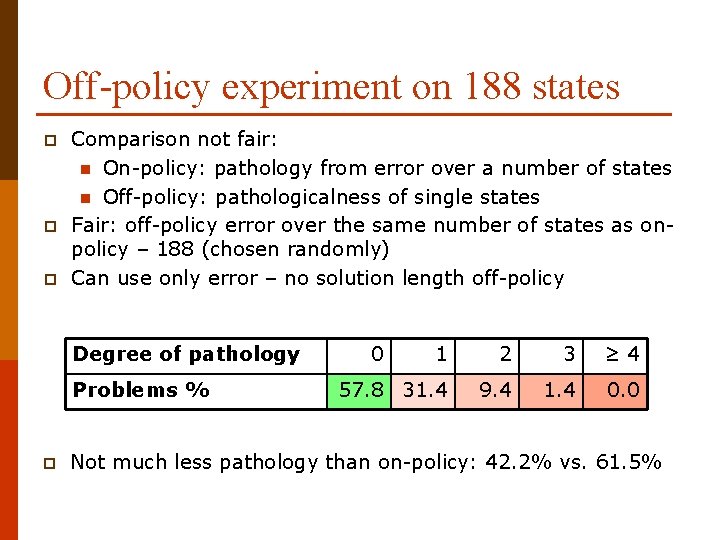

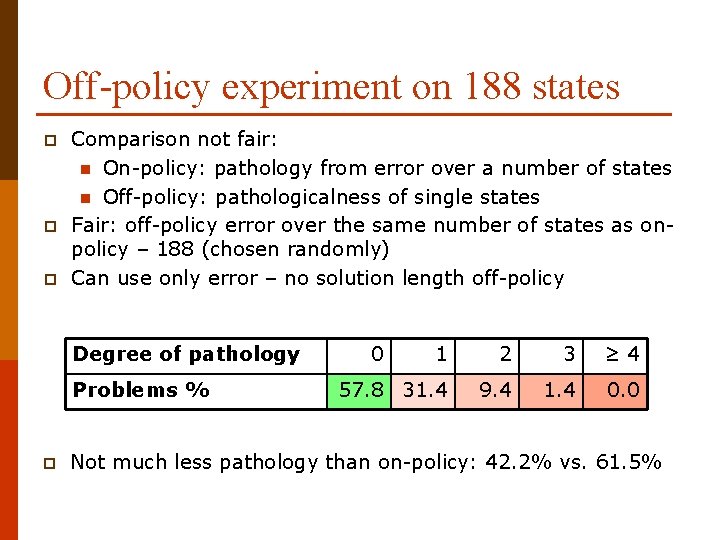

Off-policy experiment on 188 states p p p Comparison not fair: n On-policy: pathology from error over a number of states n Off-policy: pathologicalness of single states Fair: off-policy error over the same number of states as onpolicy – 188 (chosen randomly) Can use only error – no solution length off-policy Degree of pathology Problems % p 0 1 2 3 ≥ 4 57. 8 31. 4 9. 4 1. 4 0. 0 Not much less pathology than on-policy: 42. 2% vs. 61. 5%

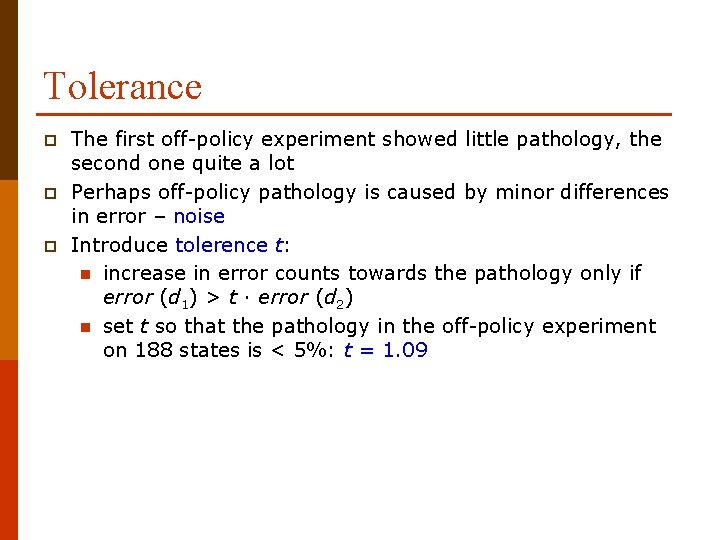

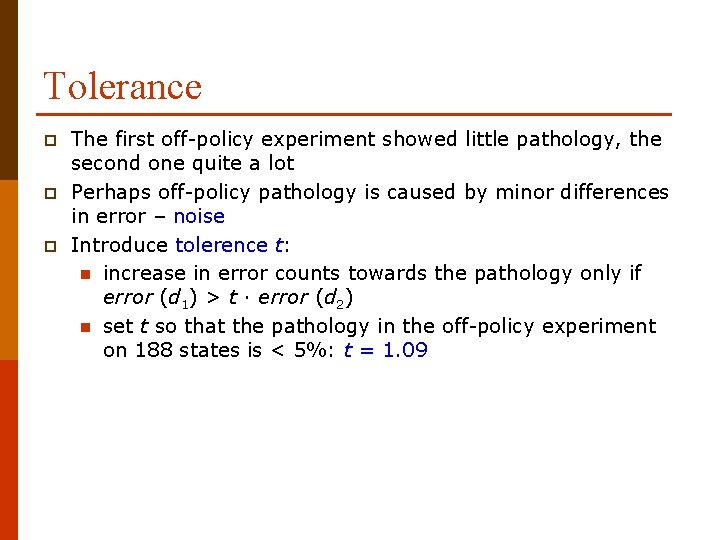

Tolerance p p p The first off-policy experiment showed little pathology, the second one quite a lot Perhaps off-policy pathology is caused by minor differences in error – noise Introduce tolerence t: n increase in error counts towards the pathology only if error (d 1) > t ∙ error (d 2) n set t so that the pathology in the off-policy experiment on 188 states is < 5%: t = 1. 09

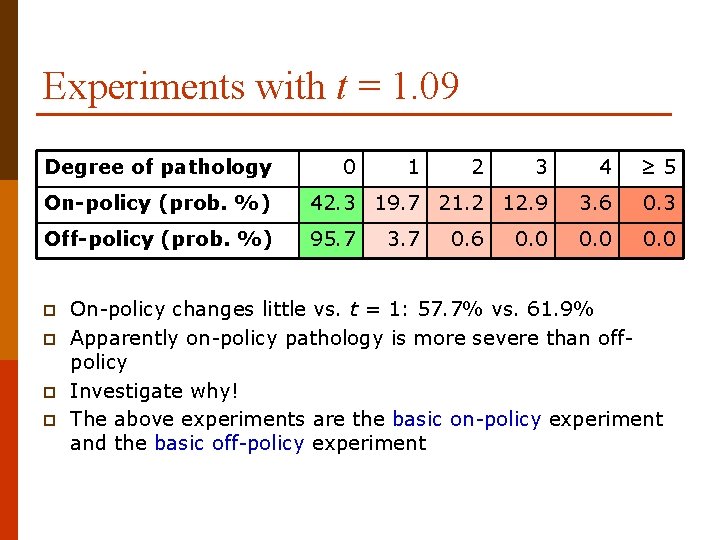

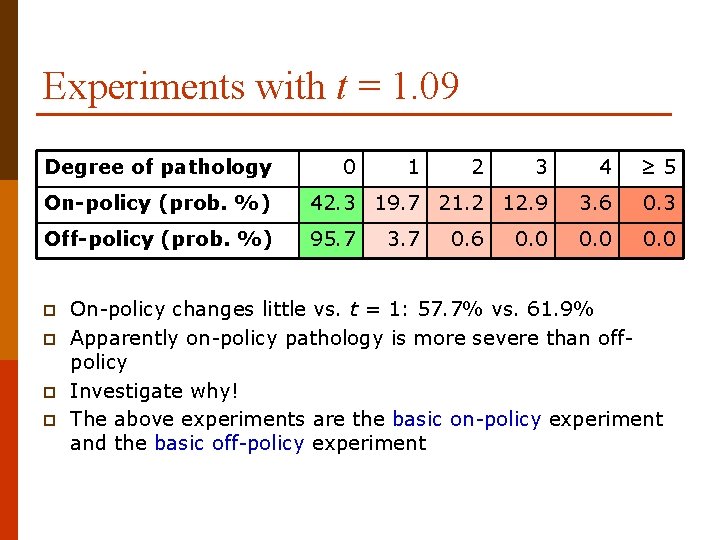

Experiments with t = 1. 09 Degree of pathology 3 4 ≥ 5 On-policy (prob. %) 42. 3 19. 7 21. 2 12. 9 3. 6 0. 3 Off-policy (prob. %) 95. 7 0. 0 p p 0 1 3. 7 2 0. 6 0. 0 On-policy changes little vs. t = 1: 57. 7% vs. 61. 9% Apparently on-policy pathology is more severe than offpolicy Investigate why! The above experiments are the basic on-policy experiment and the basic off-policy experiment

p p Introduction Problem Explanation Remedy

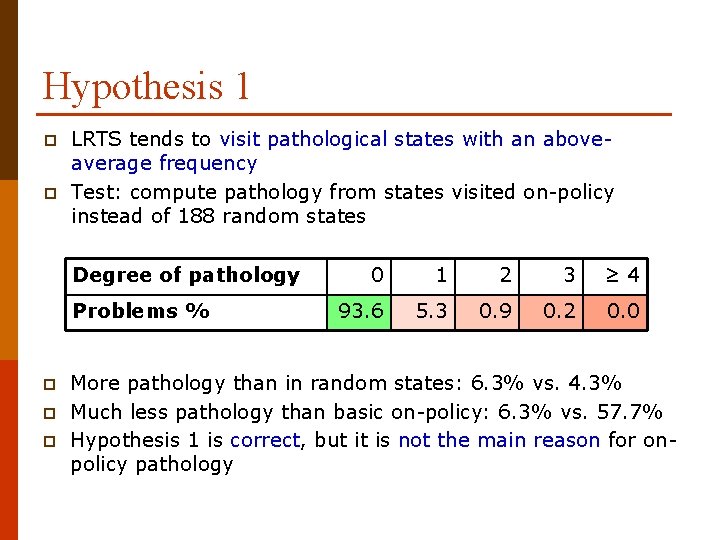

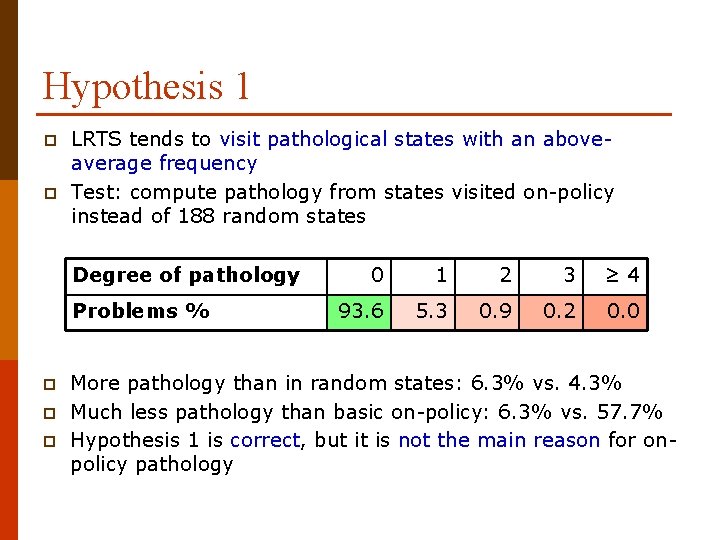

Hypothesis 1 p p LRTS tends to visit pathological states with an aboveaverage frequency Test: compute pathology from states visited on-policy instead of 188 random states Degree of pathology Problems % p p p 0 1 2 3 ≥ 4 93. 6 5. 3 0. 9 0. 2 0. 0 More pathology than in random states: 6. 3% vs. 4. 3% Much less pathology than basic on-policy: 6. 3% vs. 57. 7% Hypothesis 1 is correct, but it is not the main reason for onpolicy pathology

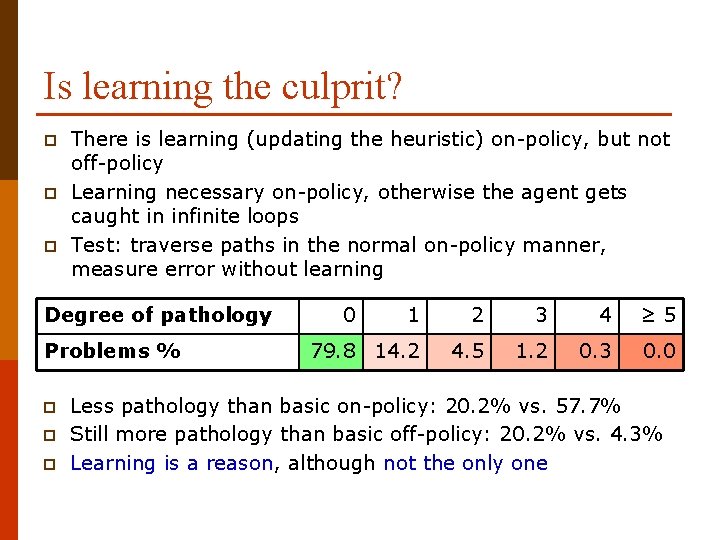

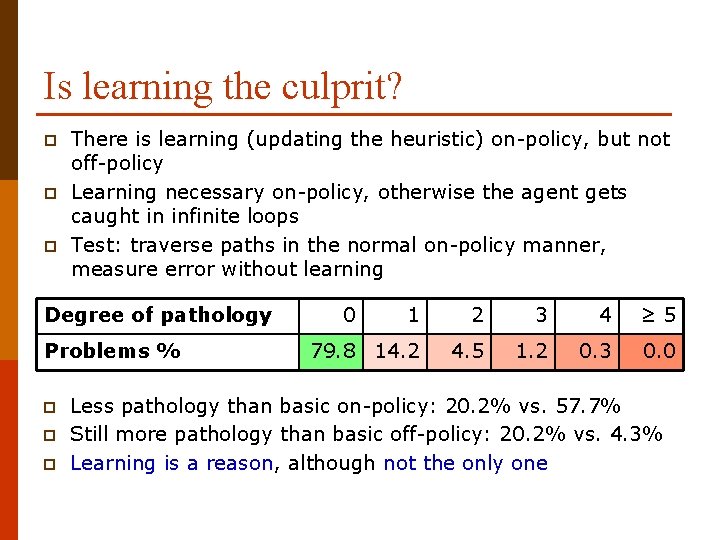

Is learning the culprit? p p p There is learning (updating the heuristic) on-policy, but not off-policy Learning necessary on-policy, otherwise the agent gets caught in infinite loops Test: traverse paths in the normal on-policy manner, measure error without learning Degree of pathology Problems % p p p 0 1 2 3 4 ≥ 5 79. 8 14. 2 4. 5 1. 2 0. 3 0. 0 Less pathology than basic on-policy: 20. 2% vs. 57. 7% Still more pathology than basic off-policy: 20. 2% vs. 4. 3% Learning is a reason, although not the only one

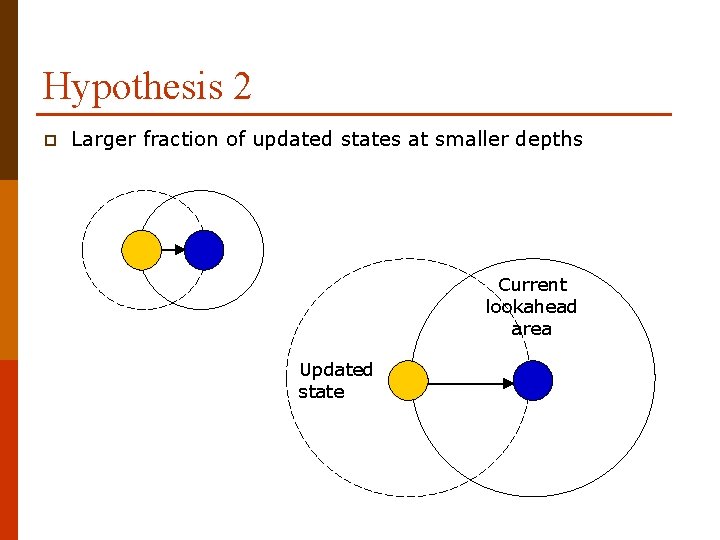

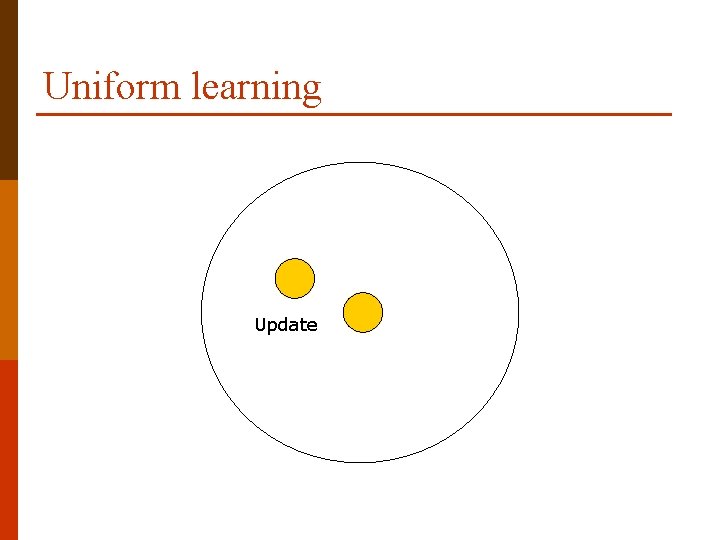

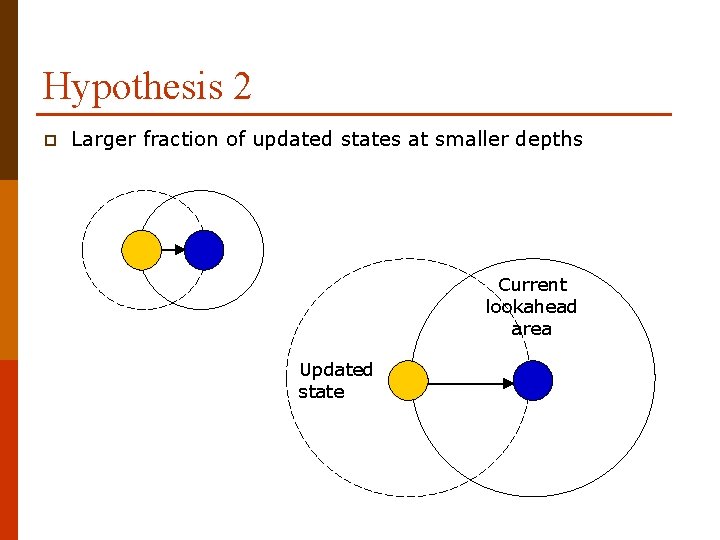

Hypothesis 2 p Larger fraction of updated states at smaller depths Current lookahead area Updated state

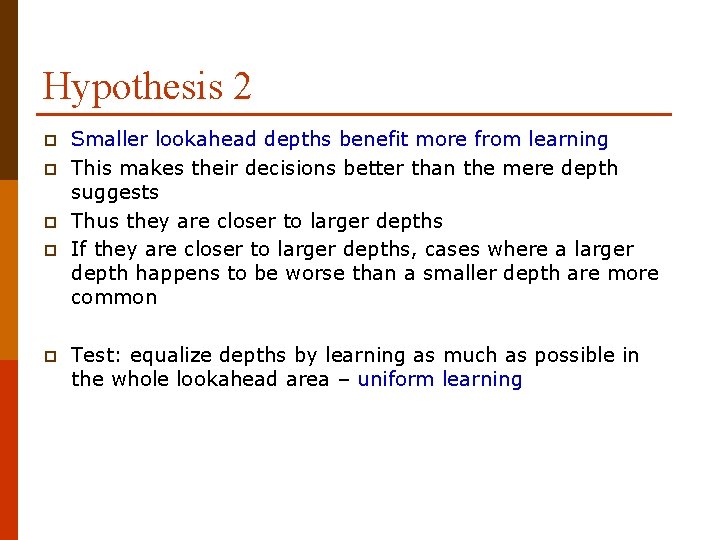

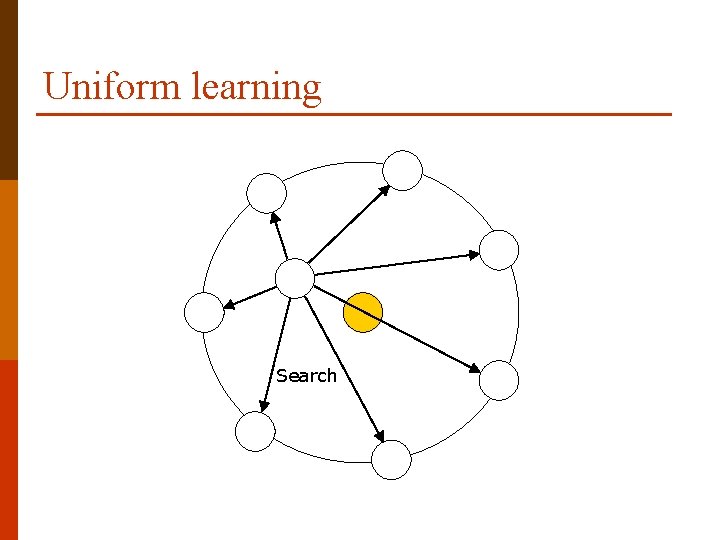

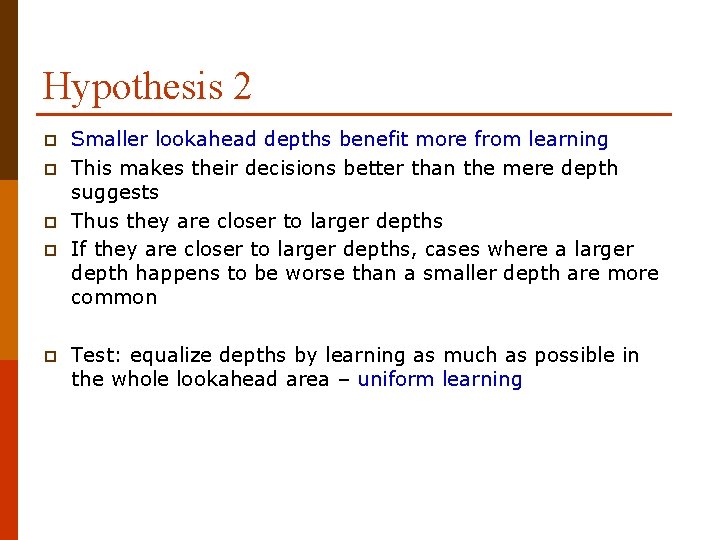

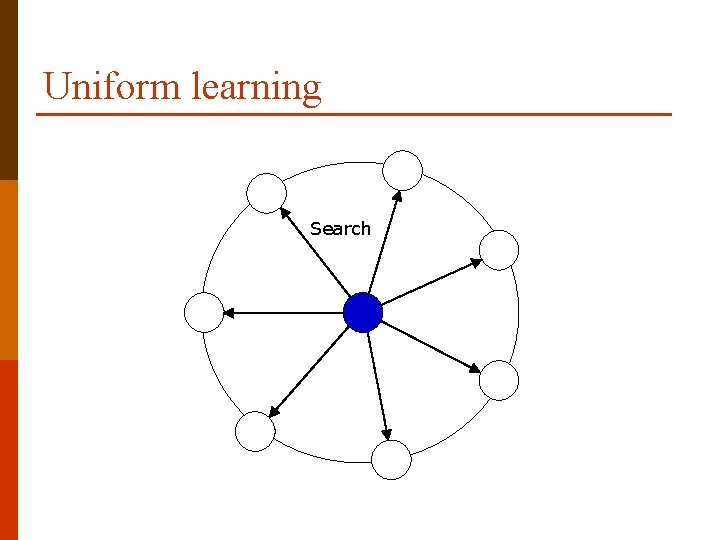

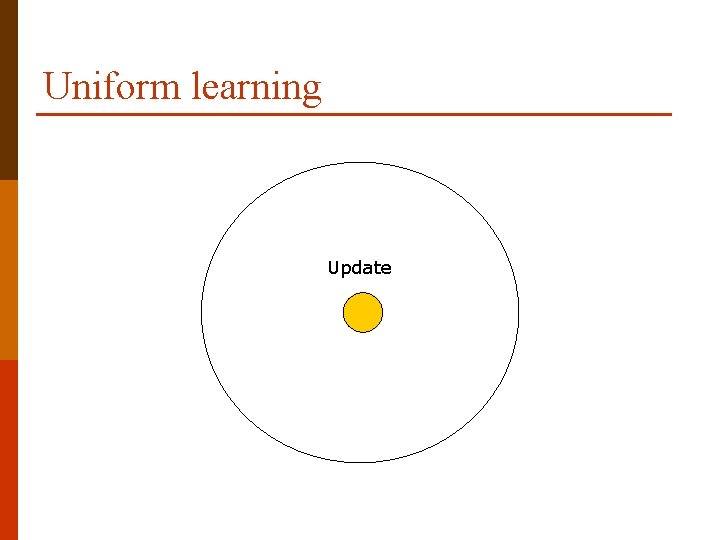

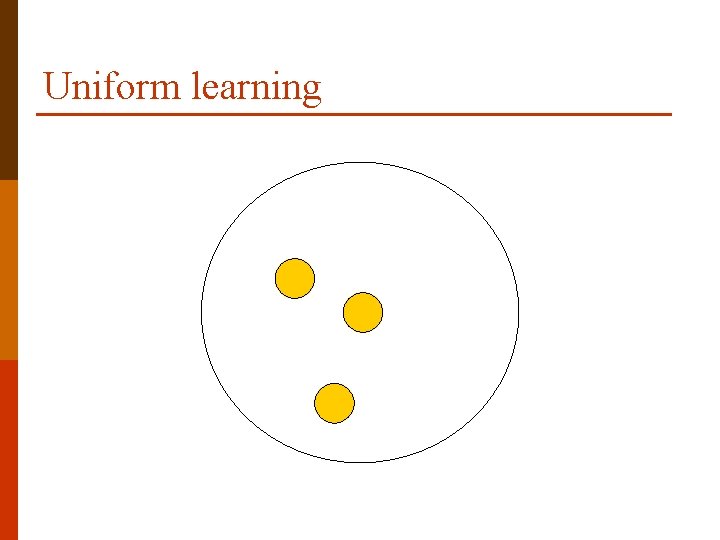

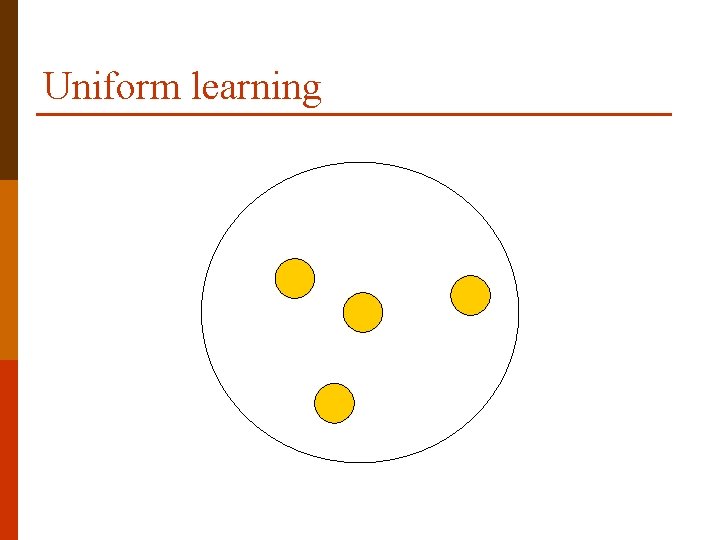

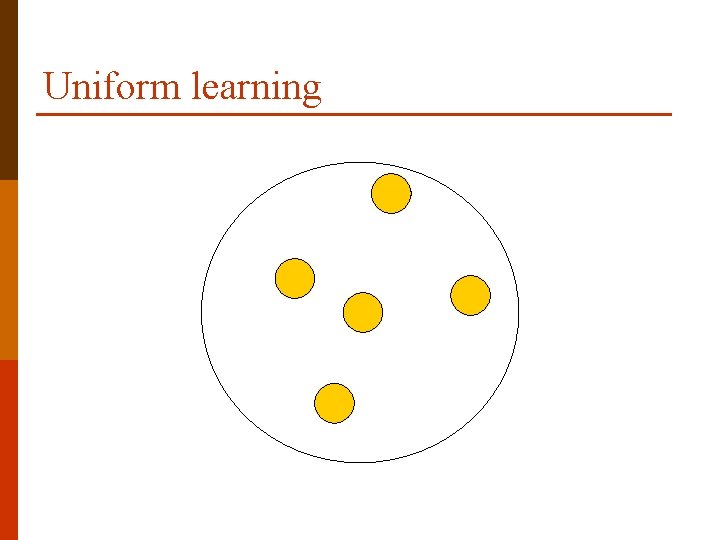

Hypothesis 2 p p p Smaller lookahead depths benefit more from learning This makes their decisions better than the mere depth suggests Thus they are closer to larger depths If they are closer to larger depths, cases where a larger depth happens to be worse than a smaller depth are more common Test: equalize depths by learning as much as possible in the whole lookahead area – uniform learning

Uniform learning

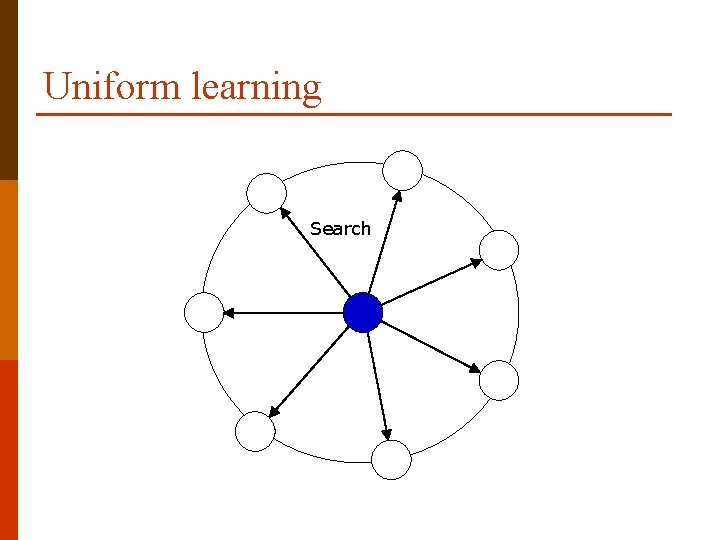

Uniform learning Search

Uniform learning Update

Uniform learning Search

Uniform learning Update

Uniform learning

Uniform learning

Uniform learning

Uniform learning

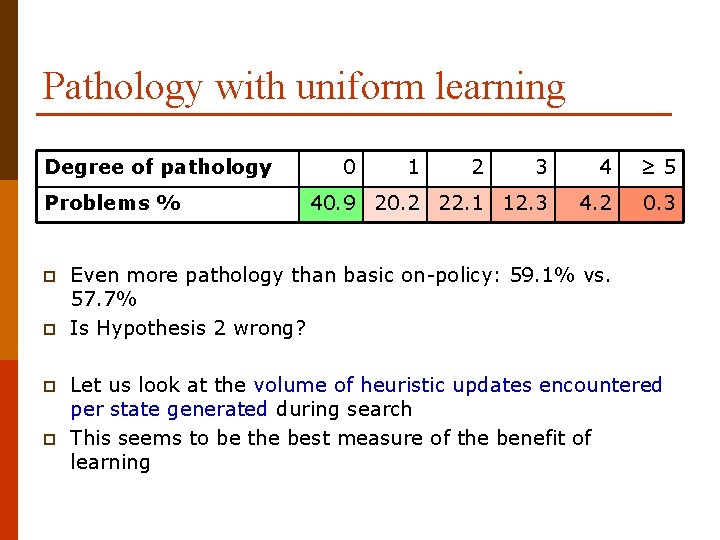

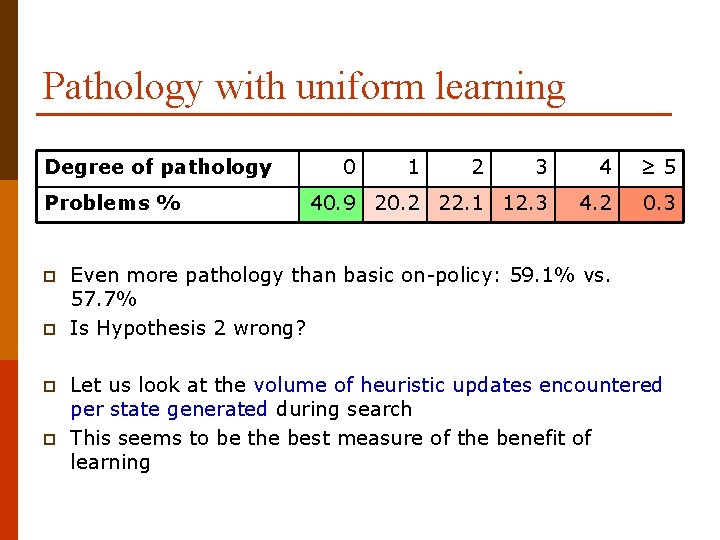

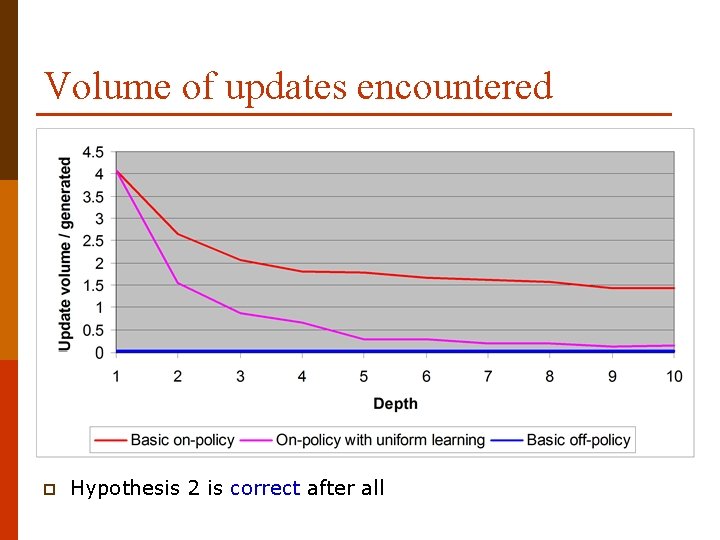

Pathology with uniform learning Degree of pathology Problems % p p 0 1 2 3 4 ≥ 5 40. 9 20. 2 22. 1 12. 3 4. 2 0. 3 Even more pathology than basic on-policy: 59. 1% vs. 57. 7% Is Hypothesis 2 wrong? Let us look at the volume of heuristic updates encountered per state generated during search This seems to be the best measure of the benefit of learning

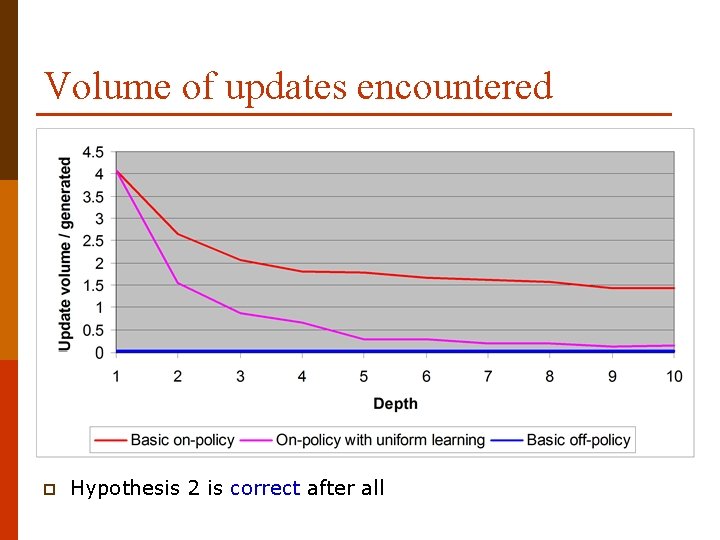

Volume of updates encountered p Hypothesis 2 is correct after all

Consistency p p p Initial heuristic is consistent n the difference in heuristic value between two states does not exceed the actual shortest distance between them Updates make it inconsistent Research on synthetic trees showed inconsistency causes pathology [Luštrek 05] Uniform learning preserves consistency It is more pathological than regular learning Consistency is not a problem in our case

Hypothesis 3 p p On-policy: one search every d moves, so fewer searchs at larger depths Off-policy: one search every move

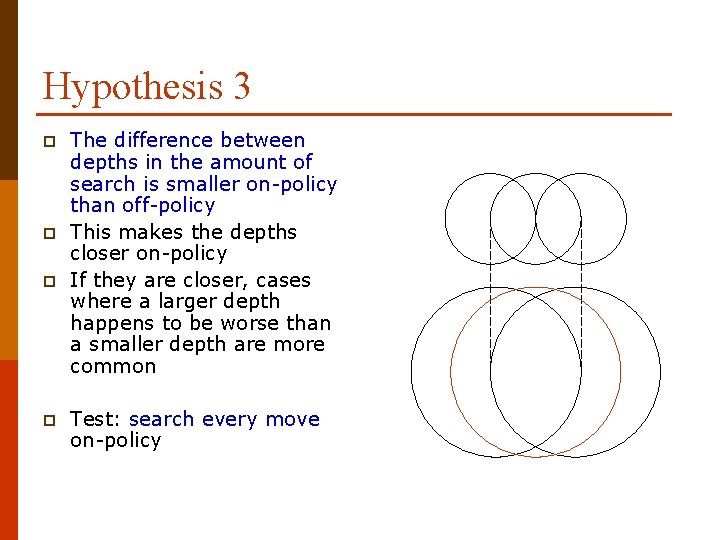

Hypothesis 3 p p The difference between depths in the amount of search is smaller on-policy than off-policy This makes the depths closer on-policy If they are closer, cases where a larger depth happens to be worse than a smaller depth are more common Test: search every move on-policy

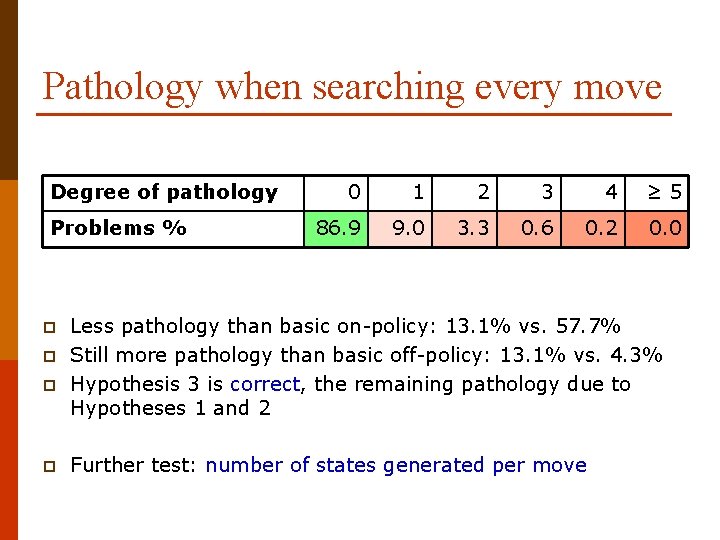

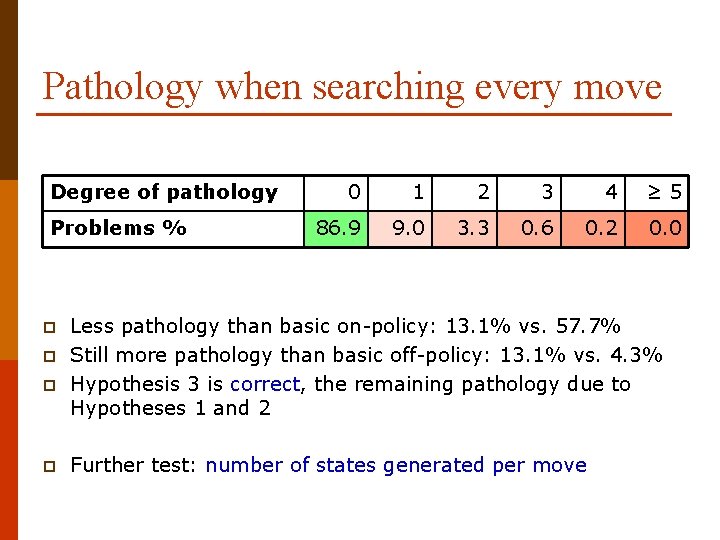

Pathology when searching every move Degree of pathology Problems % p p 0 1 2 3 4 ≥ 5 86. 9 9. 0 3. 3 0. 6 0. 2 0. 0 Less pathology than basic on-policy: 13. 1% vs. 57. 7% Still more pathology than basic off-policy: 13. 1% vs. 4. 3% Hypothesis 3 is correct, the remaining pathology due to Hypotheses 1 and 2 Further test: number of states generated per move

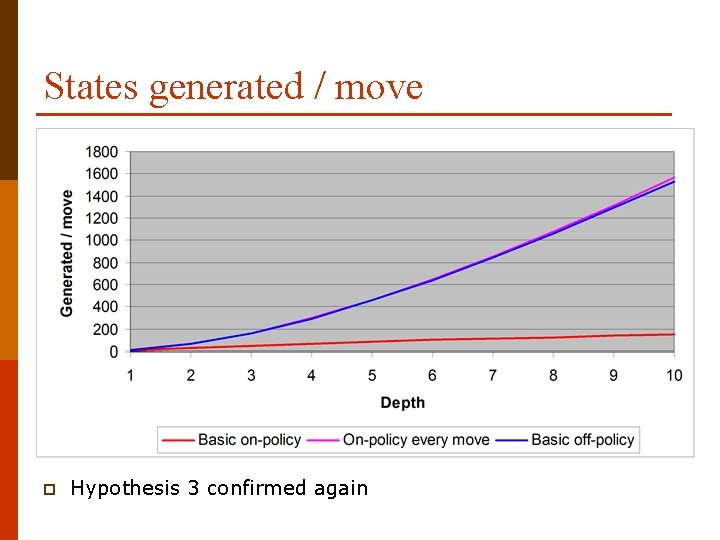

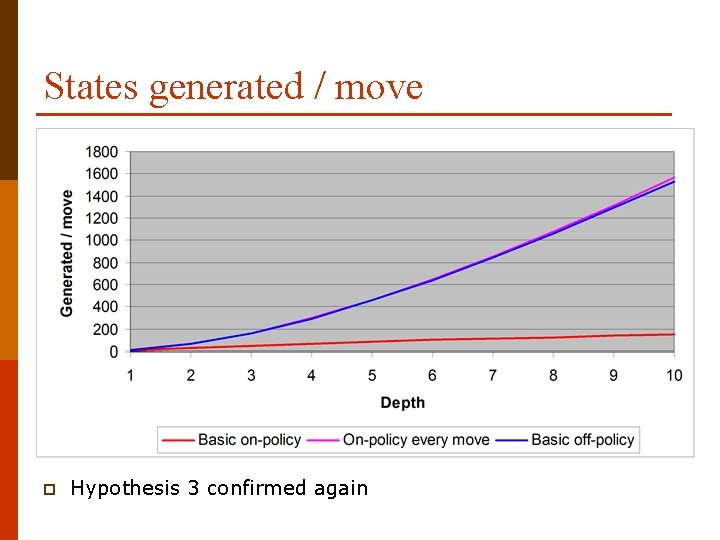

States generated / move p Hypothesis 3 confirmed again

Summary of explanation p p On-policy pathology caused by different lookahead depths being closer to each other in terms of the quality of decisions than the mere depths would suggest: n due to the volume of heuristic updates ecnountered per state generated n due to the number of states generated per move LRTS tends to visit pathological states with an aboveaverage frequency

p p Introduction Problem Explanation Remedy

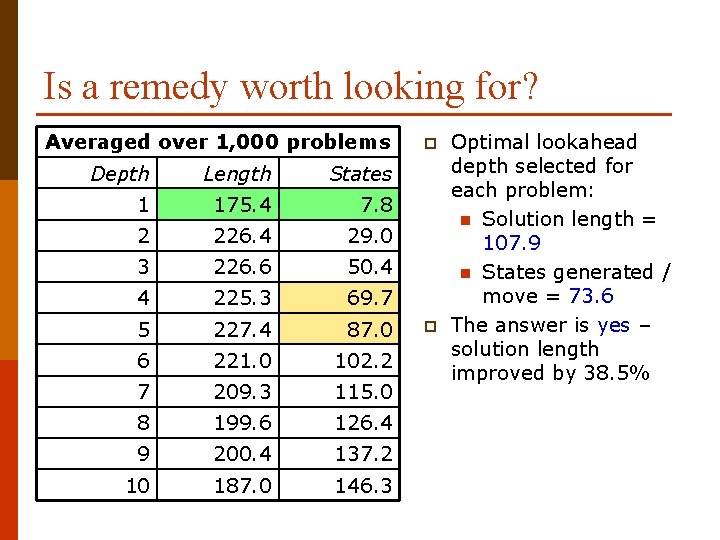

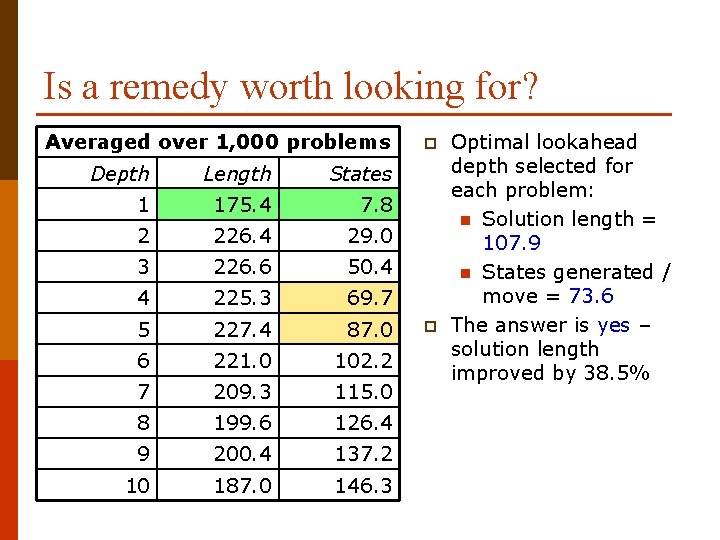

Is a remedy worth looking for? Averaged over 1, 000 problems Depth Length States 1 175. 4 7. 8 2 226. 4 29. 0 3 226. 6 50. 4 4 225. 3 69. 7 5 227. 4 87. 0 6 221. 0 102. 2 7 209. 3 115. 0 8 199. 6 126. 4 9 200. 4 137. 2 10 187. 0 146. 3 p p Optimal lookahead depth selected for each problem: n Solution length = 107. 9 n States generated / move = 73. 6 The answer is yes – solution length improved by 38. 5%

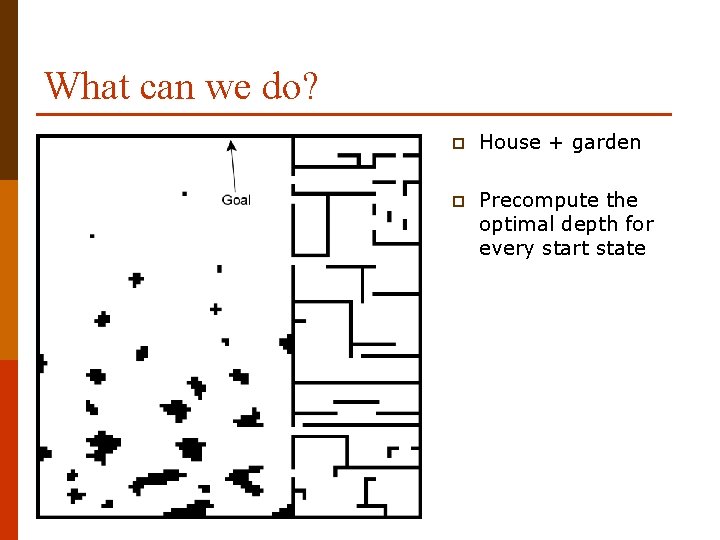

What can we do? p House + garden p Precompute the optimal depth for every start state

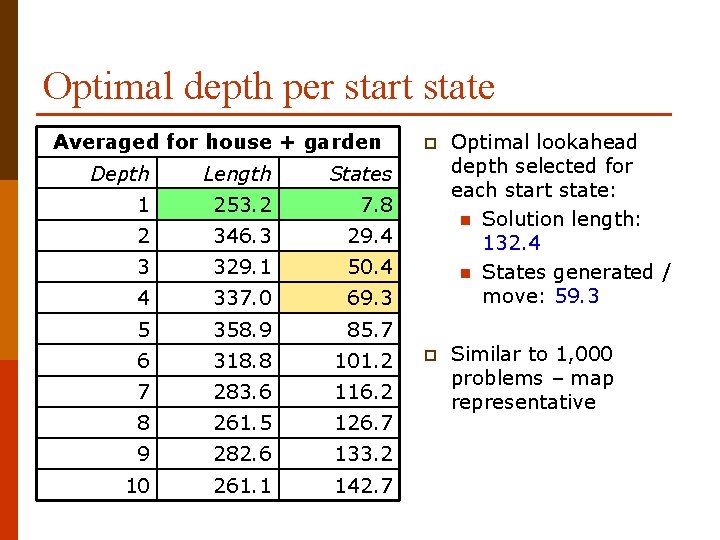

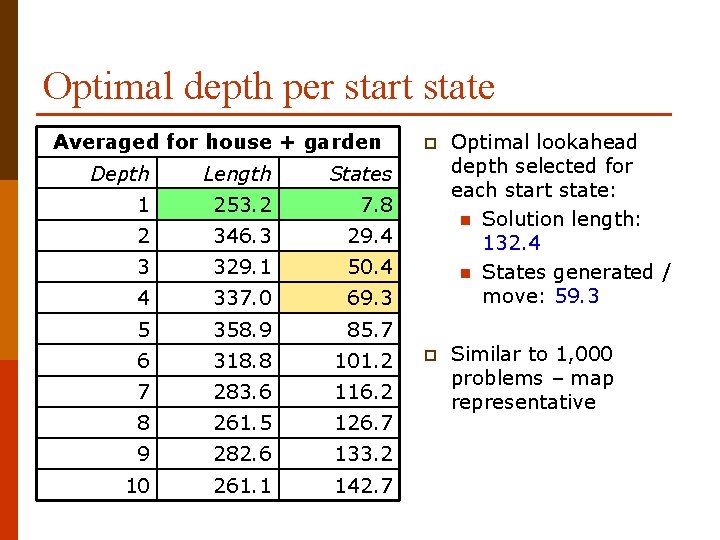

Optimal depth per start state Averaged for house + garden Depth Length States 1 253. 2 7. 8 2 346. 3 29. 4 3 329. 1 50. 4 4 337. 0 69. 3 5 358. 9 85. 7 6 318. 8 101. 2 7 283. 6 116. 2 8 261. 5 126. 7 9 282. 6 133. 2 10 261. 1 142. 7 p Optimal lookahead depth selected for each start state: n Solution length: 132. 4 n States generated / move: 59. 3 p Similar to 1, 000 problems – map representative

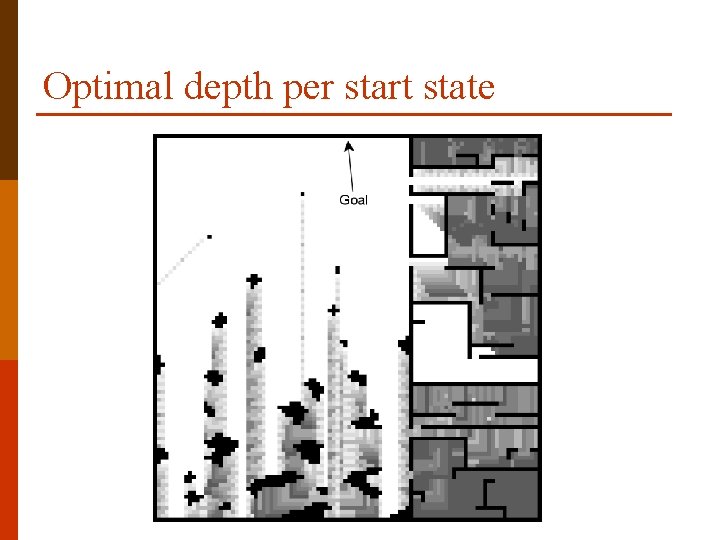

Optimal depth per start state

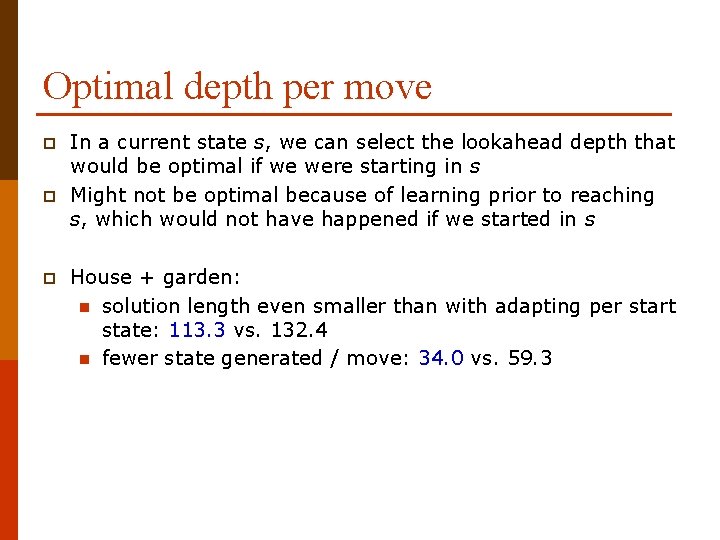

Optimal depth per move p p p In a current state s, we can select the lookahead depth that would be optimal if we were starting in s Might not be optimal because of learning prior to reaching s, which would not have happened if we started in s House + garden: n solution length even smaller than with adapting per start state: 113. 3 vs. 132. 4 n fewer state generated / move: 34. 0 vs. 59. 3

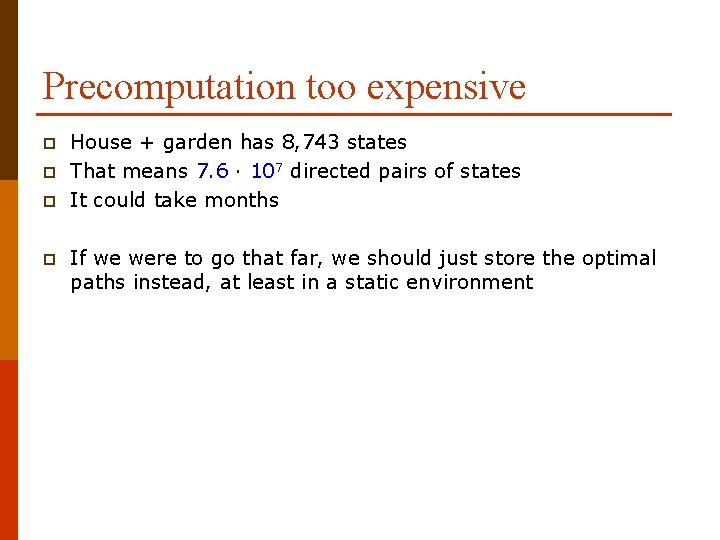

Precomputation too expensive p p House + garden has 8, 743 states That means 7. 6 ∙ 107 directed pairs of states It could take months If we were to go that far, we should just store the optimal paths instead, at least in a static environment

![State abstraction p Clique abstraction Sturtevant Bulitko et al 05 p Compute the optimal State abstraction p Clique abstraction [Sturtevant, Bulitko et al. 05] p Compute the optimal](https://slidetodoc.com/presentation_image/37aed4d795a6f7daee0814008759d0de/image-53.jpg)

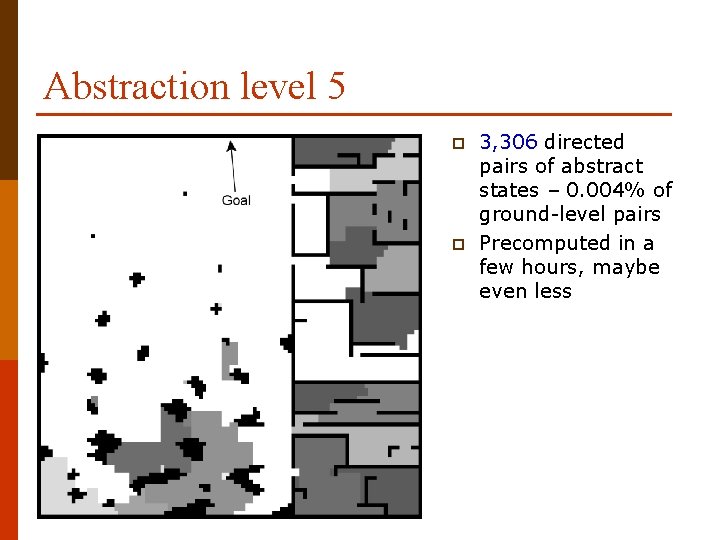

State abstraction p Clique abstraction [Sturtevant, Bulitko et al. 05] p Compute the optimal lookahead depth for the central groundlevel state under each abstract state Use the depth in all ground-level states under that abstract state p

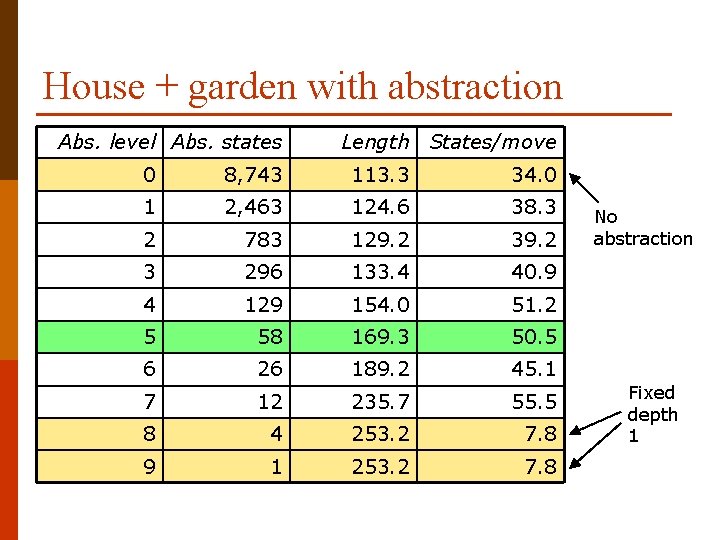

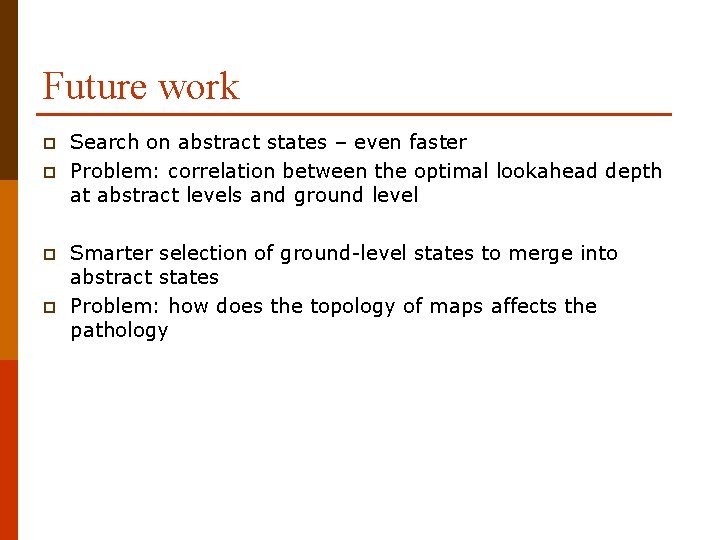

House + garden with abstraction Abs. level Abs. states Length States/move 0 8, 743 113. 3 34. 0 1 2, 463 124. 6 38. 3 2 783 129. 2 3 296 133. 4 40. 9 4 129 154. 0 51. 2 5 58 169. 3 50. 5 6 26 189. 2 45. 1 7 12 235. 7 55. 5 8 4 253. 2 7. 8 9 1 253. 2 7. 8 No abstraction Fixed depth 1

Abstraction level 5 p p 3, 306 directed pairs of abstract states – 0. 004% of ground-level pairs Precomputed in a few hours, maybe even less

Future work p p Search on abstract states – even faster Problem: correlation between the optimal lookahead depth at abstract levels and ground level Smarter selection of ground-level states to merge into abstract states Problem: how does the topology of maps affects the pathology

Thank you. Questions?