Intro to Program Evaluation Defining Terms Defining Evaluation

- Slides: 65

Intro to Program Evaluation Defining Terms

Defining Evaluation • Evaluation is the systematic investigation of the merit, worth, or significance of any “object” Michael Scriven • Program is any organized action/activity implemented to achieve some result 2

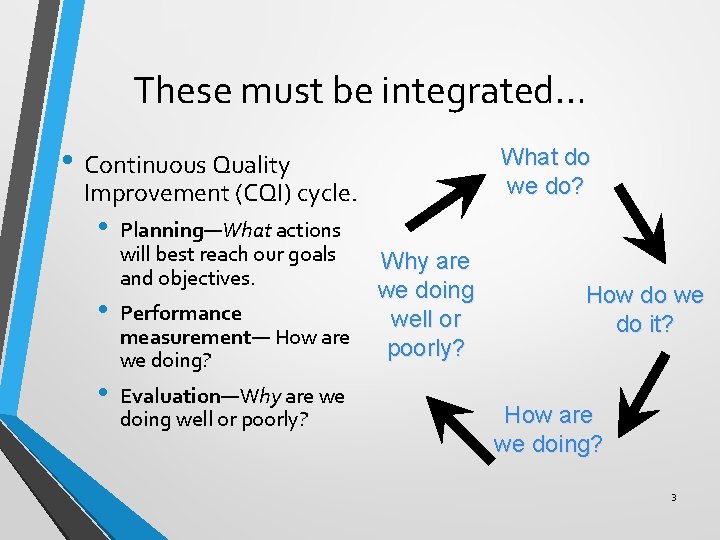

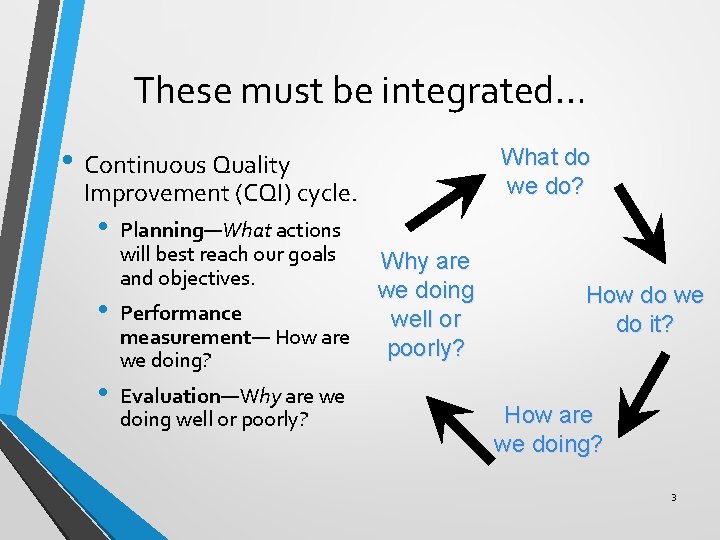

These must be integrated… • Continuous Quality What do we do? Improvement (CQI) cycle. • • • Planning—What actions will best reach our goals and objectives. Performance measurement— How are we doing? Evaluation—Why are we doing well or poorly? How do we do it? How are we doing? 3

Introduction to Program Evaluation CDC’s Evaluation Framework

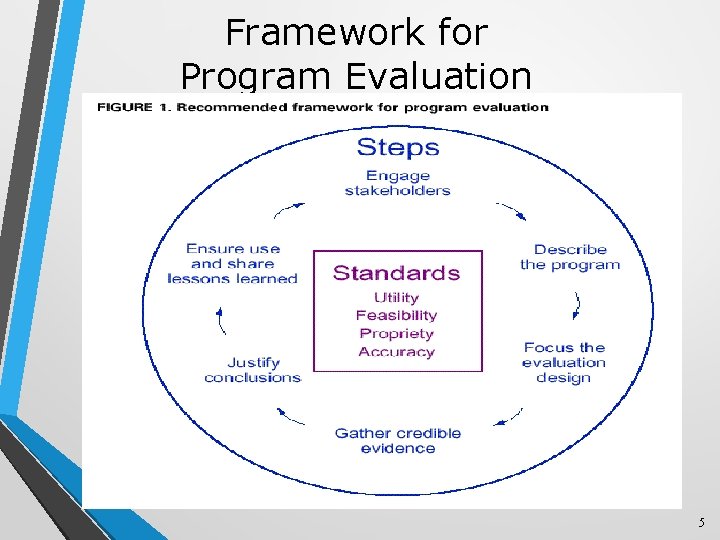

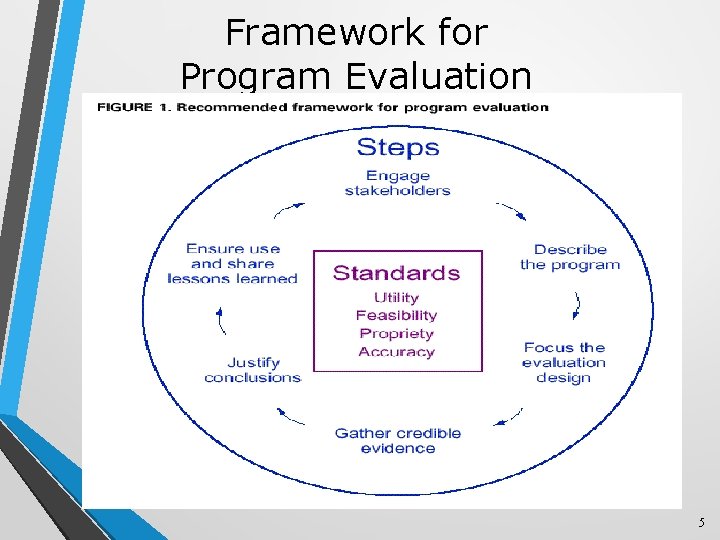

Framework for Program Evaluation 5 5

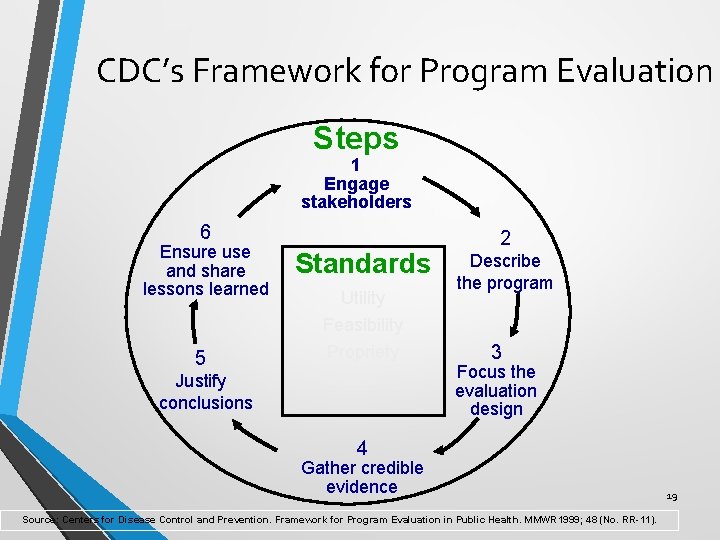

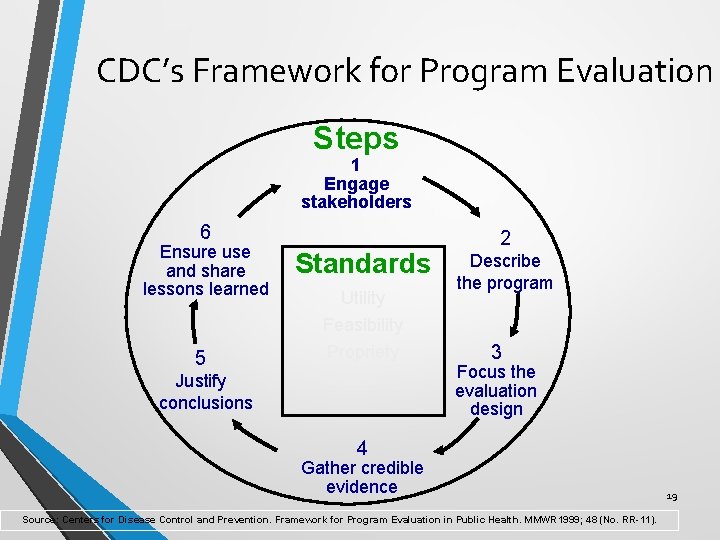

Step-by-Step 1. Engage stakeholders: Decide who needs to be part of the design and implementation of the evaluation for it to make a difference. 2. Describe the program: Draw a “soup to nuts” picture of the program—activities and all intended outcomes. 3. Focus the evaluation: Decide which evaluation questions are the key ones 6

Step-by-Step Seeds of Steps 1 -3 harvested later: 4. Gather credible evidence: Write indicators and choose and implement data collection sources and methods 5. Justify conclusions: Review and interpret data/evidence to determine success of failure 6. Use lessons learned: Use evaluation results in a meaningful way. 7

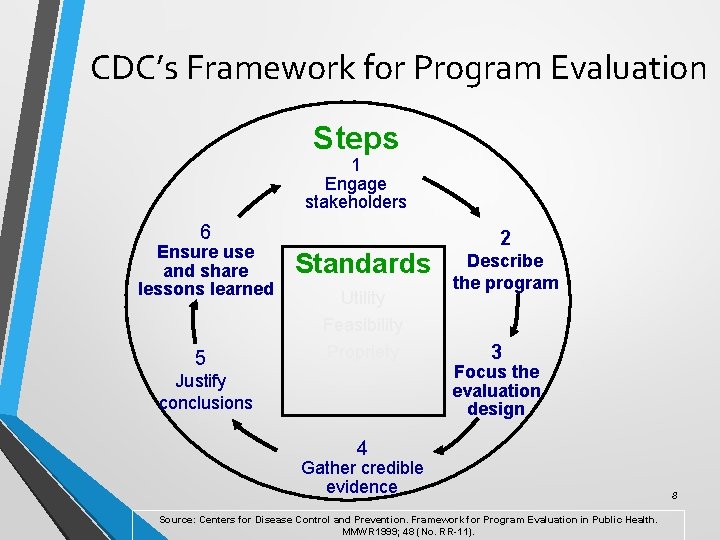

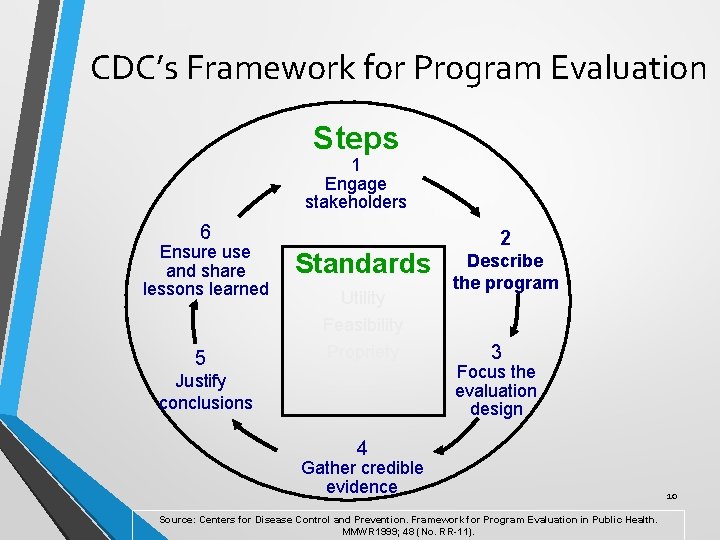

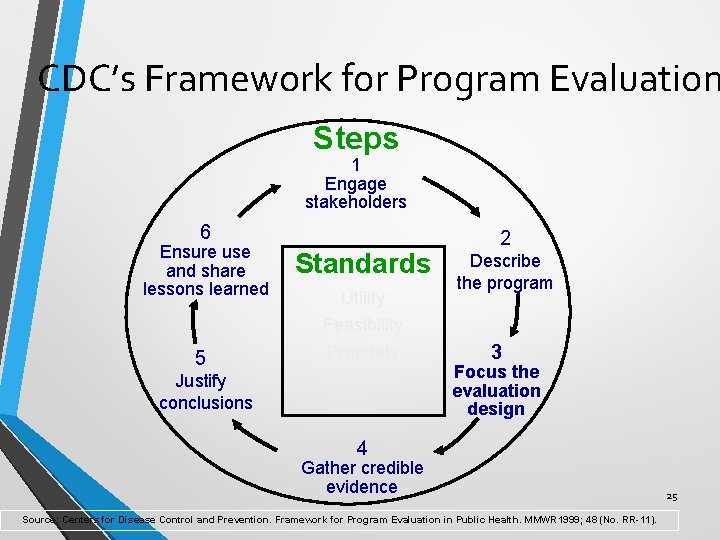

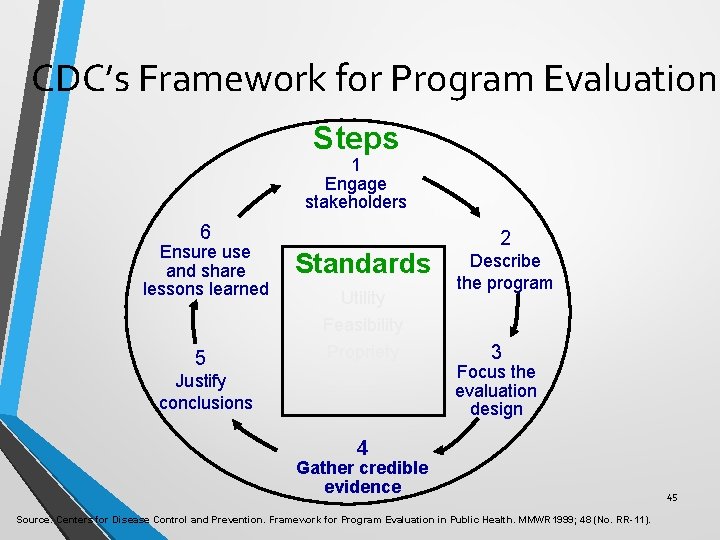

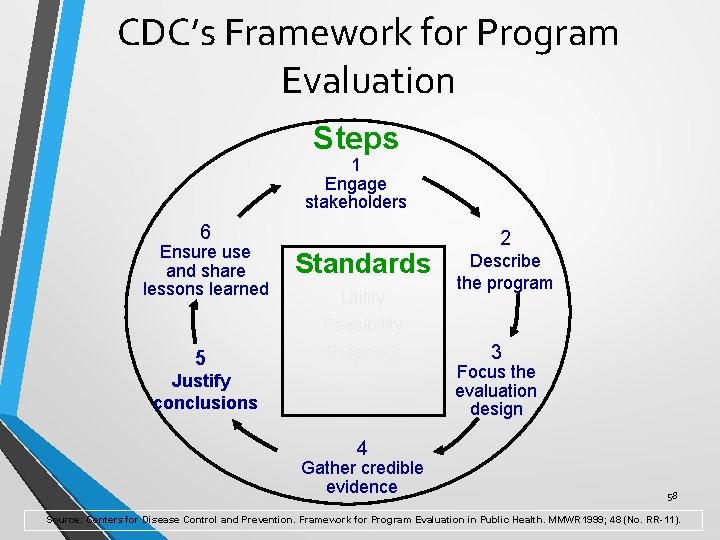

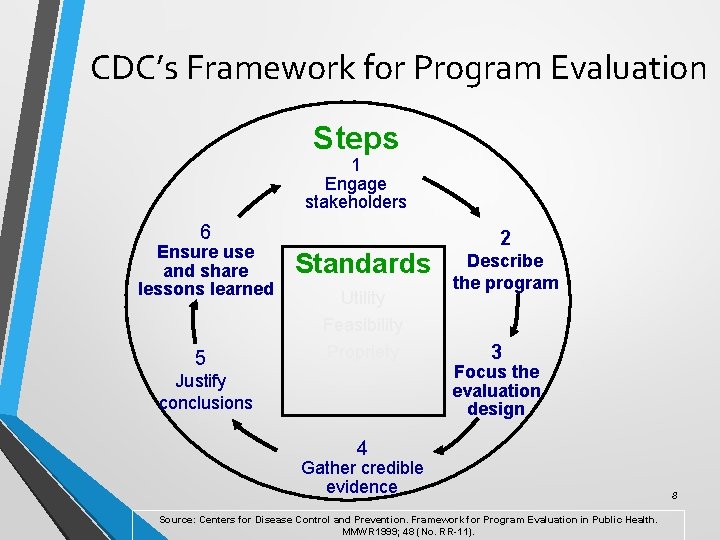

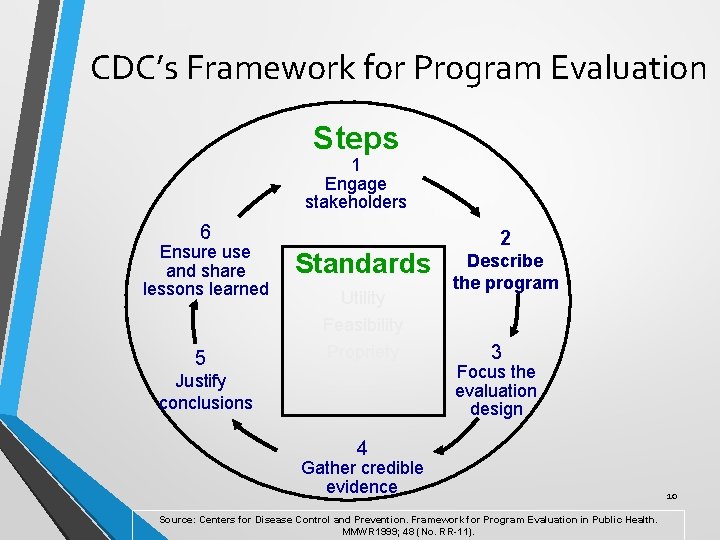

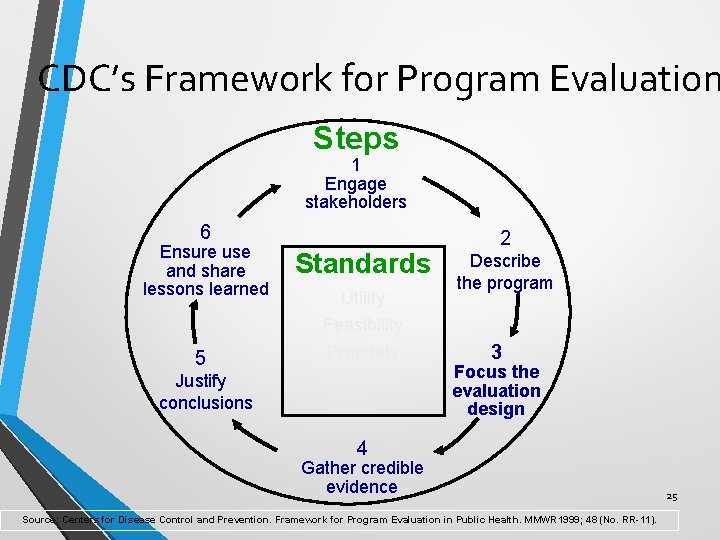

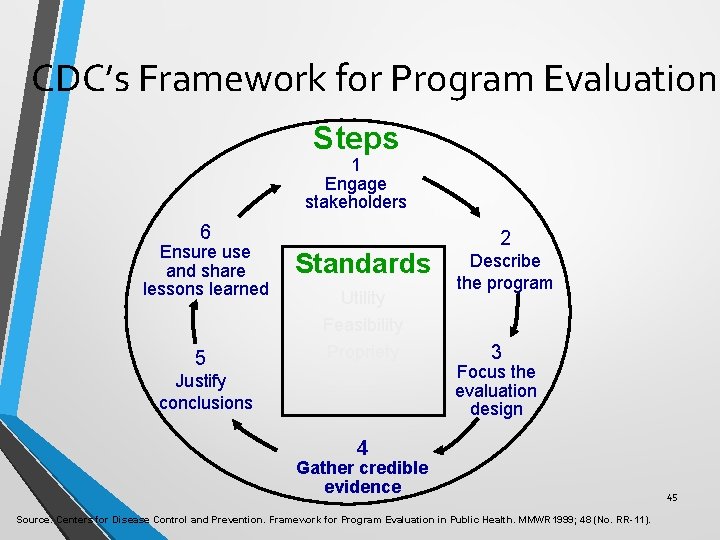

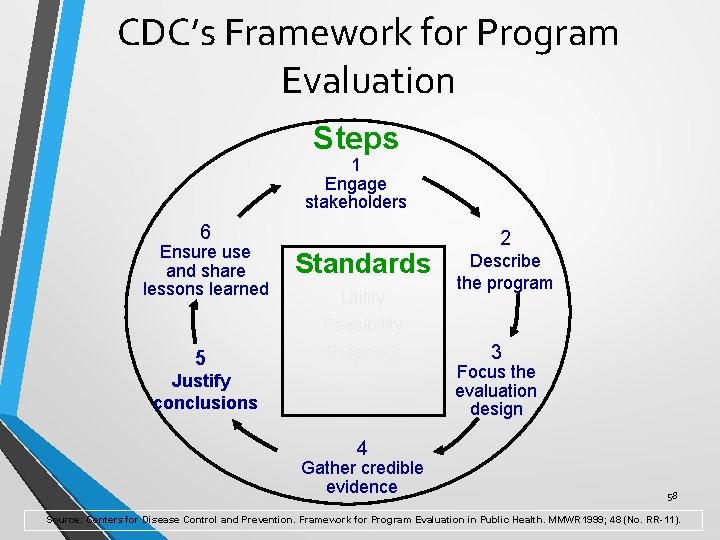

CDC’s Framework for Program Evaluation Steps 1 Engage stakeholders 6 Ensure use and share lessons learned Standards 5 Feasibility Propriety Accuracy Justify conclusions Utility 2 Describe the program 3 Focus the evaluation design 4 Gather credible evidence Source: Centers for Disease Control and Prevention. Framework for Program Evaluation in Public Health. MMWR 1999; 48 (No. RR-11). 8

Introduction to Program Evaluation Step 2. Describing the Program

CDC’s Framework for Program Evaluation Steps 1 Engage stakeholders 6 Ensure use and share lessons learned Standards 5 Feasibility Propriety Accuracy Justify conclusions Utility 2 Describe the program 3 Focus the evaluation design 4 Gather credible evidence Source: Centers for Disease Control and Prevention. Framework for Program Evaluation in Public Health. MMWR 1999; 48 (No. RR-11). 10

You Don’t Ever Need a Logic Model BUT You Always Need a Program Description Don’t jump into planning or evaluation without clarity on: • The big “need” your program is to address • The key target group(s) who need to take action • The kinds of actions they need to take (your intended outcomes or objectives) • Activities needed to meet those outcomes • “Causal” relationships between activities and outcomes 11

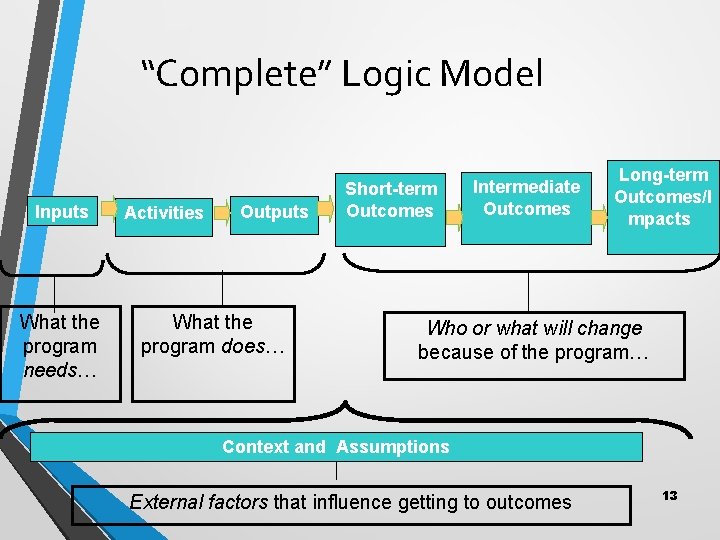

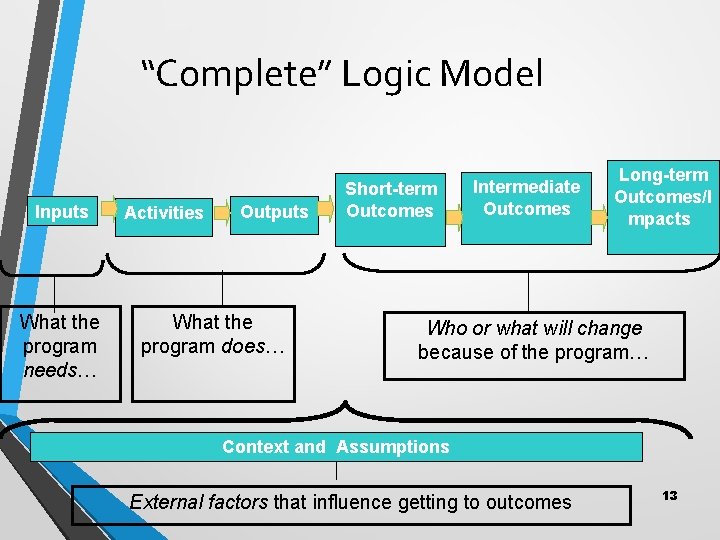

Logic Models and Program Description • Logic Models : Graphic depictions of the relationship between your program’s activities and its intended effects 12

“Complete” Logic Model Inputs What the program needs… Activities Outputs What the program does… Short-term Outcomes Intermediate Outcomes Long-term Outcomes/I mpacts Who or what will change because of the program… Context and Assumptions External factors that influence getting to outcomes 13

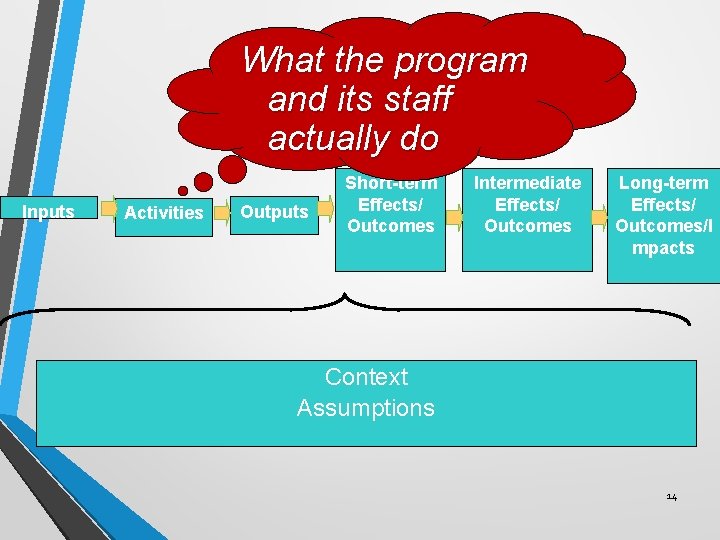

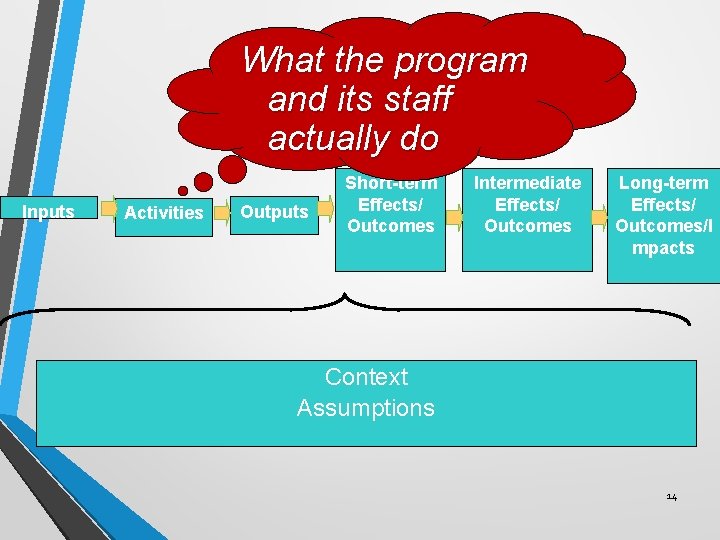

What the program and its staff actually do Inputs Activities Outputs Short-term Effects/ Outcomes Intermediate Effects/ Outcomes Long-term Effects/ Outcomes/I mpacts Context Assumptions 14

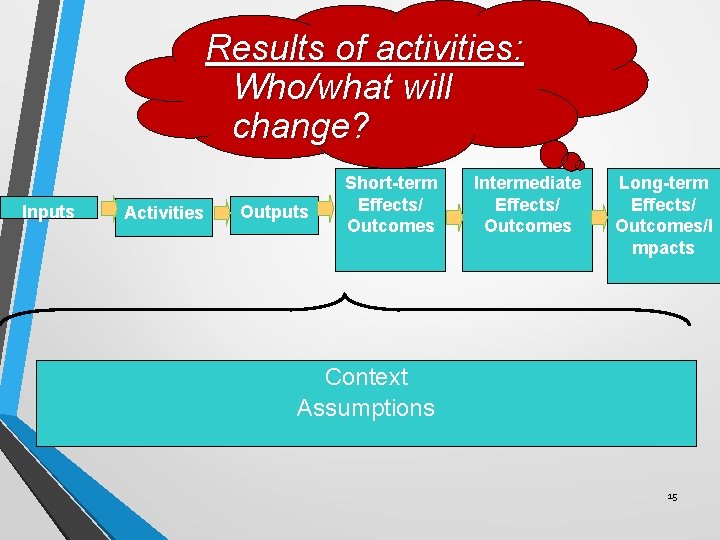

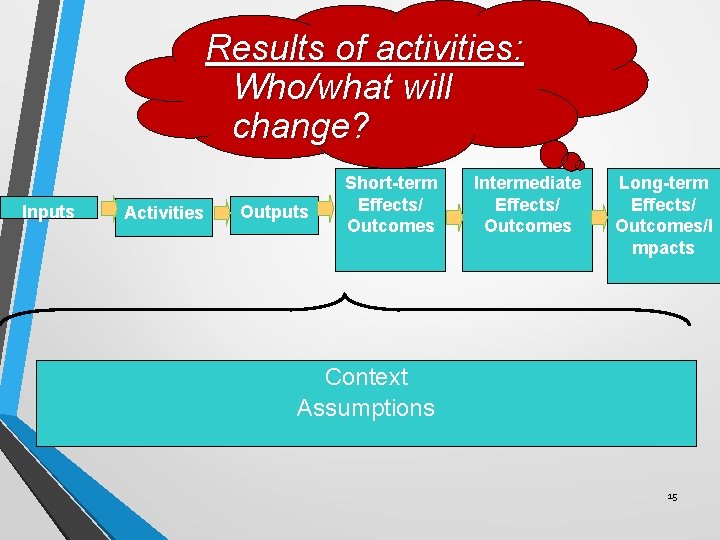

Results of activities: Who/what will change? Inputs Activities Outputs Short-term Effects/ Outcomes Intermediate Effects/ Outcomes Long-term Effects/ Outcomes/I mpacts Context Assumptions 15

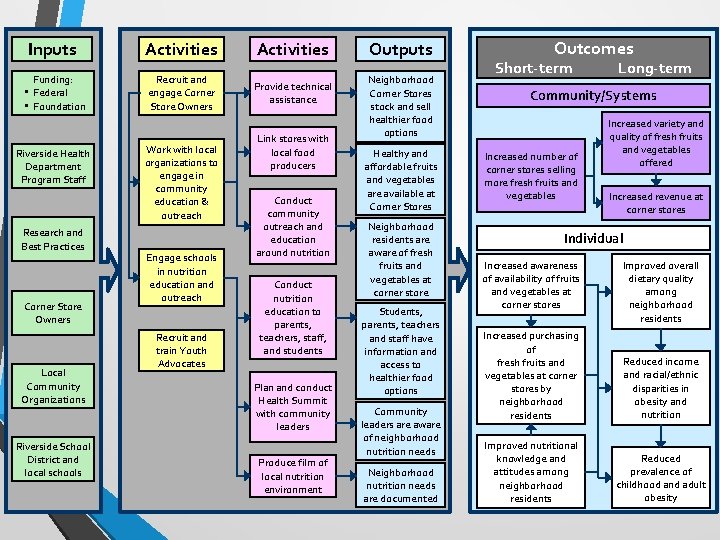

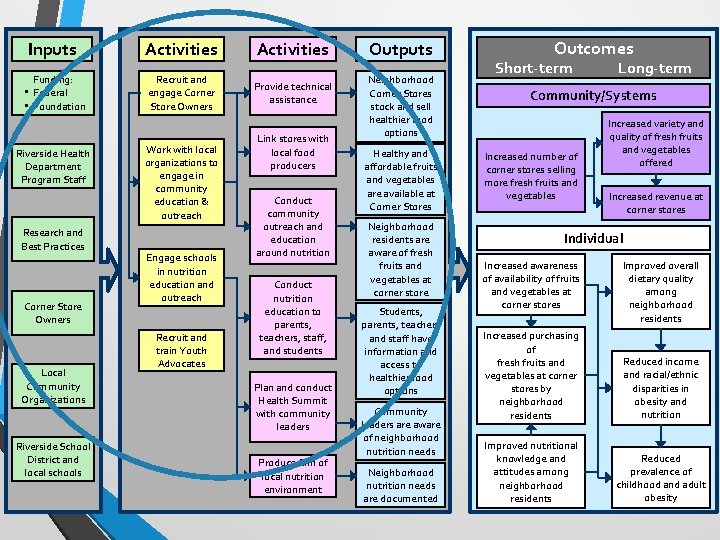

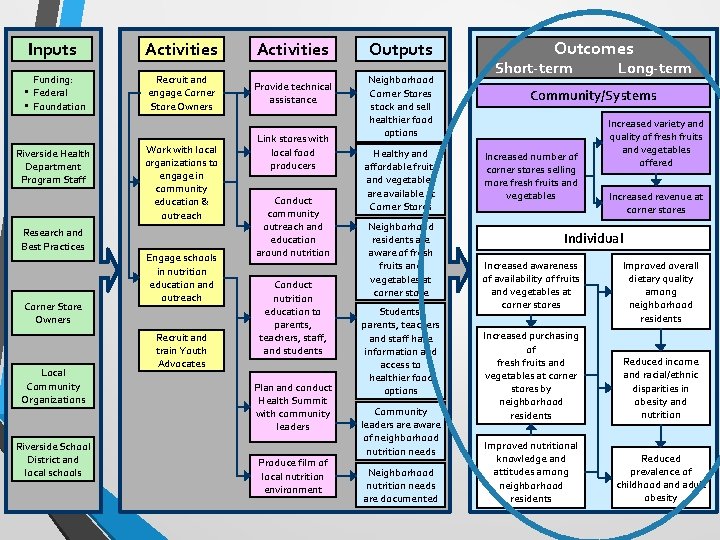

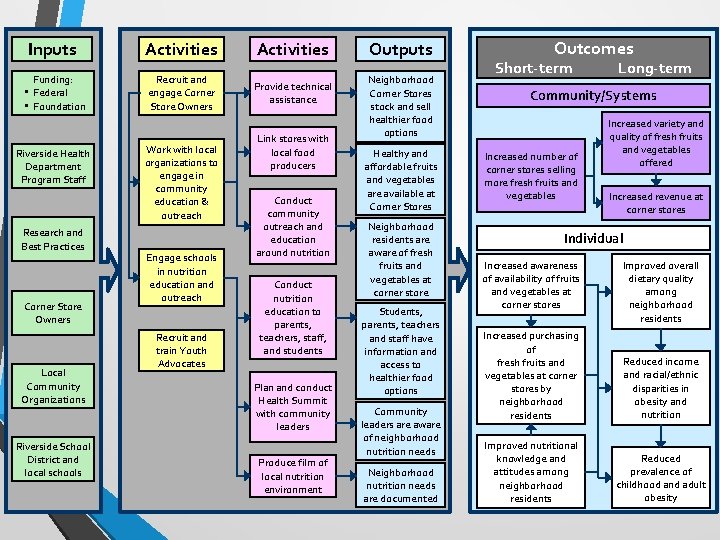

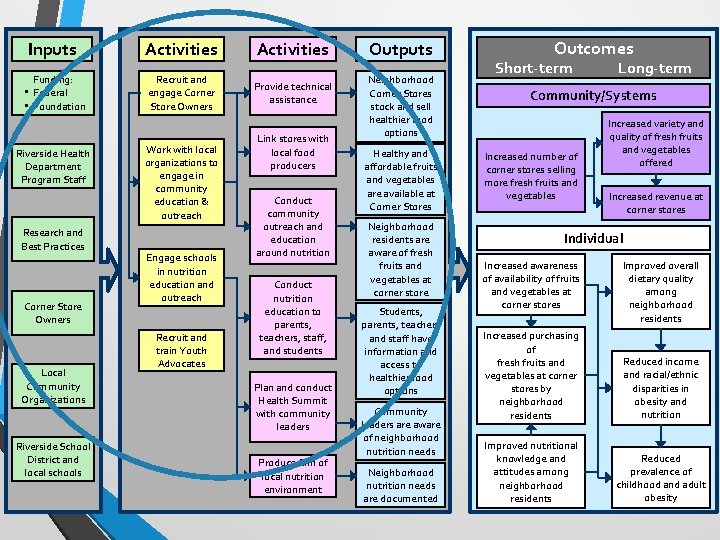

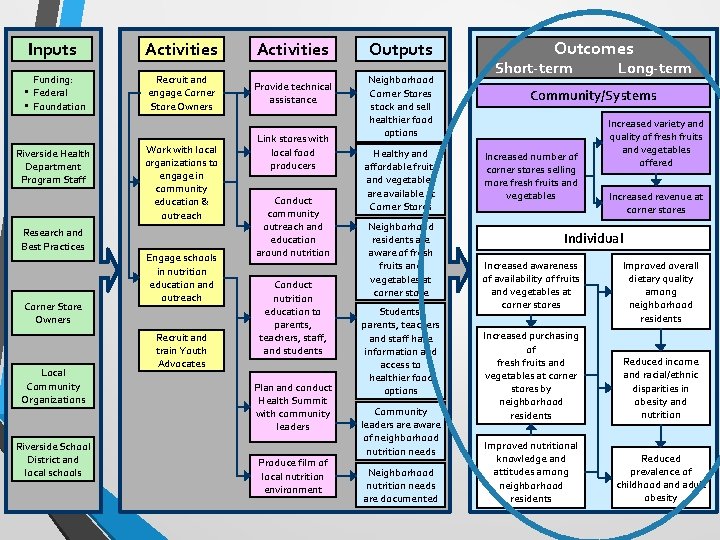

Inputs Activities Funding: • Federal • Foundation Recruit and engage Corner Store Owners Riverside Health Department Program Staff Research and Best Practices Corner Store Owners Local Community Organizations Riverside School District and local schools Work with local organizations to engage in community education & outreach Engage schools in nutrition education and outreach Recruit and train Youth Advocates Activities Provide technical assistance Link stores with local food producers Conduct community outreach and education around nutrition Conduct nutrition education to parents, teachers, staff, and students Plan and conduct Health Summit with community leaders Produce film of local nutrition environment Outputs Neighborhood Corner Stores stock and sell healthier food options Healthy and affordable fruits and vegetables are available at Corner Stores Neighborhood residents are aware of fresh fruits and vegetables at corner store Students, parents, teachers and staff have information and access to healthier food options Community leaders are aware of neighborhood nutrition needs Neighborhood nutrition needs are documented Outcomes Short-term Long-term Community/Systems Increased number of corner stores selling more fresh fruits and vegetables Increased variety and quality of fresh fruits and vegetables offered Increased revenue at corner stores Individual Increased awareness of availability of fruits and vegetables at corner stores Improved overall dietary quality among neighborhood residents Increased purchasing of fresh fruits and vegetables at corner stores by neighborhood residents Reduced income and racial/ethnic disparities in obesity and nutrition Improved nutritional knowledge and attitudes among neighborhood residents Reduced prevalence of childhood and adult obesity

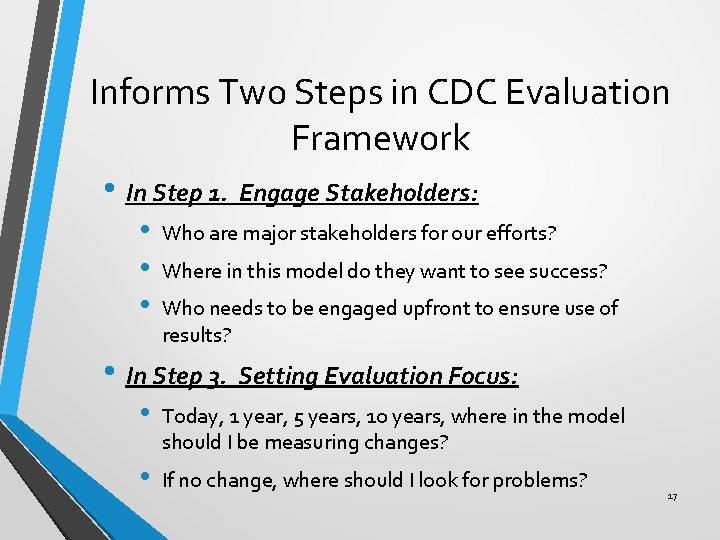

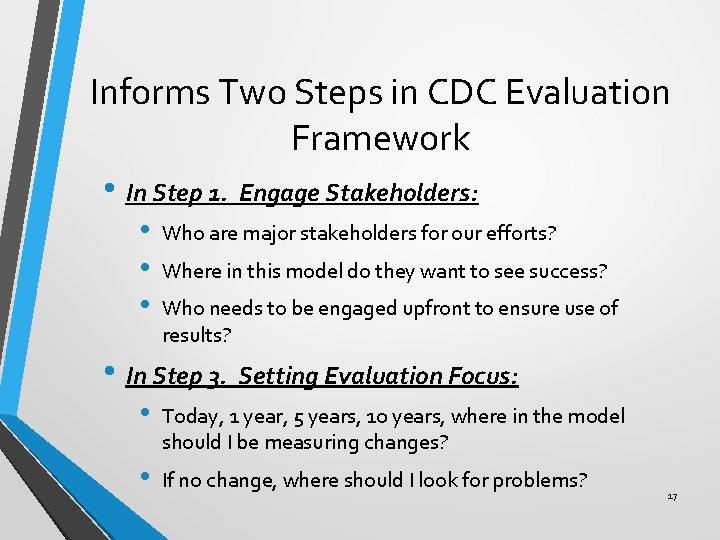

Informs Two Steps in CDC Evaluation Framework • In Step 1. Engage Stakeholders: • • • Who are major stakeholders for our efforts? Where in this model do they want to see success? Who needs to be engaged upfront to ensure use of results? • In Step 3. Setting Evaluation Focus: • Today, 1 year, 5 years, 10 years, where in the model should I be measuring changes? • If no change, where should I look for problems? 17

Intro to Program Evaluation Step 1. Engaging Stakeholders

CDC’s Framework for Program Evaluation Steps 1 Engage stakeholders 6 Ensure use and share lessons learned Standards 5 Feasibility Propriety Accuracy Justify conclusions Utility 2 Describe the program 3 Focus the evaluation design 4 Gather credible evidence Source: Centers for Disease Control and Prevention. Framework for Program Evaluation in Public Health. MMWR 1999; 48 (No. RR-11). 19

Who are Stakeholders? • Three major groups: • Those served or affected by the program • Those involved in program operation • Primary intended users of the evaluation findings 20

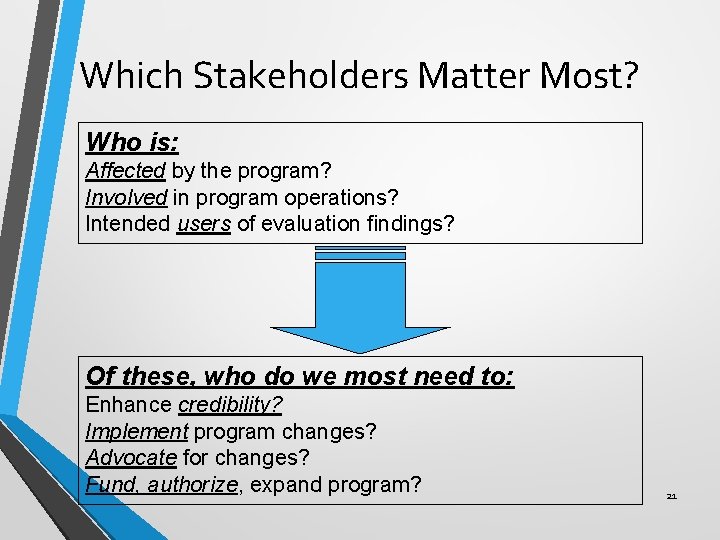

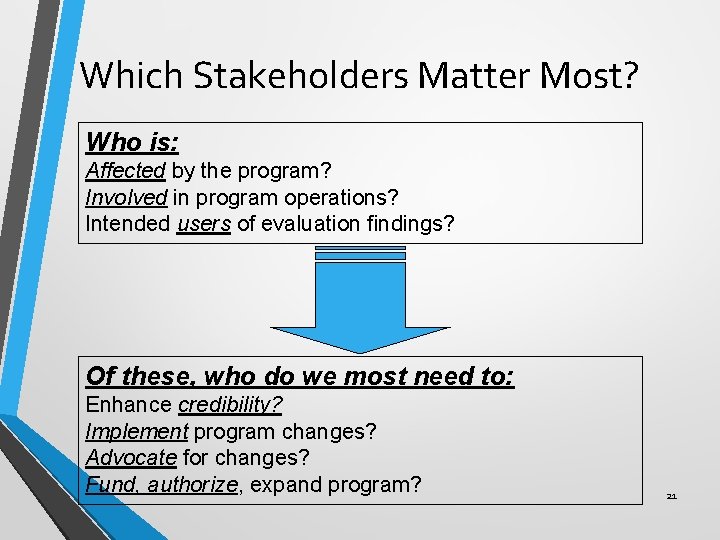

Which Stakeholders Matter Most? Who is: Affected by the program? Involved in program operations? Intended users of evaluation findings? Of these, who do we most need to: Enhance credibility? Implement program changes? Advocate for changes? Fund, authorize, expand program? 21

Knowing Your Audience • What actions do you want them to take? • What do they need or want to know? • Why should they care? • What is the most convincing way to communicate with them? 22

Triggering the Audience’s Emotions Fairness Communities are unfairly burdened Ingenuity Communities can and do work together Prevention Responsibility to prevent problems 23

Intro to Program Evaluation Step 3. Setting Evaluation Focus

CDC’s Framework for Program Evaluation Steps 1 Engage stakeholders 6 Ensure use and share lessons learned Standards 5 Feasibility Propriety Accuracy Justify conclusions Utility 2 Describe the program 3 Focus the evaluation design 4 Gather credible evidence Source: Centers for Disease Control and Prevention. Framework for Program Evaluation in Public Health. MMWR 1999; 48 (No. RR-11). 25

Evaluation Can Be About Anything • Evaluation can focus on any/all parts of the logic model • Evaluation questions can pertain to • Boxes---did this component occur as expected • Arrows---what was the relationship between components 26

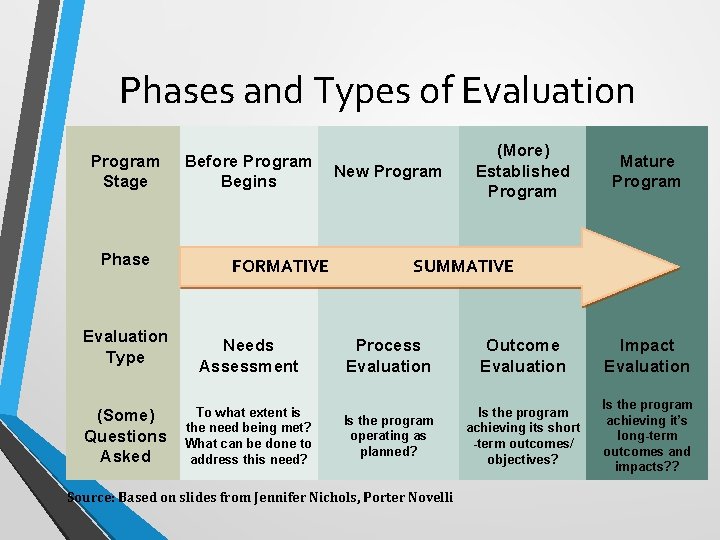

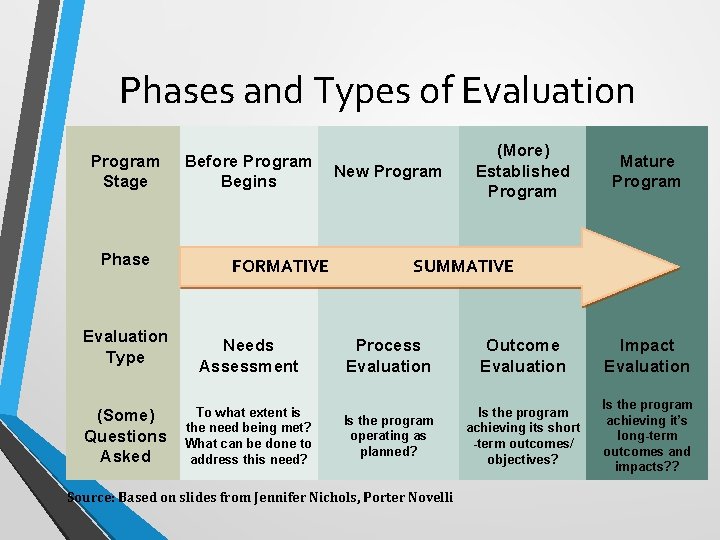

Phases and Types of Evaluation Program Stage Phase Evaluation Type (Some) Questions Asked Before Program Begins FORMATIVE Needs Assessment To what extent is the need being met? What can be done to address this need? New Program (More) Established Program Mature Program SUMMATIVE Process Evaluation Outcome Evaluation Impact Evaluation Is the program operating as planned? Is the program achieving its short -term outcomes/ objectives? Is the program achieving it’s long-term outcomes and impacts? ? Source: Based on slides from Jennifer Nichols, Porter Novelli

(Some) Potential Purposes • Test program implementation • Show accountability • “Continuous” program improvement • Increase the knowledge base • Other… 28

(Some) Potential Purposes • Test program implementation • Show accountability • “Continuous” program improvement • Increase the knowledge base • Other… 29

Inputs Activities Funding: • Federal • Foundation Recruit and engage Corner Store Owners Riverside Health Department Program Staff Research and Best Practices Corner Store Owners Local Community Organizations Riverside School District and local schools Work with local organizations to engage in community education & outreach Engage schools in nutrition education and outreach Recruit and train Youth Advocates Activities Provide technical assistance Link stores with local food producers Conduct community outreach and education around nutrition Conduct nutrition education to parents, teachers, staff, and students Plan and conduct Health Summit with community leaders Produce film of local nutrition environment Outputs Neighborhood Corner Stores stock and sell healthier food options Healthy and affordable fruits and vegetables are available at Corner Stores Neighborhood residents are aware of fresh fruits and vegetables at corner store Students, parents, teachers and staff have information and access to healthier food options Community leaders are aware of neighborhood nutrition needs Neighborhood nutrition needs are documented Outcomes Short-term Long-term Community/Systems Increased number of corner stores selling more fresh fruits and vegetables Increased variety and quality of fresh fruits and vegetables offered Increased revenue at corner stores Individual Increased awareness of availability of fruits and vegetables at corner stores Improved overall dietary quality among neighborhood residents Increased purchasing of fresh fruits and vegetables at corner stores by neighborhood residents Reduced income and racial/ethnic disparities in obesity and nutrition Improved nutritional knowledge and attitudes among neighborhood residents Reduced prevalence of childhood and adult obesity

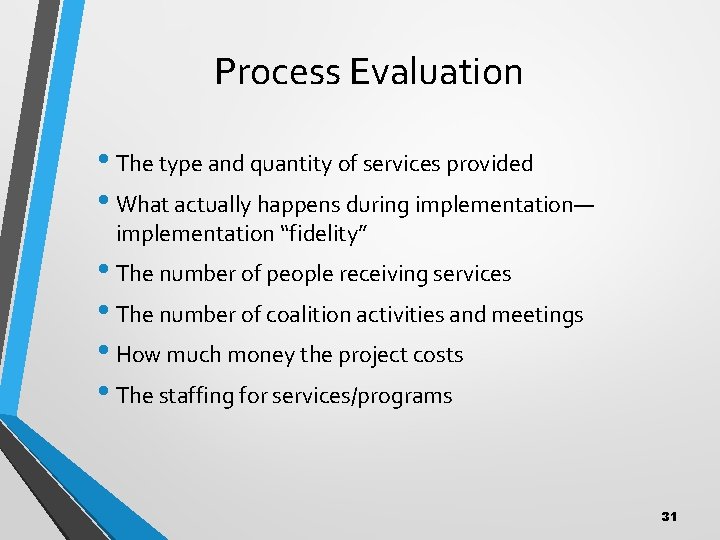

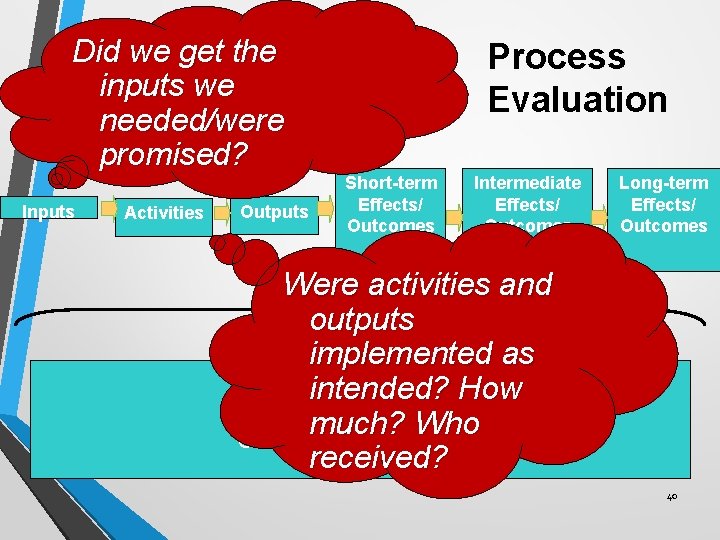

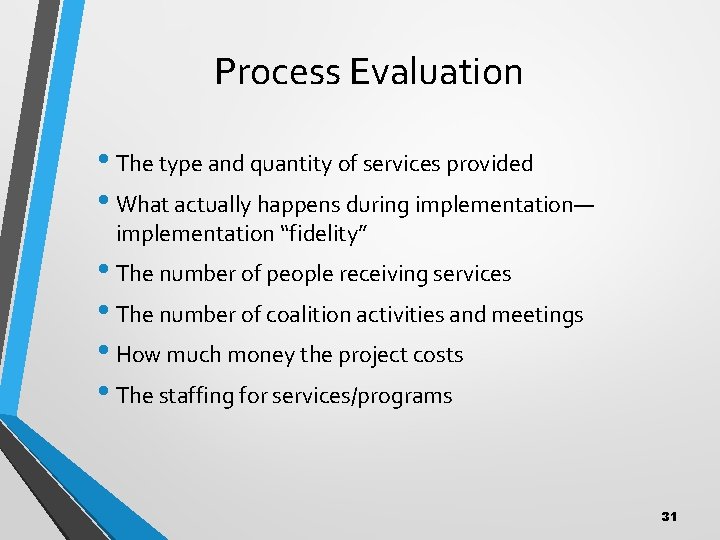

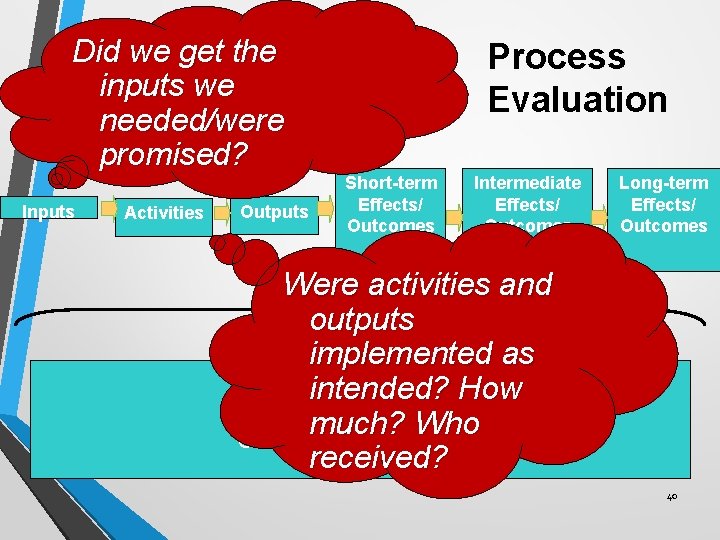

Process Evaluation • The type and quantity of services provided • What actually happens during implementation— implementation “fidelity” • The number of people receiving services • The number of coalition activities and meetings • How much money the project costs • The staffing for services/programs 31

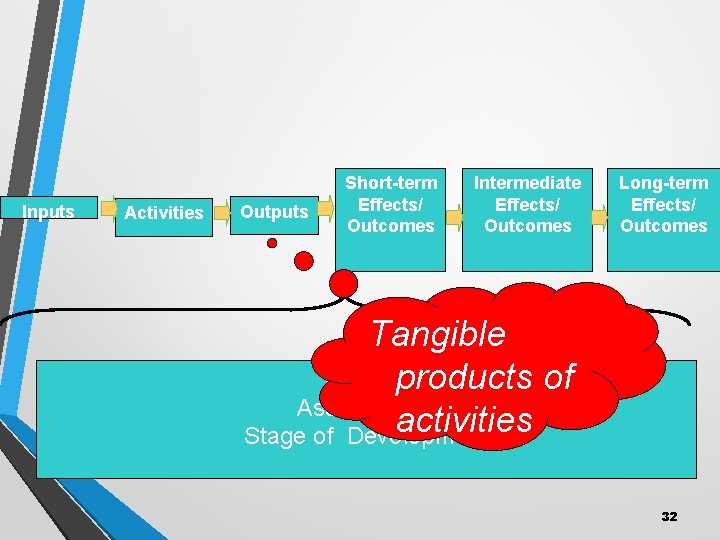

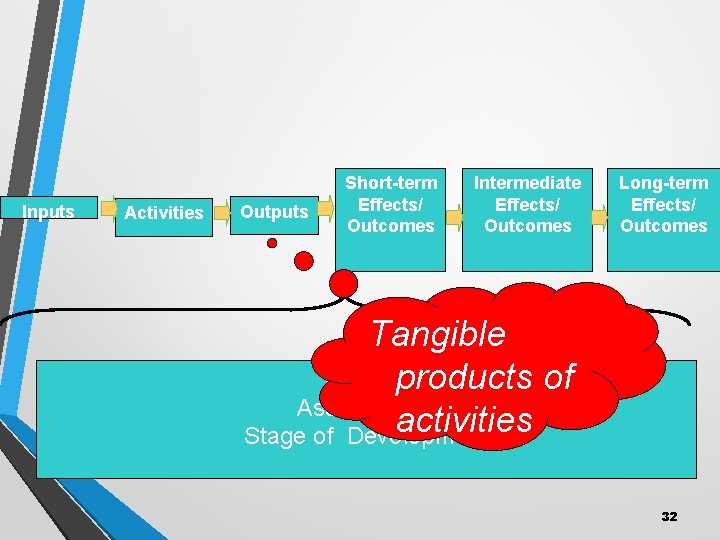

Inputs Activities Outputs Short-term Effects/ Outcomes Intermediate Effects/ Outcomes Long-term Effects/ Outcomes Tangible Context products of Assumptions activities Stage of Development 32

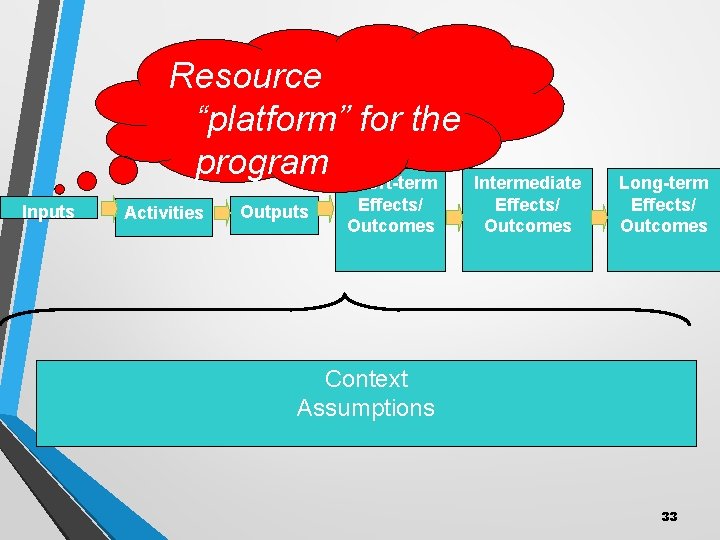

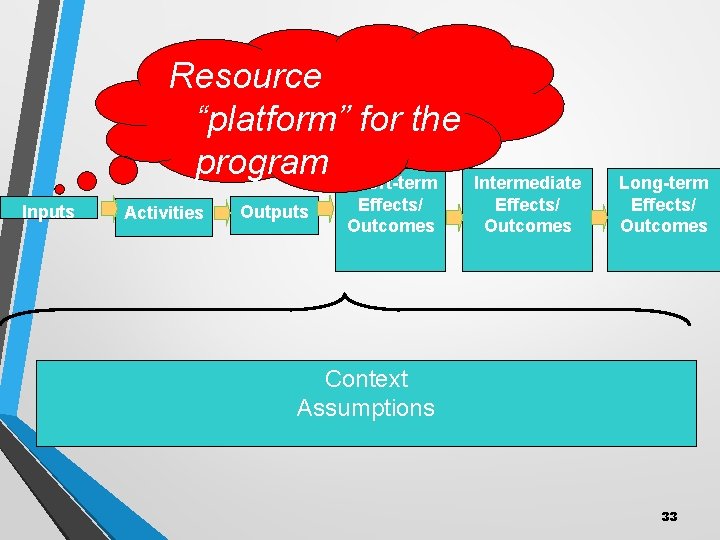

Resource “platform” for the program Short-term Inputs Activities Outputs Effects/ Outcomes Intermediate Effects/ Outcomes Long-term Effects/ Outcomes Context Assumptions 33

(Some) Potential Purposes • Test program implementation • Show accountability • “Continuous” program improvement • Increase the knowledge base • Other… 34

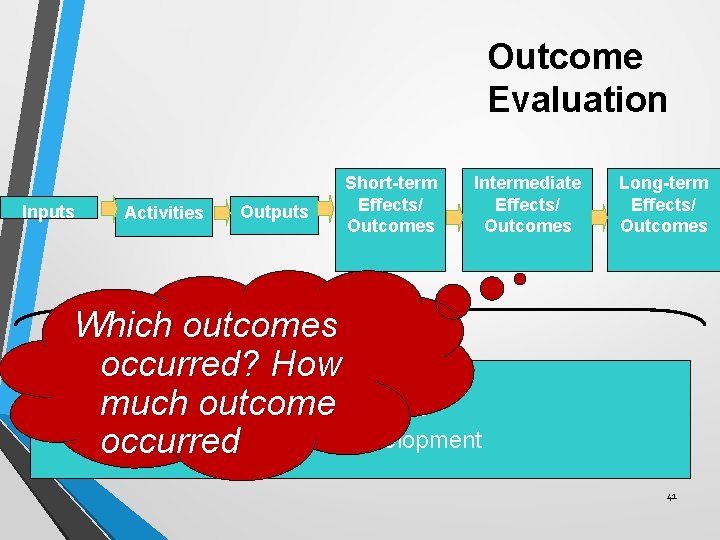

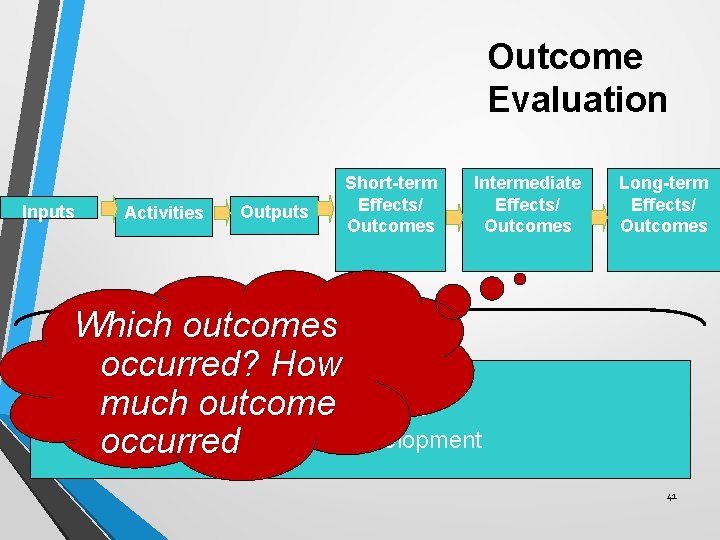

Outcome Evaluation • Results of program services • Changes in individuals • • • Knowledge/awareness Attitudes Beliefs • Changes in the environment • Changes in behaviors • Changes in disease trend 35

Inputs Activities Funding: • Federal • Foundation Recruit and engage Corner Store Owners Riverside Health Department Program Staff Research and Best Practices Corner Store Owners Local Community Organizations Riverside School District and local schools Work with local organizations to engage in community education & outreach Engage schools in nutrition education and outreach Recruit and train Youth Advocates Activities Provide technical assistance Link stores with local food producers Conduct community outreach and education around nutrition Conduct nutrition education to parents, teachers, staff, and students Plan and conduct Health Summit with community leaders Produce film of local nutrition environment Outputs Neighborhood Corner Stores stock and sell healthier food options Healthy and affordable fruits and vegetables are available at Corner Stores Neighborhood residents are aware of fresh fruits and vegetables at corner store Students, parents, teachers and staff have information and access to healthier food options Community leaders are aware of neighborhood nutrition needs Neighborhood nutrition needs are documented Outcomes Short-term Long-term Community/Systems Increased number of corner stores selling more fresh fruits and vegetables Increased variety and quality of fresh fruits and vegetables offered Increased revenue at corner stores Individual Increased awareness of availability of fruits and vegetables at corner stores Improved overall dietary quality among neighborhood residents Increased purchasing of fresh fruits and vegetables at corner stores by neighborhood residents Reduced income and racial/ethnic disparities in obesity and nutrition Improved nutritional knowledge and attitudes among neighborhood residents Reduced prevalence of childhood and adult obesity

“Reality Checking” the Focus Based on “feasibility” standard: • Stage of Development: How long has the program been in existence? • Program Intensity: How intense is the program? How much impact is reasonable to expect? • Resources: How much time, money, expertise are available? 37

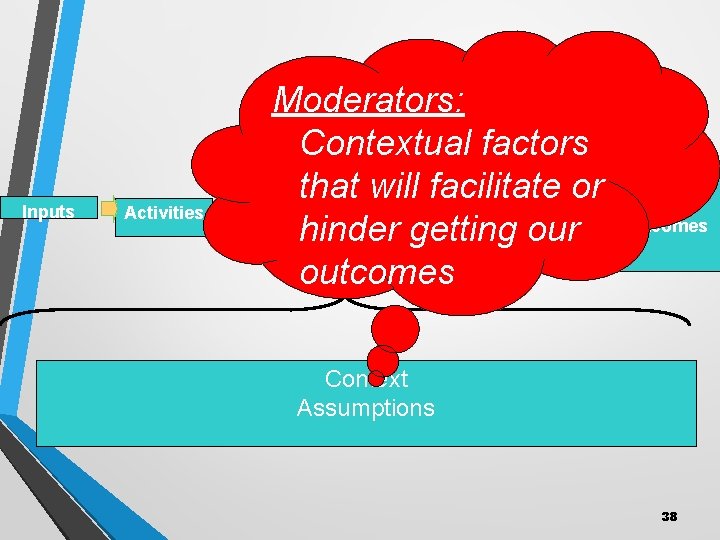

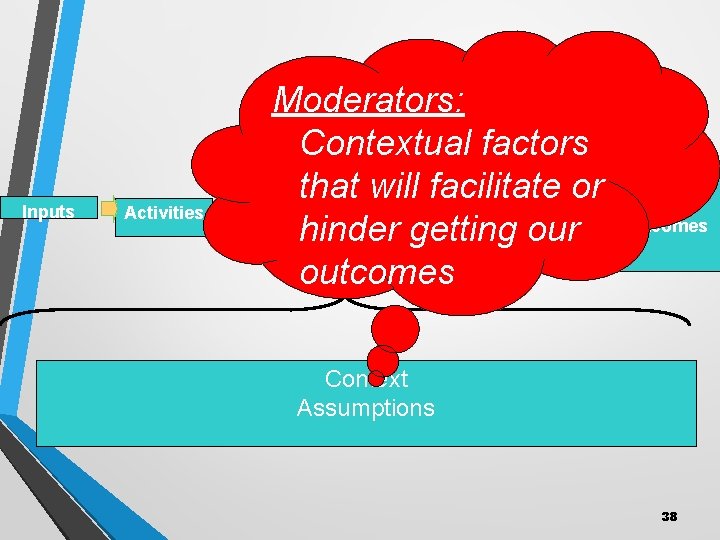

Inputs Activities Moderators: Contextual factors Intermediate that. Short-term will facilitate or Effects/ Outputs Outcomes hinder getting our outcomes Long-term Effects/ Outcomes Context Assumptions 38

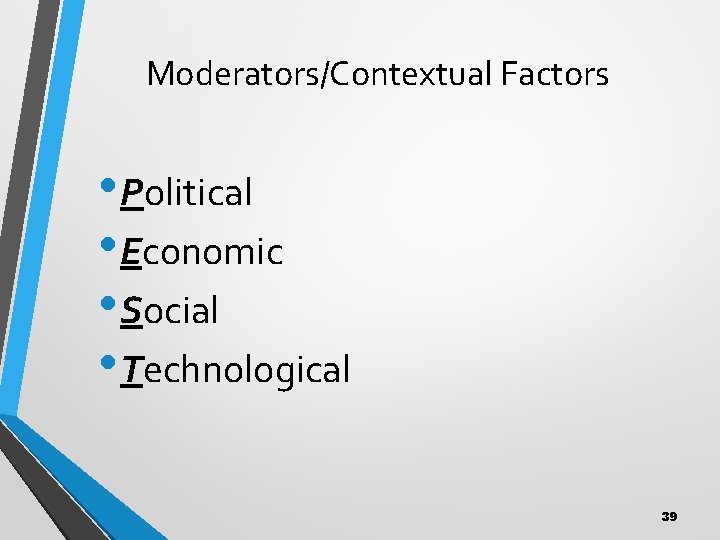

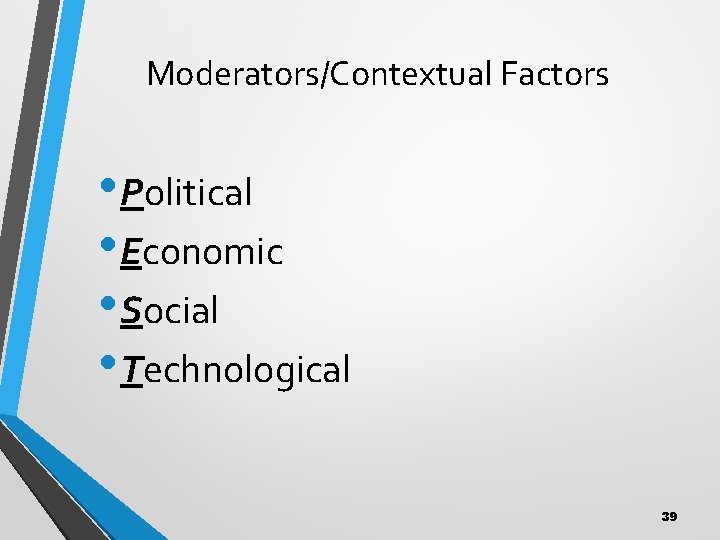

Moderators/Contextual Factors • Political • Economic • Social • Technological 39

Did we get the inputs we needed/were promised? Inputs Activities Outputs Process Evaluation Short-term Effects/ Outcomes Intermediate Effects/ Outcomes Long-term Effects/ Outcomes Were activities and outputs implemented as Context intended? How Assumptions much? Who Stage of Development received? 40

Outcome Evaluation Inputs Activities Outputs Short-term Effects/ Outcomes Intermediate Effects/ Outcomes Long-term Effects/ Outcomes Which outcomes occurred? How Context much outcome Assumptions occurred. Stage of Development 41

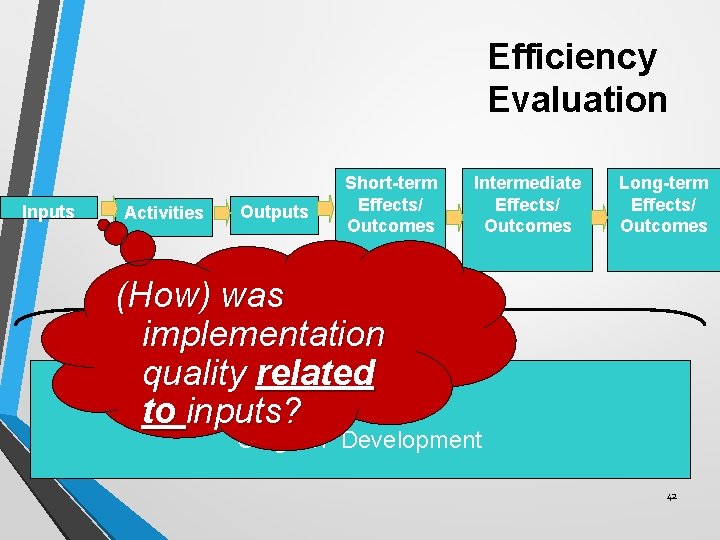

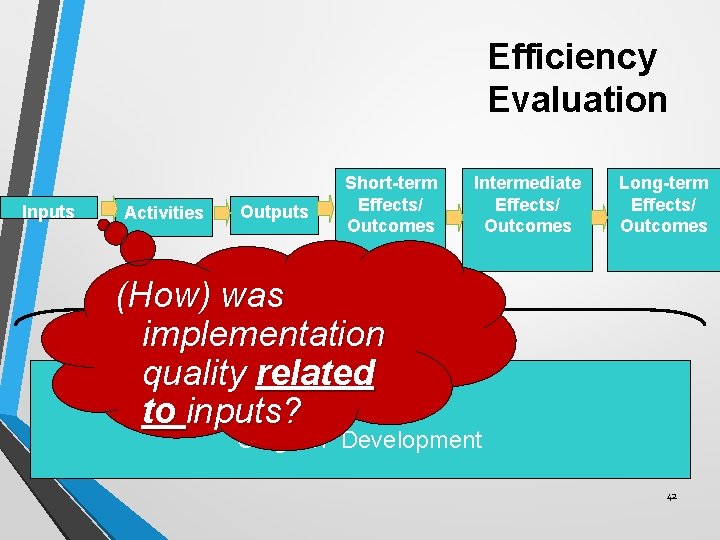

Efficiency Evaluation Inputs Activities Outputs Short-term Effects/ Outcomes Intermediate Effects/ Outcomes Long-term Effects/ Outcomes (How) was implementation Context quality related to inputs? Assumptions Stage of Development 42

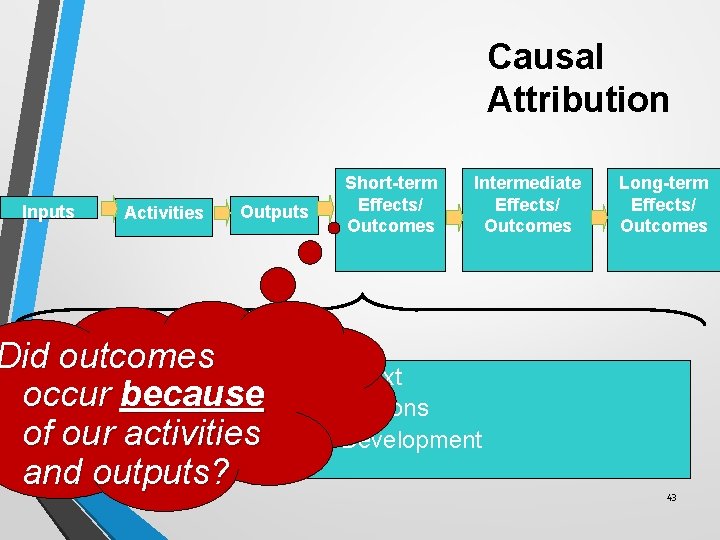

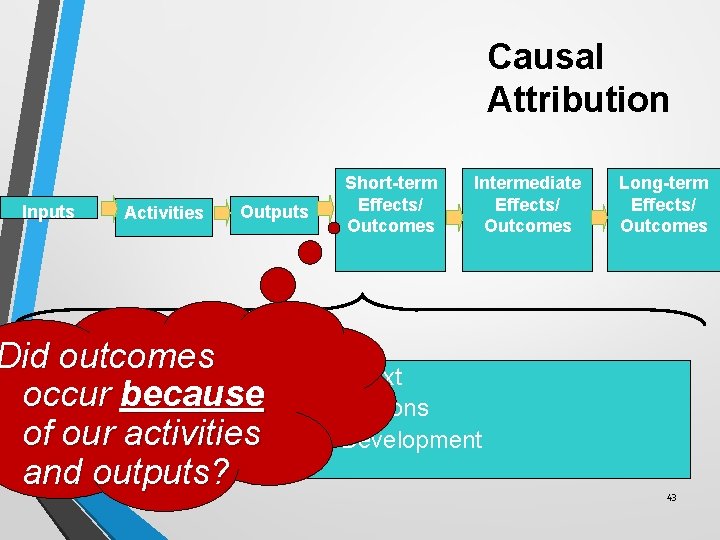

Causal Attribution Inputs Activities Outputs Short-term Effects/ Outcomes Intermediate Effects/ Outcomes Did outcomes Context occur because Assumptions of our activities Stage of Development and outputs? Long-term Effects/ Outcomes 43

Intro to Program Evaluation Step 4: Gathering Credible Evidence

CDC’s Framework for Program Evaluation Steps 1 Engage stakeholders 6 Ensure use and share lessons learned Standards 5 Feasibility Propriety Accuracy Justify conclusions Utility 2 Describe the program 3 Focus the evaluation design 4 Gather credible evidence Source: Centers for Disease Control and Prevention. Framework for Program Evaluation in Public Health. MMWR 1999; 48 (No. RR-11). 45

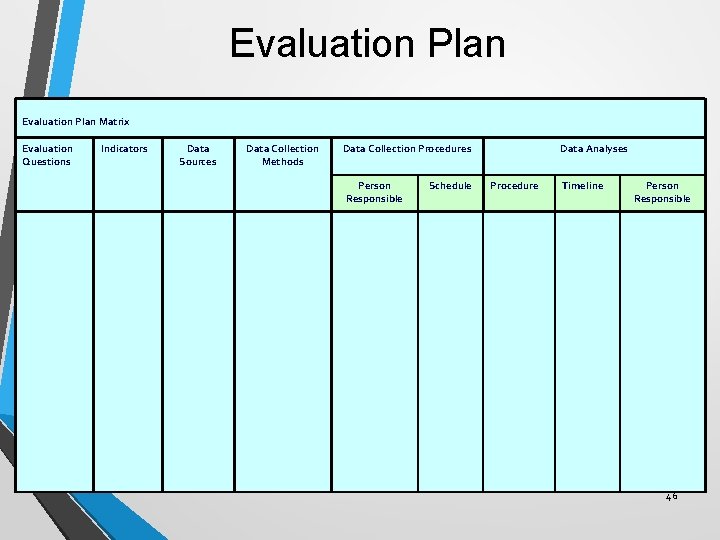

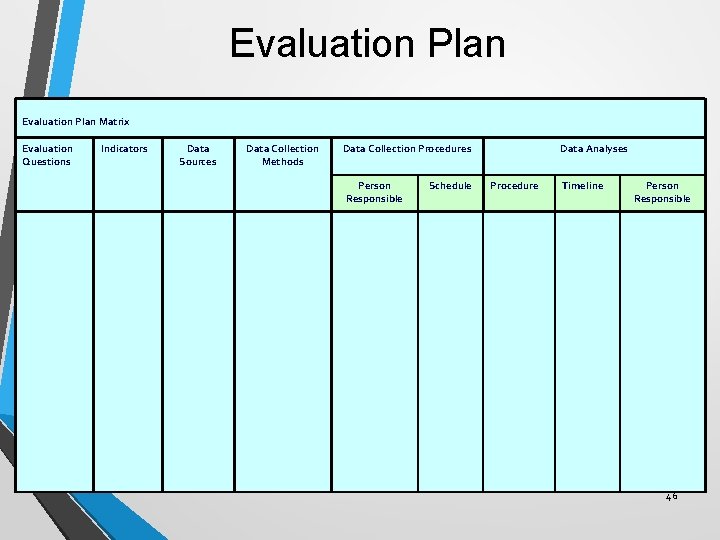

Evaluation Plan Matrix Evaluation Questions Indicators Data Sources Data Collection Methods Data Collection Procedures Person Responsible Schedule Data Analyses Procedure Timeline Person Responsible 46

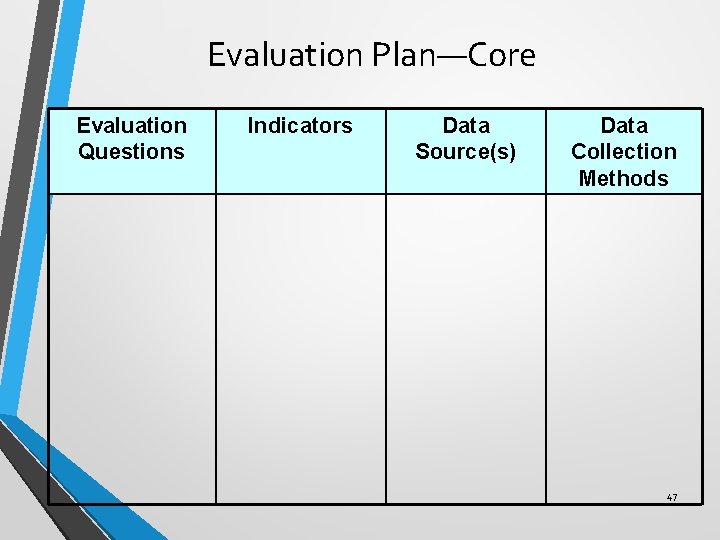

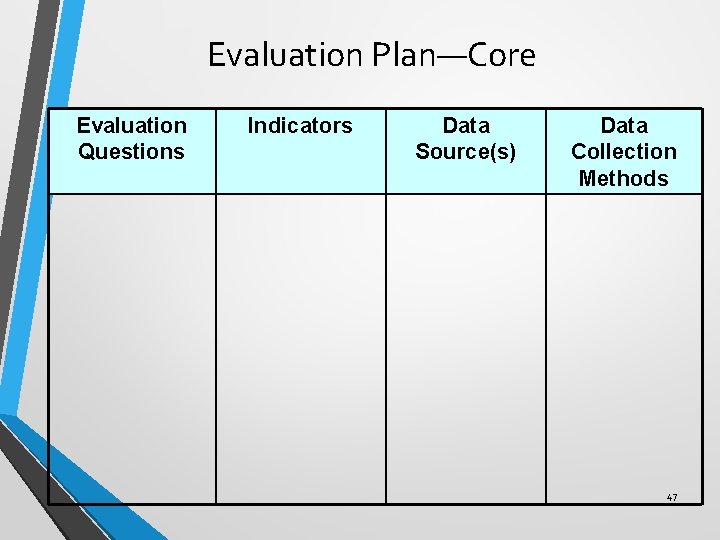

Evaluation Plan—Core Evaluation Questions Indicators Data Source(s) Data Collection Methods 47

What is an indicator? • Specific, observable, and measurable characteristics that show progress towards a specified activity or outcome. 48

Not “Collect Data” BUT “Gather Credible Evidence” Narrowing from 100 s of ways to collect data: • Utility: Who’s going to use the data and for what? • Feasibility: How much resources? • Propriety: Ethical constraints? • Accuracy: How “accurate” do data need to be? 49

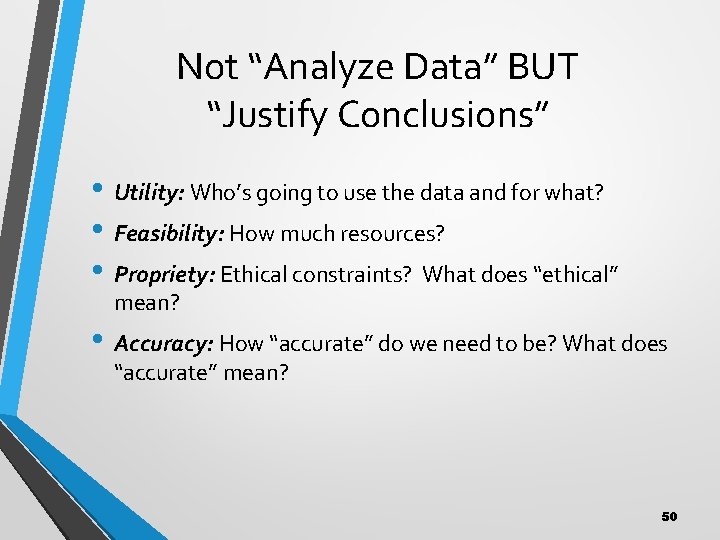

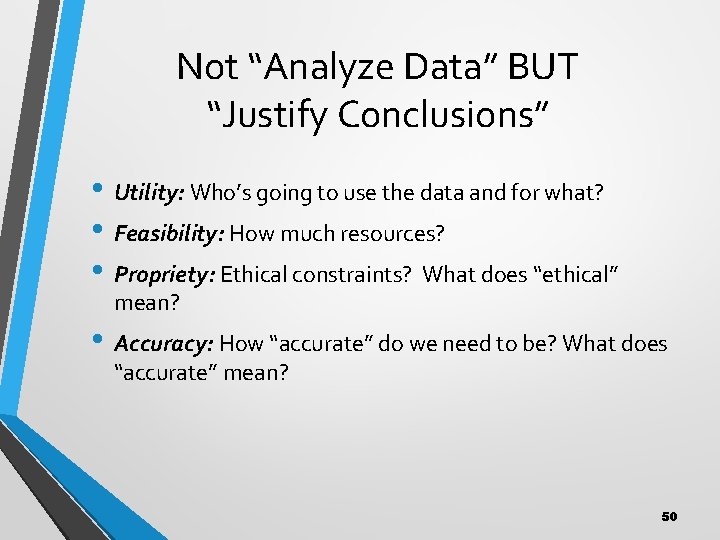

Not “Analyze Data” BUT “Justify Conclusions” • Utility: Who’s going to use the data and for what? • Feasibility: How much resources? • Propriety: Ethical constraints? What does “ethical” mean? • Accuracy: How “accurate” do we need to be? What does “accurate” mean? 50

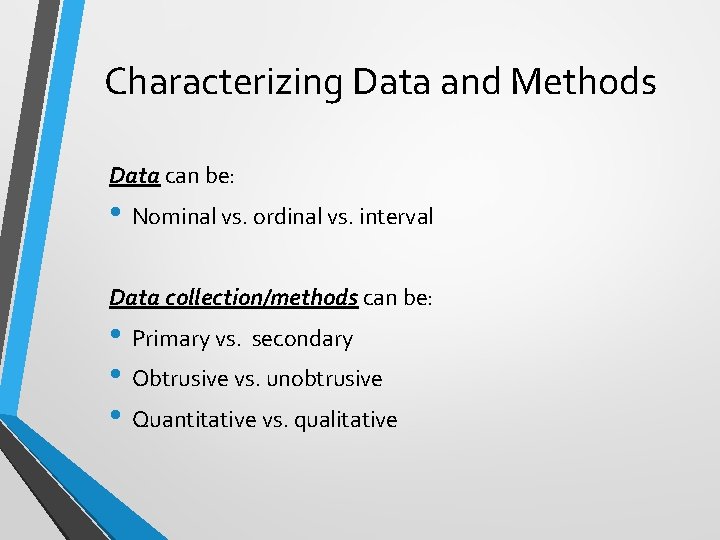

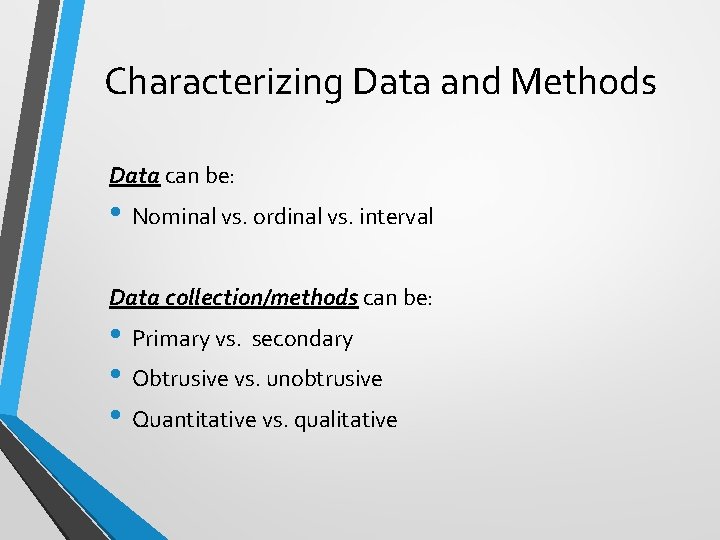

Characterizing Data and Methods Data can be: • Nominal vs. ordinal vs. interval Data collection/methods can be: • Primary vs. secondary • Obtrusive vs. unobtrusive • Quantitative vs. qualitative

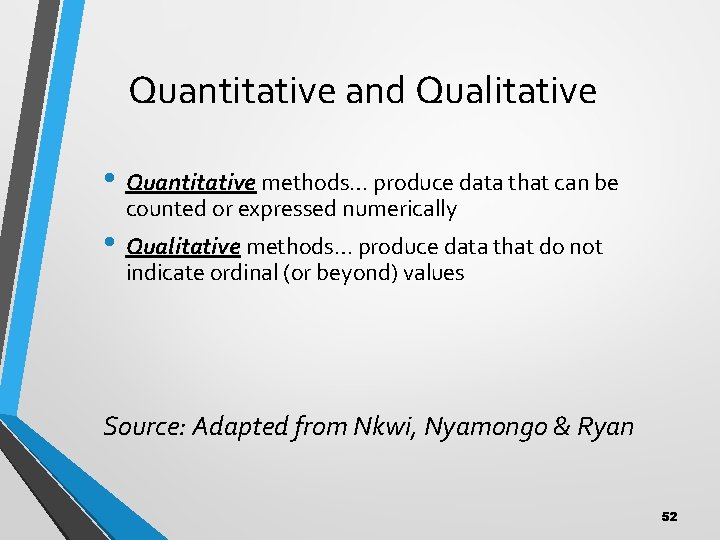

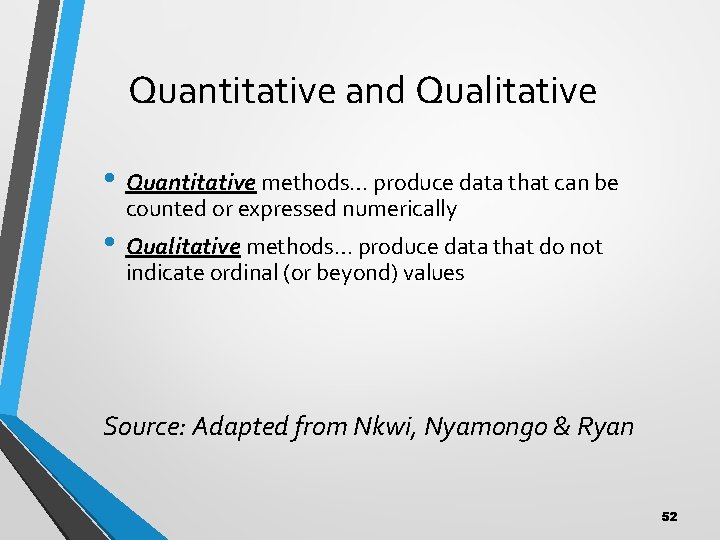

Quantitative and Qualitative • Quantitative methods… produce data that can be counted or expressed numerically • Qualitative methods… produce data that do not indicate ordinal (or beyond) values Source: Adapted from Nkwi, Nyamongo & Ryan 52

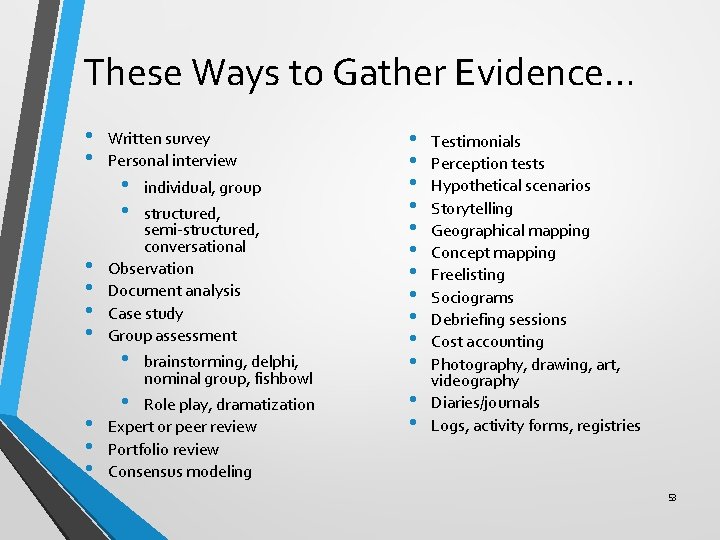

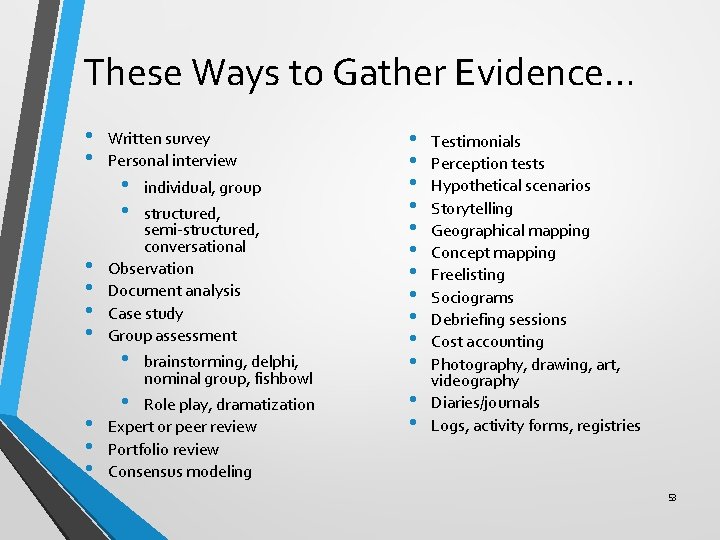

These Ways to Gather Evidence… • • • Written survey Personal interview • • individual, group • brainstorming, delphi, nominal group, fishbowl structured, semi-structured, conversational Observation Document analysis Case study Group assessment • Role play, dramatization Expert or peer review Portfolio review Consensus modeling • • • • Testimonials Perception tests Hypothetical scenarios Storytelling Geographical mapping Concept mapping Freelisting Sociograms Debriefing sessions Cost accounting Photography, drawing, art, videography Diaries/journals Logs, activity forms, registries 53

Cluster Into These Six Categories… • Surveys • Interviews • Focus groups • Document review • Observation • Secondary data analysis 54

Choosing Data Collection Methods • Function of context: • • • Time Cost Ethics • Function of content to be measured: • • Sensitivity of the issue “Hawthorne effect” Validity Reliability 55

Why Mixed Methods? • Corroboration—understanding more defensibly, validly, credibly—”triangulation” • Clarification—understanding more comprehensively or completely • Explanation—understanding more clearly, understanding the “why” behind the “what” • Exploration—understanding more insightfully, open to new ideas and insights that may lead down new paths— ”induction”

Intro to Program Evaluation Steps 5. Justifying Conclusions

CDC’s Framework for Program Evaluation Steps 1 Engage stakeholders 6 Ensure use and share lessons learned Standards 5 Feasibility Propriety Accuracy Justify conclusions Utility 2 Describe the program 3 Focus the evaluation design 4 Gather credible evidence 58 Source: Centers for Disease Control and Prevention. Framework for Program Evaluation in Public Health. MMWR 1999; 48 (No. RR-11).

Now that I have this data, what do I do with it? § Create a data management system § Analyze your data § Quantitative § Qualitative 59

Justifying Conclusions “It is not the facts that are of chief importance, but the light thrown upon them, the meaning in which they are dressed, the conclusions which are drawn from them, and the judgements delivered upon them. ” – Mark Twain 60

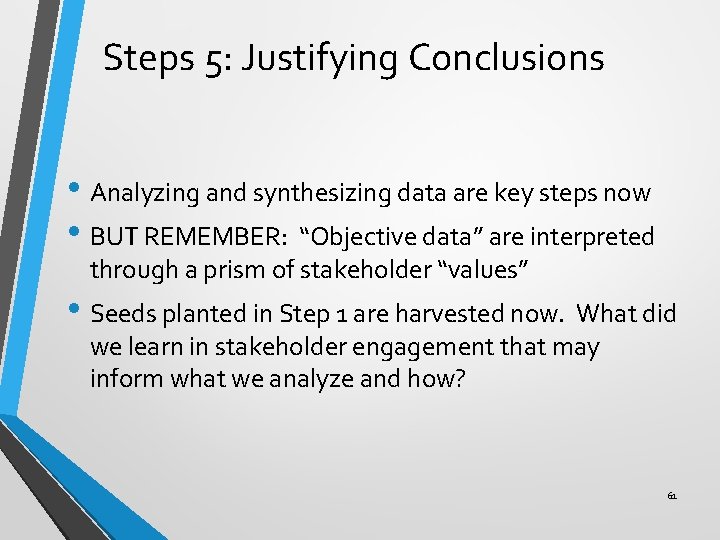

Steps 5: Justifying Conclusions • Analyzing and synthesizing data are key steps now • BUT REMEMBER: “Objective data” are interpreted through a prism of stakeholder “values” • Seeds planted in Step 1 are harvested now. What did we learn in stakeholder engagement that may inform what we analyze and how? 61

Developing Recommendations should be: • Linked with the original purpose of your evaluation. • Based on answers to your evaluation questions. • Linked to findings from your evaluation • Tailored to the users of the evaluation results to increase ownership and motivation to act. 62

Intro to Program Evaluation Steps 6. Using Lessons Learned

Steps 6: Using Lessons • The ultimate payoff • Enhanced by work done in early steps! 64

Ensure Use and Share Lessons Learned • Share the results and lessons learned from the evaluation with stakeholders and others • Use your evaluation findings to modify, strengthen, and improve your program 65