Goog Le Net Christian Szegedy Wei Liu Google

- Slides: 72

Goog. Le. Net

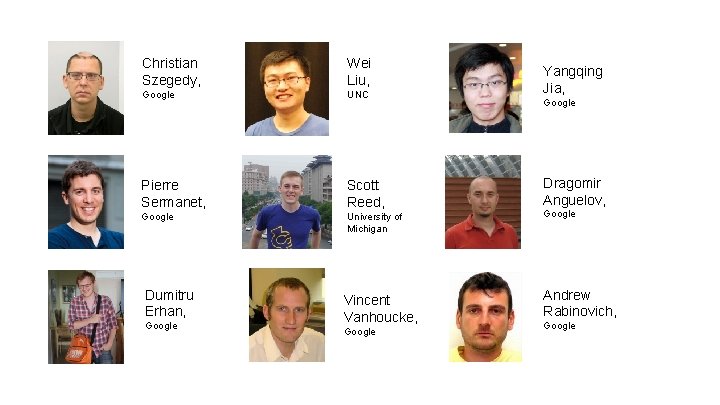

Christian Szegedy, Wei Liu, Google UNC Yangqing Jia, Pierre Sermanet, Scott Reed, Dragomir Anguelov, Google University of Michigan Dumitru Erhan, Google Vincent Vanhoucke, Google Andrew Rabinovich, Google

Deep Convolutional Networks Revolutionizing computer vision since 1989

Deep Convolutional Networks 2012 Revolutionizing computer vision since 1989

Why is the deep learning revolution arriving just now?

Why is the deep learning revolution arriving just now? ● Deep learning needs a lot of training data.

Why is the deep learning revolution arriving just now? ● Deep learning needs a lot of training data. ● Deep learning needs a lot of computational resources

Why is the deep learning revolution arriving just now? ● Deep learning needs a lot of training data. ● Deep learning needs a lot of computational resources

Why is the deep learning revolution arriving just now? ● Deep learning needs a lot of training data. ● Deep learning needs a lot of computational resources ?

Why is the deep learning revolution arriving just now? ● Deep learning needs a lot of training data. ● Deep learning needs a lot of computational resources Szegedy, C. , Toshev, A. , & Erhan, D. (2013). Deep neural networks for object detection. In Advances in Neural Information Processing Systems 2013 (pp. 2553 -2561). Then state of the art performance using a training set of ~10 K images for object detection on 20 classes of VOC, without pretraining on Image. Net.

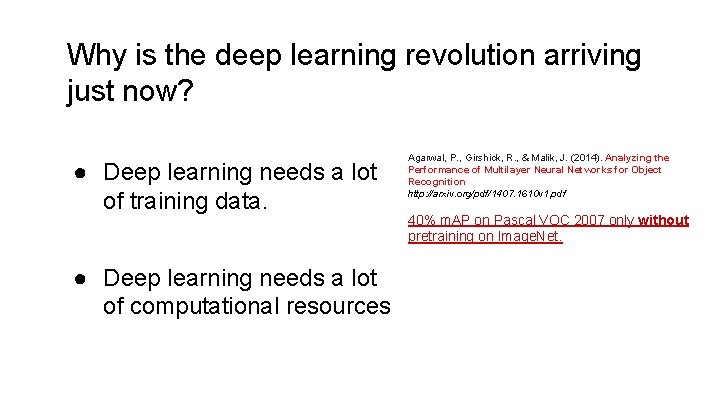

Why is the deep learning revolution arriving just now? ● Deep learning needs a lot of training data. ● Deep learning needs a lot of computational resources Agarwal, P. , Girshick, R. , & Malik, J. (2014). Analyzing the Performance of Multilayer Neural Networks for Object Recognition http: //arxiv. org/pdf/1407. 1610 v 1. pdf 40% m. AP on Pascal VOC 2007 only without pretraining on Image. Net.

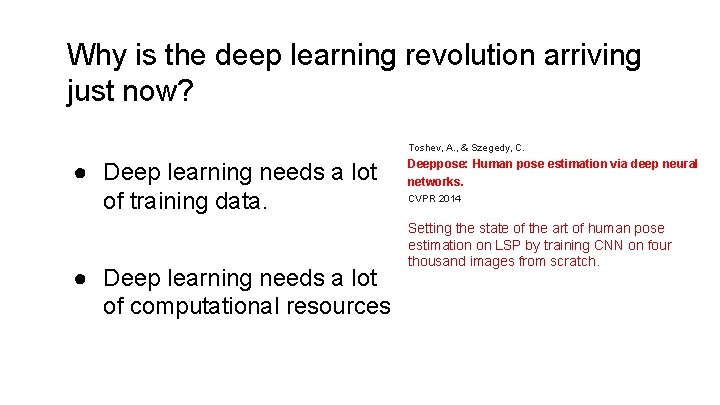

Why is the deep learning revolution arriving just now? Toshev, A. , & Szegedy, C. ● Deep learning needs a lot of training data. ● Deep learning needs a lot of computational resources Deeppose: Human pose estimation via deep neural networks. CVPR 2014 Setting the state of the art of human pose estimation on LSP by training CNN on four thousand images from scratch.

Why is the deep learning revolution arriving just now? ● Deep learning needs a lot of training data. ● Deep learning needs a lot of computational resources

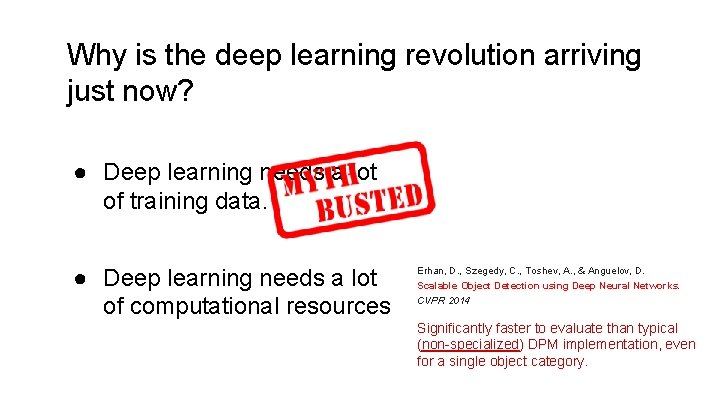

Why is the deep learning revolution arriving just now? ● Deep learning needs a lot of training data. ● Deep learning needs a lot of computational resources Erhan, D. , Szegedy, C. , Toshev, A. , & Anguelov, D. Scalable Object Detection using Deep Neural Networks. CVPR 2014 Significantly faster to evaluate than typical (non-specialized) DPM implementation, even for a single object category.

Why is the deep learning revolution arriving just now? ● Deep learning needs a lot of training data. Large scale distributed multigrid solvers since the 1990 ies. ● Deep learning needs a lot of computational resources Map. Reduce since 2004 (Jeff Dean et al. ) Scientific computing is dedicated to solving large scale complex numerical problems for decades on scale via distributed systems.

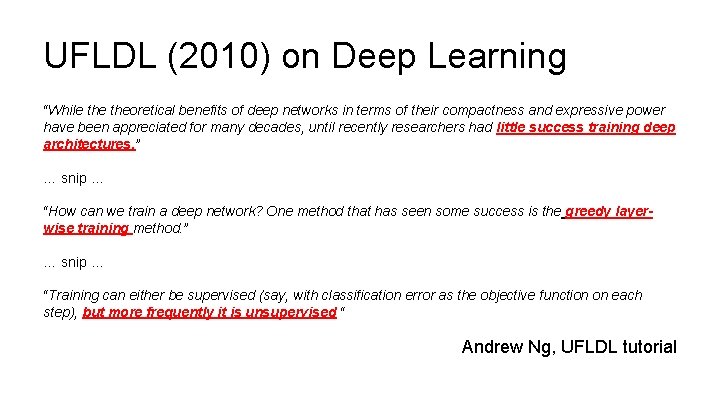

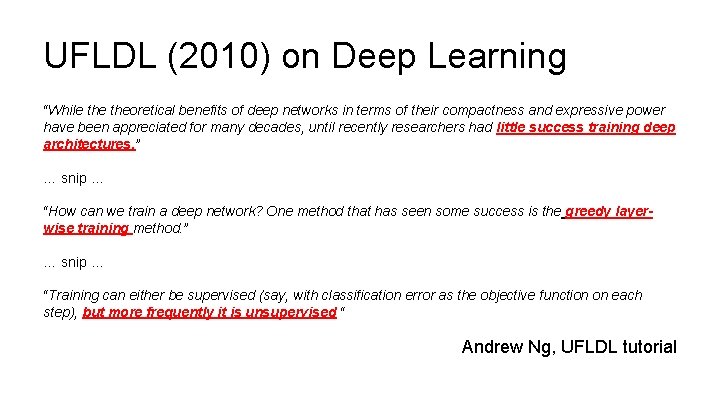

UFLDL (2010) on Deep Learning “While theoretical benefits of deep networks in terms of their compactness and expressive power have been appreciated for many decades, until recently researchers had little success training deep architectures. ” … snip … “How can we train a deep network? One method that has seen some success is the greedy layerwise training method. ” … snip … “Training can either be supervised (say, with classification error as the objective function on each step), but more frequently it is unsupervised “ Andrew Ng, UFLDL tutorial

Why is the deep learning revolution arriving just now? ● Deep learning needs a lot of training data. ● Deep learning needs a lot of computational resources ? ? ?

Why is the deep learning revolution arriving just now?

Why is the deep learning revolution arriving just now?

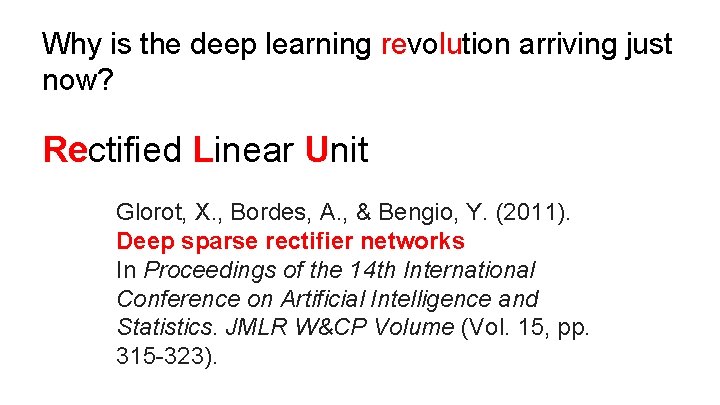

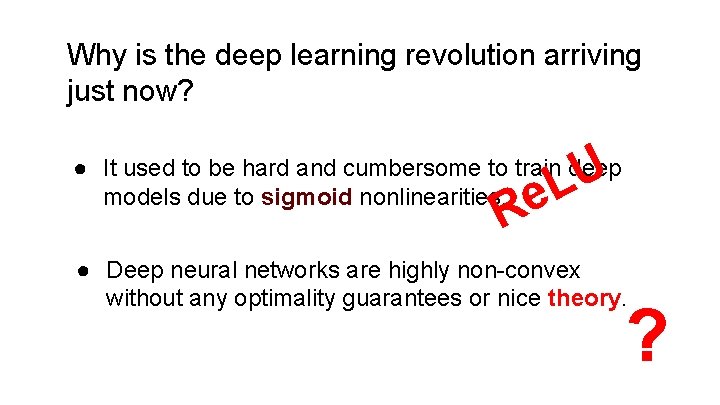

Why is the deep learning revolution arriving just now? Rectified Linear Unit Glorot, X. , Bordes, A. , & Bengio, Y. (2011). Deep sparse rectifier networks In Proceedings of the 14 th International Conference on Artificial Intelligence and Statistics. JMLR W&CP Volume (Vol. 15, pp. 315 -323).

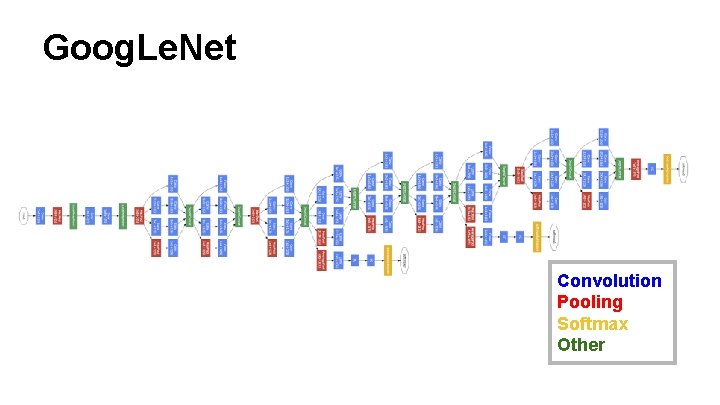

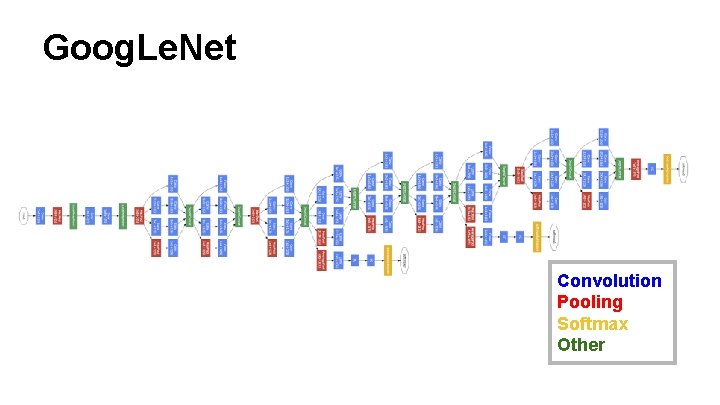

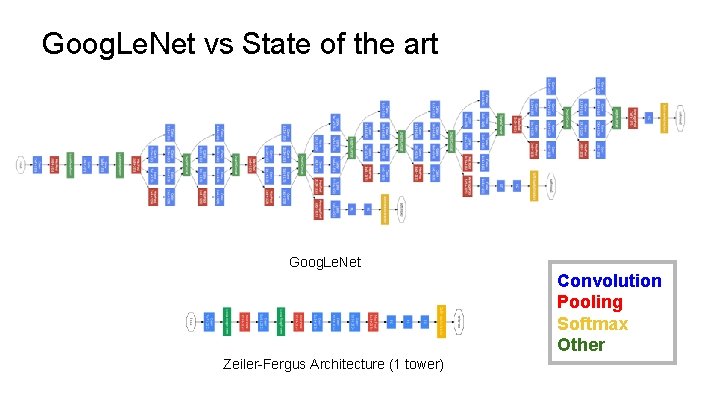

Goog. Le. Net Convolution Pooling Softmax Other

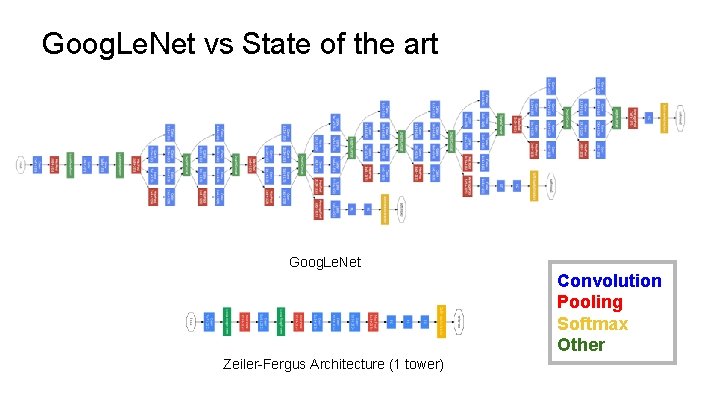

Goog. Le. Net vs State of the art Goog. Le. Net Convolution Pooling Softmax Other Zeiler-Fergus Architecture (1 tower)

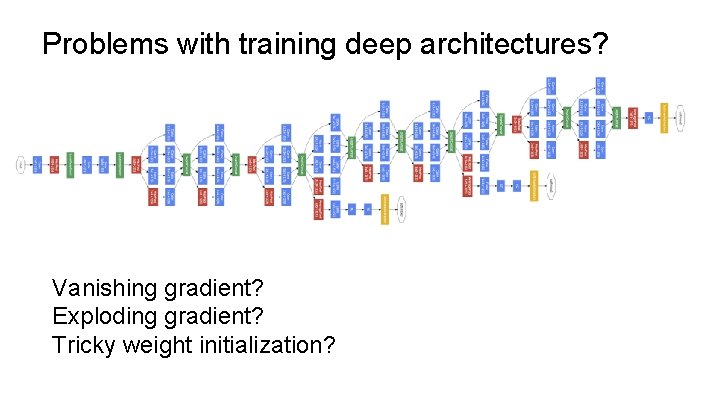

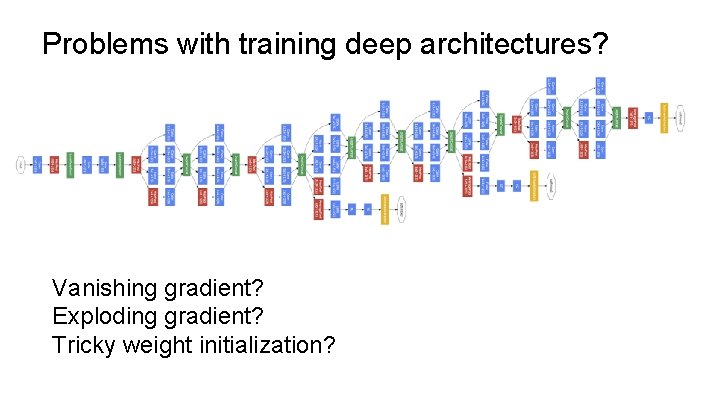

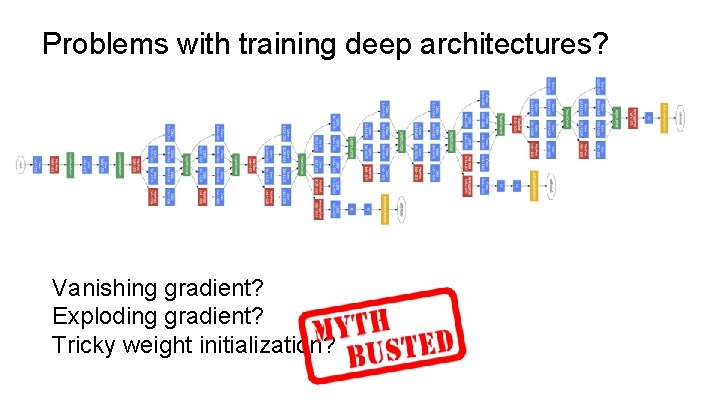

Problems with training deep architectures? Vanishing gradient? Exploding gradient? Tricky weight initialization?

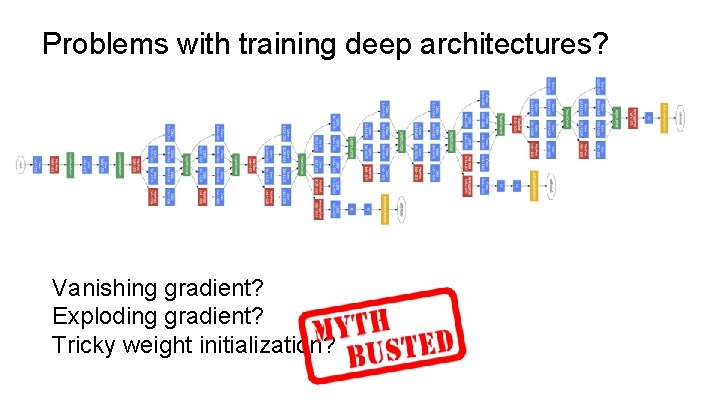

Problems with training deep architectures? Vanishing gradient? Exploding gradient? Tricky weight initialization?

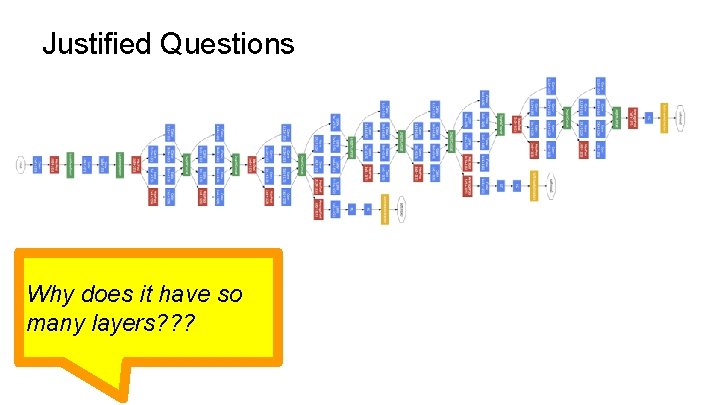

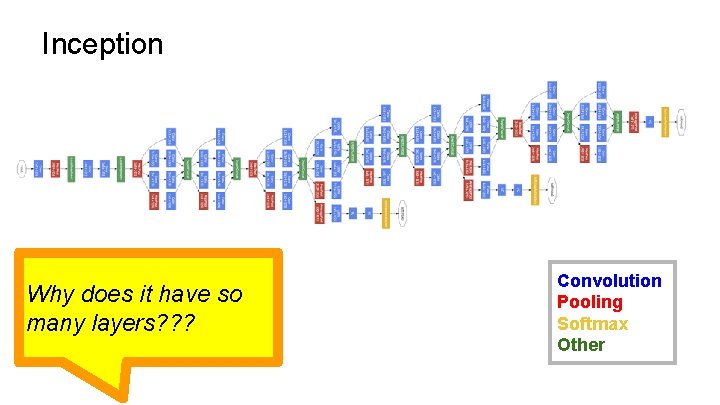

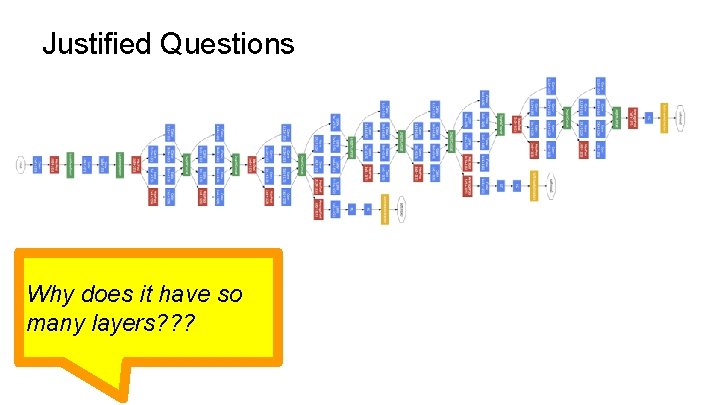

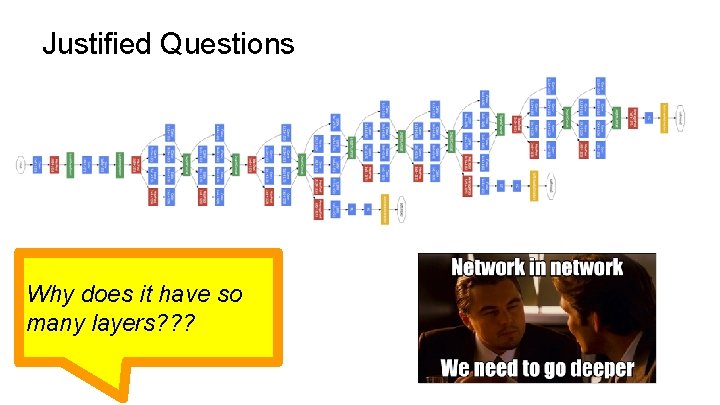

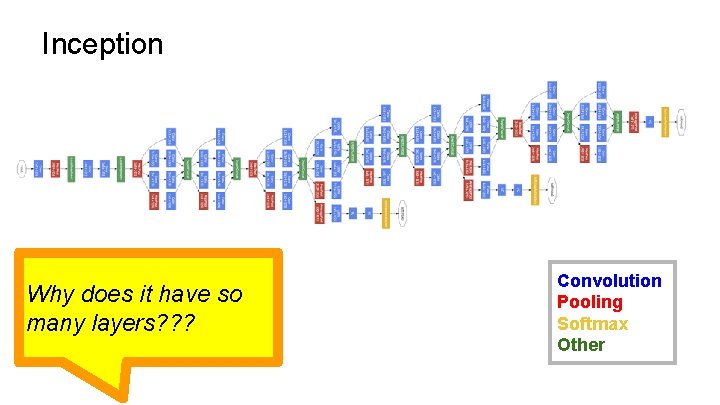

Justified Questions Why does it have so many layers? ? ?

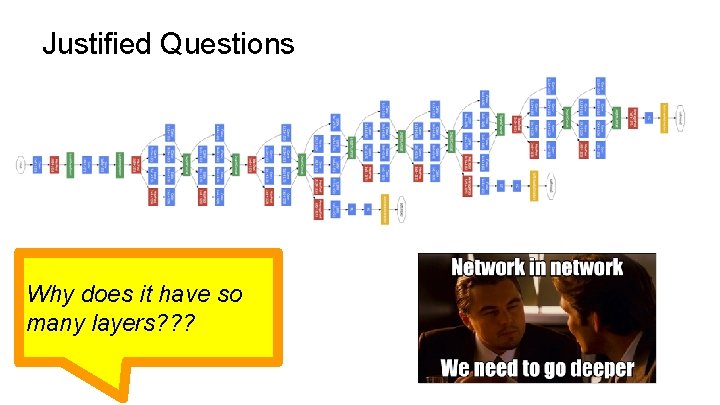

Justified Questions Why does it have so many layers? ? ?

Why is the deep learning revolution arriving just now? ● It used to be hard and cumbersome to train deep models due to sigmoid nonlinearities.

Why is the deep learning revolution arriving just now? ● It used to be hard and cumbersome to train deep models due to sigmoid nonlinearities. ● Deep neural networks are highly non-convex without any obvious optimality guarantees or nice theory.

Why is the deep learning revolution arriving just now? U L ● It used to be hard and cumbersome to train deep models due to sigmoid nonlinearities. e R ● Deep neural networks are highly non-convex without any optimality guarantees or nice theory. ?

Theoretical breakthroughs Arora, S. , Bhaskara, A. , Ge, R. , & Ma, T. Provable bounds for learning some deep representations. ICML 2014

Theoretical breakthroughs Arora, S. , Bhaskara, A. , Ge, R. , & Ma, T. Provable bounds for learning some deep representations. ! s e n o x ICML 2014 nve o n e v E -c n o n

Hebbian Principle Input

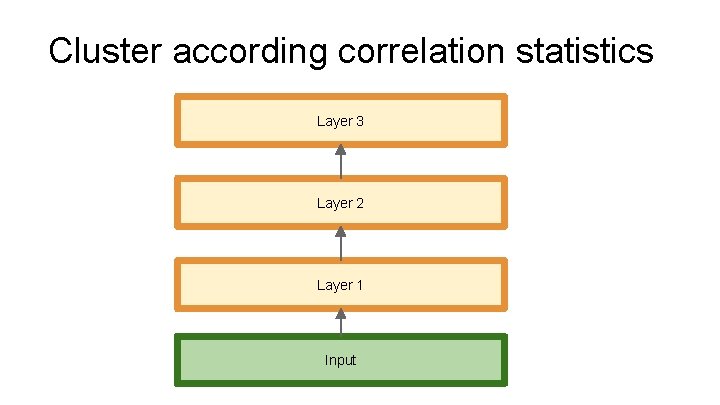

Cluster according activation statistics Layer 1 Input

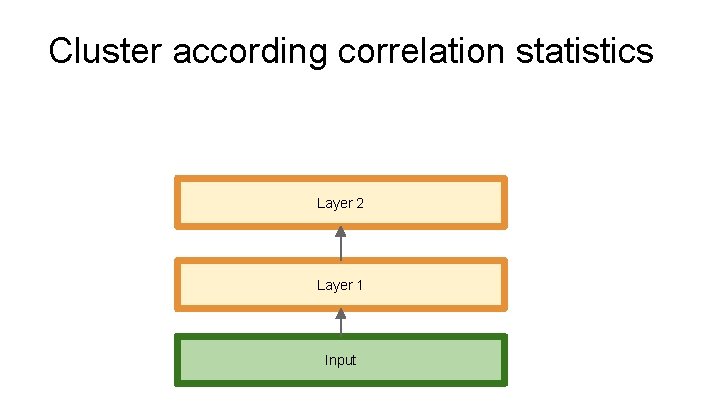

Cluster according correlation statistics Layer 2 Layer 1 Input

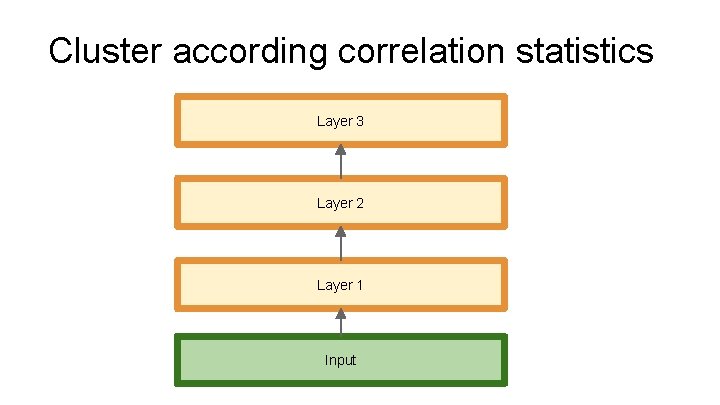

Cluster according correlation statistics Layer 3 Layer 2 Layer 1 Input

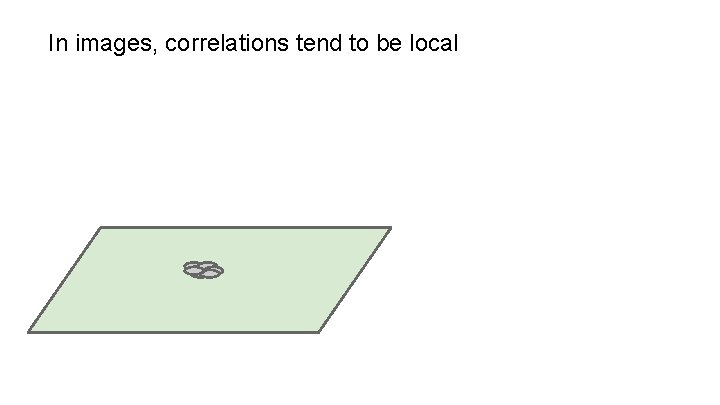

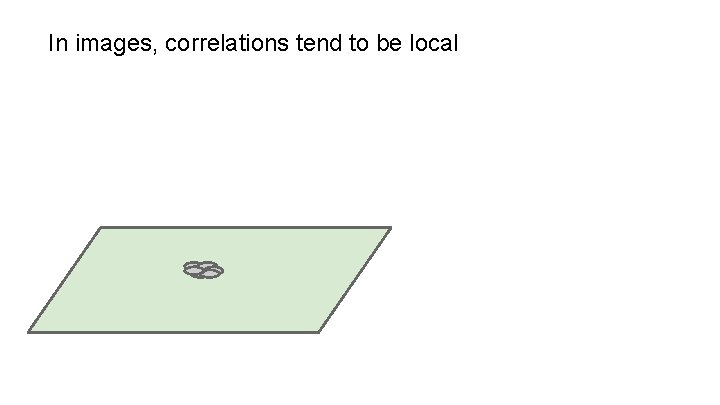

In images, correlations tend to be local

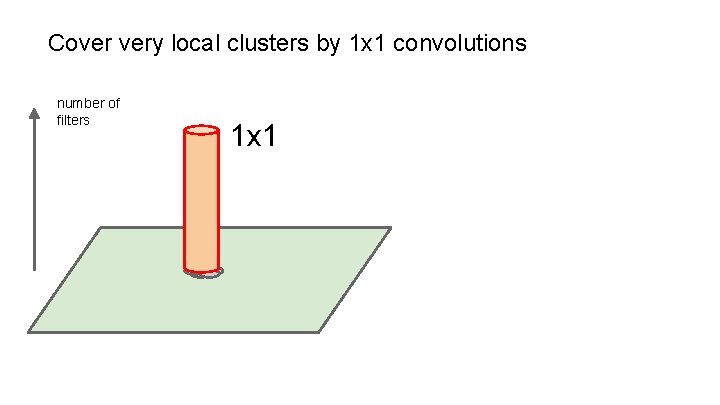

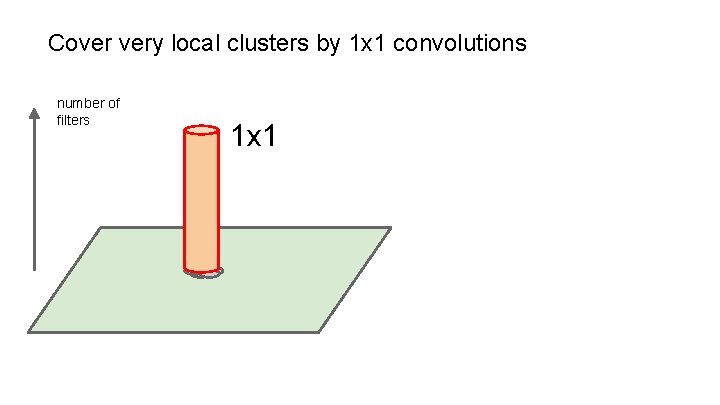

Cover very local clusters by 1 x 1 convolutions number of filters 1 x 1

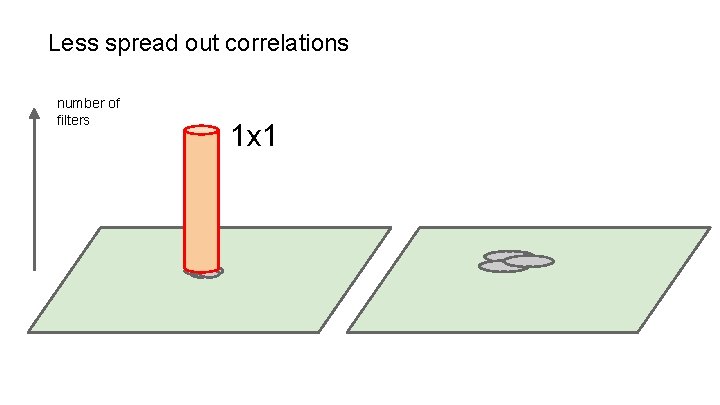

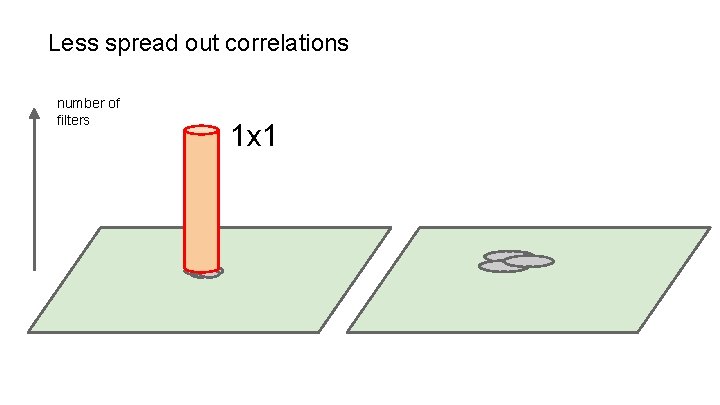

Less spread out correlations number of filters 1 x 1

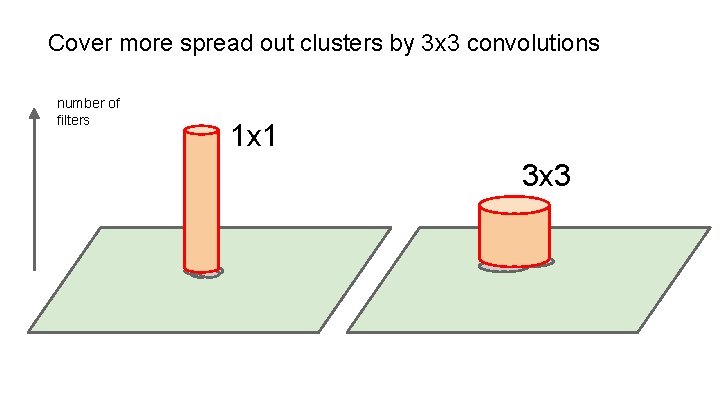

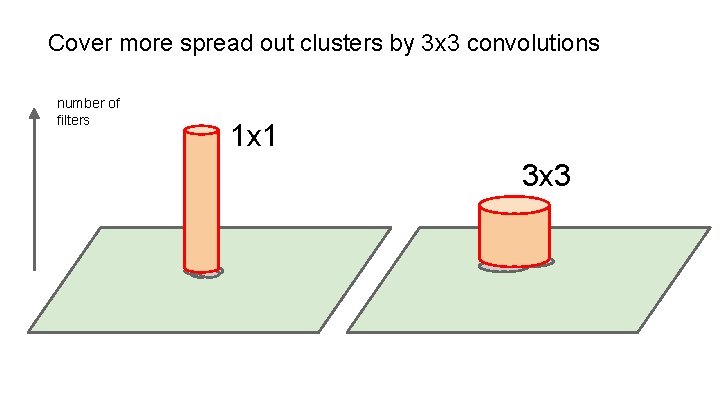

Cover more spread out clusters by 3 x 3 convolutions number of filters 1 x 1 3 x 3

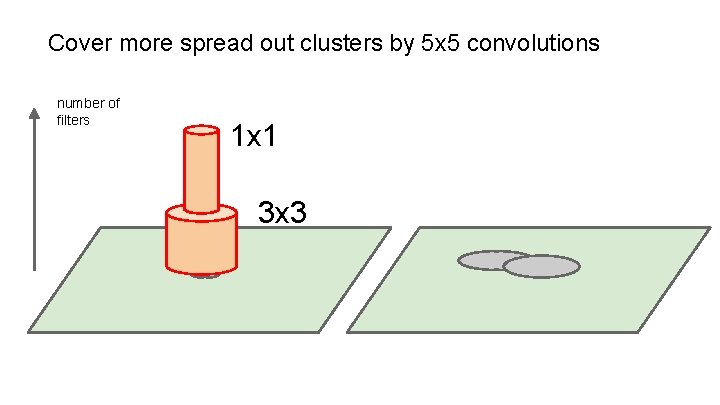

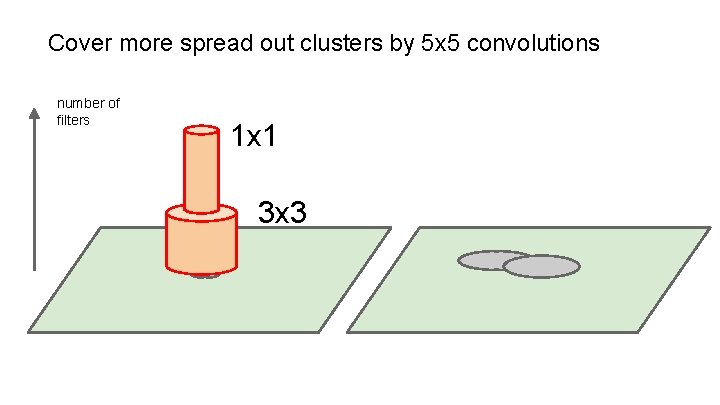

Cover more spread out clusters by 5 x 5 convolutions number of filters 1 x 1 3 x 3

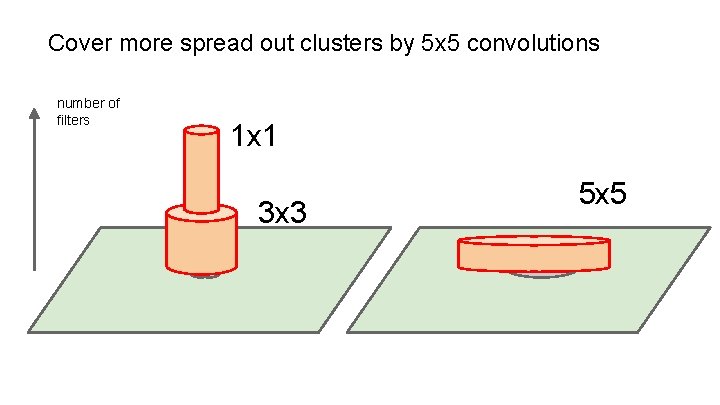

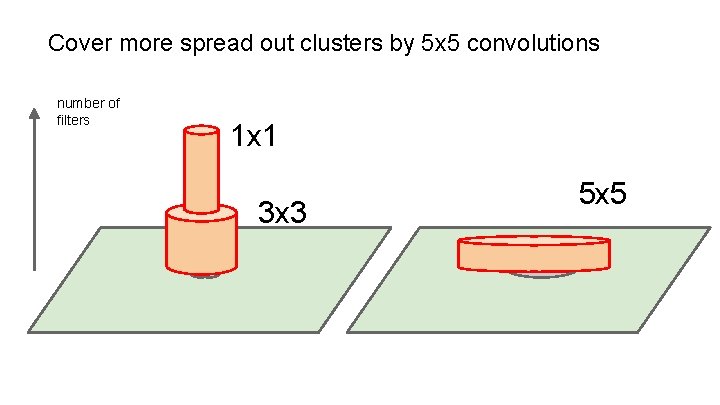

Cover more spread out clusters by 5 x 5 convolutions number of filters 1 x 1 3 x 3 5 x 5

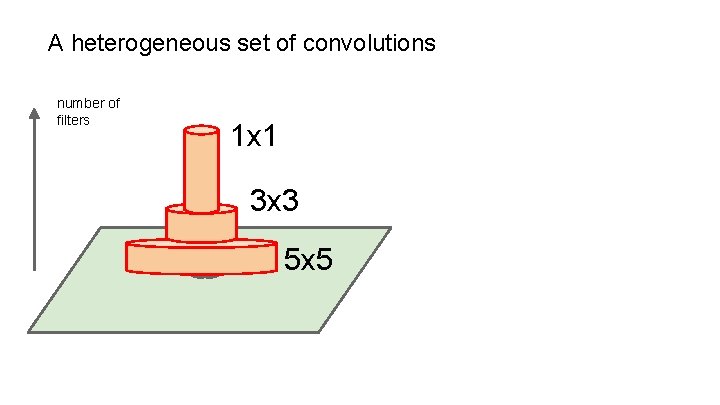

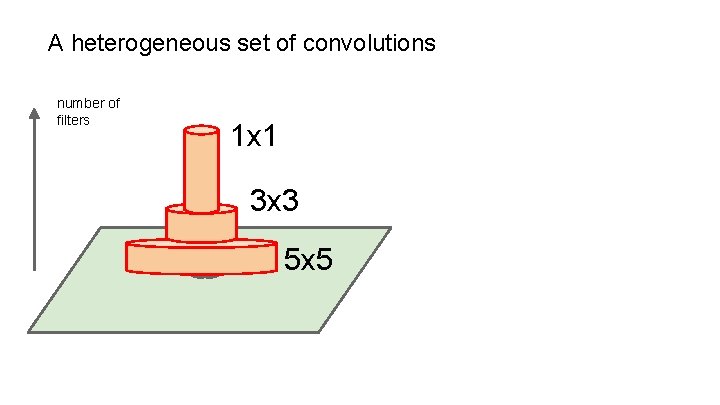

A heterogeneous set of convolutions number of filters 1 x 1 3 x 3 5 x 5

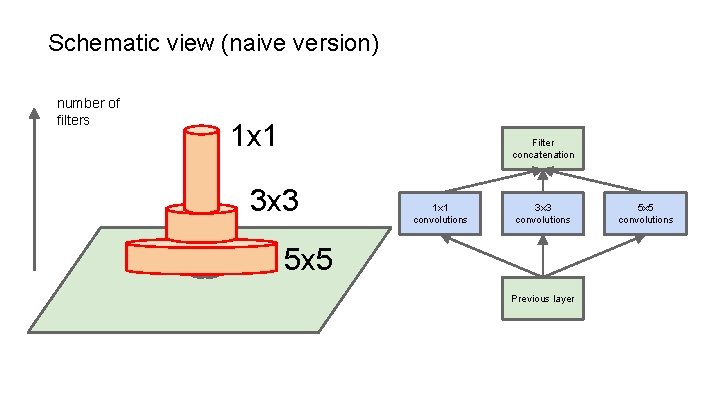

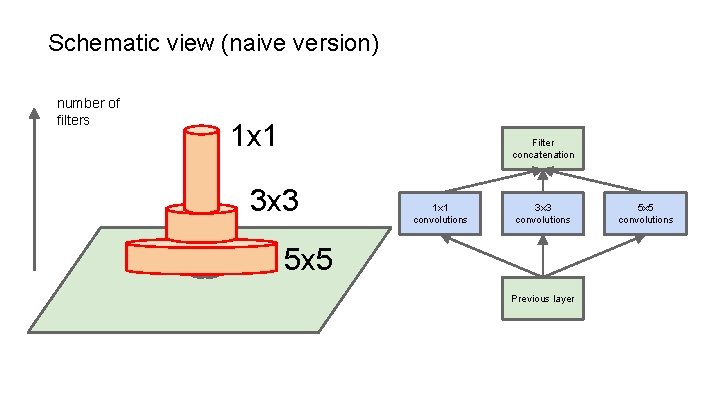

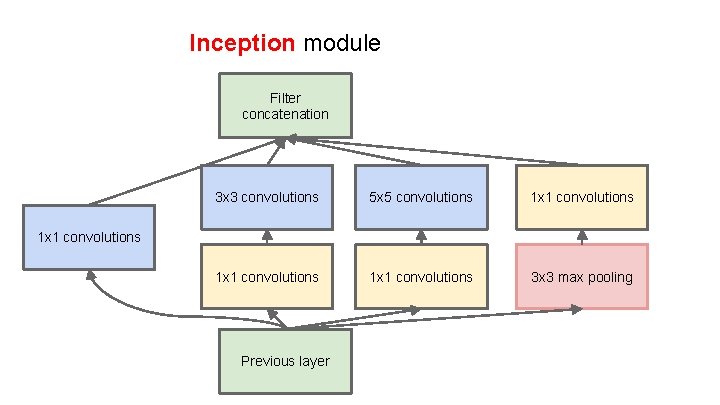

Schematic view (naive version) number of filters 1 x 1 Filter concatenation 3 x 3 1 x 1 convolutions 3 x 3 convolutions 5 x 5 Previous layer 5 x 5 convolutions

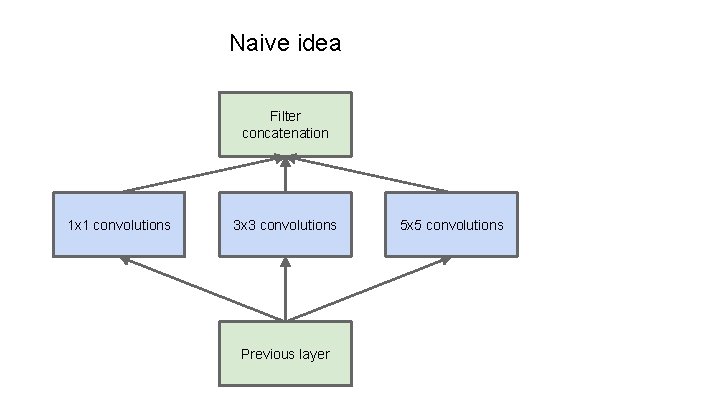

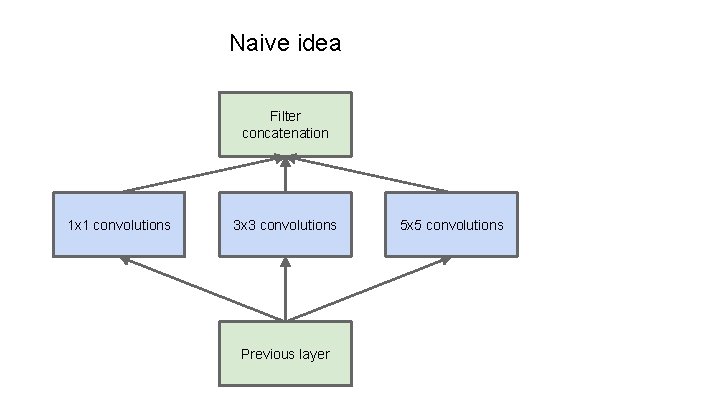

Naive idea Filter concatenation 1 x 1 convolutions 3 x 3 convolutions Previous layer 5 x 5 convolutions

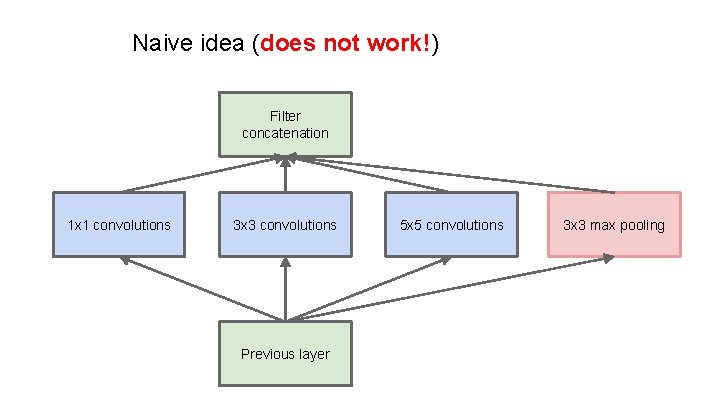

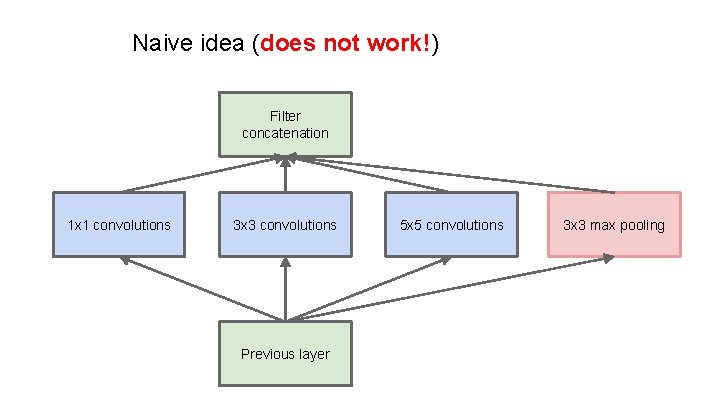

Naive idea (does not work!) Filter concatenation 1 x 1 convolutions 3 x 3 convolutions Previous layer 5 x 5 convolutions 3 x 3 max pooling

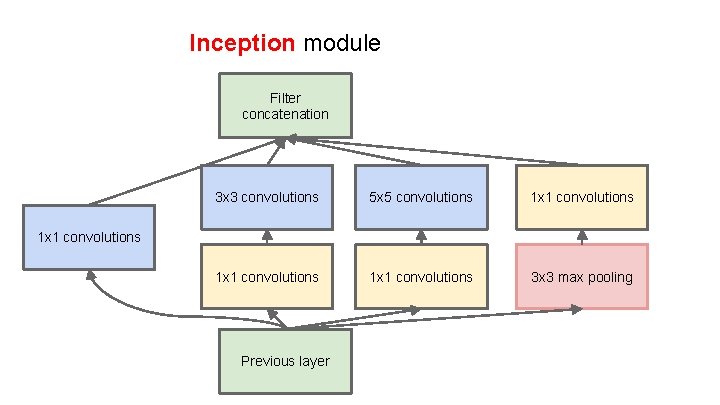

Inception module Filter concatenation 3 x 3 convolutions 5 x 5 convolutions 1 x 1 convolutions 3 x 3 max pooling 1 x 1 convolutions Previous layer

Inception Why does it have so many layers? ? ? Convolution Pooling Softmax Other

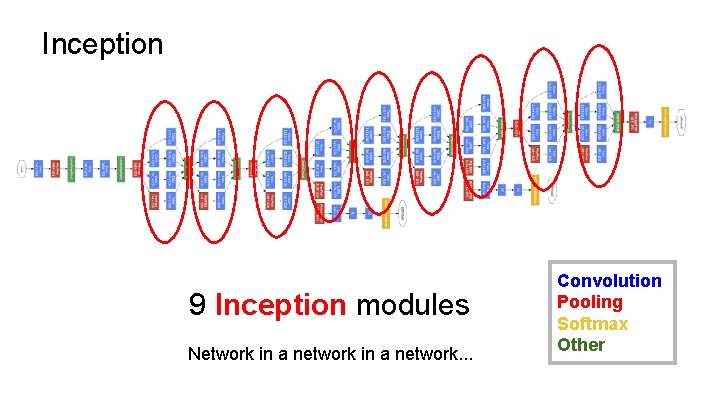

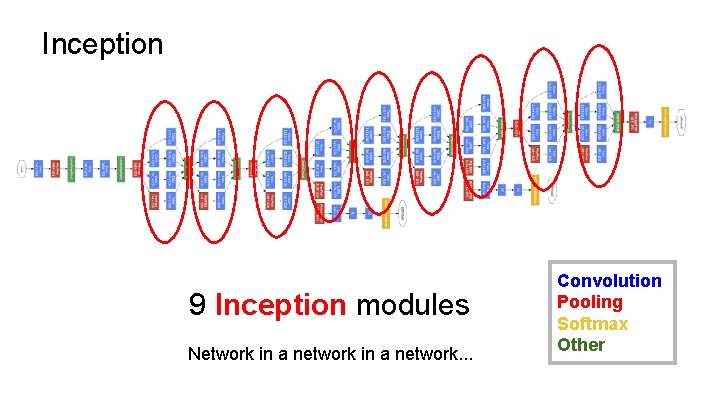

Inception 9 Inception modules Network in a network. . . Convolution Pooling Softmax Other

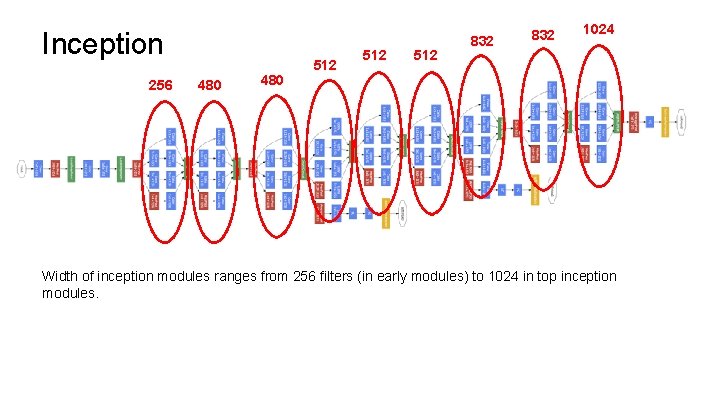

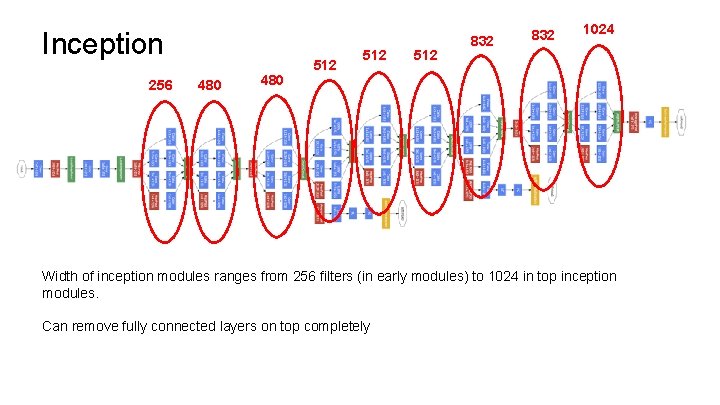

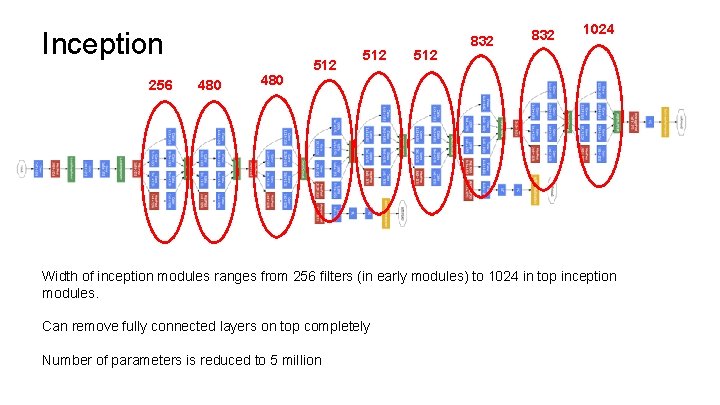

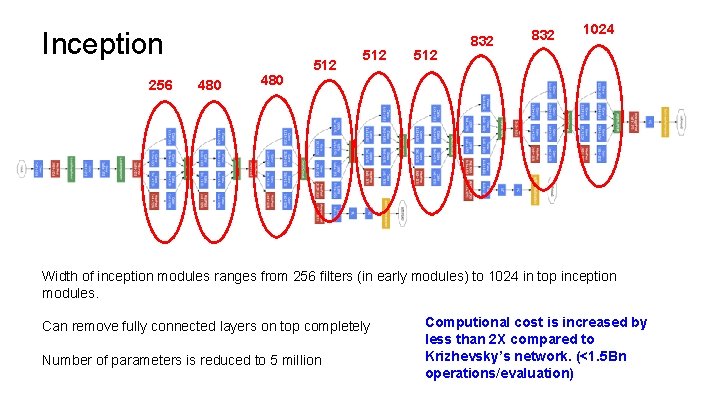

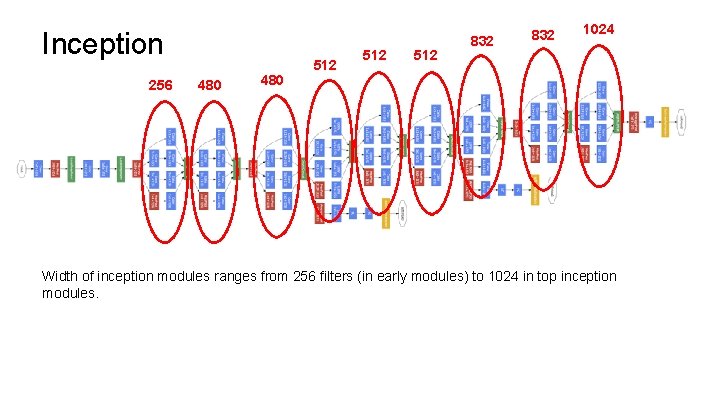

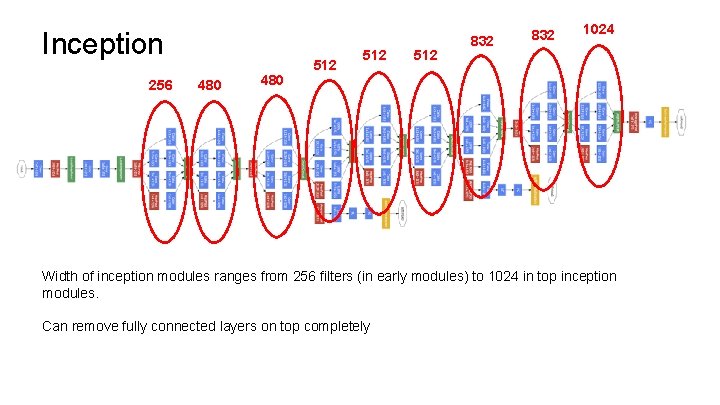

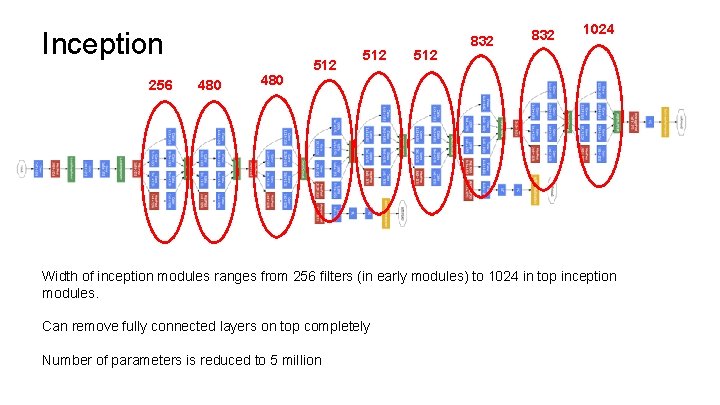

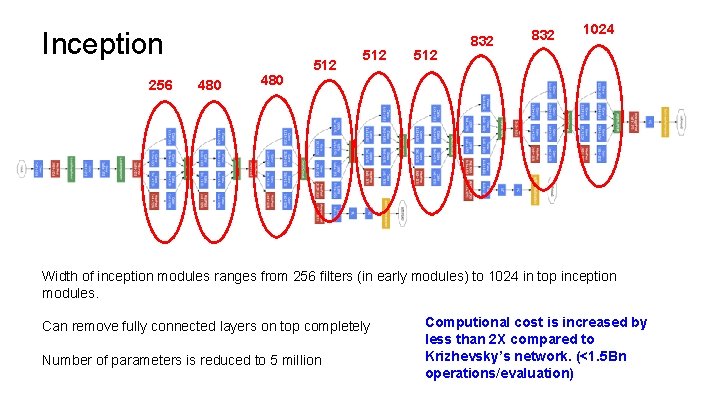

Inception 256 512 480 512 832 1024 480 Width of inception modules ranges from 256 filters (in early modules) to 1024 in top inception modules.

Inception 256 512 480 512 832 1024 480 Width of inception modules ranges from 256 filters (in early modules) to 1024 in top inception modules. Can remove fully connected layers on top completely

Inception 256 512 480 512 832 1024 480 Width of inception modules ranges from 256 filters (in early modules) to 1024 in top inception modules. Can remove fully connected layers on top completely Number of parameters is reduced to 5 million

Inception 256 512 480 512 832 1024 480 Width of inception modules ranges from 256 filters (in early modules) to 1024 in top inception modules. Can remove fully connected layers on top completely Number of parameters is reduced to 5 million Computional cost is increased by less than 2 X compared to Krizhevsky’s network. (<1. 5 Bn operations/evaluation)

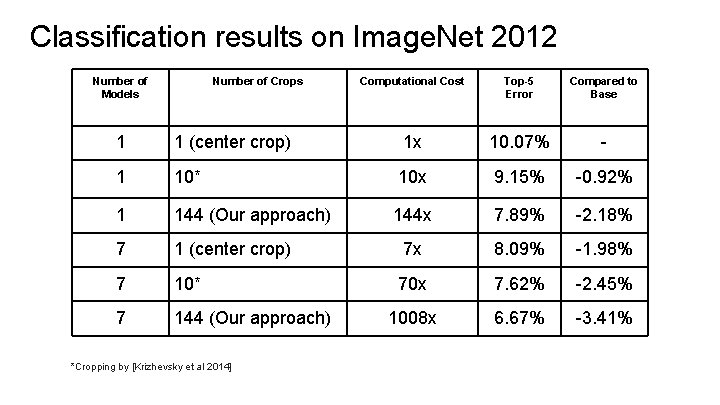

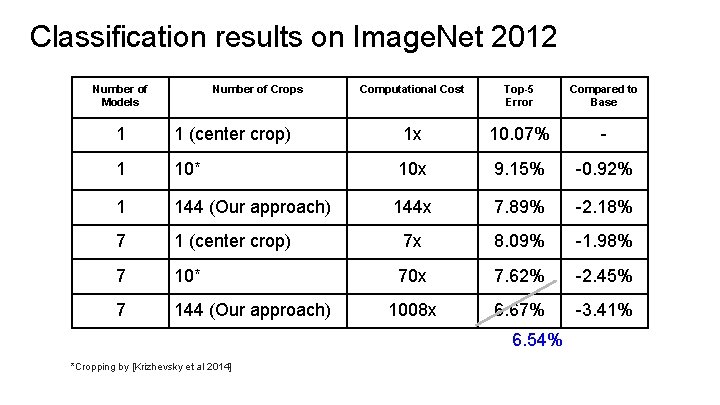

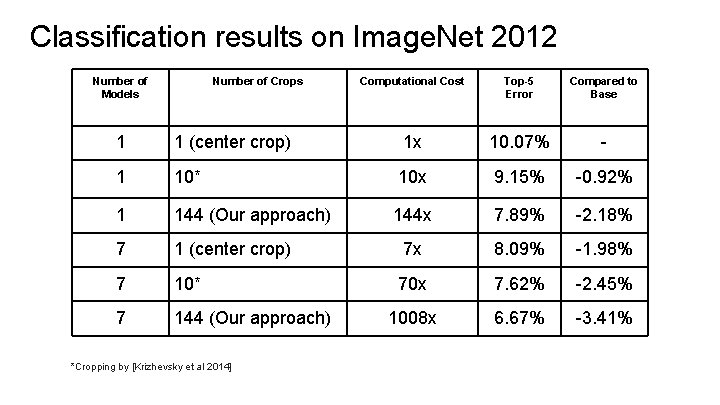

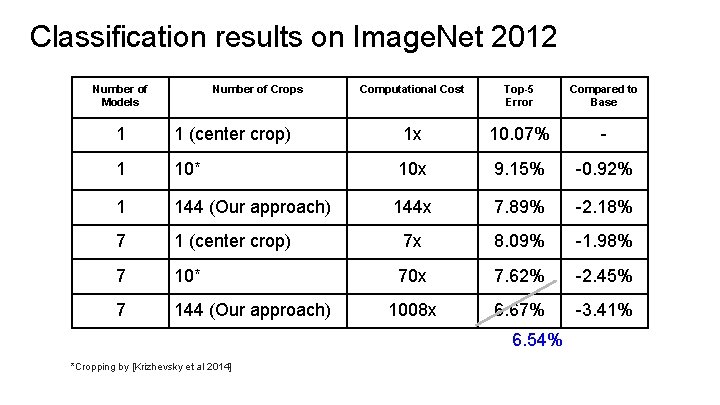

Classification results on Image. Net 2012 Number of Models Number of Crops Computational Cost Top-5 Error Compared to Base 1 1 (center crop) 1 x 10. 07% - 1 10* 10 x 9. 15% -0. 92% 1 144 (Our approach) 144 x 7. 89% -2. 18% 7 1 (center crop) 7 x 8. 09% -1. 98% 7 10* 70 x 7. 62% -2. 45% 7 144 (Our approach) 1008 x 6. 67% -3. 41% *Cropping by [Krizhevsky et al 2014]

Classification results on Image. Net 2012 Number of Models Number of Crops Computational Cost Top-5 Error Compared to Base 1 1 (center crop) 1 x 10. 07% - 1 10* 10 x 9. 15% -0. 92% 1 144 (Our approach) 144 x 7. 89% -2. 18% 7 1 (center crop) 7 x 8. 09% -1. 98% 7 10* 70 x 7. 62% -2. 45% 7 144 (Our approach) 1008 x 6. 67% -3. 41% 6. 54% *Cropping by [Krizhevsky et al 2014]

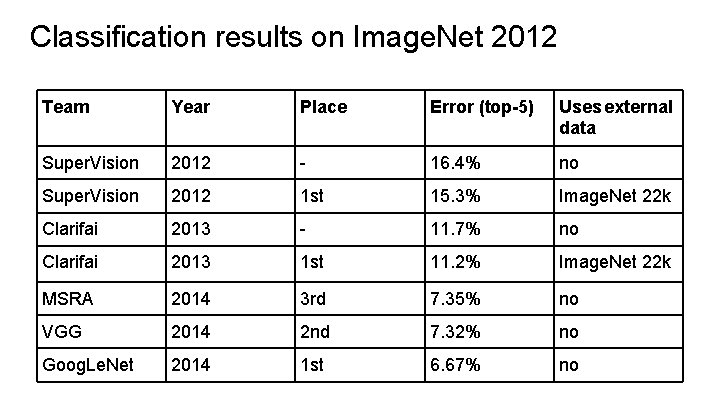

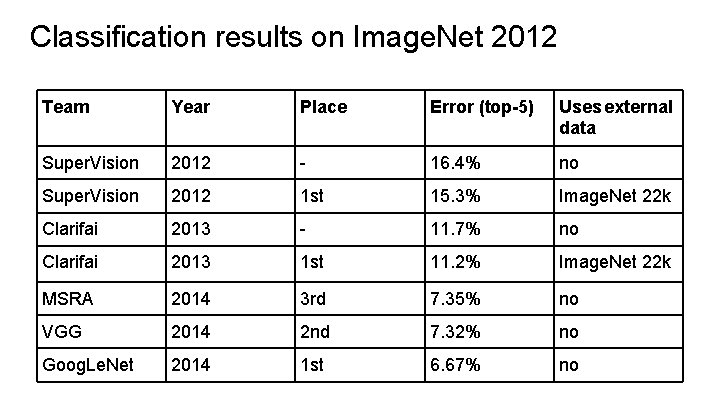

Classification results on Image. Net 2012 Team Year Place Error (top-5) Uses external data Super. Vision 2012 - 16. 4% no Super. Vision 2012 1 st 15. 3% Image. Net 22 k Clarifai 2013 - 11. 7% no Clarifai 2013 1 st 11. 2% Image. Net 22 k MSRA 2014 3 rd 7. 35% no VGG 2014 2 nd 7. 32% no Goog. Le. Net 2014 1 st 6. 67% no

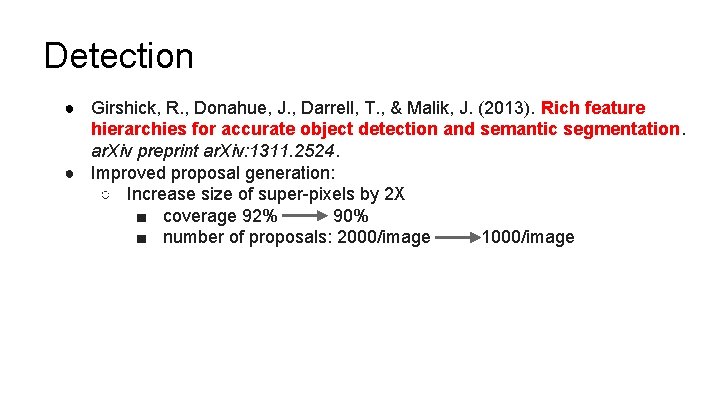

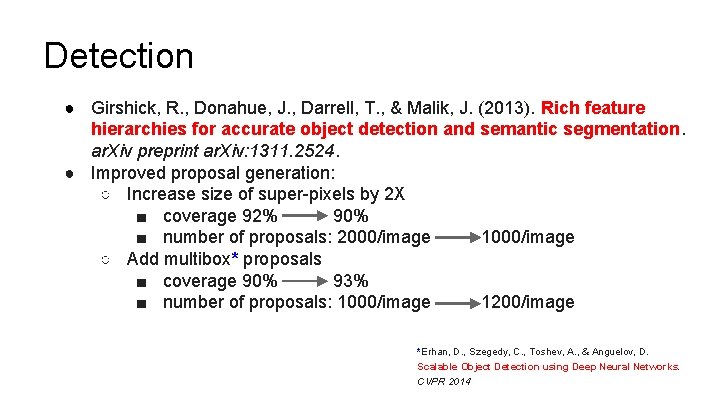

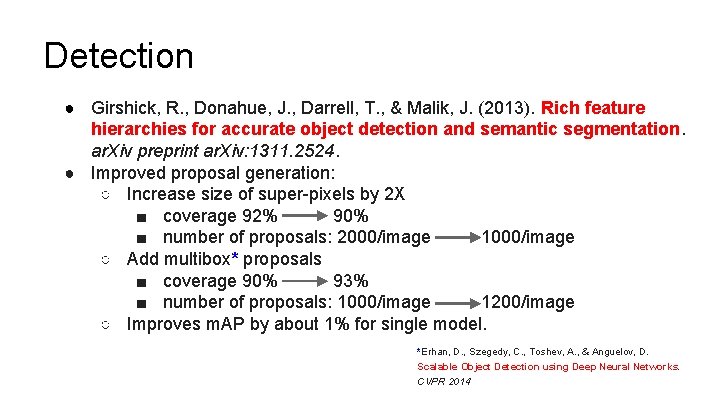

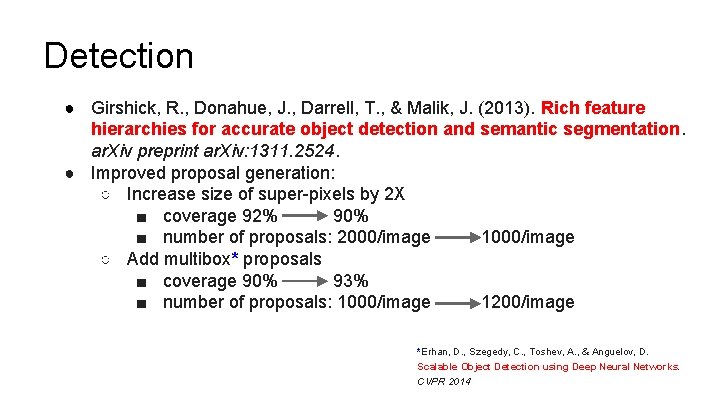

Detection ● Girshick, R. , Donahue, J. , Darrell, T. , & Malik, J. (2013). Rich feature hierarchies for accurate object detection and semantic segmentation. ar. Xiv preprint ar. Xiv: 1311. 2524.

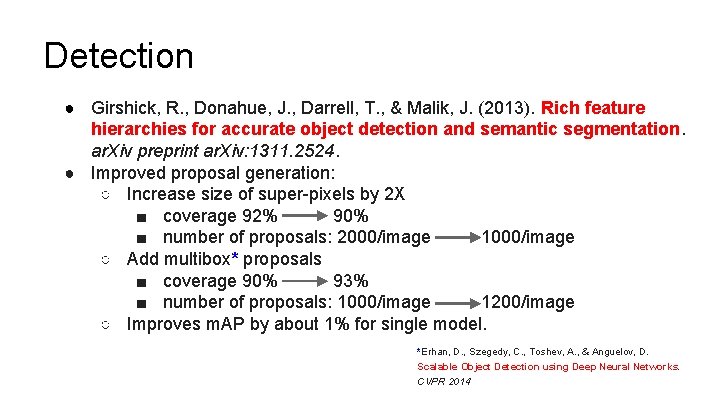

Detection ● Girshick, R. , Donahue, J. , Darrell, T. , & Malik, J. (2013). Rich feature hierarchies for accurate object detection and semantic segmentation. ar. Xiv preprint ar. Xiv: 1311. 2524. ● Improved proposal generation: ○ Increase size of super-pixels by 2 X ■ coverage 92% 90% ■ number of proposals: 2000/image 1000/image

Detection ● Girshick, R. , Donahue, J. , Darrell, T. , & Malik, J. (2013). Rich feature hierarchies for accurate object detection and semantic segmentation. ar. Xiv preprint ar. Xiv: 1311. 2524. ● Improved proposal generation: ○ Increase size of super-pixels by 2 X ■ coverage 92% 90% ■ number of proposals: 2000/image 1000/image ○ Add multibox* proposals ■ coverage 90% 93% ■ number of proposals: 1000/image 1200/image *Erhan, D. , Szegedy, C. , Toshev, A. , & Anguelov, D. Scalable Object Detection using Deep Neural Networks. CVPR 2014

Detection ● Girshick, R. , Donahue, J. , Darrell, T. , & Malik, J. (2013). Rich feature hierarchies for accurate object detection and semantic segmentation. ar. Xiv preprint ar. Xiv: 1311. 2524. ● Improved proposal generation: ○ Increase size of super-pixels by 2 X ■ coverage 92% 90% ■ number of proposals: 2000/image 1000/image ○ Add multibox* proposals ■ coverage 90% 93% ■ number of proposals: 1000/image 1200/image ○ Improves m. AP by about 1% for single model. *Erhan, D. , Szegedy, C. , Toshev, A. , & Anguelov, D. Scalable Object Detection using Deep Neural Networks. CVPR 2014

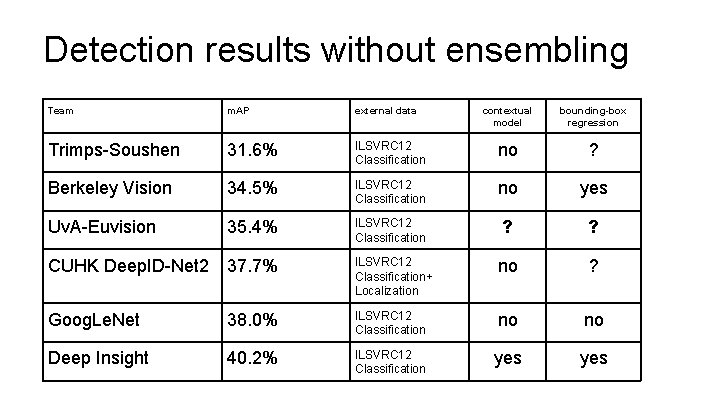

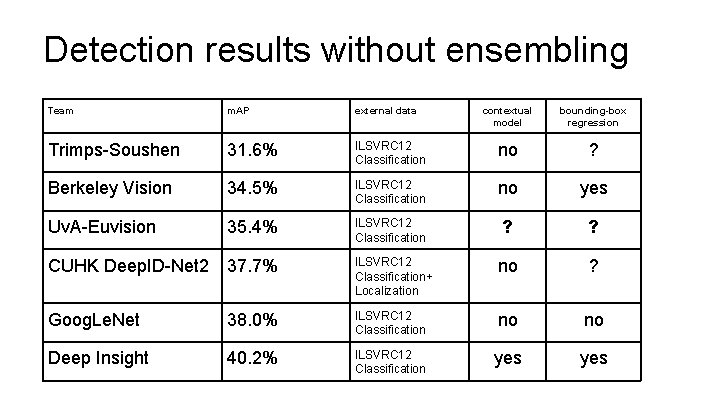

Detection results without ensembling Team m. AP external data contextual model bounding-box regression Trimps-Soushen 31. 6% ILSVRC 12 Classification no ? Berkeley Vision 34. 5% ILSVRC 12 Classification no yes Uv. A-Euvision 35. 4% ILSVRC 12 Classification ? ? CUHK Deep. ID-Net 2 37. 7% ILSVRC 12 Classification+ Localization no ? Goog. Le. Net 38. 0% ILSVRC 12 Classification no no Deep Insight 40. 2% ILSVRC 12 Classification yes

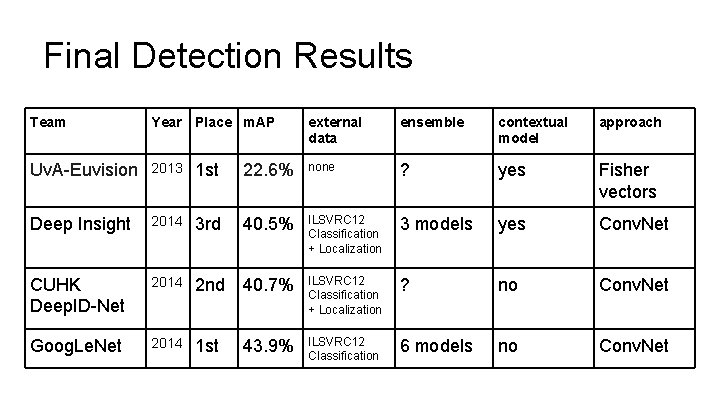

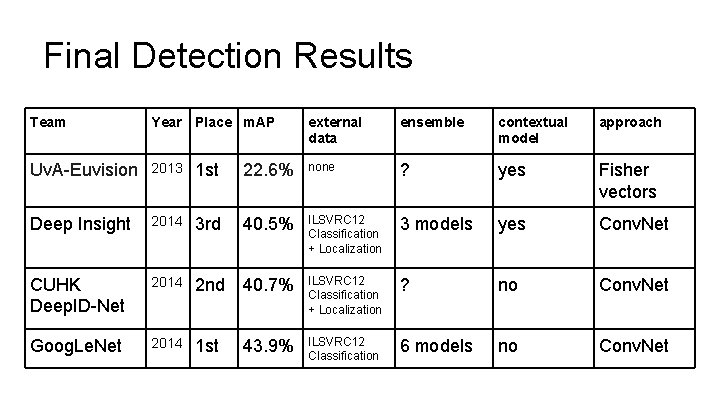

Final Detection Results Team Year Place m. AP external data ensemble contextual model approach Uv. A-Euvision 2013 1 st 22. 6% none ? yes Fisher vectors Deep Insight 2014 3 rd 40. 5% ILSVRC 12 Classification + Localization 3 models yes Conv. Net CUHK Deep. ID-Net 2014 2 nd 40. 7% ILSVRC 12 Classification + Localization ? no Conv. Net Goog. Le. Net 2014 1 st 43. 9% ILSVRC 12 Classification 6 models no Conv. Net

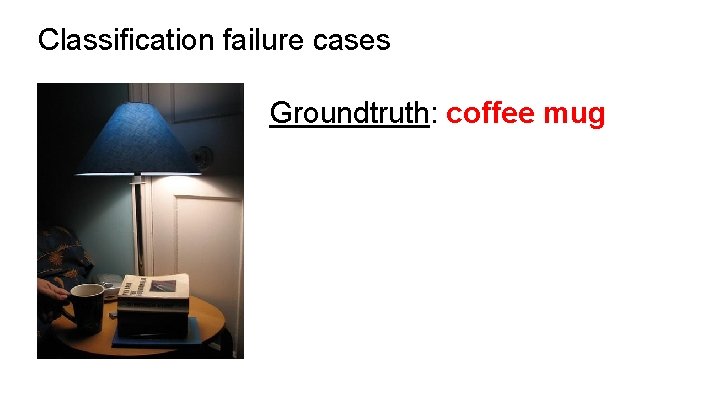

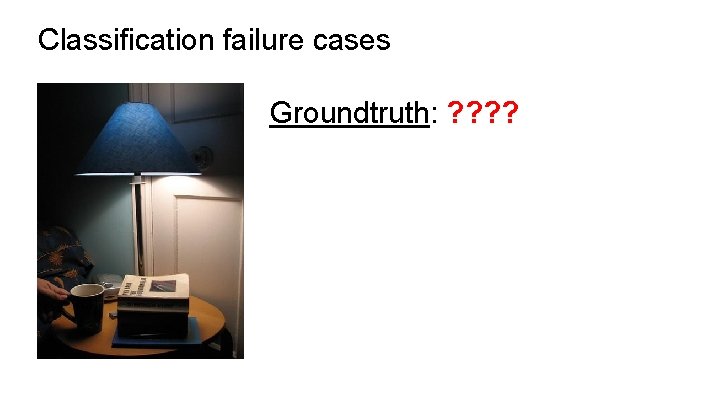

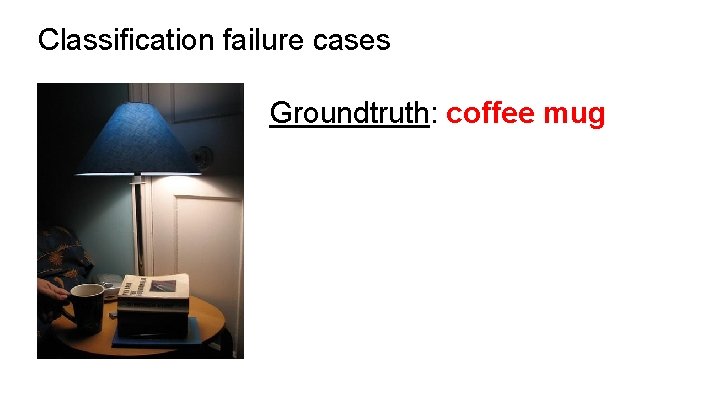

Classification failure cases Groundtruth: ? ?

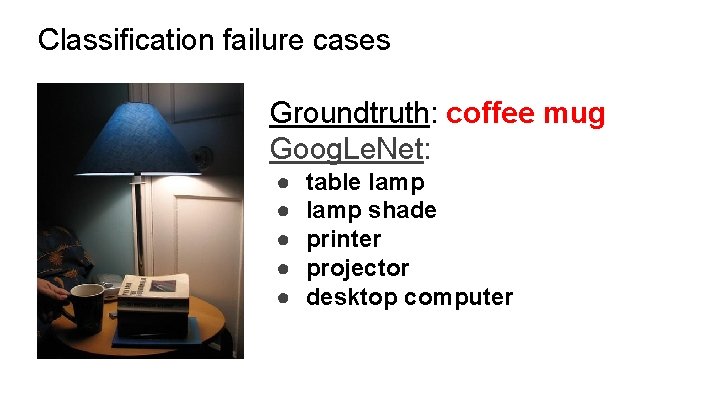

Classification failure cases Groundtruth: coffee mug

Classification failure cases Groundtruth: coffee mug Goog. Le. Net: ● ● ● table lamp shade printer projector desktop computer

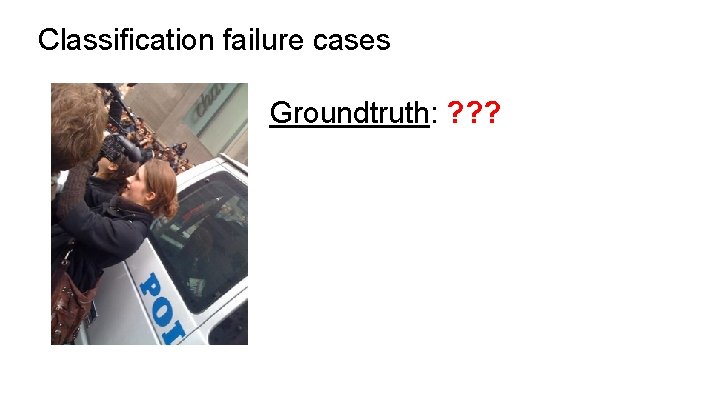

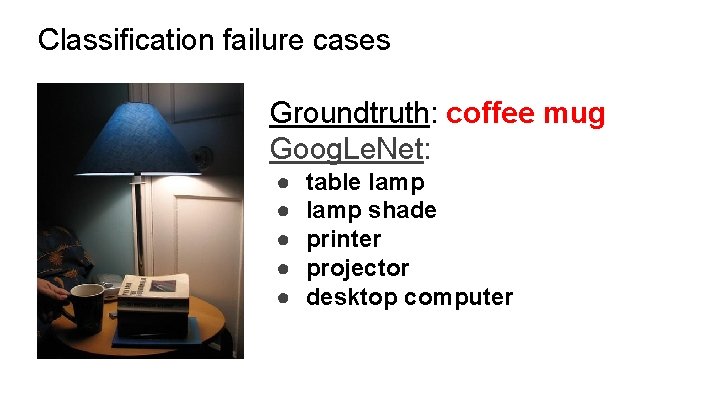

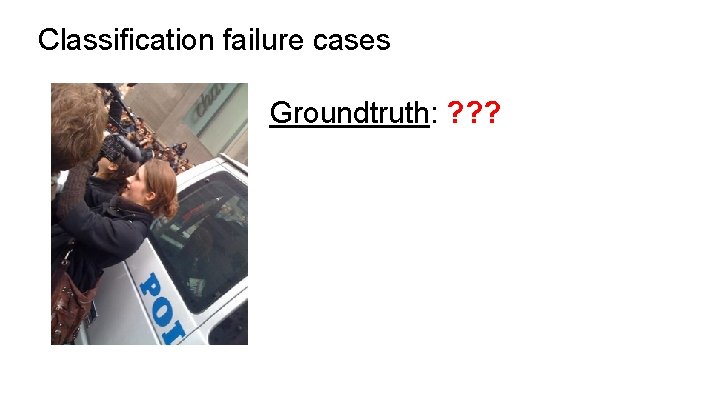

Classification failure cases Groundtruth: ? ? ?

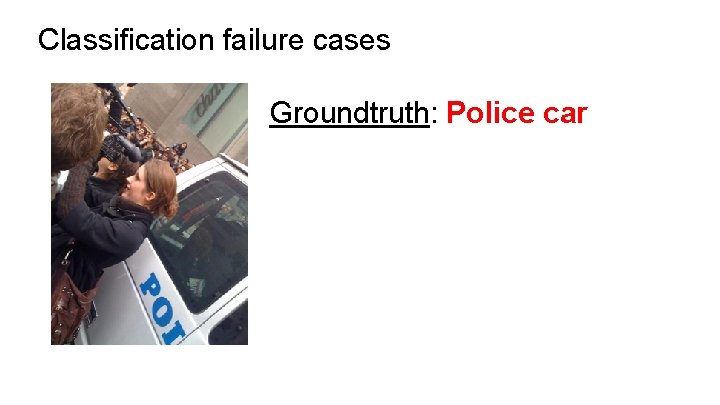

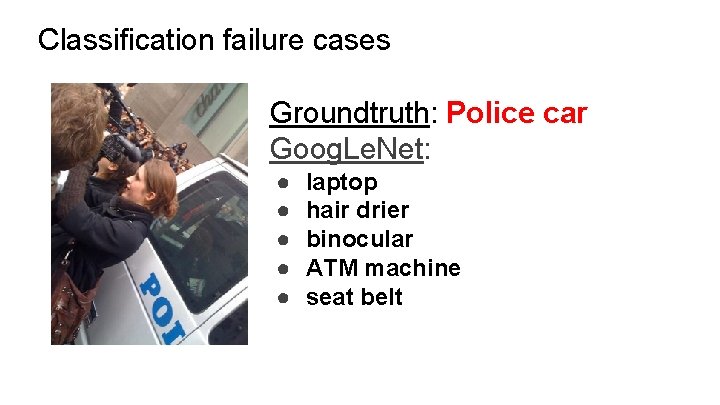

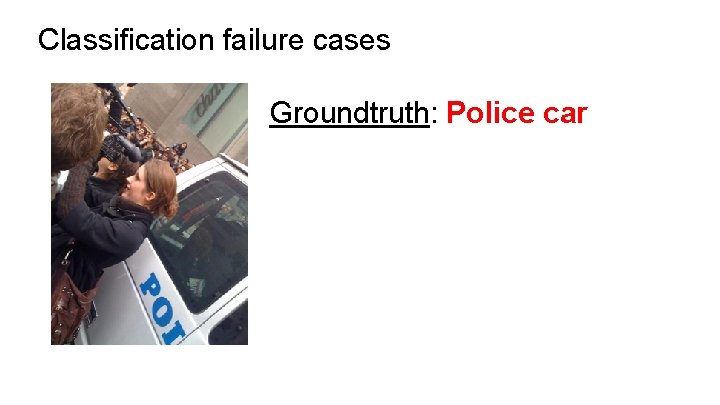

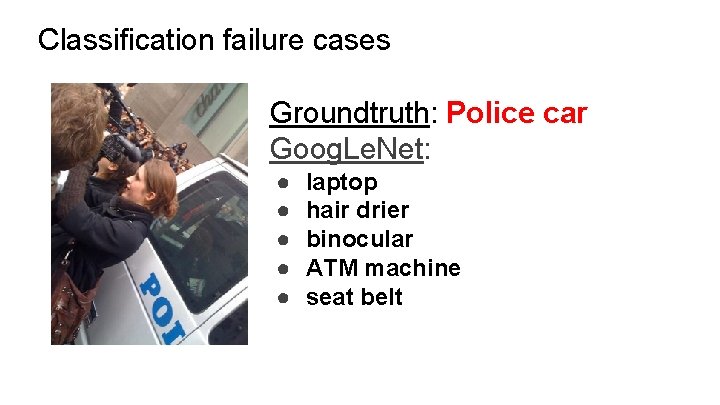

Classification failure cases Groundtruth: Police car

Classification failure cases Groundtruth: Police car Goog. Le. Net: ● ● ● laptop hair drier binocular ATM machine seat belt

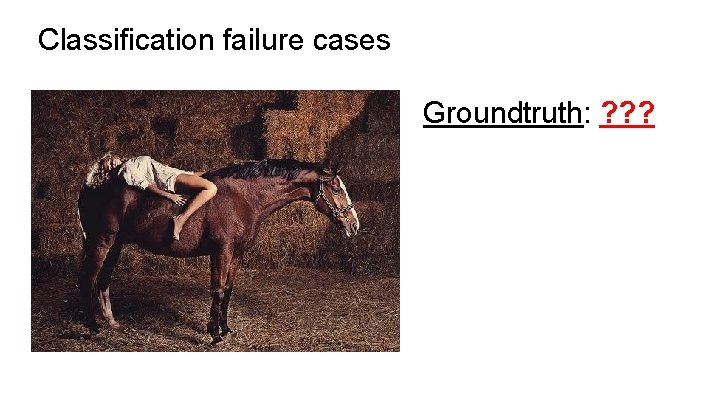

Classification failure cases Groundtruth: ? ? ?

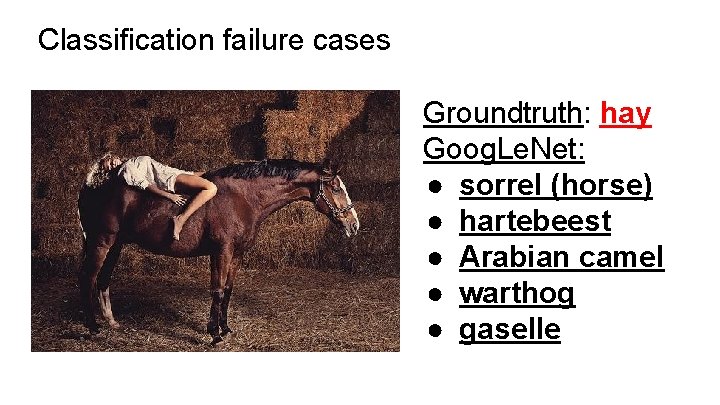

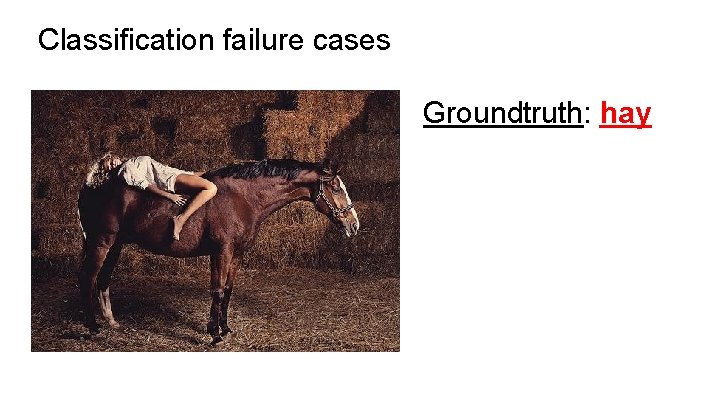

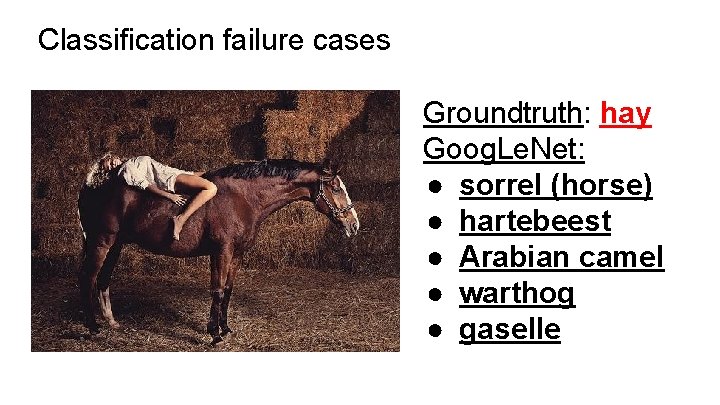

Classification failure cases Groundtruth: hay

Classification failure cases Groundtruth: hay Goog. Le. Net: ● sorrel (horse) ● hartebeest ● Arabian camel ● warthog ● gaselle

Acknowledgments We would like to thank: Chuck Rosenberg, Hartwig Adam, Alex Toshev, Tom Duerig, Ning Ye, Rajat Monga, Jon Shlens, Alex Krizhevsky, Sudheendra Vijayanarasimhan, Jeff Dean, Ilya Sutskever, … and check out our poster! Andrea Frome

Christian szegedy

Christian szegedy Alex liu cecilia liu

Alex liu cecilia liu Líu líu lo lo ta ca hát say sưa

Líu líu lo lo ta ca hát say sưa System design architecture

System design architecture Goog;e

Goog;e Ucsd eecs

Ucsd eecs Goog elmaps

Goog elmaps Gmailgmailgmailgmail

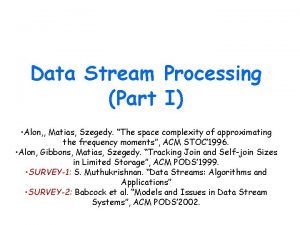

Gmailgmailgmailgmail Alon matias szegedy

Alon matias szegedy Mario szegedy

Mario szegedy Netalex

Netalex Lj wei harvard

Lj wei harvard Shen, wei-min

Shen, wei-min Li yi wei

Li yi wei Liyi wei

Liyi wei Liyi wei

Liyi wei Chua wei yang

Chua wei yang Wei cheng lee

Wei cheng lee Wong koh wei

Wong koh wei Roger clemmons dvm

Roger clemmons dvm Jim wei

Jim wei Li-yi wei

Li-yi wei Wei bin

Wei bin Wei yu taiwan host

Wei yu taiwan host Mark liberman

Mark liberman Wei ni er huo

Wei ni er huo Wer wei

Wer wei Wo men de tian fu

Wo men de tian fu Cao wei

Cao wei Zhewei wei

Zhewei wei Mostafa mortezaie

Mostafa mortezaie Tzu chieh wei

Tzu chieh wei Yichen wei

Yichen wei Ting wei ya meaning

Ting wei ya meaning Sheng wei moffitt

Sheng wei moffitt Ooi wei tsang

Ooi wei tsang Yaxing wei

Yaxing wei Ming-wei chang

Ming-wei chang Wei pollub

Wei pollub Wei pollub

Wei pollub Kaiwei chang

Kaiwei chang Fisheye state routing

Fisheye state routing Ermin wei

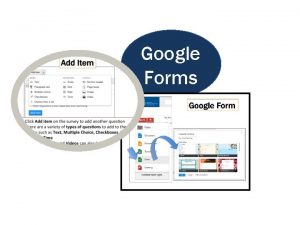

Ermin wei Google forms to calendar

Google forms to calendar Konichiwa prijevod

Konichiwa prijevod Google acadmico

Google acadmico Google docshttps://mail.google.com/mail/u/0/#inbox

Google docshttps://mail.google.com/mail/u/0/#inbox Achmed lach net ich krieg mein tach net

Achmed lach net ich krieg mein tach net Ado.net vb.net

Ado.net vb.net Diane liu md

Diane liu md Chiu-chu melissa liu

Chiu-chu melissa liu Boy or girl slinger

Boy or girl slinger Chee wee liu

Chee wee liu Yang liu stanford

Yang liu stanford Tongping liu

Tongping liu Jingchen liu

Jingchen liu Karin björsten liu

Karin björsten liu Sissi liu

Sissi liu Chim đậu trên cành chim hót líu lo

Chim đậu trên cành chim hót líu lo J liu

J liu Bing

Bing Jun s. liu

Jun s. liu Webreg liu

Webreg liu Ni jia you ji ge ren

Ni jia you ji ge ren Jasmine pai

Jasmine pai J liu

J liu Hypergene liu

Hypergene liu Kevin liu toto

Kevin liu toto Liu xiang weightlifter

Liu xiang weightlifter Yang liu stanford

Yang liu stanford Peter liu seneca

Peter liu seneca Zaijia liu

Zaijia liu Changbin liu

Changbin liu