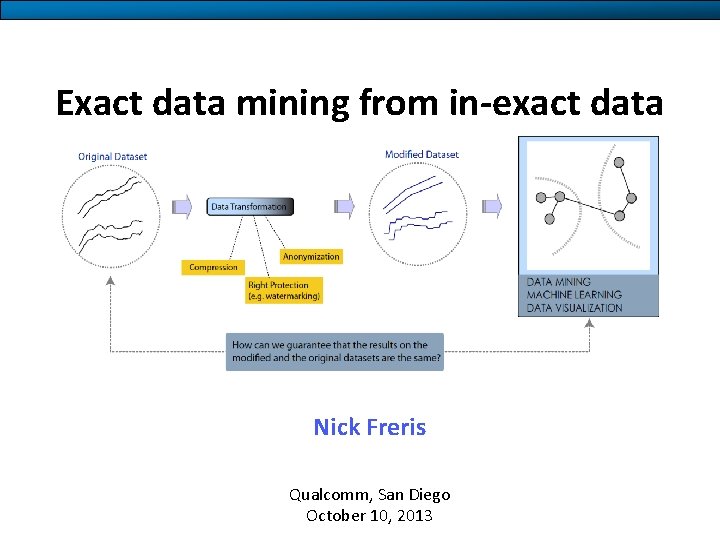

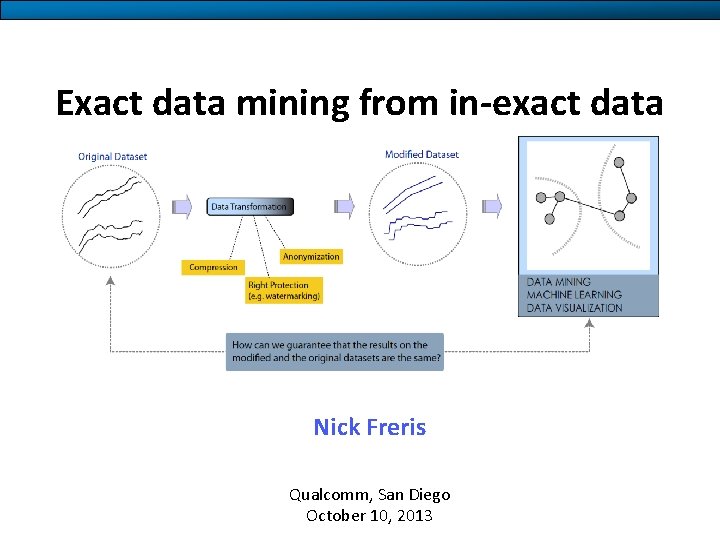

Exact data mining from inexact data Nick Freris

- Slides: 66

Exact data mining from in-exact data Nick Freris Qualcomm, San Diego October 10, 2013

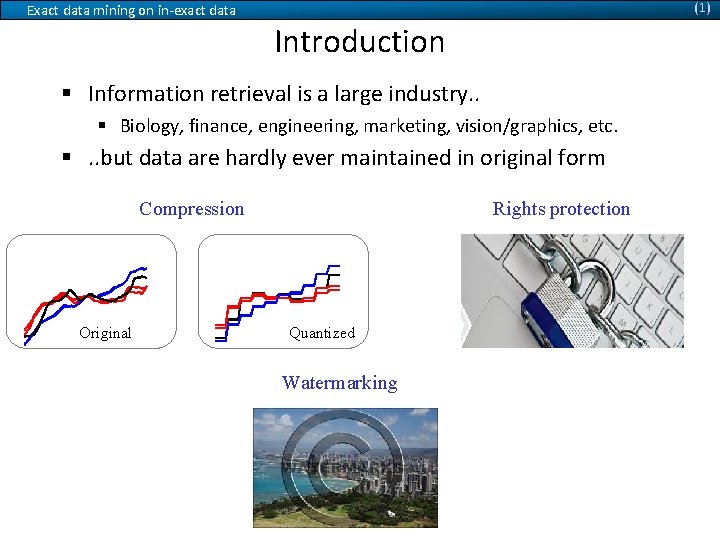

(1) Exact data mining on in-exact data Introduction § Information retrieval is a large industry. . § Biology, finance, engineering, marketing, vision/graphics, etc. §. . but data are hardly ever maintained in original form Compression Original Rights protection Quantized Watermarking

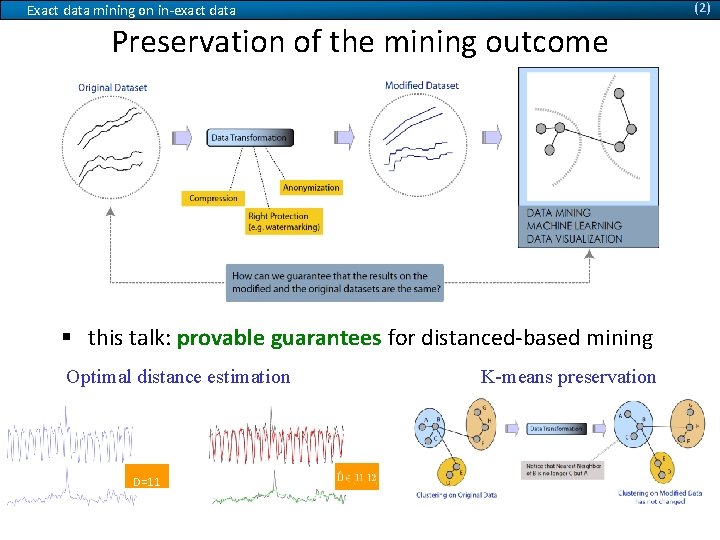

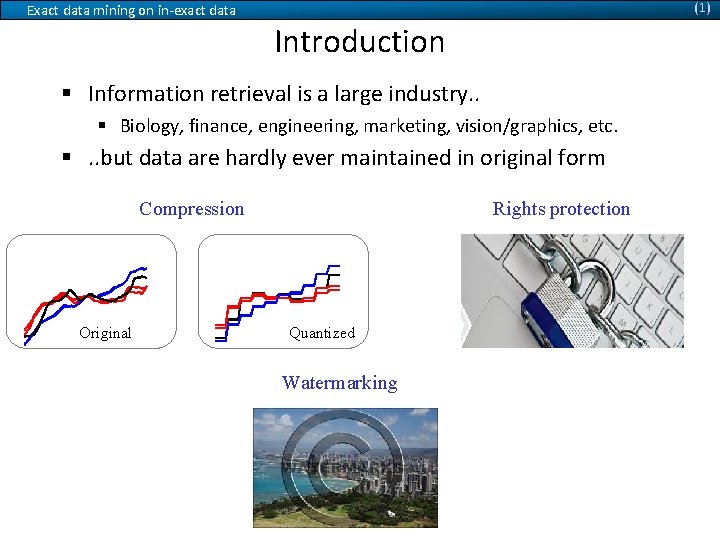

(2) Exact data mining on in-exact data Preservation of the mining outcome § this talk: provable guarantees for distanced-based mining Optimal distance estimation D=11 K-means preservation

(3) Optimal distance estimation This work presents optimal estimation of Euclidean distance in the compressed domain. This result cannot be further improved D = 11. 5 § Our approach is applicable on any orthonormal data compression basis (Fourier, Wavelets, Chebyshev, PCA, etc. ) § Our method allows up to – 57% better distance estimation – 80% less computation effort – 128 : 1 compression efficiency

(4) Motivation/Benefits Time-series data are customarily compressed in order to: Save storage space Reduce transmission bandwidth Achieve faster processing / data analysis Remove noise Distance estimation has various data analytics applications Clustering / Classification Anomaly detection Similarity search Now we can do all this very efficiently directly on the compressed data!

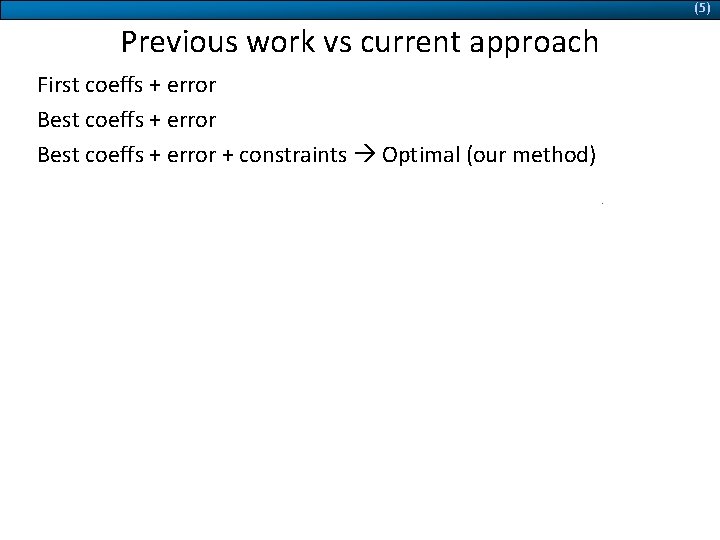

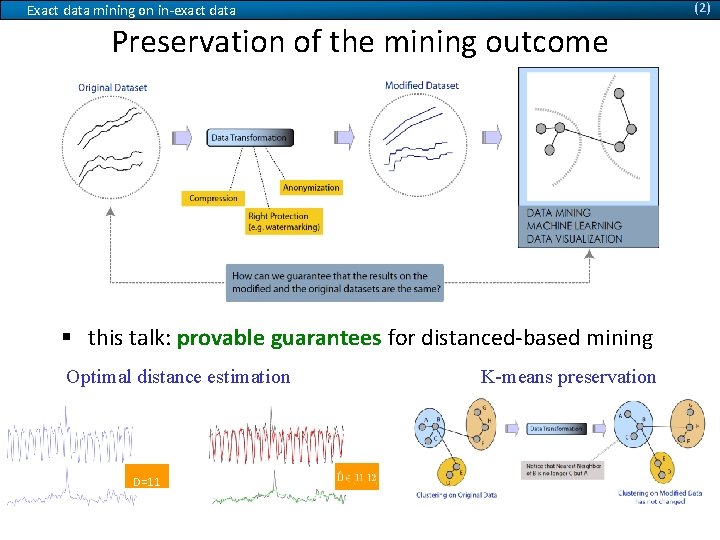

(5) Previous work vs current approach First coeffs + error Best coeffs + error + constraints Optimal (our method) Approach 1 Approach 2: (only one compressed) Our approach

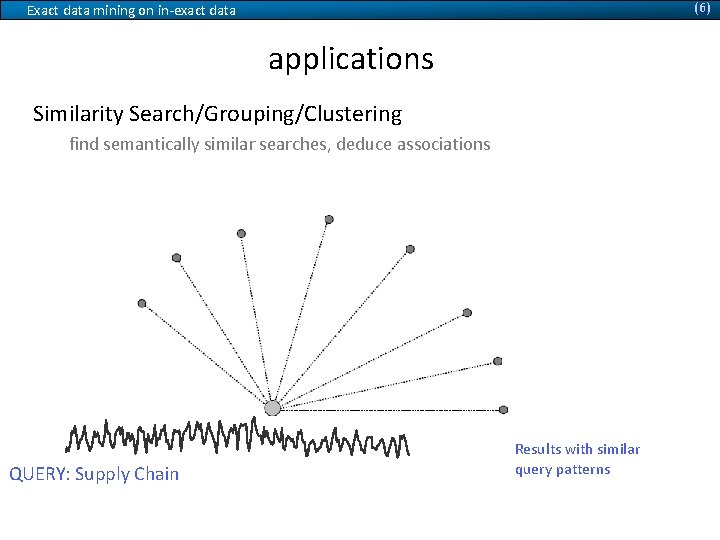

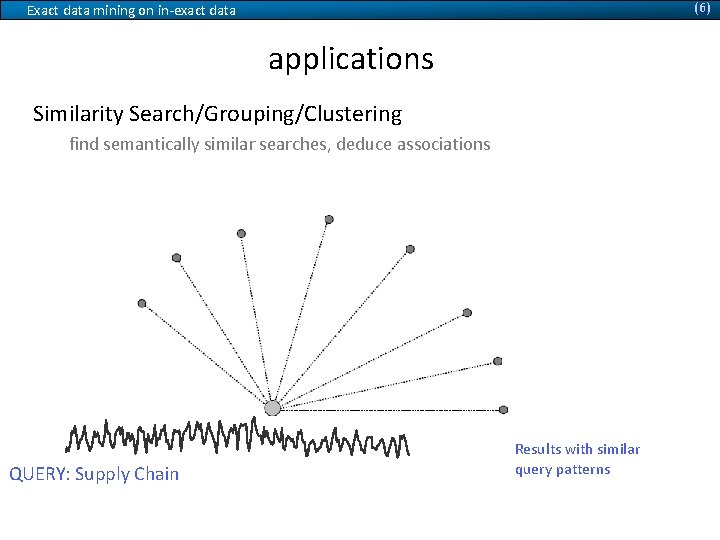

(6) Exact data mining on in-exact data applications Similarity Search/Grouping/Clustering find semantically similar searches, deduce associations Five key supply chain areas dynamic inventory optimization Chief supply chain officer IBM’s dynamic inventory integrated supply chain Smarter supply chain Retail Supply Management Business consultant supply chain management QUERY: Supply Chain Results with similar query patterns

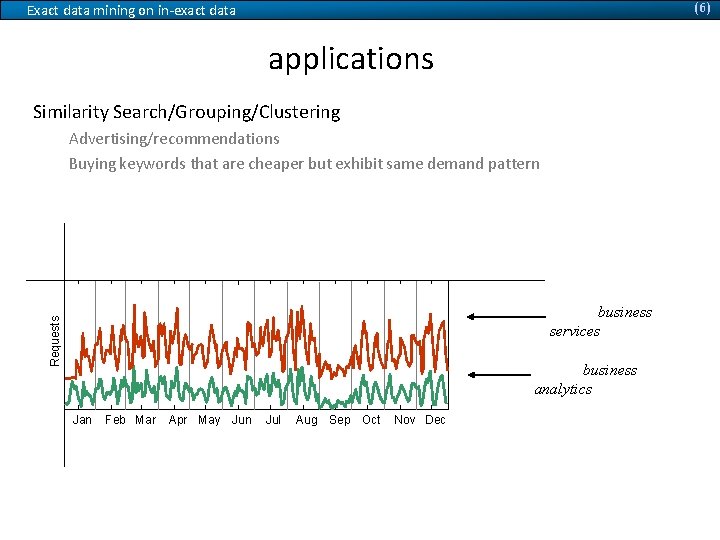

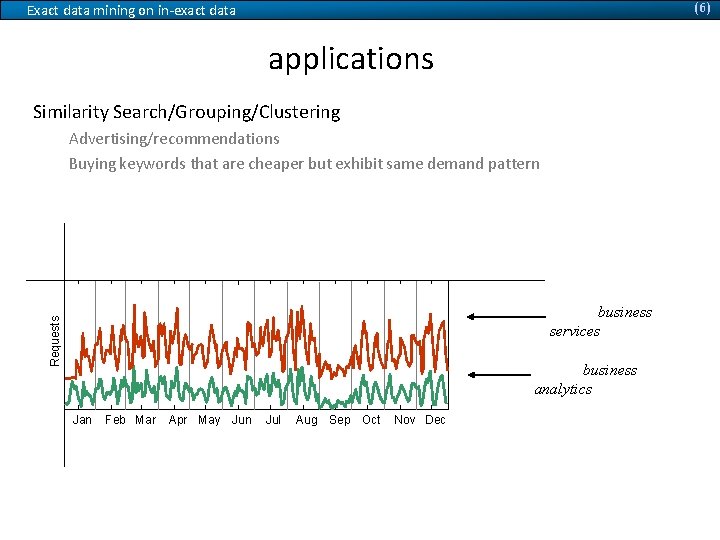

(6) Exact data mining on in-exact data applications Similarity Search/Grouping/Clustering Advertising/recommendations Buying keywords that are cheaper but exhibit same demand pattern Requests Query: business services Query: business analytics Jan Feb Mar Apr May Jun Jul Aug Sep Oct Nov Dec

(6) Exact data mining on in-exact data applications Burst Detection Discovery of important events Query: Acquisition Requests Feb ‘ 10: Intelliden Inc. Acquisition May ‘ 10: Sterling Commerce Acquisition (1. 4 B) Jan Feb Mar Apr May Jun Jul Aug Sep Okt Nov Dec

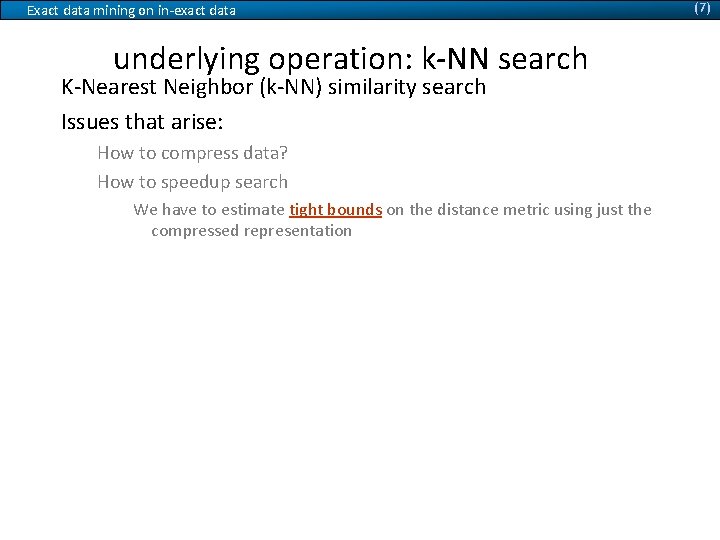

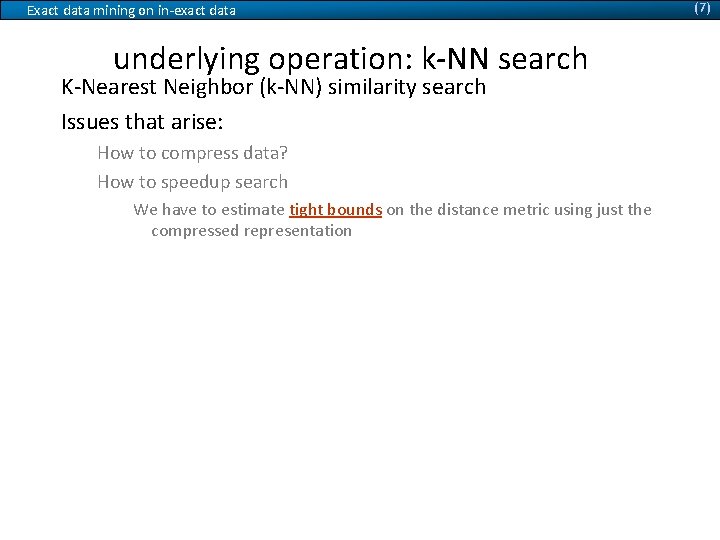

Exact data mining on in-exact data underlying operation: k-NN search K-Nearest Neighbor (k-NN) similarity search Issues that arise: How to compress data? How to speedup search We have to estimate tight bounds on the distance metric using just the compressed representation (7)

(8) Exact data mining on in-exact data similarity search problem Distance query D = 7. 3 Linear Scan: Objective: Compare the query with all sequences in DB and return the k most similar sequences to the query. D = 10. 2 D = 11. 8 D = 17 D = 22

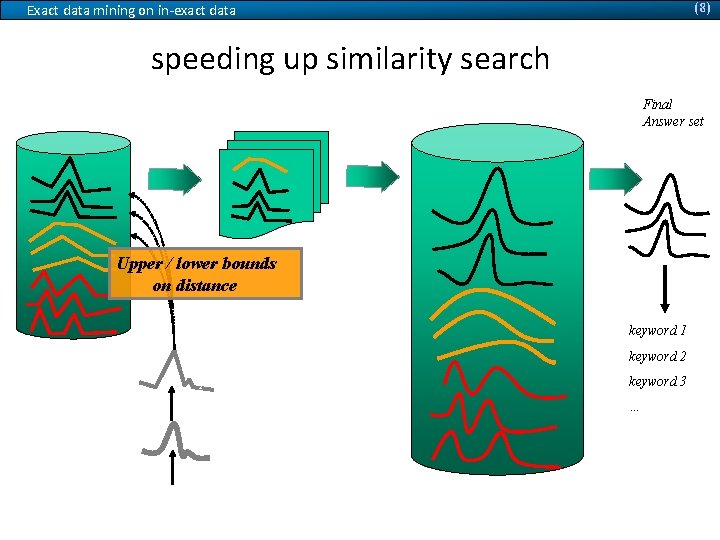

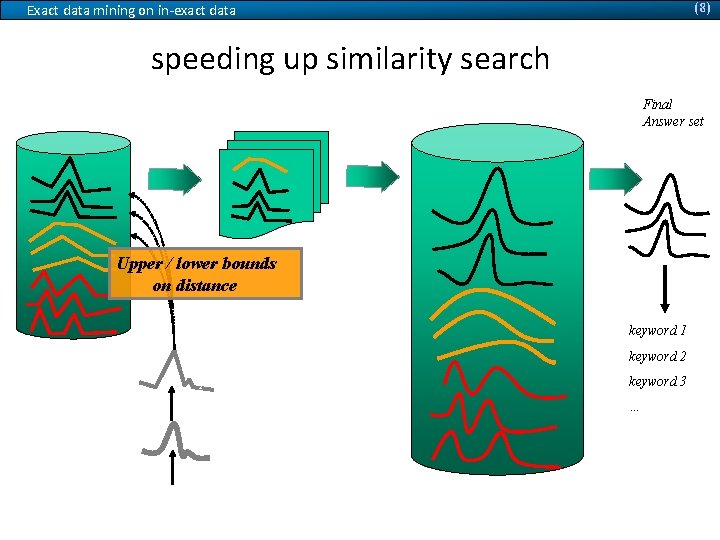

(8) Exact data mining on in-exact data speeding up similarity search simplified DB original DB Candidate Superset Final Answer set Verify against original DB Upper / lower bounds on distance keyword 1 simplified query keyword 2 keyword 3 … keyword

(9) Exact data mining on in-exact data compressing weblog data Use Euclidean distance to match time-series. But how should we compress the data? The data are highly periodic, so we can use Fourier decomposition. Query: “analytics and optimization” 1 year span Query: “consulting services” Instead of using the first Fourier coefficients we can use the best ones instead.

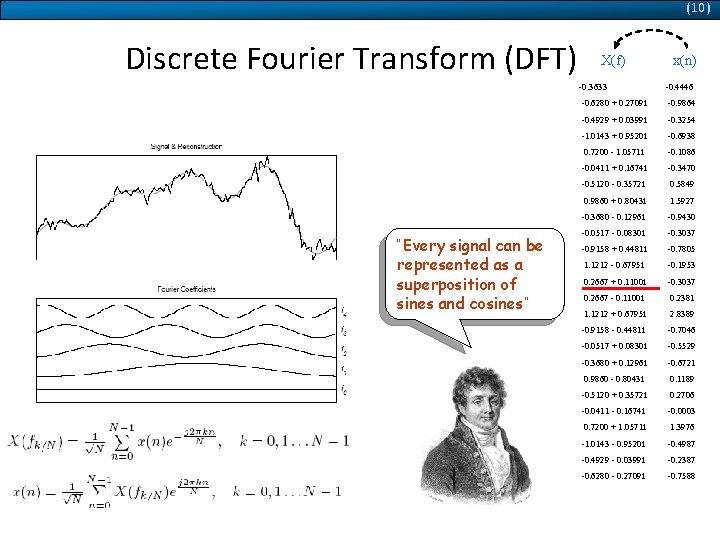

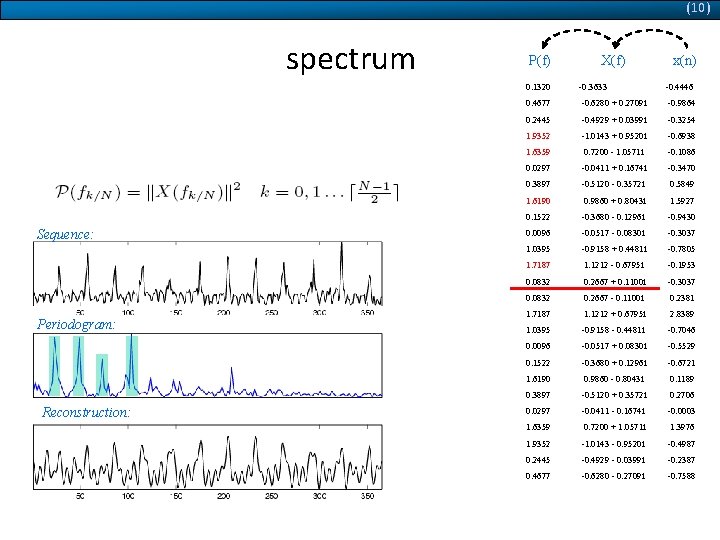

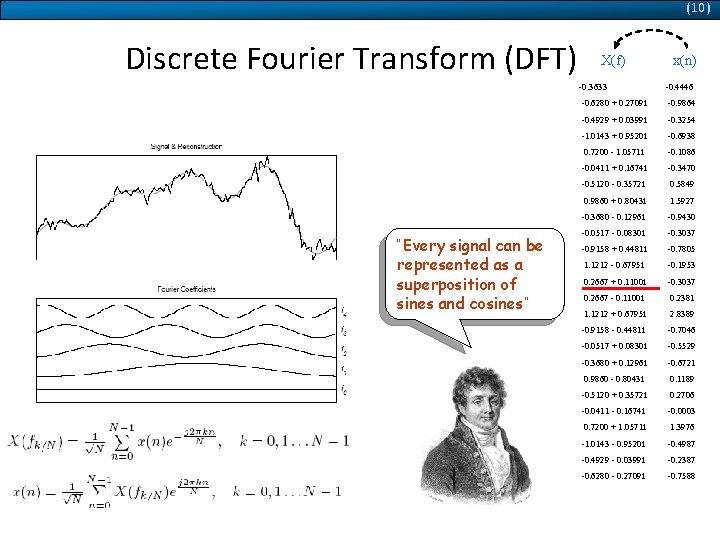

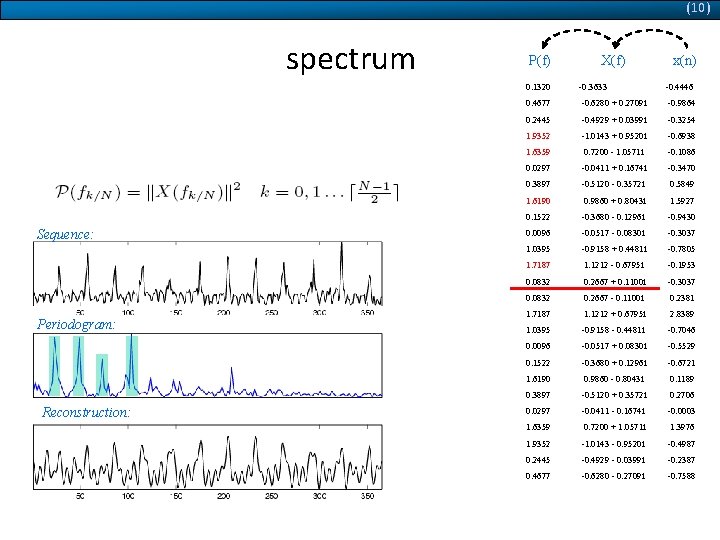

(10) Discrete Fourier Transform (DFT) decomposition of time-series into sinusoids “Every signal can be represented as a superposition of sines and cosines” Jean Baptiste Fourier (17681830) X(f) x(n) -0. 3633 -0. 4446 -0. 6280 + 0. 2709 i -0. 9864 -0. 4929 + 0. 0399 i -0. 3254 -1. 0143 + 0. 9520 i -0. 6938 0. 7200 - 1. 0571 i -0. 1086 -0. 0411 + 0. 1674 i -0. 3470 -0. 5120 - 0. 3572 i 0. 5849 0. 9860 + 0. 8043 i 1. 5927 -0. 3680 - 0. 1296 i -0. 9430 -0. 0517 - 0. 0830 i -0. 3037 -0. 9158 + 0. 4481 i -0. 7805 1. 1212 - 0. 6795 i -0. 1953 0. 2667 + 0. 1100 i -0. 3037 0. 2667 - 0. 1100 i 0. 2381 1. 1212 + 0. 6795 i 2. 8389 -0. 9158 - 0. 4481 i -0. 7046 -0. 0517 + 0. 0830 i -0. 5529 -0. 3680 + 0. 1296 i -0. 6721 0. 9860 - 0. 8043 i 0. 1189 -0. 5120 + 0. 3572 i 0. 2706 -0. 0411 - 0. 1674 i -0. 0003 0. 7200 + 1. 0571 i 1. 3976 -1. 0143 - 0. 9520 i -0. 4987 -0. 4929 - 0. 0399 i -0. 2387 -0. 6280 - 0. 2709 i -0. 7588

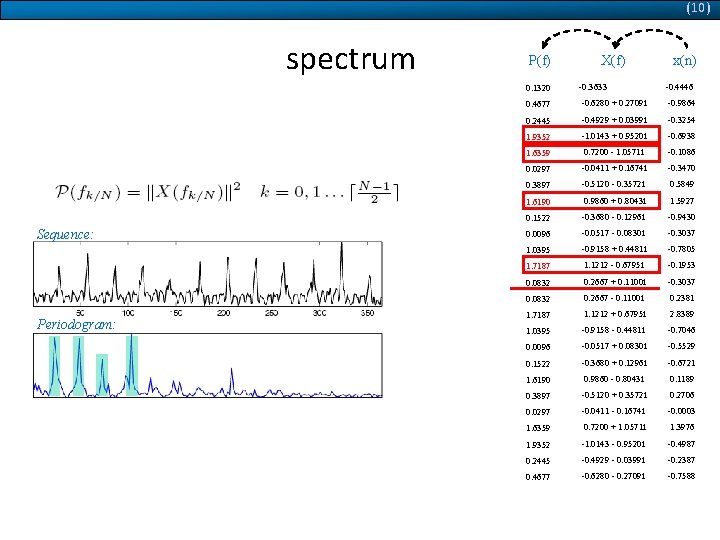

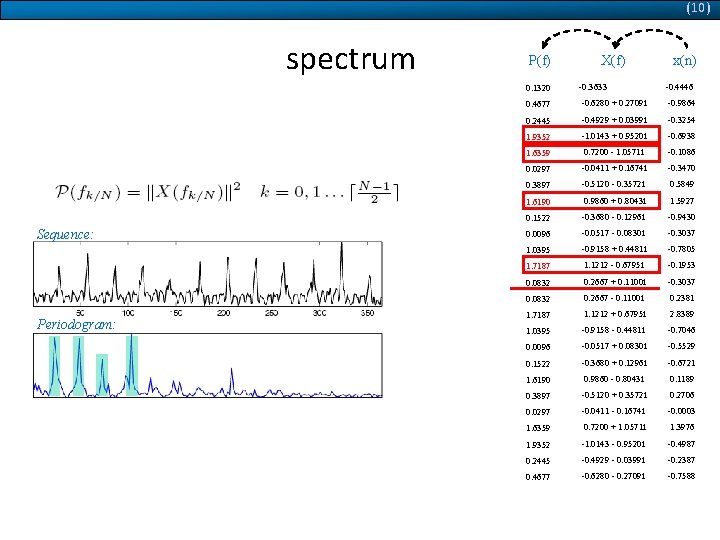

(10) spectrum Keep just some frequencies of periodogram Sequence: Periodogram: f P(f) X(f) x(n) 0. 1320 -0. 3633 -0. 4446 0. 4677 -0. 6280 + 0. 2709 i -0. 9864 0. 2445 -0. 4929 + 0. 0399 i -0. 3254 1. 9352 -1. 0143 + 0. 9520 i -0. 6938 1. 6359 0. 7200 - 1. 0571 i -0. 1086 0. 0297 -0. 0411 + 0. 1674 i -0. 3470 0. 3897 -0. 5120 - 0. 3572 i 0. 5849 1. 6190 0. 9860 + 0. 8043 i 1. 5927 0. 1522 -0. 3680 - 0. 1296 i -0. 9430 0. 0096 -0. 0517 - 0. 0830 i -0. 3037 1. 0395 -0. 9158 + 0. 4481 i -0. 7805 1. 7187 1. 1212 - 0. 6795 i -0. 1953 0. 0832 0. 2667 + 0. 1100 i -0. 3037 0. 0832 0. 2667 - 0. 1100 i 0. 2381 1. 7187 1. 1212 + 0. 6795 i 2. 8389 1. 0395 -0. 9158 - 0. 4481 i -0. 7046 0. 0096 -0. 0517 + 0. 0830 i -0. 5529 0. 1522 -0. 3680 + 0. 1296 i -0. 6721 1. 6190 0. 9860 - 0. 8043 i 0. 1189 0. 3897 -0. 5120 + 0. 3572 i 0. 2706 0. 0297 -0. 0411 - 0. 1674 i -0. 0003 1. 6359 0. 7200 + 1. 0571 i 1. 3976 1. 9352 -1. 0143 - 0. 9520 i -0. 4987 0. 2445 -0. 4929 - 0. 0399 i -0. 2387 0. 4677 -0. 6280 - 0. 2709 i -0. 7588

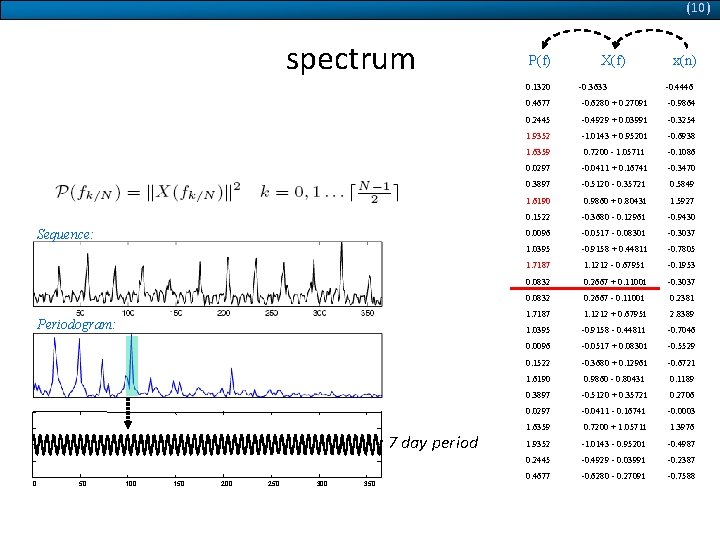

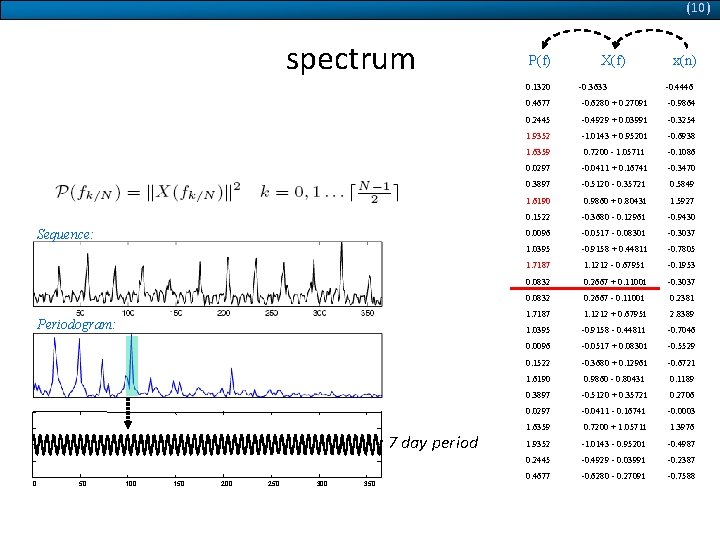

(10) spectrum Keep just some frequencies of periodogram Sequence: Periodogram: f 7 day period 0 50 100 150 200 250 300 350 P(f) X(f) x(n) 0. 1320 -0. 3633 -0. 4446 0. 4677 -0. 6280 + 0. 2709 i -0. 9864 0. 2445 -0. 4929 + 0. 0399 i -0. 3254 1. 9352 -1. 0143 + 0. 9520 i -0. 6938 1. 6359 0. 7200 - 1. 0571 i -0. 1086 0. 0297 -0. 0411 + 0. 1674 i -0. 3470 0. 3897 -0. 5120 - 0. 3572 i 0. 5849 1. 6190 0. 9860 + 0. 8043 i 1. 5927 0. 1522 -0. 3680 - 0. 1296 i -0. 9430 0. 0096 -0. 0517 - 0. 0830 i -0. 3037 1. 0395 -0. 9158 + 0. 4481 i -0. 7805 1. 7187 1. 1212 - 0. 6795 i -0. 1953 0. 0832 0. 2667 + 0. 1100 i -0. 3037 0. 0832 0. 2667 - 0. 1100 i 0. 2381 1. 7187 1. 1212 + 0. 6795 i 2. 8389 1. 0395 -0. 9158 - 0. 4481 i -0. 7046 0. 0096 -0. 0517 + 0. 0830 i -0. 5529 0. 1522 -0. 3680 + 0. 1296 i -0. 6721 1. 6190 0. 9860 - 0. 8043 i 0. 1189 0. 3897 -0. 5120 + 0. 3572 i 0. 2706 0. 0297 -0. 0411 - 0. 1674 i -0. 0003 1. 6359 0. 7200 + 1. 0571 i 1. 3976 1. 9352 -1. 0143 - 0. 9520 i -0. 4987 0. 2445 -0. 4929 - 0. 0399 i -0. 2387 0. 4677 -0. 6280 - 0. 2709 i -0. 7588

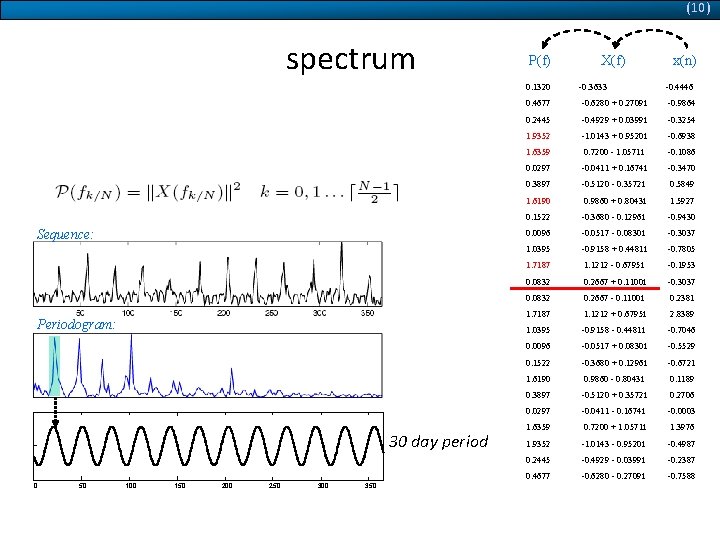

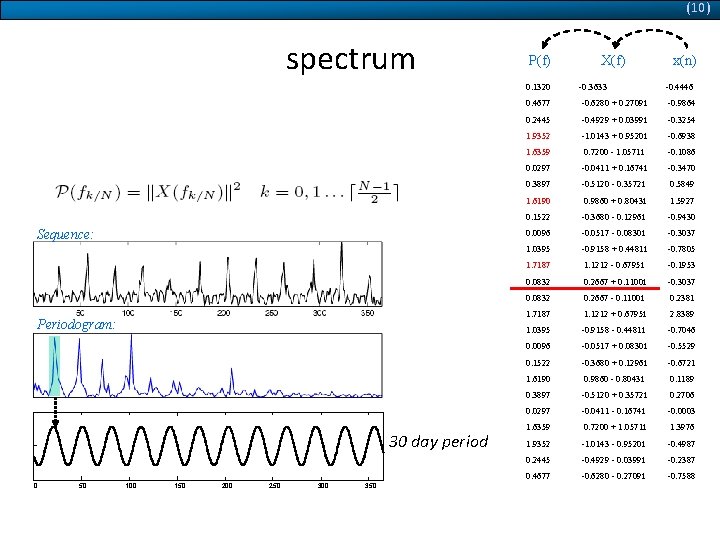

(10) spectrum Keep just some frequencies of periodogram Sequence: Periodogram: f 30 day period 0 50 100 150 200 250 300 350 P(f) X(f) x(n) 0. 1320 -0. 3633 -0. 4446 0. 4677 -0. 6280 + 0. 2709 i -0. 9864 0. 2445 -0. 4929 + 0. 0399 i -0. 3254 1. 9352 -1. 0143 + 0. 9520 i -0. 6938 1. 6359 0. 7200 - 1. 0571 i -0. 1086 0. 0297 -0. 0411 + 0. 1674 i -0. 3470 0. 3897 -0. 5120 - 0. 3572 i 0. 5849 1. 6190 0. 9860 + 0. 8043 i 1. 5927 0. 1522 -0. 3680 - 0. 1296 i -0. 9430 0. 0096 -0. 0517 - 0. 0830 i -0. 3037 1. 0395 -0. 9158 + 0. 4481 i -0. 7805 1. 7187 1. 1212 - 0. 6795 i -0. 1953 0. 0832 0. 2667 + 0. 1100 i -0. 3037 0. 0832 0. 2667 - 0. 1100 i 0. 2381 1. 7187 1. 1212 + 0. 6795 i 2. 8389 1. 0395 -0. 9158 - 0. 4481 i -0. 7046 0. 0096 -0. 0517 + 0. 0830 i -0. 5529 0. 1522 -0. 3680 + 0. 1296 i -0. 6721 1. 6190 0. 9860 - 0. 8043 i 0. 1189 0. 3897 -0. 5120 + 0. 3572 i 0. 2706 0. 0297 -0. 0411 - 0. 1674 i -0. 0003 1. 6359 0. 7200 + 1. 0571 i 1. 3976 1. 9352 -1. 0143 - 0. 9520 i -0. 4987 0. 2445 -0. 4929 - 0. 0399 i -0. 2387 0. 4677 -0. 6280 - 0. 2709 i -0. 7588

(10) spectrum Keep just some frequencies of periodogram Sequence: Periodogram: Reconstruction: f P(f) X(f) x(n) 0. 1320 -0. 3633 -0. 4446 0. 4677 -0. 6280 + 0. 2709 i -0. 9864 0. 2445 -0. 4929 + 0. 0399 i -0. 3254 1. 9352 -1. 0143 + 0. 9520 i -0. 6938 1. 6359 0. 7200 - 1. 0571 i -0. 1086 0. 0297 -0. 0411 + 0. 1674 i -0. 3470 0. 3897 -0. 5120 - 0. 3572 i 0. 5849 1. 6190 0. 9860 + 0. 8043 i 1. 5927 0. 1522 -0. 3680 - 0. 1296 i -0. 9430 0. 0096 -0. 0517 - 0. 0830 i -0. 3037 1. 0395 -0. 9158 + 0. 4481 i -0. 7805 1. 7187 1. 1212 - 0. 6795 i -0. 1953 0. 0832 0. 2667 + 0. 1100 i -0. 3037 0. 0832 0. 2667 - 0. 1100 i 0. 2381 1. 7187 1. 1212 + 0. 6795 i 2. 8389 1. 0395 -0. 9158 - 0. 4481 i -0. 7046 0. 0096 -0. 0517 + 0. 0830 i -0. 5529 0. 1522 -0. 3680 + 0. 1296 i -0. 6721 1. 6190 0. 9860 - 0. 8043 i 0. 1189 0. 3897 -0. 5120 + 0. 3572 i 0. 2706 0. 0297 -0. 0411 - 0. 1674 i -0. 0003 1. 6359 0. 7200 + 1. 0571 i 1. 3976 1. 9352 -1. 0143 - 0. 9520 i -0. 4987 0. 2445 -0. 4929 - 0. 0399 i -0. 2387 0. 4677 -0. 6280 - 0. 2709 i -0. 7588

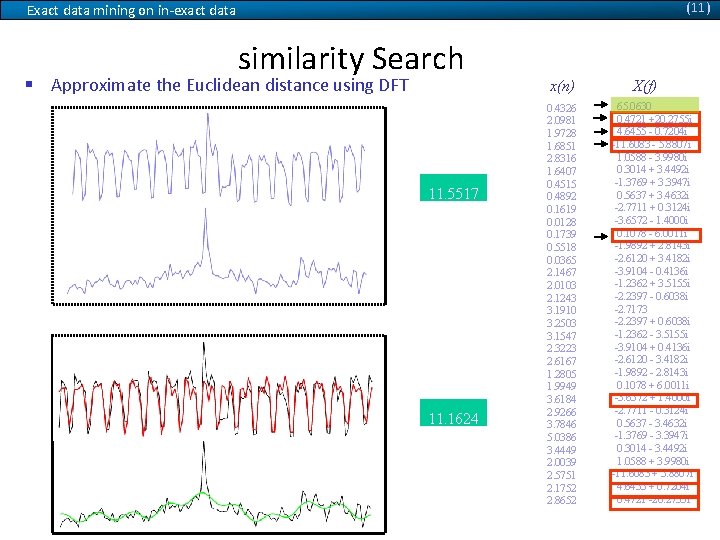

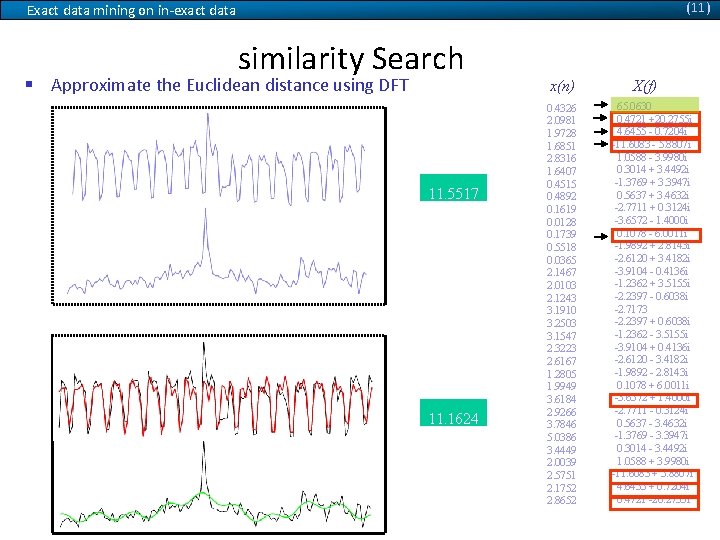

(11) Exact data mining on in-exact data similarity Search § Approximate the Euclidean distance using DFT 11. 5517 First 5 Coefficients +symmetric ones 7. 9234 x(n) 0. 4326 2. 0981 1. 9728 1. 6851 2. 8316 1. 6407 0. 4515 0. 4892 0. 1619 0. 0128 0. 1739 0. 5518 0. 0365 2. 1467 2. 0103 2. 1243 3. 1910 3. 2503 3. 1547 2. 3223 2. 6167 1. 2805 1. 9949 3. 6184 2. 9266 3. 7846 5. 0386 3. 4449 2. 0039 2. 5751 2. 1752 2. 8652 X(f) 65. 0630 0. 4721 +20. 2755 i 4. 6455 - 0. 7204 i -11. 6083 - 5. 8807 i 1. 0588 - 3. 9980 i 0. 3014 + 3. 4492 i -1. 3769 + 3. 3947 i 0. 5637 + 3. 4632 i -2. 7711 + 0. 3124 i -3. 6572 - 1. 4000 i 0. 1078 - 6. 0011 i -1. 9892 + 2. 8143 i -2. 6120 + 3. 4182 i -3. 9104 - 0. 4136 i -1. 2362 + 3. 5155 i -2. 2397 - 0. 6038 i -2. 7173 -2. 2397 + 0. 6038 i -1. 2362 - 3. 5155 i -3. 9104 + 0. 4136 i -2. 6120 - 3. 4182 i -1. 9892 - 2. 8143 i 0. 1078 + 6. 0011 i -3. 6572 + 1. 4000 i -2. 7711 - 0. 3124 i 0. 5637 - 3. 4632 i -1. 3769 - 3. 3947 i 0. 3014 - 3. 4492 i 1. 0588 + 3. 9980 i -11. 6083 + 5. 8807 i 4. 6455 + 0. 7204 i 0. 4721 -20. 2755 i

(11) Exact data mining on in-exact data similarity Search § Approximate the Euclidean distance using DFT 11. 5517 Best 5 Coefficients + symmetric ones 11. 1624 x(n) 0. 4326 2. 0981 1. 9728 1. 6851 2. 8316 1. 6407 0. 4515 0. 4892 0. 1619 0. 0128 0. 1739 0. 5518 0. 0365 2. 1467 2. 0103 2. 1243 3. 1910 3. 2503 3. 1547 2. 3223 2. 6167 1. 2805 1. 9949 3. 6184 2. 9266 3. 7846 5. 0386 3. 4449 2. 0039 2. 5751 2. 1752 2. 8652 X(f) 65. 0630 0. 4721 +20. 2755 i 4. 6455 - 0. 7204 i -11. 6083 - 5. 8807 i 1. 0588 - 3. 9980 i 0. 3014 + 3. 4492 i -1. 3769 + 3. 3947 i 0. 5637 + 3. 4632 i -2. 7711 + 0. 3124 i -3. 6572 - 1. 4000 i 0. 1078 - 6. 0011 i -1. 9892 + 2. 8143 i -2. 6120 + 3. 4182 i -3. 9104 - 0. 4136 i -1. 2362 + 3. 5155 i -2. 2397 - 0. 6038 i -2. 7173 -2. 2397 + 0. 6038 i -1. 2362 - 3. 5155 i -3. 9104 + 0. 4136 i -2. 6120 - 3. 4182 i -1. 9892 - 2. 8143 i 0. 1078 + 6. 0011 i -3. 6572 + 1. 4000 i -2. 7711 - 0. 3124 i 0. 5637 - 3. 4632 i -1. 3769 - 3. 3947 i 0. 3014 - 3. 4492 i 1. 0588 + 3. 9980 i -11. 6083 + 5. 8807 i 4. 6455 + 0. 7204 i 0. 4721 -20. 2755 i

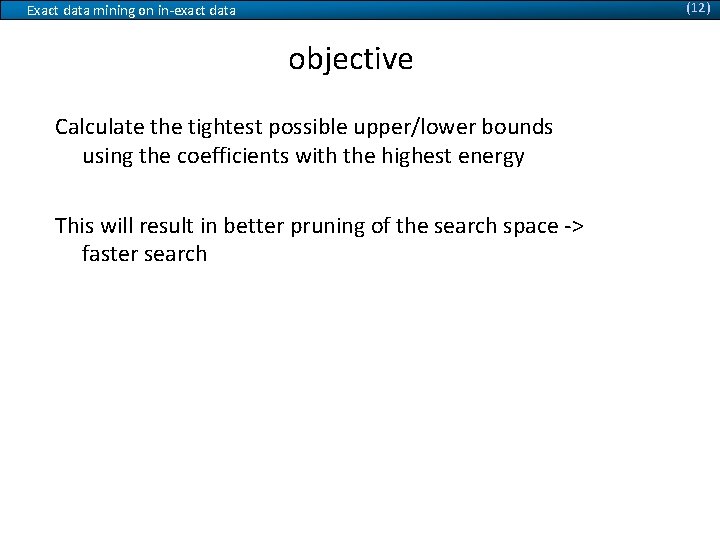

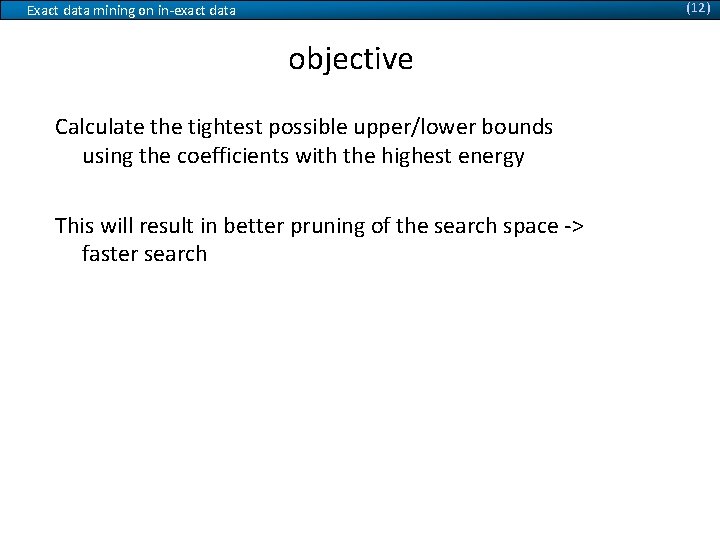

(12) Exact data mining on in-exact data objective Calculate the tightest possible upper/lower bounds using the coefficients with the highest energy This will result in better pruning of the search space -> faster search

(13) Exact data mining on in-exact data x -1. 7313 -0. 7221 -1. 2267 -0. 4194 -0. 0158 -0. 8231 -1. 2267 -0. 3185 -0. 1167 -0. 5203 0. 0851 0. 6906 0. 1861 0. 7915 1. 2961 2. 3052 1. 9016 0. 1861 -0. 0158 0. 5897 0. 8924 0. 1861 -1. 7313 -0. 3185 -0. 9240 -0. 5203 -0. 4194 -0. 3185 2. 2043 X 0. 0000 -12. 0861 + 4. 4812 i 7. 0708 - 3. 3545 i 0. 8045 + 4. 2567 i 0. 2386 + 2. 0592 i -1. 6003 + 6. 7154 i -2. 6539 + 0. 8595 i 5. 8845 + 3. 7689 i -2. 0182 + 3. 9356 i -3. 9484 + 0. 2234 i -1. 2440 + 2. 4538 i -1. 6268 + 1. 0393 i -1. 0458 + 4. 0774 i -4. 2436 + 0. 5988 i -6. 4020 - 0. 9529 i -3. 3663 - 1. 0825 i -2. 9264 -3. 3663 + 1. 0825 i -6. 4020 + 0. 9529 i -4. 2436 - 0. 5988 i -1. 0458 - 4. 0774 i -1. 6268 - 1. 0393 i -1. 2440 - 2. 4538 i -3. 9484 - 0. 2234 i -2. 0182 - 3. 9356 i 5. 8845 - 3. 7689 i -2. 6539 - 0. 8595 i -1. 6003 - 6. 7154 i 0. 2386 - 2. 0592 i 0. 8045 - 4. 2567 i 7. 0708 + 3. 3545 i -12. 0861 - 4. 4812 i Q 0. 0000 -2. 4756 + 1. 7973 i -2. 8455 - 0. 8510 i -5. 5245 - 2. 4452 i -2. 4342 - 1. 9897 i -2. 1082 + 1. 6303 i -3. 6438 - 1. 9424 i -1. 5989 - 0. 1514 i 1. 6186 - 2. 3544 i 15. 2057 - 6. 0515 i -3. 6746 + 0. 3413 i -0. 0532 + 0. 6724 i -1. 8331 - 0. 6000 i 0. 1642 + 1. 3907 i -7. 3632 - 5. 7938 i -2. 2362 - 1. 7287 i -5. 1339 -2. 2362 + 1. 7287 i -7. 3632 + 5. 7938 i 0. 1642 - 1. 3907 i -1. 8331 + 0. 6000 i -0. 0532 - 0. 6724 i -3. 6746 - 0. 3413 i 15. 2057 + 6. 0515 i 1. 6186 + 2. 3544 i -1. 5989 + 0. 1514 i -3. 6438 + 1. 9424 i -2. 1082 - 1. 6303 i -2. 4342 + 1. 9897 i -5. 5245 + 2. 4452 i -2. 8455 + 0. 8510 i -2. 4756 - 1. 7973 i q -1. 3356 0. 6182 -1. 6135 1. 0842 0. 7981 0. 2667 -1. 2048 0. 7654 0. 6182 -1. 4500 -0. 2892 0. 6264 0. 2503 -1. 0740 0. 7163 0. 7654 -1. 5073 1. 1987 0. 7735 0. 1277 -1. 2865 0. 6509 0. 5283 -1. 0413 0. 9207 1. 2150 0. 4302 -1. 2211 1. 0678 1. 0351 -1. 4337 -1. 0004

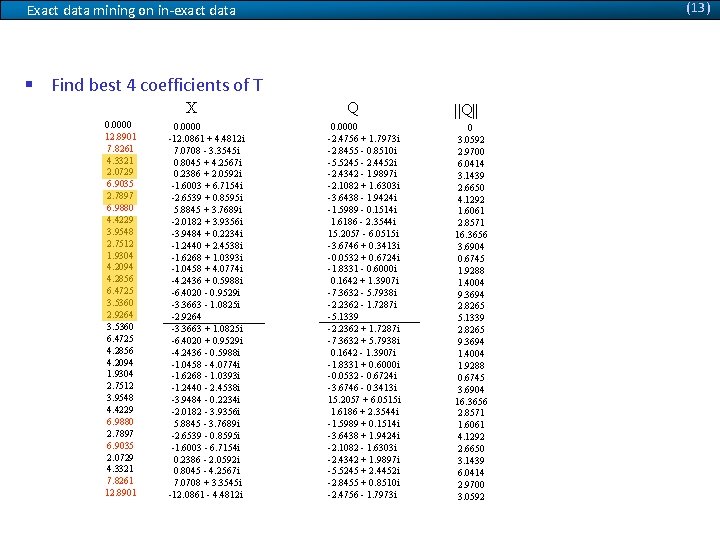

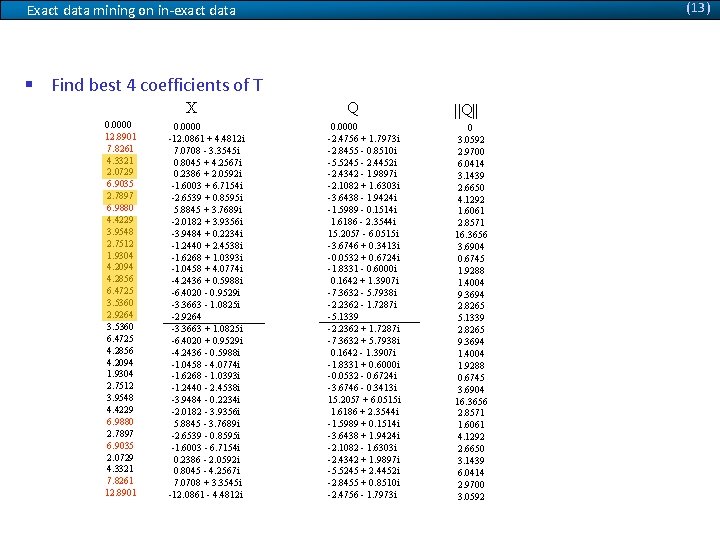

(13) Exact data mining on in-exact data § Find best k coefficients of X (k=4) Magnitude vector ||X|| 0. 0000 12. 8901 7. 8261 4. 3321 2. 0729 6. 9035 2. 7897 6. 9880 4. 4229 3. 9548 2. 7512 1. 9304 4. 2094 4. 2856 6. 4725 3. 5360 2. 9264 3. 5360 6. 4725 4. 2856 4. 2094 1. 9304 2. 7512 3. 9548 4. 4229 6. 9880 2. 7897 6. 9035 2. 0729 4. 3321 7. 8261 12. 8901 X 0. 0000 -12. 0861 + 4. 4812 i 7. 0708 - 3. 3545 i 0. 8045 + 4. 2567 i 0. 2386 + 2. 0592 i -1. 6003 + 6. 7154 i -2. 6539 + 0. 8595 i 5. 8845 + 3. 7689 i -2. 0182 + 3. 9356 i -3. 9484 + 0. 2234 i -1. 2440 + 2. 4538 i -1. 6268 + 1. 0393 i -1. 0458 + 4. 0774 i -4. 2436 + 0. 5988 i -6. 4020 - 0. 9529 i -3. 3663 - 1. 0825 i -2. 9264 -3. 3663 + 1. 0825 i -6. 4020 + 0. 9529 i -4. 2436 - 0. 5988 i -1. 0458 - 4. 0774 i -1. 6268 - 1. 0393 i -1. 2440 - 2. 4538 i -3. 9484 - 0. 2234 i -2. 0182 - 3. 9356 i 5. 8845 - 3. 7689 i -2. 6539 - 0. 8595 i -1. 6003 - 6. 7154 i 0. 2386 - 2. 0592 i 0. 8045 - 4. 2567 i 7. 0708 + 3. 3545 i -12. 0861 - 4. 4812 i Q 0. 0000 -2. 4756 + 1. 7973 i -2. 8455 - 0. 8510 i -5. 5245 - 2. 4452 i -2. 4342 - 1. 9897 i -2. 1082 + 1. 6303 i -3. 6438 - 1. 9424 i -1. 5989 - 0. 1514 i 1. 6186 - 2. 3544 i 15. 2057 - 6. 0515 i -3. 6746 + 0. 3413 i -0. 0532 + 0. 6724 i -1. 8331 - 0. 6000 i 0. 1642 + 1. 3907 i -7. 3632 - 5. 7938 i -2. 2362 - 1. 7287 i -5. 1339 -2. 2362 + 1. 7287 i -7. 3632 + 5. 7938 i 0. 1642 - 1. 3907 i -1. 8331 + 0. 6000 i -0. 0532 - 0. 6724 i -3. 6746 - 0. 3413 i 15. 2057 + 6. 0515 i 1. 6186 + 2. 3544 i -1. 5989 + 0. 1514 i -3. 6438 + 1. 9424 i -2. 1082 - 1. 6303 i -2. 4342 + 1. 9897 i -5. 5245 + 2. 4452 i -2. 8455 + 0. 8510 i -2. 4756 - 1. 7973 i ||Q|| 0 3. 0592 2. 9700 6. 0414 3. 1439 2. 6650 4. 1292 1. 6061 2. 8571 16. 3656 3. 6904 0. 6745 1. 9288 1. 4004 9. 3694 2. 8265 5. 1339 2. 8265 9. 3694 1. 4004 1. 9288 0. 6745 3. 6904 16. 3656 2. 8571 1. 6061 4. 1292 2. 6650 3. 1439 6. 0414 2. 9700 3. 0592

(13) Exact data mining on in-exact data § Find best 4 coefficients of T ||X|| 0. 0000 12. 8901 7. 8261 4. 3321 2. 0729 6. 9035 2. 7897 6. 9880 4. 4229 3. 9548 2. 7512 1. 9304 4. 2094 4. 2856 6. 4725 3. 5360 2. 9264 3. 5360 6. 4725 4. 2856 4. 2094 1. 9304 2. 7512 3. 9548 4. 4229 6. 9880 2. 7897 6. 9035 2. 0729 4. 3321 7. 8261 12. 8901 X 0. 0000 -12. 0861 + 4. 4812 i 7. 0708 - 3. 3545 i 0. 8045 + 4. 2567 i 0. 2386 + 2. 0592 i -1. 6003 + 6. 7154 i -2. 6539 + 0. 8595 i 5. 8845 + 3. 7689 i -2. 0182 + 3. 9356 i -3. 9484 + 0. 2234 i -1. 2440 + 2. 4538 i -1. 6268 + 1. 0393 i -1. 0458 + 4. 0774 i -4. 2436 + 0. 5988 i -6. 4020 - 0. 9529 i -3. 3663 - 1. 0825 i -2. 9264 -3. 3663 + 1. 0825 i -6. 4020 + 0. 9529 i -4. 2436 - 0. 5988 i -1. 0458 - 4. 0774 i -1. 6268 - 1. 0393 i -1. 2440 - 2. 4538 i -3. 9484 - 0. 2234 i -2. 0182 - 3. 9356 i 5. 8845 - 3. 7689 i -2. 6539 - 0. 8595 i -1. 6003 - 6. 7154 i 0. 2386 - 2. 0592 i 0. 8045 - 4. 2567 i 7. 0708 + 3. 3545 i -12. 0861 - 4. 4812 i Q 0. 0000 -2. 4756 + 1. 7973 i -2. 8455 - 0. 8510 i -5. 5245 - 2. 4452 i -2. 4342 - 1. 9897 i -2. 1082 + 1. 6303 i -3. 6438 - 1. 9424 i -1. 5989 - 0. 1514 i 1. 6186 - 2. 3544 i 15. 2057 - 6. 0515 i -3. 6746 + 0. 3413 i -0. 0532 + 0. 6724 i -1. 8331 - 0. 6000 i 0. 1642 + 1. 3907 i -7. 3632 - 5. 7938 i -2. 2362 - 1. 7287 i -5. 1339 -2. 2362 + 1. 7287 i -7. 3632 + 5. 7938 i 0. 1642 - 1. 3907 i -1. 8331 + 0. 6000 i -0. 0532 - 0. 6724 i -3. 6746 - 0. 3413 i 15. 2057 + 6. 0515 i 1. 6186 + 2. 3544 i -1. 5989 + 0. 1514 i -3. 6438 + 1. 9424 i -2. 1082 - 1. 6303 i -2. 4342 + 1. 9897 i -5. 5245 + 2. 4452 i -2. 8455 + 0. 8510 i -2. 4756 - 1. 7973 i ||Q|| 0 3. 0592 2. 9700 6. 0414 3. 1439 2. 6650 4. 1292 1. 6061 2. 8571 16. 3656 3. 6904 0. 6745 1. 9288 1. 4004 9. 3694 2. 8265 5. 1339 2. 8265 9. 3694 1. 4004 1. 9288 0. 6745 3. 6904 16. 3656 2. 8571 1. 6061 4. 1292 2. 6650 3. 1439 6. 0414 2. 9700 3. 0592

(13) Exact data mining on in-exact data § Identify smallest magnitude ( power) ||X|| min. Power 6. 9035 0. 0000 12. 8901 7. 8261 4. 3321 2. 0729 6. 9035 2. 7897 6. 9880 4. 4229 3. 9548 2. 7512 1. 9304 4. 2094 4. 2856 6. 4725 3. 5360 2. 9264 3. 5360 6. 4725 4. 2856 4. 2094 1. 9304 2. 7512 3. 9548 4. 4229 6. 9880 2. 7897 6. 9035 2. 0729 4. 3321 7. 8261 12. 8901 X 0. 0000 -12. 0861 + 4. 4812 i 7. 0708 - 3. 3545 i 0. 8045 + 4. 2567 i 0. 2386 + 2. 0592 i -1. 6003 + 6. 7154 i -2. 6539 + 0. 8595 i 5. 8845 + 3. 7689 i -2. 0182 + 3. 9356 i -3. 9484 + 0. 2234 i -1. 2440 + 2. 4538 i -1. 6268 + 1. 0393 i -1. 0458 + 4. 0774 i -4. 2436 + 0. 5988 i -6. 4020 - 0. 9529 i -3. 3663 - 1. 0825 i -2. 9264 -3. 3663 + 1. 0825 i -6. 4020 + 0. 9529 i -4. 2436 - 0. 5988 i -1. 0458 - 4. 0774 i -1. 6268 - 1. 0393 i -1. 2440 - 2. 4538 i -3. 9484 - 0. 2234 i -2. 0182 - 3. 9356 i 5. 8845 - 3. 7689 i -2. 6539 - 0. 8595 i -1. 6003 - 6. 7154 i 0. 2386 - 2. 0592 i 0. 8045 - 4. 2567 i 7. 0708 + 3. 3545 i -12. 0861 - 4. 4812 i Q 0. 0000 -2. 4756 + 1. 7973 i -2. 8455 - 0. 8510 i -5. 5245 - 2. 4452 i -2. 4342 - 1. 9897 i -2. 1082 + 1. 6303 i -3. 6438 - 1. 9424 i -1. 5989 - 0. 1514 i 1. 6186 - 2. 3544 i 15. 2057 - 6. 0515 i -3. 6746 + 0. 3413 i -0. 0532 + 0. 6724 i -1. 8331 - 0. 6000 i 0. 1642 + 1. 3907 i -7. 3632 - 5. 7938 i -2. 2362 - 1. 7287 i -5. 1339 -2. 2362 + 1. 7287 i -7. 3632 + 5. 7938 i 0. 1642 - 1. 3907 i -1. 8331 + 0. 6000 i -0. 0532 - 0. 6724 i -3. 6746 - 0. 3413 i 15. 2057 + 6. 0515 i 1. 6186 + 2. 3544 i -1. 5989 + 0. 1514 i -3. 6438 + 1. 9424 i -2. 1082 - 1. 6303 i -2. 4342 + 1. 9897 i -5. 5245 + 2. 4452 i -2. 8455 + 0. 8510 i -2. 4756 - 1. 7973 i ||Q|| 0 3. 0592 2. 9700 6. 0414 3. 1439 2. 6650 4. 1292 1. 6061 2. 8571 16. 3656 3. 6904 0. 6745 1. 9288 1. 4004 9. 3694 2. 8265 5. 1339 2. 8265 9. 3694 1. 4004 1. 9288 0. 6745 3. 6904 16. 3656 2. 8571 1. 6061 4. 1292 2. 6650 3. 1439 6. 0414 2. 9700 3. 0592

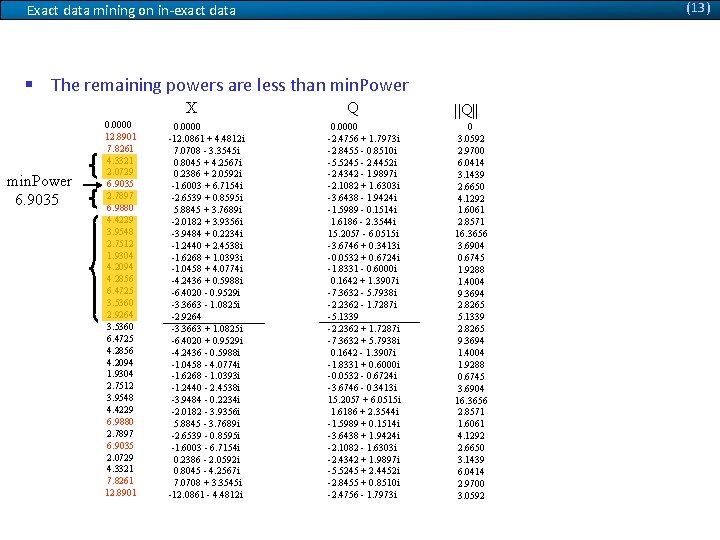

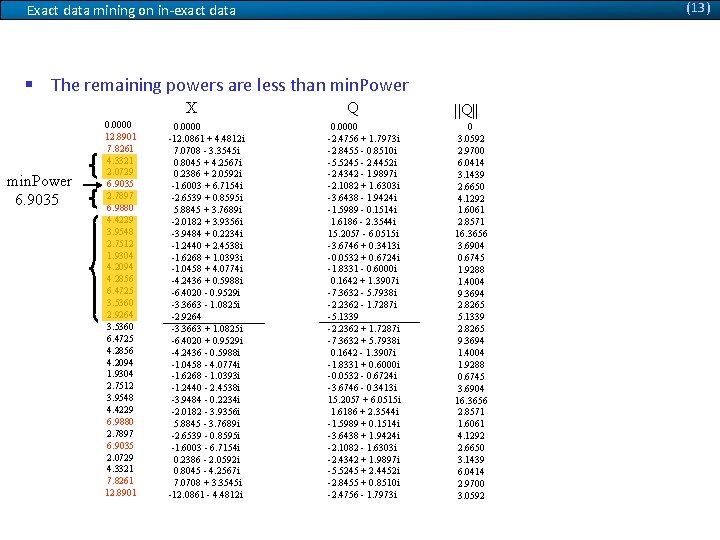

(13) Exact data mining on in-exact data § The remaining powers are less than min. Power ||X|| min. Power 6. 9035 0. 0000 12. 8901 7. 8261 4. 3321 2. 0729 6. 9035 2. 7897 6. 9880 4. 4229 3. 9548 2. 7512 1. 9304 4. 2094 4. 2856 6. 4725 3. 5360 2. 9264 3. 5360 6. 4725 4. 2856 4. 2094 1. 9304 2. 7512 3. 9548 4. 4229 6. 9880 2. 7897 6. 9035 2. 0729 4. 3321 7. 8261 12. 8901 X 0. 0000 -12. 0861 + 4. 4812 i 7. 0708 - 3. 3545 i 0. 8045 + 4. 2567 i 0. 2386 + 2. 0592 i -1. 6003 + 6. 7154 i -2. 6539 + 0. 8595 i 5. 8845 + 3. 7689 i -2. 0182 + 3. 9356 i -3. 9484 + 0. 2234 i -1. 2440 + 2. 4538 i -1. 6268 + 1. 0393 i -1. 0458 + 4. 0774 i -4. 2436 + 0. 5988 i -6. 4020 - 0. 9529 i -3. 3663 - 1. 0825 i -2. 9264 -3. 3663 + 1. 0825 i -6. 4020 + 0. 9529 i -4. 2436 - 0. 5988 i -1. 0458 - 4. 0774 i -1. 6268 - 1. 0393 i -1. 2440 - 2. 4538 i -3. 9484 - 0. 2234 i -2. 0182 - 3. 9356 i 5. 8845 - 3. 7689 i -2. 6539 - 0. 8595 i -1. 6003 - 6. 7154 i 0. 2386 - 2. 0592 i 0. 8045 - 4. 2567 i 7. 0708 + 3. 3545 i -12. 0861 - 4. 4812 i Q 0. 0000 -2. 4756 + 1. 7973 i -2. 8455 - 0. 8510 i -5. 5245 - 2. 4452 i -2. 4342 - 1. 9897 i -2. 1082 + 1. 6303 i -3. 6438 - 1. 9424 i -1. 5989 - 0. 1514 i 1. 6186 - 2. 3544 i 15. 2057 - 6. 0515 i -3. 6746 + 0. 3413 i -0. 0532 + 0. 6724 i -1. 8331 - 0. 6000 i 0. 1642 + 1. 3907 i -7. 3632 - 5. 7938 i -2. 2362 - 1. 7287 i -5. 1339 -2. 2362 + 1. 7287 i -7. 3632 + 5. 7938 i 0. 1642 - 1. 3907 i -1. 8331 + 0. 6000 i -0. 0532 - 0. 6724 i -3. 6746 - 0. 3413 i 15. 2057 + 6. 0515 i 1. 6186 + 2. 3544 i -1. 5989 + 0. 1514 i -3. 6438 + 1. 9424 i -2. 1082 - 1. 6303 i -2. 4342 + 1. 9897 i -5. 5245 + 2. 4452 i -2. 8455 + 0. 8510 i -2. 4756 - 1. 7973 i ||Q|| 0 3. 0592 2. 9700 6. 0414 3. 1439 2. 6650 4. 1292 1. 6061 2. 8571 16. 3656 3. 6904 0. 6745 1. 9288 1. 4004 9. 3694 2. 8265 5. 1339 2. 8265 9. 3694 1. 4004 1. 9288 0. 6745 3. 6904 16. 3656 2. 8571 1. 6061 4. 1292 2. 6650 3. 1439 6. 0414 2. 9700 3. 0592

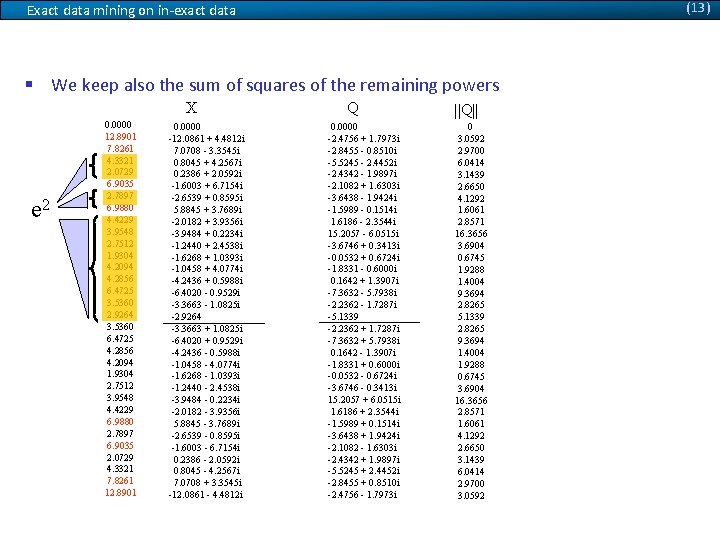

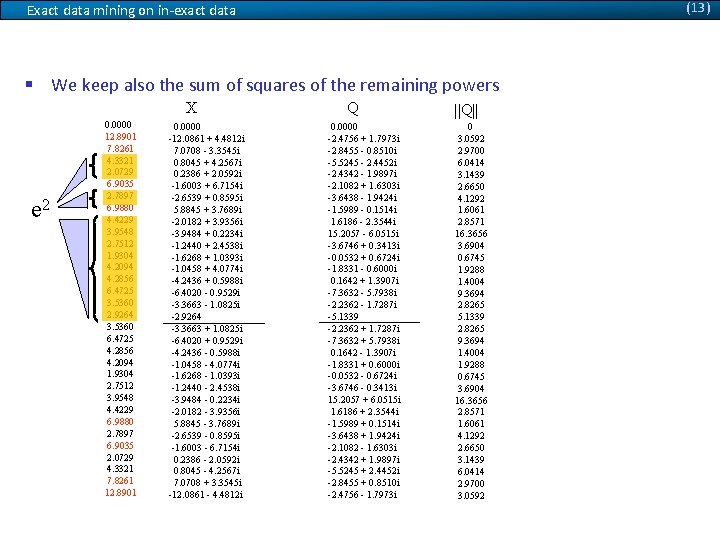

(13) Exact data mining on in-exact data § We keep also the sum of squares of the remaining powers ||X|| e 2 0. 0000 12. 8901 7. 8261 4. 3321 2. 0729 6. 9035 2. 7897 6. 9880 4. 4229 3. 9548 2. 7512 1. 9304 4. 2094 4. 2856 6. 4725 3. 5360 2. 9264 3. 5360 6. 4725 4. 2856 4. 2094 1. 9304 2. 7512 3. 9548 4. 4229 6. 9880 2. 7897 6. 9035 2. 0729 4. 3321 7. 8261 12. 8901 X 0. 0000 -12. 0861 + 4. 4812 i 7. 0708 - 3. 3545 i 0. 8045 + 4. 2567 i 0. 2386 + 2. 0592 i -1. 6003 + 6. 7154 i -2. 6539 + 0. 8595 i 5. 8845 + 3. 7689 i -2. 0182 + 3. 9356 i -3. 9484 + 0. 2234 i -1. 2440 + 2. 4538 i -1. 6268 + 1. 0393 i -1. 0458 + 4. 0774 i -4. 2436 + 0. 5988 i -6. 4020 - 0. 9529 i -3. 3663 - 1. 0825 i -2. 9264 -3. 3663 + 1. 0825 i -6. 4020 + 0. 9529 i -4. 2436 - 0. 5988 i -1. 0458 - 4. 0774 i -1. 6268 - 1. 0393 i -1. 2440 - 2. 4538 i -3. 9484 - 0. 2234 i -2. 0182 - 3. 9356 i 5. 8845 - 3. 7689 i -2. 6539 - 0. 8595 i -1. 6003 - 6. 7154 i 0. 2386 - 2. 0592 i 0. 8045 - 4. 2567 i 7. 0708 + 3. 3545 i -12. 0861 - 4. 4812 i Q 0. 0000 -2. 4756 + 1. 7973 i -2. 8455 - 0. 8510 i -5. 5245 - 2. 4452 i -2. 4342 - 1. 9897 i -2. 1082 + 1. 6303 i -3. 6438 - 1. 9424 i -1. 5989 - 0. 1514 i 1. 6186 - 2. 3544 i 15. 2057 - 6. 0515 i -3. 6746 + 0. 3413 i -0. 0532 + 0. 6724 i -1. 8331 - 0. 6000 i 0. 1642 + 1. 3907 i -7. 3632 - 5. 7938 i -2. 2362 - 1. 7287 i -5. 1339 -2. 2362 + 1. 7287 i -7. 3632 + 5. 7938 i 0. 1642 - 1. 3907 i -1. 8331 + 0. 6000 i -0. 0532 - 0. 6724 i -3. 6746 - 0. 3413 i 15. 2057 + 6. 0515 i 1. 6186 + 2. 3544 i -1. 5989 + 0. 1514 i -3. 6438 + 1. 9424 i -2. 1082 - 1. 6303 i -2. 4342 + 1. 9897 i -5. 5245 + 2. 4452 i -2. 8455 + 0. 8510 i -2. 4756 - 1. 7973 i ||Q|| 0 3. 0592 2. 9700 6. 0414 3. 1439 2. 6650 4. 1292 1. 6061 2. 8571 16. 3656 3. 6904 0. 6745 1. 9288 1. 4004 9. 3694 2. 8265 5. 1339 2. 8265 9. 3694 1. 4004 1. 9288 0. 6745 3. 6904 16. 3656 2. 8571 1. 6061 4. 1292 2. 6650 3. 1439 6. 0414 2. 9700 3. 0592

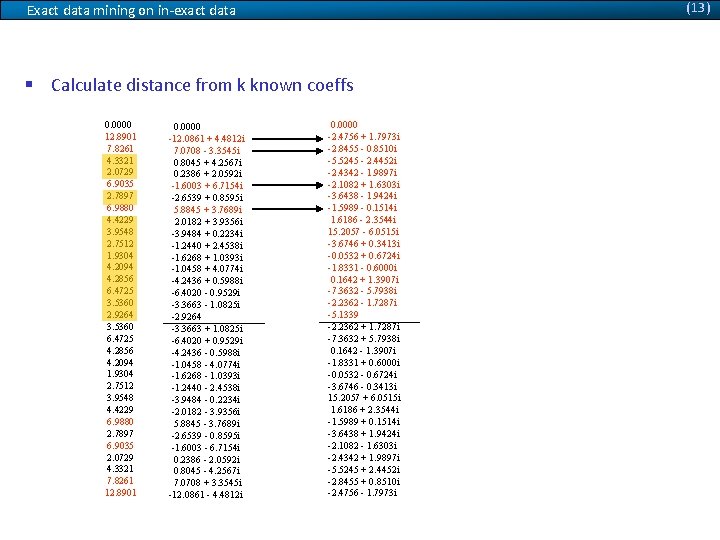

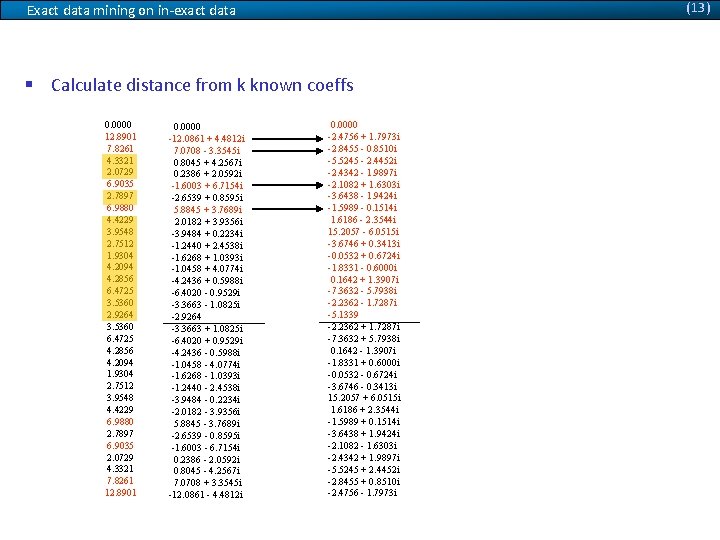

(13) Exact data mining on in-exact data § Calculate distance from k known coeffs ||X|| 0. 0000 12. 8901 7. 8261 4. 3321 2. 0729 6. 9035 2. 7897 6. 9880 4. 4229 3. 9548 2. 7512 1. 9304 4. 2094 4. 2856 6. 4725 3. 5360 2. 9264 3. 5360 6. 4725 4. 2856 4. 2094 1. 9304 2. 7512 3. 9548 4. 4229 6. 9880 2. 7897 6. 9035 2. 0729 4. 3321 7. 8261 12. 8901 X 0. 0000 -12. 0861 + 4. 4812 i 7. 0708 - 3. 3545 i 0. 8045 + 4. 2567 i 0. 2386 + 2. 0592 i -1. 6003 + 6. 7154 i -2. 6539 + 0. 8595 i 5. 8845 + 3. 7689 i -2. 0182 + 3. 9356 i -3. 9484 + 0. 2234 i -1. 2440 + 2. 4538 i -1. 6268 + 1. 0393 i -1. 0458 + 4. 0774 i -4. 2436 + 0. 5988 i -6. 4020 - 0. 9529 i -3. 3663 - 1. 0825 i -2. 9264 -3. 3663 + 1. 0825 i -6. 4020 + 0. 9529 i -4. 2436 - 0. 5988 i -1. 0458 - 4. 0774 i -1. 6268 - 1. 0393 i -1. 2440 - 2. 4538 i -3. 9484 - 0. 2234 i -2. 0182 - 3. 9356 i 5. 8845 - 3. 7689 i -2. 6539 - 0. 8595 i -1. 6003 - 6. 7154 i 0. 2386 - 2. 0592 i 0. 8045 - 4. 2567 i 7. 0708 + 3. 3545 i -12. 0861 - 4. 4812 i Q 0. 0000 -2. 4756 + 1. 7973 i -2. 8455 - 0. 8510 i -5. 5245 - 2. 4452 i -2. 4342 - 1. 9897 i -2. 1082 + 1. 6303 i -3. 6438 - 1. 9424 i -1. 5989 - 0. 1514 i 1. 6186 - 2. 3544 i 15. 2057 - 6. 0515 i -3. 6746 + 0. 3413 i -0. 0532 + 0. 6724 i -1. 8331 - 0. 6000 i 0. 1642 + 1. 3907 i -7. 3632 - 5. 7938 i -2. 2362 - 1. 7287 i -5. 1339 -2. 2362 + 1. 7287 i -7. 3632 + 5. 7938 i 0. 1642 - 1. 3907 i -1. 8331 + 0. 6000 i -0. 0532 - 0. 6724 i -3. 6746 - 0. 3413 i 15. 2057 + 6. 0515 i 1. 6186 + 2. 3544 i -1. 5989 + 0. 1514 i -3. 6438 + 1. 9424 i -2. 1082 - 1. 6303 i -2. 4342 + 1. 9897 i -5. 5245 + 2. 4452 i -2. 8455 + 0. 8510 i -2. 4756 - 1. 7973 i ||Q|| 0 3. 0592 2. 9700 6. 0414 3. 1439 2. 6650 4. 1292 1. 6061 2. 8571 16. 3656 3. 6904 0. 6745 1. 9288 1. 4004 9. 3694 2. 8265 5. 1339 2. 8265 9. 3694 1. 4004 1. 9288 0. 6745 3. 6904 16. 3656 2. 8571 1. 6061 4. 1292 2. 6650 3. 1439 6. 0414 2. 9700 3. 0592

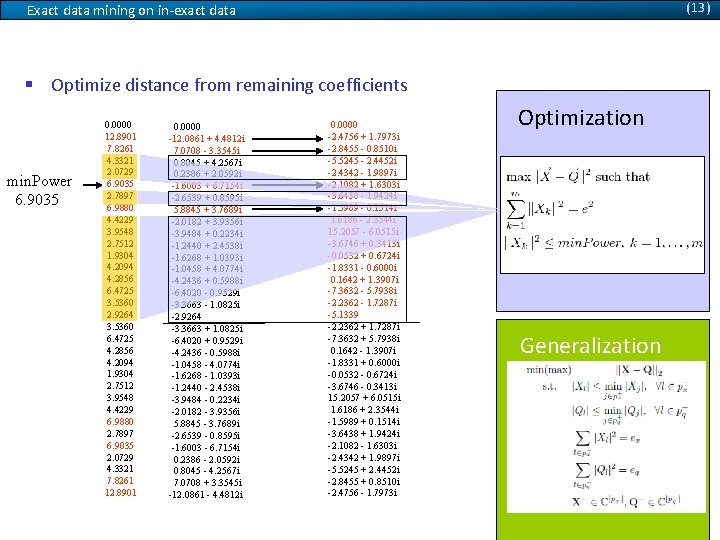

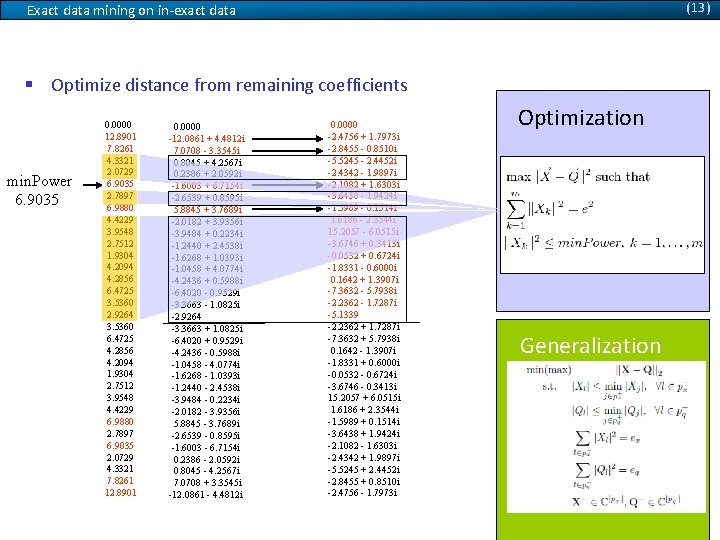

(13) Exact data mining on in-exact data § Optimize distance from remaining coefficients ||X|| min. Power 6. 9035 0. 0000 12. 8901 7. 8261 4. 3321 2. 0729 6. 9035 2. 7897 6. 9880 4. 4229 3. 9548 2. 7512 1. 9304 4. 2094 4. 2856 6. 4725 3. 5360 2. 9264 3. 5360 6. 4725 4. 2856 4. 2094 1. 9304 2. 7512 3. 9548 4. 4229 6. 9880 2. 7897 6. 9035 2. 0729 4. 3321 7. 8261 12. 8901 X 0. 0000 -12. 0861 + 4. 4812 i 7. 0708 - 3. 3545 i 0. 8045 + 4. 2567 i 0. 2386 + 2. 0592 i -1. 6003 + 6. 7154 i -2. 6539 + 0. 8595 i 5. 8845 + 3. 7689 i -2. 0182 + 3. 9356 i -3. 9484 + 0. 2234 i -1. 2440 + 2. 4538 i -1. 6268 + 1. 0393 i -1. 0458 + 4. 0774 i -4. 2436 + 0. 5988 i -6. 4020 - 0. 9529 i -3. 3663 - 1. 0825 i -2. 9264 -3. 3663 + 1. 0825 i -6. 4020 + 0. 9529 i -4. 2436 - 0. 5988 i -1. 0458 - 4. 0774 i -1. 6268 - 1. 0393 i -1. 2440 - 2. 4538 i -3. 9484 - 0. 2234 i -2. 0182 - 3. 9356 i 5. 8845 - 3. 7689 i -2. 6539 - 0. 8595 i -1. 6003 - 6. 7154 i 0. 2386 - 2. 0592 i 0. 8045 - 4. 2567 i 7. 0708 + 3. 3545 i -12. 0861 - 4. 4812 i Q 0. 0000 -2. 4756 + 1. 7973 i -2. 8455 - 0. 8510 i -5. 5245 - 2. 4452 i -2. 4342 - 1. 9897 i -2. 1082 + 1. 6303 i -3. 6438 - 1. 9424 i -1. 5989 - 0. 1514 i 1. 6186 - 2. 3544 i 15. 2057 - 6. 0515 i -3. 6746 + 0. 3413 i -0. 0532 + 0. 6724 i -1. 8331 - 0. 6000 i 0. 1642 + 1. 3907 i -7. 3632 - 5. 7938 i -2. 2362 - 1. 7287 i -5. 1339 -2. 2362 + 1. 7287 i -7. 3632 + 5. 7938 i 0. 1642 - 1. 3907 i -1. 8331 + 0. 6000 i -0. 0532 - 0. 6724 i -3. 6746 - 0. 3413 i 15. 2057 + 6. 0515 i 1. 6186 + 2. 3544 i -1. 5989 + 0. 1514 i -3. 6438 + 1. 9424 i -2. 1082 - 1. 6303 i -2. 4342 + 1. 9897 i -5. 5245 + 2. 4452 i -2. 8455 + 0. 8510 i -2. 4756 - 1. 7973 i ||Q|| 0 3. 0592 2. 9700 6. 0414 3. 1439 2. 6650 4. 1292 1. 6061 2. 8571 16. 3656 3. 6904 0. 6745 1. 9288 1. 4004 9. 3694 2. 8265 5. 1339 2. 8265 9. 3694 1. 4004 1. 9288 0. 6745 3. 6904 16. 3656 2. 8571 1. 6061 4. 1292 2. 6650 3. 1439 6. 0414 2. 9700 3. 0592 Optimization Generalization

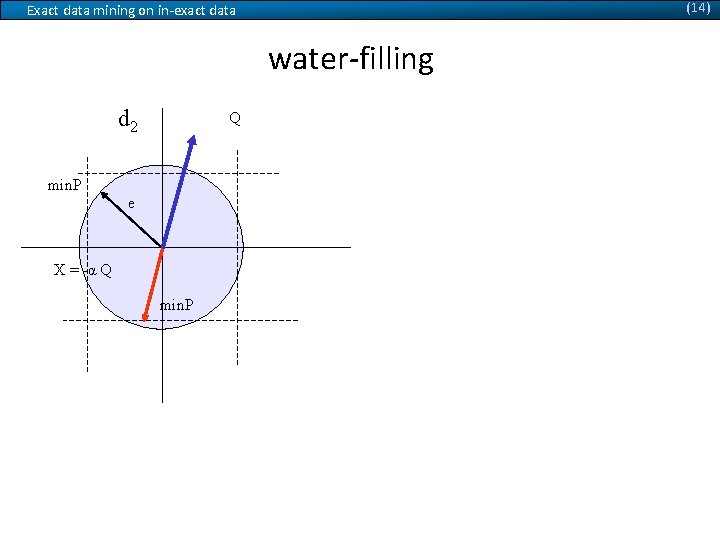

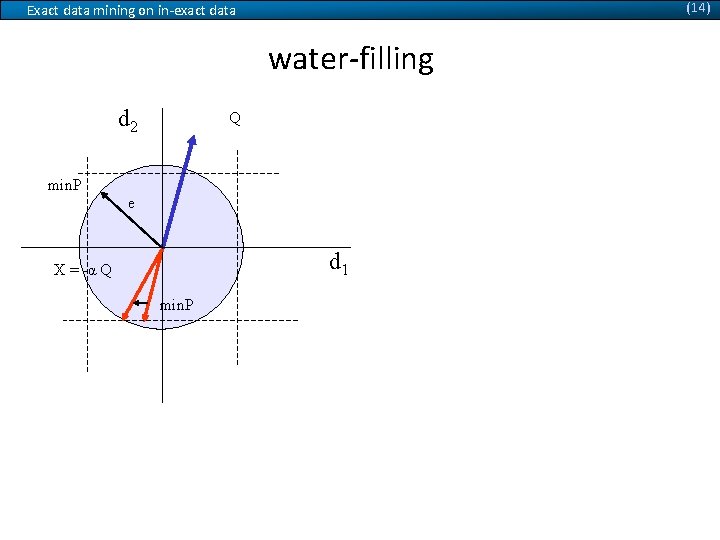

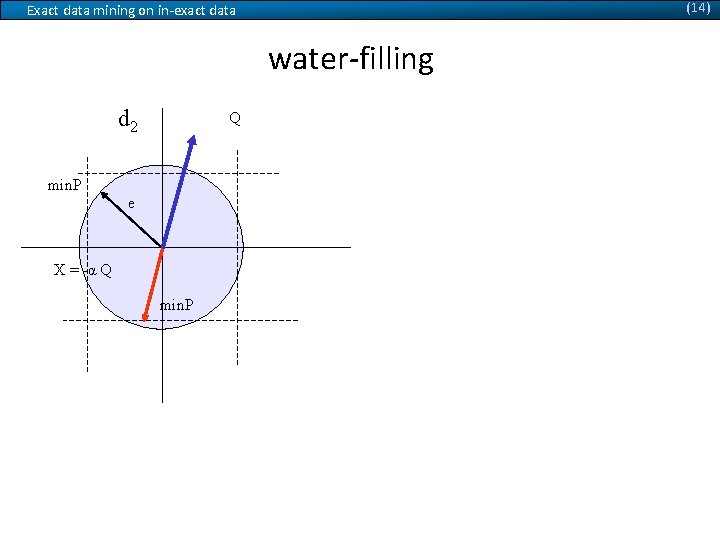

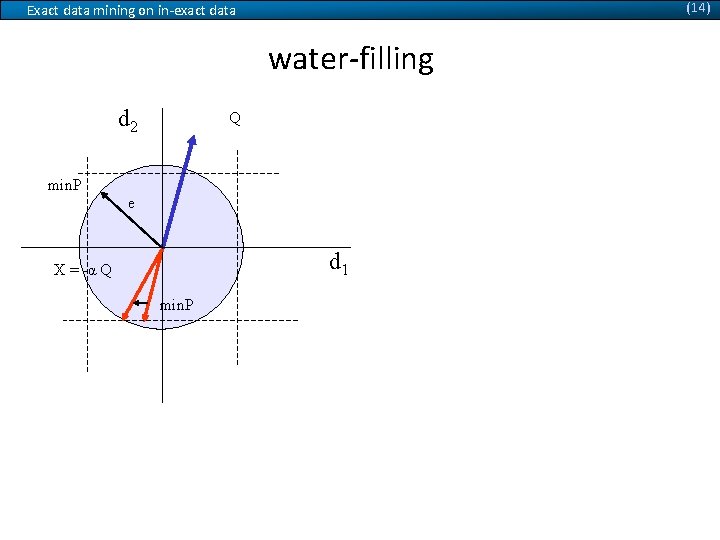

(14) Exact data mining on in-exact data water-filling d 2 X = -α Q Q d 1

(14) Exact data mining on in-exact data water-filling d 2 Q Boundary condition on total energy: e X = -α Q d 1 2 + d 2 2 < e 2 d 1

(14) Exact data mining on in-exact data water-filling d 2 Q Boundary condition on each dimension: min. P e d 1 < min. Power, d 2 < min. Power d 1 X = -α Q min. P

(14) Exact data mining on in-exact data water-filling d 2 Q We hit one of our constraints min. P e d 1 X = -α Q min. P

(14) Exact data mining on in-exact data water-filling d 2 Q We start moving on the other dimension min. P e until we use all our energy d 1 X = -α Q min. P

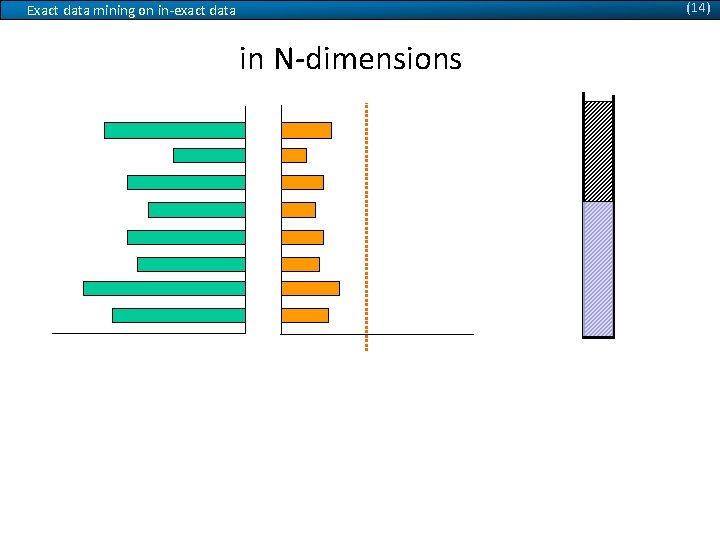

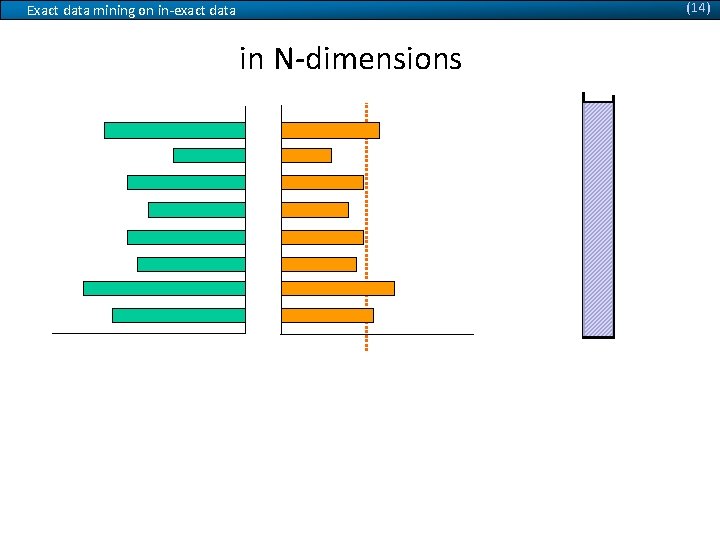

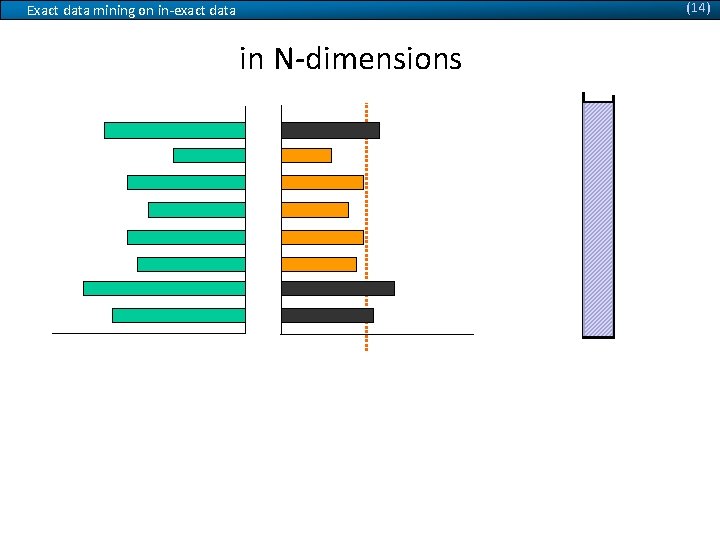

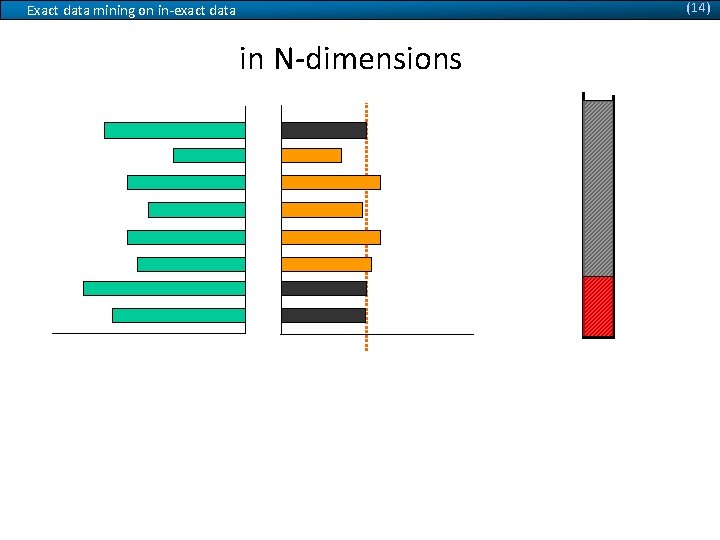

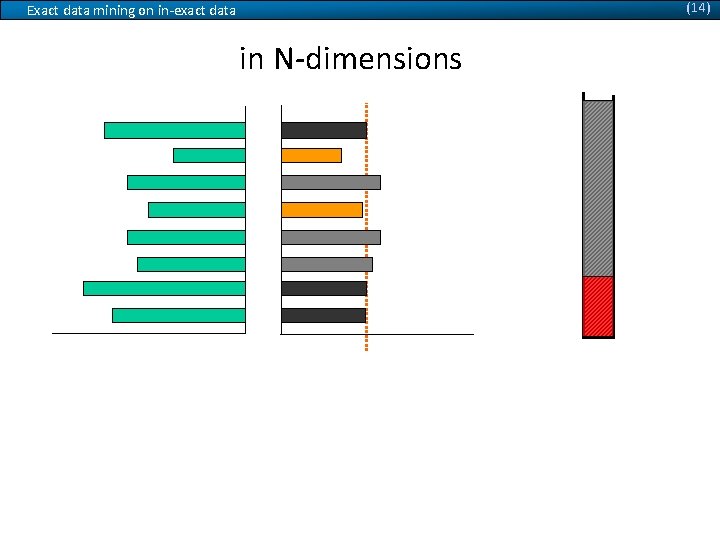

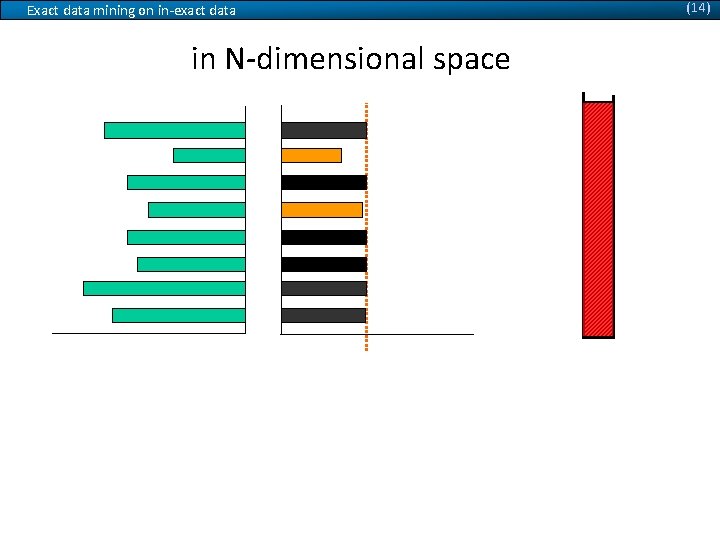

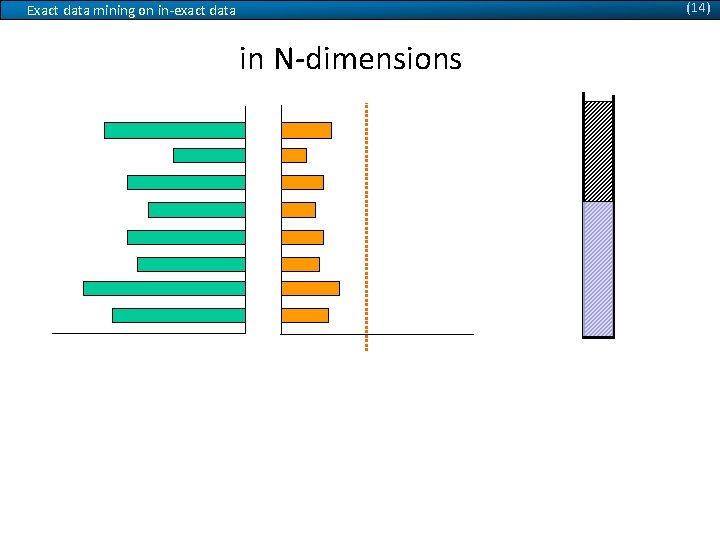

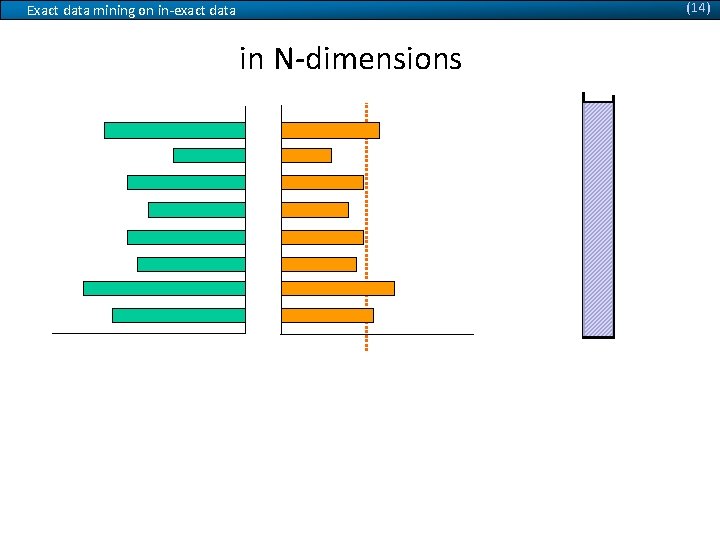

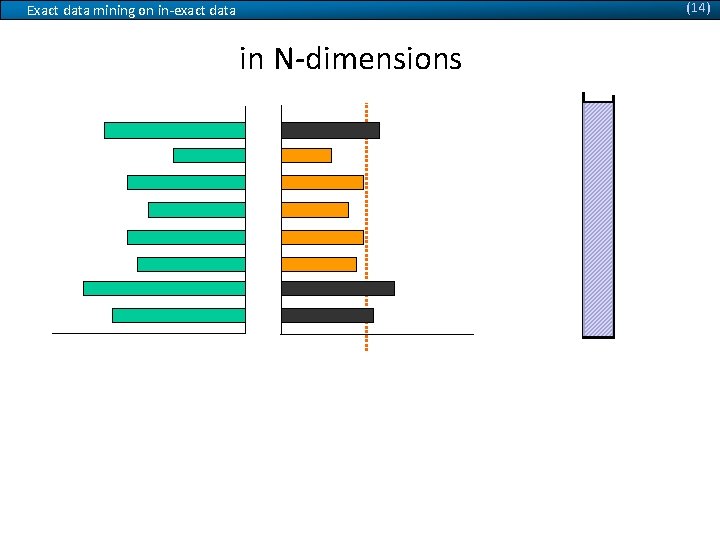

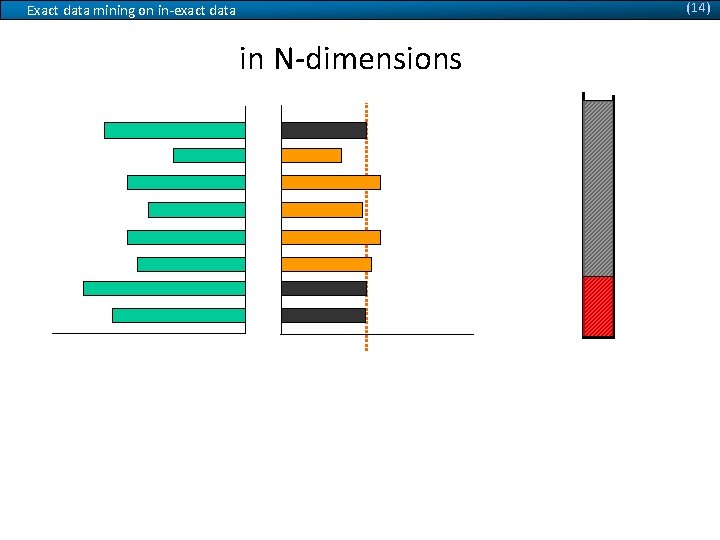

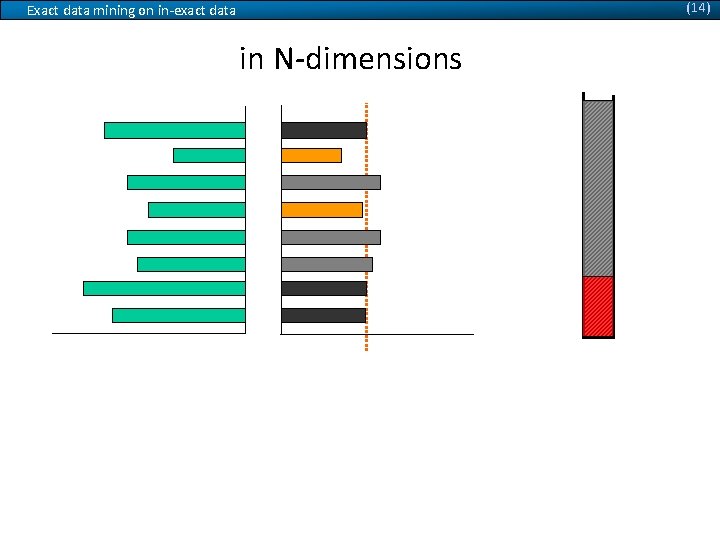

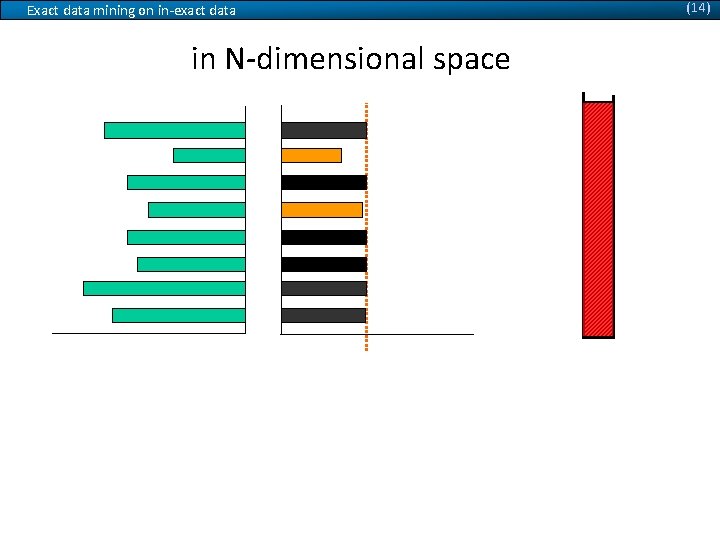

(14) Exact data mining on in-exact data in N-dimensions Q X min. Power Available Energy

(14) Exact data mining on in-exact data in N-dimensions Q X min. Power Available Energy

(14) Exact data mining on in-exact data in N-dimensions Q X min. Power Available Energy

(14) Exact data mining on in-exact data in N-dimensions Q X min. Power Available Energy

(14) Exact data mining on in-exact data in N-dimensions Q X min. Power Available Energy

(14) Exact data mining on in-exact data in N-dimensions Q X min. Power Available Energy

(14) Exact data mining on in-exact data in N-dimensions Q X min. Power Available Energy

(14) Exact data mining on in-exact data in N-dimensions Q X min. Power Available Energy

(14) Exact data mining on in-exact data in N-dimensional space Q X min. Power Available Energy

(14) Exact data mining on in-exact data in N-dimensional space Q X min. Power Available Energy

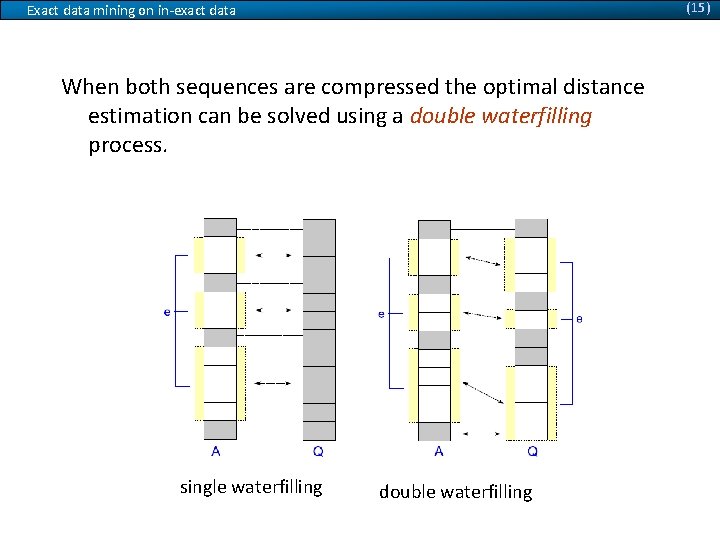

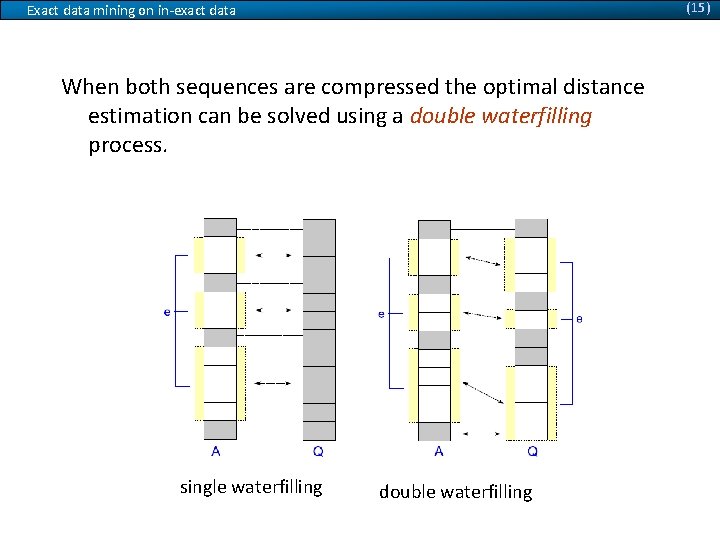

(15) Exact data mining on in-exact data When both sequences are compressed the optimal distance estimation can be solved using a double waterfilling process. single waterfilling double waterfilling

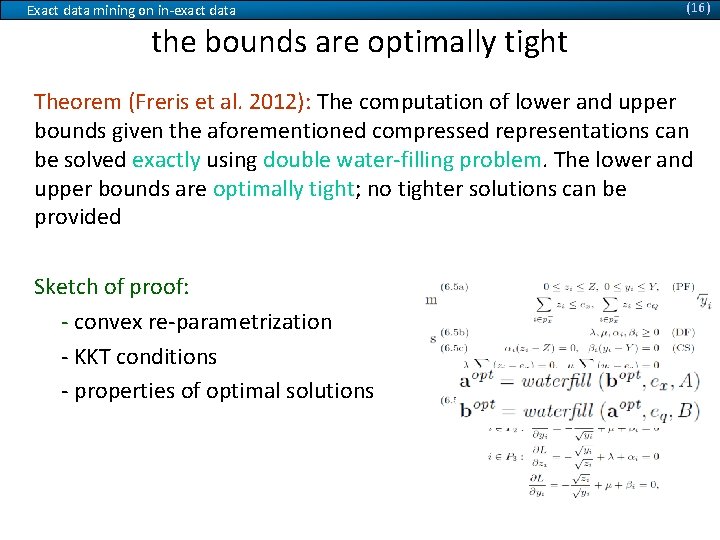

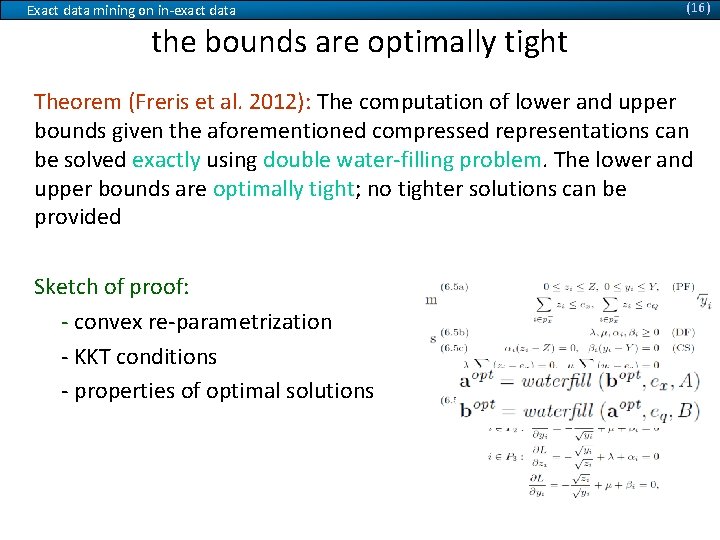

Exact data mining on in-exact data (16) the bounds are optimally tight Theorem (Freris et al. 2012): The computation of lower and upper bounds given the aforementioned compressed representations can be solved exactly using double water-filling problem. The lower and upper bounds are optimally tight; no tighter solutions can be provided Sketch of proof: - convex re-parametrization - KKT conditions - properties of optimal solutions

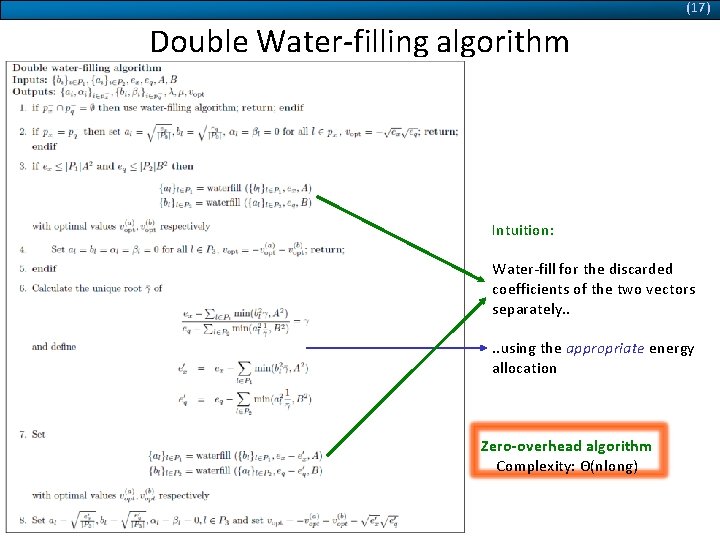

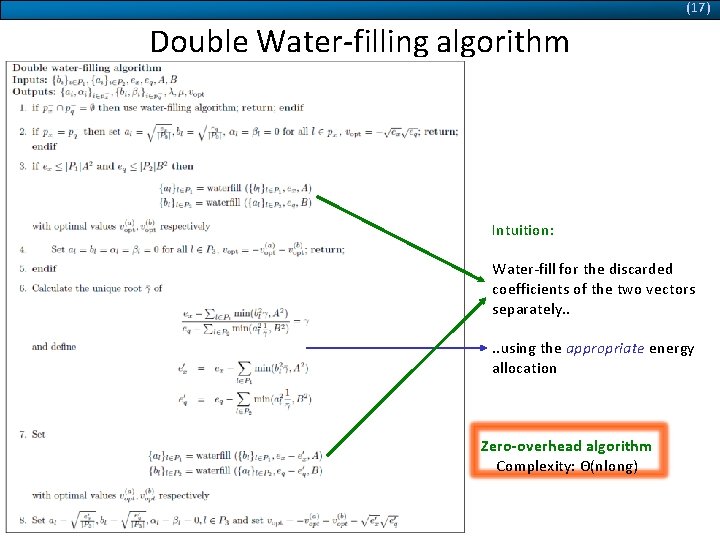

(17) Double Water-filling algorithm Intuition: Water-fill for the discarded coefficients of the two vectors separately. . using the appropriate energy allocation Zero-overhead algorithm Complexity: Θ(nlong)

(18) Exact data mining on in-exact data Experiments Unica database. IBM web traffic for year of 2010 § Marketing/Adwords recommendation – Analysis/Storage of weblog queries (1 TB of data per month) – GBS: Scheduling advertising campaigns / pricing BUSINESS DYNAMICS IBM YIN YANG OF FINANCIAL DISRUPTION EINSURANCE CUSTOMER EXPERIENCE. IBM GLOBAL BUSINESS ANDREW STEVENS BUSINESS CONSULTING GLENN FINCH IBM AMERICA MEDIA PLAYER INDUSTRY STRATEGIE ENTREPRISE RENTABILIT

Exact data mining on in-exact data our analytic solution is 300 x faster than convex optimizers (19)

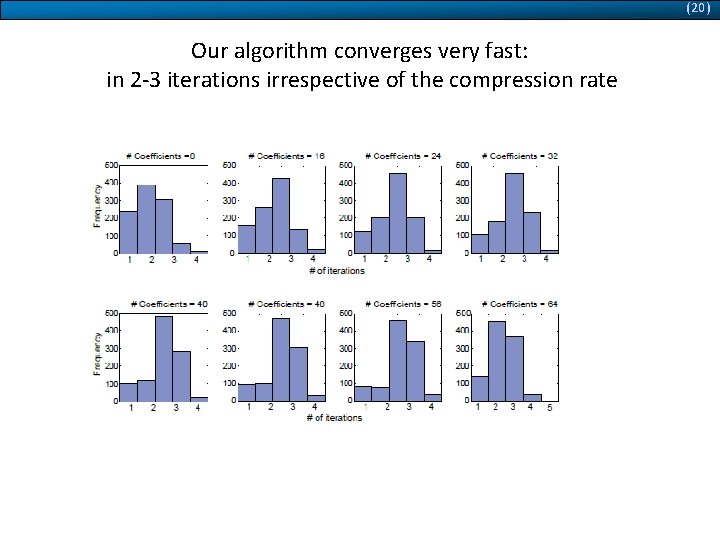

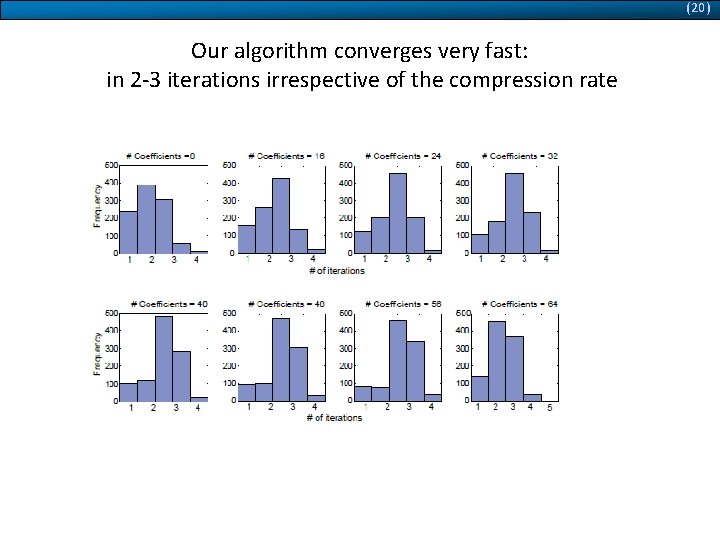

(20) Our algorithm converges very fast: in 2 -3 iterations irrespective of the compression rate

Exact data mining on in-exact data the derived LB/UB are 20% tighter than state-of-art (21)

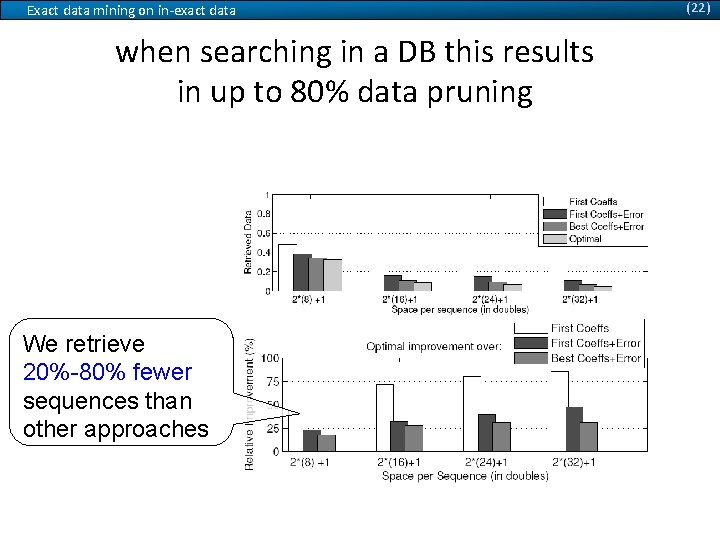

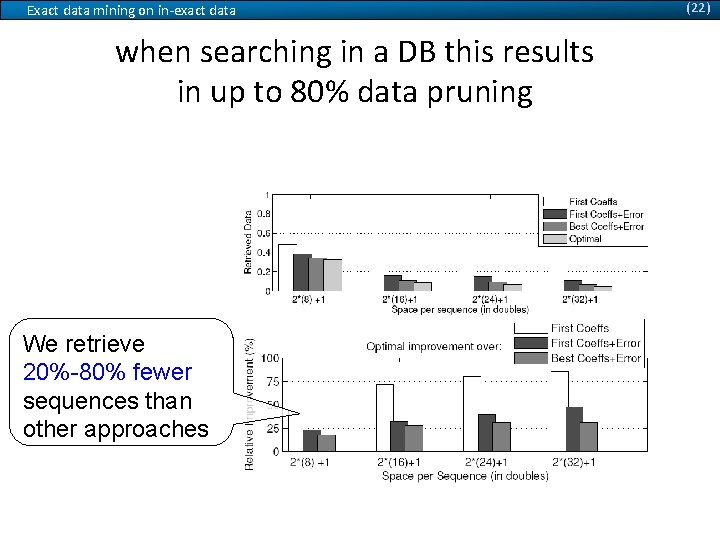

Exact data mining on in-exact data when searching in a DB this results in up to 80% data pruning the previous (10 -20%) improvement in distance estimation can significantly reduce the search space when searching for k-NN results We retrieve 20%-80% fewer sequences than other approaches (22)

(23) Exact data mining on in-exact data Conclusions Increasing data sizes is a perennial problem for data analysis It is important to support mining directly on the compressed data We have shown an exact algorithm for obtaining optimally tight Euclidean bounds on compressed representations Mining directly on compressed data with provable guarantees Many generalizations: Cosine Similarity (text documents): cos(x, y) = 1 - L 2(x, y)2/2 Correlation (financial analysis): corr(x, y) = 1 - L 2(x, y)2/2 (for normalized signals x, y) Dynamic Time Warping (flexible similarity metric):

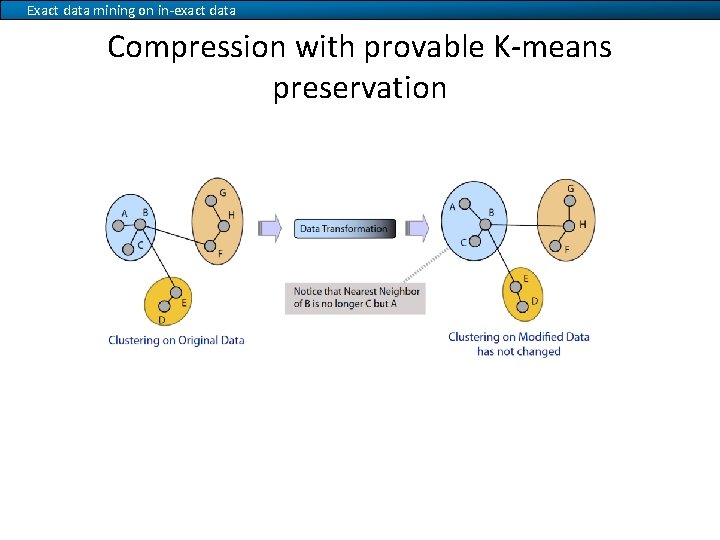

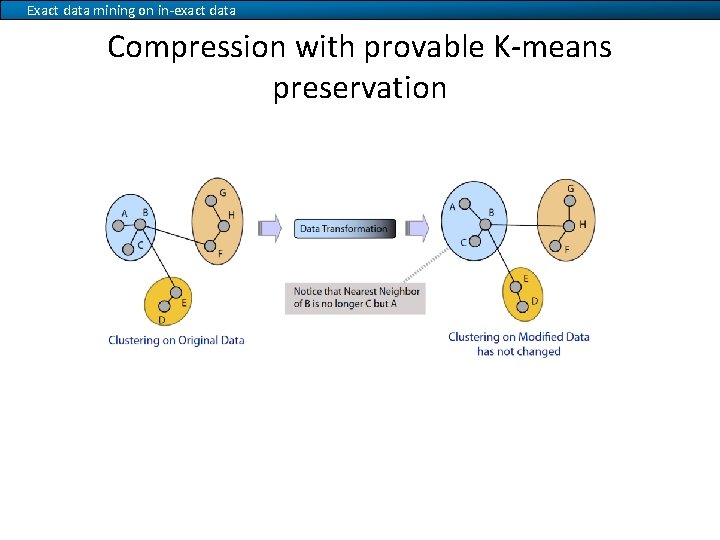

Exact data mining on in-exact data Compression with provable K-means preservation

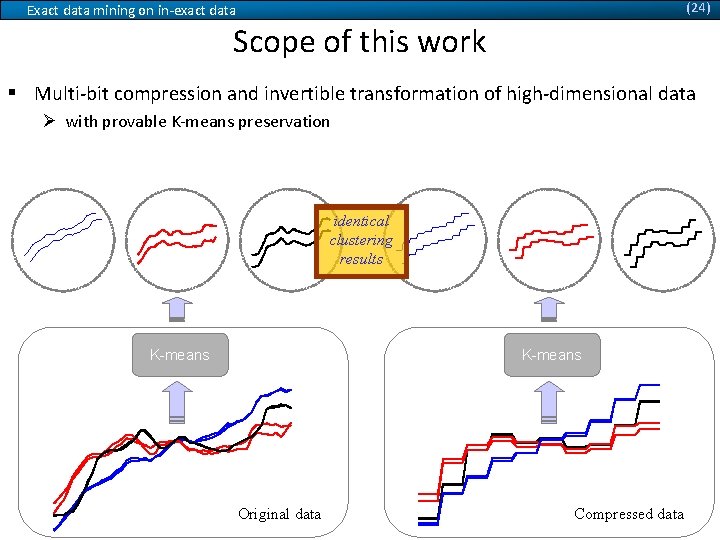

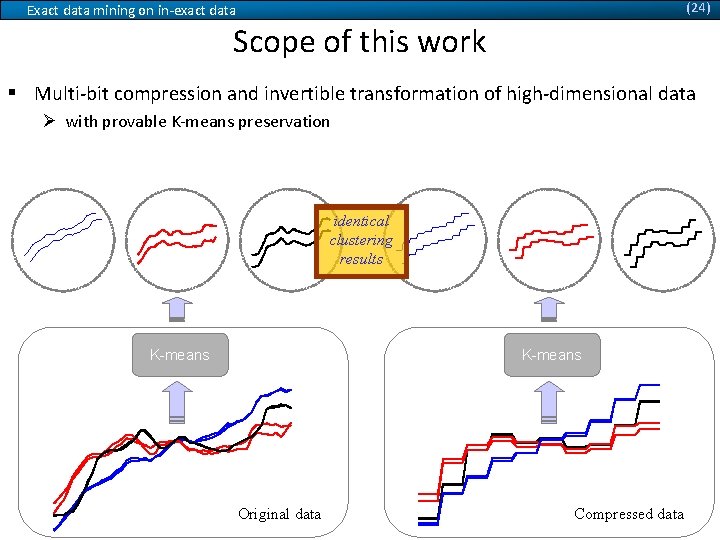

(24) Exact data mining on in-exact data Scope of this work § Multi-bit compression and invertible transformation of high-dimensional data Ø with provable K-means preservation cluster 1 cluster 2 cluster 3 identical clustering results K-means original data K-means Original data quantized data Compressed data

(25) Exact data mining on in-exact data Applications § Reduced storage requirements § Reduced processing time § Data hiding / anonymization § Original values are hidden via an invertible cluster contraction § Encoder-decoder scheme § Shape preservation § High-quality data reconstruction § Extends applicability to other mining operations such as Neighbor search, data visualization, etc. § Tunable compression level § based on number of allocated bits § in order to achieve good storage/quality trade-off Nearest-

(26) Exact data mining on in-exact data Overview 1. 2. 3. 4. 5. Pre-clustering Quantization per cluster and dimension (one or multiple bits) Data contraction transformation Post-quantization clustering Decoding using key (contraction parameter)

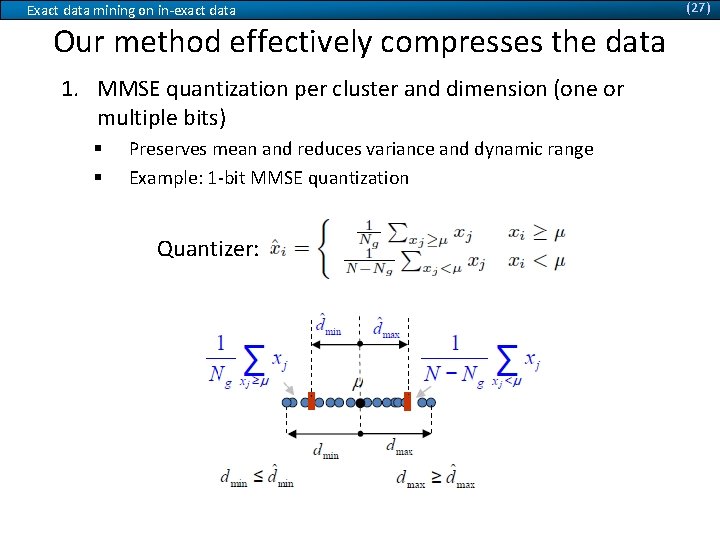

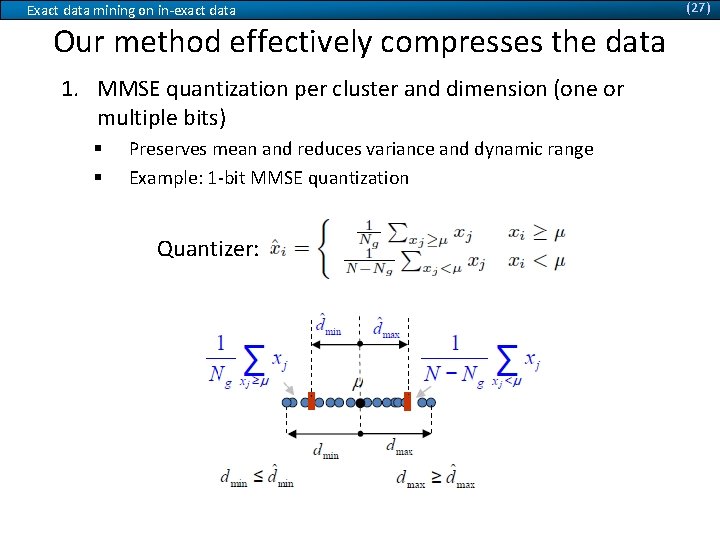

Exact data mining on in-exact data Our method effectively compresses the data 1. MMSE quantization per cluster and dimension (one or multiple bits) § § Preserves mean and reduces variance and dynamic range Example: 1 -bit MMSE quantization Quantizer: (27)

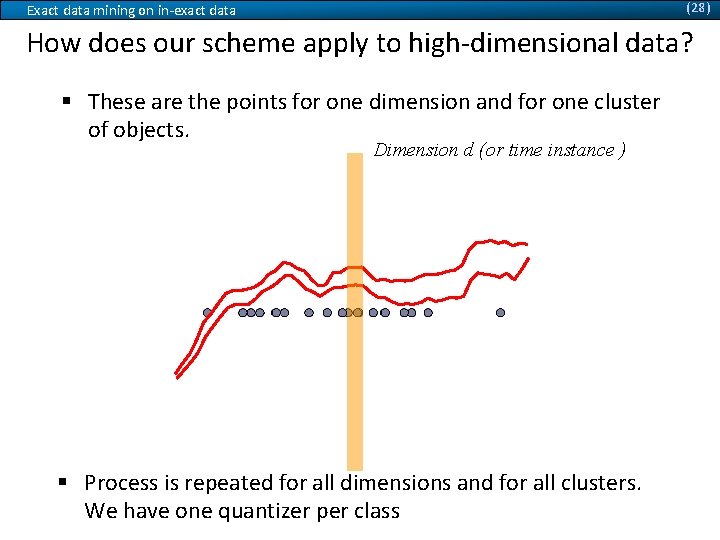

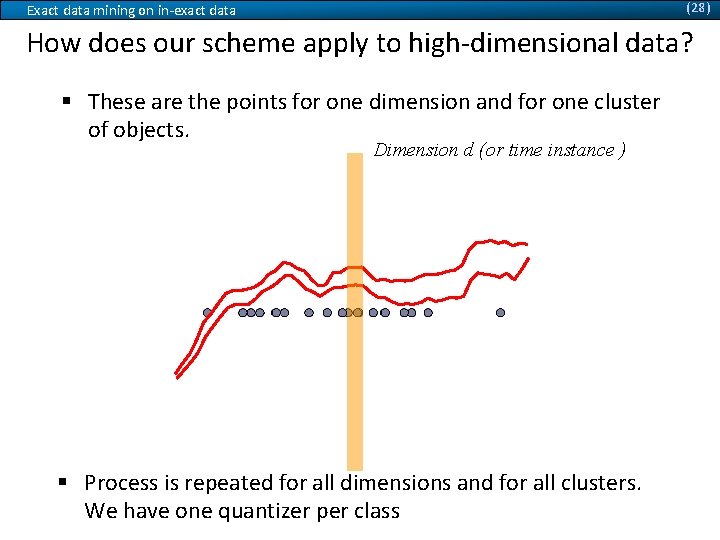

(28) Exact data mining on in-exact data How does our scheme apply to high-dimensional data? § These are the points for one dimension and for one cluster of objects. Dimension d (or time instance )d) § Process is repeated for all dimensions and for all clusters. We have one quantizer per class

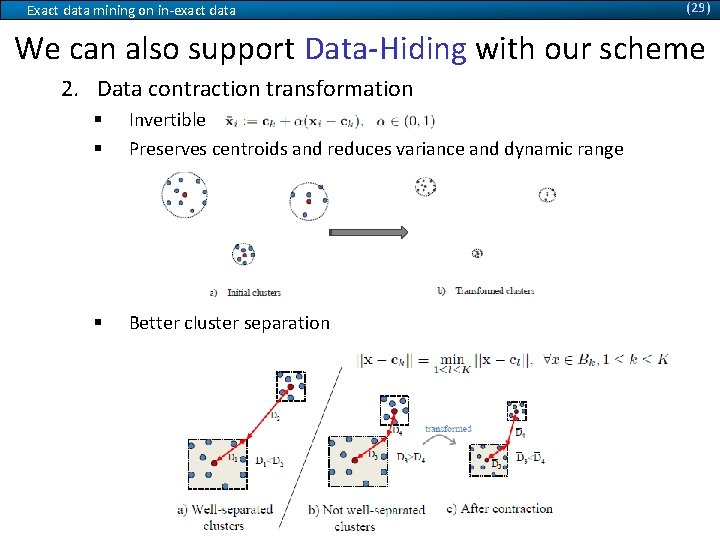

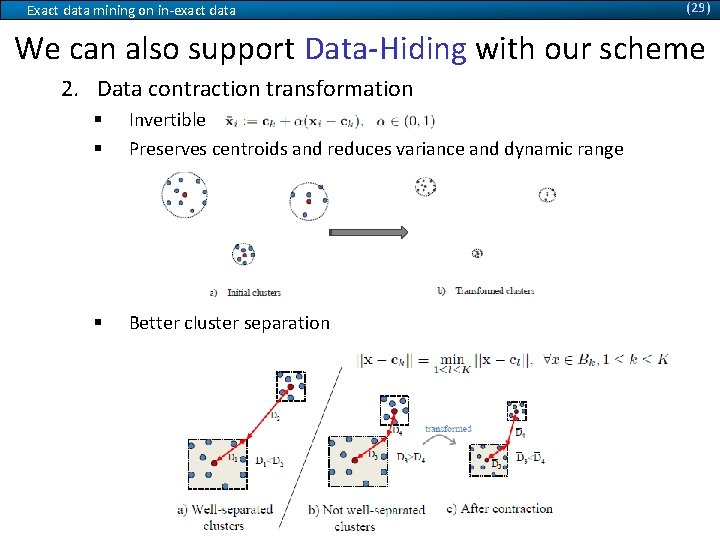

Exact data mining on in-exact data (29) We can also support Data-Hiding with our scheme 2. Data contraction transformation § § Invertible Preserves centroids and reduces variance and dynamic range § Better cluster separation

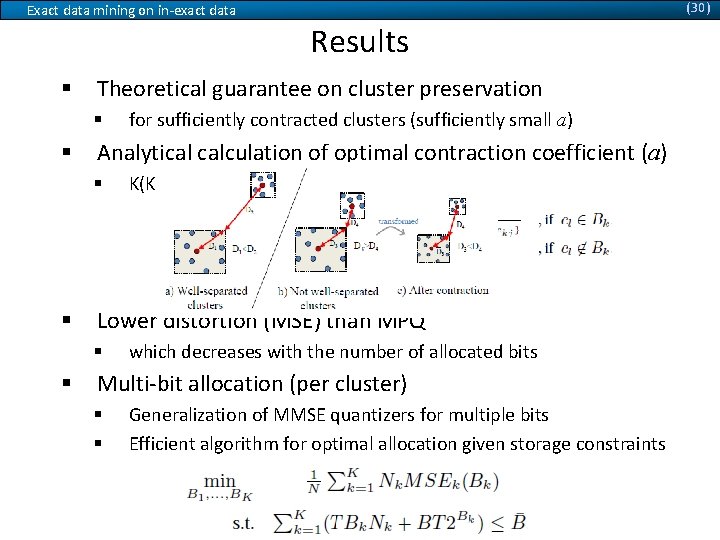

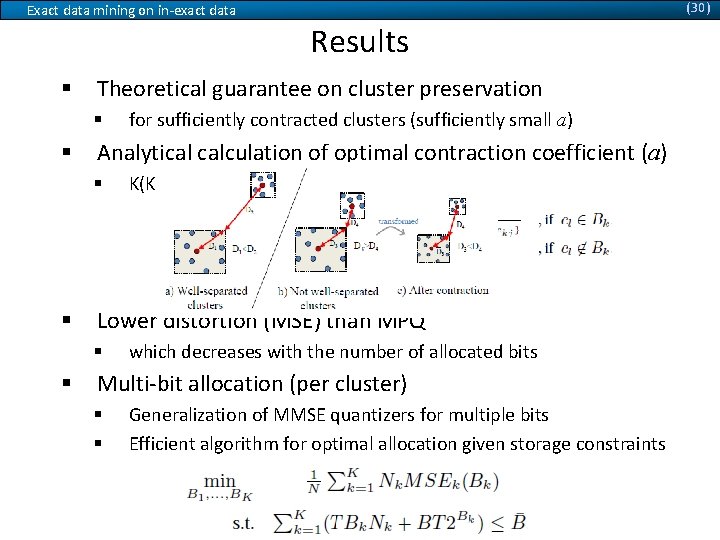

(30) Exact data mining on in-exact data Results § Theoretical guarantee on cluster preservation § § Analytical calculation of optimal contraction coefficient (a) § § K(K-1) calculations, K: number of clusters Lower distortion (MSE) than MPQ § § for sufficiently contracted clusters (sufficiently small a) which decreases with the number of allocated bits Multi-bit allocation (per cluster) § § Generalization of MMSE quantizers for multiple bits Efficient algorithm for optimal allocation given storage constraints

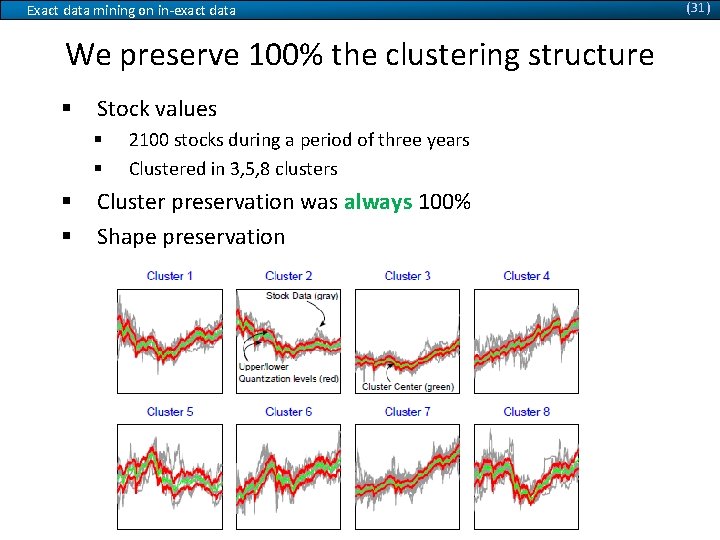

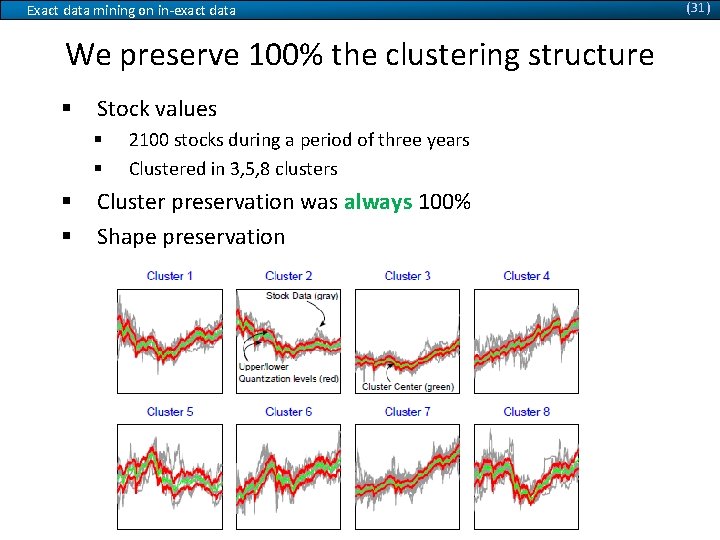

Exact data mining on in-exact data We preserve 100% the clustering structure § Stock values § § 2100 stocks during a period of three years Clustered in 3, 5, 8 clusters Cluster preservation was always 100% Shape preservation (31)

Exact data mining on in-exact data (32) Multi-Bit quantization improves quality up to 96%! § MSE due to quantization § decreases with number of bits and number of clusters § § 37%, 70% and 96% over MPQ using MMSE with 1, 2, 4 bits 76%, 84% using 5, 8 clusters over 3 clusters

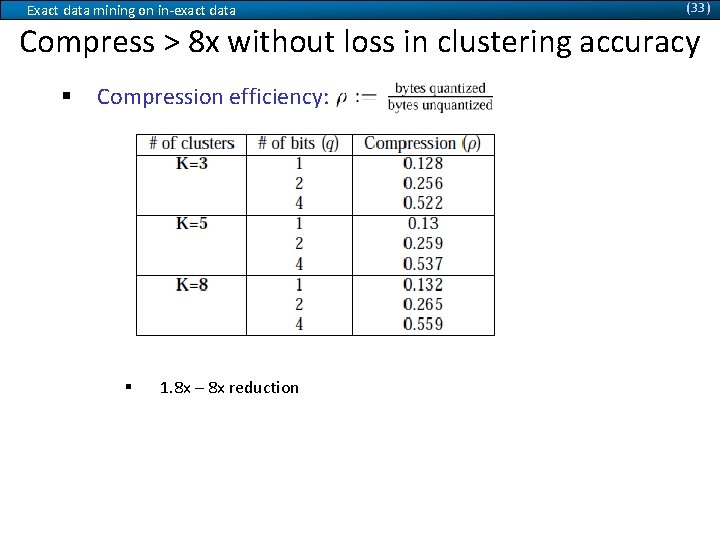

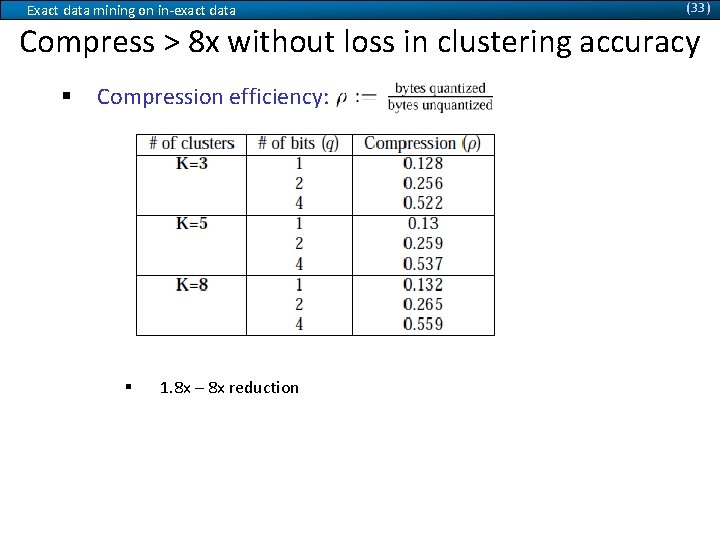

Exact data mining on in-exact data (33) Compress > 8 x without loss in clustering accuracy § Compression efficiency: § 1. 8 x – 8 x reduction

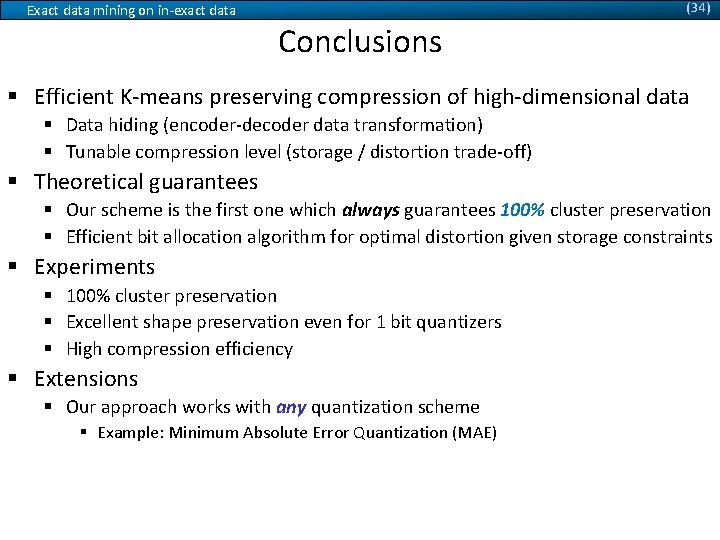

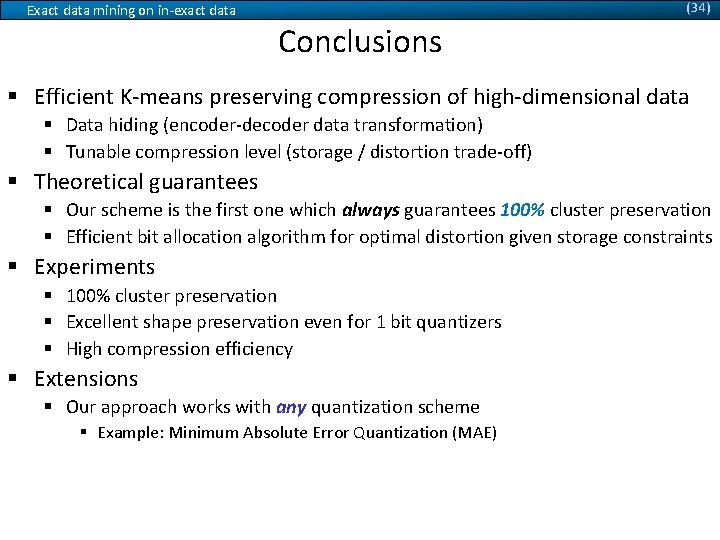

(34) Exact data mining on in-exact data Conclusions § Efficient K-means preserving compression of high-dimensional data § Data hiding (encoder-decoder data transformation) § Tunable compression level (storage / distortion trade-off) § Theoretical guarantees § Our scheme is the first one which always guarantees 100% cluster preservation § Efficient bit allocation algorithm for optimal distortion given storage constraints § Experiments § 100% cluster preservation § Excellent shape preservation even for 1 bit quantizers § High compression efficiency § Extensions § Our approach works with any quantization scheme § Example: Minimum Absolute Error Quantization (MAE)

Exact data mining on in-exact data Thank you Questions…