DecisionMaking under Uncertainty ONR MURI Uncertain Multiagent Systems

- Slides: 43

Decision-Making under Uncertainty ONR MURI Uncertain Multiagent Systems: Games and Learning July 17, 2002 H. Jin Kim, Songhwai Oh and Shankar Sastry University of California, Berkeley

Outline • Hierarchical architecture for multiagent operations • Partial observation Markov games (POMGame) • Berkeley pursuit-evasion game (PEG) setup • From PEG to unmanned dynamic battlefield – Model predictive techniques for dynamic replanning – Multi-target tracking (detect ID track) – Dynamic model selection for estimating adversarial intent

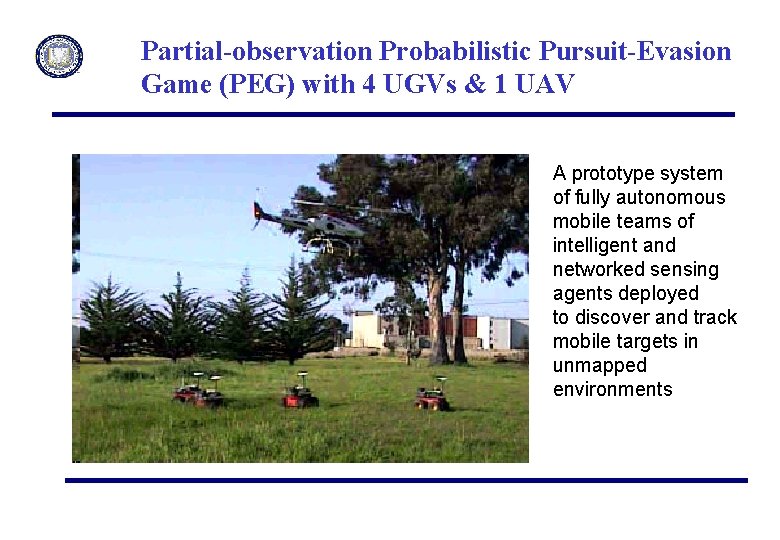

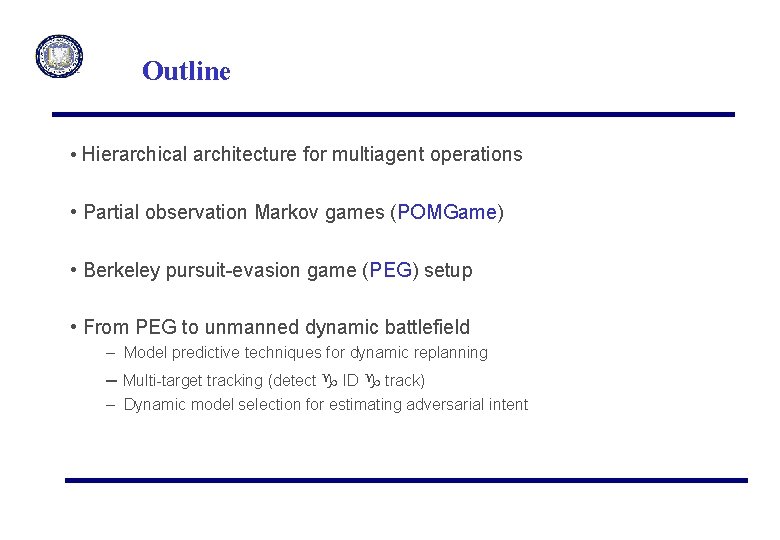

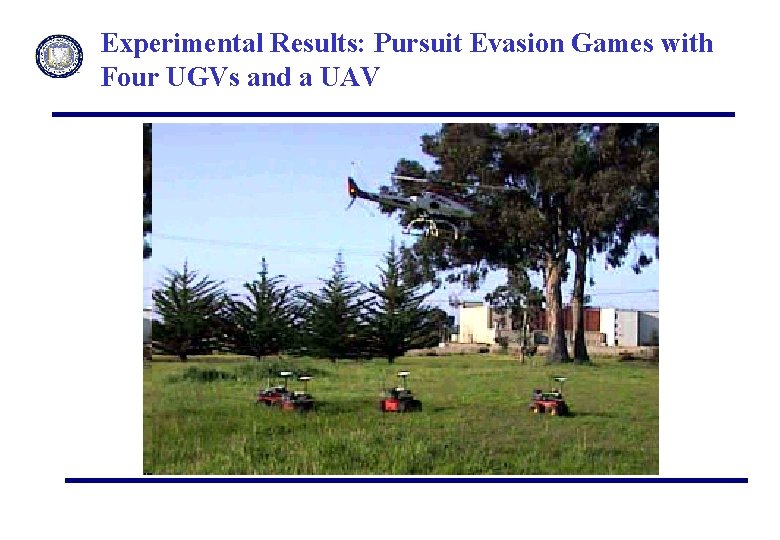

Partial-observation Probabilistic Pursuit-Evasion Game (PEG) with 4 UGVs & 1 UAV A prototype system of fully autonomous mobile teams of intelligent and networked sensing agents deployed to discover and track mobile targets in unmapped environments

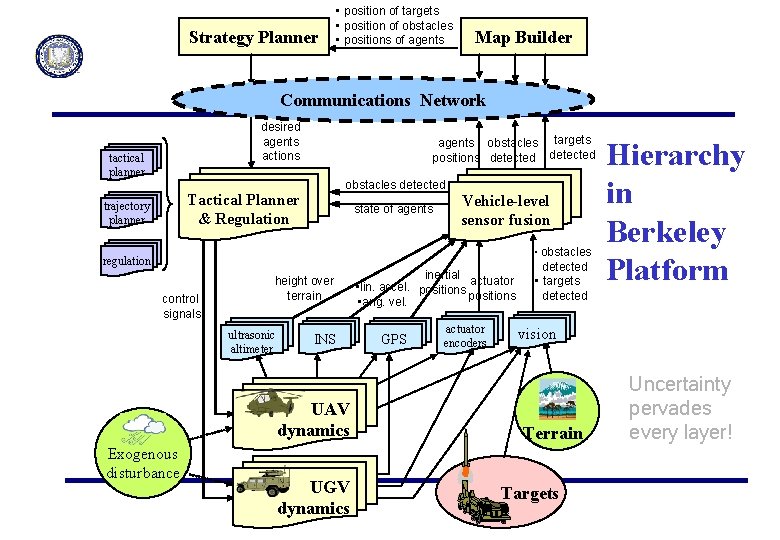

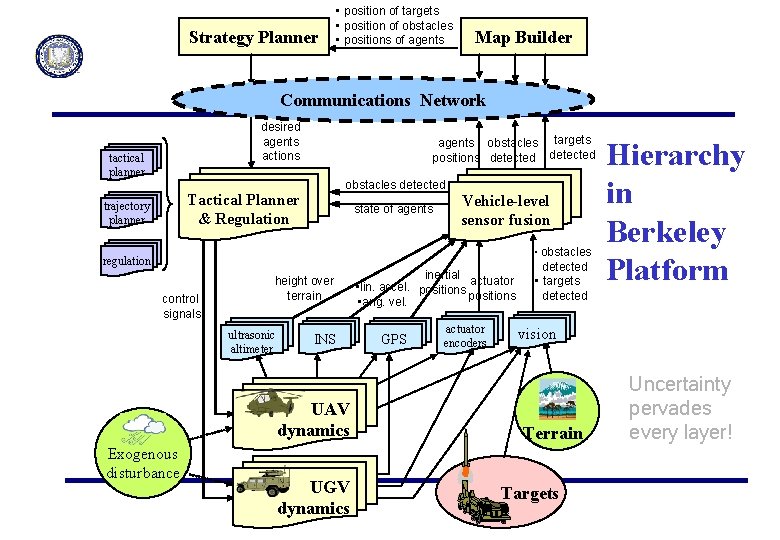

Strategy Planner • position of targets • position of obstacles • positions of agents Map Builder Communications Network desired agents actions tactical planner agents obstacles targets positions detected obstacles detected Tactical Planner & Regulation trajectory planner state of agents Vehicle-level sensor fusion • obstacles regulation control signals height over terrain ultrasonic altimeter INS UAV dynamics Exogenous disturbance UGV dynamics inertial • lin. accel. positions actuator positions • ang. vel. GPS actuator encoders detected • targets detected Hierarchy in Berkeley Platform vision Terrain Targets Uncertainty pervades every layer!

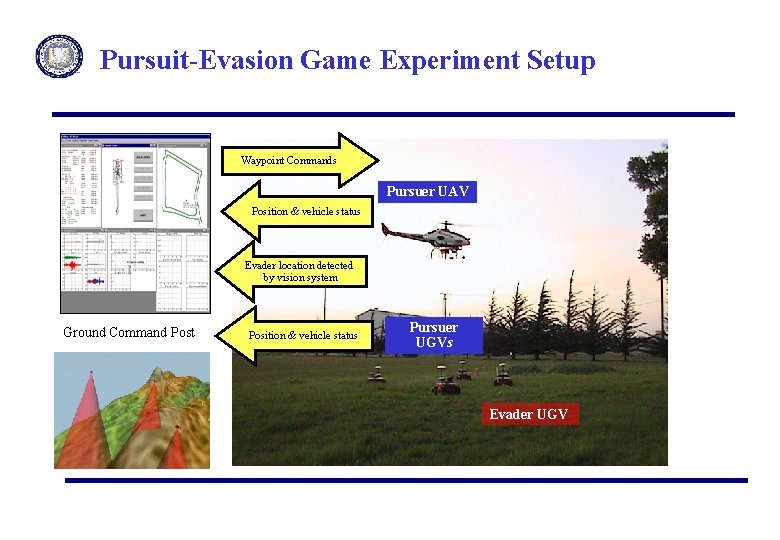

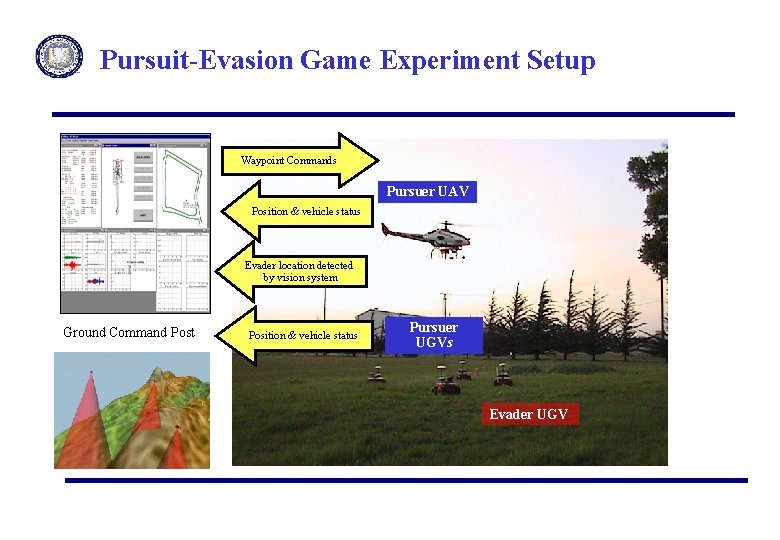

Pursuit-Evasion Game Experiment Setup Waypoint Commands Pursuer UAV Position & vehicle status Evader location detected by vision system Ground Command Post Position & vehicle status Pursuer UGVs Evader UGV

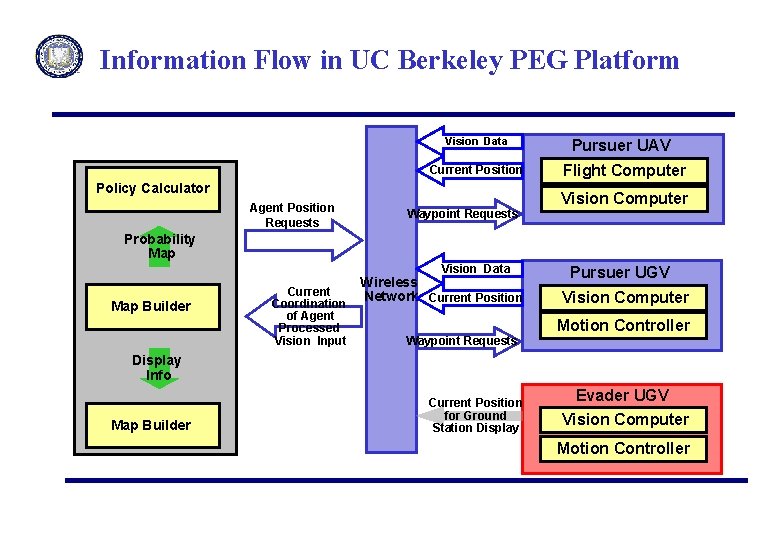

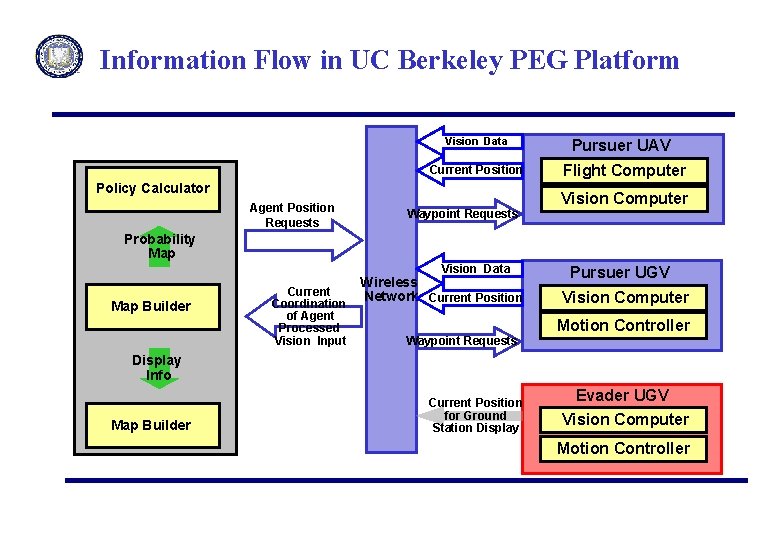

Information Flow in UC Berkeley PEG Platform Vision Data Ground-based Policy Calculator Strategy Planner Current Position Agent Position Requests Waypoint Requests Pursuer UAV Flight Computer Vision Computer Probability Map Vision Data Map Builder Wireless Current Network Current Position Coordination of Agent Processed Vision Input Pursuer UGV Vision Computer Motion Controller Waypoint Requests Display Info Map Builder Current Position for Ground Station Display Evader UGV Vision Computer Motion Controller

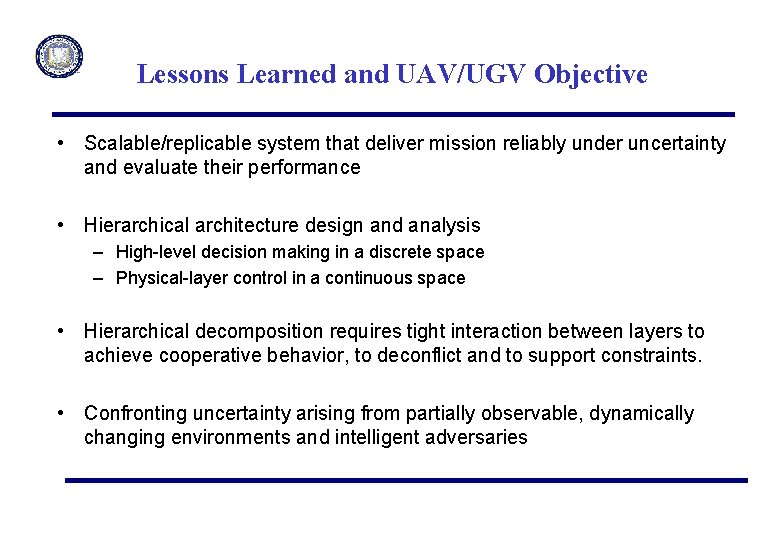

Lessons Learned and UAV/UGV Objective • Scalable/replicable system that deliver mission reliably under uncertainty and evaluate their performance • Hierarchical architecture design and analysis – High-level decision making in a discrete space – Physical-layer control in a continuous space • Hierarchical decomposition requires tight interaction between layers to achieve cooperative behavior, to deconflict and to support constraints. • Confronting uncertainty arising from partially observable, dynamically changing environments and intelligent adversaries

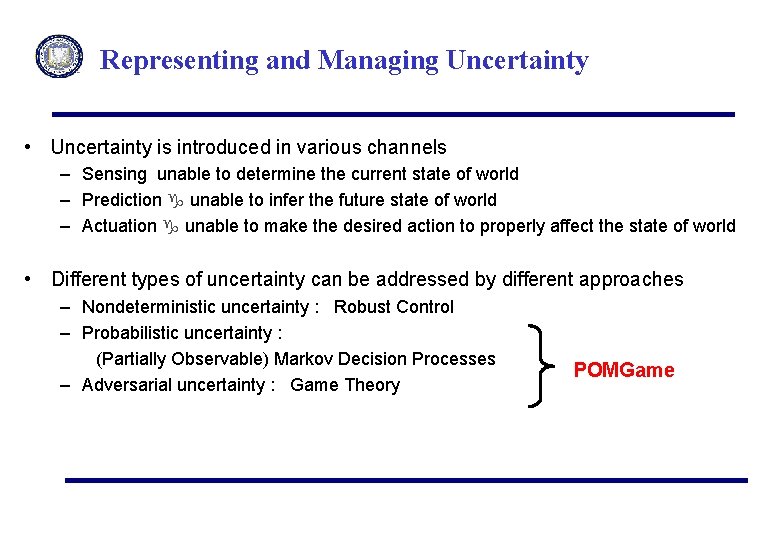

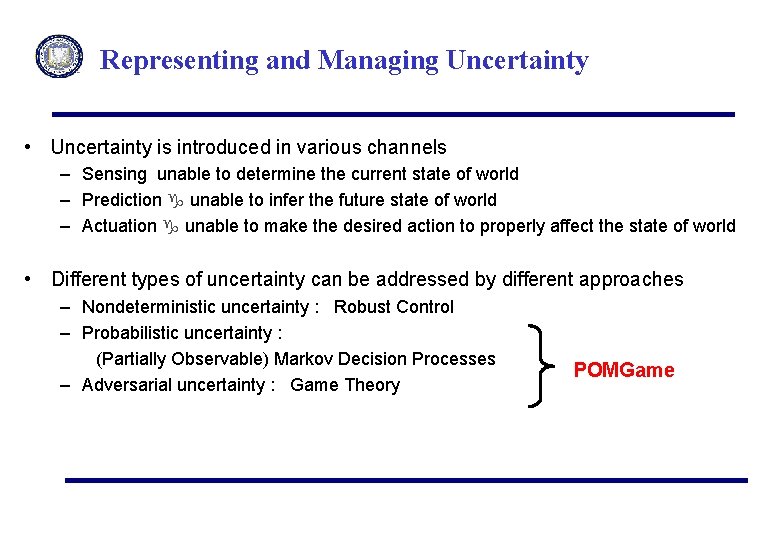

Representing and Managing Uncertainty • Uncertainty is introduced in various channels – Sensing unable to determine the current state of world – Prediction unable to infer the future state of world – Actuation unable to make the desired action to properly affect the state of world • Different types of uncertainty can be addressed by different approaches – Nondeterministic uncertainty : Robust Control – Probabilistic uncertainty : (Partially Observable) Markov Decision Processes – Adversarial uncertainty : Game Theory POMGame

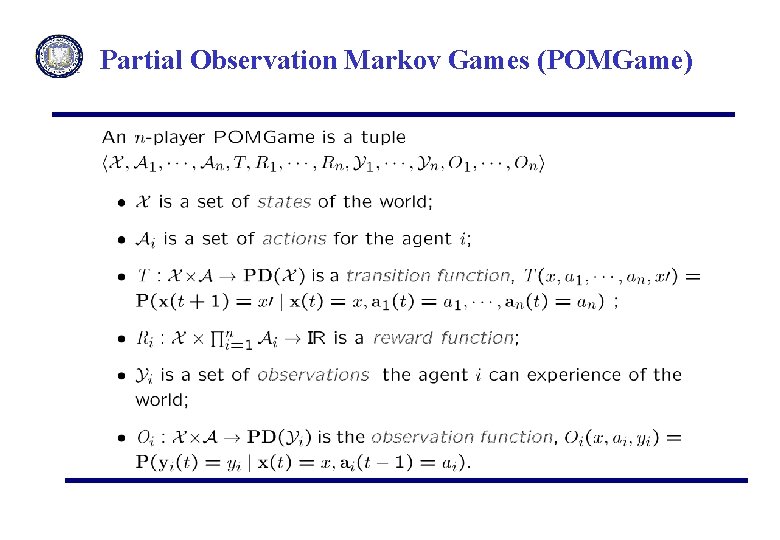

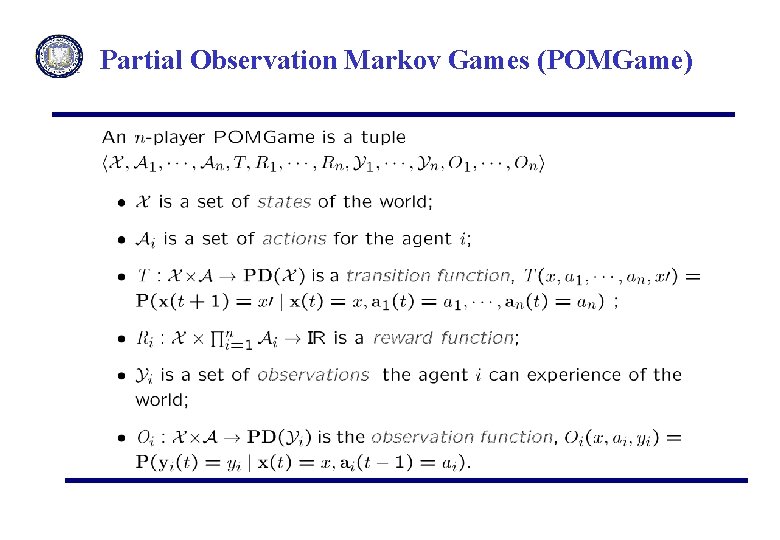

Partial Observation Markov Games (POMGame)

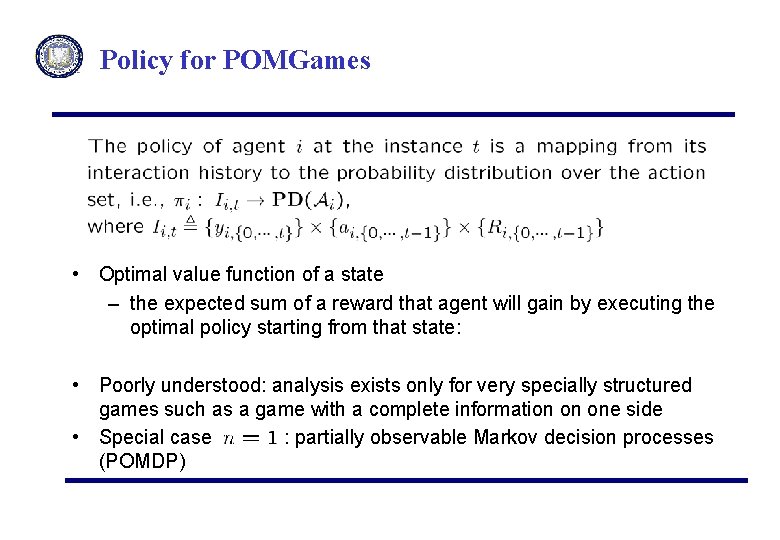

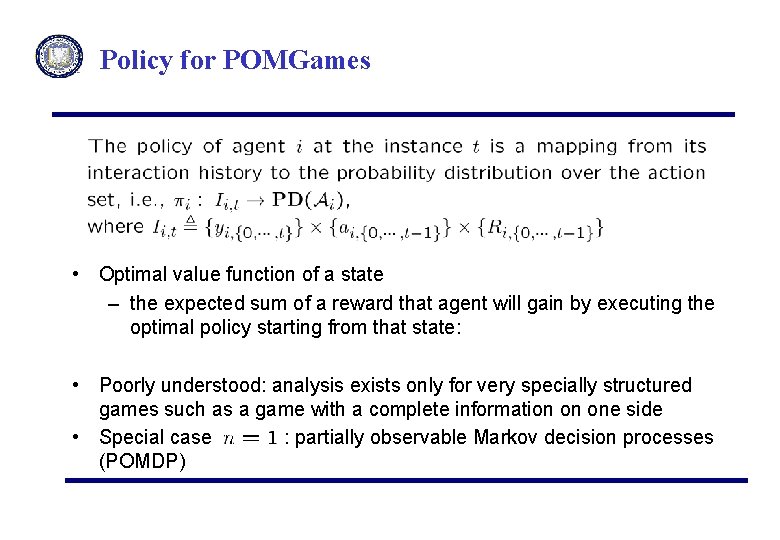

Policy for POMGames • Optimal value function of a state – the expected sum of a reward that agent will gain by executing the optimal policy starting from that state: • Poorly understood: analysis exists only for very specially structured games such as a game with a complete information on one side • Special case : partially observable Markov decision processes (POMDP)

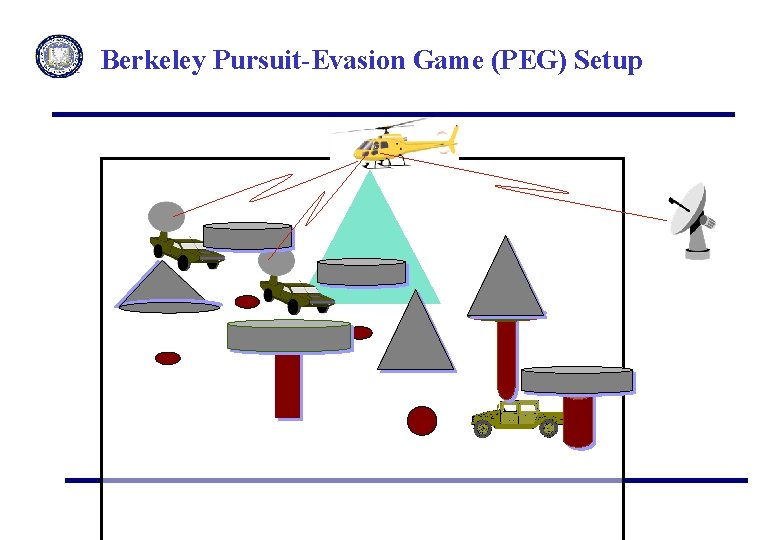

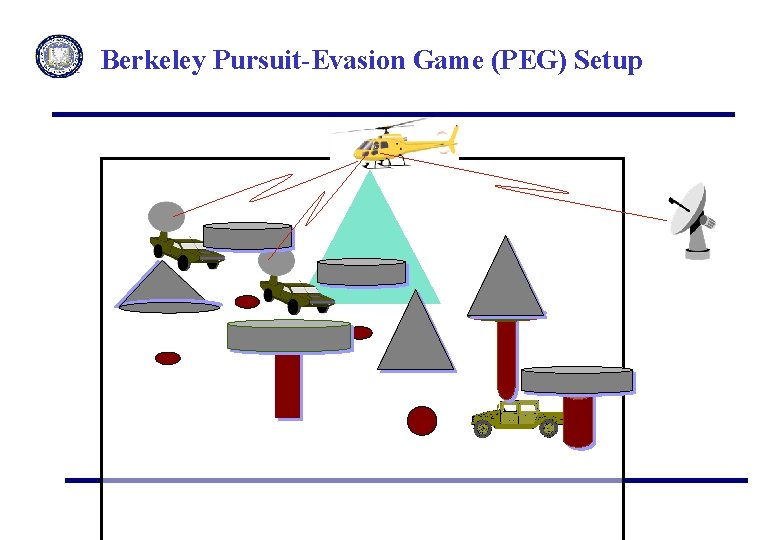

Berkeley Pursuit-Evasion Game (PEG) Setup

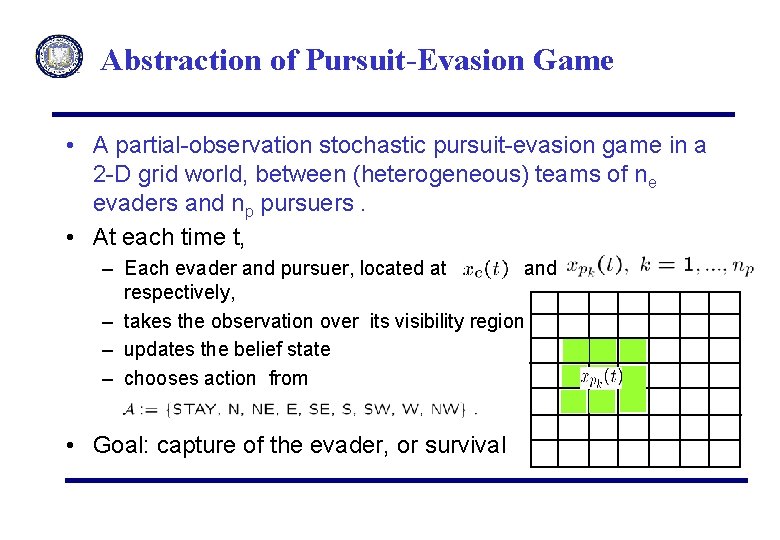

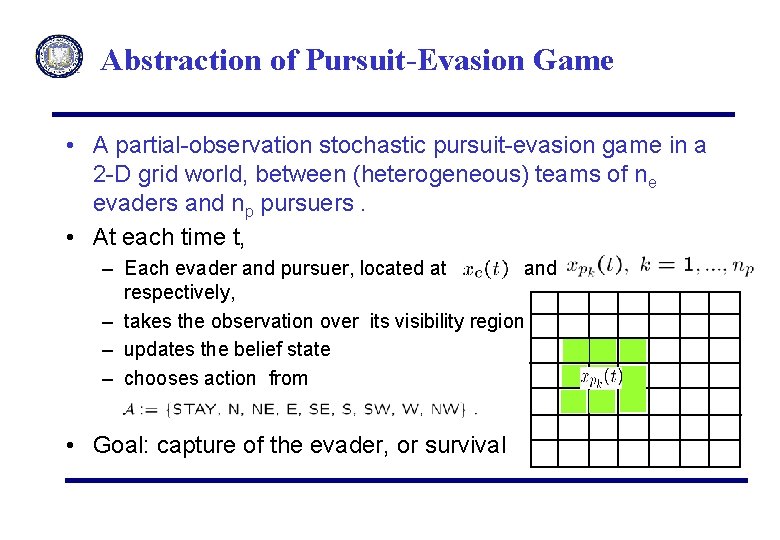

Abstraction of Pursuit-Evasion Game • A partial-observation stochastic pursuit-evasion game in a 2 -D grid world, between (heterogeneous) teams of ne evaders and np pursuers. • At each time t, – Each evader and pursuer, located at and respectively, – takes the observation over its visibility region – updates the belief state – chooses action from • Goal: capture of the evader, or survival

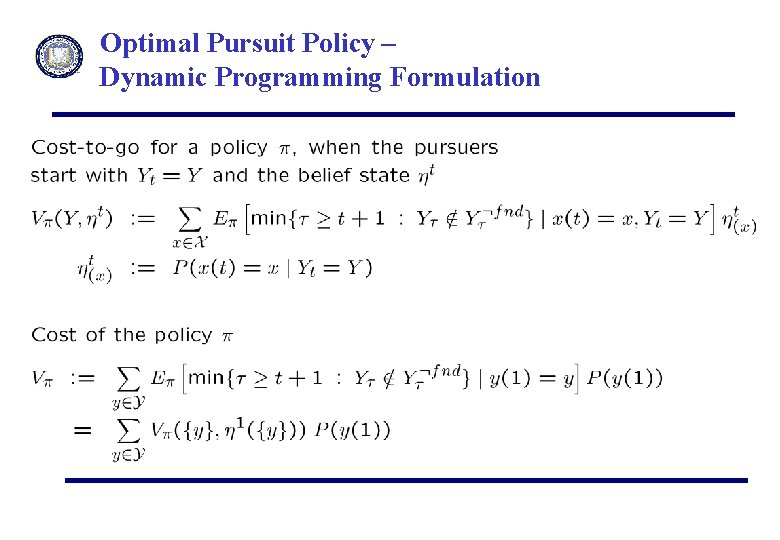

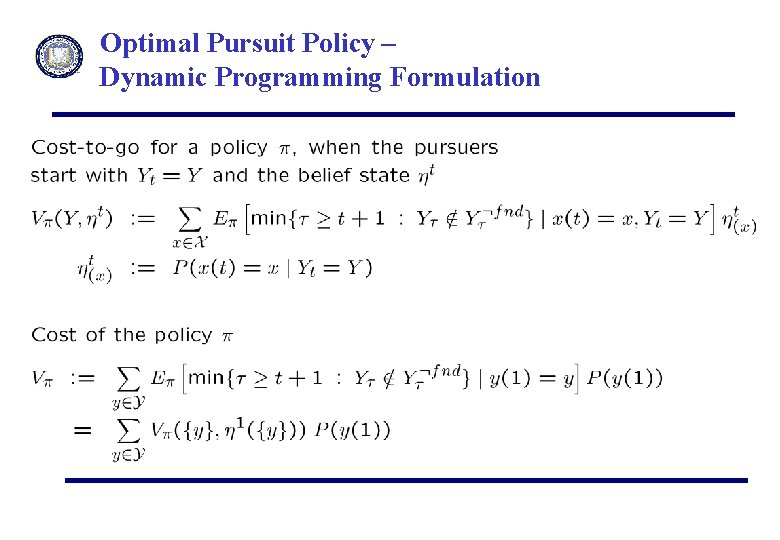

Optimal Pursuit Policy • Performance measure : capture time • Optimal policy minimizes the cost

Optimal Pursuit Policy – Dynamic Programming Formulation

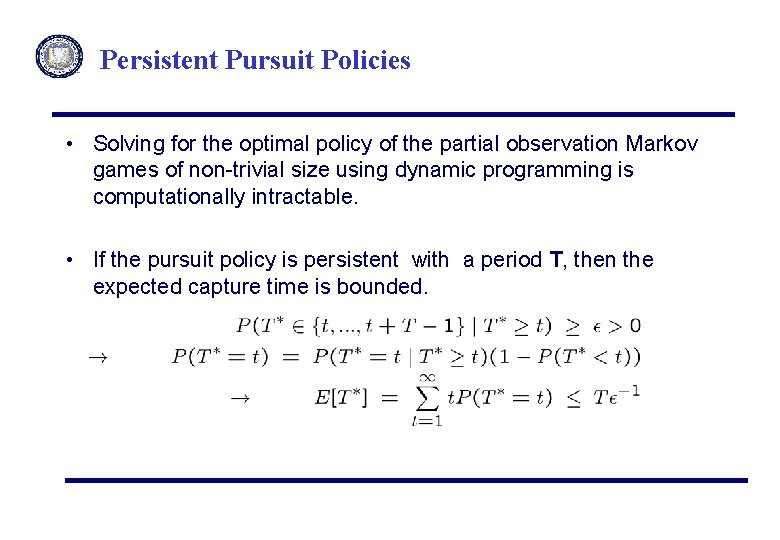

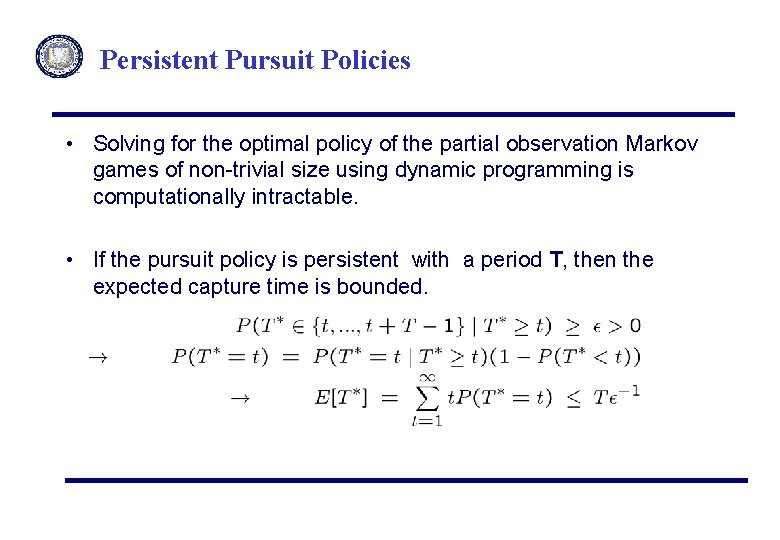

Persistent Pursuit Policies • Solving for the optimal policy of the partial observation Markov games of non-trivial size using dynamic programming is computationally intractable. • If the pursuit policy is persistent with a period T, then the expected capture time is bounded.

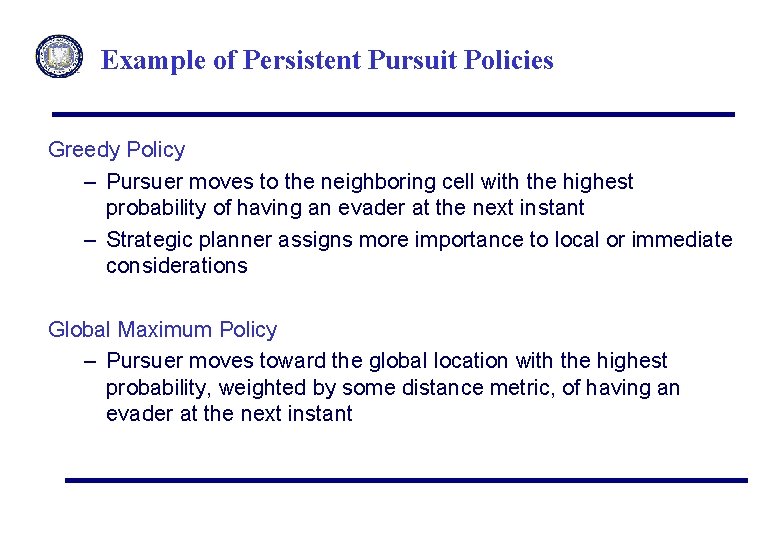

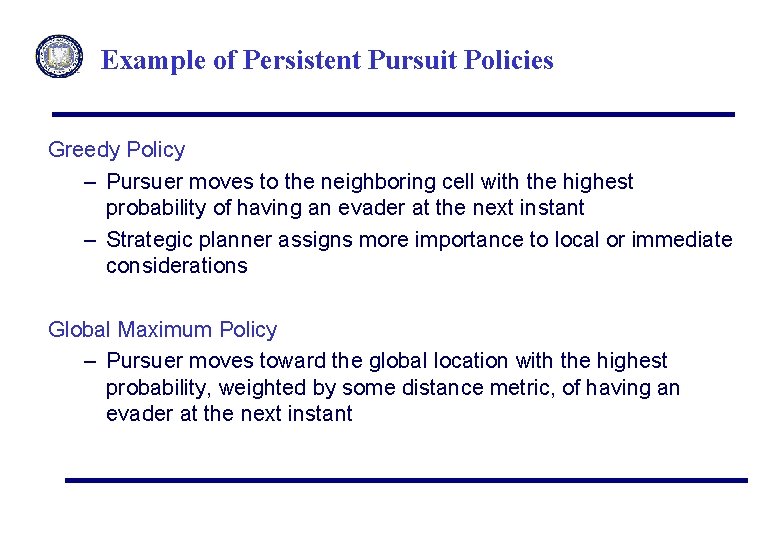

Example of Persistent Pursuit Policies Greedy Policy – Pursuer moves to the neighboring cell with the highest probability of having an evader at the next instant – Strategic planner assigns more importance to local or immediate considerations Global Maximum Policy – Pursuer moves toward the global location with the highest probability, weighted by some distance metric, of having an evader at the next instant

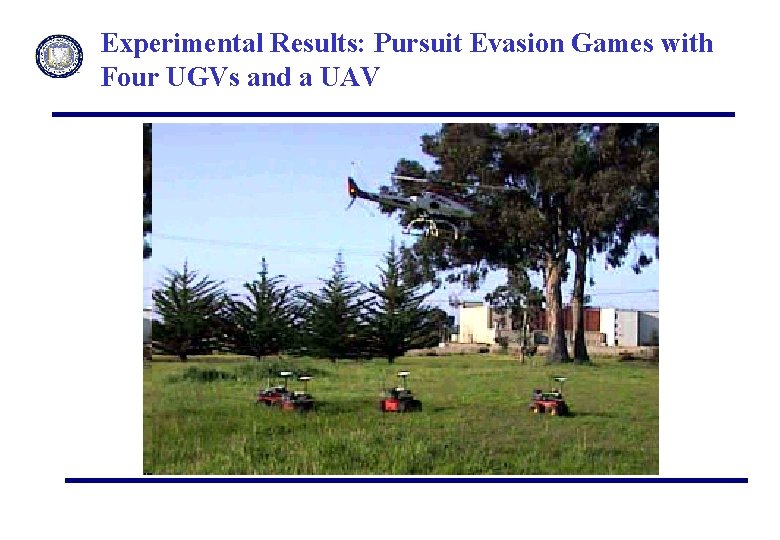

Experimental Results: Pursuit Evasion Games with Four UGVs and a UAV

Game-theoretic Policy Search Paradigm • Large number of variables affect the solution • Many interesting games including pursuit-evasion are a large game with partial information, and finding optimal solutions is well outside the capability of current algorithms • Approximate solution is not necessarily bad. There might be simple policies with satisfactory performances Choose a good policy from a restricted class of policies ! • We can find approximately optimal solutions from restricted classes, using a sparse sampling and a provably convergent policy search algorithm

Constructing a Policy Class • Given a mission with specific goals, we – decompose the problem in terms of the functions that need to be achieved for success and the means that are available – analyze how a human team would solve the problem – determine a list of important factors that complicate task performance such as safety or physical constraints • • • Maximize aerial coverage, Stay within a communications range, Penalize actions that lead an agent to a danger zone, Maximize the explored region, Minimize fuel usage, …

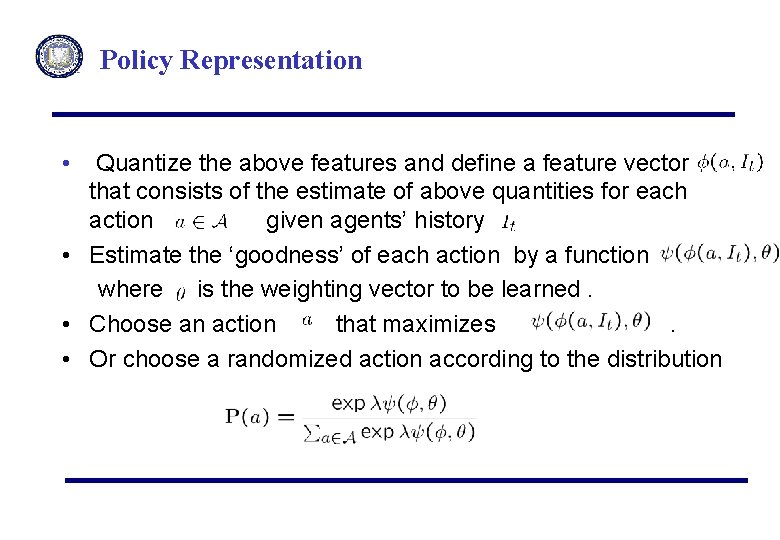

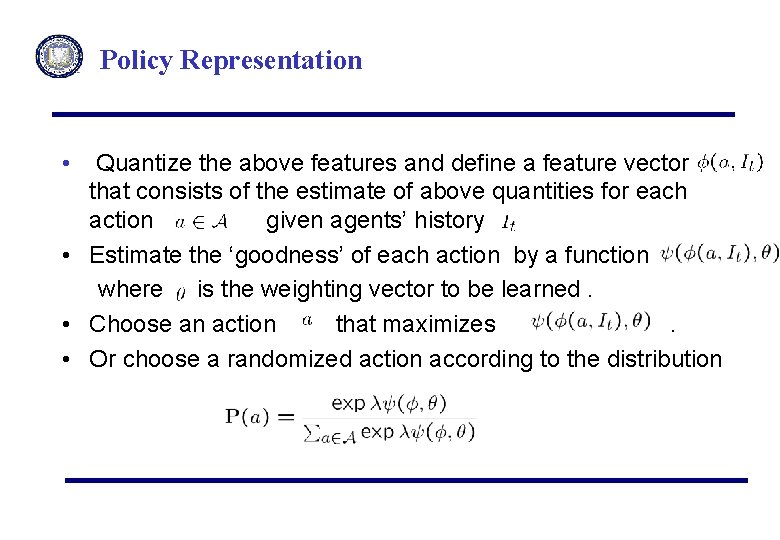

Policy Representation • Quantize the above features and define a feature vector that consists of the estimate of above quantities for each action given agents’ history • Estimate the ‘goodness’ of each action by a function where is the weighting vector to be learned. • Choose an action that maximizes. • Or choose a randomized action according to the distribution

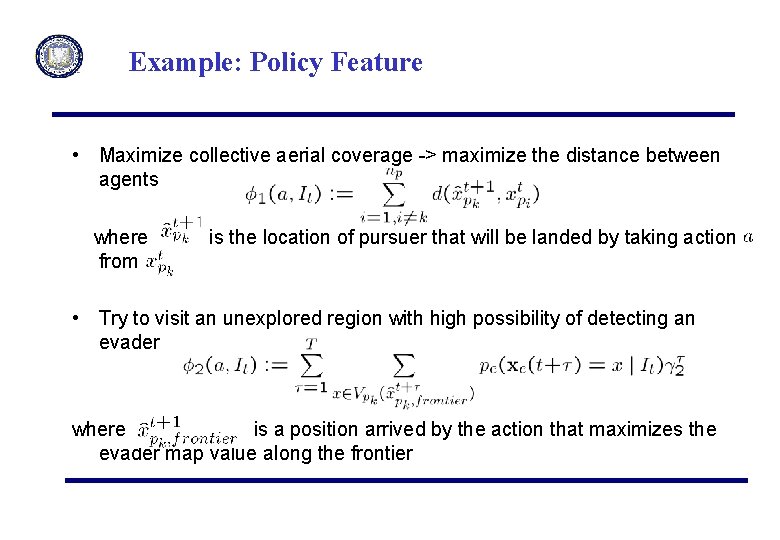

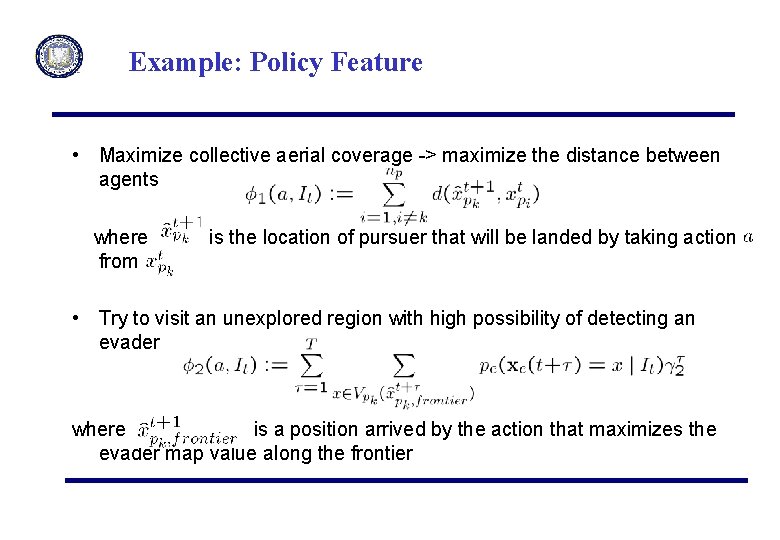

Example: Policy Feature • Maximize collective aerial coverage -> maximize the distance between agents where from is the location of pursuer that will be landed by taking action • Try to visit an unexplored region with high possibility of detecting an evader where is a position arrived by the action that maximizes the evader map value along the frontier

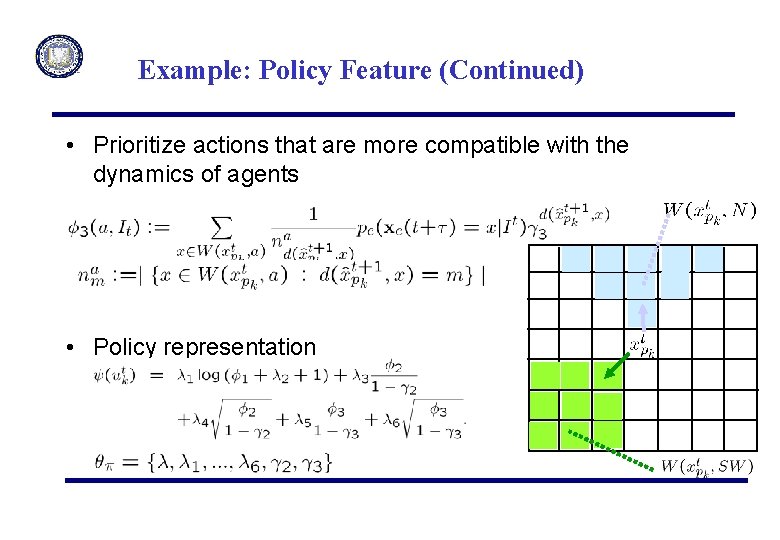

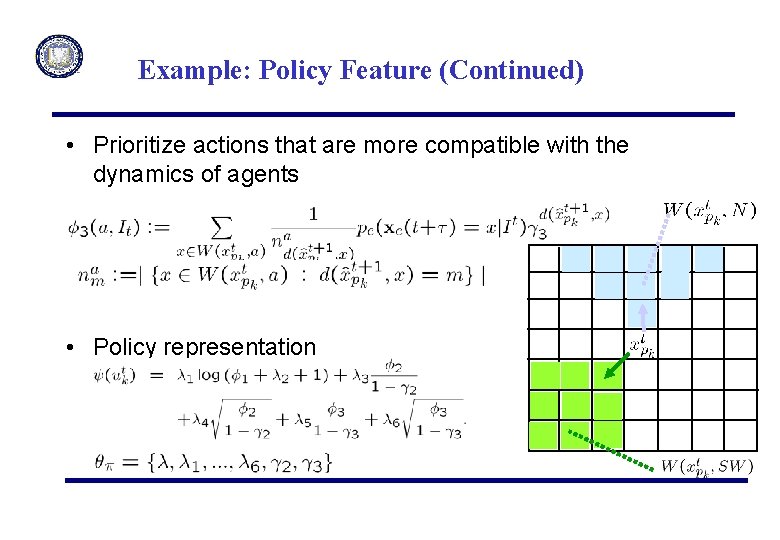

Example: Policy Feature (Continued) • Prioritize actions that are more compatible with the dynamics of agents • Policy representation

Benchmarking Experiments • Performance of two pursuit policies compared in terms of capture time • Experiment 1 : two pursuers against the evader who moves greedily with respect to the pursuers’ location Grid size 1 -Greedy pursuers Optimized pursuers 10 by 10 (7. 3, 4. 8)* (5. 1, 2. 7) 20 by 20 (42. 3, 19. 2) (12. 3, 4. 3) • Experiment 2 : When the position of evader at each step is detected by the sensor network with only 10% accuracy, two optimized pursuers took 24. 1 steps, while the one-step greedy pursuers took over 146 steps in average to capture the evader in 30 by 30 grid. * (mean, standard deviation)

Why General-sum Games? "All too often in OR dealing with military problems, war is viewed as a zero-sum two-person game with perfect information. Here I must state as forcibly as I know that war is not a zero-sum two-person game with perfect information. Anybody who sincerely believes it is a fool. Anybody who reaches conclusions based on such an assumption and then tries to peddle these conclusions without revealing the quicksand they are constructed on is a charlatan. . There is, in short, an urgent need to develop positive-sum game theory and to urge the acceptance of its precepts upon our leaders throughout the world. " Joseph H. Engel, Retiring Presidential Address to the Operations Research Society of America, October 1969

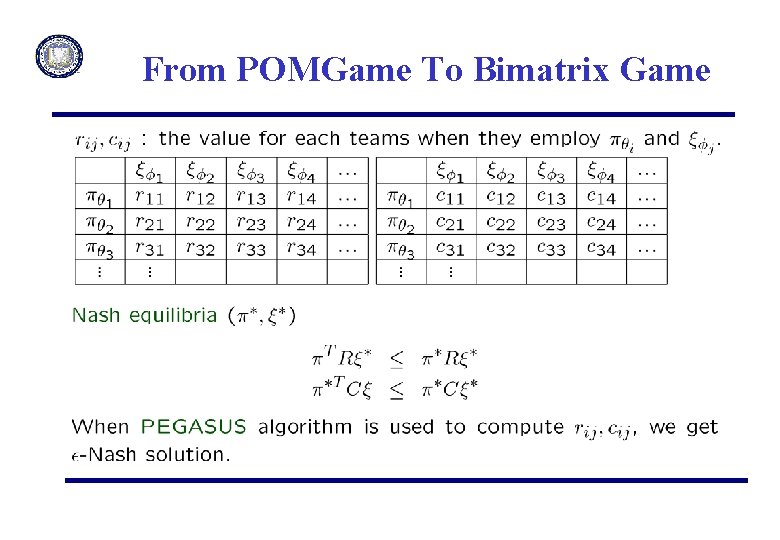

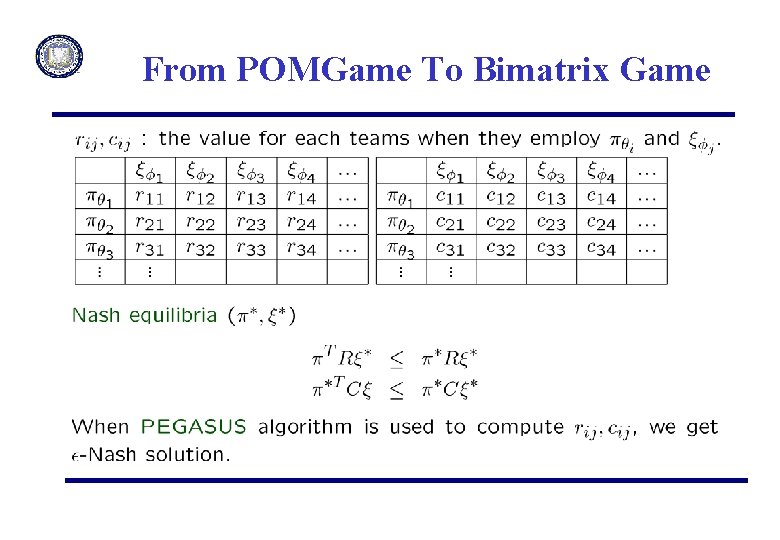

General-sum Games • Depending on the cooperation between the players, – Noncooperative – Cooperative • Depending on the least expected payoff that a player is willing to accept- Nash’s special/general bargaining solution • By restricting the blue and red policy class to be the finite size, we reduce the POMGame into the bimatrix game.

From PEG to Combat Scenarios • Adversarial attack – Reds just do not evade, but also attack -> Blues cannot blindly pursue reds. • Unknown number/capability of adversary -> Dynamic selection of the relevant red model from unstructured observation • Deconfliction between layers and teams • Increase number of feature -> Diversify possible solutions when the uncertainty is high

From POMGame To Bimatrix Game

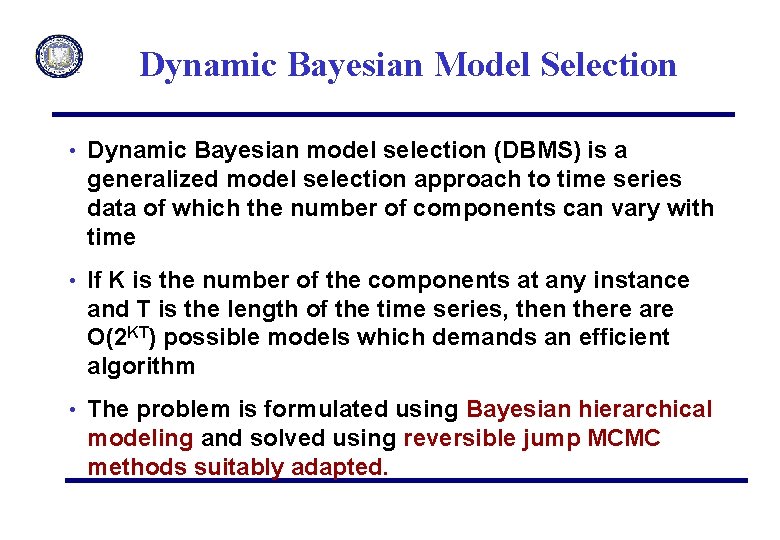

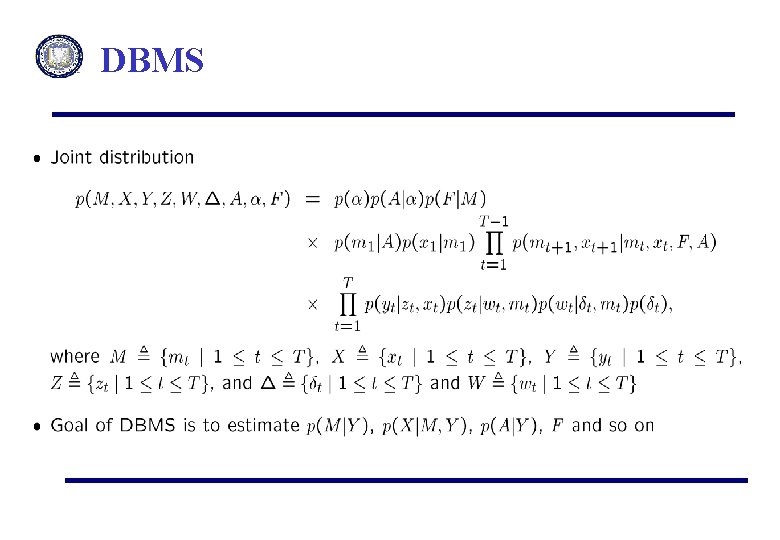

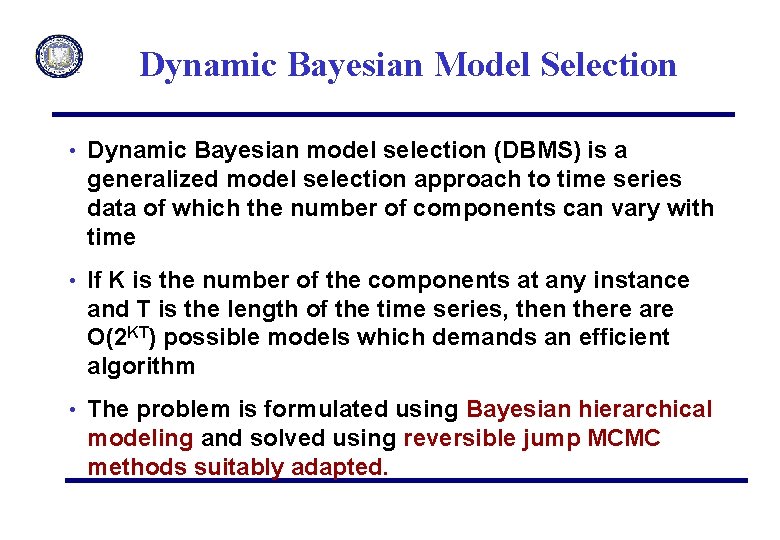

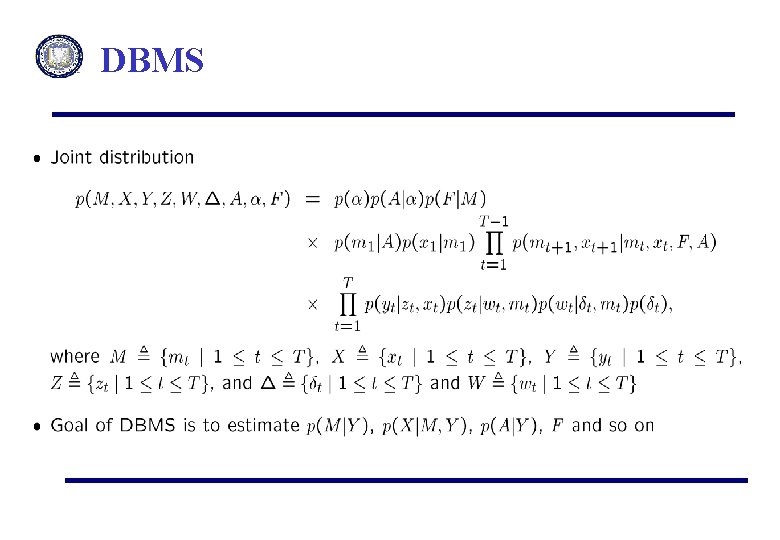

Dynamic Bayesian Model Selection • Dynamic Bayesian model selection (DBMS) is a generalized model selection approach to time series data of which the number of components can vary with time • If K is the number of the components at any instance and T is the length of the time series, then there are O(2 KT) possible models which demands an efficient algorithm • The problem is formulated using Bayesian hierarchical modeling and solved using reversible jump MCMC methods suitably adapted.

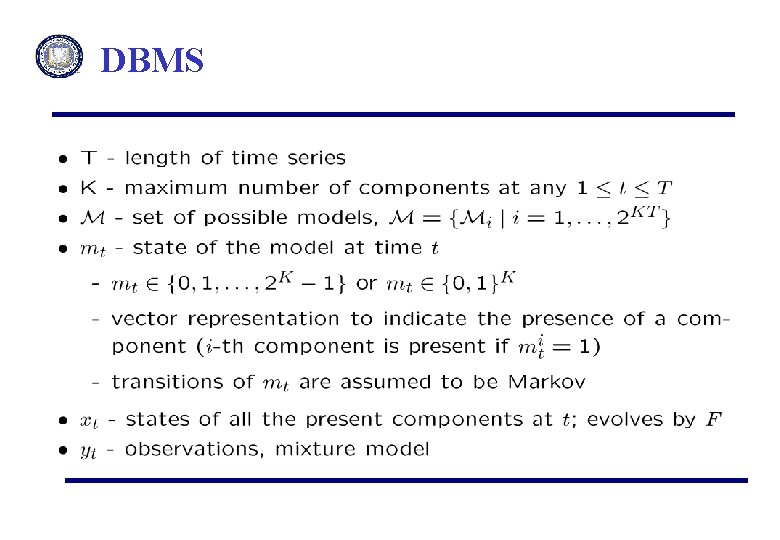

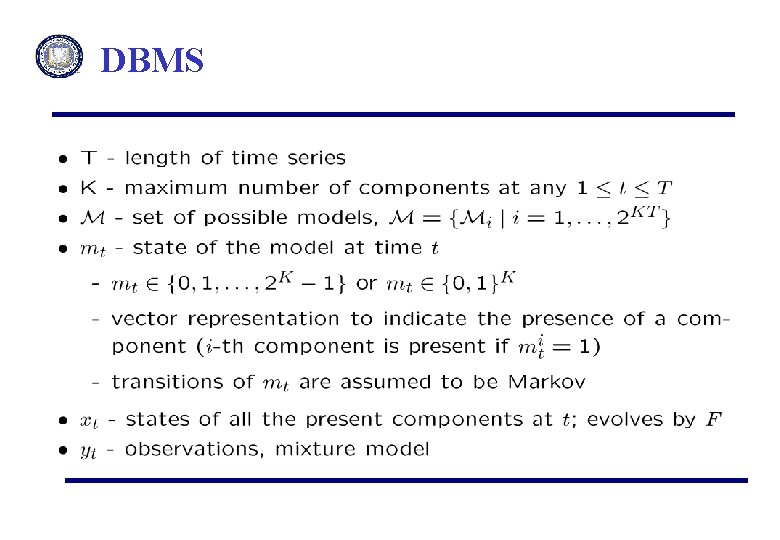

DBMS

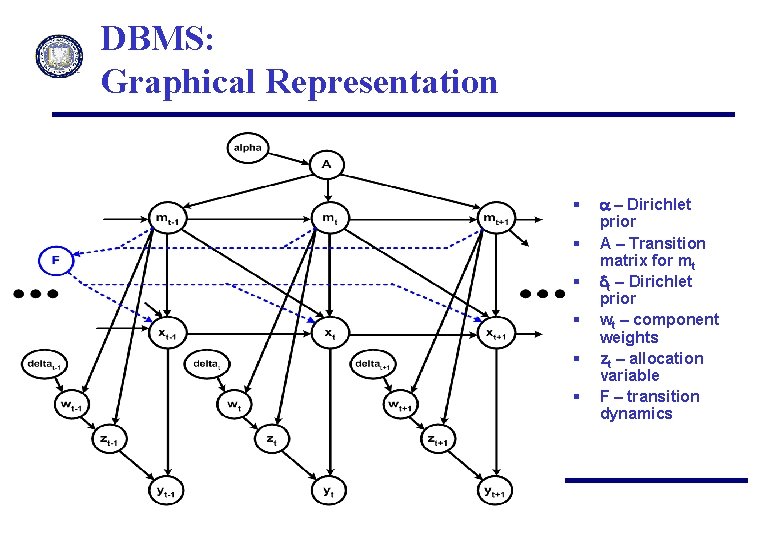

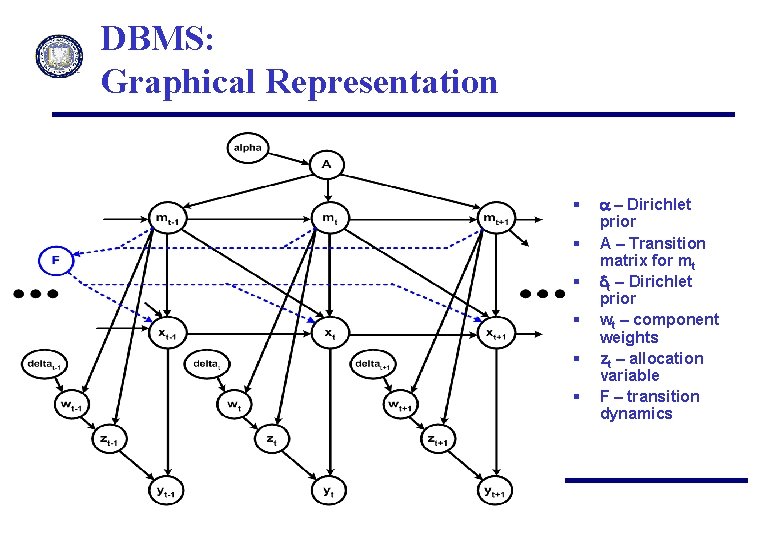

DBMS: Graphical Representation § § § a – Dirichlet prior A – Transition matrix for mt dt – Dirichlet prior wt – component weights zt – allocation variable F – transition dynamics

DBMS

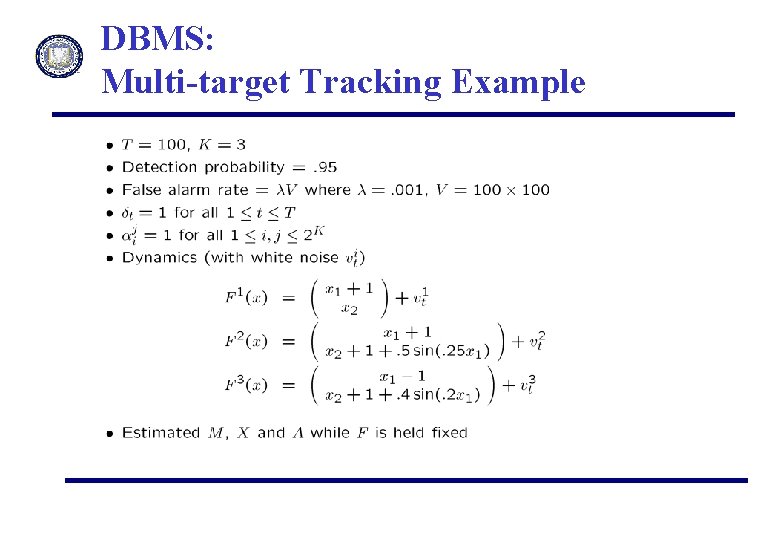

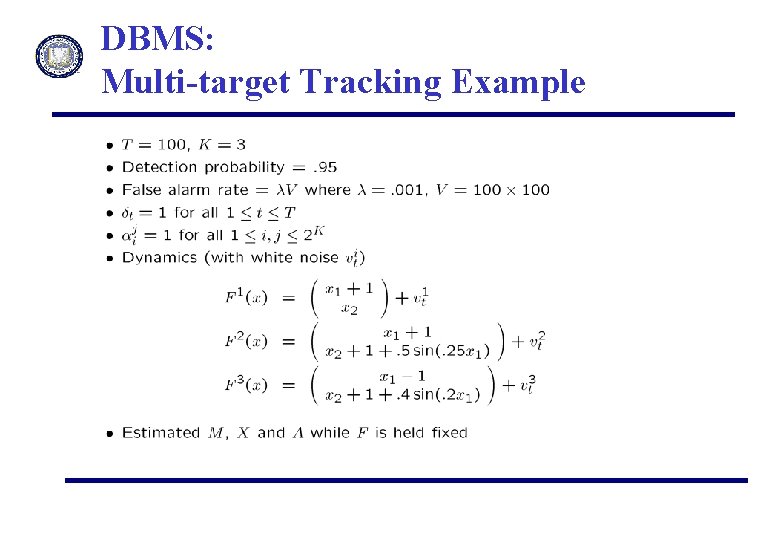

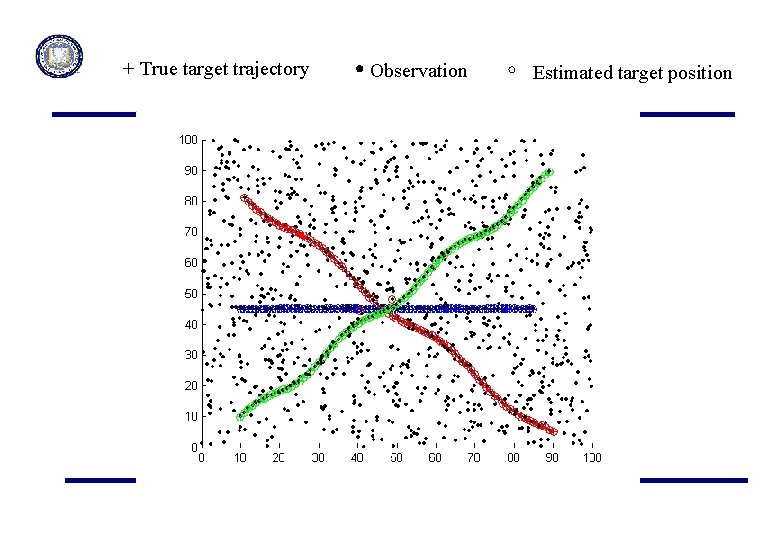

DBMS: Multi-target Tracking Example

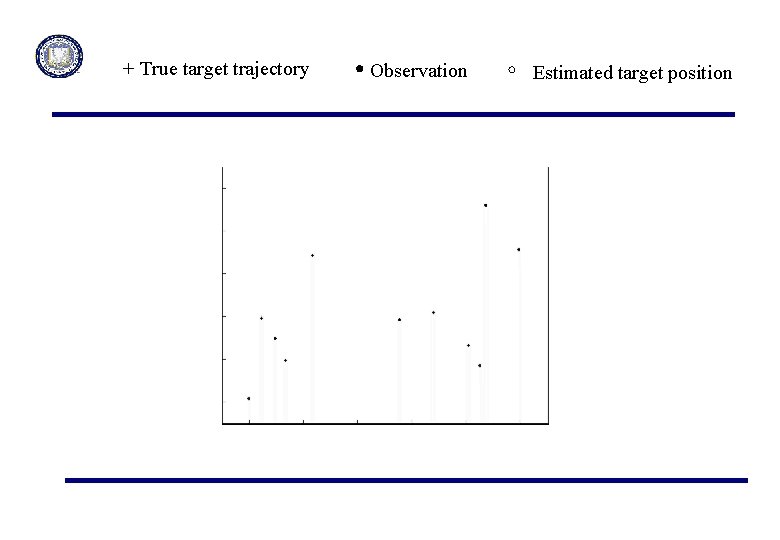

+ True target trajectory Observation Estimated target position

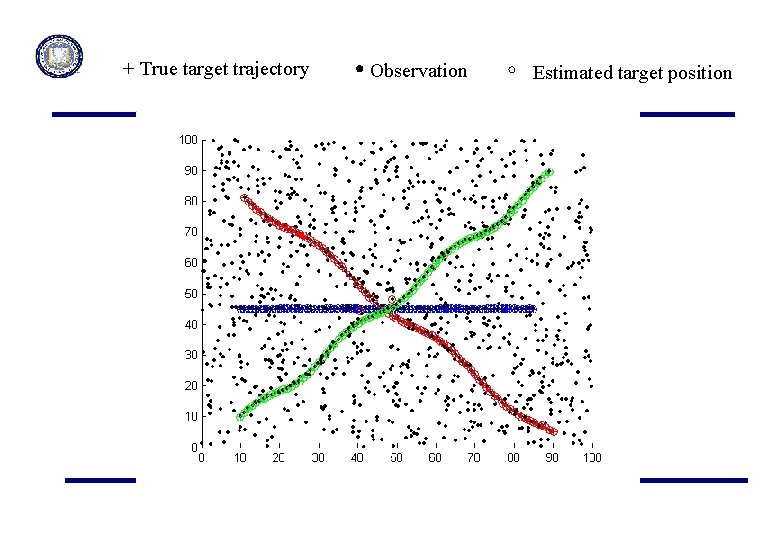

+ True target trajectory Observation Estimated target position

Summary • Decomposition of complex multiagent operation problems requires tighter interaction between subsystems and human intervention • Partial observation Markov games provides a mathematical representation of a hierarchical multiagent system operating under adversarial and environmental uncertainty • Policy class framework provides a setup for including human experience • Policy search methods and sparse sampling produce computationally tractable algorithms to generate approximate solutions to partially observable Markov games. • Model predictive (receding horizon) techniques can be used for dynamic replanning to deconflict/coordinate between vehicles, layers or subtasks

• THE END

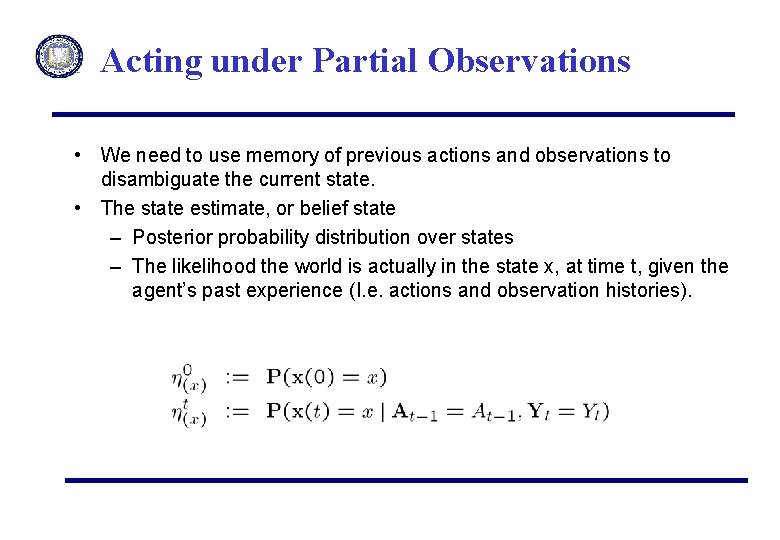

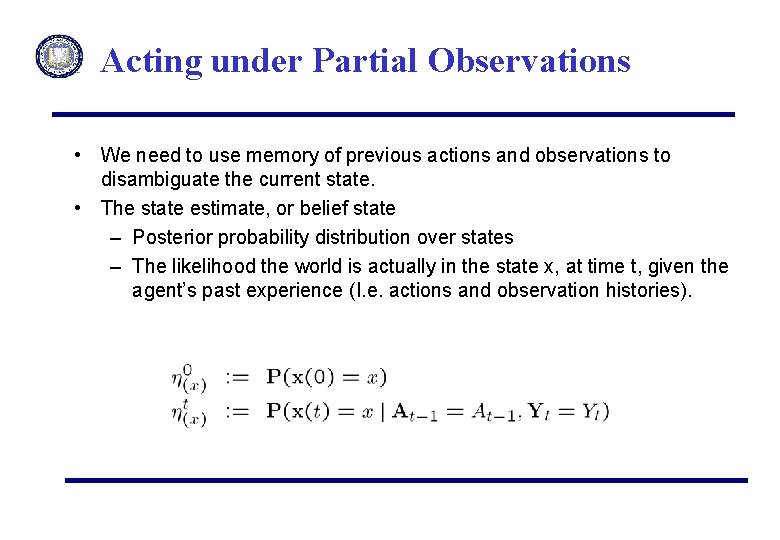

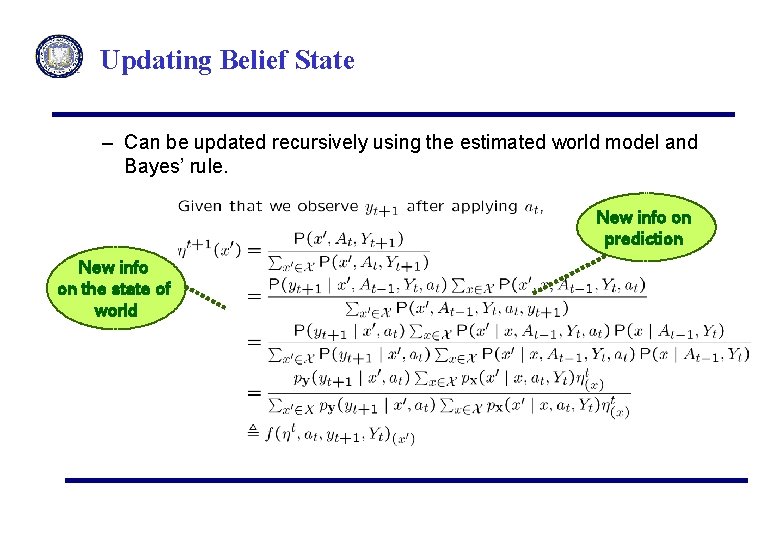

Acting under Partial Observations • We need to use memory of previous actions and observations to disambiguate the current state. • The state estimate, or belief state – Posterior probability distribution over states – The likelihood the world is actually in the state x, at time t, given the agent’s past experience (I. e. actions and observation histories).

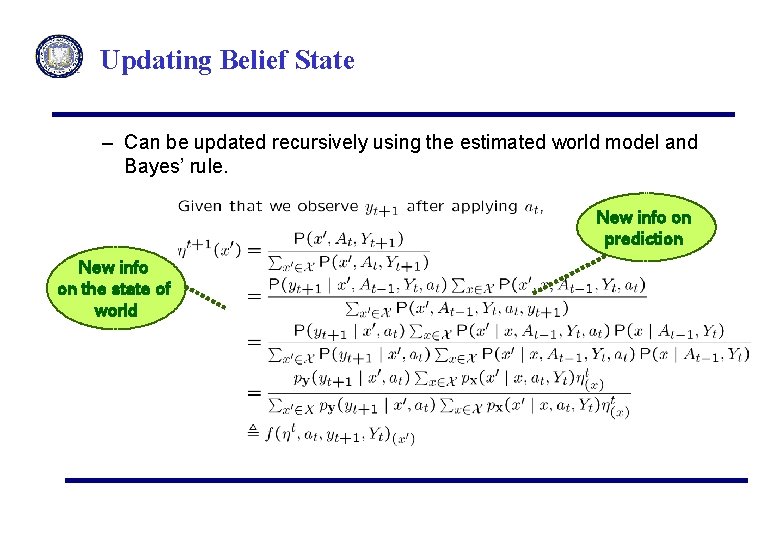

Updating Belief State – Can be updated recursively using the estimated world model and Bayes’ rule. New info on prediction New info on the state of world

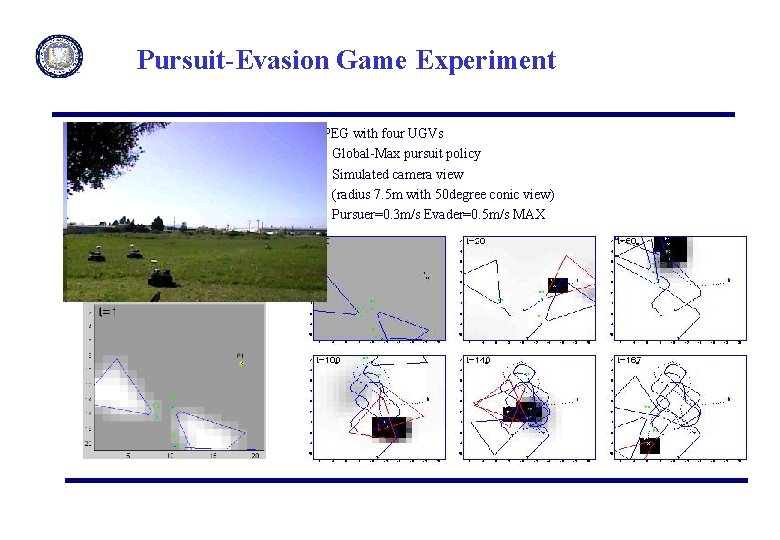

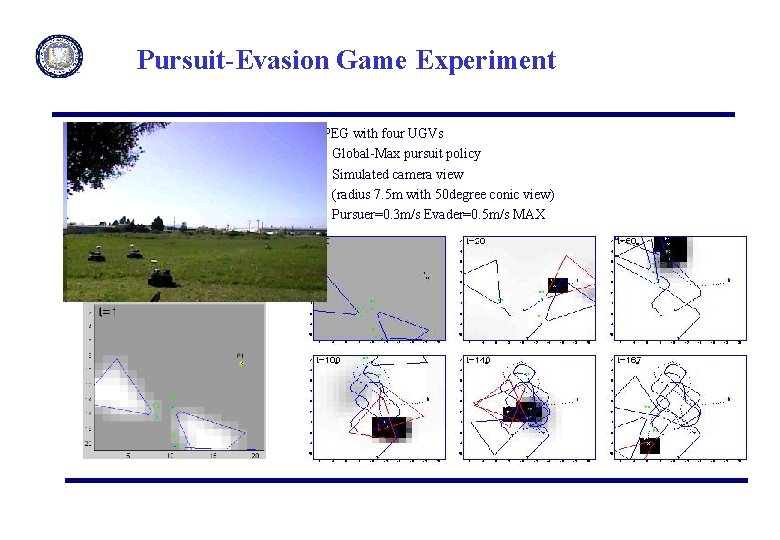

Pursuit-Evasion Game Experiment PEG with four UGVs • Global-Max pursuit policy • Simulated camera view (radius 7. 5 m with 50 degree conic view) • Pursuer=0. 3 m/s Evader=0. 5 m/s MAX

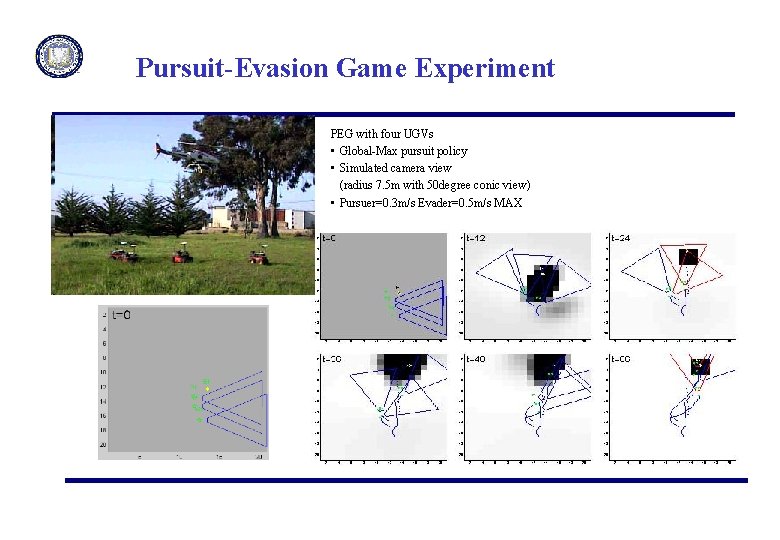

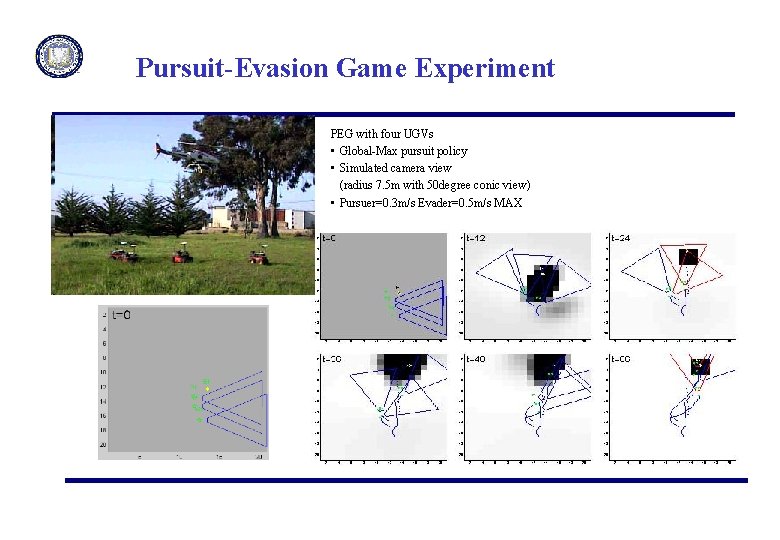

Pursuit-Evasion Game Experiment PEG with four UGVs • Global-Max pursuit policy • Simulated camera view (radius 7. 5 m with 50 degree conic view) • Pursuer=0. 3 m/s Evader=0. 5 m/s MAX

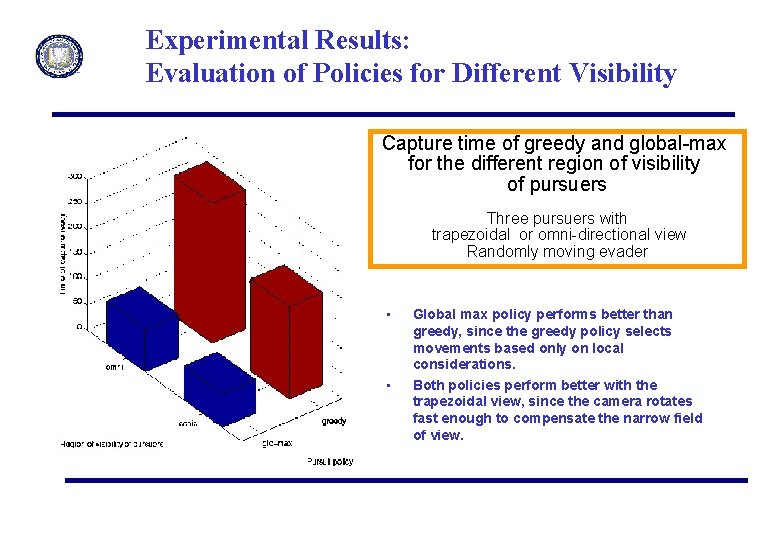

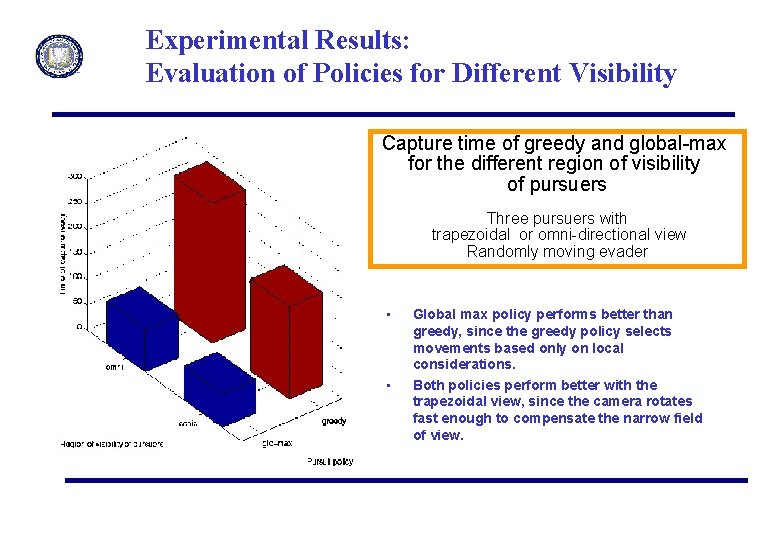

Experimental Results: Evaluation of Policies for Different Visibility Capture time of greedy and global-max for the different region of visibility of pursuers Three pursuers with trapezoidal or omni-directional view Randomly moving evader • • Global max policy performs better than greedy, since the greedy policy selects movements based only on local considerations. Both policies perform better with the trapezoidal view, since the camera rotates fast enough to compensate the narrow field of view.

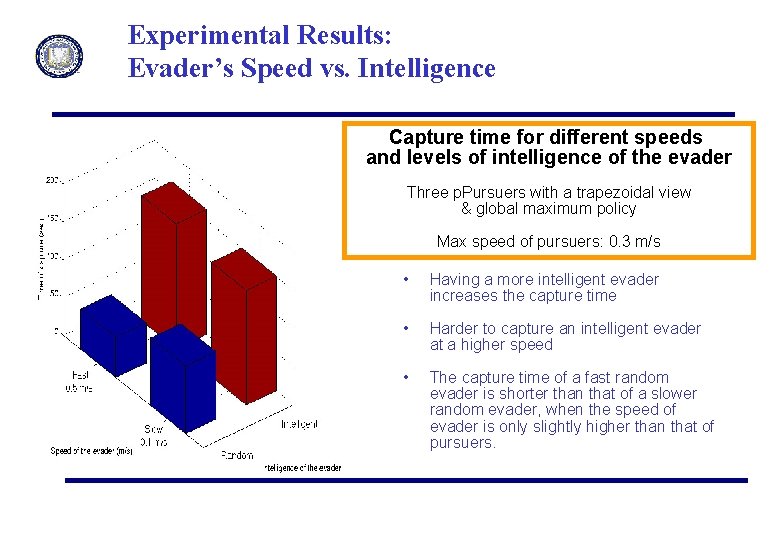

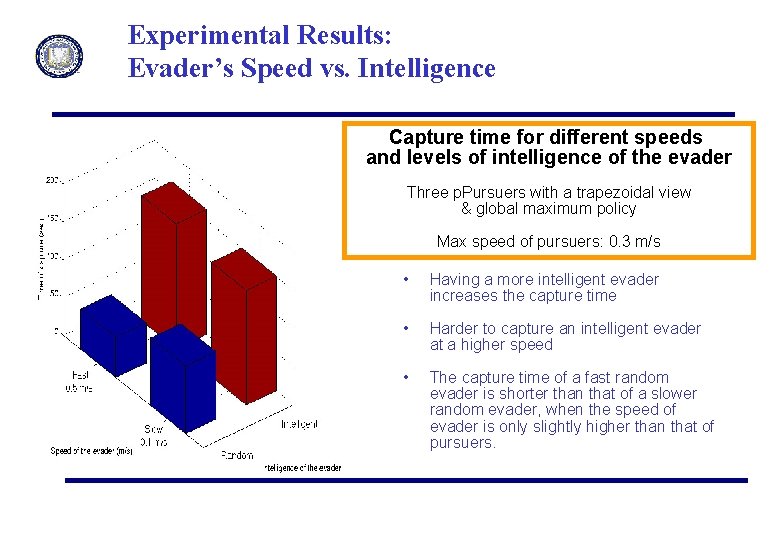

Experimental Results: Evader’s Speed vs. Intelligence Capture time for different speeds and levels of intelligence of the evader Three p. Pursuers with a trapezoidal view & global maximum policy Max speed of pursuers: 0. 3 m/s • Having a more intelligent evader increases the capture time • Harder to capture an intelligent evader at a higher speed • The capture time of a fast random evader is shorter than that of a slower random evader, when the speed of evader is only slightly higher than that of pursuers.

Coordination under Multiple Sources of Commands • When different agents or layers specify multiple, possibly conflicting goals or actions, how the system can prioritize or resolve them ? – a priori assignment of the degrees of authority – Surge in coordination demand when the situation deviates from textbook cases: can the overall system adapt real-time? • Intermediate, cooperative modes of interaction between layers, agents and human operator based on anticipatory reasoning is desirable