DECISIONMAKING UNDER UNCERTAINTY OVERVIEW Two models of decisionmaking

- Slides: 65

DECISION-MAKING UNDER UNCERTAINTY

OVERVIEW Two models of decision-making under uncertainty � Nondeterministic uncertainty � Probabilistic uncertainty In particular, what is the rational action in the presence of repeated states?

GAME PLAYING REVIEW Game playing with an adversary � Nondeterministic uncertainty => set of possible future states � Need a plan for all states that guarantees a win will be reached Minimax works with no repeated states

ACTION UNCERTAINTY Each action representation is of the form: Action: a(s) -> {s 1, …, sr} where each si, i = 1, . . . , r describes one possible effect of the action in a state s

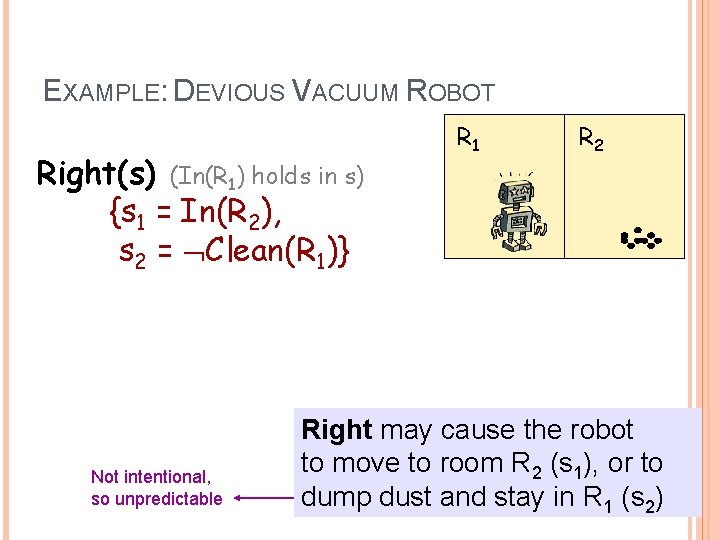

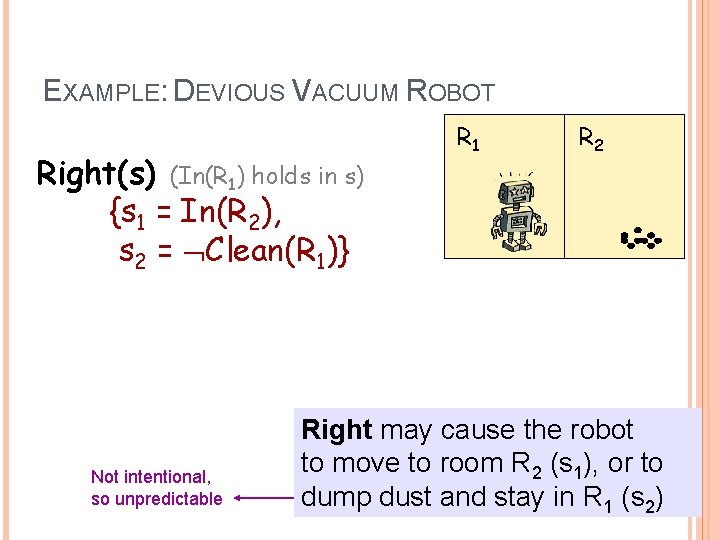

EXAMPLE: DEVIOUS VACUUM ROBOT Right(s) (In(R 1) holds in s) {s 1 = In(R 2), s 2 = Clean(R 1)} Not intentional, so unpredictable R 1 R 2 Right may cause the robot to move to room R 2 (s 1), or to dump dust and stay in R 1 (s 2)

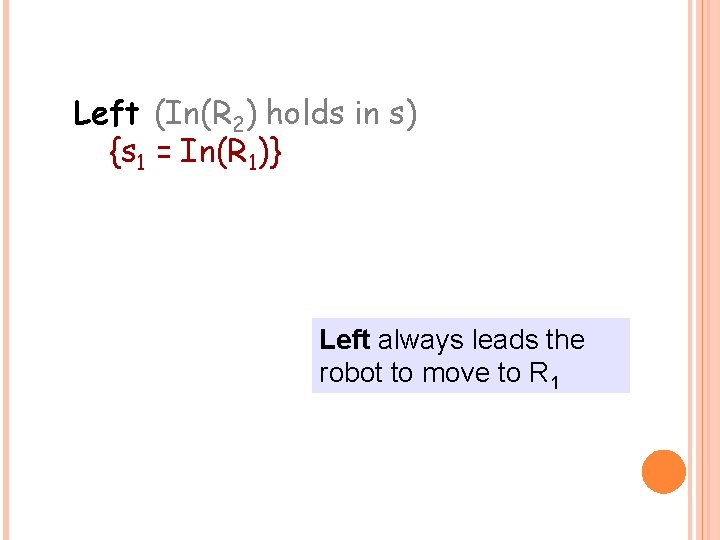

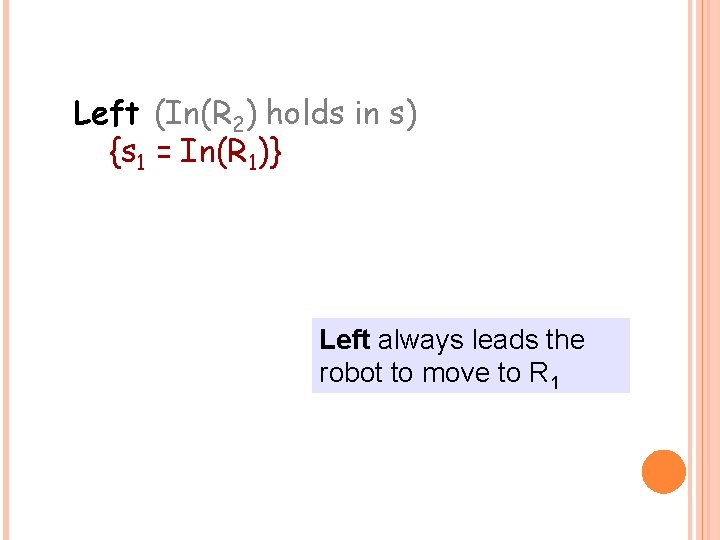

Left (In(R 2) holds in s) {s 1 = In(R 1)} Left always leads the robot to move to R 1

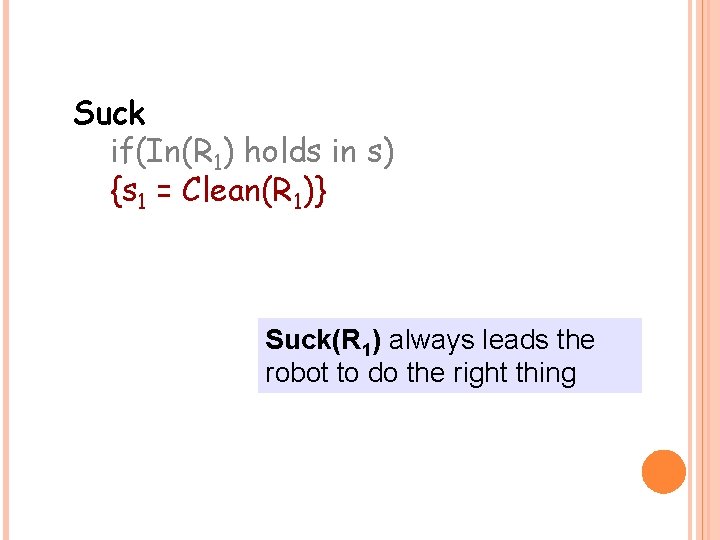

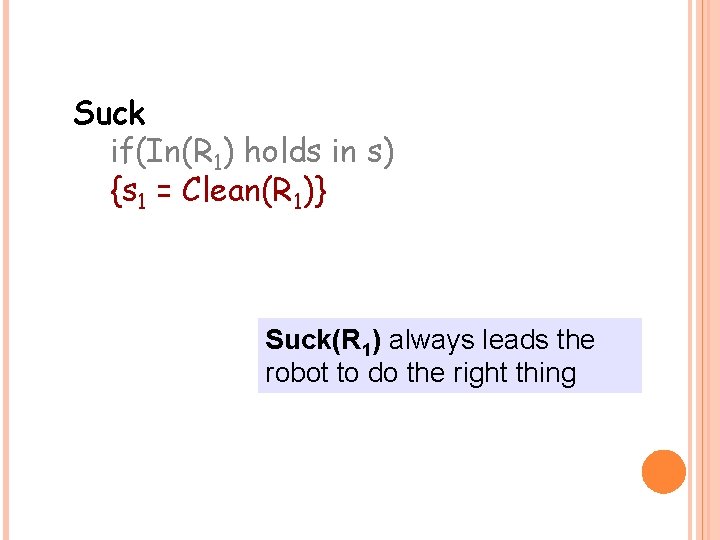

Suck if(In(R 1) holds in s) {s 1 = Clean(R 1)} Suck(R 1) always leads the robot to do the right thing

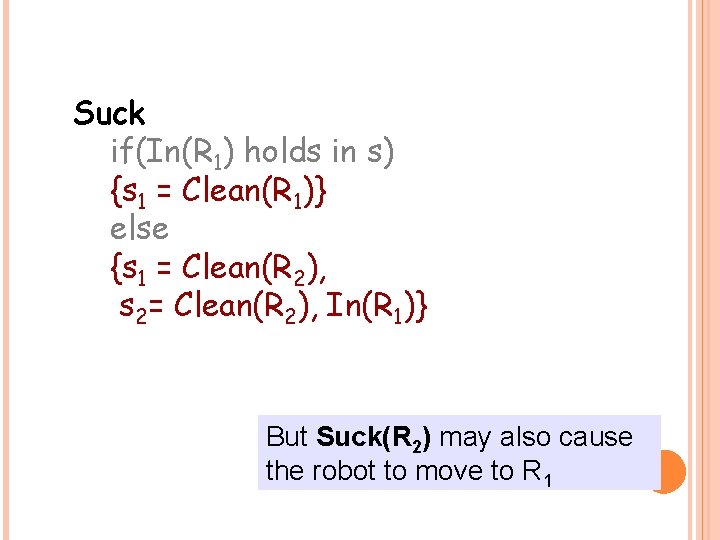

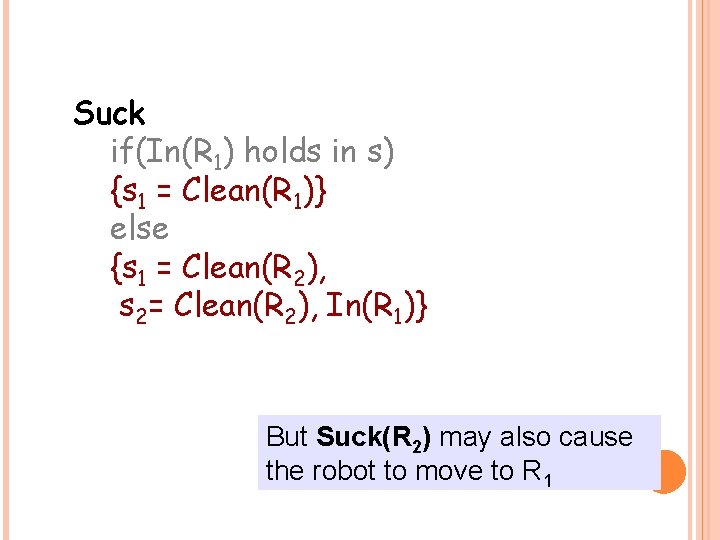

Suck if(In(R 1) holds in s) {s 1 = Clean(R 1)} else {s 1 = Clean(R 2), s 2= Clean(R 2), In(R 1)} But Suck(R 2) may also cause the robot to move to R 1

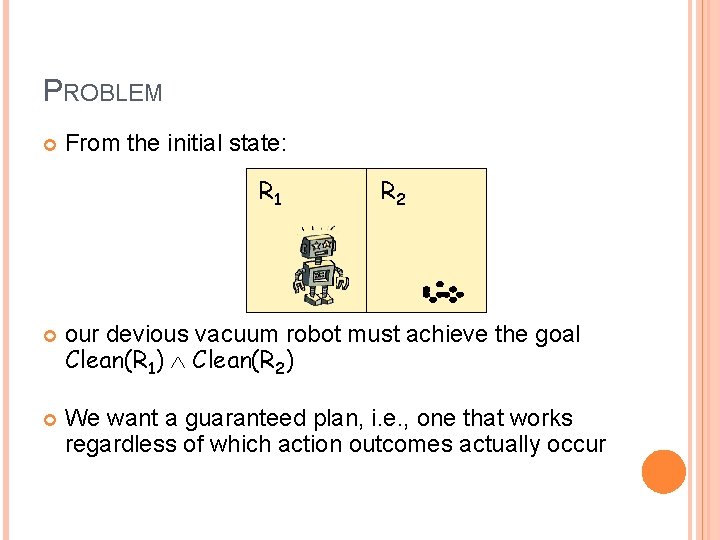

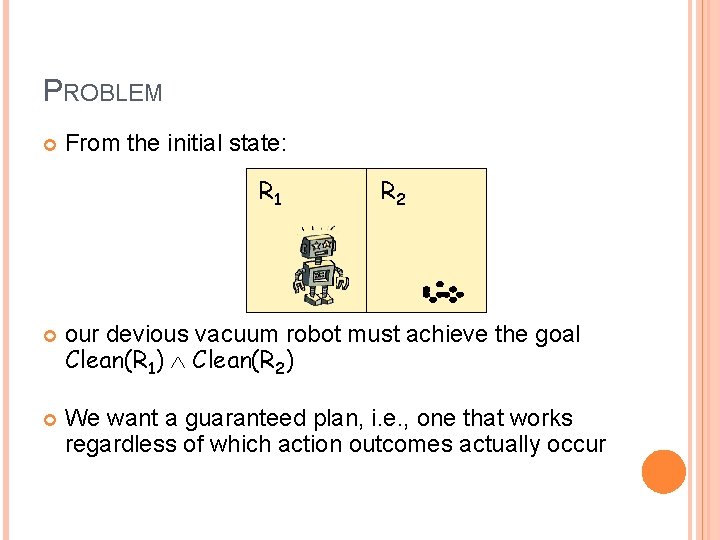

PROBLEM From the initial state: R 1 R 2 our devious vacuum robot must achieve the goal Clean(R 1) Clean(R 2) We want a guaranteed plan, i. e. , one that works regardless of which action outcomes actually occur

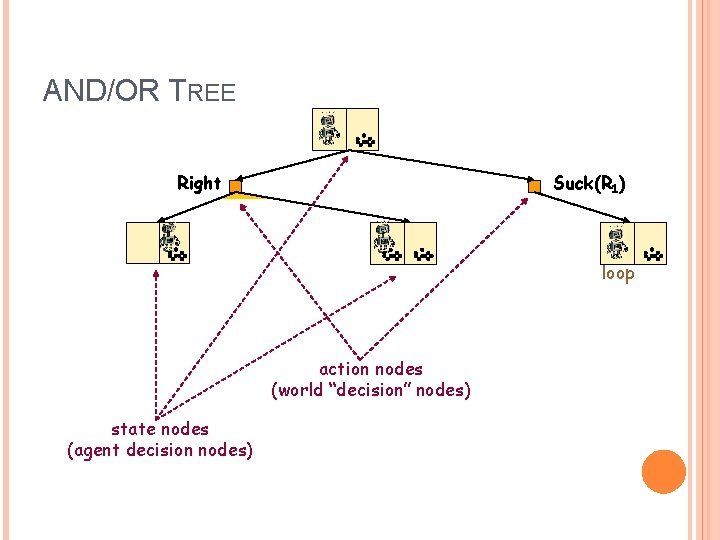

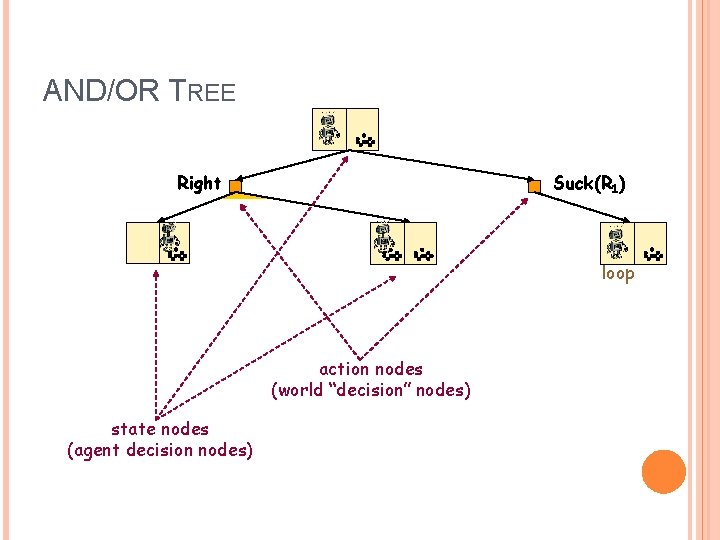

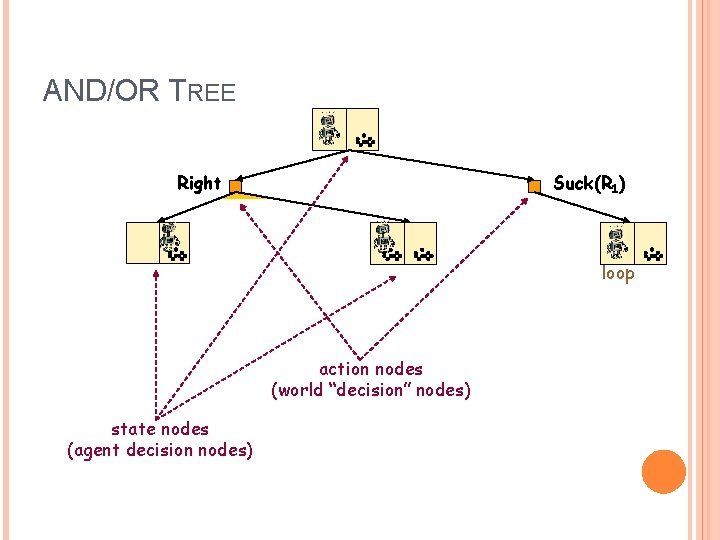

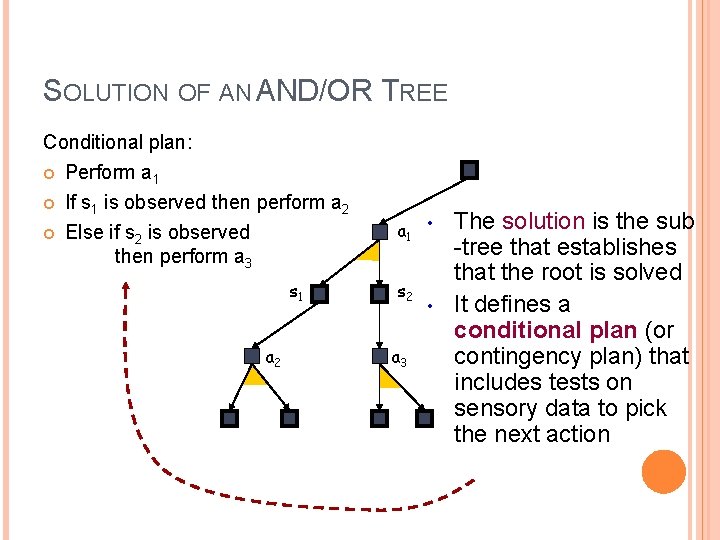

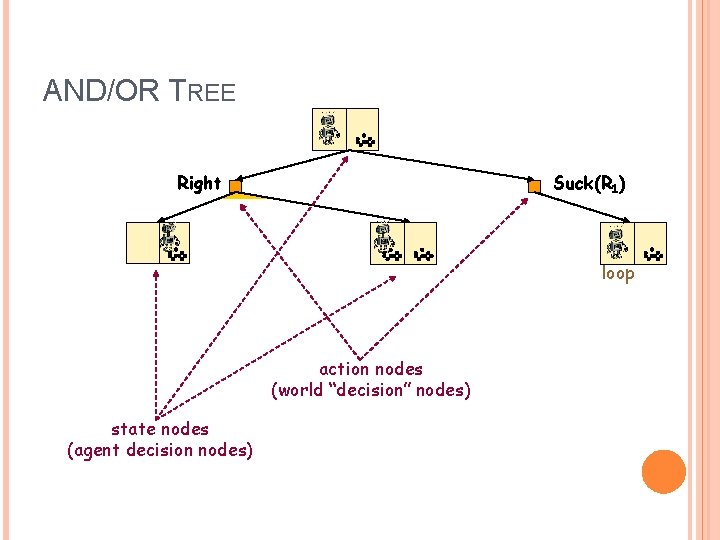

AND/OR TREE Right Suck(R 1) loop action nodes (world “decision” nodes) state nodes (agent decision nodes)

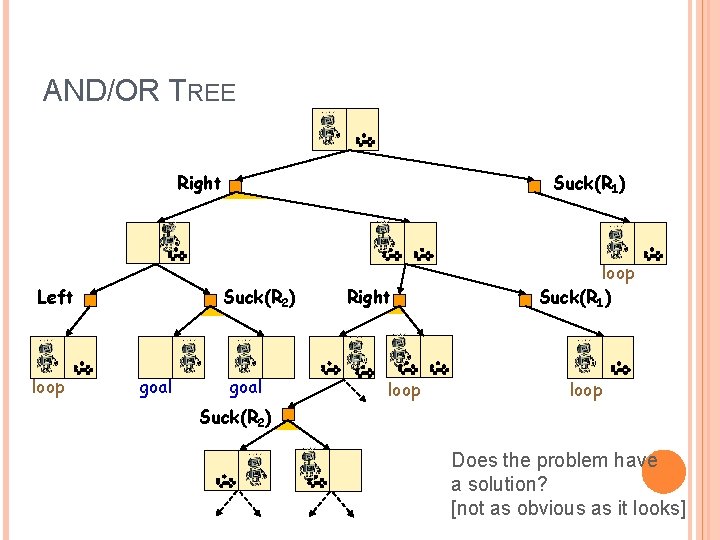

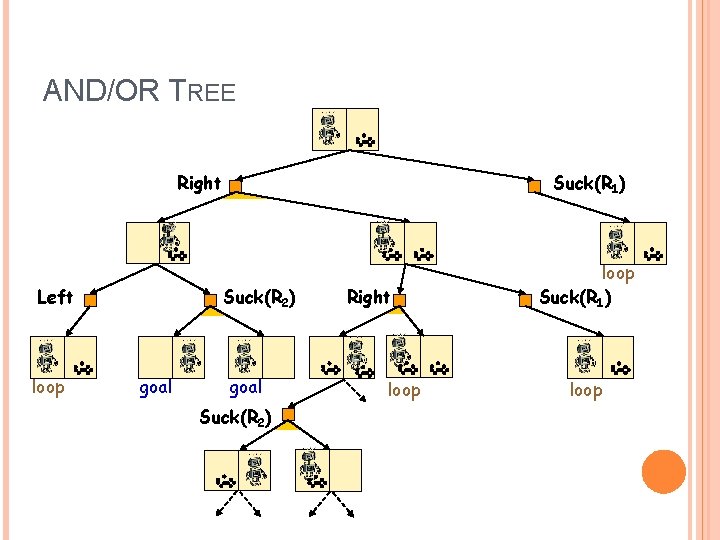

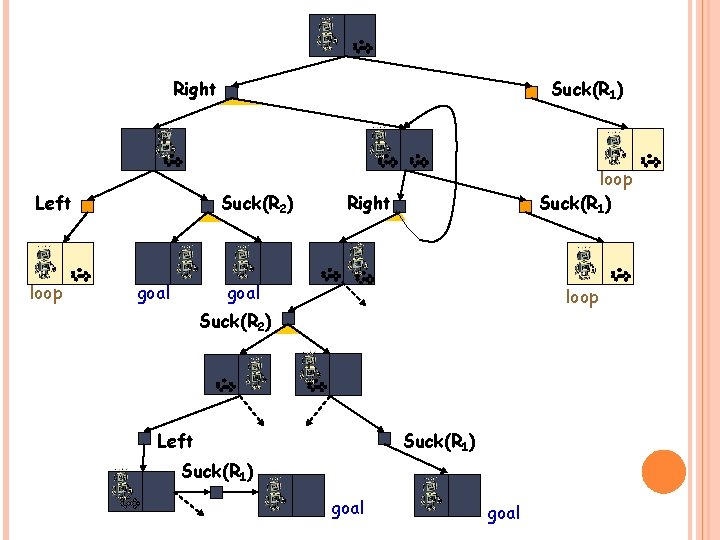

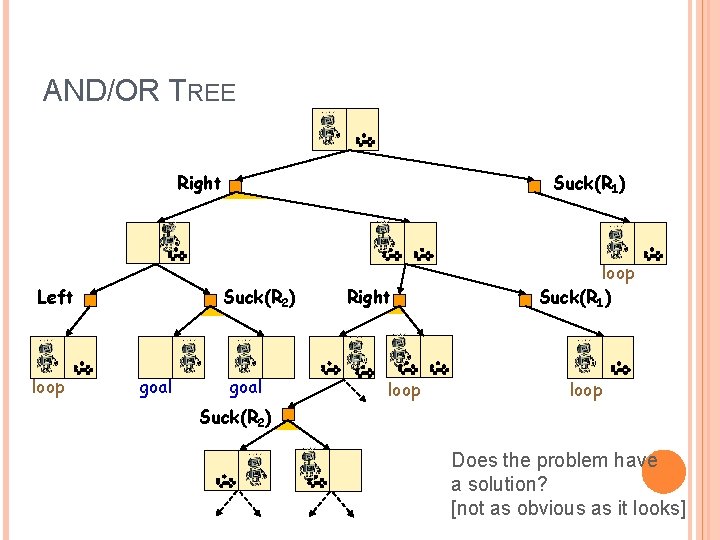

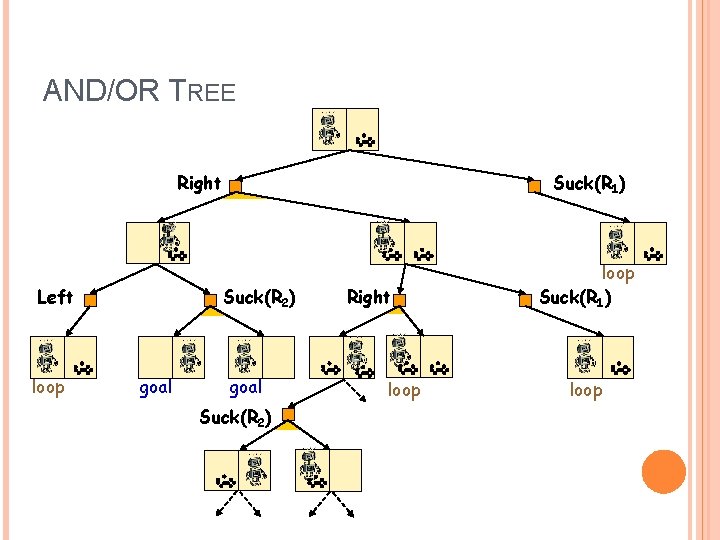

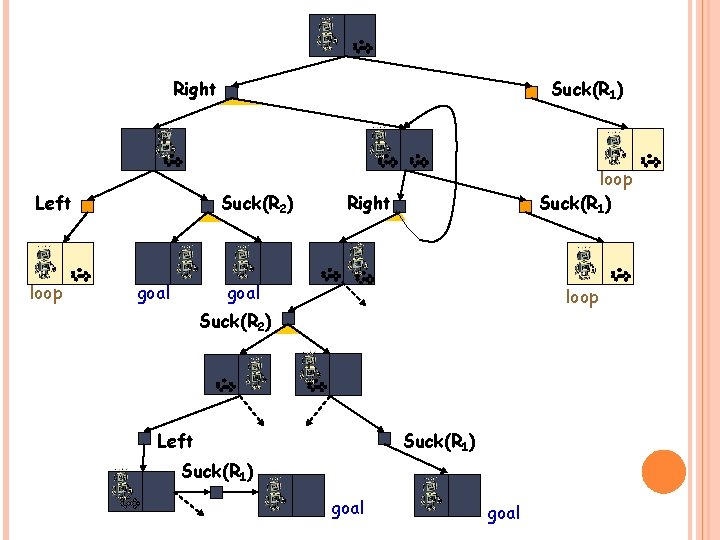

AND/OR TREE Right Left loop Suck(R 1) Suck(R 2) goal Right loop Suck(R 1) loop Suck(R 2) Does the problem have a solution? [not as obvious as it looks]

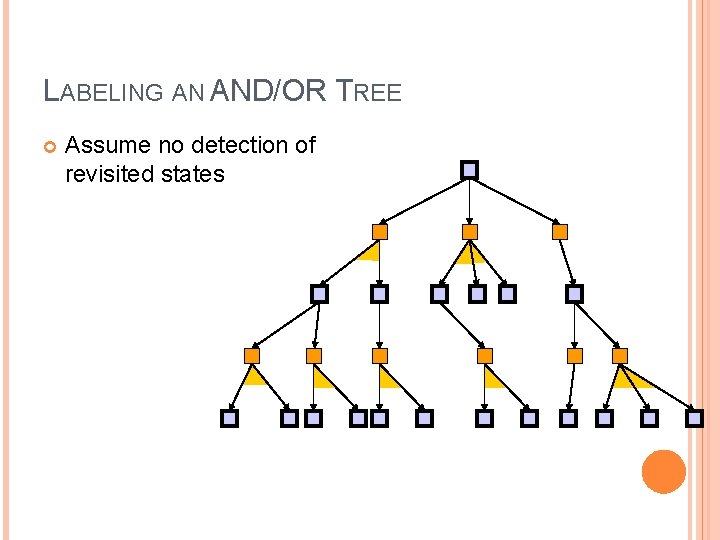

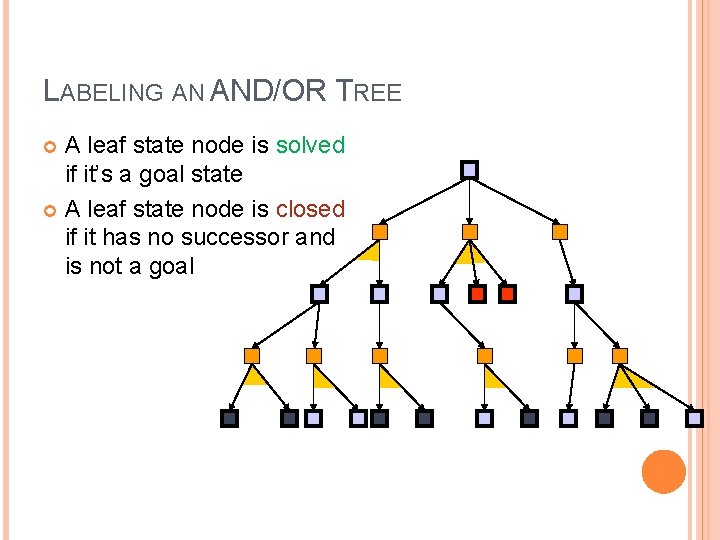

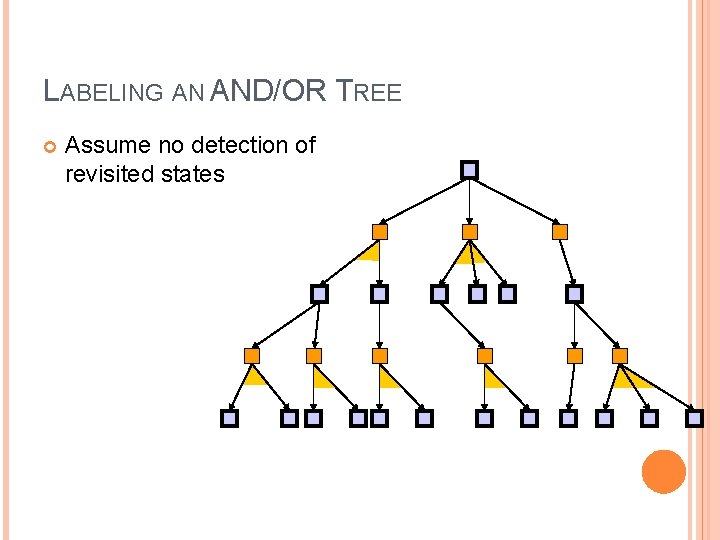

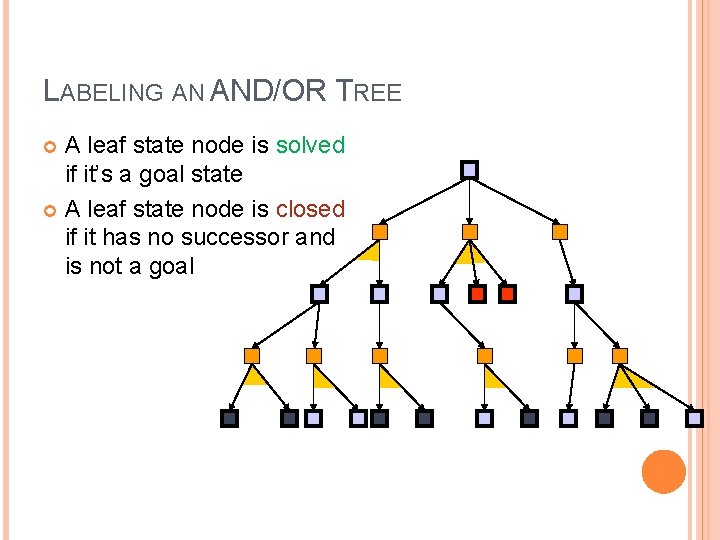

LABELING AN AND/OR TREE Assume no detection of revisited states

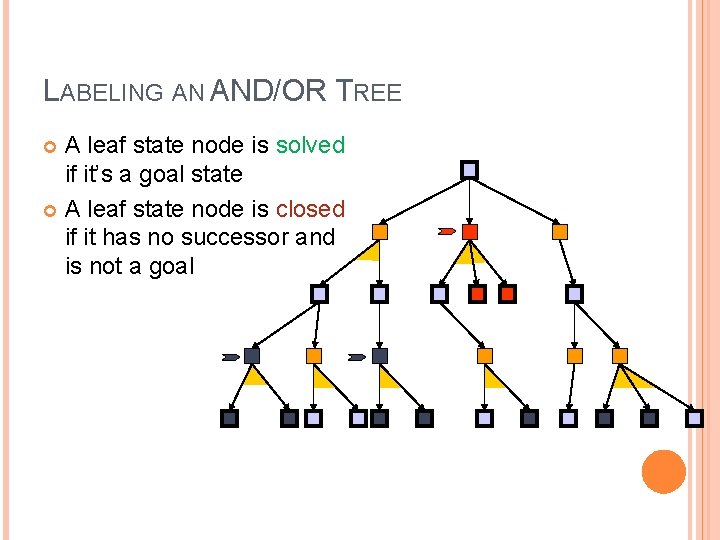

LABELING AN AND/OR TREE A leaf state node is solved if it’s a goal state A leaf state node is closed if it has no successor and is not a goal

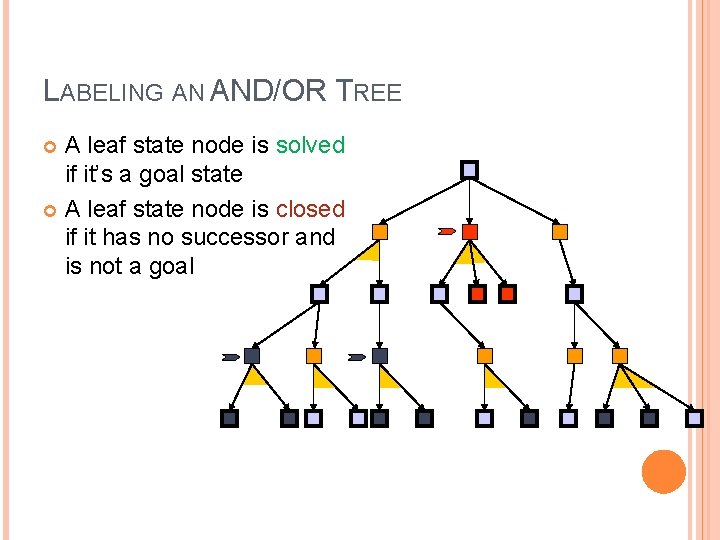

LABELING AN AND/OR TREE A leaf state node is solved if it’s a goal state A leaf state node is closed if it has no successor and is not a goal

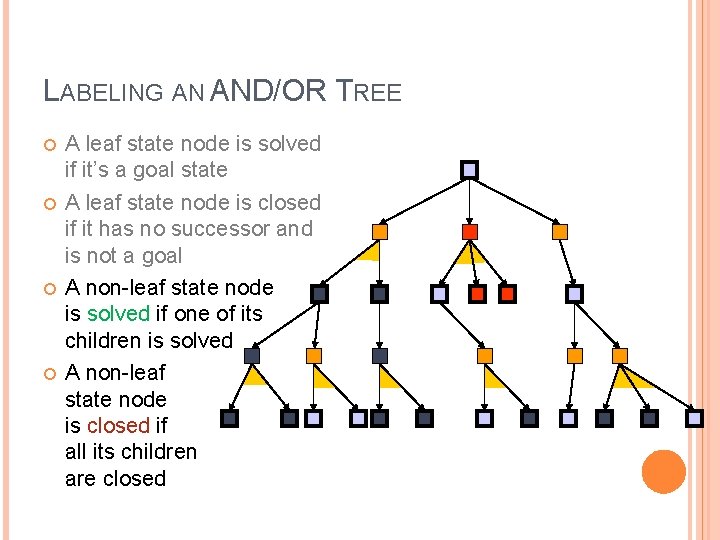

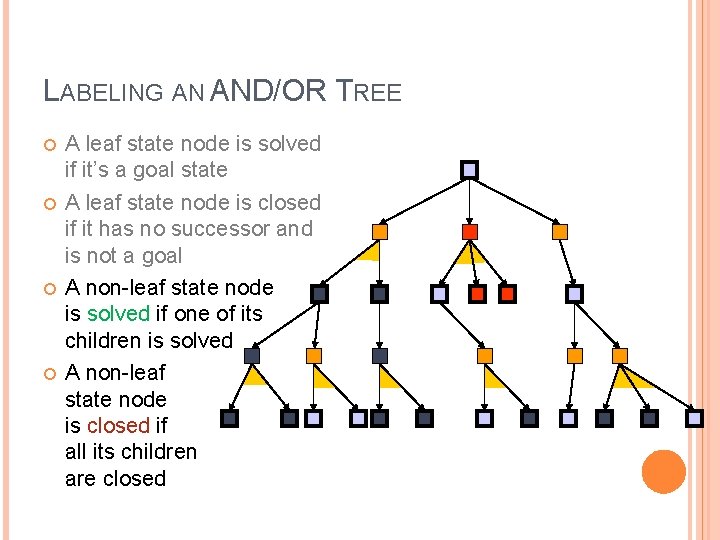

LABELING AN AND/OR TREE A leaf state node is solved if it’s a goal state A leaf state node is closed if it has no successor and is not a goal A non-leaf state node is solved if one of its children is solved A non-leaf state node is closed if all its children are closed

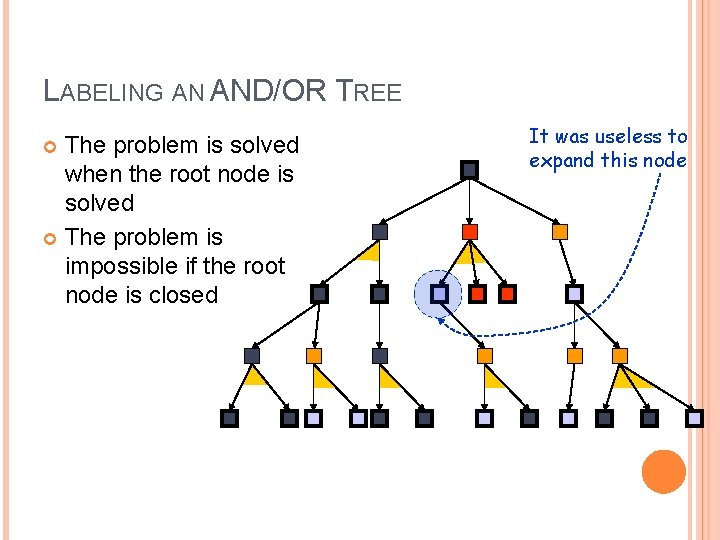

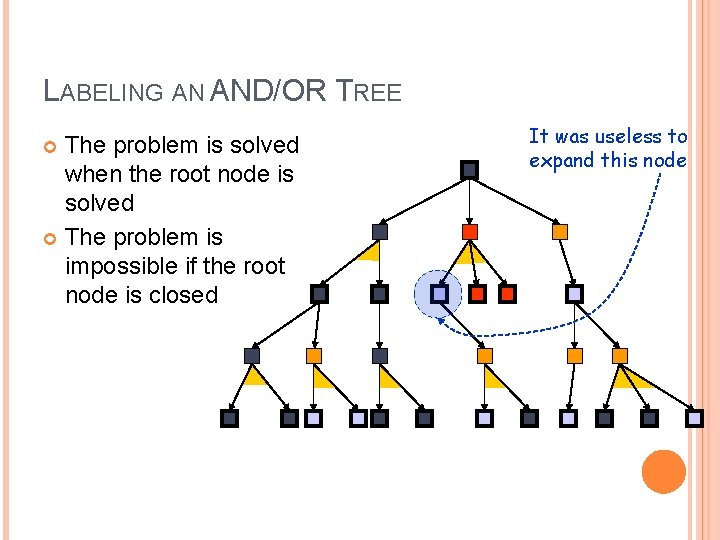

LABELING AN AND/OR TREE The problem is solved when the root node is solved The problem is impossible if the root node is closed It was useless to expand this node

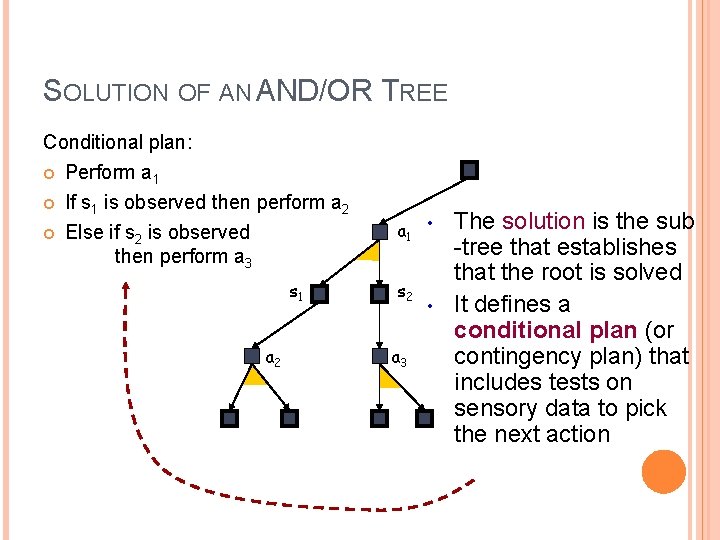

SOLUTION OF AN AND/OR TREE Conditional plan: Perform a 1 If s 1 is observed then perform a 2 Else if s 2 is observed then perform a 3 s 1 a 2 a 1 • s 2 a 3 • The solution is the sub -tree that establishes that the root is solved It defines a conditional plan (or contingency plan) that includes tests on sensory data to pick the next action

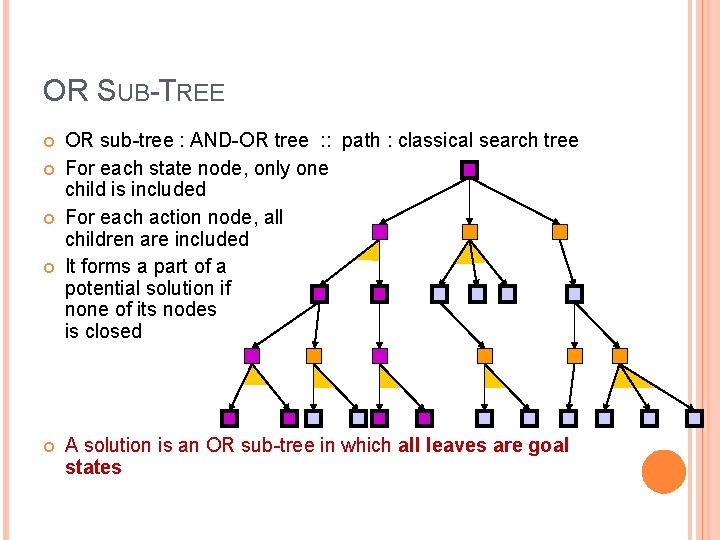

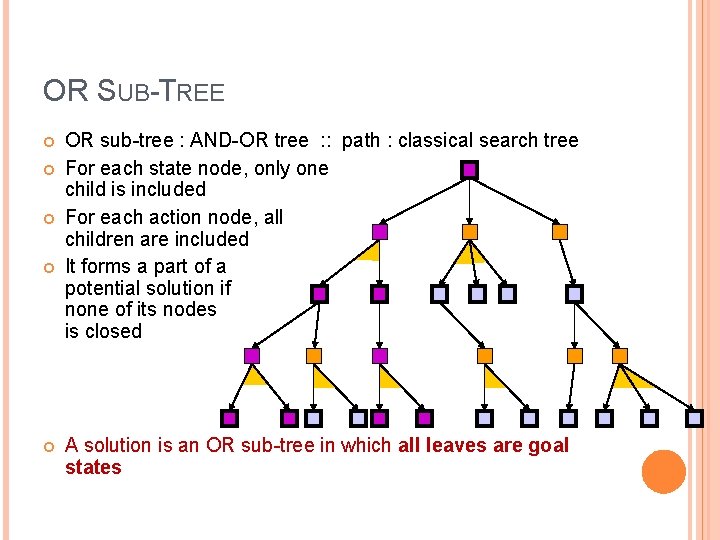

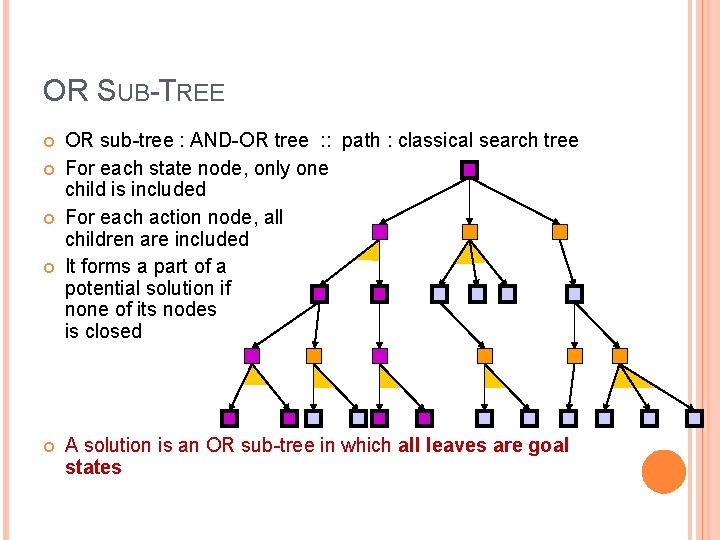

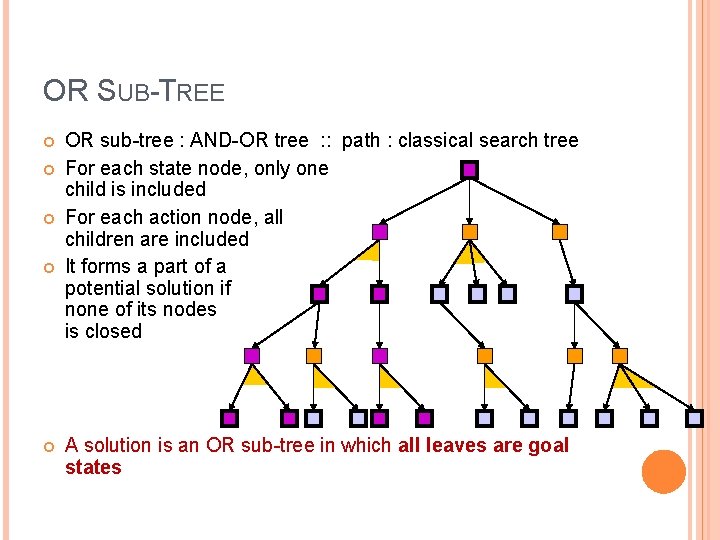

OR SUB-TREE OR sub-tree : AND-OR tree : : path : classical search tree For each state node, only one child is included For each action node, all children are included It forms a part of a potential solution if none of its nodes is closed A solution is an OR sub-tree in which all leaves are goal states

AND/OR TREE Right Suck(R 1) loop action nodes (world “decision” nodes) state nodes (agent decision nodes)

AND/OR TREE Right Left loop Suck(R 1) Suck(R 2) goal Suck(R 2) Right loop Suck(R 1) loop

OR SUB-TREE OR sub-tree : AND-OR tree : : path : classical search tree For each state node, only one child is included For each action node, all children are included It forms a part of a potential solution if none of its nodes is closed A solution is an OR sub-tree in which all leaves are goal states

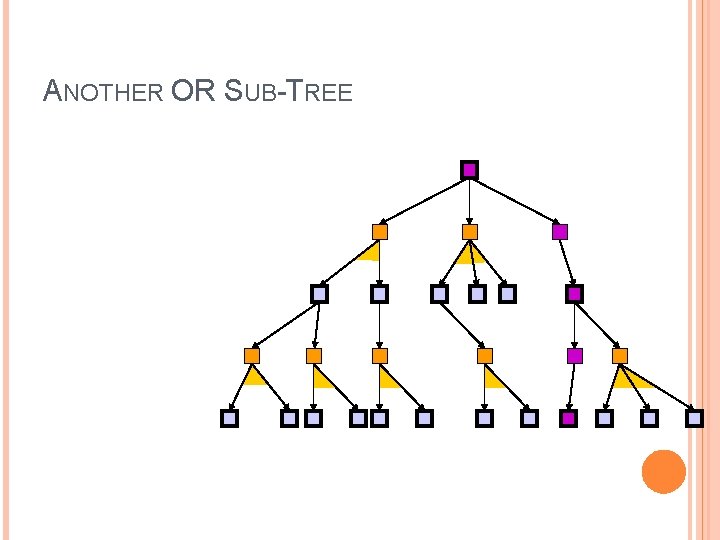

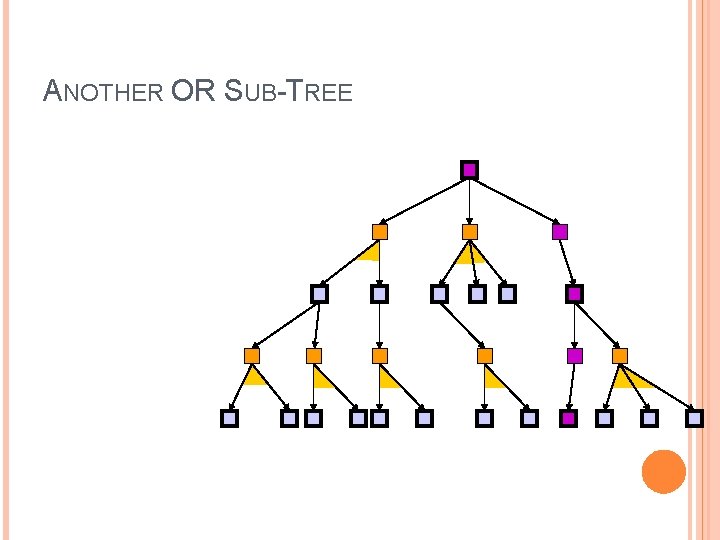

ANOTHER OR SUB-TREE

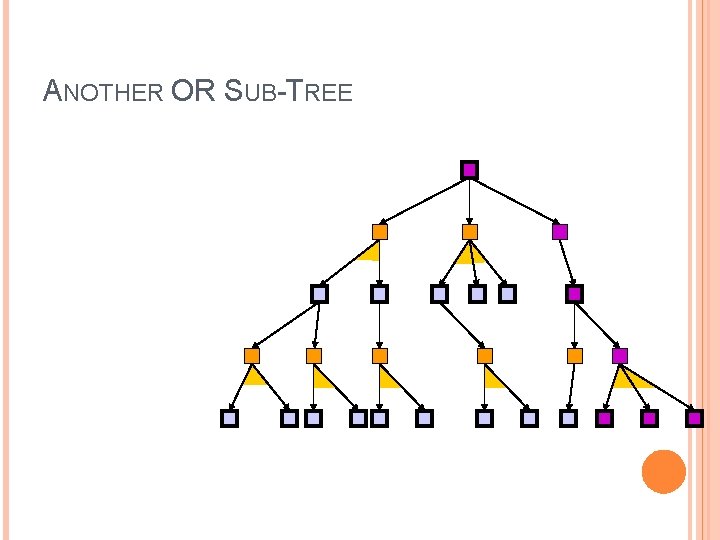

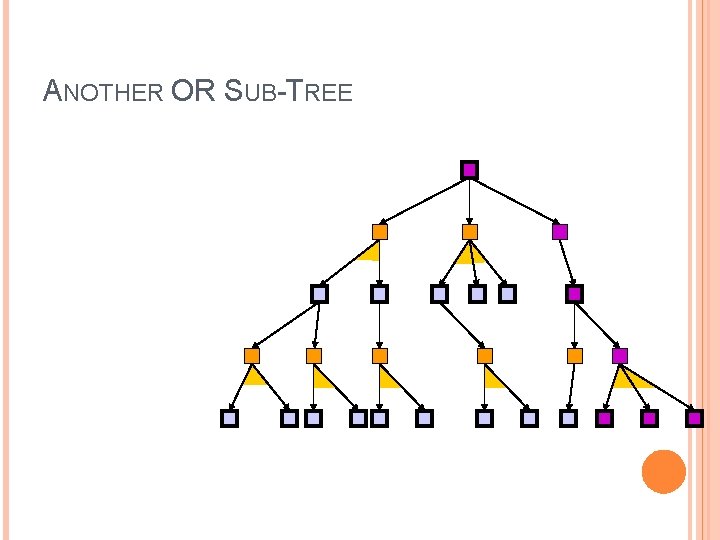

ANOTHER OR SUB-TREE

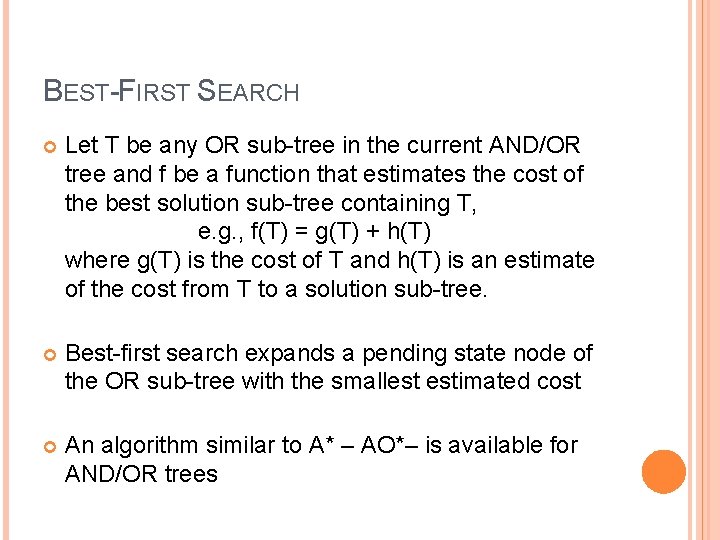

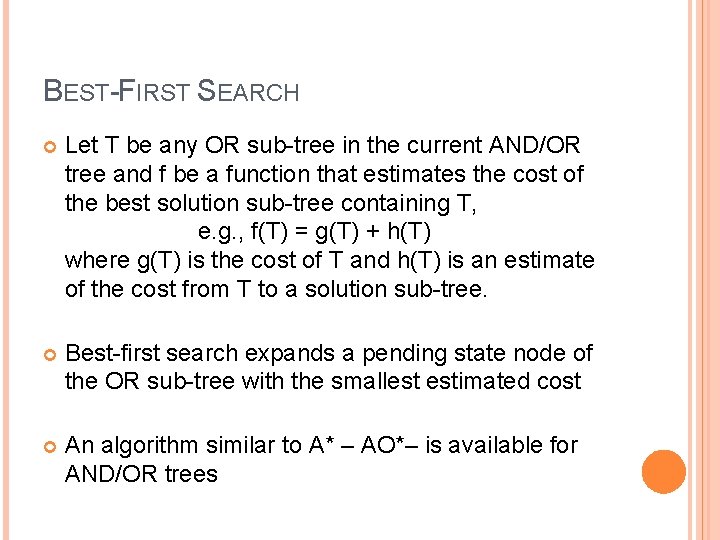

BEST-FIRST SEARCH Let T be any OR sub-tree in the current AND/OR tree and f be a function that estimates the cost of the best solution sub-tree containing T, e. g. , f(T) = g(T) + h(T) where g(T) is the cost of T and h(T) is an estimate of the cost from T to a solution sub-tree. Best-first search expands a pending state node of the OR sub-tree with the smallest estimated cost An algorithm similar to A* – AO*– is available for AND/OR trees

DEALING WITH REVISITED STATES Solution #1: Do not test for revisited states Duplicated sub-trees [The tree may grow arbitrarily large even if the state space is finite] Solution #2: Test for revisited states and avoid expanding nodes with revisited states

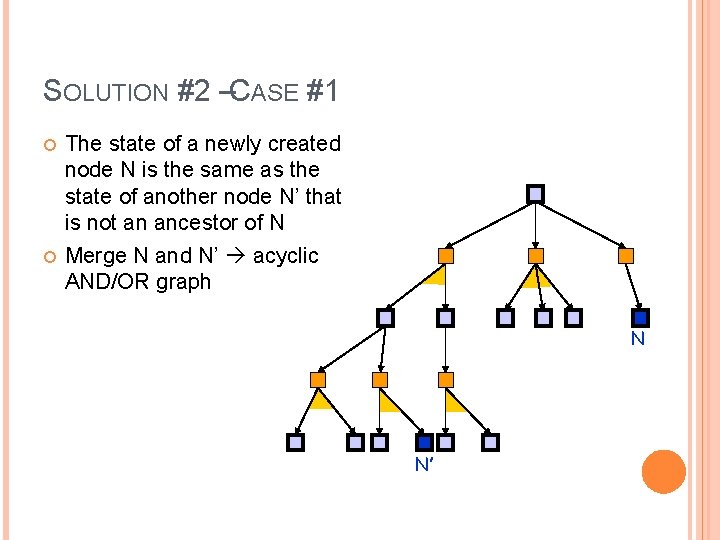

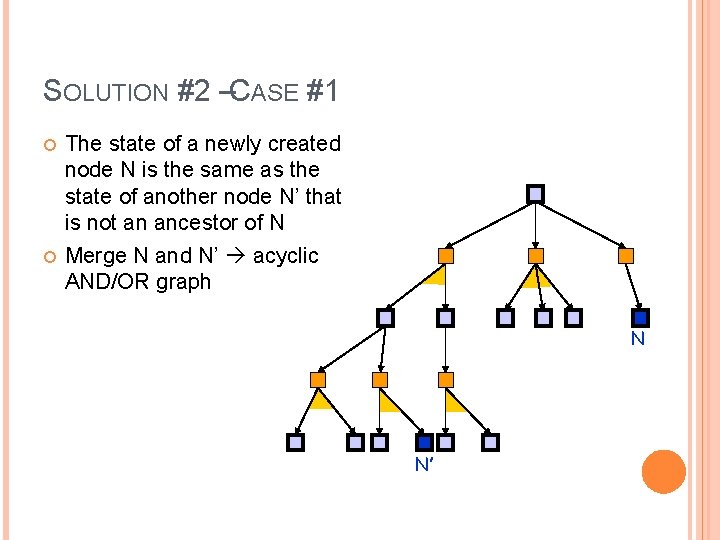

SOLUTION #2 –CASE #1 The state of a newly created node N is the same as the state of another node N’ that is not an ancestor of N Merge N and N’ acyclic AND/OR graph N N’

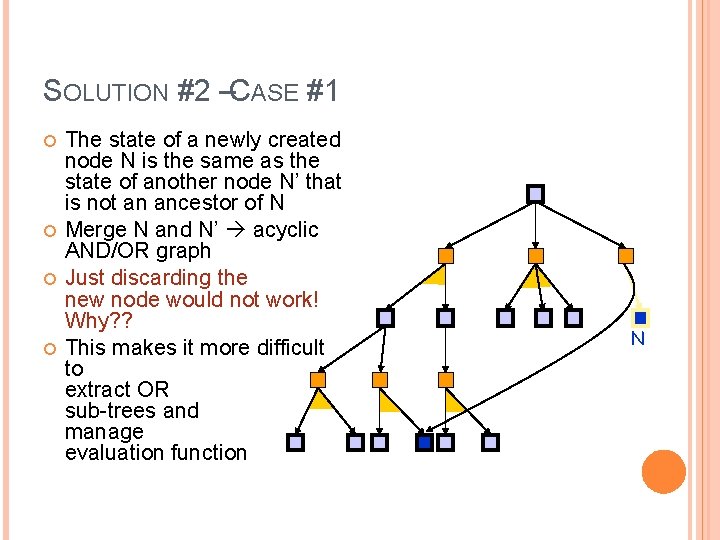

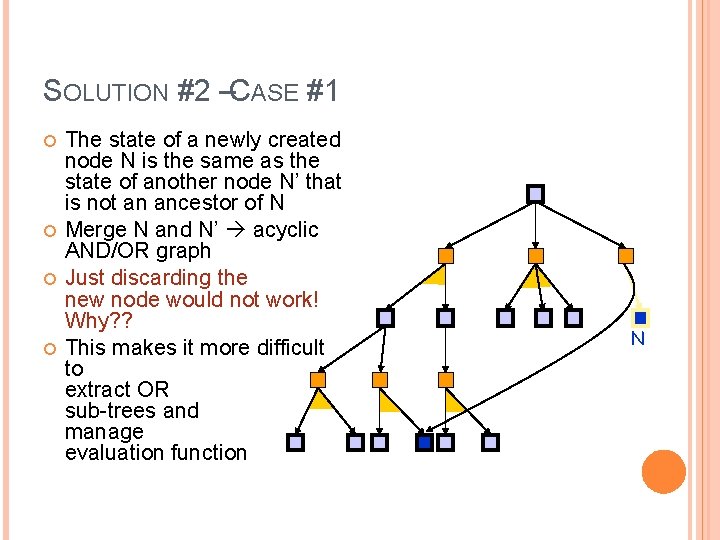

SOLUTION #2 –CASE #1 The state of a newly created node N is the same as the state of another node N’ that is not an ancestor of N Merge N and N’ acyclic AND/OR graph Just discarding the new node would not work! Why? ? This makes it more difficult to extract OR sub-trees and manage evaluation function N

SOLUTION #2 –CASE #2 The state of a newly created node N is the same as the state of a parent of N Two possible choices: � Mark N closed � Mark N solved In either case, the search tree will remain finite, if the state space is finite If N is marked solved, the conditional plan may include loops � What does this mean? ? ?

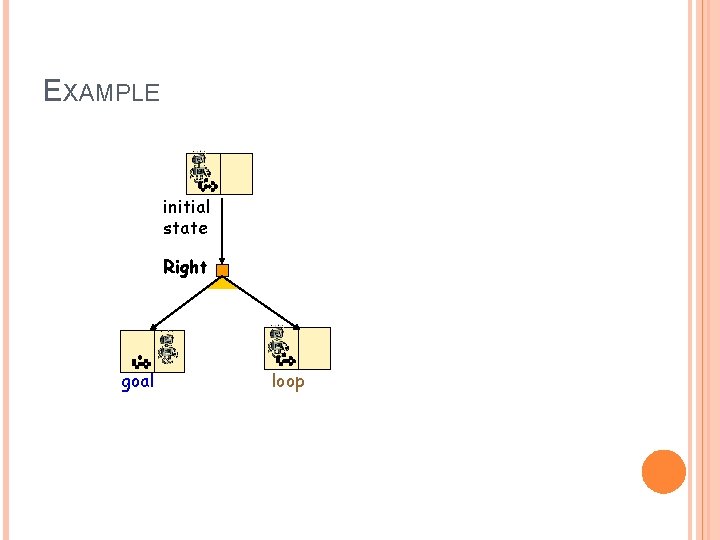

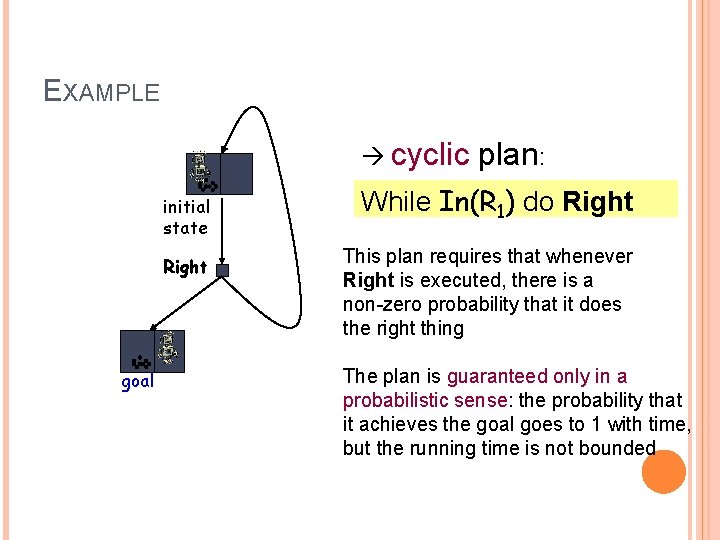

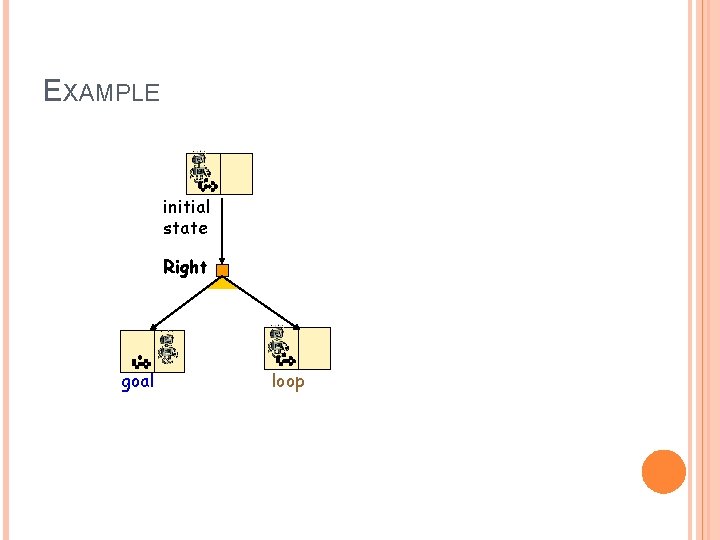

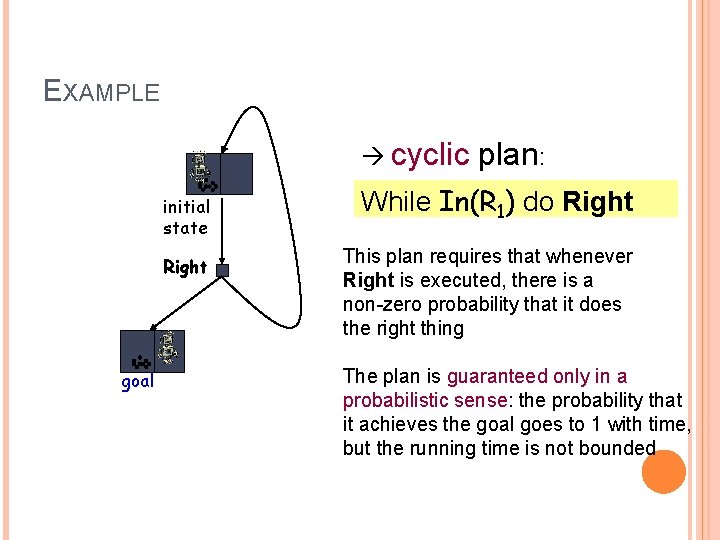

EXAMPLE initial state Right goal loop

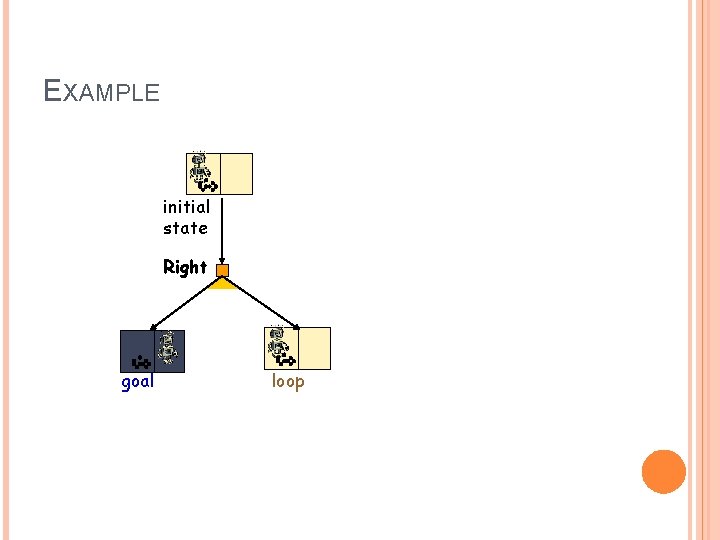

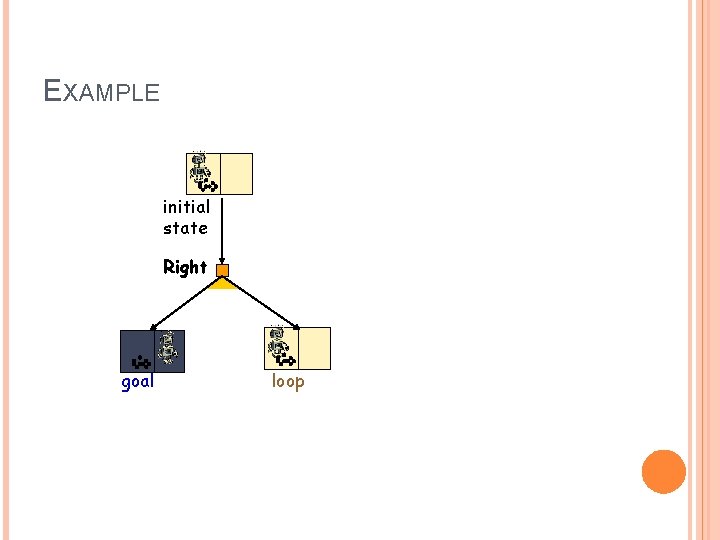

EXAMPLE initial state Right goal loop

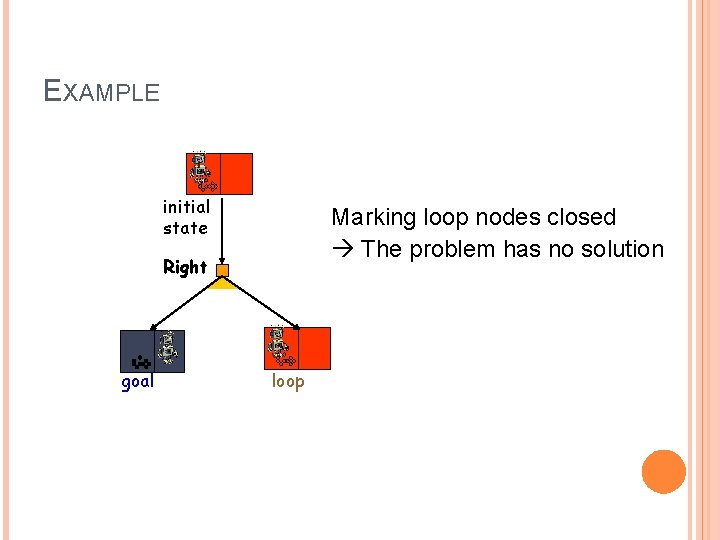

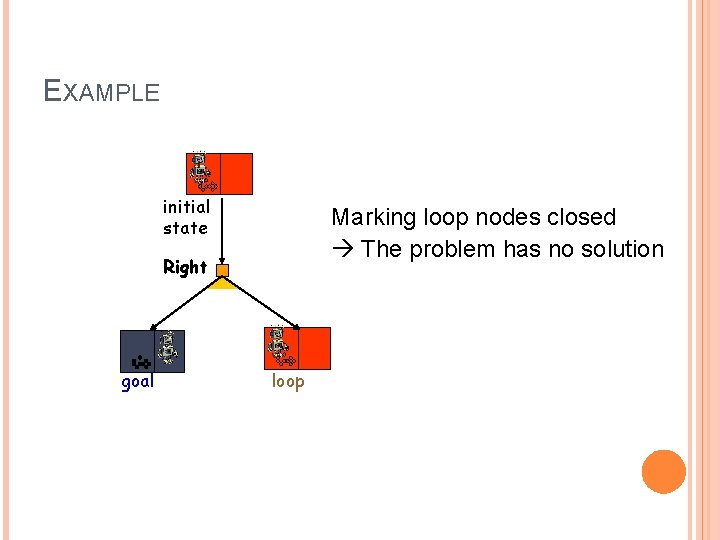

EXAMPLE initial state Marking loop nodes closed The problem has no solution Right goal loop

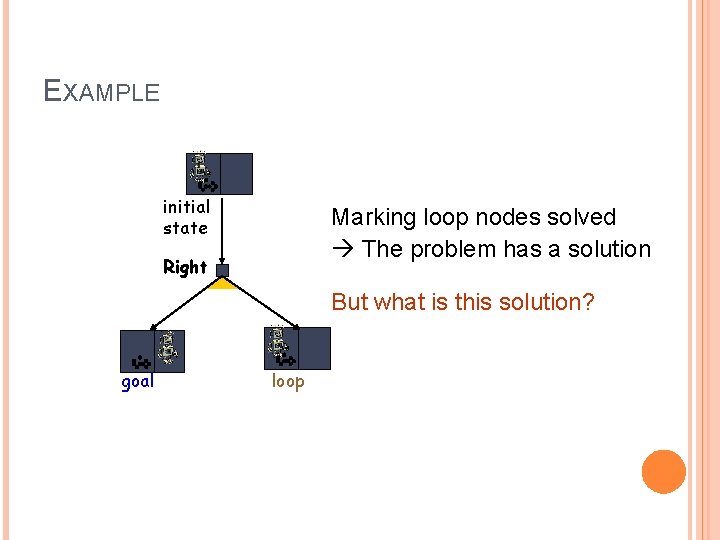

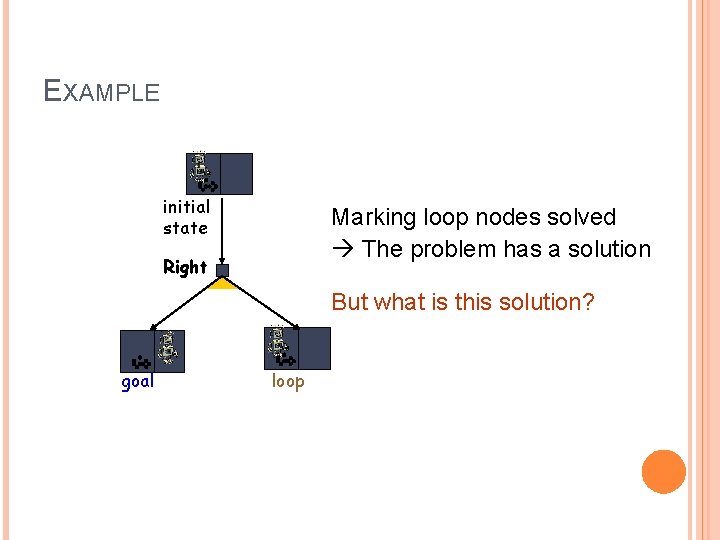

EXAMPLE initial state Marking loop nodes solved The problem has a solution Right But what is this solution? goal loop

EXAMPLE cyclic initial state Right goal plan: While In(R 1) do Right This plan requires that whenever Right is executed, there is a non-zero probability that it does the right thing The plan is guaranteed only in a probabilistic sense: the probability that it achieves the goal goes to 1 with time, but the running time is not bounded

In the presence of uncertainty, it’s often the case that things don’t work the first time as one would like them to work; one must try again Without allowing cyclic plans, many problems would have no solution So, dealing properly with repeated states in case #2 is much more than just a matter of search efficiency!

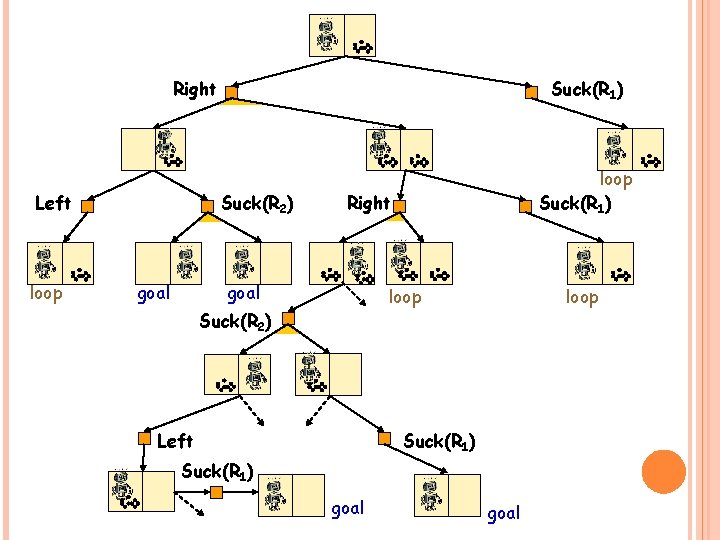

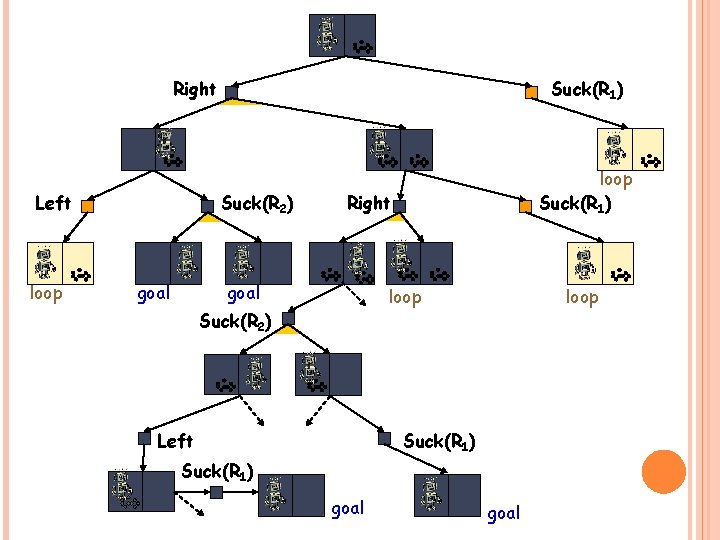

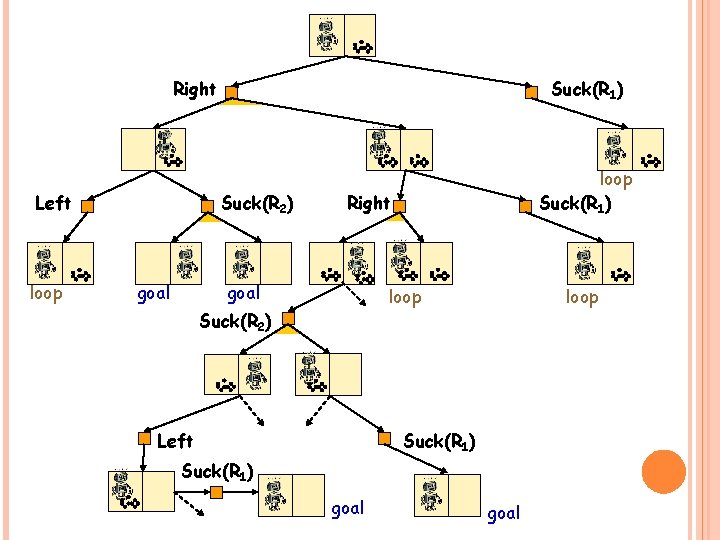

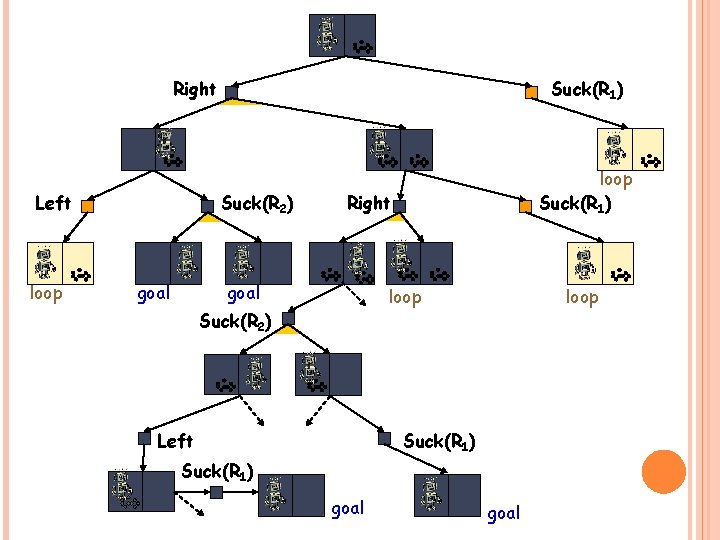

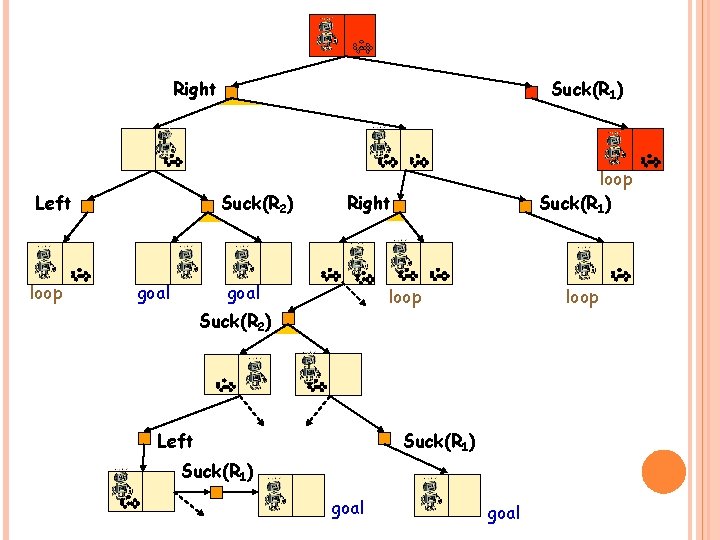

Right Left loop Suck(R 1) Suck(R 2) goal loop Suck(R 1) Right goal loop Suck(R 2) Left loop Suck(R 1) goal

Right Left loop Suck(R 1) Suck(R 2) goal loop Suck(R 1) Right goal loop Suck(R 2) Left loop Suck(R 1) goal

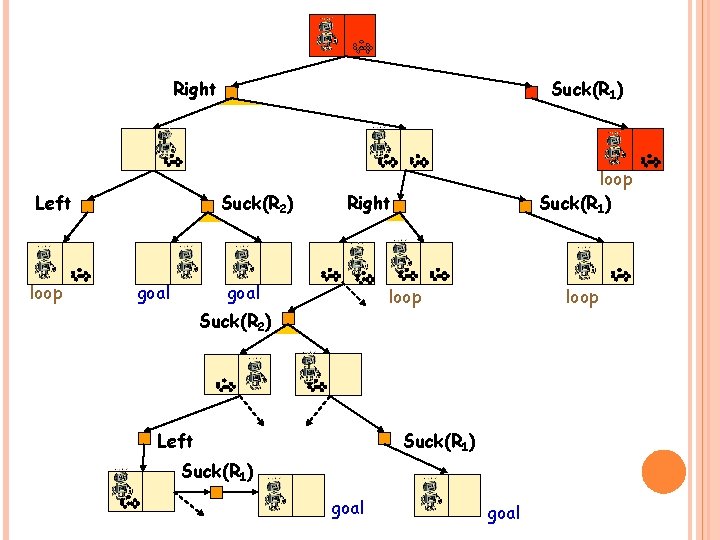

Right Left loop Suck(R 1) Suck(R 2) goal loop Suck(R 1) Right goal loop Suck(R 2) Left Suck(R 1) goal

DOES THIS ALWAYS WORK? No! We must be more careful For a cyclic plan to be correct, it should be possible to reach a goal node from every non-goal node in the plan

Right Left loop Suck(R 1) Suck(R 2) goal loop Suck(R 1) Right goal loop Suck(R 2) Left loop Suck(R 1) goal

DOES THIS ALWAYS WORK? No ! We must be more careful For a cyclic plan to be correct, it should be possible to reach a goal node from every non-goal node in the plan The node labeling algorithm must be slightly modified

PLANNING WITH UNCERTAINTY IN SENSING AND ACTION

SENSING FEEDBACK In previous, we assumed the state of the world was available to the agent after every step (feedback), and uncertainty lies in the future state of the world � “Playing a game against nature” Sensing uncertainty demands a fundamental change to the problem setup

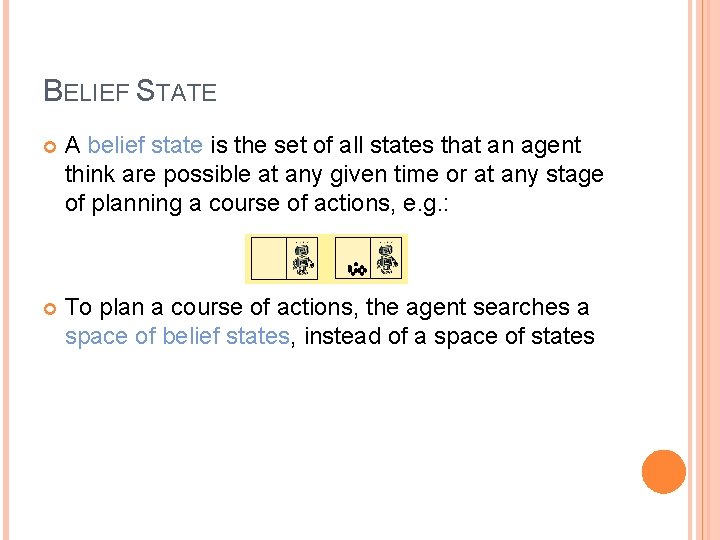

BELIEF STATE A belief state is the set of all states that an agent think are possible at any given time or at any stage of planning a course of actions, e. g. : To plan a course of actions, the agent searches a space of belief states, instead of a space of states

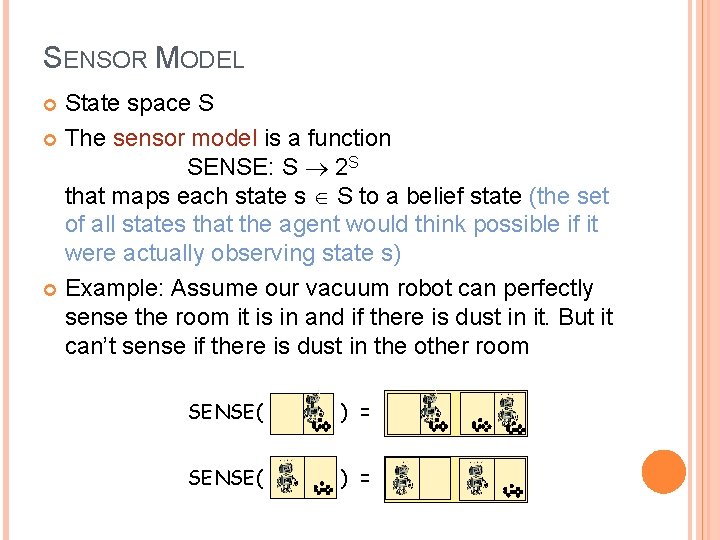

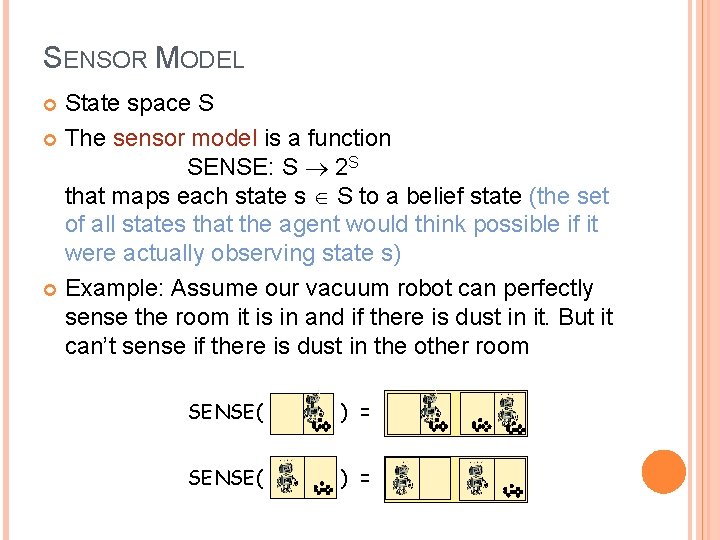

SENSOR MODEL State space S The sensor model is a function SENSE: S 2 S that maps each state s S to a belief state (the set of all states that the agent would think possible if it were actually observing state s) Example: Assume our vacuum robot can perfectly sense the room it is in and if there is dust in it. But it can’t sense if there is dust in the other room SENSE( ) =

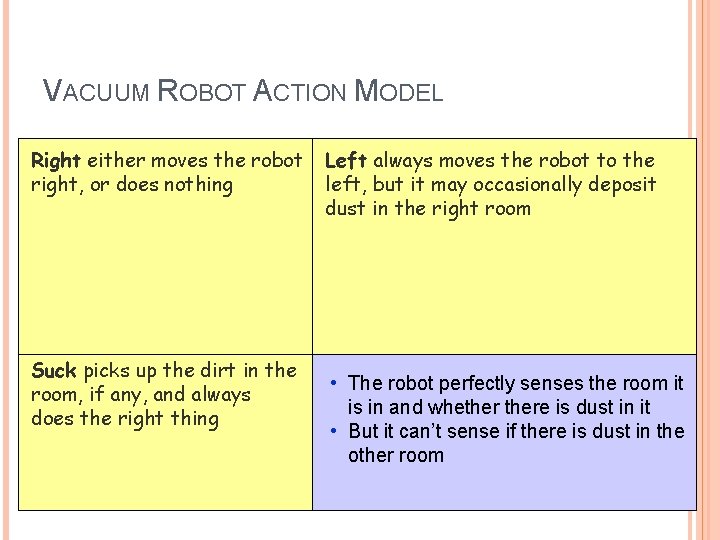

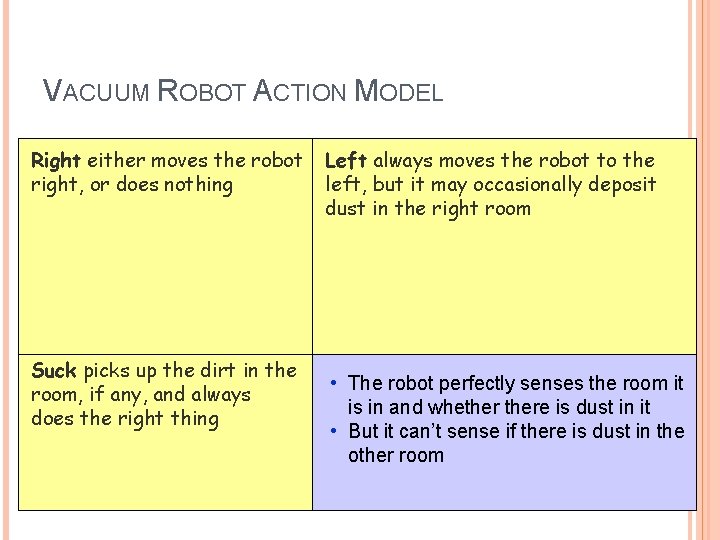

VACUUM ROBOT ACTION MODEL Right either moves the robot right, or does nothing Suck picks up the dirt in the room, if any, and always does the right thing Left always moves the robot to the left, but it may occasionally deposit dust in the right room • The robot perfectly senses the room it is in and whethere is dust in it • But it can’t sense if there is dust in the other room

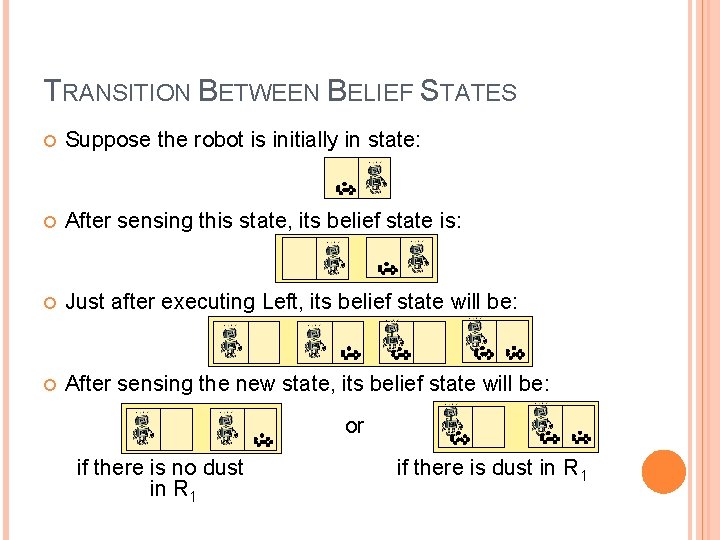

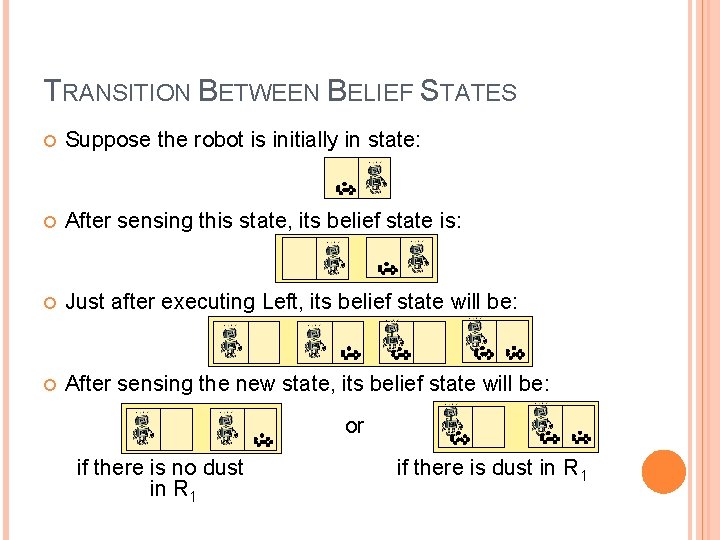

TRANSITION BETWEEN BELIEF STATES Suppose the robot is initially in state: After sensing this state, its belief state is: Just after executing Left, its belief state will be: After sensing the new state, its belief state will be: or if there is no dust in R 1 if there is dust in R 1

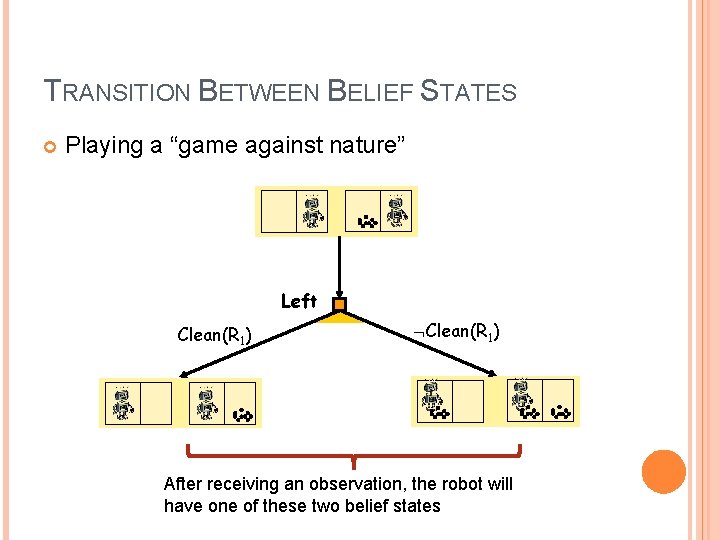

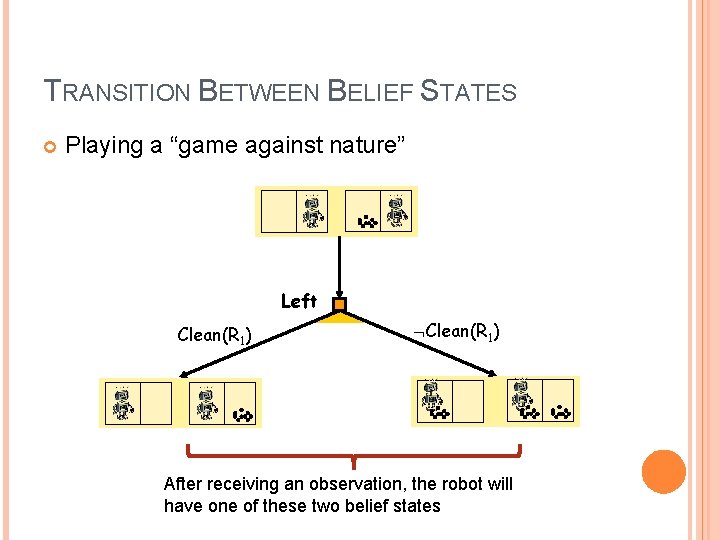

TRANSITION BETWEEN BELIEF STATES Playing a “game against nature” Left Clean(R 1) After receiving an observation, the robot will have one of these two belief states

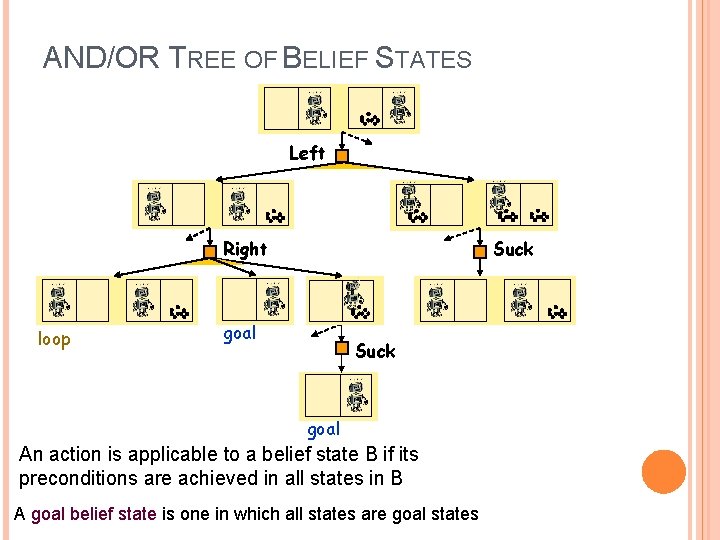

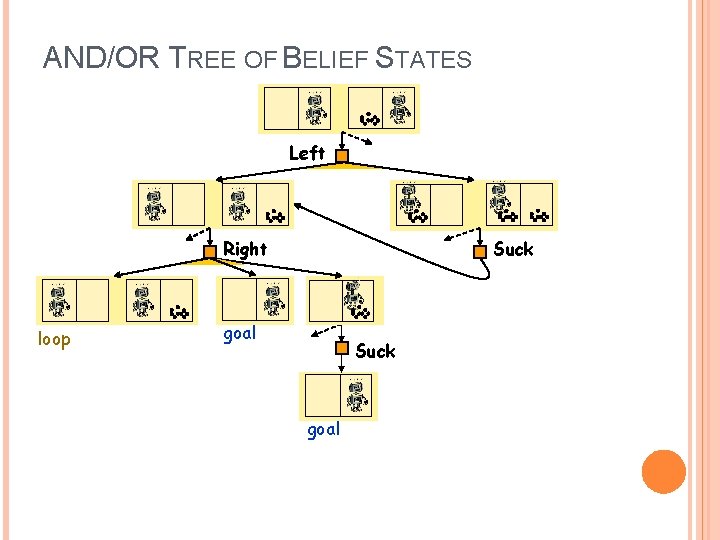

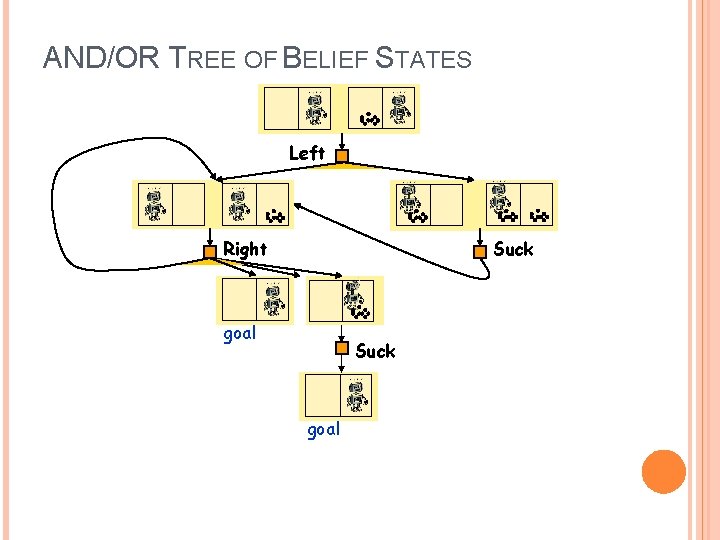

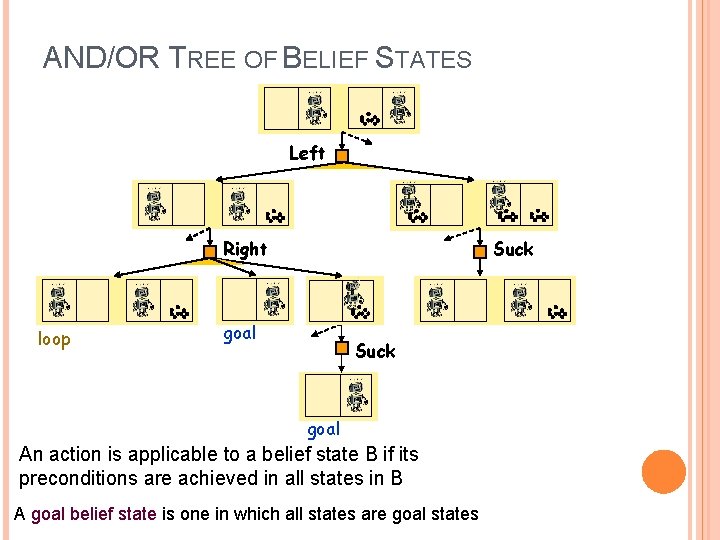

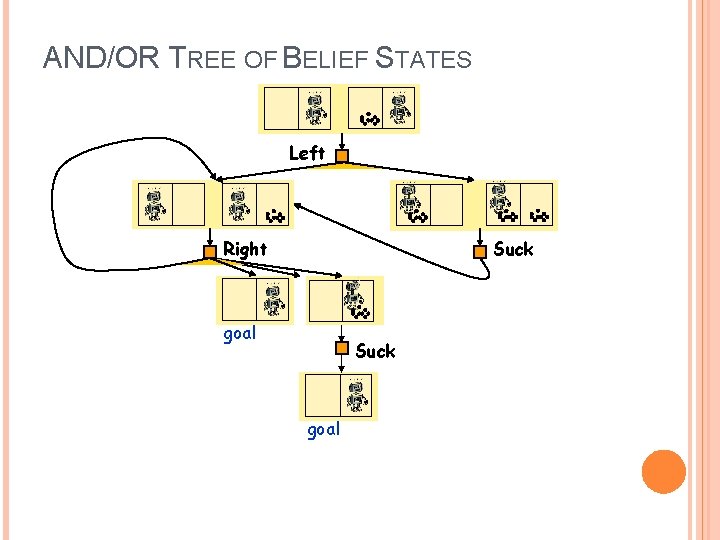

AND/OR TREE OF BELIEF STATES Left Suck Right loop goal Suck goal An action is applicable to a belief state B if its preconditions are achieved in all states in B A goal belief state is one in which all states are goal states

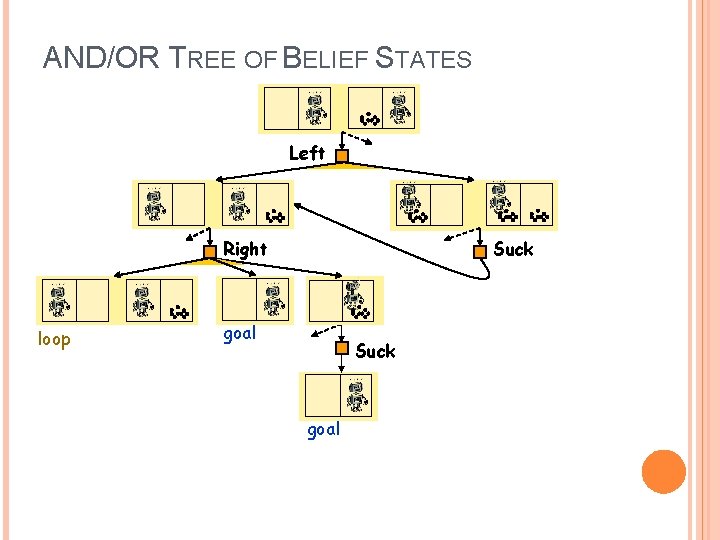

AND/OR TREE OF BELIEF STATES Left Right loop Suck goal

AND/OR TREE OF BELIEF STATES Left Right Suck goal

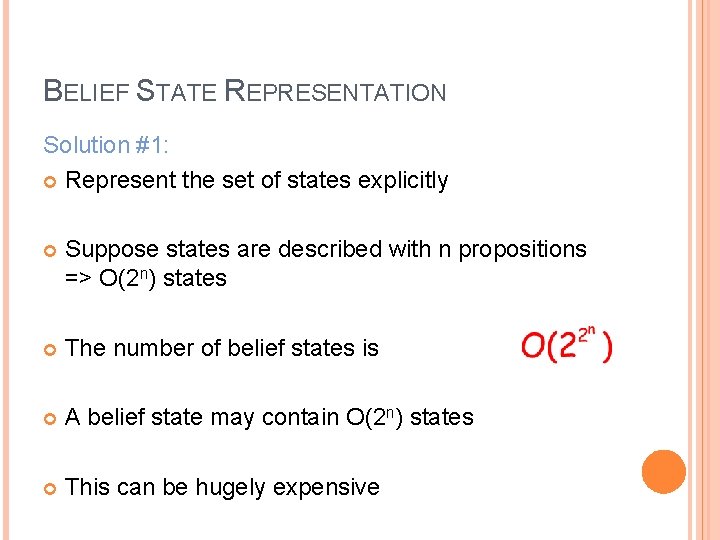

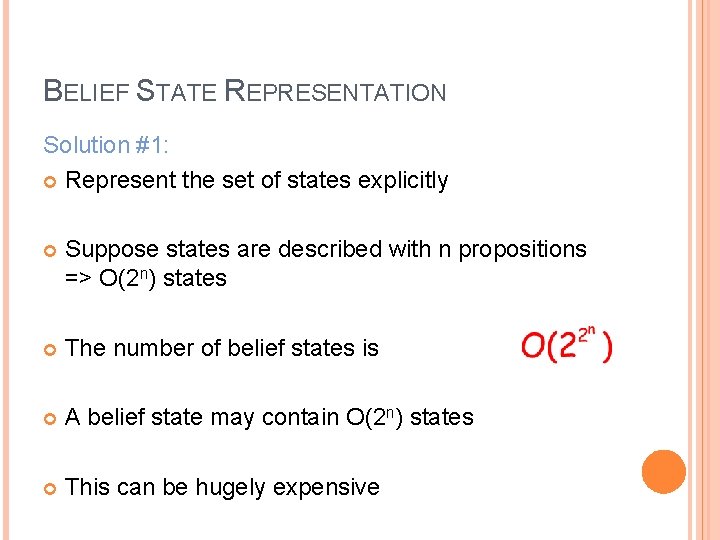

BELIEF STATE REPRESENTATION Solution #1: Represent the set of states explicitly Suppose states are described with n propositions => O(2 n) states The number of belief states is A belief state may contain O(2 n) states This can be hugely expensive

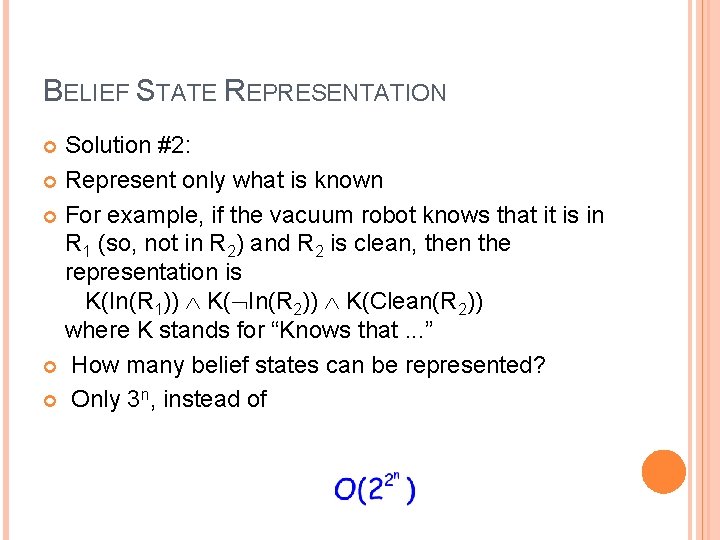

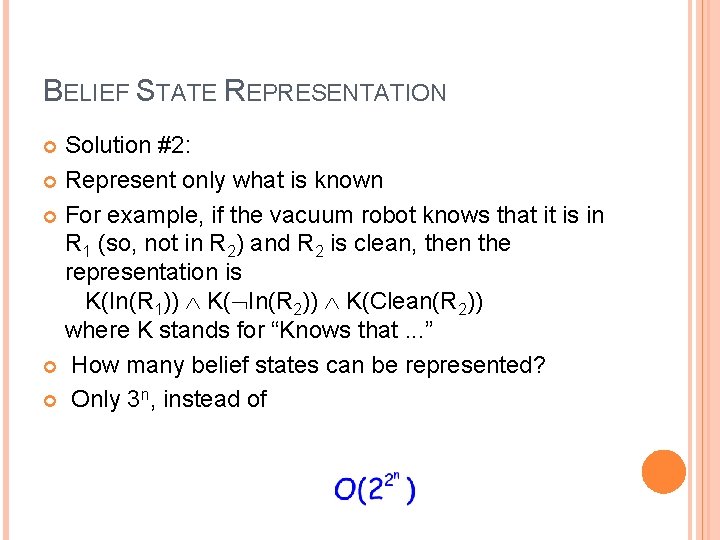

BELIEF STATE REPRESENTATION Solution #2: Represent only what is known For example, if the vacuum robot knows that it is in R 1 (so, not in R 2) and R 2 is clean, then the representation is K(In(R 1)) K( In(R 2)) K(Clean(R 2)) where K stands for “Knows that. . . ” How many belief states can be represented? Only 3 n, instead of

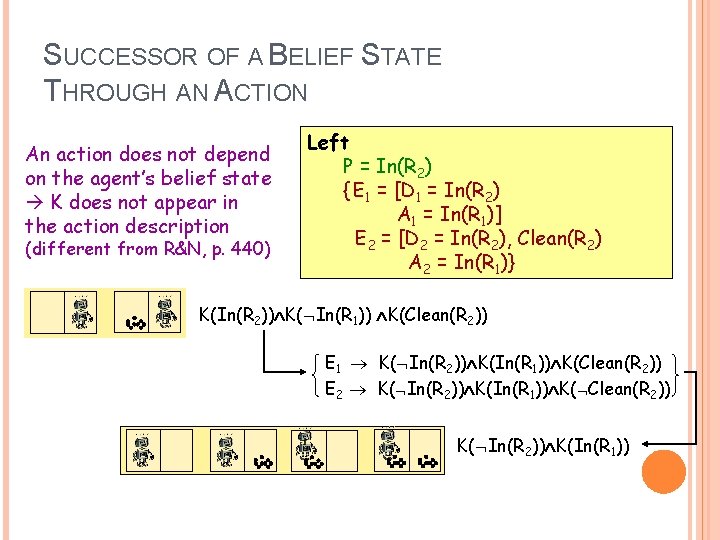

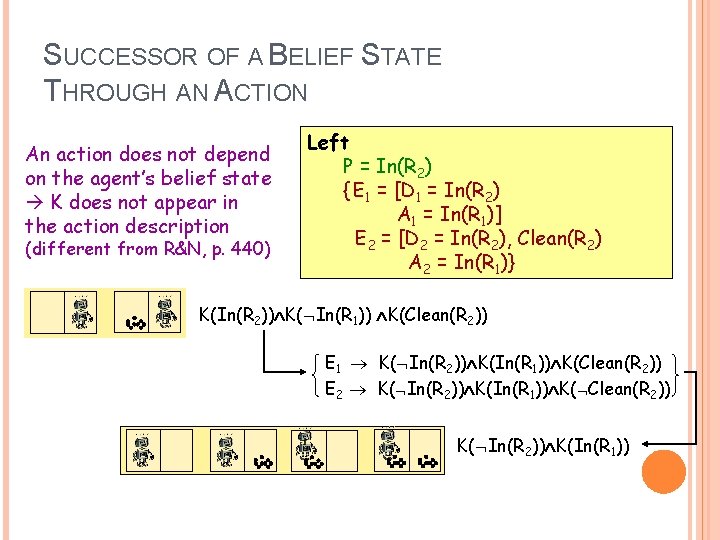

SUCCESSOR OF A BELIEF STATE THROUGH AN ACTION An action does not depend on the agent’s belief state K does not appear in the action description (different from R&N, p. 440) Left P = In(R 2) { E 1 = [D 1 = In(R 2) A 1 = In(R 1)] E 2 = [D 2 = In(R 2), Clean(R 2) A 2 = In(R 1)} K(In(R 2)) K( In(R 1)) K(Clean(R 2)) E 1 K( In(R 2)) K(In(R 1)) K(Clean(R 2)) E 2 K( In(R 2)) K(In(R 1)) K( Clean(R 2)) K( In(R 2)) K(In(R 1))

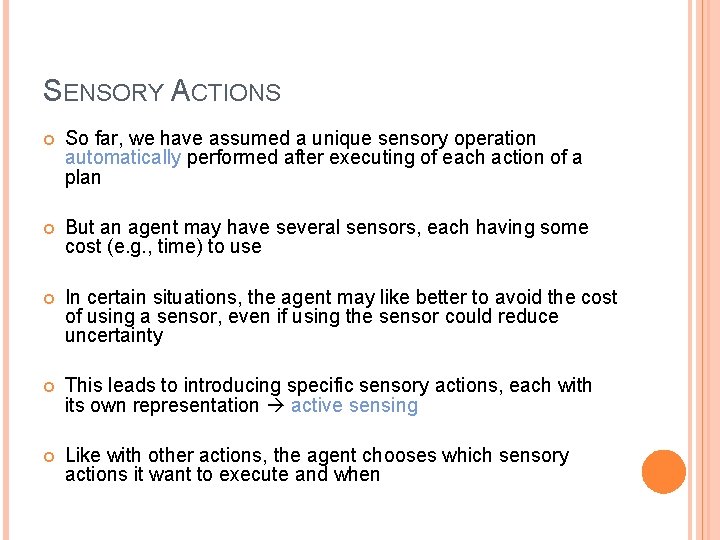

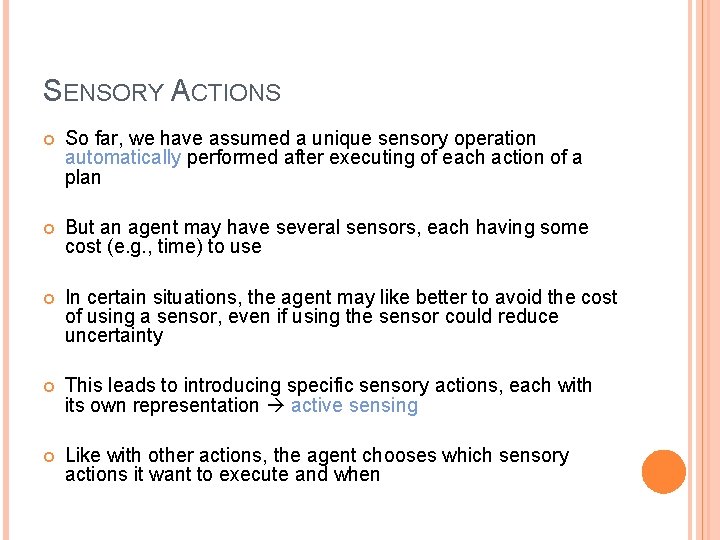

SENSORY ACTIONS So far, we have assumed a unique sensory operation automatically performed after executing of each action of a plan But an agent may have several sensors, each having some cost (e. g. , time) to use In certain situations, the agent may like better to avoid the cost of using a sensor, even if using the sensor could reduce uncertainty This leads to introducing specific sensory actions, each with its own representation active sensing Like with other actions, the agent chooses which sensory actions it want to execute and when

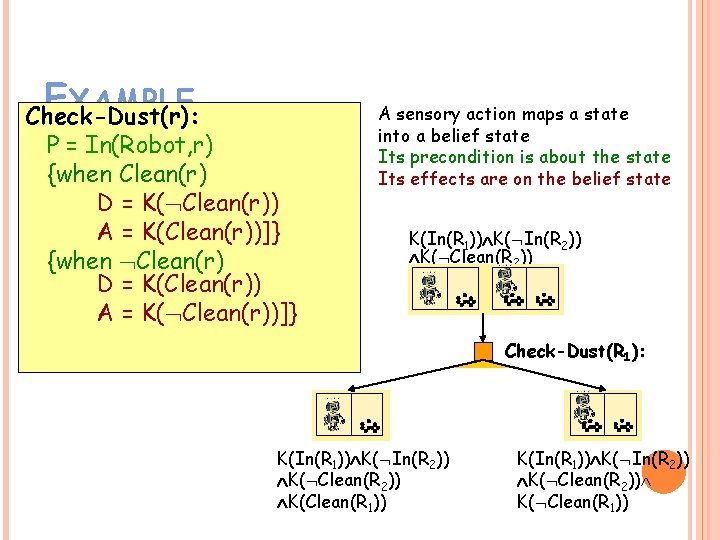

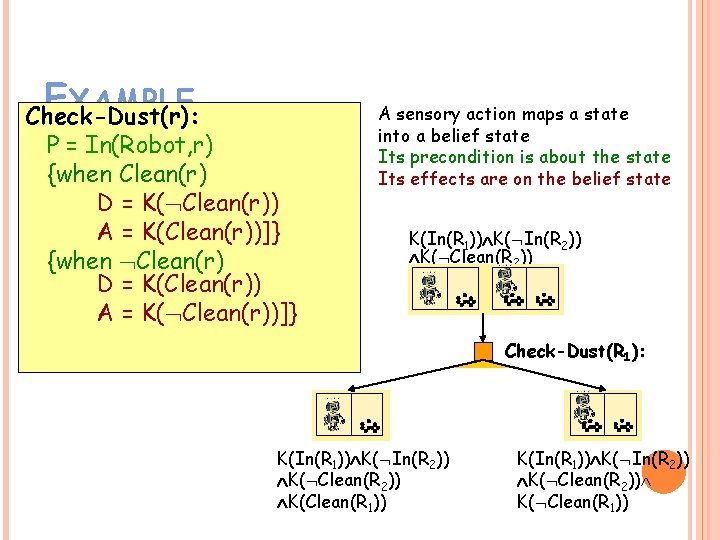

E XAMPLE Check-Dust(r): P = In(Robot, r) {when Clean(r) D = K( Clean(r)) A = K(Clean(r))]} {when Clean(r) D = K(Clean(r)) A = K( Clean(r))]} A sensory action maps a state into a belief state Its precondition is about the state Its effects are on the belief state K(In(R 1)) K( In(R 2)) K( Clean(R 2)) Check-Dust(R 1): K(In(R 1)) K( In(R 2)) K( Clean(R 2)) K(Clean(R 1)) K(In(R 1)) K( In(R 2)) K( Clean(R 1))

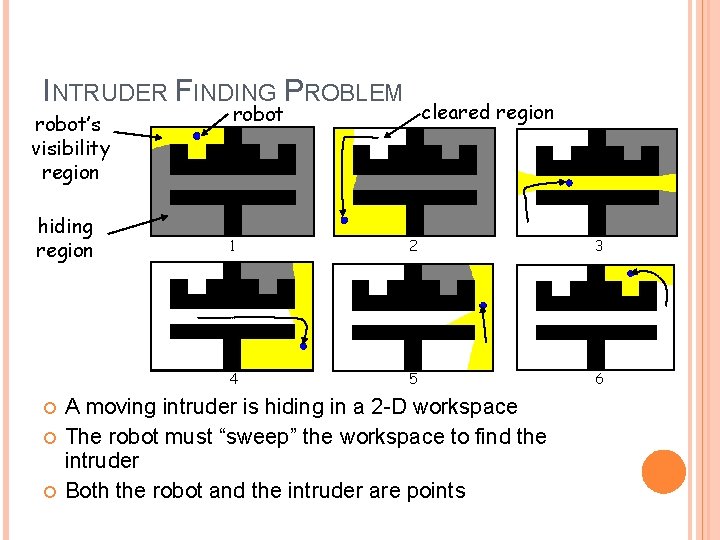

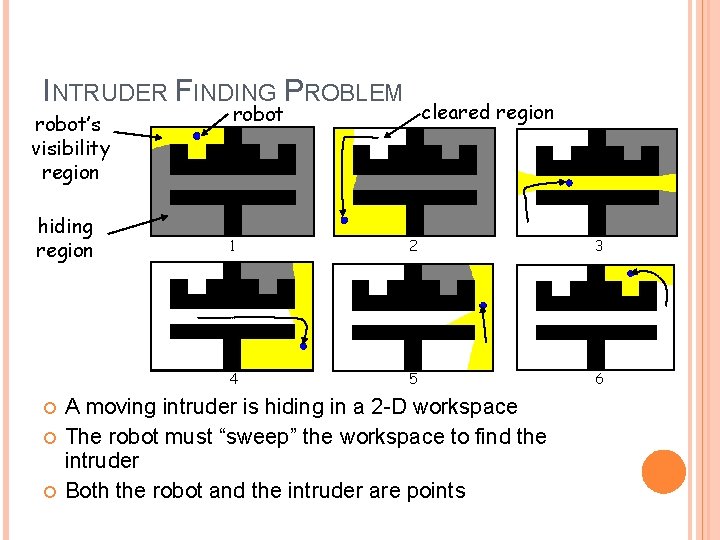

INTRUDER FINDING PROBLEM robot’s visibility region hiding region cleared region robot 1 2 3 4 5 6 A moving intruder is hiding in a 2 -D workspace The robot must “sweep” the workspace to find the intruder Both the robot and the intruder are points

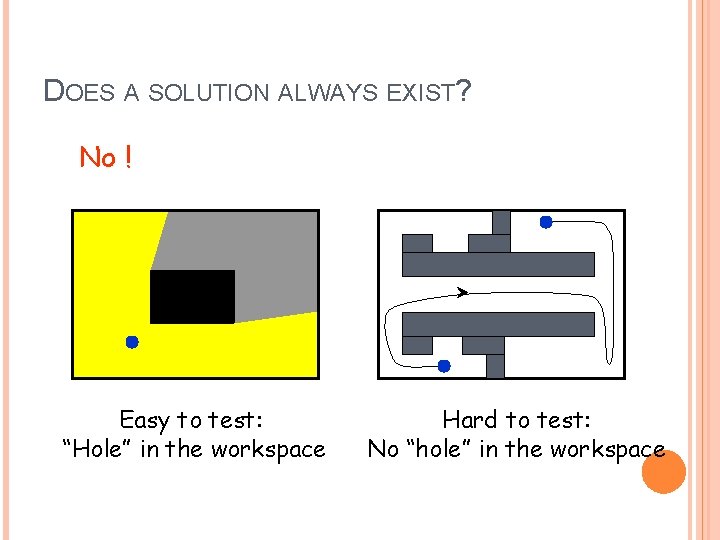

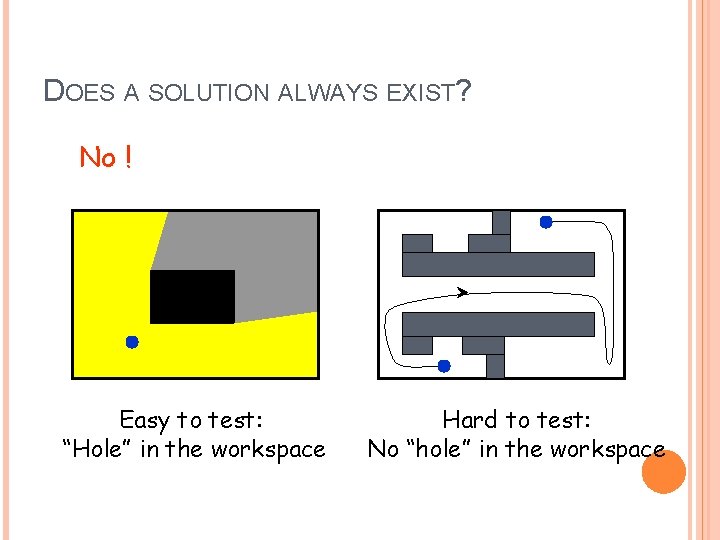

DOES A SOLUTION ALWAYS EXIST? No ! Easy to test: “Hole” in the workspace Hard to test: No “hole” in the workspace

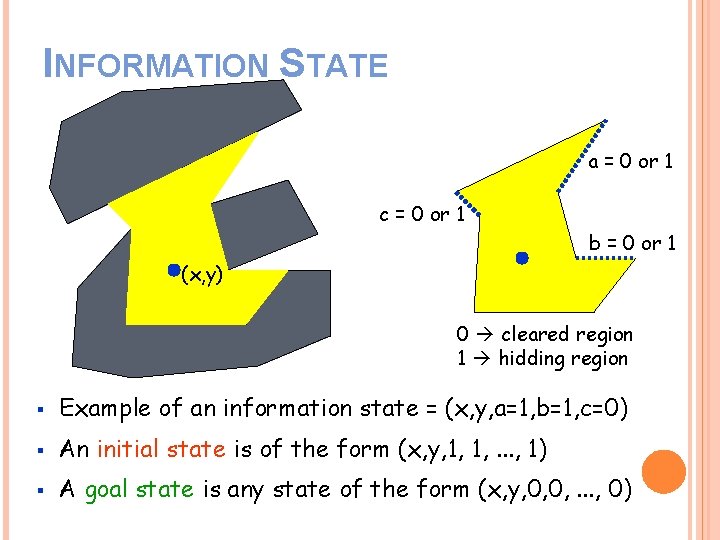

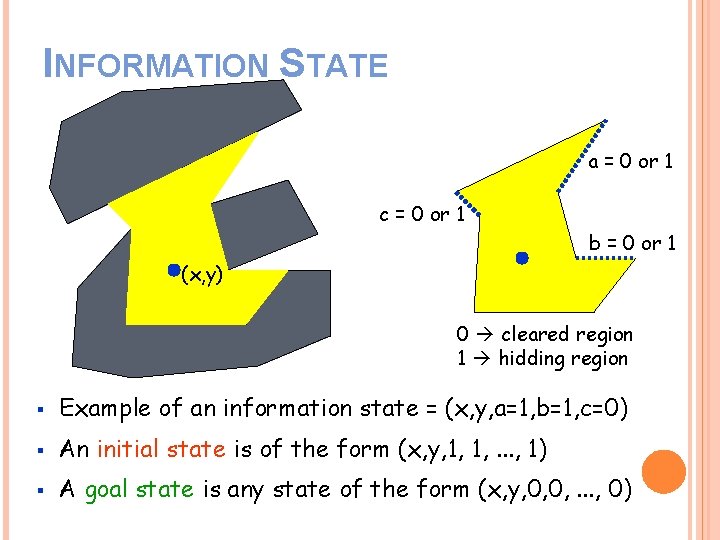

INFORMATION STATE a = 0 or 1 c = 0 or 1 b = 0 or 1 (x, y) 0 cleared region 1 hidding region § Example of an information state = (x, y, a=1, b=1, c=0) § An initial state is of the form (x, y, 1, 1, . . . , 1) § A goal state is any state of the form (x, y, 0, 0, . . . , 0)

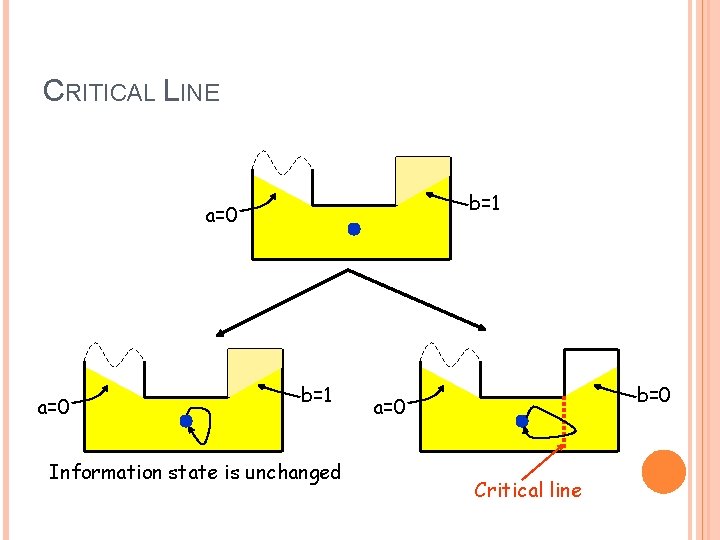

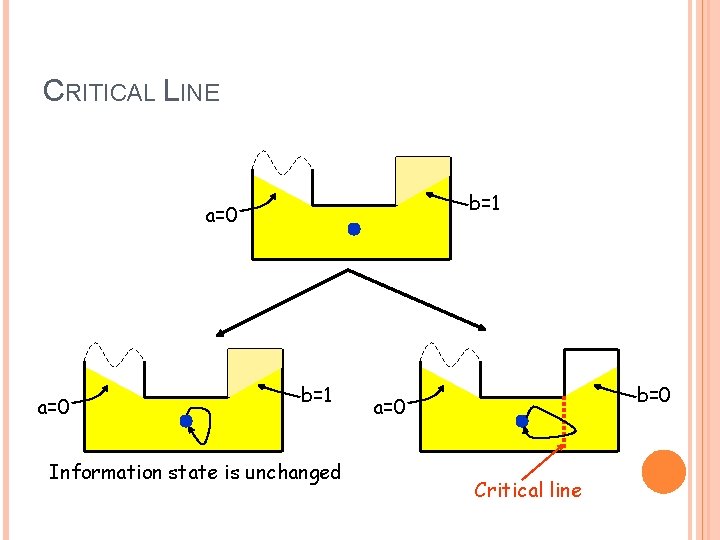

CRITICAL LINE b=1 a=0 b=1 Information state is unchanged b=0 a=0 Critical line

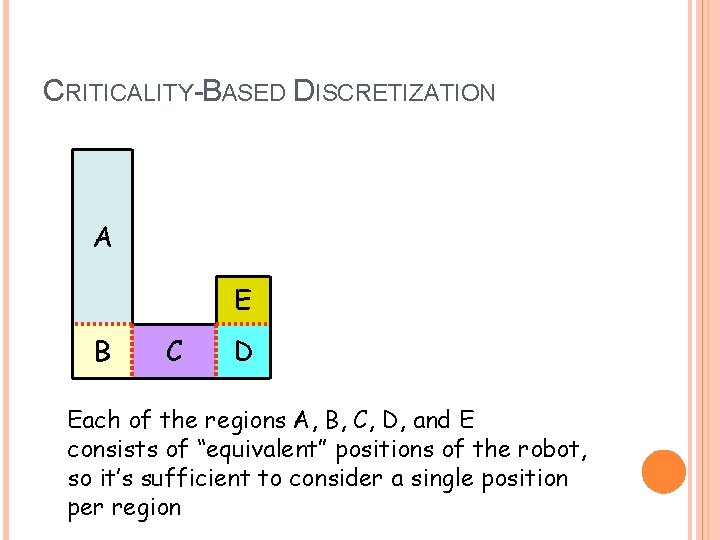

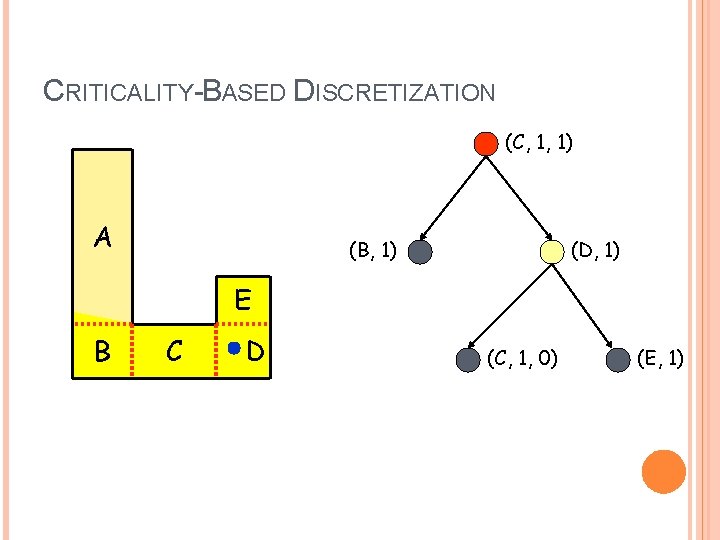

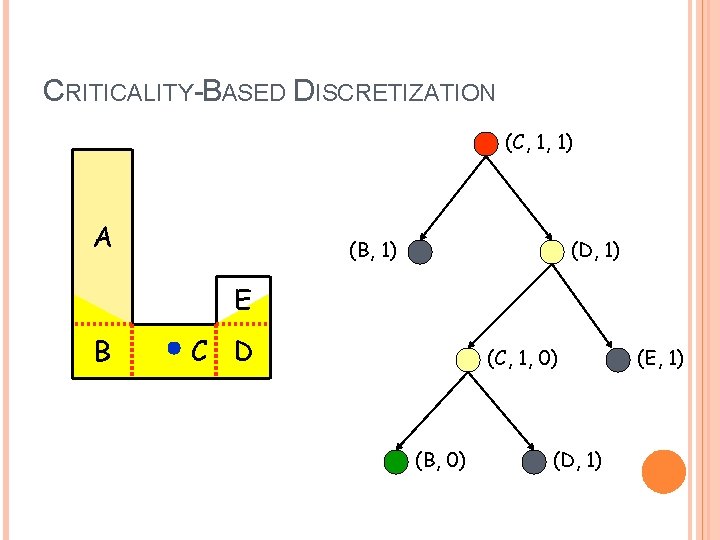

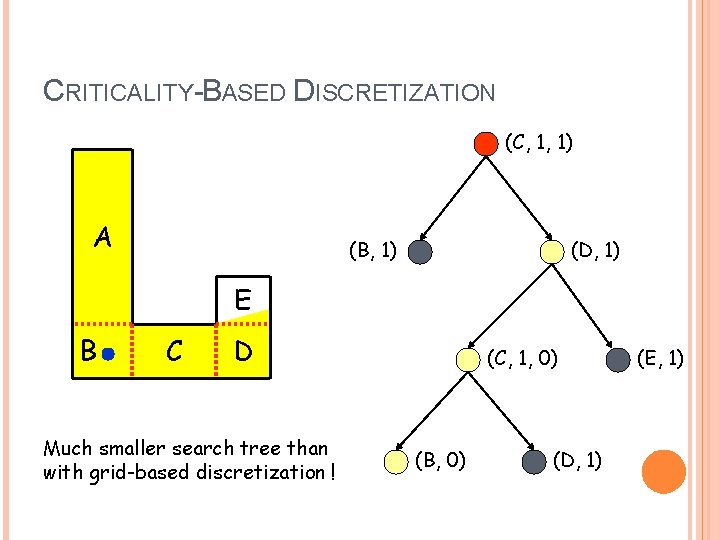

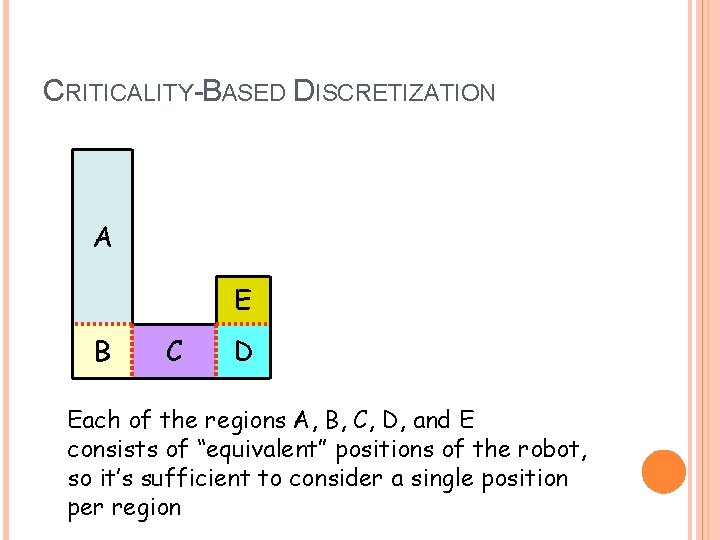

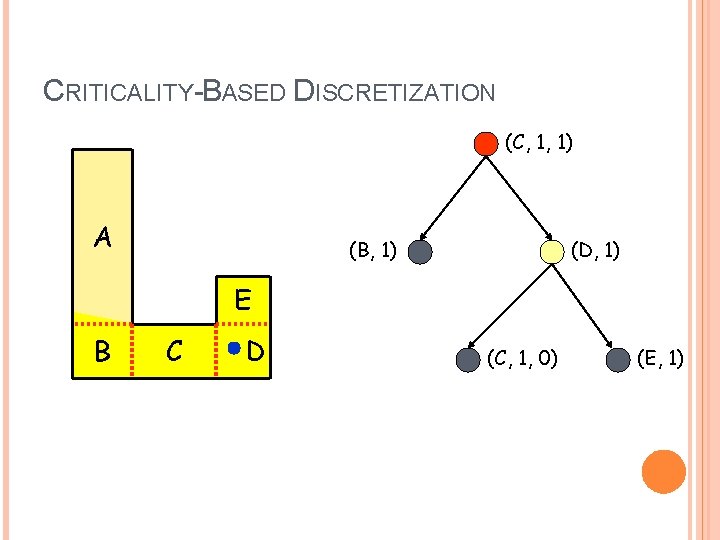

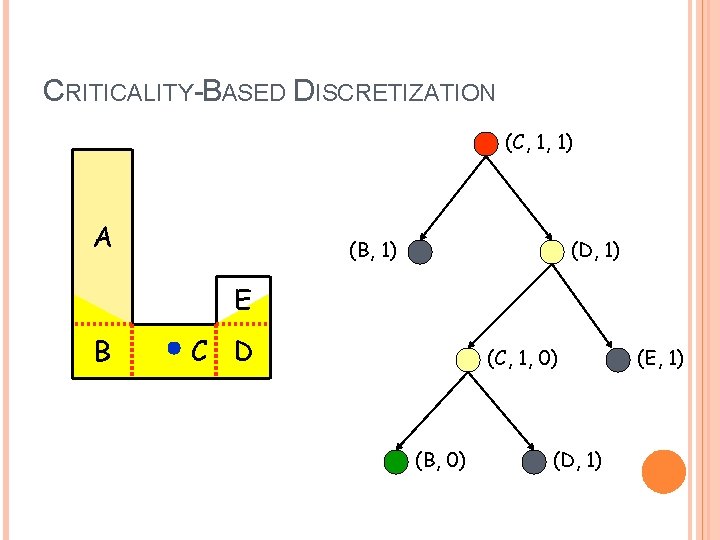

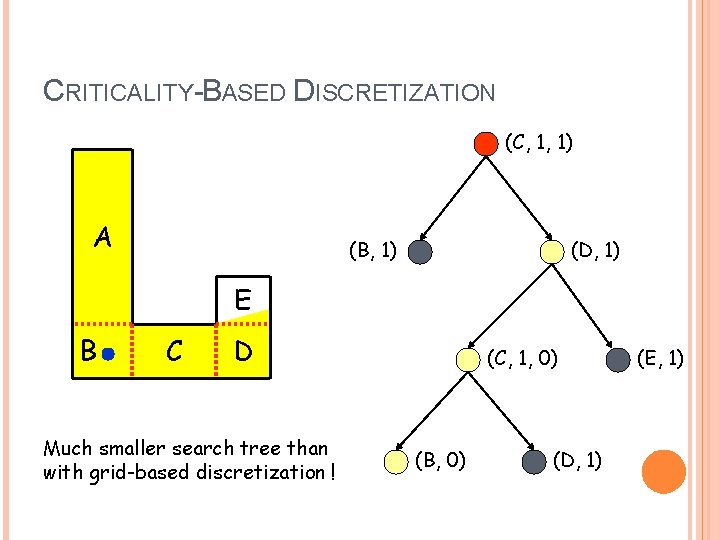

CRITICALITY-BASED DISCRETIZATION A E B C D Each of the regions A, B, C, D, and E consists of “equivalent” positions of the robot, so it’s sufficient to consider a single position per region

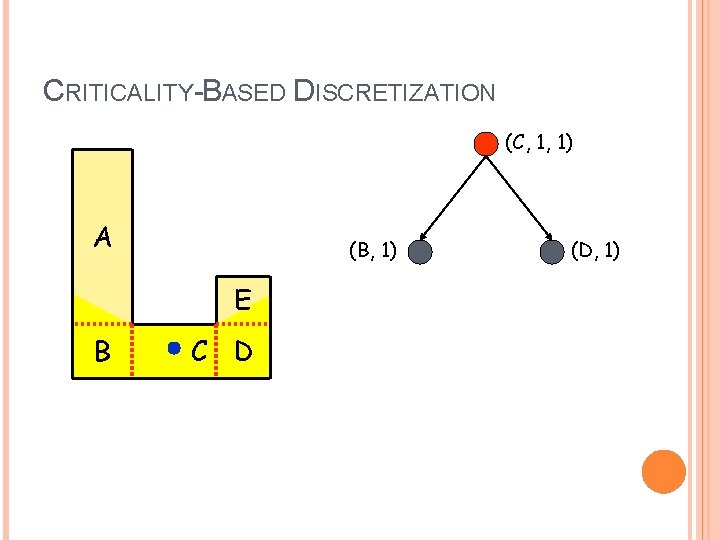

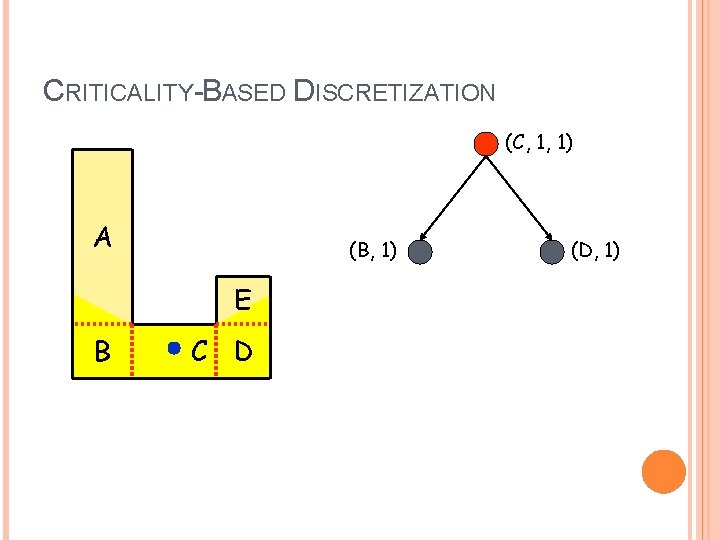

CRITICALITY-BASED DISCRETIZATION (C, 1, 1) A (B, 1) E B C D (D, 1)

CRITICALITY-BASED DISCRETIZATION (C, 1, 1) A (B, 1) (D, 1) E B C D (C, 1, 0) (E, 1)

CRITICALITY-BASED DISCRETIZATION (C, 1, 1) A (B, 1) (D, 1) E B C D (C, 1, 0) (B, 0) (D, 1) (E, 1)

CRITICALITY-BASED DISCRETIZATION (C, 1, 1) A (B, 1) (D, 1) E B C D Much smaller search tree than with grid-based discretization ! (C, 1, 0) (B, 0) (D, 1) (E, 1)

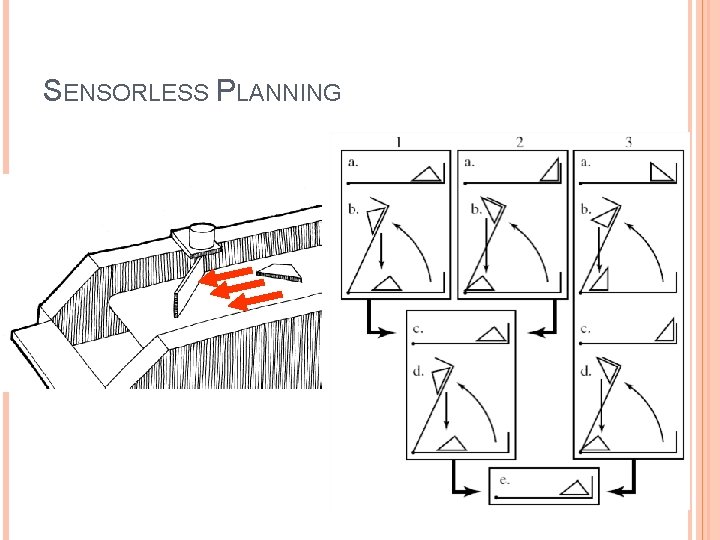

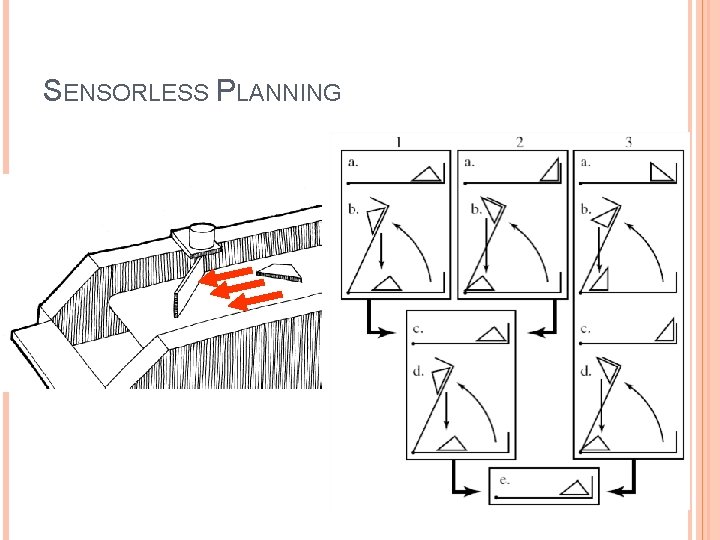

SENSORLESS PLANNING