CMU SCS Graph and Tensor Mining for fun

- Slides: 60

CMU SCS Graph and Tensor Mining for fun and profit Luna Dong, Christos Faloutsos Andrey Kan, Jun Ma, Subho Mukherjee

CMU SCS Roadmap • Introduction – Motivation • Part#1: Graphs • Part#2: Tensors – P 2. 1: Basics (dfn, PARAFAC) – P 2. 2: Embeddings & mining – P 2. 3: Inference • Conclusions KDD 2018 Dong+ 2

CMU SCS ‘Recipe’ Structure: • Problem definition • Short answer/solution • LONG answer – details • Conclusion/short-answer KDD 2018 Dong+ 3

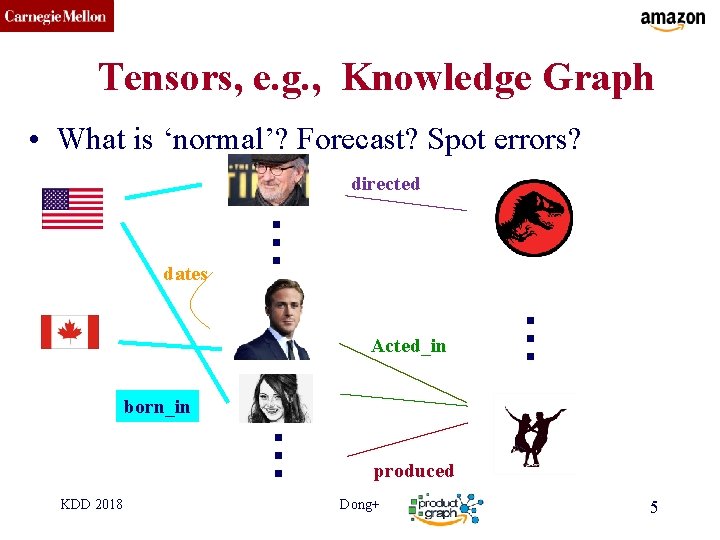

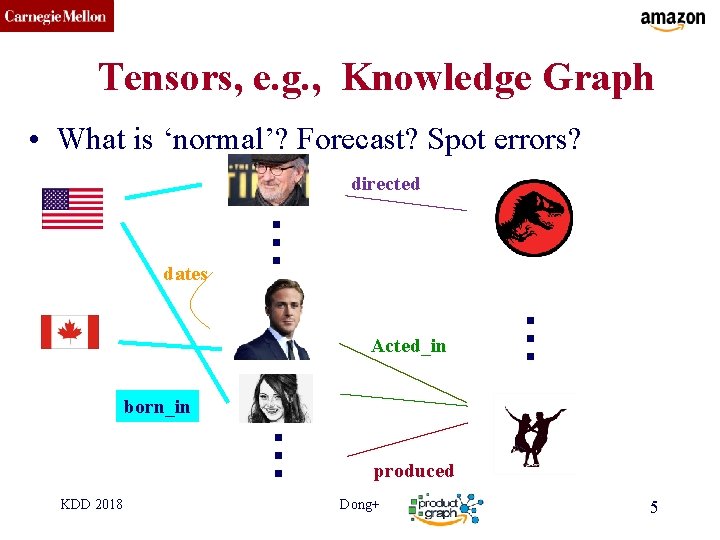

CMU SCS Tensors, e. g. , Knowledge Graph • What is ‘normal’? Forecast? Spot errors? directed … dates … Acted_in born_in … KDD 2018 produced Dong+ 5

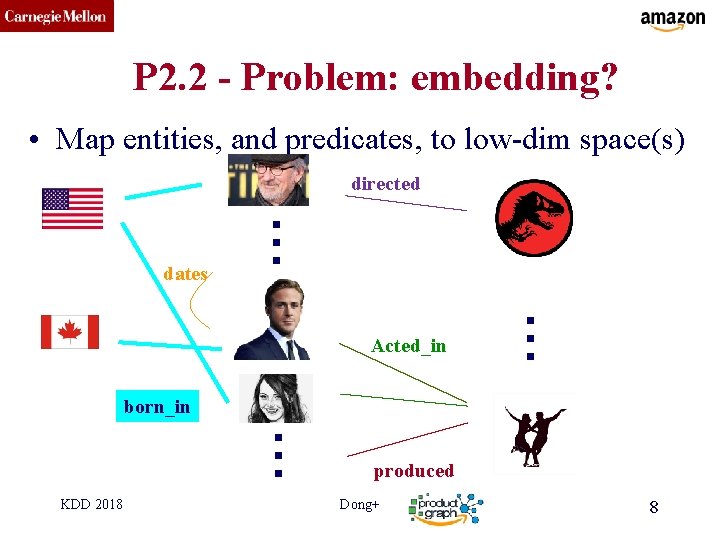

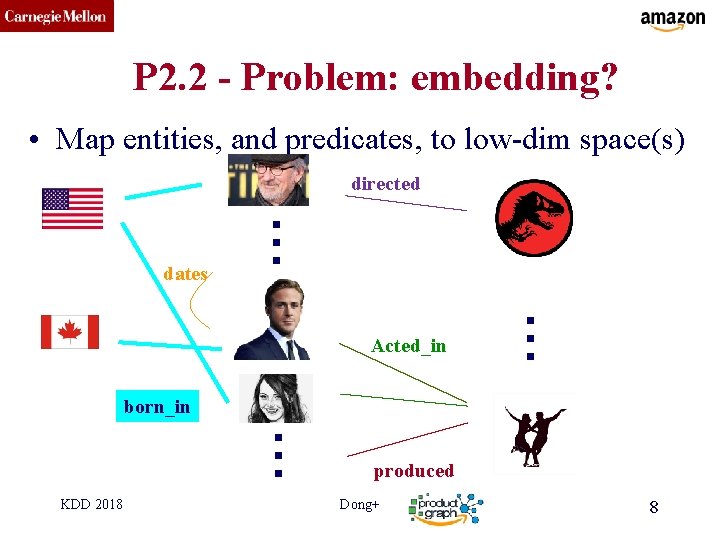

CMU SCS P 2. 2 - Problem: embedding? • Map entities, and predicates, to low-dim space(s) directed … dates … Acted_in born_in … KDD 2018 produced Dong+ 8

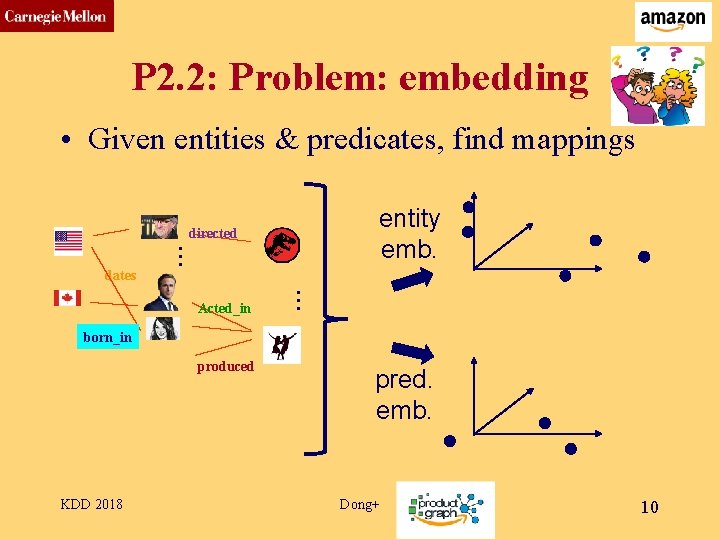

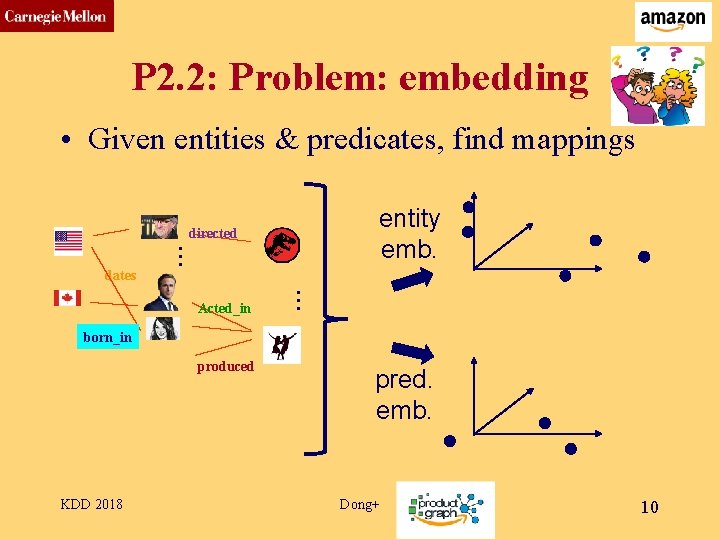

CMU SCS P 2. 2: Problem: embedding • Given entities & predicates, find mappings entity emb. directed … dates … Acted_in born_in produced KDD 2018 pred. emb. Dong+ 10

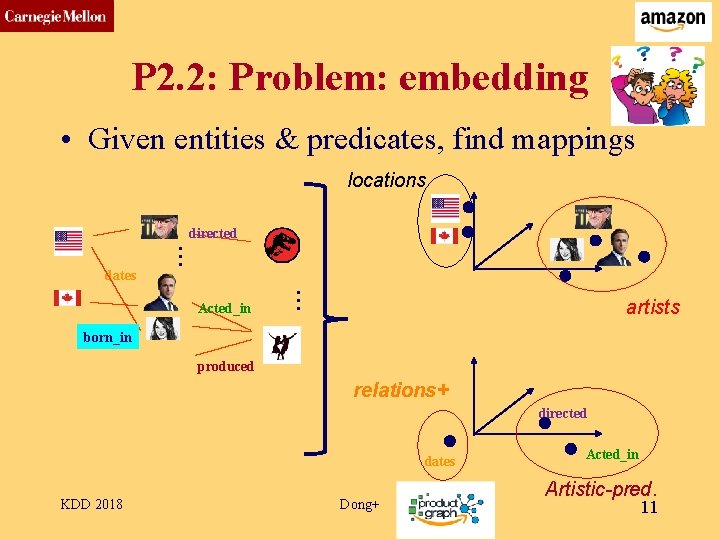

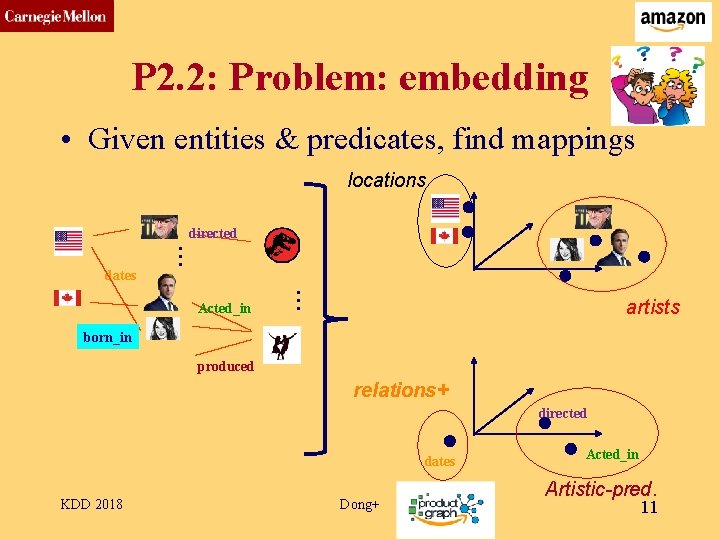

CMU SCS P 2. 2: Problem: embedding • Given entities & predicates, find mappings locations directed … dates … Acted_in artists born_in produced relations+ directed dates KDD 2018 Dong+ Acted_in Artistic-pred. 11

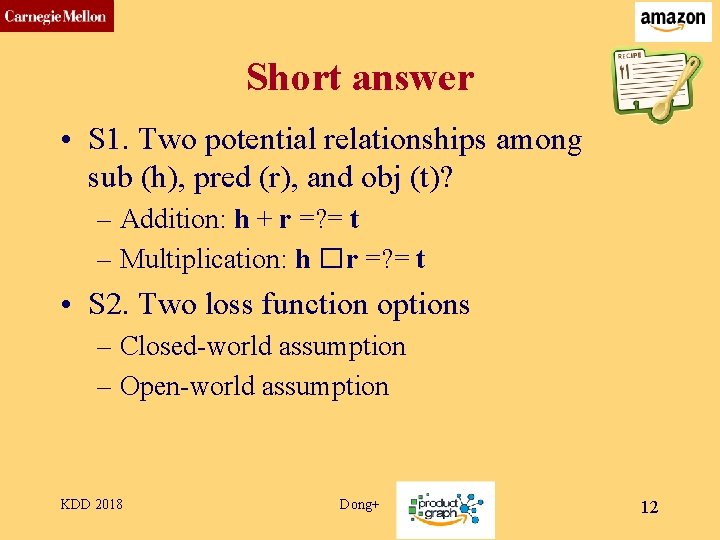

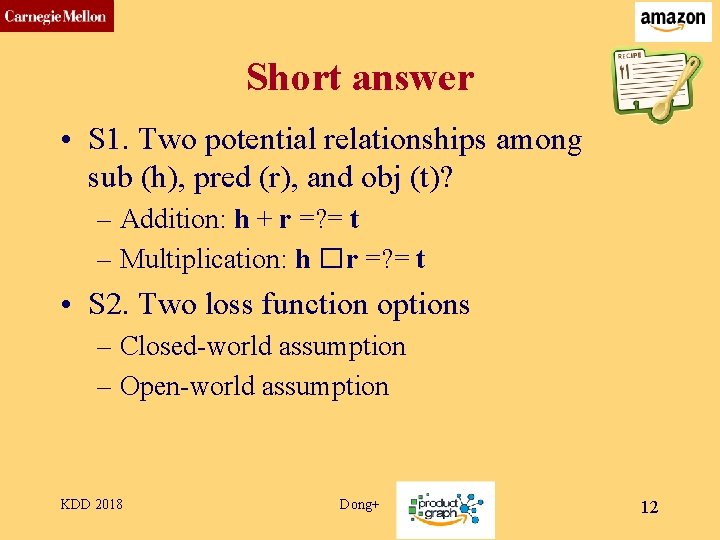

CMU SCS Short answer • S 1. Two potential relationships among sub (h), pred (r), and obj (t)? – Addition: h + r =? = t – Multiplication: h � r =? = t • S 2. Two loss function options – Closed-world assumption – Open-world assumption KDD 2018 Dong+ 12

CMU SCS Long Answer o Motivation o Graph Embedding o Tensor Embedding o Knowledge Graph Embedding KDD 2018 Dong+ 13

CMU SCS Long Answer Ø Motivation o Graph Embedding o Tensor Embedding o Knowledge Graph Embedding KDD 2018 Dong+ 14

CMU SCS Why embeddings (even for graphs)? KDD 2018 Dong+ 15

CMU SCS Why embeddings (even for graphs)? • Reason #1: easier to visualize / manipulate ? KDD 2018 Dong+ 16

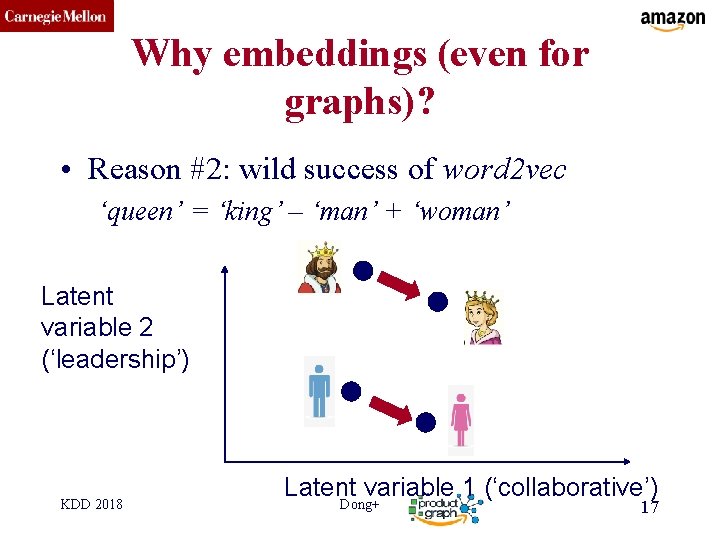

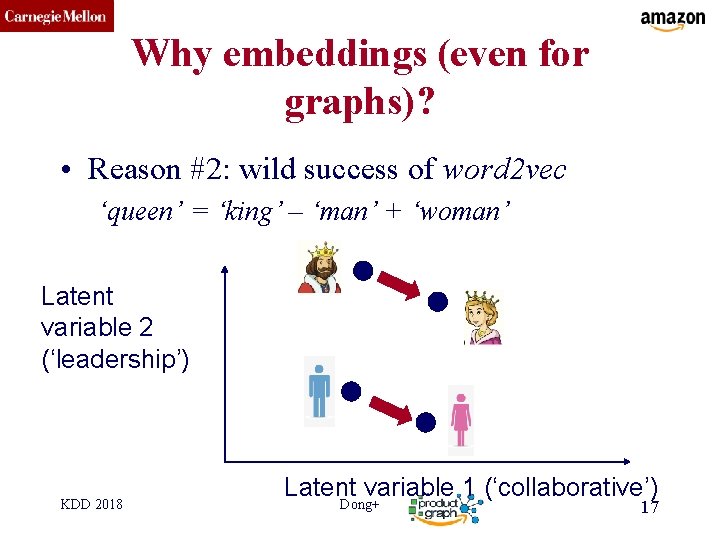

CMU SCS Why embeddings (even for graphs)? • Reason #2: wild success of word 2 vec ‘queen’ = ‘king’ – ‘man’ + ‘woman’ Latent variable 2 (‘leadership’) KDD 2018 Latent variable 1 (‘collaborative’) Dong+ 17

CMU SCS Long Answer ü Motivation Ø Graph Embedding o Tensor Embedding o Knowledge Graph Embedding KDD 2018 Dong+ 18

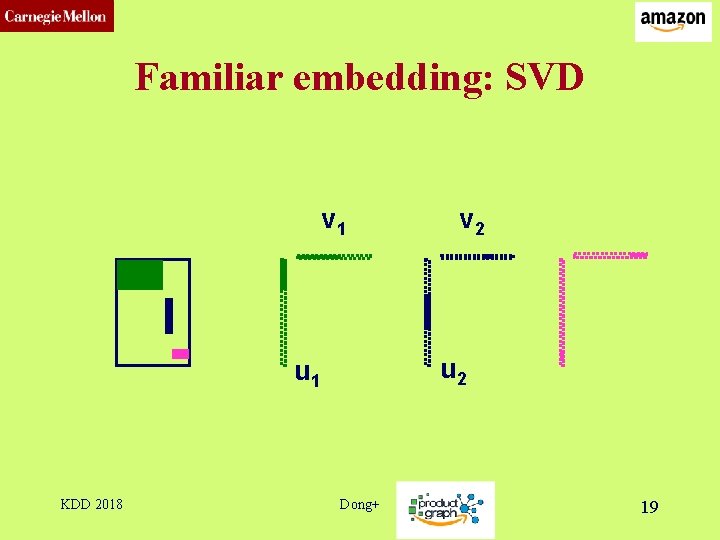

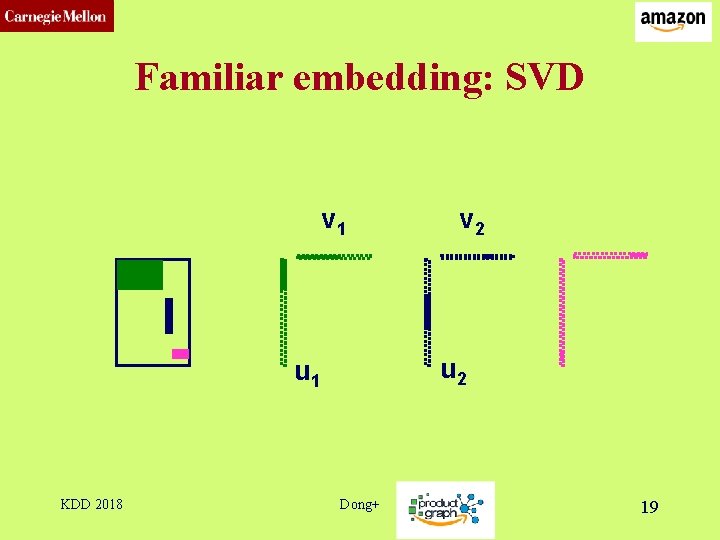

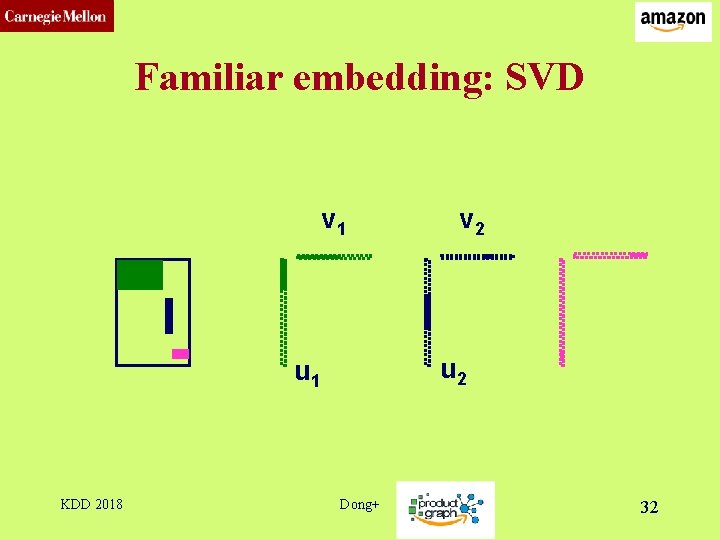

CMU SCS Familiar embedding: SVD v 1 u 2 u 1 KDD 2018 v 2 Dong+ 19

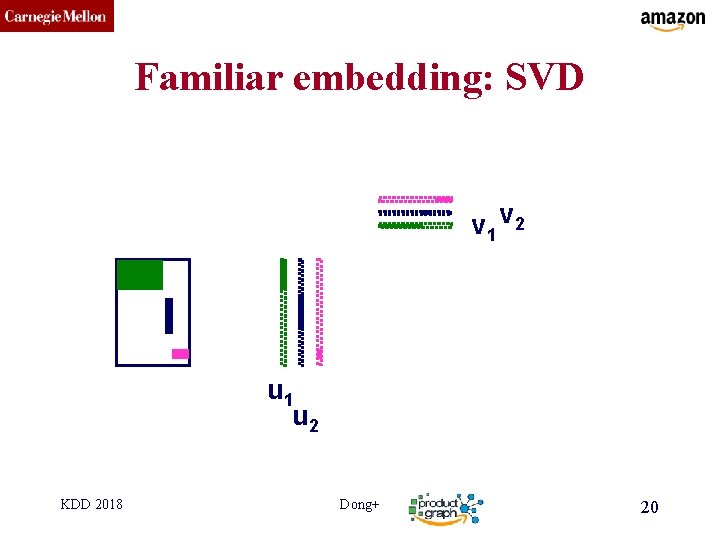

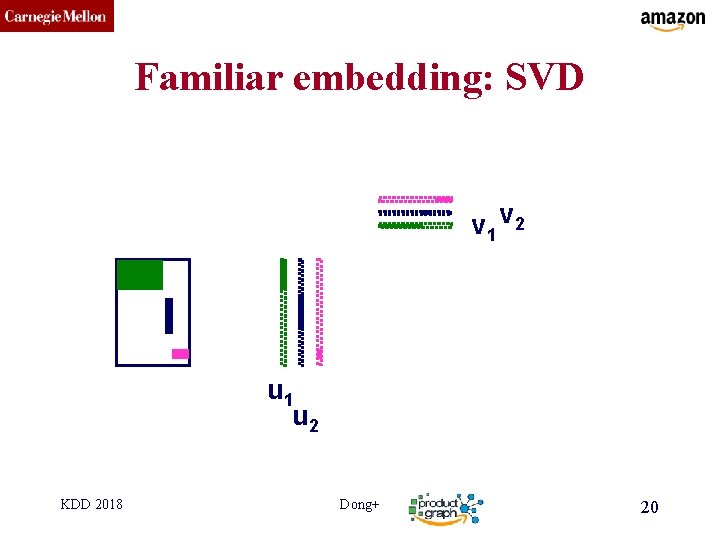

CMU SCS Familiar embedding: SVD v 1 v 2 u 1 u 2 KDD 2018 Dong+ 20

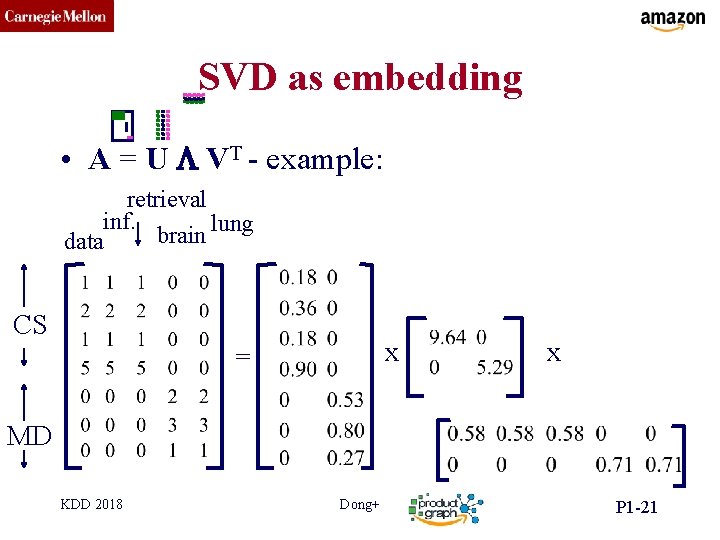

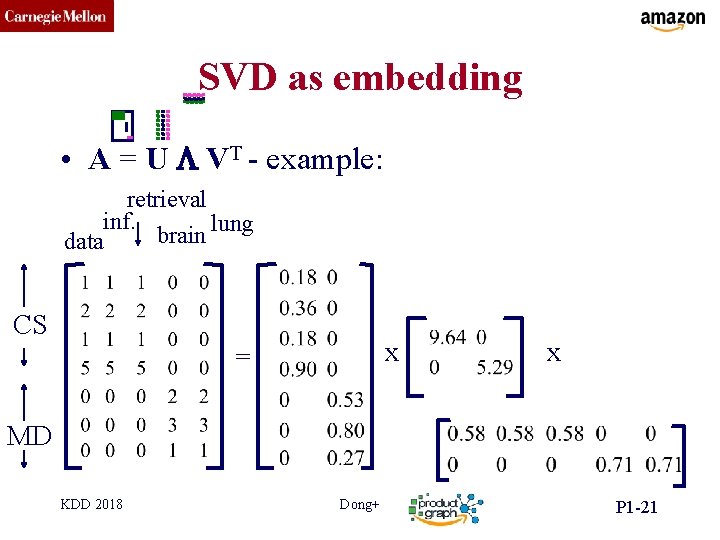

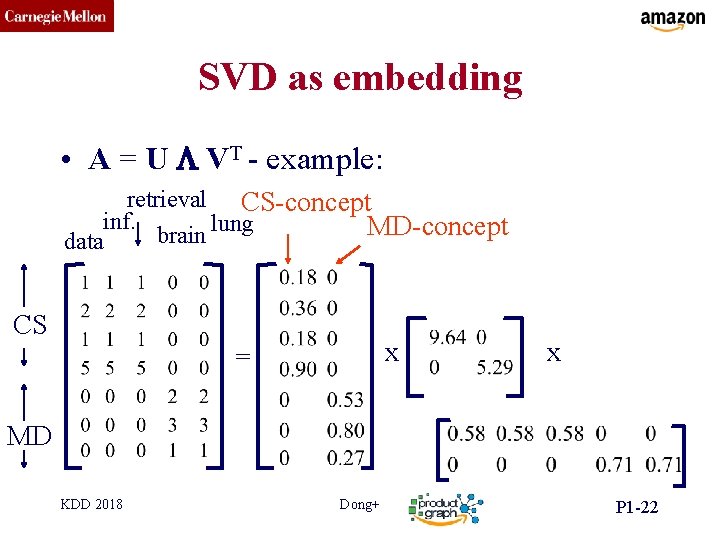

CMU SCS SVD as embedding • A = U L VT - example: retrieval inf. lung brain data CS x = x MD KDD 2018 Dong+ P 1 -21

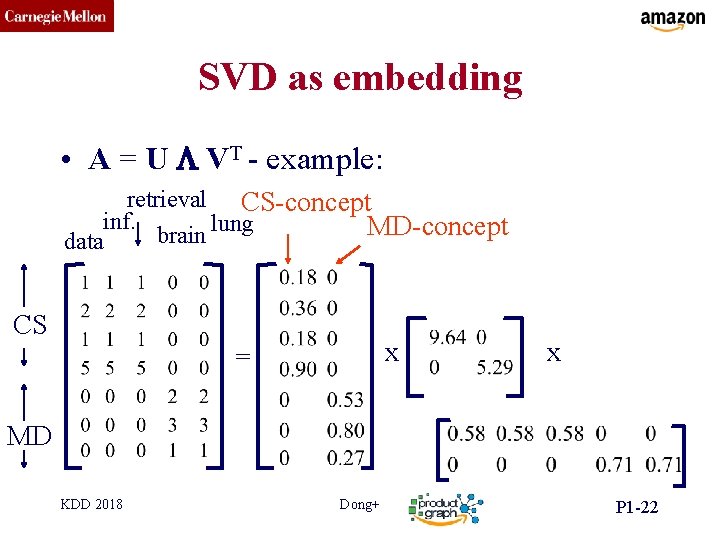

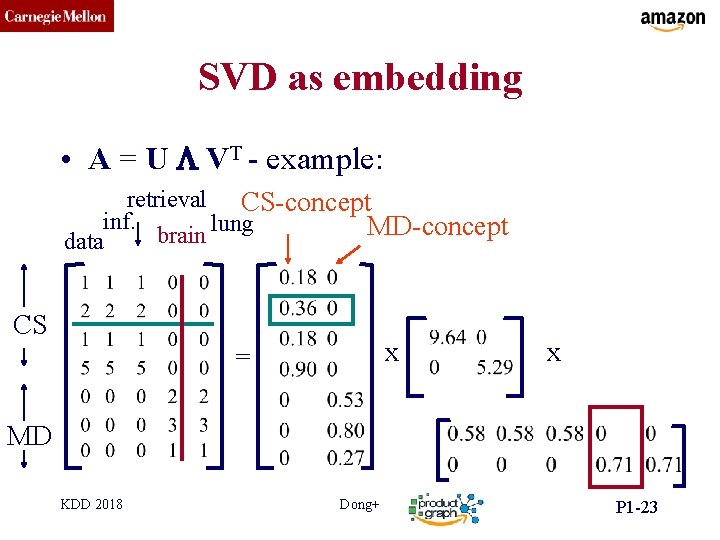

CMU SCS SVD as embedding • A = U L VT - example: retrieval CS-concept inf. lung MD-concept brain data CS x = x MD KDD 2018 Dong+ P 1 -22

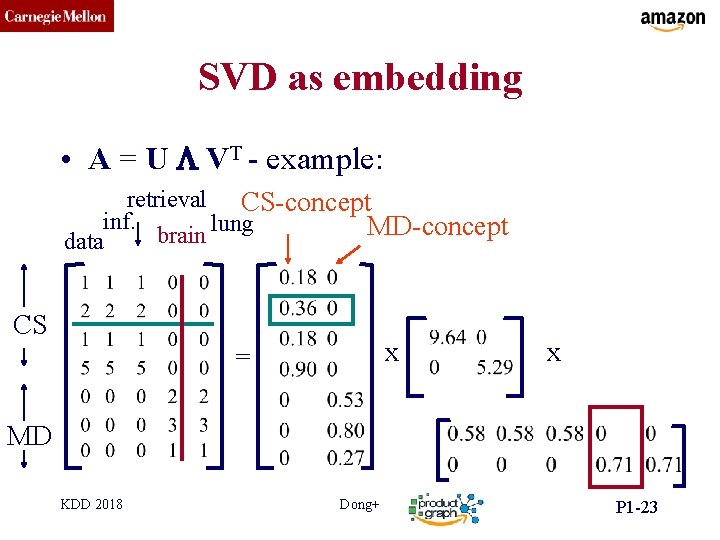

CMU SCS SVD as embedding • A = U L VT - example: retrieval CS-concept inf. lung MD-concept brain data CS x = x MD KDD 2018 Dong+ P 1 -23

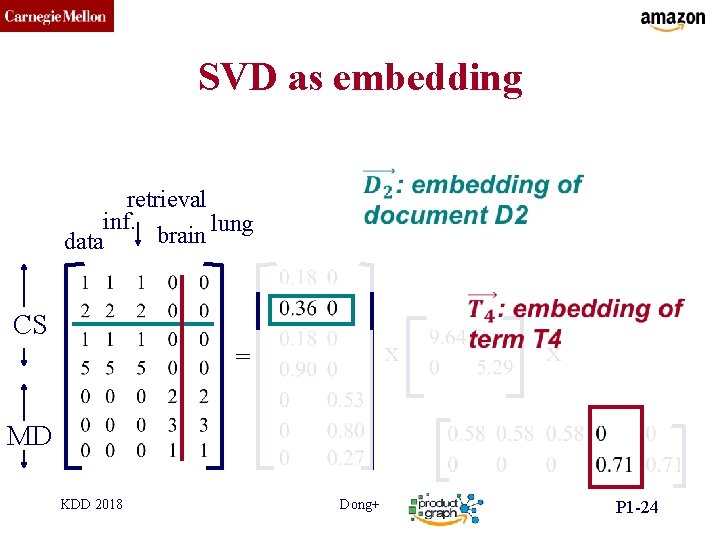

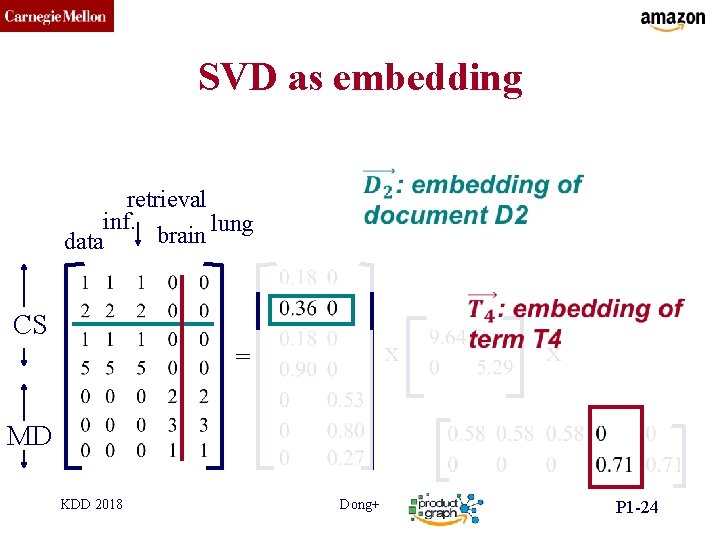

CMU SCS SVD as embedding retrieval inf. lung brain data CS x = x MD KDD 2018 Dong+ P 1 -24

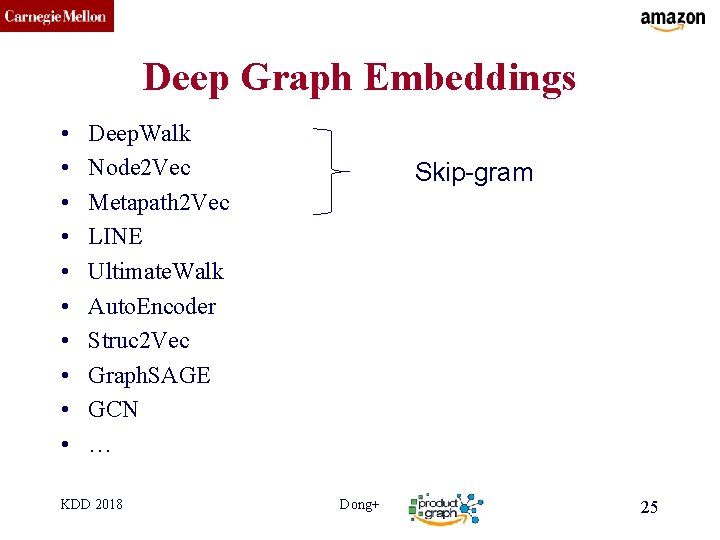

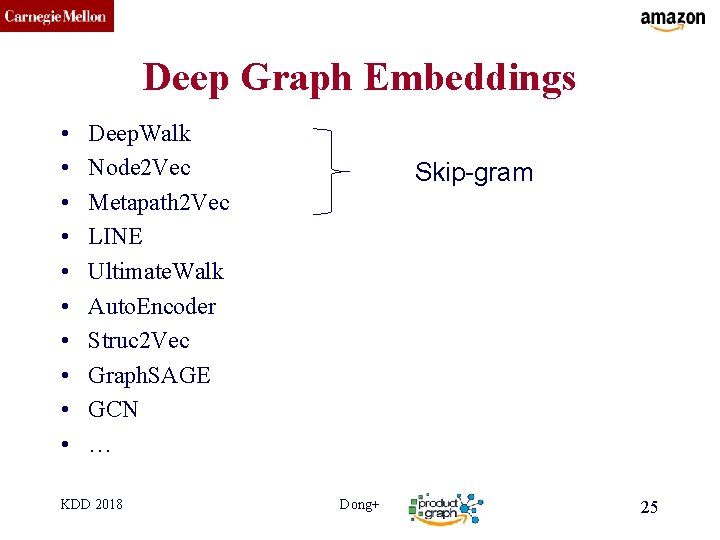

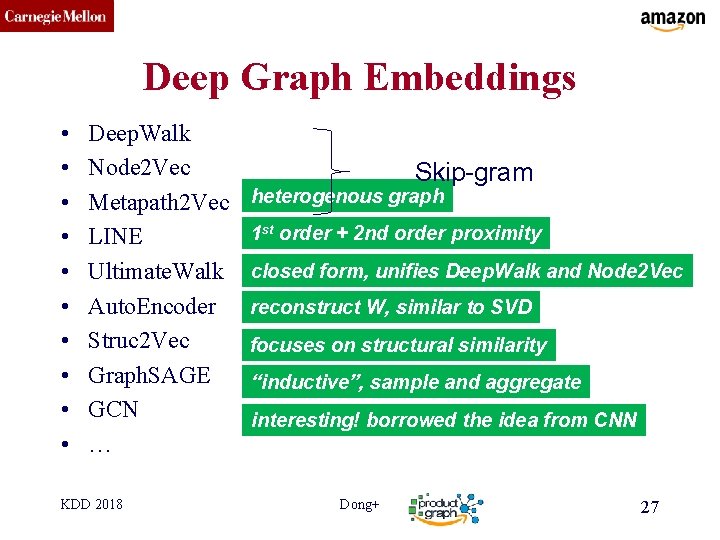

CMU SCS Deep Graph Embeddings • • • Deep. Walk Node 2 Vec Metapath 2 Vec LINE Ultimate. Walk Auto. Encoder Struc 2 Vec Graph. SAGE GCN … KDD 2018 Skip-gram Dong+ 25

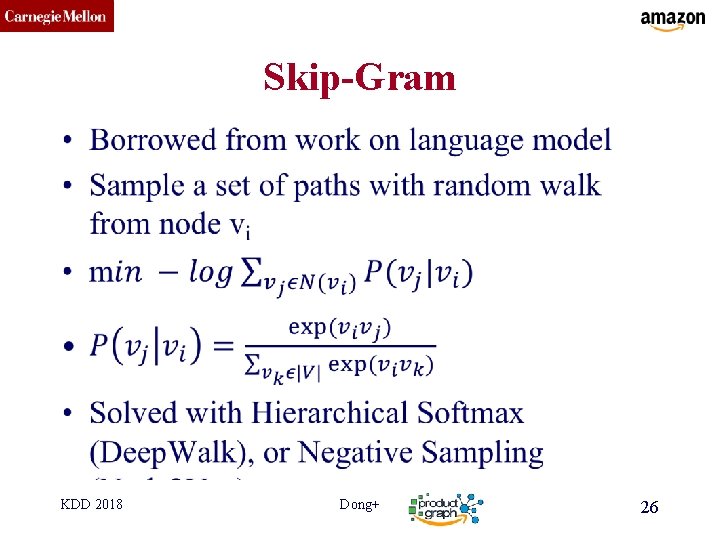

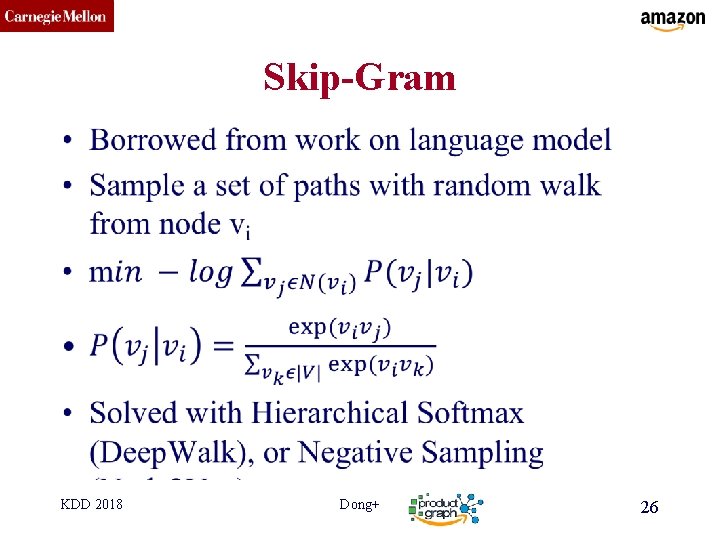

CMU SCS Skip-Gram • KDD 2018 Dong+ 26

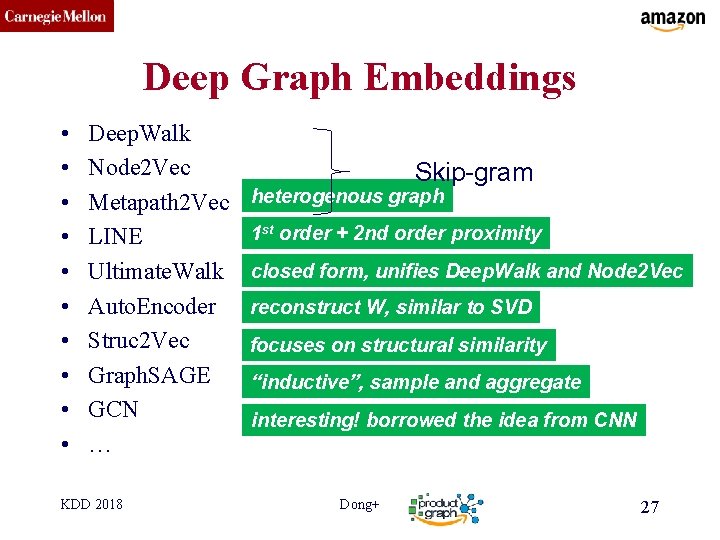

CMU SCS Deep Graph Embeddings • • • Deep. Walk Node 2 Vec Metapath 2 Vec LINE Ultimate. Walk Auto. Encoder Struc 2 Vec Graph. SAGE GCN … KDD 2018 Skip-gram heterogenous graph 1 st order + 2 nd order proximity closed form, unifies Deep. Walk and Node 2 Vec reconstruct W, similar to SVD focuses on structural similarity “inductive”, sample and aggregate interesting! borrowed the idea from CNN Dong+ 27

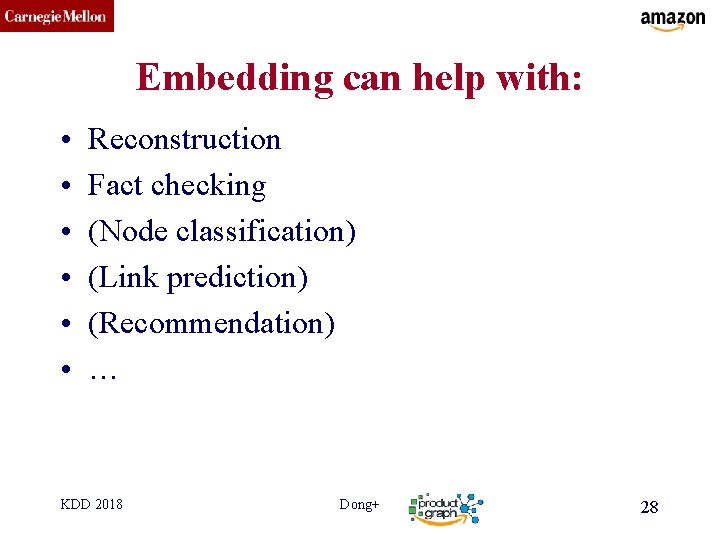

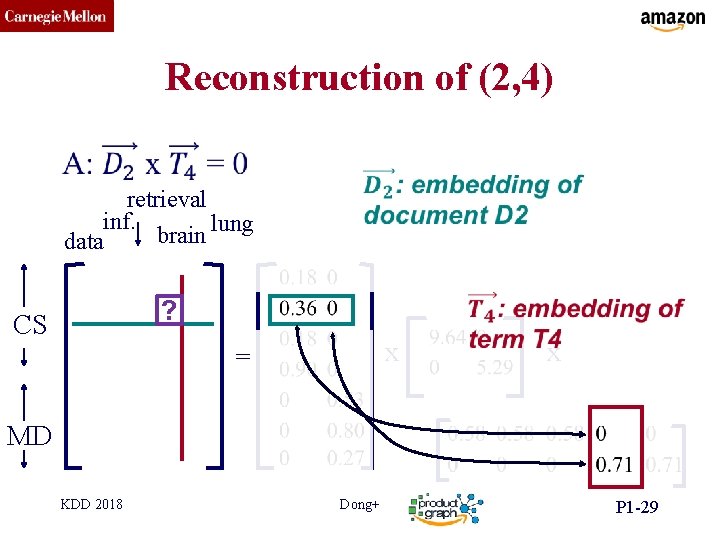

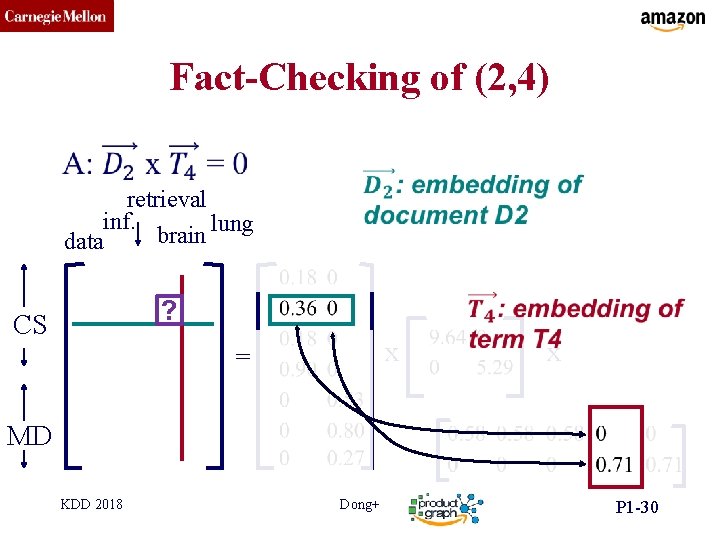

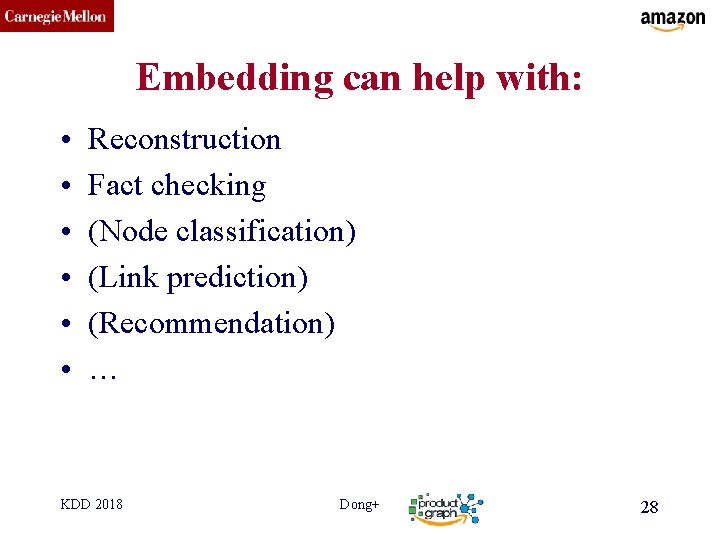

CMU SCS Embedding can help with: • • • Reconstruction Fact checking (Node classification) (Link prediction) (Recommendation) … KDD 2018 Dong+ 28

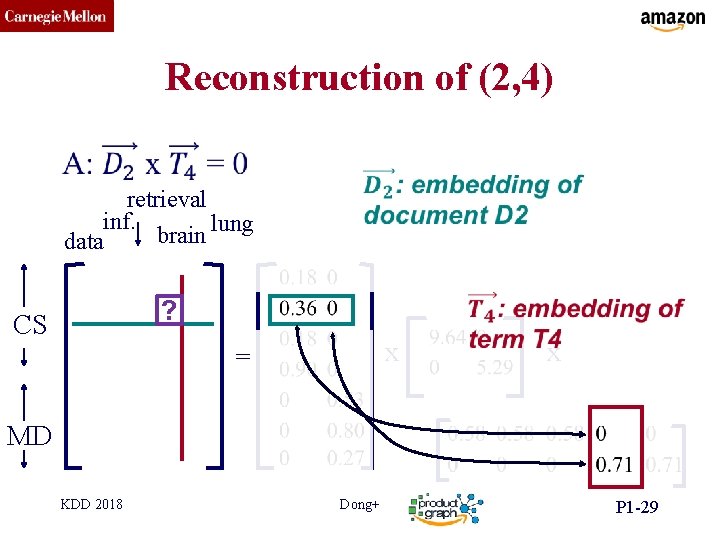

CMU SCS Reconstruction of (2, 4) • retrieval inf. lung brain data ? CS x = x MD KDD 2018 Dong+ P 1 -29

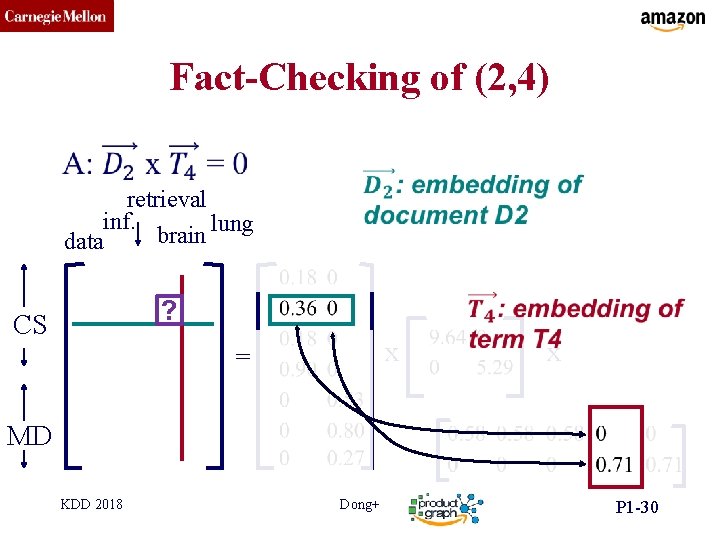

CMU SCS Fact-Checking of (2, 4) • retrieval inf. lung brain data ? CS x = x MD KDD 2018 Dong+ P 1 -30

CMU SCS Long Answer ü Motivation ü Graph Embedding Ø Tensor Embedding o Knowledge Graph Embedding KDD 2018 Dong+ 31

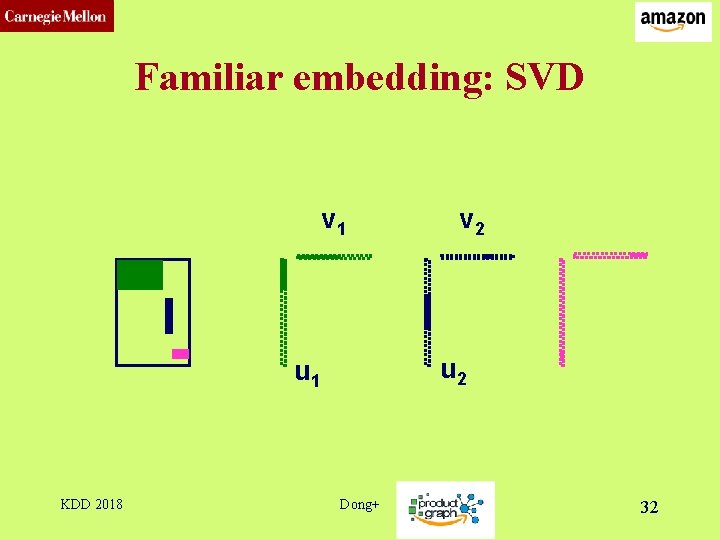

CMU SCS Familiar embedding: SVD v 1 u 2 u 1 KDD 2018 v 2 Dong+ 32

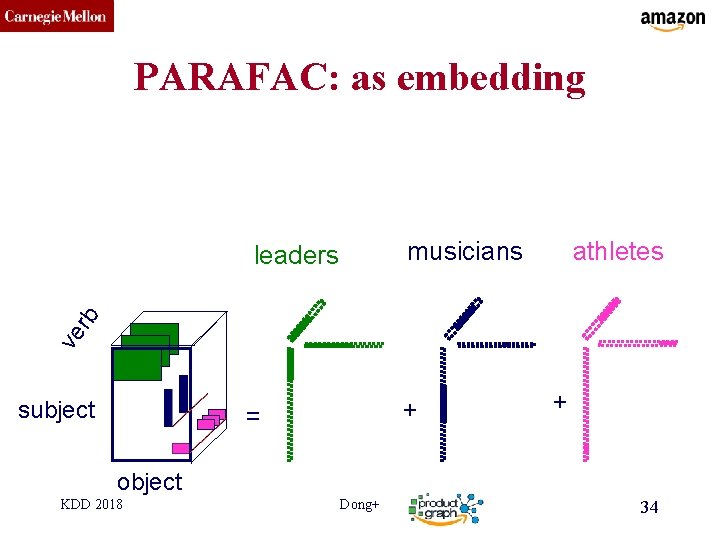

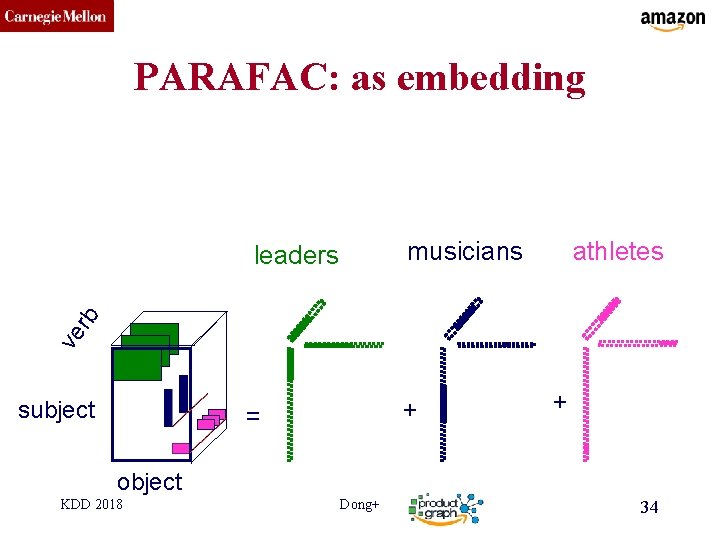

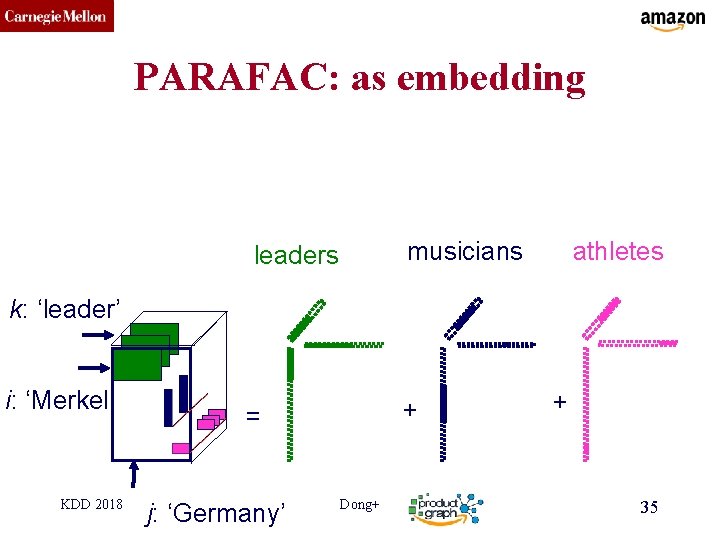

CMU SCS PARAFAC: as embedding musicians athletes ve r b leaders subject + = + object KDD 2018 Dong+ 34

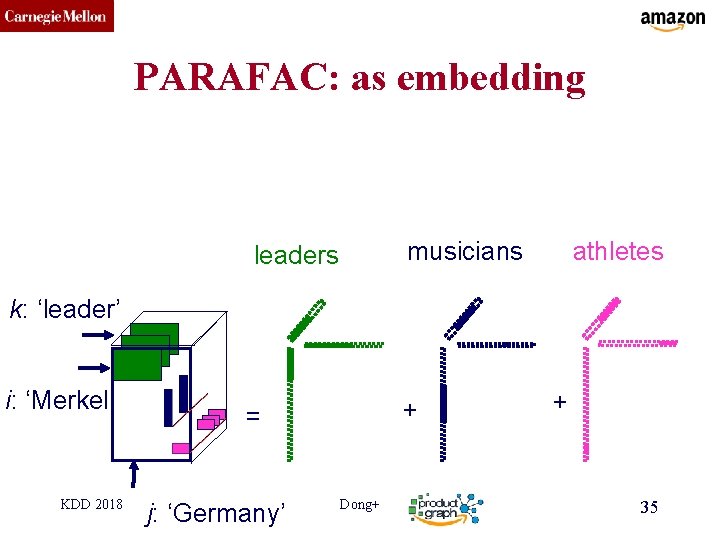

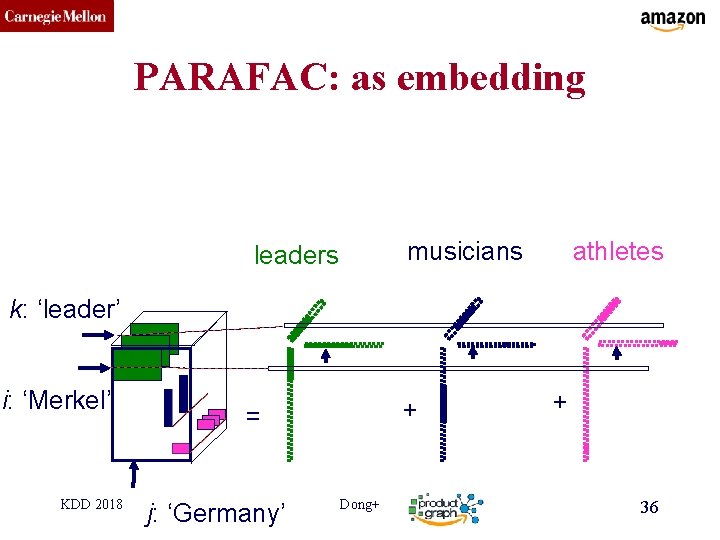

CMU SCS PARAFAC: as embedding musicians leaders athletes k: ‘leader’ i: ‘Merkel’ KDD 2018 + = j: ‘Germany’ Dong+ + 35

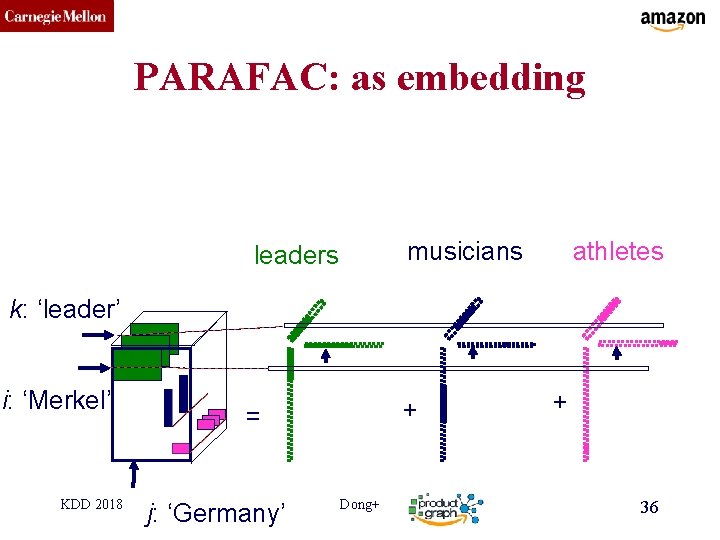

CMU SCS PARAFAC: as embedding musicians leaders athletes k: ‘leader’ i: ‘Merkel’ KDD 2018 + = j: ‘Germany’ Dong+ + 36

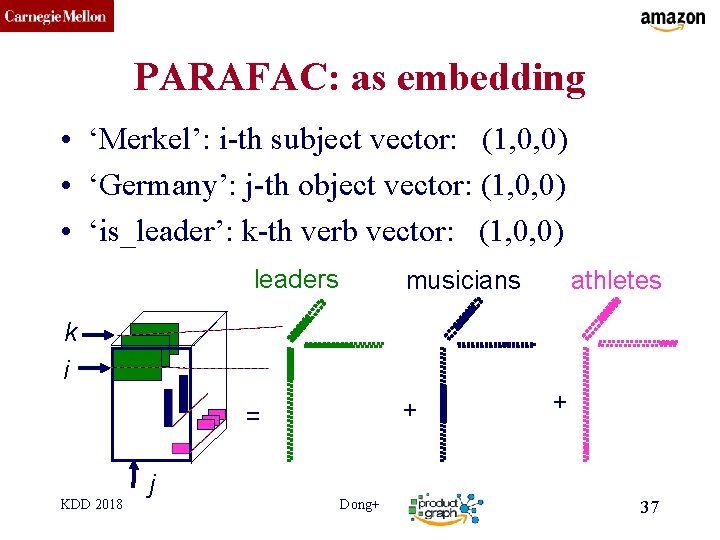

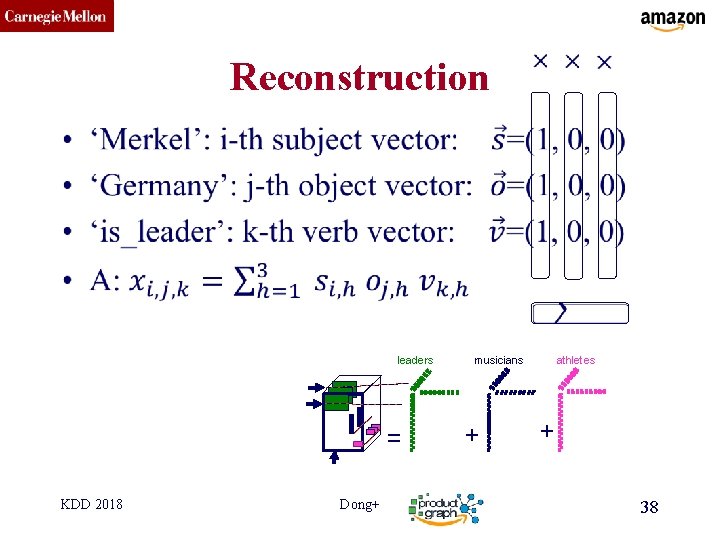

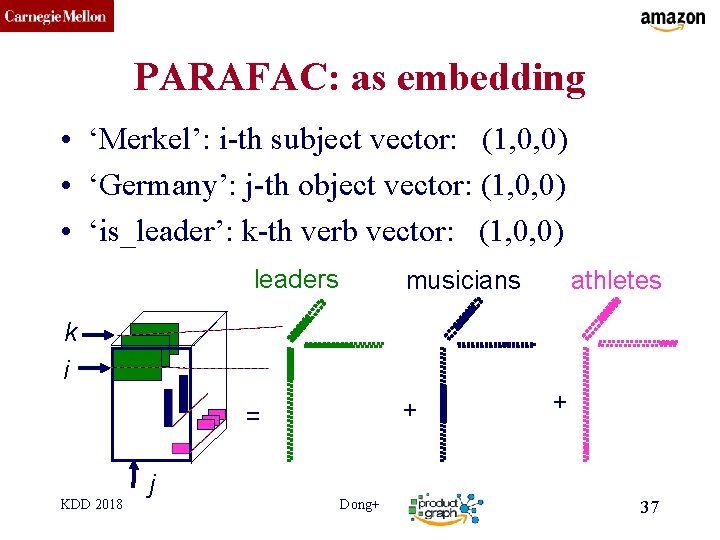

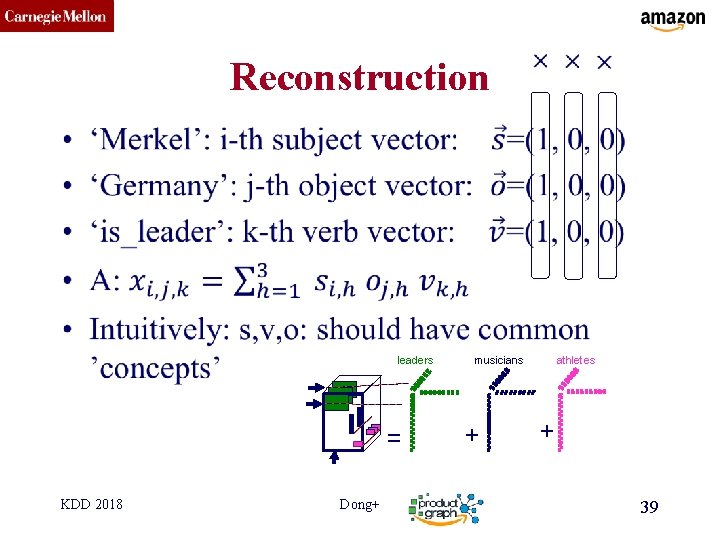

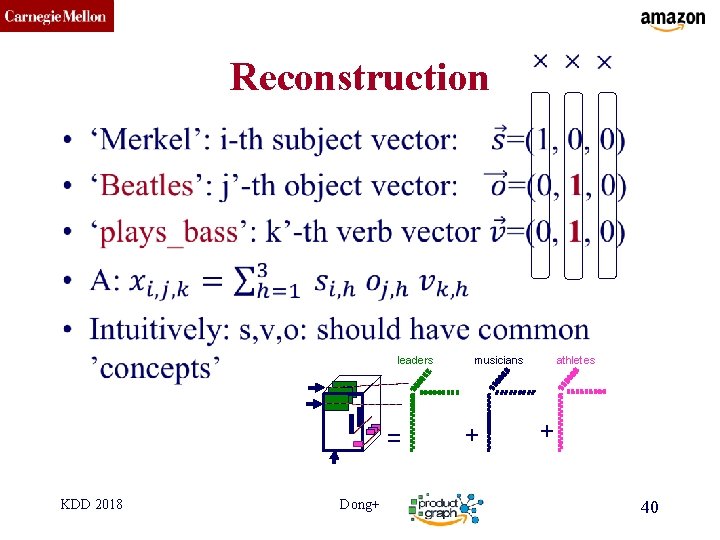

CMU SCS PARAFAC: as embedding • ‘Merkel’: i-th subject vector: (1, 0, 0) • ‘Germany’: j-th object vector: (1, 0, 0) • ‘is_leader’: k-th verb vector: (1, 0, 0) leaders musicians athletes k i + = KDD 2018 j Dong+ + 37

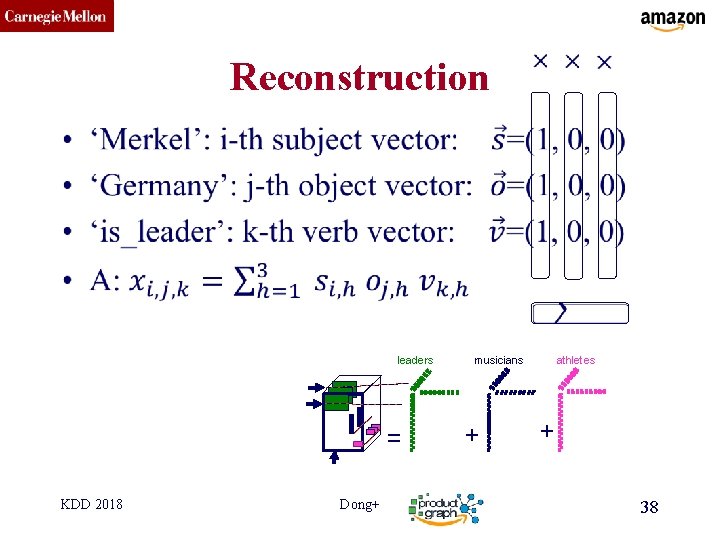

CMU SCS Reconstruction • leaders = KDD 2018 Dong+ musicians + athletes + 38

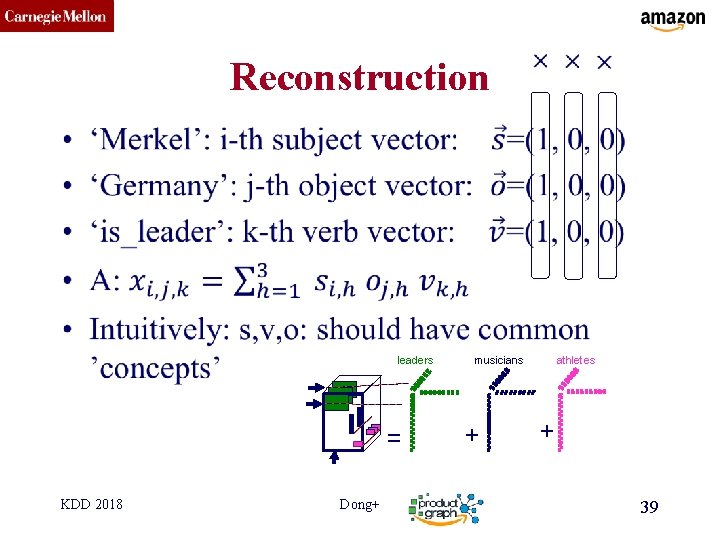

CMU SCS Reconstruction • leaders = KDD 2018 Dong+ musicians + athletes + 39

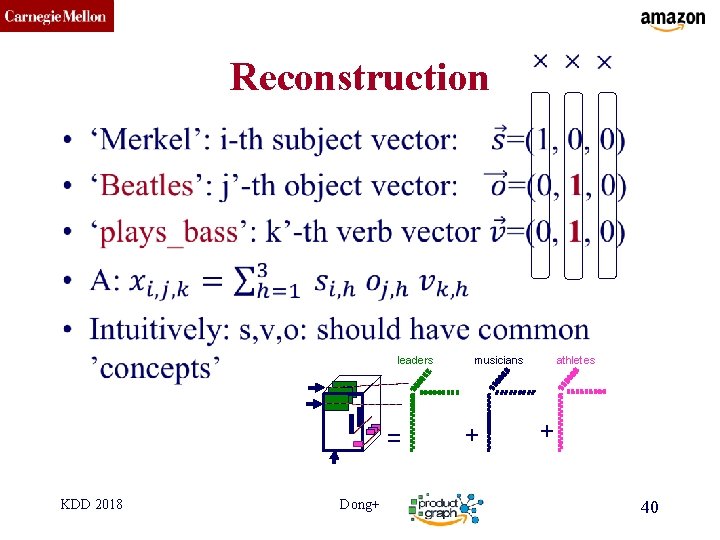

CMU SCS Reconstruction • leaders = KDD 2018 Dong+ musicians + athletes + 40

CMU SCS Long Answer ü Motivation ü Graph Embedding ü Tensor Embedding Ø Knowledge Graph Embedding KDD 2018 Dong+ 48

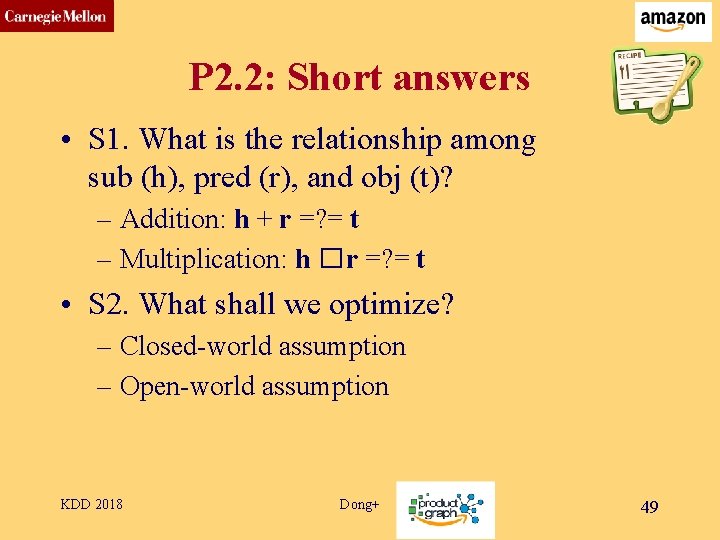

CMU SCS P 2. 2: Short answers • S 1. What is the relationship among sub (h), pred (r), and obj (t)? – Addition: h + r =? = t – Multiplication: h � r =? = t • S 2. What shall we optimize? – Closed-world assumption – Open-world assumption KDD 2018 Dong+ 49

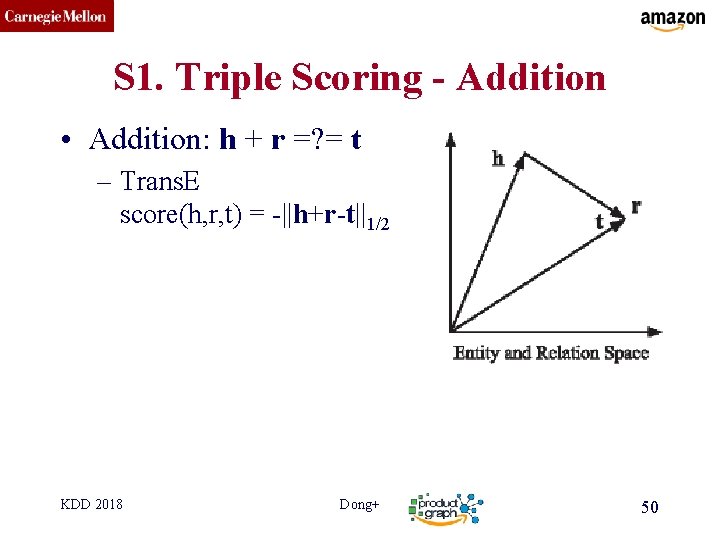

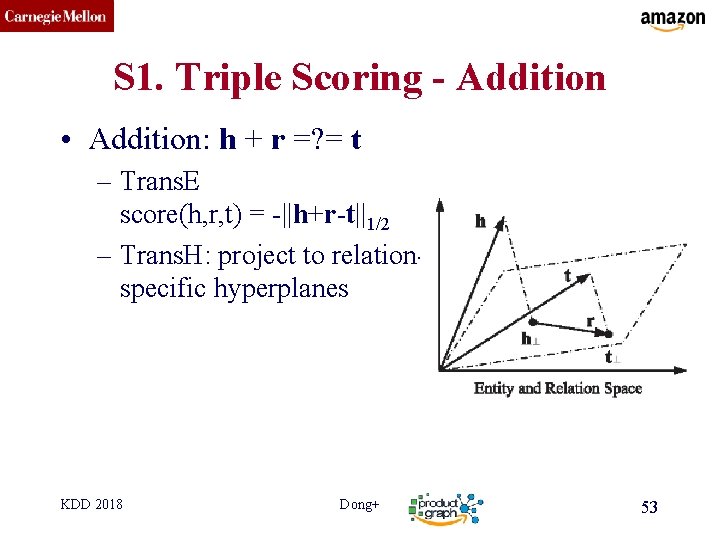

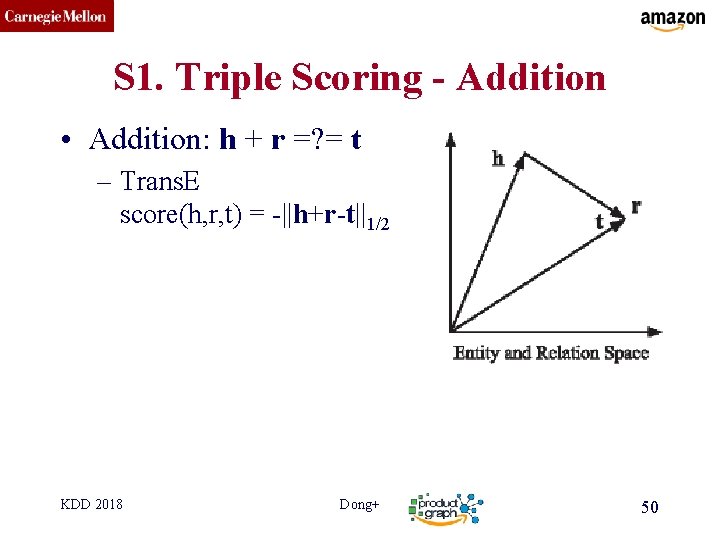

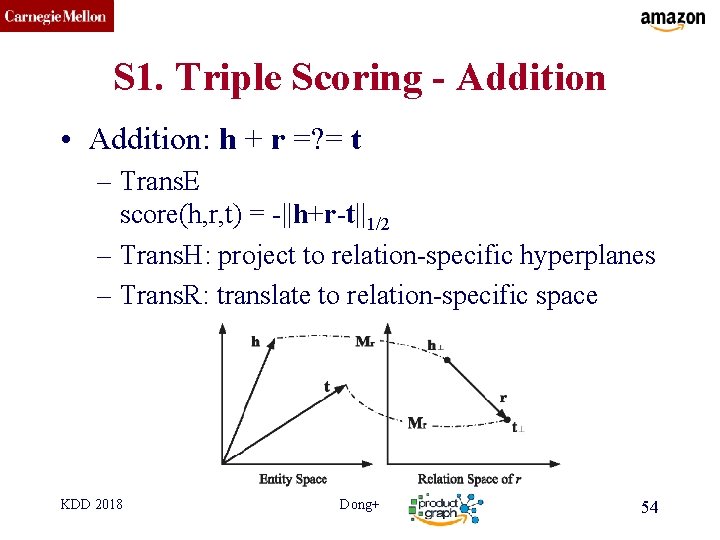

CMU SCS S 1. Triple Scoring - Addition • Addition: h + r =? = t – Trans. E score(h, r, t) = -||h+r-t||1/2 KDD 2018 Dong+ 50

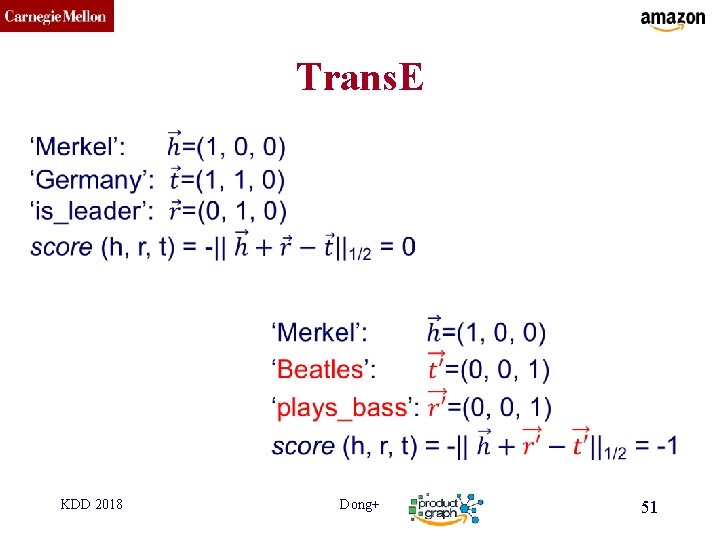

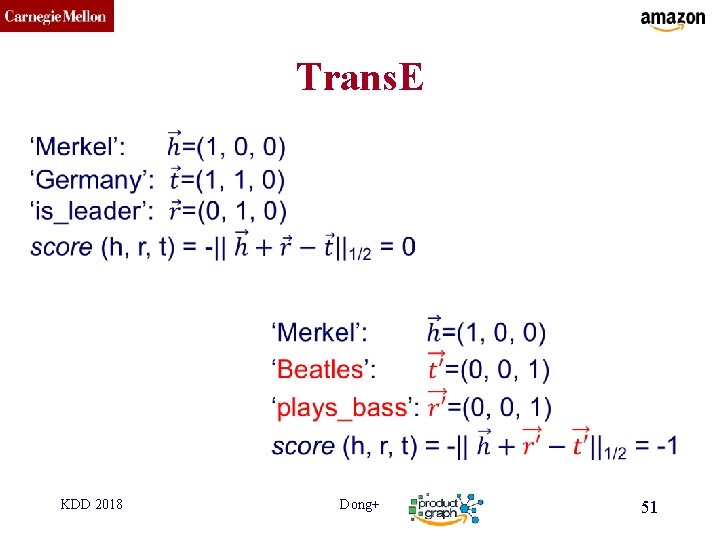

CMU SCS Trans. E KDD 2018 Dong+ 51

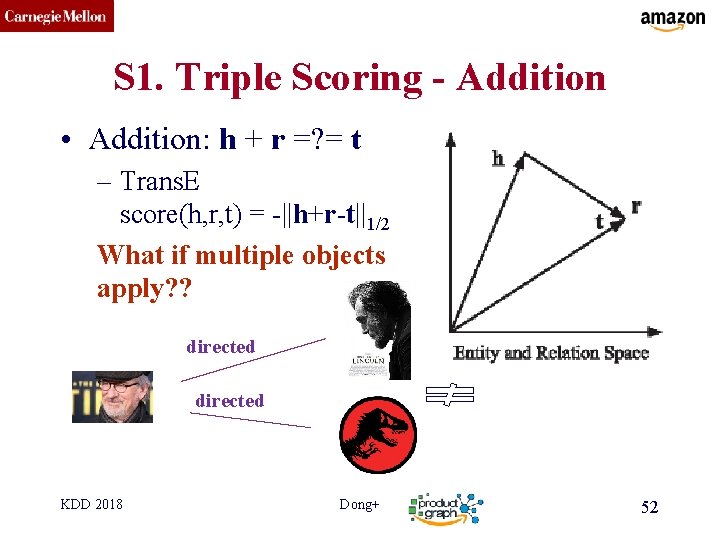

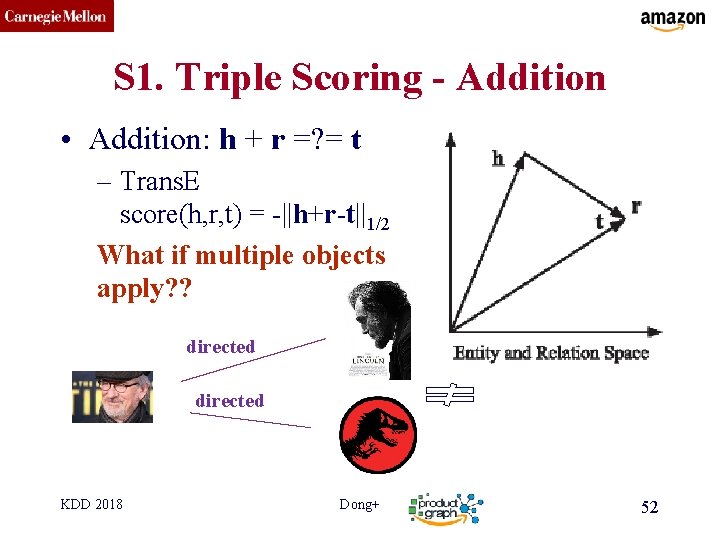

CMU SCS S 1. Triple Scoring - Addition • Addition: h + r =? = t – Trans. E score(h, r, t) = -||h+r-t||1/2 What if multiple objects apply? ? directed KDD 2018 Dong+ 52

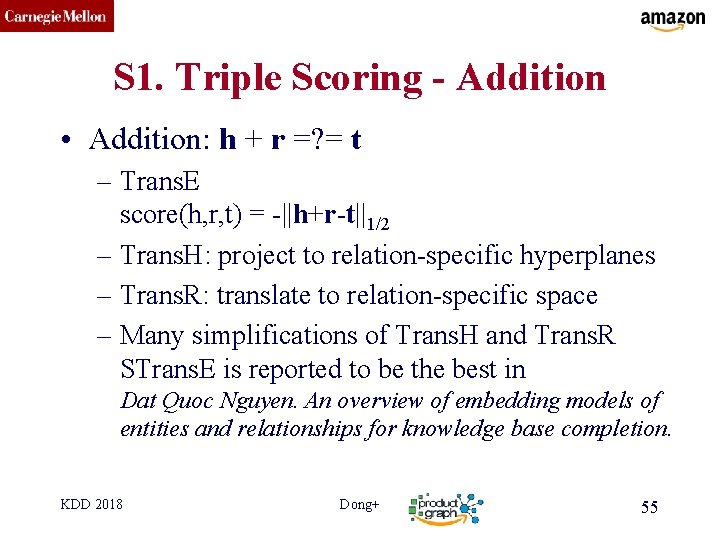

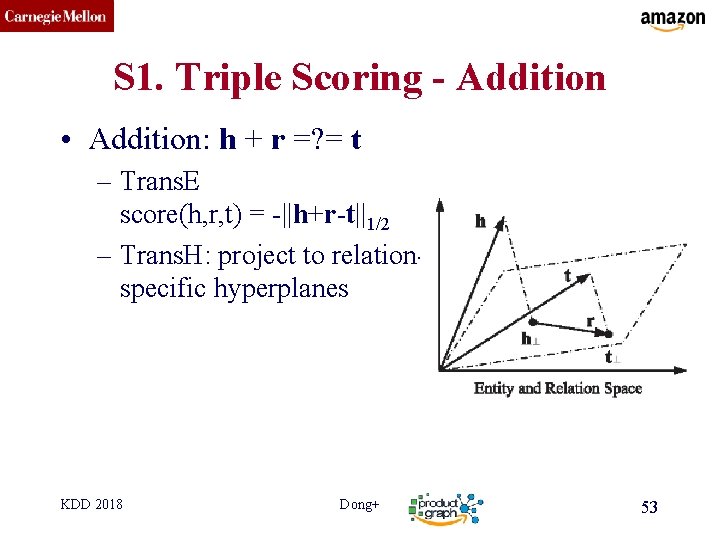

CMU SCS S 1. Triple Scoring - Addition • Addition: h + r =? = t – Trans. E score(h, r, t) = -||h+r-t||1/2 – Trans. H: project to relationspecific hyperplanes KDD 2018 Dong+ 53

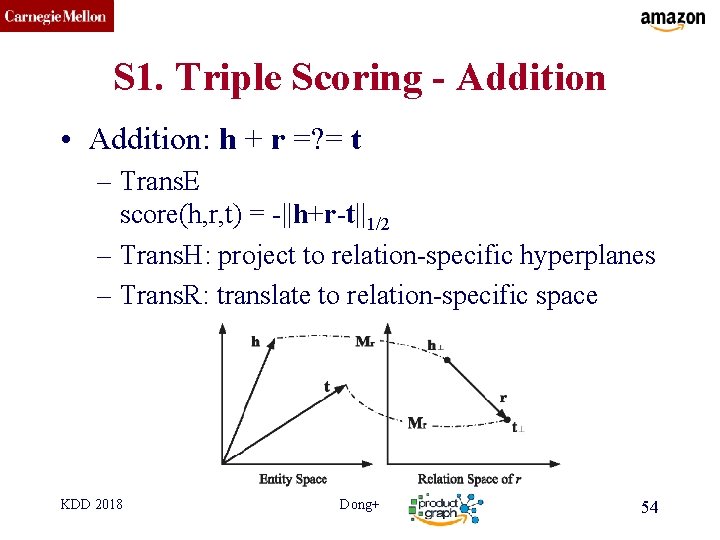

CMU SCS S 1. Triple Scoring - Addition • Addition: h + r =? = t – Trans. E score(h, r, t) = -||h+r-t||1/2 – Trans. H: project to relation-specific hyperplanes – Trans. R: translate to relation-specific space KDD 2018 Dong+ 54

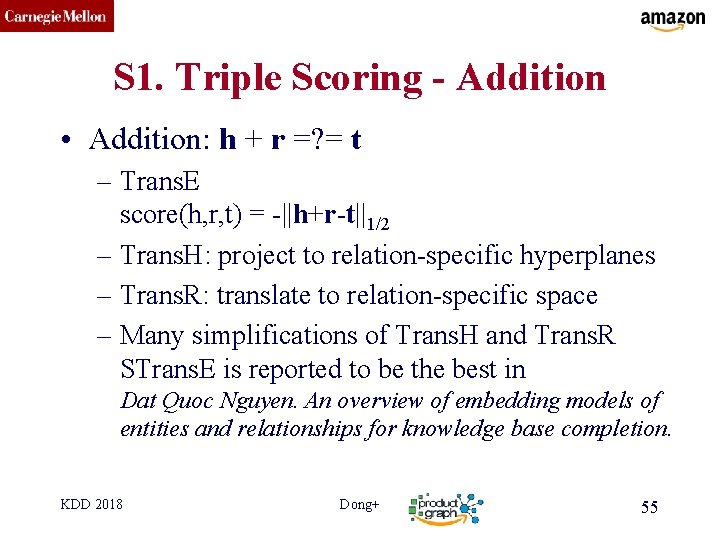

CMU SCS S 1. Triple Scoring - Addition • Addition: h + r =? = t – Trans. E score(h, r, t) = -||h+r-t||1/2 – Trans. H: project to relation-specific hyperplanes – Trans. R: translate to relation-specific space – Many simplifications of Trans. H and Trans. R STrans. E is reported to be the best in Dat Quoc Nguyen. An overview of embedding models of entities and relationships for knowledge base completion. KDD 2018 Dong+ 55

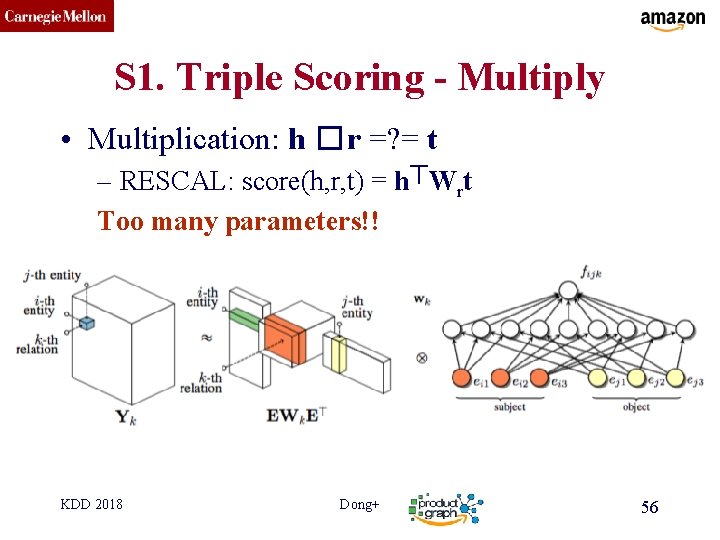

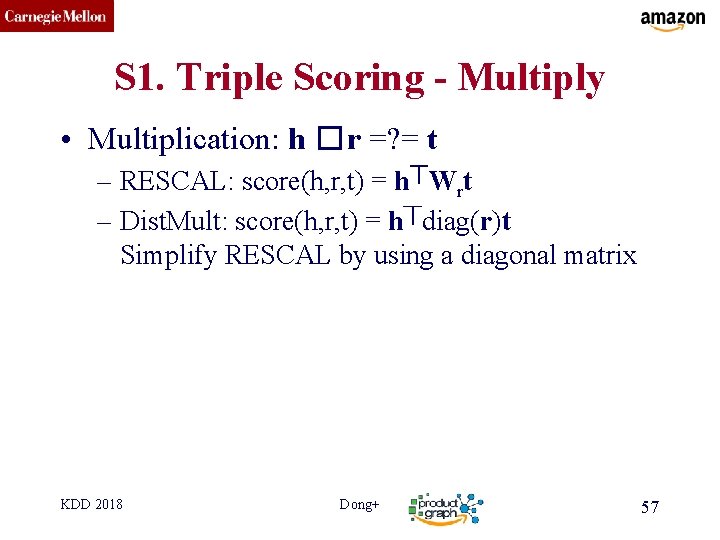

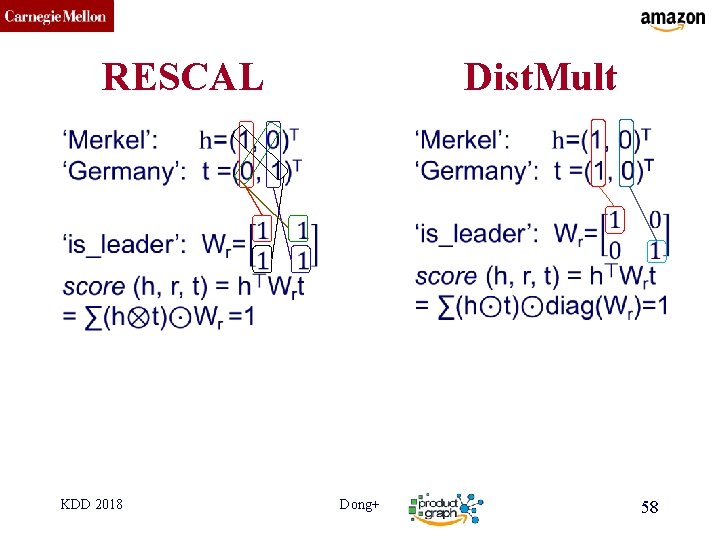

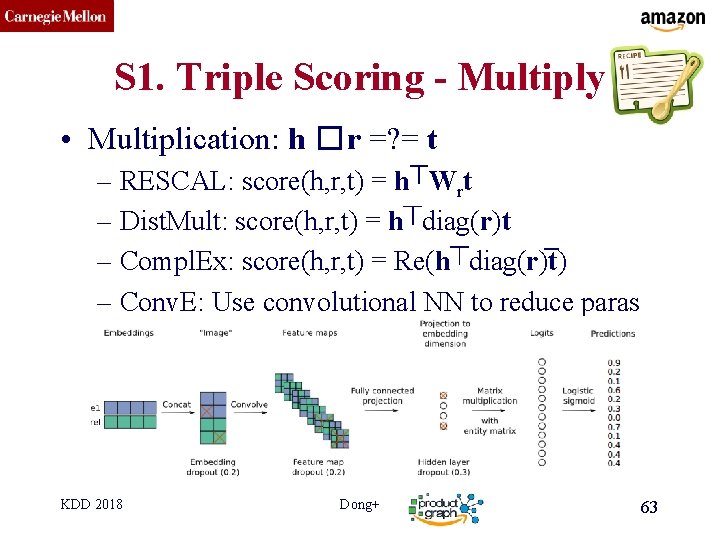

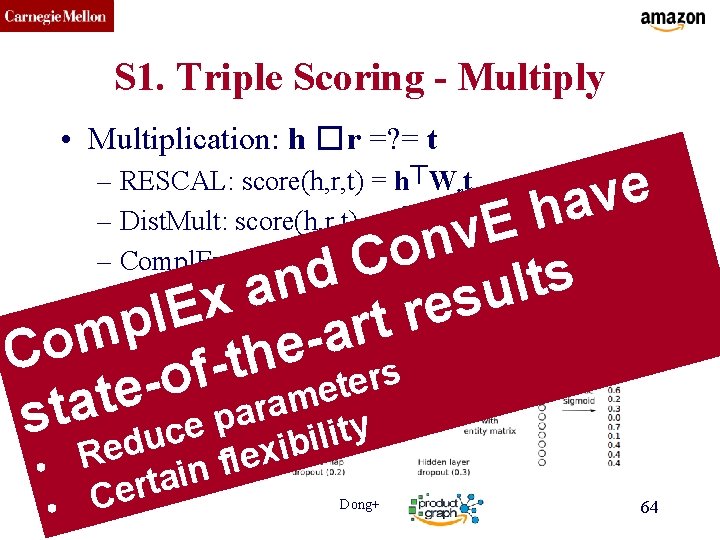

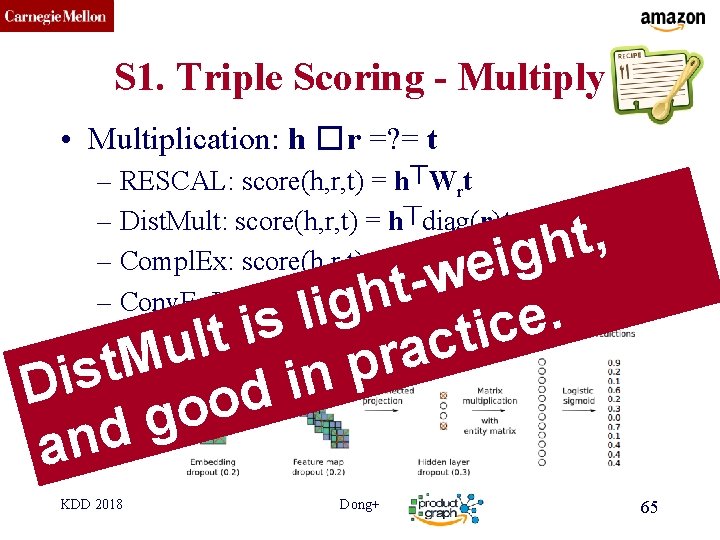

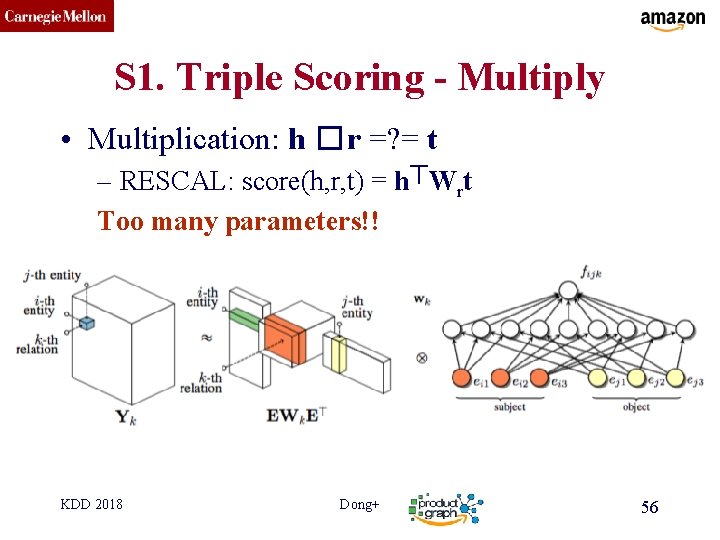

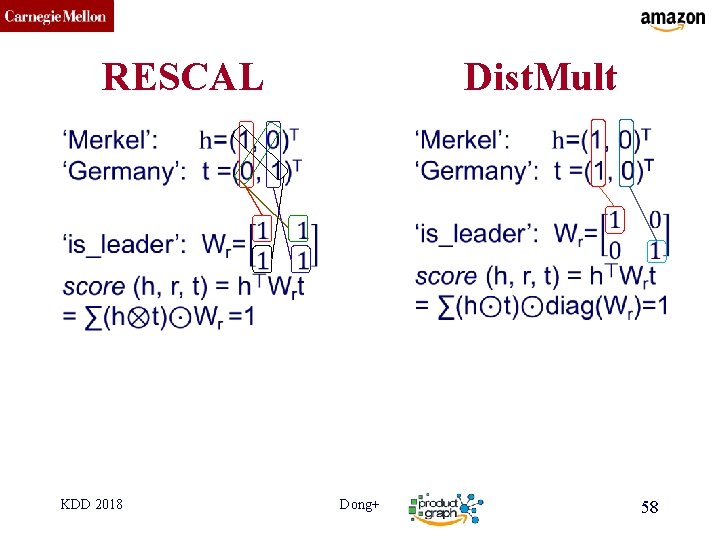

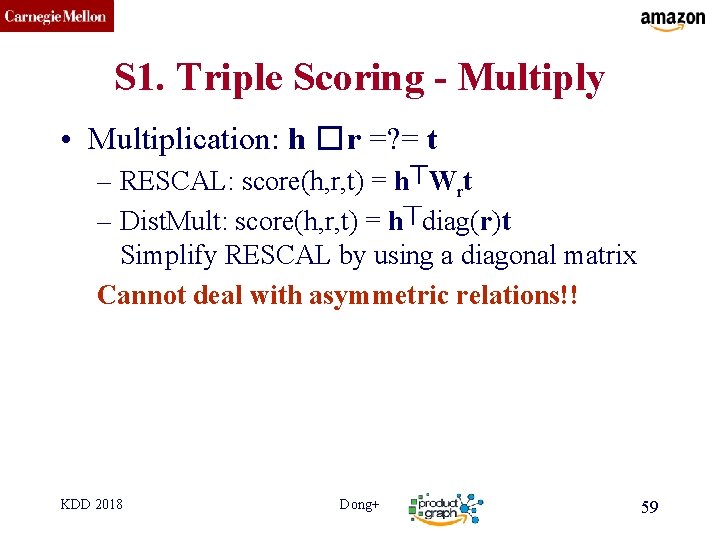

CMU SCS S 1. Triple Scoring - Multiply • Multiplication: h � r =? = t – RESCAL: score(h, r, t) = h⏉Wrt Too many parameters!! KDD 2018 Dong+ 56

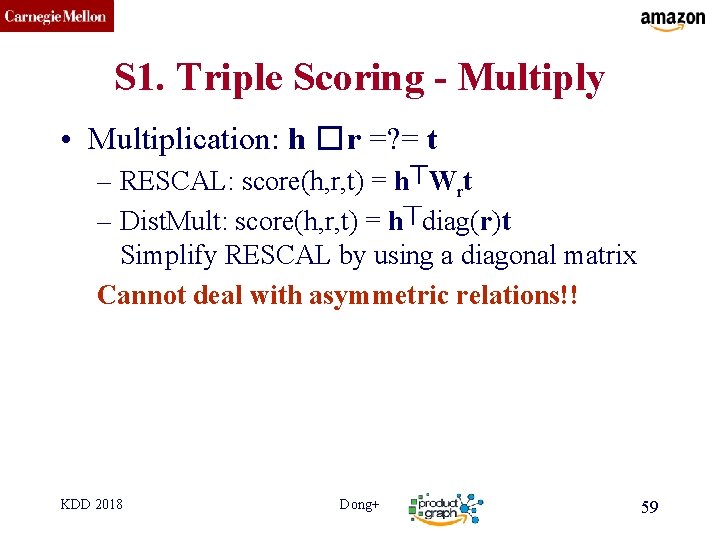

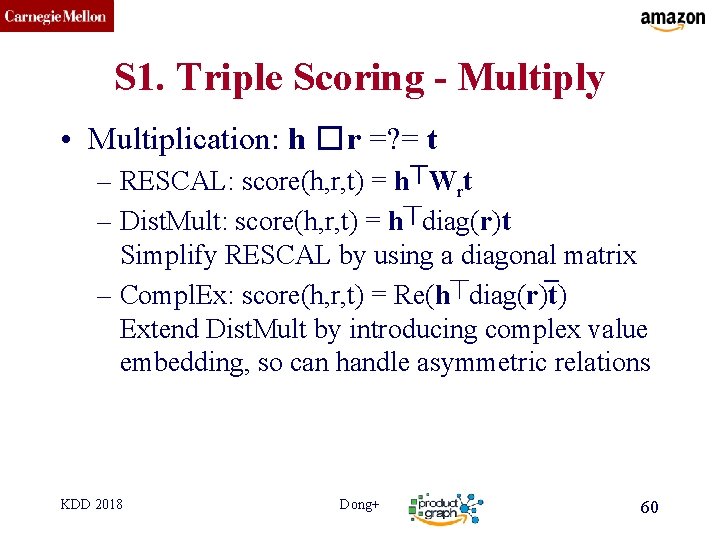

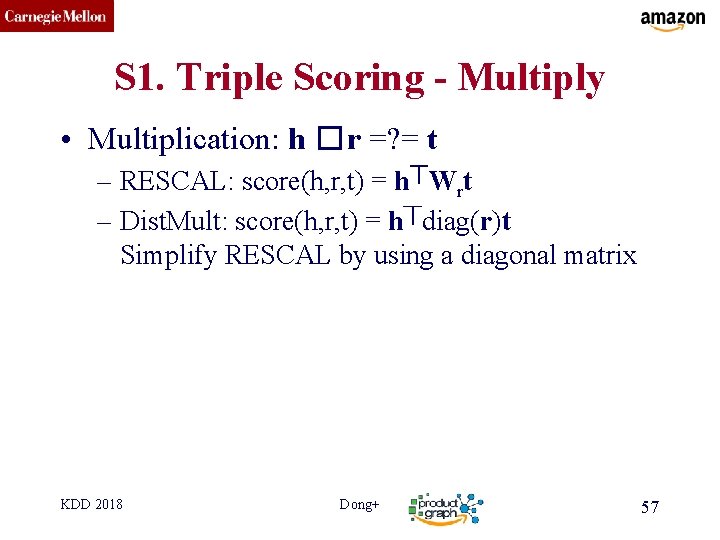

CMU SCS S 1. Triple Scoring - Multiply • Multiplication: h � r =? = t – RESCAL: score(h, r, t) = h⏉Wrt – Dist. Mult: score(h, r, t) = h⏉diag(r)t Simplify RESCAL by using a diagonal matrix KDD 2018 Dong+ 57

CMU SCS RESCAL Dist. Mult KDD 2018 Dong+ 58

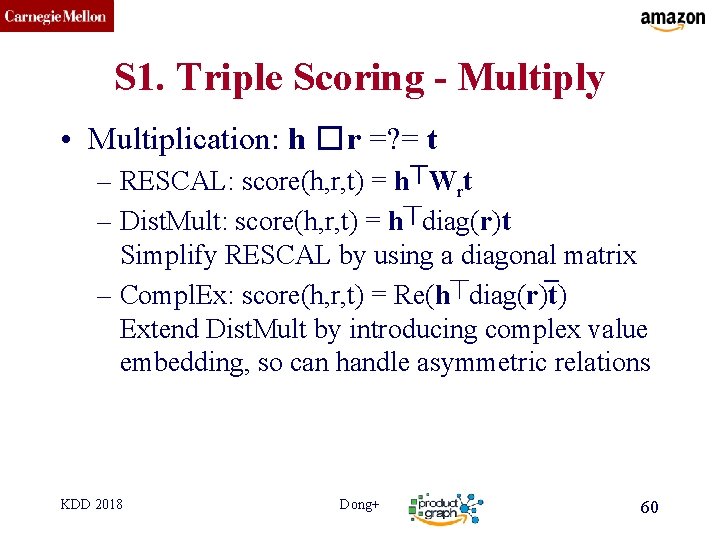

CMU SCS S 1. Triple Scoring - Multiply • Multiplication: h � r =? = t – RESCAL: score(h, r, t) = h⏉Wrt – Dist. Mult: score(h, r, t) = h⏉diag(r)t Simplify RESCAL by using a diagonal matrix Cannot deal with asymmetric relations!! KDD 2018 Dong+ 59

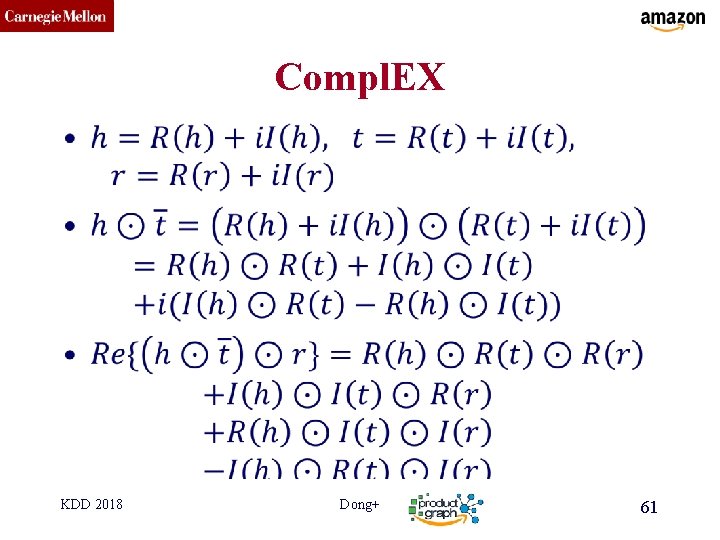

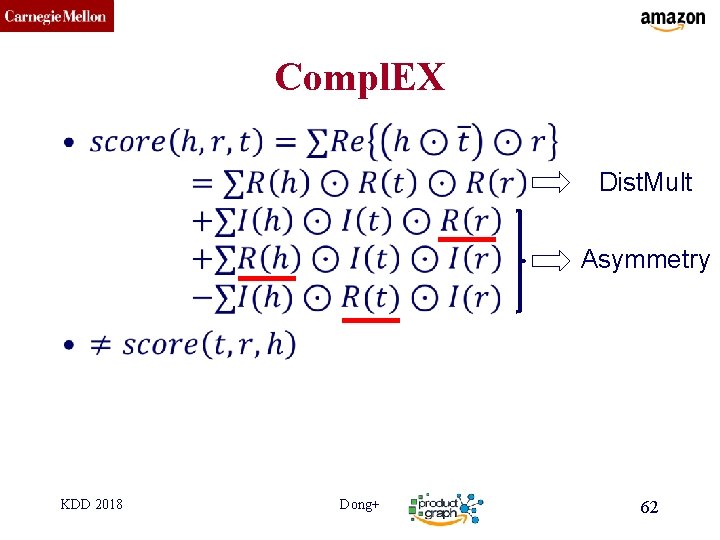

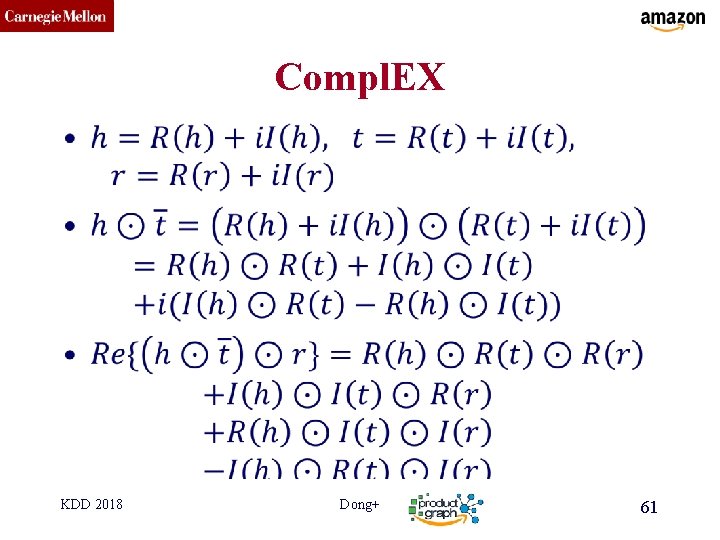

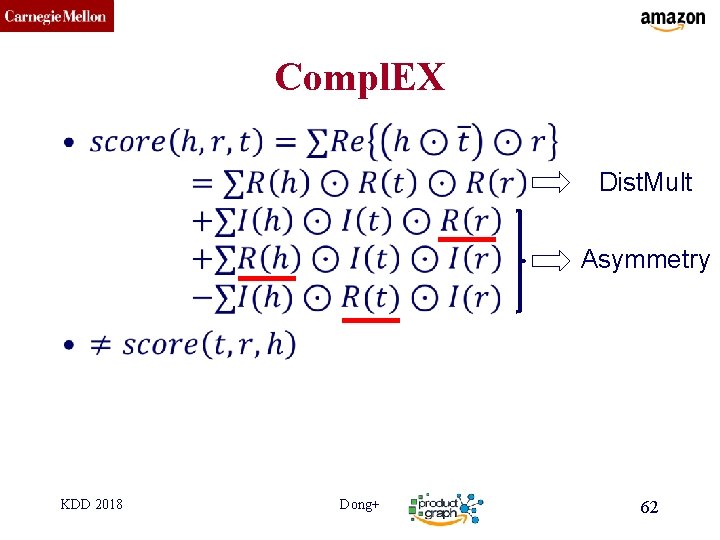

CMU SCS S 1. Triple Scoring - Multiply • Multiplication: h � r =? = t – RESCAL: score(h, r, t) = h⏉Wrt – Dist. Mult: score(h, r, t) = h⏉diag(r)t Simplify RESCAL by using a diagonal matrix _ – Compl. Ex: score(h, r, t) = Re(h⏉diag(r)t) Extend Dist. Mult by introducing complex value embedding, so can handle asymmetric relations KDD 2018 Dong+ 60

CMU SCS Compl. EX • KDD 2018 Dong+ 61

CMU SCS Compl. EX • Dist. Mult Asymmetry KDD 2018 Dong+ 62

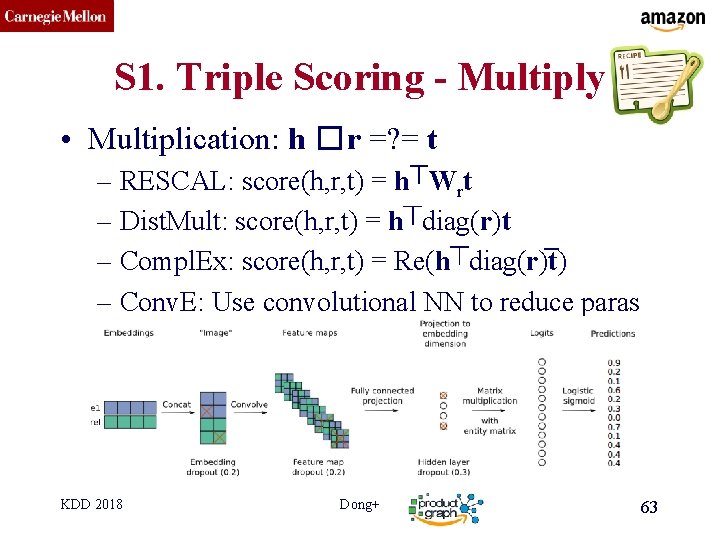

CMU SCS S 1. Triple Scoring - Multiply • Multiplication: h � r =? = t – RESCAL: score(h, r, t) = h⏉Wrt – Dist. Mult: score(h, r, t) = h⏉diag(r)t _ – Compl. Ex: score(h, r, t) = Re(h⏉diag(r)t) – Conv. E: Use convolutional NN to reduce paras KDD 2018 Dong+ 63

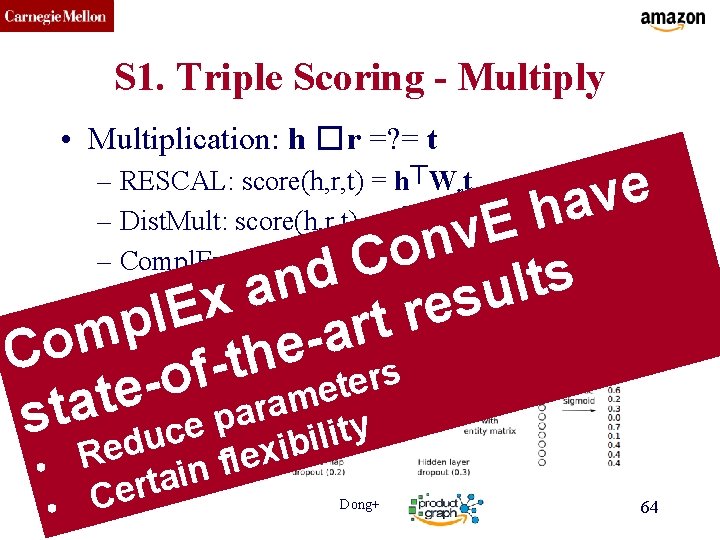

CMU SCS S 1. Triple Scoring - Multiply • Multiplication: h � r =? = t e v a h E v n o C d s t n l a u s x e E r l t p r a m o e C h t s f r e o t e e m t a sta duce par ibility – RESCAL: score(h, r, t) = h⏉Wrt – Dist. Mult: score(h, r, t) = h⏉diag(r)t _ – Compl. Ex: score(h, r, t) = Re(h⏉diag(r)t) – Conv. E: Use convolutional NN to reduce paras e x R e l f • n i a t r e C • KDD 2018 Dong+ 64

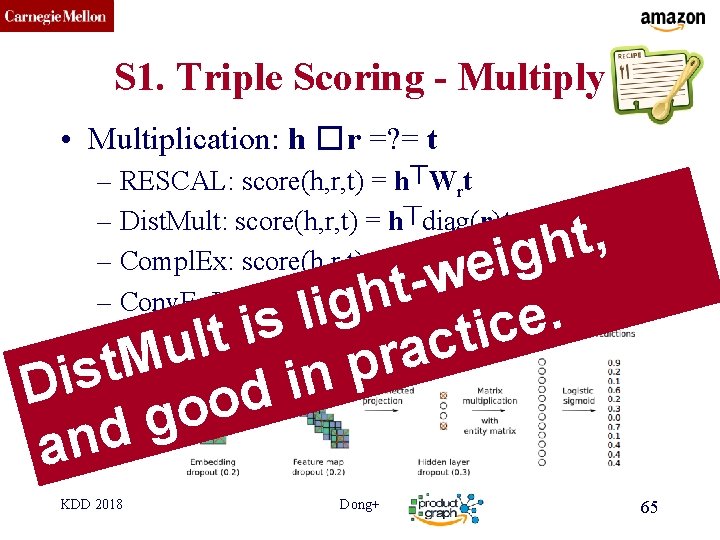

CMU SCS S 1. Triple Scoring - Multiply • Multiplication: h � r =? = t – RESCAL: score(h, r, t) = h⏉Wrt – Dist. Mult: score(h, r, t) = h⏉diag(r)t _ – Compl. Ex: score(h, r, t) = Re(h⏉diag(r)t) – Conv. E: Use convolutional NN to reduce paras , t h g i e w t h g i l. e s i c i t t l c u a r M t p s i n i D d o o g d n a KDD 2018 Dong+ 65

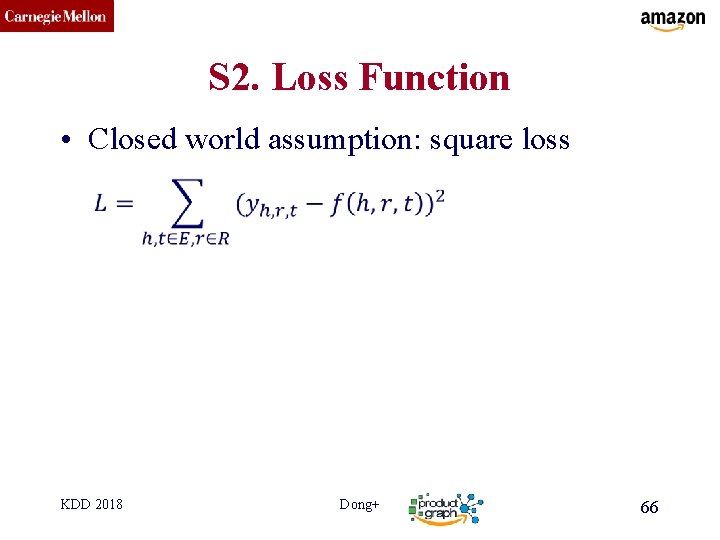

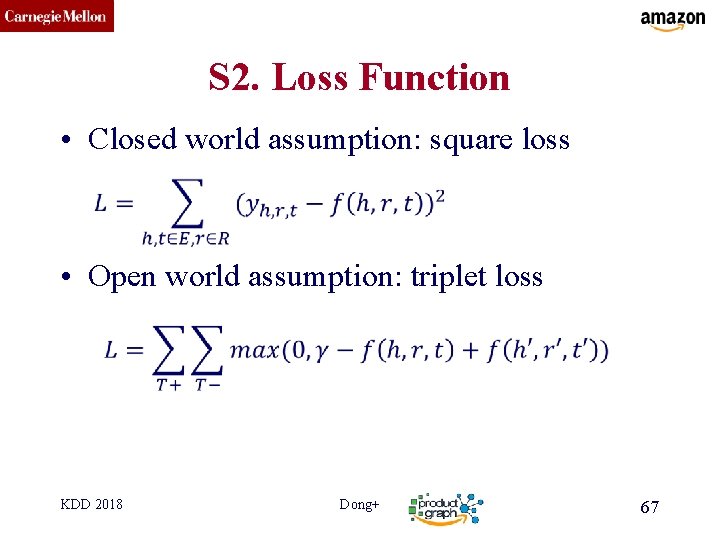

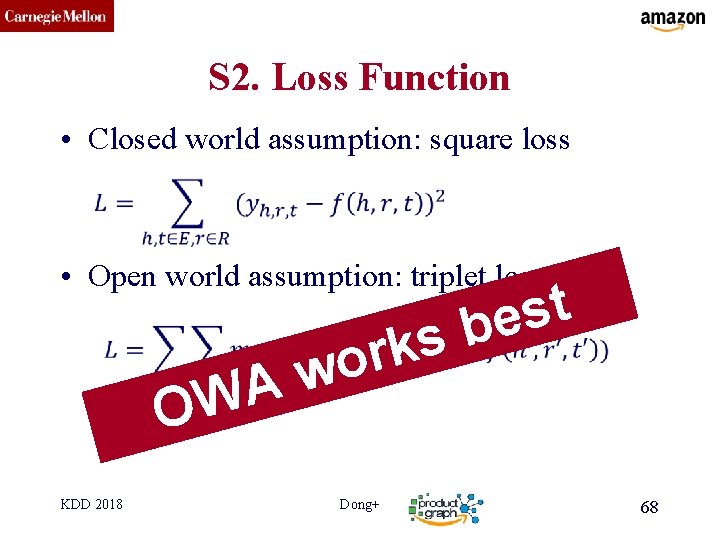

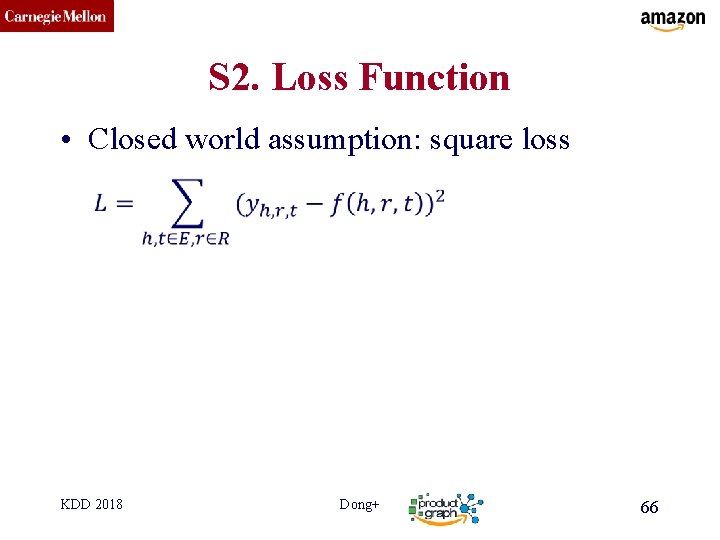

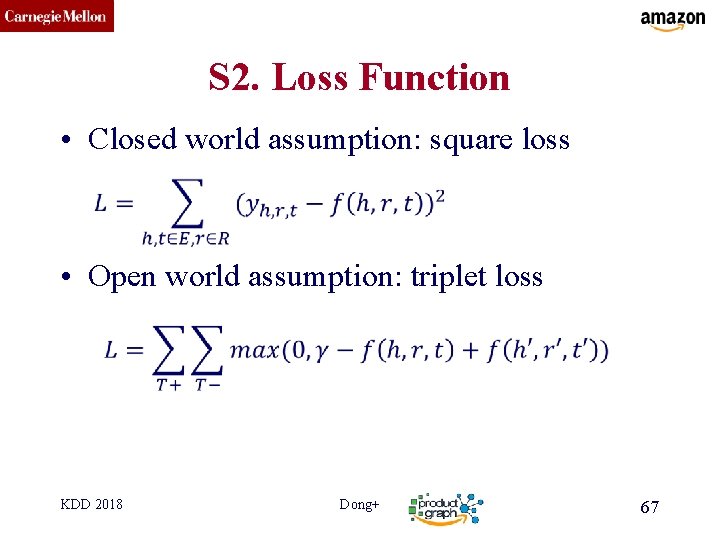

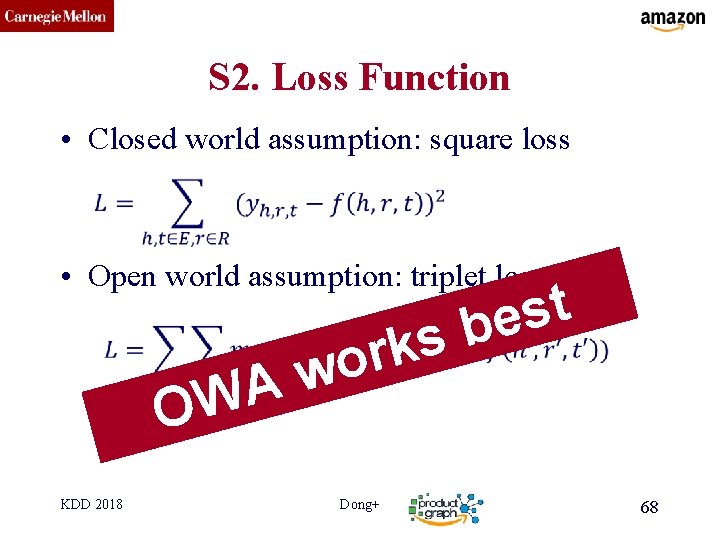

CMU SCS S 2. Loss Function • Closed world assumption: square loss KDD 2018 Dong+ 66

CMU SCS S 2. Loss Function • Closed world assumption: square loss • Open world assumption: triplet loss KDD 2018 Dong+ 67

CMU SCS S 2. Loss Function • Closed world assumption: square loss • Open world assumption: triplet loss k r wo A W O KDD 2018 t s e b s Dong+ 68

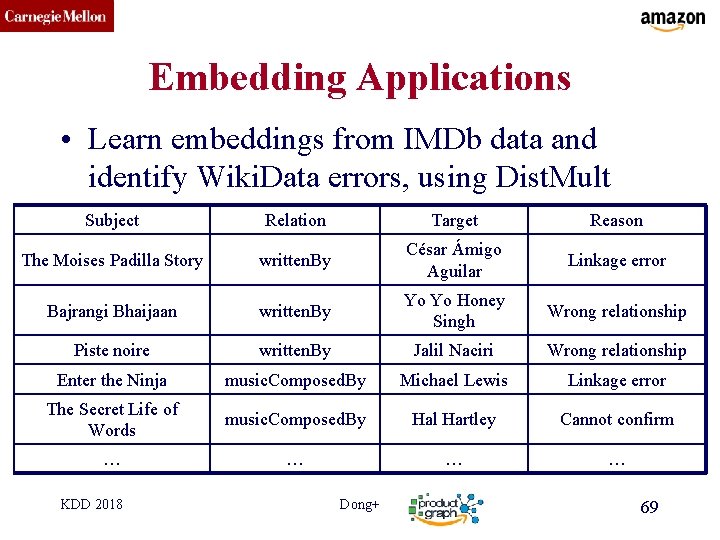

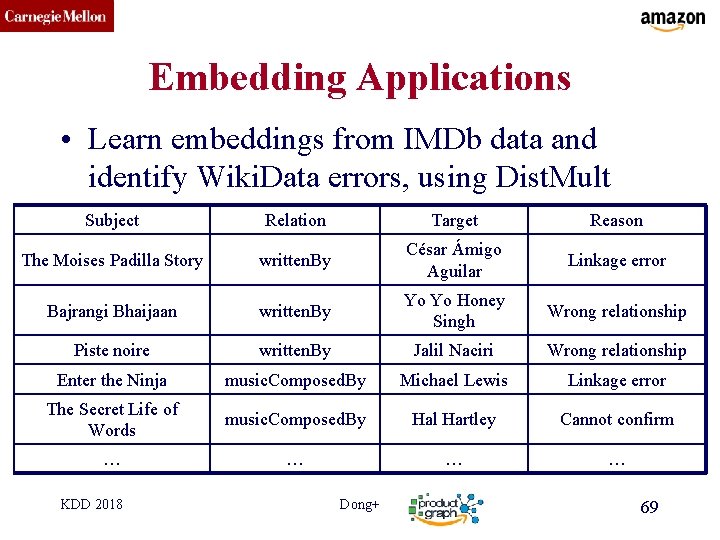

CMU SCS Embedding Applications • Learn embeddings from IMDb data and identify Wiki. Data errors, using Dist. Mult Subject Relation Target Reason The Moises Padilla Story written. By César Ámigo Aguilar Linkage error Bajrangi Bhaijaan written. By Yo Yo Honey Singh Wrong relationship Piste noire written. By Jalil Naciri Wrong relationship Enter the Ninja music. Composed. By Michael Lewis Linkage error The Secret Life of Words music. Composed. By Hal Hartley Cannot confirm … … KDD 2018 Dong+ 69

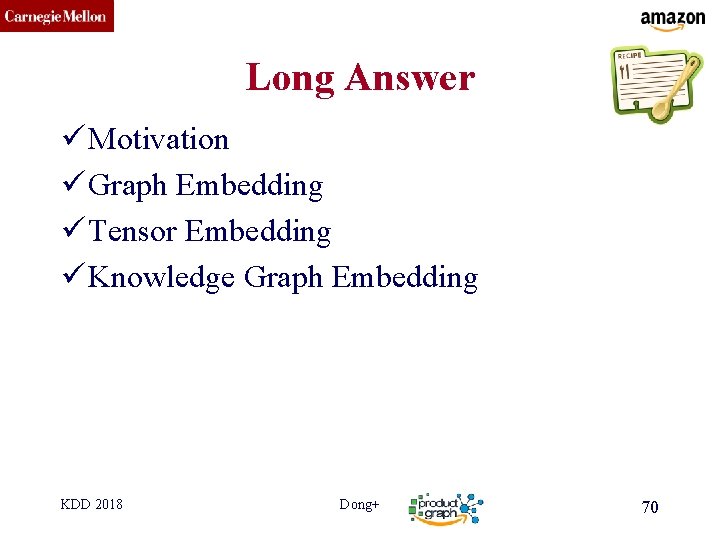

CMU SCS Long Answer ü Motivation ü Graph Embedding ü Tensor Embedding ü Knowledge Graph Embedding KDD 2018 Dong+ 70

CMU SCS Conclusion/Short answer • S 1. Two potential relationships among sub (h), pred (r), and obj (t)? – Addition: h + r =? = t – Multiplication: h � r =? = t • S 2. Two loss function options – Closed-world assumption – Open-world assumption KDD 2018 Dong+ 71

CMU SCS Conclusion/Short answer • S 1. Two potential relationships among sub (h), pred (r), and obj (t)? – Addition: h + r =? = t – Multiplication: h � r =? = t s t l u s re t r a • S 2. Two loss function options e h t l e f d o o – Closed-world assumption m e n t n o i o t a i t p a m S–t. Open-world assumption c i u l s p i s t a ul d l M r o • w n e p O • KDD 2018 Dong+ 72