Cluster Analysis Chapter 12 Statistics for Marketing Consumer

- Slides: 47

Cluster Analysis Chapter 12 Statistics for Marketing & Consumer Research Copyright © 2008 - Mario Mazzocchi 1

Cluster analysis • It is a class of techniques used to classify cases into groups that are • relatively homogeneous within themselves and • heterogeneous between each other • Homogeneity (similarity) and heterogeneity (dissimilarity) are measured on the basis of a defined set of variables • These groups are called clusters Statistics for Marketing & Consumer Research Copyright © 2008 - Mario Mazzocchi 2

Market segmentation • Cluster analysis is especially useful for market segmentation • Segmenting a market means dividing its potential consumers into separate sub-sets where • Consumers in the same group are similar with respect to a given set of characteristics • Consumers belonging to different groups are dissimilar with respect to the same set of characteristics • This allows one to calibrate the marketing mix differently according to the target consumer group Statistics for Marketing & Consumer Research Copyright © 2008 - Mario Mazzocchi 3

Other uses of cluster analysis • Product characteristics and the identification of new product opportunities. • Clustering of similar brands or products according to their characteristics allow one to identify competitors, potential market opportunities and available niches • Data reduction • Factor analysis and principal component analysis allow to reduce the number of variables. • Cluster analysis allows to reduce the number of observations, by grouping them into homogeneous clusters. • Maps profiling simultaneously consumers and products, market opportunities and preferences as in preference or perceptual mappings (lecture 14) Statistics for Marketing & Consumer Research Copyright © 2008 - Mario Mazzocchi 4

Steps to conduct a cluster analysis • • • Select a distance measure Select a clustering algorithm Define the distance between two clusters Determine the number of clusters Validate the analysis Statistics for Marketing & Consumer Research Copyright © 2008 - Mario Mazzocchi 5

Distance measures for individual observations • To measure similarity between two observations a distance measure is needed • With a single variable, similarity is straightforward • Example: income – two individuals are similar if their income level is similar and the level of dissimilarity increases as the income gap increases • Multiple variables require an aggregate distance measure • Many characteristics (e. g. income, age, consumption habits, family composition, owning a car, education level, job…), it becomes more difficult to define similarity with a single value • The most known measure of distance is the Euclidean distance, which is the concept we use in everyday life for spatial coordinates. Statistics for Marketing & Consumer Research Copyright © 2008 - Mario Mazzocchi 6

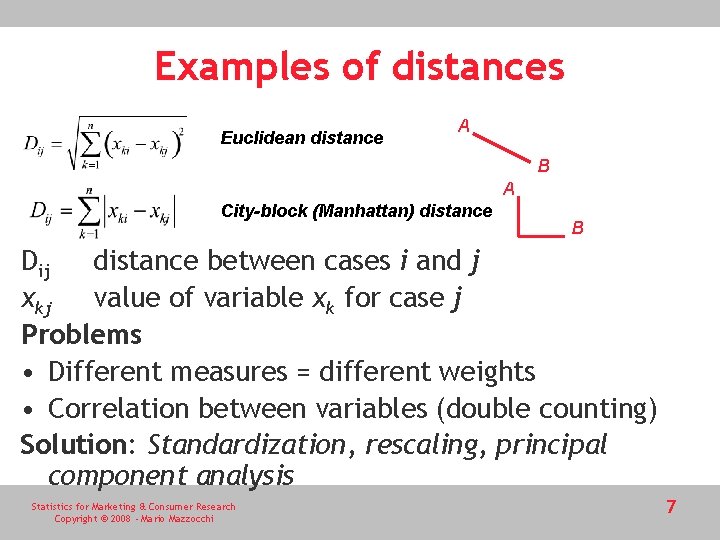

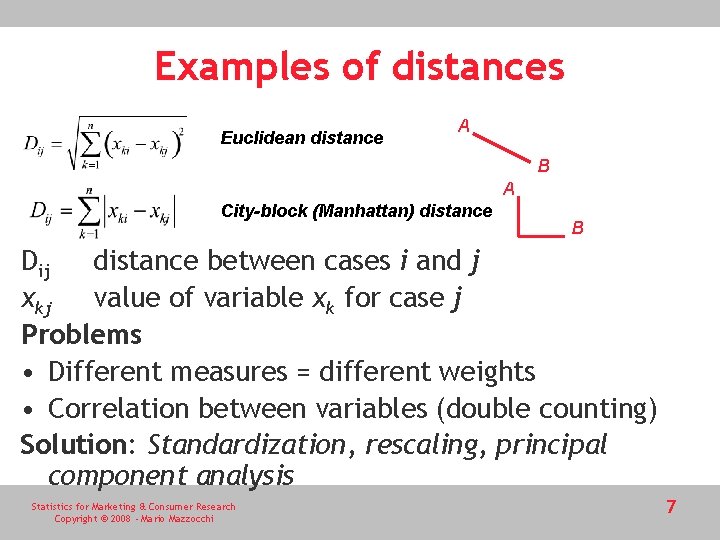

Examples of distances Euclidean distance A B A City-block (Manhattan) distance B Dij distance between cases i and j xkj value of variable xk for case j Problems • Different measures = different weights • Correlation between variables (double counting) Solution: Standardization, rescaling, principal component analysis Statistics for Marketing & Consumer Research Copyright © 2008 - Mario Mazzocchi 7

Other distance measures • Other distance measures: Chebychev, Minkowski, Mahalanobis • An alternative approach: use correlation measures, where correlations are not between variables, but between observations. • Each observation is characterized by a set of measurements (one for each variable) and bivariate correlations can be computed between two observations. Statistics for Marketing & Consumer Research Copyright © 2008 - Mario Mazzocchi 8

Clustering procedures • Hierarchical procedures • Agglomerative (start from n clusters to get to 1 cluster) • Divisive (start from 1 cluster to get to n clusters) • Non hierarchical procedures • K-means clustering Statistics for Marketing & Consumer Research Copyright © 2008 - Mario Mazzocchi 9

Hierarchical clustering • Agglomerative: • Each of the n observations constitutes a separate cluster • The two clusters that are more similar according to same distance rule are aggregated, so that in step 1 there are n-1 clusters • In the second step another cluster is formed (n-2 clusters), by nesting the two clusters that are more similar, and so on • There is a merging in each step until all observations end up in a single cluster in the final step. • Divisive • All observations are initially assumed to belong to a single cluster • The most dissimilar observation is extracted to form a separate cluster • In step 1 there will be 2 clusters, in the second step three clusters and so on, until the final step will produce as many clusters as the number of observations. • The number of clusters determines the stopping rule for the algorithms Statistics for Marketing & Consumer Research Copyright © 2008 - Mario Mazzocchi 10

Non-hierarchical clustering • These algorithms do not follow a hierarchy and produce a single partition • Knowledge of the number of clusters (c) is required • In the first step, initial cluster centres (the seeds) are determined for each of the c clusters, either by the researcher or by the software (usually the first c observation or observations are chosen randomly) • Each iteration allocates observations to each of the c clusters, based on their distance from the cluster centres • Cluster centres are computed again and observations may be reallocated to the nearest cluster in the next iteration • When no observations can be reallocated or a stopping rule is met, the process stops Statistics for Marketing & Consumer Research Copyright © 2008 - Mario Mazzocchi 11

Distance between clusters • Algorithms vary according to the way the distance between two clusters is defined. • The most common algorithm for hierarchical methods include • • • single linkage method complete linkage method average linkage method Ward algorithm (see slide 14) centroid method (see slide 15) Statistics for Marketing & Consumer Research Copyright © 2008 - Mario Mazzocchi 12

Linkage methods • Single linkage method (nearest neighbour): distance between two clusters is the minimum distance among all possible distances between observations belonging to the two clusters. • Complete linkage method (furthest neighbour): nests two cluster using as a basis the maximum distance between observations belonging to separate clusters. • Average linkage method: the distance between two clusters is the average of all distances between observations in the two clusters Statistics for Marketing & Consumer Research Copyright © 2008 - Mario Mazzocchi 13

Ward algorithm 1. The sum of squared distances is computed within each of the cluster, considering all distances between observation within the same cluster 2. The algorithm proceeds by choosing the aggregation between two clusters which generates the smallest increase in the total sum of squared distances. • It is a computationally intensive method, because at each step all the sum of squared distances need to be computed, together with all potential increases in the total sum of squared distances for each possible aggregation of clusters. Statistics for Marketing & Consumer Research Copyright © 2008 - Mario Mazzocchi 14

Centroid method • The distance between two clusters is the distance between the two centroids, • Centroids are the cluster averages for each of the variables • each cluster is defined by a single set of coordinates, the averages of the coordinates of all individual observations belonging to that cluster • Difference between the centroid and the average linkage method • Centroid: computes the average of the co-ordinates of the observations belonging to an individual cluster • Average linkage: computes the average of the distances between two separate clusters. Statistics for Marketing & Consumer Research Copyright © 2008 - Mario Mazzocchi 15

Non-hierarchical clustering: K-means method 1. 2. The number k of clusters is fixed An initial set of k “seeds” (aggregation centres) is provided • • First k elements Other seeds (randomly selected or explicitly defined) 3. Given a certain fixed threshold, all units are assigned to the nearest cluster seed 4. New seeds are computed 5. Go back to step 3 until no reclassification is necessary Units can be reassigned in successive steps (optimising partioning) Statistics for Marketing & Consumer Research Copyright © 2008 - Mario Mazzocchi 16

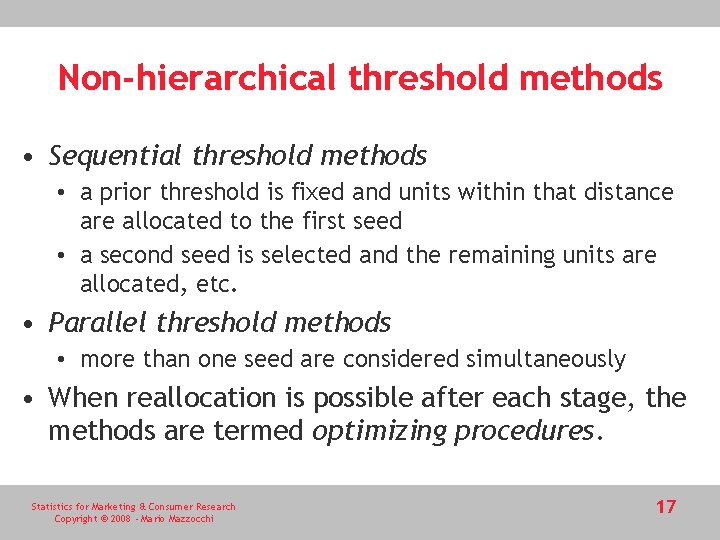

Non-hierarchical threshold methods • Sequential threshold methods • a prior threshold is fixed and units within that distance are allocated to the first seed • a second seed is selected and the remaining units are allocated, etc. • Parallel threshold methods • more than one seed are considered simultaneously • When reallocation is possible after each stage, the methods are termed optimizing procedures. Statistics for Marketing & Consumer Research Copyright © 2008 - Mario Mazzocchi 17

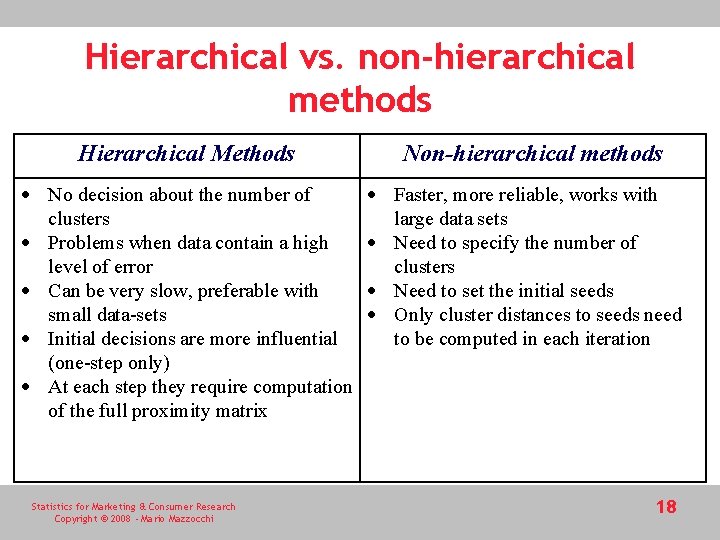

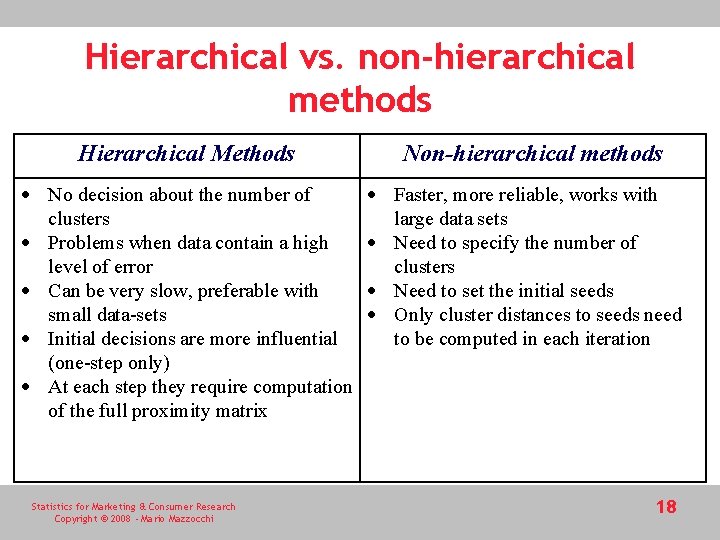

Hierarchical vs. non-hierarchical methods Hierarchical Methods No decision about the number of clusters Problems when data contain a high level of error Can be very slow, preferable with small data-sets Initial decisions are more influential (one-step only) At each step they require computation of the full proximity matrix Statistics for Marketing & Consumer Research Copyright © 2008 - Mario Mazzocchi Non-hierarchical methods Faster, more reliable, works with large data sets Need to specify the number of clusters Need to set the initial seeds Only cluster distances to seeds need to be computed in each iteration 18

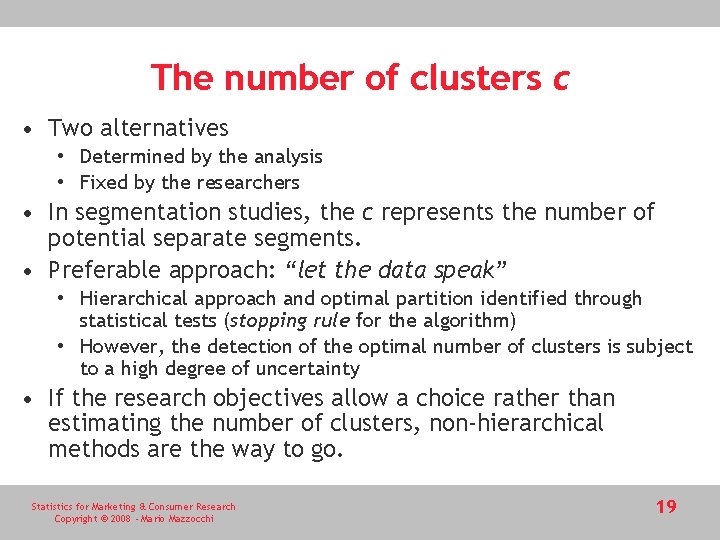

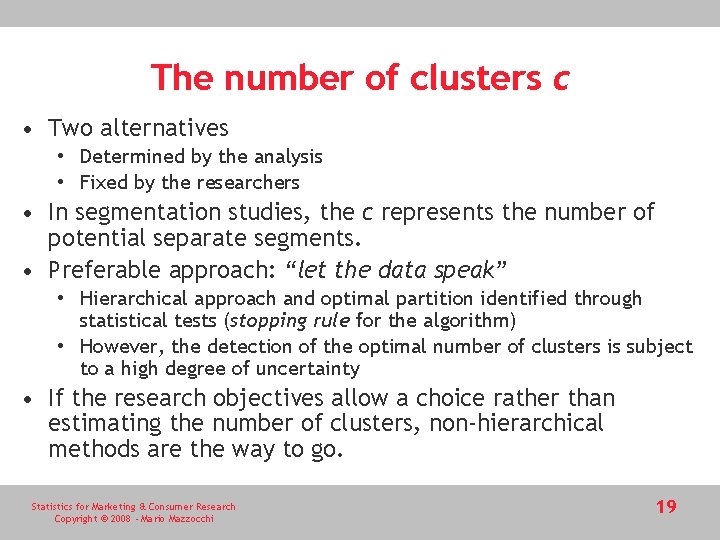

The number of clusters c • Two alternatives • Determined by the analysis • Fixed by the researchers • In segmentation studies, the c represents the number of potential separate segments. • Preferable approach: “let the data speak” • Hierarchical approach and optimal partition identified through statistical tests (stopping rule for the algorithm) • However, the detection of the optimal number of clusters is subject to a high degree of uncertainty • If the research objectives allow a choice rather than estimating the number of clusters, non-hierarchical methods are the way to go. Statistics for Marketing & Consumer Research Copyright © 2008 - Mario Mazzocchi 19

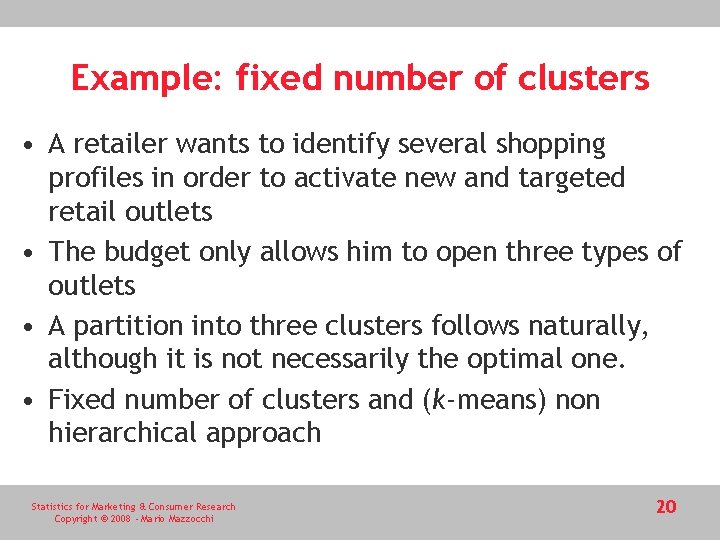

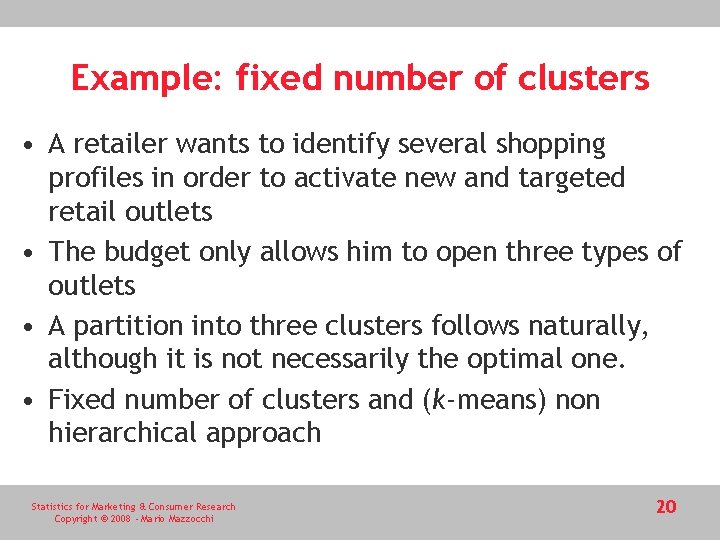

Example: fixed number of clusters • A retailer wants to identify several shopping profiles in order to activate new and targeted retail outlets • The budget only allows him to open three types of outlets • A partition into three clusters follows naturally, although it is not necessarily the optimal one. • Fixed number of clusters and (k-means) non hierarchical approach Statistics for Marketing & Consumer Research Copyright © 2008 - Mario Mazzocchi 20

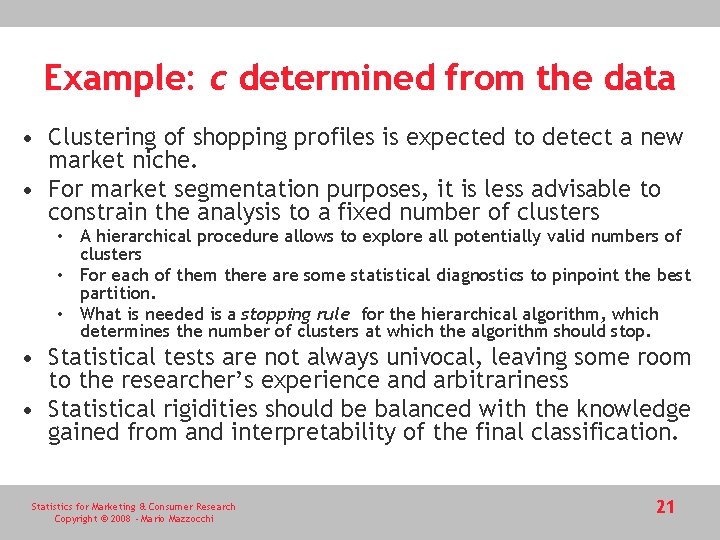

Example: c determined from the data • Clustering of shopping profiles is expected to detect a new market niche. • For market segmentation purposes, it is less advisable to constrain the analysis to a fixed number of clusters • A hierarchical procedure allows to explore all potentially valid numbers of clusters • For each of them there are some statistical diagnostics to pinpoint the best partition. • What is needed is a stopping rule for the hierarchical algorithm, which determines the number of clusters at which the algorithm should stop. • Statistical tests are not always univocal, leaving some room to the researcher’s experience and arbitrariness • Statistical rigidities should be balanced with the knowledge gained from and interpretability of the final classification. Statistics for Marketing & Consumer Research Copyright © 2008 - Mario Mazzocchi 21

Determining the optimal number of cluster from hierarchical methods • Graphical • dendrogram • scree diagram • Statistical • • Arnold’s criterion pseudo F statistic pseudo t 2 statistic cubic clustering criterion (CCC) Statistics for Marketing & Consumer Research Copyright © 2008 - Mario Mazzocchi 22

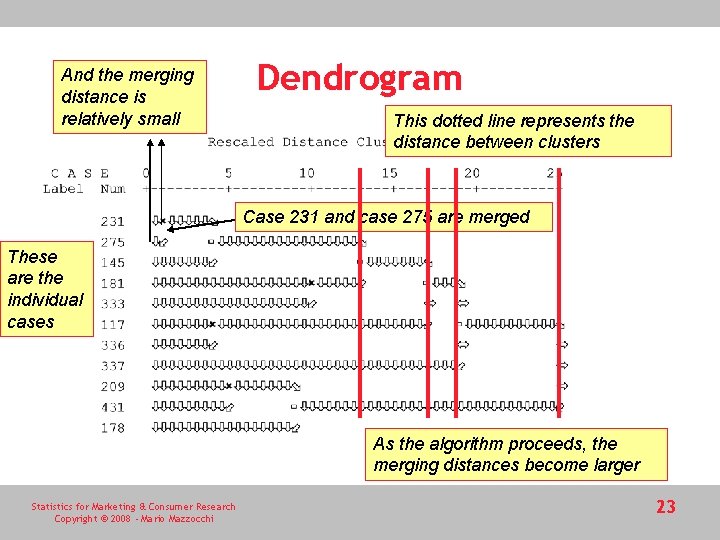

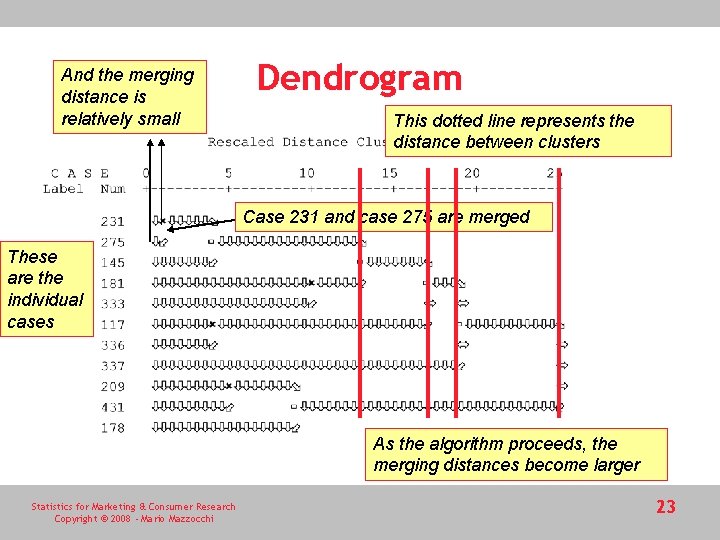

And the merging distance is relatively small Dendrogram This dotted line represents the distance between clusters Case 231 and case 275 are merged These are the individual cases As the algorithm proceeds, the merging distances become larger Statistics for Marketing & Consumer Research Copyright © 2008 - Mario Mazzocchi 23

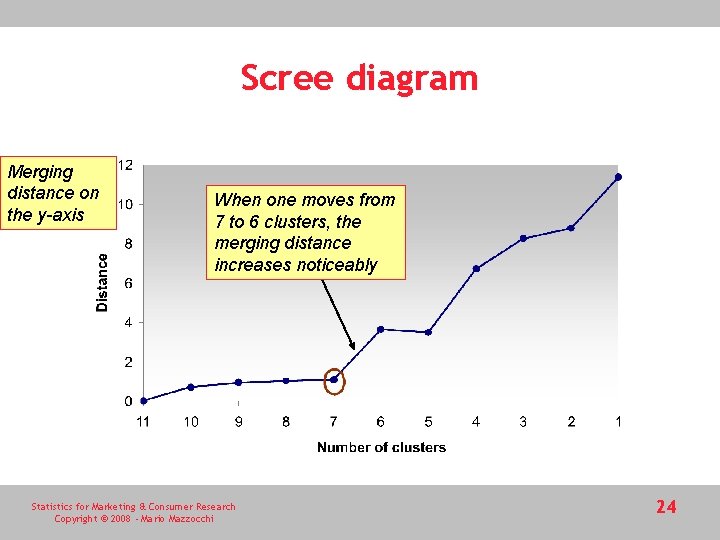

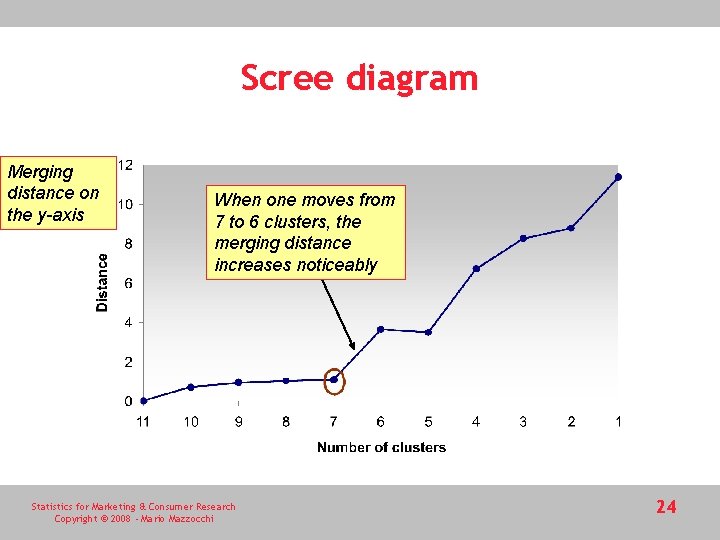

Scree diagram Merging distance on the y-axis When one moves from 7 to 6 clusters, the merging distance increases noticeably Statistics for Marketing & Consumer Research Copyright © 2008 - Mario Mazzocchi 24

Statistical tests • The rationale is that in optimal partition, variability within clusters should be as small as possible, while variability between clusters should be maximized • This principle is similar to the ANOVA-F test • However, since hierarchical algorithms proceed sequentially, the probability distribution of statistics relating variability within and variability between is unknown and differs from the F distribution Statistics for Marketing & Consumer Research Copyright © 2008 - Mario Mazzocchi 25

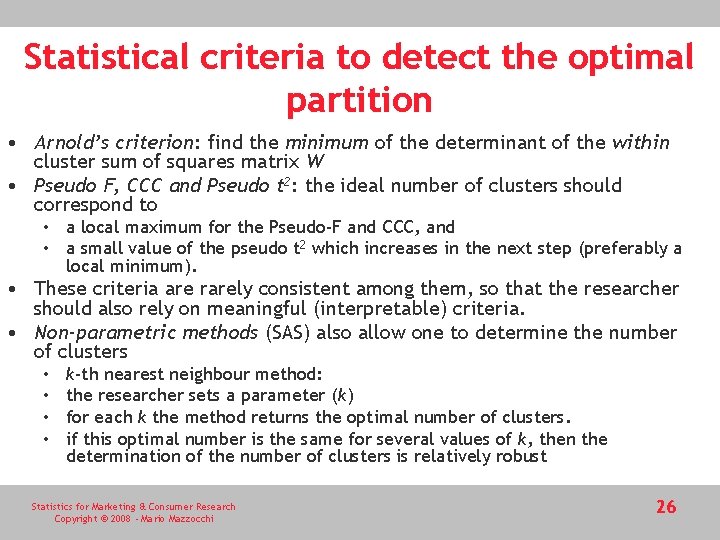

Statistical criteria to detect the optimal partition • Arnold’s criterion: find the minimum of the determinant of the within cluster sum of squares matrix W • Pseudo F, CCC and Pseudo t 2: the ideal number of clusters should correspond to • a local maximum for the Pseudo-F and CCC, and • a small value of the pseudo t 2 which increases in the next step (preferably a local minimum). • These criteria are rarely consistent among them, so that the researcher should also rely on meaningful (interpretable) criteria. • Non-parametric methods (SAS) also allow one to determine the number of clusters • • k-th nearest neighbour method: the researcher sets a parameter (k) for each k the method returns the optimal number of clusters. if this optimal number is the same for several values of k, then the determination of the number of clusters is relatively robust Statistics for Marketing & Consumer Research Copyright © 2008 - Mario Mazzocchi 26

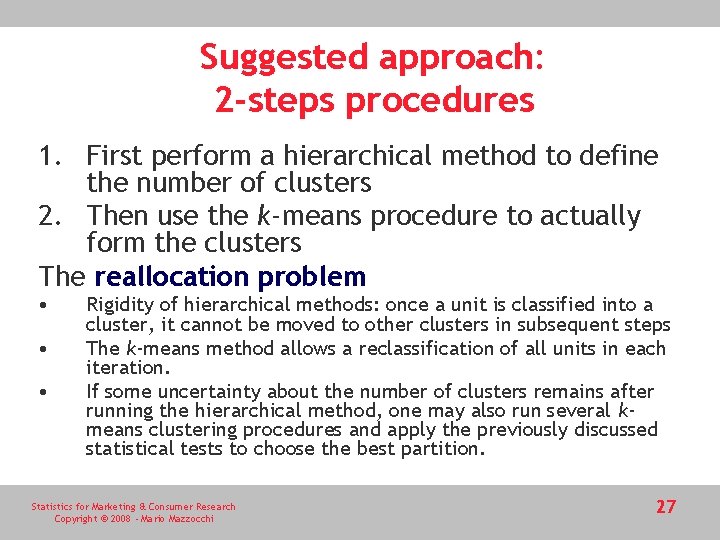

Suggested approach: 2 -steps procedures 1. First perform a hierarchical method to define the number of clusters 2. Then use the k-means procedure to actually form the clusters The reallocation problem • • • Rigidity of hierarchical methods: once a unit is classified into a cluster, it cannot be moved to other clusters in subsequent steps The k-means method allows a reclassification of all units in each iteration. If some uncertainty about the number of clusters remains after running the hierarchical method, one may also run several kmeans clustering procedures and apply the previously discussed statistical tests to choose the best partition. Statistics for Marketing & Consumer Research Copyright © 2008 - Mario Mazzocchi 27

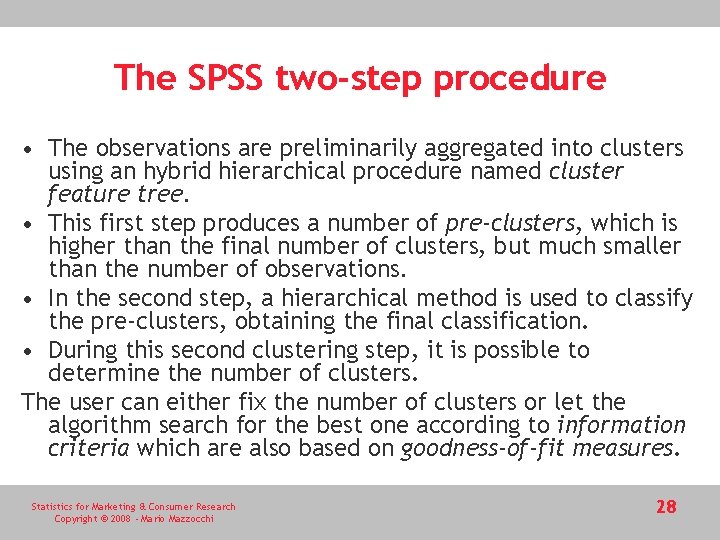

The SPSS two-step procedure • The observations are preliminarily aggregated into clusters using an hybrid hierarchical procedure named cluster feature tree. • This first step produces a number of pre-clusters, which is higher than the final number of clusters, but much smaller than the number of observations. • In the second step, a hierarchical method is used to classify the pre-clusters, obtaining the final classification. • During this second clustering step, it is possible to determine the number of clusters. The user can either fix the number of clusters or let the algorithm search for the best one according to information criteria which are also based on goodness-of-fit measures. Statistics for Marketing & Consumer Research Copyright © 2008 - Mario Mazzocchi 28

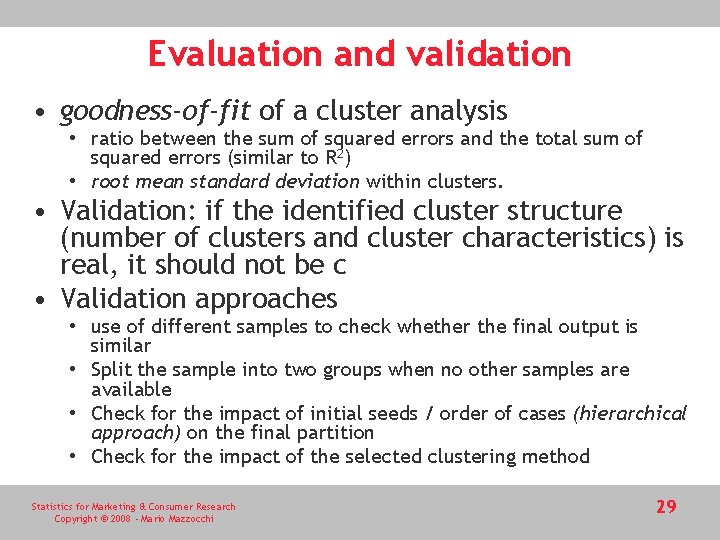

Evaluation and validation • goodness-of-fit of a cluster analysis • ratio between the sum of squared errors and the total sum of squared errors (similar to R 2) • root mean standard deviation within clusters. • Validation: if the identified cluster structure (number of clusters and cluster characteristics) is real, it should not be c • Validation approaches • use of different samples to check whether the final output is similar • Split the sample into two groups when no other samples are available • Check for the impact of initial seeds / order of cases (hierarchical approach) on the final partition • Check for the impact of the selected clustering method Statistics for Marketing & Consumer Research Copyright © 2008 - Mario Mazzocchi 29

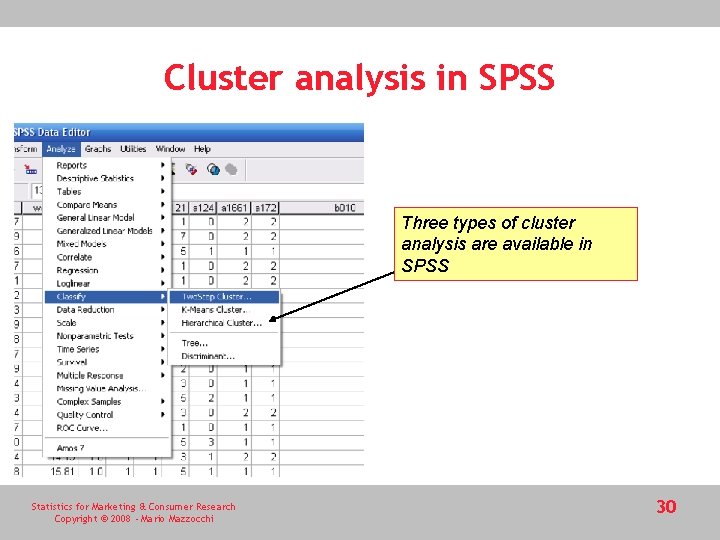

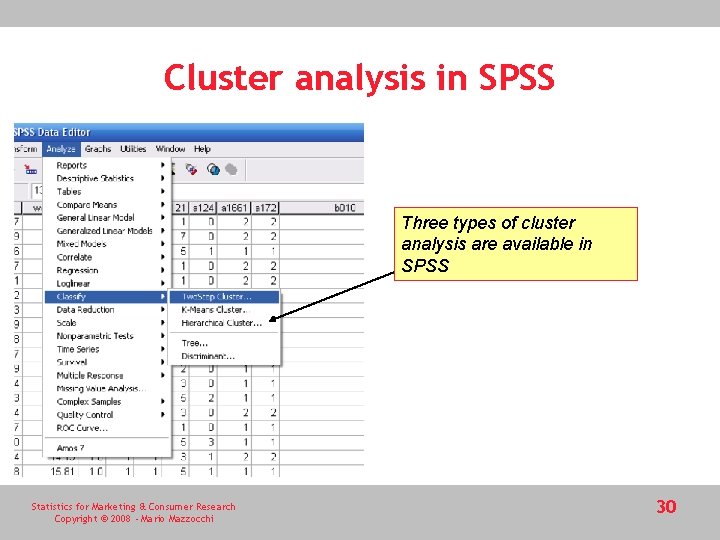

Cluster analysis in SPSS Three types of cluster analysis are available in SPSS Statistics for Marketing & Consumer Research Copyright © 2008 - Mario Mazzocchi 30

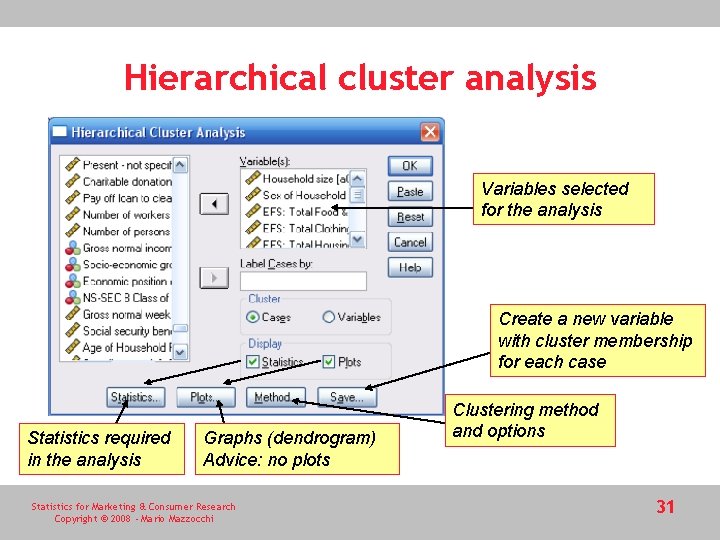

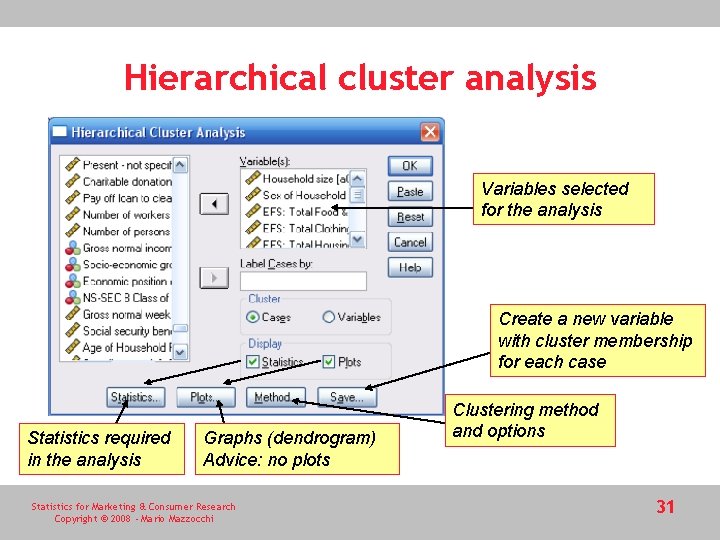

Hierarchical cluster analysis Variables selected for the analysis Create a new variable with cluster membership for each case Statistics required in the analysis Graphs (dendrogram) Advice: no plots Statistics for Marketing & Consumer Research Copyright © 2008 - Mario Mazzocchi Clustering method and options 31

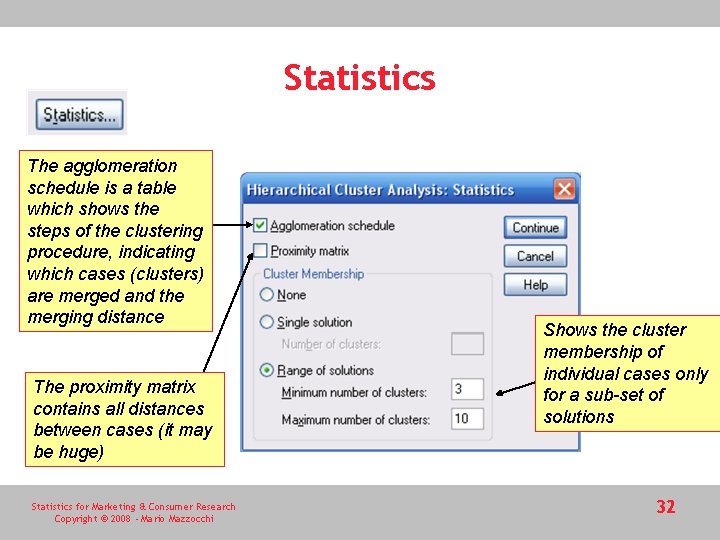

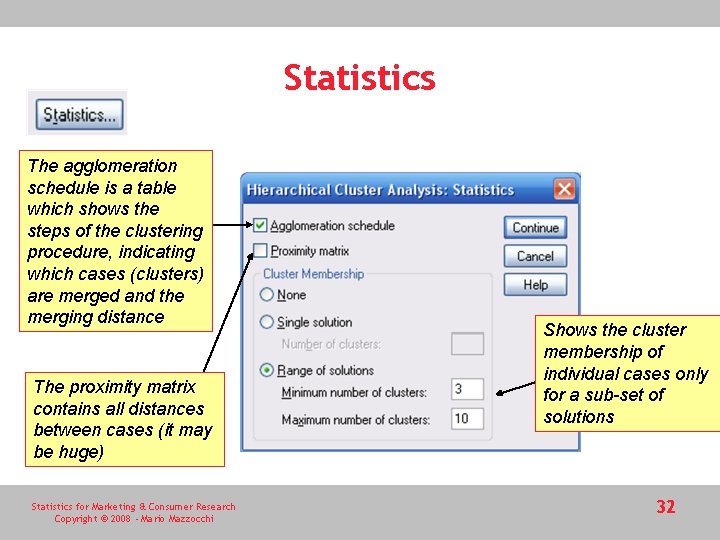

Statistics The agglomeration schedule is a table which shows the steps of the clustering procedure, indicating which cases (clusters) are merged and the merging distance The proximity matrix contains all distances between cases (it may be huge) Statistics for Marketing & Consumer Research Copyright © 2008 - Mario Mazzocchi Shows the cluster membership of individual cases only for a sub-set of solutions 32

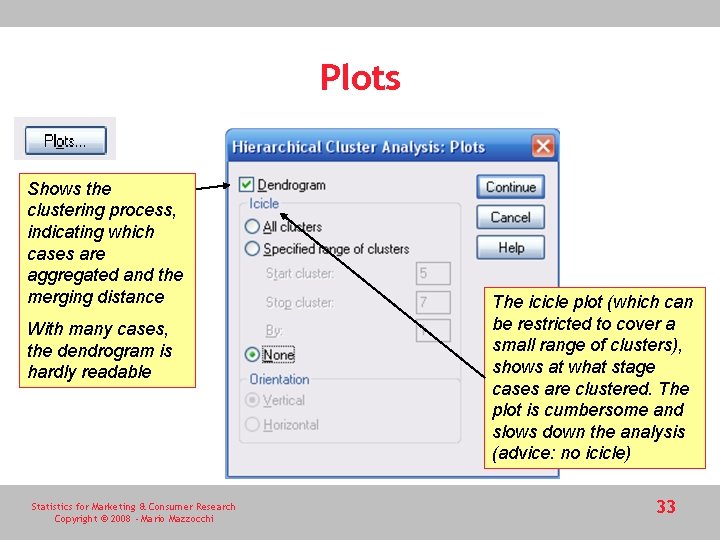

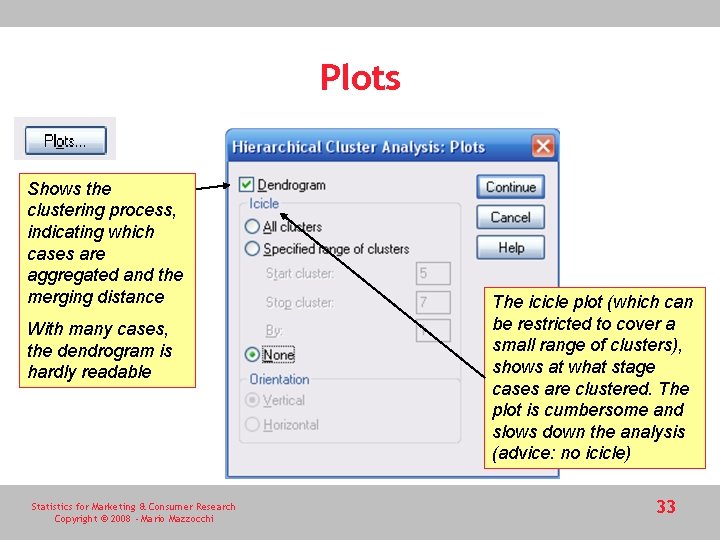

Plots Shows the clustering process, indicating which cases are aggregated and the merging distance With many cases, the dendrogram is hardly readable Statistics for Marketing & Consumer Research Copyright © 2008 - Mario Mazzocchi The icicle plot (which can be restricted to cover a small range of clusters), shows at what stage cases are clustered. The plot is cumbersome and slows down the analysis (advice: no icicle) 33

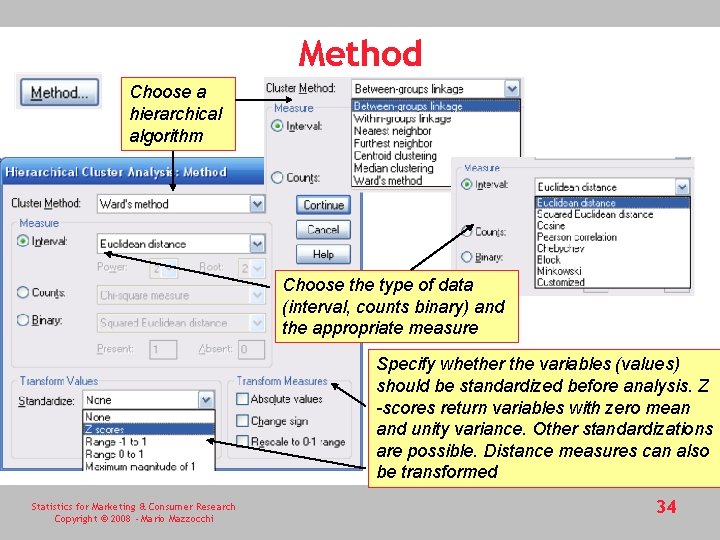

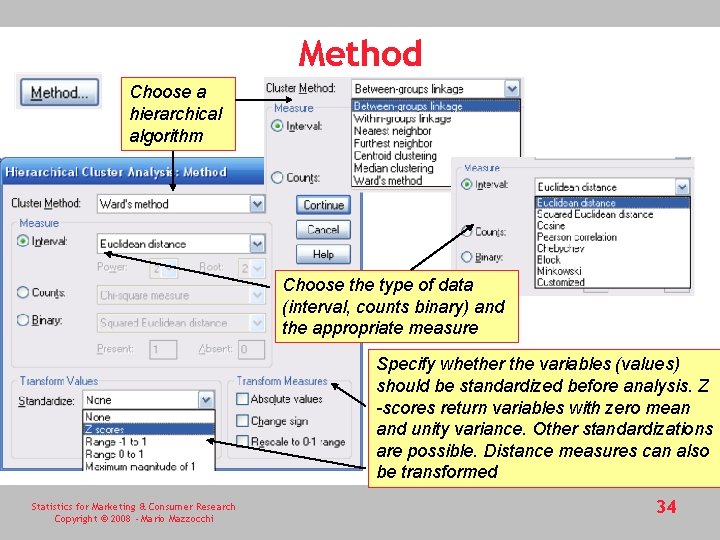

Method Choose a hierarchical algorithm Choose the type of data (interval, counts binary) and the appropriate measure Specify whether the variables (values) should be standardized before analysis. Z -scores return variables with zero mean and unity variance. Other standardizations are possible. Distance measures can also be transformed Statistics for Marketing & Consumer Research Copyright © 2008 - Mario Mazzocchi 34

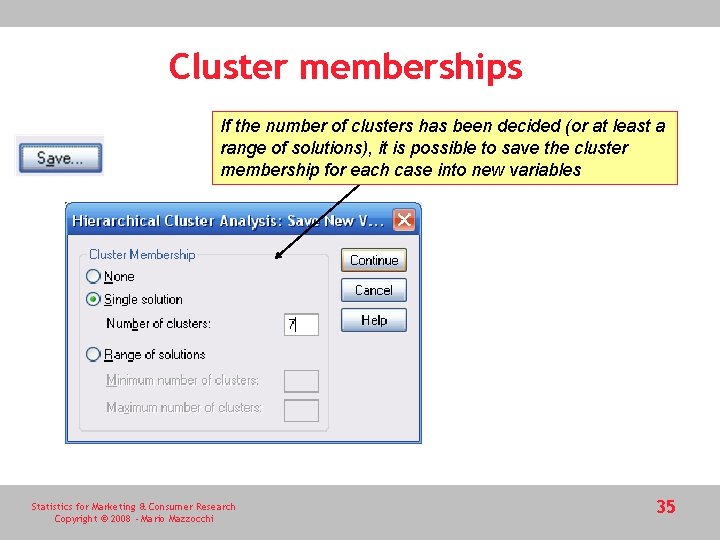

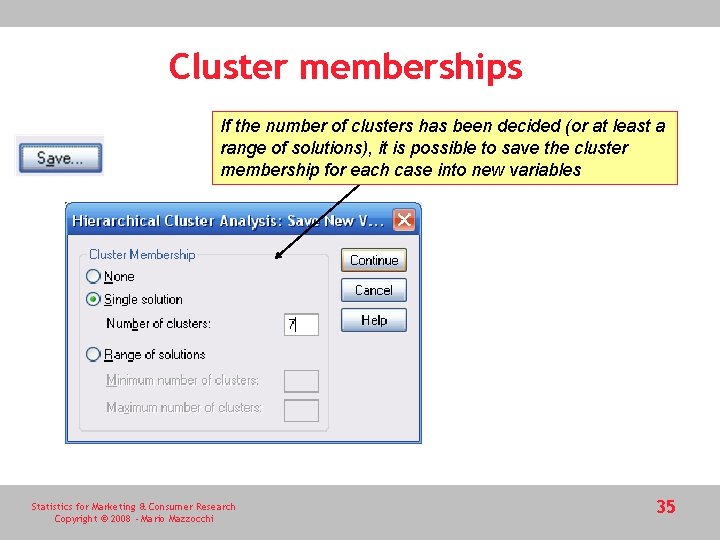

Cluster memberships If the number of clusters has been decided (or at least a range of solutions), it is possible to save the cluster membership for each case into new variables Statistics for Marketing & Consumer Research Copyright © 2008 - Mario Mazzocchi 35

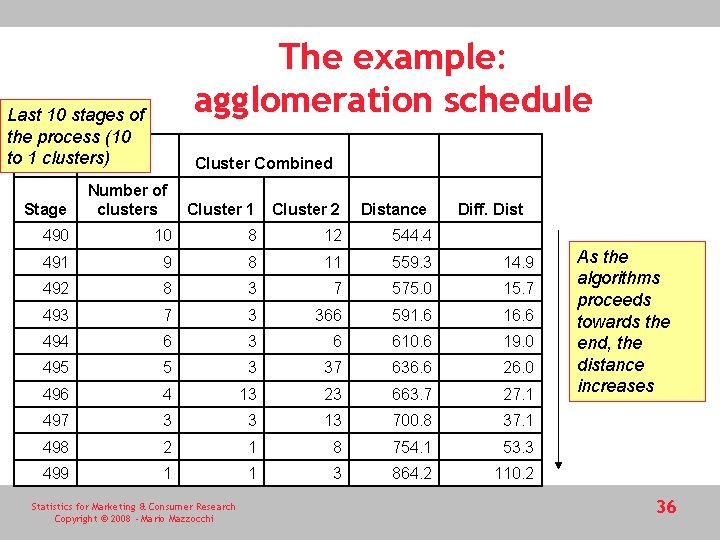

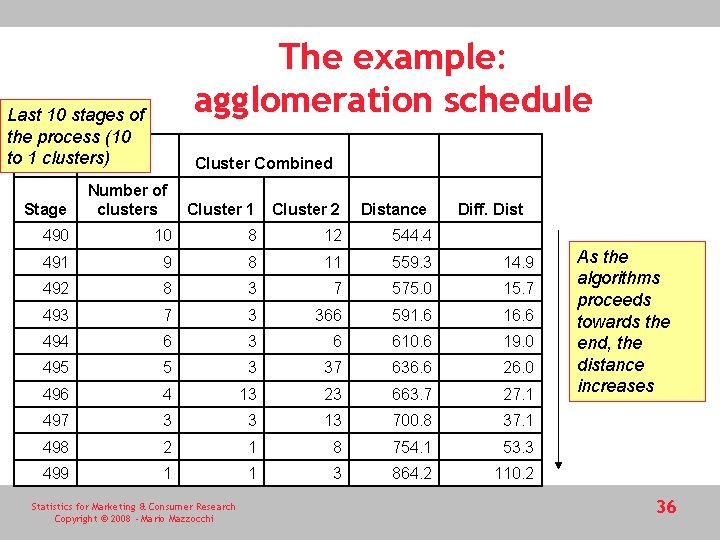

The example: agglomeration schedule Last 10 stages of the process (10 to 1 clusters) Stage Cluster Combined Number of clusters Cluster 1 Cluster 2 Distance Diff. Dist 490 10 8 12 544. 4 491 9 8 11 559. 3 14. 9 492 8 3 7 575. 0 15. 7 493 7 3 366 591. 6 16. 6 494 6 3 6 610. 6 19. 0 495 5 3 37 636. 6 26. 0 496 4 13 23 663. 7 27. 1 497 3 3 13 700. 8 37. 1 498 2 1 8 754. 1 53. 3 499 1 1 3 864. 2 110. 2 Statistics for Marketing & Consumer Research Copyright © 2008 - Mario Mazzocchi As the algorithms proceeds towards the end, the distance increases 36

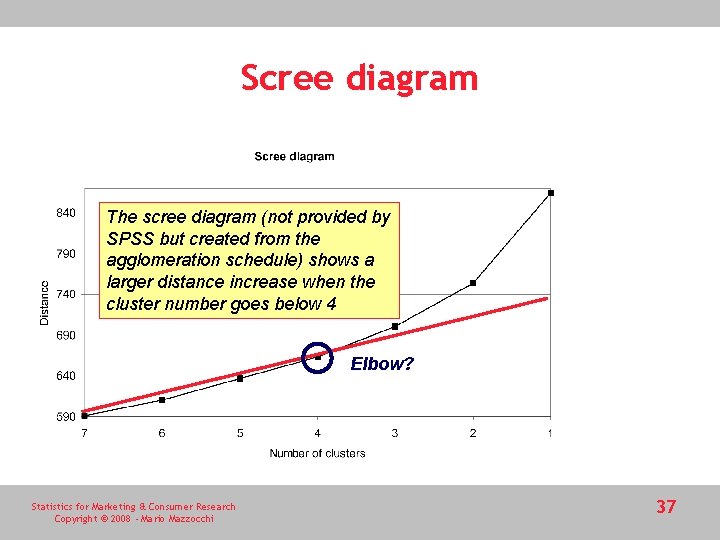

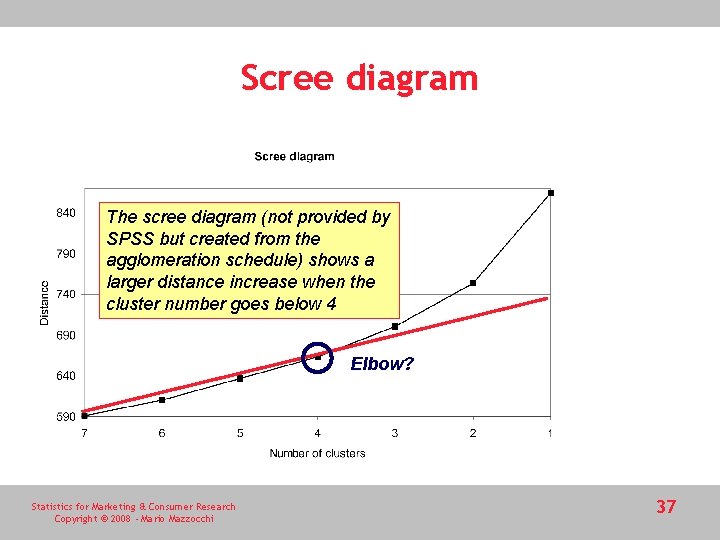

Scree diagram The scree diagram (not provided by SPSS but created from the agglomeration schedule) shows a larger distance increase when the cluster number goes below 4 Elbow? Statistics for Marketing & Consumer Research Copyright © 2008 - Mario Mazzocchi 37

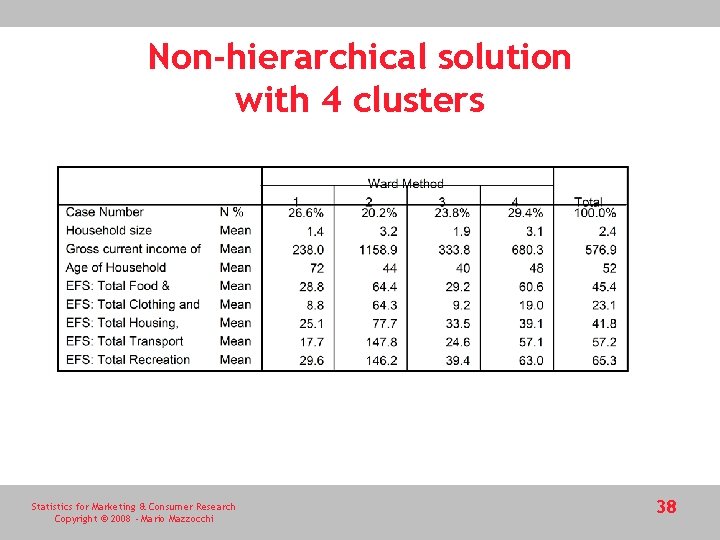

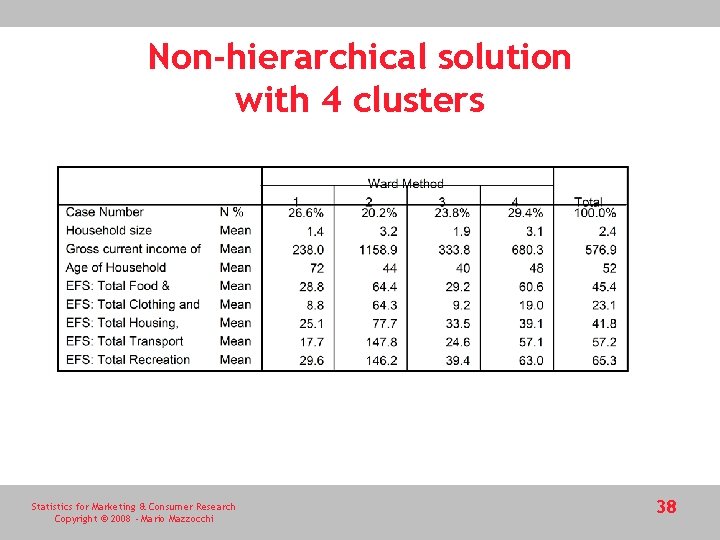

Non-hierarchical solution with 4 clusters Statistics for Marketing & Consumer Research Copyright © 2008 - Mario Mazzocchi 38

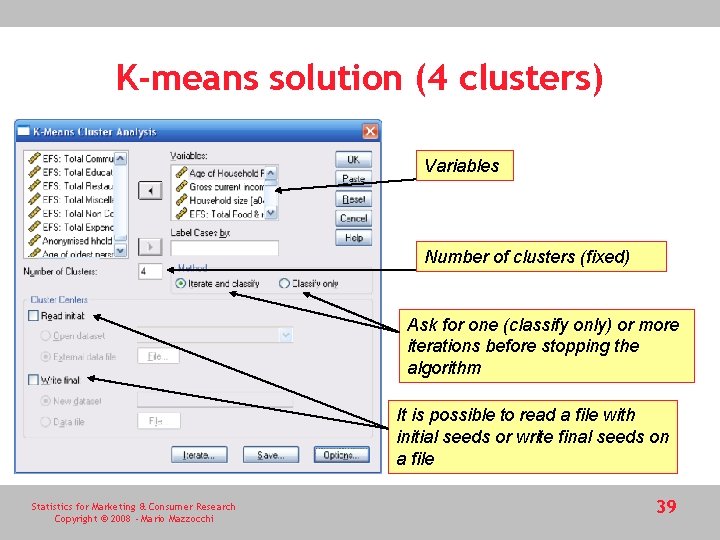

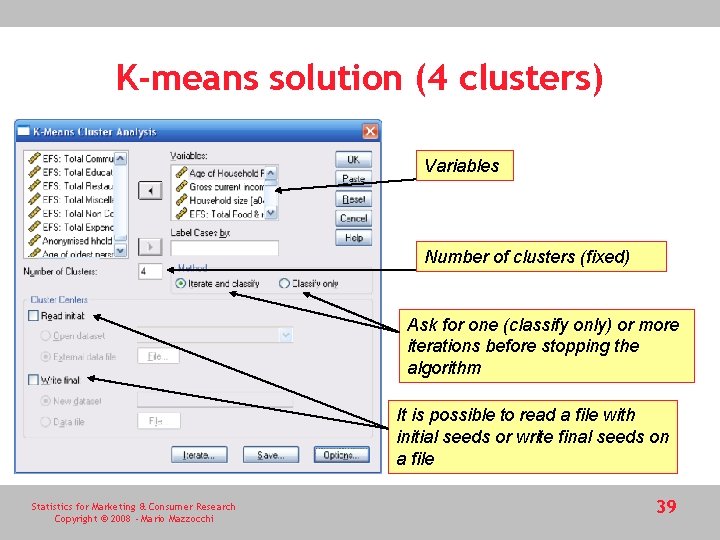

K-means solution (4 clusters) Variables Number of clusters (fixed) Ask for one (classify only) or more iterations before stopping the algorithm It is possible to read a file with initial seeds or write final seeds on a file Statistics for Marketing & Consumer Research Copyright © 2008 - Mario Mazzocchi 39

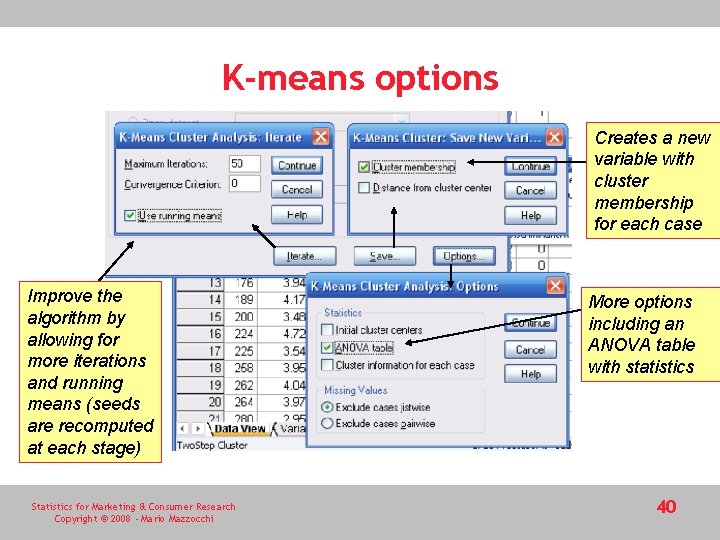

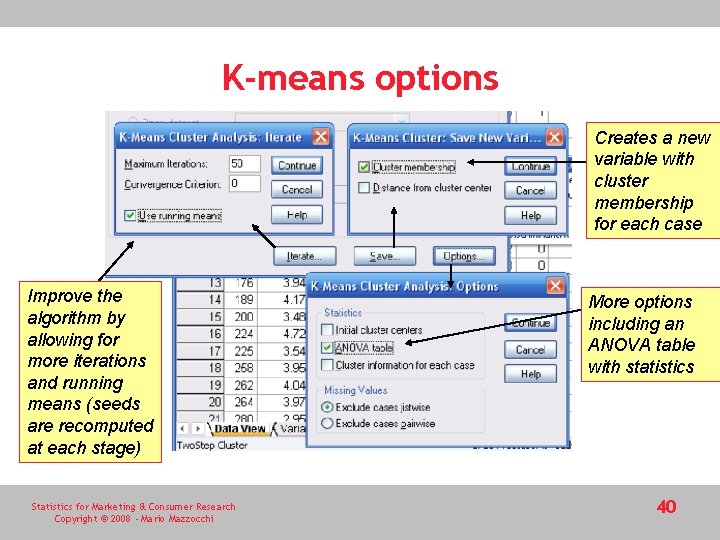

K-means options Creates a new variable with cluster membership for each case Improve the algorithm by allowing for more iterations and running means (seeds are recomputed at each stage) Statistics for Marketing & Consumer Research Copyright © 2008 - Mario Mazzocchi More options including an ANOVA table with statistics 40

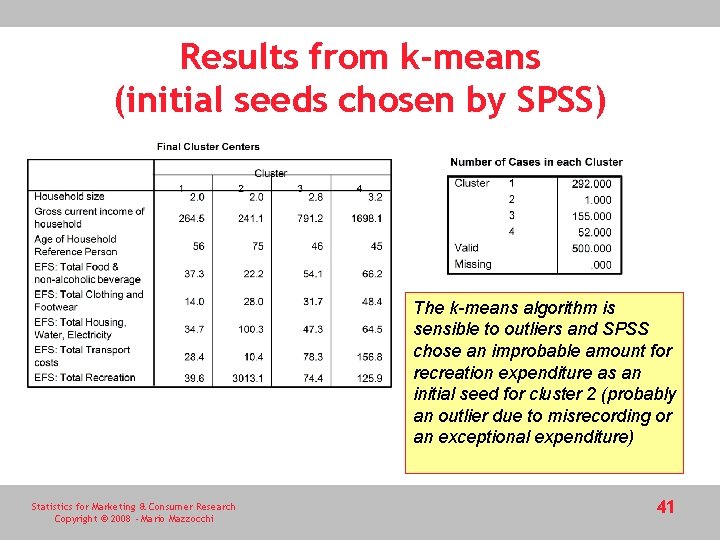

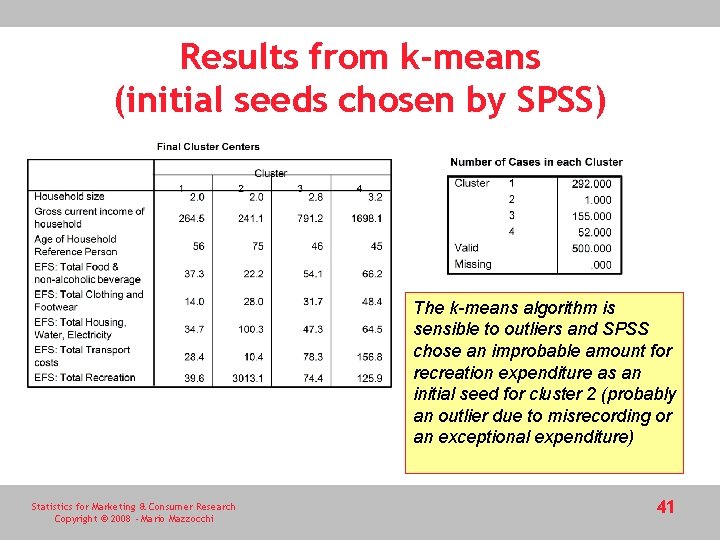

Results from k-means (initial seeds chosen by SPSS) The k-means algorithm is sensible to outliers and SPSS chose an improbable amount for recreation expenditure as an initial seed for cluster 2 (probably an outlier due to misrecording or an exceptional expenditure) Statistics for Marketing & Consumer Research Copyright © 2008 - Mario Mazzocchi 41

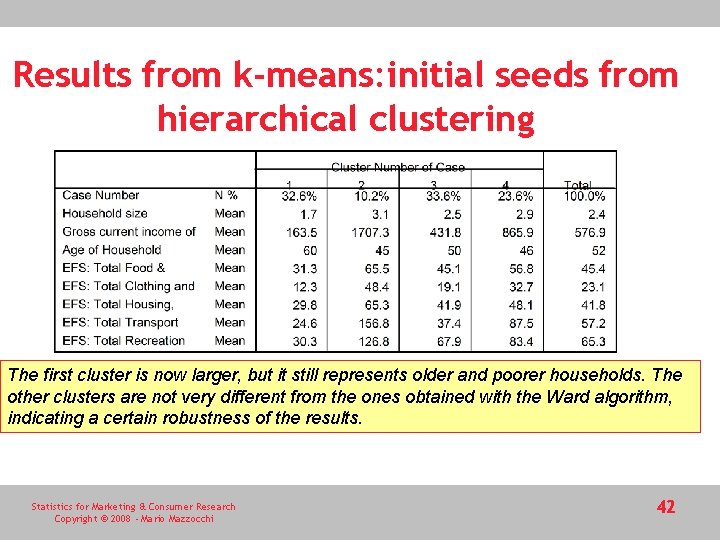

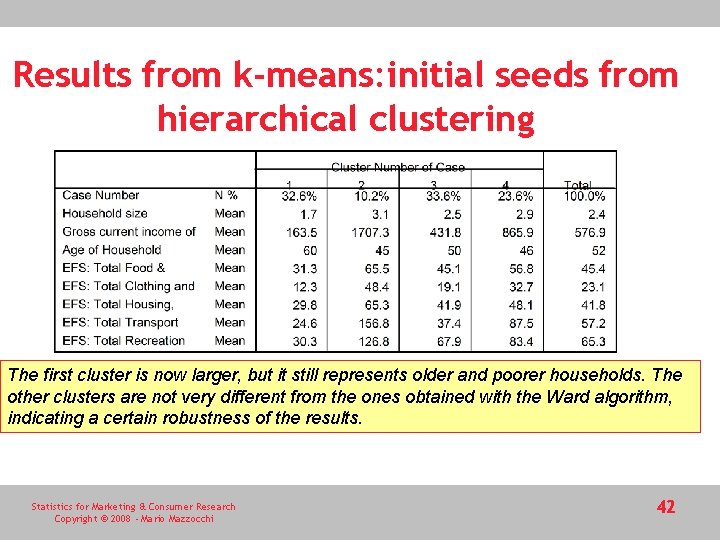

Results from k-means: initial seeds from hierarchical clustering The first cluster is now larger, but it still represents older and poorer households. The other clusters are not very different from the ones obtained with the Ward algorithm, indicating a certain robustness of the results. Statistics for Marketing & Consumer Research Copyright © 2008 - Mario Mazzocchi 42

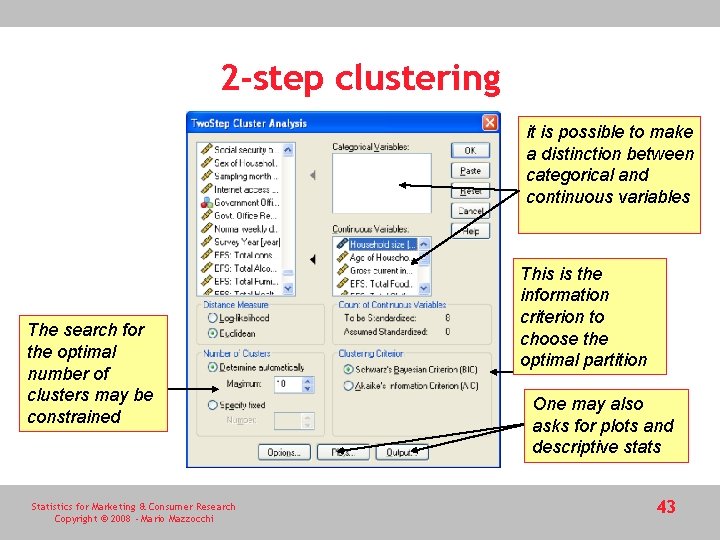

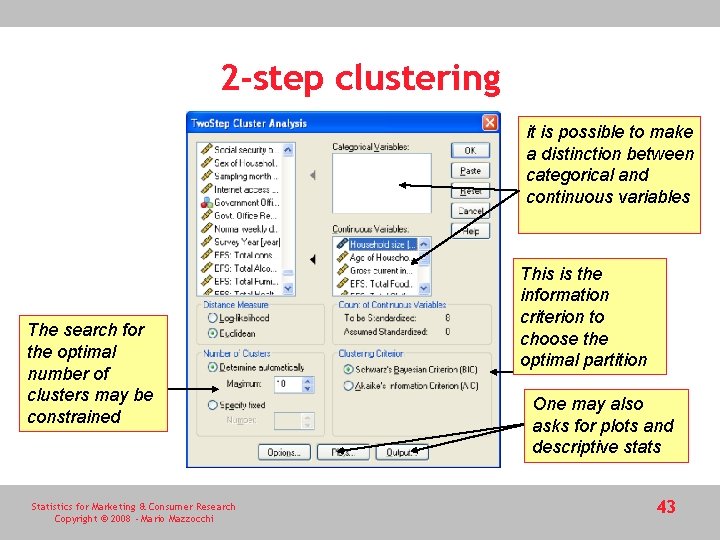

2 -step clustering it is possible to make a distinction between categorical and continuous variables The search for the optimal number of clusters may be constrained Statistics for Marketing & Consumer Research Copyright © 2008 - Mario Mazzocchi This is the information criterion to choose the optimal partition One may also asks for plots and descriptive stats 43

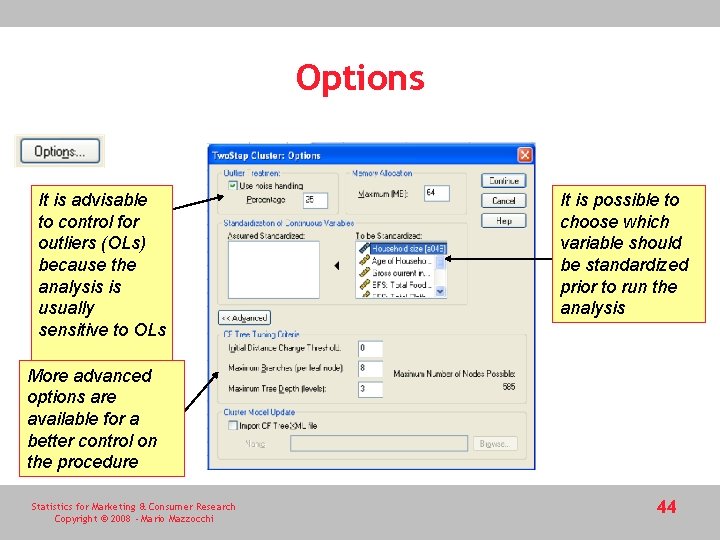

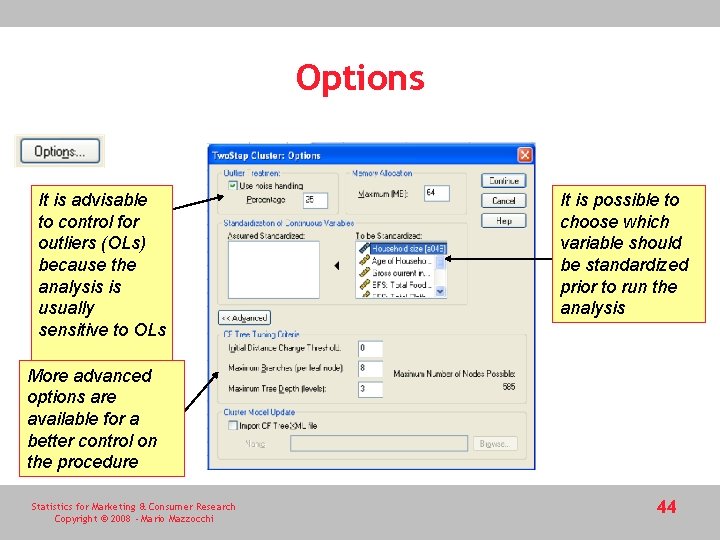

Options It is advisable to control for outliers (OLs) because the analysis is usually sensitive to OLs It is possible to choose which variable should be standardized prior to run the analysis More advanced options are available for a better control on the procedure Statistics for Marketing & Consumer Research Copyright © 2008 - Mario Mazzocchi 44

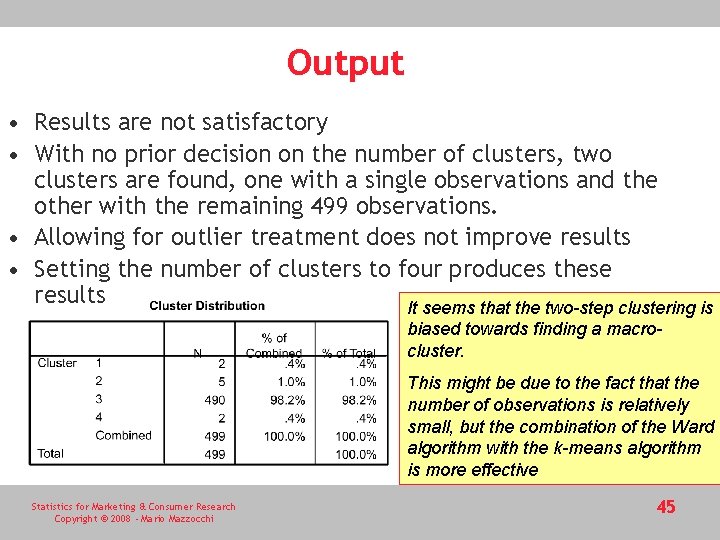

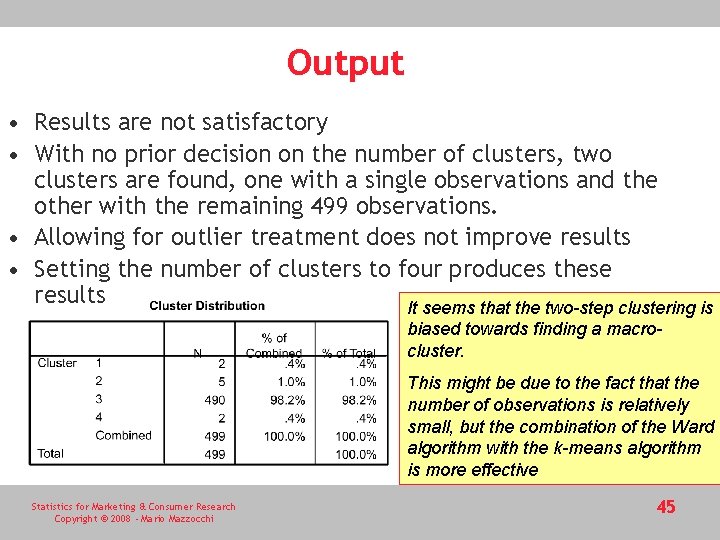

Output • Results are not satisfactory • With no prior decision on the number of clusters, two clusters are found, one with a single observations and the other with the remaining 499 observations. • Allowing for outlier treatment does not improve results • Setting the number of clusters to four produces these results It seems that the two-step clustering is biased towards finding a macrocluster. This might be due to the fact that the number of observations is relatively small, but the combination of the Ward algorithm with the k-means algorithm is more effective Statistics for Marketing & Consumer Research Copyright © 2008 - Mario Mazzocchi 45

SAS cluster analysis • Compared to SPSS, SAS provides more diagnostics and the option of non-parametric clustering through three SAS/STAT procedures • the procedure CLUSTER and VARCLUS (for hierarchical and the k-th neighbour methods) • the procedure FASTCLUS (for non-hierarchical methods) • and the procedure MODECLUS (for nonparametric methods) Statistics for Marketing & Consumer Research Copyright © 2008 - Mario Mazzocchi 46

Discussion • It might seem that cluster analysis is too sensitive to the researcher’s choice. s • This is partly due to the relatively small data-set and possibly to correlation between variables • However, all outputs point out to a segment with older and poorer household another with younger and larger households, with high expenditures. • By intensifying the search and adjusting some of the properties, cluster analysis does help identifying homogeneous groups. • “Moral”: cluster analysis needs to be adequately validated and it may be risky to run a single cluster analysis and take the results as truly informative, especially in presence of outliers. Statistics for Marketing & Consumer Research Copyright © 2008 - Mario Mazzocchi 47