CH 3 Supervised Learning 3 1 Introduction Supervised

- Slides: 32

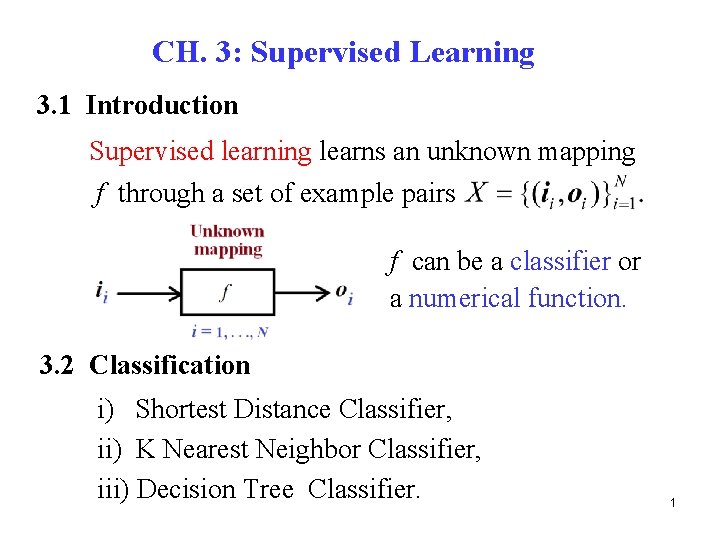

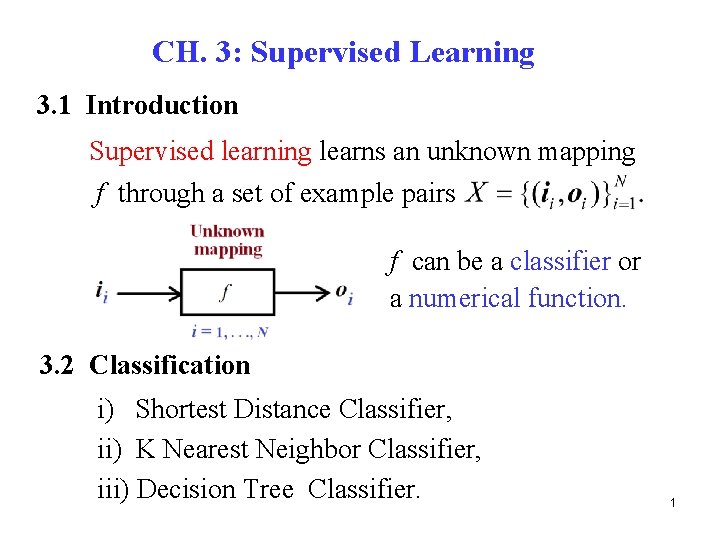

CH. 3: Supervised Learning 3. 1 Introduction Supervised learning learns an unknown mapping f through a set of example pairs f can be a classifier or a numerical function. 3. 2 Classification i) Shortest Distance Classifier, ii) K Nearest Neighbor Classifier, iii) Decision Tree Classifier. 1

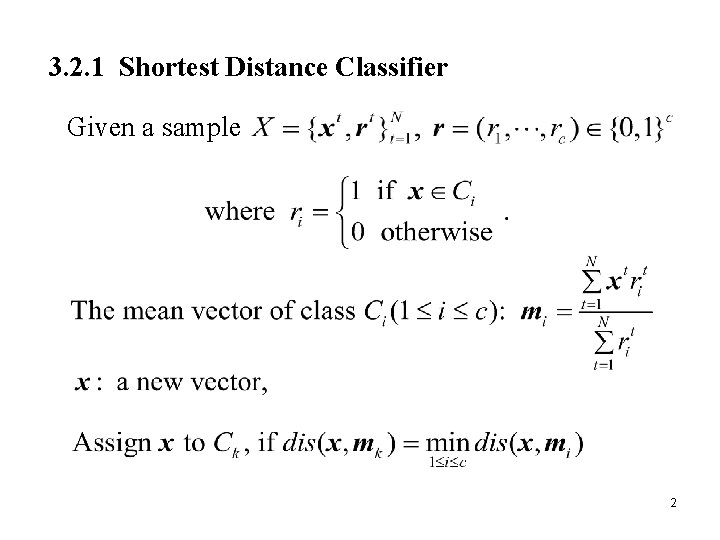

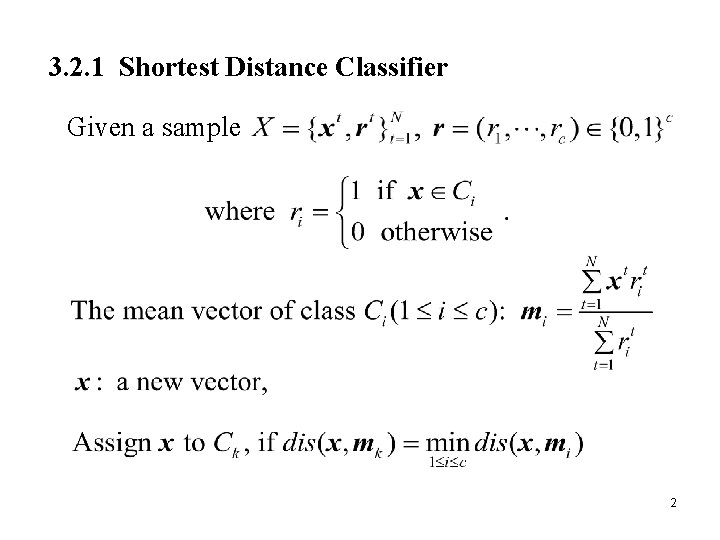

3. 2. 1 Shortest Distance Classifier Given a sample 2

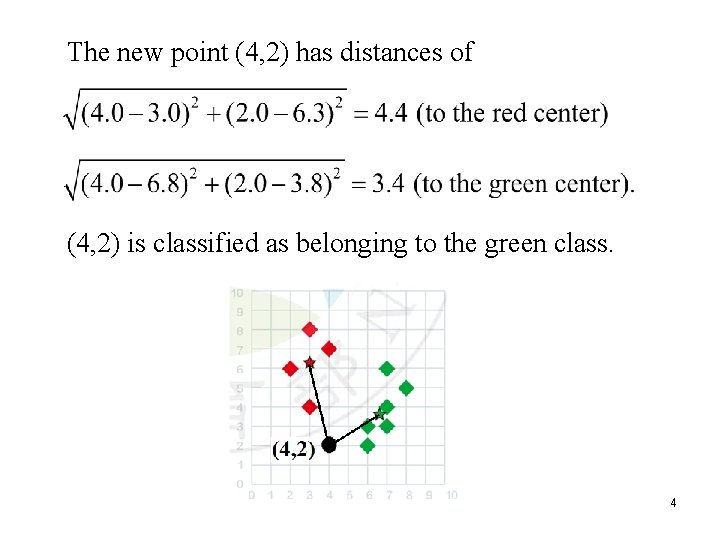

Example: 2 classes Given two classes of data points marked by red and green colors, respectively: (2, 6), (3, 4), (3, 8), (4, 7) (6, 2), (6, 3), (7, 4), (7, 6), (8, 5) The mean points of these two groups of data points are (3. 0, 6. 3) and (6. 8, 3. 8), respectively. 3

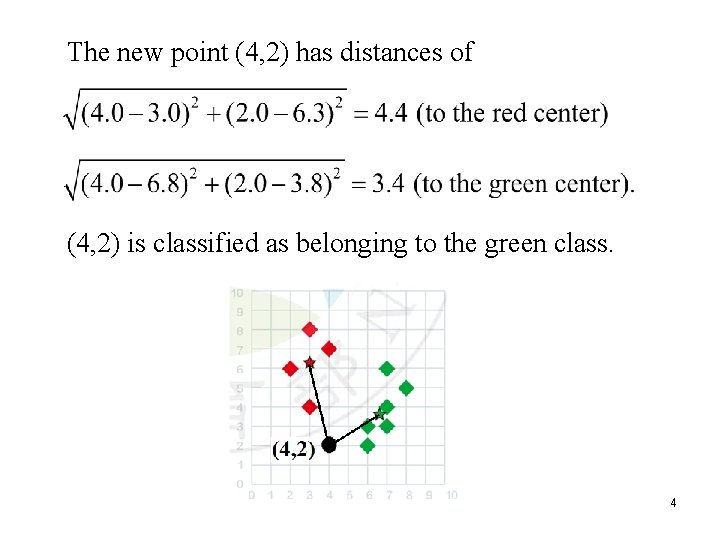

The new point (4, 2) has distances of (4, 2) is classified as belonging to the green class. 4

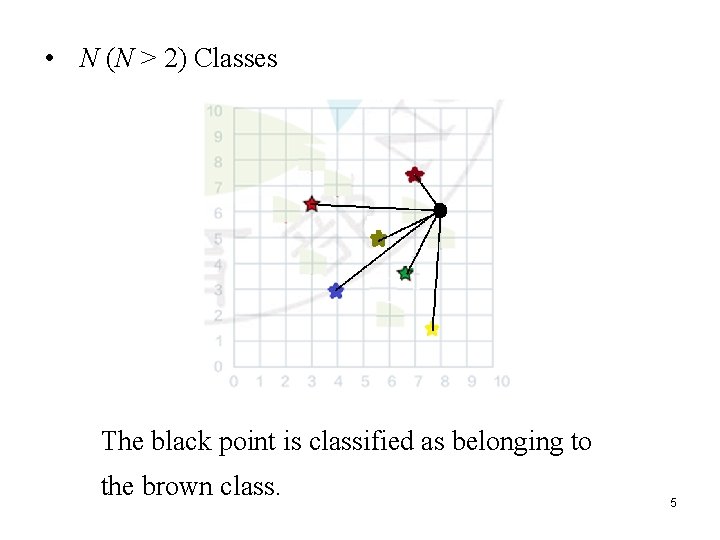

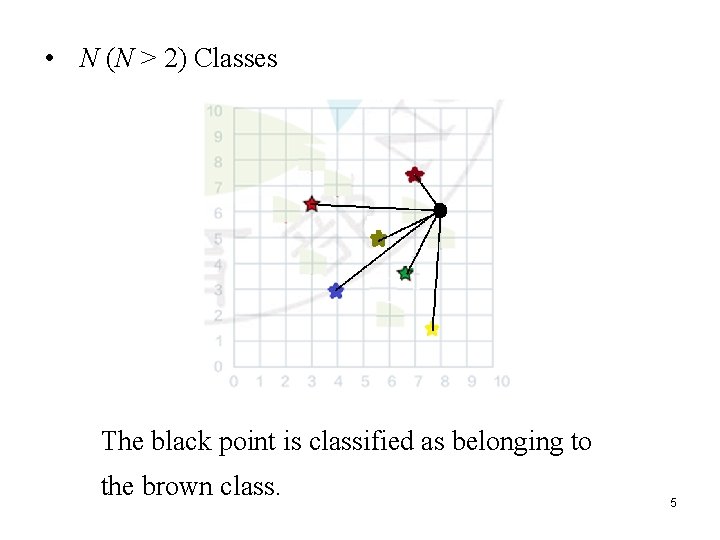

• N (N > 2) Classes The black point is classified as belonging to the brown class. 5

3. 2. 2 K Nearest Neighbor (K-NN) Classifier According to SD classifier, point (4, 3) will be classified as the green class. However, (4, 3) has the nearest red neighbor (3, 4). It is desirable to be classified as the red class. 6

The K-NN classifier assigns a point x the label most frequently present among its K nearest neighbors. 7

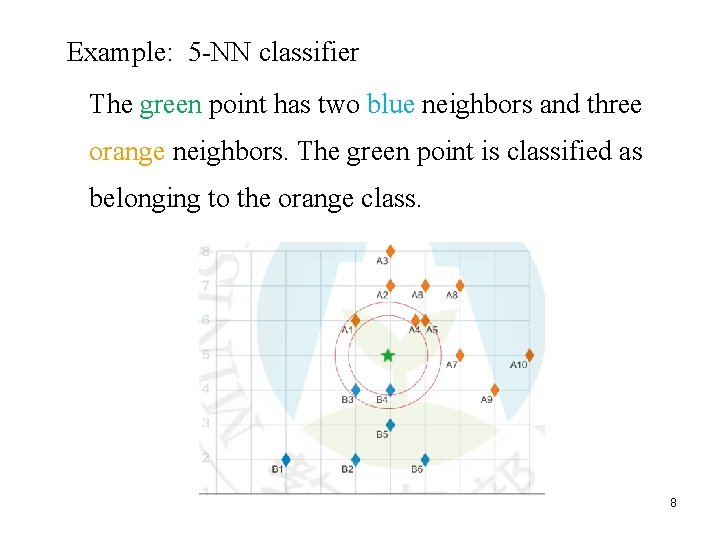

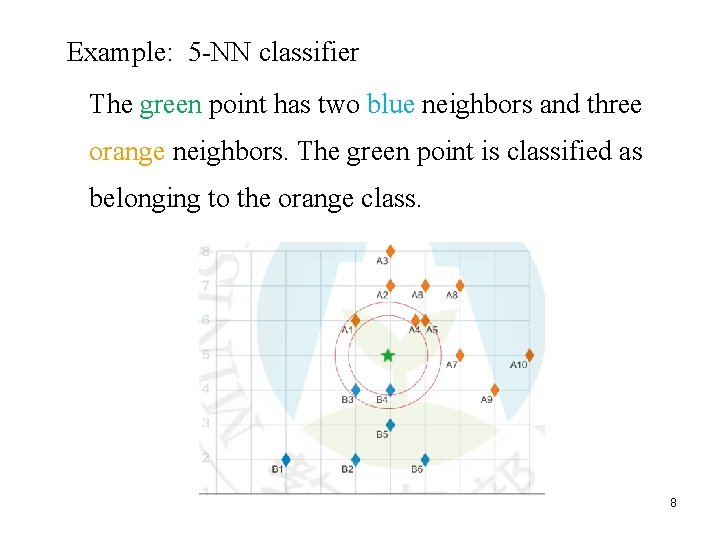

Example: 5 -NN classifier The green point has two blue neighbors and three orange neighbors. The green point is classified as belonging to the orange class. 8

3. 2. 3 Decision Tree (DT) Classifier A DT consists of internal (decision) and leaf (outcome) nodes. Each decision node goes with a test function. Each leaf node has a class label (for classification) or a numeric value (for regression). Example: Decision tree 9

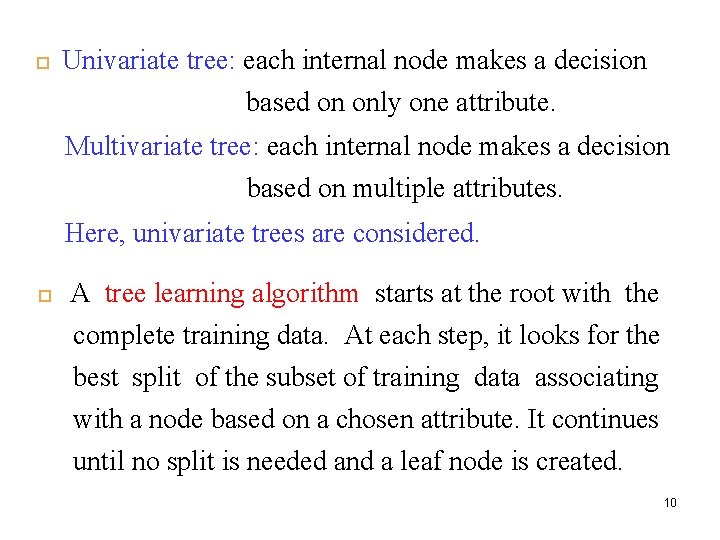

Univariate tree: each internal node makes a decision based on only one attribute. Multivariate tree: each internal node makes a decision based on multiple attributes. Here, univariate trees are considered. A tree learning algorithm starts at the root with the complete training data. At each step, it looks for the best split of the subset of training data associating with a node based on a chosen attribute. It continues until no split is needed and a leaf node is created. 10

The goodness of a split is quantified by a measure, e. g. , information gain. Entropy: 11

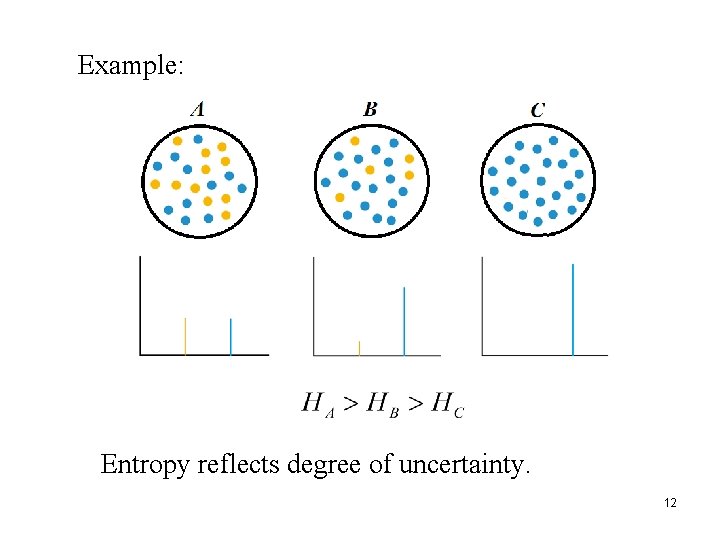

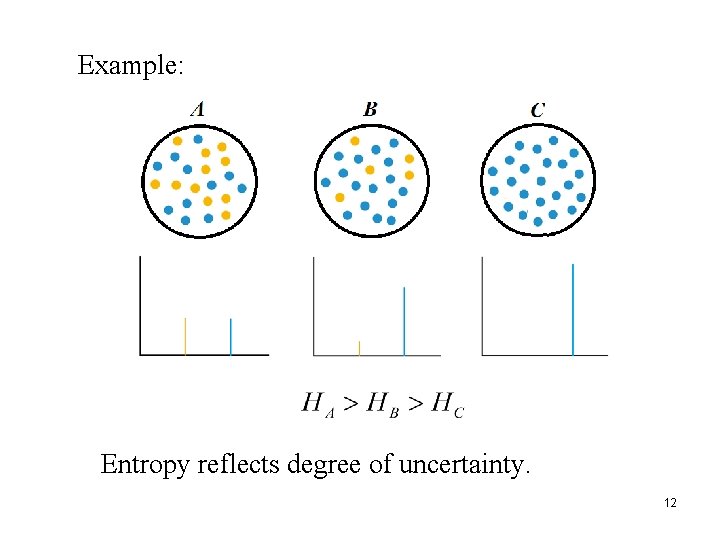

Example: Entropy reflects degree of uncertainty. 12

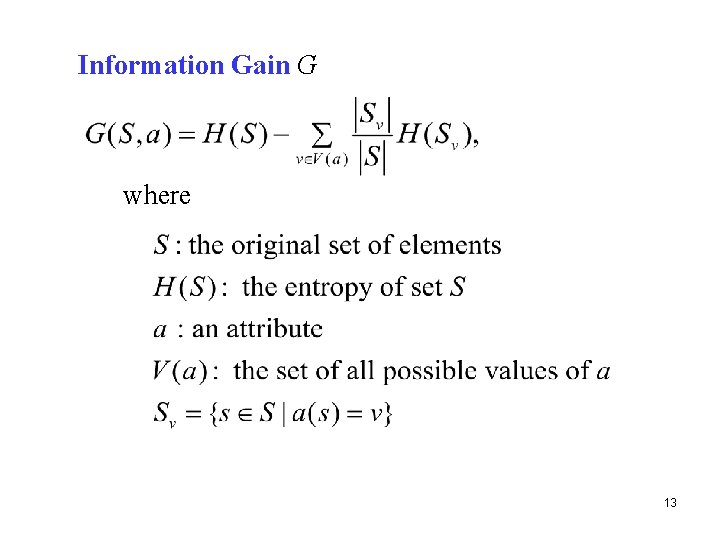

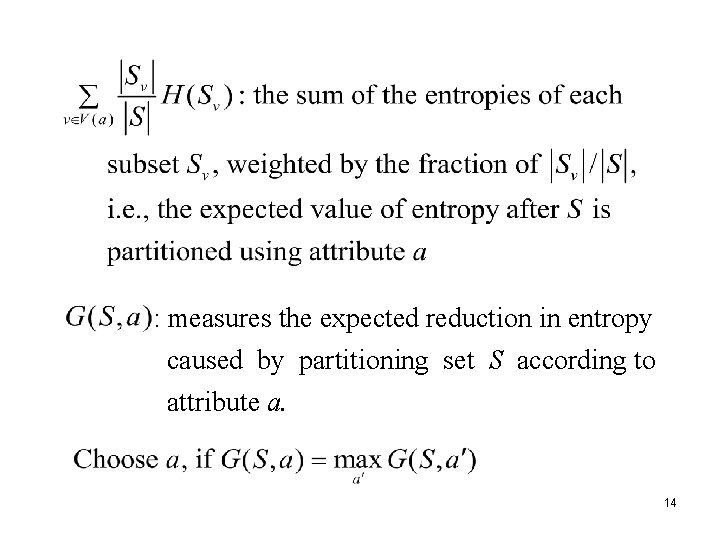

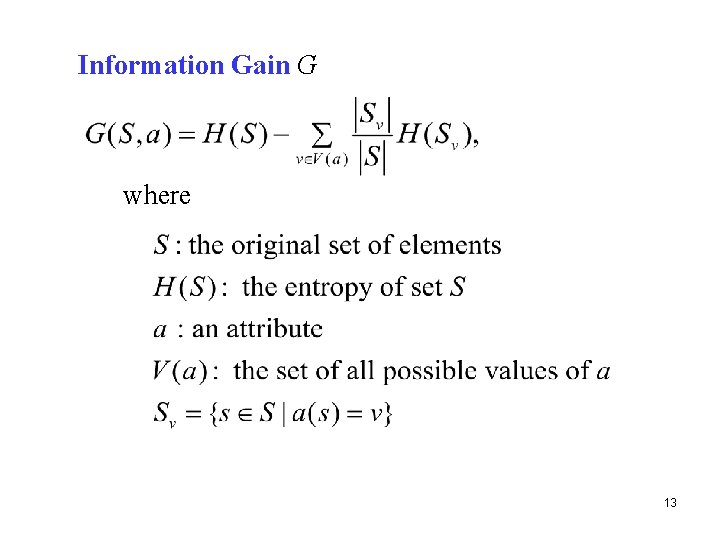

Information Gain G where 13

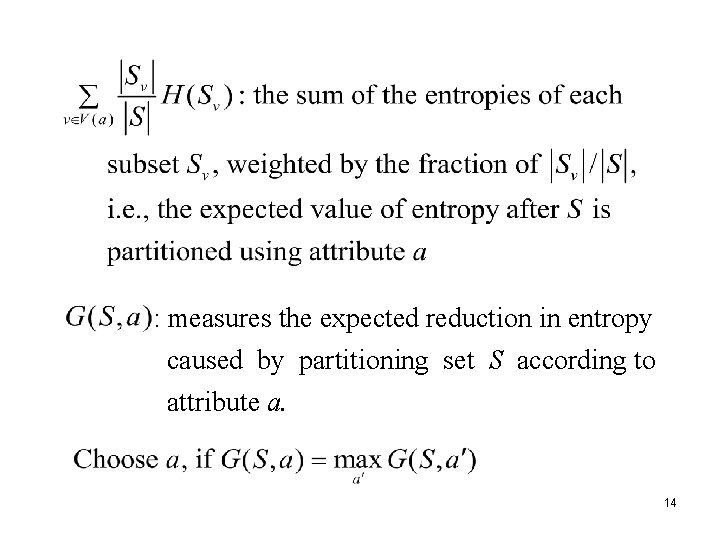

: measures the expected reduction in entropy caused by partitioning set S according to attribute a. 14

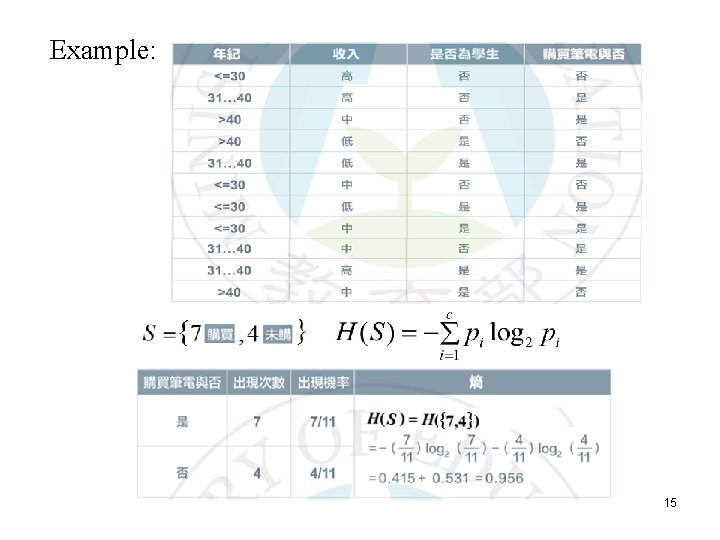

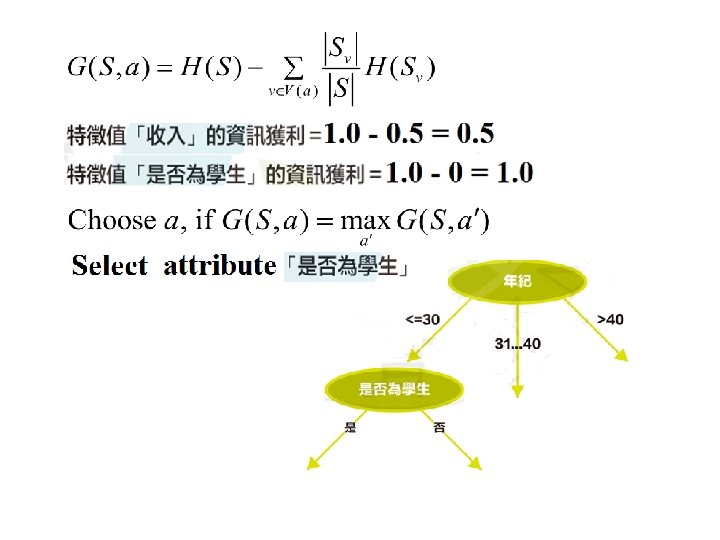

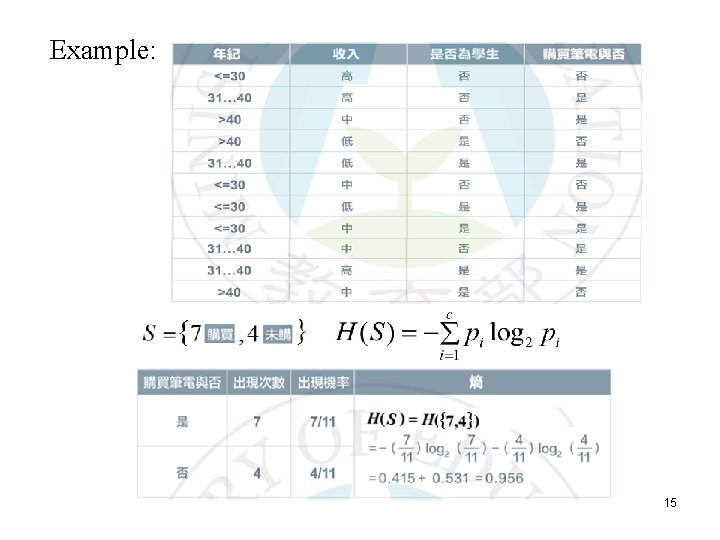

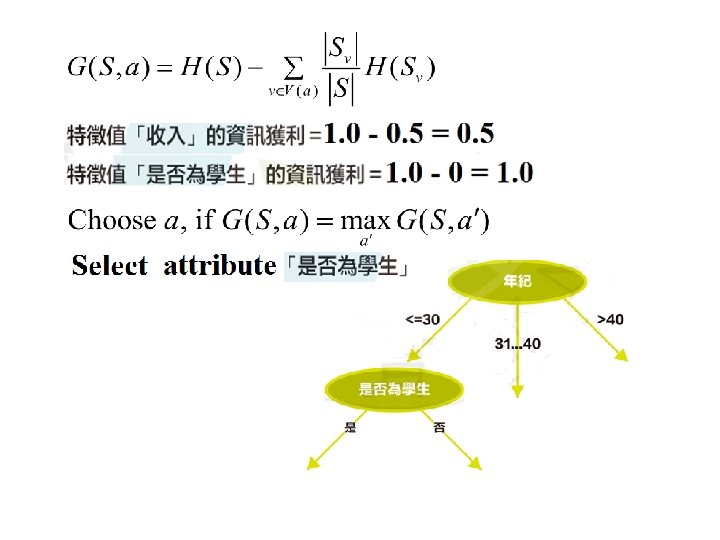

Example: 15

16

17

18

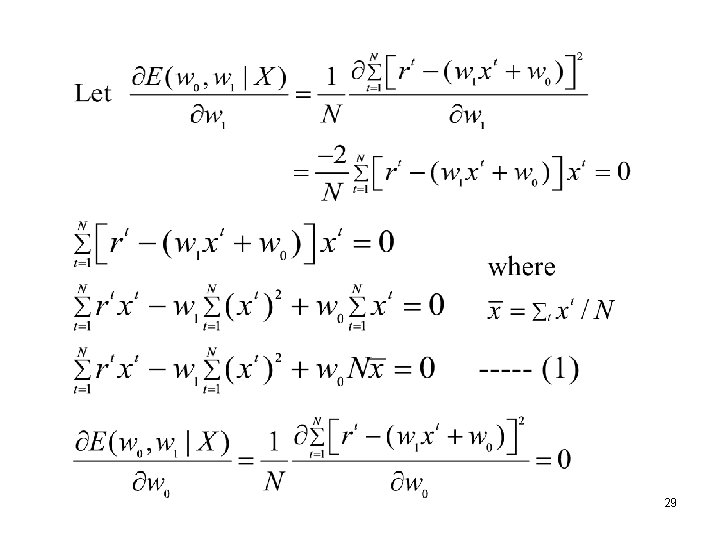

3. 3 Regression Find , s. t. Training set: Let g be the estimate of f. Expected total error: For linear model: Problem : 28

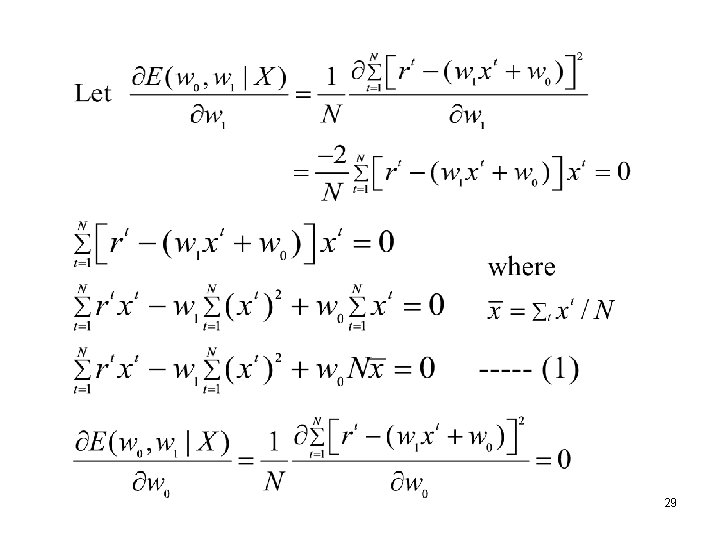

29

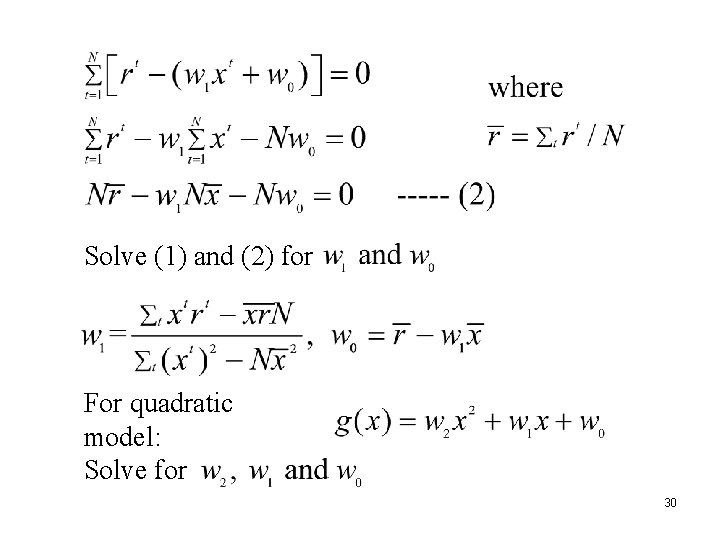

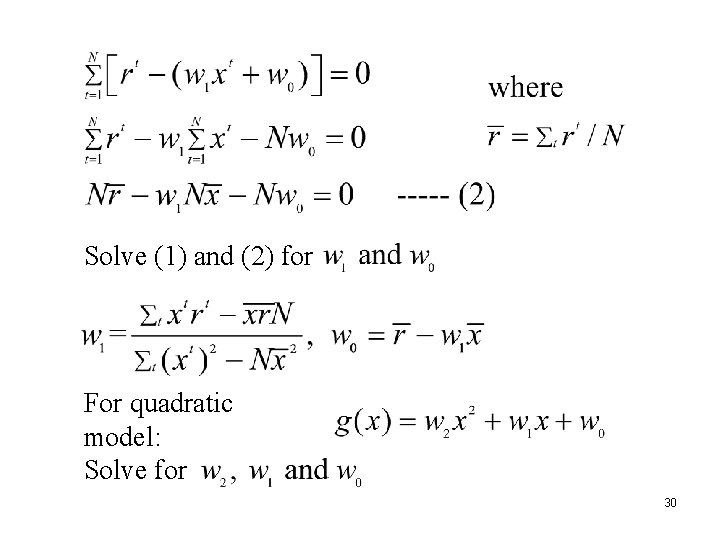

Solve (1) and (2) for For quadratic model: Solve for 30

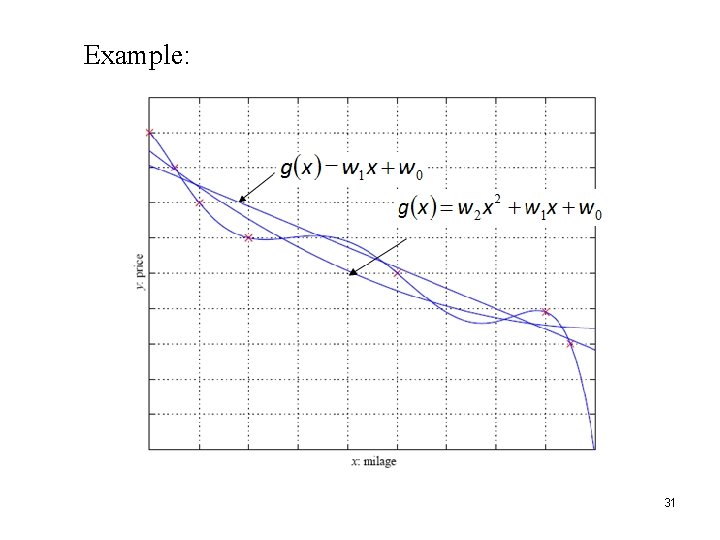

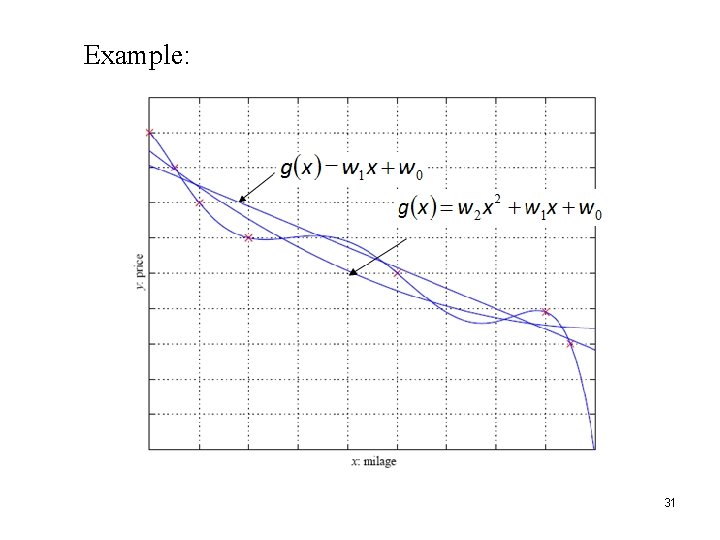

Example: 31

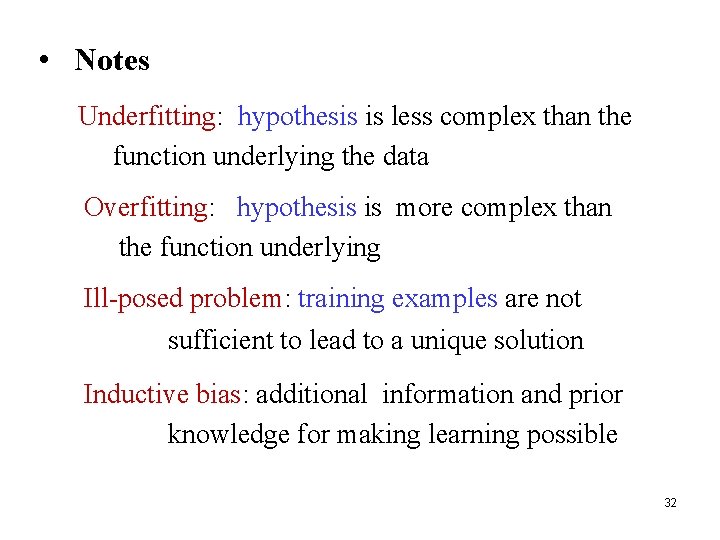

• Notes Underfitting: hypothesis is less complex than the function underlying the data Overfitting: hypothesis is more complex than the function underlying Ill-posed problem: training examples are not sufficient to lead to a unique solution Inductive bias: additional information and prior knowledge for making learning possible 32