ALTERNATE LAYER SPARSITY INTERMEDIATE FINETUNING FOR DEEP AUTOENCODERS

![DIMENSIONALITY REDUCTION �BOTTLENECK CONSTRAINT �LINEAR ACTIVATION – PCA [Baldi et al. , 1989] �NON-LINEAR DIMENSIONALITY REDUCTION �BOTTLENECK CONSTRAINT �LINEAR ACTIVATION – PCA [Baldi et al. , 1989] �NON-LINEAR](https://slidetodoc.com/presentation_image_h2/4be9c16e2f82ff1264dc3ee287450027/image-3.jpg)

![CLASSIFIERS & AUTOENCODERS TASK MODEL RBM SHALLOW AE CLASSIFIER [Hinton et al, 2006] CLASSIFIERS & AUTOENCODERS TASK MODEL RBM SHALLOW AE CLASSIFIER [Hinton et al, 2006]](https://slidetodoc.com/presentation_image_h2/4be9c16e2f82ff1264dc3ee287450027/image-10.jpg)

- Slides: 33

ALTERNATE LAYER SPARSITY & INTERMEDIATE FINE-TUNING FOR DEEP AUTOENCODERS Submitted by: Ankit Bhutani (Y 9227094) Supervised by: Prof. Amitabha Mukerjee Prof. K S Venkatesh

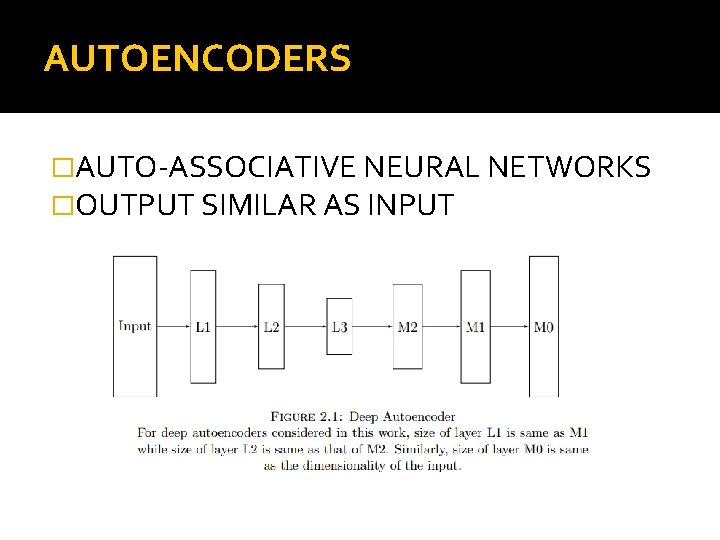

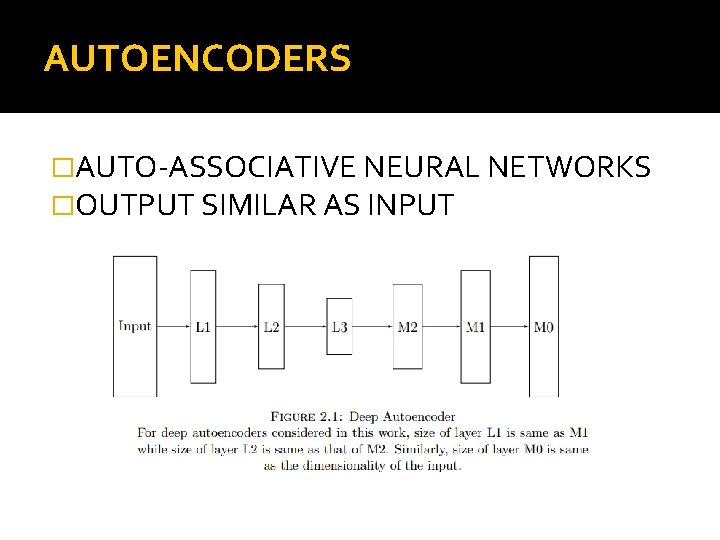

AUTOENCODERS �AUTO-ASSOCIATIVE NEURAL NETWORKS �OUTPUT SIMILAR AS INPUT

![DIMENSIONALITY REDUCTION BOTTLENECK CONSTRAINT LINEAR ACTIVATION PCA Baldi et al 1989 NONLINEAR DIMENSIONALITY REDUCTION �BOTTLENECK CONSTRAINT �LINEAR ACTIVATION – PCA [Baldi et al. , 1989] �NON-LINEAR](https://slidetodoc.com/presentation_image_h2/4be9c16e2f82ff1264dc3ee287450027/image-3.jpg)

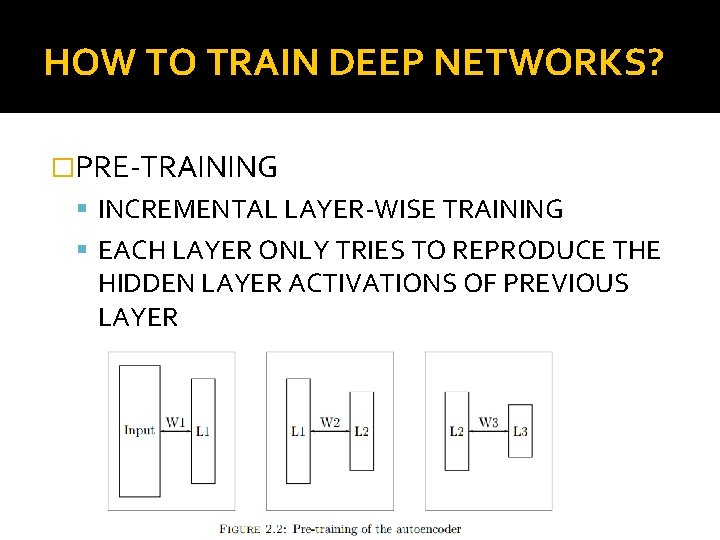

DIMENSIONALITY REDUCTION �BOTTLENECK CONSTRAINT �LINEAR ACTIVATION – PCA [Baldi et al. , 1989] �NON-LINEAR PCA [Kramer, 1991] – 5 layered network ALTERNATE SIGMOID AND LINEAR ACTIVATION EXTRACTS NON-LINEAR FACTORS

ADVANTAGES OF NETWORKS WITH MULTIPLE LAYERS �ABILITY TO LEARN HIGHLY COMPLEX FUNCTIONS �TACKLE THE NON-LINEAR STRUCTURE OF UNDERLYING DATA �HEIRARCHICAL REPRESENTATION �RESULTS FROM CIRCUIT THEORY – SINGLE LAYERED NETWORK WOULD NEED EXPONENTIALLY HIGH NUMBER OF HIDDEN UNITS

PROBLEMS WITH DEEP NETWORKS �DIFFICULTY IN TRAINING DEEP NETWORKS NON-CONVEX NATURE OF OPTIMIZATION GETS STUCK IN LOCAL MINIMA VANISHING OF GRADIENTS DURING BACKPROPAGATION �SOLUTION -``INITIAL WEIGHTS MUST BE CLOSE TO A GOOD SOLUTION’’ – [Hinton et. al. , 2006] GENERATIVE PRE-TRAINING FOLLOWED BY FINE-TUNING

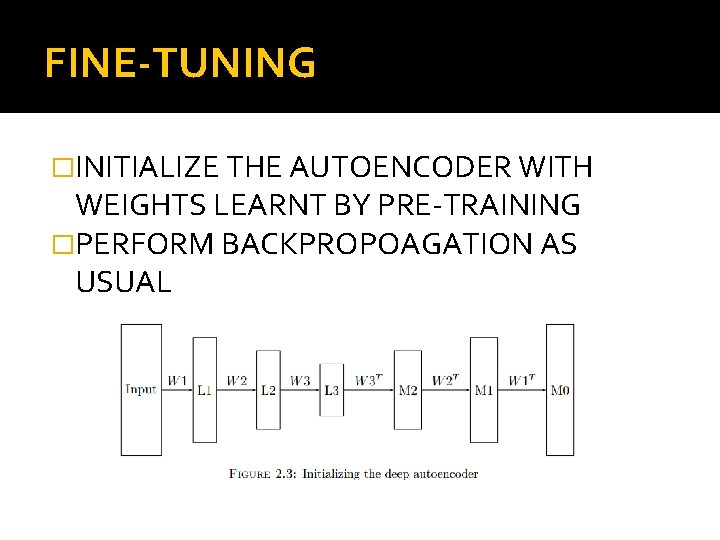

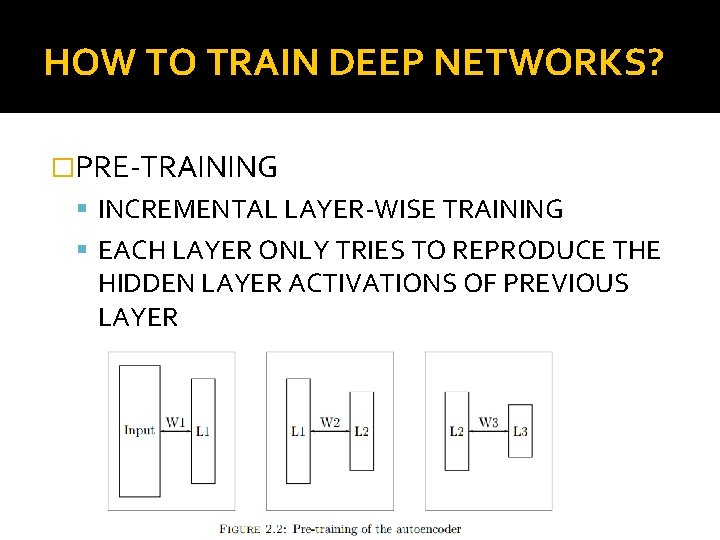

HOW TO TRAIN DEEP NETWORKS? �PRE-TRAINING INCREMENTAL LAYER-WISE TRAINING EACH LAYER ONLY TRIES TO REPRODUCE THE HIDDEN LAYER ACTIVATIONS OF PREVIOUS LAYER

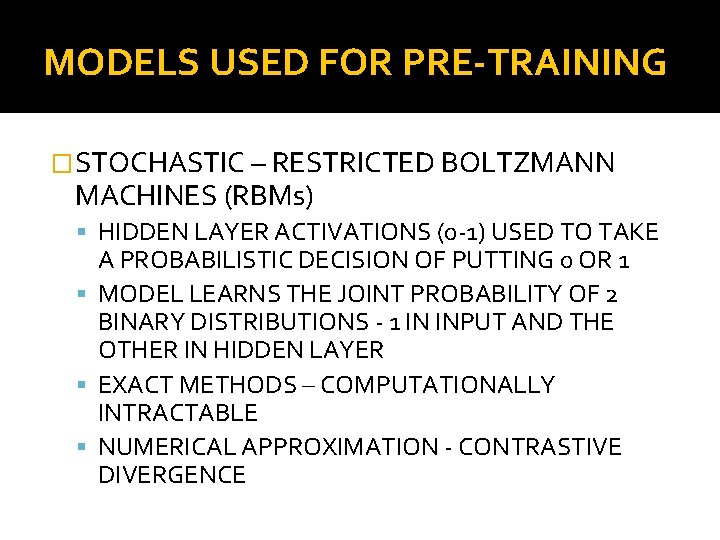

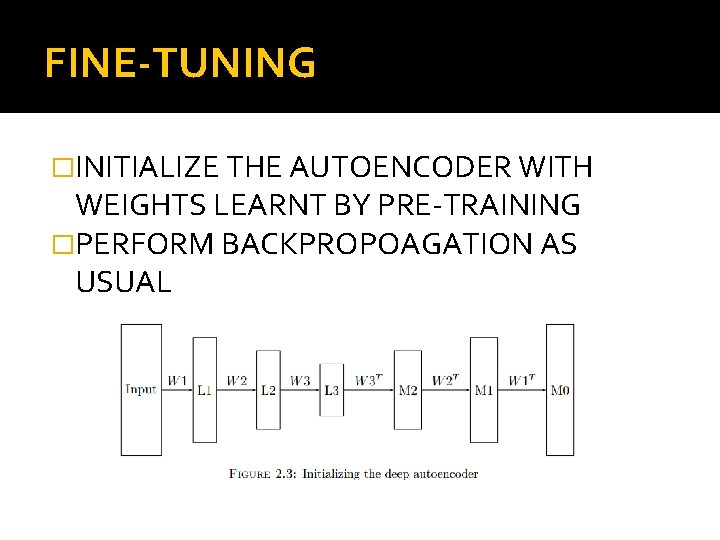

FINE-TUNING �INITIALIZE THE AUTOENCODER WITH WEIGHTS LEARNT BY PRE-TRAINING �PERFORM BACKPROPOAGATION AS USUAL

MODELS USED FOR PRE-TRAINING �STOCHASTIC – RESTRICTED BOLTZMANN MACHINES (RBMs) HIDDEN LAYER ACTIVATIONS (0 -1) USED TO TAKE A PROBABILISTIC DECISION OF PUTTING 0 OR 1 MODEL LEARNS THE JOINT PROBABILITY OF 2 BINARY DISTRIBUTIONS - 1 IN INPUT AND THE OTHER IN HIDDEN LAYER EXACT METHODS – COMPUTATIONALLY INTRACTABLE NUMERICAL APPROXIMATION - CONTRASTIVE DIVERGENCE

MODELS USED FOR PRE-TRAINING �DETERMINISTIC – SHALLOW AUTOENCODERS HIDDEN LAYER ACTIVATIONS (0 -1) ARE DIRECTLY USED FOR INPUT TO NEXT LAYER TRAINED BY BACKPROPAGATION DENOISING AUTOENCODERS CONTRACTIVE AUTOENCODERS SPARSE AUTOENCODERS

![CLASSIFIERS AUTOENCODERS TASK MODEL RBM SHALLOW AE CLASSIFIER Hinton et al 2006 CLASSIFIERS & AUTOENCODERS TASK MODEL RBM SHALLOW AE CLASSIFIER [Hinton et al, 2006]](https://slidetodoc.com/presentation_image_h2/4be9c16e2f82ff1264dc3ee287450027/image-10.jpg)

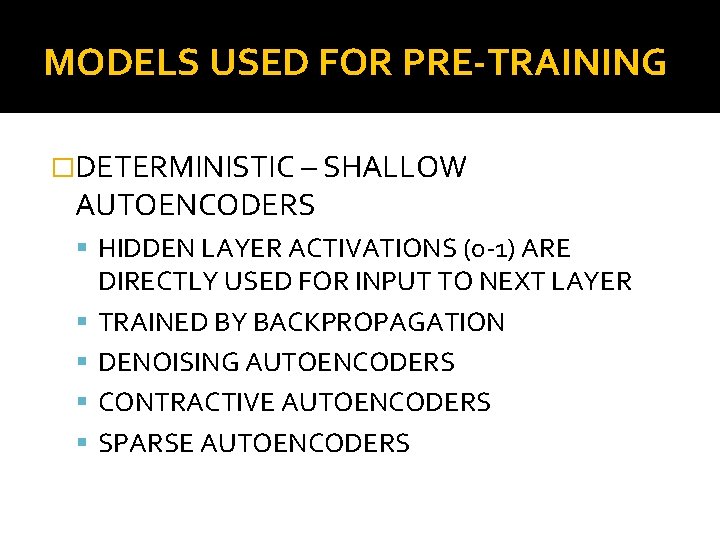

CLASSIFIERS & AUTOENCODERS TASK MODEL RBM SHALLOW AE CLASSIFIER [Hinton et al, 2006] and many others since then Investigated by [Bengio et al, 2007], [Ranzato et al, 2007], [Vincent et al, 2008], [Rifai et al, 2011] etc. DEEP AE [Hinton & No significant Salakhutdinov, 2006] results reported in literature - Gap

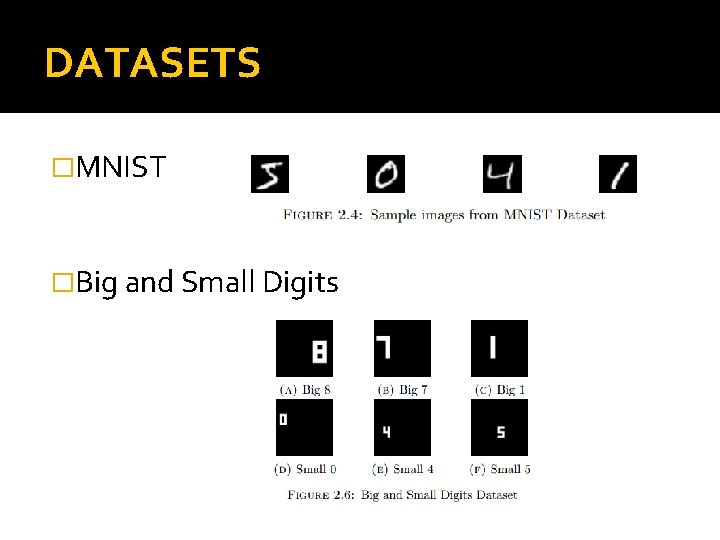

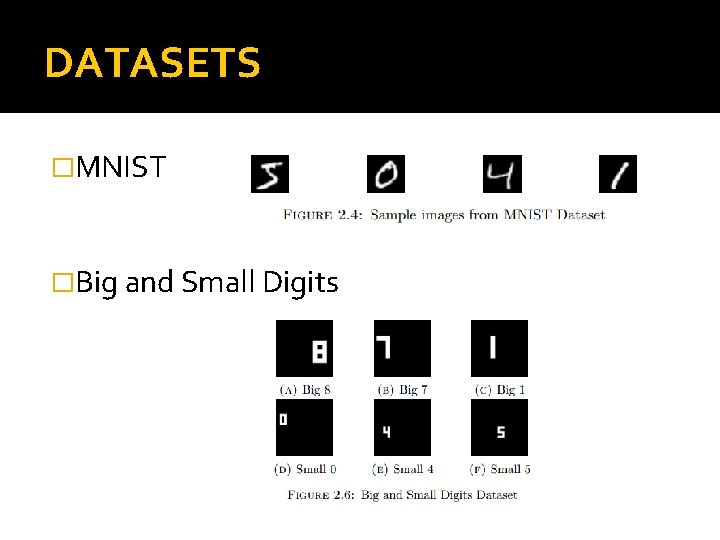

DATASETS �MNIST �Big and Small Digits

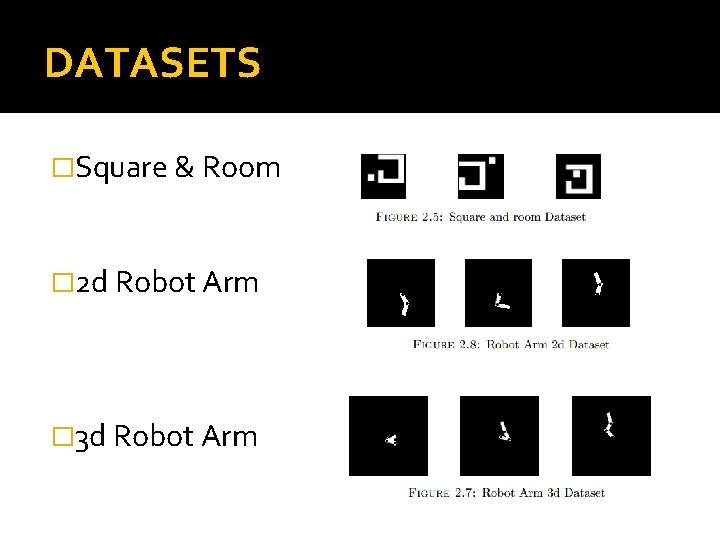

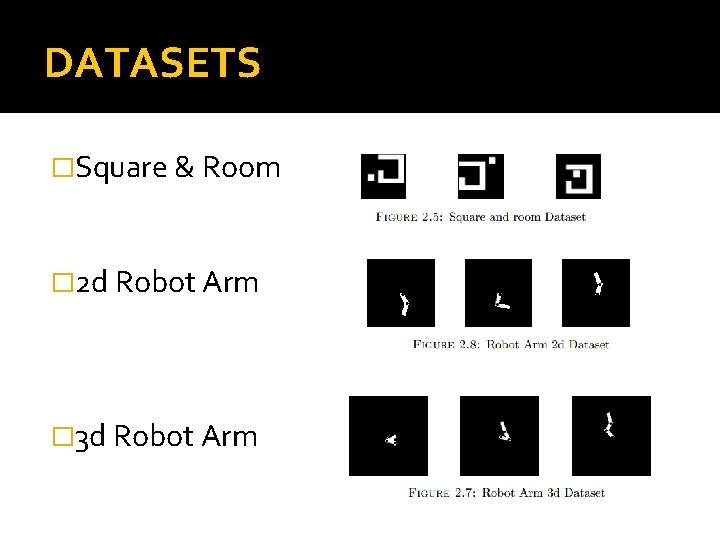

DATASETS �Square & Room � 2 d Robot Arm � 3 d Robot Arm

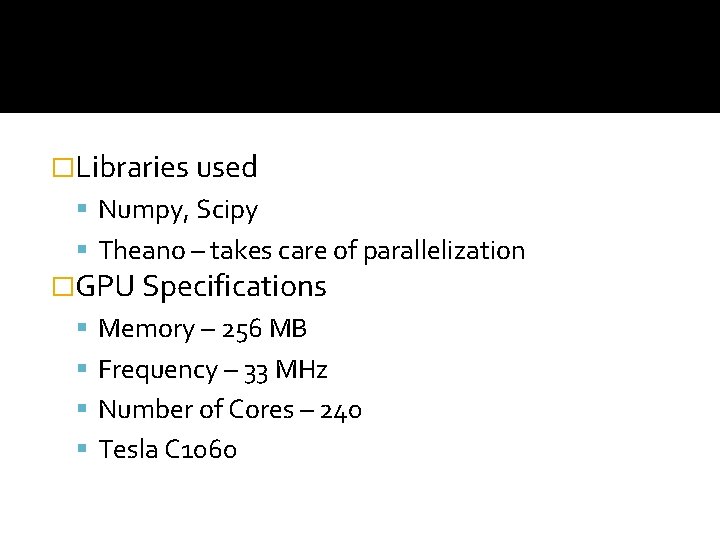

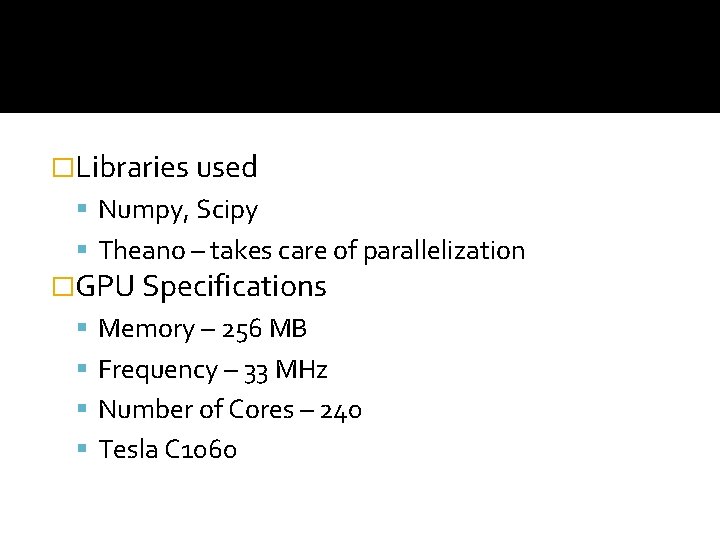

�Libraries used Numpy, Scipy Theano – takes care of parallelization �GPU Specifications Memory – 256 MB Frequency – 33 MHz Number of Cores – 240 Tesla C 1060

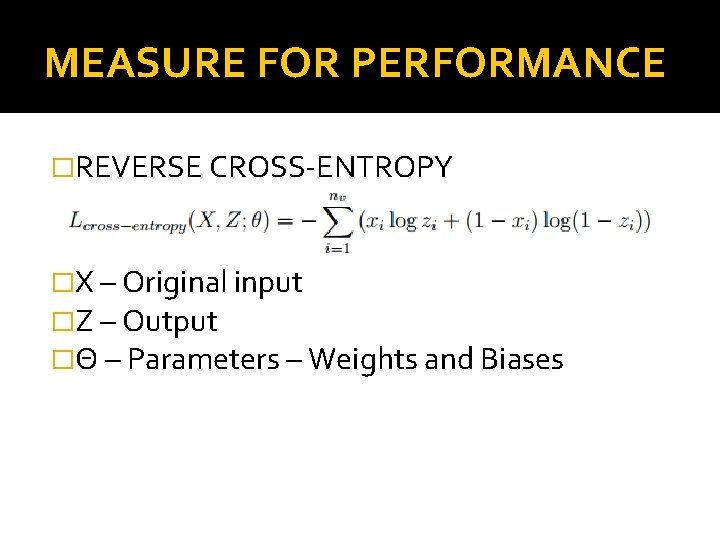

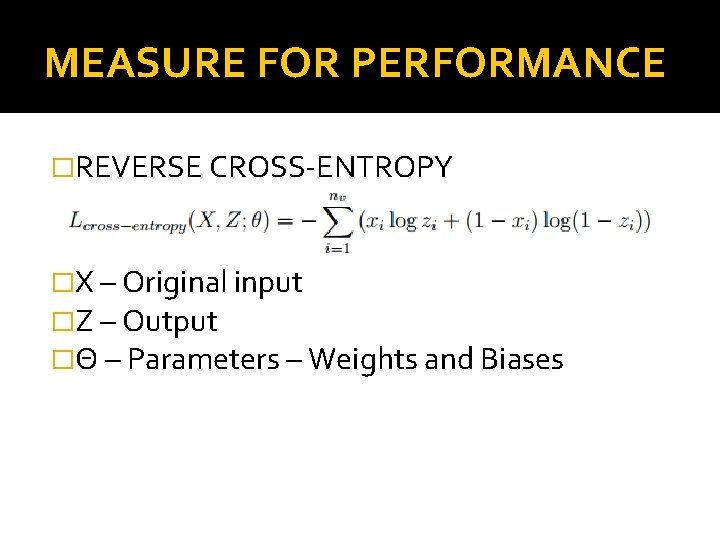

MEASURE FOR PERFORMANCE �REVERSE CROSS-ENTROPY �X – Original input �Z – Output �Θ – Parameters – Weights and Biases

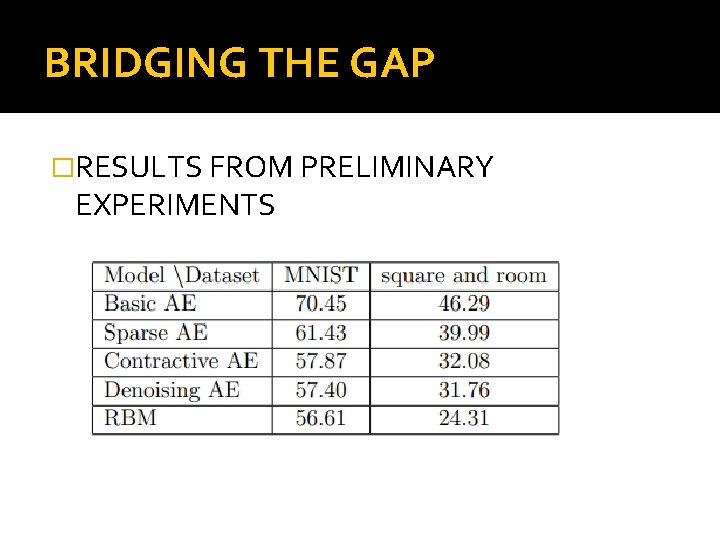

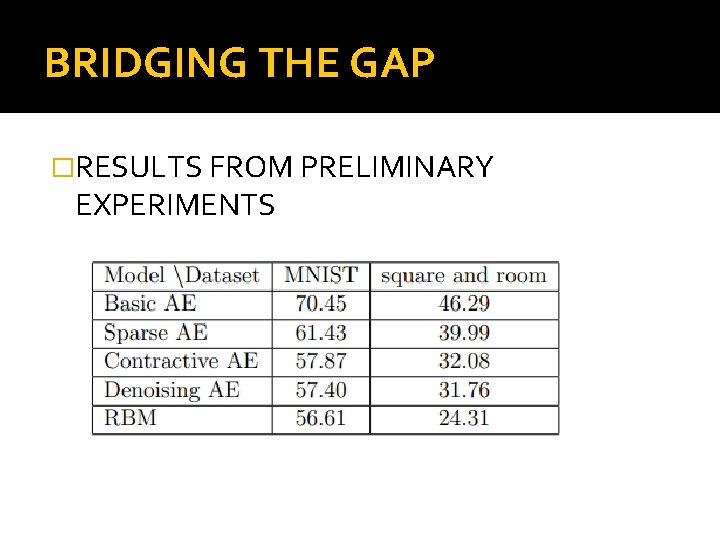

BRIDGING THE GAP �RESULTS FROM PRELIMINARY EXPERIMENTS

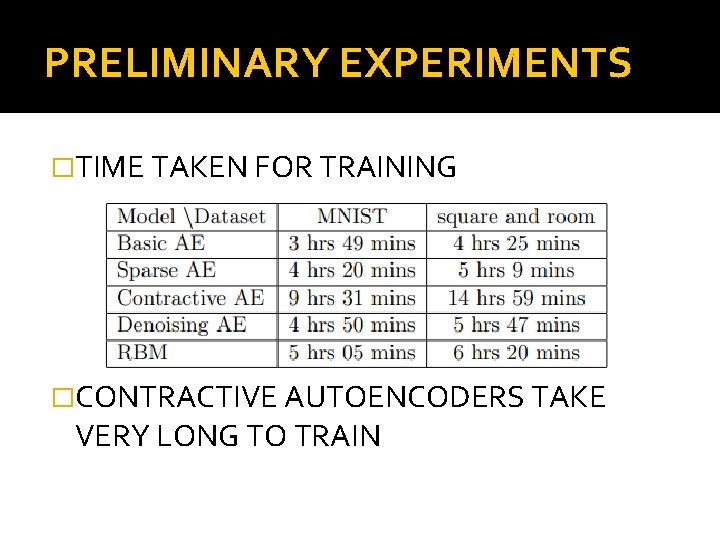

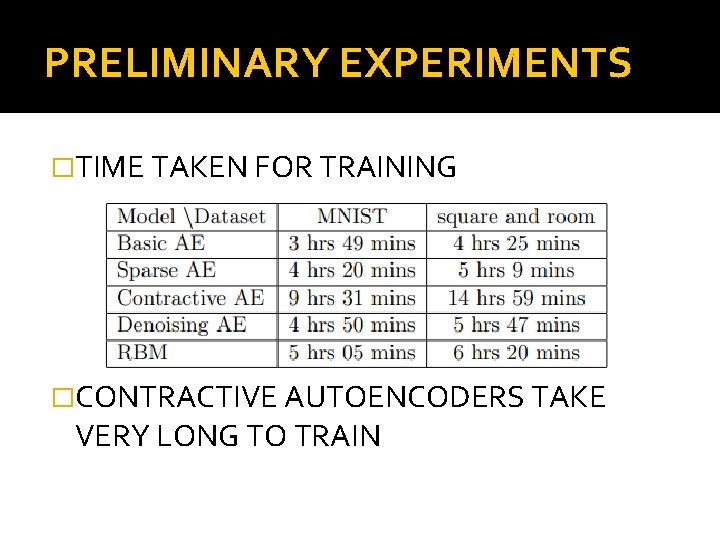

PRELIMINARY EXPERIMENTS �TIME TAKEN FOR TRAINING �CONTRACTIVE AUTOENCODERS TAKE VERY LONG TO TRAIN

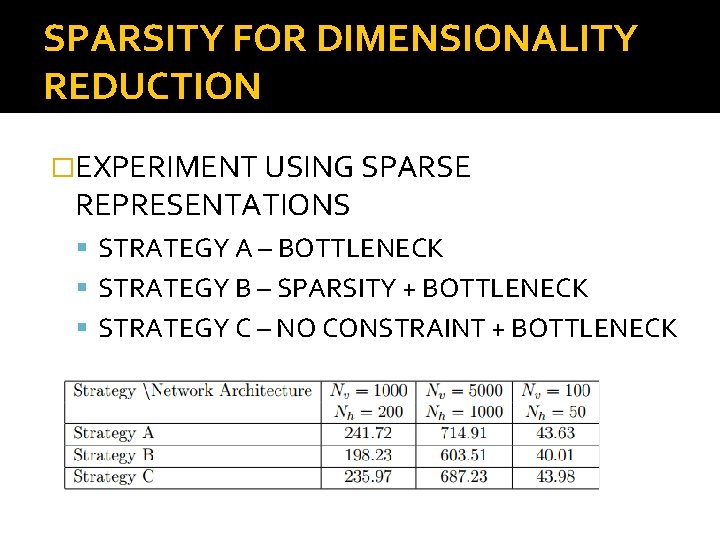

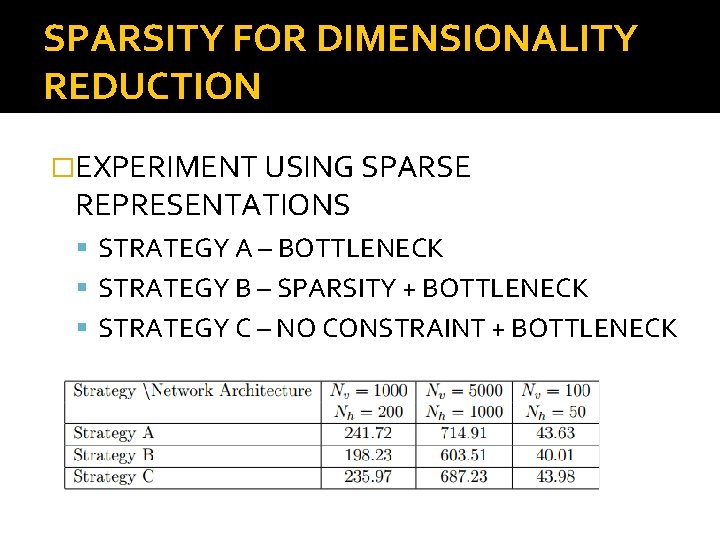

SPARSITY FOR DIMENSIONALITY REDUCTION �EXPERIMENT USING SPARSE REPRESENTATIONS STRATEGY A – BOTTLENECK STRATEGY B – SPARSITY + BOTTLENECK STRATEGY C – NO CONSTRAINT + BOTTLENECK

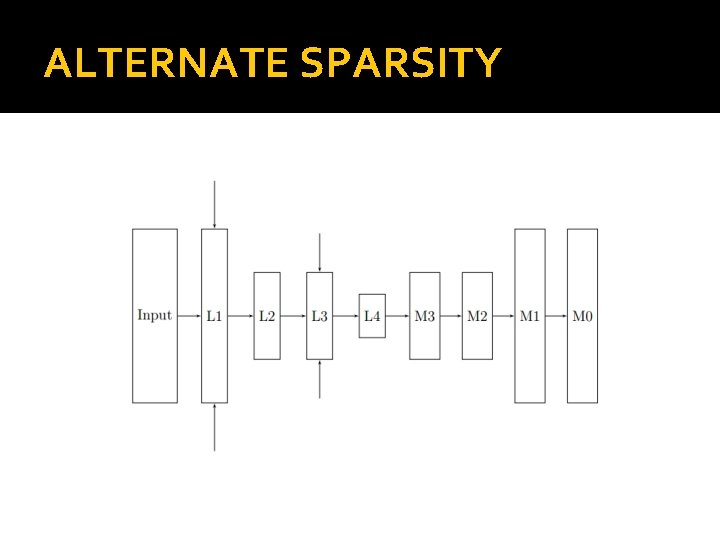

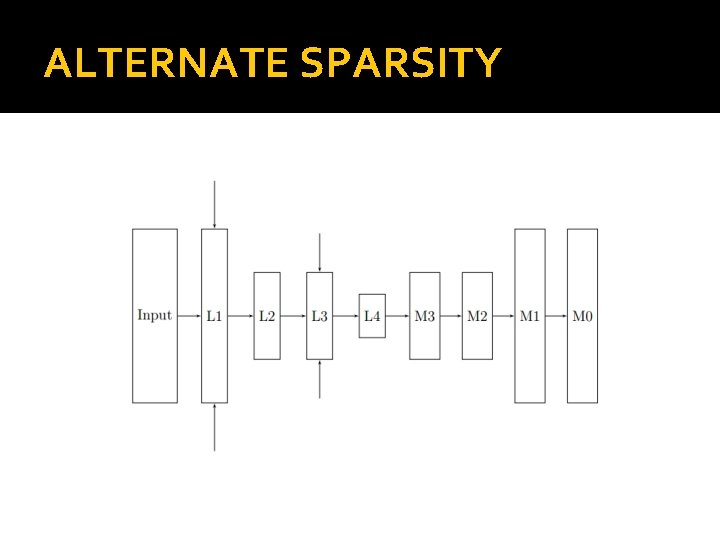

ALTERNATE SPARSITY

OTHER IMPROVEMENTS �MOMENTUM INCORPORATING THE PREVIOUS UPDATE CANCELS OUT COMPONENTS IN OPPOSITE DIRECTIONS – PREVENTS OSCILLATION ADDS UP COMPONENTS IN SAME DIRECTION – SPEEDS UP TRAINING �WEIGHT DECAY REGULARIZATION PREVENTS OVER-FITTING

COMBINING ALL �USING ALTERNATE LAYER SPARSITY WITH MOMENTUM & WEIGHT DECAY YIELDS BEST RESULTS

INTERMEDIATE FINE-TUNEING �MOTIVATION

PROCESS

PROCESS

RESULTS

RESULTS

RESULTS

CONCLUDING REMARKS

NEURAL NETWORK BASICS

BACKPROPAGATION

RBM

RBM

AUTOENCODERS