Convolutional Neural Networks Autoencoders and Deep Learning in

- Slides: 24

Convolutional Neural Networks, Autoencoders and Deep Learning in General Rishabh Sharma

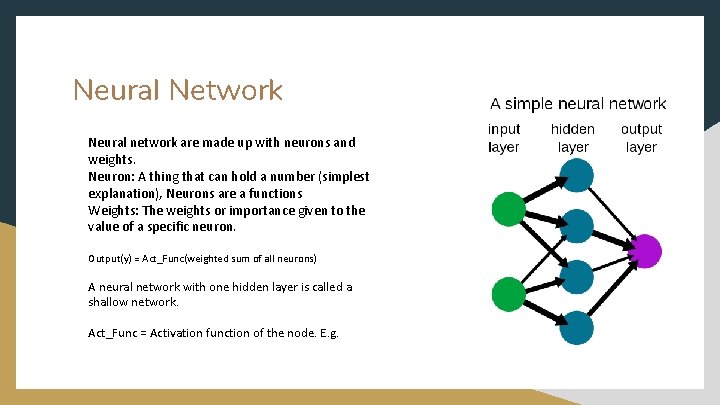

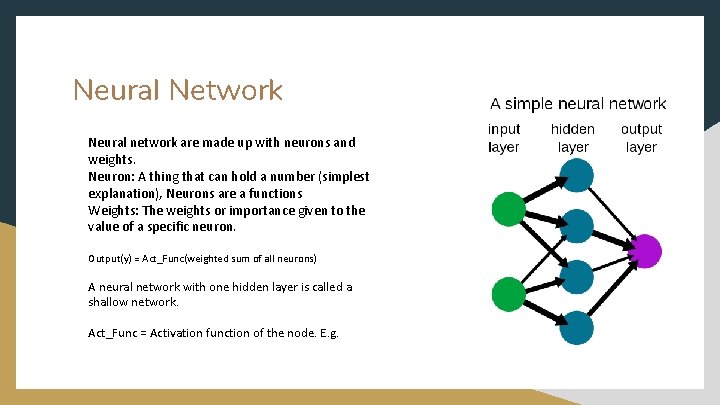

Neural Network Neural network are made up with neurons and weights. Neuron: A thing that can hold a number (simplest explanation), Neurons are a functions Weights: The weights or importance given to the value of a specific neuron. Output(y) = Act_Func(weighted sum of all neurons) A neural network with one hidden layer is called a shallow network. Act_Func = Activation function of the node. E. g.

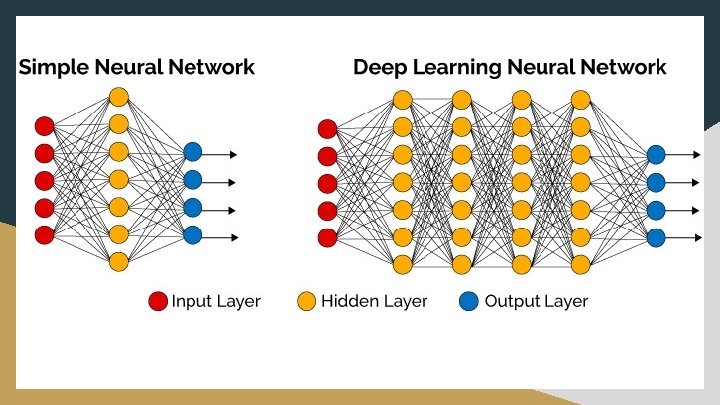

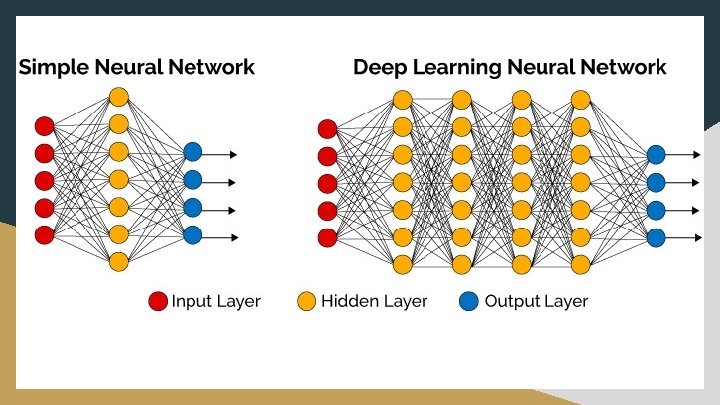

Deep Learning ● ● Deep learning is a general term for machine learning algorithms that are inspired by the brain structure of a human and uses artificial neural networks. Deep networks are networks with two or more hidden layers

Convolutional Neural Networks (CNN) ● ● Traditional image classification uses raw pixels. This method is successful and is able to classify image until the complex variation of the images are encountered. Image Source: Understanding CNN, Towards. Data. Science

Convolutional Neural Network (CNN) ● ● CNN is a type of neural network model that can extract higher representations of the image. In classical image classification you define the image features. CNN takes the image’s raw pixel data, trains the model and then extracts the features for better classification. Image Source: Understanding CNN, Towards. Data. Science

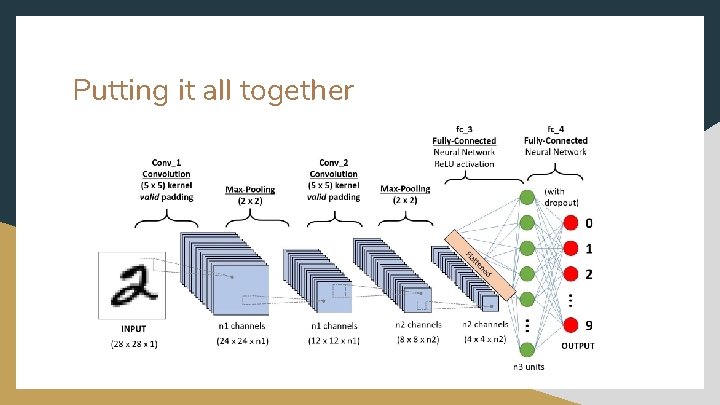

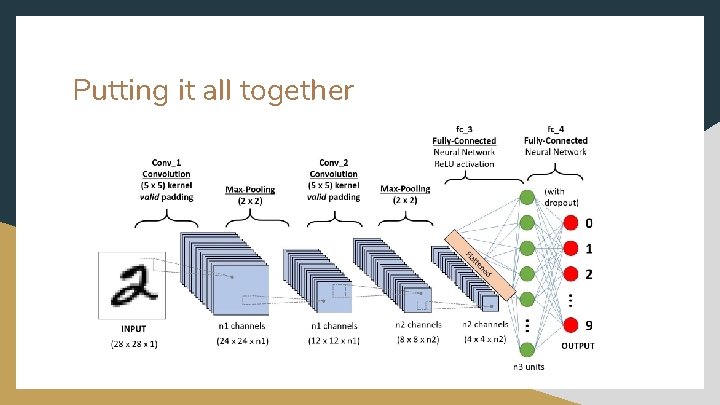

Principles of CNN ● ● ● Convolution Layer Pooling Layer Activation (Re. LU and Sigmoid) Layer

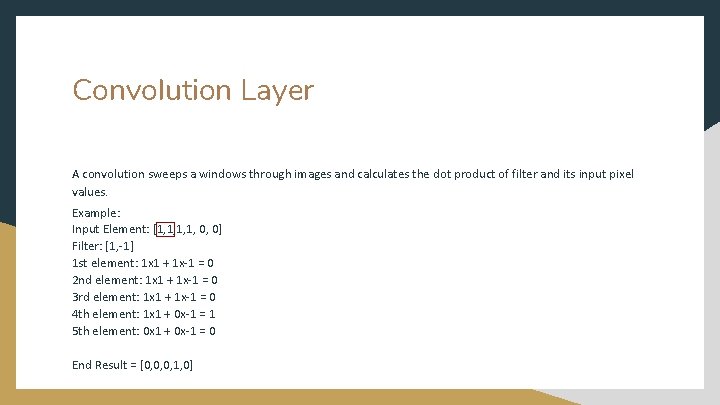

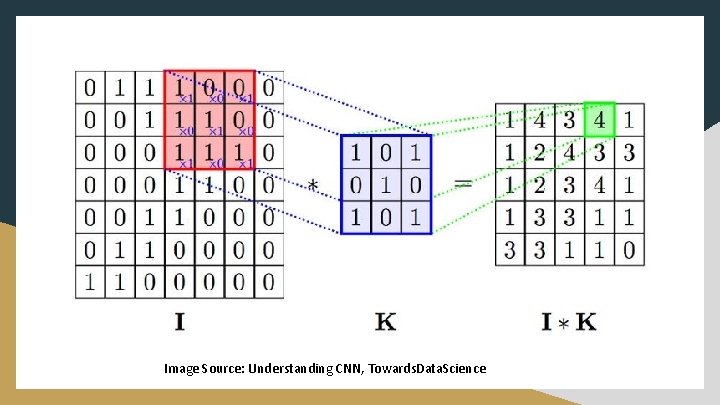

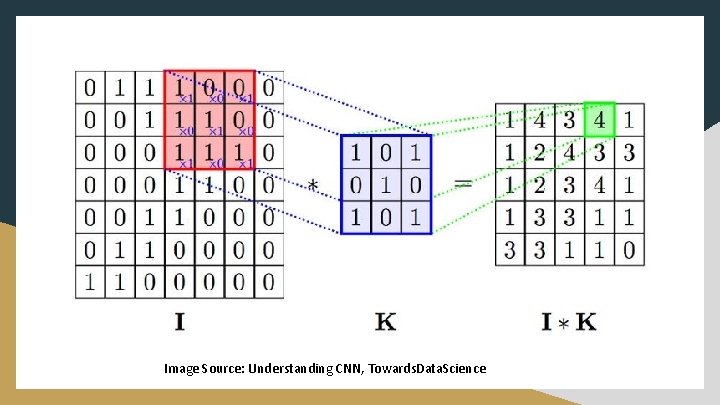

Convolution Layer A convolution sweeps a windows through images and calculates the dot product of filter and its input pixel values. Example: Input Element: [1, 1, 0, 0] Filter: [1, -1] 1 st element: 1 x 1 + 1 x-1 = 0 2 nd element: 1 x 1 + 1 x-1 = 0 3 rd element: 1 x 1 + 1 x-1 = 0 4 th element: 1 x 1 + 0 x-1 = 1 5 th element: 0 x 1 + 0 x-1 = 0 End Result = [0, 0, 0, 1, 0]

Image Source: Understanding CNN, Towards. Data. Science

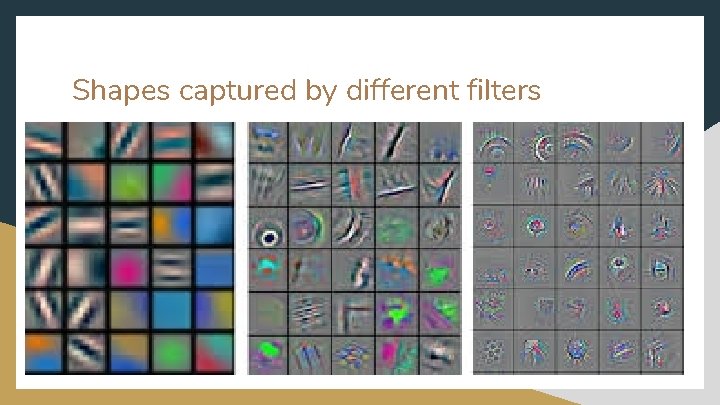

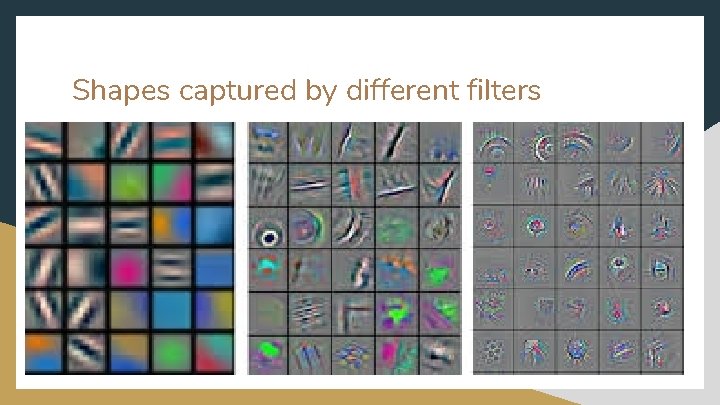

Shapes captured by different filters

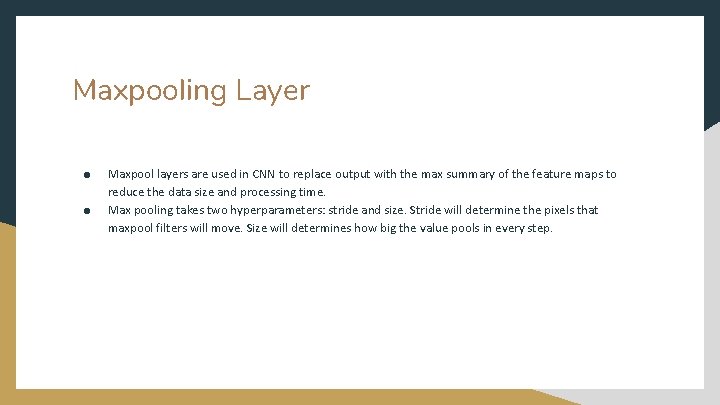

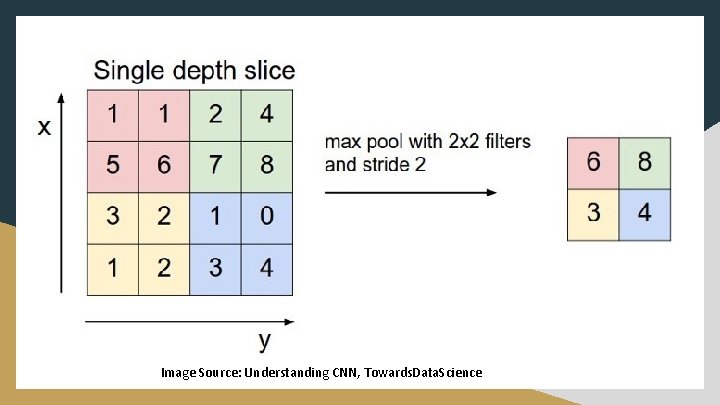

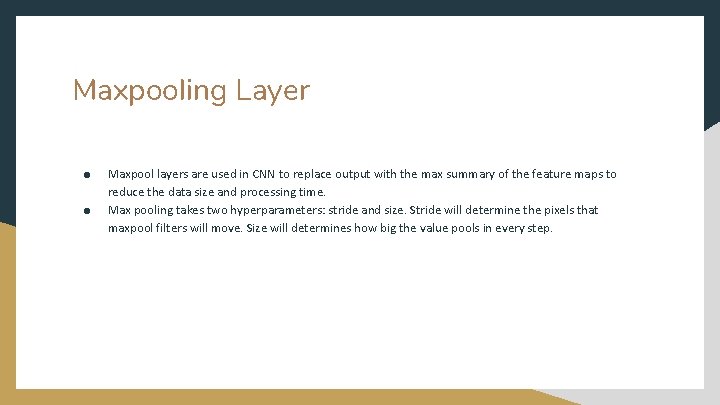

Maxpooling Layer ● ● Maxpool layers are used in CNN to replace output with the max summary of the feature maps to reduce the data size and processing time. Max pooling takes two hyperparameters: stride and size. Stride will determine the pixels that maxpool filters will move. Size will determines how big the value pools in every step.

Image Source: Understanding CNN, Towards. Data. Science

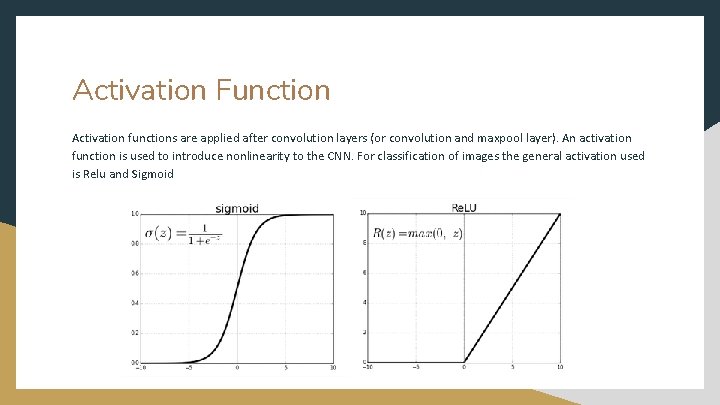

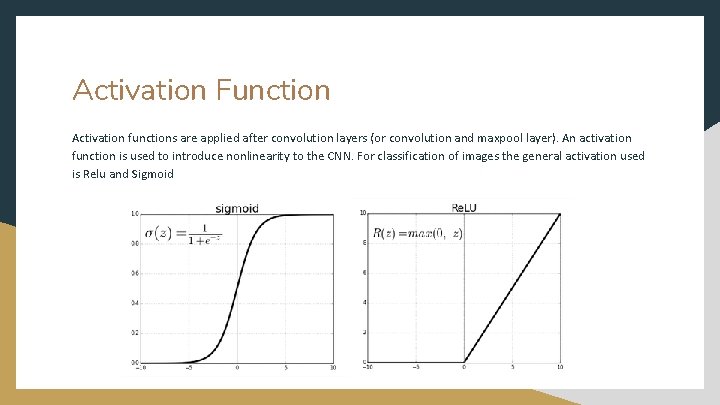

Activation Function Activation functions are applied after convolution layers (or convolution and maxpool layer). An activation function is used to introduce nonlinearity to the CNN. For classification of images the general activation used is Relu and Sigmoid

Putting it all together Image Source: Understanding CNN, Towards. Data. Science

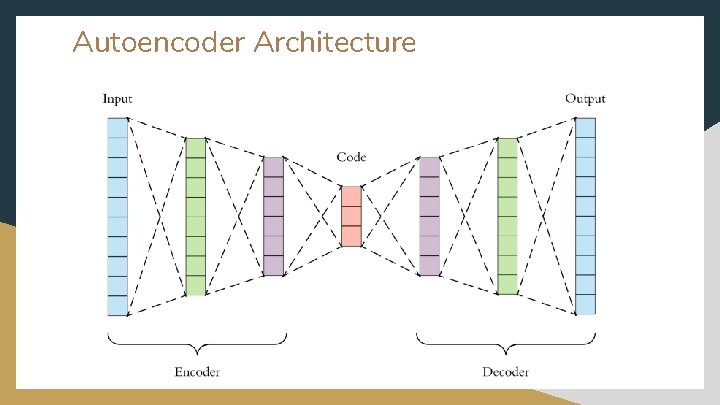

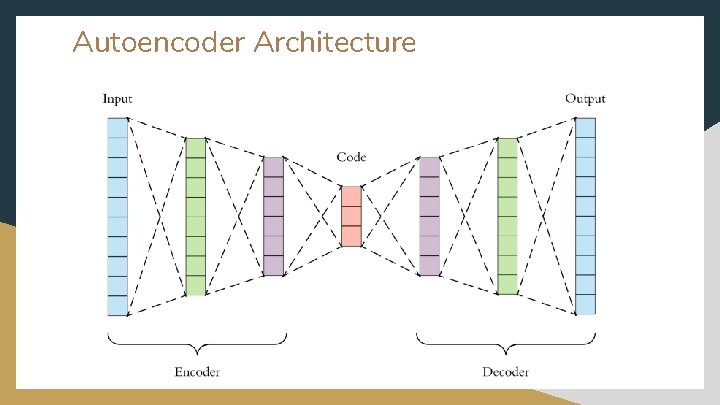

Autoencoders is a neural network that is trained to attempt to copy its input to its output. They can be supervised or unsupervised, this depends on the problem that is being solved. They are mainly a dimensionality reduction algorithm. Components of autoencoder 1. 2. 3. Encoder Code decoder

Properties of Autoencoders 1. 2. 3. Data Specific: They are only able to compress data similar to what they have been trained on because they learn the features specific for the training data. Lossy: The output of an autoencoder will not be the same as the input, it will be very similar to the input but not exactly the same. Unsupervised: Autoencoders are considered unsupervised because they don’t need explicit labels to train on. Autoencoders are self-supervised because they generate their own labels from training data.

Autoencoder Architecture

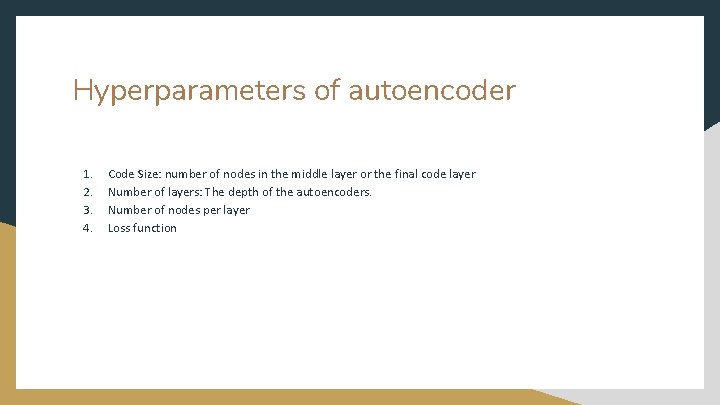

Hyperparameters of autoencoder 1. 2. 3. 4. Code Size: number of nodes in the middle layer or the final code layer Number of layers: The depth of the autoencoders. Number of nodes per layer Loss function

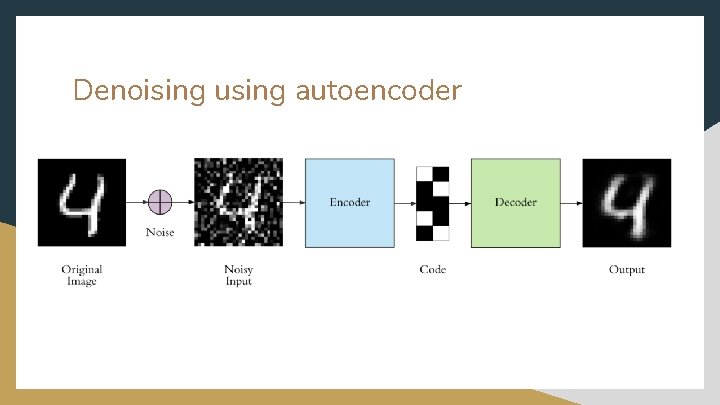

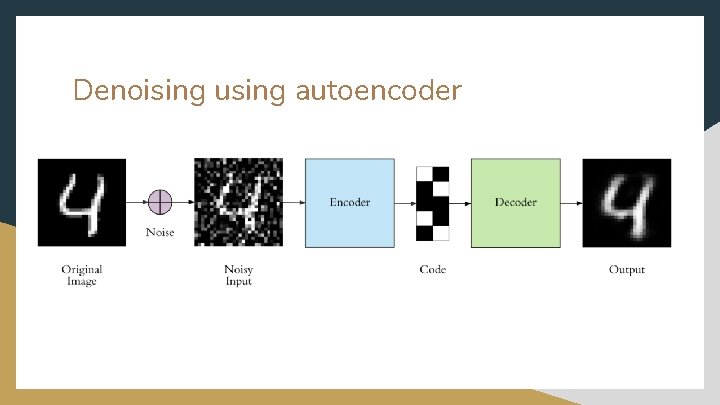

Denoising using autoencoder

Use of CNN 1. 2. Image classification (Le. Net, Inception. Net, Res. Net) Image segmentation (UNet, FCNN, RCNN)

Uses of Autoencoders ● ● ● ● Image denoising Anomaly detection Dimensionality reduction Feature Extraction Image generation Image Compression Sequence to Sequence Prediction Recommendation Systems

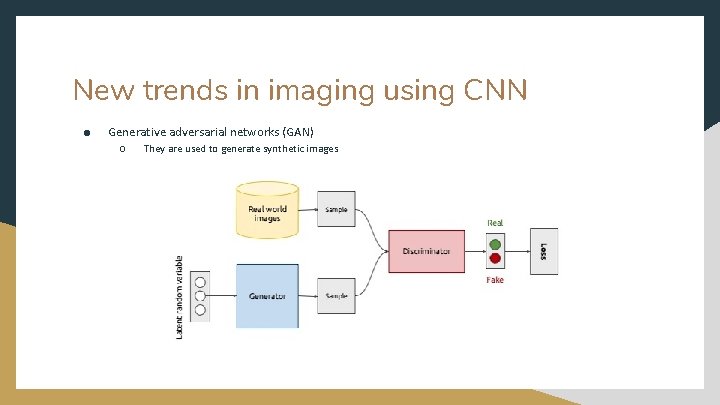

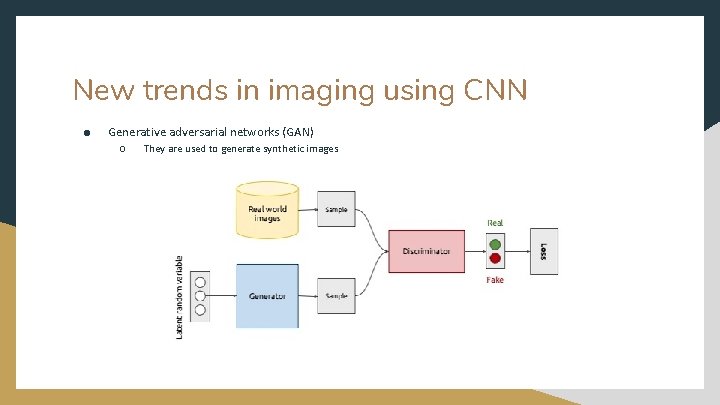

New trends in imaging using CNN ● Generative adversarial networks (GAN) ○ They are used to generate synthetic images

Reading Material CS 231 N (stanford University): https: //cs 231 n. github. io/ Understanding CNN: ● https: //towardsdatascience. com/understanding-cnn-convolutional-neural-network-69 fd 626 ee 7 d 4 Understanding Autoencoders: ● ● ● https: //www. jeremyjordan. me/autoencoders/ https: //medium. com/ai%C 2%B 3 -theory-practice-business/understanding-autoencoders-part-i-116 ed 2272 d 35 https: //medium. com/ai%C 2%B 3 -theory-practice-business/understanding-autoencoders-part-ii-41 d 18 d 3 ed 9 c 1