Advances in Transfer Learning MultiTask Learning Presenting Niv

- Slides: 55

Advances in Transfer Learning & Multi-Task Learning Presenting: Niv Granot, Lior Yariv

Human-Brain Aspect • We have an inherent ability to transfer knowledge across tasks: • Know how to throw a ball Learn how to play basketball • Know math and statistics Learn machine learning • We naturally combine tasks to learn better • Learn how to read and write simultaneously • Learn Deep Learning and Computer Vision

Transfer Learning Tasks Relation in Deep Learning Multi-Task Learning

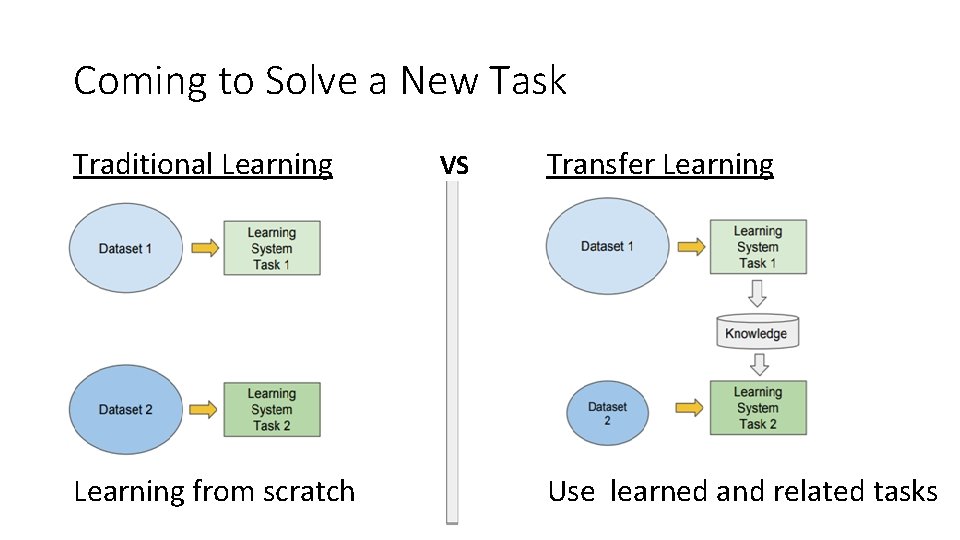

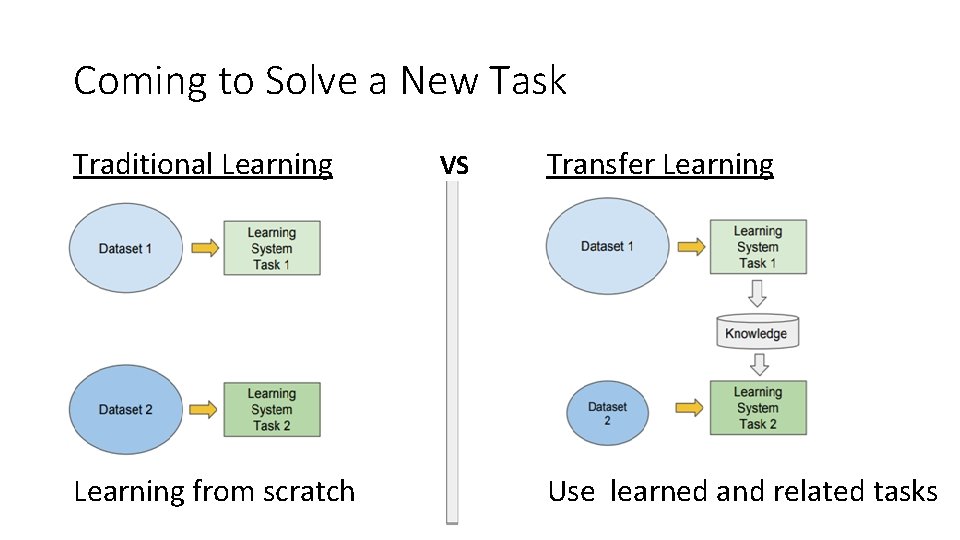

Coming to Solve a New Task Traditional Learning from scratch VS Transfer Learning Use learned and related tasks

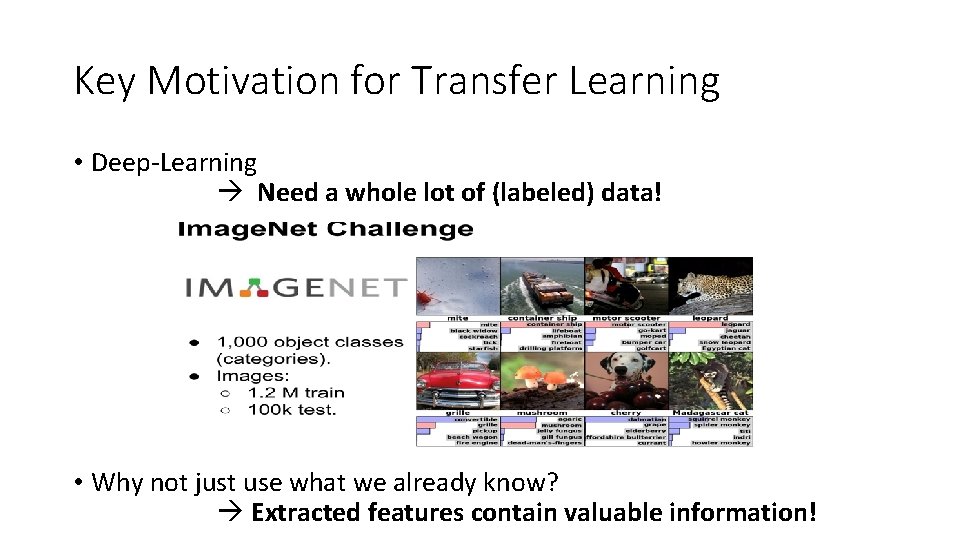

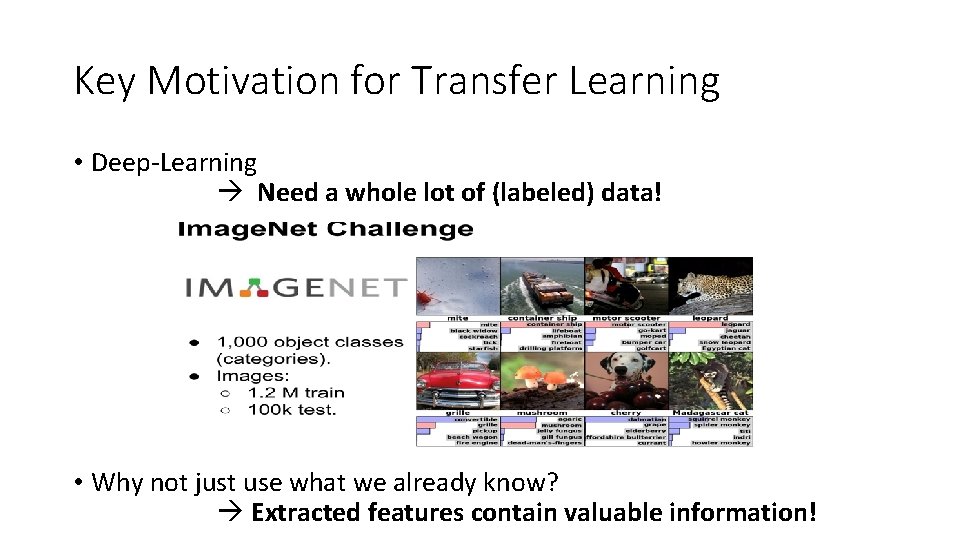

Key Motivation for Transfer Learning • Deep-Learning Need a whole lot of (labeled) data! • Why not just use what we already know? Extracted features contain valuable information!

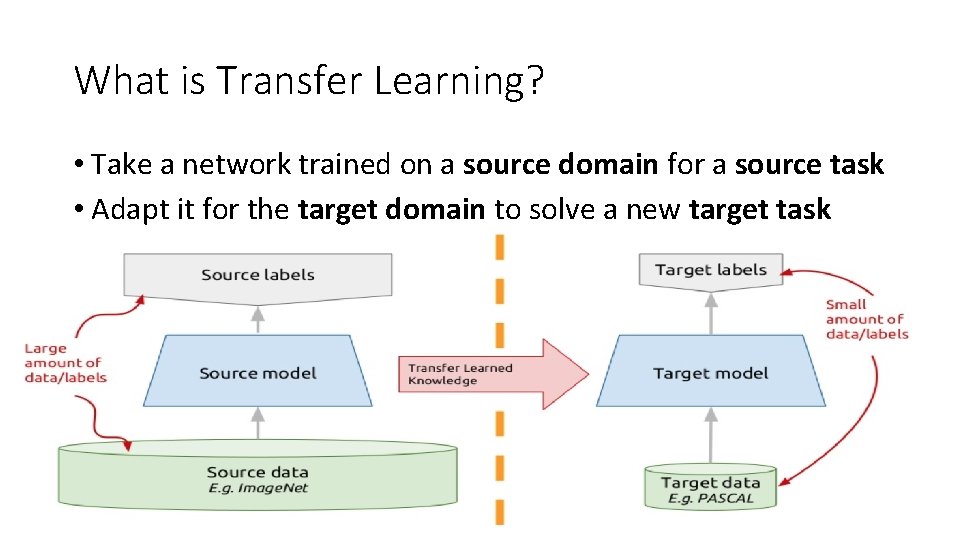

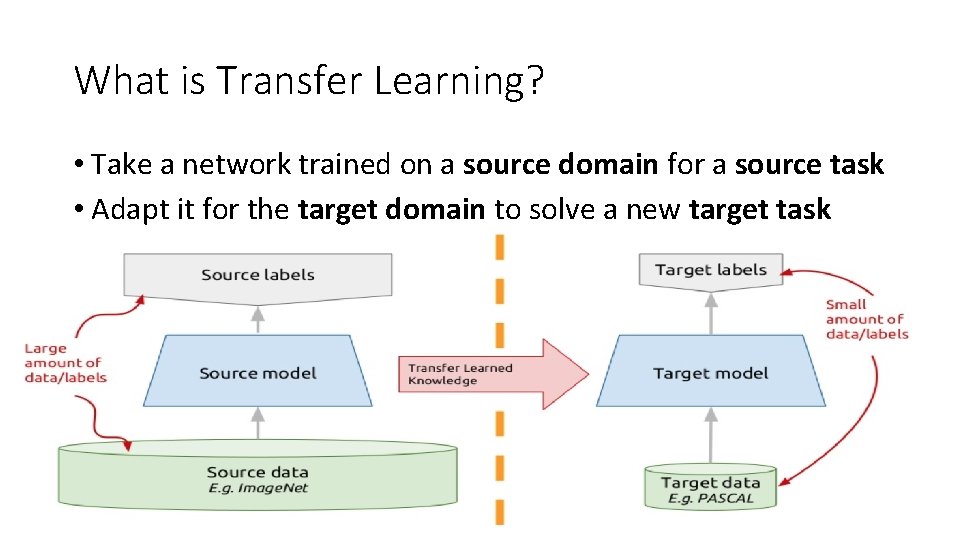

What is Transfer Learning? • Take a network trained on a source domain for a source task • Adapt it for the target domain to solve a new target task

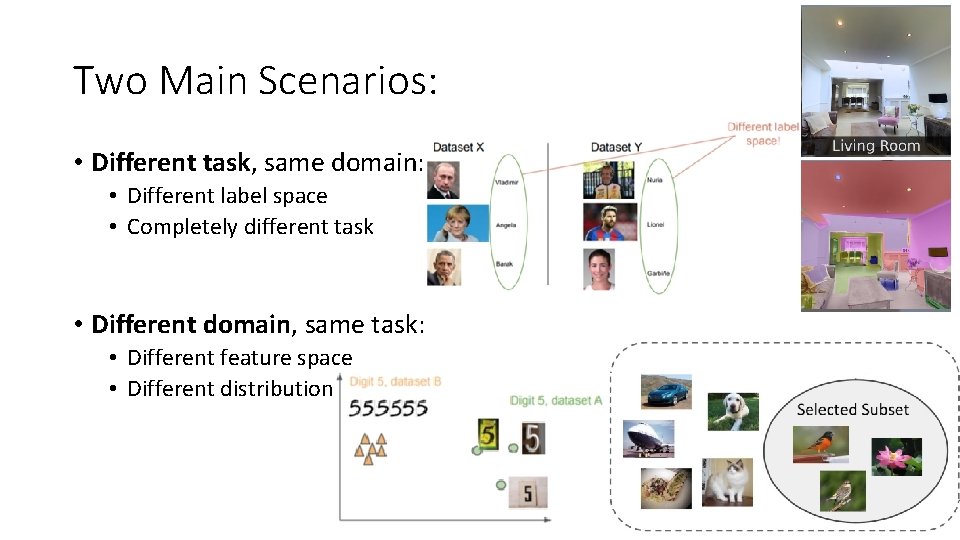

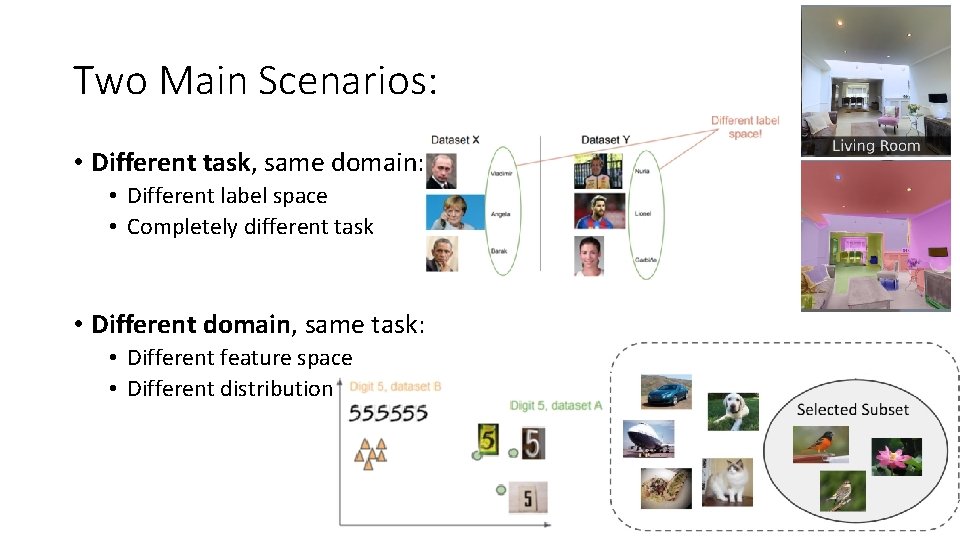

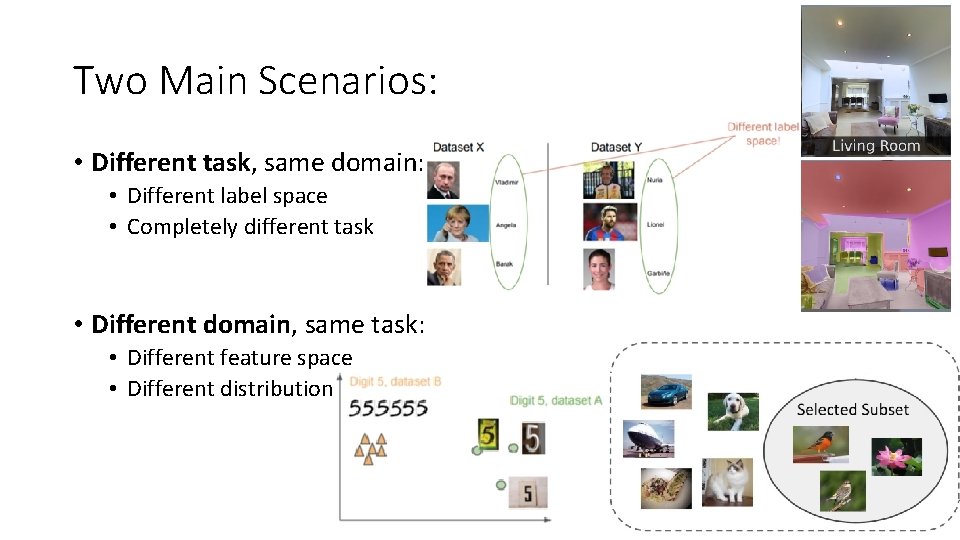

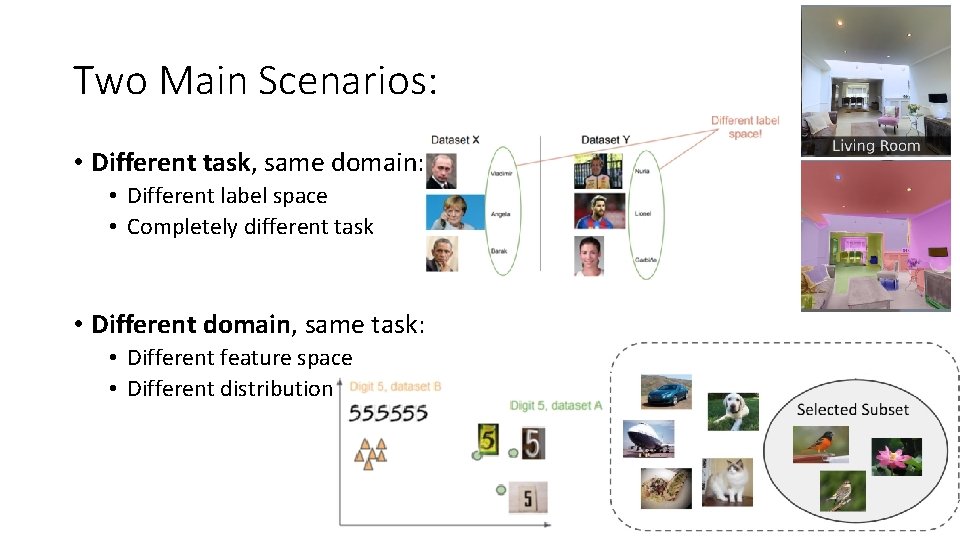

Two Main Scenarios: • Different task, same domain: • Different label space • Completely different task • Different domain, same task: • Different feature space • Different distribution

Taskonomy: Disentangling Task Transfer Learning Amir Zamir, Alexander Sax, William Shen, Leonidas Guibas, Jitendra Malik, Silvio Savarese Best Paper CVPR 2018

Which Visual Tasks Can we Transfer? • Which tasks can be transferred? Need some relation!

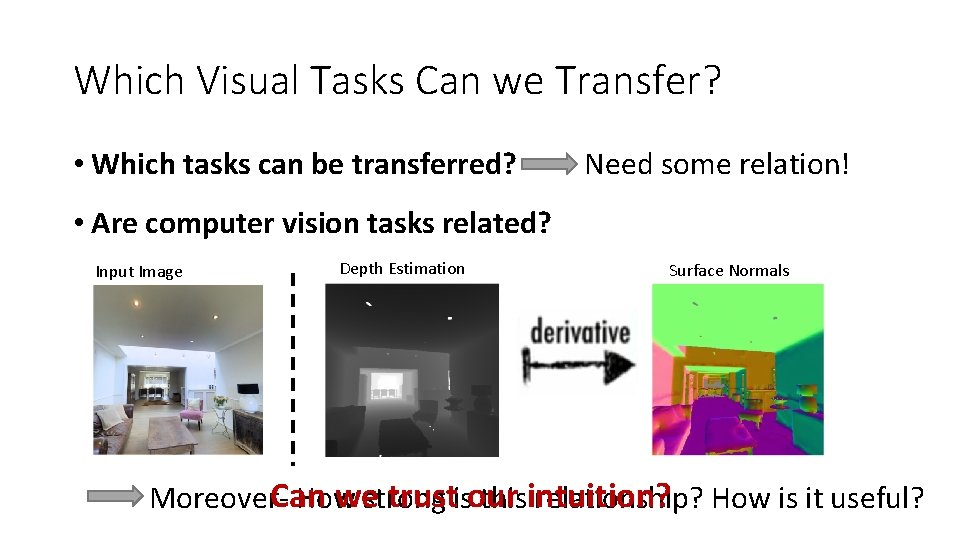

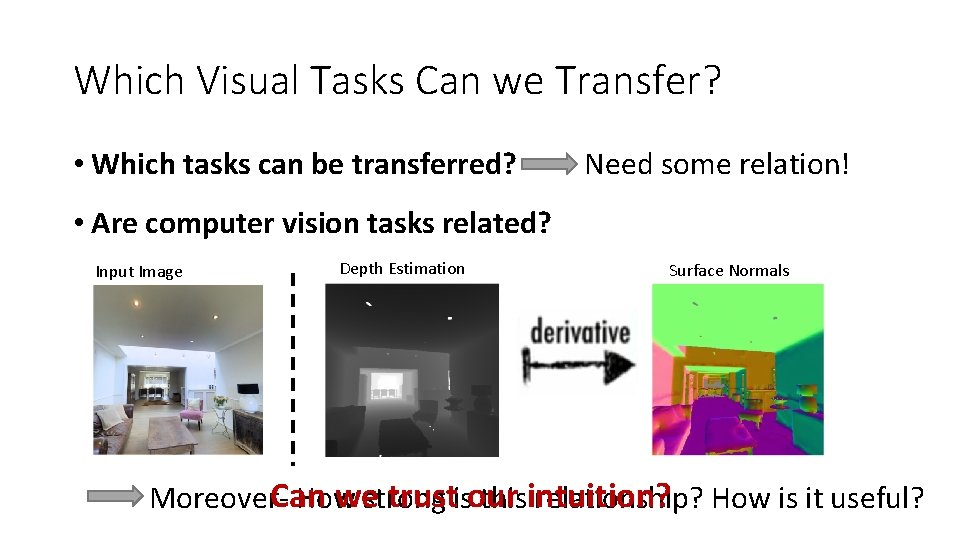

Which Visual Tasks Can we Transfer? • Which tasks can be transferred? Need some relation! • Are computer vision tasks related? Input Image Depth Estimation Surface Normals Can we trust our intuition? Moreover- How strong is this relationship? How is it useful?

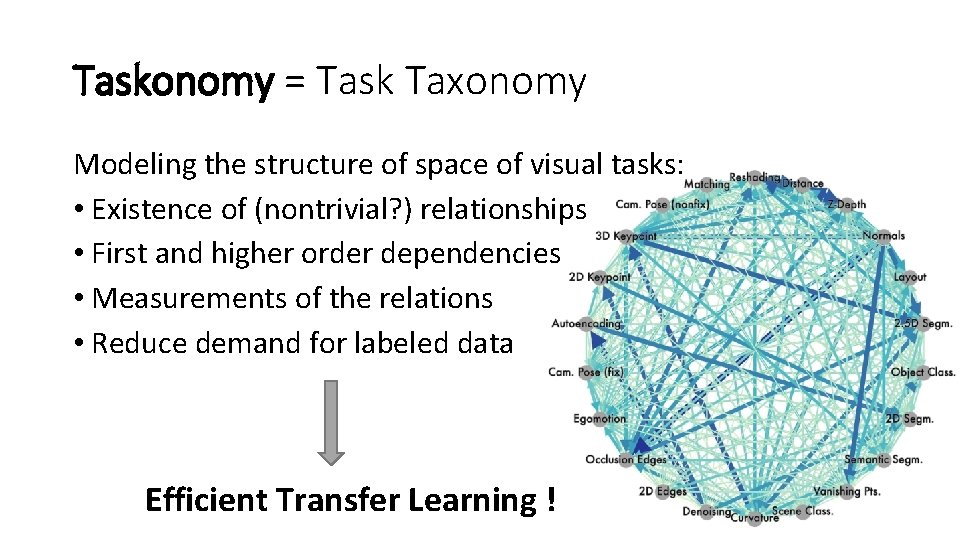

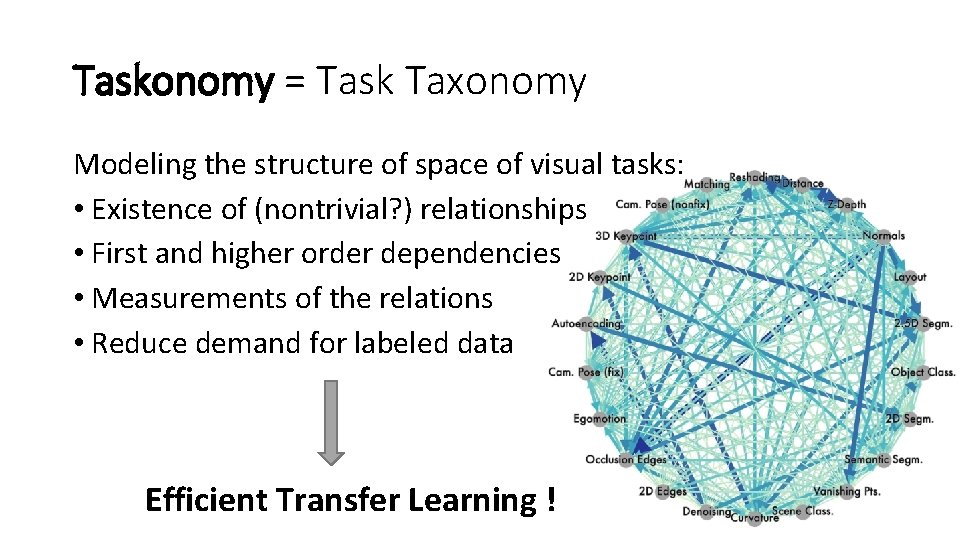

Taskonomy = Task Taxonomy Modeling the structure of space of visual tasks: • Existence of (nontrivial? ) relationships • First and higher order dependencies • Measurements of the relations • Reduce demand for labeled data Efficient Transfer Learning !

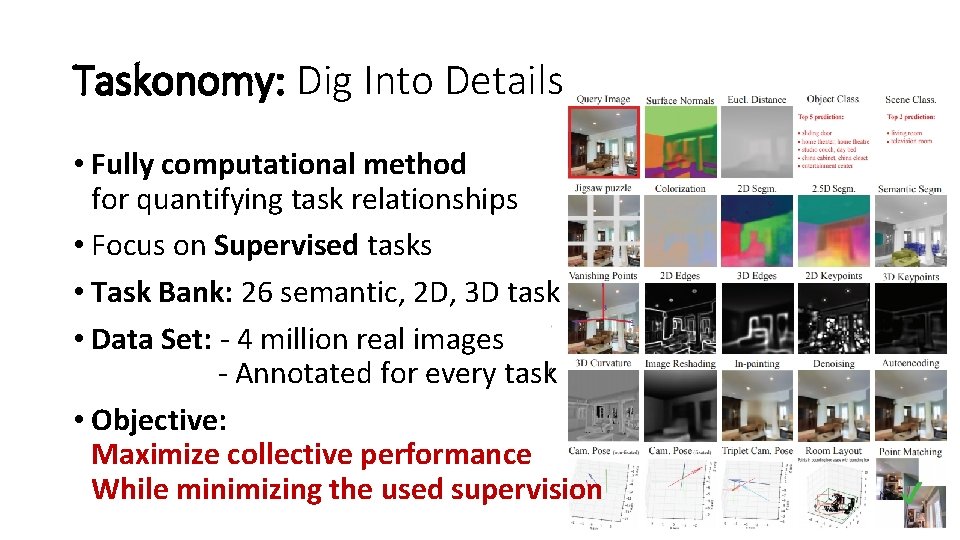

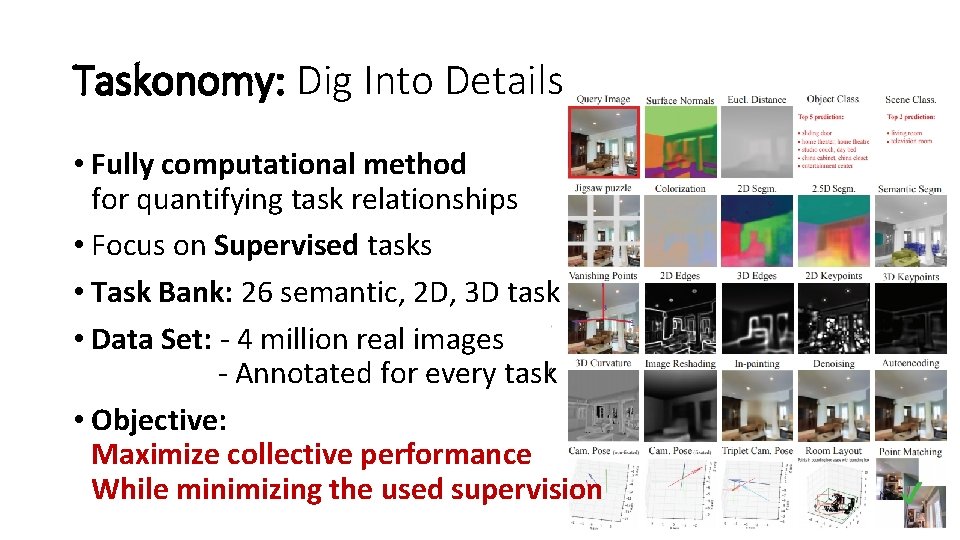

Taskonomy: Dig Into Details • Fully computational method for quantifying task relationships • Focus on Supervised tasks • Task Bank: 26 semantic, 2 D, 3 D task • Data Set: - 4 million real images - Annotated for every task • Objective: Maximize collective performance While minimizing the used supervision

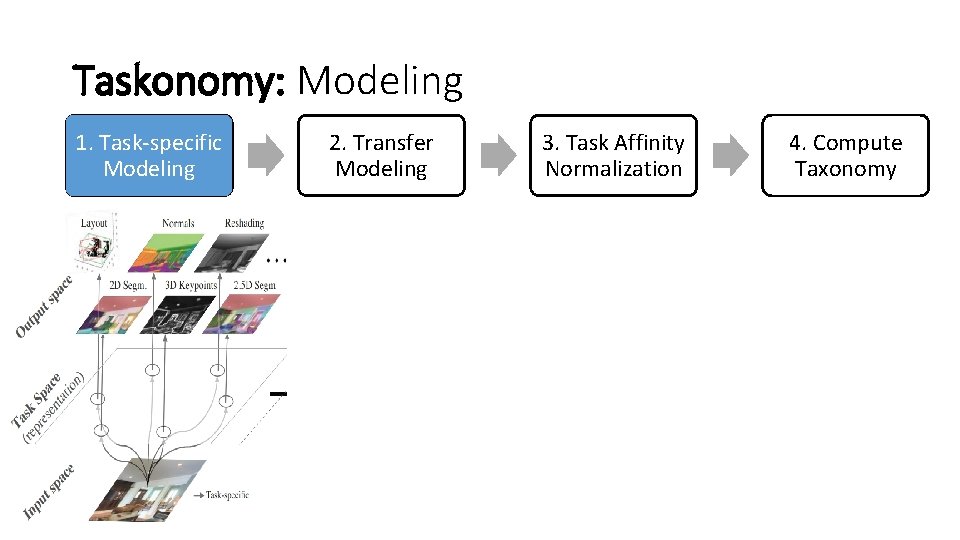

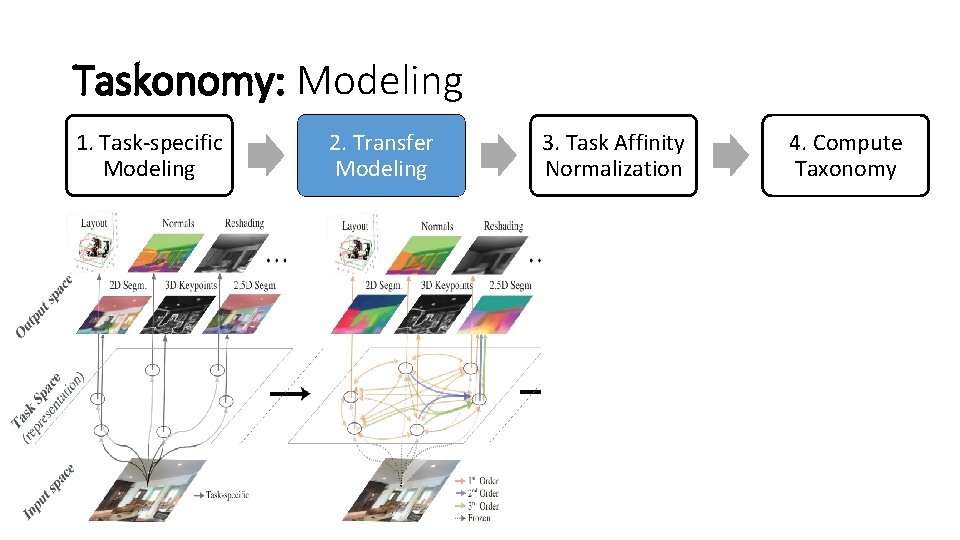

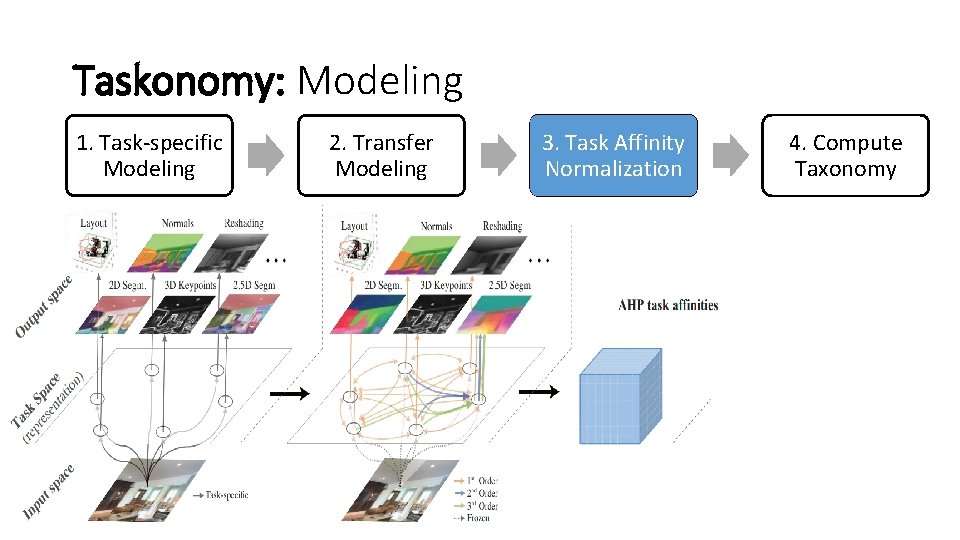

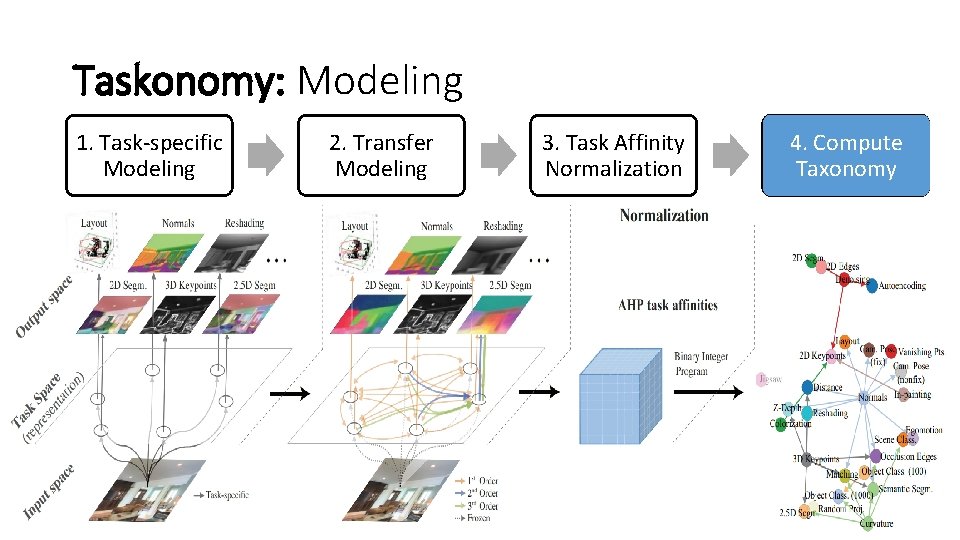

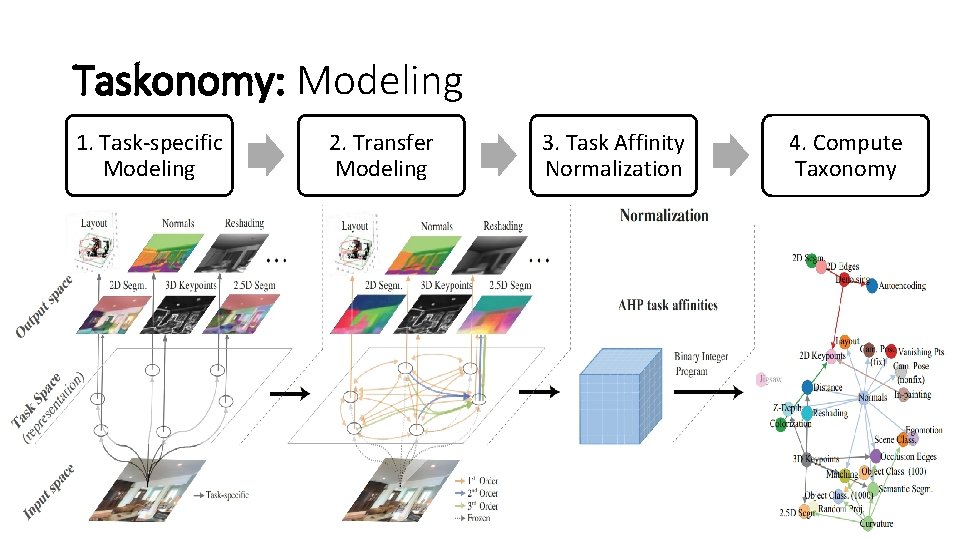

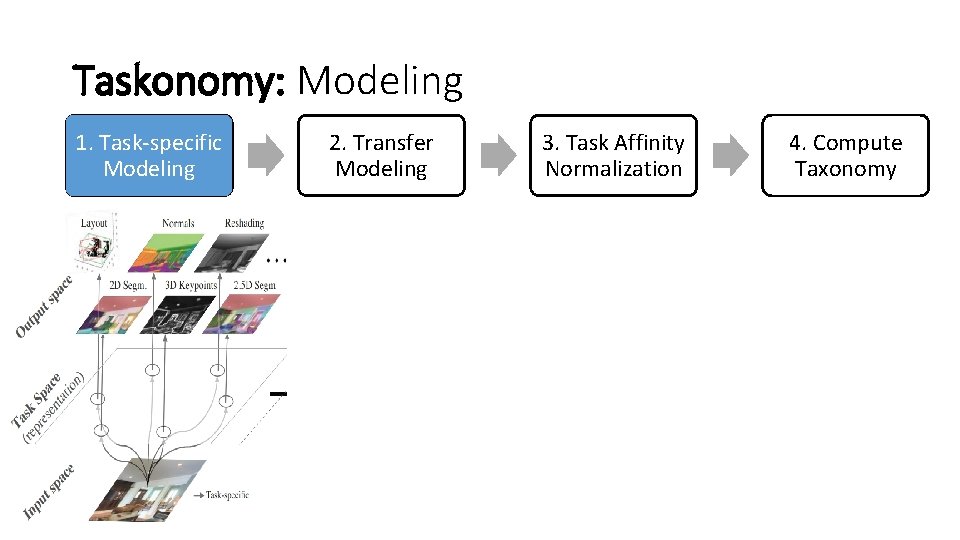

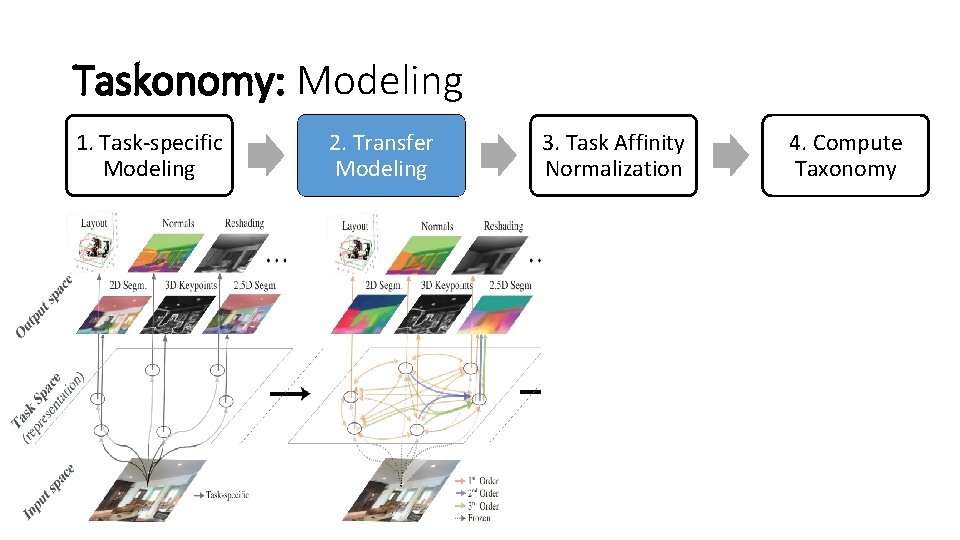

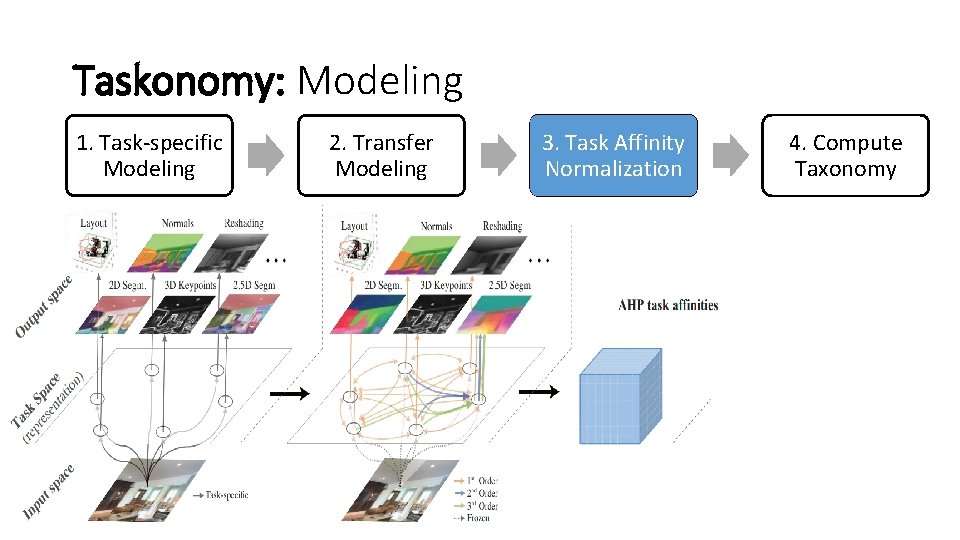

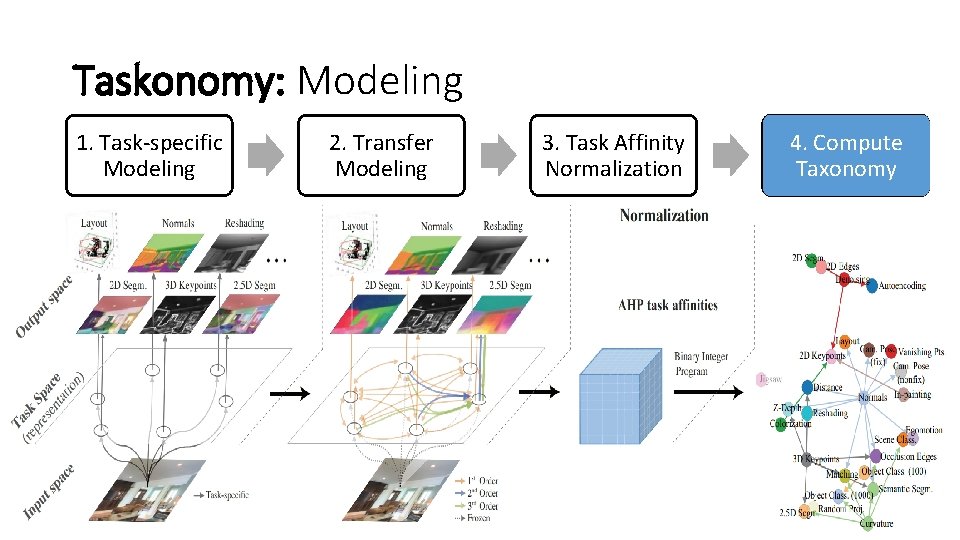

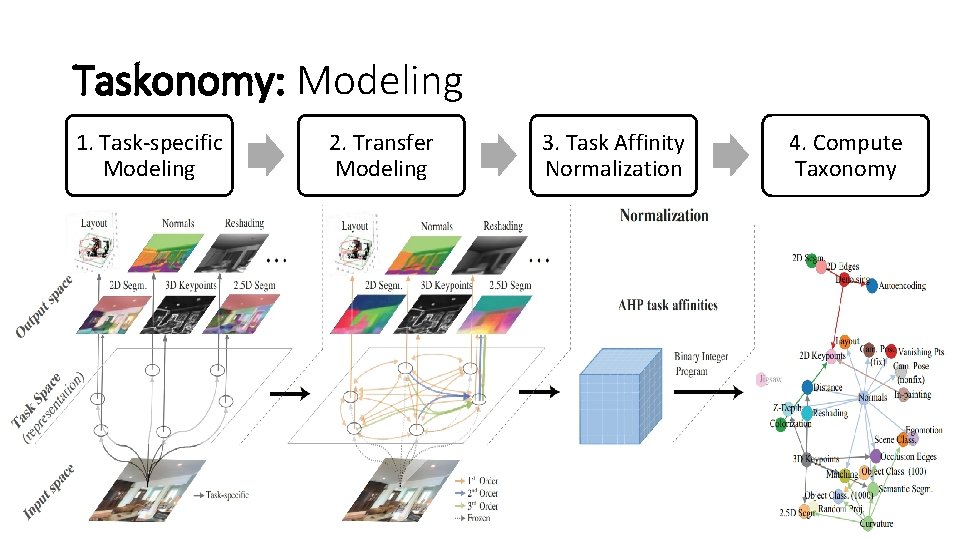

Taskonomy: Modeling 1. Task-specific Modeling 2. Transfer Modeling 3. Task Affinity Normalization 4. Compute Taxonomy

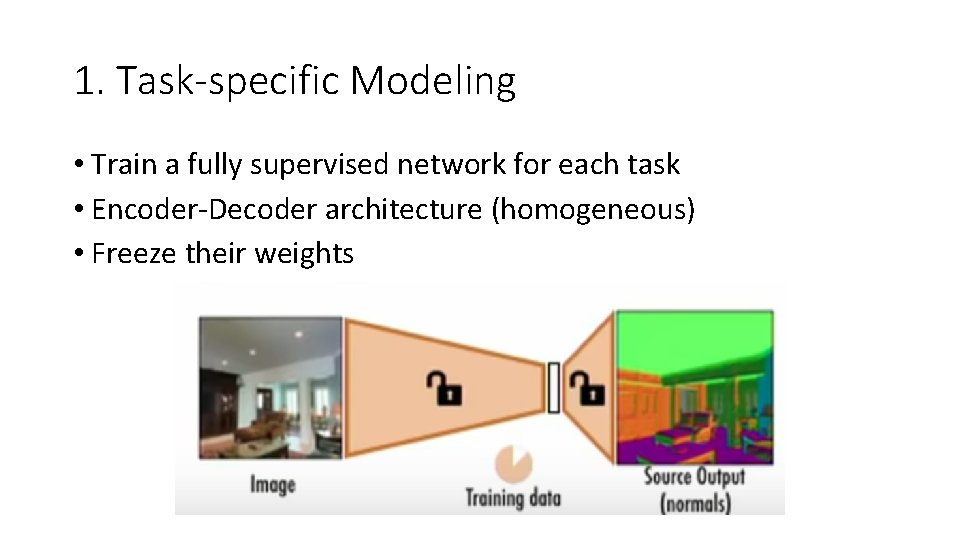

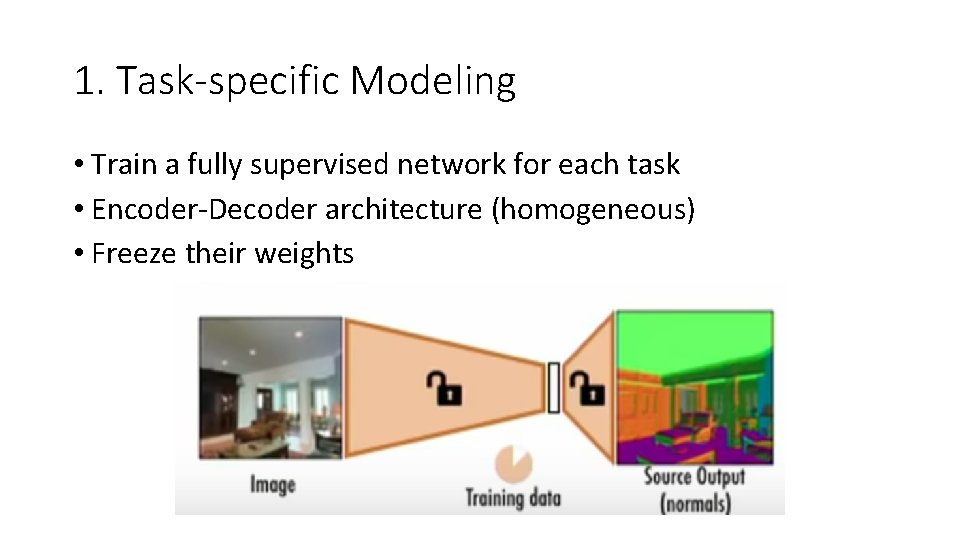

1. Task-specific Modeling • Train a fully supervised network for each task • Encoder-Decoder architecture (homogeneous) • Freeze their weights

1. Task-specific Modeling

Taskonomy: Modeling 1. Task-specific Modeling 2. Transfer Modeling 3. Task Affinity Normalization 4. Compute Taxonomy

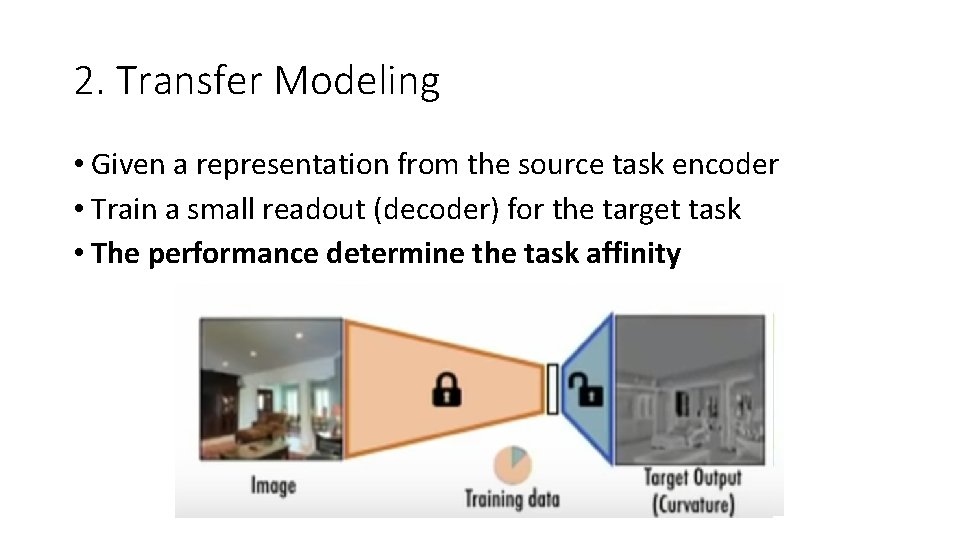

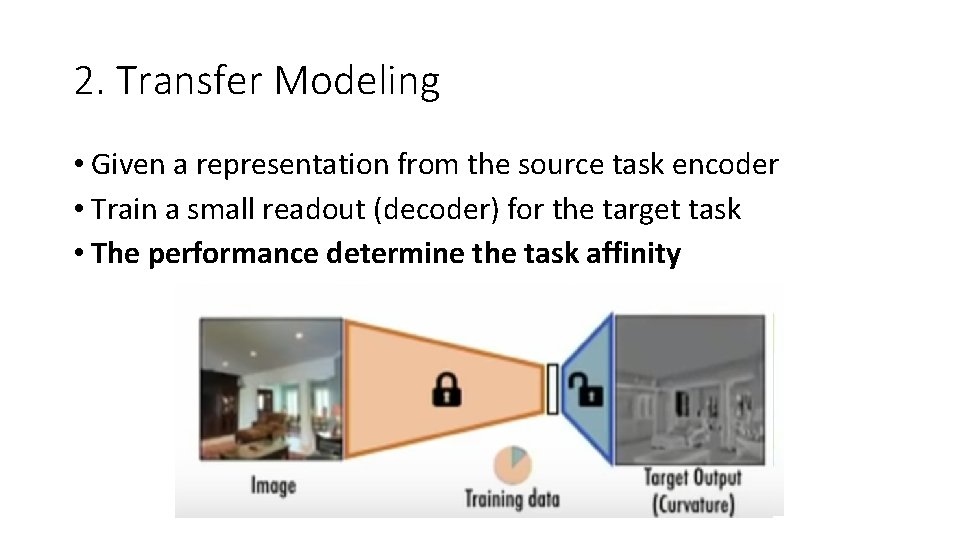

2. Transfer Modeling • Given a representation from the source task encoder • Train a small readout (decoder) for the target task • The performance determine the task affinity

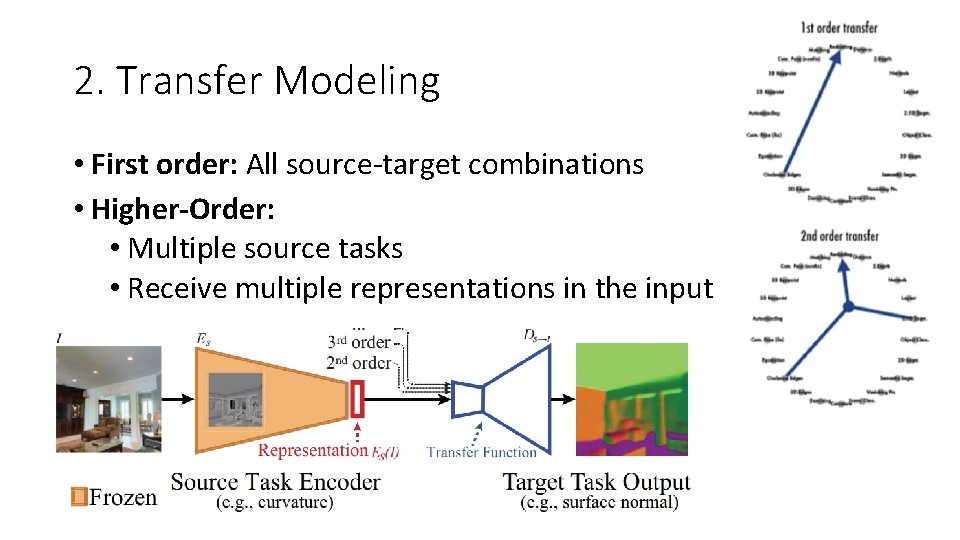

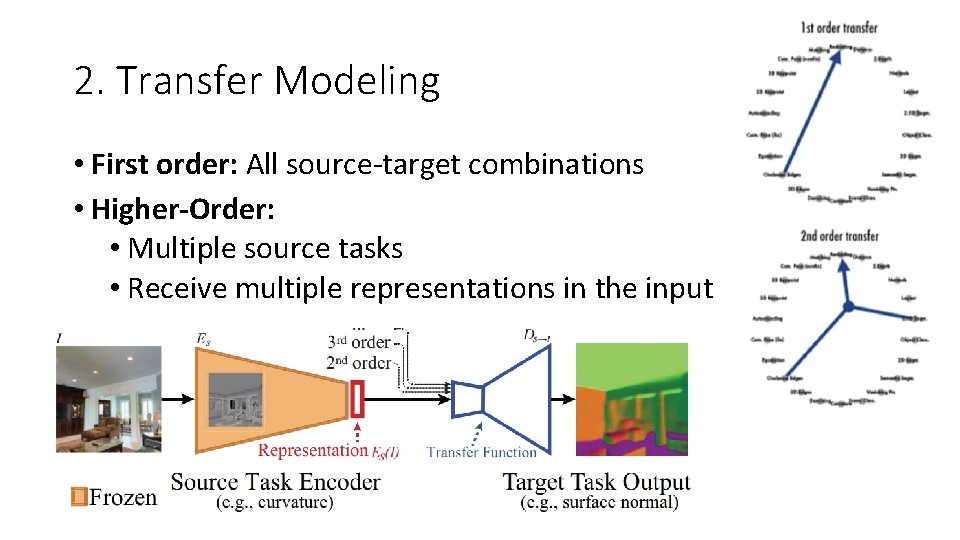

2. Transfer Modeling • First order: All source-target combinations • Higher-Order: • Multiple source tasks • Receive multiple representations in the input

Taskonomy: Modeling 1. Task-specific Modeling 2. Transfer Modeling 3. Task Affinity Normalization 4. Compute Taxonomy

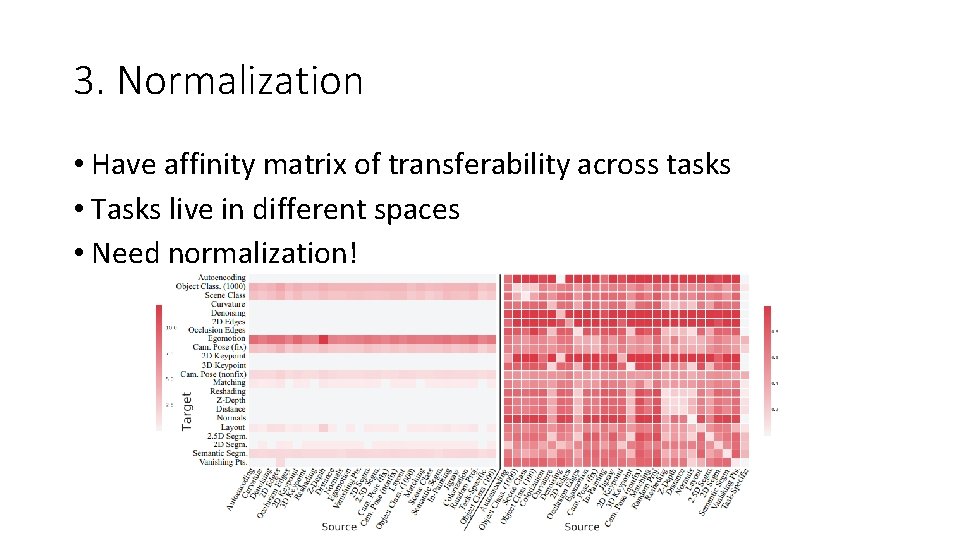

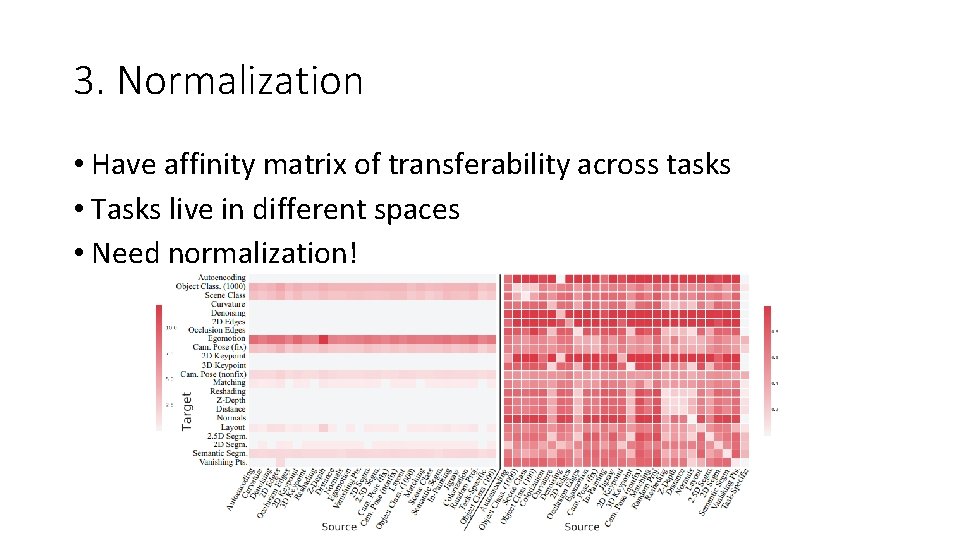

3. Normalization • Have affinity matrix of transferability across tasks • Tasks live in different spaces • Need normalization!

Taskonomy: Modeling 1. Task-specific Modeling 2. Transfer Modeling 3. Task Affinity Normalization 4. Compute Taxonomy

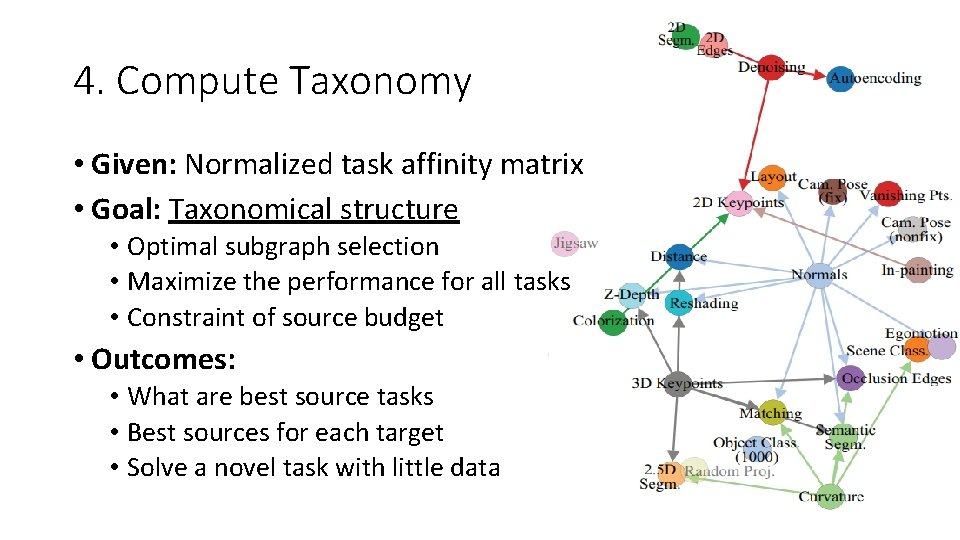

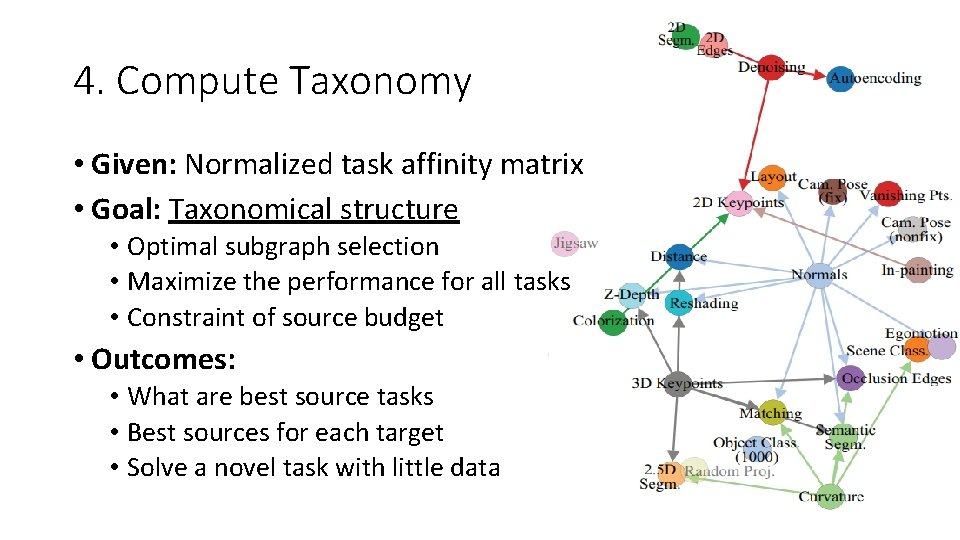

4. Compute Taxonomy • Given: Normalized task affinity matrix • Goal: Taxonomical structure • Optimal subgraph selection • Maximize the performance for all tasks • Constraint of source budget • Outcomes: • What are best source tasks • Best sources for each target • Solve a novel task with little data

Taskonomy: Modeling 1. Task-specific Modeling 2. Transfer Modeling 3. Task Affinity Normalization 4. Compute Taxonomy

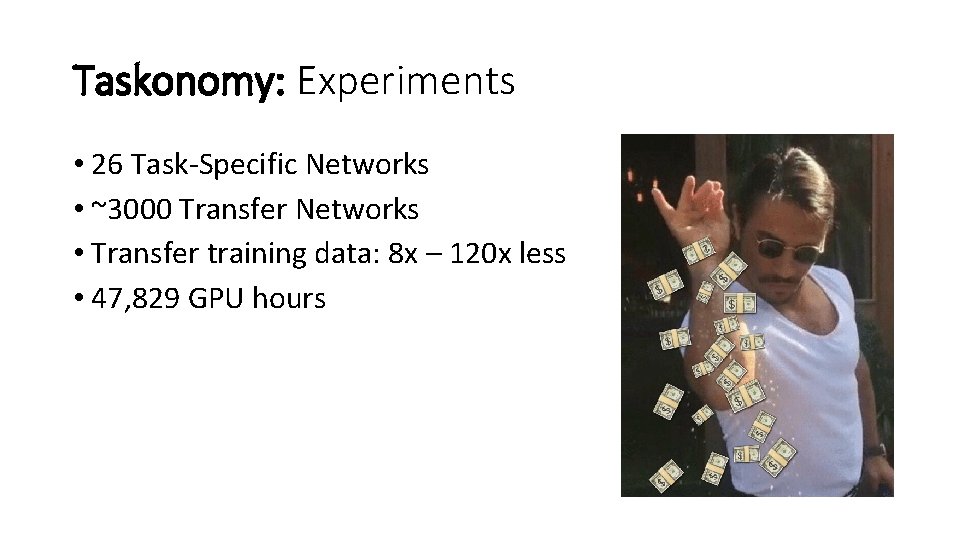

Taskonomy: Experiments • 26 Task-Specific Networks • ~3000 Transfer Networks • Transfer training data: 8 x – 120 x less • 47, 829 GPU hours

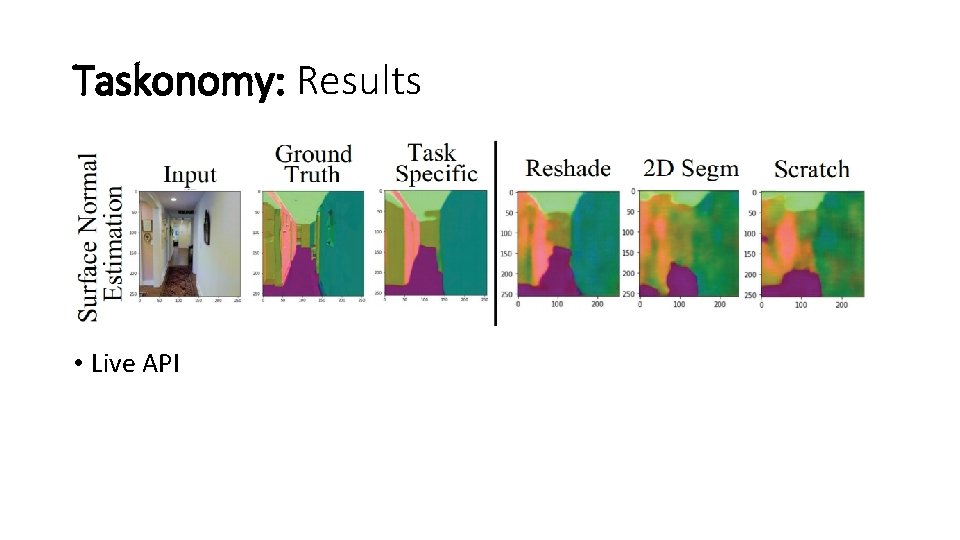

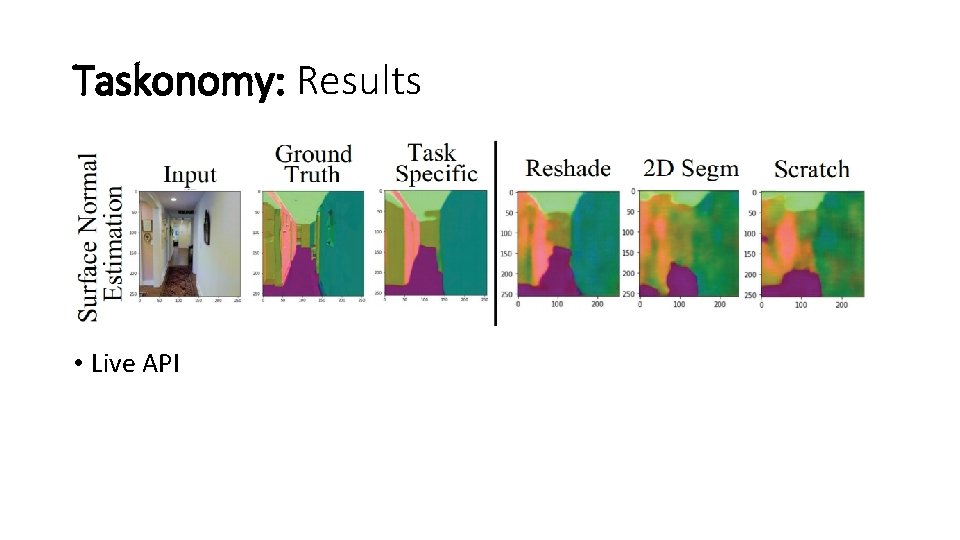

Taskonomy: Results • Live API

Break

Two Main Scenarios: • Different task, same domain: • Different label space • Completely different task • Different domain, same task: • Different feature space • Different distribution

Partial Transfer Learning with Selective Adversarial Networks Zhangjie Cao, Mingsheng Long, Jianmin Wang, Michael I. Jordan CVPR 2018

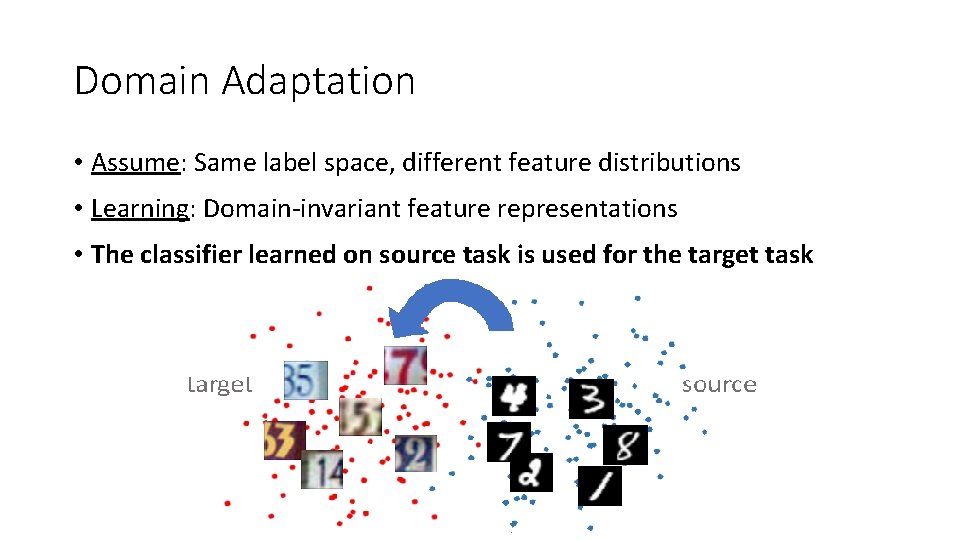

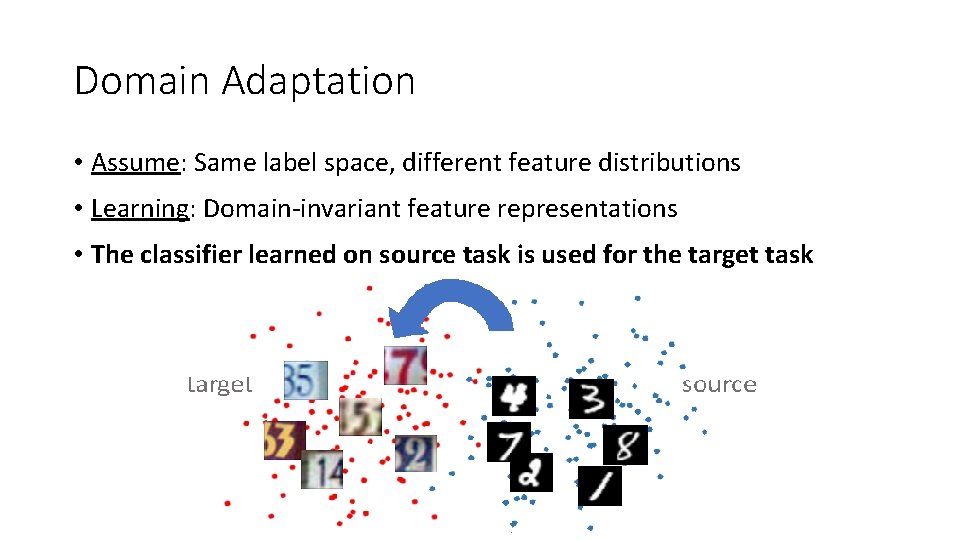

Domain Adaptation • Assume: Same label space, different feature distributions • Learning: Domain-invariant feature representations • The classifier learned on source task is used for the target task

Partial Transfer Learning •

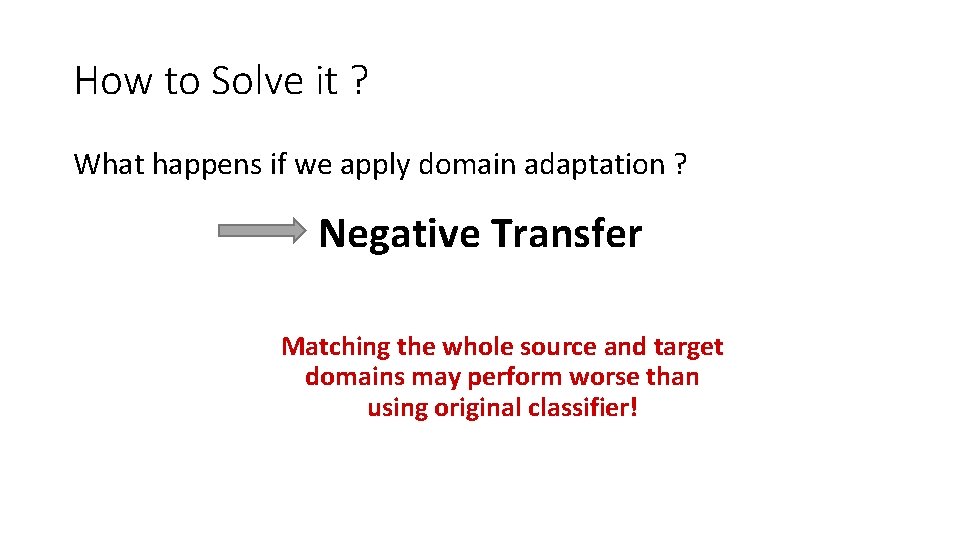

How to Solve it ? What happens if we apply domain adaptation ? Negative Transfer Matching the whole source and target domains may perform worse than using original classifier!

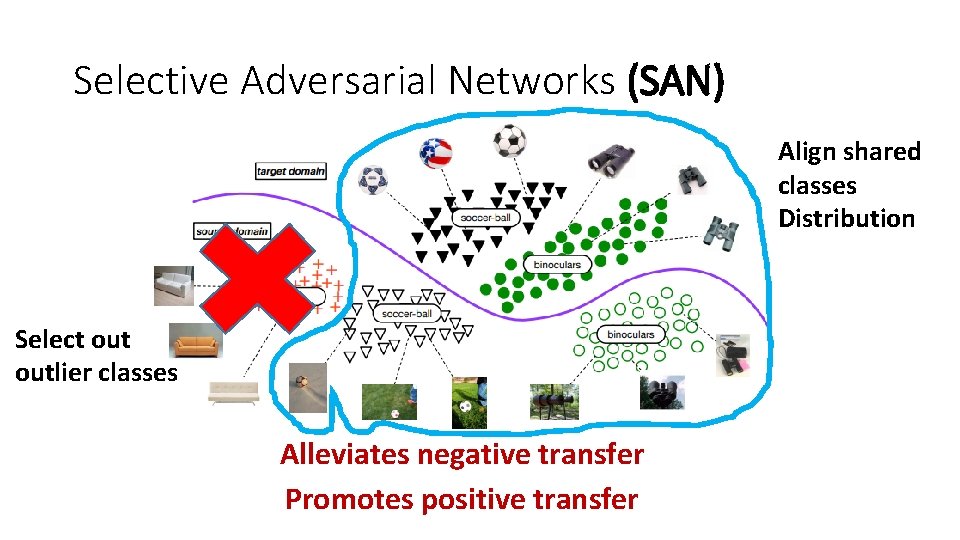

Main Goals • Avoid negative transfer Selecting out the outlier classes • Promote positive learning Adapting the shared label space

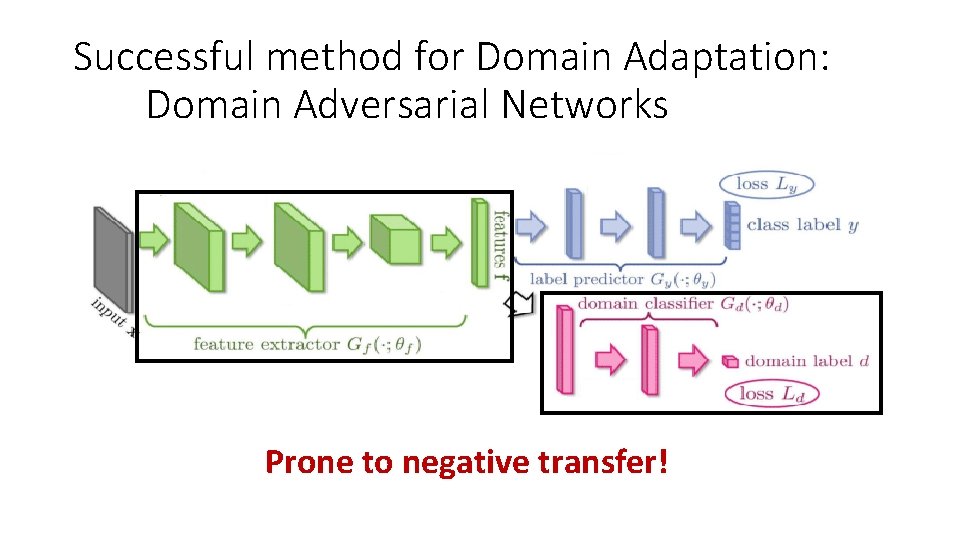

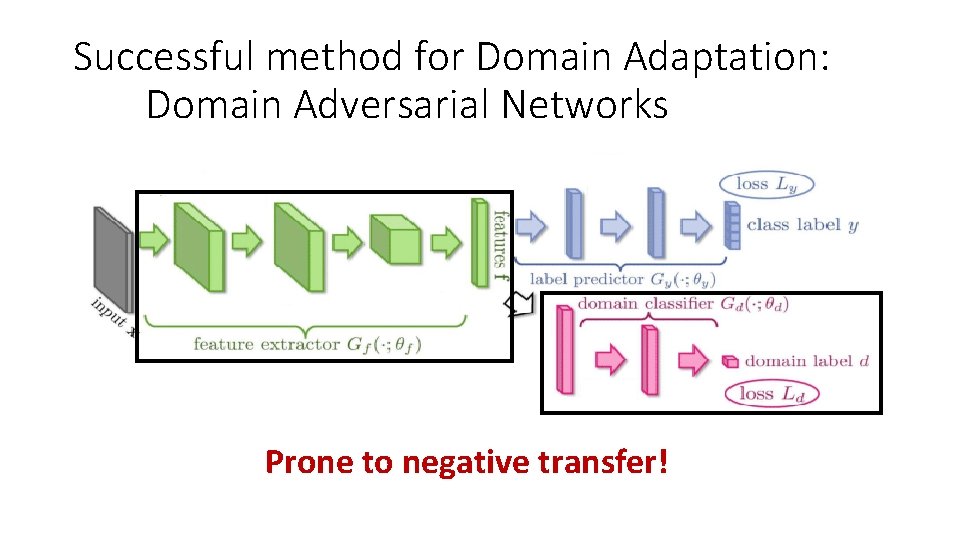

Successful method for Domain Adaptation: Domain Adversarial Networks Prone to negative transfer!

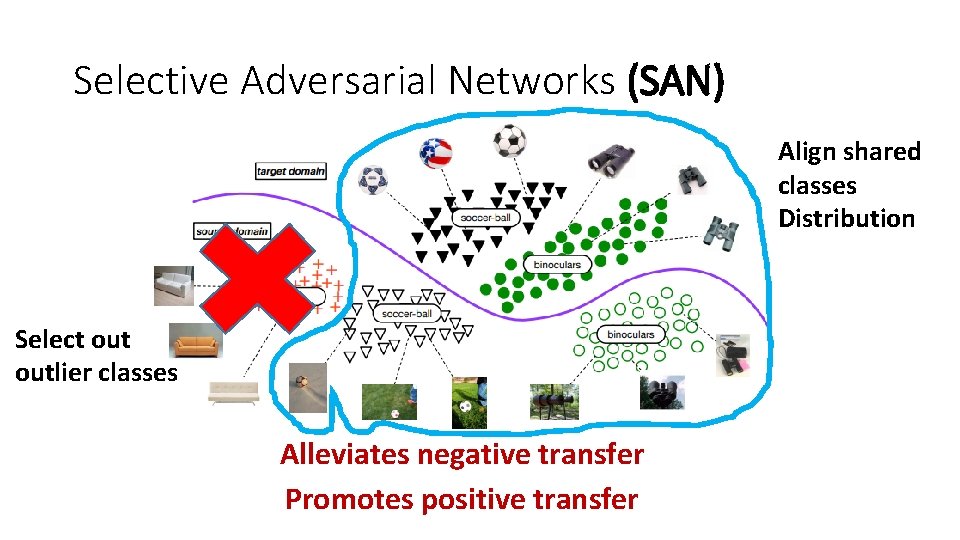

Selective Adversarial Networks (SAN) Align shared classes Distribution Select outlier classes Alleviates negative transfer Promotes positive transfer

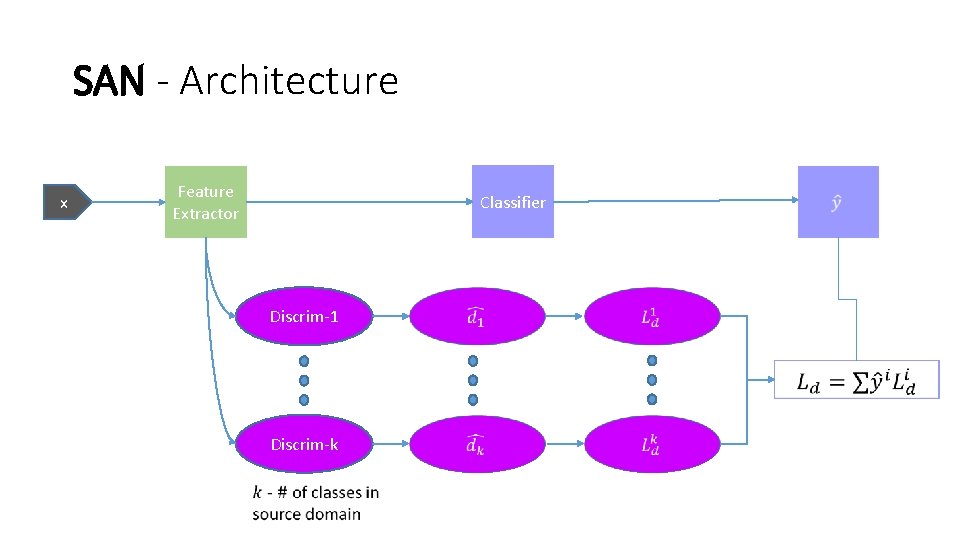

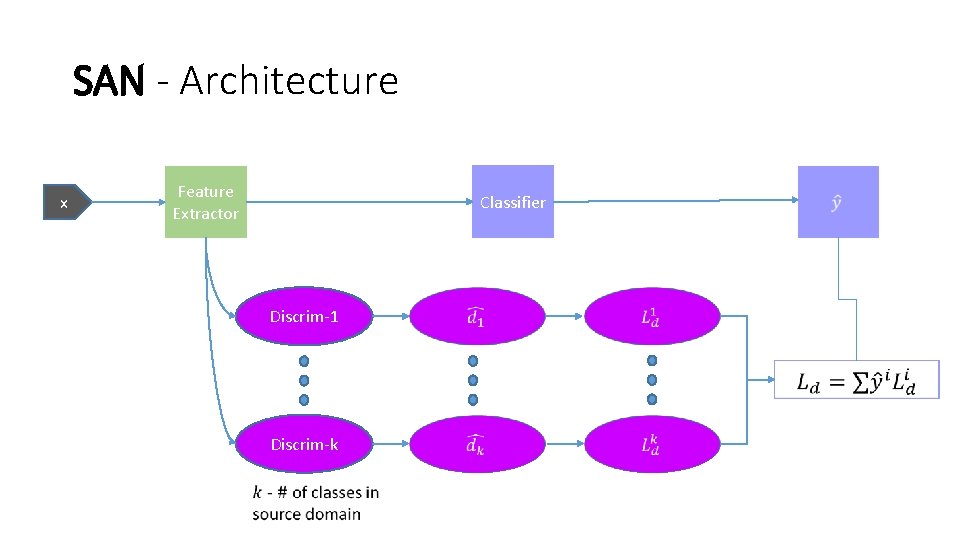

SAN - Architecture x Feature Extractor Classifier Discrim-1 Discrim-k

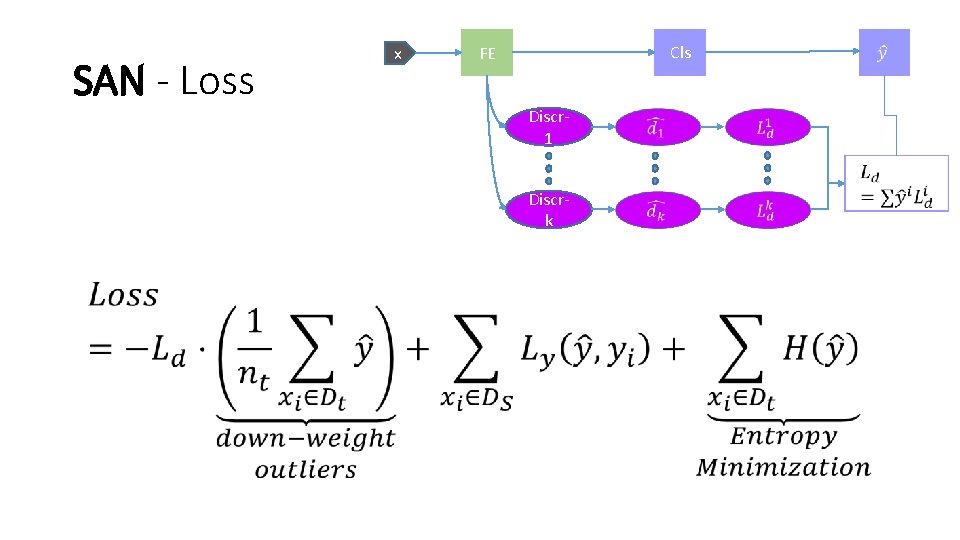

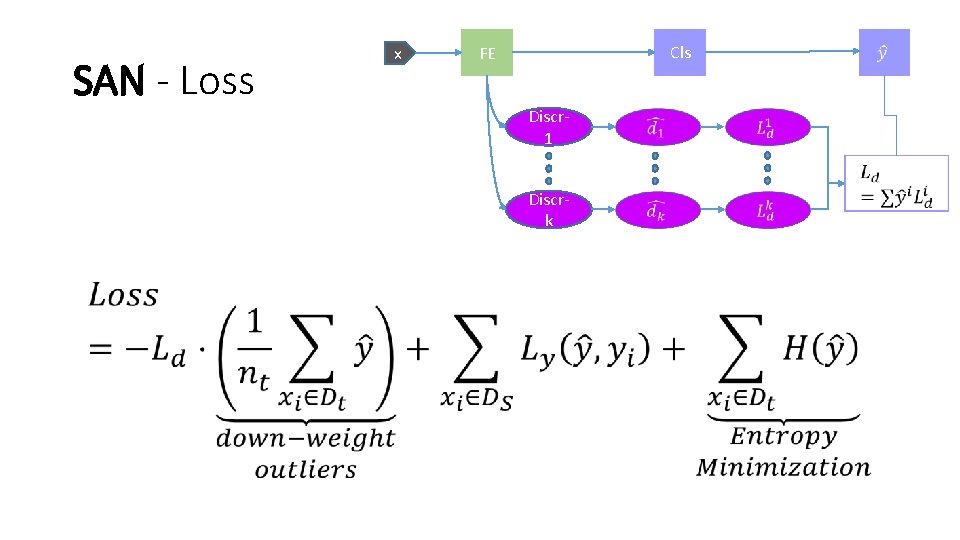

SAN - Loss • x Cls FE Discr 1 Discrk

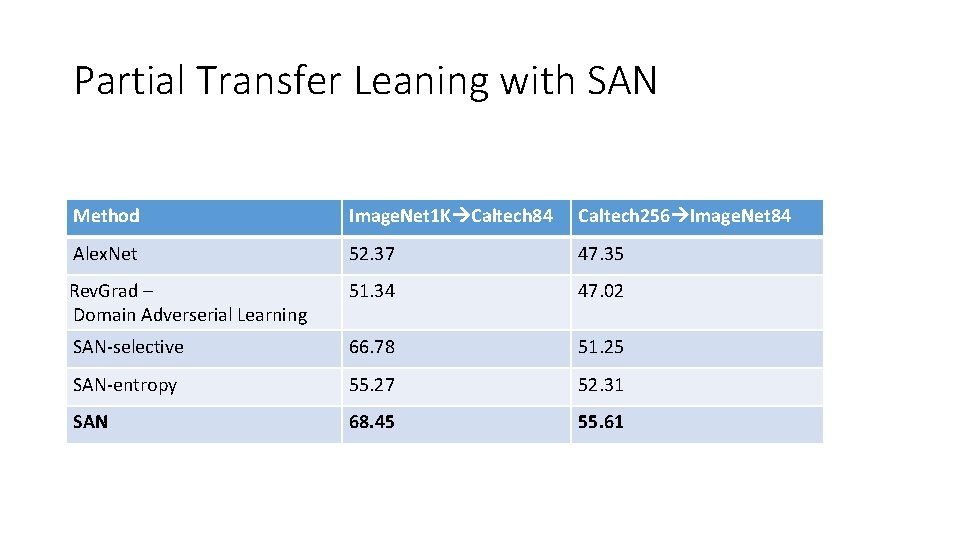

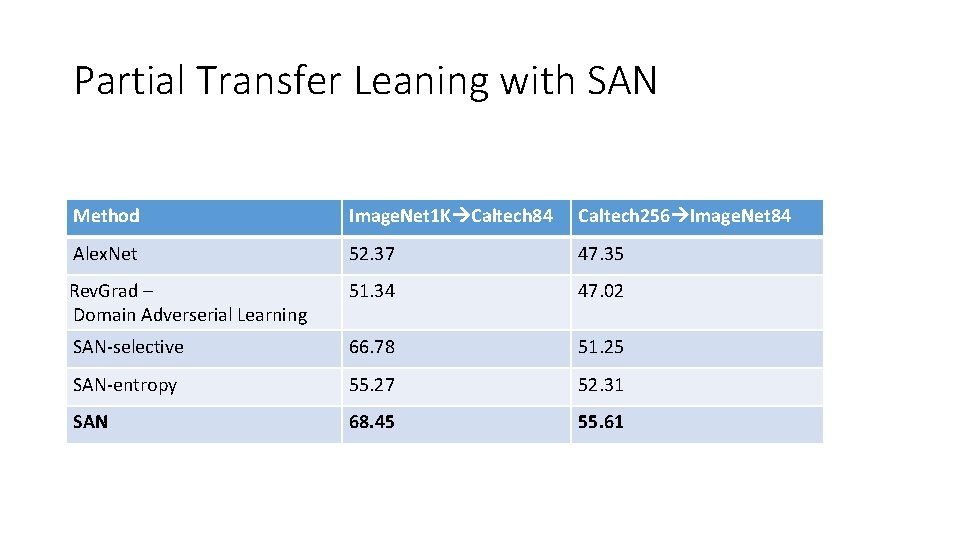

Partial Transfer Leaning with SAN Method Image. Net 1 K Caltech 84 Caltech 256 Image. Net 84 Alex. Net 52. 37 47. 35 Rev. Grad – Domain Adverserial Learning 51. 34 47. 02 SAN-selective 66. 78 51. 25 SAN-entropy 55. 27 52. 31 SAN 68. 45 55. 61

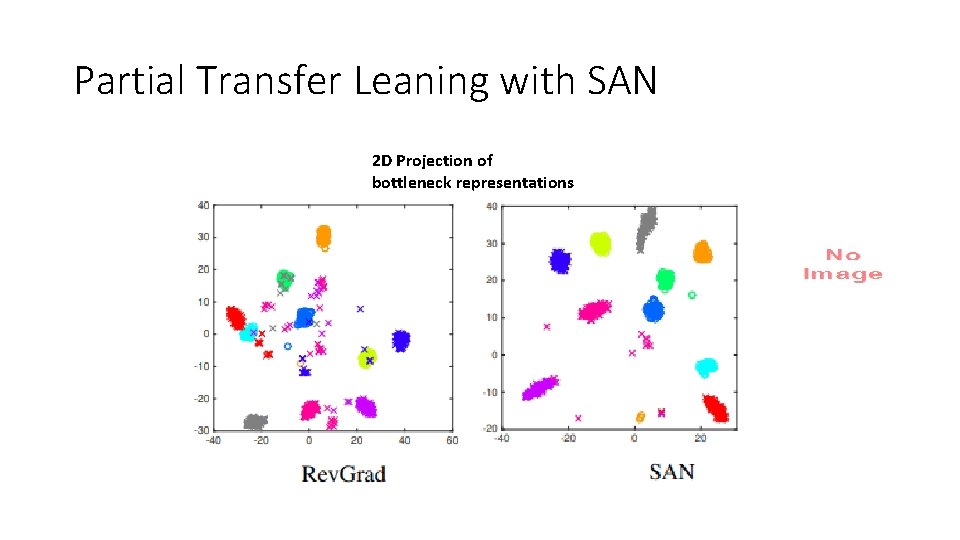

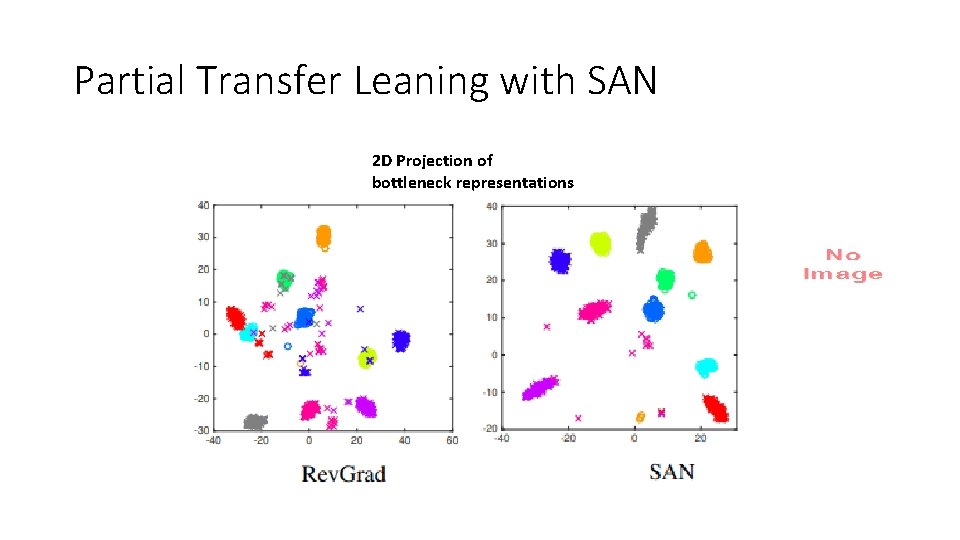

Partial Transfer Leaning with SAN 2 D Projection of bottleneck representations

Partial Transfer Leaning with SAN 2 D Projection of bottleneck representations Source classes Target classes

Transfer Learning Tasks Relation in Deep Learning Multi-Task Muli-Task Learning

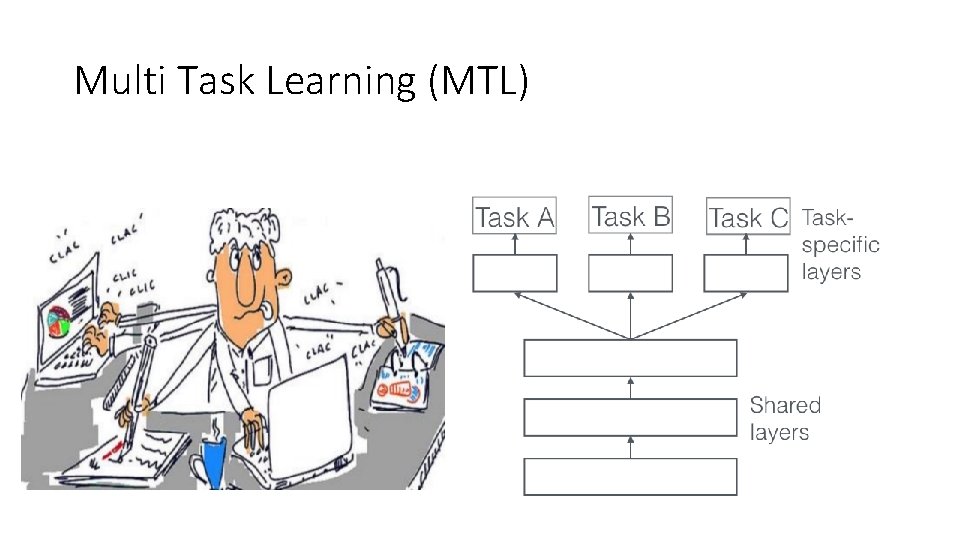

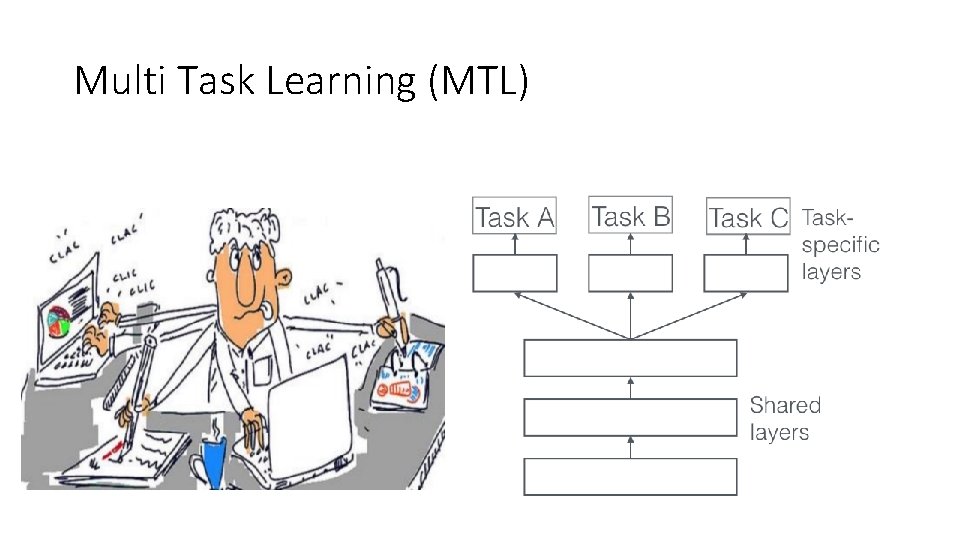

Multi Task Learning (MTL)

Motivation – Inductive Bias

How to Solve MTL? •

Multi-Task Learning as Multi. Objective Optimization Ozan Sener, Vladlen Koltun NIPS 2018

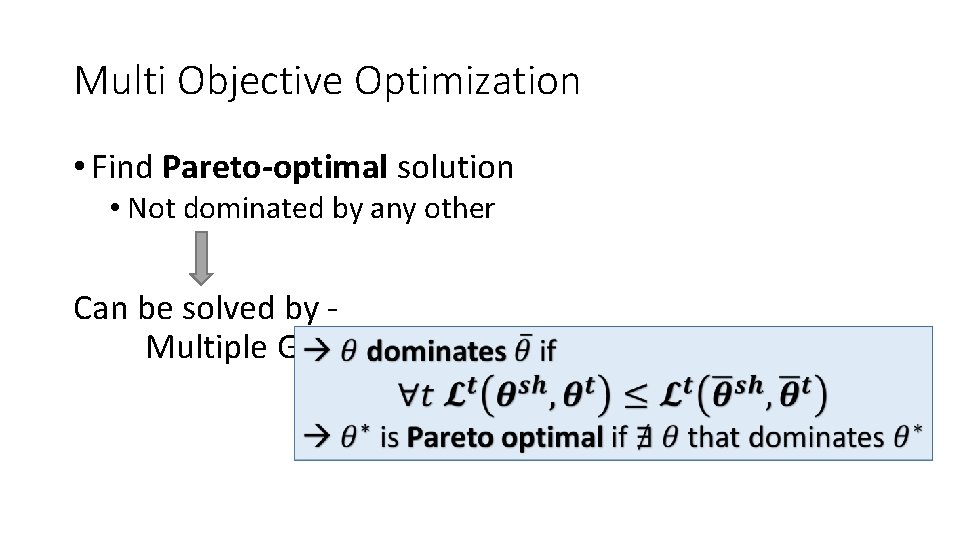

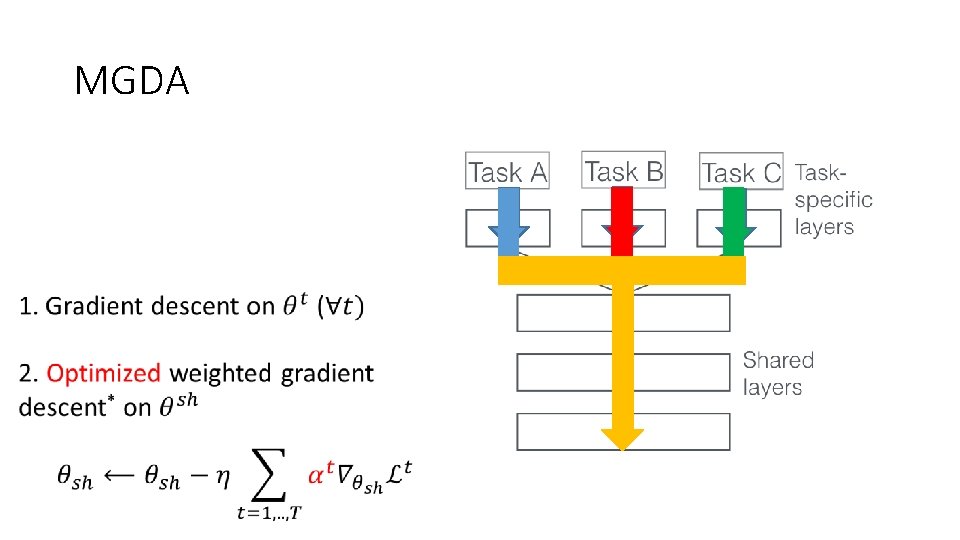

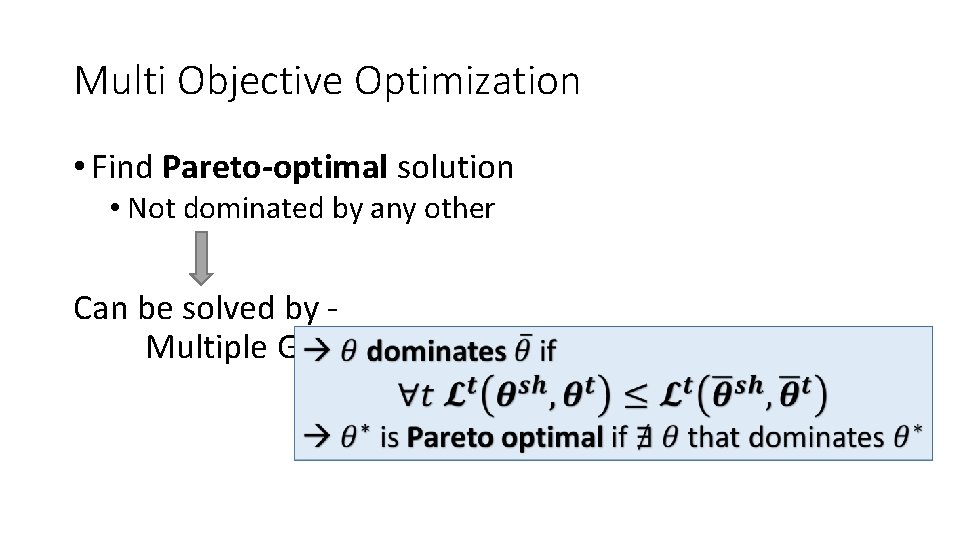

Multi Objective Optimization • Find Pareto-optimal solution • Not dominated by any other Can be solved by - Multiple Gradient Descent Algorithm (MGDA)

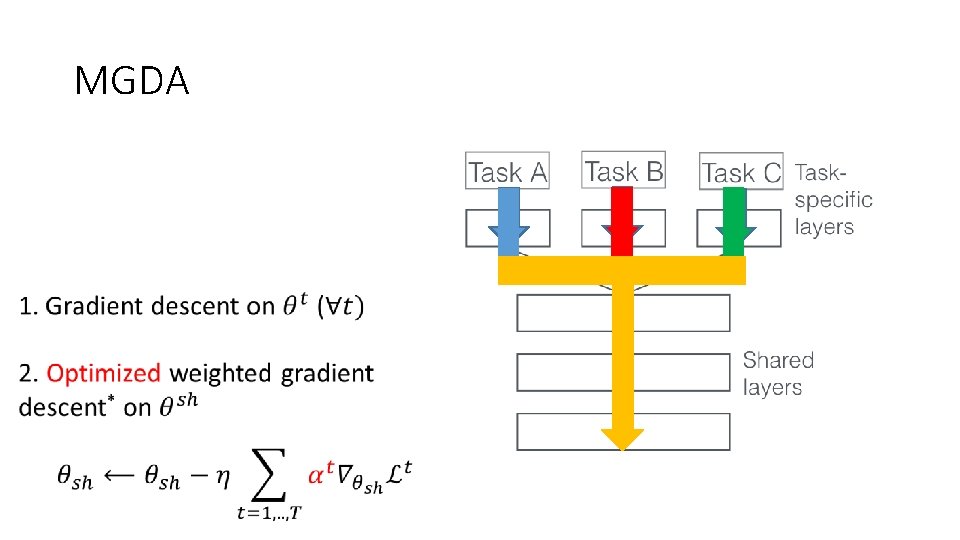

MGDA

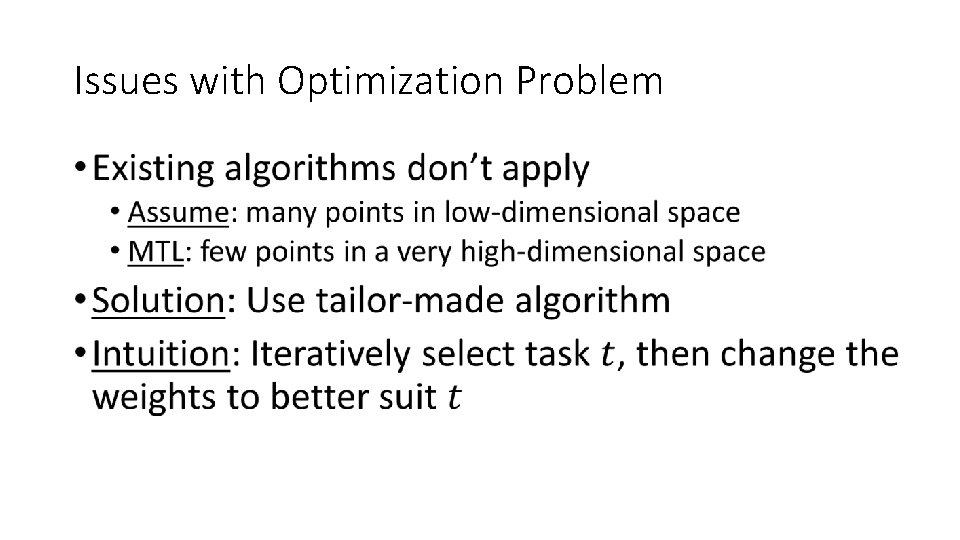

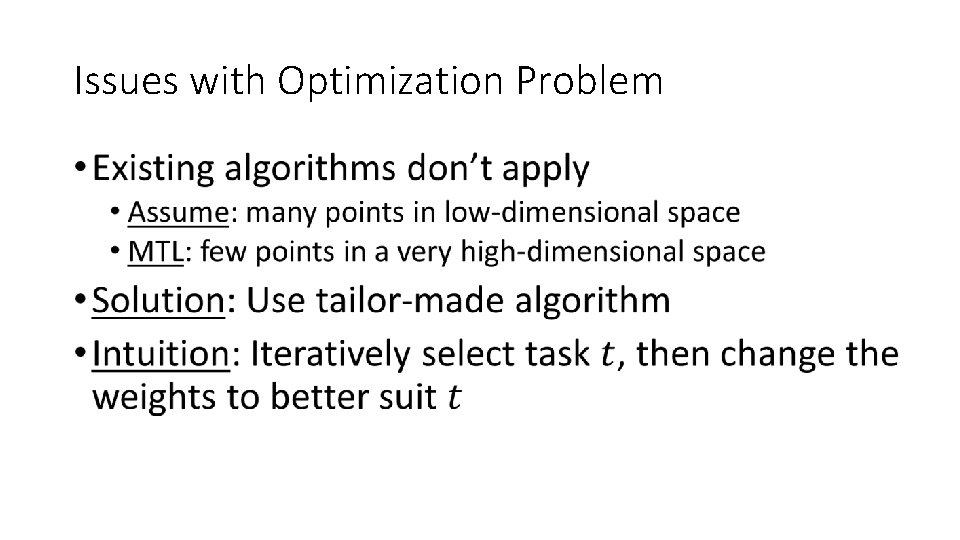

Issues with Optimization Problem

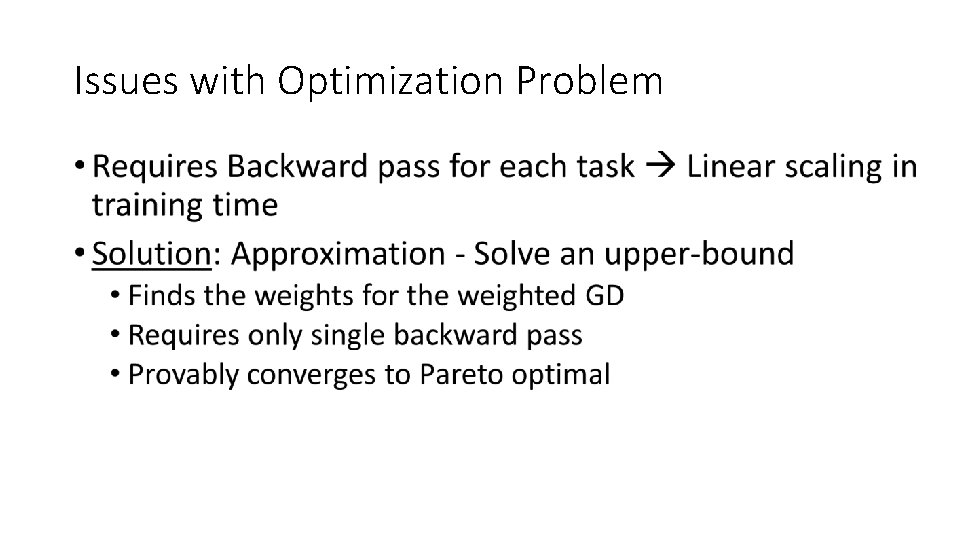

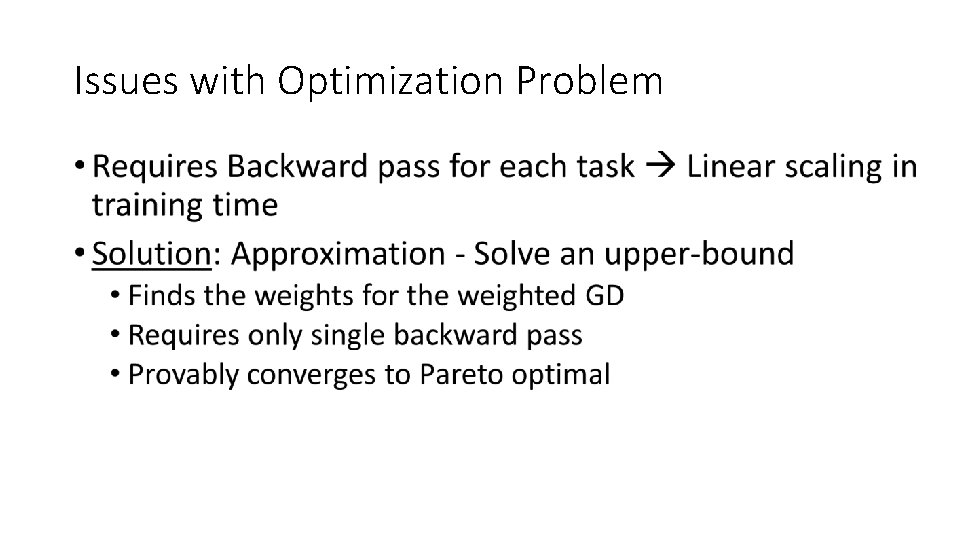

Issues with Optimization Problem

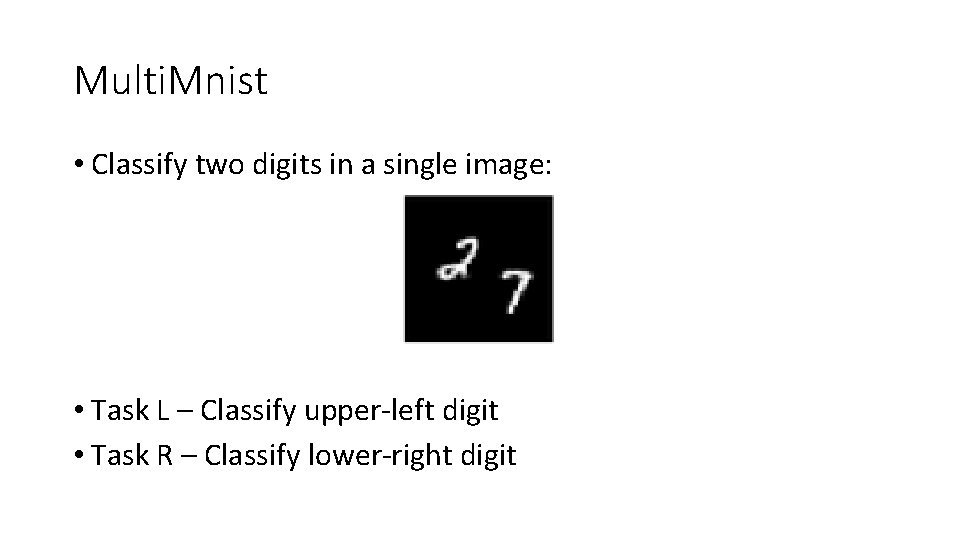

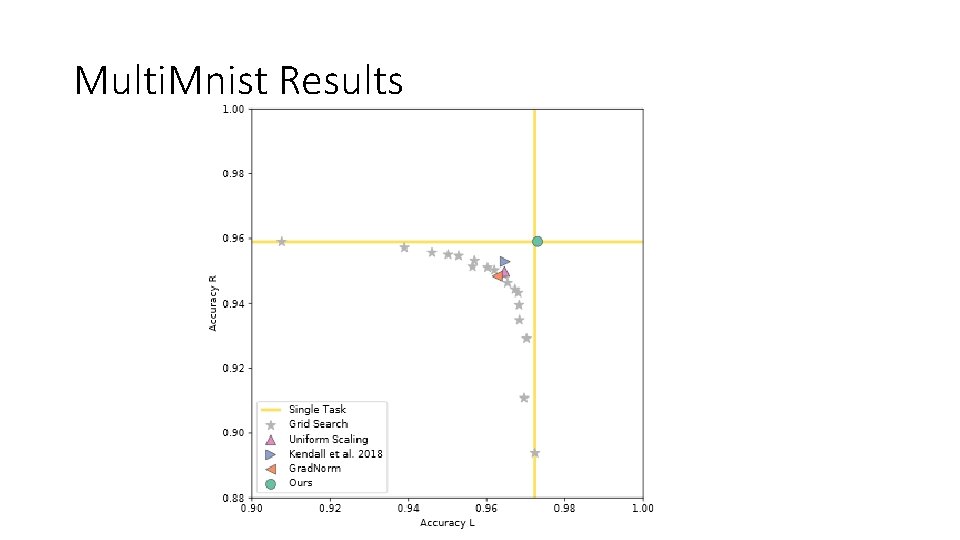

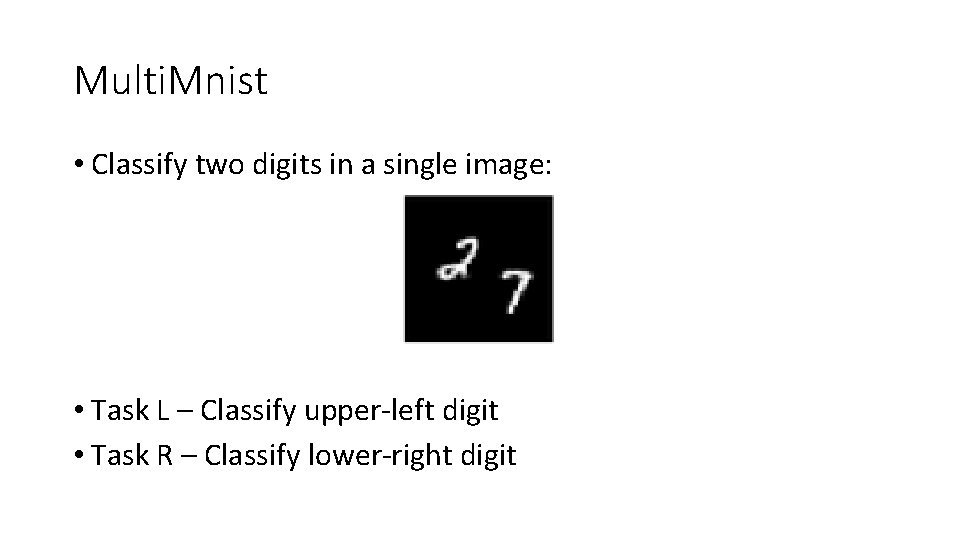

Multi. Mnist • Classify two digits in a single image: • Task L – Classify upper-left digit • Task R – Classify lower-right digit

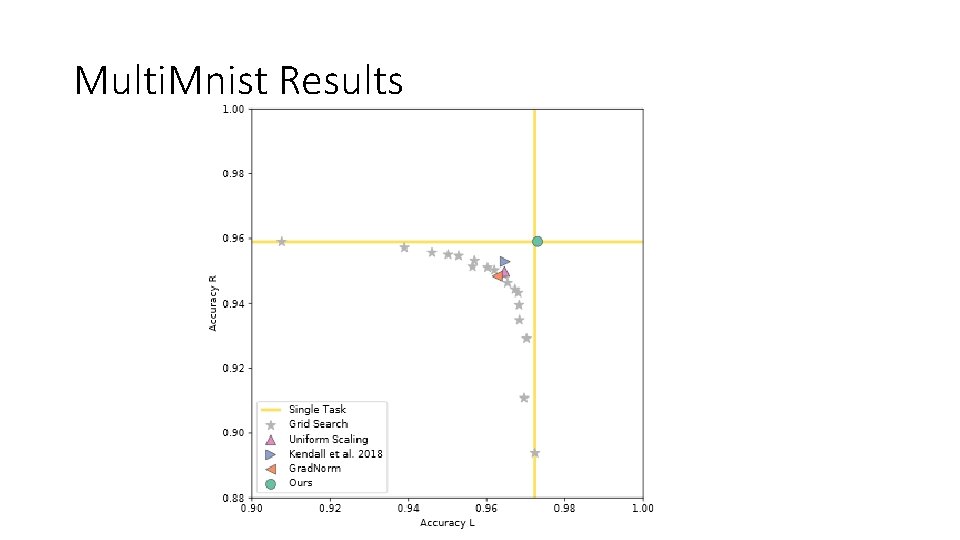

Multi. Mnist Results

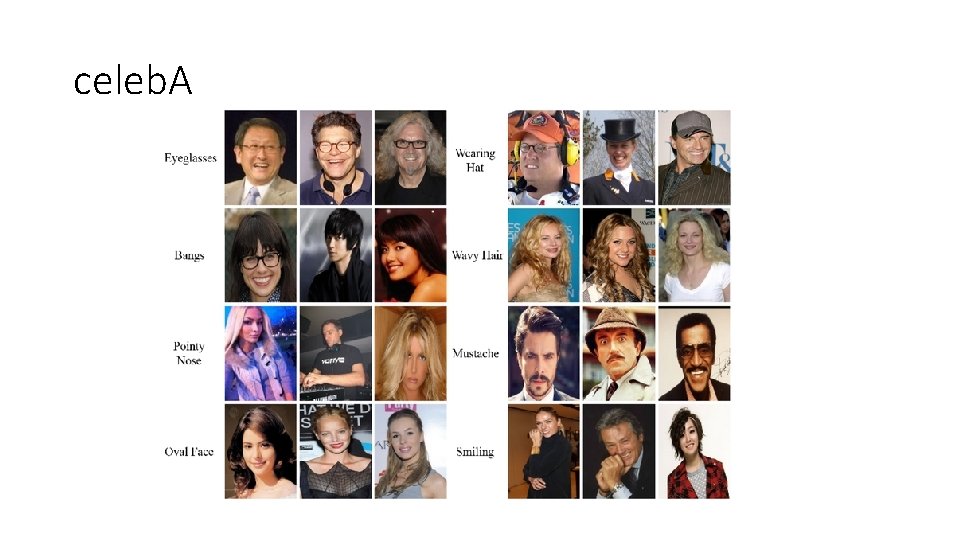

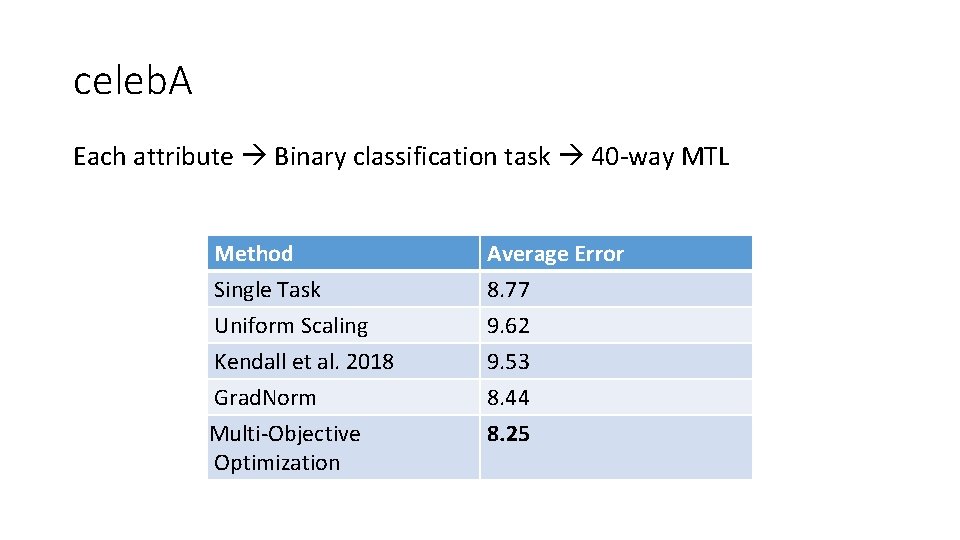

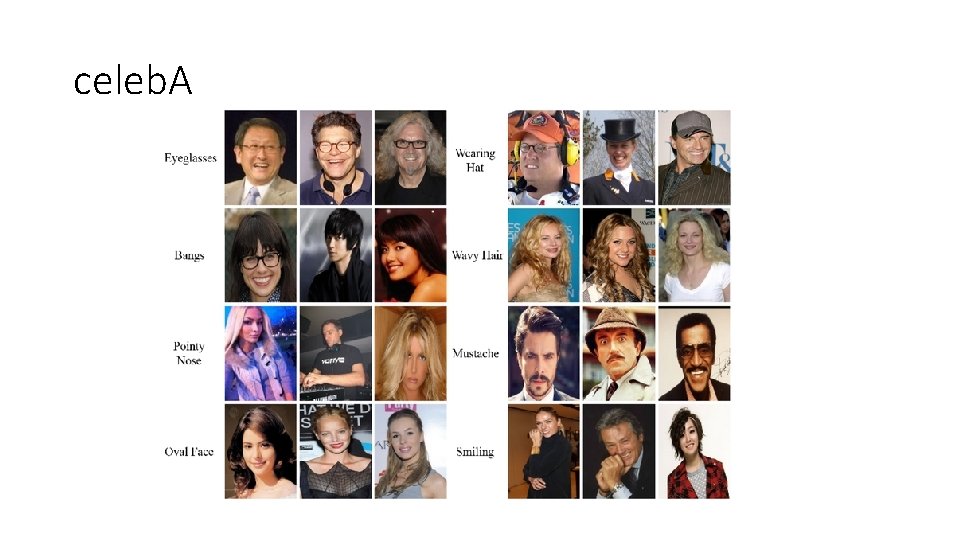

celeb. A

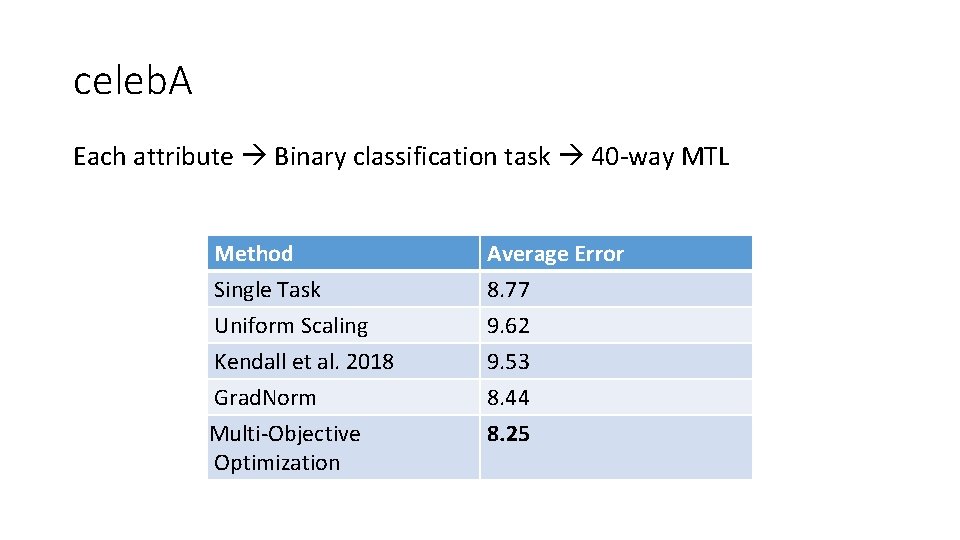

celeb. A Each attribute Binary classification task 40 -way MTL Method Single Task Uniform Scaling Kendall et al. 2018 Average Error 8. 77 9. 62 9. 53 Grad. Norm Multi-Objective Optimization 8. 44 8. 25

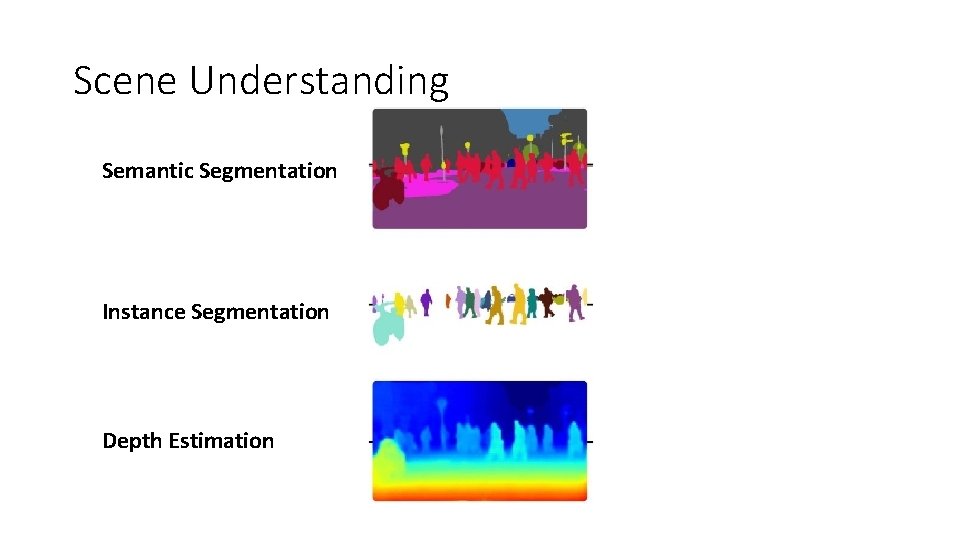

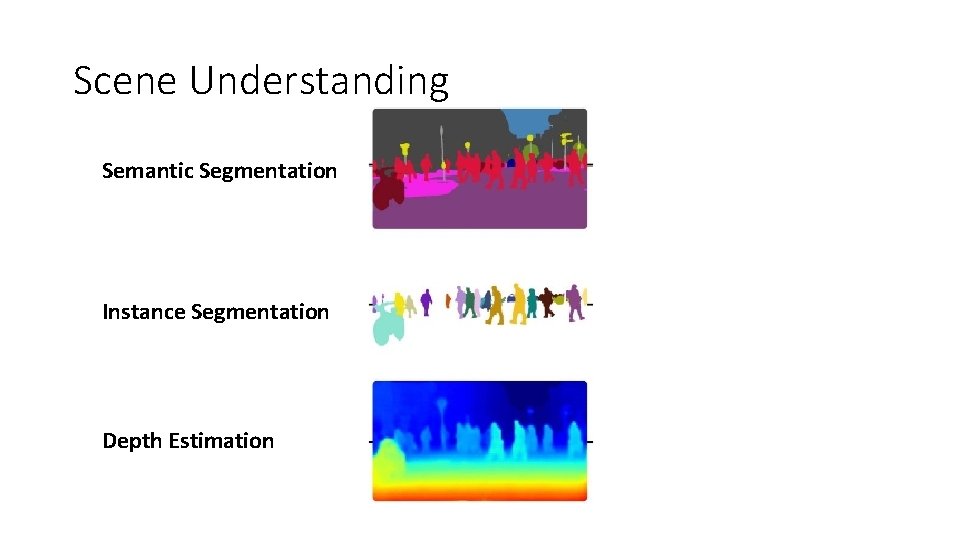

Scene Understanding Semantic Segmentation Instance Segmentation Depth Estimation

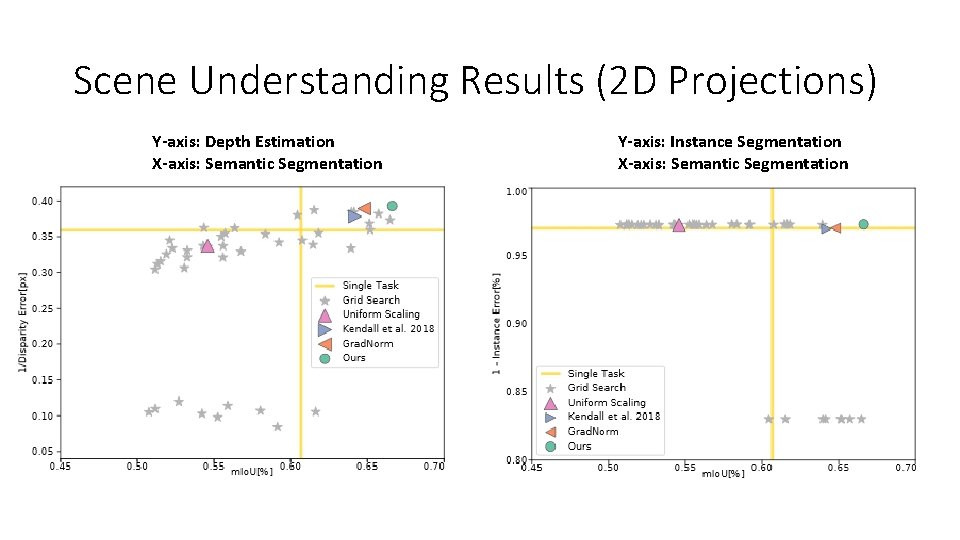

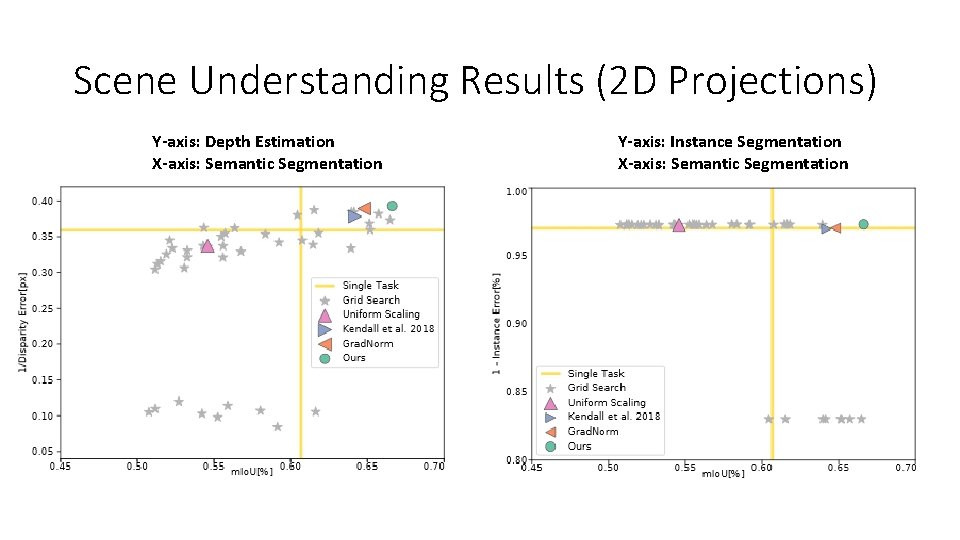

Scene Understanding Results (2 D Projections) Y-axis: Depth Estimation X-axis: Semantic Segmentation Y-axis: Instance Segmentation X-axis: Semantic Segmentation

Questions ?