Towards Heterogeneous Transfer Learning Qiang Yang Hong Kong

![Heterogeneous Transfer Learning: with a Dictionary [Bel, et al. ECDL 2003] [Zhu and Wang, Heterogeneous Transfer Learning: with a Dictionary [Bel, et al. ECDL 2003] [Zhu and Wang,](https://slidetodoc.com/presentation_image_h/847981a47b3a2b586c560cae9e2ae3bc/image-7.jpg)

![Information Bottleneck Improvements: over 15% [Ling, Xue, Yang et al. WWW 2008] Domain Adaptation Information Bottleneck Improvements: over 15% [Ling, Xue, Yang et al. WWW 2008] Domain Adaptation](https://slidetodoc.com/presentation_image_h/847981a47b3a2b586c560cae9e2ae3bc/image-8.jpg)

![Case 3: Both Labeled: Translated Learning [Dai, Chen, Yang et al. NIPS 2008] Apple Case 3: Both Labeled: Translated Learning [Dai, Chen, Yang et al. NIPS 2008] Apple](https://slidetodoc.com/presentation_image_h/847981a47b3a2b586c560cae9e2ae3bc/image-22.jpg)

![Structural Transfer [H. Wang and Q. Yang AAAI 2011] Goal: Learn a correspondence structure Structural Transfer [H. Wang and Q. Yang AAAI 2011] Goal: Learn a correspondence structure](https://slidetodoc.com/presentation_image_h/847981a47b3a2b586c560cae9e2ae3bc/image-25.jpg)

![Cross Domain Activity Recognition [Zheng, Hu, Yang, Ubicomp 2009] n Challenges: n n A Cross Domain Activity Recognition [Zheng, Hu, Yang, Ubicomp 2009] n Challenges: n n A](https://slidetodoc.com/presentation_image_h/847981a47b3a2b586c560cae9e2ae3bc/image-53.jpg)

- Slides: 69

Towards Heterogeneous Transfer Learning Qiang Yang Hong Kong University of Science and Technology Hong Kong, China http: //www. cse. ust. hk/~qyang 1

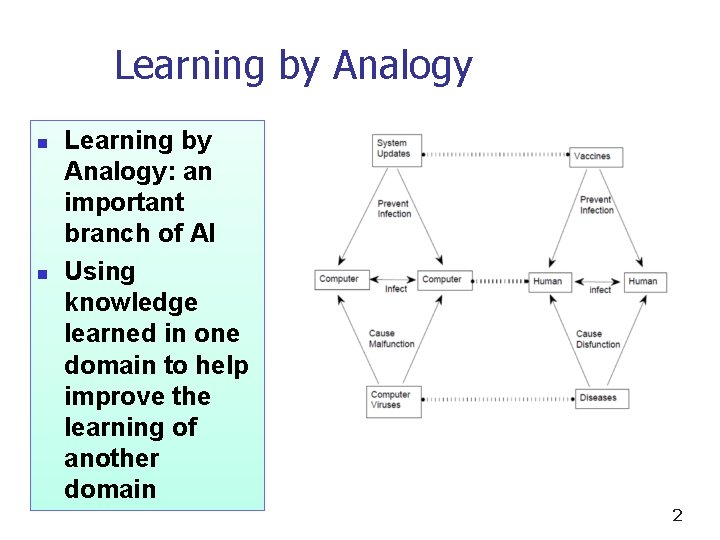

Learning by Analogy n n Learning by Analogy: an important branch of AI Using knowledge learned in one domain to help improve the learning of another domain 2

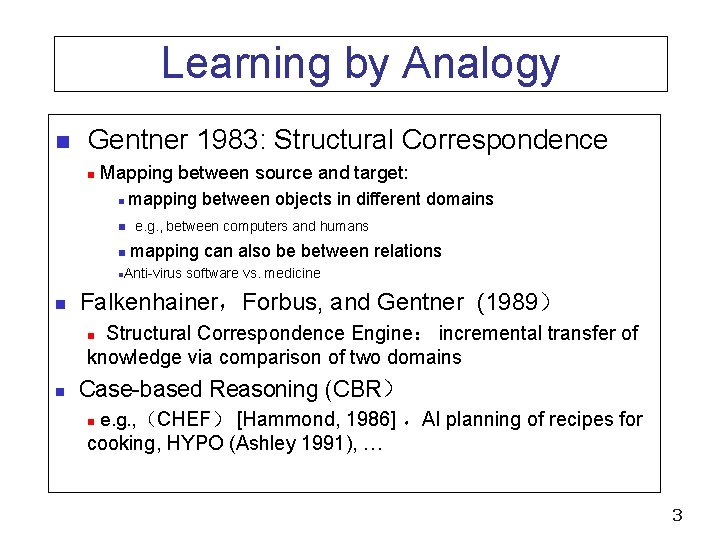

Learning by Analogy n Gentner 1983: Structural Correspondence Mapping between source and target: n n mapping between objects in different domains e. g. , between computers and humans n mapping can also be between relations n n n Anti-virus software vs. medicine Falkenhainer,Forbus, and Gentner (1989) Structural Correspondence Engine: incremental transfer of knowledge via comparison of two domains n n Case-based Reasoning (CBR) e. g. , (CHEF) [Hammond, 1986] ,AI planning of recipes for n cooking, HYPO (Ashley 1991), … 3

Challenges with LBA (ACCESS): find similar case candidates • • n How to tell similar cases? Meaning of ‘similarity’? Access, Matching and Eval: n MATCHING: between source and target domains • • • Many possible mappings? To map objects, or relations? How to decide on the objective functions? n n Our problem: n EVALUATION: test transferred knowledge • • How to create objective hypothesis for target domain? How to ? decided via prior knowledge mapping fixed How to learn the similarity automatically? 4

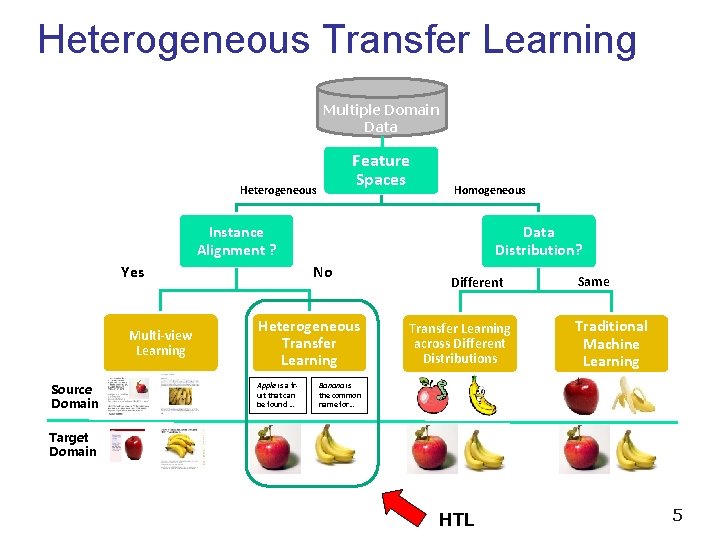

Heterogeneous Transfer Learning Multiple Domain Data Feature Spaces Heterogeneous Homogeneous Instance Alignment ? Yes Multi-view Learning Source Domain Data Distribution? No Heterogeneous Transfer Learning Apple is a fruit that can be found … Different Transfer Learning across Different Distributions Same Traditional Machine Learning Banana is the common name for… Target Domain HTL 5

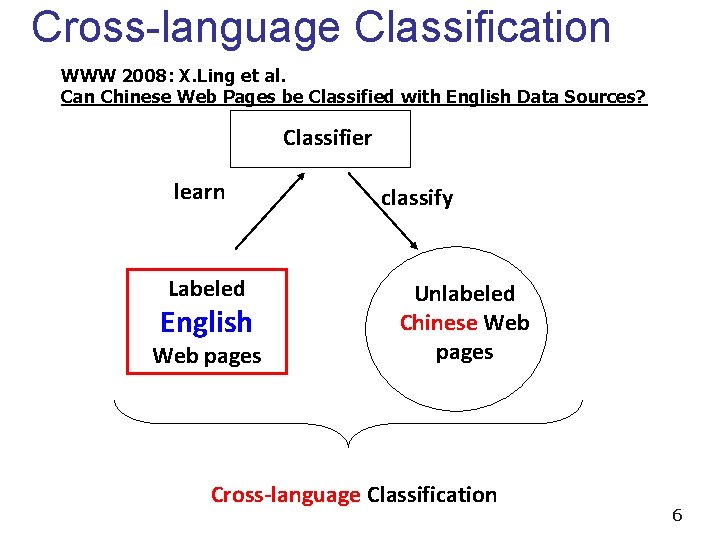

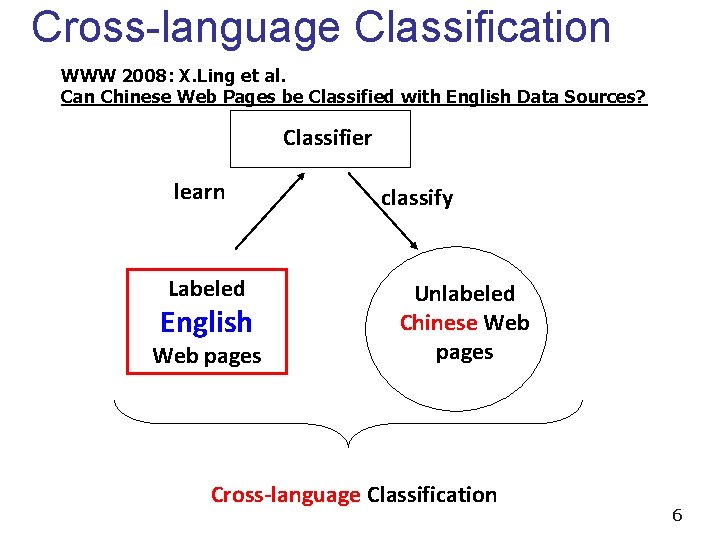

Cross-language Classification WWW 2008: X. Ling et al. Can Chinese Web Pages be Classified with English Data Sources? Classifier learn Labeled English Web pages classify Unlabeled Chinese Web pages Cross-language Classification 6

![Heterogeneous Transfer Learning with a Dictionary Bel et al ECDL 2003 Zhu and Wang Heterogeneous Transfer Learning: with a Dictionary [Bel, et al. ECDL 2003] [Zhu and Wang,](https://slidetodoc.com/presentation_image_h/847981a47b3a2b586c560cae9e2ae3bc/image-7.jpg)

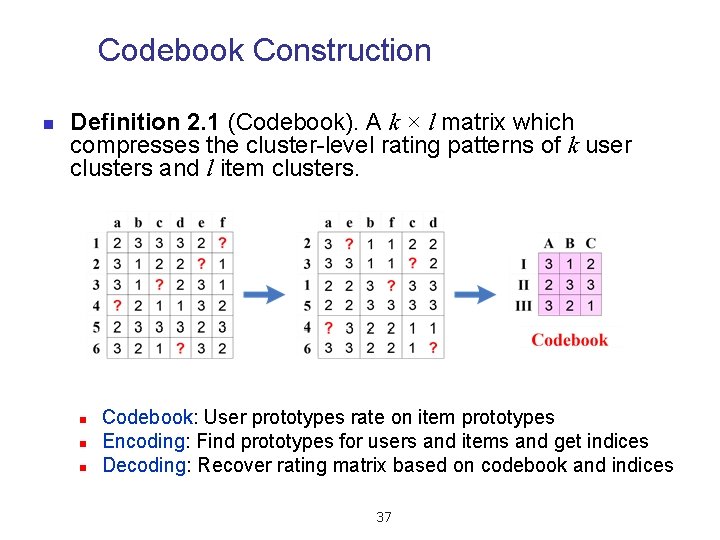

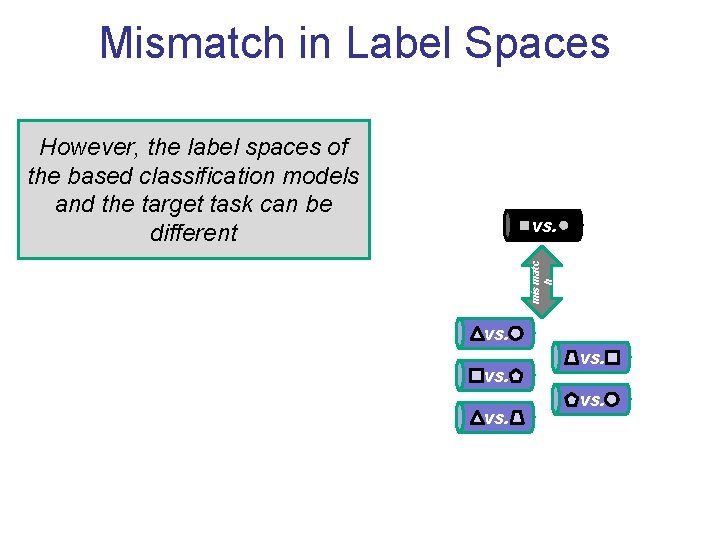

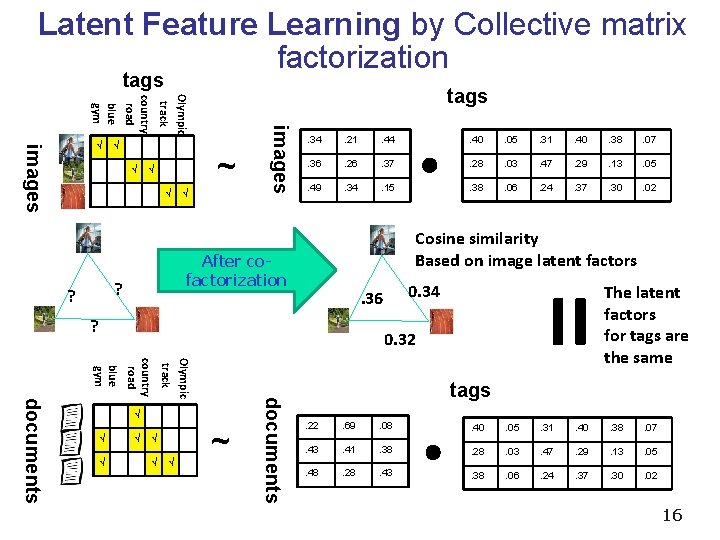

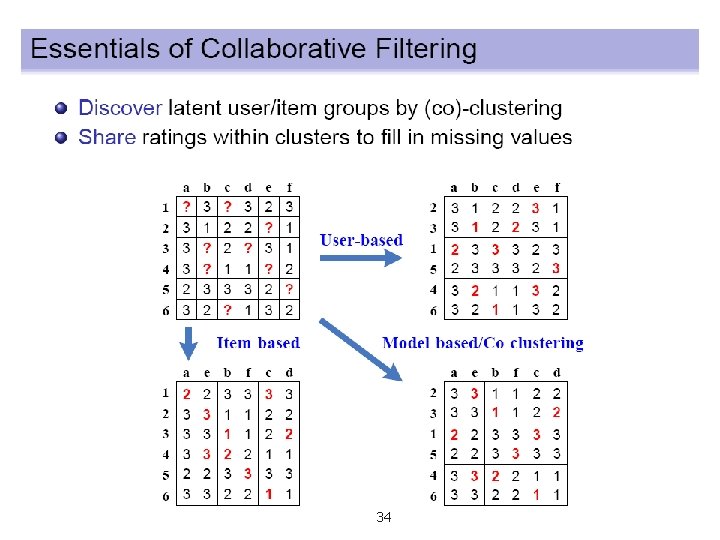

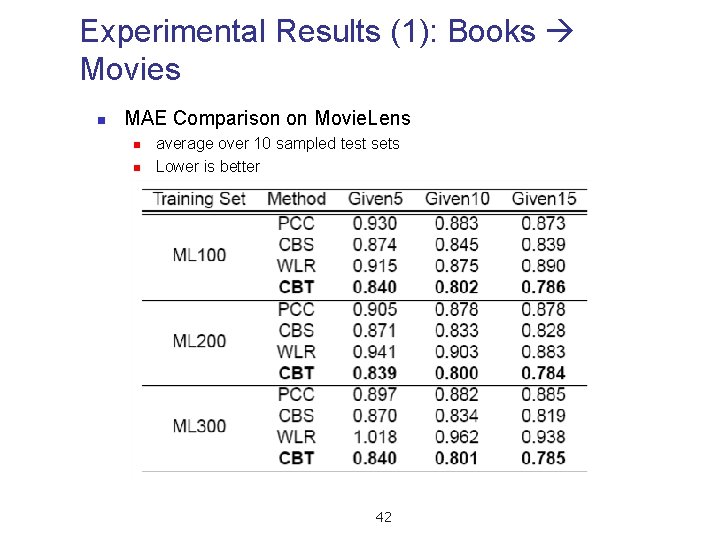

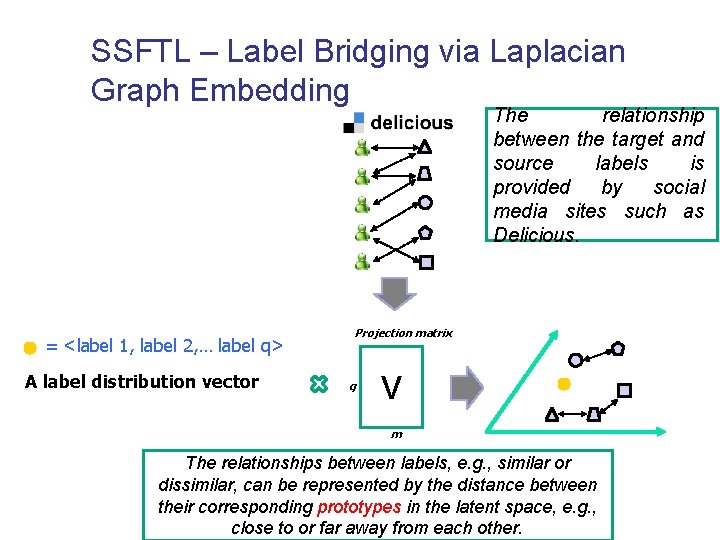

Heterogeneous Transfer Learning: with a Dictionary [Bel, et al. ECDL 2003] [Zhu and Wang, ACL 2006] [Gliozzo and Strapparava ACL 2006] DICTIONARY Labeled documents in English (abundant) Labeled documents in Chinese (scarce) Translation Error Topic Drift TASK: Classifying documents in Chinese 7

![Information Bottleneck Improvements over 15 Ling Xue Yang et al WWW 2008 Domain Adaptation Information Bottleneck Improvements: over 15% [Ling, Xue, Yang et al. WWW 2008] Domain Adaptation](https://slidetodoc.com/presentation_image_h/847981a47b3a2b586c560cae9e2ae3bc/image-8.jpg)

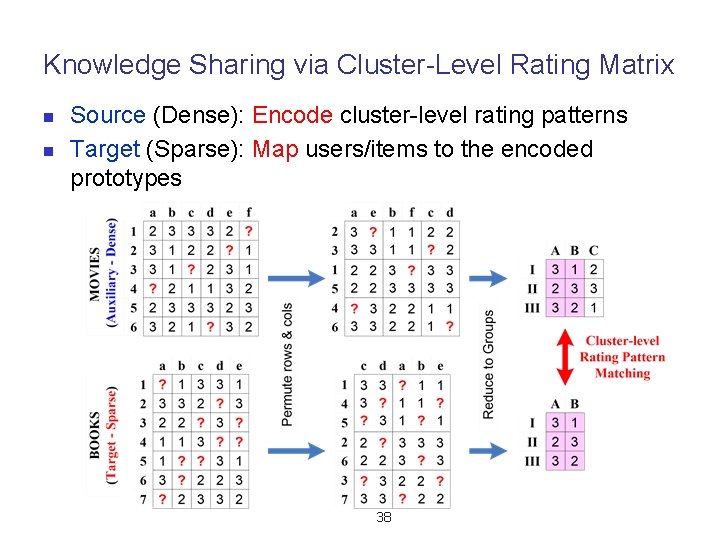

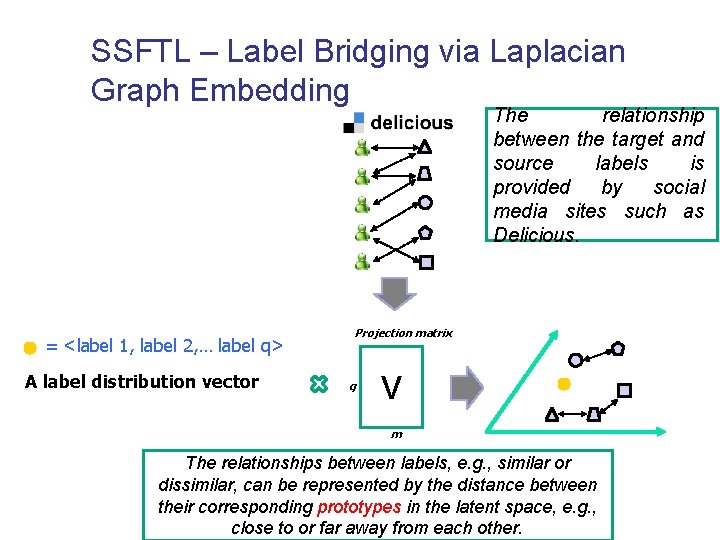

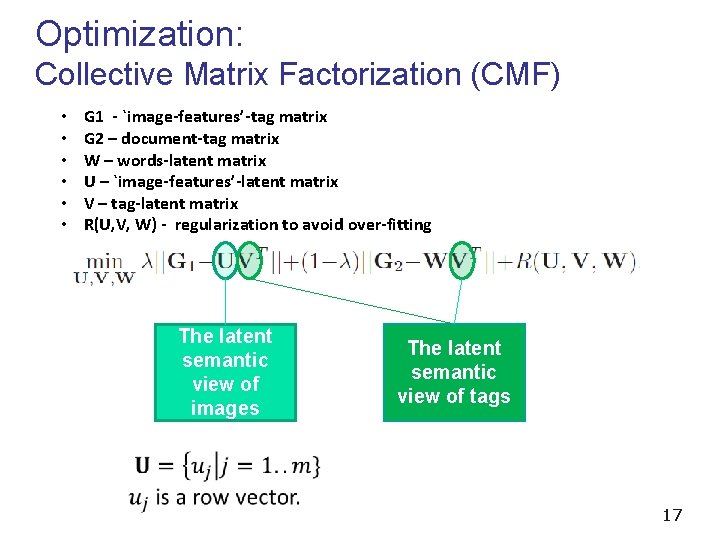

Information Bottleneck Improvements: over 15% [Ling, Xue, Yang et al. WWW 2008] Domain Adaptation C E E C 8

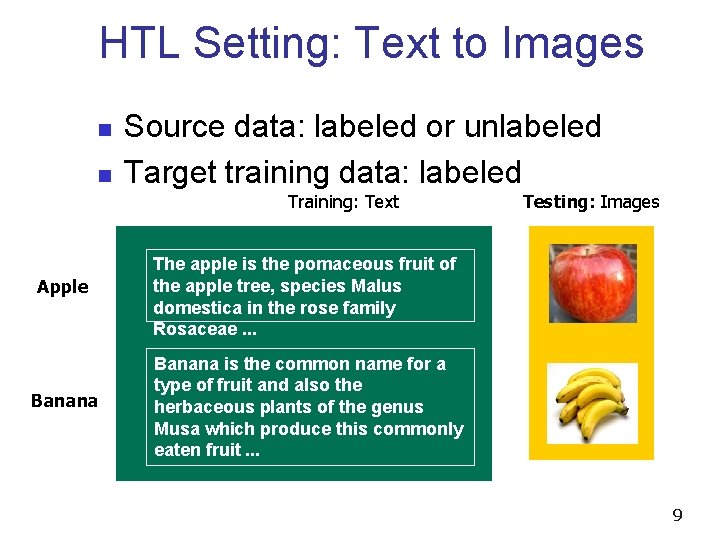

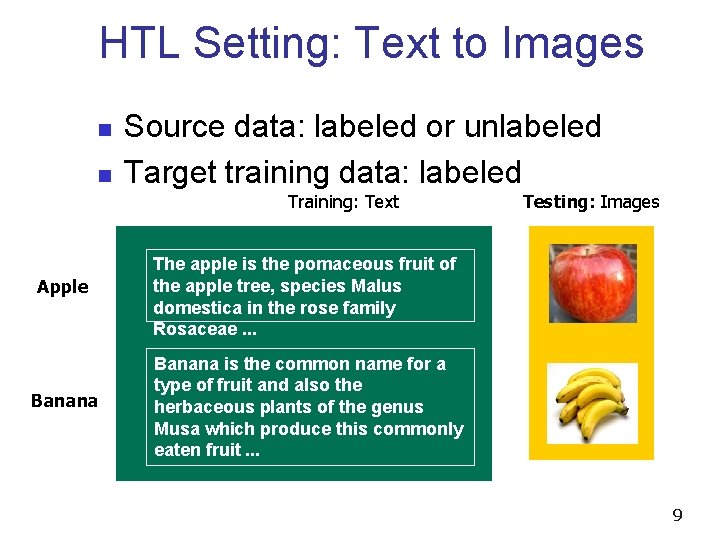

HTL Setting: Text to Images n n Source data: labeled or unlabeled Target training data: labeled Training: Text Apple Banana Testing: Images The apple is the pomaceous fruit of the apple tree, species Malus domestica in the rose family Rosaceae. . . Banana is the common name for a type of fruit and also the herbaceous plants of the genus Musa which produce this commonly eaten fruit. . . 9

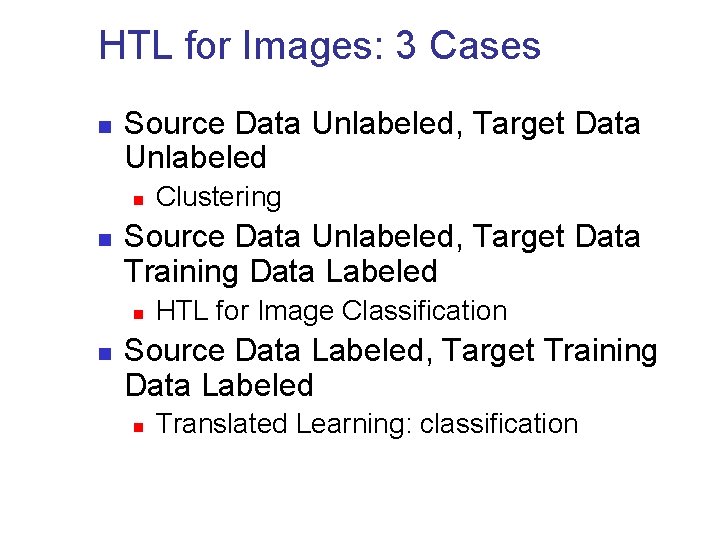

HTL for Images: 3 Cases n Source Data Unlabeled, Target Data Unlabeled n n Source Data Unlabeled, Target Data Training Data Labeled n n Clustering HTL for Image Classification Source Data Labeled, Target Training Data Labeled n Translated Learning: classification

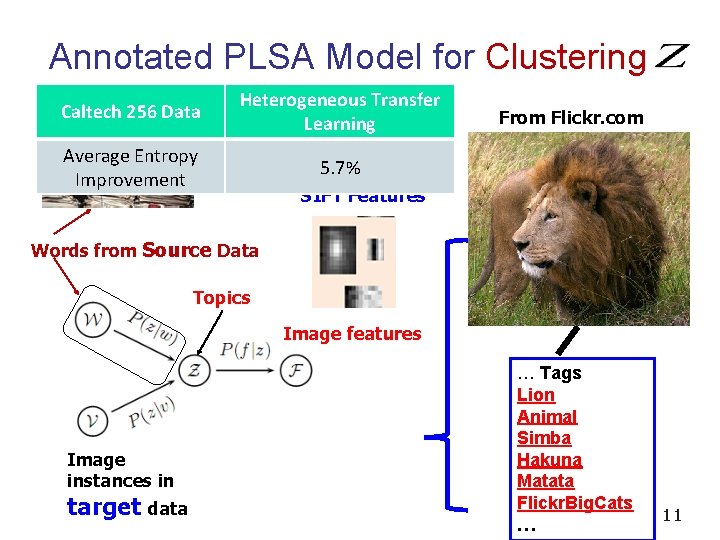

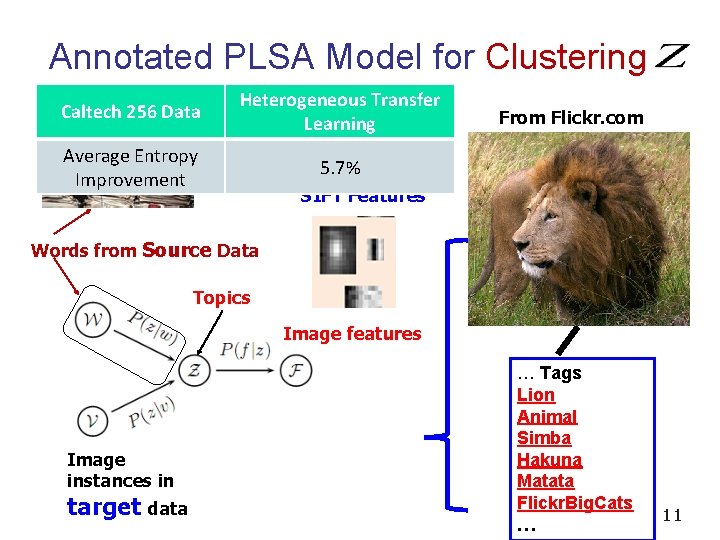

Annotated PLSA Model for Clustering Caltech 256 Data Heterogeneous Transfer Learning Average Entropy Improvement 5. 7% From Flickr. com SIFT Features Words from Source Data Topics Image features Image instances in target data … Tags Lion Animal Simba Hakuna Matata Flickr. Big. Cats … 11

n “Heterogeneous transfer learning for image classification” n Y. Zhu, G. Xue, Q. Yang et al. n AAAI 2011 12

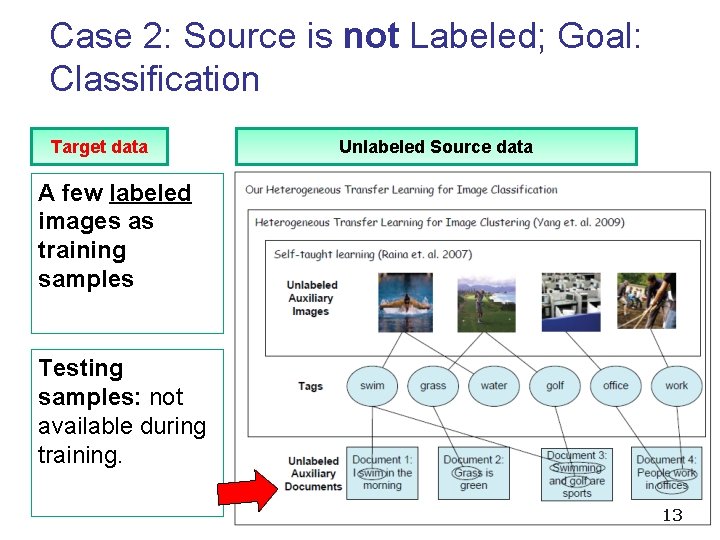

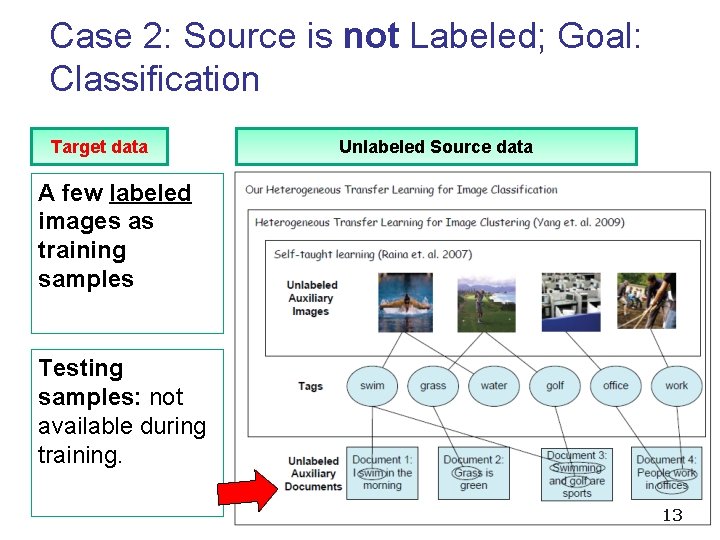

Case 2: Source is not Labeled; Goal: Classification Target data Unlabeled Source data A few labeled images as training samples Testing samples: not available during training. 13

Social Media Data as a Bridge

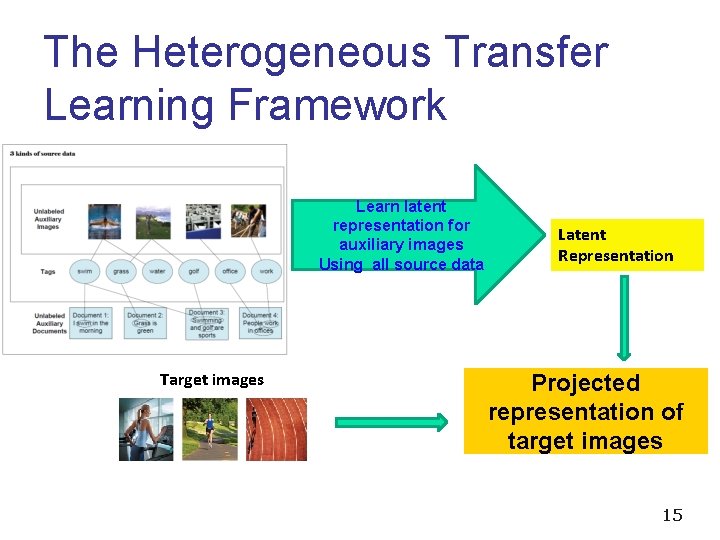

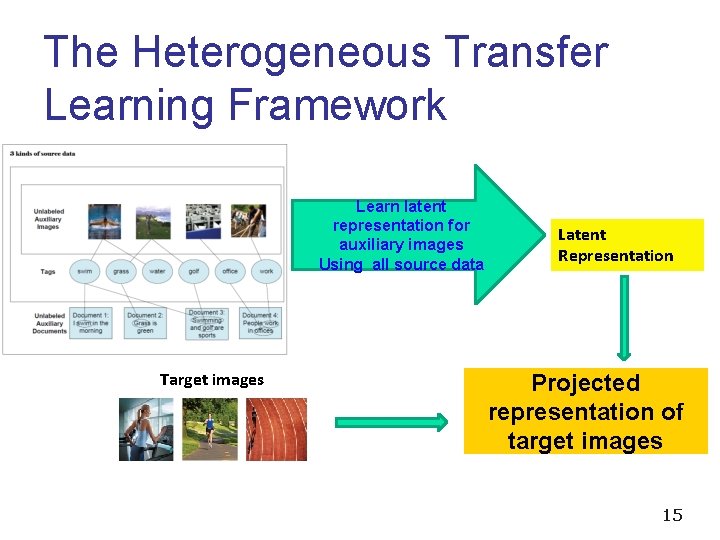

The Heterogeneous Transfer Learning Framework Learn latent representation for auxiliary images Using all source data Target images Latent Representation Projected representation of target images 15

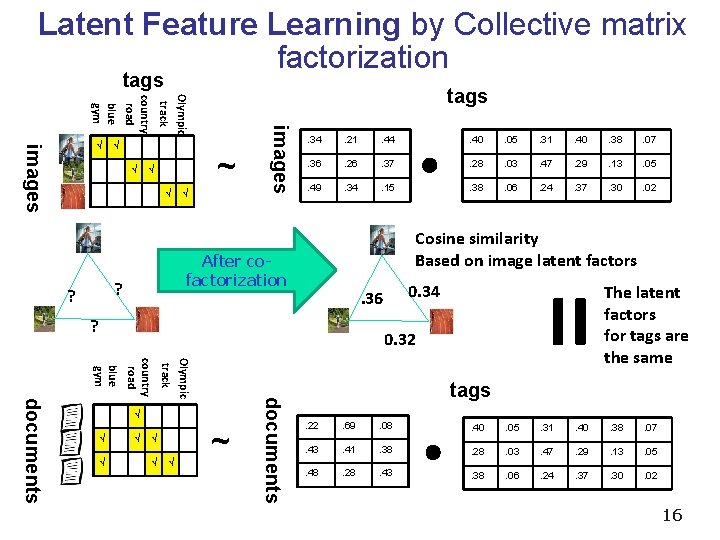

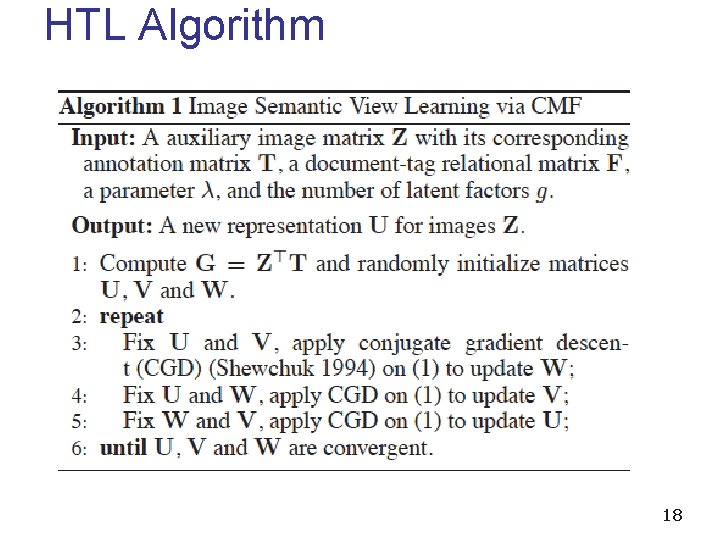

Latent Feature Learning by Collective matrix factorization tags ~ √ √ √ images √ √ . 34 . 21 . 44 . 40 . 05 . 31 . 40 . 38 . 07 . 36 . 26 . 37 . 28 . 03 . 47 . 29 . 13 . 05 . 49 . 34 . 15 . 38 . 06 . 24 . 37 . 30 . 02 Cosine similarity Based on image latent factors After cofactorization 0. 34 . 36 ? The latent factors for tags are the same = ? ? Olympic track country road blue gym images √ tags 0. 32 √ √ √ ~ documents Olympic track country road blue gym documents √ tags. 22 . 69 . 08 . 40 . 05 . 31 . 40 . 38 . 07 . 43 . 41 . 38 . 28 . 03 . 47 . 29 . 13 . 05 . 48 . 28 . 43 . 38 . 06 . 24 . 37 . 30 . 02 16

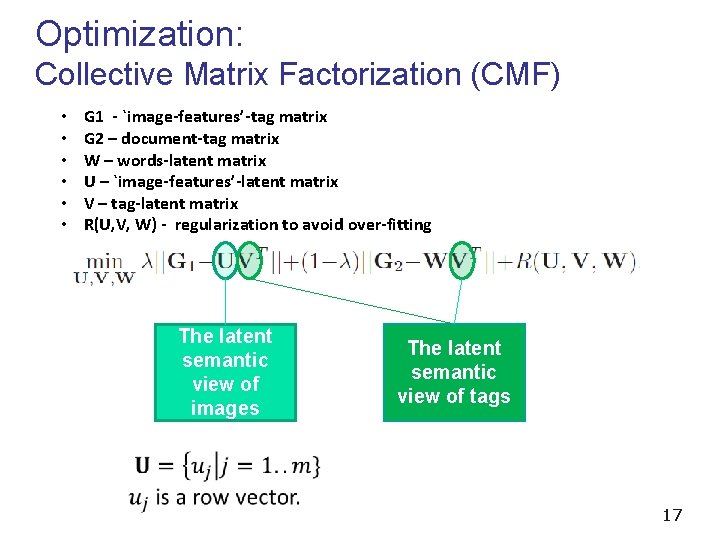

Optimization: Collective Matrix Factorization (CMF) • • • G 1 - `image-features’-tag matrix G 2 – document-tag matrix W – words-latent matrix U – `image-features’-latent matrix V – tag-latent matrix R(U, V, W) - regularization to avoid over-fitting The latent semantic view of images The latent semantic view of tags 17

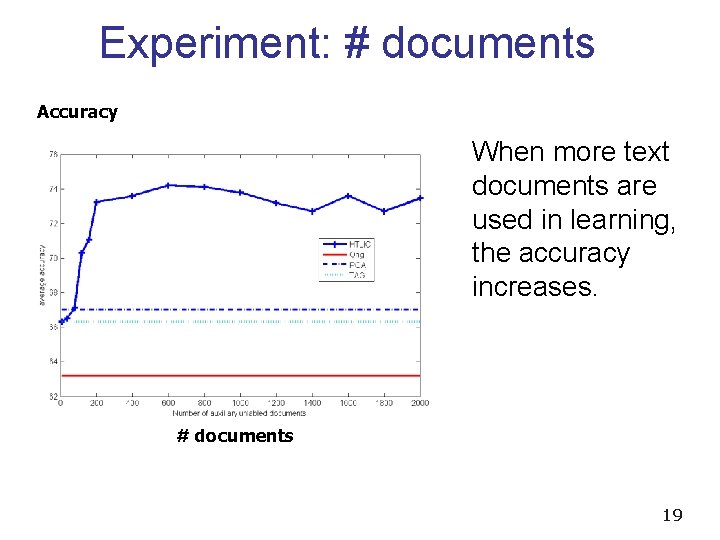

HTL Algorithm 18

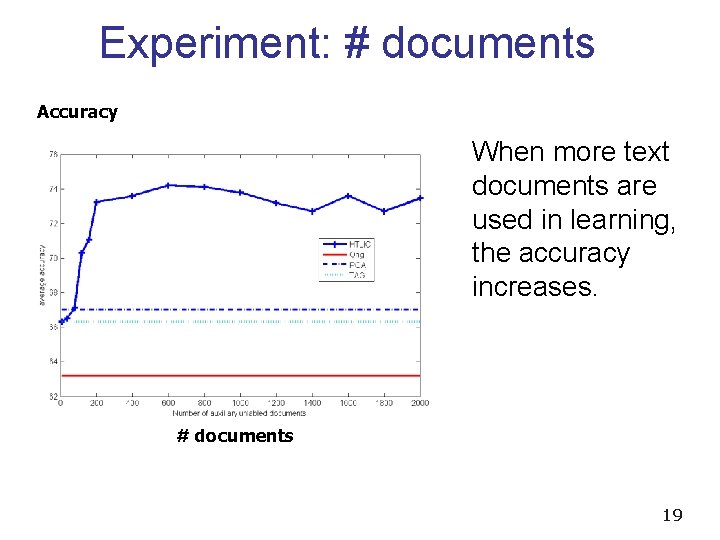

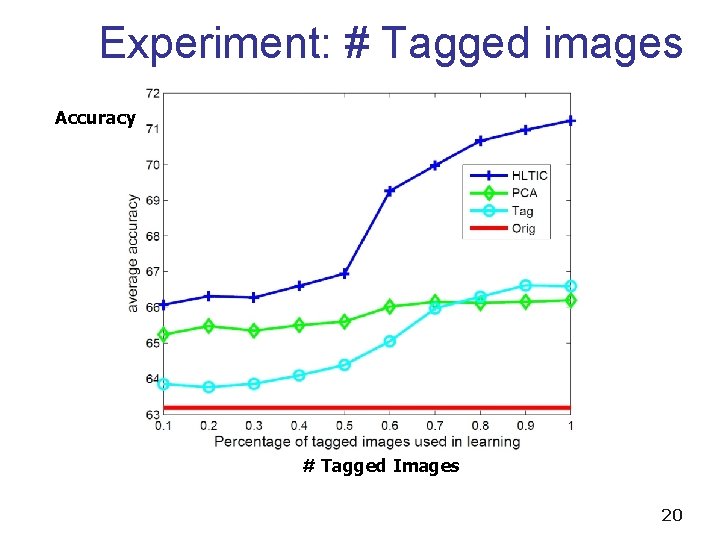

Experiment: # documents Accuracy When more text documents are used in learning, the accuracy increases. # documents 19

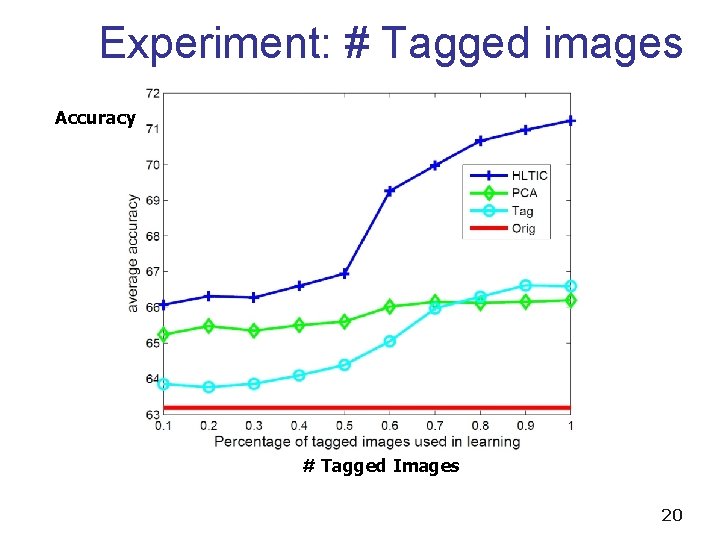

Experiment: # Tagged images Accuracy # Tagged Images 20

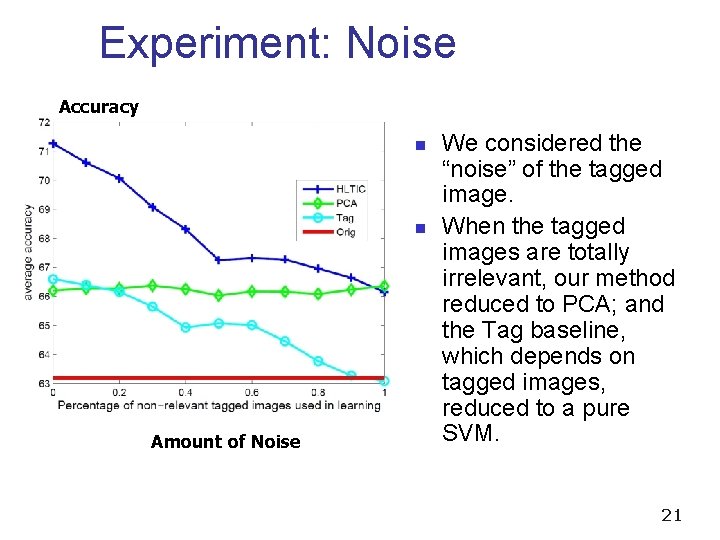

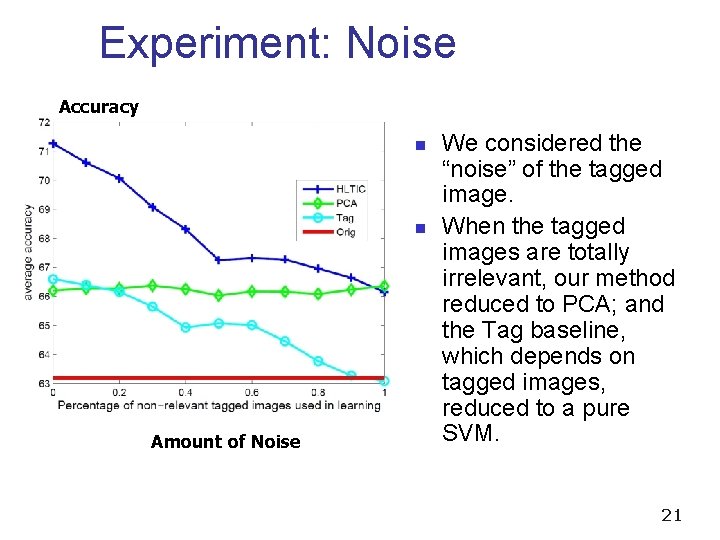

Experiment: Noise Accuracy n n Amount of Noise We considered the “noise” of the tagged image. When the tagged images are totally irrelevant, our method reduced to PCA; and the Tag baseline, which depends on tagged images, reduced to a pure SVM. 21

![Case 3 Both Labeled Translated Learning Dai Chen Yang et al NIPS 2008 Apple Case 3: Both Labeled: Translated Learning [Dai, Chen, Yang et al. NIPS 2008] Apple](https://slidetodoc.com/presentation_image_h/847981a47b3a2b586c560cae9e2ae3bc/image-22.jpg)

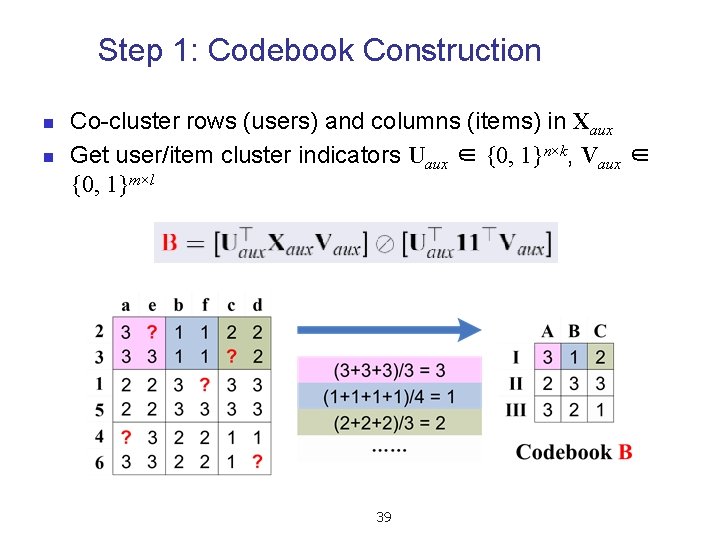

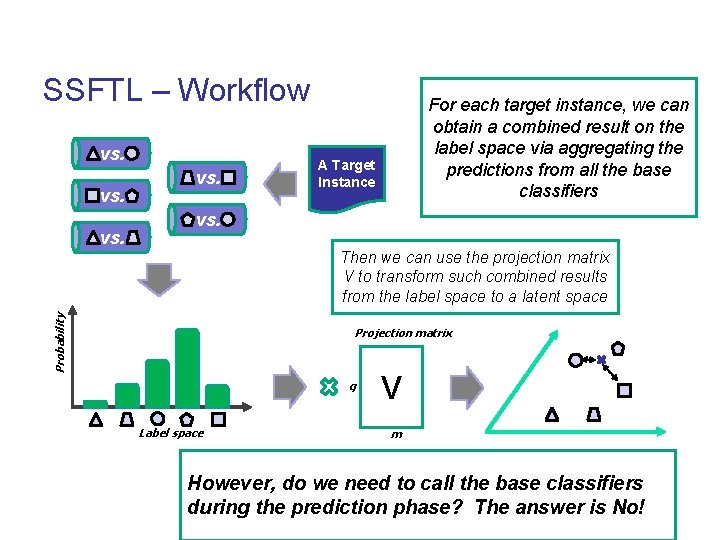

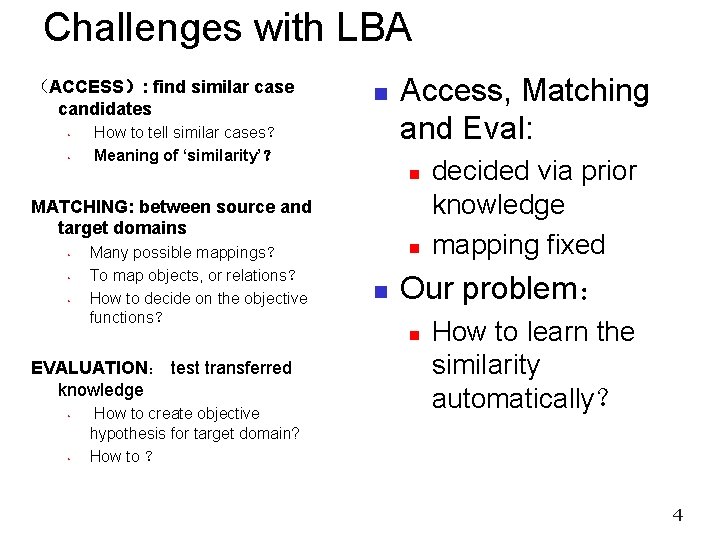

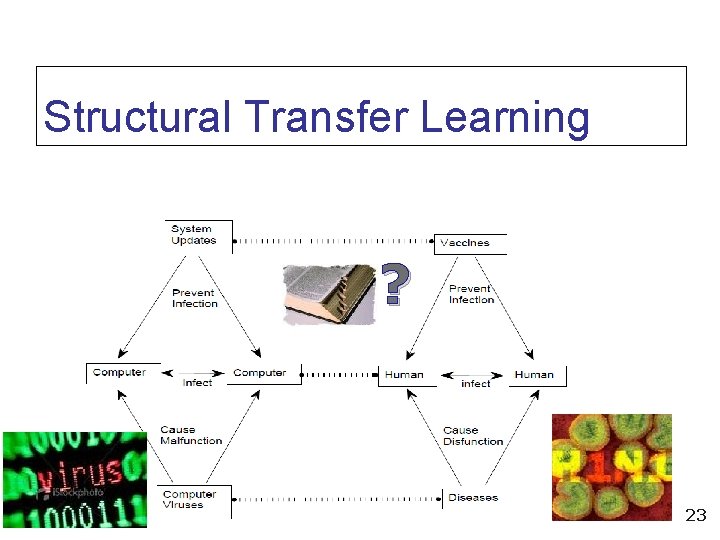

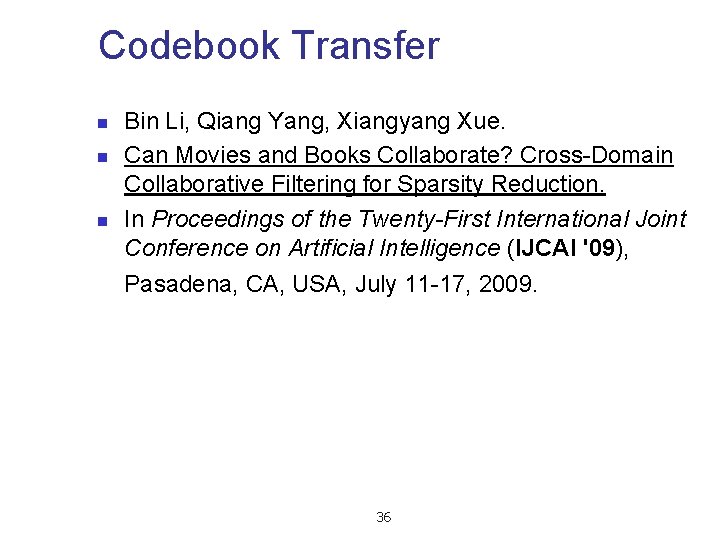

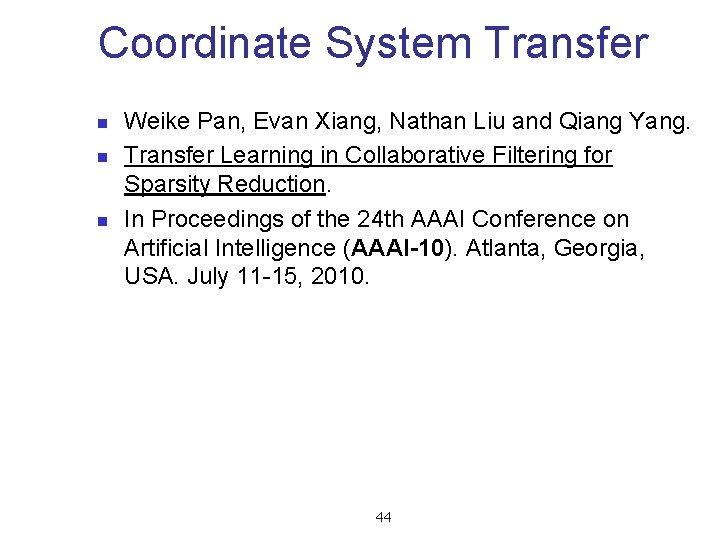

Case 3: Both Labeled: Translated Learning [Dai, Chen, Yang et al. NIPS 2008] Apple is a Apple fruit. ‘Apple’ the Apple computer pie is… movie is… is an Asian … Input Text Classifier Output translating learning models Input Image Classifier ACL-IJCNLP 2009 Output 22

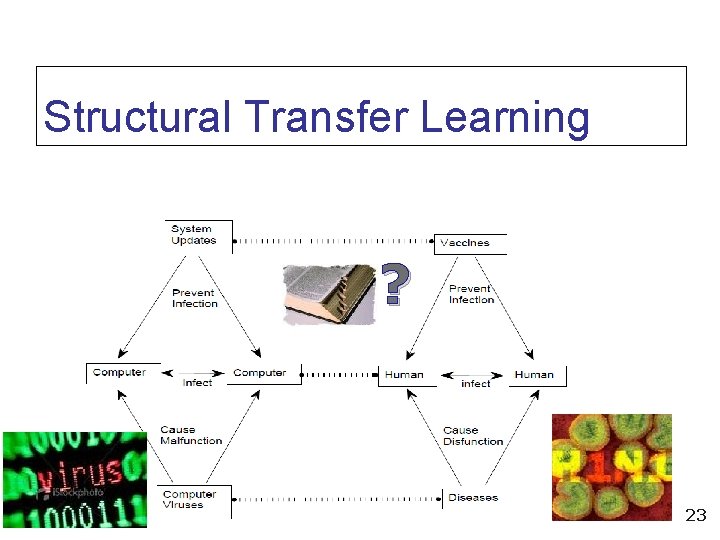

Structural Transfer Learning ? 23

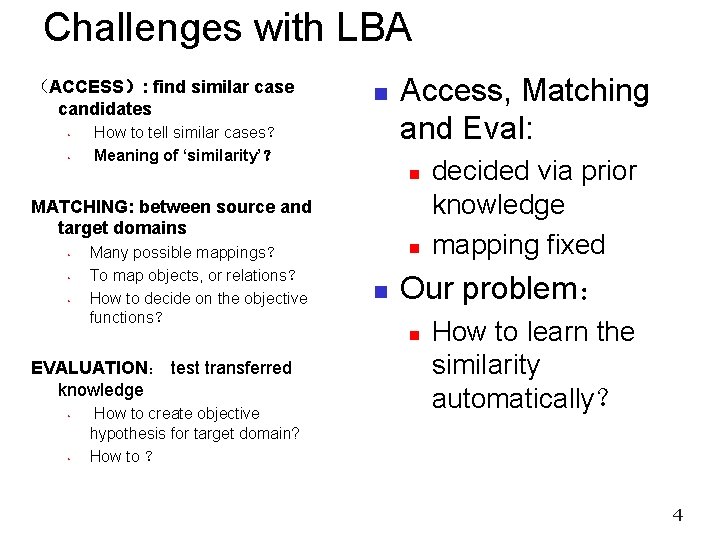

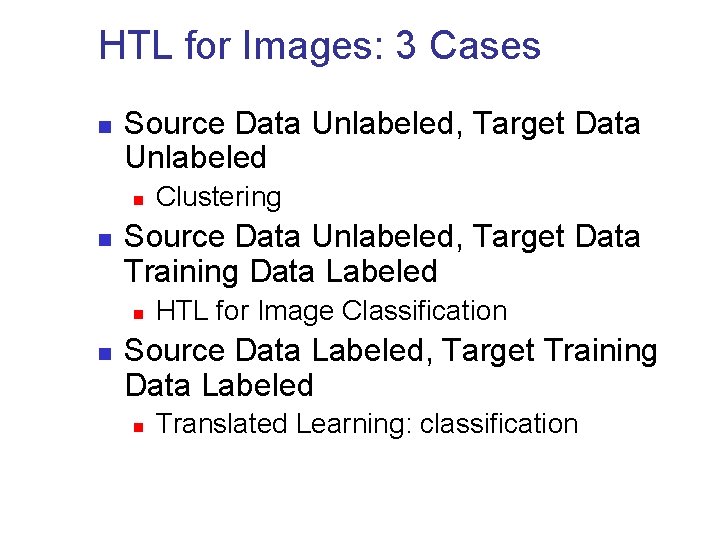

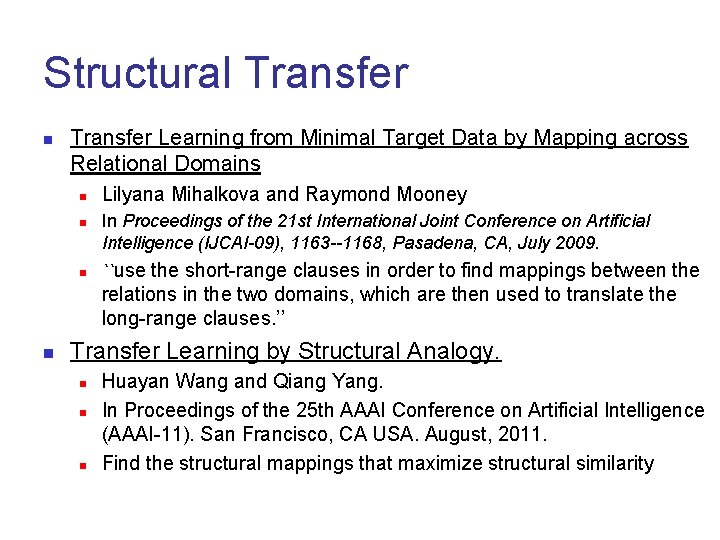

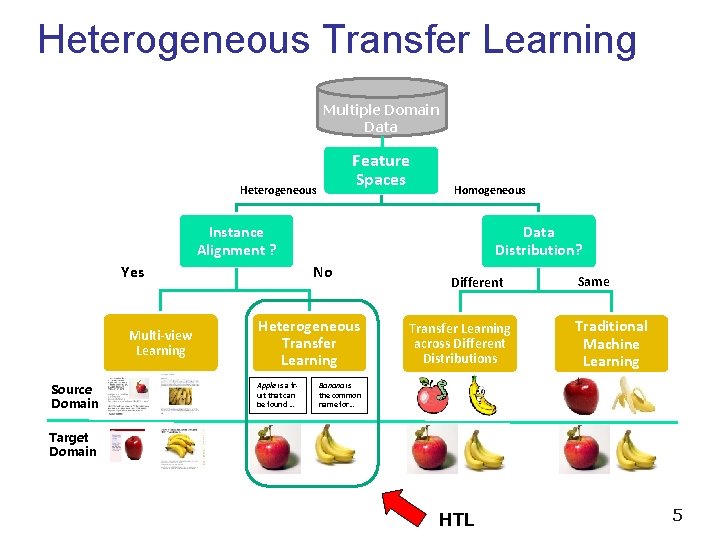

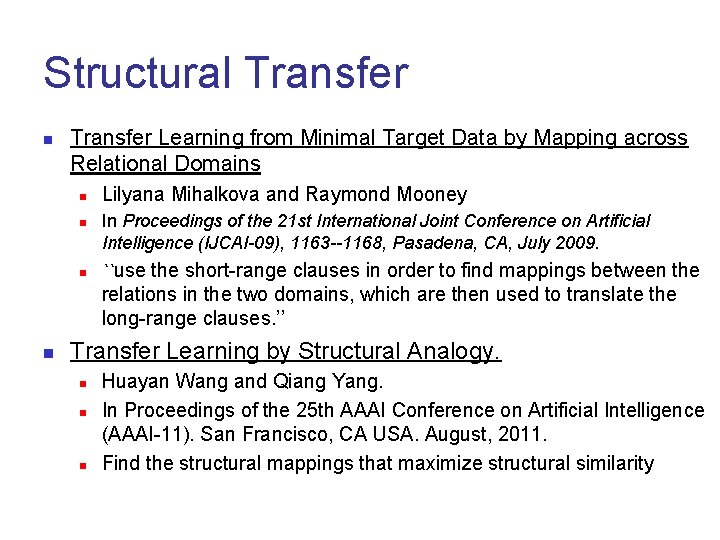

Structural Transfer n Transfer Learning from Minimal Target Data by Mapping across Relational Domains n n n Lilyana Mihalkova and Raymond Mooney In Proceedings of the 21 st International Joint Conference on Artificial Intelligence (IJCAI-09), 1163 --1168, Pasadena, CA, July 2009. ``use the short-range clauses in order to find mappings between the relations in the two domains, which are then used to translate the long-range clauses. ’’ n Transfer Learning by Structural Analogy. n n n Huayan Wang and Qiang Yang. In Proceedings of the 25 th AAAI Conference on Artificial Intelligence (AAAI-11). San Francisco, CA USA. August, 2011. Find the structural mappings that maximize structural similarity

![Structural Transfer H Wang and Q Yang AAAI 2011 Goal Learn a correspondence structure Structural Transfer [H. Wang and Q. Yang AAAI 2011] Goal: Learn a correspondence structure](https://slidetodoc.com/presentation_image_h/847981a47b3a2b586c560cae9e2ae3bc/image-25.jpg)

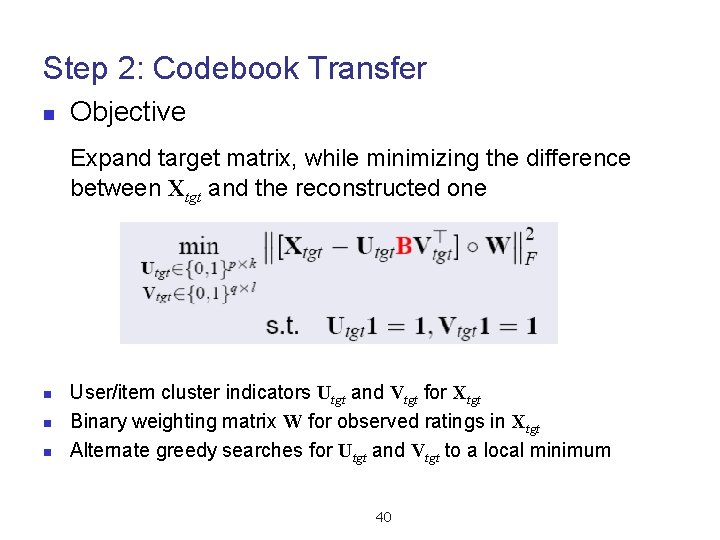

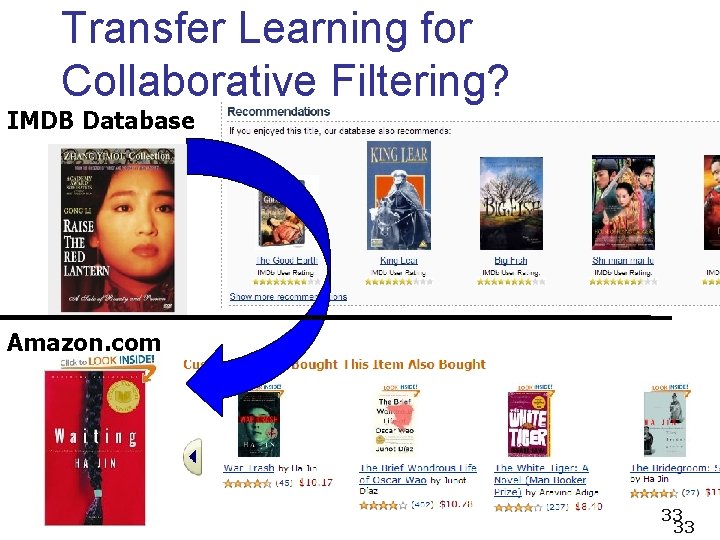

Structural Transfer [H. Wang and Q. Yang AAAI 2011] Goal: Learn a correspondence structure between domains Use the correspondence to transfer knowledge mother son 女儿 daughter 父亲 儿子 father English 母亲 Chinese (汉语) 25

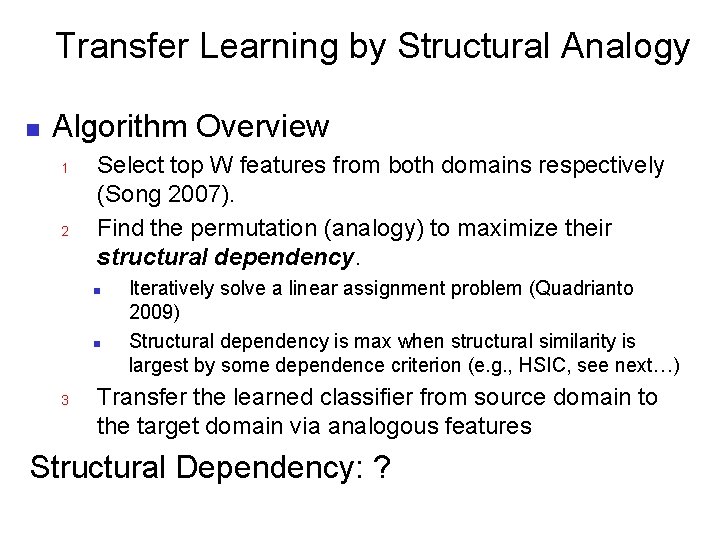

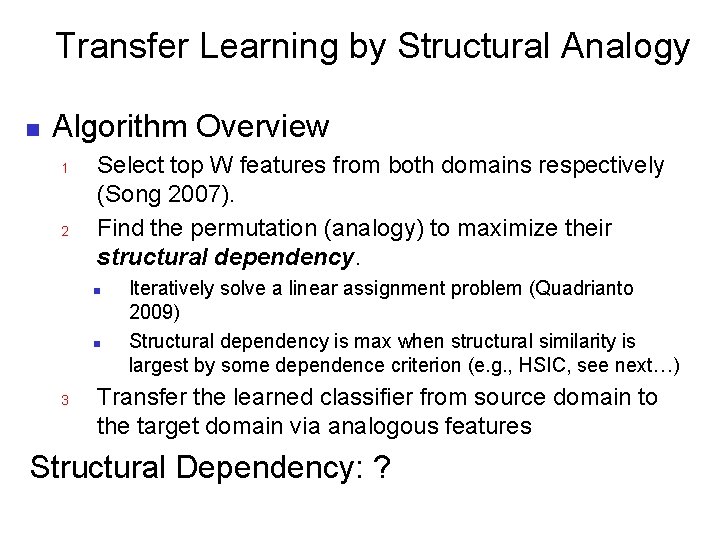

Transfer Learning by Structural Analogy n Algorithm Overview 1 2 Select top W features from both domains respectively (Song 2007). Find the permutation (analogy) to maximize their structural dependency. n n 3 Iteratively solve a linear assignment problem (Quadrianto 2009) Structural dependency is max when structural similarity is largest by some dependence criterion (e. g. , HSIC, see next…) Transfer the learned classifier from source domain to the target domain via analogous features Structural Dependency: ?

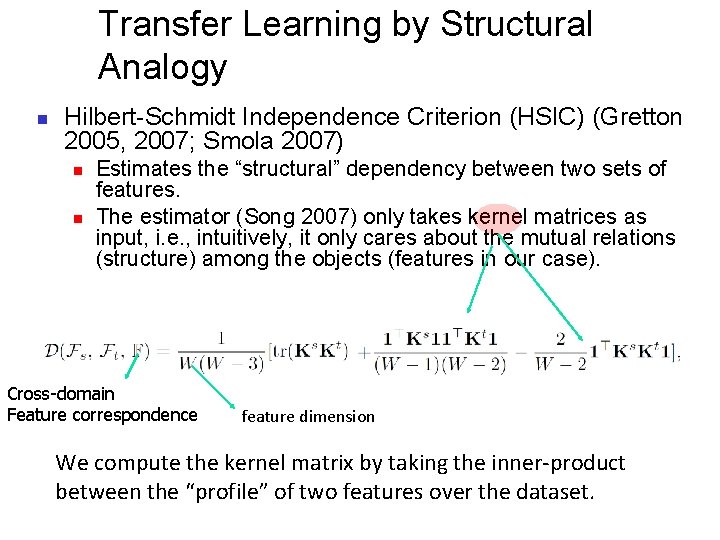

Transfer Learning by Structural Analogy n Hilbert-Schmidt Independence Criterion (HSIC) (Gretton 2005, 2007; Smola 2007) n n Estimates the “structural” dependency between two sets of features. The estimator (Song 2007) only takes kernel matrices as input, i. e. , intuitively, it only cares about the mutual relations (structure) among the objects (features in our case). Cross-domain Feature correspondence feature dimension We compute the kernel matrix by taking the inner-product between the “profile” of two features over the dataset.

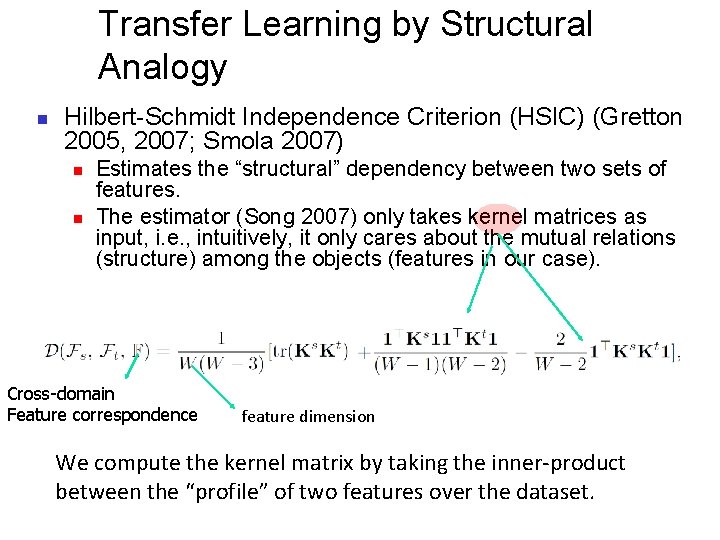

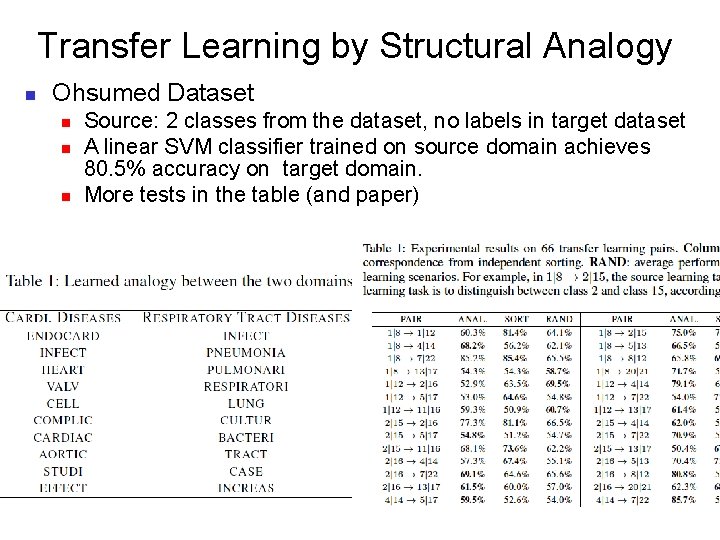

Transfer Learning by Structural Analogy n Ohsumed Dataset n n n Source: 2 classes from the dataset, no labels in target dataset A linear SVM classifier trained on source domain achieves 80. 5% accuracy on target domain. More tests in the table (and paper)

Topological Mapping n Expressed Through a Codebook 29

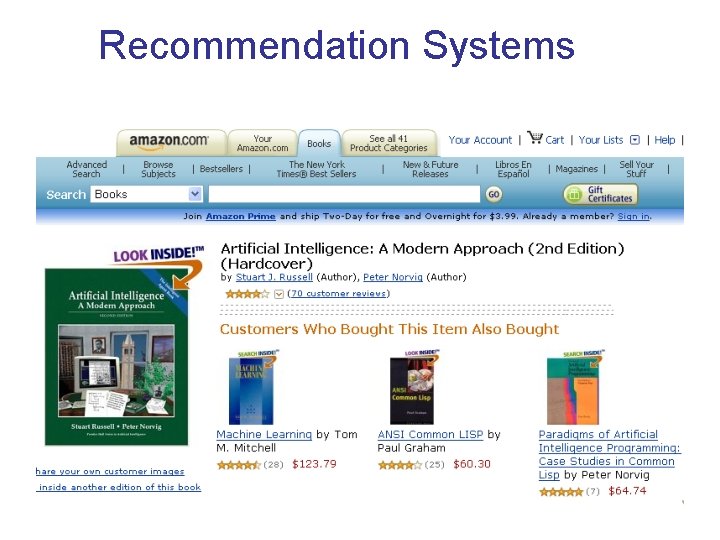

Recommendation Systems

Movie Recommendation

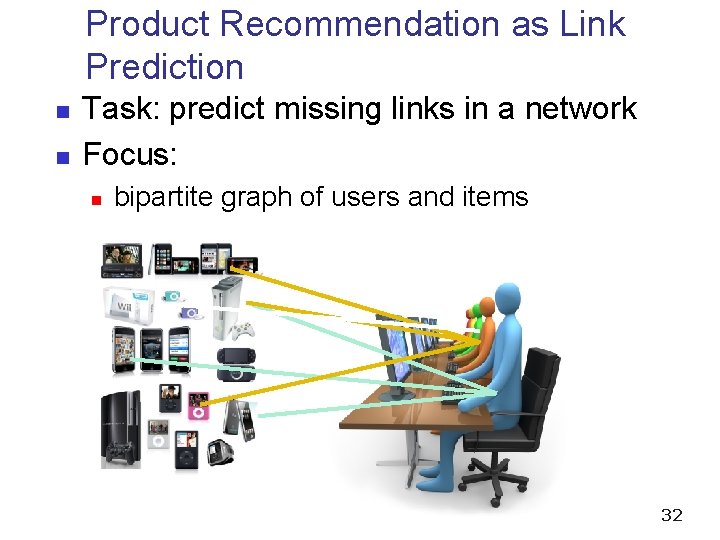

Product Recommendation as Link Prediction n n Task: predict missing links in a network Focus: n bipartite graph of users and items 32

Transfer Learning for Collaborative Filtering? IMDB Database Amazon. com 33 33

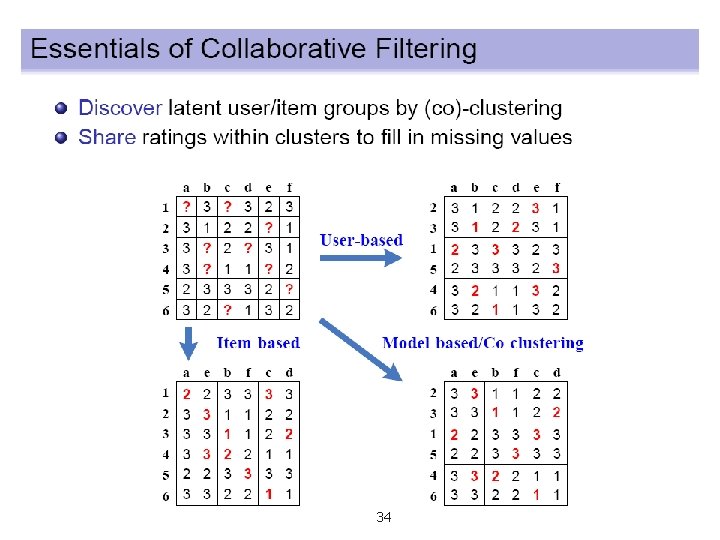

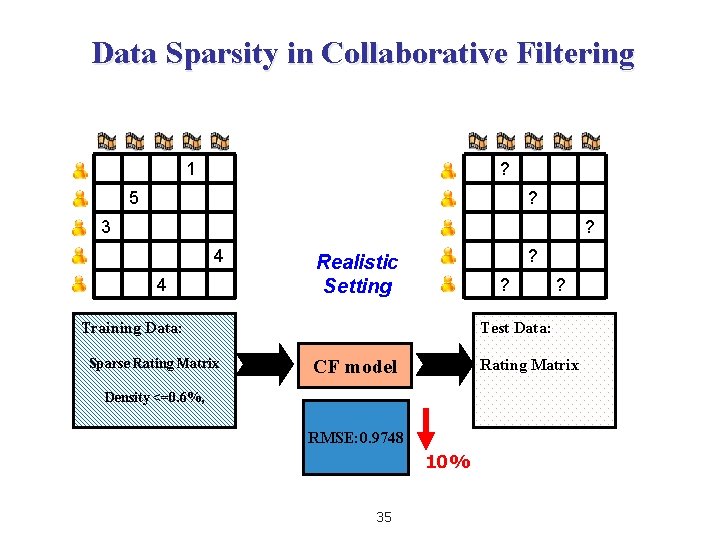

34

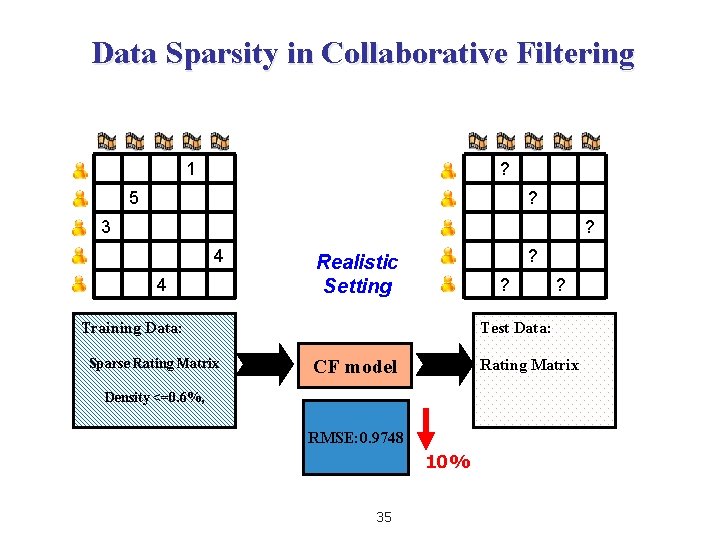

Data Sparsity in Collaborative Filtering 1 ? 5 ? 3 ? 4 4 ? Realistic Setting ? Training Data: Sparse Rating Matrix ? Test Data: CF model Rating Matrix Density <=0. 6%, RMSE: 0. 9748 10% 35

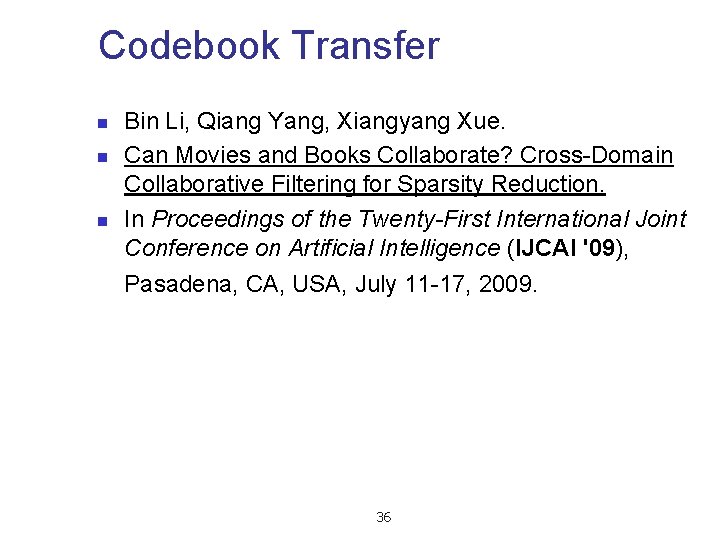

Codebook Transfer n n n Bin Li, Qiang Yang, Xiangyang Xue. Can Movies and Books Collaborate? Cross-Domain Collaborative Filtering for Sparsity Reduction. In Proceedings of the Twenty-First International Joint Conference on Artificial Intelligence (IJCAI '09), Pasadena, CA, USA, July 11 -17, 2009. 36

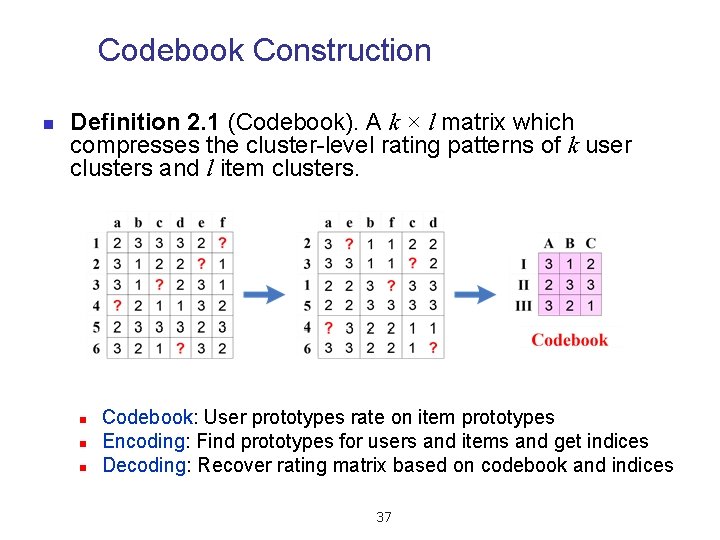

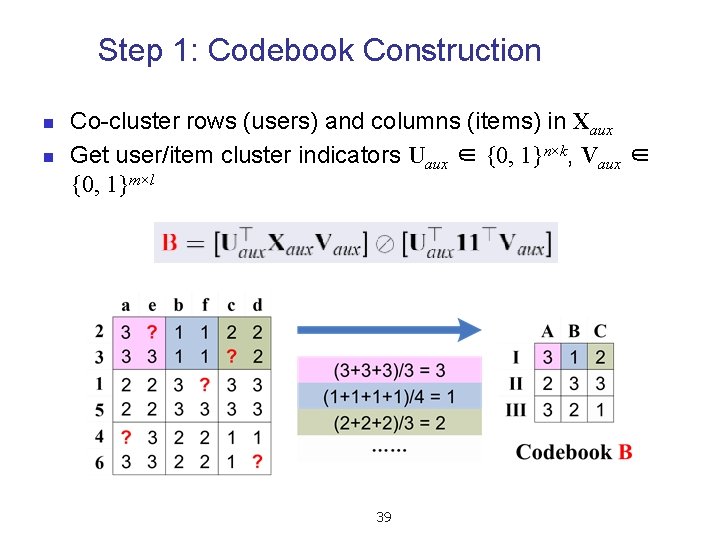

Codebook Construction n Definition 2. 1 (Codebook). A k × l matrix which compresses the cluster-level rating patterns of k user clusters and l item clusters. n n n Codebook: User prototypes rate on item prototypes Encoding: Find prototypes for users and items and get indices Decoding: Recover rating matrix based on codebook and indices 37

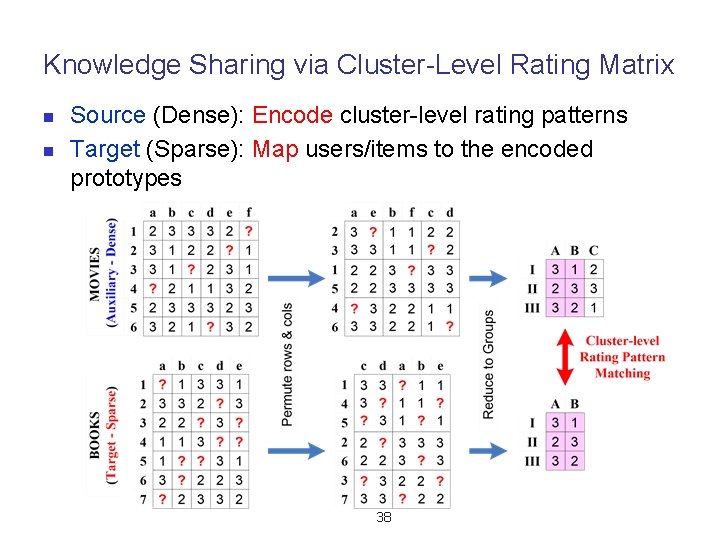

Knowledge Sharing via Cluster-Level Rating Matrix n n Source (Dense): Encode cluster-level rating patterns Target (Sparse): Map users/items to the encoded prototypes 38

Step 1: Codebook Construction n n Co-cluster rows (users) and columns (items) in Xaux Get user/item cluster indicators Uaux ∈ {0, 1}n×k, Vaux ∈ {0, 1}m×l 39

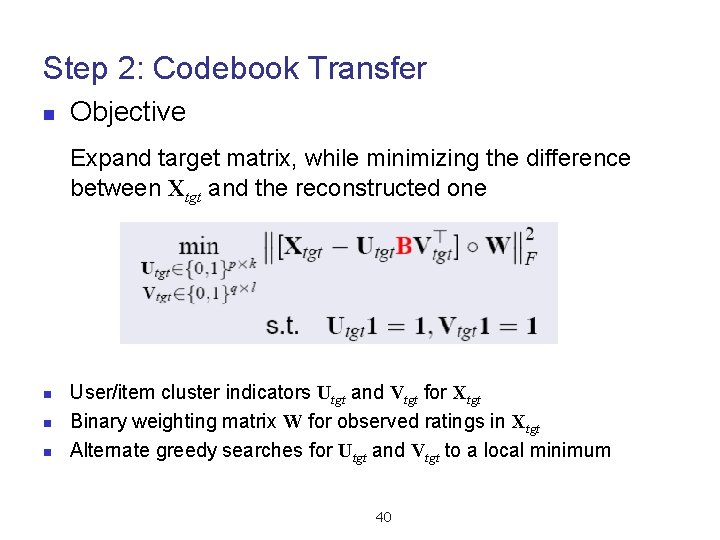

Step 2: Codebook Transfer n Objective Expand target matrix, while minimizing the difference between Xtgt and the reconstructed one n n n User/item cluster indicators Utgt and Vtgt for Xtgt Binary weighting matrix W for observed ratings in Xtgt Alternate greedy searches for Utgt and Vtgt to a local minimum 40

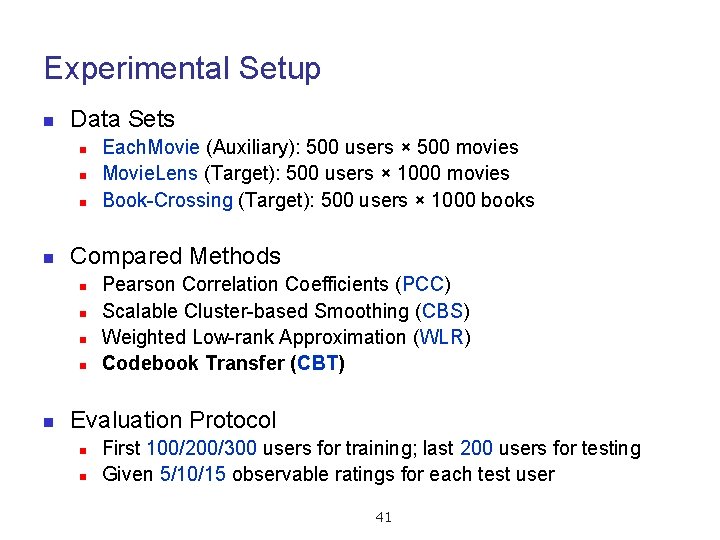

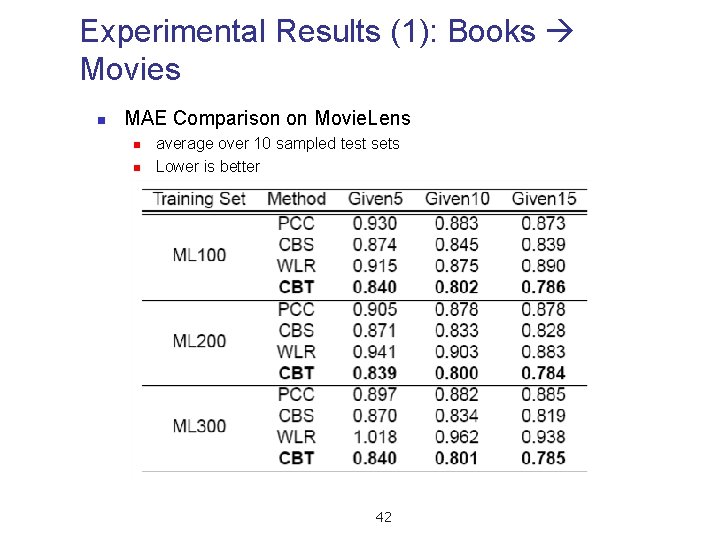

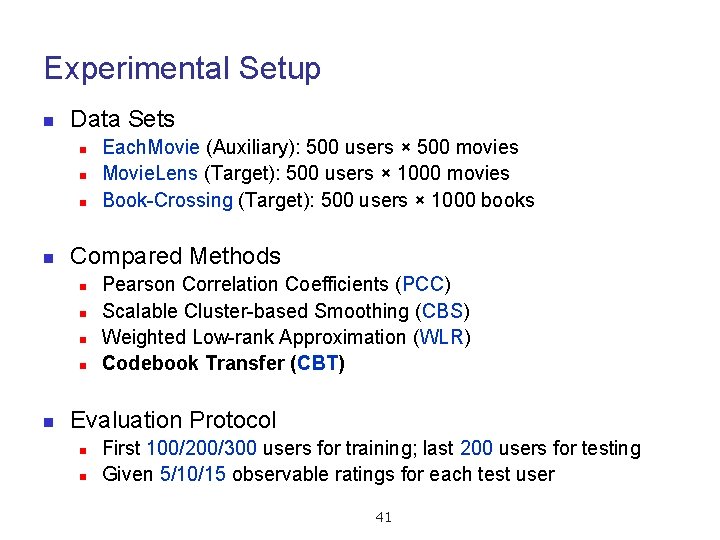

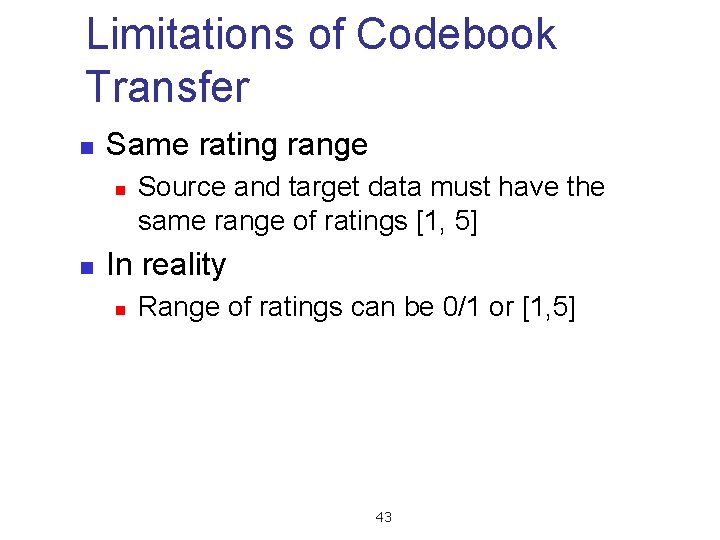

Experimental Setup n Data Sets n n Compared Methods n n n Each. Movie (Auxiliary): 500 users × 500 movies Movie. Lens (Target): 500 users × 1000 movies Book-Crossing (Target): 500 users × 1000 books Pearson Correlation Coefficients (PCC) Scalable Cluster-based Smoothing (CBS) Weighted Low-rank Approximation (WLR) Codebook Transfer (CBT) Evaluation Protocol n n First 100/200/300 users for training; last 200 users for testing Given 5/10/15 observable ratings for each test user 41

Experimental Results (1): Books Movies n MAE Comparison on Movie. Lens n n average over 10 sampled test sets Lower is better 42

Limitations of Codebook Transfer n Same rating range n n Source and target data must have the same range of ratings [1, 5] In reality n Range of ratings can be 0/1 or [1, 5] 43

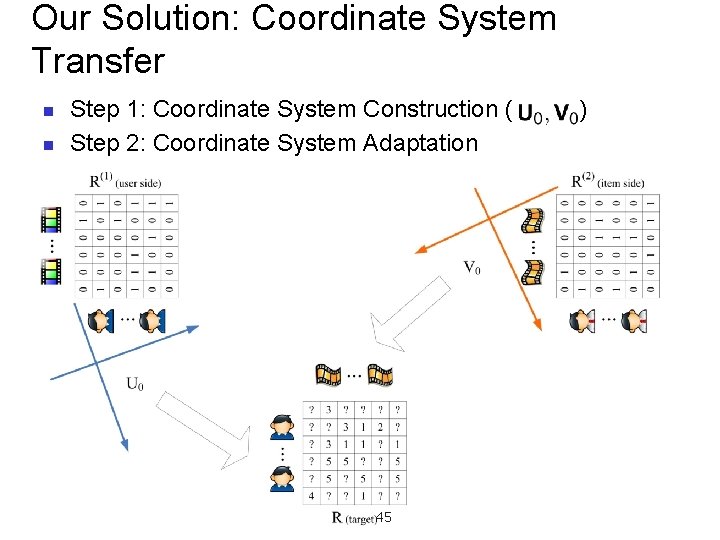

Coordinate System Transfer n n n Weike Pan, Evan Xiang, Nathan Liu and Qiang Yang. Transfer Learning in Collaborative Filtering for Sparsity Reduction. In Proceedings of the 24 th AAAI Conference on Artificial Intelligence (AAAI-10). Atlanta, Georgia, USA. July 11 -15, 2010. 44

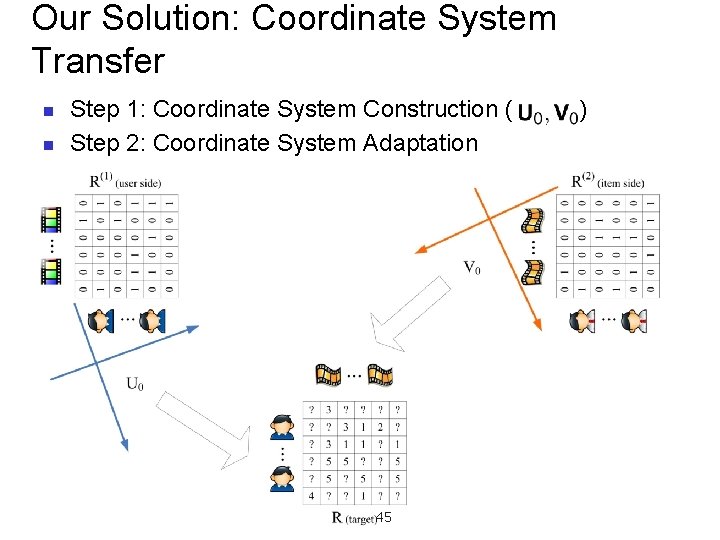

Our Solution: Coordinate System Transfer n n Step 1: Coordinate System Construction ( ) Step 2: Coordinate System Adaptation 45

Limitation of CST and CBT n n Different source domains are related to the target domain in the same way. In reality, n n n Book to Movies: related Food to Movies: not related Rating bias n Users tend to rate items that they like n Thus there are more rating = 5 than rating = 2 46

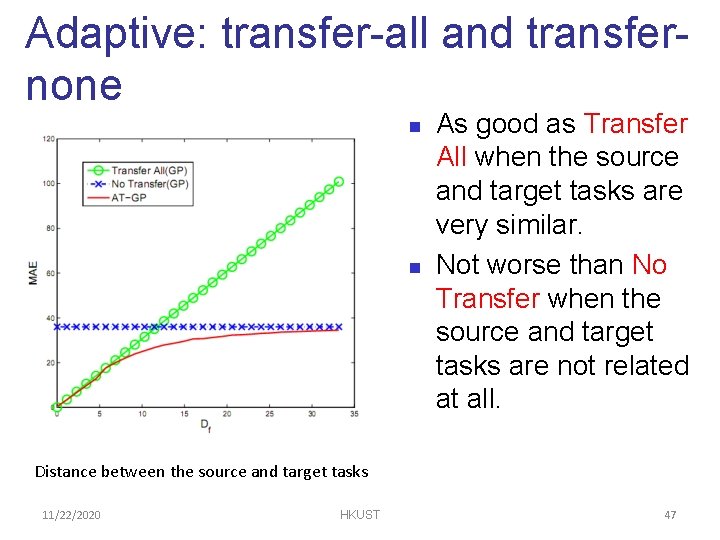

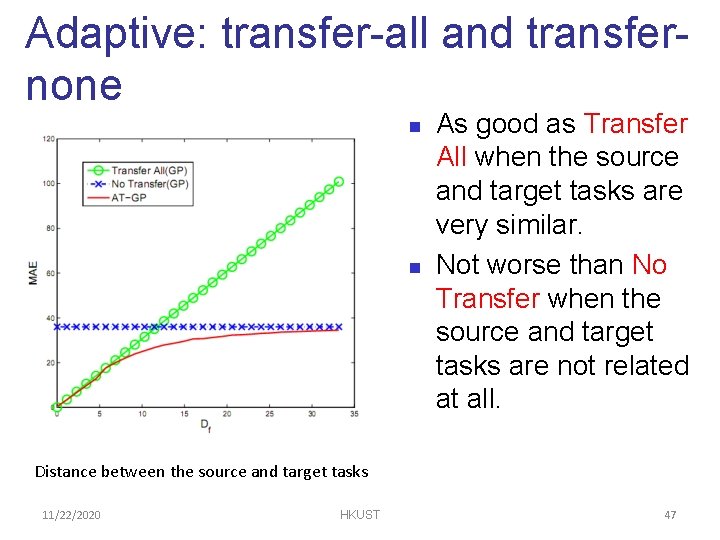

Adaptive: transfer-all and transfernone n n As good as Transfer All when the source and target tasks are very similar. Not worse than No Transfer when the source and target tasks are not related at all. Distance between the source and target tasks 11/22/2020 HKUST 47

Adaptive Transfer Learning n Bin Cao, Sinno Jialin Pan, Yu Zhang, Dit-Yan Yeung and Qiang Yang. Adaptive Transfer Learning. In Proceedings of the 24 th AAAI Conference on Artificial Intelligence (AAAI-10). Atlanta, Georgia, USA. July 11 -15, 2010.

Heterogeneous Label Spaces n Shen et al. applied HTL to KDDCUP 2005 dataset, n n Allowed category changes in real time Shi et al. used a risk-sensitive spectral partition algorithm in a multi-task learning setting to learn the label correspondence. Qi et al. used quadratic programming and Adaboost to transfer knowledge from “head” (frequent) categories to “tail” (infrequent) categories In the remaining n n Cross Domain Activity Recognition Source-Selection Free TL 49

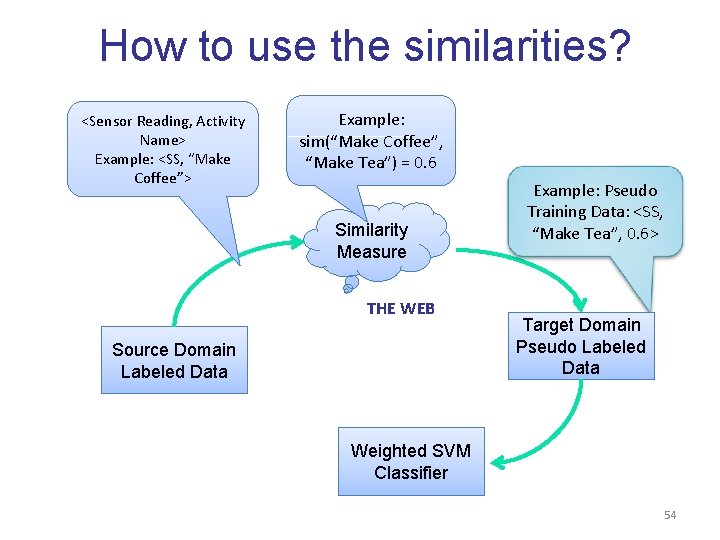

Activity Recognition n With sensor data collected on mobile devices n Location n n Context: location, weather, etc. n n GPS, Wifi, RFID From GPS, RFID, Bluetooth, etc. Various models can be used n Non-sequential models: n n Naïve Bayes, SVM … Sequential models: n HMM, CRF …

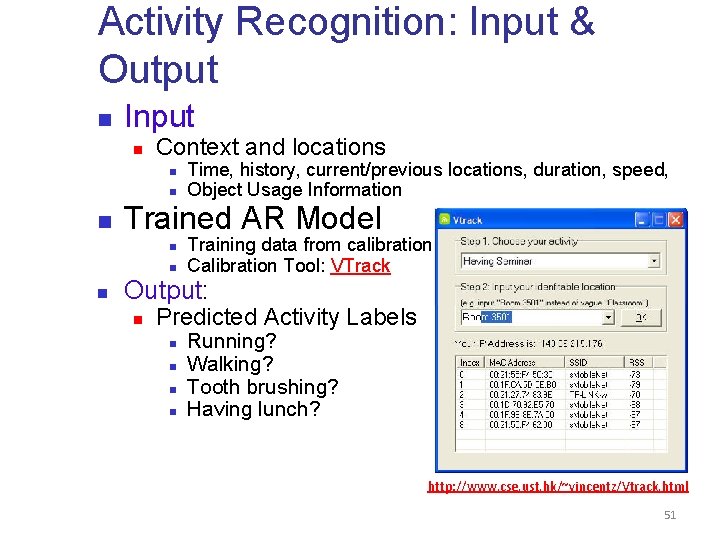

Activity Recognition: Input & Output n Input n Context and locations n n n Trained AR Model n n n Time, history, current/previous locations, duration, speed, Object Usage Information Training data from calibration Calibration Tool: VTrack Output: n Predicted Activity Labels n n Running? Walking? Tooth brushing? Having lunch? http: //www. cse. ust. hk/~vincentz/Vtrack. html 51

Datasets: MIT Place. Lab http: //architecture. mit. edu/house_n/placelab. html n n MIT Place. Lab Dataset (PLIA 2) [Intille et al. Pervasive 2005] Activities: Common household activities 52

![Cross Domain Activity Recognition Zheng Hu Yang Ubicomp 2009 n Challenges n n A Cross Domain Activity Recognition [Zheng, Hu, Yang, Ubicomp 2009] n Challenges: n n A](https://slidetodoc.com/presentation_image_h/847981a47b3a2b586c560cae9e2ae3bc/image-53.jpg)

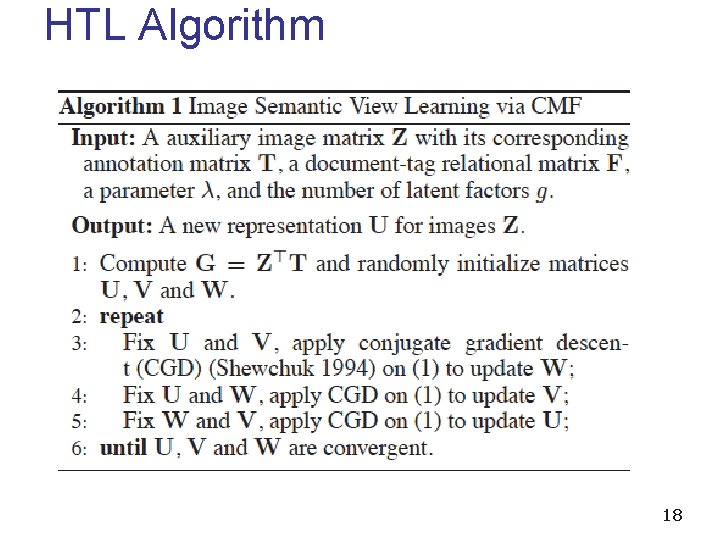

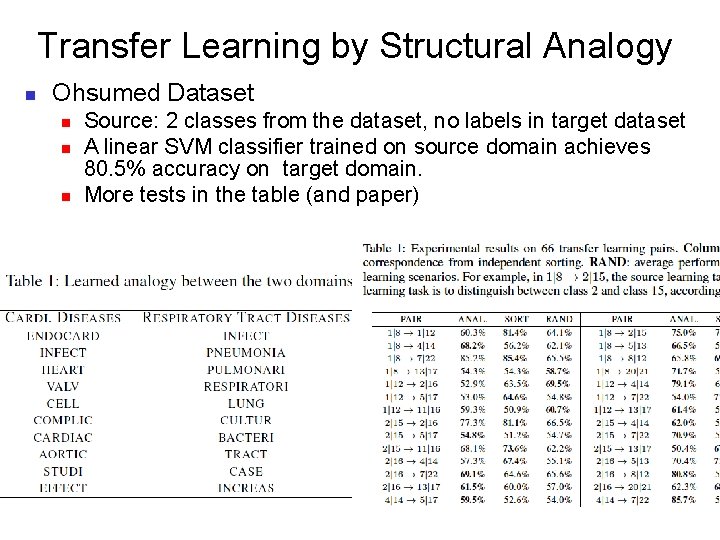

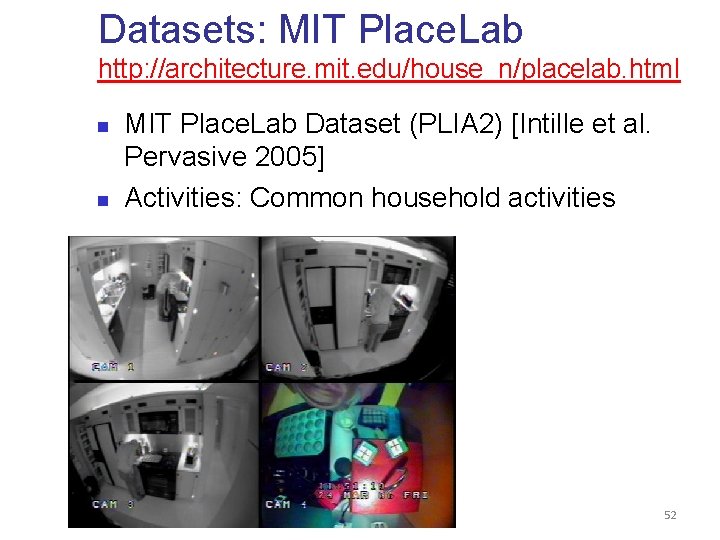

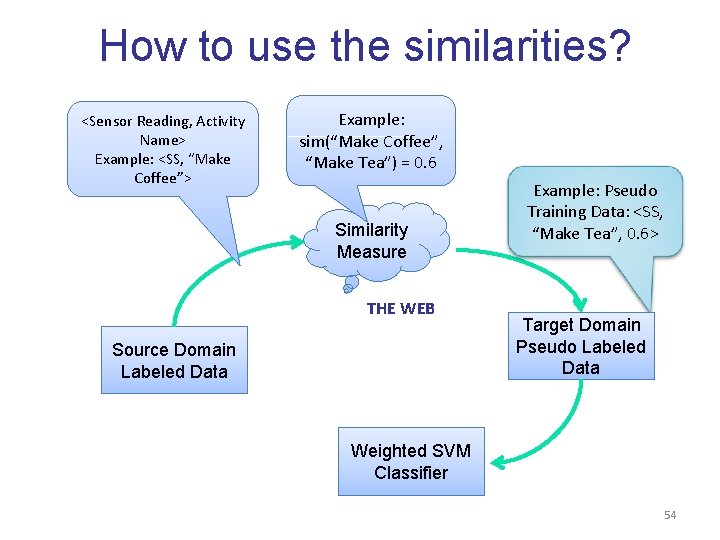

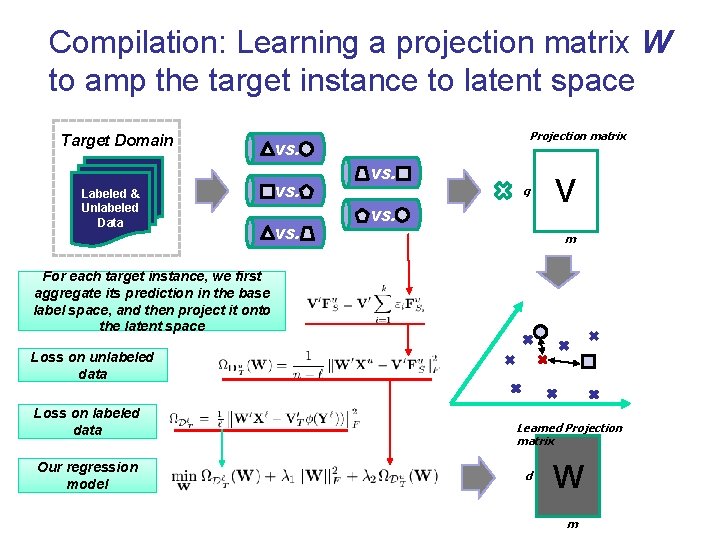

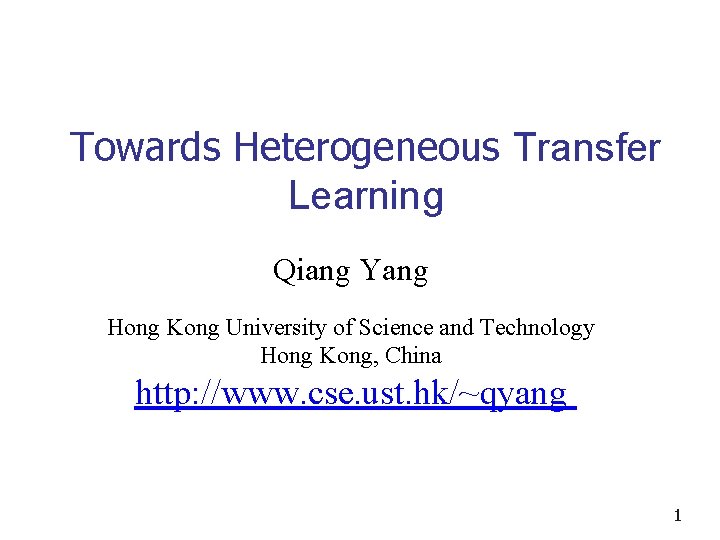

Cross Domain Activity Recognition [Zheng, Hu, Yang, Ubicomp 2009] n Challenges: n n A new domain of activities without labeled data Cleaning Indoor Cross-domain activity recognition n Transfer some available labeled data from source activities to help training the recognizer for the target activities. Laundry Dishwashing 53

How to use the similarities? <Sensor Reading, Activity Name> Example: <SS, “Make Coffee”> Example: sim(“Make Coffee”, “Make Tea”) = 0. 6 Similarity Measure THE WEB Source Domain Labeled Data Example: Pseudo Training Data: <SS, “Make Tea”, 0. 6> Target Domain Pseudo Labeled Data Weighted SVM Classifier 54

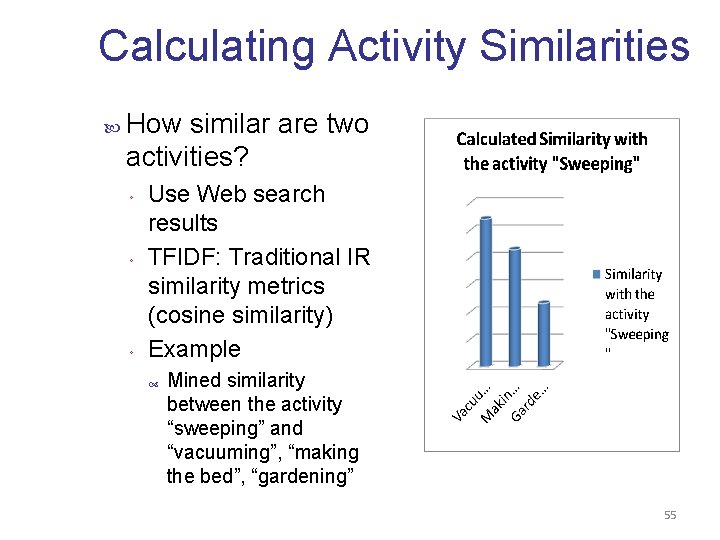

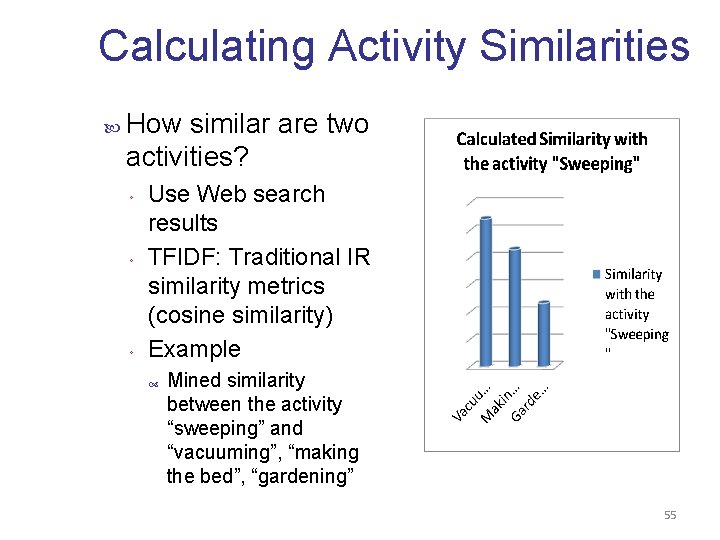

Calculating Activity Similarities How similar are two activities? ◦ ◦ ◦ Use Web search results TFIDF: Traditional IR similarity metrics (cosine similarity) Example Mined similarity between the activity “sweeping” and “vacuuming”, “making the bed”, “gardening” 55

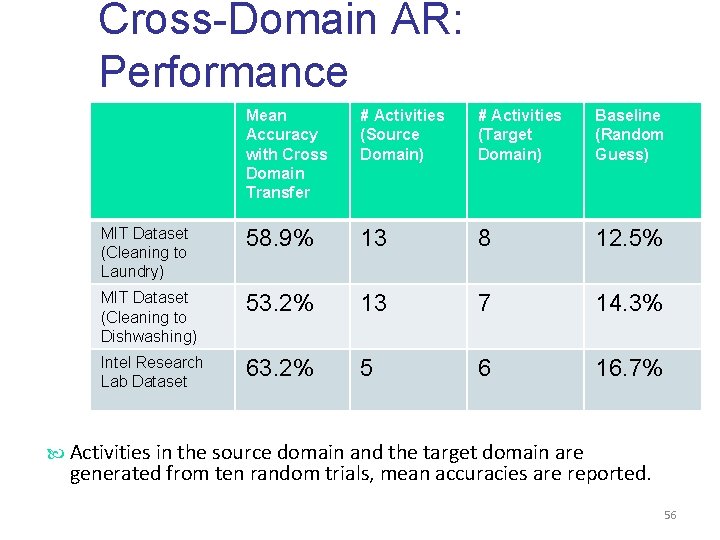

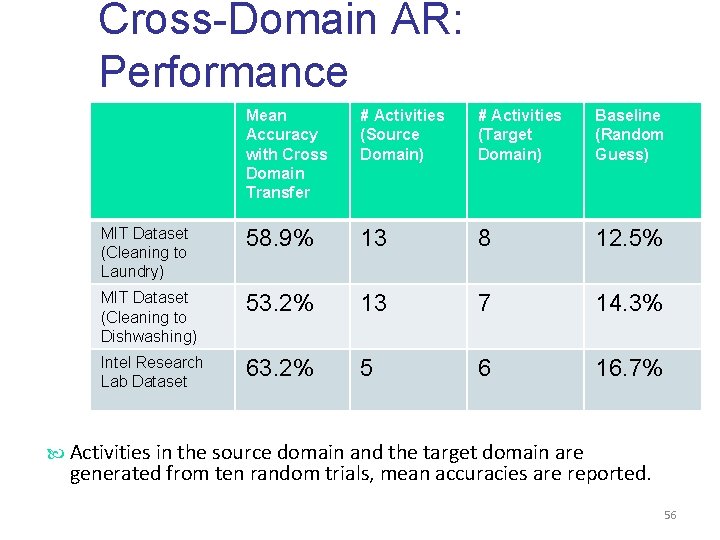

Cross-Domain AR: Performance Mean Accuracy with Cross Domain Transfer # Activities (Source Domain) # Activities (Target Domain) Baseline (Random Guess) MIT Dataset (Cleaning to Laundry) 58. 9% 13 8 12. 5% MIT Dataset (Cleaning to Dishwashing) 53. 2% 13 7 14. 3% Intel Research Lab Dataset 63. 2% 5 6 16. 7% Activities in the source domain and the target domain are generated from ten random trials, mean accuracies are reported. 56

Source-Selection-Free Transfer Learning (SSFTL) Evan Wei Xiang, Sinno Jialin Pan, Weike Pan, Jian Su and Qiang Yang. Source. Selection-Free Transfer Learning. In Proceedings of the 22 nd International Joint Conference on Artificial Intelligence (IJCAI 11), Barcelona, Spain, July 2011.

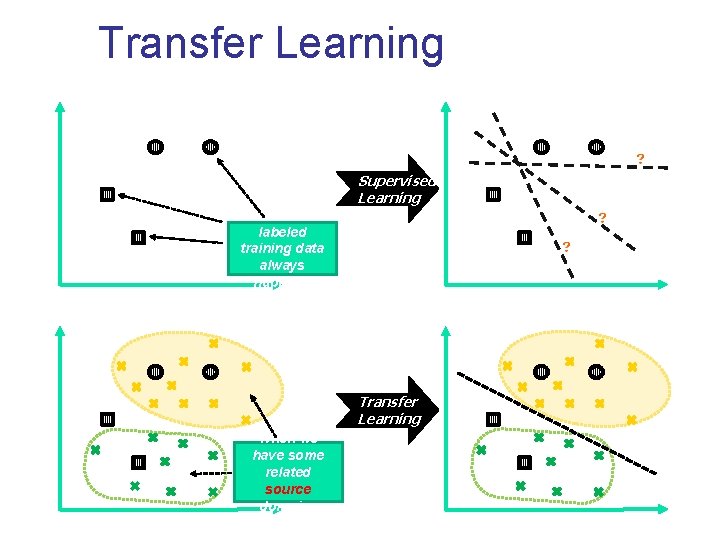

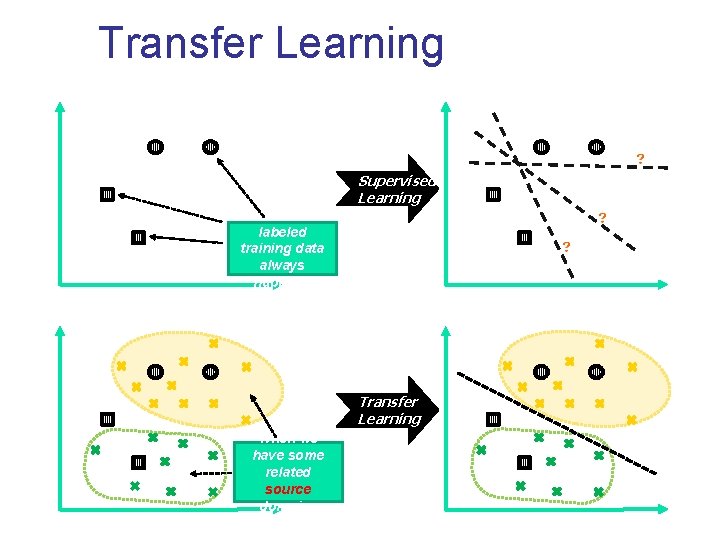

Transfer Learning Supervised Learning Lack of labeled training data always happens Transfer Learning When we have some related source domains

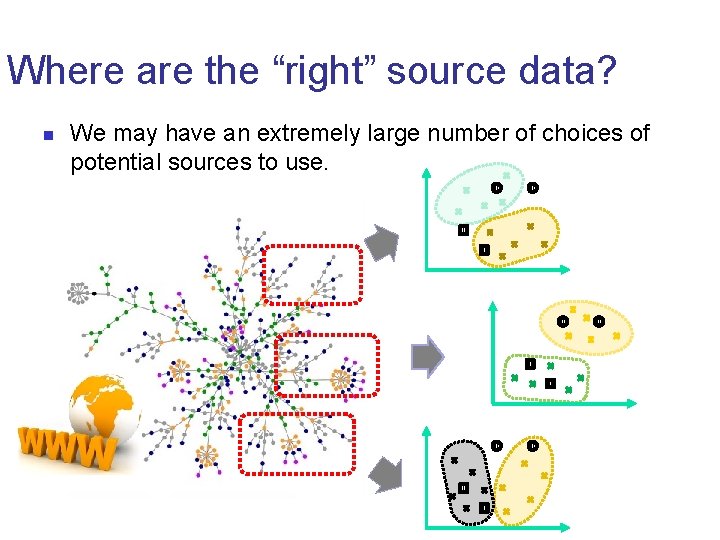

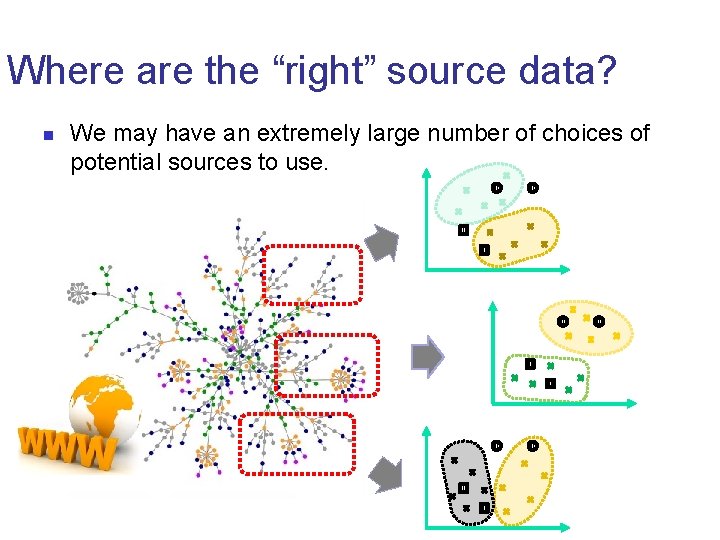

Where are the “right” source data? n We may have an extremely large number of choices of potential sources to use.

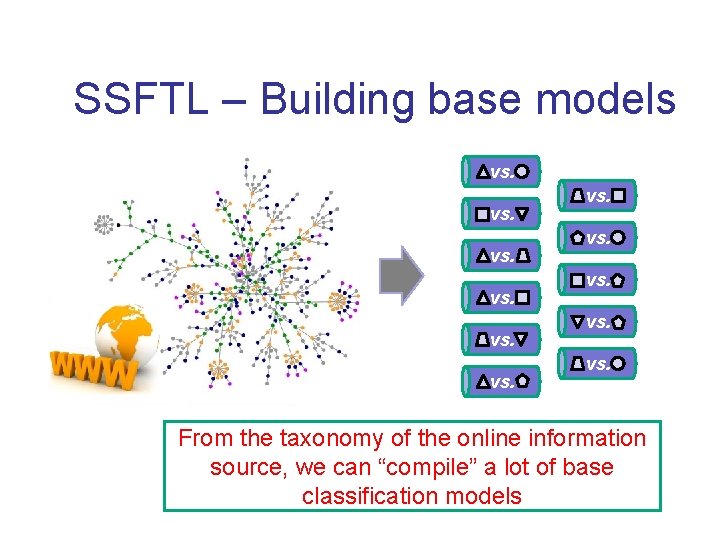

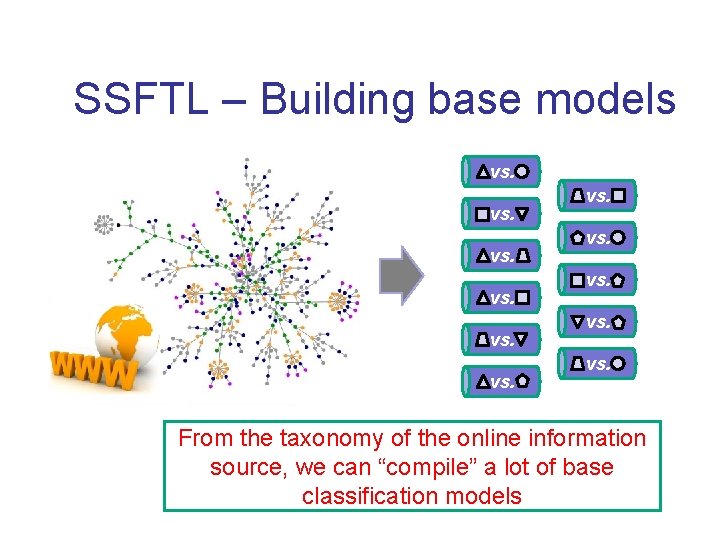

SSFTL – Building base models vs. vs. vs. From the taxonomy of the online information source, we can “compile” a lot of base classification models

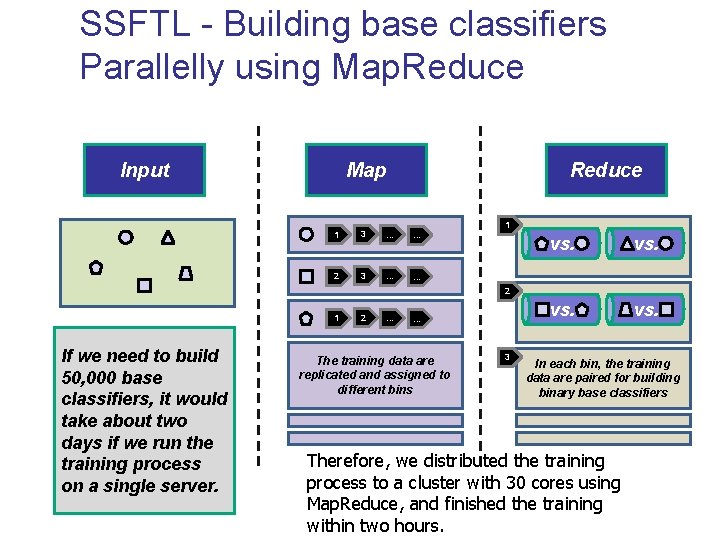

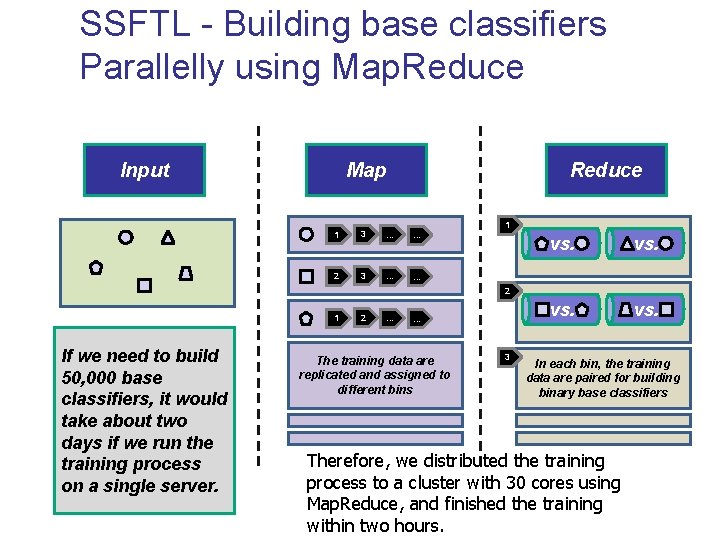

SSFTL - Building base classifiers Parallelly using Map. Reduce Map Input Reduce 1 3 … … 2 3 … … 1 vs. 2 1 If we need to build 50, 000 base classifiers, it would take about two days if we run the training process on a single server. 2 … … The training data are replicated and assigned to different bins 3 In each bin, the training data are paired for building binary base classifiers Therefore, we distributed the training process to a cluster with 30 cores using Map. Reduce, and finished the training within two hours.

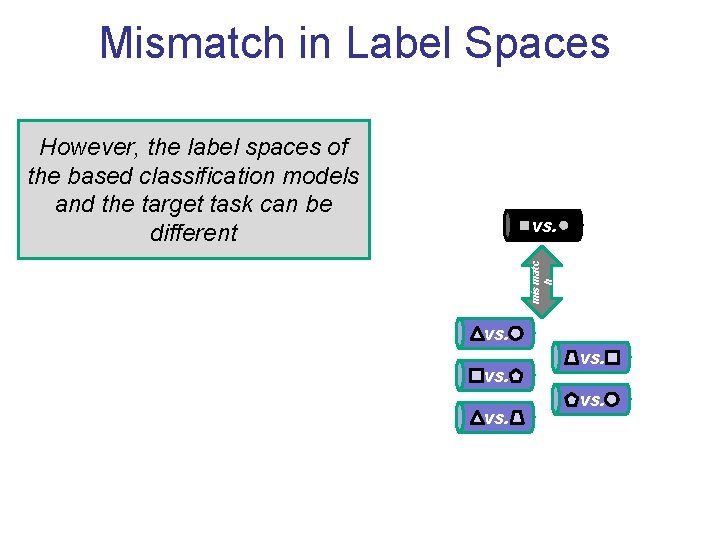

Mismatch in Label Spaces However, the label spaces of the based classification models and the target task can be different mismatc h vs. vs.

SSFTL – Label Bridging via Laplacian Graph Embedding The relationship between the target and source labels is provided by social media sites such as Delicious. = <label 1, label 2, … label q> A label distribution vector Projection matrix q V m The relationships between labels, e. g. , similar or dissimilar, can be represented by the distance between their corresponding prototypes in the latent space, e. g. , close to or far away from each other.

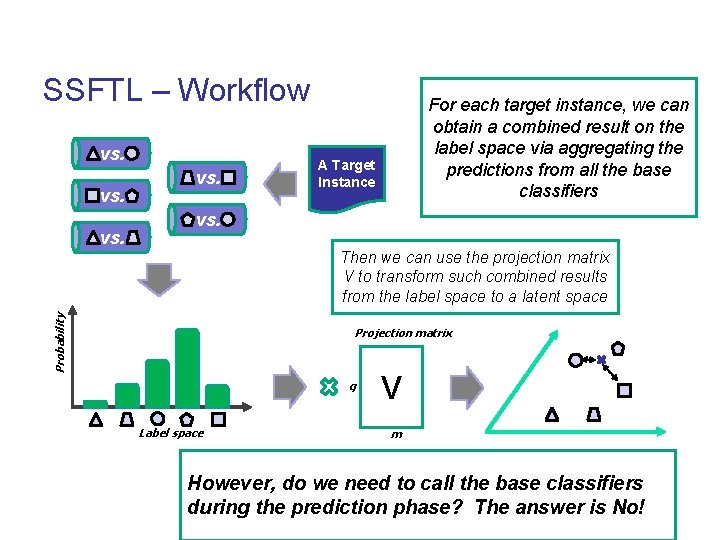

SSFTL – Workflow vs. vs. For each target instance, we can obtain a combined result on the label space via aggregating the predictions from all the base classifiers A Target Instance vs. Probability Then we can use the projection matrix V to transform such combined results from the label space to a latent space Projection matrix q Label space V m However, do we need to call the base classifiers during the prediction phase? The answer is No!

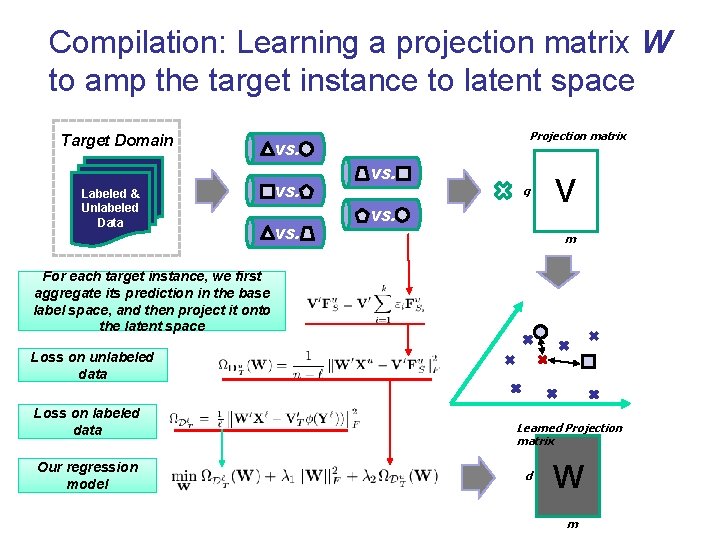

Compilation: Learning a projection matrix W to amp the target instance to latent space Target Domain Labeled & Unlabeled Data Projection matrix vs. vs. q vs. V m For each target instance, we first aggregate its prediction in the base label space, and then project it onto the latent space Loss on unlabeled data Loss on labeled data Our regression model Learned Projection matrix d W m

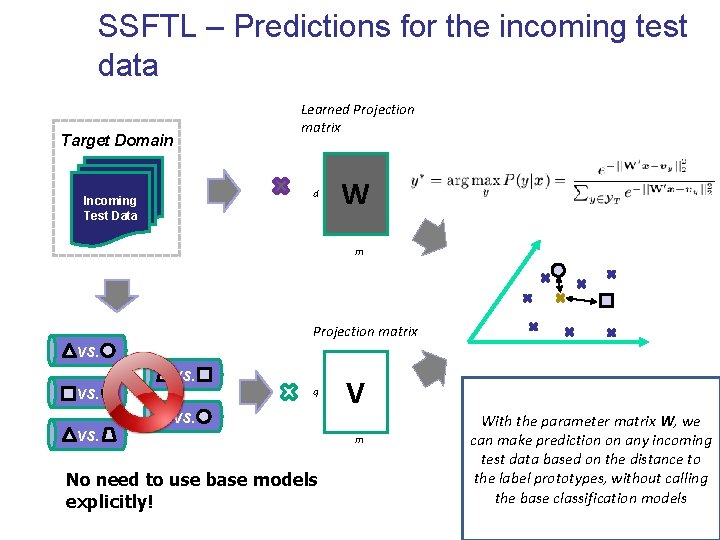

SSFTL – Predictions for the incoming test data Learned Projection matrix Target Domain d Incoming Test Data W m Projection matrix vs. vs. q vs. No need to use base models explicitly! V m With the parameter matrix W, we can make prediction on any incoming test data based on the distance to the label prototypes, without calling the base classification models

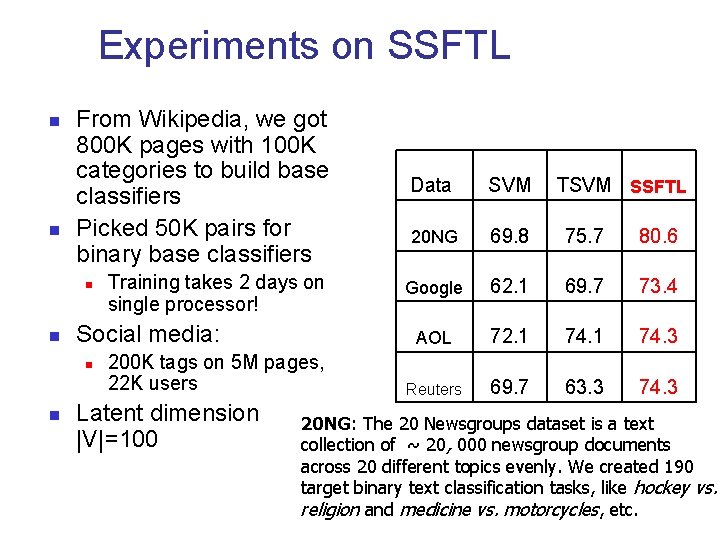

Experiments on SSFTL n n From Wikipedia, we got 800 K pages with 100 K categories to build base classifiers Picked 50 K pairs for binary base classifiers Data SVM 20 NG 69. 8 75. 7 80. 6 Training takes 2 days on single processor! Google 62. 1 69. 7 73. 4 AOL 72. 1 74. 3 Reuters 69. 7 63. 3 74. 3 n n Social media: n n 200 K tags on 5 M pages, 22 K users Latent dimension |V|=100 TSVM SSFTL 20 NG: The 20 Newsgroups dataset is a text collection of ~ 20, 000 newsgroup documents across 20 different topics evenly. We created 190 target binary text classification tasks, like hockey vs. religion and medicine vs. motorcycles, etc.

Conclusions and Future Work n Transfer Learning n n Instance based Feature based Model based Heterogeneous Transfer Learning n n Translator: Translated Learning No Translator: n n Structural Transfer Learning Challenges 68

Acknowledgements n HKUST: n n Shanghai Jiaotong University: n n Sinno J. Pan, Huayan Wang, Bin Cao, Evan Wei Xiang, Derek Hao Hu, Nathan Nan Liu, Qian Xu, Vincent Wenchen Zheng, Weike Pan, etc. Wenyuan Dai, Guirong Xue, Yuqiang Chen, Prof. Yong Yu, Xiao Ling, Ou Jin. Visiting Students n Bin Li (Fudan U. ), Xiaoxiao Shi (Zhong Shan U. ), etc. 69