A 12 WEEK PROJECT IN Speech Coding and

- Slides: 53

A 12 -WEEK PROJECT IN Speech Coding and Recognition by Fu-Tien Hsiao and Vedrana Andersen

Overview n n An Introduction to Speech Signals (Vedrana) Linear Prediction Analysis (Fu) Speech Coding and Synthesis (Fu) Speech Recognition (Vedrana)

Speech Coding and Recognition AN INTRODUCTION TO SPEECH SIGNALS

AN INTRODUCTION TO SPEECH SIGNALS Speech Production n n n Flow of air from lungs Vibrating vocal cords Speech production cavities Lips Sound wave Vowels (a, e, i), fricatives (f, s, z) and plosives (p, t, k)

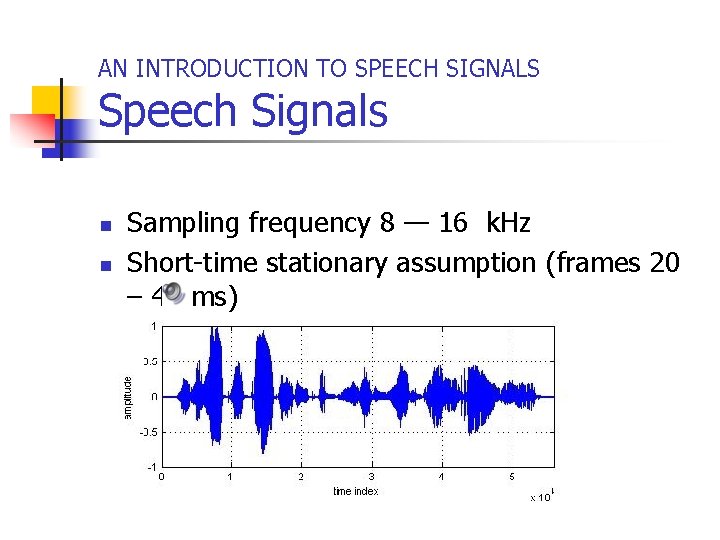

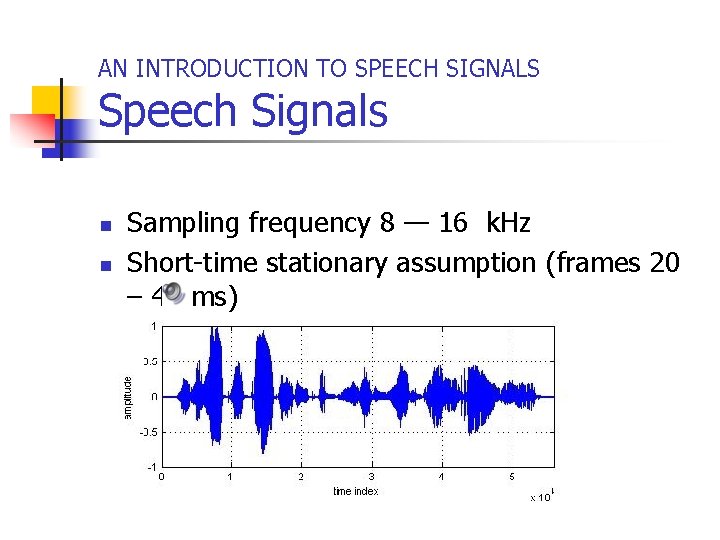

AN INTRODUCTION TO SPEECH SIGNALS Speech Signals n n Sampling frequency 8 — 16 k. Hz Short-time stationary assumption (frames 20 – 40 ms)

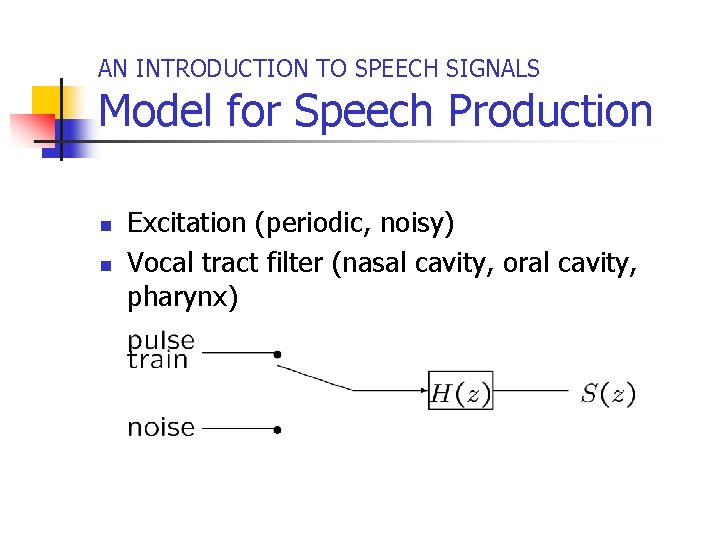

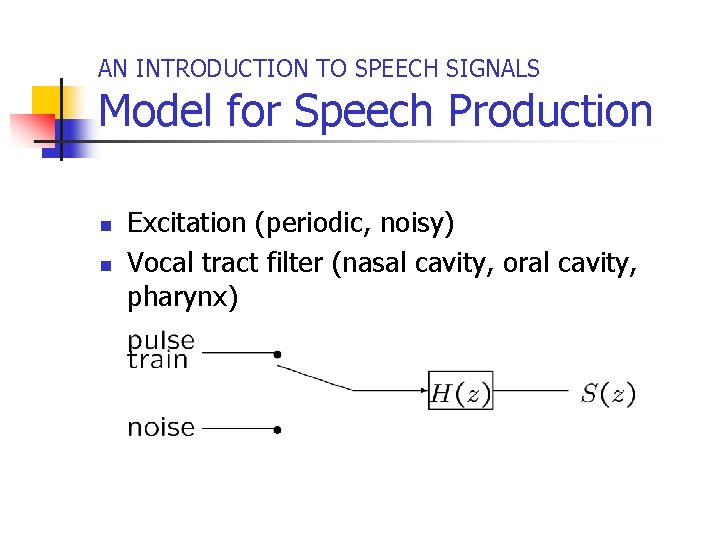

AN INTRODUCTION TO SPEECH SIGNALS Model for Speech Production n n Excitation (periodic, noisy) Vocal tract filter (nasal cavity, oral cavity, pharynx)

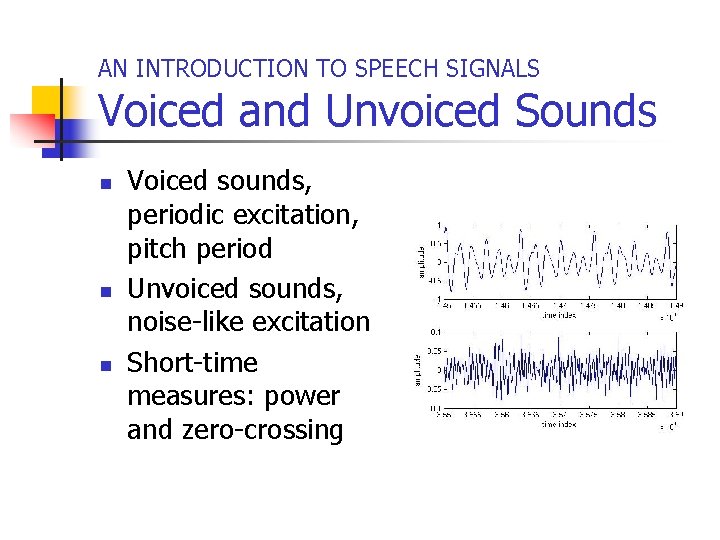

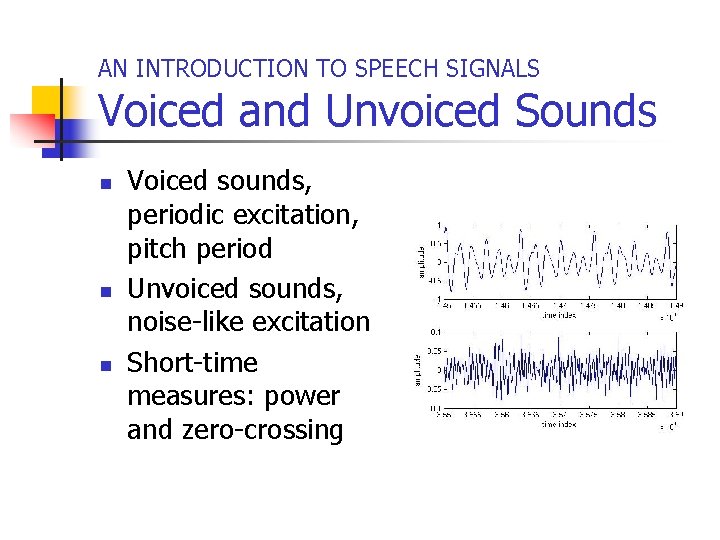

AN INTRODUCTION TO SPEECH SIGNALS Voiced and Unvoiced Sounds n n n Voiced sounds, periodic excitation, pitch period Unvoiced sounds, noise-like excitation Short-time measures: power and zero-crossing

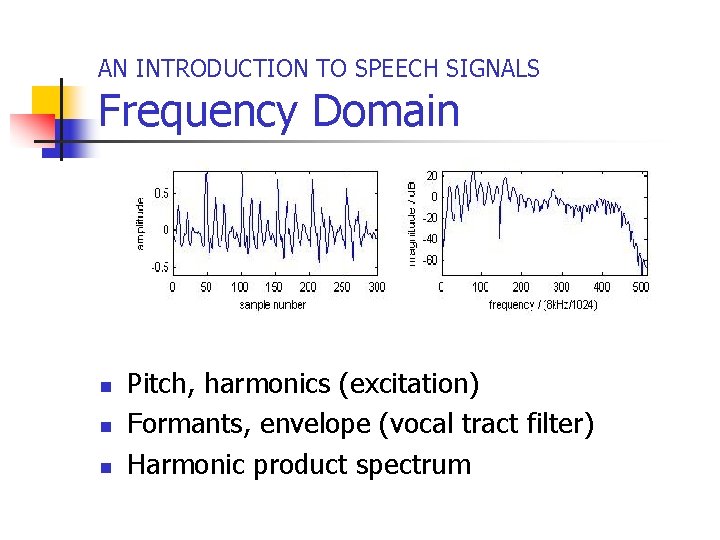

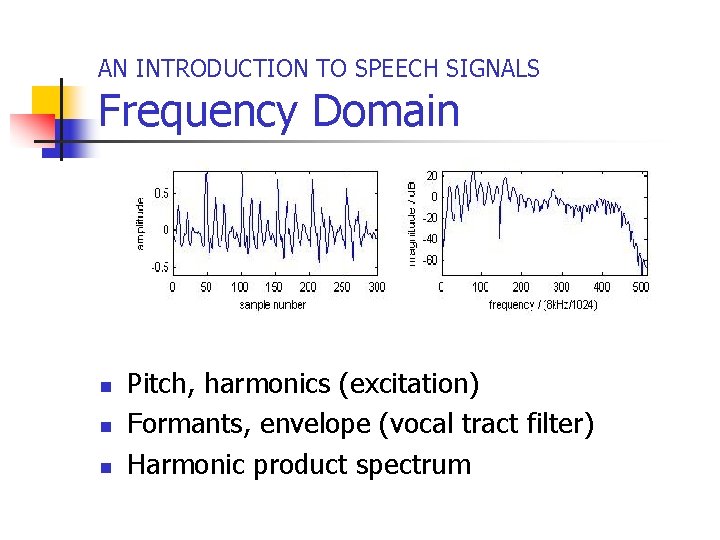

AN INTRODUCTION TO SPEECH SIGNALS Frequency Domain n Pitch, harmonics (excitation) Formants, envelope (vocal tract filter) Harmonic product spectrum

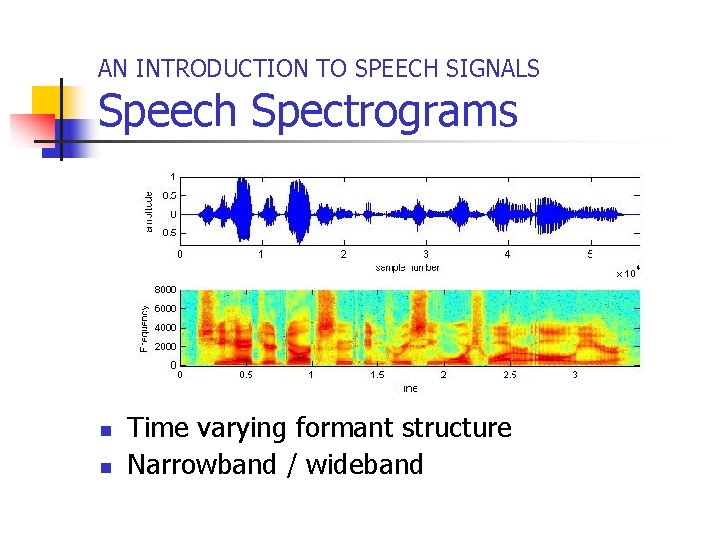

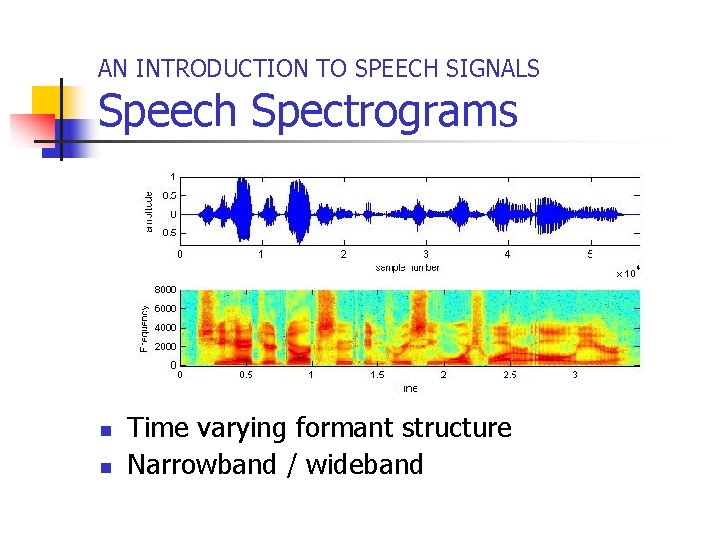

AN INTRODUCTION TO SPEECH SIGNALS Speech Spectrograms n n Time varying formant structure Narrowband / wideband

Speech Coding and Recognition LINEAR PREDICTION ANALYSIS

LINEAR PREDICTION ANALYSIS Categories n n Vocal Tract Filter Linear Prediction Analysis n n n Error Minimization Levison-Durbin Recursion Residual sequence u(n)

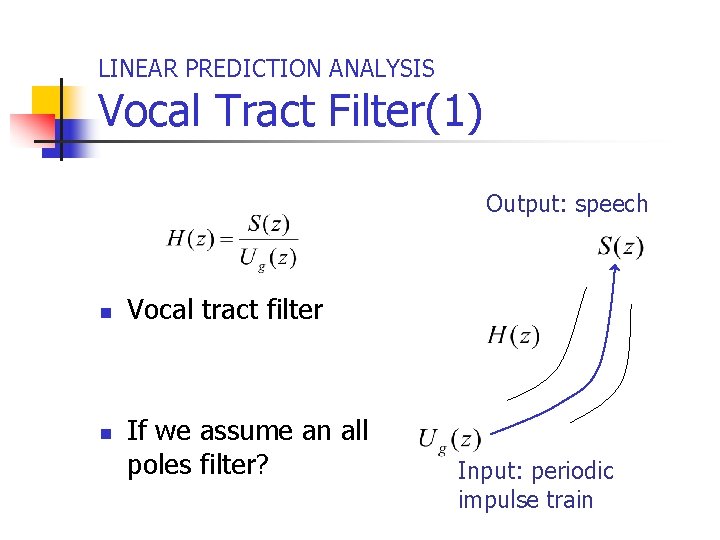

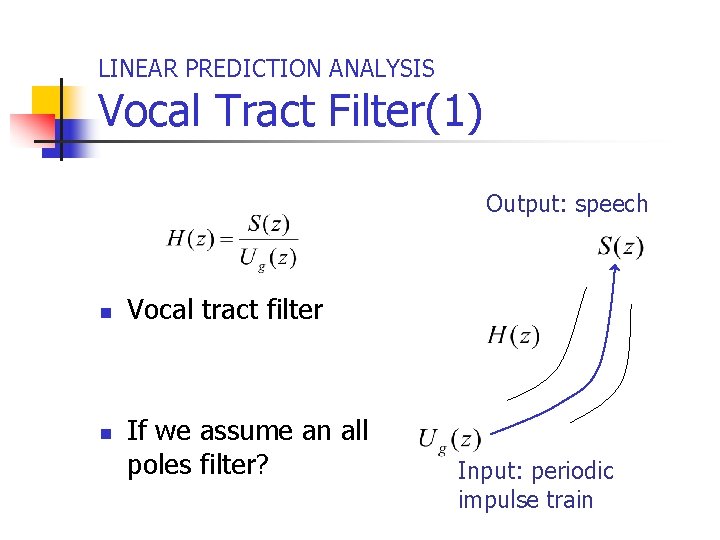

LINEAR PREDICTION ANALYSIS Vocal Tract Filter(1) Output: speech n n Vocal tract filter If we assume an all poles filter? Input: periodic impulse train

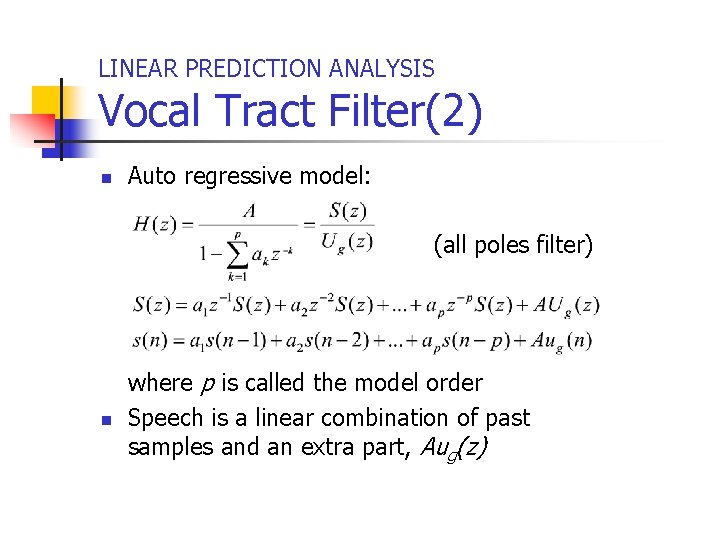

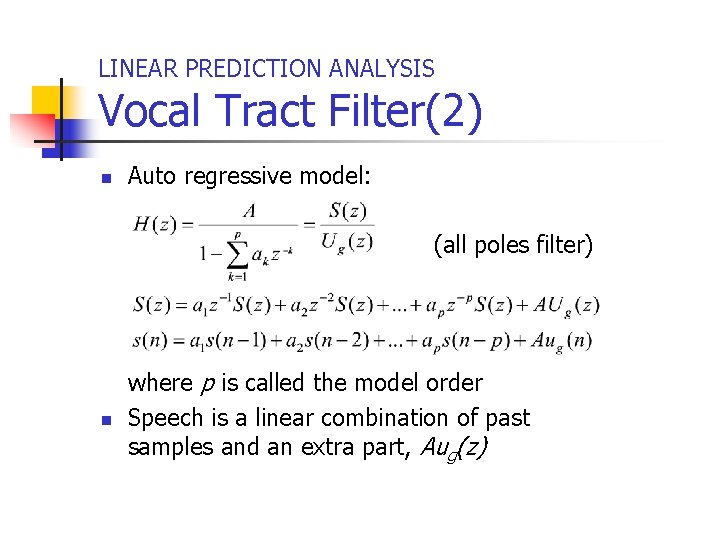

LINEAR PREDICTION ANALYSIS Vocal Tract Filter(2) n Auto regressive model: (all poles filter) n where p is called the model order Speech is a linear combination of past samples and an extra part, Aug(z)

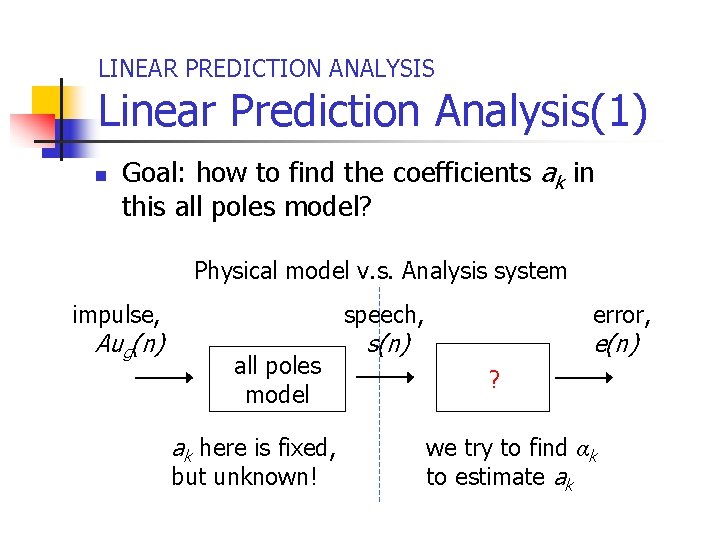

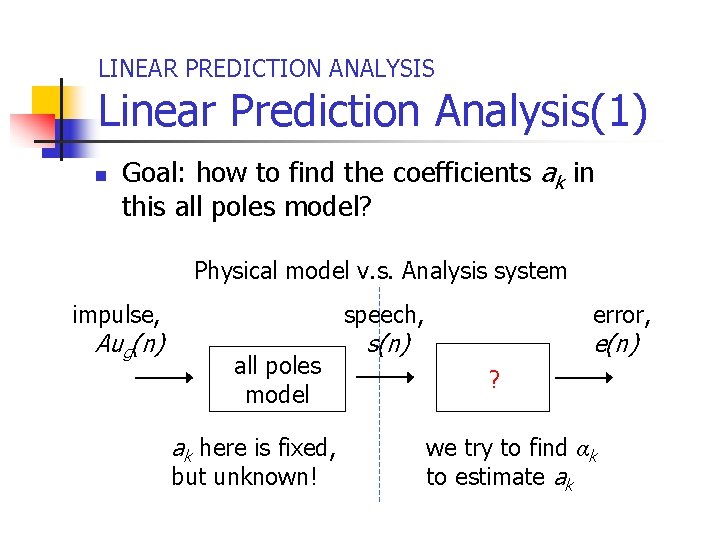

LINEAR PREDICTION ANALYSIS Linear Prediction Analysis(1) n Goal: how to find the coefficients ak in this all poles model? Physical model v. s. Analysis system impulse, Aug(n) error, speech, all poles model ak here is fixed, but unknown! e(n) s(n) ? we try to find αk to estimate ak

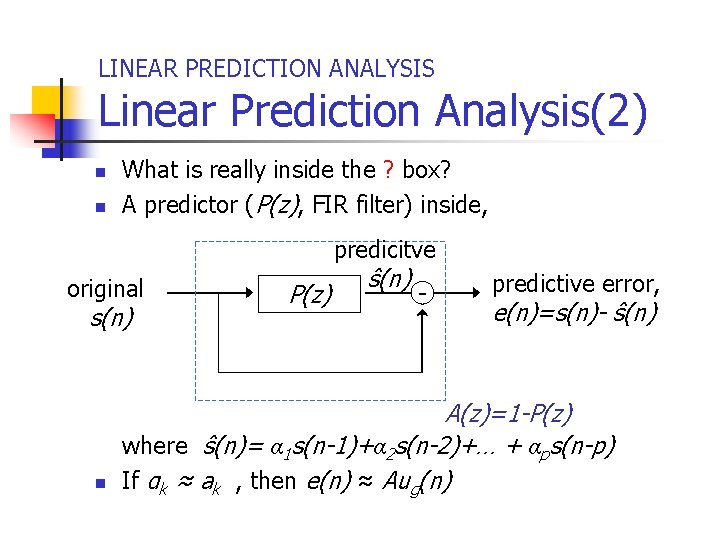

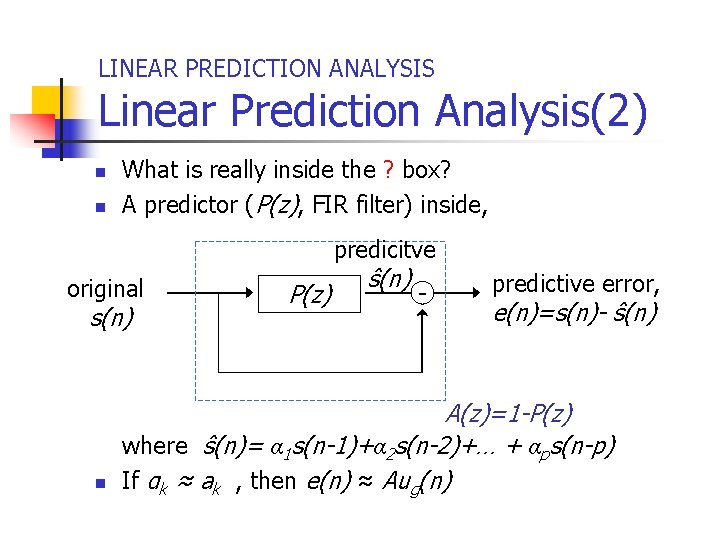

LINEAR PREDICTION ANALYSIS Linear Prediction Analysis(2) n n What is really inside the ? box? A predictor (P(z), FIR filter) inside, predicitve original s(n) n P(z) ŝ(n) - predictive error, e(n)=s(n)- ŝ(n) A(z)=1 -P(z) where ŝ(n)= α 1 s(n-1)+α 2 s(n-2)+… + αps(n-p) If αk ≈ ak , then e(n) ≈ Aug(n)

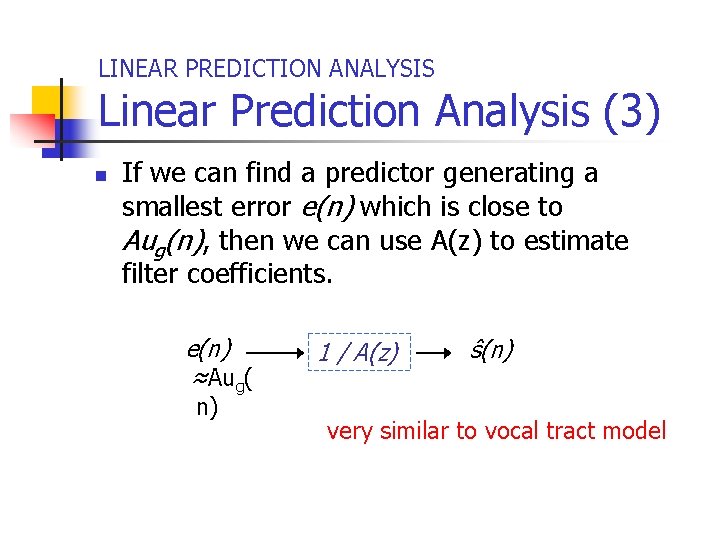

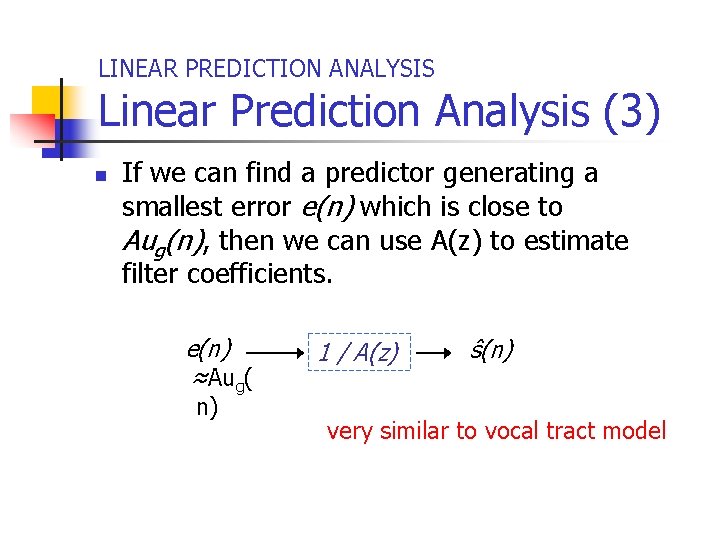

LINEAR PREDICTION ANALYSIS Linear Prediction Analysis (3) n If we can find a predictor generating a smallest error e(n) which is close to Aug(n), then we can use A(z) to estimate filter coefficients. e(n) ≈Aug( n) 1 / A(z) ŝ(n) very similar to vocal tract model

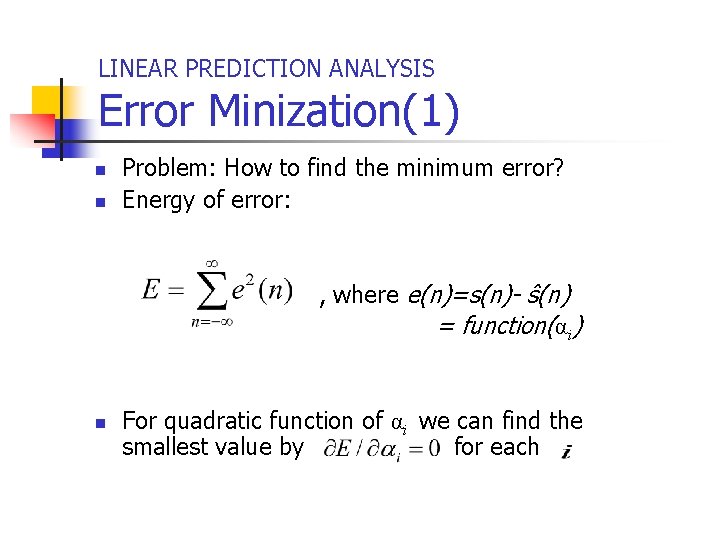

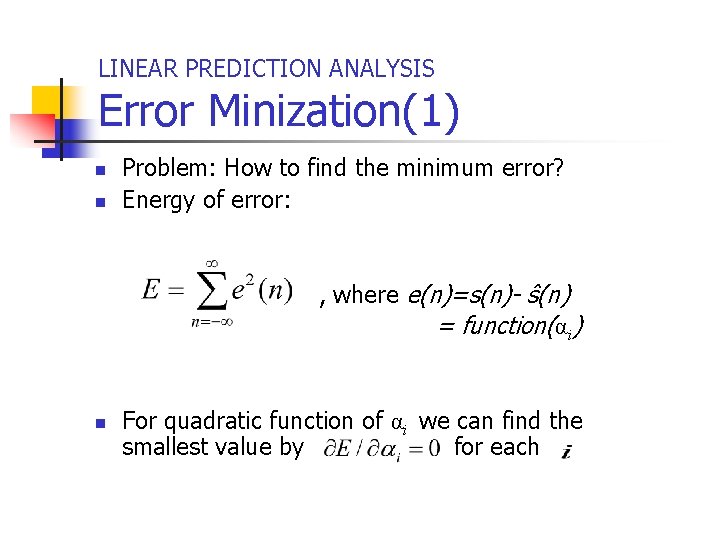

LINEAR PREDICTION ANALYSIS Error Minization(1) n n Problem: How to find the minimum error? Energy of error: , where e(n)=s(n)- ŝ(n) = function(αi) n For quadratic function of αi we can find the smallest value by for each

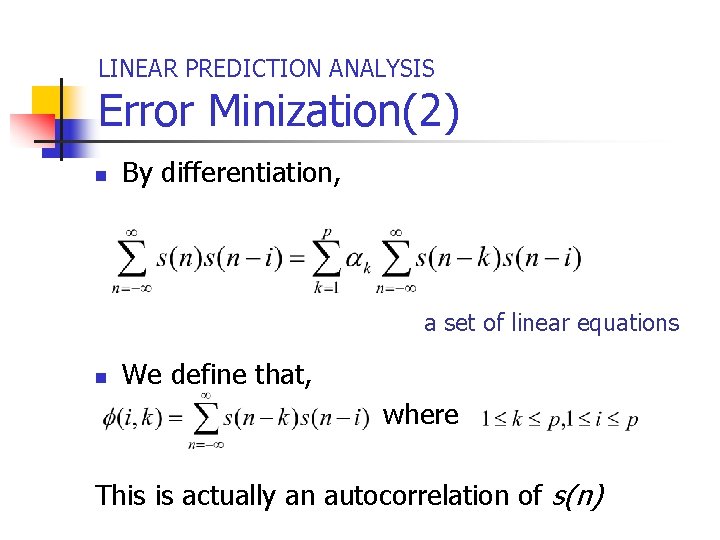

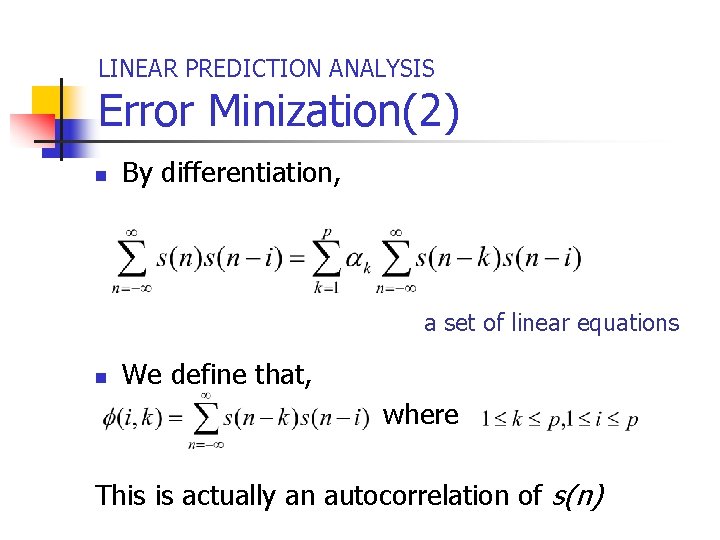

LINEAR PREDICTION ANALYSIS Error Minization(2) n By differentiation, a set of linear equations n We define that, where This is actually an autocorrelation of s(n)

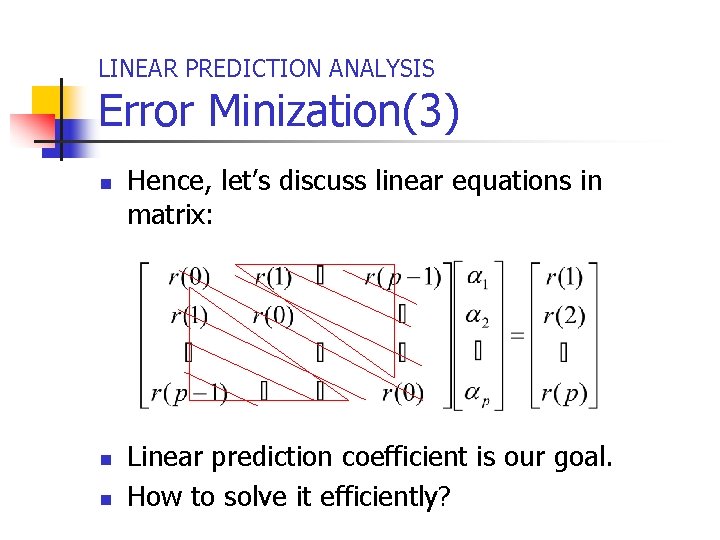

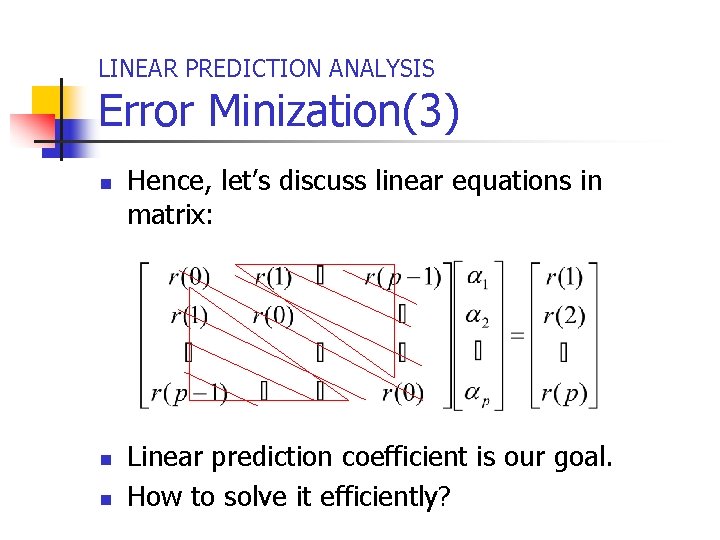

LINEAR PREDICTION ANALYSIS Error Minization(3) n n n Hence, let’s discuss linear equations in matrix: Linear prediction coefficient is our goal. How to solve it efficiently?

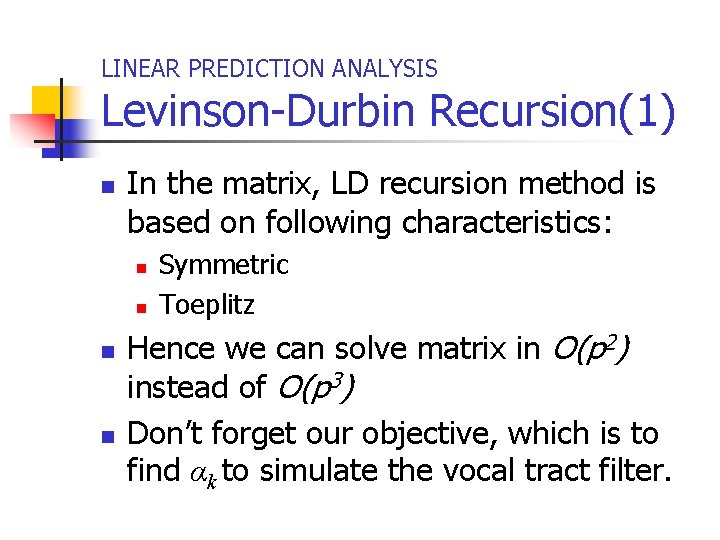

LINEAR PREDICTION ANALYSIS Levinson-Durbin Recursion(1) n In the matrix, LD recursion method is based on following characteristics: n n Symmetric Toeplitz Hence we can solve matrix in O(p 2) instead of O(p 3) Don’t forget our objective, which is to find αk to simulate the vocal tract filter.

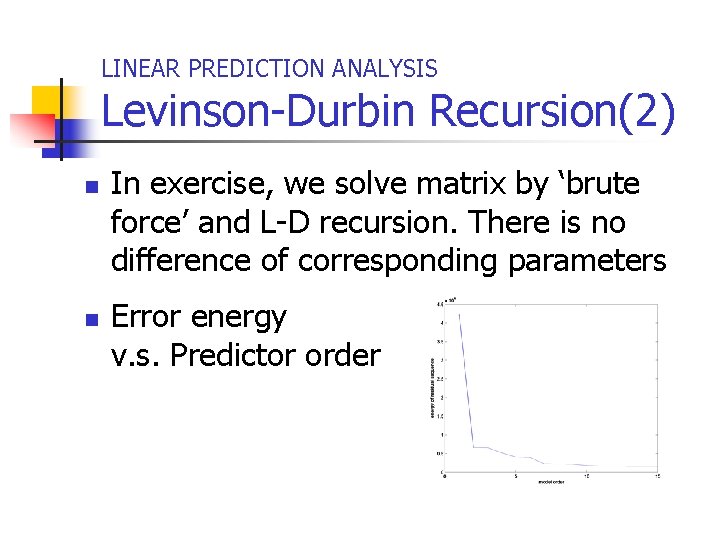

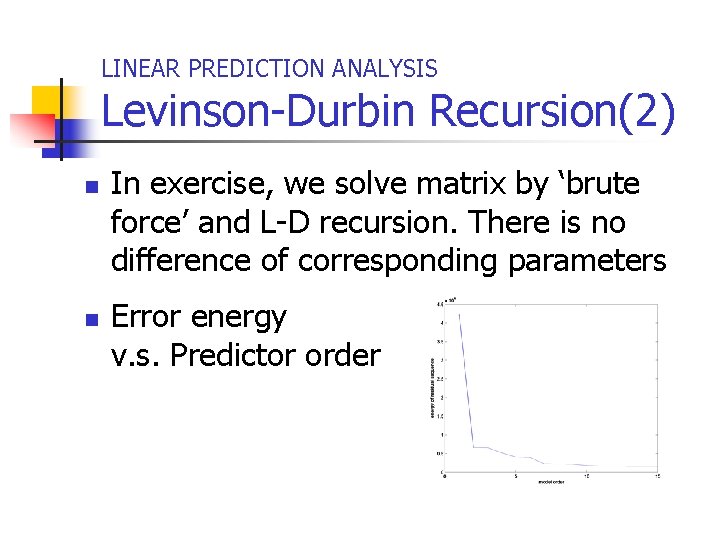

LINEAR PREDICTION ANALYSIS Levinson-Durbin Recursion(2) n n In exercise, we solve matrix by ‘brute force’ and L-D recursion. There is no difference of corresponding parameters Error energy v. s. Predictor order

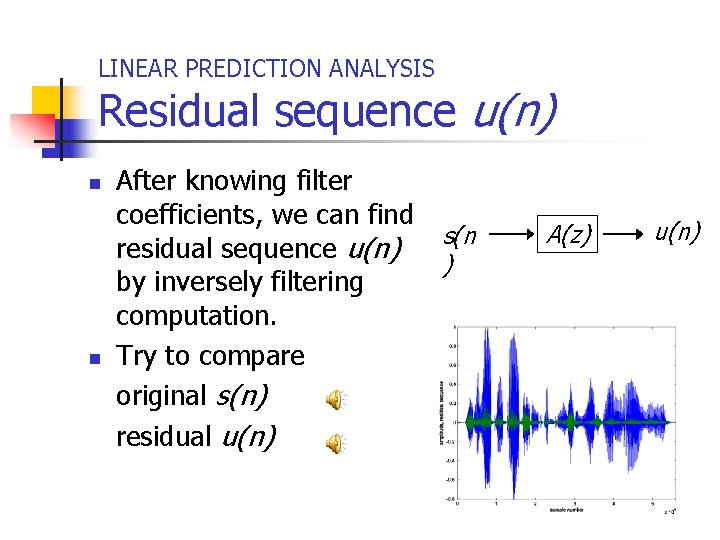

LINEAR PREDICTION ANALYSIS Residual sequence u(n) n n After knowing filter coefficients, we can find s(n residual sequence u(n) ) by inversely filtering computation. Try to compare original s(n) residual u(n) A(z) u(n)

Speech Coding and Recognition SPEECH CODING AND SYNTHESIS

SPEECH CODING AND SYNTHESIS Categories n n n Analysis-by-Synthesis Perceptual Weighting Filter Linear Predictive Coding n n Multi-Pulse Linear Prediction Code-Excited Linear Prediction (CELP) CELP Experiment Quantization

SPEECH CODING AND SYNTHESIS Analysis-by-Synthesis(1) n n n Analyze the speech by estimating a LP synthesis filter Computing a residual sequence as a excitation signal to reconstruct signal Encoder/Decoder : the parameters like LP synthesis filter, gain, and pitch are coded, transmitted, and decoded

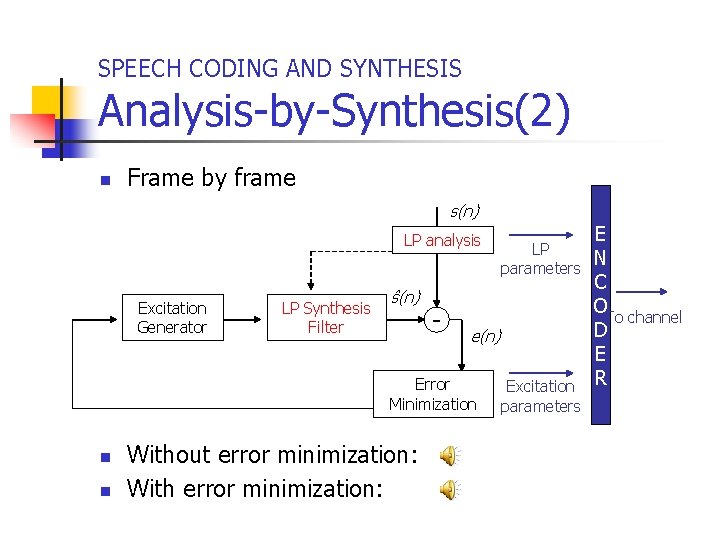

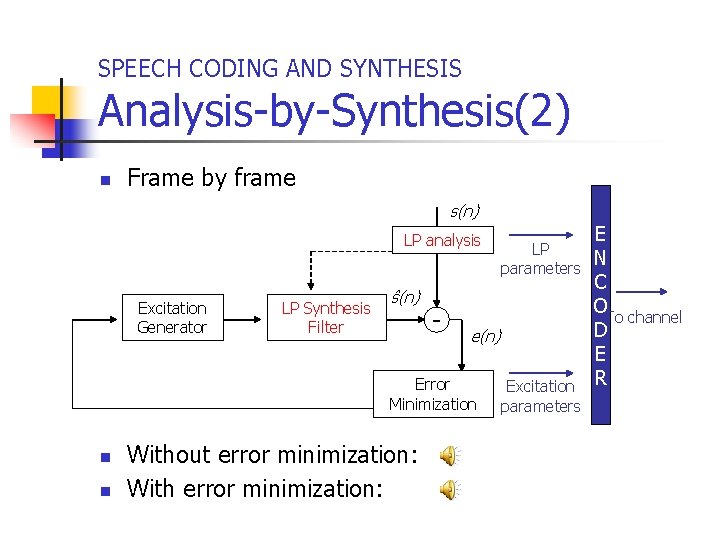

SPEECH CODING AND SYNTHESIS Analysis-by-Synthesis(2) n Frame by frame s(n) LP analysis Excitation Generator LP Synthesis Filter ŝ(n) - e(n) Error Minimization n n Without error minimization: With error minimization: LP parameters Excitation parameters E N C O To channel D E R

SPEECH CODING AND SYNTHESIS Perceptual Weighting Filter(1) n n n Perceptual masking effect: Within the formant regions, one is less sensitive to the noise Idea: designing a filter that de-emphasizes the error in the formant region Result: synthetic speech with more error near formant peaks but less error in others

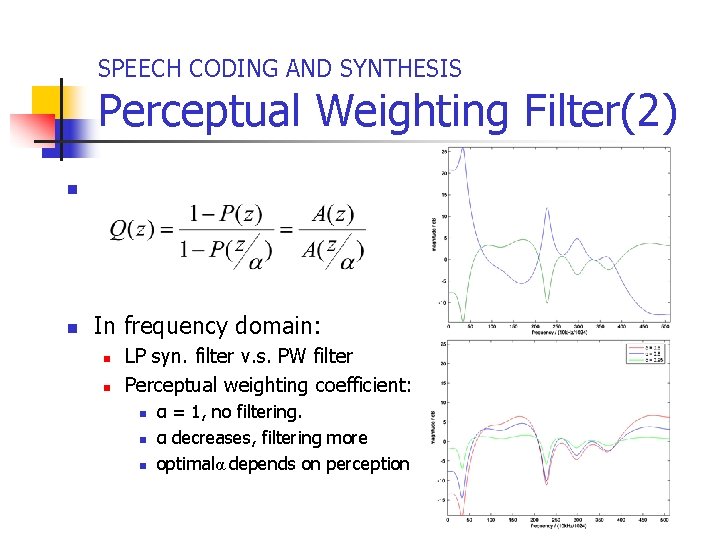

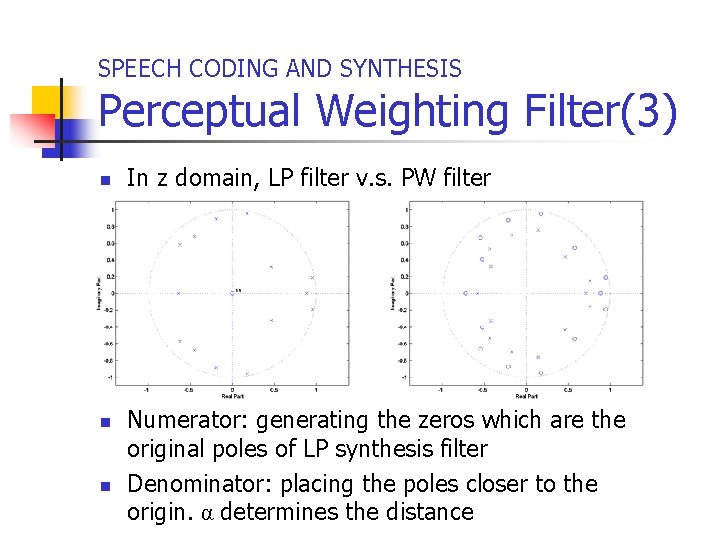

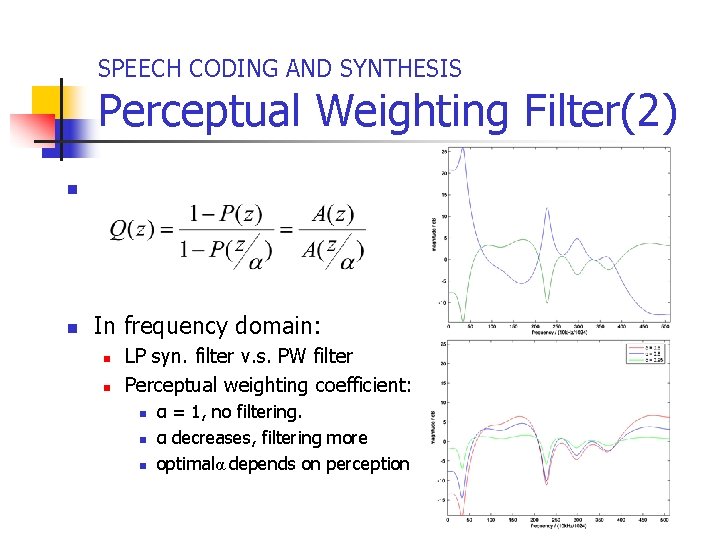

SPEECH CODING AND SYNTHESIS Perceptual Weighting Filter(2) n n In frequency domain: n n LP syn. filter v. s. PW filter Perceptual weighting coefficient: n n n α = 1, no filtering. α decreases, filtering more optimalα depends on perception

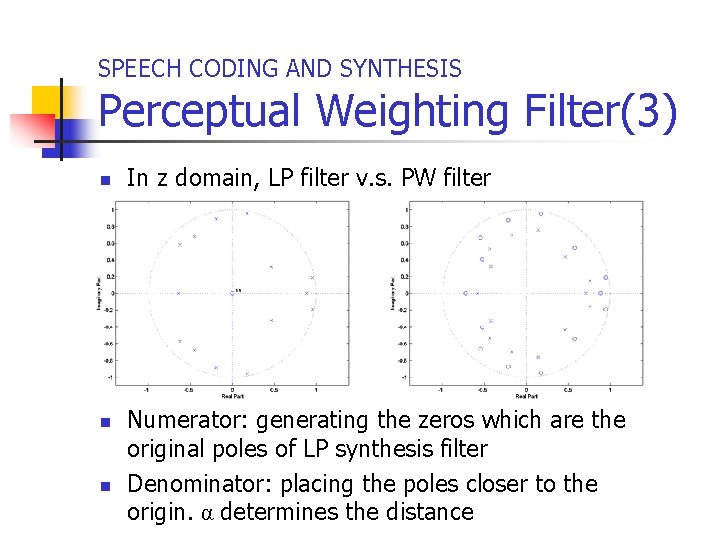

SPEECH CODING AND SYNTHESIS Perceptual Weighting Filter(3) n n n In z domain, LP filter v. s. PW filter Numerator: generating the zeros which are the original poles of LP synthesis filter Denominator: placing the poles closer to the origin. α determines the distance

SPEECH CODING AND SYNTHESIS Linear Predictive Coding(1) n n n Based on above methods, PW filter and analysis-by-synthesis If excitation signal ≈ impulse train, during voicing, we can get a reconstructed signal very close to the original More often, however, the residue is far from the impulse train

SPEECH CODING AND SYNTHESIS Linear Predictive Coding(2) n n n Hence, there are many kinds of coding trying to improve this Primarily differ in the type of excitation signal Two kinds: n Multi-Pulse Linear Prediction n Code-Excited Linear Prediction (CELP)

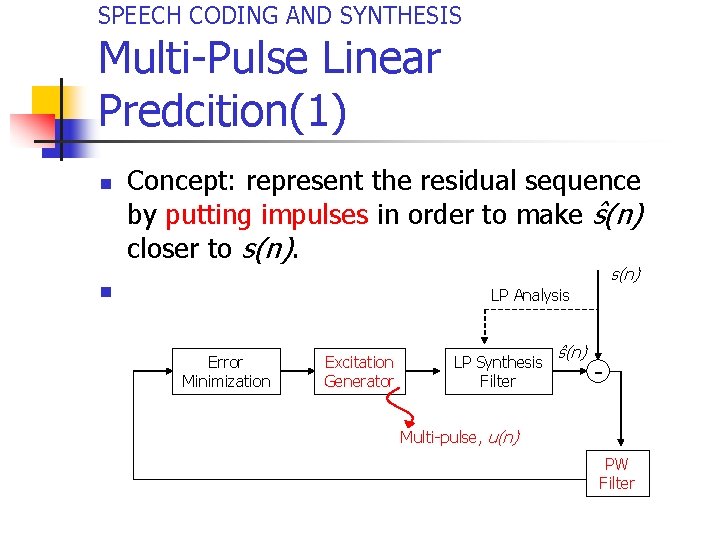

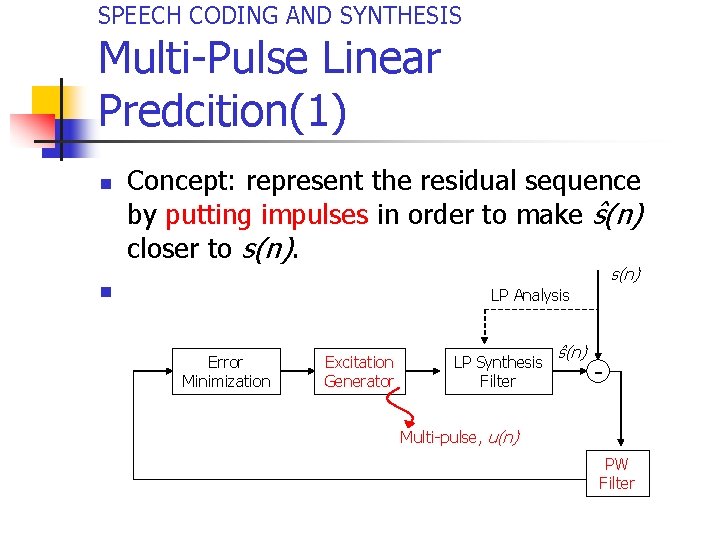

SPEECH CODING AND SYNTHESIS Multi-Pulse Linear Predcition(1) n Concept: represent the residual sequence by putting impulses in order to make ŝ(n) closer to s(n) n LP Analysis Error Minimization Excitation Generator LP Synthesis Filter ŝ(n) - Multi-pulse, u(n) PW Filter

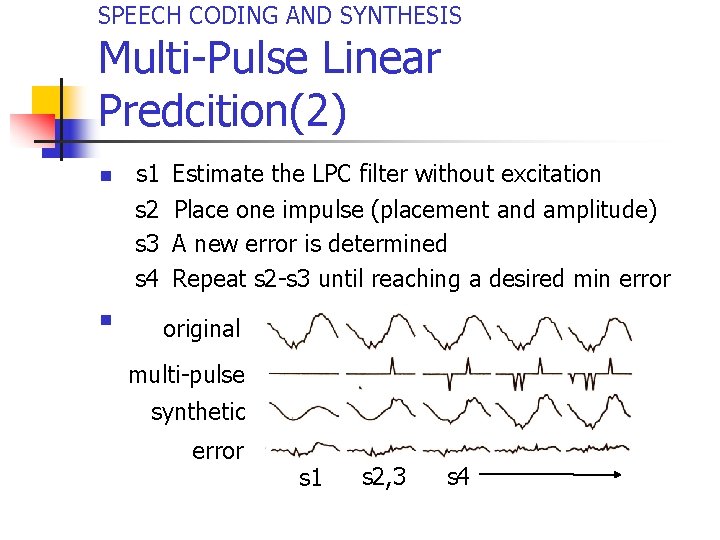

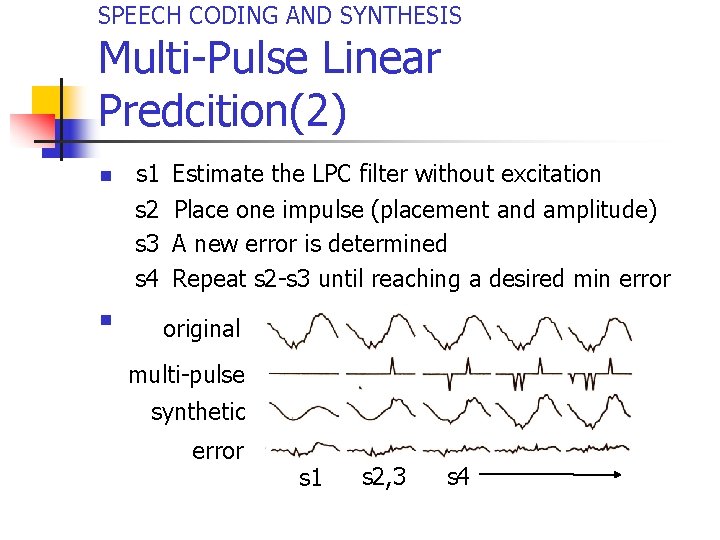

SPEECH CODING AND SYNTHESIS Multi-Pulse Linear Predcition(2) n n s 1 s 2 s 3 s 4 Estimate the LPC filter without excitation Place one impulse (placement and amplitude) A new error is determined Repeat s 2 -s 3 until reaching a desired min error original multi-pulse synthetic error s 1 s 2, 3 s 4

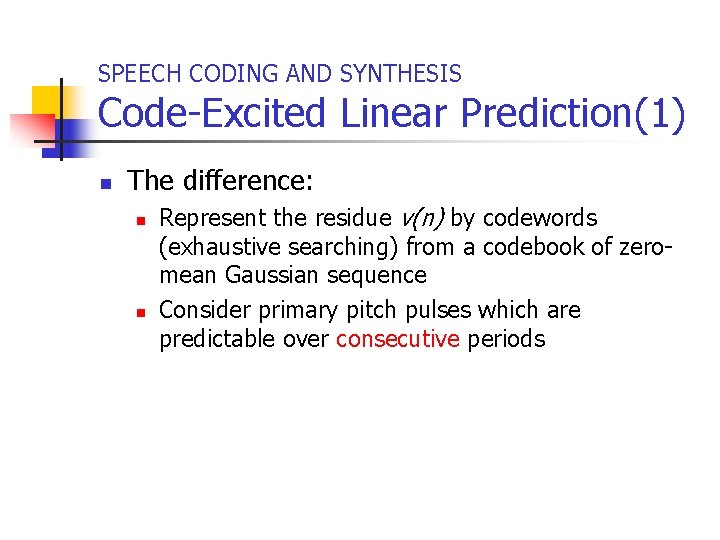

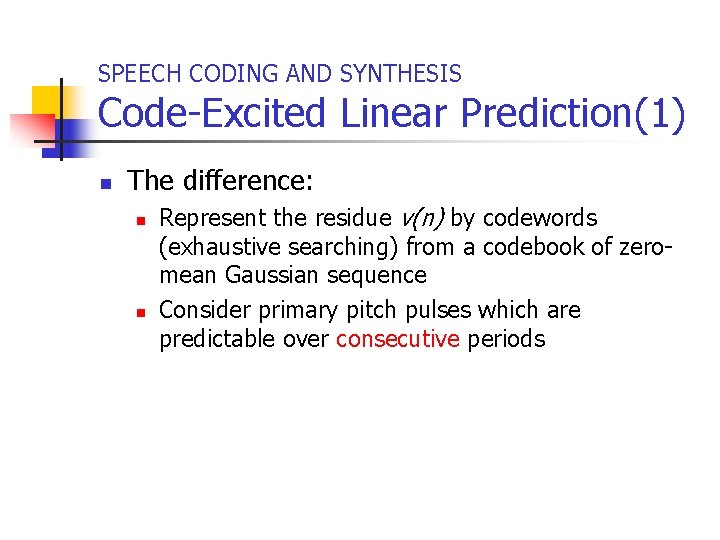

SPEECH CODING AND SYNTHESIS Code-Excited Linear Prediction(1) n The difference: n n Represent the residue v(n) by codewords (exhaustive searching) from a codebook of zeromean Gaussian sequence Consider primary pitch pulses which are predictable over consecutive periods

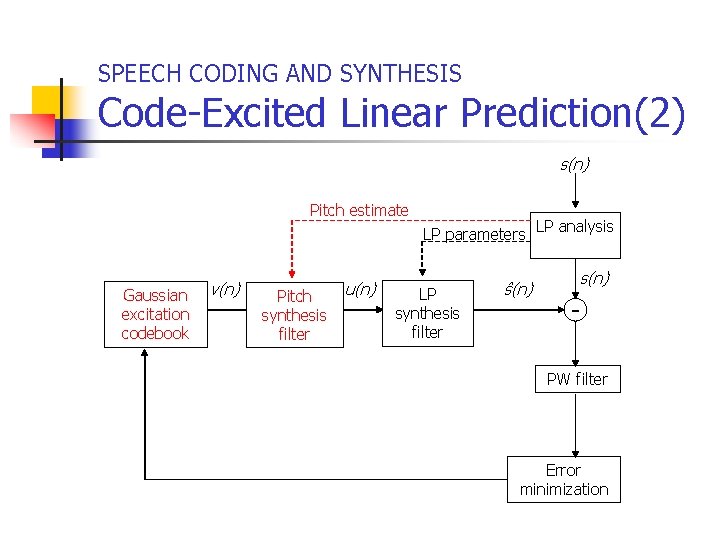

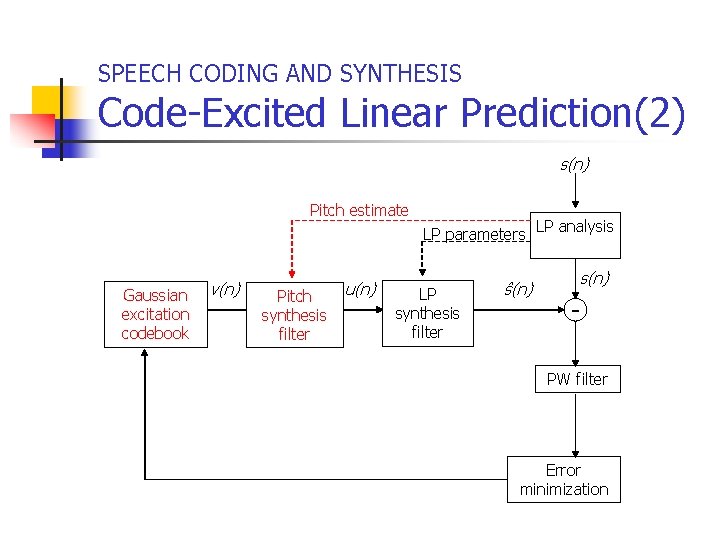

SPEECH CODING AND SYNTHESIS Code-Excited Linear Prediction(2) s(n) Pitch estimate LP parameters Gaussian Multi-pulse excitation generator codebook v(n) Pitch synthesis filter u(n) LP synthesis filter ŝ(n) LP analysis s(n) PW filter Error minimization

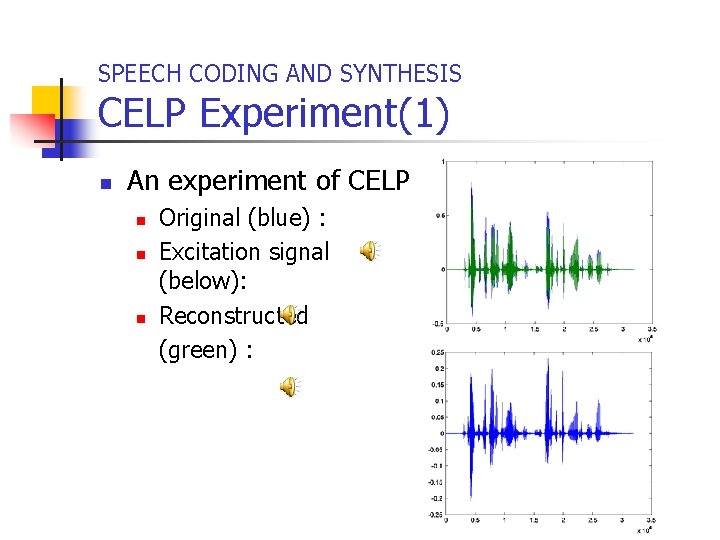

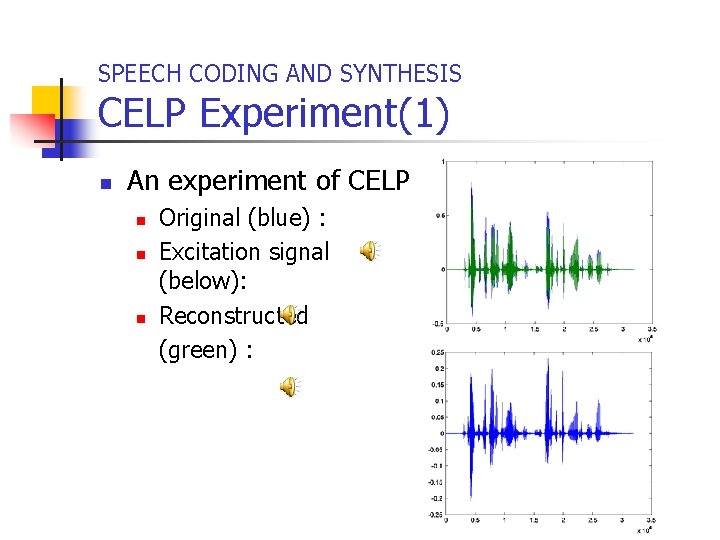

SPEECH CODING AND SYNTHESIS CELP Experiment(1) n An experiment of CELP n n n Original (blue) : Excitation signal (below): Reconstructed (green) :

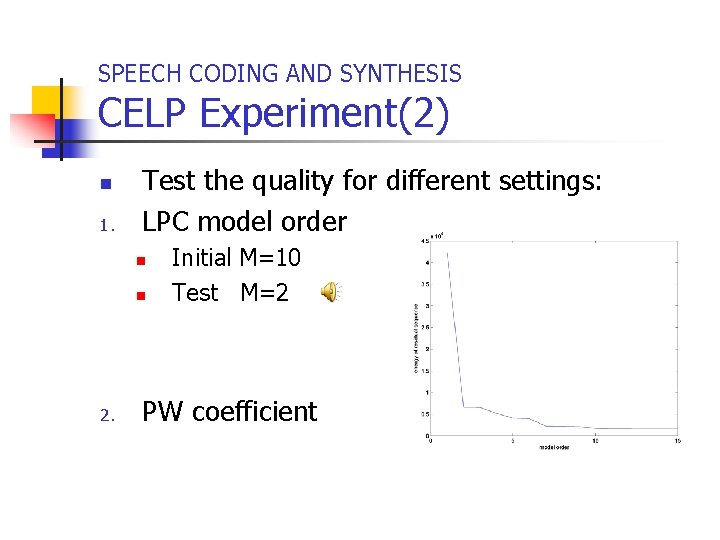

SPEECH CODING AND SYNTHESIS CELP Experiment(2) n 1. Test the quality for different settings: LPC model order n n 2. Initial M=10 Test M=2 PW coefficient

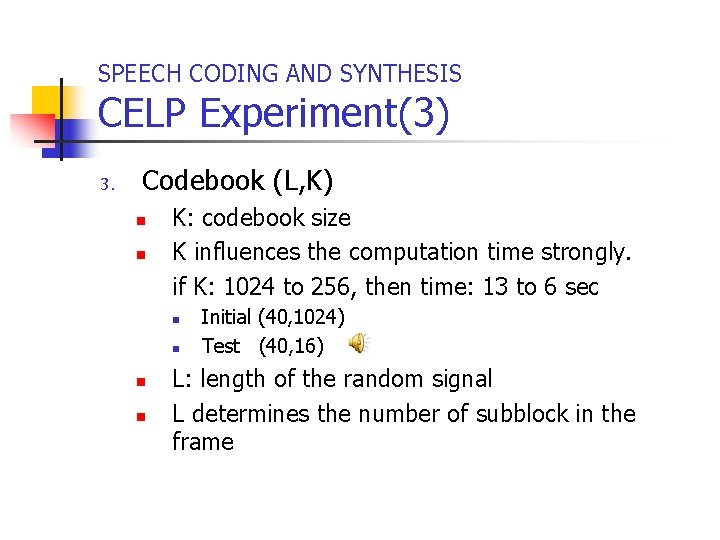

SPEECH CODING AND SYNTHESIS CELP Experiment(3) 3. Codebook (L, K) n n K: codebook size K influences the computation time strongly. if K: 1024 to 256, then time: 13 to 6 sec n n Initial (40, 1024) Test (40, 16) L: length of the random signal L determines the number of subblock in the frame

SPEECH CODING AND SYNTHESIS Quantization n With quantization, n n n 16000 bps CELP 9600 bps CELP Trade-off Bandwidth efficiency v. s. speech quality

Speech Coding and Recognition SPEECH RECOGNITION

SPEECH RECOGNITION Dimensions of Difficulty n n Speaker dependent / independent Vocabulary size (small, medium, large) Discrete words / continuous utterance Quiet / noisy environment

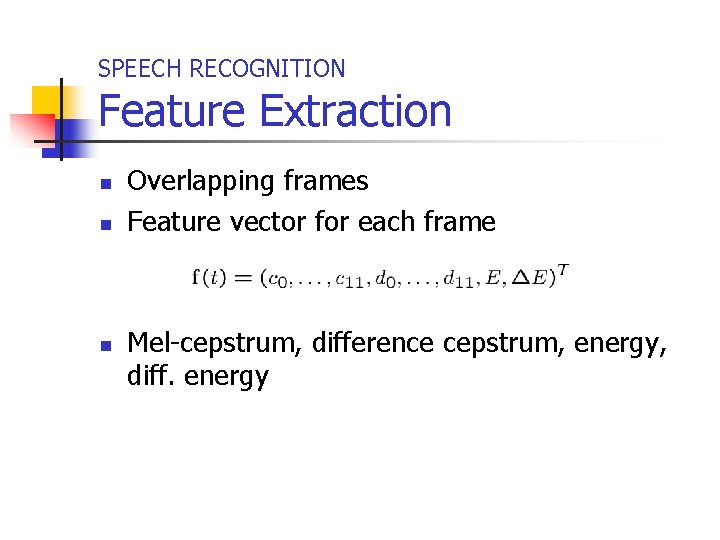

SPEECH RECOGNITION Feature Extraction n Overlapping frames Feature vector for each frame Mel-cepstrum, difference cepstrum, energy, diff. energy

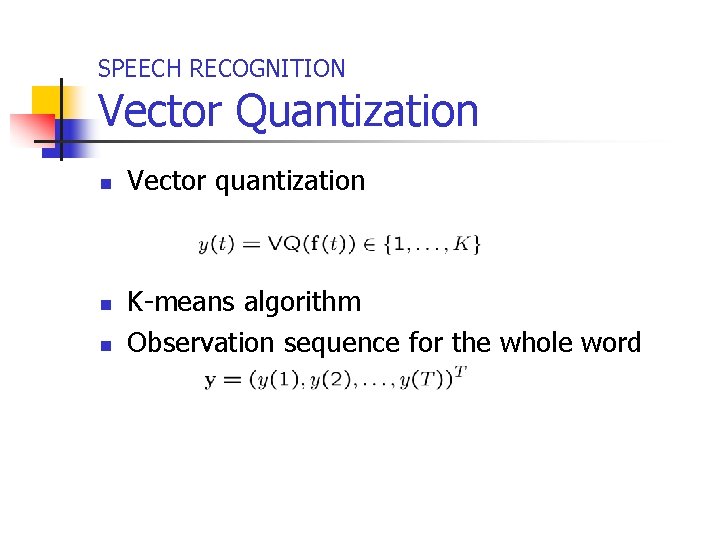

SPEECH RECOGNITION Vector Quantization n Vector quantization K-means algorithm Observation sequence for the whole word

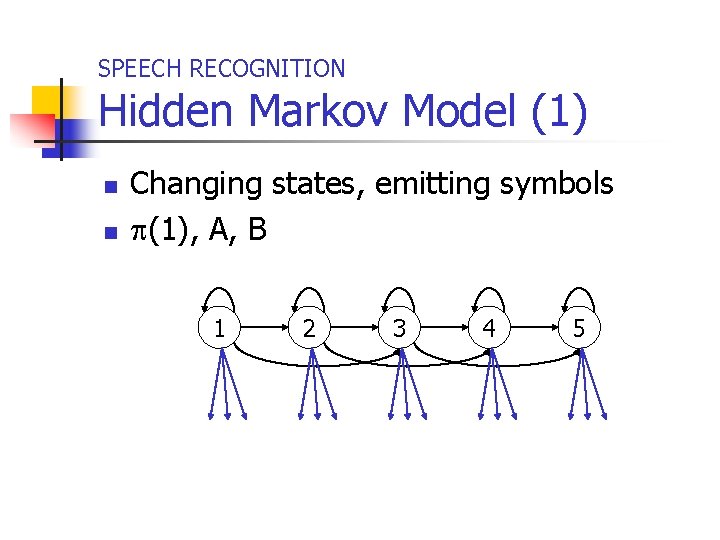

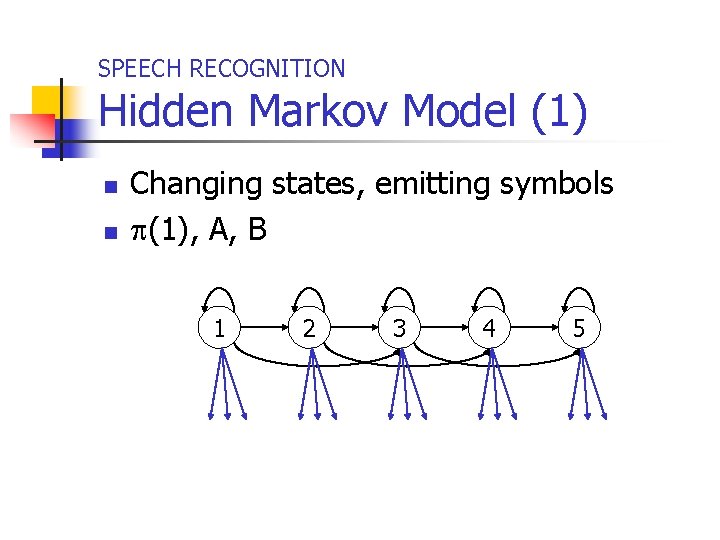

SPEECH RECOGNITION Hidden Markov Model (1) n n Changing states, emitting symbols (1), A, B 1 2 3 4 5

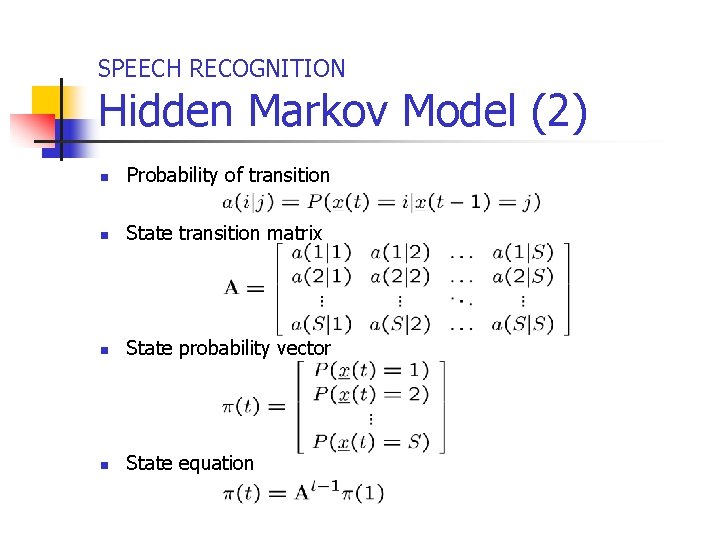

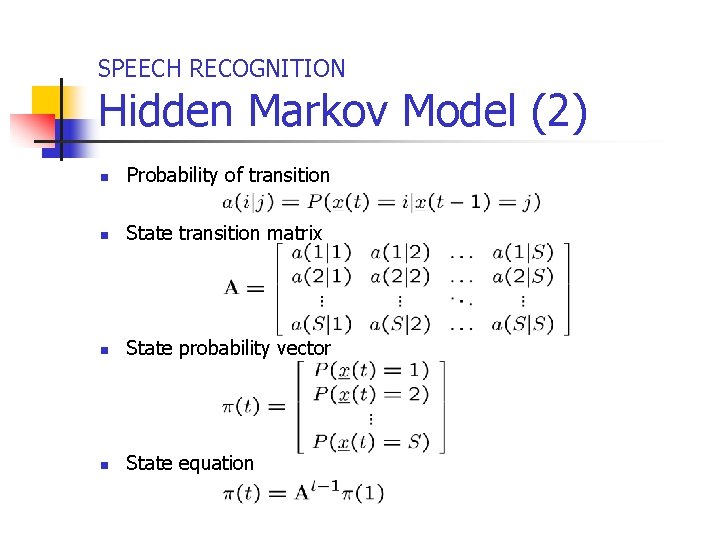

SPEECH RECOGNITION Hidden Markov Model (2) n Probability of transition n State transition matrix n State probability vector n State equation

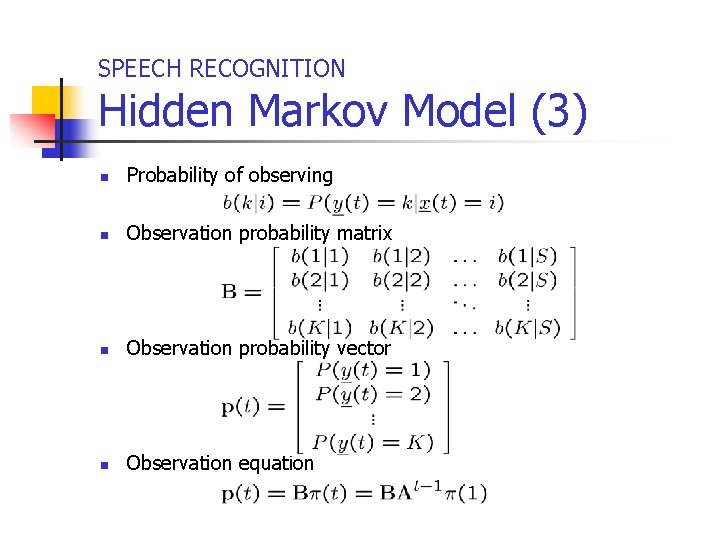

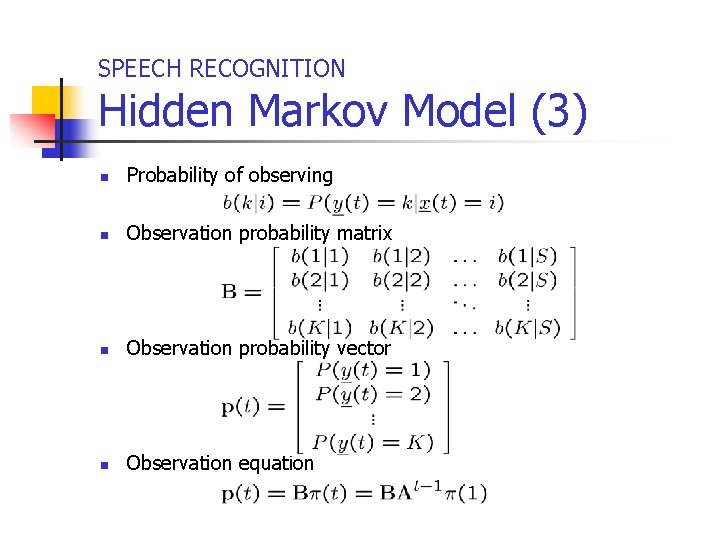

SPEECH RECOGNITION Hidden Markov Model (3) n Probability of observing n Observation probability matrix n Observation probability vector n Observation equation

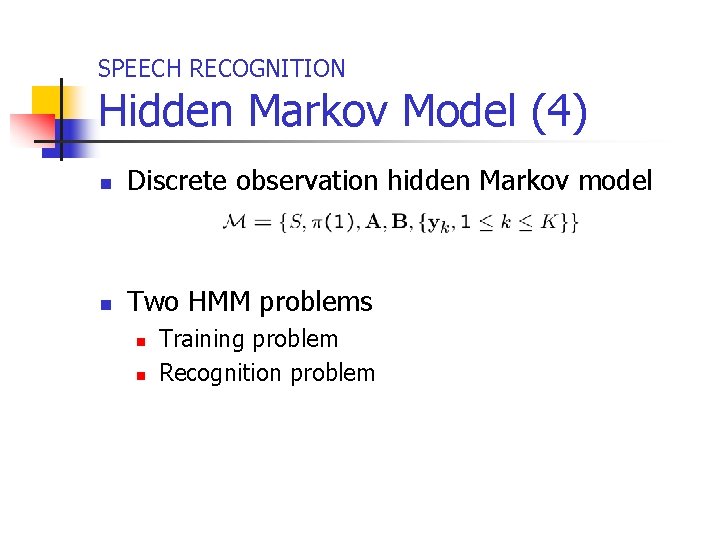

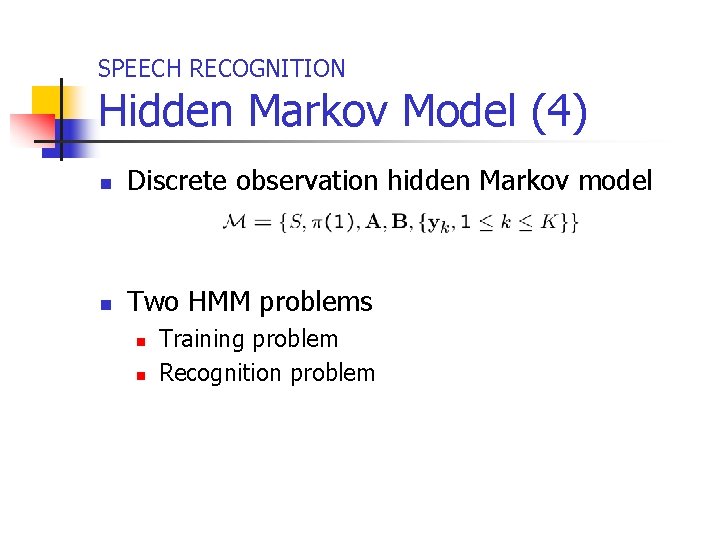

SPEECH RECOGNITION Hidden Markov Model (4) n Discrete observation hidden Markov model n Two HMM problems n n Training problem Recognition problem

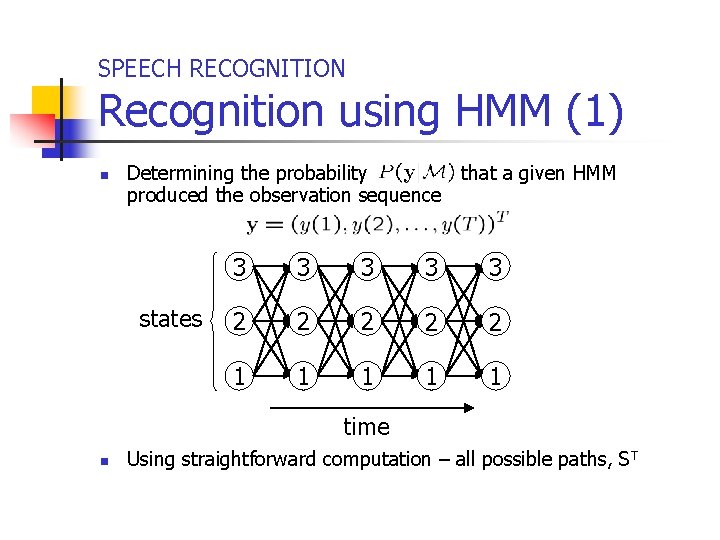

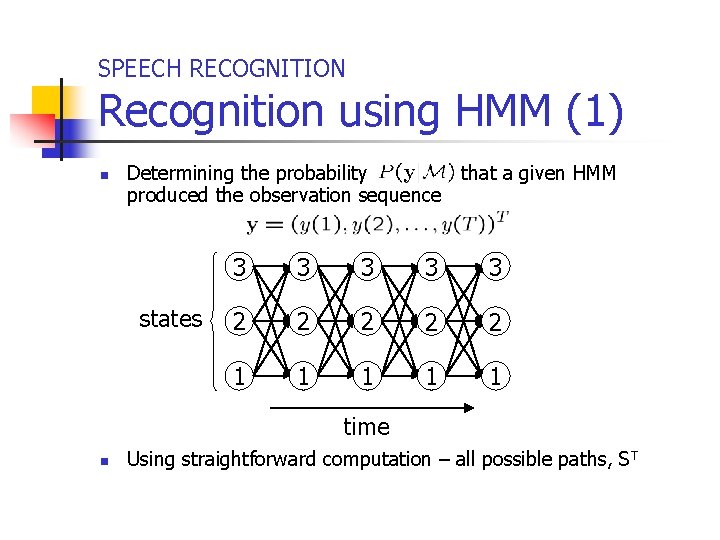

SPEECH RECOGNITION Recognition using HMM (1) n Determining the probability that a given HMM produced the observation sequence states 3 3 3 2 2 2 1 1 1 time n Using straightforward computation – all possible paths, S T

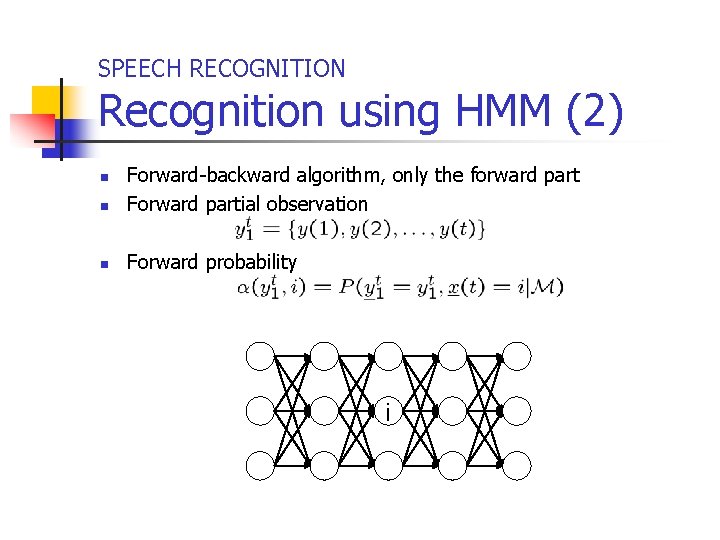

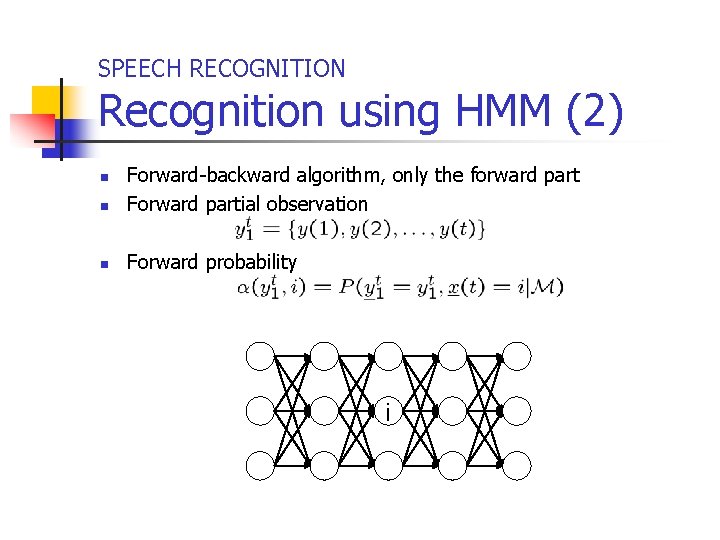

SPEECH RECOGNITION Recognition using HMM (2) n Forward-backward algorithm, only the forward part Forward partial observation n Forward probability n i

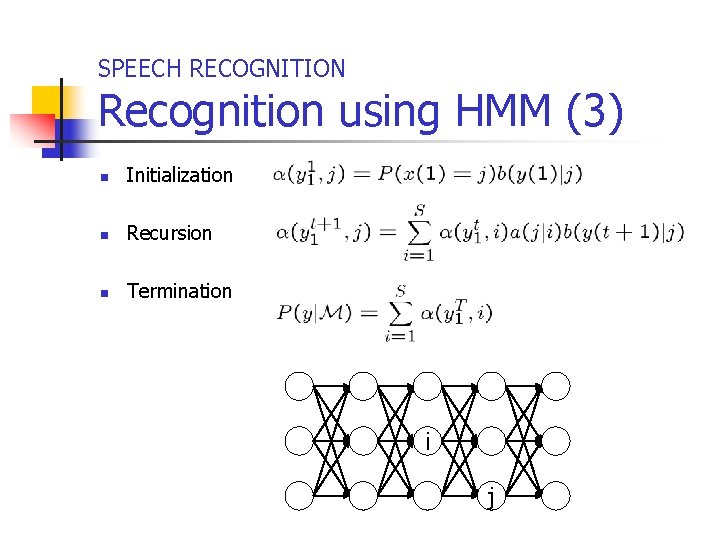

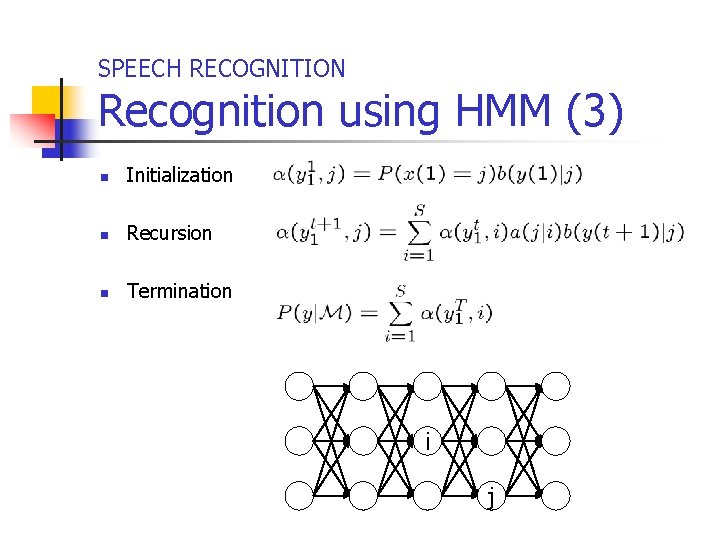

SPEECH RECOGNITION Recognition using HMM (3) n Initialization n Recursion n Termination i j

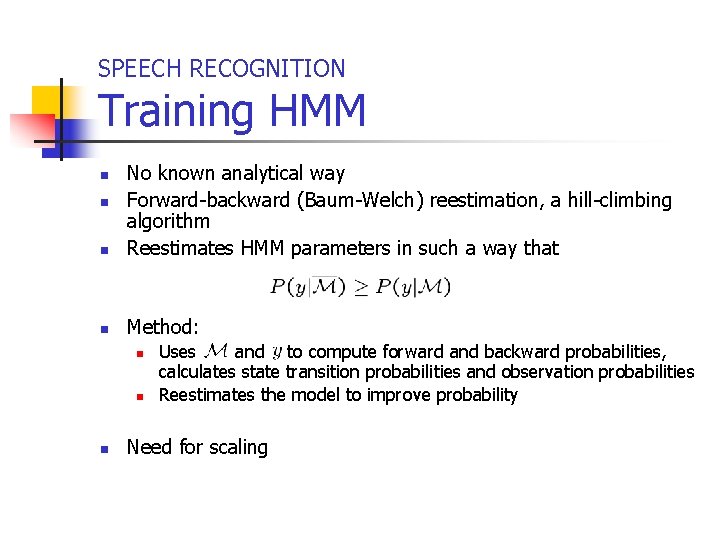

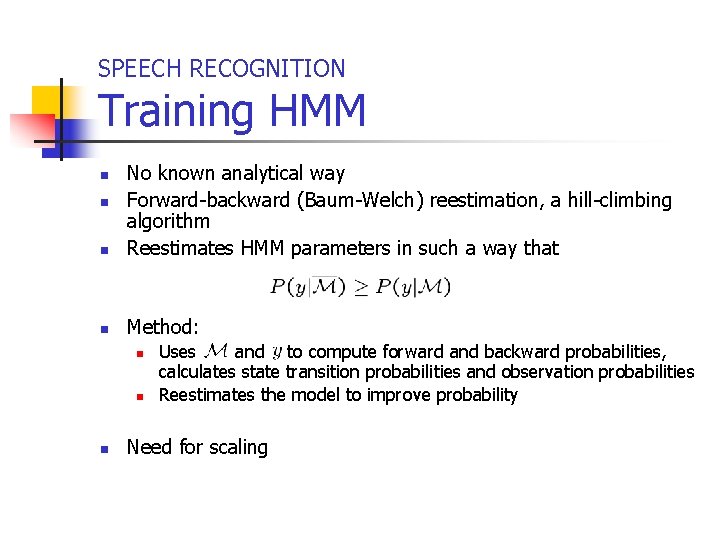

SPEECH RECOGNITION Training HMM n No known analytical way Forward-backward (Baum-Welch) reestimation, a hill-climbing algorithm Reestimates HMM parameters in such a way that n Method: n n n Uses and to compute forward and backward probabilities, calculates state transition probabilities and observation probabilities Reestimates the model to improve probability Need for scaling

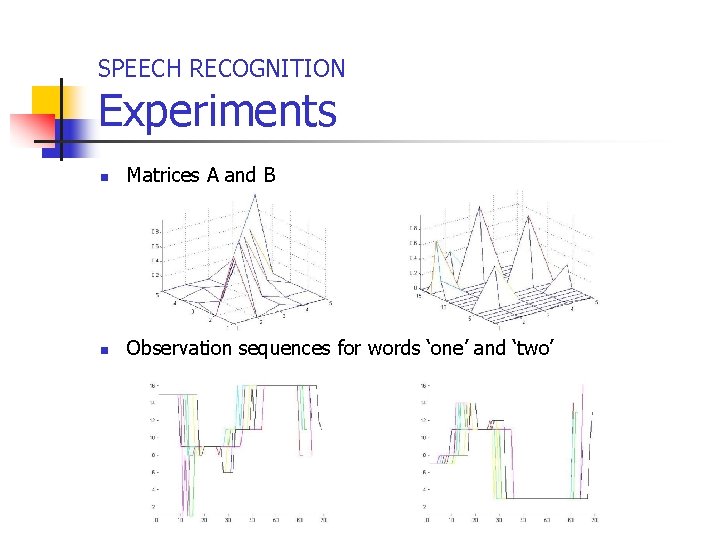

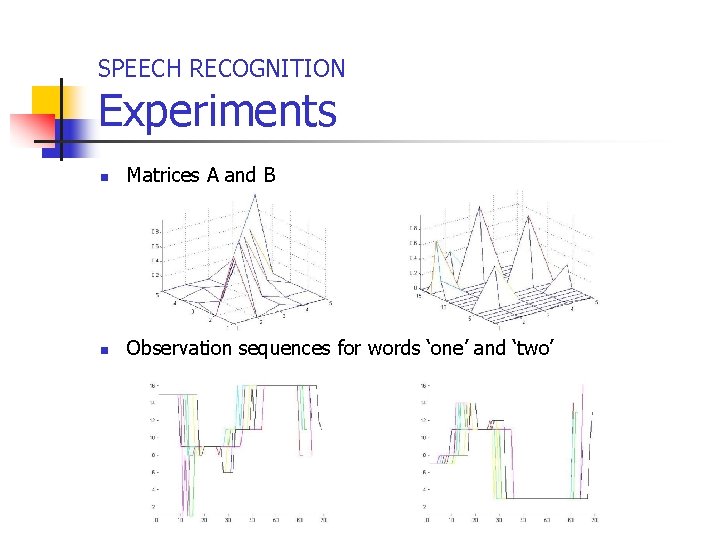

SPEECH RECOGNITION Experiments n Matrices A and B n Observation sequences for words ‘one’ and ‘two’

Thank you!