Wordnet and word similarity Lectures 11 and 12

- Slides: 71

Wordnet and word similarity Lectures 11 and 12 1

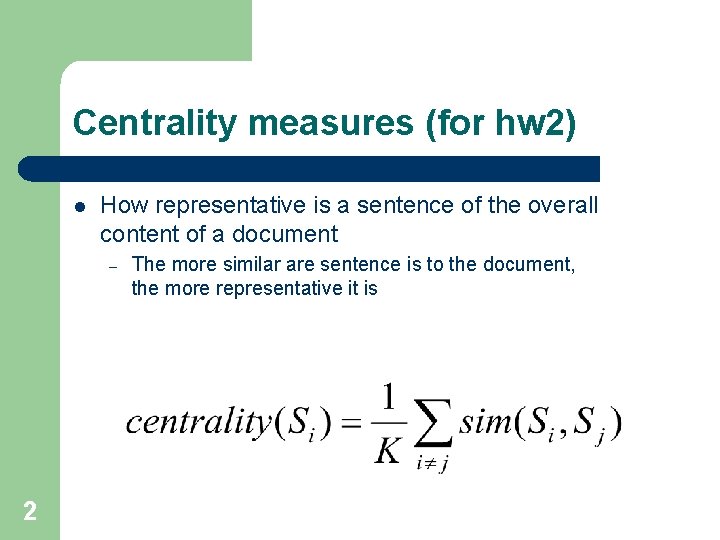

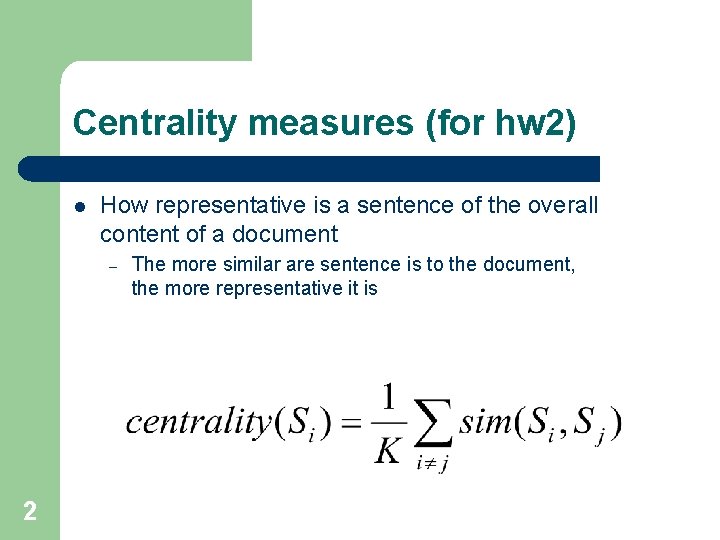

Centrality measures (for hw 2) l How representative is a sentence of the overall content of a document – 2 The more similar are sentence is to the document, the more representative it is

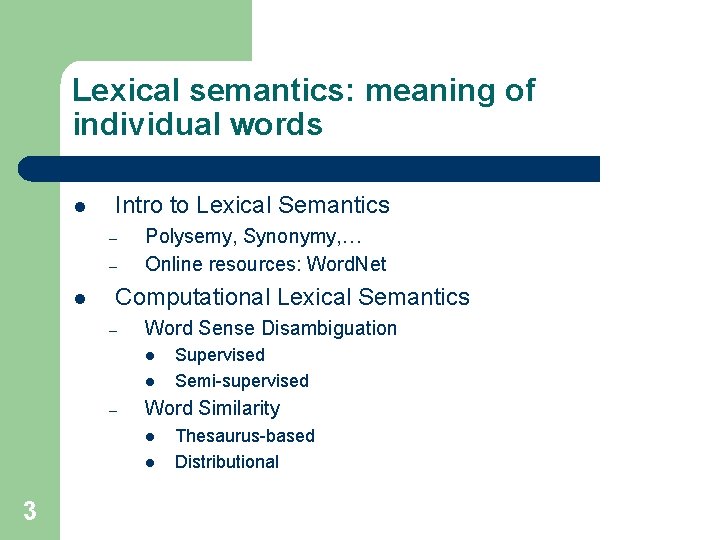

Lexical semantics: meaning of individual words l Intro to Lexical Semantics – – l Polysemy, Synonymy, … Online resources: Word. Net Computational Lexical Semantics – Word Sense Disambiguation l l – Word Similarity l l 3 Supervised Semi-supervised Thesaurus-based Distributional

What’s a word? l Definitions we’ve used: Types, tokens, stems, inflected forms, etc. . . l Lexeme: An entry in a lexicon consisting of a pairing of a form with a single meaning representation A lemma or citation form is the grammatical form that is used to represent a lexeme. l l l The lemma bank has two senses: – – l 4 Carpet is the lemma for carpets Instead, a bank can hold the investments in a custodial account in the client’s name But as agriculture burgeons on the east bank, the river will shrink even more. A sense is a discrete representation of one aspect of the meaning of a word

Relationships between word meanings l Polysemy l Homonymy Synonymy Antonymy Hypernomy Hyponomy l l 5

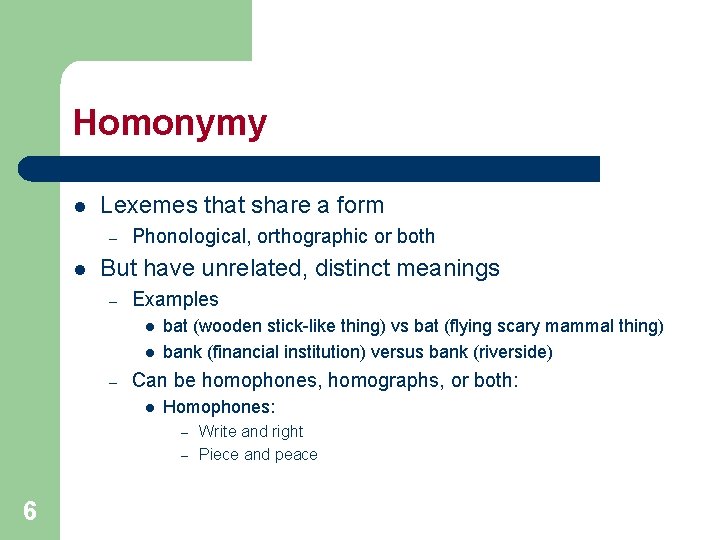

Homonymy l Lexemes that share a form – l Phonological, orthographic or both But have unrelated, distinct meanings – Examples l l – bat (wooden stick-like thing) vs bat (flying scary mammal thing) bank (financial institution) versus bank (riverside) Can be homophones, homographs, or both: l Homophones: Write and right – Piece and peace – 6

Homonymy causes problems for NLP applications l Text-to-Speech – Same orthographic form but different phonological form l l Information retrieval – Different meanings same orthographic form l l l QUERY: bat care Machine Translation Speech recognition – 7 bass vs bass Why?

Polysemy l l The bank is constructed from red brick I withdrew the money from the bank Are those the same sense? – Which sense of bank is this? l l 8 Is it distinct from (homonymous with) the river bank sense? How about the savings bank sense?

Polysemy l l A single lexeme with multiple related meanings (bank the building, bank the financial institution) Most non-rare words have multiple meanings – – – 9 The number of meanings is related to its frequency Verbs tend more to polysemy Distinguishing polysemy from homonymy isn’t always easy (or necessary)

Synonyms l Word that have the same meaning in some or all contexts. – – – l Two lexemes are synonyms if they can be successfully substituted for each other in all situations – 10 filbert / hazelnut couch / sofa big / large automobile / car vomit / throw up Water / H 20 If so they have the same propositional meaning

But l There are no examples of perfect synonymy – – – l Example: – 11 Why should that be? Even if many aspects of meaning are identical Still may not preserve the acceptability based on notions of politeness, slang, register, genre, etc. Water and H 20

Synonymy is a relation between senses rather than words l l Consider the words big and large Are they synonyms? – – l How about here: – – l Miss Nelson, for instance, became a kind of big sister to Benjamin. ? Miss Nelson, for instance, became a kind of large sister to Benjamin. Why? – – 12 How big is that plane? Would I be flying on a large or small plane? big has a sense that means being older, or grown up large lacks this sense

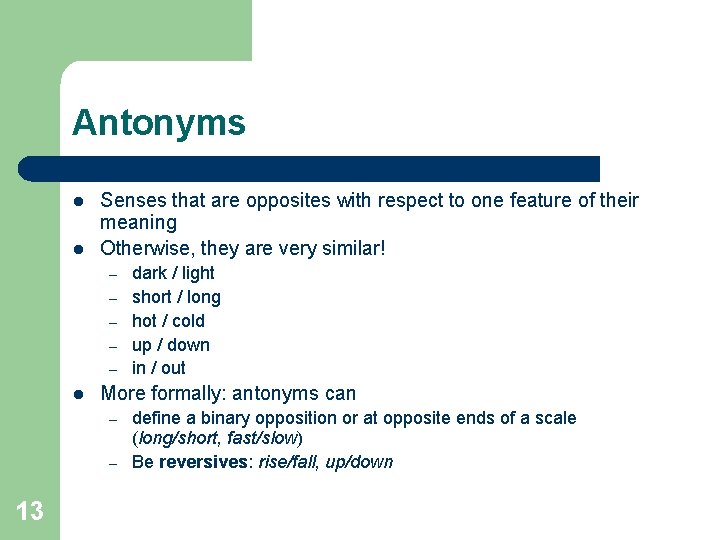

Antonyms l l Senses that are opposites with respect to one feature of their meaning Otherwise, they are very similar! – – – l More formally: antonyms can – – 13 dark / light short / long hot / cold up / down in / out define a binary opposition or at opposite ends of a scale (long/short, fast/slow) Be reversives: rise/fall, up/down

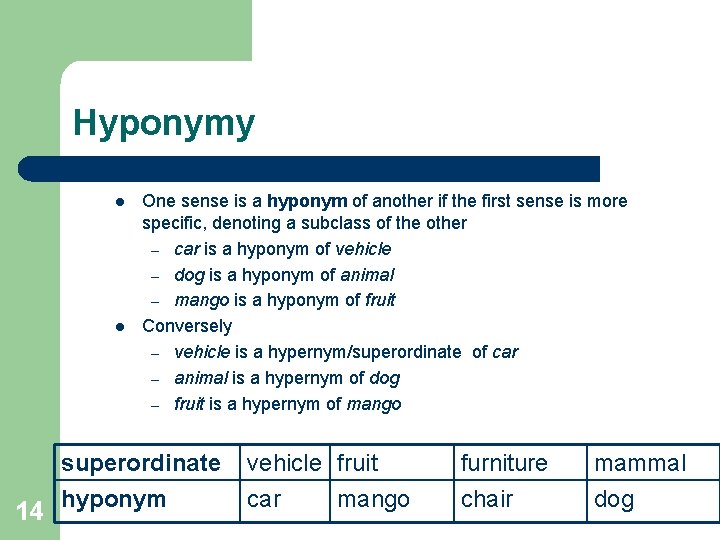

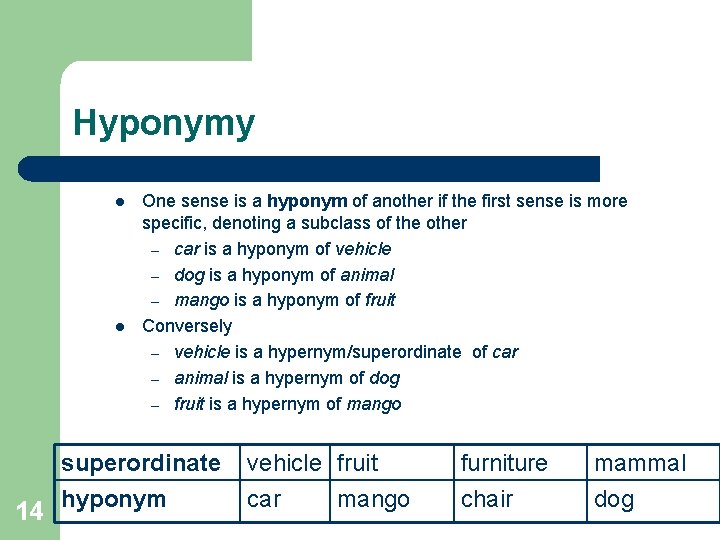

Hyponymy l l 14 One sense is a hyponym of another if the first sense is more specific, denoting a subclass of the other – car is a hyponym of vehicle – dog is a hyponym of animal – mango is a hyponym of fruit Conversely – vehicle is a hypernym/superordinate of car – animal is a hypernym of dog – fruit is a hypernym of mango superordinate hyponym vehicle fruit car mango furniture chair mammal dog

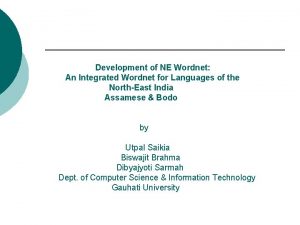

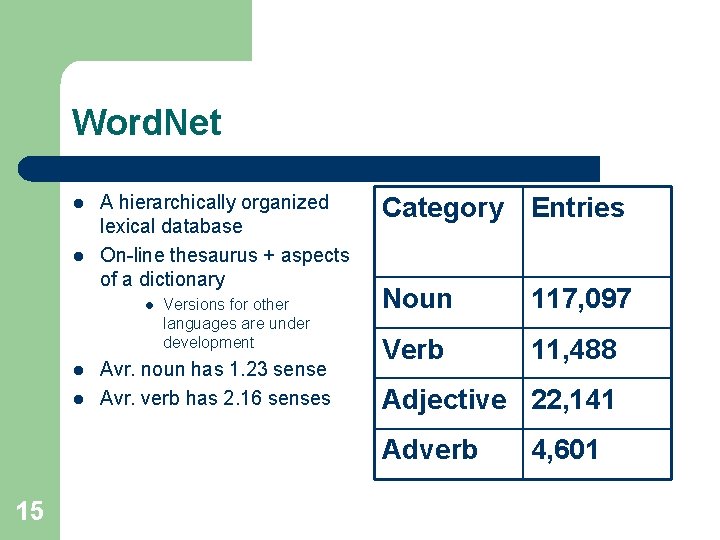

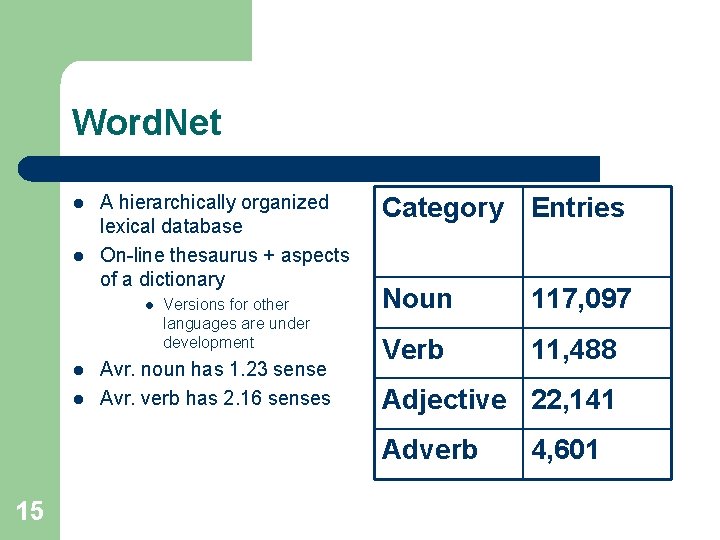

Word. Net l l A hierarchically organized lexical database On-line thesaurus + aspects of a dictionary l l l Versions for other languages are under development Avr. noun has 1. 23 sense Avr. verb has 2. 16 senses Category Entries Noun 117, 097 Verb 11, 488 Adjective 22, 141 Adverb 15 4, 601

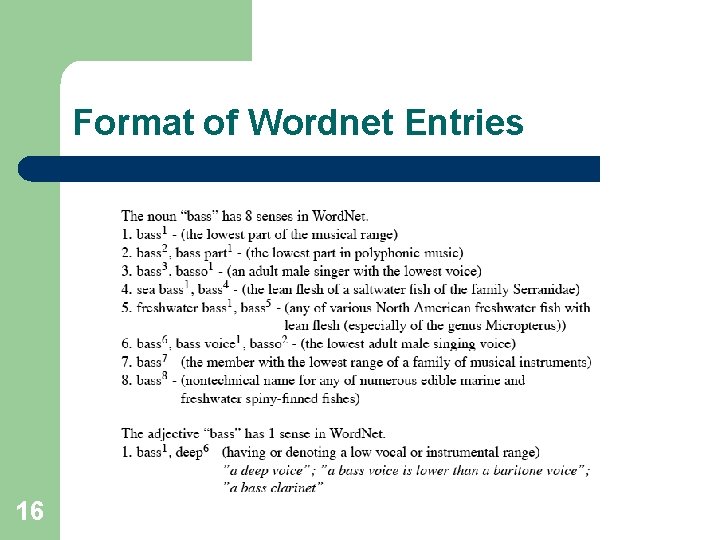

Format of Wordnet Entries 16

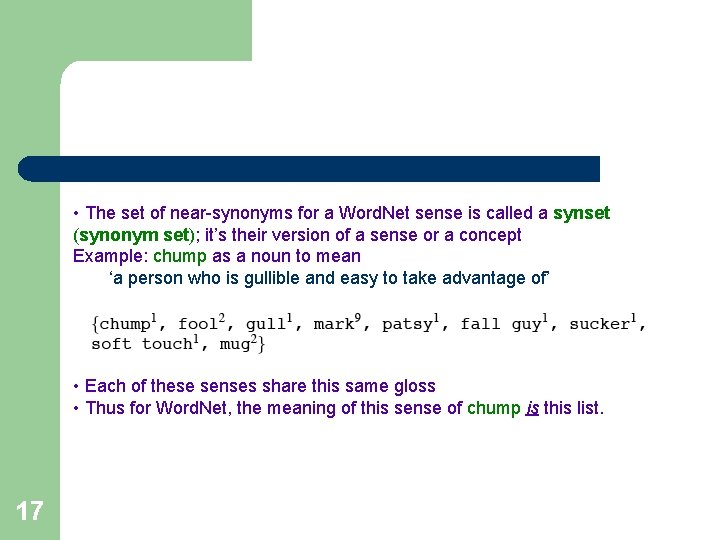

• The set of near-synonyms for a Word. Net sense is called a synset (synonym set); it’s their version of a sense or a concept Example: chump as a noun to mean ‘a person who is gullible and easy to take advantage of’ • Each of these senses share this same gloss • Thus for Word. Net, the meaning of this sense of chump is this list. 17

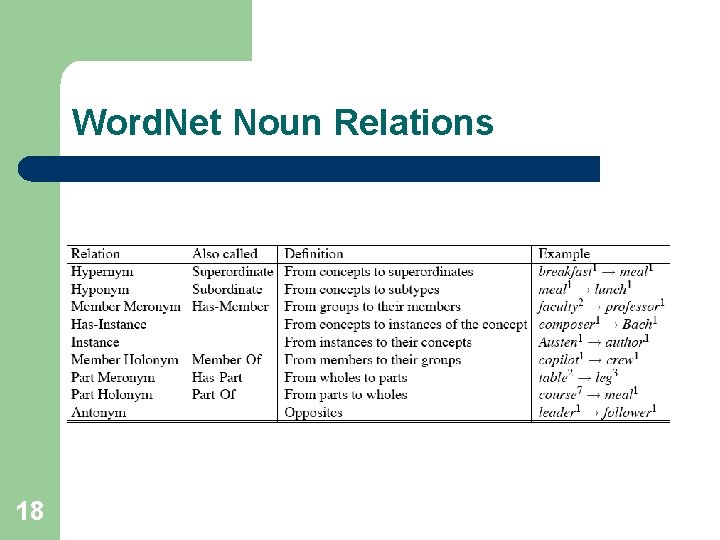

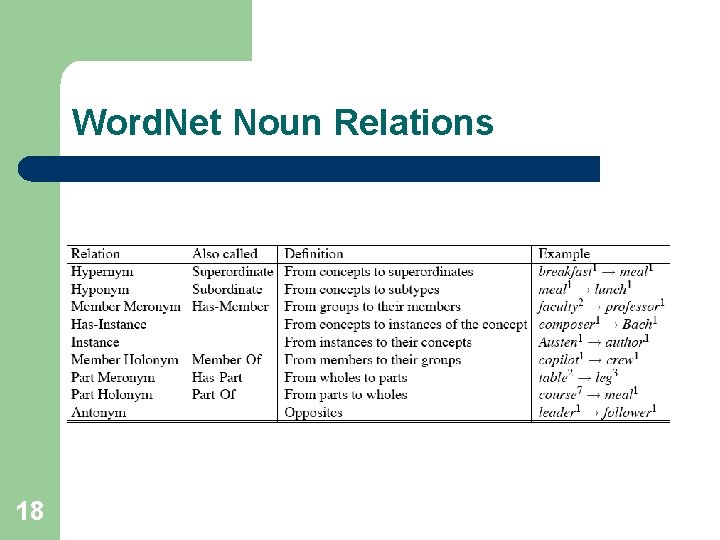

Word. Net Noun Relations 18

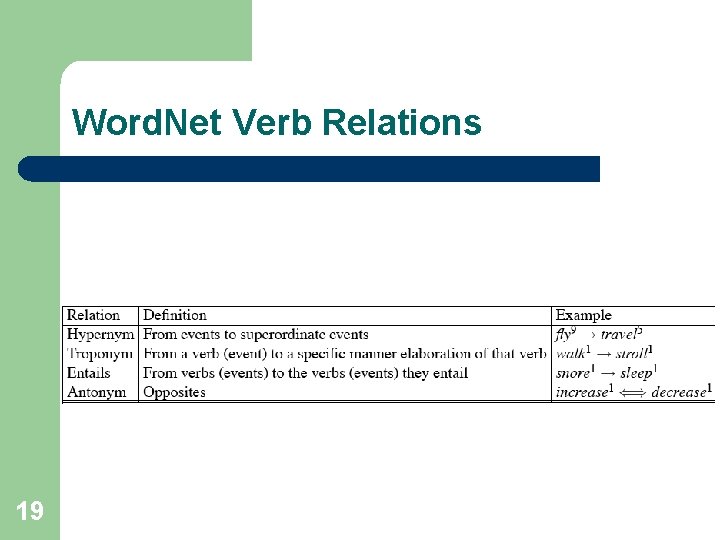

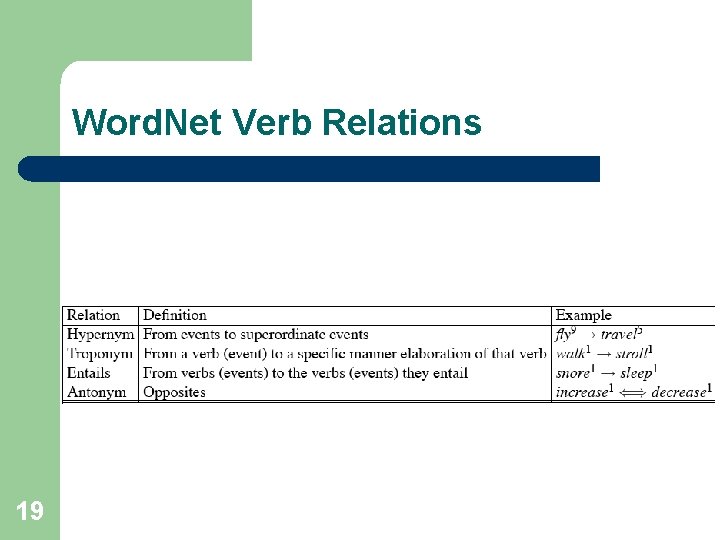

Word. Net Verb Relations 19

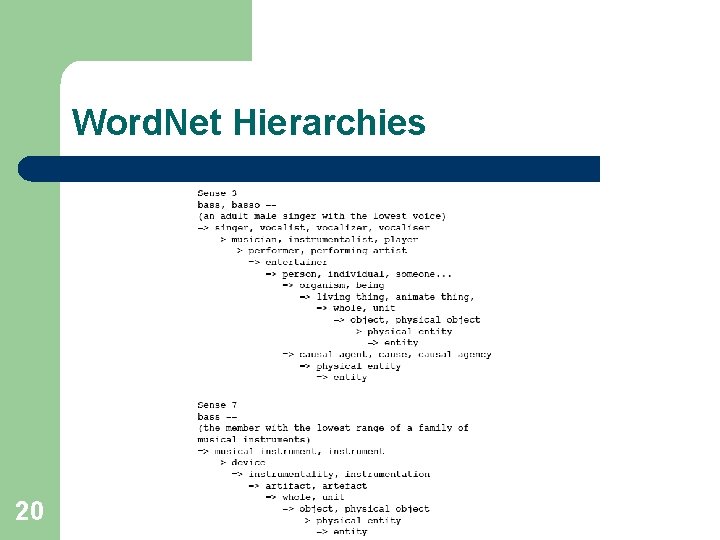

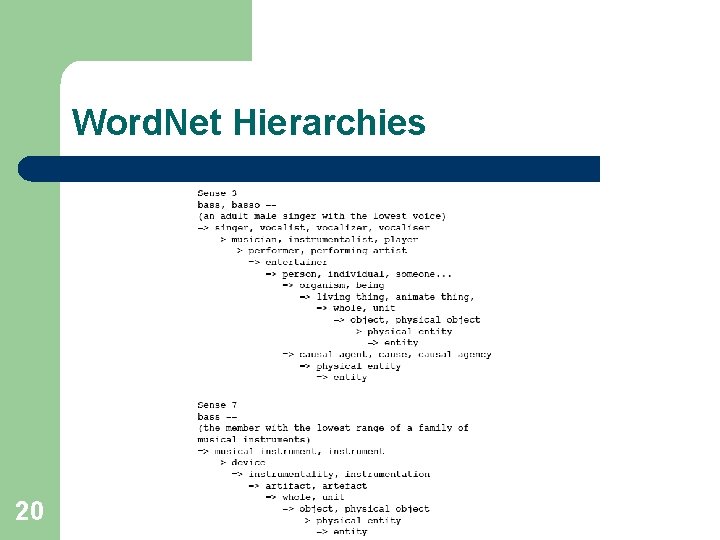

Word. Net Hierarchies 20

Word Sense Disambiguation (WSD) l Given – – a word in context, a fixed inventory of potential word sense l Decide which sense of the word this is l Examples – English-to-Spanish MT l – Speech Synthesis l 21 Inventory is set of Spanish translations Inventory is homogrpahs with different pronunciations like bass and bow

Two variants of WSD task l Lexical sample task – – l Small pre-selected set of target words And inventory of senses for each word We’ll use supervised machine learning line, interest, plant All-words task – – – Every word in an entire text A lexicon with senses for each word Sort of like part-of-speech tagging l 22 Except each lemma has its own tagset

Supervised Machine Learning Approaches l Supervised machine learning approach: – – l Summary of what we need: – – 23 a training corpus of words tagged in context with their sense used to train a classifier that can tag words in new text the tag set (“sense inventory”) the training corpus A set of features extracted from the training corpus A classifier

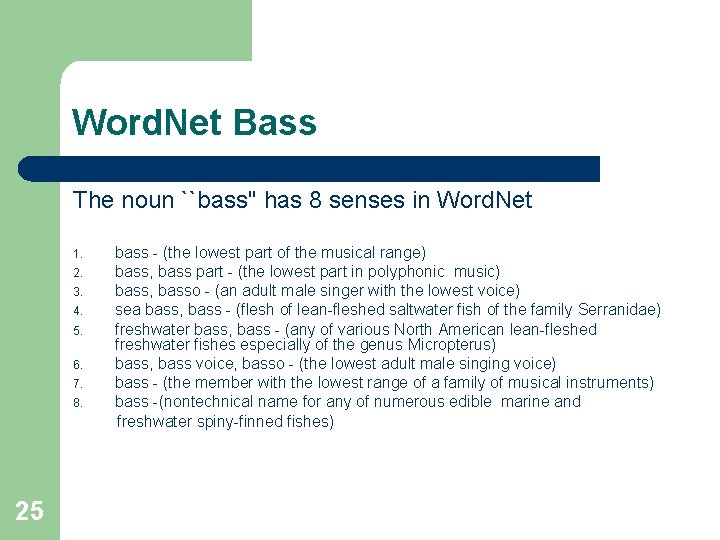

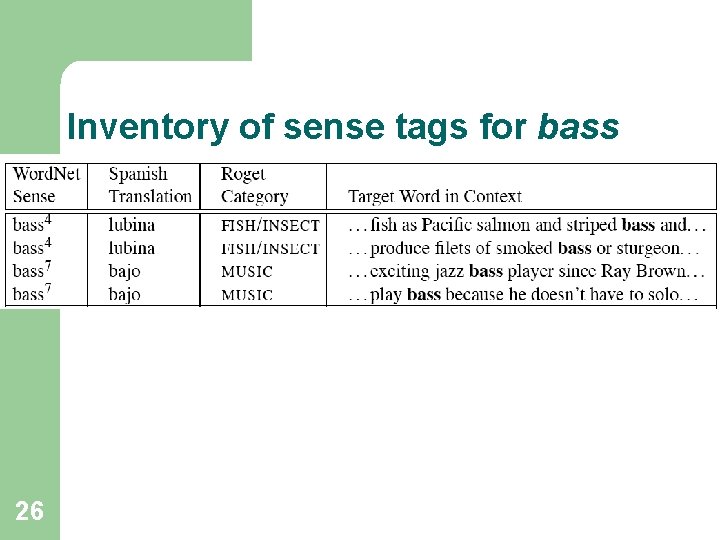

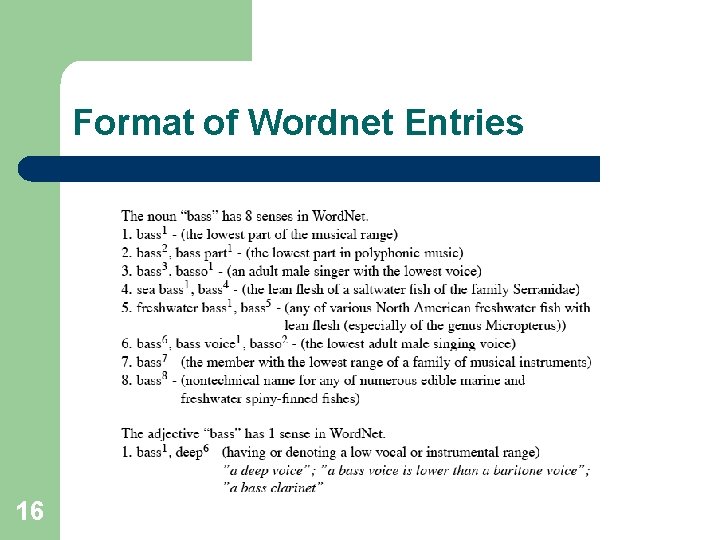

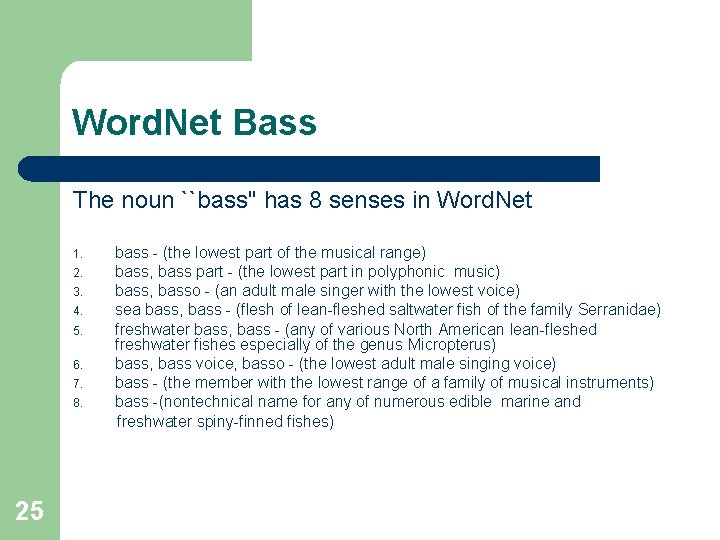

Supervised WSD 1: WSD Tags l What’s a tag? – l 24 A dictionary sense? For example, for Word. Net an instance of “bass” in a text has 8 possible tags or labels (bass 1 through bass 8).

Word. Net Bass The noun ``bass'' has 8 senses in Word. Net 1. 2. 3. 4. 5. 6. 7. 8. 25 bass - (the lowest part of the musical range) bass, bass part - (the lowest part in polyphonic music) bass, basso - (an adult male singer with the lowest voice) sea bass, bass - (flesh of lean-fleshed saltwater fish of the family Serranidae) freshwater bass, bass - (any of various North American lean-fleshed freshwater fishes especially of the genus Micropterus) bass, bass voice, basso - (the lowest adult male singing voice) bass - (the member with the lowest range of a family of musical instruments) bass -(nontechnical name for any of numerous edible marine and freshwater spiny-finned fishes)

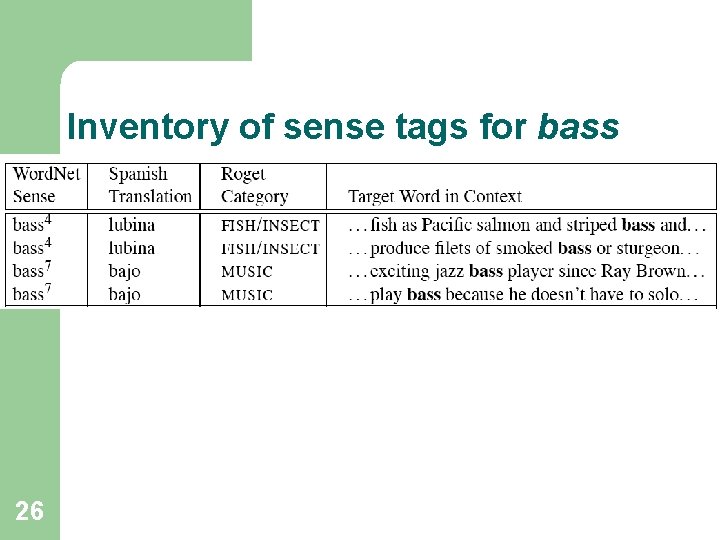

Inventory of sense tags for bass 26

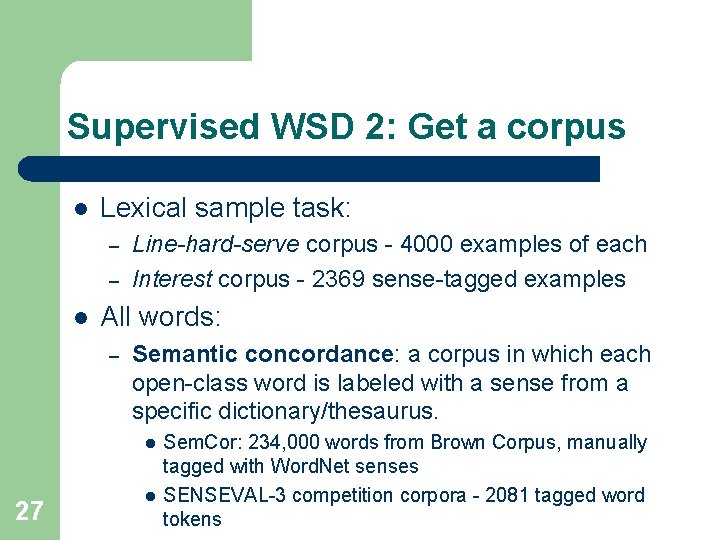

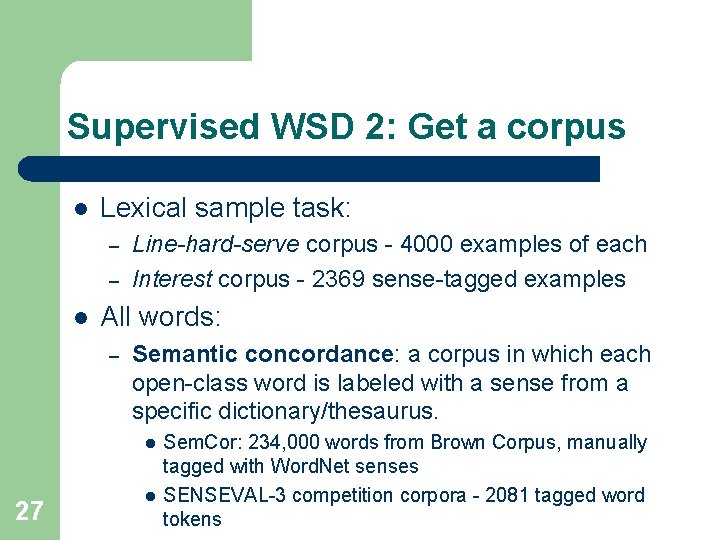

Supervised WSD 2: Get a corpus l Lexical sample task: – – l Line-hard-serve corpus - 4000 examples of each Interest corpus - 2369 sense-tagged examples All words: – Semantic concordance: a corpus in which each open-class word is labeled with a sense from a specific dictionary/thesaurus. l 27 l Sem. Cor: 234, 000 words from Brown Corpus, manually tagged with Word. Net senses SENSEVAL-3 competition corpora - 2081 tagged word tokens

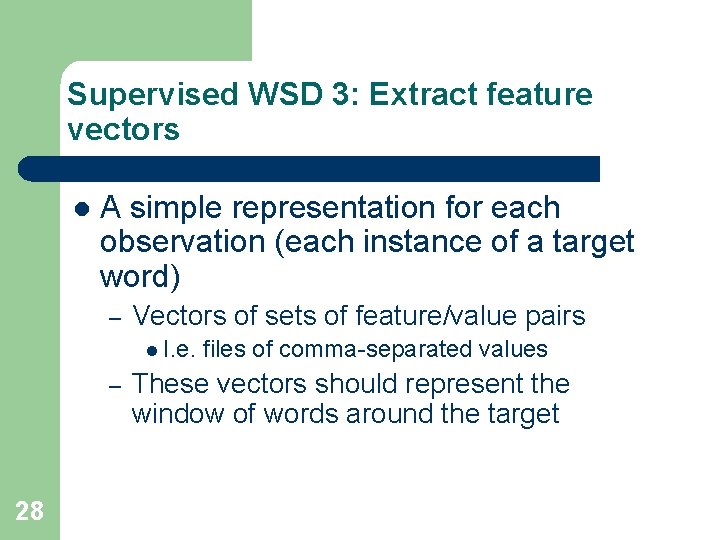

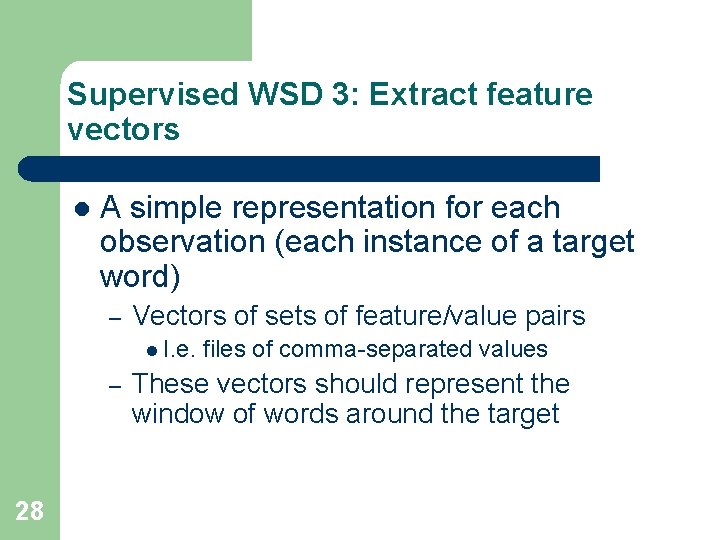

Supervised WSD 3: Extract feature vectors l A simple representation for each observation (each instance of a target word) – Vectors of sets of feature/value pairs l I. e. – 28 files of comma-separated values These vectors should represent the window of words around the target

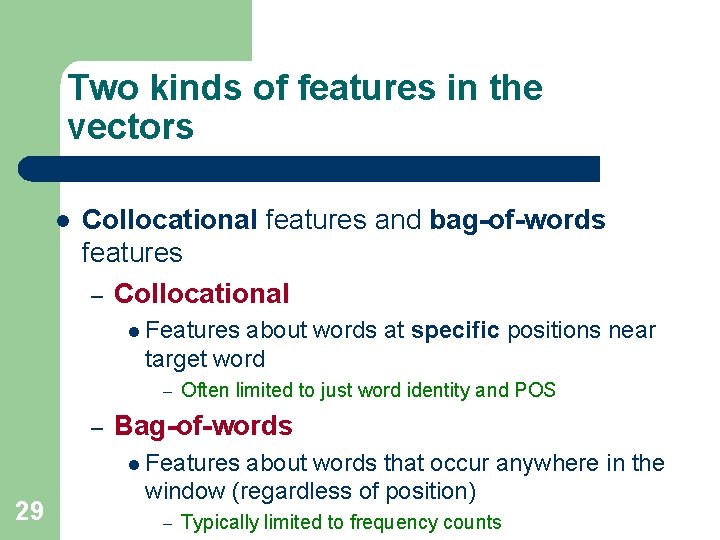

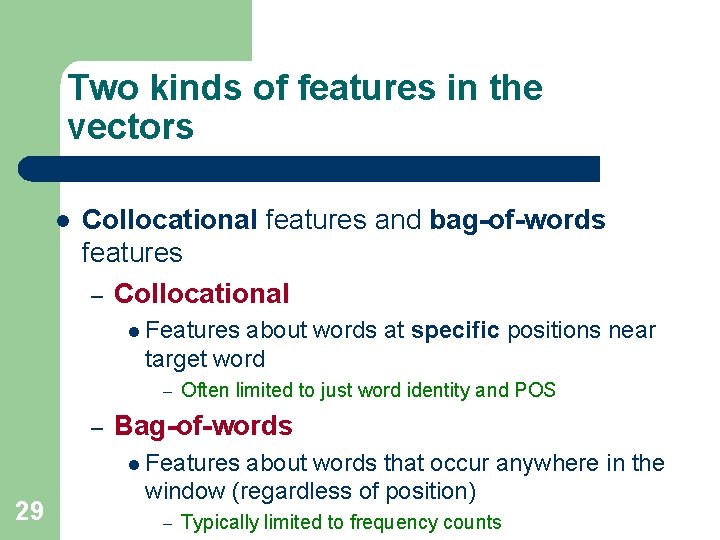

Two kinds of features in the vectors l Collocational features and bag-of-words features – Collocational l Features about words at specific positions near target word – – Often limited to just word identity and POS Bag-of-words l Features 29 about words that occur anywhere in the window (regardless of position) – Typically limited to frequency counts

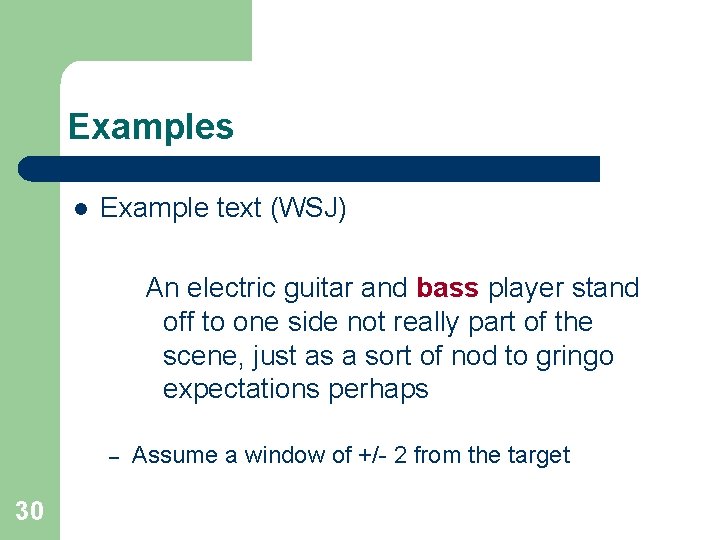

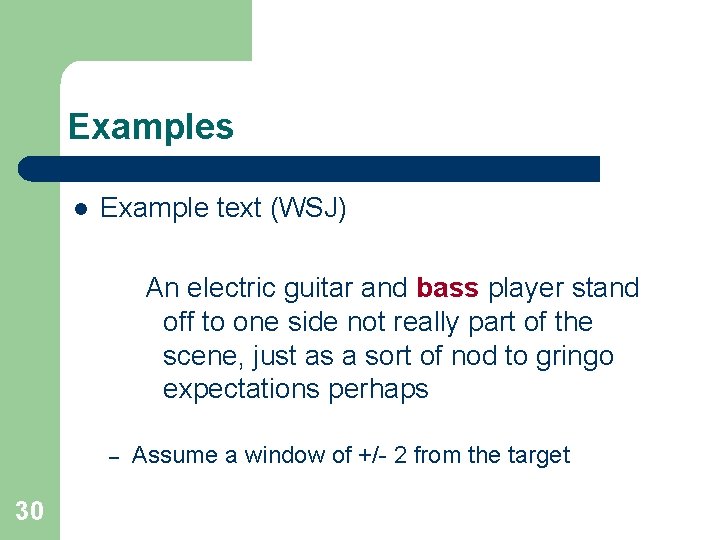

Examples l Example text (WSJ) An electric guitar and bass player stand off to one side not really part of the scene, just as a sort of nod to gringo expectations perhaps – 30 Assume a window of +/- 2 from the target

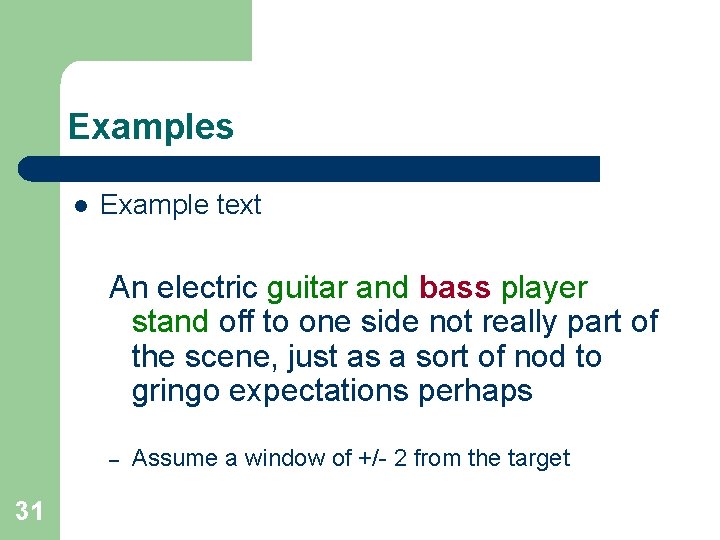

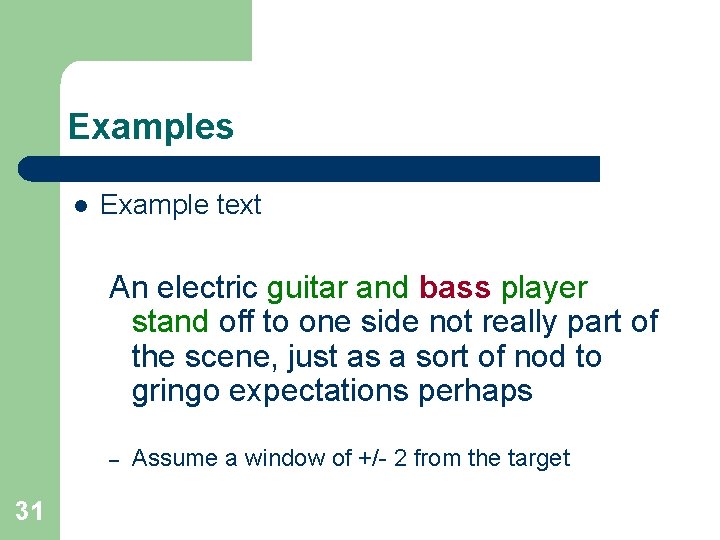

Examples l Example text An electric guitar and bass player stand off to one side not really part of the scene, just as a sort of nod to gringo expectations perhaps – 31 Assume a window of +/- 2 from the target

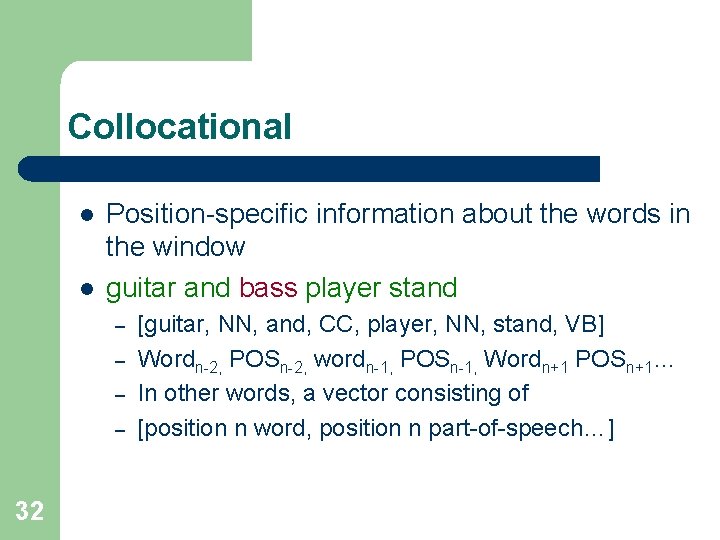

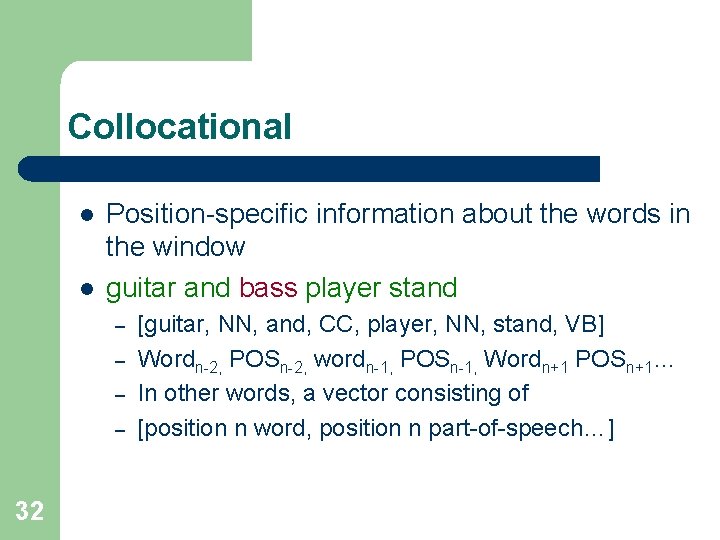

Collocational l l Position-specific information about the words in the window guitar and bass player stand – – 32 [guitar, NN, and, CC, player, NN, stand, VB] Wordn-2, POSn-2, wordn-1, POSn-1, Wordn+1 POSn+1… In other words, a vector consisting of [position n word, position n part-of-speech…]

Bag-of-words l l l 33 Words that occur within the window, regardless of specific position First derive a set of terms to place in the vector Then note how often each of those terms occurs in a given window

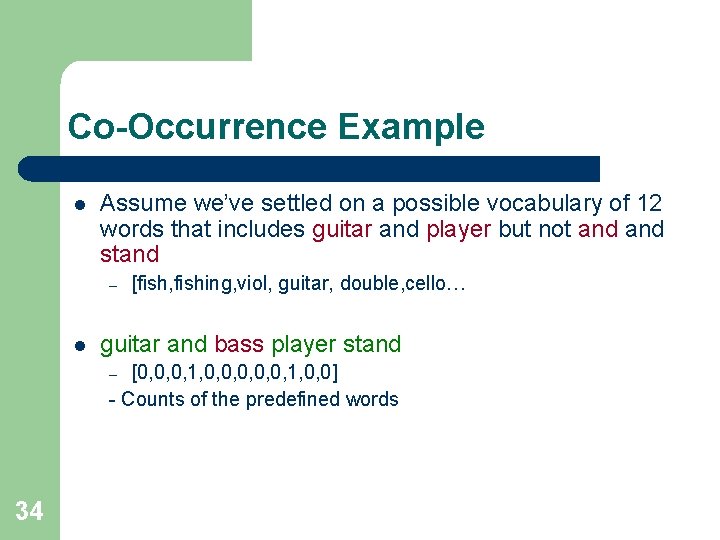

Co-Occurrence Example l Assume we’ve settled on a possible vocabulary of 12 words that includes guitar and player but not and stand – l [fish, fishing, viol, guitar, double, cello… guitar and bass player stand [0, 0, 0, 1, 0, 0] - Counts of the predefined words – 34

Naïve Bayes Test 35 l On a corpus of examples of uses of the word line, naïve Bayes achieved about 73% correct l Is this a good performance?

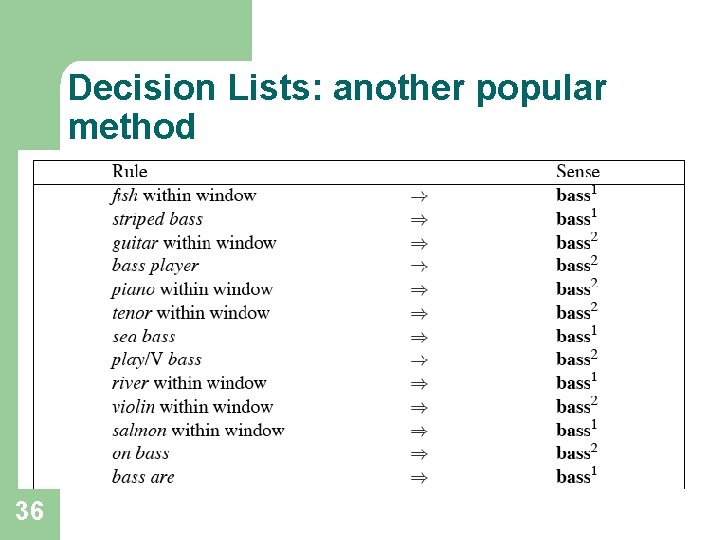

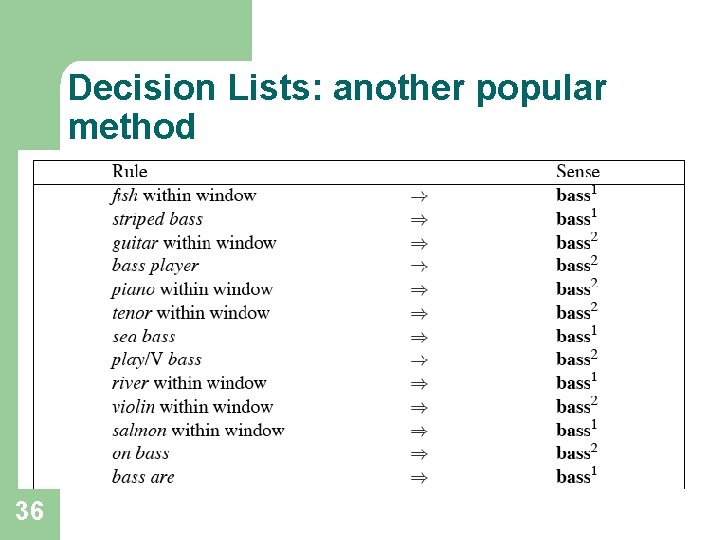

Decision Lists: another popular method l 36 A case statement….

Learning Decision Lists l l l 37 Restrict the lists to rules that test a single feature (1 -decisionlist rules) Evaluate each possible test and rank them based on how well they work Glue the top-N tests together and call that your decision list

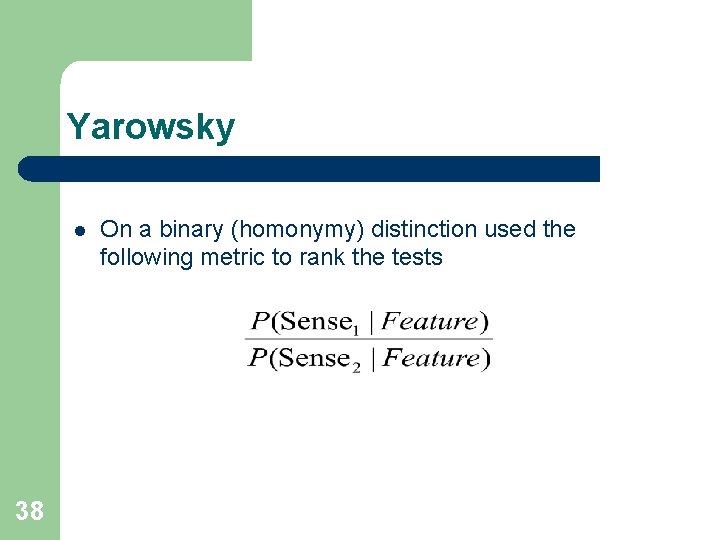

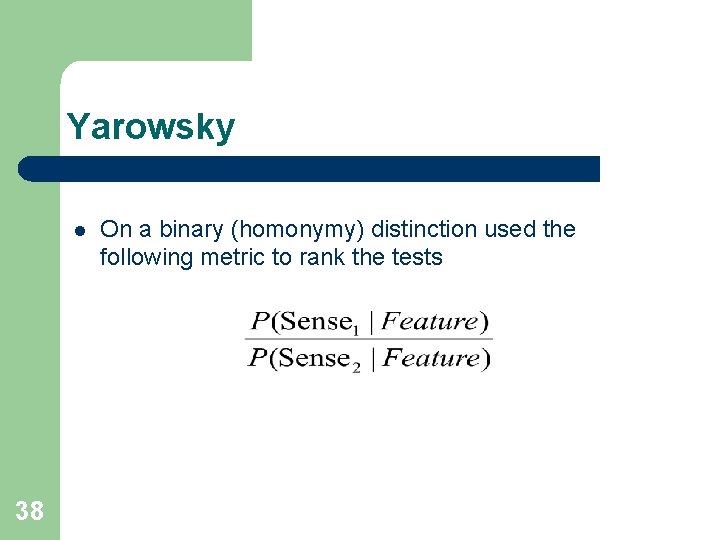

Yarowsky l 38 On a binary (homonymy) distinction used the following metric to rank the tests

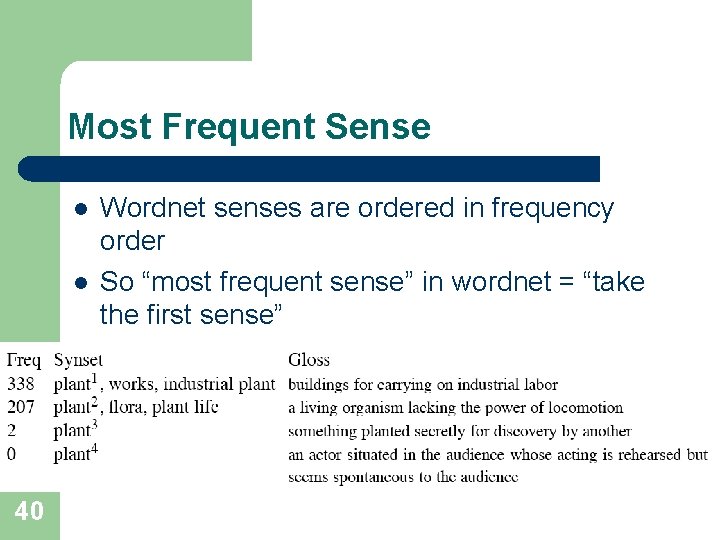

WSD Evaluations and baselines – Exact match accuracy l – l Usually evaluate using held-out data from same labeled corpus Baselines – – 39 % of words tagged identically with manual sense tags Most frequent sense Lesk algorithm: based on the shared words between the target word context and the gloss for the sense

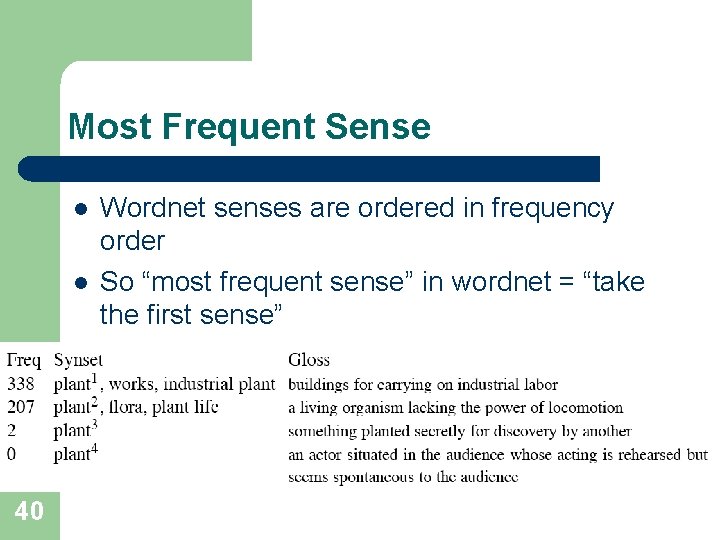

Most Frequent Sense l l l 40 Wordnet senses are ordered in frequency order So “most frequent sense” in wordnet = “take the first sense” Sense frequencies come from Sem. Cor

Ceiling l Human inter-annotator agreement – – – l Human agreements on all-words corpora with Wordnet style senses – 41 Compare annotations of two humans On same data Given same tagging guidelines 75%-80%

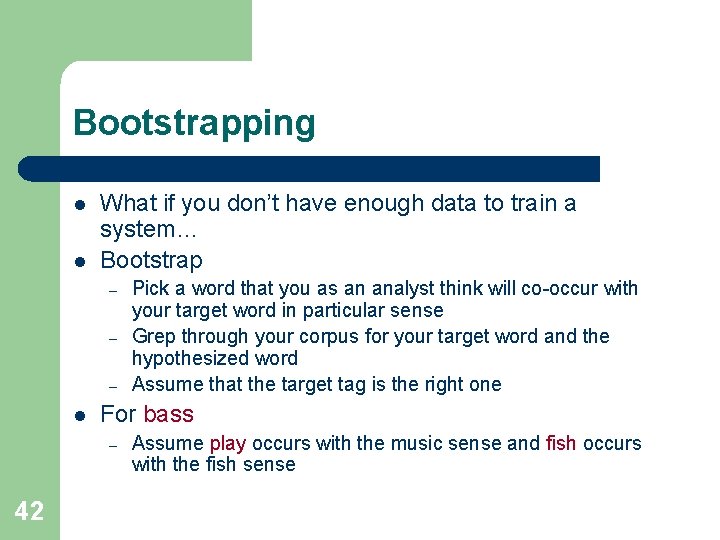

Bootstrapping l l What if you don’t have enough data to train a system… Bootstrap – – – l For bass – 42 Pick a word that you as an analyst think will co-occur with your target word in particular sense Grep through your corpus for your target word and the hypothesized word Assume that the target tag is the right one Assume play occurs with the music sense and fish occurs with the fish sense

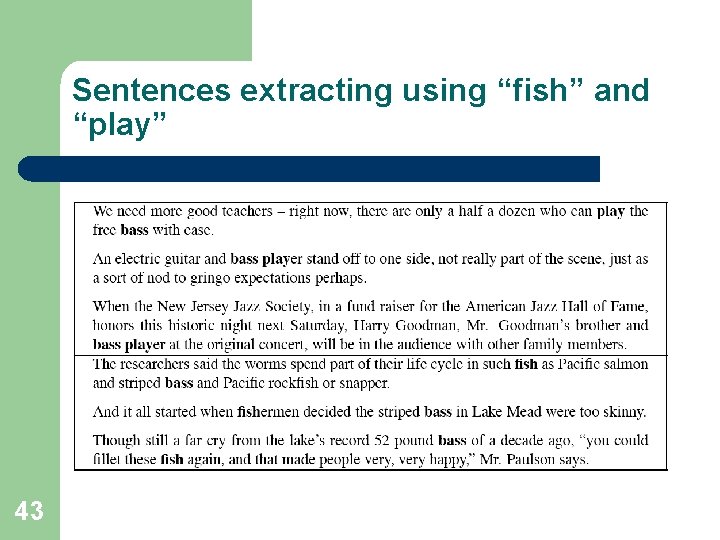

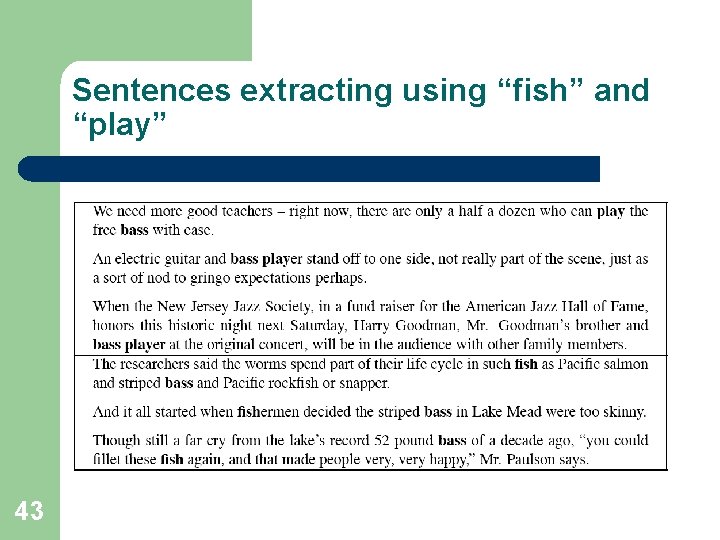

Sentences extracting using “fish” and “play” 43

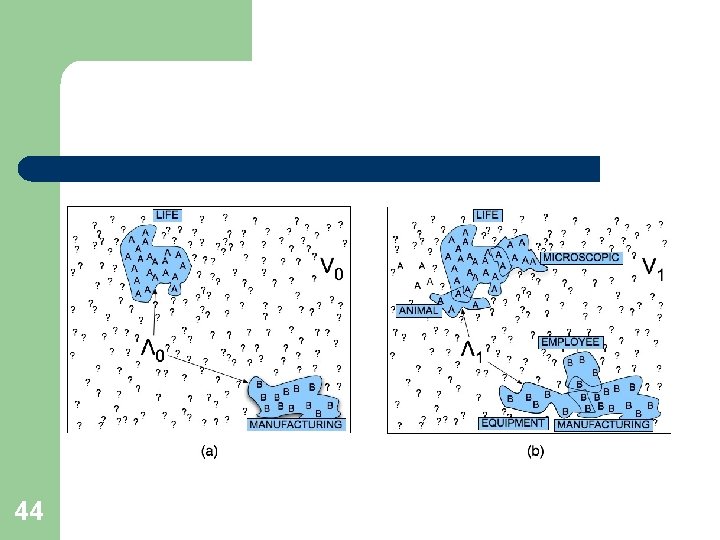

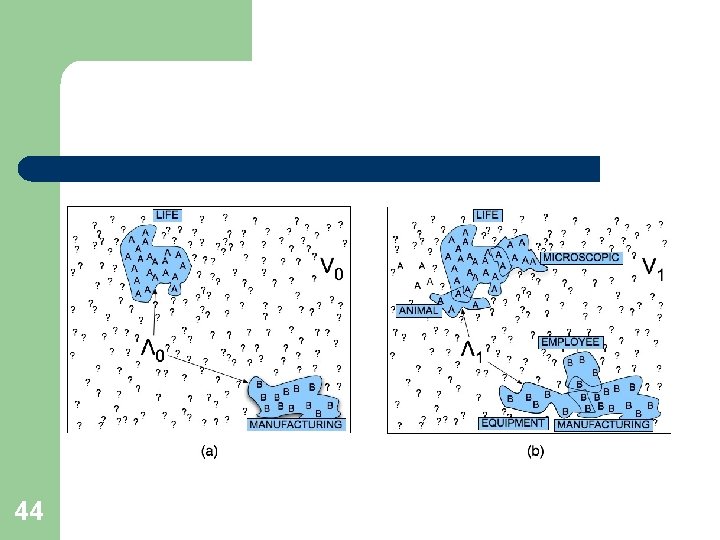

44

WSD Performance l l 45 Varies widely depending on how difficult the disambiguation task is Accuracies of over 90% are commonly reported on some of the classic, often fairly easy, WSD tasks (pike, star, interest) Senseval brought careful evaluation of difficult WSD (many senses, different POS) Senseval 1: more fine grained senses, wider range of types: – Overall: about 75% accuracy – Verbs: about 70% accuracy – Nouns: about 80% accuracy

Word similarity l Synonymy is a binary relation – l We want a looser metric – – l Word similarity or Word distance Two words are more similar – l Two words are either synonymous or not If they share more features of meaning Actually these are really relations between senses: – – Instead of saying “bank is like fund” We say l l l 46 Bank 1 is similar to fund 3 Bank 2 is similar to slope 5 We’ll compute them over both words and senses

Two classes of algorithms l Thesaurus-based algorithms – l Based on whether words are “nearby” in Wordnet Distributional algorithms – By comparing words based on their context l l 47 I like having X for dinner? What are the possible values of X

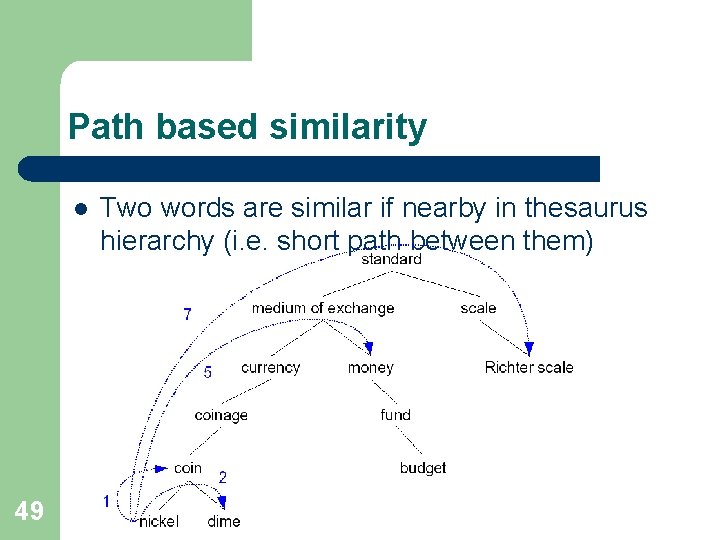

Thesaurus-based word similarity l We could use anything in thesaurus – – – l Meronymy Glosses Example sentences In practice – By “thesaurus-based” we just mean l l Word similarity versus word relatedness – – Similar words are near-synonyms Related could be related any way l l 48 Using the is-a/subsumption/hypernym hierarchy Car, gasoline: related, not similar Car, bicycle: similar

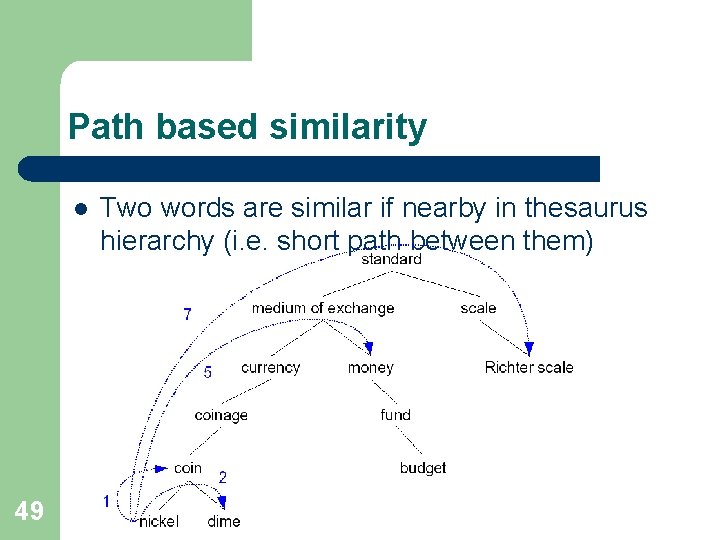

Path based similarity l 49 Two words are similar if nearby in thesaurus hierarchy (i. e. short path between them)

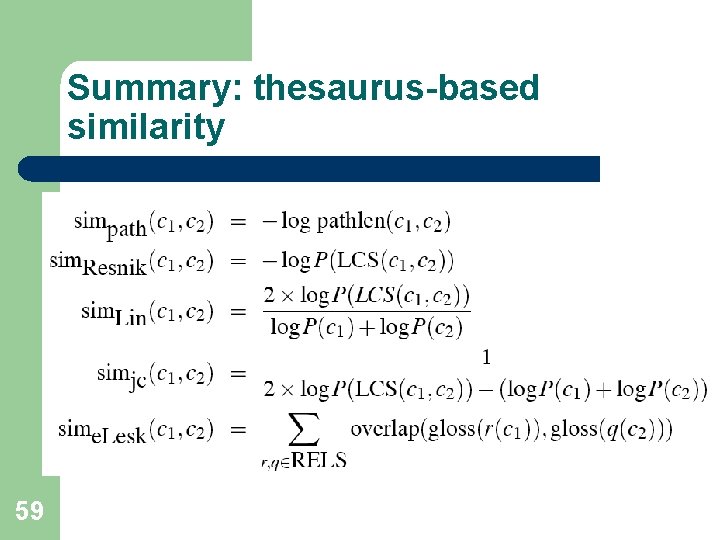

Refinements to path-based similarity l pathlen(c 1, c 2) = number of edges in the shortest path between the sense nodes c 1 and c 2 l simpath(c 1, c 2) = -log pathlen(c 1, c 2) l wordsim(w 1, w 2) = – 50 maxc 1 senses(w 1), c 2 senses(w 2) sim(c 1, c 2)

Problem with basic path-based similarity l l l Assumes each link represents a uniform distance Nickel to money seem closer than nickel to standard Instead: – 51 Want a metric which lets us represent the cost of each edge independently

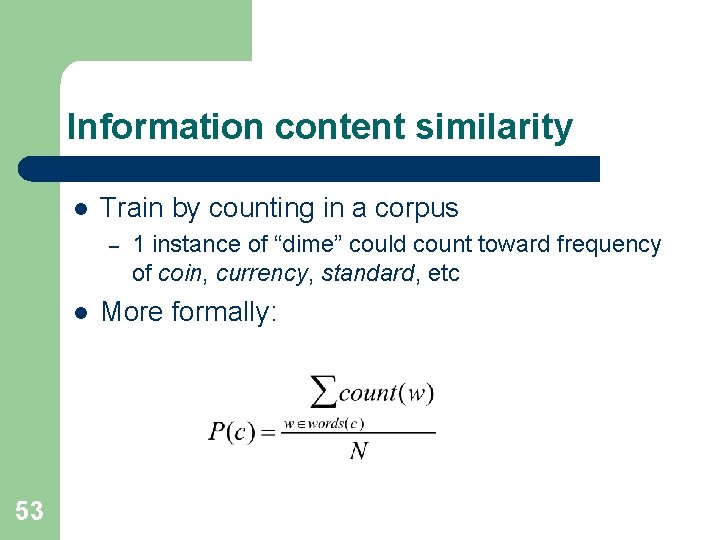

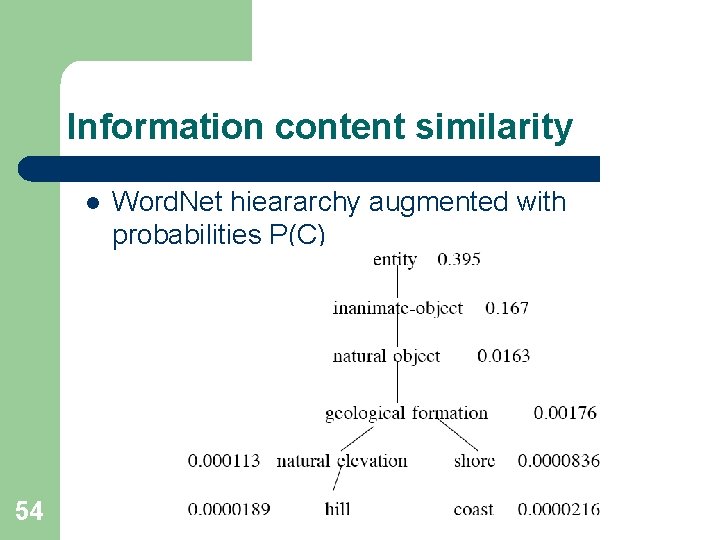

Information content similarity metrics l Let’s define P(C) as: – – 52 The probability that a randomly selected word in a corpus is an instance of concept c Formally: there is a distinct random variable, ranging over words, associated with each concept in the hierarchy P(root)=1 The lower a node in the hierarchy, the lower its probability

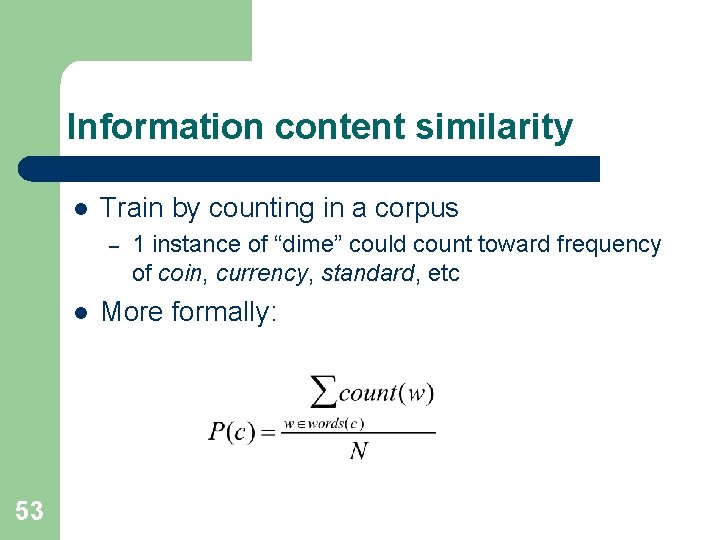

Information content similarity l Train by counting in a corpus – l 53 1 instance of “dime” could count toward frequency of coin, currency, standard, etc More formally:

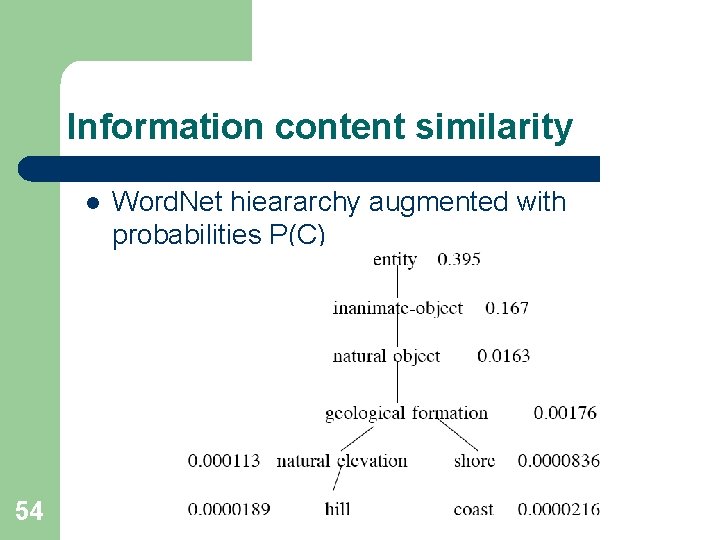

Information content similarity l 54 Word. Net hieararchy augmented with probabilities P(C)

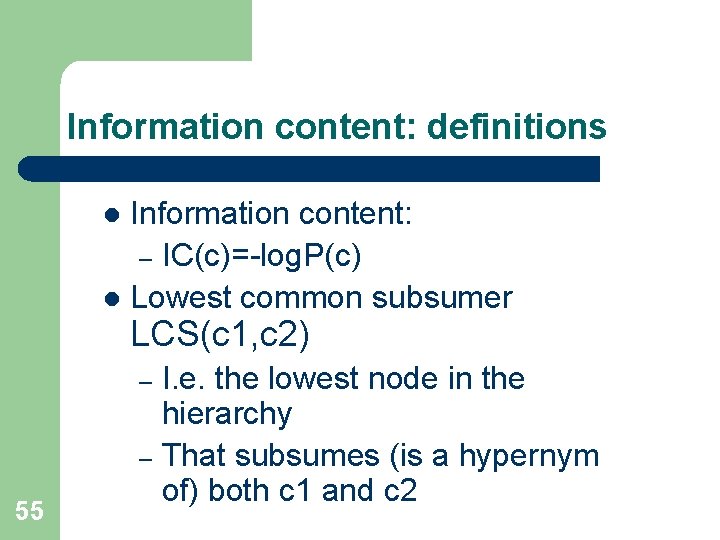

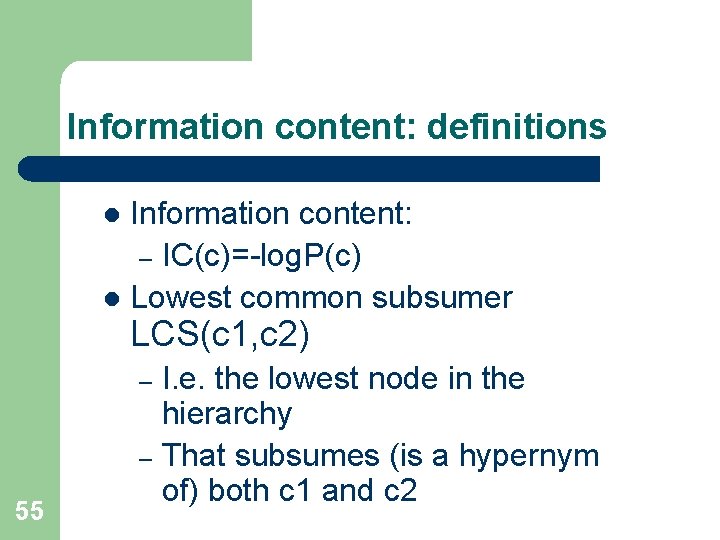

Information content: definitions Information content: – IC(c)=-log. P(c) l Lowest common subsumer l LCS(c 1, c 2) I. e. the lowest node in the hierarchy – That subsumes (is a hypernym of) both c 1 and c 2 – 55

Resnik method 56 l The similarity between two words is related to their common information l The more two words have in common, the more similar they are l Resnik: measure the common information as: – The info content of the lowest common subsumer of the two nodes – simresnik(c 1, c 2) = -log P(LCS(c 1, c 2))

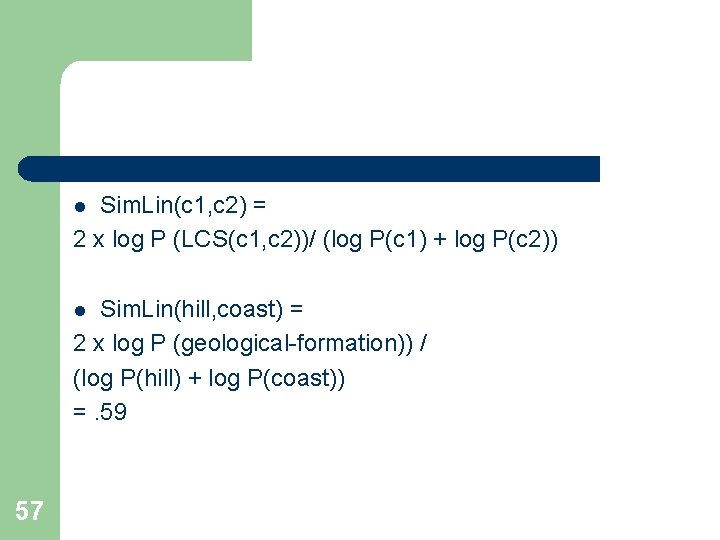

Sim. Lin(c 1, c 2) = 2 x log P (LCS(c 1, c 2))/ (log P(c 1) + log P(c 2)) l Sim. Lin(hill, coast) = 2 x log P (geological-formation)) / (log P(hill) + log P(coast)) =. 59 l 57

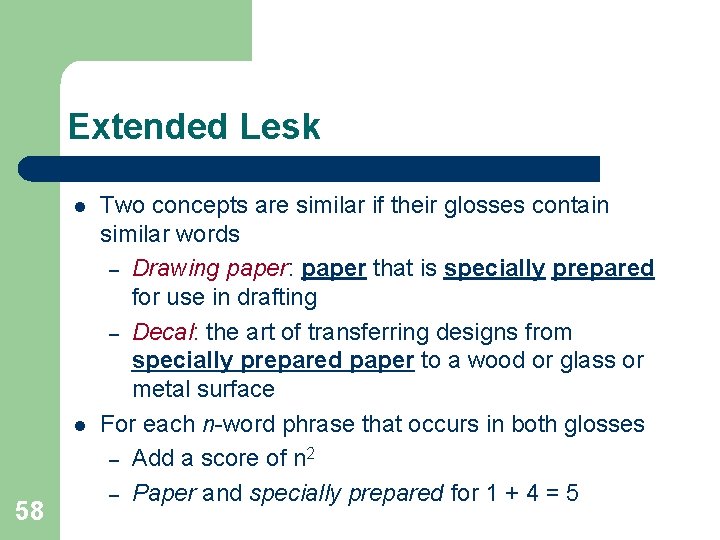

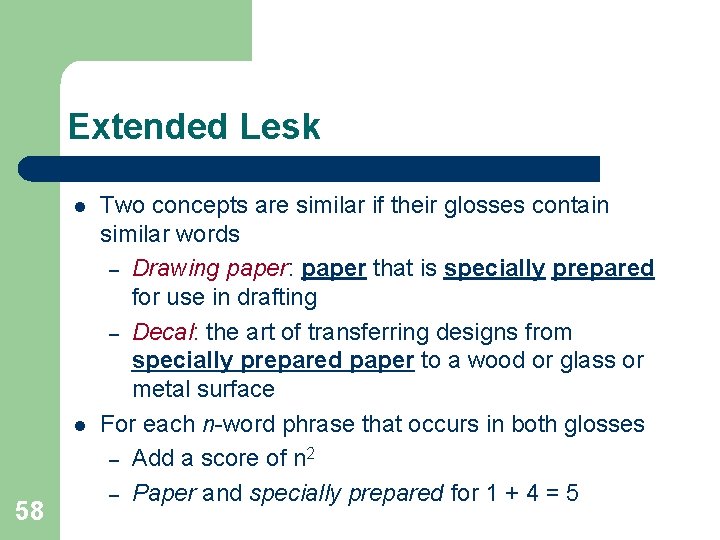

Extended Lesk l l 58 Two concepts are similar if their glosses contain similar words – Drawing paper: paper that is specially prepared for use in drafting – Decal: the art of transferring designs from specially prepared paper to a wood or glass or metal surface For each n-word phrase that occurs in both glosses – Add a score of n 2 – Paper and specially prepared for 1 + 4 = 5

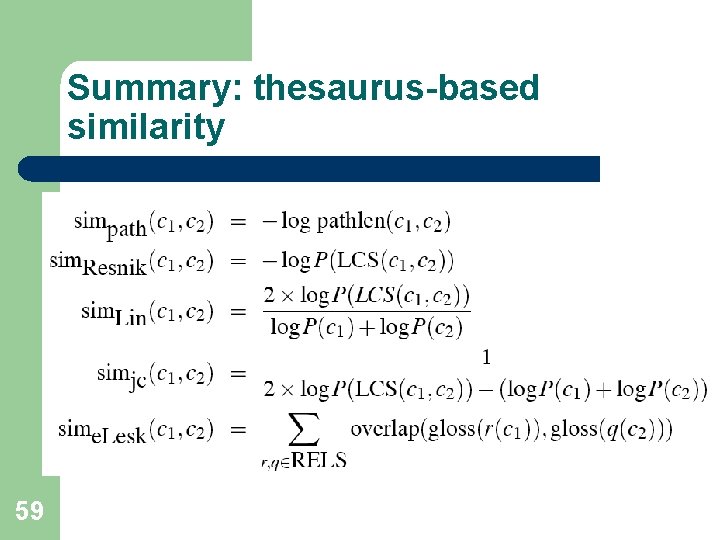

Summary: thesaurus-based similarity 59

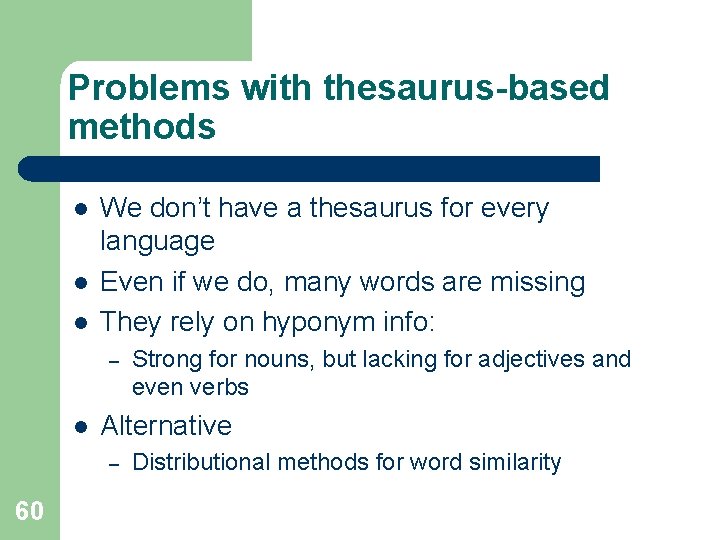

Problems with thesaurus-based methods l l l We don’t have a thesaurus for every language Even if we do, many words are missing They rely on hyponym info: – l Alternative – 60 Strong for nouns, but lacking for adjectives and even verbs Distributional methods for word similarity

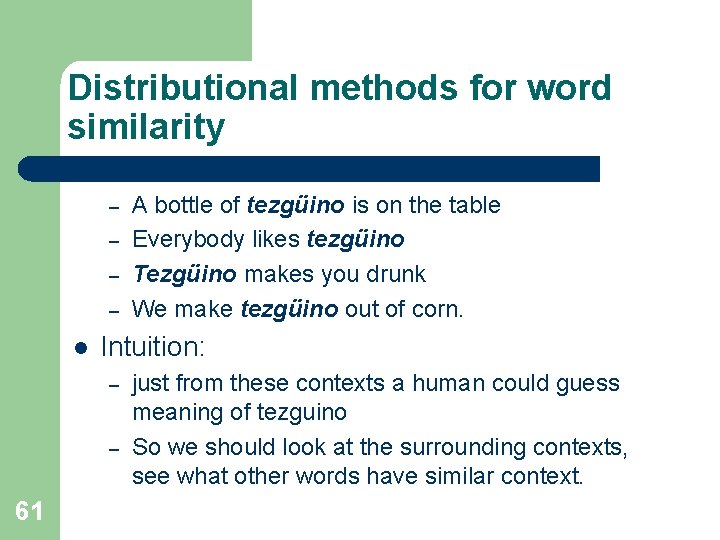

Distributional methods for word similarity – – l Intuition: – – 61 A bottle of tezgüino is on the table Everybody likes tezgüino Tezgüino makes you drunk We make tezgüino out of corn. just from these contexts a human could guess meaning of tezguino So we should look at the surrounding contexts, see what other words have similar context.

Context vector l l l 62 l Consider a target word w Suppose we had one binary feature fi for each of the N words in the lexicon vi Which means “word vi occurs in the neighborhood of w” w=(f 1, f 2, f 3, …, f. N) If w=tezguino, v 1 = bottle, v 2 = drunk, v 3 = matrix: w = (1, 1, 0, …)

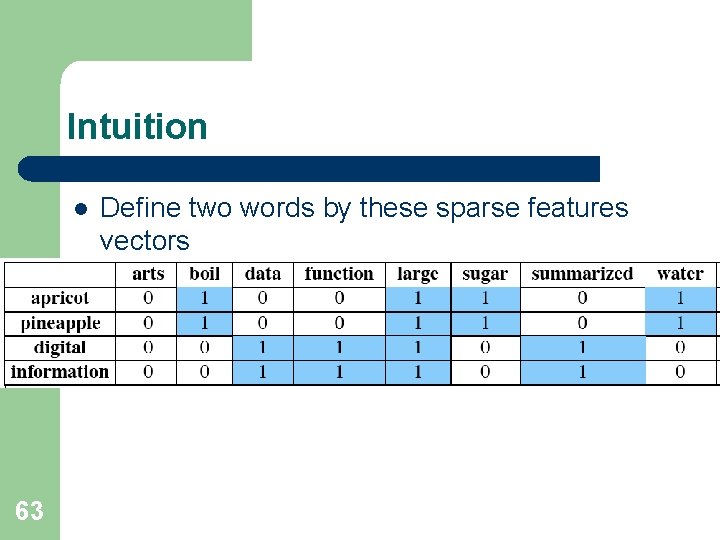

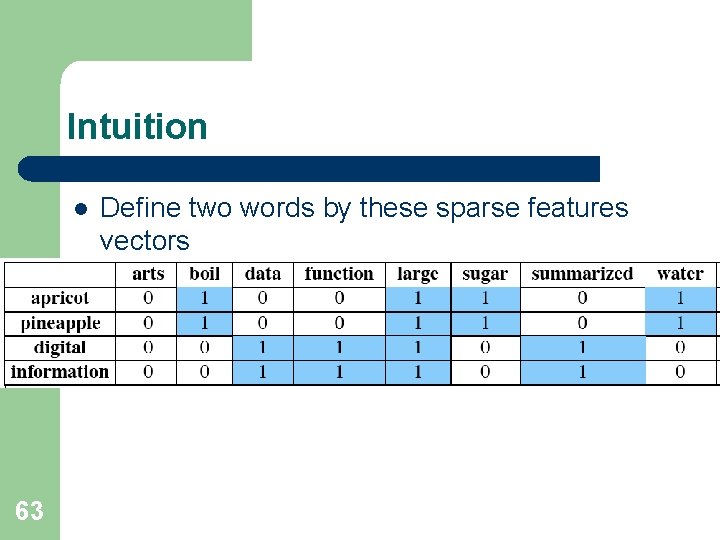

Intuition l l l 63 Define two words by these sparse features vectors Apply a vector distance metric Say that two words are similar if two vectors are similar

Distributional similarity l 64 So we just need to specify 3 things 1. How the co-occurrence terms are defined 2. How terms are weighted l (frequency? Logs? Mutual information? ) 3. What vector distance metric should we use? l Cosine? Euclidean distance?

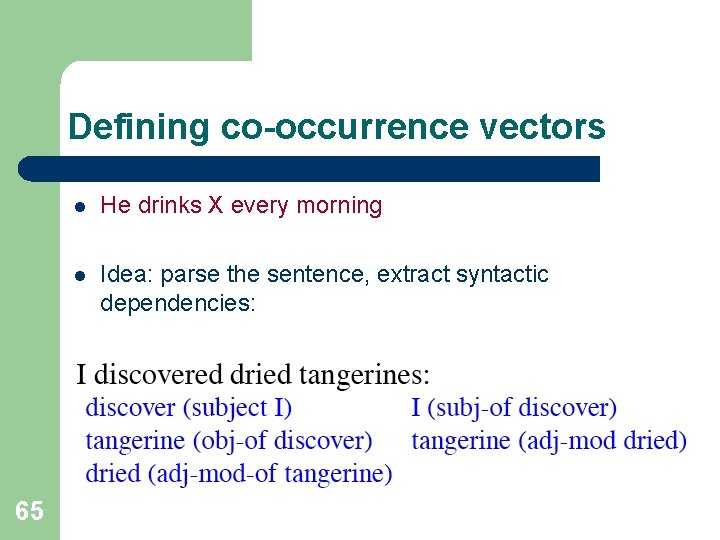

Defining co-occurrence vectors 65 l He drinks X every morning l Idea: parse the sentence, extract syntactic dependencies:

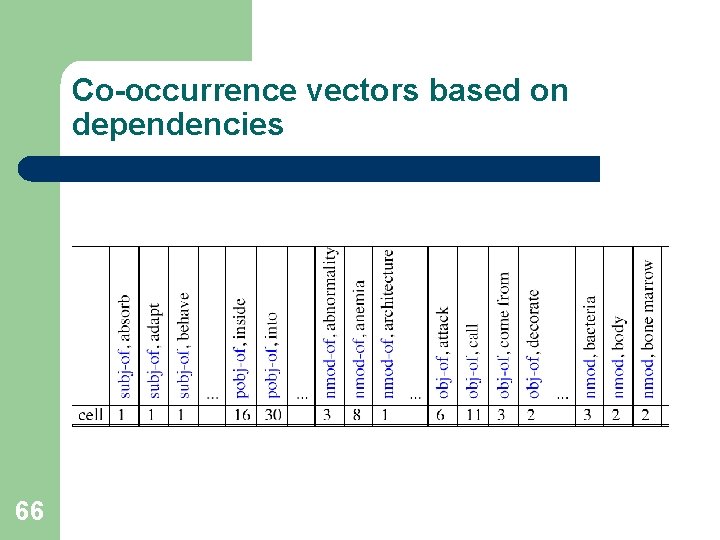

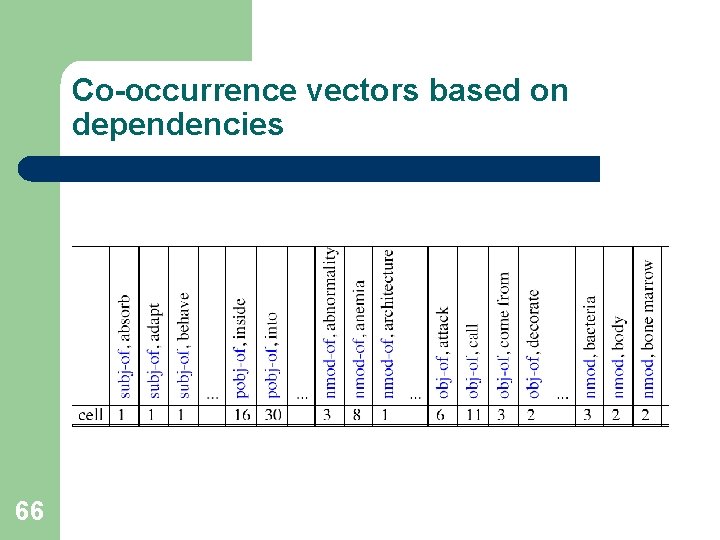

Co-occurrence vectors based on dependencies 66

Measures of association with context l l l Let’s consider one feature f=(r, w’) = (obj-of, attack) P(f|w)=count(f, w)/count(w) l Assocprob(w, f)=p(f|w) l l 67 We have been using the frequency of some feature as its weight or value But we could use any function of this frequency

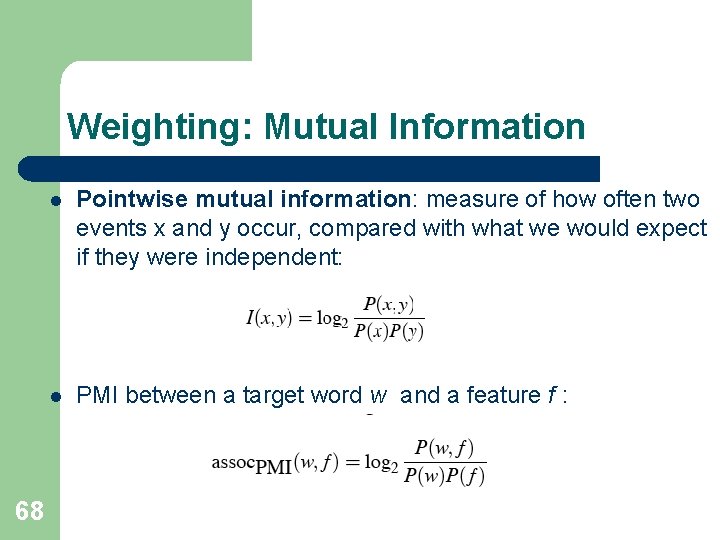

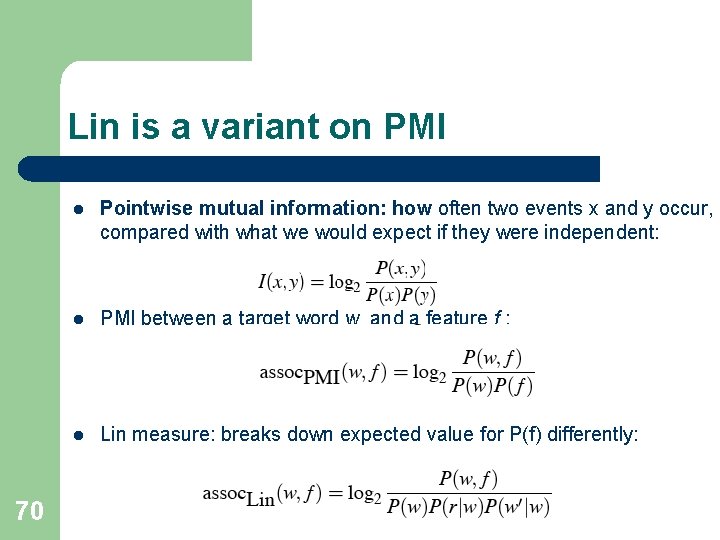

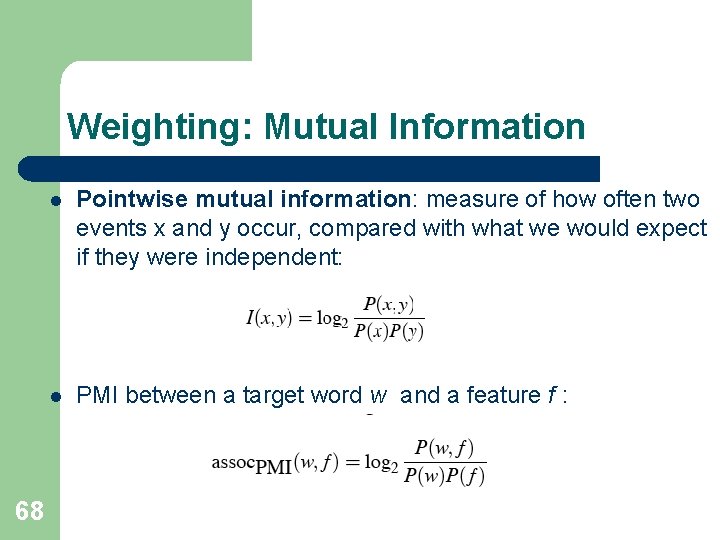

Weighting: Mutual Information 68 l Pointwise mutual information: measure of how often two events x and y occur, compared with what we would expect if they were independent: l PMI between a target word w and a feature f :

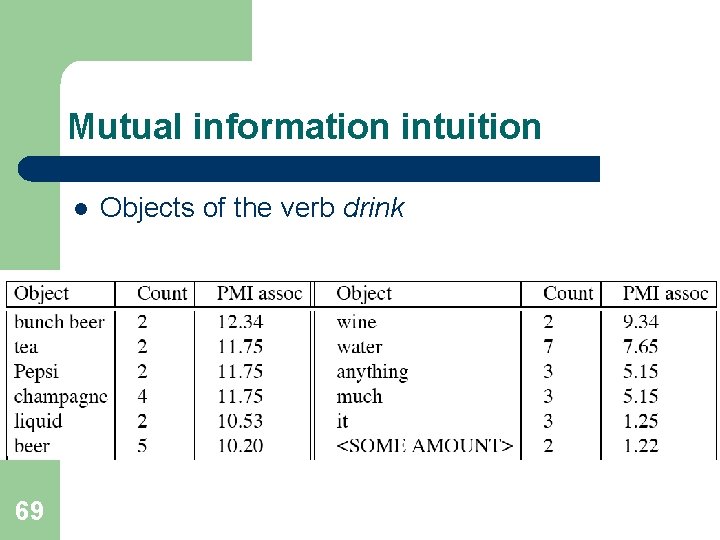

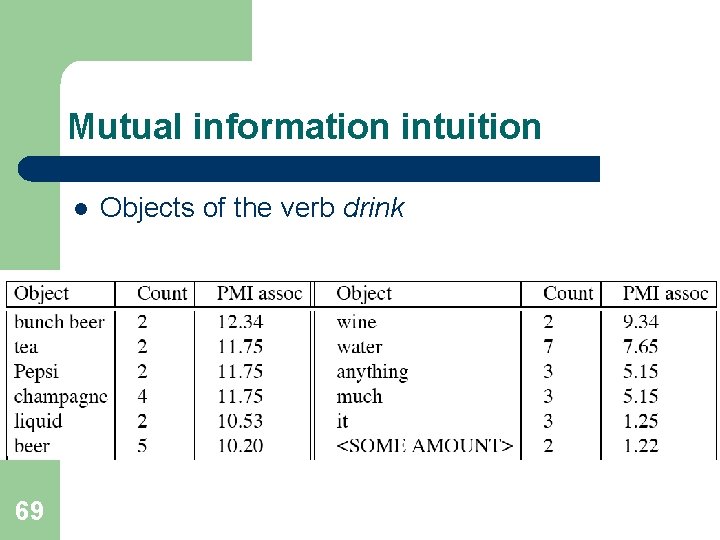

Mutual information intuition l 69 Objects of the verb drink

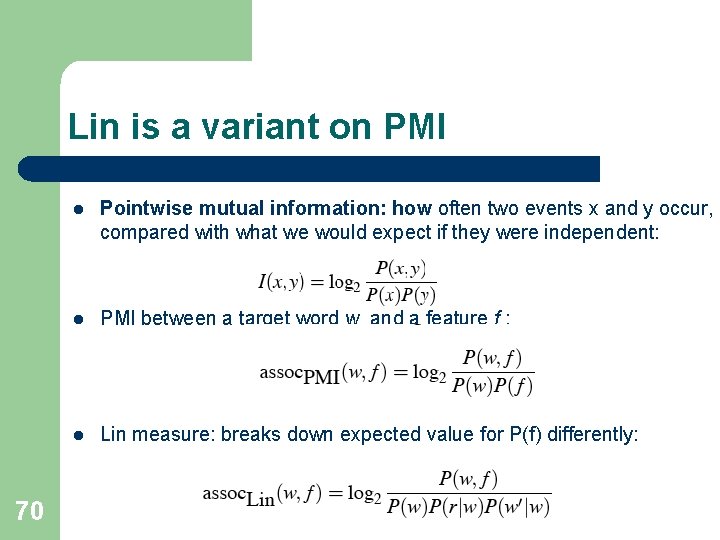

Lin is a variant on PMI 70 l Pointwise mutual information: how often two events x and y occur, compared with what we would expect if they were independent: l PMI between a target word w and a feature f : l Lin measure: breaks down expected value for P(f) differently:

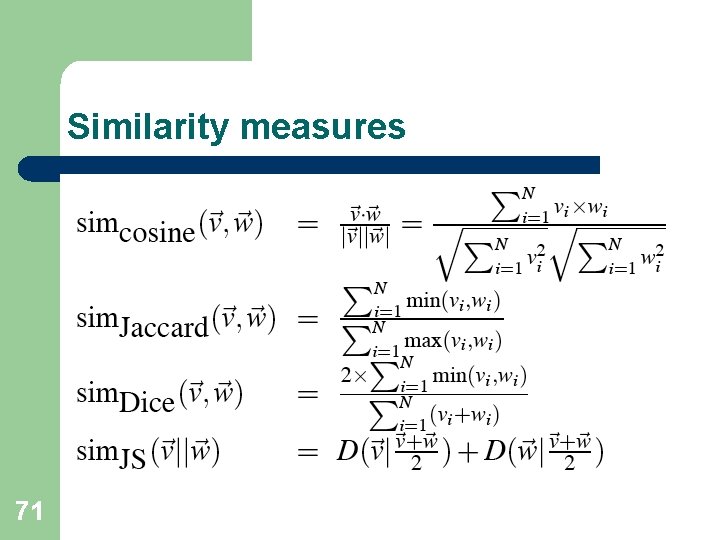

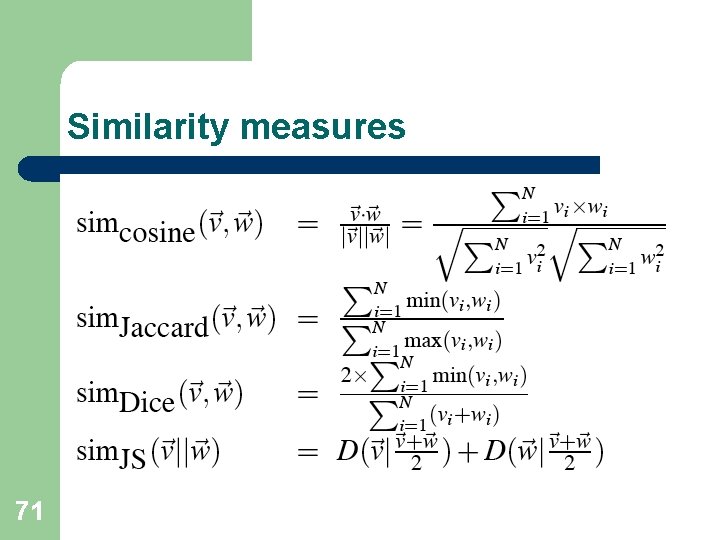

Similarity measures 71

Arabic wordnet python

Arabic wordnet python What is wordnet

What is wordnet Sanskrit wordnet

Sanskrit wordnet Wordnet demo

Wordnet demo Utilities and energy lecture

Utilities and energy lecture Theory of translation lectures

Theory of translation lectures Molecular biology lecture

Molecular biology lecture Rick trebino

Rick trebino Lectures paediatrics

Lectures paediatrics Data mining lectures

Data mining lectures Medicinal chemistry lectures

Medicinal chemistry lectures Uva ppt template

Uva ppt template Ludic space

Ludic space Activity identification approaches in spm

Activity identification approaches in spm Radio astronomy lectures

Radio astronomy lectures Dr sohail lectures

Dr sohail lectures Introduction to web engineering

Introduction to web engineering How to get the most out of lectures

How to get the most out of lectures Frcr physics lectures

Frcr physics lectures Frcr physics lectures

Frcr physics lectures Introduction to recursion

Introduction to recursion Blood physiology guyton

Blood physiology guyton Aerodynamics lectures

Aerodynamics lectures Theory of translation lectures

Theory of translation lectures Power system lectures

Power system lectures Theory of translation lectures

Theory of translation lectures Digital logic design lectures

Digital logic design lectures Compsci 453

Compsci 453 Philosophy of fine arts

Philosophy of fine arts Nuclear medicine lectures

Nuclear medicine lectures Recursive fractals c++

Recursive fractals c++ Cdeep lectures

Cdeep lectures Oral communication 3 lectures text

Oral communication 3 lectures text C programming and numerical analysis an introduction

C programming and numerical analysis an introduction Haematology lectures

Haematology lectures Bureau of lectures

Bureau of lectures Trend lectures

Trend lectures Theory of translation lectures

Theory of translation lectures Reinforcement learning lectures

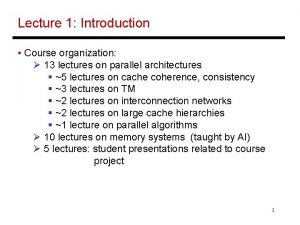

Reinforcement learning lectures 13 lectures

13 lectures Reinforcement learning lectures

Reinforcement learning lectures Bba lectures

Bba lectures Medical emergency student lectures

Medical emergency student lectures Medicine hematology student lectures

Medicine hematology student lectures Rcog associate

Rcog associate Bhadeshia lectures

Bhadeshia lectures Yelena bogdan

Yelena bogdan Comsats virtual campus lectures

Comsats virtual campus lectures Hugh blair lectures on rhetoric

Hugh blair lectures on rhetoric Cern summer student lectures

Cern summer student lectures Pathology lectures for medical students

Pathology lectures for medical students Dr asim lectures

Dr asim lectures Pab ankle fracture

Pab ankle fracture Anatomy lectures powerpoint

Anatomy lectures powerpoint Cern summer school lectures

Cern summer school lectures Triangle similarity aa

Triangle similarity aa Similarities of offspring to parents

Similarities of offspring to parents 7-6 dilations and similarity in the coordinate plane

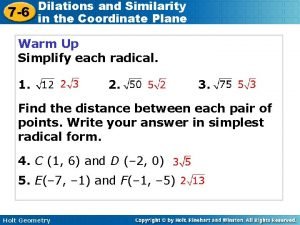

7-6 dilations and similarity in the coordinate plane Means and extremes examples

Means and extremes examples Chapter 5 competitive rivalry and competitive dynamics

Chapter 5 competitive rivalry and competitive dynamics 7-6 dilations and similarity in the coordinate plane

7-6 dilations and similarity in the coordinate plane 7-3 triangle similarity

7-3 triangle similarity 7-2 similarity and transformations

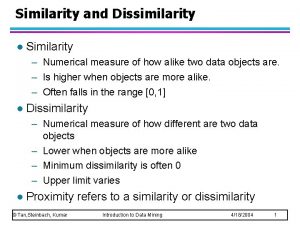

7-2 similarity and transformations Similarity and dissimilarity measures in data mining

Similarity and dissimilarity measures in data mining Rstuv

Rstuv Vedf

Vedf Similarity between prokaryotic and eukaryotic cells

Similarity between prokaryotic and eukaryotic cells What is one similarity between ghana and mali

What is one similarity between ghana and mali Similarity: aa, sss, sas worksheet answers

Similarity: aa, sss, sas worksheet answers Proving triangles are similar

Proving triangles are similar Geometry chapter 7 proportions and similarity answers

Geometry chapter 7 proportions and similarity answers Difference between scalar and vector

Difference between scalar and vector