SplitLevel IO Scheduling Suli Yang Tyler Harter Nishant

- Slides: 57

Split-Level I/O Scheduling Suli Yang, Tyler Harter, Nishant Agrawal, Samer Al-Kiswany, Salini Selvaraj Kowsalya, Anand Krishnamurthy, Rini T Kaushik, Andrea C. Arpaci-Dusseau, Remzi H. Arpaci-Dusseau

…yet another I/O scheduling paper? CFQ (2003) m. Clock (2011 BFQ (2010) p. Clock (2007 YFQ (1999) Deadline (2002) Facade(2003) Fahrrad (2008) Token-Bucket (2008) Libra (2014) 2

Some mistakes we have been making for decades… (in trying to build better schedulers) 3

Problem • Current frameworks fundamentally limited – CFQ, Deadline, Token-Bucket • Important policies cannot be realized – Fairness, Latency Guarantee, Isolation • Wasted effort trying to build new schedulers without fixing the framework 4

Can we design a simple and effective framework that lets us build schedulers to correctly realize important I/O policies? 5

Solution: Split-Level Framework • Control: Allow scheduling at multiple levels – Block level – System-call level – Page-cache level • Information: Tag requests to identify the origin • Simplicity: Small set of hooks at key junctions within the storage stack 6

Results • Three distinct policies implemented – Priory, Deadline, Isolation • Large performance improvements – Fairness: 12 x – Tail latency: 4 x – Isolation: 6 x • Good foundation for applications – Reduce transaction latency for databases – Improve isolation for virtual machines – Effective rate limit for HDFS 7

Overview • How I/O scheduling frameworks work • Split-Level Scheduling Framework: Design • Split-Level Scheduler Case Study • Conclusion 8

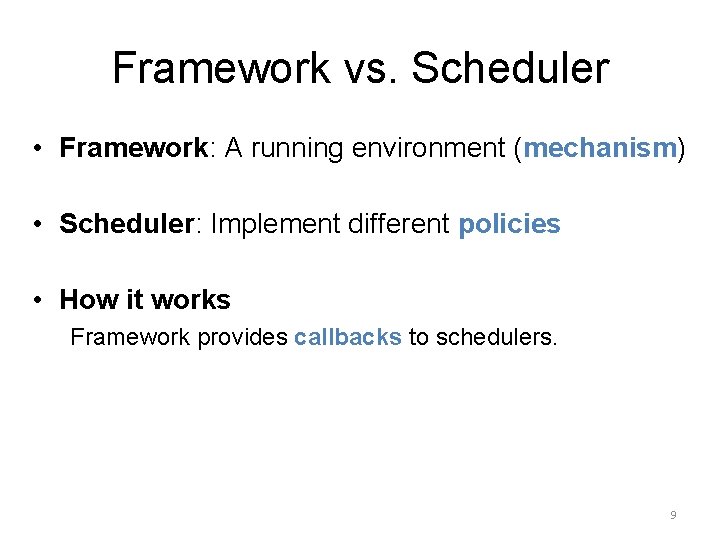

Framework vs. Scheduler • Framework: A running environment (mechanism) • Scheduler: Implement different policies • How it works Framework provides callbacks to schedulers. 9

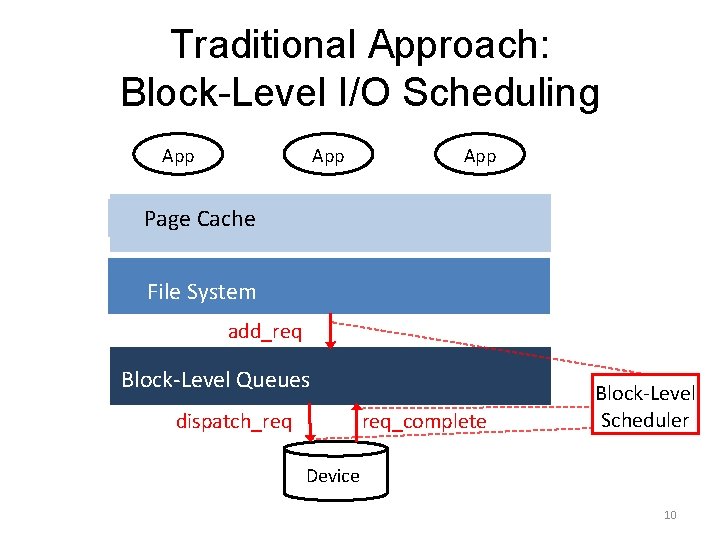

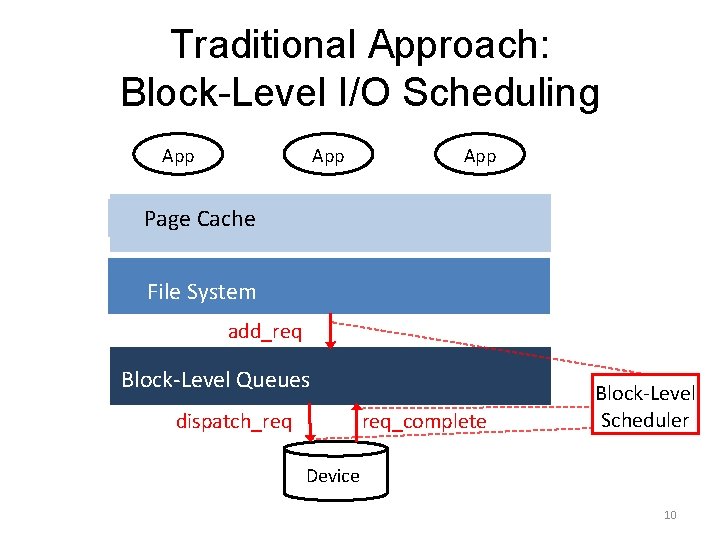

Traditional Approach: Block-Level I/O Scheduling App App Page Cache File System add_req Block-Level Queues req_complete dispatch_req Block-Level Scheduler Device 10

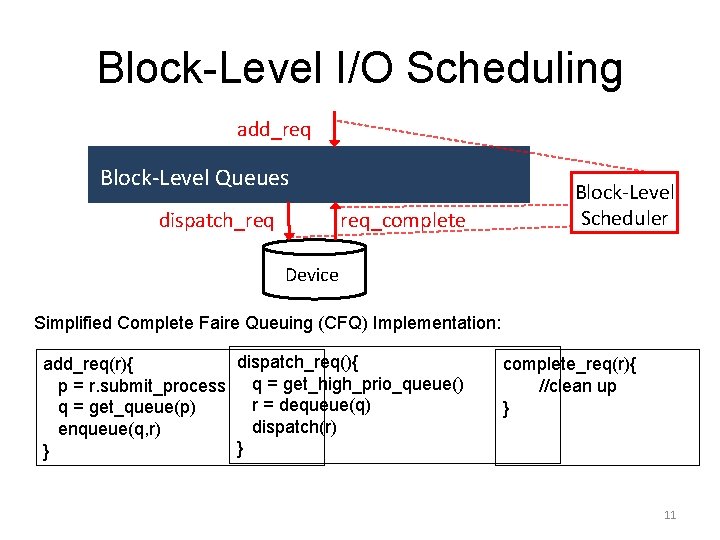

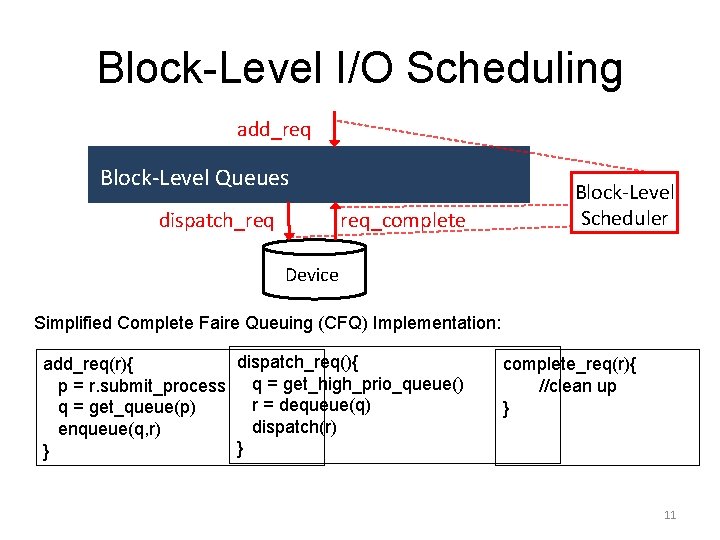

Block-Level I/O Scheduling add_req Block-Level Queues req_complete dispatch_req Block-Level Scheduler Device Simplified Complete Faire Queuing (CFQ) Implementation: dispatch_req(){ add_req(r){ p = r. submit_process q = get_high_prio_queue() r = dequeue(q) q = get_queue(p) dispatch(r) enqueue(q, r) } } complete_req(r){ //clean up } 11

Overview • What is an I/O scheduling framework • Split-Level Scheduling Framework: Design – The reordering problem – The cause-mapping problem – The cost-estimation problem • Split-Level Scheduler Case Study • Conclusion 12

Reordering Scheduling is just reordering I/O requests 13

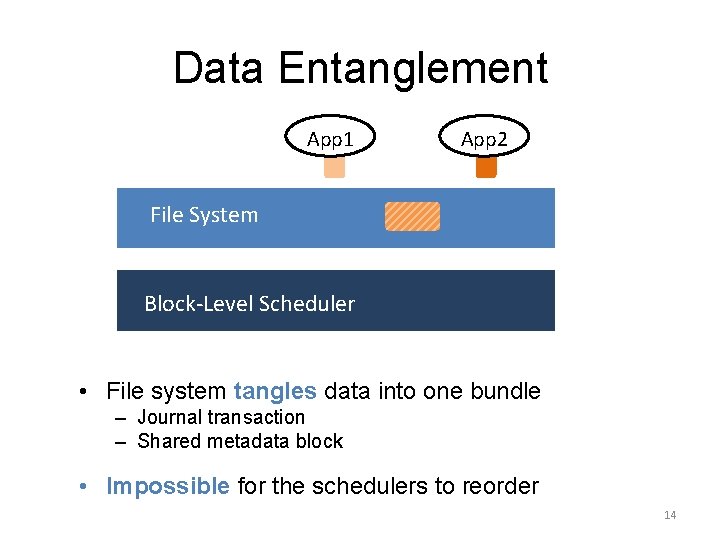

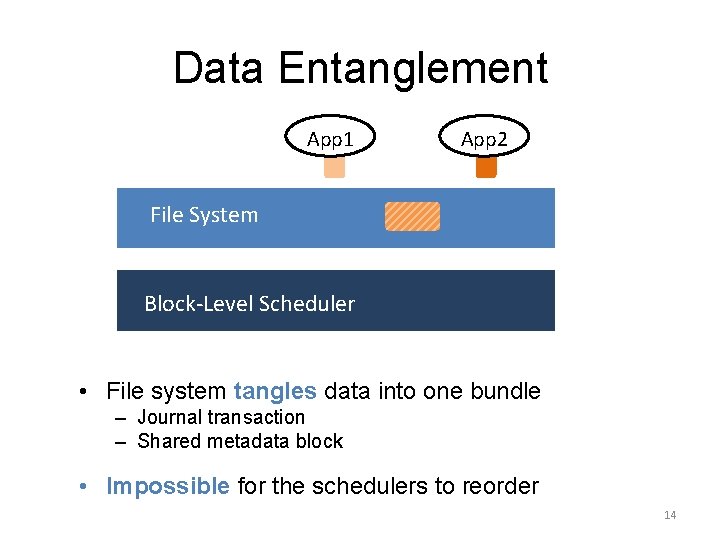

Data Entanglement App 1 App 2 File System Block-Level Scheduler • File system tangles data into one bundle – Journal transaction – Shared metadata block • Impossible for the schedulers to reorder 14

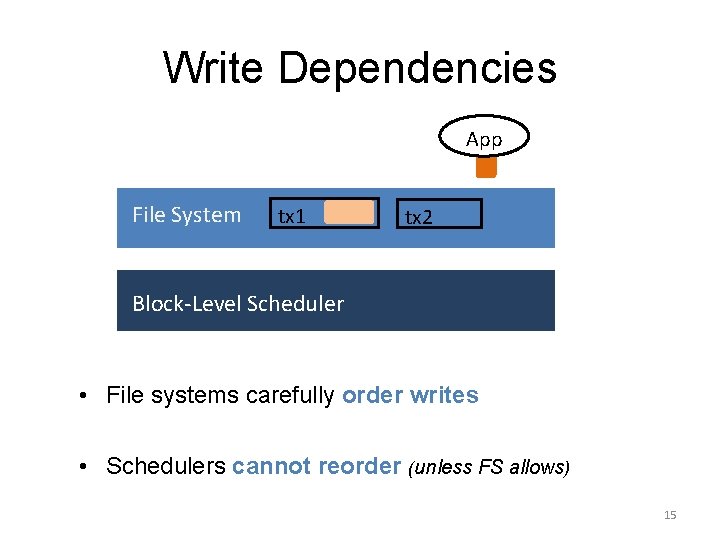

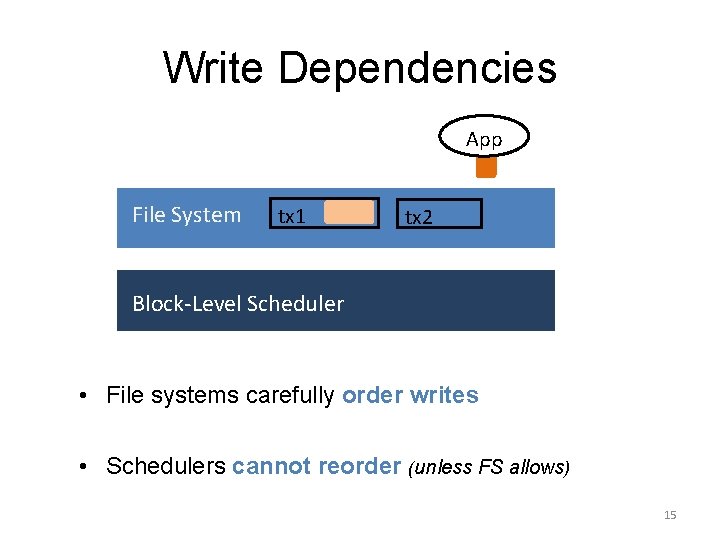

Write Dependencies App File System tx 1 tx 2 Block-Level Scheduler • File systems carefully order writes • Schedulers cannot reorder (unless FS allows) 15

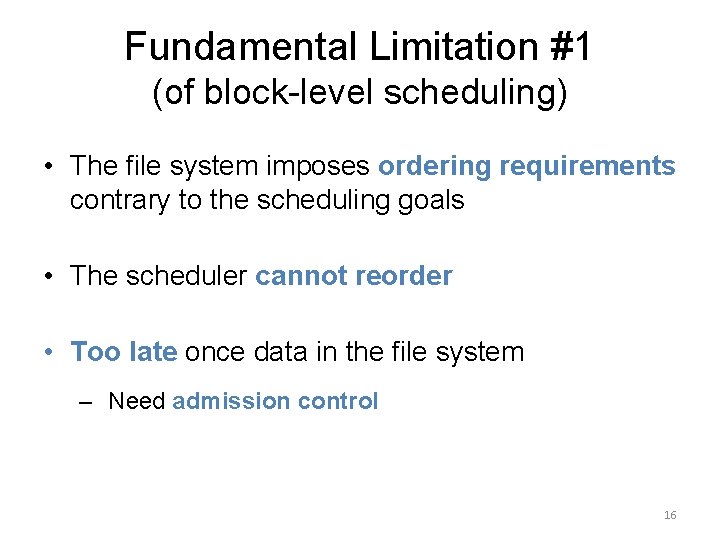

Fundamental Limitation #1 (of block-level scheduling) • The file system imposes ordering requirements contrary to the scheduling goals • The scheduler cannot reorder • Too late once data in the file system – Need admission control 16

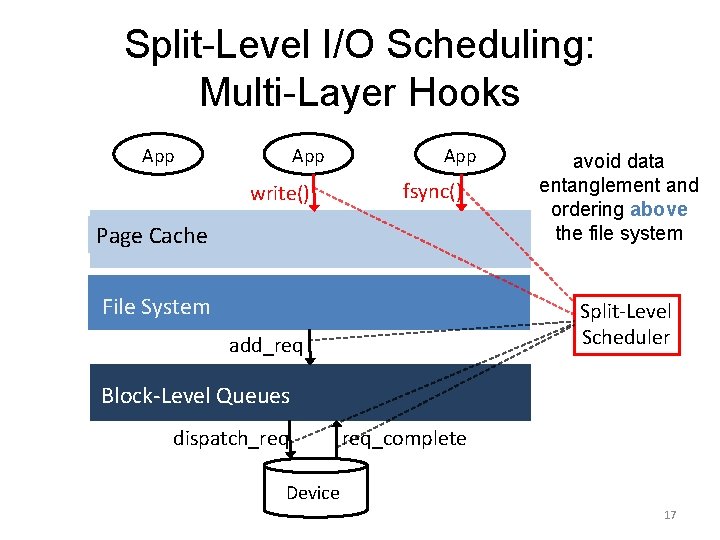

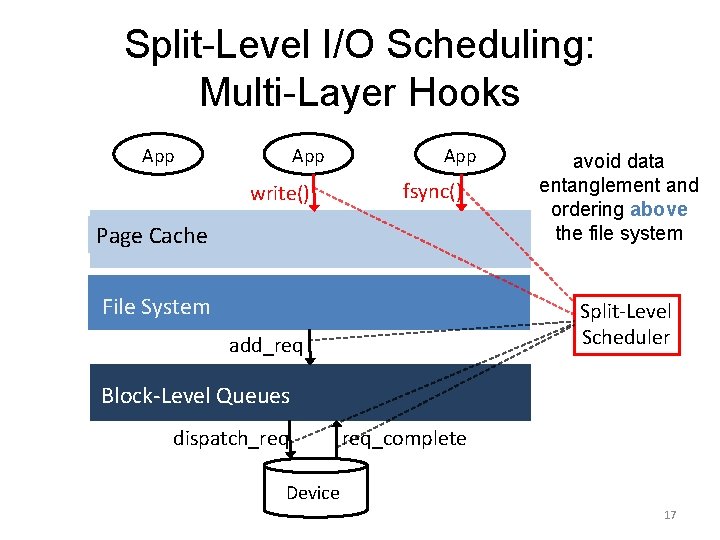

Split-Level I/O Scheduling: Multi-Layer Hooks App write() App fsync() Page Cache File System avoid data entanglement and ordering above the file system Split-Level Scheduler add_req Block-Level Queues dispatch_req req_complete Device 17

Cause Mapping A scheduler needs to map an I/O request to the originating application 18

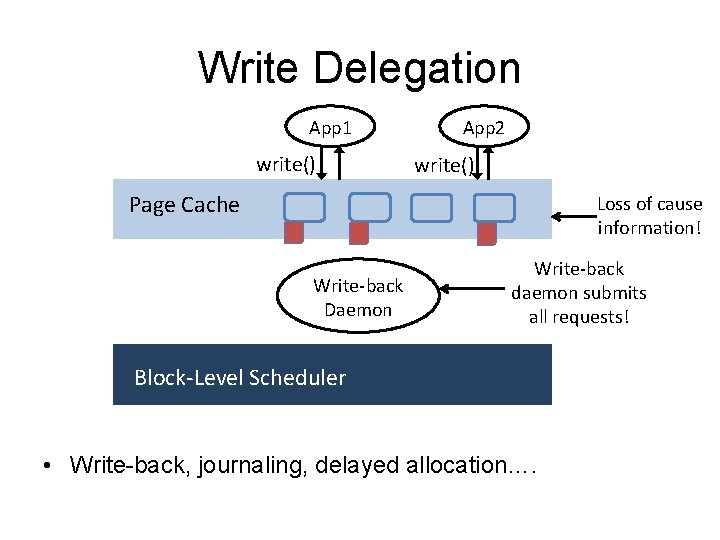

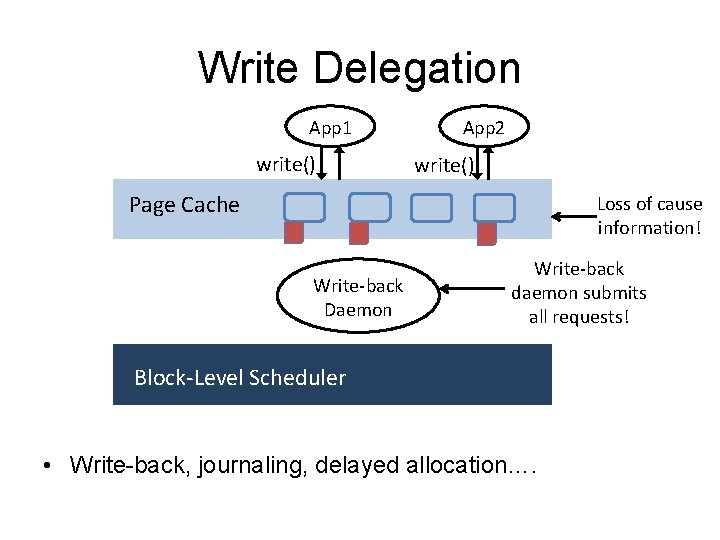

Write Delegation App 1 write() App 2 write() Page Cache Loss of cause information! Write-back Daemon Write-back daemon submits all requests! Block-Level Scheduler • Write-back, journaling, delayed allocation….

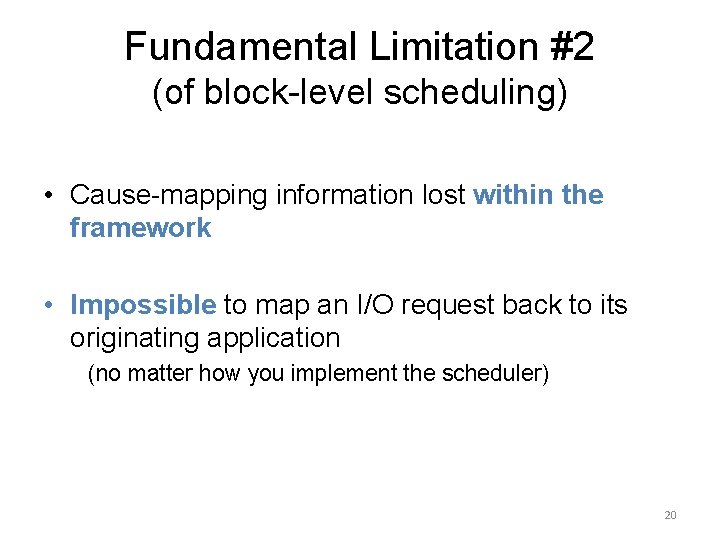

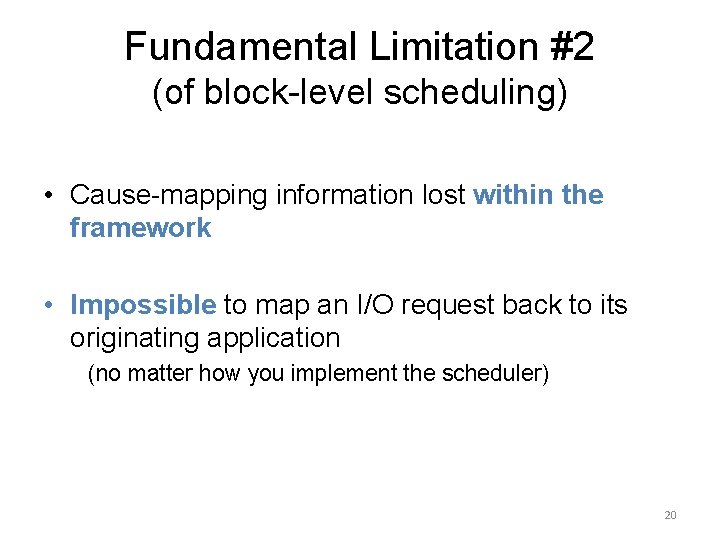

Fundamental Limitation #2 (of block-level scheduling) • Cause-mapping information lost within the framework • Impossible to map an I/O request back to its originating application (no matter how you implement the scheduler) 20

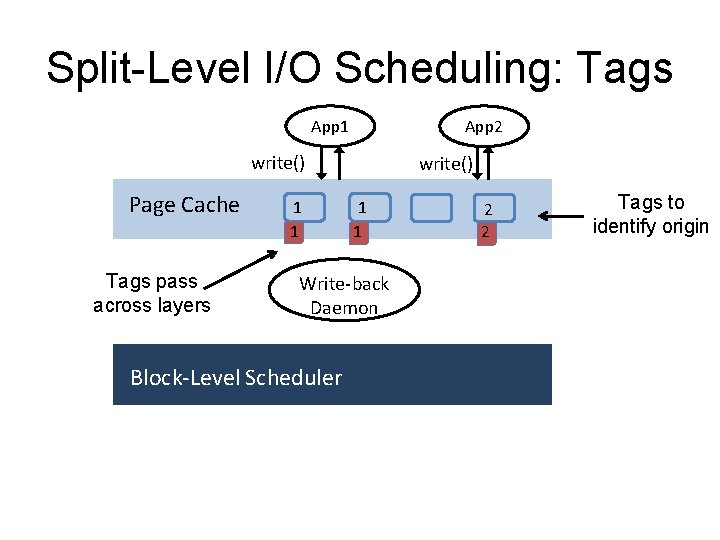

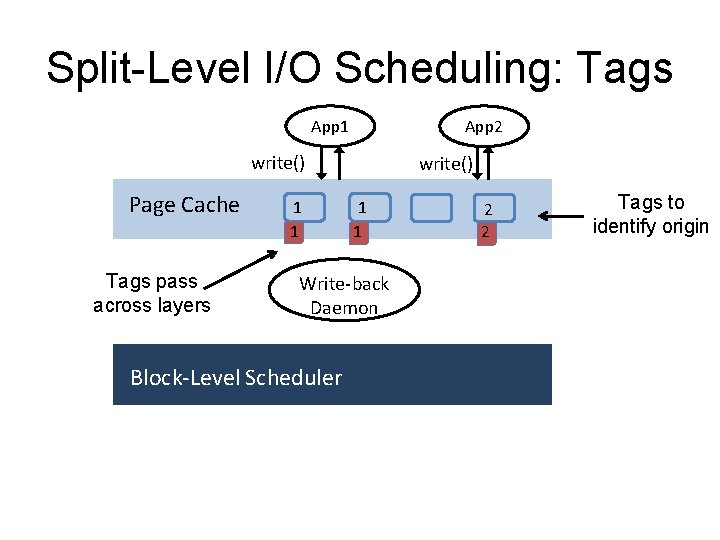

Split-Level I/O Scheduling: Tags App 1 App 2 write() Page Cache Tags pass across layers 1 1 write() 1 1 Write-back Daemon Block-Level Scheduler 2 2 Tags to identify origin

Cost Estimation A scheduler needs to estimate the cost of I/O – Memory-level notification for timely estimate – Block-level notification for accurate estimate – Details in paper 22

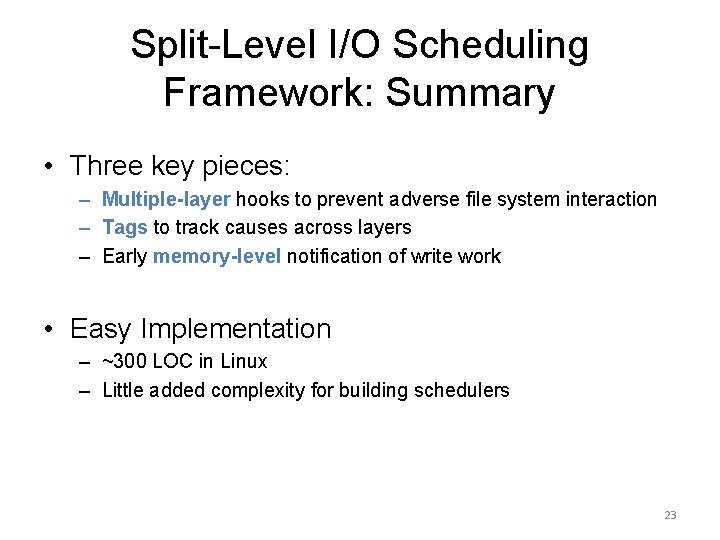

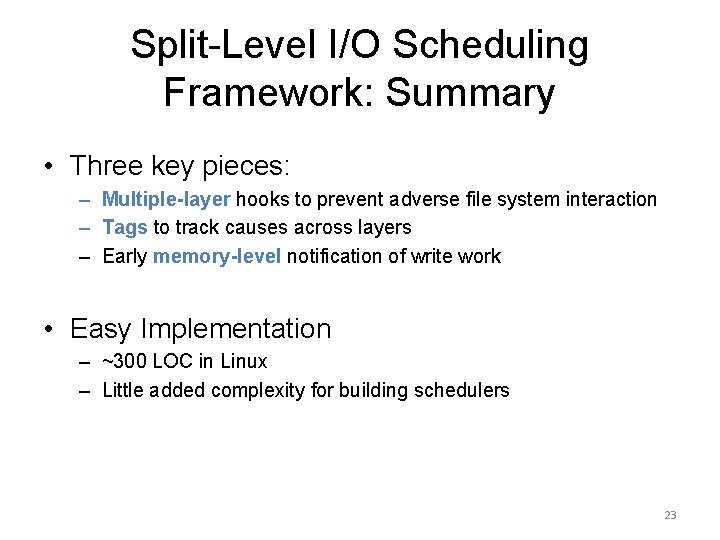

Split-Level I/O Scheduling Framework: Summary • Three key pieces: – Multiple-layer hooks to prevent adverse file system interaction – Tags to track causes across layers – Early memory-level notification of write work • Easy Implementation – ~300 LOC in Linux – Little added complexity for building schedulers 23

Overview • How I/O scheduling frameworks work • Split-Level Scheduling Framework: Design • Split-Level Scheduler Case Study • Conclusion 24

Challenge #1: Priority Scheduler Fairly allocate I/O resources based on the processes’ priorities 25

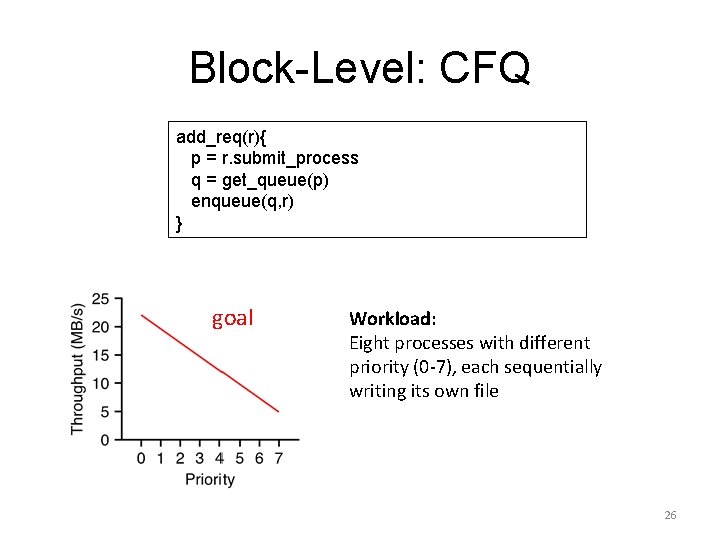

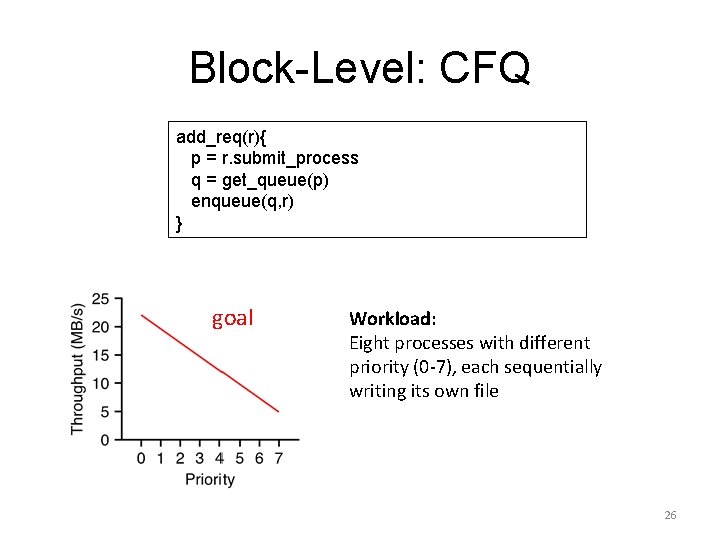

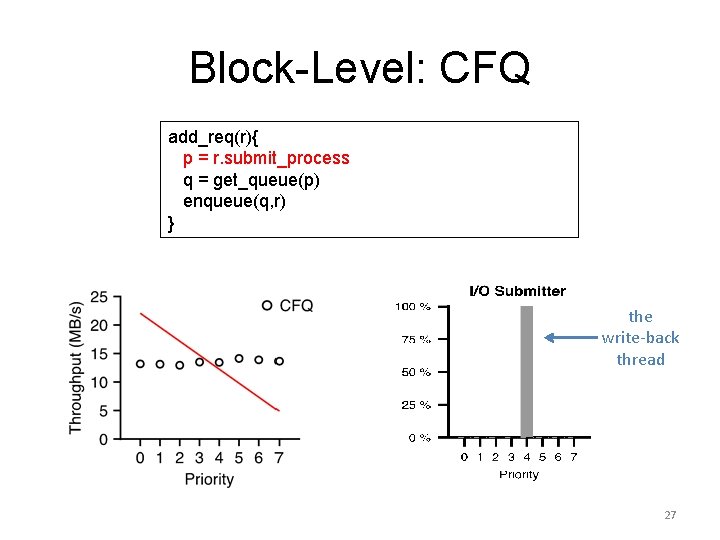

Block-Level: CFQ add_req(r){ p = r. submit_process q = get_queue(p) enqueue(q, r) } goal Workload: Eight processes with different priority (0 -7), each sequentially writing its own file 26

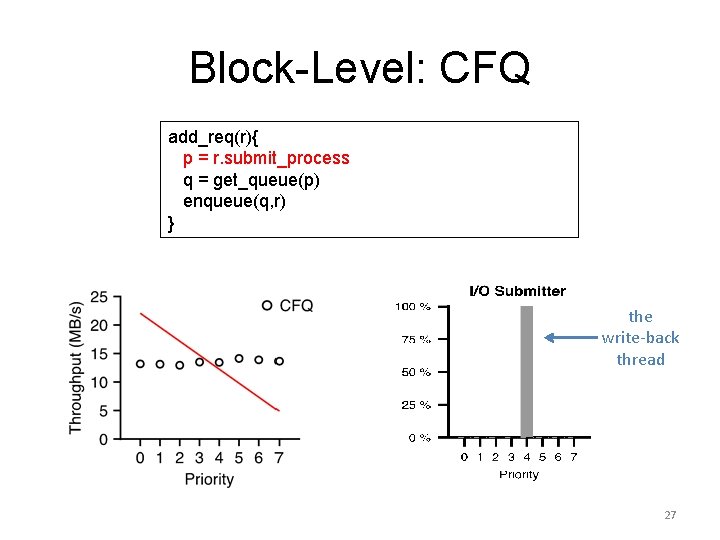

Block-Level: CFQ add_req(r){ p = r. submit_process q = get_queue(p) enqueue(q, r) } the write-back thread 27

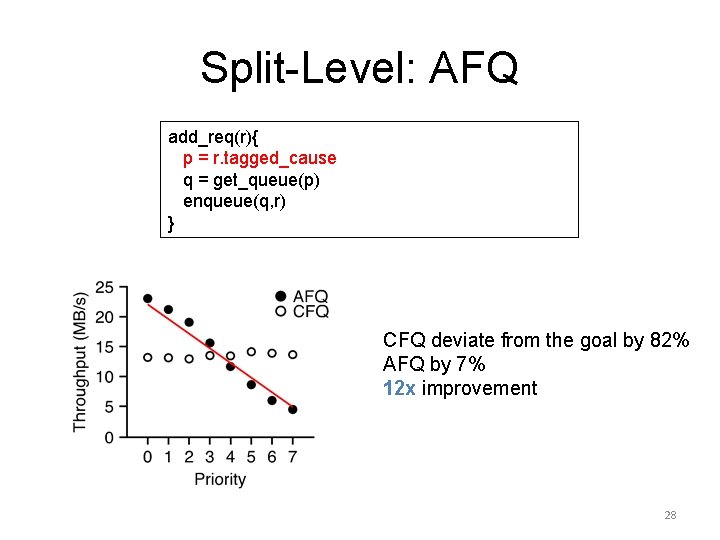

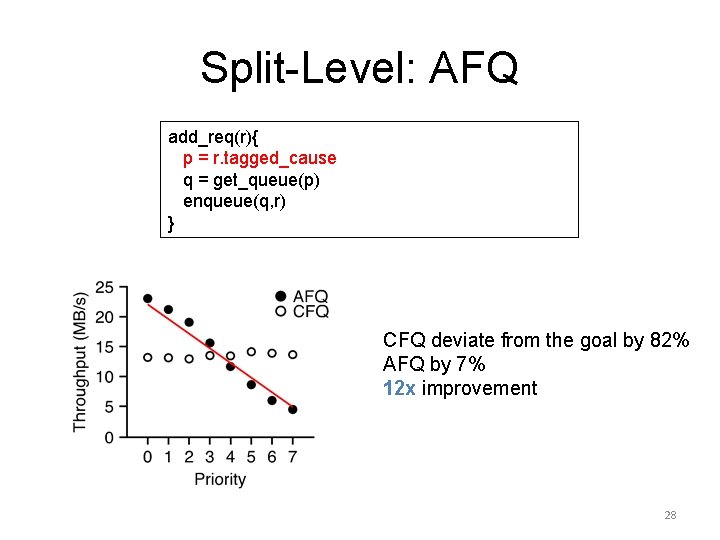

Split-Level: AFQ add_req(r){ p = r. tagged_cause q = get_queue(p) enqueue(q, r) } CFQ deviate from the goal by 82% AFQ by 7% 12 x improvement 28

Challenge #2: Deadline Scheduler Provide guaranteed latency of I/O requests 29

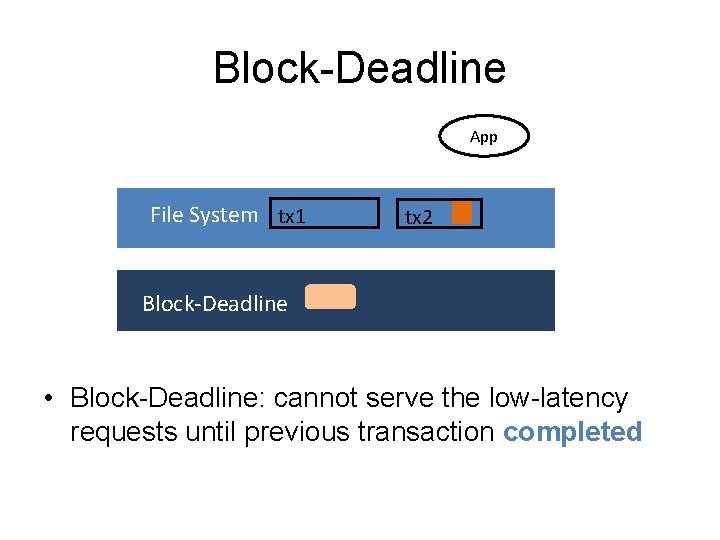

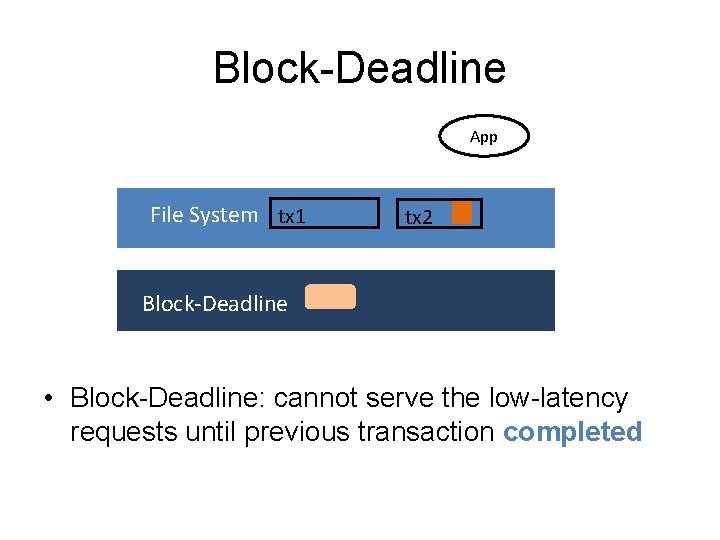

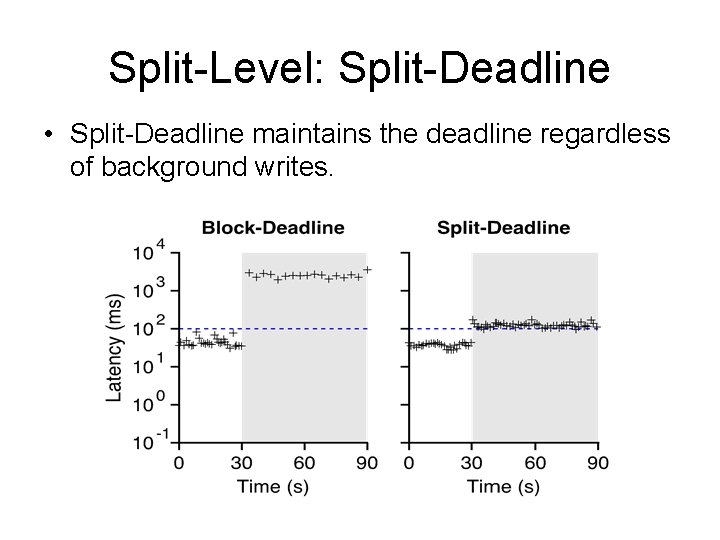

Block-Deadline App File System tx 1 tx 2 Block-Deadline • Block-Deadline: cannot serve the low-latency requests until previous transaction completed

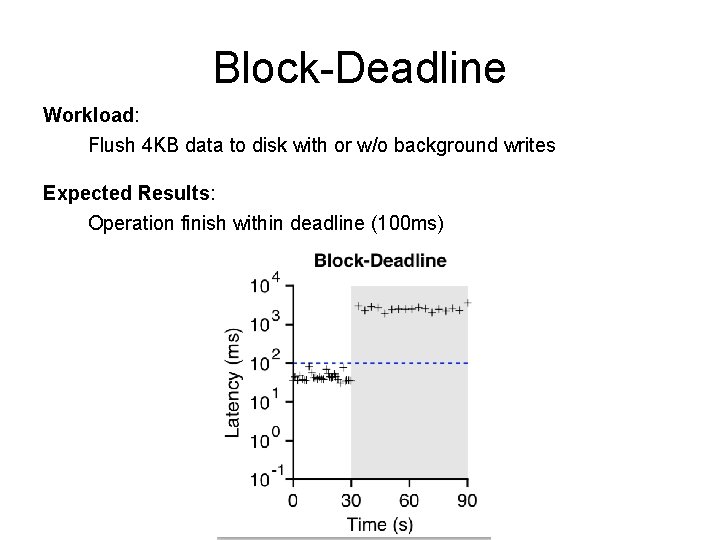

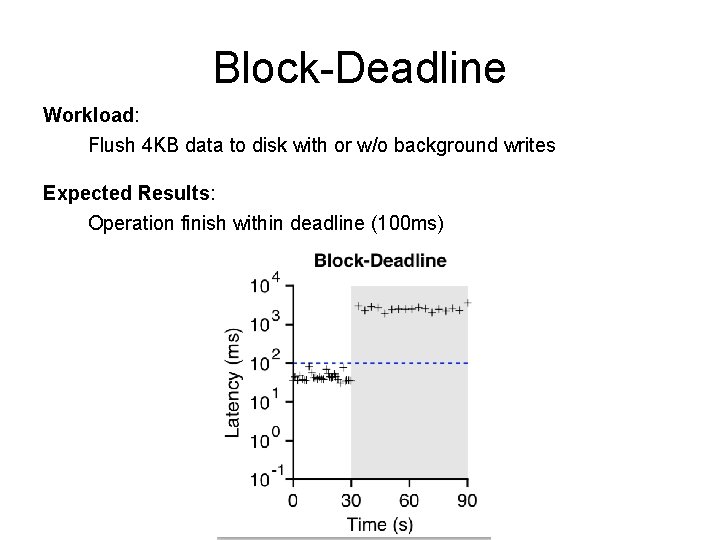

Block-Deadline Workload: Flush 4 KB data to disk with or w/o background writes Expected Results: Operation finish within deadline (100 ms)

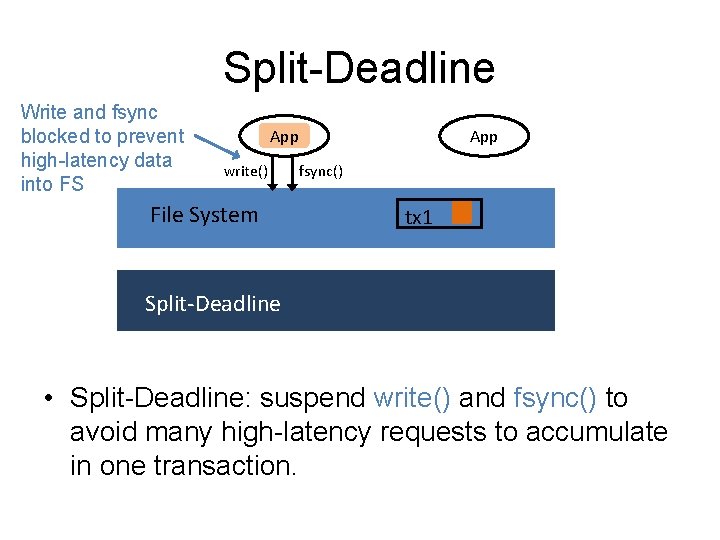

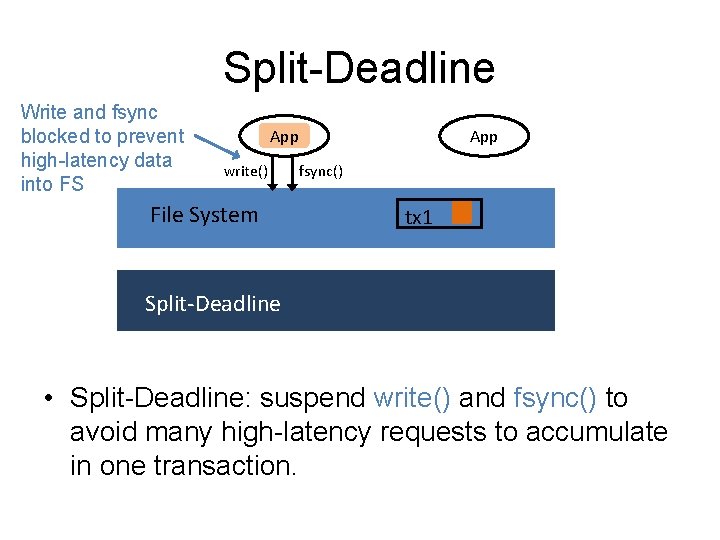

Split-Deadline Write and fsync blocked to prevent high-latency data into FS App write() File System App fsync() tx 1 Split-Deadline • Split-Deadline: suspend write() and fsync() to avoid many high-latency requests to accumulate in one transaction.

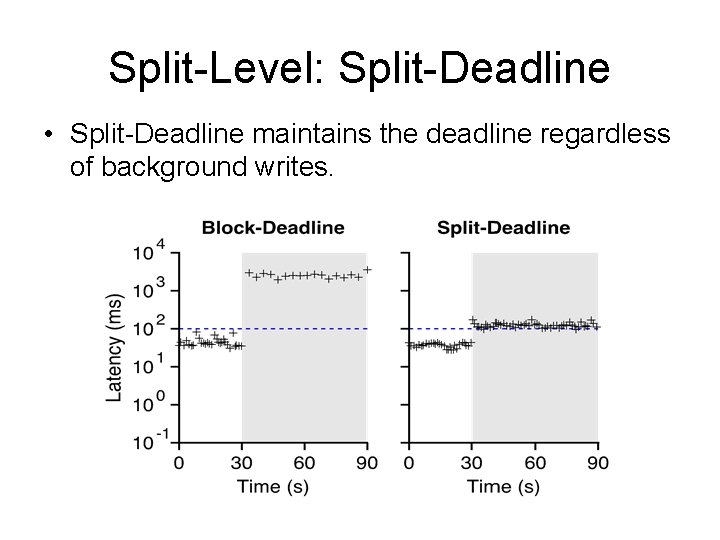

Split-Level: Split-Deadline • Split-Deadline maintains the deadline regardless of background writes.

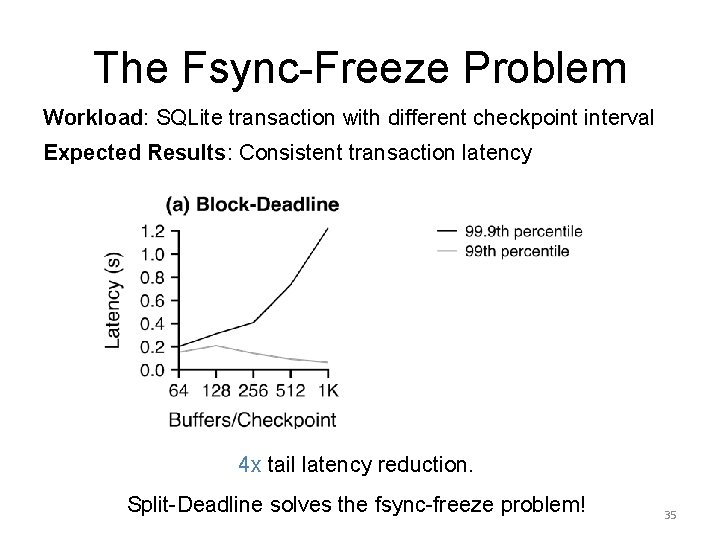

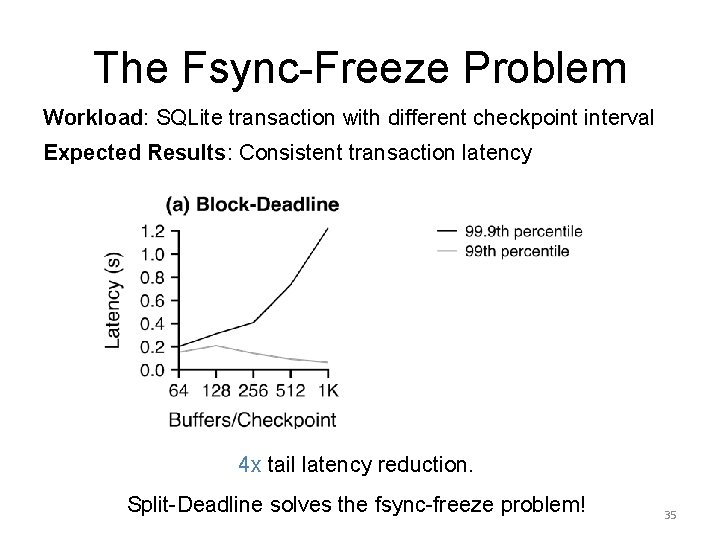

The Fsync-Freeze Problem During checkpointing, the system begins writing out the data that need to fsync()’d so aggressively that the service time for I/O requests from other processes go through the roof. ---Robert Hass (Postgre. SQL) 34

The Fsync-Freeze Problem Workload: SQLite transaction with different checkpoint interval Expected Results: Consistent transaction latency 4 x tail latency reduction. Split-Deadline solves the fsync-freeze problem! 35

Other Evaluation Results • Low overhead <1% runtime overhead <50 MB memory overhead • Other schedulers Token-bucket for performance isolation • Other applications Postgre. SQL: latency guarantee for TPC-B workloads QEMU: provides isolation across VMs HDFS: effective I/O rate limit 36

Overview • What is an I/O scheduling framework and how does it work. • Split-Level Scheduling Framework: Design • Split-Level Scheduler Case Study • Conclusion 37

Conclusion • For decades, people have been trying to build better block-level schedulers – bound to fail without appropriate framework support • Split-level framework enables correct scheduler implementation – Cross-layer tags – Multi-level hooks – Memory-level notification Source code and more information: http: //research. cs. wisc. edu/adsl/Software/split/ 38

BACKUP SLIDES 39

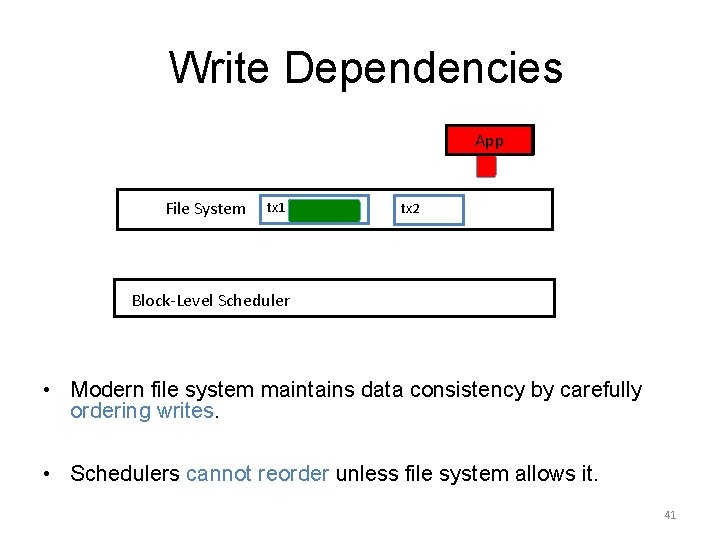

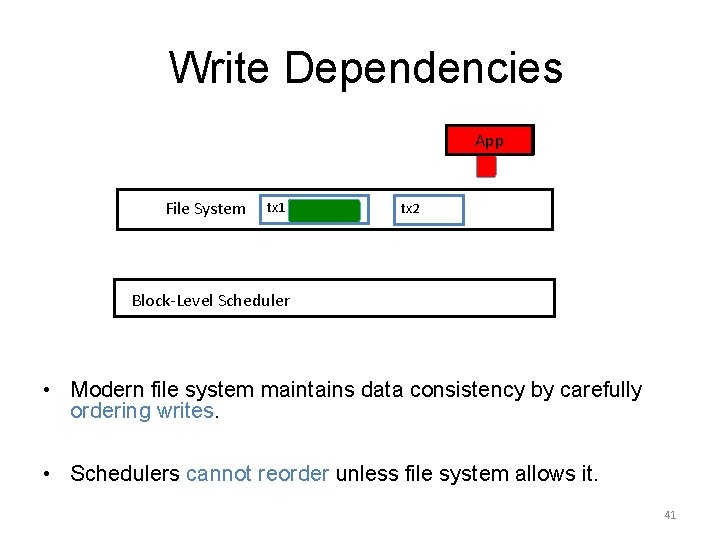

Write Dependencies App File System tx 1 tx 2 Block-Level Scheduler • Modern file system maintains data consistency by carefully ordering writes. • Schedulers cannot reorder unless file system allows it. 41

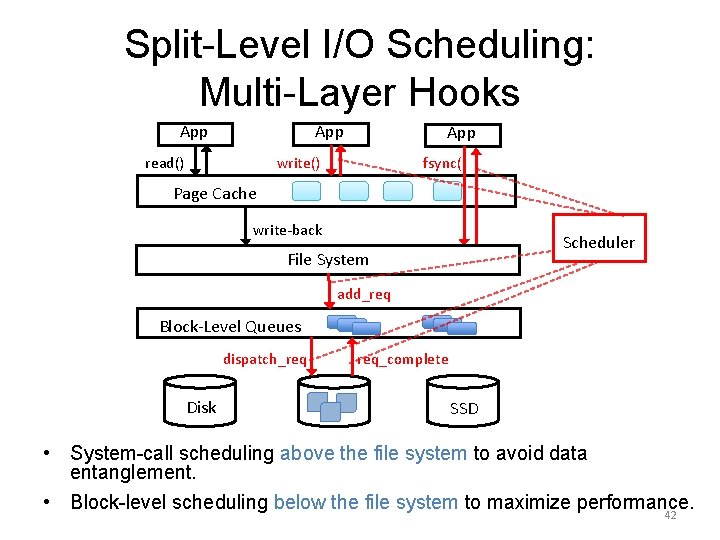

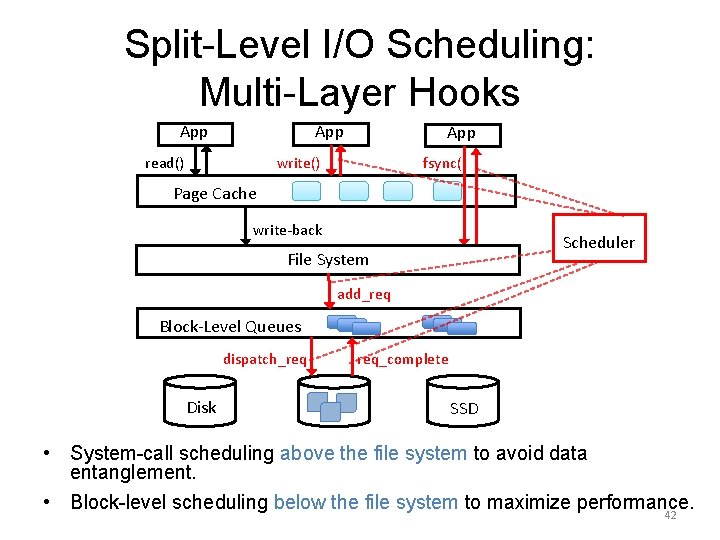

Split-Level I/O Scheduling: Multi-Layer Hooks App read() App write() fsync() Page Cache write-back Scheduler File System add_req Block-Level Queues dispatch_req Disk req_complete SSD • System-call scheduling above the file system to avoid data entanglement. • Block-level scheduling below the file system to maximize performance. 42

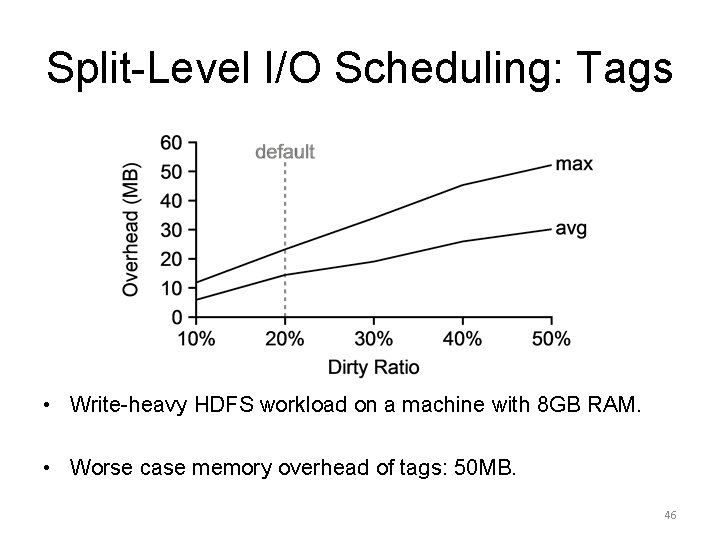

Split-Level I/O Scheduling: Tags • Write-heavy HDFS workload on a machine with 8 GB RAM. 43

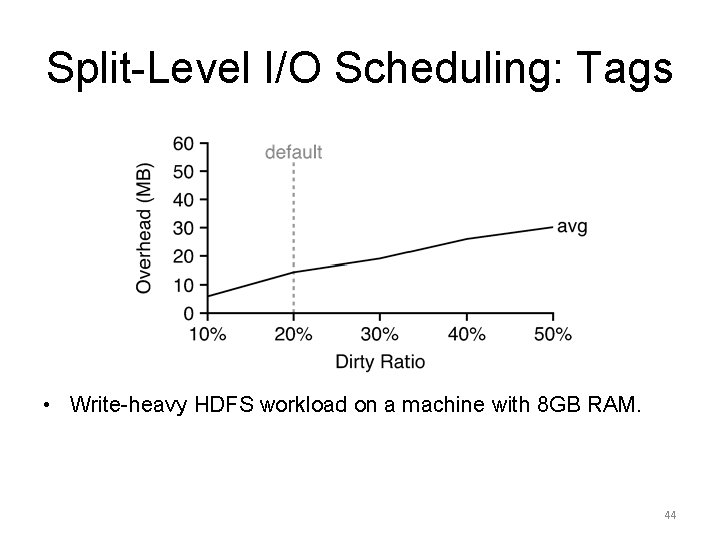

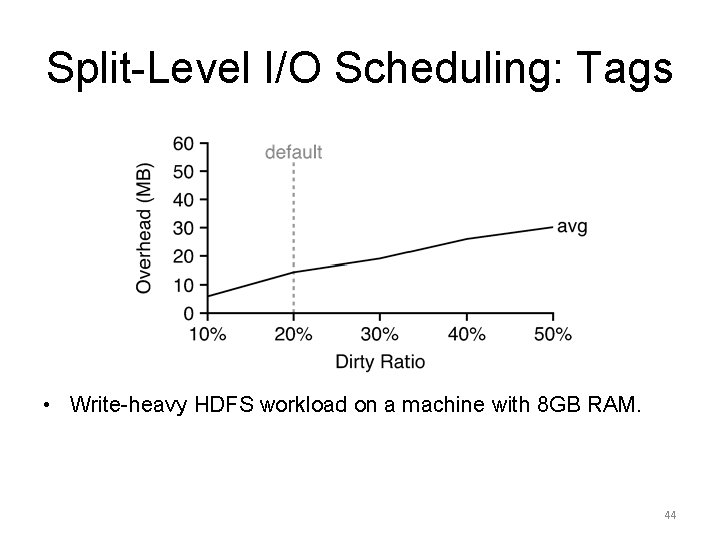

Split-Level I/O Scheduling: Tags • Write-heavy HDFS workload on a machine with 8 GB RAM. 44

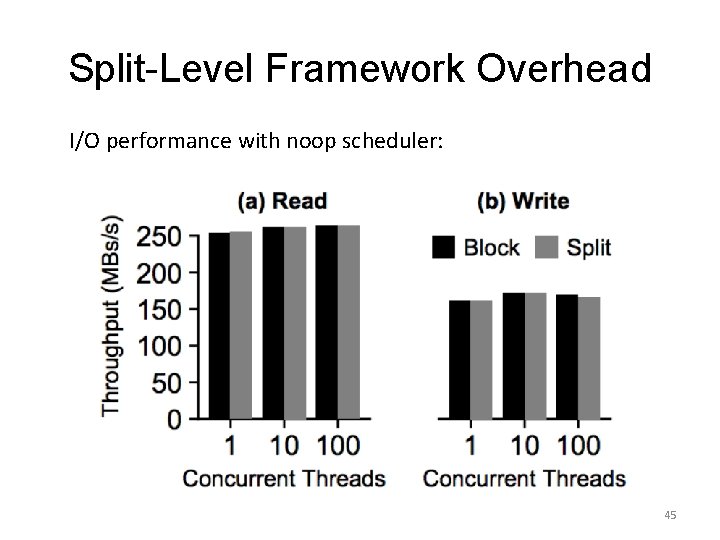

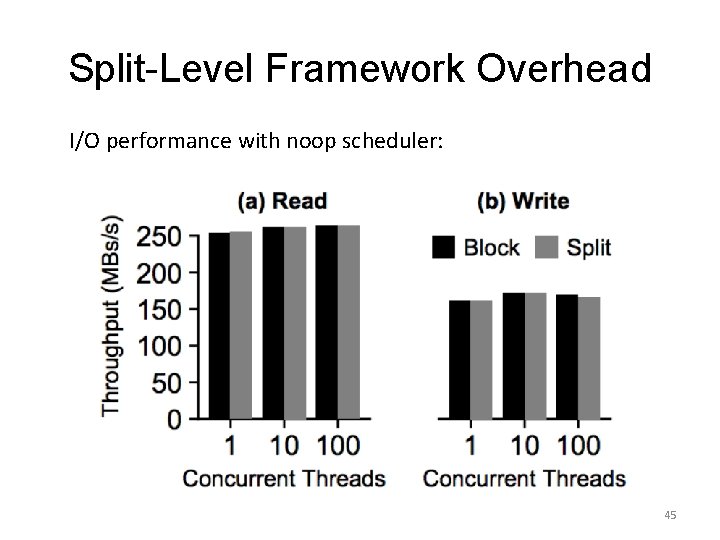

Split-Level Framework Overhead I/O performance with noop scheduler: 45

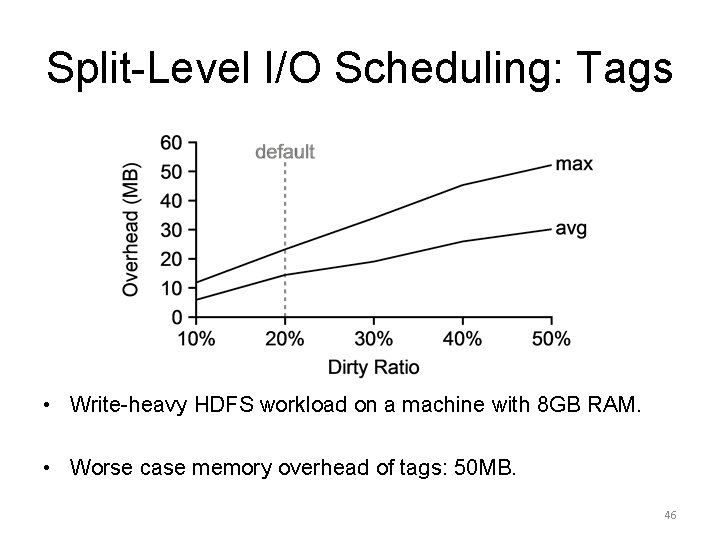

Split-Level I/O Scheduling: Tags • Write-heavy HDFS workload on a machine with 8 GB RAM. • Worse case memory overhead of tags: 50 MB. 46

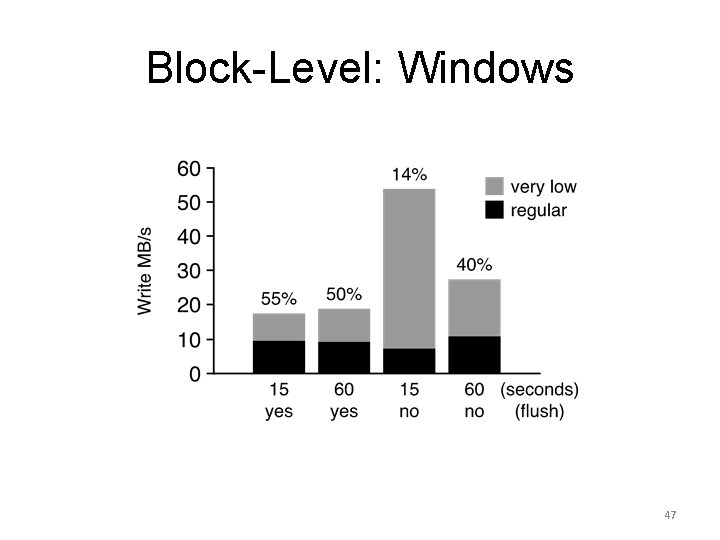

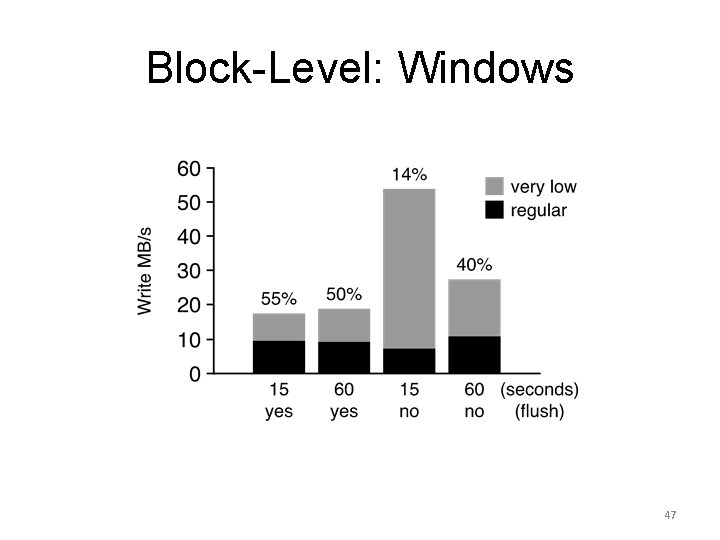

Block-Level: Windows 47

Performance Isolation A: Sequential Reader Unthrottled B: Throttled to 10 MB/s 48

Real Applications 49

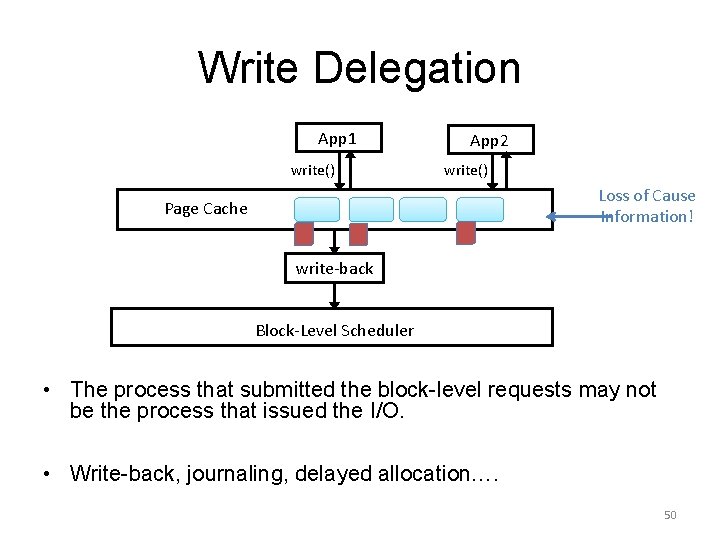

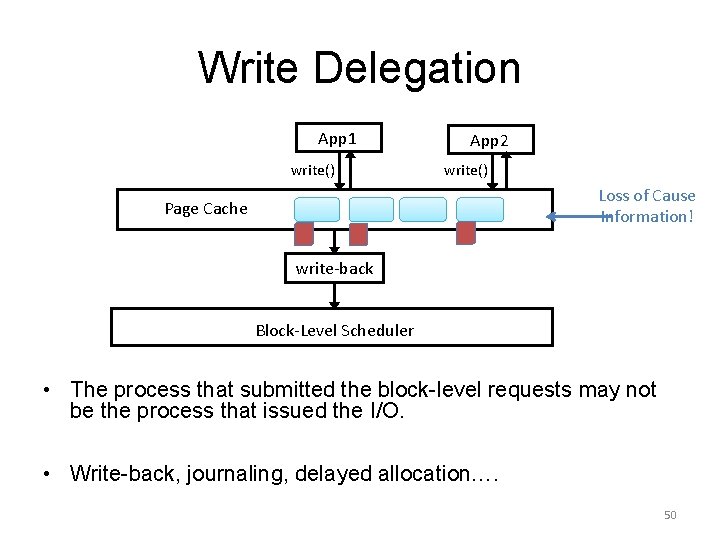

Write Delegation App 1 write() App 2 write() Loss of Cause Information! Page Cache write-back Block-Level Scheduler • The process that submitted the block-level requests may not be the process that issued the I/O. • Write-back, journaling, delayed allocation…. 50

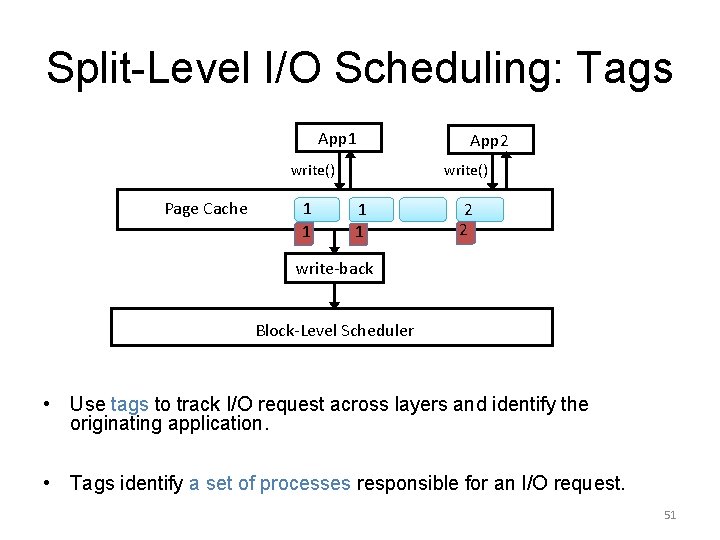

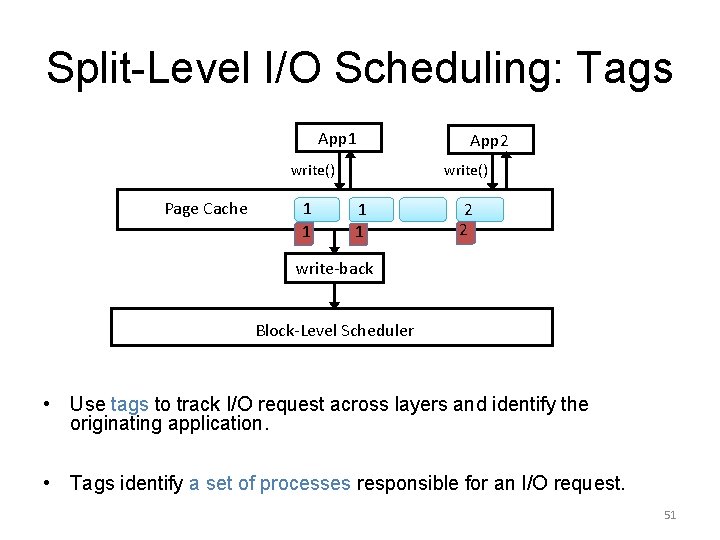

Split-Level I/O Scheduling: Tags App 1 write() Page Cache 1 1 App 2 write() 1 1 2 2 write-back Block-Level Scheduler • Use tags to track I/O request across layers and identify the originating application. • Tags identify a set of processes responsible for an I/O request. 51

Myth #1 in I/O Scheduling: I don’t have to care about I/O scheduling. It is someone else’s problem… 52

Why Is I/O Scheduling Relevant (to You) • bottleneck of many systems, from phones to servers. […our servers appear to freeze for tens of seconds during disk writes…] • Foundation of performance isolation. […the interference as a result of competing I/Os remains problematic in a virtualized environment…] • Pain points for databases, hypervisors, key-value stores and more. […one customer reported that just changing cfq to noop solved their inno. DB IO problems…] 53

Myth #1 in I/O Scheduling: I don’t have to care about I/O scheduling. It is someone else’s problem… Fact #1: If you care about performance, you should care about I/O scheduling 54

Myth #2 in I/O Scheduling: Can’t the disk (or SSD) handle all I/O scheduling? (Do I still need I/O scheduling in the era of SSD? ) 55

Why Should OS Do I/O Scheduling • Device powerless when handed the “wrong” requests from the OS -- file system may withhold requests • Devices rely on OS-provided information --lack such mechanisms • Other common reasons: --more contextual information --OS-level isolation unit --multi-device I/O scheduling 56

Myth #2 in I/O Scheduling: Shouldn’t the disk (or SSD) handle all the I/O scheduling? Fact #2: OS has to issue the right request at the right time 58