Differentiated IO services in virtualized environments Tyler Harter

- Slides: 44

Differentiated I/O services in virtualized environments Tyler Harter, Salini SK & Anand Krishnamurthy 1

Overview • Provide differentiated I/O services for applications in guest operating systems in virtual machines • Applications in virtual machines tag I/O requests • Hypervisor’s I/O scheduler uses these tags to provide quality of I/O service 2

Motivation • Variegated applications with different I/O requirements hosted in clouds • Not optimal if I/O scheduling is agnostic of the semantics of the request 3

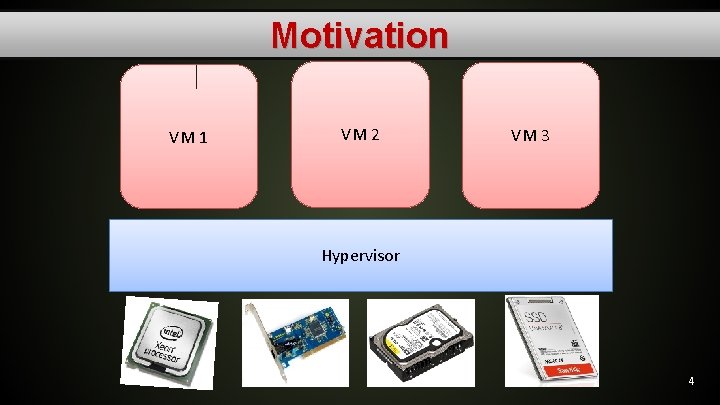

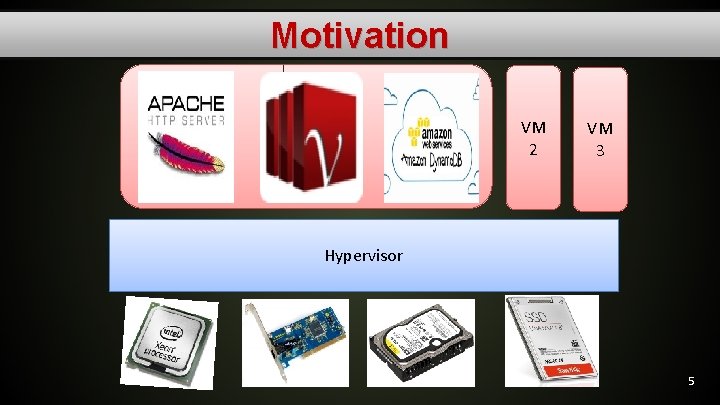

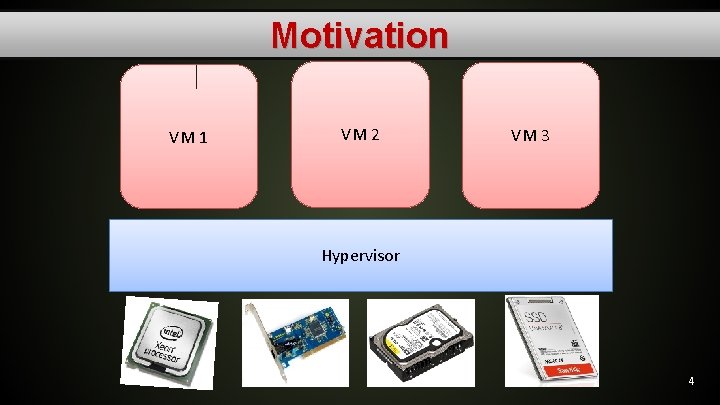

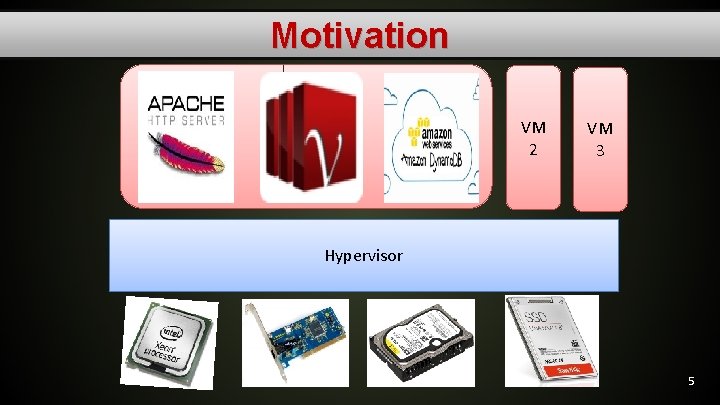

Motivation VM 1 VM 2 VM 3 Hypervisor 4

Motivation VM 2 VM 3 Hypervisor 5

Motivation • We want to have high and low priority processes that correctly get differentiated service within a VM and between VMs Can my webserver/DHT log pusher’s IO be served differently from my webserver/DHT’s IO? 6

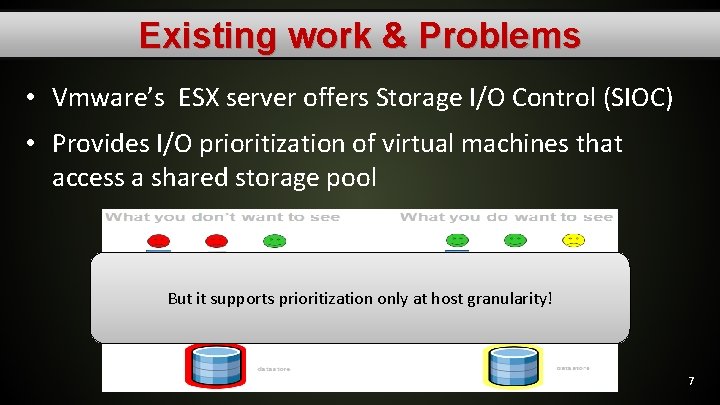

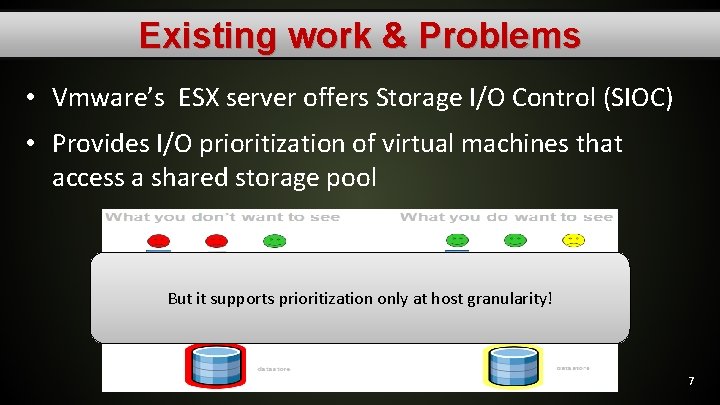

Existing work & Problems • Vmware’s ESX server offers Storage I/O Control (SIOC) • Provides I/O prioritization of virtual machines that access a shared storage pool But it supports prioritization only at host granularity! 7

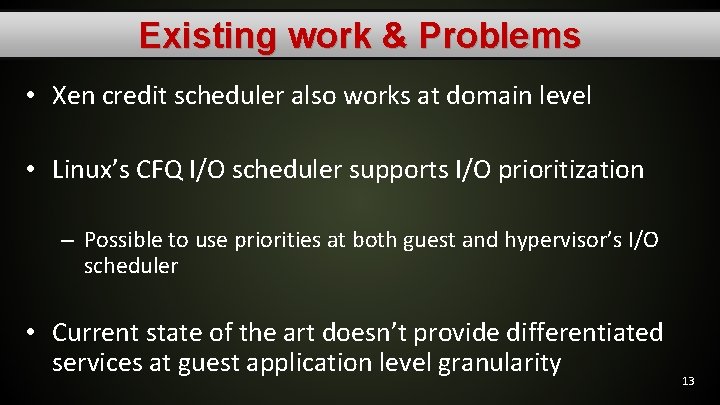

Existing work & Problems • Xen credit scheduler also works at domain level • Linux’s CFQ I/O scheduler supports I/O prioritization – Possible to use priorities at both guest and hypervisor’s I/O scheduler 8

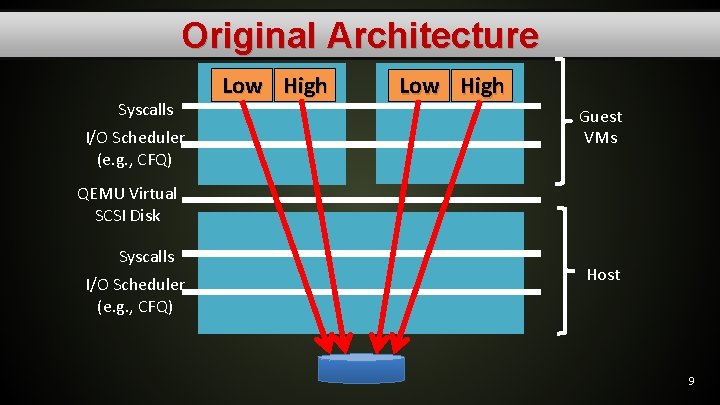

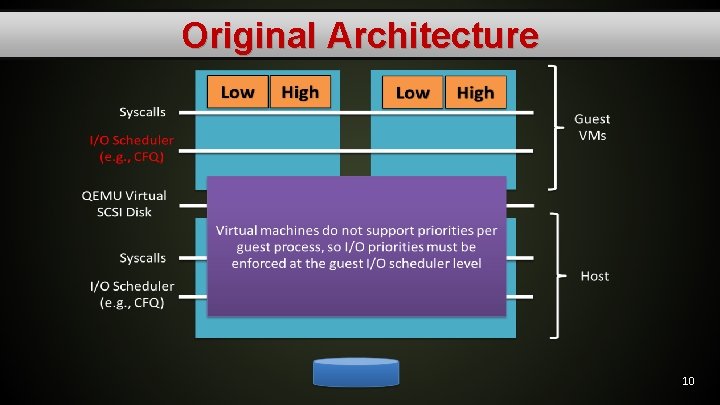

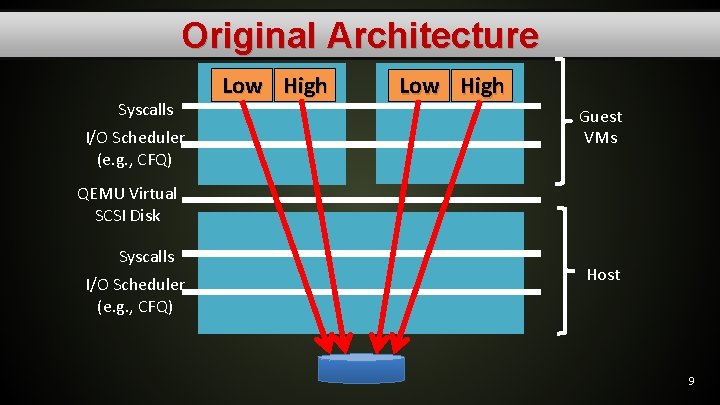

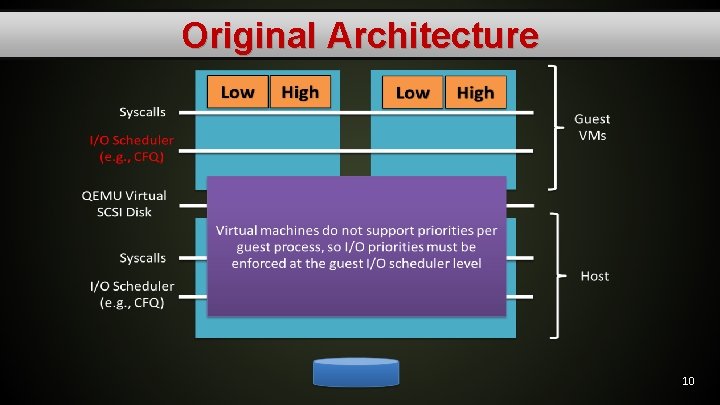

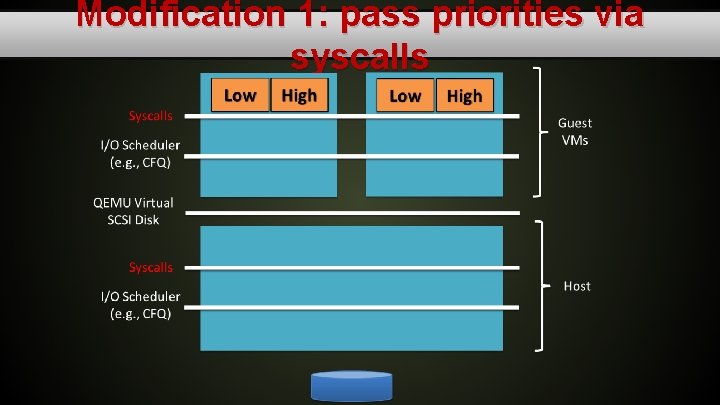

Original Architecture Syscalls I/O Scheduler (e. g. , CFQ) Low High Guest VMs QEMU Virtual SCSI Disk Syscalls I/O Scheduler (e. g. , CFQ) Host 9

Original Architecture 10

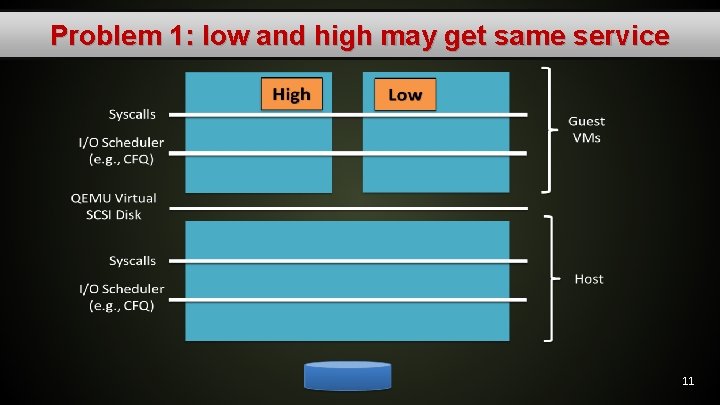

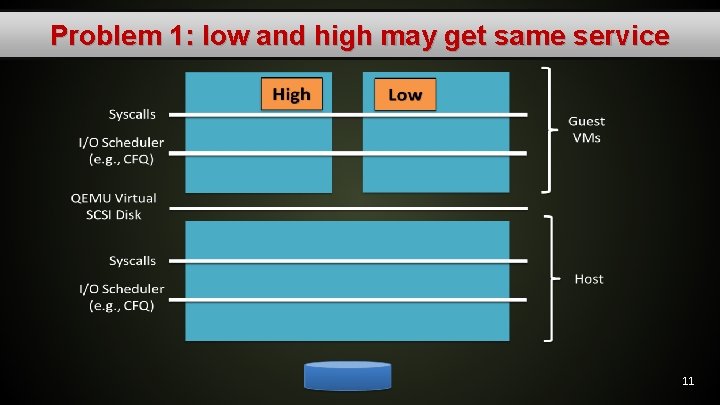

Problem 1: low and high may get same service 11

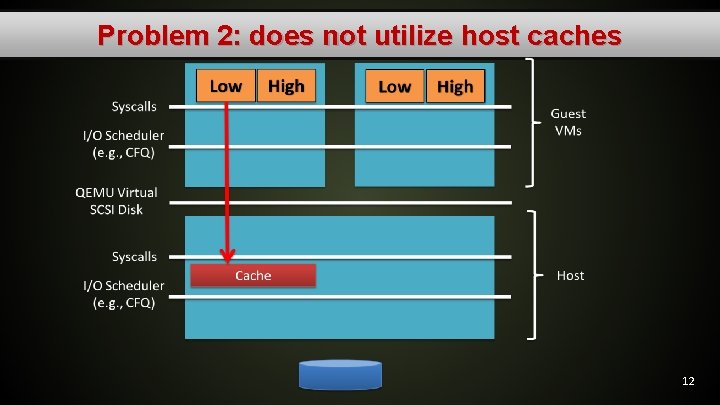

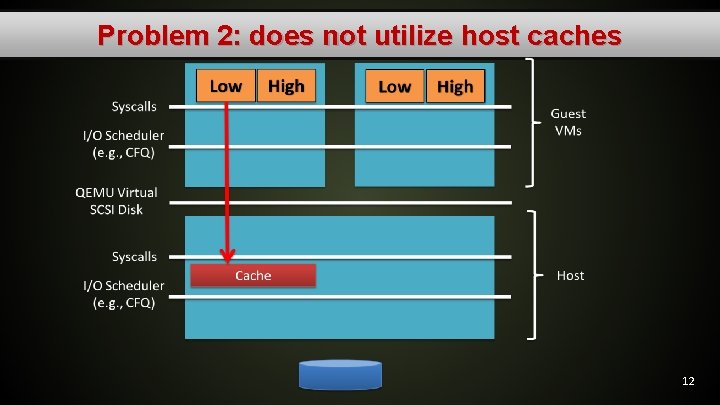

Problem 2: does not utilize host caches 12

Existing work & Problems • Xen credit scheduler also works at domain level • Linux’s CFQ I/O scheduler supports I/O prioritization – Possible to use priorities at both guest and hypervisor’s I/O scheduler • Current state of the art doesn’t provide differentiated services at guest application level granularity 13

Solution Tag I/O and prioritize in the hypervisor 14

Outline • • • KVM/Qemu, a brief intro… KVM/Qemu I/O stack Multi-level I/O tagging I/O scheduling algorithms Evaluation Summary 15

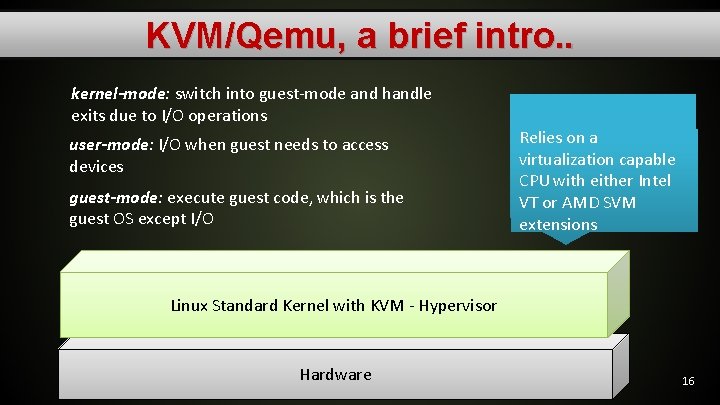

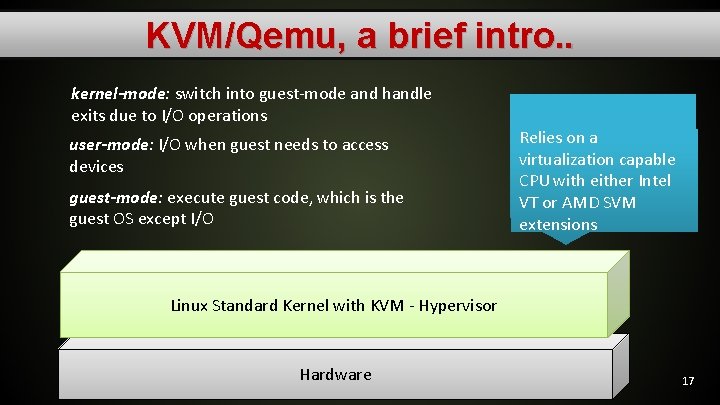

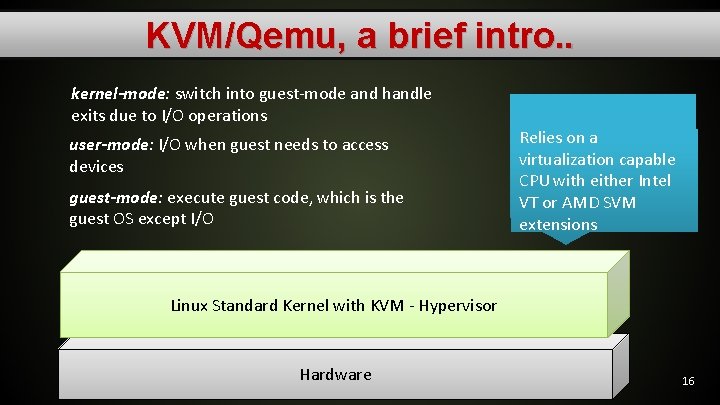

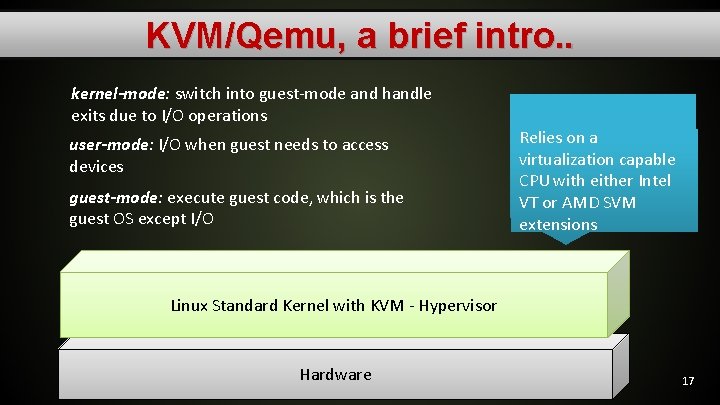

KVM/Qemu, a brief intro. . kernel-mode: switch into guest-mode and handle exits due to I/O operations user-mode: I/O when guest needs to access devices guest-mode: execute guest code, which is the guest OS except I/O Linux has all the KVM module part of Relies on a mechanisms a VMM Linux kernel since Has 3 modes: - kernel, virtualization capable needs to operate version 2. 6 user, guest CPU with either Intel several VMs. VT or AMD SVM extensions Linux Standard Kernel with KVM - Hypervisor Hardware 16

KVM/Qemu, a brief intro. . kernel-mode: switch into guest-mode and handle exits due to I/O operations user-mode: I/O when guest needs to access devices guest-mode: execute guest code, which is the guest OS except I/O Linux has all the KVM module part of Relies on a mechanisms a VMM Linux kernel since Has 3 modes: - kernel, virtualization capable needs to operate version 2. 6 user, guest CPU with either Intel several VMs. VT or AMD SVM extensions Linux Standard Kernel with KVM - Hypervisor Hardware 17

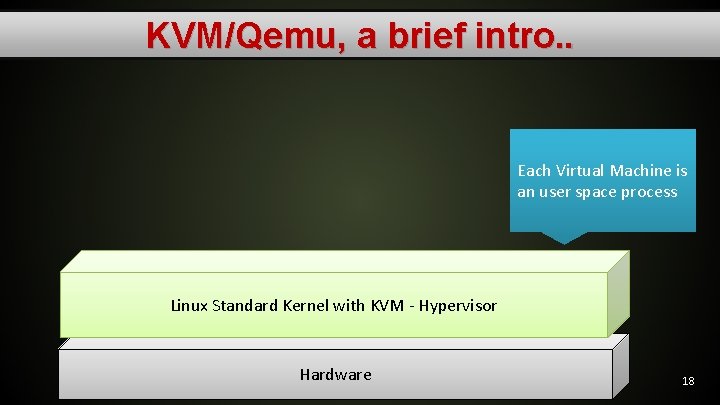

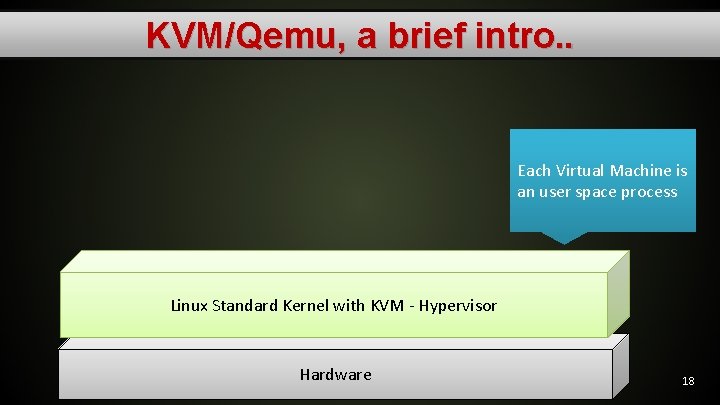

KVM/Qemu, a brief intro. . Each Virtual Machine is an user space process Linux Standard Kernel with KVM - Hypervisor Hardware 18

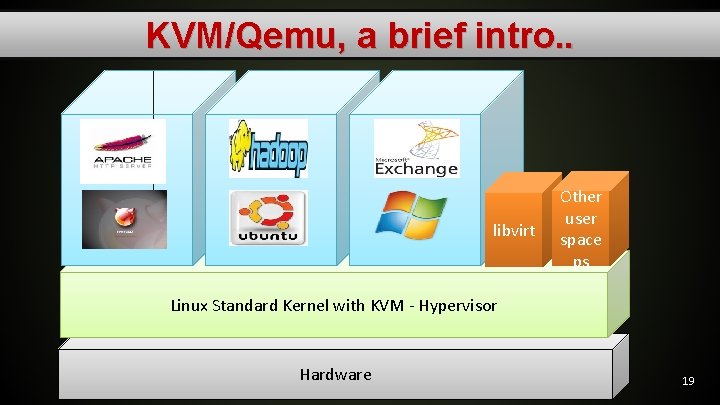

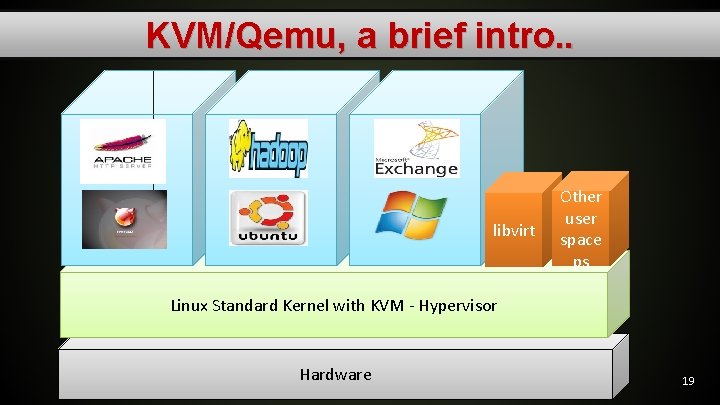

KVM/Qemu, a brief intro. . libvirt Other user space ps Linux Standard Kernel with KVM - Hypervisor Hardware 19

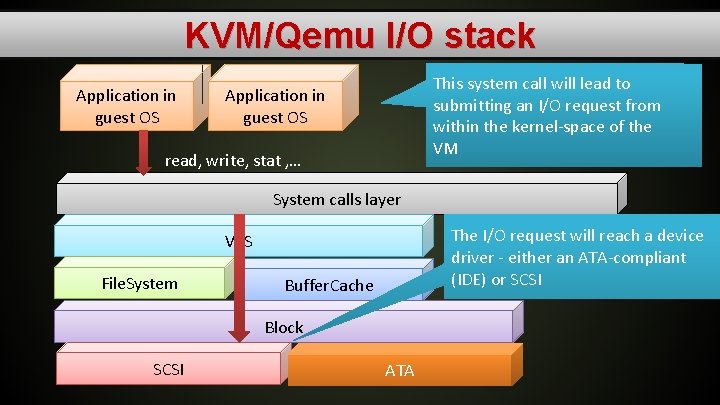

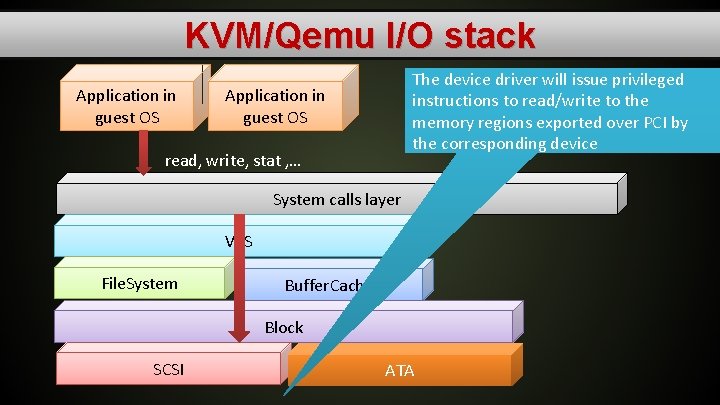

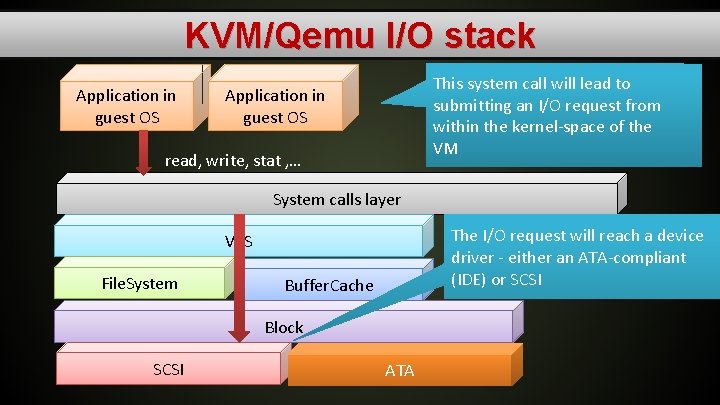

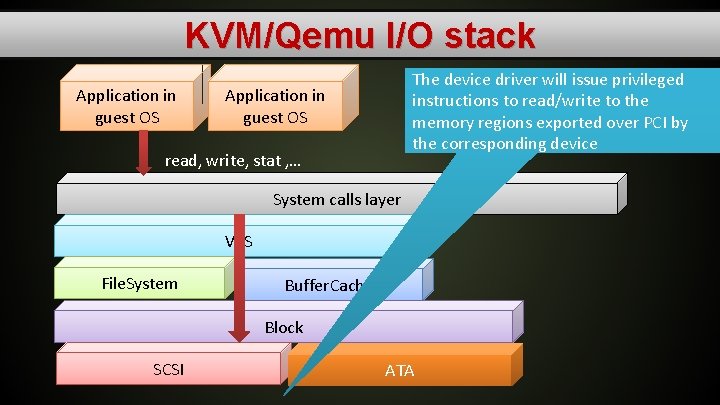

KVM/Qemu I/O stack Application in guest OS Issues an I/O-related system call This system call will lead to (eg: read(), write(), stat()) within submitting an I/O request from a user-space context of the within the kernel-space of the virtual machine. VM Application in guest OS read, write, stat , … System calls layer The I/O request will reach a device driver - either an ATA-compliant (IDE) or SCSI VFS File. System Buffer. Cache Block SCSI ATA

KVM/Qemu I/O stack Application in guest OS The device driver will issue privileged instructions to read/write to the memory regions exported over PCI by the corresponding device Application in guest OS read, write, stat , … System calls layer VFS File. System Buffer. Cache Block SCSI ATA

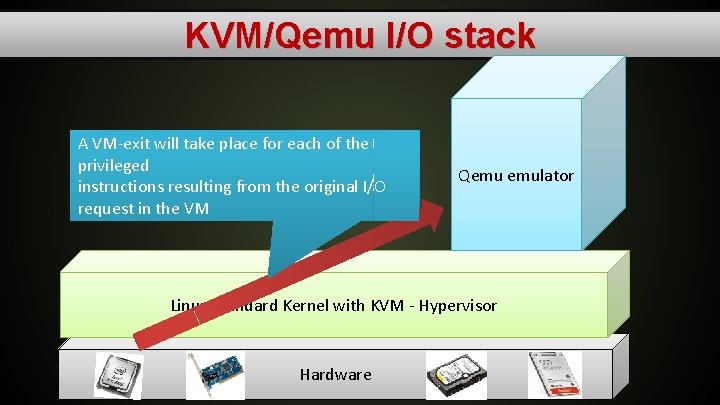

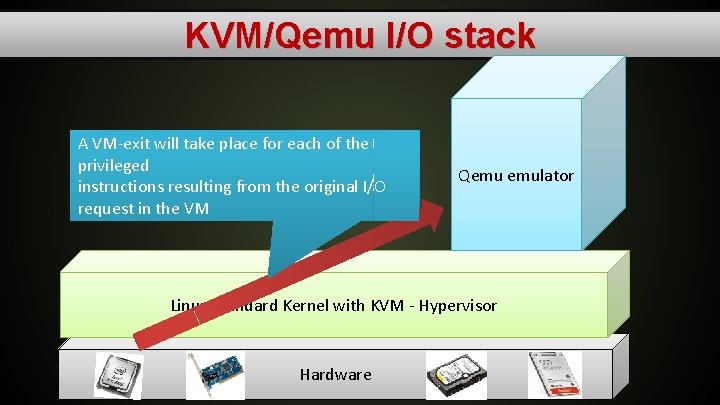

KVM/Qemu I/O stack A VM-exit will take place for each of the These instructions will trigger VM-exits, that The privileged I/O related privileged will be handled by the core instructions are passed by the hypervisor to instructions resulting from the original I/O KVM module within the Host's kernel-space the QEMU machine emulator request in the VM context Qemu emulator Linux Standard Kernel with KVM - Hypervisor Hardware

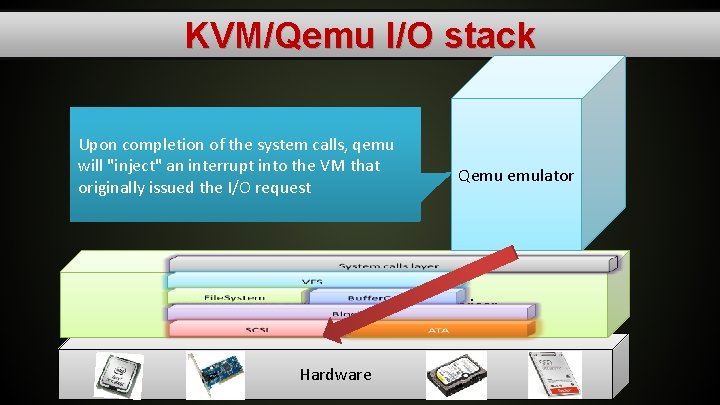

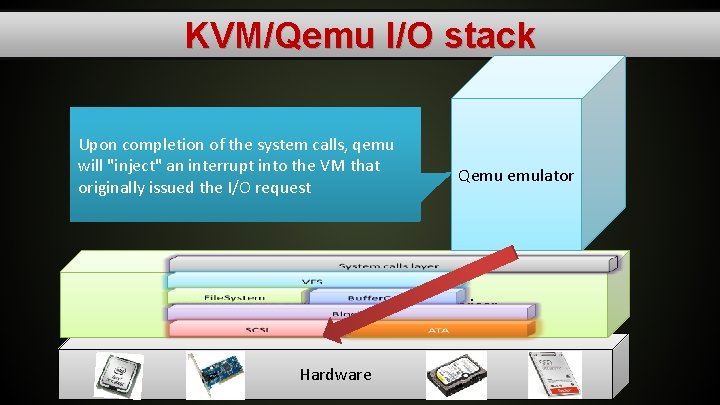

KVM/Qemu I/O stack These instructions will then be QEMU will generate block-access I/O Upon completion of the system calls, qemu emulated by device-controller emulation Thus the original I/O request will generate requests, in a special blockdevice will "inject" an interrupt into the VM that modules within QEMU (either as ATA or as I/O requests to the kernel-space of the Host emulation module originally issued the I/O request SCSI commands) Qemu emulator Linux Standard Kernel with KVM - Hypervisor Hardware

Multi-level I/O tagging modifications

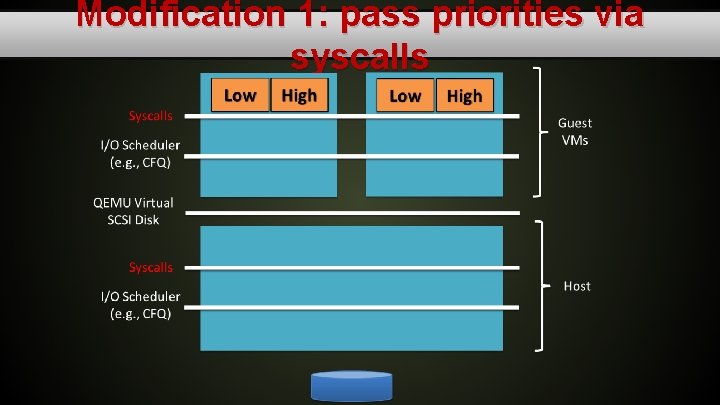

Modification 1: pass priorities via syscalls

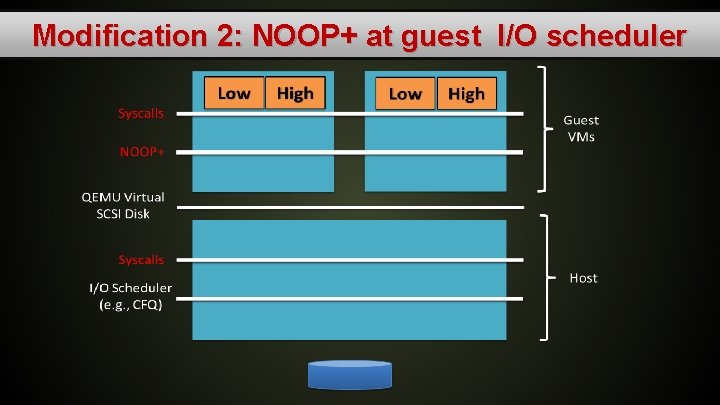

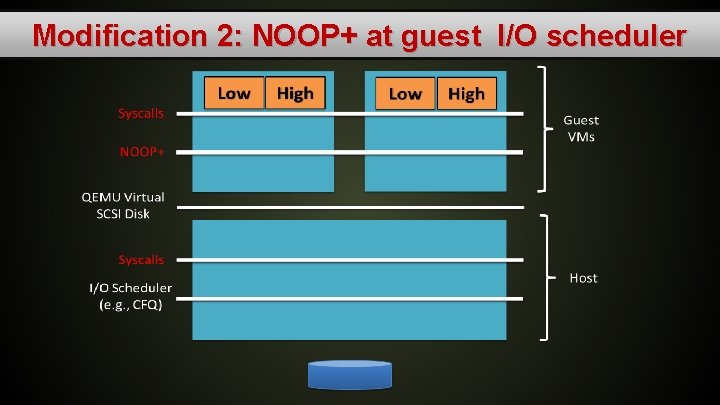

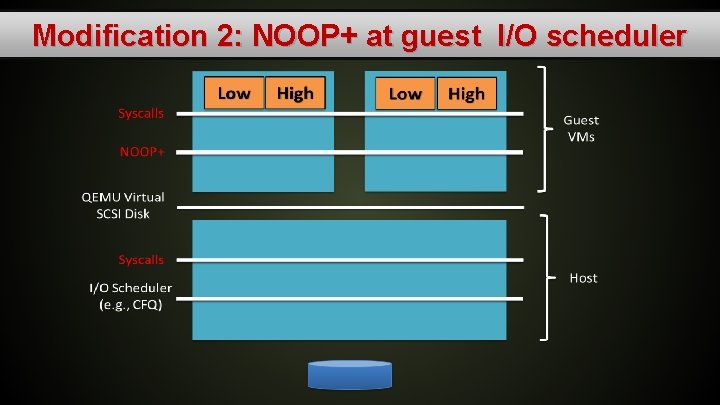

Modification 2: NOOP+ at guest I/O scheduler

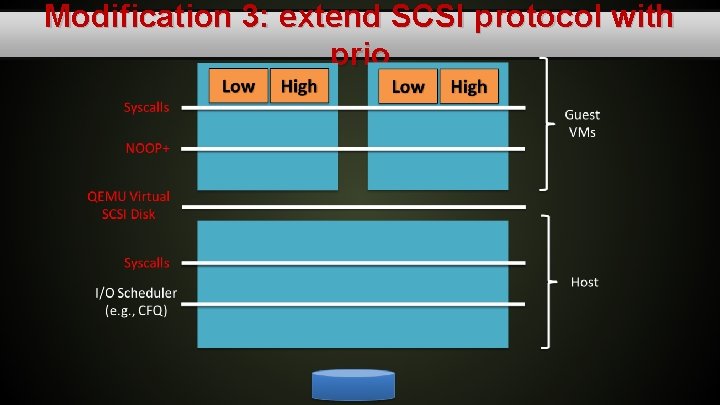

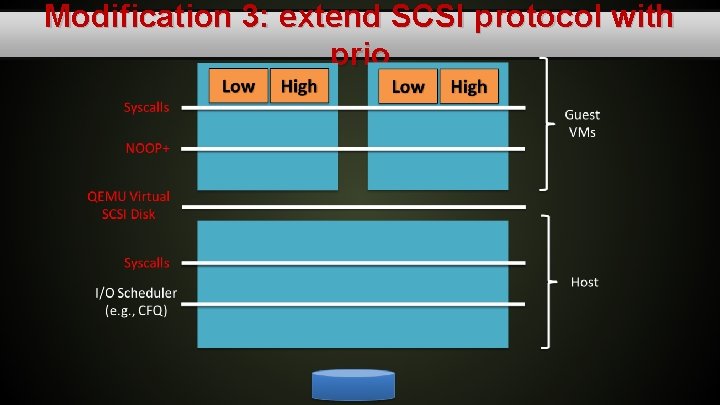

Modification 3: extend SCSI protocol with prio

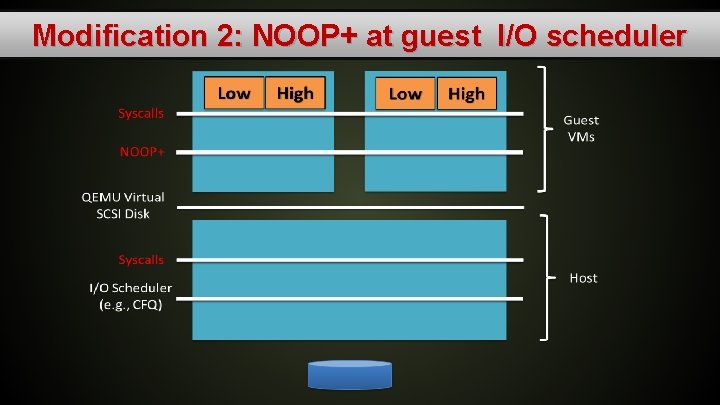

Modification 2: NOOP+ at guest I/O scheduler

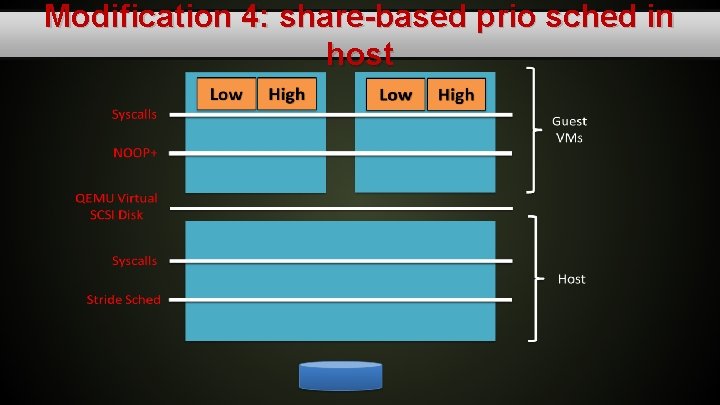

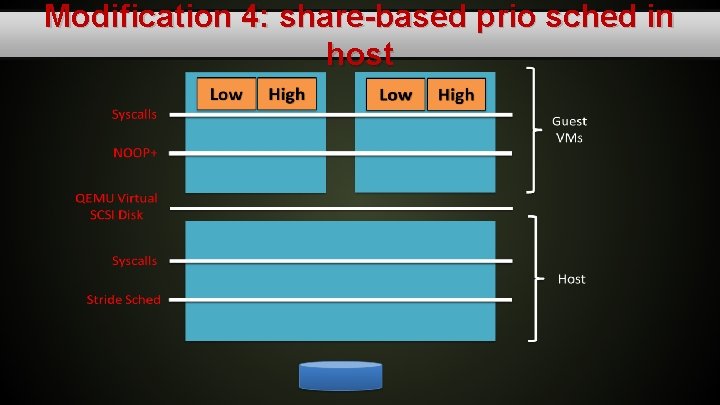

Modification 4: share-based prio sched in host

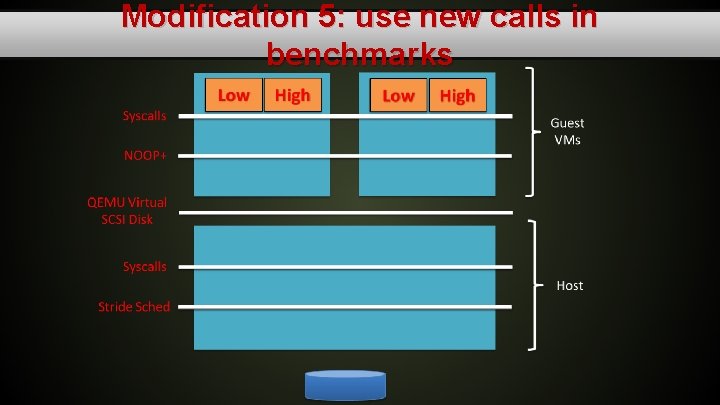

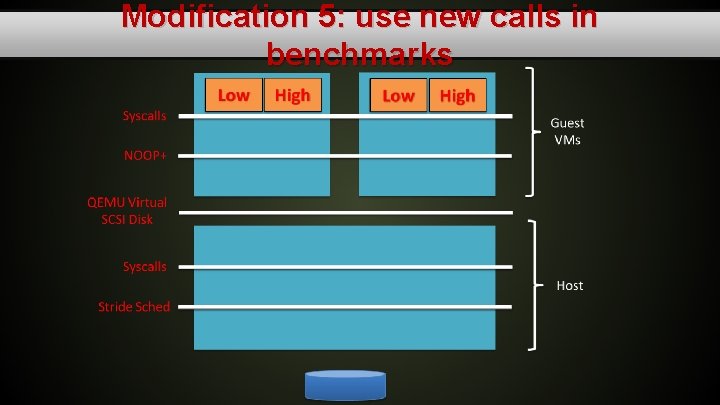

Modification 5: use new calls in benchmarks

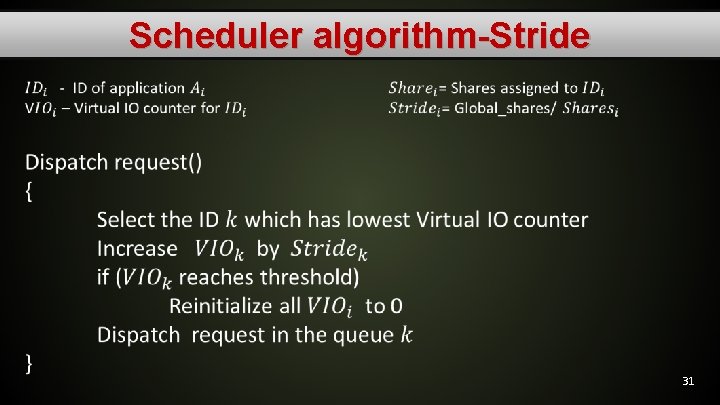

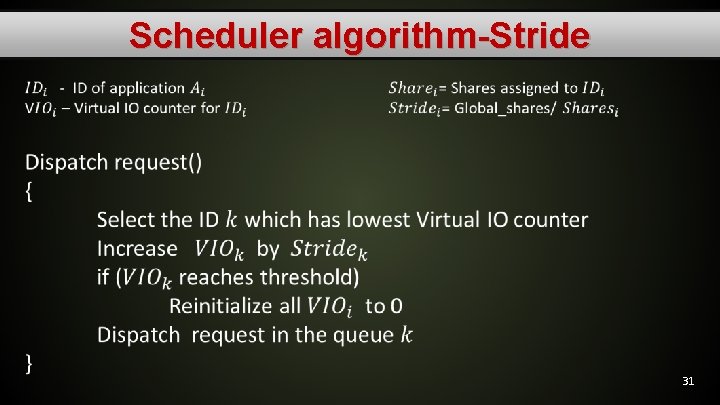

Scheduler algorithm-Stride • 31

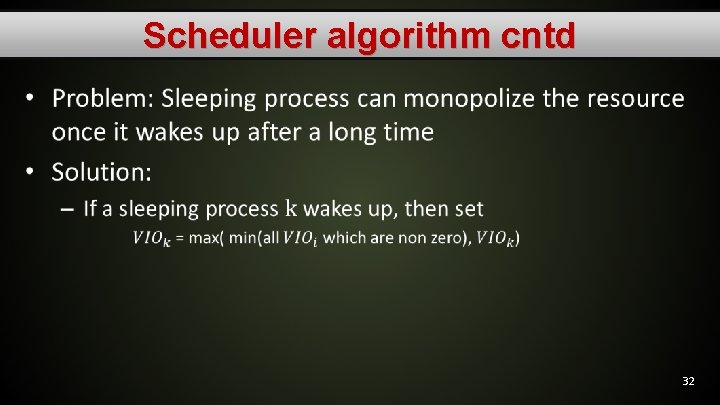

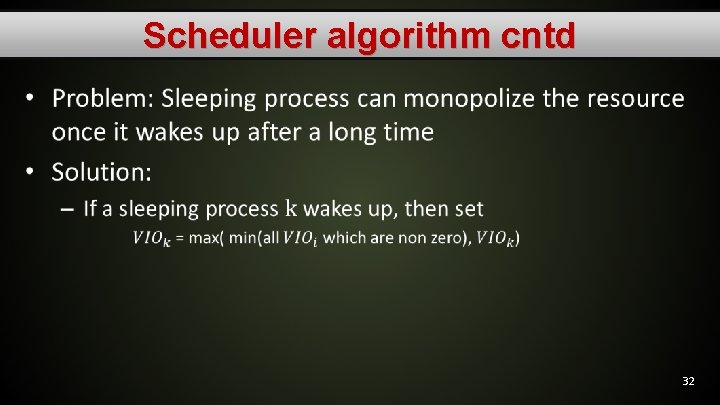

Scheduler algorithm cntd • 32

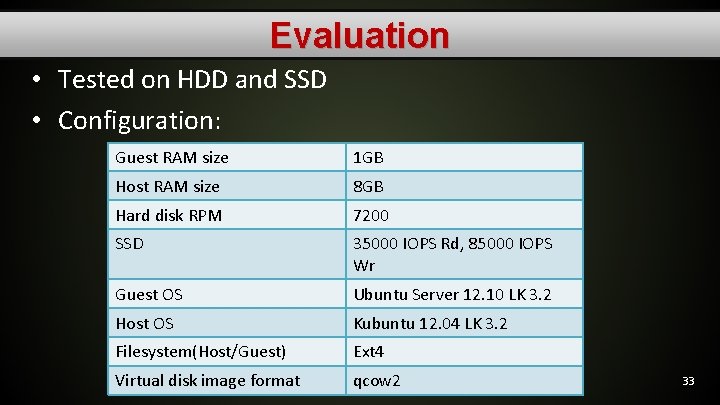

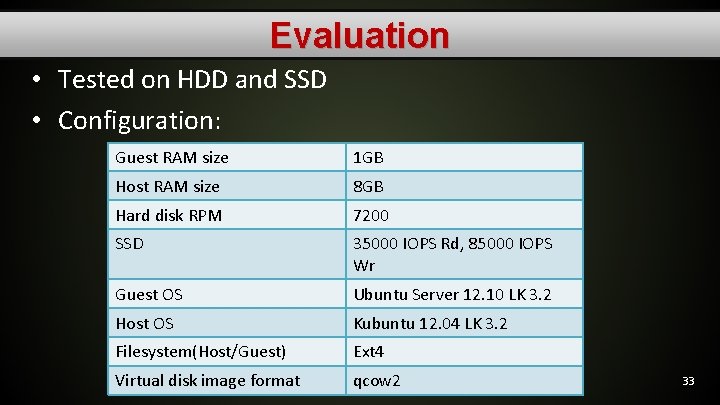

Evaluation • Tested on HDD and SSD • Configuration: Guest RAM size 1 GB Host RAM size 8 GB Hard disk RPM 7200 SSD 35000 IOPS Rd, 85000 IOPS Wr Guest OS Ubuntu Server 12. 10 LK 3. 2 Host OS Kubuntu 12. 04 LK 3. 2 Filesystem(Host/Guest) Ext 4 Virtual disk image format qcow 2 33

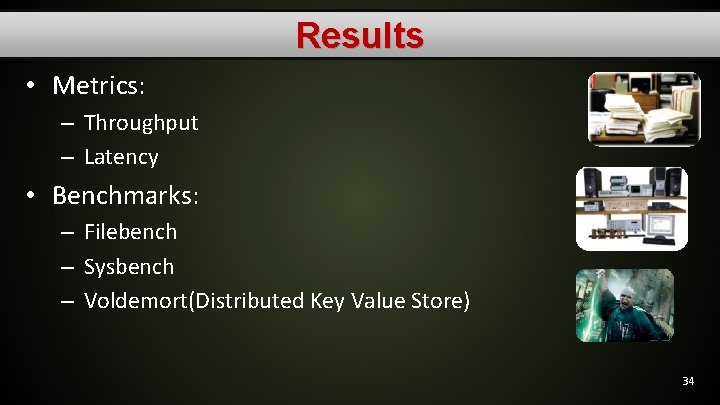

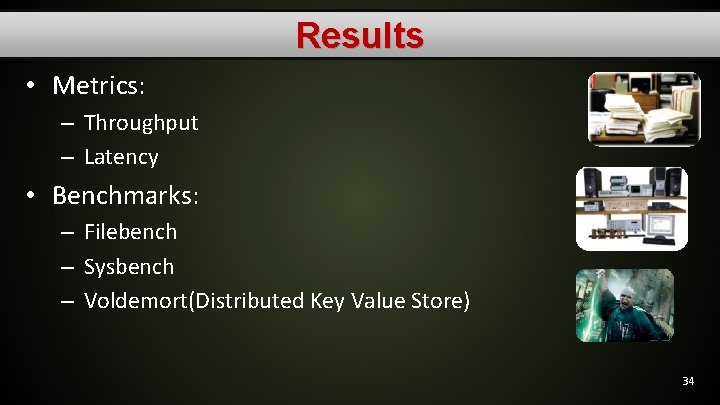

Results • Metrics: – Throughput – Latency • Benchmarks: – Filebench – Sysbench – Voldemort(Distributed Key Value Store) 34

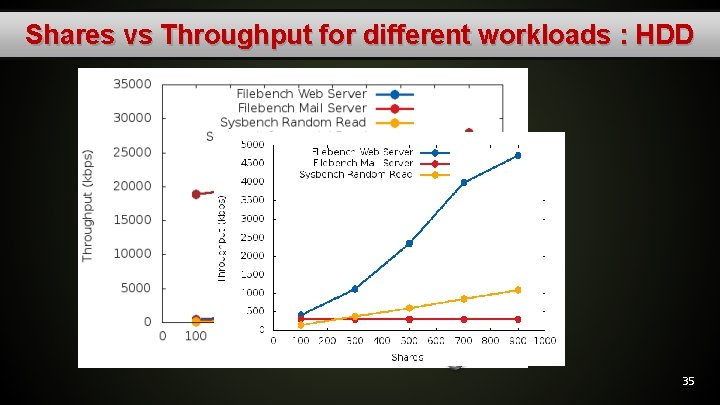

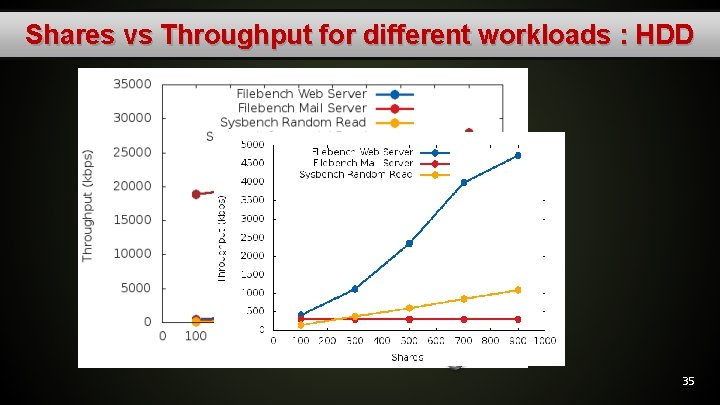

Shares vs Throughput for different workloads : HDD 35

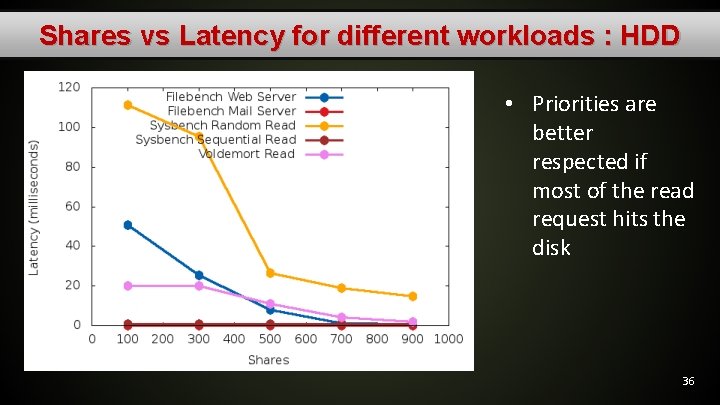

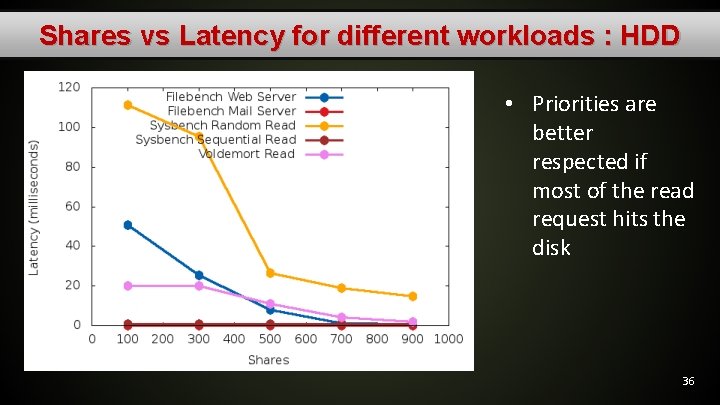

Shares vs Latency for different workloads : HDD • Priorities are better respected if most of the read request hits the disk 36

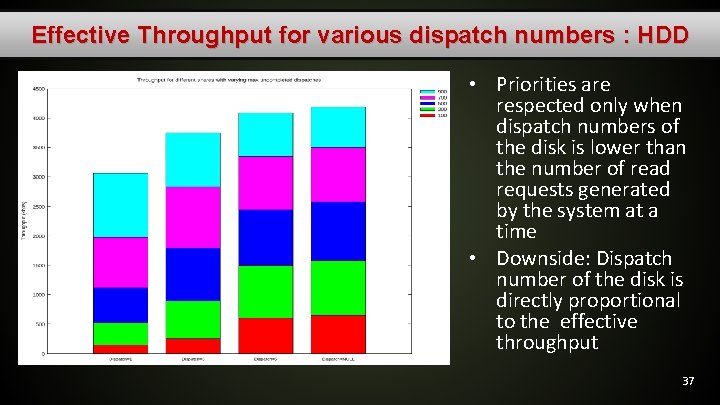

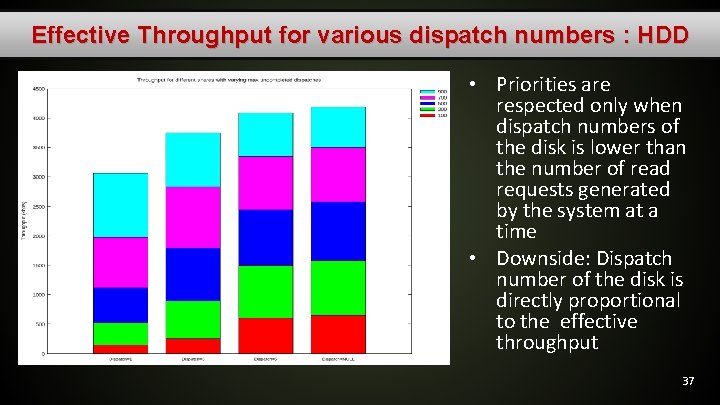

Effective Throughput for various dispatch numbers : HDD • Priorities are respected only when dispatch numbers of the disk is lower than the number of read requests generated by the system at a time • Downside: Dispatch number of the disk is directly proportional to the effective throughput 37

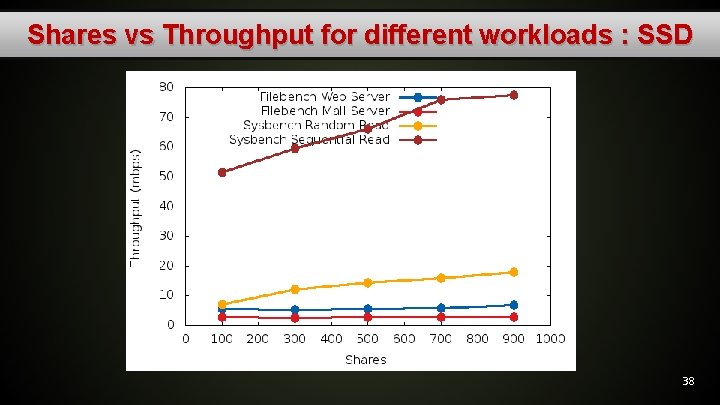

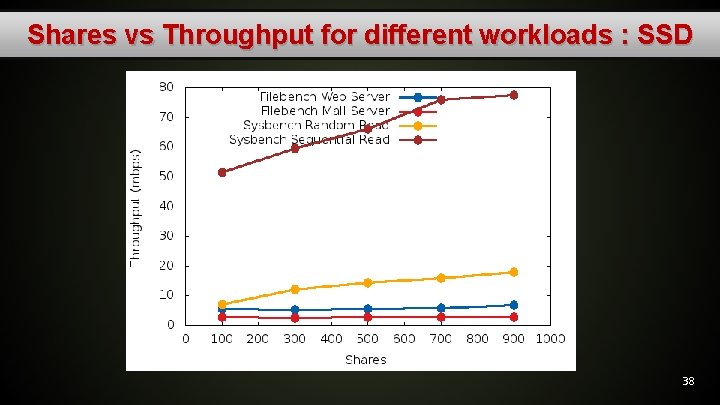

Shares vs Throughput for different workloads : SSD 38

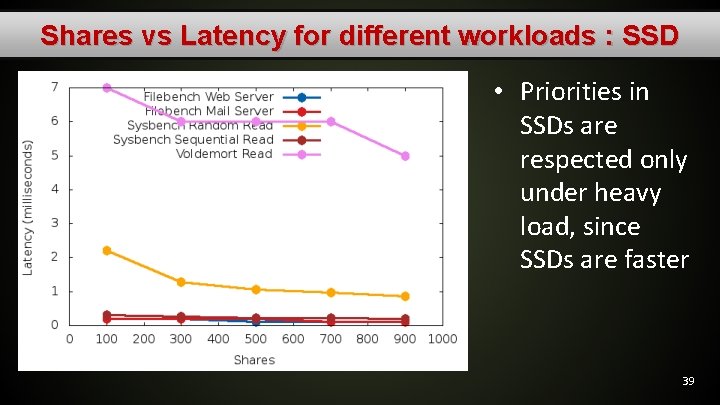

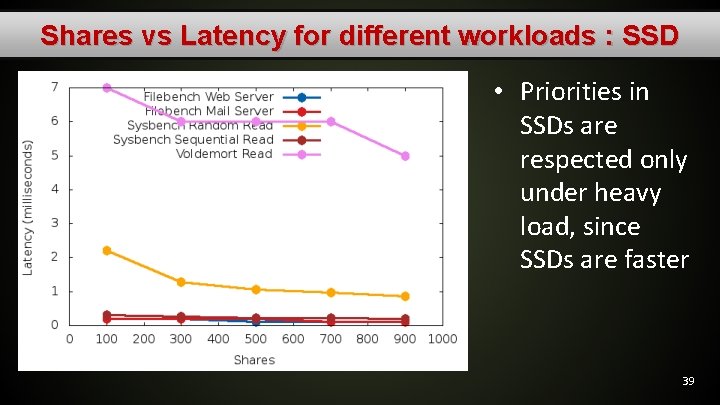

Shares vs Latency for different workloads : SSD • Priorities in SSDs are respected only under heavy load, since SSDs are faster 39

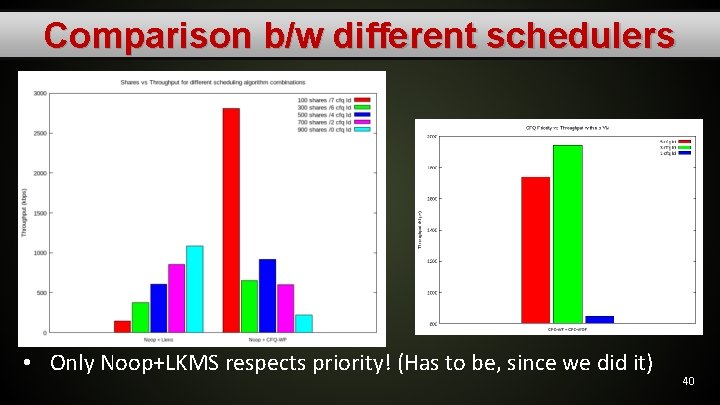

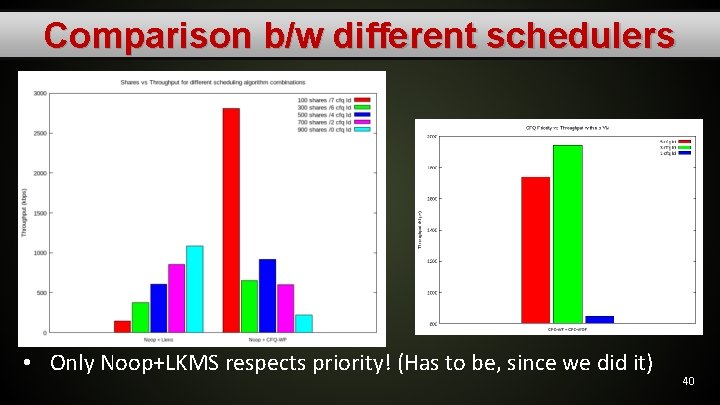

Comparison b/w different schedulers • Only Noop+LKMS respects priority! (Has to be, since we did it) 40

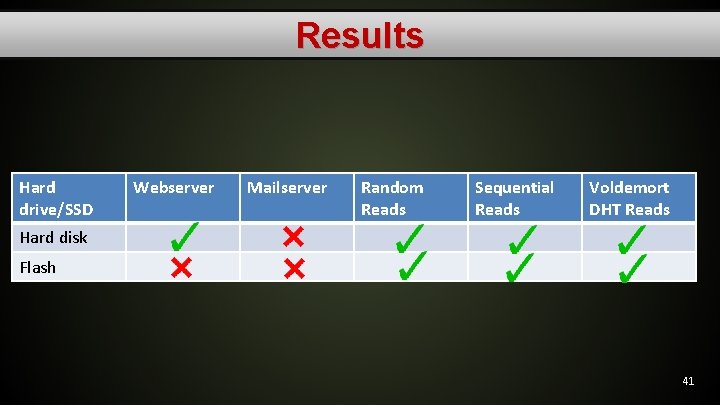

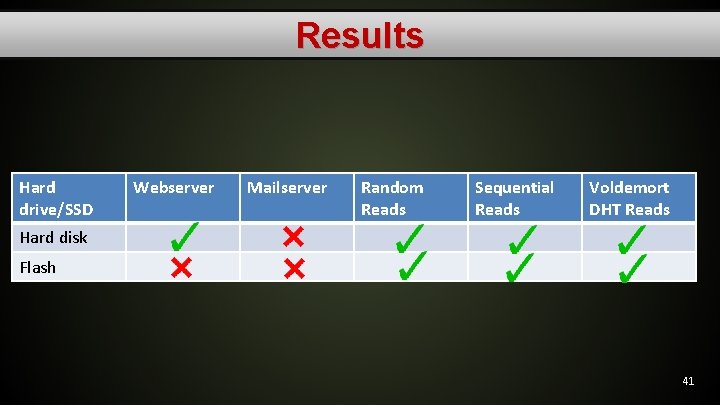

Results Hard drive/SSD Webserver Mailserver Random Reads Sequential Reads Voldemort DHT Reads Hard disk Flash 41

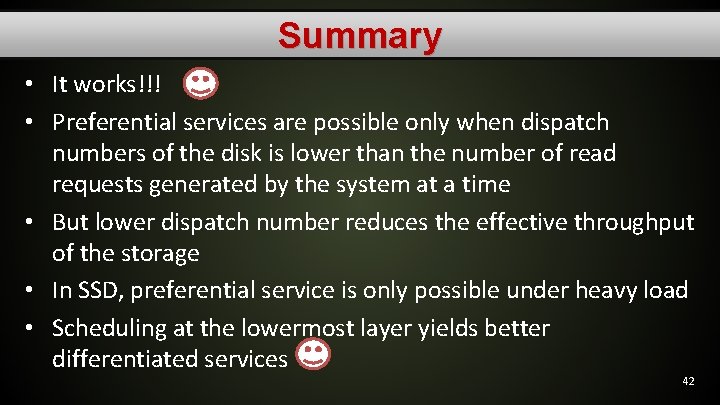

Summary • It works!!! • Preferential services are possible only when dispatch numbers of the disk is lower than the number of read requests generated by the system at a time • But lower dispatch number reduces the effective throughput of the storage • In SSD, preferential service is only possible under heavy load • Scheduling at the lowermost layer yields better differentiated services 42

Future work • Get it working for writes • Get evaluations on VMware ESX SIOC and compare with our results 43

44