Pattern Decomposition Algorithm for Data Mining Frequent Patterns

![Reference [1] R. Agrawal and R. Srikant. Fast algorithms for mining association rules. In Reference [1] R. Agrawal and R. Srikant. Fast algorithms for mining association rules. In](https://slidetodoc.com/presentation_image_h/a48c495f4cc7e2ad64b2253b07354b64/image-26.jpg)

- Slides: 26

Pattern Decomposition Algorithm for Data Mining Frequent Patterns Qinghua Zou Advisor: Dr. Wesley Chu Department of Computer Science University of California—Los Angeles PDA 2/19/2002 Qinghua Zou

Outline 1. The problem 2. Importance of mining frequent sets 3. Related work 4. PDS, an efficient approach 5. Performance analysis 6. Conclusion PDA 2/19/2002 Qinghua Zou

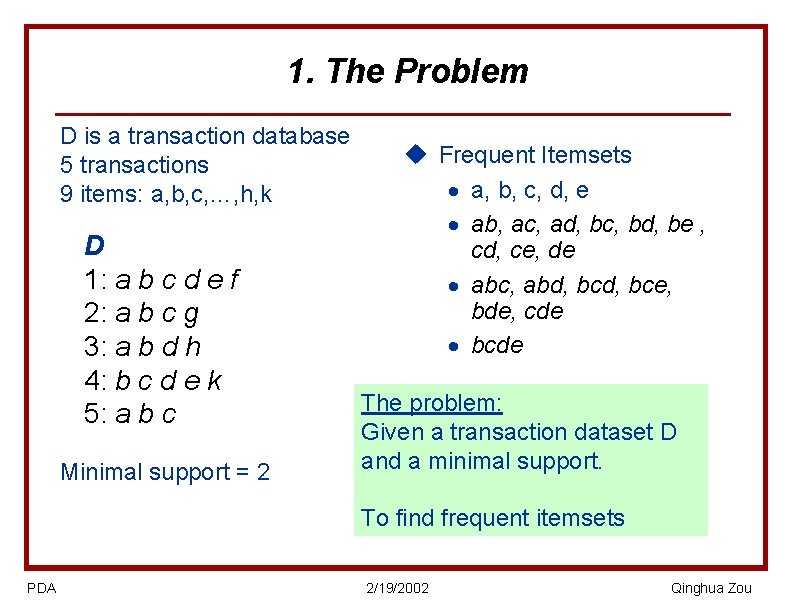

1. The Problem D is a transaction database 5 transactions 9 items: a, b, c, …, h, k D 1: a b c d e f 2: a b c g 3: a b d h 4: b c d e k 5: a b c Minimal support = 2 u Frequent Itemsets · a, b, c, d, e · ab, ac, ad, bc, bd, be , cd, ce, de · abc, abd, bce, bde, cde · bcde The problem: Given a transaction dataset D and a minimal support. To find frequent itemsets PDA 2/19/2002 Qinghua Zou

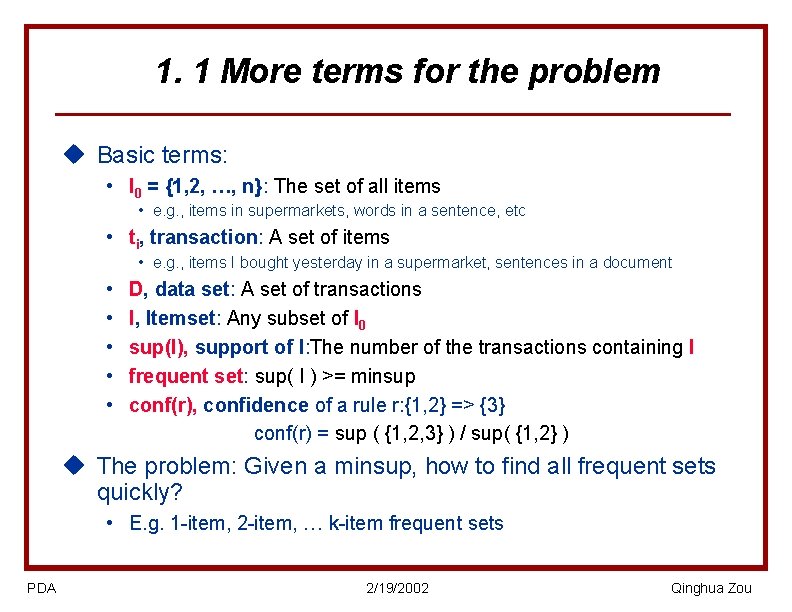

1. 1 More terms for the problem u Basic terms: • I 0 = {1, 2, …, n}: The set of all items • e. g. , items in supermarkets, words in a sentence, etc • ti, transaction: A set of items • e. g. , items I bought yesterday in a supermarket, sentences in a document • • • D, data set: A set of transactions I, Itemset: Any subset of I 0 sup(I), support of I: The number of the transactions containing I frequent set: sup( I ) >= minsup conf(r), confidence of a rule r: {1, 2} => {3} conf(r) = sup ( {1, 2, 3} ) / sup( {1, 2} ) u The problem: Given a minsup, how to find all frequent sets quickly? • E. g. 1 -item, 2 -item, … k-item frequent sets PDA 2/19/2002 Qinghua Zou

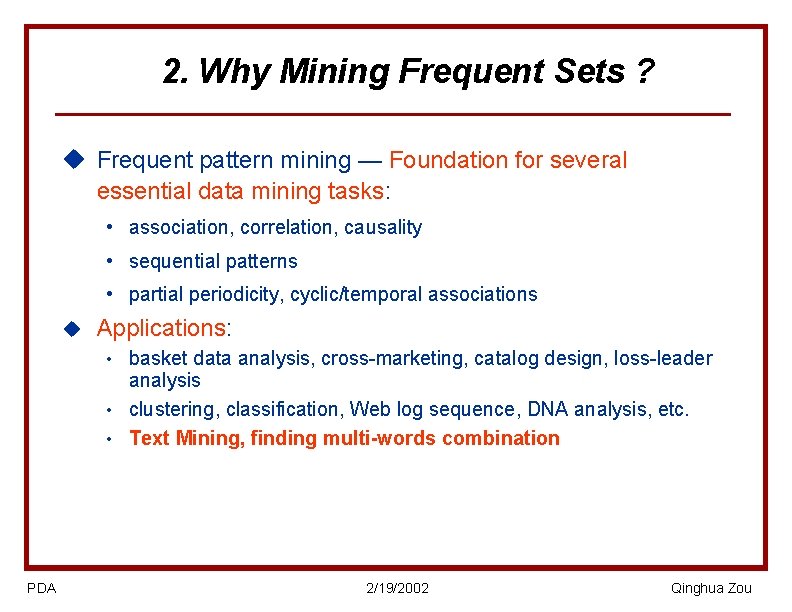

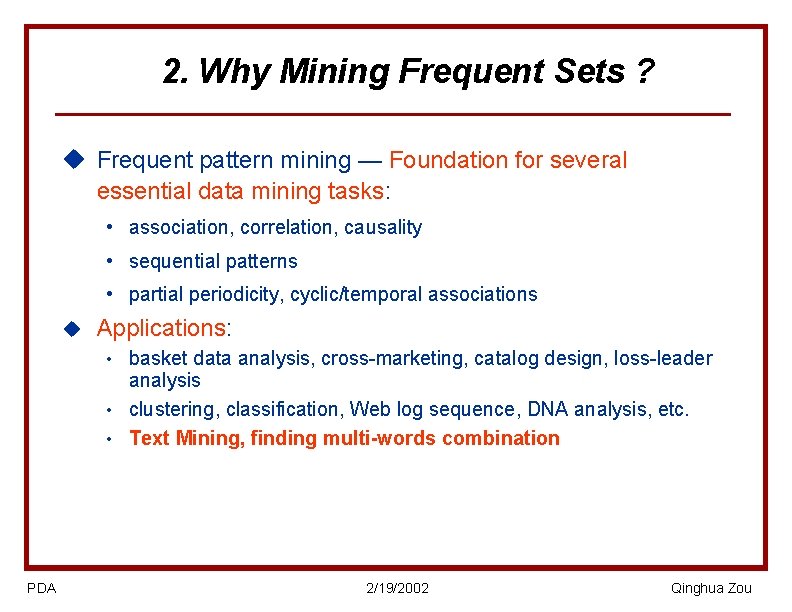

2. Why Mining Frequent Sets ? u Frequent pattern mining — Foundation for several essential data mining tasks: • association, correlation, causality • sequential patterns • partial periodicity, cyclic/temporal associations u Applications: basket data analysis, cross-marketing, catalog design, loss-leader analysis • clustering, classification, Web log sequence, DNA analysis, etc. • Text Mining, finding multi-words combination • PDA 2/19/2002 Qinghua Zou

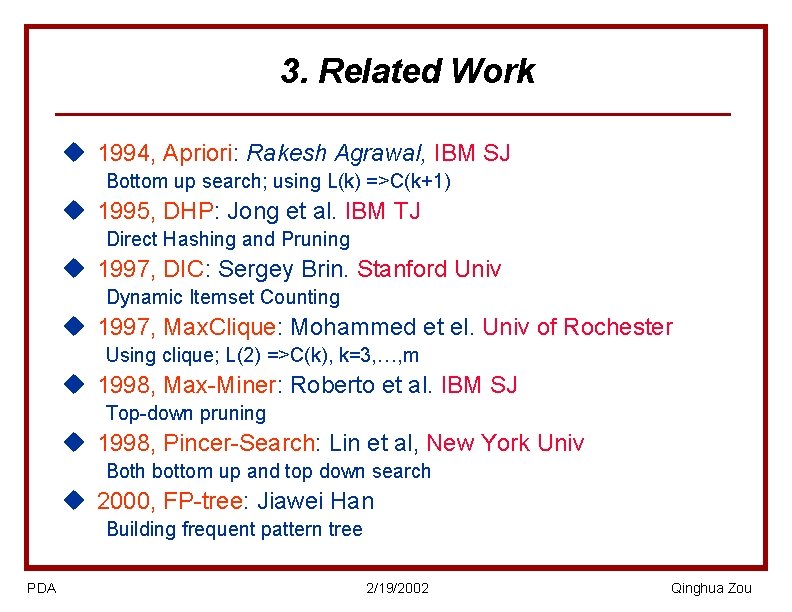

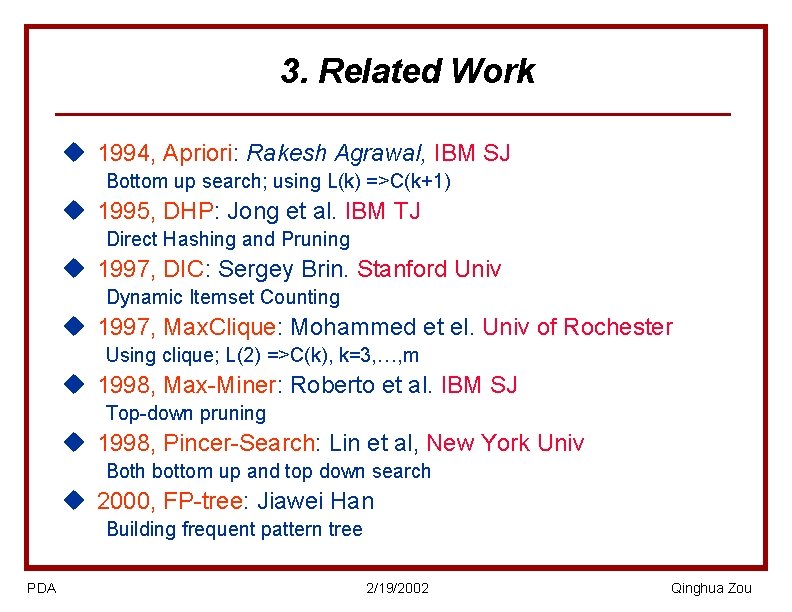

3. Related Work u 1994, Apriori: Rakesh Agrawal, IBM SJ Bottom up search; using L(k) =>C(k+1) u 1995, DHP: Jong et al. IBM TJ Direct Hashing and Pruning u 1997, DIC: Sergey Brin. Stanford Univ Dynamic Itemset Counting u 1997, Max. Clique: Mohammed et el. Univ of Rochester Using clique; L(2) =>C(k), k=3, …, m u 1998, Max-Miner: Roberto et al. IBM SJ Top-down pruning u 1998, Pincer-Search: Lin et al, New York Univ Both bottom up and top down search u 2000, FP-tree: Jiawei Han Building frequent pattern tree PDA 2/19/2002 Qinghua Zou

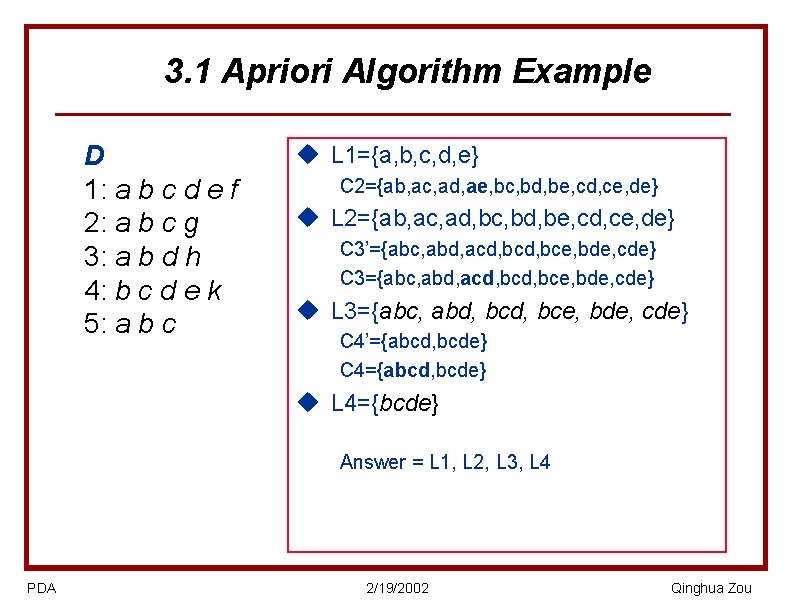

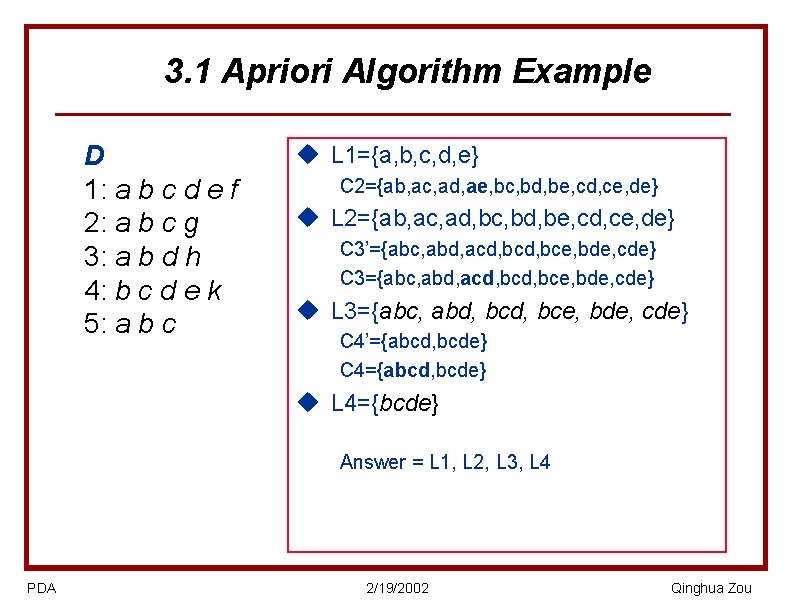

3. 1 Apriori Algorithm Example D 1: a b c d e f 2: a b c g 3: a b d h 4: b c d e k 5: a b c u L 1={a, b, c, d, e} C 2={ab, ac, ad, ae, bc, bd, be, cd, ce, de} u L 2={ab, ac, ad, bc, bd, be, cd, ce, de} C 3’={abc, abd, acd, bce, bde, cde} C 3={abc, abd, acd, bce, bde, cde} u L 3={abc, abd, bce, bde, cde} C 4’={abcd, bcde} C 4={abcd, bcde} u L 4={bcde} Answer = L 1, L 2, L 3, L 4 PDA 2/19/2002 Qinghua Zou

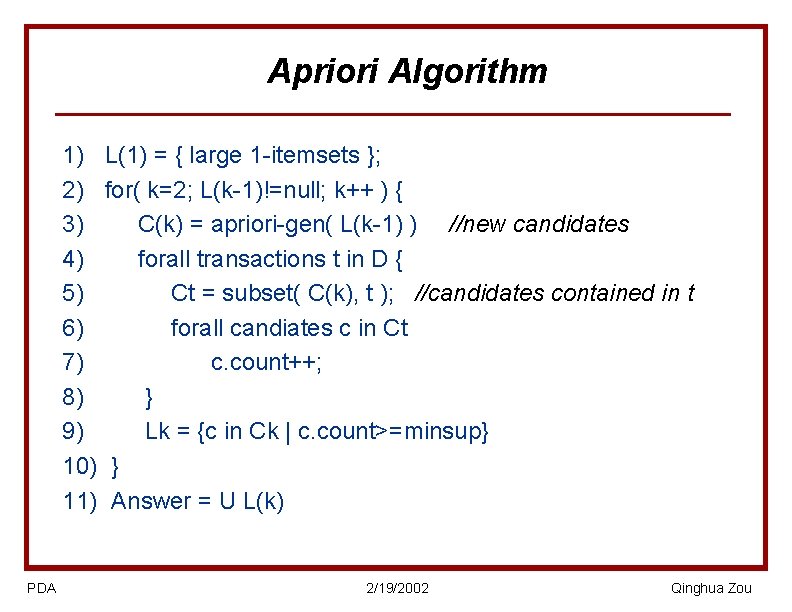

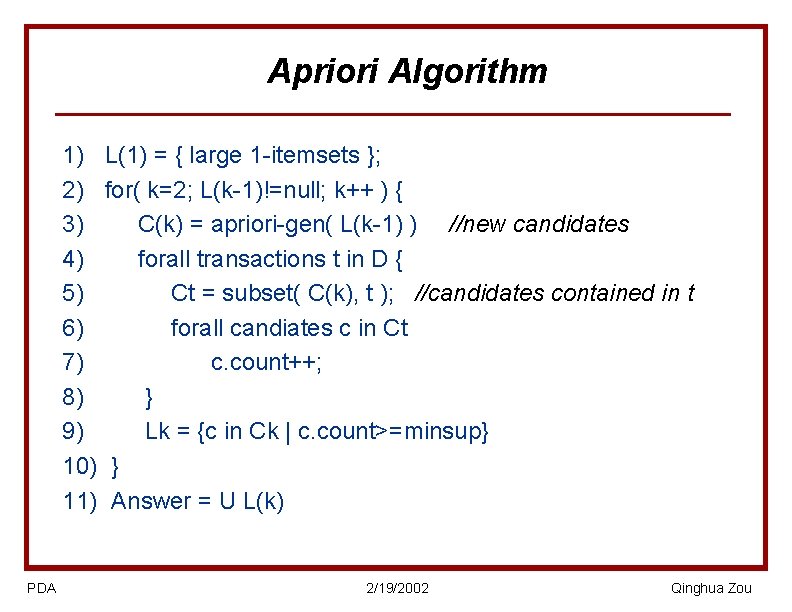

Apriori Algorithm 1) L(1) = { large 1 -itemsets }; 2) for( k=2; L(k-1)!=null; k++ ) { 3) C(k) = apriori-gen( L(k-1) ) //new candidates 4) forall transactions t in D { 5) Ct = subset( C(k), t ); //candidates contained in t 6) forall candiates c in Ct 7) c. count++; 8) } 9) Lk = {c in Ck | c. count>=minsup} 10) } 11) Answer = U L(k) PDA 2/19/2002 Qinghua Zou

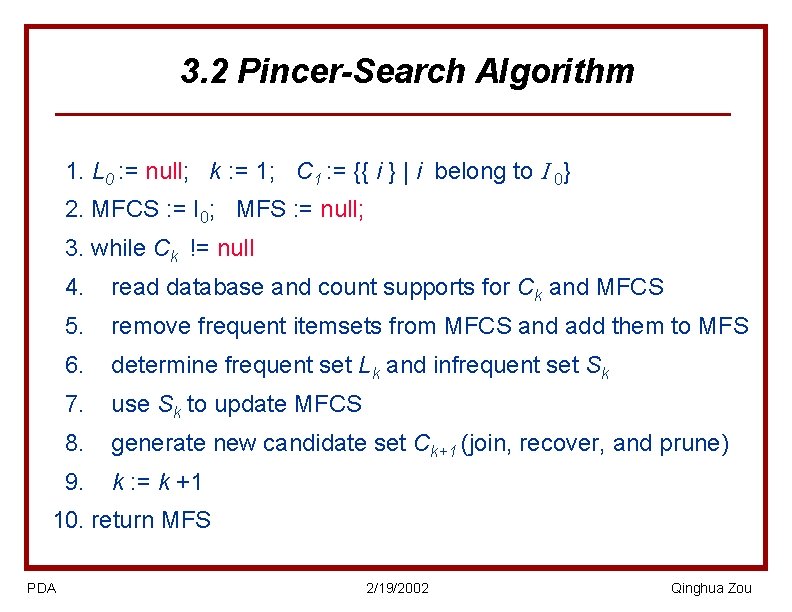

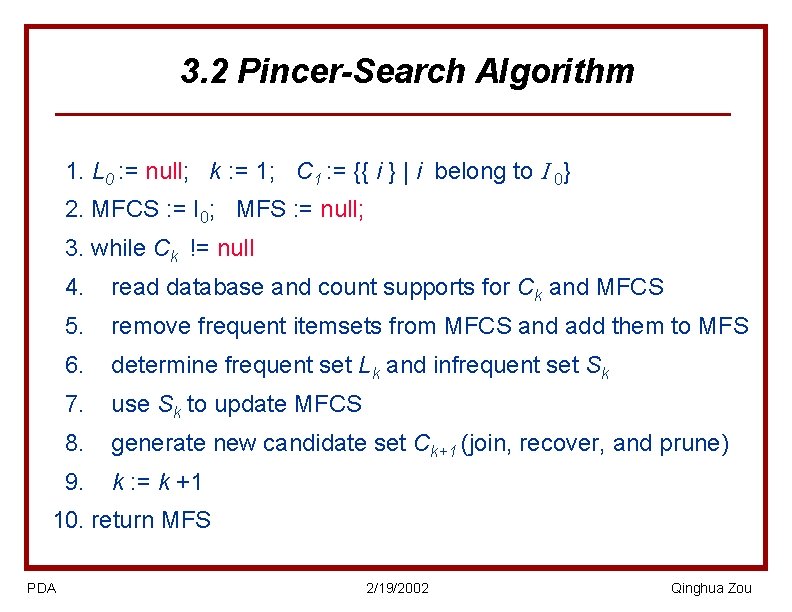

3. 2 Pincer-Search Algorithm 01. L 0 : = null; k : = 1; C 1 : = {{ i } | i belong to 0} 02. MFCS : = I 0; MFS : = null; 03. while Ck != null 04. read database and count supports for Ck and MFCS 05. remove frequent itemsets from MFCS and add them to MFS 06. determine frequent set Lk and infrequent set Sk 07. use Sk to update MFCS 08. generate new candidate set Ck+1 (join, recover, and prune) 09. k : = k +1 10. return MFS PDA 2/19/2002 Qinghua Zou

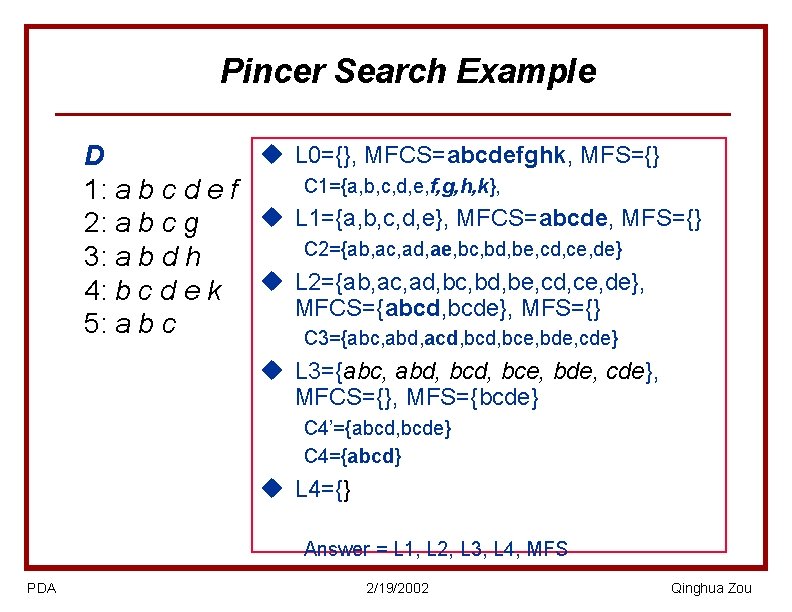

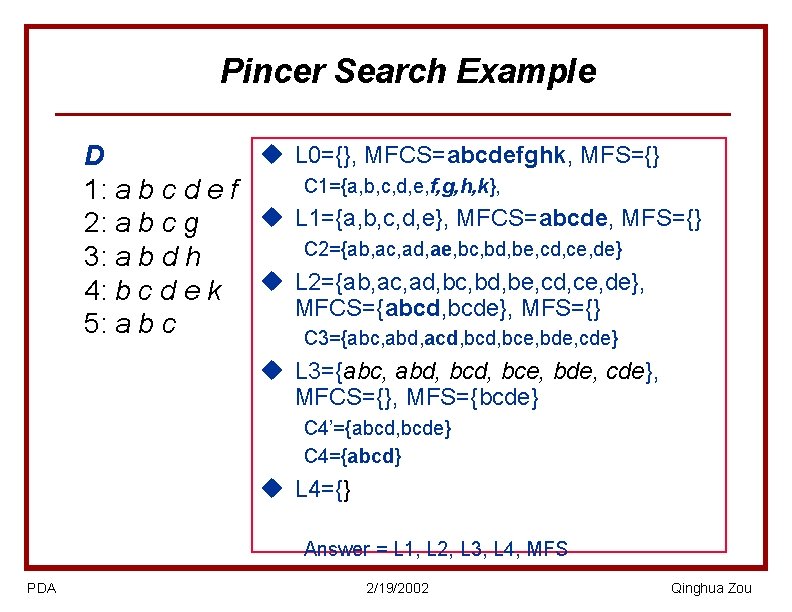

Pincer Search Example u L 0={}, MFCS=abcdefghk, MFS={} D C 1={a, b, c, d, e, f, g, h, k}, 1: a b c d e f u L 1={a, b, c, d, e}, MFCS=abcde, MFS={} 2: a b c g C 2={ab, ac, ad, ae, bc, bd, be, cd, ce, de} 3: a b d h 4: b c d e k u L 2={ab, ac, ad, bc, bd, be, cd, ce, de}, MFCS={abcd, bcde}, MFS={} 5: a b c C 3={abc, abd, acd, bce, bde, cde} u L 3={abc, abd, bce, bde, cde}, MFCS={}, MFS={bcde} C 4’={abcd, bcde} C 4={abcd} u L 4={} Answer = L 1, L 2, L 3, L 4, MFS PDA 2/19/2002 Qinghua Zou

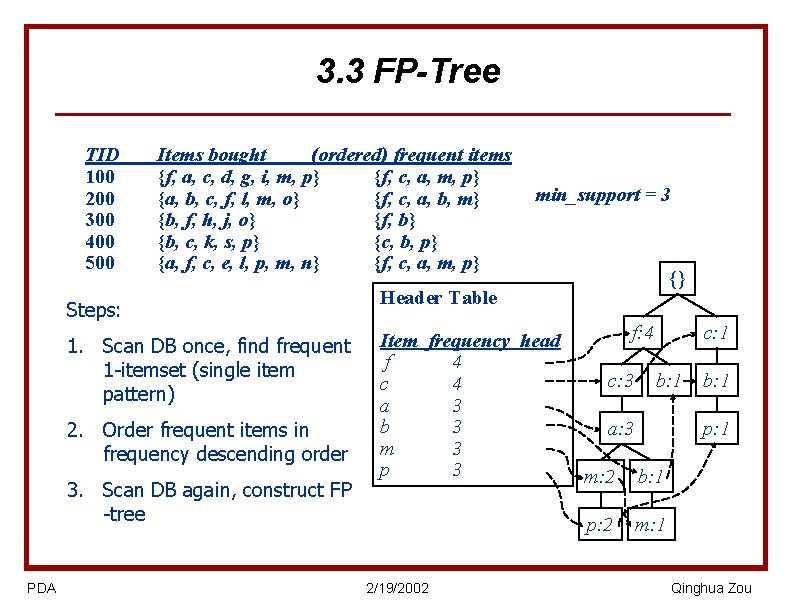

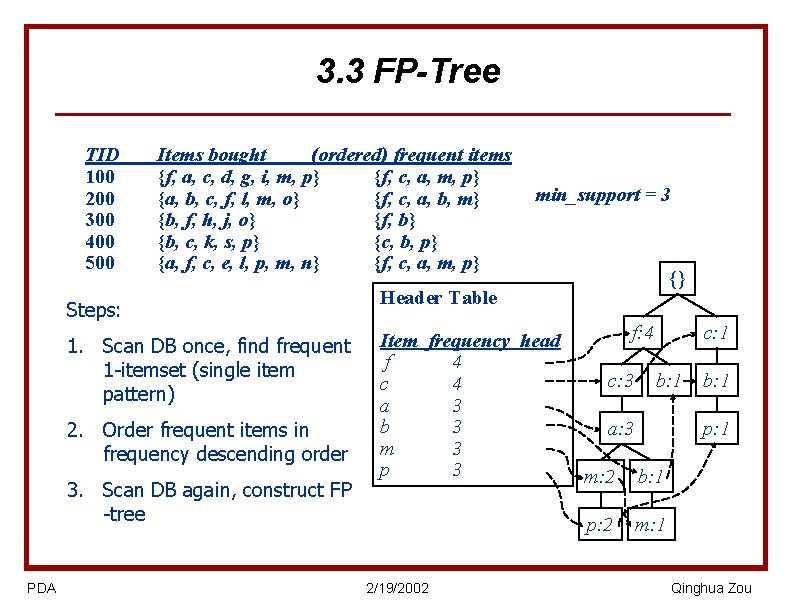

3. 3 FP-Tree TID 100 200 300 400 500 Items bought (ordered) frequent items {f, a, c, d, g, i, m, p} {f, c, a, m, p} {a, b, c, f, l, m, o} {f, c, a, b, m} {b, f, h, j, o} {f, b} {b, c, k, s, p} {c, b, p} {a, f, c, e, l, p, m, n} {f, c, a, m, p} Steps: 1. Scan DB once, find frequent 1 -itemset (single item pattern) 2. Order frequent items in frequency descending order 3. Scan DB again, construct FP -tree PDA min_support = 3 {} Header Table Item frequency head f 4 c 4 a 3 b 3 m 3 p 3 2/19/2002 f: 4 c: 3 c: 1 b: 1 a: 3 b: 1 p: 1 m: 2 b: 1 p: 2 m: 1 Qinghua Zou

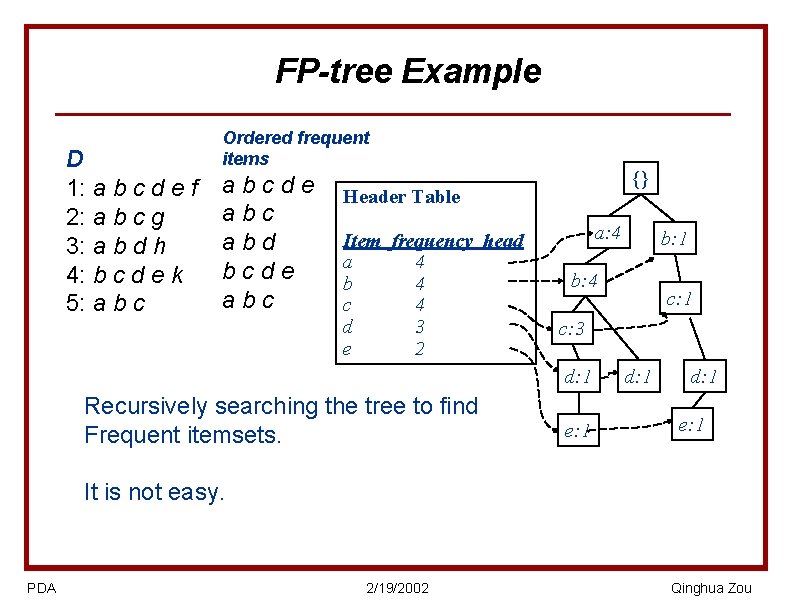

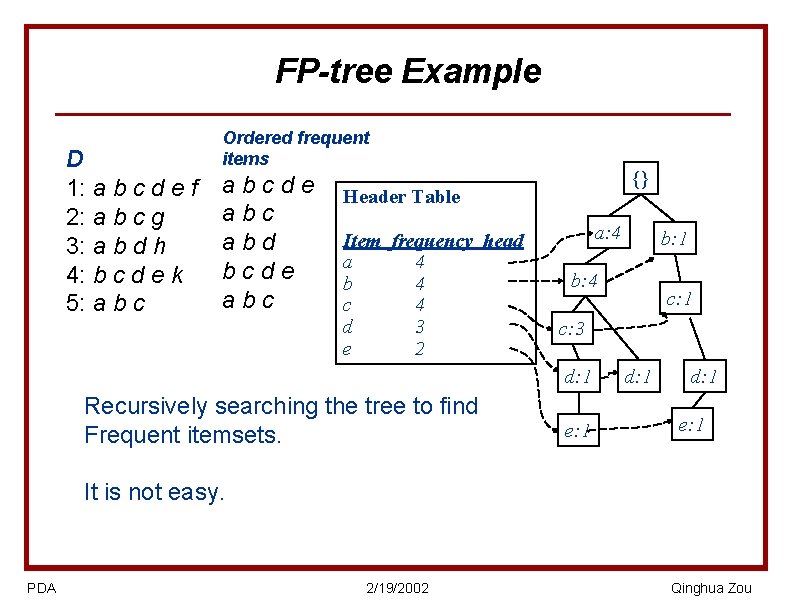

FP-tree Example D 1: a b c d e f 2: a b c g 3: a b d h 4: b c d e k 5: a b c Ordered frequent items abcde abc abd bcde abc {} Header Table Item frequency head a 4 b 4 c 4 d 3 e 2 a: 4 b: 4 c: 1 c: 3 d: 1 Recursively searching the tree to find Frequent itemsets. b: 1 e: 1 d: 1 e: 1 It is not easy. PDA 2/19/2002 Qinghua Zou

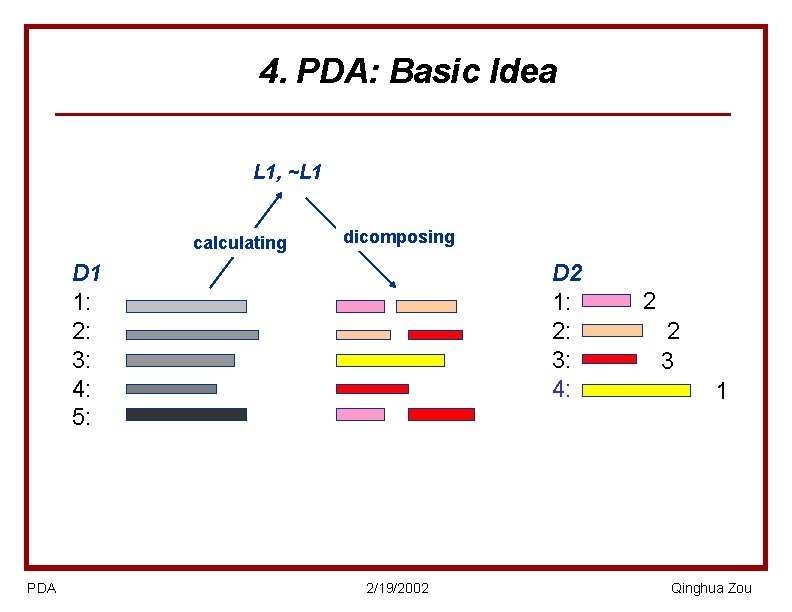

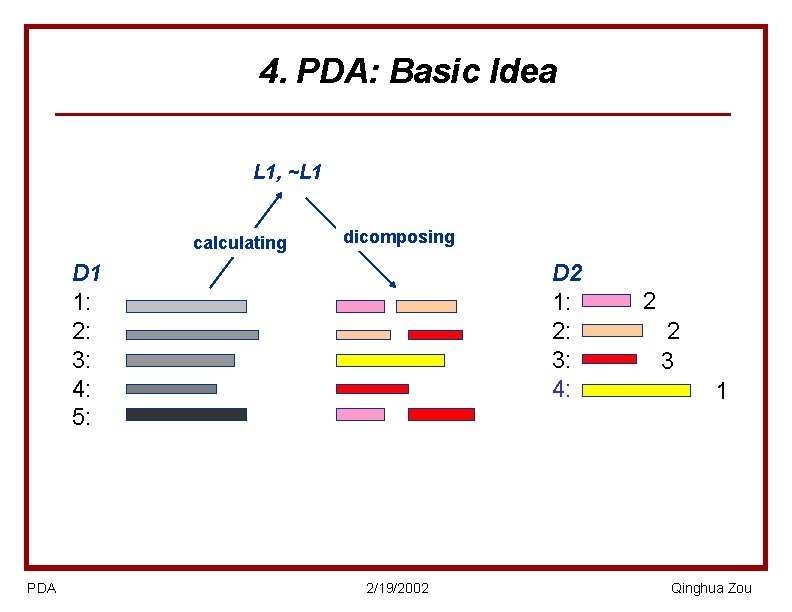

4. PDA: Basic Idea L 1, ~L 1 calculating dicomposing D 1 1: 2: 3: 4: 5: PDA D 2 1: 2: 3: 4: 2/19/2002 2 2 3 1 Qinghua Zou

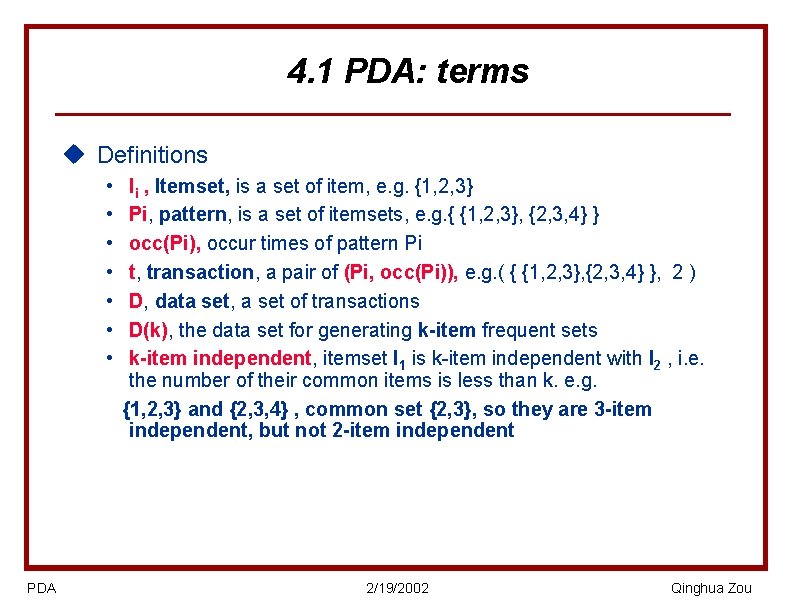

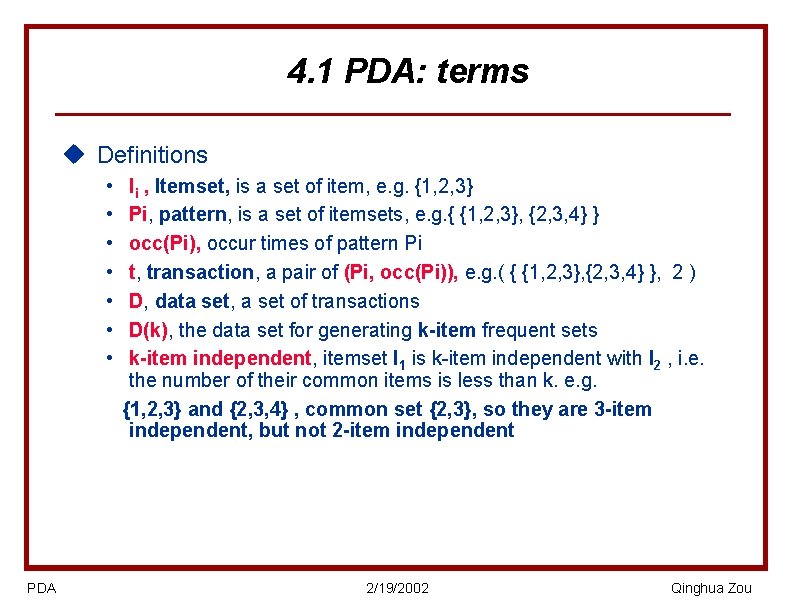

4. 1 PDA: terms u Definitions • • PDA Ii , Itemset, is a set of item, e. g. {1, 2, 3} Pi, pattern, is a set of itemsets, e. g. { {1, 2, 3}, {2, 3, 4} } occ(Pi), occur times of pattern Pi t, transaction, a pair of (Pi, occ(Pi)), e. g. ( { {1, 2, 3}, {2, 3, 4} }, 2 ) D, data set, a set of transactions D(k), the data set for generating k-item frequent sets k-item independent, itemset I 1 is k-item independent with I 2 , i. e. the number of their common items is less than k. e. g. {1, 2, 3} and {2, 3, 4} , common set {2, 3}, so they are 3 -item independent, but not 2 -item independent 2/19/2002 Qinghua Zou

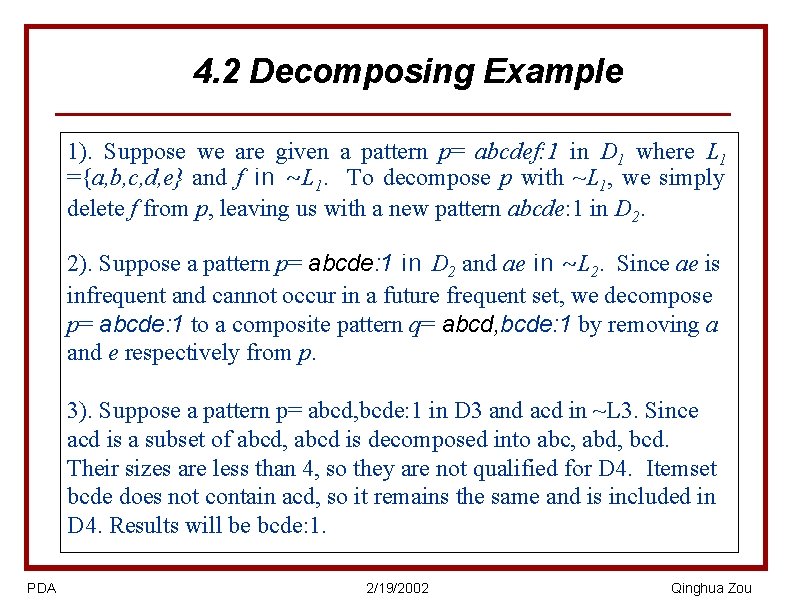

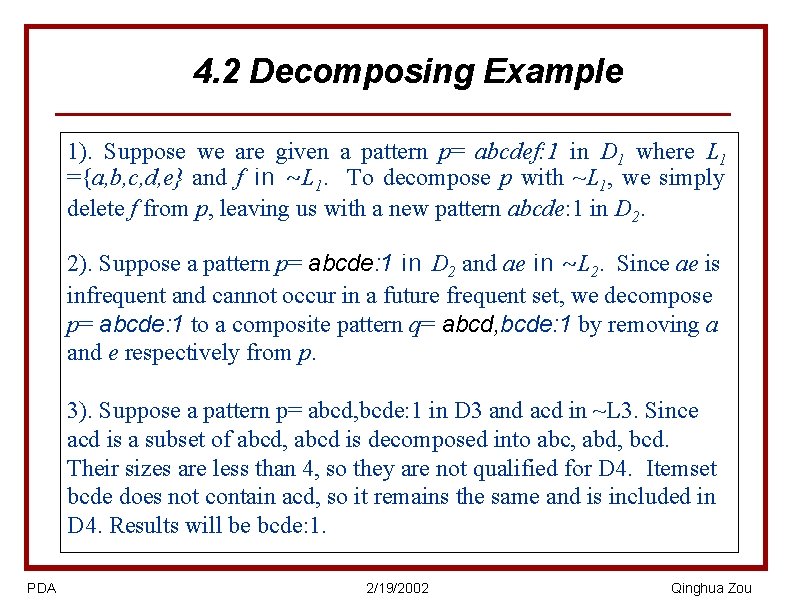

4. 2 Decomposing Example 1). Suppose we are given a pattern p= abcdef: 1 in D 1 where L 1 ={a, b, c, d, e} and f in ~L 1. To decompose p with ~L 1, we simply delete f from p, leaving us with a new pattern abcde: 1 in D 2. 2). Suppose a pattern p= abcde: 1 in D 2 and ae in ~L 2. Since ae is infrequent and cannot occur in a future frequent set, we decompose p= abcde: 1 to a composite pattern q= abcd, bcde: 1 by removing a and e respectively from p. 3). Suppose a pattern p= abcd, bcde: 1 in D 3 and acd in ~L 3. Since acd is a subset of abcd, abcd is decomposed into abc, abd, bcd. Their sizes are less than 4, so they are not qualified for D 4. Itemset bcde does not contain acd, so it remains the same and is included in D 4. Results will be bcde: 1. PDA 2/19/2002 Qinghua Zou

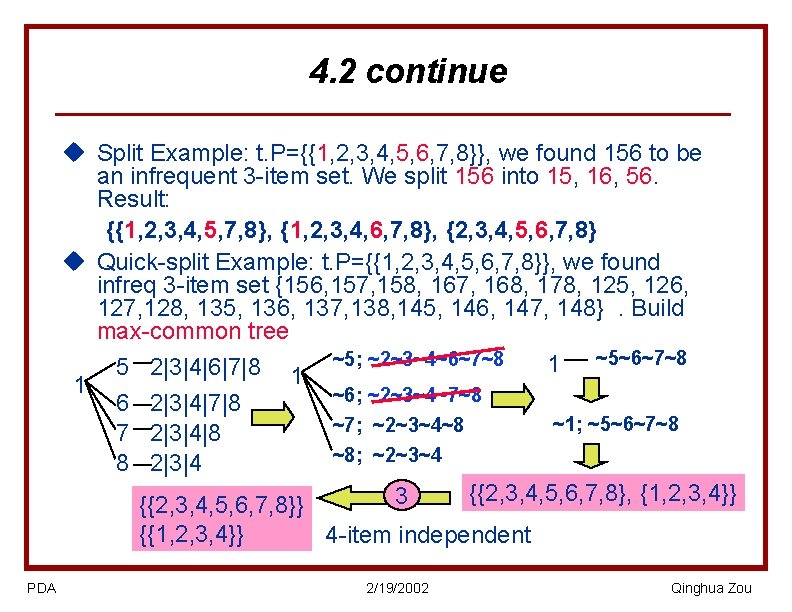

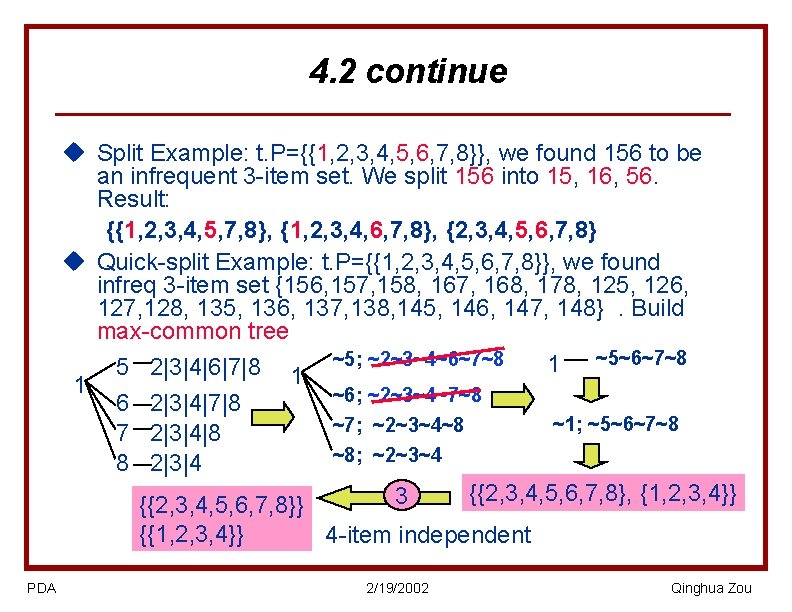

4. 2 continue u Split Example: t. P={{1, 2, 3, 4, 5, 6, 7, 8}}, we found 156 to be an infrequent 3 -item set. We split 156 into 15, 16, 56. Result: {{1, 2, 3, 4, 5, 7, 8}, {1, 2, 3, 4, 6, 7, 8}, {2, 3, 4, 5, 6, 7, 8} u Quick-split Example: t. P={{1, 2, 3, 4, 5, 6, 7, 8}}, we found infreq 3 -item set {156, 157, 158, 167, 168, 178, 125, 126, 127, 128, 135, 136, 137, 138, 145, 146, 147, 148}. Build max-common tree 1 ~5~6~7~8 5 2|3|4|6|7|8 1 ~5; ~2~3~4~6~7~8 1 ~6; ~2~3~4~7~8 6 2|3|4|7|8 ~1; ~5~6~7~8 ~7; ~2~3~4~8 7 2|3|4|8 ~8; ~2~3~4 8 2|3|4 {{2, 3, 4, 5, 6, 7, 8}, {1, 2, 3, 4}} 3 {{2, 3, 4, 5, 6, 7, 8}} {{1, 2, 3, 4}} PDA 4 -item independent 2/19/2002 Qinghua Zou

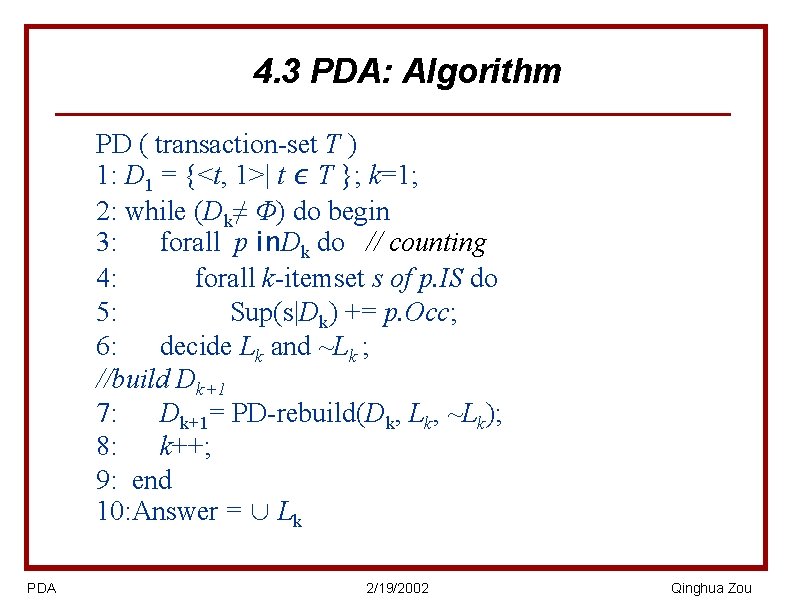

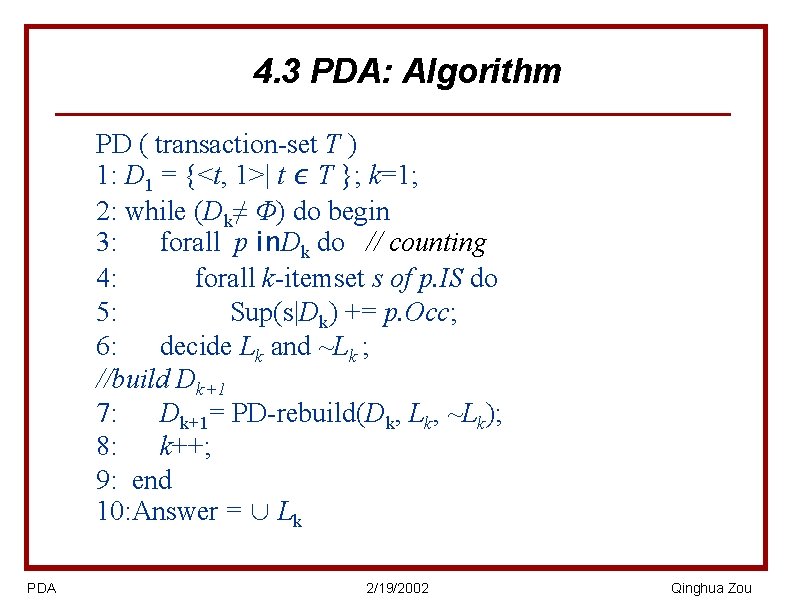

4. 3 PDA: Algorithm PD ( transaction-set T ) 1: D 1 = {<t, 1>| t ∊ T }; k=1; 2: while (Dk≠ Φ) do begin 3: forall p in. Dk do // counting 4: forall k-itemset s of p. IS do 5: Sup(s|Dk) += p. Occ; 6: decide Lk and ~Lk ; //build Dk+1 7: Dk+1= PD-rebuild(Dk, Lk, ~Lk); 8: k++; 9: end 10: Answer = ∪ Lk PDA 2/19/2002 Qinghua Zou

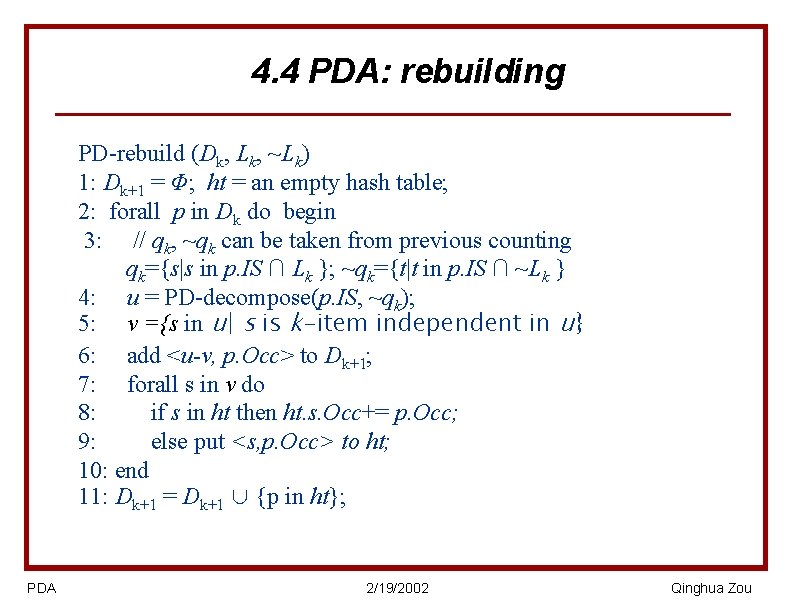

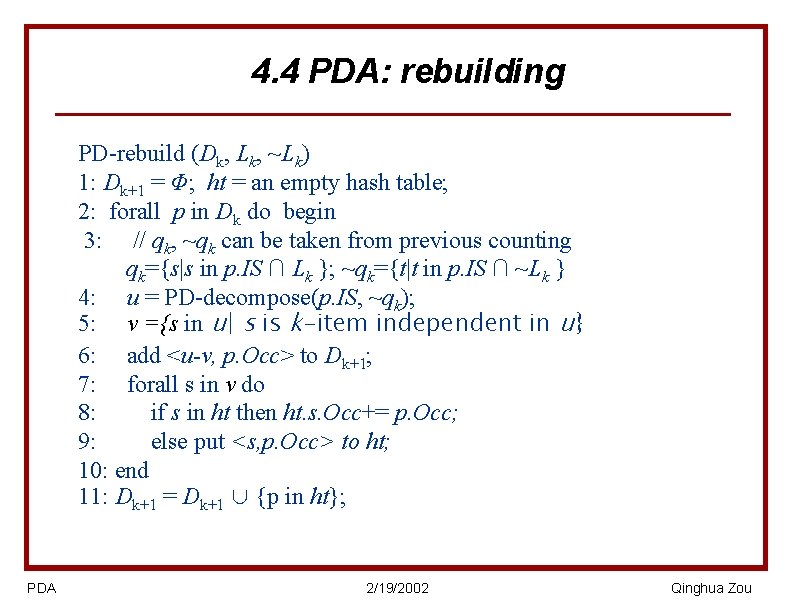

4. 4 PDA: rebuilding PD-rebuild (Dk, Lk, ~Lk) 1: Dk+1 = Φ; ht = an empty hash table; 2: forall p in Dk do begin 3: // qk, ~qk can be taken from previous counting qk={s|s in p. IS ∩ Lk }; ~qk={t|t in p. IS ∩ ~Lk } 4: u = PD-decompose(p. IS, ~qk); 5: v ={s in u| s is k-item independent in u} 6: add <u-v, p. Occ> to Dk+1; 7: forall s in v do 8: if s in ht then ht. s. Occ+= p. Occ; 9: else put <s, p. Occ> to ht; 10: end 11: Dk+1 = Dk+1 ∪ {p in ht}; PDA 2/19/2002 Qinghua Zou

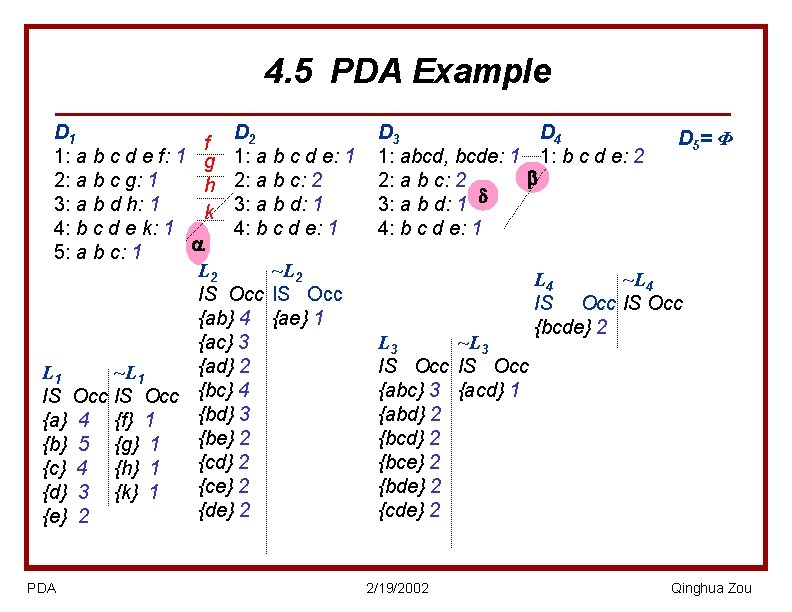

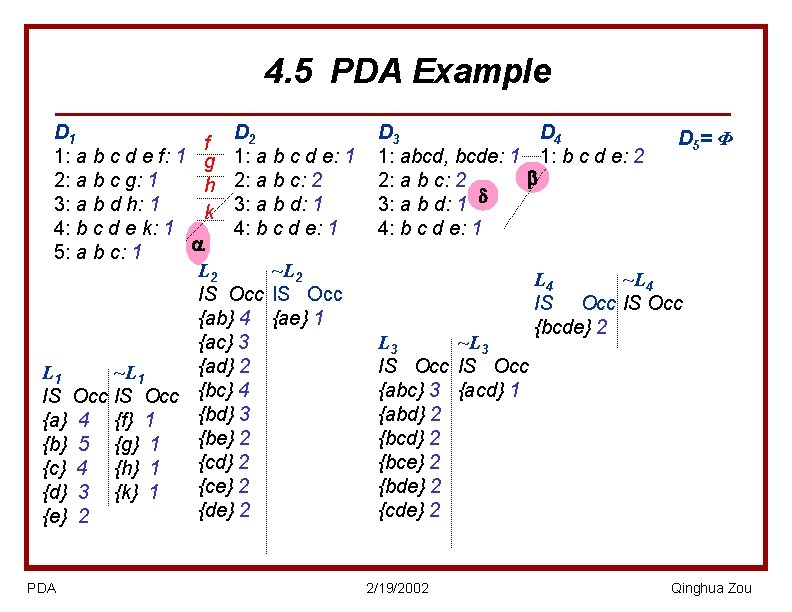

4. 5 PDA Example D 1 D 2 f 1: a b c d e f: 1 g 1: a b c d e: 1 2: a b c g: 1 h 2: a b c: 2 3: a b d h: 1 k 3: a b d: 1 4: b c d e k: 1 4: b c d e: 1 5: a b c: 1 ~L 2 IS Occ {ab} 4 {ae} 1 {ac} 3 {ad} 2 ~L 1 IS Occ {bc} 4 {bd} 3 {a} 4 {f} 1 {be} 2 {b} 5 {g} 1 {cd} 2 {c} 4 {h} 1 {ce} 2 {d} 3 {k} 1 {de} 2 {e} 2 PDA D 3 D 4 1: abcd, bcde: 1 1: b c d e: 2 2: a b c: 2 3: a b d: 1 4: b c d e: 1 L 3 ~L 3 IS Occ {abc} 3 {acd} 1 {abd} 2 {bce} 2 {bde} 2 {cde} 2 2/19/2002 D 5 = Φ L 4 ~L 4 IS Occ {bcde} 2 Qinghua Zou

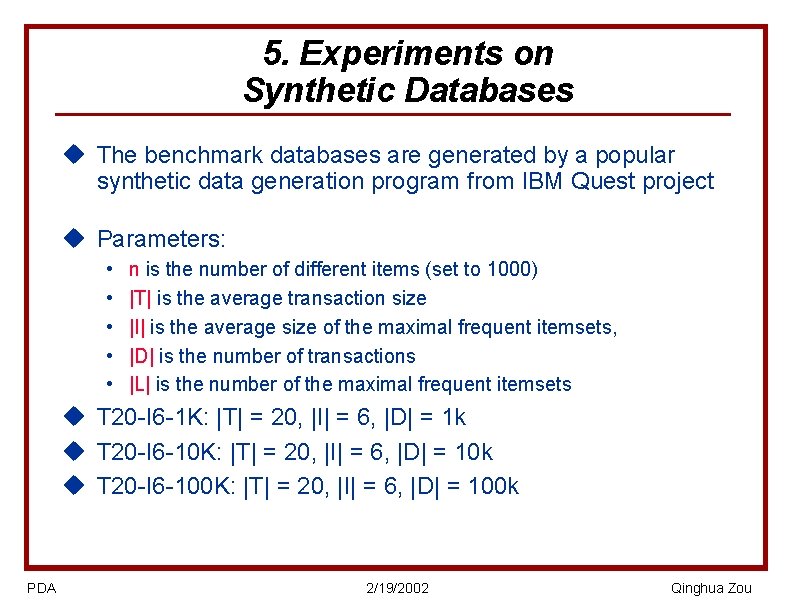

5. Experiments on Synthetic Databases u The benchmark databases are generated by a popular synthetic data generation program from IBM Quest project u Parameters: • • • n is the number of different items (set to 1000) |T| is the average transaction size |I| is the average size of the maximal frequent itemsets, |D| is the number of transactions |L| is the number of the maximal frequent itemsets u T 20 -I 6 -1 K: |T| = 20, |I| = 6, |D| = 1 k u T 20 -I 6 -10 K: |T| = 20, |I| = 6, |D| = 10 k u T 20 -I 6 -100 K: |T| = 20, |I| = 6, |D| = 100 k PDA 2/19/2002 Qinghua Zou

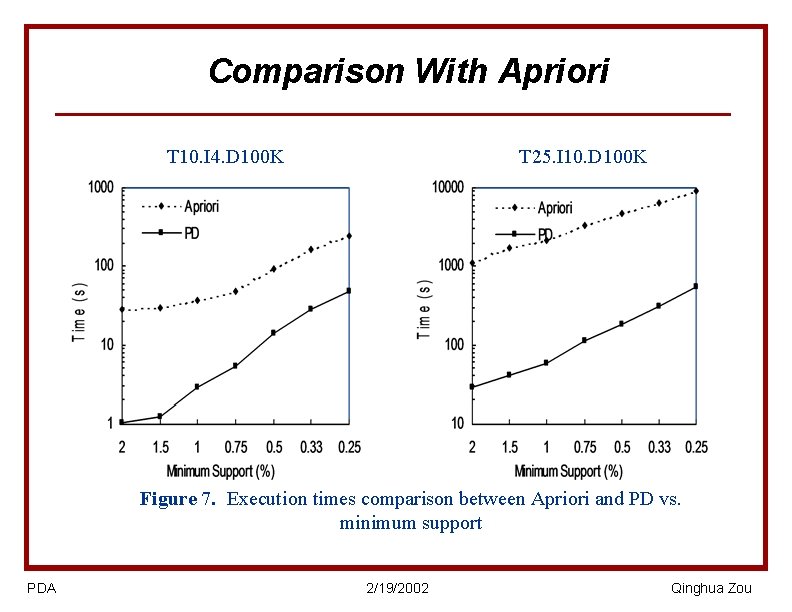

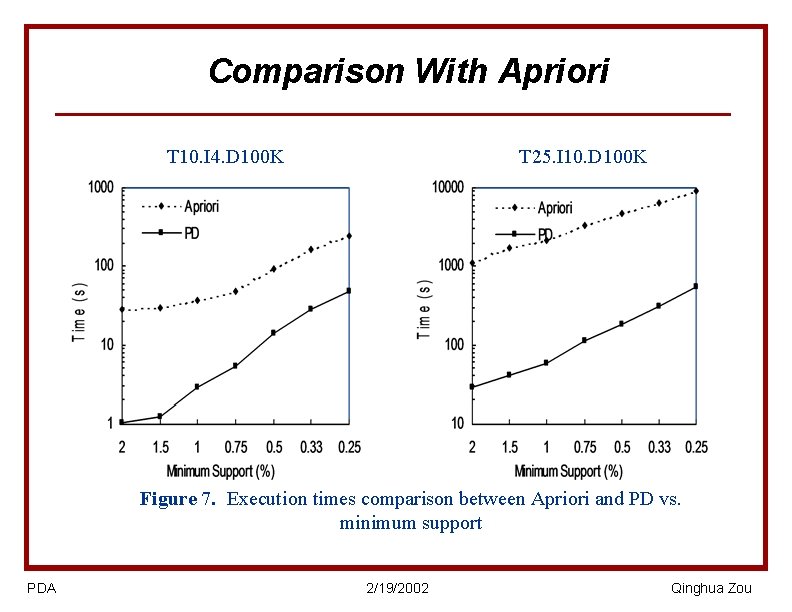

Comparison With Apriori T 10. I 4. D 100 K T 25. I 10. D 100 K Figure 7. Execution times comparison between Apriori and PD vs. minimum support PDA 2/19/2002 Qinghua Zou

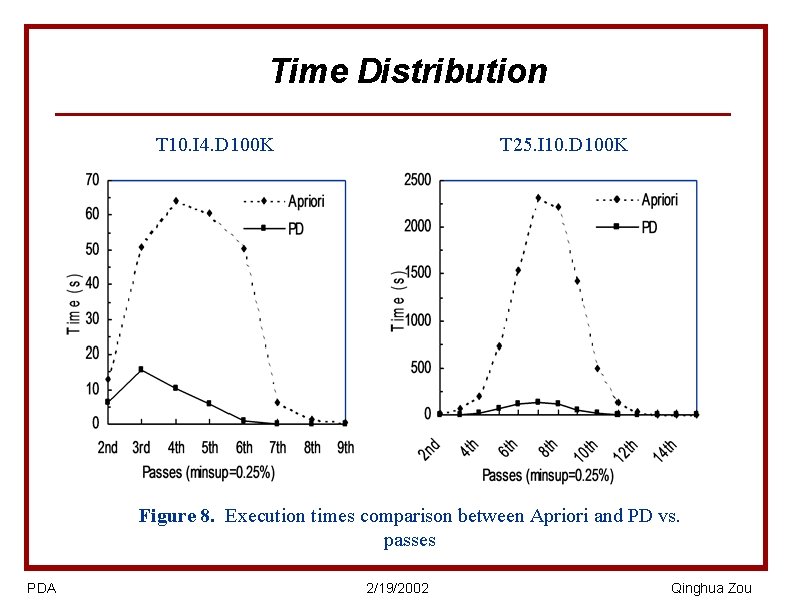

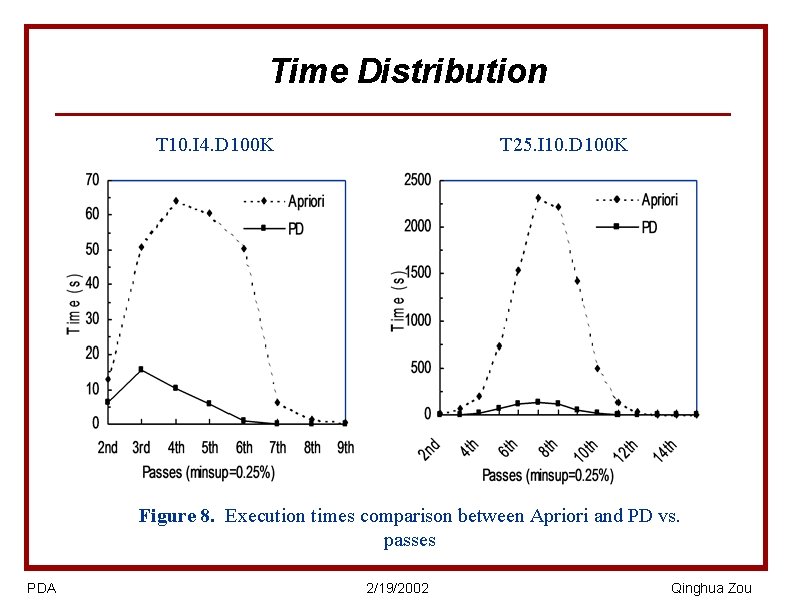

Time Distribution T 10. I 4. D 100 K T 25. I 10. D 100 K Figure 8. Execution times comparison between Apriori and PD vs. passes PDA 2/19/2002 Qinghua Zou

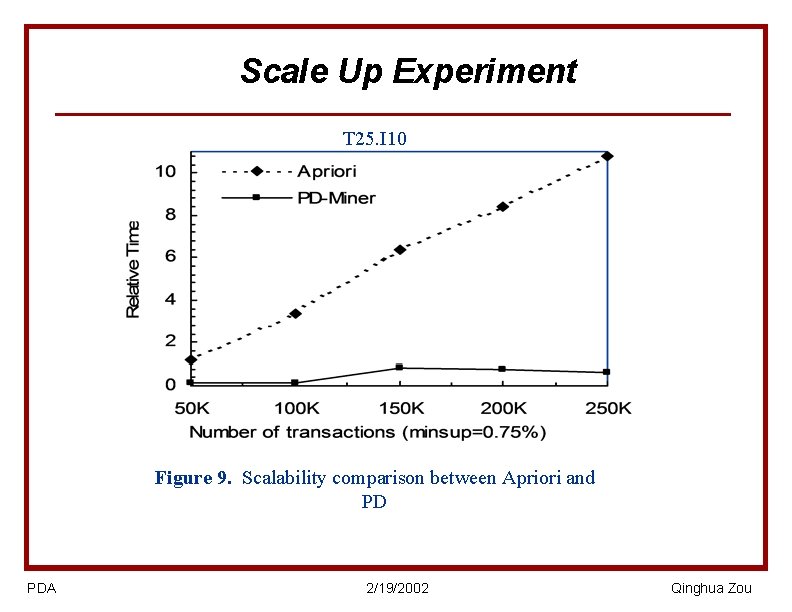

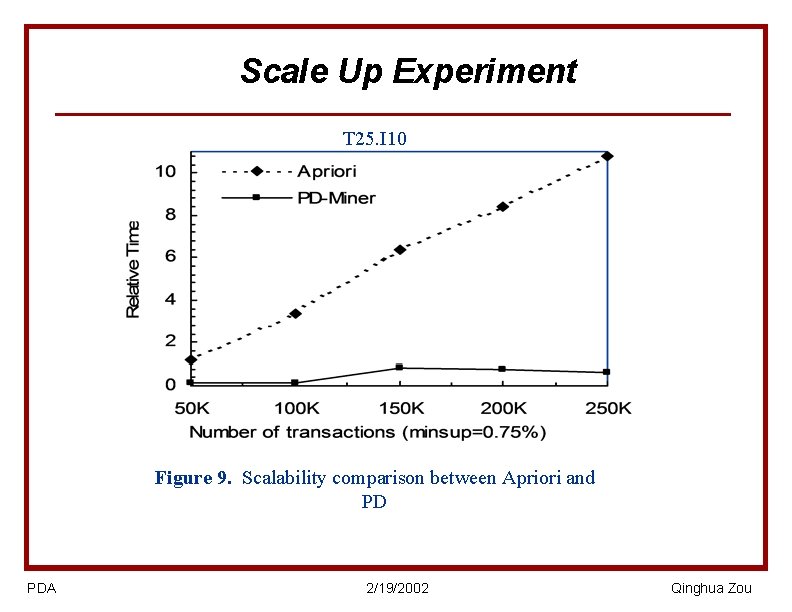

Scale Up Experiment T 25. I 10 Figure 9. Scalability comparison between Apriori and PD PDA 2/19/2002 Qinghua Zou

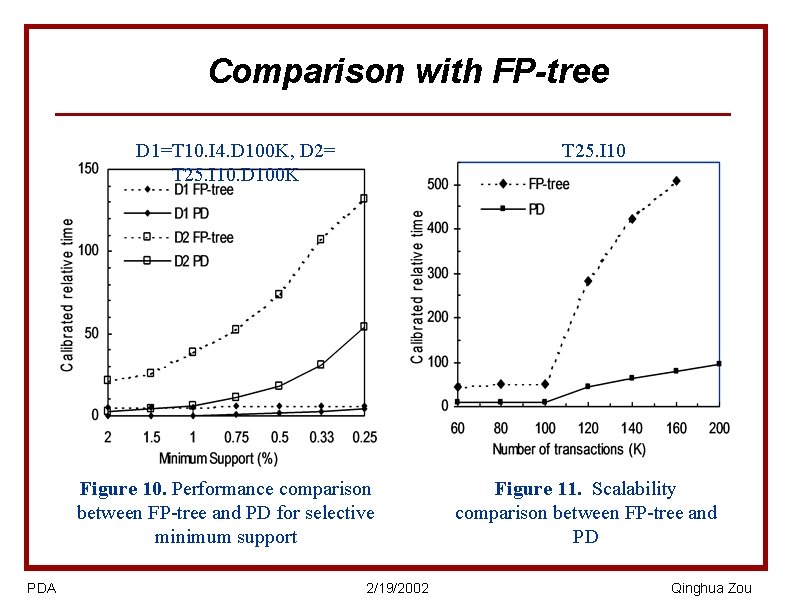

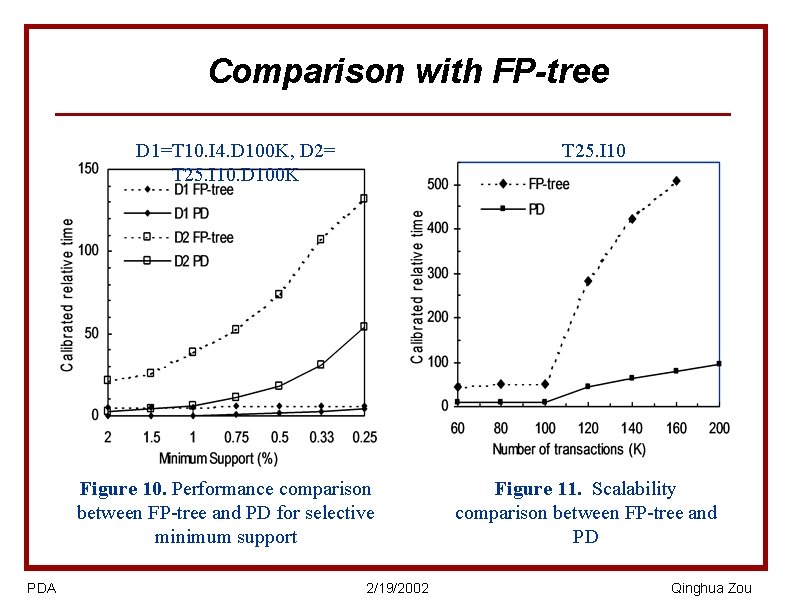

Comparison with FP-tree D 1=T 10. I 4. D 100 K, D 2= T 25. I 10. D 100 K T 25. I 10 Figure 10. Performance comparison between FP-tree and PD for selective minimum support PDA 2/19/2002 Figure 11. Scalability comparison between FP-tree and PD Qinghua Zou

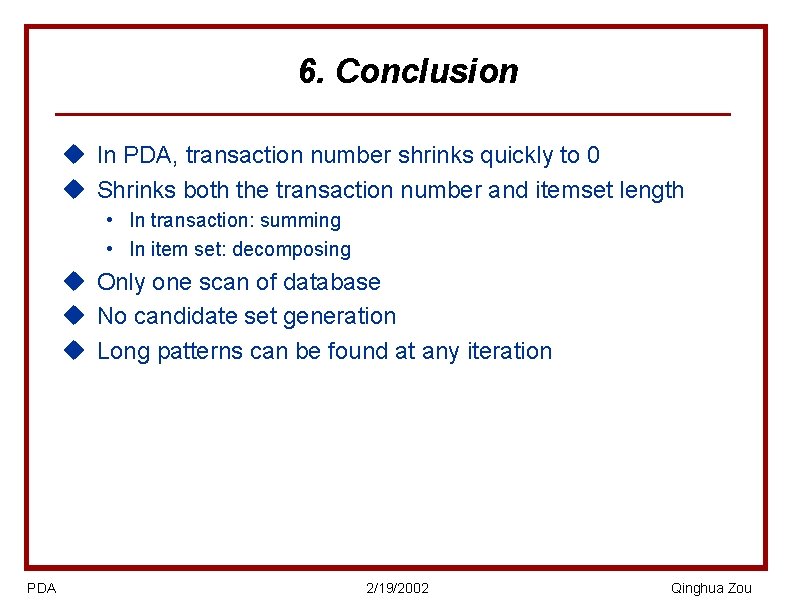

6. Conclusion u In PDA, transaction number shrinks quickly to 0 u Shrinks both the transaction number and itemset length • In transaction: summing • In item set: decomposing u Only one scan of database u No candidate set generation u Long patterns can be found at any iteration PDA 2/19/2002 Qinghua Zou

![Reference 1 R Agrawal and R Srikant Fast algorithms for mining association rules In Reference [1] R. Agrawal and R. Srikant. Fast algorithms for mining association rules. In](https://slidetodoc.com/presentation_image_h/a48c495f4cc7e2ad64b2253b07354b64/image-26.jpg)

Reference [1] R. Agrawal and R. Srikant. Fast algorithms for mining association rules. In VLDB'94, pp. 487 -499. [2] R. J. Bayardo. Efficiently mining long patterns from databases. In SIGMOD'98, pp. 85 -93. [3] Zaki, M. J. ; Parthasarathy, S. ; Ogihara, M. ; and Li, W. 1997. New Algorithms for Fast Discovery of Association Rules. In Proc. of the Third Int’l Conf. on Knowledge Discovery in Databases and Data Mining, pp. 283 -286. [4] Lin, D. -I and Kedem, Z. M. 1998. Pincer-Search: A New Algorithm for Discovering the Maximum Frequent Set. In Proc. of the Sixth European Conf. on Extending Database. Technology. [5] Park, J. S. ; Chen, M. -S. ; and Yu, P. S. 1996. An Effective Hash Based Algorithm for Mining Association Rules. In Proc. of the 1995 ACMSIGMOD Conf. on Management of Data, pp. 175 -186. [6] Brin, S. ; Motwani, R. ; Ullman, J. ; and Tsur, S. 1997. Dynamic Itemset Counting and Implication Rules for Market Basket Data. In Proc. of the 1997 ACM-SIGMOD Conf. On Management of Data, 255 -264. [7] J. Pei, J. Han, and R. Mao. CLOSET: An Efficient Algorithm for Mining Frequent Closed Itemsets, Proc. 2000 ACM-SIGMOD Int. Workshop on Data Mining and Knowledge Discovery (DMKD'00), Dallas, TX, May 2000. [8] J. Han, J. Pei, and Y. Yin. Mining Frequent Patterns without Candidate Generation, Proc. 2000 ACM-SIGMOD Int. Conf. on Management of Data (SIGMOD'00), Dallas, TX, May 2000. [9] Bomze, I. M. , Budinich, M. , Pardalos, P. M. , and Pelillo, M. The maximum clique problem, Handbook of Combinatorial Optimization (Supplement Volume A), in D. -Z. Du and P. M. Pardalos (eds. ). Kluwer Academic Publishers, Boston, MA, 1999. [10] C. Bron and J. Kerbosch. Finding all cliques of an undirected graph. In Communications of the ACM, 16(9): 575 -577, Sept. 1973. [11] Johnson D. B. , Chu W. W. , Dionisio J. D. N. , Taira R. K. , Kangarloo H. , Creating and Indexing Teaching Files from Free-text Patient Reports. Proc. AMIA Symp 1999; pp. 814 -818. [12] Johnson D. B. , Chu W. W. , Using n-word combinations for domain specific information retrieval, Proceedings of the Second International Conference on Information Fusion – FUSION’ 99, San Jose, CA, July 6 -9, 1999. [13] A. Savasere, E. Omiecinski, and S. Navathe. An Efficient Algorithm for Mining Association Rules in Large Databases. In Proceedings of the 21 st VLDB Conference, 1995. [14] Heikki Mannila, Hannu Toivonen, and A. Inkeri Verkamo. Efficient algorithms for discovering association rules. In Usama M. Fayyad and Ramasamy Uthurusamy, editors, Proc. of the AAAI Workshop on Knowledge Discovery in Databases, pp. 181 -192, Seattle, Washington, July 1994. [15] H. Toivonen. Sampling Large Databases for Association Rules. In Proceedings of the 22 nd International Conference on Very Large Data Bases, Bombay, India, September 1996. PDA 2/19/2002 Qinghua Zou